mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-18 02:00:18 +08:00

commit

d545c74aa5

@ -0,0 +1,56 @@

|

||||

一周 GNOME 之旅:品味它和 KDE 的是是非非(第一节 介绍)

|

||||

================================================================================

|

||||

|

||||

*作者声明: 如果你是因为某种神迹而在没看标题的情况下点开了这篇文章,那么我想再重申一些东西……这是一篇评论文章,文中的观点都是我自己的,不代表 Phoronix 网站和 Michael 的观点。它们完全是我自己的想法。*

|

||||

|

||||

另外,没错……这可能是一篇引战的文章。我希望 KDE 和 Gnome 社团变得更好一些,因为我想发起一个讨论并反馈给他们。为此,当我想指出(我所看到的)一个瑕疵时,我会尽量地做到具体而直接。这样,相关的讨论也能做到同样的具体和直接。再次声明:本文另一可选标题为“死于成千上万的[纸割][1]”(LCTT 译注:paper cuts——纸割,被纸片割伤——指易修复但烦人的缺陷。Ubuntu 从 9.10 开始,发起了 [One Hundred Papercuts][1] 项目,用于修复那些小而烦人的易用性问题)。

|

||||

|

||||

现在,重申完毕……文章开始。

|

||||

|

||||

|

||||

|

||||

当我把[《评价 Fedora 22 KDE 》][2]一文发给 Michael 时,感觉很不是滋味。不是因为我不喜欢 KDE,或者不待见 Fedora,远非如此。事实上,我刚开始想把我的 T450s 的系统换为 Arch Linux 时,马上又决定放弃了,因为我很享受 fedora 在很多方面所带来的便捷性。

|

||||

|

||||

我感觉很不是滋味的原因是 Fedora 的开发者花费了大量的时间和精力在他们的“工作站”产品上,但是我却一点也没看到。在使用 Fedora 时,我并没用采用那些主要开发者希望用户采用的那种使用方式,因此我也就体验不到所谓的“ Fedora 体验”。它感觉就像一个人评价 Ubuntu 时用的却是 Kubuntu,评价 OS X 时用的却是 Hackintosh,或者评价 Gentoo 时用的却是 Sabayon。根据论坛里大量读者对 Michael 的说法,他们在评价各种发行版时都是使用的默认设置——我也不例外。但是我还是认为这些评价应该在“真实”配置下完成,当然我也知道在给定的情况下评论某些东西也的确是有价值的——无论是好是坏。

|

||||

|

||||

正是在怀着这种态度的情况下,我决定跳到 Gnome 这个水坑里来泡泡澡。

|

||||

|

||||

但是,我还要在此多加一个声明……我在这里所看到的 KDE 和 Gnome 都是打包在 Fedora 中的。OpenSUSE、 Kubuntu、 Arch等发行版的各个桌面可能有不同的实现方法,使得我这里所说的具体的“痛点”跟你所用的发行版有所不同。还有,虽然用了这个标题,但这篇文章将会是一篇“很 KDE”的重量级文章。之所以这样称呼,是因为我在“使用” Gnome 之后,才知道 KDE 的“纸割”到底有多么的多。

|

||||

|

||||

### 登录界面 ###

|

||||

|

||||

|

||||

|

||||

我一般情况下都不会介意发行版带着它们自己的特别主题,因为一般情况下桌面看起来会更好看。可我今天可算是找到了一个例外。

|

||||

|

||||

第一印象很重要,对吧?那么,GDM(LCTT 译注: Gnome Display Manage:Gnome 显示管理器。)绝对干得漂亮。它的登录界面看起来极度简洁,每一部分都应用了一致的设计风格。使用通用图标而不是文本框为它的简洁加了分。

|

||||

|

||||

|

||||

|

||||

这并不是说 Fedora 22 KDE ——现在已经是 SDDM 而不是 KDM 了——的登录界面不好看,但是看起来绝对没有它这样和谐。

|

||||

|

||||

问题到底出来在哪?顶部栏。看看 Gnome 的截图——你选择一个用户,然后用一个很小的齿轮简单地选择想登入哪个会话。设计很简洁,一点都不碍事,实话讲,如果你没注意的话可能完全会看不到它。现在看看那蓝色( LCTT 译注:blue,有忧郁之意,一语双关)的 KDE 截图,顶部栏看起来甚至不像是用同一个工具渲染出来的,它的整个位置的安排好像是某人想着:“哎哟妈呀,我们需要把这个选项扔在哪个地方……”之后决定下来的。

|

||||

|

||||

对于右上角的重启和关机选项也一样。为什么不单单用一个电源按钮,点击后会下拉出一个菜单,里面包括重启,关机,挂起的功能?按钮的颜色跟背景色不同肯定会让它更加突兀和显眼……但我可不觉得这样子有多好。同样,这看起来可真像“苦思”后的决定。

|

||||

|

||||

从实用观点来看,GDM 还要远远实用的多,再看看顶部一栏。时间被列了出来,还有一个音量控制按钮,如果你想保持周围安静,你甚至可以在登录前设置静音,还有一个可用性按钮来实现高对比度、缩放、语音转文字等功能,所有可用的功能通过简单的一个开关按钮就能得到。

|

||||

|

||||

|

||||

|

||||

切换到上游(KDE 自带)的 Breeve 主题……突然间,我抱怨的大部分问题都被解决了。通用图标,所有东西都放在了屏幕中央,但不是那么重要的被放到了一边。因为屏幕顶部和底部都是同样的空白,在中间也就酝酿出了一种美好的和谐。还是有一个文本框来切换会话,但既然电源按钮被做成了通用图标,那么这点还算可以原谅。当前时间以一种漂亮的感觉呈现,旁边还有电量指示器。当然 gnome 还是有一些很好的附加物,例如音量小程序和可用性按钮,但 Breeze 总归要比 Fedora 的 KDE 主题进步。

|

||||

|

||||

到 Windows(Windows 8和10之前)或者 OS X 中去,你会看到类似的东西——非常简洁的,“不碍事”的锁屏与登录界面,它们都没有文本框或者其它分散视觉的小工具。这是一种有效的不分散人注意力的设计。Fedora……默认带有 Breeze 主题。VDG 在 Breeze 主题设计上干得不错。可别糟蹋了它。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.phoronix.com/scan.php?page=article&item=gnome-week-editorial&num=1

|

||||

|

||||

作者:Eric Griffith

|

||||

译者:[XLCYun](https://github.com/XLCYun)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:https://wiki.ubuntu.com/One%20Hundred%20Papercuts

|

||||

[2]:http://www.phoronix.com/scan.php?page=article&item=fedora-22-kde&num=1

|

||||

[3]:https://launchpad.net/hundredpapercuts

|

||||

@ -0,0 +1,32 @@

|

||||

一周 GNOME 之旅:品味它和 KDE 的是是非非(第二节 GNOME桌面)

|

||||

================================================================================

|

||||

|

||||

### 桌面 ###

|

||||

|

||||

|

||||

|

||||

在我这一周的前五天中,我都是直接手动登录进 Gnome 的——没有打开自动登录功能。在第五天的晚上,每一次都要手动登录让我觉得很厌烦,所以我就到用户管理器中打开了自动登录功能。下一次我登录的时候收到了一个提示:“你的密钥链(keychain)未解锁,请输入你的密码解锁”。在这时我才意识到了什么……Gnome 以前一直都在自动解锁我的密钥链(KDE 中叫做我的钱包),每当我通过 GDM 登录时 !当我绕开 GDM 的登录程序时,Gnome 才不得不介入让我手动解锁。

|

||||

|

||||

现在,鄙人的陋见是如果你打开了自动登录功能,那么你的密钥链也应当自动解锁——否则,这功能还有何用?无论如何,你还是需要输入你的密码,况且在 GDM 登录界面你还有机会选择要登录的会话,如果你想换的话。

|

||||

|

||||

但是,这点且不提,也就是在那一刻,我意识到要让这桌面感觉就像它在**和我**一起工作一样是多么简单的一件事。当我通过 SDDM 登录 KDE 时?甚至连启动界面都还没加载完成,就有一个窗口弹出来遮挡了启动动画(因此启动动画也就被破坏了),它提示我解锁我的 KDE 钱包或 GPG 钥匙环。

|

||||

|

||||

如果当前还没有钱包,你就会收到一个创建钱包的提醒——就不能在创建用户的时候同时为我创建一个吗?接着它又让你在两种加密模式中选择一种,甚至还暗示我们其中一种(Blowfish)是不安全的,既然是为了安全,为什么还要我选择一个不安全的东西?作者声明:如果你安装了真正的 KDE spin 版本而不是仅仅安装了被 KDE 搞过的版本,那么在创建用户时,它就会为你创建一个钱包。但很不幸的是,它不会帮你自动解锁,并且它似乎还使用了更老的 Blowfish 加密模式,而不是更新而且更安全的 GPG 模式。

|

||||

|

||||

|

||||

|

||||

如果你选择了那个安全的加密模式(GPG),那么它会尝试加载 GPG 密钥……我希望你已经创建过一个了,因为如果你没有,那么你可又要被指责一番了。怎么样才能创建一个?额……它不帮你创建一个……也不告诉你怎么创建……假如你真的搞明白了你应该使用 KGpg 来创建一个密钥,接着在你就会遇到一层层的菜单和一个个的提示,而这些菜单和提示只能让新手感到困惑。为什么你要问我 GPG 的二进制文件在哪?天知道在哪!如果不止一个,你就不能为我选择一个最新的吗?如果只有一个,我再问一次,为什么你还要问我?

|

||||

|

||||

为什么你要问我要使用多大的密钥大小和加密算法?你既然默认选择了 2048 和 RSA/RSA,为什么不直接使用?如果你想让这些选项能够被修改,那就把它们扔在下面的“Expert mode(专家模式)” 按钮里去。这里不仅仅是说让配置可被用户修改的问题,而是说根本不需要默认把多余的东西扔在了用户面前。这种问题将会成为这篇文章剩下的主要内容之一……KDE 需要更理智的默认配置。配置是好的,我很喜欢在使用 KDE 时的配置,但它还需要知道什么时候应该,什么时候不应该去提示用户。而且它还需要知道“嗯,它是可配置的”不能做为默认配置做得不好的借口。用户最先接触到的就是默认配置,不好的默认配置注定要失去用户。

|

||||

|

||||

让我们抛开密钥链的问题,因为我想我已经表达出了我的想法。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.phoronix.com/scan.php?page=article&item=gnome-week-editorial&num=2

|

||||

|

||||

作者:Eric Griffith

|

||||

译者:[XLCYun](https://github.com/XLCYun)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

@ -0,0 +1,120 @@

|

||||

如何更新 Linux 内核来提升系统性能

|

||||

================================================================================

|

||||

|

||||

|

||||

目前的 [Linux 内核][1]的开发速度是前所未有的,大概每2到3个月就会有一个主要的版本发布。每个发布都带来几个的新的功能和改进,可以让很多人的处理体验更快、更有效率、或者其它的方面更好。

|

||||

|

||||

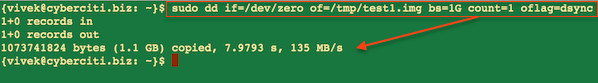

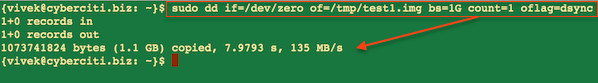

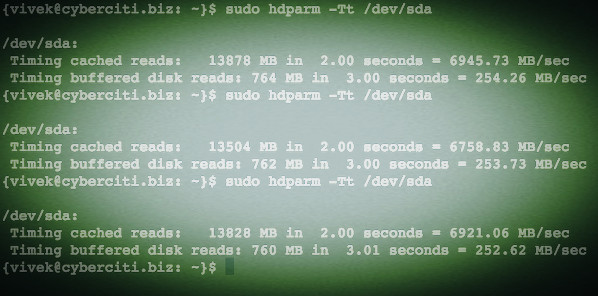

问题是,你不能在这些内核发布的时候就用它们,你要等到你的发行版带来新内核的发布。我们先前讲到[定期更新内核的好处][2],所以你不必等到那时。让我们来告诉你该怎么做。

|

||||

|

||||

> 免责声明: 我们先前的一些文章已经提到过,升级内核有(很小)的风险可能会破坏你系统。如果发生这种情况,通常可以通过使用旧内核来使系统保持工作,但是有时还是不行。因此我们对系统的任何损坏都不负责,你得自己承担风险!

|

||||

|

||||

### 预备工作 ###

|

||||

|

||||

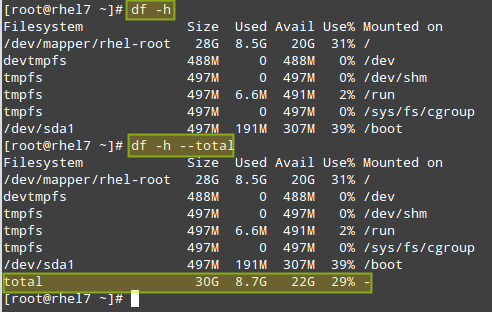

要更新你的内核,你首先要确定你使用的是32位还是64位的系统。打开终端并运行:

|

||||

|

||||

uname -a

|

||||

|

||||

检查一下输出的是 x86\_64 还是 i686。如果是 x86\_64,你就运行64位的版本,否则就运行32位的版本。千万记住这个,这很重要。

|

||||

|

||||

|

||||

|

||||

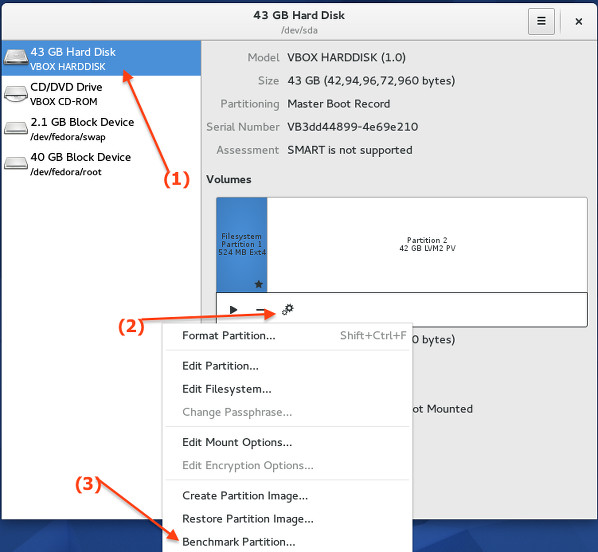

接下来,访问[官方的 Linux 内核网站][3],它会告诉你目前稳定内核的版本。愿意的话,你可以尝试下发布预选版(RC),但是这比稳定版少了很多测试。除非你确定想要需要发布预选版,否则就用稳定内核。

|

||||

|

||||

|

||||

|

||||

### Ubuntu 指导 ###

|

||||

|

||||

对 Ubuntu 及其衍生版的用户而言升级内核非常简单,这要感谢 Ubuntu 主线内核 PPA。虽然,官方把它叫做 PPA,但是你不能像其他 PPA 一样将它添加到你软件源列表中,并指望它自动升级你的内核。实际上,它只是一个简单的网页,你应该浏览并下载到你想要的内核。

|

||||

|

||||

|

||||

|

||||

现在,访问这个[内核 PPA 网页][4],并滚到底部。列表的最下面会含有最新发布的预选版本(你可以在名字中看到“rc”字样),但是这上面就可以看到最新的稳定版(说的更清楚些,本文写作时最新的稳定版是4.1.2。LCTT 译注:这里虽然 4.1.2 是当时的稳定版,但是由于尚未进入 Ubuntu 发行版中,所以文件夹名称为“-unstable”)。点击文件夹名称,你会看到几个选择。你需要下载 3 个文件并保存到它们自己的文件夹中(如果你喜欢的话可以放在下载文件夹中),以便它们与其它文件相隔离:

|

||||

|

||||

1. 针对架构的含“generic”(通用)的头文件(我这里是64位,即“amd64”)

|

||||

2. 放在列表中间,在文件名末尾有“all”的头文件

|

||||

3. 针对架构的含“generic”内核文件(再说一次,我会用“amd64”,但是你如果用32位的,你需要使用“i686”)

|

||||

|

||||

你还可以在下面看到含有“lowlatency”(低延时)的文件。但最好忽略它们。这些文件相对不稳定,并且只为那些通用文件不能满足像音频录制这类任务想要低延迟的人准备的。再说一次,首选通用版,除非你有特定的任务需求不能很好地满足。一般的游戏和网络浏览不是使用低延时版的借口。

|

||||

|

||||

你把它们放在各自的文件夹下,对么?现在打开终端,使用`cd`命令切换到新创建的文件夹下,如

|

||||

|

||||

cd /home/user/Downloads/Kernel

|

||||

|

||||

接着运行:

|

||||

|

||||

sudo dpkg -i *.deb

|

||||

|

||||

这个命令会标记文件夹中所有的“.deb”文件为“待安装”,接着执行安装。这是推荐的安装方法,因为不可以很简单地选择一个文件安装,它总会报出依赖问题。这这样一起安装就可以避免这个问题。如果你不清楚`cd`和`sudo`是什么。快速地看一下 [Linux 基本命令][5]这篇文章。

|

||||

|

||||

|

||||

|

||||

安装完成后,**重启**你的系统,这时应该就会运行刚安装的内核了!你可以在命令行中使用`uname -a`来检查输出。

|

||||

|

||||

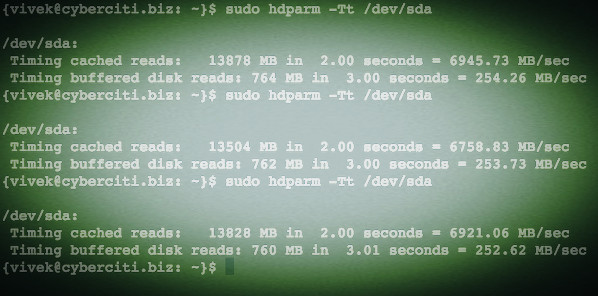

### Fedora 指导 ###

|

||||

|

||||

如果你使用的是 Fedora 或者它的衍生版,过程跟 Ubuntu 很类似。不同的是文件获取的位置不同,安装的命令也不同。

|

||||

|

||||

|

||||

|

||||

查看 [最新 Fedora 内核构建][6]列表。选取列表中最新的稳定版并翻页到下面选择 i686 或者 x86_64 版。这取决于你的系统架构。这时你需要下载下面这些文件并保存到它们对应的目录下(比如“Kernel”到下载目录下):

|

||||

|

||||

- kernel

|

||||

- kernel-core

|

||||

- kernel-headers

|

||||

- kernel-modules

|

||||

- kernel-modules-extra

|

||||

- kernel-tools

|

||||

- perf 和 python-perf (可选)

|

||||

|

||||

如果你的系统是 i686(32位)同时你有 4GB 或者更大的内存,你需要下载所有这些文件的 PAE 版本。PAE 是用于32位系统上的地址扩展技术,它允许你使用超过 3GB 的内存。

|

||||

|

||||

现在使用`cd`命令进入文件夹,像这样

|

||||

|

||||

cd /home/user/Downloads/Kernel

|

||||

|

||||

接着运行下面的命令来安装所有的文件

|

||||

|

||||

yum --nogpgcheck localinstall *.rpm

|

||||

|

||||

最后**重启**你的系统,这样你就可以运行新的内核了!

|

||||

|

||||

#### 使用 Rawhide ####

|

||||

|

||||

另外一个方案是,Fedora 用户也可以[切换到 Rawhide][7],它会自动更新所有的包到最新版本,包括内核。然而,Rawhide 经常会破坏系统(尤其是在早期的开发阶段中),它**不应该**在你日常使用的系统中用。

|

||||

|

||||

### Arch 指导 ###

|

||||

|

||||

[Arch 用户][8]应该总是使用的是最新和最棒的稳定版(或者相当接近的版本)。如果你想要更接近最新发布的稳定版,你可以启用测试库提前2到3周获取到主要的更新。

|

||||

|

||||

要这么做,用[你喜欢的编辑器][9]以`sudo`权限打开下面的文件

|

||||

|

||||

/etc/pacman.conf

|

||||

|

||||

接着取消注释带有 testing 的三行(删除行前面的#号)。如果你启用了 multilib 仓库,就把 multilib-testing 也做相同的事情。如果想要了解更多参考[这个 Arch 的 wiki 界面][10]。

|

||||

|

||||

升级内核并不简单(有意这么做的),但是这会给你带来很多好处。只要你的新内核不会破坏任何东西,你可以享受它带来的性能提升,更好的效率,更多的硬件支持和潜在的新特性。尤其是你正在使用相对较新的硬件时,升级内核可以帮助到你。

|

||||

|

||||

|

||||

**怎么升级内核这篇文章帮助到你了么?你认为你所喜欢的发行版对内核的发布策略应该是怎样的?**。在评论栏让我们知道!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.makeuseof.com/tag/update-linux-kernel-improved-system-performance/

|

||||

|

||||

作者:[Danny Stieben][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.makeuseof.com/tag/author/danny/

|

||||

[1]:http://www.makeuseof.com/tag/linux-kernel-explanation-laymans-terms/

|

||||

[2]:http://www.makeuseof.com/tag/5-reasons-update-kernel-linux/

|

||||

[3]:http://www.kernel.org/

|

||||

[4]:http://kernel.ubuntu.com/~kernel-ppa/mainline/

|

||||

[5]:http://www.makeuseof.com/tag/an-a-z-of-linux-40-essential-commands-you-should-know/

|

||||

[6]:http://koji.fedoraproject.org/koji/packageinfo?packageID=8

|

||||

[7]:http://www.makeuseof.com/tag/bleeding-edge-linux-fedora-rawhide/

|

||||

[8]:http://www.makeuseof.com/tag/arch-linux-letting-you-build-your-linux-system-from-scratch/

|

||||

[9]:http://www.makeuseof.com/tag/nano-vs-vim-terminal-text-editors-compared/

|

||||

[10]:https://wiki.archlinux.org/index.php/Pacman#Repositories

|

||||

@ -1,6 +1,7 @@

|

||||

Darkstat一个基于网络的流量分析器 - 在Linux中安装

|

||||

在 Linux 中安装 Darkstat:基于网页的流量分析器

|

||||

================================================================================

|

||||

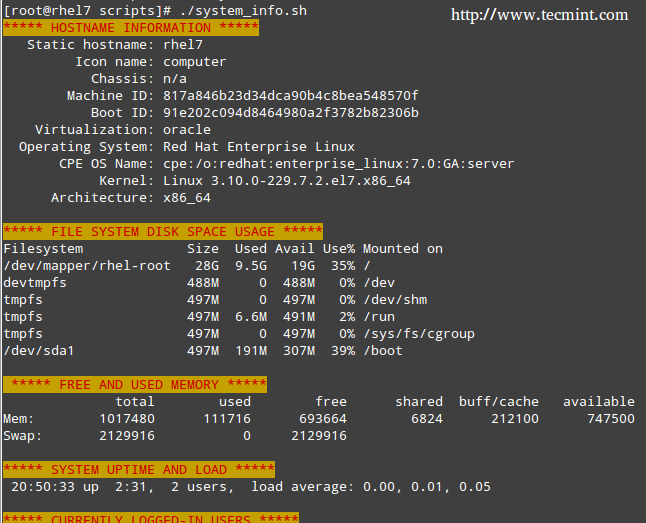

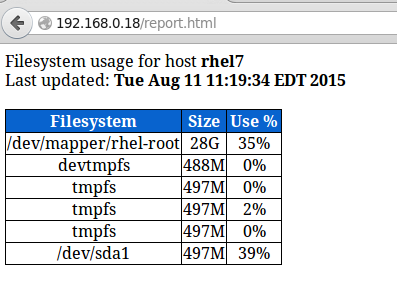

Darkstat是一个简易的,基于网络的流量分析程序。它可以在主流的操作系统如Linux、Solaris、MAC、AIX上工作。它以守护进程的形式持续工作在后台并不断地嗅探网络数据并以简单易懂的形式展现在网页上。它可以为主机生成流量报告,鉴别特定主机上哪些端口打开并且兼容IPv6。让我们看下如何在Linux中安装和配置它。

|

||||

|

||||

Darkstat是一个简易的,基于网页的流量分析程序。它可以在主流的操作系统如Linux、Solaris、MAC、AIX上工作。它以守护进程的形式持续工作在后台,不断地嗅探网络数据,以简单易懂的形式展现在它的网页上。它可以为主机生成流量报告,识别特定的主机上哪些端口是打开的,它兼容IPv6。让我们看下如何在Linux中安装和配置它。

|

||||

|

||||

### 在Linux中安装配置Darkstat ###

|

||||

|

||||

@ -20,14 +21,15 @@ Darkstat是一个简易的,基于网络的流量分析程序。它可以在主

|

||||

|

||||

### 配置 Darkstat ###

|

||||

|

||||

为了正确运行这个程序,我恩需要执行一些基本的配置。运行下面的命令用gedit编辑器打开/etc/darkstat/init.cfg文件。

|

||||

为了正确运行这个程序,我们需要执行一些基本的配置。运行下面的命令用gedit编辑器打开/etc/darkstat/init.cfg文件。

|

||||

|

||||

sudo gedit /etc/darkstat/init.cfg

|

||||

|

||||

|

||||

编辑 Darkstat

|

||||

|

||||

修改START_DARKSTAT这个参数为yes,并在“INTERFACE”中提供你的网络接口。确保取消了DIR、PORT、BINDIP和LOCAL这些参数的注释。如果你希望绑定Darkstat到特定的IP,在BINDIP中提供它

|

||||

*编辑 Darkstat*

|

||||

|

||||

修改START_DARKSTAT这个参数为yes,并在“INTERFACE”中提供你的网络接口。确保取消了DIR、PORT、BINDIP和LOCAL这些参数的注释。如果你希望绑定Darkstat到特定的IP,在BINDIP参数中提供它。

|

||||

|

||||

### 启动Darkstat守护进程 ###

|

||||

|

||||

@ -47,7 +49,7 @@ Darkstat是一个简易的,基于网络的流量分析程序。它可以在主

|

||||

|

||||

### 总结 ###

|

||||

|

||||

它是一个占用很少内存的轻量级工具。这个工具流行的原因是简易、易于配置和使用。这是一个对系统管理员而言必须拥有的程序

|

||||

它是一个占用很少内存的轻量级工具。这个工具流行的原因是简易、易于配置使用。这是一个对系统管理员而言必须拥有的程序。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -55,7 +57,7 @@ via: http://linuxpitstop.com/install-darkstat-on-ubuntu-linux/

|

||||

|

||||

作者:[Aun][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,14 +1,15 @@

|

||||

如何在 Linux 中从 Google Play 商店里下载 apk 文件

|

||||

如何在 Linux 上从 Google Play 商店里下载 apk 文件

|

||||

================================================================================

|

||||

假设你想在你的 Android 设备中安装一个 Android 应用,然而由于某些原因,你不能在 Andor 设备上访问 Google Play 商店。接着你该怎么做呢?在不访问 Google Play 商店的前提下安装应用的一种可能的方法是使用其他的手段下载该应用的 APK 文件,然后手动地在 Android 设备上 [安装 APK 文件][1]。

|

||||

|

||||

在非 Android 设备如常规的电脑和笔记本电脑上,有着几种方式来从 Google Play 商店下载到官方的 APK 文件。例如,使用浏览器插件(例如, 针对 [Chrome][2] 或针对 [Firefox][3] 的插件) 或利用允许你使用浏览器下载 APK 文件的在线的 APK 存档等。假如你不信任这些闭源的插件或第三方的 APK 仓库,这里有另一种手动下载官方 APK 文件的方法,它使用一个名为 [GooglePlayDownloader][4] 的开源 Linux 应用。

|

||||

假设你想在你的 Android 设备中安装一个 Android 应用,然而由于某些原因,你不能在 Andord 设备上访问 Google Play 商店(LCTT 译注:显然这对于我们来说是常态)。接着你该怎么做呢?在不访问 Google Play 商店的前提下安装应用的一种可能的方法是,使用其他的手段下载该应用的 APK 文件,然后手动地在 Android 设备上 [安装 APK 文件][1]。

|

||||

|

||||

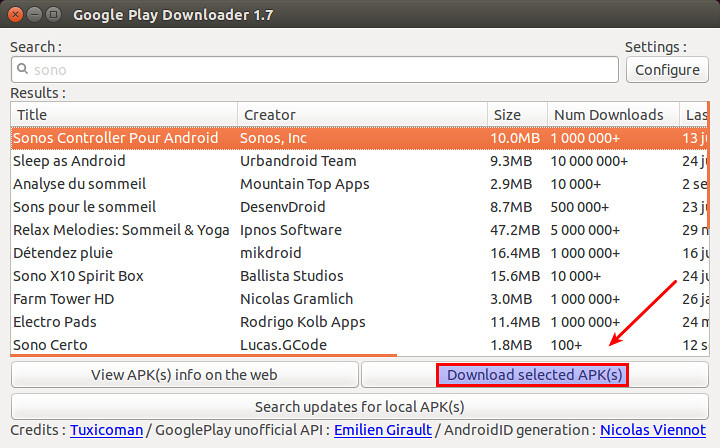

GooglePlayDownloader 是一个基于 Python 的 GUI 应用,使得你可以从 Google Play 商店上搜索和下载 APK 文件。由于它是完全开源的,你可以放心地使用它。在本篇教程中,我将展示如何在 Linux 环境下,使用 GooglePlayDownloader 来从 Google Play 商店下载 APK 文件。

|

||||

在非 Android 设备如常规的电脑和笔记本电脑上,有着几种方式来从 Google Play 商店下载到官方的 APK 文件。例如,使用浏览器插件(例如,针对 [Chrome][2] 或针对 [Firefox][3] 的插件) 或利用允许你使用浏览器下载 APK 文件的在线的 APK 存档等。假如你不信任这些闭源的插件或第三方的 APK 仓库,这里有另一种手动下载官方 APK 文件的方法,它使用一个名为 [GooglePlayDownloader][4] 的开源 Linux 应用。

|

||||

|

||||

GooglePlayDownloader 是一个基于 Python 的 GUI 应用,它可以让你从 Google Play 商店上搜索和下载 APK 文件。由于它是完全开源的,你可以放心地使用它。在本篇教程中,我将展示如何在 Linux 环境下,使用 GooglePlayDownloader 来从 Google Play 商店下载 APK 文件。

|

||||

|

||||

### Python 需求 ###

|

||||

|

||||

GooglePlayDownloader 需要使用 Python 中 SSL 模块的扩展 SNI(服务器名称指示) 来支持 SSL/TLS 通信,该功能由 Python 2.7.9 或更高版本带来。这使得一些旧的发行版本如 Debian 7 Wheezy 及早期版本,Ubuntu 14.04 及早期版本或 CentOS/RHEL 7 及早期版本均不能满足该要求。假设你已经有了一个带有 Python 2.7.9 或更高版本的发行版本,可以像下面这样接着安装 GooglePlayDownloader。

|

||||

GooglePlayDownloader 需要使用带有 SNI(Server Name Indication 服务器名称指示)的 Python 来支持 SSL/TLS 通信,该功能由 Python 2.7.9 或更高版本引入。这使得一些旧的发行版本如 Debian 7 Wheezy 及早期版本,Ubuntu 14.04 及早期版本或 CentOS/RHEL 7 及早期版本均不能满足该要求。这里假设你已经有了一个带有 Python 2.7.9 或更高版本的发行版本,可以像下面这样接着安装 GooglePlayDownloader。

|

||||

|

||||

### 在 Ubuntu 上安装 GooglePlayDownloader ###

|

||||

|

||||

@ -16,7 +17,7 @@ GooglePlayDownloader 需要使用 Python 中 SSL 模块的扩展 SNI(服务器

|

||||

|

||||

#### 在 Ubuntu 14.10 上 ####

|

||||

|

||||

下载 [python-ndg-httpsclient][5] deb 软件包,这在旧一点的 Ubuntu 发行版本中是一个缺失的依赖。同时还要下载 GooglePlayDownloader 的官方 deb 软件包。

|

||||

下载 [python-ndg-httpsclient][5] deb 软件包,这是一个较旧的 Ubuntu 发行版本中缺失的依赖。同时还要下载 GooglePlayDownloader 的官方 deb 软件包。

|

||||

|

||||

$ wget http://mirrors.kernel.org/ubuntu/pool/main/n/ndg-httpsclient/python-ndg-httpsclient_0.3.2-1ubuntu4_all.deb

|

||||

$ wget http://codingteam.net/project/googleplaydownloader/download/file/googleplaydownloader_1.7-1_all.deb

|

||||

@ -64,7 +65,7 @@ GooglePlayDownloader 需要使用 Python 中 SSL 模块的扩展 SNI(服务器

|

||||

|

||||

### 使用 GooglePlayDownloader 从 Google Play 商店下载 APK 文件 ###

|

||||

|

||||

一旦你安装好 GooglePlayDownloader 后,你就可以像下面那样从 Google Play 商店下载 APK 文件。

|

||||

一旦你安装好 GooglePlayDownloader 后,你就可以像下面那样从 Google Play 商店下载 APK 文件。(LCTT 译注:显然你需要让你的 Linux 能爬梯子)

|

||||

|

||||

首先通过输入下面的命令来启动该应用:

|

||||

|

||||

@ -76,7 +77,7 @@ GooglePlayDownloader 需要使用 Python 中 SSL 模块的扩展 SNI(服务器

|

||||

|

||||

|

||||

|

||||

一旦你从搜索列表中找到了该应用,就选择该应用,接着点击 "下载选定的 APK 文件" 按钮。最后你将在你的家目录中找到下载的 APK 文件。现在,你就可以将下载到的 APK 文件转移到你所选择的 Android 设备上,然后手动安装它。

|

||||

一旦你从搜索列表中找到了该应用,就选择该应用,接着点击 “下载选定的 APK 文件” 按钮。最后你将在你的家目录中找到下载的 APK 文件。现在,你就可以将下载到的 APK 文件转移到你所选择的 Android 设备上,然后手动安装它。

|

||||

|

||||

希望这篇教程对你有所帮助。

|

||||

|

||||

@ -86,7 +87,7 @@ via: http://xmodulo.com/download-apk-files-google-play-store.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,63 @@

|

||||

Ubuntu 有望让你安装最新 Nvidia Linux 驱动更简单

|

||||

================================================================================

|

||||

|

||||

|

||||

*Ubuntu 上的游戏玩家在增长——因而需要最新版驱动*

|

||||

|

||||

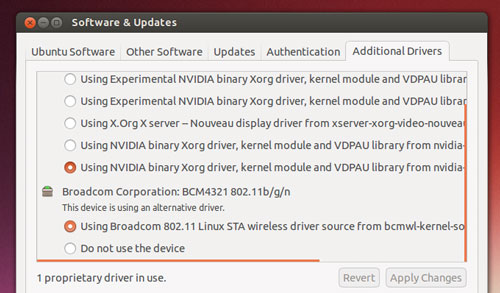

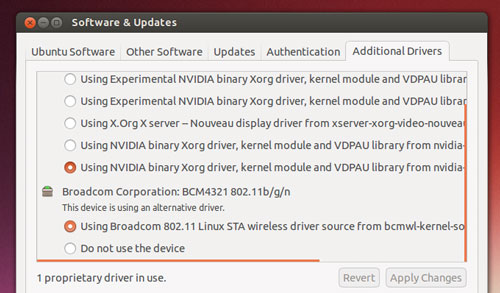

**在 Ubuntu 上安装上游的 NVIDIA 图形驱动即将变得更加容易。**

|

||||

|

||||

Ubuntu 开发者正在考虑构建一个全新的'官方' PPA,以便为桌面用户分发最新的闭源 NVIDIA 二进制驱动。

|

||||

|

||||

该项改变会让 Ubuntu 游戏玩家收益,并且*不会*给其它人造成 OS 稳定性方面的风险。

|

||||

|

||||

**仅**当用户明确选择它时,新的上游驱动将通过这个新 PPA 安装并更新。其他人将继续得到并使用更近的包含在 Ubuntu 归档中的稳定版 NVIDIA Linux 驱动快照。

|

||||

|

||||

### 为什么需要该项目? ###

|

||||

|

||||

|

||||

|

||||

*Ubuntu 提供了驱动——但是它们不是最新的*

|

||||

|

||||

可以从归档中(使用命令行、synaptic,或者通过额外驱动工具)安装到 Ubuntu 上的闭源 NVIDIA 图形驱动在大多数情况下都能工作得很好,并且可以轻松地处理 Unity 桌面外壳的混染。

|

||||

|

||||

但对于游戏需求而言,那完全是另外一码事儿。

|

||||

|

||||

如果你想要将最高帧率和 HD 纹理从最新流行的 Steam 游戏中压榨出来,你需要最新的二进制驱动文件。

|

||||

|

||||

驱动越新,越可能支持最新的特性和技术,或者带有预先打包的游戏专门的优化和漏洞修复。

|

||||

|

||||

问题在于,在 Ubuntu 上安装最新 Nvidia Linux 驱动不是件容易的事儿,而且也不具安全保证。

|

||||

|

||||

要填补这个空白,许多由热心人维护的第三方 PPA 就出现了。由于许多这些 PPA 也发布了其它实验性的或者前沿软件,它们的使用**并不是毫无风险的**。添加一个前沿的 PPA 通常是搞崩整个系统的最快的方式!

|

||||

|

||||

一个解决方法是,让 Ubuntu 用户安装最新的专有图形驱动以满足对第三方 PPA 的需要,**但是**提供一个安全机制,如果有需要,你可以回滚到稳定版本。

|

||||

|

||||

### ‘对全新驱动的需求难以忽视’ ###

|

||||

|

||||

> '一个让Ubuntu用户安全地获得最新硬件驱动的解决方案出现了。'

|

||||

|

||||

‘在快速发展的市场中,对全新驱动的需求正变得难以忽视,用户将想要最新的上游软件,’卡斯特罗在一封给 Ubuntu 桌面邮件列表的电子邮件中解释道。

|

||||

|

||||

‘[NVIDIA] 可以毫不费力为 [Windows 10] 用户带来了不起的体验。直到我们可以说服 NVIDIA 在 Ubuntu 中做了同样的工作,这样我们就可以搞定这一切了。’

|

||||

|

||||

卡斯特罗的“官方的” NVIDIA PPA 方案就是最实现这一目的的最容易的方式。

|

||||

|

||||

游戏玩家将可以在 Ubuntu 的默认专有软件驱动工具中选择接收来自该 PPA 的新驱动,再也不需要它们从网站或维基页面拷贝并粘贴终端命令了。

|

||||

|

||||

该 PPA 内的驱动将由一个选定的社区成员组成的团队打包并维护,并受惠于一个名为**自动化测试**的半官方方式。

|

||||

|

||||

就像卡斯特罗自己说的那样:'人们想要最新的闪光的东西,而不管他们想要做什么。我们也许也要在其周围放置一个框架,因此人们可以获得他们所想要的,而不必破坏他们的计算机。'

|

||||

|

||||

**你想要使用这个 PPA 吗?你怎样来评估 Ubuntu 上默认 Nvidia 驱动的性能呢?在评论中分享你的想法吧,伙计们!**

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2015/08/ubuntu-easy-install-latest-nvidia-linux-drivers

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/117485690627814051450/?rel=author

|

||||

@ -0,0 +1,66 @@

|

||||

Ubuntu NVIDIA 显卡驱动 PPA 已经做好准备

|

||||

================================================================================

|

||||

|

||||

|

||||

加速你的帧率!

|

||||

|

||||

**嘿,各位,稍安勿躁,很快就好。**

|

||||

|

||||

就在提议开发一个[新的 PPA][1] 来给 Ubuntu 用户们提供最新的 NVIDIA 显卡驱动后不久,ubuntu 社区的人们又集结起来了,就是为了这件事。

|

||||

|

||||

顾名思义,‘**Graphics Drivers PPA**’ 包含了最新的 NVIDIA Linux 显卡驱动发布,已经打包好可供用户升级使用,没有让人头疼的二进制运行时文件!

|

||||

|

||||

这个 PPA 被设计用来让玩家们尽可能方便地在 Ubuntu 上运行最新款的游戏。

|

||||

|

||||

#### 万事俱备,只欠东风 ####

|

||||

|

||||

Jorge Castro 开发一个包含 NVIDIA 最新显卡驱动的 PPA 神器的想法得到了 Ubuntu 用户和广大游戏开发者的热烈响应。

|

||||

|

||||

就连那些致力于将“Steam平台”上的知名大作移植到 Linux 上的人们,也给了不少建议。

|

||||

|

||||

Edwin Smith,Feral Interactive 公司(‘Shadow of Mordor’) 的产品总监,对于“让用户更方便地更新驱动”的倡议表示非常欣慰。

|

||||

|

||||

### 如何使用最新的 Nvidia Drivers PPA###

|

||||

|

||||

虽然新的“显卡PPA”已经开发出来,但是现在还远远达不到成熟。开发者们提醒到:

|

||||

|

||||

> “这个 PPA 还处于测试阶段,在你使用它之前最好有一些打包的经验。请大家稍安勿躁,再等几天。”

|

||||

|

||||

将 PPA 试发布给 Ubuntu desktop 邮件列表的 Jorge,也强调说,使用现行的一些 PPA(比如 xorg-edgers)的玩家可能发现不了什么区别(因为现在的驱动只不过是把内容从其他那些现存驱动拷贝过来了)

|

||||

|

||||

“新驱动发布的时候,好戏才会上演呢,”他说。

|

||||

|

||||

截至写作本文时为止,这个 PPA 囊括了从 Ubuntu 12.04.1 到 15.10 各个版本的 Nvidia 驱动。注意这些驱动对所有的发行版都适用。

|

||||

|

||||

> **毫无疑问,除非你清楚自己在干些什么,并且知道如果出了问题应该怎么撤销,否则就不要进行下面的操作。**

|

||||

|

||||

新打开一个终端窗口,运行下面的命令加入 PPA:

|

||||

|

||||

sudo add-apt-repository ppa:graphics-drivers/ppa

|

||||

|

||||

安装或更新到最新的 Nvidia 显卡驱动:

|

||||

|

||||

sudo apt-get update && sudo apt-get install nvidia-355

|

||||

|

||||

记住:如果PPA把你的系统弄崩了,你可得自己去想办法,我们提醒过了哦。(译者注:切记!)

|

||||

|

||||

如果想要撤销对PPA的改变,使用 `ppa-purge` 命令。

|

||||

|

||||

有什么意见,想法,或者指正,就在下面的评论栏里写下来吧。(我没有 NVIDIA 的硬件来为我自己验证上面的这些东西,如果你可以验证的话,那就太感谢了。)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2015/08/ubuntu-nvidia-graphics-drivers-ppa-is-ready-for-action

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[DongShuaike](https://github.com/DongShuaike)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/117485690627814051450/?rel=author

|

||||

[1]:https://linux.cn/article-6030-1.html

|

||||

|

||||

|

||||

|

||||

|

||||

@ -1,97 +0,0 @@

|

||||

translating by xiaoyu33

|

||||

|

||||

Tickr Is An Open-Source RSS News Ticker for Linux Desktops

|

||||

================================================================================

|

||||

|

||||

|

||||

**Latest! Latest! Read all about it!**

|

||||

|

||||

Alright, so the app we’re highlighting today isn’t quite the binary version of an old newspaper seller — but it is a great way to have the latest news brought to you, on your desktop.

|

||||

|

||||

Tick is a GTK-based news ticker for the Linux desktop that scrolls the latest headlines and article titles from your favourite RSS feeds in horizontal strip that you can place anywhere on your desktop.

|

||||

|

||||

Call me Joey Calamezzo; I put mine on the bottom TV news station style.

|

||||

|

||||

“Over to you, sub-heading.”

|

||||

|

||||

### RSS — Remember That? ###

|

||||

|

||||

“Thanks paragraph ending.”

|

||||

|

||||

In an era of push notifications, social media, and clickbait, cajoling us into reading the latest mind-blowing, humanity saving listicle ASAP, RSS can seem a bit old hat.

|

||||

|

||||

For me? Well, RSS lives up to its name of Really Simple Syndication. It’s the easiest, most manageable way to have news come to me. I can manage and read stuff when I want; there’s no urgency to view lest the tweet vanish into the stream or the push notification vanish.

|

||||

|

||||

The beauty of Tickr is in its utility. You can have a constant stream of news trundling along the bottom of your screen, which you can passively glance at from time to time.

|

||||

|

||||

|

||||

|

||||

There’s no pressure to ‘read’ or ‘mark all read’ or any of that. When you see something you want to read you just click it to open it in a web browser.

|

||||

|

||||

### Setting it Up ###

|

||||

|

||||

|

||||

|

||||

Although Tickr is available to install from the Ubuntu Software Centre it hasn’t been updated for a long time. Nowhere is this sense of abandonment more keenly felt than when opening the unwieldy and unintuitive configuration panel.

|

||||

|

||||

To open it:

|

||||

|

||||

1. Right click on the Tickr bar

|

||||

1. Go to Edit > Preferences

|

||||

1. Adjust the various settings

|

||||

|

||||

Row after row of options and settings, few of which seem to make sense at first. But poke and prod around and you’ll controls for pretty much everything, including:

|

||||

|

||||

- Set scrolling speed

|

||||

- Choose behaviour when mousing over

|

||||

- Feed update frequency

|

||||

- Font, including font sizes and color

|

||||

- Separator character (‘delineator’)

|

||||

- Position of Tickr on screen

|

||||

- Color and opacity of Tickr bar

|

||||

- Choose how many articles each feed displays

|

||||

|

||||

One ‘quirk’ worth mentioning is that pressing the ‘Apply’ only updates the on-screen Tickr to preview changes. For changes to take effect when you exit the Preferences window you need to click ‘OK’.

|

||||

|

||||

Getting the bar to sit flush on your display can also take a fair bit of tweaking, especially on Unity.

|

||||

|

||||

Press the “full width button” to have the app auto-detect your screen width. By default when placed at the top or bottom it leaves a 25px gap (the app was created back in the days of GNOME 2.x desktops). After hitting the top or bottom buttons just add an extra 25 pixels to the input box compensate for this.

|

||||

|

||||

Other options available include: choose which browser articles open in; whether Tickr appears within a regular window frame; whether a clock is shown; and how often the app checks feed for articles.

|

||||

|

||||

#### Adding Feeds ####

|

||||

|

||||

Tickr comes with a built-in list of over 30 different feeds, ranging from technology blogs to mainstream news services.

|

||||

|

||||

|

||||

|

||||

You can select as many of these as you like to show headlines in the on screen ticker. If you want to add your own feeds you can: –

|

||||

|

||||

1. Right click on the Tickr bar

|

||||

1. Go to File > Open Feed

|

||||

1. Enter Feed URL

|

||||

1. Click ‘Add/Upd’ button

|

||||

1. Click ‘OK (select)’

|

||||

|

||||

To set how many items from each feed shows in the ticker change the “Read N items max per feed” in the other preferences window.

|

||||

|

||||

### Install Tickr in Ubuntu 14.04 LTS and Up ###

|

||||

|

||||

So that’s Tickr. It’s not going to change the world but it will keep you abreast of what’s happening in it.

|

||||

|

||||

To install it in Ubuntu 14.04 LTS or later head to the Ubuntu Software Centre but clicking the button below.

|

||||

|

||||

- [Click to install Tickr form the Ubuntu Software Center][1]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2015/06/tickr-open-source-desktop-rss-news-ticker

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/117485690627814051450/?rel=author

|

||||

[1]:apt://tickr

|

||||

@ -0,0 +1,159 @@

|

||||

translating by xiaoyu33

|

||||

|

||||

shellinabox – A Web based AJAX Terminal Emulator

|

||||

================================================================================

|

||||

### About shellinabox ###

|

||||

|

||||

Greetings Unixmen readers!

|

||||

|

||||

We, usually, access any remote servers using well known communication tools like OpenSSH, and Putty etc. But, one important thing is we can’t access the remote systems using those tools behind a Firewall or the firewalls that allow only HTTPS traffic. No worries! We, still, have some options to access your remote systems even if you’re behind a firewall. And also, you don’t need to install any communications tools like OpenSSH or Putty. All you need is only a modern JavaScript and CSS enabled browser. And you don’t need to install any plugins or third party softwares either.

|

||||

|

||||

Meet **Shell In A Box**, pronounced as **shellinabox**, a free, open source, web based AJAX Terminal emulator developed by **Markus Gutschke**. It uses AJAX technology to provide the look and feel of a native shell via a web browser. The **shellinaboxd** daemon implements a webserver that listens on the specified port. The web server publishes one or more services that will be displayed in a VT100 emulator implemented as an AJAX web application. By default, the port is 4200. You can change the default port to any random port number of your choice. After installing shellinabox on all your remote servers that you want to access them from your local system, open up the web browser and navigate to: **http://IP-Address:4200/**. Enter your user name and password and start using your remote system’s shell. Seems interesting, isn’t it? Indeed!

|

||||

|

||||

**Disclaimer**:

|

||||

|

||||

Shellinabox is not a ssh client or any sort of security software. It is just a application that emulates a remote system’s shell via a web browser. Also, It has nothing to do with SSH in anyway. It’s not a bullet proof security way to remote your systems. It is just one of the easiest methods so far. You should not run it on any public network for any reason.

|

||||

|

||||

### Install shellinabox ###

|

||||

|

||||

#### In Debian/Ubuntu based systems: ####

|

||||

|

||||

shellinabox is available in the default repositories. So, you can install it using command:

|

||||

|

||||

$ sudo apt-get install shellinabox

|

||||

|

||||

#### In RHEL/CentOS systems: ####

|

||||

|

||||

First, install EPEL repository using command:

|

||||

|

||||

# yum install epel-release

|

||||

|

||||

Then, install shellinabox using command:

|

||||

|

||||

# yum install shellinabox

|

||||

|

||||

Done!

|

||||

|

||||

### Configure shellinabox ###

|

||||

|

||||

As I mentioned before, shellinabox listens on port **4200** by default. You can change this port to any random number of your choice to make it difficult to guess by anyone.

|

||||

|

||||

The shellinabox config file is located in **/etc/default/shellinabox** file by default in Debian/Ubuntu systems. In RHEL/CentOS/Fedora, the default location of config file is **/etc/sysconfig/shellinaboxd**.

|

||||

|

||||

If you want to change the default port,

|

||||

|

||||

In Debian/Ubuntu:

|

||||

|

||||

$ sudo vi /etc/default/shellinabox

|

||||

|

||||

In RHEL/CentOS/Fedora:

|

||||

|

||||

# vi /etc/sysconfig/shellinaboxd

|

||||

|

||||

Change your port to any random number. Since I am testing it on my local network, I use the default values.

|

||||

|

||||

# Shell in a box daemon configuration

|

||||

# For details see shellinaboxd man page

|

||||

|

||||

# Basic options

|

||||

USER=shellinabox

|

||||

GROUP=shellinabox

|

||||

CERTDIR=/var/lib/shellinabox

|

||||

PORT=4200

|

||||

OPTS="--disable-ssl-menu -s /:LOGIN"

|

||||

|

||||

# Additional examples with custom options:

|

||||

|

||||

# Fancy configuration with right-click menu choice for black-on-white:

|

||||

# OPTS="--user-css Normal:+black-on-white.css,Reverse:-white-on-black.css --disable-ssl-menu -s /:LOGIN"

|

||||

|

||||

# Simple configuration for running it as an SSH console with SSL disabled:

|

||||

# OPTS="-t -s /:SSH:host.example.com"

|

||||

|

||||

Restart shelinabox service.

|

||||

|

||||

**In Debian/Ubuntu:**

|

||||

|

||||

$ sudo systemctl restart shellinabox

|

||||

|

||||

Or

|

||||

|

||||

$ sudo service shellinabox restart

|

||||

|

||||

In RHEL/CentOS systems run the following command to start shellinaboxd service automatically on every reboot.

|

||||

|

||||

# systemctl enable shellinaboxd

|

||||

|

||||

Or

|

||||

|

||||

# chkconfig shellinaboxd on

|

||||

|

||||

Remember to open up port **4200** or any port that you assign if you are running a firewall.

|

||||

|

||||

For example, in RHEL/CentOS systems, you can allow the port as shown below.

|

||||

|

||||

# firewall-cmd --permanent --add-port=4200/tcp

|

||||

|

||||

----------

|

||||

|

||||

# firewall-cmd --reload

|

||||

|

||||

### Usage ###

|

||||

|

||||

Now, go to your client systems, open up the web browser and navigate to: **https://ip-address-of-remote-servers:4200**.

|

||||

|

||||

**Note**: Mention the correct port if you have changed it.

|

||||

|

||||

You’ll get a warning message of certificate issue. Accept the certificate and go on.

|

||||

|

||||

|

||||

|

||||

Enter your remote system’s username and password. Now, you’ll be able to access the remote system’s shell right from the browser itself.

|

||||

|

||||

|

||||

|

||||

You can get some additional menu options which might be useful by right clicking on the empty space of your browser.

|

||||

|

||||

|

||||

|

||||

From now on, you can do whatever you want to do in your remote server from the local system’s web browser.

|

||||

|

||||

Once you done, type **exit** in the shell.

|

||||

|

||||

To connect again to the remote system, click the **Connect** button and then type the user name and password of your remote server.

|

||||

|

||||

|

||||

|

||||

For more details about shellinabox, type the following command in your Terminal:

|

||||

|

||||

# man shellinabox

|

||||

|

||||

Or

|

||||

|

||||

# shellinaboxd -help

|

||||

|

||||

Also, refer the [shellinabox wiki page][1] for comprehensive usage details.

|

||||

|

||||

### Conclusion ###

|

||||

|

||||

Like I mentioned before, web-based SSH tools are very useful if you’re running servers behind a Firewall. There are many web-based ssh tools, but Shellinabox is pretty simple and useful tool to emulate a remote system’s shell from anywhere in your network. Since, it is browser based, you can access your remote server from any device as long as you have a JavaScript and CSS enabled browser.

|

||||

|

||||

That’s all for now. Have a good day!

|

||||

|

||||

#### Reference link: ####

|

||||

|

||||

- [shellinabox website][2]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.unixmen.com/shellinabox-a-web-based-ajax-terminal-emulator/

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.unixmen.com/author/sk/

|

||||

[1]:https://code.google.com/p/shellinabox/wiki/shellinaboxd_man

|

||||

[2]:https://code.google.com/p/shellinabox/

|

||||

@ -1,129 +0,0 @@

|

||||

|

||||

Translating by dingdongnigetou

|

||||

|

||||

Howto Configure Nginx as Rreverse Proxy / Load Balancer with Weave and Docker

|

||||

================================================================================

|

||||

Hi everyone today we'll learnHowto configure Nginx as Rreverse Proxy / Load balancer with Weave and Docker Weave creates a virtual network that connects Docker containers with each other, deploys across multiple hosts and enables their automatic discovery. It allows us to focus on developing our application, rather than our infrastructure. It provides such an awesome environment that the applications uses the network as if its containers were all plugged into the same network without need to configure ports, mappings, link, etc. The services of the application containers on the network can be easily accessible to the external world with no matter where its running. Here, in this tutorial we'll be using weave to quickly and easily deploy nginx web server as a load balancer for a simple php application running in docker containers on multiple nodes in Amazon Web Services. Here, we will be introduced to WeaveDNS, which provides a simple way for containers to find each other using hostname with no changes in codes and tells other containers to connect to those names.

|

||||

|

||||

Here, in this tutorial, we will use Nginx to load balance requests to a set of containers running Apache. Here are the simple and easy to do steps on using Weave to configure nginx as a load balancer running in ubuntu docker container.

|

||||

|

||||

### 1. Settting up AWS Instances ###

|

||||

|

||||

First of all, we'll need to setup Amazon Web Service Instances so that we can run docker containers with Weave and Ubuntu as Operating System. We will use the [AWS CLI][1] to setup and configure two AWS EC2 instances. Here, in this tutorial, we'll use the smallest available instances, t1.micro. We will need to have a valid **Amazon Web Services account** with AWS CLI setup and configured. We'll first gonna clone the repository of weave from the github by running the following command in AWS CLI.

|

||||

|

||||

$ git clone http://github.com/fintanr/weave-gs

|

||||

$ cd weave-gs/aws-nginx-ubuntu-simple

|

||||

|

||||

After cloning the repository, we wanna run the script that will deploy two instances of t1.micro instance running Weave and Docker in Ubuntu Operating System.

|

||||

|

||||

$ sudo ./demo-aws-setup.sh

|

||||

|

||||

Here, for this tutorial we'll need the IP addresses of these instances further in future. These are stored in an environment file weavedemo.env which is created during the execution of the demo-aws-setup.sh. To get those ip addresses, we need to run the following command which will give the output similar to the output below.

|

||||

|

||||

$ cat weavedemo.env

|

||||

|

||||

export WEAVE_AWS_DEMO_HOST1=52.26.175.175

|

||||

export WEAVE_AWS_DEMO_HOST2=52.26.83.141

|

||||

export WEAVE_AWS_DEMO_HOSTCOUNT=2

|

||||

export WEAVE_AWS_DEMO_HOSTS=(52.26.175.175 52.26.83.141)

|

||||

|

||||

Please note these are not the IP addresses for our tutorial, AWS dynamically allocate IP addresses to our instances.

|

||||

|

||||

As were are using a bash, we will just source this file and execute it using the command below.

|

||||

|

||||

. ./weavedemo.env

|

||||

|

||||

### 2. Launching Weave and WeaveDNS ###

|

||||

|

||||

After deploying the instances, we'll want to launch weave and weavedns on each hosts. Weave and weavedns allows us to easily deploy our containers to a new infrastructure and configuration without the need of changing the codes and without the need to understand concepts such as ambassador containers and links. Here are the commands to launch them in the first host.

|

||||

|

||||

ssh -i weavedemo-key.pem ubuntu@$WEAVE_AWS_DEMO_HOST1

|

||||

$ sudo weave launch

|

||||

$ sudo weave launch-dns 10.2.1.1/24

|

||||

|

||||

Next, we'll also wanna launch them in our second host.

|

||||

|

||||

ssh -i weavedemo-key.pem ubuntu@$WEAVE_AWS_DEMO_HOST2

|

||||

$ sudo weave launch $WEAVE_AWS_DEMO_HOST1

|

||||

$ sudo weave launch-dns 10.2.1.2/24

|

||||

|

||||

### 3. Launching Application Containers ###

|

||||

|

||||

Now, we wanna launch six containers across our two hosts running an Apache2 Web Server instance with our simple php site. So, we'll be running the following commands which will run 3 containers running Apache2 Web Server on our 1st instance.

|

||||

|

||||

ssh -i weavedemo-key.pem ubuntu@$WEAVE_AWS_DEMO_HOST1

|

||||

$ sudo weave run --with-dns 10.3.1.1/24 -h ws1.weave.local fintanr/weave-gs-nginx-apache

|

||||

$ sudo weave run --with-dns 10.3.1.2/24 -h ws2.weave.local fintanr/weave-gs-nginx-apache

|

||||

$ sudo weave run --with-dns 10.3.1.3/24 -h ws3.weave.local fintanr/weave-gs-nginx-apache

|

||||

|

||||

After that, we'll again launch 3 containers running apache2 web server in our 2nd instance as shown below.

|

||||

|

||||

ssh -i weavedemo-key.pem ubuntu@$WEAVE_AWS_DEMO_HOST2

|

||||

$ sudo weave run --with-dns 10.3.1.4/24 -h ws4.weave.local fintanr/weave-gs-nginx-apache

|

||||

$ sudo weave run --with-dns 10.3.1.5/24 -h ws5.weave.local fintanr/weave-gs-nginx-apache

|

||||

$ sudo weave run --with-dns 10.3.1.6/24 -h ws6.weave.local fintanr/weave-gs-nginx-apache

|

||||

|

||||

Note: Here, --with-dns option tells the container to use weavedns to resolve names and -h x.weave.local allows the host to be resolvable with WeaveDNS.

|

||||

|

||||

### 4. Launching Nginx Container ###

|

||||

|

||||

After our application containers are running well as expected, we'll wanna launch an nginx container which contains the nginx configuration which will round-robin across the severs for the reverse proxy or load balancing. To run the nginx container, we'll need to run the following command.

|

||||

|

||||

ssh -i weavedemo-key.pem ubuntu@$WEAVE_AWS_DEMO_HOST1

|

||||

$ sudo weave run --with-dns 10.3.1.7/24 -ti -h nginx.weave.local -d -p 80:80 fintanr/weave-gs-nginx-simple

|

||||

|

||||

Hence, our Nginx container is publicly exposed as a http server on $WEAVE_AWS_DEMO_HOST1.

|

||||

|

||||

### 5. Testing the Load Balancer ###

|

||||

|

||||

To test our load balancer is working or not, we'll run a script that will make http requests to our nginx container. We'll make six requests so that we can see nginx moving through each of the webservers in round-robin turn.

|

||||

|

||||

$ ./access-aws-hosts.sh

|

||||

|

||||

{

|

||||

"message" : "Hello Weave - nginx example",

|

||||

"hostname" : "ws1.weave.local",

|

||||

"date" : "2015-06-26 12:24:23"

|

||||

}

|

||||

{

|

||||

"message" : "Hello Weave - nginx example",

|

||||

"hostname" : "ws2.weave.local",

|

||||

"date" : "2015-06-26 12:24:23"

|

||||

}

|

||||

{

|

||||

"message" : "Hello Weave - nginx example",

|

||||

"hostname" : "ws3.weave.local",

|

||||

"date" : "2015-06-26 12:24:23"

|

||||

}

|

||||

{

|

||||

"message" : "Hello Weave - nginx example",

|

||||

"hostname" : "ws4.weave.local",

|

||||

"date" : "2015-06-26 12:24:23"

|

||||

}

|

||||

{

|

||||

"message" : "Hello Weave - nginx example",

|

||||

"hostname" : "ws5.weave.local",

|

||||

"date" : "2015-06-26 12:24:23"

|

||||

}

|

||||

{

|

||||

"message" : "Hello Weave - nginx example",

|

||||

"hostname" : "ws6.weave.local",

|

||||

"date" : "2015-06-26 12:24:23"

|

||||

}

|

||||

|

||||

### Conclusion ###

|

||||

|

||||

Finally, we've successfully configured nginx as a reverse proxy or load balancer with weave and docker running ubuntu server in AWS (Amazon Web Service) EC2 . From the above output in above step, it is clear that we have configured it correctly. We can see that the request is being sent to 6 application containers in round-robin turn which is running a PHP app hosted in apache web server. Here, weave and weavedns did great work to deploy a containerised PHP application using nginx across multiple hosts on AWS EC2 without need to change in codes and connected the containers to eachother with the hostname using weavedns. If you have any questions, suggestions, feedback please write them in the comment box below so that we can improve or update our contents. Thank you ! Enjoy :-)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linoxide.com/linux-how-to/nginx-load-balancer-weave-docker/

|

||||

|

||||

作者:[Arun Pyasi][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linoxide.com/author/arunp/

|

||||

[1]:http://console.aws.amazon.com/

|

||||

@ -1,419 +0,0 @@

|

||||

wyangsun translating

|

||||

Managing Linux Logs

|

||||

================================================================================

|

||||

A key best practice for logging is to centralize or aggregate your logs in one place, especially if you have multiple servers or tiers in your architecture. We’ll tell you why this is a good idea and give tips on how to do it easily.

|

||||

|

||||

### Benefits of Centralizing Logs ###

|

||||

|

||||

It can be cumbersome to look at individual log files if you have many servers. Modern web sites and services often include multiple tiers of servers, distributed with load balancers, and more. It’d take a long time to hunt down the right file, and even longer to correlate problems across servers. There’s nothing more frustrating than finding the information you are looking for hasn’t been captured, or the log file that could have held the answer has just been lost after a restart.

|

||||

|

||||

Centralizing your logs makes them faster to search, which can help you solve production issues faster. You don’t have to guess which server had the issue because all the logs are in one place. Additionally, you can use more powerful tools to analyze them, including log management solutions. These solutions can [transform plain text logs][1] into fields that can be easily searched and analyzed.

|

||||

|

||||

Centralizing your logs also makes them easier to manage:

|

||||

|

||||

- They are safer from accidental or intentional loss when they are backed up and archived in a separate location. If your servers go down or are unresponsive, you can use the centralized logs to debug the problem.

|

||||

- You don’t have to worry about ssh or inefficient grep commands requiring more resources on troubled systems.

|

||||

- You don’t have to worry about full disks, which can crash your servers.

|

||||

- You can keep your production servers secure without giving your entire team access just to look at logs. It’s much safer to give your team access to logs from the central location.

|

||||

|

||||

With centralized log management, you still must deal with the risk of being unable to transfer logs to the centralized location due to poor network connectivity or of using up a lot of network bandwidth. We’ll discuss how to intelligently address these issues in the sections below.

|

||||

|

||||

### Popular Tools for Centralizing Logs ###

|

||||

|

||||

The most common way of centralizing logs on Linux is by using syslog daemons or agents. The syslog daemon supports local log collection, then transports logs through the syslog protocol to a central server. There are several popular daemons that you can use to centralize your log files:

|

||||

|

||||

- [rsyslog][2] is a light-weight daemon installed on most common Linux distributions.

|

||||

- [syslog-ng][3] is the second most popular syslog daemon for Linux.

|

||||

- [logstash][4] is a heavier-weight agent that can do more advanced processing and parsing.

|

||||

- [fluentd][5] is another agent with advanced processing capabilities.

|

||||

|

||||

Rsyslog is the most popular daemon for centralizing your log data because it’s installed by default in most common distributions of Linux. You don’t need to download it or install it, and it’s lightweight so it won’t take up much of your system resources.

|

||||

|

||||

If you need more advanced filtering or custom parsing capabilities, Logstash is the next most popular choice if you don’t mind the extra system footprint.

|

||||

|

||||

### Configure Rsyslog.conf ###

|

||||

|

||||

Since rsyslog is the most widely used syslog daemon, we’ll show how to configure it to centralize logs. The global configuration file is located at /etc/rsyslog.conf. It loads modules, sets global directives, and has an include for application-specific files located in the directory /etc/rsyslog.d/. This directory contains /etc/rsyslog.d/50-default.conf which instructs rsyslog to write the system logs to file. You can read more about the configuration files in the [rsyslog documentation][6].

|

||||

|

||||

The configuration language for rsyslog is [RainerScript][7]. You set up specific inputs for logs as well as actions to output them to another destination. Rsyslog already configures standard defaults for syslog input, so you usually just need to add an output to your log server. Here is an example configuration for rsyslog to output logs to an external server. In this example, **BEBOP** is the hostname of the server, so you should replace it with your own server name.

|

||||

|

||||

action(type="omfwd" protocol="tcp" target="BEBOP" port="514")

|

||||

|

||||

You could send your logs to a log server with ample storage to keep a copy for search, backup, and analysis. If you’re storing logs in the file system, then you should set up [log rotation][8] to keep your disk from getting full.

|

||||

|

||||

Alternatively, you can send these logs to a log management solution. If your solution is installed locally you can send it to your local host and port as specified in your system documentation. If you use a cloud-based provider, you will send them to the hostname and port specified by your provider.

|

||||

|

||||

### Log Directories ###

|

||||

|

||||

You can centralize all the files in a directory or matching a wildcard pattern. The nxlog and syslog-ng daemons support both directories and wildcards (*).

|

||||

|

||||

Common versions of rsyslog can’t monitor directories directly. As a workaround, you can setup a cron job to monitor the directory for new files, then configure rsyslog to send those files to a destination, such as your log management system. As an example, the log management vendor Loggly has an open source version of a [script to monitor directories][9].

|

||||

|

||||

### Which Protocol: UDP, TCP, or RELP? ###

|

||||

|

||||

There are three main protocols that you can choose from when you transmit data over the Internet. The most common is UDP for your local network and TCP for the Internet. If you cannot lose logs, then use the more advanced RELP protocol.

|

||||

|

||||

[UDP][10] sends a datagram packet, which is a single packet of information. It’s an outbound-only protocol, so it doesn’t send you an acknowledgement of receipt (ACK). It makes only one attempt to send the packet. UDP can be used to smartly degrade or drop logs when the network gets congested. It’s most commonly used on reliable networks like localhost.

|

||||

|

||||

[TCP][11] sends streaming information in multiple packets and returns an ACK. TCP makes multiple attempts to send the packet, but is limited by the size of the [TCP buffer][12]. This is the most common protocol for sending logs over the Internet.

|

||||

|

||||

[RELP][13] is the most reliable of these three protocols but was created for rsyslog and has less industry adoption. It acknowledges receipt of data in the application layer and will resend if there is an error. Make sure your destination also supports this protocol.

|

||||

|

||||

### Reliably Send with Disk Assisted Queues ###

|

||||

|

||||

If rsyslog encounters a problem when storing logs, such as an unavailable network connection, it can queue the logs until the connection is restored. The queued logs are stored in memory by default. However, memory is limited and if the problem persists, the logs can exceed memory capacity.

|

||||

|

||||

**Warning: You can lose data if you store logs only in memory.**

|

||||

|

||||

Rsyslog can queue your logs to disk when memory is full. [Disk-assisted queues][14] make transport of logs more reliable. Here is an example of how to configure rsyslog with a disk-assisted queue:

|

||||

|

||||

$WorkDirectory /var/spool/rsyslog # where to place spool files

|

||||

$ActionQueueFileName fwdRule1 # unique name prefix for spool files

|

||||

$ActionQueueMaxDiskSpace 1g # 1gb space limit (use as much as possible)

|

||||

$ActionQueueSaveOnShutdown on # save messages to disk on shutdown

|

||||

$ActionQueueType LinkedList # run asynchronously

|

||||

$ActionResumeRetryCount -1 # infinite retries if host is down

|

||||

|

||||

### Encrypt Logs Using TLS ###

|

||||

|

||||

When the security and privacy of your data is a concern, you should consider encrypting your logs. Sniffers and middlemen could read your log data if you transmit it over the Internet in clear text. You should encrypt your logs if they contain private information, sensitive identification data, or government-regulated data. The rsyslog daemon can encrypt your logs using the TLS protocol and keep your data safer.

|

||||

|

||||

To set up TLS encryption, you need to do the following tasks:

|

||||

|

||||

1. Generate a [certificate authority][15] (CA). There are sample certificates in /contrib/gnutls, which are good only for testing, but you need to create your own for production. If you’re using a log management service, it will have one ready for you.

|

||||

1. Generate a [digital certificate][16] for your server to enable SSL operation, or use one from your log management service provider.

|

||||

1. Configure your rsyslog daemon to send TLS-encrypted data to your log management system.

|

||||

|

||||

Here’s an example rsyslog configuration with TLS encryption. Replace CERT and DOMAIN_NAME with your own server setting.

|

||||

|

||||

$DefaultNetstreamDriverCAFile /etc/rsyslog.d/keys/ca.d/CERT.crt

|

||||

$ActionSendStreamDriver gtls

|

||||

$ActionSendStreamDriverMode 1

|

||||

$ActionSendStreamDriverAuthMode x509/name

|

||||

$ActionSendStreamDriverPermittedPeer *.DOMAIN_NAME.com

|

||||

|

||||

### Best Practices for Application Logging ###

|

||||

|

||||

In addition to the logs that Linux creates by default, it’s also a good idea to centralize logs from important applications. Almost all Linux-based server class applications write their status information in separate, dedicated log files. This includes database products like PostgreSQL or MySQL, web servers like Nginx or Apache, firewalls, print and file sharing services, directory and DNS servers and so on.

|

||||

|

||||

The first thing administrators do after installing an application is to configure it. Linux applications typically have a .conf file somewhere within the /etc directory. It can be somewhere else too, but that’s the first place where people look for configuration files.

|

||||

|

||||

Depending on how complex or large the application is, the number of settable parameters can be few or in hundreds. As mentioned before, most applications would write their status in some sort of log file: configuration file is where log settings are defined among other things.

|

||||

|

||||

If you’re not sure where it is, you can use the locate command to find it:

|

||||

|

||||

[root@localhost ~]# locate postgresql.conf

|

||||

/usr/pgsql-9.4/share/postgresql.conf.sample

|

||||

/var/lib/pgsql/9.4/data/postgresql.conf

|

||||

|

||||

#### Set a Standard Location for Log Files ####

|

||||

|

||||

Linux systems typically save their log files under /var/log directory. This works fine, but check if the application saves under a specific directory under /var/log. If it does, great, if not, you may want to create a dedicated directory for the app under /var/log. Why? That’s because other applications save their log files under /var/log too and if your app saves more than one log file – perhaps once every day or after each service restart – it may be a bit difficult to trawl through a large directory to find the file you want.

|

||||

|

||||

If you have the more than one instance of the application running in your network, this approach also comes handy. Think about a situation where you may have a dozen web servers running in your network. When troubleshooting any one of the boxes, you would know exactly where to go.

|

||||

|

||||

#### Use A Standard Filename ####

|

||||

|

||||

Use a standard filename for the latest logs from your application. This makes it easy because you can monitor and tail a single file. Many applications add some sort of date time stamp in them. This makes it much more difficult to find the latest file and to setup file monitoring by rsyslog. A better approach is to add timestamps to older log files using logrotate. This makes them easier to archive and search historically.

|

||||

|

||||

#### Append the Log File ####

|

||||

|

||||

Is the log file going to be overwritten after each application restart? If so, we recommend turning that off. After each restart the app should append to the log file. That way, you can always go back to the last log line before the restart.

|

||||

|

||||

#### Appending vs. Rotation of Log File ####

|

||||

|

||||

Even if the application writes a new log file after each restart, how is it saving in the current log? Is it appending to one single, massive file? Linux systems are not known for frequent reboots or crashes: applications can run for very long periods without even blinking, but that can also make the log file very large. If you are trying to analyze the root cause of a connection failure that happened last week, you could easily be searching through tens of thousands of lines.

|

||||

|

||||

We recommend you configure the application to rotate its log file once every day, say at mid-night.

|

||||

|

||||

Why? Well it becomes manageable for a starter. It’s much easier to find a file name with a specific date time pattern than to search through one file for that date’s entries. Files are also much smaller: you don’t think vi has frozen when you open a log file. Secondly, if you are sending the log file over the wire to a different location – perhaps a nightly backup job copying to a centralized log server – it doesn’t chew up your network’s bandwidth. Third and final, it helps with your log retention. If you want to cull old log entries, it’s easier to delete files older than a particular date than to have an application parsing one single large file.

|

||||

|

||||

#### Retention of Log File ####

|

||||

|

||||

How long do you keep a log file? That definitely comes down to business requirement. You could be asked to keep one week’s worth of logging information, or it may be a regulatory requirement to keep ten years’ worth of data. Whatever it is, logs need to go from the server at one time or other.

|

||||

|

||||

In our opinion, unless otherwise required, keep at least a month’s worth of log files online, plus copy them to a secondary location like a logging server. Anything older than that can be offloaded to a separate media. For example, if you are on AWS, your older logs can be copied to Glacier.

|

||||

|

||||

#### Separate Disk Location for Log Files ####

|

||||

|

||||

Linux best practice usually suggests mounting the /var directory to a separate file system. This is because of the high number of I/Os associated with this directory. We would recommend mounting /var/log directory under a separate disk system. This can save I/O contention with the main application’s data. Also, if the number of log files becomes too large or the single log file becomes too big, it doesn’t fill up the entire disk.

|

||||

|

||||

#### Log Entries ####

|

||||

|

||||

What information should be captured in each log entry?

|

||||

|

||||

That depends on what you want to use the log for. Do you want to use it only for troubleshooting purposes, or do you want to capture everything that’s happening? Is it a legal requirement to capture what each user is running or viewing?

|

||||

|

||||

If you are using logs for troubleshooting purposes, save only errors, warnings or fatal messages. There’s no reason to capture debug messages, for example. The app may log debug messages by default or another administrator might have turned this on for another troubleshooting exercise, but you need to turn this off because it can definitely fill up the space quickly. At a minimum, capture the date, time, client application name, source IP or client host name, action performed and the message itself.

|

||||

|

||||

#### A Practical Example for PostgreSQL ####

|

||||

|

||||

As an example, let’s look at the main configuration file for a vanilla PostgreSQL 9.4 installation. It’s called postgresql.conf and contrary to other config files in Linux systems, it’s not saved under /etc directory. In the code snippet below, we can see it’s in /var/lib/pgsql directory of our CentOS 7 server:

|

||||

|

||||

root@localhost ~]# vi /var/lib/pgsql/9.4/data/postgresql.conf

|

||||

...

|

||||

#------------------------------------------------------------------------------

|

||||

# ERROR REPORTING AND LOGGING

|

||||

#------------------------------------------------------------------------------

|

||||

# - Where to Log -

|

||||

log_destination = 'stderr'

|

||||

# Valid values are combinations of

|

||||

# stderr, csvlog, syslog, and eventlog,

|

||||

# depending on platform. csvlog

|

||||

# requires logging_collector to be on.

|

||||

# This is used when logging to stderr:

|

||||

logging_collector = on

|

||||

# Enable capturing of stderr and csvlog

|

||||

# into log files. Required to be on for

|

||||

# csvlogs.

|

||||

# (change requires restart)

|

||||

# These are only used if logging_collector is on:

|

||||

log_directory = 'pg_log'

|

||||

# directory where log files are written,

|

||||

# can be absolute or relative to PGDATA

|

||||

log_filename = 'postgresql-%a.log' # log file name pattern,

|

||||

# can include strftime() escapes

|

||||

# log_file_mode = 0600 .

|

||||

# creation mode for log files,

|

||||

# begin with 0 to use octal notation

|

||||

log_truncate_on_rotation = on # If on, an existing log file with the

|

||||

# same name as the new log file will be

|

||||

# truncated rather than appended to.

|

||||

# But such truncation only occurs on

|

||||

# time-driven rotation, not on restarts

|

||||

# or size-driven rotation. Default is

|

||||

# off, meaning append to existing files

|

||||

# in all cases.

|

||||

log_rotation_age = 1d

|

||||

# Automatic rotation of logfiles will happen after that time. 0 disables.

|

||||

log_rotation_size = 0 # Automatic rotation of logfiles will happen after that much log output. 0 disables.

|

||||

# These are relevant when logging to syslog:

|

||||

#syslog_facility = 'LOCAL0'

|

||||

#syslog_ident = 'postgres'

|

||||

# This is only relevant when logging to eventlog (win32):

|

||||

#event_source = 'PostgreSQL'

|

||||

# - When to Log -

|

||||

#client_min_messages = notice # values in order of decreasing detail:

|

||||

# debug5

|

||||

# debug4

|

||||

# debug3

|

||||

# debug2

|

||||

# debug1

|

||||

# log

|

||||

# notice

|

||||

# warning

|

||||

# error

|

||||

#log_min_messages = warning # values in order of decreasing detail:

|

||||

# debug5

|

||||

# debug4

|

||||

# debug3

|

||||

# debug2

|

||||

# debug1

|

||||

# info

|

||||

# notice

|

||||

# warning

|

||||

# error

|

||||

# log

|

||||

# fatal

|

||||

# panic

|

||||

#log_min_error_statement = error # values in order of decreasing detail:

|

||||

# debug5

|

||||

# debug4

|

||||

# debug3

|

||||

# debug2

|

||||

# debug1

|

||||

# info

|

||||

# notice

|

||||

# warning

|

||||

# error

|

||||

# log

|

||||

# fatal

|

||||

# panic (effectively off)

|

||||

#log_min_duration_statement = -1 # -1 is disabled, 0 logs all statements

|

||||

# and their durations, > 0 logs only

|

||||

# statements running at least this number

|

||||

# of milliseconds

|

||||

# - What to Log

|

||||

#debug_print_parse = off

|

||||

#debug_print_rewritten = off

|

||||

#debug_print_plan = off

|

||||

#debug_pretty_print = on

|

||||

#log_checkpoints = off

|

||||

#log_connections = off

|

||||

#log_disconnections = off

|

||||

#log_duration = off

|

||||

#log_error_verbosity = default

|

||||

# terse, default, or verbose messages

|

||||

#log_hostname = off

|

||||

log_line_prefix = '< %m >' # special values:

|

||||

# %a = application name

|

||||

# %u = user name

|

||||

# %d = database name

|

||||

# %r = remote host and port

|

||||

# %h = remote host

|