mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-03 01:10:13 +08:00

commit

d50de2ff6d

@ -0,0 +1,67 @@

|

||||

Linux下优秀的音频编辑软件

|

||||

================================================================================

|

||||

|

||||

不论您是一个音乐爱好者或只是一个要记录您导师的讲话的学生,您都需要录制音频和编辑音频。长久以来这样的工作都要靠Macintosh,如今那个时代已经过去,现在Linux也可以胜任这些工作了。简而言之,这里有一份不完全的音频编辑器软件列表,适用于不同的任务和需求。

|

||||

|

||||

### 1. Audacity ###

|

||||

|

||||

|

||||

|

||||

让我们从我个人最喜欢的软件开始。[Audacity][1]可以运行在Windows、Mac和Linux上。它是开源的,且易于使用。你会觉得:Audacity几乎是完美的。它可以让您在干净的界面上操作音频波形。简单地说,您可以覆盖音轨、剪切和修改音轨、增加特效、执行高级的声音分析,然后将它们导出到一大堆可用的格式。我喜欢它的原因是它将基本的功能和复杂的功能结合在一起并且保持一个简单的学习曲线。然而,它并不是一个完全最优化的软件,尤其是对于音乐家和专业人员。

|

||||

|

||||

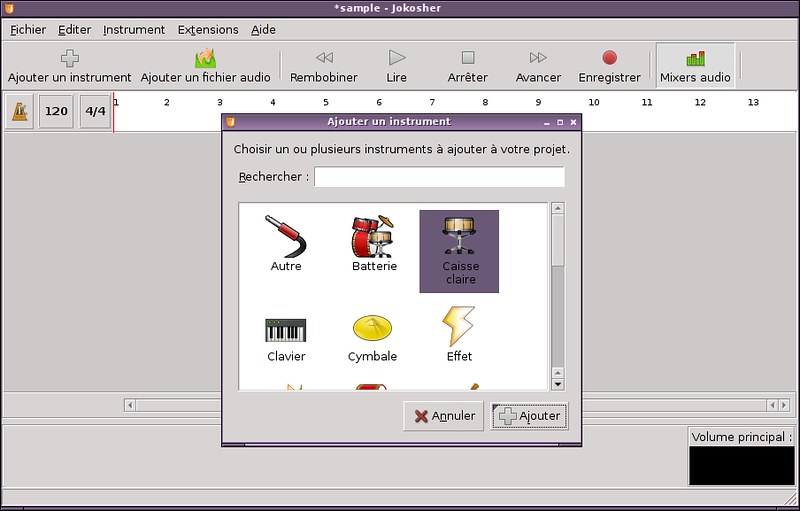

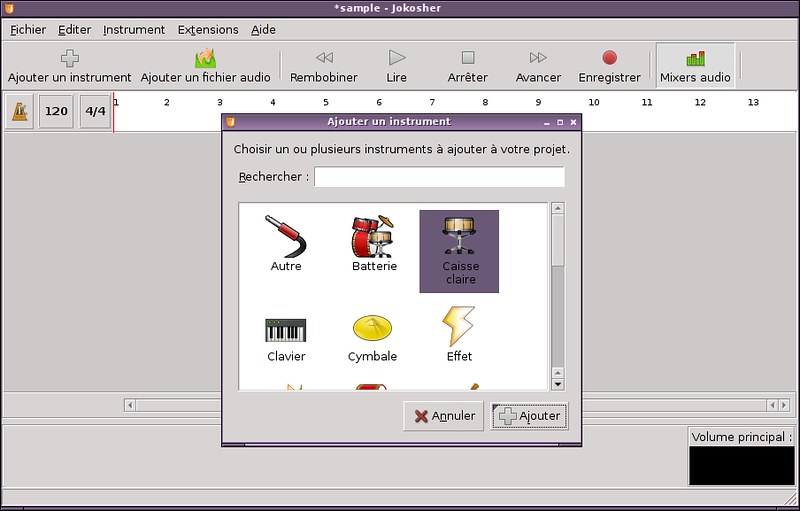

### 2. Jokosher ###

|

||||

|

||||

|

||||

|

||||

在不同的层次上,[Jokosher][2]更多的聚焦在多音轨方面。它使用Python和GTK+作为前端界面,并使用GStreamer作为音频后端。Jokosher那易用的界面和它的扩展性给我留下了深刻的印象。也许编辑的功能并不是最先进的,但它的提示十分清晰,适合音乐家。例如,我十分喜欢音轨和乐器的组合。简而言之,如果您是一个音乐家,那么它是在您转移到下一个软件前进行实践的好机会。

|

||||

|

||||

### 3. Ardour ###

|

||||

|

||||

|

||||

|

||||

接下来谈论一些复杂的工具,[Ardour][3]是一套完整的可以录制、编辑和混音的软件。这个设计吸引了所有的专业人员,Ardour在声音和插件方面超出我的想象。如果您在寻找一头野兽并且不惧怕驯服它,那么Ardour或许是一个不错的选择。再次,它的界面和丰富的文档,尤其是它首次启动时的配置工具都是它迷人魅力的一部分。

|

||||

|

||||

### 4. Kwave ###

|

||||

|

||||

|

||||

|

||||

对于所有KDE的热爱者,[KWave][4]绝对符合您对于设计和功能的想象。它有丰富的快捷键以及很多有趣的选项,例如内存管理。尽管很多特效很不错,但我们更应该关注那些用于音频剪切/粘贴的工具。可惜的是它无法与Audacity相比,而更重要的是,它的界面并没有那么吸引我。

|

||||

|

||||

### 5. Qtractor ###

|

||||

|

||||

|

||||

|

||||

如果Kwave对您来说过于简单,但基于Qt的程序却有些吸引力,那么对您来说,也许[Qtractor][5]是一个选项。它致力于做一个“对于家庭用户来说足够简单,并且对专业人员来说足够强大的软件。”实际上它功能和选项的数量几乎是压倒性的。我最喜欢的当然是可定制的快捷键。除此之外,Qtractor可能是我最喜欢的一个处理MIDI文件的工具。

|

||||

|

||||

### 6. LMMS ###

|

||||

|

||||

|

||||

|

||||

作为Linux多媒体工作室,LMMS的直接目标是音乐制作。如果您之前没有什么经验并且不想浪费太多的时间,那么请去别处吧。LMMS是其中一个复杂但强大的软件,只有少数的人真正的掌握了它。它有太多的功能和特效以至于无法一一列出,但如果我必须找一个,我会说用来模拟Game Boy声音系统的Freeboy插件简直像魔术一样。然后,去看看它那惊人的文档吧。

|

||||

|

||||

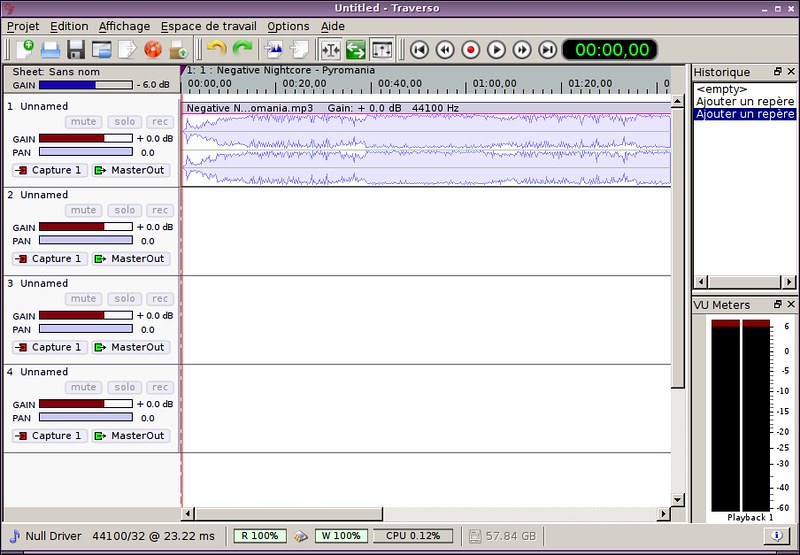

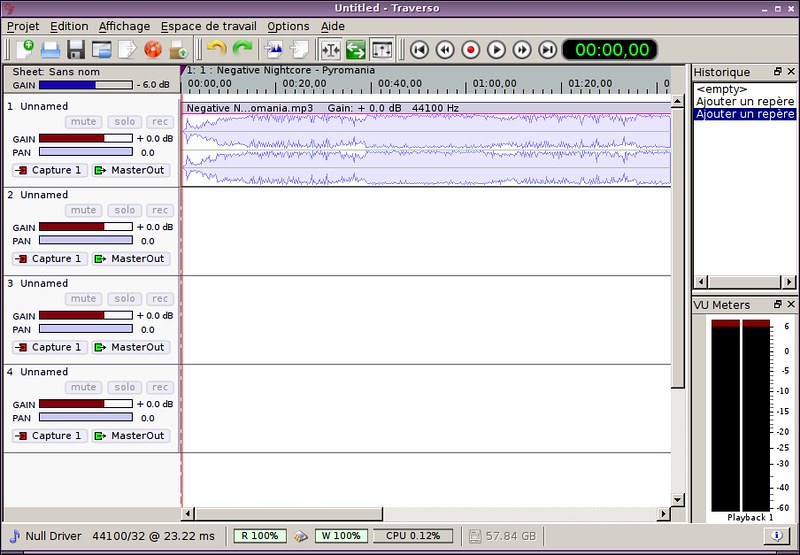

### 7. Traverso ###

|

||||

|

||||

|

||||

|

||||

最后站在我面前的是Traverso,它支持无限制的音轨计数,并直接整合了CD烧录的功能。另外,它对我来说是介于简单的软件和专业的软件之间的程序。它的界面是KDE样式的,其键盘配置很简单。更有趣的是,Traverso会监视您的系统资源以确定不会超过您的CPU或者硬件的能力。

|

||||

|

||||

总而言之,能在Linux系统上看到这么多不同的应用程序是一件开心的事。它使得您永远可以找到最适合自己的那一款。虽然我最喜欢的应用是Audacity,但我非常震惊于LMMS和Jokosher的设计。

|

||||

|

||||

我们有漏掉什么么?您在Linux下使用哪一款软件呢?原因是什么呢?请留言让我们知道。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/good-audio-editing-software-linux.html

|

||||

|

||||

作者:[Adrien Brochard][a]

|

||||

译者:[wwy-hust](https://github.com/wwy-hust)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/adrien

|

||||

[1]:http://audacity.sourceforge.net/

|

||||

[2]:https://launchpad.net/jokosher/

|

||||

[3]:http://ardour.org/

|

||||

[4]:http://kwave.sourceforge.net/

|

||||

[5]:http://qtractor.sourceforge.net/qtractor-index.html

|

||||

154

published/20150128 The top 10 rookie open source projects.md

Normal file

154

published/20150128 The top 10 rookie open source projects.md

Normal file

@ -0,0 +1,154 @@

|

||||

2015 年度开源项目新秀榜

|

||||

================================================================================

|

||||

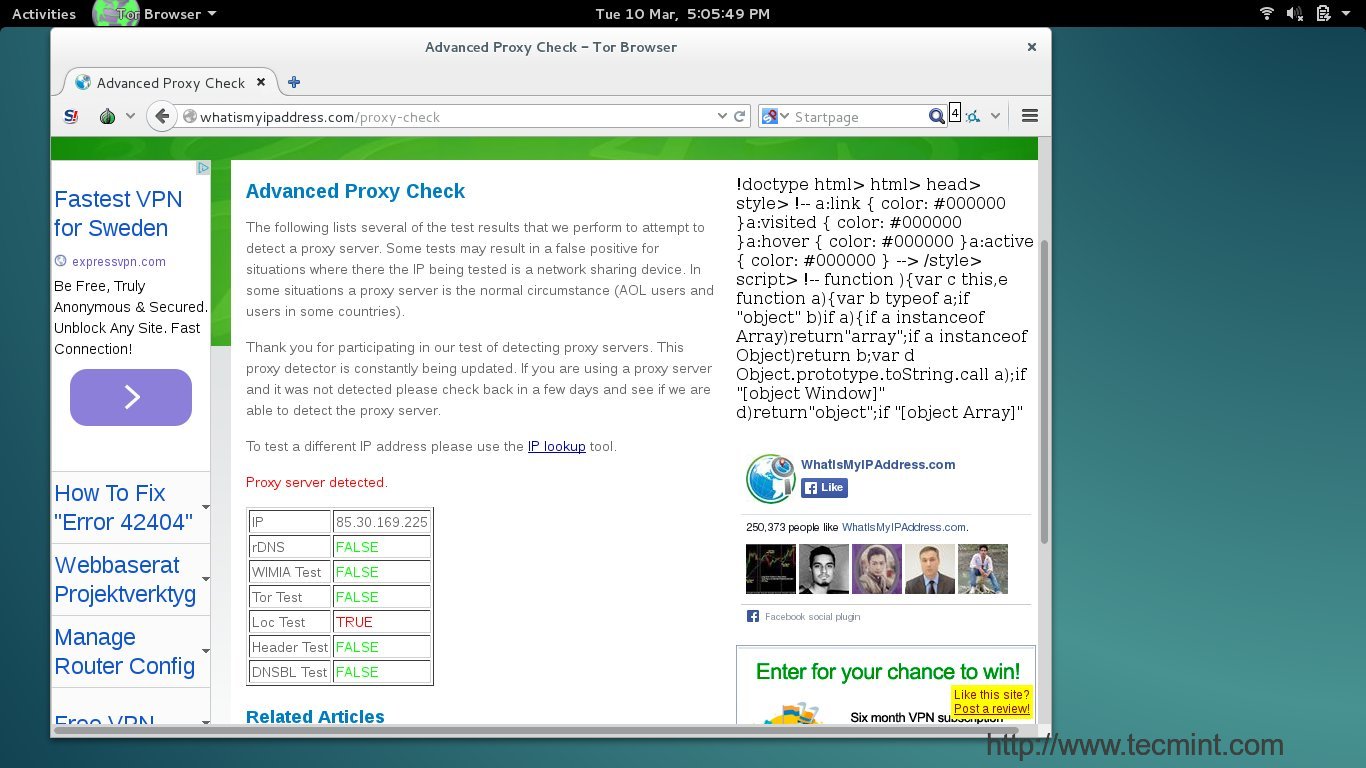

黑鸭(Black Duck)软件公布了一份名叫“年度开源项目新秀”的报告,介绍了由全球开源协会发起的10个最有趣、最活跃的新项目。

|

||||

|

||||

|

||||

|

||||

### 年度开源项目新秀 ###

|

||||

|

||||

每年都有上千新的开源项目问世,但只有少数能够真正的吸引我们的关注。一些项目因为利用了当前比较流行的技术而发展壮大,有一些则真正地开启了一个新的领域。很多开源项目建立的初衷是为了解决一些生产上的问题,还有一些项目则是世界各地志同道合的开发者们共同发起的一个宏伟项目。

|

||||

|

||||

从2009年起,开源软件管理公司黑鸭便发起了[年度开源项目新秀][1]这一活动,它的评选根据[Open Hub][2]网站(即以前的Ohloh)上的活跃度。今年,我们很荣幸能够报道2015年10大开源项目新秀的得主和2名荣誉奖得主,它们是从上千个开源项目中脱颖而出的。评选采用了加权评分系统,得分标准基于项目的活跃度,交付速度和几个其它因数。

|

||||

|

||||

开源俨然成为了产业创新的引擎,就拿今年来说,和Docker容器相关的开源项目在全球各地兴起,这也不恰巧反映了企业最感兴趣的技术领域吗?最后,我们接下来介绍的项目,将会让你了解到全球开源项目的开发者们的在思考什么,这很快将会成为一个指引我们发展的领头羊。

|

||||

|

||||

### 2015年度开源项目新秀: DebOps ###

|

||||

|

||||

|

||||

|

||||

[DebOps][3]收集打包了一套[Ansible][4]方案和规则(Ansible是一种自动化运维工具),可以从1个容器扩展到一个完整的数据中心。它的创始人Maciej Delmanowski将DebOps开源来保证项目长久进行,从而更好的通过外部贡献者的帮助发展下去。

|

||||

|

||||

DebOps始创于波兰的一个不起眼大学校园里,他们运营自己的数据中心,一切工作都采用手工配置。有时系统崩溃而导致几天的宕机,这时Delmanowski意识到一个配置管理系统是很有必要的。以Debian作为基础开始,DebOps是一组配置一整个数据基础设施的Ansible方案。此项目已经在许多不同的工作环境下实现,而创始者们则打算继续支持和改进这个项目。

|

||||

|

||||

###2015年度开源项目新秀: Code Combat ###

|

||||

|

||||

|

||||

|

||||

传统的纸笔学习方法已近不能满足技术学科了。然而游戏却有很多人都爱玩,这也就是为什么[CodeCombat][5]的创始人会去开发一款多人协同编程游戏来教人们如何编码。

|

||||

|

||||

刚开始CodeCombat是一个创业想法,但其创始人决定取而代之创建一个开源项目。此想法在社区传播开来,很快不少贡献者加入到项目中来。项目发起仅仅两个月后,这款游戏就被接纳到Google’s Summer of Code活动中。这款游戏吸引了大量玩家,并被翻译成45种语言。CodeCombat希望成为那些想要一边学习代码同时获得乐趣的同学的风向标。

|

||||

|

||||

### 2015年度开源项目新秀: Storj ###

|

||||

|

||||

|

||||

|

||||

[Storj][6]是一个点对点的云存储网络,可实现端到端加密,保证用户不用依赖第三方即可传输和共享数据。基于比特币block chain技术和点对点协议,Storj提供安全、私密、加密的云存储。

|

||||

|

||||

云数据存储的反对者担心成本开销和漏洞攻击。针对这两个担忧,Storj提供了一个私有云存储市场,用户可以通过Storjcoin X(SJCX) 购买交易存储空间。上传到Storj的文件会被粉碎、加密和存储到整个社区。只有文件所有者拥有密钥加密的信息。

|

||||

|

||||

在2014年举办的Texas Bitcoin Conference Hackathon会议上,去中心化的云存储市场概念首次被提出并证明可行。在第一次赢得黑客马拉松活动后,项目创始人们和领导团队利用开放论坛、Reddit、比特币论坛和社交媒体增长成了一个活跃的社区,如今,它们已成为影响Storj发展方向的一个重要组成部分。

|

||||

|

||||

### 2015年度开源项目新秀: Neovim ###

|

||||

|

||||

|

||||

|

||||

自1991年出现以来,Vim已经成为数以百万计软件开发人员所钟爱的文本编辑器。 而[Neovim][6]就是它的下一个版本。

|

||||

|

||||

在过去的23年里,软件开发生态系统经历了无数增长和创新。Neovim创始人Thiago de Arruda认为Vim缺乏当代元素,跟不上时代的发展。在保留Vim的招牌功能的前提下,Neovim团队同样在寻求改进和发展这个最受欢迎的文本编辑器的技术。早期众筹让Thiago de Arruda可以连续6个月时间投入到此项目。他相信Neovim社区会支持这个项目,激励他继续开发Neovim。

|

||||

|

||||

### 2015年度开源项目新秀: CockroachDB ###

|

||||

|

||||

|

||||

|

||||

前谷歌员工开发了一个开源的大型企业数据存储项目[CockroachDB][8],它是一个可扩展的、跨地域复制且支持事务的数据存储的解决方案。

|

||||

|

||||

为了保证在线的百万兆字节流量业务的质量,Google开发了Spanner系统,这是一个可扩展的,稳定的,支持事务的系统。许多参与开发CockroachDB的团队现在都服务于开源社区。就像真正的蟑螂(cockroach)一样,CockroachDB可以在没有数据头、任意节点失效的情况下正常运行。这个开源项目有很多富有经验的贡献者,创始人们通过社交媒体、Github、网络、会议和聚会结识他们并鼓励他们参与其中。

|

||||

|

||||

### 2015年度开源项目新秀: Kubernetes ###

|

||||

|

||||

|

||||

|

||||

在将容器化软件到引入开源社区发展时,[Docker][9]是一匹黑马,它创新了一套技术和工具。去年6月谷歌推出了[Kubernetes][10],这是一款开源的容器管理工具,用来加快开发和简化操作。

|

||||

|

||||

谷歌在它的内部运营上使用容器技术多年了。在2014年夏天的DockerCon上大会上,谷歌这个互联网巨头开源了Kubernetes,Kubernetes的开发是为了满足迅速增长的Docker生态系统的需要。通过和其它的组织、项目合作,比如Red Hat和CoreOS,Kubernetes项目的管理者们推动它登上了Docker Hub的工具下载榜榜首。Kubernetes的开发团队希望扩大这个项目,发展它的社区,这样的话软件开发者就能花更少的时间在管理基础设施上,而更多的去开发他们自己的APP。

|

||||

|

||||

### 2015年度开源项目新秀: Open Bazaar ###

|

||||

|

||||

|

||||

|

||||

OpenBazaar是一个使用比特币与其他人交易的去中心化的市场。OpenBazaar这一概念最早在编程马拉松(hackathon)活动中被提出,它的创始人结合了BitTorent、比特币和传统的金融服务方式,创造了一个不受审查的交易平台。OpenBazaar的开发团队在寻求新的成员,而且不久以后他们将极度扩大Open Bazaar社区。Open Bazaar的核心是透明度,其创始人和贡献者的共同目标是在商务交易中掀起一场革命,让他们向着一个真实的、一个无控制的,去中心化的市场奋进。

|

||||

|

||||

### 2015年度开源项目新秀: IPFS ###

|

||||

|

||||

|

||||

|

||||

IPFS 是一个面向全球的、点对点的分布式版本文件系统。它综合了Git,BitTorrent,HTTP的思想,开启了一个新的数据和数据结构传输协议。

|

||||

|

||||

人们所知的开源,它的本意用简单的方法解决复杂的问题,这样产生许多新颖的想法,但是那些强大的项目仅仅是开源社区的冰山一角。IFPS有一个非常激进的团队,这个概念的提出是大胆的,令人惊讶的,有点甚至高不可攀。看起来,一个点对点的分布式文件系统是在寻求将所有的计算设备连在一起。这个可能的 HTTP 替换品通过多种渠道维护着一个社区,包括Git社区和超过100名贡献者的IRC。这个疯狂的想法将在2015年进行软件内部测试。

|

||||

|

||||

### 2015年度开源项目新秀: cAdvisor ###

|

||||

|

||||

|

||||

|

||||

[cAdvisor (Container Advisor)][13] 是一个针对在运行中的容器进行收集,统计,处理和输出信息的工具,它可以给容器的使用者提供资源的使用情况和工作特性。对于每一个容器,cAdvisor记录着资源的隔离参数,资源使用历史,资源使用历史对比框图,网络状态。这些从容器输出的数据跨越主机传递。

|

||||

|

||||

cAdvisor可以在绝大多数的Linux发行版上运行,并且支持包括Docker在内的多种容器类型。事实上它成为了一种容器的代理,并被集成在了很多系统中。cAdvisor在DockerHub下载量也是位居前茅。cAdvisor的开发团队希望把cAdvisor改进到能够更深入地理解应用性能,并且集成到集群系统。

|

||||

|

||||

### 2015年度开源项目新秀: Terraform ###

|

||||

|

||||

|

||||

|

||||

[Terraform][14]提供了一些常见设置来创建一个基础设施,从物理机到虚拟机,以及email服务器、DNS服务器等。这个想法包括从家庭个人机解决方案到公共云平台提供的服务。一旦建立好了以后,Terraform可以让运维人员安全又高效地改变你的基础设施,就如同配置一样。

|

||||

|

||||

Terraform.io的创始者工作在一个Devops模式的公司,他找到了一个窍门把建立一个完整的数据中心所需的知识结合在一起,可以从添加服务器到支持网络服务的功能齐备的数据中心。基础设施的描述采用高级的配置语法,允许你把数据中心的蓝图按版本管理,并且转换成多种代码。著名开源公司HashiCorp赞助开发这个项目。

|

||||

|

||||

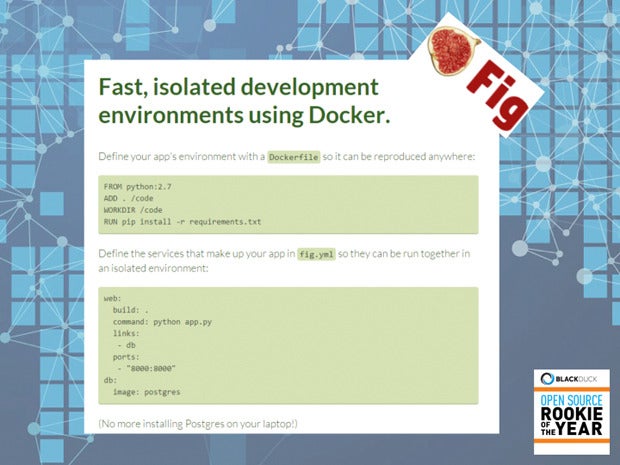

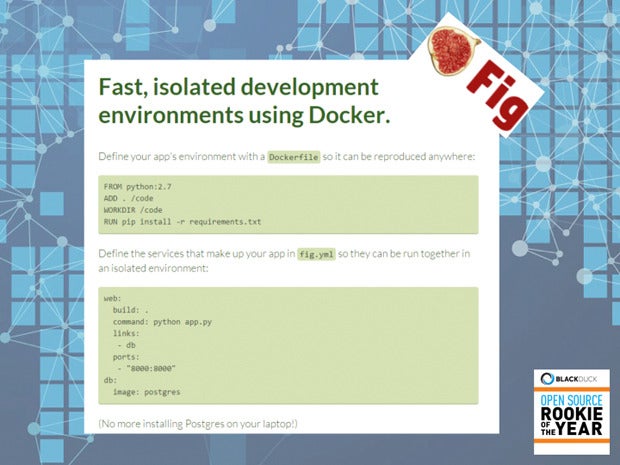

### 荣誉奖: Docker Fig ###

|

||||

|

||||

|

||||

|

||||

[Fig][15]为[Docker][16]的使用提供了一个快速的,分离的开发环境。Docker的移植只需要将配置信息放到一个简单的 fig.yml文件里。它会处理所有工作,包括构建、运行,端口转发,分享磁盘和容器链接。

|

||||

|

||||

Orchard去年发起了Fig,来创造一个使Docker工作起来的系统工具。它的开发像是为Docker设置开发环境,为了确保用户能够为他们的APP准确定义环境,在Docker中会运行数据库和缓存。Fig解决了开发者的一个难题。Docker全面支持这个开源项目,最近[将买下][17]Orchard来扩张这个项目。

|

||||

|

||||

### 荣誉奖: Drone ###

|

||||

|

||||

|

||||

|

||||

[Drone][18]是一个基于Docker的持续集成平台,而且它是用Go语言写的。Drone项目不满于现存的设置开发环境的技术和流程。

|

||||

|

||||

Drone提供了一个简单的自动测试和持续交付的方法:简单选择一个Docker镜像来满足你的需求,连接并提交至GitHub即可。Drone使用Docker容器来提供隔离的测试环境,让每个项目完全自主控制它的环境,没有传统的服务器管理的负担。Drone背后的100位社区贡献者强烈希望把这个项目带到企业和移动应用程序开发中。

|

||||

|

||||

### 开源新秀 ###

|

||||

|

||||

|

||||

|

||||

参见:

|

||||

|

||||

- [InfoWorld2015年年度技术奖][21]

|

||||

- [Bossies: 开源软件最高荣誉][22]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.infoworld.com/article/2875439/open-source-software/the-top-10-rookie-open-source-projects.html

|

||||

|

||||

作者:[Black Duck Software][a]

|

||||

译者:[sevenot](https://github.com/sevenot)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.infoworld.com/author/Black-Duck-Software/

|

||||

[1]:https://www.blackducksoftware.com/open-source-rookies

|

||||

[2]:https://www.openhub.net/

|

||||

[3]:https://github.com/debops/debops

|

||||

[4]:http://www.infoworld.com/article/2612397/data-center/review--ansible-orchestration-is-a-veteran-unix-admin-s-dream.html

|

||||

[5]:https://codecombat.com/

|

||||

[6]:http://storj.io/

|

||||

[7]:http://neovim.org/

|

||||

[8]:https://github.com/cockroachdb/cockroach

|

||||

[9]:http://www.infoworld.com/resources/16373/application-virtualization/the-beginners-guide-to-docker

|

||||

[10]:http://kubernetes.io/

|

||||

[11]:https://openbazaar.org/

|

||||

[12]:http://ipfs.io/

|

||||

[13]:https://github.com/google/cadvisor

|

||||

[14]:https://www.terraform.io/

|

||||

[15]:http://www.fig.sh/

|

||||

[16]:http://www.infoworld.com/resources/16373/application-virtualization/the-beginners-guide-to-docker

|

||||

[17]:http://www.infoworld.com/article/2608546/application-virtualization/docker-acquires-orchard-in-a-sign-of-rising-ambitions.html

|

||||

[18]:https://drone.io/

|

||||

[19]:http://www.infoworld.com/article/2683845/google-go/164121-Fast-guide-to-Go-programming.html

|

||||

[20]:https://www.blackducksoftware.com/open-source-rookies

|

||||

[21]:http://www.infoworld.com/article/2871935/application-development/infoworlds-2015-technology-of-the-year-award-winners.html

|

||||

[22]:http://www.infoworld.com/article/2688104/open-source-software/article.html

|

||||

[23]:http://www.infoworld.com/article/2854954/microsoft-windows/15-essential-open-source-tools-for-windows-admins.html

|

||||

|

||||

@ -0,0 +1,138 @@

|

||||

如何在 Linux 上使用 x2go 设置远程桌面

|

||||

================================================================================

|

||||

由于一切都迁移到了云上,作为提高职员生产力的一种方式,虚拟远程桌面在工业中越来越流行。尤其对于那些需要在多个地方和设备之间不停漫游的人,远程桌面可以让他们和工作环境保持无缝连接。远程桌面对于雇主同样有吸引力,可以在工作环境中提高敏捷性和灵活性,由于硬件整合、桌面安全加固等原因降低 IT 花费。

|

||||

|

||||

在 Linux 世界中,理所当然设置远程桌面有很多选择,支持许多协议(例如 RDP、RFB、NX) 和服务器/客户端实现(例如 [TigerVNC][1]、RealVNC、FreeNX、x2go、X11vnc、TeamViewer 等等)。

|

||||

|

||||

这当中有个出色的产品叫做 [X2Go][2],它是一个基于 NX(译者注:通过计算机网络显示远程桌面环境的一种技术,可参考 [Wiki][9])的远程桌面服务器和客户端的开源(GPLv2)实现。在这个教程中,我会介绍 **如何为 Linux VPS 使用 X2Go 设置远程桌面环境**。

|

||||

|

||||

### X2Go 是什么? ###

|

||||

|

||||

X2Go 的历史要追溯到 NoMachine 的 NX 技术。NX 远程桌面协议的设计目的是通过利用主动压缩和缓存解决低带宽和高延迟的网络连接问题。后来,NX 转为闭源,但 NX 库还是采用 GPL 协议。这导致出现了多种基于 NX 的远程桌面解决方案开源实现,X2Go 就是其中之一。

|

||||

|

||||

和其它解决方案例如 VNC 相比,X2Go 有哪些好处呢? X2Go 继承了 NX 技术的所有高级功能,很自然能在慢速网络连接上良好工作。另外,由于它内置的基于 SSH 的加密技术,X2Go 保持了确保安全的良好业绩记录。不再需要[手动设置 SSH 隧道][4] 。X2Go 默认支持音频,这意味着远程桌面的音乐播放可以通过网络传送,并进入本地扬声器。在易用性方面,远程桌面上运行的应用程序可以在你的本地桌面中以一个独立窗口无缝呈现,会给你造成一种应用程序实际上在你本地桌面运行的错觉。正如你看到的,这些都是一些基于 VNC 的解决方案所缺少的[强大功能][5]。

|

||||

|

||||

### X2GO 的桌面环境兼容性 ###

|

||||

|

||||

和其它远程桌面服务器一样,X2Go 服务器也有一些[已知的兼容性问题][6]。像 KDE 3/4、Xfce、MATE 和 LXDE 是对 X2Go 服务器最友好的桌面环境。但是,用其它桌面管理器效果可能有所不同。例如,已知 GNOME 3 之后的版本、KDE 5、Unity 和 X2Go 并不兼容。如果你的远程主机的桌面管理器和 X2Go 兼容,你可以继续以下的教程。

|

||||

|

||||

### 在 Linux 上安装 X2Go 服务器 ###

|

||||

|

||||

X2Go 由远程桌面服务器和客户端组件组成。让我们首先安装 X2Go 服务器。我假设你已经有一个和 X2Go 兼容的桌面管理器并且在远程主机上运行,我们会安装 X2Go 服务器到该远程主机。

|

||||

|

||||

注意系统启动后 X2Go 服务器组件没有需要单独启动的服务。你只需要保证开启了 SSH 服务并在正常运行。

|

||||

|

||||

#### Ubuntu 或 Linux Mint: ####

|

||||

|

||||

配置 X2Go PPA 库。对于 Ubuntu 14.04 以及更高版本,有可用的 X2Go PPA。

|

||||

|

||||

$ sudo add-apt-repository ppa:x2go/stable

|

||||

$ sudo apt-get update

|

||||

$ sudo apt-get install x2goserver x2goserver-xsession

|

||||

|

||||

#### Debian (Wheezy): ####

|

||||

|

||||

$ sudo apt-key adv --recv-keys --keyserver keys.gnupg.net E1F958385BFE2B6E

|

||||

$ sudo sh -c "echo deb http://packages.x2go.org/debian wheezy main > /etc/apt/sources.list.d/x2go.list"

|

||||

$ sudo sh -c "echo deb-src http://packages.x2go.org/debian wheezy main >> /etc/apt/sources.list.d/x2go.list"

|

||||

$ sudo apt-get update

|

||||

$ sudo apt-get install x2goserver x2goserver-xsession

|

||||

|

||||

#### Fedora: ####

|

||||

|

||||

$ sudo yum install x2goserver x2goserver-xsession

|

||||

|

||||

#### CentOS/RHEL: ####

|

||||

|

||||

首先启用 [EPEL 库][7] 然后运行:

|

||||

|

||||

$ sudo yum install x2goserver x2goserver-xsession

|

||||

|

||||

### 在 Linux 上安装 X2Go 客户端 ###

|

||||

|

||||

在将会连接到远程桌面的本地主机上,安装以下命令安装 X2Go 客户端。

|

||||

|

||||

#### Ubuntu 或 Linux Mint: ####

|

||||

|

||||

配置 X2Go PPA 库。对于 Ubuntu 14.04 以及更高版本,有可用的 X2Go PPA。

|

||||

|

||||

$ sudo add-apt-repository ppa:x2go/stable

|

||||

$ sudo apt-get update

|

||||

$ sudo apt-get install x2goclient

|

||||

|

||||

Debian (Wheezy):

|

||||

|

||||

$ sudo apt-key adv --recv-keys --keyserver keys.gnupg.net E1F958385BFE2B6E

|

||||

$ sudo sh -c "echo deb http://packages.x2go.org/debian wheezy main > /etc/apt/sources.list.d/x2go.list"

|

||||

$ sudo sh -c "echo deb-src http://packages.x2go.org/debian wheezy main >> /etc/apt/sources.list.d/x2go.list"

|

||||

$ sudo apt-get update

|

||||

$ sudo apt-get install x2goclient

|

||||

|

||||

#### Fedora: ####

|

||||

|

||||

$ sudo yum install x2goclient

|

||||

|

||||

CentOS/RHEL:

|

||||

|

||||

首先启用 [EPEL 库][7] ,然后运行:

|

||||

|

||||

$ sudo yum install x2goclient

|

||||

|

||||

### 用 X2Go 客户端连接到远程桌面 ###

|

||||

|

||||

现在可以连接到远程桌面了。在本地主机上,只需运行以下命令或者使用桌面启动器启动 X2Go 客户端。

|

||||

|

||||

$ x2goclient

|

||||

|

||||

输入远程主机的 IP 地址和 SSH 用户名称。同时,指定会话类型(例如,远程主机的桌面管理器)。

|

||||

|

||||

|

||||

|

||||

如果需要的话,你可以自定义其它东西(通过点击其它的标签),例如连接速度、压缩、屏幕分辨率等等。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

当你初始化一个远程桌面连接的时候,会要求你登录。输入你的 SSH 登录名和密码。

|

||||

|

||||

|

||||

|

||||

成功登陆后,你会看到远程桌面屏幕。

|

||||

|

||||

|

||||

|

||||

如果你想测试 X2Go 的无缝窗口功能,选择 "Single application" 会话类型,然后指定远处主机上可执行文件的路径。在该例子中,我选择远程 KDE 主机上的 Dolphin 文件管理器。

|

||||

|

||||

|

||||

|

||||

你成功连接后,你会在本地桌面上看到一个远程应用窗口,而不是完整的远程桌面屏幕。

|

||||

|

||||

|

||||

|

||||

### 总结 ###

|

||||

|

||||

在这篇教程中,我介绍了如何在 [Linux VPS][8] 实例上设置 X2Go 远程桌面。正如你所看到的,整个设置过程都非常简单(如果你使用一个合适的桌面环境的话)。尽管对于特定桌面仍有问题,X2Go 是一个安全、功能丰富、快速并且免费的远程桌面解决方案。

|

||||

|

||||

X2Go 的什么功能最吸引你?欢迎分享你的观点。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/x2go-remote-desktop-linux.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[ictlyh](https://github.com/ictlyh)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/nanni

|

||||

[1]:http://ask.xmodulo.com/centos-remote-desktop-vps.html

|

||||

[2]:http://wiki.x2go.org/

|

||||

[3]:http://xmodulo.com/go/digitalocean

|

||||

[4]:http://xmodulo.com/how-to-set-up-vnc-over-ssh.html

|

||||

[5]:http://wiki.x2go.org/doku.php/doc:newtox2go

|

||||

[6]:http://wiki.x2go.org/doku.php/doc:de-compat

|

||||

[7]:https://linux.cn/article-2324-1.html

|

||||

[8]:http://xmodulo.com/go/digitalocean

|

||||

[9]:https://en.wikipedia.org/wiki/NX_technology

|

||||

111

published/20150429 What are good command line HTTP clients.md

Normal file

111

published/20150429 What are good command line HTTP clients.md

Normal file

@ -0,0 +1,111 @@

|

||||

有哪些不错的命令行HTTP客户端?

|

||||

==============================================================================

|

||||

|

||||

“整体大于它的各部分之和”,这是引自希腊哲学家和科学家的亚里士多德的名言。这句话特别切中Linux。在我看来,Linux最强大的地方之一就是它的协作性。Linux的实用性并不仅仅源自大量的开源程序(命令行)。相反,其协作性来自于这些程序的综合利用,有时是结合成更大型的应用。

|

||||

|

||||

Unix哲学引发了一场“软件工具”的运动,关注开发简洁,基础,干净,模块化和扩展性好的代码,并可以运用于其他的项目。这种哲学成为了许多的Linux项目的一个重要的元素。

|

||||

|

||||

好的开源开发者写程序为了确保该程序尽可能运行得好,并且同时能与其他程序很好地协作。目标就是使用者拥有一堆方便的工具,每一个力求干好一件事。许多程序能独立工作得很好。

|

||||

|

||||

这篇文章讨论3个开源命令行HTTP客户端。这些客户端可以让你使用命令行从互联网上下载文件。但同时,他们也可以用于许多有意思的地方,如测试,调式和与HTTP服务器或web应用交互。对于HTTP架构师和API设计人员来说,使用命令行操作HTTP是一个值得花时间学习的技能。如果你需要经常使用API,HTTPie和cURL就非常有价值。

|

||||

|

||||

###HTTPie###

|

||||

|

||||

|

||||

|

||||

HTTPie(发音 aych-tee-tee-pie)是一款开源的命令行HTTP客户端。它是一个命令行界面,便于手工操作的类cURL工具。

|

||||

|

||||

该软件的目标是使得与Web服务器的交互尽可能的人性化。其提供了一个简单的http命令,允许使用简单且自然的语句发送任意的HTTP请求,并显示不同颜色的输出。HTTPie可以用于测试,调试,以及与HTTP服务器的常规交互。

|

||||

|

||||

#### 功能包括:####

|

||||

|

||||

- 生动而直观的语法格式

|

||||

- 经过格式化的彩色终端输出

|

||||

- 内建JSON支持

|

||||

- 支持表单和文件上传

|

||||

- 支持HTTPS,代理和认证

|

||||

- 任意数据请求

|

||||

- 自定义请求头

|

||||

- 持久会话

|

||||

- 类Wget的下载

|

||||

- 支持Python 2.6,2.7和3.x

|

||||

- 支持Linux,Mac OS X 和 Windows

|

||||

- 支持插件

|

||||

- 文档

|

||||

- 单元测试覆盖

|

||||

|

||||

---

|

||||

|

||||

- 网站:[httpie.org][1]

|

||||

- 开发者: Jakub Roztočil

|

||||

- 证书: 开源

|

||||

- 版本号: 0.9.2

|

||||

|

||||

###cURL###

|

||||

|

||||

|

||||

|

||||

cURL是一个开源的命令行工具,用于使用URL语句传输数据,支持DICT, FILE, FTP, FTPS, GOPHER, HTTP, HTTPS,IMAP, IMAPS, LDAP, LDAPS, POP3, POP3S, RTMP, RTSP, SCP, SFTP, SMTP, SMTPS, TELNET和TFTP等协议。

|

||||

|

||||

cURL支持SSL证书,HTTP POST,HTTP PUT,FTP上传,基于表单的HTTP上传,代理,缓存,用户名+密码的认证方式(Basic, Digest, NTLM, Negotiate, kerberos...),文件续传,代理通道和一些其他的有用功能。

|

||||

|

||||

#### 功能包括:####

|

||||

|

||||

- 支持配置文件

|

||||

- 一个单独命令行请求多个URL

|

||||

- 支持匹配: [0-13],{one, two, three}

|

||||

- 一个命令上传多个文件

|

||||

- 自定义最大传输速度

|

||||

- 重定向了标准错误输出

|

||||

- 支持Metalink

|

||||

|

||||

---

|

||||

|

||||

- 网站: [curl.haxx.se][2]

|

||||

- 开发者: Daniel Stenberg

|

||||

- 证书: MIT/X derivate license

|

||||

- 版本号: 7.42.0

|

||||

|

||||

###Wget###

|

||||

|

||||

|

||||

|

||||

Wget是一个从网络服务器获取信息的开源软件。其名字源于World Wide Web 和 get。Wget支持HTTP,HTTPS和FTP协议,同时也可以通过HTTP代理获取信息。

|

||||

|

||||

Wget可以根据HTML页面的链接,创建远程网络站点的本地副本,完全重造源站点的目录结构。这种方式被称作“递归下载”。

|

||||

|

||||

Wget设计上增强了低速或者不稳定的网络连接。

|

||||

|

||||

功能包括:

|

||||

|

||||

- 使用REST和RANGE恢复中断的下载

|

||||

- 使用文件名通配符,支持递归地对目录镜像

|

||||

- 基于NLS的消息文件支持多语言

|

||||

- 可选的转换下载文档里地绝对链接为相对链接,使得下载文档可以在本地相互链接

|

||||

- 可以在大多数类UNIX操作系统和微软Windows上运行

|

||||

- 支持HTTP代理

|

||||

- 支持HTTP cookie

|

||||

- 支持HTTP持久连接

|

||||

- 无人照管/后台操作

|

||||

- 当对远程镜像时,使用本地文件时间戳来决定是否需要重新下载文档

|

||||

|

||||

---

|

||||

|

||||

- 站点: [www.gnu.org/software/wget/][3]

|

||||

- 开发者: Hrvoje Niksic, Gordon Matzigkeit, Junio Hamano, Dan Harkless, and many others

|

||||

- 证书: GNU GPL v3

|

||||

- 版本号: 1.16.3

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxlinks.com/article/20150425174537249/HTTPclients.html

|

||||

|

||||

作者:Frazer Kline

|

||||

译者:[wi-cuckoo](https://github.com/wi-cuckoo)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://httpie.org/

|

||||

[2]:http://curl.haxx.se/

|

||||

[3]:https://www.gnu.org/software/wget/

|

||||

@ -1,133 +1,99 @@

|

||||

|

||||

如何在CentOS 7上安装Percona Server

|

||||

|

||||

如何在 CentOS 7 上安装 Percona服务器

|

||||

================================================================================

|

||||

在这篇文章中我们将了解关于Percona Server,一个开源简易的MySQL,MariaDB的替代。InnoDB的数据库引擎使得Percona Server非常有吸引力,如果你需要的高性能,高可靠性和高性价比的解决方案,它将是一个很好的选择。

|

||||

|

||||

在下文中将介绍在CentOS 7上Percona的服务器的安装,以及备份当前数据,配置的步骤和如何恢复备份。

|

||||

|

||||

|

||||

###目录###

|

||||

|

||||

|

||||

1.什么是Percona,为什么使用它

|

||||

2.备份你的数据库

|

||||

3.删除之前的SQL服务器

|

||||

4.使用二进制包安装Percona

|

||||

5.配置Percona

|

||||

6.保护你的数据

|

||||

7.恢复你的备份

|

||||

在这篇文章中我们将了解关于 Percona 服务器,一个开源的MySQL,MariaDB的替代品。InnoDB的数据库引擎使得Percona 服务器非常有吸引力,如果你需要的高性能,高可靠性和高性价比的解决方案,它将是一个很好的选择。

|

||||

|

||||

在下文中将介绍在CentOS 7上Percona 服务器的安装,以及备份当前数据,配置的步骤和如何恢复备份。

|

||||

|

||||

### 1.什么是Percona,为什么使用它 ###

|

||||

|

||||

|

||||

Percona是一个开源简易的MySQL,MariaDB数据库的替代,它是MYSQL的一个分支,相当多的改进和独特的功能使得它比MYSQL更可靠,性能更强,速度更快,它与MYSQL完全兼容,你甚至可以在Oracle的MYSQL与Percona之间使用复制命令。

|

||||

Percona是一个MySQL,MariaDB数据库的开源替代品,它是MySQL的一个分支,相当多的改进和独特的功能使得它比MYSQL更可靠,性能更强,速度更快,它与MYSQL完全兼容,你甚至可以在Oracle的MySQL与Percona之间使用复制。

|

||||

|

||||

#### 在Percona中独具特色的功能 ####

|

||||

|

||||

- 分区适应哈希搜索

|

||||

- 快速校验算法

|

||||

- 缓冲池预加载

|

||||

- 支持FlashCache

|

||||

|

||||

-分段自适应哈希搜索

|

||||

-快速校验算法

|

||||

-缓冲池预加载

|

||||

-支持FlashCache

|

||||

#### MySQL企业版和Percona中的特有功能 ####

|

||||

|

||||

#### MySQL企业版和Percona的特定功能 ####

|

||||

- 从不同的服务器导入表

|

||||

- PAM认证

|

||||

- 审计日志

|

||||

- 线程池

|

||||

|

||||

-从不同的服务器导入表

|

||||

-PAM认证

|

||||

-审计日志

|

||||

-线程池

|

||||

|

||||

|

||||

现在,你肯定很兴奋地看到这些好的东西整理在一起,我们将告诉你如何安装和做些的Percona Server的基本配置。

|

||||

现在,你肯定很兴奋地看到这些好的东西整合在一起,我们将告诉你如何安装和对Percona Server做基本配置。

|

||||

|

||||

### 2. 备份你的数据库 ###

|

||||

|

||||

|

||||

接下来,在命令行下使用SQL命令创建一个mydatabases.sql文件来重建/恢复salesdb和employeedb数据库,重命名数据库以便反映你的设置,如果没有安装MYSQL跳过此步

|

||||

接下来,在命令行下使用SQL命令创建一个mydatabases.sql文件,来重建或恢复salesdb和employeedb数据库,根据你的设置替换数据库名称,如果没有安装MySQL则跳过此步:

|

||||

|

||||

mysqldump -u root -p --databases employeedb salesdb > mydatabases.sql

|

||||

|

||||

复制当前的配置文件,如果你没有安装MYSQL也可跳过

|

||||

|

||||

复制当前的配置文件,如果你没有安装MYSQL也可跳过:

|

||||

|

||||

cp my.cnf my.cnf.bkp

|

||||

|

||||

### 3.删除之前的SQL服务器 ###

|

||||

|

||||

|

||||

停止MYSQL/MariaDB如果它们还在运行

|

||||

|

||||

停止MYSQL/MariaDB,如果它们还在运行:

|

||||

|

||||

systemctl stop mysql.service

|

||||

|

||||

卸载MariaDB和MYSQL

|

||||

|

||||

卸载MariaDB和MYSQL:

|

||||

|

||||

yum remove MariaDB-server MariaDB-client MariaDB-shared mysql mysql-server

|

||||

|

||||

移动重命名在/var/lib/mysql当中的MariaDB文件,这比仅仅只是移除更为安全快速,这就像2级即时备份。:)

|

||||

|

||||

移动重命名放在/var/lib/mysql当中的MariaDB文件。这比仅仅只是移除更为安全快速,这就像2级即时备份。:)

|

||||

|

||||

mv /var/lib/mysql /var/lib/mysql_mariadb

|

||||

|

||||

### 4.使用二进制包安装Percona ###

|

||||

|

||||

|

||||

你可以在众多Percona安装方法中选择,在CentOS中使用Yum或者RPM包安装通常是更好的主意,所以这些是本文介绍的方式,下载源文件编译后安装在本文中并没有介绍。

|

||||

|

||||

从Yum仓库中安装:

|

||||

|

||||

从Yum仓库中安装:

|

||||

|

||||

|

||||

首先,你需要设置的Percona的Yum库:

|

||||

|

||||

首先,你需要设置Percona的Yum库:

|

||||

|

||||

yum install http://www.percona.com/downloads/percona-release/redhat/0.1-3/percona-release-0.1-3.noarch.rpm

|

||||

|

||||

接下来安装Percona:

|

||||

|

||||

|

||||

yum install Percona-Server-client-56 Percona-Server-server-56

|

||||

|

||||

上面的命令安装Percona的服务器和客户端,共享库,可能需要Perl和Perl模块,以及其他依赖的需要。如DBI::MySQL的,如果这些尚未安装,

|

||||

|

||||

使用RPM包安装:

|

||||

上面的命令安装Percona的服务器和客户端、共享库,可能需要Perl和Perl模块,以及其他依赖的需要,如DBI::MySQL。如果这些尚未安装,可能需要安装更多的依赖包。

|

||||

|

||||

使用RPM包安装:

|

||||

|

||||

我们可以使用wget命令下载所有的rpm包:

|

||||

|

||||

|

||||

wget -r -l 1 -nd -A rpm -R "*devel*,*debuginfo*" \ http://www.percona.com/downloads/Percona-Server-5.5/Percona-Server-5.5.42-37.1/binary/redhat/7/x86_64/

|

||||

wget -r -l 1 -nd -A rpm -R "*devel*,*debuginfo*" \

|

||||

http://www.percona.com/downloads/Percona-Server-5.5/Percona-Server-5.5.42-37.1/binary/redhat/7/x86_64/

|

||||

|

||||

使用rpm工具,一次性安装所有的rpm包:

|

||||

|

||||

rpm -ivh Percona-Server-server-55-5.5.42-rel37.1.el7.x86_64.rpm \

|

||||

Percona-Server-client-55-5.5.42-rel37.1.el7.x86_64.rpm \

|

||||

Percona-Server-shared-55-5.5.42-rel37.1.el7.x86_64.rpm

|

||||

|

||||

rpm -ivh Percona-Server-server-55-5.5.42-rel37.1.el7.x86_64.rpm \ Percona-Server-client-55-5.5.42-rel37.1.el7.x86_64.rpm \ Percona-Server-shared-55-5.5.42-rel37.1.el7.x86_64.rpm

|

||||

|

||||

注意在上面命令语句中最后的反斜杠'\',如果您安装单独的软件包,记住要解决依赖关系,在安装客户端之前要先安装共享包,在安装服务器之前请先安装客户端。

|

||||

注意在上面命令语句中最后的反斜杠'\'(只是为了换行方便)。如果您安装单独的软件包,记住要解决依赖关系,在安装客户端之前要先安装共享包,在安装服务器之前请先安装客户端。

|

||||

|

||||

### 5.配置Percona服务器 ###

|

||||

|

||||

|

||||

|

||||

#### 恢复之前的配置 ####

|

||||

|

||||

|

||||

当我们从MariaDB迁移过来时,你可以将之前的my.cnf的备份文件恢复回来。

|

||||

|

||||

|

||||

cp /etc/my.cnf.bkp /etc/my.cnf

|

||||

|

||||

#### 创建一个新的my.cnf文件 ####

|

||||

|

||||

|

||||

如果你需要一个适合你需求的新的配置文件或者你并没有备份配置文件,你可以使用以下方法,通过简单的几步生成新的配置文件。

|

||||

|

||||

下面是Percona-server软件包自带的my.cnf文件

|

||||

|

||||

|

||||

# Percona Server template configuration

|

||||

|

||||

[mysqld]

|

||||

@ -158,33 +124,29 @@ Percona是一个开源简易的MySQL,MariaDB数据库的替代,它是MYSQL

|

||||

|

||||

根据你的需要配置好my.cnf后,就可以启动该服务了:

|

||||

|

||||

|

||||

systemctl restart mysql.service

|

||||

|

||||

如果一切顺利的话,它已经准备好执行SQL命令了,你可以用以下命令检查它是否已经正常启动:

|

||||

|

||||

|

||||

mysql -u root -p -e 'SHOW VARIABLES LIKE "version_comment"'

|

||||

|

||||

如果你不能够正常启动它,你可以在**/var/log/mysql/mysqld.log**中查找原因,该文件可在my.cnf的[mysql_safe]的log-error中设置。

|

||||

|

||||

tail /var/log/mysql/mysqld.log

|

||||

|

||||

你也可以在/var/lib/mysql/文件夹下查找格式为[hostname].err的文件,就像下面这个例子样:

|

||||

|

||||

你也可以在/var/lib/mysql/文件夹下查找格式为[主机名].err的文件,就像下面这个例子:

|

||||

|

||||

tail /var/lib/mysql/centos7.err

|

||||

|

||||

如果还是没找出原因,你可以试试strace:

|

||||

|

||||

|

||||

yum install strace && systemctl stop mysql.service && strace -f -f mysqld_safe

|

||||

|

||||

上面的命令挺长的,输出的结果也相对简单,但绝大多数时候你都能找到无法启动的原因。

|

||||

|

||||

### 6.保护你的数据 ###

|

||||

|

||||

好了,你的关系数据库管理系统已经准备好接收SQL查询,但是把你宝贵的数据放在没有最起码安全保护的服务器上并不可取,为了更为安全最好使用mysql_secure_instalation,这个工具可以帮助删除未使用的默认功能,还设置root的密码,并限制使用此用户进行访问。

|

||||

只需要在shell中执行,并参照屏幕上的说明。

|

||||

好了,你的关系数据库管理系统已经准备好接收SQL查询,但是把你宝贵的数据放在没有最起码安全保护的服务器上并不可取,为了更为安全最好使用mysql_secure_install来安装,这个工具可以帮助你删除未使用的默认功能,并设置root的密码,限制使用此用户进行访问。只需要在shell中执行该命令,并参照屏幕上的说明操作。

|

||||

|

||||

mysql_secure_install

|

||||

|

||||

@ -192,28 +154,27 @@ Percona是一个开源简易的MySQL,MariaDB数据库的替代,它是MYSQL

|

||||

|

||||

如果您参照之前的设置,现在你可以恢复数据库,只需再用mysqldump一次。

|

||||

|

||||

|

||||

mysqldump -u root -p < mydatabases.sql

|

||||

恭喜你,你刚刚已经在你的CentOS上成功安装了Percona,你的服务器已经可以正式投入使用;你可以像使用MYSQL一样使用它,你的服务器与他完全兼容。

|

||||

|

||||

恭喜你,你刚刚已经在你的CentOS上成功安装了Percona,你的服务器已经可以正式投入使用;你可以像使用MySQL一样使用它,你的服务器与它完全兼容。

|

||||

|

||||

### 总结 ###

|

||||

|

||||

为了获得更强的性能你需要对配置文件做大量的修改,但这里也有一些简单的选项来提高机器的性能。当使用InnoDB引擎时,将innodb_file_per_table设置为on,它将在一个文件中为每个表创建索引表,这意味着每个表都有它自己的索引文件,它使系统更强大和更容易维修。

|

||||

为了获得更强的性能你需要对配置文件做大量的修改,但这里也有一些简单的选项来提高机器的性能。当使用InnoDB引擎时,将innodb_file_per_table设置为on,它将在一个文件中为每个表创建索引表,这意味着每个表都有它自己的索引文件,它使系统更强大和更容易维修。

|

||||

|

||||

可以修改innodb_buffer_pool_size选项,InnoDB应该有足够的缓存池来应对你的数据集,大小应该为当前可用内存的70%到80%。

|

||||

|

||||

过将innodb-flush-method设置为O_DIRECT,关闭写入高速缓存,如果你使用了RAID,这可以提升性能因为在底层已经完成了缓存操作。

|

||||

将innodb-flush-method设置为O_DIRECT,关闭写入高速缓存,如果你使用了RAID,这可以提升性能,因为在底层已经完成了缓存操作。

|

||||

|

||||

如果你的数据并不是十分关键并且并不需要对数据库事务正确执行的四个基本要素完全兼容,可以将innodb_flush_log_at_trx_commit设置为2,这也能提升系统的性能。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linoxide.com/linux-how-to/percona-server-centos-7/

|

||||

|

||||

作者:[Carlos Alberto][a]

|

||||

译者:[FatJoe123](https://github.com/FatJoe123)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

如何在云服务提供商的机器使用Docker Machine

|

||||

如何在云服务提供商的平台上使用Docker Machine

|

||||

================================================================================

|

||||

大家好,今天我们来学习如何使用Docker Machine在各种云服务提供商的平台部署Docker。Docker Machine是一个可以帮助我们在自己的电脑、云服务提供商的机器以及我们数据中心的机器上创建Docker机器的应用程序。它为创建服务器、在服务器中安装Docker、根据用户需求配置Docker客户端提供了简单的解决方案。驱动API对本地机器、数据中心的虚拟机或者公用云机器都适用。Docker Machine支持Windows、OSX和Linux,并且提供一个独立的二进制文件,可以直接使用。它让我们可以充分利用支持Docker的基础设施的生态环境合作伙伴,并且使用相同的接口进行访问。它让人们可以使用一个命令来简单而迅速地在不同的云平台部署Docker容器。

|

||||

|

||||

|

||||

大家好,今天我们来了解如何使用Docker Machine在各种云服务提供商的平台上部署Docker。Docker Machine是一个可以帮助我们在自己的电脑、云服务提供商的平台以及我们数据中心的机器上创建Docker机器的应用程序。它为创建服务器、在服务器中安装Docker、根据用户需求配置Docker客户端提供了简单的解决方案。驱动API对本地机器、数据中心的虚拟机或者公用云机器都适用。Docker Machine支持Windows、OSX和Linux,并且提供一个独立的二进制文件,可以直接使用。它让我们可以充分利用支持Docker的基础设施的生态环境合作伙伴,并且使用相同的接口进行访问。它让人们可以使用一个命令来简单而迅速地在不同的云平台部署Docker容器。

|

||||

|

||||

### 1. 安装Docker Machine ###

|

||||

|

||||

@ -25,14 +25,14 @@ Docker Machine可以很好地支持每一种Linux发行版。首先,我们需

|

||||

|

||||

|

||||

|

||||

另外机器上需要有docker命令,可以使用如下命令安装:

|

||||

要在我们的机器上启用docker命令,需要使用如下命令安装Docker客户端:

|

||||

|

||||

# curl -L https://get.docker.com/builds/linux/x86_64/docker-latest > /usr/local/bin/docker

|

||||

# chmod +x /usr/local/bin/docker

|

||||

|

||||

### 2. 创建机器 ###

|

||||

|

||||

在自己的Linux机器上安装好了Docker Machine之后,我们想要将一个docker虚拟机部署到云服务器上。Docker Machine支持几个流行的云平台,如igital Ocean、Amazon Web Services(AWS)、Microsoft Azure、Google Cloud Computing等等,所以我们可以在不同的平台使用相同的接口来部署Docker。本文中我们会使用digitalocean驱动在Digital Ocean的服务器上部署Docker,--driver选项指定digitalocean驱动,--digitalocean-access-token选项指定[Digital Ocean Control Panel][1]提供的API Token,命令最后的是我们创建的Docker虚拟机的机器名。运行如下命令:

|

||||

在自己的Linux机器上安装好了Docker Machine之后,我们想要将一个docker虚拟机部署到云服务器上。Docker Machine支持几个流行的云平台,如igital Ocean、Amazon Web Services(AWS)、Microsoft Azure、Google Cloud Computing及其它等等,所以我们可以在不同的平台使用相同的接口来部署Docker。本文中我们会使用digitalocean驱动在Digital Ocean的服务器上部署Docker,--driver选项指定digitalocean驱动,--digitalocean-access-token选项指定[Digital Ocean Control Panel][1]提供的API Token,命令最后的是我们创建的Docker虚拟机的机器名。运行如下命令:

|

||||

|

||||

# docker-machine create --driver digitalocean --digitalocean-access-token <API-Token> linux-dev

|

||||

|

||||

@ -40,7 +40,7 @@ Docker Machine可以很好地支持每一种Linux发行版。首先,我们需

|

||||

|

||||

|

||||

|

||||

**注意**: 这里linux-dev是我们将要创建的机器的名称。`<API-Token>`是一个安全key,可以在Digtal Ocean Control Panel生成。要找到这个key,我们只需要登录到我们的Digital Ocean Control Panel,然后点击API,再点击Generate New Token,填写一个名称,选上Read和Write。然后我们就会得到一串十六进制的key,那就是`<API-Token>`,简单地替换到上边的命令中即可。

|

||||

**注意**: 这里linux-dev是我们将要创建的机器的名称。`<API-Token>`是一个安全key,可以在Digtal Ocean Control Panel生成。要找到这个key,我们只需要登录到我们的Digital Ocean Control Panel,然后点击API,再点击 Generate New Token,填写一个名称,选上Read和Write。然后我们就会得到一串十六进制的key,那就是`<API-Token>`,简单地替换到上边的命令中即可。

|

||||

|

||||

运行如上命令后,我们可以在Digital Ocean Droplet Panel中看到一个具有默认配置的droplet已经被创建出来了。

|

||||

|

||||

@ -48,35 +48,35 @@ Docker Machine可以很好地支持每一种Linux发行版。首先,我们需

|

||||

|

||||

简便起见,docker-machine会使用默认配置来部署Droplet。我们可以通过增加选项来定制我们的Droplet。这里是一些digitalocean相关的选项,我们可以使用它们来覆盖Docker Machine所使用的默认配置。

|

||||

|

||||

--digitalocean-image "ubuntu-14-04-x64" 是选择Droplet的镜像

|

||||

--digitalocean-ipv6 enable 是启用IPv6网络支持

|

||||

--digitalocean-private-networking enable 是启用专用网络

|

||||

--digitalocean-region "nyc3" 是选择部署Droplet的区域

|

||||

--digitalocean-size "512mb" 是选择内存大小和部署的类型

|

||||

- --digitalocean-image "ubuntu-14-04-x64" 用于选择Droplet的镜像

|

||||

- --digitalocean-ipv6 enable 启用IPv6网络支持

|

||||

- --digitalocean-private-networking enable 启用专用网络

|

||||

- --digitalocean-region "nyc3" 选择部署Droplet的区域

|

||||

- --digitalocean-size "512mb" 选择内存大小和部署的类型

|

||||

|

||||

如果你想在其他云服务使用docker-machine,并且想覆盖默认的配置,可以运行如下命令来获取Docker Mackine默认支持的对每种平台适用的参数。

|

||||

|

||||

# docker-machine create -h

|

||||

|

||||

### 3. 选择活跃机器 ###

|

||||

### 3. 选择活跃主机 ###

|

||||

|

||||

部署Droplet后,我们想马上运行一个Docker容器,但在那之前,我们需要检查下活跃机器是否是我们需要的机器。可以运行如下命令查看。

|

||||

部署Droplet后,我们想马上运行一个Docker容器,但在那之前,我们需要检查下活跃主机是否是我们需要的机器。可以运行如下命令查看。

|

||||

|

||||

# docker-machine ls

|

||||

|

||||

|

||||

|

||||

ACTIVE一列有“*”标记的是活跃机器。

|

||||

ACTIVE一列有“*”标记的是活跃主机。

|

||||

|

||||

现在,如果我们想将活跃机器切换到需要的机器,运行如下命令:

|

||||

现在,如果我们想将活跃主机切换到需要的主机,运行如下命令:

|

||||

|

||||

# docker-machine active linux-dev

|

||||

|

||||

**注意**:这里,linux-dev是机器名,我们打算激活这个机器,并且在其中运行Docker容器。

|

||||

**注意**:这里,linux-dev是机器名,我们打算激活这个机器,并且在其上运行Docker容器。

|

||||

|

||||

### 4. 运行一个Docker容器 ###

|

||||

|

||||

现在,我们已经选择了活跃机器,就可以运行Docker容器了。可以测试一下,运行一个busybox容器来执行`echo hello word`命令,这样就可以得到输出:

|

||||

现在,我们已经选择了活跃主机,就可以运行Docker容器了。可以测试一下,运行一个busybox容器来执行`echo hello word`命令,这样就可以得到输出:

|

||||

|

||||

# docker run busybox echo hello world

|

||||

|

||||

@ -98,9 +98,9 @@ SSH到机器上之后,我们可以在上边运行任何Docker容器。这里

|

||||

|

||||

# exit

|

||||

|

||||

### 5. 删除机器 ###

|

||||

### 5. 删除主机 ###

|

||||

|

||||

删除在运行的机器以及它的所有镜像和容器,我们可以使用docker-machine rm命令:

|

||||

删除在运行的主机以及它的所有镜像和容器,我们可以使用docker-machine rm命令:

|

||||

|

||||

# docker-machine rm linux-dev

|

||||

|

||||

@ -112,15 +112,15 @@ SSH到机器上之后,我们可以在上边运行任何Docker容器。这里

|

||||

|

||||

|

||||

|

||||

### 6. 在不使用驱动的情况新增一个机器 ###

|

||||

### 6. 在不使用驱动的情况新增一个主机 ###

|

||||

|

||||

我们可以在不使用驱动的情况往Docker增加一台机器,只需要一个URL。它可以使用一个已有机器的别名,所以我们就不需要每次在运行docker命令时输入完整的URL了。

|

||||

我们可以在不使用驱动的情况往Docker增加一台主机,只需要一个URL。它可以使用一个已有机器的别名,所以我们就不需要每次在运行docker命令时输入完整的URL了。

|

||||

|

||||

$ docker-machine create --url=tcp://104.131.50.36:2376 custombox

|

||||

|

||||

### 7. 管理机器 ###

|

||||

### 7. 管理主机 ###

|

||||

|

||||

如果你已经让Docker运行起来了,可以使用简单的**docker-machine stop**命令来停止所有正在运行的机器,如果需要再启动的话可以运行**docker-machine start**:

|

||||

如果你已经让Docker运行起来了,可以使用简单的**docker-machine stop**命令来停止所有正在运行的主机,如果需要再启动的话可以运行**docker-machine start**:

|

||||

|

||||

# docker-machine stop

|

||||

# docker-machine start

|

||||

@ -140,7 +140,7 @@ via: http://linoxide.com/linux-how-to/use-docker-machine-cloud-provider/

|

||||

|

||||

作者:[Arun Pyasi][a]

|

||||

译者:[goreliu](https://github.com/goreliu)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

Shell脚本:使用rsync备份文件/目录

|

||||

================================================================================

|

||||

本文,我们带来了shell脚本,用来使用rsync命令将你本地Linux机器上的文件/目录备份到远程Linux服务器上。使用该脚本实施备份会是一个交互的方式,你需要提供远程备份服务器的主机名/ip地址和文件夹位置。我们保留了一个独立文件,在这个文件中你需要提供需要备份的文件/目录。我们添加了两个脚本,**第一个脚本**在每次拷贝完一个文件后询问密码(如果你启用了ssh验证密钥,那么就不会询问密码),而第二个脚本中,则只会提示一次输入密码。

|

||||

本文我们介绍一个shell脚本,用来使用rsync命令将你本地Linux机器上的文件/目录备份到远程Linux服务器上。使用该脚本会以交互的方式实施备份,你需要提供远程备份服务器的主机名/ip地址和文件夹位置。我们使用一个单独的列表文件,在这个文件中你需要列出要备份的文件/目录。我们添加了两个脚本,**第一个脚本**在每次拷贝完一个文件后询问密码(如果你启用了ssh密钥验证,那么就不会询问密码),而第二个脚本中,则只会提示一次输入密码。

|

||||

|

||||

我们打算备份bckup.txt,dataconfig.txt,docs和orcledb。

|

||||

|

||||

@ -12,7 +12,7 @@ Shell脚本:使用rsync备份文件/目录

|

||||

drwxr-xr-x. 2 root root 4096 May 15 10:45 docs

|

||||

drwxr-xr-x. 2 root root 4096 May 15 10:44 oracledb

|

||||

|

||||

该文件包含了备份文件/目录的详情

|

||||

bckup.txt文件包含了需要备份的文件/目录的详情

|

||||

|

||||

[root@Fedora21 tmp]# cat /tmp/bckup.txt

|

||||

/tmp/oracledb

|

||||

@ -24,46 +24,46 @@ Shell脚本:使用rsync备份文件/目录

|

||||

|

||||

#!/bin/bash

|

||||

|

||||

#We will save path to backup file in variable

|

||||

# 将备份列表文件的路径保存到变量中

|

||||

backupf='/tmp/bckup.txt'

|

||||

|

||||

#Next line just prints message

|

||||

# 输入一个提示信息

|

||||

echo "Shell Script Backup Your Files / Directories Using rsync"

|

||||

|

||||

#next line check if entered value is not null, and if null it will reask user to enter Destination Server

|

||||

# 检查是否输入了目标服务器,如果为空就再次提示用户输入

|

||||

while [ x$desthost = "x" ]; do

|

||||

|

||||

#next line prints what userd should enter, and stores entered value to variable with name desthost

|

||||

# 提示用户输入目标服务器地址并保存到变量

|

||||

read -p "Destination backup Server : " desthost

|

||||

|

||||

#next line finishes while loop

|

||||

# 结束循环

|

||||

done

|

||||

|

||||

#next line check if entered value is not null, and if null it will reask user to enter Destination Path

|

||||

# 检查是否输入了目标文件夹,如果为空就再次提示用户输入

|

||||

while [ x$destpath = "x" ]; do

|

||||

|

||||

#next line prints what userd should enter, and stores entered value to variable with name destpath

|

||||

# 提示用户输入目标文件夹并保存到变量

|

||||

read -p "Destination Folder : " destpath

|

||||

|

||||

#next line finishes while loop

|

||||

# 结束循环

|

||||

done

|

||||

|

||||

#Next line will start reading backup file line by line

|

||||

# 逐行读取备份列表文件

|

||||

for line in `cat $backupf`

|

||||

|

||||

#and on each line will execute next

|

||||

# 对每一行都进行处理

|

||||

do

|

||||

|

||||

#print message that file/dir will be copied

|

||||

# 显示要被复制的文件/文件夹名称

|

||||

echo "Copying $line ... "

|

||||

#copy via rsync file/dir to destination

|

||||

# 通过 rsync 复制文件/文件夹到目标位置

|

||||

|

||||

rsync -ar "$line" "$desthost":"$destpath"

|

||||

|

||||

#this line just print done

|

||||

# 显示完成

|

||||

echo "DONE"

|

||||

|

||||

#end of reading backup file

|

||||

# 结束

|

||||

done

|

||||

|

||||

#### 运行带有输出结果的脚本 ####

|

||||

@ -91,64 +91,65 @@ Shell脚本:使用rsync备份文件/目录

|

||||

|

||||

#!/bin/bash

|

||||

|

||||

#We will save path to backup file in variable

|

||||

# 将备份列表文件的路径保存到变量中

|

||||

backupf='/tmp/bckup.txt'

|

||||

|

||||

#Next line just prints message

|

||||

# 输入一个提示信息

|

||||

echo "Shell Script Backup Your Files / Directories Using rsync"

|

||||

|

||||

#next line check if entered value is not null, and if null it will reask user to enter Destination Server

|

||||

# 检查是否输入了目标服务器,如果为空就再次提示用户输入

|

||||

while [ x$desthost = "x" ]; do

|

||||

|

||||

#next line prints what userd should enter, and stores entered value to variable with name desthost

|

||||

# 提示用户输入目标服务器地址并保存到变量

|

||||

read -p "Destination backup Server : " desthost

|

||||

|

||||

#next line finishes while loop

|

||||

# 结束循环

|

||||

done

|

||||

|

||||

#next line check if entered value is not null, and if null it will reask user to enter Destination Path

|

||||

# 检查是否输入了目标文件夹,如果为空就再次提示用户输入

|

||||

while [ x$destpath = "x" ]; do

|

||||

|

||||

#next line prints what userd should enter, and stores entered value to variable with name destpath

|

||||

# 提示用户输入目标文件夹并保存到变量

|

||||

read -p "Destination Folder : " destpath

|

||||

|

||||

#next line finishes while loop

|

||||

# 结束循环

|

||||

done

|

||||

|

||||

#next line check if entered value is not null, and if null it will reask user to enter password

|

||||

# 检查是否输入了目标服务器密码,如果为空就再次提示用户输入

|

||||

while [ x$password = "x" ]; do

|

||||

#next line prints what userd should enter, and stores entered value to variable with name password. #To hide password we are using -s key

|

||||

# 提示用户输入密码并保存到变量

|

||||

# 使用 -s 选项不回显输入的密码

|

||||

read -sp "Password : " password

|

||||

#next line finishes while loop

|

||||

# 结束循环

|

||||

done

|

||||

|

||||

#Next line will start reading backup file line by line

|

||||

# 逐行读取备份列表文件

|

||||

for line in `cat $backupf`

|

||||

|

||||

#and on each line will execute next

|

||||

# 对每一行都进行处理

|

||||

do

|

||||

|

||||

#print message that file/dir will be copied

|

||||

# 显示要被复制的文件/文件夹名称

|

||||

echo "Copying $line ... "

|

||||

#we will use expect tool to enter password inside script

|

||||

# 使用 expect 来在脚本中输入密码

|

||||

/usr/bin/expect << EOD

|

||||

#next line set timeout to -1, recommended to use

|

||||

# 推荐设置超时为 -1

|

||||

set timeout -1

|

||||

#copy via rsync file/dir to destination, using part of expect — spawn command

|

||||

# 通过 rsync 复制文件/文件夹到目标位置,使用 expect 的组成部分 spawn 命令

|

||||

|

||||

spawn rsync -ar ${line} ${desthost}:${destpath}

|

||||

#as result of previous command we expect “password” promtp

|

||||

# 上一行命令会等待 “password” 提示

|

||||

expect "*?assword:*"

|

||||

#next command enters password from script

|

||||

# 在脚本中提供密码

|

||||

send "${password}\r"

|

||||

#next command tells that we expect end of file (everything finished on remote server)

|

||||

# 等待文件结束符(远程服务器处理完了所有事情)

|

||||

expect eof

|

||||

#end of expect pard

|

||||

# 结束 expect 脚本

|

||||

EOD

|

||||

#this line just print done

|

||||

# 显示结束

|

||||

echo "DONE"

|

||||

|

||||

#end of reading backup file

|

||||

# 完成

|

||||

done

|

||||

|

||||

#### 运行第二个带有输出结果的脚本的屏幕截图 ####

|

||||

@ -163,7 +164,7 @@ via: http://linoxide.com/linux-shell-script/shell-script-backup-files-directorie

|

||||

|

||||

作者:[Yevhen Duma][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,14 +1,14 @@

|

||||

Linux 有问必答--如何在桌面版 Ubuntu 中用命令行更改系统代理设置

|

||||

Linux 有问必答:如何在桌面版 Ubuntu 中用命令行更改系统代理设置

|

||||

================================================================================

|

||||

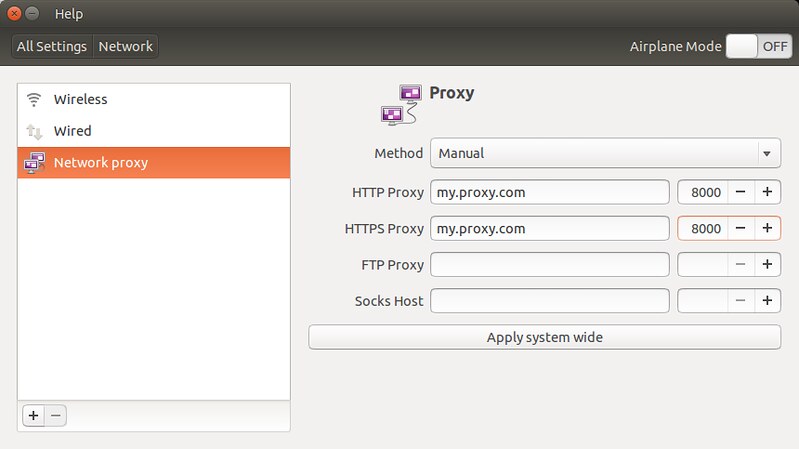

> **问题**: 我经常需要在桌面版 Ubuntu 中更改系统代理设置,但我不想通过繁琐的 GUI 菜单链:"系统设置" -> "网络" -> "网络代理"。在命令行中有更方便的方法更改桌面版的代理设置吗?

|

||||

> **问题**: 我经常需要在桌面版 Ubuntu 中更改系统代理设置,但我不想通过繁琐的 GUI 菜单点击:"系统设置" -> "网络" -> "网络代理"。在命令行中有更方便的方法更改桌面版的代理设置吗?

|

||||

|

||||

在桌面版 Ubuntu 中,它的桌面环境设置,包括系统代理设置,都存储在 DConf 数据库,这是简单的键值对存储。如果你想通过系统设置菜单修改桌面属性,更改会持久保存在后端的 DConf 数据库。在 Ubuntu 中更改 DConf 数据库有基于图像用户界面和非图形用户界面的两种方式。系统设置或者 dconf-editor 是访问 DConf 数据库的图形方法,而 gsettings 或 dconf 就是能更改数据库的命令行工具。

|

||||

在桌面版 Ubuntu 中,它的桌面环境设置,包括系统代理设置,都存储在 DConf 数据库,这是简单的键值对存储。如果你想通过系统设置菜单修改桌面属性,更改会持久保存在后端的 DConf 数据库。在 Ubuntu 中更改 DConf 数据库有基于图像用户界面和非图形用户界面的两种方式。系统设置或者 `dconf-editor` 是访问 DConf 数据库的图形方法,而 `gsettings` 或 `dconf` 就是能更改数据库的命令行工具。

|

||||

|

||||

下面介绍如何用 gsettings 从命令行更改系统代理设置。

|

||||

下面介绍如何用 `gsettings` 从命令行更改系统代理设置。

|

||||

|

||||

|

||||

|

||||

gsetting 读写特定 Dconf 设置的基本用法如下:

|

||||

`gsettings` 读写特定 Dconf 设置的基本用法如下:

|

||||

|

||||

更改 DConf 设置:

|

||||

|

||||

@ -53,7 +53,7 @@ gsetting 读写特定 Dconf 设置的基本用法如下:

|

||||

|

||||

### 在命令行中清除系统代理设置 ###

|

||||

|

||||

最后,清除所有 手动/自动 代理设置,还原为无代理设置:

|

||||

最后,清除所有“手动/自动”代理设置,还原为无代理设置:

|

||||

|

||||

$ gsettings set org.gnome.system.proxy mode 'none'

|

||||

|

||||

@ -63,7 +63,7 @@ via: http://ask.xmodulo.com/change-system-proxy-settings-command-line-ubuntu-des

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[ictlyh](https://github.com/ictlyh)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -2,9 +2,9 @@

|

||||

================================================================================

|

||||

|

||||

|

||||

笔记本过热是最近一个常见的问题。监控硬件温度或许可以帮助你诊断笔记本为什么会过热。本篇中,我们会**了解如何在Ubuntu中检查CPU的温度**。

|

||||

夏天到了,笔记本过热是最近一个常见的问题。监控硬件温度或许可以帮助你诊断笔记本为什么会过热。本篇中,我们会**了解如何在Ubuntu中检查CPU的温度**。

|

||||

|

||||

我们将使用一个GUI工具[Psensor][1],它允许你在Linux中监控硬件温度。用Psensor你可以:

|

||||

我们将使用一个GUI工具[Psensor][1],它允许你在Linux中监控硬件温度。用Psensor你可以:

|

||||

|

||||

- 监控cpu和主板的温度

|

||||

- 监控NVidia GPU的文档

|

||||

@ -17,7 +17,7 @@ Psensor最新的版本同样提供了Ubuntu中的指示小程序,这样使得

|

||||

|

||||

### 如何在Ubuntu 15.04 和 14.04中安装Psensor ###

|

||||

|

||||

在安装Psensor前,你需要安装和配置[lm-sensors][2],一个用于硬件监控的命令行工具。如果你想要测量磁盘温度,你还需要安装[hddtemp][3]。要安装这些工具,运行下面的这些命令:

|

||||

在安装Psensor前,你需要安装和配置[lm-sensors][2],这是一个用于硬件监控的命令行工具。如果你想要测量磁盘温度,你还需要安装[hddtemp][3]。要安装这些工具,运行下面的这些命令:

|

||||

|

||||

sudo apt-get install lm-sensors hddtemp

|

||||

|

||||

@ -45,7 +45,7 @@ Psensor最新的版本同样提供了Ubuntu中的指示小程序,这样使得

|

||||

|

||||

sudo apt-get install psensor

|

||||

|

||||

安装完成后,在Unity Dash中运行程序。第一次运行时,你应该配置Psensor该监控什么状态。

|

||||

安装完成后,在Unity Dash中运行程序。第一次运行时,你应该配置Psensor该监控什么状态。

|

||||

|

||||

|

||||

|

||||

@ -73,7 +73,7 @@ via: http://itsfoss.com/check-laptop-cpu-temperature-ubuntu/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,10 +1,10 @@

|

||||

Linux有问必答 -- 如何在Linux中挂载LVM分区

|

||||

Linux有问必答:如何在Linux中直接挂载LVM分区

|

||||

================================================================================

|

||||

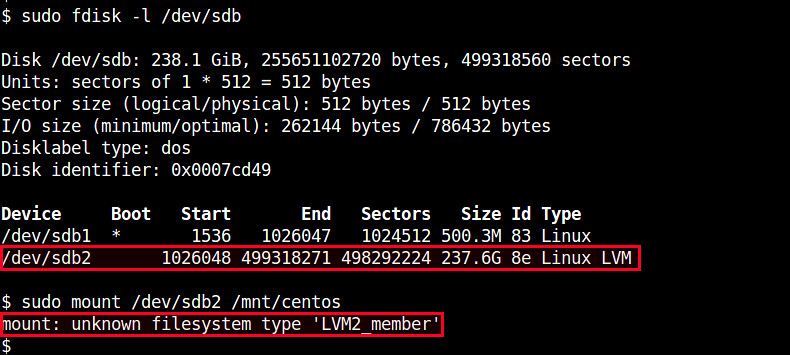

> **提问**: 我有一个USB盘包含了LVM分区。 我想要在Linux中访问这些LVM分区。我该如何在Linux中挂载LVM分区?

|

||||

|

||||

】LVM是逻辑卷管理工具,它允许你使用逻辑卷和卷组的概念来管理磁盘空间。使用LVM相比传统分区最大的好处是弹性地位用户和程序分配空间而不用考虑每个物理磁盘的大小。

|

||||

LVM是逻辑卷管理工具,它允许你使用逻辑卷和卷组的概念来管理磁盘空间。使用LVM相比传统分区最大的好处是弹性地为用户和程序分配空间而不用考虑每个物理磁盘的大小。

|

||||

|

||||

在LVM中,那些创建了逻辑分区的物理存储是传统的分区(比如:/dev/sda2,/dev/sdb1)。这些分区必须被初始化位“物理卷”并被标签(如,“Linux LVM”)来使它们可以在LVM中使用。一旦分区被标记被LVM分区,你不能直接用mount命令挂载。

|

||||

在LVM中,那些创建了逻辑分区的物理存储是传统的分区(比如:/dev/sda2,/dev/sdb1)。这些分区必须被初始化为“物理卷 PV”并加上卷标(如,“Linux LVM”)来使它们可以在LVM中使用。一旦分区被标记被LVM分区,你不能直接用mount命令挂载。

|

||||

|

||||

如果你尝试挂载一个LVM分区(比如/dev/sdb2), 你会得到下面的错误。

|

||||

|

||||

@ -16,9 +16,9 @@ Linux有问必答 -- 如何在Linux中挂载LVM分区

|

||||

|

||||

|

||||

|

||||

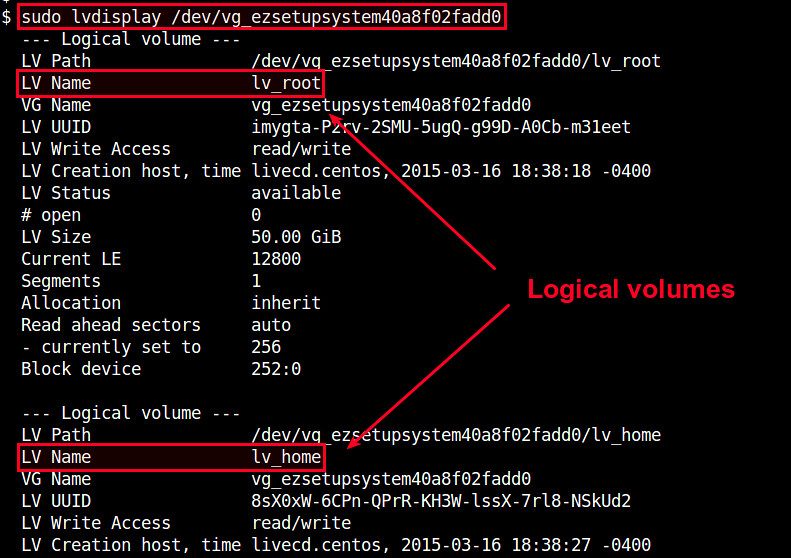

要正确地挂载LVM分区,你必须挂载分区创建的“逻辑分区”。下面就是如何做的。

|

||||

要正确地挂载LVM分区,你必须挂载分区中创建的“逻辑卷”。下面就是如何做的。

|

||||

|

||||

=首先,用下面的命令检查可用的卷组:

|

||||

首先,用下面的命令检查可用的卷组:

|

||||

|

||||

$ sudo pvs

|

||||

|

||||

@ -60,7 +60,9 @@ Linux有问必答 -- 如何在Linux中挂载LVM分区

|

||||

|

||||

|

||||

|

||||

如果你想要挂载一个特定的逻辑卷,使用“LV Path”下面的设备名(如:/dev/vg_ezsetupsystem40a8f02fadd0/lv_home)。

|

||||

*上图可以看到两个逻辑卷的名字:lv_root和lv_home*

|

||||

|

||||

如果你想要挂载一个特定的逻辑卷,使用如下的“LV Path”的设备名(如:/dev/vg_ezsetupsystem40a8f02fadd0/lv_home)。

|

||||

|

||||

$ sudo mount /dev/vg_ezsetupsystem40a8f02fadd0/lv_home /mnt

|

||||

|

||||

@ -82,7 +84,7 @@ via: http://ask.xmodulo.com/mount-lvm-partition-linux.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

11个让人惊叹的Linux终端彩蛋

|

||||

11个无用而有趣的Linux终端彩蛋

|

||||

================================================================================

|

||||

这里有一些很酷的Linux终端彩蛋,其中的每一个看上去并没有实际用途,但很精彩。

|

||||

这里有一些很酷的Linux终端彩蛋,其中的每一个看上去并没有实际用途,但很有趣。

|

||||

|

||||

|

||||

|

||||

@ -8,7 +8,7 @@

|

||||

|

||||

当我们使用命令行工作时,Linux是功能和实用性最好的操作系统之一。想要执行一个特殊任务?可能一个程序或者脚本就可以帮你搞定。但就像一本书中说到的,只工作不玩耍聪明的孩子也会变傻。下边是我最喜欢的可以在终端做的没有实际用途的、傻傻的、恼人的、可笑的事情。

|

||||

|

||||

### 给终端一个态度 ###

|

||||

### 让终端成为一个有态度的人 ###

|

||||

|

||||

* 第一步)敲入`sudo visudo`

|

||||

* 第二步)在“Defaults”末尾(文件的前半部分)添加一行“Defaults insults”。

|

||||

@ -20,13 +20,13 @@

|

||||

|

||||

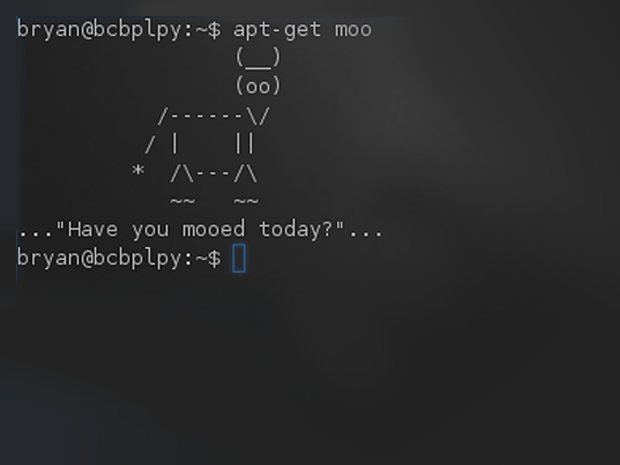

### apt-get moo ###

|

||||

|

||||

你看过这张截图?那就是运行`apt-get moo`(在基于Debian的系统)的结果。对,就是它了。不要对它抱太多幻想,你会失望的,我不骗你。但是这是Linux世界最被人熟知的彩蛋之一。所以我把它包含进来,并且放在前排,然后我也就不会收到5千封邮件,指责我把它遗漏了。

|

||||

|

||||

|

||||

|

||||

你看过这张截图?那就是运行`apt-get moo`(在基于Debian的系统)的结果。对,就是它了。不要对它抱太多幻想,你会失望的,我不骗你。但是这是Linux世界最被人熟知的彩蛋之一。所以我把它包含进来,并且放在前排,然后我也就不会收到5千封邮件,指责我把它遗漏了。

|

||||

|

||||

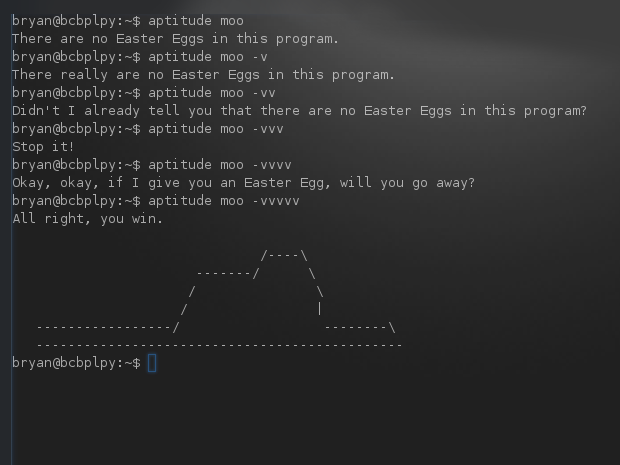

### aptitude moo ###

|

||||

|

||||

更有趣的是将moo应用到aptitude上。敲入`aptitude moo`(在Ubuntu及其衍生版),你对`moo`可以做什么事情的看法会有所变化。你还还会知道更多事情,尝试重新输入这条命令,但这次添加一个`-v`参数。这还没有结束,试着添加更多`v`,一次添加一个,直到aptitude给了你想要的东西。

|

||||

更有趣的是将moo应用到aptitude上。敲入`aptitude moo`(在Ubuntu及其衍生版),你对`moo`可以做什么事情的看法会有所变化。你还还会知道更多事情,尝试重新输入这条命令,但这次添加一个`-v`参数。这还没有结束,试着添加更多`v`,一次添加一个,直到抓狂的aptitude给了你想要的东西。

|

||||

|

||||

|

||||

|

||||

@ -38,25 +38,25 @@

|

||||

* 第二步)在“# Misc options”部分,去掉“Color”前的“#”。

|

||||

* 第三步)添加“ILoveCandy”。

|

||||

|

||||

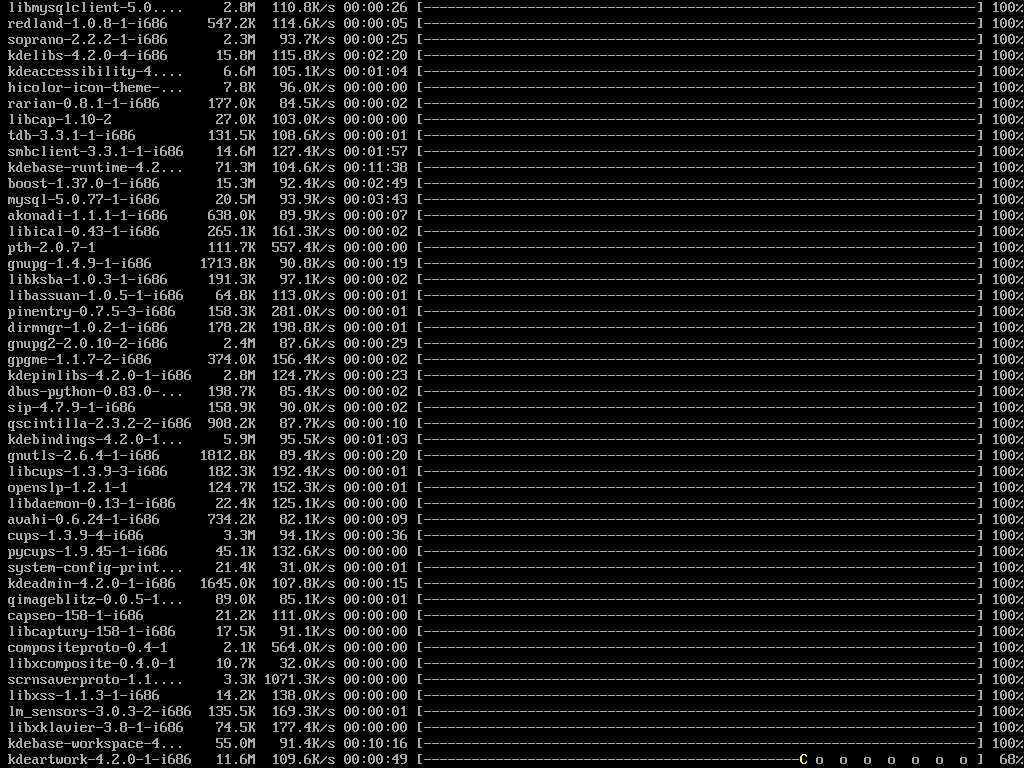

现在我们使用pacman安装新软件包时,进度条里会出现一个小吃豆人。真应该默认就是这样的。

|

||||

现在我们使用pacman安装新软件包时,进度条里会出现一个小吃豆人。真应该默认就这样的。

|

||||

|

||||

|

||||

|

||||

### Cowsay! ###

|

||||

|

||||

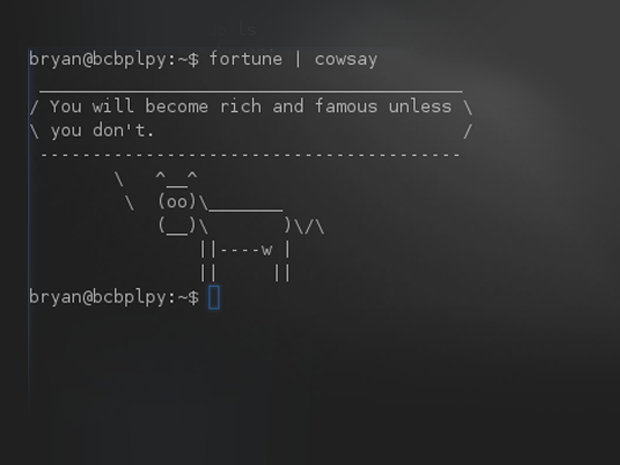

`aptitude moo`的输出格式很漂亮,但我想你苦于不能自由自在地使用。输入`cowsay`,它会做到你想做的事情。你可以让牛说任何你喜欢的东西。而且不只可以用牛,还可以用Calvin、Beavis和Ghostbusters的ASCII logo——输入`cowsay -l`可以得到所有可用的logo。它是Linux世界的强大工具。像很多其他命令一样,你可以使用管道把其他程序的输出输送给它,比如`fortune | cowsay`。

|

||||

`aptitude moo`的输出格式很漂亮,但我想你苦于不能自由自在地使用。输入`cowsay`,它会做到你想做的事情。你可以让牛说任何你喜欢的东西。而且不只可以用牛,还可以用Calvin、Beavis和Ghostbusters logo的ASCII的艺术,输入`cowsay -l`可以得到所有可用的参数。它是Linux世界的强大工具。像很多其他命令一样,你可以使用管道把其他程序的输出输送给它,比如`fortune | cowsay`,让这头牛变成哲学家。

|

||||

|

||||

|

||||

|

||||

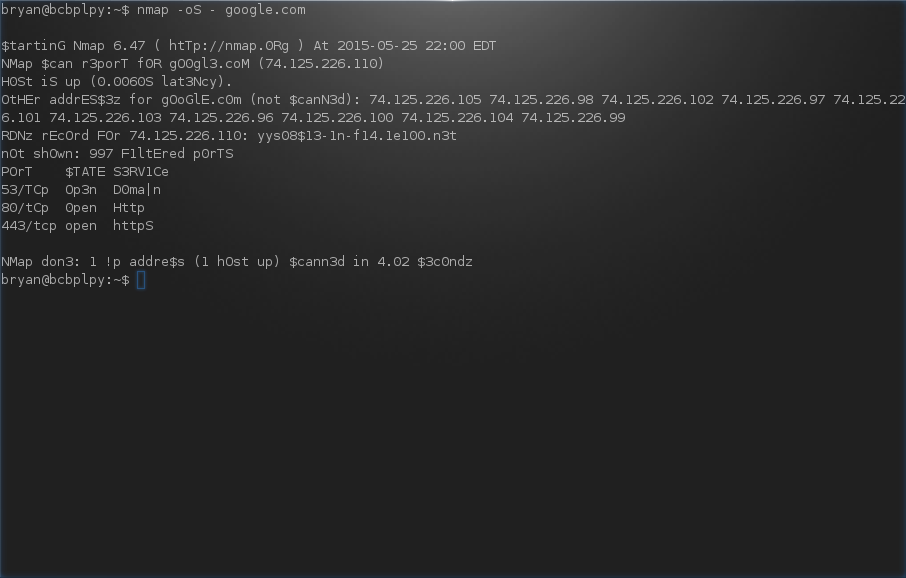

### 变成3l33t h@x0r ###

|

||||

|

||||

`nmap`并不是我们平时经常使用的基本命令。但如果你想蹂躏`nmap`的话,可能想在它的输出中看到l33t。在任何`nmap`命令(比如`nmap -oS - google.com`)后添加`-oS`。现在你的`nmap`已经处于官方名称是“[Script Kiddie Mode][1]”的模式了。Angelina Jolie和Keanu Reeves会为此骄傲的。

|

||||

`nmap`并不是我们平时经常使用的基本命令。但如果你想蹂躏`nmap`的话,比如像人一样看起来像l33t。在任何`nmap`命令后添加`-oS`(比如`nmap -oS - google.com`)。现在你的`nmap`已经处于标准叫法是“[脚本玩具模式][1]”的模式了。Angelina Jolie和Keanu Reeves会为此骄傲的。

|

||||

|

||||

|

||||

|

||||

### 获得所有的Discordian日期 ###

|

||||

|

||||

如果你们曾经坐在一起思考,“嗨!我想使用无用但异想天开的方式来书写今天的日期……”试试运行`ddate`。结果类似于“Today is Setting Orange, the 72nd day of Discord in the YOLD 3181”,这会让你的服务树日志平添不少香料。

|

||||

如果你们曾经坐在一起思考,“嗨!我想使用无用但异想天开的方式来书写今天的日期……”试试运行`ddate`。结果类似于“Today is Setting Orange, the 72nd day of Discord in the YOLD 3181”,这会让你的服务树日志平添不少趣味。

|

||||

|

||||

注意:在技术层面,确实有一个[Discordian Calendar][2],理论上被[Discordianism][3]追随者所使用。这意味着我可能得罪某些人。或者不会,我不确定。不管怎样,`ddate`是一个方便的工具。

|

||||

|

||||

@ -76,7 +76,7 @@

|

||||

|

||||

### 将任何文本逆序输出 ###

|

||||

|

||||

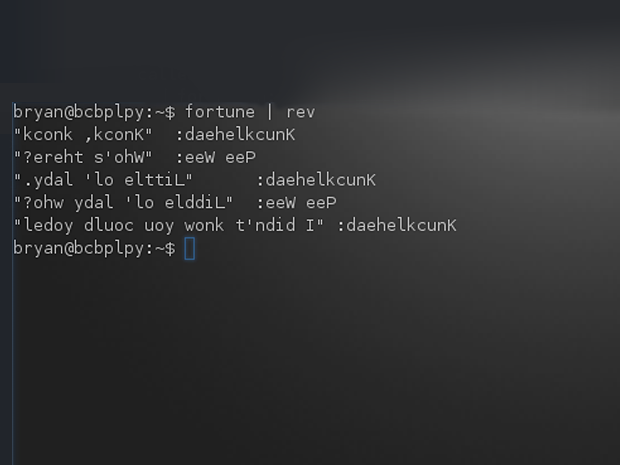

将任何文本使用管道输送给`rev`命令,它就会将文本内容逆序输出。`fortune | rev`会给你好运。当然,这不意味着rev会将幸运转换成不幸。

|

||||

将任何文本使用管道输送给`rev`命令,它就会将文本内容逆序输出。`fortune | rev`会给你好运。当然,这不意味着rev会将幸运(fortune)转换成不幸。

|

||||

|

||||

|

||||

|

||||

@ -94,7 +94,7 @@ via: http://www.networkworld.com/article/2926630/linux/11-pointless-but-awesome-

|

||||

|

||||

作者:[Bryan Lunduke][a]

|

||||

译者:[goreliu](https://github.com/goreliu)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,10 +1,15 @@

|

||||

如何在Linux中安装漂亮的扁平化Arc GTK+主题

|

||||

如何在 Linux 中安装漂亮的 GTK+ 扁平化主题 Arc

|

||||

================================================================================

|

||||

> 易于理解的分步教程

|

||||

|

||||

**今天我们将向你介绍最新发布的GTK+主题,它拥有透明和扁平元素,并且与多个桌面环境和Linux发行版兼容。[这个主题叫Arc][1]。**

|

||||

|

||||

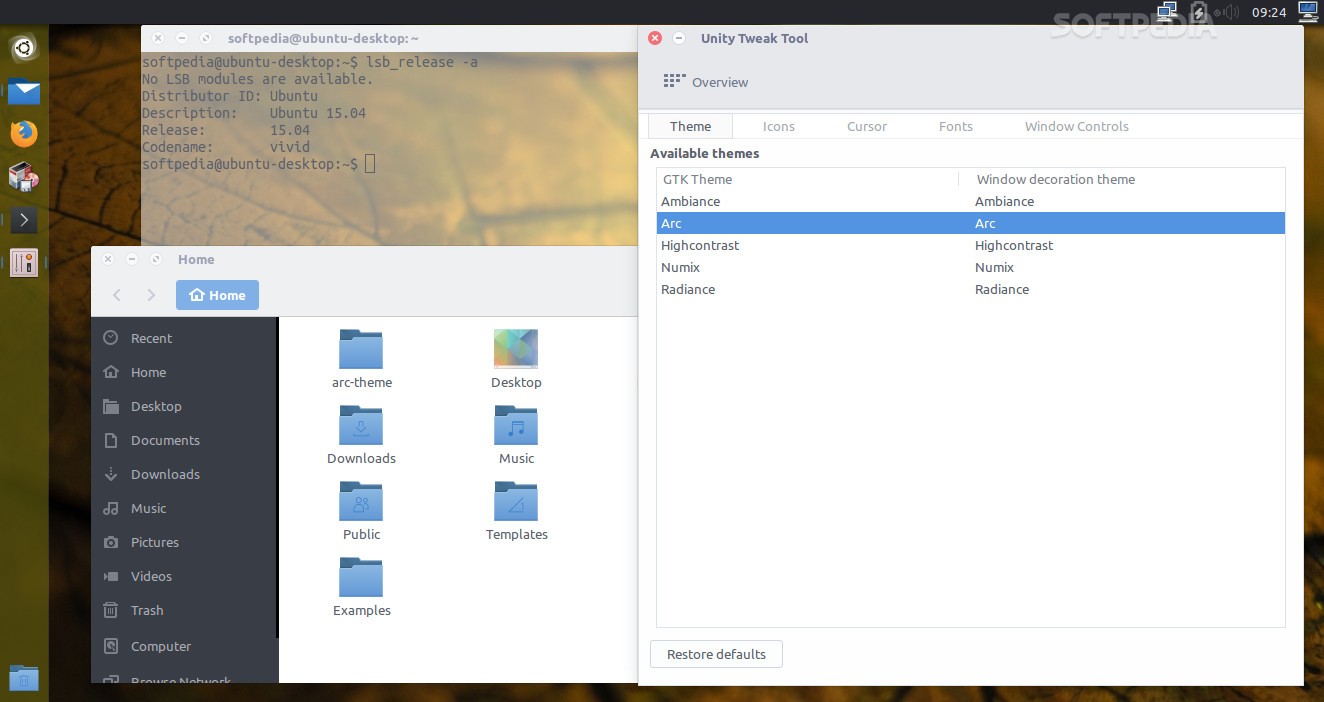

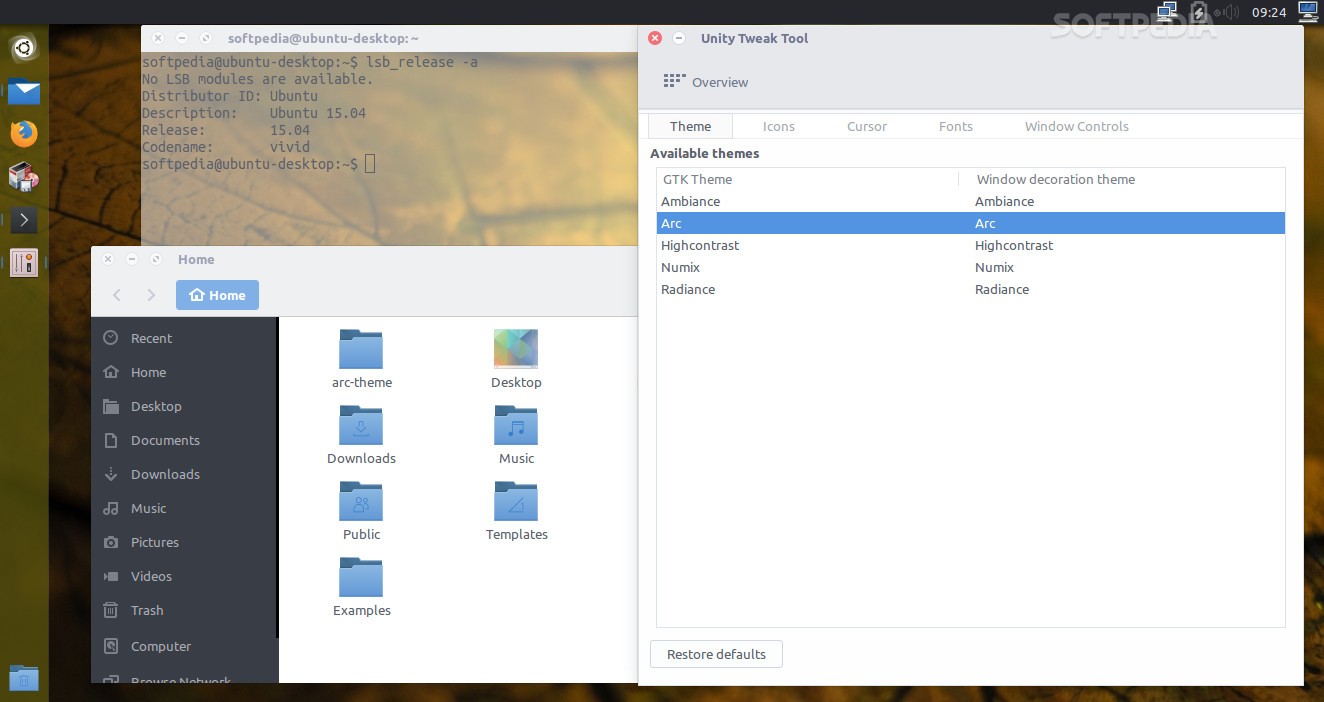

开始讲细节之前,我建议你快速浏览一下下面的图,这样你有会对这个主题就会有一个基本的概念了。同样你应该知道它目前可以工作在GTK+ 2.x、GTK+ 3.x、GNOME-Shell、 Budgie、 Unity和Pantheon用户界面,它们都使用了GNOME栈。

|

||||

开始讲细节之前,我建议你快速浏览一下下面的图,这样你有会对这个主题就会有一个基本的概念了。同样你应该知道它目前可以工作在GTK+ 2.x、GTK+ 3.x、GNOME-Shell、 Budgie、 Unity和Pantheon用户界面,它们都使用了GNOME 体系。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

同样、Arc主题的开发者告诉我们它已经成功地在Ubuntu 15.04(Vivid Vervet)、 Arch Linux、 elementary OS 0.3 Freya、 Fedora 21、 Fedora 22、 Debian GNU/Linux 8.0 (Jessie)、 Debian Testing、 Debian Unstable、 openSUSE 13.2、 openSUSE Tumbleweed和Gentoo测试过了。

|

||||

|

||||

@ -12,7 +17,7 @@

|

||||

|

||||

要构建Arc主题,你需要先安装一些包,比如autoconf、 automake、 pkg-config (对于Fedora则是pkgconfig)、基于Debian/Ubuntu-based发行版的libgtk-3-dev或者基于RPM的gtk3-devel、 git、 gtk2-engines-pixbuf和gtk-engine-murrine (对于Fedora则是gtk-murrine-engine)。

|

||||

|

||||

Arc主题还没有二进制包,因此你需要从git仓库中取下最新的源码并编译。这样,打开终端并运行下面的命令,一行行地,并在每行的末尾按下回车键并等待上一步完成来继续下一步。

|

||||

Arc主题还没有二进制包,因此你需要从git仓库中取下最新的源码并编译。这样,打开终端并运行下面的命令,一行行地输入,并在每行的末尾按下回车键并等待上一步完成来继续下一步。

|

||||

|

||||

git clone https://github.com/horst3180/arc-theme --depth 1 && cd arc-theme

|

||||

git fetch --tags

|

||||

@ -22,11 +27,7 @@ Arc主题还没有二进制包,因此你需要从git仓库中取下最新的

|

||||

|

||||

就是这样!此时你已经在你的GNU/Linux发行版中安装了Arc主题,如果你使用GNOME可以使用GONME Tweak工具,如果你使用Ubuntu的Unity可以使用Unity Tweak工具来激活主题。玩得开心但不要忘了在下面的评论栏里留下你的截图。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -1,37 +1,36 @@

|

||||

如何在Ubuntu 15.04(Vivid Vervet)中安装nginx和google pagespeed

|

||||

如何在 Ubuntu 15.04 中安装 nginx 和 google pagespeed

|

||||

================================================================================

|

||||

Nginx (engine-x)是一个开源高性能http、反向代理和IMAP/POP3代理服务器。nginx杰出的功能有:稳定、丰富的功能集、简单的配置和低资源消耗。nginx被用于一些高性能网站并在站长之间变得越来越流行。本教程会从源码构建一个带有google paespeed模块用于Ubuntu 15.04中的.deb包。

|

||||

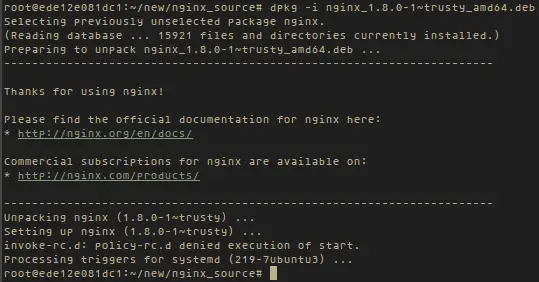

Nginx (engine-x)是一个开源的高性能 HTTP 服务器、反向代理和 IMAP/POP3 代理服务器。nginx 杰出的功能有:稳定、丰富的功能集、简单的配置和低资源消耗。nginx 被用于一些高性能网站并在站长之间变得越来越流行。本教程会从源码构建一个带有 google paespeed 模块的用于 Ubuntu 15.04 的 nginx .deb 安装包。

|

||||

|

||||

pagespeed 是一个由 google 开发的 web 服务器模块来加速网站响应时间、优化 html 和减少页面加载时间。ngx_pagespeed 的功能如下:

|

||||

|

||||

pagespeed是一个由google开发的web服务器模块来加速网站响应时间、优化html和减少页面加载时间。ngx_pagespeed的功能如下:

|

||||

|

||||

- 图像优化:去除meta数据、动态剪裁、重压缩。

|

||||

- CSS与JavaScript 放大、串联、内联、外联。

|

||||

- 图像优化:去除元数据、动态缩放、重压缩。

|

||||

- CSS 与 JavaScript 压缩、串联、内联、外联。

|

||||

- 小资源内联

|

||||

- 延迟图像与JavaScript加载

|

||||

- HTML重写。

|

||||

- 图像与 JavaScript 延迟加载

|

||||

- HTML 重写

|

||||

- 缓存生命期插件

|

||||

|

||||

更多请见 [https://developers.google.com/speed/pagespeed/module/][1].

|

||||

更多请见 [https://developers.google.com/speed/pagespeed/module/][1]。

|

||||

|

||||

### 预备要求 ###

|

||||

### 前置要求 ###

|

||||

|

||||

Ubuntu Server 15.04 64位

|

||||

root 权限

|

||||

- Ubuntu Server 15.04 64位

|

||||

- root 权限

|

||||

|

||||

本篇我们将要:

|

||||

|

||||

- 安装必备包

|

||||

- 安装带ngx_pagespeed的nginx

|

||||

- 安装必备软件包

|

||||

- 安装带 ngx_pagespeed 的 nginx

|

||||

- 测试

|

||||

|

||||

#### 安装必备包 ####

|

||||

|

||||

sudo apt-get install dpkg-dev build-essential zlib1g-dev libpcre3 libpcre3-dev

|

||||

sudo apt-get install dpkg-dev build-essential zlib1g-dev libpcre3 libpcre3-dev

|

||||

|

||||

#### 安装带ngx_pagespeed的nginx ####

|

||||

### 安装带 ngx_pagespeed 的 nginx ###

|

||||

|

||||

**第一步 - 添加nginx仓库**

|

||||

#### 第一步 - 添加nginx仓库####

|

||||

|

||||

vim /etc/apt/sources.list.d/nginx.list

|

||||

|

||||

@ -51,7 +50,7 @@ sudo apt-get install dpkg-dev build-essential zlib1g-dev libpcre3 libpcre3-dev

|

||||

sudo sudo apt-key adv --keyserver keyserver.ubuntu.com --recv-keys KEYNUMBER

|

||||

sudo apt-get update

|

||||

|

||||

**第二步 - 从仓库下载nginx 1.8**

|

||||

####第二步 - 从仓库下载 nginx 1.8####

|

||||

|

||||

sudo su

|

||||

cd ~

|

||||

@ -60,7 +59,7 @@ sudo apt-get install dpkg-dev build-essential zlib1g-dev libpcre3 libpcre3-dev

|

||||

apt-get source nginx

|

||||

apt-get build-dep nginx

|

||||

|

||||

**第三步 - 下载Pagespeed**

|

||||

#### 第三步 - 下载 Pagespeed####

|

||||

|

||||

cd ~

|

||||

mkdir -p ~/new/ngx_pagespeed/

|

||||

@ -73,12 +72,12 @@ sudo apt-get install dpkg-dev build-essential zlib1g-dev libpcre3 libpcre3-dev

|

||||

wget https://dl.google.com/dl/page-speed/psol/${ngx_version}.tar.gz

|

||||

tar -xzf 1.9.32.3.tar.gz

|

||||

|

||||

**第三步 - 配置nginx来编译Pagespeed**

|

||||

####第四步 - 配置 nginx 来编译 Pagespeed####

|

||||

|

||||

cd ~/new/nginx_source/nginx-1.8.0/debin/

|

||||

vim rules

|

||||

|

||||

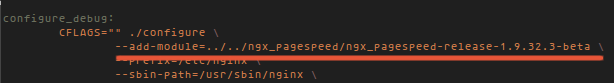

在CFLAGS `.configure`下添加模块:

|

||||

在两处 CFLAGS `.configure` 下添加模块:

|

||||

|

||||

--add-module=../../ngx_pagespeed/ngx_pagespeed-release-1.9.32.3-beta \

|

||||

|

||||

@ -86,27 +85,27 @@ sudo apt-get install dpkg-dev build-essential zlib1g-dev libpcre3 libpcre3-dev

|

||||

|

||||

|

||||

|

||||

**第五步 - 打包nginx包并安装**

|

||||

####第五步 - 打包 nginx 软件包并安装####

|

||||

|

||||

cd ~/new/nginx_source/nginx-1.8.0/

|

||||

dpkg-buildpackage -b

|

||||

|

||||

dpkg-buildpackage会编译 ~/new/ngix_source/成nginx.deb。打包完成后,看一下目录:

|

||||

dpkg-buildpackage 会编译 ~/new/ngix_source/ 为 nginx.deb。打包完成后,看一下目录:

|

||||

|

||||

cd ~/new/ngix_source/

|

||||

ls

|

||||

|

||||

|

||||

|

||||

接着安装nginx。

|

||||

接着安装 nginx。

|

||||

|

||||

dpkg -i nginx_1.8.0-1~trusty_amd64.deb

|

||||

|

||||

|

||||

|

||||

#### 测试 ####

|

||||

### 测试 ###

|

||||

|

||||

运行nginx -V测试nginx是否已经自带ngx_pagespeed。

|

||||

运行 nginx -V 测试 nginx 是否已经自带 ngx_pagespeed。

|

||||

|

||||

nginx -V

|

||||

|

||||

@ -114,15 +113,15 @@ dpkg-buildpackage会编译 ~/new/ngix_source/成nginx.deb。打包完成后,

|

||||

|

||||

### 总结 ###

|

||||

|

||||

稳定、快速、开源的nginx支持许多不同的优化模块。这其中之一是google开发的‘pagespeed’。不像apache,nginx模块不是动态加载的,因此你必须在编译之前就选择完需要的模块。

|

||||

稳定、快速、开源的 nginx 支持许多不同的优化模块。这其中之一是 google 开发的‘pagespeed’。不像 apache,nginx 模块不是动态加载的,因此你必须在编译之前就选择好需要的模块。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.howtoforge.com/tutorial/how-to-install-nginx-and-google-pagespeed-on-ubuntu-15-04/#step-build-nginx-package-and-install

|

||||

via: https://www.howtoforge.com/tutorial/how-to-install-nginx-and-google-pagespeed-on-ubuntu-15-04/

|

||||

|

||||

作者:Muhammad Arul

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,29 +1,27 @@

|

||||

|

||||

在Ubuntu 15.04下安装Android Studio

|

||||

PS 原MD文件有大段重复并且排版错误,译者已修复

|

||||

================================================================================

|

||||

|

||||

Android Studio是官方为了Android应用开发者而发布的IDE,它基于IntelliJ的IDEA。

|

||||

|

||||

|

||||

Android Studio是官方为了Android应用开发者而发布的IDE,它基于IntelliJ的IDEA。

|

||||

|

||||

### Android Studio的功能 ###

|

||||

|

||||

灵活的基于Gradle的建构系统

|

||||

- 灵活的基于Gradle的建构系统

|

||||

|

||||

针对不同手机编译多个版本的apk

|

||||

- 针对不同手机编译多个版本的apk

|

||||

|

||||

代码模板功能构建出各种常用的应用

|

||||

- 代码模板功能构建出各种常用的应用

|

||||

|

||||

支持拖动编辑主题的富布局编辑器

|

||||

- 支持拖动编辑主题的富布局编辑器

|

||||

|

||||

lint工具可以捕捉到应用的性能、可用性、版本冲突或者其他问题

|

||||

- lint工具可以捕捉到应用的性能、可用性、版本冲突或者其他问题

|

||||

|

||||

代码混淆和应用签名功能

|

||||

|

||||

内置 Google Cloud Platform 的支持,可以轻易的融入Google Cloud Messaging 和 App Engine支持

|

||||

|

||||

还有更多

|

||||

- 代码混淆和应用签名功能

|