mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-25 23:11:02 +08:00

超期回收,过期回收

2019 年文章清理

This commit is contained in:

parent

9dff5fc8ef

commit

d45031f660

@ -1,91 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Rejoice KDE Lovers! MX Linux Joins the KDE Bandwagon and Now You Can Download MX Linux KDE Edition)

|

||||

[#]: via: (https://itsfoss.com/mx-linux-kde-edition/)

|

||||

[#]: author: (Ankush Das https://itsfoss.com/author/ankush/)

|

||||

|

||||

Rejoice KDE Lovers! MX Linux Joins the KDE Bandwagon and Now You Can Download MX Linux KDE Edition

|

||||

======

|

||||

|

||||

Debian-based [MX Linux][1] is already an impressive Linux distribution with [Xfce desktop environment][2] as the default. Even though it works good and is suitable to run with minimal hardware configuration, it still isn’t the best Linux distribution in terms of eye candy.

|

||||

|

||||

That’s where KDE comes to the rescue. Of late, KDE Plasma has reduced a lot of weight and it uses fewer system resources without compromising on the modern looks. No wonder KDE Plasma is one of [the best desktop environments][3] out there.

|

||||

|

||||

![][4]

|

||||

|

||||

With [MX Linux 19.2][5], they began testing a KDE edition and have finally released their first KDE version.

|

||||

|

||||

Also, the KDE edition comes with Advanced Hardware Support (AHS) enabled. Here’s what they have mentioned in their release notes:

|

||||

|

||||

> MX-19.2 KDE is an **Advanced Hardware Support (AHS) **enabled **64-bit only** version of MX featuring the KDE/plasma desktop. Applications utilizing Qt library frameworks are given a preference for inclusion on the iso.

|

||||

|

||||

As I mentioned it earlier, this is MX Linux’s first KDE edition ever, and they’ve also shed some light on it with the announcement as well:

|

||||

|

||||

> This will be first officially supported MX/antiX family iso utilizing the KDE/plasma desktop since the halting of the predecessor MEPIS project in 2013.

|

||||

|

||||

Personally, I enjoyed the experience of using MX Linux until I started using [Pop OS 20.04][6]. So, I’ll give you some key highlights of MX Linux 19.2 KDE edition along with my impressions of testing it.

|

||||

|

||||

### MX Linux 19.2 KDE: Overview

|

||||

|

||||

![][7]

|

||||

|

||||

Out of the box, MX Linux looks cleaner and more attractive with KDE desktop on board. Unlike KDE Neon, it doesn’t feature the latest and greatest KDE stuff, but it looks to be doing the job intended.

|

||||

|

||||

Of course, you will get the same options that you expect from a KDE-powered distro to customize the look and feel of your desktop. In addition to the obvious KDE perks, you will also get the usual MX tools, antiX-live-usb-system, and snapshot feature that comes baked in the Xfce edition.

|

||||

|

||||

It’s a great thing to have the best of both worlds here, as stated in their announcement:

|

||||

|

||||

> MX-19.2 KDE includes the usual MX tools, antiX-live-usb-system, and snapshot technology that our users have come to expect from our standard flagship Xfce releases. Adding KDE/plasma to the existing Xfce/MX-fluxbox desktops will provide for a wider range user needs and wants.

|

||||

|

||||

I haven’t performed a great deal of tests but I did have some issues with extracting archives (it didn’t work the first try) and copy-pasting a file to a new location. Not sure if those are some known bugs — but I thought I should let you know here.

|

||||

|

||||

![][8]

|

||||

|

||||

Other than that, it features every useful tool you’d want to have and works great. With KDE on board, it actually feels more polished and smooth in my case.

|

||||

|

||||

Along with KDE Plasma 5.14.5 on top of Debian 10 “buster”, it also comes with GIMP 2.10.12, MESA, Debian (AHS) 5.6 Kernel, Firefox browser, and few other goodies like VLC, Thunderbird, LibreOffice, and Clementine music player.

|

||||

|

||||

You can also look for more stuff in the MX repositories.

|

||||

|

||||

![][9]

|

||||

|

||||

There are some known issues with the release like the System clock settings not being able adjustable via KDE settings. You can check their [announcement post][10] for more information or their [bug list][11] to make sure everything’s fine before trying it out on your production system.

|

||||

|

||||

### Wrapping Up

|

||||

|

||||

MX Linux 19.2 KDE edition is definitely more impressive than its Xfce offering in my opinion. It would take a while to iron out the bugs for this first KDE release — but it’s not a bad start.

|

||||

|

||||

Speaking of KDE, I recently tested out KDE Neon, the official KDE distribution. I shared my experience in this video. I’ll try to do a video on MX Linux KDE flavor as well.

|

||||

|

||||

[Subscribe to our YouTube channel for more Linux videos][12]

|

||||

|

||||

Have you tried it yet? Let me know your thoughts in the comments below!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/mx-linux-kde-edition/

|

||||

|

||||

作者:[Ankush Das][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/ankush/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://mxlinux.org/

|

||||

[2]: https://www.xfce.org/

|

||||

[3]: https://itsfoss.com/best-linux-desktop-environments/

|

||||

[4]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2020/08/mx-linux-kde-edition.jpg?resize=800%2C450&ssl=1

|

||||

[5]: https://mxlinux.org/blog/mx-19-2-now-available/

|

||||

[6]: https://itsfoss.com/pop-os-20-04-review/

|

||||

[7]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2020/08/mx-linux-19-2-kde.jpg?resize=800%2C452&ssl=1

|

||||

[8]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2020/08/mx-linux-19-2-kde-filemanager.jpg?resize=800%2C452&ssl=1

|

||||

[9]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/08/mx-linux-19-2-kde-info.jpg?resize=800%2C452&ssl=1

|

||||

[10]: https://mxlinux.org/blog/mx-19-2-kde-now-available/

|

||||

[11]: https://bugs.mxlinux.org/

|

||||

[12]: https://www.youtube.com/c/itsfoss?sub_confirmation=1

|

||||

@ -1,66 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Cisco open-source code boosts performance of Kubernetes apps over SD-WAN)

|

||||

[#]: via: (https://www.networkworld.com/article/3572310/cisco-open-source-code-boosts-performance-of-kubernetes-apps-over-sd-wan.html)

|

||||

[#]: author: (Michael Cooney https://www.networkworld.com/author/Michael-Cooney/)

|

||||

|

||||

Cisco open-source code boosts performance of Kubernetes apps over SD-WAN

|

||||

======

|

||||

Cisco's Cloud-Native SD-WAN project marries SD-WANs to Kubernetes applications to cut down on the manual work needed to optimize latency and packet loss.

|

||||

Thinkstock

|

||||

|

||||

Cisco has introduced an open-source project that it says could go a long way toward reducing the manual work involved in optimizing performance of Kubernetes-applications across [SD-WANs][1].

|

||||

|

||||

Cisco said it launched the Cloud-Native SD-WAN (CN-WAN) project to show how Kubernetes applications can be automatically mapped to SD-WAN with the result that the applications perform better over the WAN.

|

||||

|

||||

**More about SD-WAN**: [How to buy SD-WAN technology: Key questions to consider when selecting a supplier][2] • [How to pick an off-site data-backup method][3] • [SD-Branch: What it is and why you’ll need it][4] • [What are the options for security SD-WAN?][5]

|

||||

|

||||

“In many cases, enterprises deploy an SD-WAN to connect a Kubernetes cluster with users or workloads that consume cloud-native applications. In a typical enterprise, NetOps teams leverage their network expertise to program SD-WAN policies to optimize general connectivity to the Kubernetes hosted applications, with the goal to reduce latency, reduce packet loss, etc.” wrote John Apostolopoulos, vice president and CTO of Cisco’s intent-based networking group in a group [blog][6].

|

||||

|

||||

“The enterprise usually also has DevOps teams that maintain and optimize the Kubernetes infrastructure. However, despite the efforts of NetOps and DevOps teams, today Kubernetes and SD-WAN operate mostly like ships in the night, often unaware of each other. Integration between SD-WAN and Kubernetes typically involves time-consuming manual coordination between the two teams.”

|

||||

|

||||

Current SD-WAN offering often have APIs that let customers programmatically influence how their traffic is handled over the WAN. This enables interesting and valuable opportunities for automation and application optimization, Apostolopoulos stated. “We believe there is an opportunity to pair the declarative nature of Kubernetes with the programmable nature of modern SD-WAN solutions,” he stated.

|

||||

|

||||

Enter CN-WAN, which defines a set of components that can be used to integrate an SD-WAN package, such as Cisco Viptela SD-WAN, with Kubernetes to enable DevOps teams to express the WAN needs of the microservices they deploy in a Kubernetes cluster, while simultaneously letting NetOps automatically render the microservices needs to optimize the application performance over the WAN, Apostolopoulos stated.

|

||||

|

||||

Apostolopoulos wrote that CN-WAN is composed of a Kubernetes Operator, a Reader, and an Adaptor. It works like this: The CN-WAN Operator runs in the Kubernetes cluster, actively monitoring the deployed services. DevOps teams can use standard Kubernetes annotations on the services to define WAN-specific metadata, such as the traffic profile of the application. The CN-WAN Operator then automatically registers the service along with the metadata in a service registry. In a demo at KubeCon EU this week Cisco used Google Service Directory as the service registry.

|

||||

|

||||

Earlier this year [Cisco and Google][7] deepened their relationship with a turnkey package that lets customers mesh SD-WAN connectivity with applications running in a private [data center][8], Google Cloud or another cloud or SaaS application. That jointly developed platform, called Cisco SD-WAN Cloud Hub with Google Cloud, combines Cisco’s SD-WAN policy-, telemetry- and security-setting capabilities with Google's software-defined backbone to ensure that application service-level agreement, security and compliance policies are extended across the network.

|

||||

|

||||

Meanwhile, on the SD-WAN side, the CN-WAN Reader connects to the service registry to learn about how Kubernetes is exposing the services and the associated WAN metadata extracted by the CN-WAN operator, Cisco stated. When new or updated services or metadata are detected, the CN-WAN Reader sends a message towards the CN-WAN Adaptor so SD-WAN policies can be updated.

|

||||

|

||||

Finally, the CN-WAN Adaptor, maps the service-associated metadata into the detailed SD-WAN policies programmed by NetOps in the SD-WAN controller. The SD-WAN controller automatically renders the SD-WAN policies, specified by the NetOps for each metadata type, into specific SD-WAN data-plane optimizations for the service, Cisco stated.

|

||||

|

||||

“The SD-WAN may support multiple types of access at both sender and receiver (e.g., wired Internet, MPLS, wireless 4G or 5G), as well as multiple service options and prioritizations per access network, and of course multiple paths between source and destination,” Apostolopoulos stated.

|

||||

|

||||

The code for the CN-WAN project is available as open-source in [GitHub][9].

|

||||

|

||||

Join the Network World communities on [Facebook][10] and [LinkedIn][11] to comment on topics that are top of mind.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3572310/cisco-open-source-code-boosts-performance-of-kubernetes-apps-over-sd-wan.html

|

||||

|

||||

作者:[Michael Cooney][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Michael-Cooney/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.networkworld.com/article/3031279/sd-wan-what-it-is-and-why-you-ll-use-it-one-day.html

|

||||

[2]: https://www.networkworld.com/article/3323407/sd-wan/how-to-buy-sd-wan-technology-key-questions-to-consider-when-selecting-a-supplier.html

|

||||

[3]: https://www.networkworld.com/article/3328488/backup-systems-and-services/how-to-pick-an-off-site-data-backup-method.html

|

||||

[4]: https://www.networkworld.com/article/3250664/lan-wan/sd-branch-what-it-is-and-why-youll-need-it.html

|

||||

[5]: https://www.networkworld.com/article/3285728/sd-wan/what-are-the-options-for-securing-sd-wan.html

|

||||

[6]: https://blogs.cisco.com/networking/introducing-the-cloud-native-sd-wan-project

|

||||

[7]: https://www.networkworld.com/article/3539252/cisco-integrates-sd-wan-connectivity-with-google-cloud.html

|

||||

[8]: https://www.networkworld.com/article/3223692/what-is-a-data-centerhow-its-changed-and-what-you-need-to-know.html

|

||||

[9]: https://github.com/CloudNativeSDWAN/cnwan-docs

|

||||

[10]: https://www.facebook.com/NetworkWorld/

|

||||

[11]: https://www.linkedin.com/company/network-world

|

||||

@ -1,122 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (KDE Plasma 5.20 is Here With Exciting Improvements)

|

||||

[#]: via: (https://itsfoss.com/kde-plasma-5-20/)

|

||||

[#]: author: (Ankush Das https://itsfoss.com/author/ankush/)

|

||||

|

||||

KDE Plasma 5.20 is Here With Exciting Improvements

|

||||

======

|

||||

|

||||

KDE Plasma 5.20 is finally here and there’s a lot of things to be excited about, including the new wallpaper ‘**Shell’** by Lucas Andrade.

|

||||

|

||||

It is worth noting that is not an LTS release unlike [KDE Plasma 5.18][1] and will be maintained for the next 4 months or so. So, if you want the latest and greatest, you can surely go ahead and give it a try.

|

||||

|

||||

In this article, I shall mention the key highlights of KDE Plasma 5.20 from [my experience with it on KDE Neon][2] (Testing Edition).

|

||||

|

||||

![][3]

|

||||

|

||||

### Plasma 5.20 Features

|

||||

|

||||

If you like to see things in action, we made a feature overview video for you.

|

||||

|

||||

[Subscribe to our YouTube channel for more Linux videos][4]

|

||||

|

||||

#### Icon-only Taskbar

|

||||

|

||||

![][5]

|

||||

|

||||

You must be already comfortable with a taskbar that mentions the title of the window along the icon. However, that takes a lot of space in the taskbar, which looks bad when you want to have a clean look with multiple applications/windows opened.

|

||||

|

||||

Not just limited to that, if you launch several windows of the same application, it will group them together and let you cycle through it from a single icon on the task bar.

|

||||

|

||||

So, with this update, you get an icon-only taskbar by default which makes it look a lot cleaner and you can have more things in the taskbar at a glance.

|

||||

|

||||

#### Digital Clock Applet with Date

|

||||

|

||||

![][6]

|

||||

|

||||

If you’ve used any KDE-powered distro, you must have noticed that the digital clock applet (in the bottom-right corner) displays the time but not the date by default.

|

||||

|

||||

It’s always a good choice to have the date and time as well (at least I prefer that). So, with KDE Plasma 5.20, the applet will have both time and date.

|

||||

|

||||

#### Get Notified When your System almost Runs out of Space

|

||||

|

||||

I know this is not a big addition, but a necessary one. No matter whether your home directory is on a different partition, you will be notified when you’re about to run out of space.

|

||||

|

||||

#### Set the Charge Limit Below 100%

|

||||

|

||||

You are in for a treat if you are a laptop user. To help you preserve the battery health, you can now set a charge limit below 100%. I couldn’t show it to you because I use a desktop.

|

||||

|

||||

#### Workspace Improvements

|

||||

|

||||

Working with the workspaces on KDE desktop was already an impressive experience, now with the latest update, several tweaks have been made to take the user experience up a notch.

|

||||

|

||||

To start with, the system tray has been overhauled with a grid-like layout replacing the list view.

|

||||

|

||||

The default shortcut has been re-assigned with Meta+drag instead of Alt+drag to move/re-size windows to avoid conflicts with some other productivity apps with Alt+drag keybind support. You can also use the key binds like Meta + up/left/down arrow to corner-tile windows.

|

||||

|

||||

![][7]

|

||||

|

||||

It is also easier to list all the disks using the old “**Device Notifier**” applet, which has been renamed to “**Disks & Devices**“.

|

||||

|

||||

If that wasn’t enough, you will also find improvements to [KRunner][8], which is the essential application launcher or search utility for users. It will now remember the search text history and you can also have it centered on the screen instead of having it on top of the screen.

|

||||

|

||||

#### System Settings Improvements

|

||||

|

||||

The look and feel of the system setting is the same but it is more useful now. You will notice a new “**Highlight changed settings**” option which will show you the recent/modified changes when compared to the default values.

|

||||

|

||||

So, in that way, you can monitor any changes that you did accidentally or if someone else did it.

|

||||

|

||||

![][9]

|

||||

|

||||

In addition to that, you also get to utilize S.M.A.R.T monitoring and disk failure notifications.

|

||||

|

||||

#### Wayland Support Improvements

|

||||

|

||||

If you prefer to use a Wayland session, you will be happy to know that it now supports [Klipper][10] and you can also middle-click to paste (on KDE apps only for the time being).

|

||||

|

||||

The much-needed screencasting support has also been added.

|

||||

|

||||

#### Other Improvements

|

||||

|

||||

Of course, you will notice some subtle visual improvements or adjustments for the look and feel. You may notice a smooth transition effect when changing the brightness. Similarly, when changing the brightness or volume, the on-screen display that pops up is now less obtrusive

|

||||

|

||||

Options like controlling the scroll speed of mouse/touchpad have been added to give you finer controls.

|

||||

|

||||

You can find the detailed list of changes in its [official changelog][11], if you’re curious.

|

||||

|

||||

### Wrapping Up

|

||||

|

||||

The changes are definitely impressive and should make the KDE experience better than ever before.

|

||||

|

||||

If you’re running KDE Neon, you should get the update soon. But, if you are on Kubuntu, you will have to try the 20.10 ISO to get your hands on Plasma 5.20.

|

||||

|

||||

What do you like the most among the list of changes? Have you tried it yet? Let me know your thoughts in the comments below.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/kde-plasma-5-20/

|

||||

|

||||

作者:[Ankush Das][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/ankush/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://itsfoss.com/kde-plasma-5-18-release/

|

||||

[2]: https://itsfoss.com/kde-neon-review/

|

||||

[3]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/10/kde-plasma-5-20-feat.png?resize=800%2C394&ssl=1

|

||||

[4]: https://www.youtube.com/c/itsfoss?sub_confirmation=1

|

||||

[5]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/10/kde-plasma-5-20-taskbar.jpg?resize=472%2C290&ssl=1

|

||||

[6]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/10/kde-plasma-5-20-clock.jpg?resize=372%2C224&ssl=1

|

||||

[7]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/10/kde-plasma-5-20-notify.jpg?resize=800%2C692&ssl=1

|

||||

[8]: https://docs.kde.org/trunk5/en/kde-workspace/plasma-desktop/krunner.html

|

||||

[9]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2020/10/plasma-disks-smart.png?resize=800%2C539&ssl=1

|

||||

[10]: https://userbase.kde.org/Klipper

|

||||

[11]: https://kde.org/announcements/plasma-5.20.0

|

||||

@ -1,95 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (What metrics matter: A guide for open source projects)

|

||||

[#]: via: (https://opensource.com/article/19/1/metrics-guide-open-source-projects)

|

||||

[#]: author: (Gordon Haff https://opensource.com/users/ghaff)

|

||||

|

||||

What metrics matter: A guide for open source projects

|

||||

======

|

||||

5 principles for deciding what to measure.

|

||||

|

||||

|

||||

"Without data, you're just a person with an opinion."

|

||||

|

||||

Those are the words of W. Edwards Deming, the champion of statistical process control, who was credited as one of the inspirations for what became known as the Japanese post-war economic miracle of 1950 to 1960. Ironically, Japanese manufacturers like Toyota were far more receptive to Deming’s ideas than General Motors and Ford were.

|

||||

|

||||

Community management is certainly an art. It’s about mentoring. It’s about having difficult conversations with people who are hurting the community. It’s about negotiation and compromise. It’s about interacting with other communities. It’s about making connections. In the words of Red Hat’s Diane Mueller, it’s about "nurturing conversations."

|

||||

|

||||

However, it’s also about metrics and data.

|

||||

|

||||

Some have much in common with software development projects more broadly. Others are more specific to the management of the community itself. I think of deciding what to measure and how as adhering to five principles.

|

||||

|

||||

### 1. Recognize that behaviors aren't independent of the measurements you choose to highlight.

|

||||

|

||||

In 2008, Daniel Ariely published Predictably Irrational, one of a number of books written around that time that introduced behavioral psychology and behavioral economics to the general public. One memorable quote from that book is the following: “Human beings adjust behavior based on the metrics they’re held against. Anything you measure will impel a person to optimize his score on that metric. What you measure is what you’ll get. Period.”

|

||||

|

||||

This shouldn’t be surprising. It’s a finding that’s been repeatedly confirmed by research. It should also be familiar to just about anyone with business experience. It’s certainly not news to anyone in sales management, for example. Base sales reps’ (or their managers’) bonuses solely on revenue, and they’ll try to discount whatever it takes to maximize revenue, even if it puts margin in the toilet. Conversely, want the sales force to push a new product line—which will probably take extra effort—but skip the spiffs? Probably not happening.

|

||||

|

||||

And lest you think I’m unfairly picking on sales, this behavior is pervasive, all the way up to the CEO, as Ariely describes in a 2010 Harvard Business Review article: “CEOs care about stock value because that’s how we measure them. If we want to change what they care about, we should change what we measure.”

|

||||

|

||||

Developers and other community members are not immune.

|

||||

|

||||

### 2. You need to choose relevant metrics.

|

||||

|

||||

There’s a lot of folk wisdom floating around about what’s relevant and important that’s not necessarily true. My colleague [Dave Neary offers an example from baseball][1]: “In the late '90s, the key measurements that were used to measure batter skill were RBI (runs batted in) and batting average (how often a player got on base with a hit, divided by the number of at-bats). The Oakland A’s were the first major league team to recruit based on a different measurement of player performance: on-base percentage. This measures how often they get to first base, regardless of how it happens.”

|

||||

|

||||

Indeed, the whole revolution of sabermetrics in baseball and elsewhere, which was popularized in Michael Lewis’ Moneyball, often gets talked about in terms of introducing data in a field that historically was more about gut feel and personal experience. But it was also about taking a game that had actually always been fairly numbers-obsessed and coming up with new metrics based on mostly existing data to better measure player value. (The data revolution going on in sports today is more about collecting much more data through video and other means than was previously available.)

|

||||

|

||||

### 3. Quantity may not lead to quality.

|

||||

|

||||

As a corollary, collecting lots of tangential but easy-to-capture data isn’t better than just selecting a few measurements you’ve determined are genuinely useful. In a world where online behavior can be tracked with great granularity and displayed in colorful dashboards, it’s tempting to be distracted by sheer data volume, even when it doesn’t deliver any great insight into community health and trajectory.

|

||||

|

||||

This may seem like an obvious point: Why measure something that isn’t relevant? In practice, metrics often get chosen because they’re easy to measure, not because they’re particularly useful. They tend to be more about inputs than outputs: The number of developers. The number of forum posts. The number of commits. Collectively, measures like this often get called vanity metrics. They’re ubiquitous, but most people involved with community management don’t think much of them.

|

||||

|

||||

Number of downloads may be the worst of the bunch. It’s true that, at some level, they’re an indication of interest in a project. That’s something. But it’s sufficiently distant from actively using the project, much less engaging with the project deeply, that it’s hard to view downloads as a very useful number.

|

||||

|

||||

Is there any harm in these vanity metrics? Yes, to the degree that you start thinking that they’re something to base action on. Probably more seriously, stakeholders like company management or industry observers can come to see them as meaningful indicators of project health.

|

||||

|

||||

### 4. Understand what measurements really mean and how they relate to each other.

|

||||

|

||||

Neary makes this point to caution against myopia. “In one project I worked on,” he says, ”some people were concerned about a recent spike in the number of bug reports coming in because it seemed like the project must have serious quality issues to resolve. However, when we looked at the numbers, it turned out that many of the bugs were coming in because a large company had recently started using the project. The increase in bug reports was actually a proxy for a big influx of new users, which was a good thing.”

|

||||

|

||||

In practice, you often have to measure through proxies. This isn’t an inherent problem, but the further you get between what you want to measure and what you’re actually measuring, the harder it is to connect the dots. It’s fine to track progress in closing bugs, writing code, and adding new features. However, those don’t necessarily correlate with how happy users are or whether the project is doing a good job of working towards its long-term objectives, whatever those may be.

|

||||

|

||||

### 5. Different measurements serve different purposes.

|

||||

|

||||

Some measurements may be non-obvious but useful for tracking the success of a project and community relative to internal goals. Others may be better suited for a press release or other external consumption. For example, as a community manager, you may really care about the number of meetups, mentoring sessions, and virtual briefings your community has held over the past three months. But it’s the number of contributions and contributors that are more likely to grab the headlines. You probably care about those too. But maybe not as much, depending upon your current priorities.

|

||||

|

||||

Still, other measurements may relate to the goals of any sponsoring organizations. The measurements most relevant for projects tied to commercial products are likely to be different from pure community efforts.

|

||||

|

||||

Because communities differ and goals differ, it’s not possible to simply compile a metrics checklist, but here are some ideas to think about:

|

||||

|

||||

Consider qualitative metrics in addition to quantitative ones. Conducting surveys and other studies can be time-consuming, especially if they’re rigorous enough to yield better-than-anecdotal data. It also requires rigor to construct studies so that they can be used to track changes over time. In other words, it’s a lot easier to measure quantitative contributor activity than it is to suss out if the community members are happier about their participation today than they were a year ago. However, given the importance of culture to the health of a community, measuring it in a systematic way can be a worthwhile exercise.

|

||||

|

||||

Breadth of community, including how many are unaffiliated with commercial entities, is important for many projects. The greater the breadth, the greater the potential leverage of the open source development process. It can also be instructive to see how companies and individuals are contributing. Projects can be explicitly designed to better accommodate casual contributors.

|

||||

|

||||

Are new contributors able to have an impact, or are they ignored? How long does it take for code contributions to get committed? How long does it take for a reported bug to be fixed or otherwise responded to? If they asked a question in a forum, did anyone answer them? In other words, are you letting contributors contribute?

|

||||

|

||||

Advancement within the project is also an important metric. [Mikeal Rogers of the Node.js community][2] explains: “The shift that we made was to create a support system and an education system to take a user and turn them into a contributor, first at a very low level, and educate them to bring them into the committer pool and eventually into the maintainer pool. The end result of this is that we have a wide range of skill sets. Rather than trying to attract phenomenal developers, we’re creating new phenomenal developers.”

|

||||

|

||||

Whatever metrics you choose, don’t forget why you made them metrics in the first place. I find a helpful question to ask is: “What am I going to do with this number?” If the answer is to just put it in a report or in a press release, that’s not a great answer. Metrics should be measurements that tell you either that you’re on the right path or that you need to take specific actions to course-correct.

|

||||

|

||||

For this reason, Stormy Peters, who handles community leads at Red Hat, [argues for keeping it simple][3]. She writes, “It’s much better to have one or two key metrics than to worry about all the possible metrics. You can capture all the possible metrics, but as a project, you should focus on moving one. It’s also better to have a simple metric that correlates directly to something in the real world than a metric that is a complicated formula or ration between multiple things. As project members make decisions, you want them to be able to intuitively feel whether or not it will affect the project’s key metric in the right direction.”

|

||||

|

||||

The article is adapted from [How Open Source Ate Software][4] by Gordon Haff (Apress 2018).

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/1/metrics-guide-open-source-projects

|

||||

|

||||

作者:[Gordon Haff][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/ghaff

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://community.redhat.com/blog/2014/07/when-metrics-go-wrong/

|

||||

[2]: https://opensource.com/article/17/3/nodejs-community-casual-contributors

|

||||

[3]: https://medium.com/open-source-communities/3-important-things-to-consider-when-measuring-your-success-50e21ad82858

|

||||

[4]: https://www.apress.com/us/book/9781484238936

|

||||

@ -1,68 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (What happens when a veteran teacher goes to an open source conference)

|

||||

[#]: via: (https://opensource.com/open-organization/19/1/educator-at-open-source-conference)

|

||||

[#]: author: (Ben Owens https://opensource.com/users/engineerteacher)

|

||||

|

||||

What happens when a veteran teacher goes to an open source conference

|

||||

======

|

||||

Sometimes feeling like a fish out of water is precisely what educators need.

|

||||

|

||||

|

||||

|

||||

"Change is going to be continual, and today is the slowest day society will ever move."—[Tony Fadell][1]

|

||||

|

||||

If ever there was an experience that brought the above quotation home for me, it was my experience at the [All Things Open conference][2] in Raleigh, NC last October. Thousands of people from all over the world attended the conference, and many (if not most), worked as open source coders and developers. As one of the relatively few educators in attendance, I saw and heard things that were completely foreign to me—terms like as Istio, Stack Overflow, Ubuntu, Sidecar, HyperLedger, and Kubernetes tossed around for days.

|

||||

|

||||

I felt like a fish out of water. But in the end, that was the perfect dose of reality I needed to truly understand how open principles can reshape our approach to education.

|

||||

|

||||

### Not-so-strange attractors

|

||||

|

||||

All Things Open attracted me to Raleigh for two reasons, both of which have to do with how our schools must do a better job of creating environments that truly prepare students for a rapidly changing world.

|

||||

|

||||

The first is my belief that schools should embrace the ideals of the [open source way][3]. The second is that educators have to periodically force themselves out of their relatively isolated worlds of "doing school" in order to get a glimpse of what the world is actually doing.

|

||||

|

||||

When I was an engineer for 20 years, I developed a deep sense of the power of an open exchange of ideas, of collaboration, and of the need for rapid prototyping of innovations. Although we didn't call these ideas "open source" at the time, my colleagues and I constantly worked together to identify and solve problems using tools such as [Design Thinking][4] so that our businesses remained competitive and met market demands. When I became a science and math teacher at a small [public school][5] in rural Appalachia, my goal was to adapt these ideas to my classrooms and to the school at large as a way to blur the lines between a traditional school environment and what routinely happens in the "real world."

|

||||

|

||||

Through several years of hard work and many iterations, my fellow teachers and I were eventually able to develop a comprehensive, school-wide project-based learning model, where students worked in collaborative teams on projects that [made real connections][6] between required curriculum and community-based applications. Doing so gave these students the ability to develop skills they can use for a lifetime, rather than just on the next test—skills such as problem solving, critical thinking, oral and written communication, perseverance through setbacks, and adapting to changing conditions, as well as how to have routine conversations with adult mentors form the community. Only after reading [The Open Organization][7] did I realize that what we had been doing essentially embodied what Jim Whitehurst had described. In our case, of course, we applied open principles to an educational context (that model, called Open Way Learning, is the subject of a [book][8] published in December).

|

||||

|

||||

I felt like a fish out of water. But in the end, that was the perfect dose of reality I needed to truly understand how open principles can reshape our approach to education.

|

||||

|

||||

As good as this model is in terms of pushing students into a relevant, engaging, and often unpredictable learning environments, it can only go so far if we, as educators who facilitate this type of project-based learning, do not constantly stay abreast of changing technologies and their respective lexicon. Even this unconventional but proven approach will still leave students ill-prepared for a global, innovation economy if we aren't constantly pushing ourselves into areas outside our own comfort zones. My experience at the All Things Open conference was a perfect example. While humbling, it also forced me to confront what I didn't know so that I can learn from it to help the work I do with other teachers and schools.

|

||||

|

||||

### A critical decision

|

||||

|

||||

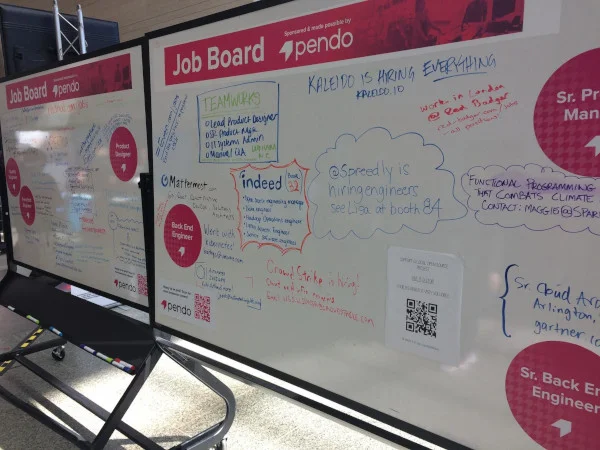

I made this point to others when I shared a picture of the All Things Open job board with dozens of colleagues all over the country. I shared it with the caption: "What did you do in your school today to prepare your students for this reality tomorrow?" The honest answer from many was, unfortunately, "not much." That has to change.

|

||||

|

||||

|

||||

|

||||

(Images courtesy of Ben Owens, CC BY-SA)

|

||||

|

||||

People in organizations everywhere have to make a critical decision: either embrace the rapid pace of change that is a fact of life in our world or face the hard reality of irrelevance. Our systems in education are at this same crossroads—even ones who think of themselves as being innovative. It involves admitting to students, "I don't know, but I'm willing to learn." That's the kind of teaching and learning experience our students deserve.

|

||||

|

||||

It can happen, but it will take pioneering educators who are willing to move away from comfortable, back-of-the-book answers to help students as they work on difficult and messy challenges. You may very well be a veritable fish out of water.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/open-organization/19/1/educator-at-open-source-conference

|

||||

|

||||

作者:[Ben Owens][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/engineerteacher

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://en.wikipedia.org/wiki/Tony_Fadell

|

||||

[2]: https://allthingsopen.org/

|

||||

[3]: https://opensource.com/open-source-way

|

||||

[4]: https://dschool.stanford.edu/resources-collections/a-virtual-crash-course-in-design-thinking

|

||||

[5]: https://www.tricountyearlycollege.org/

|

||||

[6]: https://www.bie.org/about/what_pbl

|

||||

[7]: https://www.redhat.com/en/explore/the-open-organization-book

|

||||

[8]: https://www.amazon.com/Open-Up-Education-Learning-Transform/dp/1475842007/ref=tmm_pap_swatch_0?_encoding=UTF8&qid=&sr=

|

||||

@ -1,59 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (4 confusing open source license scenarios and how to navigate them)

|

||||

[#]: via: (https://opensource.com/article/19/1/open-source-license-scenarios)

|

||||

[#]: author: (P.Kevin Nelson https://opensource.com/users/pkn4645)

|

||||

|

||||

4 confusing open source license scenarios and how to navigate them

|

||||

======

|

||||

|

||||

Before you begin using a piece of software, make sure you fully understand the terms of its license.

|

||||

|

||||

|

||||

|

||||

As an attorney running an open source program office for a Fortune 500 corporation, I am often asked to look into a product or component where there seems to be confusion as to the licensing model. Under what terms can the code be used, and what obligations run with such use? This often happens when the code or the associated project community does not clearly indicate availability under a [commonly accepted open source license][1]. The confusion is understandable as copyright owners often evolve their products and services in different directions in response to market demands. Here are some of the scenarios I commonly discover and how you can approach each situation.

|

||||

|

||||

### Multiple licenses

|

||||

|

||||

The product is truly open source with an [Open Source Initiative][2] (OSI) open source-approved license, but has changed licensing models at least once if not multiple times throughout its lifespan. This scenario is fairly easy to address; the user simply has to decide if the latest version with its attendant features and bug fixes is worth the conditions to be compliant with the current license. If so, great. If not, then the user can move back in time to a version released under a more palatable license and start from that fork, understanding that there may not be an active community for support and continued development.

|

||||

|

||||

### Old open source

|

||||

|

||||

This is a variation on the multiple licenses model with the twist that current licensing is proprietary only. You have to use an older version to take advantage of open source terms and conditions. Most often, the product was released under a valid open source license up to a certain point in its development, but then the copyright holder chose to evolve the code in a proprietary fashion and offer new releases only under proprietary commercial licensing terms. So, if you want the newest capabilities, you have to purchase a proprietary license, and you most likely will not get a copy of the underlying source code. Most often the open source community that grew up around the original code line falls away once the members understand there will be no further commitment from the copyright holder to the open source branch. While this scenario is understandable from the copyright holder's perspective, it can be seen as "burning a bridge" to the open source community. It would be very difficult to again leverage the benefits of the open source contribution models once a project owner follows this path.

|

||||

|

||||

### Open core

|

||||

|

||||

By far the most common discovery is that a product has both an open source-licensed "community edition" and a proprietary-licensed commercial offering, commonly referred to as open core. This is often encouraging to potential consumers, as it gives them a "try before you buy" option or even a chance to influence both versions of the product by becoming an active member of the community. I usually encourage clients to begin with the community version, get involved, and see what they can achieve. Then, if the product becomes a crucial part of their business plan, they have the option to upgrade to the proprietary level at any time.

|

||||

|

||||

### Freemium

|

||||

|

||||

The component is not open source at all, but instead it is released under some version of the "freemium" model. A version with restricted or time-limited functionality can be downloaded with no immediate purchase required. However, since the source code is usually not provided and its accompanying license does not allow perpetual use, the creation of derivative works, nor further distribution, it is definitely not open source. In this scenario, it is usually best to pass unless you are prepared to purchase a proprietary license and accept all attendant terms and conditions of use. Users are often the most disappointed in this outcome as it has somewhat of a deceptive feel.

|

||||

|

||||

### OSI compliant

|

||||

|

||||

Of course, the happy path I haven't mentioned is to discover the project has a single, clear, OSI-compliant license. In those situations, open source software is as easy as downloading and going forward within appropriate use.

|

||||

|

||||

Each of the more complex scenarios described above can present problems to potential development projects, but consultation with skilled procurement or intellectual property professionals with regard to licensing lineage can reveal excellent opportunities.

|

||||

|

||||

An earlier version of this article was published on [OSS Law][3] and is republished with the author's permission.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/1/open-source-license-scenarios

|

||||

|

||||

作者:[P.Kevin Nelson][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/pkn4645

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.org/licenses

|

||||

[2]: https://opensource.org/licenses/category

|

||||

[3]: http://www.pknlaw.com/2017/06/i-thought-that-was-open-source.html

|

||||

@ -1,203 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (OOP Before OOP with Simula)

|

||||

[#]: via: (https://twobithistory.org/2019/01/31/simula.html)

|

||||

[#]: author: (Sinclair Target https://twobithistory.org)

|

||||

|

||||

OOP Before OOP with Simula

|

||||

======

|

||||

|

||||

Imagine that you are sitting on the grassy bank of a river. Ahead of you, the water flows past swiftly. The afternoon sun has put you in an idle, philosophical mood, and you begin to wonder whether the river in front of you really exists at all. Sure, large volumes of water are going by only a few feet away. But what is this thing that you are calling a “river”? After all, the water you see is here and then gone, to be replaced only by more and different water. It doesn’t seem like the word “river” refers to any fixed thing in front of you at all.

|

||||

|

||||

In 2009, Rich Hickey, the creator of Clojure, gave [an excellent talk][1] about why this philosophical quandary poses a problem for the object-oriented programming paradigm. He argues that we think of an object in a computer program the same way we think of a river—we imagine that the object has a fixed identity, even though many or all of the object’s properties will change over time. Doing this is a mistake, because we have no way of distinguishing between an object instance in one state and the same object instance in another state. We have no explicit notion of time in our programs. We just breezily use the same name everywhere and hope that the object is in the state we expect it to be in when we reference it. Inevitably, we write bugs.

|

||||

|

||||

The solution, Hickey concludes, is that we ought to model the world not as a collection of mutable objects but a collection of processes acting on immutable data. We should think of each object as a “river” of causally related states. In sum, you should use a functional language like Clojure.

|

||||

|

||||

![][2]

|

||||

The author, on a hike, pondering the ontological commitments

|

||||

of object-oriented programming.

|

||||

|

||||

Since Hickey gave his talk in 2009, interest in functional programming languages has grown, and functional programming idioms have found their way into the most popular object-oriented languages. Even so, most programmers continue to instantiate objects and mutate them in place every day. And they have been doing it for so long that it is hard to imagine that programming could ever look different.

|

||||

|

||||

I wanted to write an article about Simula and imagined that it would mostly be about when and how object-oriented constructs we are familiar with today were added to the language. But I think the more interesting story is about how Simula was originally so unlike modern object-oriented programming languages. This shouldn’t be a surprise, because the object-oriented paradigm we know now did not spring into existence fully formed. There were two major versions of Simula: Simula I and Simula 67. Simula 67 brought the world classes, class hierarchies, and virtual methods. But Simula I was a first draft that experimented with other ideas about how data and procedures could be bundled together. The Simula I model is not a functional model like the one Hickey proposes, but it does focus on processes that unfold over time rather than objects with hidden state that interact with each other. Had Simula 67 stuck with more of Simula I’s ideas, the object-oriented paradigm we know today might have looked very different indeed—and that contingency should teach us to be wary of assuming that the current paradigm will dominate forever.

|

||||

|

||||

### Simula 0 Through 67

|

||||

|

||||

Simula was created by two Norwegians, Kristen Nygaard and Ole-Johan Dahl.

|

||||

|

||||

In the late 1950s, Nygaard was employed by the Norwegian Defense Research Establishment (NDRE), a research institute affiliated with the Norwegian military. While there, he developed Monte Carlo simulations used for nuclear reactor design and operations research. These simulations were at first done by hand and then eventually programmed and run on a Ferranti Mercury. Nygaard soon found that he wanted a higher-level way to describe these simulations to a computer.

|

||||

|

||||

The kind of simulation that Nygaard commonly developed is known as a “discrete event model.” The simulation captures how a sequence of events change the state of a system over time—but the important property here is that the simulation can jump from one event to the next, since the events are discrete and nothing changes in the system between events. This kind of modeling, according to a paper that Nygaard and Dahl presented about Simula in 1966, was increasingly being used to analyze “nerve networks, communication systems, traffic flow, production systems, administrative systems, social systems, etc.” So Nygaard thought that other people might want a higher-level way to describe these simulations too. He began looking for someone that could help him implement what he called his “Simulation Language” or “Monte Carlo Compiler.”

|

||||

|

||||

Dahl, who had also been employed by NDRE, where he had worked on language design, came aboard at this point to play Wozniak to Nygaard’s Jobs. Over the next year or so, Nygaard and Dahl worked to develop what has been called “Simula 0.” This early version of the language was going to be merely a modest extension to ALGOL 60, and the plan was to implement it as a preprocessor. The language was then much less abstract than what came later. The primary language constructs were “stations” and “customers.” These could be used to model certain discrete event networks; Nygaard and Dahl give an example simulating airport departures. But Nygaard and Dahl eventually came up with a more general language construct that could represent both “stations” and “customers” and also model a wider range of simulations. This was the first of two major generalizations that took Simula from being an application-specific ALGOL package to a general-purpose programming language.

|

||||

|

||||

In Simula I, there were no “stations” or “customers,” but these could be recreated using “processes.” A process was a bundle of data attributes associated with a single action known as the process’ operating rule. You might think of a process as an object with only a single method, called something like `run()`. This analogy is imperfect though, because each process’ operating rule could be suspended or resumed at any time—the operating rules were a kind of coroutine. A Simula I program would model a system as a set of processes that conceptually all ran in parallel. Only one process could actually be “current” at any time, but once a process suspended itself the next queued process would automatically take over. As the simulation ran, behind the scenes, Simula would keep a timeline of “event notices” that tracked when each process should be resumed. In order to resume a suspended process, Simula needed to keep track of multiple call stacks. This meant that Simula could no longer be an ALGOL preprocessor, because ALGOL had only once call stack. Nygaard and Dahl were committed to writing their own compiler.

|

||||

|

||||

In their paper introducing this system, Nygaard and Dahl illustrate its use by implementing a simulation of a factory with a limited number of machines that can serve orders. The process here is the order, which starts by looking for an available machine, suspends itself to wait for one if none are available, and then runs to completion once a free machine is found. There is a definition of the order process that is then used to instantiate several different order instances, but no methods are ever called on these instances. The main part of the program just creates the processes and sets them running.

|

||||

|

||||

The first Simula I compiler was finished in 1965. The language grew popular at the Norwegian Computer Center, where Nygaard and Dahl had gone to work after leaving NDRE. Implementations of Simula I were made available to UNIVAC users and to Burroughs B5500 users. Nygaard and Dahl did a consulting deal with a Swedish company called ASEA that involved using Simula to run job shop simulations. But Nygaard and Dahl soon realized that Simula could be used to write programs that had nothing to do with simulation at all.

|

||||

|

||||

Stein Krogdahl, a professor at the University of Oslo that has written about the history of Simula, claims that “the spark that really made the development of a new general-purpose language take off” was [a paper called “Record Handling”][3] by the British computer scientist C.A.R. Hoare. If you read Hoare’s paper now, this is easy to believe. I’m surprised that you don’t hear Hoare’s name more often when people talk about the history of object-oriented languages. Consider this excerpt from his paper:

|

||||

|

||||

> The proposal envisages the existence inside the computer during the execution of the program, of an arbitrary number of records, each of which represents some object which is of past, present or future interest to the programmer. The program keeps dynamic control of the number of records in existence, and can create new records or destroy existing ones in accordance with the requirements of the task in hand.

|

||||

|

||||

> Each record in the computer must belong to one of a limited number of disjoint record classes; the programmer may declare as many record classes as he requires, and he associates with each class an identifier to name it. A record class name may be thought of as a common generic term like “cow,” “table,” or “house” and the records which belong to these classes represent the individual cows, tables, and houses.

|

||||

|

||||

Hoare does not mention subclasses in this particular paper, but Dahl credits him with introducing Nygaard and himself to the concept. Nygaard and Dahl had noticed that processes in Simula I often had common elements. Using a superclass to implement those common elements would be convenient. This also raised the possibility that the “process” idea itself could be implemented as a superclass, meaning that not every class had to be a process with a single operating rule. This then was the second great generalization that would make Simula 67 a truly general-purpose programming language. It was such a shift of focus that Nygaard and Dahl briefly considered changing the name of the language so that people would know it was not just for simulations. But “Simula” was too much of an established name for them to risk it.

|

||||

|

||||

In 1967, Nygaard and Dahl signed a contract with Control Data to implement this new version of Simula, to be known as Simula 67. A conference was held in June, where people from Control Data, the University of Oslo, and the Norwegian Computing Center met with Nygaard and Dahl to establish a specification for this new language. This conference eventually led to a document called the [“Simula 67 Common Base Language,”][4] which defined the language going forward.

|

||||

|

||||

Several different vendors would make Simula 67 compilers. The Association of Simula Users (ASU) was founded and began holding annual conferences. Simula 67 soon had users in more than 23 different countries.

|

||||

|

||||

### 21st Century Simula

|

||||

|

||||

Simula is remembered now because of its influence on the languages that have supplanted it. You would be hard-pressed to find anyone still using Simula to write application programs. But that doesn’t mean that Simula is an entirely dead language. You can still compile and run Simula programs on your computer today, thanks to [GNU cim][5].

|

||||

|

||||

The cim compiler implements the Simula standard as it was after a revision in 1986. But this is mostly the Simula 67 version of the language. You can write classes, subclass, and virtual methods just as you would have with Simula 67. So you could create a small object-oriented program that looks a lot like something you could easily write in Python or Ruby:

|

||||

|

||||

```

|

||||

! dogs.sim ;

|

||||

Begin

|

||||

Class Dog;

|

||||

! The cim compiler requires virtual procedures to be fully specified ;

|

||||

Virtual: Procedure bark Is Procedure bark;;

|

||||

Begin

|

||||

Procedure bark;

|

||||

Begin

|

||||

OutText("Woof!");

|

||||

OutImage; ! Outputs a newline ;

|

||||

End;

|

||||

End;

|

||||

|

||||

Dog Class Chihuahua; ! Chihuahua is "prefixed" by Dog ;

|

||||

Begin

|

||||

Procedure bark;

|

||||

Begin

|

||||

OutText("Yap yap yap yap yap yap");

|

||||

OutImage;

|

||||

End;

|

||||

End;

|

||||

|

||||

Ref (Dog) d;

|

||||

d :- new Chihuahua; ! :- is the reference assignment operator ;

|

||||

d.bark;

|

||||

End;

|

||||

```

|

||||

|

||||

You would compile and run it as follows:

|

||||

|

||||

```

|

||||

$ cim dogs.sim

|

||||

Compiling dogs.sim:

|

||||

gcc -g -O2 -c dogs.c

|

||||

gcc -g -O2 -o dogs dogs.o -L/usr/local/lib -lcim

|

||||

$ ./dogs

|

||||

Yap yap yap yap yap yap

|

||||

```

|

||||

|

||||

(You might notice that cim compiles Simula to C, then hands off to a C compiler.)

|

||||

|

||||

This was what object-oriented programming looked like in 1967, and I hope you agree that aside from syntactic differences this is also what object-oriented programming looks like in 2019. So you can see why Simula is considered a historically important language.

|

||||

|

||||

But I’m more interested in showing you the process model that was central to Simula I. That process model is still available in Simula 67, but only when you use the `Process` class and a special `Simulation` block.

|

||||

|

||||

In order to show you how processes work, I’ve decided to simulate the following scenario. Imagine that there is a village full of villagers next to a river. The river has lots of fish, but between them the villagers only have one fishing rod. The villagers, who have voracious appetites, get hungry every 60 minutes or so. When they get hungry, they have to use the fishing rod to catch a fish. If a villager cannot use the fishing rod because another villager is waiting for it, then the villager queues up to use the fishing rod. If a villager has to wait more than five minutes to catch a fish, then the villager loses health. If a villager loses too much health, then that villager has starved to death.

|

||||

|

||||

This is a somewhat strange example and I’m not sure why this is what first came to mind. But there you go. We will represent our villagers as Simula processes and see what happens over a day’s worth of simulated time in a village with four villagers.

|

||||

|

||||

The full program is [available here as a Gist][6].

|

||||

|

||||

The last lines of my output look like the following. Here we are seeing what happens in the last few hours of the day:

|

||||

|

||||

```

|

||||

1299.45: John is hungry and requests the fishing rod.

|

||||

1299.45: John is now fishing.

|

||||

1311.39: John has caught a fish.

|

||||

1328.96: Betty is hungry and requests the fishing rod.

|

||||

1328.96: Betty is now fishing.

|

||||

1331.25: Jane is hungry and requests the fishing rod.

|

||||

1340.44: Betty has caught a fish.

|

||||

1340.44: Jane went hungry waiting for the rod.

|

||||

1340.44: Jane starved to death waiting for the rod.

|

||||

1369.21: John is hungry and requests the fishing rod.

|

||||

1369.21: John is now fishing.

|

||||

1379.33: John has caught a fish.

|

||||

1409.59: Betty is hungry and requests the fishing rod.

|

||||

1409.59: Betty is now fishing.

|

||||

1419.98: Betty has caught a fish.

|

||||

1427.53: John is hungry and requests the fishing rod.

|

||||

1427.53: John is now fishing.

|

||||

1437.52: John has caught a fish.

|

||||

```

|

||||

|

||||

Poor Jane starved to death. But she lasted longer than Sam, who didn’t even make it to 7am. Betty and John sure have it good now that only two of them need the fishing rod.

|

||||

|

||||

What I want you to see here is that the main, top-level part of the program does nothing but create the four villager processes and get them going. The processes manipulate the fishing rod object in the same way that we would manipulate an object today. But the main part of the program does not call any methods or modify and properties on the processes. The processes have internal state, but this internal state only gets modified by the process itself.

|

||||

|

||||

There are still fields that get mutated in place here, so this style of programming does not directly address the problems that pure functional programming would solve. But as Krogdahl observes, “this mechanism invites the programmer of a simulation to model the underlying system as a set of processes, each describing some natural sequence of events in that system.” Rather than thinking primarily in terms of nouns or actors—objects that do things to other objects—here we are thinking of ongoing processes. The benefit is that we can hand overall control of our program off to Simula’s event notice system, which Krogdahl calls a “time manager.” So even though we are still mutating processes in place, no process makes any assumptions about the state of another process. Each process interacts with other processes only indirectly.

|

||||

|

||||

It’s not obvious how this pattern could be used to build, say, a compiler or an HTTP server. (On the other hand, if you’ve ever programmed games in the Unity game engine, this should look familiar.) I also admit that even though we have a “time manager” now, this may not have been exactly what Hickey meant when he said that we need an explicit notion of time in our programs. (I think he’d want something like the superscript notation [that Ada Lovelace used][7] to distinguish between the different values a variable assumes through time.) All the same, I think it’s really interesting that right there at the beginning of object-oriented programming we can find a style of programming that is not all like the object-oriented programming we are used to. We might take it for granted that object-oriented programming simply works one way—that a program is just a long list of the things that certain objects do to other objects in the exact order that they do them. Simula I’s process system shows that there are other approaches. Functional languages are probably a better thought-out alternative, but Simula I reminds us that the very notion of alternatives to modern object-oriented programming should come as no surprise.

|

||||

|

||||

If you enjoyed this post, more like it come out every four weeks! Follow [@TwoBitHistory][8] on Twitter or subscribe to the [RSS feed][9] to make sure you know when a new post is out.

|

||||

|

||||

Previously on TwoBitHistory…

|

||||

|

||||

> Hey everyone! I sadly haven't had time to do any new writing but I've just put up an updated version of my history of RSS. This version incorporates interviews I've since done with some of the key people behind RSS like Ramanathan Guha and Dan Libby.<https://t.co/WYPhvpTGqB>

|

||||

>

|

||||

> — TwoBitHistory (@TwoBitHistory) [December 18, 2018][10]

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

1. Jan Rune Holmevik, “The History of Simula,” accessed January 31, 2019, http://campus.hesge.ch/daehne/2004-2005/langages/simula.htm. ↩

|

||||

|

||||

2. Ole-Johan Dahl and Kristen Nygaard, “SIMULA—An ALGOL-Based Simulation Langauge,” Communications of the ACM 9, no. 9 (September 1966): 671, accessed January 31, 2019, http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.95.384&rep=rep1&type=pdf. ↩

|

||||

|

||||

3. Stein Krogdahl, “The Birth of Simula,” 2, accessed January 31, 2019, http://heim.ifi.uio.no/~steinkr/papers/HiNC1-webversion-simula.pdf. ↩

|

||||

|

||||

4. ibid. ↩

|

||||

|

||||

5. Ole-Johan Dahl and Kristen Nygaard, “The Development of the Simula Languages,” ACM SIGPLAN Notices 13, no. 8 (August 1978): 248, accessed January 31, 2019, https://hannemyr.com/cache/knojd_acm78.pdf. ↩

|

||||

|

||||

6. Dahl and Nygaard (1966), 676. ↩

|

||||

|

||||

7. Dahl and Nygaard (1978), 257. ↩

|

||||

|

||||

8. Krogdahl, 3. ↩

|

||||

|

||||

9. Ole-Johan Dahl, “The Birth of Object-Orientation: The Simula Languages,” 3, accessed January 31, 2019, http://www.olejohandahl.info/old/birth-of-oo.pdf. ↩

|

||||

|

||||

10. Dahl and Nygaard (1978), 265. ↩

|

||||

|

||||

11. Holmevik. ↩

|

||||

|

||||

12. Krogdahl, 4. ↩

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://twobithistory.org/2019/01/31/simula.html

|

||||

|

||||

作者:[Sinclair Target][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://twobithistory.org

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.infoq.com/presentations/Are-We-There-Yet-Rich-Hickey

|

||||

[2]: /images/river.jpg

|

||||

[3]: https://archive.computerhistory.org/resources/text/algol/ACM_Algol_bulletin/1061032/p39-hoare.pdf

|

||||

[4]: http://web.eah-jena.de/~kleine/history/languages/Simula-CommonBaseLanguage.pdf

|

||||

[5]: https://www.gnu.org/software/cim/

|

||||

[6]: https://gist.github.com/sinclairtarget/6364cd521010d28ee24dd41ab3d61a96

|

||||

[7]: https://twobithistory.org/2018/08/18/ada-lovelace-note-g.html

|

||||

[8]: https://twitter.com/TwoBitHistory

|

||||

[9]: https://twobithistory.org/feed.xml

|

||||

[10]: https://twitter.com/TwoBitHistory/status/1075075139543449600?ref_src=twsrc%5Etfw

|

||||

@ -1,118 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )