mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-28 23:20:10 +08:00

commit

d4033cb682

4

LCTT翻译规范.md

Normal file

4

LCTT翻译规范.md

Normal file

@ -0,0 +1,4 @@

|

||||

# Linux中国翻译规范

|

||||

1. 翻译中出现的专有名词,可参见Dict.md中的翻译。

|

||||

2. 英文人名,如无中文对应译名,一般不译。

|

||||

2. 缩写词,一般不须翻译,可考虑旁注中文全名。

|

||||

132

published/20150716 Interview--Larry Wall.md

Normal file

132

published/20150716 Interview--Larry Wall.md

Normal file

@ -0,0 +1,132 @@

|

||||

Larry Wall 专访——语言学、Perl 6 的设计和发布

|

||||

================================================================================

|

||||

|

||||

> 经历了15年的打造,Perl 6 终将在年底与大家见面。我们预先采访了它的作者了解一下新特性。

|

||||

|

||||

Larry Wall 是个相当有趣的人。他是编程语言 Perl 的创造者,这种语言被广泛的誉为将互联网粘在一起的胶水,也由于大量地在各种地方使用非字母的符号被嘲笑为‘只写’语言——以难以阅读著称。Larry 本人具有语言学背景,以其介绍 Perl 未来发展的演讲“[洋葱的状态][1](State of the Onion)”而闻名。(LCTT 译注:“洋葱的状态”是 Larry Wall 的年度演讲的主题,洋葱也是 Perl 基金会的标志。)

|

||||

|

||||

在2015年布鲁塞尔的 FOSDEM 上,我们赶上了 Larry,问了问他为什么 Perl 6 花了如此长的时间(Perl 5 的发布时间是1994年),了解当项目中的每个人都各执己见时是多么的难以管理,以及他的语言学背景自始至终究竟给 Perl 带来了怎样的影响。做好准备,让我们来领略其中的奥妙……

|

||||

|

||||

|

||||

|

||||

**Linux Voice:你曾经有过计划去寻找世界上某个地方的某种不见经传的语言,然后为它创造书写的文字,但你从未有机会去实现它。如果你能回到过去,你会去做么?**

|

||||

|

||||

Larry Wall:你首先得是个年轻人才能搞得定!做这些事需要投入很大的努力和人力,以至于已经不适合那些上了年纪的人了。健康、活力是其中的一部分,同样也因为人们在年轻的时候更容易学习一门新的语言,只有在你学会了语言之后你才能写呀。

|

||||

|

||||

我自学了日语十年,由于我的音系学和语音学的训练我能说的比较流利——但要理解别人的意思对我来说还十分困难。所以到了日本我会问路,但我听不懂他们的回答!

|

||||

|

||||

通常需要一门语言学习得足够好才能开发一个文字体系,并可以使用这种语言进行少量的交流。在你能够实际推广它和用本土人自己的文化教育他们前,那还需要一些年。最后才可以教授本土人如何以他们的文明书写。

|

||||

|

||||

当然如果在语言方面你有帮手 —— 经过别人的提醒我们不再使用“语言线人”来称呼他们了,那样显得我们像是在 CIA 工作的一样!—— 你可以通过他们的帮助来学习外语。他们不是老师,但他们会以另一种方式来启发你学习 —— 当然他们也能教你如何说。他们会拿着一根棍子,指着它说“这是一根棍子”,然后丢掉同时说“棒子掉下去了”。然后,你就可以记下一些东西并将其系统化。

|

||||

|

||||

大多数让人们有这样做的动力是翻译圣经。但是这只是其中的一方面;另一方面也是为了文化保护。传教士在这方面臭名昭著,因为人类学家认为人们应该基于自己的文明来做这件事。但有些人注定会改变他们的文化——他们可能是军队、或是商业侵入,如可口可乐或者缝纫机,或传教士。在这三者之间,传教士相对来讲伤害最小的了,如果他们恪守本职的话。

|

||||

|

||||

**LV:许多文字系统有本可依,相较而言你的发明就像是格林兰语…**

|

||||

|

||||

印第安人照搬字母就发明了他们自己的语言,而且没有在这些字母上施加太多我们给这些字母赋予的涵义,这种做法相当随性。它们只要能够表达出人们的所思所想,使交流顺畅就行。经常是有些声调语言(Tonal language)使用的是西方文字拼写,并尽可能的使用拉丁文的字符变化,然后用重音符或数字标注出音调。

|

||||

|

||||

在你开始学习如何使用语音和语调表示之后,你也开始变得迷糊——或者你的书写就不如从前准确。或者你对话的时候像在讲英文,但发音开始无法匹配拼写。

|

||||

|

||||

**LV:当你在开发 Perl 的时候,你的语言学背景会不会使你认为:“这对程序设计语言真的非常重要”?**

|

||||

|

||||

LW:我在人们是如何使用语言上想了很多。在现实的语言中,你有一套名词、动词和形容词的体系,并且你知道这些单词的词性。在现实的自然语言中,你时常将一个单词放到不同的位置。我所学的语言学理论也被称为法位学(phoenetic),它解释了这些在自然语言中工作的原理 —— 也就是有些你当做名词的东西,有时候你可以将它用作动词,并且人们总是这样做。

|

||||

|

||||

你能很好的将任何单词放在任何位置而进行沟通。我比较喜欢的例子是将一个整句用作为一个形容词。这句话会是这样的:“我不喜欢你的[我可以用任何东西来取代这个形容词的]态度”!

|

||||

|

||||

所以自然语言非常灵活,因为聆听者非常聪明 —— 至少,相对于电脑而言 —— 你相信他们会理解你最想表达的意思,即使存在歧义。当然对电脑而言,你必须保证歧义不大。

|

||||

|

||||

> “在 Perl 6 中,我们试图让电脑更准确的了解我们。”

|

||||

|

||||

可以说在 Perl 1到5上,我们针对歧义方面处理做得还不够。有时电脑会在不应该的时候迷惑。在 Perl 6上,我们找了许多方法,使得电脑对你所说的话能更准确的理解,就算用户并不清楚这底是字符串还是数字,电脑也能准确的知道它的类型。我们找到了内部以强类型存储,而仍然可以无视类型的“以此即彼”的方法。

|

||||

|

||||

|

||||

|

||||

**LV:Perl 被视作互联网上的“胶水(glue)”语言已久,能将点点滴滴组合在一起。在你看来 Perl 6 的发布是否符合当前用户的需要,或者旨在招揽更多新用户,能使它重获新生吗?**

|

||||

|

||||

LW:最初的设想是为 Perl 程序员带来更好的 Perl。但在看到了 Perl 5 上的不足后,很明显改掉这些不足会使 Perl 6更易用,就像我在讨论中提到过 —— 类似于 [托尔金(J. R. R. Tolkien) 在《指环王》前言中谈到的适用性一样][2]。

|

||||

|

||||

重点是“简单的东西应该简单,而困难的东西应该可以实现”。让我们回顾一下,在 Perl 2和3之间的那段时间。在 Perl 2上我们不能处理二进制数据或嵌入的 null 值 —— 只支持 C 语言风格的字符串。我曾说过“Perl 只是文本处理语言 —— 在文本处理语言里你并不需要这些功能”。

|

||||

|

||||

但当时发生了一大堆的问题,因为大多数的文本中会包含少量的二进制数据 —— 如网络地址(network addresses)及类似的东西。你使用二进制数据打开套接字,然后处理文本。所以通过支持二进制数据,语言的适用性(applicability)翻了一倍。

|

||||

|

||||

这让我们开始探讨在语言中什么应该简单。现在的 Perl 中有一条原则,是我们偷师了哈夫曼编码(Huffman coding)的做法,它在位编码系统中为字符采取了不同的尺寸,常用的字符占用的位数较少,不常用的字符占用的位数更多。

|

||||

|

||||

我们偷师了这种想法并将它作为 Perl 的一般原则,针对常用的或者说常输入的 —— 这些常用的东西必须简单或简洁。不过,另一方面,也显得更加的不规则(irregular)。在自然语言中也是这样的,最常用的动词实际上往往是最不规则的。

|

||||

|

||||

所以在这样的情况下需要更多的差异存在。我很喜欢一本书是 Umberto Eco 写的的《探寻完美的语言(The Search for the Perfect Language)》,说的并不是计算机语言;而是哲学语言,大体的意思是古代的语言也许是完美的,我们应该将它们带回来。

|

||||

|

||||

所有的这类语言错误的认为类似的事物其编码也应该总是类似的。但这并不是我们沟通的方式。如果你的农场中有许多动物,他们都有相近的名字,当你想杀一只鸡的时候说“走,去把 Blerfoo 宰了”,你的真实想法是宰了 Blerfee,但有可能最后死的是一头牛(LCTT 译注:这是杀鸡用牛刀的意思吗?哈哈)。

|

||||

|

||||

所以在这种时候我们其实更需要好好的将单词区分开,使沟通信道的冗余增加。常用的单词应该有更多的差异。为了达到更有效的通讯,还有一种自足(LCTT 译注:self-clocking ,自同步,[概念][3]来自电信和电子行业,此处译为“自足”更能体现涵义)编码。如果你在一个货物上看到过 UPC 标签(条形码),它就是一个自足编码,每对“条”和“空”总是以七个列宽为单位,据此你就知道“条”的宽度加起来总是这么宽。这就是自足。

|

||||

|

||||

在电子产品中还有另一种自足编码。在老式的串行传输协议中有停止和启动位,来保持同步。自然语言中也会包含这些。比如说,在写日语时,不用使用空格。由于书写方式的原因,他们会在每个词组的开头使用中文中的汉字字符,然后用音节表(syllabary)中的字符来结尾。

|

||||

|

||||

**LV:是平假名,对吗?**

|

||||

|

||||

LW: 是的,平假名。所以在这一系统,每个词组的开头就自然就很重要了。同样的,在古希腊,大多数的动词都是搭配好的(declined 或 conjugated),所以它们的标准结尾是一种自足机制。在他们的书写体系中空格也是可有可无的 —— 引入空格是更近代的发明。

|

||||

|

||||

所以在计算机语言上也要如此,有的值也可以自足编码。在 Perl 上我们重度依赖这种方法,而且在 Perl 6 上相较于前几代这种依赖更重。当你使用表达式时,你要么得到的是一个词,要么得到的是插值(infix)操作符。当你想要得到一个词,你有可能得到的是一个前缀操作符,它也在相同的位置;同样当你想要得到一个插值操作符,你也可能得到的是前一个词的后缀。

|

||||

|

||||

但是反过来。如果编译器准确的知道它想要什么,你可以稍微重载(overload)它们,其它的让 Perl 来完成。所以在斜线“/”后面是单词时它会当成正则表达式,而斜线“/”在字串中时视作除法。而我们并不会重载所有东西,因为那只会使你失去自足冗余。

|

||||

|

||||

多数情况下我们提示的比较好的语法错误消息,是出于发现了一行中出现了两个关键词,然后我们尝试找出为什么一行会出现两个关键字 —— “哦,你一定漏掉了上一行的分号”,所以我们相较于很多其他的按步照班的解析器可以生成更好的错误消息。

|

||||

|

||||

|

||||

|

||||

**LV:为什么 Perl 6 花了15年?当每个人对事物有不同看法时一定十分难于管理,而且正确和错误并不是绝对的。**

|

||||

|

||||

LW:这必须要非常小心地平衡。刚开始会有许多的好的想法 —— 好吧,我并不是说那些全是好的想法。也有很多令人烦恼的地方,就像有361条 RFC [功能建议文件],而我也许只想要20条。我们需要坐下来,将它们全部看完,并忽略其中的解决方案,因为它们通常流于表象、视野狭隘。几乎每一条只针对一样事物,如若我们将它们全部拼凑起来,那简直是一堆垃圾。

|

||||

|

||||

> “掌握平衡时需要格外小心。毕竟在刚开始的时候总会有许多的好主意。”

|

||||

|

||||

所以我们必须基于人们在使用 Perl 5 时的真实感受重新整理,寻找统一、深层的解决方案。这些 RFC 文档许多都提到了一个事实,就是类型系统的不足。通过引入更条理分明的类型系统,我们可以解决很多问题并且即聪明又紧凑。

|

||||

|

||||

同时我们开始关注其他方面:如何统一特征集并开始重用不同领域的想法,这并不需要它们在下层相同。我们有一种标准的书写配对(pair)的方式——好吧,在 Perl 里面有两种!但使用冒号书写配对的方法同样可以用于基数计数法或是任何进制的文本编号。同样也可以用于其他形式的引用(quoting)。在 Perl 里我们称它为“奇妙的一致”。

|

||||

|

||||

> “做了 Perl 6 的早期实现的朋友们,握着我的手说:“我们真的很需要一位语言的设计者。””

|

||||

|

||||

同样的想法涌现出来,你说“我已经熟悉了语法如何运作,但是我看见它也被用在别处”,所以说视角相同才能找出这种一致。那些提出各种想法和做了 Perl 6 的早期实现的人们回来看我,握着我的手说:“我们真的需要一位语言的设计者。您能作为我们的[仁慈独裁者][4](benevolent dictator)吗?”(LCTT 译注:Benevolent Dictator For Life,或 BDFL,指开源领袖,通常指对社区争议拥有最终裁决权的领袖,典故来自 Python 创始人 Guido van Rossum, 具体参考维基条目[解释][4])

|

||||

|

||||

所以我是语言的设计者,但总是听到:“不要管具体实现(implementation)!我们目睹了你对 Perl 5 做的那些,我们不想历史重演!”真是让我忍俊不禁,因为他们作为起步的核心和原先 Perl 5 的内部结构上几乎别无二致,也许这就是为什么一些早期的实现做的并不好的原因。

|

||||

|

||||

因为我们仍然在摸索我们的整个设计,其实现在做了许多 VM (虚拟机)该做什么和不该做什么的假设,所以最终这个东西就像面向对象的汇编语言一样。类似的问题在伊始阶段无处不在。然后 Pugs 这家伙走过来说:“用用看 Haskell 吧,它能让你们清醒的认识自己正在干什么,让我们用它来弄清楚下层的语义模型(semantic model)。”

|

||||

|

||||

因此,我们明确了其中的一些语义模型,但更重要的是,我们开始建立符合那些语义模型的测试套件。在这之后,Parrot VM 继续进行开发,并且出现了另一个实现 Niecza ,它基于 .Net,是由一个年轻的家伙搞出来的。他很聪明,实现了 Perl 6 的一个很大的子集。不过他还是一个人干,并没有找到什么好方法让别人介入他的项目。

|

||||

|

||||

同时 Parrot 项目变得过于庞大,以至于任何人都不能真正的深入掌控它,并且很难重构。同时,开发 Rakudo 的人们觉得我们可能需要在更多平台上运行它,而不只是在 Parrot VM 上。 于是他们发明了所谓的可移植层 NQP ,即 “Not Quite Perl”。他们一开始将它移植到 JVM(Java虚拟机)上运行,与此同时,他们还秘密的开发了一个叫做 MoarVM 的 VM ,它去年才刚刚为人知晓。

|

||||

|

||||

无论 MoarVM 还是 JVM 在回归测试(regression test)中表现得十分接近 —— 在许多方面 Parrot 算是尾随其后。这样不挑剔 VM 真的很棒,我们也能开始考虑将 NQP 发扬光大。谷歌夏季编码大赛(Google Summer of Code project)的目标就是针对 JavaScript 的 NQP,这应该靠谱,因为 MoarVM 也同样使用 Node.js 作为日常处理。

|

||||

|

||||

我们可能要将今年余下的时光投在 MoarVM 上,直到 6.0 发布,方可休息片刻。

|

||||

|

||||

**LV:去年英国,政府开展编程年活动(Year of Code),来激发年轻人对编程的兴趣。针对活动的建议五花八门——类似为了让人们准确的认识到内存的使用你是否应该从低阶语言开始讲授,或是一门高阶语言。你对此作何看法?**

|

||||

|

||||

LW:到现在为止,Python 社区在低阶方面的教学工作做得比我们要好。我们也很想在这一方面做点什么,这也是我们有蝴蝶 logo 的部分原因,以此来吸引七岁大小的女孩子!

|

||||

|

||||

|

||||

|

||||

> “到现在为止,Python 社区在低阶方面的教学工作做得比我们要好。”

|

||||

|

||||

我们认为将 Perl 6 作为第一门语言来学习是可行的。一大堆的将 Perl 5 作为第一门语言学习的人让我们吃惊。你知道,在 Perl 5 中有许多相当大的概念,如闭包,词法范围,和一些你通常在函数式编程中见到的特性。甚至在 Perl 6 中更是如此。

|

||||

|

||||

Perl 6 花了这么长时间的部分原因是我们尝试去坚持将近 50 种互不相同的原则,在设计语言的最后对于“哪点是最重要的规则”这个问题还是悬而未决。有太多的问题需要讨论。有时我们做出了决定,并已经工作了一段时间,才发现这个决定并不很正确。

|

||||

|

||||

之前我们并未针对并发程序设计或指定很多东西,直到 Jonathan Worthington 的出现,他非常巧妙的权衡了各个方面。他结合了一些其他语言诸如 Go 和 C# 的想法,将并发原语写的非常好。可组合性(Composability)是一个语言至关重要的一部分。

|

||||

|

||||

有很多的程序设计系统的并发和并行写的并不好 —— 比如线程和锁,不良的操作方式有很多。所以在我看来,额外花点时间看一下 Go 或者 C# 这种高阶原语的开发是很值得的 —— 那是一种关键字上的矛盾 —— 写的相当棒。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxvoice.com/interview-larry-wall/

|

||||

|

||||

作者:[Mike Saunders][a]

|

||||

译者:[martin2011qi](https://github.com/martin2011qi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.linuxvoice.com/author/mike/

|

||||

[1]:https://en.wikipedia.org/wiki/Perl#State_of_the_Onion

|

||||

[2]:http://tinyurl.com/nhpr8g2

|

||||

[3]:http://en.wikipedia.org/wiki/Self-clocking_signal

|

||||

[4]:https://en.wikipedia.org/wiki/Benevolent_dictator_for_life

|

||||

@ -0,0 +1,28 @@

|

||||

Linux 4.3 内核增加了 MOST 驱动子系统

|

||||

================================================================================

|

||||

当 4.2 内核还没有正式发布的时候,Greg Kroah-Hartman 就为他维护的各种子系统模块打开了4.3 的合并窗口。

|

||||

|

||||

之前 Greg KH 发起的拉取请求(pull request)里包含了 linux 4.3 的合并窗口更新,内容涉及驱动核心、TTY/串口、USB 驱动、字符/杂项以及暂存区内容。这些拉取申请没有提供任何震撼性的改变,大部分都是改进/附加/修改bug。暂存区内容又是大量的修正和清理,但是还是有一个新的驱动子系统。

|

||||

|

||||

Greg 提到了[4.3 的暂存区改变][2],“这里的很多东西,几乎全部都是细小的修改和改变。通常的 IIO 更新和新驱动,以及我们已经添加了的 MOST 驱动子系统,已经在源码树里整理了。ozwpan 驱动最终还是被删掉,因为它很明显被废弃了而且也没有人关心它。”

|

||||

|

||||

MOST 驱动子系统是面向媒体的系统传输(Media Oriented Systems Transport)的简称。在 linux 4.3 新增的文档里面解释道,“MOST 驱动支持 LInux 应用程序访问 MOST 网络:汽车信息骨干网(Automotive Information Backbone),高速汽车多媒体网络的事实上的标准。MOST 定义了必要的协议、硬件和软件层,提供高效且低消耗的传输控制,实时的数据包传输,而只需要使用一个媒介(物理层)。目前使用的媒介是光线、非屏蔽双绞线(UTP)和同轴电缆。MOST 也支持多种传输速度,最高支持150Mbps。”如文档解释的,MOST 主要是关于 Linux 在汽车上的应用。

|

||||

|

||||

当 Greg KH 发出了他为 Linux 4.3 多个子系统做出的更新,但是他还没有打算提交 [KDBUS][5] 的内核代码。他之前已经放出了 [linux 4.3 的 KDBUS] 的开发计划,所以我们将需要等待官方的4.3 合并窗口,看看会发生什么。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.phoronix.com/scan.php?page=news_item&px=Linux-4.3-Staging-Pull

|

||||

|

||||

作者:[Michael Larabel][a]

|

||||

译者:[oska874](https://github.com/oska874)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.michaellarabel.com/

|

||||

[1]:http://www.phoronix.com/scan.php?page=search&q=Linux+4.2

|

||||

[2]:http://lkml.iu.edu/hypermail/linux/kernel/1508.2/02604.html

|

||||

[3]:http://www.phoronix.com/scan.php?page=news_item&px=KDBUS-Not-In-Linux-4.2

|

||||

[4]:http://www.phoronix.com/scan.php?page=news_item&px=Linux-4.2-rc7-Released

|

||||

[5]:http://www.phoronix.com/scan.php?page=search&q=KDBUS

|

||||

@ -1,14 +1,12 @@

|

||||

translation by strugglingyouth

|

||||

nstalling NGINX and NGINX Plus With Ansible

|

||||

使用 ansible 安装 NGINX 和 NGINX Plus

|

||||

================================================================================

|

||||

在生产环境中,我会更喜欢做与自动化相关的所有事情。如果计算机能完成你的任务,何必需要你亲自动手呢?但是,在不断变化并存在多种技术的环境中,创建和实施自动化是一项艰巨的任务。这就是为什么我喜欢[Ansible][1]。Ansible是免费的,开源的,对于 IT 配置管理,部署和业务流程,使用起来非常方便。

|

||||

在生产环境中,我会更喜欢做与自动化相关的所有事情。如果计算机能完成你的任务,何必需要你亲自动手呢?但是,在不断变化并存在多种技术的环境中,创建和实施自动化是一项艰巨的任务。这就是为什么我喜欢 [Ansible][1] 的原因。Ansible 是一个用于 IT 配置管理,部署和业务流程的开源工具,使用起来非常方便。

|

||||

|

||||

我最喜欢 Ansible 的一个特点是,它是完全无客户端的。要管理一个系统,通过 SSH 建立连接,它使用[Paramiko][2](一个 Python 库)或本地的 [OpenSSH][3]。Ansible 另一个吸引人的地方是它有许多可扩展的模块。这些模块可被系统管理员用于执行一些的常见任务。特别是,它们使用 Ansible 这个强有力的工具可以跨多个服务器、环境或操作系统安装和配置任何程序,只需要一个控制节点。

|

||||

|

||||

我最喜欢 Ansible 的一个特点是,它是完全无客户端。要管理一个系统,通过 SSH 建立连接,也使用了[Paramiko][2](一个 Python 库)或本地的 [OpenSSH][3]。Ansible 另一个吸引人的地方是它有许多可扩展的模块。这些模块可被系统管理员用于执行一些的相同任务。特别是,它们使用 Ansible 这个强有力的工具可以安装和配置任何程序在多个服务器上,环境或操作系统,只需要一个控制节点。

|

||||

在本教程中,我将带你使用 Ansible 完成安装和部署开源 [NGINX][4] 和我们的商业产品 [NGINX Plus][5]。我将在 [CentOS][6] 服务器上演示,但我也在下面的“在 Ubuntu 上创建 Ansible Playbook 来安装 NGINX 和 NGINX Plus”小节中包含了在 Ubuntu 服务器上部署的细节。

|

||||

|

||||

在本教程中,我将带你使用 Ansible 完成安装和部署开源[NGINX][4] 和 [NGINX Plus][5],我们的商业产品。我将在 [CentOS][6] 服务器上演示,但我也写了一个详细的教程关于在 Ubuntu 服务器上部署[在 Ubuntu 上创建一个 Ansible Playbook 来安装 NGINX 和 NGINX Plus][7] 。

|

||||

|

||||

在本教程中我将使用 Ansible 1.9.2 版本的,并在 CentOS 7.1 服务器上部署运行。

|

||||

在本教程中我将使用 Ansible 1.9.2 版本,并在 CentOS 7.1 服务器上部署运行。

|

||||

|

||||

$ ansible --version

|

||||

ansible 1.9.2

|

||||

@ -20,14 +18,13 @@ nstalling NGINX and NGINX Plus With Ansible

|

||||

|

||||

如果你使用的是 CentOS,安装 Ansible 十分简单,只要输入以下命令。如果你想使用源码编译安装或使用其他发行版,请参阅上面 Ansible 链接中的说明。

|

||||

|

||||

|

||||

$ sudo yum install -y epel-release && sudo yum install -y ansible

|

||||

|

||||

根据环境的不同,在本教程中的命令有的可能需要 sudo 权限。文件路径,用户名,目标服务器的值取决于你的环境中。

|

||||

根据环境的不同,在本教程中的命令有的可能需要 sudo 权限。文件路径,用户名和目标服务器取决于你的环境的情况。

|

||||

|

||||

### 创建一个 Ansible Playbook 来安装 NGINX (CentOS) ###

|

||||

|

||||

首先,我们为 NGINX 的部署创建一个工作目录,以及子目录和部署配置文件目录。我通常建议在主目录中创建目录,在文章的所有例子中都会有说明。

|

||||

首先,我们要为 NGINX 的部署创建一个工作目录,包括子目录和部署配置文件。我通常建议在你的主目录中创建该目录,在文章的所有例子中都会有说明。

|

||||

|

||||

$ cd $HOME

|

||||

$ mkdir -p ansible-nginx/tasks/

|

||||

@ -54,11 +51,11 @@ nstalling NGINX and NGINX Plus With Ansible

|

||||

|

||||

$ vim $HOME/ansible-nginx/deploy.yml

|

||||

|

||||

**deploy.yml** 文件是 Ansible 部署的主要文件,[ 在使用 Ansible 部署 NGINX][9] 时,我们将运行 ansible‑playbook 命令执行此文件。在这个文件中,我们指定运行时 Ansible 使用的库以及其它配置文件。

|

||||

**deploy.yml** 文件是 Ansible 部署的主要文件,在“使用 Ansible 部署 NGINX”小节中,我们运行 ansible‑playbook 命令时会使用此文件。在这个文件中,我们指定 Ansible 运行时使用的库以及其它配置文件。

|

||||

|

||||

在这个例子中,我使用 [include][10] 模块来指定配置文件一步一步来安装NGINX。虽然可以创建一个非常大的 playbook 文件,我建议你将其分割为小文件,以保证其可靠性。示例中的包括复制静态内容,复制配置文件,为更高级的部署使用逻辑配置设定变量。

|

||||

在这个例子中,我使用 [include][10] 模块来指定配置文件一步一步来安装NGINX。虽然可以创建一个非常大的 playbook 文件,我建议你将其分割为小文件,让它们更有条理。include 的示例中可以复制静态内容,复制配置文件,为更高级的部署使用逻辑配置设定变量。

|

||||

|

||||

在文件中输入以下行。包括顶部参考注释中的文件名。

|

||||

在文件中输入以下行。我在顶部的注释包含了文件名用于参考。

|

||||

|

||||

# ./ansible-nginx/deploy.yml

|

||||

|

||||

@ -66,21 +63,21 @@ nstalling NGINX and NGINX Plus With Ansible

|

||||

tasks:

|

||||

- include: 'tasks/install_nginx.yml'

|

||||

|

||||

hosts 语句说明 Ansible 部署 **nginx** 组的所有服务器,服务器在 **/etc/ansible/hosts** 中指定。我们将编辑此文件来 [创建 NGINX 服务器的列表][11]。

|

||||

hosts 语句说明 Ansible 部署 **nginx** 组的所有服务器,服务器在 **/etc/ansible/hosts** 中指定。我们会在下面的“创建 NGINX 服务器列表”小节编辑此文件。

|

||||

|

||||

include 语句说明 Ansible 在部署过程中从 **tasks** 目录下读取并执行 **install_nginx.yml** 文件中的内容。该文件包括以下几步:下载,安装,并启动 NGINX。我们将创建此文件在下一节。

|

||||

include 语句说明 Ansible 在部署过程中从 **tasks** 目录下读取并执行 **install\_nginx.yml** 文件中的内容。该文件包括以下几步:下载,安装,并启动 NGINX。我们将在下一节创建此文件。

|

||||

|

||||

#### 为 NGINX 创建部署文件 ####

|

||||

|

||||

现在,先保存 **deploy.yml** 文件,并在编辑器中打开 **install_nginx.yml** 。

|

||||

现在,先保存 **deploy.yml** 文件,并在编辑器中打开 **install\_nginx.yml** 。

|

||||

|

||||

$ vim $HOME/ansible-nginx/tasks/install_nginx.yml

|

||||

|

||||

该文件包含的说明有 - 以 [YAML][12] 格式写入 - 使用 Ansible 安装和配置 NGINX。每个部分(步骤中的过程)起始于一个 name 声明(前面连字符)描述此步骤。下面的 name 字符串:是 Ansible 部署过程中写到标准输出的,可以根据你的意愿来改变。YAML 文件中的下一个部分是在部署过程中将使用的模块。在下面的配置中,[yum][13] 和 [service][14] 模块使将被用。yum 模块用于在 CentOS 上安装软件包。service 模块用于管理 UNIX 的服务。在这部分的最后一行或几行指定了几个模块的参数(在本例中,这些行以 name 和 state 开始)。

|

||||

该文件包含有指令(使用 [YAML][12] 格式写的), Ansible 会按照指令安装和配置我们的 NGINX 部署过程。每个节(过程中的步骤)起始于一个描述此步骤的 `name` 语句(前面有连字符)。 `name` 后的字符串是 Ansible 部署过程中输出到标准输出的,可以根据你的意愿来修改。YAML 文件中的节的下一行是在部署过程中将使用的模块。在下面的配置中,使用了 [`yum`][13] 和 [`service`][14] 模块。`yum` 模块用于在 CentOS 上安装软件包。`service` 模块用于管理 UNIX 的服务。在这个节的最后一行或几行指定了几个模块的参数(在本例中,这些行以 `name` 和 `state` 开始)。

|

||||

|

||||

在文件中输入以下行。对于 **deploy.yml**,在我们文件的第一行是关于文件名的注释。第一部分说明 Ansible 从 NGINX 仓库安装 **.rpm** 文件在CentOS 7 上。这说明软件包管理器直接从 NGINX 仓库安装最新最稳定的版本。需要在你的 CentOS 版本上修改路径。可使用包的列表可以在 [开源 NGINX 网站][15] 上找到。接下来的两节说明 Ansible 使用 yum 模块安装最新的 NGINX 版本,然后使用 service 模块启动 NGINX。

|

||||

在文件中输入以下行。就像 **deploy.yml**,在我们文件的第一行是用于参考的文件名的注释。第一个节告诉 Ansible 在CentOS 7 上从 NGINX 仓库安装该 **.rpm** 文件。这让软件包管理器直接从 NGINX 仓库安装最新最稳定的版本。根据你的 CentOS 版本修改路径。所有可用的包的列表可以在 [开源 NGINX 网站][15] 上找到。接下来的两节告诉 Ansible 使用 `yum` 模块安装最新的 NGINX 版本,然后使用 `service` 模块启动 NGINX。

|

||||

|

||||

**注意:** 在第一部分中,CentOS 包中的路径名是连着的两行。在一行上输入其完整路径。

|

||||

**注意:** 在第一个节中,CentOS 包中的路径名可能由于宽度显示为连着的两行。请在一行上输入其完整路径。

|

||||

|

||||

# ./ansible-nginx/tasks/install_nginx.yml

|

||||

|

||||

@ -100,12 +97,12 @@ include 语句说明 Ansible 在部署过程中从 **tasks** 目录下读取并

|

||||

|

||||

#### 创建 NGINX 服务器列表 ####

|

||||

|

||||

现在,我们有 Ansible 部署所有配置的文件,我们需要告诉 Ansible 部署哪个服务器。我们需要在 Ansible 中指定 **hosts** 文件。先备份现有的文件,并新建一个新文件来部署。

|

||||

现在,我们设置好了 Ansible 部署的所有配置文件,我们需要告诉 Ansible 部署哪个服务器。我们需要在 Ansible 中指定 **hosts** 文件。先备份现有的文件,并新建一个新文件来部署。

|

||||

|

||||

$ sudo mv /etc/ansible/hosts /etc/ansible/hosts.backup

|

||||

$ sudo vim /etc/ansible/hosts

|

||||

|

||||

在文件中输入以下行来创建一个名为 **nginx** 的组并列出安装 NGINX 的服务器。你可以指定服务器通过主机名,IP 地址,或者在一个区域,例如 **server[1-3].domain.com**。在这里,我指定一台服务器通过 IP 地址。

|

||||

在文件中输入(或编辑)以下行来创建一个名为 **nginx** 的组并列出安装 NGINX 的服务器。你可以通过主机名、IP 地址、或者在一个范围,例如 **server[1-3].domain.com** 来指定服务器。在这里,我通过 IP 地址指定一台服务器。

|

||||

|

||||

# /etc/ansible/hosts

|

||||

|

||||

@ -114,20 +111,20 @@ include 语句说明 Ansible 在部署过程中从 **tasks** 目录下读取并

|

||||

|

||||

#### 设置安全性 ####

|

||||

|

||||

在部署之前,我们需要确保 Ansible 已通过 SSH 授权能访问我们的目标服务器。

|

||||

接近完成了,但在部署之前,我们需要确保 Ansible 已被授权通过 SSH 访问我们的目标服务器。

|

||||

|

||||

首选并且最安全的方法是添加 Ansible 所要部署服务器的 RSA SSH 密钥到目标服务器的 **authorized_keys** 文件中,这给 Ansible 在目标服务器上的 SSH 权限不受限制。要了解更多关于此配置,请参阅 [安全的 OpenSSH][16] 在 wiki.centos.org。这样,你就可以自动部署而无需用户交互。

|

||||

首选并且最安全的方法是添加 Ansible 所要部署服务器的 RSA SSH 密钥到目标服务器的 **authorized\_keys** 文件中,这给予 Ansible 在目标服务器上的不受限制 SSH 权限。要了解更多关于此配置,请参阅 wiki.centos.org 上 [安全加固 OpenSSH][16]。这样,你就可以自动部署而无需用户交互。

|

||||

|

||||

另外,你也可以在部署过程中需要输入密码。我强烈建议你只在测试过程中使用这种方法,因为它是不安全的,没有办法判断目标主机的身份。如果你想这样做,将每个目标主机 **/etc/ssh/ssh_config** 文件中 StrictHostKeyChecking 的默认值 yes 改为 no。然后在 ansible-playbook 命令中添加 --ask-pass参数来表示 Ansible 会提示输入 SSH 密码。

|

||||

另外,你也可以在部署过程中要求输入密码。我强烈建议你只在测试过程中使用这种方法,因为它是不安全的,没有办法跟踪目标主机的身份(fingerprint)变化。如果你想这样做,将每个目标主机 **/etc/ssh/ssh\_config** 文件中 StrictHostKeyChecking 的默认值 yes 改为 no。然后在 ansible-playbook 命令中添加 --ask-pass 参数来让 Ansible 提示输入 SSH 密码。

|

||||

|

||||

在这里,我将举例说明如何编辑 **ssh_config** 文件来禁用在目标服务器上严格的主机密钥检查。我们手动 SSH 到我们将部署 NGINX 的服务器并将StrictHostKeyChecking 的值更改为 no。

|

||||

在这里,我将举例说明如何编辑 **ssh\_config** 文件来禁用在目标服务器上严格的主机密钥检查。我们手动连接 SSH 到我们将部署 NGINX 的服务器,并将 StrictHostKeyChecking 的值更改为 no。

|

||||

|

||||

$ ssh kjones@172.16.239.140

|

||||

kjones@172.16.239.140's password:***********

|

||||

|

||||

[kjones@nginx ]$ sudo vim /etc/ssh/ssh_config

|

||||

|

||||

当你更改后,保存 **ssh_config**,并通过 SSH 连接到你的 Ansible 服务器。保存后的设置应该如下图所示。

|

||||

当你更改后,保存 **ssh\_config**,并通过 SSH 连接到你的 Ansible 服务器。保存后的设置应该如下所示。

|

||||

|

||||

# /etc/ssh/ssh_config

|

||||

|

||||

@ -135,7 +132,7 @@ include 语句说明 Ansible 在部署过程中从 **tasks** 目录下读取并

|

||||

|

||||

#### 运行 Ansible 部署 NGINX ####

|

||||

|

||||

如果你一直照本教程的步骤来做,你可以运行下面的命令来使用 Ansible 部署NGINX。(同样,如果你设置了 RSA SSH 密钥认证,那么--ask-pass 参数是不需要的。)在 Ansible 服务器运行命令,并使用我们上面创建的配置文件。

|

||||

如果你一直照本教程的步骤来做,你可以运行下面的命令来使用 Ansible 部署 NGINX。(再次提示,如果你设置了 RSA SSH 密钥认证,那么 --ask-pass 参数是不需要的。)在 Ansible 服务器运行命令,并使用我们上面创建的配置文件。

|

||||

|

||||

$ sudo ansible-playbook --ask-pass $HOME/ansible-nginx/deploy.yml

|

||||

|

||||

@ -163,7 +160,7 @@ Ansible 提示输入 SSH 密码,输出如下。recap 中显示 failed=0 这条

|

||||

|

||||

如果你没有得到一个成功的 play recap,你可以尝试用 -vvvv 参数(带连接调试的详细信息)再次运行 ansible-playbook 命令来解决部署过程的问题。

|

||||

|

||||

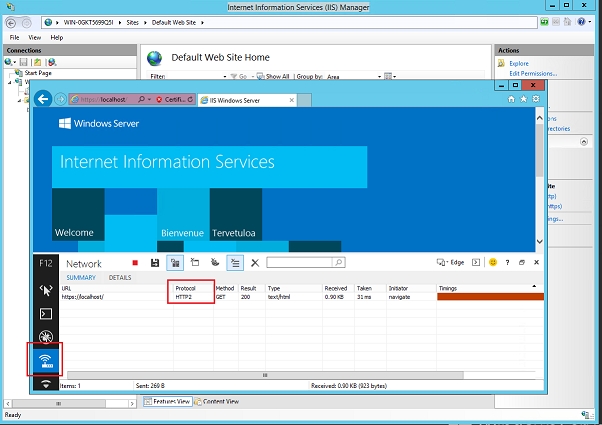

当部署成功(因为我们不是第一次部署)后,你可以验证 NGINX 在远程服务器上运行基本的 [cURL][17] 命令。在这里,它会返回 200 OK。Yes!我们使用Ansible 成功安装了 NGINX。

|

||||

当部署成功(假如我们是第一次部署)后,你可以在远程服务器上运行基本的 [cURL][17] 命令验证 NGINX 。在这里,它会返回 200 OK。Yes!我们使用 Ansible 成功安装了 NGINX。

|

||||

|

||||

$ curl -Is 172.16.239.140 | grep HTTP

|

||||

HTTP/1.1 200 OK

|

||||

@ -174,11 +171,11 @@ Ansible 提示输入 SSH 密码,输出如下。recap 中显示 failed=0 这条

|

||||

|

||||

#### 复制 NGINX Plus 上的证书和密钥到 Ansible 服务器 ####

|

||||

|

||||

使用 Ansible 安装和配置 NGINX Plus 时,首先我们需要将 [NGINX Plus Customer Portal][18] 的密钥和证书复制到部署 Ansible 服务器上的标准位置。

|

||||

使用 Ansible 安装和配置 NGINX Plus 时,首先我们需要将 [NGINX Plus Customer Portal][18] NGINX Plus 订阅的密钥和证书复制到 Ansible 部署服务器上的标准位置。

|

||||

|

||||

购买了 NGINX Plus 或正在试用的客户也可以访问 NGINX Plus Customer Portal。如果你有兴趣测试 NGINX Plus,你可以申请免费试用30天[点击这里][19]。在你注册后不久你将收到一个试用证书和密钥的链接。

|

||||

购买了 NGINX Plus 或正在试用的客户也可以访问 NGINX Plus Customer Portal。如果你有兴趣测试 NGINX Plus,你可以申请免费试用30天,[点击这里][19]。在你注册后不久你将收到一个试用证书和密钥的链接。

|

||||

|

||||

在 Mac 或 Linux 主机上,我在这里演示使用 [scp][20] 工具。在 Microsoft Windows 主机,可以使用 [WinSCP][21]。在本教程中,先下载文件到我的 Mac 笔记本电脑上,然后使用 scp 将其复制到 Ansible 服务器。密钥和证书的位置都在我的家目录下。

|

||||

在 Mac 或 Linux 主机上,我在这里使用 [scp][20] 工具演示。在 Microsoft Windows 主机,可以使用 [WinSCP][21]。在本教程中,先下载文件到我的 Mac 笔记本电脑上,然后使用 scp 将其复制到 Ansible 服务器。密钥和证书的位置都在我的家目录下。

|

||||

|

||||

$ cd /path/to/nginx-repo-files/

|

||||

$ scp nginx-repo.* user@destination-server:.

|

||||

@ -189,7 +186,7 @@ Ansible 提示输入 SSH 密码,输出如下。recap 中显示 failed=0 这条

|

||||

$ sudo mkdir -p /etc/ssl/nginx/

|

||||

$ sudo mv nginx-repo.* /etc/ssl/nginx/

|

||||

|

||||

验证你的 **/etc/ssl/nginx** 目录包含证书(**.crt**)和密钥(**.key**)文件。你可以使用 tree 命令检查。

|

||||

验证你的 **/etc/ssl/nginx** 目录包含了证书(**.crt**)和密钥(**.key**)文件。你可以使用 tree 命令检查。

|

||||

|

||||

$ tree /etc/ssl/nginx

|

||||

/etc/ssl/nginx

|

||||

@ -204,7 +201,7 @@ Ansible 提示输入 SSH 密码,输出如下。recap 中显示 failed=0 这条

|

||||

|

||||

#### 创建 Ansible 目录结构 ####

|

||||

|

||||

以下执行的步骤将和开源 NGINX 的非常相似在[创建安装 NGINX 的 Ansible Playbook 中(CentOS)][22]。首先,我们建一个工作目录为部署 NGINX Plus 使用。我喜欢将它创建为我主目录的子目录。

|

||||

以下执行的步骤和我们的“创建 Ansible Playbook 来安装 NGINX(CentOS)”小节中部署开源 NGINX 的非常相似。首先,我们建一个工作目录用于部署 NGINX Plus 使用。我喜欢将它创建为我主目录的子目录。

|

||||

|

||||

$ cd $HOME

|

||||

$ mkdir -p ansible-nginx-plus/tasks/

|

||||

@ -223,11 +220,11 @@ Ansible 提示输入 SSH 密码,输出如下。recap 中显示 failed=0 这条

|

||||

|

||||

#### 创建主部署文件 ####

|

||||

|

||||

接下来,我们使用 vim 为开源的 NGINX 创建 **deploy.yml** 文件。

|

||||

接下来,像开源的 NGINX 一样,我们使用 vim 创建 **deploy.yml** 文件。

|

||||

|

||||

$ vim ansible-nginx-plus/deploy.yml

|

||||

|

||||

和开源 NGINX 的部署唯一的区别是,我们将包含文件的名称修改为**install_nginx_plus.yml**。该文件告诉 Ansible 在 **nginx** 组中的所有服务器(**/etc/ansible/hosts** 中定义的)上部署 NGINX Plus ,然后在部署过程中从 **tasks** 目录读取并执行 **install_nginx_plus.yml** 的内容。

|

||||

和开源 NGINX 的部署唯一的区别是,我们将包含文件的名称修改为 **install\_nginx\_plus.yml**。该文件告诉 Ansible 在 **nginx** 组中的所有服务器(**/etc/ansible/hosts** 中定义的)上部署 NGINX Plus ,然后在部署过程中从 **tasks** 目录读取并执行 **install\_nginx\_plus.yml** 的内容。

|

||||

|

||||

# ./ansible-nginx-plus/deploy.yml

|

||||

|

||||

@ -235,22 +232,22 @@ Ansible 提示输入 SSH 密码,输出如下。recap 中显示 failed=0 这条

|

||||

tasks:

|

||||

- include: 'tasks/install_nginx_plus.yml'

|

||||

|

||||

如果你还没有这样做的话,你需要创建 hosts 文件,详细说明在上面的 [创建 NGINX 服务器的列表][23]。

|

||||

如果你之前没有安装过的话,你需要创建 hosts 文件,详细说明在上面的“创建 NGINX 服务器的列表”小节。

|

||||

|

||||

#### 为 NGINX Plus 创建部署文件 ####

|

||||

|

||||

在文本编辑器中打开 **install_nginx_plus.yml**。该文件在部署过程中使用 Ansible 来安装和配置 NGINX Plus。这些命令和模块仅针对 CentOS,有些是 NGINX Plus 独有的。

|

||||

在文本编辑器中打开 **install\_nginx\_plus.yml**。该文件包含了使用 Ansible 来安装和配置 NGINX Plus 部署过程中的指令。这些命令和模块仅针对 CentOS,有些是 NGINX Plus 独有的。

|

||||

|

||||

$ vim ansible-nginx-plus/tasks/install_nginx_plus.yml

|

||||

|

||||

第一部分使用 [文件][24] 模块,告诉 Ansible 使用指定的路径和状态参数为 NGINX Plus 创建特定的 SSL 目录,设置根目录的权限,将权限更改为0700。

|

||||

第一节使用 [`file`][24] 模块,告诉 Ansible 使用指定的`path`和`state`参数为 NGINX Plus 创建特定的 SSL 目录,设置属主为 root,将权限 `mode` 更改为0700。

|

||||

|

||||

# ./ansible-nginx-plus/tasks/install_nginx_plus.yml

|

||||

|

||||

- name: NGINX Plus | 创建 NGINX Plus ssl 证书目录

|

||||

file: path=/etc/ssl/nginx state=directory group=root mode=0700

|

||||

|

||||

接下来的两节使用 [copy][25] 模块从部署 Ansible 的服务器上将 NGINX Plus 的证书和密钥复制到 NGINX Plus 服务器上,再修改权根,将权限设置为0700。

|

||||

接下来的两节使用 [copy][25] 模块从 Ansible 部署服务器上将 NGINX Plus 的证书和密钥复制到 NGINX Plus 服务器上,再修改属主为 root,权限 `mode` 为0700。

|

||||

|

||||

- name: NGINX Plus | 复制 NGINX Plus repo 证书

|

||||

copy: src=/etc/ssl/nginx/nginx-repo.crt dest=/etc/ssl/nginx/nginx-repo.crt owner=root group=root mode=0700

|

||||

@ -258,17 +255,17 @@ Ansible 提示输入 SSH 密码,输出如下。recap 中显示 failed=0 这条

|

||||

- name: NGINX Plus | 复制 NGINX Plus 密钥

|

||||

copy: src=/etc/ssl/nginx/nginx-repo.key dest=/etc/ssl/nginx/nginx-repo.key owner=root group=root mode=0700

|

||||

|

||||

接下来,我们告诉 Ansible 使用 [get_url][26] 模块从 NGINX Plus 仓库下载 CA 证书在 url 参数指定的远程位置,通过 dest 参数把它放在指定的目录,并设置权限为 0700。

|

||||

接下来,我们告诉 Ansible 使用 [`get_url`][26] 模块在 url 参数指定的远程位置从 NGINX Plus 仓库下载 CA 证书,通过 `dest` 参数把它放在指定的目录 `dest` ,并设置权限 `mode` 为 0700。

|

||||

|

||||

- name: NGINX Plus | 下载 NGINX Plus CA 证书

|

||||

get_url: url=https://cs.nginx.com/static/files/CA.crt dest=/etc/ssl/nginx/CA.crt mode=0700

|

||||

|

||||

同样,我们告诉 Ansible 使用 get_url 模块下载 NGINX Plus repo 文件,并将其复制到 **/etc/yum.repos.d** 目录下在 NGINX Plus 服务器上。

|

||||

同样,我们告诉 Ansible 使用 `get_url` 模块下载 NGINX Plus repo 文件,并将其复制到 NGINX Plus 服务器上的 **/etc/yum.repos.d** 目录下。

|

||||

|

||||

- name: NGINX Plus | 下载 yum NGINX Plus 仓库

|

||||

get_url: url=https://cs.nginx.com/static/files/nginx-plus-7.repo dest=/etc/yum.repos.d/nginx-plus-7.repo mode=0700

|

||||

|

||||

最后两节的 name 告诉 Ansible 使用 yum 和 service 模块下载并启动 NGINX Plus。

|

||||

最后两节的 `name` 告诉 Ansible 使用 `yum` 和 `service` 模块下载并启动 NGINX Plus。

|

||||

|

||||

- name: NGINX Plus | 安装 NGINX Plus

|

||||

yum:

|

||||

@ -282,7 +279,7 @@ Ansible 提示输入 SSH 密码,输出如下。recap 中显示 failed=0 这条

|

||||

|

||||

#### 运行 Ansible 来部署 NGINX Plus ####

|

||||

|

||||

在保存 **install_nginx_plus.yml** 文件后,然后运行 ansible-playbook 命令来部署 NGINX Plus。同样在这里,我们使用 --ask-pass 参数使用 Ansible 提示输入 SSH 密码并把它传递给每个 NGINX Plus 服务器,指定路径在 **deploy.yml** 文件中。

|

||||

在保存 **install\_nginx\_plus.yml** 文件后,运行 ansible-playbook 命令来部署 NGINX Plus。同样在这里,我们使用 --ask-pass 参数使用 Ansible 提示输入 SSH 密码并把它传递给每个 NGINX Plus 服务器,并指定主配置文件路径 **deploy.yml** 文件。

|

||||

|

||||

$ sudo ansible-playbook --ask-pass $HOME/ansible-nginx-plus/deploy.yml

|

||||

|

||||

@ -315,18 +312,18 @@ Ansible 提示输入 SSH 密码,输出如下。recap 中显示 failed=0 这条

|

||||

PLAY RECAP ********************************************************************

|

||||

172.16.239.140 : ok=8 changed=7 unreachable=0 failed=0

|

||||

|

||||

playbook 的 recap 是成功的。现在,使用 curl 命令来验证 NGINX Plus 是否在运行。太好了,我们得到的是 200 OK!成功了!我们使用 Ansible 成功地安装了 NGINX Plus。

|

||||

playbook 的 recap 成功完成。现在,使用 curl 命令来验证 NGINX Plus 是否在运行。太好了,我们得到的是 200 OK!成功了!我们使用 Ansible 成功地安装了 NGINX Plus。

|

||||

|

||||

$ curl -Is http://172.16.239.140 | grep HTTP

|

||||

HTTP/1.1 200 OK

|

||||

|

||||

### 在 Ubuntu 上创建一个 Ansible Playbook 来安装 NGINX 和 NGINX Plus ###

|

||||

### 在 Ubuntu 上创建 Ansible Playbook 来安装 NGINX 和 NGINX Plus ###

|

||||

|

||||

此过程在 [Ubuntu 服务器][27] 上部署 NGINX 和 NGINX Plus 与 CentOS 很相似,我将一步一步的指导来完成整个部署文件,并指出和 CentOS 的细微差异。

|

||||

在 [Ubuntu 服务器][27] 上部署 NGINX 和 NGINX Plus 的过程与 CentOS 很相似,我将一步一步的指导来完成整个部署文件,并指出和 CentOS 的细微差异。

|

||||

|

||||

首先和 CentOS 一样,创建 Ansible 目录结构和主要的 Ansible 部署文件。也创建 **/etc/ansible/hosts** 文件来描述 [创建 NGINX 服务器的列表][28]。对于 NGINX Plus,你也需要复制证书和密钥在此步中 [复制 NGINX Plus 证书和密钥到 Ansible 服务器][29]。

|

||||

首先和 CentOS 一样,创建 Ansible 目录结构和 Ansible 主部署文件。也按“创建 NGINX 服务器的列表”小节的描述创建 **/etc/ansible/hosts** 文件。对于 NGINX Plus,你也需要安装“复制 NGINX Plus 证书和密钥到 Ansible 服务器”小节的描述复制证书和密钥。

|

||||

|

||||

下面是开源 NGINX 的 **install_nginx.yml** 部署文件。在第一部分,我们使用 [apt_key][30] 模块导入 Nginx 的签名密钥。接下来的两节使用[lineinfile][31] 模块来添加 URLs 到 **sources.list** 文件中。最后,我们使用 [apt][32] 模块来更新缓存并安装 NGINX(apt 取代了我们在 CentOS 中部署时的 yum 模块)。

|

||||

下面是开源 NGINX 的 **install\_nginx.yml** 部署文件。在第一节,我们使用 [`apt_key`][30] 模块导入 NGINX 的签名密钥。接下来的两节使用 [`lineinfile`][31] 模块来添加 Ubuntu 14.04 的软件包 URL 到 **sources.list** 文件中。最后,我们使用 [`apt`][32] 模块来更新缓存并安装 NGINX(`apt` 取代了我们在 CentOS 中部署时的 `yum` 模块)。

|

||||

|

||||

# ./ansible-nginx/tasks/install_nginx.yml

|

||||

|

||||

@ -352,7 +349,8 @@ playbook 的 recap 是成功的。现在,使用 curl 命令来验证 NGINX Plu

|

||||

service:

|

||||

name: nginx

|

||||

state: started

|

||||

下面是 NGINX Plus 的部署文件 **install_nginx.yml**。前四节设置了 NGINX Plus 密钥和证书。然后,我们用 apt_key 模块为开源的 NGINX 导入签名密钥,get_url 模块为 NGINX Plus 下载 apt 配置文件。[shell][33] 模块使用 printf 命令写下输出到 **nginx-plus.list** 文件中在**sources.list.d** 目录。最终的 name 模块是为开源 NGINX 的。

|

||||

|

||||

下面是 NGINX Plus 的部署文件 **install\_nginx.yml**。前四节设置了 NGINX Plus 密钥和证书。然后,我们像开源的 NGINX 一样用 `apt_key` 模块导入签名密钥,`get_url` 模块为 NGINX Plus 下载 `apt` 配置文件。[`shell`][33] 模块使用 `printf` 命令写下输出到 **sources.list.d** 目录中的 **nginx-plus.list** 文件。最终的 `name` 模块和开源 NGINX 一样。

|

||||

|

||||

# ./ansible-nginx-plus/tasks/install_nginx_plus.yml

|

||||

|

||||

@ -395,13 +393,12 @@ playbook 的 recap 是成功的。现在,使用 curl 命令来验证 NGINX Plu

|

||||

|

||||

$ sudo ansible-playbook --ask-pass $HOME/ansible-nginx-plus/deploy.yml

|

||||

|

||||

你应该得到一个成功的 play recap。如果你没有成功,你可以使用 verbose 参数,以帮助你解决在 [运行 Ansible 来部署 NGINX][34] 中出现的问题。

|

||||

你应该得到一个成功的 play recap。如果你没有成功,你可以使用冗余参数,以帮助你解决出现的问题。

|

||||

|

||||

### 小结 ###

|

||||

|

||||

我在这个教程中演示是什么是 Ansible,可以做些什么来帮助你自动部署 NGINX 或 NGINX Plus,这仅仅是个开始。还有许多有用的模块,用户账号管理,自定义配置模板等。如果你有兴趣了解更多关于这些,请访问 [Ansible 官方文档][35]。

|

||||

我在这个教程中演示是什么是 Ansible,可以做些什么来帮助你自动部署 NGINX 或 NGINX Plus,这仅仅是个开始。还有许多有用的模块,包括从用户账号管理到自定义配置模板等。如果你有兴趣了解关于这些的更多信息,请访问 [Ansible 官方文档][35]。

|

||||

|

||||

要了解更多关于 Ansible,来听我讲用 Ansible 部署 NGINX Plus 在[NGINX.conf 2015][36],9月22-24日在旧金山。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -409,7 +406,7 @@ via: https://www.nginx.com/blog/installing-nginx-nginx-plus-ansible/

|

||||

|

||||

作者:[Kevin Jones][a]

|

||||

译者:[strugglingyouth](https://github.com/strugglingyouth)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,92 @@

|

||||

如何使用 GRUB 2 直接从硬盘运行 ISO 文件

|

||||

================================================================================

|

||||

|

||||

|

||||

大多数 Linux 发行版都会提供一个可以从 USB 启动的 live 环境,以便用户无需安装即可测试系统。我们可以用它来评测这个发行版或仅仅是当成一个一次性系统,并且很容易将这些文件复制到一个 U 盘上,在某些情况下,我们可能需要经常运行同一个或不同的 ISO 镜像。GRUB 2 可以配置成直接从启动菜单运行一个 live 环境,而不需要烧录这些 ISO 到硬盘或 USB 设备。

|

||||

|

||||

### 获取和检查可启动的 ISO 镜像 ###

|

||||

|

||||

为了获取 ISO 镜像,我们通常应该访问所需的发行版的网站下载与我们架构兼容的镜像文件。如果这个镜像可以从 U 盘启动,那它也应该可以从 GRUB 菜单启动。

|

||||

|

||||

当镜像下载完后,我们应该通过 MD5 校验检查它的完整性。这会输出一大串数字与字母合成的序列。

|

||||

|

||||

|

||||

|

||||

将这个序列与下载页提供的 MD5 校验码进行比较,两者应该完全相同。

|

||||

|

||||

### 配置 GRUB 2 ###

|

||||

|

||||

ISO 镜像文件包含了整个系统。我们要做的仅仅是告诉 GRUB 2 哪里可以找到 kernel 和 initramdisk 或 initram 文件系统(这取决于我们所使用的发行版)。

|

||||

|

||||

在下面的例子中,一个 Kubuntu 15.04 live 环境将被配置到 Ubuntu 14.04 机器的 Grub 启动菜单项。这应该能在大多数新的以 Ubuntu 为基础的系统上运行。如果你是其它系统并且想实现一些其它的东西,你可以从[这些文件][1]了解更多细节,但这会要求你拥有一点 GRUB 使用经验。

|

||||

|

||||

这个例子的文件 `kubuntu-15.04-desktop-amd64.iso` 放在位于 `/dev/sda1` 的 `/home/maketecheasier/TempISOs/` 上。

|

||||

|

||||

为了使 GRUB 2 能正确找到它,我们应该编辑

|

||||

|

||||

/etc/grub.d40-custom

|

||||

|

||||

|

||||

|

||||

menuentry "Kubuntu 15.04 ISO" {

|

||||

set isofile="/home/maketecheasier/TempISOs/kubuntu-15.04-desktop-amd64.iso"

|

||||

loopback loop (hd0,1)$isofile

|

||||

echo "Starting $isofile..."

|

||||

linux (loop)/casper/vmlinuz.efi boot=casper iso-scan/filename=${isofile} quiet splash

|

||||

initrd (loop)/casper/initrd.lz

|

||||

}

|

||||

|

||||

|

||||

|

||||

### 分析上述代码 ###

|

||||

|

||||

首先设置了一个变量名 `$menuentry` ,这是 ISO 文件的所在位置 。如果你想换一个 ISO ,你应该修改 `isofile="/path/to/file/name-of-iso-file-.iso"`.

|

||||

|

||||

下一行是指定回环设备,且必须给出正确的分区号码。

|

||||

|

||||

loopback loop (hd0,1)$isofile

|

||||

|

||||

注意 hd0,1 这里非常重要,它的意思是第一硬盘,第一分区 (`/dev/sda1`)。

|

||||

|

||||

GRUB 的命名在这里稍微有点困惑,对于硬盘来说,它从 “0” 开始计数,第一块硬盘为 #0 ,第二块为 #1 ,第三块为 #2 ,依此类推。但是对于分区来说,它从 “1” 开始计数,第一个分区为 #1 ,第二个分区为 #2 ,依此类推。也许这里有一个很好的原因,但肯定不是明智的(明显用户体验很糟糕)..

|

||||

|

||||

在 Linux 中第一块硬盘,第一个分区是 `/dev/sda1` ,但在 GRUB2 中则是 `hd0,1` 。第二块硬盘,第三个分区则是 `hd1,3`, 依此类推.

|

||||

|

||||

下一个重要的行是:

|

||||

|

||||

linux (loop)/casper/vmlinuz.efi boot=casper iso-scan/filename=${isofile} quiet splash

|

||||

|

||||

这会载入内核镜像,在新的 Ubuntu Live CD 中,内核被存放在 `/casper` 目录,并且命名为 `vmlinuz.efi` 。如果你使用的是其它系统,可能会没有 `.efi` 扩展名或内核被存放在其它地方 (可以使用归档管理器打开 ISO 文件在 `/casper` 中查找确认)。最后一个选项, `quiet splash` ,是一个常规的 GRUB 选项,改不改无所谓。

|

||||

|

||||

最后

|

||||

|

||||

initrd (loop)/casper/initrd.lz

|

||||

|

||||

这会载入 `initrd` ,它负责载入 RAMDisk 到内存用于启动。

|

||||

|

||||

### 启动 live 系统 ###

|

||||

|

||||

做完上面所有的步骤后,需要更新 GRUB2:

|

||||

|

||||

sudo update-grub

|

||||

|

||||

|

||||

|

||||

当重启系统后,应该可以看见一个新的、并且允许我们启动刚刚配置的 ISO 镜像的 GRUB 条目:

|

||||

|

||||

|

||||

|

||||

选择这个新条目就允许我们像从 DVD 或 U 盘中启动一个 live 环境一样。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.maketecheasier.com/run-iso-files-hdd-grub2/

|

||||

|

||||

作者:[Attila Orosz][a]

|

||||

译者:[Locez](https://github.com/locez)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.maketecheasier.com/author/attilaorosz/

|

||||

[1]:http://git.marmotte.net/git/glim/tree/grub2

|

||||

@ -1,17 +1,17 @@

|

||||

Linux 中管理文件类型和系统时间的 5 个有用命令 - 第三部分

|

||||

5 个在 Linux 中管理文件类型和系统时间的有用命令

|

||||

================================================================================

|

||||

对于想学习 Linux 的初学者来说要适应使用命令行或者终端可能非常困难。由于终端比图形用户界面程序更能帮助用户控制 Linux 系统,我们必须习惯在终端中运行命令。因此为了有效记忆 Linux 不同的命令,你应该每天使用终端并明白怎样将命令和不同选项以及参数一同使用。

|

||||

|

||||

|

||||

|

||||

在 Linux 中管理文件类型并设置时间 - 第三部分

|

||||

*在 Linux 中管理文件类型并设置时间*

|

||||

|

||||

请先查看我们 [Linux 小技巧][1]系列之前的文章。

|

||||

请先查看我们 Linux 小技巧系列之前的文章:

|

||||

|

||||

- [Linux 中 5 个有趣的命令行提示和技巧 - 第一部分][2]

|

||||

- [给新手的有用命令行技巧 - 第二部分][3]

|

||||

- [5 个有趣的 Linux 命令行技巧][2]

|

||||

- [给新手的 10 个有用 Linux 命令行技巧][3]

|

||||

|

||||

在这篇文章中,我们打算看看终端中 10 个和文件以及时间相关的提示和技巧。

|

||||

在这篇文章中,我们打算看看终端中 5 个和文件以及时间相关的提示和技巧。

|

||||

|

||||

### Linux 中的文件类型 ###

|

||||

|

||||

@ -22,10 +22,10 @@ Linux 系统中文件有不同的类型:

|

||||

- 普通文件:可能包含命令、文档、音频文件、视频、图像,归档文件等。

|

||||

- 设备文件:系统用于访问你硬件组件。

|

||||

|

||||

这里有两种表示存储设备的设备文件块文件,例如硬盘,它们以快读取数据,字符文件,以逐个字符读取数据。

|

||||

这里有两种表示存储设备的设备文件:块文件,例如硬盘,它们以块读取数据;字符文件,以逐个字符读取数据。

|

||||

|

||||

- 硬链接和软链接:用于在 Linux 文件系统的任意地方访问文件。

|

||||

- 命名管道和套接字:允许不同的进程彼此之间交互。

|

||||

- 命名管道和套接字:允许不同的进程之间进行交互。

|

||||

|

||||

#### 1. 用 ‘file’ 命令确定文件类型 ####

|

||||

|

||||

@ -219,7 +219,7 @@ which 命令用于定位文件系统中的命令。

|

||||

20 21 22 23 24 25 26

|

||||

27 28 29 30

|

||||

|

||||

使用 hwclock 命令查看硬件始终时间。

|

||||

使用 hwclock 命令查看硬件时钟时间。

|

||||

|

||||

tecmint@tecmint ~/Linux-Tricks $ sudo hwclock

|

||||

Wednesday 09 September 2015 06:02:58 PM IST -0.200081 seconds

|

||||

@ -231,7 +231,7 @@ which 命令用于定位文件系统中的命令。

|

||||

tecmint@tecmint ~/Linux-Tricks $ sudo hwclock

|

||||

Wednesday 09 September 2015 12:33:11 PM IST -0.891163 seconds

|

||||

|

||||

系统时间是由硬件始终时间在启动时设置的,系统关闭时,硬件时间被重置为系统时间。

|

||||

系统时间是由硬件时钟时间在启动时设置的,系统关闭时,硬件时间被重置为系统时间。

|

||||

|

||||

因此你查看系统时间和硬件时间时,它们是一样的,除非你更改了系统时间。当你的 CMOS 电量不足时,硬件时间可能不正确。

|

||||

|

||||

@ -256,7 +256,7 @@ which 命令用于定位文件系统中的命令。

|

||||

|

||||

### 总结 ###

|

||||

|

||||

对于初学者来说理解 Linux 中的文件类型是一个好的尝试,同时时间管理也非常重要,尤其是在需要可靠有效地管理服务的服务器上。希望这篇指南能对你有所帮助。如果你有任何反馈,别忘了给我们写评论。和 Tecmint 保持联系。

|

||||

对于初学者来说理解 Linux 中的文件类型是一个好的尝试,同时时间管理也非常重要,尤其是在需要可靠有效地管理服务的服务器上。希望这篇指南能对你有所帮助。如果你有任何反馈,别忘了给我们写评论。和我们保持联系。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -264,16 +264,16 @@ via: http://www.tecmint.com/manage-file-types-and-set-system-time-in-linux/

|

||||

|

||||

作者:[Aaron Kili][a]

|

||||

译者:[ictlyh](http://www.mutouxiaogui.cn/blog/)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/aaronkili/

|

||||

[1]:http://www.tecmint.com/tag/linux-tricks/

|

||||

[2]:http://www.tecmint.com/free-online-linux-learning-guide-for-beginners/

|

||||

[3]:http://www.tecmint.com/10-useful-linux-command-line-tricks-for-newbies/

|

||||

[2]:https://linux.cn/article-5485-1.html

|

||||

[3]:https://linux.cn/article-6314-1.html

|

||||

[4]:http://www.tecmint.com/linux-dir-command-usage-with-examples/

|

||||

[5]:http://www.tecmint.com/12-practical-examples-of-linux-grep-command/

|

||||

[5]:https://linux.cn/article-2250-1.html

|

||||

[6]:http://www.tecmint.com/wc-command-examples/

|

||||

[7]:http://www.tecmint.com/setup-samba-file-sharing-for-linux-windows-clients/

|

||||

[8]:http://www.tecmint.com/35-practical-examples-of-linux-find-command/

|

||||

@ -0,0 +1,102 @@

|

||||

在 Ubuntu 14.04/15.04 上配置 Node JS v4.0.0

|

||||

================================================================================

|

||||

大家好,Node.JS 4.0 发布了,这个流行的服务器端 JS 平台合并了 Node.js 和 io.js 的代码,4.0 版就是这两个项目结合的产物——现在合并为一个代码库。这次最主要的变化是 Node.js 封装了4.5 版本的 Google V8 JS 引擎,与当前的 Chrome 所带的一致。所以,紧跟 V8 的发布可以让 Node.js 运行的更快、更安全,同时更好的利用 ES6 的很多语言特性。

|

||||

|

||||

|

||||

|

||||

Node.js 4.0 发布的主要目标是为 io.js 用户提供一个简单的升级途径,所以这次并没有太多重要的 API 变更。下面的内容让我们来看看如何轻松的在 ubuntu server 上安装、配置 Node.js。

|

||||

|

||||

### 基础系统安装 ###

|

||||

|

||||

Node 在 Linux,Macintosh,Solaris 这几个系统上都可以完美的运行,linux 的发行版本当中使用 Ubuntu 相当适合。这也是我们为什么要尝试在 ubuntu 15.04 上安装 Node.js,当然了在 14.04 上也可以使用相同的步骤安装。

|

||||

|

||||

#### 1) 系统资源 ####

|

||||

|

||||

Node.js 所需的基本的系统资源取决于你的架构需要。本教程我们会在一台 1GB 内存、 1GHz 处理器和 10GB 磁盘空间的服务器上进行,最小安装即可,不需要安装 Web 服务器或数据库服务器。

|

||||

|

||||

#### 2) 系统更新 ####

|

||||

|

||||

在我们安装 Node.js 之前,推荐你将系统更新到最新的补丁和升级包,所以请登录到系统中使用超级用户运行如下命令:

|

||||

|

||||

# apt-get update

|

||||

|

||||

#### 3) 安装依赖 ####

|

||||

|

||||

Node.js 仅需要你的服务器上有一些基本系统和软件功能,比如 'make'、'gcc'和'wget' 之类的。如果你还没有安装它们,运行如下命令安装:

|

||||

|

||||

# apt-get install python gcc make g++ wget

|

||||

|

||||

### 下载最新版的Node JS v4.0.0 ###

|

||||

|

||||

访问链接 [Node JS Download Page][1] 下载源代码.

|

||||

|

||||

|

||||

|

||||

复制其中的最新的源代码的链接,然后用`wget` 下载,命令如下:

|

||||

|

||||

# wget https://nodejs.org/download/rc/v4.0.0-rc.1/node-v4.0.0-rc.1.tar.gz

|

||||

|

||||

下载完成后使用命令`tar` 解压缩:

|

||||

|

||||

# tar -zxvf node-v4.0.0-rc.1.tar.gz

|

||||

|

||||

|

||||

|

||||

### 安装 Node JS v4.0.0 ###

|

||||

|

||||

现在可以开始使用下载好的源代码编译 Node.js。在开始编译前,你需要在 ubuntu server 上切换到源代码解压缩后的目录,运行 configure 脚本来配置源代码。

|

||||

|

||||

root@ubuntu-15:~/node-v4.0.0-rc.1# ./configure

|

||||

|

||||

|

||||

|

||||

现在运行命令 'make install' 编译安装 Node.js:

|

||||

|

||||

root@ubuntu-15:~/node-v4.0.0-rc.1# make install

|

||||

|

||||

make 命令会花费几分钟完成编译,安静的等待一会。

|

||||

|

||||

### 验证 Node.js 安装 ###

|

||||

|

||||

一旦编译任务完成,我们就可以开始验证安装工作是否 OK。我们运行下列命令来确认 Node.js 的版本。

|

||||

|

||||

root@ubuntu-15:~# node -v

|

||||

v4.0.0-pre

|

||||

|

||||

在命令行下不带参数的运行`node` 就会进入 REPL(Read-Eval-Print-Loop,读-执行-输出-循环)模式,它有一个简化版的emacs 行编辑器,通过它你可以交互式的运行JS和查看运行结果。

|

||||

|

||||

|

||||

|

||||

### 编写测试程序 ###

|

||||

|

||||

我们也可以写一个很简单的终端程序来测试安装是否成功,并且工作正常。要做这个,我们将会创建一个“test.js” 文件,包含以下代码,操作如下:

|

||||

|

||||

root@ubuntu-15:~# vim test.js

|

||||

var util = require("util");

|

||||

console.log("Hello! This is a Node Test Program");

|

||||

:wq!

|

||||

|

||||

现在为了运行上面的程序,在命令行运行下面的命令。

|

||||

|

||||

root@ubuntu-15:~# node test.js

|

||||

|

||||

|

||||

|

||||

在一个成功安装了 Node JS 的环境下运行上面的程序就会在屏幕上得到上图所示的输出,这个程序加载类 “util” 到变量 “util” 中,接着用对象 “util” 运行终端任务,console.log 这个命令作用类似 C++ 里的cout

|

||||

|

||||

### 结论 ###

|

||||

|

||||

就是这些了。如果你刚刚开始使用 Node.js 开发应用程序,希望本文能够通过在 ubuntu 上安装、运行 Node.js 让你了解一下Node.js 的大概。最后,我们可以认为我们可以期待 Node JS v4.0.0 能够取得显著性能提升。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linoxide.com/ubuntu-how-to/setup-node-js-4-0-ubuntu-14-04-15-04/

|

||||

|

||||

作者:[Kashif Siddique][a]

|

||||

译者:[osk874](https://github.com/osk874)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linoxide.com/author/kashifs/

|

||||

[1]:https://nodejs.org/download/rc/v4.0.0-rc.1/

|

||||

@ -0,0 +1,72 @@

|

||||

Linux 有问必答:如何在 Linux 命令行下浏览天气预报

|

||||

================================================================================

|

||||

> **Q**: 我经常在 Linux 桌面查看天气预报。然而,是否有一种在终端环境下,不通过桌面小插件或者浏览器查询天气预报的方法?

|

||||

|

||||

对于 Linux 桌面用户来说,有很多办法获取天气预报,比如使用专门的天气应用、桌面小插件,或者面板小程序。但是如果你的工作环境是基于终端的,这里也有一些在命令行下获取天气的手段。

|

||||

|

||||

其中有一个就是 [wego][1],**一个终端下的小巧程序**。使用基于 ncurses 的接口,这个命令行程序允许你查看当前的天气情况和之后的预报。它也会通过一个天气预报的 API 收集接下来 5 天的天气预报。

|

||||

|

||||

### 在 Linux 下安装 wego ###

|

||||

|

||||

安装 wego 相当简单。wego 是用 Go 编写的,引起第一个步骤就是安装 [Go 语言][2]。然后再安装 wego。

|

||||

|

||||

$ go get github.com/schachmat/wego

|

||||

|

||||

wego 会被安装到 $GOPATH/bin,所以要将 $GOPATH/bin 添加到 $PATH 环境变量。

|

||||

|

||||

$ echo 'export PATH="$PATH:$GOPATH/bin"' >> ~/.bashrc

|

||||

$ source ~/.bashrc

|

||||

|

||||

现在就可与直接从命令行启动 wego 了。

|

||||

|

||||

$ wego

|

||||

|

||||

第一次运行 weg 会生成一个配置文件(`~/.wegorc`),你需要指定一个天气 API key。

|

||||

你可以从 [worldweatheronline.com][3] 获取一个免费的 API key。免费注册和使用。你只需要提供一个有效的邮箱地址。

|

||||

|

||||

|

||||

|

||||

你的 .wegorc 配置文件看起来会这样:

|

||||

|

||||

|

||||

|

||||

除了 API key,你还可以把你想要查询天气的地方、使用的城市/国家名称、语言配置在 `~/.wegorc` 中。

|

||||

注意,这个天气 API 的使用有限制:每秒最多 5 次查询,每天最多 250 次查询。

|

||||

当你重新执行 wego 命令,你将会看到最新的天气预报(当然是你的指定地方),如下显示。

|

||||

|

||||

|

||||

|

||||

显示出来的天气信息包括:(1)温度,(2)风速和风向,(3)可视距离,(4)降水量和降水概率

|

||||

默认情况下会显示3 天的天气预报。如果要进行修改,可以通过参数改变天气范围(最多5天),比如要查看 5 天的天气预报:

|

||||

|

||||

$ wego 5

|

||||

|

||||

如果你想检查另一个地方的天气,只需要提供城市名即可:

|

||||

|

||||

$ wego Seattle

|

||||

|

||||

### 问题解决 ###

|

||||

|

||||

1. 可能会遇到下面的错误:

|

||||

|

||||

user: Current not implemented on linux/amd64

|

||||

|

||||

当你在一个不支持原生 Go 编译器的环境下运行 wego 时就会出现这个错误。在这种情况下你只需要使用 gccgo ——一个 Go 的编译器前端来编译程序即可。这一步可以通过下面的命令完成。

|

||||

|

||||

$ sudo yum install gcc-go

|

||||

$ go get -compiler=gccgo github.com/schachmat/wego

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://ask.xmodulo.com/weather-forecasts-command-line-linux.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[oska874](https://github.com/oska874)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://ask.xmodulo.com/author/nanni

|

||||

[1]:https://github.com/schachmat/wego

|

||||

[2]:http://ask.xmodulo.com/install-go-language-linux.html

|

||||

[3]:https://developer.worldweatheronline.com/auth/register

|

||||

@ -1,27 +1,28 @@

|

||||

在 Ubuntu 和 Linux Mint 上安装 Terminator 0.98

|

||||

================================================================================

|

||||

[Terminator][1],在一个窗口中有多个终端。该项目的目标之一是为管理终端提供一个有用的工具。它的灵感来自于类似 gnome-multi-term,quankonsole 等程序,这些程序关注于在窗格中管理终端。 Terminator 0.98 带来了更完美的标签功能,更好的布局保存/恢复,改进了偏好用户界面和多出 bug 修复。

|

||||

[Terminator][1],它可以在一个窗口内打开多个终端。该项目的目标之一是为摆放终端提供一个有用的工具。它的灵感来自于类似 gnome-multi-term,quankonsole 等程序,这些程序关注于按网格摆放终端。 Terminator 0.98 带来了更完美的标签功能,更好的布局保存/恢复,改进了偏好用户界面和多处 bug 修复。

|

||||

|

||||

|

||||

|

||||

###TERMINATOR 0.98 的更改和新特性

|

||||

|

||||

- 添加了一个布局启动器,允许在不用布局之间简单切换(用 Alt + L 打开一个新的布局切换器);

|

||||

- 添加了一个新的手册(使用 F1 打开);

|

||||

- 保存的时候,布局现在会记住:

|

||||

- * 最大化和全屏状态

|

||||

- * 窗口标题

|

||||

- * 激活的标签

|

||||

- * 激活的终端

|

||||

- * 每个终端的工作目录

|

||||

- 添加选项用于启用/停用非同质标签和滚动箭头;

|

||||

- 最大化和全屏状态

|

||||

- 窗口标题

|

||||

- 激活的标签

|

||||

- 激活的终端

|

||||

- 每个终端的工作目录

|

||||

- 添加选项用于启用/停用非同类(non-homogenous)标签和滚动箭头;

|

||||

- 添加快捷键用于按行/半页/一页向上/下滚动;

|

||||

- 添加使用 Ctrl+鼠标滚轮放大/缩小,Shift+鼠标滚轮向上/下滚动页面;

|

||||

- 为下一个/上一个 profile 添加快捷键

|

||||

- 添加使用 Ctrl+鼠标滚轮来放大/缩小,Shift+鼠标滚轮向上/下滚动页面;

|

||||

- 为下一个/上一个配置文件(profile)添加快捷键

|

||||

- 改进自定义命令菜单的一致性

|

||||

- 新增快捷方式/代码来切换所有/标签分组;

|

||||

- 改进监视插件

|

||||

- 增加搜索栏切换;

|

||||

- 清理和重新组织窗口偏好,包括一个完整的全局便签更新

|

||||

- 清理和重新组织偏好(preferences)窗口,包括一个完整的全局便签更新

|

||||

- 添加选项用于设置 ActivityWatcher 插件静默时间

|

||||

- 其它一些改进和 bug 修复

|

||||

- [点击此处查看完整更新日志][2]

|

||||

@ -37,10 +38,6 @@ Terminator 0.98 有可用的 PPA,首先我们需要在 Ubuntu/Linux Mint 上

|

||||

如果你想要移除 Terminator,只需要在终端中运行下面的命令(可选)

|

||||

|

||||

$ sudo apt-get remove terminator

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -48,7 +45,7 @@ via: http://www.ewikitech.com/articles/linux/terminator-install-ubuntu-linux-min

|

||||

|

||||

作者:[admin][a]

|

||||

译者:[ictlyh](http://mutouxiaogui.cn/blog)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,26 +1,26 @@

|

||||

如何在Ubuntu中添加和删除书签[新手技巧]

|

||||

[新手技巧] 如何在Ubuntu中添加和删除书签

|

||||

================================================================================

|

||||

|

||||

|

||||

这是一篇对完全是新手的一篇技巧,我将向你展示如何在Ubuntu文件管理器中添加书签。

|

||||

|

||||

现在如果你想知道为什么要这么做,答案很简单。它可以让你可以快速地在左边栏中访问。比如。我[在Ubuntu中安装了Copy][1]。现在它创建了/Home/Copy。先进入Home目录再进入Copy目录并不是一件大事,但是我想要更快地访问它。因此我添加了一个书签这样我就可以直接从侧边栏访问了。

|

||||

现在如果你想知道为什么要这么做,答案很简单。它可以让你可以快速地在左边栏中访问。比如,我[在Ubuntu中安装了Copy 云服务][1]。它创建在/Home/Copy。先进入Home目录再进入Copy目录并不是很麻烦,但是我想要更快地访问它。因此我添加了一个书签这样我就可以直接从侧边栏访问了。

|

||||

|

||||

### 在Ubuntu中添加书签 ###

|

||||

|

||||

打开Files。进入你想要保存快速访问的目录。你需要在标记书签的目录里面。

|

||||

|

||||

现在,你有两种方法。

|

||||

现在,你有两种方法:

|

||||

|

||||

#### 方法1: ####

|

||||

|

||||

当你在Files中时(Ubuntu中的文件管理器),查看顶部菜单。你会看到书签按钮。点击它你会看到将当前路径保存为书签的选项。

|

||||

当你在Files(Ubuntu中的文件管理器)中时,查看顶部菜单。你会看到书签按钮。点击它你会看到将当前路径保存为书签的选项。

|

||||

|

||||

|

||||

|

||||

#### 方法 2: ####

|

||||

|

||||

你可以直接按下Ctrl+D就可以将当前位置保存位书签。

|

||||

你可以直接按下Ctrl+D就可以将当前位置保存为书签。

|

||||

|

||||

如你所见,这里左边栏就有一个新添加的Copy目录:

|

||||

|

||||

@ -32,7 +32,7 @@

|

||||

|

||||

|

||||

|

||||

这就是在Ubuntu中管理书签需要做的。我知道这对于大多数用户而言很贱,但是这也许多Ubuntu的新手而言或许还有用。

|

||||

这就是在Ubuntu中管理书签需要做的。我知道这对于大多数用户而言很简单,但是这也许多Ubuntu的新手而言或许还有用。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -40,7 +40,7 @@ via: http://itsfoss.com/add-remove-bookmarks-ubuntu/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,28 +1,29 @@

|

||||

在 Ubuntu 15.04 上安装 Justniffer

|

||||

================================================================================

|

||||

### 简介 ###

|

||||

|

||||

|

||||

[Justniffer][1] 是一个可用于替换 Snort 的网络协议分析器。它非常流行,可交互式地跟踪/探测一个网络连接。它能从实时环境中抓取流量,支持 “lipcap” 和 “tcpdump” 文件格式。它可以帮助用户分析一个用 wireshark 难以抓包的复杂网络。尤其是它可以有效的帮助分析应用层流量,能提取类似图像、脚本、HTML 等 http 内容。Justniffer 有助于理解不同组件之间是如何通信的。

|

||||

[Justniffer][1] 是一个可用于替代 Snort 的网络协议分析器。它非常流行,可交互式地跟踪/探测一个网络连接。它能从实时环境中抓取流量,支持 “lipcap” 和 “tcpdump” 文件格式。它可以帮助用户分析一个用 wireshark 难以抓包的复杂网络。尤其是它可以有效的帮助你分析应用层流量,能提取类似图像、脚本、HTML 等 http 内容。Justniffer 有助于理解不同组件之间是如何通信的。

|

||||

|

||||

### 功能 ###

|

||||

|

||||

Justniffer 收集一个复杂网络的所有流量而不影响系统性能,这是 Justniffer 的一个优势,它还可以保存日志用于之后的分析,Justniffer 其它一些重要功能包括:

|

||||

Justniffer 可以收集一个复杂网络的所有流量而不影响系统性能,这是 Justniffer 的一个优势,它还可以保存日志用于之后的分析,Justniffer 其它一些重要功能包括:

|

||||

|

||||

#### 1. 可靠的 TCP 流重建 ####

|

||||

1. 可靠的 TCP 流重建

|

||||

|

||||

它可以使用主机 Linux 内核的一部分用于记录并重现 TCP 片段和 IP 片段。

|

||||

它可以使用主机 Linux 内核的一部分用于记录并重现 TCP 片段和 IP 片段。

|

||||

|

||||

#### 2. 日志 ####

|

||||

2. 日志

|

||||

|

||||

保存日志用于之后的分析,并能自定义保存内容和时间。

|

||||

保存日志用于之后的分析,并能自定义保存内容和时间。

|

||||

|

||||

#### 3. 可扩展 ####

|

||||

3. 可扩展

|

||||

|

||||

可以通过外部 python、 perl 和 bash 脚本扩展来从分析报告中获取一些额外的结果。

|

||||

可以通过外部的 python、 perl 和 bash 脚本扩展来从分析报告中获取一些额外的结果。

|

||||

|

||||

#### 4. 性能管理 ####

|

||||

4. 性能管理

|

||||

|

||||

基于连接时间、关闭时间、响应时间或请求时间等提取信息。

|

||||

基于连接时间、关闭时间、响应时间或请求时间等提取信息。

|

||||

|

||||

### 安装 ###

|

||||

|

||||

@ -44,41 +45,41 @@ make 的时候失败了,然后我运行下面的命令并尝试重新安装服

|

||||

|

||||

$ sudo apt-get -f install

|

||||

|

||||

### 事例 ###

|

||||

### 示例 ###

|

||||

|

||||

首先用 -v 选项验证安装的 Justniffer 版本,你需要用超级用户权限来使用这个工具。

|

||||

|

||||

$ sudo justniffer -V

|

||||

|

||||

事例输出:

|

||||

示例输出:

|

||||

|

||||

|

||||

|

||||

**1. 为 eth1 接口导出 apache 中的流量到终端**

|

||||

**1、 以类似 apache 的格式导出 eth1 接口流量,显示到终端**

|

||||

|

||||

$ sudo justniffer -i eth1

|

||||

|

||||

事例输出:

|

||||

示例输出:

|

||||

|

||||

|

||||

|

||||

**2. 可以永恒下面的选项跟踪正在运行的 tcp 流**

|

||||

**2、 可以用下面的选项跟踪正在运行的 tcp 流**

|

||||

|

||||

$ sudo justniffer -i eth1 -r

|

||||

|

||||

事例输出:

|

||||

示例输出:

|

||||

|

||||

|

||||

|

||||

**3. 获取 web 服务器的响应时间**

|

||||

**3、 获取 web 服务器的响应时长**

|

||||

|

||||

$ sudo justniffer -i eth1 -a " %response.time"

|

||||

|

||||

事例输出:

|

||||

示例输出:

|

||||

|

||||

|

||||

|

||||

**4. 使用 Justniffer 读取一个 tcpdump 抓取的文件**

|

||||

**4、 使用 Justniffer 读取一个 tcpdump 抓取的文件**

|

||||

|

||||

首先,用 tcpdump 抓取流量。

|

||||

|

||||

@ -88,33 +89,33 @@ make 的时候失败了,然后我运行下面的命令并尝试重新安装服

|

||||

|

||||

$ justniffer -f file.cap

|

||||

|

||||

事例输出:

|

||||

示例输出:

|

||||

|

||||

|

||||

|

||||

**5. 只抓取 http 数据**

|

||||

**5、 只抓取 http 数据**

|

||||

|

||||

$ sudo justniffer -i eth1 -r -p "port 80 or port 8080"

|

||||

|

||||

事例输出:

|

||||

示例输出:

|

||||

|

||||

|

||||

|

||||

**6. 从一个指定主机获取 http 数据**

|

||||

**6、 获取一个指定主机 http 数据**

|

||||

|

||||

$ justniffer -i eth1 -r -p "host 192.168.1.250 and tcp port 80"

|

||||

|

||||

事例输出:

|

||||

示例输出:

|

||||

|

||||

|

||||

|

||||

**7. 以更精确的格式抓取数据**

|

||||

**7、 以更精确的格式抓取数据**

|

||||

|

||||

当你输入 **justniffer -h** 的时候你可以看到很多用于以更精确的方式获取数据的格式关键字

|

||||

|

||||

$ justniffer -h

|

||||

|

||||

事例输出:

|

||||

示例输出:

|

||||

|

||||

|

||||

|

||||

@ -122,15 +123,15 @@ make 的时候失败了,然后我运行下面的命令并尝试重新安装服

|

||||

|

||||

$ justniffer -i eth1 -l "%request.timestamp %request.header.host %request.url %response.time"

|

||||

|

||||

事例输出:

|

||||

示例输出:

|

||||

|

||||

|

||||

|

||||

其中还有很多你可以探索的选项

|

||||

其中还有很多你可以探索的选项。

|

||||

|

||||

### 总结 ###

|

||||

|

||||

Justniffer 是用于网络测试一个很好的工具。在我看来对于那些用 Snort 来进行网络探测的用户来说,Justniffer 是一个更简单的工具。它提供了很多 **格式关键字** 用于按照你的需要精确地提取数据。你可以用 .cap 文件格式记录网络信息,之后用于分析监视网络服务性能。

|

||||

Justniffer 是一个很好的用于网络测试的工具。在我看来对于那些用 Snort 来进行网络探测的用户来说,Justniffer 是一个更简单的工具。它提供了很多 **格式关键字** 用于按照你的需要精确地提取数据。你可以用 .cap 文件格式记录网络信息,之后用于分析监视网络服务性能。

|

||||

|

||||

**参考资料:**

|

||||

|

||||

@ -142,7 +143,7 @@ via: http://www.unixmen.com/install-justniffer-ubuntu-15-04/

|

||||

|

||||

作者:[Rajneesh Upadhyay][a]

|

||||

译者:[ictlyh](http://mutouxiaogui.cn/blog)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,10 +1,10 @@

|

||||

如何在Ubuntu 14.04 / 15.04中设置IonCube Loaders

|

||||

================================================================================

|

||||

IonCube Loaders是PHP中用于辅助加速页面的加解密工具。它保护你的PHP代码不会被在未授权的计算机上查看。使用ionCube编码并加密PHP需要一个叫ionCube Loader的文件安装在web服务器上并提供给需要大量访问的PHP用。它在运行时处理并执行编码。PHP只需在‘php.ini’中添加一行就可以使用这个loader。

|

||||

IonCube Loaders是一个PHP中用于加解密的工具,并带有加速页面运行的功能。它也可以保护你的PHP代码不会查看和运行在未授权的计算机上。要使用ionCube编码、加密的PHP文件,需要在web服务器上安装一个叫ionCube Loader的文件,并需要让 PHP 可以访问到,很多 PHP 应用都在用它。它可以在运行时读取并执行编码过后的代码。PHP只需在‘php.ini’中添加一行就可以使用这个loader。

|

||||

|

||||

### 前提条件 ###

|

||||

|

||||

在这篇文章中,我们将在Ubuntu14.04/15.04安装Ioncube Loader ,以便它可以在所有PHP模式中使用。本教程的唯一要求就是你系统安装了LEMP,并有“的php.ini”文件。

|

||||

在这篇文章中,我们将在Ubuntu14.04/15.04安装Ioncube Loader ,以便它可以在所有PHP模式中使用。本教程的唯一要求就是你系统安装了LEMP,并有“php.ini”文件。

|

||||

|

||||

### 下载 IonCube Loader ###

|

||||

|

||||

@ -14,15 +14,15 @@ IonCube Loaders是PHP中用于辅助加速页面的加解密工具。它保护

|

||||

|

||||

|

||||

|

||||

下载完成后用下面的命令解压到"/usr/local/src/"。

|

||||

下载完成后用下面的命令解压到“/usr/local/src/"。

|

||||

|

||||

# tar -zxvf ioncube_loaders_lin_x86-64.tar.gz -C /usr/local/src/

|

||||

|

||||

|

||||

|

||||

解压完成后我们就可以看到所有的存在的模块。但是我们只需要我们安装的PHP版本的相关模块。

|

||||

解压完成后我们就可以看到所有提供的模块。但是我们只需要我们所安装的PHP版本的对应模块。

|

||||

|

||||

要检查PHP版本,你可以运行下面的命令来找出相关的模块。

|

||||

要检查PHP版本,你可以运行下面的命令来找出相应的模块。

|

||||

|

||||

# php -v

|

||||

|

||||

@ -30,14 +30,14 @@ IonCube Loaders是PHP中用于辅助加速页面的加解密工具。它保护

|

||||

|

||||

根据上面的命令我们知道我们安装的是PHP 5.6.4,因此我们需要拷贝合适的模块到PHP模块目录下。

|

||||

|

||||

首先我们在“/usr/local/”创建一个叫“ioncube”的目录并复制需要的ioncube loader到这里。

|

||||

首先我们在“/usr/local/”创建一个叫“ioncube”的目录并复制所需的ioncube loader到这里。

|

||||

|

||||

root@ubuntu-15:/usr/local/src/ioncube# mkdir /usr/local/ioncube

|

||||

root@ubuntu-15:/usr/local/src/ioncube# cp ioncube_loader_lin_5.6.so ioncube_loader_lin_5.6_ts.so /usr/local/ioncube/

|

||||

|

||||

### PHP 配置 ###

|

||||

|

||||

我们要在位于"/etc/php5/cli/"文件夹下的"php.ini"中加入下面的配置行并重启web服务和php模块。

|

||||

我们要在位于"/etc/php5/cli/"文件夹下的"php.ini"中加入如下的配置行并重启web服务和php模块。

|

||||

|

||||

# vim /etc/php5/cli/php.ini

|

||||

|

||||

@ -54,7 +54,6 @@ IonCube Loaders是PHP中用于辅助加速页面的加解密工具。它保护

|

||||

|

||||

要为我们的网站测试ioncube loader。用下面的内容创建一个"info.php"文件并放在网站的web目录下。

|

||||

|

||||

|

||||

# vim /usr/share/nginx/html/info.php

|

||||

|

||||

加入phpinfo的脚本后重启web服务后用域名或者ip地址访问“info.php”。

|

||||

@ -63,7 +62,6 @@ IonCube Loaders是PHP中用于辅助加速页面的加解密工具。它保护

|

||||

|

||||

|

||||

|

||||

From the terminal issue the following command to verify the php version that shows the ionCube PHP Loader is Enabled.

|

||||

在终端中运行下面的命令来验证php版本并显示PHP Loader已经启用了。

|

||||

|

||||

# php -v

|

||||

@ -74,7 +72,7 @@ From the terminal issue the following command to verify the php version that sho

|

||||

|

||||

### 总结 ###

|

||||

|

||||

教程的最后你已经了解了在安装有nginx的Ubuntu中安装和配置ionCube Loader,如果你正在使用其他的web服务,这与其他服务没有明显的差别。因此做完这些安装Loader是很简单的,并且在大多数服务器上的安装都不会有问题。然而并没有一个所谓的“标准PHP安装”,服务可以通过许多方式安装,并启用或者禁用功能。

|

||||

教程的最后你已经了解了如何在安装有nginx的Ubuntu中安装和配置ionCube Loader,如果你正在使用其他的web服务,这与其他服务没有明显的差别。因此安装Loader是很简单的,并且在大多数服务器上的安装都不会有问题。然而并没有一个所谓的“标准PHP安装”,服务可以通过许多方式安装,并启用或者禁用功能。

|

||||

|

||||

如果你是在共享服务器上,那么确保运行了ioncube-loader-helper.php脚本,并点击链接来测试运行时安装。如果安装时你仍然遇到了问题,欢迎联系我们及给我们留下评论。

|

||||

|

||||

@ -84,7 +82,7 @@ via: http://linoxide.com/ubuntu-how-to/setup-ioncube-loaders-ubuntu-14-04-15-04/

|

||||

|

||||

作者:[Kashif Siddique][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

与新的Ubuntu 15.10默认壁纸相遇

|

||||

看看新的 Ubuntu 15.10 默认壁纸

|

||||

================================================================================

|

||||

**全新的Ubuntu 15.10 Wily Werewolf默认壁纸已经亮相 **

|

||||

**全新的Ubuntu 15.10 Wily Werewolf默认壁纸已经亮相**

|

||||

|

||||

乍一看你几乎无法发现与今天4月发布的Ubuntu 15.04中收到折纸启发的‘Suru’设计有什么差别。但是仔细看你就会发现默认背景有一些细微差别。

|

||||

乍一看你几乎无法发现与今天4月发布的Ubuntu 15.04中受到折纸启发的‘Suru’设计有什么差别。但是仔细看你就会发现默认背景有一些细微差别。

|

||||

|

||||

其中一点是更淡,受到由左上角图片发出的橘黄色光的帮助。保持了角褶皱和色块,但是增加了块和矩形部分。

|

||||

|

||||

@ -10,25 +10,25 @@

|

||||

|

||||

|

||||

|

||||

Ubuntu 15.10 默认桌面背景

|

||||

*Ubuntu 15.10 默认桌面背景*

|

||||

|

||||

只是为了显示改变,这个是Ubuntu 15.04的默认壁纸作为比较:

|

||||

为了凸显变化,这个是Ubuntu 15.04的默认壁纸作为比较:

|

||||

|

||||

|

||||

|

||||

Ubuntu 15.04 默认壁纸

|

||||

*Ubuntu 15.04 默认壁纸*

|

||||

|

||||

### 下载Ubuntu 15.10 壁纸 ###

|

||||

|

||||

如果你正运行的是Ubuntu 15.10 Wily Werewolf每日编译版本,那么你无法看到这个默认壁纸:设计已经亮相但是还没有打包到Wily中。

|

||||

如果你正运行的是Ubuntu 15.10 Wily Werewolf每日构建版本,那么你无法看到这个默认壁纸:设计已经亮相但是还没有打包到Wily中。

|

||||

|

||||

你不必等到10月份来使用新的设计来作为你的桌面背景。你可以点击下面的按钮下载4096×2304高清壁纸。

|

||||

|

||||

- [下载Ubuntu 15.10新的默认壁纸][1]

|

||||

|

||||

最后,如我们每次在有新壁纸时说的,你不必在意发布版品牌和设计细节。如果壁纸不和你的口味或者不想永远用它,轻易地就换掉毕竟这不是Ubuntu Phone!

|

||||

最后,如我们每次在有新壁纸时说的,你不必在意发布版品牌和设计细节。如果壁纸不合你的口味或者不想永远用它,轻易地就换掉,毕竟这不是Ubuntu Phone!

|

||||

|

||||

**你是你版本的粉丝么?在评论中让我们知道 **

|

||||

**你是新版本的粉丝么?在评论中让我们知道**

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -36,7 +36,7 @@ via: http://www.omgubuntu.co.uk/2015/09/ubuntu-15-10-wily-werewolf-default-wallp

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,12 +1,12 @@

|

||||

Xenlism WildFire: 一个精美的 Linux 桌面版主题

|

||||

Xenlism WildFire: Linux 桌面的极简风格图标主题

|

||||

================================================================================

|

||||

|

||||

|

||||

有那么一段时间,我一直使用一个主题,没有更换过。可能是在最近的一段时间都没有一款主题能满足我的需求。有那么一些我认为是[Ubuntu 上最好的图标主题][1],比如 Numix 和 Moka,并且,我一直也对 Numix 比较满意。

|

||||

有那么一段时间我没更换主题了,可能最近的一段时间没有一款主题能让我眼前一亮了。我考虑过更换 [Ubuntu 上最好的图标主题][1],但是它们和 Numix 和 Moka 差不多,而且我觉得 Numix 也不错。

|

||||

|

||||

但是,一段时间后,我使用了[Xenslim WildFire][2],并且我必须承认,他看起来太好了。Minimail 是当前比较流行的设计趋势。并且 Xenlism 完美的表现了它。平滑和美观。Xenlism 收到了诺基亚的 Meego 和苹果图标的影响。

|

||||

但是前几天我试了试 [Xenslim WildFire][2],我必须承认,它看起来太棒了。极简风格是设计界当前的流行趋势,而 Xenlism 完美的表现了这种风格。平滑而美观,Xenlism 显然受到了诺基亚的 Meego 和苹果图标的影响。

|

||||

|

||||

让我们来看一下他的几个不同应用的图标:

|

||||

让我们来看一下它的几个不同应用的图标:

|

||||

|

||||

|

||||

|

||||

@ -14,15 +14,15 @@ Xenlism WildFire: 一个精美的 Linux 桌面版主题

|

||||

|

||||

|

||||

|

||||

主题开发者,[Nattapong Pullkhow][3], 说,这个图标主题最适合 GNOME,但是在 Unity 和 KDE,Mate 上也表现良好。

|

||||

主题开发者 [Nattapong Pullkhow][3] 说,这个图标主题最适合 GNOME,但是在 Unity 和 KDE,Mate 上也表现良好。

|

||||

|

||||

### 安装 Xenlism Wildfire ###

|

||||

|

||||

Xenlism Theme 大约有 230 MB, 对于一个主题来说确实很大,但是考虑到它支持的庞大的软件数量,这个大小,确实也不是那么令人吃惊。

|

||||

Xenlism Theme 大约有 230 MB, 对于一个主题来说确实很大,但是考虑到它所支持的庞大的软件数量,这个大小,确实也不是那么令人吃惊。

|

||||

|

||||

#### 在 Ubuntu/Debian 上安装 Xenlism ####

|

||||

|

||||

在 Ubuntu 的变种中安装前,用以下的命令添加 GPG 秘钥:

|

||||

在 Ubuntu 系列中安装之前,用以下的命令添加 GPG 秘钥:

|

||||

|

||||

sudo apt-key adv --keyserver keys.gnupg.net --recv-keys 90127F5B

|

||||

|

||||

@ -42,7 +42,7 @@ Xenlism Theme 大约有 230 MB, 对于一个主题来说确实很大,但是考

|

||||

|

||||

sudo nano /etc/pacman.conf

|

||||

|

||||

添加如下的代码块,在配置文件中:

|

||||

添加如下的代码块,在配置文件中:

|

||||

|

||||

[xenlism-arch]

|

||||

SigLevel = Never

|

||||

@ -55,17 +55,17 @@ Xenlism Theme 大约有 230 MB, 对于一个主题来说确实很大,但是考

|

||||

|

||||

#### 使用 Xenlism 主题 ####

|

||||

|

||||

在 Ubuntu Unity, [可以使用 Unity Tweak Tool 来改变主题][4]. In GNOME, [使用 Gnome Tweak Tool 改变主题][5]. 我确信你会接下来的步骤,如果你不会,请来信通知我,我会继续完善这篇文章。

|

||||

在 Ubuntu Unity, [可以使用 Unity Tweak Tool 来改变主题][4]。 在 GNOME 中,[使用 Gnome Tweak Tool 改变主题][5]。 我确信你会接下来的步骤,如果你不会,请来信通知我,我会继续完善这篇文章。

|

||||

|

||||

这就是 Xenlism 在 Ubuntu 15.04 Unity 中的截图。同时也使用了 Xenlism 桌面背景。

|

||||

|

||||

|

||||

|

||||

这看来真棒,不是吗?如果你试用了,并且喜欢他,你可以感谢他的开发者:

|

||||

这看来真棒,不是吗?如果你试用了,并且喜欢它,你可以感谢它的开发者:

|

||||

|

||||

> [Xenlism is a stunning minimal icon theme for Linux. Thanks @xenatt for this beautiful theme.][6]

|

||||

> [Xenlism 是一个用于 Linux 的、令人兴奋的极简风格的图标主题,感谢 @xenatt 做出这么漂亮的主题。][6]

|

||||

|

||||

我希望你喜欢他。同时也希望你分享你对这个主题的看法,或者你喜欢的主题。Xenlism 真的很棒,可能会替换掉你最喜欢的主题。

|

||||

我希望你喜欢它。同时也希望你分享你对这个主题的看法,或者你喜欢的主题。Xenlism 真的很棒,可能会替换掉你最喜欢的主题。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -73,7 +73,7 @@ via: http://itsfoss.com/xenlism-wildfire-theme/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[MikeCoder](https://github.com/MikeCoder)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||