From 1454d7851f2e7221ceedc44d223e5ebb33e9c110 Mon Sep 17 00:00:00 2001

From: Xingyu Wang <xingyu.wang@gmail.com>

Date: Fri, 31 Jan 2020 13:05:35 +0800

Subject: [PATCH 01/11] PRF

@robsean

---

...buntu- Important Things You Should Know.md | 83 +++++++++----------

1 file changed, 38 insertions(+), 45 deletions(-)

diff --git a/translated/tech/20200115 Root User in Ubuntu- Important Things You Should Know.md b/translated/tech/20200115 Root User in Ubuntu- Important Things You Should Know.md

index 6113f5aa4a..c09cc5b595 100644

--- a/translated/tech/20200115 Root User in Ubuntu- Important Things You Should Know.md

+++ b/translated/tech/20200115 Root User in Ubuntu- Important Things You Should Know.md

@@ -1,16 +1,18 @@

[#]: collector: (lujun9972)

[#]: translator: (robsean)

-[#]: reviewer: ( )

+[#]: reviewer: (wxy)

[#]: publisher: ( )

[#]: url: ( )

[#]: subject: (Root User in Ubuntu: Important Things You Should Know)

[#]: via: (https://itsfoss.com/root-user-ubuntu/)

[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

-Ubuntu 的 root 用户:你应该知道的重要事情

+Ubuntu 中的 root 用户:你应该知道的重要事情

======

-当你已经开始使用 Linux 时,你将发现与 Windows 的很多不同。其中一个‘不同的东西’是 root 用户的概念。

+![][5]

+

+当你刚开始使用 Linux 时,你将发现与 Windows 的很多不同。其中一个“不同的东西”是 root 用户的概念。

在这个初学者系列中,我将解释几个关于 Ubuntu 的 root 用户的重要的东西。

@@ -18,38 +20,32 @@ Ubuntu 的 root 用户:你应该知道的重要事情

你将在这篇文章中学到下面的内容:

- * [为什么在 Ubuntu 中禁用 root 用户][1]

- * [像 root 用户一样使用命令][2]

- * [切换为 root 用户][3]

- * [解锁 root 用户][4]

-

-

+* 为什么在 Ubuntu 中禁用 root 用户

+* 像 root 用户一样使用命

+* 切换为 root 用户

+* 解锁 root 用户

### 什么是 root 用户?为什么它在 Ubuntu 中被锁定?

-![][5]

+在 Linux 中,有一个称为 [root][6] 的超级用户。这是超级管理员账号,它可以做任何事以及使用系统的一切东西。它可以在你的 Linux 系统上访问任何文件和运行任何命令。

-在 Linux 中,这里总是有一个称为 [root][6] 的超级用户。这是超级管理员账号,它可以做任何事以及使用系统的一切东西。它可以在你的 Linux 系统上访问任何文件和运行任何命令。

+能力越大,责任越大。root 用户给予你完全控制系统的能力,因此,它应该被谨慎地使用。root 用户可以访问系统文件,运行更改系统配置的命令。因此,一个错误的命令可能会破坏系统。

-能力越大,责任越大。root 用户给予你完全控制系统,因此,它应该被谨慎地使用。root 用户可以访问系统文件,运行更改系统配置的命令。因此,一个错误的命令可能会破坏系统。

+这就是为什么 [Ubuntu][7] 和其它基于 Ubuntu 的发行版默认锁定 root 用户,以从意外的灾难中挽救你的原因。

-这就是为什么 [Ubuntu][7] 和其它基于 Ubuntu 的发行版默认锁定 root 用户以从意外的灾难中挽救你的原因。

+对于你的日常任务,像移动你家目录中的文件,从互联网下载文件,创建文档等等,你不需要拥有 root 权限。

-对于你的日常任务,像移动你 home 目录中的文件,从因特网下载文件,创建文档等等,你不需要拥有 root 权限。

+**打个比方来更好地理解它。假设你想要切一个水果,你可以使用一把厨房用刀。假设你想要砍一颗树,你就得使用一把锯子。现在,你可以使用锯子来切水果,但是那不明智,不是吗?**_

-_**打个比方来更好地理解它。假设你不得不切一个水果,你使用一把厨房用刀。假设你不得不砍一颗树,你不得不使用一把锯子。现在,你可以使用锯子来切水果,但是那不明智,不是吗?**_

+这意味着,你不能是 Ubuntu 中 root 用户或者不能使用 root 权限来使用系统吗?不,你仍然可以在 `sudo` 的帮助下来拥有 root 权限来访问(在下一节中解释)。

-这意味着,你不能是 Ubuntu 中 root 用户,或者不能使用 root

-权限来使用系统?不,你仍然可以在 ‘sudo’ 的帮助下来拥有 root 权限来访问(在下一节中解释)。

-

-**底线:

-**使用于常规任务,root 用户权限太过强大。这就是为什么不建议一直使用 root 用户。你仍然可以使用 root 用户来运行特殊的命令。

+> **要点:** 使用于常规任务,root 用户权限太过强大。这就是为什么不建议一直使用 root 用户。你仍然可以使用 root 用户来运行特殊的命令。

### 如何在 Ubuntu 中像 root 用户一样运行命令?

![Image Credit: xkcd][8]

-对于一些系统的特殊任务来说,你将需要 root 权限。例如。如果你想[通过命令行更新 Ubuntu ][9],你不能作为一个常规用户运行该命令。它将给予你权限被拒绝的错误。

+对于一些系统的特殊任务来说,你将需要 root 权限。例如。如果你想[通过命令行更新 Ubuntu][9],你不能作为一个常规用户运行该命令。它将给出权限被拒绝的错误。

```

apt update

@@ -60,41 +56,38 @@ W: Problem unlinking the file /var/cache/apt/pkgcache.bin - RemoveCaches (13: Pe

W: Problem unlinking the file /var/cache/apt/srcpkgcache.bin - RemoveCaches (13: Permission denied)

```

-那么,你如何像 root 用户一样运行命令?简单的答案是,在命令前添加 sudo ,来像 root 用户一样运行。

+那么,你如何像 root 用户一样运行命令?简单的答案是,在命令前添加 `sudo`,来像 root 用户一样运行。

```

sudo apt update

```

-Ubuntu 和很多其它的 Linux 发行版使用一个被称为 sudo 的特殊程序机制。Sudo 是一个像 root 用户(或其它用户)一样来控制运行命令访问的程序。

+Ubuntu 和很多其它的 Linux 发行版使用一个被称为 `sudo` 的特殊程序机制。`sudo` 是一个以 root 用户(或其它用户)来控制运行命令访问的程序。

-实际上,Sudo 是一个非常多用途的工具。它可以配置为允许一个用户像 root 用户一样来运行所有的命令,或者仅仅一些命令。你也可以配置是否一些命令需要密码,或者不使用 sudo 去运行命令。它是一个广泛的主题,也许我将在另一篇文章中详细讨论它。

+实际上,`sudo` 是一个非常多用途的工具。它可以配置为允许一个用户像 root 用户一样来运行所有的命令,或者仅仅一些命令。你也可以配置为无需密码即可使用 sudo 运行命令。这个主题内容比较丰富,也许我将在另一篇文章中详细讨论它。

-就目前而言,你应该知道 [当你安装 Ubuntu 时][10] ,你必需创建一个用户账号。这个用户账号在你系统上作为管理员工作,在 Ubuntu 中作为一个默认的 sudo 策略,它可以在你的系统上使用 root 用户权限来运行任何命令。

+就目前而言,你应该知道[当你安装 Ubuntu 时][10],你必须创建一个用户账号。这个用户账号在你系统上以管理员身份来工作,并且按照 Ubuntu 中的默认 sudo 策略,它可以在你的系统上使用 root 用户权限来运行任何命令。

-sudo 的问题是,运行 **sudo 不需要 root 用户密码,而是需要用户自己的密码**。

+`sudo` 的问题是,运行 **sudo 不需要 root 用户密码,而是需要用户自己的密码**。

-并且这就是为什么当你使用 sudo 运行一个命令,会要求正在运行 sudo 命令的用户的密码的原因:

+并且这就是为什么当你使用 `sudo` 运行一个命令,会要求输入正在运行 `sudo` 命令的用户的密码的原因:

```

[email protected]:~$ sudo apt update

[sudo] password for abhishek:

```

-正如你在上面示例中所见 _abhishek_ 在尝试使用 _sudo_ 来运行 ‘apt update’ 命令,系统要求 _abhishek_ 的密码。

+正如你在上面示例中所见 `abhishek` 在尝试使用 `sudo` 来运行 `apt update` 命令,系统要求输入 `abhishek` 的密码。

-如果你对 Linux 完全不熟悉,当你在终端中开始输入密码时,你可能会惊讶,在屏幕上什么都没有发生。这是十分正常的,因为作为默认的安全功能,在屏幕上什么都不会显示。甚至星号(*)都没有。你输入你的密码并按 Enter 键。

+**如果你对 Linux 完全不熟悉,当你在终端中开始输入密码时,你可能会惊讶,在屏幕上什么都没有发生。这是十分正常的,因为作为默认的安全功能,在屏幕上什么都不会显示。甚至星号(`*`)都没有。输入你的密码并按回车键。**

-**底限:

-**为在 Ubuntu 中像 root 用户一样运行命令,在命令前添加 sudo 。

-当被要求输入密码时,输入你的账户的密码。

-当你在屏幕上输入密码时,什么都看不到。请保持输入密码,并按 Enter 键。

+> **要点:**为在 Ubuntu 中像 root 用户一样运行命令,在命令前添加 `sudo`。 当被要求输入密码时,输入你的账户的密码。当你在屏幕上输入密码时,什么都看不到。请继续输入密码,并按回车键。

### 如何在 Ubuntu 中成为 root 用户?

-你可以使用 sudo 来像 root 用户一样运行命令。但是,在某些情况下,你必需像 root 用户一样来运行一些命令,而你总是忘了在命令前添加 sudo ,那么你可以临时切换为 root 用户。

+你可以使用 `sudo` 来像 root 用户一样运行命令。但是,在某些情况下,你必须以 root 用户身份来运行一些命令,而你总是忘了在命令前添加 `sudo`,那么你可以临时切换为 root 用户。

-sudo 命令允许你来模拟一个 root 用户登录的 shell ,使用这个命令:

+`sudo` 命令允许你来模拟一个 root 用户登录的 shell ,使用这个命令:

```

sudo -i

@@ -108,19 +101,19 @@ root

[email protected]:~#

```

-你将注意到,当你切换为 root 用户时,shell 命令提示符从 $ (美元按键符号)更改为 # (英镑按键符号)。这使我开了一个(拙劣的)玩笑,英镑比美元强大。

+你将注意到,当你切换为 root 用户时,shell 命令提示符从 `$`(美元符号)更改为 `#`(英镑符号)。我开个(拙劣的)玩笑,英镑比美元强大。

-_**虽然我已经向你显示如何成为 root 用户,但是我必需警告你,你应该避免作为 root 用户使用系统。毕竟它有阻拦你使用 root 用户的原因。**_

+**虽然我已经向你显示如何成为 root 用户,但是我必须警告你,你应该避免作为 root 用户使用系统。毕竟它有阻拦你使用 root 用户的原因。**

-另外一种临时切换为 root 用户的方法是使用 su 命令:

+另外一种临时切换为 root 用户的方法是使用 `su` 命令:

```

sudo su

```

-如果你尝试使用不带有的 sudo 的 su 命令,你将遇到 ‘su authentication failure’ 错误。

+如果你尝试使用不带有的 `sudo` 的 `su` 命令,你将遇到 “su authentication failure” 错误。

-你可以使用 exit 命令来恢复为正常用户。

+你可以使用 `exit` 命令来恢复为正常用户。

```

exit

@@ -132,13 +125,13 @@ exit

Linux 给予你在系统上想做什么就做什么的自由。解锁 root 用户就是这些自由之一。

-如果,出于某些原因,你决定启用 root 用户,你可以通过为其设置一个密码来做到:

+如果出于某些原因,你决定启用 root 用户,你可以通过为其设置一个密码来做到:

```

sudo passwd root

```

-再强调一次,不建议使用 root 用户,并且我不支持你在你的桌面上也这样做。如果你忘记密码,你将不能再次 [在 Ubuntu 中更改 root 用户密码][11] 。

+再强调一次,不建议使用 root 用户,并且我也不鼓励你在桌面上这样做。如果你忘记了密码,你将不能再次[在 Ubuntu 中更改 root 用户密码][11]。(LCTT 译注:可以通过单用户模式修改。)

你可以通过移除密码来再次锁定 root 用户:

@@ -146,9 +139,9 @@ sudo passwd root

sudo passwd -dl root

```

-**最后…**

+### 最后…

-我希望你现在对 root 概念有稍微更好一点的理解。如果你仍然有些关于它的困惑和问题,请在评论中让我知道。我将尝试回答你的问题,并且也可能更新这篇文章。

+我希望你现在对 root 概念理解得更好一点。如果你仍然有些关于它的困惑和问题,请在评论中让我知道。我将尝试回答你的问题,并且也可能更新这篇文章。

--------------------------------------------------------------------------------

@@ -157,7 +150,7 @@ via: https://itsfoss.com/root-user-ubuntu/

作者:[Abhishek Prakash][a]

选题:[lujun9972][b]

译者:[robsean](https://github.com/robsean)

-校对:[校对者ID](https://github.com/校对者ID)

+校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

From a19775aa4ab437de30b3433f471144d12fd518a0 Mon Sep 17 00:00:00 2001

From: Xingyu Wang <xingyu.wang@gmail.com>

Date: Fri, 31 Jan 2020 13:06:09 +0800

Subject: [PATCH 02/11] PUB

@robsean

https://linux.cn/article-11837-1.html

---

...5 Root User in Ubuntu- Important Things You Should Know.md | 4 ++--

1 file changed, 2 insertions(+), 2 deletions(-)

rename {translated/tech => published}/20200115 Root User in Ubuntu- Important Things You Should Know.md (99%)

diff --git a/translated/tech/20200115 Root User in Ubuntu- Important Things You Should Know.md b/published/20200115 Root User in Ubuntu- Important Things You Should Know.md

similarity index 99%

rename from translated/tech/20200115 Root User in Ubuntu- Important Things You Should Know.md

rename to published/20200115 Root User in Ubuntu- Important Things You Should Know.md

index c09cc5b595..0abc566f4b 100644

--- a/translated/tech/20200115 Root User in Ubuntu- Important Things You Should Know.md

+++ b/published/20200115 Root User in Ubuntu- Important Things You Should Know.md

@@ -1,8 +1,8 @@

[#]: collector: (lujun9972)

[#]: translator: (robsean)

[#]: reviewer: (wxy)

-[#]: publisher: ( )

-[#]: url: ( )

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11837-1.html)

[#]: subject: (Root User in Ubuntu: Important Things You Should Know)

[#]: via: (https://itsfoss.com/root-user-ubuntu/)

[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

From 6a5097dc4e770a30f3cdaf41757487aeaa83a6d5 Mon Sep 17 00:00:00 2001

From: LazyWolf Lin <LazyWolfLin@gmail.com>

Date: Fri, 31 Jan 2020 14:01:22 +0800

Subject: [PATCH 03/11] Translating.

---

.../tech/20200126 What-s your favorite Linux distribution.md | 4 ++--

1 file changed, 2 insertions(+), 2 deletions(-)

diff --git a/sources/tech/20200126 What-s your favorite Linux distribution.md b/sources/tech/20200126 What-s your favorite Linux distribution.md

index 029a4272e8..c1f4ab4688 100644

--- a/sources/tech/20200126 What-s your favorite Linux distribution.md

+++ b/sources/tech/20200126 What-s your favorite Linux distribution.md

@@ -1,5 +1,5 @@

[#]: collector: (lujun9972)

-[#]: translator: ( )

+[#]: translator: (LazyWolfLin)

[#]: reviewer: ( )

[#]: publisher: ( )

[#]: url: ( )

@@ -43,7 +43,7 @@ via: https://opensource.com/article/20/1/favorite-linux-distribution

作者:[Opensource.com][a]

选题:[lujun9972][b]

-译者:[译者ID](https://github.com/译者ID)

+译者:[LazyWolfLin](https://github.com/LazyWolfLin)

校对:[校对者ID](https://github.com/校对者ID)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

From d822d1f0e947d59c7248ded6c1b47b8892a39ffc Mon Sep 17 00:00:00 2001

From: =?UTF-8?q?=E8=90=8C=E6=96=B0=E9=98=BF=E5=B2=A9?=

<31788564+mengxinayan@users.noreply.github.com>

Date: Fri, 31 Jan 2020 06:31:15 -0800

Subject: [PATCH 04/11] Update & Translating

File name: 20200129 Showing memory usage in Linux by process and user.md

Translator: mengxinayan

---

...00129 Showing memory usage in Linux by process and user.md | 4 ++--

1 file changed, 2 insertions(+), 2 deletions(-)

diff --git a/sources/tech/20200129 Showing memory usage in Linux by process and user.md b/sources/tech/20200129 Showing memory usage in Linux by process and user.md

index 8e21baf042..85c6fcb4ea 100644

--- a/sources/tech/20200129 Showing memory usage in Linux by process and user.md

+++ b/sources/tech/20200129 Showing memory usage in Linux by process and user.md

@@ -1,5 +1,5 @@

[#]: collector: (lujun9972)

-[#]: translator: ( )

+[#]: translator: (mengxinayan)

[#]: reviewer: ( )

[#]: publisher: ( )

[#]: url: ( )

@@ -180,7 +180,7 @@ via: https://www.networkworld.com/article/3516319/showing-memory-usage-in-linux-

作者:[Sandra Henry-Stocker][a]

选题:[lujun9972][b]

-译者:[译者ID](https://github.com/译者ID)

+译者:[mengxinayan](https://github.com/mengxinayan)

校对:[校对者ID](https://github.com/校对者ID)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

From 4c90885ba1d4629c74a65cca4014d94e062d283d Mon Sep 17 00:00:00 2001

From: DarkSun <lujun9972@gmail.com>

Date: Sat, 1 Feb 2020 00:58:43 +0800

Subject: [PATCH 05/11] =?UTF-8?q?=E9=80=89=E9=A2=98:=2020200131=205=20ways?=

=?UTF-8?q?=20to=20use=20Emacs=20as=20your=20RPG=20dashboard?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

sources/tech/20200131 5 ways to use Emacs as your RPG dashboard.md

---

...ways to use Emacs as your RPG dashboard.md | 182 ++++++++++++++++++

1 file changed, 182 insertions(+)

create mode 100644 sources/tech/20200131 5 ways to use Emacs as your RPG dashboard.md

diff --git a/sources/tech/20200131 5 ways to use Emacs as your RPG dashboard.md b/sources/tech/20200131 5 ways to use Emacs as your RPG dashboard.md

new file mode 100644

index 0000000000..b1d7c6923d

--- /dev/null

+++ b/sources/tech/20200131 5 ways to use Emacs as your RPG dashboard.md

@@ -0,0 +1,182 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (5 ways to use Emacs as your RPG dashboard)

+[#]: via: (https://opensource.com/article/20/1/emacs-rpgs)

+[#]: author: (Seth Kenlon https://opensource.com/users/seth)

+

+5 ways to use Emacs as your RPG dashboard

+======

+Emacs is a flexible way to organize and manage your tabletop

+role-playing games.

+![Chess pieces on a chess board][1]

+

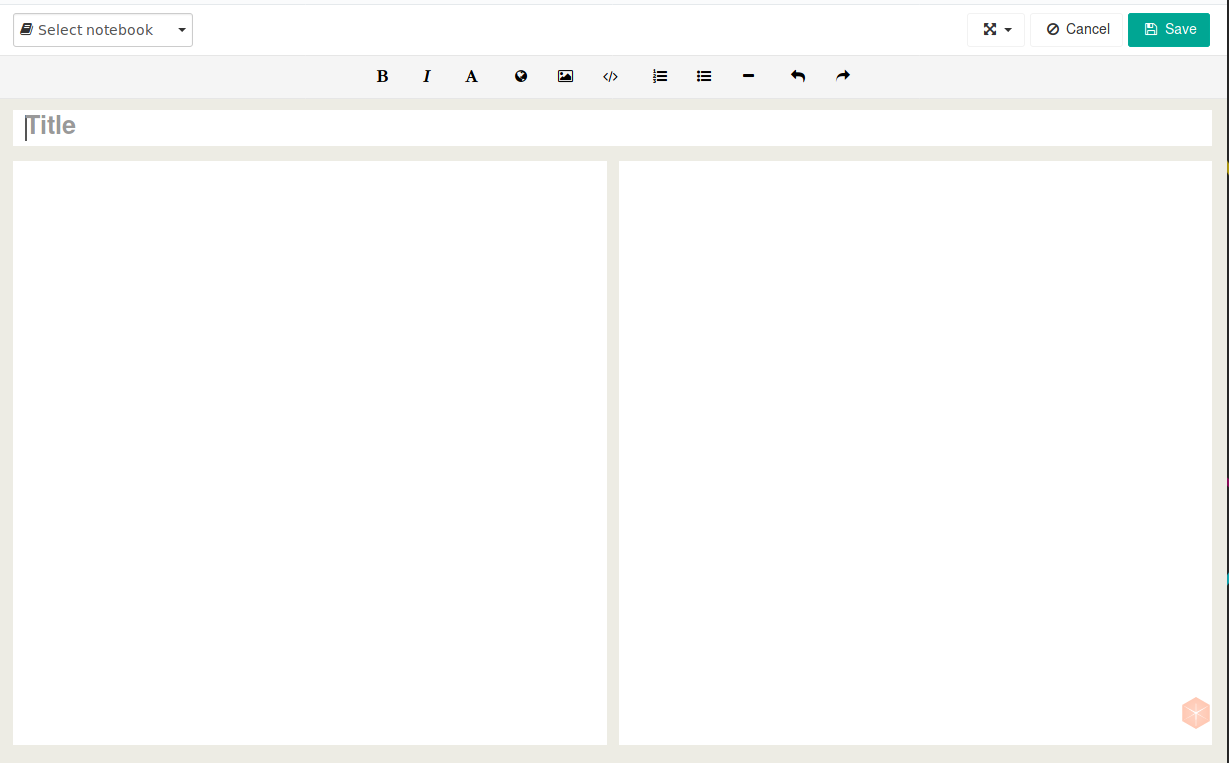

+There are two ways to play a tabletop role-playing game (RPG): You can play an adventure written by the game's publisher or an independent author, or you can play an adventure that is made up as you go. Regardless of which you choose, there's probably prep work to do. One player (generically called the _game master_) must gather monster or enemy stats, loot tables, and references for rules, and the other players must build characters and apportion (pretend) equipment. Nothing's going to eliminate prep work from a complex RPG, but if you're an [Emacs][2] user, you might find that Emacs makes a great dashboard to keep everything all straight.

+

+### Organize the rules

+

+Unfortunately, the digital editions of many RPGs are distributed as PDFs because that's what the RPG publisher sent to the printer for the physical edition. PDFs are good at preserving layout, but they're far from an ideal eBook format. If you play RPGs published under an open license, you can often obtain the rules in alternate formats (such as HTML), which gives you more control and flexibility. Even the world's first and most famous RPG, Dungeons & Dragons, provides its rules as a free download in digital format (which has been translated into HTML and Markdown by many a website).

+

+I open the rules as Markdown in Emacs so that I have a searchable reference at the ready. While opening the rules as a PDF in a PDF reader lets you search for embedded text, using a text file instead provides several benefits. First of all, a text file is much smaller than a PDF, so it's faster to load and to search. Second, text files are easily editable, so if you find a rule that sends you seeking clarification, you can add what you learn (or whatever you make up) directly into your master document. You can also add house rules and additional resources. My aim is to have a single file that contains all of the rules and resources I use in games I run, with everything a quick **Ctrl+s** (**C-s** in Emacs notation) away.

+

+### Manage initiatives

+

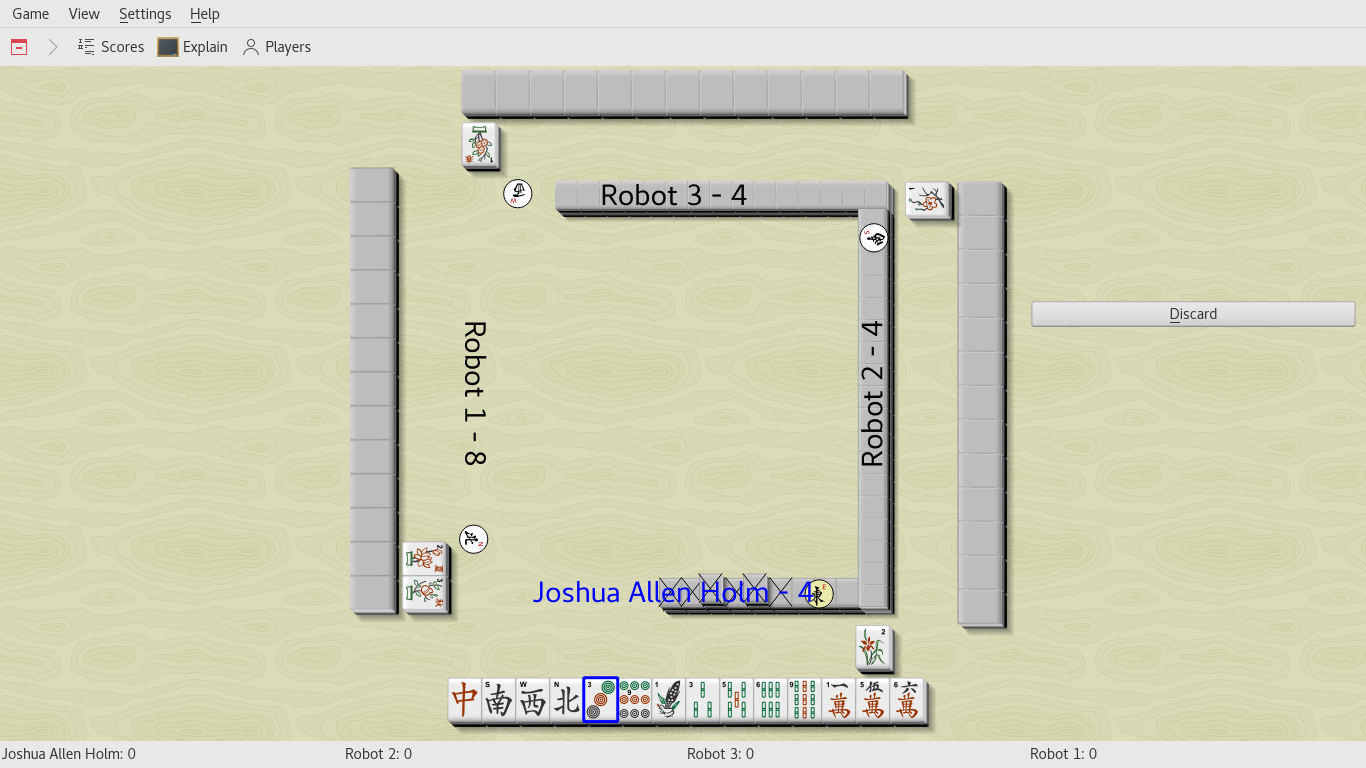

+Most RPG systems feature a method to determine the order of play during combat. This is commonly called _initiative_, and it comes up a lot since the source of conflict in games often involves combat or some kind of opposed competitive action. It's not that hard to keep track of combat with pencil and paper, but in games where I'm using digital assets anyway, I find it easier to stay digital for everything. Luckily, the venerable [Org mode][3] provides an excellent solution.

+

+When players roll for initiative, I type their names into Emacs' scratch buffer. Then I type each monster or enemy, along with the hit or health points (HP) of each, followed by two columns of 0:

+

+

+```

+brad

+emily

+zombie 22 0 0

+zombie 22 0 0

+flesh-golem 93 0 0

+```

+

+Then I select the block of player characters (PCs) and monsters and use the **org-table-create-or-convert-from-region** function to create an Org mode table around it. Using **Alt+Down arrow** (**M-down** in Emacs notation), I move each PC or monster into the correct initiative order.

+

+

+```

+| emily | | | |

+| flesh-golem | 93 | 0 | 0 |

+| zombie | 22 | 0 | 0 |

+| brad | | | |

+| zombie | 22 | 0 | 0 |

+```

+

+During combat, I only need to record damage for monsters, because the players manage their own HP. For the enemies I control in combat, the second column is its HP (its starting number is taken from the RPG system's rules), and the third is the damage dealt during the current round.

+

+Table formulas in Org mode are defined on a special **TBLFM** line at the end of the table. If you've used any computerized spreadsheet for anything, [Org table][4] will be fairly intuitive. For combat tracking, I want the third column to be subtracted from the second. Columns are indexed from left to right (**$1** for the first, **$2** for the second, and **$3** for the third), so to replace the contents of column $2 with the sum of columns $2 and $3, I add this line to the bottom of the table:

+

+

+```

+`#+TBLFM: $2=vsum($2 - $3)`

+```

+

+I don't actually type that into Emacs every time the game enters combat mode. Instead, I've defined an auto-completion trigger with Emacs' [abbrev mode][5], a system that allows you to type in a special string of your choosing, which Emacs expands into something more complex. I define my abbreviations in a file called **~/.emacs.d/abbrev_defs**, using **rpgi** followed by a **Space** as the trigger for Emacs to change the line to my initiative table formula:

+

+

+```

+(define-abbrev-table 'global-abbrev-table

+ '(

+ ("rpgi" "#+TBLFM: $2=vsum($2 - $3)" nil 0)

+ ))

+```

+

+Each time a player deals damage to a monster, I enter the amount of damage in the damage column. To trigger a table recalculation, I press **Ctrl+u Ctrl+c** (i.e., **C-u C-c** in Emacs) or **Ctrl+c Ctrl+c** (i.e., **C-c C-c**) if I happen to be on the formula line:

+

+

+```

+| brad | | |

+| emily | | |

+| zombie | 12 | 10 |

+| zombie | 15 | 7 |

+| flesh-golem | 91 | 2 |

+#+TBLFM: $2=vsum($2 - $3)

+```

+

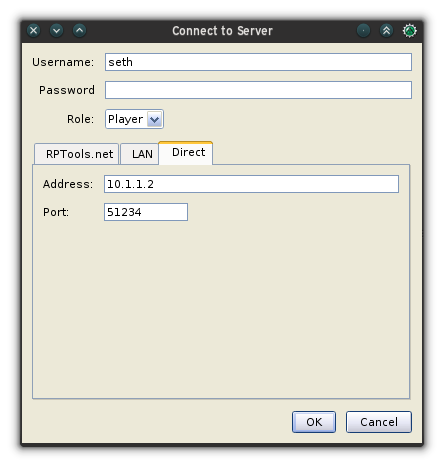

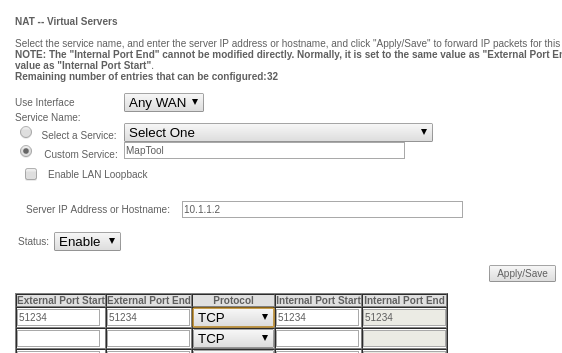

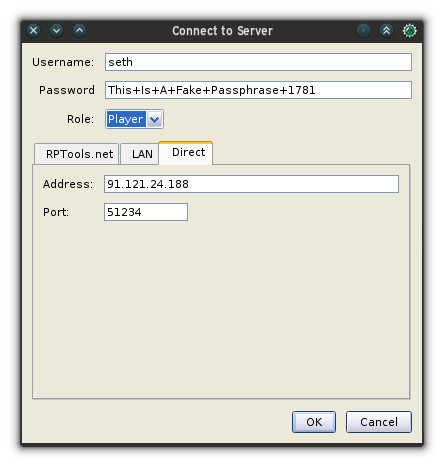

+This system isn't perfect. Character names can't contain any spaces because Org table splits cells by white space. It's relatively easy to forget that you processed one line and accidentally reprocess it at the end of a round. To add HP back to a creature's total, you have to use a negative number. (I think of it as negative damage, which suggests health.) Then again, many computerized initiative trackers suffer the same problems, so it's not a particularly bad solution. For me, it's one of the faster methods I've found (I'm happy to admit that [MapTool][6] is the best, but I use my Emacs workflow when I'm not using a digital shared map).

+

+### View PDFs in DocView

+

+Sometimes a PDF is unavoidable. Whether it's a d100 list of tavern names or a dungeon map, some resources exist only as a PDF with no extractable text data. In these cases, Emacs' [DocView][7] package can help. DocView is a mode that loads PDF data and generates a PNG file for you to view (Emacs can also view JPEG files). I've found that large PDFs are problematic and slow, but if it's a low-resolution PDF with just one or two pages, DocView is an easy way to reference a document without leaving Emacs.

+

+I use this mode exclusively for maps, tables, and lists. It's not useful for anything that might involve searching, because text data isn't accessible, but it's an amazingly useful feature for documents you only need to glance at.

+

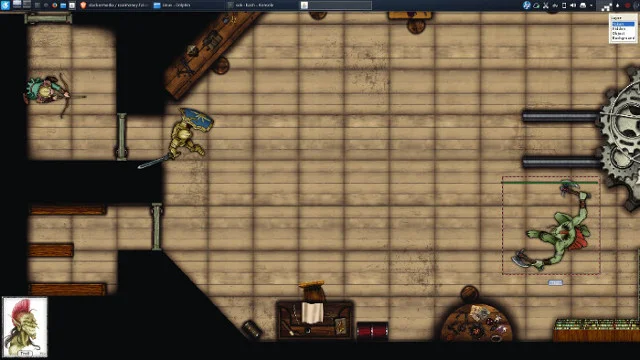

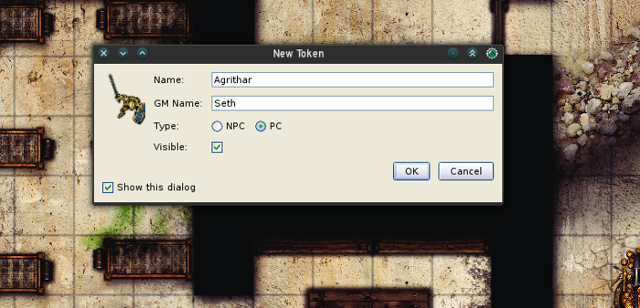

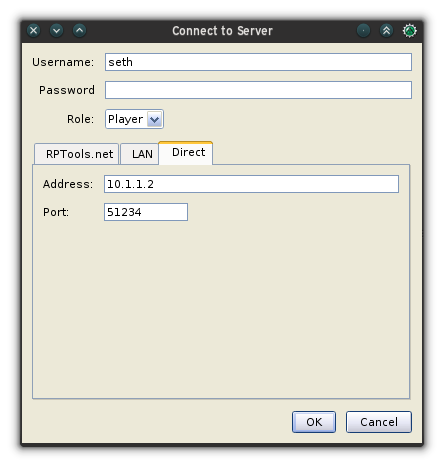

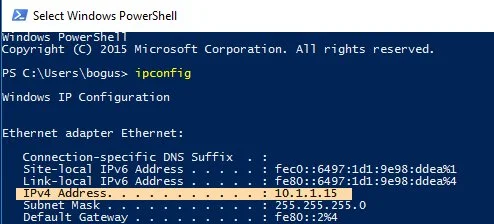

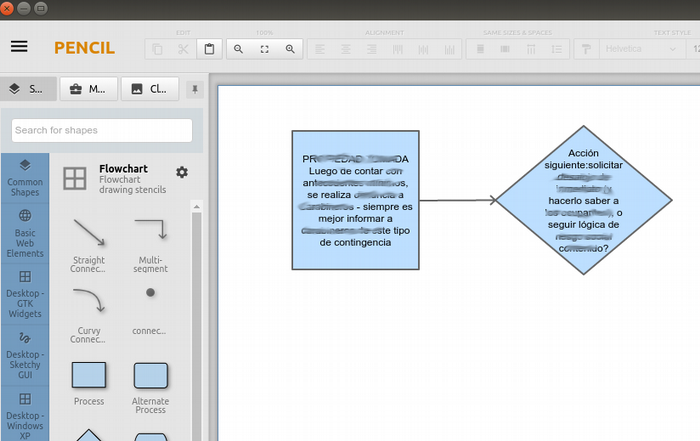

+![Emacs for RPG][8]

+

+The [Ghostscript][9] suite that ships with most Linux distributions (or certainly is available in your repository) allows you to process PDFs, drastically simplifying them by lowering the resolution of images from print quality to screen quality. The command contains mostly PostScript commands and attributes, but you don't need to become a PostScript expert to perform a quick down-res:

+

+

+```

+$ gs -sDEVICE=pdfwrite -dCompatibilityLevel=1.4 \

+-dPDFSETTINGS=/ebook -dNOPAUSE -dBATCH \

+-sOutputFile=adventure.pdf \

+-dDownsampleColorImages=true \

+-dColorImageResolution=72 big-adventure-module.pdf

+```

+

+Opening PDFs in Emacs isn't as exciting as it may sound. It's not by any means a first-class PDF viewer, but for select resources, it can be a convenient way to keep all your information on one screen.

+

+### Create adventure rap sheets

+

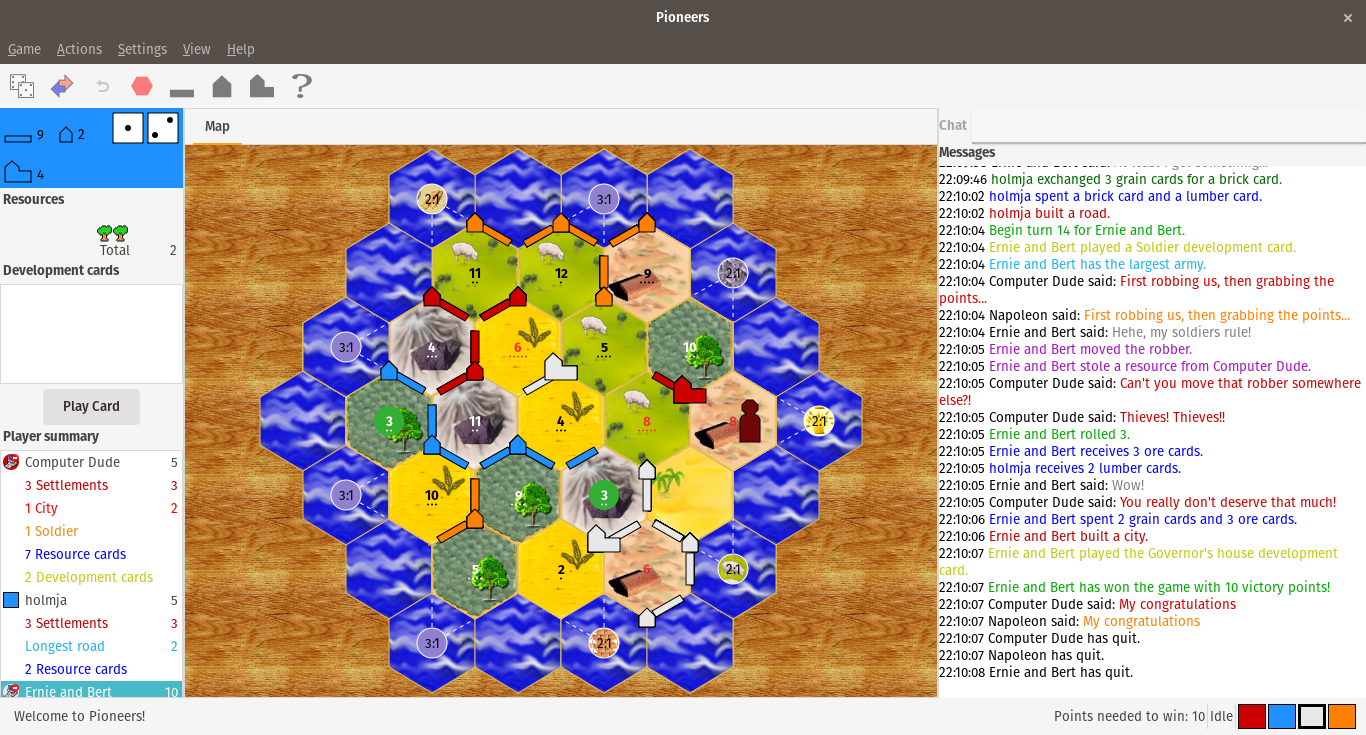

+Published adventures are often heavy on prose. The theory is that you've paid a lot of money for a prepared adventure, so you obviously want value for your purchase. I do value the lore and world-building that authors put into their adventures, but during a game, I like to have a quick reference to the information I need for the game mechanics to work as intended. In other words, I don't need to have the story of why a trap was placed in a dungeon when a rogue triggers it; I only need to know that the trap exists and what the rogue needs to roll in order to survive.

+

+I haven't found any modern adventure format that provides me with just that information, so I end up creating my own "rap sheets": a minimal outline for the adventure, with just the game mechanics information I need for each location. Once again, Org mode is the best way for me to keep this information handy.

+

+In Org mode, you create lists using asterisks for bullet points. For a sub-item, add an asterisk. Even better, press **C-c t** (that's **Ctrl+c** and then the **t** key) to mark the item as a **TODO** item. When your players clear an area in the game, press **C-c t** again to mark the location **DONE**.

+

+

+```

+* DONE 1 Entrance

+** Zombie

+AC 9 | HP 22

+* TODO 2 Necromancer's chambers

+** Flesh golem

+AC 16 | HP 93

+** Treasure

+\- Gold ring (200 gp)

+\- Rusty nail (1 cp)

+ Cursed (roll on curse table)

+** Secret door (DC 20)

+\- to area 11

+```

+

+Each asterisk is collapsible, so you can get a summary of a global area by collapsing your list down to just the top-level:

+

+

+```

+* DONE 1 Entrance

+* TODO 2 Necromancer's chambers

+* TODO 3 Wyrmling nursery

+* TODO 4 Caretaker's chambers

+* TODO 5 Caretaker's laboratory

+```

+

+An added bonus: I find that making my own rap sheets helps me internalize both the mechanics and the lore of the adventure I'm preparing, so the benefits to this method are numerous. Since I manage any adventure I run in Emacs with Git, once I do the prep work for an adventure, I have fresh copies of all my assets in case I run the adventure with another group or with a set of fresh characters.

+

+### Make your own adventure journal

+

+Generally, I let my players keep their own notes about the adventure because I want to encourage players to interpret the events happening in the adventure for themselves. However, a game master needs private notes to keep all of the improvised data in order. For example, if a published adventure doesn't feature a blacksmith shop, but players decide to visit a blacksmith, then a blacksmith needs to be invented in the moment. If the players revisit the blacksmith six weeks later, then they expect it to be the same blacksmith, and it's up to the game master to keep track of such additions to the published setting. I manage my personal notes about adventures in two different ways, depending on what's available to me.

+

+If I have the text of the adventure in an editable format (such as HTML or Markdown), I enter my additions into the adventure as if the publisher had included them from the start. This means there's always one source of truth for the setting and for significant events.

+

+If I haven't been able to get an editable copy of the adventure because it's a hard copy or a PDF that's not easily modified, then I write my additions into my rap sheets in Org mode. This functionally means that there's still one source of truth because my rap sheets are the first place I look for information, falling back on the published text only for details I've forgotten. Sometimes I like my additions enough to merge them back into my Git master for the adventure, but usually, I trust in improvisation and let additions happen dynamically for each group that plays the adventure.

+

+### Why Emacs is my favorite RPG dashboard

+

+I've fallen into using Emacs for RPGs because it serves as the heads-up display of my dreams. The "right" answer is probably a good [tiling window manager][10], but until I implement that, I'm happy with Emacs. Everything's bound to keyboard shortcuts designed for specificity and speed, and there's just enough easy customization that I can hack together good-enough solutions—sometimes even while players are arguing with one another about what to do next.

+

+I've tried juggling multiple desktops, several PDF reader windows, and a spreadsheet for initiatives; while it's a fine experience, nothing has equaled the fluidity of Emacs as my RPG dashboard.

+

+* * *

+

+Hey! do you love Emacs? [Write an article][11] about how you use an Emacs (GNU or otherwise) for inclusion in our forthcoming Emacs series!

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/20/1/emacs-rpgs

+

+作者:[Seth Kenlon][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/seth

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/life-chess-games.png?itok=U1lWMZ0y (Chess pieces on a chess board)

+[2]: https://opensource.com/life/16/2/intro-to-emacs

+[3]: https://orgmode.org/

+[4]: https://orgmode.org/manual/Tables.html

+[5]: https://www.gnu.org/software/emacs/manual/html_node/emacs/Abbrevs.html#Abbrevs

+[6]: https://opensource.com/article/19/6/how-use-maptools

+[7]: https://www.gnu.org/software/emacs/manual/html_node/emacs/Document-View.html

+[8]: https://opensource.com/sites/default/files/uploads/emacs-rpg.jpg (Emacs for RPG)

+[9]: https://www.ghostscript.com/

+[10]: https://opensource.com/article/19/12/ratpoison-linux-desktop

+[11]: https://opensource.com/how-submit-article

From 4e4f09fa7f2bd9c3e8d2ed648b9547271ea632fe Mon Sep 17 00:00:00 2001

From: DarkSun <lujun9972@gmail.com>

Date: Sat, 1 Feb 2020 01:39:09 +0800

Subject: [PATCH 06/11] =?UTF-8?q?=E9=80=89=E9=A2=98:=2020200131=20Intro=20?=

=?UTF-8?q?to=20the=20Linux=20command=20line?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

sources/tech/20200131 Intro to the Linux command line.md

---

...0200131 Intro to the Linux command line.md | 105 ++++++++++++++++++

1 file changed, 105 insertions(+)

create mode 100644 sources/tech/20200131 Intro to the Linux command line.md

diff --git a/sources/tech/20200131 Intro to the Linux command line.md b/sources/tech/20200131 Intro to the Linux command line.md

new file mode 100644

index 0000000000..572be7eee2

--- /dev/null

+++ b/sources/tech/20200131 Intro to the Linux command line.md

@@ -0,0 +1,105 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (Intro to the Linux command line)

+[#]: via: (https://www.networkworld.com/article/3518440/intro-to-the-linux-command-line.html)

+[#]: author: (Sandra Henry-Stocker https://www.networkworld.com/author/Sandra-Henry_Stocker/)

+

+Intro to the Linux command line

+======

+Here are some warm-up exercises for anyone just starting to use the Linux command line. Warning: It can be addictive.

+[Sandra Henry-Stocker / Linux][1] [(CC0)][2]

+

+If you’re new to Linux or have simply never bothered to explore the command line, you may not understand why so many Linux enthusiasts get excited typing commands when they’re sitting at a comfortable desktop with plenty of tools and apps available to them. In this post, we’ll take a quick dive to explore the wonders of the command line and see if maybe we can get you hooked.

+

+First, to use the command line, you have to open up a command tool (also referred to as a “command prompt”). How to do this will depend on which version of Linux you’re running. On RedHat, for example, you might see an Activities tab at the top of your screen which will open a list of options and a small window for entering a command (like “cmd” which will open the window for you). On Ubuntu and some others, you might see a small terminal icon along the left-hand side of your screen. On many systems, you can open a command window by pressing the **Ctrl+Alt+t** keys at the same time.

+

+You will also find yourself on the command line if you log into a Linux system using a tool like PuTTY.

+

+[][3]

+

+BrandPost Sponsored by HPE

+

+[Take the Intelligent Route with Consumption-Based Storage][3]

+

+Combine the agility and economics of HPE storage with HPE GreenLake and run your IT department with efficiency.

+

+Once you get your command line window, you’ll find yourself sitting at a prompt. It could be just a **$** or something as elaborate as “**user@system:~$**” but it means that the system is ready to run commands for you.

+

+Once you get this far, it will be time to start entering commands. Below are some of the commands to try first, and [here is a PDF][4] of some particularly useful commands and a two-sided command cheatsheet suitable for printing out and laminating.

+

+```

+Command What it does

+pwd show me where I am in the file system (initially, this will be your

+ home directory)

+ls list my files

+ls -a list even more of my files (including those that start with a period)

+ls -al list my files with lots of details (including dates, file sizes and

+ permissions)

+who show me who is logged in (don’t be disappointed if it’s only you)

+date remind me what day today is (shows the time too)

+ps list my running processes (might just be your shell and the “ps”

+ command)

+```

+

+Once you’ve gotten used to your Linux home from the command line point of view, you can begin to explore. Maybe you’ll feel ready to wander around the file system with commands like these:

+

+```

+Command What it does

+cd /tmp move to another directory (in this case, /tmp)

+ls list files in that location

+cd go back home (with no arguments, cd always takes you back to your home

+ directory)

+cat .bashrc display the contents of a file (in this case, .bashrc)

+history show your recent commands

+echo hello say “hello” to yourself

+cal show a calendar for the current month

+```

+

+To get a feeling for why more advanced Linux users like the command line so much, you will want to try some other features – like redirection and pipes. Redirection is when you take the output of a command and drop it into a file instead of displaying it on your screen. Pipes are when you take the output of one command and send it to another command that will manipulate it in some way. Here are commands to try:

+

+[[Get regularly scheduled insights by signing up for Network World newsletters.]][5]

+

+```

+Command What it does

+echo “echo hello” > tryme create a new file and put the words “echo hello” into

+ it

+chmod 700 tryme make the new file executable

+tryme run the new file (it should run the command it

+ contains and display “hello”)

+ps aux show all running processes

+ps aux | grep $USER show all running processes, but limit the output to

+ lines containing your username

+echo $USER display your username using an environment variable

+whoami display your username with a command

+who | wc -l count how many users are currently logged in

+```

+

+### Wrap-Up

+

+Once you get used to the basic commands, you can explore other commands and try your hand at writing scripts. You might find that Linux is a lot more powerful and nice to use than you ever imagined.

+

+Join the Network World communities on [Facebook][6] and [LinkedIn][7] to comment on topics that are top of mind.

+

+--------------------------------------------------------------------------------

+

+via: https://www.networkworld.com/article/3518440/intro-to-the-linux-command-line.html

+

+作者:[Sandra Henry-Stocker][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://www.networkworld.com/author/Sandra-Henry_Stocker/

+[b]: https://github.com/lujun9972

+[1]: https://commons.wikimedia.org/wiki/File:Tux.svg

+[2]: https://creativecommons.org/publicdomain/zero/1.0/

+[3]: https://www.networkworld.com/article/3440100/take-the-intelligent-route-with-consumption-based-storage.html?utm_source=IDG&utm_medium=promotions&utm_campaign=HPE21620&utm_content=sidebar ( Take the Intelligent Route with Consumption-Based Storage)

+[4]: https://www.networkworld.com/article/3391029/must-know-linux-commands.html

+[5]: https://www.networkworld.com/newsletters/signup.html

+[6]: https://www.facebook.com/NetworkWorld/

+[7]: https://www.linkedin.com/company/network-world

From 60b4b26f1606225edd395f943946dbd230f0ce75 Mon Sep 17 00:00:00 2001

From: geekpi <geekpi@users.noreply.github.com>

Date: Sat, 1 Feb 2020 10:31:17 +0800

Subject: [PATCH 07/11] translating

---

...tool to get your local weather forecast.md | 104 ------------------

...tool to get your local weather forecast.md | 101 +++++++++++++++++

2 files changed, 101 insertions(+), 104 deletions(-)

delete mode 100644 sources/tech/20200123 Use this open source tool to get your local weather forecast.md

create mode 100644 translated/tech/20200123 Use this open source tool to get your local weather forecast.md

diff --git a/sources/tech/20200123 Use this open source tool to get your local weather forecast.md b/sources/tech/20200123 Use this open source tool to get your local weather forecast.md

deleted file mode 100644

index df44d3b659..0000000000

--- a/sources/tech/20200123 Use this open source tool to get your local weather forecast.md

+++ /dev/null

@@ -1,104 +0,0 @@

-[#]: collector: (lujun9972)

-[#]: translator: (geekpi)

-[#]: reviewer: ( )

-[#]: publisher: ( )

-[#]: url: ( )

-[#]: subject: (Use this open source tool to get your local weather forecast)

-[#]: via: (https://opensource.com/article/20/1/open-source-weather-forecast)

-[#]: author: (Kevin Sonney https://opensource.com/users/ksonney)

-

-Use this open source tool to get your local weather forecast

-======

-Know whether you need a coat, an umbrella, or sunscreen before you go

-out with wego in the thirteenth in our series on 20 ways to be more

-productive with open source in 2020.

-![Sky with clouds and grass][1]

-

-Last year, I brought you 19 days of new (to you) productivity tools for 2019. This year, I'm taking a different approach: building an environment that will allow you to be more productive in the new year, using tools you may or may not already be using.

-

-### Check the weather with wego

-

-One of the things I love about the past decade of my employment is that it mostly has been remote. I can work anywhere I happen to be in the world, although the reality is that I spend a lot of time in my home office. The downside is that when I leave the house, I base a lot of decisions on what the conditions look like outside my window. And where I live, "sunny and clear" can mean anything from "scorchingly hot" to "below freezing" to "it will rain in an hour." Being able to check the actual conditions and forecast quickly is pretty useful.

-

-![Wego][2]

-

-[Wego][3] is a program written in Go that will fetch and display your local weather. It even renders it in shiny ASCII art if you wish.

-

-To install wego, you need to make sure [Go][4] is installed on your system. After that, you can fetch the latest version with the **go get** command. You'll probably want to add the **~/go/bin** directory to your path as well:

-

-

-```

-go get -u github.com/schachmat/wego

-export PATH=~/go/bin:$PATH

-wego

-```

-

-On its first run, wego will complain about missing API keys. Now you need to decide on a backend. The default backend is for [Forecast.io][5], which is part of [Dark Sky][6]. Wego also supports [OpenWeatherMap][7] and [WorldWeatherOnline][8]. I prefer OpenWeatherMap, so that's what I'll show you how to set up here.

-

-You'll need to [register for an API key][9] with OpenWeatherMap. Registration is free, although the free API key has a limit on how many queries you can make in a day; this should be fine for an average user. Once you have your API key, put it into the **~/.wegorc** file. Now is also a good time to fill in your location, language, and whether you use metric, imperial (US/UK), metric-ms, or International System of Units (SI). OpenWeatherMap supports locations by name, postal code, coordinates, and ID, which is one of the reasons I like it.

-

-

-```

-# wego configuration for OEM

-aat-coords=false

-aat-monochrome=false

-backend=openweathermap

-days=3

-forecast-lang=en

-frontend=ascii-art-table

-jsn-no-indent=false

-location=Pittsboro

-owm-api-key=XXXXXXXXXXXXXXXXXXXXX

-owm-debug=false

-owm-lang=en

-units=imperial

-```

-

-Now, running **wego** at the command line will show the local weather for the next three days.

-

-Wego can also show data as JSON output for consumption by programs and with emoji. You can choose a frontend with the **-f** command-line parameter or in the **.wegorc** file.

-

-![Wego at login][10]

-

-If you want to see the weather every time you open a new shell or log into a host, simply add wego to your **~/.bashrc** (or **~/.zshrc** in my case).

-

-The [wttr.in][11] project is a web-based wrapper around wego. It provides some additional display options and is available on the website of the same name. One cool thing about wttr.in is that you can fetch one-line information about the weather with **curl**. I have a little shell function called **get_wttr** that fetches the current forecast in a shortened form.

-

-

-```

-get_wttr() {

- curl -s "wttr.in/Pittsboro?format=3"

-}

-```

-

-![weather tool for productivity][12]

-

-Now, before I leave the house, I have a quick and easy way to find out if I need a coat, an umbrella, or sunscreen—directly from the command line where I spend most of my time.

-

-I began paragliding a few years ago. It’s maybe the most weather-dependent sport in the world. We...

-

---------------------------------------------------------------------------------

-

-via: https://opensource.com/article/20/1/open-source-weather-forecast

-

-作者:[Kevin Sonney][a]

-选题:[lujun9972][b]

-译者:[译者ID](https://github.com/译者ID)

-校对:[校对者ID](https://github.com/校对者ID)

-

-本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

-

-[a]: https://opensource.com/users/ksonney

-[b]: https://github.com/lujun9972

-[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/bus-cloud.png?itok=vz0PIDDS (Sky with clouds and grass)

-[2]: https://opensource.com/sites/default/files/uploads/productivity_13-1.png (Wego)

-[3]: https://github.com/schachmat/wego

-[4]: https://golang.org/doc/install

-[5]: https://forecast.io

-[6]: https://darksky.net

-[7]: https://openweathermap.org/

-[8]: https://www.worldweatheronline.com/

-[9]: https://openweathermap.org/api

-[10]: https://opensource.com/sites/default/files/uploads/productivity_13-2.png (Wego at login)

-[11]: https://github.com/chubin/wttr.in

-[12]: https://opensource.com/sites/default/files/uploads/day13-image3.png (weather tool for productivity)

diff --git a/translated/tech/20200123 Use this open source tool to get your local weather forecast.md b/translated/tech/20200123 Use this open source tool to get your local weather forecast.md

new file mode 100644

index 0000000000..e151e40d65

--- /dev/null

+++ b/translated/tech/20200123 Use this open source tool to get your local weather forecast.md

@@ -0,0 +1,101 @@

+[#]: collector: (lujun9972)

+[#]: translator: (geekpi)

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (Use this open source tool to get your local weather forecast)

+[#]: via: (https://opensource.com/article/20/1/open-source-weather-forecast)

+[#]: author: (Kevin Sonney https://opensource.com/users/ksonney)

+

+使用这个开源工具获取本地天气预报

+======

+在我们的 20 个使用开源提升生产力的系列的第十三篇文章中使用 wego 来了解出门前你是否要需要外套、雨伞或者防晒霜。

+![Sky with clouds and grass][1]

+

+去年,我在 19 天里给你介绍了 19 个新(对你而言)的生产力工具。今年,我换了一种方式:使用你在使用或者还没使用的工具,构建一个使你可以在新一年更加高效的环境。

+

+### 使用 wego 了解天气

+

+过去十年我对我的职业最满意的地方之一是大多数时候是远程工作。尽管现实情况是我很多时候是在家里办公,但我可以在世界上任何地方工作。缺点是,离家时我会根据天气做出一些决定。在我居住的地方,”晴朗“可以表示从”酷热“、”低于零度“到”一小时内会小雨“。能够了解实际情况和快速预测非常有用。

+

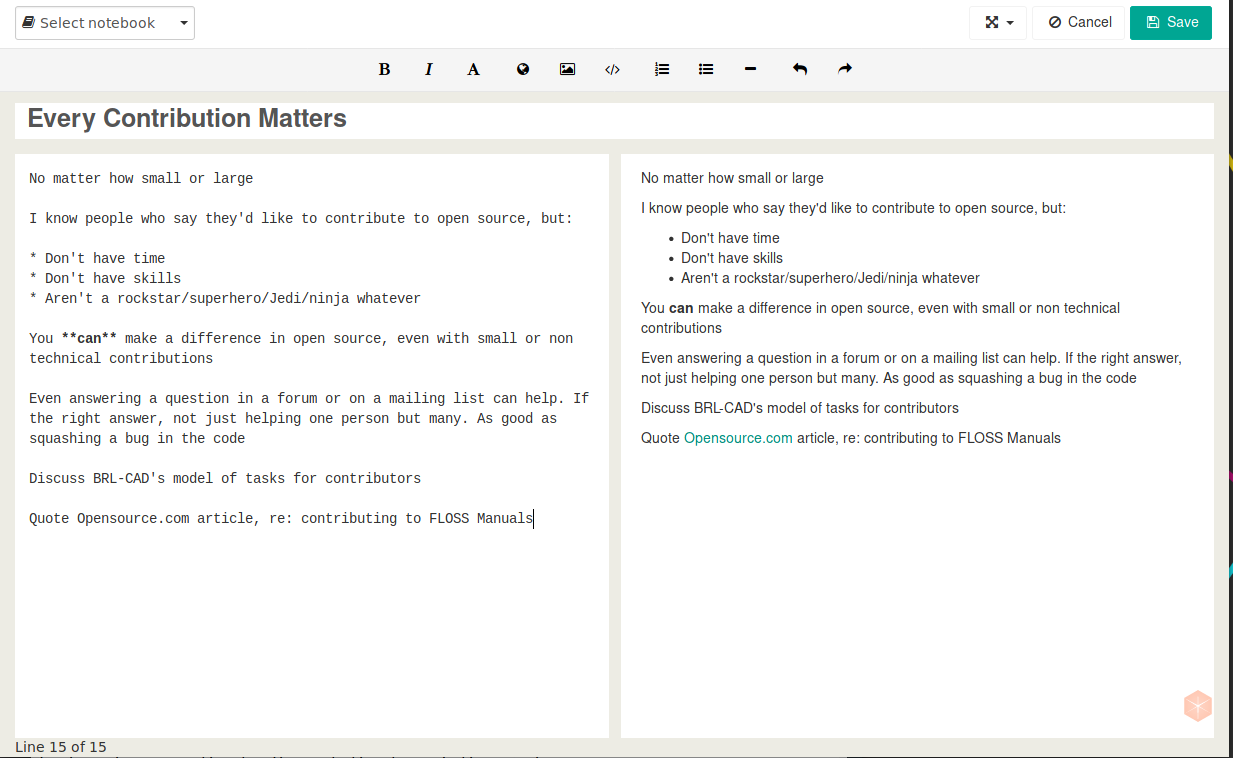

+![Wego][2]

+

+[Wego][3] 是用 Go 编写的程序,可以获取并显示你的当地天气。如果你愿意,它甚至可以用闪亮的 ASCII 艺术效果进行渲染。

+

+要安装 wego,你需要确保在系统上安装了[Go][4]。之后,你可以使用 **go get** 命令获取最新版本。你可能还想将 **~/go/bin** 目录添加到路径中:

+

+

+```

+go get -u github.com/schachmat/wego

+export PATH=~/go/bin:$PATH

+wego

+```

+

+首次运行时,wego 会报告缺失 API 密钥。现在你需要决定一个后端。默认后端是 [Forecast.io][5],它是 [Dark Sky][6]的一部分。Wego还支持 [OpenWeatherMap][7] 和 [WorldWeatherOnline][8]。我更喜欢 OpenWeatherMap,因此我将在此向你展示如何设置。

+

+你需要在 OpenWeatherMap 中[注册 API 密钥][9]。注册是免费的,尽管免费的 API 密钥限制了一天可以查询的数量,但这对于普通用户来说应该没问题。得到 API 密钥后,将它放到 **~/.wegorc** 文件中。现在可以填写你的位置、语言以及使用公制、英制(英国/美国)还是国际单位制(SI)。OpenWeatherMap 可通过名称、邮政编码、坐标和 ID 确定位置,这是我喜欢它的原因之一。

+

+

+

+```

+# wego configuration for OEM

+aat-coords=false

+aat-monochrome=false

+backend=openweathermap

+days=3

+forecast-lang=en

+frontend=ascii-art-table

+jsn-no-indent=false

+location=Pittsboro

+owm-api-key=XXXXXXXXXXXXXXXXXXXXX

+owm-debug=false

+owm-lang=en

+units=imperial

+```

+

+现在,在命令行运行 **wego** 将显示接下来三天的当地天气。

+

+Wego 还可以输出 JSON 以便程序使用,还可显示 emoji。你可以使用 **-f** 参数或在 **.wegorc** 文件中指定前端。

+

+![Wego at login][10]

+

+如果你想在每次打开 shell 或登录主机时查看天气,只需将 wego 添加到 **~/.bashrc**(我这里是 **~/.zshrc**)即可。

+

+[wttr.in][11] 项目是 wego 上的基于 Web 的封装。它提供了一些其他显示选项,并且可以在同名网站上看到。关于 wttr.in 的一件很酷的事情是,你可以使用 **curl** 获取一行天气信息。我有一个名为 **get_wttr** 的 shell 函数,用于获取当前简化的预报信息。

+

+

+```

+get_wttr() {

+ curl -s "wttr.in/Pittsboro?format=3"

+}

+```

+

+![weather tool for productivity][12]

+

+现在,在我离开家之前,我就可以通过命令行快速简单地获取我是否需要外套、雨伞或者防晒霜了。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/20/1/open-source-weather-forecast

+

+作者:[Kevin Sonney][a]

+选题:[lujun9972][b]

+译者:[geekpi](https://github.com/geekpi)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/ksonney

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/bus-cloud.png?itok=vz0PIDDS (Sky with clouds and grass)

+[2]: https://opensource.com/sites/default/files/uploads/productivity_13-1.png (Wego)

+[3]: https://github.com/schachmat/wego

+[4]: https://golang.org/doc/install

+[5]: https://forecast.io

+[6]: https://darksky.net

+[7]: https://openweathermap.org/

+[8]: https://www.worldweatheronline.com/

+[9]: https://openweathermap.org/api

+[10]: https://opensource.com/sites/default/files/uploads/productivity_13-2.png (Wego at login)

+[11]: https://github.com/chubin/wttr.in

+[12]: https://opensource.com/sites/default/files/uploads/day13-image3.png (weather tool for productivity)

From 2938813972673d0936258e1d64ce06af7932c2f7 Mon Sep 17 00:00:00 2001

From: geekpi <geekpi@users.noreply.github.com>

Date: Sat, 1 Feb 2020 10:35:42 +0800

Subject: [PATCH 08/11] translating

---

...eeds and podcasts in one place with this open source tool.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/sources/tech/20200122 Get your RSS feeds and podcasts in one place with this open source tool.md b/sources/tech/20200122 Get your RSS feeds and podcasts in one place with this open source tool.md

index 994523d830..d257520efa 100644

--- a/sources/tech/20200122 Get your RSS feeds and podcasts in one place with this open source tool.md

+++ b/sources/tech/20200122 Get your RSS feeds and podcasts in one place with this open source tool.md

@@ -1,5 +1,5 @@

[#]: collector: (lujun9972)

-[#]: translator: ( )

+[#]: translator: (geekpi)

[#]: reviewer: ( )

[#]: publisher: ( )

[#]: url: ( )

From be6b1cf405831fa52f2914ed889d220acd10d734 Mon Sep 17 00:00:00 2001

From: Xingyu Wang <xingyu.wang@gmail.com>

Date: Sat, 1 Feb 2020 11:16:42 +0800

Subject: [PATCH 09/11] =?UTF-8?q?=E5=BD=92=E6=A1=A3=20202001?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

---

... to create an e-book chapter template in LibreOffice Writer.md | 0

published/{ => 202001}/20190405 File sharing with Git.md | 0

published/{ => 202001}/20190406 Run a server with Git.md | 0

.../20190619 Getting started with OpenSSL- Cryptography basics.md | 0

.../20190724 How to make an old computer useful again.md | 0

... An advanced look at Python interfaces using zope.interface.md | 0

...n save your tasks - and your sanity - if SSH is interrupted.md | 0

published/{ => 202001}/20191015 How GNOME uses Git.md | 0

.../20191016 Open source interior design with Sweet Home 3D.md | 0

.../{ => 202001}/20191017 Intro to the Linux useradd command.md | 0

.../20191108 My Linux story- Learning Linux in the 90s.md | 0

.../20191113 How to cohost GitHub and GitLab with Ansible.md | 0

.../{ => 202001}/20191121 Simulate gravity in your Python game.md | 0

.../20191129 How to write a Python web API with Django.md | 0

.../{ => 202001}/20191130 7 maker gifts for kids and teens.md | 0

.../20191205 Add jumping to your Python platformer game.md | 0

.../20191208 What-s your favorite terminal emulator.md | 0

.../20191210 Lessons learned from programming in Go.md | 0

... Enable your Python game player to run forward and backward.md | 0

.../20191214 Make VLC More Awesome With These Simple Tips.md | 0

.../20191215 How to Add Border Around Text in GIMP.md | 0

...7 App Highlight- Open Source Disk Partitioning Tool GParted.md | 0

.../{ => 202001}/20191219 Kubernetes namespaces for beginners.md | 0

.../20191220 4 ways to volunteer this holiday season.md | 0

...220 Why Vim fans love the Herbstluftwm Linux window manager.md | 0

.../20191221 Pop-_OS vs Ubuntu- Which One is Better.md | 0

...191224 Chill out with the Linux Equinox Desktop Environment.md | 0

...91226 Darktable 3 Released With GUI Rework and New Features.md | 0

.../20191227 10 resources to boost your Git skills.md | 0

...hy Your Distribution Still Using an ‘Outdated- Linux Kernel.md | 0

.../20191229 The best resources for agile software development.md | 0

.../20191230 10 articles to enhance your security aptitude.md | 0

...230 Fixing -VLC is Unable to Open the MRL- Error -Quick Tip.md | 0

...1 10 Ansible resources to accelerate your automation skills.md | 0

.../20191231 12 programming resources for coders of all levels.md | 0

.../{ => 202001}/20200101 5 predictions for Kubernetes in 2020.md | 0

...00101 9 cheat sheets and guides to enhance your tech skills.md | 0

.../20200101 Signal- A Secure, Open Source Messaging App.md | 0

.../20200102 Put some loot in your Python platformer game.md | 0

...3 GNOME has a Secret- Screen Recorder. Here-s How to Use it.md | 0

...troducing the guide to inter-process communication in Linux.md | 0

...20200103 My Raspberry Pi retrospective- 6 projects and more.md | 0

.../20200105 PaperWM- tiled window management for GNOME.md | 0

...0106 How to write a Python web API with Pyramid and Cornice.md | 0

...107 Generating numeric sequences with the Linux seq command.md | 0

...0107 How piwheels will save Raspberry Pi users time in 2020.md | 0

.../20200108 How to setup multiple monitors in sway.md | 0

...0109 Huawei-s Linux Distribution openEuler is Available Now.md | 0

...end eMail With a List of User Accounts Expiring in -X- Days.md | 0

.../20200111 Sync files across multiple devices with Syncthing.md | 0

... Use Stow for configuration management of multiple machines.md | 0

.../20200113 Keep your email in sync with OfflineIMAP.md | 0

...tV- A Bash function to maintain Python virtual environments.md | 0

.../{ => 202001}/20200114 Organize your email with Notmuch.md | 0

published/{ => 202001}/20200115 6 handy Bash scripts for Git.md | 0

...15 Organize and sync your calendar with khal and vdirsyncer.md | 0

...00115 Root User in Ubuntu- Important Things You Should Know.md | 0

.../20200115 Why everyone is talking about WebAssembly.md | 0

.../20200116 3 open source tools to manage your contacts.md | 0

...Rust- Which to choose for programming hardware abstractions.md | 0

...200117 Get started with this open source to-do list manager.md | 0

.../20200117 Locking and unlocking accounts on Linux systems.md | 0

.../20200119 What-s your favorite Linux terminal trick.md | 0

...ting up passwordless Linux logins using public-private keys.md | 0

.../20200123 Wine 5.0 is Released- Here-s How to Install it.md | 0

65 files changed, 0 insertions(+), 0 deletions(-)

rename published/{ => 202001}/20171018 How to create an e-book chapter template in LibreOffice Writer.md (100%)

rename published/{ => 202001}/20190405 File sharing with Git.md (100%)

rename published/{ => 202001}/20190406 Run a server with Git.md (100%)

rename published/{ => 202001}/20190619 Getting started with OpenSSL- Cryptography basics.md (100%)

rename published/{ => 202001}/20190724 How to make an old computer useful again.md (100%)

rename published/{ => 202001}/20190924 An advanced look at Python interfaces using zope.interface.md (100%)

rename published/{ => 202001}/20190930 How the Linux screen tool can save your tasks - and your sanity - if SSH is interrupted.md (100%)

rename published/{ => 202001}/20191015 How GNOME uses Git.md (100%)

rename published/{ => 202001}/20191016 Open source interior design with Sweet Home 3D.md (100%)

rename published/{ => 202001}/20191017 Intro to the Linux useradd command.md (100%)

rename published/{ => 202001}/20191108 My Linux story- Learning Linux in the 90s.md (100%)

rename published/{ => 202001}/20191113 How to cohost GitHub and GitLab with Ansible.md (100%)

rename published/{ => 202001}/20191121 Simulate gravity in your Python game.md (100%)

rename published/{ => 202001}/20191129 How to write a Python web API with Django.md (100%)

rename published/{ => 202001}/20191130 7 maker gifts for kids and teens.md (100%)

rename published/{ => 202001}/20191205 Add jumping to your Python platformer game.md (100%)

rename published/{ => 202001}/20191208 What-s your favorite terminal emulator.md (100%)

rename published/{ => 202001}/20191210 Lessons learned from programming in Go.md (100%)

rename published/{ => 202001}/20191211 Enable your Python game player to run forward and backward.md (100%)

rename published/{ => 202001}/20191214 Make VLC More Awesome With These Simple Tips.md (100%)

rename published/{ => 202001}/20191215 How to Add Border Around Text in GIMP.md (100%)

rename published/{ => 202001}/20191217 App Highlight- Open Source Disk Partitioning Tool GParted.md (100%)

rename published/{ => 202001}/20191219 Kubernetes namespaces for beginners.md (100%)

rename published/{ => 202001}/20191220 4 ways to volunteer this holiday season.md (100%)

rename published/{ => 202001}/20191220 Why Vim fans love the Herbstluftwm Linux window manager.md (100%)

rename published/{ => 202001}/20191221 Pop-_OS vs Ubuntu- Which One is Better.md (100%)

rename published/{ => 202001}/20191224 Chill out with the Linux Equinox Desktop Environment.md (100%)

rename published/{ => 202001}/20191226 Darktable 3 Released With GUI Rework and New Features.md (100%)

rename published/{ => 202001}/20191227 10 resources to boost your Git skills.md (100%)

rename published/{ => 202001}/20191227 Explained- Why Your Distribution Still Using an ‘Outdated- Linux Kernel.md (100%)

rename published/{ => 202001}/20191229 The best resources for agile software development.md (100%)

rename published/{ => 202001}/20191230 10 articles to enhance your security aptitude.md (100%)

rename published/{ => 202001}/20191230 Fixing -VLC is Unable to Open the MRL- Error -Quick Tip.md (100%)

rename published/{ => 202001}/20191231 10 Ansible resources to accelerate your automation skills.md (100%)

rename published/{ => 202001}/20191231 12 programming resources for coders of all levels.md (100%)

rename published/{ => 202001}/20200101 5 predictions for Kubernetes in 2020.md (100%)

rename published/{ => 202001}/20200101 9 cheat sheets and guides to enhance your tech skills.md (100%)

rename published/{ => 202001}/20200101 Signal- A Secure, Open Source Messaging App.md (100%)

rename published/{ => 202001}/20200102 Put some loot in your Python platformer game.md (100%)

rename published/{ => 202001}/20200103 GNOME has a Secret- Screen Recorder. Here-s How to Use it.md (100%)

rename published/{ => 202001}/20200103 Introducing the guide to inter-process communication in Linux.md (100%)

rename published/{ => 202001}/20200103 My Raspberry Pi retrospective- 6 projects and more.md (100%)

rename published/{ => 202001}/20200105 PaperWM- tiled window management for GNOME.md (100%)

rename published/{ => 202001}/20200106 How to write a Python web API with Pyramid and Cornice.md (100%)

rename published/{ => 202001}/20200107 Generating numeric sequences with the Linux seq command.md (100%)

rename published/{ => 202001}/20200107 How piwheels will save Raspberry Pi users time in 2020.md (100%)

rename published/{ => 202001}/20200108 How to setup multiple monitors in sway.md (100%)

rename published/{ => 202001}/20200109 Huawei-s Linux Distribution openEuler is Available Now.md (100%)

rename published/{ => 202001}/20200110 Bash Script to Send eMail With a List of User Accounts Expiring in -X- Days.md (100%)

rename published/{ => 202001}/20200111 Sync files across multiple devices with Syncthing.md (100%)

rename published/{ => 202001}/20200112 Use Stow for configuration management of multiple machines.md (100%)

rename published/{ => 202001}/20200113 Keep your email in sync with OfflineIMAP.md (100%)

rename published/{ => 202001}/20200113 setV- A Bash function to maintain Python virtual environments.md (100%)

rename published/{ => 202001}/20200114 Organize your email with Notmuch.md (100%)

rename published/{ => 202001}/20200115 6 handy Bash scripts for Git.md (100%)

rename published/{ => 202001}/20200115 Organize and sync your calendar with khal and vdirsyncer.md (100%)

rename published/{ => 202001}/20200115 Root User in Ubuntu- Important Things You Should Know.md (100%)

rename published/{ => 202001}/20200115 Why everyone is talking about WebAssembly.md (100%)

rename published/{ => 202001}/20200116 3 open source tools to manage your contacts.md (100%)

rename published/{ => 202001}/20200117 C vs. Rust- Which to choose for programming hardware abstractions.md (100%)

rename published/{ => 202001}/20200117 Get started with this open source to-do list manager.md (100%)

rename published/{ => 202001}/20200117 Locking and unlocking accounts on Linux systems.md (100%)

rename published/{ => 202001}/20200119 What-s your favorite Linux terminal trick.md (100%)

rename published/{ => 202001}/20200122 Setting up passwordless Linux logins using public-private keys.md (100%)

rename published/{ => 202001}/20200123 Wine 5.0 is Released- Here-s How to Install it.md (100%)

diff --git a/published/20171018 How to create an e-book chapter template in LibreOffice Writer.md b/published/202001/20171018 How to create an e-book chapter template in LibreOffice Writer.md

similarity index 100%

rename from published/20171018 How to create an e-book chapter template in LibreOffice Writer.md

rename to published/202001/20171018 How to create an e-book chapter template in LibreOffice Writer.md

diff --git a/published/20190405 File sharing with Git.md b/published/202001/20190405 File sharing with Git.md

similarity index 100%

rename from published/20190405 File sharing with Git.md

rename to published/202001/20190405 File sharing with Git.md

diff --git a/published/20190406 Run a server with Git.md b/published/202001/20190406 Run a server with Git.md

similarity index 100%

rename from published/20190406 Run a server with Git.md

rename to published/202001/20190406 Run a server with Git.md

diff --git a/published/20190619 Getting started with OpenSSL- Cryptography basics.md b/published/202001/20190619 Getting started with OpenSSL- Cryptography basics.md

similarity index 100%

rename from published/20190619 Getting started with OpenSSL- Cryptography basics.md

rename to published/202001/20190619 Getting started with OpenSSL- Cryptography basics.md

diff --git a/published/20190724 How to make an old computer useful again.md b/published/202001/20190724 How to make an old computer useful again.md

similarity index 100%

rename from published/20190724 How to make an old computer useful again.md

rename to published/202001/20190724 How to make an old computer useful again.md

diff --git a/published/20190924 An advanced look at Python interfaces using zope.interface.md b/published/202001/20190924 An advanced look at Python interfaces using zope.interface.md

similarity index 100%

rename from published/20190924 An advanced look at Python interfaces using zope.interface.md

rename to published/202001/20190924 An advanced look at Python interfaces using zope.interface.md

diff --git a/published/20190930 How the Linux screen tool can save your tasks - and your sanity - if SSH is interrupted.md b/published/202001/20190930 How the Linux screen tool can save your tasks - and your sanity - if SSH is interrupted.md

similarity index 100%

rename from published/20190930 How the Linux screen tool can save your tasks - and your sanity - if SSH is interrupted.md

rename to published/202001/20190930 How the Linux screen tool can save your tasks - and your sanity - if SSH is interrupted.md

diff --git a/published/20191015 How GNOME uses Git.md b/published/202001/20191015 How GNOME uses Git.md

similarity index 100%

rename from published/20191015 How GNOME uses Git.md

rename to published/202001/20191015 How GNOME uses Git.md

diff --git a/published/20191016 Open source interior design with Sweet Home 3D.md b/published/202001/20191016 Open source interior design with Sweet Home 3D.md

similarity index 100%

rename from published/20191016 Open source interior design with Sweet Home 3D.md

rename to published/202001/20191016 Open source interior design with Sweet Home 3D.md

diff --git a/published/20191017 Intro to the Linux useradd command.md b/published/202001/20191017 Intro to the Linux useradd command.md

similarity index 100%

rename from published/20191017 Intro to the Linux useradd command.md

rename to published/202001/20191017 Intro to the Linux useradd command.md

diff --git a/published/20191108 My Linux story- Learning Linux in the 90s.md b/published/202001/20191108 My Linux story- Learning Linux in the 90s.md

similarity index 100%

rename from published/20191108 My Linux story- Learning Linux in the 90s.md

rename to published/202001/20191108 My Linux story- Learning Linux in the 90s.md

diff --git a/published/20191113 How to cohost GitHub and GitLab with Ansible.md b/published/202001/20191113 How to cohost GitHub and GitLab with Ansible.md

similarity index 100%

rename from published/20191113 How to cohost GitHub and GitLab with Ansible.md

rename to published/202001/20191113 How to cohost GitHub and GitLab with Ansible.md

diff --git a/published/20191121 Simulate gravity in your Python game.md b/published/202001/20191121 Simulate gravity in your Python game.md

similarity index 100%

rename from published/20191121 Simulate gravity in your Python game.md

rename to published/202001/20191121 Simulate gravity in your Python game.md

diff --git a/published/20191129 How to write a Python web API with Django.md b/published/202001/20191129 How to write a Python web API with Django.md

similarity index 100%

rename from published/20191129 How to write a Python web API with Django.md

rename to published/202001/20191129 How to write a Python web API with Django.md

diff --git a/published/20191130 7 maker gifts for kids and teens.md b/published/202001/20191130 7 maker gifts for kids and teens.md

similarity index 100%

rename from published/20191130 7 maker gifts for kids and teens.md

rename to published/202001/20191130 7 maker gifts for kids and teens.md

diff --git a/published/20191205 Add jumping to your Python platformer game.md b/published/202001/20191205 Add jumping to your Python platformer game.md

similarity index 100%

rename from published/20191205 Add jumping to your Python platformer game.md

rename to published/202001/20191205 Add jumping to your Python platformer game.md

diff --git a/published/20191208 What-s your favorite terminal emulator.md b/published/202001/20191208 What-s your favorite terminal emulator.md

similarity index 100%

rename from published/20191208 What-s your favorite terminal emulator.md

rename to published/202001/20191208 What-s your favorite terminal emulator.md

diff --git a/published/20191210 Lessons learned from programming in Go.md b/published/202001/20191210 Lessons learned from programming in Go.md

similarity index 100%

rename from published/20191210 Lessons learned from programming in Go.md

rename to published/202001/20191210 Lessons learned from programming in Go.md

diff --git a/published/20191211 Enable your Python game player to run forward and backward.md b/published/202001/20191211 Enable your Python game player to run forward and backward.md

similarity index 100%

rename from published/20191211 Enable your Python game player to run forward and backward.md

rename to published/202001/20191211 Enable your Python game player to run forward and backward.md

diff --git a/published/20191214 Make VLC More Awesome With These Simple Tips.md b/published/202001/20191214 Make VLC More Awesome With These Simple Tips.md

similarity index 100%

rename from published/20191214 Make VLC More Awesome With These Simple Tips.md

rename to published/202001/20191214 Make VLC More Awesome With These Simple Tips.md

diff --git a/published/20191215 How to Add Border Around Text in GIMP.md b/published/202001/20191215 How to Add Border Around Text in GIMP.md

similarity index 100%

rename from published/20191215 How to Add Border Around Text in GIMP.md

rename to published/202001/20191215 How to Add Border Around Text in GIMP.md

diff --git a/published/20191217 App Highlight- Open Source Disk Partitioning Tool GParted.md b/published/202001/20191217 App Highlight- Open Source Disk Partitioning Tool GParted.md

similarity index 100%

rename from published/20191217 App Highlight- Open Source Disk Partitioning Tool GParted.md

rename to published/202001/20191217 App Highlight- Open Source Disk Partitioning Tool GParted.md

diff --git a/published/20191219 Kubernetes namespaces for beginners.md b/published/202001/20191219 Kubernetes namespaces for beginners.md

similarity index 100%

rename from published/20191219 Kubernetes namespaces for beginners.md

rename to published/202001/20191219 Kubernetes namespaces for beginners.md

diff --git a/published/20191220 4 ways to volunteer this holiday season.md b/published/202001/20191220 4 ways to volunteer this holiday season.md

similarity index 100%

rename from published/20191220 4 ways to volunteer this holiday season.md

rename to published/202001/20191220 4 ways to volunteer this holiday season.md

diff --git a/published/20191220 Why Vim fans love the Herbstluftwm Linux window manager.md b/published/202001/20191220 Why Vim fans love the Herbstluftwm Linux window manager.md

similarity index 100%

rename from published/20191220 Why Vim fans love the Herbstluftwm Linux window manager.md

rename to published/202001/20191220 Why Vim fans love the Herbstluftwm Linux window manager.md

diff --git a/published/20191221 Pop-_OS vs Ubuntu- Which One is Better.md b/published/202001/20191221 Pop-_OS vs Ubuntu- Which One is Better.md

similarity index 100%

rename from published/20191221 Pop-_OS vs Ubuntu- Which One is Better.md

rename to published/202001/20191221 Pop-_OS vs Ubuntu- Which One is Better.md

diff --git a/published/20191224 Chill out with the Linux Equinox Desktop Environment.md b/published/202001/20191224 Chill out with the Linux Equinox Desktop Environment.md

similarity index 100%

rename from published/20191224 Chill out with the Linux Equinox Desktop Environment.md

rename to published/202001/20191224 Chill out with the Linux Equinox Desktop Environment.md

diff --git a/published/20191226 Darktable 3 Released With GUI Rework and New Features.md b/published/202001/20191226 Darktable 3 Released With GUI Rework and New Features.md

similarity index 100%

rename from published/20191226 Darktable 3 Released With GUI Rework and New Features.md

rename to published/202001/20191226 Darktable 3 Released With GUI Rework and New Features.md

diff --git a/published/20191227 10 resources to boost your Git skills.md b/published/202001/20191227 10 resources to boost your Git skills.md

similarity index 100%

rename from published/20191227 10 resources to boost your Git skills.md

rename to published/202001/20191227 10 resources to boost your Git skills.md

diff --git a/published/20191227 Explained- Why Your Distribution Still Using an ‘Outdated- Linux Kernel.md b/published/202001/20191227 Explained- Why Your Distribution Still Using an ‘Outdated- Linux Kernel.md

similarity index 100%

rename from published/20191227 Explained- Why Your Distribution Still Using an ‘Outdated- Linux Kernel.md

rename to published/202001/20191227 Explained- Why Your Distribution Still Using an ‘Outdated- Linux Kernel.md

diff --git a/published/20191229 The best resources for agile software development.md b/published/202001/20191229 The best resources for agile software development.md

similarity index 100%

rename from published/20191229 The best resources for agile software development.md

rename to published/202001/20191229 The best resources for agile software development.md

diff --git a/published/20191230 10 articles to enhance your security aptitude.md b/published/202001/20191230 10 articles to enhance your security aptitude.md

similarity index 100%

rename from published/20191230 10 articles to enhance your security aptitude.md

rename to published/202001/20191230 10 articles to enhance your security aptitude.md

diff --git a/published/20191230 Fixing -VLC is Unable to Open the MRL- Error -Quick Tip.md b/published/202001/20191230 Fixing -VLC is Unable to Open the MRL- Error -Quick Tip.md

similarity index 100%

rename from published/20191230 Fixing -VLC is Unable to Open the MRL- Error -Quick Tip.md

rename to published/202001/20191230 Fixing -VLC is Unable to Open the MRL- Error -Quick Tip.md

diff --git a/published/20191231 10 Ansible resources to accelerate your automation skills.md b/published/202001/20191231 10 Ansible resources to accelerate your automation skills.md

similarity index 100%

rename from published/20191231 10 Ansible resources to accelerate your automation skills.md

rename to published/202001/20191231 10 Ansible resources to accelerate your automation skills.md

diff --git a/published/20191231 12 programming resources for coders of all levels.md b/published/202001/20191231 12 programming resources for coders of all levels.md

similarity index 100%

rename from published/20191231 12 programming resources for coders of all levels.md

rename to published/202001/20191231 12 programming resources for coders of all levels.md

diff --git a/published/20200101 5 predictions for Kubernetes in 2020.md b/published/202001/20200101 5 predictions for Kubernetes in 2020.md

similarity index 100%

rename from published/20200101 5 predictions for Kubernetes in 2020.md

rename to published/202001/20200101 5 predictions for Kubernetes in 2020.md

diff --git a/published/20200101 9 cheat sheets and guides to enhance your tech skills.md b/published/202001/20200101 9 cheat sheets and guides to enhance your tech skills.md

similarity index 100%

rename from published/20200101 9 cheat sheets and guides to enhance your tech skills.md

rename to published/202001/20200101 9 cheat sheets and guides to enhance your tech skills.md

diff --git a/published/20200101 Signal- A Secure, Open Source Messaging App.md b/published/202001/20200101 Signal- A Secure, Open Source Messaging App.md

similarity index 100%

rename from published/20200101 Signal- A Secure, Open Source Messaging App.md

rename to published/202001/20200101 Signal- A Secure, Open Source Messaging App.md

diff --git a/published/20200102 Put some loot in your Python platformer game.md b/published/202001/20200102 Put some loot in your Python platformer game.md

similarity index 100%

rename from published/20200102 Put some loot in your Python platformer game.md

rename to published/202001/20200102 Put some loot in your Python platformer game.md

diff --git a/published/20200103 GNOME has a Secret- Screen Recorder. Here-s How to Use it.md b/published/202001/20200103 GNOME has a Secret- Screen Recorder. Here-s How to Use it.md

similarity index 100%

rename from published/20200103 GNOME has a Secret- Screen Recorder. Here-s How to Use it.md

rename to published/202001/20200103 GNOME has a Secret- Screen Recorder. Here-s How to Use it.md

diff --git a/published/20200103 Introducing the guide to inter-process communication in Linux.md b/published/202001/20200103 Introducing the guide to inter-process communication in Linux.md

similarity index 100%

rename from published/20200103 Introducing the guide to inter-process communication in Linux.md

rename to published/202001/20200103 Introducing the guide to inter-process communication in Linux.md

diff --git a/published/20200103 My Raspberry Pi retrospective- 6 projects and more.md b/published/202001/20200103 My Raspberry Pi retrospective- 6 projects and more.md

similarity index 100%

rename from published/20200103 My Raspberry Pi retrospective- 6 projects and more.md

rename to published/202001/20200103 My Raspberry Pi retrospective- 6 projects and more.md

diff --git a/published/20200105 PaperWM- tiled window management for GNOME.md b/published/202001/20200105 PaperWM- tiled window management for GNOME.md

similarity index 100%

rename from published/20200105 PaperWM- tiled window management for GNOME.md

rename to published/202001/20200105 PaperWM- tiled window management for GNOME.md

diff --git a/published/20200106 How to write a Python web API with Pyramid and Cornice.md b/published/202001/20200106 How to write a Python web API with Pyramid and Cornice.md

similarity index 100%

rename from published/20200106 How to write a Python web API with Pyramid and Cornice.md

rename to published/202001/20200106 How to write a Python web API with Pyramid and Cornice.md

diff --git a/published/20200107 Generating numeric sequences with the Linux seq command.md b/published/202001/20200107 Generating numeric sequences with the Linux seq command.md

similarity index 100%

rename from published/20200107 Generating numeric sequences with the Linux seq command.md

rename to published/202001/20200107 Generating numeric sequences with the Linux seq command.md

diff --git a/published/20200107 How piwheels will save Raspberry Pi users time in 2020.md b/published/202001/20200107 How piwheels will save Raspberry Pi users time in 2020.md

similarity index 100%

rename from published/20200107 How piwheels will save Raspberry Pi users time in 2020.md

rename to published/202001/20200107 How piwheels will save Raspberry Pi users time in 2020.md

diff --git a/published/20200108 How to setup multiple monitors in sway.md b/published/202001/20200108 How to setup multiple monitors in sway.md

similarity index 100%

rename from published/20200108 How to setup multiple monitors in sway.md

rename to published/202001/20200108 How to setup multiple monitors in sway.md

diff --git a/published/20200109 Huawei-s Linux Distribution openEuler is Available Now.md b/published/202001/20200109 Huawei-s Linux Distribution openEuler is Available Now.md

similarity index 100%

rename from published/20200109 Huawei-s Linux Distribution openEuler is Available Now.md

rename to published/202001/20200109 Huawei-s Linux Distribution openEuler is Available Now.md

diff --git a/published/20200110 Bash Script to Send eMail With a List of User Accounts Expiring in -X- Days.md b/published/202001/20200110 Bash Script to Send eMail With a List of User Accounts Expiring in -X- Days.md

similarity index 100%

rename from published/20200110 Bash Script to Send eMail With a List of User Accounts Expiring in -X- Days.md

rename to published/202001/20200110 Bash Script to Send eMail With a List of User Accounts Expiring in -X- Days.md

diff --git a/published/20200111 Sync files across multiple devices with Syncthing.md b/published/202001/20200111 Sync files across multiple devices with Syncthing.md

similarity index 100%

rename from published/20200111 Sync files across multiple devices with Syncthing.md

rename to published/202001/20200111 Sync files across multiple devices with Syncthing.md

diff --git a/published/20200112 Use Stow for configuration management of multiple machines.md b/published/202001/20200112 Use Stow for configuration management of multiple machines.md

similarity index 100%

rename from published/20200112 Use Stow for configuration management of multiple machines.md

rename to published/202001/20200112 Use Stow for configuration management of multiple machines.md

diff --git a/published/20200113 Keep your email in sync with OfflineIMAP.md b/published/202001/20200113 Keep your email in sync with OfflineIMAP.md

similarity index 100%

rename from published/20200113 Keep your email in sync with OfflineIMAP.md

rename to published/202001/20200113 Keep your email in sync with OfflineIMAP.md

diff --git a/published/20200113 setV- A Bash function to maintain Python virtual environments.md b/published/202001/20200113 setV- A Bash function to maintain Python virtual environments.md

similarity index 100%

rename from published/20200113 setV- A Bash function to maintain Python virtual environments.md

rename to published/202001/20200113 setV- A Bash function to maintain Python virtual environments.md

diff --git a/published/20200114 Organize your email with Notmuch.md b/published/202001/20200114 Organize your email with Notmuch.md

similarity index 100%