mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

d2b11bb93f

@ -1,37 +1,39 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (robsean)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10850-1.html)

|

||||

[#]: subject: (Build a game framework with Python using the module Pygame)

|

||||

[#]: via: (https://opensource.com/article/17/12/game-framework-python)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/seth)

|

||||

|

||||

使用 Python 和 Pygame 模块构建一个游戏框架

|

||||

======

|

||||

这系列的第一篇通过创建一个简单的骰子游戏来探究 Python。现在是来从零制作你自己的游戏的时间。

|

||||

|

||||

> 这系列的第一篇通过创建一个简单的骰子游戏来探究 Python。现在是来从零制作你自己的游戏的时间。

|

||||

|

||||

|

||||

|

||||

在我的 [这系列的第一篇文章][1] 中, 我已经讲解如何使用 Python 创建一个简单的,基于文本的骰子游戏。这次,我将展示如何使用 Python 和 Pygame 模块来创建一个图形化游戏。它将占用一些文章来得到一个确实完成一些东西的游戏,但是在这系列的结尾,你将有一个更好的理解,如何查找和学习新的 Python 模块和如何从其基础上构建一个应用程序。

|

||||

在我的[这系列的第一篇文章][1] 中, 我已经讲解如何使用 Python 创建一个简单的、基于文本的骰子游戏。这次,我将展示如何使用 Python 模块 Pygame 来创建一个图形化游戏。它将需要几篇文章才能来得到一个确实做成一些东西的游戏,但是到这系列的结尾,你将更好地理解如何查找和学习新的 Python 模块和如何从其基础上构建一个应用程序。

|

||||

|

||||

在开始前,你必须安装 [Pygame][2]。

|

||||

|

||||

### 安装新的 Python 模块

|

||||

|

||||

这里有一些方法来安装 Python 模块,但是最通用的两个是:

|

||||

有几种方法来安装 Python 模块,但是最通用的两个是:

|

||||

|

||||

* 从你的发行版的软件存储库

|

||||

* 使用 Python 的软件包管理器,pip

|

||||

* 使用 Python 的软件包管理器 `pip`

|

||||

|

||||

两个方法都工作很好,并且每一个都有它自己的一套优势。如果你是在 Linux 或 BSD 上开发,促使你的发行版的软件存储库确保自动及时更新。

|

||||

两个方法都工作的很好,并且每一个都有它自己的一套优势。如果你是在 Linux 或 BSD 上开发,可以利用你的发行版的软件存储库来自动和及时地更新。

|

||||

|

||||

然而,使用 Python 的内置软件包管理器给予你控制更新模块时间的能力。而且,它不是明确指定操作系统的,意味着,即使当你不是在你常用的开发机器上时,你也可以使用它。pip 的其它的优势是允许模块局部安装,如果你没有一台正在使用的计算机的权限,它是有用的。

|

||||

然而,使用 Python 的内置软件包管理器可以给予你控制更新模块时间的能力。而且,它不是特定于操作系统的,这意味着,即使当你不是在你常用的开发机器上时,你也可以使用它。`pip` 的其它的优势是允许本地安装模块,如果你没有正在使用的计算机的管理权限,这是有用的。

|

||||

|

||||

### 使用 pip

|

||||

|

||||

如果 Python 和 Python3 都安装在你的系统上,你想使用的命令很可能是 `pip3`,它区分来自Python 2.x 的 `pip` 的命令。如果你不确定,先尝试 `pip3`。

|

||||

如果 Python 和 Python3 都安装在你的系统上,你想使用的命令很可能是 `pip3`,它用来区分 Python 2.x 的 `pip` 的命令。如果你不确定,先尝试 `pip3`。

|

||||

|

||||

`pip` 命令有些像大多数 Linux 软件包管理器的工作。你可以使用 `search` 搜索 Pythin 模块,然后使用 `install` 安装它们。如果你没有你正在使用的计算机的权限来安装软件,你可以使用 `--user` 选项来仅仅安装模块到你的 home 目录。

|

||||

`pip` 命令有些像大多数 Linux 软件包管理器一样工作。你可以使用 `search` 搜索 Python 模块,然后使用 `install` 安装它们。如果你没有你正在使用的计算机的管理权限来安装软件,你可以使用 `--user` 选项来仅仅安装模块到你的家目录。

|

||||

|

||||

```

|

||||

$ pip3 search pygame

|

||||

@ -44,11 +46,11 @@ pygame_cffi (0.2.1) - A cffi-based SDL wrapper that copies the

|

||||

$ pip3 install Pygame --user

|

||||

```

|

||||

|

||||

Pygame 是一个 Python 模块,这意味着它仅仅是一套可以被使用在你的 Python 程序中库。换句话说,它不是一个你启动的程序,像 [IDLE][3] 或 [Ninja-IDE][4] 一样。

|

||||

Pygame 是一个 Python 模块,这意味着它仅仅是一套可以使用在你的 Python 程序中的库。换句话说,它不是一个像 [IDLE][3] 或 [Ninja-IDE][4] 一样可以让你启动的程序。

|

||||

|

||||

### Pygame 新手入门

|

||||

|

||||

一个电子游戏需要一个故事背景;一个发生的地点。在 Python 中,有两种不同的方法来创建你的故事背景:

|

||||

一个电子游戏需要一个背景设定:故事发生的地点。在 Python 中,有两种不同的方法来创建你的故事背景:

|

||||

|

||||

* 设置一种背景颜色

|

||||

* 设置一张背景图片

|

||||

@ -57,15 +59,15 @@ Pygame 是一个 Python 模块,这意味着它仅仅是一套可以被使用

|

||||

|

||||

### 设置你的 Pygame 脚本

|

||||

|

||||

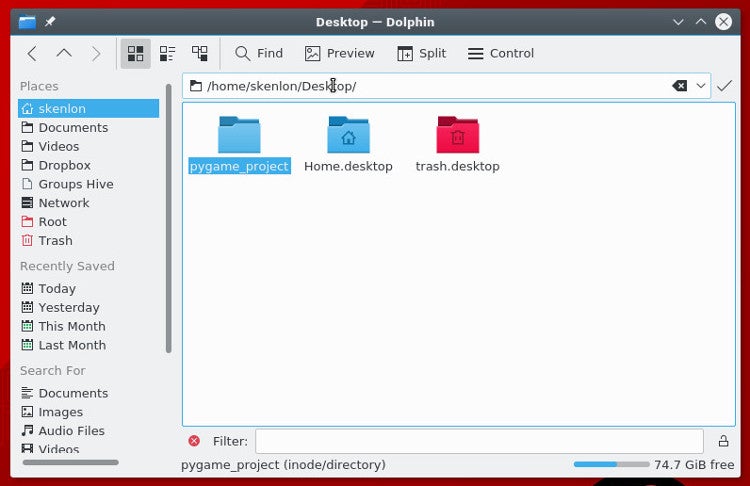

为了开始一个新的 Pygame 脚本,在计算机上创建一个文件夹。游戏的全部文件被放在这个目录中。在工程文件夹内部保持所需要的所有的文件来运行游戏是极其重要的。

|

||||

要开始一个新的 Pygame 工程,先在计算机上创建一个文件夹。游戏的全部文件被放在这个目录中。在你的工程文件夹内部保持所需要的所有的文件来运行游戏是极其重要的。

|

||||

|

||||

|

||||

|

||||

一个 Python 脚本以文件类型,你的姓名,和你想使用的协议开始。使用一个开放源码协议,以便你的朋友可以改善你的游戏并与你一起分享他们的更改:

|

||||

一个 Python 脚本以文件类型、你的姓名,和你想使用的许可证开始。使用一个开放源码许可证,以便你的朋友可以改善你的游戏并与你一起分享他们的更改:

|

||||

|

||||

```

|

||||

#!/usr/bin/env python3

|

||||

# Seth Kenlon 编写

|

||||

# by Seth Kenlon

|

||||

|

||||

## GPLv3

|

||||

# This program is free software: you can redistribute it and/or

|

||||

@ -75,14 +77,14 @@ Pygame 是一个 Python 模块,这意味着它仅仅是一套可以被使用

|

||||

#

|

||||

# This program is distributed in the hope that it will be useful, but

|

||||

# WITHOUT ANY WARRANTY; without even the implied warranty of

|

||||

# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU

|

||||

# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU

|

||||

# General Public License for more details.

|

||||

#

|

||||

# You should have received a copy of the GNU General Public License

|

||||

# along with this program. If not, see <http://www.gnu.org/licenses/>.

|

||||

# along with this program. If not, see <http://www.gnu.org/licenses/>.

|

||||

```

|

||||

|

||||

然后,你告诉 Python 你想使用的模块。一些模块是常见的 Python 库,当然,你想包括一个你刚刚安装的,Pygame 。

|

||||

然后,你告诉 Python 你想使用的模块。一些模块是常见的 Python 库,当然,你想包括一个你刚刚安装的 Pygame 模块。

|

||||

|

||||

```

|

||||

import pygame # 加载 pygame 关键字

|

||||

@ -90,7 +92,7 @@ import sys # 让 python 使用你的文件系统

|

||||

import os # 帮助 python 识别你的操作系统

|

||||

```

|

||||

|

||||

由于你将用这个脚本文件工作很多,在文件中制作成段落是有帮助的,以便你知道在哪里放原料。使用语句块注释来做这些,这些注释仅在看你的源文件代码时是可见的。在你的代码中创建三个语句块。

|

||||

由于你将用这个脚本文件做很多工作,在文件中分成段落是有帮助的,以便你知道在哪里放代码。你可以使用块注释来做这些,这些注释仅在看你的源文件代码时是可见的。在你的代码中创建三个块。

|

||||

|

||||

```

|

||||

'''

|

||||

@ -114,7 +116,7 @@ Main Loop

|

||||

|

||||

接下来,为你的游戏设置窗口大小。注意,不是每一个人都有大计算机屏幕,所以,最好使用一个适合大多数人的计算机的屏幕大小。

|

||||

|

||||

这里有一个方法来切换全屏模式,很多现代电子游戏做的方法,但是,由于你刚刚开始,保存它简单和仅设置一个大小。

|

||||

这里有一个方法来切换全屏模式,很多现代电子游戏都会这样做,但是,由于你刚刚开始,简单起见仅设置一个大小即可。

|

||||

|

||||

```

|

||||

'''

|

||||

@ -124,7 +126,7 @@ worldx = 960

|

||||

worldy = 720

|

||||

```

|

||||

|

||||

在一个脚本中使用 Pygame 引擎前,你需要一些基本的设置。你必需设置帧频,启动它的内部时钟,然后开始 (`init`) Pygame 。

|

||||

在脚本中使用 Pygame 引擎前,你需要一些基本的设置。你必须设置帧频,启动它的内部时钟,然后开始 (`init`)Pygame 。

|

||||

|

||||

```

|

||||

fps = 40 # 帧频

|

||||

@ -137,17 +139,15 @@ pygame.init()

|

||||

|

||||

### 设置背景

|

||||

|

||||

在你继续前,打开一个图形应用程序,并为你的游戏世界创建一个背景。在你的工程目录中的 `images` 文件夹内部保存它为 `stage.png` 。

|

||||

在你继续前,打开一个图形应用程序,为你的游戏世界创建一个背景。在你的工程目录中的 `images` 文件夹内部保存它为 `stage.png` 。

|

||||

|

||||

这里有一些你可以使用的自由图形应用程序。

|

||||

|

||||

* [Krita][5] 是一个专业级绘图原料模拟器,它可以被用于创建漂亮的图片。如果你对电子游戏创建艺术作品非常感兴趣,你甚至可以购买一系列的[游戏艺术作品教程][6].

|

||||

* [Pinta][7] 是一个基本的,易于学习的绘图应用程序。

|

||||

* [Inkscape][8] 是一个矢量图形应用程序。使用它来绘制形状,线,样条曲线,和 Bézier 曲线。

|

||||

* [Krita][5] 是一个专业级绘图素材模拟器,它可以被用于创建漂亮的图片。如果你对创建电子游戏艺术作品非常感兴趣,你甚至可以购买一系列的[游戏艺术作品教程][6]。

|

||||

* [Pinta][7] 是一个基本的,易于学习的绘图应用程序。

|

||||

* [Inkscape][8] 是一个矢量图形应用程序。使用它来绘制形状、线、样条曲线和贝塞尔曲线。

|

||||

|

||||

|

||||

|

||||

你的图像不必很复杂,你可以以后回去更改它。一旦你有它,在你文件的 setup 部分添加这些代码:

|

||||

你的图像不必很复杂,你可以以后回去更改它。一旦有了它,在你文件的 Setup 部分添加这些代码:

|

||||

|

||||

```

|

||||

world = pygame.display.set_mode([worldx,worldy])

|

||||

@ -155,13 +155,13 @@ backdrop = pygame.image.load(os.path.join('images','stage.png').convert())

|

||||

backdropbox = world.get_rect()

|

||||

```

|

||||

|

||||

如果你仅仅用一种颜色来填充你的游戏的背景,你需要做的全部是:

|

||||

如果你仅仅用一种颜色来填充你的游戏的背景,你需要做的就是:

|

||||

|

||||

```

|

||||

world = pygame.display.set_mode([worldx,worldy])

|

||||

```

|

||||

|

||||

你也必需定义一个来使用的颜色。在你的 setup 部分,使用红,绿,蓝 (RGB) 的值来创建一些颜色的定义。

|

||||

你也必须定义颜色以使用。在你的 Setup 部分,使用红、绿、蓝 (RGB) 的值来创建一些颜色的定义。

|

||||

|

||||

```

|

||||

'''

|

||||

@ -173,13 +173,13 @@ BLACK = (23,23,23 )

|

||||

WHITE = (254,254,254)

|

||||

```

|

||||

|

||||

在这点上,你能理论上启动你的游戏。问题是,它可能仅持续一毫秒。

|

||||

至此,你理论上可以启动你的游戏了。问题是,它可能仅持续了一毫秒。

|

||||

|

||||

为证明这一点,保存你的文件为 `your-name_game.py` (用你真实的名称替换 `your-name` )。然后启动你的游戏。

|

||||

为证明这一点,保存你的文件为 `your-name_game.py`(用你真实的名称替换 `your-name`)。然后启动你的游戏。

|

||||

|

||||

如果你正在使用 IDLE ,通过选择来自 Run 菜单的 `Run Module` 来运行你的游戏。

|

||||

如果你正在使用 IDLE,通过选择来自 “Run” 菜单的 “Run Module” 来运行你的游戏。

|

||||

|

||||

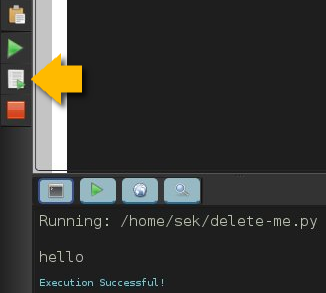

如果你正在使用 Ninja ,在左侧按钮条中单击 `Run file` 按钮。

|

||||

如果你正在使用 Ninja,在左侧按钮条中单击 “Run file” 按钮。

|

||||

|

||||

|

||||

|

||||

@ -189,27 +189,27 @@ WHITE = (254,254,254)

|

||||

$ python3 ./your-name_game.py

|

||||

```

|

||||

|

||||

如果你正在使用 Windows ,使用这命令:

|

||||

如果你正在使用 Windows,使用这命令:

|

||||

|

||||

```

|

||||

py.exe your-name_game.py

|

||||

```

|

||||

|

||||

你启动它,不过不要期望很多,因为你的游戏现在仅仅持续几毫秒。你可以在下一部分中修复它。

|

||||

启动它,不过不要期望很多,因为你的游戏现在仅仅持续几毫秒。你可以在下一部分中修复它。

|

||||

|

||||

### 循环

|

||||

|

||||

除非另有说明,一个 Python 脚本运行一次并仅一次。近来计算机的运行速度是非常快的,所以你的 Python 脚本运行时间少于1秒钟。

|

||||

除非另有说明,一个 Python 脚本运行一次并仅一次。近来计算机的运行速度是非常快的,所以你的 Python 脚本运行时间会少于 1 秒钟。

|

||||

|

||||

为强制你的游戏来处于足够长的打开和活跃状态来让人看到它(更不要说玩它),使用一个 `while` 循环。为使你的游戏保存打开,你可以设置一个变量为一些值,然后告诉一个 `while` 循环只要变量保持未更改则一直保存循环。

|

||||

|

||||

这经常被称为一个"主循环",你可以使用术语 `main` 作为你的变量。在你的 setup 部分的任意位置添加这些代码:

|

||||

这经常被称为一个“主循环”,你可以使用术语 `main` 作为你的变量。在你的 Setup 部分的任意位置添加代码:

|

||||

|

||||

```

|

||||

main = True

|

||||

```

|

||||

|

||||

在主循环期间,使用 Pygame 关键字来检查是否在键盘上的按键已经被按下或释放。添加这些代码到你的主循环部分:

|

||||

在主循环期间,使用 Pygame 关键字来检查键盘上的按键是否已经被按下或释放。添加这些代码到你的主循环部分:

|

||||

|

||||

```

|

||||

'''

|

||||

@ -228,7 +228,7 @@ while main == True:

|

||||

main = False

|

||||

```

|

||||

|

||||

也在你的循环中,刷新你世界的背景。

|

||||

也是在你的循环中,刷新你世界的背景。

|

||||

|

||||

如果你使用一个图片作为背景:

|

||||

|

||||

@ -242,33 +242,33 @@ world.blit(backdrop, backdropbox)

|

||||

world.fill(BLUE)

|

||||

```

|

||||

|

||||

最后,告诉 Pygame 来刷新在屏幕上的所有内容并推进游戏的内部时钟。

|

||||

最后,告诉 Pygame 来重新刷新屏幕上的所有内容,并推进游戏的内部时钟。

|

||||

|

||||

```

|

||||

pygame.display.flip()

|

||||

clock.tick(fps)

|

||||

```

|

||||

|

||||

保存你的文件,再次运行它来查看曾经创建的最无趣的游戏。

|

||||

保存你的文件,再次运行它来查看你曾经创建的最无趣的游戏。

|

||||

|

||||

退出游戏,在你的键盘上按 `q` 键。

|

||||

|

||||

在这系列的 [下一篇文章][9] 中,我将向你演示,如何加强你当前空的游戏世界,所以,继续学习并创建一些将要使用的图形!

|

||||

在这系列的 [下一篇文章][9] 中,我将向你演示,如何加强你当前空空如也的游戏世界,所以,继续学习并创建一些将要使用的图形!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

通过: https://opensource.com/article/17/12/game-framework-python

|

||||

via: https://opensource.com/article/17/12/game-framework-python

|

||||

|

||||

作者:[Seth Kenlon][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[robsean](https://github.com/robsean)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/seth

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/article/17/10/python-101

|

||||

[1]: https://linux.cn/article-9071-1.html

|

||||

[2]: http://www.pygame.org/wiki/about

|

||||

[3]: https://en.wikipedia.org/wiki/IDLE

|

||||

[4]: http://ninja-ide.org/

|

||||

@ -0,0 +1,56 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Server shipments to pick up in the second half of 2019)

|

||||

[#]: via: (https://www.networkworld.com/article/3393167/server-shipments-to-pick-up-in-the-second-half-of-2019.html#tk.rss_all)

|

||||

[#]: author: (Andy Patrizio https://www.networkworld.com/author/Andy-Patrizio/)

|

||||

|

||||

Server shipments to pick up in the second half of 2019

|

||||

======

|

||||

Server sales slowed in anticipation of the new Intel Xeon processors, but they are expected to start up again before the end of the year.

|

||||

![Thinkstock][1]

|

||||

|

||||

Global server shipments are not expected to return to growth momentum until the third quarter or even the fourth quarter of 2019, according to Taiwan-based tech news site DigiTimes, which cited unnamed server supply chain sources. The one bright spot remains cloud providers like Amazon, Google, and Facebook, which continue their buying binge.

|

||||

|

||||

Normally I’d be reluctant to cite such a questionable source, but given most of the OEMs and ODMs are based in Taiwan and DigiTimes (the article is behind a paywall so I cannot link) has shown it has connections to them, I’m inclined to believe them.

|

||||

|

||||

Quanta Computer chairman Barry Lam told the publication that Quanta's shipments of cloud servers have risen steadily, compared to sharp declines in shipments of enterprise servers. Lam continued that enterprise servers command only 1-2% of the firm's total server shipments.

|

||||

|

||||

**[ Also read:[Gartner: IT spending to drop due to falling equipment prices][2] ]**

|

||||

|

||||

[Server shipments began to slow down in the first quarter][3] thanks in part to the impending arrival of second-generation Xeon Scalable processors from Intel. And since it takes a while to get parts and qualify them, this quarter won’t be much better.

|

||||

|

||||

In its latest quarterly earnings, Intel's data center group (DCG) said sales declined 6% year over year, the first decline of its kind since the first quarter of 2012 and reversing an average growth of over 20% in the past.

|

||||

|

||||

[The Osbourne Effect][4] wasn’t the sole reason. An economic slowdown in China and the trade war, which will add significant tariffs to Chinese-made products, are also hampering sales.

|

||||

|

||||

DigiTimes says Inventec, Intel's largest server motherboard supplier, expects shipments of enterprise server motherboards to further lose steams for the rest of the year, while sales of data center servers are expected to grow 10-15% on year in 2019.

|

||||

|

||||

**[[Get certified as an Apple Technical Coordinator with this seven-part online course from PluralSight.][5] ]**

|

||||

|

||||

It went on to say server shipments may concentrate in the second half or even the fourth quarter of the year, while cloud-based data center servers for the cloud giants will remain positive as demand for edge computing, new artificial intelligence (AI) applications, and the proliferation of 5G applications begin in 2020.

|

||||

|

||||

Join the Network World communities on [Facebook][6] and [LinkedIn][7] to comment on topics that are top of mind.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3393167/server-shipments-to-pick-up-in-the-second-half-of-2019.html#tk.rss_all

|

||||

|

||||

作者:[Andy Patrizio][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Andy-Patrizio/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://images.techhive.com/images/article/2017/04/2_data_center_servers-100718306-large.jpg

|

||||

[2]: https://www.networkworld.com/article/3391062/it-spending-to-drop-due-to-falling-equipment-prices-gartner-predicts.html

|

||||

[3]: https://www.networkworld.com/article/3332144/server-sales-projected-to-slow-while-memory-prices-drop.html

|

||||

[4]: https://en.wikipedia.org/wiki/Osborne_effect

|

||||

[5]: https://pluralsight.pxf.io/c/321564/424552/7490?u=https%3A%2F%2Fwww.pluralsight.com%2Fpaths%2Fapple-certified-technical-trainer-10-11

|

||||

[6]: https://www.facebook.com/NetworkWorld/

|

||||

[7]: https://www.linkedin.com/company/network-world

|

||||

@ -0,0 +1,74 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Cisco adds AMP to SD-WAN for ISR/ASR routers)

|

||||

[#]: via: (https://www.networkworld.com/article/3394597/cisco-adds-amp-to-sd-wan-for-israsr-routers.html#tk.rss_all)

|

||||

[#]: author: (Michael Cooney https://www.networkworld.com/author/Michael-Cooney/)

|

||||

|

||||

Cisco adds AMP to SD-WAN for ISR/ASR routers

|

||||

======

|

||||

Cisco SD-WAN now sports Advanced Malware Protection on its popular edge routers, adding to their routing, segmentation, security, policy and orchestration capabilities.

|

||||

![vuk8691 / Getty Images][1]

|

||||

|

||||

Cisco has added support for Advanced Malware Protection (AMP) to its million-plus ISR/ASR edge routers, in an effort to [reinforce branch and core network malware protection][2] at across the SD-WAN.

|

||||

|

||||

Cisco last year added its Viptela SD-WAN technology to the IOS XE version 16.9.1 software that runs its core ISR/ASR routers such as the ISR models 1000, 4000 and ASR 5000, in use by organizations worldwide. Cisco bought Viptela in 2017.

|

||||

|

||||

**More about SD-WAN**

|

||||

|

||||

* [How to buy SD-WAN technology: Key questions to consider when selecting a supplier][3]

|

||||

* [How to pick an off-site data-backup method][4]

|

||||

* [SD-Branch: What it is and why you’ll need it][5]

|

||||

* [What are the options for security SD-WAN?][6]

|

||||

|

||||

|

||||

|

||||

The release of Cisco IOS XE offered an instant upgrade path for creating cloud-controlled SD-WAN fabrics to connect distributed offices, people, devices and applications operating on the installed base, Cisco said. At the time Cisco said that Cisco SD-WAN on edge routers builds a secure virtual IP fabric by combining routing, segmentation, security, policy and orchestration.

|

||||

|

||||

With the recent release of [IOS-XE SD-WAN 16.11][7], Cisco has brought AMP and other enhancements to its SD-WAN.

|

||||

|

||||

“Together with Cisco Talos [Cisco’s security-intelligence arm], AMP imbues your SD-WAN branch, core and campuses locations with threat intelligence from millions of worldwide users, honeypots, sandboxes, and extensive industry partnerships,” wrote Cisco’s Patrick Vitalone a product marketing manager in a [blog][8] about the security portion of the new software. “In total, AMP identifies more than 1.1 million unique malware samples a day." When AMP in Cisco SD-WAN spots malicious behavior it automatically blocks it, he wrote.

|

||||

|

||||

The idea is to use integrated preventative engines, exploit prevention and intelligent signature-based antivirus to stop malicious attachments and fileless malware before they execute, Vitalone wrote.

|

||||

|

||||

AMP support is added to a menu of security features already included in the SD-WAN software including support for URL filtering, [Cisco Umbrella][9] DNS security, Snort Intrusion Prevention, the ability to segment users across the WAN and embedded platform security, including the [Cisco Trust Anchor][10] module.

|

||||

|

||||

**[[Prepare to become a Certified Information Security Systems Professional with this comprehensive online course from PluralSight. Now offering a 10-day free trial!][11] ]**

|

||||

|

||||

The software also supports [SD-WAN Cloud onRamp for CoLocation][12], which lets customers tie distributed multicloud applications back to a local branch office or local private data center. That way a cloud-to-branch link would be shorter, faster and possibly more secure that tying cloud-based applications directly to the data center.

|

||||

|

||||

“The idea that this kind of security technology is now integrated into Cisco’s SD-WAN offering is a critical for Cisco and customers looking to evaluate SD-WAN offerings,” said Lee Doyle, principal analyst at Doyle Research.

|

||||

|

||||

IOS-XE SD-WAN 16.11 is available now.

|

||||

|

||||

Join the Network World communities on [Facebook][13] and [LinkedIn][14] to comment on topics that are top of mind.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3394597/cisco-adds-amp-to-sd-wan-for-israsr-routers.html#tk.rss_all

|

||||

|

||||

作者:[Michael Cooney][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Michael-Cooney/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://images.idgesg.net/images/article/2018/09/shimizu_island_el_nido_palawan_philippines_by_vuk8691_gettyimages-155385042_1200x800-100773533-large.jpg

|

||||

[2]: https://www.networkworld.com/article/3285728/what-are-the-options-for-securing-sd-wan.html

|

||||

[3]: https://www.networkworld.com/article/3323407/sd-wan/how-to-buy-sd-wan-technology-key-questions-to-consider-when-selecting-a-supplier.html

|

||||

[4]: https://www.networkworld.com/article/3328488/backup-systems-and-services/how-to-pick-an-off-site-data-backup-method.html

|

||||

[5]: https://www.networkworld.com/article/3250664/lan-wan/sd-branch-what-it-is-and-why-youll-need-it.html

|

||||

[6]: https://www.networkworld.com/article/3285728/sd-wan/what-are-the-options-for-securing-sd-wan.html?nsdr=true

|

||||

[7]: https://www.cisco.com/c/en/us/td/docs/routers/sdwan/release/notes/xe-16-11/sd-wan-rel-notes-19-1.html

|

||||

[8]: https://blogs.cisco.com/enterprise/enabling-amp-in-cisco-sd-wan

|

||||

[9]: https://www.networkworld.com/article/3167837/cisco-umbrella-cloud-service-shapes-security-for-cloud-mobile-resources.html

|

||||

[10]: https://www.cisco.com/c/dam/en_us/about/doing_business/trust-center/docs/trustworthy-technologies-datasheet.pdf

|

||||

[11]: https://pluralsight.pxf.io/c/321564/424552/7490?u=https%3A%2F%2Fwww.pluralsight.com%2Fpaths%2Fcertified-information-systems-security-professional-cisspr

|

||||

[12]: https://www.networkworld.com/article/3393232/cisco-boosts-sd-wan-with-multicloud-to-branch-access-system.html

|

||||

[13]: https://www.facebook.com/NetworkWorld/

|

||||

[14]: https://www.linkedin.com/company/network-world

|

||||

@ -0,0 +1,83 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (When it comes to uptime, not all cloud providers are created equal)

|

||||

[#]: via: (https://www.networkworld.com/article/3394341/when-it-comes-to-uptime-not-all-cloud-providers-are-created-equal.html#tk.rss_all)

|

||||

[#]: author: (Zeus Kerravala https://www.networkworld.com/author/Zeus-Kerravala/)

|

||||

|

||||

When it comes to uptime, not all cloud providers are created equal

|

||||

======

|

||||

Cloud uptime is critical today, but vendor-provided data can be confusing. Here's an analysis of how AWS, Google Cloud and Microsoft Azure compare.

|

||||

![Getty Images][1]

|

||||

|

||||

The cloud is not just important; it's mission-critical for many companies. More and more IT and business leaders I talk to look at public cloud as a core component of their digital transformation strategies — using it as part of their hybrid cloud or public cloud implementation.

|

||||

|

||||

That raises the bar on cloud reliability, as a cloud outage means important services are not available to the business. If this is a business-critical service, the company may not be able to operate while that key service is offline.

|

||||

|

||||

Because of the growing importance of the cloud, it’s critical that buyers have visibility into the reliability number for the cloud providers. The challenge is the cloud providers don't disclose the disruptions in a consistent manner. In fact, some are confusing to the point where it’s difficult to glean any kind of meaningful conclusion.

|

||||

|

||||

**[ RELATED:[What IT pros need to know about Azure Stack][2] and [Which cloud performs better, AWS, Azure or Google?][3] | Get regularly scheduled insights: [Sign up for Network World newsletters][4] ]**

|

||||

|

||||

### Reported cloud outage times don't always reflect actual downtime

|

||||

|

||||

Microsoft Azure and Google Cloud Platform (GCP) both typically provide information on date and time, but only high-level data on the services affected and sparse information on regional impact. The problem with that is it’s difficult to get a sense of overall reliability. For instance, if Azure reports a one-hour outage that impacts five services in three regions, the website might show just a single hour. In actuality, that’s 15 hours of total downtime.

|

||||

|

||||

Between Azure, GCP and Amazon Web Services (AWS), [Azure is the most obscure][5], as it provides the least amount of detail. [GCP does a better job of providing detail][6] at the service level but tends to be obscure with regional information. Sometimes it’s very clear as to what services are unavailable, and other times it’s not.

|

||||

|

||||

[AWS has the most granular reporting][7], as it shows every service in every region. If an incident occurs that impacts three services, all three of those services would light up red. If those were unavailable for one hour, AWS would record three hours of downtime.

|

||||

|

||||

Another inconsistency between the cloud providers is the amount of historical downtime data that is available. At one time, all three of the cloud vendors provided a one-year view into outages. GCP and AWS still do this, but Azure moved to only a [90-day view][5] sometime over the past year.

|

||||

|

||||

### Azure has significantly higher downtime than GCP and AWS

|

||||

|

||||

The next obvious question is who has the most downtime? To answer that, I worked with a third-party firm that has continually collected downtime information directly from the vendor websites. I have personally reviewed the information and can validate its accuracy. Based on the vendors own reported numbers, from the beginning of 2018 through May 3, 2019, AWS leads the pack with only 338 hours of downtime, followed by GCP closely at 361. Microsoft Azure has a whopping total of 1,934 hours of self-reported downtime.

|

||||

|

||||

![][8]

|

||||

|

||||

A few points on these numbers. First, this is an aggregation of the self-reported data from the vendors' websites, which isn’t the “true” number, as regional information or service granularity is sometimes obscured. If a service is unavailable for an hour and it’s reported for an hour on the website but it spanned five regions, correctly five hours should have been used. But for this calculation, we used only one hour because that is what was self-reported.

|

||||

|

||||

Because of this, the numbers are most favorable to Microsoft because they provide the least amount of regional information. The numbers are least favorable to AWS because they provide the most granularity. Also, I believe AWS has the most services in most regions, so they have more opportunities for an outage.

|

||||

|

||||

We had considered normalizing the data, but that would require a significant amount of work to destruct the downtime on a per service per region basis. I may choose to do that in the future, but for now, the vendor-reported view is a good indicator of relative performance.

|

||||

|

||||

Another important point is that only infrastructure as a service (IaaS) services were used to calculate downtime. If Google Street View or Bing Maps went down, most businesses would not care, so it would have been unfair to roll those number in.

|

||||

|

||||

### SLAs do not correlate to reliability

|

||||

|

||||

Given the importance of cloud services today, I would like to see every cloud provider post a 12-month running total of downtime somewhere on their website so customers can do an “apples to apples” comparison. This obviously isn’t the only factor used in determining which cloud provider to use, but it is one of the more critical ones.

|

||||

|

||||

Also, buyers should be aware that there is a big difference between service-level agreements (SLAs) and downtime. A cloud operator can promise anything they want, even provide a 100% SLA, but that just means they need to reimburse the business when a service isn’t available. Most IT leaders I have talked to say the few bucks they get back when a service is out is a mere fraction of what the outage actually cost them.

|

||||

|

||||

### Measure twice and cut once to minimize business disruption

|

||||

|

||||

If you’re reading this and you’re researching cloud services, it’s important to not just make the easy decision of buying for convenience. Many companies look at Azure because Microsoft gives away Azure credits as part of the Enterprise Agreement (EA). I’ve interviewed several companies that took the path of least resistance, but they wound up disappointed with availability and then switched to AWS or GCP later, which can have a disruptive effect.

|

||||

|

||||

I’m certainly not saying to not buy Microsoft Azure, but it is important to do your homework to understand the historical performance of the services you’re considering in the regions you need them. The information on the vendor websites may not tell the full picture, so it’s important to do the necessary due diligence to ensure you understand what you’re buying before you buy it.

|

||||

|

||||

Join the Network World communities on [Facebook][9] and [LinkedIn][10] to comment on topics that are top of mind.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3394341/when-it-comes-to-uptime-not-all-cloud-providers-are-created-equal.html#tk.rss_all

|

||||

|

||||

作者:[Zeus Kerravala][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Zeus-Kerravala/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://images.idgesg.net/images/article/2019/02/cloud_comput_connect_blue-100787048-large.jpg

|

||||

[2]: https://www.networkworld.com/article/3208029/azure-stack-microsoft-s-private-cloud-platform-and-what-it-pros-need-to-know-about-it

|

||||

[3]: https://www.networkworld.com/article/3319776/the-network-matters-for-public-cloud-performance.html

|

||||

[4]: https://www.networkworld.com/newsletters/signup.html

|

||||

[5]: https://azure.microsoft.com/en-us/status/history/

|

||||

[6]: https://status.cloud.google.com/incident/appengine/19008

|

||||

[7]: https://status.aws.amazon.com/

|

||||

[8]: https://images.idgesg.net/images/article/2019/05/public-cloud-downtime-100795948-large.jpg

|

||||

[9]: https://www.facebook.com/NetworkWorld/

|

||||

[10]: https://www.linkedin.com/company/network-world

|

||||

@ -0,0 +1,58 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Supermicro moves production from China)

|

||||

[#]: via: (https://www.networkworld.com/article/3394404/supermicro-moves-production-from-china.html#tk.rss_all)

|

||||

[#]: author: (Andy Patrizio https://www.networkworld.com/author/Andy-Patrizio/)

|

||||

|

||||

Supermicro moves production from China

|

||||

======

|

||||

Supermicro was cleared of any activity related to the Chinese government and secret chips in its motherboards, but it is taking no chances and is moving its facilities.

|

||||

![Frank Schwichtenberg \(CC BY 4.0\)][1]

|

||||

|

||||

Server maker Supermicro, based in Fremont, California, is reportedly moving production out of China over customer concerns that the Chinese government had secretly inserted chips for spying into its motherboards.

|

||||

|

||||

The claims were made by Bloomberg late last year in a story that cited more than 100 sources in government and private industry, including Apple and Amazon Web Services (AWS). However, Apple CEO Tim Cook and AWS CEO Andy Jassy denied the claims and called for Bloomberg to retract the article. And a few months later, the third-party investigations firm Nardello & Co examined the claims and [cleared Supermicro][2] of any surreptitious activity.

|

||||

|

||||

At first it seemed like Supermicro was weathering the storm, but the story did have a negative impact. Server sales have fallen since the Bloomberg story, and the company is forecasting a near 10% decline in total revenues for the March quarter compared to the previous three months.

|

||||

|

||||

**[ Also read:[Who's developing quantum computers][3] ]**

|

||||

|

||||

And now, Nikkei Asian Review reports that despite the strong rebuttals, some customers remain cautious about the company's products. To address those concerns, Nikkei says Supermicro has told suppliers to [move production out of China][4], citing industry sources familiar with the matter.

|

||||

|

||||

It also has the side benefit of mitigating against the U.S.-China trade war, which is only getting worse. Since the tariffs are on the dollar amount of the product, that can quickly add up even for a low-end system, as Serve The Home noted in [this analysis][5].

|

||||

|

||||

Supermicro is the world's third-largest server maker by shipments, selling primarily to cloud providers like Amazon and Facebook. It does its own assembly in its Fremont facility but outsources motherboard production to numerous suppliers, mostly China and Taiwan.

|

||||

|

||||

"We have to be more self-reliant [to build in-house manufacturing] without depending only on those outsourcing partners whose production previously has mostly been in China," an executive told Nikkei.

|

||||

|

||||

Nikkei notes that roughly 90% of the motherboards shipped worldwide in 2017 were made in China, but that percentage dropped to less than 50% in 2018, according to Digitimes Research, a tech supply chain specialist based in Taiwan.

|

||||

|

||||

Supermicro just held a groundbreaking ceremony in Taiwan for a 800,000 square foot manufacturing plant in Taiwan and is expanding its San Jose, California, plant as well. So, they must be anxious to be free of China if they are willing to expand in one of the most expensive real estate markets in the world.

|

||||

|

||||

A Supermicro spokesperson said via email, “We have been expanding our manufacturing capacity for many years to meet increasing customer demand. We are currently constructing a new Green Computing Park building in Silicon Valley, where we are the only Tier 1 solutions vendor manufacturing in Silicon Valley, and we proudly broke ground this week on a new manufacturing facility in Taiwan. To support our continued global growth, we look forward to expanding in Europe as well.”

|

||||

|

||||

Join the Network World communities on [Facebook][6] and [LinkedIn][7] to comment on topics that are top of mind.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3394404/supermicro-moves-production-from-china.html#tk.rss_all

|

||||

|

||||

作者:[Andy Patrizio][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Andy-Patrizio/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://images.idgesg.net/images/article/2019/05/supermicro_-_x11sae__cebit_2016_01-100796121-large.jpg

|

||||

[2]: https://www.networkworld.com/article/3326828/investigator-finds-no-evidence-of-spy-chips-on-super-micro-motherboards.html

|

||||

[3]: https://www.networkworld.com/article/3275385/who-s-developing-quantum-computers.html

|

||||

[4]: https://asia.nikkei.com/Economy/Trade-war/Server-maker-Super-Micro-to-ditch-made-in-China-parts-on-spy-fears

|

||||

[5]: https://www.servethehome.com/how-tariffs-hurt-intel-xeon-d-atom-and-amd-epyc-3000/

|

||||

[6]: https://www.facebook.com/NetworkWorld/

|

||||

[7]: https://www.linkedin.com/company/network-world

|

||||

@ -0,0 +1,83 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Write faster C extensions for Python with Cython)

|

||||

[#]: via: (https://opensource.com/article/19/5/python-cython)

|

||||

[#]: author: (Moshe Zadka https://opensource.com/users/moshez/users/moshez/users/foundjem/users/jugmac00)

|

||||

|

||||

Write faster C extensions for Python with Cython

|

||||

======

|

||||

Learn more about solving common Python problems in our series covering

|

||||

seven PyPI libraries.

|

||||

![Hand drawing out the word "code"][1]

|

||||

|

||||

Python is one of the most [popular programming languages][2] in use today—and for good reasons: it's open source, it has a wide range of uses (such as web programming, business applications, games, scientific programming, and much more), and it has a vibrant and dedicated community supporting it. This community is the reason we have such a large, diverse range of software packages available in the [Python Package Index][3] (PyPI) to extend and improve Python and solve the inevitable glitches that crop up.

|

||||

|

||||

In this series, we'll look at seven PyPI libraries that can help you solve common Python problems. First up: **[**Cython**][4]** , a language that simplifies writing C extensions for Python.

|

||||

|

||||

### Cython

|

||||

|

||||

Python is fun to use, but sometimes, programs written in it can be slow. All the runtime dynamic dispatching comes with a steep price: sometimes it's up to 10-times slower than equivalent code written in a systems language like C or Rust.

|

||||

|

||||

Moving pieces of code to a completely new language can have a big cost in both effort and reliability: All that manual rewrite work will inevitably introduce bugs. Can we have our cake and eat it too?

|

||||

|

||||

To have something to optimize for this exercise, we need something slow. What can be slower than an accidentally exponential implementation of the Fibonacci sequence?

|

||||

|

||||

|

||||

```

|

||||

def fib(n):

|

||||

if n < 2:

|

||||

return 1

|

||||

return fib(n-1) + fib(n-2)

|

||||

```

|

||||

|

||||

Since a call to **fib** results in two calls, this beautifully inefficient algorithm takes a long time to execute. For example, on my new laptop, **fib(36)** takes about 4.5 seconds. These 4.5 seconds will be our baseline as we explore how Python's Cython extension can help.

|

||||

|

||||

The proper way to use Cython is to integrate it into **setup.py**. However, a quick and easy way to try things out is with **pyximport**. Let's put the **fib** code above in **fib.pyx** and run it using Cython.

|

||||

|

||||

|

||||

```

|

||||

>>> import pyximport; pyximport.install()

|

||||

>>> import fib

|

||||

>>> fib.fib(36)

|

||||

```

|

||||

|

||||

Just using Cython with _no_ code changes reduced the time the algorithm takes on my laptop to around 2.5 seconds. That's a reduction of almost 50% runtime with almost no effort; certainly, a scrumptious cake to eat and have!

|

||||

|

||||

Putting in a little more effort, we can make things even faster.

|

||||

|

||||

|

||||

```

|

||||

cpdef int fib(int n):

|

||||

if n < 2:

|

||||

return 1

|

||||

return fib(n - 1) + fib(n - 2)

|

||||

```

|

||||

|

||||

We moved the code in **fib** to a function defined with **cpdef** and added a couple of type annotations: it takes an integer and returns an integer.

|

||||

|

||||

This makes it _much_ faster—around 0.05 seconds. It's so fast that I may start suspecting my measurement methods contain noise: previously, this noise was lost in the signal.

|

||||

|

||||

So, the next time some of your Python code spends too long on the CPU, maybe spinning up some fans in the process, why not see if Cython can fix things?

|

||||

|

||||

In the next article in this series, we'll look at **Black** , a project that automatically corrects format errors in your code.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/5/python-cython

|

||||

|

||||

作者:[Moshe Zadka ][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/moshez/users/moshez/users/foundjem/users/jugmac00

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/code_hand_draw.png?itok=dpAf--Db (Hand drawing out the word "code")

|

||||

[2]: https://opensource.com/article/18/5/numbers-python-community-trends

|

||||

[3]: https://pypi.org/

|

||||

[4]: https://pypi.org/project/Cython/

|

||||

@ -1,5 +1,5 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: translator: (MjSeven)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

|

||||

@ -0,0 +1,143 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (How to use advanced rsync for large Linux backups)

|

||||

[#]: via: (https://opensource.com/article/19/5/advanced-rsync)

|

||||

[#]: author: (Alan Formy-Duval https://opensource.com/users/alanfdoss/users/marcobravo)

|

||||

|

||||

How to use advanced rsync for large Linux backups

|

||||

======

|

||||

Basic rsync commands are usually enough to manage your Linux backups,

|

||||

but a few extra options add speed and power to large backup sets.

|

||||

![Filing papers and documents][1]

|

||||

|

||||

It seems clear that backups are always a hot topic in the Linux world. Back in 2017, David Both offered [Opensource.com][2] readers tips on "[Using rsync to back up your Linux system][3]," and earlier this year, he published a poll asking us, "[What's your primary backup strategy for the /home directory in Linux?][4]" In another poll this year, Don Watkins asked, "[Which open source backup solution do you use?][5]"

|

||||

|

||||

My response is [rsync][6]. I really like rsync! There are plenty of large and complex tools on the market that may be necessary for managing tape drives or storage library devices, but a simple open source command line tool may be all you need.

|

||||

|

||||

### Basic rsync

|

||||

|

||||

I managed the binary repository system for a global organization that had roughly 35,000 developers with multiple terabytes of files. I regularly moved or archived hundreds of gigabytes of data at a time. Rsync was used. This experience gave me confidence in this simple tool. (So, yes, I use it at home to back up my Linux systems.)

|

||||

|

||||

The basic rsync command is simple.

|

||||

|

||||

|

||||

```

|

||||

`rsync -av SRC DST`

|

||||

```

|

||||

|

||||

Indeed, the rsync commands taught in any tutorial will work fine for most general situations. However, suppose we need to back up a very large amount of data. Something like a directory with 2,000 sub-directories, each holding anywhere from 50GB to 700GB of data. Running rsync on this directory could take a tremendous amount of time, particularly if you're using the checksum option, which I prefer.

|

||||

|

||||

Performance is likely to suffer if we try to sync large amounts of data or sync across slow network connections. Let me show you some methods I use to ensure good performance and reliability.

|

||||

|

||||

### Advanced rsync

|

||||

|

||||

One of the first lines that appears when rsync runs is: "sending incremental file list." If you do a search for this line, you'll see many questions asking things like: why is it taking forever? or why does it seem to hang up?

|

||||

|

||||

Here's an example based on this scenario. Let's say we have a directory called **/storage** that we want to back up to an external USB device mounted at **/media/WDPassport**.

|

||||

|

||||

If we want to back up **/storage** to a USB external drive, we could use this command:

|

||||

|

||||

|

||||

```

|

||||

`rsync -cav /storage /media/WDPassport`

|

||||

```

|

||||

|

||||

The **c** option tells rsync to use file checksums instead of timestamps to determine changed files, and this usually takes longer. In order to break down the **/storage** directory, I sync by subdirectory, using the **find** command. Here's an example:

|

||||

|

||||

|

||||

```

|

||||

`find /storage -type d -exec rsync -cav {} /media/WDPassport \;`

|

||||

```

|

||||

|

||||

This looks OK, but if there are any files in the **/storage** directory, they will not be copied. So, how can we sync the files in **/storage**? There is also a small nuance where certain options will cause rsync to sync the **.** directory, which is the root of the source directory; this means it will sync the subdirectories twice, and we don't want that.

|

||||

|

||||

Long story short, the solution I settled on is a "double-incremental" script. This allows me to break down a directory, for example, breaking **/home** into the individual users' home directories or in cases when you have multiple large directories, such as music or family photos.

|

||||

|

||||

Here is an example of my script:

|

||||

|

||||

|

||||

```

|

||||

HOMES="alan"

|

||||

DRIVE="/media/WDPassport"

|

||||

|

||||

for HOME in $HOMES; do

|

||||

cd /home/$HOME

|

||||

rsync -cdlptgov --delete . /$DRIVE/$HOME

|

||||

find . -maxdepth 1 -type d -not -name "." -exec rsync -crlptgov --delete {} /$DRIVE/$HOME \;

|

||||

done

|

||||

```

|

||||

|

||||

The first rsync command copies the files and directories that it finds in the source directory. However, it leaves the directories empty so we can iterate through them using the **find** command. This is done by passing the **d** argument, which tells rsync not to recurse the directory.

|

||||

|

||||

|

||||

```

|

||||

`-d, --dirs transfer directories without recursing`

|

||||

```

|

||||

|

||||

The **find** command then passes each directory to rsync individually. Rsync then copies the directories' contents. This is done by passing the **r** argument, which tells rsync to recurse the directory.

|

||||

|

||||

|

||||

```

|

||||

`-r, --recursive recurse into directories`

|

||||

```

|

||||

|

||||

This keeps the increment file that rsync uses to a manageable size.

|

||||

|

||||

Most rsync tutorials use the **a** (or **archive** ) argument for convenience. This is actually a compound argument.

|

||||

|

||||

|

||||

```

|

||||

`-a, --archive archive mode; equals -rlptgoD (no -H,-A,-X)`

|

||||

```

|

||||

|

||||

The other arguments that I pass would have been included in the **a** ; those are **l** , **p** , **t** , **g** , and **o**.

|

||||

|

||||

|

||||

```

|

||||

-l, --links copy symlinks as symlinks

|

||||

-p, --perms preserve permissions

|

||||

-t, --times preserve modification times

|

||||

-g, --group preserve group

|

||||

-o, --owner preserve owner (super-user only)

|

||||

```

|

||||

|

||||

The **\--delete** option tells rsync to remove any files on the destination that no longer exist on the source. This way, the result is an exact duplication. You can also add an exclude for the **.Trash** directories or perhaps the **.DS_Store** files created by MacOS.

|

||||

|

||||

|

||||

```

|

||||

`-not -name ".Trash*" -not -name ".DS_Store"`

|

||||

```

|

||||

|

||||

### Be careful

|

||||

|

||||

One final recommendation: rsync can be a destructive command. Luckily, its thoughtful creators provided the ability to do "dry runs." If we include the **n** option, rsync will display the expected output without writing any data.

|

||||

|

||||

|

||||

```

|

||||

`rsync -cdlptgovn --delete . /$DRIVE/$HOME`

|

||||

```

|

||||

|

||||

This script is scalable to very large storage sizes and large latency or slow link situations. I'm sure there is still room for improvement, as there always is. If you have suggestions, please share them in the comments.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/5/advanced-rsync

|

||||

|

||||

作者:[Alan Formy-Duval ][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/alanfdoss/users/marcobravo

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/documents_papers_file_storage_work.png?itok=YlXpAqAJ (Filing papers and documents)

|

||||

[2]: http://Opensource.com

|

||||

[3]: https://opensource.com/article/17/1/rsync-backup-linux

|

||||

[4]: https://opensource.com/poll/19/4/backup-strategy-home-directory-linux

|

||||

[5]: https://opensource.com/article/19/2/linux-backup-solutions

|

||||

[6]: https://en.wikipedia.org/wiki/Rsync

|

||||

@ -0,0 +1,140 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Innovations on the Linux desktop: A look at Fedora 30's new features)

|

||||

[#]: via: (https://opensource.com/article/19/5/fedora-30-features)

|

||||

[#]: author: (Anderson Silva https://opensource.com/users/ansilva/users/marcobravo/users/alanfdoss/users/ansilva)

|

||||

|

||||

Innovations on the Linux desktop: A look at Fedora 30's new features

|

||||

======

|

||||

Learn about some of the highlights in the latest version of Fedora

|

||||

Linux.

|

||||

![Fedora Linux distro on laptop][1]

|

||||

|

||||

The latest version of Fedora Linux was released at the end of April. As a full-time Fedora user since its original release back in 2003 and an active contributor since 2007, I always find it satisfying to see new features and advancements in the community.

|

||||

|

||||

If you want a TL;DR version of what's has changed in [Fedora 30][2], feel free to ignore this article and jump straight to Fedora's [ChangeSet][3] wiki page. Otherwise, keep on reading to learn about some of the highlights in the new version.

|

||||

|

||||

### Upgrade vs. fresh install

|

||||

|

||||

I upgraded my Lenovo ThinkPad T series from Fedora 29 to 30 using the [DNF system upgrade instructions][4], and so far it is working great!

|

||||

|

||||

I also had the chance to do a fresh install on another ThinkPad, and it was a nice surprise to see a new boot screen on Fedora 30—it even picked up the Lenovo logo. I did not see this new and improved boot screen on the upgrade above; it was only on the fresh install.

|

||||

|

||||

![Fedora 30 boot screen][5]

|

||||

|

||||

### Desktop changes

|

||||

|

||||

If you are a GNOME user, you'll be happy to know that Fedora 30 comes with the latest version, [GNOME 3.32][6]. It has an improved on-screen keyboard (handy for touch-screen laptops), brand new icons for core applications, and a new "Applications" panel under Settings that allows users to gain a bit more control on GNOME default handlers, access permissions, and notifications. Version 3.32 also improves Google Drive performance so that Google files and calendar appointments will be integrated with GNOME.

|

||||

|

||||

![Applications panel in GNOME Settings][7]

|

||||

|

||||

The new Applications panel in GNOME Settings

|

||||

|

||||

Fedora 30 also introduces two new Desktop environments: Pantheon and Deepin. Pantheon is [ElementaryOS][8]'s default desktop environment and can be installed with a simple:

|

||||

|

||||

|

||||

```

|

||||

`$ sudo dnf groupinstall "Pantheon Desktop"`

|

||||

```

|

||||

|

||||

I haven't used Pantheon yet, but I do use [Deepin][9]. Installation is simple; just run:

|

||||

|

||||

|

||||

```

|

||||

`$ sudo dnf install deepin-desktop`

|

||||

```

|

||||

|

||||

then log out of GNOME and log back in, choosing "Deepin" by clicking on the gear icon on the login screen.

|

||||

|

||||

![Deepin desktop on Fedora 30][10]

|

||||

|

||||

Deepin desktop on Fedora 30

|

||||

|

||||

Deepin appears as a very polished, user-friendly desktop environment that allows you to control many aspects of your environment with a click of a button. So far, the only issue I've had is that it can take a few extra seconds to complete login and return control to your mouse pointer. Other than that, it is brilliant! It is the first desktop environment I've used that seems to do high dots per inch (HiDPI) properly—or at least close to correctly.

|

||||

|

||||

### Command line

|

||||

|

||||

Fedora 30 upgrades the Bourne Again Shell (aka Bash) to version 5.0.x. If you want to find out about every change since its last stable version (4.4), read this [description][11]. I do want to mention that three new environments have been introduced in Bash 5:

|

||||

|

||||

|

||||

```

|

||||

$ echo $EPOCHSECONDS

|

||||

1556636959

|

||||

$ echo $EPOCHREALTIME

|

||||

1556636968.012369

|

||||

$ echo $BASH_ARGV0

|

||||

bash

|

||||

```

|

||||

|

||||

Fedora 30 also updates the [Fish shell][12], a colorful shell with auto-suggestion, which can be very helpful for beginners. Fedora 30 comes with [Fish version 3][13], and you can even [try it out in a browser][14] without having to install it on your machine.

|

||||

|

||||

(Note that Fish shell is not the same as guestfish for mounting virtual machine images, which comes with the libguestfs-tools package.)

|

||||

|

||||

### Development

|

||||

|

||||

Fedora 30 brings updates to the following languages: [C][15], [Boost (C++)][16], [Erlang][17], [Go][18], [Haskell][19], [Python][20], [Ruby][21], and [PHP][22].

|

||||

|

||||

Regarding these updates, the most important thing to know is that Python 2 is deprecated in Fedora 30. The community and Fedora leadership are requesting that all package maintainers that still depend on Python 2 port their packages to Python 3 as soon as possible, as the plan is to remove virtually all Python 2 packages in Fedora 31.

|

||||

|

||||

### Containers

|

||||

|

||||

If you would like to run Fedora as an immutable OS for a container, kiosk, or appliance-like environment, check out [Fedora Silverblue][23]. It brings you all of Fedora's technology managed by [rpm-ostree][24], which is a hybrid image/package system that allows automatic updates and easy rollbacks for developers. It is a great option for anyone who wants to learn more and play around with [Flatpak deployments][25].

|

||||

|

||||

Fedora Atomic is no longer available under Fedora 30, but you can still [download it][26]. If your jam is containers, don't despair: even though Fedora Atomic is gone, a brand new [Fedora CoreOS][27] is under development and should be going live soon!

|

||||

|

||||

### What else is new?

|

||||

|

||||

As of Fedora 30, **/usr/bin/gpg** points to [GnuPG][28] v2 by default, and [NFS][29] server configuration is now located at **/etc/nfs.conf** instead of **/etc/sysconfig/nfs**.

|

||||

|

||||

There have also been a [few changes][30] for installation and boot time.

|

||||

|

||||

Last but not least, check out [Fedora Spins][31] for a spin of Fedora that defaults to your favorite Window manager and [Fedora Labs][32] for functionally curated software bundles built on Fedora 30 (i.e. astronomy, security, and gaming).

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/5/fedora-30-features

|

||||

|

||||

作者:[Anderson Silva ][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/ansilva/users/marcobravo/users/alanfdoss/users/ansilva

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/fedora_on_laptop_lead.jpg?itok=XMc5wo_e (Fedora Linux distro on laptop)

|

||||

[2]: https://getfedora.org/

|

||||

[3]: https://fedoraproject.org/wiki/Releases/30/ChangeSet

|

||||

[4]: https://fedoraproject.org/wiki/DNF_system_upgrade#How_do_I_use_it.3F

|

||||

[5]: https://opensource.com/sites/default/files/uploads/fedora30_fresh-boot.jpg (Fedora 30 boot screen)

|

||||

[6]: https://help.gnome.org/misc/release-notes/3.32/

|

||||

[7]: https://opensource.com/sites/default/files/uploads/fedora10_gnome.png (Applications panel in GNOME Settings)

|

||||

[8]: https://elementary.io/

|

||||

[9]: https://www.deepin.org/en/dde/

|

||||

[10]: https://opensource.com/sites/default/files/uploads/fedora10_deepin.png (Deepin desktop on Fedora 30)

|

||||

[11]: https://git.savannah.gnu.org/cgit/bash.git/tree/NEWS

|

||||

[12]: https://fishshell.com/

|

||||

[13]: https://fishshell.com/release_notes.html

|

||||

[14]: https://rootnroll.com/d/fish-shell/

|

||||

[15]: https://docs.fedoraproject.org/en-US/fedora/f30/release-notes/developers/Development_C/

|

||||

[16]: https://docs.fedoraproject.org/en-US/fedora/f30/release-notes/developers/Development_Boost/

|

||||

[17]: https://docs.fedoraproject.org/en-US/fedora/f30/release-notes/developers/Development_Erlang/

|

||||

[18]: https://docs.fedoraproject.org/en-US/fedora/f30/release-notes/developers/Development_Go/

|

||||

[19]: https://docs.fedoraproject.org/en-US/fedora/f30/release-notes/developers/Development_Haskell/

|

||||

[20]: https://docs.fedoraproject.org/en-US/fedora/f30/release-notes/developers/Development_Python/

|

||||

[21]: https://docs.fedoraproject.org/en-US/fedora/f30/release-notes/developers/Development_Ruby/

|

||||

[22]: https://docs.fedoraproject.org/en-US/fedora/f30/release-notes/developers/Development_Web/

|

||||

[23]: https://silverblue.fedoraproject.org/

|

||||

[24]: https://rpm-ostree.readthedocs.io/en/latest/

|

||||

[25]: https://flatpak.org/setup/Fedora/

|

||||

[26]: https://getfedora.org/en/atomic/

|

||||

[27]: https://coreos.fedoraproject.org/

|

||||

[28]: https://gnupg.org/index.html

|

||||

[29]: https://en.wikipedia.org/wiki/Network_File_System

|

||||

[30]: https://docs.fedoraproject.org/en-US/fedora/f30/release-notes/sysadmin/Installation/

|

||||

[31]: https://spins.fedoraproject.org

|

||||

[32]: https://labs.fedoraproject.org/

|

||||

@ -0,0 +1,79 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Why startups should release their code as open source)

|

||||

[#]: via: (https://opensource.com/article/19/5/startups-release-code)

|

||||

[#]: author: (Clément Flipo https://opensource.com/users/cl%C3%A9ment-flipo)

|

||||

|

||||

Why startups should release their code as open source

|

||||

======

|

||||

Dokit wondered whether giving away its knowledge as open source was a

|

||||

bad business decision, but that choice has been the foundation of its

|

||||

success.

|

||||

![open source button on keyboard][1]

|

||||

|

||||

It's always hard to recall exactly how a project started, but sometimes that can help you understand that project more clearly. When I think about it, our platform for creating user guides and documentation, [Dokit][2], came straight out of my childhood. Growing up in a house where my toys were Meccano and model airplane kits, the idea of making things, taking individual pieces and putting them together to create a new whole, was always a fundamental part of what it meant to play. My father worked for a DIY company, so there were always signs of building, repair, and instruction manuals around the house. When I was young, my parents sent me to join the Boy Scouts, where we made tables, tents and mud ovens, which helped foster my enjoyment of shared learning that I later found in the open source movement.

|

||||

|

||||

The art of repairing things and recycling products that I learned in childhood became part of what I did for a job. Then it became my ambition to take the reassuring feel of learning how to make and do and repair at home or in a group—but put it online. That inspired Dokit's creation.

|

||||

|

||||

### The first months

|

||||

|

||||

It hasn't always been easy, but since founding our company in 2017, I've realized that the biggest and most worthwhile goals are generally always difficult. If we were to achieve our plan to revolutionize the way [old-fashioned manuals and user guides are created and published][3], and maximize our impact in what we knew all along would be a niche market, we knew that a guiding mission was crucial to how we organized everything else. It was from there that we reached our first big decision: to [quickly launch a proof of concept using an existing open source framework][4], MediaWiki, and from there to release all of our code as open source.

|

||||

|

||||

In retrospect, this decision was made easier by the fact that [MediaWiki][5] was already up and running. With 15,000 developers already active around the world and on a platform that included 90% of the features we needed to meet our minimum viable product (MVP), things would have no doubt been harder without support from the engine that made its name by powering Wikipedia. Confluence, a documentation platform in use by many enterprises, offers some good features, but in the end, it was an easy choice between the two.

|

||||

|

||||

Placing our faith in the community, we put the first version of our platform straight onto GitHub. The excitement of watching the world's makers start using our platform, even before we'd done any real advertising, felt like an early indication that we were on the right track. Although the [maker and Fablab movements][6] encourage users to share instructions, and even sets out this expectation in the [Fablab charter][7] (as stated by MIT), in reality, there is a lack of real documentation.

|

||||

|

||||

The first and most significant reason people like using our platform is that it responds to the very real problem of poor documentation inside an otherwise great movement—one that we knew could be even better. To us, it felt a bit like we were repairing a gap in the community of makers and DIY. Within a year of our launch, Fablabs, [Wikifab][8], [Open Source Ecology][9], [Les Petits Debrouillards][10], [Ademe][11], and [Low-Tech Lab][12] had installed our tool on their servers for creating step-by-step tutorials.

|

||||

|

||||

Before even putting out a press release, one of our users, Wikifab, began to get praise in national media as "[the Wikipedia of DIY][13]." In just two years, we've seen hundreds of communities launched on their own Dokits, ranging from the fun to the funny to the more formal product guides. Again, the power of the community is the force we want to harness, and it's constantly amazing to see projects—ranging from wind turbines to pet feeders—develop engaging product manuals using the platform we started.

|

||||

|

||||

### Opening up open source

|

||||

|

||||

Looking back at such a successful first two years, it's clear to us that our choice to use open source was fundamental to how we got where we are as fast as we did. The ability to gather feedback in open source is second-to-none. If a piece of code didn't work, [someone could tell us right away][14]. Why wait on appointments with consultants if you can learn along with those who are already using the service you created?

|

||||

|

||||

The level of engagement from the community also revealed the potential (including the potential interest) in our market. [Paris has a good and growing community of developers][15], but open source took us from a pool of a few thousand locally, and brought us to millions of developers all around the world who could become a part of what we were trying to make happen. The open availability of our code also proved reassuring to our users and customers who felt safe that, even if our company went away, the code wouldn't.

|

||||

|

||||