mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-03 23:40:14 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

cff7b1f53b

@ -1,28 +1,25 @@

|

|||||||

使用 Kafka 和 MongoDB 进行 Go 异步处理

|

使用 Kafka 和 MongoDB 进行 Go 异步处理

|

||||||

============================================================

|

============================================================

|

||||||

|

|

||||||

在我前面的博客文章 ["使用 MongoDB 和 Docker 多阶段构建我的第一个 Go 微服务][9] 中,我创建了一个 Go 微服务示例,它发布一个 REST 式的 http 端点,并将从 HTTP POST 中接收到的数据保存到 MongoDB 数据库。

|

在我前面的博客文章 “[我的第一个 Go 微服务:使用 MongoDB 和 Docker 多阶段构建][9]” 中,我创建了一个 Go 微服务示例,它发布一个 REST 式的 http 端点,并将从 HTTP POST 中接收到的数据保存到 MongoDB 数据库。

|

||||||

|

|

||||||

在这个示例中,我将保存数据到 MongoDB 和创建另一个微服务去处理它解耦了。我还添加了 Kafka 为消息层服务,这样微服务就可以异步地处理它自己关心的东西了。

|

在这个示例中,我将数据的保存和 MongoDB 分离,并创建另一个微服务去处理它。我还添加了 Kafka 为消息层服务,这样微服务就可以异步处理它自己关心的东西了。

|

||||||

|

|

||||||

> 如果你有时间去看,我将这个博客文章的整个过程录制到 [这个视频中了][1] :)

|

> 如果你有时间去看,我将这个博客文章的整个过程录制到 [这个视频中了][1] :)

|

||||||

|

|

||||||

下面是这个使用了两个微服务的简单的异步处理示例的高级架构。

|

下面是这个使用了两个微服务的简单的异步处理示例的上层架构图。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

微服务 1 —— 是一个 REST 式微服务,它从一个 /POST http 调用中接收数据。接收到请求之后,它从 http 请求中检索数据,并将它保存到 Kafka。保存之后,它通过 /POST 发送相同的数据去响应调用者。

|

微服务 1 —— 是一个 REST 式微服务,它从一个 /POST http 调用中接收数据。接收到请求之后,它从 http 请求中检索数据,并将它保存到 Kafka。保存之后,它通过 /POST 发送相同的数据去响应调用者。

|

||||||

|

|

||||||

微服务 2 —— 是一个在 Kafka 中订阅一个主题的微服务,在这里就是微服务 1 保存的数据。一旦消息被微服务消费之后,它接着保存数据到 MongoDB 中。

|

微服务 2 —— 是一个订阅了 Kafka 中的一个主题的微服务,微服务 1 的数据保存在该主题。一旦消息被微服务消费之后,它接着保存数据到 MongoDB 中。

|

||||||

|

|

||||||

在你继续之前,我们需要能够去运行这些微服务的几件东西:

|

在你继续之前,我们需要能够去运行这些微服务的几件东西:

|

||||||

|

|

||||||

1. [下载 Kafka][2] —— 我使用的版本是 kafka_2.11-1.1.0

|

1. [下载 Kafka][2] —— 我使用的版本是 kafka_2.11-1.1.0

|

||||||

|

|

||||||

2. 安装 [librdkafka][3] —— 不幸的是,这个库应该在目标系统中

|

2. 安装 [librdkafka][3] —— 不幸的是,这个库应该在目标系统中

|

||||||

|

|

||||||

3. 安装 [Kafka Go 客户端][4]

|

3. 安装 [Kafka Go 客户端][4]

|

||||||

|

|

||||||

4. 运行 MongoDB。你可以去看我的 [以前的文章][5] 中关于这一块的内容,那篇文章中我使用了一个 MongoDB docker 镜像。

|

4. 运行 MongoDB。你可以去看我的 [以前的文章][5] 中关于这一块的内容,那篇文章中我使用了一个 MongoDB docker 镜像。

|

||||||

|

|

||||||

我们开始吧!

|

我们开始吧!

|

||||||

@ -32,14 +29,12 @@

|

|||||||

```

|

```

|

||||||

$ cd /<download path>/kafka_2.11-1.1.0

|

$ cd /<download path>/kafka_2.11-1.1.0

|

||||||

$ bin/zookeeper-server-start.sh config/zookeeper.properties

|

$ bin/zookeeper-server-start.sh config/zookeeper.properties

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

接着运行 Kafka —— 我使用 9092 端口连接到 Kafka。如果你需要改变端口,只需要在 `config/server.properties` 中配置即可。如果你像我一样是个新手,我建议你现在还是使用默认端口。

|

接着运行 Kafka —— 我使用 9092 端口连接到 Kafka。如果你需要改变端口,只需要在 `config/server.properties` 中配置即可。如果你像我一样是个新手,我建议你现在还是使用默认端口。

|

||||||

|

|

||||||

```

|

```

|

||||||

$ bin/kafka-server-start.sh config/server.properties

|

$ bin/kafka-server-start.sh config/server.properties

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

Kafka 跑起来之后,我们需要 MongoDB。它很简单,只需要使用这个 `docker-compose.yml` 即可。

|

Kafka 跑起来之后,我们需要 MongoDB。它很简单,只需要使用这个 `docker-compose.yml` 即可。

|

||||||

@ -61,17 +56,15 @@ volumes:

|

|||||||

|

|

||||||

networks:

|

networks:

|

||||||

network1:

|

network1:

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

使用 Docker Compose 去运行 MongoDB docker 容器。

|

使用 Docker Compose 去运行 MongoDB docker 容器。

|

||||||

|

|

||||||

```

|

```

|

||||||

docker-compose up

|

docker-compose up

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

这里是微服务 1 的相关代码。我只是修改了我前面的示例去保存到 Kafka 而不是 MongoDB。

|

这里是微服务 1 的相关代码。我只是修改了我前面的示例去保存到 Kafka 而不是 MongoDB:

|

||||||

|

|

||||||

[rest-to-kafka/rest-kafka-sample.go][10]

|

[rest-to-kafka/rest-kafka-sample.go][10]

|

||||||

|

|

||||||

@ -133,15 +126,13 @@ func saveJobToKafka(job Job) {

|

|||||||

}, nil)

|

}, nil)

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

这里是微服务 2 的代码。在这个代码中最重要的东西是从 Kafka 中消耗数据,保存部分我已经在前面的博客文章中讨论过了。这里代码的重点部分是从 Kafka 中消费数据。

|

这里是微服务 2 的代码。在这个代码中最重要的东西是从 Kafka 中消费数据,保存部分我已经在前面的博客文章中讨论过了。这里代码的重点部分是从 Kafka 中消费数据:

|

||||||

|

|

||||||

[kafka-to-mongo/kafka-mongo-sample.go][11]

|

[kafka-to-mongo/kafka-mongo-sample.go][11]

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

func main() {

|

func main() {

|

||||||

|

|

||||||

//Create MongoDB session

|

//Create MongoDB session

|

||||||

@ -206,14 +197,12 @@ func saveJobToMongo(jobString string) {

|

|||||||

fmt.Printf("Saved to MongoDB : %s", jobString)

|

fmt.Printf("Saved to MongoDB : %s", jobString)

|

||||||

|

|

||||||

}

|

}

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

我们来演示一下,运行微服务 1。确保 Kafka 已经运行了。

|

我们来演示一下,运行微服务 1。确保 Kafka 已经运行了。

|

||||||

|

|

||||||

```

|

```

|

||||||

$ go run rest-kafka-sample.go

|

$ go run rest-kafka-sample.go

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

我使用 Postman 向微服务 1 发送数据。

|

我使用 Postman 向微服务 1 发送数据。

|

||||||

@ -228,7 +217,6 @@ $ go run rest-kafka-sample.go

|

|||||||

|

|

||||||

```

|

```

|

||||||

$ go run kafka-mongo-sample.go

|

$ go run kafka-mongo-sample.go

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

现在,你将在微服务 2 上看到消费的数据,并将它保存到了 MongoDB。

|

现在,你将在微服务 2 上看到消费的数据,并将它保存到了 MongoDB。

|

||||||

@ -239,27 +227,26 @@ $ go run kafka-mongo-sample.go

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

完整的源代码可以在这里找到

|

完整的源代码可以在这里找到:

|

||||||

|

|

||||||

[https://github.com/donvito/learngo/tree/master/rest-kafka-mongo-microservice][12]

|

[https://github.com/donvito/learngo/tree/master/rest-kafka-mongo-microservice][12]

|

||||||

|

|

||||||

现在是广告时间:如果你喜欢这篇文章,请在 Twitter [@donvito][6] 上关注我。我的 Twitter 上有关于 Docker、Kubernetes、GoLang、Cloud、DevOps、Agile 和 Startups 的内容。欢迎你们在 [GitHub][7] 和 [LinkedIn][8] 关注我。

|

现在是广告时间:如果你喜欢这篇文章,请在 Twitter [@donvito][6] 上关注我。我的 Twitter 上有关于 Docker、Kubernetes、GoLang、Cloud、DevOps、Agile 和 Startups 的内容。欢迎你们在 [GitHub][7] 和 [LinkedIn][8] 关注我。

|

||||||

|

|

||||||

[视频](https://youtu.be/xa0Yia1jdu8)

|

|

||||||

|

|

||||||

开心地玩吧!

|

开心地玩吧!

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

via: https://www.melvinvivas.com/developing-microservices-using-kafka-and-mongodb/

|

via: https://www.melvinvivas.com/developing-microservices-using-kafka-and-mongodb/

|

||||||

|

|

||||||

作者:[Melvin Vivas ][a]

|

作者:[Melvin Vivas][a]

|

||||||

译者:[qhwdw](https://github.com/qhwdw)

|

译者:[qhwdw](https://github.com/qhwdw)

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

[a]:https://www.melvinvivas.com/author/melvin/

|

[a]:https://www.melvinvivas.com/author/melvin/

|

||||||

[1]:https://www.melvinvivas.com/developing-microservices-using-kafka-and-mongodb/#video1

|

[1]:https://youtu.be/xa0Yia1jdu8

|

||||||

[2]:https://kafka.apache.org/downloads

|

[2]:https://kafka.apache.org/downloads

|

||||||

[3]:https://github.com/confluentinc/confluent-kafka-go

|

[3]:https://github.com/confluentinc/confluent-kafka-go

|

||||||

[4]:https://github.com/confluentinc/confluent-kafka-go

|

[4]:https://github.com/confluentinc/confluent-kafka-go

|

||||||

@ -1,52 +1,54 @@

|

|||||||

什么是 CI/CD?

|

什么是 CI/CD?

|

||||||

======

|

======

|

||||||

|

|

||||||

|

在软件开发中经常会提到<ruby>持续集成<rt>Continuous Integration</rt></ruby>(CI)和<ruby>持续交付<rt>Continuous Delivery</rt></ruby>(CD)这几个术语。但它们真正的意思是什么呢?

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

在谈论软件开发时,经常会提到<ruby>持续集成<rt>Continuous Integration</rt></ruby>(CI)和<ruby>持续交付<rt>Continuous Delivery</rt></ruby>(CD)这几个术语。但它们真正的意思是什么呢?在本文中,我将解释这些和相关术语背后的含义和意义,例如<ruby>持续测试<rt>Continuous Testing</rt></ruby>和<ruby>持续部署<rt>Continuous Deployment</rt></ruby>。

|

在谈论软件开发时,经常会提到<ruby>持续集成<rt>Continuous Integration</rt></ruby>(CI)和<ruby>持续交付<rt>Continuous Delivery</rt></ruby>(CD)这几个术语。但它们真正的意思是什么呢?在本文中,我将解释这些和相关术语背后的含义和意义,例如<ruby>持续测试<rt>Continuous Testing</rt></ruby>和<ruby>持续部署<rt>Continuous Deployment</rt></ruby>。

|

||||||

|

|

||||||

### 概览

|

### 概览

|

||||||

|

|

||||||

工厂里的装配线以快速、自动化、可重复的方式从原材料生产出消费品。同样,软件交付管道以快速,自动化和可重复的方式从源代码生成发布版本。如何完成这项工作的总体设计称为“持续交付”。启动装配线的过程称为“持续集成”。确保质量的过程称为“持续测试”,将最终产品提供给用户的过程称为“持续部署”。一些专家让这一切简单、顺畅、高效地运行,这些人被称为<ruby>运维开发<rt>DevOps</rt></ruby>。

|

工厂里的装配线以快速、自动化、可重复的方式从原材料生产出消费品。同样,软件交付管道以快速、自动化和可重复的方式从源代码生成发布版本。如何完成这项工作的总体设计称为“持续交付”(CD)。启动装配线的过程称为“持续集成”(CI)。确保质量的过程称为“持续测试”,将最终产品提供给用户的过程称为“持续部署”。一些专家让这一切简单、顺畅、高效地运行,这些人被称为<ruby>运维开发<rt>DevOps</rt></ruby>践行者。

|

||||||

|

|

||||||

### 持续(Continuous)是什么意思?

|

### “持续”是什么意思?

|

||||||

|

|

||||||

Continuous 用于描述遵循我在此提到的许多不同流程实践。这并不意味着“一直在运行”,而是“随时可运行”。在软件开发领域,它还包括几个核心概念/最佳实践。这些是:

|

“持续”用于描述遵循我在此提到的许多不同流程实践。这并不意味着“一直在运行”,而是“随时可运行”。在软件开发领域,它还包括几个核心概念/最佳实践。这些是:

|

||||||

|

|

||||||

* **频繁发布**:持续实践背后目标是能够频繁地交付高质量的软件。此处的交付频率是可变的,可由开发团队或公司定义。对于某些产品,一季度,一个月,一周或一天交付一次可能已经足够频繁了。对于另一些来说,一天可能需要多次交付也是可行的。所谓持续也有“偶尔,按需”的方面。最终目标是相同的:在可重复,可靠的过程中为最终用户提供高质量的软件更新。通常,这可以通过很少甚至没有用户的交互或知识来完成(想想设备更新)。

|

* **频繁发布**:持续实践背后的目标是能够频繁地交付高质量的软件。此处的交付频率是可变的,可由开发团队或公司定义。对于某些产品,一季度、一个月、一周或一天交付一次可能已经足够频繁了。对于另一些来说,一天可能需要多次交付也是可行的。所谓持续也有“偶尔、按需”的方面。最终目标是相同的:在可重复、可靠的过程中为最终用户提供高质量的软件更新。通常,这可以通过很少甚至无需用户的交互或掌握的知识来完成(想想设备更新)。

|

||||||

* **自动化流程**:实现此频率的关键是用自动化流程来处理软件生产中的方方面面。这包括构建、测试、分析、版本控制,以及在某些情况下的部署。

|

* **自动化流程**:实现此频率的关键是用自动化流程来处理软件生产中的方方面面。这包括构建、测试、分析、版本控制,以及在某些情况下的部署。

|

||||||

* **可重复**:如果我们使用的自动化流程在给定相同输入的情况下始终具有相同的行为,则这个过程应该是可重复的。也就是说,如果我们把某个历史版本的代码作为输入,我们应该得到对应相同的可交付产出。这也假设我们有相同版本的外部依赖项(即我们不创建该版本代码使用的其它交付物)。理想情况下,这也意味着可以对管道中的流程进行版本控制和重建(请参阅稍后的 DevOps 讨论)。

|

* **可重复**:如果我们使用的自动化流程在给定相同输入的情况下始终具有相同的行为,则这个过程应该是可重复的。也就是说,如果我们把某个历史版本的代码作为输入,我们应该得到对应相同的可交付产出。这也假设我们有相同版本的外部依赖项(即我们不创建该版本代码使用的其它交付物)。理想情况下,这也意味着可以对管道中的流程进行版本控制和重建(请参阅稍后的 DevOps 讨论)。

|

||||||

* **快速迭代**:“快速”在这里是个相对术语,但无论软件更新/发布的频率如何,预期的持续过程都会以高效的方式将源代码转换为交付物。自动化负责大部分工作,但自动化处理的过程可能仍然很慢。例如,对于每天需要多次发布候选版更新的产品来说,一轮<ruby>集成测试<rt>integrated testing</rt></ruby>下来耗时就要大半天可能就太慢了。

|

* **快速迭代**:“快速”在这里是个相对术语,但无论软件更新/发布的频率如何,预期的持续过程都会以高效的方式将源代码转换为交付物。自动化负责大部分工作,但自动化处理的过程可能仍然很慢。例如,对于每天需要多次发布候选版更新的产品来说,一轮<ruby>集成测试<rt>integrated testing</rt></ruby>下来耗时就要大半天可能就太慢了。

|

||||||

|

|

||||||

### 什么是持续交付管道(continuous delivery pipeline)?

|

### 什么是“持续交付管道”?

|

||||||

|

|

||||||

将源代码转换为可发布品的多个不同的<ruby>任务<rt>task</rt></ruby>和<ruby>作业<rt>job</rt></ruby>通常串联成一个软件“管道”,一个自动流程成功完成后会启动管道中的下一个流程。这些管道有许多不同的叫法,例如持续交付管道,部署管道和软件开发管道。大体上讲,程序管理者在管道执行时管理管道各部分的定义、运行、监控和报告。

|

将源代码转换为可发布产品的多个不同的<ruby>任务<rt>task</rt></ruby>和<ruby>作业<rt>job</rt></ruby>通常串联成一个软件“管道”,一个自动流程成功完成后会启动管道中的下一个流程。这些管道有许多不同的叫法,例如持续交付管道、部署管道和软件开发管道。大体上讲,程序管理者在管道执行时管理管道各部分的定义、运行、监控和报告。

|

||||||

|

|

||||||

### 持续交付管道是如何工作的?

|

### 持续交付管道是如何工作的?

|

||||||

|

|

||||||

软件交付管道的实际实现可以有很大不同。有许多程序可用在管道中,用于源跟踪、构建、测试、指标采集,版本管理等各个方面。但整体工作流程通常是相同的。单个业务流程/工作流应用程序管理整个管道,每个流程作为独立的作业运行或由该应用程序进行阶段管理。通常,在业务流程中,这些独立作业是应用程序可理解并可作为工作流程管理的语法和结构中定义的。

|

软件交付管道的实际实现可以有很大不同。有许多程序可用在管道中,用于源代码跟踪、构建、测试、指标采集,版本管理等各个方面。但整体工作流程通常是相同的。单个业务流程/工作流应用程序管理整个管道,每个流程作为独立的作业运行或由该应用程序进行阶段管理。通常,在业务流程中,这些独立作业是以应用程序可理解并可作为工作流程管理的语法和结构定义的。

|

||||||

|

|

||||||

这些作业被用于一个或多个功能(构建、测试、部署等)。每个作业可能使用不同的技术或多种技术。关键是作业是自动化的,高效的,并且可重复的。如果作业成功,则工作流管理器将触发管道中的下一个作业。如果作业失败,工作流管理器会向开发人员,测试人员和其他人发出警报,以便他们尽快纠正问题。这个过程是自动化的,所以比手动运行一组过程可更快地找到错误。这种快速排错称为<ruby>快速失败<rt>fail fast</rt></ruby>,并且在获取管道端点方面同样有价值。

|

这些作业被用于一个或多个功能(构建、测试、部署等)。每个作业可能使用不同的技术或多种技术。关键是作业是自动化的、高效的,并且可重复的。如果作业成功,则工作流管理器将触发管道中的下一个作业。如果作业失败,工作流管理器会向开发人员、测试人员和其他人发出警报,以便他们尽快纠正问题。这个过程是自动化的,所以比手动运行一组过程可更快地找到错误。这种快速排错称为<ruby>快速失败<rt>fail fast</rt></ruby>,并且在抵达管道端点方面同样有价值。

|

||||||

|

|

||||||

### 快速失败(fail fast)是什么意思?

|

### “快速失败”是什么意思?

|

||||||

|

|

||||||

管道的工作之一就是快速处理变更。另一个是监视创建发布的不同任务/作业。由于编译失败或测试未通过的代码可以阻止管道继续运行,因此快速通知用户此类情况非常重要。快速失败指的是在管道流程中尽快发现问题并快速通知用户的想法,这样可以及时修正问题并重新提交代码以便使管道再次运行。通常在管道流程中可通过查看历史记录来确定是谁做了那次修改并通知此人及其团队。

|

管道的工作之一就是快速处理变更。另一个是监视创建发布的不同任务/作业。由于编译失败或测试未通过的代码可以阻止管道继续运行,因此快速通知用户此类情况非常重要。快速失败指的是在管道流程中尽快发现问题并快速通知用户的方式,这样可以及时修正问题并重新提交代码以便使管道再次运行。通常在管道流程中可通过查看历史记录来确定是谁做了那次修改并通知此人及其团队。

|

||||||

|

|

||||||

### 所有持续交付管道都应该被自动化吗?

|

### 所有持续交付管道都应该被自动化吗?

|

||||||

|

|

||||||

管道的几乎所有部分都是应该自动化的。对于某些部分,有一些人为干预/互动的地方可能是有意义的。一个例子可能是<ruby>用户验收测试<rt>user-acceptance testing</rt></ruby>(让最终用户试用软件并确保它能达到他们想要/期望的水平)。另一种情况可能是部署到生产环境时用户希望拥有更多的人为控制。当然,如果代码不正确或不能运行,则需要人工干预。

|

管道的几乎所有部分都是应该自动化的。对于某些部分,有一些人为干预/互动的地方可能是有意义的。一个例子可能是<ruby>用户验收测试<rt>user-acceptance testing</rt></ruby>(让最终用户试用软件并确保它能达到他们想要/期望的水平)。另一种情况可能是部署到生产环境时用户希望拥有更多的人为控制。当然,如果代码不正确或不能运行,则需要人工干预。

|

||||||

|

|

||||||

有了对 continuous 理解的背景,让我们看看不同类型的持续流程以及它们在软件管道上下文中的含义。

|

有了对“持续”含义理解的背景,让我们看看不同类型的持续流程以及它们在软件管道上下文中的含义。

|

||||||

|

|

||||||

### 什么是持续集成(continuous integration)?

|

### 什么是“持续集成”?

|

||||||

|

|

||||||

持续集成(CI)是在源代码变更后自动检测、拉取、构建和(在大多数情况下)进行单元测试的过程。持续集成是启动管道的环节(尽管某些预验证 —— 通常称为<ruby>上线前检查<rt>pre-flight checks</rt></ruby> —— 有时会被归在持续集成之前)。

|

持续集成(CI)是在源代码变更后自动检测、拉取、构建和(在大多数情况下)进行单元测试的过程。持续集成是启动管道的环节(尽管某些预验证 —— 通常称为<ruby>上线前检查<rt>pre-flight checks</rt></ruby> —— 有时会被归在持续集成之前)。

|

||||||

|

|

||||||

持续信札的目标是快速确保开发人员新提交的变更是好的,并且适合在代码库中进一步使用。

|

持续集成的目标是快速确保开发人员新提交的变更是好的,并且适合在代码库中进一步使用。

|

||||||

|

|

||||||

### 持续集成是如何工作的?

|

### 持续集成是如何工作的?

|

||||||

|

|

||||||

持续集成的基本思想是让一个自动化过程监测一个或多个源代码仓库是否有变更。当变更被推送到仓库时,它会监测到更改,下载副本,构建并运行任何相关的单元测试。

|

持续集成的基本思想是让一个自动化过程监测一个或多个源代码仓库是否有变更。当变更被推送到仓库时,它会监测到更改、下载副本、构建并运行任何相关的单元测试。

|

||||||

|

|

||||||

### 持续集成如何监测变更?

|

### 持续集成如何监测变更?

|

||||||

|

|

||||||

@ -54,27 +56,27 @@ Continuous 用于描述遵循我在此提到的许多不同流程实践。这并

|

|||||||

|

|

||||||

* **轮询**:监测程序反复询问代码管理系统,“代码仓库里有什么我感兴趣的新东西吗?”当代码管理系统有新的变更时,监测程序会“唤醒”并完成其工作以获取新代码并构建/测试它。

|

* **轮询**:监测程序反复询问代码管理系统,“代码仓库里有什么我感兴趣的新东西吗?”当代码管理系统有新的变更时,监测程序会“唤醒”并完成其工作以获取新代码并构建/测试它。

|

||||||

* **定期**:监测程序配置为定期启动构建,无论源码是否有变更。理想情况下,如果没有变更,则不会构建任何新内容,因此这不会增加额外的成本。

|

* **定期**:监测程序配置为定期启动构建,无论源码是否有变更。理想情况下,如果没有变更,则不会构建任何新内容,因此这不会增加额外的成本。

|

||||||

* **推送**:这与用于代码管理系统检查的监测程序相反。在这种情况下,代码管理系统被配置为提交变更到仓库时将通知“推送”到监测程序。最常见的是,这可以以 webhook 的形式完成 —— 在新代码被推送时一个<ruby>挂勾<rt>hook</rt></ruby>的程序通过互联网向监测程序发送通知。为此,监测程序必须具有可以通过网络接收 webhook 信息的开放端口。

|

* **推送**:这与用于代码管理系统检查的监测程序相反。在这种情况下,代码管理系统被配置为提交变更到仓库时将“推送”一个通知到监测程序。最常见的是,这可以以 webhook 的形式完成 —— 在新代码被推送时一个<ruby>挂勾<rt>hook</rt></ruby>的程序通过互联网向监测程序发送通知。为此,监测程序必须具有可以通过网络接收 webhook 信息的开放端口。

|

||||||

|

|

||||||

### 什么是预检查(pre-checks 又称上线前检查 pre-flight checks)?

|

### 什么是“预检查”(又称“上线前检查”)?

|

||||||

|

|

||||||

在将代码引入仓库并触发持续集成之前,可以进行其它验证。这遵循了最佳实践,例如<ruby>测试构建<rt>test builds</rt></ruby>和<ruby>代码审查<rt>code review</rt></ruby>。它们通常在代码引入管道之前构建到开发过程中。但是一些管道也可能将它们作为其监控流程或工作流的一部分。

|

在将代码引入仓库并触发持续集成之前,可以进行其它验证。这遵循了最佳实践,例如<ruby>测试构建<rt>test build</rt></ruby>和<ruby>代码审查<rt>code review</rt></ruby>。它们通常在代码引入管道之前构建到开发过程中。但是一些管道也可能将它们作为其监控流程或工作流的一部分。

|

||||||

|

|

||||||

例如,一个名为 [Gerrit][2] 的工具允许在开发人员推送代码之后但在允许进入([Git][3] 远程)仓库之前进行正式的代码审查,验证和测试构建。Gerrit 位于开发人员的工作区和 Git 远程仓库之间。它会“接收”来自开发人员的推送,并且可以执行通过/失败验证以确保它们在被允许进入仓库之前的检查是通过的。这可以包括检测新变更并启动构建测试(CI 的一种形式)。它还允许开发者在那时进行正式的代码审查。这种方式有一种额外的可信度评估机制,即当变更的代码被合并到代码库中时不会破坏任何内容。

|

例如,一个名为 [Gerrit][2] 的工具允许在开发人员推送代码之后但在允许进入([Git][3] 远程)仓库之前进行正式的代码审查、验证和测试构建。Gerrit 位于开发人员的工作区和 Git 远程仓库之间。它会“接收”来自开发人员的推送,并且可以执行通过/失败验证以确保它们在被允许进入仓库之前的检查是通过的。这可以包括检测新变更并启动构建测试(CI 的一种形式)。它还允许开发者在那时进行正式的代码审查。这种方式有一种额外的可信度评估机制,即当变更的代码被合并到代码库中时不会破坏任何内容。

|

||||||

|

|

||||||

### 什么是单元测试(unit test)?

|

### 什么是“单元测试”?

|

||||||

|

|

||||||

单元测试(也称为“提交测试”),是由开发人员编写的小型的专项测试,以确保新代码独立工作。“独立”这里意味着不依赖或调用其它不可直接访问的代码,也不依赖外部数据源或其它模块。如果运行代码需要这样的依赖关系,那么这些资源可以用<ruby>模拟<rt>mock</rt></ruby>来表示。模拟是指使用看起来像资源的<ruby>代码存根<rt>code stub</rt></ruby>,可以返回值但不实现任何功能。

|

单元测试(也称为“提交测试”),是由开发人员编写的小型的专项测试,以确保新代码独立工作。“独立”这里意味着不依赖或调用其它不可直接访问的代码,也不依赖外部数据源或其它模块。如果运行代码需要这样的依赖关系,那么这些资源可以用<ruby>模拟<rt>mock</rt></ruby>来表示。模拟是指使用看起来像资源的<ruby>代码存根<rt>code stub</rt></ruby>,可以返回值,但不实现任何功能。

|

||||||

|

|

||||||

在大多数组织中,开发人员负责创建单元测试以证明其代码正确。事实上,一种称为<ruby>测试驱动开发<rt>test-driven develop</rt></ruby>(TDD)的模型要求将首先设计单元测试作为清楚地验证代码功能的基础。因为这样的代码更改速度快且改动量大,所以它们也必须执行很快。

|

在大多数组织中,开发人员负责创建单元测试以证明其代码正确。事实上,一种称为<ruby>测试驱动开发<rt>test-driven develop</rt></ruby>(TDD)的模型要求将首先设计单元测试作为清楚地验证代码功能的基础。因为这样的代码可以更改速度快且改动量大,所以它们也必须执行很快。

|

||||||

|

|

||||||

由于这与持续集成工作流有关,因此开发人员在本地工作环境中编写或更新代码,并通单元测试来确保新开发的功能或方法正确。通常,这些测试采用断言形式,即函数或方法的给定输入集产生给定的输出集。它们通常进行测试以确保正确标记和处理出错条件。有很多单元测试框架都很有用,例如用于 Java 开发的 [JUnit][4]。

|

由于这与持续集成工作流有关,因此开发人员在本地工作环境中编写或更新代码,并通单元测试来确保新开发的功能或方法正确。通常,这些测试采用断言形式,即函数或方法的给定输入集产生给定的输出集。它们通常进行测试以确保正确标记和处理出错条件。有很多单元测试框架都很有用,例如用于 Java 开发的 [JUnit][4]。

|

||||||

|

|

||||||

### 什么是持续测试(continuous testing)?

|

### 什么是“持续测试”?

|

||||||

|

|

||||||

持续测试是指在代码通过持续交付管道时运行扩展范围的自动化测试的实践。单元测试通常与构建过程集成,作为持续集成阶段的一部分,并专注于和其它与之交互的代码隔离的测试。

|

持续测试是指在代码通过持续交付管道时运行扩展范围的自动化测试的实践。单元测试通常与构建过程集成,作为持续集成阶段的一部分,并专注于和其它与之交互的代码隔离的测试。

|

||||||

|

|

||||||

除此之外,还有各种形式的测试可以或应该出现。这些可包括:

|

除此之外,可以有或者应该有各种形式的测试。这些可包括:

|

||||||

|

|

||||||

* **集成测试** 验证组件和服务组合在一起是否正常。

|

* **集成测试** 验证组件和服务组合在一起是否正常。

|

||||||

* **功能测试** 验证产品中执行功能的结果是否符合预期。

|

* **功能测试** 验证产品中执行功能的结果是否符合预期。

|

||||||

@ -86,27 +88,27 @@ Continuous 用于描述遵循我在此提到的许多不同流程实践。这并

|

|||||||

|

|

||||||

除了测试是否通过之外,还有一些应用程序可以告诉我们测试用例执行(覆盖)的源代码行数。这是一个可以衡量代码量指标的例子。这个指标称为<ruby>代码覆盖率<rt>code-coverage</rt></ruby>,可以通过工具(例如用于 Java 的 [JaCoCo][5])进行统计。

|

除了测试是否通过之外,还有一些应用程序可以告诉我们测试用例执行(覆盖)的源代码行数。这是一个可以衡量代码量指标的例子。这个指标称为<ruby>代码覆盖率<rt>code-coverage</rt></ruby>,可以通过工具(例如用于 Java 的 [JaCoCo][5])进行统计。

|

||||||

|

|

||||||

还有很多其它类型的指标统计,例如代码行数,复杂度以及代码结构对比分析等。诸如 [SonarQube][6] 之类的工具可以检查源代码并计算这些指标。此外,用户还可以为他们可接受的“合格”范围的指标设置阈值。然后可以在管道中针对这些阈值设置一个检查,如果结果不在可接受范围内,则流程终端上。SonarQube 等应用程序具有很高的可配置性,可以设置仅检查团队感兴趣的内容。

|

还有很多其它类型的指标统计,例如代码行数、复杂度以及代码结构对比分析等。诸如 [SonarQube][6] 之类的工具可以检查源代码并计算这些指标。此外,用户还可以为他们可接受的“合格”范围的指标设置阈值。然后可以在管道中针对这些阈值设置一个检查,如果结果不在可接受范围内,则流程终端上。SonarQube 等应用程序具有很高的可配置性,可以设置仅检查团队感兴趣的内容。

|

||||||

|

|

||||||

### 什么是持续交付(continuous delivery)?

|

### 什么是“持续交付”?

|

||||||

|

|

||||||

持续交付(CD)通常是指整个流程链(管道),它自动监测源代码变更并通过构建,测试,打包和相关操作运行它们以生成可部署的版本,基本上没有任何人为干预。

|

持续交付(CD)通常是指整个流程链(管道),它自动监测源代码变更并通过构建、测试、打包和相关操作运行它们以生成可部署的版本,基本上没有任何人为干预。

|

||||||

|

|

||||||

持续交付在软件开发过程中的目标是自动化、效率、可靠性、可重复性和质量保障(通过持续测试)。

|

持续交付在软件开发过程中的目标是自动化、效率、可靠性、可重复性和质量保障(通过持续测试)。

|

||||||

|

|

||||||

持续交付包含持续集成(自动检测源代码变更,执行构建过程,运行单元测试以验证变更),持续测试(对代码运行各种测试以保障代码质量),和(可选)持续部署(通过管道发布版本自动提供给用户)。

|

持续交付包含持续集成(自动检测源代码变更、执行构建过程、运行单元测试以验证变更),持续测试(对代码运行各种测试以保障代码质量),和(可选)持续部署(通过管道发布版本自动提供给用户)。

|

||||||

|

|

||||||

### 如何在管道中识别/跟踪多个版本?

|

### 如何在管道中识别/跟踪多个版本?

|

||||||

|

|

||||||

版本控制是持续交付和管道的关键概念。持续意味着能够经常集成新代码并提供更新版本。但这并不意味着每个人都想要“最新,最好的”。对于想要开发或测试已知的稳定版本的内部团队来说尤其如此。因此,管道创建并轻松存储和访问的这些版本化对象非常重要。

|

版本控制是持续交付和管道的关键概念。持续意味着能够经常集成新代码并提供更新版本。但这并不意味着每个人都想要“最新、最好的”。对于想要开发或测试已知的稳定版本的内部团队来说尤其如此。因此,管道创建并轻松存储和访问的这些版本化对象非常重要。

|

||||||

|

|

||||||

在管道中从源代码创建的对象通常可以称为<ruby>工件<rt>artifacts</rt></ruby>。工件在构建时应该有应用于它们的版本。将版本号分配给工件的推荐策略称为<ruby>语义化版本控制<rt>semantic versioning</rt></ruby>。(这也适用于从外部源引入的依赖工件的版本。)

|

在管道中从源代码创建的对象通常可以称为<ruby>工件<rt>artifact</rt></ruby>。工件在构建时应该有应用于它们的版本。将版本号分配给工件的推荐策略称为<ruby>语义化版本控制<rt>semantic versioning</rt></ruby>。(这也适用于从外部源引入的依赖工件的版本。)

|

||||||

|

|

||||||

语义版本号有三个部分:major,minor 和 patch。(例如,1.4.3 反映了主要版本 1,次要版本 4 和补丁版本 3。)这个想法是,其中一个部分的更改表示工件中的更新级别。主要版本仅针对不兼容的 API 更改而递增。当以<ruby>向后兼容<rt>backward-compatible</rt></ruby>的方式添加功能时,次要版本会增加。当进行向后兼容的版本 bug 修复时,补丁版本会增加。这些是建议的指导方针,但只要团队在整个组织内以一致且易于理解的方式这样做,团队就可以自由地改变这种方法。例如,每次为发布完成构建时增加的数字可以放在补丁字段中。

|

语义版本号有三个部分:<ruby>主要版本<rt>major</rt></ruby>、<ruby>次要版本<rt>minor</rt></ruby> 和 <ruby>补丁版本<rt>patch</rt></ruby>。(例如,1.4.3 反映了主要版本 1,次要版本 4 和补丁版本 3。)这个想法是,其中一个部分的更改表示工件中的更新级别。主要版本仅针对不兼容的 API 更改而递增。当以<ruby>向后兼容<rt>backward-compatible</rt></ruby>的方式添加功能时,次要版本会增加。当进行向后兼容的版本 bug 修复时,补丁版本会增加。这些是建议的指导方针,但只要团队在整个组织内以一致且易于理解的方式这样做,团队就可以自由地改变这种方法。例如,每次为发布完成构建时增加的数字可以放在补丁字段中。

|

||||||

|

|

||||||

### 如何“分销”(promote)工件?

|

### 如何“分销”工件?

|

||||||

|

|

||||||

团队可以为工件分配分销级别以指示适用于测试,生产等环境或用途。有很多方法。可以用 Jenkins 或 [Artifactory][7] 等应用程序进行分销。或者一个简单的方案可以在版本号字符串的末尾添加标签。例如,`-snapshot` 可以指示用于构建工件的代码的最新版本(快照)。可以使用各种分销策略或工具将工件“提升”到其它级别,例如 `-milestone` 或 `-production`,作为工件稳定性和完备性版本的标记。

|

团队可以为工件分配<ruby>分销<rt>promotion</rt></ruby>级别以指示适用于测试、生产等环境或用途。有很多方法。可以用 Jenkins 或 [Artifactory][7] 等应用程序进行分销。或者一个简单的方案可以在版本号字符串的末尾添加标签。例如,`-snapshot` 可以指示用于构建工件的代码的最新版本(快照)。可以使用各种分销策略或工具将工件“提升”到其它级别,例如 `-milestone` 或 `-production`,作为工件稳定性和完备性版本的标记。

|

||||||

|

|

||||||

### 如何存储和访问多个工件版本?

|

### 如何存储和访问多个工件版本?

|

||||||

|

|

||||||

@ -114,9 +116,9 @@ Continuous 用于描述遵循我在此提到的许多不同流程实践。这并

|

|||||||

|

|

||||||

管道用户可以指定他们想要使用的版本,并在这些版本中使用管道。

|

管道用户可以指定他们想要使用的版本,并在这些版本中使用管道。

|

||||||

|

|

||||||

### 什么是持续部署(continuous deployment)?

|

### 什么是“持续部署”?

|

||||||

|

|

||||||

持续部署(CD)是指能够自动提供持续交付管道中发布版本给最终用户使用的想法。根据用户的安装方式,可能是在云环境中自动部署,app 升级(如手机上的应用程序),更新网站或只更新可用版本列表。

|

持续部署(CD)是指能够自动提供持续交付管道中发布版本给最终用户使用的想法。根据用户的安装方式,可能是在云环境中自动部署、app 升级(如手机上的应用程序)、更新网站或只更新可用版本列表。

|

||||||

|

|

||||||

这里的一个重点是,仅仅因为可以进行持续部署并不意味着始终部署来自管道的每组可交付成果。它实际上指,通过管道每套可交付成果都被证明是“可部署的”。这在很大程度上是由持续测试的连续级别完成的(参见本文中的持续测试部分)。

|

这里的一个重点是,仅仅因为可以进行持续部署并不意味着始终部署来自管道的每组可交付成果。它实际上指,通过管道每套可交付成果都被证明是“可部署的”。这在很大程度上是由持续测试的连续级别完成的(参见本文中的持续测试部分)。

|

||||||

|

|

||||||

@ -126,9 +128,9 @@ Continuous 用于描述遵循我在此提到的许多不同流程实践。这并

|

|||||||

|

|

||||||

由于必须回滚/撤消对所有用户的部署可能是一种代价高昂的情况(无论是技术上还是用户的感知),已经有许多技术允许“尝试”部署新功能并在发现问题时轻松“撤消”它们。这些包括:

|

由于必须回滚/撤消对所有用户的部署可能是一种代价高昂的情况(无论是技术上还是用户的感知),已经有许多技术允许“尝试”部署新功能并在发现问题时轻松“撤消”它们。这些包括:

|

||||||

|

|

||||||

#### 蓝/绿测试/部署(blue/green testing/deployments)

|

#### 蓝/绿测试/部署

|

||||||

|

|

||||||

在这种部署软件的方法中,维护了两个相同的主机环境 —— 一个 _蓝色_ 和一个 _绿色_。(颜色并不重要,仅作为标识。)对应来说,其中一个是 _生产环境_,另一个是 _预发布环境_。

|

在这种部署软件的方法中,维护了两个相同的主机环境 —— 一个“蓝色” 和一个“绿色”。(颜色并不重要,仅作为标识。)对应来说,其中一个是“生产环境”,另一个是“预发布环境”。

|

||||||

|

|

||||||

在这些实例的前面是调度系统,它们充当产品或应用程序的客户“网关”。通过将调度系统指向蓝色或绿色实例,可以将客户流量引流到期望的部署环境。通过这种方式,切换指向哪个部署实例(蓝色或绿色)对用户来说是快速,简单和透明的。

|

在这些实例的前面是调度系统,它们充当产品或应用程序的客户“网关”。通过将调度系统指向蓝色或绿色实例,可以将客户流量引流到期望的部署环境。通过这种方式,切换指向哪个部署实例(蓝色或绿色)对用户来说是快速,简单和透明的。

|

||||||

|

|

||||||

@ -136,27 +138,27 @@ Continuous 用于描述遵循我在此提到的许多不同流程实践。这并

|

|||||||

|

|

||||||

同理,如果在最新部署中发现问题并且之前的生产实例仍然可用,则简单的更改可以将客户流量引流回到之前的生产实例 —— 有效地将问题实例“下线”并且回滚到以前的版本。然后有问题的新实例可以在其它区域中修复。

|

同理,如果在最新部署中发现问题并且之前的生产实例仍然可用,则简单的更改可以将客户流量引流回到之前的生产实例 —— 有效地将问题实例“下线”并且回滚到以前的版本。然后有问题的新实例可以在其它区域中修复。

|

||||||

|

|

||||||

#### 金丝雀测试/部署(canary testing/deployment)

|

#### 金丝雀测试/部署

|

||||||

|

|

||||||

在某些情况下,通过蓝/绿发布切换整个部署可能不可行或不是期望的那样。另一种方法是为 _金丝雀_ 测试/部署。在这种模型中,一部分客户流量被重新引流到新的版本部署中。例如,新版本的搜索服务可以与当前服务的生产版本一起部署。然后,可以将 10% 的搜索查询引流到新版本,以在生产环境中对其进行测试。

|

在某些情况下,通过蓝/绿发布切换整个部署可能不可行或不是期望的那样。另一种方法是为<ruby>金丝雀<rt>canary</rt></ruby>测试/部署。在这种模型中,一部分客户流量被重新引流到新的版本部署中。例如,新版本的搜索服务可以与当前服务的生产版本一起部署。然后,可以将 10% 的搜索查询引流到新版本,以在生产环境中对其进行测试。

|

||||||

|

|

||||||

如果服务那些流量的新版本没问题,那么可能会有更多的流量会被逐渐引流过去。如果仍然没有问题出现,那么随着时间的推移,可以对新版本增量部署,直到 100% 的流量都调度到新版本。这有效地“更替”了以前版本的服务,并让新版本对所有客户生效。

|

如果服务那些流量的新版本没问题,那么可能会有更多的流量会被逐渐引流过去。如果仍然没有问题出现,那么随着时间的推移,可以对新版本增量部署,直到 100% 的流量都调度到新版本。这有效地“更替”了以前版本的服务,并让新版本对所有客户生效。

|

||||||

|

|

||||||

#### 功能开关(feature toggles)

|

#### 功能开关

|

||||||

|

|

||||||

对于可能需要轻松关掉的新功能(如果发现问题),开发人员可以添加功能开关。这是代码中的 `if-then` 软件功能开关,仅在设置数据值时才激活新代码。此数据值可以是全局可访问的位置,部署的应用程序将检查该位置是否应执行新代码。如果设置了数据值,则执行代码;如果没有,则不执行。

|

对于可能需要轻松关掉的新功能(如果发现问题),开发人员可以添加<ruby>功能开关<rt>feature toggles</rt></ruby>。这是代码中的 `if-then` 软件功能开关,仅在设置数据值时才激活新代码。此数据值可以是全局可访问的位置,部署的应用程序将检查该位置是否应执行新代码。如果设置了数据值,则执行代码;如果没有,则不执行。

|

||||||

|

|

||||||

这为开发人员提供了一个远程“终止开关”,以便在部署到生产环境后发现问题时关闭新功能。

|

这为开发人员提供了一个远程“终止开关”,以便在部署到生产环境后发现问题时关闭新功能。

|

||||||

|

|

||||||

#### 暗箱发布(dark launch)

|

#### 暗箱发布

|

||||||

|

|

||||||

在这种实践中,代码被逐步测试/部署到生产环境中,但是用户不会看到更改(因此名称中有 dark 一词)。例如,在生产版本中,网页查询的某些部分可能会重定向到查询新数据源的服务。开发人员可收集此信息进行分析,而不会将有关接口,事务或结果的任何信息暴露给用户。

|

在<ruby>暗箱发布<rt>dark launch</rt></ruby>中,代码被逐步测试/部署到生产环境中,但是用户不会看到更改(因此名称中有<ruby>暗箱<rt>dark</rt></ruby>一词)。例如,在生产版本中,网页查询的某些部分可能会重定向到查询新数据源的服务。开发人员可收集此信息进行分析,而不会将有关接口,事务或结果的任何信息暴露给用户。

|

||||||

|

|

||||||

这个想法是想获取候选版本在生产环境负载下如何执行的真实信息,而不会影响用户或改变他们的经验。随着时间的推移,可以调度更多负载,直到遇到问题或认为新功能已准备好供所有人使用。实际上功能开关标志可用于这种暗箱发布机制。

|

这个想法是想获取候选版本在生产环境负载下如何执行的真实信息,而不会影响用户或改变他们的经验。随着时间的推移,可以调度更多负载,直到遇到问题或认为新功能已准备好供所有人使用。实际上功能开关标志可用于这种暗箱发布机制。

|

||||||

|

|

||||||

### 什么是运维开发(DevOps)?

|

### 什么是“运维开发”?

|

||||||

|

|

||||||

[DevOps][9] 是关于如何使开发和运维团队更容易合作开发和发布软件的一系列想法和推荐的实践。从历史上看,开发团队研发了产品,但没有像客户那样以常规,可重复的方式安装/部署它们。在整个周期中,这组安装/部署任务(以及其它支持任务)留给运维团队负责。这经常导致很多混乱和问题,因为运维团队在后期才开始介入,并且必须在短时间内完成他们的工作。同样,开发团队经常处于不利地位 —— 因为他们没有充分测试产品的安装/部署功能,他们可能会对该过程中出现的问题感到惊讶。

|

<ruby>[运维开发][9]<rt>DevOps</rt></ruby> 是关于如何使开发和运维团队更容易合作开发和发布软件的一系列想法和推荐的实践。从历史上看,开发团队研发了产品,但没有像客户那样以常规、可重复的方式安装/部署它们。在整个周期中,这组安装/部署任务(以及其它支持任务)留给运维团队负责。这经常导致很多混乱和问题,因为运维团队在后期才开始介入,并且必须在短时间内完成他们的工作。同样,开发团队经常处于不利地位 —— 因为他们没有充分测试产品的安装/部署功能,他们可能会对该过程中出现的问题感到惊讶。

|

||||||

|

|

||||||

这往往导致开发和运维团队之间严重脱节和缺乏合作。DevOps 理念主张是贯穿整个开发周期的开发和运维综合协作的工作方式,就像持续交付那样。

|

这往往导致开发和运维团队之间严重脱节和缺乏合作。DevOps 理念主张是贯穿整个开发周期的开发和运维综合协作的工作方式,就像持续交付那样。

|

||||||

|

|

||||||

@ -166,13 +168,13 @@ Continuous 用于描述遵循我在此提到的许多不同流程实践。这并

|

|||||||

|

|

||||||

说得更远一些,DevOps 建议实现管道的基础架构也会被视为代码。也就是说,它应该自动配置、可跟踪、易于修改,并在管道发生变化时触发新一轮运行。这可以通过将管道实现为代码来完成。

|

说得更远一些,DevOps 建议实现管道的基础架构也会被视为代码。也就是说,它应该自动配置、可跟踪、易于修改,并在管道发生变化时触发新一轮运行。这可以通过将管道实现为代码来完成。

|

||||||

|

|

||||||

### 什么是管道即代码(pipeline-as-code)?

|

### 什么是“管道即代码”?

|

||||||

|

|

||||||

<ruby>管道即代码<rt>pipeline-as-code</rt></ruby>是通过编写代码创建管道作业/任务的通用术语,就像开发人员编写代码一样。它的目标是将管道实现表示为代码,以便它可以与代码一起存储、评审、跟踪,如果出现问题并且必须终止管道,则可以轻松地重建。有几个工具允许这样做,如 [Jenkins 2][1]。

|

<ruby>管道即代码<rt>pipeline-as-code</rt></ruby>是通过编写代码创建管道作业/任务的通用术语,就像开发人员编写代码一样。它的目标是将管道实现表示为代码,以便它可以与代码一起存储、评审、跟踪,如果出现问题并且必须终止管道,则可以轻松地重建。有几个工具允许这样做,如 [Jenkins 2][1]。

|

||||||

|

|

||||||

### DevOps 如何影响生产软件的基础设施?

|

### DevOps 如何影响生产软件的基础设施?

|

||||||

|

|

||||||

传统意义上,管道中使用的各个硬件系统都有配套的软件(操作系统,应用程序,开发工具等)。在极端情况下,每个系统都是手工设置来定制的。这意味着当系统出现问题或需要更新时,这通常也是一项自定义任务。这种方法违背了持续交付的基本理念,即具有易于重现和可跟踪的环境。

|

传统意义上,管道中使用的各个硬件系统都有配套的软件(操作系统、应用程序、开发工具等)。在极端情况下,每个系统都是手工设置来定制的。这意味着当系统出现问题或需要更新时,这通常也是一项自定义任务。这种方法违背了持续交付的基本理念,即具有易于重现和可跟踪的环境。

|

||||||

|

|

||||||

多年来,很多应用被开发用于标准化交付(安装和配置)系统。同样,<ruby>虚拟机<rt>virtual machine</rt></ruby>被开发为模拟在其它计算机之上运行的计算机程序。这些 VM 要有管理程序才能在底层主机系统上运行,并且它们需要自己的操作系统副本才能运行。

|

多年来,很多应用被开发用于标准化交付(安装和配置)系统。同样,<ruby>虚拟机<rt>virtual machine</rt></ruby>被开发为模拟在其它计算机之上运行的计算机程序。这些 VM 要有管理程序才能在底层主机系统上运行,并且它们需要自己的操作系统副本才能运行。

|

||||||

|

|

||||||

@ -191,7 +193,7 @@ via: https://opensource.com/article/18/8/what-cicd

|

|||||||

作者:[Brent Laster][a]

|

作者:[Brent Laster][a]

|

||||||

选题:[lujun9972](https://github.com/lujun9972)

|

选题:[lujun9972](https://github.com/lujun9972)

|

||||||

译者:[pityonline](https://github.com/pityonline)

|

译者:[pityonline](https://github.com/pityonline)

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

91

sources/talk/20180719 Finding Jobs in Software.md

Normal file

91

sources/talk/20180719 Finding Jobs in Software.md

Normal file

@ -0,0 +1,91 @@

|

|||||||

|

translating by lujun9972

|

||||||

|

Finding Jobs in Software

|

||||||

|

======

|

||||||

|

|

||||||

|

A [PDF of this article][1] is available.

|

||||||

|

|

||||||

|

I was back home in Lancaster last week, chatting with a [friend from grad school][2] who’s remained in academia, and naturally we got to talking about what advice he could give his computer science students to better prepare them for their probable future careers.

|

||||||

|

|

||||||

|

In some later follow-up emails we got to talking about how engineers find jobs. I’ve fielded this question about a dozen times over the last couple years, so I thought it was about time to crystallize it into a blog post for future linking.

|

||||||

|

|

||||||

|

Here are some strategies for finding jobs, ordered roughly from most to least useful:

|

||||||

|

|

||||||

|

### Friend-of-a-friend networking

|

||||||

|

|

||||||

|

Many of the best jobs never make it to the open market at all, and it’s all about who you know. This makes sense for employers, since good engineers are hard to find and a reliable reference can be invaluable.

|

||||||

|

|

||||||

|

In the case of my current job at Iterable, for example, a mutual colleague from thoughtbot (a previous employer) suggested that I should talk to Iterable’s VP of engineering, since he’d worked with both of us and thought we’d get along well. We did, and I liked the team, so I went through the interview process and took the job.

|

||||||

|

|

||||||

|

Like many companies, thoughtbot has an alumni Slack group with a `#job-board` channel. Those sorts of semi-formal corporate alumni networks can definitely be useful, but you’ll probably find yourself relying more on individual connections.

|

||||||

|

|

||||||

|

“Networking” isn’t a dirty word, and it’s not about handing out business cards at a hotel bar. It’s about getting to know people in a friendly and sincere way, being interested in them, and helping them out (by, say, writing a lengthy blog post about how their students might find jobs). I’m not the type to throw around words like karma, but if I were, I would.

|

||||||

|

|

||||||

|

Go to (and speak at!) [meetups][3], offer help and advice when you can, and keep in touch with friends and ex-colleagues. In a couple of years you’ll have a healthy network. Easy-peasy.

|

||||||

|

|

||||||

|

This strategy doesn’t usually work at the beginning of a career, of course, but new grads and students should know that it’s eventually how things happen.

|

||||||

|

|

||||||

|

### Applying directly to specific companies

|

||||||

|

|

||||||

|

I keep a text file of companies where I might want to work. As I come across companies that catch my eye, I add ‘em to the list. When I’m on the hunt for a new job I just consult my list.

|

||||||

|

|

||||||

|

Lots of things might convince me to add a company to the list. They might have an especially appealing mission or product, use some particular technology, or employ some specific people that I’d like to work with and learn from.

|

||||||

|

|

||||||

|

One shockingly good heuristic that identifies a great workplace is whether a company sponsors or organizes meetups, and specifically if they sponsor groups related to minorities in tech. Plenty of great companies don’t do that, and they still may be terrific, but if they do it’s an extremely good sign.

|

||||||

|

|

||||||

|

### Job boards

|

||||||

|

|

||||||

|

I generally don’t use job boards, myself, because I find networking and targeted applications to be more valuable.

|

||||||

|

|

||||||

|

The big sites like Indeed and Dice are rarely useful. While some genuinely great companies do cross-post jobs there, there are so many atrocious jobs mixed in that I don’t bother with them.

|

||||||

|

|

||||||

|

However, smaller and more targeted job boards can be really handy. Someone has created a job site for any given technology (language, framework, database, whatever). If you’re really interested in working with a specific tool or in a particular market niche, it might be worthwhile for you to track down the appropriate board.

|

||||||

|

|

||||||

|

Similarly, if you’re interested in remote work, there are a few boards that cater specifically to that. [We Work Remotely][4] is a prominent and reputable one.

|

||||||

|

|

||||||

|

The enormously popular tech news site [Hacker News][5] posts a monthly “Who’s Hiring?” thread ([an example][6]). HN focuses mainly on startups and is almost adorably obsessed with trends, tech-wise, so it’s a thoroughly biased sample, but it’s still a huge selection of relatively high-quality jobs. Browsing it can also give you an idea of what technologies are currently in vogue. Some folks have also built [sites that make it easier to filter][7] those listings.

|

||||||

|

|

||||||

|

### Recruiters

|

||||||

|

|

||||||

|

These are the folks that message you on LinkedIn. Recruiters fall into two categories: internal and external.

|

||||||

|

|

||||||

|

An internal recruiter is an employee of a specific company and hires engineers to work for that company. They’re almost invariably non-technical, but they often have a fairly clear idea of what technical skills they’re looking for. They have no idea who you are, or what your goals are, but they’re encouraged to find a good fit for the company and are generally harmless.

|

||||||

|

|

||||||

|

It’s normal to work with an internal recruiter as part of the application process at a software company, especially a larger one.

|

||||||

|

|

||||||

|

An external recruiter works independently or for an agency. They’re market makers; they have a stable of companies who have contracted with them to find employees, and they get a placement fee for every person that one of those companies hires. As such, they have incentives to make as many matches as possible as quickly as possible, and they rarely have to deal with the fallout if the match isn’t a good one.

|

||||||

|

|

||||||

|

In my experience they add nothing to the job search process and, at best, just gum up the works as unnecessary middlemen. Less reputable ones may edit your resume without your approval, forward it along to companies that you’d never want to work with, and otherwise mangle your reputation. I avoid them.

|

||||||

|

|

||||||

|

Helpful and ethical external recruiters are a bit like UFOs. I’m prepared to acknowledge that they might, possibly, exist, but I’ve never seen one myself or spoken directly with anyone who’s encountered one, and I’ve only heard about them through confusing and doubtful chains of testimonials (and such testimonials usually make me question the testifier more than my assumptions).

|

||||||

|

|

||||||

|

### University career services

|

||||||

|

|

||||||

|

I’ve never found these to be of any use. The software job market is extraordinarily specialized, and it’s virtually impossible for a career services employee (who needs to be able to place every sort of student in every sort of job) to be familiar with it.

|

||||||

|

|

||||||

|

A recruiter, whose purview is limited to the software world, will often try to estimate good matches by looking at resume keywords like “Python” or “natural language processing.” A university career services employee needs to rely on even more amorphous keywords like “software” or “programming.” It’s hard for a non-technical person to distinguish a job engineering compilers from one hooking up printers.

|

||||||

|

|

||||||

|

Exceptions exist, of course (MIT and Stanford, for example, have predictably excellent software-specific career services), but they’re thoroughly exceptional.

|

||||||

|

|

||||||

|

There are plenty of other ways to find jobs, of course (job fairs at good industrial conferences—like [PyCon][8] or [Strange Loop][9]—aren’t bad, for example, though I’ve never taken a job through one). But the avenues above are the most common ways that job-finding happens. Good luck!

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: https://harryrschwartz.com/2018/07/19/finding-jobs-in-software.html

|

||||||

|

|

||||||

|

作者:[Harry R. Schwartz][a]

|

||||||

|

选题:[lujun9972](https://github.com/lujun9972)

|

||||||

|

译者:[lujun9972](https://github.com/lujun9972)

|

||||||

|

校对:[校对者ID](https://github.com/校对者ID)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:https://harryrschwartz.com/

|

||||||

|

[1]:https://harryrschwartz.com/assets/documents/articles/finding-jobs-in-software.pdf

|

||||||

|

[2]:https://www.fandm.edu/ed-novak

|

||||||

|

[3]:https://meetup.com

|

||||||

|

[4]:https://weworkremotely.com

|

||||||

|

[5]:https://news.ycombinator.com

|

||||||

|

[6]:https://news.ycombinator.com/item?id=13764728

|

||||||

|

[7]:https://www.hnhiring.com

|

||||||

|

[8]:https://us.pycon.org

|

||||||

|

[9]:https://thestrangeloop.com

|

||||||

@ -0,0 +1,116 @@

|

|||||||

|

Debian Turns 25! Here are Some Interesting Facts About Debian Linux

|

||||||

|

======

|

||||||

|

One of the oldest Linux distribution still in development, Debian has just turned 25. Let’s have a look at some interesting facts about this awesome FOSS project.

|

||||||

|

|

||||||

|

### 10 Interesting facts about Debian Linux

|

||||||

|

|

||||||

|

![Interesting facts about Debian Linux][1]

|

||||||

|

|

||||||

|

The facts presented here have been collected from various sources available from the internet. They are true to my knowledge, but in case of any error, please remind me to update the article.

|

||||||

|

|

||||||

|

#### 1\. One of the oldest Linux distributions still under active development

|

||||||

|

|

||||||

|

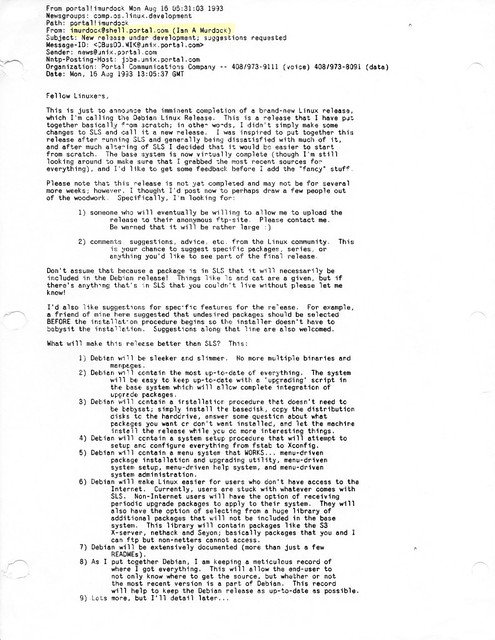

[Debian project][2] was announced on 16th August 1993 by Ian Murdock, Debian Founder. Like Linux creator [Linus Torvalds][3], Ian was a college student when he announced Debian project.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

#### 2\. Some people get tattoo while some name their project after their girlfriend’s name

|

||||||

|

|

||||||

|

The project was named by combining the name of Ian and his then-girlfriend Debra Lynn. Ian and Debra got married and had three children. Debra and Ian got divorced in 2008.

|

||||||

|

|

||||||

|

#### 3\. Ian Murdock: The Maverick behind the creation of Debian project

|

||||||

|

|

||||||

|

![Debian Founder Ian Murdock][4]

|

||||||

|

Ian Murdock

|

||||||

|

|

||||||

|

[Ian Murdock][5] led the Debian project from August 1993 until March 1996. He shaped Debian into a community project based on the principals of Free Software. The [Debian Manifesto][6] and the [Debian Social Contract][7] are still governing the project.

|

||||||

|

|

||||||

|

He founded a commercial Linux company called [Progeny Linux Systems][8] and worked for a number of Linux related companies such as Sun Microsystems, Linux Foundation and Docker.

|

||||||

|

|

||||||

|

Sadly, [Ian committed suicide in December 2015][9]. His contribution to Debian is certainly invaluable.

|

||||||

|

|

||||||

|

#### 4\. Debian is a community project in the true sense

|

||||||

|

|

||||||

|

Debian is a community based project in true sense. No one ‘owns’ Debian. Debian is being developed by volunteers from all over the world. It is not a commercial project, backed by corporates like many other Linux distributions.

|

||||||

|

|

||||||

|

Debian Linux distribution is composed of Free Software only. It’s one of the few Linux distributions that is true to the spirit of [Free Software][10] and takes proud in being called a GNU/Linux distribution.

|

||||||

|

|

||||||

|

Debian has its non-profit organization called [Software in Public Interest][11] (SPI). Along with Debian, SPI supports many other open source projects financially.

|

||||||

|

|

||||||

|

#### 5\. Debian and its 3 branches

|

||||||

|

|

||||||

|

Debian has three branches or versions: Debian Stable, Debian Unstable (Sid) and Debian Testing.

|

||||||

|

|

||||||

|

Debian Stable, as the name suggests, is the stable branch that has all the software and packages well tested to give you a rock solid stable system. Since it takes time before a well-tested software lands in the stable branch, Debian Stable often contains older versions of programs and hence people joke that Debian Stable means stale.

|

||||||

|

|

||||||

|

[Debian Unstable][12] codenamed Sid is the version where all the development of Debian takes place. This is where the new packages first land or developed. After that, these changes are propagated to the testing version.

|

||||||

|

|

||||||

|

[Debian Testing][13] is the next release after the current stable release. If the current stable release is N, Debian testing would be the N+1 release. The packages from Debian Unstable are tested in this version. After all the new changes are well tested, Debian Testing is then ‘promoted’ as the new Stable version.

|

||||||

|

|

||||||

|

There is no strict release schedule for Debian.

|

||||||

|

|

||||||

|

#### 7\. There was no Debian 1.0 release

|

||||||

|

|

||||||

|

Debian 1.0 was never released. The CD vendor, InfoMagic, accidentally shipped a development release of Debian and entitled it 1.0 in 1996. To prevent confusion between the CD version and the actual Debian release, the Debian Project renamed its next release to “Debian 1.1”.

|

||||||

|

|

||||||

|

#### 8\. Debian releases are codenamed after Toy Story characters

|

||||||

|

|

||||||

|

![Toy Story Characters][14]

|

||||||

|

|

||||||

|

Debian releases are codenamed after the characters from Pixar’s hit animation movie series [Toy Story][15].

|

||||||

|

|

||||||

|

Debian 1.1 was the first release with a codename. It was named Buzz after the Toy Story character Buzz Lightyear.

|

||||||

|

|

||||||

|

It was in 1996 and [Bruce Perens][16] had taken over leadership of the Project from Ian Murdock. Bruce was working at Pixar at the time.

|

||||||

|

|

||||||

|

This trend continued and all the subsequent releases had codenamed after Toy Story characters. For example, the current stable release is Stretch while the upcoming release has been codenamed Buster.

|

||||||

|

|

||||||

|

The unstable Debian version is codenamed Sid. This character in Toy Story is a kid with emotional problems and he enjoys breaking toys. This is symbolic in the sense that Debian Unstable might break your system with untested packages.

|

||||||

|

|

||||||

|

#### 9\. Debian also has a BSD ditribution

|

||||||

|

|

||||||

|

Debian is not limited to Linux. Debian also has a distribution based on FreeBSD kernel. It is called [Debian GNU/kFreeBSD][17].

|

||||||

|

|

||||||

|

#### 10\. Google uses Debian

|

||||||

|

|

||||||

|

[Google uses Debian][18] as its in-house development platform. Earlier, Google used a customized version of Ubuntu as its development platform. Recently they opted for Debian based gLinux.

|

||||||

|

|

||||||

|

#### Happy 25th birthday Debian

|

||||||

|

|

||||||

|

![Happy 25th birthday Debian][19]

|

||||||

|

|

||||||

|

I hope you liked these little facts about Debian. Stuff like these are reasons why people love Debian.

|

||||||

|

|

||||||

|

I wish a very happy 25th birthday to Debian. Please continue to be awesome. Cheers :)

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: https://itsfoss.com/debian-facts/

|

||||||

|

|

||||||

|

作者:[Abhishek Prakash][a]

|

||||||

|

选题:[lujun9972](https://github.com/lujun9972)

|

||||||

|

译者:[译者ID](https://github.com/译者ID)

|

||||||

|

校对:[校对者ID](https://github.com/校对者ID)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]: https://itsfoss.com/author/abhishek/

|

||||||

|

[1]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/08/Interesting-facts-about-debian.jpeg

|

||||||

|

[2]:https://www.debian.org

|

||||||

|

[3]:https://itsfoss.com/linus-torvalds-facts/

|

||||||

|

[4]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/08/ian-murdock.jpg

|

||||||

|

[5]:https://en.wikipedia.org/wiki/Ian_Murdock

|

||||||

|

[6]:https://www.debian.org/doc/manuals/project-history/ap-manifesto.en.html

|

||||||

|

[7]:https://www.debian.org/social_contract

|

||||||

|

[8]:https://en.wikipedia.org/wiki/Progeny_Linux_Systems

|

||||||

|

[9]:https://itsfoss.com/ian-murdock-dies-mysteriously/

|

||||||

|

[10]:https://www.fsf.org/

|

||||||

|

[11]:https://www.spi-inc.org/

|

||||||

|

[12]:https://www.debian.org/releases/sid/

|

||||||

|

[13]:https://www.debian.org/releases/testing/

|

||||||

|

[14]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/08/toy-story-characters.jpeg

|

||||||

|

[15]:https://en.wikipedia.org/wiki/Toy_Story_(franchise)

|

||||||

|

[16]:https://perens.com/about-bruce-perens/

|

||||||

|

[17]:https://wiki.debian.org/Debian_GNU/kFreeBSD

|

||||||

|

[18]:https://itsfoss.com/goobuntu-glinux-google/

|

||||||

|

[19]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/08/happy-25th-birthday-Debian.jpeg

|

||||||

@ -1,601 +0,0 @@

|

|||||||

Translating by DavidChenLiang

|

|

||||||

|

|

||||||

The evolution of package managers

|

|

||||||

======

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Every computerized device uses some form of software to perform its intended tasks. In the early days of software, products were stringently tested for bugs and other defects. For the last decade or so, software has been released via the internet with the intent that any bugs would be fixed by applying new versions of the software. In some cases, each individual application has its own updater. In others, it is left up to the user to figure out how to obtain and upgrade software.

|

|

||||||

|

|

||||||

Linux adopted early the practice of maintaining a centralized location where users could find and install software. In this article, I'll discuss the history of software installation on Linux and how modern operating systems are kept up to date against the never-ending torrent of [CVEs][1].

|

|

||||||

|

|

||||||

### How was software on Linux installed before package managers?

|

|

||||||

|

|

||||||

Historically, software was provided either via FTP or mailing lists (eventually this distribution would grow to include basic websites). Only a few small files contained the instructions to create a binary (normally in a tarfile). You would untar the files, read the readme, and as long as you had GCC or some other form of C compiler, you would then typically run a `./configure` script with some list of attributes, such as pathing to library files, location to create new binaries, etc. In addition, the `configure` process would check your system for application dependencies. If any major requirements were missing, the configure script would exit and you could not proceed with the installation until all the dependencies were met. If the configure script completed successfully, a `Makefile` would be created.

|

|

||||||

|

|

||||||

Once a `Makefile` existed, you would then proceed to run the `make` command (this command is provided by whichever compiler you were using). The `make` command has a number of options called make flags, which help optimize the resulting binaries for your system. In the earlier days of computing, this was very important because hardware struggled to keep up with modern software demands. Today, compilation options can be much more generic as most hardware is more than adequate for modern software.

|

|

||||||

|

|

||||||

Finally, after the `make` process had been completed, you would need to run `make install` (or `sudo make install`) in order to actually install the software. As you can imagine, doing this for every single piece of software was time-consuming and tedious—not to mention the fact that updating software was a complicated and potentially very involved process.

|

|

||||||

|

|

||||||

### What is a package?

|

|

||||||

|

|

||||||

Packages were invented to combat this complexity. Packages collect multiple data files together into a single archive file for easier portability and storage, or simply compress files to reduce storage space. The binaries included in a package are precompiled with according to the sane defaults the developer chosen. Packages also contain metadata, such as the software's name, a description of its purpose, a version number, and a list of dependencies necessary for the software to run properly.

|

|

||||||

|

|

||||||

Several flavors of Linux have created their own package formats. Some of the most commonly used package formats include:

|

|

||||||

|

|

||||||

* .deb: This package format is used by Debian, Ubuntu, Linux Mint, and several other derivatives. It was the first package type to be created.

|

|

||||||

* .rpm: This package format was originally called Red Hat Package Manager. It is used by Red Hat, Fedora, SUSE, and several other smaller distributions.

|

|

||||||

* .tar.xz: While it is just a compressed tarball, this is the format that Arch Linux uses.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

While packages themselves don't manage dependencies directly, they represented a huge step forward in Linux software management.

|

|

||||||

|

|

||||||

### What is a software repository?

|

|

||||||

|

|

||||||

A few years ago, before the proliferation of smartphones, the idea of a software repository was difficult for many users to grasp if they were not involved in the Linux ecosystem. To this day, most Windows users still seem to be hardwired to open a web browser to search for and install new software. However, those with smartphones have gotten used to the idea of a software "store." The way smartphone users obtain software and the way package managers work are not dissimilar. While there have been several attempts at making an attractive UI for software repositories, the vast majority of Linux users still use the command line to install packages. Software repositories are a centralized listing of all of the available software for any repository the system has been configured to use. Below are some examples of searching a repository for a specifc package (note that these have been truncated for brevity):

|

|

||||||

|

|

||||||

Arch Linux with aurman

|

|

||||||

```

|

|

||||||

user@arch ~ $ aurman -Ss kate

|

|

||||||

|

|

||||||

extra/kate 18.04.2-2 (kde-applications kdebase)

|

|

||||||

Advanced Text Editor

|

|

||||||

aur/kate-root 18.04.0-1 (11, 1.139399)

|

|

||||||

Advanced Text Editor, patched to be able to run as root

|

|

||||||

aur/kate-git r15288.15d26a7-1 (1, 1e-06)

|

|

||||||

An advanced editor component which is used in numerous KDE applications requiring a text editing component

|

|

||||||

```

|

|

||||||

|

|

||||||

CentOS 7 using YUM

|

|

||||||

```

|

|

||||||

[user@centos ~]$ yum search kate

|

|

||||||

|

|

||||||

kate-devel.x86_64 : Development files for kate

|

|

||||||

kate-libs.x86_64 : Runtime files for kate

|

|

||||||

kate-part.x86_64 : Kate kpart plugin

|

|

||||||

```

|

|

||||||

|

|

||||||

Ubuntu using APT

|

|

||||||

```

|

|

||||||

user@ubuntu ~ $ apt search kate

|

|

||||||

Sorting... Done

|

|

||||||

Full Text Search... Done

|

|

||||||

|

|

||||||

kate/xenial 4:15.12.3-0ubuntu2 amd64

|

|

||||||

powerful text editor

|

|

||||||

|

|

||||||

kate-data/xenial,xenial 4:4.14.3-0ubuntu4 all

|

|

||||||

shared data files for Kate text editor

|

|

||||||

|

|

||||||

kate-dbg/xenial 4:15.12.3-0ubuntu2 amd64

|

|

||||||

debugging symbols for Kate

|

|

||||||

|

|

||||||

kate5-data/xenial,xenial 4:15.12.3-0ubuntu2 all

|

|

||||||

shared data files for Kate text editor

|

|

||||||

```

|

|

||||||

|

|

||||||

### What are the most prominent package managers?

|

|

||||||

|

|

||||||

As suggested in the above output, package managers are used to interact with software repositories. The following is a brief overview of some of the most prominent package managers.

|

|

||||||

|

|

||||||

#### RPM-based package managers

|

|

||||||

|

|

||||||

Updating RPM-based systems, particularly those based on Red Hat technologies, has a very interesting and detailed history. In fact, the current versions of [yum][2] (for enterprise distributions) and [DNF][3] (for community) combine several open source projects to provide their current functionality.

|

|

||||||

|

|

||||||

Initially, Red Hat used a package manager called [RPM][4] (Red Hat Package Manager), which is still in use today. However, its primary use is to install RPMs, which you have locally, not to search software repositories. The package manager named `up2date` was created to inform users of updates to packages and enable them to search remote repositories and easily install dependencies. While it served its purpose, some community members felt that `up2date` had some significant shortcomings.

|

|

||||||

|

|

||||||

The current incantation of yum came from several different community efforts. Yellowdog Updater (YUP) was developed in 1999-2001 by folks at Terra Soft Solutions as a back-end engine for a graphical installer of [Yellow Dog Linux][5]. Duke University liked the idea of YUP and decided to improve upon it. They created [Yellowdog Updater, Modified (yum)][6] which was eventually adapted to help manage the university's Red Hat Linux systems. Yum grew in popularity, and by 2005 it was estimated to be used by more than half of the Linux market. Today, almost every distribution of Linux that uses RPMs uses yum for package management (with a few notable exceptions).

|

|

||||||

|

|

||||||

#### Working with yum

|

|

||||||

|

|

||||||

In order for yum to download and install packages out of an internet repository, files must be located in `/etc/yum.repos.d/` and they must have the extension `.repo`. Here is an example repo file:

|

|

||||||

```

|

|

||||||

[local_base]

|

|

||||||

name=Base CentOS (local)

|

|

||||||

baseurl=http://7-repo.apps.home.local/yum-repo/7/

|

|

||||||

enabled=1

|

|

||||||

gpgcheck=0

|

|

||||||

```

|

|

||||||

|

|

||||||

This is for one of my local repositories, which explains why the GPG check is off. If this check was on, each package would need to be signed with a cryptographic key and a corresponding key would need to be imported into the system receiving the updates. Because I maintain this repository myself, I trust the packages and do not bother signing them.

|

|

||||||

|

|

||||||

Once a repository file is in place, you can start installing packages from the remote repository. The most basic command is `yum update`, which will update every package currently installed. This does not require a specific step to refresh the information about repositories; this is done automatically. A sample of the command is shown below:

|

|

||||||

```

|

|

||||||

[user@centos ~]$ sudo yum update

|

|

||||||

Loaded plugins: fastestmirror, product-id, search-disabled-repos, subscription-manager

|

|

||||||

local_base | 3.6 kB 00:00:00

|

|

||||||

local_epel | 2.9 kB 00:00:00

|

|

||||||

local_rpm_forge | 1.9 kB 00:00:00

|

|

||||||

local_updates | 3.4 kB 00:00:00

|

|

||||||

spideroak-one-stable | 2.9 kB 00:00:00

|

|

||||||

zfs | 2.9 kB 00:00:00

|

|

||||||

(1/6): local_base/group_gz | 166 kB 00:00:00

|

|

||||||

(2/6): local_updates/primary_db | 2.7 MB 00:00:00

|

|

||||||

(3/6): local_base/primary_db | 5.9 MB 00:00:00

|

|

||||||

(4/6): spideroak-one-stable/primary_db | 12 kB 00:00:00

|

|

||||||

(5/6): local_epel/primary_db | 6.3 MB 00:00:00

|

|

||||||

(6/6): zfs/x86_64/primary_db | 78 kB 00:00:00

|

|

||||||

local_rpm_forge/primary_db | 125 kB 00:00:00

|

|

||||||

Determining fastest mirrors

|

|

||||||

Resolving Dependencies

|

|

||||||

--> Running transaction check

|

|

||||||

```

|

|

||||||

|

|

||||||

If you are sure you want yum to execute any command without stopping for input, you can put the `-y` flag in the command, such as `yum update -y`.

|

|

||||||

|

|

||||||

Installing a new package is just as easy. First, search for the name of the package with `yum search`:

|

|

||||||

```

|

|

||||||

[user@centos ~]$ yum search kate

|

|

||||||

|

|

||||||

artwiz-aleczapka-kates-fonts.noarch : Kates font in Artwiz family

|

|

||||||

ghc-highlighting-kate-devel.x86_64 : Haskell highlighting-kate library development files

|

|

||||||

kate-devel.i686 : Development files for kate

|

|

||||||

kate-devel.x86_64 : Development files for kate

|

|

||||||

kate-libs.i686 : Runtime files for kate

|

|

||||||

kate-libs.x86_64 : Runtime files for kate

|

|

||||||

kate-part.i686 : Kate kpart plugin

|

|

||||||

```

|

|

||||||

|

|

||||||

Once you have the name of the package, you can simply install the package with `sudo yum install kate-devel -y`. If you installed a package you no longer need, you can remove it with `sudo yum remove kate-devel -y`. By default, yum will remove the package plus its dependencies.

|

|

||||||

|

|

||||||

There may be times when you do not know the name of the package, but you know the name of the utility. For example, suppose you are looking for the utility `updatedb`, which creates/updates the database used by the `locate` command. Attempting to install `updatedb` returns the following results:

|

|

||||||

```

|

|

||||||

[user@centos ~]$ sudo yum install updatedb

|

|

||||||

Loaded plugins: fastestmirror, langpacks

|

|

||||||

Loading mirror speeds from cached hostfile

|

|

||||||

No package updatedb available.

|

|

||||||

Error: Nothing to do

|

|

||||||

```

|

|

||||||

|

|

||||||

You can find out what package the utility comes from by running:

|

|

||||||

```

|

|

||||||

[user@centos ~]$ yum whatprovides *updatedb

|

|

||||||

Loaded plugins: fastestmirror, langpacks

|

|

||||||

Loading mirror speeds from cached hostfile

|

|

||||||

|

|

||||||

bacula-director-5.2.13-23.1.el7.x86_64 : Bacula Director files

|

|

||||||

Repo : local_base

|

|

||||||

Matched from:

|

|

||||||

Filename : /usr/share/doc/bacula-director-5.2.13/updatedb

|

|

||||||

|

|

||||||

mlocate-0.26-8.el7.x86_64 : An utility for finding files by name

|

|

||||||

Repo : local_base

|

|

||||||

Matched from:

|

|

||||||

Filename : /usr/bin/updatedb

|

|

||||||

```

|

|

||||||

|

|

||||||

The reason I have used an asterisk `*` in front of the command is because `yum whatprovides` uses the path to the file in order to make a match. Since I was not sure where the file was located, I used an asterisk to indicate any path.

|

|

||||||

|

|

||||||

There are, of course, many more options available to yum. I encourage you to view the man page for yum for additional options.

|

|

||||||

|

|

||||||

[Dandified Yum (DNF)][7] is a newer iteration on yum. Introduced in Fedora 18, it has not yet been adopted in the enterprise distributions, and as such is predominantly used in Fedora (and derivatives). Its usage is almost exactly the same as that of yum, but it was built to address poor performance, undocumented APIs, slow/broken dependency resolution, and occasional high memory usage. DNF is meant as a drop-in replacement for yum, and therefore I won't repeat the commands—wherever you would use `yum`, simply substitute `dnf`.

|

|

||||||

|

|

||||||

#### Working with Zypper

|

|

||||||

|

|

||||||

[Zypper][8] is another package manager meant to help manage RPMs. This package manager is most commonly associated with [SUSE][9] (and [openSUSE][10]) but has also seen adoption by [MeeGo][11], [Sailfish OS][12], and [Tizen][13]. It was originally introduced in 2006 and has been iterated upon ever since. There is not a whole lot to say other than Zypper is used as the back end for the system administration tool [YaST][14] and some users find it to be faster than yum.

|

|

||||||

|

|

||||||

Zypper's usage is very similar to that of yum. To search for, update, install or remove a package, simply use the following:

|

|

||||||

```

|

|

||||||

zypper search kate

|

|

||||||

zypper update

|

|

||||||

zypper install kate

|

|

||||||

zypper remove kate

|

|

||||||

```

|

|

||||||

Some major differences come into play in how repositories are added to the system with `zypper`. Unlike the package managers discussed above, `zypper` adds repositories using the package manager itself. The most common way is via a URL, but `zypper` also supports importing from repo files.

|

|

||||||

```

|

|

||||||

suse:~ # zypper addrepo http://download.videolan.org/pub/vlc/SuSE/15.0 vlc

|

|

||||||

Adding repository 'vlc' [done]

|

|

||||||

Repository 'vlc' successfully added

|

|

||||||

|

|

||||||

Enabled : Yes

|

|

||||||

Autorefresh : No

|

|

||||||

GPG Check : Yes

|

|

||||||

URI : http://download.videolan.org/pub/vlc/SuSE/15.0

|

|

||||||

Priority : 99

|

|

||||||

```

|

|

||||||

|

|

||||||

You remove repositories in a similar manner:

|

|

||||||

```

|

|

||||||

suse:~ # zypper removerepo vlc

|

|

||||||

Removing repository 'vlc' ...................................[done]

|

|

||||||

Repository 'vlc' has been removed.

|

|

||||||

```

|

|

||||||

|

|

||||||

Use the `zypper repos` command to see what the status of repositories are on your system:

|

|

||||||

```

|

|

||||||

suse:~ # zypper repos

|

|

||||||

Repository priorities are without effect. All enabled repositories share the same priority.

|

|

||||||

|

|

||||||

# | Alias | Name | Enabled | GPG Check | Refresh

|

|

||||||

---|---------------------------|-----------------------------------------|---------|-----------|--------

|

|

||||||

1 | repo-debug | openSUSE-Leap-15.0-Debug | No | ---- | ----

|

|

||||||

2 | repo-debug-non-oss | openSUSE-Leap-15.0-Debug-Non-Oss | No | ---- | ----

|

|

||||||

3 | repo-debug-update | openSUSE-Leap-15.0-Update-Debug | No | ---- | ----

|

|

||||||

4 | repo-debug-update-non-oss | openSUSE-Leap-15.0-Update-Debug-Non-Oss | No | ---- | ----

|

|

||||||

5 | repo-non-oss | openSUSE-Leap-15.0-Non-Oss | Yes | ( p) Yes | Yes

|

|

||||||

6 | repo-oss | openSUSE-Leap-15.0-Oss | Yes | ( p) Yes | Yes

|

|

||||||

```

|

|

||||||

|

|

||||||

`zypper` even has a similar ability to determine what package name contains files or binaries. Unlike YUM, it uses a hyphen in the command (although this method of searching is deprecated):

|

|

||||||

```

|

|

||||||

localhost:~ # zypper what-provides kate

|

|

||||||

Command 'what-provides' is replaced by 'search --provides --match-exact'.

|

|

||||||

See 'help search' for all available options.

|

|

||||||

Loading repository data...

|

|

||||||

Reading installed packages...

|

|

||||||

|

|

||||||

S | Name | Summary | Type

|

|

||||||

---|------|----------------------|------------

|

|

||||||

i+ | Kate | Advanced Text Editor | application

|

|

||||||

i | kate | Advanced Text Editor | package

|

|

||||||

```

|

|

||||||

|

|

||||||

As with YUM and DNF, Zypper has a much richer feature set than covered here. Please consult with the official documentation for more in-depth information.

|

|

||||||

|

|

||||||

#### Debian-based package managers

|

|

||||||