mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-03 01:10:13 +08:00

commit

cd4f70a3b1

183

published/20150522 Analyzing Linux Logs.md

Normal file

183

published/20150522 Analyzing Linux Logs.md

Normal file

@ -0,0 +1,183 @@

|

||||

如何分析 Linux 日志

|

||||

==============================================================================

|

||||

|

||||

|

||||

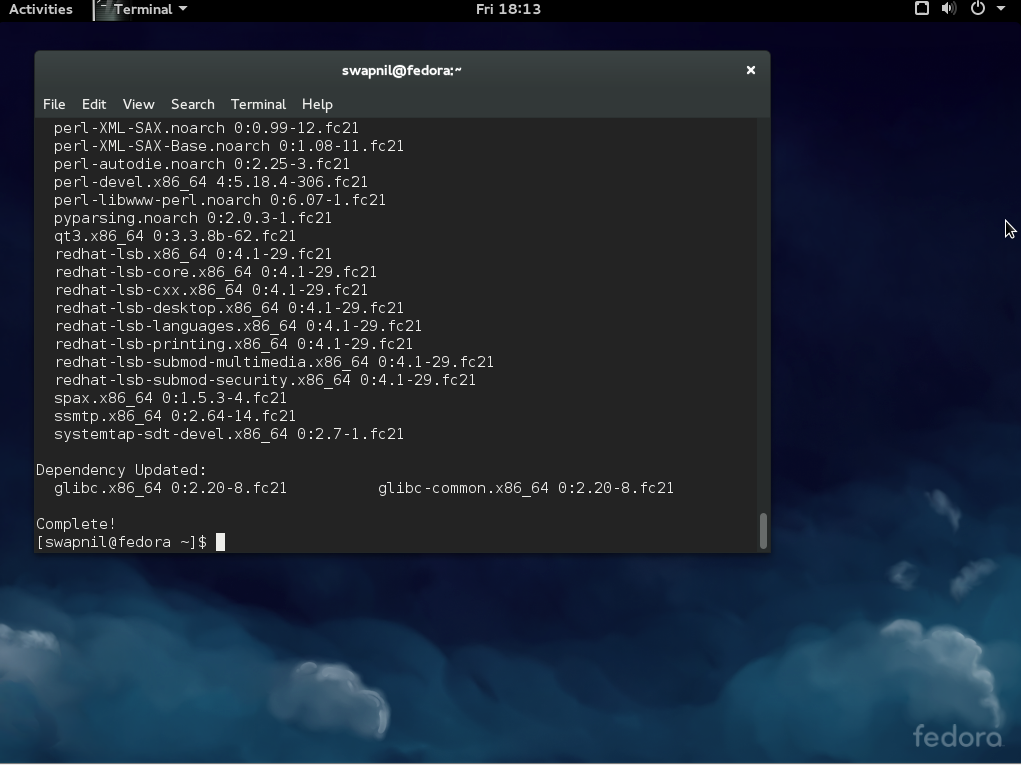

日志中有大量的信息需要你处理,尽管有时候想要提取并非想象中的容易。在这篇文章中我们会介绍一些你现在就能做的基本日志分析例子(只需要搜索即可)。我们还将涉及一些更高级的分析,但这些需要你前期努力做出适当的设置,后期就能节省很多时间。对数据进行高级分析的例子包括生成汇总计数、对有效值进行过滤,等等。

|

||||

|

||||

我们首先会向你展示如何在命令行中使用多个不同的工具,然后展示了一个日志管理工具如何能自动完成大部分繁重工作从而使得日志分析变得简单。

|

||||

|

||||

### 用 Grep 搜索 ###

|

||||

|

||||

搜索文本是查找信息最基本的方式。搜索文本最常用的工具是 [grep][1]。这个命令行工具在大部分 Linux 发行版中都有,它允许你用正则表达式搜索日志。正则表达式是一种用特殊的语言写的、能识别匹配文本的模式。最简单的模式就是用引号把你想要查找的字符串括起来。

|

||||

|

||||

#### 正则表达式 ####

|

||||

|

||||

这是一个在 Ubuntu 系统的认证日志中查找 “user hoover” 的例子:

|

||||

|

||||

$ grep "user hoover" /var/log/auth.log

|

||||

Accepted password for hoover from 10.0.2.2 port 4792 ssh2

|

||||

pam_unix(sshd:session): session opened for user hoover by (uid=0)

|

||||

pam_unix(sshd:session): session closed for user hoover

|

||||

|

||||

构建精确的正则表达式可能很难。例如,如果我们想要搜索一个类似端口 “4792” 的数字,它可能也会匹配时间戳、URL 以及其它不需要的数据。Ubuntu 中下面的例子,它匹配了一个我们不想要的 Apache 日志。

|

||||

|

||||

$ grep "4792" /var/log/auth.log

|

||||

Accepted password for hoover from 10.0.2.2 port 4792 ssh2

|

||||

74.91.21.46 - - [31/Mar/2015:19:44:32 +0000] "GET /scripts/samples/search?q=4972 HTTP/1.0" 404 545 "-" "-”

|

||||

|

||||

#### 环绕搜索 ####

|

||||

|

||||

另一个有用的小技巧是你可以用 grep 做环绕搜索。这会向你展示一个匹配前面或后面几行是什么。它能帮助你调试导致错误或问题的东西。`B` 选项展示前面几行,`A` 选项展示后面几行。举个例子,我们知道当一个人以管理员员身份登录失败时,同时他们的 IP 也没有反向解析,也就意味着他们可能没有有效的域名。这非常可疑!

|

||||

|

||||

$ grep -B 3 -A 2 'Invalid user' /var/log/auth.log

|

||||

Apr 28 17:06:20 ip-172-31-11-241 sshd[12545]: reverse mapping checking getaddrinfo for 216-19-2-8.commspeed.net [216.19.2.8] failed - POSSIBLE BREAK-IN ATTEMPT!

|

||||

Apr 28 17:06:20 ip-172-31-11-241 sshd[12545]: Received disconnect from 216.19.2.8: 11: Bye Bye [preauth]

|

||||

Apr 28 17:06:20 ip-172-31-11-241 sshd[12547]: Invalid user admin from 216.19.2.8

|

||||

Apr 28 17:06:20 ip-172-31-11-241 sshd[12547]: input_userauth_request: invalid user admin [preauth]

|

||||

Apr 28 17:06:20 ip-172-31-11-241 sshd[12547]: Received disconnect from 216.19.2.8: 11: Bye Bye [preauth]

|

||||

|

||||

#### Tail ####

|

||||

|

||||

你也可以把 grep 和 [tail][2] 结合使用来获取一个文件的最后几行,或者跟踪日志并实时打印。这在你做交互式更改的时候非常有用,例如启动服务器或者测试代码更改。

|

||||

|

||||

$ tail -f /var/log/auth.log | grep 'Invalid user'

|

||||

Apr 30 19:49:48 ip-172-31-11-241 sshd[6512]: Invalid user ubnt from 219.140.64.136

|

||||

Apr 30 19:49:49 ip-172-31-11-241 sshd[6514]: Invalid user admin from 219.140.64.136

|

||||

|

||||

关于 grep 和正则表达式的详细介绍并不在本指南的范围,但 [Ryan’s Tutorials][3] 有更深入的介绍。

|

||||

|

||||

日志管理系统有更高的性能和更强大的搜索能力。它们通常会索引数据并进行并行查询,因此你可以很快的在几秒内就能搜索 GB 或 TB 的日志。相比之下,grep 就需要几分钟,在极端情况下可能甚至几小时。日志管理系统也使用类似 [Lucene][4] 的查询语言,它提供更简单的语法来检索数字、域以及其它。

|

||||

|

||||

### 用 Cut、 AWK、 和 Grok 解析 ###

|

||||

|

||||

#### 命令行工具 ####

|

||||

|

||||

Linux 提供了多个命令行工具用于文本解析和分析。当你想要快速解析少量数据时非常有用,但处理大量数据时可能需要很长时间。

|

||||

|

||||

#### Cut ####

|

||||

|

||||

[cut][5] 命令允许你从有分隔符的日志解析字段。分隔符是指能分开字段或键值对的等号或逗号等。

|

||||

|

||||

假设我们想从下面的日志中解析出用户:

|

||||

|

||||

pam_unix(su:auth): authentication failure; logname=hoover uid=1000 euid=0 tty=/dev/pts/0 ruser=hoover rhost= user=root

|

||||

|

||||

我们可以像下面这样用 cut 命令获取用等号分割后的第八个字段的文本。这是一个 Ubuntu 系统上的例子:

|

||||

|

||||

$ grep "authentication failure" /var/log/auth.log | cut -d '=' -f 8

|

||||

root

|

||||

hoover

|

||||

root

|

||||

nagios

|

||||

nagios

|

||||

|

||||

#### AWK ####

|

||||

|

||||

另外,你也可以使用 [awk][6],它能提供更强大的解析字段功能。它提供了一个脚本语言,你可以过滤出几乎任何不相干的东西。

|

||||

|

||||

例如,假设在 Ubuntu 系统中我们有下面的一行日志,我们想要提取登录失败的用户名称:

|

||||

|

||||

Mar 24 08:28:18 ip-172-31-11-241 sshd[32701]: input_userauth_request: invalid user guest [preauth]

|

||||

|

||||

你可以像下面这样使用 awk 命令。首先,用一个正则表达式 /sshd.*invalid user/ 来匹配 sshd invalid user 行。然后用 { print $9 } 根据默认的分隔符空格打印第九个字段。这样就输出了用户名。

|

||||

|

||||

$ awk '/sshd.*invalid user/ { print $9 }' /var/log/auth.log

|

||||

guest

|

||||

admin

|

||||

info

|

||||

test

|

||||

ubnt

|

||||

|

||||

你可以在 [Awk 用户指南][7] 中阅读更多关于如何使用正则表达式和输出字段的信息。

|

||||

|

||||

#### 日志管理系统 ####

|

||||

|

||||

日志管理系统使得解析变得更加简单,使用户能快速的分析很多的日志文件。他们能自动解析标准的日志格式,比如常见的 Linux 日志和 Web 服务器日志。这能节省很多时间,因为当处理系统问题的时候你不需要考虑自己写解析逻辑。

|

||||

|

||||

下面是一个 sshd 日志消息的例子,解析出了每个 remoteHost 和 user。这是 Loggly 中的一张截图,它是一个基于云的日志管理服务。

|

||||

|

||||

|

||||

|

||||

你也可以对非标准格式自定义解析。一个常用的工具是 [Grok][8],它用一个常见正则表达式库,可以解析原始文本为结构化 JSON。下面是一个 Grok 在 Logstash 中解析内核日志文件的事例配置:

|

||||

|

||||

filter{

|

||||

grok {

|

||||

match => {"message" => "%{CISCOTIMESTAMP:timestamp} %{HOST:host} %{WORD:program}%{NOTSPACE} %{NOTSPACE}%{NUMBER:duration}%{NOTSPACE} %{GREEDYDATA:kernel_logs}"

|

||||

}

|

||||

}

|

||||

|

||||

下图是 Grok 解析后输出的结果:

|

||||

|

||||

|

||||

|

||||

### 用 Rsyslog 和 AWK 过滤 ###

|

||||

|

||||

过滤使得你能检索一个特定的字段值而不是进行全文检索。这使你的日志分析更加准确,因为它会忽略来自其它部分日志信息不需要的匹配。为了对一个字段值进行搜索,你首先需要解析日志或者至少有对事件结构进行检索的方式。

|

||||

|

||||

#### 如何对应用进行过滤 ####

|

||||

|

||||

通常,你可能只想看一个应用的日志。如果你的应用把记录都保存到一个文件中就会很容易。如果你需要在一个聚集或集中式日志中过滤一个应用就会比较复杂。下面有几种方法来实现:

|

||||

|

||||

1. 用 rsyslog 守护进程解析和过滤日志。下面的例子将 sshd 应用的日志写入一个名为 sshd-message 的文件,然后丢弃事件以便它不会在其它地方重复出现。你可以将它添加到你的 rsyslog.conf 文件中测试这个例子。

|

||||

|

||||

:programname, isequal, “sshd” /var/log/sshd-messages

|

||||

&~

|

||||

|

||||

2. 用类似 awk 的命令行工具提取特定字段的值,例如 sshd 用户名。下面是 Ubuntu 系统中的一个例子。

|

||||

|

||||

$ awk '/sshd.*invalid user/ { print $9 }' /var/log/auth.log

|

||||

guest

|

||||

admin

|

||||

info

|

||||

test

|

||||

ubnt

|

||||

|

||||

3. 用日志管理系统自动解析日志,然后在需要的应用名称上点击过滤。下面是在 Loggly 日志管理服务中提取 syslog 域的截图。我们对应用名称 “sshd” 进行过滤,如维恩图图标所示。

|

||||

|

||||

|

||||

|

||||

#### 如何过滤错误 ####

|

||||

|

||||

一个人最希望看到日志中的错误。不幸的是,默认的 syslog 配置不直接输出错误的严重性,也就使得难以过滤它们。

|

||||

|

||||

这里有两个解决该问题的方法。首先,你可以修改你的 rsyslog 配置,在日志文件中输出错误的严重性,使得便于查看和检索。在你的 rsyslog 配置中你可以用 pri-text 添加一个 [模板][9],像下面这样:

|

||||

|

||||

"<%pri-text%> : %timegenerated%,%HOSTNAME%,%syslogtag%,%msg%n"

|

||||

|

||||

这个例子会按照下面的格式输出。你可以看到该信息中指示错误的 err。

|

||||

|

||||

<authpriv.err> : Mar 11 18:18:00,hoover-VirtualBox,su[5026]:, pam_authenticate: Authentication failure

|

||||

|

||||

你可以用 awk 或者 grep 检索错误信息。在 Ubuntu 中,对这个例子,我们可以用一些语法特征,例如 . 和 >,它们只会匹配这个域。

|

||||

|

||||

$ grep '.err>' /var/log/auth.log

|

||||

<authpriv.err> : Mar 11 18:18:00,hoover-VirtualBox,su[5026]:, pam_authenticate: Authentication failure

|

||||

|

||||

你的第二个选择是使用日志管理系统。好的日志管理系统能自动解析 syslog 消息并抽取错误域。它们也允许你用简单的点击过滤日志消息中的特定错误。

|

||||

|

||||

下面是 Loggly 中一个截图,显示了高亮错误严重性的 syslog 域,表示我们正在过滤错误:

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.loggly.com/ultimate-guide/logging/analyzing-linux-logs/

|

||||

|

||||

作者:[Jason Skowronski][a],[Amy Echeverri][b],[ Sadequl Hussain][c]

|

||||

译者:[ictlyh](https://github.com/ictlyh)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linkedin.com/in/jasonskowronski

|

||||

[b]:https://www.linkedin.com/in/amyecheverri

|

||||

[c]:https://www.linkedin.com/pub/sadequl-hussain/14/711/1a7

|

||||

[1]:http://linux.die.net/man/1/grep

|

||||

[2]:http://linux.die.net/man/1/tail

|

||||

[3]:http://ryanstutorials.net/linuxtutorial/grep.php

|

||||

[4]:https://lucene.apache.org/core/2_9_4/queryparsersyntax.html

|

||||

[5]:http://linux.die.net/man/1/cut

|

||||

[6]:http://linux.die.net/man/1/awk

|

||||

[7]:http://www.delorie.com/gnu/docs/gawk/gawk_26.html#IDX155

|

||||

[8]:http://logstash.net/docs/1.4.2/filters/grok

|

||||

[9]:http://www.rsyslog.com/doc/v8-stable/configuration/templates.html

|

||||

@ -1,18 +1,18 @@

|

||||

Ubuntu 15.04上配置OpenVPN服务器-客户端

|

||||

在 Ubuntu 15.04 上配置 OpenVPN 服务器和客户端

|

||||

================================================================================

|

||||

虚拟专用网(VPN)是几种用于建立与其它网络连接的网络技术中常见的一个名称。它被称为虚拟网,因为各个节点的连接不是通过物理线路实现的。而由于没有网络所有者的正确授权是不能通过公共线路访问到网络,所以它是专用的。

|

||||

虚拟专用网(VPN)常指几种通过其它网络建立连接技术。它之所以被称为“虚拟”,是因为各个节点间的连接不是通过物理线路实现的,而“专用”是指如果没有网络所有者的正确授权是不能被公开访问到。

|

||||

|

||||

|

||||

|

||||

[OpenVPN][1]软件通过TUN/TAP驱动的帮助,使用TCP和UDP协议来传输数据。UDP协议和TUN驱动允许NAT后的用户建立到OpenVPN服务器的连接。此外,OpenVPN允许指定自定义端口。它提额外提供了灵活的配置,可以帮助你避免防火墙限制。

|

||||

[OpenVPN][1]软件借助TUN/TAP驱动使用TCP和UDP协议来传输数据。UDP协议和TUN驱动允许NAT后的用户建立到OpenVPN服务器的连接。此外,OpenVPN允许指定自定义端口。它提供了更多的灵活配置,可以帮助你避免防火墙限制。

|

||||

|

||||

OpenVPN中,由OpenSSL库和传输层安全协议(TLS)提供了安全和加密。TLS是SSL协议的一个改进版本。

|

||||

|

||||

OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示了如何配置OpenVPN的服务器端,以及如何预备使用带有公共密钥非对称加密和TLS协议基础结构(PKI)。

|

||||

OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示了如何配置OpenVPN的服务器端,以及如何配置使用带有公共密钥基础结构(PKI)的非对称加密和TLS协议。

|

||||

|

||||

### 服务器端配置 ###

|

||||

|

||||

首先,我们必须安装OpenVPN。在Ubuntu 15.04和其它带有‘apt’报管理器的Unix系统中,可以通过如下命令安装:

|

||||

首先,我们必须安装OpenVPN软件。在Ubuntu 15.04和其它带有‘apt’包管理器的Unix系统中,可以通过如下命令安装:

|

||||

|

||||

sudo apt-get install openvpn

|

||||

|

||||

@ -20,7 +20,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

sudo apt-get unstall easy-rsa

|

||||

|

||||

**注意**: 所有接下来的命令要以超级用户权限执行,如在“sudo -i”命令后;此外,你可以使用“sudo -E”作为接下来所有命令的前缀。

|

||||

**注意**: 所有接下来的命令要以超级用户权限执行,如在使用`sudo -i`命令后执行,或者你可以使用`sudo -E`作为接下来所有命令的前缀。

|

||||

|

||||

开始之前,我们需要拷贝“easy-rsa”到openvpn文件夹。

|

||||

|

||||

@ -32,15 +32,15 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

cd /etc/openvpn/easy-rsa/2.0

|

||||

|

||||

这里,我们开启了一个密钥生成进程。

|

||||

这里,我们开始密钥生成进程。

|

||||

|

||||

首先,我们编辑一个“var”文件。为了简化生成过程,我们需要在里面指定数据。这里是“var”文件的一个样例:

|

||||

首先,我们编辑一个“vars”文件。为了简化生成过程,我们需要在里面指定数据。这里是“vars”文件的一个样例:

|

||||

|

||||

export KEY_COUNTRY="US"

|

||||

export KEY_PROVINCE="CA"

|

||||

export KEY_CITY="SanFrancisco"

|

||||

export KEY_ORG="Fort-Funston"

|

||||

export KEY_EMAIL="my@myhost.mydomain"

|

||||

export KEY_COUNTRY="CN"

|

||||

export KEY_PROVINCE="BJ"

|

||||

export KEY_CITY="Beijing"

|

||||

export KEY_ORG="Linux.CN"

|

||||

export KEY_EMAIL="open@vpn.linux.cn"

|

||||

export KEY_OU=server

|

||||

|

||||

希望这些字段名称对你而言已经很清楚,不需要进一步说明了。

|

||||

@ -61,7 +61,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

./build-ca

|

||||

|

||||

在对话中,我们可以看到默认的变量,这些变量是我们先前在“vars”中指定的。我们可以检查以下,如有必要进行编辑,然后按回车几次。对话如下

|

||||

在对话中,我们可以看到默认的变量,这些变量是我们先前在“vars”中指定的。我们可以检查一下,如有必要进行编辑,然后按回车几次。对话如下

|

||||

|

||||

Generating a 2048 bit RSA private key

|

||||

.............................................+++

|

||||

@ -75,14 +75,14 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

For some fields there will be a default value,

|

||||

If you enter '.', the field will be left blank.

|

||||

-----

|

||||

Country Name (2 letter code) [US]:

|

||||

State or Province Name (full name) [CA]:

|

||||

Locality Name (eg, city) [SanFrancisco]:

|

||||

Organization Name (eg, company) [Fort-Funston]:

|

||||

Organizational Unit Name (eg, section) [MyOrganizationalUnit]:

|

||||

Common Name (eg, your name or your server's hostname) [Fort-Funston CA]:

|

||||

Country Name (2 letter code) [CN]:

|

||||

State or Province Name (full name) [BJ]:

|

||||

Locality Name (eg, city) [Beijing]:

|

||||

Organization Name (eg, company) [Linux.CN]:

|

||||

Organizational Unit Name (eg, section) [Tech]:

|

||||

Common Name (eg, your name or your server's hostname) [Linux.CN CA]:

|

||||

Name [EasyRSA]:

|

||||

Email Address [me@myhost.mydomain]:

|

||||

Email Address [open@vpn.linux.cn]:

|

||||

|

||||

接下来,我们需要生成一个服务器密钥

|

||||

|

||||

@ -102,14 +102,14 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

For some fields there will be a default value,

|

||||

If you enter '.', the field will be left blank.

|

||||

-----

|

||||

Country Name (2 letter code) [US]:

|

||||

State or Province Name (full name) [CA]:

|

||||

Locality Name (eg, city) [SanFrancisco]:

|

||||

Organization Name (eg, company) [Fort-Funston]:

|

||||

Organizational Unit Name (eg, section) [MyOrganizationalUnit]:

|

||||

Common Name (eg, your name or your server's hostname) [server]:

|

||||

Country Name (2 letter code) [CN]:

|

||||

State or Province Name (full name) [BJ]:

|

||||

Locality Name (eg, city) [Beijing]:

|

||||

Organization Name (eg, company) [Linux.CN]:

|

||||

Organizational Unit Name (eg, section) [Tech]:

|

||||

Common Name (eg, your name or your server's hostname) [Linux.CN server]:

|

||||

Name [EasyRSA]:

|

||||

Email Address [me@myhost.mydomain]:

|

||||

Email Address [open@vpn.linux.cn]:

|

||||

|

||||

Please enter the following 'extra' attributes

|

||||

to be sent with your certificate request

|

||||

@ -119,14 +119,14 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

Check that the request matches the signature

|

||||

Signature ok

|

||||

The Subject's Distinguished Name is as follows

|

||||

countryName :PRINTABLE:'US'

|

||||

stateOrProvinceName :PRINTABLE:'CA'

|

||||

localityName :PRINTABLE:'SanFrancisco'

|

||||

organizationName :PRINTABLE:'Fort-Funston'

|

||||

organizationalUnitName:PRINTABLE:'MyOrganizationalUnit'

|

||||

commonName :PRINTABLE:'server'

|

||||

countryName :PRINTABLE:'CN'

|

||||

stateOrProvinceName :PRINTABLE:'BJ'

|

||||

localityName :PRINTABLE:'Beijing'

|

||||

organizationName :PRINTABLE:'Linux.CN'

|

||||

organizationalUnitName:PRINTABLE:'Tech'

|

||||

commonName :PRINTABLE:'Linux.CN server'

|

||||

name :PRINTABLE:'EasyRSA'

|

||||

emailAddress :IA5STRING:'me@myhost.mydomain'

|

||||

emailAddress :IA5STRING:'open@vpn.linux.cn'

|

||||

Certificate is to be certified until May 22 19:00:25 2025 GMT (3650 days)

|

||||

Sign the certificate? [y/n]:y

|

||||

1 out of 1 certificate requests certified, commit? [y/n]y

|

||||

@ -143,7 +143,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

Generating DH parameters, 2048 bit long safe prime, generator 2

|

||||

This is going to take a long time

|

||||

................................+................<and many many dots>

|

||||

................................+................<许多的点>

|

||||

|

||||

在漫长的等待之后,我们可以继续生成最后的密钥了,该密钥用于TLS验证。命令如下:

|

||||

|

||||

@ -176,7 +176,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

### Unix的客户端配置 ###

|

||||

|

||||

假定我们有一台装有类Unix操作系统的设备,比如Ubuntu 15.04,并安装有OpenVPN。我们想要从先前的部分连接到OpenVPN服务器。首先,我们需要为客户端生成密钥。为了生成该密钥,请转到服务器上的目录中:

|

||||

假定我们有一台装有类Unix操作系统的设备,比如Ubuntu 15.04,并安装有OpenVPN。我们想要连接到前面建立的OpenVPN服务器。首先,我们需要为客户端生成密钥。为了生成该密钥,请转到服务器上的对应目录中:

|

||||

|

||||

cd /etc/openvpn/easy-rsa/2.0

|

||||

|

||||

@ -211,7 +211,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

dev tun

|

||||

proto udp

|

||||

|

||||

# IP and Port of remote host with OpenVPN server

|

||||

# 远程 OpenVPN 服务器的 IP 和 端口号

|

||||

remote 111.222.333.444 1194

|

||||

|

||||

resolv-retry infinite

|

||||

@ -243,7 +243,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

安卓设备上的OpenVPN配置和Unix系统上的十分类似,我们需要一个含有配置文件、密钥和证书的包。文件列表如下:

|

||||

|

||||

- configuration file (.ovpn),

|

||||

- 配置文件 (扩展名 .ovpn),

|

||||

- ca.crt,

|

||||

- dh2048.pem,

|

||||

- client.crt,

|

||||

@ -257,7 +257,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

dev tun

|

||||

proto udp

|

||||

|

||||

# IP and Port of remote host with OpenVPN server

|

||||

# 远程 OpenVPN 服务器的 IP 和 端口号

|

||||

remote 111.222.333.444 1194

|

||||

|

||||

resolv-retry infinite

|

||||

@ -274,21 +274,21 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

所有这些文件我们必须移动我们设备的SD卡上。

|

||||

|

||||

然后,我们需要安装[OpenVPN连接][2]。

|

||||

然后,我们需要安装一个[OpenVPN Connect][2] 应用。

|

||||

|

||||

接下来,配置过程很是简单:

|

||||

|

||||

open setting of OpenVPN and select Import options

|

||||

select Import Profile from SD card option

|

||||

in opened window go to folder with prepared files and select .ovpn file

|

||||

application offered us to create a new profile

|

||||

tap on the Connect button and wait a second

|

||||

- 打开 OpenVPN 并选择“Import”选项

|

||||

- 选择“Import Profile from SD card”

|

||||

- 在打开的窗口中导航到我们放置好文件的目录,并选择那个 .ovpn 文件

|

||||

- 应用会要求我们创建一个新的配置文件

|

||||

- 点击“Connect”按钮并稍等一下

|

||||

|

||||

搞定。现在,我们的安卓设备已经通过安全的VPN连接连接到我们的专用网。

|

||||

|

||||

### 尾声 ###

|

||||

|

||||

虽然OpenVPN初始配置花费不少时间,但是简易客户端配置为我们弥补了时间上的损失,也提供了从任何设备连接的能力。此外,OpenVPN提供了一个很高的安全等级,以及从不同地方连接的能力,包括位于NAT后面的客户端。因此,OpenVPN可以同时在家和在企业中使用。

|

||||

虽然OpenVPN初始配置花费不少时间,但是简易的客户端配置为我们弥补了时间上的损失,也提供了从任何设备连接的能力。此外,OpenVPN提供了一个很高的安全等级,以及从不同地方连接的能力,包括位于NAT后面的客户端。因此,OpenVPN可以同时在家和企业中使用。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -296,7 +296,7 @@ via: http://linoxide.com/ubuntu-how-to/configure-openvpn-server-client-ubuntu-15

|

||||

|

||||

作者:[Ivan Zabrovskiy][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,126 @@

|

||||

如何使用Docker Machine部署Swarm集群

|

||||

================================================================================

|

||||

|

||||

大家好,今天我们来研究一下如何使用Docker Machine部署Swarm集群。Docker Machine提供了标准的Docker API 支持,所以任何可以与Docker守护进程进行交互的工具都可以使用Swarm来(透明地)扩增到多台主机上。Docker Machine可以用来在个人电脑、云端以及的数据中心里创建Docker主机。它为创建服务器,安装Docker以及根据用户设定来配置Docker客户端提供了便捷化的解决方案。我们可以使用任何驱动来部署swarm集群,并且swarm集群将由于使用了TLS加密具有极好的安全性。

|

||||

|

||||

下面是我提供的简便方法。

|

||||

|

||||

### 1. 安装Docker Machine ###

|

||||

|

||||

Docker Machine 在各种Linux系统上都支持的很好。首先,我们需要从Github上下载最新版本的Docker Machine。我们使用curl命令来下载最先版本Docker Machine ie 0.2.0。

|

||||

|

||||

64位操作系统:

|

||||

|

||||

# curl -L https://github.com/docker/machine/releases/download/v0.2.0/docker-machine_linux-amd64 > /usr/local/bin/docker-machine

|

||||

|

||||

32位操作系统:

|

||||

|

||||

# curl -L https://github.com/docker/machine/releases/download/v0.2.0/docker-machine_linux-i386 > /usr/local/bin/docker-machine

|

||||

|

||||

下载了最先版本的Docker Machine之后,我们需要对 /usr/local/bin/ 目录下的docker-machine文件的权限进行修改。命令如下:

|

||||

|

||||

# chmod +x /usr/local/bin/docker-machine

|

||||

|

||||

在做完上面的事情以后,我们要确保docker-machine已经安装正确。怎么检查呢?运行`docker-machine -v`指令,该指令将会给出我们系统上所安装的docker-machine版本。

|

||||

|

||||

# docker-machine -v

|

||||

|

||||

|

||||

|

||||

为了让Docker命令能够在我们的机器上运行,必须还要在机器上安装Docker客户端。命令如下。

|

||||

|

||||

# curl -L https://get.docker.com/builds/linux/x86_64/docker-latest > /usr/local/bin/docker

|

||||

# chmod +x /usr/local/bin/docker

|

||||

|

||||

### 2. 创建Machine ###

|

||||

|

||||

在将Docker Machine安装到我们的设备上之后,我们需要使用Docker Machine创建一个machine。在这篇文章中,我们会将其部署在Digital Ocean Platform上。所以我们将使用“digitalocean”作为它的Driver API,然后将docker swarm运行在其中。这个Droplet会被设置为Swarm主控节点,我们还要创建另外一个Droplet,并将其设定为Swarm节点代理。

|

||||

|

||||

创建machine的命令如下:

|

||||

|

||||

# docker-machine create --driver digitalocean --digitalocean-access-token <API-Token> linux-dev

|

||||

|

||||

**备注**: 假设我们要创建一个名为“linux-dev”的machine。<API-Token>是用户在Digital Ocean Cloud Platform的Digital Ocean控制面板中生成的密钥。为了获取这个密钥,我们需要登录我们的Digital Ocean控制面板,然后点击API选项,之后点击Generate New Token,起个名字,然后在Read和Write两个选项上打钩。之后我们将得到一个很长的十六进制密钥,这个就是<API-Token>了。用其替换上面那条命令中的API-Token字段。

|

||||

|

||||

现在,运行下面的指令,将Machine 的配置变量加载进shell里。

|

||||

|

||||

# eval "$(docker-machine env linux-dev)"

|

||||

|

||||

|

||||

|

||||

然后,我们使用如下命令将我们的machine标记为ACTIVE状态。

|

||||

|

||||

# docker-machine active linux-dev

|

||||

|

||||

现在,我们检查它(指machine)是否被标记为了 ACTIVE "*"。

|

||||

|

||||

# docker-machine ls

|

||||

|

||||

|

||||

|

||||

### 3. 运行Swarm Docker镜像 ###

|

||||

|

||||

现在,在我们创建完成了machine之后。我们需要将swarm docker镜像部署上去。这个machine将会运行这个docker镜像,并且控制Swarm主控节点和从节点。使用下面的指令运行镜像:

|

||||

|

||||

# docker run swarm create

|

||||

|

||||

|

||||

|

||||

如果你想要在**32位操作系统**上运行swarm docker镜像。你需要SSH登录到Droplet当中。

|

||||

|

||||

# docker-machine ssh

|

||||

#docker run swarm create

|

||||

#exit

|

||||

|

||||

### 4. 创建Swarm主控节点 ###

|

||||

|

||||

在我们的swarm image已经运行在machine当中之后,我们将要创建一个Swarm主控节点。使用下面的语句,添加一个主控节点。

|

||||

|

||||

# docker-machine create \

|

||||

-d digitalocean \

|

||||

--digitalocean-access-token <DIGITALOCEAN-TOKEN>

|

||||

--swarm \

|

||||

--swarm-master \

|

||||

--swarm-discovery token://<CLUSTER-ID> \

|

||||

swarm-master

|

||||

|

||||

|

||||

|

||||

### 5. 创建Swarm从节点 ###

|

||||

|

||||

现在,我们将要创建一个swarm从节点,此节点将与Swarm主控节点相连接。下面的指令将创建一个新的名为swarm-node的droplet,其与Swarm主控节点相连。到此,我们就拥有了一个两节点的swarm集群了。

|

||||

|

||||

# docker-machine create \

|

||||

-d digitalocean \

|

||||

--digitalocean-access-token <DIGITALOCEAN-TOKEN>

|

||||

--swarm \

|

||||

--swarm-discovery token://<TOKEN-FROM-ABOVE> \

|

||||

swarm-node

|

||||

|

||||

|

||||

|

||||

### 6. 与Swarm主控节点连接 ###

|

||||

|

||||

现在,我们连接Swarm主控节点以便我们可以依照需求和配置文件在节点间部署Docker容器。运行下列命令将Swarm主控节点的Machine配置文件加载到环境当中。

|

||||

|

||||

# eval "$(docker-machine env --swarm swarm-master)"

|

||||

|

||||

然后,我们就可以跨节点地运行我们所需的容器了。在这里,我们还要检查一下是否一切正常。所以,运行**docker info**命令来检查Swarm集群的信息。

|

||||

|

||||

# docker info

|

||||

|

||||

### 总结 ###

|

||||

|

||||

我们可以用Docker Machine轻而易举地创建Swarm集群。这种方法有非常高的效率,因为它极大地减少了系统管理员和用户的时间消耗。在这篇文章中,我们以Digital Ocean作为驱动,通过创建一个主控节点和一个从节点成功地部署了集群。其他类似的驱动还有VirtualBox,Google Cloud Computing,Amazon Web Service,Microsoft Azure等等。这些连接都是通过TLS进行加密的,具有很高的安全性。如果你有任何的疑问,建议,反馈,欢迎在下面的评论框中注明以便我们可以更好地提高文章的质量!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linoxide.com/linux-how-to/provision-swarm-clusters-using-docker-machine/

|

||||

|

||||

作者:[Arun Pyasi][a]

|

||||

译者:[DongShuaike](https://github.com/DongShuaike)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linoxide.com/author/arunp/

|

||||

@ -0,0 +1,101 @@

|

||||

FreeBSD 和 Linux 有什么不同?

|

||||

================================================================================

|

||||

|

||||

|

||||

|

||||

### 简介 ###

|

||||

|

||||

BSD最初从UNIX继承而来,目前,有许多的类Unix操作系统是基于BSD的。FreeBSD是使用最广泛的开源的伯克利软件发行版(即 BSD 发行版)。就像它隐含的意思一样,它是一个自由开源的类Unix操作系统,并且是公共服务器平台。FreeBSD源代码通常以宽松的BSD许可证发布。它与Linux有很多相似的地方,但我们得承认它们在很多方面仍有不同。

|

||||

|

||||

本文的其余部分组织如下:FreeBSD的描述在第一部分,FreeBSD和Linux的相似点在第二部分,它们的区别将在第三部分讨论,对他们功能的讨论和总结在最后一节。

|

||||

|

||||

### FreeBSD描述 ###

|

||||

|

||||

#### 历史 ####

|

||||

|

||||

- FreeBSD的第一个版本发布于1993年,它的第一张CD-ROM是FreeBSD1.0,发行于1993年12月。接下来,FreeBSD 2.1.0在1995年发布,并且获得了所有用户的青睐。实际上许多IT公司都使用FreeBSD并且很满意,我们可以列出其中的一些:IBM、Nokia、NetApp和Juniper Network。

|

||||

|

||||

#### 许可证 ####

|

||||

|

||||

- 关于它的许可证,FreeBSD以多种开源许可证进行发布,它的名为Kernel的最新代码以两句版BSD许可证进行了发布,给予使用和重新发布FreeBSD的绝对自由。其它的代码则以三句版或四句版BSD许可证进行发布,有些是以GPL和CDDL的许可证发布的。

|

||||

|

||||

(LCTT 译注:BSD 许可证与 GPL 许可证相比,相当简短,最初只有四句规则;1999年应 RMS 请求,删除了第三句,新的许可证称作“新 BSD”或三句版BSD;原来的 BSD 许可证称作“旧 BSD”、“修订的 BSD”或四句版BSD;也有一种删除了第三、第四两句的版本,称之为两句版 BSD,等价于 MIT 许可证。)

|

||||

|

||||

#### 用户 ####

|

||||

|

||||

- FreeBSD的重要特点之一就是它的用户多样性。实际上,FreeBSD可以作为邮件服务器、Web 服务器、FTP 服务器以及路由器等,您只需要在它上运行服务相关的软件即可。而且FreeBSD还支持ARM、PowerPC、MIPS、x86、x86-64架构。

|

||||

|

||||

### FreeBSD和Linux的相似处 ###

|

||||

|

||||

FreeBSD和Linux是两个自由开源的软件。实际上,它们的用户可以很容易的检查并修改源代码,用户拥有绝对的自由。而且,FreeBSD和Linux都是类Unix系统,它们的内核、内部组件、库程序都使用从历史上的AT&T Unix继承来的算法。FreeBSD从根基上更像Unix系统,而Linux是作为自由的类Unix系统发布的。许多工具应用都可以在FreeBSD和Linux中找到,实际上,他们几乎有同样的功能。

|

||||

|

||||

此外,FreeBSD能够运行大量的Linux应用。它可以安装一个Linux的兼容层,这个兼容层可以在编译FreeBSD时加入AAC Compact Linux得到,或通过下载已编译了Linux兼容层的FreeBSD系统,其中会包括兼容程序:aac_linux.ko。不同于FreeBSD的是,Linux无法运行FreeBSD的软件。

|

||||

|

||||

最后,我们注意到虽然二者有同样的目标,但二者还是有一些不同之处,我们在下一节中列出。

|

||||

|

||||

### FreeBSD和Linux的区别 ###

|

||||

|

||||

目前对于大多数用户来说并没有一个选择FreeBSD还是Linux的明确的准则。因为他们有着很多同样的应用程序,因为他们都被称作类Unix系统。

|

||||

|

||||

在这一章,我们将列出这两种系统的一些重要的不同之处。

|

||||

|

||||

#### 许可证 ####

|

||||

|

||||

- 两个系统的区别首先在于它们的许可证。Linux以GPL许可证发行,它为用户提供阅读、发行和修改源代码的自由,GPL许可证帮助用户避免仅仅发行二进制。而FreeBSD以BSD许可证发布,BSD许可证比GPL更宽容,因为其衍生著作不需要仍以该许可证发布。这意味着任何用户能够使用、发布、修改代码,并且不需要维持之前的许可证。

|

||||

- 您可以依据您的需求,在两种许可证中选择一种。首先是BSD许可证,由于其特殊的条款,它更受用户青睐。实际上,这个许可证使用户在保证源代码的封闭性的同时,可以售卖以该许可证发布的软件。再说说GPL,它需要每个使用以该许可证发布的软件的用户多加注意。

|

||||

- 如果想在以不同许可证发布的两种软件中做出选择,您需要了解他们各自的许可证,以及他们开发中的方法论,从而能了解他们特性的区别,来选择更适合自己需求的。

|

||||

|

||||

#### 控制 ####

|

||||

|

||||

- 由于FreeBSD和Linux是以不同的许可证发布的,Linus Torvalds控制着Linux的内核,而FreeBSD却与Linux不同,它并未被控制。我个人更倾向于使用FreeBSD而不是Linux,这是因为FreeBSD才是绝对自由的软件,没有任何控制许可证的存在。Linux和FreeBSD还有其他的不同之处,我建议您先不急着做出选择,等读完本文后再做出您的选择。

|

||||

|

||||

#### 操作系统 ####

|

||||

|

||||

- Linux主要指内核系统,这与FreeBSD不同,FreeBSD的整个系统都被维护着。FreeBSD的内核和一组由FreeBSD团队开发的软件被作为一个整体进行维护。实际上,FreeBSD开发人员能够远程且高效的管理核心操作系统。

|

||||

- 而Linux方面,在管理系统方面有一些困难。由于不同的组件由不同的源维护,Linux开发者需要将它们汇集起来,才能达到同样的功能。

|

||||

- FreeBSD和Linux都给了用户大量的可选软件和发行版,但他们管理的方式不同。FreeBSD是统一的管理方式,而Linux需要被分别维护。

|

||||

|

||||

#### 硬件支持 ####

|

||||

|

||||

- 说到硬件支持,Linux比FreeBSD做的更好。但这不意味着FreeBSD没有像Linux那样支持硬件的能力。他们只是在管理的方式不同,这通常还依赖于您的需求。因此,如果您在寻找最新的解决方案,FreeBSD更适应您;但如果您在寻找更多的普适性,那最好使用Linux。

|

||||

|

||||

#### 原生FreeBSD Vs 原生Linux ####

|

||||

|

||||

- 两者的原生系统的区别又有不同。就像我之前说的,Linux是一个Unix的替代系统,由Linux Torvalds编写,并由网络上的许多极客一起协助实现的。Linux有一个现代系统所需要的全部功能,诸如虚拟内存、共享库、动态加载、优秀的内存管理等。它以GPL许可证发布。

|

||||

- FreeBSD也继承了Unix的许多重要的特性。FreeBSD作为在加州大学开发的BSD的一种发行版。开发BSD的最重要的原因是用一个开源的系统来替代AT&T操作系统,从而给用户无需AT&T许可证便可使用的能力。

|

||||

- 许可证的问题是开发者们最关心的问题。他们试图提供一个最大化克隆Unix的开源系统。这影响了用户的选择,由于FreeBSD使用BSD许可证进行发布,因而相比Linux更加自由。

|

||||

|

||||

#### 支持的软件包 ####

|

||||

|

||||

- 从用户的角度来看,另一个二者不同的地方便是软件包以及从源码安装的软件的可用性和支持。Linux只提供了预编译的二进制包,这与FreeBSD不同,它不但提供预编译的包,而且还提供从源码编译和安装的构建系统。使用它的 ports 工具,FreeBSD给了您选择使用预编译的软件包(默认)和在编译时定制您软件的能力。(LCTT 译注:此处说明有误。Linux 也提供了源代码方式的包,并支持自己构建。)

|

||||

- 这些 ports 允许您构建所有支持FreeBSD的软件。而且,它们的管理还是层次化的,您可以在/usr/ports下找到源文件的地址以及一些正确使用FreeBSD的文档。

|

||||

- 这些提到的 ports给予你产生不同软件包版本的可能性。FreeBSD给了您通过源代码构建以及预编译的两种软件,而不是像Linux一样只有预编译的软件包。您可以使用两种安装方式管理您的系统。

|

||||

|

||||

#### FreeBSD 和 Linux 常用工具比较 ####

|

||||

|

||||

- 有大量的常用工具在FreeBSD上可用,并且有趣的是他们由FreeBSD的团队所拥有。相反的,Linux工具来自GNU,这就是为什么在使用中有一些限制。(LCTT 译注:这也是 Linux 正式的名称被称作“GNU/Linux”的原因,因为本质上 Linux 其实只是指内核。)

|

||||

- 实际上FreeBSD采用的BSD许可证非常有益且有用。因此,您有能力维护核心操作系统,控制这些应用程序的开发。有一些工具类似于它们的祖先 - BSD和Unix的工具,但不同于GNU的套件,GNU套件只想做到最小的向后兼容。

|

||||

|

||||

#### 标准 Shell ####

|

||||

|

||||

- FreeBSD默认使用tcsh。它是csh的评估版,由于FreeBSD以BSD许可证发行,因此不建议您在其中使用GNU的组件 bash shell。bash和tcsh的区别仅仅在于tcsh的脚本功能。实际上,我们更推荐在FreeBSD中使用sh shell,因为它更加可靠,可以避免一些使用tcsh和csh时出现的脚本问题。

|

||||

|

||||

#### 一个更加层次化的文件系统 ####

|

||||

|

||||

- 像之前提到的一样,使用FreeBSD时,基础操作系统以及可选组件可以被很容易的区别开来。这导致了一些管理它们的标准。在Linux下,/bin,/sbin,/usr/bin或者/usr/sbin都是存放可执行文件的目录。FreeBSD不同,它有一些附加的对其进行组织的规范。基础操作系统被放在/usr/local/bin或者/usr/local/sbin目录下。这种方法可以帮助管理和区分基础操作系统和可选组件。

|

||||

|

||||

### 结论 ###

|

||||

|

||||

FreeBSD和Linux都是自由且开源的系统,他们有相似点也有不同点。上面列出的内容并不能说哪个系统比另一个更好。实际上,FreeBSD和Linux都有自己的特点和技术规格,这使它们与别的系统区别开来。那么,您有什么看法呢?您已经有在使用它们中的某个系统了么?如果答案为是的话,请给我们您的反馈;如果答案是否的话,在读完我们的描述后,您怎么看?请在留言处发表您的观点。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.unixmen.com/comparative-introduction-freebsd-linux-users/

|

||||

|

||||

作者:[anismaj][a]

|

||||

译者:[wwy-hust](https://github.com/wwy-hust)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.unixmen.com/author/anis/

|

||||

@ -1,13 +1,14 @@

|

||||

在Linux中使用‘Systemctl’管理‘Systemd’服务和单元

|

||||

systemctl 完全指南

|

||||

================================================================================

|

||||

Systemctl是一个systemd工具,主要负责控制systemd系统和服务管理器。

|

||||

|

||||

Systemd是一个系统管理守护进程、工具和库的集合,用于取代System V初始进程。Systemd的功能是用于集中管理和配置类UNIX系统。

|

||||

|

||||

在Linux生态系统中,Systemd被部署到了大多数的标准Linux发行版中,只有位数不多的几个尚未部署。Systemd通常是所有其它守护进程的父进程,但并非总是如此。

|

||||

在Linux生态系统中,Systemd被部署到了大多数的标准Linux发行版中,只有为数不多的几个发行版尚未部署。Systemd通常是所有其它守护进程的父进程,但并非总是如此。

|

||||

|

||||

|

||||

使用Systemctl管理Linux服务

|

||||

|

||||

*使用Systemctl管理Linux服务*

|

||||

|

||||

本文旨在阐明在运行systemd的系统上“如何控制系统和服务”。

|

||||

|

||||

@ -41,11 +42,9 @@ Systemd是一个系统管理守护进程、工具和库的集合,用于取代S

|

||||

root 555 1 0 16:27 ? 00:00:00 /usr/lib/systemd/systemd-logind

|

||||

dbus 556 1 0 16:27 ? 00:00:00 /bin/dbus-daemon --system --address=systemd: --nofork --nopidfile --systemd-activation

|

||||

|

||||

**注意**:systemd是作为父进程(PID=1)运行的。在上面带(-e)参数的ps命令输出中,选择所有进程,(-

|

||||

**注意**:systemd是作为父进程(PID=1)运行的。在上面带(-e)参数的ps命令输出中,选择所有进程,(-a)选择除会话前导外的所有进程,并使用(-f)参数输出完整格式列表(即 -eaf)。

|

||||

|

||||

a)选择除会话前导外的所有进程,并使用(-f)参数输出完整格式列表(如 -eaf)。

|

||||

|

||||

也请注意上例中后随的方括号和样例剩余部分。方括号表达式是grep的字符类表达式的一部分。

|

||||

也请注意上例中后随的方括号和例子中剩余部分。方括号表达式是grep的字符类表达式的一部分。

|

||||

|

||||

#### 4. 分析systemd启动进程 ####

|

||||

|

||||

@ -147,7 +146,7 @@ a)选择除会话前导外的所有进程,并使用(-f)参数输出完

|

||||

1 loaded units listed. Pass --all to see loaded but inactive units, too.

|

||||

To show all installed unit files use 'systemctl list-unit-files'.

|

||||

|

||||

#### 10. 检查某个单元(cron.service)是否启用 ####

|

||||

#### 10. 检查某个单元(如 cron.service)是否启用 ####

|

||||

|

||||

# systemctl is-enabled crond.service

|

||||

|

||||

@ -187,7 +186,7 @@ a)选择除会话前导外的所有进程,并使用(-f)参数输出完

|

||||

dbus-org.fedoraproject.FirewallD1.service enabled

|

||||

....

|

||||

|

||||

#### 13. Linux中如何启动、重启、停止、重载服务以及检查服务(httpd.service)状态 ####

|

||||

#### 13. Linux中如何启动、重启、停止、重载服务以及检查服务(如 httpd.service)状态 ####

|

||||

|

||||

# systemctl start httpd.service

|

||||

# systemctl restart httpd.service

|

||||

@ -214,15 +213,15 @@ a)选择除会话前导外的所有进程,并使用(-f)参数输出完

|

||||

Apr 28 17:21:30 tecmint systemd[1]: Started The Apache HTTP Server.

|

||||

Hint: Some lines were ellipsized, use -l to show in full.

|

||||

|

||||

**注意**:当我们使用systemctl的start,restart,stop和reload命令时,我们不会不会从终端获取到任何输出内容,只有status命令可以打印输出。

|

||||

**注意**:当我们使用systemctl的start,restart,stop和reload命令时,我们不会从终端获取到任何输出内容,只有status命令可以打印输出。

|

||||

|

||||

#### 14. 如何激活服务并在启动时启用或禁用服务(系统启动时自动启动服务) ####

|

||||

#### 14. 如何激活服务并在启动时启用或禁用服务(即系统启动时自动启动服务) ####

|

||||

|

||||

# systemctl is-active httpd.service

|

||||

# systemctl enable httpd.service

|

||||

# systemctl disable httpd.service

|

||||

|

||||

#### 15. 如何屏蔽(让它不能启动)或显示服务(httpd.service) ####

|

||||

#### 15. 如何屏蔽(让它不能启动)或显示服务(如 httpd.service) ####

|

||||

|

||||

# systemctl mask httpd.service

|

||||

ln -s '/dev/null' '/etc/systemd/system/httpd.service'

|

||||

@ -297,7 +296,7 @@ a)选择除会话前导外的所有进程,并使用(-f)参数输出完

|

||||

# systemctl enable tmp.mount

|

||||

# systemctl disable tmp.mount

|

||||

|

||||

#### 20. 在Linux中屏蔽(让它不能启动)或显示挂载点 ####

|

||||

#### 20. 在Linux中屏蔽(让它不能启用)或可见挂载点 ####

|

||||

|

||||

# systemctl mask tmp.mount

|

||||

|

||||

@ -375,7 +374,7 @@ a)选择除会话前导外的所有进程,并使用(-f)参数输出完

|

||||

|

||||

CPUShares=2000

|

||||

|

||||

**注意**:当你为某个服务设置CPUShares,会自动创建一个以服务名命名的目录(httpd.service),里面包含了一个名为90-CPUShares.conf的文件,该文件含有CPUShare限制信息,你可以通过以下方式查看该文件:

|

||||

**注意**:当你为某个服务设置CPUShares,会自动创建一个以服务名命名的目录(如 httpd.service),里面包含了一个名为90-CPUShares.conf的文件,该文件含有CPUShare限制信息,你可以通过以下方式查看该文件:

|

||||

|

||||

# vi /etc/systemd/system/httpd.service.d/90-CPUShares.conf

|

||||

|

||||

@ -528,13 +527,13 @@ a)选择除会话前导外的所有进程,并使用(-f)参数输出完

|

||||

#### 35. 启动运行等级5,即图形模式 ####

|

||||

|

||||

# systemctl isolate runlevel5.target

|

||||

OR

|

||||

或

|

||||

# systemctl isolate graphical.target

|

||||

|

||||

#### 36. 启动运行等级3,即多用户模式(命令行) ####

|

||||

|

||||

# systemctl isolate runlevel3.target

|

||||

OR

|

||||

或

|

||||

# systemctl isolate multiuser.target

|

||||

|

||||

#### 36. 设置多用户模式或图形模式为默认运行等级 ####

|

||||

@ -572,7 +571,7 @@ via: http://www.tecmint.com/manage-services-using-systemd-and-systemctl-in-linux

|

||||

|

||||

作者:[Avishek Kumar][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,34 +1,37 @@

|

||||

为Docker配置Swarm本地集群

|

||||

如何配置一个 Docker Swarm 原生集群

|

||||

================================================================================

|

||||

嗨,大家好。今天我们来学一学Swarm相关的内容吧,我们将学习通过Swarm来创建Docker本地集群。[Docker Swarm][1]是用于Docker的本地集群项目,它可以将Docker主机池转换成单个的虚拟主机。Swarm提供了标准的Docker API,所以任何可以和Docker守护进程通信的工具都可以使用Swarm来透明地规模化多个主机。Swarm遵循“包含电池并可拆卸”的原则,就像其它Docker项目一样。它附带有一个开箱即用的简单的后端调度程序,而且作为初始开发套件,也为其开发了一个可启用即插即用后端的API。其目标在于为一些简单的使用情况提供一个平滑的、开箱即用的体验,并且它允许在更强大的后端,如Mesos,中开启交换,以达到大量生产部署的目的。Swarm配置和使用极其简单。

|

||||

|

||||

嗨,大家好。今天我们来学一学Swarm相关的内容吧,我们将学习通过Swarm来创建Docker原生集群。[Docker Swarm][1]是用于Docker的原生集群项目,它可以将一个Docker主机池转换成单个的虚拟主机。Swarm工作于标准的Docker API,所以任何可以和Docker守护进程通信的工具都可以使用Swarm来透明地伸缩到多个主机上。就像其它Docker项目一样,Swarm遵循“内置电池,并可拆卸”的原则(LCTT 译注:batteries included,内置电池原来是 Python 圈里面对 Python 的一种赞誉,指自给自足,无需外求的丰富环境;but removable,并可拆卸应该指的是非强制耦合)。它附带有一个开箱即用的简单的后端调度程序,而且作为初始开发套件,也为其开发了一个可插拔不同后端的API。其目标在于为一些简单的使用情况提供一个平滑的、开箱即用的体验,并且它允许切换为更强大的后端,如Mesos,以用于大规模生产环境部署。Swarm配置和使用极其简单。

|

||||

|

||||

这里给大家提供Swarm 0.2开箱的即用一些特性。

|

||||

|

||||

1. Swarm 0.2.0大约85%与Docker引擎兼容。

|

||||

2. 它支持资源管理。

|

||||

3. 它具有一些带有限制器和类同器高级调度特性。

|

||||

3. 它具有一些带有限制和类同功能的高级调度特性。

|

||||

4. 它支持多个发现后端(hubs,consul,etcd,zookeeper)

|

||||

5. 它使用TLS加密方法进行安全通信和验证。

|

||||

|

||||

那么,我们来看一看Swarm的一些相当简单而简易的使用步骤吧。

|

||||

那么,我们来看一看Swarm的一些相当简单而简用的使用步骤吧。

|

||||

|

||||

### 1. 运行Swarm的先决条件 ###

|

||||

|

||||

我们必须在所有节点安装Docker 1.4.0或更高版本。虽然哥哥节点的IP地址不需要要公共地址,但是Swarm管理器必须可以通过网络访问各个节点。

|

||||

我们必须在所有节点安装Docker 1.4.0或更高版本。虽然各个节点的IP地址不需要要公共地址,但是Swarm管理器必须可以通过网络访问各个节点。

|

||||

|

||||

注意:Swarm当前还处于beta版本,因此功能特性等还有可能发生改变,我们不推荐你在生产环境中使用。

|

||||

**注意**:Swarm当前还处于beta版本,因此功能特性等还有可能发生改变,我们不推荐你在生产环境中使用。

|

||||

|

||||

### 2. 创建Swarm集群 ###

|

||||

|

||||

现在,我们将通过运行下面的命令来创建Swarm集群。各个节点都将运行一个swarm节点代理,该代理会注册、监控相关的Docker守护进程,并更新发现后端获取的节点状态。下面的命令会返回一个唯一的集群ID标记,在启动节点上的Swarm代理时会用到它。

|

||||

|

||||

在集群管理器中:

|

||||

|

||||

# docker run swarm create

|

||||

|

||||

|

||||

|

||||

### 3. 启动各个节点上的Docker守护进程 ###

|

||||

|

||||

我们需要使用-H标记登陆进我们将用来创建几圈和启动Docker守护进程的各个节点,它会保证Swarm管理器能够通过TCP访问到各个节点上的Docker远程API。要启动Docker守护进程,我们需要在各个节点内部运行以下命令。

|

||||

我们需要登录进我们将用来创建集群的每个节点,并在其上使用-H标记启动Docker守护进程。它会保证Swarm管理器能够通过TCP访问到各个节点上的Docker远程API。要启动Docker守护进程,我们需要在各个节点内部运行以下命令。

|

||||

|

||||

# docker -H tcp://0.0.0.0:2375 -d

|

||||

|

||||

@ -42,7 +45,7 @@

|

||||

|

||||

|

||||

|

||||

** 注意**:我们需要用步骤2中获取到的节点IP地址和集群ID替换这里的<node_ip>和<cluster_id>。

|

||||

**注意**:我们需要用步骤2中获取到的节点IP地址和集群ID替换这里的<node_ip>和<cluster_id>。

|

||||

|

||||

### 5. 开启Swarm管理器 ###

|

||||

|

||||

@ -60,7 +63,7 @@

|

||||

|

||||

|

||||

|

||||

** 注意**:我们需要替换<manager_ip:manager_port>为运行swarm管理器的主机的IP地址和端口。

|

||||

**注意**:我们需要替换<manager_ip:manager_port>为运行swarm管理器的主机的IP地址和端口。

|

||||

|

||||

### 7. 使用docker CLI来访问节点 ###

|

||||

|

||||

@ -79,7 +82,7 @@

|

||||

|

||||

### 尾声 ###

|

||||

|

||||

Swarm真的是一个有着相当不错的功能的docker,它可以用于创建和管理集群。它相当易于配置和使用,当我们在它上面使用限制器和类同器师它更为出色。高级调度程序是一个相当不错的特性,它可以应用过滤器来通过端口、标签、健康状况来排除节点,并且它使用策略来挑选最佳节点。那么,如果你有任何问题、评论、反馈,请在下面的评论框中写出来吧,好让我们知道哪些材料需要补充或改进。谢谢大家了!尽情享受吧 :-)

|

||||

Swarm真的是一个有着相当不错的功能的docker,它可以用于创建和管理集群。它相当易于配置和使用,当我们在它上面使用限制器和类同器时它更为出色。高级调度程序是一个相当不错的特性,它可以应用过滤器来通过端口、标签、健康状况来排除节点,并且它使用策略来挑选最佳节点。那么,如果你有任何问题、评论、反馈,请在下面的评论框中写出来吧,好让我们知道哪些材料需要补充或改进。谢谢大家了!尽情享受吧 :-)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -87,7 +90,7 @@ via: http://linoxide.com/linux-how-to/configure-swarm-clustering-docker/

|

||||

|

||||

作者:[Arun Pyasi][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,14 +1,12 @@

|

||||

每个Linux人应知应会的12个有用的PHP命令行用法

|

||||

在 Linux 命令行中使用和执行 PHP 代码(二):12 个 PHP 交互性 shell 的用法

|

||||

================================================================================

|

||||

在我上一篇文章“[在Linux命令行中使用并执行PHP代码][1]”中,我同时着重讨论了直接在Linux命令行中运行PHP代码以及在Linux终端中执行PHP脚本文件。

|

||||

在上一篇文章“[在 Linux 命令行中使用和执行 PHP 代码(一)][1]”中,我同时着重讨论了直接在Linux命令行中运行PHP代码以及在Linux终端中执行PHP脚本文件。

|

||||

|

||||

|

||||

|

||||

在Linux命令行运行PHP代码——第二部分

|

||||

本文旨在让你了解一些相当不错的Linux终端中的PHP交互性 shell 的用法特性。

|

||||

|

||||

本文旨在让你了解一些相当不错的Linux终端中的PHP用法特性。

|

||||

|

||||

让我们先在PHP交互shell中来对`php.ini`设置进行一些配置吧。

|

||||

让我们先在PHP 的交互shell中来对`php.ini`设置进行一些配置吧。

|

||||

|

||||

**6. 设置PHP命令行提示符**

|

||||

|

||||

@ -21,7 +19,8 @@

|

||||

php > #cli.prompt=Hi Tecmint ::

|

||||

|

||||

|

||||

启用PHP交互Shell

|

||||

|

||||

*启用PHP交互Shell*

|

||||

|

||||

同时,你也可以设置当前时间作为你的命令行提示符,操作如下:

|

||||

|

||||

@ -31,20 +30,22 @@

|

||||

|

||||

**7. 每次输出一屏**

|

||||

|

||||

在我们上一篇文章中,我们已经在原始命令中通过管道在很多地方使用了‘less‘命令。通过该操作,我们可以在那些不能一次满屏输出的地方获得每次一屏的输出。但是,我们可以通过配置php.ini文件,设置pager的值为less以每次输出一屏,操作如下:

|

||||

在我们上一篇文章中,我们已经在原始命令中通过管道在很多地方使用了`less`命令。通过该操作,我们可以在那些不能一屏全部输出的地方获得分屏显示。但是,我们可以通过配置php.ini文件,设置pager的值为less以每次输出一屏,操作如下:

|

||||

|

||||

$ php -a

|

||||

php > #cli.pager=less

|

||||

|

||||

|

||||

固定PHP屏幕输出

|

||||

|

||||

*限制PHP屏幕输出*

|

||||

|

||||

这样,下次当你运行一个命令(比如说条调试器`phpinfo();`)的时候,而该命令的输出内容又太过庞大而不能固定在一屏,它就会自动产生适合你当前屏幕的输出结果。

|

||||

|

||||

php > phpinfo();

|

||||

|

||||

|

||||

PHP信息输出

|

||||

|

||||

*PHP信息输出*

|

||||

|

||||

**8. 建议和TAB补全**

|

||||

|

||||

@ -58,50 +59,53 @@ PHP shell足够智能,它可以显示给你建议和进行TAB补全,你可

|

||||

|

||||

php > #cli.pager [TAB]

|

||||

|

||||

你可以一直按TAB键来获得选项,直到选项值满足要求。所有的行为都将记录到`~/.php-history`文件。

|

||||

你可以一直按TAB键来获得建议的补全,直到该值满足要求。所有的行为都将记录到`~/.php-history`文件。

|

||||

|

||||

要检查你的PHP交互shell活动日志,你可以执行:

|

||||

|

||||

$ nano ~/.php_history | less

|

||||

|

||||

|

||||

检查PHP交互Shell日志

|

||||

|

||||

*检查PHP交互Shell日志*

|

||||

|

||||

**9. 你可以在PHP交互shell中使用颜色,你所需要知道的仅仅是颜色代码。**

|

||||

|

||||

使用echo来打印各种颜色的输出结果,看我信手拈来:

|

||||

使用echo来打印各种颜色的输出结果,类似这样:

|

||||

|

||||

php > echo “color_code1 TEXT second_color_code”;

|

||||

php > echo "color_code1 TEXT second_color_code";

|

||||

|

||||

一个更能说明问题的例子是:

|

||||

具体来说是:

|

||||

|

||||

php > echo "\033[0;31m Hi Tecmint \x1B[0m";

|

||||

|

||||

|

||||

在PHP Shell中启用彩色

|

||||

|

||||

*在PHP Shell中启用彩色*

|

||||

|

||||

到目前为止,我们已经看到,按回车键意味着执行命令,然而PHP Shell中各个命令结尾的分号是必须的。

|

||||

|

||||

**10. PHP shell中的用以打印后续组件的路径名称**

|

||||

**10. 在PHP shell中用basename()输出路径中最后一部分**

|

||||

|

||||

PHP shell中的basename函数从给出的包含有到文件或目录路径的后续组件的路径名称。

|

||||

PHP shell中的basename函数可以从给出的包含有到文件或目录路径的最后部分。

|

||||

|

||||

basename()样例#1和#2。

|

||||

|

||||

php > echo basename("/var/www/html/wp/wp-content/plugins");

|

||||

php > echo basename("www.tecmint.com/contact-us.html");

|

||||

|

||||

上述两个样例都将输出:

|

||||

上述两个样例将输出:

|

||||

|

||||

plugins

|

||||

contact-us.html

|

||||

|

||||

|

||||

在PHP中打印基本名称

|

||||

|

||||

*在PHP中打印基本名称*

|

||||

|

||||

**11. 你可以使用PHP交互shell在你的桌面创建文件(比如说test1.txt),就像下面这么简单**

|

||||

|

||||

$ touch("/home/avi/Desktop/test1.txt");

|

||||

php> touch("/home/avi/Desktop/test1.txt");

|

||||

|

||||

我们已经见识了PHP交互shell在数学运算中有多优秀,这里还有更多一些例子会令你吃惊。

|

||||

|

||||

@ -112,7 +116,8 @@ strlen函数用于获取指定字符串的长度。

|

||||

php > echo strlen("tecmint.com");

|

||||

|

||||

|

||||

在PHP中打印字符串长度

|

||||

|

||||

*在PHP中打印字符串长度*

|

||||

|

||||

**13. PHP交互shell可以对数组排序,是的,你没听错**

|

||||

|

||||

@ -137,9 +142,10 @@ strlen函数用于获取指定字符串的长度。

|

||||

)

|

||||

|

||||

|

||||

在PHP中对数组排序

|

||||

|

||||

**14. 在PHP交互Shell中获取Pi的值**

|

||||

*在PHP中对数组排序*

|

||||

|

||||

**14. 在PHP交互Shell中获取π的值**

|

||||

|

||||

php > echo pi();

|

||||

|

||||

@ -151,14 +157,15 @@ strlen函数用于获取指定字符串的长度。

|

||||

|

||||

12.247448713916

|

||||

|

||||

**16. 从0-10的范围内回显一个随机数**

|

||||

**16. 从0-10的范围内挑选一个随机数**

|

||||

|

||||

php > echo rand(0, 10);

|

||||

|

||||

|

||||

在PHP中获取随机数

|

||||

|

||||

**17. 获取某个指定字符串的md5sum和sha1sum,例如,让我们在PHP Shell中检查某个字符串(比如说avi)的md5sum和sha1sum,并交叉检查这些带有bash shell生成的md5sum和sha1sum的结果。**

|

||||

*在PHP中获取随机数*

|

||||

|

||||

**17. 获取某个指定字符串的md5校验和sha1校验,例如,让我们在PHP Shell中检查某个字符串(比如说avi)的md5校验和sha1校验,并交叉校验bash shell生成的md5校验和sha1校验的结果。**

|

||||

|

||||

php > echo md5(avi);

|

||||

3fca379b3f0e322b7b7967bfcfb948ad

|

||||

@ -175,9 +182,10 @@ strlen函数用于获取指定字符串的长度。

|

||||

8f920f22884d6fea9df883843c4a8095a2e5ac6f -

|

||||

|

||||

|

||||

在PHP中检查md5sum和sha1sum

|

||||

|

||||

这里只是PHP Shell中所能获取的功能和PHP Shell的交互特性的惊鸿一瞥,这些就是到现在为止我所讨论的一切。保持和tecmint的连线,在评论中为我们提供你有价值的反馈吧。为我们点赞并分享,帮助我们扩散哦。

|

||||

*在PHP中检查md5校验和sha1校验*

|

||||

|

||||

这里只是PHP Shell中所能获取的功能和PHP Shell的交互特性的惊鸿一瞥,这些就是到现在为止我所讨论的一切。保持连线,在评论中为我们提供你有价值的反馈吧。为我们点赞并分享,帮助我们扩散哦。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -185,9 +193,9 @@ via: http://www.tecmint.com/execute-php-codes-functions-in-linux-commandline/

|

||||

|

||||

作者:[Avishek Kumar][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/avishek/

|

||||

[1]:http://www.tecmint.com/run-php-codes-from-linux-commandline/

|

||||

[1]:https://linux.cn/article-5906-1.html

|

||||

@ -1,20 +1,18 @@

|

||||

|

||||

如何在 Ubuntu/CentOS7.1/Fedora22 上安装 Plex Media Server ?

|

||||

如何在 Ubuntu/CentOS7.1/Fedora22 上安装 Plex Media Server

|

||||

================================================================================

|

||||

在本文中我们将会向你展示如何容易地在主流的最新发布的Linux发行版上安装Plex Home Media Server。在Plex安装成功后你将可以使用你的集中式家庭媒体播放系统,该系统能让多个Plex播放器App共享它的媒体资源,并且该系统允许你设置你的环境,通过增加你的设备以及设置一个可以一起使用Plex的用户组。让我们首先在Ubuntu15.04上开始Plex的安装。

|

||||

在本文中我们将会向你展示如何容易地在主流的最新Linux发行版上安装Plex Media Server。在Plex安装成功后你将可以使用你的中央式家庭媒体播放系统,该系统能让多个Plex播放器App共享它的媒体资源,并且该系统允许你设置你的环境,增加你的设备以及设置一个可以一起使用Plex的用户组。让我们首先在Ubuntu15.04上开始Plex的安装。

|

||||

|

||||

### 基本的系统资源 ###

|

||||

|

||||

系统资源主要取决于你打算用来连接服务的设备类型和数量, 所以根据我们的需求我们将会在一个单独的服务器上使用以下系统资源。

|

||||

|

||||

注:表格

|

||||

<table width="666" style="height: 181px;">

|

||||

<tbody>

|

||||

<tr>

|

||||

<td width="670" colspan="2"><b>Plex Home Media Server</b></td>

|

||||

<td width="670" colspan="2"><b>Plex Media Server</b></td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="236"><b>Base Operating System</b></td>

|

||||

<td width="236"><b>基础操作系统</b></td>

|

||||

<td width="425">Ubuntu 15.04 / CentOS 7.1 / Fedora 22 Work Station</td>

|

||||

</tr>

|

||||

<tr>

|

||||

@ -22,11 +20,11 @@

|

||||

<td width="425">Version 0.9.12.3.1173-937aac3</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="236"><b>RAM and CPU</b></td>

|

||||

<td width="236"><b>RAM 和 CPU</b></td>

|

||||

<td width="425">1 GB , 2.0 GHZ</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="236"><b>Hard Disk</b></td>

|

||||

<td width="236"><b>硬盘</b></td>

|

||||

<td width="425">30 GB</td>

|

||||

</tr>

|

||||

</tbody>

|

||||

@ -38,13 +36,13 @@

|

||||

|

||||

#### 步骤 1: 系统更新 ####

|

||||

|

||||

用root权限登陆你的服务器。确保你的系统是最新的,如果不是就使用下面的命令。

|

||||

用root权限登录你的服务器。确保你的系统是最新的,如果不是就使用下面的命令。

|

||||

|

||||

root@ubuntu-15:~#apt-get update

|

||||

|

||||

#### 步骤 2: 下载最新的Plex Media Server包 ####

|

||||

|

||||

创建一个新目录,用wget命令从Plex官网下载为Ubuntu提供的.deb包并放入该目录中。

|

||||

创建一个新目录,用wget命令从[Plex官网](https://plex.tv/)下载为Ubuntu提供的.deb包并放入该目录中。

|

||||

|

||||

root@ubuntu-15:~# cd /plex/

|

||||

root@ubuntu-15:/plex#

|

||||

@ -52,7 +50,7 @@

|

||||

|

||||

#### 步骤 3: 安装Plex Media Server的Debian包 ####

|

||||

|

||||

现在在相同的目录下执行下面的命令来开始debian包的安装, 然后检查plexmediaserver(译者注: 原文plekmediaserver, 明显笔误)的状态。

|

||||

现在在相同的目录下执行下面的命令来开始debian包的安装, 然后检查plexmediaserver服务的状态。

|

||||

|

||||

root@ubuntu-15:/plex# dpkg -i plexmediaserver_0.9.12.3.1173-937aac3_amd64.deb

|

||||

|

||||

@ -62,41 +60,41 @@

|

||||

|

||||

|

||||

|

||||

### 在Ubuntu 15.04上设置Plex Home Media Web应用 ###

|

||||

### 在Ubuntu 15.04上设置Plex Media Web应用 ###

|

||||

|

||||

让我们在你的本地网络主机中打开web浏览器, 并用你的本地主机IP以及端口32400来打开Web界面并完成以下步骤来配置Plex。

|

||||

让我们在你的本地网络主机中打开web浏览器, 并用你的本地主机IP以及端口32400来打开Web界面,并完成以下步骤来配置Plex。

|

||||

|

||||

http://172.25.10.179:32400/web

|

||||

http://localhost:32400/web

|

||||

|

||||

#### 步骤 1: 登陆前先注册 ####

|

||||

#### 步骤 1: 登录前先注册 ####

|

||||

|

||||

在你访问到Plex Media Server的Web界面之后(译者注: 原文是Plesk, 应该是笔误), 确保注册并填上你的用户名(译者注: 原文username email ID感觉怪怪:))和密码来登陆。

|

||||

在你访问到Plex Media Server的Web界面之后, 确保注册并填上你的用户名和密码来登录。

|

||||

|

||||

|

||||

|

||||

#### 输入你的PIN码来保护你的Plex Home Media用户(译者注: 原文Plex Media Home, 个人觉得专业称谓应该保持一致) ####

|

||||

#### 输入你的PIN码来保护你的Plex Media用户####

|

||||

|

||||

|

||||

|

||||

现在你已经成功的在Plex Home Media下配置你的用户。

|

||||

现在你已经成功的在Plex Media下配置你的用户。

|

||||

|

||||

|

||||

|

||||

### 在设备上而不是本地服务器上打开Plex Web应用 ###

|

||||

|

||||

正如我们在Plex Media主页看到的表明"你没有权限访问这个服务"。 这是因为我们跟服务器计算机不在同个网络。

|

||||

如我们在Plex Media主页看到的提示“你没有权限访问这个服务”。 这说明我们跟服务器计算机不在同个网络。

|

||||

|

||||

|

||||

|

||||

现在我们需要解决这个权限问题以便我们通过设备访问服务器而不是通过托管服务器(Plex服务器), 通过完成下面的步骤。

|

||||

现在我们需要解决这个权限问题,以便我们通过设备访问服务器而不是只能在服务器上访问。通过完成下面的步骤完成。

|

||||

|

||||

### 设置SSH隧道使Windows系统访问到Linux服务器 ###

|

||||

### 设置SSH隧道使Windows系统可以访问到Linux服务器 ###

|

||||

|

||||

首先我们需要建立一条SSH隧道以便我们访问远程服务器资源,就好像资源在本地一样。 这仅仅是必要的初始设置。

|

||||

|

||||

如果你正在使用Windows作为你的本地系统,Linux作为服务器,那么我们可以参照下图通过Putty来设置SSH隧道。

|

||||

(译者注: 首先要在Putty的Session中用Plex服务器IP配置一个SSH的会话,才能进行下面的隧道转发规则配置。

|

||||

(LCTT译注: 首先要在Putty的Session中用Plex服务器IP配置一个SSH的会话,才能进行下面的隧道转发规则配置。

|

||||

然后点击“Open”,输入远端服务器用户名密码, 来保持SSH会话连接。)

|

||||

|

||||

|

||||

@ -111,13 +109,13 @@

|

||||

|

||||

|

||||

|

||||

现在一个功能齐全的Plex Home Media Server已经准备好添加新的媒体库、频道、播放列表等资源。

|

||||

现在一个功能齐全的Plex Media Server已经准备好添加新的媒体库、频道、播放列表等资源。

|

||||

|

||||

|

||||

|

||||

### 在CentOS 7.1上安装Plex Media Server 0.9.12.3 ###

|

||||

|

||||

我们将会按照上述在Ubuntu15.04上安装Plex Home Media Server的步骤来将Plex安装到CentOS 7.1上。

|

||||

我们将会按照上述在Ubuntu15.04上安装Plex Media Server的步骤来将Plex安装到CentOS 7.1上。

|

||||

|

||||

让我们从安装Plex Media Server开始。

|

||||

|

||||

@ -144,9 +142,9 @@

|

||||

[root@linux-tutorials plex]# systemctl enable plexmediaserver.service

|

||||

[root@linux-tutorials plex]# systemctl status plexmediaserver.service

|

||||

|

||||

### 在CentOS-7.1上设置Plex Home Media Web应用 ###

|

||||

### 在CentOS-7.1上设置Plex Media Web应用 ###

|

||||

|

||||

现在我们只需要重复在Ubuntu上设置Plex Web应用的所有步骤就可以了。 让我们在Web浏览器上打开一个新窗口并用localhost或者Plex服务器的IP(译者注: 原文为or your Plex server, 明显的笔误)来访问Plex Home Media Web应用(译者注:称谓一致)。

|

||||

现在我们只需要重复在Ubuntu上设置Plex Web应用的所有步骤就可以了。 让我们在Web浏览器上打开一个新窗口并用localhost或者Plex服务器的IP来访问Plex Media Web应用。

|

||||

|

||||

http://172.20.3.174:32400/web

|

||||

http://localhost:32400/web

|

||||

@ -157,25 +155,25 @@

|

||||

|

||||

### 在Fedora 22工作站上安装Plex Media Server 0.9.12.3 ###

|

||||

|

||||

基本的下载和安装Plex Media Server步骤跟在CentOS 7.1上安装的步骤一致。我们只需要下载对应的rpm包然后用rpm命令来安装它。

|

||||

下载和安装Plex Media Server步骤基本跟在CentOS 7.1上安装的步骤一致。我们只需要下载对应的rpm包然后用rpm命令来安装它。

|

||||

|

||||

|

||||

|

||||

### 在Fedora 22工作站上配置Plex Home Media Web应用 ###

|

||||

### 在Fedora 22工作站上配置Plex Media Web应用 ###

|

||||

|

||||

我们在(与Plex服务器)相同的主机上配置Plex Media Server,因此不需要设置SSH隧道。只要在你的Fedora 22工作站上用Plex Home Media Server的默认端口号32400打开Web浏览器并同意Plex的服务条款即可。

|

||||

我们在(与Plex服务器)相同的主机上配置Plex Media Server,因此不需要设置SSH隧道。只要在你的Fedora 22工作站上用Plex Media Server的默认端口号32400打开Web浏览器并同意Plex的服务条款即可。

|

||||

|

||||

|

||||

|

||||

**欢迎来到Fedora 22工作站上的Plex Home Media Server**

|

||||

*欢迎来到Fedora 22工作站上的Plex Media Server*

|

||||

|

||||

让我们用你的Plex账户登陆,并且开始将你喜欢的电影频道添加到媒体库、创建你的播放列表、添加你的图片以及享用更多其他的特性。

|

||||

让我们用你的Plex账户登录,并且开始将你喜欢的电影频道添加到媒体库、创建你的播放列表、添加你的图片以及享用更多其他的特性。

|

||||

|

||||

|

||||

|

||||

### 总结 ###

|

||||

|

||||

我们已经成功完成Plex Media Server在主流Linux发行版上安装和配置。Plex Home Media Server永远都是媒体管理的最佳选择。 它在跨平台上的设置是如此的简单,就像我们在Ubuntu,CentOS以及Fedora上的设置一样。它简化了你组织媒体内容的工作,并将媒体内容“流”向其他计算机以及设备以便你跟你的朋友分享媒体内容。

|

||||

我们已经成功完成Plex Media Server在主流Linux发行版上安装和配置。Plex Media Server永远都是媒体管理的最佳选择。 它在跨平台上的设置是如此的简单,就像我们在Ubuntu,CentOS以及Fedora上的设置一样。它简化了你组织媒体内容的工作,并将媒体内容“流”向其他计算机以及设备以便你跟你的朋友分享媒体内容。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -183,7 +181,7 @@ via: http://linoxide.com/tools/install-plex-media-server-ubuntu-centos-7-1-fedor

|

||||

|

||||

作者:[Kashif Siddique][a]

|

||||

译者:[dingdongnigetou](https://github.com/dingdongnigetou)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -6,17 +6,17 @@

|

||||

|

||||

每当你开机进入一个操作系统,一系列的应用将会自动启动。这些应用被称为‘开机启动应用’ 或‘开机启动程序’。随着时间的推移,当你在系统中安装了足够多的应用时,你将发现有太多的‘开机启动应用’在开机时自动地启动了,它们吃掉了很多的系统资源,并将你的系统拖慢。这可能会让你感觉卡顿,我想这种情况并不是你想要的。

|

||||

|

||||

让 Ubuntu 变得更快的方法之一是对这些开机启动应用进行控制。 Ubuntu 为你提供了一个 GUI 工具来让你发现这些开机启动应用,然后完全禁止或延迟它们的启动,这样就可以不让每个应用在开机时同时运行。

|

||||

让 Ubuntu 变得更快的方法之一是对这些开机启动应用进行控制。 Ubuntu 为你提供了一个 GUI 工具来让你找到这些开机启动应用,然后完全禁止或延迟它们的启动,这样就可以不让每个应用在开机时同时运行。

|

||||

|

||||

在这篇文章中,我们将看到 **在 Ubuntu 中,如何控制开机启动应用,如何让一个应用在开机时启动以及如何发现隐藏的开机启动应用。**这里提供的指导对所有的 Ubuntu 版本均适用,例如 Ubuntu 12.04, Ubuntu 14.04 和 Ubuntu 15.04。

|

||||

|

||||

### 在 Ubuntu 中管理开机启动应用 ###

|

||||

|

||||

默认情况下, Ubuntu 提供了一个`开机启动应用工具`来供你使用,你不必再进行安装。只需到 Unity 面板中就可以查找到该工具。

|

||||

默认情况下, Ubuntu 提供了一个`Startup Applications`工具来供你使用,你不必再进行安装。只需到 Unity 面板中就可以查找到该工具。

|

||||

|

||||

|

||||

|

||||

点击它来启动。下面是我的`开机启动应用`的样子:

|

||||

点击它来启动。下面是我的`Startup Applications`的样子:

|

||||

|

||||

|

||||

|

||||

@ -84,7 +84,7 @@

|

||||

|

||||

就这样,你将在下一次开机时看到这个程序会自动运行。这就是在 Ubuntu 中你能做的关于开机启动应用的所有事情。

|

||||

|

||||

到现在为止,我们已经讨论在开机时可见的应用,但仍有更多的服务,守护进程和程序并不在`开机启动应用工具`中可见。下一节中,我们将看到如何在 Ubuntu 中查看这些隐藏的开机启动程序。

|

||||

到现在为止,我们已经讨论在开机时可见到的应用,但仍有更多的服务,守护进程和程序并不在`开机启动应用工具`中可见。下一节中,我们将看到如何在 Ubuntu 中查看这些隐藏的开机启动程序。

|

||||

|

||||

### 在 Ubuntu 中查看隐藏的开机启动程序 ###

|

||||

|

||||

@ -97,13 +97,14 @@

|

||||

|

||||

|

||||

你可以像先前我们讨论的那样管理这些开机启动应用。我希望这篇教程可以帮助你在 Ubuntu 中控制开机启动程序。任何的问题或建议总是欢迎的。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://itsfoss.com/manage-startup-applications-ubuntu/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,260 +0,0 @@

|

||||

translating...

|

||||

|

||||

How to set up IPv6 BGP peering and filtering in Quagga BGP router

|

||||

================================================================================

|

||||

In the previous tutorials, we demonstrated how we can set up a [full-fledged BGP router][1] and configure [prefix filtering][2] with Quagga. In this tutorial, we are going to show you how we can set up IPv6 BGP peering and advertise IPv6 prefixes through BGP. We will also demonstrate how we can filter IPv6 prefixes advertised or received by using prefix-list and route-map features.

|

||||

|

||||

### Topology ###

|

||||

|

||||

For this tutorial, we will be considering the following topology.

|

||||

|

||||

|

||||

|

||||

Service providers A and B want to establish an IPv6 BGP peering between them. Their IPv6 and AS information is as follows.

|

||||

|

||||

- Peering IP block: 2001:DB8:3::/64

|

||||

- Service provider A: AS 100, 2001:DB8:1::/48

|

||||

- Service provider B: AS 200, 2001:DB8:2::/48

|

||||

|

||||

### Installing Quagga on CentOS/RHEL ###

|

||||

|

||||

If Quagga has not already been installed, we can install it using yum.

|

||||

|

||||

# yum install quagga

|

||||

|

||||

On CentOS/RHEL 7, the default SELinux policy, which prevents /usr/sbin/zebra from writing to its configuration directory, can interfere with the setup procedure we are going to describe. Thus we want to disable this policy as follows. Skip this step if you are using CentOS/RHEL 6.

|

||||

|

||||

# setsebool -P zebra_write_config 1

|

||||

|

||||

### Creating Configuration Files ###

|

||||

|

||||

After installation, we start the configuration process by creating the zebra/bgpd configuration files.

|

||||

|

||||

# cp /usr/share/doc/quagga-XXXXX/zebra.conf.sample /etc/quagga/zebra.conf

|

||||

# cp /usr/share/doc/quagga-XXXXX/bgpd.conf.sample /etc/quagga/bgpd.conf

|

||||

|

||||

Next, enable auto-start of these services.

|

||||

|

||||

**On CentOS/RHEL 6:**

|

||||

|

||||

# service zebra start; service bgpd start

|

||||

# chkconfig zebra on; chkconfig bgpd on

|

||||

|

||||

**On CentOS/RHEL 7:**

|

||||

|

||||

# systemctl start zebra; systemctl start bgpd

|

||||

# systemctl enable zebra; systmectl enable bgpd

|

||||

|

||||

Quagga provides a built-in shell called vtysh, whose interface is similar to those of major router vendors such as Cisco or Juniper. Launch vtysh command shell:

|

||||

|

||||

# vtysh

|

||||

|

||||

The prompt will be changed to:

|

||||

|

||||

router-a#

|

||||

|

||||

or

|

||||

|

||||

router-b#

|

||||

|

||||

In the rest of the tutorials, these prompts indicate that you are inside vtysh shell of either router.

|

||||

|

||||

### Specifying Log File for Zebra ###

|

||||

|

||||

Let's configure the log file for Zebra, which will be helpful for debugging.

|

||||

|

||||

First, enter the global configuration mode by typing:

|

||||

|

||||

router-a# configure terminal

|

||||

|

||||

The prompt will be changed to:

|

||||

|

||||

router-a(config)#

|

||||

|

||||

Now specify log file location. Then exit the configuration mode:

|

||||

|

||||

router-a(config)# log file /var/log/quagga/quagga.log

|

||||

router-a(config)# exit

|

||||

|

||||

Save configuration permanently by:

|

||||

|

||||

router-a# write

|

||||

|

||||

### Configuring Interface IP Addresses ###

|

||||

|

||||

Let's now configure the IP addresses for Quagga's physical interfaces.

|

||||

|

||||

First, we check the available interfaces from inside vtysh.

|

||||

|

||||

router-a# show interfaces

|

||||

|

||||

----------

|

||||

|

||||

Interface eth0 is up, line protocol detection is disabled

|

||||

## OUTPUT TRUNCATED ###

|

||||

Interface eth1 is up, line protocol detection is disabled

|

||||

## OUTPUT TRUNCATED ##

|

||||

|

||||

Now we assign necessary IPv6 addresses.

|

||||

|

||||

router-a# conf terminal

|

||||

router-a(config)# interface eth0

|

||||

router-a(config-if)# ipv6 address 2001:db8:3::1/64

|

||||

router-a(config-if)# interface eth1

|

||||

router-a(config-if)# ipv6 address 2001:db8:1::1/64

|

||||

|

||||

We use the same method to assign IPv6 addresses to router-B. I am summarizing the configuration below.

|

||||

|

||||

router-b# show running-config

|

||||

|

||||

----------

|

||||

|

||||

interface eth0

|

||||

ipv6 address 2001:db8:3::2/64

|

||||

|

||||

interface eth1

|

||||

ipv6 address 2001:db8:2::1/64

|

||||

|

||||

Since the eth0 interface of both routers are in the same subnet, i.e., 2001:DB8:3::/64, you should be able to ping from one router to another. Make sure that you can ping successfully before moving on to the next step.

|

||||

|

||||

router-a# ping ipv6 2001:db8:3::2

|

||||

|

||||

----------

|

||||

|

||||

PING 2001:db8:3::2(2001:db8:3::2) 56 data bytes

|

||||

64 bytes from 2001:db8:3::2: icmp_seq=1 ttl=64 time=3.20 ms

|

||||

64 bytes from 2001:db8:3::2: icmp_seq=2 ttl=64 time=1.05 ms

|

||||

|

||||

### Phase 1: IPv6 BGP Peering ###

|

||||

|

||||

In this section, we will configure IPv6 BGP between the two routers. We start by specifying BGP neighbors in router-A.

|

||||

|

||||

router-a# conf t

|

||||

router-a(config)# router bgp 100

|

||||

router-a(config-router)# no auto-summary

|

||||

router-a(config-router)# no synchronization

|

||||

router-a(config-router)# neighbor 2001:DB8:3::2 remote-as 200

|

||||

|

||||

Next, we define the address family for IPv6. Within the address family section, we will define the network to be advertised, and activate the neighbors as well.

|

||||

|

||||

router-a(config-router)# address-family ipv6

|

||||

router-a(config-router-af)# network 2001:DB8:1::/48

|

||||

router-a(config-router-af)# neighbor 2001:DB8:3::2 activate

|

||||

|

||||

We will go through the same configuration for router-B. I'm providing the summary of the configuration.

|

||||

|

||||

router-b# conf t

|

||||

router-b(config)# router bgp 200

|

||||

router-b(config-router)# no auto-summary

|

||||

router-b(config-router)# no synchronization

|

||||

router-b(config-router)# neighbor 2001:DB8:3::1 remote-as 100

|

||||

router-b(config-router)# address-family ipv6

|

||||

router-b(config-router-af)# network 2001:DB8:2::/48

|

||||

router-b(config-router-af)# neighbor 2001:DB8:3::1 activate

|

||||

|

||||

If all goes well, an IPv6 BGP session should be up between the two routers. If not already done, please make sure that necessary ports (TCP 179) are [open in your firewall][3].

|

||||

|

||||

We can check IPv6 BGP session information using the following commands.

|

||||

|

||||

**For BGP summary:**

|

||||

|

||||

router-a# show bgp ipv6 unicast summary

|

||||

|

||||

**For BGP advertised routes:**

|

||||

|

||||

router-a# show bgp ipv6 neighbors <neighbor-IPv6-address> advertised-routes

|

||||

|

||||

**For BGP received routes:**

|

||||