mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-21 02:10:11 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

cc97a887b4

@ -1,3 +1,5 @@

|

||||

申请翻译 WangYueScream

|

||||

================================

|

||||

Best Websites to Download Linux Games

|

||||

======

|

||||

Brief: New to Linux gaming and wondering where to **download Linux games** from? We list the best resources from where you can **download free Linux games** as well as buy premium Linux games.

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

8 simple ways to promote team communication

|

||||

======

|

||||

|

||||

translating

|

||||

|

||||

|

||||

Image by : opensource.com

|

||||

|

||||

73

sources/talk/20180122 How to price cryptocurrencies.md

Normal file

73

sources/talk/20180122 How to price cryptocurrencies.md

Normal file

@ -0,0 +1,73 @@

|

||||

How to price cryptocurrencies

|

||||

======

|

||||

|

||||

|

||||

|

||||

Predicting cryptocurrency prices is a fool's game, yet this fool is about to try. The drivers of a single cryptocurrency's value are currently too varied and vague to make assessments based on any one point. News is trending up on Bitcoin? Maybe there's a hack or an API failure that is driving it down at the same time. Ethereum looking sluggish? Who knows: Maybe someone will build a new smarter DAO tomorrow that will draw in the big spenders.

|

||||

|

||||

So how do you invest? Or, more correctly, on which currency should you bet?

|

||||

|

||||

The key to understanding what to buy or sell and when to hold is to use the tools associated with assessing the value of open-source projects. This has been said again and again, but to understand the current crypto boom you have to go back to the quiet rise of Linux.

|

||||

|

||||

Linux appeared on most radars during the dot-com bubble. At that time, if you wanted to set up a web server, you had to physically ship a Windows server or Sun Sparc Station to a server farm where it would do the hard work of delivering Pets.com HTML. At the same time, Linux, like a freight train running on a parallel path to Microsoft and Sun, would consistently allow developers to build one-off projects very quickly and easily using an OS and toolset that were improving daily. In comparison, then, the massive hardware and software expenditures associated with the status quo solution providers were deeply inefficient, and very quickly all of the tech giants that made their money on software now made their money on services or, like Sun, folded.

|

||||

|

||||

From the acorn of Linux an open-source forest bloomed. But there was one clear problem: You couldn't make money from open source. You could consult and you could sell products that used open-source components, but early builders built primarily for the betterment of humanity and not the betterment of their bank accounts.

|

||||

|

||||

Cryptocurrencies have followed the Linux model almost exactly, but cryptocurrencies have cash value. Therefore, when you're working on a crypto project you're not doing it for the common good or for the joy of writing free software. You're writing it with the expectation of a big payout. This, therefore, clouds the value judgements of many programmers. The same folks that brought you Python, PHP, Django and Node.js are back… and now they're programming money.

|

||||

|

||||

### Check the codebase

|

||||

|

||||

This year will be the year of great reckoning in the token sale and cryptocurrency space. While many companies have been able to get away with poor or unusable codebases, I doubt developers will let future companies get away with so much smoke and mirrors. It's safe to say we can [expect posts like this one detailing Storj's anemic codebase to become the norm][1] and, more importantly, that these commentaries will sink many so-called ICOs. Though massive, the money trough that is flowing from ICO to ICO is finite and at some point there will be greater scrutiny paid to incomplete work.

|

||||

|

||||

What does this mean? It means to understand cryptocurrency you have to treat it like a startup. Does it have a good team? Does it have a good product? Does the product work? Would someone want to use it? It's far too early to assess the value of cryptocurrency as a whole, but if we assume that tokens or coins will become the way computers pay each other in the future, this lets us hand wave away a lot of doubt. After all, not many people knew in 2000 that Apache was going to beat nearly every other web server in a crowded market or that Ubuntu instances would be so common that you'd spin them up and destroy them in an instant.

|

||||

|

||||

The key to understanding cryptocurrency pricing is to ignore the froth, hype and FUD and instead focus on true utility. Do you think that some day your phone will pay another phone for, say, an in-game perk? Do you expect the credit card system to fold in the face of an Internet of Value? Do you expect that one day you'll move through life splashing out small bits of value in order to make yourself more comfortable? Then by all means, buy and hold or speculate on things that you think will make your life better. If you don't expect the Internet of Value to improve your life the way the TCP/IP internet did (or you do not understand enough to hold an opinion), then you're probably not cut out for this. NASDAQ is always open, at least during banker's hours.

|

||||

|

||||

Still will us? Good, here are my predictions.

|

||||

|

||||

### The rundown

|

||||

|

||||

Here is my assessment of what you should look at when considering an "investment" in cryptocurrencies. There are a number of caveats we must address before we begin:

|

||||

|

||||

* Crypto is not a monetary investment in a real currency, but an investment in a pie-in-the-sky technofuture. That's right: When you buy crypto you're basically assuming that we'll all be on the deck of the Starship Enterprise exchanging them like Galactic Credits one day. This is the only inevitable future for crypto bulls. While you can force crypto into various economic models and hope for the best, the entire platform is techno-utopianist and assumes all sorts of exciting and unlikely things will come to pass in the next few years. If you have spare cash lying around and you like Star Wars, then you're golden. If you bought bitcoin on a credit card because your cousin told you to, then you're probably going to have a bad time.

|

||||

* Don't trust anyone. There is no guarantee and, in addition to offering the disclaimer that this is not investment advice and that this is in no way an endorsement of any particular cryptocurrency or even the concept in general, we must understand that everything I write here could be wrong. In fact, everything ever written about crypto could be wrong, and anyone who is trying to sell you a token with exciting upside is almost certainly wrong. In short, everyone is wrong and everyone is out to get you, so be very, very careful.

|

||||

* You might as well hold. If you bought when BTC was $18,000 you'd best just hold on. Right now you're in Pascal's Wager territory. Yes, maybe you're angry at crypto for screwing you, but maybe you were just stupid and you got in too high and now you might as well keep believing because nothing is certain, or you can admit that you were a bit overeager and now you're being punished for it but that there is some sort of bitcoin god out there watching over you. Ultimately you need to take a deep breath, agree that all of this is pretty freaking weird, and hold on.

|

||||

|

||||

|

||||

|

||||

Now on with the assessments.

|

||||

|

||||

**Bitcoin** - Expect a rise over the next year that will surpass the current low. Also expect [bumps as the SEC and other federal agencies][2] around the world begin regulating the buying and selling of cryptocurrencies in very real ways. Now that banks are in on the joke they're going to want to reduce risk. Therefore, the bitcoin will become digital gold, a staid, boring and volatility proof safe haven for speculators. Although all but unusable as a real currency, it's good enough for what we need it to do and we also can expect quantum computing hardware to change the face of the oldest and most familiar cryptocurrency.

|

||||

|

||||

**Ethereum** - Ethereum could sustain another few thousand dollars on its price as long as Vitalik Buterin, the creator, doesn't throw too much cold water on it. Like a remorseful Victor Frankenstein, Buterin tends to make amazing things and then denigrate them online, a sort of self-flagellation that is actually quite useful in a space full of froth and outright lies. Ethereum is the closest we've come to a useful cryptocurrency, but it is still the Raspberry Pi of distributed computing -- it's a useful and clever hack that makes it easy to experiment but no one has quite replaced the old systems with new distributed data stores or applications. In short, it's a really exciting technology, but nobody knows what to do with it.

|

||||

|

||||

![][3]

|

||||

|

||||

Where will the price go? It will hover around $1,000 and possibly go as high as $1,500 this year, but this is a principled tech project and not a store of value.

|

||||

|

||||

**Altcoins** - One of the signs of a bubble is when average people make statements like "I couldn't afford a Bitcoin so I bought a Litecoin." This is exactly what I've heard multiple times from multiple people and it's akin to saying "I couldn't buy hamburger so I bought a pound of sawdust instead. I think the kids will eat it, right?" Play at your own risk. Altcoins are a very useful low-risk play for many, and if you create an algorithm -- say to sell when the asset hits a certain level -- then you could make a nice profit. Further, most altcoins will not disappear overnight. I would honestly recommend playing with Ethereum instead of altcoins, but if you're dead set on it, then by all means, enjoy.

|

||||

|

||||

**Tokens** - This is where cryptocurrency gets interesting. Tokens require research, education and a deep understanding of technology to truly assess. Many of the tokens I've seen are true crapshoots and are used primarily as pump and dump vehicles. I won't name names, but the rule of thumb is that if you're buying a token on an open market then you've probably already missed out. The value of the token sale as of January 2018 is to allow crypto whales to turn a few cent per token investment into a 100X return. While many founders talk about the magic of their product and the power of their team, token sales are quite simply vehicles to turn 4 cents into 20 cents into a dollar. Multiply that by millions of tokens and you see the draw.

|

||||

|

||||

The answer is simple: find a few projects you like and lurk in their message boards. Assess if the team is competent and figure out how to get in very, very early. Also expect your money to disappear into a rat hole in a few months or years. There are no sure things, and tokens are far too bleeding-edge a technology to assess sanely.

|

||||

|

||||

You are reading this post because you are looking to maintain confirmation bias in a confusing space. That's fine. I've spoken to enough crypto-heads to know that nobody knows anything right now and that collusion and dirty dealings are the rule of the day. Therefore, it's up to folks like us to slowly buy surely begin to understand just what's going on and, perhaps, profit from it. At the very least we'll all get a new Linux of Value when we're all done.

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://techcrunch.com/2018/01/22/how-to-price-cryptocurrencies/

|

||||

|

||||

作者:[John Biggs][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://techcrunch.com/author/john-biggs/

|

||||

[1]:https://shitcoin.com/storj-not-a-dropbox-killer-1a9f27983d70

|

||||

[2]:http://www.businessinsider.com/bitcoin-price-cryptocurrency-warning-from-sec-cftc-2018-1

|

||||

[3]:https://tctechcrunch2011.files.wordpress.com/2018/01/vitalik-twitter-1312.png?w=525&h=615

|

||||

[4]:https://unsplash.com/photos/pElSkGRA2NU?utm_source=unsplash&utm_medium=referral&utm_content=creditCopyText

|

||||

[5]:https://unsplash.com/search/photos/cash?utm_source=unsplash&utm_medium=referral&utm_content=creditCopyText

|

||||

@ -0,0 +1,170 @@

|

||||

Linux / Unix Bash Shell List All Builtin Commands

|

||||

======

|

||||

|

||||

Builtin commands contained within the bash shell itself. How do I list all built-in bash commands on Linux / Apple OS X / *BSD / Unix like operating systems without reading large size bash man page?

|

||||

|

||||

A shell builtin is nothing but command or a function, called from a shell, that is executed directly in the shell itself. The bash shell executes the command directly, without invoking another program. You can view information for Bash built-ins with help command. There are different types of built-in commands.

|

||||

|

||||

|

||||

### built-in command types

|

||||

|

||||

1. Bourne Shell Builtins: Builtin commands inherited from the Bourne Shell.

|

||||

2. Bash Builtins: Table of builtins specific to Bash.

|

||||

3. Modifying Shell Behavior: Builtins to modify shell attributes and optional behavior.

|

||||

4. Special Builtins: Builtin commands classified specially by POSIX.

|

||||

|

||||

|

||||

|

||||

### How to see all bash builtins

|

||||

|

||||

Type the following command:

|

||||

```

|

||||

$ help

|

||||

$ help | less

|

||||

$ help | grep read

|

||||

```

|

||||

|

||||

Sample outputs:

|

||||

```

|

||||

GNU bash, version 4.1.5(1)-release (x86_64-pc-linux-gnu)

|

||||

These shell commands are defined internally. Type `help' to see this list.

|

||||

Type `help name' to find out more about the function `name'.

|

||||

Use `info bash' to find out more about the shell in general.

|

||||

Use `man -k' or `info' to find out more about commands not in this list.

|

||||

|

||||

A star (*) next to a name means that the command is disabled.

|

||||

|

||||

job_spec [&] history [-c] [-d offset] [n] or hist>

|

||||

(( expression )) if COMMANDS; then COMMANDS; [ elif C>

|

||||

. filename [arguments] jobs [-lnprs] [jobspec ...] or jobs >

|

||||

: kill [-s sigspec | -n signum | -sigs>

|

||||

[ arg... ] let arg [arg ...]

|

||||

[[ expression ]] local [option] name[=value] ...

|

||||

alias [-p] [name[=value] ... ] logout [n]

|

||||

bg [job_spec ...] mapfile [-n count] [-O origin] [-s c>

|

||||

bind [-lpvsPVS] [-m keymap] [-f filen> popd [-n] [+N | -N]

|

||||

break [n] printf [-v var] format [arguments]

|

||||

builtin [shell-builtin [arg ...]] pushd [-n] [+N | -N | dir]

|

||||

caller [expr] pwd [-LP]

|

||||

case WORD in [PATTERN [| PATTERN]...)> read [-ers] [-a array] [-d delim] [->

|

||||

cd [-L|-P] [dir] readarray [-n count] [-O origin] [-s>

|

||||

command [-pVv] command [arg ...] readonly [-af] [name[=value] ...] or>

|

||||

compgen [-abcdefgjksuv] [-o option] > return [n]

|

||||

complete [-abcdefgjksuv] [-pr] [-DE] > select NAME [in WORDS ... ;] do COMM>

|

||||

compopt [-o|+o option] [-DE] [name ..> set [--abefhkmnptuvxBCHP] [-o option>

|

||||

continue [n] shift [n]

|

||||

coproc [NAME] command [redirections] shopt [-pqsu] [-o] [optname ...]

|

||||

declare [-aAfFilrtux] [-p] [name[=val> source filename [arguments]

|

||||

dirs [-clpv] [+N] [-N] suspend [-f]

|

||||

disown [-h] [-ar] [jobspec ...] test [expr]

|

||||

echo [-neE] [arg ...] time [-p] pipeline

|

||||

enable [-a] [-dnps] [-f filename] [na> times

|

||||

eval [arg ...] trap [-lp] [[arg] signal_spec ...]

|

||||

exec [-cl] [-a name] [command [argume> true

|

||||

exit [n] type [-afptP] name [name ...]

|

||||

export [-fn] [name[=value] ...] or ex> typeset [-aAfFilrtux] [-p] name[=val>

|

||||

false ulimit [-SHacdefilmnpqrstuvx] [limit>

|

||||

fc [-e ename] [-lnr] [first] [last] o> umask [-p] [-S] [mode]

|

||||

fg [job_spec] unalias [-a] name [name ...]

|

||||

for NAME [in WORDS ... ] ; do COMMAND> unset [-f] [-v] [name ...]

|

||||

for (( exp1; exp2; exp3 )); do COMMAN> until COMMANDS; do COMMANDS; done

|

||||

function name { COMMANDS ; } or name > variables - Names and meanings of so>

|

||||

getopts optstring name [arg] wait [id]

|

||||

hash [-lr] [-p pathname] [-dt] [name > while COMMANDS; do COMMANDS; done

|

||||

help [-dms] [pattern ...] { COMMANDS ; }

|

||||

```

|

||||

|

||||

### Viewing information for Bash built-ins

|

||||

|

||||

To get detailed info run:

|

||||

```

|

||||

help command

|

||||

help read

|

||||

```

|

||||

To just get a list of all built-ins with a short description, execute:

|

||||

|

||||

`$ help -d`

|

||||

|

||||

### Find syntax and other options for builtins

|

||||

|

||||

Use the following syntax ' to find out more about the builtins commands:

|

||||

```

|

||||

help name

|

||||

help cd

|

||||

help fg

|

||||

help for

|

||||

help read

|

||||

help :

|

||||

```

|

||||

|

||||

Sample outputs:

|

||||

```

|

||||

:: :

|

||||

Null command.

|

||||

|

||||

No effect; the command does nothing.

|

||||

|

||||

Exit Status:

|

||||

Always succeeds

|

||||

```

|

||||

|

||||

### Find out if a command is internal (builtin) or external

|

||||

|

||||

Use the type command or command command:

|

||||

```

|

||||

type -a command-name-here

|

||||

type -a cd

|

||||

type -a uname

|

||||

type -a :

|

||||

type -a ls

|

||||

```

|

||||

|

||||

|

||||

OR

|

||||

```

|

||||

type -a cd uname : ls uname

|

||||

```

|

||||

|

||||

Sample outputs:

|

||||

```

|

||||

cd is a shell builtin

|

||||

uname is /bin/uname

|

||||

: is a shell builtin

|

||||

ls is aliased to `ls --color=auto'

|

||||

ls is /bin/ls

|

||||

l is a function

|

||||

l ()

|

||||

{

|

||||

ls --color=auto

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

OR

|

||||

```

|

||||

command -V ls

|

||||

command -V cd

|

||||

command -V foo

|

||||

```

|

||||

|

||||

[![View list bash built-ins command info on Linux or Unix][1]][1]

|

||||

|

||||

### about the author

|

||||

|

||||

The author is the creator of nixCraft and a seasoned sysadmin and a trainer for the Linux operating system/Unix shell scripting. He has worked with global clients and in various industries, including IT, education, defense and space research, and the nonprofit sector. Follow him on [Twitter][2], [Facebook][3], [Google+][4].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.cyberciti.biz/faq/linux-unix-bash-shell-list-all-builtin-commands/

|

||||

|

||||

作者:[Vivek Gite][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.cyberciti.biz

|

||||

[1]:https://www.cyberciti.biz/media/new/faq/2013/03/View-list-bash-built-ins-command-info-on-Linux-or-Unix.jpg

|

||||

[2]:https://twitter.com/nixcraft

|

||||

[3]:https://facebook.com/nixcraft

|

||||

[4]:https://plus.google.com/+CybercitiBiz

|

||||

173

sources/tech/20140523 Tail Calls Optimization and ES6.md

Normal file

173

sources/tech/20140523 Tail Calls Optimization and ES6.md

Normal file

@ -0,0 +1,173 @@

|

||||

#Translating by qhwdw [Tail Calls, Optimization, and ES6][1]

|

||||

|

||||

|

||||

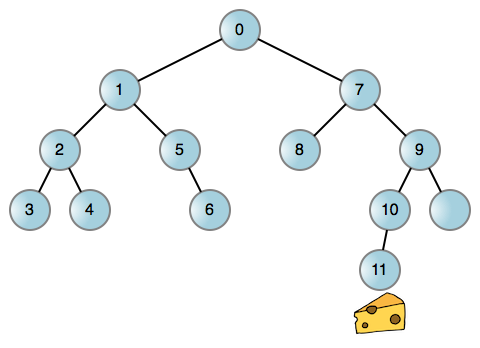

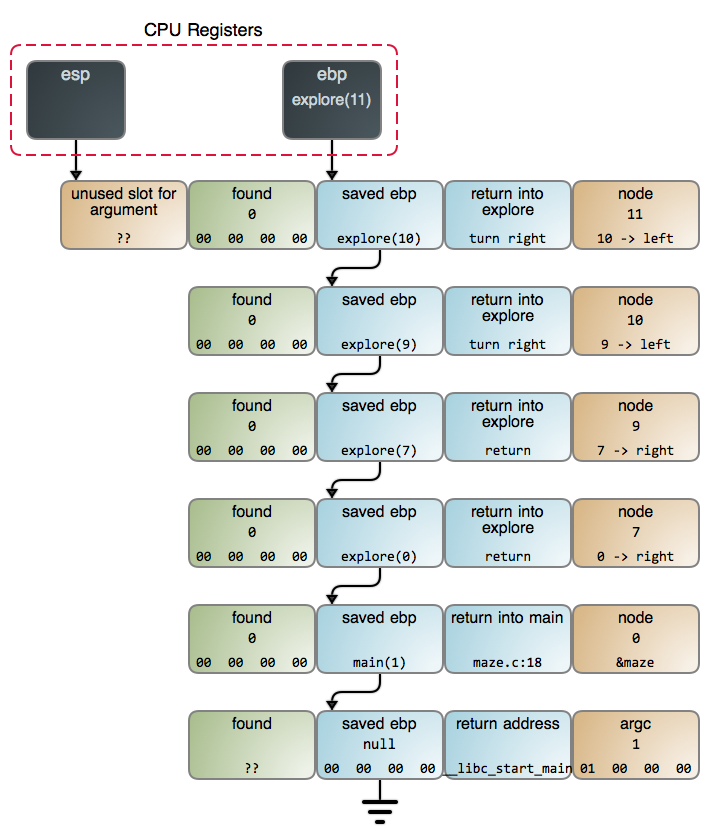

In this penultimate post about the stack, we take a quick look at tail calls, compiler optimizations, and the proper tail calls landing in the newest version of JavaScript.

|

||||

|

||||

A tail call happens when a function F makes a function call as its final action. At that point F will do absolutely no more work: it passes the ball to whatever function is being called and vanishes from the game. This is notable because it opens up the possibility of tail call optimization: instead of [creating a new stack frame][6] for the function call, we can simply reuse F's stack frame, thereby saving stack space and avoiding the work involved in setting up a new frame. Here are some examples in C and their results compiled with [mild optimization][7]:

|

||||

|

||||

Simple Tail Calls[download][2]

|

||||

|

||||

```

|

||||

int add5(int a)

|

||||

{

|

||||

return a + 5;

|

||||

}

|

||||

|

||||

int add10(int a)

|

||||

{

|

||||

int b = add5(a); // not tail

|

||||

return add5(b); // tail

|

||||

}

|

||||

|

||||

int add5AndTriple(int a){

|

||||

int b = add5(a); // not tail

|

||||

return 3 * add5(a); // not tail, doing work after the call

|

||||

}

|

||||

|

||||

int finicky(int a){

|

||||

if (a > 10){

|

||||

return add5AndTriple(a); // tail

|

||||

}

|

||||

|

||||

if (a > 5){

|

||||

int b = add5(a); // not tail

|

||||

return finicky(b); // tail

|

||||

}

|

||||

|

||||

return add10(a); // tail

|

||||

}

|

||||

```

|

||||

|

||||

You can normally spot tail call optimization (hereafter, TCO) in compiler output by seeing a [jump][8] instruction where a [call][9] would have been expected. At runtime TCO leads to a reduced call stack.

|

||||

|

||||

A common misconception is that tail calls are necessarily [recursive][10]. That's not the case: a tail call may be recursive, such as in finicky() above, but it need not be. As long as caller F is completely done at the call site, we've got ourselves a tail call. Whether it can be optimized is a different question whose answer depends on your programming environment.

|

||||

|

||||

"Yes, it can, always!" is the best answer we can hope for, which is famously the case for Scheme, as discussed in [SICP][11] (by the way, if when you program you don't feel like "a Sorcerer conjuring the spirits of the computer with your spells," I urge you to read that book). It's also the case for [Lua][12]. And most importantly, it is the case for the next version of JavaScript, ES6, whose spec does a good job defining [tail position][13] and clarifying the few conditions required for optimization, such as [strict mode][14]. When a language guarantees TCO, it supports proper tail calls.

|

||||

|

||||

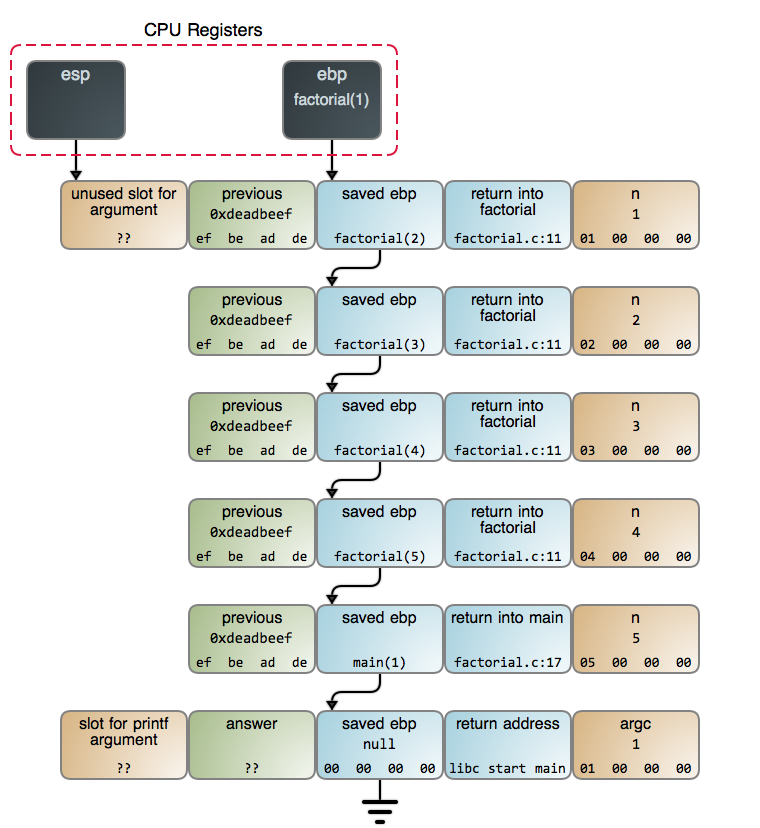

Now some of us can't kick that C habit, heart bleed and all, and the answer there is a more complicated "sometimes" that takes us into compiler optimization territory. We've seen the [simple examples][15] above; now let's resurrect our factorial from [last post][16]:

|

||||

|

||||

Recursive Factorial[download][3]

|

||||

|

||||

```

|

||||

#include <stdio.h>

|

||||

|

||||

int factorial(int n)

|

||||

{

|

||||

int previous = 0xdeadbeef;

|

||||

|

||||

if (n == 0 || n == 1) {

|

||||

return 1;

|

||||

}

|

||||

|

||||

previous = factorial(n-1);

|

||||

return n * previous;

|

||||

}

|

||||

|

||||

int main(int argc)

|

||||

{

|

||||

int answer = factorial(5);

|

||||

printf("%d\n", answer);

|

||||

}

|

||||

```

|

||||

|

||||

So, is line 11 a tail call? It's not, because of the multiplication by n afterwards. But if you're not used to optimizations, gcc's [result][17] with [O2 optimization][18] might shock you: not only it transforms factorial into a [recursion-free loop][19], but the factorial(5) call is eliminated entirely and replaced by a [compile-time constant][20] of 120 (5! == 120). This is why debugging optimized code can be hard sometimes. On the plus side, if you call this function it will use a single stack frame regardless of n's initial value. Compiler algorithms are pretty fun, and if you're interested I suggest you check out [Building an Optimizing Compiler][21] and [ACDI][22].

|

||||

|

||||

However, what happened here was not tail call optimization, since there was no tail call to begin with. gcc outsmarted us by analyzing what the function does and optimizing away the needless recursion. The task was made easier by the simple, deterministic nature of the operations being done. By adding a dash of chaos (e.g., getpid()) we can throw gcc off:

|

||||

|

||||

Recursive PID Factorial[download][4]

|

||||

|

||||

```

|

||||

#include <stdio.h>

|

||||

#include <sys/types.h>

|

||||

#include <unistd.h>

|

||||

|

||||

int pidFactorial(int n)

|

||||

{

|

||||

if (1 == n) {

|

||||

return getpid(); // tail

|

||||

}

|

||||

|

||||

return n * pidFactorial(n-1) * getpid(); // not tail

|

||||

}

|

||||

|

||||

int main(int argc)

|

||||

{

|

||||

int answer = pidFactorial(5);

|

||||

printf("%d\n", answer);

|

||||

}

|

||||

```

|

||||

|

||||

Optimize that, unix fairies! So now we have a regular [recursive call][23] and this function allocates O(n) stack frames to do its work. Heroically, gcc still does [TCO for getpid][24] in the recursion base case. If we now wished to make this function tail recursive, we'd need a slight change:

|

||||

|

||||

tailPidFactorial.c[download][5]

|

||||

|

||||

```

|

||||

#include <stdio.h>

|

||||

#include <sys/types.h>

|

||||

#include <unistd.h>

|

||||

|

||||

int tailPidFactorial(int n, int acc)

|

||||

{

|

||||

if (1 == n) {

|

||||

return acc * getpid(); // not tail

|

||||

}

|

||||

|

||||

acc = (acc * getpid() * n);

|

||||

return tailPidFactorial(n-1, acc); // tail

|

||||

}

|

||||

|

||||

int main(int argc)

|

||||

{

|

||||

int answer = tailPidFactorial(5, 1);

|

||||

printf("%d\n", answer);

|

||||

}

|

||||

```

|

||||

|

||||

The accumulation of the result is now [a loop][25] and we've achieved true TCO. But before you go out partying, what can we say about the general case in C? Sadly, while good C compilers do TCO in a number of cases, there are many situations where they cannot do it. For example, as we saw in our [function epilogues][26], the caller is responsible for cleaning up the stack after a function call using the standard C calling convention. So if function F takes two arguments, it can only make TCO calls to functions taking two or fewer arguments. This is one among many restrictions. Mark Probst wrote an excellent thesis discussing [Proper Tail Recursion in C][27] where he discusses these issues along with C stack behavior. He also does [insanely cool juggling][28].

|

||||

|

||||

"Sometimes" is a rocky foundation for any relationship, so you can't rely on TCO in C. It's a discrete optimization that may or may not take place, rather than a language feature like proper tail calls, though in practice the compiler will optimize the vast majority of cases. But if you must have it, say for transpiling Scheme into C, you will [suffer][29].

|

||||

|

||||

Since JavaScript is now the most popular transpilation target, proper tail calls become even more important there. So kudos to ES6 for delivering it along with many other significant improvements. It's like Christmas for JS programmers.

|

||||

|

||||

This concludes our brief tour of tail calls and compiler optimization. Thanks for reading and see you next time.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via:https://manybutfinite.com/post/tail-calls-optimization-es6/

|

||||

|

||||

作者:[Gustavo Duarte][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://duartes.org/gustavo/blog/about/

|

||||

[1]:https://manybutfinite.com/post/tail-calls-optimization-es6/

|

||||

[2]:https://manybutfinite.com/code/x86-stack/tail.c

|

||||

[3]:https://manybutfinite.com/code/x86-stack/factorial.c

|

||||

[4]:https://manybutfinite.com/code/x86-stack/pidFactorial.c

|

||||

[5]:https://manybutfinite.com/code/x86-stack/tailPidFactorial.c

|

||||

[6]:https://manybutfinite.com/post/journey-to-the-stack

|

||||

[7]:https://github.com/gduarte/blog/blob/master/code/x86-stack/asm-tco.sh

|

||||

[8]:https://github.com/gduarte/blog/blob/master/code/x86-stack/tail-tco.s#L27

|

||||

[9]:https://github.com/gduarte/blog/blob/master/code/x86-stack/tail.s#L37-L39

|

||||

[10]:https://manybutfinite.com/post/recursion/

|

||||

[11]:http://mitpress.mit.edu/sicp/full-text/book/book-Z-H-11.html

|

||||

[12]:http://www.lua.org/pil/6.3.html

|

||||

[13]:https://people.mozilla.org/~jorendorff/es6-draft.html#sec-tail-position-calls

|

||||

[14]:https://people.mozilla.org/~jorendorff/es6-draft.html#sec-strict-mode-code

|

||||

[15]:https://github.com/gduarte/blog/blob/master/code/x86-stack/tail.c

|

||||

[16]:https://manybutfinite.com/post/recursion/

|

||||

[17]:https://github.com/gduarte/blog/blob/master/code/x86-stack/factorial-o2.s

|

||||

[18]:https://gcc.gnu.org/onlinedocs/gcc/Optimize-Options.html

|

||||

[19]:https://github.com/gduarte/blog/blob/master/code/x86-stack/factorial-o2.s#L16-L19

|

||||

[20]:https://github.com/gduarte/blog/blob/master/code/x86-stack/factorial-o2.s#L38

|

||||

[21]:http://www.amazon.com/Building-Optimizing-Compiler-Bob-Morgan-ebook/dp/B008COCE9G/

|

||||

[22]:http://www.amazon.com/Advanced-Compiler-Design-Implementation-Muchnick-ebook/dp/B003VM7GGK/

|

||||

[23]:https://github.com/gduarte/blog/blob/master/code/x86-stack/pidFactorial-o2.s#L20

|

||||

[24]:https://github.com/gduarte/blog/blob/master/code/x86-stack/pidFactorial-o2.s#L43

|

||||

[25]:https://github.com/gduarte/blog/blob/master/code/x86-stack/tailPidFactorial-o2.s#L22-L27

|

||||

[26]:https://manybutfinite.com/post/epilogues-canaries-buffer-overflows/

|

||||

[27]:http://www.complang.tuwien.ac.at/schani/diplarb.ps

|

||||

[28]:http://www.complang.tuwien.ac.at/schani/jugglevids/index.html

|

||||

[29]:http://en.wikipedia.org/wiki/Tail_call#Through_trampolining

|

||||

@ -1,3 +1,5 @@

|

||||

translating---geekpi

|

||||

|

||||

Ansible Tutorial: Intorduction to simple Ansible commands

|

||||

======

|

||||

In our earlier Ansible tutorial, we discussed [**the installation & configuration of Ansible**][1]. Now in this ansible tutorial, we will learn some basic examples of ansible commands that we will use to manage our infrastructure. So let us start by looking at the syntax of a complete ansible command,

|

||||

|

||||

@ -1,93 +0,0 @@

|

||||

Translated by name1e5s

|

||||

|

||||

The big break in computer languages

|

||||

============================================================

|

||||

|

||||

|

||||

My last post ([The long goodbye to C][3]) elicited a comment from a C++ expert I was friends with long ago, recommending C++ as the language to replace C. Which ain’t gonna happen; if that were a viable future, Go and Rust would never have been conceived.

|

||||

|

||||

But my readers deserve more than a bald assertion. So here, for the record, is the story of why I don’t touch C++ any more. This is a launch point for a disquisition on the economics of computer-language design, why some truly unfortunate choices got made and baked into our infrastructure, and how we’re probably going to fix them.

|

||||

|

||||

Along the way I will draw aside the veil from a rather basic mistake that people trying to see into the future of programming languages (including me) have been making since the 1980s. Only very recently do we have the field evidence to notice where we went wrong.

|

||||

|

||||

I think I first picked up C++ because I needed GNU eqn to be able to output MathXML, and eqn was written in C++. That project succeeded. Then I was a senior dev on Battle For Wesnoth for a number of years in the 2000s and got comfortable with the language.

|

||||

|

||||

Then came the day we discovered that a person we incautiously gave commit privileges to had fucked up the games’s AI core. It became apparent that I was the only dev on the team not too frightened of that code to go in. And I fixed it all right – took me two weeks of struggle. After which I swore a mighty oath never to go near C++ again.

|

||||

|

||||

My problem with the language, starkly revealed by that adventure, is that it piles complexity on complexity upon chrome upon gingerbread in an attempt to address problems that cannot actually be solved because the foundational abstractions are leaky. It’s all very well to say “well, don’t do that” about things like bare pointers, and for small-scale single-developer projects (like my eqn upgrade) it is realistic to expect the discipline can be enforced.

|

||||

|

||||

Not so on projects with larger scale or multiple devs at varying skill levels (the case I normally deal with). With probability asymptotically approaching one over time and increasing LOC, someone is inadvertently going to poke through one of the leaks. At which point you have a bug which, because of over-layers of gnarly complexity such as STL, is much more difficult to characterize and fix than the equivalent defect in C. My Battle For Wesnoth experience rubbed my nose in this problem pretty hard.

|

||||

|

||||

What works for a Steve Heller (my old friend and C++ advocate) doesn’t scale up when I’m dealing with multiple non-Steve-Hellers and might end up having to clean up their mess. So I just don’t go there any more. Not worth the aggravation. C is flawed, but it does have one immensely valuable property that C++ didn’t keep – if you can mentally model the hardware it’s running on, you can easily see all the way down. If C++ had actually eliminated C’s flaws (that it, been type-safe and memory-safe) giving away that transparency might be a trade worth making. As it is, nope.

|

||||

|

||||

One way we can tell that C++ is not sufficient is to imagine an alternate world in which it is. In that world, older C projects would routinely up-migrate to C++. Major OS kernels would be written in C++, and existing kernel implementations like Linux would be upgrading to it. In the real world, this ain’t happening. Not only has C++ failed to present enough of a value proposition to keep language designers uninterested in imagining languages like D, Go, and Rust, it has failed to displace its own ancestor. There’s no path forward from C++ without breaching its core assumptions; thus, the abstraction leaks won’t go away.

|

||||

|

||||

Since I’ve mentioned D, I suppose this is also the point at which I should explain why I don’t see it as a serious contender to replace C. Yes, it was spun up eight years before Rust and nine years before Go – props to Walter Bright for having the vision. But in 2001 the example of Perl and Python had already been set – the window when a proprietary language could compete seriously with open source was already closing. The wrestling match between the official D library/runtime and Tango hurt it, too. It has never recovered from those mistakes.

|

||||

|

||||

So now there’s Go (I’d say “…and Rust”, but for reasons I’ve discussed before I think it will be years before Rust is fully competitive). It _is_ type-safe and memory-safe (well, almost; you can partway escape using interfaces, but it’s not normal to have to go to the unsafe places). One of my regulars, Mark Atwood, has correctly pointed out that Go is a language made of grumpy-old-man rage, specifically rage by _one of the designers of C_ (Ken Thompson) at the bloated mess that C++ became.

|

||||

|

||||

I can relate to Ken’s grumpiness; I’ve been muttering for decades that C++ attacked the wrong problem. There were two directions a successor language to C might have gone. One was to do what C++ did – accept C’s leaky abstractions, bare pointers and all, for backward compatibility, than try to build a state-of-the-art language on top of them. The other would have been to attack C’s problems at their root – _fix_ the leaky abstractions. That would break backward compatibility, but it would foreclose the class of problems that dominate C/C++ defects.

|

||||

|

||||

The first serious attempt at the second path was Java in 1995\. It wasn’t a bad try, but the choice to build it over a j-code interpreter mode it unsuitable for systems programming. That left a huge hole in the options for systems programming that wouldn’t be properly addressed for another 15 years, until Rust and Go. In particular, it’s why software like my GPSD and NTPsec projects is still predominantly written in C in 2017 despite C’s manifest problems.

|

||||

|

||||

This is in many ways a bad situation. It was hard to really see this because of the lack of viable alternatives, but C/C++ has not scaled well. Most of us take for granted the escalating rate of defects and security compromises in infrastructure software without really thinking about how much of that is due to really fundamental language problems like buffer-overrun vulnerabilities.

|

||||

|

||||

So, why did it take so long to address that? It was 37 years from C (1972) to Go (2009); Rust only launched a year sooner. I think the underlying reasons are economic.

|

||||

|

||||

Ever since the very earliest computer languages it’s been understood that every language design embodies an assertion about the relative value of programmer time vs. machine resources. At one end of that spectrum you have languages like assembler and (later) C that are designed to extract maximum performance at the cost of also pessimizing developer time and costs; at the other, languages like Lisp and (later) Python that try to automate away as much housekeeping detail as possible, at the cost of pessimizing machine performance.

|

||||

|

||||

In broadest terms, the most important discriminator between the ends of this spectrum is the presence or absence of automatic memory management. This corresponds exactly to the empirical observation that memory-management bugs are by far the most common class of defects in machine-centric languages that require programmers to manage that resource by hand.

|

||||

|

||||

A language becomes economically viable where and when its relative-value assertion matches the actual cost drivers of some particular area of software development. Language designers respond to the conditions around them by inventing languages that are a better fit for present or near-future conditions than the languages they have available to use.

|

||||

|

||||

Over time, there’s been a gradual shift from languages that require manual memory management to languages with automatic memory management and garbage collection (GC). This shift corresponds to the Moore’s Law effect of decreasing hardware costs making programmer time relatively more expensive. But there are at least two other relevant dimensions.

|

||||

|

||||

One is distance from the bare metal. Inefficiency low in the software stack (kernels and service code) ripples multiplicatively up the stack. This, we see machine-centric languages down low and programmer-centric languages higher up, most often in user-facing software that only has to respond at human speed (time scale 0.1 sec).

|

||||

|

||||

Another is project scale. Every language also has an expected rate of induced defects per thousand lines of code due to programmers tripping over leaks and flaws in its abstractions. This rate runs higher in machine-centric languages, much lower in programmer-centric ones with GC. As project scale goes up, therefore, languages with GC become more and more important as a strategy against unacceptable defect rates.

|

||||

|

||||

When we view language deployments along these three dimensions, the observed pattern today – C down below, an increasing gallimaufry of languages with GC above – almost makes sense. Almost. But there is something else going on. C is stickier than it ought to be, and used way further up the stack than actually makes sense.

|

||||

|

||||

Why do I say this? Consider the classic Unix command-line utilities. These are generally pretty small programs that would run acceptably fast implemented in a scripting language with a full POSIX binding. Re-coded that way they would be vastly easier to debug, maintain and extend.

|

||||

|

||||

Why are these still in C (or, in unusual exceptions like eqn, in C++)? Transition costs. It’s difficult to translate even small, simple programs between languages and verify that you have faithfully preserved all non-error behaviors. More generally, any area of applications or systems programming can stay stuck to a language well after the tradeoff that language embodies is actually obsolete.

|

||||

|

||||

Here’s where I get to the big mistake I and other prognosticators made. We thought falling machine-resource costs – increasing the relative cost of programmer-hours – would be enough by themselves to displace C (and non-GC languages generally). In this we were not entirely or even mostly wrong – the rise of scripting languages, Java, and things like Node.js since the early 1990s was pretty obviously driven that way.

|

||||

|

||||

Not so the new wave of contending systems-programming languages, though. Rust and Go are both explicitly responses to _increasing project scale_ . Where scripting languages got started as an effective way to write small programs and gradually scaled up, Rust and Go were positioned from the start as ways to reduce defect rates in _really large_ projects. Like, Google’s search service and Facebook’s real-time-chat multiplexer.

|

||||

|

||||

I think this is the answer to the “why not sooner” question. Rust and Go aren’t actually late at all, they’re relatively prompt responses to a cost driver that was underweighted until recently.

|

||||

|

||||

OK, so much for theory. What predictions does this one generate? What does it tell us about what comes after C?

|

||||

|

||||

Here’s the big one. The largest trend driving development towards GC languages haven’t reversed, and there’s no reason to expect it will. Therefore: eventually we _will_ have GC techniques with low enough latency overhead to be usable in kernels and low-level firmware, and those will ship in language implementations. Those are the languages that will truly end C’s long reign.

|

||||

|

||||

There are broad hints in the working papers from the Go development group that they’re headed in this direction – references to academic work on concurrent garbage collectors that never have stop-the-world pauses. If Go itself doesn’t pick up this option, other language designers will. But I think they will – the business case for Google to push them there is obvious (can you say “Android development”?).

|

||||

|

||||

Well before we get to GC that good, I’m putting my bet on Go to replace C anywhere that the GC it has now is affordable – which means not just applications but most systems work outside of kernels and embedded. The reason is simple: there is no path out of C’s defect rates with lower transition costs.

|

||||

|

||||

I’ve been experimenting with moving C code to Go over the last week, and I’m noticing two things. One is that it’s easy to do – C’s idioms map over pretty well. The other is that the resulting code is much simpler. One would expect that, with GC in the language and maps as a first-class data type, but I’m seeing larger reductions in code volume than initially expected – about 2:1, similar to what I see when moving C code to Python.

|

||||

|

||||

Sorry, Rustaceans – you’ve got a plausible future in kernels and deep firmware, but too many strikes against you to beat Go over most of C’s range. No GC, plus Rust is a harder transition from C because of the borrow checker, plus the standardized part of the API is still seriously incomplete (where’s my select(2), again?).

|

||||

|

||||

The only consolation you get, if it is one, is that the C++ fans are screwed worse than you are. At least Rust has a real prospect of dramatically lowering downstream defect rates relative to C anywhere it’s not crowded out by Go; C++ doesn’t have that.

|

||||

|

||||

This entry was posted in [Software][4] by [Eric Raymond][5]. Bookmark the [permalink][6].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://esr.ibiblio.org/?p=7724

|

||||

|

||||

作者:[Eric Raymond][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://esr.ibiblio.org/?author=2

|

||||

[1]:http://esr.ibiblio.org/?author=2

|

||||

[2]:http://esr.ibiblio.org/?p=7724

|

||||

[3]:http://esr.ibiblio.org/?p=7711

|

||||

[4]:http://esr.ibiblio.org/?cat=13

|

||||

[5]:http://esr.ibiblio.org/?author=2

|

||||

[6]:http://esr.ibiblio.org/?p=7724

|

||||

319

sources/tech/20180122 A Simple Command-line Snippet Manager.md

Normal file

319

sources/tech/20180122 A Simple Command-line Snippet Manager.md

Normal file

@ -0,0 +1,319 @@

|

||||

A Simple Command-line Snippet Manager

|

||||

======

|

||||

|

||||

|

||||

|

||||

We can't remember all the commands, right? Yes. Except the frequently used commands, it is nearly impossible to remember some long commands that we rarely use. That's why we need to some external tools to help us to find the commands when we need them. In the past, we have reviewed two useful utilities named [**" Bashpast"**][1] and [**" Keep"**][2]. Using Bashpast, we can easily bookmark the Linux commands for easier repeated invocation. And, the Keep utility can be used to keep the some important and lengthy commands in your Terminal, so you can use them on demand. Today, we are going to see yet another tool in the series to help you remembering commands. Say hello to **" Pet"**, a simple command-line snippet manager written in **Go** language.

|

||||

|

||||

Using Pet, you can;

|

||||

|

||||

* Register/add your important, long and complex command snippets.

|

||||

* Search the saved command snippets interactively.

|

||||

* Run snippets directly without having to type over and over.

|

||||

* Edit the saved command snippets easily.

|

||||

* Sync the snippets via Gist.

|

||||

* Use variables in snippets.

|

||||

* And more yet to come.

|

||||

|

||||

|

||||

|

||||

#### Installing Pet CLI Snippet Manager

|

||||

|

||||

Since it is written in Go language, make sure you have installed Go in your system.

|

||||

|

||||

After Go language, grab the latest binaries from [**the releases page**][3].

|

||||

```

|

||||

wget https://github.com/knqyf263/pet/releases/download/v0.2.4/pet_0.2.4_linux_amd64.zip

|

||||

```

|

||||

|

||||

For 32 bit:

|

||||

```

|

||||

wget https://github.com/knqyf263/pet/releases/download/v0.2.4/pet_0.2.4_linux_386.zip

|

||||

```

|

||||

|

||||

Extract the downloaded archive:

|

||||

```

|

||||

unzip pet_0.2.4_linux_amd64.zip

|

||||

```

|

||||

|

||||

32 bit:

|

||||

```

|

||||

unzip pet_0.2.4_linux_386.zip

|

||||

```

|

||||

|

||||

Copy the pet binary file to your PATH (i.e **/usr/local/bin** or the like).

|

||||

```

|

||||

sudo cp pet /usr/local/bin/

|

||||

```

|

||||

|

||||

Finally, make it executable:

|

||||

```

|

||||

sudo chmod +x /usr/local/bin/pet

|

||||

```

|

||||

|

||||

If you're using Arch based systems, then you can install it from AUR using any AUR helper tools.

|

||||

|

||||

Using [**Pacaur**][4]:

|

||||

```

|

||||

pacaur -S pet-git

|

||||

```

|

||||

|

||||

Using [**Packer**][5]:

|

||||

```

|

||||

packer -S pet-git

|

||||

```

|

||||

|

||||

Using [**Yaourt**][6]:

|

||||

```

|

||||

yaourt -S pet-git

|

||||

```

|

||||

|

||||

Using [**Yay** :][7]

|

||||

```

|

||||

yay -S pet-git

|

||||

```

|

||||

|

||||

Also, you need to install **[fzf][8]** or [**peco**][9] tools to enable interactive search. Refer the official GitHub links to know how to install these tools.

|

||||

|

||||

#### Usage

|

||||

|

||||

Run 'pet' without any arguments to view the list of available commands and general options.

|

||||

```

|

||||

$ pet

|

||||

pet - Simple command-line snippet manager.

|

||||

|

||||

Usage:

|

||||

pet [command]

|

||||

|

||||

Available Commands:

|

||||

configure Edit config file

|

||||

edit Edit snippet file

|

||||

exec Run the selected commands

|

||||

help Help about any command

|

||||

list Show all snippets

|

||||

new Create a new snippet

|

||||

search Search snippets

|

||||

sync Sync snippets

|

||||

version Print the version number

|

||||

|

||||

Flags:

|

||||

--config string config file (default is $HOME/.config/pet/config.toml)

|

||||

--debug debug mode

|

||||

-h, --help help for pet

|

||||

|

||||

Use "pet [command] --help" for more information about a command.

|

||||

```

|

||||

|

||||

To view the help section of a specific command, run:

|

||||

```

|

||||

$ pet [command] --help

|

||||

```

|

||||

|

||||

**Configure Pet**

|

||||

|

||||

It just works fine with default values. However, you can change the default directory to save snippets, choose the selector (fzf or peco) to use, the default text editor to edit snippets, add GIST id details etc.

|

||||

|

||||

To configure Pet, run:

|

||||

```

|

||||

$ pet configure

|

||||

```

|

||||

|

||||

This command will open the default configuration in the default text editor (for example **vim** in my case). Change/edit the values as per your requirements.

|

||||

```

|

||||

[General]

|

||||

snippetfile = "/home/sk/.config/pet/snippet.toml"

|

||||

editor = "vim"

|

||||

column = 40

|

||||

selectcmd = "fzf"

|

||||

|

||||

[Gist]

|

||||

file_name = "pet-snippet.toml"

|

||||

access_token = ""

|

||||

gist_id = ""

|

||||

public = false

|

||||

~

|

||||

```

|

||||

|

||||

**Creating Snippets**

|

||||

|

||||

To create a new snippet, run:

|

||||

```

|

||||

$ pet new

|

||||

```

|

||||

|

||||

Add the command and the description and hit ENTER to save it.

|

||||

```

|

||||

Command> echo 'Hell1o, Welcome1 2to OSTechNix4' | tr -d '1-9'

|

||||

Description> Remove numbers from output.

|

||||

```

|

||||

|

||||

[![][10]][11]

|

||||

|

||||

This is a simple command to remove all numbers from the echo command output. You can easily remember it. But, if you rarely use it, you may forgot it completely after few days. Of course we can search the history using "CTRL+r", but "Pet" is much easier. Also, Pet can help you to add any number of entries.

|

||||

|

||||

Another cool feature is we can easily add the previous command. To do so, add the following lines in your **.bashrc** or **.zshrc** file.

|

||||

```

|

||||

function prev() {

|

||||

PREV=$(fc -lrn | head -n 1)

|

||||

sh -c "pet new `printf %q "$PREV"`"

|

||||

}

|

||||

```

|

||||

|

||||

Do the following command to take effect the saved changes.

|

||||

```

|

||||

source .bashrc

|

||||

```

|

||||

|

||||

Or,

|

||||

```

|

||||

source .zshrc

|

||||

```

|

||||

|

||||

Now, run any command, for example:

|

||||

```

|

||||

$ cat Documents/ostechnix.txt | tr '|' '\n' | sort | tr '\n' '|' | sed "s/.$/\\n/g"

|

||||

```

|

||||

|

||||

To add the above command, you don't have to use "pet new" command. just do:

|

||||

```

|

||||

$ prev

|

||||

```

|

||||

|

||||

Add the description to the command snippet and hit ENTER to save.

|

||||

|

||||

[![][10]][12]

|

||||

|

||||

**List snippets**

|

||||

|

||||

To view the saved snippets, run:

|

||||

```

|

||||

$ pet list

|

||||

```

|

||||

|

||||

[![][10]][13]

|

||||

|

||||

**Edit Snippets**

|

||||

|

||||

If you want to edit the description or the command of a snippet, run:

|

||||

```

|

||||

$ pet edit

|

||||

```

|

||||

|

||||

This will open all saved snippets in your default text editor. You can edit or change the snippets as you wish.

|

||||

```

|

||||

[[snippets]]

|

||||

description = "Remove numbers from output."

|

||||

command = "echo 'Hell1o, Welcome1 2to OSTechNix4' | tr -d '1-9'"

|

||||

output = ""

|

||||

|

||||

[[snippets]]

|

||||

description = "Alphabetically sort one line of text"

|

||||

command = "\t prev"

|

||||

output = ""

|

||||

```

|

||||

|

||||

**Use Tags in snippets**

|

||||

|

||||

To use tags to a snippet, use **-t** flag like below.

|

||||

```

|

||||

$ pet new -t

|

||||

Command> echo 'Hell1o, Welcome1 2to OSTechNix4' | tr -d '1-9

|

||||

Description> Remove numbers from output.

|

||||

Tag> tr command examples

|

||||

|

||||

```

|

||||

|

||||

**Execute Snippets**

|

||||

|

||||

To execute a saved snippet, run:

|

||||

```

|

||||

$ pet exec

|

||||

```

|

||||

|

||||

Choose the snippet you want to run from the list and hit ENTER to run it.

|

||||

|

||||

[![][10]][14]

|

||||

|

||||

Remember you need to install fzf or peco to use this feature.

|

||||

|

||||

**Search Snippets**

|

||||

|

||||

If you have plenty of saved snippets, you can easily search them using a string or key word like below.

|

||||

```

|

||||

$ pet search

|

||||

```

|

||||

|

||||

Enter the search term or keyword to narrow down the search results.

|

||||

|

||||

[![][10]][15]

|

||||

|

||||

**Sync Snippets**

|

||||

|

||||

First, you need to obtain the access token. Go to this link <https://github.com/settings/tokens/new> and create access token (only need "gist" scope).

|

||||

|

||||

Configure Pet using command:

|

||||

```

|

||||

$ pet configure

|

||||

```

|

||||

|

||||

Set that token to **access_token** in **[Gist]** field.

|

||||

|

||||

After setting, you can upload snippets to Gist like below.

|

||||

```

|

||||

$ pet sync -u

|

||||

Gist ID: 2dfeeeg5f17e1170bf0c5612fb31a869

|

||||

Upload success

|

||||

|

||||

```

|

||||

|

||||

You can also download snippets on another PC. To do so, edit configuration file and set **Gist ID** to **gist_id** in **[Gist]**.

|

||||

|

||||

Then, download the snippets using command:

|

||||

```

|

||||

$ pet sync

|

||||

Download success

|

||||

|

||||

```

|

||||

|

||||

For more details, refer the help section:

|

||||

```

|

||||

pet -h

|

||||

```

|

||||

|

||||

Or,

|

||||

```

|

||||

pet [command] -h

|

||||

```

|

||||

|

||||

And, that's all. Hope this helps. As you can see, Pet usage is fairly simple and easy to use! If you're having hard time remembering lengthy commands, Pet utility can definitely be useful.

|

||||

|

||||

Cheers!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/pet-simple-command-line-snippet-manager/

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:https://www.ostechnix.com/bookmark-linux-commands-easier-repeated-invocation/

|

||||

[2]:https://www.ostechnix.com/save-commands-terminal-use-demand/

|

||||

[3]:https://github.com/knqyf263/pet/releases

|

||||

[4]:https://www.ostechnix.com/install-pacaur-arch-linux/

|

||||

[5]:https://www.ostechnix.com/install-packer-arch-linux-2/

|

||||

[6]:https://www.ostechnix.com/install-yaourt-arch-linux/

|

||||

[7]:https://www.ostechnix.com/yay-found-yet-another-reliable-aur-helper/

|

||||

[8]:https://github.com/junegunn/fzf

|

||||

[9]:https://github.com/peco/peco

|

||||

[10]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[11]:http://www.ostechnix.com/wp-content/uploads/2018/01/pet-1.png ()

|

||||

[12]:http://www.ostechnix.com/wp-content/uploads/2018/01/pet-2.png ()

|

||||

[13]:http://www.ostechnix.com/wp-content/uploads/2018/01/pet-3.png ()

|

||||

[14]:http://www.ostechnix.com/wp-content/uploads/2018/01/pet-4.png ()

|

||||

[15]:http://www.ostechnix.com/wp-content/uploads/2018/01/pet-5.png ()

|

||||

@ -0,0 +1,95 @@

|

||||

Linux mkdir Command Explained for Beginners (with examples)

|

||||

======

|

||||

|

||||

At any given time on the command line, you are in a directory. So it speaks for itself how integral directories are to the command line. In Linux, while the rm command lets you delete directories, it's the **mkdir** command that allows you create them in the first place. In this tutorial, we will discuss the basics of this tool using some easy to understand examples.

|

||||

|

||||

But before we do that, it's worth mentioning that all examples in this tutorial have been tested on Ubuntu 16.04 LTS.

|

||||

|

||||

### Linux mkdir command

|

||||

|

||||

As already mentioned, the mkdir command allows the user to create directories. Following is its syntax:

|

||||

|

||||

```

|

||||

mkdir [OPTION]... DIRECTORY...

|

||||

```

|

||||

|

||||

And here's how the tool's man page describes it:

|

||||

```

|

||||

Create the DIRECTORY(ies), if they do not already exist.

|

||||

```

|

||||

|

||||

The following Q&A-styled examples should give you a better idea on how mkdir works.

|

||||

|

||||

### Q1. How to create directories using mkdir?

|

||||

|

||||

Creating directories is pretty simple, all you need to do is to pass the name of the directory you want to create to the mkdir command.

|

||||

|

||||

```

|

||||

mkdir [dir-name]

|

||||

```

|

||||

|

||||

Following is an example:

|

||||

|

||||

```

|

||||

mkdir test-dir

|

||||

```

|

||||

|

||||

### Q2. How to make sure parent directories (if non-existent) are created in process?

|

||||

|

||||

Sometimes the requirement is to create a complete directory structure with a single mkdir command. This is possible, but you'll have to use the **-p** command line option.

|

||||

|

||||

For example, if you want to create dir1/dir2/dir2 when none of these directories are already existing, then you can do this in the following way:

|

||||

|

||||

```

|

||||

mkdir -p dir1/dir2/dir3

|

||||

```

|

||||

|

||||

[![How to make sure parent directories \(if non-existent\) are created][1]][2]

|

||||

|

||||

### Q3. How to set permissions for directory being created?

|

||||

|

||||

By default, the mkdir command sets rwx, rwx, and r-x permissions for the directories created through it.

|

||||

|

||||

[![How to set permissions for directory being created][3]][4]

|

||||

|

||||

However, if you want, you can set custom permissions using the **-m** command line option.

|

||||

|

||||

[![mkdir -m command option][5]][6]

|

||||

|

||||

### Q4. How to make mkdir emit details of operation?

|

||||

|

||||

In case you want mkdir to display complete details of the operation it's performing, then this can be done through the **-v** command line option.

|

||||

|

||||

```

|

||||

mkdir -v [dir]

|

||||

```

|

||||

|

||||

Here's an example:

|

||||

|

||||

[![How to make mkdir emit details of operation][7]][8]

|

||||

|

||||

### Conclusion

|

||||

|

||||

So you can see mkdir is a pretty simple command to understand and use. It doesn't have any learning curve associated with it. We have covered almost all of its command line options here. Just practice them and you can start using the command in your day-to-day work. In case you want to know more about the tool, head to its [man page][9].

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.howtoforge.com/linux-mkdir-command/

|

||||

|

||||

作者:[Himanshu Arora][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.howtoforge.com

|

||||

[1]:https://www.howtoforge.com/images/command-tutorial/mkdir-p.png

|

||||

[2]:https://www.howtoforge.com/images/command-tutorial/big/mkdir-p.png

|

||||

[3]:https://www.howtoforge.com/images/command-tutorial/mkdir-def-perm.png

|

||||

[4]:https://www.howtoforge.com/images/command-tutorial/big/mkdir-def-perm.png

|

||||

[5]:https://www.howtoforge.com/images/command-tutorial/mkdir-custom-perm.png

|

||||

[6]:https://www.howtoforge.com/images/command-tutorial/big/mkdir-custom-perm.png

|

||||

[7]:https://www.howtoforge.com/images/command-tutorial/mkdir-verbose.png

|

||||

[8]:https://www.howtoforge.com/images/command-tutorial/big/mkdir-verbose.png

|

||||

[9]:https://linux.die.net/man/1/mkdir

|

||||

@ -0,0 +1,170 @@

|

||||

Never miss a Magazine's article, build your own RSS notification system

|

||||

======

|

||||

|

||||

|

||||

|

||||

Python is a great programming language to quickly build applications that make our life easier. In this article we will learn how to use Python to build a RSS notification system, the goal being to have fun learning Python using Fedora. If you are looking for a complete RSS notifier application, there are a few already packaged in Fedora.

|

||||

|

||||

### Fedora and Python - getting started

|

||||

|

||||

Python 3.6 is available by default in Fedora, that includes Python's extensive standard library. The standard library provides a collection of modules which make some tasks simpler for us. For example, in our case we will use the [**sqlite3**][1] module to create, add and read data from a database. In the case where a particular problem we are trying to solve is not covered by the standard library, the chance is that someone has already developed a module for everyone to use. The best place to search for such modules is the Python Package Index known as [PyPI][2]. In our example we are going to use the [**feedparser**][3] to parse an RSS feed.

|

||||

|

||||

Since **feedparser** is not in the standard library, we have to install it in our system. Luckily for us there is an rpm package in Fedora, so the installation of **feedparser** is as simple as:

|

||||

```

|

||||

$ sudo dnf install python3-feedparser

|

||||

```

|

||||

|

||||

We now have everything we need to start coding our application.

|

||||

|

||||

### Storing the feed data

|

||||

|

||||

We need to store data from the articles that have already been published so that we send a notification only for new articles. The data we want to store will give us a unique way to identify an article. Therefore we will store the **title** and the **publication date** of the article.

|

||||

|

||||

So let's create our database using python **sqlite3** module and a simple SQL query. We are also adding the modules we are going to use later ( **feedparser** , **smtplib** and **email** ).

|

||||

|

||||

#### Creating the Database

|

||||

```

|

||||

#!/usr/bin/python3

|

||||

import sqlite3

|

||||

import smtplib

|

||||

from email.mime.text import MIMEText

|

||||

|

||||

import feedparser

|

||||

|

||||

db_connection = sqlite3.connect('/var/tmp/magazine_rss.sqlite')

|

||||

db = db_connection.cursor()

|

||||

db.execute(' CREATE TABLE IF NOT EXISTS magazine (title TEXT, date TEXT)')

|

||||

|

||||

```

|

||||

|

||||

These few lines of code create a new sqlite database stored in a file called 'magazine_rss.sqlite', and then create a new table within the database called 'magazine'. This table has two columns - 'title' and 'date' - that can store data of the type TEXT, which means that the value of each column will be a text string.

|

||||

|

||||

#### Checking the Database for old articles

|

||||

|

||||