mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-06 23:50:16 +08:00

Merge pull request #1 from LCTT/master

merge the repo from LCTT/TranslateProject

This commit is contained in:

commit

c8da1a51e3

@ -1,57 +1,27 @@

|

||||

Docker 指南:Docker 化 Python Django 应用程序

|

||||

如何 Docker 化 Python Django 应用程序

|

||||

======

|

||||

|

||||

### 目录

|

||||

|

||||

1. [我们要做什么?][6]

|

||||

|

||||

2. [步骤 1 - 安装 Docker-ce][7]

|

||||

|

||||

3. [步骤 2 - 安装 Docker-compose][8]

|

||||

|

||||

4. [步骤 3 - 配置项目环境][9]

|

||||

1. [创建一个新的 requirements.txt 文件][1]

|

||||

|

||||

2. [创建 Nginx 虚拟主机文件 django.conf][2]

|

||||

|

||||

3. [创建 Dockerfile][3]

|

||||

|

||||

4. [创建 Docker-compose 脚本][4]

|

||||

|

||||

5. [配置 Django 项目][5]

|

||||

|

||||

5. [步骤 4 - 构建并运行 Docker 镜像][10]

|

||||

|

||||

6. [步骤 5 - 测试][11]

|

||||

|

||||

7. [参考][12]

|

||||

|

||||

|

||||

Docker 是一个开源项目,为开发人员和系统管理员提供了一个开放平台,作为一个轻量级容器,它可以在任何地方构建,打包和运行应用程序。Docker 在软件容器中自动部署应用程序。

|

||||

Docker 是一个开源项目,为开发人员和系统管理员提供了一个开放平台,可以将应用程序构建、打包为一个轻量级容器,并在任何地方运行。Docker 会在软件容器中自动部署应用程序。

|

||||

|

||||

Django 是一个用 Python 编写的 Web 应用程序框架,遵循 MVC(模型-视图-控制器)架构。它是免费的,并在开源许可下发布。它速度很快,旨在帮助开发人员尽快将他们的应用程序上线。

|

||||

|

||||

在本教程中,我将逐步向你展示在 Ubuntu 16.04 如何为现有的 Django 应用程序创建 docker 镜像。我们将学习如何 docker 化一个 Python Django 应用程序,然后使用一个 docker-compose 脚本将应用程序作为容器部署到 docker 环境。

|

||||

在本教程中,我将逐步向你展示在 Ubuntu 16.04 中如何为现有的 Django 应用程序创建 docker 镜像。我们将学习如何 docker 化一个 Python Django 应用程序,然后使用一个 `docker-compose` 脚本将应用程序作为容器部署到 docker 环境。

|

||||

|

||||

为了部署我们的 Python Django 应用程序,我们需要其他 docker 镜像:一个用于 Web 服务器的 nginx docker 镜像和用于数据库的 PostgreSQL 镜像。

|

||||

为了部署我们的 Python Django 应用程序,我们需要其它 docker 镜像:一个用于 Web 服务器的 nginx docker 镜像和用于数据库的 PostgreSQL 镜像。

|

||||

|

||||

### 我们要做什么?

|

||||

|

||||

1. 安装 Docker-ce

|

||||

|

||||

2. 安装 Docker-compose

|

||||

|

||||

3. 配置项目环境

|

||||

|

||||

4. 构建并运行

|

||||

|

||||

5. 测试

|

||||

|

||||

### 步骤 1 - 安装 Docker-ce

|

||||

|

||||

在本教程中,我们将重 docker 仓库安装 docker-ce 社区版。我们将安装 docker-ce 社区版和 docker-compose,其支持 compose 文件版本 3(to 校正者:此处不太明白具体意思)。

|

||||

在本教程中,我们将从 docker 仓库安装 docker-ce 社区版。我们将安装 docker-ce 社区版和 `docker-compose`(其支持 compose 文件版本 3)。

|

||||

|

||||

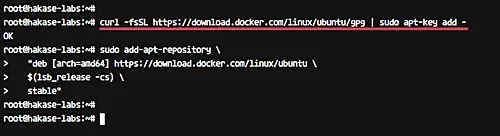

在安装 docker-ce 之前,先使用 apt 命令安装所需的 docker 依赖项。

|

||||

在安装 docker-ce 之前,先使用 `apt` 命令安装所需的 docker 依赖项。

|

||||

|

||||

```

|

||||

sudo apt install -y \

|

||||

@ -71,7 +41,7 @@ sudo add-apt-repository \

|

||||

stable"

|

||||

```

|

||||

|

||||

[][14]

|

||||

[][14]

|

||||

|

||||

更新仓库并安装 docker-ce。

|

||||

|

||||

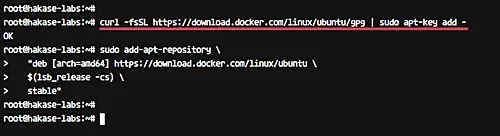

@ -87,16 +57,16 @@ systemctl start docker

|

||||

systemctl enable docker

|

||||

```

|

||||

|

||||

接着,我们将添加一个名为 'omar' 的新用户并将其添加到 docker 组。

|

||||

接着,我们将添加一个名为 `omar` 的新用户并将其添加到 `docker` 组。

|

||||

|

||||

```

|

||||

useradd -m -s /bin/bash omar

|

||||

usermod -a -G docker omar

|

||||

```

|

||||

|

||||

[][15]

|

||||

[][15]

|

||||

|

||||

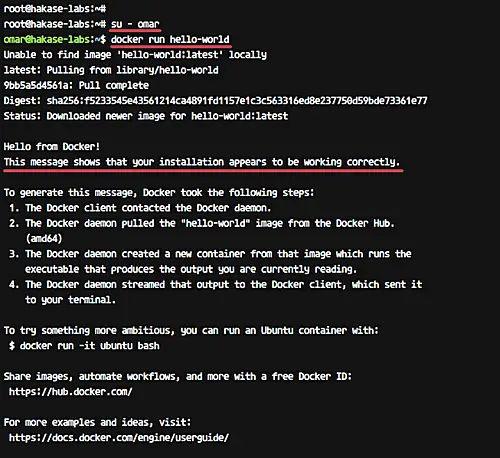

以 omar 用户身份登录并运行 docker 命令,如下所示。

|

||||

以 `omar` 用户身份登录并运行 `docker` 命令,如下所示。

|

||||

|

||||

```

|

||||

su - omar

|

||||

@ -107,13 +77,13 @@ docker run hello-world

|

||||

|

||||

[][16]

|

||||

|

||||

Docker-ce 安装已经完成。

|

||||

Docker-ce 安装已经完成。

|

||||

|

||||

### 步骤 2 - 安装 Docker-compose

|

||||

### 步骤 2 - 安装 Docker-compose

|

||||

|

||||

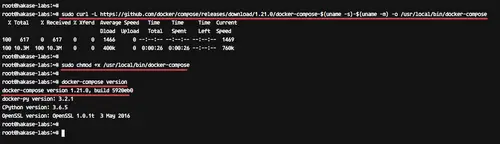

在本教程中,我们将使用最新的 docker-compose 支持 compose 文件版本 3。我们将手动安装 docker-compose。

|

||||

在本教程中,我们将使用支持 compose 文件版本 3 的最新 `docker-compose`。我们将手动安装 `docker-compose`。

|

||||

|

||||

使用 curl 命令将最新版本的 docker-compose 下载到 `/usr/local/bin` 目录,并使用 chmod 命令使其有执行权限。

|

||||

使用 `curl` 命令将最新版本的 `docker-compose` 下载到 `/usr/local/bin` 目录,并使用 `chmod` 命令使其有执行权限。

|

||||

|

||||

运行以下命令:

|

||||

|

||||

@ -122,7 +92,7 @@ sudo curl -L https://github.com/docker/compose/releases/download/1.21.0/docker-c

|

||||

sudo chmod +x /usr/local/bin/docker-compose

|

||||

```

|

||||

|

||||

现在检查 docker-compose 版本。

|

||||

现在检查 `docker-compose` 版本。

|

||||

|

||||

```

|

||||

docker-compose version

|

||||

@ -132,26 +102,26 @@ docker-compose version

|

||||

|

||||

[][17]

|

||||

|

||||

已安装支持 compose 文件版本 3 的 docker-compose 最新版本。

|

||||

已安装支持 compose 文件版本 3 的 `docker-compose` 最新版本。

|

||||

|

||||

### 步骤 3 - 配置项目环境

|

||||

|

||||

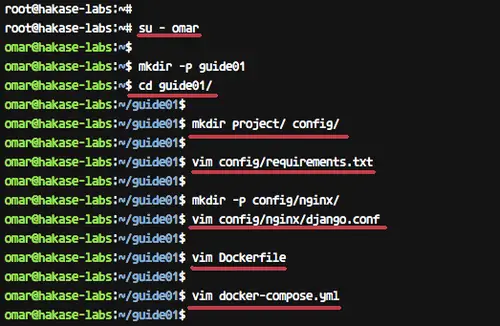

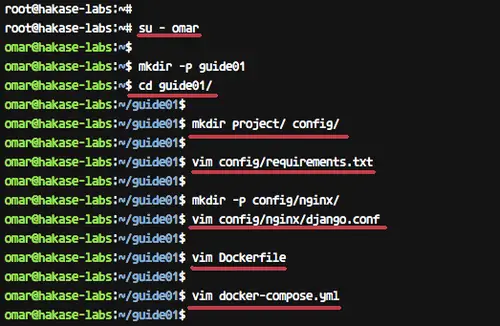

在这一步中,我们将配置 Python Django 项目环境。我们将创建新目录 'guide01',并使其成为我们项目文件的主目录,例如 Dockerfile,Django 项目,nginx 配置文件等。

|

||||

在这一步中,我们将配置 Python Django 项目环境。我们将创建新目录 `guide01`,并使其成为我们项目文件的主目录,例如包括 Dockerfile、Django 项目、nginx 配置文件等。

|

||||

|

||||

登录到 'omar' 用户。

|

||||

登录到 `omar` 用户。

|

||||

|

||||

```

|

||||

su - omar

|

||||

```

|

||||

|

||||

创建一个新目录 'guide01',并进入目录。

|

||||

创建一个新目录 `guide01`,并进入目录。

|

||||

|

||||

```

|

||||

mkdir -p guide01

|

||||

cd guide01/

|

||||

```

|

||||

|

||||

现在在 'guide01' 目录下,创建两个新目录 'project' 和 'config'。

|

||||

现在在 `guide01` 目录下,创建两个新目录 `project` 和 `config`。

|

||||

|

||||

```

|

||||

mkdir project/ config/

|

||||

@ -159,118 +129,151 @@ mkdir project/ config/

|

||||

|

||||

注意:

|

||||

|

||||

* 'project' 目录:我们所有的 python Django 项目文件都将放在该目录中。

|

||||

* `project` 目录:我们所有的 python Django 项目文件都将放在该目录中。

|

||||

* `config` 目录:项目配置文件的目录,包括 nginx 配置文件、python pip 的`requirements.txt` 文件等。

|

||||

|

||||

* 'config' 目录:项目配置文件的目录,包括 nginx 配置文件,python pip requirements 文件等。

|

||||

#### 创建一个新的 requirements.txt 文件

|

||||

|

||||

### 创建一个新的 requirements.txt 文件

|

||||

|

||||

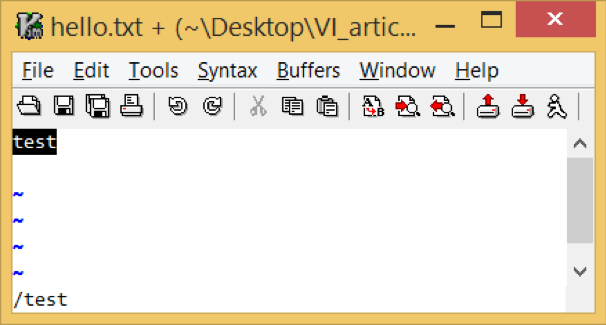

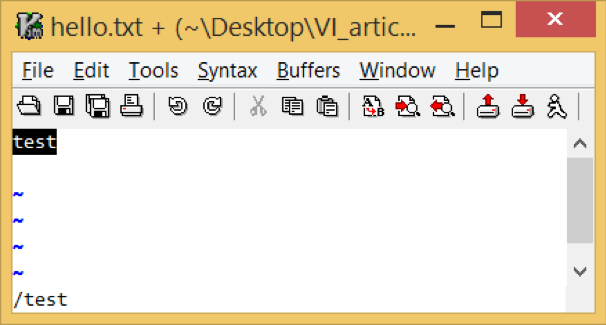

接下来,使用 vim 命令在 'config' 目录中创建一个新的 requirements.txt 文件

|

||||

接下来,使用 `vim` 命令在 `config` 目录中创建一个新的 `requirements.txt` 文件。

|

||||

|

||||

```

|

||||

vim config/requirements.txt

|

||||

```

|

||||

|

||||

粘贴下面的配置。

|

||||

粘贴下面的配置:

|

||||

|

||||

```

|

||||

Django==2.0.4

|

||||

gunicorn==19.7.0

|

||||

psycopg2==2.7.4

|

||||

gunicorn==19.7.0

|

||||

psycopg2==2.7.4

|

||||

```

|

||||

|

||||

保存并退出。

|

||||

|

||||

### 创建 Dockerfile

|

||||

#### 创建 Nginx 虚拟主机文件 django.conf

|

||||

|

||||

在 'guide01' 目录下创建新文件 'Dockerfile'。

|

||||

在 `config` 目录下创建 nginx 配置目录并添加虚拟主机配置文件 `django.conf`。

|

||||

|

||||

运行以下命令。

|

||||

```

|

||||

mkdir -p config/nginx/

|

||||

vim config/nginx/django.conf

|

||||

```

|

||||

|

||||

粘贴下面的配置:

|

||||

|

||||

```

|

||||

upstream web {

|

||||

ip_hash;

|

||||

server web:8000;

|

||||

}

|

||||

|

||||

# portal

|

||||

server {

|

||||

location / {

|

||||

proxy_pass http://web/;

|

||||

}

|

||||

listen 8000;

|

||||

server_name localhost;

|

||||

|

||||

location /static {

|

||||

autoindex on;

|

||||

alias /src/static/;

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

保存并退出。

|

||||

|

||||

#### 创建 Dockerfile

|

||||

|

||||

在 `guide01` 目录下创建新文件 `Dockerfile`。

|

||||

|

||||

运行以下命令:

|

||||

|

||||

```

|

||||

vim Dockerfile

|

||||

```

|

||||

|

||||

现在粘贴下面的 Dockerfile 脚本。

|

||||

现在粘贴下面的 Dockerfile 脚本:

|

||||

|

||||

```

|

||||

FROM python:3.5-alpine

|

||||

ENV PYTHONUNBUFFERED 1

|

||||

ENV PYTHONUNBUFFERED 1

|

||||

|

||||

RUN apk update && \

|

||||

apk add --virtual build-deps gcc python-dev musl-dev && \

|

||||

apk add postgresql-dev bash

|

||||

RUN apk update && \

|

||||

apk add --virtual build-deps gcc python-dev musl-dev && \

|

||||

apk add postgresql-dev bash

|

||||

|

||||

RUN mkdir /config

|

||||

ADD /config/requirements.txt /config/

|

||||

RUN pip install -r /config/requirements.txt

|

||||

RUN mkdir /src

|

||||

WORKDIR /src

|

||||

RUN mkdir /config

|

||||

ADD /config/requirements.txt /config/

|

||||

RUN pip install -r /config/requirements.txt

|

||||

RUN mkdir /src

|

||||

WORKDIR /src

|

||||

```

|

||||

|

||||

保存并退出。

|

||||

|

||||

注意:

|

||||

|

||||

我们想要为我们的 Django 项目构建基于 Alpine Linux 的 Docker 镜像,Alpine 是最小的 Linux 版本。我们的 Django 项目将运行在带有 Python3.5 的 Alpine Linux 上,并添加 postgresql-dev 包以支持 PostgreSQL 数据库。然后,我们将使用 python pip 命令安装在 'requirements.txt' 上列出的所有 Python 包,并为我们的项目创建新目录 '/src'。

|

||||

我们想要为我们的 Django 项目构建基于 Alpine Linux 的 Docker 镜像,Alpine 是最小的 Linux 版本。我们的 Django 项目将运行在带有 Python 3.5 的 Alpine Linux 上,并添加 postgresql-dev 包以支持 PostgreSQL 数据库。然后,我们将使用 python `pip` 命令安装在 `requirements.txt` 上列出的所有 Python 包,并为我们的项目创建新目录 `/src`。

|

||||

|

||||

### 创建 Docker-compose 脚本

|

||||

#### 创建 Docker-compose 脚本

|

||||

|

||||

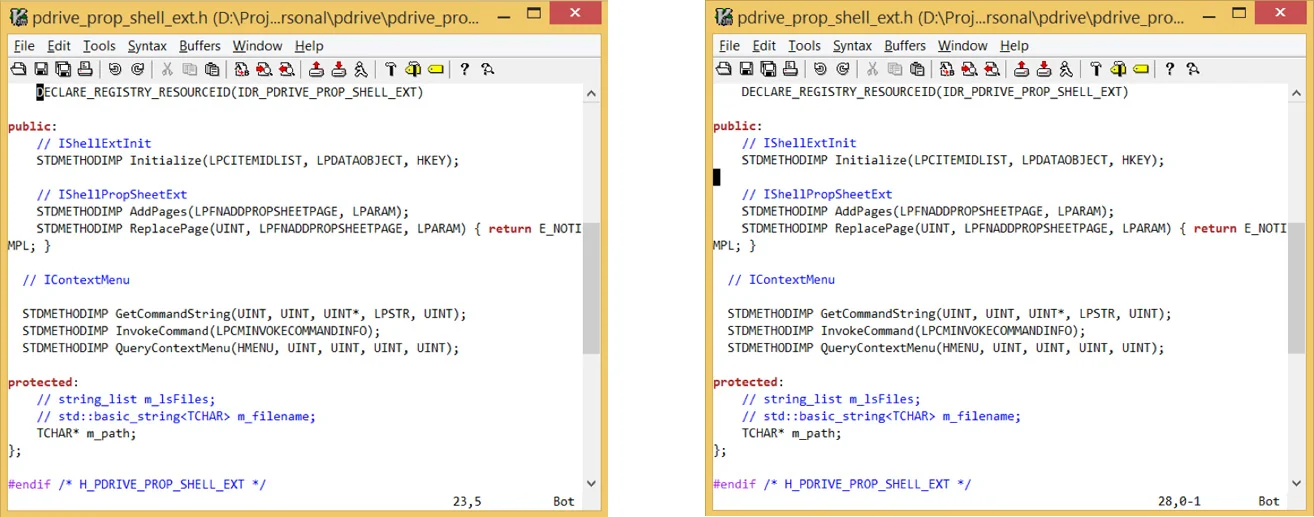

使用 [vim][18] 命令在 'guide01' 目录下创建 'docker-compose.yml' 文件。

|

||||

使用 [vim][18] 命令在 `guide01` 目录下创建 `docker-compose.yml` 文件。

|

||||

|

||||

```

|

||||

vim docker-compose.yml

|

||||

```

|

||||

|

||||

粘贴以下配置内容。

|

||||

粘贴以下配置内容:

|

||||

|

||||

```

|

||||

version: '3'

|

||||

services:

|

||||

db:

|

||||

image: postgres:10.3-alpine

|

||||

container_name: postgres01

|

||||

nginx:

|

||||

image: nginx:1.13-alpine

|

||||

container_name: nginx01

|

||||

ports:

|

||||

- "8000:8000"

|

||||

volumes:

|

||||

- ./project:/src

|

||||

- ./config/nginx:/etc/nginx/conf.d

|

||||

depends_on:

|

||||

- web

|

||||

web:

|

||||

build: .

|

||||

container_name: django01

|

||||

command: bash -c "python manage.py makemigrations && python manage.py migrate && python manage.py collectstatic --noinput && gunicorn hello_django.wsgi -b 0.0.0.0:8000"

|

||||

depends_on:

|

||||

- db

|

||||

volumes:

|

||||

- ./project:/src

|

||||

expose:

|

||||

- "8000"

|

||||

restart: always

|

||||

services:

|

||||

db:

|

||||

image: postgres:10.3-alpine

|

||||

container_name: postgres01

|

||||

nginx:

|

||||

image: nginx:1.13-alpine

|

||||

container_name: nginx01

|

||||

ports:

|

||||

- "8000:8000"

|

||||

volumes:

|

||||

- ./project:/src

|

||||

- ./config/nginx:/etc/nginx/conf.d

|

||||

depends_on:

|

||||

- web

|

||||

web:

|

||||

build: .

|

||||

container_name: django01

|

||||

command: bash -c "python manage.py makemigrations && python manage.py migrate && python manage.py collectstatic --noinput && gunicorn hello_django.wsgi -b 0.0.0.0:8000"

|

||||

depends_on:

|

||||

- db

|

||||

volumes:

|

||||

- ./project:/src

|

||||

expose:

|

||||

- "8000"

|

||||

restart: always

|

||||

```

|

||||

|

||||

保存并退出。

|

||||

|

||||

注意:

|

||||

|

||||

使用这个 docker-compose 文件脚本,我们将创建三个服务。使用 PostgreSQL alpine Linux 创建名为 'db' 的数据库服务,再次使用 Nginx alpine Linux 创建 'nginx' 服务,并使用从 Dockerfile 生成的自定义 docker 镜像创建我们的 python Django 容器。

|

||||

使用这个 `docker-compose` 文件脚本,我们将创建三个服务。使用 alpine Linux 版的 PostgreSQL 创建名为 `db` 的数据库服务,再次使用 alpine Linux 版的 Nginx 创建 `nginx` 服务,并使用从 Dockerfile 生成的自定义 docker 镜像创建我们的 python Django 容器。

|

||||

|

||||

[][19]

|

||||

[][19]

|

||||

|

||||

### 配置 Django 项目

|

||||

#### 配置 Django 项目

|

||||

|

||||

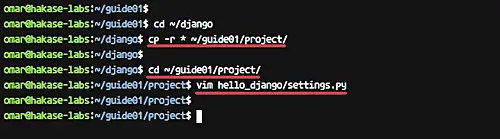

将 Django 项目文件复制到 'project' 目录。

|

||||

将 Django 项目文件复制到 `project` 目录。

|

||||

|

||||

```

|

||||

cd ~/django

|

||||

cp -r * ~/guide01/project/

|

||||

```

|

||||

|

||||

进入 'project' 目录并编辑应用程序设置 'settings.py'。

|

||||

进入 `project` 目录并编辑应用程序设置 `settings.py`。

|

||||

|

||||

```

|

||||

cd ~/guide01/project/

|

||||

@ -279,29 +282,29 @@ vim hello_django/settings.py

|

||||

|

||||

注意:

|

||||

|

||||

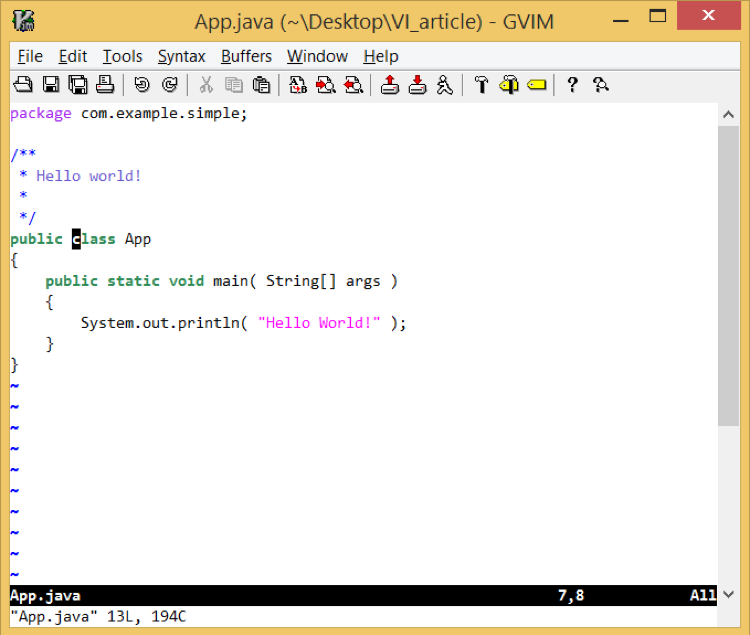

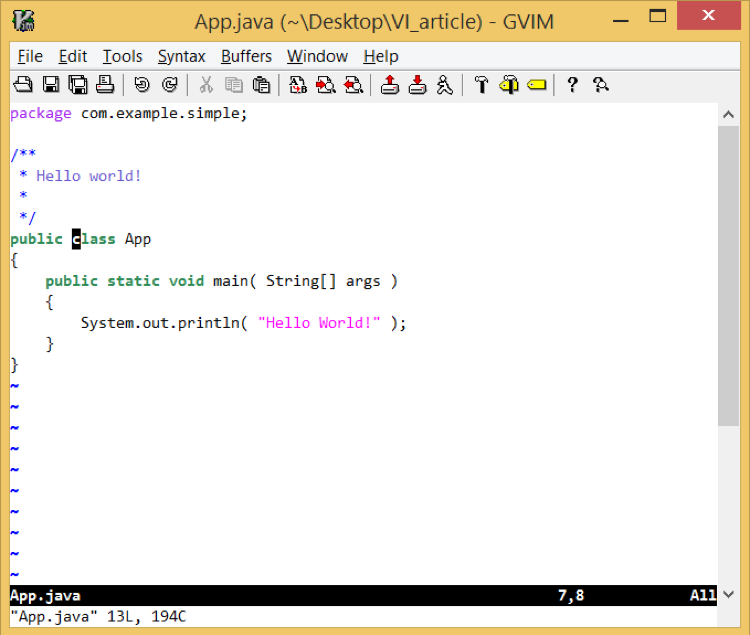

我们将部署名为 'hello_django' 的简单 Django 应用程序。

|

||||

我们将部署名为 “hello_django” 的简单 Django 应用程序。

|

||||

|

||||

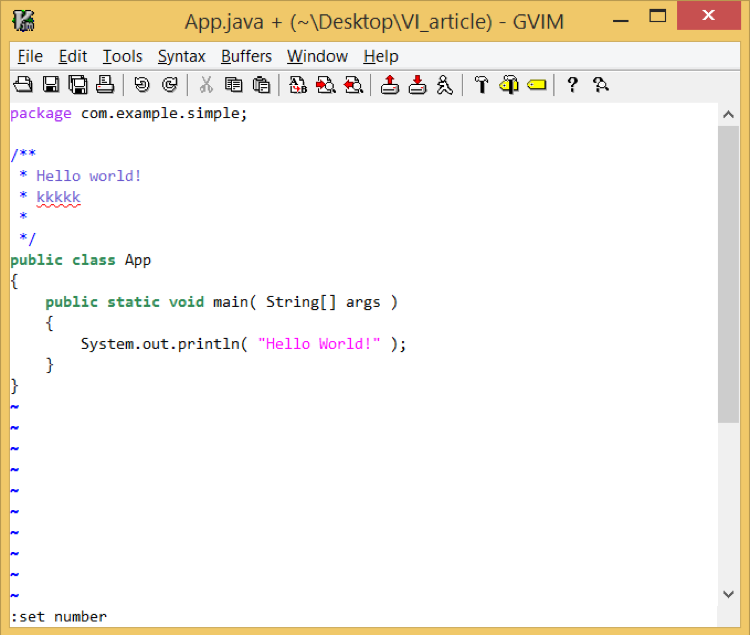

在 'ALLOW_HOSTS' 行中,添加服务名称 'web'。

|

||||

在 `ALLOW_HOSTS` 行中,添加服务名称 `web`。

|

||||

|

||||

```

|

||||

ALLOW_HOSTS = ['web']

|

||||

```

|

||||

|

||||

现在更改数据库设置,我们将使用 PostgreSQL 数据库,'db' 数据库作为服务运行,使用默认用户和密码。

|

||||

现在更改数据库设置,我们将使用 PostgreSQL 数据库来运行名为 `db` 的服务,使用默认用户和密码。

|

||||

|

||||

```

|

||||

DATABASES = {

|

||||

'default': {

|

||||

'ENGINE': 'django.db.backends.postgresql_psycopg2',

|

||||

'NAME': 'postgres',

|

||||

'USER': 'postgres',

|

||||

'HOST': 'db',

|

||||

'PORT': 5432,

|

||||

}

|

||||

}

|

||||

'default': {

|

||||

'ENGINE': 'django.db.backends.postgresql_psycopg2',

|

||||

'NAME': 'postgres',

|

||||

'USER': 'postgres',

|

||||

'HOST': 'db',

|

||||

'PORT': 5432,

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

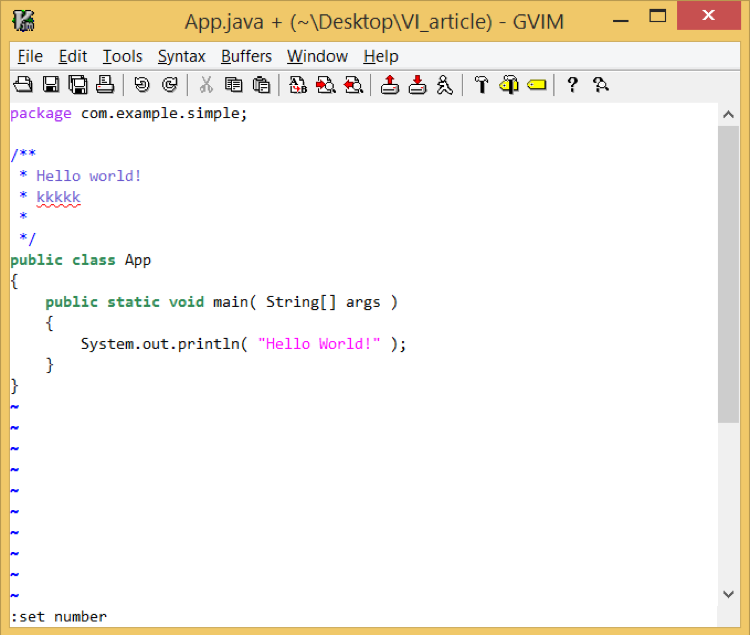

至于 'STATIC_ROOT' 配置目录,将此行添加到文件行的末尾。

|

||||

至于 `STATIC_ROOT` 配置目录,将此行添加到文件行的末尾。

|

||||

|

||||

```

|

||||

STATIC_ROOT = os.path.join(BASE_DIR, 'static/')

|

||||

@ -309,21 +312,21 @@ STATIC_ROOT = os.path.join(BASE_DIR, 'static/')

|

||||

|

||||

保存并退出。

|

||||

|

||||

[][20]

|

||||

[][20]

|

||||

|

||||

现在我们准备在 docker 容器下构建和运行 Django 项目。

|

||||

|

||||

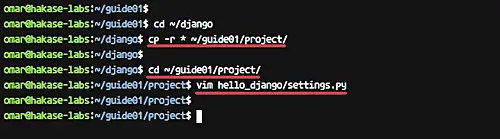

### 步骤 4 - 构建并运行 Docker 镜像

|

||||

|

||||

在这一步中,我们想要使用 'guide01' 目录中的配置为我们的 Django 项目构建一个 Docker 镜像。

|

||||

在这一步中,我们想要使用 `guide01` 目录中的配置为我们的 Django 项目构建一个 Docker 镜像。

|

||||

|

||||

进入 'guide01' 目录。

|

||||

进入 `guide01` 目录。

|

||||

|

||||

```

|

||||

cd ~/guide01/

|

||||

```

|

||||

|

||||

现在使用 docker-compose 命令构建 docker 镜像。

|

||||

现在使用 `docker-compose` 命令构建 docker 镜像。

|

||||

|

||||

```

|

||||

docker-compose build

|

||||

@ -331,7 +334,7 @@ docker-compose build

|

||||

|

||||

[][21]

|

||||

|

||||

启动 docker-compose 脚本中的所有服务。

|

||||

启动 `docker-compose` 脚本中的所有服务。

|

||||

|

||||

```

|

||||

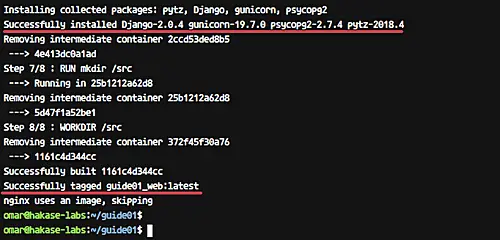

docker-compose up -d

|

||||

@ -348,7 +351,7 @@ docker-compose ps

|

||||

docker-compose images

|

||||

```

|

||||

|

||||

现在,你将在系统上运行三个容器并列出 Docker 镜像,如下所示。

|

||||

现在,你将在系统上运行三个容器,列出 Docker 镜像,如下所示。

|

||||

|

||||

[][23]

|

||||

|

||||

@ -356,17 +359,19 @@ docker-compose images

|

||||

|

||||

### 步骤 5 - 测试

|

||||

|

||||

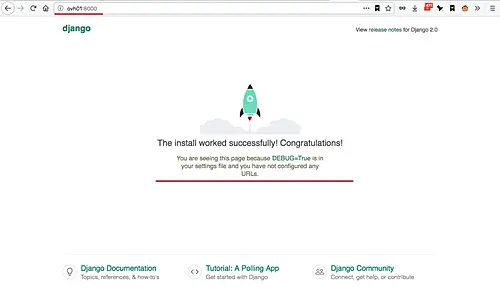

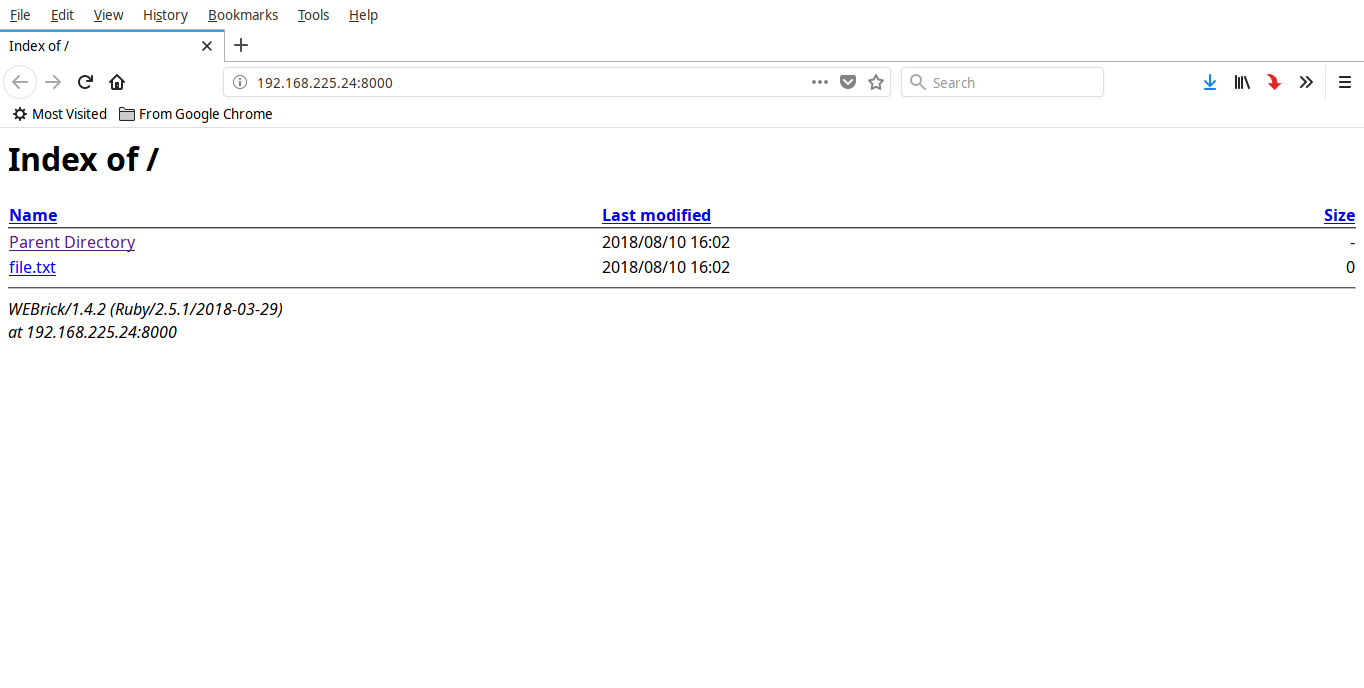

打开 Web 浏览器并使用端口 8000 键入服务器地址,我的是:http://ovh01:8000/

|

||||

打开 Web 浏览器并使用端口 8000 键入服务器地址,我的是:`http://ovh01:8000/`。

|

||||

|

||||

现在你将获得默认的 Django 主页。

|

||||

现在你将看到默认的 Django 主页。

|

||||

|

||||

[][24]

|

||||

|

||||

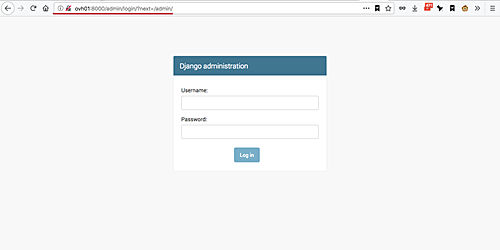

接下来,通过在 URL 上添加 “/admin” 路径来测试管理页面。

|

||||

接下来,通过在 URL 上添加 `/admin` 路径来测试管理页面。

|

||||

|

||||

```

|

||||

http://ovh01:8000/admin/

|

||||

```

|

||||

|

||||

然后你将会看到 Django admin 登录页面。

|

||||

然后你将会看到 Django 管理登录页面。

|

||||

|

||||

[][25]

|

||||

|

||||

@ -383,7 +388,7 @@ via: https://www.howtoforge.com/tutorial/docker-guide-dockerizing-python-django-

|

||||

|

||||

作者:[Muhammad Arul][a]

|

||||

译者:[MjSeven](https://github.com/MjSeven)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,36 +1,30 @@

|

||||

运营一个 Kubernetes 网络

|

||||

============================================================

|

||||

Kubernetes 网络运维

|

||||

======

|

||||

|

||||

最近我一直在研究 Kubernetes 网络。我注意到一件事情就是,虽然关于如何设置 Kubernetes 网络的文章很多,也写得很不错,但是却没有看到关于如何去运营 Kubernetes 网络的文章、以及如何完全确保它不会给你造成生产事故。

|

||||

最近我一直在研究 Kubernetes 网络。我注意到一件事情就是,虽然关于如何设置 Kubernetes 网络的文章很多,也写得很不错,但是却没有看到关于如何去运维 Kubernetes 网络的文章、以及如何完全确保它不会给你造成生产事故。

|

||||

|

||||

在本文中,我将尽力让你相信三件事情(我觉得这些都很合理 :)):

|

||||

|

||||

* 避免生产系统网络中断非常重要

|

||||

|

||||

* 运营联网软件是很难的

|

||||

|

||||

* 运维联网软件是很难的

|

||||

* 有关你的网络基础设施的重要变化值得深思熟虑,以及这种变化对可靠性的影响。虽然非常“牛x”的谷歌人常说“这是我们在谷歌正在用的”(谷歌工程师在 Kubernetes 上正做着很重大的工作!但是我认为重要的仍然是研究架构,并确保它对你的组织有意义)。

|

||||

|

||||

我肯定不是 Kubernetes 网络方面的专家,但是我在配置 Kubernetes 网络时遇到了一些问题,并且比以前更加了解 Kubernetes 网络了。

|

||||

|

||||

### 运营联网软件是很难的

|

||||

### 运维联网软件是很难的

|

||||

|

||||

在这里,我并不讨论有关运营物理网络的话题(对于它我不懂),而是讨论关于如何让像 DNS 服务、负载均衡以及代理这样的软件正常工作方面的内容。

|

||||

在这里,我并不讨论有关运维物理网络的话题(对于它我不懂),而是讨论关于如何让像 DNS 服务、负载均衡以及代理这样的软件正常工作方面的内容。

|

||||

|

||||

我在一个负责很多网络基础设施的团队工作过一年时间,并且因此学到了一些运营网络基础设施的知识!(显然我还有很多的知识需要继续学习)在我们开始之前有三个整体看法:

|

||||

|

||||

* 联网软件经常重度依赖 Linux 内核。因此除了正确配置软件之外,你还需要确保许多不同的系统控制(sysctl)配置正确,而一个错误配置的系统控制就很容易让你处于“一切都很好”和“到处都出问题”的差别中。

|

||||

我在一个负责很多网络基础设施的团队工作过一年时间,并且因此学到了一些运维网络基础设施的知识!(显然我还有很多的知识需要继续学习)在我们开始之前有三个整体看法:

|

||||

|

||||

* 联网软件经常重度依赖 Linux 内核。因此除了正确配置软件之外,你还需要确保许多不同的系统控制(`sysctl`)配置正确,而一个错误配置的系统控制就很容易让你处于“一切都很好”和“到处都出问题”的差别中。

|

||||

* 联网需求会随时间而发生变化(比如,你的 DNS 查询或许比上一年多了五倍!或者你的 DNS 服务器突然开始返回 TCP 协议的 DNS 响应而不是 UDP 的,它们是完全不同的内核负载!)。这意味着之前正常工作的软件突然开始出现问题。

|

||||

|

||||

* 修复一个生产网络的问题,你必须有足够的经验。(例如,看这篇 [由 Sophie Haskins 写的关于 kube-dns 问题调试的文章][1])我在网络调试方面比以前进步多了,但那也是我花费了大量时间研究 Linux 网络知识之后的事了。

|

||||

|

||||

我距离成为一名网络运营专家还差得很远,但是我认为以下几点很重要:

|

||||

我距离成为一名网络运维专家还差得很远,但是我认为以下几点很重要:

|

||||

|

||||

1. 对生产网络的基础设施做重要的更改是很难得的(因为它会产生巨大的混乱)

|

||||

|

||||

2. 当你对网络基础设施做重大更改时,真的应该仔细考虑如果新网络基础设施失败该如何处理

|

||||

|

||||

3. 是否有很多人都能理解你的网络配置

|

||||

|

||||

切换到 Kubernetes 显然是个非常大的更改!因此,我们来讨论一下可能会导致错误的地方!

|

||||

@ -39,86 +33,72 @@

|

||||

|

||||

在本文中我们将要讨论的 Kubernetes 网络组件有:

|

||||

|

||||

* 网络覆盖后端(像 flannel/calico/weave 网络/romana)

|

||||

|

||||

* <ruby>覆盖网络<rt>overlay network</rt></ruby>的后端(像 flannel/calico/weave 网络/romana)

|

||||

* `kube-dns`

|

||||

|

||||

* `kube-proxy`

|

||||

|

||||

* 入站控制器 / 负载均衡器

|

||||

|

||||

* `kubelet`

|

||||

|

||||

如果你打算配置 HTTP 服务,或许这些你都会用到。这些组件中的大部分我都不会用到,但是我尽可能去理解它们,因此,本文将涉及它们有关的内容。

|

||||

|

||||

### 最简化的方式:为所有容器使用宿主机网络

|

||||

|

||||

我们从你能做到的最简单的东西开始。这并不能让你在 Kubernetes 中运行 HTTP 服务。我认为它是非常安全的,因为在这里面可以让你动的东西很少。

|

||||

让我们从你能做到的最简单的东西开始。这并不能让你在 Kubernetes 中运行 HTTP 服务。我认为它是非常安全的,因为在这里面可以让你动的东西很少。

|

||||

|

||||

如果你为所有容器使用宿主机网络,我认为需要你去做的全部事情仅有:

|

||||

|

||||

1. 配置 kubelet,以便于容器内部正确配置 DNS

|

||||

|

||||

2. 没了,就这些!

|

||||

|

||||

如果你为每个 Pod 直接使用宿主机网络,那就不需要 kube-dns 或者 kube-proxy 了。你都不需要一个作为基础的覆盖网络。

|

||||

如果你为每个 pod 直接使用宿主机网络,那就不需要 kube-dns 或者 kube-proxy 了。你都不需要一个作为基础的覆盖网络。

|

||||

|

||||

这种配置方式中,你的 pod 们都可以连接到外部网络(同样的方式,你的宿主机上的任何进程都可以与外部网络对话),但外部网络不能连接到你的 pod 们。

|

||||

|

||||

这并不是最重要的(我认为大多数人想在 Kubernetes 中运行 HTTP 服务并与这些服务进行真实的通讯),但我认为有趣的是,从某种程度上来说,网络的复杂性并不是绝对需要的,并且有时候你不用这么复杂的网络就可以实现你的需要。如果可以的话,尽可能地避免让网络过于复杂。

|

||||

|

||||

### 运营一个覆盖网络

|

||||

### 运维一个覆盖网络

|

||||

|

||||

我们将要讨论的第一个网络组件是有关覆盖网络的。Kubernetes 假设每个 pod 都有一个 IP 地址,这样你就可以与那个 pod 中的服务进行通讯了。我在说到“覆盖网络”这个词时,指的就是这个意思(“让你通过它的 IP 地址指向到 pod 的系统)。

|

||||

|

||||

所有其它的 Kubernetes 网络的东西都依赖正确工作的覆盖网络。更多关于它的内容,你可以读 [这里的 kubernetes 网络模型][10]。

|

||||

|

||||

Kelsey Hightower 在 [kubernetes the hard way][11] 中描述的方式看起来似乎很好,但是,事实上它的作法在超过 50 个节点的 AWS 上是行不通的,因此,我不打算讨论它了。

|

||||

Kelsey Hightower 在 [kubernetes 艰难之路][11] 中描述的方式看起来似乎很好,但是,事实上它的作法在超过 50 个节点的 AWS 上是行不通的,因此,我不打算讨论它了。

|

||||

|

||||

有许多覆盖网络后端(calico、flannel、weaveworks、romana)并且规划非常混乱。就我的观点来看,我认为一个覆盖网络有 2 个职责:

|

||||

|

||||

1. 确保你的 pod 能够发送网络请求到外部的集群

|

||||

|

||||

2. 保持一个到子网络的稳定的节点映射,并且保持集群中每个节点都可以使用那个映射得以更新。当添加和删除节点时,能够做出正确的反应。

|

||||

|

||||

Okay! 因此!你的覆盖网络可能会出现的问题是什么呢?

|

||||

|

||||

* 覆盖网络负责设置 iptables 规则(最基本的是 `iptables -A -t nat POSTROUTING -s $SUBNET -j MASQUERADE`),以确保那个容器能够向 Kubernetes 之外发出网络请求。如果在这个规则上有错误,你的容器就不能连接到外部网络。这并不很难(它只是几条 iptables 规则而已),但是它非常重要。我发起了一个 [pull request][2],因为我想确保它有很好的弹性。

|

||||

|

||||

* 添加或者删除节点时可能会有错误。我们使用 `flannel hostgw` 后端,我们开始使用它的时候,节点删除 [尚未开始工作][3]。

|

||||

|

||||

* 覆盖网络负责设置 iptables 规则(最基本的是 `iptables -A -t nat POSTROUTING -s $SUBNET -j MASQUERADE`),以确保那个容器能够向 Kubernetes 之外发出网络请求。如果在这个规则上有错误,你的容器就不能连接到外部网络。这并不很难(它只是几条 iptables 规则而已),但是它非常重要。我发起了一个 [拉取请求][2],因为我想确保它有很好的弹性。

|

||||

* 添加或者删除节点时可能会有错误。我们使用 `flannel hostgw` 后端,我们开始使用它的时候,节点删除功能 [尚未开始工作][3]。

|

||||

* 你的覆盖网络或许依赖一个分布式数据库(etcd)。如果那个数据库发生什么问题,这将导致覆盖网络发生问题。例如,[https://github.com/coreos/flannel/issues/610][4] 上说,如果在你的 `flannel etcd` 集群上丢失了数据,最后的结果将是在容器中网络连接会丢失。(现在这个问题已经被修复了)

|

||||

|

||||

* 你升级 Docker 以及其它东西导致的崩溃

|

||||

|

||||

* 还有更多的其它的可能性!

|

||||

|

||||

我在这里主要讨论的是过去发生在 Flannel 中的问题,但是我并不是要承诺不去使用 Flannel —— 事实上我很喜欢 Flannel,因为我觉得它很简单(比如,类似 [vxlan 在后端这一块的部分][12] 只有 500 行代码),并且我觉得对我来说,通过代码来找出问题的根源成为了可能。并且很显然,它在不断地改进。他们在审查 `pull requests` 方面做的很好。

|

||||

我在这里主要讨论的是过去发生在 Flannel 中的问题,但是我并不是要承诺不去使用 Flannel —— 事实上我很喜欢 Flannel,因为我觉得它很简单(比如,类似 [vxlan 在后端这一块的部分][12] 只有 500 行代码),对我来说,通过代码来找出问题的根源成为了可能。并且很显然,它在不断地改进。他们在审查拉取请求方面做的很好。

|

||||

|

||||

到目前为止,我运营覆盖网络的方法是:

|

||||

到目前为止,我运维覆盖网络的方法是:

|

||||

|

||||

* 学习它的工作原理的详细内容以及如何去调试它(比如,Flannel 用于创建路由的 hostgw 网络后端,因此,你只需要使用 `sudo ip route list` 命令去查看它是否正确即可)

|

||||

|

||||

* 如果需要的话,维护一个内部构建版本,这样打补丁比较容易

|

||||

|

||||

* 有问题时,向上游贡献补丁

|

||||

|

||||

我认为去遍历所有已合并的 PR 以及过去已修复的 bug 清单真的是非常有帮助的 —— 这需要花费一些时间,但这是得到一个其它人遇到的各种问题的清单的好方法。

|

||||

我认为去遍历所有已合并的拉取请求以及过去已修复的 bug 清单真的是非常有帮助的 —— 这需要花费一些时间,但这是得到一个其它人遇到的各种问题的清单的好方法。

|

||||

|

||||

对其他人来说,他们的覆盖网络可能工作的很好,但是我并不能从中得到任何经验,并且我也曾听说过其他人报告类似的问题。如果你有一个类似配置的覆盖网络:a) 在 AWS 上并且 b) 在多于 50-100 节点上运行,我想知道你运营这样的一个网络有多大的把握。

|

||||

对其他人来说,他们的覆盖网络可能工作的很好,但是我并不能从中得到任何经验,并且我也曾听说过其他人报告类似的问题。如果你有一个类似配置的覆盖网络:a) 在 AWS 上并且 b) 在多于 50-100 节点上运行,我想知道你运维这样的一个网络有多大的把握。

|

||||

|

||||

### 运营 kube-proxy 和 kube-dns?

|

||||

### 运维 kube-proxy 和 kube-dns?

|

||||

|

||||

现在,我有一些关于运营覆盖网络的想法,我们来讨论一下。

|

||||

现在,我有一些关于运维覆盖网络的想法,我们来讨论一下。

|

||||

|

||||

这个标题的最后面有一个问号,那是因为我并没有真的去运营过。在这里我还有更多的问题要问答。

|

||||

这个标题的最后面有一个问号,那是因为我并没有真的去运维过。在这里我还有更多的问题要问答。

|

||||

|

||||

这里的 Kubernetes 服务是如何工作的!一个服务是一群 pod 们,它们中的每个都有自己的 IP 地址(像 10.1.0.3、10.2.3.5、10.3.5.6 这样)

|

||||

|

||||

1. 每个 Kubernetes 服务有一个 IP 地址(像 10.23.1.2 这样)

|

||||

|

||||

2. `kube-dns` 去解析 Kubernetes 服务 DNS 名字为 IP 地址(因此,my-svc.my-namespace.svc.cluster.local 可能映射到 10.23.1.2 上)

|

||||

|

||||

3. `kube-proxy` 配置 `iptables` 规则是为了在它们之间随机进行均衡负载。Kube-proxy 也有一个用户空间的轮询负载均衡器,但是在我的印象中,他们并不推荐使用它。

|

||||

|

||||

因此,当你发出一个请求到 `my-svc.my-namespace.svc.cluster.local` 时,它将解析为 10.23.1.2,然后,在你本地主机上的 `iptables` 规则(由 kube-proxy 生成)将随机重定向到 10.1.0.3 或者 10.2.3.5 或者 10.3.5.6 中的一个上。

|

||||

@ -126,9 +106,7 @@ Okay! 因此!你的覆盖网络可能会出现的问题是什么呢?

|

||||

在这个过程中我能想像出的可能出问题的地方:

|

||||

|

||||

* `kube-dns` 配置错误

|

||||

|

||||

* `kube-proxy` 挂了,以致于你的 `iptables` 规则没有得以更新

|

||||

|

||||

* 维护大量的 `iptables` 规则相关的一些问题

|

||||

|

||||

我们来讨论一下 `iptables` 规则,因为创建大量的 `iptables` 规则是我以前从没有听过的事情!

|

||||

@ -141,7 +119,6 @@ kube-proxy 像如下这样为每个目标主机创建一个 `iptables` 规则:

|

||||

-A KUBE-SVC-LI77LBOOMGYET5US -m comment --comment "default/showreadiness:showreadiness" -m statistic --mode random --probability 0.33332999982 -j KUBE-SEP-RKIFTWKKG3OHTTMI

|

||||

-A KUBE-SVC-LI77LBOOMGYET5US -m comment --comment "default/showreadiness:showreadiness" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-CGDKBCNM24SZWCMS

|

||||

-A KUBE-SVC-LI77LBOOMGYET5US -m comment --comment "default/showreadiness:showreadiness" -j KUBE-SEP-RI4SRNQQXWSTGE2Y

|

||||

|

||||

```

|

||||

|

||||

因此,kube-proxy 创建了许多 `iptables` 规则。它们都是什么意思?它对我的网络有什么样的影响?这里有一个来自华为的非常好的演讲,它叫做 [支持 50,000 个服务的可伸缩 Kubernetes][14],它说如果在你的 Kubernetes 集群中有 5,000 服务,增加一个新规则,将需要 **11 分钟**。如果这种事情发生在真实的集群中,我认为这将是一件非常糟糕的事情。

|

||||

@ -152,19 +129,16 @@ kube-proxy 像如下这样为每个目标主机创建一个 `iptables` 规则:

|

||||

|

||||

但是,我觉得使用 HAProxy 更舒服!它能够用于去替换 kube-proxy!我用谷歌搜索了一下,然后发现了这个 [thread on kubernetes-sig-network][15],它说:

|

||||

|

||||

> kube-proxy 是很难用的,我们在生产系统中使用它近一年了,它在大部分的时间都表现的很好,但是,随着我们集群中的服务越来越多,我们发现它的排错和维护工作越来越难。在我们的团队中没有 iptables 方面的专家,我们只有 HAProxy&LVS 方面的专家,由于我们已经使用它们好几年了,因此我们决定使用一个中心化的 HAProxy 去替换分布式的代理。我觉得这可能会对在 Kubernetes 中使用 HAProxy 的其他人有用,因此,我们更新了这个项目,并将它开源:[https://github.com/AdoHe/kube2haproxy][5]。如果你发现它有用,你可以去看一看、试一试。

|

||||

> kube-proxy 是很难用的,我们在生产系统中使用它近一年了,它在大部分的时间都表现的很好,但是,随着我们集群中的服务越来越多,我们发现它的排错和维护工作越来越难。在我们的团队中没有 iptables 方面的专家,我们只有 HAProxy & LVS 方面的专家,由于我们已经使用它们好几年了,因此我们决定使用一个中心化的 HAProxy 去替换分布式的代理。我觉得这可能会对在 Kubernetes 中使用 HAProxy 的其他人有用,因此,我们更新了这个项目,并将它开源:[https://github.com/AdoHe/kube2haproxy][5]。如果你发现它有用,你可以去看一看、试一试。

|

||||

|

||||

因此,那是一个有趣的选择!我在这里确实没有答案,但是,有一些想法:

|

||||

|

||||

* 负载均衡器是很复杂的

|

||||

|

||||

* DNS 也很复杂

|

||||

* 如果你有运维某种类型的负载均衡器(比如 HAProxy)的经验,与其使用一个全新的负载均衡器(比如 kube-proxy),还不如做一些额外的工作去使用你熟悉的那个来替换,或许更有意义。

|

||||

* 我一直在考虑,我们希望在什么地方能够完全使用 kube-proxy 或者 kube-dns —— 我认为,最好是只在 Envoy 上投入,并且在负载均衡&服务发现上完全依赖 Envoy 来做。因此,你只需要将 Envoy 运维好就可以了。

|

||||

|

||||

* 如果你有运营某种类型的负载均衡器(比如 HAProxy)的经验,与其使用一个全新的负载均衡器(比如 kube-proxy),还不如做一些额外的工作去使用你熟悉的那个来替换,或许更有意义。

|

||||

|

||||

* 我一直在考虑,我们希望在什么地方能够完全使用 kube-proxy 或者 kube-dns —— 我认为,最好是只在 Envoy 上投入,并且在负载均衡&服务发现上完全依赖 Envoy 来做。因此,你只需要将 Envoy 运营好就可以了。

|

||||

|

||||

正如你所看到的,我在关于如何运营 Kubernetes 中的内部代理方面的思路还是很混乱的,并且我也没有使用它们的太多经验。总体上来说,kube-proxy 和 kube-dns 还是很好的,也能够很好地工作,但是我仍然认为应该去考虑使用它们可能产生的一些问题(例如,”你不能有超出 5000 的 Kubernetes 服务“)。

|

||||

正如你所看到的,我在关于如何运维 Kubernetes 中的内部代理方面的思路还是很混乱的,并且我也没有使用它们的太多经验。总体上来说,kube-proxy 和 kube-dns 还是很好的,也能够很好地工作,但是我仍然认为应该去考虑使用它们可能产生的一些问题(例如,”你不能有超出 5000 的 Kubernetes 服务“)。

|

||||

|

||||

### 入口

|

||||

|

||||

@ -175,14 +149,12 @@ kube-proxy 像如下这样为每个目标主机创建一个 `iptables` 规则:

|

||||

几个有用的链接,总结如下:

|

||||

|

||||

* [Kubernetes 网络模型][6]

|

||||

|

||||

* GKE 网络是如何工作的:[https://www.youtube.com/watch?v=y2bhV81MfKQ][7]

|

||||

|

||||

* 上述的有关 `kube-proxy` 上性能的讨论:[https://www.youtube.com/watch?v=4-pawkiazEg][8]

|

||||

|

||||

### 我认为网络运营很重要

|

||||

### 我认为网络运维很重要

|

||||

|

||||

我对 Kubernetes 的所有这些联网软件的感觉是,它们都仍然是非常新的,并且我并不能确定我们(作为一个社区)真的知道如何去把它们运营好。这让我作为一个操作者感到很焦虑,因为我真的想让我的网络运行的很好!:) 而且我觉得作为一个组织,运行你自己的 Kubernetes 集群需要相当大的投入,以确保你理解所有的代码片段,这样当它们出现问题时你可以去修复它们。这不是一件坏事,它只是一个事而已。

|

||||

我对 Kubernetes 的所有这些联网软件的感觉是,它们都仍然是非常新的,并且我并不能确定我们(作为一个社区)真的知道如何去把它们运维好。这让我作为一个操作者感到很焦虑,因为我真的想让我的网络运行的很好!:) 而且我觉得作为一个组织,运行你自己的 Kubernetes 集群需要相当大的投入,以确保你理解所有的代码片段,这样当它们出现问题时你可以去修复它们。这不是一件坏事,它只是一个事而已。

|

||||

|

||||

我现在的计划是,继续不断地学习关于它们都是如何工作的,以尽可能多地减少对我动过的那些部分的担忧。

|

||||

|

||||

@ -192,9 +164,9 @@ kube-proxy 像如下这样为每个目标主机创建一个 `iptables` 规则:

|

||||

|

||||

via: https://jvns.ca/blog/2017/10/10/operating-a-kubernetes-network/

|

||||

|

||||

作者:[Julia Evans ][a]

|

||||

作者:[Julia Evans][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,198 @@

|

||||

如何在 Linux 中压缩和解压缩文件

|

||||

======

|

||||

|

||||

|

||||

|

||||

当在备份重要文件和通过网络发送大文件的时候,对文件进行压缩非常有用。请注意,压缩一个已经压缩过的文件会增加额外开销,因此你将会得到一个更大一些的文件。所以,请不要压缩已经压缩过的文件。在 GNU/Linux 中,有许多程序可以用来压缩和解压缩文件。在这篇教程中,我们仅学习其中两个应用程序。

|

||||

|

||||

在类 Unix 系统中,最常见的用来压缩文件的程序是:

|

||||

|

||||

1. gzip

|

||||

2. bzip2

|

||||

|

||||

### 1. 使用 gzip 程序来压缩和解压缩文件

|

||||

|

||||

`gzip` 是一个使用 Lempel-Ziv 编码(LZ77)算法来压缩和解压缩文件的实用工具。

|

||||

|

||||

#### 1.1 压缩文件

|

||||

|

||||

如果要压缩一个名为 `ostechnix.txt` 的文件,使之成为 gzip 格式的压缩文件,那么只需运行如下命令:

|

||||

|

||||

```

|

||||

$ gzip ostechnix.txt

|

||||

```

|

||||

|

||||

上面的命令运行结束之后,将会出现一个名为 `ostechnix.txt.gz` 的 gzip 格式压缩文件,代替了原始的 `ostechnix.txt` 文件。

|

||||

|

||||

`gzip` 命令还可以有其他用法。一个有趣的例子是,我们可以将一个特定命令的输出通过管道传递,然后作为 `gzip` 程序的输入来创建一个压缩文件。看下面的命令:

|

||||

|

||||

```

|

||||

$ ls -l Downloads/ | gzip > ostechnix.txt.gz

|

||||

```

|

||||

|

||||

上面的命令将会创建一个 gzip 格式的压缩文件,文件的内容为 `Downloads` 目录的目录项。

|

||||

|

||||

#### 1.2 压缩文件并将输出写到新文件中(不覆盖原始文件)

|

||||

|

||||

默认情况下,`gzip` 程序会压缩给定文件,并以压缩文件替代原始文件。但是,你也可以保留原始文件,并将输出写到标准输出。比如,下面这个命令将会压缩 `ostechnix.txt` 文件,并将输出写入文件 `output.txt.gz`。

|

||||

|

||||

```

|

||||

$ gzip -c ostechnix.txt > output.txt.gz

|

||||

```

|

||||

|

||||

类似地,要解压缩一个 `gzip` 格式的压缩文件并指定输出文件的文件名,只需运行:

|

||||

|

||||

```

|

||||

$ gzip -c -d output.txt.gz > ostechnix1.txt

|

||||

```

|

||||

|

||||

上面的命令将会解压缩 `output.txt.gz` 文件,并将输出写入到文件 `ostechnix1.txt` 中。在上面两个例子中,原始文件均不会被删除。

|

||||

|

||||

#### 1.3 解压缩文件

|

||||

|

||||

如果要解压缩 `ostechnix.txt.gz` 文件,并以原始未压缩版本的文件来代替它,那么只需运行:

|

||||

|

||||

```

|

||||

$ gzip -d ostechnix.txt.gz

|

||||

```

|

||||

|

||||

我们也可以使用 `gunzip` 程序来解压缩文件:

|

||||

|

||||

```

|

||||

$ gunzip ostechnix.txt.gz

|

||||

```

|

||||

|

||||

#### 1.4 在不解压缩的情况下查看压缩文件的内容

|

||||

|

||||

如果你想在不解压缩的情况下,使用 `gzip` 程序查看压缩文件的内容,那么可以像下面这样使用 `-c` 选项:

|

||||

|

||||

```

|

||||

$ gunzip -c ostechnix1.txt.gz

|

||||

```

|

||||

|

||||

或者,你也可以像下面这样使用 `zcat` 程序:

|

||||

|

||||

```

|

||||

$ zcat ostechnix.txt.gz

|

||||

```

|

||||

|

||||

你也可以通过管道将输出传递给 `less` 命令,从而一页一页的来查看输出,就像下面这样:

|

||||

|

||||

```

|

||||

$ gunzip -c ostechnix1.txt.gz | less

|

||||

$ zcat ostechnix.txt.gz | less

|

||||

```

|

||||

|

||||

另外,`zless` 程序也能够实现和上面的管道同样的功能。

|

||||

|

||||

```

|

||||

$ zless ostechnix1.txt.gz

|

||||

```

|

||||

|

||||

#### 1.5 使用 gzip 压缩文件并指定压缩级别

|

||||

|

||||

`gzip` 的另外一个显著优点是支持压缩级别。它支持下面给出的 3 个压缩级别:

|

||||

|

||||

* **1** – 最快 (最差)

|

||||

* **9** – 最慢 (最好)

|

||||

* **6** – 默认级别

|

||||

|

||||

要压缩名为 `ostechnix.txt` 的文件,使之成为“最好”压缩级别的 gzip 压缩文件,可以运行:

|

||||

|

||||

```

|

||||

$ gzip -9 ostechnix.txt

|

||||

```

|

||||

|

||||

#### 1.6 连接多个压缩文件

|

||||

|

||||

我们也可以把多个需要压缩的文件压缩到同一个文件中。如何实现呢?看下面这个例子。

|

||||

|

||||

```

|

||||

$ gzip -c ostechnix1.txt > output.txt.gz

|

||||

$ gzip -c ostechnix2.txt >> output.txt.gz

|

||||

```

|

||||

|

||||

上面的两个命令将会压缩文件 `ostechnix1.txt` 和 `ostechnix2.txt`,并将输出保存到一个文件 `output.txt.gz` 中。

|

||||

|

||||

你可以通过下面其中任何一个命令,在不解压缩的情况下,查看两个文件 `ostechnix1.txt` 和 `ostechnix2.txt` 的内容:

|

||||

|

||||

```

|

||||

$ gunzip -c output.txt.gz

|

||||

$ gunzip -c output.txt

|

||||

$ zcat output.txt.gz

|

||||

$ zcat output.txt

|

||||

```

|

||||

|

||||

如果你想了解关于 `gzip` 的更多细节,请参阅它的 man 手册。

|

||||

|

||||

```

|

||||

$ man gzip

|

||||

```

|

||||

|

||||

### 2. 使用 bzip2 程序来压缩和解压缩文件

|

||||

|

||||

`bzip2` 和 `gzip` 非常类似,但是 `bzip2` 使用的是 Burrows-Wheeler 块排序压缩算法,并使用<ruby>哈夫曼<rt>Huffman</rt></ruby>编码。使用 `bzip2` 压缩的文件以 “.bz2” 扩展结尾。

|

||||

|

||||

正如我上面所说的, `bzip2` 的用法和 `gzip` 几乎完全相同。只需在上面的例子中将 `gzip` 换成 `bzip2`,将 `gunzip` 换成 `bunzip2`,将 `zcat` 换成 `bzcat` 即可。

|

||||

|

||||

要使用 `bzip2` 压缩一个文件,并以压缩后的文件取而代之,只需运行:

|

||||

|

||||

```

|

||||

$ bzip2 ostechnix.txt

|

||||

```

|

||||

|

||||

如果你不想替换原始文件,那么可以使用 `-c` 选项,并把输出写入到新文件中。

|

||||

|

||||

```

|

||||

$ bzip2 -c ostechnix.txt > output.txt.bz2

|

||||

```

|

||||

|

||||

如果要解压缩文件,则运行:

|

||||

|

||||

```

|

||||

$ bzip2 -d ostechnix.txt.bz2

|

||||

```

|

||||

|

||||

或者,

|

||||

|

||||

```

|

||||

$ bunzip2 ostechnix.txt.bz2

|

||||

```

|

||||

|

||||

如果要在不解压缩的情况下查看一个压缩文件的内容,则运行:

|

||||

|

||||

```

|

||||

$ bunzip2 -c ostechnix.txt.bz2

|

||||

```

|

||||

|

||||

或者,

|

||||

|

||||

```

|

||||

$ bzcat ostechnix.txt.bz2

|

||||

```

|

||||

|

||||

如果你想了解关于 `bzip2` 的更多细节,请参阅它的 man 手册。

|

||||

|

||||

```

|

||||

$ man bzip2

|

||||

```

|

||||

|

||||

### 总结

|

||||

|

||||

在这篇教程中,我们学习了 `gzip` 和 `bzip2` 程序是什么,并通过 GNU/Linux 下的一些例子学习了如何使用它们来压缩和解压缩文件。接下来,我们将要学习如何在 Linux 中将文件和目录归档。

|

||||

|

||||

干杯!

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/how-to-compress-and-decompress-files-in-linux/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[ucasFL](https://github.com/ucasFL)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

@ -0,0 +1,258 @@

|

||||

理解 ext4 等 Linux 文件系统

|

||||

======

|

||||

|

||||

> 了解 ext4 的历史,包括其与 ext3 和之前的其它文件系统之间的区别。

|

||||

|

||||

|

||||

|

||||

目前的大部分 Linux 文件系统都默认采用 ext4 文件系统,正如以前的 Linux 发行版默认使用 ext3、ext2 以及更久前的 ext。

|

||||

|

||||

对于不熟悉 Linux 或文件系统的朋友而言,你可能不清楚 ext4 相对于上一版本 ext3 带来了什么变化。你可能还想知道在一连串关于替代的文件系统例如 Btrfs、XFS 和 ZFS 不断被发布的情况下,ext4 是否仍然能得到进一步的发展。

|

||||

|

||||

在一篇文章中,我们不可能讲述文件系统的所有方面,但我们尝试让你尽快了解 Linux 默认文件系统的发展历史,包括它的诞生以及未来发展。

|

||||

|

||||

我仔细研究了维基百科里的各种关于 ext 文件系统文章、kernel.org 的 wiki 中关于 ext4 的条目以及结合自己的经验写下这篇文章。

|

||||

|

||||

### ext 简史

|

||||

|

||||

#### MINIX 文件系统

|

||||

|

||||

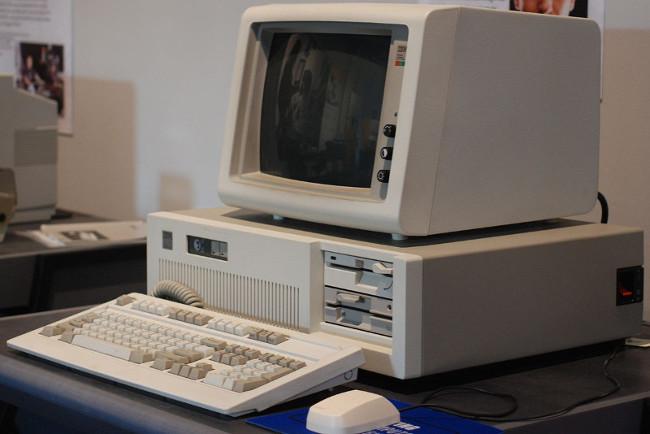

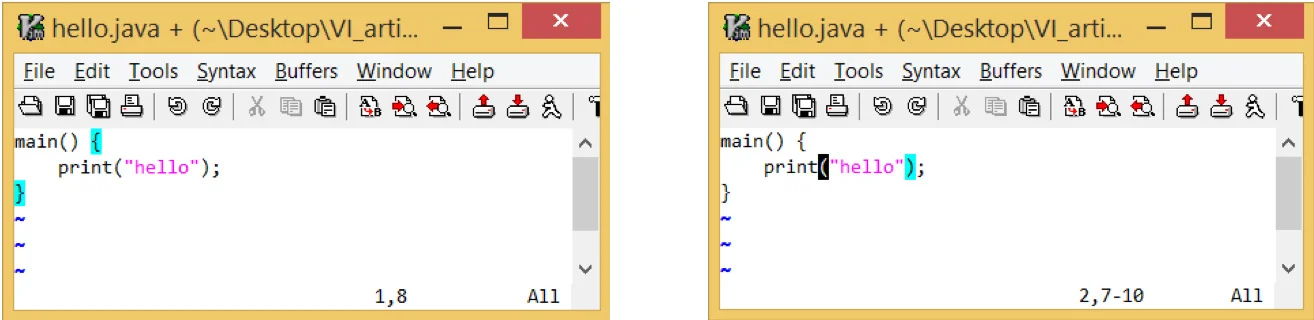

在有 ext 之前,使用的是 MINIX 文件系统。如果你不熟悉 Linux 历史,那么可以理解为 MINIX 是用于 IBM PC/AT 微型计算机的一个非常小的类 Unix 系统。Andrew Tannenbaum 为了教学的目的而开发了它,并于 1987 年发布了源代码(以印刷版的格式!)。

|

||||

|

||||

|

||||

|

||||

*IBM 1980 中期的 PC/AT,[MBlairMartin](https://commons.wikimedia.org/wiki/File:IBM_PC_AT.jpg),[CC BY-SA 4.0](https://creativecommons.org/licenses/by-sa/4.0/deed.en)*

|

||||

|

||||

虽然你可以细读 MINIX 的源代码,但实际上它并不是自由开源软件(FOSS)。出版 Tannebaum 著作的出版商要求你花 69 美元的许可费来运行 MINIX,而这笔费用包含在书籍的费用中。尽管如此,在那时来说非常便宜,并且 MINIX 的使用得到迅速发展,很快超过了 Tannebaum 当初使用它来教授操作系统编码的意图。在整个 20 世纪 90 年代,你可以发现 MINIX 的安装在世界各个大学里面非常流行。而此时,年轻的 Linus Torvalds 使用 MINIX 来开发原始 Linux 内核,并于 1991 年首次公布,而后在 1992 年 12 月在 GPL 开源协议下发布。

|

||||

|

||||

但是等等,这是一篇以 *文件系统* 为主题的文章不是吗?是的,MINIX 有自己的文件系统,早期的 Linux 版本依赖于它。跟 MINIX 一样,Linux 的文件系统也如同玩具那般小 —— MINIX 文件系统最多能处理 14 个字符的文件名,并且只能处理 64MB 的存储空间。到了 1991 年,一般的硬盘尺寸已经达到了 40-140 MB。很显然,Linux 需要一个更好的文件系统。

|

||||

|

||||

#### ext

|

||||

|

||||

当 Linus 开发出刚起步的 Linux 内核时,Rémy Card 从事第一代的 ext 文件系统的开发工作。ext 文件系统在 1992 年首次实现并发布 —— 仅在 Linux 首次发布后的一年!—— ext 解决了 MINIX 文件系统中最糟糕的问题。

|

||||

|

||||

1992 年的 ext 使用在 Linux 内核中的新虚拟文件系统(VFS)抽象层。与之前的 MINIX 文件系统不同的是,ext 可以处理高达 2 GB 存储空间并处理 255 个字符的文件名。

|

||||

|

||||

但 ext 并没有长时间占统治地位,主要是由于它原始的时间戳(每个文件仅有一个时间戳,而不是今天我们所熟悉的有 inode、最近文件访问时间和最新文件修改时间的时间戳。)仅仅一年后,ext2 就替代了它。

|

||||

|

||||

#### ext2

|

||||

|

||||

Rémy 很快就意识到 ext 的局限性,所以一年后他设计出 ext2 替代它。当 ext 仍然根植于 “玩具” 操作系统时,ext2 从一开始就被设计为一个商业级文件系统,沿用 BSD 的 Berkeley 文件系统的设计原理。

|

||||

|

||||

ext2 提供了 GB 级别的最大文件大小和 TB 级别的文件系统大小,使其在 20 世纪 90 年代的地位牢牢巩固在文件系统大联盟中。很快它被广泛地使用,无论是在 Linux 内核中还是最终在 MINIX 中,且利用第三方模块可以使其应用于 MacOS 和 Windows。

|

||||

|

||||

但这里仍然有一些问题需要解决:ext2 文件系统与 20 世纪 90 年代的大多数文件系统一样,如果在将数据写入到磁盘的时候,系统发生崩溃或断电,则容易发生灾难性的数据损坏。随着时间的推移,由于碎片(单个文件存储在多个位置,物理上其分散在旋转的磁盘上),它们也遭受了严重的性能损失。

|

||||

|

||||

尽管存在这些问题,但今天 ext2 还是用在某些特殊的情况下 —— 最常见的是,作为便携式 USB 驱动器的文件系统格式。

|

||||

|

||||

#### ext3

|

||||

|

||||

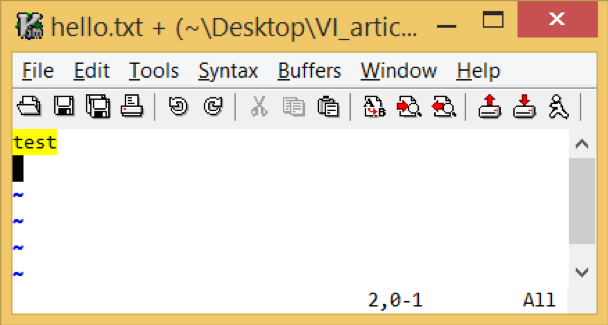

1998 年,在 ext2 被采用后的 6 年后,Stephen Tweedie 宣布他正在致力于改进 ext2。这成了 ext3,并于 2001 年 11 月在 2.4.15 内核版本中被采用到 Linux 内核主线中。

|

||||

|

||||

![Packard Bell 计算机][2]

|

||||

|

||||

*20 世纪 90 年代中期的 Packard Bell 计算机,[Spacekid][3],[CC0][4]*

|

||||

|

||||

在大部分情况下,ext2 在 Linux 发行版中工作得很好,但像 FAT、FAT32、HFS 和当时的其它文件系统一样 —— 在断电时容易发生灾难性的破坏。如果在将数据写入文件系统时候发生断电,则可能会将其留在所谓 *不一致* 的状态 —— 事情只完成一半而另一半未完成。这可能导致大量文件丢失或损坏,这些文件与正在保存的文件无关甚至导致整个文件系统无法卸载。

|

||||

|

||||

ext3 和 20 世纪 90 年代后期的其它文件系统,如微软的 NTFS,使用 *日志* 来解决这个问题。日志是磁盘上的一种特殊的分配区域,其写入被存储在事务中;如果该事务完成磁盘写入,则日志中的数据将提交给文件系统自身。如果系统在该操作提交前崩溃,则重新启动的系统识别其为未完成的事务而将其进行回滚,就像从未发生过一样。这意味着正在处理的文件可能依然会丢失,但文件系统 *本身* 保持一致,且其它所有数据都是安全的。

|

||||

|

||||

在使用 ext3 文件系统的 Linux 内核中实现了三个级别的日志记录方式:<ruby>日记<rt>journal</rt></ruby>、<ruby>顺序<rt>ordered</rt></ruby>和<ruby>回写<rt>writeback</rt></ruby>。

|

||||

|

||||

* **日记** 是最低风险模式,在将数据和元数据提交给文件系统之前将其写入日志。这可以保证正在写入的文件与整个文件系统的一致性,但其显著降低了性能。

|

||||

* **顺序** 是大多数 Linux 发行版默认模式;顺序模式将元数据写入日志而直接将数据提交到文件系统。顾名思义,这里的操作顺序是固定的:首先,元数据提交到日志;其次,数据写入文件系统,然后才将日志中关联的元数据更新到文件系统。这确保了在发生崩溃时,那些与未完整写入相关联的元数据仍在日志中,且文件系统可以在回滚日志时清理那些不完整的写入事务。在顺序模式下,系统崩溃可能导致在崩溃期间文件的错误被主动写入,但文件系统它本身 —— 以及未被主动写入的文件 —— 确保是安全的。

|

||||

* **回写** 是第三种模式 —— 也是最不安全的日志模式。在回写模式下,像顺序模式一样,元数据会被记录到日志,但数据不会。与顺序模式不同,元数据和数据都可以以任何有利于获得最佳性能的顺序写入。这可以显著提高性能,但安全性低很多。尽管回写模式仍然保证文件系统本身的安全性,但在崩溃或崩溃之前写入的文件很容易丢失或损坏。

|

||||

|

||||

跟之前的 ext2 类似,ext3 使用 16 位内部寻址。这意味着对于有着 4K 块大小的 ext3 在最大规格为 16 TiB 的文件系统中可以处理的最大文件大小为 2 TiB。

|

||||

|

||||

#### ext4

|

||||

|

||||

Theodore Ts'o(是当时 ext3 主要开发人员)在 2006 年发表的 ext4,于两年后在 2.6.28 内核版本中被加入到了 Linux 主线。

|

||||

|

||||

Ts'o 将 ext4 描述为一个显著扩展 ext3 但仍然依赖于旧技术的临时技术。他预计 ext4 终将会被真正的下一代文件系统所取代。

|

||||

|

||||

|

||||

|

||||

*Dell Precision 380 工作站,[Lance Fisher](https://commons.wikimedia.org/wiki/File:Dell_Precision_380_Workstation.jpeg),[CC BY-SA 2.0](https://creativecommons.org/licenses/by-sa/2.0/deed.en)*

|

||||

|

||||

ext4 在功能上与 ext3 在功能上非常相似,但支持大文件系统,提高了对碎片的抵抗力,有更高的性能以及更好的时间戳。

|

||||

|

||||

### ext4 vs ext3

|

||||

|

||||

ext3 和 ext4 有一些非常明确的差别,在这里集中讨论下。

|

||||

|

||||

#### 向后兼容性

|

||||

|

||||

ext4 特地设计为尽可能地向后兼容 ext3。这不仅允许 ext3 文件系统原地升级到 ext4;也允许 ext4 驱动程序以 ext3 模式自动挂载 ext3 文件系统,因此使它无需单独维护两个代码库。

|

||||

|

||||

#### 大文件系统

|

||||

|

||||

ext3 文件系统使用 32 位寻址,这限制它仅支持 2 TiB 文件大小和 16 TiB 文件系统系统大小(这是假设在块大小为 4 KiB 的情况下,一些 ext3 文件系统使用更小的块大小,因此对其进一步被限制)。

|

||||

|

||||

ext4 使用 48 位的内部寻址,理论上可以在文件系统上分配高达 16 TiB 大小的文件,其中文件系统大小最高可达 1000000 TiB(1 EiB)。在早期 ext4 的实现中有些用户空间的程序仍然将其限制为最大大小为 16 TiB 的文件系统,但截至 2011 年,e2fsprogs 已经直接支持大于 16 TiB 大小的 ext4 文件系统。例如,红帽企业 Linux 在其合同上仅支持最高 50 TiB 的 ext4 文件系统,并建议 ext4 卷不超过 100 TiB。

|

||||

|

||||

#### 分配方式改进

|

||||

|

||||

ext4 在将存储块写入磁盘之前对存储块的分配方式进行了大量改进,这可以显著提高读写性能。

|

||||

|

||||

##### 区段

|

||||

|

||||

<ruby>区段<rt>extent</rt></ruby>是一系列连续的物理块 (最多达 128 MiB,假设块大小为 4 KiB),可以一次性保留和寻址。使用区段可以减少给定文件所需的 inode 数量,并显著减少碎片并提高写入大文件时的性能。

|

||||

|

||||

##### 多块分配

|

||||

|

||||

ext3 为每一个新分配的块调用一次块分配器。当多个写入同时打开分配器时,很容易导致严重的碎片。然而,ext4 使用延迟分配,这允许它合并写入并更好地决定如何为尚未提交的写入分配块。

|

||||

|

||||

##### 持久的预分配

|

||||

|

||||

在为文件预分配磁盘空间时,大部分文件系统必须在创建时将零写入该文件的块中。ext4 允许替代使用 `fallocate()`,它保证了空间的可用性(并试图为它找到连续的空间),而不需要先写入它。这显著提高了写入和将来读取流和数据库应用程序的写入数据的性能。

|

||||

|

||||

##### 延迟分配

|

||||

|

||||

这是一个耐人寻味而有争议性的功能。延迟分配允许 ext4 等待分配将写入数据的实际块,直到它准备好将数据提交到磁盘。(相比之下,即使数据仍然在往写入缓存中写入,ext3 也会立即分配块。)

|

||||

|

||||

当缓存中的数据累积时,延迟分配块允许文件系统对如何分配块做出更好的选择,降低碎片(写入,以及稍后的读)并显著提升性能。然而不幸的是,它 *增加* 了还没有专门调用 `fsync()` 方法(当程序员想确保数据完全刷新到磁盘时)的程序的数据丢失的可能性。

|

||||

|

||||

假设一个程序完全重写了一个文件:

|

||||

|

||||

```

|

||||

fd=open("file", O_TRUNC); write(fd, data); close(fd);

|

||||

```

|

||||

|

||||

使用旧的文件系统,`close(fd);` 足以保证 `file` 中的内容刷新到磁盘。即使严格来说,写不是事务性的,但如果文件关闭后发生崩溃,则丢失数据的风险很小。

|

||||

|

||||

如果写入不成功(由于程序上的错误、磁盘上的错误、断电等),文件的原始版本和较新版本都可能丢失数据或损坏。如果其它进程在写入文件时访问文件,则会看到损坏的版本。如果其它进程打开文件并且不希望其内容发生更改 —— 例如,映射到多个正在运行的程序的共享库。这些进程可能会崩溃。

|

||||

|

||||

为了避免这些问题,一些程序员完全避免使用 `O_TRUNC`。相反,他们可能会写入一个新文件,关闭它,然后将其重命名为旧文件名:

|

||||

|

||||

```

|

||||

fd=open("newfile"); write(fd, data); close(fd); rename("newfile", "file");

|

||||

```

|

||||

|

||||

在 *没有* 延迟分配的文件系统下,这足以避免上面列出的潜在的损坏和崩溃问题:因为 `rename()` 是原子操作,所以它不会被崩溃中断;并且运行的程序将继续引用旧的文件。现在 `file` 的未链接版本只要有一个打开的文件文件句柄即可。但是因为 ext4 的延迟分配会导致写入被延迟和重新排序,`rename("newfile", "file")` 可以在 `newfile` 的内容实际写入磁盘内容之前执行,这出现了并行进行再次获得 `file` 坏版本的问题。

|

||||

|

||||

为了缓解这种情况,Linux 内核(自版本 2.6.30)尝试检测这些常见代码情况并强制立即分配。这会减少但不能防止数据丢失的可能性 —— 并且它对新文件没有任何帮助。如果你是一位开发人员,请注意:保证数据立即写入磁盘的唯一方法是正确调用 `fsync()`。

|

||||

|

||||

#### 无限制的子目录

|

||||

|

||||

ext3 仅限于 32000 个子目录;ext4 允许无限数量的子目录。从 2.6.23 内核版本开始,ext4 使用 HTree 索引来减少大量子目录的性能损失。

|

||||

|

||||

#### 日志校验

|

||||

|

||||

ext3 没有对日志进行校验,这给处于内核直接控制之外的磁盘或自带缓存的控制器设备带来了问题。如果控制器或具自带缓存的磁盘脱离了写入顺序,则可能会破坏 ext3 的日记事务顺序,从而可能破坏在崩溃期间(或之前一段时间)写入的文件。

|

||||

|

||||

理论上,这个问题可以使用写入<ruby>障碍<rt>barrier</rt></ruby> —— 在安装文件系统时,你在挂载选项设置 `barrier=1`,然后设备就会忠实地执行 `fsync` 一直向下到底层硬件。通过实践,可以发现存储设备和控制器经常不遵守写入障碍 —— 提高性能(和跟竞争对手比较的性能基准),但增加了本应该防止数据损坏的可能性。

|

||||

|

||||

对日志进行校验和允许文件系统崩溃后第一次挂载时意识到其某些条目是无效或无序的。因此,这避免了回滚部分条目或无序日志条目的错误,并进一步损坏的文件系统 —— 即使部分存储设备假做或不遵守写入障碍。

|

||||

|

||||

#### 快速文件系统检查

|

||||

|

||||

在 ext3 下,在 `fsck` 被调用时会检查整个文件系统 —— 包括已删除或空文件。相比之下,ext4 标记了 inode 表未分配的块和扇区,从而允许 `fsck` 完全跳过它们。这大大减少了在大多数文件系统上运行 `fsck` 的时间,它实现于内核 2.6.24。

|

||||

|

||||

#### 改进的时间戳

|

||||

|

||||

ext3 提供粒度为一秒的时间戳。虽然足以满足大多数用途,但任务关键型应用程序经常需要更严格的时间控制。ext4 通过提供纳秒级的时间戳,使其可用于那些企业、科学以及任务关键型的应用程序。

|

||||

|

||||

ext3 文件系统也没有提供足够的位来存储 2038 年 1 月 18 日以后的日期。ext4 在这里增加了两个位,将 [Unix 纪元][5]扩展了 408 年。如果你在公元 2446 年读到这篇文章,你很有可能已经转移到一个更好的文件系统 —— 如果你还在测量自 1970 年 1 月 1 日 00:00(UTC)以来的时间,这会让我死后得以安眠。

|

||||

|

||||

#### 在线碎片整理

|

||||

|

||||

ext2 和 ext3 都不直接支持在线碎片整理 —— 即在挂载时会对文件系统进行碎片整理。ext2 有一个包含的实用程序 `e2defrag`,它的名字暗示 —— 它需要在文件系统未挂载时脱机运行。(显然,这对于根文件系统来说非常有问题。)在 ext3 中的情况甚至更糟糕 —— 虽然 ext3 比 ext2 更不容易受到严重碎片的影响,但 ext3 文件系统运行 `e2defrag` 可能会导致灾难性损坏和数据丢失。

|

||||

|

||||

尽管 ext3 最初被认为“不受碎片影响”,但对同一文件(例如 BitTorrent)采用大规模并行写入过程的过程清楚地表明情况并非完全如此。一些用户空间的手段和解决方法,例如 [Shake][6],以这样或那样方式解决了这个问题 —— 但它们比真正的、文件系统感知的、内核级碎片整理过程更慢并且在各方面都不太令人满意。

|

||||

|

||||

ext4 通过 `e4defrag` 解决了这个问题,且是一个在线、内核模式、文件系统感知、块和区段级别的碎片整理实用程序。

|

||||

|

||||

### 正在进行的 ext4 开发

|

||||

|

||||

ext4,正如 Monty Python 中瘟疫感染者曾经说过的那样,“我还没死呢!”虽然它的[主要开发人员][7]认为它只是一个真正的[下一代文件系统][8]的权宜之计,但是在一段时间内,没有任何可能的候选人准备好(由于技术或许可问题)部署为根文件系统。

|

||||

|

||||

在未来的 ext4 版本中仍然有一些关键功能要开发,包括元数据校验和、一流的配额支持和大分配块。

|

||||

|

||||

#### 元数据校验和

|

||||

|

||||

由于 ext4 具有冗余超级块,因此为文件系统校验其中的元数据提供了一种方法,可以自行确定主超级块是否已损坏并需要使用备用块。可以在没有校验和的情况下,从损坏的超级块恢复 —— 但是用户首先需要意识到它已损坏,然后尝试使用备用方法手动挂载文件系统。由于在某些情况下,使用损坏的主超级块安装文件系统读写可能会造成进一步的损坏,即使是经验丰富的用户也无法避免,这也不是一个完美的解决方案!

|

||||

|

||||

与 Btrfs 或 ZFS 等下一代文件系统提供的极其强大的每块校验和相比,ext4 的元数据校验和的功能非常弱。但它总比没有好。虽然校验 **所有的事情** 都听起来很简单!—— 事实上,将校验和与文件系统连接到一起有一些重大的挑战;请参阅[设计文档][9]了解详细信息。

|

||||

|

||||

#### 一流的配额支持

|

||||

|

||||

等等,配额?!从 ext2 出现的那天开始我们就有了这些!是的,但它们一直都是事后的添加的东西,而且它们总是犯傻。这里可能不值得详细介绍,但[设计文档][10]列出了配额将从用户空间移动到内核中的方式,并且能够更加正确和高效地执行。

|

||||

|

||||

#### 大分配块

|

||||

|

||||

随着时间的推移,那些讨厌的存储系统不断变得越来越大。由于一些固态硬盘已经使用 8K 硬件块大小,因此 ext4 对 4K 模块的当前限制越来越受到限制。较大的存储块可以显著减少碎片并提高性能,代价是增加“松弛”空间(当你只需要块的一部分来存储文件或文件的最后一块时留下的空间)。

|

||||

|

||||

你可以在[设计文档][11]中查看详细说明。

|

||||

|

||||

### ext4 的实际限制

|

||||

|

||||

ext4 是一个健壮、稳定的文件系统。如今大多数人都应该在用它作为根文件系统,但它无法处理所有需求。让我们简单地谈谈你不应该期待的一些事情 —— 现在或可能在未来:

|

||||

|

||||

虽然 ext4 可以处理高达 1 EiB 大小(相当于 1,000,000 TiB)大小的数据,但你 *真的* 不应该尝试这样做。除了能够记住更多块的地址之外,还存在规模上的问题。并且现在 ext4 不会处理(并且可能永远不会)超过 50-100 TiB 的数据。

|

||||

|

||||

ext4 也不足以保证数据的完整性。随着日志记录的重大进展又回到了 ext3 的那个时候,它并未涵盖数据损坏的许多常见原因。如果数据已经在磁盘上被[破坏][12] —— 由于故障硬件,宇宙射线的影响(是的,真的),或者只是数据随时间衰减 —— ext4 无法检测或修复这种损坏。

|

||||

|

||||

基于上面两点,ext4 只是一个纯 *文件系统*,而不是存储卷管理器。这意味着,即使你有多个磁盘 —— 也就是奇偶校验或冗余,理论上你可以从 ext4 中恢复损坏的数据,但无法知道使用它是否对你有利。虽然理论上可以在不同的层中分离文件系统和存储卷管理系统而不会丢失自动损坏检测和修复功能,但这不是当前存储系统的设计方式,并且它将给新设计带来重大挑战。

|

||||

|

||||

### 备用文件系统

|

||||

|

||||

在我们开始之前,提醒一句:要非常小心,没有任何备用的文件系统作为主线内核的一部分而内置和直接支持!

|

||||

|

||||

即使一个文件系统是 *安全的*,如果在内核升级期间出现问题,使用它作为根文件系统也是非常可怕的。如果你没有充分的理由通过一个 chroot 去使用替代介质引导,耐心地操作内核模块、grub 配置和 DKMS……不要在一个很重要的系统中去掉预留的根文件。

|

||||

|

||||

可能有充分的理由使用你的发行版不直接支持的文件系统 —— 但如果你这样做,我强烈建议你在系统启动并可用后再安装它。(例如,你可能有一个 ext4 根文件系统,但是将大部分数据存储在 ZFS 或 Btrfs 池中。)

|

||||

|

||||

#### XFS

|

||||

|

||||

XFS 与非 ext 文件系统在 Linux 中的主线中的地位一样。它是一个 64 位的日志文件系统,自 2001 年以来内置于 Linux 内核中,为大型文件系统和高度并发性提供了高性能(即大量的进程都会立即写入文件系统)。

|

||||

|

||||

从 RHEL 7 开始,XFS 成为 Red Hat Enterprise Linux 的默认文件系统。对于家庭或小型企业用户来说,它仍然有一些缺点 —— 最值得注意的是,重新调整现有 XFS 文件系统是一件非常痛苦的事情,不如创建另一个并复制数据更有意义。

|

||||

|

||||

虽然 XFS 是稳定的且是高性能的,但它和 ext4 之间没有足够具体的最终用途差异,以值得推荐在非默认(如 RHEL7)的任何地方使用它,除非它解决了对 ext4 的特定问题,例如大于 50 TiB 容量的文件系统。

|

||||

|

||||

XFS 在任何方面都不是 ZFS、Btrfs 甚至 WAFL(一个专有的 SAN 文件系统)的“下一代”文件系统。就像 ext4 一样,它应该被视为一种更好的方式的权宜之计。

|

||||

|

||||

#### ZFS

|

||||

|

||||

ZFS 由 Sun Microsystems 开发,以 zettabyte 命名 —— 相当于 1 万亿 GB —— 因为它理论上可以解决大型存储系统。

|

||||

|

||||

作为真正的下一代文件系统,ZFS 提供卷管理(能够在单个文件系统中处理多个单独的存储设备),块级加密校验和(允许以极高的准确率检测数据损坏),[自动损坏修复][12](其中冗余或奇偶校验存储可用),[快速异步增量复制][13],内联压缩等,[以及更多][14]。

|

||||

|

||||

从 Linux 用户的角度来看,ZFS 的最大问题是许可证问题。ZFS 许可证是 CDDL 许可证,这是一种与 GPL 冲突的半许可的许可证。关于在 Linux 内核中使用 ZFS 的意义存在很多争议,其争议范围从“它是 GPL 违规”到“它是 CDDL 违规”到“它完全没问题,它还没有在法庭上进行过测试。”最值得注意的是,自 2016 年以来 Canonical 已将 ZFS 代码内联在其默认内核中,而且目前尚无法律挑战。

|

||||

|

||||

此时,即使我作为一个非常狂热于 ZFS 的用户,我也不建议将 ZFS 作为 Linux 的根文件系统。如果你想在 Linux 上利用 ZFS 的优势,用 ext4 设置一个小的根文件系统,然后将 ZFS 用在你剩余的存储上,把数据、应用程序以及你喜欢的东西放在它上面 —— 但把 root 分区保留在 ext4 上,直到你的发行版明确支持 ZFS 根目录。

|

||||

|

||||

#### Btrfs

|

||||

|

||||

Btrfs 是 B-Tree Filesystem 的简称,通常发音为 “butter” —— 由 Chris Mason 于 2007 年在 Oracle 任职期间发布。Btrfs 旨在跟 ZFS 有大部分相同的目标,提供多种设备管理、每块校验、异步复制、直列压缩等,[还有更多][8]。

|

||||

|

||||

截至 2018 年,Btrfs 相当稳定,可用作标准的单磁盘文件系统,但可能不应该依赖于卷管理器。与许多常见用例中的 ext4、XFS 或 ZFS 相比,它存在严重的性能问题,其下一代功能 —— 复制、多磁盘拓扑和快照管理 —— 可能非常多,其结果可能是从灾难性地性能降低到实际数据的丢失。

|

||||

|

||||

Btrfs 的维持状态是有争议的;SUSE Enterprise Linux 在 2015 年采用它作为默认文件系统,而 Red Hat 于 2017 年宣布它从 RHEL 7.4 开始不再支持 Btrfs。可能值得注意的是,该产品支持 Btrfs 部署用作单磁盘文件系统,而不是像 ZFS 中的多磁盘卷管理器,甚至 Synology 在它的存储设备使用 Btrfs,但是它在传统 Linux 内核 RAID(mdraid)之上分层来管理磁盘。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/4/ext4-filesystem

|

||||

|

||||

作者:[Jim Salter][a]

|

||||

译者:[HardworkFish](https://github.com/HardworkFish)

|

||||

校对:[wxy](https://github.com/wxy), [pityonline](https://github.com/pityonline)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/jim-salter

|

||||

[1]: https://opensource.com/file/391546

|

||||

[2]: https://opensource.com/sites/default/files/styles/panopoly_image_original/public/u128651/packard_bell_pc.jpg?itok=VI8dzcwp (Packard Bell computer)

|

||||

[3]: https://commons.wikimedia.org/wiki/File:Old_packard_bell_pc.jpg

|

||||

[4]: https://creativecommons.org/publicdomain/zero/1.0/deed.en

|

||||

[5]: https://en.wikipedia.org/wiki/Unix_time

|

||||

[6]: https://vleu.net/shake/

|

||||

[7]: http://www.linux-mag.com/id/7272/

|

||||

[8]: https://arstechnica.com/information-technology/2014/01/bitrot-and-atomic-cows-inside-next-gen-filesystems/

|

||||

[9]: https://ext4.wiki.kernel.org/index.php/Ext4_Metadata_Checksums

|

||||

[10]: https://ext4.wiki.kernel.org/index.php/Design_For_1st_Class_Quota_in_Ext4

|

||||

[11]: https://ext4.wiki.kernel.org/index.php/Design_for_Large_Allocation_Blocks

|

||||

[12]: https://en.wikipedia.org/wiki/Data_degradation#Visual_example_of_data_degradation

|

||||

[13]: https://arstechnica.com/information-technology/2015/12/rsync-net-zfs-replication-to-the-cloud-is-finally-here-and-its-fast/

|

||||

[14]: https://arstechnica.com/information-technology/2014/02/ars-walkthrough-using-the-zfs-next-gen-filesystem-on-linux/

|

||||

@ -0,0 +1,107 @@

|

||||

如何在 Ubuntu 或 Linux Mint 启用 Chromium 硬件加速的视频解码

|

||||

======

|

||||

|

||||

你或许已经注意到了,在 Linux 上使用 Google Chrome 或 Chromium 浏览器在 YouTube 或其它类似网站观看高清视频会增加你的 CPU 使用率,如果你用的是笔记本,电脑会发热而且电池会很快用完。这是因为 Chrome/Chromium(Firefox 也是如此,但是 Firefox 的问题没有办法解决)在 Linux 上不支持硬件加速的视频解码。

|

||||

|

||||

这篇文章讲述了如何在 Linux 环境安装带有 VA-API 补丁的 Chromium 开发版,它支持 GPU 加速的视频解码,可以显著减少观看在线高清视频时的 CPU 使用率,这篇教程只适用于 Intel 和 Nvidia 的显卡,我没有 ATI/AMD 的显卡可以试验,也没有使用过这几种显卡。

|

||||

|

||||

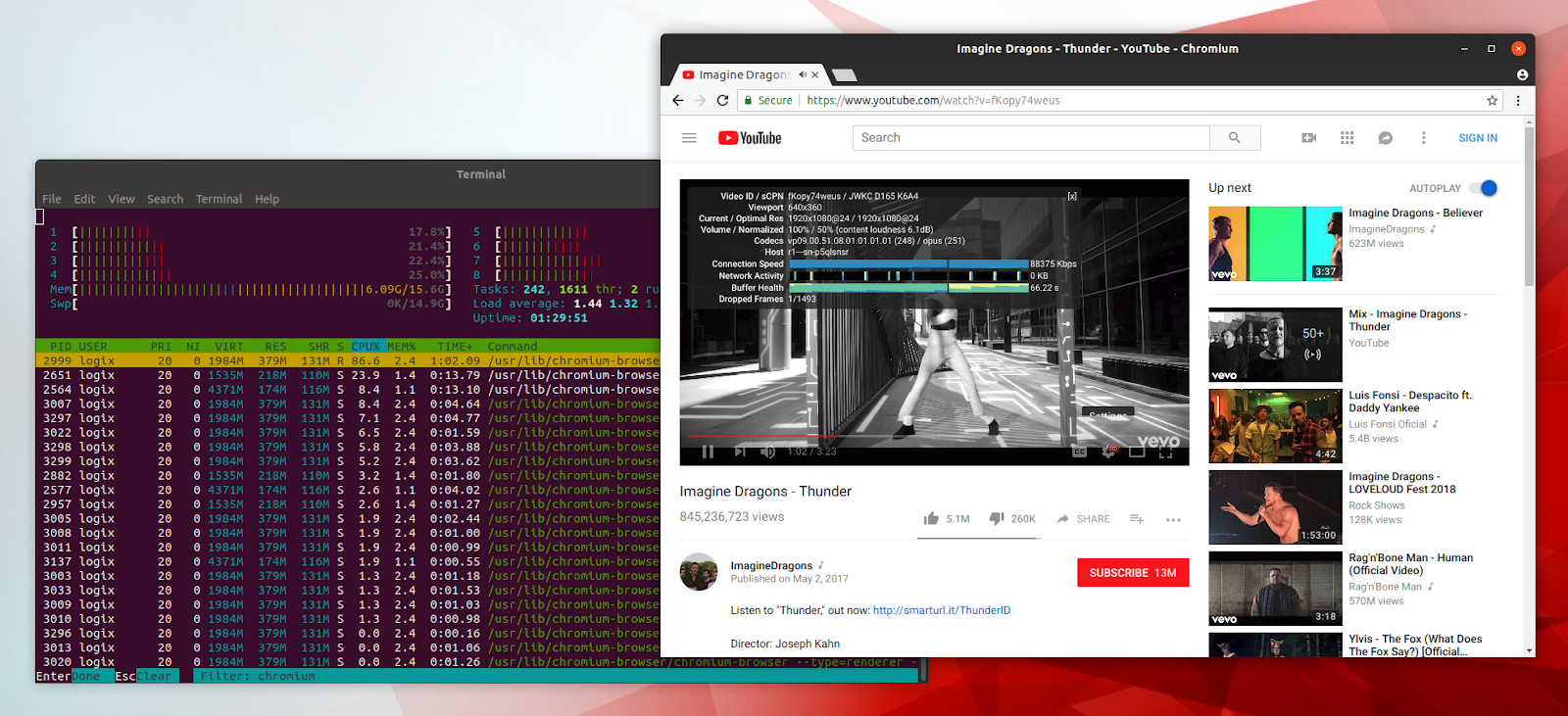

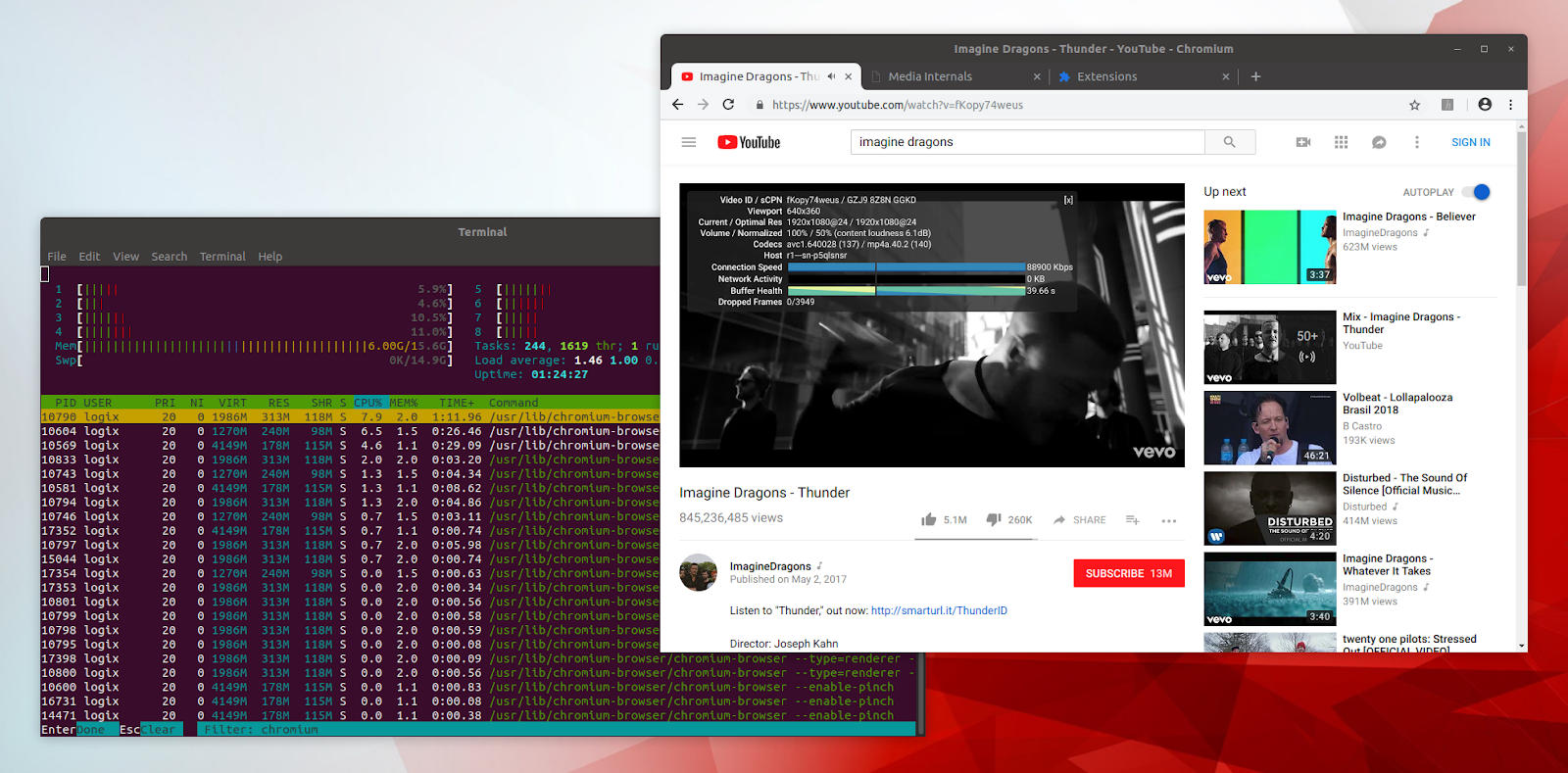

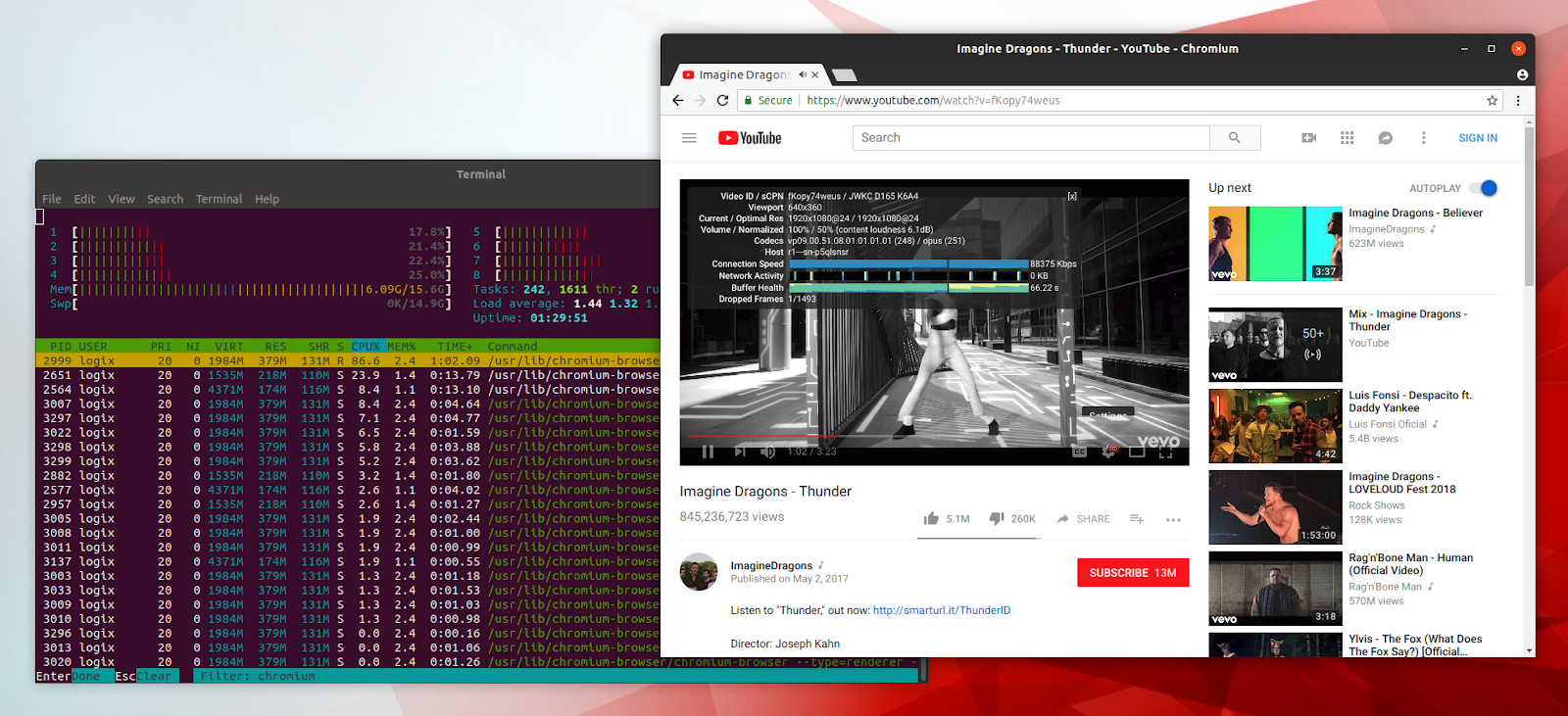

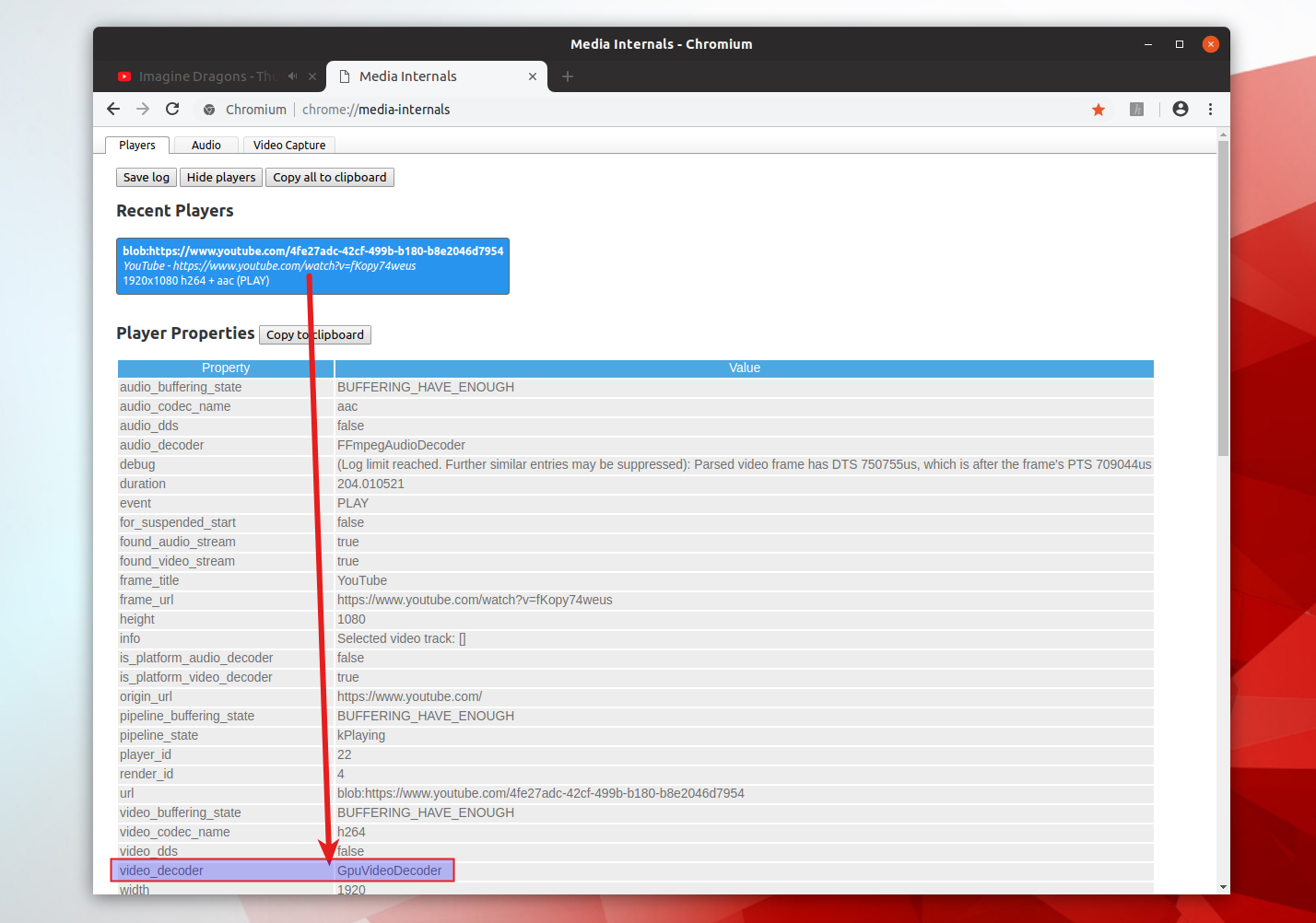

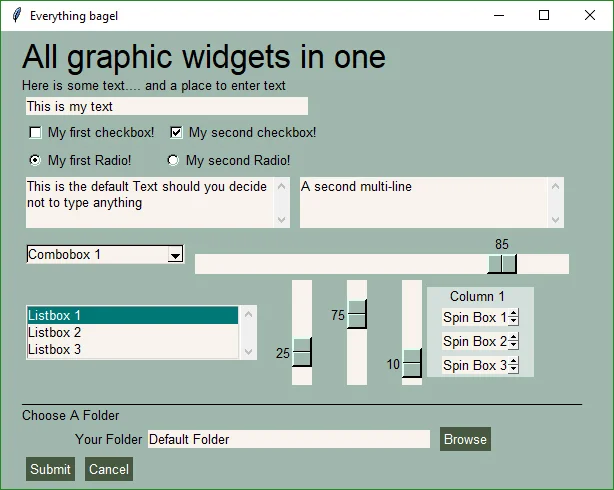

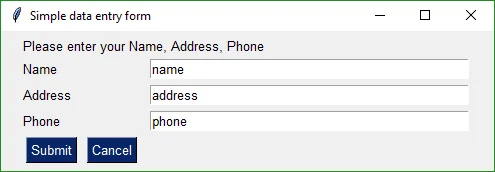

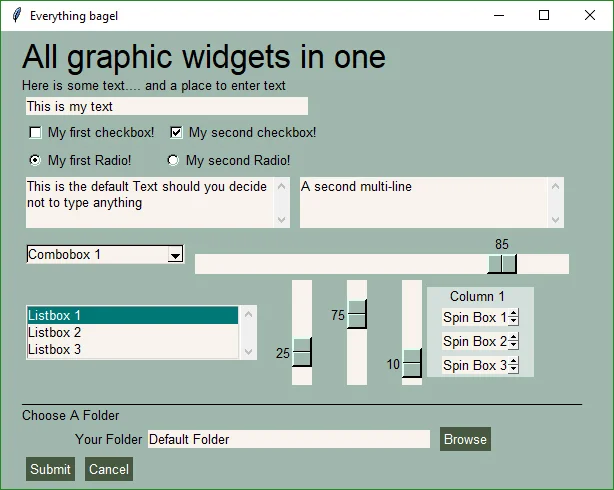

这是 Chromium 浏览器在 Ubuntu18.04 中,在没有 GPU 加速视频解码的情况下播放一个 1080p 的 YouTube 视频:

|

||||

|

||||

|

||||

|

||||

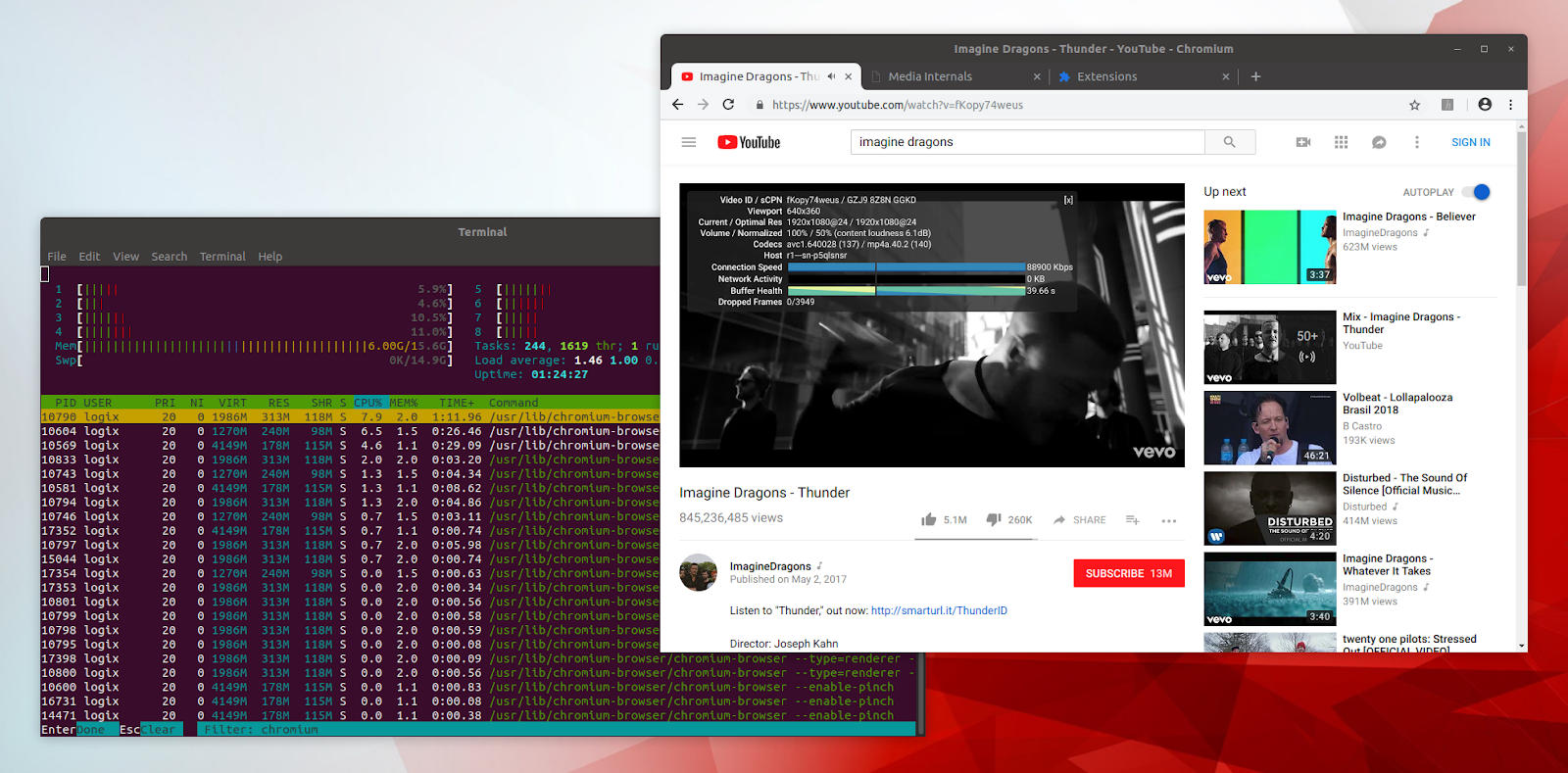

这是带有 VA-API 补丁的 Chromium 浏览器在 Ubuntu18.04 中,在带有 GPU 加速视频解码的情况下播放同样的 1080p 的 YouTube 视频:

|

||||

|

||||

|

||||

|

||||

注意截图中的 CPU 使用率。两张截图都是在我老旧而依然强大的桌面计算机上捕捉的。在我的笔记本电脑上,没有硬件加速的 Chromium 带来更高的 CPU 使用率。

|

||||

|

||||

“只需 VA-API 即可在 Linux 启用 VAVDA、VAVEA 和 VAJDA” 这个[补丁][3]在一年多以前就提交给了 Chromium,但是它还没有合并。

|

||||

|

||||

Chrome 有一个选项可以覆盖软件渲染列表(`#ignore-gpu-blacklist`),但是这个选项不能启用硬件加速的视频解码。启用这个选项以后,你或许会在访问 `chrome://gpu` 时发现这些信息:“_Video Decode: Hardware accelerated_ “,然而这个并不意味着真的可以工作。在 YouTube 打开一个高清视频并用诸如 `htop` 的工具查看 CPU 使用率(这是我在以上截图中用来查看 CPU 使用率的)。因为 GPU 视频解码没有真的被启用,你应该看到较高的 CPU 使用率。下面有一个部分是关于检查你是否真的在使用硬件加速的视频解码的。

|

||||

|

||||

**文中使用的 Chromium 浏览器 Ubuntu 版启用 VA-API 的补丁在[这个地址][1]可以获得**

|

||||

|

||||

### 在 Ubuntu 和 Linux Mint 安装和使用带有 VA-API 支持的 Chromium 浏览器

|

||||

|

||||

每个人都该知道 Chromium 开发版本没有理想中那么稳定。所以你可能发现 bug,它可能会发生崩溃等情况。它现在可能正常运行,但是谁知道几次更新以后会发生什么。

|

||||

|

||||

还有,如果你想启用 Widevine 支持(这样你才能观看 Netflix 视频和 YouTube 付费视频),Chromium dev 分支 PPA 要求你执行一些额外步骤。 如果你想要一些功能,比如同步,也是如此(需要注册 API 密钥还要在你的系统上设置好)。执行这些任务的说明在 [Chromium 开发版本的 PPA][4] 中有详细解释。

|

||||

|

||||

对于 Nvidia 显卡,vdpau 视频驱动程序需要更新以便显示 vaQuerySurfaceAttributes。所以 Nvidia 需要使用打过补丁的 vdpau-va-driver。值得庆幸的是,Chromium-dev PPA 提供了这个打过补丁的包。

|

||||

|

||||

带有 VA-API 补丁的 Chromium 也可用于其它 Linux 发行版,在第三方仓库,比如说 [Arch Linux][5](对于 Nvidia 你需要[这个][6]补丁过的 libva-vdpau-driver)。如果你不使用 Ubuntu 或 Linux Mint,你得自己找那些包。

|

||||

|

||||

#### 1、安装带有 VA-API 补丁的 Chromium

|

||||

|

||||

有一个带 VA-API 补丁的 Chromium Beta PPA,但是它缺少适用于 Ubuntu 18.04 的 vdpau-video。如果你需要,你可以使用这个 [Beta PPA][7],而不是我在下面的步骤中使用 [Dev PPA][8],不过如果你使用 Nvidia 显卡,你需要从这个 Dev PPA 中下载安装 vdpau-va-driver,并确认 Ubuntu/Linux Mint 不更新这个包(有点复杂,如果你准备根据下面步骤使用 Dev PPA 的话,不需要手动做这些)。

|

||||

|

||||

你可以添加 [Chromium 开发分支 PPA][4],并在 Ubuntu 或 Linux Mint(及其它基于 Ubuntu 的发行版,如 elementary,以及 Ubuntu 或 Linux Mint 的风味版,如 Xubuntu、Kubuntu、Ubuntu MATE、Linux Mint MATE 等等)上安装最新的 Chromium 浏览器开发版:

|

||||

|

||||

|

||||

```

|

||||

sudo add-apt-repository ppa:saiarcot895/chromium-dev

|

||||

sudo apt-get update

|

||||

sudo apt install chromium-browser

|

||||

|

||||

```

|

||||

|

||||

#### 2、安装 VA-API 驱动

|

||||

|

||||

对于 Intel 的显卡,你需要安装 `i965-va-driver` 这个包(它可能早就安装好了)

|

||||

|

||||

```

|

||||

sudo apt install i965-va-driver

|

||||

```

|

||||

|

||||

对于 Nvidia 的显卡(在开源的 Nouveau 驱动和闭源的 Nvidia 驱动上,它应该都有效), 安装 `vdpau-va-driver`:

|

||||

|

||||

```

|

||||

sudo apt install vdpau-va-driver

|

||||

```

|

||||

|

||||

#### 3、在 Chromium 启用硬件加速视频选项

|

||||

|

||||

复制这串地址,粘贴进 Chromium 的 URL 栏: `chrome://flags/#enable-accelerated-video` (或者在 `chrome://flags` 搜索 `Hardware-accelerated video` )并启用它,然后重启 Chromium 浏览器。

|

||||

|

||||

在默认的 Google Chrome / Chromium 版本,这个选项不可用,但是你可以在启用了 VP-API 的 Chromium 版本启用它。

|

||||

|

||||

#### 4、安装 [h264ify][2] Chrome 扩展

|

||||

|

||||

YouTube(可能还有其它一些网址也是如此)默认使用 VP8 或 VP9 编码解码器,许多 GPU 不支持这种编码解码器的硬件解码。h264ify 会强制 YouTube 使用大多数 GPU 都支持的 H.264 而不是 VP8/VP9。

|

||||

|

||||

这个扩展还能阻塞 60fps 的视频,对低性能机器有用。

|

||||

|

||||

你可以在视频上右键点击,并且选择 `Stats for nerds` 以查看 Youtube 视频所使用额编码解码器,如果启用了 h264ify 扩展,你可以看到编码解码器是 avc / mp4a。如果没有启用,编码解码器应该是 vp09 / opus。

|

||||

|

||||

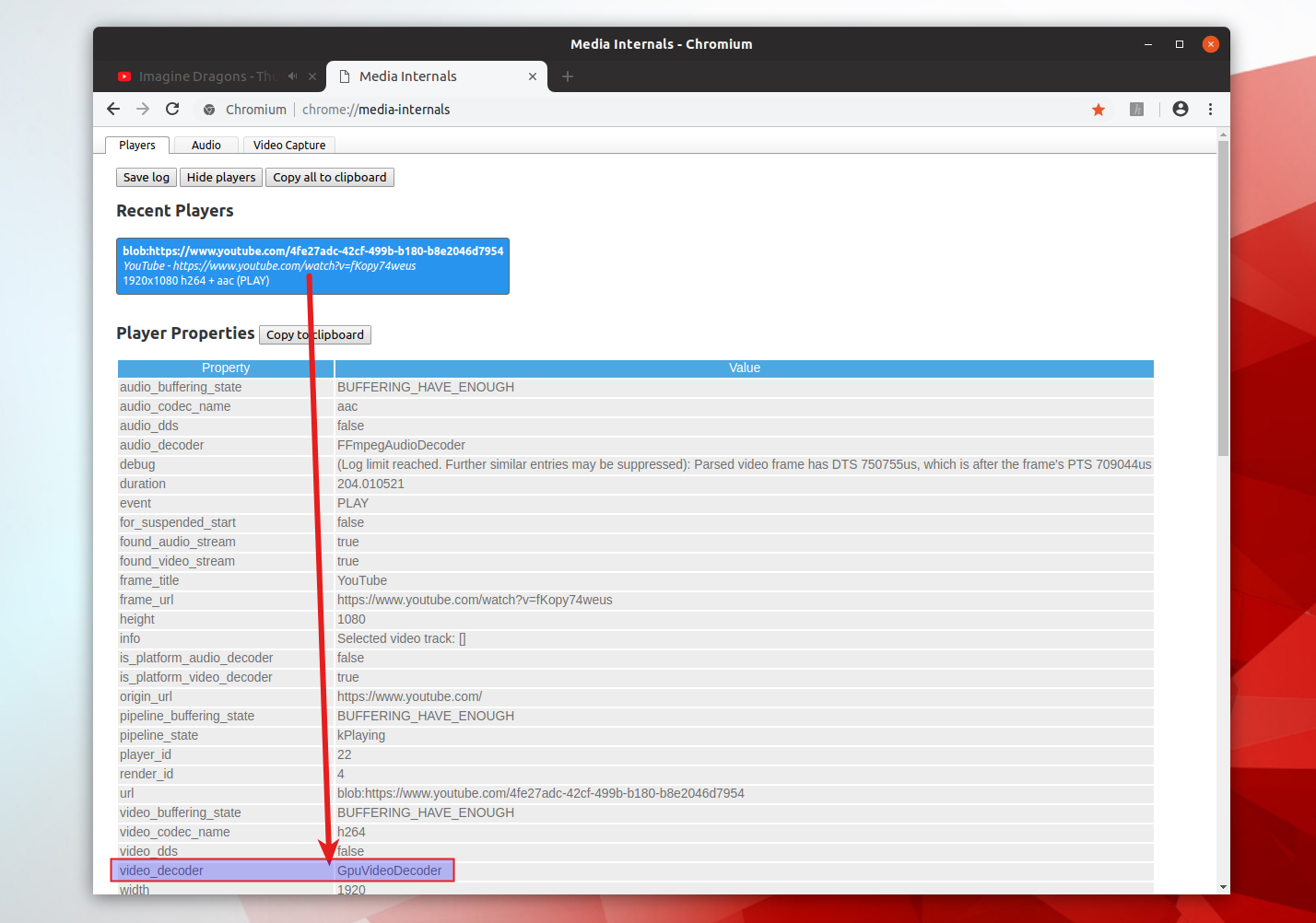

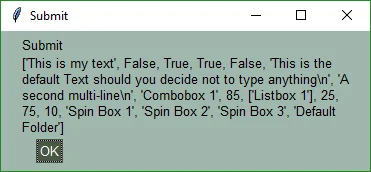

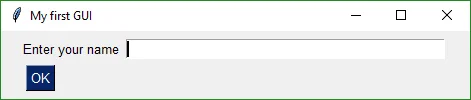

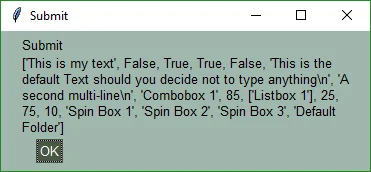

### 如何检查 Chromium 是否在使用 GPU 视频解码

|

||||

|

||||

在 YouTube 打开一个视频,然后,在 Chromium 打开一个新的标签页并将以下地址输入 URL 栏:`chrome://media-internals`。

|

||||

|

||||

在 `chrome://media-internals` 标签页中,点击视频的 URL(为了展开它), 往下滚动查看 `Player Properties` 的下面,你应该可以找到 `video_decoder` 属性。如果`video_decoder` 的值是 `GpuVideoDecoder` ,这说明当前在另一个标签页播放的 YouTube 视频正在使用硬件加速的的视频解码。

|

||||

|

||||

|

||||

|

||||

如果它显示的是 `FFmpegVideoDecoder` 或 `VpxVideoDecoder` ,说明加速视频解码无效或者你忘记安装或禁用了 h264ify 这个 Chrome 扩展。

|

||||

|

||||

如果无效,你可以通过在命令行运行 `chromium-browser` ,通过查看是否有 VA-API 相关的错误显示出来以调试。你也可以运行 `vainfo`(在 Ubuntu 或 Linux Mint 上安装:`sudo apt install vainfo`)和 `vdpauinfo` (对于 Nvidia,在 Ubuntu 或 Linux Mint 上安装:`sudo apt install vdpauinfo`)并且查看是否有显示任何错误。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linuxuprising.com/2018/08/how-to-enable-hardware-accelerated.html

|

||||

|

||||

作者:[Logix][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[GraveAccent](https://github.com/GraveAccent)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/118280394805678839070

|

||||

[1]:https://github.com/saiarcot895/chromium-ubuntu-build/tree/master/debian/patches

|

||||

[2]:https://chrome.google.com/webstore/detail/h264ify/aleakchihdccplidncghkekgioiakgal

|

||||

[3]:https://chromium-review.googlesource.com/c/chromium/src/+/532294

|

||||

[4]:https://launchpad.net/~saiarcot895/+archive/ubuntu/chromium-dev

|

||||

[5]:https://aur.archlinux.org/packages/?O=0&SeB=nd&K=chromium+vaapi&outdated=&SB=n&SO=a&PP=50&do_Search=Go

|

||||

[6]:https://aur.archlinux.org/packages/libva-vdpau-driver-chromium/

|

||||

[7]:https://launchpad.net/~saiarcot895/+archive/ubuntu/chromium-beta

|

||||

[8]:https://launchpad.net/~saiarcot895/+archive/ubuntu/chromium-dev/+packages

|

||||

321

published/20180822 What is a Makefile and how does it work.md

Normal file

321

published/20180822 What is a Makefile and how does it work.md

Normal file

@ -0,0 +1,321 @@

|

||||

Makefile 及其工作原理

|

||||

======

|

||||

|

||||

> 用这个方便的工具来更有效的运行和编译你的程序。

|

||||

|

||||

|

||||

|

||||

当你需要在一些源文件改变后运行或更新一个任务时,通常会用到 `make` 工具。`make` 工具需要读取一个 `Makefile`(或 `makefile`)文件,在该文件中定义了一系列需要执行的任务。你可以使用 `make` 来将源代码编译为可执行程序。大部分开源项目会使用 `make` 来实现最终的二进制文件的编译,然后使用 `make install` 命令来执行安装。

|

||||

|

||||

本文将通过一些基础和进阶的示例来展示 `make` 和 `Makefile` 的使用方法。在开始前,请确保你的系统中安装了 `make`。

|

||||

|

||||

### 基础示例

|

||||

|

||||

依然从打印 “Hello World” 开始。首先创建一个名字为 `myproject` 的目录,目录下新建 `Makefile` 文件,文件内容为:

|

||||

|

||||

```

|

||||

say_hello:

|

||||

echo "Hello World"

|

||||

```

|

||||

|

||||

在 `myproject` 目录下执行 `make`,会有如下输出:

|

||||

|

||||

```

|

||||

$ make

|

||||

echo "Hello World"

|

||||

Hello World

|

||||

```

|

||||

|

||||

在上面的例子中,“say_hello” 类似于其他编程语言中的函数名。这被称之为<ruby>目标<rt>target</rt></ruby>。在该目标之后的是预置条件或依赖。为了简单起见,我们在这个示例中没有定义预置条件。`echo ‘Hello World'` 命令被称为<ruby>步骤<rt>recipe</rt></ruby>。这些步骤基于预置条件来实现目标。目标、预置条件和步骤共同构成一个规则。

|

||||

|

||||

总结一下,一个典型的规则的语法为:

|

||||

|

||||

```

|

||||

目标: 预置条件

|

||||

<TAB> 步骤

|

||||

```

|

||||

|

||||

作为示例,目标可以是一个基于预置条件(源代码)的二进制文件。另一方面,预置条件也可以是依赖其他预置条件的目标。

|

||||

|

||||

```

|

||||

final_target: sub_target final_target.c

|

||||

Recipe_to_create_final_target

|

||||

|

||||

sub_target: sub_target.c

|

||||

Recipe_to_create_sub_target

|

||||

```

|

||||

|

||||

目标并不要求是一个文件,也可以只是步骤的名字,就如我们的例子中一样。我们称之为“伪目标”。

|

||||

|

||||

再回到上面的示例中,当 `make` 被执行时,整条指令 `echo "Hello World"` 都被显示出来,之后才是真正的执行结果。如果不希望指令本身被打印处理,需要在 `echo` 前添加 `@`。

|

||||

```

|

||||

say_hello:

|

||||

@echo "Hello World"

|

||||

```

|

||||

|

||||

重新运行 `make`,将会只有如下输出:

|

||||

|

||||

```

|

||||

$ make

|

||||

Hello World

|

||||

```

|

||||

|

||||

接下来在 `Makefile` 中添加如下伪目标:`generate` 和 `clean`:

|

||||

|

||||

```

|

||||

say_hello:

|

||||

@echo "Hello World"

|

||||

|

||||

generate:

|

||||

@echo "Creating empty text files..."

|

||||

touch file-{1..10}.txt

|

||||

|

||||

clean:

|

||||

@echo "Cleaning up..."

|

||||

rm *.txt

|

||||

```

|

||||

|

||||

随后当我们运行 `make` 时,只有 `say_hello` 这个目标被执行。这是因为`Makefile` 中的第一个目标为默认目标。通常情况下会调用默认目标,这就是你在大多数项目中看到 `all` 作为第一个目标而出现。`all` 负责来调用它他的目标。我们可以通过 `.DEFAULT_GOAL` 这个特殊的伪目标来覆盖掉默认的行为。

|

||||

|

||||

在 `Makefile` 文件开头增加 `.DEFAULT_GOAL`:

|

||||

|

||||

```

|

||||

.DEFAULT_GOAL := generate

|

||||

```

|

||||

|

||||

`make` 会将 `generate` 作为默认目标:

|

||||

|

||||

```

|

||||

$ make

|

||||

Creating empty text files...

|

||||

touch file-{1..10}.txt

|

||||

```

|

||||

|

||||

顾名思义,`.DEFAULT_GOAL` 伪目标仅能定义一个目标。这就是为什么很多 `Makefile` 会包括 `all` 这个目标,这样可以调用多个目标。

|

||||

|

||||

下面删除掉 `.DEFAULT_GOAL`,增加 `all` 目标:

|

||||

|

||||

```

|

||||

all: say_hello generate

|

||||

|

||||

say_hello:

|

||||

@echo "Hello World"

|

||||

|

||||

generate:

|

||||

@echo "Creating empty text files..."

|

||||

touch file-{1..10}.txt

|

||||

|

||||

clean:

|

||||

@echo "Cleaning up..."

|

||||

rm *.txt

|

||||

```

|

||||

|

||||

运行之前,我们再增加一些特殊的伪目标。`.PHONY` 用来定义这些不是文件的目标。`make` 会默认调用这些伪目标下的步骤,而不去检查文件名是否存在或最后修改日期。完整的 `Makefile` 如下:

|

||||

|

||||

```

|

||||

.PHONY: all say_hello generate clean

|

||||

|

||||

all: say_hello generate

|

||||

|

||||

say_hello:

|

||||

@echo "Hello World"

|

||||

|

||||

generate:

|

||||

@echo "Creating empty text files..."

|

||||

touch file-{1..10}.txt

|

||||

|

||||

clean:

|

||||

@echo "Cleaning up..."

|

||||

rm *.txt

|

||||

```

|

||||

|

||||

`make` 命令会调用 `say_hello` 和 `generate`:

|

||||

|

||||

```

|

||||

$ make

|

||||

Hello World

|

||||

Creating empty text files...

|

||||

touch file-{1..10}.txt

|

||||

```

|

||||

|

||||

`clean` 不应该被放入 `all` 中,或者被放入第一个目标中。`clean` 应当在需要清理时手动调用,调用方法为 `make clean`。

|

||||

|

||||

```

|

||||

$ make clean

|

||||

Cleaning up...

|

||||

rm *.txt

|

||||

```

|

||||

|

||||

现在你应该已经对 `Makefile` 有了基础的了解,接下来我们看一些进阶的示例。

|

||||

|

||||

### 进阶示例

|

||||

|

||||

#### 变量

|

||||

|

||||

在之前的实例中,大部分目标和预置条件是已经固定了的,但在实际项目中,它们通常用变量和模式来代替。

|

||||

|

||||

定义变量最简单的方式是使用 `=` 操作符。例如,将命令 `gcc` 赋值给变量 `CC`:

|

||||

|

||||

```

|

||||

CC = gcc

|

||||

```

|

||||

|

||||

这被称为递归扩展变量,用于如下所示的规则中:

|

||||

|

||||

```

|

||||

hello: hello.c

|

||||

${CC} hello.c -o hello

|

||||

```

|

||||

|

||||

你可能已经想到了,这些步骤将会在传递给终端时展开为:

|

||||

|

||||

```

|

||||

gcc hello.c -o hello

|

||||

```

|

||||

|

||||

`${CC}` 和 `$(CC)` 都能对 `gcc` 进行引用。但如果一个变量尝试将它本身赋值给自己,将会造成死循环。让我们验证一下:

|

||||

|

||||

```

|

||||

CC = gcc

|

||||

CC = ${CC}

|

||||

|

||||

all:

|

||||

@echo ${CC}

|

||||

```

|

||||

|

||||

此时运行 `make` 会导致:

|

||||

|

||||

```

|

||||

$ make

|

||||

Makefile:8: *** Recursive variable 'CC' references itself (eventually). Stop.

|

||||

```

|

||||

|

||||

为了避免这种情况发生,可以使用 `:=` 操作符(这被称为简单扩展变量)。以下代码不会造成上述问题:

|

||||

|

||||

```

|

||||

CC := gcc

|

||||

CC := ${CC}

|

||||

|

||||

all:

|

||||

@echo ${CC}

|

||||

```

|

||||

|

||||

#### 模式和函数

|

||||

|

||||

下面的 `Makefile` 使用了变量、模式和函数来实现所有 C 代码的编译。我们来逐行分析下:

|

||||

|

||||

```

|

||||

# Usage:

|

||||

# make # compile all binary

|

||||

# make clean # remove ALL binaries and objects

|

||||

|

||||

.PHONY = all clean

|

||||

|

||||

CC = gcc # compiler to use

|

||||

|

||||

LINKERFLAG = -lm

|

||||

|

||||

SRCS := $(wildcard *.c)

|

||||

BINS := $(SRCS:%.c=%)

|

||||

|

||||

all: ${BINS}

|

||||

|

||||

%: %.o

|

||||

@echo "Checking.."

|

||||

${CC} ${LINKERFLAG} $< -o $@

|

||||

|

||||

%.o: %.c