mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-30 02:40:11 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject

This commit is contained in:

commit

c8522c58f9

published

20150824 How to Setup Zephyr Test Management Tool on CentOS 7.x.md20151020 how to h2 in apache.md20151104 How to Install Redis Server on CentOS 7.md20151105 Linus Torvalds Lambasts Open Source Programmers over Insecure Code.md20151204 How to Remove Banned IP from Fail2ban on CentOS 6 or CentOS 7.md

sources

news

share

20150824 Great Open Source Collaborative Editing Tools.md20150901 5 best open source board games to play online.md20151130 eSpeak--Text To Speech Tool For Linux.md

talk

tech

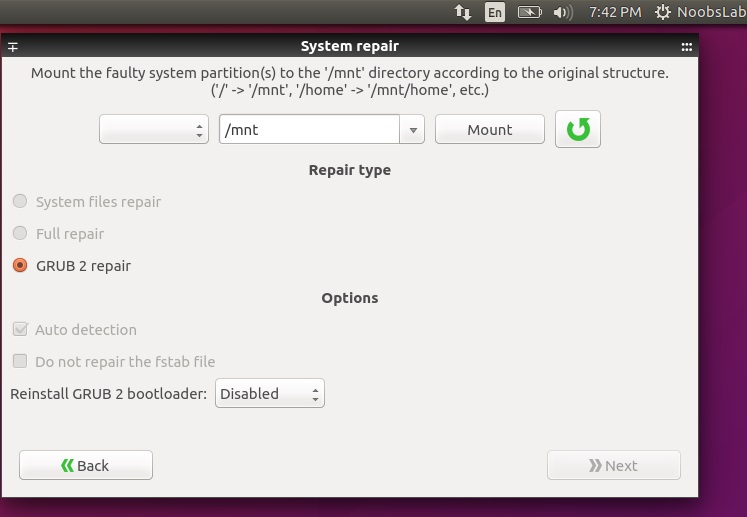

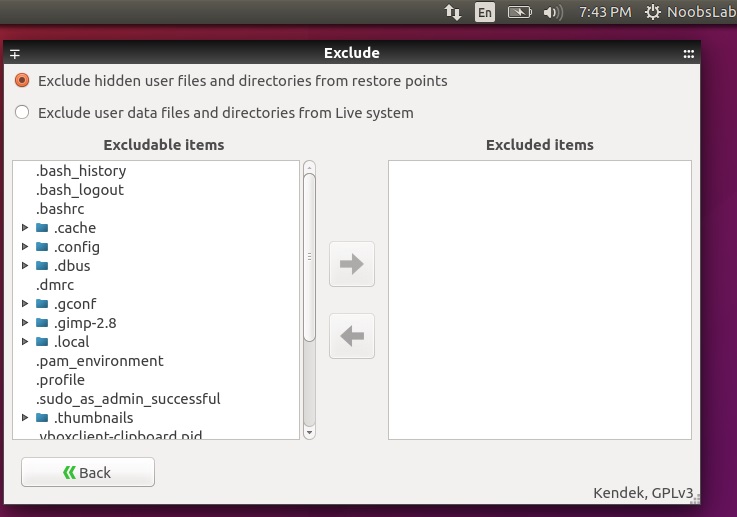

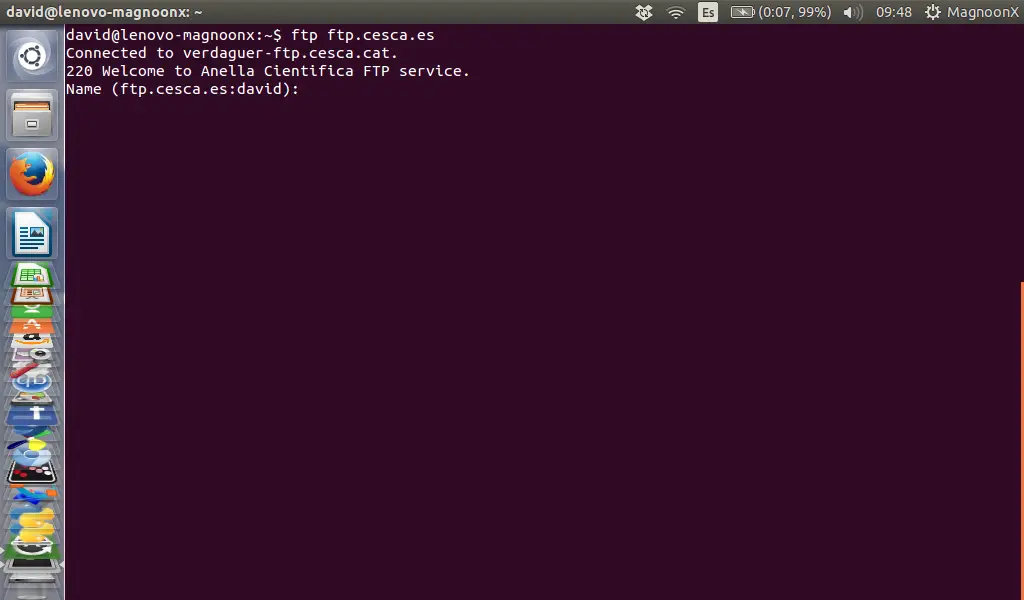

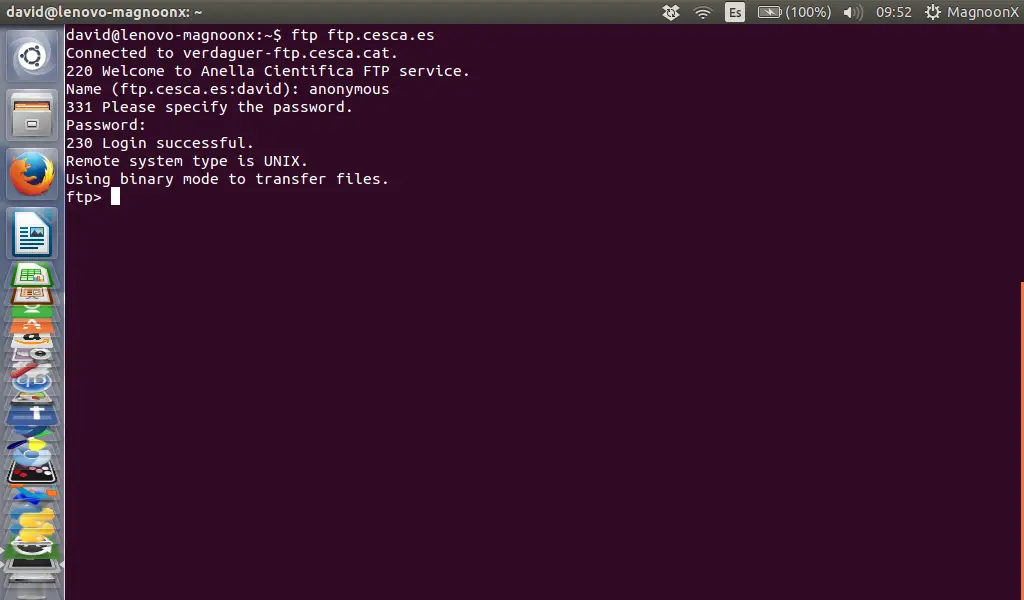

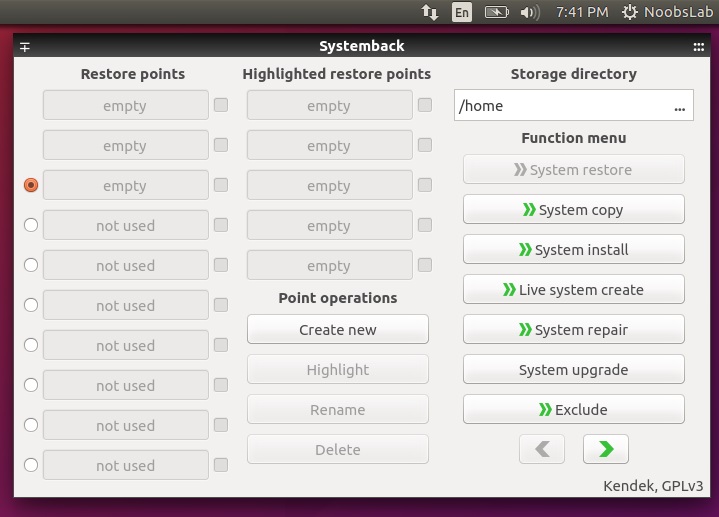

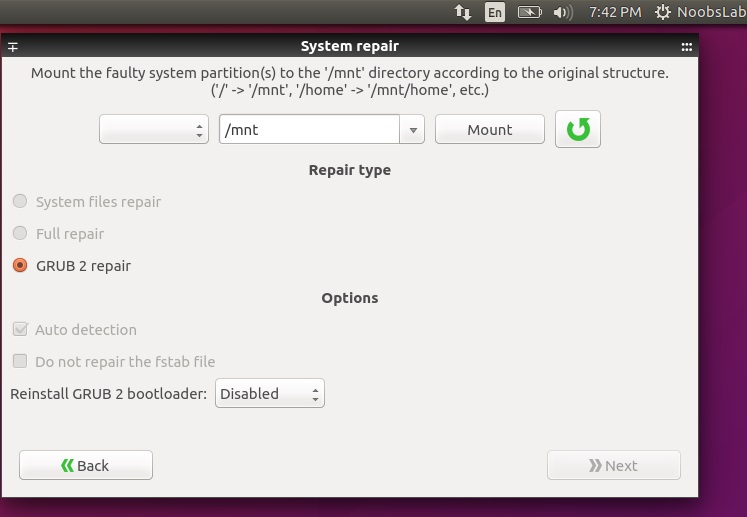

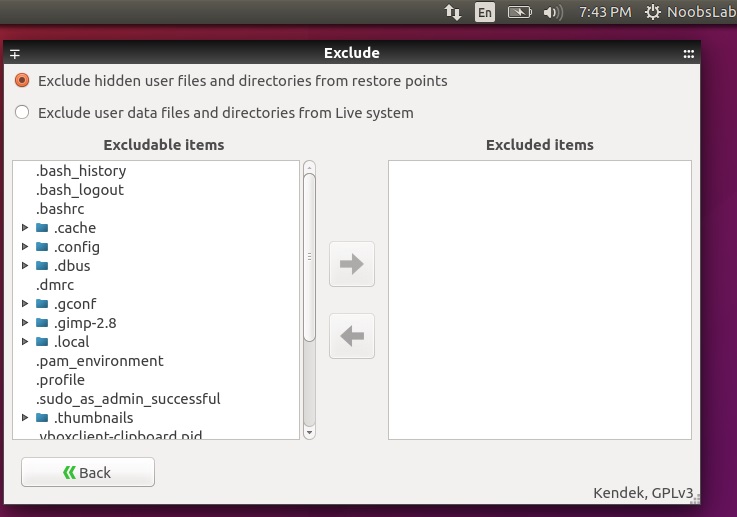

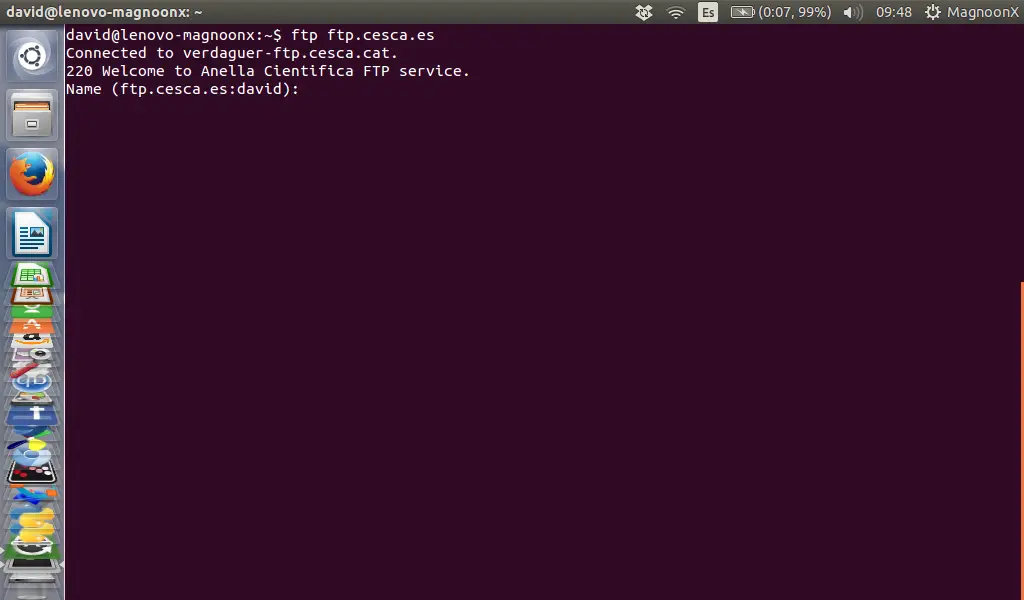

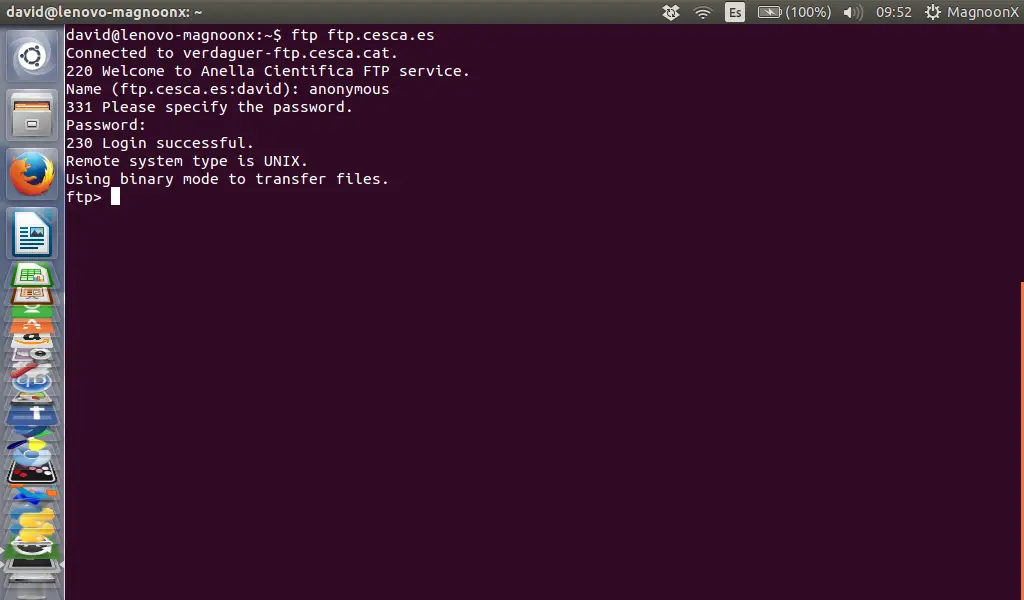

20150917 A Repository with 44 Years of Unix Evolution.md20151122 Doubly linked list in the Linux Kernel.md20151123 Data Structures in the Linux Kernel.md20151126 How to Install Nginx as Reverse Proxy for Apache on FreeBSD 10.2.md20151201 Backup (System Restore Point) your Ubuntu or Linux Mint with SystemBack.md20151202 How to use the Linux ftp command to up- and download files on the shell.md20151208 How to Customize Time and Date Format in Ubuntu Panel.md20151208 How to Install Bugzilla with Apache and SSL on FreeBSD 10.2.md20151210 Getting started with Docker by Dockerizing this Blog.md

Linux or UNIX grep Command Tutorial series

translated

news

share

talk

20151124 Review--5 memory debuggers for Linux coding.md

The history of Android

tech

20151104 How to Install Redis Server on CentOS 7.md20151122 Doubly linked list in the Linux Kernel.md20151123 Assign Multiple IP Addresses To One Interface On Ubuntu 15.10.md20151126 How to Install Nginx as Reverse Proxy for Apache on FreeBSD 10.2.md20151201 Backup (System Restore Point) your Ubuntu or Linux Mint with SystemBack.md20151202 How to use the Linux ftp command to up- and download files on the shell.md20151208 How to renew the ISPConfig 3 SSL Certificate.md20151208 Install Wetty on Centos or RHEL 6.X.md

Linux or UNIX grep Command Tutorial series

@ -1,6 +1,7 @@

|

||||

如何在 CentOS 7.x 上安装 Zephyr 测试管理工具

|

||||

================================================================================

|

||||

测试管理工具包括作为测试人员需要的任何东西。测试管理工具用来记录测试执行的结果、计划测试活动以及报告质量保证活动的情况。在这篇文章中我们会向你介绍如何配置 Zephyr 测试管理工具,它包括了管理测试活动需要的所有东西,不需要单独安装测试活动所需要的应用程序从而降低测试人员不必要的麻烦。一旦你安装完它,你就看可以用它跟踪 bug、缺陷,和你的团队成员协作项目任务,因为你可以轻松地共享和访问测试过程中多个项目团队的数据。

|

||||

|

||||

测试管理(Test Management)指测试人员所需要的任何的所有东西。测试管理工具用来记录测试执行的结果、计划测试活动以及汇报质量控制活动的情况。在这篇文章中我们会向你介绍如何配置 Zephyr 测试管理工具,它包括了管理测试活动需要的所有东西,不需要单独安装测试活动所需要的应用程序从而降低测试人员不必要的麻烦。一旦你安装完它,你就看可以用它跟踪 bug 和缺陷,和你的团队成员协作项目任务,因为你可以轻松地共享和访问测试过程中多个项目团队的数据。

|

||||

|

||||

### Zephyr 要求 ###

|

||||

|

||||

@ -19,21 +20,21 @@

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="140"><strong>Packages</strong></td>

|

||||

<td width="312">JDK 7 or above , Oracle JDK 6 update</td>

|

||||

<td width="209">No Prior Tomcat, MySQL installed</td>

|

||||

<td width="312">JDK 7 或更高 , Oracle JDK 6 update</td>

|

||||

<td width="209">没有事先安装的 Tomcat 和 MySQL</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="140"><strong>RAM</strong></td>

|

||||

<td width="312">4 GB</td>

|

||||

<td width="209">Preferred 8 GB</td>

|

||||

<td width="209">推荐 8 GB</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="140"><strong>CPU</strong></td>

|

||||

<td width="521" colspan="2">2.0 GHZ or Higher</td>

|

||||

<td width="521" colspan="2">2.0 GHZ 或更高</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="140"><strong>Hard Disk</strong></td>

|

||||

<td width="521" colspan="2">30 GB , Atleast 5GB must be free</td>

|

||||

<td width="521" colspan="2">30 GB , 至少 5GB </td>

|

||||

</tr>

|

||||

</tbody>

|

||||

</table>

|

||||

@ -48,8 +49,6 @@

|

||||

|

||||

[root@centos-007 ~]# yum install java-1.7.0-openjdk-1.7.0.79-2.5.5.2.el7_1

|

||||

|

||||

----------

|

||||

|

||||

[root@centos-007 ~]# yum install java-1.7.0-openjdk-devel-1.7.0.85-2.6.1.2.el7_1.x86_64

|

||||

|

||||

安装完 java 和它的所有依赖后,运行下面的命令设置 JAVA_HOME 环境变量。

|

||||

@ -61,8 +60,6 @@

|

||||

|

||||

[root@centos-007 ~]# java –version

|

||||

|

||||

----------

|

||||

|

||||

java version "1.7.0_79"

|

||||

OpenJDK Runtime Environment (rhel-2.5.5.2.el7_1-x86_64 u79-b14)

|

||||

OpenJDK 64-Bit Server VM (build 24.79-b02, mixed mode)

|

||||

@ -71,7 +68,7 @@

|

||||

|

||||

### 安装 MySQL 5.6.x ###

|

||||

|

||||

如果的机器上有其它的 MySQL,建议你先卸载它们并安装这个版本,或者升级它们的模式到指定的版本。因为 Zephyr 前提要求这个指定的主要/最小 MySQL (5.6.x)版本要有 root 用户名。

|

||||

如果的机器上有其它的 MySQL,建议你先卸载它们并安装这个版本,或者升级它们的模式(schemas)到指定的版本。因为 Zephyr 前提要求这个指定的 5.6.x 版本的 MySQL ,要有 root 用户名。

|

||||

|

||||

可以按照下面的步骤在 CentOS-7.1 上安装 MySQL 5.6 :

|

||||

|

||||

@ -93,10 +90,7 @@

|

||||

[root@centos-007 ~]# service mysqld start

|

||||

[root@centos-007 ~]# service mysqld status

|

||||

|

||||

对于全新安装的 MySQL 服务器,MySQL root 用户的密码为空。

|

||||

为了安全起见,我们应该重置 MySQL root 用户的密码。

|

||||

|

||||

用自动生成的空密码连接到 MySQL 并更改 root 用户密码。

|

||||

对于全新安装的 MySQL 服务器,MySQL root 用户的密码为空。为了安全起见,我们应该重置 MySQL root 用户的密码。用自动生成的空密码连接到 MySQL 并更改 root 用户密码。

|

||||

|

||||

[root@centos-007 ~]# mysql

|

||||

mysql> SET PASSWORD FOR 'root'@'localhost' = PASSWORD('your_password');

|

||||

@ -224,7 +218,7 @@ via: http://linoxide.com/linux-how-to/setup-zephyr-tool-centos-7-x/

|

||||

|

||||

作者:[Kashif Siddique][a]

|

||||

译者:[ictlyh](http://mutouxiaogui.cn/blog/)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -8,45 +8,44 @@ Copyright (C) 2015 greenbytes GmbH

|

||||

|

||||

### 源码 ###

|

||||

|

||||

你可以从[这里][1]得到 Apache 发行版。Apache 2.4.17 及其更高版本都支持 HTTP/2。我不会再重复介绍如何构建服务器的指令。在很多地方有很好的指南,例如[这里][2]。

|

||||

你可以从[这里][1]得到 Apache 版本。Apache 2.4.17 及其更高版本都支持 HTTP/2。我不会再重复介绍如何构建该服务器的指令。在很多地方有很好的指南,例如[这里][2]。

|

||||

|

||||

(有任何试验的链接?在 Twitter 上告诉我吧 @icing)

|

||||

(有任何这个试验性软件包的相关链接?在 Twitter 上告诉我吧 @icing)

|

||||

|

||||

#### 编译支持 HTTP/2 ####

|

||||

#### 编译支持 HTTP/2 ####

|

||||

|

||||

在你编译发行版之前,你要进行一些**配置**。这里有成千上万的选项。和 HTTP/2 相关的是:

|

||||

在你编译版本之前,你要进行一些**配置**。这里有成千上万的选项。和 HTTP/2 相关的是:

|

||||

|

||||

- **--enable-http2**

|

||||

|

||||

启用在 Apache 服务器内部实现协议的 ‘http2’ 模块。

|

||||

启用在 Apache 服务器内部实现该协议的 ‘http2’ 模块。

|

||||

|

||||

- **--with-nghttp2=<dir>**

|

||||

- **--with-nghttp2=\<dir>**

|

||||

|

||||

指定 http2 模块需要的 libnghttp2 模块的非默认位置。如果 nghttp2 是在默认的位置,配置过程会自动采用。

|

||||

|

||||

- **--enable-nghttp2-staticlib-deps**

|

||||

|

||||

很少用到的选项,你可能用来静态链接 nghttp2 库到服务器。在大部分平台上,只有在找不到共享 nghttp2 库时才有效。

|

||||

很少用到的选项,你可能想将 nghttp2 库静态链接到服务器里。在大部分平台上,只有在找不到共享 nghttp2 库时才有用。

|

||||

|

||||

如果你想自己编译 nghttp2,你可以到 [nghttp2.org][3] 查看文档。最新的 Fedora 以及其它发行版已经附带了这个库。

|

||||

如果你想自己编译 nghttp2,你可以到 [nghttp2.org][3] 查看文档。最新的 Fedora 以及其它版本已经附带了这个库。

|

||||

|

||||

#### TLS 支持 ####

|

||||

|

||||

大部分人想在浏览器上使用 HTTP/2, 而浏览器只在 TLS 连接(**https:// 开头的 url)时支持它。你需要一些我下面介绍的配置。但首先你需要的是支持 ALPN 扩展的 TLS 库。

|

||||

大部分人想在浏览器上使用 HTTP/2, 而浏览器只在使用 TLS 连接(**https:// 开头的 url)时才支持 HTTP/2。你需要一些我下面介绍的配置。但首先你需要的是支持 ALPN 扩展的 TLS 库。

|

||||

|

||||

ALPN 用来协商(negotiate)服务器和客户端之间的协议。如果你服务器上 TLS 库还没有实现 ALPN,客户端只能通过 HTTP/1.1 通信。那么,可以和 Apache 链接并支持它的是什么库呢?

|

||||

|

||||

ALPN 用来屏蔽服务器和客户端之间的协议。如果你服务器上 TLS 库还没有实现 ALPN,客户端只能通过 HTTP/1.1 通信。那么,和 Apache 连接的到底是什么?又是什么支持它呢?

|

||||

- **OpenSSL 1.0.2** 及其以后。

|

||||

- ??? (别的我也不知道了)

|

||||

|

||||

- **OpenSSL 1.0.2** 即将到来。

|

||||

- ???

|

||||

|

||||

如果你的 OpenSSL 库是 Linux 发行版自带的,这里使用的版本号可能和官方 OpenSSL 发行版的不同。如果不确定的话检查一下你的 Linux 发行版吧。

|

||||

如果你的 OpenSSL 库是 Linux 版本自带的,这里使用的版本号可能和官方 OpenSSL 版本的不同。如果不确定的话检查一下你的 Linux 版本吧。

|

||||

|

||||

### 配置 ###

|

||||

|

||||

另一个给服务器的好建议是为 http2 模块设置合适的日志等级。添加下面的配置:

|

||||

|

||||

# 某个地方有这样一行

|

||||

# 放在某个地方的这样一行

|

||||

LoadModule http2_module modules/mod_http2.so

|

||||

|

||||

<IfModule http2_module>

|

||||

@ -62,38 +61,37 @@ ALPN 用来屏蔽服务器和客户端之间的协议。如果你服务器上 TL

|

||||

|

||||

那么,假设你已经编译部署好了服务器, TLS 库也是最新的,你启动了你的服务器,打开了浏览器。。。你怎么知道它在工作呢?

|

||||

|

||||

如果除此之外你没有添加其它到服务器配置,很可能它没有工作。

|

||||

如果除此之外你没有添加其它的服务器配置,很可能它没有工作。

|

||||

|

||||

你需要告诉服务器在哪里使用协议。默认情况下,你的服务器并没有启动 HTTP/2 协议。因为这是安全路由,你可能要有一套部署了才能继续。

|

||||

你需要告诉服务器在哪里使用该协议。默认情况下,你的服务器并没有启动 HTTP/2 协议。因为这样比较安全,也许才能让你已有的部署可以继续工作。

|

||||

|

||||

你用 **Protocols** 命令启用 HTTP/2 协议:

|

||||

你可以用新的 **Protocols** 指令启用 HTTP/2 协议:

|

||||

|

||||

# for a https server

|

||||

# 对于 https 服务器

|

||||

Protocols h2 http/1.1

|

||||

...

|

||||

|

||||

# for a http server

|

||||

# 对于 http 服务器

|

||||

Protocols h2c http/1.1

|

||||

|

||||

你可以给一般服务器或者指定的 **vhosts** 添加这个配置。

|

||||

你可以给整个服务器或者指定的 **vhosts** 添加这个配置。

|

||||

|

||||

#### SSL 参数 ####

|

||||

|

||||

对于 TLS (SSL),HTTP/2 有一些特殊的要求。阅读 [https:// 连接][4]了解更详细的信息。

|

||||

对于 TLS (SSL),HTTP/2 有一些特殊的要求。阅读下面的“ https:// 连接”一节了解更详细的信息。

|

||||

|

||||

### http:// 连接 (h2c) ###

|

||||

|

||||

尽管现在还没有浏览器支持 HTTP/2 协议, http:// 这样的 url 也能正常工作, 因为有 mod_h[ttp]2 的支持。启用它你只需要做的一件事是在 **httpd.conf** 配置 Protocols :

|

||||

尽管现在还没有浏览器支持,但是 HTTP/2 协议也工作在 http:// 这样的 url 上, 而且 mod_h[ttp]2 也支持。启用它你唯一所要做的是在 Protocols 配置中启用它:

|

||||

|

||||

# for a http server

|

||||

# 对于 http 服务器

|

||||

Protocols h2c http/1.1

|

||||

|

||||

|

||||

这里有一些支持 **h2c** 的客户端(和客户端库)。我会在下面介绍:

|

||||

|

||||

#### curl ####

|

||||

|

||||

Daniel Stenberg 维护的网络资源命令行客户端 curl 当然支持。如果你的系统上有 curl,有一个简单的方法检查它是否支持 http/2:

|

||||

Daniel Stenberg 维护的用于访问网络资源的命令行客户端 curl 当然支持。如果你的系统上有 curl,有一个简单的方法检查它是否支持 http/2:

|

||||

|

||||

sh> curl -V

|

||||

curl 7.43.0 (x86_64-apple-darwin15.0) libcurl/7.43.0 SecureTransport zlib/1.2.5

|

||||

@ -126,11 +124,11 @@ Daniel Stenberg 维护的网络资源命令行客户端 curl 当然支持。如

|

||||

|

||||

恭喜,如果看到了有 **...101 Switching...** 的行就表示它正在工作!

|

||||

|

||||

有一些情况不会发生到 HTTP/2 的 Upgrade 。如果你的第一个请求没有内容,例如你上传一个文件,就不会触发 Upgrade。[h2c 限制][5]部分有详细的解释。

|

||||

有一些情况不会发生 HTTP/2 的升级切换(Upgrade)。如果你的第一个请求有内容数据(body),例如你上传一个文件时,就不会触发升级切换。[h2c 限制][5]部分有详细的解释。

|

||||

|

||||

#### nghttp ####

|

||||

|

||||

nghttp2 有能一起编译的客户端和服务器。如果你的系统中有客户端,你可以简单地通过获取资源验证你的安装:

|

||||

nghttp2 可以一同编译它自己的客户端和服务器。如果你的系统中有该客户端,你可以简单地通过获取一个资源来验证你的安装:

|

||||

|

||||

sh> nghttp -uv http://<yourserver>/

|

||||

[ 0.001] Connected

|

||||

@ -151,7 +149,7 @@ nghttp2 有能一起编译的客户端和服务器。如果你的系统中有客

|

||||

|

||||

这和我们上面 **curl** 例子中看到的 Upgrade 输出很相似。

|

||||

|

||||

在命令行参数中隐藏着一种可以使用 **h2c**:的参数:**-u**。这会指示 **nghttp** 进行 HTTP/1 Upgrade 过程。但如果我们不使用呢?

|

||||

有另外一种在命令行参数中不用 **-u** 参数而使用 **h2c** 的方法。这个参数会指示 **nghttp** 进行 HTTP/1 升级切换过程。但如果我们不使用呢?

|

||||

|

||||

sh> nghttp -v http://<yourserver>/

|

||||

[ 0.002] Connected

|

||||

@ -166,36 +164,33 @@ nghttp2 有能一起编译的客户端和服务器。如果你的系统中有客

|

||||

:scheme: http

|

||||

...

|

||||

|

||||

连接马上显示出了 HTTP/2!这就是协议中所谓的直接模式,当客户端发送一些特殊的 24 字节到服务器时就会发生:

|

||||

连接马上使用了 HTTP/2!这就是协议中所谓的直接(direct)模式,当客户端发送一些特殊的 24 字节到服务器时就会发生:

|

||||

|

||||

0x505249202a20485454502f322e300d0a0d0a534d0d0a0d0a

|

||||

or in ASCII: PRI * HTTP/2.0\r\n\r\nSM\r\n\r\n

|

||||

|

||||

用 ASCII 表示是:

|

||||

|

||||

PRI * HTTP/2.0\r\n\r\nSM\r\n\r\n

|

||||

|

||||

支持 **h2c** 的服务器在一个新的连接中看到这些信息就会马上切换到 HTTP/2。HTTP/1.1 服务器则认为是一个可笑的请求,响应并关闭连接。

|

||||

|

||||

因此 **直接** 模式只适合于那些确定服务器支持 HTTP/2 的客户端。例如,前一个 Upgrade 过程是成功的。

|

||||

因此,**直接**模式只适合于那些确定服务器支持 HTTP/2 的客户端。例如,当前一个升级切换过程成功了的时候。

|

||||

|

||||

**直接** 模式的魅力是零开销,它支持所有请求,即使没有 body 部分(查看[h2c 限制][6])。任何支持 h2c 协议的服务器默认启用了直接模式。如果你想停用它,可以添加下面的配置指令到你的服务器:

|

||||

**直接**模式的魅力是零开销,它支持所有请求,即使带有请求数据部分(查看[h2c 限制][6])。

|

||||

|

||||

注:下面这行打删除线

|

||||

|

||||

H2Direct off

|

||||

|

||||

注:下面这行打删除线

|

||||

|

||||

对于 2.4.17 发行版,默认明文连接时启用 **H2Direct** 。但是有一些模块和这不兼容。因此,在下一发行版中,默认会设置为**off**,如果你希望你的服务器支持它,你需要设置它为:

|

||||

对于 2.4.17 版本,明文连接时默认启用 **H2Direct** 。但是有一些模块和这不兼容。因此,在下一版本中,默认会设置为**off**,如果你希望你的服务器支持它,你需要设置它为:

|

||||

|

||||

H2Direct on

|

||||

|

||||

### https:// 连接 (h2) ###

|

||||

|

||||

一旦你的 mod_h[ttp]2 支持 h2c 连接,就是时候一同启用 **h2**,因为现在的浏览器支持它和 **https:** 一同使用。

|

||||

当你的 mod_h[ttp]2 可以支持 h2c 连接时,那就可以一同启用 **h2** 兄弟了,现在的浏览器仅支持它和 **https:** 一同使用。

|

||||

|

||||

HTTP/2 标准对 https:(TLS)连接增加了一些额外的要求。上面已经提到了 ALNP 扩展。另外的一个要求是不会使用特定[黑名单][7]中的密码。

|

||||

HTTP/2 标准对 https:(TLS)连接增加了一些额外的要求。上面已经提到了 ALNP 扩展。另外的一个要求是不能使用特定[黑名单][7]中的加密算法。

|

||||

|

||||

尽管现在版本的 **mod_h[ttp]2** 不增强这些密码(以后可能会),大部分客户端会这么做。如果你用不切当的密码在浏览器中打开 **h2** 服务器,你会看到模糊警告**INADEQUATE_SECURITY**,浏览器会拒接连接。

|

||||

尽管现在版本的 **mod_h[ttp]2** 不增强这些算法(以后可能会),但大部分客户端会这么做。如果让你的浏览器使用不恰当的算法打开 **h2** 服务器,你会看到不明确的警告**INADEQUATE_SECURITY**,浏览器会拒接连接。

|

||||

|

||||

一个可接受的 Apache SSL 配置类似:

|

||||

一个可行的 Apache SSL 配置类似:

|

||||

|

||||

SSLCipherSuite ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-DSS-AES128-GCM-SHA256:kEDH+AESGCM:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-DSS-AES128-SHA256:DHE-RSA-AES256-SHA256:DHE-DSS-AES256-SHA:DHE-RSA-AES256-SHA:!aNULL:!eNULL:!EXPORT:!DES:!RC4:!3DES:!MD5:!PSK

|

||||

SSLProtocol All -SSLv2 -SSLv3

|

||||

@ -203,11 +198,11 @@ HTTP/2 标准对 https:(TLS)连接增加了一些额外的要求。上面已

|

||||

|

||||

(是的,这确实很长。)

|

||||

|

||||

这里还有一些应该调整的 SSL 配置参数,但不是必须:**SSLSessionCache**, **SSLUseStapling** 等,其它地方也有介绍这些。例如 Ilya Grigorik 写的一篇博客 [高性能浏览器网络][8]。

|

||||

这里还有一些应该调整,但不是必须调整的 SSL 配置参数:**SSLSessionCache**, **SSLUseStapling** 等,其它地方也有介绍这些。例如 Ilya Grigorik 写的一篇超赞的博客: [高性能浏览器网络][8]。

|

||||

|

||||

#### curl ####

|

||||

|

||||

再次回到 shell 并使用 curl(查看 [curl h2c 章节][9] 了解要求)你也可以通过 curl 用简单的命令检测你的服务器:

|

||||

再次回到 shell 使用 curl(查看上面的“curl h2c”章节了解要求),你也可以通过 curl 用简单的命令检测你的服务器:

|

||||

|

||||

sh> curl -v --http2 https://<yourserver>/

|

||||

...

|

||||

@ -220,9 +215,9 @@ HTTP/2 标准对 https:(TLS)连接增加了一些额外的要求。上面已

|

||||

|

||||

恭喜你,能正常工作啦!如果还不能,可能原因是:

|

||||

|

||||

- 你的 curl 不支持 HTTP/2。查看[检测][10]。

|

||||

- 你的 curl 不支持 HTTP/2。查看上面的“检测 curl”一节。

|

||||

- 你的 openssl 版本太低不支持 ALPN。

|

||||

- 不能验证你的证书,或者不接受你的密码配置。尝试添加命令行选项 -k 停用 curl 中的检查。如果那能工作,还要重新配置你的 SSL 和证书。

|

||||

- 不能验证你的证书,或者不接受你的算法配置。尝试添加命令行选项 -k 停用 curl 中的这些检查。如果可以工作,就重新配置你的 SSL 和证书。

|

||||

|

||||

#### nghttp ####

|

||||

|

||||

@ -246,11 +241,11 @@ HTTP/2 标准对 https:(TLS)连接增加了一些额外的要求。上面已

|

||||

The negotiated protocol: http/1.1

|

||||

[ERROR] HTTP/2 protocol was not selected. (nghttp2 expects h2)

|

||||

|

||||

这表示 ALPN 能正常工作,但并没有用 h2 协议。你需要像上面介绍的那样在服务器上选中那个协议。如果一开始在 vhost 部分选中不能正常工作,试着在通用部分选中它。

|

||||

这表示 ALPN 能正常工作,但并没有用 h2 协议。你需要像上面介绍的那样检查你服务器上的 Protocols 配置。如果一开始在 vhost 部分设置不能正常工作,试着在通用部分设置它。

|

||||

|

||||

#### Firefox ####

|

||||

|

||||

Update: [Apache Lounge][11] 的 Steffen Land 告诉我 [Firefox HTTP/2 指示插件][12]。你可以看到有多少地方用到了 h2(提示:Apache Lounge 用 h2 已经有一段时间了。。。)

|

||||

更新: [Apache Lounge][11] 的 Steffen Land 告诉我 [Firefox 上有个 HTTP/2 指示插件][12]。你可以看到有多少地方用到了 h2(提示:Apache Lounge 用 h2 已经有一段时间了。。。)

|

||||

|

||||

你可以在 Firefox 浏览器中打开开发者工具,在那里的网络标签页查看 HTTP/2 连接。当你打开了 HTTP/2 并重新刷新 html 页面时,你会看到类似下面的东西:

|

||||

|

||||

@ -260,9 +255,9 @@ Update: [Apache Lounge][11] 的 Steffen Land 告诉我 [Firefox HTTP/2 指示

|

||||

|

||||

#### Google Chrome ####

|

||||

|

||||

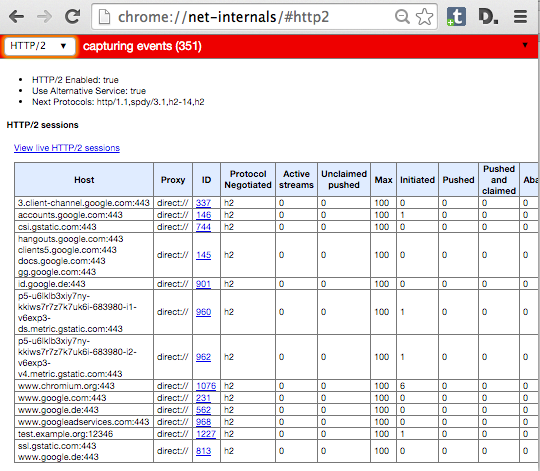

在 Google Chrome 中,你在开发者工具中看不到 HTTP/2 指示器。相反,Chrome 用特殊的地址 **chrome://net-internals/#http2** 给出了相关信息。

|

||||

在 Google Chrome 中,你在开发者工具中看不到 HTTP/2 指示器。相反,Chrome 用特殊的地址 **chrome://net-internals/#http2** 给出了相关信息。(LCTT 译注:Chrome 已经有一个 “HTTP/2 and SPDY indicator” 可以很好的在地址栏识别 HTTP/2 连接)

|

||||

|

||||

如果你在服务器中打开了一个页面并在 Chrome 那个页面查看,你可以看到类似下面这样:

|

||||

如果你打开了一个服务器的页面,可以在 Chrome 中查看那个 net-internals 页面,你可以看到类似下面这样:

|

||||

|

||||

|

||||

|

||||

@ -276,21 +271,21 @@ Windows 10 中 Internet Explorer 的继任者 Edge 也支持 HTTP/2。你也可

|

||||

|

||||

#### Safari ####

|

||||

|

||||

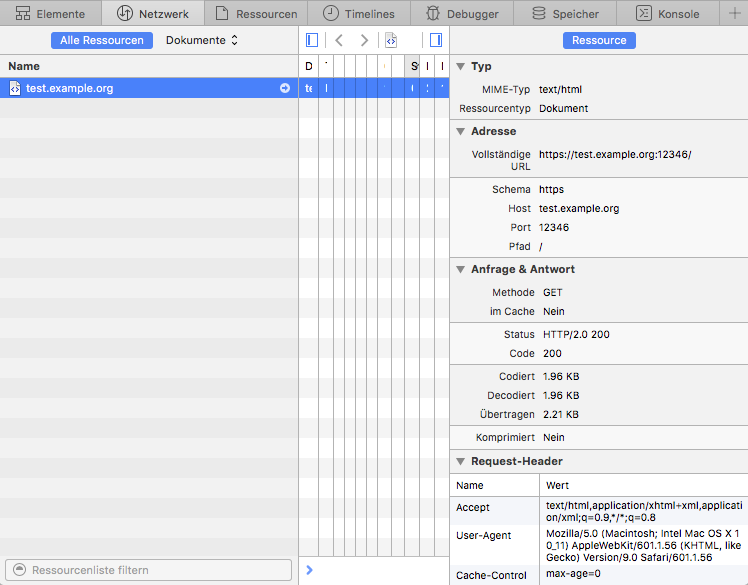

在 Apple 的 Safari 中,打开开发者工具,那里有个网络标签页。重新加载你的服务器页面并在开发者工具中选择显示了加载的行。如果你启用了在右边显示详细试图,看 **状态** 部分。那里显示了 **HTTP/2.0 200**,类似:

|

||||

在 Apple 的 Safari 中,打开开发者工具,那里有个网络标签页。重新加载你的服务器上的页面,并在开发者工具中选择显示了加载的那行。如果你启用了在右边显示详细视图,看 **Status** 部分。那里显示了 **HTTP/2.0 200**,像这样:

|

||||

|

||||

|

||||

|

||||

#### 重新协商 ####

|

||||

|

||||

https: 连接重新协商是指正在运行的连接中特定的 TLS 参数会发生变化。在 Apache httpd 中,你可以通过目录中的配置文件修改 TLS 参数。如果一个要获取特定位置资源的请求到来,配置的 TLS 参数会和当前的 TLS 参数进行对比。如果它们不相同,就会触发重新协商。

|

||||

https: 连接重新协商是指正在运行的连接中特定的 TLS 参数会发生变化。在 Apache httpd 中,你可以在 directory 配置中改变 TLS 参数。如果进来一个获取特定位置资源的请求,配置的 TLS 参数会和当前的 TLS 参数进行对比。如果它们不相同,就会触发重新协商。

|

||||

|

||||

这种最常见的情形是密码变化和客户端验证。你可以要求客户访问特定位置时需要通过验证,或者对于特定资源,你可以使用更安全的, CPU 敏感的密码。

|

||||

这种最常见的情形是算法变化和客户端证书。你可以要求客户访问特定位置时需要通过验证,或者对于特定资源,你可以使用更安全的、对 CPU 压力更大的算法。

|

||||

|

||||

不管你的想法有多么好,HTTP/2 中都**不可以**发生重新协商。如果有 100 多个请求到同一个地方,什么时候哪个会发生重新协商呢?

|

||||

但不管你的想法有多么好,HTTP/2 中都**不可以**发生重新协商。在同一个连接上会有 100 多个请求,那么重新协商该什么时候做呢?

|

||||

|

||||

对于这种配置,现有的 **mod_h[ttp]2** 还不能保证你的安全。如果你有一个站点使用了 TLS 重新协商,别在上面启用 h2!

|

||||

对于这种配置,现有的 **mod_h[ttp]2** 还没有办法。如果你有一个站点使用了 TLS 重新协商,别在上面启用 h2!

|

||||

|

||||

当然,我们会在后面的发行版中解决这个问题然后你就可以安全地启用了。

|

||||

当然,我们会在后面的版本中解决这个问题,然后你就可以安全地启用了。

|

||||

|

||||

### 限制 ###

|

||||

|

||||

@ -298,45 +293,45 @@ https: 连接重新协商是指正在运行的连接中特定的 TLS 参数会

|

||||

|

||||

实现除 HTTP 之外协议的模块可能和 **mod_http2** 不兼容。这在其它协议要求服务器首先发送数据时无疑会发生。

|

||||

|

||||

**NNTP** 就是这种协议的一个例子。如果你在服务器中配置了 **mod_nntp_like_ssl**,甚至都不要加载 mod_http2。等待下一个发行版。

|

||||

**NNTP** 就是这种协议的一个例子。如果你在服务器中配置了 **mod\_nntp\_like\_ssl**,那么就不要加载 mod_http2。等待下一个版本。

|

||||

|

||||

#### h2c 限制 ####

|

||||

|

||||

**h2c** 的实现还有一些限制,你应该注意:

|

||||

|

||||

#### 在虚拟主机中拒绝 h2c ####

|

||||

##### 在虚拟主机中拒绝 h2c #####

|

||||

|

||||

你不能对指定的虚拟主机拒绝 **h2c 直连**。连接建立而没有看到请求时会触发**直连**,这使得不可能预先知道 Apache 需要查找哪个虚拟主机。

|

||||

|

||||

#### 升级请求体 ####

|

||||

##### 有请求数据时的升级切换 #####

|

||||

|

||||

对于有 body 部分的请求,**h2c** 升级不能正常工作。那些是 PUT 和 POST 请求(用于提交和上传)。如果你写了一个客户端,你可能会用一个简单的 GET 去处理请求或者用选项 * 去触发升级。

|

||||

对于有数据的请求,**h2c** 升级切换不能正常工作。那些是 PUT 和 POST 请求(用于提交和上传)。如果你写了一个客户端,你可能会用一个简单的 GET 或者 OPTIONS * 来处理那些请求以触发升级切换。

|

||||

|

||||

原因从技术层面来看显而易见,但如果你想知道:升级过程中,连接处于半疯状态。请求按照 HTTP/1.1 的格式,而响应使用 HTTP/2。如果请求有一个 body 部分,服务器在发送响应之前需要读取整个 body。因为响应可能需要从客户端处得到应答用于流控制。但如果仍在发送 HTTP/1.1 请求,客户端就还不能处理 HTTP/2 连接。

|

||||

原因从技术层面来看显而易见,但如果你想知道:在升级切换过程中,连接处于半疯状态。请求按照 HTTP/1.1 的格式,而响应使用 HTTP/2 帧。如果请求有一个数据部分,服务器在发送响应之前需要读取整个数据。因为响应可能需要从客户端处得到应答用于流控制及其它东西。但如果仍在发送 HTTP/1.1 请求,客户端就仍然不能以 HTTP/2 连接。

|

||||

|

||||

为了使行为可预测,几个服务器实现商决定不要在任何请求体中进行升级,即使 body 很小。

|

||||

为了使行为可预测,几个服务器在实现上决定不在任何带有请求数据的请求中进行升级切换,即使请求数据很小。

|

||||

|

||||

#### 升级 302s ####

|

||||

##### 302 时的升级切换 #####

|

||||

|

||||

有重定向发生时当前 h2c 升级也不能工作。看起来 mod_http2 之前的重写有可能发生。这当然不会导致断路,但你测试这样的站点也许会让你迷惑。

|

||||

有重定向发生时,当前的 h2c 升级切换也不能工作。看起来 mod_http2 之前的重写有可能发生。这当然不会导致断路,但你测试这样的站点也许会让你迷惑。

|

||||

|

||||

#### h2 限制 ####

|

||||

|

||||

这里有一些你应该意识到的 h2 实现限制:

|

||||

|

||||

#### 连接重用 ####

|

||||

##### 连接重用 #####

|

||||

|

||||

HTTP/2 协议允许在特定条件下重用 TLS 连接:如果你有带通配符的证书或者多个 AltSubject 名称,浏览器可能会重用现有的连接。例如:

|

||||

|

||||

你有一个 **a.example.org** 的证书,它还有另外一个名称 **b.example.org**。你在浏览器中打开 url **https://a.example.org/**,用另一个标签页加载 **https://b.example.org/**。

|

||||

你有一个 **a.example.org** 的证书,它还有另外一个名称 **b.example.org**。你在浏览器中打开 URL **https://a.example.org/**,用另一个标签页加载 **https://b.example.org/**。

|

||||

|

||||

在重新打开一个新的连接之前,浏览器看到它有一个到 **a.example.org** 的连接并且证书对于 **b.example.org** 也可用。因此,它在第一个连接上面向第二个标签页发送请求。

|

||||

在重新打开一个新的连接之前,浏览器看到它有一个到 **a.example.org** 的连接并且证书对于 **b.example.org** 也可用。因此,它在第一个连接上面发送第二个标签页的请求。

|

||||

|

||||

这种连接重用是刻意设计的,它使得致力于 HTTP/1 切分效率的站点能够不需要太多变化就能利用 HTTP/2。

|

||||

这种连接重用是刻意设计的,它使得使用了 HTTP/1 切分(sharding)来提高效率的站点能够不需要太多变化就能利用 HTTP/2。

|

||||

|

||||

Apache **mod_h[ttp]2** 还没有完全实现这点。如果 **a.example.org** 和 **b.example.org** 是不同的虚拟主机, Apache 不会允许这样的连接重用,并会告知浏览器状态码**421 错误请求**。浏览器会意识到它需要重新打开一个到 **b.example.org** 的连接。这仍然能工作,只是会降低一些效率。

|

||||

Apache **mod_h[ttp]2** 还没有完全实现这点。如果 **a.example.org** 和 **b.example.org** 是不同的虚拟主机, Apache 不会允许这样的连接重用,并会告知浏览器状态码 **421 Misdirected Request**。浏览器会意识到它需要重新打开一个到 **b.example.org** 的连接。这仍然能工作,只是会降低一些效率。

|

||||

|

||||

我们期望下一次的发布中能有切当的检查。

|

||||

我们期望下一次的发布中能有合适的检查。

|

||||

|

||||

Münster, 12.10.2015,

|

||||

|

||||

@ -355,7 +350,7 @@ via: https://icing.github.io/mod_h2/howto.html

|

||||

|

||||

作者:[icing][a]

|

||||

译者:[ictlyh](http://mutouxiaogui.cn/blog/)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

239

published/20151104 How to Install Redis Server on CentOS 7.md

Normal file

239

published/20151104 How to Install Redis Server on CentOS 7.md

Normal file

@ -0,0 +1,239 @@

|

||||

如何在 CentOS 7 上安装 Redis 服务器

|

||||

================================================================================

|

||||

|

||||

大家好,本文的主题是 Redis,我们将要在 CentOS 7 上安装它。编译源代码,安装二进制文件,创建、安装文件。在安装了它的组件之后,我们还会配置 redis ,就像配置操作系统参数一样,目标就是让 redis 运行的更加可靠和快速。

|

||||

|

||||

|

||||

|

||||

*Redis 服务器*

|

||||

|

||||

Redis 是一个开源的多平台数据存储软件,使用 ANSI C 编写,直接在内存使用数据集,这使得它得以实现非常高的效率。Redis 支持多种编程语言,包括 Lua, C, Java, Python, Perl, PHP 和其他很多语言。redis 的代码量很小,只有约3万行,它只做“很少”的事,但是做的很好。尽管是在内存里工作,但是数据持久化的保存还是有的,而redis 的可靠性就很高,同时也支持集群,这些可以很好的保证你的数据安全。

|

||||

|

||||

### 构建 Redis ###

|

||||

|

||||

redis 目前没有官方 RPM 安装包,我们需要从源代码编译,而为了要编译就需要安装 Make 和 GCC。

|

||||

|

||||

如果没有安装过 GCC 和 Make,那么就使用 yum 安装。

|

||||

|

||||

yum install gcc make

|

||||

|

||||

从[官网][1]下载 tar 压缩包。

|

||||

|

||||

curl http://download.redis.io/releases/redis-3.0.4.tar.gz -o redis-3.0.4.tar.gz

|

||||

|

||||

解压缩。

|

||||

|

||||

tar zxvf redis-3.0.4.tar.gz

|

||||

|

||||

进入解压后的目录。

|

||||

|

||||

cd redis-3.0.4

|

||||

|

||||

使用Make 编译源文件。

|

||||

|

||||

make

|

||||

|

||||

### 安装 ###

|

||||

|

||||

进入源文件的目录。

|

||||

|

||||

cd src

|

||||

|

||||

复制 Redis 的服务器和客户端到 /usr/local/bin。

|

||||

|

||||

cp redis-server redis-cli /usr/local/bin

|

||||

|

||||

最好也把 sentinel,benchmark 和 check 复制过去。

|

||||

|

||||

cp redis-sentinel redis-benchmark redis-check-aof redis-check-dump /usr/local/bin

|

||||

|

||||

创建redis 配置文件夹。

|

||||

|

||||

mkdir /etc/redis

|

||||

|

||||

在`/var/lib/redis` 下创建有效的保存数据的目录

|

||||

|

||||

mkdir -p /var/lib/redis/6379

|

||||

|

||||

#### 系统参数 ####

|

||||

|

||||

为了让 redis 正常工作需要配置一些内核参数。

|

||||

|

||||

配置 `vm.overcommit_memory` 为1,这可以避免数据被截断,详情[见此][2]。

|

||||

|

||||

sysctl -w vm.overcommit_memory=1

|

||||

|

||||

修改 backlog 连接数的最大值超过 redis.conf 中的 `tcp-backlog` 值,即默认值511。你可以在[kernel.org][3] 找到更多有关基于 sysctl 的 ip 网络隧道的信息。

|

||||

|

||||

sysctl -w net.core.somaxconn=512

|

||||

|

||||

取消对透明巨页内存(transparent huge pages)的支持,因为这会造成 redis 使用过程产生延时和内存访问问题。

|

||||

|

||||

echo never > /sys/kernel/mm/transparent_hugepage/enabled

|

||||

|

||||

### redis.conf ###

|

||||

|

||||

redis.conf 是 redis 的配置文件,然而你会看到这个文件的名字是 6379.conf ,而这个数字就是 redis 监听的网络端口。如果你想要运行超过一个的 redis 实例,推荐用这样的名字。

|

||||

|

||||

复制示例的 redis.conf 到 **/etc/redis/6379.conf**。

|

||||

|

||||

cp redis.conf /etc/redis/6379.conf

|

||||

|

||||

现在编辑这个文件并且配置参数。

|

||||

|

||||

vi /etc/redis/6379.conf

|

||||

|

||||

#### daemonize ####

|

||||

|

||||

设置 `daemonize` 为 no,systemd 需要它运行在前台,否则 redis 会突然挂掉。

|

||||

|

||||

daemonize no

|

||||

|

||||

#### pidfile ####

|

||||

|

||||

设置 `pidfile` 为 /var/run/redis_6379.pid。

|

||||

|

||||

pidfile /var/run/redis_6379.pid

|

||||

|

||||

#### port ####

|

||||

|

||||

如果不准备用默认端口,可以修改。

|

||||

|

||||

port 6379

|

||||

|

||||

#### loglevel ####

|

||||

|

||||

设置日志级别。

|

||||

|

||||

loglevel notice

|

||||

|

||||

#### logfile ####

|

||||

|

||||

修改日志文件路径。

|

||||

|

||||

logfile /var/log/redis_6379.log

|

||||

|

||||

#### dir ####

|

||||

|

||||

设置目录为 /var/lib/redis/6379

|

||||

|

||||

dir /var/lib/redis/6379

|

||||

|

||||

### 安全 ###

|

||||

|

||||

下面有几个可以提高安全性的操作。

|

||||

|

||||

#### Unix sockets ####

|

||||

|

||||

在很多情况下,客户端程序和服务器端程序运行在同一个机器上,所以不需要监听网络上的 socket。如果这和你的使用情况类似,你就可以使用 unix socket 替代网络 socket,为此你需要配置 `port` 为0,然后配置下面的选项来启用 unix socket。

|

||||

|

||||

设置 unix socket 的套接字文件。

|

||||

|

||||

unixsocket /tmp/redis.sock

|

||||

|

||||

限制 socket 文件的权限。

|

||||

|

||||

unixsocketperm 700

|

||||

|

||||

现在为了让 redis-cli 可以访问,应该使用 -s 参数指向该 socket 文件。

|

||||

|

||||

redis-cli -s /tmp/redis.sock

|

||||

|

||||

#### requirepass ####

|

||||

|

||||

你可能需要远程访问,如果是,那么你应该设置密码,这样子每次操作之前要求输入密码。

|

||||

|

||||

requirepass "bTFBx1NYYWRMTUEyNHhsCg"

|

||||

|

||||

#### rename-command ####

|

||||

|

||||

想象一下如下指令的输出。是的,这会输出服务器的配置,所以你应该在任何可能的情况下拒绝这种访问。

|

||||

|

||||

CONFIG GET *

|

||||

|

||||

为了限制甚至禁止这条或者其他指令可以使用 `rename-command` 命令。你必须提供一个命令名和替代的名字。要禁止的话需要设置替代的名字为空字符串,这样禁止任何人猜测命令的名字会比较安全。

|

||||

|

||||

rename-command FLUSHDB "FLUSHDB_MY_SALT_G0ES_HERE09u09u"

|

||||

rename-command FLUSHALL ""

|

||||

rename-command CONFIG "CONFIG_MY_S4LT_GO3S_HERE09u09u"

|

||||

|

||||

|

||||

|

||||

*使用密码通过 unix socket 访问,和修改命令*

|

||||

|

||||

#### 快照 ####

|

||||

|

||||

默认情况下,redis 会周期性的将数据集转储到我们设置的目录下的 **dump.rdb** 文件。你可以使用 `save` 命令配置转储的频率,它的第一个参数是以秒为单位的时间帧,第二个参数是在数据文件上进行修改的数量。

|

||||

|

||||

每隔15分钟并且最少修改过一次键。

|

||||

|

||||

save 900 1

|

||||

|

||||

每隔5分钟并且最少修改过10次键。

|

||||

|

||||

save 300 10

|

||||

|

||||

每隔1分钟并且最少修改过10000次键。

|

||||

|

||||

save 60 10000

|

||||

|

||||

文件 `/var/lib/redis/6379/dump.rdb` 包含了从上次保存以来内存里数据集的转储数据。因为它先创建临时文件然后替换之前的转储文件,这里不存在数据破坏的问题,你不用担心,可以直接复制这个文件。

|

||||

|

||||

### 开机时启动 ###

|

||||

|

||||

你可以使用 systemd 将 redis 添加到系统开机启动列表。

|

||||

|

||||

复制示例的 init_script 文件到 `/etc/init.d`,注意脚本名所代表的端口号。

|

||||

|

||||

cp utils/redis_init_script /etc/init.d/redis_6379

|

||||

|

||||

现在我们要使用 systemd,所以在 `/etc/systems/system` 下创建一个单位文件名字为 `redis_6379.service`。

|

||||

|

||||

vi /etc/systemd/system/redis_6379.service

|

||||

|

||||

填写下面的内容,详情可见 systemd.service。

|

||||

|

||||

[Unit]

|

||||

Description=Redis on port 6379

|

||||

|

||||

[Service]

|

||||

Type=forking

|

||||

ExecStart=/etc/init.d/redis_6379 start

|

||||

ExecStop=/etc/init.d/redis_6379 stop

|

||||

|

||||

[Install]

|

||||

WantedBy=multi-user.target

|

||||

|

||||

现在添加我之前在 `/etc/sysctl.conf` 里面修改过的内存过量使用和 backlog 最大值的选项。

|

||||

|

||||

vm.overcommit_memory = 1

|

||||

|

||||

net.core.somaxconn=512

|

||||

|

||||

对于透明巨页内存支持,并没有直接 sysctl 命令可以控制,所以需要将下面的命令放到 `/etc/rc.local` 的结尾。

|

||||

|

||||

echo never > /sys/kernel/mm/transparent_hugepage/enabled

|

||||

|

||||

### 总结 ###

|

||||

|

||||

这样就可以启动了,通过设置这些选项你就可以部署 redis 服务到很多简单的场景,然而在 redis.conf 还有很多为复杂环境准备的 redis 选项。在一些情况下,你可以使用 [replication][4] 和 [Sentinel][5] 来提高可用性,或者[将数据分散][6]在多个服务器上,创建服务器集群。

|

||||

|

||||

谢谢阅读。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linoxide.com/storage/install-redis-server-centos-7/

|

||||

|

||||

作者:[Carlos Alberto][a]

|

||||

译者:[ezio](https://github.com/oska874)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linoxide.com/author/carlosal/

|

||||

[1]:http://redis.io/download

|

||||

[2]:https://www.kernel.org/doc/Documentation/vm/overcommit-accounting

|

||||

[3]:https://www.kernel.org/doc/Documentation/networking/ip-sysctl.txt

|

||||

[4]:http://redis.io/topics/replication

|

||||

[5]:http://redis.io/topics/sentinel

|

||||

[6]:http://redis.io/topics/partitioning

|

||||

@ -2,9 +2,9 @@

|

||||

================================================================================

|

||||

|

||||

|

||||

Linus 最近(LCTT 译注:其实是11月份,没有及时翻译出来,看官轻喷Orz)骂了一个 Linux 开发者,原因是他向 kernel 提交了一份不安全的代码。

|

||||

Linus 上个月骂了一个 Linux 开发者,原因是他向 kernel 提交了一份不安全的代码。

|

||||

|

||||

Linus 是个 Linux 内核项目非官方的“仁慈的独裁者(LCTT译注:英国《卫报》曾将乔布斯评价为‘仁慈的独裁者’)”,这意味着他有权决定将哪些代码合入内核,哪些代码直接丢掉。

|

||||

Linus 是个 Linux 内核项目非官方的“仁慈的独裁者(benevolent dictator)”(LCTT译注:英国《卫报》曾将乔布斯评价为‘仁慈的独裁者’),这意味着他有权决定将哪些代码合入内核,哪些代码直接丢掉。

|

||||

|

||||

在10月28号,一个开源开发者提交的代码未能符合 Torvalds 的要求,于是遭来了[一顿臭骂][1]。Torvalds 在他提交的代码下评论道:“你提交的是什么东西。”

|

||||

|

||||

@ -14,7 +14,7 @@ Torvalds 为什么会这么生气?他觉得那段代码可以写得更有效

|

||||

|

||||

Torvalds 重新写了一版代码将原来的那份替换掉,并建议所有开发者应该像他那种风格来写代码。

|

||||

|

||||

Torvalds 一直在嘲讽那些不符合他观点的人。早在1991年他就攻击过[Andrew Tanenbaum][2]——那个 Minix 操作系统的作者,而那个 Minix 操作系统被 Torvalds 描述为“脑残”。

|

||||

Torvalds 一直在嘲讽那些不符合他观点的人。早在1991年他就攻击过 [Andrew Tanenbaum][2]——那个 Minix 操作系统的作者,而那个 Minix 操作系统被 Torvalds 描述为“脑残”。

|

||||

|

||||

但是 Torvalds 在这次嘲讽中表现得更有战略性了:“我想让*每个人*都知道,像他这种代码是完全不能被接收的。”他说他的目的是提醒每个 Linux 开发者,而不是针对那个开发者。

|

||||

|

||||

@ -26,7 +26,7 @@ via: http://thevarguy.com/open-source-application-software-companies/110415/linu

|

||||

|

||||

作者:[Christopher Tozzi][a]

|

||||

译者:[bazz2](https://github.com/bazz2)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,12 +1,12 @@

|

||||

如何在CentOS 6/7 上移除被Fail2ban禁止的IP

|

||||

如何在 CentOS 6/7 上移除被 Fail2ban 禁止的 IP

|

||||

================================================================================

|

||||

|

||||

|

||||

[Fail2ban][1]是一款用于保护你的服务器免于暴力攻击的入侵保护软件。Fail2ban用python写成,并被广泛用户大多数服务器上。Fail2ban将扫描日志文件和IP黑名单来显示恶意软件、过多的密码失败、web服务器利用、Wordpress插件攻击和其他漏洞。如果你已经安装并使用了fail2ban来保护你的web服务器,你也许会想知道如何在CentOS 6、CentOS 7、RHEL 6、RHEL 7 和 Oracle Linux 6/7中找到被Fail2ban阻止的IP,或者你想将ip从fail2ban监狱中移除。

|

||||

[fail2ban][1] 是一款用于保护你的服务器免于暴力攻击的入侵保护软件。fail2ban 用 python 写成,并广泛用于很多服务器上。fail2ban 会扫描日志文件和 IP 黑名单来显示恶意软件、过多的密码失败尝试、web 服务器利用、wordpress 插件攻击和其他漏洞。如果你已经安装并使用了 fail2ban 来保护你的 web 服务器,你也许会想知道如何在 CentOS 6、CentOS 7、RHEL 6、RHEL 7 和 Oracle Linux 6/7 中找到被 fail2ban 阻止的 IP,或者你想将 ip 从 fail2ban 监狱中移除。

|

||||

|

||||

### 如何列出被禁止的IP ###

|

||||

### 如何列出被禁止的 IP ###

|

||||

|

||||

要查看所有被禁止的ip地址,运行下面的命令:

|

||||

要查看所有被禁止的 ip 地址,运行下面的命令:

|

||||

|

||||

# iptables -L

|

||||

Chain INPUT (policy ACCEPT)

|

||||

@ -40,19 +40,19 @@

|

||||

REJECT all -- 104.194.26.205 anywhere reject-with icmp-port-unreachable

|

||||

RETURN all -- anywhere anywhere

|

||||

|

||||

### 如何从Fail2ban中移除IP ###

|

||||

### 如何从 Fail2ban 中移除 IP ###

|

||||

|

||||

# iptables -D f2b-NoAuthFailures -s banned_ip -j REJECT

|

||||

|

||||

我希望这篇教程可以给你在CentOS 6、CentOS 7、RHEL 6、RHEL 7 和 Oracle Linux 6/7中移除被禁止的ip一些指导。

|

||||

我希望这篇教程可以给你在 CentOS 6、CentOS 7、RHEL 6、RHEL 7 和 Oracle Linux 6/7 中移除被禁止的 ip 一些指导。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.ehowstuff.com/how-to-remove-banned-ip-from-fail2ban-on-centos/

|

||||

|

||||

作者:[skytech][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,44 +0,0 @@

|

||||

Let's Encrypt:Entering Public Beta

|

||||

================================================================================

|

||||

We’re happy to announce that Let’s Encrypt has entered Public Beta. Invitations are no longer needed in order to get free

|

||||

certificates from Let’s Encrypt.

|

||||

|

||||

It’s time for the Web to take a big step forward in terms of security and privacy. We want to see HTTPS become the default.

|

||||

Let’s Encrypt was built to enable that by making it as easy as possible to get and manage certificates.

|

||||

|

||||

We’d like to thank everyone who participated in the Limited Beta. Let’s Encrypt issued over 26,000 certificates during the

|

||||

Limited Beta period. This allowed us to gain valuable insight into how our systems perform, and to be confident about moving

|

||||

to Public Beta.

|

||||

|

||||

We’d also like to thank all of our [sponsors][1] for their support. We’re happy to have announced earlier today that

|

||||

[Facebook is our newest Gold sponsor][2]/

|

||||

|

||||

We have more work to do before we’re comfortable dropping the beta label entirely, particularly on the client experience.

|

||||

Automation is a cornerstone of our strategy, and we need to make sure that the client works smoothly and reliably on a

|

||||

wide range of platforms. We’ll be monitoring feedback from users closely, and making improvements as quickly as possible.

|

||||

|

||||

Instructions for getting a certificate with the [Let’s Encrypt client][3] can be found [here][4].

|

||||

|

||||

[Let’s Encrypt Community Support][5] is an invaluable resource for our community, we strongly recommend making use of the

|

||||

site if you have any questions about Let’s Encrypt.

|

||||

|

||||

Let’s Encrypt depends on support from a wide variety of individuals and organizations. Please consider [getting involved][6]

|

||||

, and if your company or organization would like to sponsor Let’s Encrypt please email us at [sponsor@letsencrypt.org][7].

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://letsencrypt.org/2015/12/03/entering-public-beta.html

|

||||

|

||||

作者:[Josh Aas][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://letsencrypt.org/2015/12/03/entering-public-beta.html

|

||||

[1]:https://letsencrypt.org/sponsors/

|

||||

[2]:https://letsencrypt.org/2015/12/03/facebook-sponsorship.html

|

||||

[3]:https://github.com/letsencrypt/letsencrypt

|

||||

[4]:https://letsencrypt.readthedocs.org/en/latest/

|

||||

[5]:https://community.letsencrypt.org/

|

||||

[6]:https://letsencrypt.org/getinvolved/

|

||||

[7]:mailto:sponsor@letsencrypt.org

|

||||

@ -1,3 +1,4 @@

|

||||

Translating by H-mudcup

|

||||

Great Open Source Collaborative Editing Tools

|

||||

================================================================================

|

||||

In a nutshell, collaborative writing is writing done by more than one person. There are benefits and risks of collaborative working. Some of the benefits include a more integrated / co-ordinated approach, better use of existing resources, and a stronger, united voice. For me, the greatest advantage is one of the most transparent. That's when I need to take colleagues' views. Sending files back and forth between colleagues is inefficient, causes unnecessary delays and leaves people (i.e. me) unhappy with the whole notion of collaboration. With good collaborative software, I can share notes, data and files, and use comments to share thoughts in real-time or asynchronously. Working together on documents, images, video, presentations, and tasks is made less of a chore.

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

translating by tastynoodle

|

||||

5 best open source board games to play online

|

||||

================================================================================

|

||||

I have always had a fascination with board games, in part because they are a device of social interaction, they challenge the mind and, most importantly, they are great fun to play. In my misspent youth, myself and a group of friends gathered together to escape the horrors of the classroom, and indulge in a little escapism. The time provided an outlet for tension and rivalry. Board games help teach diplomacy, how to make and break alliances, bring families and friends together, and learn valuable lessons.

|

||||

|

||||

@ -1,64 +0,0 @@

|

||||

eSpeak: Text To Speech Tool For Linux

|

||||

================================================================================

|

||||

|

||||

|

||||

[eSpeak][1] is a command line tool for Linux that converts text to speech. This is a compact speech synthesizer that provides support to English and many other languages. It is written in C.

|

||||

|

||||

eSpeak reads the text from the standard input or the input file. The voice generated, however, is nowhere close to a human voice. But it is still a compact and handy tool if you want to use it in your projects.

|

||||

|

||||

Some of the main features of eSpeak are:

|

||||

|

||||

- A command line tool for Linux and Windows

|

||||

- Speaks text from a file or from stdin

|

||||

- Shared library version for use by other programs

|

||||

- SAPI5 version for Windows, so it can be used with screen-readers and other programs that support the Windows SAPI5 interface.

|

||||

- Ported to other platforms, including Android, Mac OSX etc.

|

||||

- Several voice characteristics to choose from

|

||||

- speech output can be saved as [.WAV file][2]

|

||||

- SSML ([Speech Synthesis Markup Language][3]) is supported partially along with HTML

|

||||

- Tiny in size, the complete program with language support etc is under 2 MB.

|

||||

- Can translate text into phoneme codes, so it could be adapted as a front end for another speech synthesis engine.

|

||||

- Development tools available for producing and tuning phoneme data.

|

||||

|

||||

### Install eSpeak ###

|

||||

|

||||

To install eSpeak in Ubuntu based system, use the command below in a terminal:

|

||||

|

||||

sudo apt-get install espeak

|

||||

|

||||

eSpeak is an old tool and I presume that it should be available in the repositories of other Linux distributions such as Arch Linux, Fedora etc. You can install eSpeak easily using dnf, pacman etc.

|

||||

|

||||

To use eSpeak, just use it like: espeak and press enter to hear it aloud. Use Ctrl+C to close the running program.

|

||||

|

||||

|

||||

|

||||

There are several other options available. You can browse through them through the help section of the program.

|

||||

|

||||

### GUI version: Gespeaker ###

|

||||

|

||||

If you prefer the GUI version over the command line, you can install Gespeaker that provides a GTK front end to eSpeak.

|

||||

|

||||

Use the command below to install Gespeaker:

|

||||

|

||||

sudo apt-get install gespeaker

|

||||

|

||||

The interface is straightforward and easy to use. You can explore it all by yourself.

|

||||

|

||||

|

||||

|

||||

While such tools might not be useful for general computing need, it could be handy if you are working on some projects where text to speech conversion is required. I let you decide the usage of this speech synthesizer.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://itsfoss.com/espeak-text-speech-linux/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://itsfoss.com/author/abhishek/

|

||||

[1]:http://espeak.sourceforge.net/

|

||||

[2]:http://en.wikipedia.org/wiki/WAV

|

||||

[3]:http://en.wikipedia.org/wiki/Speech_Synthesis_Markup_Language

|

||||

@ -1,284 +0,0 @@

|

||||

Review: 5 memory debuggers for Linux coding

|

||||

================================================================================

|

||||

|

||||

Credit: [Moini][1]

|

||||

|

||||

As a programmer, I'm aware that I tend to make mistakes -- and why not? Even programmers are human. Some errors are detected during code compilation, while others get caught during software testing. However, a category of error exists that usually does not get detected at either of these stages and that may cause the software to behave unexpectedly -- or worse, terminate prematurely.

|

||||

|

||||

If you haven't already guessed it, I am talking about memory-related errors. Manually debugging these errors can be not only time-consuming but difficult to find and correct. Also, it's worth mentioning that these errors are surprisingly common, especially in software written in programming languages like C and C++, which were designed for use with [manual memory management][2].

|

||||

|

||||

Thankfully, several programming tools exist that can help you find memory errors in your software programs. In this roundup, I assess five popular, free and open-source memory debuggers that are available for Linux: Dmalloc, Electric Fence, Memcheck, Memwatch and Mtrace. I've used all five in my day-to-day programming, and so these reviews are based on practical experience.

|

||||

|

||||

eviews are based on practical experience.

|

||||

|

||||

### [Dmalloc][3] ###

|

||||

|

||||

**Developer**: Gray Watson

|

||||

**Reviewed version**: 5.5.2

|

||||

**Linux support**: All flavors

|

||||

**License**: Creative Commons Attribution-Share Alike 3.0 License

|

||||

|

||||

Dmalloc is a memory-debugging tool developed by Gray Watson. It is implemented as a library that provides wrappers around standard memory management functions like **malloc(), calloc(), free()** and more, enabling programmers to detect problematic code.

|

||||

|

||||

|

||||

Dmalloc

|

||||

|

||||

As listed on the tool's Web page, the debugging features it provides includes memory-leak tracking, [double free][4] error tracking and [fence-post write detection][5]. Other features include file/line number reporting, and general logging of statistics.

|

||||

|

||||

#### What's new ####

|

||||

|

||||

Version 5.5.2 is primarily a [bug-fix release][6] containing corrections for a couple of build and install problems.

|

||||

|

||||

#### What's good about it ####

|

||||

|

||||

The best part about Dmalloc is that it's extremely configurable. For example, you can configure it to include support for C++ programs as well as threaded applications. A useful functionality it provides is runtime configurability, which means that you can easily enable/disable the features the tool provides while it is being executed.

|

||||

|

||||

You can also use Dmalloc with the [GNU Project Debugger (GDB)][7] -- just add the contents of the dmalloc.gdb file (located in the contrib subdirectory in Dmalloc's source package) to the .gdbinit file in your home directory.

|

||||

|

||||

Another thing that I really like about Dmalloc is its extensive documentation. Just head to the [documentation section][8] on its official website, and you'll get everything from how to download, install, run and use the library to detailed descriptions of the features it provides and an explanation of the output file it produces. There's also a section containing solutions to some common problems.

|

||||

|

||||

#### Other considerations ####

|

||||

|

||||

Like Mtrace, Dmalloc requires programmers to make changes to their program's source code. In this case you may, at the very least, want to add the **dmalloc.h** header, because it allows the tool to report the file/line numbers of calls that generate problems, something that is very useful as it saves time while debugging.

|

||||

|

||||

In addition, the Dmalloc library, which is produced after the package is compiled, needs to be linked with your program while the program is being compiled.

|

||||

|

||||

However, complicating things somewhat is the fact that you also need to set an environment variable, dubbed **DMALLOC_OPTION**, that the debugging tool uses to configure the memory debugging features -- as well as the location of the output file -- at runtime. While you can manually assign a value to the environment variable, beginners may find that process a bit tough, given that the Dmalloc features you want to enable are listed as part of that value, and are actually represented as a sum of their respective hexadecimal values -- you can read more about it [here][9].

|

||||

|

||||

An easier way to set the environment variable is to use the [Dmalloc Utility Program][10], which was designed for just that purpose.

|

||||

|

||||

#### Bottom line ####

|

||||

|

||||

Dmalloc's real strength lies in the configurability options it provides. It is also highly portable, having being successfully ported to many OSes, including AIX, BSD/OS, DG/UX, Free/Net/OpenBSD, GNU/Hurd, HPUX, Irix, Linux, MS-DOG, NeXT, OSF, SCO, Solaris, SunOS, Ultrix, Unixware and even Unicos (on a Cray T3E). Although the tool has a bit of a learning curve associated with it, the features it provides are worth it.

|

||||

|

||||

### [Electric Fence][15] ###

|

||||

|

||||

**Developer**: Bruce Perens

|

||||

**Reviewed version**: 2.2.3

|

||||

**Linux support**: All flavors

|

||||

**License**: GNU GPL (version 2)

|

||||

|

||||

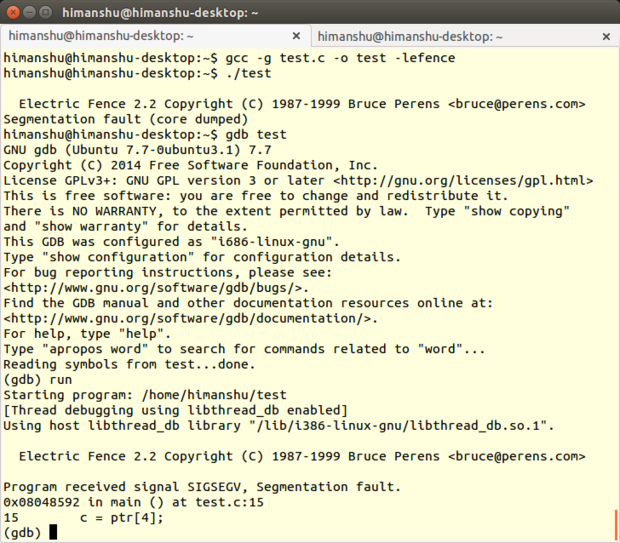

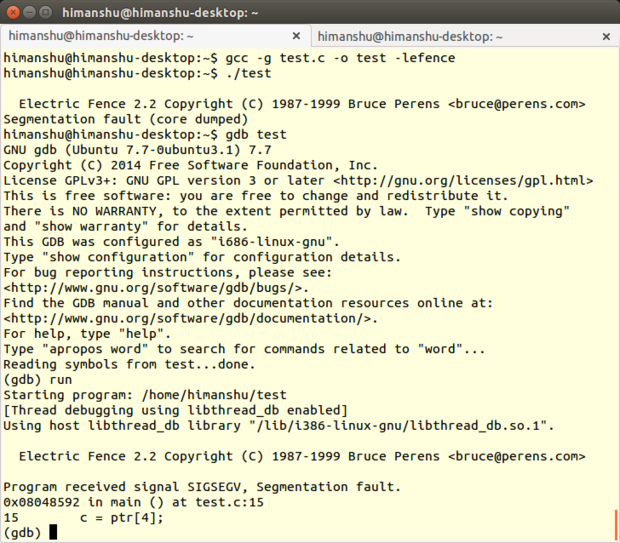

Electric Fence is a memory-debugging tool developed by Bruce Perens. It is implemented in the form of a library that your program needs to link to, and is capable of detecting overruns of memory allocated on a [heap][11] ) as well as memory accesses that have already been released.

|

||||

|

||||

|

||||

Electric Fence

|

||||

|

||||

As the name suggests, Electric Fence creates a virtual fence around each allocated buffer in a way that any illegal memory access results in a [segmentation fault][12]. The tool supports both C and C++ programs.

|

||||

|

||||

#### What's new ####

|

||||

|

||||

Version 2.2.3 contains a fix for the tool's build system, allowing it to actually pass the -fno-builtin-malloc option to the [GNU Compiler Collection (GCC)][13].

|

||||

|

||||

#### What's good about it ####

|

||||

|

||||

The first thing that I liked about Electric Fence is that -- unlike Memwatch, Dmalloc and Mtrace -- it doesn't require you to make any changes in the source code of your program. You just need to link your program with the tool's library during compilation.

|

||||

|

||||

Secondly, the way the debugging tool is implemented makes sure that a segmentation fault is generated on the very first instruction that causes a bounds violation, which is always better than having the problem detected at a later stage.

|

||||

|

||||

Electric Fence always produces a copyright message in output irrespective of whether an error was detected or not. This behavior is quite useful, as it also acts as a confirmation that you are actually running an Electric Fence-enabled version of your program.

|

||||

|

||||

#### Other considerations ####

|

||||

|

||||

On the other hand, what I really miss in Electric Fence is the ability to detect memory leaks, as it is one of the most common and potentially serious problems that software written in C/C++ has. In addition, the tool cannot detect overruns of memory allocated on the stack, and is not thread-safe.

|

||||

|

||||

Given that the tool allocates an inaccessible virtual memory page both before and after a user-allocated memory buffer, it ends up consuming a lot of extra memory if your program makes too many dynamic memory allocations.

|

||||

|

||||

Another limitation of the tool is that it cannot explicitly tell exactly where the problem lies in your programs' code -- all it does is produce a segmentation fault whenever it detects a memory-related error. To find out the exact line number, you'll have to debug your Electric Fence-enabled program with a tool like [The Gnu Project Debugger (GDB)][14], which in turn depends on the -g compiler option to produce line numbers in output.

|

||||

|

||||

Finally, although Electric Fence is capable of detecting most buffer overruns, an exception is the scenario where the allocated buffer size is not a multiple of the word size of the system -- in that case, an overrun (even if it's only a few bytes) won't be detected.

|

||||

|

||||

#### Bottom line ####

|

||||

|

||||

Despite all its limitations, where Electric Fence scores is the ease of use -- just link your program with the tool once, and it'll alert you every time it detects a memory issue it's capable of detecting. However, as already mentioned, the tool requires you to use a source-code debugger like GDB.

|

||||

|

||||

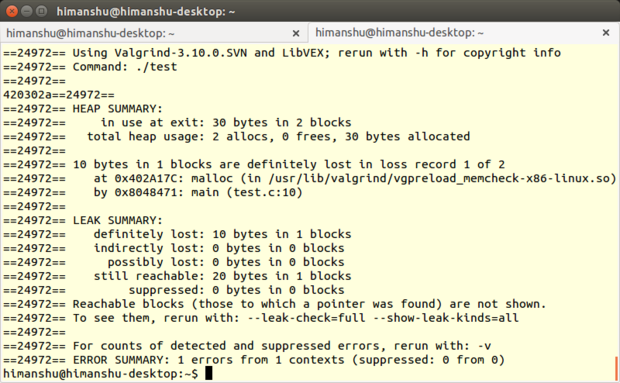

### [Memcheck][16] ###

|

||||

|

||||

**Developer**: [Valgrind Developers][17]

|

||||

**Reviewed version**: 3.10.1

|

||||

**Linux support**: All flavors

|

||||

**License**: GPL

|

||||

|

||||

[Valgrind][18] is a suite that provides several tools for debugging and profiling Linux programs. Although it works with programs written in many different languages -- such as Java, Perl, Python, Assembly code, Fortran, Ada and more -- the tools it provides are largely aimed at programs written in C and C++.

|

||||

|

||||

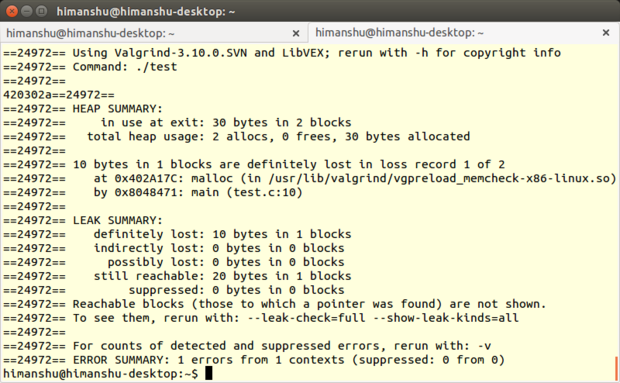

The most popular Valgrind tool is Memcheck, a memory-error detector that can detect issues such as memory leaks, invalid memory access, uses of undefined values and problems related to allocation and deallocation of heap memory.

|

||||

|

||||

#### What's new ####

|

||||

|

||||

This [release][19] of the suite (3.10.1) is a minor one that primarily contains fixes to bugs reported in version 3.10.0. In addition, it also "backports fixes for all reported missing AArch64 ARMv8 instructions and syscalls from the trunk."

|

||||

|

||||

#### What's good about it ####

|

||||

|

||||

Memcheck, like all other Valgrind tools, is basically a command line utility. It's very easy to use: If you normally run your program on the command line in a form such as prog arg1 arg2, you just need to add a few values, like this: valgrind --leak-check=full prog arg1 arg2.

|

||||

|

||||

|

||||

Memcheck

|

||||

|

||||

(Note: You don't need to mention Memcheck anywhere in the command line because it's the default Valgrind tool. However, you do need to initially compile your program with the -g option -- which adds debugging information -- so that Memcheck's error messages include exact line numbers.)

|

||||

|

||||

What I really like about Memcheck is that it provides a lot of command line options (such as the --leak-check option mentioned above), allowing you to not only control how the tool works but also how it produces the output.

|

||||

|

||||

For example, you can enable the --track-origins option to see information on the sources of uninitialized data in your program. Enabling the --show-mismatched-frees option will let Memcheck match the memory allocation and deallocation techniques. For code written in C language, Memcheck will make sure that only the free() function is used to deallocate memory allocated by malloc(), while for code written in C++, the tool will check whether or not the delete and delete[] operators are used to deallocate memory allocated by new and new[], respectively. If a mismatch is detected, an error is reported.

|

||||

|

||||

But the best part, especially for beginners, is that the tool even produces suggestions about which command line option the user should use to make the output more meaningful. For example, if you do not use the basic --leak-check option, it will produce an output suggesting: "Rerun with --leak-check=full to see details of leaked memory." And if there are uninitialized variables in the program, the tool will generate a message that says, "Use --track-origins=yes to see where uninitialized values come from."

|

||||

|

||||

Another useful feature of Memcheck is that it lets you [create suppression files][20], allowing you to suppress certain errors that you can't fix at the moment -- this way you won't be reminded of them every time the tool is run. It's worth mentioning that there already exists a default suppression file that Memcheck reads to suppress errors in the system libraries, such as the C library, that come pre-installed with your OS. You can either create a new suppression file for your use, or edit the existing one (usually /usr/lib/valgrind/default.supp).

|

||||

|

||||

For those seeking advanced functionality, it's worth knowing that Memcheck can also [detect memory errors][21] in programs that use [custom memory allocators][22]. In addition, it also provides [monitor commands][23] that can be used while working with Valgrind's built-in gdbserver, as well as a [client request mechanism][24] that allows you not only to tell the tool facts about the behavior of your program, but make queries as well.

|

||||

|

||||

#### Other considerations ####

|

||||

|

||||

While there's no denying that Memcheck can save you a lot of debugging time and frustration, the tool uses a lot of memory, and so can make your program execution significantly slower (around 20 to 30 times, [according to the documentation][25]).

|

||||

|

||||

Aside from this, there are some other limitations, too. According to some user comments, Memcheck apparently isn't [thread-safe][26]; it doesn't detect [static buffer overruns][27]). Also, there are some Linux programs, like [GNU Emacs][28], that currently do not work with Memcheck.

|

||||

|

||||

If you're interested in taking a look, an exhaustive list of Valgrind's limitations can be found [here][29].

|

||||

|

||||

#### Bottom line ####

|

||||

|

||||

Memcheck is a handy memory-debugging tool for both beginners as well as those looking for advanced features. While it's very easy to use if all you need is basic debugging and error checking, there's a bit of learning curve if you want to use features like suppression files or monitor commands.

|

||||

|

||||

Although it has a long list of limitations, Valgrind (and hence Memcheck) claims on its site that it is used by [thousands of programmers][30] across the world -- the team behind the tool says it's received feedback from users in over 30 countries, with some of them working on projects with up to a whopping 25 million lines of code.

|

||||

|

||||

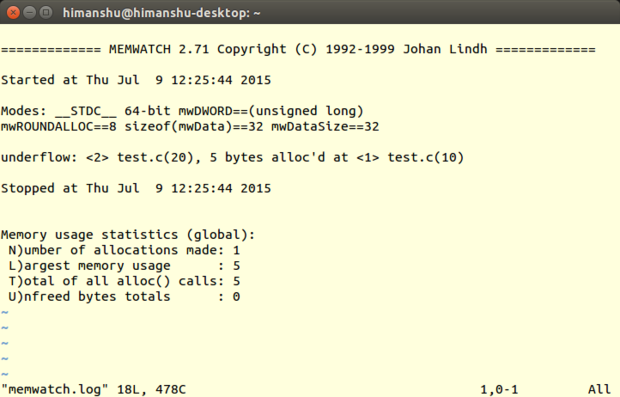

### [Memwatch][31] ###

|

||||

|

||||

**Developer**: Johan Lindh

|

||||

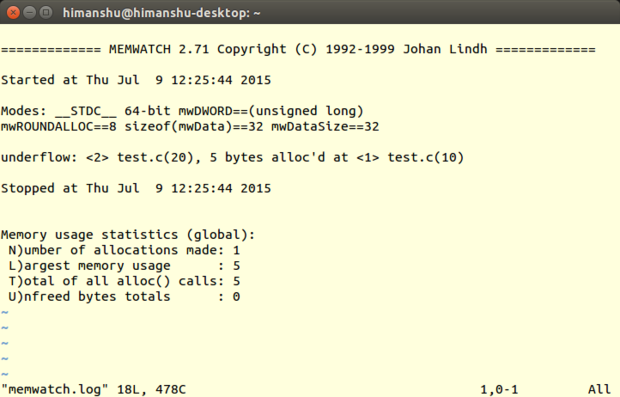

**Reviewed version**: 2.71

|

||||

**Linux support**: All flavors

|

||||

**License**: GNU GPL

|

||||

|

||||

Memwatch is a memory-debugging tool developed by Johan Lindh. Although it's primarily a memory-leak detector, it is also capable (according to its Web page) of detecting other memory-related issues like [double-free error tracking and erroneous frees][32], buffer overflow and underflow, [wild pointer][33] writes, and more.

|

||||

|

||||

The tool works with programs written in C. Although you can also use it with C++ programs, it's not recommended (according to the Q&A file that comes with the tool's source package).

|

||||

|

||||

#### What's new ####

|

||||

|

||||

This version adds ULONG_LONG_MAX to detect whether a program is 32-bit or 64-bit.

|

||||

|

||||

#### What's good about it ####

|

||||

|

||||

Like Dmalloc, Memwatch comes with good documentation. You can refer to the USING file if you want to learn things like how the tool works; how it performs initialization, cleanup and I/O operations; and more. Then there is a FAQ file that is aimed at helping users in case they face any common error while using Memcheck. Finally, there is a test.c file that contains a working example of the tool for your reference.

|

||||

|

||||

|

||||

Memwatch

|

||||

|

||||

Unlike Mtrace, the log file to which Memwatch writes the output (usually memwatch.log) is in human-readable form. Also, instead of truncating, Memwatch appends the memory-debugging output to the file each time the tool is run, allowing you to easily refer to the previous outputs should the need arise.

|

||||

|

||||

It's also worth mentioning that when you execute your program with Memwatch enabled, the tool produces a one-line output on [stdout][34] informing you that some errors were found -- you can then head to the log file for details. If no such error message is produced, you can rest assured that the log file won't contain any mistakes -- this actually saves time if you're running the tool several times.

|

||||

|

||||

Another thing that I liked about Memwatch is that it also provides a way through which you can capture the tool's output from within the code, and handle it the way you like (refer to the mwSetOutFunc() function in the Memwatch source code for more on this).

|

||||

|

||||

#### Other considerations ####

|

||||

|

||||

Like Mtrace and Dmalloc, Memwatch requires you to add extra code to your source file -- you have to include the memwatch.h header file in your code. Also, while compiling your program, you need to either compile memwatch.c along with your program's source files or include the object module from the compile of the file, as well as define the MEMWATCH and MW_STDIO variables on the command line. Needless to say, the -g compiler option is also required for your program if you want exact line numbers in the output.

|

||||

|

||||

There are some features that it doesn't contain. For example, the tool cannot detect attempts to write to an address that has already been freed or read data from outside the allocated memory. Also, it's not thread-safe. Finally, as I've already pointed out in the beginning, there is no guarantee on how the tool will behave if you use it with programs written in C++.

|

||||

|

||||

#### Bottom line ####

|

||||

|

||||

Memcheck can detect many memory-related problems, making it a handy debugging tool when dealing with projects written in C. Given that it has a very small source code, you can learn how the tool works, debug it if the need arises, and even extend or update its functionality as per your requirements.

|

||||

|

||||

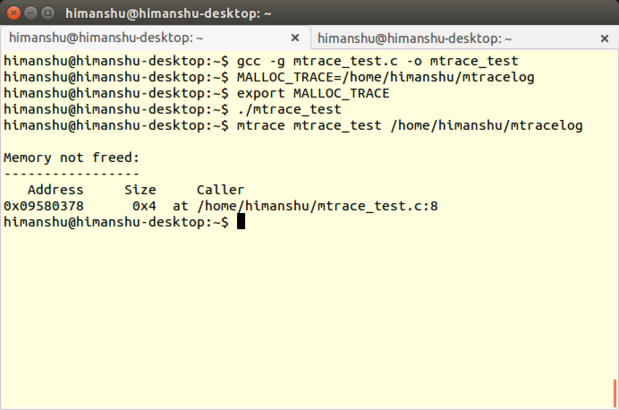

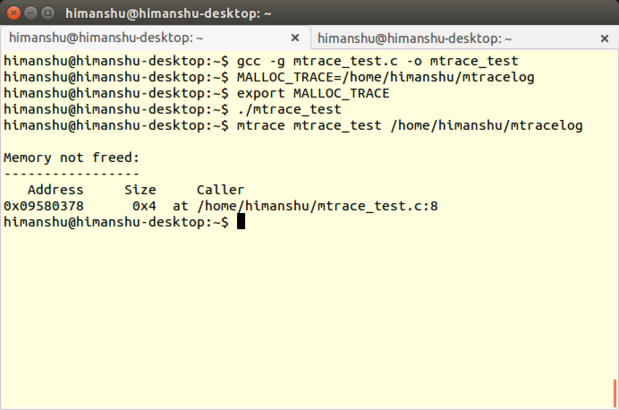

### [Mtrace][35] ###

|

||||

|

||||

**Developers**: Roland McGrath and Ulrich Drepper

|

||||

**Reviewed version**: 2.21

|

||||

**Linux support**: All flavors

|

||||

**License**: GNU LGPL

|

||||

|

||||

Mtrace is a memory-debugging tool included in [the GNU C library][36]. It works with both C and C++ programs on Linux, and detects memory leaks caused by unbalanced calls to the malloc() and free() functions.

|

||||

|

||||

|

||||

Mtrace

|

||||

|

||||

The tool is implemented in the form of a function called mtrace(), which traces all malloc/free calls made by a program and logs the information in a user-specified file. Because the file contains data in computer-readable format, a Perl script -- also named mtrace -- is used to convert and display it in human-readable form.

|

||||

|

||||

#### What's new ####

|

||||

|

||||

[The Mtrace source][37] and [the Perl file][38] that now come with the GNU C library (version 2.21) add nothing new to the tool aside from an update to the copyright dates.

|

||||

|

||||

#### What's good about it ####

|

||||

|

||||

The best part about Mtrace is that the learning curve for it isn't steep; all you need to understand is how and where to add the mtrace() -- and the corresponding muntrace() -- function in your code, and how to use the Mtrace Perl script. The latter is very straightforward -- all you have to do is run the mtrace() <program-executable> <log-file-generated-upon-program-execution> command. (For an example, see the last command in the screenshot above.)

|

||||

|

||||

Another thing that I like about Mtrace is that it's scalable -- which means that you can not only use it to debug a complete program, but can also use it to detect memory leaks in individual modules of the program. Just call the mtrace() and muntrace() functions within each module.

|

||||

|

||||

Finally, since the tool is triggered when the mtrace() function -- which you add in your program's source code -- is executed, you have the flexibility to enable the tool dynamically (during program execution) [using signals][39].

|

||||

|

||||

#### Other considerations ####

|

||||

|

||||

Because the calls to mtrace() and mauntrace() functions -- which are declared in the mcheck.h file that you need to include in your program's source -- are fundamental to Mtrace's operation (the mauntrace() function is not [always required][40]), the tool requires programmers to make changes in their code at least once.

|

||||

|

||||

Be aware that you need to compile your program with the -g option (provided by both the [GCC][41] and [G++][42] compilers), which enables the debugging tool to display exact line numbers in the output. In addition, some programs (depending on how big their source code is) can take a long time to compile. Finally, compiling with -g increases the size of the executable (because it produces extra information for debugging), so you have to remember that the program needs to be recompiled without -g after the testing has been completed.

|

||||

|

||||

To use Mtrace, you need to have some basic knowledge of environment variables in Linux, given that the path to the user-specified file -- which the mtrace() function uses to log all the information -- has to be set as a value for the MALLOC_TRACE environment variable before the program is executed.

|

||||

|

||||

Feature-wise, Mtrace is limited to detecting memory leaks and attempts to free up memory that was never allocated. It can't detect other memory-related issues such as illegal memory access or use of uninitialized memory. Also, [there have been complaints][43] that it's not [thread-safe][44].

|

||||

|

||||

### Conclusions ###

|

||||

|

||||

Needless to say, each memory debugger that I've discussed here has its own qualities and limitations. So, which one is best suited for you mostly depends on what features you require, although ease of setup and use might also be a deciding factor in some cases.

|

||||

|

||||

Mtrace is best suited for cases where you just want to catch memory leaks in your software program. It can save you some time, too, since the tool comes pre-installed on your Linux system, something which is also helpful in situations where the development machines aren't connected to the Internet or you aren't allowed to download a third party tool for any kind of debugging.

|

||||

|

||||

Dmalloc, on the other hand, can not only detect more error types compared to Mtrace, but also provides more features, such as runtime configurability and GDB integration. Also, unlike any other tool discussed here, Dmalloc is thread-safe. Not to mention that it comes with detailed documentation, making it ideal for beginners.

|

||||

|

||||

Although Memwatch comes with even more comprehensive documentation than Dmalloc, and can detect even more error types, you can only use it with software written in the C programming language. One of its features that stands out is that it lets you handle its output from within the code of your program, something that is helpful in case you want to customize the format of the output.

|

||||

|

||||

If making changes to your program's source code is not what you want, you can use Electric Fence. However, keep in mind that it can only detect a couple of error types, and that doesn't include memory leaks. Plus, you also need to know GDB basics to make the most out of this memory-debugging tool.

|

||||

|

||||