mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-12 01:40:10 +08:00

commit

c7debee9fc

@ -1,4 +0,0 @@

|

||||

# Linux中国翻译规范

|

||||

1. 翻译中出现的专有名词,可参见Dict.md中的翻译。

|

||||

2. 英文人名,如无中文对应译名,一般不译。

|

||||

2. 缩写词,一般不须翻译,可考虑旁注中文全名。

|

||||

@ -1,42 +1,49 @@

|

||||

知识共享是怎样造福艺术家和大企业的

|

||||

你所不知道的知识共享(CC)

|

||||

======

|

||||

|

||||

> 知识共享为艺术家提供访问权限和原始素材。大公司也从中受益。

|

||||

|

||||

|

||||

|

||||

我毕业于电影学院,毕业后在一所电影学校教书,之后进入一家主流电影工作室,我一直在从事电影相关的工作。创造业的方方面面面临着同一个问题:创造者需要原材料。有趣的是,自由文化运动提出了解决方案,具体来说是在自由文化运动中出现的知识共享组织。

|

||||

我毕业于电影学院,毕业后在一所电影学校教书,之后进入一家主流电影工作室,我一直在从事电影相关的工作。创意产业的方方面面面临着同一个问题:创作者需要原材料。有趣的是,自由文化运动提出了解决方案,具体来说是在自由文化运动中出现的<ruby>知识共享<rt>Creative Commons</rt></ruby>组织。

|

||||

|

||||

###知识共享能够为我们提供展示片段和小样

|

||||

### 知识共享能够为我们提供展示片段和小样

|

||||

|

||||

和其他事情一样,创造力也需要反复练习。幸运的是,在我刚开始接触电脑时,就在一本渲染工场的专业杂志中接触到了开源这个存在。当时我并不理解所谓的“开源”是什么,但我知道只有开源工具能帮助我在领域内稳定发展。对我来说,知识共享也是如此。知识共享可以为艺术家们提供充满丰富艺术资源的工作室。

|

||||

和其他事情一样,创造力也需要反复练习。幸运的是,在我刚开始接触电脑时,就在一本关于渲染工场的专业杂志中接触到了开源这个存在。当时我并不理解所谓的“开源”是什么,但我知道只有开源工具能帮助我在领域内稳定发展。对我来说,知识共享也是如此。知识共享可以为艺术家们提供充满丰富艺术资源的工作室。

|

||||

|

||||

我在电影学院任教时,经常需要给学生们准备练习编辑、录音、拟音、分级、评分的脚本。在 Jim Munroe 的独立作品 [Infest Wisely][1] 中和 [Vimeo][2] 上的知识共享里我总能找到我想要的。这些写实的脚本覆盖内容十分广泛,从独立影院出品到昂贵的高质的升降镜头(一般都会用无人机代替)都有。

|

||||

我在电影学院任教时,经常需要给学生们准备练习编辑、录音、拟音、分级、评分的示例录像。在 Jim Munroe 的独立作品 [Infest Wisely][1] 中和 [Vimeo][2] 上的知识共享内容里我总能找到我想要的。这些逼真的镜头覆盖内容十分广泛,从独立制作到昂贵的高品质的升降镜头(一般都会用无人机代替)都有。

|

||||

|

||||

对实验艺术来说,确有无尽可能。知识共享提供了丰富的底片材料,这些材料可以用来整合,混剪等等,可以满足一位视觉先锋能够想到的任何用途。

|

||||

|

||||

|

||||

在接触知识共享之前,如果我想要使用写实脚本,我只能用之前的学生和老师拍摄的或者直接使用版权库里的脚本,但这些都有很多局限性。

|

||||

对实验主义艺术来说,确有无尽可能。知识共享提供了丰富的素材,这些材料可以用来整合、混剪等等,可以满足一位视觉先锋能够想到的任何用途。

|

||||

|

||||

###坚守版权的底线很重要

|

||||

在接触知识共享之前,如果我想要使用写实镜头,如果在大学,只能用之前的学生和老师拍摄的或者直接使用版权库里的镜头,或者使用有受限的版权保护的镜头。

|

||||

|

||||

知识共享同样能够创造经济效益。在某大型计算机公司的渲染工场工作时,我负责在某些硬件设施上测试渲染的运行情况,而这个测试时刻面临着被搁置的风险。做这些测试时,我用的都是[大雄兔][3]的资源,因为这个电影和它的组件都是可以免费使用和分享的。如果没有这个小短片,在接触写实资源之前我都没法完成我的实验,因为对于一个计算机公司来说,雇佣一只3D艺术家来应召布景是不太现实的。

|

||||

### 坚守版权的底线很重要

|

||||

|

||||

令我震惊的是,与开源类似,知识共享已经用我们难以想象的方式支撑起了大公司。知识共享的使用或有或无地影响着公司的日常程序,但它填补了不足,让工作流程顺利进行。我没见到谁在他们的书中将流畅工作归功于知识共享的应用,但它确实无处不在。

|

||||

知识共享同样能够创造经济效益。在某大型计算机公司的渲染工场工作时,我负责在某些硬件设施上测试渲染的运行情况,而这个测试时刻面临着被搁置的风险。做这些测试时,我用的都是[大雄兔][3]的资源,因为这个电影和它的组件都是可以免费使用和分享的。如果没有这个小短片,在接触写实资源之前我都没法完成我的实验,因为对于一个计算机公司来说,雇佣一只 3D 艺术家来按需布景是不太现实的。

|

||||

|

||||

我也见过一些开放版权的电影,比如[辛特尔][4],在最近的电视节目中播放了它的短片,那时的电视比现在的网络媒体要火得多。

|

||||

令我震惊的是,与开源类似,知识共享已经用我们难以想象的方式支撑起了大公司。知识共享的使用可能会也可能不会影响公司的日常流程,但它填补了不足,让工作流程顺利进行。我没见到谁在他们的书中将流畅工作归功于知识共享的应用,但它确实无处不在。

|

||||

|

||||

###知识共享可以提供大量原材料

|

||||

|

||||

|

||||

艺术家需要原材料。画家需要颜料,画笔和画布。雕塑家需要陶土和工具。数字内容编辑师需要数字内容,无论它是剪贴画还是音效或者是电子游戏里的成品精灵。

|

||||

我也见过一些开放版权的电影,比如[辛特尔][4],在最近的电视节目中播放了它的短片,电视的分辨率已经超过了标准媒体。

|

||||

|

||||

### 知识共享可以提供大量原材料

|

||||

|

||||

艺术家需要原材料。画家需要颜料、画笔和画布。雕塑家需要陶土和工具。数字内容编辑师需要数字内容,无论它是剪贴画还是音效或者是电子游戏里的现成的精灵。

|

||||

|

||||

数字媒介赋予了人们超能力,让一个人就能完成需要一组人员才能完成的工作。事实上,我们大部分都好高骛远。我们想做高大上的项目,想让我们的成果不论是视觉上还是听觉上都无与伦比。我们想塑造的是宏大的世界,紧张的情节,能引起共鸣的作品,但我们所拥有的时间精力和技能与之都不匹配,达不到想要的效果。

|

||||

|

||||

是知识共享再一次拯救了我们,用 [Freesound.org][5], [Openclipart.org][6], [OpenGameArt.org][7] 等等网站上那些细小的开放版权艺术材料。通过知识共享,艺术家可以使用各种他们自己没办法创造的原材料,来完成他们原本完不成的工作。

|

||||

是知识共享再一次拯救了我们,在 [Freesound.org][5]、 [Openclipart.org][6]、 [OpenGameArt.org][7] 等等网站上都有大量的开放版权艺术材料。通过知识共享,艺术家可以使用各种他们自己没办法创造的原材料,来完成他们原本完不成的工作。

|

||||

|

||||

最神奇的是,不用自己投资,你放在网上给大家使用的原材料就能变成精美的作品,而这是你从没想过的。我在知识共享上面分享了很多音乐素材,它们现在用于无数的专辑和电子游戏里。有些人用了我的材料会通知我,有些是我自己发现的,所以这些材料的应用可能比我知道的还有多得多。有时我会偶然看到我亲手画的标志出现在我从没听说过的软件里。我见到过我为[开源网站][8]写的文章在别处发表,有的是论文的参考文献,白皮书或者参考资料中。

|

||||

最神奇的是,不用自己投资,你放在网上给大家使用的原材料就能变成精美的作品,而这是你从没想过的。我在知识共享上面分享了很多音乐素材,它们现在用于无数的专辑和电子游戏里。有些人用了我的材料会通知我,有些是我自己发现的,所以这些材料的应用可能比我知道的还有多得多。有时我会偶然看到我亲手画的标志出现在我从没听说过的软件里。我见到过我为 [Opensource.com][8] 写的文章在别处发表,有的是论文的参考文献,白皮书或者参考资料中。

|

||||

|

||||

###知识共享所代表的自由文化也是一种文化

|

||||

### 知识共享所代表的自由文化也是一种文化

|

||||

|

||||

“自由文化”这个说法过于累赘,文化,从概念上来说,是一个有机的整体。在这种文化中社会逐渐成长发展,从一个人到另一个。它是人与人之间的互动和思想交流。自由文化是自由缺失的现代世界里的特殊产物。

|

||||

|

||||

如果你也想对这样的局限进行反抗,想把你的思想、作品,你自己的文化分享给全世界的人,那么就来和我们一起,使用知识共享吧!

|

||||

如果你也想对这样的局限进行反抗,想把你的思想、作品、你自己的文化分享给全世界的人,那么就来和我们一起,使用知识共享吧!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -44,7 +51,7 @@ via: https://opensource.com/article/18/1/creative-commons-real-world

|

||||

|

||||

作者:[Seth Kenlon][a]

|

||||

译者:[Valoniakim](https://github.com/Valoniakim)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,9 +1,11 @@

|

||||

使用开放决策框架收集项目需求

|

||||

降低项目失败率的三个原则

|

||||

======

|

||||

|

||||

> 透明和包容性的项目要求可以降低您的失败率。 以下是如何协作收集它们。

|

||||

|

||||

|

||||

|

||||

众所周知,明确、简洁和可衡量的需求会带来更多成功的项目。一项关于[麦肯锡与牛津大学][1]的大型项目的研究表明:“平均而言,大型 IT 项目超出预算 45%,时间每推移 7%,价值就比预期低 56% “。该研究还表明,造成这种失败的一些原因是“模糊的业务目标,不同步的利益相关者以及过度的返工”。

|

||||

众所周知,明确、简洁和可衡量的需求会带来更多成功的项目。一项[麦肯锡与牛津大学][1]的关于大型项目的研究表明:“平均而言,大型 IT 项目超出预算 45%,时间每推移 7%,价值就比预期低 56% 。”该研究还表明,造成这种失败的一些原因是“模糊的业务目标,不同步的利益相关者以及过度的返工。”

|

||||

|

||||

业务分析师经常发现自己通过持续对话来构建这些需求。为此,他们必须吸引多个利益相关方,并确保参与者提供明确的业务目标。这样可以减少返工,提高更多项目的成功率。

|

||||

|

||||

@ -29,7 +31,7 @@ via: https://opensource.com/open-organization/18/2/constructing-project-requirem

|

||||

|

||||

作者:[Tracy Buckner][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -37,4 +39,4 @@ via: https://opensource.com/open-organization/18/2/constructing-project-requirem

|

||||

[1]:http://calleam.com/WTPF/?page_id=1445

|

||||

[2]:https://opensource.com/open-organization/resources/open-decision-framework

|

||||

[3]:https://opensource.com/open-organization/resources/open-org-definition

|

||||

[4]:https://opensource.com/open-organization/16/6/introducing-open-decision-framework

|

||||

[4]:https://opensource.com/open-organization/16/6/introducing-open-decision-framework

|

||||

@ -1,10 +1,11 @@

|

||||

12 条实用的 zypper 命令范例

|

||||

======

|

||||

zypper 是 Suse Linux 系统的包和补丁管理器,你可以根据下面的 12 条附带输出示例的实用范例来学习 zypper 命令的使用。

|

||||

|

||||

`zypper` 是 Suse Linux 系统的包和补丁管理器,你可以根据下面的 12 条附带输出示例的实用范例来学习 `zypper` 命令的使用。

|

||||

|

||||

![zypper 命令示例][1]

|

||||

|

||||

Suse Linux 使用 zypper 进行包管理,其是一个由 [ZYpp 包管理引擎][2]提供技术支持的包管理系统。在此篇文章中我们将分享 12 条附带输出示例的实用 zypper 命令,能帮助你处理日常的系统管理任务。

|

||||

Suse Linux 使用 `zypper` 进行包管理,其是一个由 [ZYpp 包管理引擎][2]提供的包管理系统。在此篇文章中我们将分享 12 条附带输出示例的实用 `zypper` 命令,能帮助你处理日常的系统管理任务。

|

||||

|

||||

不带参数的 `zypper` 命令将列出所有可用的选项,这比参考详细的 man 手册要容易上手得多。

|

||||

|

||||

@ -18,12 +19,12 @@ root@kerneltalks # zypper

|

||||

--help, -h 帮助

|

||||

--version, -V 输出版本号

|

||||

--promptids 输出 zypper 用户提示符列表

|

||||

--config, -c <file> 使用制定的配置文件来替代默认的

|

||||

--config, -c <file> 使用指定的配置文件来替代默认的

|

||||

--userdata <string> 在历史和插件中使用的用户自定义事务 id

|

||||

--quiet, -q 忽略正常输出,只打印错误信息

|

||||

--verbose, -v 增加冗长程度

|

||||

--color

|

||||

--no-color 是否启用彩色模式如果 tty 支持

|

||||

--no-color 是否启用彩色模式,如果 tty 支持的话

|

||||

--no-abbrev, -A 表格中的文字不使用缩写

|

||||

--table-style, -s 表格样式(整型)

|

||||

--non-interactive, -n 不询问任何选项,自动使用默认答案

|

||||

@ -43,7 +44,7 @@ root@kerneltalks # zypper

|

||||

--gpg-auto-import-keys 自动信任并导入新仓库的签名密钥

|

||||

--plus-repo, -p <URI> 使用附加仓库

|

||||

--plus-content <tag> 另外使用禁用的仓库来提供特定的关键词

|

||||

尝试 '--plus-content debug' 选项来启用仓库

|

||||

尝试使用 '--plus-content debug' 选项来启用仓库

|

||||

--disable-repositories 不从仓库中读取元数据

|

||||

--no-refresh 不刷新仓库

|

||||

--no-cd 忽略 CD/DVD 中的仓库

|

||||

@ -55,11 +56,11 @@ root@kerneltalks # zypper

|

||||

--disable-system-resolvables

|

||||

不读取已安装包

|

||||

|

||||

命令:

|

||||

命令:

|

||||

help, ? 打印帮助

|

||||

shell, sh 允许多命令

|

||||

|

||||

仓库管理:

|

||||

仓库管理:

|

||||

repos, lr 列出所有自定义仓库

|

||||

addrepo, ar 添加一个新仓库

|

||||

removerepo, rr 移除指定仓库

|

||||

@ -68,14 +69,14 @@ root@kerneltalks # zypper

|

||||

refresh, ref 刷新所有仓库

|

||||

clean 清除本地缓存

|

||||

|

||||

服务管理:

|

||||

服务管理:

|

||||

services, ls 列出所有自定义服务

|

||||

addservice, as 添加一个新服务

|

||||

modifyservice, ms 修改指定服务

|

||||

removeservice, rs 移除指定服务

|

||||

refresh-services, refs 刷新所有服务

|

||||

|

||||

软件管理:

|

||||

软件管理:

|

||||

install, in 安装包

|

||||

remove, rm 移除包

|

||||

verify, ve 确认包依赖的完整性

|

||||

@ -83,7 +84,7 @@ root@kerneltalks # zypper

|

||||

install-new-recommends, inr

|

||||

安装由已安装包建议一并安装的新包

|

||||

|

||||

更新管理:

|

||||

更新管理:

|

||||

update, up 更新已安装包至更新版本

|

||||

list-updates, lu 列出可用更新

|

||||

patch 安装必要的补丁

|

||||

@ -91,7 +92,7 @@ root@kerneltalks # zypper

|

||||

dist-upgrade, dup 进行发行版更新

|

||||

patch-check, pchk 检查补丁

|

||||

|

||||

查询:

|

||||

查询:

|

||||

search, se 查找符合匹配模式的包

|

||||

info, if 展示特定包的完全信息

|

||||

patch-info 展示特定补丁的完全信息

|

||||

@ -103,27 +104,28 @@ root@kerneltalks # zypper

|

||||

products, pd 列出所有可用的产品

|

||||

what-provides, wp 列出提供特定功能的包

|

||||

|

||||

包锁定:

|

||||

包锁定:

|

||||

addlock, al 添加一个包锁定

|

||||

removelock, rl 移除一个包锁定

|

||||

locks, ll 列出当前的包锁定

|

||||

cleanlocks, cl 移除无用的锁定

|

||||

|

||||

其他命令:

|

||||

其他命令:

|

||||

versioncmp, vcmp 比较两个版本字符串

|

||||

targetos, tos 打印目标操作系统 ID 字符串

|

||||

licenses 打印已安装包的证书和 EULAs 报告

|

||||

download 使用命令行下载指定 rpm 包到本地目录

|

||||

source-download 下载所有已安装包的源码 rpm 包到本地目录

|

||||

|

||||

子命令:

|

||||

子命令:

|

||||

subcommand 列出可用子命令

|

||||

|

||||

输入 'zypper help <command>' 来获得特定命令的帮助。

|

||||

```

|

||||

##### 如何使用 zypper 安装包

|

||||

|

||||

`zypper` 通过 `in` 或 `install` 开关来在你的系统上安装包。它的用法与 [yum package installation][3] 相同。你只需要提供包名作为参数,包管理器(此处是 zypper)就会处理所有的依赖并与你指定的包一并安装。

|

||||

### 如何使用 zypper 安装包

|

||||

|

||||

`zypper` 通过 `in` 或 `install` 子命令来在你的系统上安装包。它的用法与 [yum 软件包安装][3] 相同。你只需要提供包名作为参数,包管理器(此处是 `zypper`)就会处理所有的依赖并与你指定的包一并安装。

|

||||

|

||||

```

|

||||

# zypper install telnet

|

||||

@ -147,11 +149,11 @@ Checking for file conflicts: ...................................................

|

||||

|

||||

以上是我们安装 `telnet` 包时的输出,供你参考。

|

||||

|

||||

推荐阅读 : [在 YUM 和 APT 系统中安装包][3]

|

||||

推荐阅读:[在 YUM 和 APT 系统中安装包][3]

|

||||

|

||||

##### 如何使用 zypper 移除包

|

||||

### 如何使用 zypper 移除包

|

||||

|

||||

要在 Suse Linux 中擦除或移除包,使用 `zypper` 命令附带 `remove` 或 `rm` 开关。

|

||||

要在 Suse Linux 中擦除或移除包,使用 `zypper` 附带 `remove` 或 `rm` 子命令。

|

||||

|

||||

```

|

||||

root@kerneltalks # zypper rm telnet

|

||||

@ -167,13 +169,14 @@ After the operation, 113.3 KiB will be freed.

|

||||

Continue? [y/n/...? shows all options] (y): y

|

||||

(1/1) Removing telnet-1.2-165.63.x86_64 ..........................................................................................................................[done]

|

||||

```

|

||||

|

||||

我们在此处移除了先前安装的 telnet 包。

|

||||

|

||||

##### 使用 zypper 检查依赖或者认证已安装包的完整性

|

||||

### 使用 zypper 检查依赖或者认证已安装包的完整性

|

||||

|

||||

有时可以通过强制忽略依赖关系来安装软件包。`zypper` 使你能够扫描所有已安装的软件包并检查其依赖性。如果缺少任何依赖项,它将提供你安装或重新安装它的机会,从而保持已安装软件包的完整性。

|

||||

|

||||

使用附带 `verify` 或 `ve` 开关的 `zypper` 命令来检查已安装包的完整性。

|

||||

使用附带 `verify` 或 `ve` 子命令的 `zypper` 命令来检查已安装包的完整性。

|

||||

|

||||

```

|

||||

root@kerneltalks # zypper ve

|

||||

@ -184,9 +187,10 @@ Reading installed packages...

|

||||

|

||||

Dependencies of all installed packages are satisfied.

|

||||

```

|

||||

|

||||

在上面的输出中,你能够看到最后一行说明已安装包的所有依赖都已安装完全,并且无需更多操作。

|

||||

|

||||

##### 如何在 Suse Linux 中使用 zypper 下载包

|

||||

### 如何在 Suse Linux 中使用 zypper 下载包

|

||||

|

||||

`zypper` 提供了一种方法使得你能够将包下载到本地目录而不去安装它。你可以在其他具有同样配置的系统上使用这个已下载的软件包。包会被下载至 `/var/cache/zypp/packages/<repo>/<arch>/` 目录。

|

||||

|

||||

@ -206,13 +210,14 @@ total 52

|

||||

-rw-r--r-- 1 root root 53025 Feb 21 03:17 telnet-1.2-165.63.x86_64.rpm

|

||||

|

||||

```

|

||||

|

||||

你能看到我们使用 `zypper` 将 telnet 包下载到了本地。

|

||||

|

||||

推荐阅读 : [在 YUM 和 APT 系统中只下载包而不安装][4]

|

||||

推荐阅读:[在 YUM 和 APT 系统中只下载包而不安装][4]

|

||||

|

||||

##### 如何使用 zypper 列出可用包更新

|

||||

### 如何使用 zypper 列出可用包更新

|

||||

|

||||

`zypper` 允许你浏览已安装包的所有可用更新,以便你可以提前计划更新活动。使用 `list-updates` 或 `lu` 开关来显示已安装包的所有可用更新。

|

||||

`zypper` 允许你浏览已安装包的所有可用更新,以便你可以提前计划更新活动。使用 `list-updates` 或 `lu` 子命令来显示已安装包的所有可用更新。

|

||||

|

||||

```

|

||||

root@kerneltalks # zypper lu

|

||||

@ -229,11 +234,12 @@ v | SLE-Module-Containers12-Updates | containerd | 0.2.5+gitr6

|

||||

v | SLES12-SP3-Updates | crash | 7.1.8-4.3.1 | 7.1.8-4.6.2 | x86_64

|

||||

v | SLES12-SP3-Updates | rsync | 3.1.0-12.1 | 3.1.0-13.10.1 | x86_64

|

||||

```

|

||||

|

||||

输出特意被格式化以便于阅读。每一列分别代表包所属仓库名称、包名、已安装版本、可用的更新版本和架构。

|

||||

|

||||

##### 在 Suse Linux 中列出和安装补丁

|

||||

### 在 Suse Linux 中列出和安装补丁

|

||||

|

||||

使用 `list-patches` 或 `lp` 开关来显示你的 Suse Linux 系统需要被应用的所有可用补丁。

|

||||

使用 `list-patches` 或 `lp` 子命令来显示你的 Suse Linux 系统需要被应用的所有可用补丁。

|

||||

|

||||

```

|

||||

root@kerneltalks # zypper lp

|

||||

@ -260,9 +266,9 @@ Found 37 applicable patches:

|

||||

|

||||

你可以通过发出 `zypper patch` 命令安装所有需要的补丁。

|

||||

|

||||

##### 如何使用 zypper 更新包

|

||||

### 如何使用 zypper 更新包

|

||||

|

||||

要使用 zypper 更新包,使用 `update` 或 `up` 开关后接包名。在上述列出的更新命令中,我们知道在我们的服务器上 `rsync` 包更新可用。让我们现在来更新它吧!

|

||||

要使用 `zypper` 更新包,使用 `update` 或 `up` 子命令后接包名。在上述列出的更新命令中,我们知道在我们的服务器上 `rsync` 包更新可用。让我们现在来更新它吧!

|

||||

|

||||

```

|

||||

root@kerneltalks # zypper update rsync

|

||||

@ -284,9 +290,9 @@ Checking for file conflicts: ...................................................

|

||||

(1/1) Installing: rsync-3.1.0-13.10.1.x86_64 .....................................................................................................................[done]

|

||||

```

|

||||

|

||||

##### 在 Suse Linux 上使用 zypper 查找包

|

||||

### 在 Suse Linux 上使用 zypper 查找包

|

||||

|

||||

如果你不确定包的全名也不要担心。你可以使用 zypper 附带 `se` 或 `search` 开关并提供查找字符串来查找包。

|

||||

如果你不确定包的全名也不要担心。你可以使用 `zypper` 附带的 `se` 或 `search` 子命令并提供查找字符串来查找包。

|

||||

|

||||

```

|

||||

root@kerneltalks # zypper se lvm

|

||||

@ -303,14 +309,15 @@ S | Name | Summary | Type

|

||||

| llvm-devel | Header Files for LLVM | package

|

||||

| lvm2 | Logical Volume Manager Tools | srcpackage

|

||||

i+ | lvm2 | Logical Volume Manager Tools | package

|

||||

| lvm2-devel | Development files for LVM2 | package

|

||||

|

||||

| lvm2-devel | Development files for LVM2 | package

|

||||

```

|

||||

在上述示例中我们查找了 `lvm` 字符串并得到了如上输出列表。你能在 zypper install/remove/update 命令中使用 `Name` 字段的名字。

|

||||

|

||||

##### 使用 zypper 检查已安装包信息

|

||||

在上述示例中我们查找了 `lvm` 字符串并得到了如上输出列表。你能在 `zypper install/remove/update` 命令中使用 `Name` 字段的名字。

|

||||

|

||||

### 使用 zypper 检查已安装包信息

|

||||

|

||||

你能够使用 `zypper` 检查已安装包的详细信息。`info` 或 `if` 子命令将列出已安装包的信息。它也可以显示未安装包的详细信息,在该情况下,`Installed` 参数将返回 `No` 值。

|

||||

|

||||

你能够使用 zypper 检查已安装包的详细信息。`info` 或 `if` 开关将列出已安装包的信息。它也可以显示未安装包的详细信息,在该情况下,`Installed` 参数将返回 `No` 值。

|

||||

```

|

||||

root@kerneltalks # zypper info rsync

|

||||

Refreshing service 'SMT-http_smt-ec2_susecloud_net'.

|

||||

@ -343,9 +350,9 @@ Description :

|

||||

for backups and mirroring and as an improved copy command for everyday use.

|

||||

```

|

||||

|

||||

##### 使用 zypper 列出仓库

|

||||

### 使用 zypper 列出仓库

|

||||

|

||||

使用 zypper 命令附带 `lr` 或 `repos` 开关列出仓库。

|

||||

使用 `zypper` 命令附带 `lr` 或 `repos` 子命令列出仓库。

|

||||

|

||||

```

|

||||

root@kerneltalks # zypper lr

|

||||

@ -364,7 +371,7 @@ Repository priorities are without effect. All enabled repositories share the sam

|

||||

|

||||

此处你需要检查 `enabled` 列来确定哪些仓库是已被启用的而哪些没有。

|

||||

|

||||

##### 在 Suse Linux 中使用 zypper 添加或移除仓库

|

||||

### 在 Suse Linux 中使用 zypper 添加或移除仓库

|

||||

|

||||

要添加仓库你需要仓库或 .repo 文件的 URI,否则你会遇到如下错误。

|

||||

|

||||

@ -390,16 +397,17 @@ Priority : 99 (default priority)

|

||||

Repository priorities are without effect. All enabled repositories share the same priority.

|

||||

```

|

||||

|

||||

在 Suse 中使用附带 `addrepo` 或 `ar` 开关的 `zypper` 命令添加仓库,后接 URI 以及你需要提供一个别名。

|

||||

在 Suse 中使用附带 `addrepo` 或 `ar` 子命令的 `zypper` 命令添加仓库,后接 URI 以及你需要提供一个别名。

|

||||

|

||||

要在 Suse 中移除一个仓库,使用附带 `removerepo` 或 `rr` 子命令的 `zypper` 命令。

|

||||

|

||||

要在 Suse 中移除一个仓库,使用附带 `removerepo` 或 `rr` 开关的 `zypper` 命令。

|

||||

```

|

||||

root@kerneltalks # zypper removerepo nVidia-Driver-SLE12-SP3

|

||||

Removing repository 'nVidia-Driver-SLE12-SP3' ....................................................................................................................[done]

|

||||

Repository 'nVidia-Driver-SLE12-SP3' has been removed.

|

||||

```

|

||||

|

||||

##### 清除 zypper 本地缓存

|

||||

### 清除 zypper 本地缓存

|

||||

|

||||

使用 `zypper clean` 命令清除 zypper 本地缓存。

|

||||

|

||||

@ -414,7 +422,7 @@ via: https://kerneltalks.com/commands/12-useful-zypper-command-examples/

|

||||

|

||||

作者:[KernelTalks][a]

|

||||

译者:[cycoe](https://github.com/cycoe)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,138 @@

|

||||

在 Linux 命令行下进行时间管理

|

||||

======

|

||||

|

||||

> 学习如何在命令行下用这些方法自己组织待办事项。

|

||||

|

||||

|

||||

|

||||

关于如何在命令行下进行<ruby>时间管理<rt>getting things done</rt></ruby>(GTD)有很多讨论。不知有多少文章在讲使用 ls 晦涩的选项、配合 Sed 和 Awk 的一些神奇的正则表达式,以及用 Perl 解析一大堆的文本。但这些都不是问题的重点。

|

||||

|

||||

本文章是关于“[如何完成][1]”,在我们不需要图形桌面、网络浏览器或网络连接情况下,用命令行操作能实际完成事务的跟踪。为了达到这一点,我们将介绍四种跟踪待办事项的方式:纯文件文件、Todo.txt、TaskWarrior 和 Org 模式。

|

||||

|

||||

### 简单纯文本

|

||||

|

||||

![纯文本][3]

|

||||

|

||||

*我喜欢用 Vim,其实你也可以用 Nano。*

|

||||

|

||||

最直接管理你的待办事项的方式就是用纯文本文件来编辑。只需要打开一个空文件,每一行添加一个任务。当任务完成后,删除这一行。简单有效,无论你用它做什么都没关系。不过这个方法也有两个缺点,一但你删除一行并保存了文件,它就是永远消失了。如果你想知道本周或者上周都做了哪些事情,就成了问题。使用简单文本文件很方便却也容易导致混乱。

|

||||

|

||||

### Todo.txt: 纯文件的升级版

|

||||

|

||||

![todo.txt 截屏][5]

|

||||

|

||||

*整洁,有条理,易用*

|

||||

|

||||

这就是我们要说的 [Todo.txt][6] 文件格式和应用程序。安装很简单,可从 GitHub [下载][7]最新的版本解压后并执行命令 `sudo make install` 。

|

||||

|

||||

![安装 todo.txt][9]

|

||||

|

||||

*也可以从 Git 克隆一个。*

|

||||

|

||||

Todo.txt 可以很容易的增加新任务,并能显示任务列表和已完成任务的标记:

|

||||

|

||||

| 命令 | 说明 |

|

||||

| ------------- |:-------------|

|

||||

| `todo.sh add "某任务"` | 增加 “某任务” 到你的待办列表 |

|

||||

| `todo.sh ls` | 显示所有的任务 |

|

||||

| `todo.sh ls due:2018-02-15` | 显示2018-02-15之前的所有任务 |

|

||||

| `todo.sh do 3` | 标记任务3 为已完成任务 |

|

||||

|

||||

这个清单实际上仍然是纯文本,你可以用你喜欢的编辑器遵循[正确的格式][10]编辑它。

|

||||

|

||||

该应用程序同时也内置了一个强大的帮助系统。

|

||||

|

||||

![在 todo.txt 中语法高亮][12]

|

||||

|

||||

*你可以使用语法高亮的功能*

|

||||

|

||||

此外,还有许多附加组件可供选择,以及编写自己的附件组件规范。甚至有浏览器组件、移动设备应用程序和桌面应用程序支持 Todo.txt 的格式。

|

||||

|

||||

![GNOME extensions in todo.txt][14]

|

||||

|

||||

*GNOME的扩展组件*

|

||||

|

||||

Todo.txt 最大的缺点是缺少自动或内置的同步机制。大多数(不是全部)的浏览器扩展程序和移动应用程序需要用 Dropbox 实现桌面系统和应用程序直接的数据同步。如果你想内置同步机制,我们有……

|

||||

|

||||

### Taskwarrior: 现在我们用 Python 做事了

|

||||

|

||||

![Taskwarrior][25]

|

||||

|

||||

*花哨吗?*

|

||||

|

||||

[Taskwarrior][15] 是一个与 Todo.txt 有许多相同功能的 Python 工具。但不同的是它的数据保存在数据库里并具有内置的数据同步功能。它还可以跟踪即将要做的任务,可以提醒某个任务持续了多久,可以提醒你一些重要的事情应该马上去做。

|

||||

|

||||

![][26]

|

||||

|

||||

*看起来不错*

|

||||

|

||||

[安装][16] Taskwarrior 可以通过通过发行版自带的包管理器,或通过 Python 命令 `pip` 安装,或者用源码编译。用法也和 Todo.txt 的命令完全一样:

|

||||

|

||||

| 命令 | 说明 |

|

||||

| ------------- |:-------------|

|

||||

| `task add "某任务"` | 增加 “某任务” 到任务清单 |

|

||||

| `task list` | 列出所有任务 |

|

||||

| `task list due ``:today` | 列出截止今天的任务 |

|

||||

| `task do 3` | 标记编号是3的任务为完成状态 |

|

||||

|

||||

Taskwarrior 还有漂亮的文本用户界面。

|

||||

|

||||

![Taskwarrior in Vit][18]

|

||||

|

||||

*我喜欢 Vit, 它的设计灵感来自 Vim*

|

||||

|

||||

不同于 Todo.txt,Taskwarrior 可以和本地或远程服务器同步信息。如果你希望运行自己的同步服务器可以使用名为 `taskd` 的非常基本的服务器,如果不使用自己的服务器也有好几个可用服务器。

|

||||

|

||||

Taskwarriot 还拥有一个蓬勃发展的插件和扩展生态系统,以及移动和桌面系统的应用。

|

||||

|

||||

![GNOME in Taskwarrior ][20]

|

||||

|

||||

*在 GNOME 下的 Taskwarrior 看起来还是很漂亮的。*

|

||||

|

||||

Taskwarrior 有一个唯一的缺点,你是不能直接修改待办任务的,这和其他的工具不一样。你只能把任务清单按照格式导出,然后修改导出文件后,重新再导入,这样相对于编辑器直接编辑任务还是挺麻烦的。

|

||||

|

||||

谁能给我们带来最大的希望呢……

|

||||

|

||||

### Emacs Org 模式:牛X的任务收割机

|

||||

|

||||

![Org-mode][22]

|

||||

|

||||

*Emacs 啥都有*

|

||||

|

||||

Emacs [Org 模式][23] 是目前为止最强大、最灵活的开源待办事项管理器。它支持多文件、使用纯文本、高度可定制、自动识别日期、截止日期和任务计划。相对于我们这里介绍的其他工具,它的配置也更复杂一些。但是一旦配置好,它可以比其他工具完成更多功能。如果你是熟悉或者是 [Bullet Journals][24] 的粉丝,Org 模式可能是在桌面程序里最像[Bullet Journals][24] 的了。

|

||||

|

||||

Emacs 能运行,Org 模式就能运行,一些移动应用程序可以和它很好交互。但是不幸的是,目前没有桌面程序或浏览器插件支持 Org 模式。尽管如此,Org 模式仍然是跟踪待办事项最好的应用程序之一,因为它确实很强大。

|

||||

|

||||

### 选择适合自己的工具

|

||||

|

||||

最后,这些程序目的是帮助你跟踪待办事项,并确保不会忘记做某个事情。这些程序的基础功能都大同小异,那一款适合你取决于多种因素。有的人需要自带同步功能,有的人需要一个移动客户端,有的人要必须支持插件。不管你选择什么,请记住程序本身不会让你更有调理,但是可以帮助你。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/2/getting-to-done-agile-linux-command-line

|

||||

|

||||

作者:[Kevin Sonney][a]

|

||||

译者:[guevaraya](https://github.com/guevaraya)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/ksonney (Kevin Sonney)

|

||||

[1]:https://www.scruminc.com/getting-done/

|

||||

[3]:https://opensource.com/sites/default/files/u128651/plain-text.png (plaintext)

|

||||

[5]:https://opensource.com/sites/default/files/u128651/todo-txt.png (todo.txt screen)

|

||||

[6]:http://todotxt.org/

|

||||

[7]:https://github.com/todotxt/todo.txt-cli/releases

|

||||

[9]:https://opensource.com/sites/default/files/u128651/todo-txt-install.png (Installing todo.txt)

|

||||

[10]:https://github.com/todotxt/todo.txt

|

||||

[12]:https://opensource.com/sites/default/files/u128651/todo-txt-vim.png (Syntax highlighting in todo.txt)

|

||||

[14]:https://opensource.com/sites/default/files/u128651/tod-txt-gnome.png (GNOME extensions in todo.txt)

|

||||

[15]:https://taskwarrior.org/

|

||||

[16]:https://taskwarrior.org/download/

|

||||

[18]:https://opensource.com/sites/default/files/u128651/taskwarrior-vit.png (Taskwarrior in Vit)

|

||||

[20]:https://opensource.com/sites/default/files/u128651/taskwarrior-gnome.png (Taskwarrior on GNOME)

|

||||

[22]:https://opensource.com/sites/default/files/u128651/emacs-org-mode.png (Org-mode)

|

||||

[23]:https://orgmode.org/

|

||||

[24]:http://bulletjournal.com/

|

||||

[25]:https://opensource.com/sites/default/files/u128651/taskwarrior.png

|

||||

[26]:https://opensource.com/sites/default/files/u128651/taskwarrior-complains.png

|

||||

@ -0,0 +1,122 @@

|

||||

NASA 在开放科学方面做了些什么

|

||||

======

|

||||

|

||||

![][1]

|

||||

|

||||

最近我们刚为开设了一个新的“[科学类][2]”的文章分类。其中发表的最新一篇文章名为:[开源是怎样影响科学的][3]。在这篇文章中我们主要讨论了 [NASA][4] 的积极努力,这些努力包括他们通过开源实践来促进科学研究的积极作用。

|

||||

|

||||

### NASA 是怎样使用开源手段促进科学研究的

|

||||

|

||||

NASA 将他们的整个研究库对整个公共领域开放,这是一项[壮举][5]。

|

||||

|

||||

没错!每个人都能访问他们的整个研究库,并能从他们的研究中获益。

|

||||

|

||||

他们现已开放的资料可以大致分为以下三类:

|

||||

|

||||

* 开源 NASA

|

||||

* 开放 API

|

||||

* 开放数据

|

||||

|

||||

### 1、开源 NASA

|

||||

|

||||

这里有一份 [GitHub][7] 的联合创始人之一和执行总裁 [Chris Wanstrath][6] 的采访,他向我们介绍道,一切都是从很多年前开始的。

|

||||

|

||||

- [Chris Wanstrath on NASA, open source and Github](https://youtu.be/dUFzMe8GM3M)

|

||||

|

||||

该项目名为 “[code.nasa.gov][8]”,截至本文发表为止,NASA 已经[通过 GitHub 开源][9]了 365 个科学软件(LCTT 译注:本文原文发表于 2018/3/28,截止至本译文发布,已经有 454 个项目了)。对于一位热爱程序的开发者来说,即使一天研究一个软件,想把 NASA 的这些软件全部研究过来也要整整一年的时间。

|

||||

|

||||

即使你不是一位开发者,你也可以在这个门户网站浏览这个壮观的软件合集。

|

||||

|

||||

其中就有[阿波罗 11 号][10]的制导计算机的源代码。阿波罗 11 号空间飞行器[首次将两名人类带上月球][11],分别是 [Neil Armstrong][12] 和 [Edwin Aldrin][13] 。如果你对 Edwin Aldrin 感兴趣,可以点击[这里][14]了解更多。

|

||||

|

||||

#### NASA 开源代码促进会使用的开源代码许可

|

||||

|

||||

它们采用了几种[开源许可证][15],其分类如下:

|

||||

|

||||

- [Apache 许可证 2.0](https://www.apache.org/licenses/LICENSE-2.0)

|

||||

- [Nasa 开源许可证 3.0](https://opensource.org/licenses/NASA-1.3)

|

||||

- [GPL v3](https://www.gnu.org/licenses/gpl.html)

|

||||

- [MIT 许可证](https://en.wikipedia.org/wiki/MIT_License)

|

||||

|

||||

### 2、开放 API

|

||||

|

||||

开放 [API][16] 在推行开放科学中起到了很大作用。与[开源促进会][17]类似,对于 API,也有一个 [开放 API 促进会][18]。下面这张示意图可以告诉你 API 是怎样将应用程序和它的开发者连接起来的。

|

||||

|

||||

![][19]

|

||||

|

||||

记得点击这个[链接](https://sproutsocial.com/insights/what-is-an-api/)看看。链接内的文章使用了简单易懂的方法解读了 API ,文末总结了五大要点。

|

||||

|

||||

![][20]

|

||||

|

||||

这会让你感受到专有 API 和开放 API 会有多么大的不同。

|

||||

|

||||

![][22]

|

||||

|

||||

[NASA 的 Open API][23] 主要针对应用程序开发者,旨在显著改善数据的可访问性,也包括图片内容在内。该网站有一个实时编辑器,可供你调用[每日天文一图(APOD)][24] 的 API。

|

||||

|

||||

#### 3、开放数据

|

||||

|

||||

![][25]

|

||||

|

||||

在[我们发布的第一篇开放科学的文章][3]中,我们在“开放科学”段落下提到的三个国家 —— 法国、印度和美国的多种开放数据形式。NASA 有着类似的想法和行为。这种重要的意识形态已经被[多个国家][26]所接受。

|

||||

|

||||

[NASA 的开放数据门户][27]致力于开放,拥有不断增长的可供大众自由使用的开放数据。将数据集纳入到这个数据集对于任何研究活动来说都是必要且重要的。NASA 还在他们的门户网站上征集各方的数据需求,以一同收录在他们的数据库中。这一举措不仅是领先的、创新的,还顺应了[数据科学][28]、[AI 和深度学习][29]的趋势。

|

||||

|

||||

下面的视频讲的是学者和学生们是怎样通过大量研究得出对数据科学的定义的。这个过程十分的激动人心。瑞尔森大学罗杰斯商学院的 [Murtaza Haider 教授][30]在视频结尾中提到了开源的出现对数据科学的改变,尤其让是旧有的闭源方式逐渐变得开放。而这也确实成为了现实。

|

||||

|

||||

- [What is Data Science? Data Science 101](https://youtu.be/z1kPKBdYks4)

|

||||

|

||||

![][31]

|

||||

|

||||

现在任何人都能在 NASA 上征集数据。正如前面的视频中所说,NASA 的举措很大程度上与征集和分析优化数据有关。

|

||||

|

||||

![][32]

|

||||

|

||||

你只需要免费注册即可。考虑到论坛上的公开讨论以及数据集在可能存在的每一类分析领域中的重要性,这一举措在未来会有非常积极的影响,对数据的统计分析当然也会大幅进展。在之后的文章中我们还会具体讨论这些细节,还有它们和开源模式之间的相关性。

|

||||

|

||||

以上就是对 NASA 开放科学模式的一些探索成就,希望您能继续关注我们接下来的相关文章!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/nasa-open-science/

|

||||

|

||||

作者:[Avimanyu Bandyopadhyay][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[Valoniakim](https://github.com/Valoniakim)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/avimanyu/

|

||||

[1]:https://itsfoss.com/wp-content/uploads/2018/03/tux-in-space.jpg

|

||||

[2]:https://itsfoss.com/category/science/

|

||||

[3]:https://itsfoss.com/open-source-impact-on-science/

|

||||

[4]:https://www.nasa.gov/

|

||||

[5]:https://futurism.com/free-science-nasa-just-opened-its-entire-research-library-to-the-public/

|

||||

[6]:http://chriswanstrath.com/

|

||||

[7]:https://github.com/

|

||||

[8]:http://code.nasa.gov

|

||||

[9]:https://github.com/open-source

|

||||

[10]:https://www.nasa.gov/mission_pages/apollo/missions/apollo11.html

|

||||

[11]:https://www.space.com/16758-apollo-11-first-moon-landing.html

|

||||

[12]:https://www.jsc.nasa.gov/Bios/htmlbios/armstrong-na.html

|

||||

[13]:https://www.jsc.nasa.gov/Bios/htmlbios/aldrin-b.html

|

||||

[14]:https://buzzaldrin.com/the-man/

|

||||

[15]:https://itsfoss.com/open-source-licenses-explained/

|

||||

[16]:https://en.wikipedia.org/wiki/Application_programming_interface

|

||||

[17]:https://opensource.org/

|

||||

[18]:https://www.openapis.org/

|

||||

[19]:https://itsfoss.com/wp-content/uploads/2018/03/api-bridge.jpeg

|

||||

[20]:https://itsfoss.com/wp-content/uploads/2018/03/open-api-diagram.jpg

|

||||

[21]:http://www.apiacademy.co/resources/api-strategy-lesson-201-private-apis-vs-open-apis/

|

||||

[22]:https://itsfoss.com/wp-content/uploads/2018/03/nasa-open-api-live-example.jpg

|

||||

[23]:https://api.nasa.gov/

|

||||

[24]:https://apod.nasa.gov/apod/astropix.html

|

||||

[25]:https://itsfoss.com/wp-content/uploads/2018/03/nasa-open-data-portal.jpg

|

||||

[26]:https://www.xbrl.org/the-standard/why/ten-countries-with-open-data/

|

||||

[27]:https://data.nasa.gov/

|

||||

[28]:https://en.wikipedia.org/wiki/Data_science

|

||||

[29]:https://www.kdnuggets.com/2017/07/ai-deep-learning-explained-simply.html

|

||||

[30]:https://www.ryerson.ca/tedrogersschool/bm/programs/real-estate-management/murtaza-haider/

|

||||

[31]:https://itsfoss.com/wp-content/uploads/2018/03/suggest-dataset-nasa-1.jpg

|

||||

[32]:https://itsfoss.com/wp-content/uploads/2018/03/suggest-dataset-nasa-2-1.jpg

|

||||

@ -0,0 +1,72 @@

|

||||

Emacs 系列(四):使用 Org 模式自动管理邮件及同步文档

|

||||

======

|

||||

|

||||

这是 [Emacs 和 Org 模式系列][4]的第四篇。

|

||||

|

||||

至今为止,你已经见识到了 Org 模式的强大和高效,如果你像我一样,你可能会想:

|

||||

|

||||

> “我真的很想让它在我所有的设备上同步。”

|

||||

|

||||

或者是说:

|

||||

|

||||

> “我能在 Org 模式中转发邮件吗?”

|

||||

|

||||

答案当然是肯定的,因为这就是 Emacs。

|

||||

|

||||

### 同步

|

||||

|

||||

由于 Org 模式只使用文本文件,所以使用任意工具都可以很容易地实现同步。我使用的是 git 的 `git-remote-gcrypt`。由于 `git-remote-gcrypt` 的一些限制,每台机器都倾向于推到自己的分支,并使用命令来控制。每台机器都会先合并其它所有的分支,然后再将合并后的结果推送到主干上。cron 作业可以实现将机器上的分支推送上去,而 elisp 会协调这一切 —— 确保在同步之前保存缓冲区,在同步之后从磁盘刷新缓冲区,等等。

|

||||

|

||||

这篇文章的代码有点多,所以我将把它链接到 github 上,而不是写在这里。

|

||||

|

||||

|

||||

我有一个用来存放我所有的 Org 模式的文件的目录 `$HOME/org`,在 `~/org` 目录下有个 [Makefile][2] 文件来处理同步。该文件定义了以下目标:

|

||||

|

||||

* `push`: 添加、提交和推送到以主机命名的分支上

|

||||

* `fetch`: 一个简单的 `git fetch` 操作

|

||||

* `sync`: 添加、提交和拉取远程的修改,合并,并(假设合并成功)将其推送到以主机命名的分支和主干上

|

||||

|

||||

现在,在我的用户 crontab 中有这个:

|

||||

|

||||

```

|

||||

*/15 * * * * make -C $HOME/org push fetch 2>&1 | logger --tag 'orgsync'

|

||||

```

|

||||

|

||||

[与之相关的 elisp 代码][3] 定义了一个快捷键(`C-c s`)来调用同步。多亏了 cronjob,只要文件被保存 —— 即使我没有在另一个机器上同步 —— 它们也会被拉取进来。

|

||||

|

||||

我发现这个设置非常好用。

|

||||

|

||||

### 用 Org 模式发邮件

|

||||

|

||||

在继续下去之前,首先要问自己一下:你真的需要它吗? 我用的是带有 [mu4e][4] 的 Org 模式,而且它集成的也很好;任何 Org 模式的任务都可以通过 `Message-id` 链接到电子邮件,这很理想 —— 它可以让一个人做一些事情,比如提醒他在一周内回复一条消息。

|

||||

|

||||

然而,Org 模式不仅仅只有提醒。它还是一个知识库、创作系统等,但是并不是我所有的邮件客户端都使用 mu4e。(注意:移动设备中有像 MobileOrg 这样的应用)。我并没有像我想的那样经常使用它,但是它有它的用途,所以我认为我也应该在这里记录它。

|

||||

|

||||

现在我不仅想处理纯文本电子邮件。我希望能够处理附件、HTML 邮件等。这听起来很快就有问题了 —— 但是通过使用 ripmime 和 pandoc 这样的工具,情况还不错。

|

||||

|

||||

第一步就是要用某些方法将获取到的邮件放入指定的文件夹下。扩展名、特殊用户等。然后我用一个 [fetchmail 配置][5] 来将它拉取下来并运行我自己的 [insorgmail][6] 脚本。

|

||||

|

||||

这个脚本就是处理所有有趣的部分了。它开始用 ripmime 处理消息,用 pandoc 将 HTML 的部分转换为 Org 模式的格式。 Org 模式的层次结构是用来尽可能最好地表示 email 的结构。使用 HTML 和其他工具时,email 可能会变得相当复杂,但我发现这对于我来说是可以接受的。

|

||||

|

||||

### 下一篇

|

||||

|

||||

我最后一篇关于 Org 模式的文章将讨论如何使用它来编写文档和准备幻灯片 —— 我发现自己对 Org 模式的使用非常满意,但这需要一些调整。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://changelog.complete.org/archives/9898-emacs-4-automated-emails-to-org-mode-and-org-mode-syncing

|

||||

|

||||

作者:[John Goerzen][a]

|

||||

译者:[oneforalone](https://github.com/oneforalone)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://changelog.complete.org/

|

||||

[1]:https://changelog.complete.org/archives/tag/emacs2018

|

||||

[2]:https://github.com/jgoerzen/public-snippets/blob/master/emacs/org-tools/Makefile

|

||||

[3]:https://github.com/jgoerzen/public-snippets/blob/master/emacs/org-tools/emacs-config.org

|

||||

[4]:https://www.emacswiki.org/emacs/mu4e

|

||||

[5]:https://github.com/jgoerzen/public-snippets/blob/master/emacs/org-tools/fetchmailrc.orgmail

|

||||

[6]:https://github.com/jgoerzen/public-snippets/blob/master/emacs/org-tools/insorgmail

|

||||

@ -1,59 +1,58 @@

|

||||

Emacs #5: org-mode 之文档与 Presentations

|

||||

Emacs 系列(五):Org 模式之文档与演示稿

|

||||

======

|

||||

|

||||

### 1 org-mode 的输出

|

||||

这是 [Emacs 和 Org 模式系列][20]的第五篇。

|

||||

|

||||

这篇博文是由 Org 模式的源文件生成的,其有几种格式:[博客页面][21]、[演示稿][22] 和 [PDF 文档][23]。

|

||||

|

||||

### 1 Org 模式的输出

|

||||

|

||||

#### 1.1 背景

|

||||

|

||||

org-mode 不仅仅只是一个议程生成程序, 它也能输出许多不同的格式: LaTeX,PDF,Beamer,iCalendar(议程),HTML,Markdown,ODT,普通文本,帮助页面(man pages)和其它更多的复杂的格式,比如说网页文件。

|

||||

Org 模式不仅仅只是一个议程生成程序,它也能输出许多不同的格式: LaTeX、PDF、Beamer、iCalendar(议程)、HTML、Markdown、ODT、普通文本、手册页和其它更多的复杂的格式,比如说网页文件。

|

||||

|

||||

这也不只是一些事后的想法,这是 org-mode 的设计核心部分并且集成的很好。

|

||||

这也不只是一些事后的想法,这是 Org 模式的设计核心部分并且集成的很好。

|

||||

|

||||

一个文件可以同时是源代码,自动生成的输出,任务列表,文档和 presentation。

|

||||

这一个文件可以同时是源代码、自动生成的输出、任务列表、文档和展示。

|

||||

|

||||

有些人将 org-mode 作为他们首选的标记格式,甚至对于 LaTeX 文档也是如此。org-mode 手册中的 [section on exporting][13] 有更详细的介绍。

|

||||

有些人将 Org 模式作为他们首选的标记格式,甚至对于 LaTeX 文档也是如此。Org 模式手册中的 [输出一节][13] 有更详细的介绍。

|

||||

|

||||

#### 1.2 开始

|

||||

|

||||

对于任意的 org-mode 的文档,只要按下 C-c C-e键,就会弹出一个让你选择多种输出格式和选项的菜单。这些选项通常是次键选择,所以很容易设置和执行。例如:要输出一个 PDF 文档,按 C-c C-e l p,要输出 HMTL 格式的, 按 C-c C-e h h。

|

||||

对于任意的 Org 模式的文档,只要按下 `C-c C-e` 键,就会弹出一个让你选择多种输出格式和选项的菜单。这些选项通常是次键选择,所以很容易设置和执行。例如:要输出一个 PDF 文档,按 `C-c C-e l p`,要输出 HMTL 格式的, 按 `C-c C-e h h`。

|

||||

|

||||

对于所有的输出选项,都有许多可用的设置;详情参见手册。事实上,使用 LaTeX 格式相当于同时使用 LaTeX 和 HTML 模式,在不同的模式中插入任意的前言和设置等。

|

||||

|

||||

#### 1.3 第三方插件

|

||||

|

||||

[ELPA][19] 中也包含了许多额外的输出格式,详情参见 [ELPA][19].

|

||||

[ELPA][19] 中也包含了许多额外的输出格式,详情参见 [ELPA][19]。

|

||||

|

||||

### 2 org-mode 的 Beamer 演示

|

||||

### 2 Org 模式的 Beamer 演示

|

||||

|

||||

#### 2.1 关于 Beamer

|

||||

|

||||

[Beamer][14] 是一个生成 presentation 的 LaTeX 环境. 它包括了一下特性:

|

||||

[Beamer][14] 是一个生成演示稿的 LaTeX 环境. 它包括了以下特性:

|

||||

|

||||

* 在 presentation 中自动生成结构化的元素(例如 [the Marburg theme][1])。 在 presentation 时,这个特性可以为观众提供了视觉参考。

|

||||

* 在演示稿中自动生成结构化的元素(例如 [Marburg 主题][1])。 在演示稿中,这个特性可以为观众提供了视觉参考。

|

||||

* 对组织演示稿有很大的帮助。

|

||||

* 主题

|

||||

* 完全支持 LaTeX

|

||||

|

||||

* 对组织 presentation 有很大的帮助。

|

||||

#### 2.2 Org 模式中 Beamer 的优点

|

||||

|

||||

* 主题

|

||||

|

||||

* 完全支持 LaTeX

|

||||

|

||||

#### 2.2 org-mode 中 Beamer 的优点

|

||||

|

||||

在 org-mode 中用 Beamer 有很多好处,总的来说:

|

||||

|

||||

* org-mode 很简单而且对可视化支持的很好,同时改变结构可以快速的重组你的材料。

|

||||

在 Org 模式中用 Beamer 有很多好处,总的来说:

|

||||

|

||||

* Org 模式很简单而且对可视化支持的很好,同时改变结构可以快速的重组你的材料。

|

||||

* 与 org-babel 绑定在一起,实时语法高亮源码和内嵌结果。

|

||||

|

||||

* 语法通常更容易使用。

|

||||

|

||||

我已经完全用 org-mode 和 beamer 替换掉 LibreOffice/Powerpoint/GoogleDocs 的使用。事实上,当我必须使用其中一种工具时,这是相当令人沮丧的,因为它们在可视化表示结构方面远远比不上 org-mode。

|

||||

我已经完全用 Org 模式和 beamer 替换掉使用 LibreOffice/Powerpoint/GoogleDocs。事实上,当我必须使用其中一种工具时,这是相当令人沮丧的,因为它们在可视化表示演讲稿结构方面远远比不上 Org 模式。

|

||||

|

||||

#### 2.3 标题层次

|

||||

|

||||

org-mode 的 Beamer 会将你文档中的部分(文中定义了标题的)转换成幻灯片。当然,问题是:哪些部分?这是由 H [export setting][15](org-export-headline-levels)决定的。

|

||||

Org 模式的 Beamer 会将你文档中的部分(文中定义了标题的)转换成幻灯片。当然,问题是:哪些部分?这是由 H [输出设置][15](`org-export-headline-levels`)决定的。

|

||||

|

||||

针对不同的人,有许多不同的方法。我比较喜欢我的 presentation 这样:

|

||||

针对不同的人,有许多不同的方法。我比较喜欢我的演示稿这样:

|

||||

|

||||

```

|

||||

#+OPTIONS: H:2

|

||||

@ -64,7 +63,7 @@ org-mode 的 Beamer 会将你文档中的部分(文中定义了标题的)转

|

||||

|

||||

#### 2.4 主题和配置

|

||||

|

||||

你可以在 org 文件的顶部来插入几行来配置 Beamer 和 LaTeX。在本文中,例如,你可以这样定义:

|

||||

你可以在 Org 模式的文件顶部来插入几行来配置 Beamer 和 LaTeX。在本文中,例如,你可以这样定义:

|

||||

|

||||

```

|

||||

#+TITLE: Documents and presentations with org-mode

|

||||

@ -96,13 +95,13 @@ org-mode 的 Beamer 会将你文档中的部分(文中定义了标题的)转

|

||||

#+BEAMER_HEADER: \setlength{\parskip}{\smallskipamount}

|

||||

```

|

||||

|

||||

在这里, aspectratio=169 将纵横比设为 16:9, 其它部分都是标准的 LaTeX/Beamer 配置。

|

||||

在这里,`aspectratio=169` 将纵横比设为 16:9, 其它部分都是标准的 LaTeX/Beamer 配置。

|

||||

|

||||

#### 2.6 缩小 (适应屏幕)

|

||||

|

||||

有时你会遇到一些非常大的代码示例,你可能更倾向与将幻灯片缩小以适应它们。

|

||||

|

||||

只要按下 C-c C-c p 将 BEAMER_opt属性设为 shrink=15\.(或者设为更大的 shrink 值)。上一张幻灯片就用到了这个。

|

||||

只要按下 `C-c C-c p` 将 `BEAMER_opt` 属性设为 `shrink=15`\。(或者设为更大的 shrink 值)。上一张幻灯片就用到了这个。

|

||||

|

||||

#### 2.7 效果

|

||||

|

||||

@ -114,28 +113,24 @@ org-mode 的 Beamer 会将你文档中的部分(文中定义了标题的)转

|

||||

|

||||

#### 3.1 交互式的 Emacs 幻灯片

|

||||

|

||||

使用 [org-tree-slide package][17] 这个插件的话, 就可以在 Emacs 的右侧显示幻灯片了。 只要按下 M-x,然后输入 org-tree-slide-mode,回车,然后你就可以用 C-> 和 C-< 在幻灯片之间切换了。

|

||||

使用 [org-tree-slide][17] 这个插件的话,就可以在 Emacs 的右侧显示幻灯片了。 只要按下 `M-x`,然后输入 `org-tree-slide-mode`,回车,然后你就可以用 `C->` 和 `C-<` 在幻灯片之间切换了。

|

||||

|

||||

你可能会发现 C-c C-x C-v (即 org-toggle-inline-images)有助于使系统显示内嵌的图像。

|

||||

你可能会发现 `C-c C-x C-v` (即 `org-toggle-inline-images`)有助于使系统显示内嵌的图像。

|

||||

|

||||

#### 3.2 HTML 幻灯片

|

||||

|

||||

有许多方式可以将 org-mode 的 presentation 导出为 HTML,并有不同级别的 JavaScript 集成。有关详细信息,请参见 org-mode 的 wiki 中的 [non-beamer presentations section][18]。

|

||||

有许多方式可以将 Org 模式的演讲稿导出为 HTML,并有不同级别的 JavaScript 集成。有关详细信息,请参见 Org 模式的 wiki 中的 [非 beamer 演讲稿一节][18]。

|

||||

|

||||

### 4 更多

|

||||

|

||||

#### 4.1 本文中的附加资源

|

||||

|

||||

* [orgmode.org beamer tutorial][2]

|

||||

|

||||

* [LaTeX wiki][3]

|

||||

|

||||

* [Generating section title slides][4]

|

||||

|

||||

* [Shrinking content to fit on slide][5]

|

||||

|

||||

* 很棒的资源: refcard-org-beamer 详情参见其 [Github repo][6] 中的 PDF 和 .org 文件。

|

||||

|

||||

* 很棒的资源: refcard-org-beamer

|

||||

* 详情参见其 [Github repo][6] 中的 PDF 和 .org 文件。

|

||||

* 很漂亮的主题: [Theme matrix][7]

|

||||

|

||||

#### 4.2 下一个 Emacs 系列

|

||||

@ -148,9 +143,9 @@ mu4e 邮件!

|

||||

via: http://changelog.complete.org/archives/9900-emacs-5-documents-and-presentations-with-org-mode

|

||||

|

||||

作者:[John Goerzen][a]

|

||||

译者:[oneforalone](https://github.com/oneforalone)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[oneforalone](https://github.com/oneforalone)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -174,3 +169,9 @@ via: http://changelog.complete.org/archives/9900-emacs-5-documents-and-presentat

|

||||

[17]:https://orgmode.org/worg/org-tutorials/non-beamer-presentations.html#org-tree-slide

|

||||

[18]:https://orgmode.org/worg/org-tutorials/non-beamer-presentations.html

|

||||

[19]:https://www.emacswiki.org/emacs/ELPA

|

||||

[20]:https://changelog.complete.org/archives/tag/emacs2018

|

||||

[21]:https://github.com/jgoerzen/public-snippets/blob/master/emacs/emacs-org-beamer/emacs-org-beamer.org

|

||||

[22]:http://changelog.complete.org/archives/9900-emacs-5-documents-and-presentations-with-org-mode

|

||||

[23]:https://github.com/jgoerzen/public-snippets/raw/master/emacs/emacs-org-beamer/emacs-org-beamer.pdf

|

||||

[24]:https://github.com/jgoerzen/public-snippets/raw/master/emacs/emacs-org-beamer/emacs-org-beamer-document.pdf

|

||||

|

||||

@ -1,11 +1,13 @@

|

||||

一种新的用于安全检测的方法

|

||||

一种新的安全检测的方法

|

||||

======

|

||||

|

||||

> 不要只测试已有系统,强安全要求更积极主动的策略。

|

||||

|

||||

|

||||

|

||||

我们当中有多少人曾说出过下面这句话:“我希望这能起到作用!”?

|

||||

|

||||

毫无疑问,我们中的大多数人可能都不止一次地说过这句话。这句话不是用来激发信心的,相反它揭示了我们对自身能力和当前正在测试功能的怀疑。不幸的是,这句话非常好地描述了我们传统的安全模型。我们的运营基于这样的假设,并希望我们实施的控制措施——从 web 应用的漏扫到终端上的杀毒软件——防止恶意的病毒和软件进入我们的系统,损坏或偷取我们的信息。

|

||||

毫无疑问,我们中的大多数人可能都不止一次地说过这句话。这句话不是用来激发信心的,相反它揭示了我们对自身能力和当前正在测试的功能的怀疑。不幸的是,这句话非常好地描述了我们传统的安全模型。我们的运营基于这样的假设,并希望我们实施的控制措施 —— 从 web 应用的漏扫到终端上的杀毒软件 —— 防止恶意的病毒和软件进入我们的系统,损坏或偷取我们的信息。

|

||||

|

||||

渗透测试通过积极地尝试侵入网络、向 web 应用注入恶意代码或者通过发送钓鱼邮件来传播病毒等等这些步骤来避免我们对假设的依赖。由于我们在不同的安全层面上来发现和渗透漏洞,手动测试无法解决漏洞被主动打开的情况。在安全实验中,我们故意在受控的情形下创造混乱,模拟事故的情形,来客观地检测我们检测、阻止这类问题的能力。

|

||||

|

||||

@ -13,15 +15,15 @@

|

||||

|

||||

在分布式系统的安全性和复杂性方面,需要反复地重申混沌工程界的一句名言,“希望不是一种有效的策略”。我们多久会主动测试一次我们设计或构建的系统,来确定我们是否已失去对它的控制?大多数组织都不会发现他们的安全控制措施失效了,直到安全事件的发生。我们相信“安全事件不是侦察措施”,而且“希望不要出事也不是一个有效的策略”应该是 IT 专业人士执行有效安全实践的口号。

|

||||

|

||||

工业在传统上强调预防性的安全措施和纵深防御,但我们的任务是通过侦探实验来驱动对安全工具链新的知识和见解。因为过于专注于预防机制,我们很少尝试一次以上地或者年度性地手动测试要求的安全措施,来验证这些控件是否按设计的那样执行。

|

||||

行业在传统上强调预防性的安全措施和纵深防御,但我们的任务是通过侦探实验来驱动对安全工具链的新知识和见解。因为过于专注于预防机制,我们很少尝试一次以上地或者年度性地手动测试要求的安全措施,来验证这些控件是否按设计的那样执行。

|

||||

|

||||

随着现代分布式系统中的无状态变量的不断改变,人们很难充分理解他们的系统的行为,因为会随时变化。解决这个问题的一种途径是通过强大的系统性的设备进行检测,对于安全性检测,你可以将这个问题分成两个主要方面,测试,和我们称之为实验的部分。测试是对我们已知部分的验证和评估,简单来说,就是我们在开始找之前,要先弄清楚我们在找什么。另一方面,实验是去寻找获得我们之前并不清楚的见解和知识。虽然测试对于一个成熟的安全团队来说是一项重要实践,但以下示例会有助于进一步地阐述两者之间的差异,并对实验的附加价值提供一个更为贴切的描述。

|

||||

随着现代分布式系统中的无状态变量的不断改变,人们很难充分理解他们的系统的行为,因为会随时变化。解决这个问题的一种途径是通过强大的系统性的设备进行检测,对于安全性检测,你可以将这个问题分成两个主要方面:**测试**,和我们称之为**实验**的部分。测试是对我们已知部分的验证和评估,简单来说,就是我们在开始找之前,要先弄清楚我们在找什么。另一方面,实验是去寻找获得我们之前并不清楚的见解和知识。虽然测试对于一个成熟的安全团队来说是一项重要实践,但以下示例会有助于进一步地阐述两者之间的差异,并对实验的附加价值提供一个更为贴切的描述。

|

||||

|

||||

### 示例场景:精酿啤酒

|

||||

|

||||

思考一个用于接收精酿啤酒订单的 web 服务或者 web 应用。

|

||||

|

||||

这是这家精酿啤酒运输公司的一项重要服务,这些订单来自客户的移动设备,网页,和通过为这家公司精酿啤酒提供服务的餐厅的 API。这项重要服务运行在 AWS EC2 环境上,并且公司认为它是安全的。这家公司去年成功地通过了 PCI 规则,并且每年都会请第三方进行渗透测试,所以公司认为这个系统是安全的。

|

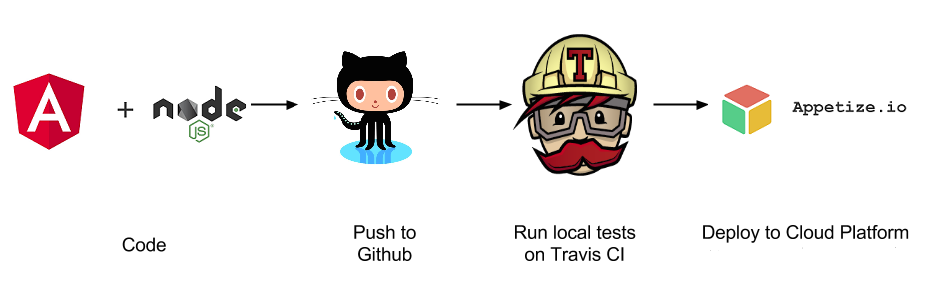

||||

这是这家精酿啤酒运输公司的一项重要服务,这些订单来自客户的移动设备、网页,和通过为这家公司精酿啤酒提供服务的餐厅的 API。这项重要服务运行在 AWS EC2 环境上,并且公司认为它是安全的。这家公司去年成功地通过了 PCI 规则,并且每年都会请第三方进行渗透测试,所以公司认为这个系统是安全的。

|

||||

|

||||

这家公司有时一天两次部署来进行 DevOps 和持续交付工作,公司为其感到自豪。

|

||||

|

||||

@ -35,10 +37,8 @@

|

||||

* 该配置会从已选择的目标中随机指定对象,同时端口的范围和数量也会被改变。

|

||||

* 团队还会设置进行实验的时间并缩小爆破攻击的范围,来确保对业务的影响最小。

|

||||

* 对于第一次测试,团队选择在他们的测试环境中运行实验并运行一个单独的测试。

|

||||

* 在真实的游戏日风格里,团队在预先计划好的两个小时的窗口期内,选择灾难大师来运行实验。在那段窗口期内,灾难大师会在 EC2 实例安全组中的一个上执行这次实验。

|

||||

* 一旦游戏日结束,团队就会开始进行一个彻底的、无可指责的事后练习。它的重点在于针对稳定状态和原始假设的实验结果。问题会类似于下面这些:

|

||||

|

||||

|

||||

* 在真实的<ruby>游戏日<rt>Game Day</rt></ruby>风格里,团队在预先计划好的两个小时的窗口期内,选择<ruby>灾难大师<rt>Master of Disaster</rt></ruby>来运行实验。在那段窗口期内,灾难大师会在 EC2 实例安全组中的一个实例上执行这次实验。

|

||||

* 一旦游戏日结束,团队就会开始进行一个彻底的、免于指责的事后练习。它的重点在于针对稳定状态和原始假设的实验结果。问题会类似于下面这些:

|

||||

|

||||

### 事后验证问题

|

||||

|

||||

@ -53,24 +53,22 @@

|

||||

* 获得警报的 SOC 分析师是否能对警报采取措施,还是缺少必要的信息?

|

||||

* 如果 SOC 确定警报是真实的,那么安全事件响应是否能简单地从数据中进行分类活动?

|

||||

|

||||

|

||||

|

||||

我们系统中对失败的承认和预期已经开始揭示我们对系统工作的假设。我们的使命是利用我们所学到的,并更加广泛地应用它。以此来真正主动地解决安全问题,来超越当前传统主流的被动处理问题的安全模型。

|

||||

|

||||

随着我们继续在这个新领域内进行探索,我们一定会发布我们的研究成果。如果您有兴趣想了解更多有关研究的信息或是想参与进来,请随时联系 Aaron Rinehart 或者 Grayson Brewer。

|

||||

|

||||

特别感谢 Samuel Roden 对本文提供的见解和想法。

|

||||

|

||||

**[看我们相关的文章,是否需要 DevSecOps 这个词?][3]]**

|

||||

- [看我们相关的文章:是否需要 DevSecOps 这个词?][3]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/4/new-approach-security-instrumentation

|

||||

|

||||

作者:[Aaron Rinehart][a]

|

||||

译者:[hopefully2333](https://github.com/hopefully2333)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[hopefully2333](https://github.com/hopefully2333)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,483 @@

|

||||

数据科学家的命令行技巧

|

||||

======

|

||||

|

||||

|

||||

|

||||

对于许多数据科学家来说,数据操作从始至终就是 Pandas 或 Tidyverse。从理论上讲,这样做没有任何问题。毕竟,这就是这些工具存在的原因。然而,对于像分隔符转换这样的简单任务,这些工具是大材小用了。

|

||||

|

||||

立志掌握命令行应该在每个开发人员的学习清单上,特别是数据科学家。学习 shell 的来龙去脉将无可否认地提高你的生产力。除此之外,命令行还是计算领域的一个重要历史课程。例如,awk —— 一种数据驱动的脚本语言。1977 年,在 [Brain Kernighan][1](即传奇的 [K&R 书][2]中 K)的帮助下,awk 首次出现。今天,大约五十年过去了,awk 仍然活跃在每年[新出版的书][3]里面。因此,可以安全地假设对命令行魔法的付出不会很快贬值。

|

||||

|

||||

### 我们将涵盖什么

|

||||

|

||||

* ICONV

|

||||

* HEAD

|

||||

* TR

|

||||

* WC

|

||||

* SPLIT

|

||||

* SORT & UNIQ

|

||||

* CUT

|

||||

* PASTE

|

||||

* JOIN

|

||||

* GREP

|

||||

* SED

|

||||

* AWK

|

||||

|

||||

### ICONV

|

||||

|

||||

文件编码可能会很棘手。现在大部分文件都是 UTF-8 编码的。要了解 UTF-8 背后的一些魔力,请查看这个出色的[视频][4]。尽管如此,有时我们收到的文件不是这种编码。这可能引起对改变编码模式的一些胡乱尝试。这里,`iconv` 是一个拯救者。`iconv` 是一个简单的程序,它将获取采用一种编码的文本并输出采用另一种编码的文本。

|

||||

|

||||

```

|

||||

# Converting -f (from) latin1 (ISO-8859-1)

|

||||

# -t (to) standard UTF_8

|

||||

|

||||

iconv -f ISO-8859-1 -t UTF-8 < input.txt > output.txt

|

||||

```

|

||||

|

||||

实用选项:

|

||||

|

||||

* `iconv -l` 列出所有已知编码

|

||||

* `iconv -c` 默默丢弃无法转换的字符

|

||||

|

||||

### HEAD

|

||||

|

||||

如果你是一个 Pandas 重度用户,那么会很熟悉 `head`。通常在处理新数据时,我们想做的第一件事就是了解其内容。这就得启动 Pandas,读取数据然后调用 `df.head()` —— 要说这有点费劲。没有任何选项的 `head` 将打印出文件的前 10 行。`head` 的真正力量在于干净利落的测试操作。例如,如果我们想将文件的分隔符从逗号更改为管道。一个快速测试将是:`head mydata.csv | sed 's/,/|/g'`。

|

||||

|

||||

```bash

|

||||

# Prints out first 10 lines

|

||||

head filename.csv

|

||||

|

||||

# Print first 3 lines

|

||||

head -n 3 filename.csv

|

||||

```

|

||||

|

||||

实用选项:

|

||||

|

||||

* `head -n` 打印特定行数

|

||||

* `head -c` 打印特定字节数

|

||||

|

||||

### TR

|

||||

|

||||

`tr` 类似于翻译。这个功能强大的实用程序是文件基础清理的主力。理想的用例是替换文件中的分隔符。

|

||||

|

||||

```bash

|

||||

# Converting a tab delimited file into commas

|

||||

cat tab_delimited.txt | tr "\t" "," comma_delimited.csv

|

||||

```

|

||||

|

||||

`tr` 另一个功能是你可以用内建 `[:class:]` 变量(POSIX 字符类)发挥威力。这些包括了:

|

||||

|

||||

- `[:alnum:]` 所有字母和数字

|

||||

- `[:alpha:]` 所有字母

|

||||

- `[:blank:]` 所有水平空白

|

||||

- `[:cntrl:]` 所有控制字符

|

||||

- `[:digit:]` 所有数字

|

||||

- `[:graph:]` 所有可打印字符,但不包括空格

|

||||

- `[:lower:]` 所有小写字母

|

||||

- `[:print:]` 所有可打印字符,包括空格

|

||||

- `[:punct:]` 所有标点符号

|

||||

- `[:space:]` 所有水平或垂直空白

|

||||

- `[:upper:]` 所有大写字母

|

||||

- `[:xdigit:]` 所有 16 进制数字

|

||||

|

||||

你可以将这些连接在一起以组成强大的程序。以下是一个基本的字数统计程序,可用于检查 README 是否被滥用。

|

||||

|

||||

```

|

||||

cat README.md | tr "[:punct:][:space:]" "\n" | tr "[:upper:]" "[:lower:]" | grep . | sort | uniq -c | sort -nr

|

||||

```

|

||||

|

||||

另一个使用基本正则表达式的例子:

|

||||

|

||||

```

|

||||

# Converting all upper case letters to lower case

|

||||

cat filename.csv | tr '[A-Z]' '[a-z]'

|

||||

```

|

||||

|

||||

实用选项:

|

||||

|

||||

* `tr -d` 删除字符

|

||||

* `tr -s` 压缩字符

|

||||

* `\b` 退格

|

||||

* `\f` 换页

|

||||

* `\v` 垂直制表符

|

||||

* `\NNN` 八进制字符

|

||||

|

||||

### WC

|

||||

|

||||

单词计数。它的价值主要来自其 `-l` 选项,它会给你提供行数。

|

||||

|

||||

```

|

||||

# Will return number of lines in CSV

|

||||

wc -l gigantic_comma.csv

|

||||

```

|

||||

|

||||

这个工具可以方便地确认各种命令的输出。所以,如果我们在转换文件中的分隔符之后运行 `wc -l`,我们会期待总行数是一样的,如果不一致,我们就知道有地方出错了。

|

||||

|

||||

实用选项:

|

||||

|

||||

* `wc -c` 打印字节数

|

||||

* `wc -m` 打印字符数

|

||||

* `wc -L` 打印最长行的长度

|

||||

* `wc -w` 打印单词数量

|

||||

|

||||

### SPLIT

|

||||

|

||||

文件大小的范围可以很广。对于有的任务,拆分文件或许是有好处的,所以使用 `split` 吧。`split` 的基本语法是:

|

||||

|

||||

```bash

|

||||

# We will split our CSV into new_filename every 500 lines

|

||||

split -l 500 filename.csv new_filename_

|

||||

# filename.csv

|

||||

# ls output

|

||||

# new_filename_aaa

|

||||

# new_filename_aab

|

||||

# new_filename_aa

|

||||

```

|

||||

|

||||

它有两个奇怪的地方是命名约定和缺少文件扩展名。后缀约定可以通过 `-d` 标志变为数字。要添加文件扩展名,你需要运行以下 `find` 命令。它将通过附加 `.csv` 扩展名来更改当前目录中所有文件的名称,所以小心了。

|

||||

|

||||

```bash

|

||||

find . -type f -exec mv '{}' '{}'.csv \;

|

||||

# ls output

|

||||

# filename.csv.csv

|

||||

# new_filename_aaa.csv

|

||||

# new_filename_aab.csv

|

||||

# new_filename_aac.csv

|

||||

```

|

||||

|

||||

实用选项:

|

||||

|

||||

* `split -b N` 按特定字节大小分割

|

||||

* `split -a N` 生成长度为 N 的后缀

|

||||

* `split -x` 使用十六进制后缀

|

||||

|

||||

### SORT & UNIQ

|

||||

|

||||

上面两个命令很明显:它们的作用就是字面意思。这两者结合起来可以提供最强大的冲击 (例如,唯一单词的数量)。这是由于 `uniq` 只作用于重复的相邻行。这也是在输出前进行 `sort` 的原因。一个有趣的事情是 `sort -u` 会达到和典型的 `sort file.txt | uniq` 模式一样的结果。

|

||||

|

||||

`sort` 对数据科学家来说确实具有潜在的有用能力:能够根据特定列对整个 CSV 进行排序。

|

||||

|

||||

```bash

|

||||

# Sorting a CSV file by the second column alphabetically

|

||||

sort -t"," -k2,2 filename.csv

|

||||

|

||||

# Numerically

|

||||

sort -t"," -k2n,2 filename.csv

|

||||

|

||||

# Reverse order

|

||||

sort -t"," -k2nr,2 filename.csv

|

||||

```

|

||||

|

||||

这里的 `-t` 选项将逗号指定为分隔符,通常假设分隔符是空格或制表符。此外,`-k` 选项是为了确定我们的键。这里的语法是 `-km,n`,`m` 作为开始列,`n` 作为结束列。

|

||||

|

||||

实用选项:

|

||||

|

||||

* `sort -f` 忽略大小写

|

||||

* `sort -r` 反向排序

|

||||

* `sort -R` 乱序

|

||||

* `uniq -c` 统计出现次数

|

||||

* `uniq -d` 只打印重复行

|

||||

|

||||

### CUT

|

||||

|

||||

`cut` 用于删除列。作为演示,如果我们只想删除第一和第三列。

|

||||

|

||||

```bash

|

||||

cut -d, -f 1,3 filename.csv

|

||||

```

|

||||

|

||||

要选择除了第一行外的所有行。

|

||||

|

||||

```bash

|

||||

cut -d, -f 2- filename.csv

|

||||

```

|

||||

|

||||

结合其他命令,将 `cut` 用作过滤器。

|

||||

|

||||

```bash

|

||||

# Print first 10 lines of column 1 and 3, where "some_string_value" is present

|

||||

head filename.csv | grep "some_string_value" | cut -d, -f 1,3

|

||||

```

|

||||

|

||||

查出第二列中唯一值的数量。

|

||||

|

||||

```bash

|

||||

cat filename.csv | cut -d, -f 2 | sort | uniq | wc -l

|

||||

|

||||

# Count occurences of unique values, limiting to first 10 results

|

||||

cat filename.csv | cut -d, -f 2 | sort | uniq -c | head

|

||||

```

|

||||

|

||||

### PASTE

|

||||

|

||||

`paste` 是一个带有趣味性功能的特定命令。如果你有两个需要合并的文件,并且它们已经排序好了,`paste` 帮你解决了接下来的步骤。

|

||||

|

||||

```bash

|

||||

# names.txt

|

||||

adam

|

||||

john

|

||||

zach

|

||||

|

||||

# jobs.txt

|

||||

lawyer

|

||||

youtuber

|

||||

developer

|

||||

|

||||

# Join the two into a CSV

|

||||

paste -d ',' names.txt jobs.txt > person_data.txt

|

||||

|

||||

# Output

|

||||

adam,lawyer

|

||||

john,youtuber

|

||||

zach,developer

|

||||

```

|

||||

|

||||

更多 SQL 式变种,见下文。

|

||||

|

||||

### JOIN

|

||||

|

||||

`join` 是一个简单的、<ruby>准切向的<rt>quasi-tangential</rt></ruby> SQL。最大的区别是 `join` 将返回所有列以及只能在一个字段上匹配。默认情况下,`join` 将尝试使用第一列作为匹配键。为了获得不同结果,必须使用以下语法:

|

||||

|

||||

```bash

|

||||

# Join the first file (-1) by the second column

|

||||

# and the second file (-2) by the first

|

||||

join -t "," -1 2 -2 1 first_file.txt second_file.txt

|

||||

```

|

||||

|

||||

标准的 `join` 是内连接。然而,外连接通过 `-a` 选项也是可行的。另一个值得一提的技巧是 `-q` 标志,如果发现有缺失的字段,可用于替换值。

|

||||

|

||||

```bash

|

||||

# Outer join, replace blanks with NULL in columns 1 and 2

|

||||

# -o which fields to substitute - 0 is key, 1.1 is first column, etc...

|

||||

join -t"," -1 2 -a 1 -a2 -e ' NULL' -o '0,1.1,2.2' first_file.txt second_file.txt

|

||||

```

|

||||

|

||||

它不是最用户友好的命令,而是绝望时刻的绝望措施。

|

||||

|

||||

实用选项:

|

||||

|

||||

* `join -a` 打印不可配对的行

|

||||

* `join -e` 替换丢失的输入字段

|

||||

* `join -j` 相当于 `-1 FIELD -2 FIELD`

|

||||

|

||||

### GREP

|

||||

|

||||

`grep` 即 <ruby>用正则表达式全局搜索并且打印<rt>Global search for a Regular Expression and Print</rt></ruby>,可能是最有名的命令,并且名副其实。`grep` 很强大,特别适合在大型代码库中查找。在数据科学的王国里,它充当其他命令的提炼机制。虽然它的标准用途也很有价值。

|

||||

|

||||

```

|

||||

# Recursively search and list all files in directory containing 'word'

|

||||

|

||||

grep -lr 'word' .

|

||||

|

||||

# List number of files containing word

|

||||

|

||||

grep -lr 'word' . | wc -l

|

||||

|

||||

```

|

||||

|

||||

计算包含单词或模式的总行数。

|

||||

|

||||

```

|

||||

grep -c 'some_value' filename.csv

|

||||

|

||||

# Same thing, but in all files in current directory by file name

|

||||

|

||||

grep -c 'some_value' *

|

||||

```

|

||||

|

||||

对多个值使用“或”运算符: `\|`。

|

||||

|

||||

```

|

||||

grep "first_value\|second_value" filename.csv

|

||||

```

|

||||

|

||||

实用选项:

|

||||

|

||||

* `alias grep="grep --color=auto"` 使 grep 色彩丰富

|

||||

* `grep -E` 使用扩展正则表达式

|

||||

* `grep -w` 只匹配整个单词

|

||||

* `grep -l` 打印匹配的文件名

|

||||

* `grep -v` 非匹配

|

||||

|

||||

### 大人物们

|

||||

|

||||

`sed` 和 `awk` 是本文中最强大的两个命令。为简洁起见,我不打算详细讨论这两个命令。相反,我将介绍各种能证明其令人印象深刻的力量的命令。如果你想了解更多,[这儿就有一本书][5]是关于它们的。

|

||||

|

||||

### SED

|

||||

|

||||

`sed` 本质上是一个流编辑器。它擅长替换,但也可以用于所有输出重构。

|

||||

|

||||

最基本的 `sed` 命令由 `s/old/new/g` 组成。它的意思是搜索 `old`,全局替换为 `new`。 如果没有 `/g`,我们的命令将在 `old` 第一次出现后终止。

|

||||

|

||||

为了快速了解它的功能,我们可以深入了解一个例子。 在以下情景中,你已有以下文件:

|

||||

|

||||

```

|

||||

balance,name

|

||||

$1,000,john

|

||||

$2,000,jack

|

||||

```

|

||||

|

||||

我们可能想要做的第一件事是删除美元符号。`-i` 标志表示原位。`''` 表示零长度文件扩展名,从而覆盖我们的初始文件。理想情况下,你可以单独测试,然后输出到新文件。

|

||||

|

||||

```

|

||||

sed -i '' 's/\$//g' data.txt

|

||||

# balance,name

|

||||

# 1,000,john

|

||||

# 2,000,jack

|

||||

```

|

||||

|

||||

接下来,去除 `blance` 列的逗号。

|

||||

|

||||

```

|

||||

sed -i '' 's/\([0-9]\),\([0-9]\)/\1\2/g' data.txt

|

||||

# balance,name

|

||||

# 1000,john

|

||||

# 2000,jack

|

||||

```

|

||||

|

||||

最后 jack 有一天决定辞职。所以,再见了,我的朋友。

|

||||

|

||||

```

|

||||

sed -i '' '/jack/d' data.txt

|

||||

# balance,name

|

||||

# 1000,john

|

||||

```

|

||||

|

||||

正如你所看到的,`sed` 有很多强大的功能,但乐趣并不止于此。

|

||||

|

||||

### AWK

|

||||

|

||||

最好的留在最后。`awk` 不仅仅是一个简单的命令:它是一个成熟的语言。在本文中涉及的所有内容中,`awk` 是目前为止最酷的。如果你感兴趣,这里有很多很棒的资源 —— 看 [这里][6]、[这里][7] 和 [这里][8]。

|

||||

|

||||

`awk` 的常见用例包括:

|

||||

|

||||

* 文字处理

|

||||

* 格式化文本报告

|

||||

* 执行算术运算

|

||||

* 执行字符串操作

|

||||

|

||||

`awk` 可以以最原生的形式并行 `grep`。

|

||||

|

||||

```

|

||||

awk '/word/' filename.csv

|

||||

```

|

||||

|

||||

或者更加神奇:将 `grep` 和 `cut` 组合起来。在这里,对于所有带我们指定单词 `word` 的行,`awk` 打印第三和第四列,用 `tab` 分隔。`-F,` 用于指定切分时的列分隔符为逗号。

|

||||

|

||||

```bash

|

||||

awk -F, '/word/ { print $3 "\t" $4 }' filename.csv

|

||||

```

|

||||

|

||||

`awk` 内置了许多精巧的变量。比如,`NF` —— 字段数,和 `NR` —— 记录数。要获取文件中的第 53 条记录:

|

||||

|

||||

```bash

|

||||

awk -F, 'NR == 53' filename.csv

|

||||

```

|

||||

|

||||

更多的花招是其基于一个或多个值进行过滤的能力。下面的第一个示例将打印第一列等于给定字符串的行的行号和列。

|

||||

|

||||

```bash

|

||||

awk -F, ' $1 == "string" { print NR, $0 } ' filename.csv

|

||||

|

||||

# Filter based off of numerical value in second column

|

||||

awk -F, ' $2 == 1000 { print NR, $0 } ' filename.csv

|

||||

```

|

||||

|

||||

多个数值表达式:

|

||||

|

||||

```bash

|

||||

# Print line number and columns where column three greater

|

||||

# than 2005 and column five less than one thousand

|

||||

|

||||

awk -F, ' $3 >= 2005 && $5 <= 1000 { print NR, $0 } ' filename.csv

|

||||

```

|

||||

|

||||

求出第三列的总和:

|

||||

|

||||

```bash

|

||||

awk -F, '{ x+=$3 } END { print x }' filename.csv

|

||||

```

|

||||

|

||||

在第一列等于 `something` 的那些行,求出第三列值的总和。

|

||||

|

||||

```bash

|

||||

awk -F, '$1 == "something" { x+=$3 } END { print x }' filename.csv

|

||||

```

|

||||

|

||||

获取文件的行列数:

|

||||

|

||||

```bash

|

||||

awk -F, 'END { print NF, NR }' filename.csv

|

||||

|

||||

# Prettier version

|

||||

awk -F, 'BEGIN { print "COLUMNS", "ROWS" }; END { print NF, NR }' filename.csv

|

||||

```

|

||||

|

||||

打印出现了两次的行:

|

||||

|

||||

```bash

|

||||

awk -F, '++seen[$0] == 2' filename.csv

|

||||

```

|

||||

|

||||

删除重复的行:

|

||||

|

||||

```bash

|

||||

# Consecutive lines

|

||||

awk 'a !~ $0; {a=$0}']

|

||||

|

||||

# Nonconsecutive lines

|

||||

awk '! a[$0]++' filename.csv

|

||||

|

||||

# More efficient

|

||||

awk '!($0 in a) {a[$0];print}

|

||||

```

|

||||

|

||||

使用内置函数 `gsub()` 替换多个值。

|

||||

|

||||

```bash

|

||||

awk '{gsub(/scarlet|ruby|puce/, "red"); print}'

|

||||

```

|

||||

|

||||

这个 `awk` 命令将组合多个 CSV 文件,忽略标题,然后在最后附加它。

|

||||

|

||||

```bash

|

||||

awk 'FNR==1 && NR!=1{next;}{print}' *.csv > final_file.csv

|

||||

```

|

||||

|

||||

需要缩小一个庞大的文件? `awk` 可以在 `sed` 的帮助下处理它。具体来说,该命令根据行数将一个大文件分成多个较小的文件。这个一行脚本将增加一个扩展名。

|

||||

|

||||

```bash

|

||||

sed '1d;$d' filename.csv | awk 'NR%NUMBER_OF_LINES==1{x="filename-"++i".csv";}{print > x}'

|

||||

|

||||

# Example: splitting big_data.csv into data_(n).csv every 100,000 lines

|

||||

sed '1d;$d' big_data.csv | awk 'NR%100000==1{x="data_"++i".csv";}{print > x}'

|

||||

```

|

||||

|

||||

### 结语

|

||||

|

||||

命令行拥有无穷无尽的力量。本文中介绍的命令足以将你从一无所知提升到英雄人物。除了涵盖的内容之外,还有许多实用程序可以考虑用于日常数据操作。[Csvkit][9]、[xsv][10] 还有 [q][11] 是需要记住的三个。如果你希望更深入地了解命令行数据科学,查看[这本书][12]。它也可以[免费][13]在线获得!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://kadekillary.work/post/cli-4-ds/

|

||||

|

||||

作者:[Kade Killary][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[GraveAccent](https://github.com/graveaccent)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://kadekillary.work/authors/kadekillary

|

||||

[1]:https://en.wikipedia.org/wiki/Brian_Kernighan

|

||||

[2]:https://en.wikipedia.org/wiki/The_C_Programming_Language

|

||||

[3]:https://www.amazon.com/Learning-AWK-Programming-cutting-edge-text-processing-ebook/dp/B07BT98HDS

|

||||

[4]:https://www.youtube.com/watch?v=MijmeoH9LT4

|

||||

[5]:https://www.amazon.com/sed-awk-Dale-Dougherty/dp/1565922255/ref=sr_1_1?ie=UTF8&qid=1524381457&sr=8-1&keywords=sed+and+awk

|

||||

[6]:https://www.amazon.com/AWK-Programming-Language-Alfred-Aho/dp/020107981X/ref=sr_1_1?ie=UTF8&qid=1524388936&sr=8-1&keywords=awk

|

||||

[7]:http://www.grymoire.com/Unix/Awk.html

|

||||

[8]:https://www.tutorialspoint.com/awk/index.htm

|

||||

[9]:http://csvkit.readthedocs.io/en/1.0.3/

|

||||