diff --git a/published/20190312 When the web grew up- A browser story.md b/published/20190312 When the web grew up- A browser story.md

new file mode 100644

index 0000000000..a53a5babfb

--- /dev/null

+++ b/published/20190312 When the web grew up- A browser story.md

@@ -0,0 +1,59 @@

+[#]: collector: (lujun9972)

+[#]: translator: (XYenChi)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13130-1.html)

+[#]: subject: (When the web grew up: A browser story)

+[#]: via: (https://opensource.com/article/19/3/when-web-grew)

+[#]: author: (Mike Bursell https://opensource.com/users/mikecamel)

+

+Web 的成长,就是一篇浏览器的故事

+======

+

+> 互联网诞生之时我的个人故事。

+

+

+

+最近,我和大家 [分享了][1] 我在 1994 年获得英国文学和神学学位离开大学后,如何在一个人们还不知道 Web 服务器是什么的世界里成功找到一份运维 Web 服务器的工作。我说的“世界”,并不仅仅指的是我工作的机构,而是泛指所有地方。Web 那时当真是全新的 —— 人们还正尝试理出头绪。

+

+那并不是说我工作的地方(一家学术出版社)特别“懂” Web。这是个大部分人还在用 28.8K 猫(调制解调器,俗称“猫”)访问网页的世界。我还记得我拿到 33.6K 猫时有多激动。至少上下行速率不对称的日子已经过去了,[^1] 以前 1200/300 的带宽描述特别常见。这意味着(在同一家机构的)印刷人员制作的设计复杂、色彩缤纷、纤毫毕现的文档是完全不可能放在 Web 上的。我不能允许在网站的首页出现大于 40k 的 GIF 图片,这对我们的许多访问者来说是很难接受的。大于大约 60k 图片的会作为独立的图片,以缩略图链接过去。

+

+如果说市场部只有这一点不喜欢,那是绝对是轻描淡写了。更糟的是布局问题。“浏览器决定如何布局文档,”我一遍又一遍地解释,“你可以使用标题或者段落,但是文档在页面上如何呈现并不取决于文档,而是取决于渲染器!”他们想控制这些,想要不同颜色的背景。后来明白了那些不能实现。我觉得我就像是参加了第一次讨论层叠样式表(CSS)的 W3C 会议,并进行了激烈地争论。关于文档编写者应控制布局的建议真令人厌恶。[^2] CSS 花了一些时间才被人们采用,与此同时,关心这些问题的人搭上了 PDF 这种到处都是安全问题的列车。

+

+如何呈现文档不是唯一的问题。作为一个实体书出版社,对于市场部来说,拥有一个网站的全部意义在于,让客户(或者说潜在的客户)不仅知道一本书的内容,而且知道买这本书需要花多少钱。但这有一个问题,你看,互联网,包括快速发展的万维网,是开放的,是所有都免费的自由之地,没有人会在意钱;事实上,在那里谈钱是要回避和避免的。

+

+我和主流“网民”的看法一致,认为没必要把价格信息放在线上。我老板,以及机构里相当多的人都持相反的意见。他们觉得消费者应该能够看到书要花多少钱。他们也觉得我的银行经理也会想看到我的账户里每个月进了多少钱,如果我不认同他们的观点的话,那我的收入就可能堪忧。

+

+幸运的是,在我被炒鱿鱼之前,我已经自己认清了一些 —— 可能是在我开始迈入 Web 的几星期之后,Web 已经发生变化,有其他人公布他们的产品价格信息。这些新来者通常被那些从早期就开始运行 Web 服务器的老派人士所看不起,[^3] 但很明显,风向是往那边吹的。然而,这并不意味着我们的网站就赢得了战争。作为一个学术出版社,我们和大学共享一个域名(在 “ac.uk” 下)。大学不太相信发布价格信息是合适的,直到出版社的一些资深人士指出,普林斯顿大学出版社正在这样做,如果我们不做……看起来是不是有点傻?

+

+有趣的事情还没完。在我担任站点管理员(“webmaster@…”)的短短几个月后,我们和其他很多网站一样开始看到了一种令人担忧的趋势。某些访问者可以轻而易举地让我们的 Web 服务器跪了。这些访问者使用了新的网页浏览器:网景浏览器(Netscape)。网景浏览器实在太恶劣了,它居然是多线程的。

+

+这为什么是个问题呢?在网景浏览器之前,所有的浏览器都是单线程。它们一次只进行一个连接,所以即使一个页面有五张 GIF 图,[^4] 也会先请求 HTML 基本文件进行解析,然后下载第一张 GIF,完成,接着第二张,完成,如此类推。事实上,GIF 的顺序经常出错,使得页面加载得非常奇怪,但这也是常规思路。而粗暴的网景公司的人决定,它们可以同时打开多个连接到 Web 服务器,比如说,可以同时请求所有的 GIF!为什么这是个问题呢?好吧,问题就在于大多数 Web 服务器都是单线程的。它们不是设计来一次进行多个连接的。确实,我们运行的 HTTP 服务的软件(MacHTTP)是单线程的。尽管我们花钱购买了它(最初是共享软件),但我们用的这版无法同时处理多个请求。

+

+互联网上爆发了大量讨论。这些网景公司的人以为他们是谁,能改变世界的运作方式?它应该如何工作?大家分成了不同阵营,就像所有的技术争论一样,双方都用各种技术热词互丢。问题是,网景浏览器不仅是多线程的,它也比其他的浏览器更好。非常多 Web 服务器代码维护者,包括 MacHTTP 作者 Chuck Shotton 在内,开始坐下来认真地在原有代码基础上更新了多线程测试版。几乎所有人立马转向测试版,它们变得稳定了,最终,浏览器要么采用了这种技术,变成多线程,要么就像所有过时产品一样销声匿迹了。[^6]

+

+对我来说,这才是 Web 真正成长起来的时候。既不是网页展示的价格,也不是设计者能定义你能在网页上看到什么,[^8] 而是浏览器变得更易用,以及成千上万的浏览者向数百万浏览者转变的网络效应,使天平向消费者而不是生产者倾斜。在我的旅程中,还有更多故事,我将留待下次再谈。但从这时起,我的雇主开始看我们的月报,然后是周报、日报,并意识到这将是一件大事,真的需要关注。

+

+[^1]: 它们又是怎么回来的?

+[^2]: 你可能不会惊讶,我还是在命令行里最开心。

+[^3]: 大约六个月前。

+[^4]: 莽撞,没错,但它确实发生了 [^5]

+[^5]: 噢,不,是 GIF 或 BMP,JPEG 还是个好主意,但还没有用上。

+[^6]: 没有真正的沉寂:总有一些坚持他们的首选解决方案具有技术上的优势,并哀叹互联网的其他人都是邪恶的死硬爱好者。 [^7]

+[^7]: 我不是唯一一个说“我还在用 Lynx”的人。

+[^8]: 我会指出,为那些有各种无障碍需求的人制造严重而持续的问题。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/3/when-web-grew

+

+作者:[Mike Bursell][a]

+选题:[lujun9972][b]

+译者:[XYenChi](https://github.com/XYenChi)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/mikecamel

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/article/18/11/how-web-was-won

diff --git a/published/20190404 Intel formally launches Optane for data center memory caching.md b/published/20190404 Intel formally launches Optane for data center memory caching.md

new file mode 100644

index 0000000000..f07eb56caa

--- /dev/null

+++ b/published/20190404 Intel formally launches Optane for data center memory caching.md

@@ -0,0 +1,69 @@

+[#]: collector: (lujun9972)

+[#]: translator: (ShuyRoy)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13109-1.html)

+[#]: subject: (Intel formally launches Optane for data center memory caching)

+[#]: via: (https://www.networkworld.com/article/3387117/intel-formally-launches-optane-for-data-center-memory-caching.html#tk.rss_all)

+[#]: author: (Andy Patrizio https://www.networkworld.com/author/Andy-Patrizio/)

+

+英特尔 Optane:用于数据中心内存缓存

+======

+

+

+

+> 英特尔推出了包含 3D Xpoint 内存技术的 Optane 持久内存产品线。英特尔的这个解决方案介乎于 DRAM 和 NAND 中间,以此来提升性能。

+

+![Intel][1]

+

+英特尔在 2019 年 4 月的[大规模数据中心活动][2]中正式推出 Optane 持久内存产品线。它已经问世了一段时间,但是目前的 Xeon 服务器处理器还不能充分利用它。而新的 Xeon8200 和 9200 系列可以充分利用 Optane 持久内存的优势。

+

+由于 Optane 是英特尔的产品(与美光合作开发),所以意味着 AMD 和 ARM 的服务器处理器不能够支持它。

+

+正如[我之前所说的][3],OptaneDC 持久内存采用与美光合作研发的 3D Xpoint 内存技术。3D Xpoint 是一种比 SSD 更快的非易失性内存,速度几乎与 DRAM 相近,而且它具有 NAND 闪存的持久性。

+

+第一个 3D Xpoint 产品是被称为英特尔“尺子”的 SSD,因为它们被设计成细长的样子,很像尺子的形状。它们被设计这样是为了适合 1u 的服务器机架。在发布的公告中,英特尔推出了新的利用四芯或者 QLC 3D NAND 内存的英特尔 SSD D5-P4325 [尺子][7] SSD,可以在 1U 的服务器机架上放 1PB 的存储。

+

+OptaneDC 持久内存的可用容量最初可以通过使用 128GB 的 DIMM 达到 512GB。英特尔数据中心集团执行副总裁及总经理 Navin Shenoy 说:“OptaneDC 持久内存可达到的容量是 DRAM 的 2 到 4 倍。”

+

+他说:“我们希望服务器系统的容量可以扩展到每个插槽 4.5TB 或者 8 个插槽 36TB,这是我们第一代 Xeon 可扩展芯片的 3 倍。”

+

+### 英特尔Optane内存的使用和速度

+

+Optane 有两种不同的运行模式:内存模式和应用直连模式。内存模式是将 DRAM 放在 Optane 内存之上,将 DRAM 作为 Optane 内存的缓存。应用直连模式是将 DRAM 和 OptaneDC 持久内存一起作为内存来最大化总容量。并不是每个工作负载都适合这种配置,所以应该在对延迟不敏感的应用程序中使用。正如英特尔推广的那样,Optane 的主要使用情景是内存模式。

+

+几年前,当 3D Xpoint 最初发布时,英特尔宣称 Optane 的速度是 NAND 的 1000 倍,耐用是 NAND 的 1000 倍,密度潜力是 DRAM 的 10 倍。这虽然有点夸张,但这些因素确实很令人着迷。

+

+在 256B 的连续 4 个缓存行中使用 Optane 内存可以达到 8.3GB/秒的读速度和 3.0GB/秒的写速度。与 SATA SSD 的 500MB/秒左右的读/写速度相比,可以看到性能有很大提升。请记住,Optane 充当内存,所以它会缓存被频繁访问的 SSD 中的内容。

+

+这是了解 OptaneDC 的关键。它能将非常大的数据集存储在离内存非常近的位置,因此具有很低延迟的 CPU 可以最小化访问较慢的存储子系统的访问延迟,无论存储是 SSD 还是 HDD。现在,它提供了一种可能性,即把多个 TB 的数据放在非常接近 CPU 的地方,以实现更快的访问。

+

+### Optane 内存的一个挑战

+

+唯一真正的挑战是 Optane 插进内存所在的 DIMM 插槽。现在有些主板的每个 CPU 有多达 16 个 DIMM 插槽,但是这仍然是客户和设备制造商之间需要平衡的电路板空间:Optane 还是内存。有一些 Optane 驱动采用了 PCIe 接口进行连接,可以减轻主板上内存的拥挤。

+

+3D Xpoint 由于它写数据的方式,提供了比传统的 NAND 闪存更高的耐用性。英特尔承诺 Optane 提供 5 年保修期,而很多 SSD 只提供 3 年保修期。

+

+--------------------------------------------------------------------------------

+

+via: https://www.networkworld.com/article/3387117/intel-formally-launches-optane-for-data-center-memory-caching.html#tk.rss_all

+

+作者:[Andy Patrizio][a]

+选题:[lujun9972][b]

+译者:[RiaXu](https://github.com/ShuyRoy)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://www.networkworld.com/author/Andy-Patrizio/

+[b]: https://github.com/lujun9972

+[1]: https://images.idgesg.net/images/article/2018/06/intel-optane-persistent-memory-100760427-large.jpg

+[2]: https://www.networkworld.com/article/3386142/intel-unveils-an-epic-response-to-amds-server-push.html

+[3]: https://www.networkworld.com/article/3279271/intel-launches-optane-the-go-between-for-memory-and-storage.html

+[4]: https://www.networkworld.com/article/3290421/why-nvme-users-weigh-benefits-of-nvme-accelerated-flash-storage.html

+[5]: https://www.networkworld.com/article/3242807/data-center/top-10-data-center-predictions-idc.html#nww-fsb

+[6]: https://www.networkworld.com/newsletters/signup.html#nww-fsb

+[7]: https://www.theregister.co.uk/2018/02/02/ruler_and_miniruler_ssd_formats_look_to_banish_diskstyle_drives/

+[8]: https://pluralsight.pxf.io/c/321564/424552/7490?u=https%3A%2F%2Fwww.pluralsight.com%2Fpaths%2Fapple-certified-technical-trainer-10-11

+[9]: https://www.facebook.com/NetworkWorld/

+[10]: https://www.linkedin.com/company/network-world

diff --git a/published/20190610 Tmux Command Examples To Manage Multiple Terminal Sessions.md b/published/20190610 Tmux Command Examples To Manage Multiple Terminal Sessions.md

new file mode 100644

index 0000000000..c80af774f7

--- /dev/null

+++ b/published/20190610 Tmux Command Examples To Manage Multiple Terminal Sessions.md

@@ -0,0 +1,305 @@

+[#]: collector: (lujun9972)

+[#]: translator: (chensanle)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13107-1.html)

+[#]: subject: (Tmux Command Examples To Manage Multiple Terminal Sessions)

+[#]: via: (https://www.ostechnix.com/tmux-command-examples-to-manage-multiple-terminal-sessions/)

+[#]: author: (sk https://www.ostechnix.com/author/sk/)

+

+基于 Tmux 的多会话终端管理示例

+======

+

+

+

+我们已经了解到如何通过 [GNU Screen][2] 进行多会话管理。今天,我们将要领略另一个著名的管理会话的命令行实用工具 **Tmux**。类似 GNU Screen,Tmux 是一个帮助我们在单一终端窗口中创建多个会话,同一时间内同时运行多个应用程序或进程的终端复用工具。Tmux 自由、开源并且跨平台,支持 Linux、OpenBSD、FreeBSD、NetBSD 以及 Mac OS X。本文将讨论 Tmux 在 Linux 系统下的高频用法。

+

+### Linux 下安装 Tmux

+

+Tmux 可以在绝大多数的 Linux 官方仓库下获取。

+

+在 Arch Linux 或它的变种系统下,执行下列命令来安装:

+

+```

+$ sudo pacman -S tmux

+```

+

+Debian、Ubuntu 或 Linux Mint:

+

+```

+$ sudo apt-get install tmux

+```

+

+Fedora:

+```

+$ sudo dnf install tmux

+```

+

+RHEL 和 CentOS:

+

+```

+$ sudo yum install tmux

+```

+

+SUSE/openSUSE:

+

+```

+$ sudo zypper install tmux

+```

+

+以上,我们已经完成 Tmux 的安装。之后我们继续看看一些 Tmux 示例。

+

+### Tmux 命令示例: 多会话管理

+

+Tmux 默认所有命令的前置命令都是 `Ctrl+b`,使用前牢记这个快捷键即可。

+

+> **注意**:**Screen** 的前置命令都是 `Ctrl+a`.

+

+#### 创建 Tmux 会话

+

+在终端中运行如下命令创建 Tmux 会话并附着进入:

+

+```

+tmux

+```

+

+抑或,

+

+```

+tmux new

+```

+

+一旦进入 Tmux 会话,你将看到一个 **沉在底部的绿色的边栏**,如下图所示。

+

+![][3]

+

+*创建 Tmux 会话*

+

+这个绿色的边栏能很容易提示你当前是否身处 Tmux 会话当中。

+

+#### 退出 Tmux 会话

+

+退出当前 Tmux 会话仅需要使用 `Ctrl+b` 和 `d`。无需同时触发这两个快捷键,依次按下 `Ctrl+b` 和 `d` 即可。

+

+退出当前会话后,你将能看到如下输出:

+

+```

+[detached (from session 0)]

+```

+

+#### 创建有名会话

+

+如果使用多个会话,你很可能会混淆运行在多个会话中的应用程序。这种情况下,我们需要会话并赋予名称。譬如需要 web 相关服务的会话,就创建一个名称为 “webserver”(或任意一个其他名称) 的 Tmux 会话。

+

+```

+tmux new -s webserver

+```

+

+这里是新的 Tmux 有名会话:

+

+![][4]

+

+*拥有自定义名称的 Tmux 会话*

+

+如你所见上述截图,这个 Tmux 会话的名称已经被标注为 “webserver”。如此,你可以在多个会话中,轻易的区分应用程序的所在。

+

+退出会话,轻按 `Ctrl+b` 和 `d`。

+

+#### 查看 Tmux 会话清单

+

+查看 Tmux 会话清单,执行:

+

+```

+tmux ls

+```

+

+示例输出:

+

+![][5]

+

+*列出 Tmux 会话*

+

+如你所见,我们开启了两个 Tmux 会话。

+

+#### 创建非附着会话

+

+有时候,你可能想要简单创建会话,但是并不想自动切入该会话。

+

+创建一个非附着会话,并赋予名称 “ostechnix”,运行:

+

+```

+tmux new -s ostechnix -d

+```

+

+上述命令将会创建一个名为 “ostechnix” 的会话,但是并不会附着进入。

+

+你可以通过使用 `tmux ls` 命令验证:

+

+![][6]

+

+*创建非附着会话*

+

+#### 附着进入 Tmux 会话

+

+通过如下命令,你可以附着进入最后一个被创建的会话:

+

+```

+tmux attach

+```

+

+抑或,

+

+```

+tmux a

+```

+

+如果你想附着进入任意一个指定的有名会话,譬如 “ostechnix”,运行:

+

+```

+tmux attach -t ostechnix

+```

+

+或者,简写为:

+

+```

+tmux a -t ostechnix

+```

+

+#### 关闭 Tmux 会话

+

+当你完成或者不再需要 Tmux 会话,你可以通过如下命令关闭:

+

+```

+tmux kill-session -t ostechnix

+```

+

+当身处该会话时,使用 `Ctrl+b` 以及 `x`。点击 `y` 来关闭会话。

+

+可以通过 `tmux ls` 命令验证。

+

+关闭所有 Tmux 服务下的所有会话,运行:

+

+```

+tmux kill-server

+```

+

+谨慎!这将终止所有 Tmux 会话,并不会产生任何警告,即便会话存在运行中的任务。

+

+如果不存在活跃的 Tmux 会话,将看到如下输出:

+

+```

+$ tmux ls

+no server running on /tmp/tmux-1000/default

+```

+

+#### 切割 Tmux 窗口

+

+切割窗口成多个小窗口,在 Tmux 中,这个叫做 “Tmux 窗格”。每个窗格中可以同时运行不同的程序,并同时与所有的窗格进行交互。每个窗格可以在不影响其他窗格的前提下可以调整大小、移动位置和控制关闭。我们可以以水平、垂直或者二者混合的方式切割屏幕。

+

+##### 水平切割窗格

+

+欲水平切割窗格,使用 `Ctrl+b` 和 `"`(半个双引号)。

+

+![][7]

+

+*水平切割 Tmux 窗格*

+

+可以使用组合键进一步切割面板。

+

+##### 垂直切割窗格

+

+垂直切割面板,使用 `Ctrl+b` 和 `%`。

+

+![][8]

+

+*垂直切割 Tmux 窗格*

+

+##### 水平、垂直混合切割窗格

+

+我们也可以同时采用水平和垂直的方案切割窗格。看看如下截图:

+

+![][9]

+

+*切割 Tmux 窗格*

+

+首先,我通过 `Ctrl+b` `"` 水平切割,之后通过 `Ctrl+b` `%` 垂直切割下方的窗格。

+

+如你所见,每个窗格下我运行了不同的程序。

+

+##### 切换窗格

+

+通过 `Ctrl+b` 和方向键(上下左右)切换窗格。

+

+##### 发送命令给所有窗格

+

+之前的案例中,我们在每个窗格中运行了三个不同命令。其实,也可以发送相同的命令给所有窗格。

+

+为此,使用 `Ctrl+b` 然后键入如下命令,之后按下回车:

+

+```

+:setw synchronize-panes

+```

+

+现在在任意窗格中键入任何命令。你将看到相同命令影响了所有窗格。

+

+##### 交换窗格

+

+使用 `Ctrl+b` 和 `o` 交换窗格。

+

+##### 展示窗格号

+

+使用 `Ctrl+b` 和 `q` 展示窗格号。

+

+##### 终止窗格

+

+要关闭窗格,直接键入 `exit` 并且按下回车键。或者,按下 `Ctrl+b` 和 `x`。你会看到确认信息。按下 `y` 关闭窗格。

+

+![][10]

+

+*关闭窗格*

+

+##### 放大和缩小 Tmux 窗格

+

+我们可以将 Tmux 窗格放大到当前终端窗口的全尺寸,以获得更好的文本可视性,并查看更多的内容。当你需要更多的空间或专注于某个特定的任务时,这很有用。在完成该任务后,你可以将 Tmux 窗格缩小(取消放大)到其正常位置。更多详情请看以下链接。

+

+- [如何缩放 Tmux 窗格以提高文本可见度?](https://ostechnix.com/how-to-zoom-tmux-panes-for-better-text-visibility/)

+

+#### 自动启动 Tmux 会话

+

+当通过 SSH 与远程系统工作时,在 Tmux 会话中运行一个长期运行的进程总是一个好的做法。因为,它可以防止你在网络连接突然中断时失去对运行进程的控制。避免这个问题的一个方法是自动启动 Tmux 会话。更多详情,请参考以下链接。

+

+- [通过 SSH 登录远程系统时自动启动 Tmux 会话](https://ostechnix.com/autostart-tmux-session-on-remote-system-when-logging-in-via-ssh/)

+

+### 总结

+

+这个阶段下,你已经获得了基本的 Tmux 技能来进行多会话管理,更多细节,参阅 man 页面。

+

+```

+$ man tmux

+```

+

+GNU Screen 和 Tmux 工具都能透过 SSH 很好的管理远程服务器。学习 Screen 和 Tmux 命令,像个行家一样,彻底通过这些工具管理远程服务器。

+

+--------------------------------------------------------------------------------

+

+via: https://www.ostechnix.com/tmux-command-examples-to-manage-multiple-terminal-sessions/

+

+作者:[sk][a]

+选题:[lujun9972][b]

+译者:[chensanle](https://github.com/chensanle)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://www.ostechnix.com/author/sk/

+[b]: https://github.com/lujun9972

+[1]: https://www.ostechnix.com/wp-content/uploads/2019/06/Tmux-720x340.png

+[2]: https://www.ostechnix.com/screen-command-examples-to-manage-multiple-terminal-sessions/

+[3]: https://www.ostechnix.com/wp-content/uploads/2019/06/Tmux-session.png

+[4]: https://www.ostechnix.com/wp-content/uploads/2019/06/Named-Tmux-session.png

+[5]: https://www.ostechnix.com/wp-content/uploads/2019/06/List-Tmux-sessions.png

+[6]: https://www.ostechnix.com/wp-content/uploads/2019/06/Create-detached-sessions.png

+[7]: https://www.ostechnix.com/wp-content/uploads/2019/06/Horizontal-split.png

+[8]: https://www.ostechnix.com/wp-content/uploads/2019/06/Vertical-split.png

+[9]: https://www.ostechnix.com/wp-content/uploads/2019/06/Split-Panes.png

+[10]: https://www.ostechnix.com/wp-content/uploads/2019/06/Kill-panes.png

diff --git a/published/20190626 Where are all the IoT experts going to come from.md b/published/20190626 Where are all the IoT experts going to come from.md

new file mode 100644

index 0000000000..5b10af4a11

--- /dev/null

+++ b/published/20190626 Where are all the IoT experts going to come from.md

@@ -0,0 +1,72 @@

+[#]: collector: (lujun9972)

+[#]: translator: (scvoet)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13115-1.html)

+[#]: subject: (Where are all the IoT experts going to come from?)

+[#]: via: (https://www.networkworld.com/article/3404489/where-are-all-the-iot-experts-going-to-come-from.html)

+[#]: author: (Fredric Paul https://www.networkworld.com/author/Fredric-Paul/)

+

+物联网专家都从何而来?

+======

+

+> 物联网(IoT)的快速发展催生了对跨职能专家进行培养的需求,这些专家可以将传统的网络和基础设施专业知识与数据库和报告技能相结合。

+

+![Kevin \(CC0\)][1]

+

+如果物联网(IoT)要实现其宏伟的诺言,它将需要大量聪明、熟练、**训练有素**的工人军团来实现这一切。而现在,这些人将从何而来尚不清楚。

+

+这就是我为什么有兴趣同资产优化软件公司 [AspenTech][2] 的产品管理、研发高级总监 Keith Flynn 通邮件的原因,他说,当处理大量属于物联网范畴的新技术时,你需要能够理解如何配置技术和解释数据的人。Flynn 认为,现有的教育机构对物联网特定课程的需求越来越大,这同时也给了以物联网为重点,提供了完善课程的新私立学院机会。

+

+Flynn 跟我说,“在未来,物联网项目将与如今普遍的数据管理和自动化项目有着巨大的不同……未来需要更全面的技能和交叉交易能力,这样我们才会说同一种语言。”

+

+Flynn 补充说,随着物联网每年增长 30%,将不再依赖于几个特定的技能,“从传统的部署技能(如网络和基础设施)到数据库和报告技能,坦白说,甚至是基础数据科学,都将需要一起理解和使用。”

+

+### 召集所有物联网顾问

+

+Flynn 预测,“受过物联网教育的人的第一个大机会将会是在咨询领域,随着咨询公司对行业趋势的适应或淘汰……有受过物联网培训的员工将有助于他们在物联网项目中的定位,并在新的业务线中提出要求——物联网咨询。”

+

+对初创企业和小型公司而言,这个问题尤为严重。“组织越大,他们越有可能雇佣到不同技术类别的人”Flynn 这样说到,“但对于较小的组织和较小的物联网项目来说,你则需要一个能同时兼顾的人。”

+

+两者兼而有之?还是**一应俱全?**物联网“需要将所有知识和技能组合在一起”,Flynn 说到,“并不是所有技能都是全新的,只是在此之前从来没有被归纳在一起或放在一起教授过。”

+

+### 未来的物联网专家

+

+Flynn 表示,真正的物联网专业技术是从基础的仪器仪表和电气技能开始的,这能帮助工人发明新的无线发射器或提升技术,以提高电池寿命和功耗。

+

+“IT 技能,如网络、IP 寻址、子网掩码、蜂窝和卫星也是物联网的关键需求”,Flynn 说。他还认为物联网需要数据库管理技能和云管理和安全专业知识,“特别是当高级过程控制(APC)将传感器数据直接发送到数据库和数据湖等事情成为常态时。”

+

+### 物联网专家又从何而来?

+

+Flynn 说,标准化的正规教育课程将是确保毕业生或证书持有者掌握一套正确技能的最佳途径。他甚至还列出了一个样本课程。“按时间顺序开始,从基础知识开始,比如 [电气仪表] 和测量。然后讲授网络知识,数据库管理和云计算课程都应在此之后开展。这个学位甚至可以循序渐进至现有的工程课程中,这可能需要两年时间……来完成物联网部分的学业。”

+

+虽然企业培训也能发挥作用,但实际上却是“说起来容易做起来难”,Flynn 这样警告,“这些培训需要针对组织的具体努力而推动。”

+

+当然,现在市面上已经有了 [大量的在线物联网培训课程和证书课程][5]。但追根到底,这一工作全都依赖于工人自身的推断。

+

+“在这个世界上,随着科技不断改变行业,提升技能是非常重要的”,Flynn 说,“如果这种提升技能的推动力并不是来源于你的雇主,那么在线课程和认证将会是提升你自己很好的一个方式。我们只需要创建这些课程……我甚至可以预见组织将与提供这些课程的高等教育机构合作,让他们的员工更好地开始。当然,物联网课程的挑战在于它需要不断发展以跟上科技的发展。”

+

+--------------------------------------------------------------------------------

+

+via: https://www.networkworld.com/article/3404489/where-are-all-the-iot-experts-going-to-come-from.html

+

+作者:[Fredric Paul][a]

+选题:[lujun9972][b]

+译者:[Percy (@scvoet)](https://github.com/scvoet)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://www.networkworld.com/author/Fredric-Paul/

+[b]: https://github.com/lujun9972

+[1]: https://images.idgesg.net/images/article/2018/07/programmer_certification-skills_code_devops_glasses_student_by-kevin-unsplash-100764315-large.jpg

+[2]: https://www.aspentech.com/

+[3]: https://www.networkworld.com/article/3276025/careers/20-hot-jobs-ambitious-it-pros-should-shoot-for.html

+[4]: https://pluralsight.pxf.io/c/321564/424552/7490?u=https%3A%2F%2Fwww.pluralsight.com%2Fpaths%2Fupgrading-your-technology-career

+[5]: https://www.google.com/search?client=firefox-b-1-d&q=iot+training

+[6]: https://www.networkworld.com/article/3254185/internet-of-things/tips-for-securing-iot-on-your-network.html#nww-fsb

+[7]: https://www.networkworld.com/article/2287045/internet-of-things/wireless-153629-10-most-powerful-internet-of-things-companies.html#nww-fsb

+[8]: https://www.networkworld.com/article/3243928/internet-of-things/what-is-the-industrial-iot-and-why-the-stakes-are-so-high.html#nww-fsb

+[9]: https://www.networkworld.com/newsletters/signup.html#nww-fsb

+[10]: https://www.facebook.com/NetworkWorld/

+[11]: https://www.linkedin.com/company/network-world

diff --git a/published/20190810 EndeavourOS Aims to Fill the Void Left by Antergos in Arch Linux World.md b/published/20190810 EndeavourOS Aims to Fill the Void Left by Antergos in Arch Linux World.md

new file mode 100644

index 0000000000..54c752a913

--- /dev/null

+++ b/published/20190810 EndeavourOS Aims to Fill the Void Left by Antergos in Arch Linux World.md

@@ -0,0 +1,98 @@

+[#]: collector: (lujun9972)

+[#]: translator: (Chao-zhi)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13096-1.html)

+[#]: subject: (EndeavourOS Aims to Fill the Void Left by Antergos in Arch Linux World)

+[#]: via: (https://itsfoss.com/endeavouros/)

+[#]: author: (John Paul https://itsfoss.com/author/john/)

+

+EndeavourOS:填补 Antergos 在 ArchLinux 世界留下的空白

+======

+

+

+

+我相信我们的大多数读者都知道 [Antergos 项目的终结][2]。在这一消息宣布之后,Antergos 社区的成员创建了几个发行版来继承 Antergos。今天,我们将着眼于 Antergos 的“精神继承人”之一:[EndeavourOS][3]。

+

+### EndeavourOS 不是 Antergos 的分支

+

+在我们开始之前,我想非常明确地指出,EndeavourOS 并不是一个 Antergos 的复刻版本。开发者们以 Antergos 为灵感,创建了一个基于 Arch 的轻量级发行版。

+

+![Endeavouros First Boot][4]

+

+根据 [这个项目网站][5] 的说法,EndeavourOS 的诞生是因为 Antergos 社区的人们想要保持 Antergos 的精神。他们的目标很简单:“让 Arch 拥有一个易于使用的安装程序和一个友好、有帮助的社区,在掌握系统的过程中能够有一个社区可以依靠。”

+

+与许多基于 Arch 的发行版不同,EndeavourOS 打算像 [原生 Arch][5] 那样使用,“所以没有一键式安装你喜欢的应用程序的解决方案,也没有一堆你最终不需要的预装应用程序。”对于大多数人来说,尤其是那些刚接触 Linux 和 Arch 的人,会有一个学习曲线,但 EndeavourOS 的目标是建立一个大型友好的社区,鼓励人们提出问题并了解他们的系统。

+

+![Endeavouros Installing][6]

+

+### 正在进行的工作

+

+EndeavourOS 在 [2019 年 5 月 23 日首次宣布成立][8] 随后 [在 7 月 15 日发布第一个版本][7]。不幸的是,这意味着开发人员无法将他们计划的所有功能全部整合进来。(LCTT 译注:本文原文发表于 2019 年,而现在,EndeavourOS 还在持续活跃着。)

+

+例如,他们想要一个类似于 Antergos 的在线安装,但却遇到了[当前选项的问题][9]。“Cnchi 运行在 Antergos 生态系统之外会造成严重的问题,需要彻底重写才能发挥作用。RebornOS 的 Fenix 安装程序还没有完全成型,需要更多时间才能正常运行。”于是现在,EndeavourOS 将会和 [Calamares 安装程序 ][10] 一起发布。

+

+EndeavourOS 会提供 [比 Antergos 少的东西][9]:它的存储库比 Antergos 小,尽管他们会附带一些 AUR 包。他们的目标是提供一个接近 Arch 却不是原生 Arch 的系统。

+

+![Endeavouros Updating With Kalu][12]

+

+开发者[进一步声明 ][13]:

+

+> “Linux,特别是 Arch,核心精神是自由选择,我们提供了一个基本的安装,让你在一个精细的层面上方便地探索各项选择。我们永远不会强行为你作决定,比如为你安装 GUI 应用程序,如 Pamac,甚至采用沙盒解决方案,如 Flatpak 或 Snaps。想安装成什么样子完全取决于你,这是我们与 Antergos 或 Manjaro 的主要区别,但与 Antergos 一样,如果你安装的软件包遇到问题,我们会尽力帮助你。”

+

+### 体验 EndeavourOS

+

+我在 [VirtualBox][14] 中安装了 EndeavourOS,并且研究了一番。当我第一次启动时,我看到一个窗口,里面有关于安装的 EndeavourOS 网站的链接。它还有一个安装按钮和一个手动分区工具。Calamares 安装程序的安装过程非常顺利。

+

+在我重新启动到新安装的 EndeavourOS 之后,迎接我的是一个彩色主题的 XFCE 桌面。我还收到了一堆通知消息。我使用过的大多数基于 Arch 的发行版都带有一个 GUI 包管理器,比如 [pamac][15] 或 [octopi][16],以进行系统更新。EndeavourOS 配有 [kalu][17](kalu 是 “Keeping Arch Linux Up-to-date” 的缩写)。它可以更新软件包、可以看 Archlinux 新闻、可以更新 AUR 包等等。一旦它检查到有更新,它就会显示通知消息。

+

+我浏览了一下菜单,看看默认安装了什么。默认的安装并不多,连办公套件都没有。他们想让 EndeavourOS 成为一块空白画布,让任何人都可以创建他们想要的系统。他们正朝着正确的方向前进。

+

+![Endeavouros Desktop][18]

+

+### 总结思考

+

+EndeavourOS 还很年轻。第一个稳定版本都没有发布多久。它缺少一些东西,最重要的是一个在线安装程序。这就是说,我们无法估计他能够走到哪一步。(LCTT 译注:本文发表于 2019 年)

+

+虽然它不是 Antergos 的精确复刻,但 EndeavourOS 希望复制 Antergos 最重要的部分——热情友好的社区。很多时候,Linux 社区对初学者似乎是不受欢迎甚至是完全敌对的。我看到越来越多的人试图与这种消极情绪作斗争,并将更多的人引入 Linux。随着 EndeavourOS 团队把焦点放在社区建设上,我相信一个伟大的发行版将会诞生。

+

+如果你当前正在使用 Antergos,有一种方法可以让你[不用重装系统就切换到 EndeavourOS][20]

+

+如果你想要一个 Antergos 的精确复刻,我建议你去看看 [RebornOS][21]。他们目前正在开发一个名为 Fenix 的 Cnchi 安装程序的替代品。

+

+你试过 EndeavourOS 了吗?你的感受如何?

+

+--------------------------------------------------------------------------------

+

+via: https://itsfoss.com/endeavouros/

+

+作者:[John Paul][a]

+选题:[lujun9972][b]

+译者:[Chao-zhi](https://github.com/Chao-zhi)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://itsfoss.com/author/john/

+[b]: https://github.com/lujun9972

+[1]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/08/endeavouros-logo.png?ssl=1

+[2]: https://itsfoss.com/antergos-linux-discontinued/

+[3]: https://endeavouros.com/

+[4]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/08/endeavouros-first-boot.png?resize=800%2C600&ssl=1

+[5]: https://endeavouros.com/info-2/

+[6]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/08/endeavouros-installing.png?resize=800%2C600&ssl=1

+[7]: https://endeavouros.com/endeavouros-first-stable-release-has-arrived/

+[8]: https://forum.antergos.com/topic/11780/endeavour-antergos-community-s-next-stage

+[9]: https://endeavouros.com/what-to-expect-on-the-first-release/

+[10]: https://calamares.io/

+[11]: https://itsfoss.com/veltos-linux/

+[12]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/08/endeavouros-updating-with-kalu.png?resize=800%2C600&ssl=1

+[13]: https://endeavouros.com/second-week-after-the-stable-release/

+[14]: https://itsfoss.com/install-virtualbox-ubuntu/

+[15]: https://aur.archlinux.org/packages/pamac-aur/

+[16]: https://octopiproject.wordpress.com/

+[17]: https://github.com/jjk-jacky/kalu

+[18]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/08/endeavouros-desktop.png?resize=800%2C600&ssl=1

+[19]: https://itsfoss.com/clear-linux/

+[20]: https://forum.endeavouros.com/t/how-to-switch-from-antergos-to-endevouros/105/2

+[21]: https://rebornos.org/

diff --git a/published/20191227 The importance of consistency in your Python code.md b/published/20191227 The importance of consistency in your Python code.md

new file mode 100644

index 0000000000..5ff2d11caf

--- /dev/null

+++ b/published/20191227 The importance of consistency in your Python code.md

@@ -0,0 +1,72 @@

+[#]: collector: (lujun9972)

+[#]: translator: (stevenzdg988)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13082-1.html)

+[#]: subject: (The importance of consistency in your Python code)

+[#]: via: (https://opensource.com/article/19/12/zen-python-consistency)

+[#]: author: (Moshe Zadka https://opensource.com/users/moshez)

+

+Python 代码一致性的重要性

+======

+

+> 本文是 Python 之禅特殊系列的一部分,重点是第十二、十三和十四原则:模糊性和明确性的作用。

+

+

+

+最小惊喜原则是设计用户界面时的一个 [准则][2]。它是说,当用户执行某项操作时,程序执行的事情应该使用户尽量少地感到意外。这和孩子们喜欢一遍又一遍地读同一本书的原因是一样的:没有什么比能够预测并让预测成真更让人欣慰的了。

+

+在开发 [ABC 语言][3](Python 的灵感来源)的过程中,一个重要的见解是,编程设计是用户界面,需要使用与 UI 设计者相同的工具来设计。值得庆幸的是,从那以后,越来越多的语言采用了 UI 设计中的可承受性和人体工程学的概念,即使它们的应用并不严格。

+

+这就引出了 [Python 之禅][4] 中的三个原则。

+

+### 面对歧义,要拒绝猜测的诱惑

+

+`1 + "1"` 的结果应该是什么? `"11"` 和 `2` 都是猜测。这种表达方式是*歧义的*:无论如何做都会让一些人感到惊讶。

+

+一些语言选择猜测。在 JavaScript 中,结果为 `"11"`。在 Perl 中,结果为 `2`。在 C 语言中,结果自然是空字符串。面对歧义,JavaScript、Perl 和 C 都在猜测。

+

+在 Python 中,这会引发 `TypeError`:这不是能忽略的错误。捕获 `TypeError` 是非典型的:它通常将终止程序或至少终止当前任务(例如,在大多数 Web 框架中,它将终止对当前请求的处理)。

+

+Python 拒绝猜测 `1 + "1"` 的含义。程序员必须以明确的意图编写代码:`1 + int("1")`,即 `2`;或者 `str(1) + "1"`,即 `"11"`;或 `"1"[1:]`,这将是一个空字符串。通过拒绝猜测,Python 使程序更具可预测性。

+

+### 尽量找一种,最好是唯一一种明显的解决方案

+

+预测也会出现偏差。给定一个任务,你能预知要实现该任务的代码吗?当然,不可能完美地预测。毕竟,编程是一项具有创造性的任务。

+

+但是,不必有意提供多种冗余方式来实现同一目标。从某种意义上说,某些解决方案或许 “更好” 或 “更 Python 化”。

+

+对 Python 美学欣赏部分是因为,可以就哪种解决方案更好进行健康的辩论。甚至可以持不同观点而继续编程。甚至为使其达成一致,接受不同意的观点也是可以的。但在这一切之下,必须有一种这样的认识,即正确的解决方案终将会出现。我们必须希望,通过商定实现目标的最佳方法,而最终达成真正的一致。

+

+### 虽然这种方式一开始可能并不明显(除非你是荷兰人)

+

+这是一个重要的警告:首先,实现任务的最佳方法往往*不*明显。观念在不断发展。Python 也在进化。逐块读取文件的最好方法,可能要等到 Python 3.8 时使用 [walrus 运算符][5](`:=`)。

+

+逐块读取文件这样常见的任务,在 Python 存在近 *30年* 的历史中并没有 “唯一的最佳方法”。

+

+当我在 1998 年从 Python 1.5.2 开始使用 Python 时,没有一种逐行读取文件的最佳方法。多年来,知道字典中是否有某个键的最佳方法是使用关键字 `.haskey`,直到 `in` 操作符出现才发生改变。

+

+只是要意识到找到实现目标的一种(也是唯一一种)方法可能需要 30 年的时间来尝试其它方法,Python 才可以不断寻找这些方法。这种历史观认为,为了做一件事用上 30 年是可以接受的,但对于美国这个存在仅 200 多年的国家来说,人们常常会感到不习惯。

+

+从 Python 之禅的这一部分来看,荷兰人,无论是 Python 的创造者 [Guido van Rossum][6] 还是著名的计算机科学家 [Edsger W. Dijkstra][7],他们的世界观是不同的。要理解这一部分,某种程度的欧洲人对时间的感受是必不可少的。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/12/zen-python-consistency

+

+作者:[Moshe Zadka][a]

+选题:[lujun9972][b]

+译者:[stevenzdg988](https://github.com/stevenzdg988)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/moshez

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/rh_003499_01_other11x_cc.png?itok=I_kCDYj0 (Two animated computers waving one missing an arm)

+[2]: https://www.uxpassion.com/blog/the-principle-of-least-surprise/

+[3]: https://en.wikipedia.org/wiki/ABC_(programming_language)

+[4]: https://www.python.org/dev/peps/pep-0020/

+[5]: https://www.python.org/dev/peps/pep-0572/#abstract

+[6]: https://en.wikipedia.org/wiki/Guido_van_Rossum

+[7]: http://en.wikipedia.org/wiki/Edsger_W._Dijkstra

diff --git a/published/20191228 The Zen of Python- Why timing is everything.md b/published/20191228 The Zen of Python- Why timing is everything.md

new file mode 100644

index 0000000000..6ebab5320a

--- /dev/null

+++ b/published/20191228 The Zen of Python- Why timing is everything.md

@@ -0,0 +1,45 @@

+[#]: collector: (lujun9972)

+[#]: translator: (wxy)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13103-1.html)

+[#]: subject: (The Zen of Python: Why timing is everything)

+[#]: via: (https://opensource.com/article/19/12/zen-python-timeliness)

+[#]: author: (Moshe Zadka https://opensource.com/users/moshez)

+

+Python 之禅:时机最重要

+======

+

+> 这是 Python 之禅特别系列的一部分,重点是第十五和第十六条原则:现在与将来。

+

+

+

+Python 一直在不断发展。Python 社区对特性请求的渴求是无止境的,对现状也总是不满意的。随着 Python 越来越流行,这门语言的变化会影响到更多的人。

+

+确定什么时候该进行变化往往很难,但 [Python 之禅][2] 给你提供了指导。

+

+### 现在有总比永远没有好

+

+总有一种诱惑,就是要等到事情完美才去做,虽然,它们永远没有完美的一天。当它们看起来已经“准备”得足够好了,那就大胆采取行动吧,去做出改变吧。无论如何,变化总是发生在*某个*现在:拖延的唯一作用就是把它移到未来的“现在”。

+

+### 虽然将来总比现在好

+

+然而,这并不意味着应该急于求成。从测试、文档、用户反馈等方面决定发布的标准。在变化就绪之前的“现在”,并不是一个好时机。

+

+这不仅对 Python 这样的流行语言是个很好的经验,对你个人的小开源项目也是如此。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/12/zen-python-timeliness

+

+作者:[Moshe Zadka][a]

+选题:[lujun9972][b]

+译者:[wxy](https://github.com/wxy)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/moshez

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/desk_clock_job_work.jpg?itok=Nj4fuhl6 (Clock, pen, and notepad on a desk)

+[2]: https://www.python.org/dev/peps/pep-0020/

diff --git a/published/20191229 How to tell if implementing your Python code is a good idea.md b/published/20191229 How to tell if implementing your Python code is a good idea.md

new file mode 100644

index 0000000000..1a2358a77c

--- /dev/null

+++ b/published/20191229 How to tell if implementing your Python code is a good idea.md

@@ -0,0 +1,47 @@

+[#]: collector: (lujun9972)

+[#]: translator: (wxy)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13116-1.html)

+[#]: subject: (How to tell if implementing your Python code is a good idea)

+[#]: via: (https://opensource.com/article/19/12/zen-python-implementation)

+[#]: author: (Moshe Zadka https://opensource.com/users/moshez)

+

+如何判断你的 Python 代码实现是否合适?

+======

+

+> 这是 Python 之禅特别系列的一部分,重点介绍第十七和十八条原则:困难和容易。

+

+

+

+一门语言并不是抽象存在的。每一个语言功能都必须用代码来实现。承诺一些功能是很容易的,但实现起来就会很麻烦。复杂的实现意味着更多潜在的 bug,甚至更糟糕的是,会带来日复一日的维护负担。

+

+对于这个难题,[Python 之禅][2] 中有答案。

+

+### 如果一个实现难以解释,那就是个坏思路

+

+编程语言最重要的是可预测性。有时我们用抽象的编程模型来解释某个结构的语义,而这些模型与实现并不完全对应。然而,最好的释义就是*解释该实现*。

+

+如果该实现很难解释,那就意味着这条路行不通。

+

+### 如果一个实现易于解释,那它可能是一个好思路

+

+仅仅因为某事容易,并不意味着它值得。然而,一旦解释清楚,判断它是否是一个好思路就容易得多。

+

+这也是为什么这个原则的后半部分故意含糊其辞的原因:没有什么可以肯定一定是好的,但总是可以讨论一下。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/12/zen-python-implementation

+

+作者:[Moshe Zadka][a]

+选题:[lujun9972][b]

+译者:[wxy](https://github.com/wxy)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/moshez

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/devops_confusion_wall_questions.png?itok=zLS7K2JG (Brick wall between two people, a developer and an operations manager)

+[2]: https://www.python.org/dev/peps/pep-0020/

diff --git a/published/20191230 Namespaces are the shamash candle of the Zen of Python.md b/published/20191230 Namespaces are the shamash candle of the Zen of Python.md

new file mode 100644

index 0000000000..dc98e7afc0

--- /dev/null

+++ b/published/20191230 Namespaces are the shamash candle of the Zen of Python.md

@@ -0,0 +1,58 @@

+[#]: collector: (lujun9972)

+[#]: translator: (wxy)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13123-1.html)

+[#]: subject: (Namespaces are the shamash candle of the Zen of Python)

+[#]: via: (https://opensource.com/article/19/12/zen-python-namespaces)

+[#]: author: (Moshe Zadka https://opensource.com/users/moshez)

+

+命名空间是 Python 之禅的精髓

+======

+

+> 这是 Python 之禅特别系列的一部分,重点是一个额外的原则:命名空间。

+

+

+

+著名的光明节有八个晚上的庆祝活动。然而,光明节的灯台有九根蜡烛:八根普通的蜡烛和总是偏移的第九根蜡烛。它被称为 “shamash” 或 “shamos”,大致可以翻译为“仆人”或“看门人”的意思。

+

+shamos 是点燃所有其它蜡烛的蜡烛:它是唯一一支可以用火的蜡烛,而不仅仅是观看。当我们结束 Python 之禅系列时,我看到命名空间提供了类似的作用。

+

+### Python 中的命名空间

+

+Python 使用命名空间来处理一切。虽然简单,但它们是稀疏的数据结构 —— 这通常是实现目标的最佳方式。

+

+> *命名空间* 是一个从名字到对象的映射。

+>

+> —— [Python.org][2]

+

+模块是命名空间。这意味着正确地预测模块语义通常只需要熟悉 Python 命名空间的工作方式。类是命名空间,对象是命名空间。函数可以访问它们的本地命名空间、父命名空间和全局命名空间。

+

+这个简单的模型,即用 `.` 操作符访问一个对象,而这个对象又通常(但并不总是)会进行某种字典查找,这使得 Python 很难优化,但很容易解释。

+

+事实上,一些第三方模块也采取了这个准则,并以此来运行。例如,[variants][3] 包把函数变成了“相关功能”的命名空间。这是一个很好的例子,说明 [Python 之禅][4] 是如何激发新的抽象的。

+

+### 结语

+

+感谢你和我一起参加这次以光明节为灵感的 [我最喜欢的语言][5] 的探索。

+

+静心参禅,直至悟道。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/12/zen-python-namespaces

+

+作者:[Moshe Zadka][a]

+选题:[lujun9972][b]

+译者:[wxy](https://github.com/wxy)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/moshez

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/computer_code_programming_laptop.jpg?itok=ormv35tV (Person programming on a laptop on a building)

+[2]: https://docs.python.org/3/tutorial/classes.html

+[3]: https://pypi.org/project/variants/

+[4]: https://www.python.org/dev/peps/pep-0020/

+[5]: https://opensource.com/article/19/10/why-love-python

diff --git a/published/20200419 Getting Started With Pacman Commands in Arch-based Linux Distributions.md b/published/20200419 Getting Started With Pacman Commands in Arch-based Linux Distributions.md

new file mode 100644

index 0000000000..8171a81ede

--- /dev/null

+++ b/published/20200419 Getting Started With Pacman Commands in Arch-based Linux Distributions.md

@@ -0,0 +1,244 @@

+[#]: collector: (lujun9972)

+[#]: translator: (Chao-zhi)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13099-1.html)

+[#]: subject: (Getting Started With Pacman Commands in Arch-based Linux Distributions)

+[#]: via: (https://itsfoss.com/pacman-command/)

+[#]: author: (Dimitrios Savvopoulos https://itsfoss.com/author/dimitrios/)

+

+Arch Linux 的 pacman 命令入门

+======

+

+> 这本初学者指南向你展示了在 Linux 中可以使用 pacman 命令做什么,如何使用它们来查找新的软件包,安装和升级新的软件包,以及清理你的系统。

+

+[pacman][1] 包管理器是 [Arch Linux][2] 和其他主要发行版如 Red Hat 和 Ubuntu/Debian 之间的主要区别之一。它结合了简单的二进制包格式和易于使用的 [构建系统][3]。`pacman` 的目标是方便地管理软件包,无论它是来自 [官方库][4] 还是用户自己构建的软件库。

+

+如果你曾经使用过 Ubuntu 或基于 debian 的发行版,那么你可能使用过 `apt-get` 或 `apt` 命令。`pacman` 在 Arch Linux 中是同样的命令。如果你 [刚刚安装了 Arch Linux][5],在安装 Arch Linux 后,首先要做的 [几件事][6] 之一就是学习使用 `pacman` 命令。

+

+在这个初学者指南中,我将解释一些基本的 `pacman` 命令的用法,你应该知道如何用这些命令来管理你的基于 Archlinux 的系统。

+

+### Arch Linux 用户应该知道的几个重要的 pacman 命令

+

+

+

+与其他包管理器一样,`pacman` 可以将包列表与软件库同步,它能够自动解决所有所需的依赖项,以使得用户可以通过一个简单的命令下载和安装软件。

+

+#### 通过 pacman 安装软件

+

+你可以用以下形式的代码来安装一个或者多个软件包:

+

+```

+pacman -S 软件包名1 软件包名2 ...

+```

+

+![安装一个包][8]

+

+`-S` 选项的意思是同步,它的意思是 `pacman` 在安装之前先与软件库进行同步。

+

+`pacman` 数据库根据安装的原因将安装的包分为两组:

+

+ * **显式安装**:由 `pacman -S` 或 `-U` 命令直接安装的包

+ * **依赖安装**:由于被其他显式安装的包所 [依赖][9],而被自动安装的包。

+

+#### 卸载已安装的软件包

+

+卸载一个包,并且删除它的所有依赖。

+

+```

+pacman -R 软件包名

+```

+

+![移除一个包][10]

+

+删除一个包,以及其不被其他包所需要的依赖项:

+

+```

+pacman -Rs 软件包名

+```

+

+如果需要这个依赖的包已经被删除了,这条命令可以删除所有不再需要的依赖项:

+

+```

+pacman -Qdtq | pacman -Rs -

+```

+

+#### 升级软件包

+

+`pacman` 提供了一个简单的办法来 [升级 Arch Linux][11]。你只需要一条命令就可以升级所有已安装的软件包。这可能需要一段时间,这取决于系统的新旧程度。

+

+以下命令可以同步存储库数据库,*并且* 更新系统的所有软件包,但不包括不在软件库中的“本地安装的”包:

+

+```

+pacman -Syu

+```

+

+ * `S` 代表同步

+ * `y` 代表更新本地存储库

+ * `u` 代表系统更新

+

+也就是说,同步到中央软件库(主程序包数据库),刷新主程序包数据库的本地副本,然后执行系统更新(通过更新所有有更新版本可用的程序包)。

+

+![系统更新][12]

+

+> 注意!

+>

+> 对于 Arch Linux 用户,在系统升级前,建议你访问 [Arch-Linux 主页][2] 查看最新消息,以了解异常更新的情况。如果系统更新需要人工干预,主页上将发布相关的新闻。你也可以订阅 [RSS 源][13] 或 [Arch 的声明邮件][14]。

+>

+> 在升级基础软件(如 kernel、xorg、systemd 或 glibc) 之前,请注意查看相应的 [论坛][15],以了解大家报告的各种问题。

+>

+> 在 Arch 和 Manjaro 等滚动发行版中不支持**部分升级**。这意味着,当新的库版本被推送到软件库时,软件库中的所有包都需要根据库版本进行升级。例如,如果两个包依赖于同一个库,则仅升级一个包可能会破坏依赖于该库的旧版本的另一个包。

+

+#### 用 Pacman 查找包

+

+`pacman` 使用 `-Q` 选项查询本地包数据库,使用 `-S` 选项查询同步数据库,使用 `-F` 选项查询文件数据库。

+

+`pacman` 可以在数据库中搜索包,包括包的名称和描述:

+

+```

+pacman -Ss 字符串1 字符串2 ...

+```

+

+![查找一个包][16]

+

+查找已经被安装的包:

+

+```

+pacman -Qs 字符串1 字符串2 ...

+```

+

+根据文件名在远程软包中查找它所属的包:

+

+```

+pacman -F 字符串1 字符串2 ...

+```

+

+查看一个包的依赖树:

+

+```

+pactree 软件包名

+```

+

+#### 清除包缓存

+

+`pacman` 将其下载的包存储在 `/var/cache/Pacman/pkg/` 中,并且不会自动删除旧版本或卸载的版本。这有一些优点:

+

+ 1. 它允许 [降级][17] 一个包,而不需要通过其他来源检索以前的版本。

+ 2. 已卸载的软件包可以轻松地直接从缓存文件夹重新安装。

+

+但是,有必要定期清理缓存以防止文件夹增大。

+

+[pacman contrib][19] 包中提供的 [paccache(8)][18] 脚本默认情况下会删除已安装和未安装包的所有缓存版本,但最近 3 个版本除外:

+

+```

+paccache -r

+```

+

+![清除缓存][20]

+

+要删除当前未安装的所有缓存包和未使用的同步数据库,请执行:

+

+```

+pacman -Sc

+```

+

+要从缓存中删除所有文件,请使用清除选项两次,这是最激进的方法,不会在缓存文件夹中留下任何内容:

+

+```

+pacman -Scc

+```

+

+#### 安装本地或者第三方的包

+

+安装不是来自远程存储库的“本地”包:

+

+```

+pacman -U 本地软件包路径.pkg.tar.xz

+```

+

+安装官方存储库中未包含的“远程”软件包:

+

+```

+pacman -U http://www.example.com/repo/example.pkg.tar.xz

+```

+

+### 额外内容:用 pacman 排除常见错误

+

+下面是使用 `pacman` 管理包时可能遇到的一些常见错误。

+

+#### 提交事务失败(文件冲突)

+

+如果你看到以下报错:

+

+```

+error: could not prepare transaction

+error: failed to commit transaction (conflicting files)

+package: /path/to/file exists in filesystem

+Errors occurred, no packages were upgraded.

+```

+

+这是因为 `pacman` 检测到文件冲突,不会为你覆盖文件。

+

+解决这个问题的一个安全方法是首先检查另一个包是否拥有这个文件(`pacman-Qo 文件路径`)。如果该文件属于另一个包,请提交错误报告。如果文件不属于另一个包,请重命名“存在于文件系统中”的文件,然后重新发出更新命令。如果一切顺利,文件可能会被删除。

+

+你可以显式地运行 `pacman -S –overwrite 要覆盖的文件模式**,强制 `pacman` 覆盖与 给模式匹配的文件,而不是手动重命名并在以后删除属于该包的所有文件。

+

+#### 提交事务失败(包无效或损坏)

+

+在 `/var/cache/pacman/pkg/` 中查找 `.part` 文件(部分下载的包),并将其删除。这通常是由在 `pacman.conf` 文件中使用自定义 `XferCommand` 引起的。

+

+#### 初始化事务失败(无法锁定数据库)

+

+当 `pacman` 要修改包数据库时,例如安装包时,它会在 `/var/lib/pacman/db.lck` 处创建一个锁文件。这可以防止 `pacman` 的另一个实例同时尝试更改包数据库。

+

+如果 `pacman` 在更改数据库时被中断,这个过时的锁文件可能仍然保留。如果你确定没有 `pacman` 实例正在运行,那么请删除锁文件。

+

+检查进程是否持有锁定文件:

+

+```

+lsof /var/lib/pacman/db.lck

+```

+

+如果上述命令未返回任何内容,则可以删除锁文件:

+

+```

+rm /var/lib/pacman/db.lck

+```

+

+如果你发现 `lsof` 命令输出了使用锁文件的进程的 PID,请先杀死这个进程,然后删除锁文件。

+

+我希望你喜欢我对 `pacman` 基础命令的介绍。

+

+--------------------------------------------------------------------------------

+

+via: https://itsfoss.com/pacman-command/

+

+作者:[Dimitrios Savvopoulos][a]

+选题:[lujun9972][b]

+译者:[Chao-zhi](https://github.com/Chao-zhi)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://itsfoss.com/author/dimitrios/

+[b]: https://github.com/lujun9972

+[1]: https://www.archlinux.org/pacman/

+[2]: https://www.archlinux.org/

+[3]: https://wiki.archlinux.org/index.php/Arch_Build_System

+[4]: https://wiki.archlinux.org/index.php/Official_repositories

+[5]: https://itsfoss.com/install-arch-linux/

+[6]: https://itsfoss.com/things-to-do-after-installing-arch-linux/

+[7]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2020/04/essential-pacman-commands.jpg?ssl=1

+[8]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/04/sudo-pacman-S.png?ssl=1

+[9]: https://wiki.archlinux.org/index.php/Dependency

+[10]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2020/04/sudo-pacman-R.png?ssl=1

+[11]: https://itsfoss.com/update-arch-linux/

+[12]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2020/04/sudo-pacman-Syu.png?ssl=1

+[13]: https://www.archlinux.org/feeds/news/

+[14]: https://mailman.archlinux.org/mailman/listinfo/arch-announce/

+[15]: https://bbs.archlinux.org/

+[16]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2020/04/sudo-pacman-Ss.png?ssl=1

+[17]: https://wiki.archlinux.org/index.php/Downgrade

+[18]: https://jlk.fjfi.cvut.cz/arch/manpages/man/paccache.8

+[19]: https://www.archlinux.org/packages/?name=pacman-contrib

+[20]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2020/04/sudo-paccache-r.png?ssl=1

diff --git a/published/20200529 A new way to build cross-platform UIs for Linux ARM devices.md b/published/20200529 A new way to build cross-platform UIs for Linux ARM devices.md

new file mode 100644

index 0000000000..9a742f2eb2

--- /dev/null

+++ b/published/20200529 A new way to build cross-platform UIs for Linux ARM devices.md

@@ -0,0 +1,170 @@

+[#]: collector: (lujun9972)

+[#]: translator: (Chao-zhi)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13129-1.html)

+[#]: subject: (A new way to build cross-platform UIs for Linux ARM devices)

+[#]: via: (https://opensource.com/article/20/5/linux-arm-ui)

+[#]: author: (Bruno Muniz https://opensource.com/users/brunoamuniz)

+

+一种为 Linux ARM 设备构建跨平台 UI 的新方法

+======

+

+> AndroidXML 和 TotalCross 的运用为树莓派和其他设备创建 UI 提供了更简单的方法。

+

+

+

+为应用程序创建良好的用户体验(UX)是一项艰巨的任务,尤其是在开发嵌入式应用程序时。今天,有两种图形用户界面(GUI)工具通常用于开发嵌入式软件:它们要么涉及复杂的技术,要么非常昂贵。

+

+然而,我们已经创建了一个概念验证(PoC),它提供了一种新的方法来使用现有的、成熟的工具为运行在桌面、移动、嵌入式设备和低功耗 ARM 设备上的应用程序构建用户界面(UI)。我们的方法是使用 Android Studio 绘制 UI;使用 [TotalCross][2] 在设备上呈现 Android XML;采用被称为 [KnowCode][4] 的新 [TotalCross API][3];以及使用 [树莓派 4][5] 来执行应用程序。

+

+### 选择 Android Studio

+

+可以使用 TotalCross API 为应用程序构建一个美观的响应式用户体验,但是在 Android Studio 中创建 UI 缩短了制作原型和实际应用程序之间的时间。

+

+有很多工具可以用来为应用程序构建 UI,但是 [Android Studio][6] 是全世界开发者最常使用的工具。除了它被大量采用以外,这个工具的使用也非常直观,而且它对于创建简单和复杂的应用程序都非常强大。在我看来,唯一的缺点是使用该工具所需的计算机性能,它比其他集成开发环境 (IDE) 如 VSCode 或其开源替代方案 [VSCodium][7] 要庞大得多。

+

+通过思考这些问题,我们创建了一个概念验证,使用 Android Studio 绘制 UI,并使用 TotalCross 直接在设备上运行 AndroidXML。

+

+### 构建 UI

+

+对于我们的 PoC,我们想创建一个家用电器应用程序来控制温度和其他东西,并在 Linux ARM 设备上运行。

+

+![Home appliance application to control thermostat][8]

+

+我们想为树莓派开发我们的应用程序,所以我们使用 Android 的 [ConstraintLayout][10] 来构建 848x480(树莓派的分辨率)的固定屏幕大小的 UI,不过你可以用其他布局构建响应性 UI。

+

+Android XML 为 UI 创建增加了很多灵活性,使得为应用程序构建丰富的用户体验变得容易。在下面的 XML 中,我们使用了两个主要组件:[ImageView][11] 和 [TextView][12]。

+

+```

+

+

+```

+

+TextView 元素用于向用户显示一些数据,比如建筑物内的温度。大多数 ImageView 都用作用户与 UI 交互的按钮,但它们也需要实现屏幕上组件提供的事件。

+

+### 用 TotalCross 整合

+

+这个 PoC 中的第二项技术是 TotalCross。我们不想在设备上使用 Android 的任何东西,因为:

+

+ 1。我们的目标是为 Linux ARM 提供一个出色的 UI。

+ 2。我们希望在设备上实现低占用。

+ 3。我们希望应用程序在低计算能力的低端硬件设备上运行(例如,没有 GPU、 低 RAM 等)。

+

+首先,我们使用 [VSCode 插件][13] 创建了一个空的 TotalCross 项目。接下来,我们保存了 `drawable` 文件夹中的图像副本和 `xml` 文件夹中的 Android XML 文件副本,这两个文件夹都位于 `resources` 文件夹中:

+

+![Home Appliance file structure][14]

+

+为了使用 TotalCross 模拟器运行 XML 文件,我们添加了一个名为 KnowCode 的新 TotalCross API 和一个主窗口来加载 XML。下面的代码使用 API 加载和呈现 XML:

+

+```

+public void initUI() {

+ XmlScreenAbstractLayout xmlCont = XmlScreenFactory.create("xml / homeApplianceXML.xml");

+ swap(xmlCont);

+}

+```

+

+就这样!只需两个命令,我们就可以使用 TotalCross 运行 Android XML 文件。以下是 XML 如何在 TotalCross 的模拟器上执行:

+

+![TotalCross simulator running temperature application][15]

+

+完成这个 PoC 还有两件事要做:添加一些事件来提供用户交互,并在树莓派上运行它。

+

+### 添加事件

+

+KnowCode API 提供了一种通过 ID(`getControlByID`) 获取 XML 元素并更改其行为的方法,如添加事件、更改可见性等。

+

+例如,为了使用户能够改变家中或其他建筑物的温度,我们在 UI 底部放置了加号和减号按钮,并在每次单击按钮时都会出现“单击”事件,使温度升高或降低一度:

+

+```

+Button plus = (Button) xmlCont.getControlByID("@+id/plus");

+Label insideTempLabel = (Label) xmlCont.getControlByID("@+id/insideTempLabel");

+plus.addPressListener(new PressListener() {

+ @Override

+ public void controlPressed(ControlEvent e) {

+ try {

+ String tempString = insideTempLabel.getText();

+ int temp;

+ temp = Convert.toInt(tempString);

+ insideTempLabel.setText(Convert.toString(++temp));

+ } catch (InvalidNumberException e1) {

+ e1.printStackTrace();

+ }

+ }

+});

+```

+

+### 在树莓派 4 上测试

+

+最后一步!我们在一台设备上运行了应用程序并检查了结果。我们只需要打包应用程序并在目标设备上部署和运行它。[VNC][19] 也可用于检查设备上的应用程序。

+

+整个应用程序,包括资源(图像等)、Android XML、TotalCross 和 Knowcode API,在 Linux ARM 上大约是 8MB。

+

+下面是应用程序的演示:

+

+![Application demo][20]

+

+在本例中,该应用程序仅为 Linux ARM 打包,但同一应用程序可以作为 Linux 桌面应用程序运行,在Android 设备 、Windows、windows CE 甚至 iOS 上运行。

+

+所有示例源代码和项目都可以在 [HomeApplianceXML GitHub][21] 存储库中找到。

+

+### 现有工具的新玩法

+

+为嵌入式应用程序创建 GUI 并不需要像现在这样困难。这种概念证明为如何轻松地完成这项任务提供了新的视角,不仅适用于嵌入式系统,而且适用于所有主要的操作系统,所有这些系统都使用相同的代码库。

+

+我们的目标不是为设计人员或开发人员创建一个新的工具来构建 UI 应用程序;我们的目标是为使用现有的最佳工具提供新的玩法。

+

+你对这种新的应用程序开发方式有何看法?在下面的评论中分享你的想法。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/20/5/linux-arm-ui

+

+作者:[Bruno Muniz][a]

+选题:[lujun9972][b]

+译者:[Chao-zhi](https://github.com/Chao-zhi)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/brunoamuniz

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/computer_desk_home_laptop_browser.png?itok=Y3UVpY0l (Digital images of a computer desktop)

+[2]: https://totalcross.com/

+[3]: https://yourapp.totalcross.com/knowcode-app

+[4]: https://github.com/TotalCross/KnowCodeXML

+[5]: https://www.raspberrypi.org/

+[6]: https://developer.android.com/studio

+[7]: https://vscodium.com/

+[8]: https://opensource.com/sites/default/files/uploads/homeapplianceapp.png (Home appliance application to control thermostat)

+[9]: https://creativecommons.org/licenses/by-sa/4.0/

+[10]: https://codelabs.developers.google.com/codelabs/constraint-layout/index.html#0

+[11]: https://developer.android.com/reference/android/widget/ImageView

+[12]: https://developer.android.com/reference/android/widget/TextView

+[13]: https://medium.com/totalcross-community/totalcross-plugin-for-vscode-4f45da146a0a

+[14]: https://opensource.com/sites/default/files/uploads/homeappliancexml.png (Home Appliance file structure)

+[15]: https://opensource.com/sites/default/files/uploads/totalcross-simulator_0.png (TotalCross simulator running temperature application)

+[16]: http://www.google.com/search?hl=en&q=allinurl%3Adocs.oracle.com+javase+docs+api+button

+[17]: http://www.google.com/search?hl=en&q=allinurl%3Adocs.oracle.com+javase+docs+api+label

+[18]: http://www.google.com/search?hl=en&q=allinurl%3Adocs.oracle.com+javase+docs+api+string

+[19]: https://tigervnc.org/

+[20]: https://opensource.com/sites/default/files/uploads/application.gif (Application demo)

+[21]: https://github.com/TotalCross/HomeApplianceXML

diff --git a/published/20200607 Top Arch-based User Friendly Linux Distributions That are Easier to Install and Use Than Arch Linux Itself.md b/published/20200607 Top Arch-based User Friendly Linux Distributions That are Easier to Install and Use Than Arch Linux Itself.md

new file mode 100644

index 0000000000..11c43fd1f5

--- /dev/null

+++ b/published/20200607 Top Arch-based User Friendly Linux Distributions That are Easier to Install and Use Than Arch Linux Itself.md

@@ -0,0 +1,201 @@

+[#]: collector: (lujun9972)

+[#]: translator: (Chao-zhi)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13104-1.html)

+[#]: subject: (Top Arch-based User Friendly Linux Distributions That are Easier to Install and Use Than Arch Linux Itself)

+[#]: via: (https://itsfoss.com/arch-based-linux-distros/)

+[#]: author: (Dimitrios Savvopoulos https://itsfoss.com/author/dimitrios/)

+

+9 个易用的基于 Arch 的用户友好型 Linux 发行版

+======

+

+在 Linux 社区中,[Arch Linux][1] 有一群狂热的追随者。这个轻量级的发行版以 DIY 的态度提供了最前沿的更新。

+

+但是,Arch 的目标用户是那些更有经验的用户。因此,它通常被认为是那些技术不够(或耐心不够)的人所无法触及的。

+

+事实上,只是最开始的步骤,[安装 Arch Linux 就足以把很多人吓跑][2]。与大多数其他发行版不同,Arch Linux 没有一个易于使用的图形安装程序。安装过程中涉及到的磁盘分区,连接到互联网,挂载驱动器和创建文件系统等只用命令行工具来操作。

+

+对于那些不想经历复杂的安装和设置的人来说,有许多用户友好的基于 Arch 的发行版。

+

+在本文中,我将向你展示一些 Arch 替代发行版。这些发行版附带了图形安装程序、图形包管理器和其他工具,比它们的命令行版本更容易使用。

+

+### 更容易设置和使用的基于 Arch 的 Linux 发行版

+

+

+

+请注意,这不是一个排名列表。这些数字只是为了计数的目的。排第二的发行版不应该被认为比排第七的发行版好。

+

+#### 1、Manjaro Linux

+

+![][4]

+

+[Manjaro][5] 不需要任何介绍。它是几年来最流行的 Linux 发行版之一,它值得拥有。

+

+Manjaro 提供了 Arch Linux 的所有优点,同时注重用户友好性和可访问性。Manjaro 既适合新手,也适合有经验的 Linux 用户。

+

+**对于新手**,它提供了一个用户友好的安装程序,系统本身也设计成可以在你[最喜爱的桌面环境 ][6](DE)或窗口管理器中直接“开箱即用”。

+

+**对于更有经验的用户**,Manjaro 还提供多种功能,以满足每个个人的口味和喜好。[Manjaro Architect][7] 提供了安装各种 Manjaro 风格的选项,并为那些想要完全自由地塑造系统的人提供了各种桌面环境、文件系统([最近推出的 ZFS][8]) 和引导程序的选择。

+

+Manjaro 也是一个滚动发布的前沿发行版。然而,与 Arch 不同的是,Manjaro 首先测试更新,然后将其提供给用户。稳定在这里也很重要。

+

+#### 2、ArcoLinux

+

+![][9]

+

+[ArcoLinux][10](以前称为 ArchMerge)是一个基于 Arch Linux 的发行版。开发团队提供了三种变体。ArcoLinux、ArcoLinuxD 和 ArcoLinuxB。

+

+ArcoLinux 是一个功能齐全的发行版,附带有 [Xfce 桌面][11]、[Openbox][12] 和 [i3 窗口管理器][13]。

+

+**ArcoLinuxD** 是一个精简的发行版,它包含了一些脚本,可以让高级用户安装任何桌面和应用程序。

+

+**ArcoLinuxB** 是一个让用户能够构建自定义发行版的项目,同时还开发了几个带有预配置桌面的社区版本,如 Awesome、bspwm、Budgie、Cinnamon、Deepin、GNOME、MATE 和 KDE Plasma。

+

+ArcoLinux 还提供了各种视频教程,因为它非常注重学习和获取 Linux 技能。

+

+#### 3、Archlabs Linux

+

+![][14]

+

+[ArchLabs Linux][15] 是一个轻量级的滚动版 Linux 发行版,基于最精简的 Arch Linux,带有 [Openbox][16] 窗口管理器。[ArchLabs][17] 在观感设计中受到 [BunsenLabs][18] 的影响和启发,主要考虑到中级到高级用户的需求。

+

+#### 4、Archman Linux

+

+![][19]

+

+[Archman][20] 是一个独立的项目。Arch Linux 发行版对于没有多少 Linux 经验的用户来说通常不是理想的操作系统。要想在最小的挫折感下让事情变得更有意义,必须要有相当的背景知识。Archman Linux 的开发人员正试图改变这种评价。

+

+Archman 的开发是基于对开发的理解,包括用户反馈和体验组件。根据团队过去的经验,将用户的反馈和要求融合在一起,确定路线图并完成构建工作。

+

+#### 5、EndeavourOS

+

+![][21]

+

+当流行的基于 Arch 的发行版 [Antergos 在 2019 结束][22] 时,它留下了一个友好且非常有用的社区。Antergos 项目结束的原因是因为该系统对于开发人员来说太难维护了。

+

+在宣布结束后的几天内,一些有经验的用户通过创建一个新的发行版来填补 Antergos 留下的空白,从而维护了以前的社区。这就是 [EndeavourOS][23] 的诞生。

+

+[EndeavourOS][24] 是轻量级的,并且附带了最少数量的预装应用程序。一块近乎空白的画布,随时可以个性化。

+

+#### 6、RebornOS

+

+![][25]

+

+[RebornOS][26] 开发人员的目标是将 Linux 的真正威力带给每个人,一个 ISO 提供了 15 个桌面环境可供选择,并提供无限的定制机会。

+

+RebornOS 还声称支持 [Anbox][27],它可以在桌面 Linux 上运行 Android 应用程序。它还提供了一个简单的内核管理器 GUI 工具。

+

+再加上 [Pacman][28]、[AUR][29],以及定制版本的 Cnchi 图形安装程序,Arch Linux 终于可以让最没有经验的用户也能够使用了。

+

+#### 7、Chakra Linux

+

+![][30]

+

+一个社区开发的 GNU/Linux 发行版,它的亮点在 KDE 和 Qt 技术。[Chakra Linux][31] 不在特定日期安排发布,而是使用“半滚动发布”系统。

+

+这意味着 Chakra Linux 的核心包被冻结,只在修复安全问题时才会更新。这些软件包是在最新版本经过彻底测试后更新的,然后再转移到永久软件库(大约每六个月更新一次)。

+

+除官方软件库外,用户还可以安装 Chakra 社区软件库 (CCR) 的软件包,该库为官方存储库中未包含的软件提供用户制作的 PKGINFOs 和 [PKGBUILD][32] 脚本,其灵感来自于 Arch 用户软件库(AUR)。

+

+#### 8、Artix Linux

+

+![Artix Mate Edition][33]

+

+[Artix Linux][34] 也是一个基于 Arch Linux 的滚动发行版,它使用 [OpenRC][35]、[runit][36] 或 [s6][37] 作为初始化工具而不是 [systemd][38]。

+

+Artix Linux 有自己的软件库,但作为一个基于 `pacman` 的发行版,它可以使用 Arch Linux 软件库或任何其他衍生发行版的软件包,甚至可以使用明确依赖于 systemd 的软件包。也可以使用 [Arch 用户软件库][29](AUR)。

+

+#### 9、BlackArch Linux

+

+![][39]

+

+BlackArch 是一个基于 Arch Linux 的 [渗透测试发行版][40],它提供了大量的网络安全工具。它是专门为渗透测试人员和安全研究人员创建的。该软件库包含 2400 多个[黑客和渗透测试工具 ][41],可以单独安装,也可以分组安装。BlackArch Linux 兼容现有的 Arch Linux 包。

+

+### 想要真正的原版 Arch Linux 吗?可以使用图形化 Arch 安装程序简化安装

+

+如果你想使用原版的 Arch Linux,但又被它困难的安装所难倒。幸运的是,你可以下载一个带有图形安装程序的 Arch Linux ISO。

+

+Arch 安装程序基本上是 Arch Linux ISO 的一个相对容易使用的基于文本的安装程序。它比裸奔的 Arch 安装容易得多。

+

+#### Anarchy Installer

+

+![][42]

+

+[Anarchy installer][43] 打算为新手和有经验的 Linux 用户提供一种简单而无痛苦的方式来安装 ArchLinux。在需要的时候安装,在需要的地方安装,并且以你想要的方式安装。这就是 Anarchy 的哲学。

+

+启动安装程序后,将显示一个简单的 [TUI 菜单][44],列出所有可用的安装程序选项。

+

+#### Zen Installer

+

+![][45]

+

+[Zen Installer][46] 为安装 Arch Linux 提供了一个完整的图形(点击式)环境。它支持安装多个桌面环境 、AUR 以及 Arch Linux 的所有功能和灵活性,并且易于图形化安装。

+

+ISO 将引导一个临场环境,然后在你连接到互联网后下载最新稳定版本的安装程序。因此,你将始终获得最新的安装程序和更新的功能。

+

+### 总结

+

+对于许多用户来说,基于 Arch 的发行版会是一个很好的无忧选择,而像 Anarchy 这样的图形化安装程序至少离原版的 Arch Linux 更近了一步。

+

+在我看来,[Arch Linux 的真正魅力在于它的安装过程][2],对于 Linux 爱好者来说,这是一个学习的机会,而不是麻烦。Arch Linux 及其衍生产品有很多东西需要你去折腾,但是在折腾的过程中你就会进入到开源软件的世界,这里是神奇的新世界。下次再见!

+

+--------------------------------------------------------------------------------

+

+via: https://itsfoss.com/arch-based-linux-distros/

+

+作者:[Dimitrios Savvopoulos][a]

+选题:[lujun9972][b]

+译者:[Chao-zhi](https://github.com/Chao-zhi)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://itsfoss.com/author/dimitrios/

+[b]: https://github.com/lujun9972

+[1]: https://www.archlinux.org/

+[2]: https://itsfoss.com/install-arch-linux/

+[3]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/06/arch-based-linux-distributions.png?ssl=1

+[4]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/05/manjaro-20.jpg?ssl=1

+[5]: https://manjaro.org/

+[6]: https://itsfoss.com/best-linux-desktop-environments/

+[7]: https://itsfoss.com/manjaro-architect-review/

+[8]: https://itsfoss.com/manjaro-20-release/

+[9]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2020/05/arcolinux.png?ssl=1

+[10]: https://arcolinux.com/

+[11]: https://www.xfce.org/

+[12]: http://openbox.org/wiki/Main_Page

+[13]: https://i3wm.org/

+[14]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2020/06/Archlabs.jpg?ssl=1

+[15]: https://itsfoss.com/archlabs-review/

+[16]: https://en.wikipedia.org/wiki/Openbox

+[17]: https://archlabslinux.com/

+[18]: https://www.bunsenlabs.org/

+[19]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2020/06/Archman.png?ssl=1

+[20]: https://archman.org/en/

+[21]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2020/05/04_endeavouros_slide.jpg?ssl=1

+[22]: https://itsfoss.com/antergos-linux-discontinued/

+[23]: https://itsfoss.com/endeavouros/

+[24]: https://endeavouros.com/

+[25]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/06/RebornOS.png?ssl=1

+[26]: https://rebornos.org/

+[27]: https://anbox.io/

+[28]: https://itsfoss.com/pacman-command/

+[29]: https://itsfoss.com/aur-arch-linux/

+[30]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/06/Chakra_Goedel_Screenshot.png?ssl=1

+[31]: https://www.chakralinux.org/

+[32]: https://wiki.archlinux.org/index.php/PKGBUILD

+[33]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2020/06/Artix_MATE_edition.png?ssl=1

+[34]: https://artixlinux.org/

+[35]: https://en.wikipedia.org/wiki/OpenRC

+[36]: https://en.wikipedia.org/wiki/Runit

+[37]: https://en.wikipedia.org/wiki/S6_(software)

+[38]: https://en.wikipedia.org/wiki/Systemd

+[39]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2020/06/BlackArch.png?ssl=1

+[40]: https://itsfoss.com/linux-hacking-penetration-testing/

+[41]: https://itsfoss.com/best-kali-linux-tools/

+[42]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/05/anarchy.jpg?ssl=1

+[43]: https://anarchyinstaller.org/

+[44]: https://en.wikipedia.org/wiki/Text-based_user_interface

+[45]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2020/05/zen.jpg?ssl=1

+[46]: https://sourceforge.net/projects/revenge-installer/

diff --git a/published/20200615 LaTeX Typesetting - Part 1 (Lists).md b/published/20200615 LaTeX Typesetting - Part 1 (Lists).md

new file mode 100644

index 0000000000..b69d418b64

--- /dev/null

+++ b/published/20200615 LaTeX Typesetting - Part 1 (Lists).md

@@ -0,0 +1,260 @@

+[#]: collector: "lujun9972"

+[#]: translator: "rakino"

+[#]: reviewer: "wxy"

+[#]: publisher: "wxy"

+[#]: url: "https://linux.cn/article-13112-1.html"

+[#]: subject: "LaTeX Typesetting – Part 1 (Lists)"

+[#]: via: "https://fedoramagazine.org/latex-typesetting-part-1/"

+[#]: author: "Earl Ramirez https://fedoramagazine.org/author/earlramirez/"

+

+LaTeX 排版(1):列表

+======

+

+![][1]

+

+本系列基于前文《[在 Fedora 上用 LaTex 和 TeXstudio 排版你的文档][2]》和《[LaTeX 基础][3]》,本文即系列的第一部分,是关于 LaTeX 列表的。

+

+### 列表类型

+

+LaTeX 中的列表是封闭的环境,列表中的每个项目可以取一行文字到一个完整的段落。在 LaTeX 中有三种列表类型:

+

+ * `itemize`:无序列表/项目符号列表

+ * `enumerate`:有序列表

+ * `description`:描述列表

+

+### 创建列表

+

+要创建一个列表,需要在每个项目前加上控制序列 `\item`,并在项目清单前后分别加上控制序列 `\begin{<类型>}` 和 `\end`{<类型>}`(将其中的 `<类型>` 替换为将要使用的列表类型),如下例:

+

+#### itemize(无序列表)

+

+```

+\begin{itemize}

+ \item Fedora

+ \item Fedora Spin

+ \item Fedora Silverblue

+\end{itemize}

+```

+

+![][4]

+

+#### enumerate(有序列表)

+

+```

+\begin{enumerate}

+ \item Fedora CoreOS

+ \item Fedora Silverblue

+ \item Fedora Spin

+\end{enumerate}

+```

+

+![][5]

+

+#### description(描述列表)

+

+```

+\begin{description}

+ \item[Fedora 6] Code name Zod

+ \item[Fedora 8] Code name Werewolf

+\end{description}

+```

+

+![][6]

+

+### 列表项目间距

+

+可以通过在导言区加入 `\usepackage{enumitem}` 来自定义默认的间距,宏包 `enumitem` 启用了选项 `noitemsep` 和控制序列 `\itemsep`,可以在列表中使用它们,如下例所示:

+

+#### 使用选项 noitemsep

+

+将选项 `noitemsep` 封闭在方括号内,并同下文所示放在控制序列 `\begin` 之后,该选项将移除默认的间距。

+

+```

+\begin{itemize}[noitemsep]

+ \item Fedora

+ \item Fedora Spin

+ \item Fedora Silverblue

+\end{itemize}

+```

+

+![][7]

+

+#### 使用控制序列 \itemsep

+

+控制序列 `\itemsep` 必须以一个数字作为后缀,用以表示列表项目之间应该有多少空间。

+

+```

+\begin{itemize} \itemsep0.75pt

+ \item Fedora Silverblue

+ \item Fedora CoreOS

+\end{itemize}

+```

+

+![][8]

+

+### 嵌套列表

+

+LaTeX 最多最多支持四层嵌套列表,如下例:

+

+#### 嵌套无序列表

+

+```

+\begin{itemize}[noitemsep]

+ \item Fedora Versions

+ \begin{itemize}

+ \item Fedora 8

+ \item Fedora 9

+ \begin{itemize}

+ \item Werewolf

+ \item Sulphur

+ \begin{itemize}

+ \item 2007-05-31

+ \item 2008-05-13

+ \end{itemize}

+ \end{itemize}

+ \end{itemize}

+ \item Fedora Spin

+ \item Fedora Silverblue

+\end{itemize}

+```

+

+![][9]

+

+#### 嵌套有序列表

+

+```

+\begin{enumerate}[noitemsep]

+ \item Fedora Versions

+ \begin{enumerate}

+ \item Fedora 8

+ \item Fedora 9

+ \begin{enumerate}

+ \item Werewolf

+ \item Sulphur

+ \begin{enumerate}

+ \item 2007-05-31

+ \item 2008-05-13

+ \end{enumerate}

+ \end{enumerate}

+ \end{enumerate}

+ \item Fedora Spin

+ \item Fedora Silverblue

+\end{enumerate}

+```

+

+![][10]

+

+### 每种列表类型的列表样式名称

+

+**enumerate(有序列表)** | **itemize(无序列表)**

+---|---

+`\alph*` (小写字母) | `$\bullet$` (●)

+`\Alph*` (大写字母) | `$\cdot$` (•)

+`\arabic*` (阿拉伯数字) | `$\diamond$` (◇)

+`\roman*` (小写罗马数字) | `$\ast$` (✲)

+`\Roman*` (大写罗马数字) | `$\circ$` (○)

+ | `$-$` (-)

+

+### 按嵌套深度划分的默认样式

+

+**嵌套深度** | **enumerate(有序列表)** | **itemize(无序列表)**

+---|---|---

+1 | 阿拉伯数字 | (●)

+2 | 小写字母 | (-)

+3 | 小写罗马数字 | (✲)

+4 | 大写字母 | (•)

+

+### 设置列表样式

+

+下面的例子列举了无序列表的不同样式。

+

+```

+% 无序列表样式

+\begin{itemize}

+ \item[$\ast$] Asterisk

+ \item[$\diamond$] Diamond

+ \item[$\circ$] Circle

+ \item[$\cdot$] Period

+ \item[$\bullet$] Bullet (default)

+ \item[--] Dash

+ \item[$-$] Another dash

+\end{itemize}

+```

+

+![][11]

+

+有三种设置列表样式的方式,下面将按照优先级从高到低的顺序分别举例。

+

+#### 方式一:为各项目单独设置

+

+将需要的样式名称封闭在方括号内,并放在控制序列 `\item` 之后,如下例:

+

+```

+% 方式一

+\begin{itemize}

+ \item[$\ast$] Asterisk

+ \item[$\diamond$] Diamond

+ \item[$\circ$] Circle

+ \item[$\cdot$] period

+ \item[$\bullet$] Bullet (default)

+ \item[--] Dash

+ \item[$-$] Another dash

+\end{itemize}

+```

+

+#### 方式二:为整个列表设置

+

+将需要的样式名称以 `label=` 前缀并封闭在方括号内,放在控制序列 `\begin` 之后,如下例:

+

+```

+% 方式二

+\begin{enumerate}[label=\Alph*.]

+ \item Fedora 32

+ \item Fedora 31

+ \item Fedora 30

+\end{enumerate}

+```

+

+#### 方式三:为整个文档设置

+

+该方式将改变整个文档的默认样式。使用 `\renewcommand` 来设置项目标签的值,下例分别为四个嵌套深度的项目标签设置了不同的样式。

+

+```

+% 方式三

+\renewcommand{\labelitemi}{$\ast$}

+\renewcommand{\labelitemii}{$\diamond$}

+\renewcommand{\labelitemiii}{$\bullet$}

+\renewcommand{\labelitemiv}{$-$}

+```

+

+### 总结

+

+LaTeX 支持三种列表,而每种列表的风格和间距都是可以自定义的。在以后的文章中,我们将解释更多的 LaTeX 元素。

+

+关于 LaTeX 列表的延伸阅读可以在这里找到:[LaTeX List Structures][12]

+

+--------------------------------------------------------------------------------

+

+via: https://fedoramagazine.org/latex-typesetting-part-1/

+

+作者:[Earl Ramirez][a]

+选题:[lujun9972][b]

+译者:[rakino](https://github.com/rakino)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://fedoramagazine.org/author/earlramirez/

+[b]: https://github.com/lujun9972

+[1]: https://fedoramagazine.org/wp-content/uploads/2020/06/latex-series-816x345.png

+[2]: https://fedoramagazine.org/typeset-latex-texstudio-fedora

+[3]: https://fedoramagazine.org/fedora-classroom-latex-101-beginners

+[4]: https://fedoramagazine.org/wp-content/uploads/2020/06/image-1.png

+[5]: https://fedoramagazine.org/wp-content/uploads/2020/06/image-2.png

+[6]: https://fedoramagazine.org/wp-content/uploads/2020/06/image-3.png

+[7]: https://fedoramagazine.org/wp-content/uploads/2020/06/image-4.png

+[8]: https://fedoramagazine.org/wp-content/uploads/2020/06/image-5.png

+[9]: https://fedoramagazine.org/wp-content/uploads/2020/06/image-7.png

+[10]: https://fedoramagazine.org/wp-content/uploads/2020/06/image-8.png

+[11]: https://fedoramagazine.org/wp-content/uploads/2020/06/image-9.png

+[12]: https://en.wikibooks.org/wiki/LaTeX/List_Structures

diff --git a/published/20200922 Give Your GNOME Desktop a Tiling Makeover With Material Shell GNOME Extension.md b/published/20200922 Give Your GNOME Desktop a Tiling Makeover With Material Shell GNOME Extension.md

new file mode 100644

index 0000000000..a645f460fb

--- /dev/null

+++ b/published/20200922 Give Your GNOME Desktop a Tiling Makeover With Material Shell GNOME Extension.md

@@ -0,0 +1,126 @@

+[#]: collector: (lujun9972)

+[#]: translator: (Chao-zhi)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13110-1.html)

+[#]: subject: (Give Your GNOME Desktop a Tiling Makeover With Material Shell GNOME Extension)

+[#]: via: (https://itsfoss.com/material-shell/)

+[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

+

+使用 Material Shell 扩展将你的 GNOME 桌面打造成平铺式风格

+======

+

+平铺式窗口的特性吸引了很多人的追捧。也许是因为它很好看,也许是因为它能提高 [Linux 快捷键][1] 玩家的效率。又或者是因为使用不同寻常的平铺式窗口是一种新奇的挑战。

+

+![Tiling Windows in Linux | Image Source][2]

+

+从 i3 到 [Sway][3],Linux 桌面拥有各种各样的平铺式窗口管理器。配置一个平铺式窗口管理器需要一个陡峭的学习曲线。

+

+这就是为什么像 [Regolith 桌面][4] 这样的项目会存在,给你预先配置好的平铺桌面,让你可以更轻松地开始使用平铺窗口。

+

+让我给你介绍一个类似的项目 —— Material Shell。它可以让你用上平铺式桌面,甚至比 [Regolith][5] 还简单。

+

+### Material Shell 扩展:将 GNOME 桌面转变成平铺式窗口管理器

+

+[Material Shell][6] 是一个 GNOME 扩展,这就是它最好的地方。这意味着你不需要注销并登录其他桌面环境。你只需要启用或关闭这个扩展就可以自如的切换你的工作环境。

+

+我会列出 Material Shell 的各种特性,但是也许视频更容易让你理解:

+

+- [video](https://youtu.be/Wc5mbuKrGDE)

+

+这个项目叫做 Material Shell 是因为它遵循 [Material Design][8] 原则。因此这个应用拥有一个美观的界面。这就是它最重要的一个特性。

+

+#### 直观的界面

+

+Material Shell 添加了一个左侧面板,以便快速访问。在此面板上,你可以在底部找到系统托盘,在顶部找到搜索和工作区。

+

+所有新打开的应用都会添加到当前工作区中。你也可以创建新的工作区并切换到该工作区,以将正在运行的应用分类。其实这就是工作区最初的意义。

+

+在 Material Shell 中,每个工作区都可以显示为具有多个应用程序的行列,而不是包含多个应用程序的程序框。

+

+#### 平铺式窗口

+

+在工作区中,你可以一直在顶部看到所有打开的应用程序。默认情况下,应用程序会像在 GNOME 桌面中那样铺满整个屏幕。你可以使用右上角的布局改变器来改变布局,将其分成两半、多列或多个应用网格。

+

+这段视频一目了然的显示了以上所有功能:

+

+- [video](https://player.vimeo.com/video/460050750?dnt=1&app_id=122963)

+

+#### 固定布局和工作区

+

+Material Shell 会记住你打开的工作区和窗口,这样你就不必重新组织你的布局。这是一个很好的特性,因为如果你对应用程序的位置有要求的话,它可以节省时间。

+

+#### 热建/快捷键

+

+像任何平铺窗口管理器一样,你可以使用键盘快捷键在应用程序和工作区之间切换。

+

+ * `Super+W` 切换到上个工作区;

+ * `Super+S` 切换到下个工作区;

+ * `Super+A` 切换到左边的窗口;

+ * `Super+D` 切换到右边的窗口;

+ * `Super+1`、`Super+2` … `Super+0` 切换到某个指定的工作区;

+ * `Super+Q` 关闭当前窗口;

+ * `Super+[鼠标拖动]` 移动窗口;

+ * `Super+Shift+A` 将当前窗口左移;

+ * `Super+Shift+D` 将当前窗口右移;

+ * `Super+Shift+W` 将当前窗口移到上个工作区;

+ * `Super+Shift+S` 将当前窗口移到下个工作区。

+

+### 安装 Material Shell

+

+> 警告!

+>

+> 对于大多数用户来说,平铺式窗口可能会导致混乱。你最好先熟悉如何使用 GNOME 扩展。如果你是 Linux 新手或者你害怕你的系统发生翻天覆地的变化,你应当避免使用这个扩展。

+

+Material Shell 是一个 GNOME 扩展。所以,请 [检查你的桌面环境][9],确保你运行的是 GNOME 3.34 或者更高的版本。

+

+除此之外,我注意到在禁用 Material Shell 之后,它会导致 Firefox 的顶栏和 Ubuntu 的坞站消失。你可以在 GNOME 的“扩展”应用程序中禁用/启用 Ubuntu 的坞站扩展来使其变回原来的样子。我想这些问题也应该在系统重启后消失,虽然我没试过。

+

+我希望你知道 [如何使用 GNOME 扩展][10]。最简单的办法就是 [在浏览器中打开这个链接][11],安装 GNOME 扩展浏览器插件,然后启用 Material Shell 扩展即可。

+

+![][12]

+

+如果你不喜欢这个扩展,你也可以在同样的链接中禁用它。或者在 GNOME 的“扩展”应用程序中禁用它。

+

+![][13]

+

+### 用不用平铺式?

+

+我使用多个电脑屏幕,我发现 Material Shell 不适用于多个屏幕的情况。这是开发者将来可以改进的地方。

+

+除了这个毛病以外,Material Shell 是个让你开始使用平铺式窗口的好东西。如果你尝试了 Material Shell 并且喜欢它,请 [在 GitHub 上给它一个星标或赞助它][14] 来鼓励这个项目。

+

+由于某些原因,平铺窗户越来越受欢迎。最近发布的 [Pop OS 20.04][15] 也增加了平铺窗口的功能。有一个类似的项目叫 PaperWM,也是这样做的。

+

+但正如我前面提到的,平铺布局并不适合所有人,它可能会让很多人感到困惑。

+

+你呢?你是喜欢平铺窗口还是喜欢经典的桌面布局?

+

+--------------------------------------------------------------------------------

+

+via: https://itsfoss.com/material-shell/

+

+作者:[Abhishek Prakash][a]

+选题:[lujun9972][b]

+译者:[Chao-zhi](https://github.com/Chao-zhi)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://itsfoss.com/author/abhishek/

+[b]: https://github.com/lujun9972

+[1]: https://itsfoss.com/ubuntu-shortcuts/

+[2]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/09/linux-ricing-example-800x450.jpg?resize=800%2C450&ssl=1

+[3]: https://itsfoss.com/sway-window-manager/

+[4]: https://itsfoss.com/regolith-linux-desktop/

+[5]: https://regolith-linux.org/

+[6]: https://material-shell.com

+[7]: https://www.youtube.com/c/itsfoss?sub_confirmation=1

+[8]: https://material.io/

+[9]: https://itsfoss.com/find-desktop-environment/

+[10]: https://itsfoss.com/gnome-shell-extensions/

+[11]: https://extensions.gnome.org/extension/3357/material-shell/

+[12]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/09/install-material-shell.png?resize=800%2C307&ssl=1

+[13]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/09/material-shell-gnome-extension.png?resize=799%2C497&ssl=1

+[14]: https://github.com/material-shell/material-shell

+[15]: https://itsfoss.com/pop-os-20-04-review/

diff --git a/published/20201013 My first day using Ansible.md b/published/20201013 My first day using Ansible.md

new file mode 100644

index 0000000000..990ddc1f24

--- /dev/null

+++ b/published/20201013 My first day using Ansible.md

@@ -0,0 +1,297 @@

+[#]: collector: "lujun9972"

+[#]: translator: "MjSeven"

+[#]: reviewer: "wxy"

+[#]: publisher: "wxy"

+[#]: url: "https://linux.cn/article-13079-1.html"

+[#]: subject: "My first day using Ansible"

+[#]: via: "https://opensource.com/article/20/10/first-day-ansible"

+[#]: author: "David Both https://opensource.com/users/dboth"

+

+使用 Ansible 的第一天

+======

+

+> 一名系统管理员分享了如何使用 Ansible 在网络中配置计算机并把其带入实际工作的信息和建议。

+

+

+

+无论是第一次还是第五十次,启动并运行一台新的物理或虚拟计算机都非常耗时,而且需要大量的工作。多年来,我一直使用我创建的一系列脚本和 RPM 来安装所需的软件包,并为我喜欢的工具配置各种选项。这种方法效果很好,简化了我的工作,而且还减少了在键盘上输入命令的时间。

+

+我一直在寻找更好的工作方式。近几年来,我一直在听到并且读到有关 [Ansible][2] 的信息,它是一个自动配置和管理系统的强大工具。Ansible 允许系统管理员在一个或多个剧本中为每个主机指定一个特定状态,然后执行各种必要的任务,使主机进入该状态。这包括安装或删除各种资源,例如 RPM 或 Apt 软件包、配置文件和其它文件、用户、组等等。

+

+因为一些琐事,我推迟了很长一段时间学习如何使用它。直到最近,我遇到了一个我认为 Ansible 可以轻松解决的问题。

+

+这篇文章并不会完整地告诉你如何入门 Ansible,相反,它只是对我遇到的问题和我在一些隐秘的地方发现的信息的做了一些记录。我在各种在线讨论和问答小组中找到的有关 Ansible 的许多信息都是错误的。错误范围包括明显的老旧信息(没有任何日期或来源的迹象),还有一些是完全错误的信息。

+

+本文所介绍的内容是有用的,尽管可能还有其它方法可以完成相同的事情,但我使用的是 Ansible 2.9.13 和 [Python][3] 3.8.5。

+

+### 我的问题

+

+我所有的最佳学习经历都始于我需要解决的问题,这次也不例外。

+

+我一直在做一个小项目,修改 [Midnight Commander][4] 文件管理器的配置文件,并将它们推送到我网络上的各种系统中进行测试。尽管我有一个脚本可以自动执行这个操作,但它仍然需要使用命令行循环来提供我想要推送新代码的系统名称。我对配置文件进行了大量的更改,这使我必须频繁推送新的配置文件。但是,就在我以为我的新配置刚刚好时,我发现了一个问题,所以我需要在修复后再进行一次推送。

+

+这种环境使得很难跟踪哪些系统有新文件,哪些没有。我还有几个主机需要区别对待。我对 Ansible 的一点了解表明,它可能能够满足我的全部或大部分工作。

+

+### 开始

+

+我读过许多有关 Ansible 的好文章和书籍,但从来没有在“我必须现在就把这个做好!”的情况下读过。而现在 —— 好吧,就是现在!

+

+在重读这些文档时,我发现它们主要是在讨论如何从 GitHub 开始安装并使用 Ansible,这很酷。但是我真的只是想尽快开始,所以我使用 DNF 和 Fedora 仓库中的版本在我的 Fedora 工作站上安装了它,非常简单。

+

+但是后来我开始寻找文件位置,并尝试确定需要修改哪些配置文件、将我的剧本保存在什么位置,甚至一个剧本怎么写以及它的作用,我脑海中有一大堆(到目前为止)悬而未决的问题。

+

+因此,不不需要进一步描述我的困难的情况下,以下是我发现的东西以及促使我继续前进的东西。

+

+### 配置

+

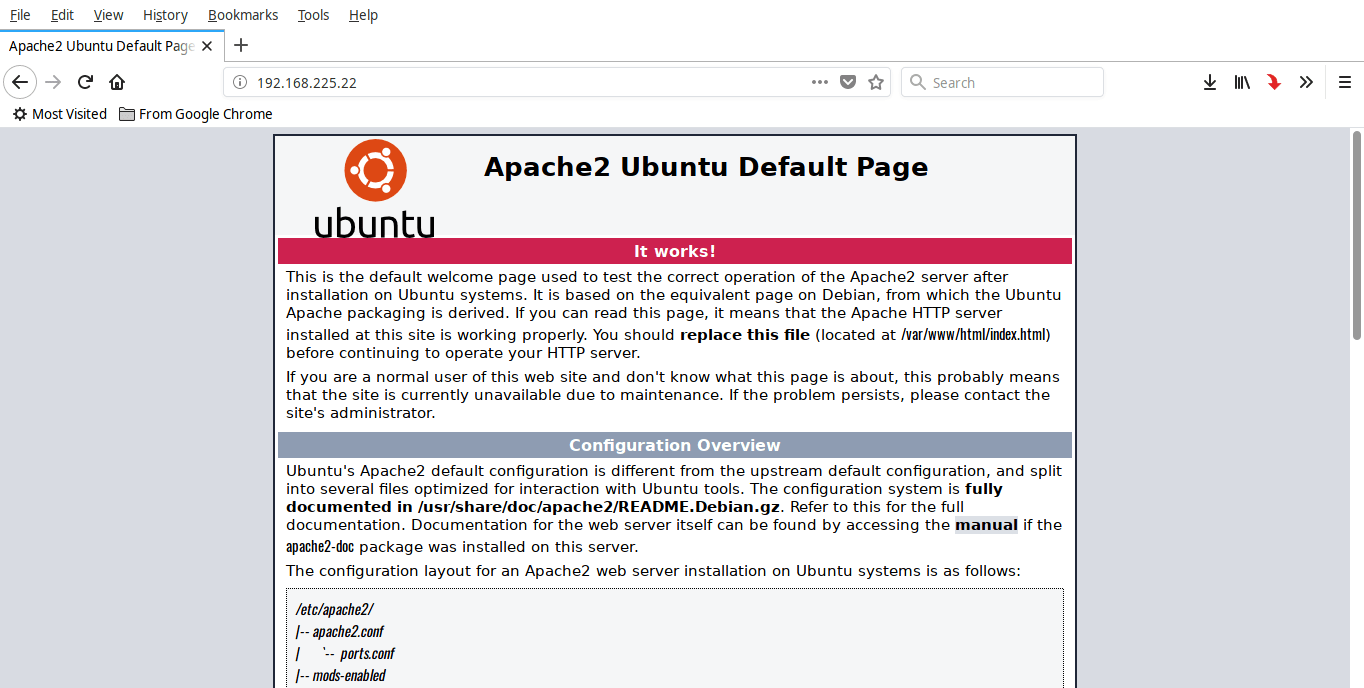

+Ansible 的配置文件保存在 `/etc/ansible` 中,这很有道理,因为 `/etc/` 是系统程序应该保存配置文件的地方。我需要使用的两个文件是 `ansible.cfg` 和 `hosts`。

+

+#### ansible.cfg

+

+在进行了从文档和线上找到的一些实践练习之后,我遇到了一些有关弃用某些较旧的 Python 文件的警告信息。因此,我在 `ansible.cfg` 中将 `deprecation_warnings` 设置为 `false`,这样那些愤怒的红色警告消息就不会出现了:

+

+```

+deprecation_warnings = False

+```

+

+这些警告很重要,所以我稍后将重新回顾它们,并弄清楚我需要做什么。但是现在,它们不会再扰乱屏幕,也不会让我混淆实际上需要关注的错误。

+

+#### hosts 文件

+

+与 `/etc/hosts` 文件不同,`hosts` 文件也被称为清单文件,它列出了网络上的主机。此文件允许将主机分组到相关集合中,例如“servers”、“workstations”和任何你所需的名称。这个文件包含帮助和大量示例,所以我在这里就不详细介绍了。但是,有些事情你必须知道。

+

+主机也可以列在组之外,但是组对于识别具有一个或多个共同特征的主机很有帮助。组使用 INI 格式,所以服务器组看起来像这样:

+

+```

+[servers]

+server1

+server2

+......

+```

+

+这个文件中必须有一个主机名,这样 Ansible 才能对它进行操作。即使有些子命令允许指定主机名,但除非主机名在 `hosts` 文件中,否则命令会失败。一个主机也可以放在多个组中。因此,除了 `[servers]` 组之外,`server1` 也可能是 `[webservers]` 组的成员,还可以是 `[ubuntu]` 组的成员,这样以区别于 Fedora 服务器。

+

+Ansible 很智能。如果 `all` 参数用作主机名,Ansible 会扫描 `hosts` 文件并在它列出的所有主机上执行定义的任务。Ansible 只会尝试在每个主机上工作一次,不管它出现在多少个组中。这也意味着不需要定义 `all` 组,因为 Ansible 可以确定文件中的所有主机名,并创建自己唯一的主机名列表。

+

+另一件需要注意的事情是单个主机的多个条目。我在 DNS 文件中使用 `CNAME` 记录来创建别名,这些别名指向某些主机的 [A 记录][5],这样,我可以将一个主机称为 `host1` 或 `h1` 或 `myhost`。如果你在 `hosts` 文件中为同一主机指定多个主机名,则 Ansible 将尝试在所有这些主机名上执行其任务,它无法知道它们指向同一主机。好消息是,这并不会影响整体结果;它只是多花了一点时间,因为 Ansible 会在次要主机名上工作,它会确定所有操作均已执行。

+

+### Ansible 实情

+

+我阅读过 Ansible 的大多数材料都谈到了 Ansible [实情][6],它是与远程系统相关的数据,包括操作系统、IP 地址、文件系统等等。这些信息可以通过其它方式获得,如 `lshw`、`dmidecode` 或 `/proc` 文件系统等。但是 Ansible 会生成一个包含此信息的 JSON 文件。每次 Ansible 运行时,它都会生成这些实情数据。在这个数据流中,有大量的信息,都是以键值对形式出现的:`<"variable-name": "value">`。所有这些变量都可以在 Ansible 剧本中使用,理解大量可用信息的最好方法是实际显示一下:

+

+```

+# ansible -m setup | less

+```

+

+明白了吗?你想知道的有关主机硬件和 Linux 发行版的所有内容都在这里,它们都可以在剧本中使用。我还没有达到需要使用这些变量的地步,但是我相信在接下来的几天中会用到。

+

+### 模块

+

+上面的 `ansible` 命令使用 `-m` 选项来指定 `setup` 模块。Ansible 已经内置了许多模块,所以你对这些模块不需要使用 `-m`。也可以安装许多下载的模块,但是内置模块可以完成我目前项目所需的一切。

+

+### 剧本

+

+剧本几乎可以放在任何地方。因为我需要以 root 身份运行,所以我将它放在了 `/root/ansible` 下。当我运行 Ansible 时,只要这个目录是当前的工作目录(PWD),它就可以找到我的剧本。Ansible 还有一个选项,用于在运行时指定不同的剧本和位置。

+

+剧本可以包含注释,但是我看到的文章或书籍很少提及此。但作为一个相信记录一切的系统管理员,我发现使用注释很有帮助。这并不是说在注释中做和任务名称同样的事情,而是要确定任务组的目的,并确保我以某种方式或顺序记录我做这些事情的原因。当我可能忘记最初的想法时,这可以帮助以后解决调试问题。

+

+剧本只是定义主机所需状态的任务集合。在剧本的开头指定主机名或清单组,并定义 Ansible 将在其上运行剧本的主机。

+

+以下是我的一个剧本示例:

+

+```

+################################################################################

+# This Ansible playbook updates Midnight commander configuration files. #

+################################################################################

+- name: Update midnight commander configuration files

+ hosts: all

+

+ tasks:

+ - name: ensure midnight commander is the latest version

+ dnf:

+ name: mc

+ state: present

+

+ - name: create ~/.config/mc directory for root

+ file:

+ path: /root/.config/mc

+ state: directory

+ mode: 0755

+ owner: root

+ group: root