mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-28 01:01:09 +08:00

commit

c1af3dfb7f

@ -0,0 +1,155 @@

|

||||

用 Hugo 30 分钟搭建静态博客

|

||||

======

|

||||

> 了解 Hugo 如何使构建网站变得有趣。

|

||||

|

||||

|

||||

|

||||

你是不是强烈地想搭建博客来将自己对软件框架等的探索学习成果分享呢?你是不是面对缺乏指导文档而一团糟的项目就有一种想去改变它的冲动呢?或者换个角度,你是不是十分期待能创建一个属于自己的个人博客网站呢?

|

||||

|

||||

很多人在想搭建博客之前都有一些严重的迟疑顾虑:感觉自己缺乏内容管理系统(CMS)的相关知识,更缺乏时间去学习这些知识。现在,如果我说不用花费大把的时间去学习 CMS 系统、学习如何创建一个静态网站、更不用操心如何去强化网站以防止它受到黑客攻击的问题,你就可以在 30 分钟之内创建一个博客?你信不信?利用 Hugo 工具,就可以实现这一切。

|

||||

|

||||

|

||||

|

||||

Hugo 是一个基于 Go 语言开发的静态站点生成工具。也许你会问,为什么选择它?

|

||||

|

||||

* 无需数据库、无需需要各种权限的插件、无需跑在服务器上的底层平台,更没有额外的安全问题。

|

||||

* 都是静态站点,因此拥有轻量级、快速响应的服务性能。此外,所有的网页都是在部署的时候生成,所以服务器负载很小。

|

||||

* 极易操作的版本控制。一些 CMS 平台使用它们自己的版本控制软件(VCS)或者在网页上集成 Git 工具。而 Hugo,所有的源文件都可以用你所选的 VCS 软件来管理。

|

||||

|

||||

### 0-5 分钟:下载 Hugo,生成一个网站

|

||||

|

||||

直白的说,Hugo 使得写一个网站又一次变得有趣起来。让我们来个 30 分钟计时,搭建一个网站。

|

||||

|

||||

为了简化 Hugo 安装流程,这里直接使用 Hugo 可执行安装文件。

|

||||

|

||||

1. 下载和你操作系统匹配的 Hugo [版本][2];

|

||||

2. 压缩包解压到指定路径,例如 windows 系统的 `C:\hugo_dir` 或者 Linux 系统的 `~/hugo_dir` 目录;下文中的变量 `${HUGO_HOME}` 所指的路径就是这个安装目录;

|

||||

3. 打开命令行终端,进入安装目录:`cd ${HUGO_HOME}`;

|

||||

4. 确认 Hugo 已经启动:

|

||||

* Unix 系统:`${HUGO_HOME}/[hugo version]`;

|

||||

* Windows 系统:`${HUGO_HOME}\[hugo.exe version]`,例如:cmd 命令行中输入:`c:\hugo_dir\hugo version`。

|

||||

|

||||

为了书写上的简化,下文中的 `hugo` 就是指 hugo 可执行文件所在的路径(包括可执行文件),例如命令 `hugo version` 就是指命令 `c:\hugo_dir\hugo version` 。(LCTT 译注:可以把 hugo 可执行文件所在的路径添加到系统环境变量下,这样就可以直接在终端中输入 `hugo version`)

|

||||

|

||||

如果命令 `hugo version` 报错,你可能下载了错误的版本。当然,有很多种方法安装 Hugo,更多详细信息请查阅 [官方文档][3]。最稳妥的方法就是把 Hugo 可执行文件放在某个路径下,然后执行的时候带上路径名

|

||||

5. 创建一个新的站点来作为你的博客,输入命令:`hugo new site awesome-blog`;

|

||||

6. 进入新创建的路径下: `cd awesome-blog`;

|

||||

|

||||

恭喜你!你已经创建了自己的新博客。

|

||||

|

||||

### 5-10 分钟:为博客设置主题

|

||||

|

||||

Hugo 中你可以自己构建博客的主题或者使用网上已经有的一些主题。这里选择 [Kiera][4] 主题,因为它简洁漂亮。按以下步骤来安装该主题:

|

||||

|

||||

1. 进入主题所在目录:`cd themes`;

|

||||

2. 克隆主题:`git clone https://github.com/avianto/hugo-kiera kiera`。如果你没有安装 Git 工具:

|

||||

* 从 [Github][5] 上下载 hugo 的 .zip 格式的文件;

|

||||

* 解压该 .zip 文件到你的博客主题 `theme` 路径;

|

||||

* 重命名 `hugo-kiera-master` 为 `kiera`;

|

||||

3. 返回博客主路径:`cd awesome-blog`;

|

||||

4. 激活主题;通常来说,主题(包括 Kiera)都自带文件夹 `exampleSite`,里面存放了内容配置的示例文件。激活 Kiera 主题需要拷贝它提供的 `config.toml` 到你的博客下:

|

||||

* Unix 系统:`cp themes/kiera/exampleSite/config.toml .`;

|

||||

* Windows 系统:`copy themes\kiera\exampleSite\config.toml .`;

|

||||

* 选择 `Yes` 来覆盖原有的 `config.toml`;

|

||||

|

||||

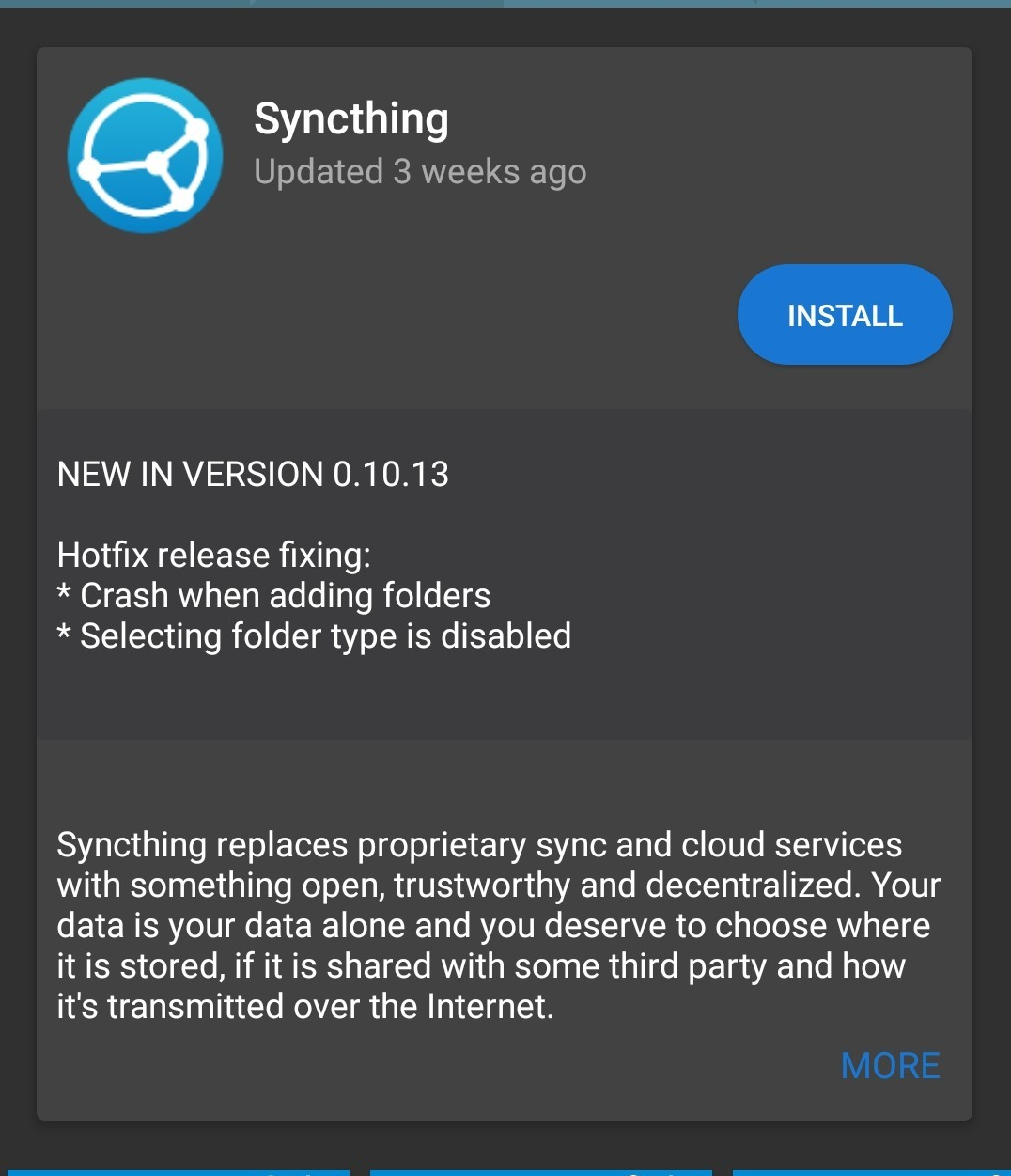

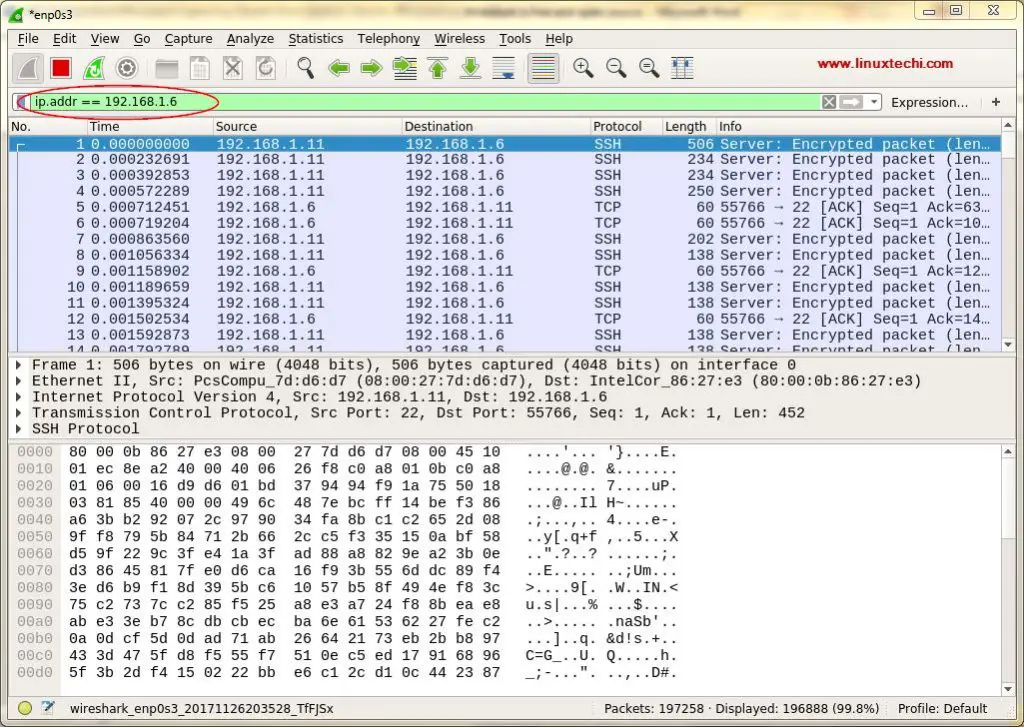

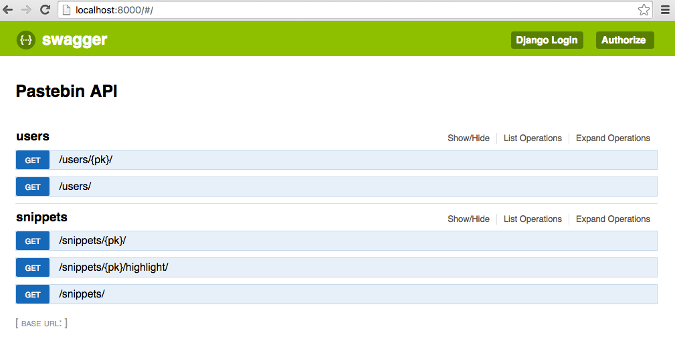

5. ( 可选操作 )你可以选择可视化的方式启动服务器来验证主题是否生效:`hugo server -D` 然后在浏览器中输入 `http://localhost:1313`。可用通过在终端中输入 `Crtl+C` 来停止服务器运行。现在你的博客还是空的,但这也给你留了写作的空间。它看起来如下所示:

|

||||

|

||||

|

||||

|

||||

你已经成功的给博客设置了主题!你可以在官方 [Hugo 主题][4] 网站上找到上百种漂亮的主题供你使用。

|

||||

|

||||

### 10-20 分钟:给博客添加内容

|

||||

|

||||

对于碗来说,它是空的时候用处最大,可以用来盛放东西;但对于博客来说不是这样,空博客几乎毫无用处。在这一步,你将会给博客添加内容。Hugo 和 Kiera 主题都为这个工作提供了方便性。按以下步骤来进行你的第一次提交:

|

||||

|

||||

1. archetypes 将会是你的内容模板。

|

||||

2. 添加主题中的 archtypes 至你的博客:

|

||||

* Unix 系统: `cp themes/kiera/archetypes/* archetypes/`

|

||||

* Windows 系统:`copy themes\kiera\archetypes\* archetypes\`

|

||||

* 选择 `Yes` 来覆盖原来的 `default.md` 内容架构类型

|

||||

|

||||

3. 创建博客 posts 目录:

|

||||

* Unix 系统: `mkdir content/posts`

|

||||

* Windows 系统: `mkdir content\posts`

|

||||

|

||||

4. 利用 Hugo 生成你的 post:

|

||||

* Unix 系统:`hugo nes posts/first-post.md`;

|

||||

* Windows 系统:`hugo new posts\first-post.md`;

|

||||

|

||||

5. 在文本编辑器中打开这个新建的 post 文件:

|

||||

* Unix 系统:`gedit content/posts/first-post.md`;

|

||||

* Windows 系统:`notepadd content\posts\first-post.md`;

|

||||

|

||||

此刻,你可以疯狂起来了。注意到你的提交文件中包括两个部分。第一部分是以 `+++` 符号分隔开的。它包括了提交文档的主要数据,例如名称、时间等。在 Hugo 中,这叫做前缀。在前缀之后,才是正文。下面编辑第一个提交文件内容:

|

||||

|

||||

```

|

||||

+++

|

||||

title = "First Post"

|

||||

date = 2018-03-03T13:23:10+01:00

|

||||

draft = false

|

||||

tags = ["Getting started"]

|

||||

categories = []

|

||||

+++

|

||||

|

||||

Hello Hugo world! No more excuses for having no blog or documentation now!

|

||||

```

|

||||

|

||||

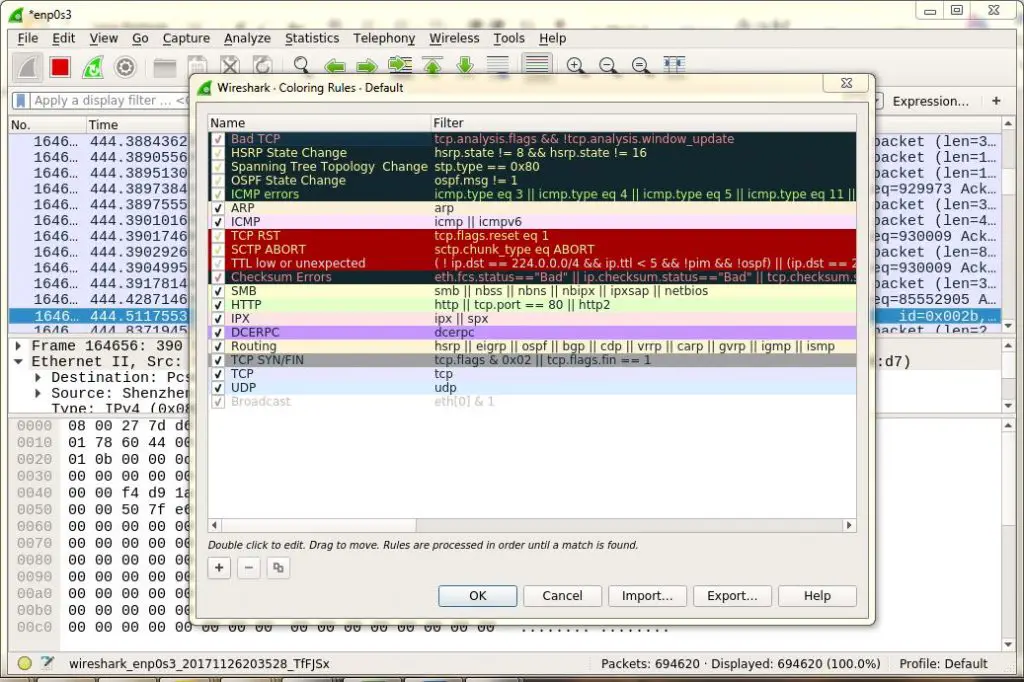

现在你要做的就是启动你的服务器:`hugo server -D`;然后打开浏览器,输入 `http://localhost:1313/`。

|

||||

|

||||

|

||||

|

||||

### 20-30 分钟:调整网站

|

||||

|

||||

前面的工作很完美,但还有一些问题需要解决。例如,简单地命名你的站点:

|

||||

|

||||

1. 终端中按下 `Ctrl+C` 以停止服务器。

|

||||

2. 打开 `config.toml`,编辑博客的名称,版权,你的姓名,社交网站等等。

|

||||

|

||||

当你再次启动服务器后,你会发现博客私人订制味道更浓了。不过,还少一个重要的基础内容:主菜单。快速的解决这个问题。返回 `config.toml` 文件,在末尾插入如下一段:

|

||||

|

||||

```

|

||||

[[menu.main]]

|

||||

name = "Home" #Name in the navigation bar

|

||||

weight = 10 #The larger the weight, the more on the right this item will be

|

||||

url = "/" #URL address

|

||||

[[menu.main]]

|

||||

name = "Posts"

|

||||

weight = 20

|

||||

url = "/posts/"

|

||||

```

|

||||

|

||||

上面这段代码添加了 `Home` 和 `Posts` 到主菜单中。你还需要一个 `About` 页面。这次是创建一个 `.md` 文件,而不是编辑 `config.toml` 文件:

|

||||

|

||||

1. 创建 `about.md` 文件:`hugo new about.md` 。注意它是 `about.md`,不是 `posts/about.md`。该页面不是博客提交内容,所以你不想它显示到博客内容提交当中吧。

|

||||

2. 用文本编辑器打开该文件,输入如下一段:

|

||||

|

||||

```

|

||||

+++

|

||||

title = "About"

|

||||

date = 2018-03-03T13:50:49+01:00

|

||||

menu = "main" #Display this page on the nav menu

|

||||

weight = "30" #Right-most nav item

|

||||

meta = "false" #Do not display tags or categories

|

||||

+++

|

||||

|

||||

> Waves are the practice of the water. Shunryu Suzuki

|

||||

```

|

||||

|

||||

当你启动你的服务器并输入:`http://localhost:1313/`,你将会看到你的博客。(访问我 Gihub 主页上的 [例子][6] )如果你想让文章的菜单栏和 Github 相似,给 `themes/kiera/static/css/styles.css` 打上这个 [补丁][7]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/3/start-blog-30-minutes-hugo

|

||||

|

||||

作者:[Marek Czernek][a]

译者:[jrg](https://github.com/jrglinux)

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/mczernek

|

||||

[1]:https://gohugo.io/

|

||||

[2]:https://github.com/gohugoio/hugo/releases

|

||||

[3]:https://gohugo.io/getting-started/installing/

|

||||

[4]:https://themes.gohugo.io/

|

||||

[5]:https://github.com/avianto/hugo-kiera

|

||||

[6]:https://m-czernek.github.io/awesome-blog/

|

||||

[7]:https://github.com/avianto/hugo-kiera/pull/18/files

|

||||

@ -1,47 +1,48 @@

|

||||

PKI 和 密码学中的私钥的角色

|

||||

公钥基础设施和密码学中的私钥的角色

|

||||

======

|

||||

> 了解如何验证某人所声称的身份。

|

||||

|

||||

|

||||

|

||||

在[上一篇文章][1]中,我们概述了密码学并讨论了密码学的核心概念:<ruby>保密性<rt>confidentiality</rt></ruby> (让数据保密),<ruby>完整性<rt>integrity</rt></ruby> (防止数据被篡改)和<ruby>身份认证<rt>authentication</rt></ruby> (确认数据源的<ruby>身份<rt>identity</rt></ruby>)。由于要在存在各种身份混乱的现实世界中完成身份认证,人们逐渐建立起一个复杂的<ruby>技术生态体系<rt>technological ecosystem</rt></ruby>,用于证明某人就是其声称的那个人。在本文中,我们将大致介绍这些体系是如何工作的。

|

||||

在[上一篇文章][1]中,我们概述了密码学并讨论了密码学的核心概念:<ruby>保密性<rt>confidentiality</rt></ruby> (让数据保密)、<ruby>完整性<rt>integrity</rt></ruby> (防止数据被篡改)和<ruby>身份认证<rt>authentication</rt></ruby> (确认数据源的<ruby>身份<rt>identity</rt></ruby>)。由于要在存在各种身份混乱的现实世界中完成身份认证,人们逐渐建立起一个复杂的<ruby>技术生态体系<rt>technological ecosystem</rt></ruby>,用于证明某人就是其声称的那个人。在本文中,我们将大致介绍这些体系是如何工作的。

|

||||

|

||||

### 公钥密码学及数字签名快速回顾

|

||||

### 快速回顾公钥密码学及数字签名

|

||||

|

||||

互联网世界中的身份认证依赖于公钥密码学,其中密钥分为两部分:拥有者需要保密的私钥和可以对外公开的公钥。经过公钥加密过的数据,只能用对应的私钥解密。举个例子,对于希望与[记者][2]建立联系的举报人来说,这个特性非常有用。但就本文介绍的内容而言,私钥更重要的用途是与一个消息一起创建一个<ruby>数字签名<rt>digital signature</rt></ruby>,用于提供完整性和身份认证。

|

||||

|

||||

在实际应用中,我们签名的并不是真实消息,而是经过<ruby>密码学哈希函数<rt>cryptographic hash function</rt></ruby>处理过的消息<ruby>摘要<rt>digest</rt></ruby>。要发送一个包含源代码的压缩文件,发送者会对该压缩文件的 256 比特长度的 [SHA-256][3] 摘要而不是文件本身进行签名,然后用明文发送该压缩包(和签名)。接收者会独立计算收到文件的 SHA-256 摘要,然后结合该摘要、收到的签名及发送者的公钥,使用签名验证算法进行验证。验证过程取决于加密算法,加密算法不同,验证过程也相应不同;而且,由于不断发现微妙的触发条件,签名验证[漏洞][4]依然[层出不穷][5]。如果签名验证通过,说明文件在传输过程中没有被篡改而且来自于发送者,这是因为只有发送者拥有创建签名所需的私钥。

|

||||

在实际应用中,我们签名的并不是真实消息,而是经过<ruby>密码学哈希函数<rt>cryptographic hash function</rt></ruby>处理过的消息<ruby>摘要<rt>digest</rt></ruby>。要发送一个包含源代码的压缩文件,发送者会对该压缩文件的 256 比特长度的 [SHA-256][3] 摘要进行签名,而不是文件本身进行签名,然后用明文发送该压缩包(和签名)。接收者会独立计算收到文件的 SHA-256 摘要,然后结合该摘要、收到的签名及发送者的公钥,使用签名验证算法进行验证。验证过程取决于加密算法,加密算法不同,验证过程也相应不同;而且,很微妙的是签名验证[漏洞][4]依然[层出不穷][5]。如果签名验证通过,说明文件在传输过程中没有被篡改而且来自于发送者,这是因为只有发送者拥有创建签名所需的私钥。

|

||||

|

||||

### 方案中缺失的环节

|

||||

|

||||

上述方案中缺失了一个重要的环节:我们从哪里获得发送者的公钥?发送者可以将公钥与消息一起发送,但除了发送者的自我宣称,我们无法核验其身份。假设你是一名银行柜员,一名顾客走过来向你说,“你好,我是 Jane Doe,我要取一笔钱”。当你要求其证明身份时,她指着衬衫上贴着的姓名标签说道,“看,Jane Doe!”。如果我是这个柜员,我会礼貌的拒绝她的请求。

|

||||

|

||||

如果你认识发送者,你们可以私下见面并彼此交换公钥。如果你并不认识发送者,你们可以私下见面,检查对方的证件,确认真实性后接受对方的公钥。为提高流程效率,你可以举办聚会并邀请一堆人,检查他们的证件,然后接受他们的公钥。此外,如果你认识并信任 Jane Doe (尽管她在银行的表现比较反常),Jane 可以参加聚会,收集大家的公钥然后交给你。事实上,Jane 可以使用她自己的私钥对这些公钥(及对应的身份信息)进行签名,进而你可以从一个[线上密钥库][7]获取公钥(及对应的身份信息)并信任已被 Jane 签名的那部分。如果一个人的公钥被很多你信任的人(即使你并不认识他们)签名,你也可能选择信任这个人。按照这种方式,你可以建立一个[<ruby>信任网络<rt>Web of Trust</rt></ruby>][8]。

|

||||

如果你认识发送者,你们可以私下见面并彼此交换公钥。如果你并不认识发送者,你们可以私下见面,检查对方的证件,确认真实性后接受对方的公钥。为提高流程效率,你可以举办[聚会][6]并邀请一堆人,检查他们的证件,然后接受他们的公钥。此外,如果你认识并信任 Jane Doe(尽管她在银行的表现比较反常),Jane 可以参加聚会,收集大家的公钥然后交给你。事实上,Jane 可以使用她自己的私钥对这些公钥(及对应的身份信息)进行签名,进而你可以从一个[线上密钥库][7]获取公钥(及对应的身份信息)并信任已被 Jane 签名的那部分。如果一个人的公钥被很多你信任的人(即使你并不认识他们)签名,你也可能选择信任这个人。按照这种方式,你可以建立一个<ruby>[信任网络][8]<rt>Web of Trust</rt></ruby>。

|

||||

|

||||

但事情也变得更加复杂:我们需要建立一种标准的编码机制,可以将公钥和其对应的身份信息编码成一个<ruby>数字捆绑<rt>digital bundle</rt></ruby>,以便我们进一步进行签名。更准确的说,这类数字捆绑被称为<ruby>证书<rt>cerificates</rt></ruby>。我们还需要可以创建、使用和管理这些证书的工具链。满足诸如此类的各种需求的方案构成了<ruby>公钥基础设施<rt>public key infrastructure, PKI</rt></ruby>。

|

||||

但事情也变得更加复杂:我们需要建立一种标准的编码机制,可以将公钥和其对应的身份信息编码成一个<ruby>数字捆绑<rt>digital bundle</rt></ruby>,以便我们进一步进行签名。更准确的说,这类数字捆绑被称为<ruby>证书<rt>cerificate</rt></ruby>。我们还需要可以创建、使用和管理这些证书的工具链。满足诸如此类的各种需求的方案构成了<ruby>公钥基础设施<rt>public key infrastructure</rt></ruby>(PKI)。

|

||||

|

||||

### 比信任网络更进一步

|

||||

|

||||

你可以用人际关系网类比信任网络。如果人们之间广泛互信,可以很容易找到(两个人之间的)一条<ruby>短信任链<rt>short path of trust</rt></ruby>:不妨以社交圈为例。基于 [GPG][9] 加密的邮件依赖于信任网络,([理论上][10])只适用于与少量朋友、家庭或同事进行联系的情形。

|

||||

你可以用人际关系网类比信任网络。如果人们之间广泛互信,可以很容易找到(两个人之间的)一条<ruby>短信任链<rt>short path of trust</rt></ruby>:就像一个社交圈。基于 [GPG][9] 加密的邮件依赖于信任网络,([理论上][10])只适用于与少量朋友、家庭或同事进行联系的情形。

|

||||

|

||||

(LCTT 译注:作者提到的“短信任链”应该是暗示“六度空间理论”,即任意两个陌生人之间所间隔的人一般不会超过 6 个。对 GPG 的唱衰,一方面是因为密钥管理的复杂性没有改善,另一方面 Yahoo 和 Google 都提出了更便利的端到端加密方案。)

|

||||

|

||||

在实际应用中,信任网络有一些[<ruby>"硬伤"<rt>significant problems</rt></ruby>][11],主要是在可扩展性方面。当网络规模逐渐增大或者人们之间的连接逐渐降低时,信任网络就会慢慢失效。如果信任链逐渐变长,信任链中某人有意或无意误签证书的几率也会逐渐增大。如果信任链不存在,你不得不自己创建一条信任链;具体而言,你与其它组织建立联系,验证它们的密钥符合你的要求。考虑下面的场景,你和你的朋友要访问一个从未使用过的在线商店。你首先需要核验网站所用的公钥属于其对应的公司而不是伪造者,进而建立安全通信信道,最后完成下订单操作。核验公钥的方法包括去实体店、打电话等,都比较麻烦。这样会导致在线购物变得不那么便利(或者说不那么安全,毕竟很多人会图省事,不去核验密钥)。

|

||||

在实际应用中,信任网络有一些“<ruby>[硬伤][11]<rt>significant problems</rt></ruby>”,主要是在可扩展性方面。当网络规模逐渐增大或者人们之间的连接较少时,信任网络就会慢慢失效。如果信任链逐渐变长,信任链中某人有意或无意误签证书的几率也会逐渐增大。如果信任链不存在,你不得不自己创建一条信任链,与其它组织建立联系,验证它们的密钥以符合你的要求。考虑下面的场景,你和你的朋友要访问一个从未使用过的在线商店。你首先需要核验网站所用的公钥属于其对应的公司而不是伪造者,进而建立安全通信信道,最后完成下订单操作。核验公钥的方法包括去实体店、打电话等,都比较麻烦。这样会导致在线购物变得不那么便利(或者说不那么安全,毕竟很多人会图省事,不去核验密钥)。

|

||||

|

||||

如果世界上有那么几个格外值得信任的人,他们专门负责核验和签发网站证书,情况会怎样呢?你可以只信任他们,那么浏览互联网也会变得更加容易。整体来看,这就是当今互联网的工作方式。那些“格外值得信任的人”就是被称为<ruby>证书颁发机构<rt>cerificate authorities, CAs</rt></ruby>的公司。当网站希望获得公钥签名时,只需向 CA 提交<ruby>证书签名请求<rt>certificate signing request</rt></ruby>。

|

||||

如果世界上有那么几个格外值得信任的人,他们专门负责核验和签发网站证书,情况会怎样呢?你可以只信任他们,那么浏览互联网也会变得更加容易。整体来看,这就是当今互联网的工作方式。那些“格外值得信任的人”就是被称为<ruby>证书颁发机构<rt>cerificate authoritie</rt></ruby>(CA)的公司。当网站希望获得公钥签名时,只需向 CA 提交<ruby>证书签名请求<rt>certificate signing request</rt></ruby>(CSR)。

|

||||

|

||||

CSR 类似于包括公钥和身份信息(在本例中,即服务器的主机名)的<ruby>存根<rt>stub</rt></ruby>证书,但CA 并不会直接对 CSR 本身进行签名。CA 在签名之前会进行一些验证。对于一些证书类型(LCTT 译注:<ruby>DV<rt>Domain Validated</rt></ruby> 类型),CA 只验证申请者的确是 CSR 中列出主机名对应域名的控制者(例如通过邮件验证,让申请者完成指定的域名解析)。[对于另一些证书类型][12] (LCTT 译注:链接中提到<ruby>EV<rt>Extended Validated</rt></ruby> 类型,其实还有 <ruby>OV<rt>Organization Validated</rt></ruby> 类型),CA 还会检查相关法律文书,例如公司营业执照等。一旦验证完成,CA(一般在申请者付费后)会从 CSR 中取出数据(即公钥和身份信息),使用 CA 自己的私钥进行签名,创建一个(签名)证书并发送给申请者。申请者将该证书部署在网站服务器上,当用户使用 HTTPS (或其它基于 [TLS][13] 加密的协议)与服务器通信时,该证书被分发给用户。

|

||||

CSR 类似于包括公钥和身份信息(在本例中,即服务器的主机名)的<ruby>存根<rt>stub</rt></ruby>证书,但 CA 并不会直接对 CSR 本身进行签名。CA 在签名之前会进行一些验证。对于一些证书类型(LCTT 译注:<ruby>域名证实<rt>Domain Validated</rt></ruby>(DV) 类型),CA 只验证申请者的确是 CSR 中列出主机名对应域名的控制者(例如通过邮件验证,让申请者完成指定的域名解析)。[对于另一些证书类型][12] (LCTT 译注:链接中提到<ruby>扩展证实<rt>Extended Validated</rt></ruby>(EV)类型,其实还有 <ruby>OV<rt>Organization Validated</rt></ruby> 类型),CA 还会检查相关法律文书,例如公司营业执照等。一旦验证完成,CA(一般在申请者付费后)会从 CSR 中取出数据(即公钥和身份信息),使用 CA 自己的私钥进行签名,创建一个(签名)证书并发送给申请者。申请者将该证书部署在网站服务器上,当用户使用 HTTPS (或其它基于 [TLS][13] 加密的协议)与服务器通信时,该证书被分发给用户。

|

||||

|

||||

当用户访问该网站时,浏览器获取该证书,接着检查证书中的主机名是否与当前正在连接的网站一致(下文会详细说明),核验 CA 签名有效性。如果其中一步验证不通过,浏览器会给出安全警告并切断与网站的连接。反之,如果验证通过,浏览器会使用证书中的公钥核验服务器发送的签名信息,确认该服务器持有该证书的私钥。有几种算法用于协商后续通信用到的<ruby>共享密钥<rt>shared secret key</rt></ruby>,其中一种也用到了服务器发送的签名信息。<ruby>密钥交换<rt>Key exchange</rt></ruby>算法不在本文的讨论范围,可以参考这个[视频][14],其中仔细说明了一种密钥交换算法。

|

||||

当用户访问该网站时,浏览器获取该证书,接着检查证书中的主机名是否与当前正在连接的网站一致(下文会详细说明),核验 CA 签名有效性。如果其中一步验证不通过,浏览器会给出安全警告并切断与网站的连接。反之,如果验证通过,浏览器会使用证书中的公钥来核验该服务器发送的签名信息,确认该服务器持有该证书的私钥。有几种算法用于协商后续通信用到的<ruby>共享密钥<rt>shared secret key</rt></ruby>,其中一种也用到了服务器发送的签名信息。<ruby>密钥交换<rt>key exchange</rt></ruby>算法不在本文的讨论范围,可以参考这个[视频][14],其中仔细说明了一种密钥交换算法。

|

||||

|

||||

### 建立信任

|

||||

|

||||

你可能会问,“如果 CA 使用其私钥对证书进行签名,也就意味着我们需要使用 CA 的公钥验证证书。那么 CA 的公钥从何而来,谁对其进行签名呢?” 答案是 CA 对自己签名!可以使用证书公钥对应的私钥,对证书本身进行签名!这类签名证书被称为是<ruby>自签名的<rt>self-signed</rt></ruby>;在 PKI 体系下,这意味着对你说“相信我”。(为了表达方便,人们通常说用证书进行了签名,虽然真正用于签名的私钥并不在证书中。)

|

||||

|

||||

通过遵守[浏览器][15]和[操作系统][16]供应商建立的规则,CA 表明自己足够可靠并寻求加入到浏览器或操作系统预装的一组自签名证书中。这些证书被称为“<ruby>信任锚<rt>trust anchors</rt></ruby>”或 <ruby>CA 根证书<rt>root CA certificates</rt></ruby>,被存储在根证书区,我们<ruby>约定<rt>implicitly</rt></ruby>信任该区域内的证书。

|

||||

通过遵守[浏览器][15]和[操作系统][16]供应商建立的规则,CA 表明自己足够可靠并寻求加入到浏览器或操作系统预装的一组自签名证书中。这些证书被称为“<ruby>信任锚<rt>trust anchor</rt></ruby>”或 <ruby>CA 根证书<rt>root CA certificate</rt></ruby>,被存储在根证书区,我们<ruby>约定<rt>implicitly</rt></ruby>信任该区域内的证书。

|

||||

|

||||

CA 也可以签发一种特殊的证书,该证书自身可以作为 CA。在这种情况下,它们可以生成一个证书链。要核验证书链,需要从“信任锚”(也就是 CA 根证书)开始,使用当前证书的公钥核验下一层证书的签名(或其它一些信息)。按照这个方式依次核验下一层证书,直到证书链底部。如果整个核验过程没有问题,信任链也建立完成。当向 CA 付费为网站签发证书时,实际购买的是将证书放置在证书链下的权利。CA 将卖出的证书标记为“不可签发子证书”,这样它们可以在适当的长度终止信任链(防止其继续向下扩展)。

|

||||

|

||||

为何要使用长度超过 2 的信任链呢?毕竟网站的证书可以直接被 CA 根证书签名。在实际应用中,很多因素促使 CA 创建<ruby>中间 CA 证书<rt>intermediate CA certificate</rt></ruby>,最主要是为了方便。由于价值连城,CA 根证书对应的私钥通常被存放在特定的设备中,一种需要多人解锁的<ruby>硬件安全模块<rt>hardware security module, HSM</rt></ruby>,该模块完全离线并被保管在配备监控和报警设备的[地下室][18]中。

|

||||

为何要使用长度超过 2 的信任链呢?毕竟网站的证书可以直接被 CA 根证书签名。在实际应用中,很多因素促使 CA 创建<ruby>中间 CA 证书<rt>intermediate CA certificate</rt></ruby>,最主要是为了方便。由于价值连城,CA 根证书对应的私钥通常被存放在特定的设备中,一种需要多人解锁的<ruby>硬件安全模块<rt>hardware security module</rt></ruby>(HSM),该模块完全离线并被保管在配备监控和报警设备的[地下室][18]中。

|

||||

|

||||

<ruby>CA/浏览器论坛<rt>CAB Forum, CA/Browser Forum</rt></ruby>负责管理 CA,[要求][19]任何与 CA 根证书(LCTT 译注:就像前文提到的那样,这里是指对应的私钥)相关的操作必须由人工完成。设想一下,如果每个证书请求都需要员工将请求内容拷贝到保密介质中、进入地下室、与同事一起解锁 HSM、(使用 CA 根证书对应的私钥)签名证书,最后将签名证书从保密介质中拷贝出来;那么每天为大量网站签发证书是相当繁重乏味的工作。因此,CA 创建内部使用的中间 CA,用于证书签发自动化。

|

||||

|

||||

@ -72,12 +73,12 @@ via: https://opensource.com/article/18/7/private-keys

|

||||

作者:[Alex Wood][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[pinewall](https://github.com/pinewall)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/awood

|

||||

[1]:https://opensource.com/article/18/5/cryptography-pki

|

||||

[1]:https://linux.cn/article-9792-1.html

|

||||

[2]:https://theintercept.com/2014/10/28/smuggling-snowden-secrets/

|

||||

[3]:https://en.wikipedia.org/wiki/SHA-2

|

||||

[4]:https://www.ietf.org/mail-archive/web/openpgp/current/msg00999.html

|

||||

@ -16,12 +16,8 @@ Linux DNS 查询剖析(第四部分)

|

||||

|

||||

在第四部分中,我将介绍容器如何完成 DNS 查询。你想的没错,也不是那么简单。

|

||||

|

||||

* * *

|

||||

|

||||

### 1) Docker 和 DNS

|

||||

|

||||

============================================================

|

||||

|

||||

在 [Linux DNS 查询剖析(第三部分)][3] 中,我们介绍了 `dnsmasq`,其工作方式如下:将 DNS 查询指向到 localhost 地址 `127.0.0.1`,同时启动一个进程监听 `53` 端口并处理查询请求。

|

||||

|

||||

在按上述方式配置 DNS 的主机上,如果运行了一个 Docker 容器,容器内的 `/etc/resolv.conf` 文件会是怎样的呢?

|

||||

@ -72,29 +68,29 @@ google.com. 112 IN A 172.217.23.14

|

||||

|

||||

在这个问题上,Docker 的解决方案是忽略所有可能的复杂情况,即无论主机中使用什么 DNS 服务器,容器内都使用 Google 的 DNS 服务器 `8.8.8.8` 和 `8.8.4.4` 完成 DNS 查询。

|

||||

|

||||

_我的经历:在 2013 年,我遇到了使用 Docker 以来的第一个问题,与 Docker 的这种 DNS 解决方案密切相关。我们公司的网络屏蔽了 `8.8.8.8` 和 `8.8.4.4`,导致容器无法解析域名。_

|

||||

_我的经历:在 2013 年,我遇到了使用 Docker 以来的第一个问题,与 Docker 的这种 DNS 解决方案密切相关。我们公司的网络屏蔽了 `8.8.8.8` 和 `8.8.4.4`,导致容器无法解析域名。_

|

||||

|

||||

这就是 Docker 容器的情况,但对于包括 Kubernetes 在内的容器 _<ruby>编排引擎<rt>orchestrators</rt></ruby>_,情况又有些不同。

|

||||

这就是 Docker 容器的情况,但对于包括 Kubernetes 在内的容器 <ruby>编排引擎<rt>orchestrators</rt></ruby>,情况又有些不同。

|

||||

|

||||

### 2) Kubernetes 和 DNS

|

||||

|

||||

在 Kubernetes 中,最小部署单元是 `pod`;`pod` 是一组相互协作的容器,共享 IP 地址(和其它资源)。

|

||||

在 Kubernetes 中,最小部署单元是 pod;它是一组相互协作的容器,共享 IP 地址(和其它资源)。

|

||||

|

||||

Kubernetes 面临的一个额外的挑战是,将 Kubernetes 服务请求(例如,`myservice.kubernetes.io`)通过对应的<ruby>解析器<rt>resolver</rt></ruby>,转发到具体服务地址对应的<ruby>内网地址<rt>private network</rt></ruby>。这里提到的服务地址被称为归属于“<ruby>集群域<rt>cluster domain</rt></ruby>”。集群域可由管理员配置,根据配置可以是 `cluster.local` 或 `myorg.badger` 等。

|

||||

|

||||

在 Kubernetes 中,你可以为 `pod` 指定如下四种 `pod` 内 DNS 查询的方式。

|

||||

在 Kubernetes 中,你可以为 pod 指定如下四种 pod 内 DNS 查询的方式。

|

||||

|

||||

* Default

|

||||

**Default**

|

||||

|

||||

在这种(名称容易让人误解)的方式中,`pod` 与其所在的主机采用相同的 DNS 查询路径,与前面介绍的主机 DNS 查询一致。我们说这种方式的名称容易让人误解,因为该方式并不是默认选项!`ClusterFirst` 才是默认选项。

|

||||

在这种(名称容易让人误解)的方式中,pod 与其所在的主机采用相同的 DNS 查询路径,与前面介绍的主机 DNS 查询一致。我们说这种方式的名称容易让人误解,因为该方式并不是默认选项!`ClusterFirst` 才是默认选项。

|

||||

|

||||

如果你希望覆盖 `/etc/resolv.conf` 中的条目,你可以添加到 `kubelet` 的配置中。

|

||||

|

||||

* ClusterFirst

|

||||

**ClusterFirst**

|

||||

|

||||

在 `ClusterFirst` 方式中,遇到 DNS 查询请求会做有选择的转发。根据配置的不同,有以下两种方式:

|

||||

|

||||

第一种方式配置相对古老但更简明,即采用一个规则:如果请求的域名不是集群域的子域,那么将其转发到 `pod` 所在的主机。

|

||||

第一种方式配置相对古老但更简明,即采用一个规则:如果请求的域名不是集群域的子域,那么将其转发到 pod 所在的主机。

|

||||

|

||||

第二种方式相对新一些,你可以在内部 DNS 中配置选择性转发。

|

||||

|

||||

@ -115,27 +111,27 @@ data:

|

||||

|

||||

在 `stubDomains` 条目中,可以为特定域名指定特定的 DNS 服务器;而 `upstreamNameservers` 条目则给出,待查询域名不是集群域子域情况下用到的 DNS 服务器。

|

||||

|

||||

这是通过在一个 `pod` 中运行我们熟知的 `dnsmasq` 实现的。

|

||||

这是通过在一个 pod 中运行我们熟知的 `dnsmasq` 实现的。

|

||||

|

||||

|

||||

|

||||

剩下两种选项都比较小众:

|

||||

|

||||

* ClusterFirstWithHostNet

|

||||

**ClusterFirstWithHostNet**

|

||||

|

||||

适用于 `pod` 使用主机网络的情况,例如绕开 Docker 网络配置,直接使用与 `pod` 对应主机相同的网络。

|

||||

适用于 pod 使用主机网络的情况,例如绕开 Docker 网络配置,直接使用与 pod 对应主机相同的网络。

|

||||

|

||||

* None

|

||||

**None**

|

||||

|

||||

`None` 意味着不改变 DNS,但强制要求你在 `pod` <ruby>规范文件<rt>specification</rt></ruby>的 `dnsConfig` 条目中指定 DNS 配置。

|

||||

|

||||

### CoreDNS 即将到来

|

||||

|

||||

除了上面提到的那些,一旦 `CoreDNS` 取代Kubernetes 中的 `kube-dns`,情况还会发生变化。`CoreDNS` 相比 `kube-dns` 具有可配置性更高、效率更高等优势。

|

||||

除了上面提到的那些,一旦 `CoreDNS` 取代 Kubernetes 中的 `kube-dns`,情况还会发生变化。`CoreDNS` 相比 `kube-dns` 具有可配置性更高、效率更高等优势。

|

||||

|

||||

如果想了解更多,参考[这里][5]。

|

||||

|

||||

如果你对 OpenShift 的网络感兴趣,我曾写过一篇[文章][6]可供你参考。但文章中 OpenShift 的版本是 `3.6`,可能有些过时。

|

||||

如果你对 OpenShift 的网络感兴趣,我曾写过一篇[文章][6]可供你参考。但文章中 OpenShift 的版本是 3.6,可能有些过时。

|

||||

|

||||

### 第四部分总结

|

||||

|

||||

@ -152,14 +148,14 @@ via: https://zwischenzugs.com/2018/08/06/anatomy-of-a-linux-dns-lookup-part-iv/

|

||||

|

||||

作者:[zwischenzugs][a]

|

||||

译者:[pinewall](https://github.com/pinewall)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://zwischenzugs.com/

|

||||

[1]:https://zwischenzugs.com/2018/06/08/anatomy-of-a-linux-dns-lookup-part-i/

|

||||

[2]:https://zwischenzugs.com/2018/06/18/anatomy-of-a-linux-dns-lookup-part-ii/

|

||||

[3]:https://zwischenzugs.com/2018/07/06/anatomy-of-a-linux-dns-lookup-part-iii/

|

||||

[1]:https://linux.cn/article-9943-1.html

|

||||

[2]:https://linux.cn/article-9949-1.html

|

||||

[3]:https://linux.cn/article-9972-1.html

|

||||

[4]:https://kubernetes.io/docs/tasks/administer-cluster/dns-custom-nameservers/#impacts-on-pods

|

||||

[5]:https://coredns.io/

|

||||

[6]:https://zwischenzugs.com/2017/10/21/openshift-3-6-dns-in-pictures/

|

||||

@ -0,0 +1,78 @@

|

||||

|

||||

Steam 让我们在 Linux 上玩 Windows 的游戏更加容易

|

||||

======

|

||||

|

||||

![Steam Wallpaper][1]

|

||||

|

||||

总所周知,[Linux 游戏][2]库中的游戏只有 Windows 游戏库中的一部分,实际上,许多人甚至都不会考虑将操作系统[转换为 Linux][3],原因很简单,因为他们喜欢的游戏,大多数都不能在这个平台上运行。

|

||||

|

||||

在撰写本文时,Steam 上已有超过 5000 种游戏可以在 Linux 上运行,而 Steam 上的游戏总数已经接近 27000 种了。现在 5000 种游戏可能看起来很多,但还没有达到 27000 种,确实没有。

|

||||

|

||||

虽然几乎所有的新的<ruby>独立游戏<rt>indie game</rt></ruby>都是在 Linux 中推出的,但我们仍然无法在这上面玩很多的 [3A 大作][4]。对我而言,虽然这其中有很多游戏我都很希望能有机会玩,但这从来都不是一个非黑即白的问题。因为我主要是玩独立游戏和[复古游戏][5],所以几乎所有我喜欢的游戏都可以在 Linux 系统上运行。

|

||||

|

||||

### 认识 Proton,Steam 的一个 WINE 复刻

|

||||

|

||||

现在,这个问题已经成为过去式了,因为本周 Valve [宣布][6]要对 Steam Play 进行一次更新,此次更新会将一个名为 Proton 的 Wine 复刻版本添加到 Linux 客户端中。是的,这个工具是开源的,Valve 已经在 [GitHub][7] 上开源了源代码,但该功能仍然处于测试阶段,所以你必须使用测试版的 Steam 客户端才能使用这项功能。

|

||||

|

||||

#### 使用 proton ,可以在 Linux 系统上通过 Steam 运行更多 Windows 游戏

|

||||

|

||||

这对我们这些 Linux 用户来说,实际上意味着什么?简单来说,这意味着我们可以在 Linux 电脑上运行全部 27000 种游戏,而无需配置像 [PlayOnLinux][8] 或 [Lutris][9] 这样的东西。我要告诉你的是,配置这些东西有时候会非常让人头疼。

|

||||

|

||||

对此更为复杂的答案是,某种原因听起来非常美好。虽然在理论上,你可以用这种方式在 Linux 上玩所有的 Windows 平台上的游戏。但只有一少部分游戏在推出时会正式支持 Linux。这少部分游戏包括 《DOOM》、《最终幻想 VI》、《铁拳 7》、《星球大战:前线 2》,和其他几个。

|

||||

|

||||

#### 你可以在 Linux 上玩所有的 Windows 游戏(理论上)

|

||||

|

||||

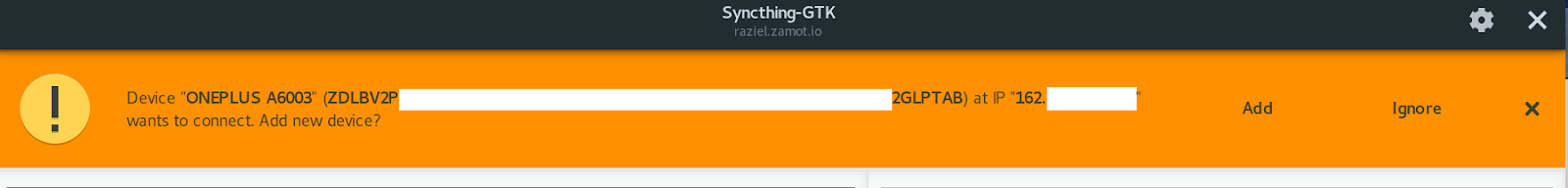

虽然目前该列表只有大约 30 个游戏,你可以点击“为所有游戏启用 Steam Play”复选框来强制使用 Steam 的 Proton 来安装和运行任意游戏。但你最好不要有太高的期待,它们的稳定性和性能表现不一定有你希望的那么好,所以请把期望值压低一点。

|

||||

|

||||

![Steam Play][10]

|

||||

|

||||

据[这份报告][13],已经有超过一千个游戏可以在 Linux 上玩了。按[此指南][14]来了解如何启用 Steam Play 测试版本。

|

||||

|

||||

#### 体验 Proton,没有我想的那么烂

|

||||

|

||||

例如,我安装了一些难度适中的游戏,使用 Proton 来进行安装。其中一个是《上古卷轴 4:湮没》,在我玩这个游戏的两个小时里,它只崩溃了一次,而且几乎是紧跟在游戏教程的自动保存点之后。

|

||||

|

||||

我有一块英伟达 Gtx 1050 Ti 的显卡。所以我可以使用 1080P 的高配置来玩这个游戏。而且我没有遇到除了这次崩溃之外的任何问题。我唯一真正感到不爽的只有它的帧数没有原本的高。在 90% 的时间里,游戏的帧数都在 60 帧以上,但我知道它的帧数应该能更高。

|

||||

|

||||

我安装和运行的其他所有游戏都运行得很完美,虽然我还没有较长时间地玩过它们中的任何一个。我安装的游戏中包括《森林》、《丧尸围城 4》和《刺客信条 2》。(你觉得我这是喜欢恐怖游戏吗?)

|

||||

|

||||

#### 为什么 Steam(仍然)要下注在 Linux 上?

|

||||

|

||||

现在,一切都很好,这件事为什么会发生呢?为什么 Valve 要花费时间,金钱和资源来做这样的事?我倾向于认为,他们这样做是因为他们懂得 Linux 社区的价值,但是如果要我老实地说,我不相信我们和它有任何的关系。

|

||||

|

||||

如果我一定要在这上面花钱,我想说 Valve 开发了 Proton,因为他们还没有放弃 [Steam Machine][11]。因为 [Steam OS][12] 是基于 Linux 的发行版,在这类东西上面投资可以获取最大的利润,Steam OS 上可用的游戏越多,就会有更多的人愿意购买 Steam Machine。

|

||||

|

||||

可能我是错的,但是我敢打赌啊,我们会在不远的未来看到新一批的 Steam Machine。可能我们会在一年内看到它们,也有可能我们再等五年都见不到,谁知道呢!

|

||||

|

||||

无论哪种方式,我所知道的是,我终于能兴奋地从我的 Steam 游戏库里玩游戏了。这个游戏库是多年来我通过各种慈善包、促销码和不定时地买的游戏慢慢积累的,只不过是想试试让它在 Lutris 中运行。

|

||||

|

||||

#### 为 Linux 上越来越多的游戏而激动?

|

||||

|

||||

你怎么看?你对此感到激动吗?或者说你会害怕只有很少的开发者会开发 Linux 平台上的游戏,因为现在几乎没有需求?Valve 喜欢 Linux 社区,还是说他们喜欢钱?请在下面的评论区告诉我们您的想法,然后重新搜索来查看更多类似这样的开源软件方面的文章。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/steam-play-proton/

|

||||

|

||||

作者:[Phillip Prado][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[hopefully2333](https://github.com/hopefully2333)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://itsfoss.com/author/phillip/

|

||||

[1]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/08/steam-wallpaper.jpeg

|

||||

[2]:https://itsfoss.com/linux-gaming-guide/

|

||||

[3]:https://itsfoss.com/reasons-switch-linux-windows-xp/

|

||||

[4]:https://itsfoss.com/triplea-game-review/

|

||||

[5]:https://itsfoss.com/play-retro-games-linux/

|

||||

[6]:https://steamcommunity.com/games/221410

|

||||

[7]:https://github.com/ValveSoftware/Proton/

|

||||

[8]:https://www.playonlinux.com/en/

|

||||

[9]:https://lutris.net/

|

||||

[10]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/08/SteamProton.jpg

|

||||

[11]:https://store.steampowered.com/sale/steam_machines

|

||||

[12]:https://itsfoss.com/valve-annouces-linux-based-gaming-operating-system-steamos/

|

||||

[13]:https://spcr.netlify.com/

|

||||

[14]:https://itsfoss.com/steam-play/

|

||||

@ -1,26 +1,27 @@

|

||||

如何在 Linux 上使用 tcpdump 命令捕获和分析数据包

|

||||

======

|

||||

tcpdump 是一个有名的命令行**数据包分析**工具。我们可以使用 tcpdump 命令捕获实时 TCP/IP 数据包,这些数据包也可以保存到文件中。之后这些捕获的数据包可以通过 tcpdump 命令进行分析。tcpdump 命令在网络级故障排除时变得非常方便。

|

||||

|

||||

`tcpdump` 是一个有名的命令行**数据包分析**工具。我们可以使用 `tcpdump` 命令捕获实时 TCP/IP 数据包,这些数据包也可以保存到文件中。之后这些捕获的数据包可以通过 `tcpdump` 命令进行分析。`tcpdump` 命令在网络层面进行故障排除时变得非常方便。

|

||||

|

||||

|

||||

|

||||

tcpdump 在大多数 Linux 发行版中都能用,对于基于 Debian 的Linux,可以使用 apt 命令安装它

|

||||

`tcpdump` 在大多数 Linux 发行版中都能用,对于基于 Debian 的Linux,可以使用 `apt` 命令安装它。

|

||||

|

||||

```

|

||||

# apt install tcpdump -y

|

||||

```

|

||||

|

||||

在基于 RPM 的 Linux 操作系统上,可以使用下面的 yum 命令安装 tcpdump

|

||||

在基于 RPM 的 Linux 操作系统上,可以使用下面的 `yum` 命令安装 `tcpdump`。

|

||||

|

||||

```

|

||||

# yum install tcpdump -y

|

||||

```

|

||||

|

||||

当我们在没用任何选项的情况下运行 tcpdump 命令时,它将捕获所有接口的数据包。因此,要停止或取消 tcpdump 命令,请输入 '**ctrl+c**'。在本教程中,我们将使用不同的实例来讨论如何捕获和分析数据包,

|

||||

当我们在没用任何选项的情况下运行 `tcpdump` 命令时,它将捕获所有接口的数据包。因此,要停止或取消 `tcpdump` 命令,请键入 `ctrl+c`。在本教程中,我们将使用不同的实例来讨论如何捕获和分析数据包。

|

||||

|

||||

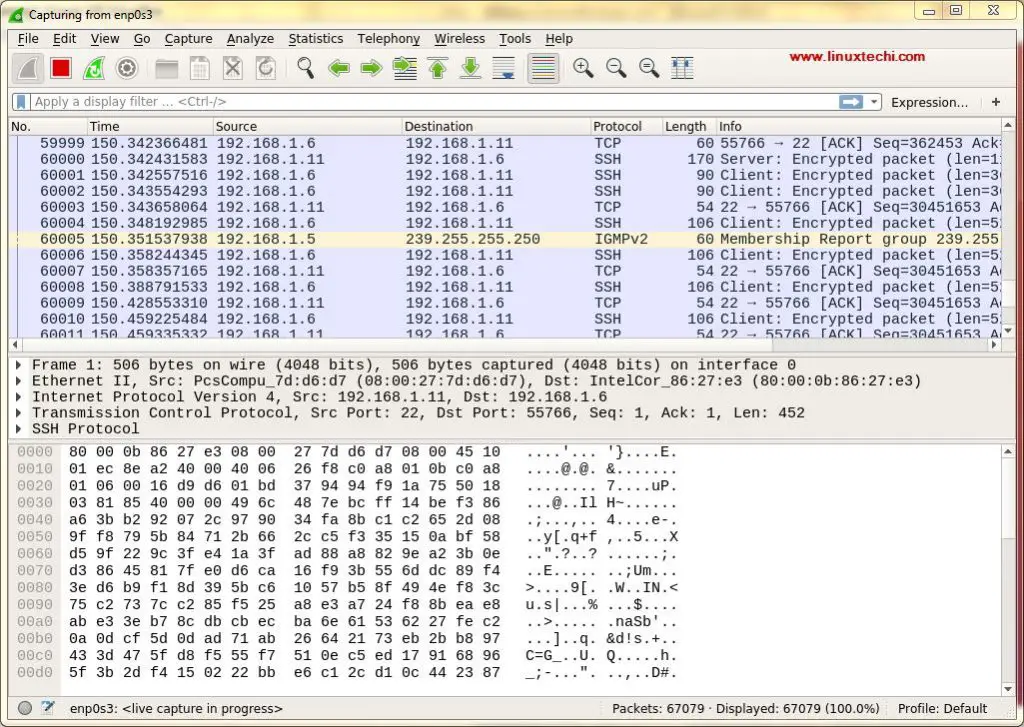

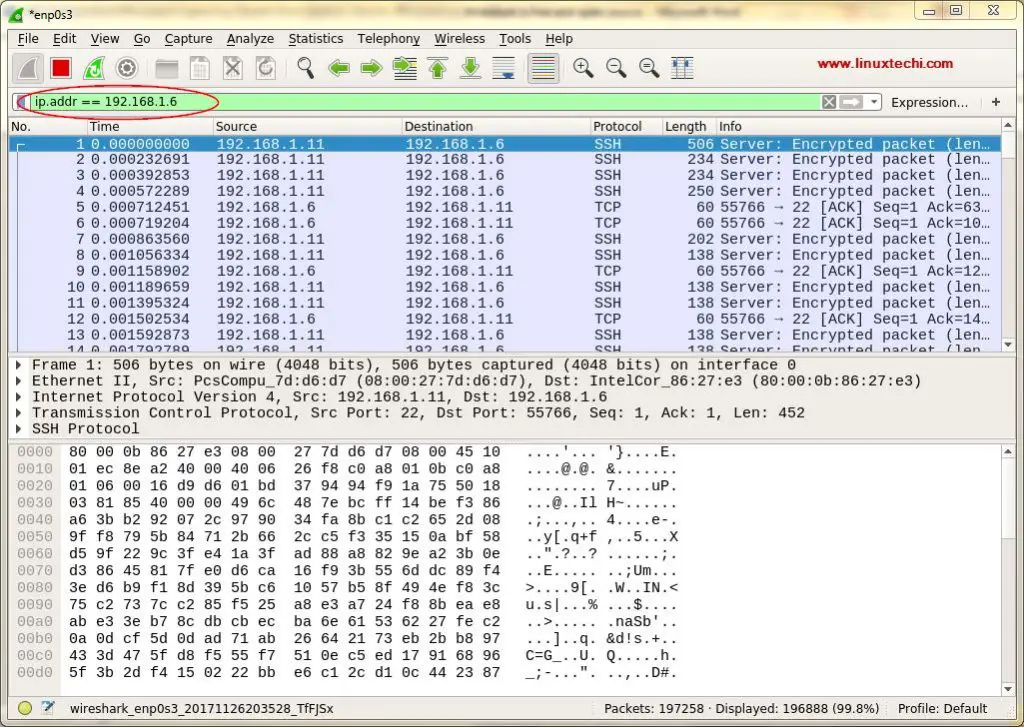

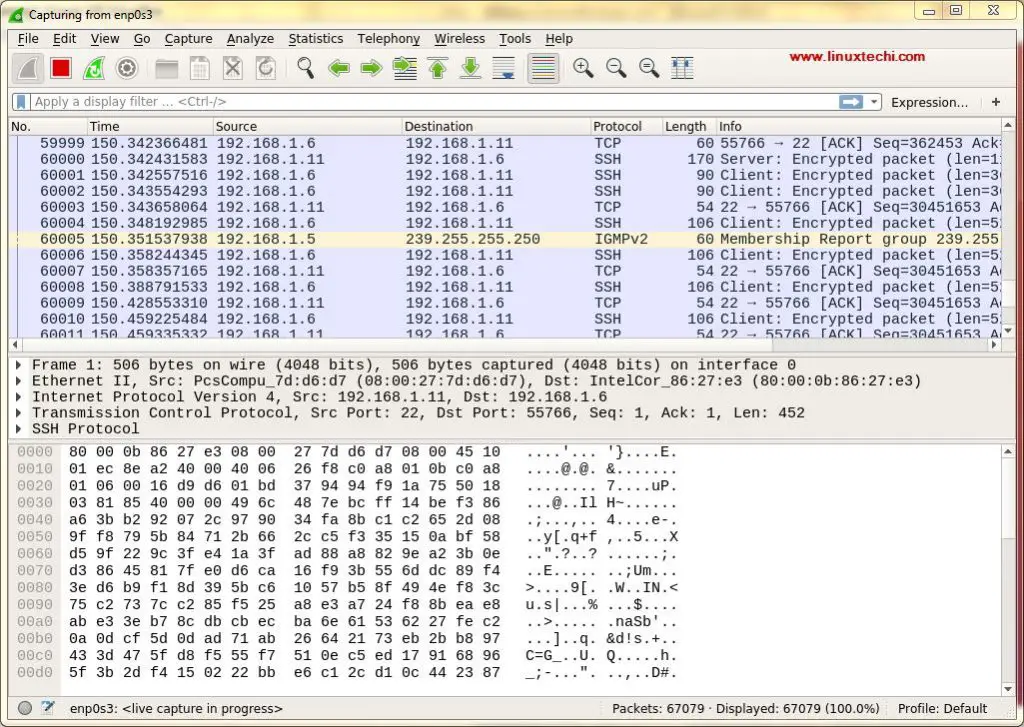

### 示例: 1) 从特定接口捕获数据包

|

||||

### 示例:1)从特定接口捕获数据包

|

||||

|

||||

当我们在没用任何选项的情况下运行 tcpdump 命令时,它将捕获所有接口上的数据包,因此,要从特定接口捕获数据包,请使用选项 '**-i**',后跟接口名称。

|

||||

当我们在没用任何选项的情况下运行 `tcpdump` 命令时,它将捕获所有接口上的数据包,因此,要从特定接口捕获数据包,请使用选项 `-i`,后跟接口名称。

|

||||

|

||||

语法:

|

||||

|

||||

@ -28,7 +29,7 @@ tcpdump 在大多数 Linux 发行版中都能用,对于基于 Debian 的Linux

|

||||

# tcpdump -i {接口名}

|

||||

```

|

||||

|

||||

假设我想从接口“enp0s3”捕获数据包

|

||||

假设我想从接口 `enp0s3` 捕获数据包。

|

||||

|

||||

输出将如下所示,

|

||||

|

||||

@ -49,21 +50,21 @@ listening on enp0s3, link-type EN10MB (Ethernet), capture size 262144 bytes

|

||||

|

||||

```

|

||||

|

||||

### 示例: 2) 从特定接口捕获特定数量数据包

|

||||

### 示例:2)从特定接口捕获特定数量数据包

|

||||

|

||||

假设我们想从特定接口(如“enp0s3”)捕获12个数据包,这可以使用选项 '**-c {数量} -I {接口名称}**' 轻松实现

|

||||

假设我们想从特定接口(如 `enp0s3`)捕获 12 个数据包,这可以使用选项 `-c {数量} -I {接口名称}` 轻松实现。

|

||||

|

||||

```

|

||||

root@compute-0-1 ~]# tcpdump -c 12 -i enp0s3

|

||||

```

|

||||

|

||||

上面的命令将生成如下所示的输出

|

||||

上面的命令将生成如下所示的输出,

|

||||

|

||||

[![N-Number-Packsets-tcpdump-interface][1]][2]

|

||||

|

||||

### 示例: 3) 显示 tcpdump 的所有可用接口

|

||||

### 示例:3)显示 tcpdump 的所有可用接口

|

||||

|

||||

使用 '**-D**' 选项显示 tcpdump 命令的所有可用接口,

|

||||

使用 `-D` 选项显示 `tcpdump` 命令的所有可用接口,

|

||||

|

||||

```

|

||||

[root@compute-0-1 ~]# tcpdump -D

|

||||

@ -86,11 +87,11 @@ root@compute-0-1 ~]# tcpdump -c 12 -i enp0s3

|

||||

[[email protected] ~]#

|

||||

```

|

||||

|

||||

我正在我的一个openstack计算节点上运行tcpdump命令,这就是为什么在输出中你会看到数字接口、标签接口、网桥和vxlan接口

|

||||

我正在我的一个 openstack 计算节点上运行 `tcpdump` 命令,这就是为什么在输出中你会看到数字接口、标签接口、网桥和 vxlan 接口

|

||||

|

||||

### 示例: 4) 捕获带有可读时间戳(-tttt 选项)的数据包

|

||||

### 示例:4)捕获带有可读时间戳的数据包(`-tttt` 选项)

|

||||

|

||||

默认情况下,在tcpdump命令输出中,没有显示可读性好的时间戳,如果您想将可读性好的时间戳与每个捕获的数据包相关联,那么使用 '**-tttt**'选项,示例如下所示,

|

||||

默认情况下,在 `tcpdump` 命令输出中,不显示可读性好的时间戳,如果您想将可读性好的时间戳与每个捕获的数据包相关联,那么使用 `-tttt` 选项,示例如下所示,

|

||||

|

||||

```

|

||||

[[email protected] ~]# tcpdump -c 8 -tttt -i enp0s3

|

||||

@ -108,12 +109,11 @@ listening on enp0s3, link-type EN10MB (Ethernet), capture size 262144 bytes

|

||||

134 packets received by filter

|

||||

69 packets dropped by kernel

|

||||

[[email protected] ~]#

|

||||

|

||||

```

|

||||

|

||||

### 示例: 5) 捕获数据包并将其保存到文件( -w 选项)

|

||||

### 示例:5)捕获数据包并将其保存到文件(`-w` 选项)

|

||||

|

||||

使用 tcpdump 命令中的 '**-w**' 选项将捕获的 TCP/IP 数据包保存到一个文件中,以便我们可以在将来分析这些数据包以供进一步分析。

|

||||

使用 `tcpdump` 命令中的 `-w` 选项将捕获的 TCP/IP 数据包保存到一个文件中,以便我们可以在将来分析这些数据包以供进一步分析。

|

||||

|

||||

语法:

|

||||

|

||||

@ -121,9 +121,9 @@ listening on enp0s3, link-type EN10MB (Ethernet), capture size 262144 bytes

|

||||

# tcpdump -w 文件名.pcap -i {接口名}

|

||||

```

|

||||

|

||||

注意:文件扩展名必须为 **.pcap**

|

||||

注意:文件扩展名必须为 `.pcap`。

|

||||

|

||||

假设我要把 '**enp0s3**' 接口捕获到的包保存到文件名为 **enp0s3-26082018.pcap**

|

||||

假设我要把 `enp0s3` 接口捕获到的包保存到文件名为 `enp0s3-26082018.pcap`。

|

||||

|

||||

```

|

||||

[root@compute-0-1 ~]# tcpdump -w enp0s3-26082018.pcap -i enp0s3

|

||||

@ -140,24 +140,23 @@ tcpdump: listening on enp0s3, link-type EN10MB (Ethernet), capture size 262144 b

|

||||

[root@compute-0-1 ~]# ls

|

||||

anaconda-ks.cfg enp0s3-26082018.pcap

|

||||

[root@compute-0-1 ~]#

|

||||

|

||||

```

|

||||

|

||||

捕获并保存大小**大于 N 字节**的数据包

|

||||

捕获并保存大小**大于 N 字节**的数据包。

|

||||

|

||||

```

|

||||

[root@compute-0-1 ~]# tcpdump -w enp0s3-26082018-2.pcap greater 1024

|

||||

```

|

||||

|

||||

捕获并保存大小**小于 N 字节**的数据包

|

||||

捕获并保存大小**小于 N 字节**的数据包。

|

||||

|

||||

```

|

||||

[root@compute-0-1 ~]# tcpdump -w enp0s3-26082018-3.pcap less 1024

|

||||

```

|

||||

|

||||

### 示例: 6) 从保存的文件中读取数据包( -r 选项)

|

||||

### 示例:6)从保存的文件中读取数据包(`-r` 选项)

|

||||

|

||||

在上面的例子中,我们已经将捕获的数据包保存到文件中,我们可以使用选项 '**-r**' 从文件中读取这些数据包,例子如下所示,

|

||||

在上面的例子中,我们已经将捕获的数据包保存到文件中,我们可以使用选项 `-r` 从文件中读取这些数据包,例子如下所示,

|

||||

|

||||

```

|

||||

[root@compute-0-1 ~]# tcpdump -r enp0s3-26082018.pcap

|

||||

@ -183,12 +182,11 @@ p,TS val 81359114 ecr 81350901], length 508

|

||||

2018-08-25 22:03:17.647502 IP controller0.example.com.amqp > compute-0-1.example.com.57788: Flags [.], ack 1956, win 1432, options [nop,nop,TS val 813

|

||||

52753 ecr 81359114], length 0

|

||||

.........................................................................................................................

|

||||

|

||||

```

|

||||

|

||||

### 示例: 7) 仅捕获特定接口上的 IP 地址数据包( -n 选项)

|

||||

### 示例:7)仅捕获特定接口上的 IP 地址数据包(`-n` 选项)

|

||||

|

||||

使用 tcpdump 命令中的 -n 选项,我们能只捕获特定接口上的 IP 地址数据包,示例如下所示,

|

||||

使用 `tcpdump` 命令中的 `-n` 选项,我们能只捕获特定接口上的 IP 地址数据包,示例如下所示,

|

||||

|

||||

```

|

||||

[root@compute-0-1 ~]# tcpdump -n -i enp0s3

|

||||

@ -211,19 +209,18 @@ listening on enp0s3, link-type EN10MB (Ethernet), capture size 262144 bytes

|

||||

22:22:28.539595 IP 169.144.0.1.39406 > 169.144.0.20.ssh: Flags [.], ack 1572, win 9086, options [nop,nop,TS val 20666614 ecr 82510006], length 0

|

||||

22:22:28.539760 IP 169.144.0.20.ssh > 169.144.0.1.39406: Flags [P.], seq 1572:1912, ack 1, win 291, options [nop,nop,TS val 82510007 ecr 20666614], length 340

|

||||

.........................................................................

|

||||

|

||||

```

|

||||

|

||||

您还可以使用 tcpdump 命令中的 -c 和 -N 选项捕获 N 个 IP 地址包,

|

||||

您还可以使用 `tcpdump` 命令中的 `-c` 和 `-N` 选项捕获 N 个 IP 地址包,

|

||||

|

||||

```

|

||||

[root@compute-0-1 ~]# tcpdump -c 25 -n -i enp0s3

|

||||

```

|

||||

|

||||

|

||||

### 示例: 8) 仅捕获特定接口上的TCP数据包

|

||||

### 示例:8)仅捕获特定接口上的 TCP 数据包

|

||||

|

||||

在 tcpdump 命令中,我们能使用 '**tcp**' 选项来只捕获TCP数据包,

|

||||

在 `tcpdump` 命令中,我们能使用 `tcp` 选项来只捕获 TCP 数据包,

|

||||

|

||||

```

|

||||

[root@compute-0-1 ~]# tcpdump -i enp0s3 tcp

|

||||

@ -241,9 +238,9 @@ listening on enp0s3, link-type EN10MB (Ethernet), capture size 262144 bytes

|

||||

...................................................................................................................................................

|

||||

```

|

||||

|

||||

### 示例: 9) 从特定接口上的特定端口捕获数据包

|

||||

### 示例:9)从特定接口上的特定端口捕获数据包

|

||||

|

||||

使用 tcpdump 命令,我们可以从特定接口 enp0s3 上的特定端口(例如 22 )捕获数据包

|

||||

使用 `tcpdump` 命令,我们可以从特定接口 `enp0s3` 上的特定端口(例如 22)捕获数据包。

|

||||

|

||||

语法:

|

||||

|

||||

@ -262,13 +259,12 @@ listening on enp0s3, link-type EN10MB (Ethernet), capture size 262144 bytes

|

||||

22:54:55.038564 IP 169.144.0.1.39406 > compute-0-1.example.com.ssh: Flags [.], ack 940, win 9177, options [nop,nop,TS val 21153238 ecr 84456505], length 0

|

||||

22:54:55.038708 IP compute-0-1.example.com.ssh > 169.144.0.1.39406: Flags [P.], seq 940:1304, ack 1, win 291, options [nop,nop,TS val 84456506 ecr 21153238], length 364

|

||||

............................................................................................................................

|

||||

[root@compute-0-1 ~]#

|

||||

```

|

||||

|

||||

|

||||

### 示例: 10) 在特定接口上捕获来自特定来源 IP 的数据包

|

||||

### 示例:10)在特定接口上捕获来自特定来源 IP 的数据包

|

||||

|

||||

在tcpdump命令中,使用 '**src**' 关键字后跟 '**IP 地址**',我们可以捕获来自特定来源 IP 的数据包,

|

||||

在 `tcpdump` 命令中,使用 `src` 关键字后跟 IP 地址,我们可以捕获来自特定来源 IP 的数据包,

|

||||

|

||||

语法:

|

||||

|

||||

@ -296,17 +292,16 @@ listening on enp0s3, link-type EN10MB (Ethernet), capture size 262144 bytes

|

||||

10 packets captured

|

||||

12 packets received by filter

|

||||

0 packets dropped by kernel

|

||||

[root@compute-0-1 ~]#

|

||||

|

||||

```

|

||||

|

||||

### 示例: 11) 在特定接口上捕获来自特定目的IP的数据包

|

||||

### 示例:11)在特定接口上捕获来自特定目的 IP 的数据包

|

||||

|

||||

语法:

|

||||

|

||||

```

|

||||

# tcpdump -n -i {接口名} dst {IP 地址}

|

||||

```

|

||||

|

||||

```

|

||||

[root@compute-0-1 ~]# tcpdump -n -i enp0s3 dst 169.144.0.1

|

||||

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

|

||||

@ -318,42 +313,39 @@ listening on enp0s3, link-type EN10MB (Ethernet), capture size 262144 bytes

|

||||

23:10:43.522157 IP 169.144.0.20.ssh > 169.144.0.1.39406: Flags [P.], seq 800:996, ack 1, win 291, options [nop,nop,TS val 85404989 ecr 21390359], length 196

|

||||

23:10:43.522346 IP 169.144.0.20.ssh > 169.144.0.1.39406: Flags [P.], seq 996:1192, ack 1, win 291, options [nop,nop,TS val 85404989 ecr 21390359], length 196

|

||||

.........................................................................................

|

||||

|

||||

```

|

||||

|

||||

### 示例: 12) 捕获两台主机之间的 TCP 数据包通信

|

||||

### 示例:12)捕获两台主机之间的 TCP 数据包通信

|

||||

|

||||

假设我想捕获两台主机 169.144.0.1 和 169.144.0.20 之间的 TCP 数据包,示例如下所示,

|

||||

|

||||

```

|

||||

[root@compute-0-1 ~]# tcpdump -w two-host-tcp-comm.pcap -i enp0s3 tcp and \(host 169.144.0.1 or host 169.144.0.20\)

|

||||

|

||||

```

|

||||

|

||||

使用 tcpdump 命令只捕获两台主机之间的 SSH 数据包流,

|

||||

使用 `tcpdump` 命令只捕获两台主机之间的 SSH 数据包流,

|

||||

|

||||

```

|

||||

[root@compute-0-1 ~]# tcpdump -w ssh-comm-two-hosts.pcap -i enp0s3 src 169.144.0.1 and port 22 and dst 169.144.0.20 and port 22

|

||||

|

||||

```

|

||||

|

||||

示例: 13) 捕获两台主机之间的 UDP 网络数据包(来回)

|

||||

### 示例:13)捕获两台主机之间(来回)的 UDP 网络数据包

|

||||

|

||||

语法:

|

||||

|

||||

```

|

||||

# tcpdump -w -s -i udp and \(host and host \)

|

||||

```

|

||||

|

||||

```

|

||||

[root@compute-0-1 ~]# tcpdump -w two-host-comm.pcap -s 1000 -i enp0s3 udp and \(host 169.144.0.10 and host 169.144.0.20\)

|

||||

|

||||

```

|

||||

|

||||

### 示例: 14) 捕获十六进制和ASCII格式的数据包

|

||||

### 示例:14)捕获十六进制和 ASCII 格式的数据包

|

||||

|

||||

使用 tcpdump 命令,我们可以以 ASCII 和十六进制格式捕获 TCP/IP 数据包,

|

||||

使用 `tcpdump` 命令,我们可以以 ASCII 和十六进制格式捕获 TCP/IP 数据包,

|

||||

|

||||

要使用** -A **选项捕获ASCII格式的数据包,示例如下所示:

|

||||

要使用 `-A` 选项捕获 ASCII 格式的数据包,示例如下所示:

|

||||

|

||||

```

|

||||

[root@compute-0-1 ~]# tcpdump -c 10 -A -i enp0s3

|

||||

@ -376,7 +368,7 @@ root@compute-0-1 @..........

|

||||

..................................................................................................................................................

|

||||

```

|

||||

|

||||

要同时以十六进制和 ASCII 格式捕获数据包,请使用** -XX **选项

|

||||

要同时以十六进制和 ASCII 格式捕获数据包,请使用 `-XX` 选项。

|

||||

|

||||

```

|

||||

[root@compute-0-1 ~]# tcpdump -c 10 -XX -i enp0s3

|

||||

@ -406,10 +398,9 @@ listening on enp0s3, link-type EN10MB (Ethernet), capture size 262144 bytes

|

||||

0x0030: 3693 7c0e 0000 0101 080a 015a a734 0568 6.|........Z.4.h

|

||||

0x0040: 39af

|

||||

.......................................................................

|

||||

|

||||

```

|

||||

|

||||

这就是本文的全部内容,我希望您能了解如何使用 tcpdump 命令捕获和分析 TCP/IP 数据包。请分享你的反馈和评论。

|

||||

这就是本文的全部内容,我希望您能了解如何使用 `tcpdump` 命令捕获和分析 TCP/IP 数据包。请分享你的反馈和评论。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -418,7 +409,7 @@ via: https://www.linuxtechi.com/capture-analyze-packets-tcpdump-command-linux/

|

||||

作者:[Pradeep Kumar][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[ypingcn](https://github.com/ypingcn)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,16 +1,17 @@

|

||||

如何在 Ubuntu 18.04 和其他 Linux 发行版中创建照片幻灯片

|

||||

如何在 Ubuntu 和其他 Linux 发行版中创建照片幻灯片

|

||||

======

|

||||

|

||||

创建照片幻灯片只需点击几下。以下是如何在 Ubuntu 18.04 和其他 Linux 发行版中制作照片幻灯片。

|

||||

|

||||

![How to create slideshow of photos in Ubuntu Linux][1]

|

||||

|

||||

想象一下,你的朋友和亲戚正在拜访你,并请求你展示最近的活动/旅行照片。

|

||||

想象一下,你的朋友和亲戚正在拜访你,并请你展示最近的活动/旅行照片。

|

||||

|

||||

你将照片保存在计算机上,并整齐地放在单独的文件夹中。你邀请计算机附近的所有人。你进入该文件夹,单击其中一张图片,然后按箭头键逐个显示照片。

|

||||

|

||||

但那太累了!如果这些图片每隔几秒自动更改一次,那将会好很多。

|

||||

|

||||

这称之为为幻灯片,我将向你展示如何在 Ubuntu 中创建照片幻灯片。这能让你在文件夹中循环播放图片并以全屏模式显示它们。

|

||||

这称之为幻灯片,我将向你展示如何在 Ubuntu 中创建照片幻灯片。这能让你在文件夹中循环播放图片并以全屏模式显示它们。

|

||||

|

||||

### 在 Ubuntu 18.04 和其他 Linux 发行版中创建照片幻灯片

|

||||

|

||||

@ -20,19 +21,19 @@

|

||||

|

||||

如果你在 Ubuntu 18.04 或任何其他发行版中使用 GNOME,那么你很幸运。Gnome 的默认图像浏览器,Eye of GNOME,能够在当前文件夹中显示图片的幻灯片。

|

||||

|

||||

只需单击其中一张图片,你将在程序的右上角菜单中看到设置选项。它看起来像三条横栏堆在彼此的顶部。

|

||||

只需单击其中一张图片,你将在程序的右上角菜单中看到设置选项。它看起来像堆叠在一起的三条横栏。

|

||||

|

||||

你会在这里看到几个选项。勾选幻灯片选项,它将全屏显示图像。

|

||||

|

||||

![How to create slideshow of photos in Ubuntu Linux][2]

|

||||

|

||||

默认情况下,图像以 5 秒的间隔变化。你可以进入 Preferences->Slideshow 来更改幻灯片放映间隔。

|

||||

默认情况下,图像以 5 秒的间隔变化。你可以进入 “Preferences -> Slideshow” 来更改幻灯片放映间隔。

|

||||

|

||||

![change slideshow interval in Ubuntu][3]Changing slideshow interval

|

||||

![change slideshow interval in Ubuntu][3]

|

||||

|

||||

#### 方法 2:使用 Shotwell Photo Manager 进行照片幻灯片放映

|

||||

|

||||

[Shotwell][4] 是一种流行的[ Linux 照片管理程序][5]。适用于所有主要的 Linux 发行版。

|

||||

[Shotwell][4] 是一款流行的 [Linux 照片管理程序][5]。适用于所有主要的 Linux 发行版。

|

||||

|

||||

如果尚未安装,请在你的发行版软件中心中搜索 Shotwell 并安装。

|

||||

|

||||

@ -55,7 +56,7 @@ via: https://itsfoss.com/photo-slideshow-ubuntu/

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,24 +1,24 @@

|

||||

用 zsh 提高生产力的5个 tips

|

||||

用 zsh 提高生产力的 5 个技巧

|

||||

======

|

||||

> zsh 提供了数之不尽的功能和特性,这里有五个可以让你在命令行暴增效率的方法。

|

||||

|

||||

|

||||

|

||||

Z shell (亦称 zsh) 是 *unx 系统中的命令解析器 。 它跟 `sh` (Bourne shell) 家族的其他解析器 ( 如 `bash` 和 `ksh` ) 有着相似的特点,但它还提供了大量的高级特性以及强大的命令行编辑功能(选项?),如增强版tab补全。

|

||||

Z shell([zsh][1])是 Linux 和类 Unix 系统中的一个[命令解析器][2]。 它跟 sh (Bourne shell) 家族的其它解析器(如 bash 和 ksh)有着相似的特点,但它还提供了大量的高级特性以及强大的命令行编辑功能,如增强版 Tab 补全。

|

||||

|

||||

由于 zsh 有好几百页的文档去描述他的特性,所以我无法在这里阐明 zsh 的所有功能。在本文,我会列出5个 tips,让你通过使用 zsh 来提高你的生产力。

|

||||

在这里不可能涉及到 zsh 的所有功能,[描述][3]它的特性需要好几百页。在本文中,我会列出 5 个技巧,让你通过在命令行使用 zsh 来提高你的生产力。

|

||||

|

||||

### 1\. 主题和插件

|

||||

### 1、主题和插件

|

||||

|

||||

多年来,开源社区已经为 zsh 开发了数不清的主题和插件。主题是预定义提示符的配置,而插件则是一组常用的别名命令和功能,让你更方便的使用一种特定的命令或者编程语言。

|

||||

多年来,开源社区已经为 zsh 开发了数不清的主题和插件。主题是一个预定义提示符的配置,而插件则是一组常用的别名命令和函数,可以让你更方便的使用一种特定的命令或者编程语言。

|

||||

|

||||

如果你现在想开始用 zsh 的主题和插件,那么使用 zsh 的配置框架 (configuiration framework) 是你最快的入门方式。在众多的配置框架中,最受欢迎的则是 [Oh My Zsh][4]。在默认配置中,他就已经为 zsh 启用了一些合理的配置,同时它也自带多个主题和插件。

|

||||

如果你现在想开始用 zsh 的主题和插件,那么使用一种 zsh 的配置框架是你最快的入门方式。在众多的配置框架中,最受欢迎的则是 [Oh My Zsh][4]。在默认配置中,它就已经为 zsh 启用了一些合理的配置,同时它也自带上百个主题和插件。

|

||||

|

||||

由于主题会在你的命令行提示符之前添加一些常用的信息,比如你 Git 仓库的状态,或者是当前使用的 Python 虚拟环境,所以它会让你的工作更高效。只需要看到这些信息,你就不用再敲命令去重新获取它们,而且这些提示也相当酷炫。

|

||||

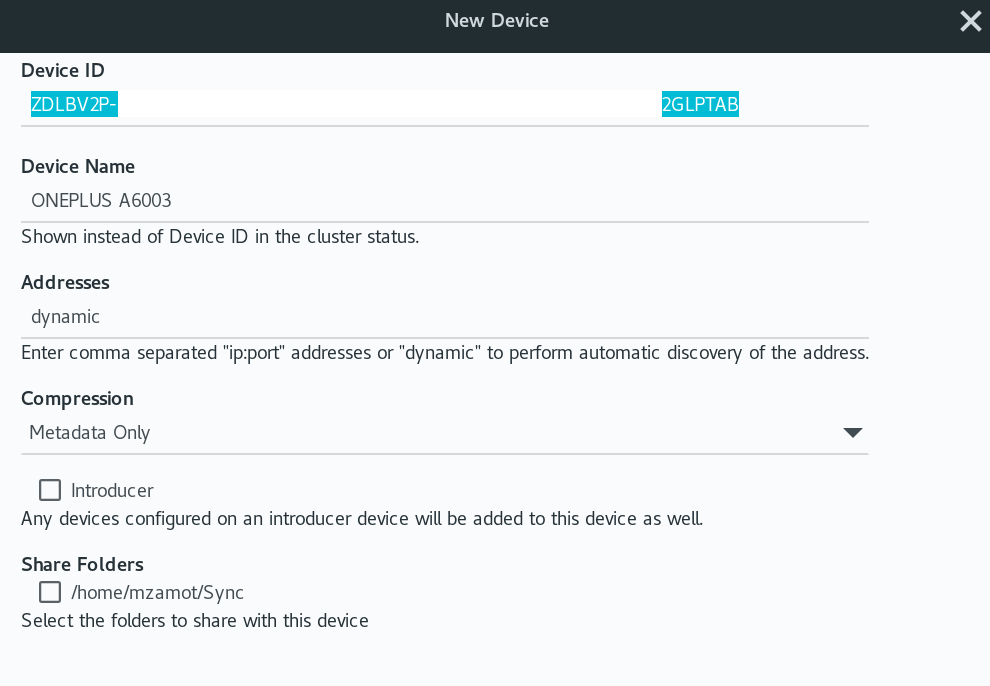

下图就是我(作者)选用的主题 [Powerlevel9k][5]

|

||||

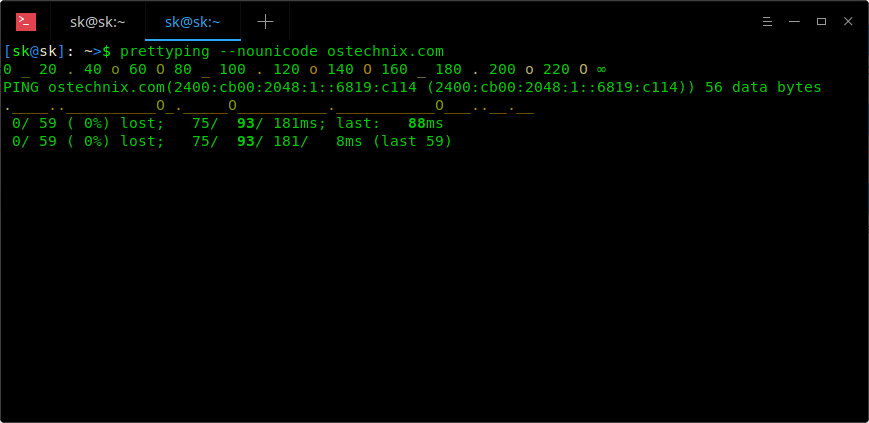

主题会在你的命令行提示符之前添加一些有用的信息,比如你 Git 仓库的状态,或者是当前使用的 Python 虚拟环境,所以它会让你的工作更高效。只需要看到这些信息,你就不用再敲命令去重新获取它们,而且这些提示也相当酷炫。下图就是我选用的主题 [Powerlevel9k][5]:

|

||||

|

||||

![zsh Powerlevel9K theme][7]

|

||||

|

||||

zsh 主题 Powerlevel9k

|

||||

*zsh 主题 Powerlevel9k*

|

||||

|

||||

除了主题,Oh my Zsh 还自带了大量常用的 zsh 插件。比如,通过启用 Git 插件,你可以用一组简便的命令别名操作 Git, 比如

|

||||

|

||||

@ -36,39 +36,37 @@ gcs='git commit -S'

|

||||

glg='git log --stat'

|

||||

```

|

||||

|

||||

zsh 还有许多插件是用于多种编程语言,打包系统和一些平时在命令行中常用的工具。

|

||||

以下是我(作者) Ferdora 工作站中用到的插件表:

|

||||

zsh 还有许多插件可以用于许多编程语言、打包系统和一些平时在命令行中常用的工具。以下是我 Ferdora 工作站中用到的插件表:

|

||||

|

||||

```

|

||||

git golang fedora docker oc sudo vi-mode virtualenvwrapper

|

||||

```

|

||||

|

||||

### 2\. 智能的命令别名

|

||||

### 2、智能的命令别名

|

||||

|

||||

命令别名在 zsh 中十分常用。为你常用的命令定义别名可以节省你的打字时间。Oh My Zsh 默认配置了一些常用的命令别名,包括目录导航命令别名,为常用的命令添加额外的选项,比如:

|

||||

命令别名在 zsh 中十分有用。为你常用的命令定义别名可以节省你的打字时间。Oh My Zsh 默认配置了一些常用的命令别名,包括目录导航命令别名,为常用的命令添加额外的选项,比如:

|

||||

|

||||

```

|

||||

ls='ls --color=tty'

|

||||

grep='grep --color=auto --exclude-dir={.bzr,CVS,.git,.hg,.svn}'

|

||||

```

|

||||

|

||||

除了命令别名以外, zsh 还自带两种额外常用的别名类型:后缀别名和全局别名。

|

||||

|

||||

除了命令别名意外, zsh 还自带两种额外常用的别名类型:后缀别名和全局别名。

|

||||

|

||||

后缀别名可以让你在基于文件后缀的前提下,在命令行中利用指定程序打开这个文件。比如,要用 vim 打开 YAML 文件,可以定义以下命令行别名:

|

||||

后缀别名可以让你基于文件后缀,在命令行中利用指定程序打开这个文件。比如,要用 vim 打开 YAML 文件,可以定义以下命令行别名:

|

||||

|

||||

```

|

||||

alias -s {yml,yaml}=vim

|

||||

```

|

||||

|

||||

现在,如果你在命令行中输入任何后缀名为 `yml` 或 `yaml` 文件, zsh 都会用 vim 打开这个文件

|

||||

现在,如果你在命令行中输入任何后缀名为 `yml` 或 `yaml` 文件, zsh 都会用 vim 打开这个文件。

|

||||

|

||||

```

|

||||

$ playbook.yml

|

||||

# Opens file playbook.yml using vim

|

||||

```

|

||||

|

||||

全局别名可以让你在使用命令行的任何时刻创建命令别名,而不仅仅是在开始的时候。这个在你想替换常用文件名或者管道命令的时候就显得非常有用了。比如

|

||||

全局别名可以让你创建一个可在命令行的任何地方展开的别名,而不仅仅是在命令开始的时候。这个在你想替换常用文件名或者管道命令的时候就显得非常有用了。比如:

|

||||

|

||||

```

|

||||

alias -g G='| grep -i'

|

||||

@ -84,9 +82,9 @@ drwxr-xr-x. 6 rgerardi rgerardi 4096 Aug 24 14:51 Downloads

|

||||

|

||||

接着,我们就来看看 zsh 是如何导航文件系统的。

|

||||

|

||||

### 3\. 便捷的目录导航

|

||||

### 3、便捷的目录导航

|

||||

|

||||

当你使用命令行的时候, 在不同的目录之间切换访问是最常见的工作了。 zsh 提供了一些十分有用的目录导航功能来简化这个操作。这些功能已经集成到 Oh My Zsh 中了, 而你可以用以下命令来启用它

|

||||

当你使用命令行的时候,在不同的目录之间切换访问是最常见的工作了。 zsh 提供了一些十分有用的目录导航功能来简化这个操作。这些功能已经集成到 Oh My Zsh 中了, 而你可以用以下命令来启用它

|

||||

|

||||

```

|

||||

setopt autocd autopushd \ pushdignoredups

|

||||

@ -104,7 +102,7 @@ $ pwd

|

||||

|

||||

如果想要回退,只要输入 `-`:

|

||||

|

||||

Zsh 会记录你访问过的目录,这样下次你就可以快速切换到这些目录中。如果想要看这个目录列表,只要输入 `dirs -v`:

|

||||

zsh 会记录你访问过的目录,这样下次你就可以快速切换到这些目录中。如果想要看这个目录列表,只要输入 `dirs -v`:

|

||||

|

||||

```

|

||||

$ dirs -v

|

||||

@ -168,7 +166,7 @@ $ pwd

|

||||

/tmp

|

||||

```

|

||||

|

||||

最后,你可以在 zsh 中利用 Tab 来自动补全目录名称。你可以先输入目录的首字母,然后用 `TAB` 来补全它们:

|

||||

最后,你可以在 zsh 中利用 Tab 来自动补全目录名称。你可以先输入目录的首字母,然后按 `TAB` 键来补全它们:

|

||||

|

||||

```

|

||||

$ pwd

|

||||

@ -179,22 +177,22 @@ $ Projects/Opensource.com/zsh-5tips/

|

||||

|

||||

以上仅仅是 zsh 强大的 Tab 补全系统中的一个功能。接来下我们来探索它更多的功能。

|

||||

|

||||

### 4\. 先进的 Tab 补全

|

||||

### 4、先进的 Tab 补全

|

||||

|

||||

Zsh 强大的补全系统是它其中一个卖点。为了简便起见,我称它为 Tab 补全,然而在系统底层,它不仅仅只做一件事。这里通常包括扩展以及命令的补全,我会在这里同时讨论它们。如果想了解更多,详见 [用户手册][8] ( [User's Guide][8] )。

|

||||

zsh 强大的补全系统是它的卖点之一。为了简便起见,我称它为 Tab 补全,然而在系统底层,它起到了几个作用。这里通常包括展开以及命令补全,我会在这里用讨论它们。如果想了解更多,详见 [用户手册][8]。

|

||||

|

||||

在 Oh My Zsh 中,命令补全是默认启用的。要启用它,你只要在 `.zshrc` 文件中添加以下命令:

|

||||

|

||||

在 Oh My Zsh 中,命令补全是默认可用的。要启用它,你只要在 `.zshrc` 文件中添加以下命令:

|

||||

```

|

||||

autoload -U compinit

|

||||

compinit

|

||||

```

|

||||

|

||||

Zsh 的补全系统非常智能。他会根据当前上下文来进行命令的提示——比如,你输入了 `cd` 和 `TAB`,zsh 只会为你提示目录名,因为它知道

|

||||

当前的 `cd` 没有任何作用。

|

||||

zsh 的补全系统非常智能。它会尝试唯一提示可用在当前上下文环境中的项目 —— 比如,你输入了 `cd` 和 `TAB`,zsh 只会为你提示目录名,因为它知道其它的项目放在 `cd` 后面没用。

|

||||

|

||||

反之,如果你使用 `ssh` 或者 `ping` 这类与用户或者主机相关的命令, zsh 便会提示用户名。

|

||||

反之,如果你使用与用户相关的命令便会提示用户名,而 `ssh` 或者 `ping` 这类则会提示主机名。

|

||||

|

||||

`zsh` 拥有一个巨大而又完整的库,因此它能识别许多不同的命令。比如,如果你使用 `tar` 命令, 你可以按 Tab 键,他会为你展示一个可以用于解压的文件列表:

|

||||

zsh 拥有一个巨大而又完整的库,因此它能识别许多不同的命令。比如,如果你使用 `tar` 命令, 你可以按 `TAB` 键,它会为你展示一个可以用于解压的文件列表:

|

||||

|

||||

```

|

||||

$ tar -xzvf test1.tar.gz test1/file1 (TAB)

|

||||

@ -221,7 +219,7 @@ $ git add (TAB)

|

||||

$ git add zsh-5tips.md

|

||||

```

|

||||

|

||||

zsh 还能识别命令行选项,同时他只会提示与选中子命令相关的命令列表:

|

||||

zsh 还能识别命令行选项,同时它只会提示与选中子命令相关的命令列表:

|

||||

|

||||

```

|

||||

$ git commit - (TAB)

|

||||

@ -243,27 +241,27 @@ $ git commit - (TAB)

|

||||

... TRUNCATED ...

|

||||

```

|

||||

|

||||

在按 `TAB` 键之后,你可以使用方向键来选择你想用的命令。现在你就不用记住所有的 Git 命令项了。

|

||||

在按 `TAB` 键之后,你可以使用方向键来选择你想用的命令。现在你就不用记住所有的 `git` 命令项了。

|

||||

|

||||

zsh 还有很多有用的功能。当你用它的时候,你就知道哪些对你才是最有用的。

|

||||

|

||||

### 5\. 命令行编辑与历史记录

|

||||

### 5、命令行编辑与历史记录

|

||||

|

||||

Zsh 的命令行编辑功能也十分有效。默认条件下,他是模拟 emacs 编辑器的。如果你是跟我一样更喜欢用 vi/vim,你可以用以下命令启用 vi 编辑。

|

||||

zsh 的命令行编辑功能也十分有用。默认条件下,它是模拟 emacs 编辑器的。如果你是跟我一样更喜欢用 vi/vim,你可以用以下命令启用 vi 的键绑定。

|

||||

|

||||

```

|

||||

$ bindkey -v

|

||||

```

|

||||

|

||||

如果你使用 Oh My Zsh,`vi-mode` 插件可以启用额外的绑定,同时会在你的命令提示符上增加 vi 的模式提示--这个非常有用。

|

||||

如果你使用 Oh My Zsh,`vi-mode` 插件可以启用额外的绑定,同时会在你的命令提示符上增加 vi 的模式提示 —— 这个非常有用。

|

||||

|

||||

当启用 vi 的绑定后,你可以再命令行中使用 vi 命令进行编辑。比如,输入 `ESC+/` 来查找命令行记录。在查找的时候,输入 `n` 来找下一个匹配行,输入 `N` 来找上一个。输入 `ESC` 后,最常用的 vi 命令有以下几个,如输入 `0` 跳转到第一行,输入 `$` 跳转到最后一行,输入 `i` 来插入文本,输入 `a` 来追加文本等等,一些直接操作的命令也同样有效,比如输入 `cw` 来修改单词。

|

||||

当启用 vi 的绑定后,你可以在命令行中使用 vi 命令进行编辑。比如,输入 `ESC+/` 来查找命令行记录。在查找的时候,输入 `n` 来找下一个匹配行,输入 `N` 来找上一个。输入 `ESC` 后,常用的 vi 命令都可以使用,如输入 `0` 跳转到行首,输入 `$` 跳转到行尾,输入 `i` 来插入文本,输入 `a` 来追加文本等等,即使是跟随的命令也同样有效,比如输入 `cw` 来修改单词。

|

||||

|

||||

除了命令行编辑,如果你想修改或重新执行之前使用过的命令,zsh 还提供几个常用的命令行历史功能。比如,你打错了一个命令,输入 `fc`,你可以在你偏好的编辑器中修复最后一条命令。使用哪个编辑是参照 `$EDITOR` 变量的,而默认是使用 vi。

|

||||

|

||||

另外一个有用的命令是 `r`, 他会重新执行上一条命令;而 `r <WORD>` 则会执行上一条包含 `WORD` 的命令。

|

||||

另外一个有用的命令是 `r`, 它会重新执行上一条命令;而 `r <WORD>` 则会执行上一条包含 `WORD` 的命令。

|

||||

|

||||

最后,输入两个感叹号( `!!` ),可以在命令行中回溯最后一条命令。这个十分有用,比如,当你忘记使用 `sudo` 去执行需要权限的命令时:

|

||||

最后,输入两个感叹号(`!!`),可以在命令行中回溯最后一条命令。这个十分有用,比如,当你忘记使用 `sudo` 去执行需要权限的命令时:

|

||||

|

||||

```

|

||||

$ less /var/log/dnf.log

|

||||

@ -274,19 +272,16 @@ $ sudo less /var/log/dnf.log

|

||||

|

||||

这个功能让查找并且重新执行之前命令的操作更加方便。

|

||||

|

||||

### 何去何从?

|

||||

### 下一步呢?

|

||||

|

||||

这里仅仅介绍了几个可以让你提高生产率的 zsh 特性;其实还有更多功能带你发掘;想知道更多的信息,你可以访问以下的资源:

|

||||

这里仅仅介绍了几个可以让你提高生产率的 zsh 特性;其实还有更多功能有待你的发掘;想知道更多的信息,你可以访问以下的资源:

|

||||

|

||||

[An Introduction to the Z Shell][9]

|

||||

- [An Introduction to the Z Shell][9]

|

||||

- [A User's Guide to ZSH][10]

|

||||

- [Archlinux Wiki][11]

|

||||

- [zsh-lovers][12]

|

||||

|

||||

[A User's Guide to ZSH][10]

|

||||

|

||||

[Archlinux Wiki][11]

|

||||

|

||||

[zsh-lovers][12]

|

||||

|

||||

你有使用 zsh 提高生产力的tips可以分享吗?我(作者)很乐意在下方评论看到它们。

|

||||

你有使用 zsh 提高生产力的技巧可以分享吗?我很乐意在下方评论中看到它们。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -295,7 +290,7 @@ via: https://opensource.com/article/18/9/tips-productivity-zsh

|

||||

作者:[Ricardo Gerardi][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[tnuoccalanosrep](https://github.com/tnuoccalanosrep)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -3,48 +3,46 @@

|

||||

|

||||

|

||||

|

||||

有什么好的方法,既可以宣传开源的精神又不用写代码呢?这里有个点子:“开源食堂”。在过去的8年间,这就是我们在慕尼黑做的事情。

|

||||

有什么好的方法,既可以宣传开源的精神又不用写代码呢?这里有个点子:“<ruby>开源食堂<rt>open source cooking</rt></ruby>”。在过去的 8 年间,这就是我们在慕尼黑做的事情。

|

||||

|

||||

开源食堂已经是我们常规的开源宣传活动了,因为我们发现开源与烹饪有很多共同点。

|

||||

|

||||

### 协作烹饪

|

||||

|

||||

[慕尼黑开源聚会][1]自2009年7月在[Café Netzwerk][2]创办以来,已经组织了若干次活动,活动一般在星期五的晚上组织。该聚会为开源项目工作者或者开源爱好者们提供了相互认识的方式。我们的信条是:“每四周的星期五属于免费软件(Every fourth Friday for free software)”。当然在一些周末,我们还会举办一些研讨会。那之后,我们很快加入了很多其他的活动,包括白香肠早餐、桑拿与烹饪活动。

|

||||

[慕尼黑开源聚会][1]自 2009 年 7 月在 [Café Netzwerk][2] 创办以来,已经组织了若干次活动,活动一般在星期五的晚上组织。该聚会为开源项目工作者或者开源爱好者们提供了相互认识的方式。我们的信条是:“<ruby>每四周的星期五属于自由软件<rt>Every fourth Friday for free software</rt></ruby>”。当然在一些周末,我们还会举办一些研讨会。那之后,我们很快加入了很多其他的活动,包括白香肠早餐、桑拿与烹饪活动。

|

||||

|

||||

事实上,第一次开源烹饪聚会举办的有些混乱,但是我们经过这8年来以及15次的组织,已经可以为25-30个与会者提供丰盛的美食了。

|

||||

事实上,第一次开源烹饪聚会举办的有些混乱,但是我们经过这 8 年来以及 15 次的活动,已经可以为 25-30 个与会者提供丰盛的美食了。

|

||||

|

||||

回头看看这些夜晚,我们愈发发现共同烹饪与开源社区协作之间,有很多相似之处。

|

||||

|

||||

### 烹饪步骤中的开源精神

|

||||

### 烹饪步骤中的自由开源精神

|

||||

|

||||

这里是几个烹饪与开源精神相同的地方:

|

||||

|

||||

* 我们乐于合作且朝着一个共同的目标前进

|

||||

* 我们成立社区组织

|

||||

* 我们成了一个社区

|

||||

* 由于我们有相同的兴趣与爱好,我们可以更多的了解我们自身与他人,并且可以一同协作

|

||||

* 我们也会犯错,但我们会从错误中学习,并为了共同的李医生去分享关于错误的经验,从而让彼此避免再犯相同的错误

|

||||

* 我们也会犯错,但我们会从错误中学习,并为了共同的利益去分享关于错误的经验,从而让彼此避免再犯相同的错误

|

||||

* 每个人都会贡献自己擅长的事情,因为每个人都有自己的一技之长

|

||||

* 我们会动员其他人去做出贡献并加入到我们之中

|

||||

* 虽说协作是关键,但难免会有点混乱

|

||||

* 每个人都会从中收益

|

||||

|

||||

|

||||

|

||||

### 烹饪中的开源气息

|

||||

|

||||

同很多成功的开源聚会一样,开源烹饪也需要一些协作和组织结构。在每次活动之前,我们会组织所有的成员对菜单进行投票,而不单单是直接给每个人分一角披萨,我们希望真正的作出一道美味,迄今为止我们做过日本、墨西哥、匈牙利、印度等地区风味的美食,限于篇幅就不一一列举了。

|

||||

|

||||

就像在生活中,共同烹饪一样需要各个成员之间相互的尊重和理解,所以我们也会试着为素食主义者、食物过敏者、或者对某些事物有偏好的人提供针对性的事物。正式开始烹饪之前,在家预先进行些小规模的测试会非常有帮助(乐趣!)

|

||||

就像在生活中,共同烹饪同样需要各个成员之间相互的尊重和理解,所以我们也会试着为素食主义者、食物过敏者、或者对某些事物有偏好的人提供针对性的事物。正式开始烹饪之前,在家预先进行些小规模的测试会非常有帮助(和乐趣!)

|

||||

|

||||

可扩展性也很重要,在杂货店采购必要的食材很容易就消耗掉3个小时。所以我们使用一些表格工具(自然是 LibreOffice Calc)来做一些所需要的食材以及相应的成本。

|

||||

可扩展性也很重要,在杂货店采购必要的食材很容易就消耗掉 3 个小时。所以我们使用一些表格工具(自然是 LibreOffice Calc)来做一些所需要的食材以及相应的成本。

|

||||

|

||||

我们会同志愿者一起,为每次晚餐准备一个“包管理器”,从而及时的制作出菜单并在问题产生的时候寻找一些独到的解决方法。

|

||||

我们会同志愿者一起,对于每次晚餐我们都有一个“包维护者”,从而及时的制作出菜单并在问题产生的时候寻找一些独到的解决方法。

|

||||

|

||||

虽然不是所有人都是大厨,但是只要给与一些帮助,并比较合理的分配任务和责任,就很容易让每个人都参与其中。某种程度上来说,处理 18kg 的西红柿和 100 个鸡蛋都不会让你觉得是件难事,相信我!唯一的限制是一个烤炉只有四个灶,所以可能是时候对基础设施加大投入了。

|

||||

|

||||

发布有时间要求,当然要求也不那么严格,我们通常会在21:30和01:30之间的相当“灵活”时间内供应主菜,即便如此,这个时间也是硬性的发布规定。

|

||||

发布有时间要求,当然要求也不那么严格,我们通常会在 21:30 和 01:30 之间的相当“灵活”时间内供应主菜,即便如此,这个时间也是硬性的发布规定。

|

||||

|

||||

最后,想很多开源项目一样,烹饪文档同样有提升的空间。类似洗碟子这样的扫尾工作同样也有可优化的地方。

|

||||

最后,像很多开源项目一样,烹饪文档同样有提升的空间。类似洗碟子这样的扫尾工作同样也有可优化的地方。

|

||||

|

||||

### 未来的一些新功能点

|

||||

|

||||

@ -54,21 +52,18 @@

|

||||

* 购买和烹饪一个价值 700 欧元的大南瓜,并且

|

||||

* 找家可以为我们采购提供折扣的商店

|

||||

|

||||

|

||||

最后一点,也是开源软件的动机:永远记住,还有一些人们生活在阴影中,他们为没有同等的权限去访问资源而苦恼着。我们如何通过开源的精神去帮助他们呢?

|

||||

|

||||

一想到这点,我便期待这下一次的开源烹饪聚会。如果读了上面的东西让你觉得不够完美,并且想自己运作这样的活动,我们非常乐意你能够借鉴我们的想法,甚至抄袭一个。我们也乐意你能够参与到我们其中,甚至做一些演讲和问答。

|

||||

|

||||

Article originally appeared on [blog.effenberger.org][3]. Reprinted with permission.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/9/open-source-cooking

|

||||

|

||||

作者:[Florian Effenberger][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/sd886393)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[sd886393](https://github.com/sd886393)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,173 @@

|

||||

每位 Ubuntu 18.04 用户都应该知道的快捷键

|

||||

======

|

||||

|

||||

了解快捷键能够提升您的生产力。这里有一些实用的 Ubuntu 快捷键助您像专业人士一样使用 Ubuntu。

|

||||

|

||||

您可以用键盘和鼠标组合来使用操作系统。

|

||||

|

||||

> 注意:本文中提到的键盘快捷键适用于 Ubuntu 18.04 GNOME 版。 通常,它们中的大多数(或者全部)也适用于其他的 Ubuntu 版本,但我不能够保证。

|

||||

|

||||

![Ubuntu keyboard shortcuts][1]

|

||||

|

||||

### 实用的 Ubuntu 快捷键

|

||||

|

||||

让我们来看一看 Ubuntu GNOME 必备的快捷键吧!通用的快捷键如 `Ctrl+C`(复制)、`Ctrl+V`(粘贴)或者 `Ctrl+S`(保存)不再赘述。

|

||||

|

||||

注意:Linux 中的 Super 键即键盘上带有 Windows 图标的键,本文中我使用了大写字母,但这不代表你需要按下 `shift` 键,比如,`T` 代表键盘上的 ‘t’ 键,而不代表 `Shift+t`。

|

||||

|

||||

#### 1、 Super 键:打开活动搜索界面

|

||||

|

||||

使用 `Super` 键可以打开活动菜单。如果你只能在 Ubuntu 上使用一个快捷键,那只能是 `Super` 键。

|

||||

|

||||

想要打开一个应用程序?按下 `Super` 键然后搜索应用程序。如果搜索的应用程序未安装,它会推荐来自应用中心的应用程序。

|

||||

|

||||

想要看看有哪些正在运行的程序?按下 `Super` 键,屏幕上就会显示所有正在运行的 GUI 应用程序。

|

||||

|

||||

想要使用工作区吗?只需按下 `Super` 键,您就可以在屏幕右侧看到工作区选项。

|

||||

|

||||

#### 2、 Ctrl+Alt+T:打开 Ubuntu 终端窗口

|

||||

|

||||

![Ubuntu Terminal Shortcut][2]

|

||||

|

||||

*使用 Ctrl+alt+T 来打开终端窗口*

|

||||

|

||||

想要打开一个新的终端,您只需使用快捷键 `Ctrl+Alt+T`。这是我在 Ubuntu 中最喜欢的键盘快捷键。 甚至在我的许多 FOSS 教程中,当需要打开终端窗口是,我都会提到这个快捷键。

|

||||

|

||||

#### 3、 Super+L 或 Ctrl+Alt+L:锁屏

|

||||

|

||||

当您离开电脑时锁定屏幕,是最基本的安全习惯之一。您可以使用 `Super+L` 快捷键,而不是繁琐地点击屏幕右上角然后选择锁定屏幕选项。

|

||||

|

||||

有些系统也会使用 `Ctrl+Alt+L` 键锁定屏幕。

|

||||

|

||||

#### 4、 Super+D or Ctrl+Alt+D:显示桌面

|

||||

|

||||

按下 `Super+D` 可以最小化所有正在运行的应用程序窗口并显示桌面。

|

||||

|

||||

再次按 `Super+D` 将重新打开所有正在运行的应用程序窗口,像之前一样。

|

||||

|

||||

您也可以使用 `Ctrl+Alt+D` 来实现此目的。

|

||||

|

||||

#### 5、 Super+A:显示应用程序菜单

|

||||

|

||||

您可以通过单击屏幕左下角的 9 个点打开 Ubuntu 18.04 GNOME 中的应用程序菜单。 但是一个更快捷的方法是使用 `Super+A` 快捷键。

|

||||

|

||||

它将显示应用程序菜单,您可以在其中查看或搜索系统上已安装的应用程序。

|

||||

|

||||

您可以使用 `Esc` 键退出应用程序菜单界面。

|

||||

|

||||

#### 6、 Super+Tab 或 Alt+Tab:在运行中的应用程序间切换

|

||||

|

||||

如果您运行的应用程序不止一个,则可以使用 `Super+Tab` 或 `Alt+Tab` 快捷键在应用程序之间切换。

|

||||

|

||||

按住 `Super` 键同时按下 `Tab` 键,即可显示应用程序切换器。 按住 `Super` 的同时,继续按下 `Tab` 键在应用程序之间进行选择。 当光标在所需的应用程序上时,松开 `Super` 和 `Tab` 键。

|

||||

|

||||

默认情况下,应用程序切换器从左向右移动。 如果要从右向左移动,可使用 `Super+Shift+Tab` 快捷键。

|

||||

|

||||

在这里您也可以用 `Alt` 键代替 `Super` 键。

|

||||

|

||||

> 提示:如果有多个应用程序实例,您可以使用 Super+` 快捷键在这些实例之间切换。

|

||||

|

||||

#### 7、 Super+箭头:移动窗口位置

|

||||

|

||||

<https://player.vimeo.com/video/289091549>

|

||||

|

||||

这个快捷键也适用于 Windows 系统。 使用应用程序时,按下 `Super+左箭头`,应用程序将贴合屏幕的左边缘,占用屏幕的左半边。

|

||||

|

||||

同样,按下 `Super+右箭头`会使应用程序贴合右边缘。

|

||||

|

||||

按下 `Super+上箭头`将最大化应用程序窗口,`Super+下箭头`将使应用程序恢复到其正常的大小。

|

||||

|

||||

#### 8、 Super+M:切换到通知栏

|

||||

|

||||

GNOME 中有一个通知栏,您可以在其中查看系统和应用程序活动的通知,这里也有一个日历。

|

||||

|

||||

![Notification Tray Ubuntu 18.04 GNOME][3]

|

||||

|

||||

*通知栏*

|

||||

|

||||

使用 `Super+M` 快捷键,您可以打开此通知栏。 如果再次按这些键,将关闭打开的通知托盘。

|

||||

|

||||

使用 `Super+V` 也可实现相同的功能。

|

||||

|

||||

#### 9、 Super+空格:切换输入法(用于多语言设置)

|

||||

|

||||

如果您使用多种语言,可能您的系统上安装了多个输入法。 例如,我需要在 Ubuntu 上同时使用[印地语] [4]和英语,所以我安装了印地语(梵文)输入法以及默认的英语输入法。

|

||||

|

||||

如果您也使用多语言设置,则可以使用 `Super+空格` 快捷键快速更改输入法。

|

||||

|

||||

#### 10、 Alt+F2:运行控制台

|

||||

|

||||

这适用于高级用户。 如果要运行快速命令,而不是打开终端并在其中运行命令,则可以使用 `Alt+F2` 运行控制台。

|

||||

|

||||

![Alt+F2 to run commands in Ubuntu][5]

|

||||

|

||||

*控制台*

|

||||

|

||||

当您使用只能在终端运行的应用程序时,这尤其有用。

|

||||

|

||||

#### 11、 Ctrl+Q:关闭应用程序窗口

|

||||

|

||||

如果您有正在运行的应用程序,可以使用 `Ctrl+Q` 快捷键关闭应用程序窗口。您也可以使用 `Ctrl+W` 来实现此目的。

|

||||

|

||||

`Alt+F4` 是关闭应用程序窗口更“通用”的快捷方式。

|

||||

|

||||

它不适用于一些应用程序,如 Ubuntu 中的默认终端。

|

||||

|

||||

#### 12、 Ctrl+Alt+箭头:切换工作区

|

||||

|

||||

![Workspace switching][6]

|

||||

|

||||

*切换工作区*

|

||||

|

||||

如果您是使用工作区的重度用户,可以使用 `Ctrl+Alt+上箭头`和 `Ctrl+Alt+下箭头`在工作区之间切换。

|

||||

|

||||

#### 13、 Ctrl+Alt+Del:注销

|

||||

|

||||

不!在 Linux 中使用著名的快捷键 `Ctrl+Alt+Del` 并不会像在 Windows 中一样打开任务管理器(除非您使用自定义快捷键)。

|

||||

|

||||

![Log Out Ubuntu][7]

|

||||

|

||||

*注销*

|

||||

|

||||

在普通的 GNOME 桌面环境中,您可以使用 `Ctrl+Alt+Del` 键打开关机菜单,但 Ubuntu 并不总是遵循此规范,因此当您在 Ubuntu 中使用 `Ctrl+Alt+Del` 键时,它会打开注销菜单。

|

||||

|

||||

### 在 Ubuntu 中使用自定义键盘快捷键

|

||||

|

||||

您不是只能使用默认的键盘快捷键,您可以根据需要创建自己的自定义键盘快捷键。

|

||||

|

||||

转到“设置->设备->键盘”,您将在这里看到系统的所有键盘快捷键。向下滚动到底部,您将看到“自定义快捷方式”选项。

|

||||

|

||||

![Add custom keyboard shortcut in Ubuntu][8]

|

||||

|

||||

您需要提供易于识别的快捷键名称、使用快捷键时运行的命令,以及您自定义的按键组合。

|

||||

|

||||

### Ubuntu 中你最喜欢的键盘快捷键是什么?

|

||||

|

||||

快捷键无穷无尽。如果需要,你可以看一看所有可能的 [GNOME 快捷键][9],看其中有没有你需要用到的快捷键。

|

||||

|

||||

您可以学习使用您经常使用应用程序的快捷键,这是很有必要的。例如,我使用 Kazam 进行[屏幕录制][10],键盘快捷键帮助我方便地暂停和开始录像。

|

||||

|

||||

您最喜欢、最离不开的 Ubuntu 快捷键是什么?

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/ubuntu-shortcuts/

|

||||

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[XiatianSummer](https://github.com/XiatianSummer)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/abhishek/

|

||||

[1]: https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/09/ubuntu-keyboard-shortcuts.jpeg

|

||||

[2]: https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/09/ubuntu-terminal-shortcut.jpg

|

||||

[3]: https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/09/notification-tray-ubuntu-gnome.jpeg

|

||||

[4]: https://itsfoss.com/type-indian-languages-ubuntu/

|

||||

[5]: https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/09/console-alt-f2-ubuntu-gnome.jpeg

|

||||

[6]: https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/09/workspace-switcher-ubuntu.png

|

||||

[7]: https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/09/log-out-ubuntu.jpeg

|

||||

[8]: https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/09/custom-keyboard-shortcut.jpg

|

||||

[9]: https://wiki.gnome.org/Design/OS/KeyboardShortcuts

|

||||

[10]: https://itsfoss.com/best-linux-screen-recorders/

|

||||

110

published/20180910 3 open source log aggregation tools.md

Normal file

110

published/20180910 3 open source log aggregation tools.md

Normal file

@ -0,0 +1,110 @@

|

||||

3 个开源日志聚合工具

|

||||

======

|

||||

|

||||

> 日志聚合系统可以帮助我们进行故障排除和其它任务。以下是三个主要工具介绍。

|

||||

|

||||

|

||||

|

||||

<ruby>指标聚合<rt>metrics aggregation</rt></ruby>与<ruby>日志聚合<rt>log aggregation</rt></ruby>有何不同?日志不能包括指标吗?日志聚合系统不能做与指标聚合系统相同的事情吗?

|

||||

|

||||

这些是我经常听到的问题。我还看到供应商推销他们的日志聚合系统作为所有可观察问题的解决方案。日志聚合是一个有价值的工具,但它通常对时间序列数据的支持不够好。

|

||||

|

||||

时间序列的指标聚合系统中几个有价值的功能是专门为时间序列数据定制的<ruby>固定间隔<rt>regular interval</rt></ruby>和存储系统。固定间隔允许用户不断地收集实时的数据结果。如果要求日志聚合系统以固定间隔收集指标数据,它也可以。但是,它的存储系统没有针对指标聚合系统中典型的查询类型进行优化。使用日志聚合工具中的存储系统处理这些查询将花费更多的资源和时间。

|

||||

|

||||

所以,我们知道日志聚合系统可能不适合时间序列数据,但是它有什么好处呢?日志聚合系统是收集事件数据的好地方。这些无规律的活动是非常重要的。最好的例子为 web 服务的访问日志,这些很重要,因为我们想知道什么正在访问我们的系统,什么时候访问的。另一个例子是应用程序错误记录 —— 因为它不是正常的操作记录,所以在故障排除过程中可能很有价值的。

|

||||

|

||||

日志记录的一些规则:

|

||||

|

||||

* **须**包含时间戳

|

||||

* **须**格式化为 JSON

|

||||

* **不**记录无关紧要的事件

|

||||

* **须**记录所有应用程序的错误

|

||||

* **可**记录警告错误

|

||||

* **可**开关的日志记录

|

||||

* **须**以可读的形式记录信息

|

||||

* **不**在生产环境中记录信息

|

||||

* **不**记录任何无法阅读或反馈的内容

|

||||

|

||||

### 云的成本

|

||||

|

||||

当研究日志聚合工具时,云服务可能看起来是一个有吸引力的选择。然而,这可能会带来巨大的成本。当跨数百或数千台主机和应用程序聚合时,日志数据是大量的。在基于云的系统中,数据的接收、存储和检索是昂贵的。

|

||||

|

||||

以一个真实的系统来参考,大约 500 个节点和几百个应用程序的集合每天产生 200GB 的日志数据。这个系统可能还有改进的空间,但是在许多 SaaS 产品中,即使将它减少一半,每月也要花费将近 10000 美元。而这通常仅保留 30 天,如果你想查看一年一年的趋势数据,就不可能了。

|

||||

|

||||

并不是要不使用这些基于云的系统,尤其是对于较小的组织它们可能非常有价值的。这里的目的是指出可能会有很大的成本,当这些成本很高时,就可能令人非常的沮丧。本文的其余部分将集中讨论自托管的开源和商业解决方案。

|

||||

|

||||

### 工具选择