mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-25 23:11:02 +08:00

commit

bfcff006f5

@ -0,0 +1,216 @@

|

||||

8 个优秀的开源 Markdown 编辑器

|

||||

============================================================

|

||||

|

||||

### Markdown

|

||||

|

||||

首先,对 Markdown 进行一个简单的介绍。Markdown 是由 John Gruber 和 Aaron Swartz 共同创建的一种轻量级纯文本格式语法。Markdown 可以让用户“以易读、易写的纯文本格式来进行写作,然后可以将其转换为有效格式的 XHTML(或 HTML)“。Markdown 语法只包含一些非常容易记住的符号。其学习曲线平缓;你可以在炒蘑菇的同时一点点学习 Markdown 语法(大约 10 分钟)。通过使用尽可能简单的语法,错误率达到了最小化。除了拥有友好的语法,它还具有直接输出干净、有效的(X)HTML 文件的强大功能。如果你看过我的 HTML 文件,你就会知道这个功能是多么的重要。

|

||||

|

||||

Markdown 格式语法的主要目标是实现最大的可读性。用户能够以纯文本的形式发布一份 Markdown 格式的文件。用 Markdown 进行文本写作的一个优点是易于在计算机、智能手机和个人之间共享。几乎所有的内容管理系统都支持 Markdown 。它作为一种网络写作格式流行起来,其产生一些被许多服务采用的变种,比如 GitHub 和 Stack Exchange 。

|

||||

|

||||

你可以使用任何文本编辑器来写 Markdown 文件。但我建议使用一个专门为这种语法设计的编辑器。这篇文章中所讨论的软件允许你使用 Markdown 语法来写各种格式的专业文档,包括博客文章、演示文稿、报告、电子邮件以及幻灯片等。另外,所有的应用都是在开源许可证下发布的,在 Linux、OS X 和 Windows 操作系统下均可用。

|

||||

|

||||

|

||||

### Remarkable

|

||||

|

||||

|

||||

|

||||

让我们从 Remarkable 开始。Remarkable 是一个 apt 软件包的名字,它是一个相当有特色的 Markdown 编辑器 — 它并不支持 Markdown 的全部功能特性,但该有的功能特性都有。它使用和 GitHub Markdown 类似的语法。

|

||||

|

||||

你可以使用 Remarkable 来写 Markdown 文档,并在实时预览窗口查看更改。你可以把你的文件导出为 PDF 格式(带有目录)和 HTML 格式文件。它有强大的配置选项,从而具有许多样式,因此,你可以把它配置成你最满意的 Markdown 编辑器。

|

||||

|

||||

其他一些特性:

|

||||

|

||||

* 语法高亮

|

||||

* 支持 [GitHub 风味的 Markdown](https://linux.cn/article-8399-1.html)

|

||||

* 支持 MathJax - 通过高级格式呈现丰富文档

|

||||

* 键盘快捷键

|

||||

|

||||

在 Debian、Ubuntu、Fedora、SUSE 和 Arch 系统上均有 Remarkable 的可用的简易安装程序。

|

||||

|

||||

主页: [https://remarkableapp.github.io/][4]

|

||||

许可证: MIT 许可

|

||||

|

||||

### Atom

|

||||

|

||||

|

||||

|

||||

毫无疑问, Atom 是一个神话般的文本编辑器。超过 50 个开源包集合在一个微小的内核上,从而构成 Atom 。伴有 Node.js 的支持,以及全套功能特性,Atom 是我最喜欢用来写代码的编辑器。Atom 的特性在[杀手级开源应用][5]的文章中有更详细介绍,它是如此的强大。但是作为一个 Markdown 编辑器,Atom 还有许多不足之处,它的默认包不支持 Markdown 的特性。例如,正如上图所展示的,它不支持等价渲染。

|

||||

|

||||

但是,开源拥有强大的力量,这是我强烈提倡开源的一个重要原因。Atom 上有许多包以及一些复刻,从而添加了缺失的功能特性。比如,Markdown Preview Plus 提供了 Markdown 文件的实时预览,并伴有数学公式渲染和实时重加载。另外,你也可以尝试一下 [Markdown Preview Enhanced][6]。如果你需要自动滚动特性,那么 [markdown-scroll-sync][7] 可以满足你的需求。我是 [Markdown-Writer][8]和 [Markdown-pdf][9]的忠实拥趸,后者支持将 Markdown 快速转换为 PDF、PNG 以及 JPEG 文件。

|

||||

|

||||

这个方式体现了开源的理念:允许用户通过添加扩展来提供所需的特性。这让我想起了 Woolworths 的 n 种杂拌糖果的故事。虽然需要多付出一些努力,但能收获最好的回报。

|

||||

|

||||

主页: [https://atom.io/][10]

|

||||

许可证: MIT 许可

|

||||

|

||||

### Haroopad

|

||||

|

||||

|

||||

|

||||

Haroopad 是一个优秀的 Markdown 编辑器,是一个用于创建适宜 Web 的文档的处理器。使用 Haroopad 可以创作各种格式的文档,比如博客文章、幻灯片、演示文稿、报告和电子邮件等。Haroopad 在 Windows、Mac OS X 和 Linux 上均可用。它有 Debian/Ubuntu 的软件包,也有 Windows 和 Mac 的二进制文件。该应用程序使用 node-webkit、CodeMirror,marked,以及 Twitter 的 Bootstrap 。

|

||||

|

||||

Haroo 在韩语中的意思是“一天”。

|

||||

|

||||

它的功能列表非常可观。请看下面:

|

||||

|

||||

* 主题、皮肤和 UI 组件

|

||||

* 超过 30 种不同的编辑主题 - tomorrow-night-bright 和 zenburn 是近期刚添加的

|

||||

* 编辑器中的代码块的语法高亮

|

||||

* Ruby、Python、PHP、Javascript、C、HTML 和 CSS 的语法高亮支持

|

||||

* 基于 CodeMirror,这是一个在浏览器中使用 JavaScript 实现的通用文本编辑器

|

||||

* 实时预览主题

|

||||

* 基于 markdown-css 的 7 个主题

|

||||

* 语法高亮

|

||||

* 基于 hightlight.js 的 112 种语言以及 49 种样式

|

||||

* 定制主题

|

||||

* 基于 CSS (层叠样式表)的样式

|

||||

* 演示模式 - 对于现场演示非常有用

|

||||

* 绘图 - 流程图和序列图

|

||||

* 任务列表

|

||||

* 扩展 Markdown 语法,支持 TOC(目录)、 GitHub 风味 Markdown 以及数学表达式、脚注和任务列表等

|

||||

* 字体大小

|

||||

* 使用首选窗口和快捷键来设置编辑器和预览字体大小

|

||||

* 嵌入富媒体内容

|

||||

* 视频、音频、3D、文本、开放图形以及 oEmbed

|

||||

* 支持大约 100 种主要的网络服务(YouTude、SoundCloud、Flickr 等)

|

||||

* 支持拖放

|

||||

* 显示模式

|

||||

* 默认:编辑器|预览器,倒置:预览器|编辑器,仅编辑器,仅预览器(View -> Mode)

|

||||

* 插入当前日期和时间

|

||||

* 多种格式支持(Insert -> Data & Time)

|

||||

* HtML 到 Markdown

|

||||

* 拖放你在 Web 浏览器中选择好的文本

|

||||

* Markdown 解析选项

|

||||

* 大纲预览

|

||||

* 纯粹主义者的 Vim 键位绑定

|

||||

* Markdown 自动补全

|

||||

* 导出为 PDF 和 HTML

|

||||

* 带有样式的 HTML 复制到剪切板可用于所见即所得编辑器

|

||||

* 自动保存和恢复

|

||||

* 文件状态信息

|

||||

* 换行符或空格缩进

|

||||

* (一、二、三)列布局视图

|

||||

* Markdown 语法帮助对话框

|

||||

* 导入和导出设置

|

||||

* 通过 MathJax 支持 LaTex 数学表达式

|

||||

* 导出文件为 HTML 和 PDF

|

||||

* 创建扩展来构建自己的功能

|

||||

* 高效地将文件转换进博客系统:WordPress、Evernote 和 Tumblr 等

|

||||

* 全屏模式-尽管该模式不能隐藏顶部菜单栏和顶部工具栏

|

||||

* 国际化支持:英文、韩文、西班牙文、简体中文、德文、越南文、俄文、希腊文、葡萄牙文、日文、意大利文、印度尼西亚文土耳其文和法文

|

||||

|

||||

主页 [http://pad.haroopress.com/][11]

|

||||

许可证: GNU GPL v3 许可

|

||||

|

||||

### StackEdit

|

||||

|

||||

|

||||

|

||||

StackEdit 是一个功能齐全的 Markdown 编辑器,基于 PageDown(该 Markdown 库被 Stack Overflow 和其他一些 Stack 交流网站使用)。不同于在这个列表中的其他编辑器,StackEdit 是一个基于 Web 的编辑器。在 Chrome 浏览器上即可使用 StackEdit 。

|

||||

|

||||

特性包括:

|

||||

|

||||

* 实时预览 HTML,并通过绑定滚动连接特性来将编辑器和预览的滚动条相绑定

|

||||

* 支持 Markdown Extra 和 GitHub 风味 Markdown,Prettify/Highlight.js 语法高亮

|

||||

* 通过 MathJax 支持 LaTex 数学表达式

|

||||

* 所见即所得的控制按键

|

||||

* 布局配置

|

||||

* 不同风格的主题支持

|

||||

* la carte 扩展

|

||||

* 离线编辑

|

||||

* 可以与 Google 云端硬盘(多帐户)和 Dropbox 在线同步

|

||||

* 一键发布到 Blogger、Dropbox、Gist、GitHub、Google Drive、SSH 服务器、Tumblr 和 WordPress

|

||||

|

||||

主页: [https://stackedit.io/][12]

|

||||

许可证: Apache 许可

|

||||

|

||||

### MacDown

|

||||

|

||||

|

||||

|

||||

MacDown 是在这个列表中唯一一个只运行在 macOS 上的全特性编辑器。具体来说,它需要在 OX S 10.8 或更高的版本上才能使用。它在内部使用 Hoedown 将 Markdown 渲染成 HTML,这使得它的特性更加强大。Heodown 是 Sundown 的一个复活复刻。它完全符合标准,无依赖,具有良好的扩展支持和 UTF-8 感知。

|

||||

|

||||

MacDown 基于 Mou,这是专为 Web 开发人员设计的专用解决方案。

|

||||

|

||||

它提供了良好的 Markdown 渲染,通过 Prism 提供的语言识别渲染实现代码块级的语法高亮,MathML 和 LaTex 渲染,GTM 任务列表,Jekyll 前端以及可选的高级自动补全。更重要的是,它占用资源很少。想在 OS X 上写 Markdown?MacDown 是我针对 Web 开发者的开源推荐。

|

||||

|

||||

主页: [https://macdown.uranusjr.com/][13]

|

||||

许可证: MIT 许可

|

||||

|

||||

|

||||

### ghostwriter

|

||||

|

||||

|

||||

|

||||

ghostwriter 是一个跨平台的、具有美感的、无干扰的 Markdown 编辑器。它内建了 Sundown 处理器支持,还可以自动检测 pandoc、MultiMarkdown、Discount 和 cmark 处理器。它试图成为一个朴实的编辑器。

|

||||

|

||||

ghostwriter 有许多很好的功能设置,包括语法高亮、全屏模式、聚焦模式、主题、通过 Hunspell 进行拼写检查、实时字数统计、实时 HTML 预览、HTML 预览自定义 CSS 样式表、图片拖放支持以及国际化支持。Hemingway 模式按钮可以禁用退格键和删除键。一个新的 “Markdown cheat sheet” HUD 窗口是一个有用的新增功能。主题支持很基本,但在 [GitHub 仓库上][14]也有一些可用的试验性主题。

|

||||

|

||||

ghostwriter 的功能有限。我越来越欣赏这个应用的通用性,部分原因是其简洁的界面能够让写作者完全集中在策划内容上。这一应用非常值得推荐。

|

||||

|

||||

ghostwirter 在 Linux 和 Windows 系统上均可用。在 Windows 系统上还有一个便携式的版本可用。

|

||||

|

||||

主页: [https://github.com/wereturtle/ghostwriter][15]

|

||||

许可证: GNU GPL v3 许可

|

||||

|

||||

### Abricotine

|

||||

|

||||

|

||||

|

||||

Abricotine 是一个为桌面构建的、旨在跨平台且开源的 Markdown 编辑器。它在 Linux、OS X 和 Windows 上均可用。

|

||||

|

||||

该应用支持 Markdown 语法以及一些 GitHub 风味的 Markdown 增强(比如表格)。它允许用户直接在文本编辑器中预览文档,而不是在侧窗栏。

|

||||

|

||||

该应用有一系列有用的特性,包括拼写检查、以 HTML 格式保存文件或把富文本复制粘贴到邮件客户端。你也可以在侧窗中显示文档目录,展示语法高亮代码、以及助手、锚点和隐藏字符等。它目前正处于早期的开发阶段,因此还有一些很基本的 bug 需要修复,但它值得关注。它有两个主题可用,如果有能力,你也可以添加你自己的主题。

|

||||

|

||||

主页: [http://abricotine.brrd.fr/][16]

|

||||

许可证: GNU 通用公共许可证 v3 或更高许可

|

||||

|

||||

### ReText

|

||||

|

||||

|

||||

|

||||

ReText 是一个简单而强大的 Markdown 和 reStructureText 文本编辑器。用户可以控制所有输出的格式。它编辑的文件是纯文本文件,但可以导出为 PDF、HTML 和其他格式的文件。ReText 官方仅支持 Linux 系统。

|

||||

|

||||

特性包括:

|

||||

|

||||

* 全屏模式

|

||||

* 实时预览

|

||||

* 同步滚动(针对 Markdown)

|

||||

* 支持数学公式

|

||||

* 拼写检查

|

||||

* 分页符

|

||||

* 导出为 HTML、ODT 和 PDF 格式

|

||||

* 使用其他标记语言

|

||||

|

||||

主页: [https://github.com/retext-project/retext][17]

|

||||

许可证: GNU GPL v2 或更高许可

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ossblog.org/markdown-editors/

|

||||

|

||||

作者:[Steve Emms][a]

|

||||

译者:[ucasFL](https://github.com/ucasFL)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ossblog.org/author/steve/

|

||||

[1]:https://www.ossblog.org/author/steve/

|

||||

[2]:https://www.ossblog.org/markdown-editors/#comments

|

||||

[3]:https://www.ossblog.org/category/utilities/

|

||||

[4]:https://remarkableapp.github.io/

|

||||

[5]:https://www.ossblog.org/top-software/2/

|

||||

[6]:https://atom.io/packages/markdown-preview-enhanced

|

||||

[7]:https://atom.io/packages/markdown-scroll-sync

|

||||

[8]:https://atom.io/packages/markdown-writer

|

||||

[9]:https://atom.io/packages/markdown-pdf

|

||||

[10]:https://atom.io/

|

||||

[11]:http://pad.haroopress.com/

|

||||

[12]:https://stackedit.io/

|

||||

[13]:https://macdown.uranusjr.com/

|

||||

[14]:https://github.com/jggouvea/ghostwriter-themes

|

||||

[15]:https://github.com/wereturtle/ghostwriter

|

||||

[16]:http://abricotine.brrd.fr/

|

||||

[17]:https://github.com/retext-project/retext

|

||||

@ -0,0 +1,111 @@

|

||||

AI 正快速入侵我们生活的五个方面

|

||||

============================================================

|

||||

|

||||

> 让我们来看看我们已经被人工智能包围的五个真实存在的方面。

|

||||

|

||||

|

||||

|

||||

> 图片来源: opensource.com

|

||||

|

||||

开源项目[正在助推][2]人工智能(AI)进步,而且随着技术的成熟,我们将听到更多关于 AI 如何影响我们生活的消息。你有没有考虑过 AI 是如何改变你周围的世界的?让我们来看看我们日益被我们所改变的世界,以及大胆预测一下 AI 对未来影响。

|

||||

|

||||

### 1. AI 影响你的购买决定

|

||||

|

||||

最近 [VentureBeat][3] 上的一篇文章,[“AI 将如何帮助我们解读千禧一代”][4]吸引了我的注意。我承认我对人工智能没有思考太多,也没有费力尝试解读千禧一代,所以我很好奇,希望了解更多。事实证明,文章标题有点误导人,“如何卖东西给千禧一代”或许会是一个更准确的标题。

|

||||

|

||||

根据这篇文章,千禧一代是“一个令人垂涎的年龄阶段的人群,全世界的市场经理都在争抢他们”。通过分析网络行为 —— 无论是购物、社交媒体或其他活动 - 机器学习可以帮助预测行为模式,这将可以变成有针对性的广告。文章接着解释如何对物联网和社交媒体平台进行挖掘形成数据点。“使用机器学习挖掘社交媒体数据,可以让公司了解千禧一代如何谈论其产品,他们对一个产品类别的看法,他们对竞争对手的广告活动如何响应,还可获得很多数据,用于设计有针对性的广告,"这篇文章解释说。AI 和千禧一代成为营销的未来并不是什么很令人吃惊的事,但是 X 一代和婴儿潮一代,你们也逃不掉呢!(LCTT 译注:X 一代指出生于 20 世纪 60 年代中期至 70 年代末的美国人,婴儿潮是指二战结束后,1946 年初至 1964 年底出生的人)

|

||||

|

||||

> 人工智能根据行为变化,将包括城市人在内的整个人群设为目标群体。

|

||||

|

||||

例如, [Raconteur 上][23]的一篇文章 —— “AI 将怎样改变购买者的行为”中解释说,AI 在网上零售行业最大的力量是它能够迅速适应客户行为不断变化的形势。人工智能创业公司 [Fluid AI][25] 首席执行官 Abhinav Aggarwal 表示,他公司的软件被一个客户用来预测顾客行为,有一次系统注意到在暴风雪期间发生了一个变化。“那些通常会忽略在一天中发送的电子邮件或应用内通知的用户现在正在打开它们,因为他们在家里没有太多的事情可做。一个小时之内,AI 系统就适应了新的情况,并在工作时间开始发送更多的促销材料。”他解释说。

|

||||

|

||||

AI 正在改变我们怎样花钱和为什么花钱,但是 AI 是怎样改变我们挣钱的方式的呢?

|

||||

|

||||

### 2. 人工智能正在改变我们如何工作

|

||||

|

||||

[Fast 公司][5]最近的一篇文章“2017 年人工智能将如何改变我们的生活”中说道,求职者将会从人工智能中受益。作者解释说,除更新薪酬趋势之外,人工智能将被用来给求职者发送相关职位空缺信息。当你应该升职的时候,你很可能会得到一个升职的机会。

|

||||

|

||||

人工智能也可以被公司用来帮助新入职的员工。文章解释说:“许多新员工在刚入职的几天内会获得大量信息,其中大部分都留不下来。” 相反,机器人可能会随着时间的推移,当新员工需要相关信息时,再向他一点点“告知信息”。

|

||||

|

||||

[Inc.][7] 有一篇文章[“没有偏见的企业:人工智能将如何重塑招聘机制”][8],观察了人才管理解决方案提供商 [SAP SuccessFactors][9] 是怎样利用人工智能作为一个工作描述“偏见检查器”,以及检查员工赔偿金的偏见。

|

||||

|

||||

[《Deloitte 2017 人力资本趋势报告》][10]显示,AI 正在激励组织进行重组。Fast 公司的文章[“AI 是怎样改变公司组织的方式”][11]审查了这篇报告,该报告是基于全球 10,000 多名人力资源和商业领袖的调查结果。这篇文章解释说:"许多公司现在更注重文化和环境的适应性,而不是聘请最有资格的人来做某个具体任务,因为知道个人角色必须随 AI 的实施而发展。" 为了适应不断变化的技术,组织也从自上而下的结构转向多学科团队,文章说。

|

||||

|

||||

### 3. AI 正在改变教育

|

||||

|

||||

> AI 将使所有教育生态系统的利益相关者受益。

|

||||

|

||||

尽管教育预算正在缩减,但是教室的规模却正在增长。因此利用技术的进步有助于提高教育体系的生产率和效率,并在提高教育质量和负担能力方面发挥作用。根据 VentureBeat 上的一篇文章[“2017 年人工智能将怎样改变教育”][26],今年我们将看到 AI 对学生们的书面答案进行评分,机器人回答学生的问题,虚拟个人助理辅导学生等等。文章解释说:“AI 将惠及教育生态系统的所有利益相关者。学生将能够通过即时的反馈和指导学习地更好,教师将获得丰富的学习分析和对个性化教学的见解,父母将以更低的成本看到他们的孩子的更好的职业前景,学校能够规模化优质的教育,政府能够向所有人提供可负担得起的教育。"

|

||||

|

||||

### 4. 人工智能正在重塑医疗保健

|

||||

|

||||

2017 年 2 月 [CB Insights][12] 的一篇文章挑选了 106 个医疗保健领域的人工智能初创公司,它们中的很多在过去几年中提高了第一次股权融资。这篇文章说:“在 24 家成像和诊断公司中,19 家公司自 2015 年 1 月起就首次公开募股。”这份名单上有那些从事于远程病人监测,药物发现和肿瘤学方面人工智能的公司。”

|

||||

|

||||

3 月 16 日发表在 TechCrunch 上的一篇关于 AI 进步如何重塑医疗保健的文章解释说:“一旦对人类的 DNA 有了更好的理解,就有机会更进一步,并能根据他们特殊的生活习性为他们提供个性化的见解。这种趋势预示着‘个性化遗传学’的新纪元,人们能够通过获得关于自己身体的前所未有的信息来充分控制自己的健康。”

|

||||

|

||||

本文接着解释说,AI 和机器学习降低了研发新药的成本和时间。部分得益于广泛的测试,新药进入市场需要 12 年以上的时间。这篇文章说:“机器学习算法可以让计算机根据先前处理的数据来‘学习’如何做出预测,或者选择(在某些情况下,甚至是产品)需要做什么实验。类似的算法还可用于预测特定化合物对人体的副作用,这样可以加快审批速度。”这篇文章指出,2015 年旧金山的一个创业公司 [Atomwise][15] 一天内完成了可以减少埃博拉感染的两种新药物的分析,而不是花费数年时间。

|

||||

|

||||

> AI 正在帮助发现、诊断和治疗新疾病。

|

||||

|

||||

另外一个位于伦敦的初创公司 [BenevolentAI][27] 正在利用人工智能寻找科学文献中的模式。这篇文章说:“最近,这家公司找到了两种可能对 Alzheimer 起作用的化合物,引起了很多制药公司的关注。"

|

||||

|

||||

除了有助于研发新药,AI 正在帮助发现、诊断和治疗新疾病。TechCrunch 上的文章解释说,过去是根据显示的症状诊断疾病,但是现在 AI 正在被用于检测血液中的疾病特征,并利用对数十亿例临床病例分析进行深度学习获得经验来制定治疗计划。这篇文章说:“IBM 的 Watson 正在与纽约的 Memorial Sloan Kettering 合作,消化理解数十年来关于癌症患者和治疗方面的数据,为了向治疗疑难的癌症病例的医生提供和建议治疗方案。”

|

||||

|

||||

### 5. AI 正在改变我们的爱情生活

|

||||

|

||||

有 195 个国家的超过 5000 万活跃用户通过一个在 2012 年推出的约会应用程序 [Tinder][16] 找到潜在的伴侣。在一个 [Forbes 采访播客][17]中,Tinder 的创始人兼董事长 Sean Rad spoke 与 Steven Bertoni 对人工智能是如何正在改变人们约会进行过讨论。在[关于此次采访的文章][18]中,Bertoni 引用了 Rad 说的话,他说:“可能有这样一个时刻,Tinder 可以很好的推测你会感兴趣的人,在组织约会中还可能会做很多跑腿的工作”,所以,这个 app 会向用户推荐一些附近的同伴,并更进一步,协调彼此的时间安排一次约会,而不只是向用户显示一些有可能的同伴。

|

||||

|

||||

> 我们的后代真的可能会爱上人工智能。

|

||||

|

||||

你爱上了 AI 吗?我们的后代真的可能会爱上人工智能。Raya Bidshahri 发表在 [Singularity Hub][19] 的一篇文章“AI 将如何重新定义爱情”说,几十年的后,我们可能会认为爱情不再受生物学的限制。

|

||||

|

||||

Bidshahri 解释说:“我们的技术符合摩尔定律,正在以惊人的速度增长 —— 智能设备正在越来越多地融入我们的生活。”,他补充道:“到 2029 年,我们将会有和人类同等智慧的 AI,而到 21 世纪 40 年代,AI 将会比人类聪明无数倍。许多人预测,有一天我们会与强大的机器合并,我们自己可能会变成人工智能。”他认为在这样一个世界上那些是不可避免的,人们将会接受与完全的非生物相爱。

|

||||

|

||||

这听起来有点怪异,但是相比较于未来机器人将统治世界,爱上 AI 会是一个更乐观的结果。Bidshahri 说:“对 AI 进行编程,让他们能够感受到爱,这将使我们创造出更富有同情心的 AI,这可能也是避免很多人忧虑的 AI 大灾难的关键。”

|

||||

|

||||

这份 AI 正在入侵我们生活各领域的清单只是涉及到了我们身边的人工智能的表面。哪些 AI 创新是让你最兴奋的,或者是让你最烦恼的?大家可以在文章评论区写下你们的感受。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

Rikki Endsley - Rikki Endsley 是开源社区 Opensource.com 的管理员。在过去,她曾做过 Red Hat 开源和标准(OSAS)团队社区传播者;自由技术记者;USENIX 协会的社区管理员;linux 权威杂志 ADMIN 和 Ubuntu User 的合作出版者,还是杂志 Sys Admin 和 UnixReview.com 的主编。在 Twitter 上关注她:@rikkiends。

|

||||

|

||||

----

|

||||

|

||||

via: https://opensource.com/article/17/3/5-big-ways-ai-rapidly-invading-our-lives

|

||||

|

||||

作者:[Rikki Endsley][a]

|

||||

译者:[zhousiyu325](https://github.com/zhousiyu325)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/rikki-endsley

|

||||

[1]:https://opensource.com/article/17/3/5-big-ways-ai-rapidly-invading-our-lives?rate=ORfqhKFu9dpA9aFfg-5Za9ZWGcBcx-f0cUlf_VZNeQs

|

||||

[2]:https://www.linux.com/news/open-source-projects-are-transforming-machine-learning-and-ai

|

||||

[3]:https://twitter.com/venturebeat

|

||||

[4]:http://venturebeat.com/2017/03/16/how-ai-will-help-us-decipher-millennials/

|

||||

[5]:https://opensource.com/article/17/3/5-big-ways-ai-rapidly-invading-our-lives

|

||||

[6]:https://www.fastcompany.com/3066620/this-is-how-ai-will-change-your-work-in-2017

|

||||

[7]:https://twitter.com/Inc

|

||||

[8]:http://www.inc.com/bill-carmody/businesses-beyond-bias-how-ai-will-reshape-hiring-practices.html

|

||||

[9]:https://www.successfactors.com/en_us.html

|

||||

[10]:https://dupress.deloitte.com/dup-us-en/focus/human-capital-trends.html?id=us:2el:3pr:dup3575:awa:cons:022817:hct17

|

||||

[11]:https://www.fastcompany.com/3068492/how-ai-is-changing-the-way-companies-are-organized

|

||||

[12]:https://twitter.com/CBinsights

|

||||

[13]:https://www.cbinsights.com/blog/artificial-intelligence-startups-healthcare/

|

||||

[14]:https://techcrunch.com/2017/03/16/advances-in-ai-and-ml-are-reshaping-healthcare/

|

||||

[15]:http://www.atomwise.com/

|

||||

[16]:https://twitter.com/Tinder

|

||||

[17]:https://www.forbes.com/podcasts/the-forbes-interview/#5e962e5624e1

|

||||

[18]:https://www.forbes.com/sites/stevenbertoni/2017/02/14/tinders-sean-rad-on-how-technology-and-artificial-intelligence-will-change-dating/#4180fc2e5b99

|

||||

[19]:https://twitter.com/singularityhub

|

||||

[20]:https://singularityhub.com/2016/08/05/how-ai-will-redefine-love/

|

||||

[21]:https://opensource.com/user/23316/feed

|

||||

[22]:https://opensource.com/article/17/3/5-big-ways-ai-rapidly-invading-our-lives#comments

|

||||

[23]:https://twitter.com/raconteur

|

||||

[24]:https://www.raconteur.net/technology/how-ai-will-change-buyer-behaviour

|

||||

[25]:http://www.fluid.ai/

|

||||

[26]:http://venturebeat.com/2017/02/04/how-ai-will-transform-education-in-2017/

|

||||

[27]:https://twitter.com/benevolent_ai

|

||||

[28]:https://opensource.com/users/rikki-endsley

|

||||

|

||||

@ -0,0 +1,82 @@

|

||||

T-UI Launcher:将你的 Android 设备变成 Linux 命令行界面

|

||||

============================================================

|

||||

|

||||

不管你是一位命令行大师,还是只是不想让你的朋友和家人使用你的 Android 设备,那就看下 T-UI Launcher 这个程序。Unix/Linux 用户一定会喜欢这个。

|

||||

|

||||

T-UI Launcher 是一个免费的轻量级 Android 程序,具有类似 Linux 的命令行界面,它可将你的普通 Android 设备变成一个完整的命令行界面。对于喜欢使用基于文本的界面的人来说,这是一个简单、快速、智能的启动器。

|

||||

|

||||

#### T-UI Launcher 功能

|

||||

|

||||

下面是一些重要的功能:

|

||||

|

||||

* 第一次启动后展示快速使用指南。

|

||||

* 快速且可完全定制。

|

||||

* 提供自动补全菜单及快速、强大的别名系统。

|

||||

* 此外,提供预测建议,并提供有用的搜索功能。

|

||||

|

||||

它是免费的,你可以从 Google Play 商店[下载并安装它][1],接着在 Android 设备中运行。

|

||||

|

||||

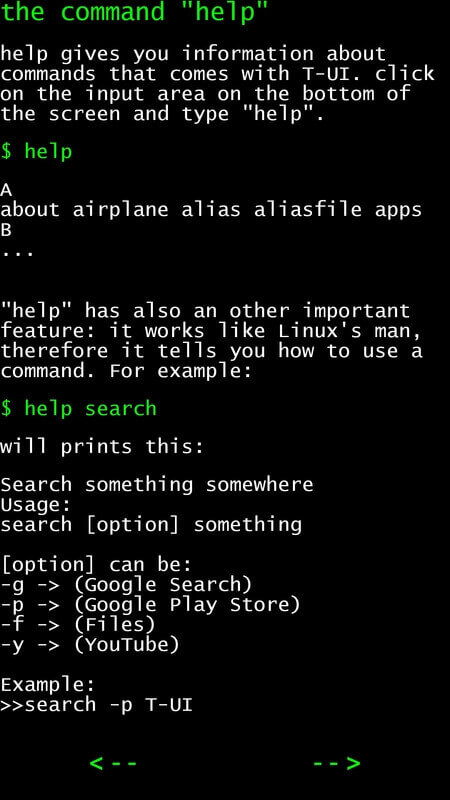

安装完成后,第一次启动时你会看到一个快速指南。阅读完成之后,你可以如下面那样使用简单的命令开始使用了。

|

||||

|

||||

[][2]

|

||||

|

||||

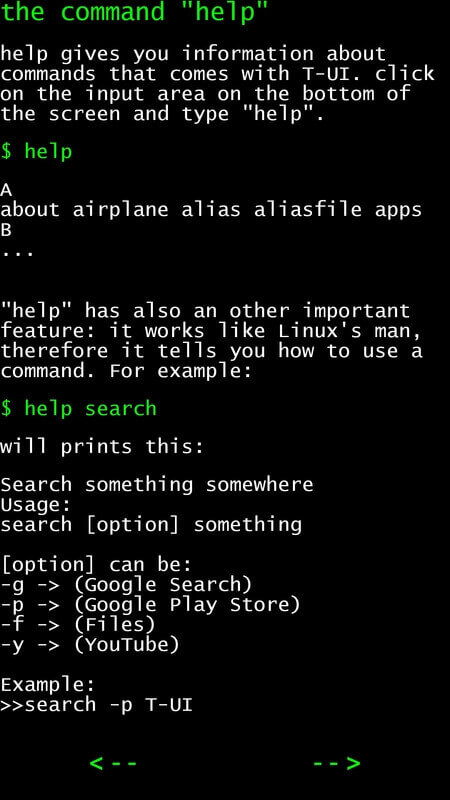

*T-UI 命令行帮助指南*

|

||||

|

||||

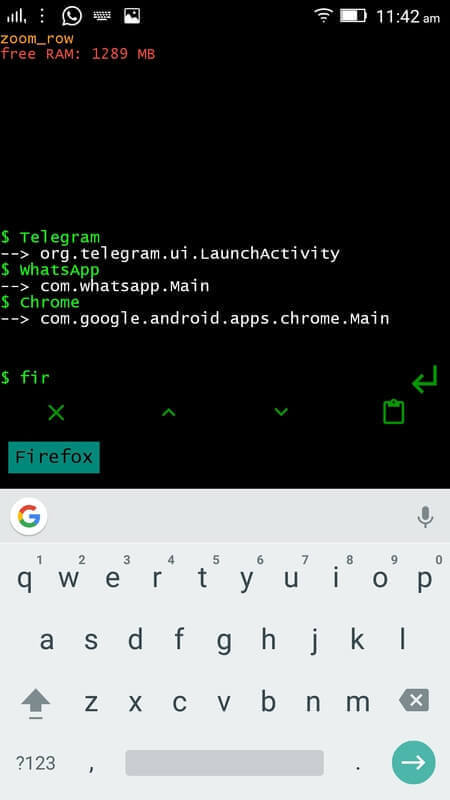

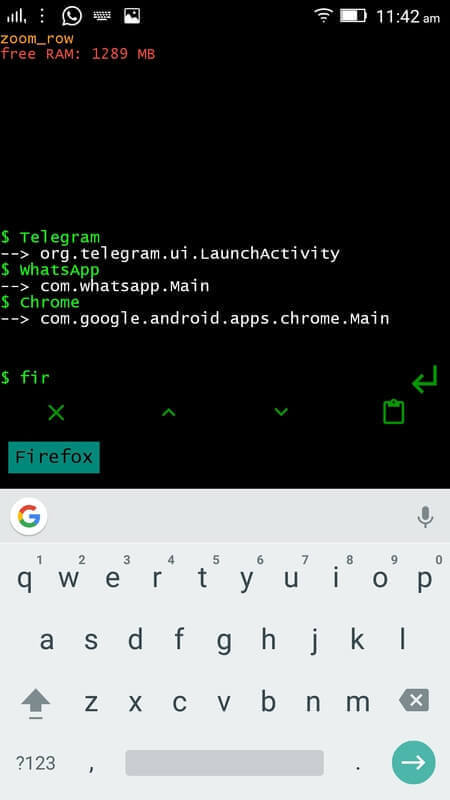

要启动一个 app,只要输入几个字母,自动补全功能会在屏幕中展示可用的 app。接着点击你想打开的程序。

|

||||

|

||||

```

|

||||

$ Telegram ### 启动 telegram

|

||||

$ WhatsApp ### 启动 whatsapp

|

||||

$ Chrome ### 启动 chrome

|

||||

```

|

||||

|

||||

[][3]

|

||||

|

||||

*T-UI 命令行使用*

|

||||

|

||||

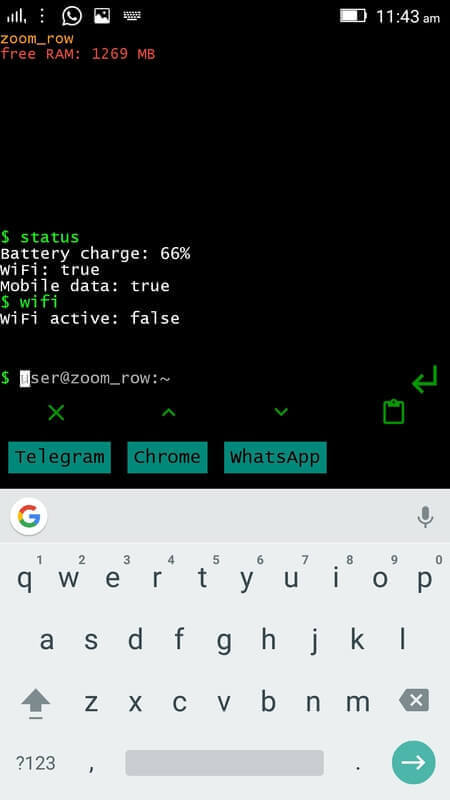

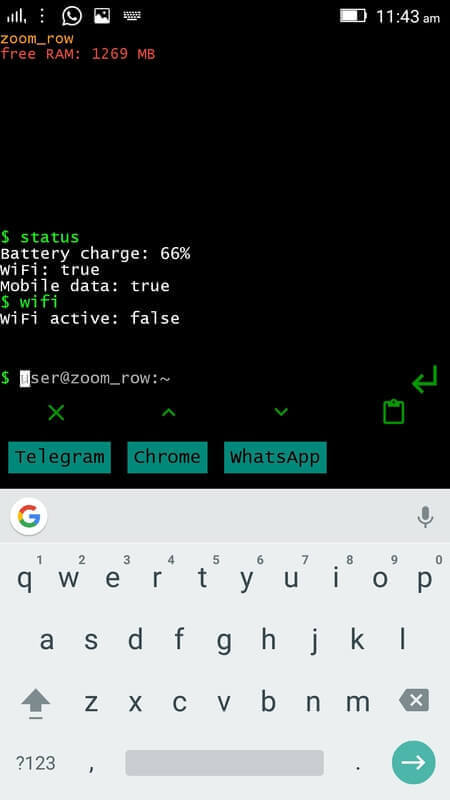

要浏览你的 Android 设备状态(电池电量、wifi、移动数据),输入:

|

||||

|

||||

```

|

||||

$ status

|

||||

```

|

||||

|

||||

[][4]

|

||||

|

||||

*Android 电话状态*

|

||||

|

||||

其它的有用命令。

|

||||

|

||||

```

|

||||

$ uninstall telegram ### 卸载 telegram

|

||||

$ search [google, playstore, youtube, files] ### 搜索在线应用或本地文件

|

||||

$ wifi ### 打开或关闭 WIFI

|

||||

$ cp Downloads/* Music ### 从 Download 文件夹复制所有文件到 Music 文件夹

|

||||

$ mv Downloads/* Music ### 从 Download 文件夹移动所有文件到 Music 文件夹

|

||||

```

|

||||

|

||||

就是这样了!在本篇中,我们展示了一个带有类似 Linux CLI(命令界面)的简单而有用的 Android 程序,它可以将你的常规 Android 设备变成一个完整的命令行界面。尝试一下并在下面的评论栏分享你的想法。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Aaron Kili 是 Linux 和 F.O.S.S 爱好者,将来的 Linux 系统管理员和网络开发人员,目前是 TecMint 的内容创作者,他喜欢用电脑工作,并坚信分享知识。

|

||||

|

||||

------------------

|

||||

|

||||

via: https://www.tecmint.com/t-ui-launcher-turns-android-device-into-linux-cli/

|

||||

|

||||

作者:[Aaron Kili][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.tecmint.com/author/aaronkili/

|

||||

[1]:https://play.google.com/store/apps/details?id=ohi.andre.consolelauncher

|

||||

[2]:https://www.tecmint.com/wp-content/uploads/2017/05/T-UI-Commandline-Help.jpg

|

||||

[3]:https://www.tecmint.com/wp-content/uploads/2017/05/T-UI-Commandline-Usage.jpg

|

||||

[4]:https://www.tecmint.com/wp-content/uploads/2017/05/T-UI-Commandline-Status.jpg

|

||||

[5]:https://www.tecmint.com/author/aaronkili/

|

||||

[6]:https://www.tecmint.com/10-useful-free-linux-ebooks-for-newbies-and-administrators/

|

||||

[7]:https://www.tecmint.com/free-linux-shell-scripting-books/

|

||||

101

sources/talk/20170421 A Window Into the Linux Desktop.md

Normal file

101

sources/talk/20170421 A Window Into the Linux Desktop.md

Normal file

@ -0,0 +1,101 @@

|

||||

A Window Into the Linux Desktop

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

"What can it do that Windows can't?"

|

||||

|

||||

That is the first question many people ask when considering Linux for their desktop. While the open source philosophy that underpins Linux is a good enough draw for some, others want to know just how different its look, feel and functionality can get. To a degree, that depends on whether you choose a desktop environment or a window manager.

|

||||

|

||||

If you want a desktop experience that is lightning fast and uncompromisingly efficient, foregoing the classic desktop environment for a window manager might be for you.

|

||||

|

||||

### What's What

|

||||

|

||||

"Desktop environment" is the technical term for a typical, full-featured desktop -- that is, the complete graphical layout of your system. Besides displaying your programs, the desktop environment includes accoutrements such as app launchers, menu panels and widgets.

|

||||

|

||||

In Microsoft Windows, the desktop environment consists of, among other things, the Start menu, the taskbar of open applications and notification center, all the Windows programs that come bundled with the OS, and the frames enclosing open applications (with a dash, square and X in the upper right corner).

|

||||

|

||||

There are many similarities in Linux.

|

||||

|

||||

The Linux [Gnome][3] desktop environment, for instance, has a slightly different design, but it shares all of the Microsoft Windows basics -- from an app menu to a panel showing open applications, to a notification bar, to the windows framing programs.

|

||||

|

||||

Window program frames rely on a component for drawing them and letting you move and resize them: It's called the "window manager." So, as they all have windows, every desktop environment includes a window manager.

|

||||

|

||||

However, not every window manager is part of a desktop environment. You can run window managers by themselves, and there are reasons to consider doing just that.

|

||||

|

||||

### Out of Your Environment

|

||||

|

||||

For the purpose of this column, references to "window manager" refer to those that can stand alone. If you install a window manager on an existing Linux system, you can log out without shutting down, choose the new window manager on your login screen, and log back in.

|

||||

|

||||

You might not want to do this without researching your window manager first, though, because you will be greeted by a blank screen and sparse status bar that may or may not be clickable.

|

||||

|

||||

There typically is a straightforward way to bring up a terminal in a window manager, because that's how you edit its configuration file. There you will find key- and mouse-bindings to launch programs, at which point you actually can use your new setup.

|

||||

|

||||

In the popular i3 window manager, for instance, you can launch a terminal by hitting the Super (i.e., Windows) key plus Enter -- or press Super plus D to bring up the app launcher. There you can type an app name and hit Enter to open it. All the existing apps can be found that way, and they will open to full screen once selected.

|

||||

|

||||

[][4] (Click Image to Enlarge)

|

||||

|

||||

i3 is also a tiling window manager, meaning it ensures that all windows expand to evenly fit the screen, neither overlapping nor wasting space. When a new window pops up, it reduces the existing windows, nudging them aside to make room. Users can toggle to open the next window either vertically or horizontally adjacent.

|

||||

|

||||

### Features Can Be Friends or Foes

|

||||

|

||||

Desktop environments have their advantages, of course. First and foremost, they provide a feature-rich, recognizable interface. Each has its signature style, but overall they provide unobtrusive default settings out of the box, which makes desktop environments ready to use right from the start.

|

||||

|

||||

Another strong point is that desktop environments come with a constellation of programs and media codecs, allowing users to accomplish simple tasks immediately. Further, they include handy features like battery monitors, wireless widgets and system notifications.

|

||||

|

||||

As comprehensive as desktop environments are, the large software base and user experience philosophy unique to each means there are limits on how far they can go. That means they are not always very configurable. With desktop environments that emphasize flashy looks, oftentimes what you see is what you get.

|

||||

|

||||

Many desktop environments are notoriously heavy on system resources, so they're not friendly to lower-end hardware. Because of the visual effects running on them, there are more things that can go wrong, too. I once tried tweaking networking settings that were unrelated to the desktop environment I was running, and the whole thing crashed. When I started a window manager, I was able to change the settings.

|

||||

|

||||

Those prioritizing security may want to avoid desktop environments, since more programs means greater attack surface -- that is, entry points where malicious actors can break in.

|

||||

|

||||

However, if you want to give a desktop environment a try, XFCE is a good place to start, as its smaller software base trims some bloat, leaving less clutter behind if you don't stick with it.

|

||||

|

||||

It's not the prettiest at first sight, but after downloading some GTK theme packs (every desktop environment serves up either these or Qt themes, and XFCE is in the GTK camp) and enabling them in the Appearance section of settings, you easily can touch it up. You can even shop around at this [centralized gallery][5] to find the theme you like best.

|

||||

|

||||

### You Can Save a Lot of Time... if You Take the Time First

|

||||

|

||||

If you'd like to see what you can do outside of a desktop environment, you'll find a window manager allows plenty of room to maneuver.

|

||||

|

||||

More than anything, window managers are about customization. In fact, their customizability has spawned numerous galleries hosting a vibrant community of users whose palette of choice is a window manager.

|

||||

|

||||

The modest resource needs of window managers make them ideal for lower specs, and since most window managers don't come with any programs, they allow users who appreciate modularity to add only those they want.

|

||||

|

||||

Perhaps the most noticeable distinction from desktop environments is that window managers generally focus on efficiency by emphasizing mouse movements and keyboard hotkeys to open programs or launchers.

|

||||

|

||||

Keyboard-driven window managers are especially streamlined, since you can bring up new windows, enter text or more keyboard commands, move them around, and close them again -- all without moving your hands from the home row. Once you acculturate to the design logic, you will be amazed at how quickly you can blaze through your tasks.

|

||||

|

||||

In spite of the freedom they provide, window managers have their drawbacks. Most significantly, they are extremely bare-bones out of the box. Before you can make much use of one, you'll have to spend time reading your window manager's documentation for configuration syntax, and probably some more time getting the hang of said syntax.

|

||||

|

||||

Although you will have some user programs if you switched from a desktop environment (the likeliest scenario), you also will start out missing familiar things like battery indicators and network widgets, and it will take some time to set up new ones.

|

||||

|

||||

If you want to dive into window managers, i3 has [thorough documentation][6] and straightforward configuration syntax. The configuration file doesn't use any programming language -- it simply defines a variable-value pair on each line. Creating a hotkey is as easy as writing "bindsym", the key combination, and the action for that combination to launch.

|

||||

|

||||

While window managers aren't for everyone, they offer a distinctive computing experience, and Linux is one of the few OSes that allows them. No matter which paradigm you ultimately go with, I hope this overview gives you enough information to feel confident about the choice you've made -- or confident enough to venture out of your familiar zone and see what else is available.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

**Jonathan Terrasi** has been an ECT News Network columnist since 2017\. His main interests are computer security (particularly with the Linux desktop), encryption, and analysis of politics and current affairs. He is a full-time freelance writer and musician. His background includes providing technical commentaries and analyses in articles published by the Chicago Committee to Defend the Bill of Rights.

|

||||

|

||||

|

||||

-----------

|

||||

|

||||

via: http://www.linuxinsider.com/story/84473.html?rss=1

|

||||

|

||||

作者:[ ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:

|

||||

[1]:http://www.linuxinsider.com/story/84473.html?rss=1#

|

||||

[2]:http://www.linuxinsider.com/perl/mailit/?id=84473

|

||||

[3]:http://en.wikipedia.org/wiki/GNOME

|

||||

[4]:http://www.linuxinsider.com/article_images/2017/84473_1200x750.jpg

|

||||

[5]:http://www.xfce-look.org/

|

||||

[6]:https://i3wm.org/docs/

|

||||

@ -0,0 +1,52 @@

|

||||

Faster machine learning is coming to the Linux kernel

|

||||

============================================================

|

||||

|

||||

### The addition of heterogenous memory management to the Linux kernel will unlock new ways to speed up GPUs, and potentially other kinds of machine learning hardware

|

||||

|

||||

|

||||

|

||||

>Credit: Thinkstock

|

||||

|

||||

It's been a long time in the works, but a memory management feature intended to give machine learning or other GPU-powered applications a major performance boost is close to making it into one of the next revisions of the kernel.

|

||||

|

||||

Heterogenous memory management (HMM) allows a device’s driver to mirror the address space for a process under its own memory management. As Red Hat developer Jérôme Glisse [explains][10], this makes it easier for hardware devices like GPUs to directly access the memory of a process without the extra overhead of copying anything. It also doesn't violate the memory protection features afforded by modern OSes.

|

||||

|

||||

|

||||

One class of application that stands to benefit most from HMM is GPU-based machine learning. Libraries like OpenCL and CUDA would be able to get a speed boost from HMM. HMM does this in much the same way as [speedups being done to GPU-based machine learning][11], namely by leaving data in place near the GPU, operating directly on it there, and moving it around as little as possible.

|

||||

|

||||

These kinds of speed-ups for CUDA, Nvidia’s library for GPU-based processing, would only benefit operations on Nvidia GPUs, but those GPUs currently constitute the vast majority of the hardware used to accelerate number crunching. However, OpenCL was devised to write code that could target multiple kinds of hardware—CPUs, GPUs, FPGAs, and so on—so HMM could provide much broader benefits as that hardware matures.

|

||||

|

||||

|

||||

There are a few obstacles to getting HMM into a usable state in Linux. First is kernel support, which has been under wraps for quite some time. HMM was first proposed as a Linux kernel patchset [back in 2014][12], with Red Hat and Nvidia both involved as key developers. The amount of work involved wasn’t trivial, but the developers believe code could be submitted for potential inclusion within the next couple of kernel releases.

|

||||

|

||||

The second obstacle is video driver support, which Nvidia has been working on separately. According to Glisse’s notes, AMD GPUs are likely to support HMM as well, so this particular optimization won’t be limited to Nvidia GPUs. AMD has been trying to ramp up its presence in the GPU market, potentially by [merging GPU and CPU processing][13] on the same die. However, the software ecosystem still plainly favors Nvidia; there would need to be a few more vendor-neutral projects like HMM, and OpenCL performance on a par with what CUDA can provide, to make real choice possible.

|

||||

|

||||

The third obstacle is hardware support, since HMM requires the presence of a replayable page faults hardware feature to work. Only Nvidia’s Pascal line of high-end GPUs supports this feature. In a way that’s good news, since it means Nvidia will only need to provide driver support for one piece of hardware—requiring less work on its part—to get HMM up and running.

|

||||

|

||||

Once HMM is in place, there will be pressure on public cloud providers with GPU instances to [support the latest-and-greatest generation of GPU][14]. Not just by swapping old-school Nvidia Kepler cards for bleeding-edge Pascal GPUs; as each succeeding generation of GPU pulls further away from the pack, support optimizations like HMM will provide strategic advantages.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.infoworld.com/article/3196884/linux/faster-machine-learning-is-coming-to-the-linux-kernel.html

|

||||

|

||||

作者:[Serdar Yegulalp][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.infoworld.com/author/Serdar-Yegulalp/

|

||||

[1]:https://twitter.com/intent/tweet?url=http%3A%2F%2Fwww.infoworld.com%2Farticle%2F3196884%2Flinux%2Ffaster-machine-learning-is-coming-to-the-linux-kernel.html&via=infoworld&text=Faster+machine+learning+is+coming+to+the+Linux+kernel

|

||||

[2]:https://www.facebook.com/sharer/sharer.php?u=http%3A%2F%2Fwww.infoworld.com%2Farticle%2F3196884%2Flinux%2Ffaster-machine-learning-is-coming-to-the-linux-kernel.html

|

||||

[3]:http://www.linkedin.com/shareArticle?url=http%3A%2F%2Fwww.infoworld.com%2Farticle%2F3196884%2Flinux%2Ffaster-machine-learning-is-coming-to-the-linux-kernel.html&title=Faster+machine+learning+is+coming+to+the+Linux+kernel

|

||||

[4]:https://plus.google.com/share?url=http%3A%2F%2Fwww.infoworld.com%2Farticle%2F3196884%2Flinux%2Ffaster-machine-learning-is-coming-to-the-linux-kernel.html

|

||||

[5]:http://reddit.com/submit?url=http%3A%2F%2Fwww.infoworld.com%2Farticle%2F3196884%2Flinux%2Ffaster-machine-learning-is-coming-to-the-linux-kernel.html&title=Faster+machine+learning+is+coming+to+the+Linux+kernel

|

||||

[6]:http://www.stumbleupon.com/submit?url=http%3A%2F%2Fwww.infoworld.com%2Farticle%2F3196884%2Flinux%2Ffaster-machine-learning-is-coming-to-the-linux-kernel.html

|

||||

[7]:http://www.infoworld.com/article/3196884/linux/faster-machine-learning-is-coming-to-the-linux-kernel.html#email

|

||||

[8]:http://www.infoworld.com/article/3152565/linux/5-rock-solid-linux-distros-for-developers.html#tk.ifw-infsb

|

||||

[9]:http://www.infoworld.com/newsletters/signup.html#tk.ifw-infsb

|

||||

[10]:https://lkml.org/lkml/2017/4/21/872

|

||||

[11]:http://www.infoworld.com/article/3195437/machine-learning-analytics-get-a-boost-from-gpu-data-frame-project.html

|

||||

[12]:https://lwn.net/Articles/597289/

|

||||

[13]:http://www.infoworld.com/article/3099204/hardware/amd-mulls-a-cpugpu-super-chip-in-a-server-reboot.html

|

||||

[14]:http://www.infoworld.com/article/3126076/artificial-intelligence/aws-machine-learning-vms-go-faster-but-not-forward.html

|

||||

136

sources/talk/20170515 How I got started with bash scripting.md

Normal file

136

sources/talk/20170515 How I got started with bash scripting.md

Normal file

@ -0,0 +1,136 @@

|

||||

How I got started with bash scripting

|

||||

============================================================

|

||||

|

||||

### With a few simple Google searches, a programming novice learned to write code that automates a previously tedious and time-consuming task.

|

||||

|

||||

|

||||

|

||||

|

||||

>Image by : opensource.com

|

||||

|

||||

I wrote a script the other day. For some of you, that sentence sounds like no big deal. For others, and I know you're out there, that sentence is significant. You see, I'm not a programmer. I'm a writer.

|

||||

|

||||

### What I needed to solve

|

||||

|

||||

My problem was fairly simple: I had to juggle files from engineering into our documentation. The files were available in a .zip format from a web URL. I was copying them to my desktop manually, then moving them into a different directory structure to match my documentation needs. A fellow writer gave me this advice: _"Why don't you just write a script to do this for you?"_

|

||||

|

||||

Programming and development

|

||||

|

||||

* [New Python content][1]

|

||||

|

||||

* [Our latest JavaScript articles][2]

|

||||

|

||||

* [Recent Perl posts][3]

|

||||

|

||||

* [Red Hat Developers Blog][4]

|

||||

|

||||

|

||||

|

||||

I thought _"just write a script?!?"_ —as if it was the easiest thing in the world to do.

|

||||

|

||||

### How Google came to the rescue

|

||||

|

||||

My colleague's question got me thinking, and as I thought, I googled.

|

||||

|

||||

**What scripting languages are on Linux?**

|

||||

|

||||

This was my first Google search criteria, and many of you are probably thinking, "She's pretty clueless." Well, I was, but it did set me on a path to solving my problem. The most common result was Bash. Hmm, I've seen Bash. Heck, one of the files I had to document had Bash in it, that ubiquitous line **#!/bin/bash**. I took another look at that file, and I knew what it was doing because I had to document it.

|

||||

|

||||

So that led me to my next Google search request.

|

||||

|

||||

**How to download a zip file from a URL?**

|

||||

|

||||

That was my basic task really. I had a URL with a .zip file containing all the files I needed to include in my documentation, so I asked the All Powerful Google to help me out. That search gem, and a few more, led me to Curl. But here's the best part: Not only did I find Curl, one of the top search hits showed me a Bash script that used Curl to download a .zip file and extract it. That was more than I asked for, but that's when I realized being specific in my Google search requests could give me the information I needed to write this script. So, momentum in my favor, I wrote the simplest of scripts:

|

||||

|

||||

```

|

||||

#!/bin/sh

|

||||

|

||||

curl http://rather.long.url | tar -xz -C my_directory --strip-components=1

|

||||

```

|

||||

|

||||

What a moment to see that thing run! But then I realized one gotcha: The URL can change, depending on which set of files I'm trying to access. I had another problem to solve, which led me to my next search.

|

||||

|

||||

**How to pass parameters into a Bash script?**

|

||||

|

||||

I needed to be able to run this script with different URLs and different end directories. Google showed me how to put in **$1**, **$2**, etc., to replace what I typed on the command line with my script. For example:

|

||||

|

||||

```

|

||||

bash myscript.sh http://rather.long.url my_directory

|

||||

```

|

||||

|

||||

That was much better. Everything was working as I needed it to, I had flexibility, I had a working script, and most of all, I had a short command to type and save myself 30 minutes of copy-paste grunt work. That was a morning well spent.

|

||||

|

||||

Then I realized I had one more problem. You see, my memory is short, and I knew I'd run this script only every couple of months. That left me with two issues:

|

||||

|

||||

* How would I remember what to type for my script (URL first? directory first?)?

|

||||

|

||||

* How would another writer know how to run my script if I got hit by a truck?

|

||||

|

||||

I needed a usage message—something the script would display if I didn't use it correctly. For example:

|

||||

|

||||

```

|

||||

usage: bash yaml-fetch.sh <'snapshot_url'> <directory>

|

||||

```

|

||||

|

||||

Otherwise, run the script. My next search was:

|

||||

|

||||

**How to write "if/then/else" in a Bash script?**

|

||||

|

||||

Fortunately I already knew **if/then/else** existed in programming. I just had to find out how to do that. Along the way, I also learned to print from a Bash script using **echo**. What I ended up with was something like this:

|

||||

|

||||

```

|

||||

#!/bin/sh

|

||||

|

||||

URL=$1

|

||||

DIRECTORY=$2

|

||||

|

||||

if [ $# -eq 0 ];

|

||||

then

|

||||

echo "usage: bash yaml-fetch.sh <'snapshot_url'> <directory>".

|

||||

else

|

||||

|

||||

# make the directory if it doesn't already exist

|

||||

echo 'create directory'

|

||||

|

||||

mkdir $DIRECTORY

|

||||

|

||||

# fetch and untar the yaml files

|

||||

echo 'fetch and untar the yaml files'

|

||||

|

||||

curl $URL | tar -xz -C $DIRECTORY --strip-components=1

|

||||

fi

|

||||

```

|

||||

|

||||

### How Google and scripting rocked my world

|

||||

|

||||

Okay, slight exaggeration there, but this being the 21st century, learning new things (especially somewhat simple things) is a whole lot easier than it used to be. What I learned (besides how to write a short, self-documented Bash script) is that if I have a question, there's a good chance someone else had the same or a similar question before. When I get stumped, I can ask the next question, and the next question. And in the end, not only do I have a script, I have the start of a new skill that I can hold onto and use to simplify other tasks I've been avoiding.

|

||||

|

||||

Don't let that first script (or programming step) get the best of you. It's a skill, like any other, and there's a wealth of information out there to help you along the way. You don't need to read a massive book or take a month-long course. You can do it a simpler way with baby steps and baby scripts that get you started, then build on that skill and your confidence. There will always be a need for folks to write those thousands-of-lines-of-code programs with all the branching and merging and bug-fixing.

|

||||

|

||||

But there is also a strong need for simple scripts and other ways to automate/simplify tasks. And that's where a little script and a little confidence can give you a kickstart.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Sandra McCann - Sandra McCann is a Linux and open source advocate. She's worked as a software developer, content architect for learning resources, and content creator. Sandra is currently a content creator for Red Hat in Westford, MA focusing on OpenStack and NFV techology.

|

||||

|

||||

----

|

||||

|

||||

via: https://opensource.com/article/17/5/how-i-learned-bash-scripting

|

||||

|

||||

作者:[ Sandra McCann ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/sandra-mccann

|

||||

[1]:https://opensource.com/tags/python?src=programming_resource_menu

|

||||

[2]:https://opensource.com/tags/javascript?src=programming_resource_menu

|

||||

[3]:https://opensource.com/tags/perl?src=programming_resource_menu

|

||||

[4]:https://developers.redhat.com/?intcmp=7016000000127cYAAQ&src=programming_resource_menu

|

||||

[5]:https://opensource.com/article/17/5/how-i-learned-bash-scripting?rate=s_R-jmOxcMvs9bi41yRwenl7GINDvbIFYrUMIJ8OBYk

|

||||

[6]:https://opensource.com/user/39771/feed

|

||||

[7]:https://opensource.com/article/17/5/how-i-learned-bash-scripting#comments

|

||||

[8]:https://opensource.com/users/sandra-mccann

|

||||

@ -0,0 +1,60 @@

|

||||

How Microsoft is becoming a Linux vendor

|

||||

=====================================

|

||||

|

||||

|

||||

>Microsoft is bridging the gap with Linux by baking it into its own products.

|

||||

|

||||

|

||||

|

||||

|

||||

Linux and open source technologies have become too dominant in data centers, cloud and IoT for Microsoft to ignore them.

|

||||

|

||||

On Microsoft’s own cloud, one in three machines run Linux. These are Microsoft customers who are running Linux. Microsoft needs to support the platform they use, or they will go somewhere else.

|

||||

|

||||

Here's how Microsoft's Linux strategy breaks down on its developer platform (Windows 10), on its cloud (Azure) and datacenter (Windows Server).

|

||||

|

||||

**Linux in Windows**: IT professionals managing Linux machines on public or private cloud need native UNIX tooling. Linux and macOS are the only two platforms that offer such native capabilities. No wonder all you see is MacBooks or a few Linux desktops at events like DockerCon, OpenStack Summit or CoreOS Fest.

|

||||

|

||||

To bridge the gap, Microsoft worked with Canonical to build a Linux subsystem within Windows that offers native Linux tooling. It’s a great compromise, where IT professionals can continue to use Windows 10 desktop while getting to run almost all Linux utilities to manage their Linux machines.

|

||||

|

||||

**Linux in Azure**: What good is a cloud that can’t run fully supported Linux machines? Microsoft has been working with Linux vendors that allow customers to run Linux applications and workloads on Azure.

|

||||

|

||||

Microsoft not only managed to sign deals with all three major Linux vendors Red Hat, SUSE and Canonical, it also worked with countless other companies to offer support for community-based distros like Debian.

|

||||

|

||||

**Linux in Windows Server**: This is the last missing piece of the puzzle. There is a massive ecosystem of Linux containers that are used by customers. There are over 900,000 Docker containers on Docker Hub, which can run only on Linux machines. Microsoft wanted to bring these containers to its own platform.

|

||||

|

||||

At DockerCon, Microsoft announced support for Linux containers on Windows Server bringing all those containers to Linux.

|

||||

|

||||

Things are about to get more interesting, after the success of Bash on Ubuntu on Windows 10, Microsoft is bringing Ubuntu bash to Windows Server. Yes, you heard it right. Windows Server will now have a Linux subsystem.

|

||||

|

||||

Rich Turner, Senior Program Manager at Microsoft told me, “WSL on the server provides admins with a preference for *NIX admin scripting & tools to have a more familiar environment in which to work.”

|

||||

|

||||

Microsoft said in an announcement that It will allow IT professionals “to use the same scripts, tools, procedures and container images they have been using for Linux containers on their Windows Server container host. These containers use our Hyper-V isolation technology combined with your choice of Linux kernel to host the workload while the management scripts and tools on the host use WSL.”

|

||||

|

||||

With all three bases covered, Microsoft has succeeded in creating an environment where its customers don't have to deal with any Linux vendor.

|

||||

|

||||

### What does it mean for Microsoft?

|

||||

|

||||

By baking Linux into its own products, Microsoft has become a Linux vendor. They are part of the Linux Foundation, they are one of the many contributors to the Linux kernel, and they now distribute Linux from their own store.

|

||||

|

||||

There is only one minor problem. Microsoft doesn’t own any Linux technologies. They are totally dependent on an external vendor, in this case Canonical, for their entire Linux layer. Too risky a proposition, if Canonical gets acquired by a fierce competitor.

|

||||

|

||||

It might make sense for Microsoft to attempt to acquire Canonical and bring the core technologies in house. It makes sense.

|

||||

|

||||

### What does it mean for Linux vendors

|

||||

|

||||

On the surface, it’s a clear victory for Microsoft as its customers can live within the Windows world. It will also contain the momentum of Linux in a datacenter. It might also affect Linux on the desktop as now IT professionals looking for *NIX tooling don’t have to run Linux desktop, they can do everything from within Windows.

|

||||

|

||||

Is Microsoft's victory a loss for traditional Linux vendors? To some degree, yes. Microsoft has become a direct competitor. But the clear winner here is Linux.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.cio.com/article/3197016/linux/how-microsoft-is-becoming-a-linux-vendor.html

|

||||

|

||||

作者:[ Swapnil Bhartiya ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.cio.com/author/Swapnil-Bhartiya/

|

||||

103

sources/talk/20170517 Security Debt is an Engineers Problem.md

Normal file

103

sources/talk/20170517 Security Debt is an Engineers Problem.md

Normal file

@ -0,0 +1,103 @@

|

||||

Security Debt is an Engineer’s Problem

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

|

||||

>Keziah Plattner of AirBnBSecurity.

|

||||

|

||||

Just like organizations can build up technical debt, so too can they also build up something called “security debt,” if they don’t plan accordingly, attendees learned at the [WomenWhoCode Connect ][5]event at Twitter headquarters in San Francisco last month.

|

||||

|

||||

Security has got to be integral to every step of the software development process, stressed [Mary Ann Davidson][6], Oracle’s Chief Security Officer, in a keynote talk with about security for developers with [Zassmin Montes de Oca][7] of [WomenWhoCode][8].

|

||||

|

||||

In the past, security used to be ignored by pretty much everyone, except banks. But security is more critical than it has ever been because there are so many access points. We’ve entered the era of [Internet of Things][9], where thieves can just hack your fridge to see that you’re not home.

|

||||

|

||||

Davidson is in charge of assurance at Oracle, “making sure we build security into everything we build, whether it’s an on-premise product, whether it’s a cloud service, even devices we have that support group builds at customer sites and reports data back to us, helping us do diagnostics — every single one of those things has to have security engineered into it.”

|

||||

|

||||

|

||||

|

||||

Plattner talking to a capacity crowd at #WWCConnect

|

||||

|

||||

AirBnB’s [Keziah Plattner][10] echoed that sentiment in her breakout session. “Most developers don’t see security as their job,” she said, “but this has to change.”

|

||||

|

||||

She shared four basic security principles for engineers. First, security debt is expensive. There’s a lot of talk about [technical debt ][11]and she thinks security debt should be included in those conversations.

|

||||

|

||||

“This historical attitude is ‘We’ll think about security later,’” Plattner said. As companies grab the low-hanging fruit of software efficiency and growth, they ignore security, but an initial insecure design can cause problems for years to come.

|

||||

|

||||

It’s very hard to add security to an existing vulnerable system, she said. Even when you know where the security holes are and have budgeted the time and resources to make the changes, it’s time-consuming and difficult to re-engineer a secure system.

|

||||

|

||||

So it’s key, she said, to build security into your design from the start. Think of security as part of the technical debt to avoid. And cover all possibilities.

|

||||

|

||||

Most importantly, according to Plattner, is the difficulty in getting to people to change their behavior. No one will change voluntarily, she said, even when you point out that the new behavior is more secure. We all nodded.

|

||||

|

||||

Davidson said engineers need to start thinking about how their code could be attacked, and design from that perspective. She said she only has two rules. The first is never trust any unvalidated data and rule two is see rule one.

|

||||

|

||||

“People do this all the time. They say ‘My client sent me the data so it will be fine.’ Nooooooooo,” she said, to laughs.

|

||||

|

||||

The second key to security, Plattner said, is “never trust users.”

|

||||

|

||||

Davidson put it another way: “My job is to be a professional paranoid.” She worries all the time about how someone might breach her systems even inadvertently. This is not academic, there has been recent denial of service attacks through IoT devices.

|

||||

|

||||

### Little Bobby Tables

|

||||

|

||||

If part of your security plan is trusting users to do the right thing, your system is inherently insecure regardless of whatever other security measures you have in place, said Plattner.

|

||||

|

||||

It’s important to properly sanitize all user input, she explained, showing the [XKCD cartoon][12] where a mom wiped out an entire school database because her son’s middle name was “DropTable Students.”

|

||||

|

||||

So sanitize all user input. Check.

|

||||

|

||||

She showed an example of JavaScript developers using Eval on open source. “A good ground rule is ‘Never use eval(),’” she cautioned. The [eval() ][13]function evaluates JavaScript code. “You’re opening your system to random users if you do.”

|

||||

|

||||

Davidson cautioned that her paranoia extends to including security testing your example code in documentation. “Because we all know no one ever copies sample code,” she said to laughter. She underscored the point that any code should be subject to security checks.

|

||||

|

||||

|

||||

|

||||

Make it easy

|

||||

|

||||

Plattner’s suggestion three: Make security easy. Take the path of least resistance, she suggested.

|

||||

|

||||

Externally, make users opt out of security instead of opting in, or, better yet, make it mandatory. Changing people’s behavior is the hardest problem in tech, she said. Once users get used to using your product in a non-secure way, getting them to change in the future is extremely difficult.

|

||||

|

||||

Internal to your company, she suggested make tools that standardize security so it’s not something individual developers need to think about. For example, encrypting data as a service so engineers can just call the service to encrypt or decrypt data.

|

||||

|

||||

Make sure that your company is focused on good security hygiene, she said. Switch to good security habits across the company.

|

||||

|

||||

You’re only secure as your weakest link, so it’s important that each individual also has good personal security hygiene as well as having good corporate security hygiene.

|

||||

|

||||

At Oracle, they’ve got this covered. Davidson said she got tired of explaining security to engineers who graduated college with absolutely no security training, so she wrote the first coding standards at Oracle. There are now hundreds of pages with lots of contributors, and there are classes that are mandatory. They have metrics for compliance to security requirements and measure it. The classes are not just for engineers, but for doc writers as well. “It’s a cultural thing,” she said.

|

||||

|

||||

And what discussion about security would be secure without a mention of passwords? While everyone should be using a good password manager, Plattner said, but they should be mandatory for work, along with two-factor authentication.

|

||||

|

||||

Basic password principles should be a part of every engineer’s waking life, she said. What matters most in passwords is their length and entropy — making the collection of keystrokes as random as possible. A robust password entropy checker is invaluable for this. She recommends [zxcvbn][14], the Dropbox open-source entropy checker.

|

||||

|

||||

Another trick is to use something intentionally slow like [bcrypt][15] when authenticating user input, said Plattner. The slowness doesn’t bother most legit users but irritates hackers who try to force password attempts.

|

||||

|

||||

All of this adds up to job security for anyone wanting to get into the security side of technology, said Davidson. We’re putting more code more places, she said, and that creates systemic risk. “I don’t think anybody is not going to have a job in security as long as we keep doing interesting things in technology.”

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://thenewstack.io/security-engineers-problem/

|

||||

|

||||

作者:[TC Currie][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://thenewstack.io/author/tc/

|

||||

[1]:http://twitter.com/share?url=https://thenewstack.io/security-engineers-problem/&text=Security+Debt+is+an+Engineer%E2%80%99s+Problem+

|

||||

[2]:http://www.facebook.com/sharer.php?u=https://thenewstack.io/security-engineers-problem/

|

||||

[3]:http://www.linkedin.com/shareArticle?mini=true&url=https://thenewstack.io/security-engineers-problem/

|

||||

[4]:https://thenewstack.io/security-engineers-problem/#disqus_thread

|

||||

[5]:http://connect2017.womenwhocode.com/

|

||||

[6]:https://www.linkedin.com/in/mary-ann-davidson-235ba/

|

||||

[7]:https://www.linkedin.com/in/zassmin/

|

||||

[8]:https://www.womenwhocode.com/

|

||||

[9]:https://www.thenewstack.io/tag/Internet-of-Things

|

||||

[10]:https://twitter.com/ittskeziah

|

||||

[11]:https://martinfowler.com/bliki/TechnicalDebt.html

|

||||

[12]:https://xkcd.com/327/

|

||||

[13]:https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/eval

|

||||

[14]:https://blogs.dropbox.com/tech/2012/04/zxcvbn-realistic-password-strength-estimation/

|

||||

[15]:https://en.wikipedia.org/wiki/Bcrypt

|

||||

[16]:https://thenewstack.io/author/tc/

|

||||

@ -1,79 +1,54 @@

|

||||

|

||||

朝鲜180局的网络战部门让西方国家忧虑。

|

||||

|

||||

Translating by hwlog

|

||||

North Korea's Unit 180, the cyber warfare cell that worries the West

|

||||

|

||||

============================================================

|

||||

[][13] [**PHOTO:** 脱北者说, 平壤的网络战攻击目的在于一个叫做“180局”的部门来筹集资金。(Reuters: Damir Sagolj, file)][14]

|

||||

据叛逃者,官方和网络安全专家称,朝鲜的情报机关有一个叫做180局的特殊部门, 这个部门已经发起过多起勇敢且成功的网络战。

|

||||

近几年朝鲜被美国,韩国,和周边几个国家指责对多数的金融网络发起过一系列在线袭击。

|

||||

网络安全技术人员称他们找到了这个月感染了150多个国家30多万台计算机的全球想哭勒索病毒"ransomware"和朝鲜网络战有关联的技术证据。

|

||||

平壤称该指控是“荒谬的”。

|

||||

对朝鲜的关键指控是指朝鲜与一个叫做拉撒路的黑客组织有联系,这个组织是在去年在孟加拉国中央银行网络抢劫8000万美元并在2014年攻击了索尼的好莱坞工作室的网路。

|

||||

美国政府指责朝鲜对索尼公司的黑客袭击,同时美国政府对平壤在孟加拉国银行的盗窃行为提起公诉并要求立案。

|

||||

由于没有确凿的证据,没有犯罪指控并不能够立案。朝鲜之后也否认了Sony公司和银行的袭击与其有关。

|

||||

朝鲜是世界上最封闭的国家之一,它秘密行动的一些细节很难获得。

|

||||

但研究这个封闭的国家和流落到韩国和一些西方国家的的叛逃者已经给出了或多或少的提示。

|

||||

|

||||

### 黑客们喜欢用雇员来作为掩护

|

||||

金恒光,朝鲜前计算机教授,2004叛逃到韩国,他仍然有着韩国内部的消息,他说平壤的网络战目的在于通过侦察总局下属的一个叫做180局来筹集资金,这个局主要是负责海外的情报机构。

|

||||

金教授称,“180局负责入侵金融机构通过漏洞从银行账户提取资金”。

|

||||

他之前也说过,他以前的一些学生已经加入了朝鲜的网络战略司令部-朝鲜的网络部队。

|

||||

|

||||

[][13] [**PHOTO:** Defectors say Pyongyang's cyberattacks aimed at raising cash are likely organised by the special cell — Unit 180. (Reuters: Damir Sagolj, file)][14]

|

||||

>"黑客们到海外寻找比朝鲜更好的互联网服务的地方,以免留下痕迹," 金教授补充说。

|

||||

他说他们经常用贸易公司,朝鲜的海外分公司和在中国和东南亚合资企业的雇员来作为掩护

|

||||

位于华盛顿的战略与国际研究中心的叫做James Lewis的朝鲜专家称,平壤首先用黑客作为间谍活动的工具然后对韩国和美国的目的进行政治干扰。

|

||||

索尼公司事件之后,他们改变方法,通过用黑客来支持犯罪活动来形成国内坚挺的货币经济政策。

|

||||

“目前为止,网上毒品,假冒伪劣,走私,都是他们惯用的伎俩”。

|

||||

Media player: 空格键播放,“M”键静音,“左击”和“右击”查看。

|

||||

|

||||

North Korea's main spy agency has a special cell called Unit 180 that is likely to have launched some of its most daring and successful cyberattacks, according to defectors, officials and internet security experts.

|

||||

[**VIDEO:** 你遇到过勒索病毒吗? (ABC News)][16]

|

||||

|

||||

### 韩国声称拥有大量的“证据”

|

||||

美国国防部称在去年提交给国会的一个报告中显示,朝鲜可能有作为有效成本的,不对称的,可拒绝的工具,它能够应付来自报复性袭击很小的风险,因为它的“网络”大部分是和因特网分离的。

|

||||

|

||||

> 报告中说," 它可能从第三方国家使用互联网基础设施"。

|

||||

韩国政府称,他们拥有朝鲜网络战行动的大量证据。

|

||||

“朝鲜进行网络战通过第三方国家来掩护网络袭击的来源,并且使用他们的信息和通讯技术设施”,Ahn Chong-ghee,韩国外交部副部长,在书面评论中告诉路透社。

|

||||

除了孟加拉银行抢劫案,他说平壤也被怀疑与菲律宾,越南和波兰的银行袭击有关。

|

||||

去年六月,警察称朝鲜袭击了160个韩国公司和政府机构,入侵了大约14万台计算机,暗中在他的对手的计算机中植入恶意代码作为长期计划的一部分来进行大规模网络攻击。

|

||||

朝鲜也被怀疑在2014年对韩国核反应堆操作系统进行阶段性网络攻击,尽管朝鲜否认与其无关。

|

||||

根据在一个韩国首尔的杀毒软件厂商“hauri”的高级安全研究员Simon Choi的说法,网络袭击是来自于他在中国的一个基地。

|

||||

Choi先生,一个有着对朝鲜的黑客能力进行了广泛的研究的人称,“他们在那里行动以至于不论他们做什么样的计划,他们拥有中国的ip地址”。

|

||||

|

||||

North Korea has been blamed in recent years for a series of online attacks, mostly on financial networks, in the United States, South Korea and over a dozen other countries.

|

||||

|

||||

Cyber security researchers have also said they found technical evidence that could l[ink North Korea with the global WannaCry "ransomware" cyberattack][15] that infected more than 300,000 computers in 150 countries this month.

|

||||

|

||||

Pyongyang has called the allegation "ridiculous".

|

||||

|

||||

The crux of the allegations against North Korea is its connection to a hacking group called Lazarus that is linked to last year's $US81 million cyber heist at the Bangladesh central bank and the 2014 attack on Sony's Hollywood studio.

|

||||

|

||||

The US Government has blamed North Korea for the Sony hack and some US officials have said prosecutors are building a case against Pyongyang in the Bangladesh Bank theft.

|

||||

|

||||

No conclusive proof has been provided and no criminal charges have yet been filed. North Korea has also denied being behind the Sony and banking attacks.

|

||||

|

||||

North Korea is one of the most closed countries in the world and any details of its clandestine operations are difficult to obtain.

|

||||

|

||||

But experts who study the reclusive country and defectors who have ended up in South Korea or the West have provided some clues.

|

||||

|

||||

### Hackers likely under cover as employees

|

||||

|

||||

Kim Heung-kwang, a former computer science professor in North Korea who defected to the South in 2004 and still has sources inside North Korea, said Pyongyang's cyberattacks aimed at raising cash are likely organised by Unit 180, a part of the Reconnaissance General Bureau (RGB), its main overseas intelligence agency.

|

||||

|

||||

"Unit 180 is engaged in hacking financial institutions (by) breaching and withdrawing money out of bank accounts," Mr Kim said.

|

||||

|

||||

|

||||

He has previously said that some of his former students have joined join North Korea's Strategic Cyber Command, its cyber-army.

|

||||

|

||||

> "The hackers go overseas to find somewhere with better internet services than North Korea so as not to leave a trace," Mr Kim added.

|

||||

|

||||

He said it was likely they went under the cover of being employees of trading firms, overseas branches of North Korean companies, or joint ventures in China or South-East Asia.

|

||||

|

||||

James Lewis, a North Korea expert at the Washington-based Centre for Strategic and International Studies, said Pyongyang first used hacking as a tool for espionage and then political harassment against South Korean and US targets.

|

||||

|

||||

"They changed after Sony by using hacking to support criminal activities to generate hard currency for the regime," he said.

|

||||

|

||||

"So far, it's worked as well or better as drugs, counterfeiting, smuggling — all their usual tricks."

|

||||

|

||||

Media player: "Space" to play, "M" to mute, "left" and "right" to seek.

|

||||

|

||||

[**VIDEO:** Have you been hit by ransomware? (ABC News)][16]

|

||||

|

||||

### South Korea purports to have 'considerable evidence'

|

||||

|

||||

The US Department of Defence said in a report submitted to Congress last year that North Korea likely "views cyber as a cost-effective, asymmetric, deniable tool that it can employ with little risk from reprisal attacks, in part because its networks are largely separated from the internet".

|

||||

|

||||

> "It is likely to use internet infrastructure from third-party nations," the report said.

|

||||

|

||||

South Korean officials said they had considerable evidence of North Korea's cyber warfare operations.

|

||||

|

||||

|

||||

"North Korea is carrying out cyberattacks through third countries to cover up the origin of the attacks and using their information and communication technology infrastructure," Ahn Chong-ghee, South Korea's Vice-Foreign Minister, told Reuters in written comments.

|

||||

|

||||

Besides the Bangladesh Bank heist, he said Pyongyang was also suspected in attacks on banks in the Philippines, Vietnam and Poland.

|

||||

|

||||