mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

commit

bd2d472a23

@ -0,0 +1,230 @@

|

||||

Linux 包管理基础:apt、yum、dnf 和 pkg

|

||||

========================

|

||||

|

||||

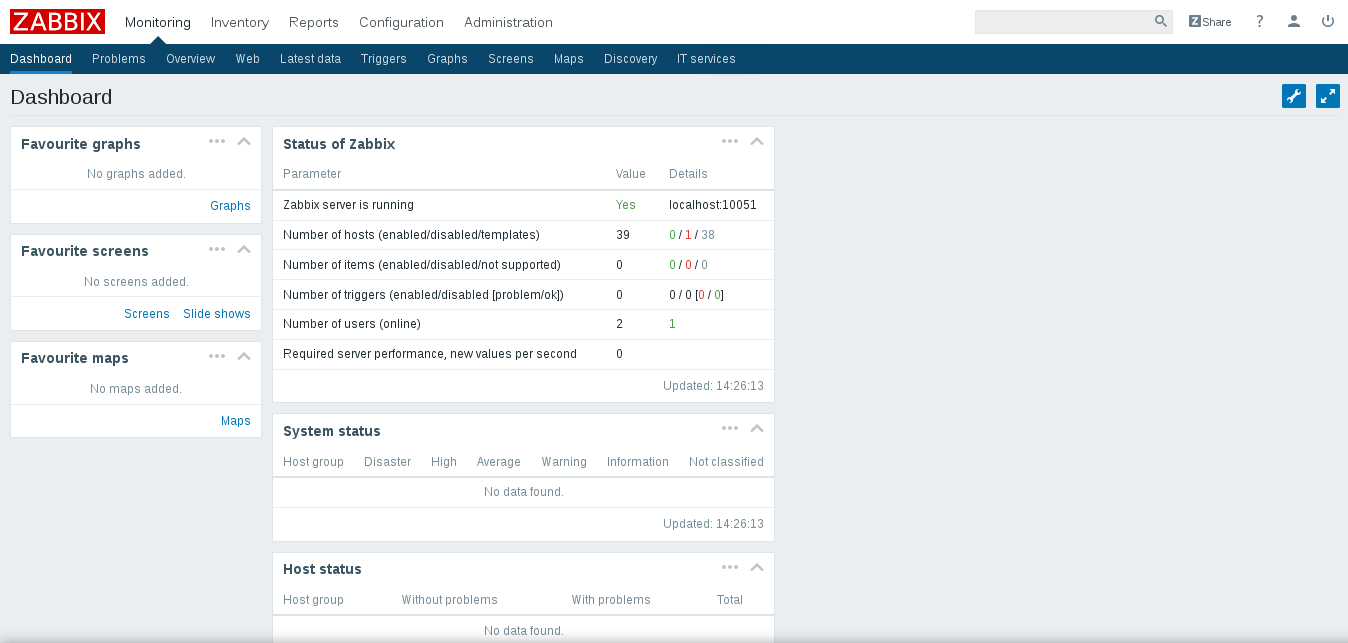

![Package_Management_tw_mostov.png-307.8kB][1]

|

||||

|

||||

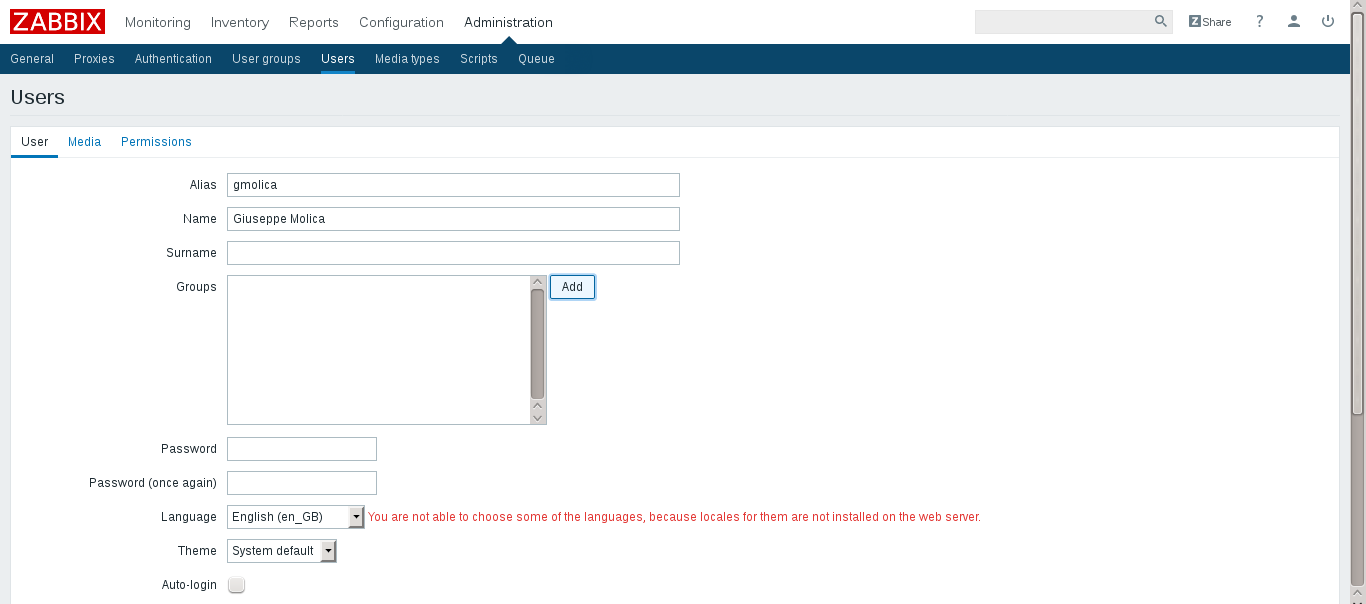

### 介绍

|

||||

|

||||

大多数现代的类 Unix 操作系统都提供了一种中心化的机制用来搜索和安装软件。软件通常都是存放在存储库中,并通过包的形式进行分发。处理包的工作被称为包管理。包提供了操作系统的基本组件,以及共享的库、应用程序、服务和文档。

|

||||

|

||||

包管理系统除了安装软件外,它还提供了工具来更新已经安装的包。包存储库有助于确保你的系统中使用的代码是经过审查的,并且软件的安装版本已经得到了开发人员和包维护人员的认可。

|

||||

|

||||

在配置服务器或开发环境时,我们最好了解下包在官方存储库之外的情况。某个发行版的稳定版本中的包有可能已经过时了,尤其是那些新的或者快速迭代的软件。然而,包管理无论对于系统管理员还是开发人员来说都是至关重要的技能,而已打包的软件对于主流 Linux 发行版来说也是一笔巨大的财富。

|

||||

|

||||

本指南旨在快速地介绍下在多种 Linux 发行版中查找、安装和升级软件包的基础知识,并帮助您将这些内容在多个系统之间进行交叉对比。

|

||||

|

||||

### 包管理系统:简要概述

|

||||

|

||||

大多数包系统都是围绕包文件的集合构建的。包文件通常是一个存档文件,它包含已编译的二进制文件和软件的其他资源,以及安装脚本。包文件同时也包含有价值的元数据,包括它们的依赖项,以及安装和运行它们所需的其他包的列表。

|

||||

|

||||

虽然这些包管理系统的功能和优点大致相同,但打包格式和工具却因平台而异:

|

||||

|

||||

| 操作系统 | 格式 | 工具 |

|

||||

| --- | --- | --- |

|

||||

| Debian | `.deb` | `apt`, `apt-cache`, `apt-get`, `dpkg` |

|

||||

| Ubuntu | `.deb` | `apt`, `apt-cache`, `apt-get`, `dpkg` |

|

||||

| CentOS | `.rpm` | `yum` |

|

||||

| Fedora | `.rpm` | `dnf` |

|

||||

| FreeBSD | Ports, `.txz` | `make`, `pkg` |

|

||||

|

||||

Debian 及其衍生版,如 Ubuntu、Linux Mint 和 Raspbian,它们的包格式是 `.deb`。APT 这款先进的包管理工具提供了大多数常见的操作命令:搜索存储库、安装软件包及其依赖项,并管理升级。在本地系统中,我们还可以使用 `dpkg` 程序来安装单个的 `deb` 文件,APT 命令作为底层 `dpkg` 的前端,有时也会直接调用它。

|

||||

|

||||

最近发布的 debian 衍生版大多数都包含了 `apt` 命令,它提供了一个简洁统一的接口,可用于通常由 `apt-get` 和 `apt-cache` 命令处理的常见操作。这个命令是可选的,但使用它可以简化一些任务。

|

||||

|

||||

CentOS、Fedora 和其它 Red Hat 家族成员使用 RPM 文件。在 CentOS 中,通过 `yum` 来与单独的包文件和存储库进行交互。

|

||||

|

||||

在最近的 Fedora 版本中,`yum` 已经被 `dnf` 取代,`dnf` 是它的一个现代化的分支,它保留了大部分 `yum` 的接口。

|

||||

|

||||

FreeBSD 的二进制包系统由 `pkg` 命令管理。FreeBSD 还提供了 `Ports` 集合,这是一个存在于本地的目录结构和工具,它允许用户获取源码后使用 Makefile 直接从源码编译和安装包。

|

||||

|

||||

### 更新包列表

|

||||

|

||||

大多数系统在本地都会有一个和远程存储库对应的包数据库,在安装或升级包之前最好更新一下这个数据库。另外,`yum` 和 `dnf` 在执行一些操作之前也会自动检查更新。当然你可以在任何时候对系统进行更新。

|

||||

|

||||

| 系统 | 命令 |

|

||||

| --- | --- |

|

||||

| Debian / Ubuntu | `sudo apt-get update` |

|

||||

| | `sudo apt update` |

|

||||

| CentOS | `yum check-update` |

|

||||

| Fedora | `dnf check-update` |

|

||||

| FreeBSD Packages | `sudo pkg update` |

|

||||

| FreeBSD Ports | `sudo portsnap fetch update` |

|

||||

|

||||

### 更新已安装的包

|

||||

|

||||

在没有包系统的情况下,想确保机器上所有已安装的软件都保持在最新的状态是一个很艰巨的任务。你将不得不跟踪数百个不同包的上游更改和安全警报。虽然包管理器并不能解决升级软件时遇到的所有问题,但它确实使你能够使用一些命令来维护大多数系统组件。

|

||||

|

||||

在 FreeBSD 上,升级已安装的 ports 可能会引入破坏性的改变,有些步骤还需要进行手动配置,所以在通过 `portmaster` 更新之前最好阅读下 `/usr/ports/UPDATING` 的内容。

|

||||

|

||||

| 系统 | 命令 | 说明 |

|

||||

| --- | --- | --- |

|

||||

| Debian / Ubuntu | `sudo apt-get upgrade` | 只更新已安装的包 |

|

||||

| | `sudo apt-get dist-upgrade` | 可能会增加或删除包以满足新的依赖项 |

|

||||

| | `sudo apt upgrade` | 和 `apt-get upgrade` 类似 |

|

||||

| | `sudo apt full-upgrade` | 和 `apt-get dist-upgrade` 类似 |

|

||||

| CentOS | `sudo yum update` | |

|

||||

| Fedora | `sudo dnf upgrade` | |

|

||||

| FreeBSD Packages | `sudo pkg upgrade` | |

|

||||

| FreeBSD Ports | `less /usr/ports/UPDATING` | 使用 `less` 来查看 ports 的更新提示(使用上下光标键滚动,按 q 退出)。 |

|

||||

| | `cd /usr/ports/ports-mgmt/portmaster && sudo make install && sudo portmaster -a` | 安装 `portmaster` 然后使用它更新已安装的 ports |

|

||||

|

||||

### 搜索某个包

|

||||

|

||||

大多数发行版都提供针对包集合的图形化或菜单驱动的工具,我们可以分类浏览软件,这也是一个发现新软件的好方法。然而,查找包最快和最有效的方法是使用命令行工具进行搜索。

|

||||

|

||||

| 系统 | 命令 | 说明 |

|

||||

| --- | --- | --- |

|

||||

| Debian / Ubuntu | `apt-cache search search_string` | |

|

||||

| | `apt search search_string` | |

|

||||

| CentOS | `yum search search_string` | |

|

||||

| | `yum search all search_string` | 搜索所有的字段,包括描述 |

|

||||

| Fedora | `dnf search search_string` | |

|

||||

| | `dnf search all search_string` | 搜索所有的字段,包括描述 |

|

||||

| FreeBSD Packages | `pkg search search_string` | 通过名字进行搜索 |

|

||||

| | `pkg search -f search_string` | 通过名字进行搜索并返回完整的描述 |

|

||||

| | `pkg search -D search_string` | 搜索描述 |

|

||||

| FreeBSD Ports | `cd /usr/ports && make search name=package` | 通过名字进行搜索 |

|

||||

| | `cd /usr/ports && make search key=search_string` | 搜索评论、描述和依赖 |

|

||||

|

||||

### 查看某个软件包的信息

|

||||

|

||||

在安装软件包之前,我们可以通过仔细阅读包的描述来获得很多有用的信息。除了人类可读的文本之外,这些内容通常包括像版本号这样的元数据和包的依赖项列表。

|

||||

|

||||

| 系统 | 命令 | 说明 |

|

||||

| --- | --- | --- |

|

||||

| Debian / Ubuntu | `apt-cache show package` | 显示有关包的本地缓存信息 |

|

||||

| | `apt show package` | |

|

||||

| | `dpkg -s package` | 显示包的当前安装状态 |

|

||||

| CentOS | `yum info package` | |

|

||||

| | `yum deplist package` | 列出包的依赖 |

|

||||

| Fedora | `dnf info package` | |

|

||||

| | `dnf repoquery --requires package` | 列出包的依赖 |

|

||||

| FreeBSD Packages | `pkg info package` | 显示已安装的包的信息 |

|

||||

| FreeBSD Ports | `cd /usr/ports/category/port && cat pkg-descr` | |

|

||||

|

||||

### 从存储库安装包

|

||||

|

||||

知道包名后,通常可以用一个命令来安装它及其依赖。你也可以一次性安装多个包,只需将它们全部列出来即可。

|

||||

|

||||

| 系统 | 命令 | 说明 |

|

||||

| --- | --- | --- |

|

||||

| Debian / Ubuntu | `sudo apt-get install package` | |

|

||||

| | `sudo apt-get install package1 package2 ...` | 安装所有列出来的包 |

|

||||

| | `sudo apt-get install -y package` | 在 `apt` 提示是否继续的地方直接默认 `yes` |

|

||||

| | `sudo apt install package` | 显示一个彩色的进度条 |

|

||||

| CentOS | `sudo yum install package` | |

|

||||

| | `sudo yum install package1 package2 ...` | 安装所有列出来的包 |

|

||||

| | `sudo yum install -y package` | 在 `yum` 提示是否继续的地方直接默认 `yes` |

|

||||

| Fedora | `sudo dnf install package` | |

|

||||

| | `sudo dnf install package1 package2 ...` | 安装所有列出来的包 |

|

||||

| | `sudo dnf install -y package` | 在 `dnf` 提示是否继续的地方直接默认 `yes` |

|

||||

| FreeBSD Packages | `sudo pkg install package` | |

|

||||

| | `sudo pkg install package1 package2 ...` | 安装所有列出来的包 |

|

||||

| FreeBSD Ports | `cd /usr/ports/category/port && sudo make install` | 从源码构建安装一个 port |

|

||||

|

||||

### 从本地文件系统安装一个包

|

||||

|

||||

对于一个给定的操作系统,有时有些软件官方并没有提供相应的包,那么开发人员或供应商将需要提供包文件的下载。你通常可以通过 web 浏览器检索这些包,或者通过命令行 `curl` 来检索这些信息。将包下载到目标系统后,我们通常可以通过单个命令来安装它。

|

||||

|

||||

在 Debian 派生的系统上,`dpkg` 用来处理单个的包文件。如果一个包有未满足的依赖项,那么我们可以使用 `gdebi` 从官方存储库中检索它们。

|

||||

|

||||

在 CentOS 和 Fedora 系统上,`yum` 和 `dnf` 用于安装单个的文件,并且会处理需要的依赖。

|

||||

|

||||

| 系统 | 命令 | 说明 |

|

||||

| --- | --- | --- |

|

||||

| Debian / Ubuntu | `sudo dpkg -i package.deb` | |

|

||||

| | `sudo apt-get install -y gdebi && sudo gdebi package.deb` | 安装 `gdebi`,然后使用 `gdebi` 安装 `package.deb` 并处理缺失的依赖|

|

||||

| CentOS | `sudo yum install package.rpm` | |

|

||||

| Fedora | `sudo dnf install package.rpm` | |

|

||||

| FreeBSD Packages | `sudo pkg add package.txz` | |

|

||||

| | `sudo pkg add -f package.txz` | 即使已经安装的包也会重新安装 |

|

||||

|

||||

### 删除一个或多个已安装的包

|

||||

|

||||

由于包管理器知道给定的软件包提供了哪些文件,因此如果某个软件不再需要了,它通常可以干净利落地从系统中清除这些文件。

|

||||

|

||||

| 系统 | 命令 | 说明 |

|

||||

| --- | --- | --- |

|

||||

| Debian / Ubuntu | `sudo apt-get remove package` | |

|

||||

| | `sudo apt remove package` | |

|

||||

| | `sudo apt-get autoremove` | 删除不需要的包 |

|

||||

| CentOS | `sudo yum remove package` | |

|

||||

| Fedora | `sudo dnf erase package` | |

|

||||

| FreeBSD Packages | `sudo pkg delete package` | |

|

||||

| | `sudo pkg autoremove` | 删除不需要的包 |

|

||||

| FreeBSD Ports | `sudo pkg delete package` | |

|

||||

| | `cd /usr/ports/path_to_port && make deinstall` | 卸载 port |

|

||||

|

||||

### `apt` 命令

|

||||

|

||||

Debian 家族发行版的管理员通常熟悉 `apt-get` 和 `apt-cache`。较少为人所知的是简化的 `apt` 接口,它是专为交互式使用而设计的。

|

||||

|

||||

| 传统命令 | 等价的 `apt` 命令 |

|

||||

| --- | --- |

|

||||

| `apt-get update` | `apt update` |

|

||||

| `apt-get dist-upgrade` | `apt full-upgrade` |

|

||||

| `apt-cache search string` | `apt search string` |

|

||||

| `apt-get install package` | `apt install package` |

|

||||

| `apt-get remove package` | `apt remove package` |

|

||||

| `apt-get purge package` | `apt purge package` |

|

||||

|

||||

虽然 `apt` 通常是一个特定操作的快捷方式,但它并不能完全替代传统的工具,它的接口可能会随着版本的不同而发生变化,以提高可用性。如果你在脚本或 shell 管道中使用包管理命令,那么最好还是坚持使用 `apt-get` 和 `apt-cache`。

|

||||

|

||||

### 获取帮助

|

||||

|

||||

除了基于 web 的文档,请记住我们可以通过 shell 从 Unix 手册页(通常称为 man 页面)中获得大多数的命令。比如要阅读某页,可以使用 `man`:

|

||||

|

||||

```

|

||||

man page

|

||||

|

||||

```

|

||||

|

||||

在 `man` 中,你可以用箭头键导航。按 `/` 搜索页面内的文本,使用 `q` 退出。

|

||||

|

||||

| 系统 | 命令 | 说明 |

|

||||

| --- | --- | --- |

|

||||

| Debian / Ubuntu | `man apt-get` | 更新本地包数据库以及与包一起工作 |

|

||||

| | `man apt-cache` | 在本地的包数据库中搜索 |

|

||||

| | `man dpkg` | 和单独的包文件一起工作以及能查询已安装的包 |

|

||||

| | `man apt` | 通过更简洁,用户友好的接口进行最基本的操作 |

|

||||

| CentOS | `man yum` | |

|

||||

| Fedora | `man dnf` | |

|

||||

| FreeBSD Packages | `man pkg` | 和预先编译的二进制包一起工作 |

|

||||

| FreeBSD Ports | `man ports` | 和 Ports 集合一起工作 |

|

||||

|

||||

### 结论和进一步的阅读

|

||||

|

||||

本指南通过对多个系统间进行交叉对比概述了一下包管理系统的基本操作,但只涉及了这个复杂主题的表面。对于特定系统更详细的信息,可以参考以下资源:

|

||||

|

||||

* [这份指南][2] 详细介绍了 Ubuntu 和 Debian 的软件包管理。

|

||||

* 这里有一份 CentOS 官方的指南 [使用 yum 管理软件][3]

|

||||

* 这里有一个有关 Fedora 的 `dnf` 的 [wifi 页面][4] 以及一份有关 `dnf` [官方的手册][5]

|

||||

* [这份指南][6] 讲述了如何使用 `pkg` 在 FreeBSD 上进行包管理

|

||||

* 这本 [FreeBSD Handbook][7] 有一节讲述了[如何使用 Ports 集合][8]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.digitalocean.com/community/tutorials/package-management-basics-apt-yum-dnf-pkg

|

||||

|

||||

译者后记:

|

||||

|

||||

从经典的 `configure` && `make` && `make install` 三部曲到 `dpkg`,从需要手处理依赖关系的 `dpkg` 到全自动化的 `apt-get`,恩~,你有没有想过接下来会是什么?译者只能说可能会是 `Snaps`,如果你还没有听过这个东东,你也许需要关注下这个公众号了:**Snapcraft**

|

||||

|

||||

作者:[Brennen Bearnes][a]

|

||||

译者:[Snapcrafter](https://github.com/Snapcrafter)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.digitalocean.com/community/users/bpb

|

||||

|

||||

|

||||

[1]: http://static.zybuluo.com/apollomoon/g9kiere2xuo1511ls1hi9w9w/Package_Management_tw_mostov.png

|

||||

[2]:https://www.digitalocean.com/community/tutorials/ubuntu-and-debian-package-management-essentials

|

||||

[3]: https://www.centos.org/docs/5/html/yum/

|

||||

[4]: https://fedoraproject.org/wiki/Dnf

|

||||

[5]: https://dnf.readthedocs.org/en/latest/index.html

|

||||

[6]: https://www.digitalocean.com/community/tutorials/how-to-manage-packages-on-freebsd-10-1-with-pkg

|

||||

[7]:https://www.freebsd.org/doc/handbook/

|

||||

[8]: https://www.freebsd.org/doc/handbook/ports-using.html

|

||||

[9]:https://www.freebsd.org/doc/handbook/ports-using.html

|

||||

@ -1,20 +1,20 @@

|

||||

响应式编程vs.响应式系统

|

||||

响应式编程与响应式系统

|

||||

============================================================

|

||||

|

||||

>在恒久的迷惑与过多期待的海洋中,登上一组简单响应式设计原则的小岛。

|

||||

> 在恒久的迷惑与过多期待的海洋中,登上一组简单响应式设计原则的小岛。

|

||||

|

||||

>

|

||||

|

||||

|

||||

|

||||

下载 Konrad Malawski 的免费电子书[《为什么选择响应式?企业应用中的基本原则》][5],深入了解更多响应式技术的知识与好处。

|

||||

> 下载 Konrad Malawski 的免费电子书[《为什么选择响应式?企业应用中的基本原则》][5],深入了解更多响应式技术的知识与好处。

|

||||

|

||||

自从2013年一起合作写了[《响应式宣言》][23]之后,我们看着响应式从一种几乎无人知晓的软件构建技术——当时只有少数几个公司的边缘项目使用了这一技术——最后成为中间件领域(middleware field)大佬们全平台战略中的一部分。本文旨在定义和澄清响应式各个方面的概念,方法是比较在_响应式编程_风格下,以及把_响应式系统_视作一个紧密整体的设计方法下写代码的不同。

|

||||

自从 2013 年一起合作写了[《响应式宣言》][23]之后,我们看着响应式从一种几乎无人知晓的软件构建技术——当时只有少数几个公司的边缘项目使用了这一技术——最后成为<ruby>中间件领域<rt>middleware field</rt></ruby>大佬们全平台战略中的一部分。本文旨在定义和澄清响应式各个方面的概念,方法是比较在_响应式编程_风格下和把_响应式系统_视作一个紧密整体的设计方法下编写代码的不同之处。

|

||||

|

||||

### 响应式是一组设计原则

|

||||

响应式技术目前成功的标志之一是“响应式”成为了一个热词,并且跟一些不同的事物与人联系在了一起——常常伴随着像“流(streaming)”,“轻量级(lightweight)”和“实时(real-time)”这样的词。

|

||||

|

||||

举个例子:当我们看到一支运动队时(像棒球队或者篮球队),我们一般会把他们看成一个个单独个体的组合,但是当他们之间碰撞不出火花,无法像一个团队一样高效地协作时,他们就会输给一个“更差劲”的队伍。从这篇文章的角度来看,响应式是一组设计原则,一种关于系统架构与设计的思考方式,一种关于在一个分布式环境下,当实现技术(implementation techniques),工具和设计模式都只是一个更大系统的一部分时如何设计的思考方式。

|

||||

响应式技术目前成功的标志之一是“<ruby>响应式<rt>reactive</rt></ruby>”成为了一个热词,并且跟一些不同的事物与人联系在了一起——常常伴随着像“<ruby>流<rt>streaming</rt></ruby>”、“<ruby>轻量级<rt>lightweight</rt></ruby>”和“<ruby>实时<rt>real-time</rt></ruby>”这样的词。

|

||||

|

||||

举个例子:当我们看到一支运动队时(像棒球队或者篮球队),我们一般会把他们看成一个个单独个体的组合,但是当他们之间碰撞不出火花,无法像一个团队一样高效地协作时,他们就会输给一个“更差劲”的队伍。从这篇文章的角度来看,响应式是一组设计原则,一种关于系统架构与设计的思考方式,一种关于在一个分布式环境下,当实现技术(implementation techniques)、工具和设计模式都只是一个更大系统的一部分时如何设计的思考方式。

|

||||

|

||||

这个例子展示了不经考虑地将一堆软件拼揍在一起——尽管单独来看,这些软件都很优秀——和响应式系统之间的不同。在一个响应式系统中,正是_不同组件(parts)间的相互作用_让响应式系统如此不同,它使得不同组件能够独立地运作,同时又一致协作从而达到最终想要的结果。

|

||||

|

||||

@ -28,62 +28,64 @@ _一个响应式系统_ 是一种架构风格(architectural style),它允许

|

||||

* 响应式编程(基于声明的事件的)

|

||||

* 函数响应式编程(FRP)

|

||||

|

||||

我们将检查这些做法与技术的意思,特别是前两个。更明确地说,我们会在使用它们的时候讨论它们,例如它们是怎么联系在一起的,从它们身上又能到什么样的好处——特别是在为多核、云或移动架构搭建系统的情境下。

|

||||

我们将调查这些做法与技术的意思,特别是前两个。更明确地说,我们会在使用它们的时候讨论它们,例如它们是怎么联系在一起的,从它们身上又能到什么样的好处——特别是在为多核、云或移动架构搭建系统的情境下。

|

||||

|

||||

让我们先来说一说函数响应式编程吧,以及我们在本文后面不再讨论它的原因。

|

||||

|

||||

### 函数响应式编程(FRP)

|

||||

|

||||

_函数响应式编程_,通常被称作_FRP_,是最常被误解的。FRP在二十年前就被Conal Elliott[精确地定义][24]了。但是最近这个术语却被错误地用来描述一些像Elm,Bacon.js的技术以及其它技术中的响应式插件(RxJava, Rx.NET, RxJS)。许多的库(libraries)声称他们支持FRP,事实上他们说的并非_响应式编程_,因此我们不会再进一步讨论它们。

|

||||

<ruby>函数响应式编程<rt>Functional reactive programming</rt></ruby>,通常被称作_FRP_,是最常被误解的。FRP在二十年前就被 Conal Elliott [精确地定义过了][24]了。但是最近这个术语却被错误地^脚注1 用来描述一些像 Elm、Bacon.js 的技术以及其它技术中的响应式插件(RxJava、Rx.NET、 RxJS)。许多的库(libraries)声称他们支持 FRP,事实上他们说的并非_响应式编程_,因此我们不会再进一步讨论它们。

|

||||

|

||||

### 响应式编程

|

||||

|

||||

_响应式编程_,不要把它跟_函数响应式编程_混淆了,它是异步编程下的一个子集,也是一种范式,在这种范式下,由新信息的有效性(availability)推动逻辑的前进,而不是让一条执行线程(a thread-of-execution)去推动控制流(control flow)。

|

||||

<ruby>响应式编程<rt>Reactive programming</rt></ruby>,不要把它跟_函数响应式编程_混淆了,它是异步编程下的一个子集,也是一种范式,在这种范式下,由新信息的有效性(availability)推动逻辑的前进,而不是让一条执行线程(a thread-of-execution)去推动控制流(control flow)。

|

||||

|

||||

它能够把问题分解为多个独立的步骤,这些独立的步骤可以以异步且非阻塞(non-blocking)的方式被执行,最后再组合在一起产生一条工作流(workflow)——它的输入和输出可能是非绑定的(unbounded)。

|

||||

|

||||

[“异步地(Asynchronous)”][25]被牛津词典定义为“不在同一时刻存在或发生”,在我们的语境下,它意味着一条消息或者一个事件可发生在任何时刻,有可能是在未来。这在响应式编程中是非常重要的一项技术,因为响应式编程允许[非阻塞式(non-blocking)]的执行方式——执行线程在竞争一块共享资源时不会因为阻塞(blocking)而陷入等待(防止执行线程在当前的工作完成之前执行任何其它操作),而是在共享资源被占用的期间转而去做其它工作。阿姆达尔定律(Amdahl's Law)[2][9]告诉我们,竞争是可伸缩性(scalability)最大的敌人,所以一个响应式系统应当在极少数的情况下才不得不做阻塞工作。

|

||||

[“异步地(Asynchronous)”][25]被牛津词典定义为“不在同一时刻存在或发生”,在我们的语境下,它意味着一条消息或者一个事件可发生在任何时刻,也有可能是在未来。这在响应式编程中是非常重要的一项技术,因为响应式编程允许[非阻塞式(non-blocking)]的执行方式——执行线程在竞争一块共享资源时不会因为阻塞(blocking)而陷入等待(为了防止执行线程在当前的工作完成之前执行任何其它操作),而是在共享资源被占用的期间转而去做其它工作。阿姆达尔定律(Amdahl's Law) ^脚注2 告诉我们,竞争是可伸缩性(scalability)最大的敌人,所以一个响应式系统应当在极少数的情况下才不得不做阻塞工作。

|

||||

|

||||

响应式编程一般是_事件驱动(event-driven)_ ,相比之下,响应式系统则是_消息驱动(message-driven)_ 的——事件驱动与消息驱动之间的差别会在文章后面阐明。

|

||||

|

||||

响应式编程库的应用程序接口(API)一般是以下二者之一:

|

||||

|

||||

* 基于回调的(Callback-based)——匿名的间接作用(side-effecting)回调函数被绑定在事件源(event sources)上,当事件被放入数据流(dataflow chain)中时,回调函数被调用。

|

||||

* 声明式的(Declarative)——通过函数的组合,通常是使用一些固定的函数,像 _map_, _filter_, _fold_ 等等。

|

||||

* 声明式的(Declarative)——通过函数的组合,通常是使用一些固定的函数,像 _map_、 _filter_、 _fold_ 等等。

|

||||

|

||||

大部分的库会混合这两种风格,一般还带有基于流(stream-based)的操作符(operators),像windowing, counts, triggers。

|

||||

大部分的库会混合这两种风格,一般还带有基于流(stream-based)的操作符(operators),像 windowing、 counts、 triggers。

|

||||

|

||||

说响应式编程跟[数据流编程(dataflow programming)][27]有关是很合理的,因为它强调的是_数据流_而不是_控制流_。

|

||||

|

||||

举几个为这种编程技术提供支持的的编程抽象概念:

|

||||

|

||||

* [Futures/Promises][10]——一个值的容器,具有读共享/写独占(many-read/single-write)的语义,即使变量尚不可用也能够添加异步的值转换操作。

|

||||

* 流(streams)-[响应式流][11]——无限制的数据处理流,支持异步,非阻塞式,支持多个源与目的的反压转换管道(back-pressured transformation pipelines)。

|

||||

* [数据流变量][12]——依赖于输入,过程(procedures)或者其它单元的单赋值变量(存储单元)(single assignment variables),它能够自动更新值的改变。其中一个应用例子是表格软件——一个单元的值的改变会像涟漪一样荡开,影响到所有依赖于它的函数,顺流而下地使它们产生新的值。

|

||||

* 流(streams) - [响应式流][11]——无限制的数据处理流,支持异步,非阻塞式,支持多个源与目的的反压转换管道(back-pressured transformation pipelines)。

|

||||

* [数据流变量][12]——依赖于输入、过程(procedures)或者其它单元的单赋值变量(single assignment variables)(存储单元),它能够自动更新值的改变。其中一个应用例子是表格软件——一个单元的值的改变会像涟漪一样荡开,影响到所有依赖于它的函数,顺流而下地使它们产生新的值。

|

||||

|

||||

在JVM中,支持响应式编程的流行库有Akka Streams、Ratpack、Reactor、RxJava和Vert.x等等。这些库实现了响应式编程的规范,成为JVM上响应式编程库之间的互通标准(standard for interoperability),并且根据它自身的叙述是“……一个为如何处理非阻塞式反压异步流提供标准的倡议”

|

||||

在 JVM 中,支持响应式编程的流行库有 Akka Streams、Ratpack、Reactor、RxJava 和 Vert.x 等等。这些库实现了响应式编程的规范,成为 JVM 上响应式编程库之间的互通标准(standard for interoperability),并且根据它自身的叙述是“……一个为如何处理非阻塞式反压异步流提供标准的倡议”。

|

||||

|

||||

响应式编程的基本好处是:提高多核和多CPU硬件的计算资源利用率;根据阿姆达尔定律以及引申的Günther的通用可伸缩性定律[3][13](Günther’s Universal Scalability Law),通过减少序列化点(serialization points)来提高性能。

|

||||

响应式编程的基本好处是:提高多核和多 CPU 硬件的计算资源利用率;根据阿姆达尔定律以及引申的 Günther 的通用可伸缩性定律(Günther’s Universal Scalability Law) ^脚注3 ,通过减少序列化点(serialization points)来提高性能。

|

||||

|

||||

另一个好处是开发者生产效率,传统的编程范式都尽力想提供一个简单直接的可持续的方法来处理异步非阻塞式计算和I/O。在响应式编程中,因活动(active)组件之间通常不需要明确的协作,从而也就解决了其中大部分的挑战。

|

||||

另一个好处是开发者生产效率,传统的编程范式都尽力想提供一个简单直接的可持续的方法来处理异步非阻塞式计算和 I/O。在响应式编程中,因活动(active)组件之间通常不需要明确的协作,从而也就解决了其中大部分的挑战。

|

||||

|

||||

响应式编程真正的发光点在于组件的创建跟工作流的组合。为了在异步执行上取得最大的优势,把[反压(back-pressure)][28]加进来是很重要,这样能避免过度使用,或者确切地说,无限度的消耗资源。

|

||||

响应式编程真正的发光点在于组件的创建跟工作流的组合。为了在异步执行上取得最大的优势,把[反压(back-pressure)][28]加进来是很重要,这样能避免过度使用,或者确切地说,避免无限度的消耗资源。

|

||||

|

||||

尽管如此,响应式编程在搭建现代软件上仍然非常有用,为了在更高层次上理解(reason about)一个系统,那么必须要使用到另一个工具:_响应式架构_——设计响应式系统的方法。此外,要记住编程范式有很多,而响应式编程仅仅只是其中一个,所以如同其它工具一样,响应式编程并不是万金油,它不意图适用于任何情况。

|

||||

尽管如此,响应式编程在搭建现代软件上仍然非常有用,为了在更高层次上理解(reason about)一个系统,那么必须要使用到另一个工具:<ruby>响应式架构<rt>reactive architecture</rt></ruby>——设计响应式系统的方法。此外,要记住编程范式有很多,而响应式编程仅仅只是其中一个,所以如同其它工具一样,响应式编程并不是万金油,它不意图适用于任何情况。

|

||||

|

||||

### 事件驱动 vs. 消息驱动

|

||||

如上面提到的,响应式编程——专注于短时间的数据流链条上的计算——因此倾向于_事件驱动_,而响应式系统——关注于通过分布式系统的通信和协作所得到的弹性和韧性——则是[_消息驱动的_][29][4][14](或者称之为 _消息式(messaging)_ 的)。

|

||||

|

||||

如上面提到的,响应式编程——专注于短时间的数据流链条上的计算——因此倾向于_事件驱动_,而响应式系统——关注于通过分布式系统的通信和协作所得到的弹性和韧性——则是[_消息驱动的_][29] ^脚注4(或者称之为 _消息式(messaging)_ 的)。

|

||||

|

||||

一个拥有长期存活的可寻址(long-lived addressable)组件的消息驱动系统跟一个事件驱动的数据流驱动模型的不同在于,消息具有固定的导向,而事件则没有。消息会有明确的(一个)去向,而事件则只是一段等着被观察(observe)的信息。另外,消息式(messaging)更适用于异步,因为消息的发送与接收和发送者和接收者是分离的。

|

||||

|

||||

响应式宣言中的术语表定义了两者之间[概念上的不同][30]:

|

||||

|

||||

> 一条消息就是一则被送往一个明确目的地的数据。一个事件则是达到某个给定状态的组件发出的一个信号。在一个消息驱动系统中,可寻址到的接收者等待消息的到来然后响应它,否则保持休眠状态。在一个事件驱动系统中,通知的监听者被绑定到消息源上,这样当消息被发出时它就会被调用。这意味着一个事件驱动系统专注于可寻址的事件源而消息驱动系统专注于可寻址的接收者。

|

||||

|

||||

分布式系统需要通过消息在网络上传输进行交流,以实现其沟通基础,与之相反,事件的发出则是本地的。在底层通过发送包裹着事件的消息来搭建跨网络的事件驱动系统的做法很常见。这样能够维持在分布式环境下事件驱动编程模型的相对简易性并且在某些特殊的和合理范围内的使用案例上工作得很好。

|

||||

分布式系统需要通过消息在网络上传输进行交流,以实现其沟通基础,与之相反,事件的发出则是本地的。在底层通过发送包裹着事件的消息来搭建跨网络的事件驱动系统的做法很常见。这样能够维持在分布式环境下事件驱动编程模型的相对简易性,并且在某些特殊的和合理的范围内的使用案例上工作得很好。

|

||||

|

||||

然而,这是有利有弊的:在编程模型的抽象性和简易性上得一分,在控制上就减一分。消息强迫我们去拥抱分布式系统的真实性和一致性——像局部错误(partial failures),错误侦测(failure detection),丢弃/复制/重排序 消息(dropped/duplicated/reordered messages),最后还有一致性,管理多个并发真实性等等——然后直面它们,去处理它们,而不是像过去无数次一样,藏在一个蹩脚的抽象面罩后——假装网络并不存在(例如EJB, [RPC][31], [CORBA][32], 和 [XA][33])。

|

||||

然而,这是有利有弊的:在编程模型的抽象性和简易性上得一分,在控制上就减一分。消息强迫我们去拥抱分布式系统的真实性和一致性——像局部错误(partial failures),错误侦测(failure detection),丢弃/复制/重排序 (dropped/duplicated/reordered )消息,最后还有一致性,管理多个并发真实性等等——然后直面它们,去处理它们,而不是像过去无数次一样,藏在一个蹩脚的抽象面罩后——假装网络并不存在(例如EJB、 [RPC][31]、 [CORBA][32] 和 [XA][33])。

|

||||

|

||||

这些在语义学和适用性上的不同在应用设计中有着深刻的含义,包括分布式系统的复杂性(complexity)中的 _弹性(resilience)_, _韧性(elasticity)_,_移动性(mobility)_,_位置透明性(location transparency)_ 和 _管理(management)_,这些在文章后面再进行介绍。

|

||||

这些在语义学和适用性上的不同在应用设计中有着深刻的含义,包括分布式系统的复杂性(complexity)中的 _弹性(resilience)_、 _韧性(elasticity)_、_移动性(mobility)_、_位置透明性(location transparency)_ 和 _管理(management)_,这些在文章后面再进行介绍。

|

||||

|

||||

在一个响应式系统中,特别是使用了响应式编程技术的,这样的系统中就即有事件也有消息——一个是用于沟通的强大工具(消息),而另一个则呈现现实(事件)。

|

||||

|

||||

@ -91,17 +93,17 @@ _响应式编程_,不要把它跟_函数响应式编程_混淆了,它是异

|

||||

|

||||

_响应式系统_ —— 如同在《响应式宣言》中定义的那样——是一组用于搭建现代系统——已充分准备好满足如今应用程序所面对的不断增长的需求的现代系统——的架构设计原则。

|

||||

|

||||

响应式系统的原则决对不是什么新东西,它可以被追溯到70和80年代Jim Gray和Pat Helland在[串级系统(Tandem System)][34]上和Joe aomstrong和Robert Virding在[Erland][35]上做出的重大工作。然而,这些人在当时都超越了时代,只有到了最近5-10年,技术行业才被不得不反思当前企业系统最好的开发实践活动并且学习如何将来之不易的响应式原则应用到今天这个多核、云计算和物联网的世界中。

|

||||

响应式系统的原则决对不是什么新东西,它可以被追溯到 70 和 80 年代 Jim Gray 和 Pat Helland 在[串级系统(Tandem System)][34] 上和 Joe aomstrong 和 Robert Virding 在 [Erland][35] 上做出的重大工作。然而,这些人在当时都超越了时代,只有到了最近 5 - 10 年,技术行业才被不得不反思当前企业系统最好的开发实践活动并且学习如何将来之不易的响应式原则应用到今天这个多核、云计算和物联网的世界中。

|

||||

|

||||

响应式系统的基石是_消息传递(message-passing)_ ,消息传递为两个组件之间创建一条暂时的边界,使得他们能够在 _时间_ 上分离——实现并发性——和 _空间(space)_ ——实现分布式(distribution)与移动性(mobility)。这种分离是两个组件完全[隔离(isolation)][36]以及实现 _弹性(resilience)_ 和 _韧性(elasticity)_ 基础的必需条件。

|

||||

响应式系统的基石是_消息传递(message-passing)_ ,消息传递为两个组件之间创建一条暂时的边界,使得它们能够在 _时间_ 上分离——实现并发性——和 _空间(space)_ ——实现分布式(distribution)与移动性(mobility)。这种分离是两个组件完全[隔离(isolation)][36]以及实现 _弹性(resilience)_ 和 _韧性(elasticity)_ 基础的必需条件。

|

||||

|

||||

### 从程序到系统

|

||||

|

||||

这个世界的连通性正在变得越来越高。我们构建 _程序_ ——为单个操作子计算某些东西的端到端逻辑——已经不如我们构建 _系统_ 来得多了。

|

||||

这个世界的连通性正在变得越来越高。我们不再构建 _程序_ ——为单个操作子来计算某些东西的端到端逻辑——而更多地在构建 _系统_ 了。

|

||||

|

||||

系统从定义上来说是复杂的——每一部分都包含多个组件,每个组件的自身或其子组件也可以是一个系统——这意味着软件要正常工作已经越来越依赖于其它软件。

|

||||

|

||||

我们今天构建的系统会在多个计算机上被操作,小型的或大型的,数量少的或数量多的,相近的或远隔半个地球的。同时,由于人们的生活正变得越来越依赖于系统顺畅运行的有效性,用户的期望也变得越得越来越难以满足。

|

||||

我们今天构建的系统会在多个计算机上操作,小型的或大型的,或少或多,相近的或远隔半个地球的。同时,由于人们的生活正变得越来越依赖于系统顺畅运行的有效性,用户的期望也变得越得越来越难以满足。

|

||||

|

||||

为了实现用户——和企业——能够依赖的系统,这些系统必须是 _灵敏的(responsive)_ ,这样无论是某个东西提供了一个正确的响应,还是当需要一个响应时响应无法使用,都不会有影响。为了达到这一点,我们必须保证在错误( _弹性_ )和欠载( _韧性_ )下,系统仍然能够保持灵敏性。为了实现这一点,我们把系统设计为 _消息驱动的_ ,我们称其为 _响应式系统_ 。

|

||||

|

||||

@ -109,36 +111,32 @@ _响应式系统_ —— 如同在《响应式宣言》中定义的那样——

|

||||

|

||||

弹性是与 _错误下_ 的灵敏性(responsiveness)有关的,它是系统内在的功能特性,是需要被设计的东西,而不是能够被动的加入系统中的东西。弹性是大于容错性的——弹性无关于故障退化(graceful degradation)——虽然故障退化对于系统来说是很有用的一种特性——与弹性相关的是与从错误中完全恢复达到 _自愈_ 的能力。这就需要组件的隔离以及组件对错误的包容,以免错误散播到其相邻组件中去——否则,通常会导致灾难性的连锁故障。

|

||||

|

||||

因此构建一个弹性的,自愈(self-healing)系统的关键是允许错误被:容纳,具体化为消息,发送给其他的(担当监管者的(supervisors))组件,从而在错误组件之外修复出一个安全环境。在这,消息驱动是其促成因素:远离高度耦合的、脆弱的深层嵌套的同步调用链,大家长期要么学会忍受其煎熬或直接忽略。解决的想法是将调用链中的错误管理分离,将客户端从处理服务端错误的责任中解放出来。

|

||||

因此构建一个弹性的、自愈(self-healing)系统的关键是允许错误被:容纳、具体化为消息,发送给其他的(担当监管者的(supervisors))组件,从而在错误组件之外修复出一个安全环境。在这,消息驱动是其促成因素:远离高度耦合的、脆弱的深层嵌套的同步调用链,大家长期要么学会忍受其煎熬或直接忽略。解决的想法是将调用链中的错误管理分离,将客户端从处理服务端错误的责任中解放出来。

|

||||

|

||||

### 响应式系统的韧性

|

||||

|

||||

[韧性(Elasticity)][37]是关于 _欠载下的灵敏性(responsiveness)_ 的——意味着一个系统的吞吐量在资源增加或减少时能够自动地相应增加或减少(scales up or down)(同样能够向内或外扩展(scales in or out))以满足不同的需求。这是利用云计算承诺的特性所必需的因素:使系统利用资源更加有效,成本效益更佳,对环境友好以及实现按次付费。

|

||||

|

||||

系统必须能够在不重写甚至不重新设置的情况下,适应性地——即无需介入自动伸缩——响应状态及行为,沟通负载均衡,故障转移(failover),以及升级。实现这些的就是 _位置透明性(location transparency)_ :使用同一个方法,同样的编程抽象,同样的语义,在所有向度中伸缩(scaling)系统的能力——从CPU核心到数据中心。

|

||||

系统必须能够在不重写甚至不重新设置的情况下,适应性地——即无需介入自动伸缩——响应状态及行为,沟通负载均衡,故障转移(failover),以及升级。实现这些的就是 _位置透明性(location transparency)_ :使用同一个方法,同样的编程抽象,同样的语义,在所有向度中伸缩(scaling)系统的能力——从 CPU 核心到数据中心。

|

||||

|

||||

如同《响应式宣言》所述:

|

||||

|

||||

> 一个极大地简化问题的关键洞见在于意识到我们都在使用分布式计算。无论我们的操作系统是运行在一个单一结点上(拥有多个独立的CPU,并通过QPI链接进行交流),还是在一个节点集群(cluster of nodes,独立的机器,通过网络进行交流)上。拥抱这个事实意味着在垂直方向上多核的伸缩与在水平方面上集群的伸缩并无概念上的差异。在空间上的解耦 [...],是通过异步消息传送以及运行时实例与其引用解耦从而实现的,这就是我们所说的位置透明性。

|

||||

> 一个极大地简化问题的关键洞见在于意识到我们都在使用分布式计算。无论我们的操作系统是运行在一个单一结点上(拥有多个独立的 CPU,并通过 QPI 链接进行交流),还是在一个节点集群(cluster of nodes)(独立的机器,通过网络进行交流)上。拥抱这个事实意味着在垂直方向上多核的伸缩与在水平方面上集群的伸缩并无概念上的差异。在空间上的解耦 [...],是通过异步消息传送以及运行时实例与其引用解耦从而实现的,这就是我们所说的位置透明性。

|

||||

|

||||

因此,不论接收者在哪里,我们都以同样的方式与它交流。唯一能够在语义上等同实现的方式是消息传送。

|

||||

|

||||

### 响应式系统的生产效率

|

||||

|

||||

既然大多数的系统生来即是复杂的,那么其中一个最重要的点即是保证一个系统架构在开发和维护组件时,最小程度地减低生产效率,同时将操作的 _偶发复杂性(accidental complexity_ 降到最低。

|

||||

既然大多数的系统生来即是复杂的,那么其中一个最重要的点即是保证一个系统架构在开发和维护组件时,最小程度地减低生产效率,同时将操作的 _偶发复杂性(accidental complexity)_ 降到最低。

|

||||

|

||||

这一点很重要,因为在一个系统的生命周期中——如果系统的设计不正确——系统的维护会变得越来越困难,理解、定位和解决问题所需要花费时间和精力会不断地上涨。

|

||||

|

||||

响应式系统是我们所知的最具 _生产效率_ 的系统架构(在多核、云及移动架构的背景下):

|

||||

|

||||

* 错误的隔离为组件与组件之间裹上[舱壁][15](译者注:当船遭到损坏进水时,舱壁能够防止水从损坏的船舱流入其他船舱),防止引发连锁错误,从而限制住错误的波及范围以及严重性。

|

||||

|

||||

* 错误的隔离为组件与组件之间裹上[舱壁][15](LCTT 译注:当船遭到损坏进水时,舱壁能够防止水从损坏的船舱流入其他船舱),防止引发连锁错误,从而限制住错误的波及范围以及严重性。

|

||||

* 监管者的层级制度提供了多个等级的防护,搭配以自我修复能力,避免了许多曾经在侦查(inverstigate)时引发的操作代价(cost)——大量的瞬时故障(transient failures)。

|

||||

|

||||

* 消息传送和位置透明性允许组件被卸载下线、代替或重新布线(rerouted)同时不影响终端用户的使用体验,并降低中断的代价、它们的相对紧迫性以及诊断和修正所需的资源。

|

||||

|

||||

* 复制减少了数据丢失的风险,减轻了数据检索(retrieval)和存储的有效性错误的影响。

|

||||

|

||||

* 韧性允许在使用率波动时保存资源,允许在负载很低时,最小化操作开销,并且允许在负载增加时,最小化运行中断(outgae)或紧急投入(urgent investment)伸缩性的风险。

|

||||

|

||||

因此,响应式系统使生成系统(creation systems)很好的应对错误、随时间变化的负载——同时还能保持低运营成本。

|

||||

@ -173,19 +171,19 @@ _响应式系统_ —— 如同在《响应式宣言》中定义的那样——

|

||||

|

||||

响应式编程在内部逻辑及数据流转换的组件层次上为开发者提高了生产率——通过性能与资源的有效利用实现。而响应式系统在构建 _原生云(cloud native)_ 和其它大型分布式系统的系统层次上为架构师及DevOps从业者提高了生产率——通过弹性与韧性。我们建议在响应式系统设计原则中结合响应式编程技术。

|

||||

|

||||

```

|

||||

1 参考Conal Elliott,FRP的发明者,见[这个演示][16][↩][17]

|

||||

2 [Amdahl 定律][18]揭示了系统理论上的加速会被一系列的子部件限制,这意味着系统在新的资源加入后会出现收益递减(diminishing returns)。 [↩][19]

|

||||

3 Neil Günter的[通用可伸缩性定律(Universal Scalability Law)][20]是理解并发与分布式系统的竞争与协作的重要工具,它揭示了当新资源加入到系统中时,保持一致性的开销会导致不好的结果。

|

||||

4 消息可以是同步的(要求发送者和接受者同时存在),也可以是异步的(允许他们在时间上解耦)。其语义上的区别超出本文的讨论范围。[↩][22]

|

||||

```

|

||||

|

||||

> 1. 参考Conal Elliott,FRP的发明者,见[这个演示][16]

|

||||

> 2. [Amdahl 定律][18]揭示了系统理论上的加速会被一系列的子部件限制,这意味着系统在新的资源加入后会出现收益递减(diminishing returns)。

|

||||

> 3. Neil Günter的[通用可伸缩性定律(Universal Scalability Law)][20]是理解并发与分布式系统的竞争与协作的重要工具,它揭示了当新资源加入到系统中时,保持一致性的开销会导致不好的结果。

|

||||

> 4. 消息可以是同步的(要求发送者和接受者同时存在),也可以是异步的(允许他们在时间上解耦)。其语义上的区别超出本文的讨论范围。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.oreilly.com/ideas/reactive-programming-vs-reactive-systems

|

||||

|

||||

作者:[Jonas Bonér][a] , [Viktor Klang][b]

|

||||

作者:[Jonas Bonér][a], [Viktor Klang][b]

|

||||

译者:[XLCYun](https://github.com/XLCYun)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 组织编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

399

published/20161220 TypeScript the missing introduction.md

Normal file

399

published/20161220 TypeScript the missing introduction.md

Normal file

@ -0,0 +1,399 @@

|

||||

一篇缺失的 TypeScript 介绍

|

||||

=============================================================

|

||||

|

||||

**下文是 James Henry([@MrJamesHenry][8])所提交的内容。我是 ESLint 核心团队的一员,也是 TypeScript 布道师。我正在和 Todd 在 [UltimateAngular][9] 平台上合作发布 Angular 和 TypeScript 的精品课程。**

|

||||

|

||||

> 本文的主旨是为了介绍我们是如何看待 TypeScript 的以及它在加强 JavaScript 开发中所起的作用。

|

||||

>

|

||||

> 我们也将尽可能地给出那些类型和编译方面的那些时髦词汇的准确定义。

|

||||

|

||||

TypeScript 强大之处远远不止这些,本篇文章无法涵盖,想要了解更多请阅读[官方文档][15],或者学习 [UltimateAngular 上的 TypeScript 课程][16] ,从初学者成为一位 TypeScript 高手。

|

||||

|

||||

### 背景

|

||||

|

||||

TypeScript 是个出乎意料强大的工具,而且它真的很容易掌握。

|

||||

|

||||

然而,TypeScript 可能比 JavaScript 要更为复杂一些,因为 TypeScript 可能向我们同时引入了一系列以前没有考虑过的 JavaScript 程序相关的技术概念。

|

||||

|

||||

每当我们谈论到类型、编译器等这些概念的时候,你会发现很快会变的不知所云起来。

|

||||

|

||||

这篇文章就是一篇为了解答你需要知道的许许多多不知所云的概念,来帮助你 TypeScript 快速入门的教程,可以让你轻松自如的应对这些概念。

|

||||

|

||||

### 关键知识的掌握

|

||||

|

||||

在 Web 浏览器中运行我们的代码这件事或许使我们对它是如何工作的产生一些误解,“它不用经过编译,是吗?”,“我敢肯定这里面是没有类型的...”

|

||||

|

||||

更有意思的是,上述的说法既是正确的也是不正确的,这取决于上下文环境和我们是如何定义这些概念的。

|

||||

|

||||

首先,我们要作的是明确这些。

|

||||

|

||||

#### JavaScript 是解释型语言还是编译型语言?

|

||||

|

||||

传统意义上,程序员经常将自己的程序编译之后运行出结果就认为这种语言是编译型语言。

|

||||

|

||||

> 从初学者的角度来说,编译的过程就是将我们自己编辑好的高级语言程序转换成机器实际运行的格式。

|

||||

|

||||

就像 Go 语言,可以使用 `go build` 的命令行工具编译 .go 的文件,将其编译成代码的低级形式,它可以直接执行、运行。

|

||||

|

||||

```

|

||||

# We manually compile our .go file into something we can run

|

||||

# using the command line tool "go build"

|

||||

go build ultimate-angular.go

|

||||

# ...then we execute it!

|

||||

./ultimate-angular

|

||||

```

|

||||

|

||||

作为一个 JavaScript 程序员(这一刻,请先忽略我们对新一代构建工具和模块加载程序的热爱),我们在日常的 JavaScript 开发中并没有编译的这一基本步骤,

|

||||

|

||||

我们写一些 JavaScript 代码,把它放在浏览器的 `<script>` 标签中,它就能运行了(或者在服务端环境运行,比如:node.js)。

|

||||

|

||||

**好吧,因此 JavaScript 没有进行过编译,那它一定是解释型语言了,是吗?**

|

||||

|

||||

实际上,我们能够确定的一点是,JavaScript 不是我们自己编译的,现在让我们简单的回顾一个简单的解释型语言的例子,再来谈 JavaScript 的编译问题。

|

||||

|

||||

> 解释型计算机语言的执行的过程就像人们看书一样,从上到下、一行一行的阅读。

|

||||

|

||||

我们所熟知的解释型语言的典型例子是 bash 脚本。我们终端中的 bash 解释器逐行读取我们的命令并且执行它。

|

||||

|

||||

现在我们回到 JavaScript 是解释执行还是编译执行的讨论中,我们要将逐行读取和逐行执行程序分开理解(对“解释型”的简单理解),不要混在一起。

|

||||

|

||||

以此代码为例:

|

||||

|

||||

```

|

||||

hello();

|

||||

function hello(){

|

||||

console.log("Hello")

|

||||

}

|

||||

```

|

||||

|

||||

这是真正意义上 JavaScript 输出 Hello 单词的程序代码,但是,在 `hello()` 在我们定义它之前就已经使用了这个函数,这是简单的逐行执行办不到的,因为 `hello()` 在第一行没有任何意义的,直到我们在第二行声明了它。

|

||||

|

||||

像这样在 JavaScript 是存在的,因为我们的代码实际上在执行之前就被所谓的“JavaScript 引擎”或者是“特定的编译环境”编译过,这个编译的过程取决于具体的实现(比如,使用 V8 引擎的 node.js 和 Chome 就和使用 SpiderMonkey 的 FireFox 就有所不同)。

|

||||

|

||||

在这里,我们不会在进一步的讲解编译型执行和解释型执行微妙之处(这里的定义已经很好了)。

|

||||

|

||||

> 请务必记住,我们编写的 JavaScript 代码已经不是我们的用户实际执行的代码了,即使是我们简单地将其放在 HTML 中的 `<script>` ,也是不一样的。

|

||||

|

||||

#### 运行时间 VS 编译时间

|

||||

|

||||

现在我们已经正确理解了编译和运行是两个不同的阶段,那“<ruby>运行阶段<rt>Run Time</rt></ruby>”和“<ruby>编译阶段<rt>Compile Time</rt></ruby>”理解起来也就容易多了。

|

||||

|

||||

编译阶段,就是我们在我们的编辑器或者 IDE 当中的代码转换成其它格式的代码的阶段。

|

||||

|

||||

运行阶段,就是我们程序实际执行的阶段,例如:上面的 `hello()` 函数就执行在“运行阶段”。

|

||||

|

||||

#### TypeScript 编译器

|

||||

|

||||

现在我们了解了程序的生命周期中的关键阶段,接下来我们可以介绍 TypeScript 编译器了。

|

||||

|

||||

TypeScript 编译器是帮助我们编写代码的关键。比如,我们不需将 JavaScript 代码包含到 `<script>` 标签当中,只需要通过 TypeScript 编译器传递它,就可以在运行程序之前得到改进程序的建议。

|

||||

|

||||

> 我们可以将这个新的步骤作为我们自己的个人“编译阶段”,这将在我们的程序抵达 JavaScript 主引擎之前,确保我们的程序是以我们预期的方式编写的。

|

||||

|

||||

它与上面 Go 语言的实例类似,但是 TypeScript 编译器只是基于我们编写程序的方式提供提示信息,并不会将其转换成低级的可执行文件,它只会生成纯 JavaScript 代码。

|

||||

|

||||

```

|

||||

# One option for passing our source .ts file through the TypeScript

|

||||

# compiler is to use the command line tool "tsc"

|

||||

tsc ultimate-angular.ts

|

||||

|

||||

# ...this will produce a .js file of the same name

|

||||

# i.e. ultimate-angular.js

|

||||

```

|

||||

|

||||

在[官方文档][23]中,有许多关于将 TypeScript 编译器以各种方式融入到你的现有工作流程中的文章。这些已经超出本文范围。

|

||||

|

||||

#### 动态类型与静态类型

|

||||

|

||||

就像对比编译程序与解释程序一样,动态类型与静态类型的对比在现有的资料中也是极其模棱两可的。

|

||||

|

||||

让我们先回顾一下我们在 JavaScript 中对于类型的理解。

|

||||

|

||||

我们的代码如下:

|

||||

|

||||

```

|

||||

var name = 'James';

|

||||

var sum = 1 + 2;

|

||||

```

|

||||

|

||||

我们如何给别人描述这段代码?

|

||||

|

||||

“我们声明了一个变量 `name`,它被分配了一个 “James” 的**字符串**,然后我们又申请了一个变量 `sum`,它被分配了一个**数字** 1 和**数字** 2 的求和的数值结果。”

|

||||

|

||||

即使在这样一个简单的程序中,我们也使用了两个 JavaScript 的基本类型:`String` 和 `Number`。

|

||||

|

||||

就像上面我们讲编译一样,我们不会陷入编程语言类型的学术细节当中,关键是要理解在 JavaScript 中类型表示的是什么,并扩展到 TypeScript 的类型的理解上。

|

||||

|

||||

从每夜拜读的最新 ECMAScript 规范中我们可以学到(LOL, JK - “wat’s an ECMA?”),它大量引用了 JavaScript 的类型及其用法。

|

||||

|

||||

直接引自官方规范:

|

||||

|

||||

> ECMAScript 语言的类型取决于使用 ECMAScript 语言的 ECMAScript 程序员所直接操作的值。

|

||||

>

|

||||

> ECMAScript 语言的类型有 Undefined、Null、Boolean、String、Symbol、Number 和 Object。

|

||||

|

||||

我们可以看到,JavaScript 语言有 7 种正式类型,其中我们在我们现在程序中使用了 6 种(Symbol 首次在 ES2015 中引入,也就是 ES6)。

|

||||

|

||||

现在我们来深入一点看上面的 JavaScript 代码中的 “name 和 sum”。

|

||||

|

||||

我们可以把我们当前被分配了字符串“James”的变量 `name` 重新赋值为我们的第二个变量 sum 的当前值,目前是数字 3。

|

||||

|

||||

```

|

||||

var name = 'James';

|

||||

var sum = 1 + 2;

|

||||

|

||||

name = sum;

|

||||

```

|

||||

|

||||

该 `name` 变量开始“存有”一个字符串,但现在它“存有”一个数字。这凸显了 JavaScript 中变量和类型的基本特性:

|

||||

|

||||

“James” 值一直是字符串类型,而 `name` 变量可以分配任何类型的值。和 `sum` 赋值的情况相同,1 是一个数字类型,`sum` 变量可以分配任何可能的值。

|

||||

|

||||

> 在 JavaScript 中,值是具有类型的,而变量是可以随时保存任何类型的值。

|

||||

|

||||

这也恰好是一个“动态类型语言”的定义。

|

||||

|

||||

相比之下,我们可以将“静态类型语言”视为我们可以(也必须)将类型信息与特定变量相关联的语言:

|

||||

|

||||

```

|

||||

var name: string = ‘James’;

|

||||

```

|

||||

|

||||

在这段代码中,我们能够更好地显式声明我们对变量 `name` 的意图,我们希望它总是用作一个字符串。

|

||||

|

||||

你猜怎么着?我们刚刚看到我们的第一个 TypeScript 程序。

|

||||

|

||||

当我们<ruby>反思</rt>reflect</rt></ruby>我们自己的代码(非编程方面的双关语“反射”)时,我们可以得出的结论,即使我们使用动态语言(如 JavaScript),在几乎所有的情况下,当我们初次定义变量和函数参数时,我们应该有非常明确的使用意图。如果这些变量和参数被重新赋值为与我们原先赋值不同类型的值,那么有可能某些东西并不是我们预期的那样工作的。

|

||||

|

||||

> 作为 JavaScript 开发者,TypeScript 的静态类型注释给我们的一个巨大的帮助,它能够清楚地表达我们对变量的意图。

|

||||

|

||||

> 这种改进不仅有益于 TypeScript 编译器,还可以让我们的同事和将来的自己明白我们的代码。代码是用来读的。

|

||||

|

||||

### TypeScript 在我们的 JavaScript 工作流程中的作用

|

||||

|

||||

我们已经开始看到“为什么经常说 TypeScript 只是 JavaScript + 静态类型”的说法了。`: string` 对于我们的 `name` 变量就是我们所谓的“类型注释”,在编译时被使用(换句话说,当我们让代码通过 TypeScript 编译器时),以确保其余的代码符合我们原来的意图。

|

||||

|

||||

我们再来看看我们的程序,并添加显式注释,这次是我们的 `sum` 变量:

|

||||

|

||||

```

|

||||

var name: string = 'James';

|

||||

var sum: number = 1 + 2;

|

||||

|

||||

name = sum;

|

||||

```

|

||||

|

||||

如果我们使用 TypeScript 编译器编译这个代码,我们现在就会收到一个在 `name = sum` 这行的错误: `Type 'number' is not assignable to type 'string'`,我们的这种“偷渡”被警告,我们执行的代码可能有问题。

|

||||

|

||||

> 重要的是,如果我们想要继续执行,我们可以选择忽略 TypeScript 编译器的错误,因为它只是在将 JavaScript 代码发送给我们的用户之前给我们反馈的工具。

|

||||

|

||||

TypeScript 编译器为我们输出的最终 JavaScript 代码将与上述原始源代码完全相同:

|

||||

|

||||

```

|

||||

var name = 'James';

|

||||

var sum = 1 + 2;

|

||||

|

||||

name = sum;

|

||||

```

|

||||

|

||||

类型注释全部为我们自动删除了,现在我们可以运行我们的代码了。

|

||||

|

||||

> 注意:在此示例中,即使我们没有提供显式类型注释的 `: string` 和 `: number` ,TypeScript 编译器也可以为我们提供完全相同的错误 。

|

||||

|

||||

> TypeScript 通常能够从我们使用它的方式推断变量的类型!

|

||||

|

||||

#### 我们的源文件是我们的文档,TypeScript 是我们的拼写检查

|

||||

|

||||

对于 TypeScript 与我们的源代码的关系来说,一个很好的类比,就是拼写检查与我们在 Microsoft Word 中写的文档的关系。

|

||||

|

||||

这两个例子有三个关键的共同点:

|

||||

|

||||

1. **它能告诉我们写的东西的客观的、直接的错误:**

|

||||

* _拼写检查_:“我们已经写了字典中不存在的字”

|

||||

* _TypeScript_:“我们引用了一个符号(例如一个变量),它没有在我们的程序中声明”

|

||||

2. **它可以提醒我们写的可能是错误的:**

|

||||

* _拼写检查_:“该工具无法完全推断特定语句的含义,并建议重写”

|

||||

* _TypeScript_:“该工具不能完全推断特定变量的类型,并警告不要这样使用它”

|

||||

3. **我们的来源可以用于其原始目的,无论工具是否存在错误:**

|

||||

* _拼写检查_:“即使您的文档有很多拼写错误,您仍然可以打印出来,并把它当成文档使用”

|

||||

* _TypeScript_:“即使您的源代码具有 TypeScript 错误,它仍然会生成您可以执行的 JavaScript 代码”

|

||||

|

||||

### TypeScript 是一种可以启用其它工具的工具

|

||||

|

||||

TypeScript 编译器由几个不同的部分或阶段组成。我们将通过查看这些部分之一 The Parser(语法分析程序)来结束这篇文章,除了 TypeScript 已经为我们做的以外,它为我们提供了在其上构建其它开发工具的机会。

|

||||

|

||||

编译过程的“解析器步骤”的结果是所谓的抽象语法树,简称为 AST。

|

||||

|

||||

#### 什么是抽象语法树(AST)?

|

||||

|

||||

我们以普通文本形式编写我们的程序,因为这是我们人类与计算机交互的最好方式,让它们能够做我们想要的东西。我们并不是很擅长于手工编写复杂的数据结构!

|

||||

|

||||

然而,不管在哪种情况下,普通文本在编译器里面实际上是一个非常棘手的事情。它可能包含程序运作不必要的东西,例如空格,或者可能存在有歧义的部分。

|

||||

|

||||

因此,我们希望将我们的程序转换成数据结构,将数据结构全部映射为我们所使用的所谓“标记”,并将其插入到我们的程序中。

|

||||

|

||||

这个数据结构正是 AST!

|

||||

|

||||

AST 可以通过多种不同的方式表示,我使用 JSON 来看一看。

|

||||

|

||||

我们从这个极其简单的基本源代码来看:

|

||||

|

||||

```

|

||||

var a = 1;

|

||||

```

|

||||

|

||||

TypeScript 编译器的 Parser(语法分析程序)阶段的(简化后的)输出将是以下 AST:

|

||||

|

||||

```

|

||||

{

|

||||

"pos": 0,

|

||||

"end": 10,

|

||||

"kind": 256,

|

||||

"text": "var a = 1;",

|

||||

"statements": [

|

||||

{

|

||||

"pos": 0,

|

||||

"end": 10,

|

||||

"kind": 200,

|

||||

"declarationList": {

|

||||

"pos": 0,

|

||||

"end": 9,

|

||||

"kind": 219,

|

||||

"declarations": [

|

||||

{

|

||||

"pos": 3,

|

||||

"end": 9,

|

||||

"kind": 218,

|

||||

"name": {

|

||||

"pos": 3,

|

||||

"end": 5,

|

||||

"text": "a"

|

||||

},

|

||||

"initializer": {

|

||||

"pos": 7,

|

||||

"end": 9,

|

||||

"kind": 8,

|

||||

"text": "1"

|

||||

}

|

||||

}

|

||||

]

|

||||

}

|

||||

}

|

||||

]

|

||||

}

|

||||

```

|

||||

|

||||

我们的 AST 中的对象称为节点。

|

||||

|

||||

#### 示例:在 VS Code 中重命名符号

|

||||

|

||||

在内部,TypeScript 编译器将使用 Parser 生成的 AST 来提供一些非常重要的事情,例如,发生在编译程序时的类型检查。

|

||||

|

||||

但它不止于此!

|

||||

|

||||

> 我们可以使用 AST 在 TypeScript 之上开发自己的工具,如代码美化工具、代码格式化工具和分析工具。

|

||||

|

||||

建立在这个 AST 代码之上的工具的一个很好的例子是:<ruby>语言服务器<rt>Language Server</rt></ruby>。

|

||||

|

||||

深入了解语言服务器的工作原理超出了本文的范围,但是当我们编写程序时,它能为我们提供一个绝对重量级别功能,就是“重命名符号”。

|

||||

|

||||

假设我们有以下源代码:

|

||||

|

||||

```

|

||||

// The name of the author is James

|

||||

var first_name = 'James';

|

||||

console.log(first_name);

|

||||

```

|

||||

|

||||

经过代码审查和对完美的适当追求,我们决定应该改换我们的变量命名惯例;使用驼峰式命名方式,而不是我们当前正在使用这种蛇式命名。

|

||||

|

||||

在我们的代码编辑器中,我们一直以来可以选择多个相同的文本,并使用多个光标来一次更改它们。

|

||||

|

||||

|

||||

|

||||

当我们把程序也视作文本这样继续操作时,我们已经陷入了一个典型的陷阱中。

|

||||

|

||||

那个注释中我们不想修改的“name”单词,在我们的手动匹配中却被误选中了。我们可以看到在现实世界的应用程序中这样更改代码是有多危险。

|

||||

|

||||

正如我们在上面学到的那样,像 TypeScript 这样的东西在幕后生成一个 AST 的时候,与我们的程序不再像普通文本那样可以交互,每个标记在 AST 中都有自己的位置,而且它有很清晰的映射关系。

|

||||

|

||||

当我们右键单击我们的 `first_name` 变量时,我们可以在 VS Code 中直接“重命名符号”(TypeScript 语言服务器插件也可用于其他编辑器)。

|

||||

|

||||

|

||||

|

||||

非常好!现在我们的 `first_name` 变量是唯一需要改变的东西,如果需要的话,这个改变甚至会发生在我们项目中的多个文件中(与导出和导入的值一样)!

|

||||

|

||||

### 总结

|

||||

|

||||

哦,我们在这篇文章中已经讲了很多的内容。

|

||||

|

||||

我们把有关学术方面的规避开,围绕编译器和类型还有很多专业术语给出了通俗的定义。

|

||||

|

||||

我们对比了编译语言与解释语言、运行阶段与编译阶段、动态类型与静态类型,以及抽象语法树(AST)如何为我们的程序构建工具提供了更为优化的方法。

|

||||

|

||||

重要的是,我们提供了 TypeScript 作为我们 JavaScript 开发工具的一种思路,以及如何在其上构建更棒的工具,比如说作为重构代码的一种方式的重命名符号。

|

||||

|

||||

快来 UltimateAngular 平台上学习从初学者到 TypeScript 高手的课程吧,开启你的学习之旅!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://toddmotto.com/typescript-the-missing-introduction

|

||||

|

||||

作者:James Henry

|

||||

译者:[MonkeyDEcho](https://github.com/MonkeyDEcho)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#javascript---interpreted-or-compiled

|

||||

[2]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#run-time-vs-compile-time

|

||||

[3]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#the-typescript-compiler

|

||||

[4]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#dynamic-vs-static-typing

|

||||

[5]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#our-source-file-is-our-document-typescript-is-our-spell-check

|

||||

[6]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#what-is-an-abstract-syntax-tree-ast

|

||||

[7]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#example-renaming-symbols-in-vs-code

|

||||

[8]:https://twitter.com/MrJamesHenry

|

||||

[9]:https://ultimateangular.com/courses

|

||||

[10]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#background

|

||||

[11]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#getting-to-grips-with-the-buzzwords

|

||||

[12]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#typescripts-role-in-our-javascript-workflow

|

||||

[13]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#typescript-is-a-tool-which-enables-other-tools

|

||||

[14]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#summary

|

||||

[15]:http://www.typescriptlang.org/docs

|

||||

[16]:https://ultimateangular.com/courses#typescript

|

||||

[17]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#table-of-contents

|

||||

[18]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#background

|

||||

[19]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#getting-to-grips-with-the-buzzwords

|

||||

[20]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#javascript---interpreted-or-compiled

|

||||

[21]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#run-time-vs-compile-time

|

||||

[22]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#the-typescript-compiler

|

||||

[23]:http://www.typescriptlang.org/docs

|

||||

[24]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#dynamic-vs-static-typing

|

||||

[25]:http://www.ecma-international.org/publications/files/ECMA-ST/Ecma-262.pdf

|

||||

[26]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#typescripts-role-in-our-javascript-workflow

|

||||

[27]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#our-source-file-is-our-document-typescript-is-our-spell-check

|

||||

[28]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#typescript-is-a-tool-which-enables-other-tools

|

||||

[29]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#what-is-an-abstract-syntax-tree-ast

|

||||

[30]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#example-renaming-symbols-in-vs-code

|

||||

[31]:https://toddmotto.com/typescript-the-missing-introduction?utm_source=javascriptweekly&utm_medium=email#summary

|

||||

[32]:https://ultimateangular.com/courses#typescript

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

145

published/20170113 3 open source music players.md

Normal file

145

published/20170113 3 open source music players.md

Normal file

@ -0,0 +1,145 @@

|

||||

3 个开源的音乐播放器:Aqulung、Lollypop 和 GogglesMM

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

|

||||

音乐是生活的一部分。[维基百科关于音乐发展历史的文章][4]有这样一段不错的描述说:“全世界所有的人们,包括哪怕是最孤立、与世隔绝的部落,都会有自己的特色音乐……”好吧,我们开源人就构成了一个部落。我建议我们的“音乐形式”应该包括开源音乐播放器。在过去几年里,我已经使用体验过不少我能接触到的音乐播放器;[2016 年 12 月份][5]我根据这六个标准来总结概括了我使用开源音乐播放器的感受:

|

||||

|

||||

1. 必须是能够通过设置让音乐一成不变地转换到 [ALSA][1]。(最高分 5分)

|

||||

2. 应该有一个不错的“智能播放列表”。(1 分)

|

||||

3. 不应该强迫用户只能通过播放列表来进行交互。(1 分)

|

||||

4. 应该能够提供一个简单的方法来显示歌曲的封面图片——使用内嵌的封面图或使用在音乐目录里面 cover.jpg(或者 .png)文件替代。

|

||||

5. 应该能够在音乐播放的时候显示信号级别和实际比特率。(1 分)

|

||||

6. 能够呈现出不错的整体组织,结构布局和执行性能。(1 分)

|

||||

|

||||

热心的读者让告诉我有三个播放器是在我的资源仓库里没有的:[Aqualung][6]、[Lollypop][7] 和 [GogglesMM][8]。我并不想在我办公用的电脑里面安装那些来自外面的软件,我承诺过我会配置一个“试验台”来测试这三个音乐播放器,并给出测试的细节。

|

||||

|

||||

### Aqualung

|

||||

|

||||

[Aqualung][9] 有一个写的清晰明了的网站来解释它众多的特点。其上提供的说明中我发现其中一点特别有趣:

|

||||

|

||||

“你能够(也应该)将你的所有音乐按照艺术家/档案/声轨这样组织成一个树型结构,这样比生成一个一体化的 Winamp/XMMS 播放列表更舒服。”

|

||||

|

||||

这点让我有些困惑,因为我总是把我的音乐按照艺术家、专辑和声轨这样组织成树状。但这就可能解释了为什么我有时发现 XMMS 流派的播放器在浏览音乐时有一点古怪。

|

||||

|

||||

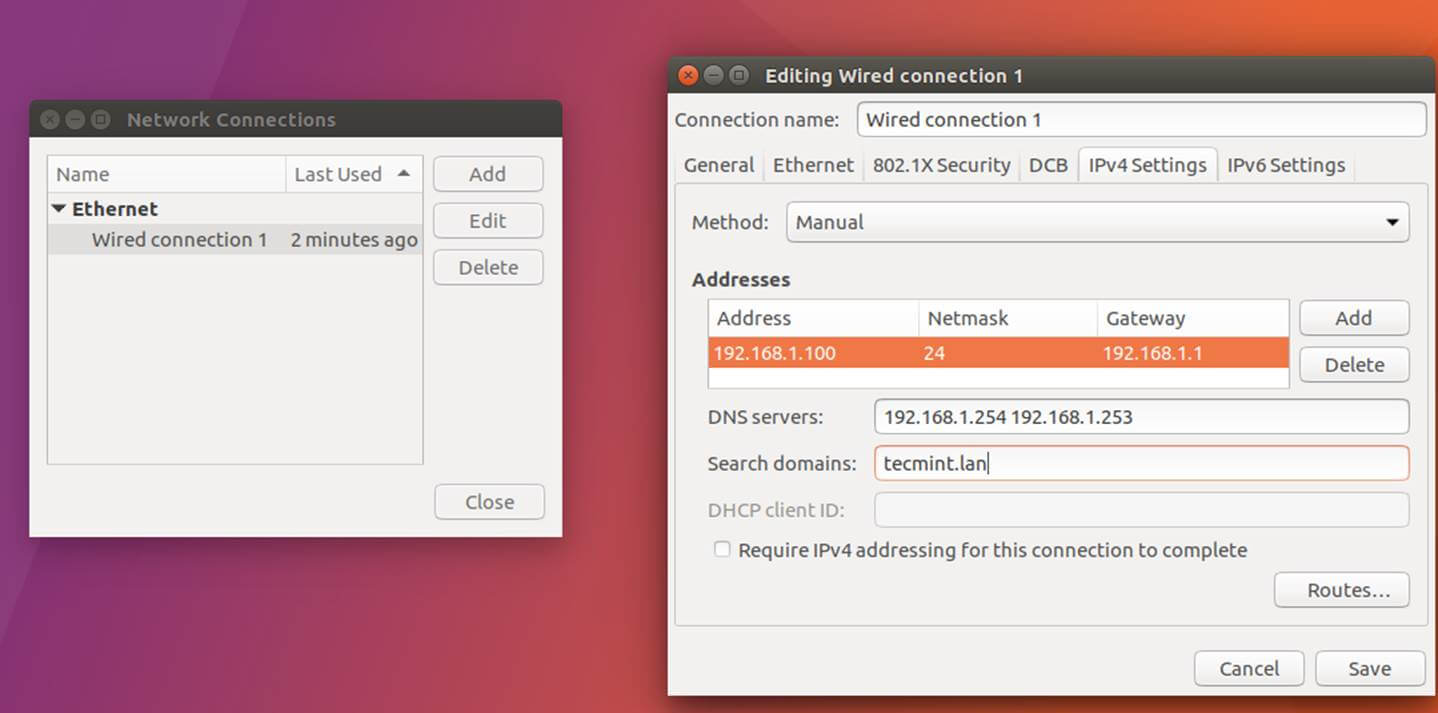

根据 Aqualung 官网的下载页面说明,官方发布的只有源代码。但是文档上的说明暗示了绝大多数主流的 Linux 发行版本都包括一份 Aqualung 的构建副本,但我当前用的办公电脑所使用的 Linux 发行版 Ubuntu 16.10 并不在此范围内。[Launchpad.net][10] 提供有 PPA,但那些软件看起来都有些过时了,所以为什么不试试编译源码安装软件呢?

|

||||

|

||||

我根据官网上编译文档的建议和配置脚本的提示安装了 **pkgconf** 以及 **libasound**、**libflac**、**libmp3lame**、**libvorbis**、**libxml2**、**libglib2.0** 和 **libgtk+-2.0** 的开发库。接下来,我就能够干净利索的进行 `configure` 然后进行 `make` 和 `make install`。最终我可以执行 `/usr/local/bin/aqualung` 了。

|

||||

|

||||

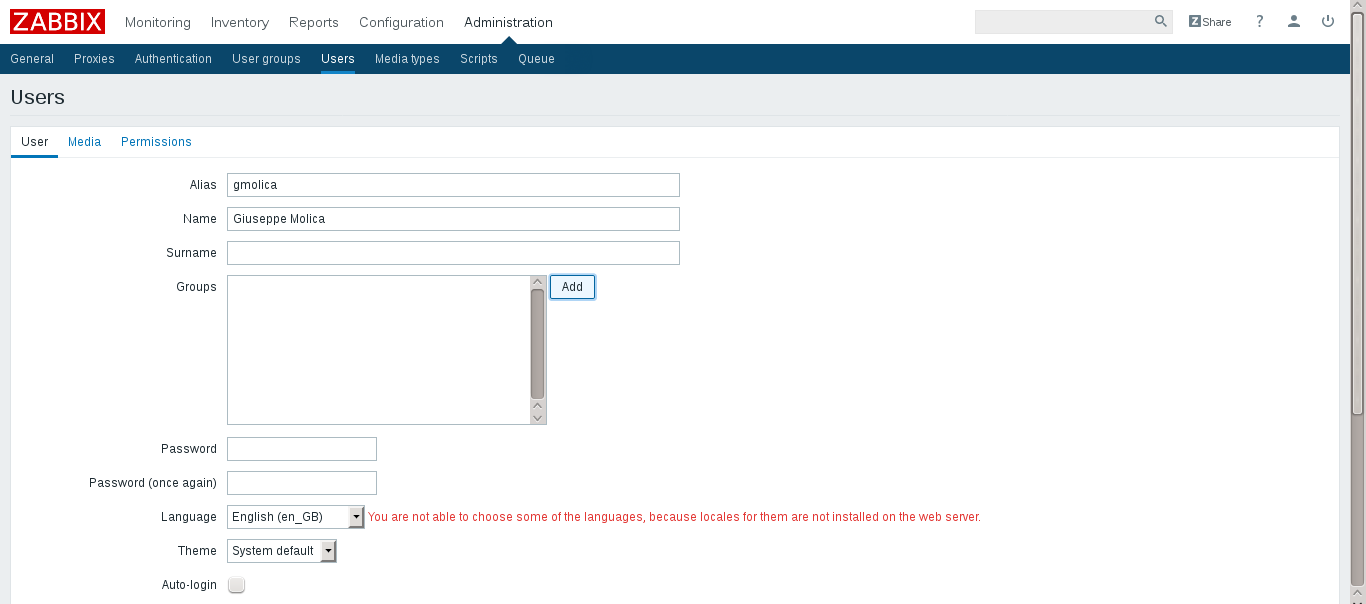

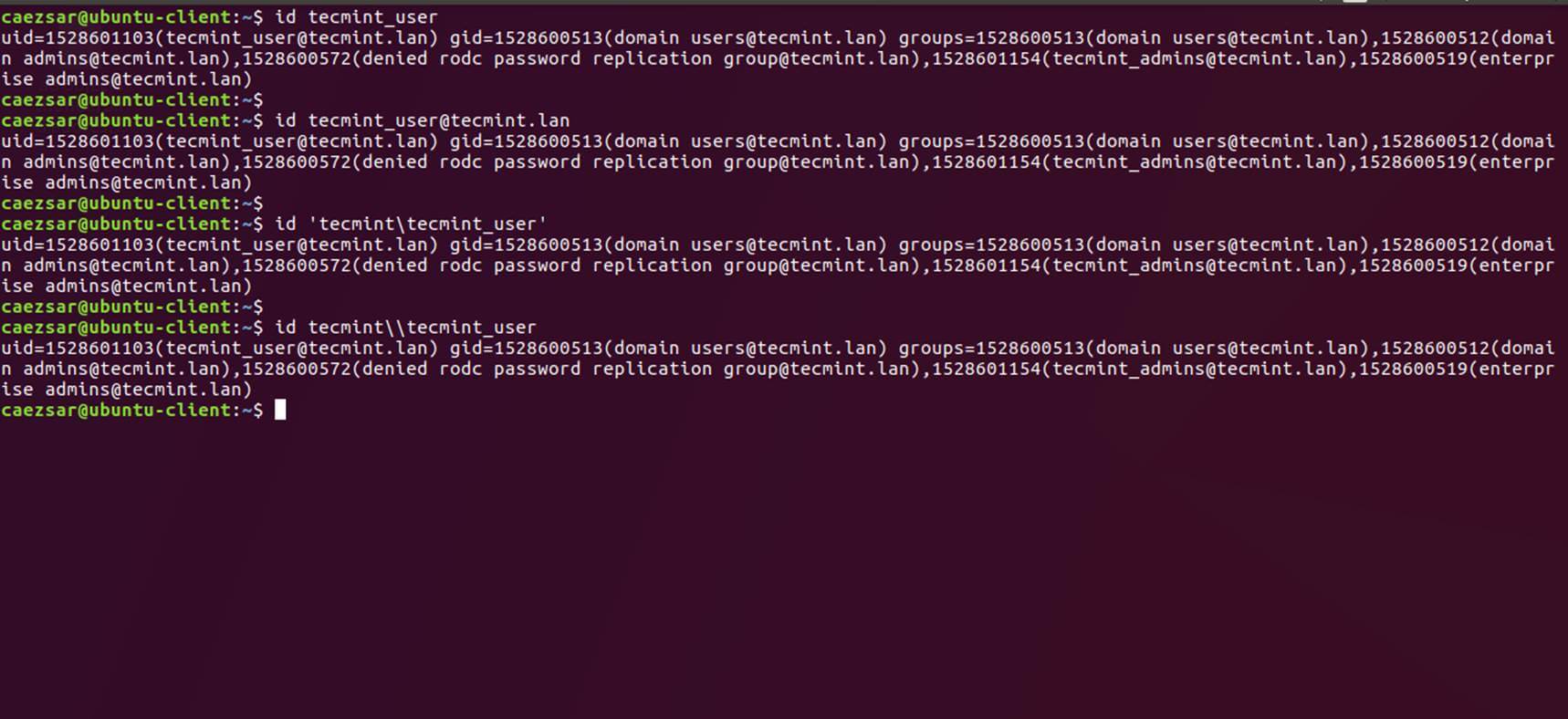

|

||||

|

||||

|

||||

*Aqualung,不能切换音乐播放的码率。*

|

||||

|

||||

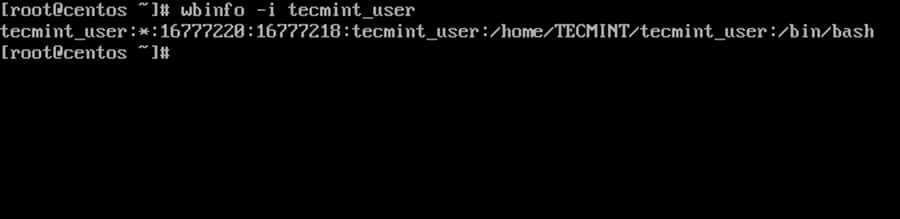

一旦 Aqualung 启动运行,我就能看到相当简洁直接的两窗口界面:播放器本身和“音乐商店”。我通过右键点击播放器的音乐面板打开参数设置查看这些可设置的参数,看是否能找到 AudioQuest DragonFly 这个数模转换器,但我没有找到任何相关的迹象。然而,站点上的说明指出可以通过命令行指定输出设备。最终我用 **plughw** 设备才让 Aqualung 启动起来。

|

||||

|

||||

在那个时候,真正让我对 Aqualung 感到失望的是 Aqualung 似乎是需要一个固定的输出采样频率。我能够用 Aqualung 播放器的默认设置来正常播放我的 44.1 Khz 文件,但是同样的采样频率播放 96 Khz 的音乐文件时,我不得不关闭软件并重新启动。也正是因为这一点,我不会再继续对 Aqualung 进行使用测评。

|

||||

|

||||

**无评分。**

|

||||

|

||||

### Lollypop

|

||||

|

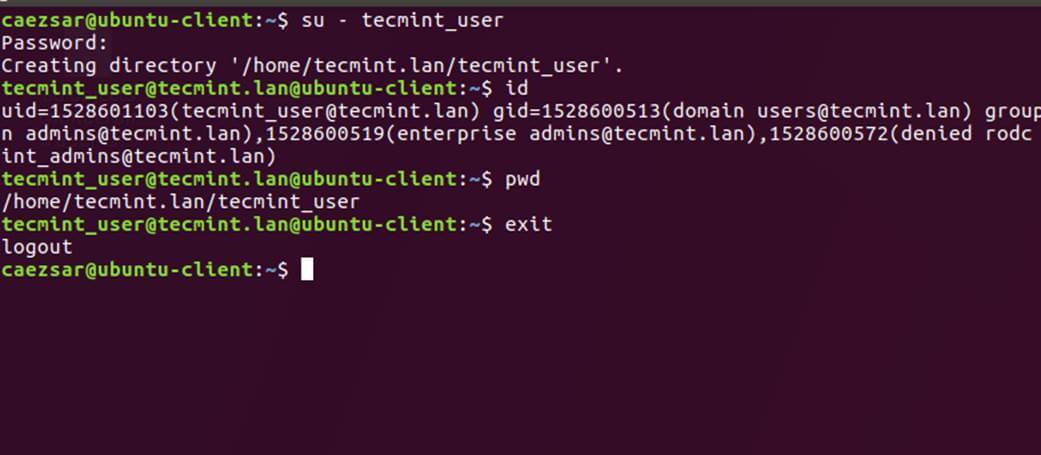

||||

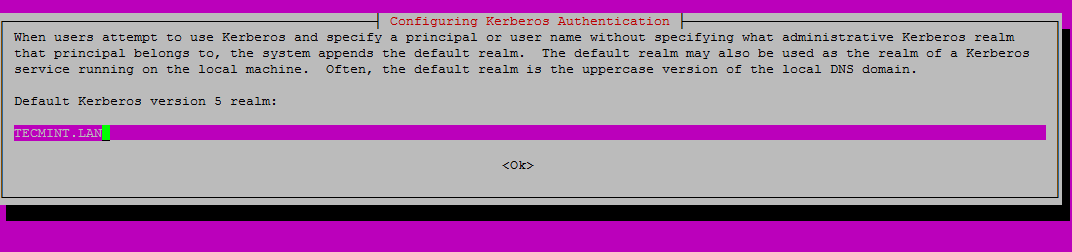

|

||||

|

||||

*优美的 Lollypop 用户界面。*

|

||||

|

||||

[Lollypop][11] 有一个华丽的网站。尽管它不在我办公专用的电脑的软件仓库里面,但是有一个“针对 Ubuntu/Debian 用户的下载”链接带你跳转到 [launchpad.net 站点提供的最新的 PPA][12]。这个站点还提供针对 Flatpak、Arch Linux、Fedora 和 OpenSUSE 这些系统的 Lollypop 软件包的下载。我看了下 [Fedora COPR 上针对各个 Fedora 版本的 Lollypop 下载链接][13],看起来 Lollypop 更新的比较及时而且从 Fedora 版本的 23 到 26 都有对应的软件包提供下载安装。

|

||||

|

||||

一天内做一次源码编译就足够了,所以我决定试试从 PPA 安装这款软件。我通过命令行来执行 Lollypop 软件。设置菜单能够在 Lollypop 界面的右上方很显眼地看见。更新完我的音乐后,我开始找电脑的输出设备设置,但是在一番查看后,我不知道该怎么选择合适的输出设备。即便我在命令行通过 **-help** 也找不到有用的帮助信息。

|

||||

|

||||

经过一番网上搜索后我找到一个 Lollypop 的开发者的提示才知道我需要 **gstreamer libav** 来让 Lollypop 工作。通过这个说明我决定停止,因为这可能需要一个 **gstreamer** 相关配置才有能工作,但是我不太想继续尝试了。

|

||||

|

||||

Lollypop 有一个优美的用户交互界面和它的优美的网站相得益彰,但是我现在不会进一步对它进行测评,否则我就又多了一个进一步去学习了解 **gstreamer** 的理由。

|

||||

|

||||

**无评分。**

|

||||

|

||||

### GogglesMM

|

||||

|

||||

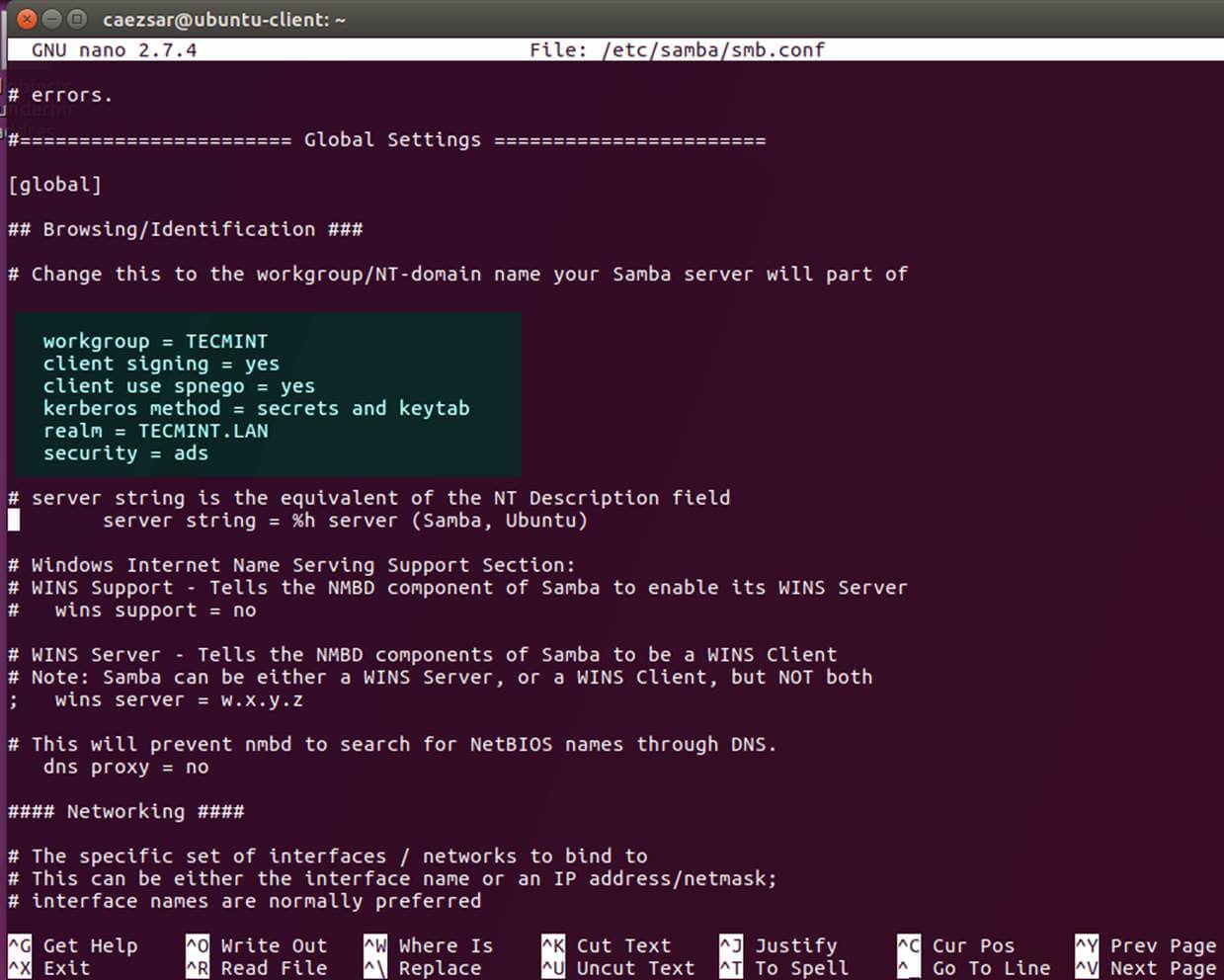

[Goggles Music Manager][14] 也有一个[在 launchpad.net 及时更新的 PPA][15];安装流程简单明了,我现在可以在命令行执行 **gogglesmm** 了。

|

||||

|

||||

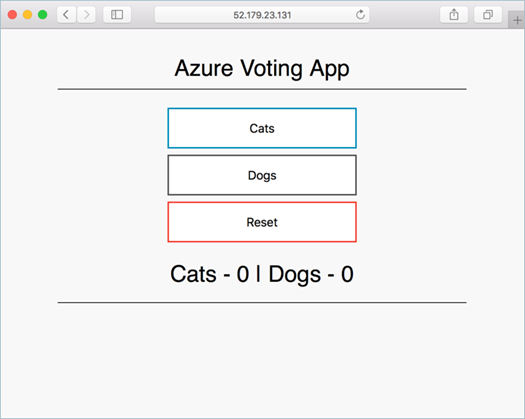

GogglesMM,非常容易上手使用,看上去和 Rhythmbox 有点像。我在 GogglesMM 的设置里面的参数设置中找到了音频选项设置,能够让我选择 ALSA 和设置音频输出设备。通过查看 **/proc/asound/DragonFly/stream0** 文件和 DragonFly 自己的 LED 颜色,我确定我能够用 GogglesMM 播放 44.1-KHz/21-bit 和 96-KHz/24-bit 这两种规格的 mp3;因此,就凭 “rate/depth passthrough” 我给 GogglesMM 打 5 分。

|

||||

|

||||

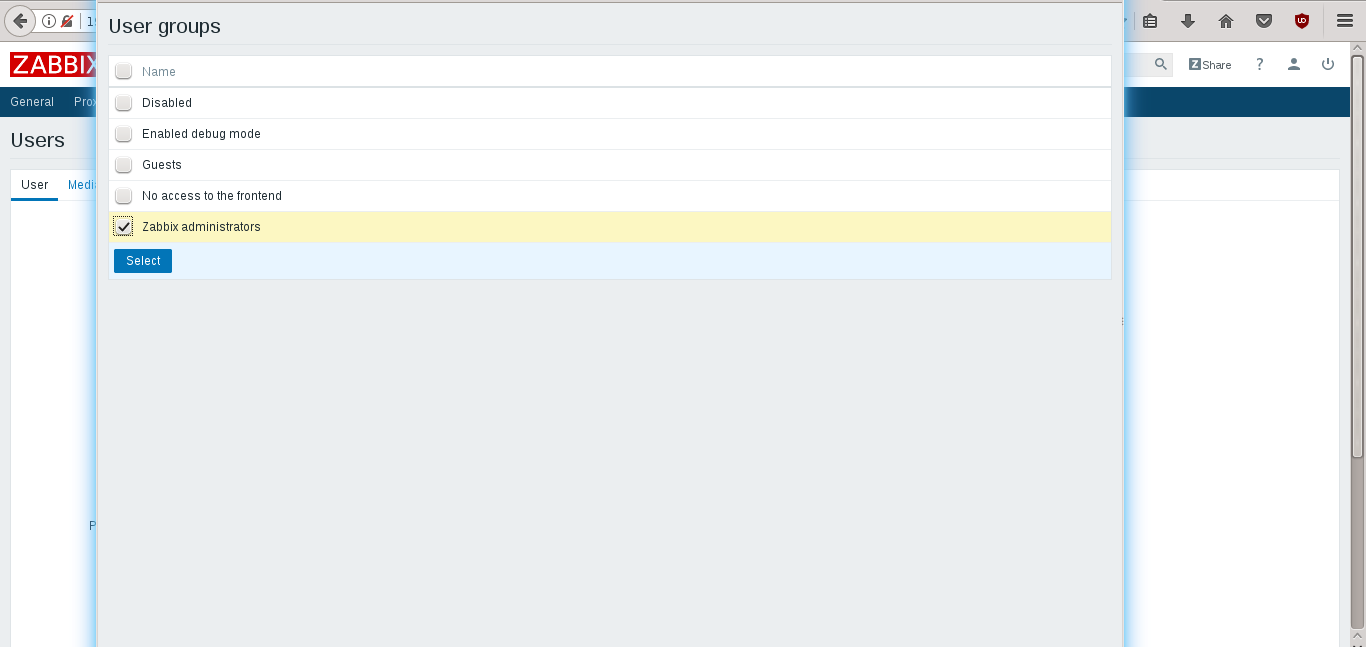

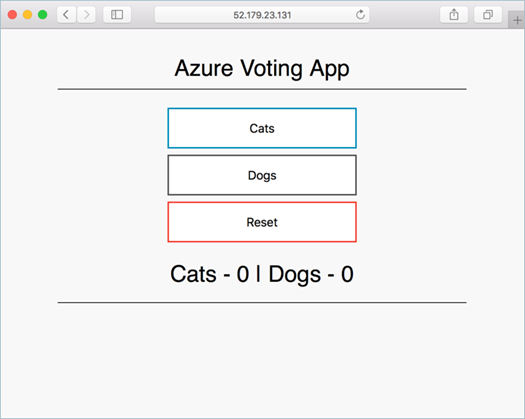

|

||||

|

||||

*GogglesMM 在播放 96/24 这种规格的音乐,显示音频输出设备选择。 *

|

||||

|

||||

GogglesMM 的说明文档并没有大量的细节介绍,但是我尽可能说明的是,开发者们使用了过滤器来实现类似“智能播放列表”的功能。我在我的测试环境下使用三张专辑来尽我所能检测过滤功能,当我使用“智能播放列表”功能的时候尽管我喜欢我看到的通过过滤筛选出来的歌曲(特别是能够基于广泛的标准来针对歌曲定义筛选条件),但这并不是我认为的“智能播放列表”,对我来说我认为“智能播放列表”应该是这样的,通过借助一些社区数据库来推荐提供和你近期播放的歌曲类似的曲目。或者我该把这个叫作“自动的 DJ”而不是“智能播放列表”,但是通过测试我最终能够确定的是,这个特性并不会在近期版本的 GogglesMM 中出现,所以我给它这个所谓的“智能播放列表”打 0 分。

|

||||

|

||||

至于播放列表队列的操作,这款应用能够支持播放你选中的音乐,也能够随机播放音乐或者把一些音乐整合到一个播放列表里面,所以我因为“播放列表的队列选项”给它打 1 分。

|

||||

|

||||

同样的,它看起来也能够很好地不需要额外的干预来管理我的音乐艺术封面(每个专辑都包含一张合适的艺术封面, GogglesMM 可以自动识别),所以为“内嵌的艺术封面或者封面图片”打 1 分。

|

||||

|

||||

我找不到任何方法来让 GogglesMM 显示信号级别或者实际的比特率。我也不能找到显示比特率和位深度的方法;尽管这款应用能够显示一个“格式”列,但是在我的音乐栏里面除了显示音乐格式不会显示其他的信息了,所以为 GogglesMM 的“信号级别和有效比特率”打 0 分。

|

||||

|

||||

至于 GogglesMM 的整体结构,它的所有按钮选项都正好完全符合我的使用习惯。我能够在播放队列里面看到歌曲的时间和歌曲当前已播放的时间所占歌曲总体时间的比例,专辑封面,歌曲名,专辑名和歌唱者。可用的播放栏列表看起来相当大而有用,比如也包括了作曲者。最后,一个真正让我眼前一亮的特点是,音量控制竟然包含了 ALSA 音量。也就是如果我启动 alsamixer 的话,然后不管是在 alsamixer 还是在 GogglesMM 里面调整音量,另一个音量控制也会做相应的音量调整。这个出乎我意外之外的功能相当的酷而且这个功能在其他的音乐播放器上也不常见,因此为它的整体架构给 GogglesMM 加 1 分。

|

||||

|

||||

最终 GogglesMM 的这些优点共计得分 8。所表现出来的特点确实很优秀。

|

||||

|

||||

**评分:8**

|

||||

|

||||

### 到目前为止所给出的评分

|

||||

|

||||

我之前所提到的这几个开源音乐播放器中,我最喜欢的还是 [Guayadeque][16],根据我制定的标准来进行排名的话,我给 Guayadeque 打满分 10 分。来看下我对这三个开源音乐播放器的评分总结吧(N/R 代表“无评分”,因为我不确定如何配置这些播放器来让它们以完美的码率和贯穿模式工作,以便我的数模信号转换器在相应源的码率和位深度接收 PCM 数据):

|

||||

|

||||

|

||||

|

||||

请注意下我用的这个排名方法并不适合每个人。特别是很多人并不清楚高品质音乐的价值,他们更喜欢专有格式的音乐能够给他们带来更好的音乐品质。

|

||||

|

||||

与此同时,我会继续评测一些之前向大家承诺的音乐播放器一些和评测评分无关的特性。我特别喜欢 Lollypop 的外观,我也觉得待揭秘的 **gstreamer** 有一种神秘的魅力,它能让基于 **gstreamer** 的音乐播放器不用通过转换就能传输它们的数据。

|

||||

|

||||

### 关于音乐的部分……

|

||||

|

||||

我还在保持继续购买唱片的习惯,对于唱片的购买我有些不错的推荐。

|

||||

|

||||

第一个就是 Nils Frahm 的专辑 [Felt][17],这是我女儿送我的一份非常贴心的礼物。我真的真的很喜欢这张专辑,它的绝大部分歌曲都是在深夜用电麦录制的非常接近钢琴的弦乐,而且也有不少有趣的钢琴演奏的背景音乐,真的是很棒的音乐。至于 Nils Frahm 其他的音乐,这些唱片提供的下载链接允许你下载质量高达 96-KHz,24-bit FLAC 格式的音乐。

|

||||

|

||||

第二个就是 Massive Attack 的专辑 Protection 的 [Mad Professor 的重混版][18],专辑名是 No Protection。你可以[在这里了解这份专辑][19],并且如果你想要尝试这份专辑最原始的版本,[这里是它的所有汇总信息][20]。该专辑最初发布于 20 世纪 90 年代,这份专辑刻录在唱片上面而且听起来非常奇幻。遗憾的是,不提供下载链接。

|

||||

|

||||

第三个就是 Bayonne 的 [Primitives][21]。[这是专辑要表达的想法][22]。Guardian 报社把这份专辑称作是“新式无聊”。那么这种类型的音乐到底怎么样呢?如果这些音乐真的是非常令人乏味的,或许是时候来换份工作了,无论如何你可以试试听这些音乐;或许你会觉得它确实很乏味或者你会像我一样喜欢上这份音乐。

|

||||

|

||||

(图片来源:[互联网档案馆][2]书中的图片;由 Opensource.com 编辑发布。遵循 [CC BY-SA 4.0][3] 协议。)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

|

||||

作者简介:

|

||||

|

||||

|

||||

|

||||

|

||||

Chris Hermansen - 自 1978 年毕业于 British Columbia 大学后一直从事计算机相关工作,2005 年之前是 Solaris、SunOS、UNIX System V 的忠实用户,之后是 Linux 的忠实用户。在技术方面,我的职业生涯大部分时间都是在做数据分析;特别是空间数据分析。拥有丰富的和数据分析相关的编程经验,用过的编程语言有 awk,Python、PostgreSQL、 PostGIS 和 最新的 Groovy。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/17/1/open-source-music-players

|

||||

|

||||

作者:[Chris Hermansen][a]

|

||||

译者:[WangYueScream](https://github.com/WangYueScream)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/clhermansen

|

||||

[1]:http://www.alsa-project.org/main/index.php/Main_Page

|

||||

[2]:https://www.flickr.com/photos/internetarchivebookimages/14565158187/in/photolist-ocoBRG-ocqdPM-ot9YYX-ovb7SE-oroqfj-ot8Sfi-of1HoD-oc5c28-otBk3B-foZxvq-ocoUvo-4TqEKE-otsG7t-oeYo4w-ornGMQ-orpD9y-wLDBUf-outZV7-oc26Ui-ortZpW-ocpWLH-ocoK6c-ocYDY1-od6ADb-xxAKyY-ocofDx-oc4Jr5-otyT2E-ocpUyu-xqTAb6-oc8gK1-otdsK5-ovhkz2-ocpcHj-oc8xwk-otgmZG-otr595-otnv4o-otvdRs-ovfYEt-ovDXUV-obUPJ6-oc2MuJ-oc4zLE-oruPbN-oc1P2H-ouRk93-otaGd3-otTmwB-oc5f62

|

||||

[3]:http://creativecommons.org/licenses/by-sa/4.0/

|

||||

[4]:https://en.wikipedia.org/wiki/History_of_music

|

||||

[5]:https://opensource.com/article/16/12/soundtrack-open-source-music-players

|

||||

[6]:http://aqualung.jeremyevans.net/

|

||||

[7]:https://gnumdk.github.io/lollypop-web/

|

||||

[8]:https://gogglesmm.github.io/

|

||||

[9]:http://aqualung.jeremyevans.net/

|

||||

[10]:https://launchpad.net/+search?field.text=aqualung+ppa

|

||||

[11]:https://gnumdk.github.io/lollypop-web/

|

||||

[12]:https://launchpad.net/~gnumdk/+archive/ubuntu/lollypop

|

||||

[13]:https://copr.fedorainfracloud.org/coprs/gnumdk/lollypop/

|

||||

[14]:https://gogglesmm.github.io/

|

||||

[15]:https://launchpad.net/~s.jansen/+archive/ubuntu/gogglesmm

|

||||

[16]:http://www.guayadeque.org/

|

||||

[17]:http://www.nilsfrahm.com/works/felt/

|

||||

[18]:https://en.wikipedia.org/wiki/No_Protection_(Massive_Attack_album)

|

||||

[19]:https://www.youtube.com/watch?v=9TvgRb4wiB0

|

||||

[20]:https://www.youtube.com/watch?v=LCUv-hLN71c

|

||||

[21]:https://musicglue.com/bayonne/products/primitives---vinyl--/

|

||||

[22]:https://www.youtube.com/watch?v=WZ6xl6CKITE

|

||||

@ -0,0 +1,113 @@

|

||||

10 个应当了解的 Unikernel 开源项目

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

> unikernel 实质上是一个缩减的操作系统,它可以与应用程序结合成为一个 unikernel 程序,它通常在虚拟机中运行。下载《开放云指南》了解更多。

|

||||

|

||||

当涉及到操作系统、容器技术和 unikernel,趋势是朝着微型化发展。什么是 unikernel?unikernel 实质上是一个缩减的操作系统(特指 “unikernel”),它可以与应用程序结合成为一个 unikernel 程序, 它通常在虚拟机中运行。它们有时被称为库操作系统,因为它包含了使应用程序能够将硬件和网络协议与一组访问控制和网络层隔离的策略相结合使用的库。

|

||||

|

||||

在讨论云计算和 Linux 时容器常常会被提及,而 unikernel 也在做一些变革。容器和 unikernel 都不是新事物。在 20 世纪 90 年代就有类似 unikernel 的系统,如 Exokernel,而如今流行的 unikernel 系统则有 MirageOS 和 OSv。 Unikernel 程序可以独立使用并在异构环境中部署。它们可以促进专业化和隔离化服务,并被广泛用于在微服务架构中开发应用程序。

|

||||

|

||||

作为 unikernel 如何引起关注的一个例子,你可以看看 Docker 收购了[基于 Cambridge 的 Unikernel 系统][3],并且已在许多情况下在使用 unikernel。

|

||||

|

||||

unikernel,就像容器技术一样, 它剥离了非必需的的部分,因此它们对应用程序的稳定性、可用性以及安全性有非常积极的影响。在开源领域,它们也吸引了许多顶级,最具创造力的开发人员。

|

||||

|

||||

Linux 基金会最近[宣布][4]发布了其 2016 年度报告[开放云指南:当前趋势和开源项目指南][5]。这份第三年度的报告全面介绍了开放云计算的状况,并包含了一节关于 unikernel 的内容。你现在可以[下载该报告][6]。它汇总并分析研究、描述了容器、unikernel 的发展趋势,已经它们如何重塑云计算的。该报告提供了对当今开放云环境中心的各类项目的描述和链接。

|

||||

|

||||

在本系列文章中,我们将按类别分析指南中提到的项目,为整体类别的演变提供了额外的见解。下面, 你将看到几个重要 unikernel 项目的列表及其影响,以及它们的 GitHub 仓库的链接, 这些都是从开放云指南中收集到的:

|

||||

|

||||

### [ClickOS][7]

|

||||

|

||||

ClickOS 是 NEC 的高性能虚拟化软件中间件平台,用于构建于 MiniOS/MirageOS 之上网络功能虚拟化(NFV)

|

||||

|

||||

- [ClickOS 的 GitHub][8]

|

||||

|

||||

### [Clive][9]

|

||||

|

||||

Clive 是用 Go 编写的一个操作系统,旨在工作于分布式和云计算环境中。

|

||||

|

||||

### [HaLVM][10]

|

||||

|

||||

Haskell 轻量级虚拟机(HaLVM)是 Glasgow Haskell 编译器工具包的移植,它使开发人员能够编写可以直接在 Xen 虚拟机管理程序上运行的高级轻量级虚拟机。

|

||||

|

||||

- [HaLVM 的 GitHub][11]

|

||||

|

||||

### [IncludeOS][12]

|

||||

|

||||

IncludeOS 是在云中运行 C++ 服务的 unikernel 操作系统。它提供了一个引导加载程序、标准库以及运行服务的构建和部署系统。在 VirtualBox 或 QEMU 中进行测试,并在 OpenStack 上部署服务。

|

||||

|

||||

- [IncludeOS 的 GitHub][13]

|

||||

|

||||

### [Ling][14]

|

||||

|

||||

Ling 是一个用于构建超级可扩展云的 Erlang 平台,可直接运行在 Xen 虚拟机管理程序之上。它只运行三个外部库 (没有 OpenSSL),并且文件系统是只读的,以避免大多数攻击。

|

||||

|

||||

- [Ling 的 GitHub][15]

|

||||

|

||||

### [MirageOS][16]

|

||||

|

||||

MirageOS 是在 Linux 基金会的 Xen 项目下孵化的库操作系统。它使用 OCaml 语言构建的 unikernel 可以用于各种云计算和移动平台上安全的高性能网络应用。代码可以在诸如 Linux 或 MacOS X 等普通的操作系统上开发,然后编译成在 Xen 虚拟机管理程序下运行的完全独立的专用 Unikernel。

|

||||

|

||||

- [MirageOS 的 GitHub][17]

|

||||

|

||||

### [OSv][18]

|

||||

|

||||

OSv 是 Cloudius Systems 为云设计的开源操作系统。它支持用 Java、Ruby(通过 JRuby)、JavaScript(通过 Rhino 和 Nashorn)、Scala 等编写程序。它运行在 VMware、VirtualBox、KVM 和 Xen 虚拟机管理程序上。

|

||||

|

||||

- [OSV 的 GitHub][19]

|

||||

|

||||

### [Rumprun][20]

|

||||

|

||||

Rumprun 是一个可用于生产环境的 unikernel,它使用 rump 内核提供的驱动程序,添加了 libc 和应用程序环境,并提供了一个工具链,用于将现有的 POSIX-y 程序构建为 Rumprun unikernel。它适用于 KVM 和 Xen 虚拟机管理程序和裸机,并支持用 C、C ++、Erlang、Go、Java、JavaScript(Node.js)、Python、Ruby、Rust 等编写的程序。

|

||||

|

||||

- [Rumprun 的 GitHub][21]

|

||||

|

||||

### [Runtime.js][22]

|

||||

|

||||

Runtime.js 是用于在云上运行 JavaScript 的开源库操作系统(unikernel),它可以与应用程序捆绑在一起,并部署为轻量级和不可变的 VM 镜像。它基于 V8 JavaScript 引擎,并使用受 Node.js 启发的事件驱动和非阻塞 I/O 模型。KVM 是唯一支持的虚拟机管理程序。

|

||||

|

||||

- [Runtime.js 的 GitHub] [23]

|

||||

|

||||

### [UNIK][24]

|

||||

|

||||

Unik 是 EMC 推出的工具,可以将应用程序源编译为 unikernel(轻量级可引导磁盘镜像)而不是二进制文件。它允许应用程序在各种云提供商、嵌入式设备(IoT) 以及开发人员的笔记本或工作站上安全地部署,资源占用很少。它支持多种 unikernel 类型、处理器架构、管理程序和编排工具,包括 Cloud Foundry、Docker 和 Kubernetes。[Unik 的 GitHub] [25]

|

||||

|

||||

(题图:Pixabay)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/news/open-cloud-report/2016/guide-open-cloud-age-unikernel

|

||||

|

||||

作者:[SAM DEAN][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/sam-dean

|

||||

[1]:https://www.linux.com/licenses/category/creative-commons-zero

|

||||

[2]:https://www.linux.com/files/images/unikernelsjpg-0

|

||||

[3]:http://www.infoworld.com/article/3024410/application-virtualization/docker-kicks-off-unikernel-revolution.html

|

||||

[4]:https://www.linux.com/blog/linux-foundation-issues-2016-guide-open-source-cloud-projects

|

||||

[5]:http://ctt.marketwire.com/?release=11G120876-001&id=10172077&type=0&url=http%3A%2F%2Fgo.linuxfoundation.org%2Frd-open-cloud-report-2016-pr

|

||||

[6]:http://go.linuxfoundation.org/l/6342/2016-10-31/3krbjr

|

||||

[7]:http://cnp.neclab.eu/clickos/

|

||||

[8]:https://github.com/cnplab/clickos

|

||||

[9]:http://lsub.org/ls/clive.html

|

||||

[10]:https://galois.com/project/halvm/

|

||||

[11]:https://github.com/GaloisInc/HaLVM

|

||||

[12]:http://www.includeos.org/

|

||||

[13]:https://github.com/hioa-cs/IncludeOS

|

||||

[14]:http://erlangonxen.org/

|

||||

[15]:https://github.com/cloudozer/ling

|

||||

[16]:https://mirage.io/

|

||||

[17]:https://github.com/mirage/mirage

|

||||

[18]:http://osv.io/

|

||||

[19]:https://github.com/cloudius-systems/osv

|

||||

[20]:http://rumpkernel.org/

|

||||

[21]:https://github.com/rumpkernel/rumprun

|

||||

[22]:http://runtimejs.org/

|

||||

[23]:https://github.com/runtimejs/runtime

|

||||

[24]:http://dojoblog.emc.com/unikernels/unik-build-run-unikernels-easy/

|

||||

[25]:https://github.com/emc-advanced-dev/unik

|

||||

103

published/20170120 lxc exec vs ssh.md

Normal file

103

published/20170120 lxc exec vs ssh.md

Normal file

@ -0,0 +1,103 @@

|

||||

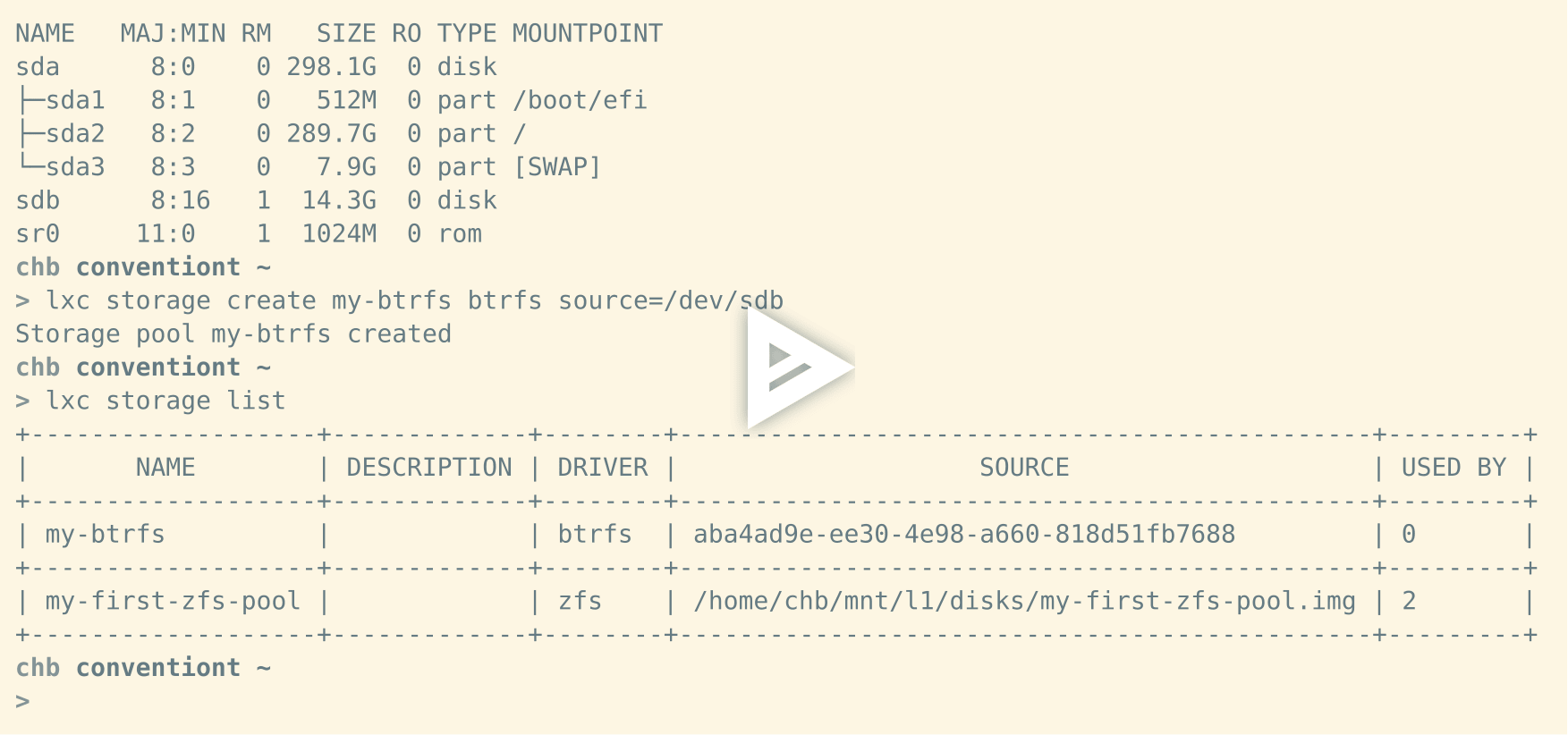

lxc exec 介绍

|

||||

============================================================

|

||||

|

||||

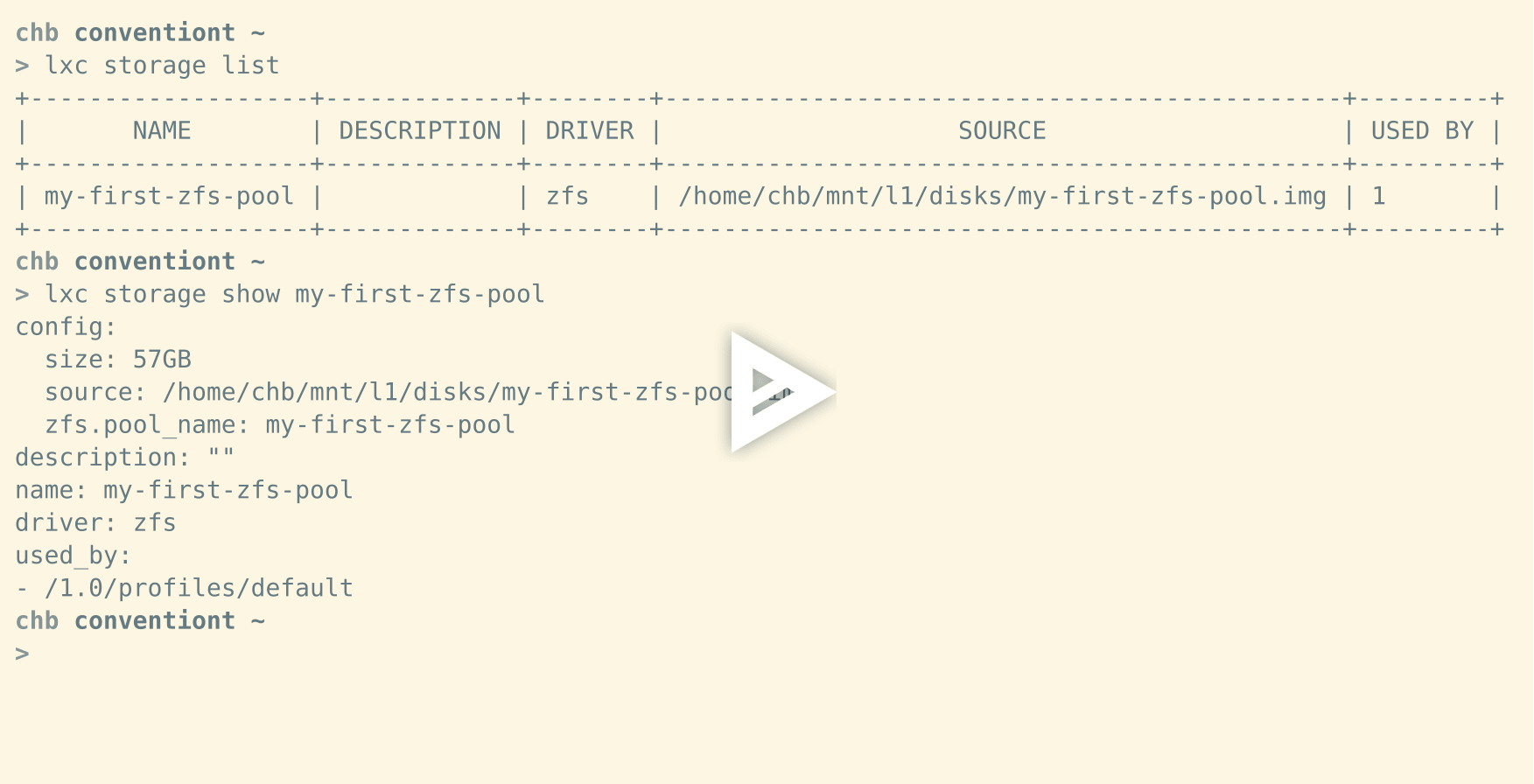

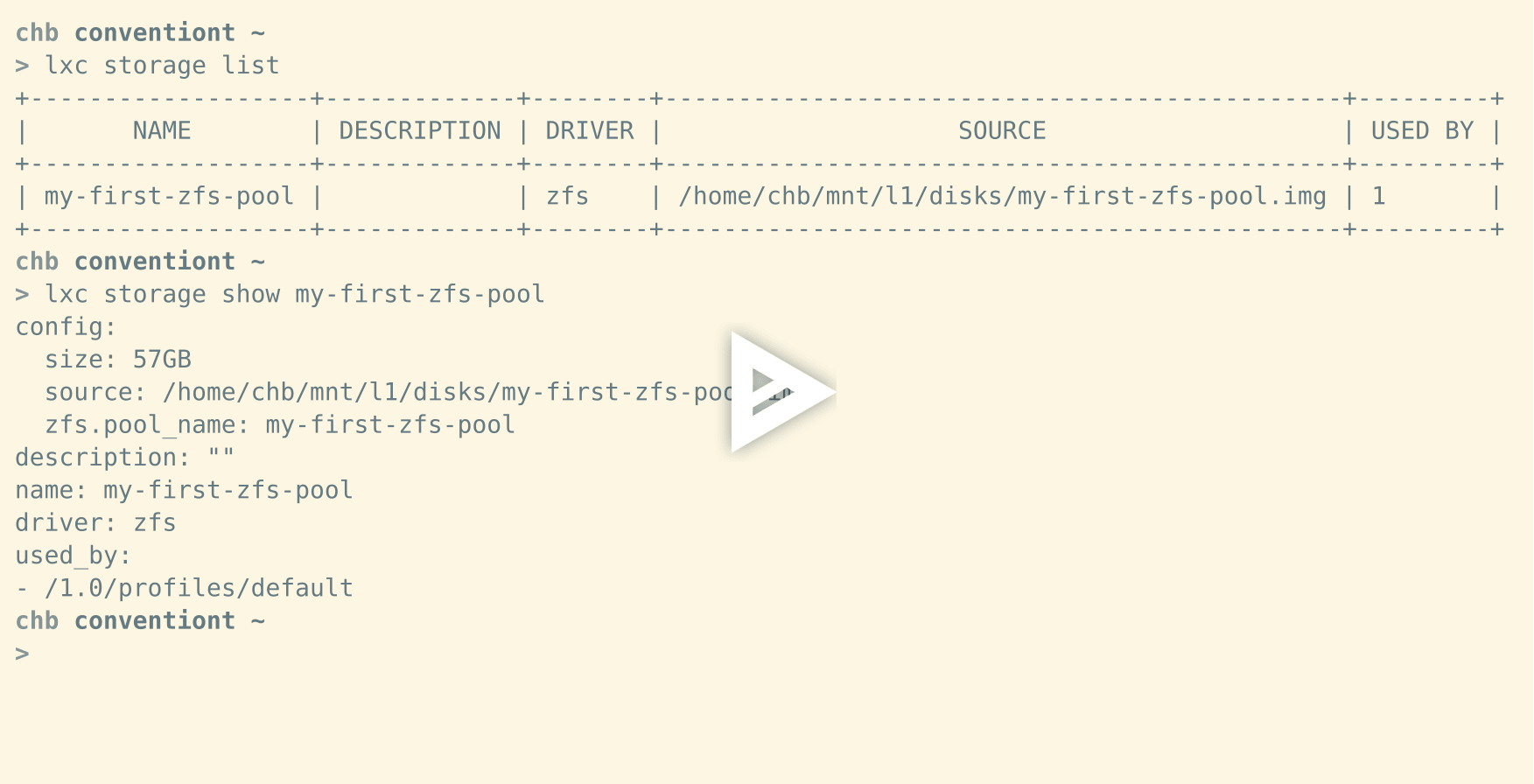

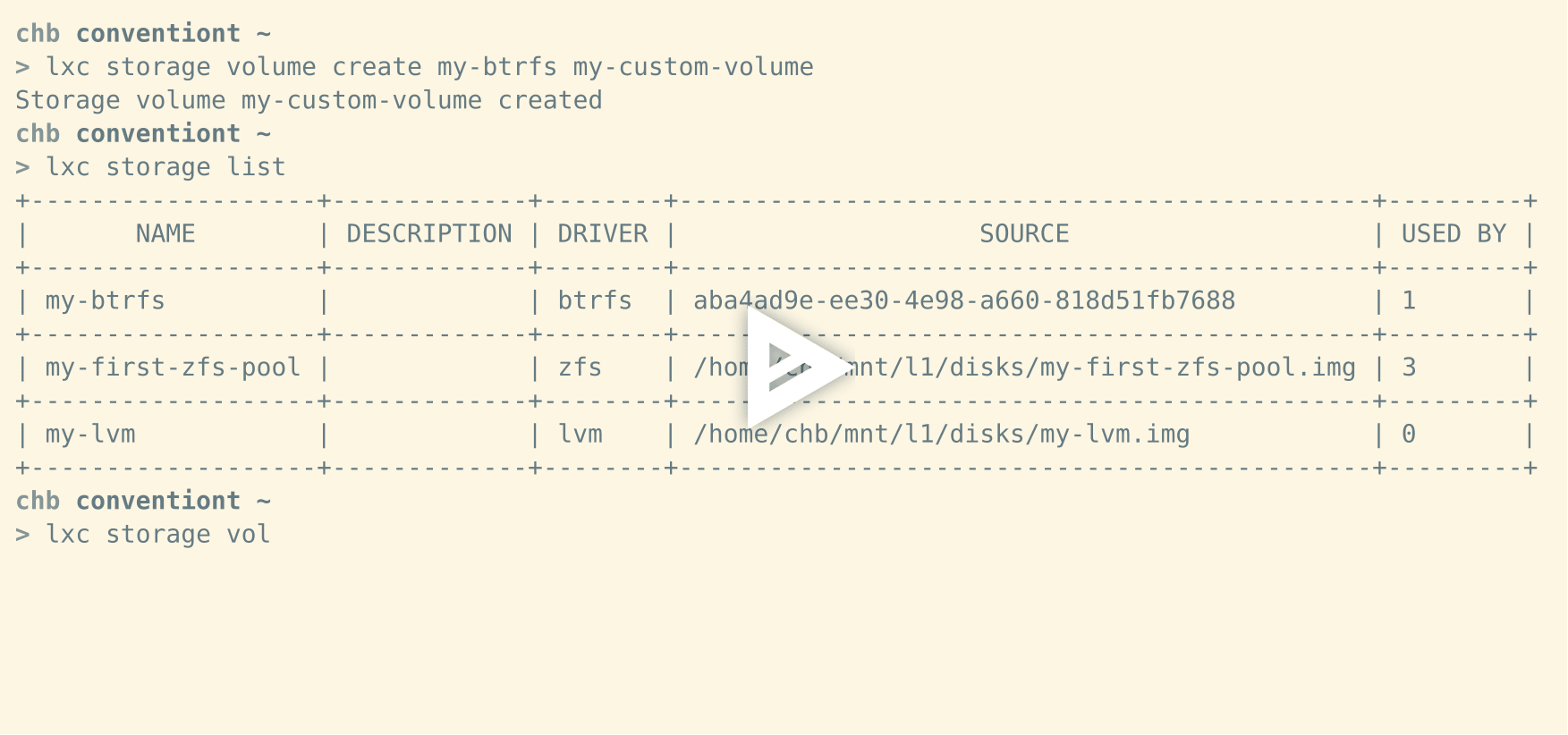

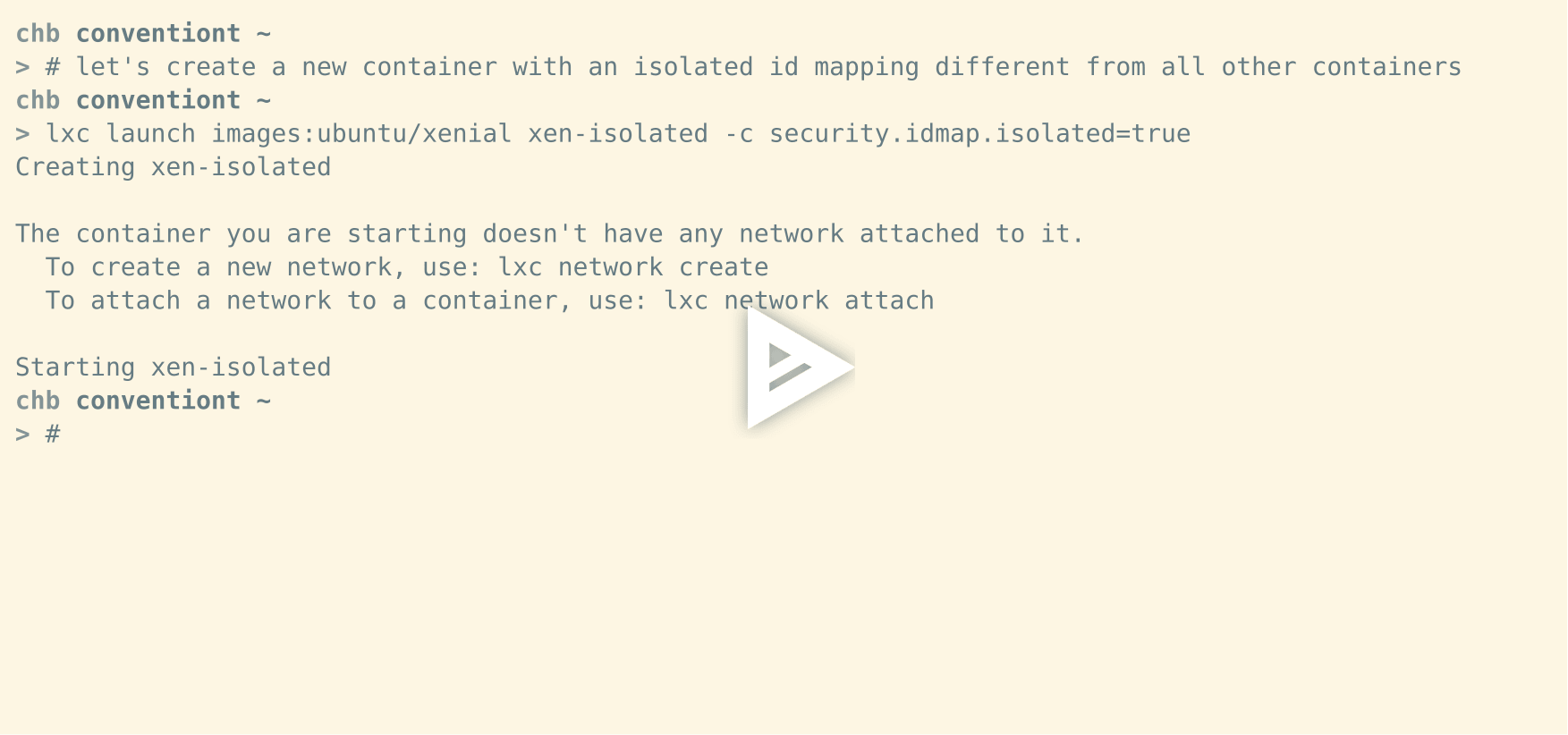

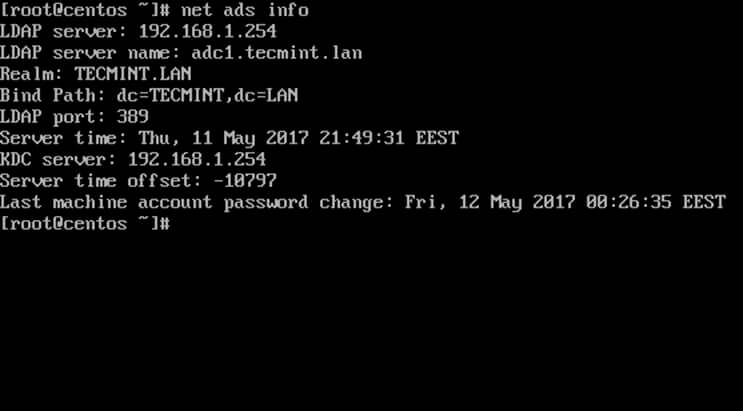

最近,我对 `lxc exec` 进行了几个改进。如果你不知道它的话我介绍一下,`lxc exec` 是 [LXD][1] 的客户端工具,使用 [LXD][2] [客户端 api][3] 与 LXD 守护程序通信,并执行用户想要执行的各种程序,以下是你可以使用的一个例子:

|

||||

|

||||

[][4]

|

||||

|

||||

我们的主要目标之一就是使 `lxc exec` 与 `ssh` 类似,因为它是交互式或非交互式远程运行命令的标准。这使得 把 `lxc exec` 做得很好变得有点棘手。

|

||||

|

||||

### 1、 处理后台任务

|

||||

|

||||

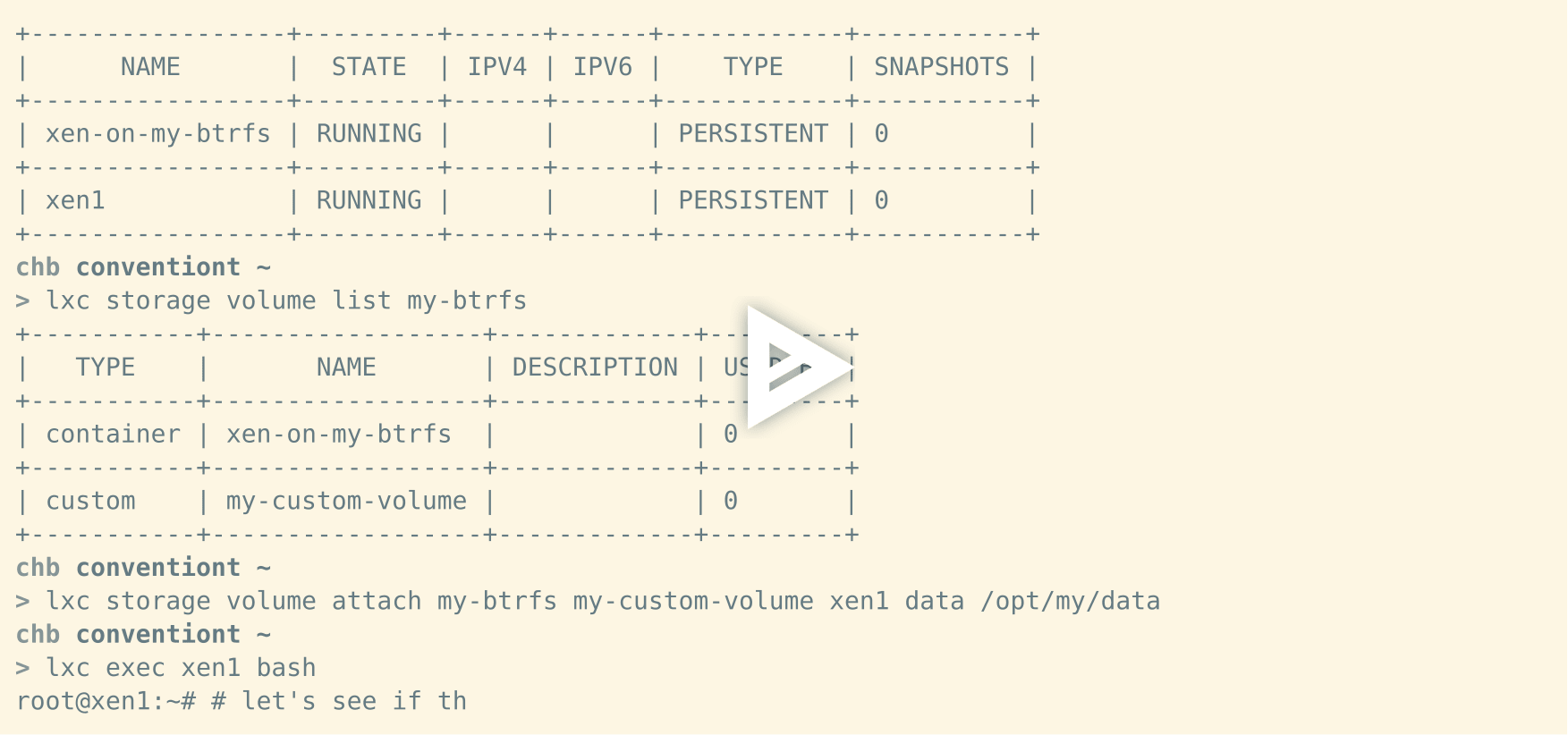

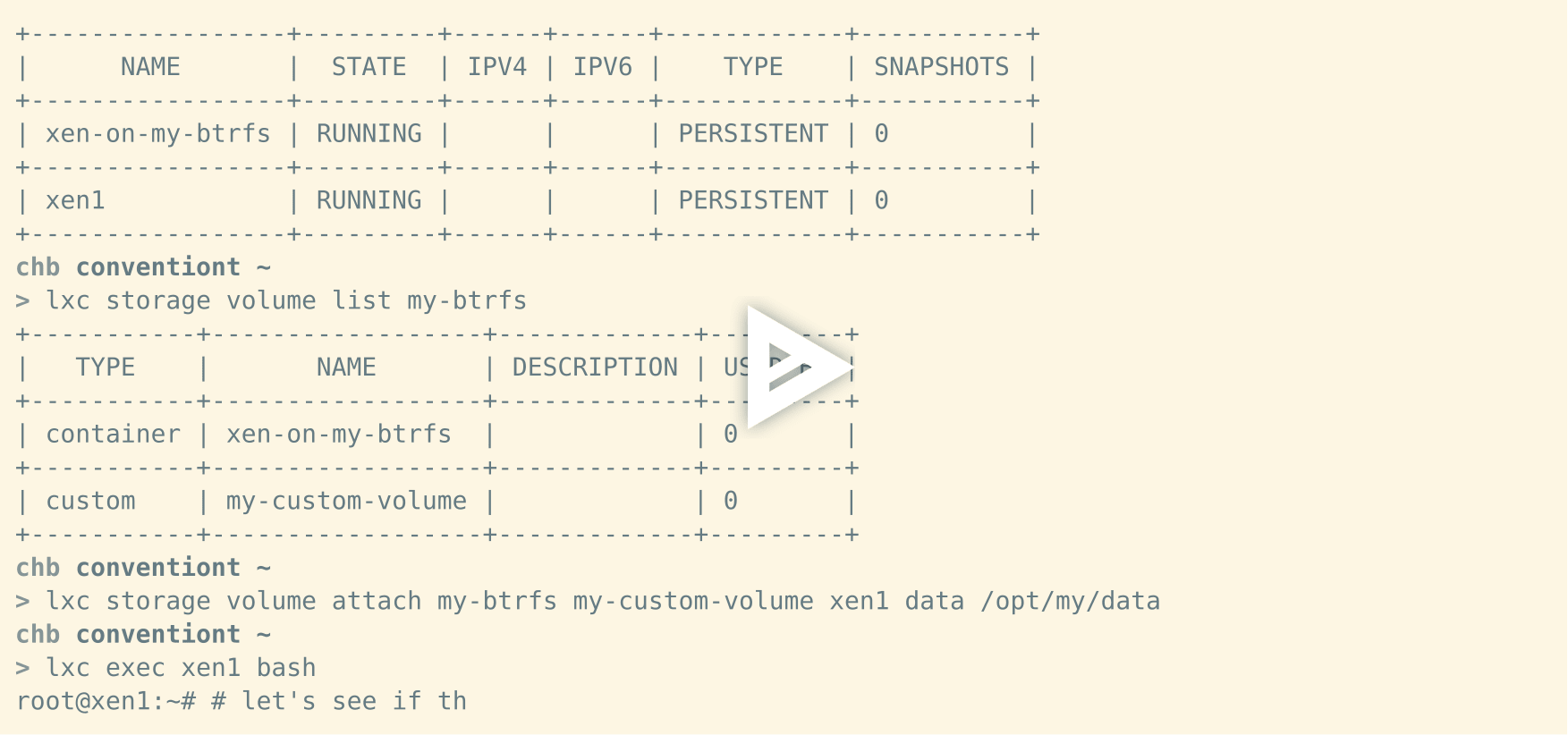

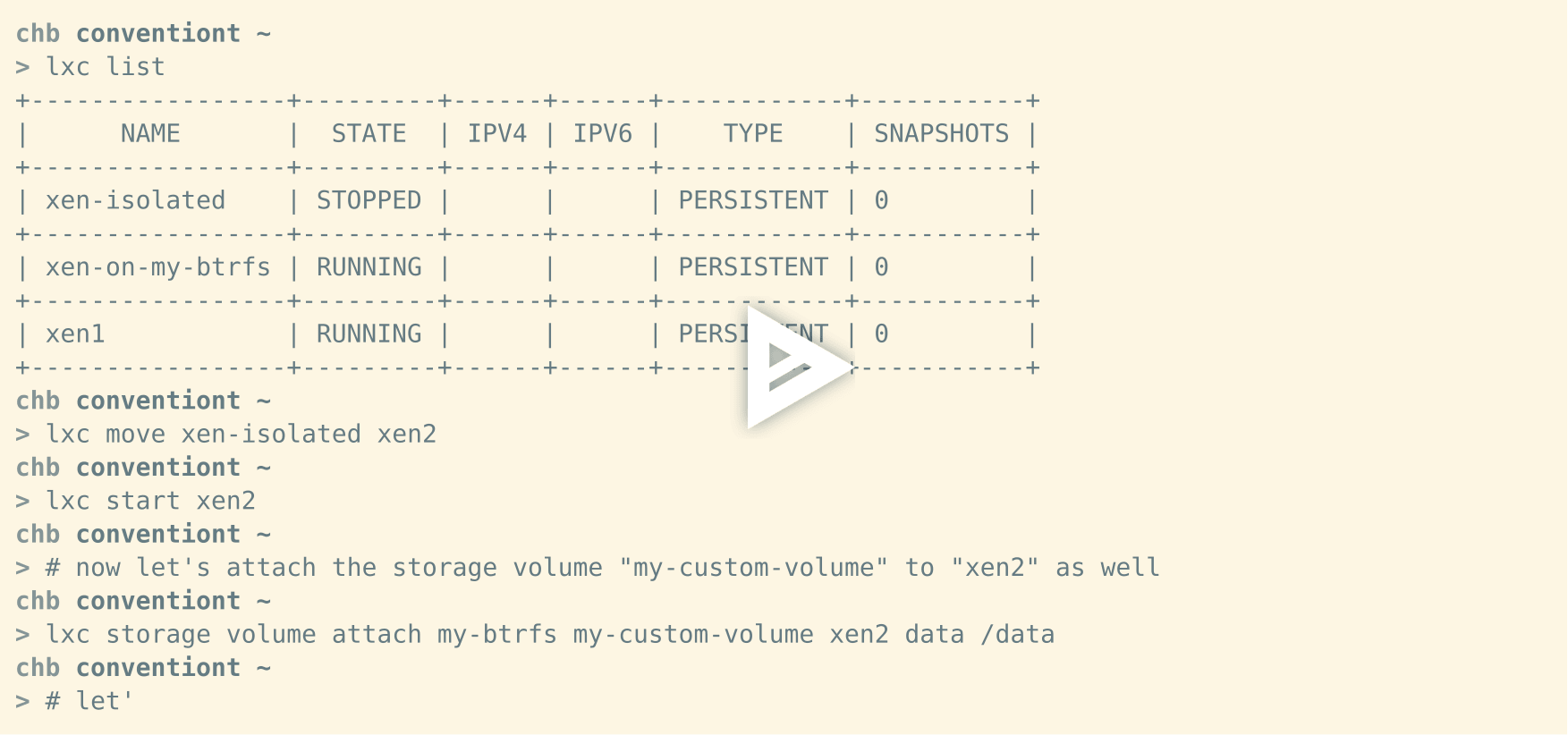

一个长期存在的问题当然是如何正确处理后台任务。这是一个关于 [LXD][5] 2.7 实例的问题的例子:

|

||||

|

||||

[][6]

|

||||

|

||||

你可以看到,在后台执行任务将导致 `lxc exec` 无法退出。许多命令可以触发此问题:

|

||||

|

||||

```

|

||||

chb@conventiont|~

|

||||

> lxc exec zest1 bash

|

||||

root@zest1:~# yes &

|

||||

y

|

||||

y

|

||||

y

|

||||

.

|

||||

.

|

||||

.

|

||||

```

|

||||

|

||||

现在没有什么能救你了。`yes` 将会永远直接写入`stdout`。

|

||||

|

||||

问题的根源在于 `stdout` 是一直打开着的,但这是必要的,因为它用以确保用户所启动的进程写入的任何数据实际上都是通过我们建立的 websocket 连接读取并发回的。

|

||||

|

||||

假如你想这样,运行一个 shell 会话,然后在后台运行一个进程,并马上退出 shell。对不起,它并不能如预期那样。

|

||||

|

||||

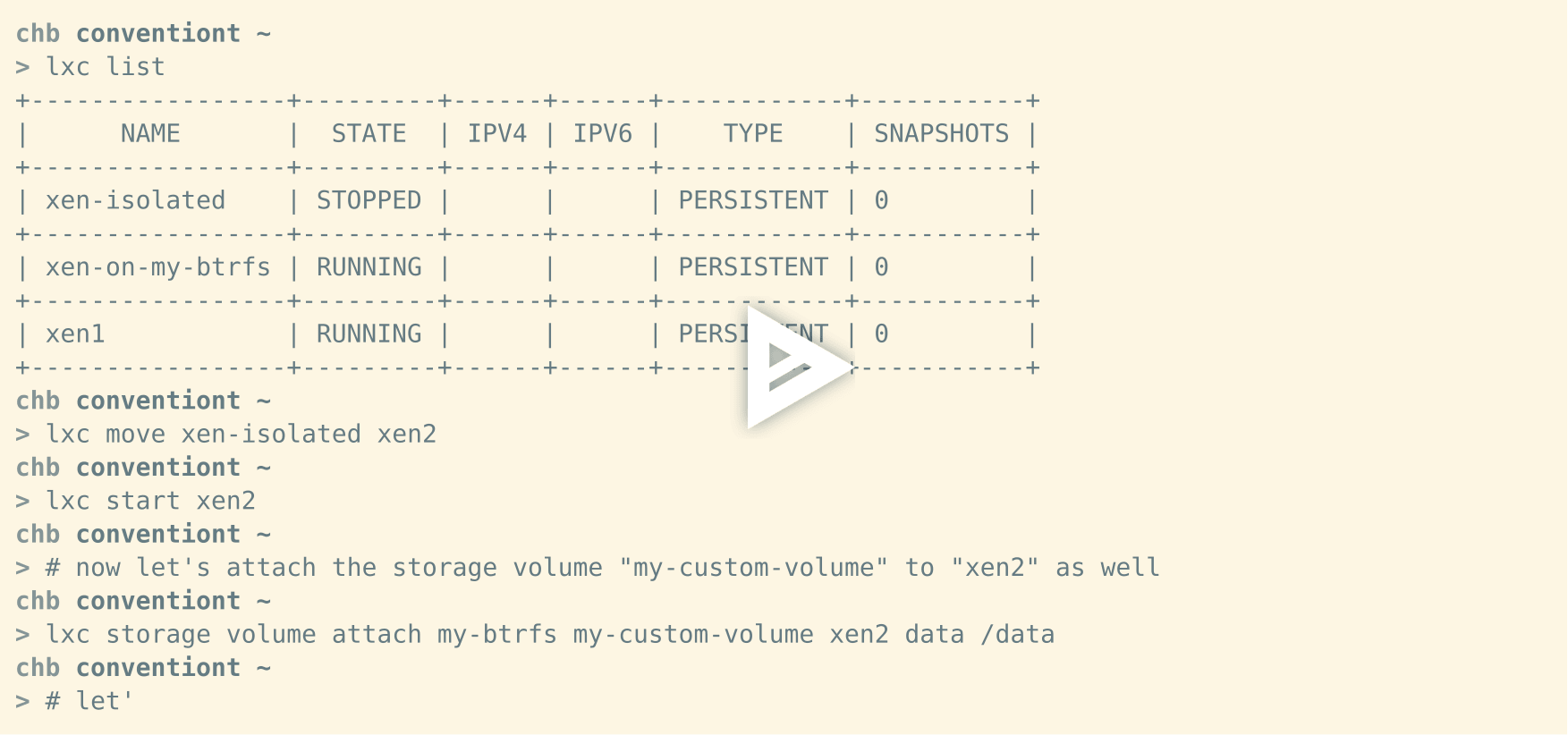

第一种并且原始的方法是一旦你检测到前台程序(例如 shell)已经退出就直接关闭 `stdout`。但这不像想得那么好,当你运行快速执行程序时,这个问题会变得明显,比如:

|

||||

|

||||

```

|

||||

lxc exec -- ls -al /usr/lib

|

||||

```

|

||||

|

||||

这里 `lxc exec` 进程(和相关的 `forkexec` 进程。但现在不要考虑它,只要记住 `Go` + `setns()` 不相往来就行了……)会在 `stdout` 中所有的_缓冲_数据被读取之前退出。这种情况下将会导致截断输出,没有人想要这样。在尝试使用几个方法来解决问题之后,包括禁用 pty 缓冲(我告诉你,这不太漂亮,也没有如预期工作。)和其他奇怪的思路,我设法通过几个 `poll()` “技巧”(在某种意义上说一个“技巧”)解决了这个问题。现在你终于可以运行后台任务,并且可以完全退出了。如图:

|

||||

|

||||

[][7]

|

||||

|

||||

### 2、 报告由信号引起的退出码

|

||||

|

||||

`ssh` 是一个很棒的工具。但有一件事,我一直以来不喜欢当 ssh 运行的命令接收到一个信号时, `ssh` 总是会报告 `-1`,也就是退出码 `255`。当你想要了解导致程序终止的信号时,这很烦人。这就是为什么我最近实施标准 shell 所使用的惯例 `128 + n` 来报告任何由信号导致的退出,其中 `n` 被定义为导致执行程序退出的信号量。例如,在 `SIGKILL` 信号上,你会看到 `128 + SIGKILL = 137`(计算其他致命信号的退出码作为读者的练习)。所以你可以这么做:

|

||||

|

||||

```

|

||||

chb@conventiont|~

|

||||

> lxc exec zest1 sleep 100

|

||||

```

|

||||

|

||||

现在,将 `SIGKILL` 发送到执行程序(不是 `lxc exec`本身,因为 `SIGKILL` 不可转发)。

|

||||

|

||||

```

|

||||

kill -KILL $(pidof sleep 100)

|

||||

```

|

||||

|

||||

最后检查你程序的退出码:

|

||||

|

||||

```

|

||||

chb@conventiont|~

|

||||

> echo $?

|

||||

137

|

||||

```

|

||||

|

||||

瞧。这显然只有当 a) 退出码没有超过 `8` 位计算壁垒,b)当执行程序不使用 `137` 来表示成功(这可真……有趣?!)。这两个论点似乎对我来说都不太有说服力。前者因为致命信号量不_应该_超过这个范围。后者因为(i)这是用户问题,(ii)这些退出代码实际上是保留的(我是_这样认为_。),(iii)你在本地或其他上面运行程序时会遇到同样的问题。

|

||||

|

||||

我看到的主要优点是这能够回报执行程序细粒度的退出状态。注意,我们不会报告_所有_被信号杀死的程序实例。比如说,当你的程序能够处理 `SIGTERM` 并且完全退出时,[LXD][8] 没有简单的方法来检测到这个情况并报告说这个程序被信号杀死了。你只会简单地收到退出码 `0`。

|

||||

|

||||

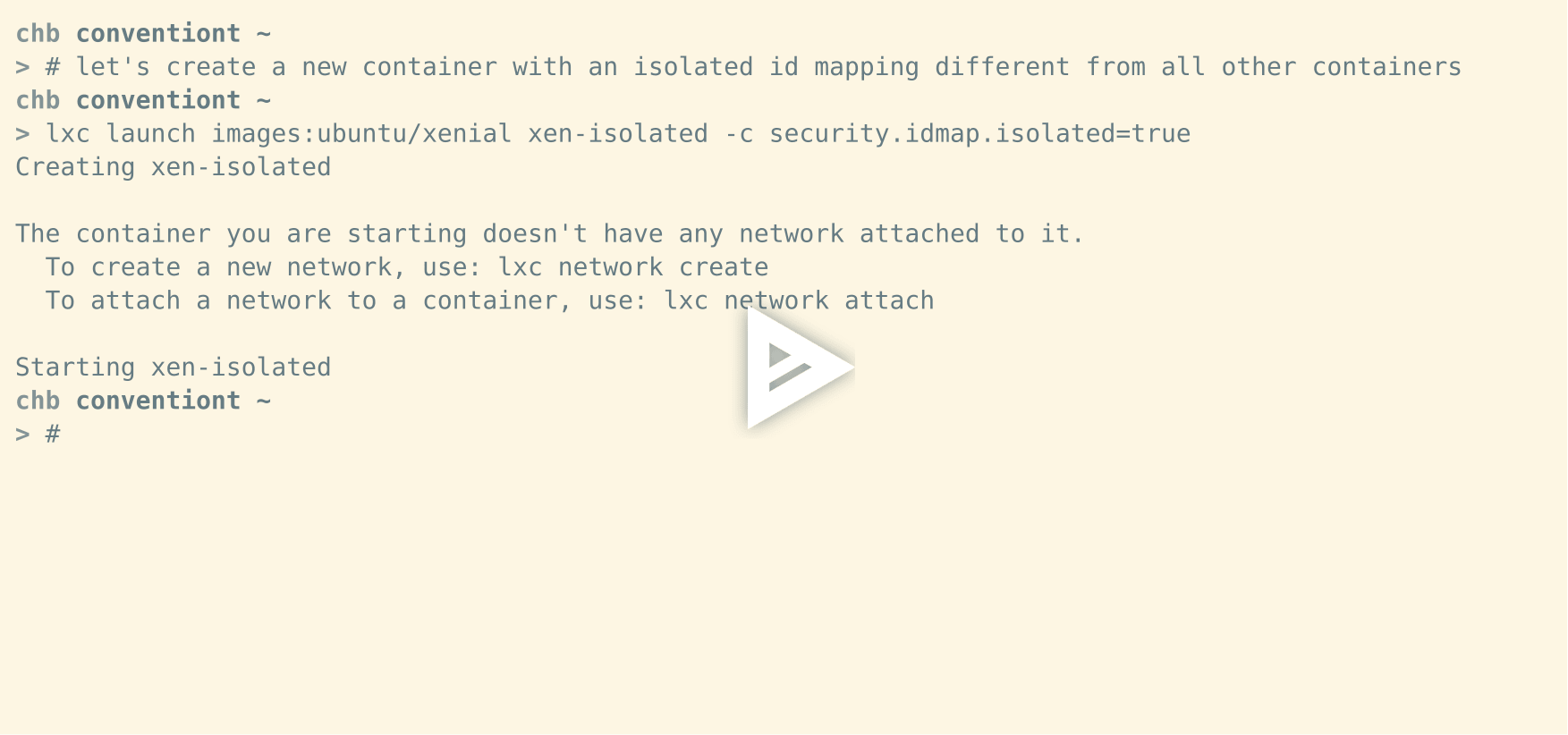

### 3、 转发信号

|

||||

|

||||

这可能不太有趣(或者也许不是,谁知道呢),但我发现它非常有用。正如你在 `SIGKILL` 案例中看到的那样,我明确地指出,必须将 `SIGKILL` 发送到执行程序,而不是 `lxc exec`命令本身。这是因为 `SIGKILL` 在程序中无法处理。程序可以做的唯一的事情就是去死,像现在这样……像这个例子……马上(你明白了了吧……)。但是程序可以处理很多其他信号 `SIGTERM`、`SIGHUP',当然也可以处理 `SIGUSR1` 和 `SIGUSR2`。因此,当你发送可以被 `lxc exec` 处理而不是被执行程序处理的信号时,较新版本的 [LXD][9] 会将信号转发到执行进程。这在脚本中非常方便。

|

||||

|

||||

无论如何,我希望你觉得这篇小小的 `lxc exec` 文章/胡言乱语有用。享受 [LXD][10] 吧,这是与一只疯狂的美丽的野兽玩耍。请试试在线实验:[https://linuxcontainers.org/lxd/try-it/][11],对于开发人员看看这里:[https://github.com/lxc/lxd][12] 并给我们补丁。

|

||||

|

||||

我们不要求签署任何 CLA,我们遵循内核风格,只要其中有 “Signed-off-by” 这行就好。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://cbrauner.wordpress.com/2017/01/20/lxc-exec-vs-ssh/

|

||||

|

||||

作者:[brauner][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://cbrauner.wordpress.com

|

||||

[1]:https://github.com/lxc/lxd

|

||||

[2]:https://github.com/lxc/lxd

|

||||

[3]:https://github.com/lxc/lxd/blob/master/client.go

|

||||

[4]:https://asciinema.org/a/100035?autoplay=1

|

||||

[5]:https://github.com/lxc/lxd

|

||||

[6]:https://asciinema.org/a/100040?autoplay=1

|

||||

[7]:https://asciinema.org/a/100043?autoplay=1

|

||||

[8]:https://github.com/lxc/lxd

|

||||

[9]:https://github.com/lxc/lxd

|

||||

[10]:https://github.com/lxc/lxd

|

||||

[11]:https://linuxcontainers.org/lxd/try-it/

|

||||

[12]:https://github.com/lxc/lxd

|

||||

@ -0,0 +1,161 @@

|

||||

用 R 收集和映射推特数据的初学者向导

|

||||

============================================================

|

||||

|

||||

> 学习使用 R 的 twitteR 和 leaflet 包, 你就可以把任何话题的推文定位画在地图上。

|

||||

|

||||

|

||||

|

||||

当我开始学习 R ,我也需要学习如何出于研究的目的地收集推特数据并对其进行映射。尽管网上关于这个话题的信息很多,但我发觉难以理解什么与收集并映射推特数据相关。我不仅是个 R 新手,而且对各种教程中技术名词不熟悉。但尽管困难重重,我成功了!在这个教程里,我将以一种新手程序员都能看懂的方式来攻略如何收集推特数据并将至展现在地图中。

|

||||

|

||||

### 创建应用程序

|

||||

|

||||

如果你没有推特帐号,首先你需要 [注册一个][19]。然后,到 [apps.twitter.com][20] 创建一个允许你收集推特数据的应用程序。别担心,创建应用程序极其简单。你创建的应用程序会与推特应用程序接口(API)相连。 想象 API 是一个多功能电子个人助手。你可以使用 API 让其它程序帮你做事。这样一来,你可以接入推特 API 令其收集数据。只需确保不要请求太多,因为推特数据请求次数是有[限制][21] 的。

|

||||

|

||||