mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-21 02:10:11 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

bb9a7f9808

@ -1,13 +1,13 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11916-1.html)

|

||||

[#]: subject: (What is WireGuard? Why Linux Users Going Crazy Over it?)

|

||||

[#]: via: (https://itsfoss.com/wireguard/)

|

||||

[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

|

||||

|

||||

什么是 WireGuard?为什么 Linux 用户对它疯狂?

|

||||

什么是 WireGuard?为什么 Linux 用户为它疯狂?

|

||||

======

|

||||

|

||||

从普通的 Linux 用户到 Linux 创建者 [Linus Torvalds][1],每个人都对 WireGuard 很感兴趣。什么是 WireGuard,它为何如此特别?

|

||||

@ -18,7 +18,6 @@

|

||||

|

||||

[WireGuard][3] 是一个易于配置、快速且安全的开源 [VPN][4],它利用了最新的加密技术。目的是提供一种更快、更简单、更精简的通用 VPN,它可以轻松地在树莓派这类低端设备到高端服务器上部署。

|

||||

|

||||

|

||||

[IPsec][5] 和 OpenVPN 等大多数其他解决方案是几十年前开发的。安全研究人员和内核开发人员 Jason Donenfeld 意识到它们速度慢且难以正确配置和管理。

|

||||

|

||||

这让他创建了一个新的开源 VPN 协议和解决方案,它更加快速、安全、易于部署和管理。

|

||||

@ -31,31 +30,31 @@ WireGuard 最初是为 Linux 开发的,但现在可用于 Windows、macOS、BS

|

||||

|

||||

除了可以跨平台之外,WireGuard 的最大优点之一就是易于部署。配置和部署 WireGuard 就像配置和使用 SSH 一样容易。

|

||||

|

||||

看看 [WireGuard 设置指南][7]。安装 WireGuard、生成公钥和私钥(像 SSH 一样),设置防火墙规则并启动服务。现在将它和 [OpenVPN 设置指南][8]进行比较。它有太多要做的了。

|

||||

看看 [WireGuard 设置指南][7]。安装 WireGuard、生成公钥和私钥(像 SSH 一样),设置防火墙规则并启动服务。现在将它和 [OpenVPN 设置指南][8]进行比较——有太多要做的了。

|

||||

|

||||

WireGuard 的另一个好处是它有一个仅 4000 行代码的精简代码库。将它与 [OpenVPN][9](另一个流行的开源 VPN)的 100,000 行代码相比。显然,调试W ireGuard 更加容易。

|

||||

WireGuard 的另一个好处是它有一个仅 4000 行代码的精简代码库。将它与 [OpenVPN][9](另一个流行的开源 VPN)的 100,000 行代码相比。显然,调试 WireGuard 更加容易。

|

||||

|

||||

不要小看它的简单。WireGuard 支持所有最新的加密技术,例如 [Noise协议框架][10]、[Curve25519][11]、[ChaCha20][12]、[Poly1305][13]、[BLAKE2][14]、[SipHash24][15]、[HKDF][16] 和安全受信任结构。

|

||||

不要因其简单而小看它。WireGuard 支持所有最新的加密技术,例如 [Noise 协议框架][10]、[Curve25519][11]、[ChaCha20][12]、[Poly1305][13]、[BLAKE2][14]、[SipHash24][15]、[HKDF][16] 和安全受信任结构。

|

||||

|

||||

由于 WireGuard 运行在[内核空间][17],因此可以高速提供安全的网络。

|

||||

|

||||

这些是 WireGuard 越来越受欢迎的一些原因。Linux 创造者 Linus Torvalds 非常喜欢 WireGuard,以至于将其合并到 [Linux Kernel 5.6][18] 中:

|

||||

|

||||

> 我能否再次声明对它的爱,并希望它能很快合并?也许代码不是完美的,但我已经忽略,与 OpenVPN 和 IPSec 的恐怖相比,这是一件艺术品。

|

||||

> 我能否再次声明对它的爱,并希望它能很快合并?也许代码不是完美的,但我不在乎,与 OpenVPN 和 IPSec 的恐怖相比,这是一件艺术品。

|

||||

>

|

||||

> Linus Torvalds

|

||||

|

||||

### 如果 WireGuard 已经可用,那么将其包含在 Linux 内核中有什么大惊小怪的?

|

||||

|

||||

这可能会让新的 Linux 用户感到困惑。你知道可以在 Linux 上安装和配置 WireGuard VPN 服务器,但同时会看到 Linux Kernel 5.6 将包含 WireGuard 的消息。让我向您解释。

|

||||

这可能会让新的 Linux 用户感到困惑。你知道可以在 Linux 上安装和配置 WireGuard VPN 服务器,但同时也会看到 Linux Kernel 5.6 将包含 WireGuard 的消息。让我向您解释。

|

||||

|

||||

目前,你可以将 WireGuard 作为[内核模块][19]安装在 Linux 中。诸如 VLC、GIMP 等常规应用安装在 Linux 内核之上(在 [用户空间][20]中),而不是内部。

|

||||

目前,你可以将 WireGuard 作为[内核模块][19]安装在 Linux 中。而诸如 VLC、GIMP 等常规应用安装在 Linux 内核之上(在 [用户空间][20]中),而不是内部。

|

||||

|

||||

当将 WireGuard 安装为内核模块时,基本上是自行修改 Linux 内核并向其添加代码。从 5.6 内核开始,你无需手动添加内核模块。默认情况下它将包含在内核中。

|

||||

当将 WireGuard 安装为内核模块时,基本上需要你自行修改 Linux 内核并向其添加代码。从 5.6 内核开始,你无需手动添加内核模块。默认情况下它将包含在内核中。

|

||||

|

||||

在 5.6 内核中包含 WireGuard 很有可能[扩展 WireGuard 的采用,从而改变当前的 VPN 场景][21]。

|

||||

|

||||

**总结**

|

||||

### 总结

|

||||

|

||||

WireGuard 之所以受欢迎是有充分理由的。诸如 [Mullvad VPN][23] 之类的一些流行的[关注隐私的 VPN][22] 已经在使用 WireGuard,并且在不久的将来,采用率可能还会增长。

|

||||

|

||||

@ -68,7 +67,7 @@ via: https://itsfoss.com/wireguard/

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -96,4 +95,4 @@ via: https://itsfoss.com/wireguard/

|

||||

[20]: http://www.linfo.org/user_space.html

|

||||

[21]: https://www.zdnet.com/article/vpns-will-change-forever-with-the-arrival-of-wireguard-into-linux/

|

||||

[22]: https://itsfoss.com/best-vpn-linux/

|

||||

[23]: https://mullvad.net/en/

|

||||

[23]: https://mullvad.net/en/

|

||||

@ -1,32 +1,34 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11917-1.html)

|

||||

[#]: subject: (Dino is a Modern Looking Open Source XMPP Client)

|

||||

[#]: via: (https://itsfoss.com/dino-xmpp-client/)

|

||||

[#]: author: (Ankush Das https://itsfoss.com/author/ankush/)

|

||||

|

||||

Dino 是一个有着现代外观的开源 XMPP 客户端

|

||||

Dino:一个有着现代外观的开源 XMPP 客户端

|

||||

======

|

||||

|

||||

_**简介:Dino 是一个相对较新的开源 XMPP 客户端,它尝试提供良好的用户体验,同时鼓励注重隐私的用户使用 XMPP 发送消息。**_

|

||||

> Dino 是一个相对较新的开源 XMPP 客户端,它试图提供良好的用户体验,鼓励注重隐私的用户使用 XMPP 发送消息。

|

||||

|

||||

|

||||

|

||||

### Dino:一个开源 XMPP 客户端

|

||||

|

||||

![][1]

|

||||

|

||||

[XMPP][2] (可扩展通讯和表示协议) 是一个去中心化的网络模型,可促进即时消息传递和协作。去中心化意味着没有中央服务器可以访问你的数据。通信直接点对点。

|

||||

[XMPP][2](<ruby>可扩展通讯和表示协议<rt>eXtensible Messaging Presence Protocol</rt></ruby>) 是一个去中心化的网络模型,可促进即时消息传递和协作。去中心化意味着没有中央服务器可以访问你的数据。通信直接点对点。

|

||||

|

||||

我们中的一些人可能会称它为"老派"技术,可能是因为 XMPP 客户端通常有着非常糟糕的用户体验,或者仅仅是因为它需要时间来适应(或设置它)。

|

||||

我们中的一些人可能会称它为“老派”技术,可能是因为 XMPP 客户端通常用户体验非常糟糕,或者仅仅是因为它需要时间来适应(或设置它)。

|

||||

|

||||

这时候 [Dino[3] 作为现代 XMPP 客户端出现了,在不损害你的隐私的情况下提供干净清爽的用户体验。

|

||||

这时候 [Dino][3] 作为现代 XMPP 客户端出现了,在不损害你的隐私的情况下提供干净清爽的用户体验。

|

||||

|

||||

### 用户体验

|

||||

|

||||

![][4]

|

||||

|

||||

Dino 有试图改善 XMPP 客户端的用户体验,但值得注意的是,它的外观和感受将在一定程度上取决于你的 Linux 发行版。你的图标主题或 Gnome 主题会让你的个人体验更好或更糟。

|

||||

Dino 试图改善 XMPP 客户端的用户体验,但值得注意的是,它的外观和感受将在一定程度上取决于你的 Linux 发行版。你的图标主题或 Gnome 主题会让你的个人体验更好或更糟。

|

||||

|

||||

从技术上讲,它的用户界面非常简单,易于使用。所以,我建议你看下 Ubuntu 中的[最佳图标主题][5]和 [GNOME 主题][6]来调整 Dino 的外观。

|

||||

|

||||

@ -34,7 +36,7 @@ Dino 有试图改善 XMPP 客户端的用户体验,但值得注意的是,它

|

||||

|

||||

![Dino Screenshot][7]

|

||||

|

||||

你可以期望将 Dino 用作 Slack、[Signal][8] 或 [Wire][9] 的替代产品,来用于你的业务或个人用途。

|

||||

你可以将 Dino 用作 Slack、[Signal][8] 或 [Wire][9] 的替代产品,来用于你的业务或个人用途。

|

||||

|

||||

它提供了消息应用所需的所有基本特性,让我们看下你可以从中得到的:

|

||||

|

||||

@ -47,14 +49,10 @@ Dino 有试图改善 XMPP 客户端的用户体验,但值得注意的是,它

|

||||

* 支持 [OpenPGP][10] 和 [OMEMO][11] 加密

|

||||

* 轻量级原生桌面应用

|

||||

|

||||

|

||||

|

||||

### 在 Linux 上安装 Dino

|

||||

|

||||

你可能会发现它列在你的软件中心中,也可能未找到。Dino 为基于 Debian(deb)和 Fedora(rpm)的发行版提供了可用的二进制文件。

|

||||

|

||||

**对于 Ubuntu:**

|

||||

|

||||

Dino 在 Ubuntu 的 universe 仓库中,你可以使用以下命令安装它:

|

||||

|

||||

```

|

||||

@ -63,15 +61,15 @@ sudo apt install dino-im

|

||||

|

||||

类似地,你可以在 [GitHub 分发包页面][12]上找到其他 Linux 发行版的包。

|

||||

|

||||

如果你想要获取最新的,你可以在 [OpenSUSE 的软件页面][13]找到 Dino 的 **.deb** 和 .**rpm** (每日构建版)安装在 Linux 中。

|

||||

如果你想要获取最新的,你可以在 [OpenSUSE 的软件页面][13]找到 Dino 的 **.deb** 和 .**rpm** (每日构建版)安装在 Linux 中。

|

||||

|

||||

在任何一种情况下,前往它的 [Github 页面][14]或点击下面的链接访问官方网站。

|

||||

|

||||

[下载 Dino][3]

|

||||

- [下载 Dino][3]

|

||||

|

||||

**总结**

|

||||

### 总结

|

||||

|

||||

它工作良好没有出过任何问题(在我编写这篇文章时快速测试过它)。我将尝试探索更多,并希望能涵盖更多有关 XMPP 的文章来鼓励用户使用 XMPP 的客户端和服务器用于通信。

|

||||

在我编写这篇文章时快速测试过它,它工作良好,没有出过问题。我将尝试探索更多,并希望能涵盖更多有关 XMPP 的文章来鼓励用户使用 XMPP 的客户端和服务器用于通信。

|

||||

|

||||

你觉得 Dino 怎么样?你会推荐另一个可能好于 Dino 的开源 XMPP 客户端吗?在下面的评论中让我知道你的想法。

|

||||

|

||||

@ -82,7 +80,7 @@ via: https://itsfoss.com/dino-xmpp-client/

|

||||

作者:[Ankush Das][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,64 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Google Cloud moves to aid mainframe migration)

|

||||

[#]: via: (https://www.networkworld.com/article/3528451/google-cloud-moves-to-aid-mainframe-migration.html)

|

||||

[#]: author: (Michael Cooney https://www.networkworld.com/author/Michael-Cooney/)

|

||||

|

||||

Google Cloud moves to aid mainframe migration

|

||||

======

|

||||

Google bought Cornerstone Technology, whose technology facilitates moving mainframe applications to the cloud.

|

||||

Thinkstock

|

||||

|

||||

Google Cloud this week bought a mainframe cloud-migration service firm Cornerstone Technology with an eye toward helping Big Iron customers move workloads to the private and public cloud.

|

||||

|

||||

Google said the Cornerstone technology – found in its [G4 platform][1] – will shape the foundation of its future mainframe-to-Google Cloud offerings and help mainframe customers modernize applications and infrastructure.

|

||||

|

||||

[[Get regularly scheduled insights by signing up for Network World newsletters.]][2]

|

||||

|

||||

“Through the use of automated processes, Cornerstone’s tools can break down your Cobol, PL/1, or Assembler programs into services and then make them cloud native, such as within a managed, containerized environment” wrote Howard Weale, Google’s director, Transformation Practice, in a [blog][3] about the buy.

|

||||

|

||||

“As the industry increasingly builds applications as a set of services, many customers want to break their mainframe monolith programs into either Java monoliths or Java microservices,” Weale stated.

|

||||

|

||||

Google Cloud’s Cornerstone service will:

|

||||

|

||||

* Develop a migration roadmap where Google will assess a customer’s mainframe environment and create a roadmap to a modern services architecture.

|

||||

* Convert any language to any other language and any database to any other database to prepare applications for modern environments.

|

||||

* Automate the migration of workloads to the Google Cloud.

|

||||

|

||||

|

||||

|

||||

“Easy mainframe migration will go a long way as Google attracts large enterprises to its cloud,” said Matt Eastwood, senior vice president, Enterprise Infrastructure, Cloud, Developers and Alliances, IDC wrote in a statement.

|

||||

|

||||

The Cornerstone move is also part of Google’s effort stay competitive in the face of mainframe-migration offerings from [Amazon Web Services][4], [IBM/RedHat][5] and [Microsoft][6].

|

||||

|

||||

While the idea of moving legacy applications off the mainframe might indeed be beneficial to a business, Gartner last year warned that such decisions should be taken very deliberately.

|

||||

|

||||

“The value gained by moving applications from the traditional enterprise platform onto the next ‘bright, shiny thing’ rarely provides an improvement in the business process or the company’s bottom line. A great deal of analysis must be performed and each cost accounted for,” Gartner stated in a report entitled *[_Considering Leaving Legacy IBM Platforms? Beware, as Cost Savings May Disappoint, While Risking Quality_][7]. * “Legacy platforms may seem old, outdated and due for replacement. Yet IBM and other vendors are continually integrating open-source tools to appeal to more developers while updating the hardware. Application leaders should reassess the capabilities and quality of these platforms before leaving them.”

|

||||

|

||||

Join the Network World communities on [Facebook][8] and [LinkedIn][9] to comment on topics that are top of mind.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3528451/google-cloud-moves-to-aid-mainframe-migration.html

|

||||

|

||||

作者:[Michael Cooney][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Michael-Cooney/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.cornerstone.nl/solutions/modernization

|

||||

[2]: https://www.networkworld.com/newsletters/signup.html

|

||||

[3]: https://cloud.google.com/blog/topics/inside-google-cloud/helping-customers-migrate-their-mainframe-workloads-to-google-cloud

|

||||

[4]: https://aws.amazon.com/blogs/enterprise-strategy/yes-you-should-modernize-your-mainframe-with-the-cloud/

|

||||

[5]: https://www.networkworld.com/article/3438542/ibm-z15-mainframe-amps-up-cloud-security-features.html

|

||||

[6]: https://azure.microsoft.com/en-us/migration/mainframe/

|

||||

[7]: https://www.gartner.com/doc/reprints?id=1-6L80XQJ&ct=190429&st=sb

|

||||

[8]: https://www.facebook.com/NetworkWorld/

|

||||

[9]: https://www.linkedin.com/company/network-world

|

||||

@ -0,0 +1,57 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Japanese firm announces potential 80TB hard drives)

|

||||

[#]: via: (https://www.networkworld.com/article/3528211/japanese-firm-announces-potential-80tb-hard-drives.html)

|

||||

[#]: author: (Andy Patrizio https://www.networkworld.com/author/Andy-Patrizio/)

|

||||

|

||||

Japanese firm announces potential 80TB hard drives

|

||||

======

|

||||

Using some very fancy physics for stacking electrons, Showa Denko K.K. plans to quadruple the top end of proposed capacity.

|

||||

[geralt][1] [(CC0)][2]

|

||||

|

||||

Hard drive makers are staving off obsolescence to solid-state drives (SSDs) by offering capacities that are simply not feasible in an SSD. Seagate and Western Digital are both pushing to release 20TB hard disks in the next few years. A 20TB SSD might be doable but also cost more than a new car.

|

||||

|

||||

But Showa Denko K.K. of Japan has gone one further with the announcement of its next-generation of heat-assisted magnetic recording (HAMR) media for hard drives. The platters use all-new magnetic thin films to maximize their data density, with the goal of eventually enabling 70TB to 80TB hard drives in a 3.5-inch form factor.

|

||||

|

||||

[[Get regularly scheduled insights by signing up for Network World newsletters.]][3]

|

||||

|

||||

Showa Denko is the world’s largest independent maker of platters for hard drives, selling them to basically anyone left making hard drives not named Seagate and Western Digital. Those two make their own platters and are working on their own next-generation drives for release in the coming years.

|

||||

|

||||

While similar in concept, Seagate and Western Digital have chosen different solutions to the same problem. HAMR, championed by Seagate and Showa, works by temporarily heating the disk material during the write process so data can be written to a much smaller space, thus increasing capacity.

|

||||

|

||||

Western Digital supports a different technology called microwave-assisted magnetic recording (MAMR). It operates under a similar concept as HAMR but uses microwaves instead of heat to alter the drive platter. Seagate hopes to get to 48TB by 2023, while Western Digital is planning on releasing 18TB and 20TB drives this year.

|

||||

|

||||

Heat is never good for a piece of electrical equipment, and Showa Denko’s platters for HAMR HDDs are made of a special composite alloy to tolerate temperature and reduce wear, not to mention increase density. A standard hard disk has a density of about 1.1TB per square inch. Showa’s drive platters have a density of 5-6TB per square inch.

|

||||

|

||||

The question is when they will be for sale, and who will use them. Fellow Japanese electronics giant Toshiba is expected to ship drives with Showa platters later this year. Seagate will be the first American company to adopt HAMR, with 20TB drives scheduled to ship in late 2020.

|

||||

|

||||

[][4]

|

||||

|

||||

Know what’s scary? That still may not be enough. IDC predicts that our global datasphere – the total of all of the digital data we create, consume, or capture – will grow from a total of approximately 40 zettabytes of data in 2019 to 175 zettabytes total by 2025.

|

||||

|

||||

So even with the growth in hard-drive density, the growth in the global data pool – everything from Oracle databases to Instagram photos – may still mean deploying thousands upon thousands of hard drives across data centers.

|

||||

|

||||

Join the Network World communities on [Facebook][5] and [LinkedIn][6] to comment on topics that are top of mind.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3528211/japanese-firm-announces-potential-80tb-hard-drives.html

|

||||

|

||||

作者:[Andy Patrizio][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Andy-Patrizio/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://pixabay.com/en/data-data-loss-missing-data-process-2764823/

|

||||

[2]: https://creativecommons.org/publicdomain/zero/1.0/

|

||||

[3]: https://www.networkworld.com/newsletters/signup.html

|

||||

[4]: https://www.networkworld.com/article/3440100/take-the-intelligent-route-with-consumption-based-storage.html?utm_source=IDG&utm_medium=promotions&utm_campaign=HPE21620&utm_content=sidebar ( Take the Intelligent Route with Consumption-Based Storage)

|

||||

[5]: https://www.facebook.com/NetworkWorld/

|

||||

[6]: https://www.linkedin.com/company/network-world

|

||||

@ -1,234 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (lujun9972)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Fun and Games in Emacs)

|

||||

[#]: via: (https://www.masteringemacs.org/article/fun-games-in-emacs)

|

||||

[#]: author: (Mickey Petersen https://www.masteringemacs.org/about)

|

||||

|

||||

Fun and Games in Emacs

|

||||

======

|

||||

|

||||

It’s yet another Monday and you’re hard at work on those [TPS reports][1] for your boss, Lumbergh. Why not play Emacs’s Zork-like text adventure game to take your mind off the tedium of work?

|

||||

|

||||

But seriously, yes, there are both games and quirky playthings in Emacs. Some you have probably heard of or played before. The only thing they have in common is that most of them were added a long time ago: some are rather odd inclusions (as you’ll see below) and others were clearly written by bored employees or graduate students. What they all have in common is a whimsy and a casualness that I rarely see in Emacs today. Emacs is Serious Business now in a way that it probably wasn’t back in the 1980s when some of these games were written.

|

||||

|

||||

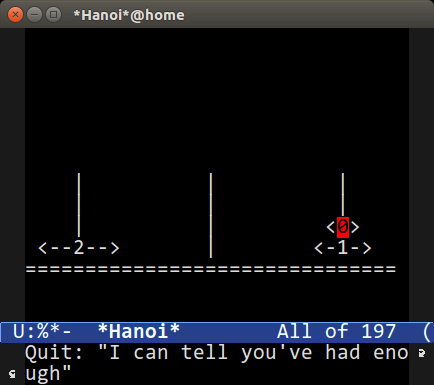

### Tower of Hanoi

|

||||

|

||||

The [Tower of Hanoi][2] is an ancient mathematical puzzle game and one that is probably familiar to some of us as it is often used in Computer Science as a teaching aid because of its recursive and iterative solutions.

|

||||

|

||||

|

||||

|

||||

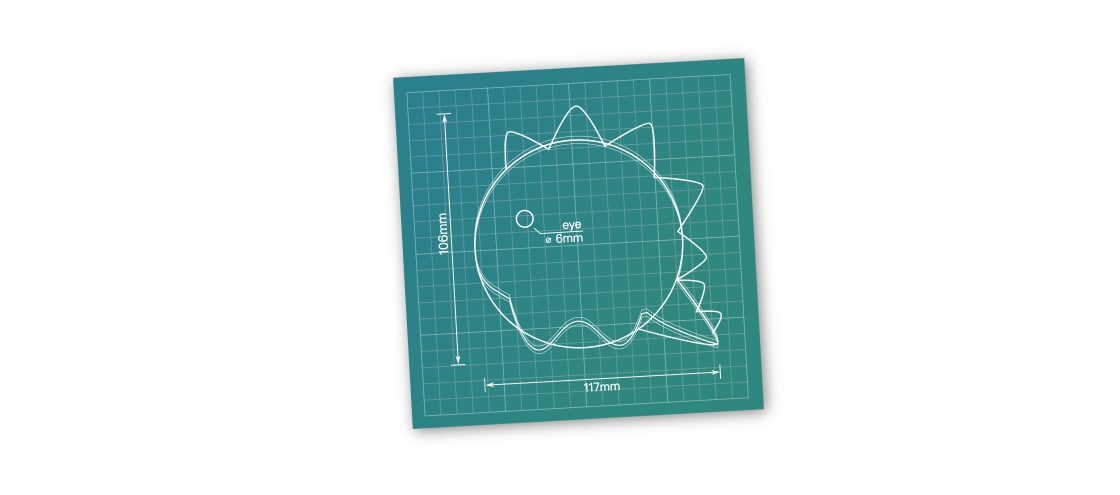

In Emacs there are three commands you can run to trigger the Tower of Hanoi puzzle: M-x hanoi with a default of 3 discs; M-x hanoi-unix and M-x hanoi-unix-64 uses the unix timestamp, making a move each second in line with the clock, and with the latter pretending it uses a 64-bit clock.

|

||||

|

||||

The Tower of Hanoi implementation in Emacs dates from the mid 1980s — an awful long time ago indeed. There are a few Customize options (M-x customize-group RET hanoi RET) such as enabling colorized discs. And when you exit the Hanoi buffer or type a character you are treated to a sarcastic goodbye message (see above.)

|

||||

|

||||

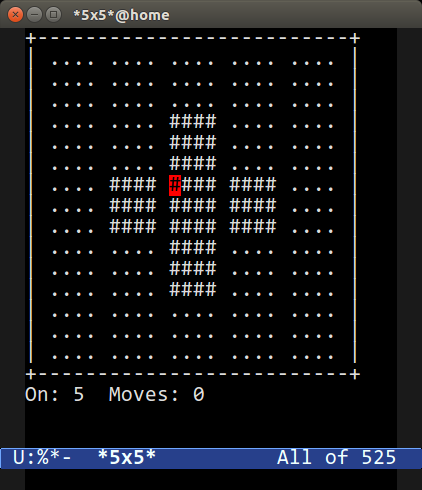

### 5x5

|

||||

|

||||

|

||||

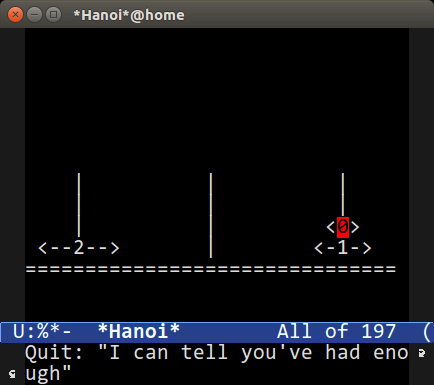

The 5x5 game is a logic puzzle: you are given a 5x5 grid with a central cross already filled-in; your goal is to fill all the cells by toggling them on and off in the right order to win. It’s not as easy as it sounds!

|

||||

|

||||

To play, type M-x 5x5, and with an optional digit argument you can change the size of the grid. What makes this game interesting is its rather complex ability to suggest the next move and attempt to solve the game grid. It uses Emacs’s very own, and very cool, symbolic RPN calculator M-x calc (and in [Fun with Emacs Calc][3] I use it to solve a simple problem.)

|

||||

|

||||

So what I like about this game is that it comes with a very complex solver – really, you should read the source code with M-x find-library RET 5x5 – and a “cracker” that attempts to brute force solutions to the game.

|

||||

|

||||

Try creating a bigger game grid, such as M-10 M-x 5x5, and then run one of the crack commands below. The crackers will attempt to iterate their way to the best solution. This runs in real time and is fun to watch:

|

||||

|

||||

|

||||

|

||||

`M-x 5x5-crack-mutating-best`

|

||||

Attempt to crack 5x5 by mutating the best solution.

|

||||

|

||||

`M-x 5x5-crack-mutating-current`

|

||||

Attempt to crack 5x5 by mutating the current solution.

|

||||

|

||||

`M-x 5x5-crack-randomly`

|

||||

Attempt to crack 5x5 using random solutions.

|

||||

|

||||

`M-x 5x5-crack-xor-mutate`

|

||||

Attempt to crack 5x5 by xoring the current and best solution.

|

||||

|

||||

### Text Animation

|

||||

|

||||

You can display a fancy birthday present animation by running M-x animate-birthday-present and giving it your name. It looks rather cool!

|

||||

|

||||

|

||||

|

||||

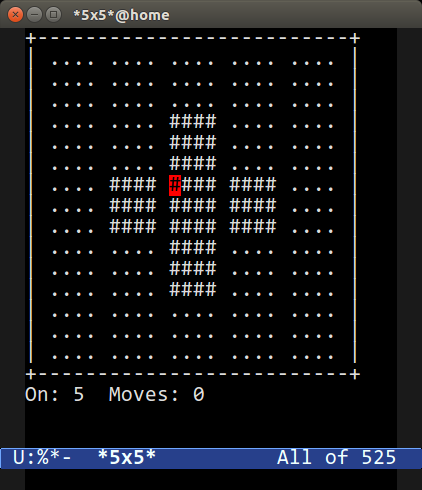

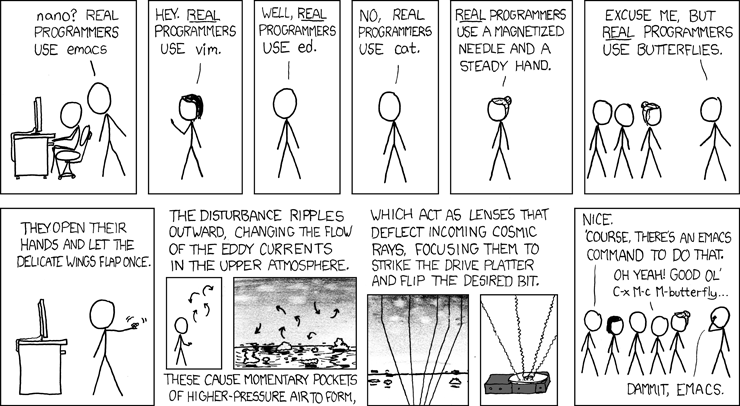

The animate package is also used by M-x butterfly command, a command added to Emacs as an homage to the [XKCD][4] strip above. Of course the Emacs command in the strip is teeechnically not valid but the humor more than makes up for it.

|

||||

|

||||

### Blackbox

|

||||

|

||||

The objective of this game I am going to quote literally:

|

||||

|

||||

> The object of the game is to find four hidden balls by shooting rays into the black box. There are four possibilities: 1) the ray will pass thru the box undisturbed, 2) it will hit a ball and be absorbed, 3) it will be deflected and exit the box, or 4) be deflected immediately, not even being allowed entry into the box.

|

||||

|

||||

So, it’s a bit like the [Battleship][5] most of us played as kids but… for people with advanced degrees in physics?

|

||||

|

||||

It’s another game that was added back in the 1980s. I suggest you read the extensive documentation on how to play by typing C-h f blackbox.

|

||||

|

||||

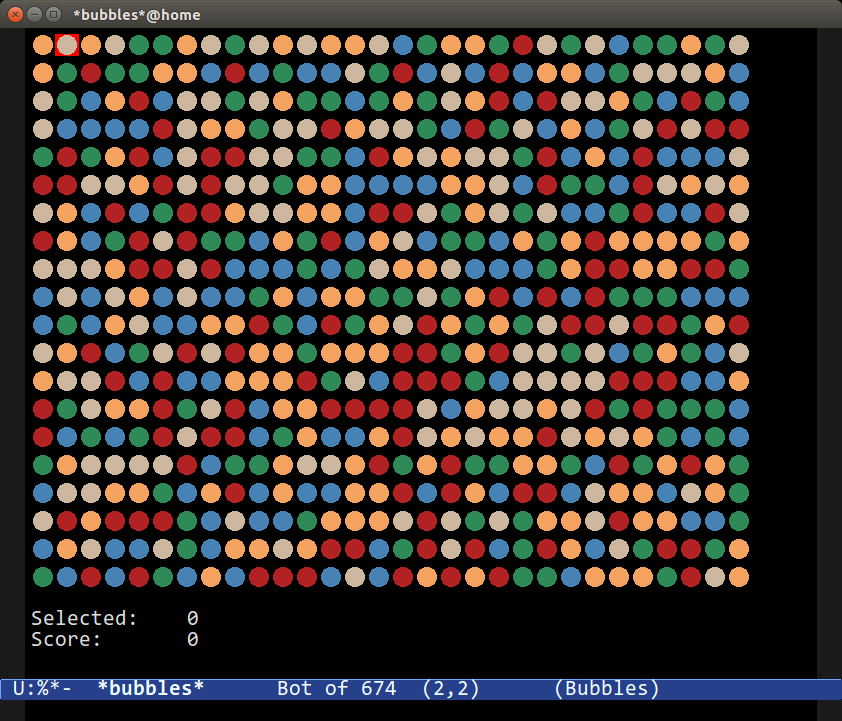

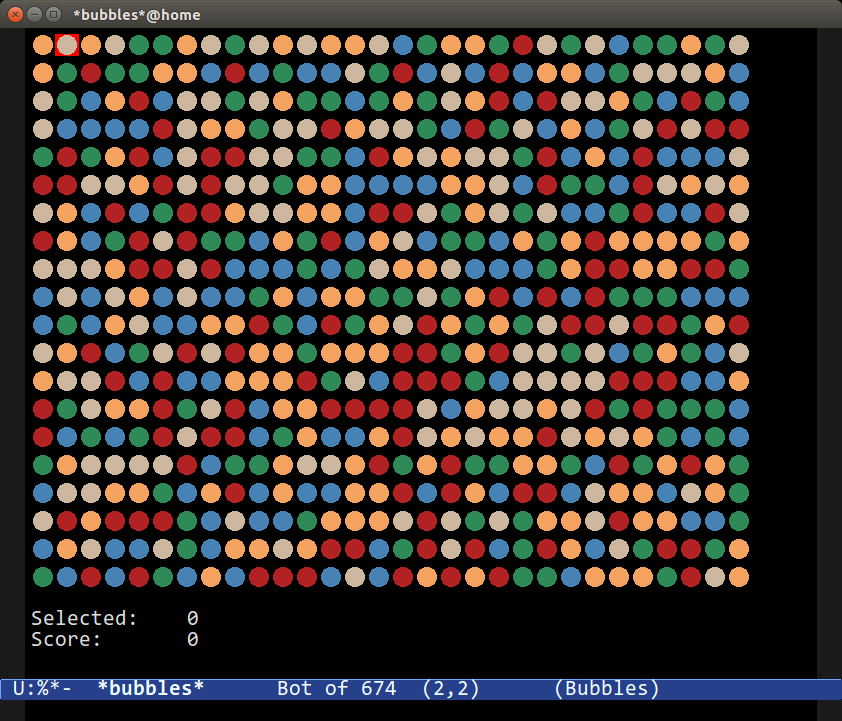

### Bubbles

|

||||

|

||||

|

||||

|

||||

The M-x bubbles game is rather simple: you must clear out as many “bubbles” as you can in as few moves as possible. When you remove bubbles the other bubbles drop and stick together. It’s a fun game that, as an added bonus, comes with graphics if you use Emacs’s GUI. It also works with your mouse.

|

||||

|

||||

You can configure the difficulty of the game by calling M-x bubbles-set-game- where is one of: easy, medium, difficult, hard, or userdefined. Furthermore, you can alter the graphics, grid size and colors using Customize: M-x customize-group bubbles.

|

||||

|

||||

For its simplicity and fun factor, this ranks as one of my favorite games in Emacs.

|

||||

|

||||

### Fortune & Cookie

|

||||

|

||||

I like the fortune command. Snarky, unhelpful and often sarcastic “advice” mixed in with literature and riddles brightens up my day whenever I launch a new shell.

|

||||

|

||||

Rather confusingly there are two packages in Emacs that does more-or-less the same thing: fortune and cookie1. The former is geared towards putting fortune cookie messages in email signatures and the latter is just a simple reader for the fortune format.

|

||||

|

||||

Anyway, to use Emacs’s cookie1 package you must first tell it where to find the file by customizing the variable cookie-file with customize-option RET cookie RET.

|

||||

|

||||

If you’re on Ubuntu you will have to install the fortune package first. The files are found in the /usr/share/games/fortunes/ directory.

|

||||

|

||||

You can then call M-x cookie or, should you want to do this, find all matching cookies with M-x cookie-apropos.

|

||||

|

||||

### Decipher

|

||||

|

||||

This package perfectly captures the utilitarian nature of Emacs: it’s a package to help you break simple substitution ciphers (like cryptogram puzzles) using a helpful user interface. You just know that – more than twenty years ago – someone really had a dire need to break a lot of basic ciphers. It’s little things like this module that makes me overjoyed to use Emacs: a module of scant importance to all but a few people and, yet, should you need it – there it is.

|

||||

|

||||

So how do you use it then? Well, let’s consider the “rot13” cipher: rotating characters by 13 places in a 26-character alphabet. It’s an easy thing to try out in Emacs with M-x ielm, Emacs’s REPL for [Evaluating Elisp][6]:

|

||||

|

||||

```

|

||||

*** Welcome to IELM *** Type (describe-mode) for help.

|

||||

ELISP> (rot13 "Hello, World")

|

||||

"Uryyb, Jbeyq"

|

||||

ELISP> (rot13 "Uryyb, Jbeyq")

|

||||

"Hello, World"

|

||||

ELISP>

|

||||

```

|

||||

|

||||

So how can the decipher module help us here? Well, create a new buffer test-cipher and type in your cipher text (in my case Uryyb, Jbeyq)

|

||||

|

||||

|

||||

|

||||

You’re now presented with a rather complex interface. You can now place the point on any of the characters in the ciphertext on the purple line and guess what the character might be: Emacs will update the rest of the plaintext guess with your choices and tell you how the characters in the alphabet have been allocated thus far.

|

||||

|

||||

You can then start winnowing down the options using various helper commands to help infer which cipher characters might correspond to which plaintext character:

|

||||

|

||||

|

||||

|

||||

`D`

|

||||

Shows a list of digrams (two-character combinations from the cipher) and their frequency

|

||||

|

||||

`F`

|

||||

Shows the frequency of each ciphertext letter

|

||||

|

||||

`N`

|

||||

Shows adjacency of characters. I am not entirely sure how this works.

|

||||

|

||||

`M` and `R`

|

||||

Save and restore a checkpoint, allowing you to branch your work and explore different ways of cracking the cipher.

|

||||

|

||||

All in all, for such an esoteric task, this package is rather impressive! If you regularly solve cryptograms maybe this package can help?

|

||||

|

||||

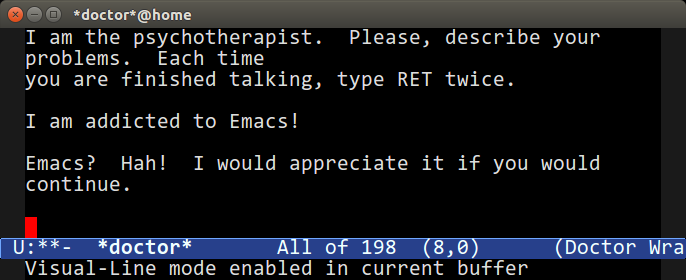

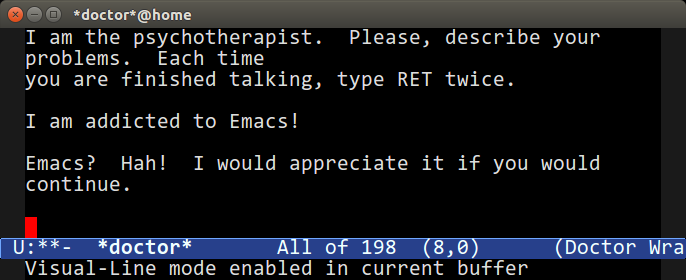

### Doctor

|

||||

|

||||

|

||||

|

||||

Ah, the Emacs doctor. Based on the original [ELIZA][7] the “Doctor” tries to psychoanalyze what you say and attempts to repeat the question back to you. Rather fun, for a few minutes, and one of the more famous Emacs oddities. You can run it with M-x doctor.

|

||||

|

||||

### Dunnet

|

||||

|

||||

Emacs’s very own Zork-like text adventure game. To play it, type M-x dunnet. It’s rather good, if short, but it’s another rather famous Emacs game that too few have actually played through to the end.

|

||||

|

||||

If you find yourself with time to kill between your TPS reports then it’s a great game with a built-in “boss screen” as it’s text-only.

|

||||

|

||||

Oh, and, don’t try to eat the CPU card :)

|

||||

|

||||

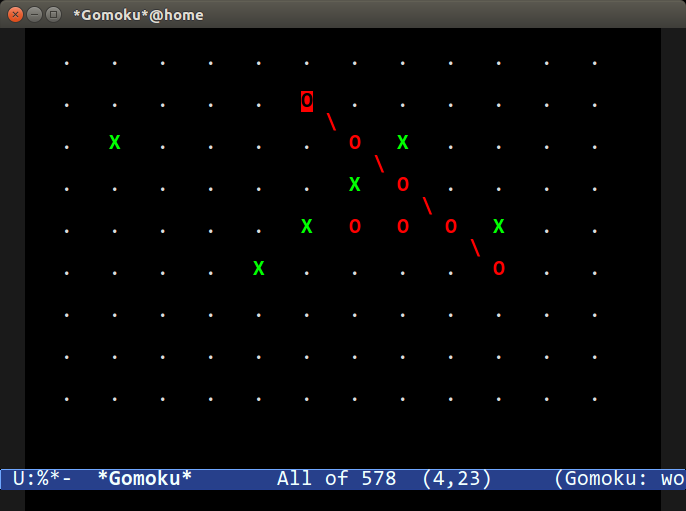

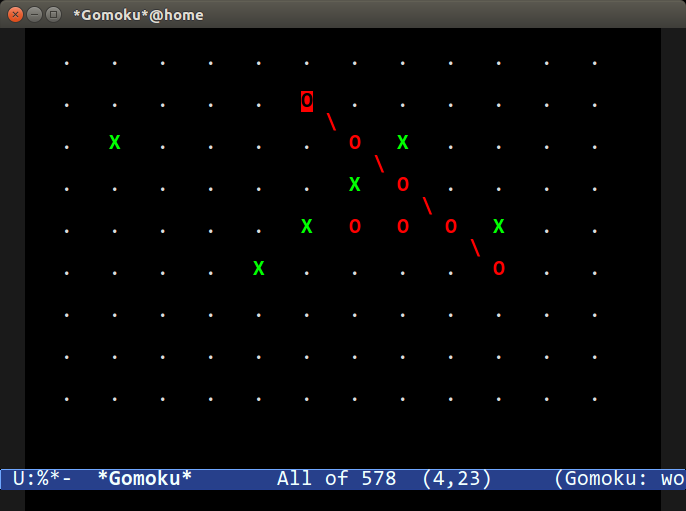

### Gomoku

|

||||

|

||||

|

||||

|

||||

Another game written in the 1980s. You have to connect 5 squares, tic-tac-toe style. You can play against Emacs with M-x gomoku. The game also supports the mouse, which is rather handy. You can customize the group gomoku to adjust the size of the grid.

|

||||

|

||||

### Game of Life

|

||||

|

||||

[Conway’s Game of Life][8] is a famous example of cellular automata. The Emacs version comes with a handful of starting patterns that you can (programmatically with elisp) alter by adjusting the life-patterns variable.

|

||||

|

||||

You can trigger a game of life with M-x life. The fact that the whole thing, display code, comments and all, come in at less than 300 characters is also rather impressive.

|

||||

|

||||

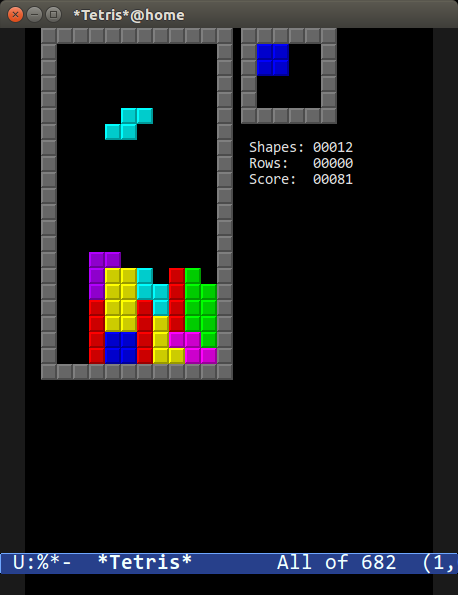

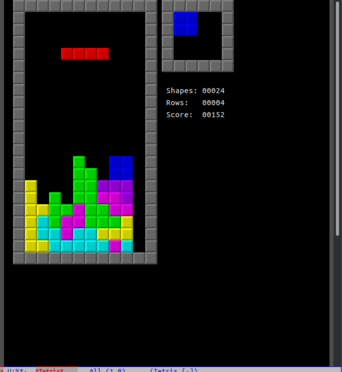

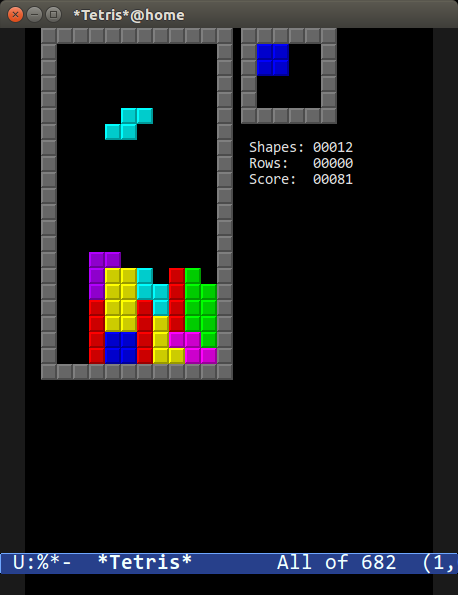

### Pong, Snake and Tetris

|

||||

|

||||

|

||||

|

||||

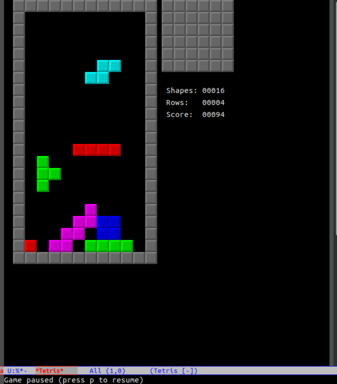

These classic games are all implemented using the Emacs package gamegrid, a generic framework for building grid-based games like Tetris and Snake. The great thing about the gamegrid package is its compatibility with both graphical and terminal Emacs: if you run Emacs in a GUI you get fancy graphics; if you don’t, you get simple ASCII art.

|

||||

|

||||

You can run the games by typing M-x pong, M-x snake, or M-x tetris.

|

||||

|

||||

The Tetris game in particular is rather faithfully implemented, having both gradual speed increase and the ability to slide blocks into place. And given you have the code to it, you can finally remove that annoying Z-shaped piece no one likes!

|

||||

|

||||

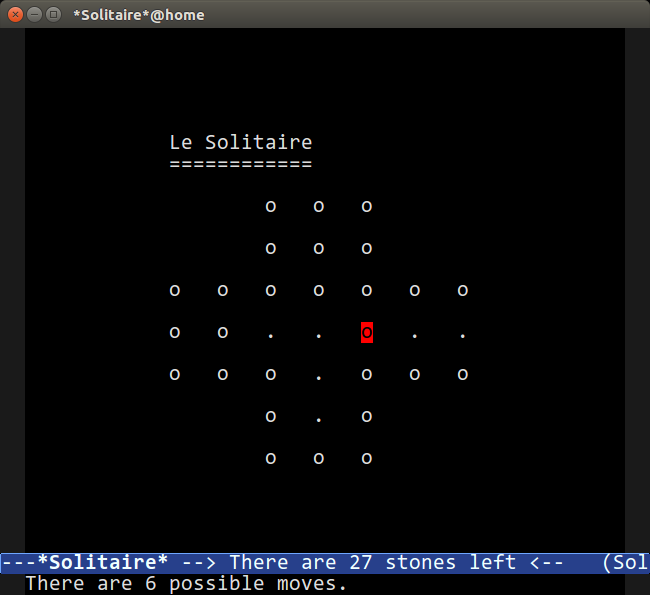

### Solitaire

|

||||

|

||||

|

||||

|

||||

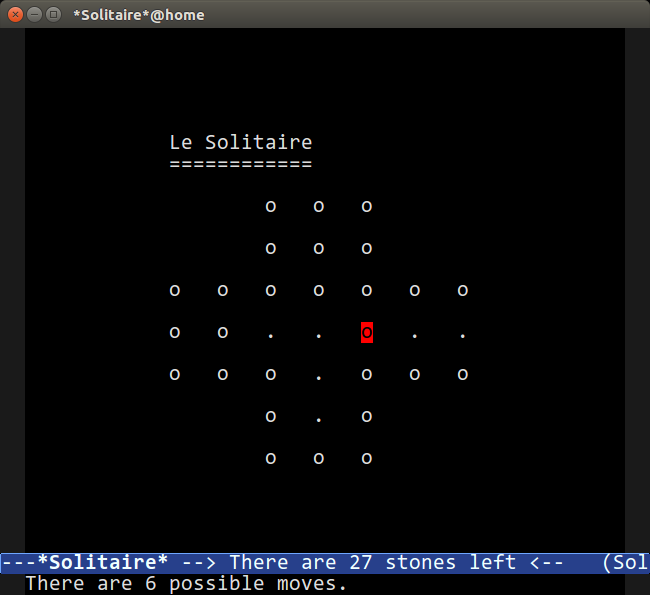

This is not the card game, unfortunately. But a peg-based game where you have to end up with just one stone on the board, by taking a stone (the o) and “jumping” over an adjacent stone into the hole (the .), removing the stone you jumped over in the process. Rinse and repeat until the board is empty.

|

||||

|

||||

There is a handy solver built in called M-x solitaire-solve if you get stuck.

|

||||

|

||||

### Zone

|

||||

|

||||

Another of my favorites. This time’s it’s a screensaver – or rather, a series of screensavers.

|

||||

|

||||

Type M-x zone and watch what happens to your screen!

|

||||

|

||||

You can configure a screensaver idle time by running M-x zone-when-idle (or calling it from elisp) with an idle time in seconds. You can turn it off with M-x zone-leave-me-alone.

|

||||

|

||||

This one’s guaranteed to make your coworkers freak out if it kicks off while they are looking.

|

||||

|

||||

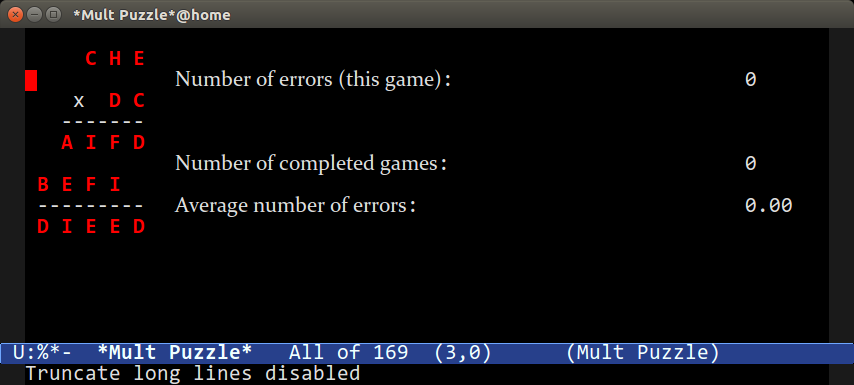

### Multiplication Puzzle

|

||||

|

||||

|

||||

|

||||

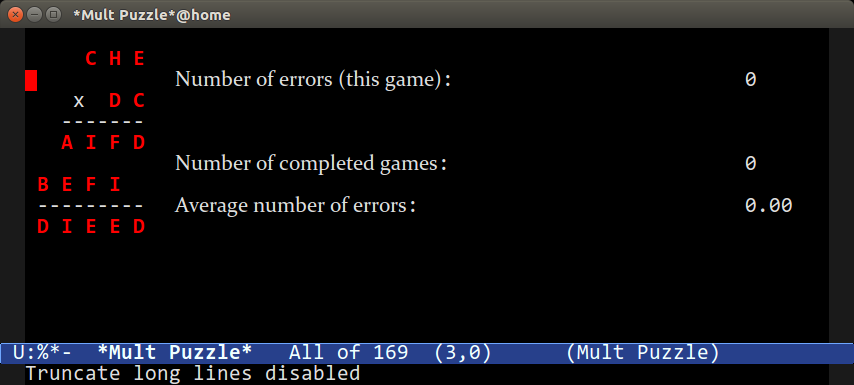

This is another brain-twisting puzzle game. When you run M-x mpuz you are given a multiplication puzzle where you have to replace the letters with numbers and ensure the numbers add (multiply?) up.

|

||||

|

||||

You can run M-x mpuz-show-solution to solve the puzzle if you get stuck.

|

||||

|

||||

### Miscellaneous

|

||||

|

||||

There are more, but they’re not the most useful or interesting:

|

||||

|

||||

* You can translate a region into morse code with M-x morse-region and M-x unmorse-region.

|

||||

|

||||

* The Dissociated Press is a very simple command that applies something like a random walk markov-chain generator to a body of text in a buffer and generates nonsensical text from the source body. Try it with M-x dissociated-press.

|

||||

|

||||

* The Gamegrid package is a generic framework for building grid-based games. So far only Tetris, Pong and Snake use it. It’s called gamegrid.

|

||||

|

||||

* The gametree package is a complex way of notating and tracking chess games played via email.

|

||||

|

||||

* The M-x spook command inserts random words (usually into emails) designed to confuse/overload the “NSA trunk trawler” – and keep in mind this module dates from the 1980s and 1990s – with various words the spooks are supposedly listening for. Of course, even ten years ago that would’ve seemed awfully paranoid and quaint but not so much any more…

|

||||

|

||||

### Conclusion

|

||||

|

||||

I love the games and playthings that ship with Emacs. A lot of them date from, well, let’s just call a different era: an era where whimsy was allowed or perhaps even encouraged. Some are known classics (like Tetris and Tower of Hanoi) and some of the others are fun variations on classics (like blackbox) — and yet I love that they ship with Emacs after all these years. I wonder if any of these would make it into Emacs’s codebase today; well, they probably wouldn’t — they’d be relegated to the package manager where, in a clean and sterile world, they no doubt belong.

|

||||

|

||||

There’s a mandate in Emacs to move things not essential to the Emacs experience to ELPA, the package manager. I mean, as a developer myself, that does make sense, but… surely for every package removed and exiled to ELPA we chip away the essence of what defines Emacs?

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

|

||||

via: https://www.masteringemacs.org/article/fun-games-in-emacs

|

||||

|

||||

作者:[Mickey Petersen][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.masteringemacs.org/about

|

||||

[b]:https://github.com/lujun9972

|

||||

[1]:https://en.wikipedia.org/wiki/Office_Space

|

||||

[2]:https://en.wikipedia.org/wiki/Tower_of_Hanoi

|

||||

[3]:https://www.masteringemacs.org/article/fun-emacs-calc

|

||||

[4]:http://www.xkcd.com

|

||||

[5]:https://en.wikipedia.org/wiki/Battleship_(game)

|

||||

[6]:https://www.masteringemacs.org/article/evaluating-elisp-emacs

|

||||

[7]:https://en.wikipedia.org/wiki/ELIZA

|

||||

[8]:https://en.wikipedia.org/wiki/Conway's_Game_of_Life

|

||||

@ -0,0 +1,157 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (lujun9972)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Some Advice for How to Make Emacs Tetris Harder)

|

||||

[#]: via: (https://nickdrozd.github.io/2019/01/14/tetris.html)

|

||||

[#]: author: (nickdrozd https://nickdrozd.github.io)

|

||||

|

||||

Some Advice for How to Make Emacs Tetris Harder

|

||||

======

|

||||

|

||||

Did you know that Emacs comes bundled with an implementation of Tetris? Just hit M-x tetris and there it is:

|

||||

|

||||

|

||||

|

||||

This is often mentioned by Emacs advocates in text editor discussions. “Yeah, but can that other editor run Tetris?” I wonder, is that supposed to convince anyone that Emacs is superior? Like, why would anyone care that they could play games in their text editor? “Yeah, but can that other vacuum play mp3s?”

|

||||

|

||||

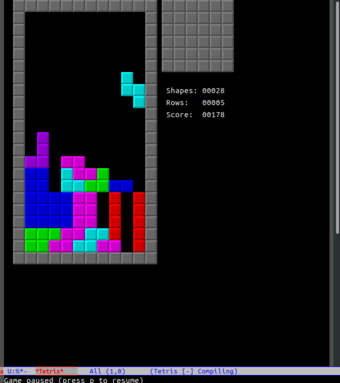

That said, Tetris is always fun. Like everything in Emacs, the source code is open for easy inspection and modifcation, so it’s possible to make it even more fun. And by more fun, I mean harder.

|

||||

|

||||

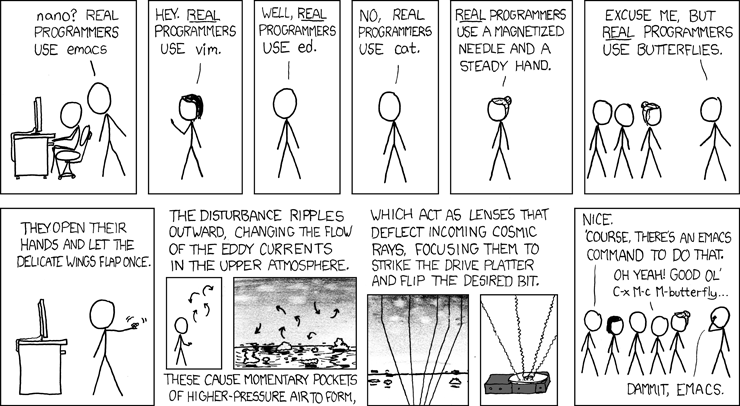

One of the simplest ways to make the game harder is to get rid of the next-block preview. No more sitting that S/Z block in a precarious position knowing that you can fill in the space with the next piece – you have to chance it and hope for the best. Here’s what it looks like with no preview (as you can see, without the preview I made some choices that turned out to have dire consequences):

|

||||

|

||||

|

||||

|

||||

The preview box is set with a function called tetris-draw-next-shape[1][2]:

|

||||

|

||||

```

|

||||

(defun tetris-draw-next-shape ()

|

||||

(dotimes (x 4)

|

||||

(dotimes (y 4)

|

||||

(gamegrid-set-cell (+ tetris-next-x x)

|

||||

(+ tetris-next-y y)

|

||||

tetris-blank)))

|

||||

(dotimes (i 4)

|

||||

(let ((tetris-shape tetris-next-shape)

|

||||

(tetris-rot 0))

|

||||

(gamegrid-set-cell (+ tetris-next-x

|

||||

(aref (tetris-get-shape-cell i) 0))

|

||||

(+ tetris-next-y

|

||||

(aref (tetris-get-shape-cell i) 1))

|

||||

tetris-shape))))

|

||||

```

|

||||

|

||||

First, we’ll introduce a flag to allow configuring next-preview[2][3]:

|

||||

|

||||

```

|

||||

(defvar tetris-preview-next-shape nil

|

||||

"When non-nil, show the next block the preview box.")

|

||||

```

|

||||

|

||||

Now the question is, how can we make tetris-draw-next-shape obey this flag? The obvious way would be to redefine it:

|

||||

|

||||

```

|

||||

(defun tetris-draw-next-shape ()

|

||||

(when tetris-preview-next-shape

|

||||

;; existing tetris-draw-next-shape logic

|

||||

))

|

||||

```

|

||||

|

||||

This is not an ideal solution. There will be two definitions of the same function floating around, which is confusing, and we’ll have to maintain our modified definition in case the upstream version changes.

|

||||

|

||||

A better approach is to use advice. Emacs advice is like a Python decorator, but even more flexible, since advice can be added to a function from anywhere. This means that we can modify the function without disturbing the original source file at all.

|

||||

|

||||

There are a lot of different ways to use Emacs advice ([check the manual][4]), but for now we’ll just stick with the advice-add function with the :around flag. The advising function takes the original function as an argument, and it might or might not execute it. In this case, we’ll say that the original should be executed only if the preview flag is non-nil:

|

||||

|

||||

```

|

||||

(defun tetris-maybe-draw-next-shape (tetris-draw-next-shape)

|

||||

(when tetris-preview-next-shape

|

||||

(funcall tetris-draw-next-shape)))

|

||||

|

||||

(advice-add 'tetris-draw-next-shape :around #'tetris-maybe-draw-next-shape)

|

||||

```

|

||||

|

||||

This code will modify the behavior of tetris-draw-next-shape, but it can be stored in your config files, safely away from the actual Tetris code.

|

||||

|

||||

Getting rid of the preview box is a simple change. A more drastic change is to make it so that blocks randomly stop in the air:

|

||||

|

||||

|

||||

|

||||

In that picture, the red I and green T pieces are not falling, they’re set in place. This can make the game almost unplayably hard, but it’s easy to implement.

|

||||

|

||||

As before, we’ll first define a flag:

|

||||

|

||||

```

|

||||

(defvar tetris-stop-midair t

|

||||

"If non-nil, pieces will sometimes stop in the air.")

|

||||

```

|

||||

|

||||

Now, the way Emacs Tetris works is something like this. The active piece has x- and y-coordinates. On each clock tick, the y-coordinate is incremented (the piece moves down one row), and then a check is made for collisions. If a collision is detected, the piece is backed out (its y-coordinate is decremented) and set in place. In order to make a piece stop in the air, all we have to do is hack the detection function, tetris-test-shape.

|

||||

|

||||

It doesn’t matter what this function does internally – what matters is that it’s a function of no arguments that returns a boolean value. We need it to return true whenever it normally would (otherwise we risk weird collisions) but also at other times. I’m sure there are a variety of ways this could be done, but here is what I came up with:

|

||||

|

||||

```

|

||||

(defun tetris-test-shape-random (tetris-test-shape)

|

||||

(or (and

|

||||

tetris-stop-midair

|

||||

;; Don't stop on the first shape.

|

||||

(< 1 tetris-n-shapes )

|

||||

;; Stop every INTERVAL pieces.

|

||||

(let ((interval 7))

|

||||

(zerop (mod tetris-n-shapes interval)))

|

||||

;; Don't stop too early (it makes the game unplayable).

|

||||

(let ((upper-limit 8))

|

||||

(< upper-limit tetris-pos-y))

|

||||

;; Don't stop at the same place every time.

|

||||

(zerop (mod (random 7) 10)))

|

||||

(funcall tetris-test-shape)))

|

||||

|

||||

(advice-add 'tetris-test-shape :around #'tetris-test-shape-random)

|

||||

```

|

||||

|

||||

The hardcoded parameters here were chosen to make the game harder but still playable. I was drunk on an airplane when I decided on them though, so they might need some further tweaking.

|

||||

|

||||

By the way, according to my tetris-scores file, my top score is

|

||||

|

||||

```

|

||||

01389 Wed Dec 5 15:32:19 2018

|

||||

```

|

||||

|

||||

The scores in that file are listed up to five digits by default, so that doesn’t seem very good.

|

||||

|

||||

Exercises for the reader

|

||||

|

||||

1. Using advice, modify Emacs Tetris so that it flashes the messsage “OH SHIT” under the scoreboard every time the block moves down. Make the size of the message proportional to the height of the block stack (when there are no blocks, the message should be small or nonexistent, and when the highest block is close to the ceiling, the message should be large).

|

||||

|

||||

2. The version of tetris-test-shape-random given here has every seventh piece stop midair. A player could potentially figure out the interval and use it to their advantage. Modify it to make the interval random in some reasonable range (say, every five to ten pieces).

|

||||

|

||||

3. For a different take on advising Tetris, try out [autotetris-mode][1].

|

||||

|

||||

4. Come up with an interesting way to mess with the piece-rotation mechanics and then implement it with advice.

|

||||

|

||||

Footnotes

|

||||

============================================================

|

||||

|

||||

[1][5] Emacs has just one big global namespace, so function and variable names are typically prefixed with their package name in order to avoid collisions.

|

||||

|

||||

[2][6] A lot of people will tell you that you shouldn’t use an existing namespace prefix and that you should reserve a namespace prefix for anything you define yourself, e.g. my/tetris-preview-next-shape. This is ugly and usually pointless, so I don’t do it.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://nickdrozd.github.io/2019/01/14/tetris.html

|

||||

|

||||

作者:[nickdrozd][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://nickdrozd.github.io

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://nullprogram.com/blog/2014/10/19/

|

||||

[2]: https://nickdrozd.github.io/2019/01/14/tetris.html#fn.1

|

||||

[3]: https://nickdrozd.github.io/2019/01/14/tetris.html#fn.2

|

||||

[4]: https://www.gnu.org/software/emacs/manual/html_node/elisp/Advising-Functions.html

|

||||

[5]: https://nickdrozd.github.io/2019/01/14/tetris.html#fnr.1

|

||||

[6]: https://nickdrozd.github.io/2019/01/14/tetris.html#fnr.2

|

||||

@ -1,49 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (heguangzhi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (How Kubernetes Became the Standard for Compute Resources)

|

||||

[#]: via: (https://www.linux.com/articles/how-kubernetes-became-the-standard-for-compute-resources/)

|

||||

[#]: author: (Swapnil Bhartiya https://www.linux.com/author/swapnil/)

|

||||

|

||||

How Kubernetes Became the Standard for Compute Resources

|

||||

======

|

||||

|

||||

<https://www.linux.com/wp-content/uploads/2019/08/elevator-1598431_1920.jpg>

|

||||

|

||||

2019 has been a game-changing year for the cloud-native ecosystem. There were [consolidations][1], acquisitions of powerhouses like Red Hat Docker and Pivotal, and the emergence of players like Rancher Labs and Mirantis.

|

||||

|

||||

“All these consolidation and M&A in this space is an indicator of how fast the market has matured,” said Sheng Liang, co-founder and CEO of Rancher Labs, a company that offers a complete software stack for teams adopting containers.

|

||||

|

||||

Traditionally, emerging technologies like Kubernetes and Docker appeal to tinkerers and mega-scalers such as Facebook and Google. There was very little interest outside of that group. However, both of these technologies experienced massive adoption at the enterprise level. Suddenly, there was a massive market with huge opportunities. Almost everyone jumped in. There were players who were bringing innovative solutions and then there were players who were trying to catch up with the rest. It became very crowded very quickly.

|

||||

|

||||

It also changed the way innovation was happening. [Early adopters were usually tech-savvy companies.][2] Now, almost everyone is using it, even in areas that were not considered turf for Kubernetes. It changed the market dynamics as companies like Rancher Labs were witnessing unique use cases.

|

||||

|

||||

Liang adds, “I’ve never been in a market or technology evolution that’s happened as quickly and as dynamically as Kubernetes. When we started some five years ago, it was a very crowded space. Over time, most of our peers disappeared for one reason or the other. Either they weren’t able to adjust to the change or they chose not to adjust to some of the changes.”

|

||||

|

||||

In the early days of Kubernetes, the most obvious opportunity was to build Kubernetes distro and Kubernetes operations. It’s new technology. It’s known to be reasonably complex to install, upgrade, and operate.

|

||||

|

||||

It all changed when Google, AWS, and Microsoft entered the market. At that point, there was a stampede of vendors rushing in to provide solutions for the platform. “As soon as cloud providers like Google decided to make Kubernetes as a service and offered it for free as loss-leader to drive infrastructure consumption, we knew that the business of actually operating and supporting Kubernetes, the upside of that would be very limited,” said Liang.

|

||||

|

||||

Not everything was bad for non-Google players. Since cloud vendors removed all the complexity that came with Kubernetes by offering it as a service, it meant wider adoption of the technology, even by those who refrained from using it due to the overhead of operating it. It meant that Kubernetes would become ubiquitous and would become an industry standard.

|

||||

|

||||

“Rancher Labs was one of the very few companies that saw this as an opportunity and looked one step further than everyone else. We realized that Kubernetes was going to become the new computing standard, just the way TCP/IP became the networking standard,” said Liang.

|

||||

|

||||

CNCF plays a critical role in building a vibrant ecosystem around Kubernetes, creating a massive community to build, nurture and commercialize cloud-native open source technologies.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/articles/how-kubernetes-became-the-standard-for-compute-resources/

|

||||

|

||||

作者:[Swapnil Bhartiya][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[heguangzhi](https://github.com/heguangzhi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linux.com/author/swapnil/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.cloudfoundry.org/blog/2019-is-the-year-of-consolidation-why-ibms-deal-with-red-hat-is-a-harbinger-of-things-to-come/

|

||||

[2]: https://www.packet.com/blog/open-source-season-on-the-kubernetes-highway/

|

||||

@ -1,5 +1,5 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: translator: (heguangzhi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

@ -656,7 +656,7 @@ via: https://opensource.com/article/20/2/python-gnu-octave-data-science

|

||||

|

||||

作者:[Cristiano L. Fontana][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

译者:[heguangzhi](https://github.com/heguangzhi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

427

sources/tech/20200221 Don-t like loops- Try Java Streams.md

Normal file

427

sources/tech/20200221 Don-t like loops- Try Java Streams.md

Normal file

@ -0,0 +1,427 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Don't like loops? Try Java Streams)

|

||||

[#]: via: (https://opensource.com/article/20/2/java-streams)

|

||||

[#]: author: (Chris Hermansen https://opensource.com/users/clhermansen)

|

||||

|

||||

Don't like loops? Try Java Streams

|

||||

======

|

||||

It's 2020 and time to learn about Java Streams.

|

||||

![Person drinking a hat drink at the computer][1]

|

||||

|

||||

In this article, I will explain how to not write loops anymore.

|

||||

|

||||

What? Whaddaya mean, no more loops?

|

||||

|

||||

Yep, that's my 2020 resolution—no more loops in Java. Understand that it's not that loops have failed me, nor have they led me astray (well, at least, I can argue that point). Really, it is that I, a Java programmer of modest abilities since 1997 or so, must finally learn about all this new [Streams][2] stuff, saying "what" I want to do and not "how" I want to do it, maybe being able to parallelize some of my computations, and all that other good stuff.

|

||||

|

||||

I'm guessing that there are other Java programmers out there who also have been programming in Java for a decent amount of time and are in the same boat. Therefore, I'm offering my experiences as a guide to "how to not write loops in Java anymore."

|

||||

|

||||

### Find a problem worth solving

|

||||

|

||||

If you're like me, then the first show-stopper you run into is "right, cool stuff, but what am I solving for, and how do I apply this?" I realized that I can spot the perfect opportunity camouflaged as _Something I've Done Before_.

|

||||

|

||||

In my case, it's sampling land cover within a specific area and coming up with an estimate and a confidence interval around that estimate for the land cover across the whole area. The specific problem involves deciding whether an area is "forested" or not, given a specific legal definition: if at least 10% of the soil is covered over by tree crowns, then the area is considered to be forested; otherwise, it's something else.

|

||||

|

||||

![Image of land cover in an area][3]

|

||||

|

||||

It's a pretty esoteric example of a recurring problem; I'll grant you. But there it is. For the ecologists and foresters out there who are accustomed to cool temperate or tropical forests, 10% might sound kind of low, but in the case of dry areas with low-growing shrubs and trees, that's a reasonable number.

|

||||

|

||||

So the basic idea is: use images to stratify the area (i.e., areas completely devoid of trees, areas of predominantly small trees spaced quite far apart, areas of predominantly small trees spaced closer together, areas of somewhat larger trees), locate some samples in those strata, send the crew out to measure the samples, analyze the results, and calculate the proportion of soil covered by tree crowns across the area. Simple, right?

|

||||

|

||||

![Survey team assessing land cover][4]

|

||||

|

||||

### What the field data looks like

|

||||

|

||||

In the current project, the samples are rectangular areas 20 meters wide by 25 meters long, so 500 square meters each. On each patch, the field crew measured each tree: its species, its height, the maximum and minimum width of its crown, and the diameter of its trunk at trunk height (nominally 30cm above the ground). This information was collected, entered into a spreadsheet, and exported to a bar separated value (BSV) file for me to analyze. It looks like this:

|

||||

|

||||

Stratum# | Sample# | Tree# | Species | Trunk diameter (cm) | Crown diameter 1 (m) | Crown diameter 2 (m) | Height (m)

|

||||

---|---|---|---|---|---|---|---

|

||||

1 | 1 | 1 | Ac | 6 | 3.6 | 4.6 | 2.4

|

||||

1 | 1 | 2 | Ac | 6 | 2.2 | 2.3 | 2.5

|

||||

1 | 1 | 3 | Ac | 16 | 2.5 | 1.7 | 2.4

|

||||

1 | 1 | 4 | Ac | 6 | 1.5 | 2.1 | 1.8

|

||||

1 | 1 | 5 | Ac | 5 | 0.9 | 1.7 | 1.7

|

||||

1 | 1 | 6 | Ac | 6 | 1.7 | 1.3 | 1.6

|

||||

1 | 1 | 7 | Ac | 5 | 1.82 | 1.32 | 1.8

|

||||

1 | 1 | 1 | Ac | 1 | 0.3 | 0.25 | 0.9

|

||||

1 | 1 | 2 | Ac | 2 | 1.2 | 1.2 | 1.7

|

||||

|

||||

The first column is the stratum number (where 1 is "predominantly small trees spaced quite far apart," 2 is "predominantly small trees spaced closer together," and 3 is "somewhat larger trees"; we didn't sample the areas "completely devoid of trees"). The second column is the sample number (there are 73 samples altogether, located in the three strata in proportion to the area of each stratum). The third column is the tree number within the sample. The fourth is the two-letter species code, the fifth the trunk diameter (in this case, 10cm above ground or exposed roots), the sixth the smallest distance across the crown, the seventh the largest distance, and the eighth the height of the tree.

|

||||

|

||||

For the purposes of this exercise, I'm only concerned with the total amount of ground covered by the tree crowns—not the species, nor the height, nor the diameter of the trunk.

|

||||

|

||||

In addition to the measurement information above, I also have the areas of the three strata, also in a BSV:

|

||||

|

||||

stratum | hectares

|

||||

---|---

|

||||

1 | 114.89

|

||||

2 | 207.72

|

||||

3 | 29.77

|

||||

|

||||

### What I want to do (not how I want to do it)

|

||||

|

||||

In keeping with one of the main design goals of Java Streams, here is "what" I want to do:

|

||||

|

||||

1. Read the stratum area BSV and save the data as a lookup table.

|

||||

2. Read the measurements from the measurement BSV file.

|

||||

3. Accumulate each measurement (tree) to calculate the total area of the sample covered by tree crowns.

|

||||

4. Accumulate the sample tree crown area values and count the number of samples to estimate the mean tree crown area coverage and standard error of the mean for each stratum.

|

||||

5. Summarize the stratum figures.

|

||||

6. Weigh the stratum means and standard errors by the stratum areas (looked up from the table created in step 1) and accumulate them to estimate the mean tree crown area coverage and standard error of the mean for the total area.

|

||||

7. Summarize the weighted figures.

|

||||

|

||||

|

||||

|

||||

Generally speaking, the way to define "what" with Java Streams is by creating a stream processing pipeline of function calls that pass over the data. So, yes, there is actually a bit of "how" that ends up creeping in… in fact, quite a bit of "how." But, it needs a very different knowledge base than the good, old fashioned loop.

|

||||

|

||||

I'll go through each of these steps in detail.

|

||||

|

||||

#### Build the stratum area table

|

||||

|

||||

The first job is to convert the stratum areas BSV file to a lookup table:

|

||||

|

||||

|

||||

```

|

||||

[String][5] fileName = "stratum_areas.bsv";

|

||||

Stream<String> inputLineStream = Files.lines(Paths.get(fileName)); // (1)

|

||||

|

||||

final Map<[Integer][6],Double> stratumAreas = // (2)

|

||||

inputLineStream // (3)

|

||||

.skip(1) // (4)

|

||||

.map(l -> l.split("\\\|")) // (5)

|

||||

.collect( // (6)

|

||||

Collectors.toMap( // (7)

|

||||

a -> [Integer][6].parseInt(a[0]), // (8)

|

||||

a -> [Double][7].parseDouble(a[1]) // (9)

|

||||

)

|

||||

);

|

||||

inputLineStream.close(); // (10)

|

||||

|

||||

[System][8].out.println("stratumAreas = " + stratumAreas); // (11)

|

||||

```

|

||||

|

||||

I'll take this a line or two at a time, where the numbers in comments following the lines above—e.g., _// (3)_— correspond to the numbers below:

|

||||

|

||||

1. java.nio.Files.lines() gives a stream of strings corresponding to lines in the file.

|

||||

2. The goal is to create the lookup table, **stratumAreas**, which is a **Map<Integer,Double>**. Therefore, I can get the **double** value area for stratum 2 as **stratumAreas.get(2)**.

|

||||

3. This is the beginning of the stream "pipeline."

|

||||

4. Skip the first line in the pipeline since it's the header line containing the column names.

|

||||

5. Use **map()** to split the **String** input line into an array of **String** fields, with the first field being the stratum # and the second being the stratum area.

|

||||

6. Use **collect()** to [materialize the results][9].

|

||||

7. The materialized result will be produced as a sequence of **Map** entries.

|

||||

8. The key of each map entry is the first element of the array in the pipeline—the **int** stratum number. By the way, this is a _Java lambda_ expression—[an anonymous function][10] that takes an argument and returns that argument converted to an **int**.

|

||||

9. The value of each map entry is the second element of the array in the pipeline—the **double** stratum area.

|

||||

10. Don't forget to close the stream (file).

|

||||

11. Print out the result, which looks like: [code]`stratumAreas = {1=114.89, 2=207.72, 3=29.77}`

|

||||

```

|

||||

### Build the measurements table and accumulate the measurements into the sample totals

|

||||

|

||||

Now that I have the stratum areas, I can start processing the main body of data—the measurements. I combine the two tasks of building the measurements table and accumulating the measurements into the sample totals since I don't have any interest in the measurement data per se.

|

||||

```

|

||||

|

||||

|

||||

fileName = "sample_data_for_testing.bsv";

|

||||

inputLineStream = Files.lines(Paths.get(fileName));

|

||||

|

||||

final Map<[Integer][6],Map<[Integer][6],Double>> sampleValues =

|

||||

inputLineStream

|

||||

.skip(1)

|

||||

.map(l -> l.split("\\\|"))

|

||||

.collect( // (1)

|

||||

Collectors.groupingBy(a -> [Integer][6].parseInt(a[0]), // (2)

|

||||

Collectors.groupingBy(b -> [Integer][6].parseInt(b[1]), // (3)

|

||||

Collectors.summingDouble( // (4)

|

||||

c -> { // (5)

|

||||

double rm = ([Double][7].parseDouble(c[5]) +

|

||||

[Double][7].parseDouble(c[6]))/4d; // (6)

|

||||

return rm*rm * [Math][11].PI / 500d; // (7)

|

||||

})

|

||||

)

|

||||

)

|

||||

);

|

||||

inputLineStream.close();

|

||||

|

||||

[System][8].out.println("sampleValues = " + sampleValues); // (8)

|

||||

|

||||

```

|

||||

Again, a line or two or so at a time:

|

||||

|

||||

1. The first seven lines are the same in this task and the previous, except the name of this lookup table is **sampleValues**; and it is a **Map** of **Map**s.

|

||||

2. The measurement data is grouped into samples (by sample #), which are, in turn, grouped into strata (by stratum #), so I use **Collectors.groupingBy()** at the topmost level [to separate data][12] into strata, with **a[0]** here being the stratum number.

|

||||

3. I use **Collectors.groupingBy()** once more to separate data into samples, with **b[1]** here being the sample number.

|

||||

4. I use the handy **Collectors.summingDouble()** [to accumulate the data][13] for each measurement within the sample within the stratum.

|

||||

5. Again, a Java lambda or anonymous function whose argument **c** is the array of fields, where this lambda has several lines of code that are surrounded by **{** and **}** with a **return** statement just before the **}**.

|

||||

6. Calculate the mean crown radius of the measurement.

|

||||

7. Calculate the crown area of the measurement as a proportion of the total sample area and return that value as the result of the lambda.

|

||||

8. Again, similar to the previous task. The result looks like (with some numbers elided): [code]`sampleValues = {1={1=0.09083231861452731, 66=0.06088002082602869, ... 28=0.0837823490804228}, 2={65=0.14738326403381743, 2=0.16961183847374103, ... 63=0.25083064794883453}, 3={64=0.3306323635177101, 32=0.25911911184680053, ... 30=0.2642668470291564}}`

|

||||

```

|

||||

|

||||

|

||||

|

||||

This output shows the **Map** of **Map**s structure clearly—there are three entries in the top level corresponding to the strata 1, 2, and 3, and each stratum has subentries corresponding to the proportional area of the sample covered by tree crowns.

|

||||

|

||||

#### Accumulate the sample totals into the stratum means and standard errors

|

||||

|

||||

At this point, the task becomes more complex; I need to count the number of samples, sum up the sample values in preparation for calculating the sample mean, and sum up the squares of the sample values in preparation for calculating the standard error of the mean. I may as well incorporate the stratum area into this grouping of data as well, as I'll need it shortly to weigh the stratum results together.

|

||||

|

||||

So the first thing to do is create a class, **StratumAccumulator**, to handle the accumulation and provide the calculation of the interesting results. This class implements **java.util.function.DoubleConsumer**, which can be passed to **collect()** to handle accumulation:

|

||||

|

||||

|

||||

```

|

||||

class StratumAccumulator implements DoubleConsumer {

|

||||

private double ha;

|

||||

private int n;

|

||||

private double sum;

|

||||

private double ssq;

|

||||

public StratumAccumulator(double ha) { // (1)

|

||||

this.ha = ha;

|

||||

this.n = 0;

|

||||

this.sum = 0d;

|

||||

this.ssq = 0d;

|

||||

}

|

||||

public void accept(double d) { // (2)

|

||||

this.sum += d;

|

||||

this.ssq += d*d;

|

||||

this.n++;

|

||||

}

|

||||

public void combine(StratumAccumulator other) { // (3)

|

||||

this.sum += other.sum;

|

||||

this.ssq += other.ssq;

|

||||

this.n += other.n;

|

||||

}

|

||||

public double getHa() { // (4)

|

||||

return this.ha;

|

||||

}

|

||||

public int getN() { // (5)

|

||||

return this.n;

|

||||

}

|

||||

public double getMean() { // (6)

|

||||

return this.n > 0 ? this.sum / this.n : 0d;

|

||||

}

|

||||

public double getStandardError() { // (7)

|

||||

double mean = this.getMean();

|

||||

double variance = this.n > 1 ? (this.ssq - mean*mean*n)/(this.n - 1) : 0d;

|

||||

return this.n > 0 ? [Math][11].sqrt(variance/this.n) : 0d;

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

Line-by-line:

|

||||

|

||||

1. The constructor **StratumAccumulator(double ha)** takes an argument, the area of the stratum in hectares, which allows me to merge the stratum area lookup table into instances of this class.

|

||||

2. The **accept(double d)** method is used to accumulate the stream of double values, and I use it to:

|

||||

a. Count the number of values.

|

||||

b. Sum the values in preparation for computing the sample mean.

|

||||

c. Sum the squares of the values in preparation for computing the standard error of the mean.

|

||||

3. The **combine()** method is used to merge substreams of **StratumAccumulator**s (in case I want to process in parallel).

|

||||

4. The getter for the area of the stratum

|

||||

5. The getter for the number of samples in the stratum

|

||||

6. The getter for the mean sample value in the stratum

|

||||

7. The getter for the standard error of the mean in the stratum

|

||||

|

||||

|

||||

|

||||

Once I have this accumulator, I can use it to accumulate the sample values pertaining to each stratum:

|

||||

|

||||

|

||||

```

|

||||

final Map<[Integer][6],StratumAccumulator> stratumValues = // (1)

|

||||

sampleValues.entrySet().stream() // (2)

|

||||

.collect( // (3)

|

||||

Collectors.toMap( // (4)

|

||||

e -> e.getKey(), // (5)

|

||||

e -> e.getValue().entrySet().stream() // (6)

|

||||

.map([Map.Entry][14]::getValue) // (7)

|

||||

.collect( // (8)

|

||||

() -> new StratumAccumulator(stratumAreas.get(e.getKey())), // (9)

|

||||

StratumAccumulator::accept, // (10)

|

||||

StratumAccumulator::combine) // (11)

|

||||

)

|

||||

);

|

||||

```

|

||||

|