` 加上 `div` 标签,注意生成的段落是自动缩进的。

+

+```

+

+

Vim plugins are awesome !

+

+```

+

+Vim Surround 有很多其它选项,你可以参照 [GitHub][7] 上的说明尝试它们。

+

+### 4、Vim Gitgutter

+

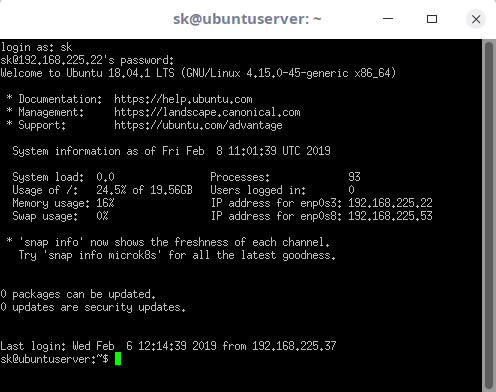

+[Vim Gitgutter][8] 插件对使用 Git 作为版本控制工具的人来说非常有用。它会在 Vim 的行号列旁显示 `git diff` 的差异标记。假设你有如下已提交过的代码:

+

+```

+ 1 package main

+ 2

+ 3 import "fmt"

+ 4

+ 5 func main() {

+ 6 x := true

+ 7 items := []string{"tv", "pc", "tablet"}

+ 8

+ 9 if x {

+ 10 for _, i := range items {

+ 11 fmt.Println(i)

+ 12 }

+ 13 }

+ 14 }

+```

+

+当你做出一些修改后,Vim Gitgutter 会显示如下标记:

+

+```

+ 1 package main

+ 2

+ 3 import "fmt"

+ 4

+_ 5 func main() {

+ 6 items := []string{"tv", "pc", "tablet"}

+ 7

+~ 8 if len(items) > 0 {

+ 9 for _, i := range items {

+ 10 fmt.Println(i)

++ 11 fmt.Println("------")

+ 12 }

+ 13 }

+ 14 }

+```

+

+`_` 标记表示在第 5 行和第 6 行之间删除了一行。`~` 表示第 8 行有修改,`+` 表示新增了第 11 行。

+

+另外,Vim Gitgutter 允许你用 `[c` 和 `]c` 在多个有修改的块之间跳转,甚至可以用 `Leader+hs` 来暂存某个变更集。

+

+这个插件提供了对变更的即时视觉反馈,如果你用 Git 的话,有了它简直是如虎添翼。

+

+### 5、VIM Fugitive

+

+[Vim Fugitive][9] 是另一个将 Git 工作流集成到 Vim 中的超棒插件。它对 Git 做了一些封装,可以让你在 Vim 里直接执行 Git 命令并将结果集成在 Vim 界面里。这个插件有超多的特性,更多信息请访问它的 [GitHub][10] 项目页面。

+

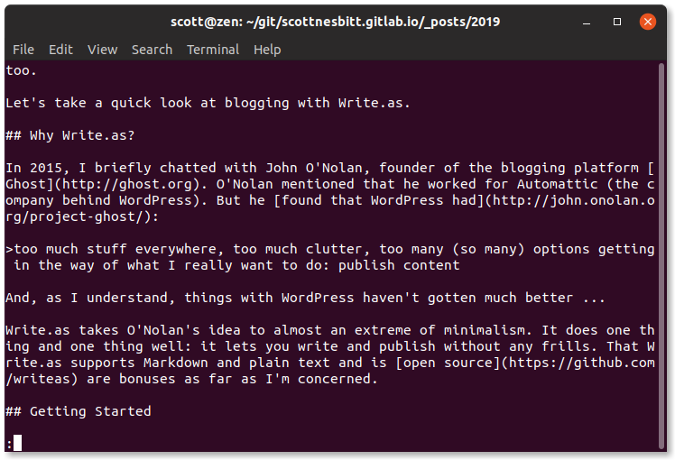

+这里有一个使用 Vim Fugitive 的基础 Git 工作流示例。设想我们已经对下面的 Go 代码做出修改,你可以用 `:Gblame` 调用 `git blame` 来查看每行最后的提交信息:

+

+```

+e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 1 package main

+e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 2

+e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 3 import "fmt"

+e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 4

+e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│_ 5 func main() {

+e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 6 items := []string{"tv", "pc", "tablet"}

+e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 7

+00000000 (Not Committed Yet 2018-12-05 18:55:00 -0500)│~ 8 if len(items) > 0 {

+e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 9 for _, i := range items {

+e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 10 fmt.Println(i)

+00000000 (Not Committed Yet 2018-12-05 18:55:00 -0500)│+ 11 fmt.Println("------")

+e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 12 }

+e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 13 }

+e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 14 }

+```

+

+可以看到第 8 行和第 11 行显示还未提交。用 `:Gstatus` 命令检查仓库当前的状态:

+

+```

+ 1 # On branch master

+ 2 # Your branch is up to date with 'origin/master'.

+ 3 #

+ 4 # Changes not staged for commit:

+ 5 # (use "git add

..." to update what will be committed)

+ 6 # (use "git checkout -- ..." to discard changes in working directory)

+ 7 #

+ 8 # modified: vim-5plugins/examples/test1.go

+ 9 #

+ 10 no changes added to commit (use "git add" and/or "git commit -a")

+--------------------------------------------------------------------------------------------------------

+ 1 package main

+ 2

+ 3 import "fmt"

+ 4

+_ 5 func main() {

+ 6 items := []string{"tv", "pc", "tablet"}

+ 7

+~ 8 if len(items) > 0 {

+ 9 for _, i := range items {

+ 10 fmt.Println(i)

++ 11 fmt.Println("------")

+ 12 }

+ 13 }

+ 14 }

+```

+

+Vim Fugitive 在分割的窗口里显示 `git status` 的输出结果。你可以在该行按下 `-` 键用该文件的名字暂存这个文件的提交,再按一次 `-` 可以取消暂存。这个信息会随着你的操作自动更新:

+

+```

+ 1 # On branch master

+ 2 # Your branch is up to date with 'origin/master'.

+ 3 #

+ 4 # Changes to be committed:

+ 5 # (use "git reset HEAD ..." to unstage)

+ 6 #

+ 7 # modified: vim-5plugins/examples/test1.go

+ 8 #

+--------------------------------------------------------------------------------------------------------

+ 1 package main

+ 2

+ 3 import "fmt"

+ 4

+_ 5 func main() {

+ 6 items := []string{"tv", "pc", "tablet"}

+ 7

+~ 8 if len(items) > 0 {

+ 9 for _, i := range items {

+ 10 fmt.Println(i)

++ 11 fmt.Println("------")

+ 12 }

+ 13 }

+ 14 }

+```

+

+现在你可以用 `:Gcommit` 来提交修改了。Vim Fugitive 会打开另一个分割窗口让你输入提交信息:

+

+```

+ 1 vim-5plugins: Updated test1.go example file

+ 2 # Please enter the commit message for your changes. Lines starting

+ 3 # with '#' will be ignored, and an empty message aborts the commit.

+ 4 #

+ 5 # On branch master

+ 6 # Your branch is up to date with 'origin/master'.

+ 7 #

+ 8 # Changes to be committed:

+ 9 # modified: vim-5plugins/examples/test1.go

+ 10 #

+```

+

+按 `:wq` 保存文件完成提交:

+

+```

+[master c3bf80f] vim-5plugins: Updated test1.go example file

+ 1 file changed, 2 insertions(+), 2 deletions(-)

+Press ENTER or type command to continue

+```

+

+然后你可以再用 `:Gstatus` 检查结果并用 `:Gpush` 把新的提交推送到远程。

+

+```

+ 1 # On branch master

+ 2 # Your branch is ahead of 'origin/master' by 1 commit.

+ 3 # (use "git push" to publish your local commits)

+ 4 #

+ 5 nothing to commit, working tree clean

+```

+

+Vim Fugitive 的 GitHub 项目主页有很多屏幕录像展示了它的更多功能和工作流,如果你喜欢它并想多学一些,快去看看吧。

+

+### 接下来?

+

+这些 Vim 插件都是程序开发者的神器!还有另外两类开发者常用的插件:自动完成插件和语法检查插件。它些大都是和具体的编程语言相关的,以后我会在一些文章中介绍它们。

+

+你在写代码时是否用到一些其它 Vim 插件?请在评论区留言分享。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/1/vim-plugins-developers

+

+作者:[Ricardo Gerardi][a]

+选题:[lujun9972][b]

+译者:[pityonline](https://github.com/pityonline)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/rgerardi

+[b]: https://github.com/lujun9972

+[1]: https://www.vim.org/

+[2]: https://www.vim.org/scripts/script.php?script_id=3599

+[3]: https://github.com/jiangmiao/auto-pairs

+[4]: https://github.com/scrooloose/nerdcommenter

+[5]: http://vim.wikia.com/wiki/Filetype.vim

+[6]: https://www.vim.org/scripts/script.php?script_id=1697

+[7]: https://github.com/tpope/vim-surround

+[8]: https://github.com/airblade/vim-gitgutter

+[9]: https://www.vim.org/scripts/script.php?script_id=2975

+[10]: https://github.com/tpope/vim-fugitive

diff --git a/translated/talk/20190110 Toyota Motors and its Linux Journey.md b/published/201902/20190110 Toyota Motors and its Linux Journey.md

similarity index 61%

rename from translated/talk/20190110 Toyota Motors and its Linux Journey.md

rename to published/201902/20190110 Toyota Motors and its Linux Journey.md

index b4ae8074a3..d89f4f2a29 100644

--- a/translated/talk/20190110 Toyota Motors and its Linux Journey.md

+++ b/published/201902/20190110 Toyota Motors and its Linux Journey.md

@@ -1,34 +1,32 @@

[#]: collector: (lujun9972)

[#]: translator: (jdh8383)

-[#]: reviewer: ( )

-[#]: publisher: ( )

-[#]: url: ( )

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-10543-1.html)

[#]: subject: (Toyota Motors and its Linux Journey)

[#]: via: (https://itsfoss.com/toyota-motors-linux-journey)

-[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

+[#]: author: (Malcolm Dean https://itsfoss.com/toyota-motors-linux-journey)

-丰田汽车的Linux之旅

+丰田汽车的 Linux 之旅

======

-**这篇文章来自 It's FOSS 的读者 Malcolm Dean的投递。**

+我之前跟丰田汽车北美分公司的 Brian.R.Lyons(丰田发言人)聊了聊,话题是关于 Linux 在丰田和雷克萨斯汽车的信息娱乐系统上的实施方案。我了解到一些汽车制造商使用了 Automotive Grade Linux(AGL)。

-我之前跟丰田汽车北美分公司的 Brian.R.Lyons(丰田发言人)聊了聊,话题是关于Linux在丰田和雷克萨斯汽车的信息娱乐系统上的实施方案。我了解到一些汽车制造商使用了 Automotive Grade Linux (AGL)。

+然后我写了一篇短文,记录了我和 Brian 的讨论内容,谈及了丰田和 Linux 的一些渊源。希望 Linux 的狂热粉丝们能够喜欢这次对话。

-然后我写了一篇短文,记录了我和 Brian 的讨论内容,就是丰田和 Linux 的一些渊源。希望 Linux 的狂热粉丝们能够喜欢这次对话。

-

-全部[丰田和雷克萨斯汽车都将会使用 Automotive Grade Linux][1] (AGL),主要是用于车载信息娱乐系统。这项措施对于丰田集团来说是至关重要的,因为据 Lyons 先生所说:“作为技术的引领者之一,丰田认识到,赶上科技快速进步最好的方法就是拥抱开源理念”。

+全部的[丰田和雷克萨斯汽车都将会使用 Automotive Grade Linux(AGL)][1],主要是用于车载信息娱乐系统。这项措施对于丰田集团来说是至关重要的,因为据 Lyons 先生所说:“作为技术的引领者之一,丰田认识到,赶上科技快速进步最好的方法就是接受开源发展的理念。”

丰田和众多汽车制造公司都认为,与使用非自由软件相比,采用基于 Linux 的操作系统在更新和升级方面会更加廉价和快捷。

-这简直太棒了! Linux 终于跟汽车结合起来了。我每天都在电脑上使用 Linux;能看到这个优秀的软件在一个完全不同的产业领域里大展拳脚真是太好了。

+这简直太棒了!Linux 终于跟汽车结合起来了。我每天都在电脑上使用 Linux;能看到这个优秀的软件在一个完全不同的产业领域里大展拳脚真是太好了。

-我很好奇丰田是什么时候开始使用 [Automotive Grade Linux][2] (AGL) 的。按照 Lyons 先生的说法,这要追溯到 2011 年。

+我很好奇丰田是什么时候开始使用 [Automotive Grade Linux(AGL)][2]的。按照 Lyons 先生的说法,这要追溯到 2011 年。

->“自 AGL 项目在五年前启动之始,作为活跃的会员和贡献者,丰田与其他顶级制造商和供应商展开合作,着手开发一个基于 Linux 的强大平台,并不断地增强其功能和安全性。”

+> “自 AGL 项目在五年前启动之始,作为活跃的会员和贡献者,丰田与其他顶级制造商和供应商展开合作,着手开发一个基于 Linux 的强大平台,并不断地增强其功能和安全性。”

![丰田信息娱乐系统][3]

-[丰田于2011年加入了 Linux 基金会][4],与其他汽车制造商和软件公司就 IVI(车内信息娱乐系统)展开讨论,最终在 2012 年,Linux 基金会内部成立了 Automotive Grade Linux 工作组。

+[丰田于 2011 年加入了 Linux 基金会][4],与其他汽车制造商和软件公司就 IVI(车内信息娱乐系统)展开讨论,最终在 2012 年,Linux 基金会内部成立了 Automotive Grade Linux 工作组。

丰田在 AGL 工作组里首先提出了“代码优先”的策略,这在开源领域是很常见的做法。然后丰田和其他汽车制造商、IVI 一线厂家,软件公司等各方展开对话,根据各方的技术需求详细制定了初始方向。

@@ -48,14 +46,14 @@

via: https://itsfoss.com/toyota-motors-linux-journey

-作者:[Abhishek Prakash][a]

+作者:[Malcolm Dean][a]

选题:[lujun9972][b]

译者:[jdh8383](https://github.com/jdh8383)

-校对:[校对者ID](https://github.com/校对者ID)

+校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

-[a]: https://itsfoss.com/author/abhishek/

+[a]: https://itsfoss.com/toyota-motors-linux-journey

[b]: https://github.com/lujun9972

[1]: https://www.linuxfoundation.org/press-release/2018/01/automotive-grade-linux-hits-road-globally-toyota-amazon-alexa-joins-agl-support-voice-recognition/

[2]: https://www.automotivelinux.org/

diff --git a/published/20190114 Hegemon - A Modular System And Hardware Monitoring Tool For Linux.md b/published/201902/20190114 Hegemon - A Modular System And Hardware Monitoring Tool For Linux.md

similarity index 100%

rename from published/20190114 Hegemon - A Modular System And Hardware Monitoring Tool For Linux.md

rename to published/201902/20190114 Hegemon - A Modular System And Hardware Monitoring Tool For Linux.md

diff --git a/published/20190114 Remote Working Survival Guide.md b/published/201902/20190114 Remote Working Survival Guide.md

similarity index 100%

rename from published/20190114 Remote Working Survival Guide.md

rename to published/201902/20190114 Remote Working Survival Guide.md

diff --git a/published/20190115 Comparing 3 open source databases- PostgreSQL, MariaDB, and SQLite.md b/published/201902/20190115 Comparing 3 open source databases- PostgreSQL, MariaDB, and SQLite.md

similarity index 100%

rename from published/20190115 Comparing 3 open source databases- PostgreSQL, MariaDB, and SQLite.md

rename to published/201902/20190115 Comparing 3 open source databases- PostgreSQL, MariaDB, and SQLite.md

diff --git a/published/20190115 Getting started with Sandstorm, an open source web app platform.md b/published/201902/20190115 Getting started with Sandstorm, an open source web app platform.md

similarity index 100%

rename from published/20190115 Getting started with Sandstorm, an open source web app platform.md

rename to published/201902/20190115 Getting started with Sandstorm, an open source web app platform.md

diff --git a/published/201902/20190116 The Evil-Twin Framework- A tool for improving WiFi security.md b/published/201902/20190116 The Evil-Twin Framework- A tool for improving WiFi security.md

new file mode 100644

index 0000000000..760c2ed1cf

--- /dev/null

+++ b/published/201902/20190116 The Evil-Twin Framework- A tool for improving WiFi security.md

@@ -0,0 +1,230 @@

+[#]: collector: (lujun9972)

+[#]: translator: (hopefully2333)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-10568-1.html)

+[#]: subject: (The Evil-Twin Framework: A tool for improving WiFi security)

+[#]: via: (https://opensource.com/article/19/1/evil-twin-framework)

+[#]: author: (André Esser https://opensource.com/users/andreesser)

+

+Evil-Twin 框架:一个用于提升 WiFi 安全性的工具

+======

+

+> 了解一款用于对 WiFi 接入点安全进行渗透测试的工具。

+

+

+

+越来越多的设备通过无线传输的方式连接到互联网,以及,大范围可用的 WiFi 接入点为攻击者攻击用户提供了很多机会。通过欺骗用户连接到[虚假的 WiFi 接入点][1],攻击者可以完全控制用户的网络连接,这将使得攻击者可以嗅探和篡改用户的数据包,将用户的连接重定向到一个恶意的网站,并通过网络发起其他的攻击。

+

+为了保护用户并告诉他们如何避免线上的危险操作,安全审计人员和安全研究员必须评估用户的安全实践能力,用户常常在没有确认该 WiFi 接入点为安全的情况下就连接上了该网络,安全审计人员和研究员需要去了解这背后的原因。有很多工具都可以对 WiFi 的安全性进行审计,但是没有一款工具可以测试大量不同的攻击场景,也没有能和其他工具集成得很好的工具。

+

+Evil-Twin Framework(ETF)用于解决 WiFi 审计过程中的这些问题。审计者能够使用 ETF 来集成多种工具并测试该 WiFi 在不同场景下的安全性。本文会介绍 ETF 的框架和功能,然后会提供一些案例来说明该如何使用这款工具。

+

+### ETF 的架构

+

+ETF 的框架是用 [Python][2] 写的,因为这门开发语言的代码非常易读,也方便其他开发者向这个项目贡献代码。除此之外,很多 ETF 的库,比如 [Scapy][3],都是为 Python 开发的,很容易就能将它们用于 ETF。

+

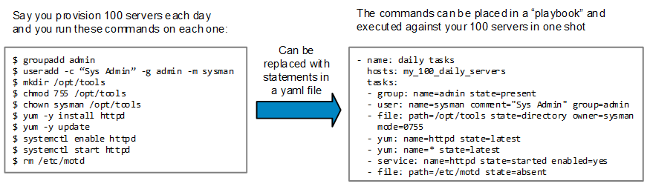

+ETF 的架构(图 1)分为不同的彼此交互的模块。该框架的设置都写在一个单独的配置文件里。用户可以通过 `ConfigurationManager` 类里的用户界面来验证并修改这些配置。其他模块只能读取这些设置并根据这些设置进行运行。

+

+![Evil-Twin Framework Architecture][5]

+

+*图 1:Evil-Twin 的框架架构*

+

+ETF 支持多种与框架交互的用户界面,当前的默认界面是一个交互式控制台界面,类似于 [Metasploit][6] 那种。正在开发用于桌面/浏览器使用的图形用户界面(GUI)和命令行界面(CLI),移动端界面也是未来的一个备选项。用户可以使用交互式控制台界面来修改配置文件里的设置(最终会使用 GUI)。用户界面可以与存在于这个框架里的每个模块进行交互。

+

+WiFi 模块(AirCommunicator)用于支持多种 WiFi 功能和攻击类型。该框架确定了 Wi-Fi 通信的三个基本支柱:数据包嗅探、自定义数据包注入和创建接入点。三个主要的 WiFi 通信模块 AirScanner、AirInjector,和 AirHost,分别用于数据包嗅探、数据包注入,和接入点创建。这三个类被封装在主 WiFi 模块 AirCommunicator 中,AirCommunicator 在启动这些服务之前会先读取这些服务的配置文件。使用这些核心功能的一个或多个就可以构造任意类型的 WiFi 攻击。

+

+要使用中间人(MITM)攻击(这是一种攻击 WiFi 客户端的常见手法),ETF 有一个叫做 ETFITM(Evil-Twin Framework-in-the-Middle)的集成模块,这个模块用于创建一个 web 代理,来拦截和修改经过的 HTTP/HTTPS 数据包。

+

+许多其他的工具也可以利用 ETF 创建的 MITM。通过它的可扩展性,ETF 能够支持它们,而不必单独地调用它们,你可以通过扩展 Spawner 类来将这些工具添加到框架里。这使得开发者和安全审计人员可以使用框架里预先配置好的参数字符来调用程序。

+

+扩展 ETF 的另一种方法就是通过插件。有两类插件:WiFi 插件和 MITM 插件。MITM 插件是在 MITM 代理运行时可以执行的脚本。代理会将 HTTP(s) 请求和响应传递给可以记录和处理它们的插件。WiFi 插件遵循一个更加复杂的执行流程,但仍然会给想参与开发并且使用自己插件的贡献者提供一个相对简单的 API。WiFi 插件还可以进一步地划分为三类,其中每个对应一个核心 WiFi 通信模块。

+

+每个核心模块都有一些特定事件能触发响应的插件的执行。举个例子,AirScanner 有三个已定义的事件,可以对其响应进行编程处理。事件通常对应于服务开始运行之前的设置阶段、服务正在运行时的中间执行阶段、服务完成后的卸载或清理阶段。因为 Python 允许多重继承,所以一个插件可以继承多个插件类。

+

+上面的图 1 是框架架构的摘要。从 ConfigurationManager 指出的箭头意味着模块会从中读取信息,指向它的箭头意味着模块会写入/修改配置。

+

+### 使用 ETF 的例子

+

+ETF 可以通过多种方式对 WiFi 的网络安全或者终端用户的 WiFi 安全意识进行渗透测试。下面的例子描述了这个框架的一些渗透测试功能,例如接入点和客户端检测、对使用 WPA 和 WEP 类型协议的接入点进行攻击,和创建 evil twin 接入点。

+

+这些例子是使用 ETF 和允许进行 WiFi 数据捕获的 WiFi 卡设计的。它们也在 ETF 设置命令中使用了下面这些缩写:

+

+ * **APS** Access Point SSID

+ * **APB** Access Point BSSID

+ * **APC** Access Point Channel

+ * **CM** Client MAC address

+

+在实际的测试场景中,确保你使用了正确的信息来替换这些缩写。

+

+#### 在解除认证攻击后捕获 WPA 四次握手的数据包。

+

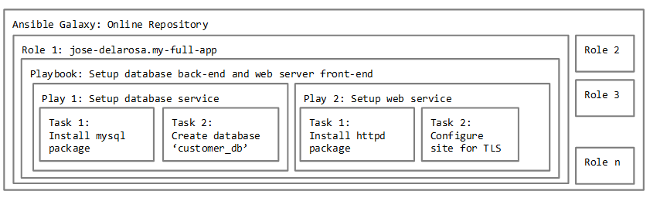

+这个场景(图 2)做了两个方面的考虑:解除认证攻击和捕获 WPA 四次握手数据包的可能性。这个场景从一个启用了 WPA/WPA2 的接入点开始,这个接入点有一个已经连上的客户端设备(在本例中是一台智能手机)。目的是通过常规的解除认证攻击(LCTT 译注:类似于 DoS 攻击)来让客户端断开和 WiFi 的网络,然后在客户端尝试重连的时候捕获 WPA 的握手包。重连会在断开连接后马上手动完成。

+

+![Scenario for capturing a WPA handshake after a de-authentication attack][8]

+

+*图 2:在解除认证攻击后捕获 WPA 握手包的场景*

+

+在这个例子中需要考虑的是 ETF 的可靠性。目的是确认工具是否一直都能捕获 WPA 的握手数据包。每个工具都会用来多次复现这个场景,以此来检查它们在捕获 WPA 握手数据包时的可靠性。

+

+使用 ETF 来捕获 WPA 握手数据包的方法不止一种。一种方法是使用 AirScanner 和 AirInjector 两个模块的组合;另一种方法是只使用 AirInjector。下面这个场景是使用了两个模块的组合。

+

+ETF 启用了 AirScanner 模块并分析 IEEE 802.11 数据帧来发现 WPA 握手包。然后 AirInjecto 就可以使用解除认证攻击来强制客户端断开连接,以进行重连。必须在 ETF 上执行下面这些步骤才能完成上面的目标:

+

+ 1. 进入 AirScanner 配置模式:`config airscanner`

+ 2. 设置 AirScanner 不跳信道:`config airscanner`

+ 3. 设置信道以嗅探经过 WiFi 接入点信道的数据(APC):`set fixed_sniffing_channel = `

+ 4. 使用 CredentialSniffer 插件来启动 AirScanner 模块:`start airscanner with credentialsniffer`

+ 5. 从已嗅探的接入点列表中添加目标接入点的 BSSID(APS):`add aps where ssid = `

+ 6. 启用 AirInjector 模块,在默认情况下,它会启用解除认证攻击:`start airinjector`

+

+这些简单的命令设置能让 ETF 在每次测试时执行成功且有效的解除认证攻击。ETF 也能在每次测试的时候捕获 WPA 的握手数据包。下面的代码能让我们看到 ETF 成功的执行情况。

+

+```

+███████╗████████╗███████╗

+██╔════╝╚══██╔══╝██╔════╝

+█████╗ ██║ █████╗

+██╔══╝ ██║ ██╔══╝

+███████╗ ██║ ██║

+╚══════╝ ╚═╝ ╚═╝

+

+

+[+] Do you want to load an older session? [Y/n]: n

+[+] Creating new temporary session on 02/08/2018

+[+] Enter the desired session name:

+ETF[etf/aircommunicator/]::> config airscanner

+ETF[etf/aircommunicator/airscanner]::> listargs

+ sniffing_interface = wlan1; (var)

+ probes = True; (var)

+ beacons = True; (var)

+ hop_channels = false; (var)

+fixed_sniffing_channel = 11; (var)

+ETF[etf/aircommunicator/airscanner]::> start airscanner with

+arpreplayer caffelatte credentialsniffer packetlogger selfishwifi

+ETF[etf/aircommunicator/airscanner]::> start airscanner with credentialsniffer

+[+] Successfully added credentialsniffer plugin.

+[+] Starting packet sniffer on interface 'wlan1'

+[+] Set fixed channel to 11

+ETF[etf/aircommunicator/airscanner]::> add aps where ssid = CrackWPA

+ETF[etf/aircommunicator/airscanner]::> start airinjector

+ETF[etf/aircommunicator/airscanner]::> [+] Starting deauthentication attack

+ - 1000 bursts of 1 packets

+ - 1 different packets

+[+] Injection attacks finished executing.

+[+] Starting post injection methods

+[+] Post injection methods finished

+[+] WPA Handshake found for client '70:3e:ac:bb:78:64' and network 'CrackWPA'

+```

+

+#### 使用 ARP 重放攻击并破解 WEP 无线网络

+

+下面这个场景(图 3)将关注[地址解析协议][9](ARP)重放攻击的效率和捕获包含初始化向量(IVs)的 WEP 数据包的速度。相同的网络可能需要破解不同数量的捕获的 IVs,所以这个场景的 IVs 上限是 50000。如果这个网络在首次测试期间,还未捕获到 50000 IVs 就崩溃了,那么实际捕获到的 IVs 数量会成为这个网络在接下来的测试里的新的上限。我们使用 `aircrack-ng` 对数据包进行破解。

+

+测试场景从一个使用 WEP 协议进行加密的 WiFi 接入点和一台知道其密钥的离线客户端设备开始 —— 为了测试方便,密钥使用了 12345,但它可以是更长且更复杂的密钥。一旦客户端连接到了 WEP 接入点,它会发送一个不必要的 ARP 数据包;这是要捕获和重放的数据包。一旦被捕获的包含 IVs 的数据包数量达到了设置的上限,测试就结束了。

+

+![Scenario for capturing a WPA handshake after a de-authentication attack][11]

+

+*图 3:在进行解除认证攻击后捕获 WPA 握手包的场景*

+

+ETF 使用 Python 的 Scapy 库来进行包嗅探和包注入。为了最大限度地解决 Scapy 里的已知的性能问题,ETF 微调了一些低级库,来大大加快包注入的速度。对于这个特定的场景,ETF 为了更有效率地嗅探,使用了 `tcpdump` 作为后台进程而不是 Scapy,Scapy 用于识别加密的 ARP 数据包。

+

+这个场景需要在 ETF 上执行下面这些命令和操作:

+

+ 1. 进入 AirScanner 设置模式:`config airscanner`

+ 2. 设置 AirScanner 不跳信道:`set hop_channels = false`

+ 3. 设置信道以嗅探经过接入点信道的数据(APC):`set fixed_sniffing_channel = `

+ 4. 进入 ARPReplayer 插件设置模式:`config arpreplayer`

+ 5. 设置 WEP 网络目标接入点的 BSSID(APB):`set target_ap_bssid `

+ 6. 使用 ARPReplayer 插件启动 AirScanner 模块:`start airscanner with arpreplayer`

+

+在执行完这些命令后,ETF 会正确地识别加密的 ARP 数据包,然后成功执行 ARP 重放攻击,以此破坏这个网络。

+

+#### 使用一款全能型蜜罐

+

+图 4 中的场景使用相同的 SSID 创建了多个接入点,对于那些可以探测到但是无法接入的 WiFi 网络,这个技术可以发现网络的加密类型。通过启动具有所有安全设置的多个接入点,客户端会自动连接和本地缓存的接入点信息相匹配的接入点。

+

+![Scenario for capturing a WPA handshake after a de-authentication attack][13]

+

+*图 4:在解除认证攻击后捕获 WPA 握手包数据。*

+

+使用 ETF,可以去设置 `hostapd` 配置文件,然后在后台启动该程序。`hostapd` 支持在一张无线网卡上通过设置虚拟接口开启多个接入点,并且因为它支持所有类型的安全设置,因此可以设置完整的全能蜜罐。对于使用 WEP 和 WPA(2)-PSK 的网络,使用默认密码,和对于使用 WPA(2)-EAP 的网络,配置“全部接受”策略。

+

+对于这个场景,必须在 ETF 上执行下面的命令和操作:

+

+ 1. 进入 APLauncher 设置模式:`config aplauncher`

+ 2. 设置目标接入点的 SSID(APS):`set ssid = `

+ 3. 设置 APLauncher 为全部接收的蜜罐:`set catch_all_honeypot = true`

+ 4. 启动 AirHost 模块:`start airhost`

+

+使用这些命令,ETF 可以启动一个包含所有类型安全配置的完整全能蜜罐。ETF 同样能自动启动 DHCP 和 DNS 服务器,从而让客户端能与互联网保持连接。ETF 提供了一个更好、更快、更完整的解决方案来创建全能蜜罐。下面的代码能够看到 ETF 的成功执行。

+

+```

+███████╗████████╗███████╗

+██╔════╝╚══██╔══╝██╔════╝

+█████╗ ██║ █████╗

+██╔══╝ ██║ ██╔══╝

+███████╗ ██║ ██║

+╚══════╝ ╚═╝ ╚═╝

+

+

+[+] Do you want to load an older session? [Y/n]: n

+[+] Creating ne´,cxzw temporary session on 03/08/2018

+[+] Enter the desired session name:

+ETF[etf/aircommunicator/]::> config aplauncher

+ETF[etf/aircommunicator/airhost/aplauncher]::> setconf ssid CatchMe

+ssid = CatchMe

+ETF[etf/aircommunicator/airhost/aplauncher]::> setconf catch_all_honeypot true

+catch_all_honeypot = true

+ETF[etf/aircommunicator/airhost/aplauncher]::> start airhost

+[+] Killing already started processes and restarting network services

+[+] Stopping dnsmasq and hostapd services

+[+] Access Point stopped...

+[+] Running airhost plugins pre_start

+[+] Starting hostapd background process

+[+] Starting dnsmasq service

+[+] Running airhost plugins post_start

+[+] Access Point launched successfully

+[+] Starting dnsmasq service

+```

+

+### 结论和以后的工作

+

+这些场景使用常见和众所周知的攻击方式来帮助验证 ETF 测试 WIFI 网络和客户端的能力。这个结果同样证明了该框架的架构能在平台现有功能的优势上开发新的攻击向量和功能。这会加快新的 WiFi 渗透测试工具的开发,因为很多的代码已经写好了。除此之外,将 WiFi 技术相关的东西都集成到一个单独的工具里,会使 WiFi 渗透测试更加简单高效。

+

+ETF 的目标不是取代现有的工具,而是为它们提供补充,并为安全审计人员在进行 WiFi 渗透测试和提升用户安全意识时,提供一个更好的选择。

+

+ETF 是 [GitHub][14] 上的一个开源项目,欢迎社区为它的开发做出贡献。下面是一些您可以提供帮助的方法。

+

+当前 WiFi 渗透测试的一个限制是无法在测试期间记录重要的事件。这使得报告已经识别到的漏洞更加困难且准确性更低。这个框架可以实现一个记录器,每个类都可以来访问它并创建一个渗透测试会话报告。

+

+ETF 工具的功能涵盖了 WiFi 渗透测试的方方面面。一方面,它让 WiFi 目标侦察、漏洞挖掘和攻击这些阶段变得更加容易。另一方面,它没有提供一个便于提交报告的功能。增加了会话的概念和会话报告的功能,比如在一个会话期间记录重要的事件,会极大地增加这个工具对于真实渗透测试场景的价值。

+

+另一个有价值的贡献是扩展该框架来促进 WiFi 模糊测试。IEEE 802.11 协议非常的复杂,考虑到它在客户端和接入点两方面都会有多种实现方式。可以假设这些实现都包含 bug 甚至是安全漏洞。这些 bug 可以通过对 IEEE 802.11 协议的数据帧进行模糊测试来进行发现。因为 Scapy 允许自定义的数据包创建和数据包注入,可以通过它实现一个模糊测试器。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/1/evil-twin-framework

+

+作者:[André Esser][a]

+选题:[lujun9972][b]

+译者:[hopefully2333](https://github.com/hopefully2333)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/andreesser

+[b]: https://github.com/lujun9972

+[1]: https://en.wikipedia.org/wiki/Rogue_access_point

+[2]: https://www.python.org/

+[3]: https://scapy.net

+[4]: /file/417776

+[5]: https://opensource.com/sites/default/files/uploads/pic1.png (Evil-Twin Framework Architecture)

+[6]: https://www.metasploit.com

+[7]: /file/417781

+[8]: https://opensource.com/sites/default/files/uploads/pic2.png (Scenario for capturing a WPA handshake after a de-authentication attack)

+[9]: https://en.wikipedia.org/wiki/Address_Resolution_Protocol

+[10]: /file/417786

+[11]: https://opensource.com/sites/default/files/uploads/pic3.png (Scenario for capturing a WPA handshake after a de-authentication attack)

+[12]: /file/417791

+[13]: https://opensource.com/sites/default/files/uploads/pic4.png (Scenario for capturing a WPA handshake after a de-authentication attack)

+[14]: https://github.com/Esser420/EvilTwinFramework

diff --git a/published/20190117 How to Update-Change Users Password in Linux Using Different Ways.md b/published/201902/20190117 How to Update-Change Users Password in Linux Using Different Ways.md

similarity index 100%

rename from published/20190117 How to Update-Change Users Password in Linux Using Different Ways.md

rename to published/201902/20190117 How to Update-Change Users Password in Linux Using Different Ways.md

diff --git a/published/20190119 Get started with Roland, a random selection tool for the command line.md b/published/201902/20190119 Get started with Roland, a random selection tool for the command line.md

similarity index 100%

rename from published/20190119 Get started with Roland, a random selection tool for the command line.md

rename to published/201902/20190119 Get started with Roland, a random selection tool for the command line.md

diff --git a/published/20190120 Get started with HomeBank, an open source personal finance app.md b/published/201902/20190120 Get started with HomeBank, an open source personal finance app.md

similarity index 100%

rename from published/20190120 Get started with HomeBank, an open source personal finance app.md

rename to published/201902/20190120 Get started with HomeBank, an open source personal finance app.md

diff --git a/translated/tech/20190121 Get started with TaskBoard, a lightweight kanban board.md b/published/201902/20190121 Get started with TaskBoard, a lightweight kanban board.md

similarity index 77%

rename from translated/tech/20190121 Get started with TaskBoard, a lightweight kanban board.md

rename to published/201902/20190121 Get started with TaskBoard, a lightweight kanban board.md

index d2490b03da..bff5c209c7 100644

--- a/translated/tech/20190121 Get started with TaskBoard, a lightweight kanban board.md

+++ b/published/201902/20190121 Get started with TaskBoard, a lightweight kanban board.md

@@ -1,25 +1,26 @@

[#]: collector: (lujun9972)

[#]: translator: (geekpi)

-[#]: reviewer: ( )

-[#]: publisher: ( )

-[#]: url: ( )

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-10539-1.html)

[#]: subject: (Get started with TaskBoard, a lightweight kanban board)

[#]: via: (https://opensource.com/article/19/1/productivity-tool-taskboard)

[#]: author: (Kevin Sonney https://opensource.com/users/ksonney (Kevin Sonney))

-开始使用轻量级看板 TaskBoard

+开始使用 TaskBoard 吧,一款轻量级看板

======

-了解我们在开源工具系列中的第九个工具,它将帮助你在 2019 年提高工作效率。

+

+> 了解我们在开源工具系列中的第九个工具,它将帮助你在 2019 年提高工作效率。

-每年年初似乎都有疯狂的冲动,想方设法提高工作效率。新年的决议,开始一年的权利,当然,“与旧的,与新的”的态度都有助于实现这一目标。通常的一轮建议严重偏向封闭源和专有软件。它不一定是这样。

+每年年初似乎都有疯狂的冲动想提高工作效率。新年的决心,渴望开启新的一年,当然,“抛弃旧的,拥抱新的”的态度促成了这一切。通常这时的建议严重偏向闭源和专有软件,但事实上并不用这样。

这是我挑选出的 19 个新的(或者对你而言新的)开源工具中的第九个工具来帮助你在 2019 年更有效率。

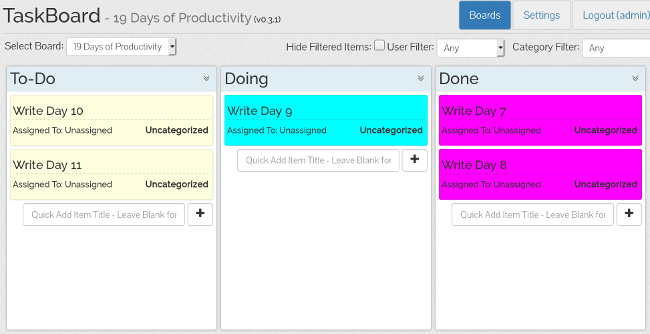

### TaskBoard

-正如我在本系列的[第二篇文章][1]中所写的那样,[看板][2]现在非常受欢迎。并非所有的看板都是相同的。[TaskBoard][3] 是一个易于在现有 Web 服务器上部署的 PHP 应用,它有一些易于使用和管理的功能。

+正如我在本系列的[第二篇文章][1]中所写的那样,[看板][2]现在非常受欢迎。但并非所有的看板都是相同的。[TaskBoard][3] 是一个易于在现有 Web 服务器上部署的 PHP 应用,它有一些易于使用和管理的功能。

@@ -29,15 +30,15 @@

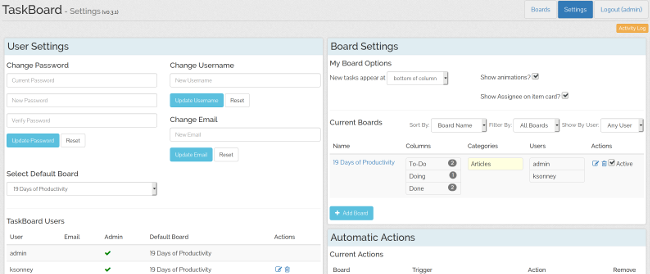

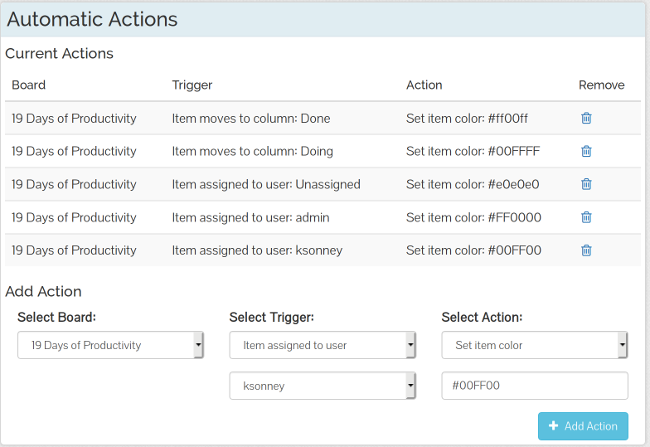

-TaskBoard 还允许你创建自动操作,包括更改用户分配、列或卡片类别这些操作。虽然 TaskBoard 不如其他一些看板应用那么强大,但你可以设置自动操作,使看板用户更容易看到卡片,清除截止日期,并根据需要自动为人们分配新卡片。例如,在下面的截图中,如果将卡片分配给 “admin” 用户,那么它的颜色将更改为红色,并且当将卡片分配给我的用户时,其颜色将更改为蓝绿色。如果项目已添加到“待办事项”列,我还添加了一个操作来清除项目的截止日期,并在发生这种情况时自动将卡片分配给我的用户。

+TaskBoard 还允许你创建自动操作,包括更改用户分配、列或卡片类别这些操作。虽然 TaskBoard 不如其他一些看板应用那么强大,但你可以设置自动操作,使看板用户更容易看到卡片、清除截止日期,并根据需要自动为人们分配新卡片。例如,在下面的截图中,如果将卡片分配给 “admin” 用户,那么它的颜色将更改为红色,并且当将卡片分配给我的用户时,其颜色将更改为蓝绿色。如果项目已添加到“待办事项”列,我还添加了一个操作来清除项目的截止日期,并在发生这种情况时自动将卡片分配给我的用户。

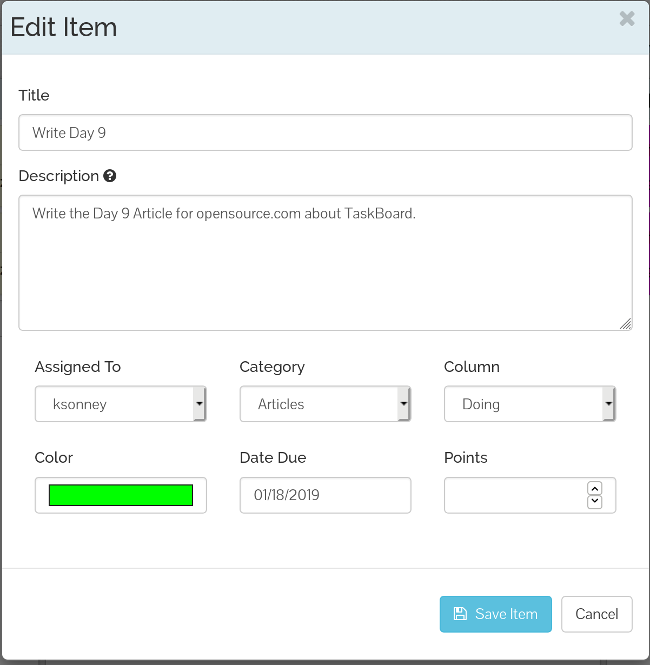

-卡片非常简单。虽然他们没有开始日期,但他们确实有结束日期和点数字段。点数可用于估计所需的时间、所需的工作量或仅是一般优先级。使用点数是可选的,但如果你使用 TaskBoard 进行 scrum 规划或其他敏捷技术,那么这是一个非常方便的功能。你还可以按用户和类别过滤视图。这对于正在进行多个工作流的团队非常有用,因为它允许团队负责人或经理了解进度状态或人员工作量。

+卡片非常简单。虽然它们没有开始日期,但它们确实有结束日期和点数字段。点数可用于估计所需的时间、所需的工作量或仅是一般优先级。使用点数是可选的,但如果你使用 TaskBoard 进行 scrum 规划或其他敏捷技术,那么这是一个非常方便的功能。你还可以按用户和类别过滤视图。这对于正在进行多个工作流的团队非常有用,因为它允许团队负责人或经理了解进度状态或人员工作量。

-如果你需要一个相当轻便的看板,请看下 TaskBoard。它安装快速,有一些很好的功能,且非常,非常容易使用。它还足够的灵活性,可用于开发团队,个人任务跟踪等等。

+如果你需要一个相当轻便的看板,请看下 TaskBoard。它安装快速,有一些很好的功能,且非常、非常容易使用。它还足够的灵活性,可用于开发团队,个人任务跟踪等等。

--------------------------------------------------------------------------------

@@ -46,13 +47,13 @@ via: https://opensource.com/article/19/1/productivity-tool-taskboard

作者:[Kevin Sonney][a]

选题:[lujun9972][b]

译者:[geekpi](https://github.com/geekpi)

-校对:[校对者ID](https://github.com/校对者ID)

+校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

[a]: https://opensource.com/users/ksonney (Kevin Sonney)

[b]: https://github.com/lujun9972

-[1]: https://opensource.com/article/19/1/productivity-tool-wekan

+[1]: https://linux.cn/article-10454-1.html

[2]: https://en.wikipedia.org/wiki/Kanban

[3]: https://taskboard.matthewross.me/

[4]: https://taskboard.matthewross.me/docs/

diff --git a/published/20190122 Dcp (Dat Copy) - Easy And Secure Way To Transfer Files Between Linux Systems.md b/published/201902/20190122 Dcp (Dat Copy) - Easy And Secure Way To Transfer Files Between Linux Systems.md

similarity index 100%

rename from published/20190122 Dcp (Dat Copy) - Easy And Secure Way To Transfer Files Between Linux Systems.md

rename to published/201902/20190122 Dcp (Dat Copy) - Easy And Secure Way To Transfer Files Between Linux Systems.md

diff --git a/published/201902/20190122 Get started with Go For It, a flexible to-do list application.md b/published/201902/20190122 Get started with Go For It, a flexible to-do list application.md

new file mode 100644

index 0000000000..e2602b216c

--- /dev/null

+++ b/published/201902/20190122 Get started with Go For It, a flexible to-do list application.md

@@ -0,0 +1,61 @@

+[#]: collector: (lujun9972)

+[#]: translator: (geekpi)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-10557-1.html)

+[#]: subject: (Get started with Go For It, a flexible to-do list application)

+[#]: via: (https://opensource.com/article/19/1/productivity-tool-go-for-it)

+[#]: author: (Kevin Sonney https://opensource.com/users/ksonney (Kevin Sonney))

+

+开始使用 Go For It 吧,一个灵活的待办事项列表程序

+======

+

+> Go For It,是我们开源工具系列中的第十个工具,它将使你在 2019 年更高效,它在 Todo.txt 系统的基础上构建,以帮助你完成更多工作。

+

+

+

+每年年初似乎都有疯狂的冲动想提高工作效率。新年的决心,渴望开启新的一年,当然,“抛弃旧的,拥抱新的”的态度促成了这一切。通常这时的建议严重偏向闭源和专有软件,但事实上并不用这样。

+

+这是我挑选出的 19 个新的(或者对你而言新的)开源工具中的第 10 个工具来帮助你在 2019 年更有效率。

+

+### Go For It

+

+有时,人们要高效率需要的不是一个花哨的看板或一组笔记,而是一个简单、直接的待办事项清单。像“将项目添加到列表中,在完成后检查”一样基本的东西。为此,[纯文本 Todo.txt 系统][1]可能是最容易使用的系统之一,几乎所有系统都支持它。

+

+

+

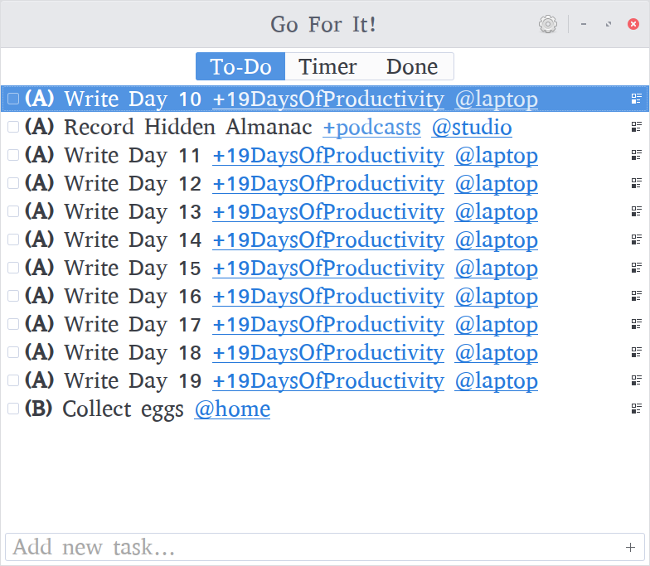

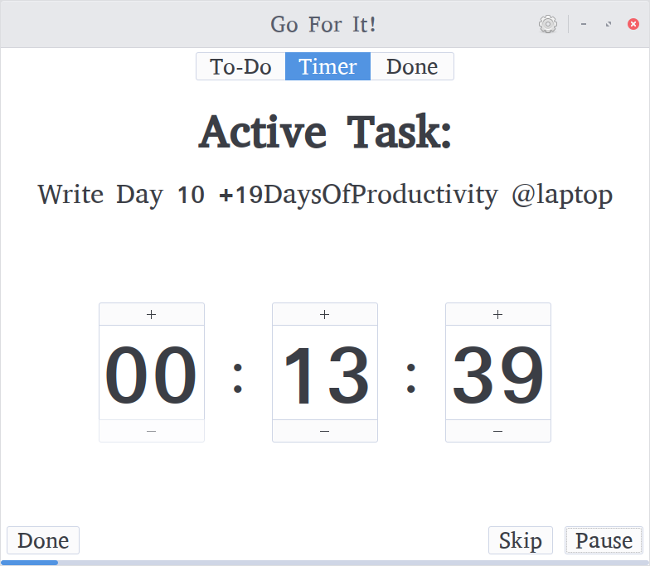

+[Go For It][2] 是一个简单易用的 Todo.txt 图形界面。如果你已经在使用 Todo.txt,它可以与现有文件一起使用,如果还没有,那么可以同时创建待办事项和完成事项。它允许拖放任务排序,允许用户按照他们想要执行的顺序组织待办事项。它还支持 [Todo.txt 格式指南][3]中所述的优先级、项目和上下文。而且,只需单击任务列表中的项目或者上下文就可通过它们过滤任务。

+

+

+

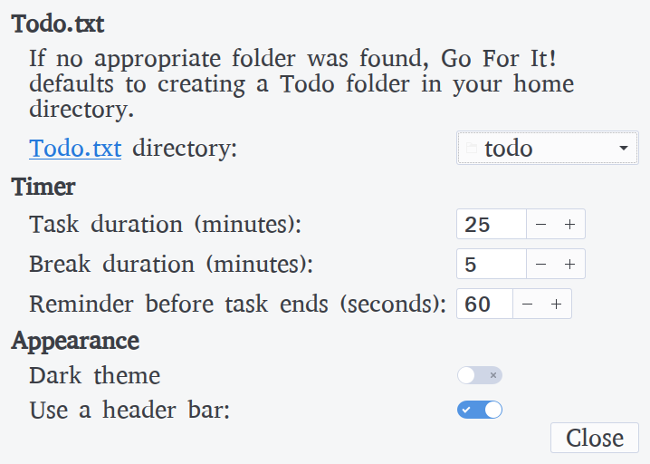

+一开始,Go For It 可能看起来与任何其他 Todo.txt 程序相同,但外观可能是骗人的。将 Go For It 与其他程序真正区分开的功能是它包含一个内置的[番茄工作法][4]计时器。选择要完成的任务,切换到“计时器”选项卡,然后单击“启动”。任务完成后,只需单击“完成”,它将自动重置计时器并选择列表中的下一个任务。你可以暂停并重新启动计时器,也可以单击“跳过”跳转到下一个任务(或中断)。在当前任务剩余 60 秒时,它会发出警告。任务的默认时间设置为 25 分钟,中断的默认时间设置为 5 分钟。你可以在“设置”页面中调整,同时还能调整 Todo.txt 和 done.txt 文件的目录的位置。

+

+

+

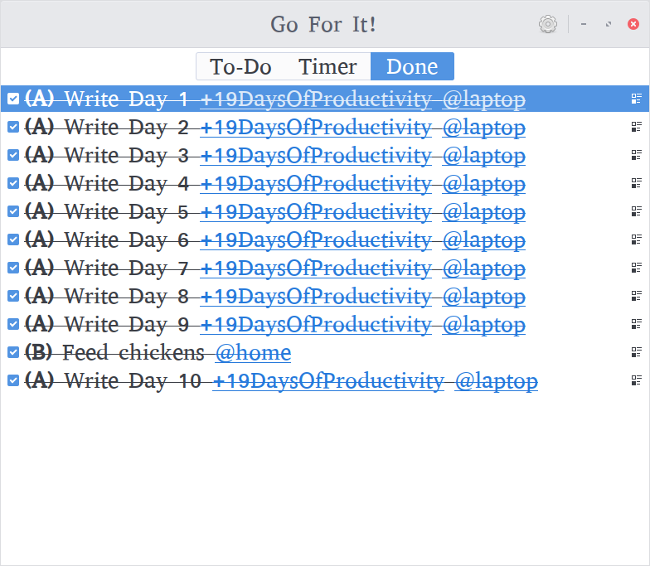

+Go For It 的第三个选项卡是“已完成”,允许你查看已完成的任务并在需要时将其清除。能够看到你已经完成的可能是非常激励的,也是一种了解你在更长的过程中进度的好方法。

+

+

+

+它还有 Todo.txt 的所有其他优点。Go For It 的列表可以被其他使用相同格式的程序访问,包括 [Todo.txt 的原始命令行工具][5]和任何已安装的[附加组件][6]。

+

+Go For It 旨在成为一个简单的工具来帮助管理你的待办事项列表并完成这些项目。如果你已经使用过 Todo.txt,那么 Go For It 是你的工具箱的绝佳补充,如果你还没有,这是一个尝试最简单、最灵活系统之一的好机会。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/1/productivity-tool-go-for-it

+

+作者:[Kevin Sonney][a]

+选题:[lujun9972][b]

+译者:[geekpi](https://github.com/geekpi)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/ksonney (Kevin Sonney)

+[b]: https://github.com/lujun9972

+[1]: http://todotxt.org/

+[2]: http://manuel-kehl.de/projects/go-for-it/

+[3]: https://github.com/todotxt/todo.txt

+[4]: https://en.wikipedia.org/wiki/Pomodoro_Technique

+[5]: https://github.com/todotxt/todo.txt-cli

+[6]: https://github.com/todotxt/todo.txt-cli/wiki/Todo.sh-Add-on-Directory

diff --git a/published/201902/20190122 How To Copy A File-Folder From A Local System To Remote System In Linux.md b/published/201902/20190122 How To Copy A File-Folder From A Local System To Remote System In Linux.md

new file mode 100644

index 0000000000..66407b0156

--- /dev/null

+++ b/published/201902/20190122 How To Copy A File-Folder From A Local System To Remote System In Linux.md

@@ -0,0 +1,393 @@

+[#]: collector: (lujun9972)

+[#]: translator: (luming)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-10569-1.html)

+[#]: subject: (How To Copy A File/Folder From A Local System To Remote System In Linux?)

+[#]: via: (https://www.2daygeek.com/linux-scp-rsync-pscp-command-copy-files-folders-in-multiple-servers-using-shell-script/)

+[#]: author: (Prakash Subramanian https://www.2daygeek.com/author/prakash/)

+

+如何在 Linux 上复制文件/文件夹到远程系统?

+======

+

+从一个服务器复制文件到另一个服务器,或者从本地到远程复制是 Linux 管理员的日常任务之一。

+

+我觉得不会有人不同意,因为无论在哪里这都是你的日常操作之一。有很多办法都能处理这个任务,我们试着加以概括。你可以挑一个喜欢的方法。当然,看看其他命令也能在别的地方帮到你。

+

+我已经在自己的环境下测试过所有的命令和脚本了,因此你可以直接用到日常工作当中。

+

+通常大家都倾向 `scp`,因为它是文件复制的原生命令之一。但本文所列出的其它命令也很好用,建议你尝试一下。

+

+文件复制可以轻易地用以下四种方法。

+

+- `scp`:在网络上的两个主机之间复制文件,它使用 `ssh` 做文件传输,并使用相同的认证方式,具有相同的安全性。

+- `rsync`:是一个既快速又出众的多功能文件复制工具。它能本地复制、通过远程 shell 在其它主机之间复制,或者与远程的 `rsync` 守护进程 之间复制。

+- `pscp`:是一个并行复制文件到多个主机上的程序。它提供了诸多特性,例如为 `scp` 配置免密传输,保存输出到文件,以及超时控制。

+- `prsync`:也是一个并行复制文件到多个主机上的程序。它也提供了诸多特性,例如为 `ssh` 配置免密传输,保存输出到 文件,以及超时控制。

+

+### 方式 1:如何在 Linux 上使用 scp 命令从本地系统向远程系统复制文件/文件夹?

+

+`scp` 命令可以让我们从本地系统复制文件/文件夹到远程系统上。

+

+我会把 `output.txt` 文件从本地系统复制到 `2g.CentOS.com` 远程系统的 `/opt/backup` 文件夹下。

+

+```

+# scp output.txt root@2g.CentOS.com:/opt/backup

+

+output.txt 100% 2468 2.4KB/s 00:00

+```

+

+从本地系统复制两个文件 `output.txt` 和 `passwd-up.sh` 到远程系统 `2g.CentOs.com` 的 `/opt/backup` 文件夹下。

+

+```

+# scp output.txt passwd-up.sh root@2g.CentOS.com:/opt/backup

+

+output.txt 100% 2468 2.4KB/s 00:00

+passwd-up.sh 100% 877 0.9KB/s 00:00

+```

+

+从本地系统复制 `shell-script` 文件夹到远程系统 `2g.CentOs.com` 的 `/opt/back` 文件夹下。

+

+这会连同`shell-script` 文件夹下所有的文件一同复制到`/opt/back` 下。

+

+```

+# scp -r /home/daygeek/2g/shell-script/ root@:/opt/backup/

+

+output.txt 100% 2468 2.4KB/s 00:00

+ovh.sh 100% 76 0.1KB/s 00:00

+passwd-up.sh 100% 877 0.9KB/s 00:00

+passwd-up1.sh 100% 7 0.0KB/s 00:00

+server-list.txt 100% 23 0.0KB/s 00:00

+```

+

+### 方式 2:如何在 Linux 上使用 scp 命令和 Shell 脚本复制文件/文件夹到多个远程系统上?

+

+如果你想复制同一个文件到多个远程服务器上,那就需要创建一个如下面那样的小 shell 脚本。

+

+并且,需要将服务器添加进 `server-list.txt` 文件。确保添加成功后,每个服务器应当单独一行。

+

+最终,你想要的脚本就像下面这样:

+

+```

+# file-copy.sh

+

+#!/bin/sh

+for server in `more server-list.txt`

+do

+ scp /home/daygeek/2g/shell-script/output.txt root@$server:/opt/backup

+done

+```

+

+完成之后,给 `file-copy.sh` 文件设置可执行权限。

+

+```

+# chmod +x file-copy.sh

+```

+

+最后运行脚本完成复制。

+

+```

+# ./file-copy.sh

+

+output.txt 100% 2468 2.4KB/s 00:00

+output.txt 100% 2468 2.4KB/s 00:00

+```

+

+使用下面的脚本可以复制多个文件到多个远程服务器上。

+

+```

+# file-copy.sh

+

+#!/bin/sh

+for server in `more server-list.txt`

+do

+ scp /home/daygeek/2g/shell-script/output.txt passwd-up.sh root@$server:/opt/backup

+done

+```

+

+下面结果显示所有的两个文件都复制到两个服务器上。

+

+```

+# ./file-cp.sh

+

+output.txt 100% 2468 2.4KB/s 00:00

+passwd-up.sh 100% 877 0.9KB/s 00:00

+output.txt 100% 2468 2.4KB/s 00:00

+passwd-up.sh 100% 877 0.9KB/s 00:00

+```

+

+使用下面的脚本递归地复制文件夹到多个远程服务器上。

+

+```

+# file-copy.sh

+

+#!/bin/sh

+for server in `more server-list.txt`

+do

+ scp -r /home/daygeek/2g/shell-script/ root@$server:/opt/backup

+done

+```

+

+上述脚本的输出。

+

+```

+# ./file-cp.sh

+

+output.txt 100% 2468 2.4KB/s 00:00

+ovh.sh 100% 76 0.1KB/s 00:00

+passwd-up.sh 100% 877 0.9KB/s 00:00

+passwd-up1.sh 100% 7 0.0KB/s 00:00

+server-list.txt 100% 23 0.0KB/s 00:00

+

+output.txt 100% 2468 2.4KB/s 00:00

+ovh.sh 100% 76 0.1KB/s 00:00

+passwd-up.sh 100% 877 0.9KB/s 00:00

+passwd-up1.sh 100% 7 0.0KB/s 00:00

+server-list.txt 100% 23 0.0KB/s 00:00

+```

+

+### 方式 3:如何在 Linux 上使用 pscp 命令复制文件/文件夹到多个远程系统上?

+

+`pscp` 命令可以直接让我们复制文件到多个远程服务器上。

+

+使用下面的 `pscp` 命令复制单个文件到远程服务器。

+

+```

+# pscp.pssh -H 2g.CentOS.com /home/daygeek/2g/shell-script/output.txt /opt/backup

+

+[1] 18:46:11 [SUCCESS] 2g.CentOS.com

+```

+

+使用下面的 `pscp` 命令复制多个文件到远程服务器。

+

+```

+# pscp.pssh -H 2g.CentOS.com /home/daygeek/2g/shell-script/output.txt ovh.sh /opt/backup

+

+[1] 18:47:48 [SUCCESS] 2g.CentOS.com

+```

+

+使用下面的 `pscp` 命令递归地复制整个文件夹到远程服务器。

+

+```

+# pscp.pssh -H 2g.CentOS.com -r /home/daygeek/2g/shell-script/ /opt/backup

+

+[1] 18:48:46 [SUCCESS] 2g.CentOS.com

+```

+

+使用下面的 `pscp` 命令使用下面的命令复制单个文件到多个远程服务器。

+

+```

+# pscp.pssh -h server-list.txt /home/daygeek/2g/shell-script/output.txt /opt/backup

+

+[1] 18:49:48 [SUCCESS] 2g.CentOS.com

+[2] 18:49:48 [SUCCESS] 2g.Debian.com

+```

+

+使用下面的 `pscp` 命令复制多个文件到多个远程服务器。

+

+```

+# pscp.pssh -h server-list.txt /home/daygeek/2g/shell-script/output.txt passwd-up.sh /opt/backup

+

+[1] 18:50:30 [SUCCESS] 2g.Debian.com

+[2] 18:50:30 [SUCCESS] 2g.CentOS.com

+```

+

+使用下面的命令递归地复制文件夹到多个远程服务器。

+

+```

+# pscp.pssh -h server-list.txt -r /home/daygeek/2g/shell-script/ /opt/backup

+

+[1] 18:51:31 [SUCCESS] 2g.Debian.com

+[2] 18:51:31 [SUCCESS] 2g.CentOS.com

+```

+

+### 方式 4:如何在 Linux 上使用 rsync 命令复制文件/文件夹到多个远程系统上?

+

+`rsync` 是一个即快速又出众的多功能文件复制工具。它能本地复制、通过远程 shell 在其它主机之间复制,或者在远程 `rsync` 守护进程 之间复制。

+

+使用下面的 `rsync` 命令复制单个文件到远程服务器。

+

+```

+# rsync -avz /home/daygeek/2g/shell-script/output.txt root@2g.CentOS.com:/opt/backup

+

+sending incremental file list

+output.txt

+

+sent 598 bytes received 31 bytes 1258.00 bytes/sec

+total size is 2468 speedup is 3.92

+```

+

+使用下面的 `rsync` 命令复制多个文件到远程服务器。

+

+```

+# rsync -avz /home/daygeek/2g/shell-script/output.txt passwd-up.sh root@2g.CentOS.com:/opt/backup

+

+sending incremental file list

+output.txt

+passwd-up.sh

+

+sent 737 bytes received 50 bytes 1574.00 bytes/sec

+total size is 2537 speedup is 3.22

+```

+

+使用下面的 `rsync` 命令通过 `ssh` 复制单个文件到远程服务器。

+

+```

+# rsync -avzhe ssh /home/daygeek/2g/shell-script/output.txt root@2g.CentOS.com:/opt/backup

+

+sending incremental file list

+output.txt

+

+sent 598 bytes received 31 bytes 419.33 bytes/sec

+total size is 2.47K speedup is 3.92

+```

+

+使用下面的 `rsync` 命令通过 `ssh` 递归地复制文件夹到远程服务器。这种方式只复制文件不包括文件夹。

+

+```

+# rsync -avzhe ssh /home/daygeek/2g/shell-script/ root@2g.CentOS.com:/opt/backup

+

+sending incremental file list

+./

+output.txt

+ovh.sh

+passwd-up.sh

+passwd-up1.sh

+server-list.txt

+

+sent 3.85K bytes received 281 bytes 8.26K bytes/sec

+total size is 9.12K speedup is 2.21

+```

+

+### 方式 5:如何在 Linux 上使用 rsync 命令和 Shell 脚本复制文件/文件夹到多个远程系统上?

+

+如果你想复制同一个文件到多个远程服务器上,那也需要创建一个如下面那样的小 shell 脚本。

+

+```

+# file-copy.sh

+

+#!/bin/sh

+for server in `more server-list.txt`

+do

+ rsync -avzhe ssh /home/daygeek/2g/shell-script/ root@2g.CentOS.com$server:/opt/backup

+done

+```

+

+上面脚本的输出。

+

+```

+# ./file-copy.sh

+

+sending incremental file list

+./

+output.txt

+ovh.sh

+passwd-up.sh

+passwd-up1.sh

+server-list.txt

+

+sent 3.86K bytes received 281 bytes 8.28K bytes/sec

+total size is 9.13K speedup is 2.21

+

+sending incremental file list

+./

+output.txt

+ovh.sh

+passwd-up.sh

+passwd-up1.sh

+server-list.txt

+

+sent 3.86K bytes received 281 bytes 2.76K bytes/sec

+total size is 9.13K speedup is 2.21

+```

+

+### 方式 6:如何在 Linux 上使用 scp 命令和 Shell 脚本从本地系统向多个远程系统复制文件/文件夹?

+

+在上面两个 shell 脚本中,我们需要事先指定好文件和文件夹的路径,这儿我做了些小修改,让脚本可以接收文件或文件夹作为输入参数。当你每天需要多次执行复制时,这将会非常有用。

+

+```

+# file-copy.sh

+

+#!/bin/sh

+for server in `more server-list.txt`

+do

+scp -r $1 root@2g.CentOS.com$server:/opt/backup

+done

+```

+

+输入文件名并运行脚本。

+

+```

+# ./file-copy.sh output1.txt

+

+output1.txt 100% 3558 3.5KB/s 00:00

+output1.txt 100% 3558 3.5KB/s 00:00

+```

+

+### 方式 7:如何在 Linux 系统上用非标准端口复制文件/文件夹到远程系统?

+

+如果你想使用非标准端口,使用下面的 shell 脚本复制文件或文件夹。

+

+如果你使用了非标准端口,确保像下面 `scp` 命令那样指定好了端口号。

+

+```

+# file-copy-scp.sh

+

+#!/bin/sh

+for server in `more server-list.txt`

+do

+scp -P 2222 -r $1 root@2g.CentOS.com$server:/opt/backup

+done

+```

+

+运行脚本,输入文件名。

+

+```

+# ./file-copy.sh ovh.sh

+

+ovh.sh 100% 3558 3.5KB/s 00:00

+ovh.sh 100% 3558 3.5KB/s 00:00

+```

+

+如果你使用了非标准端口,确保像下面 `rsync` 命令那样指定好了端口号。

+

+```

+# file-copy-rsync.sh

+

+#!/bin/sh

+for server in `more server-list.txt`

+do

+rsync -avzhe 'ssh -p 2222' $1 root@2g.CentOS.com$server:/opt/backup

+done

+```

+

+运行脚本,输入文件名。

+

+```

+# ./file-copy-rsync.sh passwd-up.sh

+sending incremental file list

+passwd-up.sh

+

+sent 238 bytes received 35 bytes 26.00 bytes/sec

+total size is 159 speedup is 0.58

+

+sending incremental file list

+passwd-up.sh

+

+sent 238 bytes received 35 bytes 26.00 bytes/sec

+total size is 159 speedup is 0.58

+```

+

+--------------------------------------------------------------------------------

+

+via: https://www.2daygeek.com/linux-scp-rsync-pscp-command-copy-files-folders-in-multiple-servers-using-shell-script/

+

+作者:[Prakash Subramanian][a]

+选题:[lujun9972][b]

+译者:[LuuMing](https://github.com/LuuMing)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://www.2daygeek.com/author/prakash/

+[b]: https://github.com/lujun9972

diff --git a/published/201902/20190123 Book Review- Fundamentals of Linux.md b/published/201902/20190123 Book Review- Fundamentals of Linux.md

new file mode 100644

index 0000000000..bdde86d16e

--- /dev/null

+++ b/published/201902/20190123 Book Review- Fundamentals of Linux.md

@@ -0,0 +1,75 @@

+[#]: collector: (lujun9972)

+[#]: translator: (mySoul8012)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-10565-1.html)

+[#]: subject: (Book Review: Fundamentals of Linux)

+[#]: via: (https://itsfoss.com/fundamentals-of-linux-book-review)

+[#]: author: (John Paul https://itsfoss.com/author/john/)

+

+书评:《Linux 基础》

+======

+

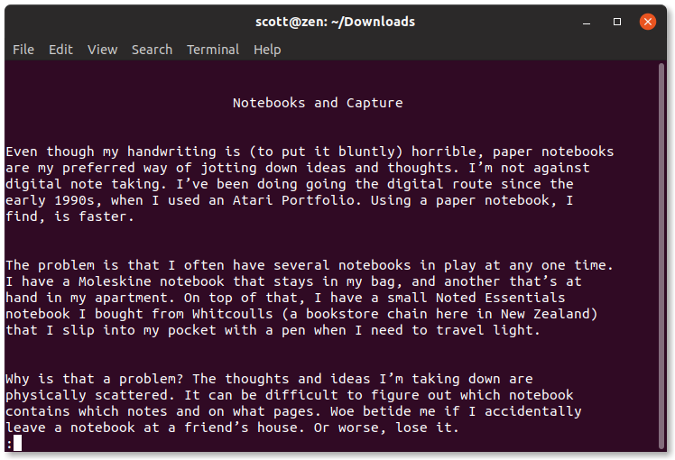

+介绍 Linux 的基础知识以及它的工作原理的书很多,今天,我们将会点评这样一本书。这次讨论的主题为 Oliver Pelz 所写的 《[Linux 基础][1]》,由 [PacktPub][2] 出版。

+

+[Oliver Pelz][3] 是一位拥有超过十年软件开发经验的开发者和系统管理员,拥有生物信息学学位证书。

+

+### 《Linux 基础》

+

+![Fundamental of Linux books][4]

+

+正如可以从书名中猜到那样,《Linux 基础》的目标是为读者打下一个从了解 Linux 到学习 Linux 命令行的坚实基础。这本书一共有两百多页,因此它专注于教给用户日常任务和解决经常遇到的问题。本书是为想要成为 Linux 管理员的读者而写的。

+

+第一章首先概述了虚拟化。本书作者指导了读者如何在 [VirtualBox][6] 中创建 [CentOS][5] 实例。如何克隆实例,如何使用快照。并且同时你也会学习到如何通过 SSH 命令连接到虚拟机。

+

+第二章介绍了 Linux 命令行的基础知识,包括 shell 通配符,shell 展开,如何使用包含空格和特殊字符的文件名称。如何来获取命令手册的帮助页面。如何使用 `sed`、`awk` 这两个命令。如何浏览 Linux 的文件系统。

+

+第三章更深入的介绍了 Linux 文件系统。你将了解如何在 Linux 中文件是如何链接的,以及如何搜索它们。你还将获得用户、组,以及文件权限的大概了解。由于本章的重点介绍了如何与文件进行交互。因此还将会介绍如何从命令行中读取文本文件,以及初步了解如何使用 vim 编辑器。

+

+第四章重点介绍了如何使用命令行。以及涵盖的重要命令。如 `cat`、`sort`、`awk`、`tee`、`tar`、`rsync`、`nmap`、`htop` 等。你还将会了解到进程,以及它们如何彼此通讯。这一章还介绍了 Bash shell 脚本编程。

+

+第五章同时也是本书的最后一章,将会介绍 Linux 和其他高级命令,以及网络的概念。本书的作者讨论了 Linux 是如何处理网络,并提供使用多个虚拟机的示例。同时还将会介绍如何安装新的程序,如何设置防火墙。

+

+### 关于这本书的思考

+

+Linux 的基础知识只有五章和少少的 200 来页可能看起来有些短,但是也涵盖了相当多的信息。同时也将会获得如何使用命令行所需要的知识的一切。

+

+使用本书的时候,需要注意一件事情,即,本书专注于对命令行的关注,没有任何关于如何使用图形化的用户界面的任何教程。这是因为在 Linux 中有太多不同的桌面环境,以及很多的类似的系统应用,因此很难编写一本可以涵盖所有变种的书。此外,还有部分原因还因为本书的面向的用户群体为潜在的 Linux 管理员。

+

+当我看到作者使用 Centos 教授 Linux 的时候有点惊讶。我原本以为他会使用更为常见的 Linux 的发行版本,例如 Ubuntu、Debian 或者 Fedora。原因在于 Centos 是为服务器设计的发行版本。随着时间的推移变化很小,能够为 Linux 的基础知识打下一个非常坚实的基础。

+

+我自己使用 Linux 已经操作五年了。我大部分时间都在使用桌面版本的 Linux。我有些时候会使用命令行操作。但我并没有花太多的时间在那里。我使用鼠标完成了本书中涉及到的很多操作。现在呢。我同时也知道了如何通过终端做到同样的事情。这种方式不会改变我完成任务的方式,但是会有助于自己理解幕后发生的事情。

+

+如果你刚刚使用 Linux,或者计划使用。我不会推荐你阅读这本书。这可能有点绝对化。但是如何你已经花了一些时间在 Linux 上。或者可以快速掌握某种技术语言。那么这本书很适合你。

+

+如果你认为本书适合你的学习需求。你可以从以下链接获取到该书:

+

+- [下载《Linux 基础》](https://www.packtpub.com/networking-and-servers/fundamentals-linux)

+

+我们将在未来几个月内尝试点评更多 Linux 书籍,敬请关注我们。

+

+你最喜欢的关于 Linux 的入门书籍是什么?请在下面的评论中告诉我们。

+

+如果你发现这篇文章很有趣,请花一点时间在社交媒体、Hacker News或 [Reddit][8] 上分享。

+

+--------------------------------------------------------------------------------

+

+via: https://itsfoss.com/fundamentals-of-linux-book-review

+

+作者:[John Paul][a]

+选题:[lujun9972][b]

+译者:[mySoul8012](https://github.com/mySoul8012)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://itsfoss.com/author/john/

+[b]: https://github.com/lujun9972

+[1]: https://www.packtpub.com/networking-and-servers/fundamentals-linux

+[2]: https://www.packtpub.com/

+[3]: http://www.oliverpelz.de/index.html

+[4]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/01/fundamentals-of-linux-book-review.jpeg?resize=800%2C450&ssl=1

+[5]: https://centos.org/

+[6]: https://www.virtualbox.org/

+[7]: https://www.centos.org/

+[8]: http://reddit.com/r/linuxusersgroup

diff --git a/published/20190123 Commands to help you monitor activity on your Linux server.md b/published/201902/20190123 Commands to help you monitor activity on your Linux server.md

similarity index 100%

rename from published/20190123 Commands to help you monitor activity on your Linux server.md

rename to published/201902/20190123 Commands to help you monitor activity on your Linux server.md

diff --git a/published/201902/20190124 Get started with LogicalDOC, an open source document management system.md b/published/201902/20190124 Get started with LogicalDOC, an open source document management system.md

new file mode 100644

index 0000000000..35e90d4839

--- /dev/null

+++ b/published/201902/20190124 Get started with LogicalDOC, an open source document management system.md

@@ -0,0 +1,63 @@

+[#]: collector: (lujun9972)

+[#]: translator: (geekpi)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-10561-1.html)

+[#]: subject: (Get started with LogicalDOC, an open source document management system)

+[#]: via: (https://opensource.com/article/19/1/productivity-tool-logicaldoc)

+[#]: author: (Kevin Sonney https://opensource.com/users/ksonney)

+

+开始使用 LogicalDOC 吧,一个开源文档管理系统

+======

+

+> 使用 LogicalDOC 更好地跟踪文档版本,这是我们开源工具系列中的第 12 个工具,它将使你在 2019 年更高效。

+

+

+

+每年年初似乎都有疯狂的冲动想提高工作效率。新年的决心,渴望开启新的一年,当然,“抛弃旧的,拥抱新的”的态度促成了这一切。通常这时的建议严重偏向闭源和专有软件,但事实上并不用这样。

+

+这是我挑选出的 19 个新的(或者对你而言新的)开源工具中的第 12 个工具来帮助你在 2019 年更有效率。

+

+### LogicalDOC

+

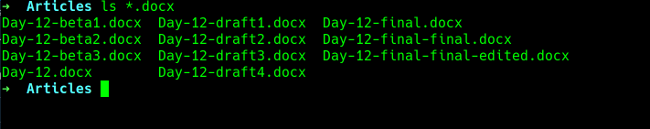

+高效部分表现在能够在你需要时找到你所需的东西。我们都看到过塞满名称类似的文件的目录,这是每次更改文档时为了跟踪所有版本而重命名这些文件而导致的。例如,我的妻子是一名作家,她在将文档发送给审稿人之前,她经常使用新名称保存文档修订版。

+

+

+

+程序员对此一个自然的解决方案是 Git 或者其他版本控制器,但这个不适用于文档作者,因为用于代码的系统通常不能很好地兼容商业文本编辑器使用的格式。之前有人说,“改变格式就行”,[这不是适合每个人的选择][1]。同样,许多版本控制工具对于非技术人员来说并不是非常友好。在大型组织中,有一些工具可以解决此问题,但它们还需要大型组织的资源来运行、管理和支持它们。

+

+

+

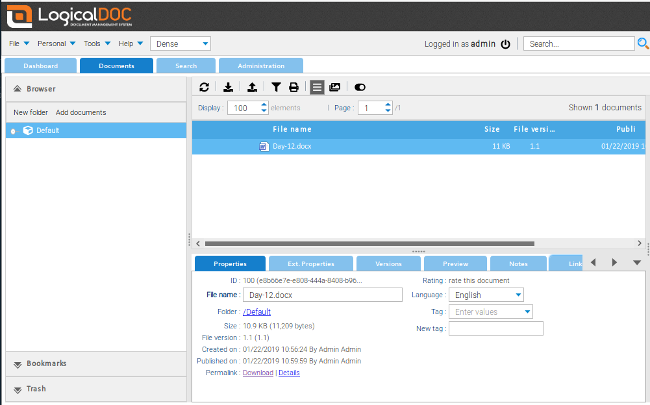

+[LogicalDOC CE][2] 是为解决此问题而编写的开源文档管理系统。它允许用户签入、签出、查看版本、搜索和锁定文档,并保留版本历史记录,类似于程序员使用的版本控制工具。

+

+LogicalDOC 可在 Linux、MacOS 和 Windows 上[安装][3],使用基于 Java 的安装程序。在安装时,系统将提示你提供数据库存储位置,并提供只在本地文件存储的选项。你将获得访问服务器的 URL 和默认用户名和密码,以及保存用于自动安装脚本选项。

+

+登录后,LogicalDOC 的默认页面会列出你已标记、签出的文档以及有关它们的最新说明。切换到“文档”选项卡将显示你有权访问的文件。你可以在界面中选择文件或使用拖放来上传文档。如果你上传 ZIP 文件,LogicalDOC 会解压它,并将其中的文件添加到仓库中。

+

+

+

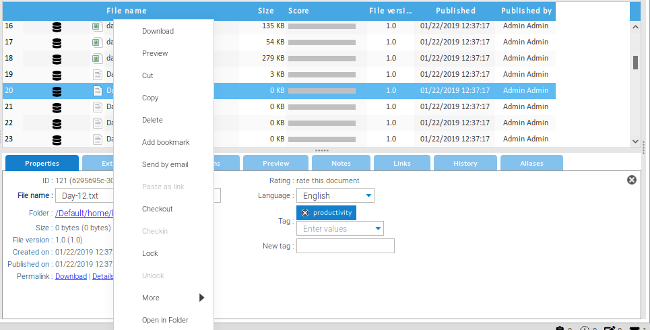

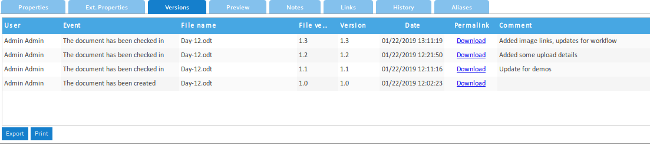

+右键单击文件将显示一个菜单选项,包括检出文件、锁定文件以防止更改,以及执行大量其他操作。签出文件会将其下载到本地计算机以便编辑。在重新签入之前,其他任何人都无法修改签出的文件。当重新签入文件时(使用相同的菜单),用户可以向版本添加标签,并且需要备注对其执行的操作。

+

+

+

+查看早期版本只需在“版本”页面下载就行。对于某些第三方服务,它还有导入和导出选项,内置 [Dropbox][4] 支持。

+

+文档管理不仅仅是能够负担得起昂贵解决方案的大公司才能有的。LogicalDOC 可帮助你追踪文档的版本历史,并为难以管理的文档提供了安全的仓库。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/1/productivity-tool-logicaldoc

+

+作者:[Kevin Sonney][a]

+选题:[lujun9972][b]

+译者:[geekpi](https://github.com/geekpi)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/ksonney (Kevin Sonney)

+[b]: https://github.com/lujun9972

+[1]: http://www.antipope.org/charlie/blog-static/2013/10/why-microsoft-word-must-die.html

+[2]: https://www.logicaldoc.com/download-logicaldoc-community

+[3]: https://docs.logicaldoc.com/en/installation

+[4]: https://dropbox.com

diff --git a/published/20190124 Understanding Angle Brackets in Bash.md b/published/201902/20190124 Understanding Angle Brackets in Bash.md

similarity index 100%

rename from published/20190124 Understanding Angle Brackets in Bash.md

rename to published/201902/20190124 Understanding Angle Brackets in Bash.md

diff --git a/published/20190125 PyGame Zero- Games without boilerplate.md b/published/201902/20190125 PyGame Zero- Games without boilerplate.md

similarity index 100%

rename from published/20190125 PyGame Zero- Games without boilerplate.md

rename to published/201902/20190125 PyGame Zero- Games without boilerplate.md

diff --git a/published/20190125 Top 5 Linux Distributions for Development in 2019.md b/published/201902/20190125 Top 5 Linux Distributions for Development in 2019.md

similarity index 100%

rename from published/20190125 Top 5 Linux Distributions for Development in 2019.md

rename to published/201902/20190125 Top 5 Linux Distributions for Development in 2019.md

diff --git a/published/20190126 Get started with Tint2, an open source taskbar for Linux.md b/published/201902/20190126 Get started with Tint2, an open source taskbar for Linux.md

similarity index 100%

rename from published/20190126 Get started with Tint2, an open source taskbar for Linux.md

rename to published/201902/20190126 Get started with Tint2, an open source taskbar for Linux.md

diff --git a/published/20190127 Get started with eDEX-UI, a Tron-influenced terminal program for tablets and desktops.md b/published/201902/20190127 Get started with eDEX-UI, a Tron-influenced terminal program for tablets and desktops.md

similarity index 100%

rename from published/20190127 Get started with eDEX-UI, a Tron-influenced terminal program for tablets and desktops.md

rename to published/201902/20190127 Get started with eDEX-UI, a Tron-influenced terminal program for tablets and desktops.md

diff --git a/published/20190128 3 simple and useful GNOME Shell extensions.md b/published/201902/20190128 3 simple and useful GNOME Shell extensions.md

similarity index 100%

rename from published/20190128 3 simple and useful GNOME Shell extensions.md

rename to published/201902/20190128 3 simple and useful GNOME Shell extensions.md

diff --git a/published/20190128 Using more to view text files at the Linux command line.md b/published/201902/20190128 Using more to view text files at the Linux command line.md

similarity index 100%

rename from published/20190128 Using more to view text files at the Linux command line.md

rename to published/201902/20190128 Using more to view text files at the Linux command line.md

diff --git a/published/20190128 fdisk - Easy Way To Manage Disk Partitions In Linux.md b/published/201902/20190128 fdisk - Easy Way To Manage Disk Partitions In Linux.md

similarity index 100%

rename from published/20190128 fdisk - Easy Way To Manage Disk Partitions In Linux.md

rename to published/201902/20190128 fdisk - Easy Way To Manage Disk Partitions In Linux.md

diff --git a/published/201902/20190129 Get started with gPodder, an open source podcast client.md b/published/201902/20190129 Get started with gPodder, an open source podcast client.md

new file mode 100644

index 0000000000..f5449a95de

--- /dev/null

+++ b/published/201902/20190129 Get started with gPodder, an open source podcast client.md

@@ -0,0 +1,65 @@

+[#]: collector: (lujun9972)

+[#]: translator: (geekpi)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-10567-1.html)

+[#]: subject: (Get started with gPodder, an open source podcast client)

+[#]: via: (https://opensource.com/article/19/1/productivity-tool-gpodder)

+[#]: author: (Kevin Sonney https://opensource.com/users/ksonney (Kevin Sonney))

+

+开始使用 gPodder 吧,一个开源播客客户端

+======

+

+> 使用 gPodder 将你的播客同步到你的设备上,gPodder 是我们开源工具系列中的第 17 个工具,它将在 2019 年提高你的工作效率。

+

+

+

+每年年初似乎都有疯狂的冲动想提高工作效率。新年的决心,渴望开启新的一年,当然,“抛弃旧的,拥抱新的”的态度促成了这一切。通常这时的建议严重偏向闭源和专有软件,但事实上并不用这样。

+

+这是我挑选出的 19 个新的(或者对你而言新的)开源工具中的第 17 个工具来帮助你在 2019 年更有效率。

+

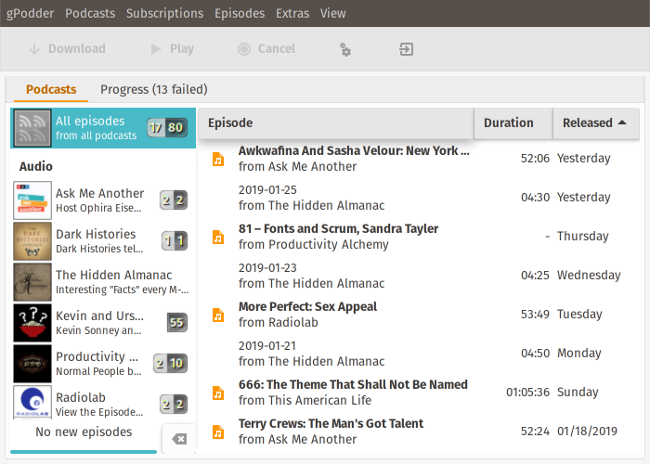

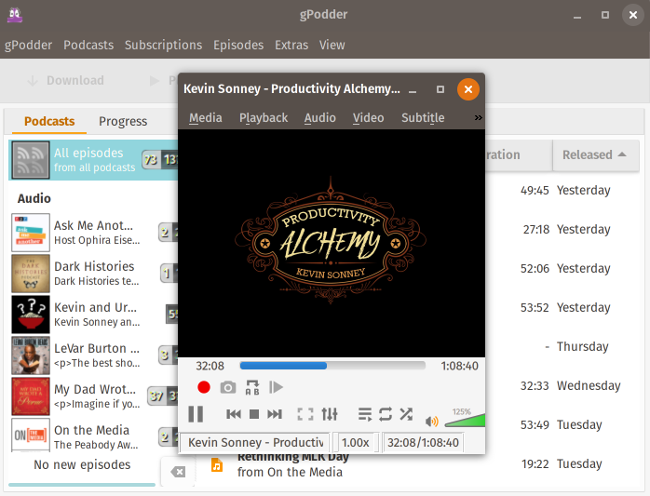

+### gPodder

+

+我喜欢播客。哎呀,我非常喜欢它们,因此我录制了其中的三个(你可以在[我的个人资料][1]中找到它们的链接)。我从播客那里学到了很多东西,并在我工作时在后台播放它们。但是,如何在多台桌面和移动设备之间保持同步可能会有一些挑战。

+

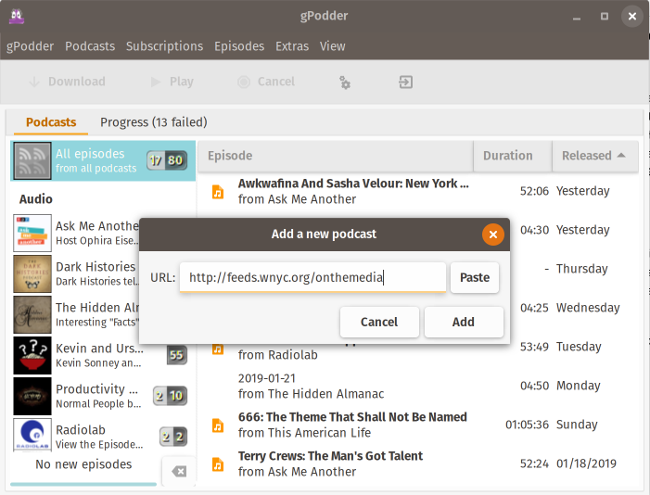

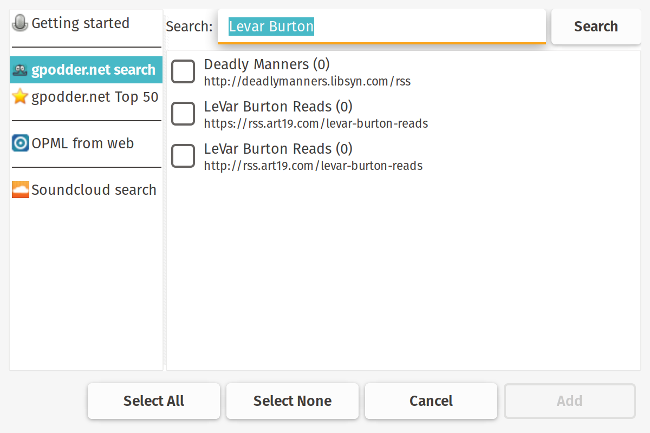

+[gPodder][2] 是一个简单的跨平台播客下载器、播放器和同步工具。它支持 RSS feed、[FeedBurner][3]、[YouTube][4] 和 [SoundCloud][5],它还有一个开源的同步服务,你可以根据需要运行它。gPodder 不直接播放播客。相反,它会使用你选择的音频或视频播放器。

+

+

+

+安装 gPodder 非常简单。安装程序适用于 Windows 和 MacOS,同时也有用于主要的 Linux 发行版的软件包。如果你的发行版中没有它,你可以直接从 Git 下载运行。通过 “Add Podcasts via URL” 菜单,你可以输入播客的 RSS 源 URL 或其他服务的 “特殊” URL。gPodder 将获取节目列表并显示一个对话框,你可以在其中选择要下载的节目或在列表上标记旧节目。

+

+

+

+它一个更好的功能是,如果 URL 已经在你的剪贴板中,gPodder 会自动将它放入播放 URL 中,这样你就可以很容易地将新的播客添加到列表中。如果你已有播客 feed 的 OPML 文件,那么可以上传并导入它。还有一个发现选项,让你可搜索 [gPodder.net][6] 上的播客,这是由编写和维护 gPodder 的人员提供的自由及开源的播客的列表网站。

+

+

+

+[mygpo][7] 服务器在设备之间同步播客。gPodder 默认使用 [gPodder.net][8] 的服务器,但是如果你想要运行自己的服务器,那么可以在配置文件中更改它(请注意,你需要直接修改配置文件)。同步能让你在桌面和移动设备之间保持列表一致。如果你在多个设备上收听播客(例如,我在我的工作电脑、家用电脑和手机上收听),这会非常有用,因为这意味着无论你身在何处,你都拥有最近的播客和节目列表而无需一次又一次地设置。

+

+

+

+单击播客节目将显示与其关联的文本,单击“播放”将启动设备的默认音频或视频播放器。如果要使用默认之外的其他播放器,可以在 gPodder 的配置设置中更改此设置。

+

+通过 gPodder,你可以轻松查找、下载和收听播客,在设备之间同步这些播客,在易于使用的界面中访问许多其他功能。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/1/productivity-tool-gpodder

+

+作者:[Kevin Sonney][a]

+选题:[lujun9972][b]

+译者:[geekpi](https://github.com/geekpi)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/ksonney (Kevin Sonney)

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/users/ksonney

+[2]: https://gpodder.github.io/

+[3]: https://feedburner.google.com/

+[4]: https://youtube.com

+[5]: https://soundcloud.com/

+[6]: http://gpodder.net

+[7]: https://github.com/gpodder/mygpo

+[8]: http://gPodder.net

diff --git a/published/20190129 More About Angle Brackets in Bash.md b/published/201902/20190129 More About Angle Brackets in Bash.md

similarity index 100%

rename from published/20190129 More About Angle Brackets in Bash.md

rename to published/201902/20190129 More About Angle Brackets in Bash.md

diff --git a/published/201902/20190130 Get started with Budgie Desktop, a Linux environment.md b/published/201902/20190130 Get started with Budgie Desktop, a Linux environment.md

new file mode 100644

index 0000000000..58a8a6505c

--- /dev/null

+++ b/published/201902/20190130 Get started with Budgie Desktop, a Linux environment.md

@@ -0,0 +1,61 @@

+[#]: collector: (lujun9972)

+[#]: translator: (geekpi)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-10547-1.html)

+[#]: subject: (Get started with Budgie Desktop, a Linux environment)

+[#]: via: (https://opensource.com/article/19/1/productivity-tool-budgie-desktop)

+[#]: author: (Kevin Sonney https://opensource.com/users/ksonney (Kevin Sonney))

+

+开始使用 Budgie 吧,一款 Linux 桌面环境

+======

+

+> 使用 Budgie 按需配置你的桌面,这是我们开源工具系列中的第 18 个工具,它将在 2019 年提高你的工作效率。

+

+

+

+每年年初似乎都有疯狂的冲动想提高工作效率。新年的决心,渴望开启新的一年,当然,“抛弃旧的,拥抱新的”的态度促成了这一切。通常这时的建议严重偏向闭源和专有软件,但事实上并不用这样。

+

+这是我挑选出的 19 个新的(或者对你而言新的)开源工具中的第 18 个工具来帮助你在 2019 年更有效率。

+

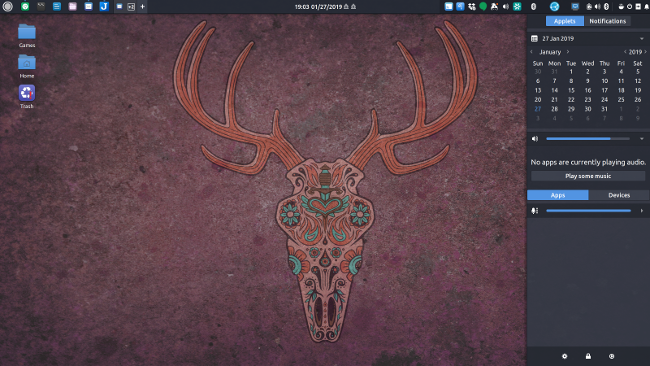

+### Budgie 桌面

+

+Linux 中有许多桌面环境。从易于使用并有令人惊叹图形界面的 [GNOME 桌面][1](在大多数主要 Linux 发行版上是默认桌面)和 [KDE][2],到极简主义的 [Openbox][3],再到高度可配置的平铺化的 [i3][4],有很多选择。我要寻找的桌面环境需要速度、不引人注目和干净的用户体验。当桌面不适合你时,很难会有高效率。

+

+

+

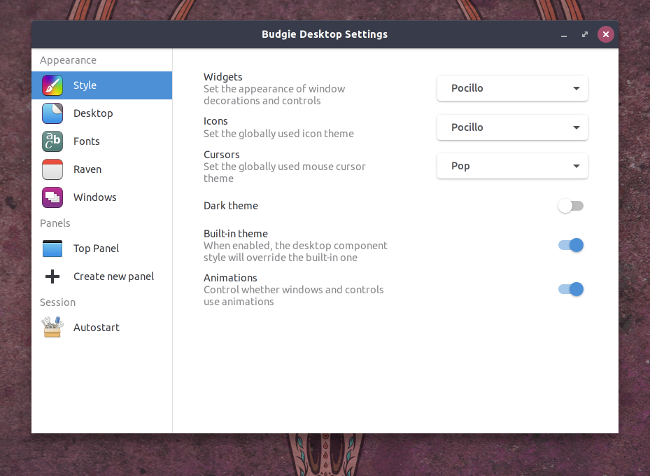

+[Budgie 桌面][5]是 [Solus][6] Linux 发行版的默认桌面,它在大多数主要 Linux 发行版的附加软件包中提供。它基于 GNOME,并使用了许多你可能已经在计算机上使用的相同工具和库。

+

+其默认桌面非常简约,只有面板和空白桌面。Budgie 包含一个集成的侧边栏(称为 Raven),通过它可以快速访问日历、音频控件和设置菜单。Raven 还包含一个集成的通知区域,其中包含与 MacOS 类似的统一系统消息显示。

+

+

+

+点击 Raven 中的齿轮图标会显示 Budgie 的控制面板及其配置。由于 Budgie 仍处于开发阶段,与 GNOME 或 KDE 相比,它的选项有点少,我希望随着时间的推移它会有更多的选项。顶部面板选项允许用户配置顶部面板的排序、位置和内容,这很不错。

+

+

+

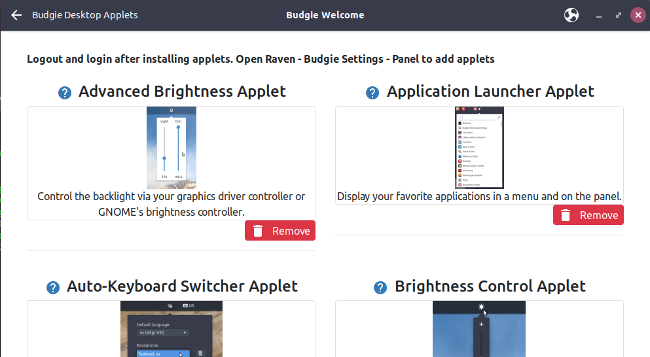

+Budgie 的 Welcome 应用(首次登录时展示)包含安装其他软件、面板小程序、截图和 Flatpack 软件包的选项。这些小程序有处理网络、截图、额外的时钟和计时器等等。

+

+

+

+Budgie 提供干净稳定的桌面。它响应迅速,有许多选项,允许你根据需要自定义它。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/1/productivity-tool-budgie-desktop

+

+作者:[Kevin Sonney][a]

+选题:[lujun9972][b]

+译者:[geekpi](https://github.com/geekpi)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/ksonney (Kevin Sonney)

+[b]: https://github.com/lujun9972

+[1]: https://www.gnome.org/

+[2]: https://www.kde.org/

+[3]: http://openbox.org/wiki/Main_Page

+[4]: https://i3wm.org/

+[5]: https://getsol.us/solus/experiences/

+[6]: https://getsol.us/home/

diff --git a/published/201902/20190130 Olive is a new Open Source Video Editor Aiming to Take On Biggies Like Final Cut Pro.md b/published/201902/20190130 Olive is a new Open Source Video Editor Aiming to Take On Biggies Like Final Cut Pro.md

new file mode 100644

index 0000000000..430b6210dd

--- /dev/null

+++ b/published/201902/20190130 Olive is a new Open Source Video Editor Aiming to Take On Biggies Like Final Cut Pro.md

@@ -0,0 +1,111 @@

+[#]: collector: (lujun9972)

+[#]: translator: (geekpi)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-10577-1.html)

+[#]: subject: (Olive is a new Open Source Video Editor Aiming to Take On Biggies Like Final Cut Pro)

+[#]: via: (https://itsfoss.com/olive-video-editor)

+[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

+

+Olive:一款以 Final Cut Pro 为目标的开源视频编辑器

+======

+

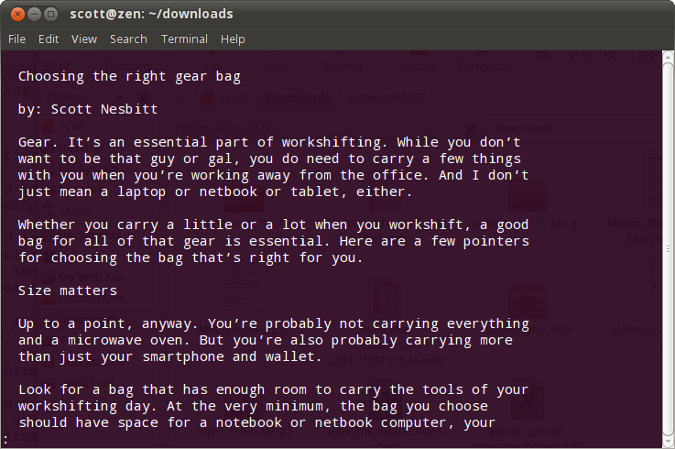

+[Olive][1] 是一个正在开发的新的开源视频编辑器。这个非线性视频编辑器旨在提供高端专业视频编辑软件的免费替代品。目标高么?我认为是的。

+

+如果你读过我们的 [Linux 中的最佳视频编辑器][2]这篇文章,你可能已经注意到大多数“专业级”视频编辑器(如 [Lightworks][3] 或 DaVinciResolve)既不免费也不开源。

+

+[Kdenlive][4] 和 Shotcut 也是此类,但它通常无法达到专业视频编辑的标准(这是许多 Linux 用户说的)。

+

+爱好者级和专业级的视频编辑之间的这种差距促使 Olive 的开发人员启动了这个项目。

+

+![Olive Video Editor][5]

+

+*Olive 视频编辑器界面*

+

+Libre Graphics World 中有一篇详细的[关于 Olive 的点评][6]。实际上,这是我第一次知道 Olive 的地方。如果你有兴趣了解更多信息,请阅读该文章。

+

+### 在 Linux 中安装 Olive 视频编辑器

+

+> 提醒你一下。Olive 正处于发展的早期阶段。你会发现很多 bug 和缺失/不完整的功能。你不应该把它当作你的主要视频编辑器。

+

+如果你想测试 Olive,有几种方法可以在 Linux 上安装它。

+

+#### 通过 PPA 在基于 Ubuntu 的发行版中安装 Olive

+

+你可以在 Ubuntu、Mint 和其他基于 Ubuntu 的发行版使用官方 PPA 安装 Olive。

+

+```

+sudo add-apt-repository ppa:olive-editor/olive-editor

+sudo apt-get update

+sudo apt-get install olive-editor

+```

+

+#### 通过 Snap 安装 Olive

+

+如果你的 Linux 发行版支持 Snap,则可以使用以下命令进行安装。

+

+```

+sudo snap install --edge olive-editor

+```

+

+#### 通过 Flatpak 安装 Olive

+

+如果你的 [Linux 发行版支持 Flatpak][7],你可以通过 Flatpak 安装 Olive 视频编辑器。

+

+- [Flatpak 地址](https://flathub.org/apps/details/org.olivevideoeditor.Olive)

+

+#### 通过 AppImage 使用 Olive

+

+不想安装吗?下载 [AppImage][8] 文件,将其设置为可执行文件并运行它。32 位和 64 位 AppImage 文件都有。你应该下载相应的文件。

+

+- [下载 Olive 的 AppImage](https://github.com/olive-editor/olive/releases/tag/continuous)

+

+Olive 也可用于 Windows 和 macOS。你可以从它的[下载页面][9]获得它。

+

+### 想要支持 Olive 视频编辑器的开发吗?

+

+如果你喜欢 Olive 尝试实现的功能,并且想要支持它,那么你可以通过以下几种方式。

+

+如果你在测试 Olive 时发现一些 bug,请到它们的 GitHub 仓库中报告。

+

+- [提交 bug 报告以帮助 Olive](https://github.com/olive-editor/olive/issues)

+

+如果你是程序员,请浏览 Olive 的源代码,看看你是否可以通过编码技巧帮助项目。

+

+- [Olive 的 GitHub 仓库](https://github.com/olive-editor/olive)

+

+在经济上为项目做贡献是另一种可以帮助开发开源软件的方法。你可以通过成为赞助人来支持 Olive。

+

+- [赞助 Olive](https://www.patreon.com/olivevideoeditor)

+

+如果你没有支持 Olive 的金钱或编码技能,你仍然可以帮助它。在社交媒体或你经常访问的 Linux/软件相关论坛和群组中分享这篇文章或 Olive 的网站。一点微小的口碑都能间接地帮助它。

+

+### 你如何看待 Olive?

+

+评判 Olive 还为时过早。我希望能够持续快速开发,并且在年底之前发布 Olive 的稳定版(如果我没有过于乐观的话)。

+

+你如何看待 Olive?你是否认同开发人员针对专业用户的目标?你希望 Olive 拥有哪些功能?

+

+--------------------------------------------------------------------------------

+

+via: https://itsfoss.com/olive-video-editor

+

+作者:[Abhishek Prakash][a]

+选题:[lujun9972][b]

+译者:[geekpi](https://github.com/geekpi)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://itsfoss.com/author/abhishek/

+[b]: https://github.com/lujun9972

+[1]: https://www.olivevideoeditor.org/

+[2]: https://itsfoss.com/best-video-editing-software-linux/

+[3]: https://www.lwks.com/

+[4]: https://kdenlive.org/en/

+[5]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/01/olive-video-editor-interface.jpg?resize=800%2C450&ssl=1

+[6]: http://libregraphicsworld.org/blog/entry/introducing-olive-new-non-linear-video-editor

+[7]: https://itsfoss.com/flatpak-guide/

+[8]: https://itsfoss.com/use-appimage-linux/

+[9]: https://www.olivevideoeditor.org/download.php

+[10]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/01/olive-video-editor-interface.jpg?fit=800%2C450&ssl=1

diff --git a/published/201902/20190131 Will quantum computing break security.md b/published/201902/20190131 Will quantum computing break security.md

new file mode 100644

index 0000000000..33323796ce

--- /dev/null

+++ b/published/201902/20190131 Will quantum computing break security.md

@@ -0,0 +1,89 @@

+[#]: collector: (lujun9972)

+[#]: translator: (HankChow)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-10566-1.html)

+[#]: subject: (Will quantum computing break security?)

+[#]: via: (https://opensource.com/article/19/1/will-quantum-computing-break-security)

+[#]: author: (Mike Bursell https://opensource.com/users/mikecamel)

+

+量子计算会打破现有的安全体系吗?

+======

+

+> 你会希望[某黑客][6]假冒你的银行吗?

+

+

+

+近年来,量子计算机已经出现在大众的视野当中。量子计算机被认为是第六类计算机,这六类计算机包括:

+

+1. 人力:在人造的计算工具出现之前,人类只能使用人力去进行计算。而承担计算工作的人,只能被称为“计算者”。

+2. 模拟计算工具:由人类制造的一些模拟计算过程的小工具,例如[安提凯希拉装置][1]、星盘、计算尺等等。

+3. 机械工具:在这一个类别中包括了运用到离散数学但未使用电子技术进行计算的工具,例如算盘、Charles Babbage 的差分机等等。

+4. 电子模拟计算工具:这一个类别的计算机多数用于军事方面的用途,例如炸弹瞄准器、枪炮瞄准装置等等。

+5. 电子计算机:我在这里会稍微冒险一点,我觉得 Colossus 是第一台电子计算机,[^1] :这一类几乎包含现代所有的电子设备,从移动电话到超级计算机,都在这个类别当中。

+6. 量子计算机:即将进入我们的生活,而且与之前的几类完全不同。

+

+### 什么是量子计算?

+

+量子计算的概念来源于量子力学,使用的计算方式和我们平常使用的普通计算非常不同。如果想要深入理解,建议从参考[维基百科上的定义][2]开始。对我们来说,最重要的是理解这一点:量子计算机使用量子位进行计算。在这样的前提下,对于很多数学算法和运算操作,量子计算机的计算速度会比普通计算机要快得多。

+

+这里的“快得多”是按数量级来说的“快得多”。在某些情况下,一个计算任务如果由普通计算机来执行,可能要耗费几年或者几十年才能完成,但如果由量子计算机来执行,就只需要几秒钟。这样的速度甚至令人感到可怕。因为量子计算机会非常擅长信息的加密解密计算,即使在没有密钥的情况下,也能快速完成繁重的计算任务。

+

+这意味着,如果拥有足够强大的量子计算机,那么你的所有信息都会被一览无遗,任何被加密的数据都可以被正确解密出来,甚至伪造数字签名也会成为可能。这确实是一个严重的问题。谁也不想被某个黑客冒充成自己在用的银行,更不希望自己在区块链上的交易被篡改得面目全非。

+

+### 好消息

+

+尽管上面的提到的问题非常可怕,但也不需要太担心。

+

+首先,如果要实现上面提到的能力,一台可以操作大量量子位的量子计算机是必不可少的,而这个硬件上的要求就是一个很高的门槛。[^4] 目前普遍认为,规模大得足以有效破解经典加密算法的量子计算机在最近几年还不可能出现。

+

+其次,除了攻击现有的加密算法需要大量的量子位以外,还需要很多量子位来保证容错性。

+

+还有,尽管确实有一些理论上的模型阐述了量子计算机如何对一些现有的算法作出攻击,但是要让这样的理论模型实际运作起来的难度会比我们[^5] 想象中大得多。事实上,有一些攻击手段也是未被完全确认是可行的,又或者这些攻击手段还需要继续耗费很多年的改进才能到达如斯恐怖的程度。

+

+最后,还有很多专业人士正在研究能够防御量子计算的算法(这样的算法也被称为“后量子算法”)。如果这些防御算法经过测试以后投入使用,我们就可以使用这些算法进行加密,来对抗量子计算了。

+

+总而言之,很多专家都认为,我们现有的加密方式在未来 5 年甚至未来 10 年内都是安全的,不需要过分担心。

+

+### 也有坏消息

+

+但我们也并不是高枕无忧了,以下两个问题就值得我们关注:

+

+1. 人们在设计应用系统的时候仍然没有对量子计算作出太多的考量。如果设计的系统可能会使用 10 年以上,又或者数据加密和签名的时间跨度在 10 年以上,那么就必须考虑量子计算在未来会不会对系统造成不利的影响。

+2. 新出现的防御量子计算的算法可能会是专有的。也就是说,如果基于这些防御量子计算的算法来设计系统,那么在系统落地的时候,可能会需要为此付费。尽管我是支持开源的,尤其是[开源密码学][3],但我最担心的就是无法开源这方面的内容。而且最糟糕的是,在建立新的协议标准时(不管是事实标准还是通过标准组织建立的标准),无论是故意的,还是无意忽略,或者是没有好的开源替代品,他们都很可能使用专有算法而排除使用开源算法。

+

+### 我们要怎样做?

+

+幸运的是,针对上述两个问题,我们还是有应对措施的。首先,在整个系统的设计阶段,就需要考虑到它是否会受到量子计算的影响,并作出相应的规划。当然了,不需要现在就立即采取行动,因为当前的技术水平也没法实现有效的方案,但至少也要[在加密方面保持敏捷性][4],以便在任何需要的时候为你的协议和系统更换更有效的加密算法。[^7]

+

+其次是参与开源运动。尽可能鼓励密码学方面的有识之士团结起来,支持开放标准,并投入对非专有的防御量子计算的算法研究当中去。这一点也算是当务之急,因为号召更多的人重视起来并加入研究,比研究本身更为重要。

+

+本文首发于《[Alice, Eve, and Bob][5]》,并在作者同意下重新发表。

+

+[^1]: 我认为把它称为第一台电子可编程计算机是公平的。我知道有早期的非可编程的,也有些人声称是 ENIAC,但我没有足够的空间或精力在这里争论这件事。

+[^2]: No。

+[^3]: See 2. Don't get me wrong, by the way—I grew up near Weston-super-Mare, and it's got things going for it, but it's not Mayfair.

+[^4]: 如果量子物理学家说很难,那么在我看来,就很难。

+[^5]: 而且我假设我们都不是量子物理学家或数学家。

+[^6]: I'm definitely not.

+[^7]: 而且不仅仅是出于量子计算的原因:我们现有的一些经典算法很可能会陷入其他非量子攻击,例如新的数学方法。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/1/will-quantum-computing-break-security

+

+作者:[Mike Bursell][a]

+选题:[lujun9972][b]

+译者:[HankChow](https://github.com/HankChow)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/mikecamel

+[b]: https://github.com/lujun9972

+[1]: https://en.wikipedia.org/wiki/Antikythera_mechanism

+[2]: https://en.wikipedia.org/wiki/Quantum_computing

+[3]: https://opensource.com/article/17/10/many-eyes

+[4]: https://aliceevebob.com/2017/04/04/disbelieving-the-many-eyes-hypothesis/

+[5]: https://aliceevebob.com/2019/01/08/will-quantum-computing-break-security/

+[6]: https://www.techopedia.com/definition/20225/j-random-hacker

diff --git a/published/201902/20190201 Top 5 Linux Distributions for New Users.md b/published/201902/20190201 Top 5 Linux Distributions for New Users.md

new file mode 100644

index 0000000000..5641dd8796

--- /dev/null

+++ b/published/201902/20190201 Top 5 Linux Distributions for New Users.md

@@ -0,0 +1,111 @@

+[#]: collector: (lujun9972)

+[#]: translator: (wxy)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-10553-1.html)

+[#]: subject: (Top 5 Linux Distributions for New Users)

+[#]: via: (https://www.linux.com/blog/learn/2019/2/top-5-linux-distributions-new-users)

+[#]: author: (Jack Wallen https://www.linux.com/users/jlwallen)

+

+5 个面向新手的 Linux 发行版

+======

+

+> 5 个可使用新用户有如归家般感觉的发行版。

+

+

+

+从最初的 Linux 到现在,Linux 已经发展了很长一段路。但是,无论你曾经多少次听说过现在使用 Linux 有多容易,仍然会有表示怀疑的人。而要真的承担得其这份声明,桌面必须足够简单,以便不熟悉 Linux 的人也能够使用它。事实上大量的桌面发行版使这成为了现实。

+

+### 无需 Linux 知识

+

+将这个清单误解为又一个“最佳用户友好型 Linux 发行版”的清单可能很简单。但这不是我们要在这里看到的。这二者之间有什么不同?就我的目的而言,定义的界限是 Linux 是否真正起到了使用的作用。换句话说,你是否可以将这个桌面操作系统放在一个用户面前,并让他们应用自如而无需懂得 Linux 知识呢?

+

+不管你相信与否,有些发行版就能做到。这里我将介绍给你 5 个这样的发行版。这些或许你全都听说过。它们或许不是你所选择的发行版,但可以向你保证它们无需过多关注,而是将用户放在眼前的。

+

+我们来看看选中的几个。

+

+### Elementary OS

+

+[Elementary OS](https://elementary.io/) 的理念主要围绕人们如何实际使用他们的桌面。开发人员和设计人员不遗余力地创建尽可能简单的桌面。在这个过程中,他们致力于去 Linux 化的 Linux。这并不是说他们已经从这个等式中删除了 Linux。不,恰恰相反,他们所做的就是创建一个与你所发现的一样的中立的操作系统。Elementary OS 是如此流畅,以确保一切都完美合理。从单个 Dock 到每个人都清晰明了的应用程序菜单,这是一个桌面,而不用提醒用户说,“你正在使用 Linux!” 事实上,其布局本身就让人联想到 Mac,但附加了一个简单的应用程序菜单(图 1)。

+

+![Elementary OS Juno][2]

+

+*图 1:Elementary OS Juno 应用菜单*

+

+将 Elementary OS 放在此列表中的另一个重要原因是它不像其他桌面发行版那样灵活。当然,有些用户会对此不以为然,但是如果桌面没有向用户扔出各种花哨的定制诱惑,那么就会形成一个非常熟悉的环境:一个既不需要也不允许大量修修补补的环境。操作系统在让新用户熟悉该平台这一方面还有很长的路要走。

+

+与任何现代 Linux 桌面发行版一样,Elementary OS 包括了应用商店,称为 AppCenter,用户可以在其中安装所需的所有应用程序,而无需触及命令行。

+

+### 深度操作系统

+

+[深度操作系统](https://www.deepin.org/)不仅得到了市场上最漂亮的台式机之一的赞誉,它也像任何桌面操作系统一样容易上手。其桌面界面非常简单,对于毫无 Linux 经验的用户来说,它的上手速度非常快。事实上,你很难找到无法立即上手使用 Deepin 桌面的用户。而这里唯一可能的障碍可能是其侧边栏控制中心(图 2)。

+

+![][5]

+

+*图 2:Deepin 的侧边栏控制编码*

+

+但即使是侧边栏控制面板,也像市场上的任何其他配置工具一样直观。任何使用过移动设备的人对于这种布局都很熟悉。至于打开应用程序,Deepin 的启动器采用了 macOS Launchpad 的方式。此按钮位于桌面底座上通常最右侧的位置,因此用户立即就可以会意,知道它可能类似于标准的“开始”菜单。

+

+与 Elementary OS(以及市场上大多数 Linux 发行版)类似,深度操作系统也包含一个应用程序商店(简称为“商店”),可以轻松安装大量应用程序。

+

+### Ubuntu

+

+你知道肯定有它。[Ubuntu](https://www.ubuntu.com/) 通常在大多数用户友好的 Linux 列表中占据首位。因为它是少数几个不需要懂得 Linux 就能使用的桌面之一。但在采用 GNOME(和 Unity 谢幕)之前,情况并非如此。因为 Unity 经常需要进行一些调整才能达到一点 Linux 知识都不需要的程度(图 3)。现在 Ubuntu 已经采用了 GNOME,并将其调整到甚至不需要懂得 GNOME 的程度,这个桌面使得对 Linux 的简单性和可用性的要求不再是迫切问题。

+

+![Ubuntu 18.04][7]

+

+*图 3:Ubuntu 18.04 桌面可使用马上熟悉起来*

+

+与 Elementary OS 不同,Ubuntu 对用户毫无阻碍。因此,任何想从桌面上获得更多信息的人都可以拥有它。但是,其开箱即用的体验对于任何类型的用户都是足够的。任何一个让用户不知道他们触手可及的力量有多少的桌面,肯定不如 Ubuntu。

+

+### Linux Mint

+

+我需要首先声明,我从来都不是 [Linux Mint](https://linuxmint.com/) 的忠实粉丝。但这并不是说我不尊重开发者的工作,而更多的是一种审美观点。我更喜欢现代化的桌面环境。但是,旧式的学校计算机桌面的隐喻(可以在默认的 Cinnamon 桌面中找到)可以让几乎每个人使用它的人都格外熟悉。Linux Mint 使用任务栏、开始按钮、系统托盘和桌面图标(图 4),提供了一个需要零学习曲线的界面。事实上,一些用户最初可能会被愚弄,以为他们正在使用 Windows 7 的克隆版。甚至是它的更新警告图标也会让用户感到非常熟悉。

+

+![Linux Mint][9]

+

+*图 4:Linux Mint 的 Cinnamon 桌面非常像 Windows 7*

+

+因为 Linux Mint 受益于其所基于的 Ubuntu,它不仅会让你马上熟悉起来,而且具有很高的可用性。无论你是否对底层平台有所了解,用户都会立即感受到宾至如归的感觉。

+

+### Ubuntu Budgie

+

+我们的列表将以这样一个发行版做结:它也能让用户忘记他们正在使用 Linux,并且使用常用工具变得简单、美观。使 Ubuntu 融合 Budgie 桌面可以构成一个令人印象深刻的易用发行版。虽然其桌面布局(图 5)可能不太一样,但毫无疑问,适应这个环境并不需要浪费时间。实际上,除了 Dock 默认居于桌面的左侧,[Ubuntu Budgie](https://ubuntubudgie.org/) 确实看起来像 Elementary OS。

+

+![Budgie][11]

+

+*图 5:Budgie 桌面既漂亮又简单*

+

+Ubuntu Budgie 中的系统托盘/通知区域提供了一些不太多见的功能,比如:快速访问 Caffeine(一种保持桌面清醒的工具)、快速笔记工具(用于记录简单笔记)、Night Lite 开关、原地下拉菜单(用于快速访问文件夹),当然还有 Raven 小程序/通知侧边栏(与深度操作系统中的控制中心侧边栏类似,但不太优雅)。Budgie 还包括一个应用程序菜单(左上角),用户可以访问所有已安装的应用程序。打开一个应用程序,该图标将出现在 Dock 中。右键单击该应用程序图标,然后选择“保留在 Dock”以便更快地访问。

+

+Ubuntu Budgie 的一切都很直观,所以几乎没有学习曲线。这种发行版既优雅又易于使用,不能再好了。

+

+### 选择一个吧

+

+至此介绍了 5 个 Linux 发行版,它们各自以自己的方式提供了让任何用户都马上熟悉的桌面体验。虽然这些可能不是你对顶级发行版的选择,但对于那些不熟悉 Linux 的用户来说,却不能否定它们的价值。

+

+--------------------------------------------------------------------------------

+

+via: https://www.linux.com/blog/learn/2019/2/top-5-linux-distributions-new-users

+

+作者:[Jack Wallen][a]

+选题:[lujun9972][b]

+译者:[wxy](https://github.com/wxy)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://www.linux.com/users/jlwallen

+[b]: https://github.com/lujun9972

+[1]: https://www.linux.com/files/images/elementaryosjpg-2

+[2]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/elementaryos_0.jpg?itok=KxgNUvMW (Elementary OS Juno)

+[3]: https://www.linux.com/licenses/category/used-permission

+[4]: https://www.linux.com/files/images/deepinjpg

+[5]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/deepin.jpg?itok=VV381a9f

+[6]: https://www.linux.com/files/images/ubuntujpg-1

+[7]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/ubuntu_1.jpg?itok=bax-_Tsg (Ubuntu 18.04)

+[8]: https://www.linux.com/files/images/linuxmintjpg

+[9]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/linuxmint.jpg?itok=8sPon0Cq (Linux Mint )

+[10]: https://www.linux.com/files/images/budgiejpg-0

+[11]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/budgie_0.jpg?itok=zcf-AHmj (Budgie)

+[12]: https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

diff --git a/published/20190205 DNS and Root Certificates.md b/published/201902/20190205 DNS and Root Certificates.md

similarity index 100%

rename from published/20190205 DNS and Root Certificates.md

rename to published/201902/20190205 DNS and Root Certificates.md

diff --git a/published/201902/20190205 Installing Kali Linux on VirtualBox- Quickest - Safest Way.md b/published/201902/20190205 Installing Kali Linux on VirtualBox- Quickest - Safest Way.md

new file mode 100644

index 0000000000..830ff13fd9

--- /dev/null

+++ b/published/201902/20190205 Installing Kali Linux on VirtualBox- Quickest - Safest Way.md

@@ -0,0 +1,142 @@

+[#]: collector: (lujun9972)

+[#]: translator: (wxy)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-10550-1.html)

+[#]: subject: (Installing Kali Linux on VirtualBox: Quickest & Safest Way)

+[#]: via: (https://itsfoss.com/install-kali-linux-virtualbox)

+[#]: author: (Ankush Das https://itsfoss.com/author/ankush/)

+

+在 VirtualBox 上安装 Kali Linux 的最安全快捷的方式

+======

+

+> 本教程将向你展示如何以最快的方式在运行于 Windows 和 Linux 上的 VirtualBox 上安装 Kali Linux。

+

+[Kali Linux][1] 是最好的[黑客][2] 和安全爱好者的 Linux 发行版之一。

+

+由于它涉及像黑客这样的敏感话题,它就像一把双刃剑。我们过去在一篇详细的 [Kali Linux 点评](https://linux.cn/article-10198-1.html)中对此进行了讨论,所以我不会再次赘述。

+

+虽然你可以通过替换现有的操作系统来安装 Kali Linux,但通过虚拟机使用它将是一个更好、更安全的选择。

+

+使用 Virtual Box,你可以将 Kali Linux 当做 Windows / Linux 系统中的常规应用程序一样,几乎就和在系统中运行 VLC 或游戏一样简单。

+

+在虚拟机中使用 Kali Linux 也是安全的。无论你在 Kali Linux 中做什么都不会影响你的“宿主机系统”(即你原来的 Windows 或 Linux 操作系统)。你的实际操作系统将不会受到影响,宿主机系统中的数据将是安全的。

+

+![Kali Linux on Virtual Box][3]

+

+### 如何在 VirtualBox 上安装 Kali Linux

+

+我将在这里使用 [VirtualBox][4]。它是一个很棒的开源虚拟化解决方案,适用于任何人(无论是专业或个人用途)。它可以免费使用。

+

+在本教程中,我们将特指 Kali Linux 的安装,但你几乎可以安装任何其他已有 ISO 文件的操作系统或预先构建好的虚拟机存储文件。

+

+**注意:**这些相同的步骤适用于运行在 Windows / Linux 上的 VirtualBox。

+

+正如我已经提到的,你可以安装 Windows 或 Linux 作为宿主机。但是,在本文中,我安装了 Windows 10(不要讨厌我!),我会尝试在 VirtualBox 中逐步安装 Kali Linux。

+

+而且,最好的是,即使你碰巧使用 Linux 发行版作为主要操作系统,相同的步骤也完全适用!

+

+想知道怎么样做吗?让我们来看看…

+

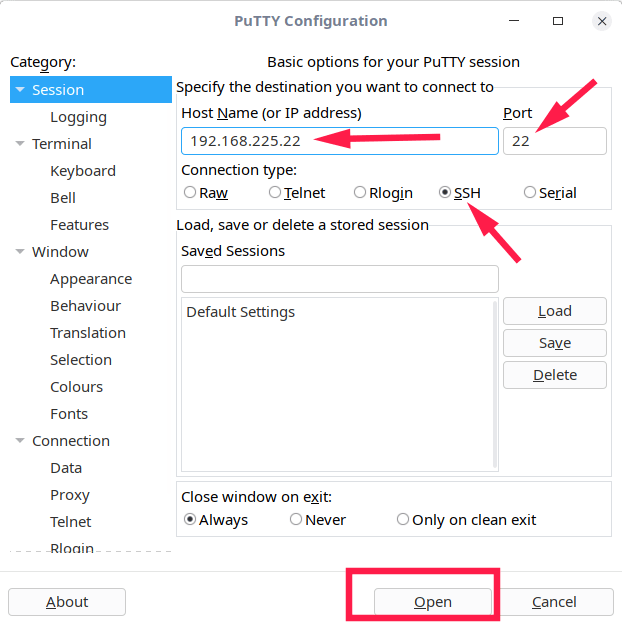

+### 在 VirtualBox 上安装 Kali Linux 的逐步指导

+

+我们将使用专为 VirtualBox 制作的定制 Kali Linux 镜像。当然,你还可以下载 Kali Linux 的 ISO 文件并创建一个新的虚拟机,但是为什么在你有一个简单的替代方案时还要这样做呢?

+

+#### 1、下载并安装 VirtualBox

+

+你需要做的第一件事是从 Oracle 官方网站下载并安装 VirtualBox。

+

+- [下载 VirtualBox](https://www.virtualbox.org/wiki/Downloads)

+

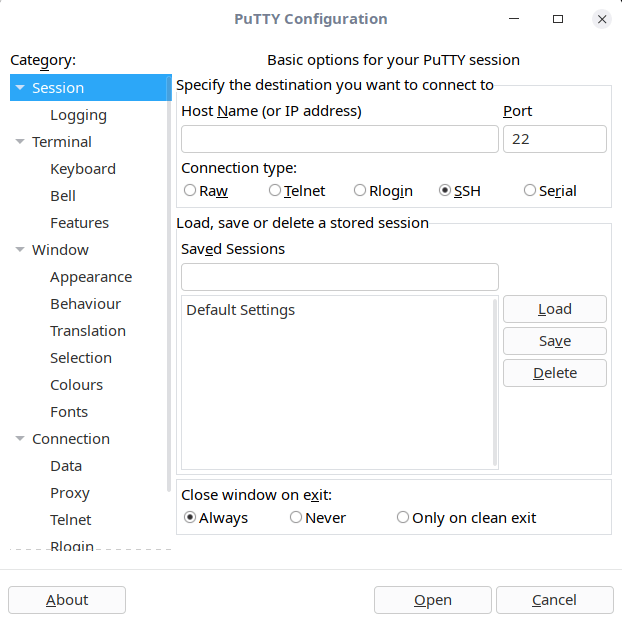

+下载了安装程序后,只需双击它即可安装 VirtualBox。在 Ubuntu / Fedora Linux 上安装 VirtualBox 也是一样的。

+

+#### 2、下载就绪的 Kali Linux 虚拟镜像

+

+VirtualBox 成功安装后,前往 [Offensive Security 的下载页面][5] 下载用于 VirtualBox 的虚拟机镜像。如果你改变主意想使用 [VMware][6],也有用于它的。

+

+![Kali Linux Virtual Box Image][7]

+

+如你所见,文件大小远远超过 3 GB,你应该使用 torrent 方式或使用 [下载管理器][8] 下载它。

+