mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-25 00:50:15 +08:00

Merge branch 'HEAD' of git@github.com:LCTT/TranslateProject.git

This commit is contained in:

commit

b6923d130c

84

README.md

84

README.md

@ -1,16 +1,16 @@

|

||||

简介

|

||||

-------------------------------

|

||||

|

||||

LCTT是“Linux中国”([https://linux.cn/](https://linux.cn/))的翻译组,负责从国外优秀媒体翻译Linux相关的技术、资讯、杂文等内容。

|

||||

LCTT 是“Linux中国”([https://linux.cn/](https://linux.cn/))的翻译组,负责从国外优秀媒体翻译 Linux 相关的技术、资讯、杂文等内容。

|

||||

|

||||

LCTT已经拥有几百名活跃成员,并欢迎更多的Linux志愿者加入我们的团队。

|

||||

LCTT 已经拥有几百名活跃成员,并欢迎更多的Linux志愿者加入我们的团队。

|

||||

|

||||

|

||||

|

||||

LCTT的组成

|

||||

LCTT 的组成

|

||||

-------------------------------

|

||||

|

||||

**选题**,负责选择合适的内容,并将原文转换为markdown格式,提交到LCTT的[TranslateProject](https://github.com/LCTT/TranslateProject) 库中。

|

||||

**选题**,负责选择合适的内容,并将原文转换为 markdown 格式,提交到 LCTT 的 [TranslateProject](https://github.com/LCTT/TranslateProject) 库中。

|

||||

|

||||

**译者**,负责从选题中选择内容进行翻译。

|

||||

|

||||

@ -21,38 +21,38 @@ LCTT的组成

|

||||

加入我们

|

||||

-------------------------------

|

||||

|

||||

请首先加入翻译组的QQ群,群号是:198889102,加群时请说明是“志愿者”。加入后记得修改您的群名片为您的github的ID。

|

||||

请首先加入翻译组的 QQ 群,群号是:198889102,加群时请说明是“志愿者”。加入后记得修改您的群名片为您的 GitHub 的 ID。

|

||||

|

||||

加入的成员,请先阅读[WIKI 如何开始](https://github.com/LCTT/TranslateProject/wiki/01-如何开始)。

|

||||

加入的成员,请先阅读 [WIKI 如何开始](https://github.com/LCTT/TranslateProject/wiki/01-如何开始)。

|

||||

|

||||

如何开始

|

||||

-------------------------------

|

||||

|

||||

请阅读[WIKI](https://github.com/LCTT/TranslateProject/wiki)。

|

||||

请阅读 [WIKI](https://github.com/LCTT/TranslateProject/wiki)。

|

||||

|

||||

历史

|

||||

-------------------------------

|

||||

|

||||

* 2013/09/10 倡议并得到了大家的积极响应,成立翻译组。

|

||||

* 2013/09/11 采用github进行翻译协作,并开始进行选题翻译。

|

||||

* 2013/09/11 采用 GitHub 进行翻译协作,并开始进行选题翻译。

|

||||

* 2013/09/16 公开发布了翻译组成立消息后,又有新的成员申请加入了。并从此建立见习成员制度。

|

||||

* 2013/09/24 鉴于大家使用Github的水平不一,容易导致主仓库的一些错误,因此换成了常规的fork+PR的模式来进行翻译流程。

|

||||

* 2013/10/11 根据对LCTT的贡献,划分了Core Translators组,最先的加入成员是vito-L和tinyeyeser。

|

||||

* 2013/10/12 取消对LINUX.CN注册用户的依赖,在QQ群内、文章内都采用github的注册ID。

|

||||

* 2013/10/18 正式启动man翻译计划。

|

||||

* 2013/09/24 鉴于大家使用 GitHub 的水平不一,容易导致主仓库的一些错误,因此换成了常规的 fork+PR 的模式来进行翻译流程。

|

||||

* 2013/10/11 根据对 LCTT 的贡献,划分了 Core Translators 组,最先的加入成员是 vito-L 和 tinyeyeser。

|

||||

* 2013/10/12 取消对 LINUX.CN 注册用户的依赖,在 QQ 群内、文章内都采用 GitHub 的注册 ID。

|

||||

* 2013/10/18 正式启动 man 翻译计划。

|

||||

* 2013/11/10 举行第一次北京线下聚会。

|

||||

* 2014/01/02 增加了Core Translators 成员: geekpi。

|

||||

* 2014/05/04 更换了新的QQ群:198889102

|

||||

* 2014/05/16 增加了Core Translators 成员: will.qian、vizv。

|

||||

* 2014/06/18 由于GOLinux令人惊叹的翻译速度和不错的翻译质量,升级为Core Translators成员。

|

||||

* 2014/01/02 增加了 Core Translators 成员: geekpi。

|

||||

* 2014/05/04 更换了新的 QQ 群:198889102

|

||||

* 2014/05/16 增加了 Core Translators 成员: will.qian、vizv。

|

||||

* 2014/06/18 由于 GOLinux 令人惊叹的翻译速度和不错的翻译质量,升级为 Core Translators 成员。

|

||||

* 2014/09/09 LCTT 一周年,做一年[总结](http://linux.cn/article-3784-1.html)。并将曾任 CORE 的成员分组为 Senior,以表彰他们的贡献。

|

||||

* 2014/10/08 提升bazz2为Core Translators成员。

|

||||

* 2014/11/04 提升zpl1025为Core Translators成员。

|

||||

* 2014/12/25 提升runningwater为Core Translators成员。

|

||||

* 2014/10/08 提升 bazz2 为 Core Translators 成员。

|

||||

* 2014/11/04 提升 zpl1025 为 Core Translators 成员。

|

||||

* 2014/12/25 提升 runningwater 为 Core Translators 成员。

|

||||

* 2015/04/19 发起 LFS-BOOK-7.7-systemd 项目。

|

||||

* 2015/06/09 提升ictlyh和dongfengweixiao为Core Translators成员。

|

||||

* 2015/11/10 提升strugglingyouth、FSSlc、Vic020、alim0x为Core Translators成员。

|

||||

* 2016/05/09 提升PurlingNayuki为校对。

|

||||

* 2015/06/09 提升 ictlyh 和 dongfengweixiao 为 Core Translators 成员。

|

||||

* 2015/11/10 提升 strugglingyouth、FSSlc、Vic020、alim0x 为 Core Translators 成员。

|

||||

* 2016/05/09 提升 PurlingNayuki 为校对。

|

||||

|

||||

活跃成员

|

||||

-------------------------------

|

||||

@ -74,16 +74,16 @@ LCTT的组成

|

||||

- CORE @dongfengweixiao,

|

||||

- CORE @alim0x,

|

||||

- Senior @DeadFire,

|

||||

- Senior @reinoir,

|

||||

- Senior @reinoir222,

|

||||

- Senior @tinyeyeser,

|

||||

- Senior @vito-L,

|

||||

- Senior @jasminepeng,

|

||||

- Senior @willqian,

|

||||

- Senior @vizv,

|

||||

- ZTinoZ,

|

||||

- theo-l,

|

||||

- luoxcat,

|

||||

- martin2011qi,

|

||||

- theo-l,

|

||||

- Luoxcat,

|

||||

- wi-cuckoo,

|

||||

- disylee,

|

||||

- haimingfg,

|

||||

@ -91,8 +91,8 @@ LCTT的组成

|

||||

- wwy-hust,

|

||||

- felixonmars,

|

||||

- su-kaiyao,

|

||||

- ivo-wang,

|

||||

- GHLandy,

|

||||

- ivo-wang,

|

||||

- cvsher,

|

||||

- wyangsun,

|

||||

- DongShuaike,

|

||||

@ -119,6 +119,7 @@ LCTT的组成

|

||||

- blueabysm,

|

||||

- boredivan,

|

||||

- name1e5s,

|

||||

- StdioA,

|

||||

- yechunxiao19,

|

||||

- l3b2w1,

|

||||

- XLCYun,

|

||||

@ -134,49 +135,34 @@ LCTT的组成

|

||||

- 1w2b3l,

|

||||

- JonathanKang,

|

||||

- crowner,

|

||||

- mtunique,

|

||||

- dingdongnigetou,

|

||||

- mtunique,

|

||||

- CNprober,

|

||||

- hyaocuk,

|

||||

- szrlee,

|

||||

- KnightJoker,

|

||||

- Xuanwo,

|

||||

- nd0104,

|

||||

- jerryling315,

|

||||

- Moelf,

|

||||

- xiaoyu33,

|

||||

- guodongxiaren,

|

||||

- ynmlml,

|

||||

- kylepeng93,

|

||||

- vim-kakali,

|

||||

- ggaaooppeenngg,

|

||||

- Ricky-Gong,

|

||||

- zky001,

|

||||

- Flowsnow,

|

||||

- lfzark,

|

||||

- 213edu,

|

||||

- Tanete,

|

||||

- liuaiping,

|

||||

- bestony,

|

||||

- mudongliang,

|

||||

- liuaiping,

|

||||

- Timeszoro,

|

||||

- rogetfan,

|

||||

- itsang,

|

||||

- JeffDing,

|

||||

- Yuking-net,

|

||||

- MikeCoder,

|

||||

- zhangboyue,

|

||||

- liaoishere,

|

||||

- yupmoon,

|

||||

- Medusar,

|

||||

- zzlyzq,

|

||||

- yujianxuechuan,

|

||||

- ailurus1991,

|

||||

- tomatoKiller,

|

||||

- stduolc,

|

||||

- shaohaolin,

|

||||

- FineFan,

|

||||

- kingname,

|

||||

- CHINAANSHE,

|

||||

|

||||

(按提交行数排名前百)

|

||||

|

||||

(按增加行数排名前百)

|

||||

|

||||

LFS 项目活跃成员有:

|

||||

|

||||

@ -188,7 +174,7 @@ LFS 项目活跃成员有:

|

||||

- @KevinSJ

|

||||

- @Yuking-net

|

||||

|

||||

(更新于2016/05/09)

|

||||

(更新于2016/06/20)

|

||||

|

||||

谢谢大家的支持!

|

||||

|

||||

|

||||

@ -19,7 +19,7 @@ LinuxQuestions 问卷调查揭晓最佳开源项目

|

||||

via: http://ostatic.com/blog/linuxquestions-survey-results-surface-top-open-source-projects

|

||||

|

||||

作者:[Sam Dean][a]

|

||||

译者:[jerryling315](https://github.com/jerryling315)

|

||||

译者:[Moelf](https://github.com/Moelf)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

@ -29,4 +29,4 @@ via: http://ostatic.com/blog/linuxquestions-survey-results-surface-top-open-sour

|

||||

[2]:http://www.linuxquestions.org/questions/linux-news-59/2014-linuxquestions-org-members-choice-award-winners-4175532948/

|

||||

[3]:http://www.linuxquestions.org/questions/2014mca.php

|

||||

[4]:http://ostatic.com/blog/lq-members-choice-award-winners-announced

|

||||

[5]:http://www.linuxquestions.org/questions/2014mca.php

|

||||

[5]:http://www.linuxquestions.org/questions/2014mca.php

|

||||

|

||||

@ -98,7 +98,7 @@ Debian 在 Linux 生态环境中的贡献是难以用语言描述的。 如果 D

|

||||

via: http://www.tecmint.com/happy-birthday-to-debian-gnu-linux/

|

||||

|

||||

作者:[Avishek Kumar][a]

|

||||

译者:[jerryling315](http://moelf.xyz)

|

||||

译者:[Moelf](https://github.com/Moelf)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -69,7 +69,7 @@ b、 一旦你保存了这个文件,你应该能在 Wifi 菜单里看到你刚

|

||||

via: http://www.linuxveda.com/2015/08/23/how-to-create-an-ap-in-ubuntu-15-04-to-connect-to-androidiphone/

|

||||

|

||||

作者:[Sayantan Das][a]

|

||||

译者:[jerryling315](https://github.com/jerryling315)

|

||||

译者:[Moelf](https://github.com/Moelf)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -222,7 +222,7 @@ KDE Plasma 5 – 第五代 KDE。大幅改进了设计和系统,新的默认

|

||||

via: [https://tlhp.cf/kde-history/](https://tlhp.cf/kde-history/)

|

||||

|

||||

作者:[Pavlo Rudyi][a]

|

||||

译者:[jerryling315](https://github.com/jerryling315)

|

||||

译者:[Moelf](https://github.com/Moelf)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,86 @@

|

||||

Linux 下五个顶级的开源命令行 Shell

|

||||

===============================================

|

||||

|

||||

|

||||

|

||||

这个世界上有两种 Linux 用户:敢于冒险的和态度谨慎的。

|

||||

|

||||

其中一类用户总是本能的去尝试任何能够戳中其痛点的新选择。他们尝试过不计其数的窗口管理器、系统发行版和几乎所有能找到的桌面插件。

|

||||

|

||||

另一类用户找到他们喜欢的东西后,会一直使用下去。他们往往喜欢所使用的系统发行版的默认配置。最先熟练掌握的文本编辑器会成为他们最钟爱的那一个。

|

||||

|

||||

作为一个使用桌面版和服务器版十五年之久的 Linux 用户,比起第一类来,我无疑属于第二类用户。我更倾向于使用现成的东西,如此一来,很多时候我就可以通过文档和示例方便地找到我所需要的使用案例。如果我决定选择使用非费标准的东西,这个切换过程一定会基于细致的研究,并且前提是来自好基友的大力推荐。

|

||||

|

||||

但这并不意味着我不喜欢尝试新事物并且查漏补失。所以最近一段时间,在我不假思索的使用了 bash shell 多年之后,决定尝试一下另外四个 shell 工具:ksh、tcsh、zsh 和 fish。这四个 shell 都可以通过我所用的 Fedora 系统的默认库轻松安装,并且他们可能已经内置在你所使用的系统发行版当中了。

|

||||

|

||||

这里对它们每个选择都稍作介绍,并且阐述下它适合做为你的下一个 Linux 命令行解释器的原因所在。

|

||||

|

||||

### bash

|

||||

|

||||

首先,我们回顾一下最为熟悉的一个。 [GNU Bash][1],又名 Bourne Again Shell,它是我这些年使用过的众多 Linux 发行版的默认选择。它最初发布于 1989 年,并且轻松成长为 Linux 世界中使用最广泛的 shell,甚至常见于其他一些类 Unix 系统当中。

|

||||

|

||||

Bash 是一个广受赞誉的 shell,当你通过互联网寻找各种事情解决方法所需的文档时,总能够无一例外的发现这些文档都默认你使用的是 bash shell。但 bash 也有一些缺点存在,如果你写过 Bash 脚本就会发现我们写的代码总是得比真正所需要的多那么几行。这并不是说有什么事情是它做不到的,而是说它读写起来并不总是那么直观,至少是不够优雅。

|

||||

|

||||

如上所述,基于其巨大的安装量,并且考虑到各类专业和非专业系统管理员已经适应了它的使用方式和独特之处,至少在将来一段时间内,bash 或许会一直存在。

|

||||

|

||||

### ksh

|

||||

|

||||

[KornShell][4],或许你对这个名字并不熟悉,但是你一定知道它的调用命令 ksh。这个替代性的 shell 于 80 年代起源于贝尔实验室,由 David Korn 所写。虽然最初是一个专有软件,但是后期版本是在 [Eclipse Public 许可][5]下发布的。

|

||||

|

||||

ksh 的拥趸们列出了他们觉得其优越的诸多理由,包括更好的循环语法,清晰的管道退出代码,处理重复命令和关联数组的更简单的方式。它能够模拟 vi 和 emacs 的许多行为,所以如果你是一个重度文本编辑器患者,它值得你一试。最后,我发现它虽然在高级脚本方面拥有不同的体验,但在基本输入方面与 bash 如出一辙。

|

||||

|

||||

### tcsh

|

||||

|

||||

[tcsh][6] 衍生于 csh(Berkely Unix C shell),并且可以追溯到早期的 Unix 和计算机时代开始。

|

||||

|

||||

tcsh 最大的卖点在于它的脚本语言,对于熟悉 C 语言编程的人来说,看起来会非常亲切。tcsh 的脚本编写有人喜欢,有人憎恶。但是它也有其他的技术特色,包括可以为 aliases 添加参数,各种可能迎合你偏好的默认行为,包括 tab 自动完成和将 tab 完成的工作记录下来以备后查。

|

||||

|

||||

tcsh 以 [BSD 许可][7]发布。

|

||||

|

||||

### zsh

|

||||

|

||||

[zsh][8] 是另外一个与 bash 和 ksh 有着相似之处的 shell。诞生于 90 年代初,zsh 支持众多有用的新技术,包括拼写纠正、主题化、可命名的目录快捷键,在多个终端中共享同一个命令历史信息和各种相对于原来的 bash 的轻微调整。

|

||||

|

||||

虽然部分需要遵照 GPL 许可,但 zsh 的代码和二进制文件可以在一个类似 MIT 许可证的许可下进行分发; 你可以在 [actual license][9] 中查看细节。

|

||||

|

||||

### fish

|

||||

|

||||

之前我访问了 [fish][10] 的主页,当看到 “好了,这是一个为 90 后而生的命令行 shell” 这条略带调侃的介绍时(fish 完成于 2005 年),我就意识到我会爱上这个交互友好的 shell 的。

|

||||

|

||||

fish 的作者提供了若干切换过来的理由,这些理由有点小幽默并且能戳中笑点,不过还真是那么回事。这些特性包括自动建议(“注意, Netscape Navigator 4.0 来了”,LCTT 译注:NN4 是一个重要版本。),支持“惊人”的 256 色 VGA 调色,不过也有真正有用的特性,包括根据你机器上的 man 页面自动补全命令,清除脚本和基于 web 界面的配置方式。

|

||||

|

||||

fish 的许可主要基于 GPLv2,但有些部分是在其他许可下的。你可以查看资源库来了解[完整信息][11]。

|

||||

|

||||

***

|

||||

|

||||

如果你想要寻找关于每个选择确切不同之处的详尽纲要,[这个网站][12]应该可以帮到你。

|

||||

|

||||

我的立场到底是怎样的呢?好吧,最终我应该还是会重新投入 bash 的怀抱,因为对于大多数时间都在使用命令行交互的人来说,切换过程对于编写高级的脚本能带来的好处微乎其微,并且我已经习惯于使用 bash 了。

|

||||

|

||||

但是我很庆幸做出了敞开大门并且尝试新选择的决定。我知道门外还有许许多多其他的东西。你尝试过哪些 shell,更中意哪一个?请在评论里告诉我们。

|

||||

|

||||

---

|

||||

|

||||

via: https://opensource.com/business/16/3/top-linux-shells

|

||||

|

||||

作者:[Jason Baker][a]

|

||||

译者:[mr-ping](https://github.com/mr-ping)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/jason-baker

|

||||

|

||||

[1]: https://www.gnu.org/software/bash/

|

||||

[2]: http://mywiki.wooledge.org/BashPitfalls

|

||||

[3]: http://www.gnu.org/licenses/gpl.html

|

||||

[4]: http://www.kornshell.org/

|

||||

[5]: https://www.eclipse.org/legal/epl-v10.html

|

||||

[6]: http://www.tcsh.org/Welcome

|

||||

[7]: https://en.wikipedia.org/wiki/BSD_licenses

|

||||

[8]: http://www.zsh.org/

|

||||

[9]: https://sourceforge.net/p/zsh/code/ci/master/tree/LICENCE

|

||||

[10]: https://fishshell.com/

|

||||

[11]: https://github.com/fish-shell/fish-shell/blob/master/COPYING

|

||||

[12]: http://hyperpolyglot.org/unix-shells

|

||||

|

||||

63

published/20160531 Why Ubuntu-based Distros Are Leaders.md

Normal file

63

published/20160531 Why Ubuntu-based Distros Are Leaders.md

Normal file

@ -0,0 +1,63 @@

|

||||

为什么 Ubuntu 家族会占据 Linux 发行版的主导地位?

|

||||

=========================================

|

||||

|

||||

在过去的数年中,我体验了一些优秀的 Linux 发行版。给我印象最深刻的是那些由强大的社区维护的发行版,而流行的发行版比强大的社区给我的印象更深。流行的 Linux 发行版往往能吸引新用户,这通常是由于其流行而使得使用该发行版会更加容易。并非绝对如此,但一般来说是这样的。

|

||||

|

||||

说到这里,首先映入我脑海的一个发行版是 [Ubuntu][1]。其基于健壮的 [Debian][2] 发行版构建,它不仅成为了一个非常受欢迎的 Linux 发行版,而且它也衍生出了不可计数的其他分支,比如 Linux Mint 就是一个例子。在本文中,我会探讨为何我认为 Ubuntu 会赢得 Linux 发行版之战的原因,以及它是怎样影响到了整个 Linux 桌面领域。

|

||||

|

||||

### Ubuntu 易于使用

|

||||

|

||||

在我几年前首次尝试使用 Ubuntu 前,我更喜欢使用 KED 桌面。在那个时期,我接触的大多是这种 KDE 桌面环境。主要原因还是 KDE 是大多数新手容易入手的 Linux 发行版中最受欢迎的。这些新手友好的发行版有 Knoppix、Simply Mepis、Xandros、Linspire 以及其它的发行版等等,这些发行版都推荐他们的用户去使用广受欢迎的 KDE。

|

||||

|

||||

现在 KDE 能满足我的需求,我也没有什么理由去折腾其他的桌面环境。有一天我的 Debian 安装失败了(由于我个人的操作不当),我决定尝试开发代号为 Dapper Drake 的 Ubuntu 版本(LCTT 译注:Ubuntu 6.06 - Dapper Drake,发布日期:2006 年 6 月 1 日),每个人都对它赞不绝口。那个时候,我对于它的印象仅限于屏幕截图,但是我想试试也挺有趣的。

|

||||

|

||||

Ubuntu Dapper Drake 给我的最大的印象是它让我很清楚地知道每个东西都在哪儿。记住,我是来自于 KDE 世界的用户,在 KDE 上要想改变菜单的设置就有 15 种方法 !而 Ubuntu 上的 GNOME 实现极具极简主义的。

|

||||

|

||||

时间来到 2016 年,最新的版本号是 16.04:我们有了好几种 Ubuntu 特色版本,也有一大堆基于 Ubuntu 的发行版。所有的 Ubuntu 特色版和衍生发行版的共同具有的核心都是为易用而设计。发行版想要增大用户基数时,这就是最重要的原因。

|

||||

|

||||

### Ubuntu LTS

|

||||

|

||||

过去,我几乎一直坚持使用 LTS(Long Term Support)发行版作为我的主要桌面系统。10月份的发行版很适合我测试硬盘驱动器,甚至把它用在一个老旧的手提电脑上。我这样做的原因很简单——我没有兴趣在一个正式使用的电脑上折腾短期发行版。我是个很忙的家伙,我觉得这样会浪费我的时间。

|

||||

|

||||

对于我来说,我认为 Ubuntu 提供 LTS 发行版是 Ubuntu 能够变得流行的最大的原因。这样说吧———给普罗大众提供一个桌面 Linux 发行版,这个发行版能够得到长期的有效支持就是它的优势。事实上,不只 Ubuntu 是这样,其他的分支在这一点上也做的很好。长期支持策略以及对新手的友好环境,我认为这就为 Ubuntu 的普及带来了莫大的好处。

|

||||

|

||||

### Ubuntu Snap 软件包

|

||||

|

||||

以前,用户会夸赞可以在他们的系统上使用 PPA(personal package archive 个人软件包档案)获得新的软件。不好的是,这种技术也有缺点。当它用在各种软件名称时, PPA 经常会找不到,这种情况很常见。

|

||||

|

||||

现在有了 [Snap 软件包][3] 。当然这不是一个全新的概念,过去已经进行了类似的尝试。用户可以在一个长期支持版本上运行最新的软件,而不必去使用最新的 Ubuntu 发行版。虽然我认为目前还处于 Snap 软件包的早期,但是我很期待可以在一个稳定的发行版上运行的崭新的软件。

|

||||

|

||||

最明显的问题是,如果你要运行很多软件,那么 Snap 包实际会占用很多硬盘空间。不仅如此,大多数 Ubuntu 软件仍然需要由官方从 deb 包进行转换。第一个问题可以通过使用更大的硬盘空间得到解决,而后一个问题的解决则需要等待。

|

||||

|

||||

### Ubuntu 社区

|

||||

|

||||

首先,我承认大多数主要的 Linux 发行版都有强大的社区。然而,我坚信 Ubuntu 社区的成员是最多样化的,他们来自各行各业。例如,我们的论坛包括从苹果硬件支持到游戏等不同分类。特别是这些专业的讨论话题还非常广泛。

|

||||

|

||||

除过论坛,Ubuntu 也提供了一个很正式的社区组织。这个组织包括一个理事会、技术委员会、[本地社区团队][4]和开发者成员委员会。还有很多,但是这些都是我知道的社区组织部分。

|

||||

|

||||

我们还有一个 [Ubuntu 问答][5]版块。我认为,这种功能可以代替人们从论坛寻求帮助的方式,我发现在这个网站你得到有用信息的可能性更大。不仅如此,那些提供的解决方案中被选出的最精准的答案也会被写入到官方文档中。

|

||||

|

||||

### Ubuntu 的未来

|

||||

|

||||

我认为 Ubuntu 的 Unity 界面(LCTT 译注:Unity 是 Canonical 公司为 Ubuntu 操作系统的 GNOME 桌面环境开发的图形化界面)在提升桌面占有率上少有作为。我能理解其中的缘由,现在它主要做一些诸如可以使开发团队的工作更轻松的事情。但是最终,我还是认为 Unity 为 Ubuntu MATE 和 Linux Mint 的普及铺平道路。

|

||||

|

||||

我最好奇的一点是 Ubuntu's IRC 和邮件列表的发展(LCTT 译注:可以在 Ubuntu LoCo Teams 的 IRC Chat 上提问关于地方团队和计划的事件的问题,也可以和一些不同团队的成员进行交流)。事实是,他们都不能像 Ubuntu 问答板块那样文档化。至于邮件列表,我一直认为这对于合作是一种很痛苦的过时方法,但这仅仅是我的个人看法——其他人可能有不同的看法,也可能会认为它很好。

|

||||

|

||||

你怎么看?你认为 Ubuntu 将来会占据主要的份额吗?也许你会认为 Arch 和 Linux Mint 或者其他的发行版会在普及度上打败 Ubuntu? 既然这样,那请大声说出你最喜爱的发行版。如果这个发行版是 Ubuntu 衍生版 ,说说你为什么更喜欢它而不是 Ubuntu 本身。如果不出意外,Ubuntu 会成为构建其他发行版的基础,我想很多人都是这样认为的。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.datamation.com/open-source/why-ubuntu-based-distros-are-leaders.html

|

||||

|

||||

作者:[Matt Hartley][a]

|

||||

译者:[vim-kakali](https://github.com/vim-kakali)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://www.datamation.com/author/Matt-Hartley-3080.html

|

||||

[1]: http://www.ubuntu.com/

|

||||

[2]: https://www.debian.org/

|

||||

[3]: http://www.datamation.com/open-source/ubuntu-snap-packages-the-good-the-bad-the-ugly.html

|

||||

[4]: http://loco.ubuntu.com/

|

||||

[5]: http://askubuntu.com/

|

||||

@ -0,0 +1,129 @@

|

||||

马克·沙特尔沃思 – Ubuntu 背后的那个男人

|

||||

================================================================================

|

||||

|

||||

|

||||

|

||||

**马克·理查德·沙特尔沃思(Mark Richard Shuttleworth)** 是 Ubuntu 的创始人,也被称作 [Debian 背后的人][1]([之一][2])。他于 1973 年出生在南非的韦尔科姆(Welkom)。他不仅是个企业家,还是个太空游客——他是第一个前往太空旅行的非洲独立国家的公民。

|

||||

|

||||

马克曾在 1996 年成立了一家名为 **Thawte** 的互联网商务安全公司,那时他还在开普敦大学( University of Cape Town)的学习金融和信息技术。

|

||||

|

||||

2000 年,马克创立了 HBD(Here be Dragons (此处有龙/危险)的缩写,所以其吉祥物是一只龙),这是一家投资公司,同时他还创立了沙特尔沃思基金会(Shuttleworth Foundation),致力于以奖金和投资等形式给社会中有创新性的领袖提供资助。

|

||||

|

||||

> “移动设备对于个人电脑行业的未来而言至关重要。比如就在这个月,相对于平板电脑的发展而言,传统 PC 行业很明显正在萎缩。所以如果我们想要涉足个人电脑产业,我们必须首先涉足移动行业。移动产业之所以有趣,是因为在这里没有盗版 Windows 操作系统的市场。所以如果你为你的操作系统赢得了一台设备的市场份额,这台设备会一直使用你的操作系统。在传统 PC 行业,我们时不时得和“免费”的 Windows 产生竞争,这是一种非常微妙的挑战。所以我们现在的重心是围绕 Ubuntu 和移动设备——手机和平板——以图与普通用户建立更深层次的联系。”

|

||||

>

|

||||

> — 马克·沙特尔沃思

|

||||

|

||||

2002 年,他在俄罗斯的星城(Star City)接受了为期一年的训练,随后作为联盟号 TM-34 任务组的一员飞往了国际空间站。再后来,在面向有志于航空航天或者其相关学科的南非学生群体发起了推广科学、编程及数学的运动后,马克 创立了 **Canonical Ltd**。此后直至2013年,他一直在领导 Ubuntu 操作系统的开发。

|

||||

|

||||

现今,沙特尔沃思拥有英国与南非双重国籍并和 18 只可爱的鸭子住在英国的 Isle of Man 小岛上的一处花园,一同的还有他可爱的女友 Claire,两条黑色母狗以及时不时经过的羊群。

|

||||

|

||||

> “电脑不仅仅是一台电子设备了。它现在是你思维的延续,以及通向他人的大门。”

|

||||

>

|

||||

> — 马克·沙特尔沃思

|

||||

|

||||

### 马克·沙特尔沃思的早年生活###

|

||||

|

||||

正如我们之前提到的,马克出生在南非的奥兰治自由邦(Orange Free State)的韦尔科姆(Welkom)。他是一名外科医生和护士学校教师的孩子。他在西部省预科学校就读并在 1986 年成为了学生会主席,一个学期后就读于 Rondebosch 男子高中,再之后入学 Bishops Diocesan 学院并在 1991 年再次成为那里的学生会主席。

|

||||

|

||||

马克在开普敦大学( University of Cape Town)拿到了金融和信息系统的商业科学双学士学位,他在学校就读时住在 Smuts Hall。作为学生,他也在那里帮助安装了学校的第一条宿舍互联网接入。

|

||||

|

||||

>“无数的企业和国家已经证明,引入开源政策能提高竞争力和效率。在不同层面上创造生产力对于公司和国家而言都是至关重要的。”

|

||||

>

|

||||

> — 马克·沙特尔沃思

|

||||

|

||||

### 马克·沙特尔沃思的职业生涯 ###

|

||||

|

||||

马克在 1995 年创立了 Thawte,公司专注于数字证书和互联网安全,然后在 1999 年把公司卖给了 VeriSign,赚取了大约 5.75 亿美元。

|

||||

|

||||

2000 年,马克创立了 HBD 风险资本公司,成为了商业投资人和项目孵化器。2004 年,他创立了 Canonical Ltd. 以支持和鼓励自由软件开发项目的商业化,特别是 Ubuntu 操作系统的项目。直到 2009 年,马克才从 Canonical CEO 的位置上退下。

|

||||

|

||||

> “在 [DDC](https://en.wikipedia.org/wiki/DCC_Alliance) (LCTT 译注:一个 Debian GNU/Linux 开发者联盟) 的早期,我更倾向于让拥护者们放手去做,看看能发展出什么。”

|

||||

>

|

||||

> — 马克·沙特尔沃思

|

||||

|

||||

### Linux、自由开源软件与马克·沙特尔沃思 ###

|

||||

|

||||

在 90 年代后期,马克曾作为一名开发者参与 Debian 操作系统项目。

|

||||

|

||||

2001 年,马克创立了沙特尔沃思基金会,这是个扎根南非的、非赢利性的基金会,专注于赞助社会创新、免费/教育用途开源软件,曾赞助过[自由烤面包机][3](Freedom Toaster)(LCTT 译注:自由烤面包机是一个可以给用户带来的 CD/DVD 上刻录自由软件的公共信息亭)。

|

||||

|

||||

2004 年,马克通过出资开发基于 Debian 的 Ubuntu 操作系统返回了自由软件界,这一切也经由他的 Canonical 公司完成。

|

||||

|

||||

2005 年,马克出资建立了 Ubuntu 基金会并投入了一千万美元作为启动资金。在 Ubuntu 项目内,人们经常用一个朗朗上口的名字称呼他——“**SABDFL :自封的生命之仁慈独裁者(Self-Appointed Benevolent Dictator for Life)**”。为了能够找到足够多的高手开发这个巨大的项目,马克花费了 6 个月的时间从 Debian 邮件列表里寻找,这一切都是在他乘坐在南极洲的一艘破冰船——赫列布尼科夫船长号(Kapitan Khlebnikov)——上完成的。同年,马克买下了 Impi Linux 65% 的股份。

|

||||

|

||||

|

||||

> “我呼吁电信公司的掌权者们尽快开发出跨洲际的高效信息传输服务。”

|

||||

>

|

||||

> — 马克·沙特尔沃思

|

||||

|

||||

2006 年,KDE 宣布沙特尔沃思成为 KDE 的**第一赞助人(first patron)**——彼时 KDE 最高级别的赞助。这一赞助协议在 2012 年终止,取而代之的是对 Kubuntu 的资金支持,这是一个使用 KDE 作为默认桌面环境的 Ubuntu 变种。

|

||||

|

||||

|

||||

|

||||

2009 年,Shuttleworth 宣布他会从 Canonical 的 CEO 上退位以更好地关注合作关系、产品设计和客户。从 2004 年起担任公司 COO 的珍妮·希比尔(Jane Silber)晋升为 CEO。

|

||||

|

||||

2010 年,马克由于其贡献而被开放大学(Open University)授予了荣誉学位。

|

||||

|

||||

2012 年,马克和肯尼斯·罗格夫(Kenneth Rogoff)一同在牛津大学与彼得·蒂尔(Peter Thiel)和加里·卡斯帕罗夫(Garry Kasparov)就**创新悖论**(The Innovation Enigma)展开辩论。

|

||||

|

||||

2013 年,马克和 Ubuntu 一同被授予**澳大利亚反个人隐私大哥奖**(Austrian anti-privacy Big Brother Award),理由是默认情况下, Ubuntu 会把 Unity 桌面的搜索框的搜索结果发往 Canonical 服务器(LCTT 译注:因此侵犯了个人隐私)。而一年前,马克曾经申明过这一过程进行了匿名化处理。

|

||||

|

||||

> “所有主流 PC 厂家现在都提供 Ubuntu 预安装选项,所以我们和业界的合作已经相当紧密了。但那些 PC 厂家对于给买家推广新东西这件事都很紧张。如果我们可以让 PC 买家习惯 Ubuntu 的平板/手机操作系统的体验,那他们也应该更愿意买预装 Ubuntu 的 PC。没有哪个操作系统是通过抄袭模仿获得成功的,Android 很棒,但如果我们想成功的话我们必须给市场带去更新更好的东西(LCTT 译注:而不是改进或者模仿 Android)。如果我们中没有人追寻未来的话,我们将陷入停滞不前的危险。但如果你尝试去追寻未来了,那你必须接受不是所有人对未来的预见都和你一样这一事实。”

|

||||

>

|

||||

> — 马克·沙特尔沃思

|

||||

|

||||

### 马克·沙特尔沃思的太空之旅 ###

|

||||

|

||||

马克在 2002 年作为世界第二名自费太空游客而闻名世界,同时他也是南非第一个旅行太空的人。这趟旅行中,马克作为俄罗斯联盟号 TM-34 任务的一名乘员加入,并为此支付了约两千万美元。2 天后,联盟号宇宙飞船抵达了国际空间站,在那里马克呆了 8 天并参与了艾滋病和基因组研究的相关实验。同年晚些时候,马克随联盟号 TM-33 任务返回了地球。为了参与这趟旅行,马克花了一年时间准备与训练,其中有 7 个月居住在俄罗斯的星城。

|

||||

|

||||

|

||||

|

||||

在太空中,马克与纳尔逊·曼德拉(Nelson Mandela)和另一个 14 岁的南非女孩米歇尔·福斯特(Michelle Foster) (她问马克要不要娶她)通过无线电进行了交谈。马克礼貌地回避了这个结婚问题,但在巧妙地改换话题之前他说他感到很荣幸。身患绝症的女孩福斯特通过梦想基金会( Dream foundation)的赞助获得了与马克和纳尔逊·曼德拉交谈的机会。

|

||||

|

||||

归来后,马克在世界各地做了旅行,并和各地的学生就太空之旅发表了感言。

|

||||

|

||||

>“粗略的统计数据表明 Ubuntu 的实际用户依然在增长。而我们的合作方——戴尔、惠普、联想和其他硬件生产商,以及游戏厂商 EA、Valve 都在加入我们——这让我觉得我们在关键的领域继续领先。”

|

||||

>

|

||||

> — 马克·沙特尔沃思

|

||||

|

||||

### 马克·沙特尔沃思的交通工具 ###

|

||||

|

||||

马克有他自己的私人客机庞巴迪全球特快(Bombardier Global Express),虽然它经常被称为 Canonical 一号,但事实上此飞机是通过 HBD 风险投资公司注册拥有的。涂画在飞机侧面的龙图案是 HBD 风投公司的吉祥物 ,名叫 Norman。

|

||||

|

||||

|

||||

|

||||

### 与南非储备银行的法律冲突 ###

|

||||

|

||||

在从南非转移 25 亿南非兰特去往 Isle of Man 的过程中,南非储备银行征收了 2.5 亿南非兰特的税金。马克上诉了,经过冗长的法庭唇枪舌战,南非储备银行被勒令返还 2.5 亿征税,以及其利息。马克宣布他会把这 2.5 亿存入信托基金,以用于帮助那些上诉到宪法法院的案子。

|

||||

|

||||

|

||||

> “离境征税倒也不和宪法冲突。但离境征税的主要目的不是提高税收,而是通过监管资金流出来保护本国经济。”

|

||||

>

|

||||

> — Dikgang Moseneke 法官

|

||||

|

||||

2015 年,南非宪法法院修正了低级法院的判决结果,并宣布了上述对于离岸征税的理解。

|

||||

|

||||

### 马克·沙特尔沃思喜欢的东西 ###

|

||||

|

||||

Cesária Évora、mp3、春天、切尔西(Chelsea)、“恍然大悟”(finally seeing something obvious for first time)、回家、辛纳屈(Sinatra)、白日梦、暮后小酌、挑逗、苔丝(d’Urberville)、弦理论、Linux、粒子物理、Python、转世、米格-29、雪、旅行、Mozilla、酸橙果酱、激情代价(body shots)、非洲丛林、豹、拉贾斯坦邦、俄罗斯桑拿、单板滑雪、失重、Iain m 银行、宽度、阿拉斯泰尔·雷诺兹(Alastair Reynolds)、化装舞会服装、裸泳、灵机一动、肾上腺素激情消退、莫名(the inexplicable)、活动顶篷式汽车、Clifton、国家公路、国际空间站、机器学习、人工智能、维基百科、Slashdot、风筝冲浪(kitesurfing)和 Manx lanes。

|

||||

|

||||

|

||||

|

||||

### 马克·沙特尔沃思不喜欢的东西 ###

|

||||

|

||||

行政、涨工资、法律术语和公众演讲。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.unixmen.com/mark-shuttleworth-man-behind-ubuntu-operating-system/

|

||||

|

||||

作者:[M.el Khamlichi][a]

|

||||

译者:[Moelf](https://github.com/Moelf)

|

||||

校对:[PurlingNayuki](https://github.com/PurlingNayuki), [wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.unixmen.com/author/pirat9/

|

||||

[1]:https://wiki.debian.org/PeopleBehindDebian

|

||||

[2]:https://raphaelhertzog.com/2011/11/17/people-behind-debian-mark-shuttleworth-ubuntus-founder/

|

||||

[3]:https://en.wikipedia.org/wiki/Freedom_Toaster

|

||||

@ -1,39 +1,41 @@

|

||||

修补 Linux 系统 glibc 严重漏洞

|

||||

=================================================

|

||||

|

||||

**谷歌揭露的一个严重漏洞影响主流的 Linux 发行版。glibc 的漏洞可能导致远程代码执行。**

|

||||

**谷歌披露的一个严重漏洞影响到了主流的 Linux 发行版。glibc 的漏洞可能导致远程代码执行。**

|

||||

|

||||

Linux 用户今天都竞相给一个可以使系统暴露在远程代码执行风险中的核心 glibc 开放源码库的严重漏洞打补丁。glibc 的漏洞被确定为 CVE-2015-7547,题为“getaddrinfo 基于堆栈的缓冲区溢出”。

|

||||

编者按:这个消息并不是一个新闻,基于技术的原因,我们还是分享给大家。

|

||||

|

||||

Linux 用户都在竞相给一个可以使系统暴露在远程代码执行风险中的核心 glibc 开放源码库的严重漏洞打补丁。这个 glibc 的漏洞编号被确定为 CVE-2015-7547,题为“getaddrinfo 基于堆栈的缓冲区溢出”。

|

||||

|

||||

glibc,或 GNU C 库,是一个开放源码的 C 和 C++ 编程语言库的实现,是每一个主流 Linux 发行版的一部分。谷歌工程师们在他们试图连接到某个主机系统时发生了一个段错误导致连接崩溃,偶然发现了 CVE-2015-7547 问题。进一步的研究表明, glibc 有缺陷而且该崩溃可能实现任意远程代码执行的条件。

|

||||

|

||||

谷歌在一篇博客文章中写道, “当 getaddrinfo() 库函数被使用时,glibc 的 DNS 客户端解析器易受基于堆栈缓冲区溢出的攻击,使用该功能的软件可能被利用为攻击者控制的域名,攻击者控制的 DNS[域名系统] 服务器,或通过中间人攻击。”

|

||||

谷歌在一篇博客文章中写道, “当 getaddrinfo() 库函数被使用时,glibc 的 DNS 客户端解析器易受基于堆栈缓冲区溢出的攻击,使用该功能的软件可能通过攻击者控制的域名、攻击者控制的 DNS [域名系统] 服务器,或通过中间人攻击方式(MITM)进行破坏。”

|

||||

|

||||

其实利用 CVE-2015-7547 问题并不简单,但它是可能的。为了证明这个问题能被利用,谷歌发布了论证一个终端用户或系统是否易受攻击的概念验证(POC)代码到 GitHub 上。

|

||||

|

||||

GitHub 上的 POC 网页声明“服务器代码触发漏洞,因此会使客户端代码崩溃”。

|

||||

GitHub 上的 POC 网页说“服务器代码会触发漏洞,因此会使客户端代码崩溃”。

|

||||

|

||||

Duo Security 公司的高级安全研究员 Mark Loveless 解释说 CVE-2015-7547 的主要风险在于 Linux 上依赖于 DNS 响应的基于客户端的应用程序。

|

||||

Duo Security 公司的高级安全研究员 Mark Loveless 解释说 CVE-2015-7547 的主要风险在于依赖于 DNS 响应的基于 Linux 客户端的应用程序。

|

||||

|

||||

Loveless 告诉 eWEEK “需要一些特定的条件,所以不是每个应用程序都会受到影响,但似乎一些命令行工具,包括流行的 SSH[安全 Shell] 客户端都可能触发该漏洞,我们认为这是严重的,主要是因为对 Linux 系统存在的风险,但也因为潜在的其他问题。”

|

||||

|

||||

其他问题可能包括一种触发调用易受攻击的 glibc 库 getaddrinfo() 的基于电子邮件攻击的风险。另外值得注意的是,该漏洞被发现之前已存在于代码之中多年。

|

||||

其他问题可能包括一种通过电子邮件触发调用易受攻击的 glibc 库 getaddrinfo() 攻击的风险。另外值得注意的是,该漏洞被发现之前已存在于代码之中多年。

|

||||

|

||||

谷歌的工程师不是第一或唯一发现 glibc 中的安全风险的团体。这个问题于 2015 年 7 月 13 日首先被报告给了 glibc 的 bug[跟踪系统](https://sourceware.org/bugzilla/show_bug.cgi?id=1866)。该缺陷的根源可以更进一步追溯到在 2008 五月发布的 glibc 2.9 的代码提交时首次引入缺陷。

|

||||

谷歌的工程师不是第一或唯一发现这个 glibc 安全风险的团体。这个问题于 2015 年 7 月 13 日首先被报告给了 glibc 的 bug[跟踪系统](https://sourceware.org/bugzilla/show_bug.cgi?id=1866)。该缺陷的根源可以更进一步追溯到在 2008 五月发布的 glibc 2.9 的代码提交时首次引入缺陷。

|

||||

|

||||

Linux 厂商红帽也独立找到了 glibc 中的这个 bug,而且在 2016 年 1 月 6 日,谷歌和红帽开发人员证实,他们作为最初与上游 glibc 的维护者私下讨论的部分人员,已经独立在为同一个漏洞工作。

|

||||

Linux 厂商红帽也独立找到了 glibc 中的这个 bug,而且是在 2016 年 1 月 6 日,谷歌和红帽开发人员证实,他们作为最初与上游 glibc 的维护者私下讨论的部分人员,已经独立在为同一个漏洞工作。

|

||||

|

||||

红帽产品安全首席软件工程师 Florian Weimer 告诉 eWEEK “一旦确认了两个团队都在为同一个漏洞工作,我们合作进行可能的修复,缓解措施和回归测试,我们还共同努力,使测试覆盖尽可能广,捕捉代码中的任何相关问题,以帮助避免今后更多问题。”

|

||||

红帽产品安全首席软件工程师 Florian Weimer 告诉 eWEEK “一旦确认了两个团队都在为同一个漏洞工作,我们会合作进行可能的修复,缓解措施和回归测试,我们还会共同努力,使测试覆盖尽可能广,捕捉代码中的任何相关问题,以帮助避免今后更多问题。”

|

||||

|

||||

由于缺陷不明显或不易立即显现,我们花了几年时间才发现 glibc 代码有一个安全问题。

|

||||

|

||||

Weimer 说“要诊断一个网络组件的漏洞,如 DNS 解析器,当遇到问题时通常要看被抓数据包的踪迹,在这种情况下这样的抓包不适用,所以需要一些实验来重现触发这个 bug 的确切场景。”

|

||||

Weimer 说“要诊断一个网络组件的漏洞,如 DNS 解析器,当遇到问题时通常要看抓到的数据包的踪迹,在这种情况下这样的抓包不适用,所以需要一些实验来重现触发这个 bug 的确切场景。”

|

||||

|

||||

Weimer 补充说,一旦可以抓取数据包,大量精力投入到验证修复程序中,最终导致回归测试套件一系列的改进,有助于上游 glibc 项目。

|

||||

Weimer 补充说,一旦可以抓取数据包,就会投入大量精力到验证修复程序中,最终完成回归测试套件一系列的改进,有助于上游 glibc 项目。

|

||||

|

||||

在许多情况下,安全增强式 Linux (SELinux) 的强制访问安全控制可以减少潜在漏洞风险,除了这个 glibc 的新问题。

|

||||

在许多情况下,安全增强式 Linux (SELinux) 的强制访问安全控制可以减少潜在漏洞风险,但是这个 glibc 的新问题例外。

|

||||

|

||||

Weimer 说“由于攻击者提供的任意代码的执行,风险是重要系统功能的一个妥协。一个合适的 SELinux 策略可以遏制一些攻击者可能会做的损害,并限制他们访问系统,但是 DNS 被许多应用程序和系统组件使用,所以 SELinux 策略只提供了针对此问题有限的遏制。”

|

||||

Weimer 说“由于攻击者提供的任意代码的执行,会对很多重要系统功能带来风险。一个合适的 SELinux 策略可以遏制一些攻击者可能会做的损害,并限制他们访问系统,但是 DNS 被许多应用程序和系统组件使用,所以 SELinux 策略只提供了针对此问题有限的遏制。”

|

||||

|

||||

在揭露漏洞的今天,现在有一个可用的补丁来减少 CVE-2015-7547 的潜在风险。

|

||||

|

||||

@ -43,7 +45,7 @@ via: http://www.eweek.com/security/linux-systems-patched-for-critical-glibc-flaw

|

||||

|

||||

作者:[Michael Kerner][a]

|

||||

译者:[robot527](https://github.com/robot527)

|

||||

校对:[校对者 ID](https://github.com/校对者 ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux 中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,97 @@

|

||||

两个出色的一体化 Linux 服务器软件

|

||||

================================================

|

||||

|

||||

回到2000年那时,微软发布小型商务服务器(SBS:Small Business Server)。这个产品改变了很多人们对科技在商务领域的看法。你可以部署一个单独的服务器,它能处理邮件,日历,文件共享,目录服务,VPN,以及更多,而不是很多机器处理不同的任务。对很多小型公司来说,这是实实在在的好处,但是对于一些公司来说 Windows SMB 是昂贵的。对于另外一些人,根本不会考虑使用这种微软设计的单一服务器的想法。

|

||||

|

||||

对于后者也有替代方案。事实上,在 Linux 和开源领域里,你可以选择许多稳定的平台,它可以作为一站式服务商店服务于你的小型企业。如果你的小型企业有10到50员工,一体化服务器也许是你所需的理想方案。

|

||||

|

||||

这里,我将要展示两个 Linux 一体化服务器,你可以看看它们哪个能完美适用于你的公司。

|

||||

|

||||

记住,这些服务器不适用于(不管是哪种方式)大型商务或企业。大公司无法依靠一体化服务器,那是因为一台服务器不能负担得起企业所需的期望。也就是说,Linux 一体化服务器适合于小型企业。

|

||||

|

||||

### ClearOS

|

||||

|

||||

[ClearOS][1] 最初发布于 2009 年,那时名为 ClarkConnect,是一个路由和网关的发行版。从那以后,ClearOS 增加了所有一体化服务器必要的特性。CearOS 提供的不仅仅是一个软件,你可以购买一个 [ClearBox 100][2] 或 [ClearBox 300][3]。这些服务器搭载了完整的 ClearOS,作为一个 IT 设备被销售。在[这里][4]查看特性比对/价格矩阵。

|

||||

|

||||

如果你已经有响应的硬件,你可以下载这些之一:

|

||||

|

||||

- [ClearOS 社区版][5] — 社区(免费)版的 ClearOS

|

||||

- [ClearOS 家庭版][6] — 理想的家庭办公室(详细的功能和订阅费用,见[这里][12])

|

||||

- [ClearOS商务][7] — 理想的小型企业(详细的功能和订阅费用,见[这里][13])

|

||||

|

||||

使用 ClearOS 能给你你带来什么?你得到了一个商业级的服务器,带有单一的精美 Web 界面。是什么让 ClearOS 从标准的服务器所提供的一大堆功能中脱颖而出?除了那些基础的部分,你可以从 [Clear 市场][8] 中增加功能。在这个市场里,你可以安装免费或付费的应用来扩展 ClearOS 服务器的特性。这里你可以找到支持 Windows 服务器活动目录,OpenLDAP,Flexshares,Antimalware,云,Web 访问控制,内容过滤等等很多的补充插件。你甚至可以找到一些第三方组件,比如谷歌应用同步,Zarafa 合作平台,卡巴斯基杀毒。

|

||||

|

||||

ClearOS 的安装就像其他的 Linux 发行版一样(基于红帽的 Anaconda 安装程序)。安装完成后,系统将提示您设置网络接口,这个地址用来供你的浏览器(需要与 ClearOS 服务器在同一个网络里)访问。地址格式如下:

|

||||

|

||||

https://IP_OF_CLEAROS_SERVER:81

|

||||

|

||||

IP_OF_CLEAROS_SERVER 就是服务器的真实 IP 地址。注:当你第一次在浏览器访问这个服务器时,你将收到一个“Connection is not private”的警告。继续访问,以便你可以继续设置。

|

||||

|

||||

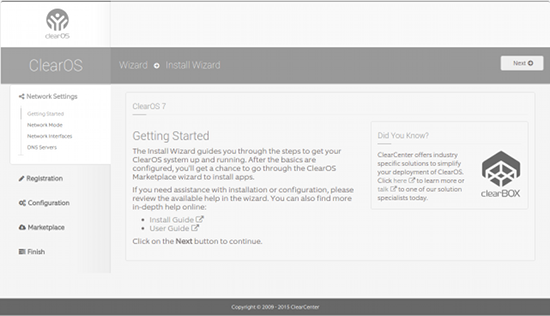

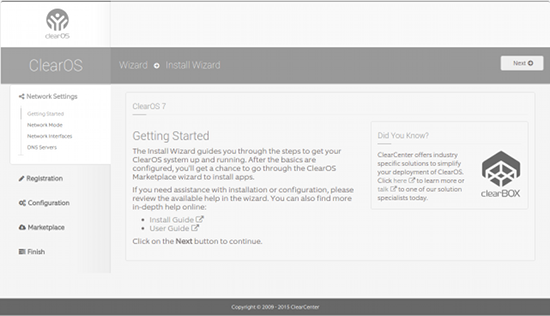

当浏览器最终连接上之后,就会提示你 root 用户认证(在初始化安装中你设置的 root 用户密码)。一通过认证,你将看到 ClearOS 的安装向导(图1)

|

||||

|

||||

|

||||

|

||||

*图1: ClearOS安装向导。*

|

||||

|

||||

点击下一步按钮,开始设置你的 ClearOS 服务器。这个向导无需加以说明,在最后还会问你想用那个版本的 ClearOS。点击“社区”,“家庭”,或者“商业”。选择之后,你就被要求注册一个账户。创建了一个账户并注册了你的服务器后,你可以开始更新服务器,配置服务器,从市场添加模块(图2)。

|

||||

|

||||

|

||||

|

||||

*图2: 从市场安装模块。*

|

||||

|

||||

此时,一切准备就绪,可以开始深入挖掘配置你的 ClearOS 小型商务服务器了。

|

||||

|

||||

### Zentyal

|

||||

|

||||

[Zentyal][10] 是一个基于 Ubuntu 的小型商务服务器,有段时期的名字是 eBox。Zentyal 提供了大量的服务器/服务来适应你的小型商务需求:

|

||||

|

||||

- 电子邮件 — 网页邮件;支持原生的微软 Exchange 协议和活动目录;日历和通讯录;手机设备电子邮件同步;反病毒/反垃圾;IMAP,POP,SMTP,CalDAV,和 CardDAV 支持。

|

||||

- 域和目录 — 中央域目录管理;多个组织部门;单点登录身份验证;文件共享;ACL,高级域管理,打印机管理。

|

||||

- 网络和防火墙 — 支持静态和 DHCP 接口;对象和服务;包过滤;端口转发。

|

||||

- 基础设施 — DNS;DHCP;NTP;认证中心;VPN。

|

||||

- 防火墙

|

||||

|

||||

安装 Zentyal 很像Ubuntu服务器的安装,基于文本界面而且很简单:从安装镜像启动,做一些简单的选择,然后等待安装完成。当这个最初的基于文本的安装完成之后,就会显示桌面 GUI,提供选择软件包的向导程序。你可以选择所有你想安装的包,让安装程序继续完成这些工作。

|

||||

|

||||

最终,你可以通过网页界面来访问 Zentyal 服务器(浏览器访问 https://IP_OF_SERVER:8443 - 这里 IP_OF_SERVER是你的 Zentyal 服务器的局域网地址)或使用独立的桌面 GUI 程序来管理服务器(Zentyal 包括一个可以快速访问管理员和用户控制台的 Zentyal 管理控制台)。当真系统已经保存并启动,你将看到 Zentyal 面板(图3)。

|

||||

|

||||

|

||||

|

||||

*图3: Zentyal活动面板。*

|

||||

|

||||

这个面板允许你控制服务器所有方面,比如更新,管理服务器/服务,获取服务器的敏捷状态更新。您也可以进入组件区域,然后安装在部署过程中没有选择的组件或更新当前的软件包列表。点击“软件管理” > “系统更新”并选择你想更新的(图4),然后在屏幕最底端点击“更新”按钮。

|

||||

|

||||

|

||||

|

||||

*图4: 更新你的Zentyal服务器很简单。*

|

||||

|

||||

### 那个服务器适合你?

|

||||

|

||||

回答这个问题要看你有什么需求。Zentyal 是一个不可思议的服务器,它可以很好的胜任你的小型商务网络。如果你需要更多,如群件,我觉得你可以试试 ClearOS。如果你不需要群件,其它的服务器也不错。

|

||||

|

||||

我强烈建议你安装一下这两个一体化的服务器,看看哪个更适合你的小公司。

|

||||

|

||||

------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linux.com/learn/tutorials/882146-two-outstanding-all-in-one-linux-servers

|

||||

|

||||

作者:[Jack Wallen][a]

|

||||

译者:[wyangsun](https://github.com/wyangsun)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://www.linux.com/community/forums/person/93

|

||||

[1]: http://www.linux.com/learn/tutorials/882146-two-outstanding-all-in-one-linux-servers#clearfoundation-overview

|

||||

[2]: https://www.clearos.com/products/hardware/clearbox-100-series

|

||||

[3]: https://www.clearos.com/products/hardware/clearbox-300-series

|

||||

[4]: https://www.clearos.com/products/hardware/clearbox-overview

|

||||

[5]: http://mirror.clearos.com/clearos/7/iso/x86_64/ClearOS-DVD-x86_64.iso

|

||||

[6]: http://mirror.clearos.com/clearos/7/iso/x86_64/ClearOS-DVD-x86_64.iso

|

||||

[7]: http://mirror.clearos.com/clearos/7/iso/x86_64/ClearOS-DVD-x86_64.iso

|

||||

[8]: https://www.clearos.com/products/purchase/clearos-marketplace-overview

|

||||

[9]: https://ip_of_clearos_server:81/

|

||||

[10]: http://www.zentyal.org/server/

|

||||

[11]: https://ip_of_server:8443/

|

||||

[12]: https://www.clearos.com/products/clearos-editions/clearos-7-home

|

||||

[13]: https://www.clearos.com/products/clearos-editions/clearos-7-business

|

||||

@ -0,0 +1,63 @@

|

||||

65% 的企业正致力于开源项目

|

||||

==========================================================

|

||||

|

||||

|

||||

|

||||

今年 Black Duck 和 North Bridge 发布了第十届年度开源软件前景调查,来调查开源软件的发展趋势。今年这份调查的亮点在于,当前主流社会对开源软件的接受程度以及过去的十年中人们对开源软件态度的变化。

|

||||

|

||||

[2016 年的开源软件前景调查][1],分析了来自约3400位专家的反馈。今年的调查中,开发者发表了他们的看法,大约 70% 的参与者是开发者。数据显示,安全专家的参与人数呈指数级增长,增长超过 450% 。他们的参与表明,开源社区开始逐渐关注开源软件中存在的安全问题,以及当新的技术出现时确保它们的安全性。

|

||||

|

||||

Black Duck 的[年度开源新秀奖][2] 涉及到一些新出现的技术,如容器方面的 Docker 和 Kontena。容器技术这一年有了巨大的发展 ———— 76% 的受访者表示,他们的企业有一些使用容器技术的规划。而 59% 的受访者正准备使用容器技术完成大量的部署,从开发与测试,到内部与外部的生产环境部署。开发者社区已经把容器技术作为一种简单快速开发的方法。

|

||||

|

||||

调查显示,几乎每个组织都有开发者致力于开源软件,这一点毫不惊讶。当像微软和苹果这样的大公司将它们的一些解决方案开源时,开发者就获得了更多的机会来参与开源项目。我非常希望这样的趋势会延续下去,让更多的软件开发者无论在工作中,还是工作之余都可以致力于开源项目。

|

||||

|

||||

### 2016 年调查结果中的一些要点

|

||||

|

||||

#### 商业价值

|

||||

|

||||

* 开源软件是发展战略中的一个重要元素,超过 65% 的受访者使用开源软件来加速软件开发的进度。

|

||||

* 超过 55% 的受访者在生产环境中使用开源软件。

|

||||

|

||||

#### 创新的原动力

|

||||

|

||||

* 受访者表示,开源软件的使用让软件开发更加快速灵活,从而推进了创新;同时加速了软件推向市场的时间,也极大地减少了与上司沟通的时间。

|

||||

* 开源软件的优质解决方案,富有竞争力的特性,技术能力,以及可定制化的能力,也促进了更多的创新。

|

||||

|

||||

#### 开源商业模式与投资的激增

|

||||

|

||||

* 更多不同商业模式的出现给开源企业带来了前所未有的价值。这些价值并不依赖于云服务和技术支持。

|

||||

* 开源的私募融资在过去的五年内,已增长了将近四倍。

|

||||

|

||||

#### 安全和管理

|

||||

|

||||

一流的开源安全与管理实践的发展,也没有跟上人们使用开源不断增长的步伐。尽管备受关注的开源项目近年来爆炸式地增长,调查结果却指出:

|

||||

|

||||

* 50% 的企业在选择和批准开源代码这方面没有出台正式的政策。

|

||||

* 47% 的企业没有正式的流程来跟踪开源代码,这就限制了它们对开源代码的了解,以及控制开源代码的能力。

|

||||

* 超过三分之一的企业没有用于识别、跟踪和修复重大开源安全漏洞的流程。

|

||||

|

||||

#### 不断增长的开源参与者

|

||||

|

||||

调查结果显示,一个活跃的企业开源社区,激励创新,提供价值,共享情谊:

|

||||

|

||||

* 67% 的受访者表示,它们积极鼓励开发者参与开源项目。

|

||||

* 65% 的企业正致力于开源项目。

|

||||

* 约三分之一的企业有专门为开源项目设置的全职岗位。

|

||||

* 59% 的受访者参与开源项目以获得竞争优势。

|

||||

|

||||

Black Duck 和 North Bridge 从今年的调查中了解到了很多,如安全,政策,商业模式等。我们很兴奋能够分享这些新发现。感谢我们的合作者,以及所有参与我们调查的受访者。这是一个伟大的十年,我很高兴我们可以肯定地说,开源的未来充满了无限可能。

|

||||

|

||||

想要了解更多内容,可以查看完整的[调查结果][3]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/business/16/5/2016-future-open-source-survey

|

||||

|

||||

作者:[Haidee LeClair][a]

|

||||

译者:[Cathon](https://github.com/Cathon)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

[a]: https://opensource.com/users/blackduck2016

|

||||

[1]: http://www.slideshare.net/blackducksoftware/2016-future-of-open-source-survey-results

|

||||

[2]: https://info.blackducksoftware.com/OpenSourceRookies2015.html

|

||||

[3]: http://www.slideshare.net/blackducksoftware/2016-future-of-open-source-survey-results%C2%A0

|

||||

@ -1,12 +1,11 @@

|

||||

如何为登录和 sudo 设置双重认证

|

||||

如何为登录和 sudo 设置双因子认证

|

||||

==========================================================

|

||||

|

||||

|

||||

>[Used with permission][1]

|

||||

|

||||

安全就是一切。我们生活的当今世界,数据具有令人难以置信的价值,而你也一直处于数据丢失的风险之中。因此,你必须想尽办法保证你桌面系统和服务器中东西的安全。结果,管理员和用户就会创建极其复杂的密码、使用密码管理器甚至其它更复杂的东西。但是,如果我告诉你你可以只需要一步-至多两步就能登录到你的 Linux 服务器或桌面系统中呢?多亏了 [Google Authenticator][2],现在你可以做到了。在这之上配置也极其简单。

|

||||

安全就是一切。我们生活的当今世界,数据具有令人难以置信的价值,而你也一直处于数据丢失的风险之中。因此,你必须想尽办法保证你桌面系统和服务器中数据的安全。结果,管理员和用户就会创建极其复杂的密码、使用密码管理器甚至其它更复杂的东西。但是,如果我告诉你你可以只需要一步,至多两步就能登录到你的 Linux 服务器或桌面系统中呢?多亏了 [Google 身份验证器][2],现在你可以做到了。并且,配置也极其简单。

|

||||

|

||||

我会给你简要介绍为登录和 sudo 设值双重认证的步骤。我基于 Ubuntu 16.04 桌面系统进行介绍,但这些步骤也适用于其它服务器。为了做到双重认证,我会使用 Google Authenticator。

|

||||

我会给你简要介绍为登录和 sudo 设置双因子认证的步骤。我基于 Ubuntu 16.04 桌面系统进行介绍,但这些步骤也适用于其它服务器。为了实现双因子认证,我会使用 Google 身份验证器。

|

||||

|

||||

这里有个非常重要的警告:一旦你设置了认证,没有一个从认证器中获得的由 6 个数字组成的验证码你就不可能登录账户(或者执行 sudo 命令)。这也给你增加了一步额外的操作,因此如果你不想每次登录到 Linux 服务器(或者使用 sudo)的时候都要拿出你的智能手机,这个方案就不适合你。但你也要记住,这额外的一个步骤也给你带来一层其它方法无法给予的保护。

|

||||

|

||||

@ -14,38 +13,28 @@

|

||||

|

||||

### 安装必要的组件

|

||||

|

||||

安装 Google 认证,首先要解决两个问题。一是安装智能机应用。下面是如何从 Google 应用商店安装的方法:

|

||||

安装 Google 身份验证器(Google Authenticator),首先要解决两个问题。一是安装智能机应用。下面是如何从 Google 应用商店安装的方法:

|

||||

|

||||

1. 在你的安卓设备中打开 Google 应用商店

|

||||

|

||||

2. 搜索 google 认证

|

||||

|

||||

3. 找到并点击有 Google 标识的应用

|

||||

|

||||

2. 搜索 google 身份验证器

|

||||

3. 找到并点击有 Google Inc. 标识的应用

|

||||

4. 点击安装

|

||||

|

||||

5. 点击 接受

|

||||

|

||||

5. 点击“接受”

|

||||

6. 等待安装完成

|

||||

|

||||

接下来,我们继续在你的 Linux 机器上安装认证。步骤如下:

|

||||

接下来,我们继续在你的 Linux 机器上安装这个认证器。步骤如下:

|

||||

|

||||

1. 打开一个终端窗口

|

||||

|

||||

2. 输入命令 sudo apt-get install google-authenticator

|

||||

|

||||

3. 输入你的 sudo 密码并敲击回车

|

||||

|

||||

4. 如果有弹窗提示,输入 y 并敲击回车

|

||||

|

||||

5. 等待安装完成

|

||||

|

||||

接下来配置使用 google-authenticator 进行登录。

|

||||

|

||||

### 配置

|

||||

|

||||

要为登录和 sudo 添加两阶段认证只需要编辑一个文件。也就是 /etc/pam.d/common-auth。打开并找到如下一行:

|

||||

Just one file must be edited to add two-step authentication for both login and sudo usage. The file is /etc/pam.d/common-auth. Open it and look for the line

|

||||

要为登录和 sudo 添加双因子认证只需要编辑一个文件,即 /etc/pam.d/common-auth。打开并找到如下一行:

|

||||

|

||||

```

|

||||

auth [success=1 default=ignore] pam_unix.so nullok_secure

|

||||

@ -59,57 +48,53 @@ auth required pam_google_authenticator.so

|

||||

|

||||

保存并关闭文件。

|

||||

|

||||

下一步就是为系统中的每个用户设置 google-authenticator(否则会不允许他们登录)。为了简单起见,我们假设你的系统中有两个用户:jack 和 olivia。首先为 jack 设置(我们假设这是我们一直使用的账户)。

|

||||

下一步就是为系统中的每个用户设置 google-authenticator(否则他们就不能登录了)。为了简单起见,我们假设你的系统中有两个用户:jack 和 olivia。首先为 jack 设置(我们假设这是我们一直使用的账户)。

|

||||

|

||||

打开一个终端窗口并输入命令 google-authenticator。之后会问你一系列的问题(每个问题你都应该用 y 回答)。问题包括:

|

||||

|

||||

* 是否允许更新你的 "/home/jlwallen/.google_authenticator" 文件 (y/n) y

|

||||

|

||||

* 是否禁止多个用户使用同一个认证令牌?这会限制你每 30 秒内只能登录一次,但能增加你注意到甚至防止中间人攻击的可能 (y/n)

|

||||

* 默认情况下令牌时长为 30 秒即可,为了补偿客户端和服务器之间可能出现的时间偏差,我们允许使用当前时间之前或之后的其它令牌。如果你无法进行时间同步,你可以把这个时间窗口由默认的 1:30 分钟增加到 4 分钟。是否希望如此 (y/n)

|

||||

* 如果你尝试登录的计算机没有针对暴力破解进行加固,你可以为验证模块启用速率限制。默认情况下,限制攻击者每 30 秒不能尝试登陆超过 3 次。是否启用速率限制 (y/n)

|

||||

|

||||

* 默认情况下令牌时长为 30 秒即可,为了补偿客户端和服务器之间可能出现的时间偏差,我们允许添加一个当前时间之前或之后的令牌。如果你无法进行时间同步,你可以把时间窗口由默认的 1:30 分钟增加到 4 分钟。是否希望如此 (y/n)

|

||||

|

||||

* 如果你尝试登陆的计算机没有针对蛮力登陆进行加固,你可以为验证模块启用速率限制。默认情况下,限制攻击者每 30 秒不能尝试登陆超过 3 次。是否启用速率限制 (y/n)

|

||||

|

||||

一旦完成了问题回答,你就会看到你的密钥、验证码以及 5 个紧急刮码。把刮码输出保存起来。你可以在无法使用手机的时候使用它们(每个刮码仅限使用一次)。密钥用于你在 Google Authenticator 上设置账户,验证码是你能立即使用(如果需要)的一次性验证码。

|

||||

一旦完成了问题回答,你就会看到你的密钥、验证码以及 5 个紧急刮码(emergency scratch code)。把这些刮码打印出来并保存。你可以在无法使用手机的时候使用它们(每个刮码仅限使用一次)。密钥用于你在 Google 身份验证器上设置账户,验证码是你能当下就能够立即使用(如果需要)的一次性验证码。

|

||||

|

||||

### 设置应用

|

||||

|

||||

现在你已经配置好了用户 jack。在设置用户 olivia 之前,你需要在 Google Authenticator 应用上为 jack 添加账户。在主屏幕上打开应用,点击 菜单 按钮(右上角三个竖排点)。点击添加账户然后输入提供的密钥。在下一个窗口(示意图1),你需要输入你运行 google-authenticator 应用时提供的 16 个数字的密钥。给账户取个名字(以便你记住这用于哪个账户),然后点击添加。

|

||||

现在你已经配置好了用户 jack。在设置用户 olivia 之前,你需要在 Google 身份验证器应用上为 jack 添加账户(LCTT 译注:实际操作情形中,是为 jack 的手机上安装的该应用创建一个账户)。在打开应用,点击“菜单”按钮(右上角三个竖排点)。点击“添加账户”然后点击“输入提供的密钥”。在下一个窗口(图1),你需要输入你运行 google-authenticator 应用时提供的 16 个数字的密钥。给账户取个名字(以便你记住这用于哪个账户),然后点击“添加”。

|

||||

|

||||

|

||||

>Figure 1: 在 Google Authenticator 应用上新建账户

|

||||

|

||||

*图1: 在 Google Authenticator 应用上新建账户*

|

||||

|

||||

(LCTT 译注:Google 身份验证器也可以扫描你在服务器上设置时显示的二维码,而不用手工输入密钥)

|

||||

|

||||

添加完账户之后,你就会看到一个 6 个数字的密码,你每次登录或者使用 sudo 的时候都会需要这个密码。

|

||||

|

||||

最后,在系统上设置其它账户。正如之前提到的,我们会设置一个叫 olivia 的账户。步骤如下:

|

||||

|

||||

1. 打开一个终端窗口

|

||||

|

||||

2. 输入命令 sudo su olivia

|

||||

|

||||

3. 在智能机上打开 Google Authenticator

|

||||

|

||||

4. 在终端窗口(示意图2)中输入(应用提供的) 6 位数字验证码并敲击回车

|

||||

|

||||

3. 在智能机上打开 Google 身份验证器

|

||||

4. 在终端窗口(图2)中输入(应用提供的) 6 位数字验证码并敲击回车

|

||||

5. 输入你的 sudo 密码并敲击回车

|

||||

|

||||

6. 以新用户输入命令 google-authenticator,回答问题并记录生成的密钥和验证码。

|

||||

|

||||

成功为 olivia 用户设置好之后,用 google-authenticator 命令,在 Google Authenticator 应用上根据用户信息(和之前为第一个用户添加账户相同)添加一个新的账户。现在你在 Google Authenticator 应用上就会有 jack 和 olivia 两个账户了。

|

||||

成功为 olivia 用户设置好之后,用 google-authenticator 命令,在 Google 身份验证器应用上根据用户信息(和之前为第一个用户添加账户相同)添加一个新的账户。现在你在 Google 身份验证器应用上就会有 jack 和 olivia 两个账户了。(LCTT 译注:在实际操作情形中,通常是为 jack 和 olivia 两个人的手机分别设置。)

|

||||

|

||||

|

||||

>Figure 2: 为 sudo 输入 6位数字验证码

|

||||

|

||||

好了,就是这些。每次你尝试登陆系统(或者使用 sudo) 的时候,在你输入用户密码之前,都会要求你输入提供的 6 位数字验证码。现在你的 Linux 机器就比添加双重认证之前安全多了。虽然有些人会认为这非常麻烦,我仍然推荐使用,尤其是那些保存了敏感数据的机器。

|

||||

*图2: 为 sudo 输入 6位数字验证码*

|

||||

|

||||

好了,就是这些。每次你尝试登录系统(或者使用 sudo) 的时候,在你输入用户密码之前,都会要求你输入提供的 6 位数字验证码。现在你的 Linux 机器就比添加双因子认证之前安全多了。虽然有些人会认为这非常麻烦,我仍然推荐使用,尤其是那些保存了敏感数据的机器。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/auth_b.png?itok=FH36V1r0

|

||||

via: https://www.linux.com/learn/how-set-2-factor-authentication-login-and-sudo

|

||||

|

||||

作者:[JACK WALLEN][a]

|

||||

译者:[ictlyh](http://mutouxiaogui.cn/blog/)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

[a]: https://www.linux.com/users/jlwallen

|

||||

[1]: https://www.linux.com/licenses/category/used-permission

|

||||

@ -3,23 +3,23 @@

|

||||

|

||||

谷歌安卓的一项新创新将可以让你无需安装即可在你的设备上使用应用程序。现在已经初具雏形。

|

||||

|

||||

还记得那时候吗,某人发给你了一个链接,要求你通过安装来查看应用。

|

||||

还记得那时候吗,某人发给你了一个链接,要求你通过安装一个应用才能查看。

|

||||

|

||||

是否要安装这个应用来查看一个一次性的链接,这种进退两难的选择一定让你感到很沮丧。而且,应用安装本身也会消耗你不少宝贵的时间。

|

||||

是否要安装这个应用就为了看一下链接,这种进退两难的选择一定让你感到很沮丧。而且,安装应用这个事也会消耗你不少宝贵的时间。

|

||||

|

||||

上述场景可能大多数人都经历过,或者说大多数现代科技用户都经历过。尽管如此,我们都接受这是正确且合理的过程。

|

||||

上述场景可能大多数人都经历过,或者说大多数现代科技用户都经历过。尽管如此,我们都接受,认为这是天经地义的事情。

|

||||

|

||||

事实真的如此吗?

|

||||

|

||||

针对这个问题谷歌的安卓部门给出了一个全新的,开箱即用的答案:

|

||||

针对这个问题谷歌的安卓部门给出了一个全新的、开箱即用的答案:

|

||||

|

||||

### Android Instant Apps

|

||||

### Android Instant Apps (AIA)

|

||||

|

||||

Android Instant Apps 声称第一时间帮你摆脱这样的两难境地,让你简单地点击链接(见打开链接的示例)然后直接开始使用这个应用。

|

||||

Android Instant Apps 声称可以从一开始就帮你摆脱这样的两难境地,让你简单地点击链接(见打开链接的示例)然后直接开始使用这个应用。

|

||||

|

||||

另一个真实生活场景的例子,如果你想停车但是没有停车码表的配对应用,有了 Instant Apps 在这种情况下就方便多了。

|

||||

另一个真实生活场景的例子,如果你想停车但是没有停车码表的相应应用,有了 Instant Apps 在这种情况下就方便多了。

|

||||

|

||||

根据谷歌的信息,你可以简单地将你的手机和码表触碰,停车应用就会直接显示在你的屏幕上,并且准备就绪可以使用。

|

||||

根据谷歌提供的信息,你可以简单地将你的手机和码表触碰,停车应用就会直接显示在你的屏幕上,并且准备就绪可以使用。

|

||||

|

||||

#### 它是怎么工作的?

|

||||

|

||||

@ -30,21 +30,25 @@ Instant Apps 和你已经熟悉的应用基本相同,只有一个不同——

|

||||

这样应用就可以快速打开,让你可以完成你的目标任务。

|

||||

|

||||

|

||||

>AIA 示例

|

||||

|

||||

*AIA 示例*

|

||||

|

||||

|

||||

>B&H 图片(通过谷歌搜索)

|

||||

|

||||

*B&H 图片(通过谷歌搜索)*

|

||||

|

||||

|

||||

>BuzzFeedVideo(通过一个共享链接)

|

||||

|

||||

*BuzzFeedVideo(通过一个共享链接)*

|

||||

|

||||

|

||||

>停车与支付(例)(通过 NFC)

|

||||

|

||||

*停车与支付(例)(通过 NFC)*

|

||||

|

||||

|

||||

听起来很棒,不是吗?但是其中还有很多技术方面的问题需要解决。

|

||||

|

||||

比如,从安全的观点来说:如果任何应用从理论上来说都能在你的设备上运行,甚至你都不用安装它——你要怎么保证设备远离恶意软件攻击?

|

||||

比如,从安全的观点来说:从理论上来说,如果任何应用都能在你的设备上运行,甚至你都不用安装它——你要怎么保证设备远离恶意软件攻击?

|

||||

|

||||

因此,为了消除这类威胁,谷歌还在这个项目上努力,目前只有少数合作伙伴,未来将逐步扩展。

|

||||

|

||||

@ -5,19 +5,19 @@ ORB:新一代 Linux 应用

|

||||

|

||||

我们之前讨论过[在 Ubuntu 上离线安装应用][1]。我们现在要再次讨论它。

|

||||

|

||||

[Orbital Apps][2] 给我们带来了新的软件包类型,**ORB**,它带有便携软件,交互式安装向导支持,以及离线使用的能力。

|

||||

[Orbital Apps][2] 给我们带来了一种新的软件包类型 **ORB**,它具有便携软件、交互式安装向导支持,以及离线使用的能力。

|

||||

|

||||

便携软件很方便。主要是因为它们能够无需任何管理员权限直接运行,也能够带着所有的设置和数据随U盘存储。而交互式的安装向导也能让我们轻松地安装应用。

|

||||

便携软件很方便。主要是因为它们能够无需任何管理员权限直接运行,也能够带着所有的设置和数据随 U 盘存储。而交互式的安装向导也能让我们轻松地安装应用。

|

||||

|

||||

### 开放可运行包 OPEN RUNNABLE BUNDLE (ORB)

|

||||

### 开放式可运行的打包(OPEN RUNNABLE BUNDLE) (ORB)

|

||||

|

||||

ORB 是一个免费和开源的包格式,它和其它包格式在很多方面有所不同。ORB 的一些特性:

|

||||

ORB 是一个自由开源的包格式,它和其它包格式在很多方面有所不同。ORB 的一些特性:

|

||||

|

||||

- **压缩**:所有的包经过压缩,使用 squashfs,体积最多减少 60%。

|

||||

- **便携模式**:如果一个便携 ORB 应用是从可移动设备运行的,它会把所有设置和数据存储在那之上。

|

||||

- **压缩**:所有的包都经过 squashfs 压缩,体积最多可减少 60%。

|

||||

- **便携模式**:如果一个便携 ORB 应用是在可移动设备上运行的,它会把所有设置和数据存储在那之上。

|

||||

- **安全**:所有的 ORB 包使用 PGP/RSA 签名,通过 TLS 1.2 分发。

|

||||

- **离线**:所有的依赖都打包进软件包,所以不再需要下载依赖。

|

||||

- **开放包**:ORB 包可以作为 ISO 镜像挂载。

|

||||

- **开放式软件包**:ORB 软件包可以作为 ISO 镜像挂载。

|

||||

|

||||

### 种类

|

||||

|

||||

@ -26,77 +26,69 @@ ORB 应用现在有两种类别:

|

||||

- 便携软件

|

||||

- SuperDEB

|

||||

|

||||

#### 1. 便携 ORB 软件

|

||||

### 1. 便携 ORB 软件

|

||||

|

||||

便携 ORB 软件可以立即运行而不需要任何的事先安装。那意味着它不需要管理员权限和依赖!你可以直接从 Orbital Apps 网站下载下来就能使用。

|

||||

便携 ORB 软件可以立即运行而不需要任何的事先安装。这意味着它不需要管理员权限,也没有依赖!你可以直接从 Orbital Apps 网站下载下来就能使用。

|

||||

|

||||

并且由于它支持便携模式,你可以将它拷贝到U盘携带。它所有的设置和数据会和它一起存储在U盘。只需将U盘连接到任何运行 Ubuntu 16.04 的机器上就行了。

|

||||

并且由于它支持便携模式,你可以将它拷贝到 U 盘携带。它所有的设置和数据会和它一起存储在 U 盘。只需将 U 盘连接到任何运行 Ubuntu 16.04 的机器上就行了。

|

||||

|

||||

##### 可用便携软件

|

||||

#### 可用便携软件

|

||||

|

||||

目前有超过 35 个软件以便携包的形式提供,包括一些十分流行的软件,比如:[Deluge][3],[Firefox][4],[GIMP][5],[Libreoffice][6],[uGet][7] 以及 [VLC][8]。

|

||||

|

||||

完整的可用包列表可以查阅 [便携 ORB 软件列表][9]。

|

||||

|

||||

##### 使用便携软件

|

||||

#### 使用便携软件

|

||||

|

||||

按照以下步骤使用便携 ORB 软件:

|

||||

|

||||

- 从 Orbital Apps 网站下载想要的软件包。

|

||||

- 将其移动到想要的位置(本地磁盘/U盘)。

|

||||

- 将其移动到想要的位置(本地磁盘/U 盘)。

|

||||

- 打开存储 ORB 包的目录。

|

||||

|

||||

|

||||

|

||||

|

||||

- 打开 ORB 包的属性。

|

||||

|

||||

|

||||

>给 ORB 包添加运行权限

|

||||

|

||||

|

||||

- 在权限标签页添加运行权限。

|

||||

- 双击打开它。

|

||||

|

||||

等待几秒,让它准备好运行。大功告成。

|

||||

|

||||

#### 2. SuperDEB

|

||||

### 2. SuperDEB

|

||||

|

||||

另一种类型的 ORB 软件是 SuperDEB。SuperDEB 很简单,交互式安装向导能够让软件安装过程顺利得多。如果你不喜欢从终端或软件中心安装软件,superDEB 就是你的菜。

|

||||

|

||||

最有趣的部分是你安装时不需要一个互联网连接,因为所有的依赖都由安装向导打包了。

|

||||

|

||||

##### 可用的 SuperDEB

|

||||

#### 可用的 SuperDEB

|

||||

|

||||

超过 60 款软件以 SuperDEB 的形式提供。其中一些流行的有:[Chromium][10],[Deluge][3],[Firefox][4],[GIMP][5],[Libreoffice][6],[uGet][7] 以及 [VLC][8]。

|

||||

|

||||

完整的可用 SuperDEB 列表,参阅 [SuperDEB 列表][11]。

|

||||

|

||||

##### 使用 SuperDEB 安装向导

|

||||

#### 使用 SuperDEB 安装向导

|

||||

|

||||

- 从 Orbital Apps 网站下载需要的 SuperDEB。

|

||||

- 像前面一样给它添加**运行权限**(属性 > 权限)。

|

||||

- 双击 SuperDEB 安装向导并按下列说明操作:

|

||||

|

||||

|

||||

>点击 OK

|

||||

|

||||

|

||||

|

||||

>输入你的密码并继续

|

||||

|

||||

|

||||

|

||||

>它会开始安装…

|

||||

|

||||

|

||||

|

||||

>一会儿他就完成了…

|

||||

|

||||

|

||||

- 完成安装之后,你就可以正常使用了。

|

||||

|

||||

### ORB 软件兼容性

|

||||

|

||||

从 Orbital Apps 可知,它们完全适配 Ubuntu 16.04 [64 bit]。

|

||||

从 Orbital Apps 可知,它们完全适配 Ubuntu 16.04 [64 位]。

|

||||

|

||||

>阅读建议:[如何在 Ubuntu 获知你的是电脑 32 位还是 64 位的][12]。

|

||||

|

||||

至于其它发行版兼容性不受保证。但我们可以说,它在所有 Ubuntu 16.04 衍生版(UbuntuMATE,UbuntuGNOME,Lubuntu,Xubuntu 等)以及基于 Ubuntu 16.04 的发行版(比如即将到来的 Linux Mint 18)上都适用。我们现在还不清楚 Orbital Apps 是否有计划拓展它的支持到其它版本 Ubuntu 或 Linux 发行版上。

|

||||

至于其它发行版兼容性则不受保证。但我们可以说,它在所有 Ubuntu 16.04 衍生版(UbuntuMATE,UbuntuGNOME,Lubuntu,Xubuntu 等)以及基于 Ubuntu 16.04 的发行版(比如即将到来的 Linux Mint 18)上都适用。我们现在还不清楚 Orbital Apps 是否有计划拓展它的支持到其它版本 Ubuntu 或 Linux 发行版上。

|

||||

|

||||

如果你在你的系统上经常使用便携 ORB 软件,你可以考虑安装 ORB 启动器。它不是必需的,但是推荐安装它以获取更佳的体验。最简短的 ORB 启动器安装流程是打开终端输入以下命令:

|

||||

|

||||

@ -116,11 +108,11 @@ wget -O - https://www.orbital-apps.com/orb.sh | bash

|

||||

|

||||

|

||||

----------------------------------

|

||||

via: http://itsfoss.com/orb-linux-apps/?utm_source=feedburner&utm_medium=feed&utm_campaign=Feed%3A+ItsFoss+%28Its+FOSS%21+An+Open+Source+Blog%29

|

||||

via: http://itsfoss.com/orb-linux-apps/

|

||||

|

||||

作者:[Munif Tanjim][a]

|

||||

译者:[alim0x](https://github.com/alim0x)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,106 @@

|

||||

与 Linux 一同驾车奔向未来

|

||||

===========================================

|

||||

|

||||

|

||||

|

||||

当我驾车的时候并没有这么想过,但是我肯定喜欢一个配有这样系统的车子,它可以让我按下几个按钮就能与我的妻子、母亲以及孩子们语音通话。这样的系统也可以让我选择是否从云端、卫星广播、以及更传统的 AM/FM 收音机收听音乐流媒体。我也会得到最新的天气情况,以及它可以引导我的车载 GPS 找到抵达下一个目的地的最快路线。[车载娱乐系统(In-vehicle infotainment)][1],业界常称作 IVI,它已经普及出现在最新的汽车上了。

|

||||

|

||||

前段时间,我乘坐飞机跨越了数百英里,然后租了一辆汽车。令人愉快的是,我发现我租赁的汽车上配置了类似我自己车上同样的 IVI 技术。毫不犹豫地,我就通过蓝牙连接把我的联系人上传到了系统当中,然后打电话回家给我的家人,让他们知道我已经安全抵达了,然后我的主机会让他们知道我正在去往他们家的路上。

|

||||

|

||||

在最近的[新闻综述][2]中,Scott Nesbitt 引述了一篇文章,说福特汽车公司因其开源的[智能设备连接(Smart Device Link)][3](SDL)从竞争对手汽车制造商中得到了足够多的回报,这个中间件框架可以用于支持移动电话。 SDL 是 [GENIVI 联盟][4]的一个项目,这个联盟是一个非营利性组织,致力于建设支持开源车载娱乐系统的中间件。据 GENIVI 的执行董事 [Steven Crumb][5] 称,他们的[成员][6]有很多,包括戴姆勒集团、现代、沃尔沃、日产、本田等等 170 个企业。

|

||||

|

||||

为了在同行业间保持竞争力,汽车生产企业需要一个中间设备系统,以支持现代消费者所使用的各种人机界面技术。无论您使用的是 Android、iOS 还是其他设备,汽车 OEM 厂商都希望自己的产品能够支持这些。此外,这些的 IVI 系统必须有足够适应能力以支持日益变化的移动技术。OEM 厂商希望提供有价值的服务,并可以在他们的 IVI 之上增加服务,以满足他们客户的各种需求。

|

||||

|

||||

### 步入 Linux 和开源软件

|

||||

|

||||

除了 GENIVI 在努力之外,[Linux 基金会][7]也赞助支持了[车载 Linux(Automotive Grade Linux)][8](AGL)工作组,这是一个致力于为汽车应用寻求开源解决方案的软件基金会。虽然 AGL 初期将侧重于 IVI 系统,但是未来他们希望发展到不同的方向,包括[远程信息处理(telematics)][9]、抬头显示器(HUD)及其他控制系统等等。 现在 AGL 已经有超过 50 名成员,包括捷豹、丰田、日产,并在其[最近发布的一篇公告][10]中宣称福特、马自达、三菱、和斯巴鲁也加入了。

|

||||

|

||||

为了了解更多信息,我们采访了这一新兴领域的两位领导人。具体来说,我们想知道 Linux 和开源软件是如何被使用的,并且它们是如何事实上改变了汽车行业的面貌。首先,我们将与 [Alison Chaiken][11] 谈谈,她是一位任职于 Peloton Technology 的软件工程师,也是一位在车载 Linux 、网络安全和信息透明化方面的专家。她曾任职于 [Alison Chaiken][11] 公司、诺基亚和斯坦福直线性加速器。然后我们和 [Steven Crumb][12] 进行了交谈,他是 GENIVI 执行董事,他之前从事于高性能计算环境(超级计算机和早期的云计算)的开源工作。他说,虽然他再不是一个程序员了,但是他乐于帮助企业解决在使用开源软件时的实际业务问题。

|

||||

|

||||

### 采访 Alison Chaiken (by [Deb Nicholson][13])

|

||||

|

||||

#### 你是如何开始对汽车软件领域感兴趣的?

|

||||

|

||||

我曾在诺基亚从事于手机上的 [MeeGo][14] 产品,2009 年该项目被取消了。我想,我下一步怎么办?其时,我的一位同事正在从事于 [MeeGo-IVI][15],这是一个早期的车载 Linux 发行版。 “Linux 在汽车方面将有很大发展,” 我想,所以我就朝着这个方向努力。

|

||||

|

||||

#### 你能告诉我们你在这些日子里工作在哪些方面吗?

|

||||

|

||||

我目前正在启动一个高级巡航控制系统的项目,它用在大型卡车上,使用实时 Linux 以提升安全性和燃油经济性。我喜欢在这方面的工作,因为没有人会反对提升货运的能力。

|

||||

|

||||

#### 近几年有几则汽车被黑的消息。开源代码方案可以帮助解决这个问题吗?

|

||||

|

||||

我恰好针对这一话题准备了一次讲演,我会在南加州 Linux 2016 博览会上就 Linux 能否解决汽车上的安全问题做个讲演 ([讲演稿在此][16])。值得注意的是,GENIVI 和车载 Linux 项目已经公开了他们的代码,这两个项目可以通过 Git 提交补丁。(如果你有补丁的话),请给上游发送您的补丁!许多眼睛都盯着,bug 将无从遁形。

|

||||

|

||||

#### 执法机构和保险公司可以找到很多汽车上的数据的用途。他们获取这些信息很容易吗?

|

||||

|

||||

好问题。IEEE-1609 专用短程通信标准(Dedicated Short Range Communication Standard)就是为了让汽车的 WiFi 消息可以安全、匿名地传递。不过,如果你从你的车上发推,那可能就有人能够跟踪到你。

|

||||

|

||||

#### 开发人员和公民个人可以做些什么,以在汽车技术进步的同时确保公民自由得到保护?

|

||||

|

||||

电子前沿基金会( Electronic Frontier Foundation)(EFF)在关注汽车问题方面做了出色的工作,包括对哪些数据可以存储在汽车 “黑盒子”里通过官方渠道发表了看法,以及 DMCA 规定 1201 如何应用于汽车上。

|

||||

|

||||

#### 在未来几年,你觉得在汽车方面会发生哪些令人激动的发展?

|

||||

|

||||

可以拯救生命的自适应巡航控制系统和防撞系统将取得长足发展。当它们大量进入汽车里面时,我相信这会使得(因车祸而导致的)死亡人数下降。如果这都不令人激动,我不知道还有什么会更令人激动。此外,像自动化停车辅助功能,将会使汽车更容易驾驶,减少汽车磕碰事故。

|

||||

|

||||

#### 我们需要做什么?人们怎样才能参与?

|

||||

|

||||

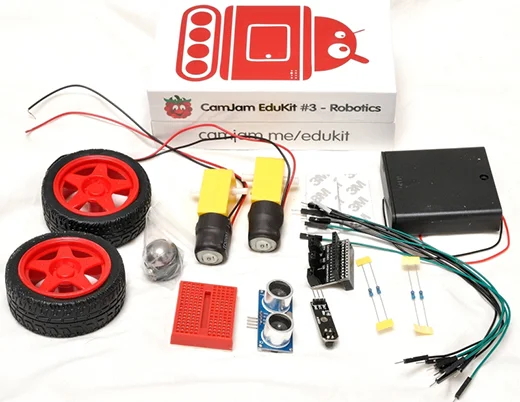

车载 Linux 开发是以开源的方式开发,它运行在每个人都能买得起的廉价硬件上(如树莓派 2 和中等价位的 Renesas Porter 主板)。 GENIVI 汽车 Linux 中间件联盟通过 Git 开源了很多软件。此外,还有很酷的 [OSVehicle 开源硬件][17]汽车平台。

|

||||

|

||||

只需要不太多的预算,人们就可以参与到 Linux 软件和开放硬件中。如果您感兴趣,请加入我们在 Freenode 上的IRC #automotive 吧。

|

||||

|

||||

### 采访 Steven Crumb (by Don Watkins)

|

||||

|

||||

#### GENIVI 在 IVI 方面做了哪些巨大贡献?

|

||||

|

||||

GENIVI 率先通过使用自由开源软件填补了汽车行业的巨大空白,这包括 Linux、非安全关键性汽车软件(如车载娱乐系统(IVI))等。作为消费者,他们很期望在车辆上有和智能手机一样的功能,对这种支持 IVI 功能的软件的需求量成倍地增长。不过不断提升的软件数量也增加了建设 IVI 系统的成本,从而延缓了其上市时间。

|

||||

|

||||

GENIVI 使用开源软件和社区开发的模式为汽车制造商及其软件提供商节省了大量资金,从而显著地缩短了产品面市时间。我为 GENIVI 而感到激动,我们有幸引导了一场革命,在缓慢进步的汽车行业中,从高度结构化和专有的解决方案转换为以社区为基础的开发方式。我们还没有完全达成目标,但是我们很荣幸在这个可以带来实实在在好处的转型中成为其中的一份子。

|

||||

|

||||

#### 你们的主要成员怎样推动了 GENIVI 的发展方向?

|

||||

|

||||

GENIVI 有很多成员和非成员致力于我们的工作。在许多开源项目中,任何公司都可以通过通过技术输出而发挥影响,包括简单地贡献代码、补丁、花点时间测试。前面说过,宝马、奔驰、现代汽车、捷豹路虎、标致雪铁龙、雷诺/日产和沃尔沃都是 GENIVI 积极的参与者和贡献者,其他的许多 OEM 厂商也在他们的汽车中采用了 IVI 解决方案,广泛地使用了 GENIVI 的软件。

|

||||

|

||||

#### 这些贡献的代码使用了什么许可证?

|

||||

|

||||

GENIVI 采用了一些许可证,包括从(L)GPLv2 到 MPLv2 和 Apache2.0。我们的一些工具使用的是 Eclipse 许可证。我们有一个[公开许可策略][18],详细地说明了我们的许可证偏好。

|

||||

|

||||

#### 个人或团体如何参与其中?社区的参与对于这个项目迈向成功有多重要?

|

||||

|

||||

GENIVI 的开发完全是开放的([projects.genivi.org][19]),因此,欢迎任何有兴趣在汽车中使用开源软件的人参加。也就是说,公司可以通过成员的方式[加入该联盟][20],联盟以开放的方式资助其不断进行开发。GENIVI 的成员可以享受各种各样的便利,在过去六年中,已经有多达 140 家公司参与到这个全球性的社区当中。

|

||||

|

||||

社区对于 GENIVI 是非常重要的,没有一个活跃的贡献者社区,我们不可能在这些年开发和维护了这么多有价值的软件。我们努力让参与到 GENIVI 更加简单,现在只要加入一个[邮件列表][21]就可以接触到各种软件项目中的人们。我们使用了许多开源项目采用的标准做法,并提供了高品质的工具和基础设施,以帮助开发人员宾至如归而富有成效。

|

||||

|

||||

无论你是否熟悉汽车软件,都欢迎你加入我们的社区。人们已经对汽车改装了许多年,所以对于许多人来说,在汽车上修修改改是自热而然的做法。对于汽车来说,软件是一个新的领域,GENIVI 希望能为对汽车和开源软件有兴趣的人打开这扇门。

|

||||

|

||||

-------------------------------

|

||||

via: https://opensource.com/business/16/5/interview-alison-chaiken-steven-crumb

|

||||

|

||||

作者:[Don Watkins][a]

|

||||

译者:[erlinux](https://github.com/erlinux)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/don-watkins

|

||||

[1]: https://en.wikipedia.org/wiki/In_car_entertainment

|

||||

[2]: https://opensource.com/life/16/1/weekly-news-jan-9

|

||||

[3]: http://projects.genivi.org/smartdevicelink/home

|

||||

[4]: http://www.genivi.org/

|

||||

[5]: https://www.linkedin.com/in/stevecrumb

|

||||

[6]: http://www.genivi.org/genivi-members

|

||||

[7]: http://www.linuxfoundation.org/

|

||||

[8]: https://www.automotivelinux.org/

|

||||

[9]: https://en.wikipedia.org/wiki/Telematics

|

||||

[10]: https://www.automotivelinux.org/news/announcement/2016/01/ford-mazda-mitsubishi-motors-and-subaru-join-linux-foundation-and

|

||||

[11]: https://www.linkedin.com/in/alison-chaiken-3ba456b3

|

||||

[12]: https://www.linkedin.com/in/stevecrumb

|

||||

[13]: https://opensource.com/users/eximious

|

||||

[14]: https://en.wikipedia.org/wiki/MeeGo

|

||||

[15]: http://webinos.org/deliverable-d026-target-platform-requirements-and-ipr/automotive/

|

||||

[16]: http://she-devel.com/Chaiken_automotive_cybersecurity.pdf

|

||||

[17]: https://www.osvehicle.com/

|

||||

[18]: http://projects.genivi.org/how

|

||||

[19]: http://projects.genivi.org/

|

||||

[20]: http://genivi.org/join

|

||||

[21]: http://lists.genivi.org/mailman/listinfo/genivi-projects

|

||||

@ -0,0 +1,212 @@

|

||||

如何在 Linux 及 Unix 系统中添加定时任务

|

||||

======================================

|

||||

|

||||

|

||||

|

||||

### 导言

|

||||

|

||||

|

||||

|

||||

定时任务 (cron job) 被用于安排那些需要被周期性执行的命令。利用它,你可以配置某些命令或者脚本,让它们在某个设定的时间周期性地运行。`cron` 是 Linux 或者类 Unix 系统中最为实用的工具之一。cron 服务(守护进程)在系统后台运行,并且会持续地检查 `/etc/crontab` 文件和 `/etc/cron.*/ `目录。它同样也会检查 `/var/spool/cron/` 目录。

|

||||

|

||||

### crontab 命令

|

||||

|

||||

`crontab` 是用来安装、卸载或者列出定时任务列表的命令。cron 配置文件则用于驱动 `Vixie Cron` 的 [cron(8)][1] 守护进程。每个用户都可以拥有自己的 crontab 文件,虽然这些文件都位于 `/var/spool/cron/crontabs` 目录中,但并不意味着你可以直接编辑它们。你需要通过 `crontab` 命令来编辑或者配置你自己的定时任务。

|

||||

|

||||

### 定时配置文件的类型

|

||||

|

||||

配置文件分为以下不同的类型:

|

||||

|

||||

- **UNIX 或 Linux 系统的 crontab** : 此类型通常由那些需要 root 或类似权限的系统服务和重要任务使用。第六个字段(见下方的字段介绍)为用户名,用来指定此命令以哪个用户身份来执行。如此一来,系统的 `crontab` 就能够以任意用户的身份来执行操作。

|

||||

|

||||

- **用户的 crontab**: 用户可以使用 `crontab` 命令来安装属于他们自己的定时任务。 第六个字段为需要运行的命令, 所有的命令都会以创建该 crontab 任务的用户的身份运行。

|

||||

|

||||

**注意**: 这种问答形式的 `Cron` 实现由 Paul Vixie 所编写,并且被包含在许多 [Linux][2] 发行版本和类 Unix 系统(如广受欢迎的第四版 BSD)中。它的语法被各种 crond 的实现所[兼容][3]。

|

||||

|

||||

那么我该如何安装、创建或者编辑我自己的定时任务呢?

|

||||

|

||||

要编辑你的 crontab 文件,需要在 Linux 或 Unix 的 shell 提示符后键入以下命令:

|

||||

|

||||

```

|

||||

$ crontab -e

|

||||

```

|

||||

|

||||

`crontab` 语法(字段介绍)

|

||||

|

||||

语法为:

|

||||

|

||||

```

|

||||

1 2 3 4 5 /path/to/command arg1 arg2

|

||||

```

|

||||

|

||||

或者

|

||||

|

||||

```

|

||||

1 2 3 4 5 /root/ntp_sync.sh

|

||||

```

|

||||

|

||||

其中:

|

||||

|

||||

- 第1个字段:分钟 (0-59)

|

||||

- 第2个字段:小时 (0-23)

|

||||

- 第3个字段:日期 (0-31)

|

||||

- 第4个字段:月份 (0-12 [12 代表 December])

|

||||

- 第5个字段:一周当中的某天 (0-7 [7 或 0 代表星期天])

|

||||

- /path/to/command - 计划执行的脚本或命令的名称

|

||||

|

||||

便于记忆的格式:

|

||||

|

||||

```

|

||||

* * * * * 要执行的命令

|

||||

----------------

|

||||

| | | | |

|

||||

| | | | ---- 周当中的某天 (0 - 7) (周日为 0 或 7)

|

||||

| | | ------ 月份 (1 - 12)

|

||||

| | -------- 一月当中的某天 (1 - 31)

|

||||

| ---------- 小时 (0 - 23)

|

||||

------------ 分钟 (0 - 59)

|

||||

```

|

||||

|

||||

简单的 `crontab` 示例:

|

||||

|

||||

````

|

||||

## 每隔 5 分钟运行一次 backupscript 脚本 ##

|

||||

*/5 * * * * /root/backupscript.sh

|

||||

|

||||

## 每天的凌晨 1 点运行 backupscript 脚本 ##

|

||||

|

||||

0 1 * * * /root/backupscript.sh

|

||||

|

||||

## 每月的第一个凌晨 3:15 运行 backupscript 脚本 ##

|

||||

|

||||

15 3 1 * * /root/backupscript.sh

|

||||

```

|

||||

|

||||

### 如何使用操作符

|

||||

|

||||

操作符允许你为一个字段指定多个值,这里有三个操作符可供使用:

|

||||

|

||||

- **星号 (*)** : 此操作符为字段指定所有可用的值。举个例子,在小时字段中,一个星号等同于每个小时;在月份字段中,一个星号则等同于每月。

|

||||

|

||||

- **逗号 (,)** : 这个操作符指定了一个包含多个值的列表,例如:`1,5,10,15,20,25`.

|

||||

|

||||

- **横杠 (-)** : 此操作符指定了一个值的范围,例如:`5-15` ,等同于使用逗号操作符键入的 `5,6,7,8,9,...,13,14,15`。

|

||||

|

||||

- **分隔符 (/)** : 此操作符指定了一个步进值,例如: `0-23/` 可以用于小时字段来指定某个命令每小时被执行一次。步进值也可以跟在星号操作符后边,如果你希望命令行每 2 小时执行一次,则可以使用 `*/2`。

|

||||

|

||||

### 如何禁用邮件输出

|

||||

|

||||

默认情况下,某个命令或者脚本的输出内容(如果有的话)会发送到你的本地邮箱账户中。若想停止收到 `crontab` 发送的邮件,需要添加 `>/dev/null 2>&1` 这段内容到执行的命令的后面,例如:

|

||||

|

||||

```

|

||||

0 3 * * * /root/backup.sh >/dev/null 2>&1

|

||||

```

|

||||

|

||||

如果想将输出内容发送到特定的邮件账户中,比如说 vivek@nixcraft.in 这个邮箱, 则你需要像下面这样定义一个 MAILTO 变量:

|

||||

|

||||

```

|

||||

MAILTO="vivek@nixcraft.in"

|

||||

0 3 * * * /root/backup.sh >/dev/null 2>&1

|

||||

```

|

||||

|

||||

访问 “[禁用 Crontab 命令的邮件提示](http://www.cyberciti.biz/faq/disable-the-mail-alert-by-crontab-command/)” 查看更多信息。

|

||||

|

||||

|

||||

### 任务:列出你所有的定时任务

|

||||

|

||||

键入以下命令:

|

||||

|

||||

```

|

||||

# crontab -l

|

||||

# crontab -u username -l

|

||||

```

|

||||

|

||||

要删除所有的定时任务,可以使用如下命令:

|

||||

|

||||

```

|

||||

# 删除当前定时任务 #

|

||||

crontab -r

|

||||

```

|

||||

|

||||

```

|

||||

## 删除某用户名下的定时任务,此命令需以 root 用户身份执行 ##

|

||||

crontab -r -u username

|

||||

```

|

||||

|

||||

### 使用特殊字符串来节省时间

|

||||

|

||||

你可以使用以下 8 个特殊字符串中的其中一个替代头五个字段,这样不但可以节省你的时间,还可以提高可读性。

|

||||

|

||||

特殊字符 |含义

|

||||

|:-- |:--

|

||||

@reboot | 在每次启动时运行一次

|

||||

@yearly | 每年运行一次,等同于 “0 0 1 1 *”.

|

||||

@annually | (同 @yearly)

|

||||

@monthly | 每月运行一次, 等同于 “0 0 1 * *”.

|

||||

@weekly | 每周运行一次, 等同于 “0 0 * * 0”.

|

||||

@daily | 每天运行一次, 等同于 “0 0 * * *”.

|

||||

@midnight | (同 @daily)

|

||||

@hourly | 每小时运行一次, 等同于 “0 * * * *”.

|

||||

|

||||

示例:

|

||||

|

||||

#### 每小时运行一次 ntpdate 命令 ####

|

||||

|

||||

```

|

||||

@hourly /path/to/ntpdate

|

||||

```

|

||||

|

||||

### 关于 `/etc/crontab` 文件和 `/etc/cron.d/*` 目录的更多内容

|

||||

|

||||

** /etc/crontab ** 是系统的 crontab 文件。通常只被 root 用户或守护进程用于配置系统级别的任务。每个独立的用户必须像上面介绍的那样使用 `crontab` 命令来安装和编辑自己的任务。`/var/spool/cron/` 或者 `/var/cron/tabs/` 目录存放了个人用户的 crontab 文件,它必定会备份在用户的家目录当中。

|

||||