mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-25 00:50:15 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

b5f423fca2

@ -0,0 +1,161 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wenwensnow)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11504-1.html)

|

||||

[#]: subject: (Use GameHub to Manage All Your Linux Games in One Place)

|

||||

[#]: via: (https://itsfoss.com/gamehub/)

|

||||

[#]: author: (Ankush Das https://itsfoss.com/author/ankush/)

|

||||

|

||||

用 GameHub 集中管理你 Linux 上的所有游戏

|

||||

======

|

||||

|

||||

你在 Linux 上是怎么[玩游戏的呢][1]? 让我猜猜,要不就是从软件中心直接安装,要不就选 Steam、GOG、Humble Bundle 等平台,对吧?但是,如果你有多个游戏启动器和客户端,又要如何管理呢?好吧,对我来说这简直令人头疼 —— 这也是我发现 [GameHub][2] 这个应用之后,感到非常高兴的原因。

|

||||

|

||||

GameHub 是为 Linux 发行版设计的一个桌面应用,它能让你“集中管理你的所有游戏”。这听起来很有趣,是不是?下面让我来具体说明一下。

|

||||

|

||||

![][3]

|

||||

|

||||

### 集中管理不同平台 Linux 游戏的 GameHub

|

||||

|

||||

让我们看看,对玩家来说,让 GameHub 成为一个[不可或缺的 Linux 应用][4]的功能,都有哪些。

|

||||

|

||||

#### Steam、GOG & Humble Bundle 支持

|

||||

|

||||

![][5]

|

||||

|

||||

它支持 Steam、[GOG][6] 和 [Humble Bundle][7] 账户整合。你可以登录你的 GameHub 账号,从而在你的库管理器中管理所有游戏。

|

||||

|

||||

对我来说,我在 Steam 上有很多游戏,Humble Bundle 上也有一些。我不能确保它支持所有平台,但可以确信的是,主流平台游戏是没有问题的。

|

||||

|

||||

#### 支持原生游戏

|

||||

|

||||

![][8]

|

||||

|

||||

[有很多网站专门推荐 Linux 游戏,并支持下载][9]。你可以通过下载安装包,或者添加可执行文件,从而管理原生游戏。

|

||||

|

||||

可惜的是,现在无法在 GameHub 内搜索 Linux 游戏。如上图所示,你需要分别下载游戏,随后再将其添加到 GameHub 中。

|

||||

|

||||

#### 模拟器支持

|

||||

|

||||

用模拟器,你可以在 [Linux 上玩复古游戏][10]。正如上图所示,你可以添加模拟器(并导入模拟的镜像)。

|

||||

|

||||

你可以在 [RetroArch][11] 查看已有的模拟器,但也能根据需求添加自定义模拟器。

|

||||

|

||||

#### 用户界面

|

||||

|

||||

![Gamehub 界面选项][12]

|

||||

|

||||

当然,用户体验很重要。因此,探究下用户界面都有些什么,也很有必要。

|

||||

|

||||

我个人觉得,这一应用很容易使用,并且黑色主题是一个加分项。

|

||||

|

||||

#### 手柄支持

|

||||

|

||||

如果你习惯在 Linux 系统上用手柄玩游戏 —— 你可以轻松在设置里添加,启用或禁用它。

|

||||

|

||||

#### 多个数据提供商

|

||||

|

||||

因为它需要获取你的游戏信息(或元数据),也意味着它需要一个数据源。你可以看到下图列出的所有数据源。

|

||||

|

||||

![Data Providers Gamehub][13]

|

||||

|

||||

这里你什么也不用做 —— 但如果你使用的是 steam 之外的其他平台,你需要为 [IDGB 生成一个 API 密钥][14]。

|

||||

|

||||

我建议只有出现 GameHub 中的提示/通知,或有些游戏在 GameHub 上没有任何描述/图片/状态时,再这么做。

|

||||

|

||||

#### 兼容性选项

|

||||

|

||||

![][15]

|

||||

|

||||

你有不支持在 Linux 上运行的游戏吗?

|

||||

|

||||

不用担心,GameHub 上提供了多种兼容工具,如 Wine/Proton,你可以利用它们来玩游戏。

|

||||

|

||||

我们无法确定具体哪个兼容工具适用于你 —— 所以你需要自己亲自测试。然而,对许多游戏玩家来说,这的确是个很有用的功能。

|

||||

|

||||

### GameHub: 如何安装它呢?

|

||||

|

||||

![][18]

|

||||

|

||||

首先,你可以直接在软件中心或者应用商店内搜索。 它在 “Pop!_Shop” 之下。所以,它在绝大多数官方源中都能找到。

|

||||

|

||||

如果你在这些地方都没有找到,你可以手动添加源,并从终端上安装它,你需要输入以下命令:

|

||||

|

||||

```

|

||||

sudo add-apt-repository ppa:tkashkin/gamehub

|

||||

sudo apt update

|

||||

sudo apt install com.github.tkashkin.gamehub

|

||||

```

|

||||

|

||||

如果你遇到了 “add-apt-repository command not found” 这个错误,你可以看看,[add-apt-repository not found error.][19]这篇文章,它能帮你解决这一问题。

|

||||

|

||||

这里还提供 AppImage 和 FlatPak 版本。 在[官网][2] 上,你可以针对找到其他 Linux 发行版的安装手册。

|

||||

|

||||

同时,你还可以从它的 [GitHub 页面][20]下载之前版本的安装包.

|

||||

|

||||

[GameHub][2]

|

||||

|

||||

### 如何在 GameHub 上管理你的游戏?

|

||||

|

||||

在启动程序后,你可以将自己的 Steam/GOG/Humble Bundle 账号添加进来。

|

||||

|

||||

对于 Steam,你需要在 Linux 发行版上安装 Steam 客户端。一旦安装完成,你可以轻松将账号中的游戏导入 GameHub。

|

||||

|

||||

![][16]

|

||||

|

||||

对于 GOG & Humble Bundle,登录后,就能直接在 GameHub 上管理游戏了。

|

||||

|

||||

如果你想添加模拟器或者本地安装文件,点击窗口右上角的 “+” 按钮进行添加。

|

||||

|

||||

### 如何安装游戏?

|

||||

|

||||

对于 Steam 游戏,它会自动启动 Steam 客户端,从而下载/安装游戏(我希望之后安装游戏,可以不用启动 Steam!)

|

||||

|

||||

![][17]

|

||||

|

||||

但对于 GOG/Humble Bundle,登录后就能直接、下载安装游戏。必要的话,对于那些不支持在 Linux 上运行的游戏,你可以使用兼容工具。

|

||||

|

||||

无论是模拟器游戏,还是本地游戏,只需添加安装包或导入模拟器镜像就可以了。这里没什么其他步骤要做。

|

||||

|

||||

### 注意

|

||||

|

||||

GameHub 是相当灵活的一个集中游戏管理应用。 用户界面和选项设置也相当直观。

|

||||

|

||||

你之前是否使用过这一应用呢?如果有,请在评论里写下你的感受。

|

||||

|

||||

而且,如果你想尝试一些与此功能相似的工具/应用,请务必告诉我们。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/gamehub/

|

||||

|

||||

作者:[Ankush Das][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wenwensnow](https://github.com/wenwensnow)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/ankush/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://itsfoss.com/linux-gaming-guide/

|

||||

[2]: https://tkashkin.tk/projects/gamehub/

|

||||

[3]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2019/10/gamehub-home-1.png?ssl=1

|

||||

[4]: https://itsfoss.com/essential-linux-applications/

|

||||

[5]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/10/gamehub-platform-support.png?ssl=1

|

||||

[6]: https://www.gog.com/

|

||||

[7]: https://www.humblebundle.com/monthly?partner=itsfoss

|

||||

[8]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/10/gamehub-native-installers.png?ssl=1

|

||||

[9]: https://itsfoss.com/download-linux-games/

|

||||

[10]: https://itsfoss.com/play-retro-games-linux/

|

||||

[11]: https://www.retroarch.com/

|

||||

[12]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2019/10/gamehub-appearance.png?ssl=1

|

||||

[13]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/10/data-providers-gamehub.png?ssl=1

|

||||

[14]: https://www.igdb.com/api

|

||||

[15]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2019/10/gamehub-windows-game.png?fit=800%2C569&ssl=1

|

||||

[16]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/10/gamehub-library.png?ssl=1

|

||||

[17]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2019/10/gamehub-compatibility-layer.png?ssl=1

|

||||

[18]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/10/gamehub-install.jpg?ssl=1

|

||||

[19]: https://itsfoss.com/add-apt-repository-command-not-found/

|

||||

[20]: https://github.com/tkashkin/GameHub/releases

|

||||

@ -1,27 +1,27 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11505-1.html)

|

||||

[#]: subject: (How to Configure Rsyslog Server in CentOS 8 / RHEL 8)

|

||||

[#]: via: (https://www.linuxtechi.com/configure-rsyslog-server-centos-8-rhel-8/)

|

||||

[#]: author: (James Kiarie https://www.linuxtechi.com/author/james/)

|

||||

|

||||

如何在 CentOS 8 / RHEL 8 中配置 Rsyslog 服务器

|

||||

如何在 CentOS8/RHEL8 中配置 Rsyslog 服务器

|

||||

======

|

||||

|

||||

**Rsyslog** 是一个免费的开源日志记录程序,默认下在 **CentOS** 8 和 **RHEL** 8 系统上存在。它提供了一种从客户端节点到单个中央服务器的“集中日志”的简单有效的方法。日志集中化有两个好处。首先,它简化了日志查看,因为系统管理员可以在一个中心节点查看远程服务器的所有日志,而无需登录每个客户端系统来检查日志。如果需要监视多台服务器,这将非常有用,其次,如果远程客户端崩溃,你不用担心丢失日志,因为所有日志都将保存在**中央 rsyslog 服务器上**。Rsyslog 取代了仅支持 **UDP** 协议的 syslog。它以优异的功能扩展了基本的 syslog 协议,例如在传输日志时支持 **UDP** 和 **TCP**协议,增强的过滤功能以及灵活的配置选项。让我们来探讨如何在 CentOS 8 / RHEL 8 系统中配置 Rsyslog 服务器。

|

||||

|

||||

|

||||

[![configure-rsyslog-centos8-rhel8][1]][2]

|

||||

Rsyslog 是一个自由开源的日志记录程序,在 CentOS 8 和 RHEL 8 系统上默认可用。它提供了一种从客户端节点到单个中央服务器的“集中日志”的简单有效的方法。日志集中化有两个好处。首先,它简化了日志查看,因为系统管理员可以在一个中心节点查看远程服务器的所有日志,而无需登录每个客户端系统来检查日志。如果需要监视多台服务器,这将非常有用,其次,如果远程客户端崩溃,你不用担心丢失日志,因为所有日志都将保存在中心的 Rsyslog 服务器上。rsyslog 取代了仅支持 UDP 协议的 syslog。它以优异的功能扩展了基本的 syslog 协议,例如在传输日志时支持 UDP 和 TCP 协议,增强的过滤功能以及灵活的配置选项。让我们来探讨如何在 CentOS 8 / RHEL 8 系统中配置 Rsyslog 服务器。

|

||||

|

||||

![configure-rsyslog-centos8-rhel8][2]

|

||||

|

||||

### 预先条件

|

||||

|

||||

我们将搭建以下实验环境来测试集中式日志记录过程:

|

||||

|

||||

* **Rsyslog 服务器** CentOS 8 Minimal IP 地址: 10.128.0.47

|

||||

* **客户端系统** RHEL 8 Minimal IP 地址: 10.128.0.48

|

||||

|

||||

|

||||

* Rsyslog 服务器 CentOS 8 Minimal IP 地址: 10.128.0.47

|

||||

* 客户端系统 RHEL 8 Minimal IP 地址: 10.128.0.48

|

||||

|

||||

通过上面的设置,我们将演示如何设置 Rsyslog 服务器,然后配置客户端系统以将日志发送到 Rsyslog 服务器进行监视。

|

||||

|

||||

@ -35,30 +35,30 @@

|

||||

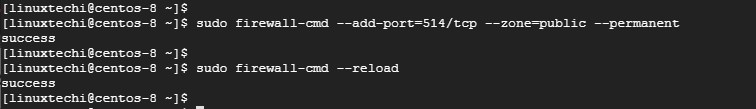

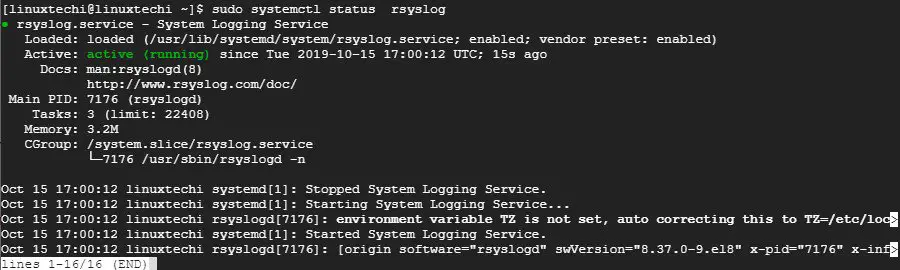

$ systemctl status rsyslog

|

||||

```

|

||||

|

||||

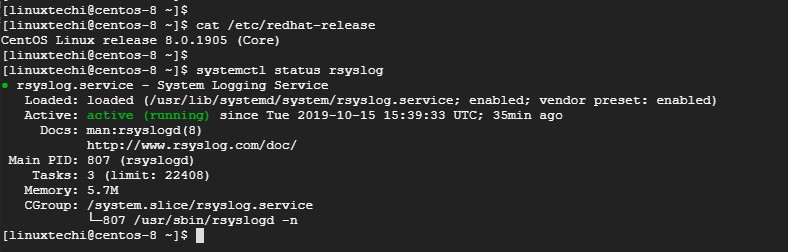

示例输出

|

||||

示例输出:

|

||||

|

||||

![rsyslog-service-status-centos8][1]

|

||||

|

||||

|

||||

如果由于某种原因不存在 rsyslog,那么可以使用以下命令进行安装:

|

||||

如果由于某种原因 Rsyslog 不存在,那么可以使用以下命令进行安装:

|

||||

|

||||

```

|

||||

$ sudo yum install rsyslog

|

||||

```

|

||||

|

||||

接下来,你需要修改 Rsyslog 配置文件中的一些设置。打开配置文件。

|

||||

接下来,你需要修改 Rsyslog 配置文件中的一些设置。打开配置文件:

|

||||

|

||||

```

|

||||

$ sudo vim /etc/rsyslog.conf

|

||||

```

|

||||

|

||||

滚动并取消注释下面的行,以允许通过 UDP 协议接收日志

|

||||

滚动并取消注释下面的行,以允许通过 UDP 协议接收日志:

|

||||

|

||||

```

|

||||

module(load="imudp") # needs to be done just once

|

||||

input(type="imudp" port="514")

|

||||

```

|

||||

|

||||

![rsyslog-conf-centos8-rhel8][1]

|

||||

|

||||

|

||||

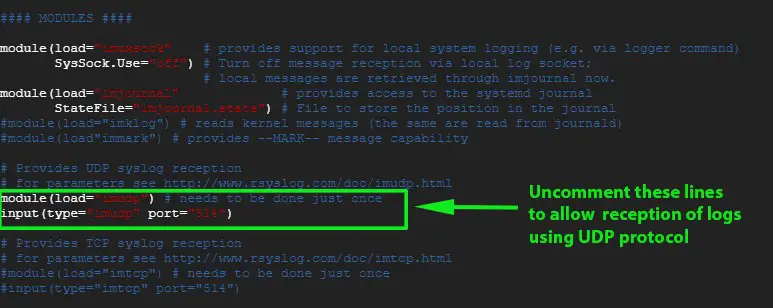

同样,如果你希望启用 TCP rsyslog 接收,请取消注释下面的行:

|

||||

|

||||

@ -67,47 +67,47 @@ module(load="imtcp") # needs to be done just once

|

||||

input(type="imtcp" port="514")

|

||||

```

|

||||

|

||||

![rsyslog-conf-tcp-centos8-rhel8][1]

|

||||

|

||||

|

||||

保存并退出配置文件。

|

||||

|

||||

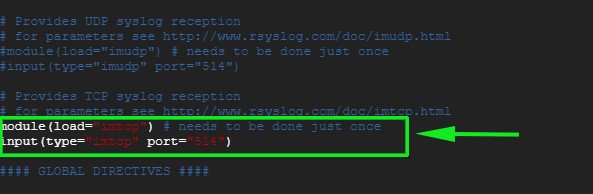

要从客户端系统接收日志,我们需要在防火墙上打开 Rsyslog 默认端口 514。为此,请运行

|

||||

要从客户端系统接收日志,我们需要在防火墙上打开 Rsyslog 默认端口 514。为此,请运行:

|

||||

|

||||

```

|

||||

# sudo firewall-cmd --add-port=514/tcp --zone=public --permanent

|

||||

```

|

||||

|

||||

接下来,重新加载防火墙保存更改

|

||||

接下来,重新加载防火墙保存更改:

|

||||

|

||||

```

|

||||

# sudo firewall-cmd --reload

|

||||

```

|

||||

|

||||

示例输出

|

||||

示例输出:

|

||||

|

||||

![firewall-ports-rsyslog-centos8][1]

|

||||

|

||||

|

||||

接下来,重启 Rsyslog 服务器

|

||||

接下来,重启 Rsyslog 服务器:

|

||||

|

||||

```

|

||||

$ sudo systemctl restart rsyslog

|

||||

```

|

||||

|

||||

要在启动时运行 Rsyslog,运行以下命令

|

||||

要在启动时运行 Rsyslog,运行以下命令:

|

||||

|

||||

```

|

||||

$ sudo systemctl enable rsyslog

|

||||

```

|

||||

|

||||

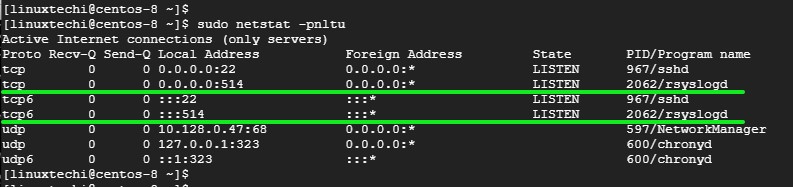

要确认 Rsyslog 服务器正在监听 514 端口,请使用 netstat 命令,如下所示:

|

||||

要确认 Rsyslog 服务器正在监听 514 端口,请使用 `netstat` 命令,如下所示:

|

||||

|

||||

```

|

||||

$ sudo netstat -pnltu

|

||||

```

|

||||

|

||||

示例输出

|

||||

示例输出:

|

||||

|

||||

![netstat-rsyslog-port-centos8][1]

|

||||

|

||||

|

||||

完美!我们已经成功配置了 Rsyslog 服务器来从客户端系统接收日志。

|

||||

|

||||

@ -127,42 +127,42 @@ $ tail -f /var/log/messages

|

||||

$ sudo systemctl status rsyslog

|

||||

```

|

||||

|

||||

示例输出

|

||||

示例输出:

|

||||

|

||||

![client-rsyslog-service-rhel8][1]

|

||||

|

||||

|

||||

接下来,打开 rsyslog 配置文件

|

||||

接下来,打开 rsyslog 配置文件:

|

||||

|

||||

```

|

||||

$ sudo vim /etc/rsyslog.conf

|

||||

```

|

||||

|

||||

在文件末尾,添加以下行

|

||||

在文件末尾,添加以下行:

|

||||

|

||||

```

|

||||

*.* @10.128.0.47:514 # Use @ for UDP protocol

|

||||

*.* @@10.128.0.47:514 # Use @@ for TCP protocol

|

||||

```

|

||||

|

||||

保存并退出配置文件。就像 Rsyslog 服务器一样,打开 514 端口,这是防火墙上的默认 Rsyslog 端口。

|

||||

保存并退出配置文件。就像 Rsyslog 服务器一样,打开 514 端口,这是防火墙上的默认 Rsyslog 端口:

|

||||

|

||||

```

|

||||

$ sudo firewall-cmd --add-port=514/tcp --zone=public --permanent

|

||||

```

|

||||

|

||||

接下来,重新加载防火墙以保存更改

|

||||

接下来,重新加载防火墙以保存更改:

|

||||

|

||||

```

|

||||

$ sudo firewall-cmd --reload

|

||||

```

|

||||

|

||||

接下来,重启 rsyslog 服务

|

||||

接下来,重启 rsyslog 服务:

|

||||

|

||||

```

|

||||

$ sudo systemctl restart rsyslog

|

||||

```

|

||||

|

||||

要在启动时运行 Rsyslog,请运行以下命令

|

||||

要在启动时运行 Rsyslog,请运行以下命令:

|

||||

|

||||

```

|

||||

$ sudo systemctl enable rsyslog

|

||||

@ -178,15 +178,15 @@ $ sudo systemctl enable rsyslog

|

||||

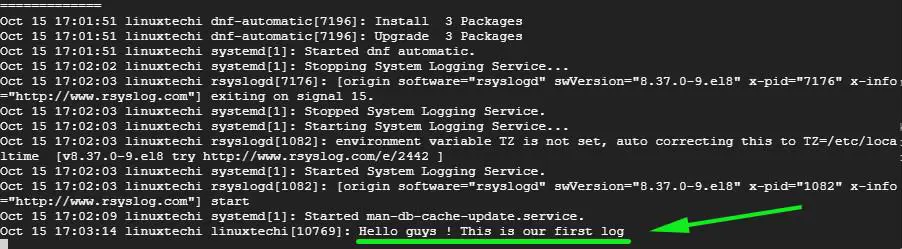

# logger "Hello guys! This is our first log"

|

||||

```

|

||||

|

||||

现在进入 Rsyslog 服务器并运行以下命令来实时查看日志消息

|

||||

现在进入 Rsyslog 服务器并运行以下命令来实时查看日志消息:

|

||||

|

||||

```

|

||||

# tail -f /var/log/messages

|

||||

```

|

||||

|

||||

客户端系统上命令运行的输出显示在了 Rsyslog 服务器的日志中,这意味着 Rsyslog 服务器正在接收来自客户端系统的日志。

|

||||

客户端系统上命令运行的输出显示在了 Rsyslog 服务器的日志中,这意味着 Rsyslog 服务器正在接收来自客户端系统的日志:

|

||||

|

||||

![centralize-logs-rsyslogs-centos8][1]

|

||||

|

||||

|

||||

就是这些了!我们成功设置了 Rsyslog 服务器来接收来自客户端系统的日志信息。

|

||||

|

||||

@ -197,11 +197,11 @@ via: https://www.linuxtechi.com/configure-rsyslog-server-centos-8-rhel-8/

|

||||

作者:[James Kiarie][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linuxtechi.com/author/james/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[2]: https://www.linuxtechi.com/wp-content/uploads/2019/10/configure-rsyslog-centos8-rhel8.jpg

|

||||

[2]: https://www.linuxtechi.com/wp-content/uploads/2019/10/configure-rsyslog-centos8-rhel8.jpg

|

||||

@ -1,82 +1,76 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11502-1.html)

|

||||

[#]: subject: (Pylint: Making your Python code consistent)

|

||||

[#]: via: (https://opensource.com/article/19/10/python-pylint-introduction)

|

||||

[#]: author: (Moshe Zadka https://opensource.com/users/moshez)

|

||||

|

||||

Pylint:让你的 Python 代码保持一致

|

||||

======

|

||||

当你想要争论代码复杂性时,Pylint 是你的朋友。

|

||||

|

||||

> 当你想要争论代码复杂性时,Pylint 是你的朋友。

|

||||

|

||||

![OpenStack source code \(Python\) in VIM][1]

|

||||

|

||||

Pylint 是更高层级的 Python 样式强制程序。而 [flake8][2] 和 [black][3] 检查的是“本地”样式:换行位置、注释的格式、发现注释掉的代码或日志格式中的错误做法之类的问题。

|

||||

|

||||

默认情况下,Pylint 非常激进。它将对一切提供强有力的意见,从检查是否实际实现声明的接口到重构重复代码,这对新用户来说可能会很多。一种温和地将其引入项目或团对的方法是先关闭_所有_检查器,然后逐个启用检查器。如果你已经在使用 flake8、black 和 [mypy][4],这尤其有用:Pylint 有相当多的检查器在功能上重叠。

|

||||

默认情况下,Pylint 非常激进。它将对每样东西都提供严厉的意见,从检查是否实际实现声明的接口到重构重复代码的可能性,这对新用户来说可能会很多。一种温和地将其引入项目或团队的方法是先关闭*所有*检查器,然后逐个启用检查器。如果你已经在使用 flake8、black 和 [mypy][4],这尤其有用:Pylint 有相当多的检查器和它们在功能上重叠。

|

||||

|

||||

但是,Pylint 独有之处之一是能够强制执行更高级别的问题:例如,函数的行数或者类中方法的数量。

|

||||

|

||||

这些数字可能因项目而异,并且可能取决于开发团队的偏好。但是,一旦团队就参数达成一致,使用自动工具_强制化_这些参数非常有用。这是 Pylint 闪耀的地方。

|

||||

这些数字可能因项目而异,并且可能取决于开发团队的偏好。但是,一旦团队就参数达成一致,使用自动工具*强制化*这些参数非常有用。这是 Pylint 闪耀的地方。

|

||||

|

||||

### 配置 Pylint

|

||||

|

||||

要以空配置开始,请将 `.pylintrc` 设置为

|

||||

|

||||

|

||||

```

|

||||

[MESSAGES CONTROL]

|

||||

|

||||

disable=all

|

||||

```

|

||||

|

||||

这将禁用所有 Pylint 消息。由于其中许多是冗余的,这是有道理的。在 Pylint 中, `message` 是一种特定的警告。

|

||||

|

||||

你可以通过运行 `pylint` 来检查所有消息都已关闭:

|

||||

这将禁用所有 Pylint 消息。由于其中许多是冗余的,这是有道理的。在 Pylint 中,`message` 是一种特定的警告。

|

||||

|

||||

你可以通过运行 `pylint` 来确认所有消息都已关闭:

|

||||

|

||||

```

|

||||

`$ pylint <my package>`

|

||||

$ pylint <my package>

|

||||

```

|

||||

|

||||

通常,向 `pylint` 命令行添加参数并不是一个好主意:配置 `pylint` 的最佳位置是 `.pylintrc`。为了使它做_一些_有用的事,我们需要启用一些消息。

|

||||

|

||||

要启用消息,在 `.pylintrc` 中的 `[MESSAGES CONTROL]` 下添加

|

||||

通常,向 `pylint` 命令行添加参数并不是一个好主意:配置 `pylint` 的最佳位置是 `.pylintrc`。为了使它做*一些*有用的事,我们需要启用一些消息。

|

||||

|

||||

要启用消息,在 `.pylintrc` 中的 `[MESSAGES CONTROL]` 下添加

|

||||

|

||||

```

|

||||

enable=<message>,

|

||||

|

||||

...

|

||||

enable=<message>,

|

||||

...

|

||||

```

|

||||

|

||||

对于看起来有用的“消息”(Pylint 称之为不同类型的警告)。我最喜欢的包括 `too-many-lines`、`too-many-arguments` 和 `too-many-branches`。所有这些限制模块或函数的复杂性,并且无需进行人工操作即可客观地进行代码复杂度测量。。

|

||||

|

||||

_检查器_是_消息_的来源:每条消息只属于一个检查器。许多最有用的消息都在[设计检查器][5]下。 默认数字通常都不错,但要调整最大值也很简单:我们可以在 `.pylintrc` 中添加一个名为 `DESIGN` 的一段。

|

||||

对于看起来有用的“消息”(Pylint 称之为不同类型的警告)。我最喜欢的包括 `too-many-lines`、`too-many-arguments` 和 `too-many-branches`。所有这些会限制模块或函数的复杂性,并且无需进行人工操作即可客观地进行代码复杂度测量。

|

||||

|

||||

*检查器*是*消息*的来源:每条消息只属于一个检查器。许多最有用的消息都在[设计检查器][5]下。默认数字通常都不错,但要调整最大值也很简单:我们可以在 `.pylintrc` 中添加一个名为 `DESIGN` 的段。

|

||||

|

||||

```

|

||||

[DESIGN]

|

||||

|

||||

max-args=7

|

||||

|

||||

max-locals=15

|

||||

```

|

||||

|

||||

另一个有用的消息来源是`重构`检查器。我已启用一些最喜欢的消息有 `consider-using-dict-comprehension`、`stop-iteration-return`(它会查找使用当 `return` 是正确的停止迭代的方式,而使用 `raise StopIteration` 的迭代器)和 `chained-comparison`,它将建议使用如 `1 <= x < 5`,而不是不太明显的 `1 <= x && 5 > 5` 的语法

|

||||

另一个有用的消息来源是“重构”检查器。我已启用一些最喜欢的消息有 `consider-using-dict-comprehension`、`stop-iteration-return`(它会查找正确的停止迭代的方式是 `return` 而使用了 `raise StopIteration` 的迭代器)和 `chained-comparison`,它将建议使用如 `1 <= x < 5`,而不是不太明显的 `1 <= x && 5 > 5` 的语法。

|

||||

|

||||

最后是一个在性能方面昂贵的检查器,但它非常有用,是 `similarities`。它会查找不同部分代码之间的复制粘贴来强制执行“不要重复自己”(DRY 原则)。它只启用一条消息:`duplicate-code`。默认的 “minimum similarity lines” 设置为 “4”。可以使用 `.pylintrc` 将其设置为不同的值。

|

||||

最后是一个在性能方面消耗很大的检查器,但它非常有用,就是 `similarities`。它会查找不同部分代码之间的复制粘贴来强制执行“不要重复自己”(DRY 原则)。它只启用一条消息:`duplicate-code`。默认的 “最小相似行数” 设置为 4。可以使用 `.pylintrc` 将其设置为不同的值。

|

||||

|

||||

```

|

||||

[SIMILARITIES]

|

||||

|

||||

min-similarity-lines=3

|

||||

```

|

||||

|

||||

### Pylint 使代码评审变得简单

|

||||

|

||||

如果你厌倦了需要指出一个类太复杂,或者两个不同的函数基本相同的代码评审,请将 Pylint 添加到你的[持续集成][6]配置中,只需_一次性_设置你项目的复杂性指导参数。

|

||||

如果你厌倦了需要指出一个类太复杂,或者两个不同的函数基本相同的代码评审,请将 Pylint 添加到你的[持续集成][6]配置中,并且只需要对项目复杂性准则的争论一次就行。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -85,7 +79,7 @@ via: https://opensource.com/article/19/10/python-pylint-introduction

|

||||

作者:[Moshe Zadka][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (lnrCoder)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11503-1.html)

|

||||

[#]: subject: (How to Get the Size of a Directory in Linux)

|

||||

[#]: via: (https://www.2daygeek.com/find-get-size-of-directory-folder-linux-disk-usage-du-command/)

|

||||

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

||||

@ -10,25 +10,19 @@

|

||||

如何获取 Linux 中的目录大小

|

||||

======

|

||||

|

||||

你应该已经注意用到,在 Linux 中使用 **[ls 命令][1]** 列出的目录内容中,目录的大小仅显示 4KB。

|

||||

你应该已经注意到,在 Linux 中使用 [ls 命令][1] 列出的目录内容中,目录的大小仅显示 4KB。这个大小正确吗?如果不正确,那它代表什么,又该如何获取 Linux 中的目录或文件夹大小?这是一个默认的大小,是用来存储磁盘上存储目录的元数据的大小。

|

||||

|

||||

这个大小正确吗?如果不正确,那它代表什么,又该如何获取 Linux 中的目录或文件夹大小?

|

||||

|

||||

这是一个默认的大小,用来存储磁盘上存储目录的元数据。

|

||||

|

||||

Linux 上有一些应用程序可以 **[获取目录的实际大小][2]**.

|

||||

|

||||

但是,磁盘使用率(du)命令已被 Linux 管理员广泛使用。

|

||||

Linux 上有一些应用程序可以 [获取目录的实际大小][2]。其中,磁盘使用率(`du`)命令已被 Linux 管理员广泛使用。

|

||||

|

||||

我将向您展示如何使用各种选项获取文件夹大小。

|

||||

|

||||

### 什么是 du 命令?

|

||||

|

||||

**[du 命令][3]** 表示 <ruby>Disk Usage<rt>磁盘使用率</rt></ruby>。这是一个标准的 Unix 程序,用于估计当前工作目录中的文件空间使用情况。

|

||||

[du 命令][3] 表示 <ruby>磁盘使用率<rt>Disk Usage</rt></ruby>。这是一个标准的 Unix 程序,用于估计当前工作目录中的文件空间使用情况。

|

||||

|

||||

它使用递归方式总结磁盘使用情况,以获取目录及其子目录的大小。

|

||||

|

||||

如同我说的那样, 使用 ls 命令时,目录大小仅显示 4KB。参见下面的输出。

|

||||

如同我说的那样, 使用 `ls` 命令时,目录大小仅显示 4KB。参见下面的输出。

|

||||

|

||||

```

|

||||

$ ls -lh | grep ^d

|

||||

@ -42,29 +36,27 @@ drwxr-xr-x 15 daygeek daygeek 4.0K Sep 29 21:32 Thanu_Photos

|

||||

|

||||

### 1) 在 Linux 上如何只获取父目录的大小

|

||||

|

||||

使用以下 du 命令格式获取给定目录的总大小。在该示例中,我们将得到 **“/home/daygeek/Documents”** 目录的总大小

|

||||

使用以下 `du` 命令格式获取给定目录的总大小。在该示例中,我们将得到 `/home/daygeek/Documents` 目录的总大小。

|

||||

|

||||

```

|

||||

$ du -hs /home/daygeek/Documents

|

||||

or

|

||||

或

|

||||

$ du -h --max-depth=0 /home/daygeek/Documents/

|

||||

|

||||

20G /home/daygeek/Documents

|

||||

```

|

||||

|

||||

**详细说明**:

|

||||

|

||||

* du – 这是一个命令

|

||||

* h – 以人类可读的格式显示大小 (例如 1K 234M 2G)

|

||||

* s – 仅显示每个参数的总数

|

||||

* –max-depth=N – 目录的打印级别

|

||||

详细说明:

|

||||

|

||||

* `du` – 这是一个命令

|

||||

* `-h` – 以易读的格式显示大小 (例如 1K 234M 2G)

|

||||

* `-s` – 仅显示每个参数的总数

|

||||

* `--max-depth=N` – 目录的打印深度

|

||||

|

||||

### 2) 在 Linux 上如何获取每个目录的大小

|

||||

|

||||

使用以下 du 命令格式获取每个目录(包括子目录)的总大小。

|

||||

使用以下 `du` 命令格式获取每个目录(包括子目录)的总大小。

|

||||

|

||||

在该示例中,我们将获得每个 **“/home/daygeek/Documents”** 目录及其子目录的总大小。

|

||||

在该示例中,我们将获得每个 `/home/daygeek/Documents` 目录及其子目录的总大小。

|

||||

|

||||

```

|

||||

$ du -h /home/daygeek/Documents/ | sort -rh | head -20

|

||||

@ -93,7 +85,7 @@ $ du -h /home/daygeek/Documents/ | sort -rh | head -20

|

||||

|

||||

### 3) 在 Linux 上如何获取每个目录的摘要

|

||||

|

||||

使用如下 du 命令格式仅获取每个目录的摘要。

|

||||

使用如下 `du` 命令格式仅获取每个目录的摘要。

|

||||

|

||||

```

|

||||

$ du -hs /home/daygeek/Documents/* | sort -rh | head -10

|

||||

@ -112,7 +104,7 @@ $ du -hs /home/daygeek/Documents/* | sort -rh | head -10

|

||||

|

||||

### 4) 在 Linux 上如何获取每个目录的不含子目录的大小

|

||||

|

||||

使用如下 du 命令格式来展示每个目录的总大小,不包括子目录。

|

||||

使用如下 `du` 命令格式来展示每个目录的总大小,不包括子目录。

|

||||

|

||||

```

|

||||

$ du -hS /home/daygeek/Documents/ | sort -rh | head -20

|

||||

@ -156,7 +148,7 @@ $ du -h --max-depth=1 /home/daygeek/Documents/

|

||||

|

||||

### 6) 如何在 du 命令输出中获得总计

|

||||

|

||||

如果要在 du 命令输出中获得总计,请使用以下 du 命令格式。

|

||||

如果要在 `du` 命令输出中获得总计,请使用以下 `du` 命令格式。

|

||||

|

||||

```

|

||||

$ du -hsc /home/daygeek/Documents/* | sort -rh | head -10

|

||||

@ -180,7 +172,7 @@ via: https://www.2daygeek.com/find-get-size-of-directory-folder-linux-disk-usage

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[lnrCoder](https://github.com/lnrCoder)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,68 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Cisco issues critical security warning for IOS XE REST API container)

|

||||

[#]: via: (https://www.networkworld.com/article/3447558/cisco-issues-critical-security-warning-for-ios-xe-rest-api-container.html)

|

||||

[#]: author: (Michael Cooney https://www.networkworld.com/author/Michael-Cooney/)

|

||||

|

||||

Cisco issues critical security warning for IOS XE REST API container

|

||||

======

|

||||

This Cisco IOS XE REST API vulnerability could lead to attackers obtaining the token-id of an authenticated user.

|

||||

D3Damon / Getty Images

|

||||

|

||||

Cisco this week said it issued a software update to address a vulnerability in its [Cisco REST API virtual service container for Cisco IOS XE][1] software that scored a critical 10 out of 10 on the Common Vulnerability Scoring System (CVSS) system.

|

||||

|

||||

With the vulnerability an attacker could submit malicious HTTP requests to the targeted device and if successful, obtain the _token-id_ of an authenticated user. This _token-id_ could be used to bypass authentication and execute privileged actions through the interface of the REST API virtual service container on the affected Cisco IOS XE device, the company said.

|

||||

|

||||

[[Get regularly scheduled insights by signing up for Network World newsletters.]][2]

|

||||

|

||||

According to Cisco the REST API is an application that runs in a virtual services container. A virtual services container is a virtualized environment on a device and is delivered as an open virtual application (OVA). The OVA package has to be installed and enabled on a device through the device virtualization manager (VMAN) CLI.

|

||||

|

||||

**[ [Prepare to become a Certified Information Security Systems Professional with this comprehensive online course from PluralSight. Now offering a 10-day free trial!][3] ]**

|

||||

|

||||

The Cisco REST API provides a set of RESTful APIs as an alternative method to the Cisco IOS XE CLI to provision selected functions on Cisco devices.

|

||||

|

||||

Cisco said the vulnerability can be exploited under the following conditions:

|

||||

|

||||

* The device runs an affected Cisco IOS XE Software release.

|

||||

* The device has installed and enabled an affected version of the Cisco REST API virtual service container.

|

||||

* An authorized user with administrator credentials (level 15) is authenticated to the REST API interface.

|

||||

|

||||

|

||||

|

||||

The REST API interface is not enabled by default. To be vulnerable, the virtual services container must be installed and activated. Deleting the OVA package from the device storage memory removes the attack vector. If the Cisco REST API virtual service container is not enabled, this operation will not impact the device's normal operating conditions, Cisco stated.

|

||||

|

||||

This vulnerability affects Cisco devices that are configured to use a vulnerable version of Cisco REST API virtual service container. This vulnerability affected the following products:

|

||||

|

||||

* Cisco 4000 Series Integrated Services Routers

|

||||

* Cisco ASR 1000 Series Aggregation Services Routers

|

||||

* Cisco Cloud Services Router 1000V Series

|

||||

* Cisco Integrated Services Virtual Router

|

||||

|

||||

|

||||

|

||||

Cisco said it has [released a fixed version of the REST API][4] virtual service container and a hardened IOS XE release that prevents installation or activation of a vulnerable container on a device. If the device was already configured with an active vulnerable container, the IOS XE software upgrade will deactivate the container, making the device not vulnerable. In that case, to restore the REST API functionality, customers should upgrade the Cisco REST API virtual service container to a fixed software release, the company said.

|

||||

|

||||

Join the Network World communities on [Facebook][5] and [LinkedIn][6] to comment on topics that are top of mind.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3447558/cisco-issues-critical-security-warning-for-ios-xe-rest-api-container.html

|

||||

|

||||

作者:[Michael Cooney][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Michael-Cooney/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://tools.cisco.com/security/center/content/CiscoSecurityAdvisory/cisco-sa-20190828-iosxe-rest-auth-bypass

|

||||

[2]: https://www.networkworld.com/newsletters/signup.html

|

||||

[3]: https://pluralsight.pxf.io/c/321564/424552/7490?u=https%3A%2F%2Fwww.pluralsight.com%2Fpaths%2Fcertified-information-systems-security-professional-cisspr

|

||||

[4]: https://www.cisco.com/c/en/us/about/legal/cloud-and-software/end_user_license_agreement.html

|

||||

[5]: https://www.facebook.com/NetworkWorld/

|

||||

[6]: https://www.linkedin.com/company/network-world

|

||||

@ -0,0 +1,68 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (IT-as-a-Service Simplifies Hybrid IT)

|

||||

[#]: via: (https://www.networkworld.com/article/3447342/it-as-a-service-simplifies-hybrid-it.html)

|

||||

[#]: author: (Anne Taylor https://www.networkworld.com/author/Anne-Taylor/)

|

||||

|

||||

IT-as-a-Service Simplifies Hybrid IT

|

||||

======

|

||||

Consumption-based model reduces complexity, improves IT infrastructure.

|

||||

iStock

|

||||

|

||||

The data center must rapidly change. Companies are increasingly moving toward hybrid IT models, with some workloads in the cloud and others staying on premises. The burden of ever-growing apps and data is placing pressure on infrastructure in both worlds, but especially the data center.

|

||||

|

||||

Organizations are struggling to reach the required speed and flexibility — with the same public-cloud economics — from their on-premises data centers. That’s likely because they’re dealing with legacy systems acquired over the years, possibly inherited as the result of mergers and acquisitions.

|

||||

|

||||

These complex environments create headaches when trying to accommodate for IT capacity fluctuations. When extra storage is needed, for example, 67% of IT departments buy too much, according to [Futurum Research][1]. They don’t have the visibility into resources, nor the ability to effectively scale up and down.

|

||||

|

||||

Meanwhile, lines of business need solutions fast, and if IT can’t deliver, they’ll go out and buy their own cloud-based services or solutions. IT must think strategically about how all this technology strings together — efficiently, securely, and cost-effectively.

|

||||

|

||||

Enter IT-as-a-Service (ITaaS).

|

||||

|

||||

**1) How does ITaaS work?**

|

||||

|

||||

Unlike other as-a-service models, ITaaS is not cloud based, although the concept can be applied to cloud environments. Rather, the focus is about shifting IT operations toward managed services on an as-needed, pay-as-you-go basis. 1

|

||||

|

||||

For example, HPE GreenLake delivers infrastructure capacity based on actual metered usage, where companies only pay for what is used. There are no upfront costs, extended purchasing and implementation timeframes, or overprovisioning headaches. Infrastructure capacity can be scaled up or down as needed.

|

||||

|

||||

**2) What are the benefits of ITaaS?**

|

||||

|

||||

Some of the most significant advantages include: scalable infrastructure and resources, improved workload management, greater availability, and reduced burden on IT, including network admins.

|

||||

|

||||

* _Infrastructure_. Resource needs are often in flux depending on business demands and market changes. Using ITaaS not only enhances infrastructure usage, it also helps network admins better plan for and manage bandwidth, switches, routers, and other network gear.

|

||||

* _Workloads_. ITaaS can immediately tackle cloud bursting to better manage application flow. Companies might also, for example, choose to use the consumption-based model for workloads that are unpredictable in their growth — such as big data, storage, and private cloud.

|

||||

* _Availability_. It’s critical to have zero network downtime. Using a consumption-based IT model, companies can opt to adopt services such as continuous network monitoring or expertise on-call with a 24/7 network help desk.

|

||||

* _Reduced burden on IT_. All of the above benefits affect day-to-day operations. By simplifying network management, ITaaS frees personnel to use their expertise where it is best served.

|

||||

|

||||

|

||||

|

||||

Furthermore, a consumption-based IT model helps organizations gain end-to-end visibility into storage resources, so that admins can ensure the highest levels of service, performance, and availability.

|

||||

|

||||

**HPE GreenLake: The Answer**

|

||||

|

||||

As hybrid IT takes hold, IT organizations must get a grip on their infrastructure resources to ensure agility and scalability for the business, while maintaining IT cost-effectiveness.

|

||||

|

||||

HPE GreenLake enables a simplified IT environment where companies pay only for the resources they actually use, while providing the business with the speed and agility it requires.

|

||||

|

||||

[Learn more at hpe.com/greenlake.][2]

|

||||

|

||||

Minimum commitment may apply

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3447342/it-as-a-service-simplifies-hybrid-it.html

|

||||

|

||||

作者:[Anne Taylor][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Anne-Taylor/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://h20195.www2.hpe.com/v2/Getdocument.aspx?docname=a00079768enw

|

||||

[2]: https://www.hpe.com/us/en/services/flexible-capacity.html

|

||||

76

sources/talk/20191023 Psst- Wanna buy a data center.md

Normal file

76

sources/talk/20191023 Psst- Wanna buy a data center.md

Normal file

@ -0,0 +1,76 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Psst! Wanna buy a data center?)

|

||||

[#]: via: (https://www.networkworld.com/article/3447657/psst-wanna-buy-a-data-center.html)

|

||||

[#]: author: (Andy Patrizio https://www.networkworld.com/author/Andy-Patrizio/)

|

||||

|

||||

Psst! Wanna buy a data center?

|

||||

======

|

||||

Data centers are being bought and sold at an increasing rate, although since they are often private transactions, solid numbers can be hard to come by.

|

||||

artisteer / Getty Images

|

||||

|

||||

When investment bank Bear Stearns collapsed in 2008, there was nothing left of value to auction off except its [data centers][1]. JP Morgan bought the company's carcass for just $270 million, but the only thing of value was Bear's NYC headquarters and two data centers.

|

||||

|

||||

Since then there have been numerous sales of data centers under better conditions. There are even websites ([Datacenters.com][2], [Five 9s Digital][3]) that list data centers for sale. You can buy an empty building, but in most cases, you get the equipment, too.

|

||||

|

||||

There are several reasons why, the most common being companies want to get out of owning a data center. It's an expensive capex and opex investment, and if the cloud is a good alternative, then that's where they go.

|

||||

|

||||

[][4]

|

||||

|

||||

BrandPost Sponsored by HPE

|

||||

|

||||

[Take the Intelligent Route with Consumption-Based Storage][4]

|

||||

|

||||

Combine the agility and economics of HPE storage with HPE GreenLake and run your IT department with efficiency.

|

||||

|

||||

But there are other reasons, too, said Jon Lin, president of the Equinix Americas office. He said enterprises have overbuilt because of their initial long-term forecasts fell short, partially driven by increased use of cloud. He also said there is an increase in the amount of private equity and real estate investors interested in diversifying into data centers.

|

||||

|

||||

But that doesn't mean Equinix takes every data center they are offered. He cited three reasons why Equinix would pass on an offer:

|

||||

|

||||

1) It is difficult to repurpose an enterprise data center designed around a very tailored customer into a general purpose, multi-tenant data center without significant investment in order to tailor it to the company's satisfaction.

|

||||

|

||||

2) Most of these sites were not built to Equinix standards, diminishing their value.

|

||||

|

||||

**[ Learn more about SDN: Find out [where SDN is going][5] and learn the [difference between SDN and NFV][6]. | Get regularly scheduled insights by [signing up for Network World newsletters][7]. ]**

|

||||

|

||||

3) Enterprise data centers are usually located where the company HQ is for convenience, and not near the interconnection points or infrastructure locations Equinix would prefer for fiber and power.

|

||||

|

||||

Just how much buying and selling is going on is hard to tell. Most of these firms are privately held and thus no disclosure is required. Kelly Morgan, research vice president with 451 Research who tracks the data center market, put the dollar figure for data center sales in 2019 so far at $5.4 billion. That's way down from $19.5 billion just two years ago.

|

||||

|

||||

She says that back then there were very big deals, like when Verizon sold its data centers to Equinix in 2017 for $3.6 billion while AT&T sold its data centers to Brookfield Infrastructure Partners, which buys and managed infrastructure assets, for $1.1 billion.

|

||||

|

||||

These days, she says, the main buyers are big real estate-oriented pension funds that have a different perspective on why they buy vs. traditional real estate investors. Pension funds like the steady income, even in a recession. Private equity firms were buying data centers to buy up the assets, group them, then sell them and make a double-digit return, she said.

|

||||

|

||||

Enterprises do look to sell their data centers, but it's a more challenging process. She echoes what Lin said about the problem with specialty data centers. "They tend to be expensive and often in not great locations for multi-tenant situations. They are often at company headquarters or the town where the company is headquartered. So they are hard to sell," she said.

|

||||

|

||||

Enterprises want to sell their data center to get out of data center ownership, since they are often older -- the average age of corporate data centers is from 10 years to 25 years old – for the obvious reasons. "When we ask enterprises why they are selling or closing their data centers, they say they are consolidating multiple data centers into one, plus moving half their stuff to the cloud," said Morgan.

|

||||

|

||||

There is still a good chunk of companies who build or acquire data centers, either because they are consolidating or just getting rid of older facilities. Some add space because they are moving to a new geography. However, Morgan said they almost never buy. "They lease one from someone else. Enterprise data centers for sale are not bought by other enterprises, they are bought by service providers who will lease it. Enterprises build a new one," she said.

|

||||

|

||||

Join the Network World communities on [Facebook][8] and [LinkedIn][9] to comment on topics that are top of mind.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3447657/psst-wanna-buy-a-data-center.html

|

||||

|

||||

作者:[Andy Patrizio][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Andy-Patrizio/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.networkworld.com/article/3223692/what-is-a-data-centerhow-its-changed-and-what-you-need-to-know.html

|

||||

[2]: https://www.datacenters.com/real-estate/data-centers-for-sale

|

||||

[3]: https://five9sdigital.com/data-centers/

|

||||

[4]: https://www.networkworld.com/article/3440100/take-the-intelligent-route-with-consumption-based-storage.html?utm_source=IDG&utm_medium=promotions&utm_campaign=HPE20773&utm_content=sidebar ( Take the Intelligent Route with Consumption-Based Storage)

|

||||

[5]: https://www.networkworld.com/article/3209131/lan-wan/what-sdn-is-and-where-its-going.html

|

||||

[6]: https://www.networkworld.com/article/3206709/lan-wan/what-s-the-difference-between-sdn-and-nfv.html

|

||||

[7]: https://www.networkworld.com/newsletters/signup.html

|

||||

[8]: https://www.facebook.com/NetworkWorld/

|

||||

[9]: https://www.linkedin.com/company/network-world

|

||||

@ -0,0 +1,141 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (The Protocols That Help Things to Communicate Over the Internet)

|

||||

[#]: via: (https://opensourceforu.com/2019/10/the-protocols-that-help-things-to-communicate-over-the-internet-2/)

|

||||

[#]: author: (Sapna Panchal https://opensourceforu.com/author/sapna-panchal/)

|

||||

|

||||

The Protocols That Help Things to Communicate Over the Internet

|

||||

======

|

||||

|

||||

[![][1]][2]

|

||||

|

||||

_The Internet of Things is a system of connected, interrelated objects. These objects transmit data to servers for processing and, in turn, receive messages from the servers. These messages are sent and received using different protocols. This article discusses some of the protocols related to the IoT._

|

||||

|

||||

The Internet of Things (IoT) is beginning to pervade more and more aspects of our lives. Everyone everywhere is using the Internet of Things. Using the Internet, connected things are used to collect information, convey/send information back, or do both. IoT is an architecture that is a combination of available technologies. It helps to make our daily lives more pleasant and convenient.

|

||||

|

||||

![Figure 1: IoT architecture][3]

|

||||

|

||||

![Figure 2: Messaging Queuing Telemetry Transmit protocol][4]

|

||||

|

||||

**IoT architecture**

|

||||

Basically, IoT architecture has four components. In this article, we will explore each component to understand the architecture better.

|

||||

|

||||

**Sensors:** These are present everywhere. They help to collect data from any location and then share it to the IoT gateway. As an example, sensors sense the temperature at different locations, which helps to gauge the weather conditions. And this information is shared or passed to the IoT gateway. This is a basic example of how the IoT operates.

|

||||

|

||||

**IoT gateway:** Once the information is collected from the sensors, it is passed on to the gateway. The gateway is a mediator between sensor nodes and the World Wide Web. So basically, it processes the data that is collected from sensor nodes and then transmits this to the Internet infrastructure.

|

||||

**Cloud server:** Once data is transmitted through the gateway, it is stored and processed in the cloud server.

|

||||

**Mobile app:** Using a mobile application, the user can view and access the data processed in the cloud server.

|

||||

This is the basic idea of the IoT and its architecture, along with the components. We now move on to the basic ideas behind different IoT protocols.

|

||||

|

||||

![Figure 3: Advance Message Queuing Protocol][5]

|

||||

|

||||

![Figure 4: CoAP][6]

|

||||

|

||||

**IoT protocols**

|

||||

As mentioned earlier, connected things are used to collect information, convey/send information back, or do both, using the Internet. This is the fundamental basis of the IoT. To convey/send information, we need a protocol, which is a set of procedures that is used to transmit the data between electronic devices.

|

||||

Essentially, we have two types of IoT protocols — the IoT network protocols and the IoT data protocols. This article discusses the IoT data protocols.

|

||||

|

||||

![Figure 5: Constrained Application Protocol architecture][7]

|

||||

|

||||

**MQTT**

|

||||

The Messaging Queuing Telemetry Transmit (MQTT) protocol was primarily designed for low bandwidth networks, but is very popular today as an IoT protocol. It is used to exchange data between clients and the server. It is a lightweight messaging protocol.

|

||||

|

||||

This protocol has many advantages:

|

||||

|

||||

* It is small in size and has low power usage.

|

||||

* It is a lightweight protocol.

|

||||

* It is based on low network usage.

|

||||

* It works entirely in real-time.

|

||||

|

||||

|

||||

|

||||

Considering all the above reasons, MQTT emerges as the perfect IoT data protocol.

|

||||

|

||||

**How MQTT works:** MQTT is based on a client-server relationship. The server manages the requests that come from different clients and sends the required information to clients. MQTT is based on two operations.

|

||||

|

||||

i) _Publish:_ When the client sends data to the MQTT broker, this operation is known as ‘Publish’.

|

||||

ii) _Subscribe:_ When the client receives data from the broker, this operation is known as ‘Subscribe’.

|

||||

|

||||

The MQTT broker is the mediator that handles these operations, primarily taking messages and delivering them to the application or client.

|

||||

|

||||

Let’s look at the example of a device temperature sensor, which sends readings to the MQTT broker, and then information is delivered to desktop or mobile applications. As stated earlier, ‘Publish’ means sending readings to the MQTT broker and ‘Subscribe’ means delivering the information to the desktop/mobile application.

|

||||

|

||||

**AMQP**

|

||||

Advanced Message Queuing Protocol is a peer-to-peer protocol, where one peer plays the role of the client application and the other peer plays the role of the delivery service or broker. It is the combination of hard and fast components that basically routes and saves messages within the delivery service or broker carrier.

|

||||

The benefits of AMQP are:

|

||||

|

||||

* It helps to send messages without them getting missed out.

|

||||

* It helps to guarantee a ‘one-time-only’ and secured delivery.

|

||||

* It provides a secure connection.

|

||||

* It always supports acknowledgements for message delivery or failure.

|

||||

|

||||

|

||||

|

||||

**How AMQP works and its architecture:** The AMQP architecture is made up of the following parts.

|

||||

|

||||

_**Exchange**_ – Messages that come from the publisher are accepted by Exchange, which routes them to the message queue.

|

||||

_**Message queue**_ – This is the combination of multiple queues and is helpful for processing the messages.

|

||||

_**Binding**_ – This helps to maintain the connectivity between Exchange and the message queue.

|

||||

The combination of Exchange and the message queues is known as the broker or AMQP broker.

|

||||

|

||||

![Figure 6: Extensible Messaging and Presence Protocol][8]

|

||||

|

||||

**Constrained Application Protocol (CoAP)**

|

||||

This was initially used as a machine-to-machine (M2M) protocol and later began to be used as an IoT protocol. It is a Web transfer protocol that is used with constrained nodes and constrained networks. CoAP uses the RESTful architecture, just like the HTTP protocol.

|

||||

The advantages CoAP offers are:

|

||||

|

||||

* It works as a REST model for small devices.

|

||||

* As this is like HTTP, it’s easy for developers to work on.

|

||||

* It is a one-to-one protocol for transferring information between the client and server, directly.

|

||||

* It is very simple to parse.

|

||||

|

||||

|

||||

|

||||

**How CoAP works and its architecture:** From Figure 4, we can understand that CoAP is the combination of ‘Request/Response and Message’. We can also say it has two layers – ‘Request/Response’and ‘Message’.

|

||||

Figure 5 clearly explains that CoAP architecture is based on the client server relationship, where…

|

||||

|

||||

* The client sends requests to the server.

|

||||

* The server receives requests from the client and responds to them.

|

||||

|

||||

|

||||

|

||||

**Extensible Messaging and Presence Protocol (XMPP)**

|

||||

|

||||

This protocol is used to exchange messages in real-time. It is used not only to communicate with others, but also to get information on the status of the user (away, offline, active). This protocol is widely used in real life, like in WhatsApp.

|

||||

|

||||

The Extensible Messaging and Presence Protocol should be used because:

|

||||

|

||||

* It is free, open and easy to understand. Hence, it is very popular.

|

||||

* It has secured authentication, is extensible and flexible.

|

||||

|

||||

|

||||

|

||||

**How XMPP works and its architecture:** In the XMPP architecture, each client has a unique name associated with it and communicates to other clients via the XMPP server. The XMPP client has either the same domain or a different one.

|

||||

|

||||

In Figure 6, the XMPP client belongs to the same domain in which one XMPP client sends the information to the XMPP server. The server translates it and conveys the information to another client.

|

||||

Basically, this protocol is the backbone that provides universal connectivity between different endpoint protocols.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensourceforu.com/2019/10/the-protocols-that-help-things-to-communicate-over-the-internet-2/

|

||||

|

||||

作者:[Sapna Panchal][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensourceforu.com/author/sapna-panchal/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://i2.wp.com/opensourceforu.com/wp-content/uploads/2019/10/Internet-of-things-illustration.jpg?resize=696%2C439&ssl=1 (Internet of things illustration)

|

||||

[2]: https://i2.wp.com/opensourceforu.com/wp-content/uploads/2019/10/Internet-of-things-illustration.jpg?fit=1125%2C710&ssl=1

|

||||

[3]: https://i1.wp.com/opensourceforu.com/wp-content/uploads/2019/10/Figure-1-IoT-architecture.jpg?resize=350%2C133&ssl=1

|

||||

[4]: https://i1.wp.com/opensourceforu.com/wp-content/uploads/2019/10/Figure-2-Messaging-Queuing-Telemetry-Transmit-protocol.jpg?resize=350%2C206&ssl=1

|

||||

[5]: https://i1.wp.com/opensourceforu.com/wp-content/uploads/2019/10/Figure-3-Advance-Message-Queuing-Protocol.jpg?resize=350%2C160&ssl=1

|

||||

[6]: https://i0.wp.com/opensourceforu.com/wp-content/uploads/2019/10/Figure-4-CoAP.jpg?resize=350%2C84&ssl=1

|

||||

[7]: https://i1.wp.com/opensourceforu.com/wp-content/uploads/2019/10/Figure-5-Constrained-Application-Protocol-architecture.jpg?resize=350%2C224&ssl=1

|

||||

[8]: https://i0.wp.com/opensourceforu.com/wp-content/uploads/2019/10/Figure-6-Extensible-Messaging-and-Presence-Protocol.jpg?resize=350%2C46&ssl=1

|

||||

@ -1,5 +1,5 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: translator: (wenwensnow)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

|

||||

@ -1,5 +1,5 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: translator: ( Morisun029)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

|

||||

@ -0,0 +1,147 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (The Five Most Popular Operating Systems for the Internet of Things)

|

||||

[#]: via: (https://opensourceforu.com/2019/10/the-five-most-popular-operating-systems-for-the-internet-of-things/)

|

||||

[#]: author: (K S Kuppusamy https://opensourceforu.com/author/ks-kuppusamy/)

|

||||

|

||||

The Five Most Popular Operating Systems for the Internet of Things

|

||||

======

|

||||

|

||||

[![][1]][2]

|

||||

|

||||

_Connecting every ‘thing’ that we see around us to the Internet is the fundamental idea of the Internet of Things (IoT). There are many operating systems to get the best out of the things that are connected to the Internet. This article explores four popular operating systems for IoT — Ubuntu Core, RIOT, Contiki and TinyOS._

|

||||

|

||||

To say that life is running on the Internet these days is not an exaggeration due to the number and variety of services that we consume on the Net. These services span multiple domains such as information, financial services, social networking and entertainment. As this list grows longer, it becomes imperative that we do not restrict the types of devices that can connect to the Internet. The Internet of Things (IoT) facilitates connecting various types of ‘things’ to the Internet infrastructure. By connecting a device or thing to the Internet, these things get the ability to not only interact with the user but also between themselves. This feature of a variety of things interacting among themselves to assist users in a pervasive manner constitutes an interesting phenomenon called ambient intelligence.

|

||||

|

||||

![Figure 1: IoT application domains][3]

|

||||

|

||||

IoT is becoming increasingly popular as the types of devices that can be connected to it are becoming more diverse. The nature of applications is also evolving. Some of the popular domains in which IoT is getting used increasingly are listed below (Figure 1):

|

||||

|

||||

* Smart homes

|

||||

* Smart cities

|

||||

* Smart agriculture

|

||||

* Connected automobiles

|

||||

* Smart shopping

|

||||

* Connected health

|

||||

|

||||

|

||||

|

||||

![Figure 2: IoT operating system features][4]

|

||||

|

||||

As the application domains become diverse, the need to manage the IoT infrastructure efficiently is also becoming more important. The operating systems in normal computers perform the primary functions such as resource management, user interaction, etc. The requirements of IoT operating systems are specialised due to the nature and size of the devices involved in the process. Some of the important characteristics/requirements of IoT operating systems are listed below (Figure 2):

|

||||

|

||||

* A tiny memory footprint

|

||||

* Energy efficiency

|

||||

* Connectivity features

|

||||

* Hardware-agnostic operations

|

||||

* Real-time processing requirements

|

||||

* Security requirements

|

||||

* Application development ecosystem

|

||||

|

||||

|

||||

|

||||

As of 2019, there is a spectrum of choices for selecting the operating system (OS) for the Internet of Things. Some of these OSs are shown in Figure 3.

|

||||

|

||||

![Figure 3: IoT operating systems][5]

|

||||

|

||||

**Ubuntu Core**

|

||||

As Ubuntu is a popular Linux distribution, the Ubuntu Core IoT offering has also become popular. Ubuntu Core is a secure and lightweight OS for IoT, and is designed with a ‘security first’ philosophy. According to the official documentation, the entire system has been redesigned to focus on security from the first boot. There is a detailed white paper available on Ubuntu Core’s security features. It can be accessed at _<https://assets.ubuntu.com/v1/66fcd858> -ubuntu-core-security-whitepaper.pdf?_ga=2.74563154.1977628533. 1565098475-2022264852.1565098475_.

|

||||

|

||||

Ubuntu Core has been made tamper-resistant. As the applications may be from diverse sources, they are given privileges for only their own data. This has been done so that one poorly designed app does not make the entire system vulnerable. Ubuntu Core is ‘built for business’, which means that the developers can focus directly on the application at hand, while the other requirements are supported by the default operating system.

|

||||

|

||||

Another important feature of Ubuntu Core is the availability of a secure app store, which you can learn more about at _<https://ubuntu.com/internet-of-things/appstore>_. There is a ready-to-go software ecosystem that makes using Ubuntu Core simple.

|

||||

|

||||

The official documentation lists various successful case studies about how Ubuntu Core has been successfully used.

|

||||

|

||||

**RIOT**

|

||||

RIOT is a user-friendly OS for the Internet of Things. This FOSS OS has been developed by a number of people from around the world.

|

||||

RIOT supports many low-power IoT devices. It has support for various microcontroller architectures. The official documentation lists the following reasons for using the RIOT OS.

|

||||

|

||||

* _**It is developer friendly:**_ It supports the standard environments and tools so that developers need not go through a steep learning curve. Standard programming languages such as C or C++ are supported. The hardware dependent code is very minimal. Developers can code once and then run their code on 8-bit, 16-bit and 32-bit platforms.

|

||||

* _**RIOT is resource friendly:**_ One of the important features of RIOT is its ability to support lightweight devices. It enables maximum energy efficiency. It supports multi-threading with very little overhead for threading.

|

||||

* _**RIOT is IoT friendly:**_ The common system support provided by RIOT makes it a very important choice for IoT. It has support for CoAP, CBOR, high resolution and long-term timers.

|

||||

|

||||

|

||||

|

||||

**Contiki**

|

||||

Contiki is an important OS for IoT. It facilitates connecting tiny, low-cost and low-energy devices to the Internet.

|

||||

The prominent reasons for choosing the Contiki OS are as follows.

|

||||

|

||||

* _**Internet standards:**_ The Contiki OS supports the IPv6 and IPv4 standards, in addition to the low-power 6lowpan, RPL and CoAP standards.

|

||||

* _**Support for a variety of hardware:**_ Contiki can be run on a variety of low-power devices, which are easily available online.

|

||||

* _**Large community support:**_ One of the important advantages of using Contiki is the availability of an active community of developers. So when you have some technical issues to be solved, these community members make the problem solving process simple and effective.

|

||||

|

||||

|

||||

|

||||

The major features of Contiki are listed below.

|

||||

|

||||

* _**Memory allocation:**_ Even the tiny systems with only a few kilobytes of memory can also use Contiki. Its memory efficiency is an important feature.

|

||||

* _**Full IP networking:**_ The Contiki OS offers a full IP network stack. This includes major standard protocols such as UDP, TCP, HTTP, 6lowpan, RPL, CoAP, etc.

|

||||

* _**Power awareness:**_ The ability to assess the power requirements and to use them in an optimal minimal manner is an important feature of Contiki.

|

||||

* The Cooja network simulator makes the process of developing and debugging software easier.

|

||||

* The availability of the Coffee Flash file system and the Contiki shell makes the file handling and command execution simpler and more effective.

|

||||

|

||||

|

||||

|

||||

**TinyOS**

|

||||

TinyOS is an open source operating system designed for low-power wireless devices. It has a vibrant community of users spread across the world from both academia and industry. The popularity of TinyOS can be understood from the fact that it gets downloaded more than 35,000 times in a year.

|

||||

TinyOS is very effectively used in various scenarios such as sensor networks, smart buildings, smart meters, etc. The main repository of TinyOS is available at <https://github.com/tinyos/tinyos-main>.

|

||||

TinyOS is written in nesC which is a dialect of C. A sample code snippet is shown below:

|

||||

|

||||

```

|

||||

configuration Led {

|

||||

provides {

|

||||

interface LedControl;

|

||||

}

|

||||

uses {

|

||||

interface Gpio;

|

||||

}

|

||||

}

|

||||

implementation {

|

||||

|

||||

command void LedControl.turnOn() {

|

||||

call Gpio.set();

|

||||

}

|

||||

|

||||

command void LedControl.turnOff() {

|

||||

call Gpio.clear();

|

||||

}

|

||||

|

||||

}

|

||||

```

|

||||

|

||||

**Zephyr**

|

||||

Zephyr is a real-time OS that supports multiple architectures and is optimised for resource-constrained environments. Security is also given importance in the Zephyr design.

|

||||

|

||||

The prominent features of Zephyr are listed below:

|

||||

|

||||

* Support for 150+ boards.

|

||||

* Complete flexibility and freedom of choice.

|

||||

* Can handle small footprint IoT devices.

|

||||

* Can develop products with built-in security features.

|

||||

|

||||

|

||||

|

||||

This article has introduced readers to a list of four OSs for the IoT, from which they can select the ideal one, based on individual requirements.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensourceforu.com/2019/10/the-five-most-popular-operating-systems-for-the-internet-of-things/

|

||||

|

||||

作者:[K S Kuppusamy][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensourceforu.com/author/ks-kuppusamy/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://i2.wp.com/opensourceforu.com/wp-content/uploads/2019/10/OS-for-IoT.jpg?resize=696%2C647&ssl=1 (OS for IoT)

|

||||

[2]: https://i2.wp.com/opensourceforu.com/wp-content/uploads/2019/10/OS-for-IoT.jpg?fit=800%2C744&ssl=1

|

||||

[3]: https://i2.wp.com/opensourceforu.com/wp-content/uploads/2019/10/Figure-1-IoT-application-domains.jpg?resize=350%2C107&ssl=1

|

||||

[4]: https://i1.wp.com/opensourceforu.com/wp-content/uploads/2019/10/Figure-2-IoT-operating-system-features.jpg?resize=350%2C93&ssl=1

|

||||

[5]: https://i1.wp.com/opensourceforu.com/wp-content/uploads/2019/10/Figure-3-IoT-operating-systems.jpg?resize=350%2C155&ssl=1

|

||||

@ -0,0 +1,93 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (4 cool new projects to try in COPR for October 2019)

|

||||

[#]: via: (https://fedoramagazine.org/4-cool-new-projects-to-try-in-copr-for-october-2019/)

|

||||

[#]: author: (Dominik Turecek https://fedoramagazine.org/author/dturecek/)

|

||||

|

||||

4 cool new projects to try in COPR for October 2019

|

||||

======

|

||||