mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-21 02:10:11 +08:00

commit

b4e3fe9684

@ -7,7 +7,7 @@

|

||||

|

||||

这是一个基本扯平的方面。每一个桌面环境都有一些非常好的应用,也有一些不怎么样的。再次强调,Gnome 把那些 KDE 完全错失的小细节给做对了。我不是想说 KDE 中有哪些应用不好。他们都能工作,但仅此而已。也就是说:它们合格了,但确实还没有达到甚至接近100分。

|

||||

|

||||

Gnome 是一个样子,KDE 是另外一种。Dragon 播放器运行得很好,清晰的标出了播放文件、URL或和光盘的按钮,正如你在 Gnome Videos 中能做到的一样……但是在便利的文件名和用户的友好度方面,Gnome 多走了一小步。它默认显示了在你的电脑上检测到的所有影像文件,不需要你做任何事情。KDE 有 [Baloo][](正如之前的 [Nepomuk][2],LCTT 译注:这是 KDE 中一种文件索引服务框架)为什么不使用它们?它们能列出可读取的影像文件……但却没被使用。

|

||||

Gnome 在左,KDE 在右。Dragon 播放器运行得很好,清晰的标出了播放文件、URL或和光盘的按钮,正如你在 Gnome Videos 中能做到的一样……但是在便利的文件名和用户的友好度方面,Gnome 多走了一小步。它默认显示了在你的电脑上检测到的所有影像文件,不需要你做任何事情。KDE 有 [Baloo][](正如之前的 [Nepomuk][2],LCTT 译注:这是 KDE 中一种文件索引服务框架)为什么不使用它们?它们能列出可读取的影像文件……但却没被使用。

|

||||

|

||||

下一步……音乐播放器

|

||||

|

||||

|

||||

@ -0,0 +1,52 @@

|

||||

一周 GNOME 之旅:品味它和 KDE 的是是非非(第四节 GNOME设置)

|

||||

================================================================================

|

||||

|

||||

### 设置 ###

|

||||

|

||||

在这我要挑一挑几个特定 KDE 控制模块的毛病,大部分原因是因为相比它们的对手GNOME来说,糟糕得太可笑,实话说,真是悲哀。

|

||||

|

||||

第一个接招的?打印机。

|

||||

|

||||

|

||||

|

||||

GNOME 在左,KDE 在右。你知道左边跟右边的打印程序有什么区别吗?当我在 GNOME 控制中心打开“打印机”时,程序窗口弹出来了,然后这样就可以使用了。而当我在 KDE 系统设置打开“打印机”时,我得到了一条密码提示。甚至我都没能看一眼打印机呢,我就必须先交出 ROOT 密码。

|

||||

|

||||

让我再重复一遍。在今天这个有了 PolicyKit 和 Logind 的日子里,对一个应该是 sudo 的操作,我依然被询问要求 ROOT 的密码。我安装系统的时候甚至都没设置 root 密码。所以我必须跑到 Konsole 去,接着运行 'sudo passwd root' 命令,这样我才能给 root 设一个密码,然后我才能回到系统设置中的打印程序,再交出 root 密码,然后仅仅是看一看哪些打印机可用。完成了这些工作后,当我点击“添加打印机”时,我再次得到请求 ROOT 密码的提示,当我解决了它后再选择一个打印机和驱动时,我再次得到请求 ROOT 密码的提示。仅仅是为了添加一个打印机到系统我就收到三次密码请求!

|

||||

|

||||

而在 GNOME 下添加打印机,在点击打印机程序中的“解锁”之前,我没有得到任何请求 SUDO 密码的提示。整个过程我只被请求过一次,仅此而已。KDE,求你了……采用 GNOME 的“解锁”模式吧。不到一定需要的时候不要发出提示。还有,不管是哪个库,只要它允许 KDE 应用程序绕过 PolicyKit/Logind(如果有的话)并直接请求 ROOT 权限……那就把它封进箱里吧。如果这是个多用户系统,那我要么必须交出 ROOT 密码,要么我必须时时刻刻待命,以免有一个用户需要升级、更改或添加一个新的打印机。而这两种情况都是完全无法接受的。

|

||||

|

||||

有还一件事……

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

这个问题问大家:怎么样看起来更简洁?我在写这篇文章时意识到:当有任何附加的打印机准备好时,Gnome 打印机程序会把过程做得非常简洁,它们在左边上放了一个竖直栏来列出这些打印机。而我在 KDE 中添加第二台打印机时,它突然增加出一个左边栏来。而在添加之前,我脑海中已经有了一个恐怖的画面,它会像图片文件夹显示预览图一样直接在界面里插入另外一个图标。我很高兴也很惊讶的看到我是错的。但是事实是它直接“长出”另外一个从未存在的竖直栏,彻底改变了它的界面布局,而这样也称不上“好”。终究还是一种令人困惑,奇怪而又不直观的设计。

|

||||

|

||||

打印机说得够多了……下一个接受我公开石刑的 KDE 系统设置是?多媒体,即 Phonon。

|

||||

|

||||

|

||||

|

||||

一如既往,GNOME 在左边,KDE 在右边。让我们先看看 GNOME 的系统设置先……眼睛移动是从左到右,从上到下,对吧?来吧,就这样做。首先:音量控制滑条。滑条中的蓝色条与空条百分百清晰地消除了哪边是“音量增加”的困惑。在音量控制条后马上就是一个 On/Off 开关,用来开关静音功能。Gnome 的再次得分在于静音后能记住当前设置的音量,而在点击音量增加按钮取消静音后能回到原来设置的音量中来。Kmixer,你个健忘的垃圾,我真的希望我能多讨论你一下。

|

||||

|

||||

继续!输入输出和应用程序的标签选项?每一个应用程序的音量随时可控?Gnome,每过一秒,我爱你越深。音量均衡选项、声音配置、和清晰地标上标志的“测试麦克风”选项。

|

||||

|

||||

我不清楚它能否以一种更干净更简洁的设计实现。是的,它只是一个 Gnome 化的 Pavucontrol,但我想这就是重要的地方。Pavucontrol 在这方面几乎完全做对了,Gnome 控制中心中的“声音”应用程序的改善使它向完美更进了一步。

|

||||

|

||||

Phonon,该你上了。但开始前我想说:我 TM 看到的是什么?!我知道我看到的是音频设备的优先级列表,但是它呈现的方式有点太坑。还有,那些用户可能关心的那些东西哪去了?拥有一个优先级列表当然很好,它也应该存在,但问题是优先级列表属于那种用户乱搞一两次之后就不会再碰的东西。它还不够重要,或者说不够常用到可以直接放在正中间位置的程度。音量控制滑块呢?对每个应用程序的音量控制功能呢?那些用户使用最频繁的东西呢?好吧,它们在 Kmix 中,一个分离的程序,拥有它自己的配置选项……而不是在系统设置下……这样真的让“系统设置”这个词变得有点用词不当。

|

||||

|

||||

|

||||

|

||||

上面展示的 Gnome 的网络设置。KDE 的没有展示,原因就是我接下来要吐槽的内容了。如果你进入 KDE 的系统设置里,然后点击“网络”区域中三个选项中的任何一个,你会得到一大堆的选项:蓝牙设置、Samba 分享的默认用户名和密码(说真的,“连通性(Connectivity)”下面只有两个选项:SMB 的用户名和密码。TMD 怎么就配得上“连通性”这么大的词?),浏览器身份验证控制(只有 Konqueror 能用……一个已经倒闭的项目),代理设置,等等……我的 wifi 设置哪去了?它们没在这。哪去了?好吧,它们在网络应用程序的设置里面……而不是在网络设置里……

|

||||

|

||||

KDE,你这是要杀了我啊,你有“系统设置”当凶器,拿着它动手吧!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.phoronix.com/scan.php?page=article&item=gnome-week-editorial&num=4

|

||||

|

||||

作者:Eric Griffith

|

||||

译者:[XLCYun](https://github.com/XLCYun)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

@ -0,0 +1,40 @@

|

||||

一周 GNOME 之旅:品味它和 KDE 的是是非非(第三节 总结)

|

||||

================================================================================

|

||||

|

||||

### 用户体验和最后想法 ###

|

||||

|

||||

当 Gnome 2.x 和 KDE 4.x 要正面交锋时……我在它们之间左右逢源。我对它们爱恨交织,但总的来说它们使用起来还算是一种乐趣。然后 Gnome 3.x 来了,带着一场 Gnome Shell 的戏剧。那时我就放弃了 Gnome,我尽我所能的避开它。当时它对用户是不友好的,而且不直观,它打破了原有的设计典范,只为平板的统治世界做准备……而根据平板下跌的销量来看,这样的未来不可能实现。

|

||||

|

||||

在 Gnome 3 后续发布了八个版本后,奇迹发生了。Gnome 变得对对用户友好了,变得直观了。它完美吗?当然不。我还是很讨厌它想推动的那种设计范例,我讨厌它总想给我强加一种工作流(work flow),但是在付出时间和耐心后,这两都能被接受。只要你能够回头去看看 Gnome Shell 那外星人一样的界面,然后开始跟 Gnome 的其它部分(特别是控制中心)互动,你就能发现 Gnome 绝对做对了:细节,对细节的关注!

|

||||

|

||||

人们能适应新的界面设计范例,能适应新的工作流—— iPhone 和 iPad 都证明了这一点——但真正让他们操心的一直是“纸割”——那些不完美的细节。

|

||||

|

||||

它带出了 KDE 和 Gnome 之间最重要的一个区别。Gnome 感觉像一个产品,像一种非凡的体验。你用它的时候,觉得它是完整的,你要的东西都触手可及。它让人感觉就像是一个拥有 Windows 或者 OS X 那样桌面体验的 Linux 桌面版:你要的都在里面,而且它是被同一个目标一致的团队中的同一个人写出来的。天,即使是一个应用程序发出的 sudo 请求都感觉是 Gnome 下的一个特意设计的部分,就像在 Windows 下的一样。而在 KDE 下感觉就是随便一个应用程序都能创建的那种各种外观的弹窗。它不像是以系统本身这样的正式身份停下来说“嘿,有个东西要请求管理员权限!你要给它吗?”。

|

||||

|

||||

KDE 让人体验不到有凝聚力的体验。KDE 像是在没有方向地打转,感觉没有完整的体验。它就像是一堆东西往不同的的方向移动,只不过恰好它们都有一个共同享有的工具包而已。如果开发者对此很开心,那么好吧,他们开心就好,但是如果他们想提供最好体验的话,那么就需要多关注那些小地方了。用户体验跟直观应当做为每一个应用程序的设计中心,应当有一个视野,知道 KDE 要提供什么——并且——知道它看起来应该是什么样的。

|

||||

|

||||

是不是有什么原因阻止我在 KDE 下使用 Gnome 磁盘管理? Rhythmbox 呢? Evolution 呢? 没有,没有,没有。但是这样说又错过了关键。Gnome 和 KDE 都称它们自己为“桌面环境”。那么它们就应该是完整的环境,这意味着他们的各个部件应该汇集并紧密结合在一起,意味着你应该使用它们环境下的工具,因为它们说“您在一个完整的桌面中需要的任何东西,我们都支持。”说真的?只有 Gnome 看起来能符合完整的要求。KDE 在“汇集在一起”这一方面感觉就像个半成品,更不用说提供“完整体验”中你所需要的东西。Gnome 磁盘管理没有相应的对手—— kpartionmanage 要求 ROOT 权限。KDE 不运行“首次用户注册”的过程(原文:No 'First Time User' run through。可能是指系统安装过程中KDE没有创建新用户的过程,译注) ,现在也不过是在 Kubuntu 下引入了一个用户管理器。老天,Gnome 甚至提供了地图、笔记、日历和时钟应用。这些应用都是百分百要紧的吗?不,当然不了。但是正是这些应用帮助 Gnome 推动“Gnome 是一种完整丰富的体验”的想法。

|

||||

|

||||

我吐槽的 KDE 问题并非不可能解决,决对不是这样的!但是它需要人去关心它。它需要开发者为他们的作品感到自豪,而不仅仅是为它们实现的功能而感到自豪——组织的价值可大了去了。别夺走用户设置选项的能力—— GNOME 3.x 就是因为缺乏配置选项的能力而为我所诟病,但别把“好吧,你想怎么设置就怎么设置”作为借口而不提供任何理智的默认设置。默认设置是用户将看到的东西,它们是用户从打开软件的第一刻开始进行评判的关键。给用户留个好印象吧。

|

||||

|

||||

我知道 KDE 开发者们知道设计很重要,这也是为什么VDG(Visual Design Group 视觉设计组)存在的原因,但是感觉好像他们没有让 VDG 充分发挥,所以 KDE 里存在组织上的缺陷。不是 KDE 没办法完整,不是它没办法汇集整合在一起然后解决衰败问题,只是开发者们没做到。他们瞄准了靶心……但是偏了。

|

||||

|

||||

还有,在任何人说这句话之前……千万别说“欢迎给我们提交补丁啊"。因为当我开心的为某个人提交补丁时,只要开发者坚持以他们喜欢的却不直观的方式干事,更多这样的烦人事就会不断发生。这不关 Muon 有没有中心对齐。也不关 Amarok 的界面太丑。也不关每次我敲下快捷键后,弹出的音量和亮度调节窗口占用了我一大块的屏幕“地皮”(说真的,有人会把这些东西缩小)。

|

||||

|

||||

这跟心态的冷漠有关,跟开发者们在为他们的应用设计 UI 时根本就不多加思考有关。KDE 团队做的东西都工作得很好。Amarok 能播放音乐。Dragon 能播放视频。Kwin 或 Qt 和 kdelibs 似乎比 Mutter/gtk 更有力更效率(仅根据我的电池电量消耗计算。非科学性测试)。这些都很好,很重要……但是它们呈现的方式也很重要。甚至可以说,呈现方式是最重要的,因为它是用户看到的并与之交互的东西。

|

||||

|

||||

KDE 应用开发者们……让 VDG 参与进来吧。让 VDG 审查并核准每一个“核心”应用,让一个 VDG 的 UI/UX 专家来设计应用的使用模式和使用流程,以此保证其直观性。真见鬼,不管你们在开发的是啥应用,仅仅把它的模型发到 VDG 论坛寻求反馈甚至都可能都能得到一些非常好的指点跟反馈。你有这么好的资源在这,现在赶紧用吧。

|

||||

|

||||

我不想说得好像我一点都不懂感恩。我爱 KDE,我爱那些志愿者们为了给 Linux 用户一个可视化的桌面而付出的工作与努力,也爱可供选择的 Gnome。正是因为我关心我才写这篇文章。因为我想看到更好的 KDE,我想看到它走得比以前更加遥远。而这样做需要每个人继续努力,并且需要人们不再躲避批评。它需要人们对系统互动及系统崩溃的地方都保持诚实。如果我们不能直言批评,如果我们不说“这真垃圾!”,那么情况永远不会变好。

|

||||

|

||||

这周后我会继续使用 Gnome 吗?可能不。应该不。Gnome 还在试着强迫我接受其工作流,而我不想追随,也不想遵循,因为我在使用它的时候感觉变得不够高效,因为它并不遵循我的思维模式。可是对于我的朋友们,当他们问我“我该用哪种桌面环境?”我可能会推荐 Gnome,特别是那些不大懂技术,只要求“能工作”就行的朋友。根据目前 KDE 的形势来看,这可能是我能说出的最狠毒的评估了。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.phoronix.com/scan.php?page=article&item=gnome-week-editorial&num=5

|

||||

|

||||

作者:Eric Griffith

|

||||

译者:[XLCYun](https://github.com/XLCYun)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

@ -1,16 +1,17 @@

|

||||

shellinabox–基于Web的Ajax的终端模拟器安装及使用详解

|

||||

shellinabox:一款使用 AJAX 的基于 Web 的终端模拟器

|

||||

================================================================================

|

||||

|

||||

### shellinabox简介 ###

|

||||

|

||||

unixmen的读者朋友们,你们好!

|

||||

通常情况下,我们在访问任何远程服务器时,会使用常见的通信工具如OpenSSH和Putty等。但是,有可能我们在防火墙后面不能使用这些工具访问远程系统,或者防火墙只允许HTTPS流量才能通过。不用担心!即使你在这样的防火墙后面,我们依然有办法来访问你的远程系统。而且,你不需要安装任何类似于OpenSSH或Putty的通讯工具。你只需要有一个支持JavaScript和CSS的现代浏览器,并且你不用安装任何插件或第三方应用软件。

|

||||

|

||||

通常情况下,我们访问任何远程服务器时,使用常见的通信工具如OpenSSH和Putty等。但是如果我们在防火墙外,或者防火墙只允许HTTPS流量才能通过,那么我们就不能再使用这些工具来访问远程系统了。不用担心!即使你在防火墙后面,我们依然有办法来访问你的远程系统。而且,你不需要安装任何类似于OpenSSH或Putty的通讯工具。你只需要有一个支持JavaScript和CSS的现代浏览器。并且你不用安装任何插件或第三方应用软件。

|

||||

这个 **Shell In A Box**,发音是**shellinabox**,是由**Markus Gutschke**开发的一款自由开源的基于Web的Ajax的终端模拟器。它使用AJAX技术,通过Web浏览器提供了类似原生的 Shell 的外观和感受。

|

||||

|

||||

Meet **Shell In A Box**,发音是**shellinabox**,是由**Markus Gutschke**开发的一款免费的,开源的,基于Web的Ajax的终端模拟器。它使用AJAX技术,通过Web浏览器提供的外观和感觉像一个原生壳。该**shellinaboxd**的守护进程实现了一个Web服务器,能够侦听指定的端口。Web服务器发布一个或多个服务,这些服务将在VT100模拟器实现为一个AJAX的Web应用程序显示。默认情况下,端口为4200。你可以更改默认端口到任意选择的任意端口号。在你的远程服务器安装shellinabox以后,如果你想从本地系统接入,打开Web浏览器并导航到:**http://IP-Address:4200/**。输入你的用户名和密码,然后就可以开始使用你远程系统的外壳。看起来很有趣,不是吗?确实!

|

||||

这个**shellinaboxd**守护进程实现了一个Web服务器,能够侦听指定的端口。其Web服务器可以发布一个或多个服务,这些服务显示在用 AJAX Web 应用实现的VT100模拟器中。默认情况下,端口为4200。你可以更改默认端口到任意选择的任意端口号。在你的远程服务器安装shellinabox以后,如果你想从本地系统接入,打开Web浏览器并导航到:**http://IP-Address:4200/**。输入你的用户名和密码,然后就可以开始使用你远程系统的Shell。看起来很有趣,不是吗?确实 有趣!

|

||||

|

||||

**免责声明**:

|

||||

|

||||

shellinabox不是SSH客户端或任何安全软件。它仅仅是一个应用程序,能够通过Web浏览器模拟一个远程系统的壳。同时,它和SSH没有任何关系。这不是防弹的安全的方式来远程控制您的系统。这只是迄今为止最简单的方法之一。无论什么原因,你都不应该在任何公共网络上运行它。

|

||||

shellinabox不是SSH客户端或任何安全软件。它仅仅是一个应用程序,能够通过Web浏览器模拟一个远程系统的Shell。同时,它和SSH没有任何关系。这不是可靠的安全地远程控制您的系统的方式。这只是迄今为止最简单的方法之一。无论如何,你都不应该在任何公共网络上运行它。

|

||||

|

||||

### 安装shellinabox ###

|

||||

|

||||

@ -48,7 +49,7 @@ shellinabox在默认库是可用的。所以,你可以使用命令来安装它

|

||||

|

||||

# vi /etc/sysconfig/shellinaboxd

|

||||

|

||||

更改你的端口到任意数量。因为我在本地网络上测试它,所以我使用默认值。

|

||||

更改你的端口到任意数字。因为我在本地网络上测试它,所以我使用默认值。

|

||||

|

||||

# Shell in a box daemon configuration

|

||||

# For details see shellinaboxd man page

|

||||

@ -98,7 +99,7 @@ shellinabox在默认库是可用的。所以,你可以使用命令来安装它

|

||||

|

||||

### 使用 ###

|

||||

|

||||

现在,去你的客户端系统,打开Web浏览器并导航到:**https://ip-address-of-remote-servers:4200**。

|

||||

现在,在你的客户端系统,打开Web浏览器并导航到:**https://ip-address-of-remote-servers:4200**。

|

||||

|

||||

**注意**:如果你改变了端口,请填写修改后的端口。

|

||||

|

||||

@ -110,13 +111,13 @@ shellinabox在默认库是可用的。所以,你可以使用命令来安装它

|

||||

|

||||

|

||||

|

||||

右键点击你浏览器的空白位置。你可以得到一些有很有用的额外的菜单选项。

|

||||

右键点击你浏览器的空白位置。你可以得到一些有很有用的额外菜单选项。

|

||||

|

||||

|

||||

|

||||

从现在开始,你可以通过本地系统的Web浏览器在你的远程服务器随意操作。

|

||||

|

||||

当你完成时,记得点击**退出**。

|

||||

当你完成工作时,记得输入`exit`退出。

|

||||

|

||||

当再次连接到远程系统时,单击**连接**按钮,然后输入远程服务器的用户名和密码。

|

||||

|

||||

@ -134,7 +135,7 @@ shellinabox在默认库是可用的。所以,你可以使用命令来安装它

|

||||

|

||||

### 结论 ###

|

||||

|

||||

正如我之前提到的,如果你在服务器运行在防火墙后面,那么基于web的SSH工具是非常有用的。有许多基于web的SSH工具,但shellinabox是非常简单并且有用的工具,能从的网络上的任何地方,模拟一个远程系统的壳。因为它是基于浏览器的,所以你可以从任何设备访问您的远程服务器,只要你有一个支持JavaScript和CSS的浏览器。

|

||||

正如我之前提到的,如果你在服务器运行在防火墙后面,那么基于web的SSH工具是非常有用的。有许多基于web的SSH工具,但shellinabox是非常简单而有用的工具,可以从的网络上的任何地方,模拟一个远程系统的Shell。因为它是基于浏览器的,所以你可以从任何设备访问您的远程服务器,只要你有一个支持JavaScript和CSS的浏览器。

|

||||

|

||||

就这些啦。祝你今天有个好心情!

|

||||

|

||||

@ -148,7 +149,7 @@ via: http://www.unixmen.com/shellinabox-a-web-based-ajax-terminal-emulator/

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[xiaoyu33](https://github.com/xiaoyu33)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,52 @@

|

||||

Linux Without Limits: IBM Launch LinuxONE Mainframes

|

||||

================================================================================

|

||||

|

||||

|

||||

LinuxONE Emperor MainframeGood news for Ubuntu’s server team today as [IBM launch the LinuxONE][1] a Linux-only mainframe that is also able to run Ubuntu.

|

||||

|

||||

The largest of the LinuxONE systems launched by IBM is called ‘Emperor’ and can scale up to 8000 virtual machines or tens of thousands of containers – a possible record for any one single Linux system.

|

||||

|

||||

The LinuxONE is described by IBM as a ‘game changer’ that ‘unleashes the potential of Linux for business’.

|

||||

|

||||

IBM and Canonical are working together on the creation of an Ubuntu distribution for LinuxONE and other IBM z Systems. Ubuntu will join RedHat and SUSE as ‘premier Linux distributions’ on IBM z.

|

||||

|

||||

Alongside the ‘Emperor’ IBM is also offering the LinuxONE Rockhopper, a smaller mainframe for medium-sized businesses and organisations.

|

||||

|

||||

IBM is the market leader in mainframes and commands over 90% of the mainframe market.

|

||||

|

||||

注:youtube 视频

|

||||

<iframe width="750" height="422" frameborder="0" allowfullscreen="" src="https://www.youtube.com/embed/2ABfNrWs-ns?feature=oembed"></iframe>

|

||||

|

||||

### What Is a Mainframe Computer Used For? ###

|

||||

|

||||

The computer you’re reading this article on would be dwarfed by a ‘big iron’ mainframe. They are large, hulking great cabinets packed full of high-end components, custom designed technology and dizzying amounts of storage (that is data storage, not ample room for pens and rulers).

|

||||

|

||||

Mainframes computers are used by large organizations and businesses to process and store large amounts of data, crunch through statistics, and handle large-scale transaction processing.

|

||||

|

||||

### ‘World’s Fastest Processor’ ###

|

||||

|

||||

IBM has teamed up with Canonical Ltd to use Ubuntu on the LinuxONE and other IBM z Systems.

|

||||

|

||||

The LinuxONE Emperor uses the IBM z13 processor. The chip, announced back in January, is said to be the world’s fastest microprocessor. It is able to deliver transaction response times in the milliseconds.

|

||||

|

||||

But as well as being well equipped to handle for high-volume mobile transactions, the z13 inside the LinuxONE is also an ideal cloud system.

|

||||

|

||||

It can handle more than 50 virtual servers per core for a total of 8000 virtual servers, making it a cheaper, greener and more performant way to scale-out to the cloud.

|

||||

|

||||

**You don’t have to be a CIO or mainframe spotter to appreciate this announcement. The possibilities LinuxONE provides are clear enough. **

|

||||

|

||||

Source: [Reuters (h/t @popey)][2]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2015/08/ibm-linuxone-mainframe-ubuntu-partnership

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/117485690627814051450/?rel=author

|

||||

[1]:http://www-03.ibm.com/systems/z/announcement.html

|

||||

[2]:http://www.reuters.com/article/2015/08/17/us-ibm-linuxone-idUSKCN0QM09P20150817

|

||||

@ -0,0 +1,46 @@

|

||||

Ubuntu Linux is coming to IBM mainframes

|

||||

================================================================================

|

||||

SEATTLE -- It's finally happened. At [LinuxCon][1], IBM and [Canonical][2] announced that [Ubuntu Linux][3] will soon be running on IBM mainframes.

|

||||

|

||||

|

||||

|

||||

You'll soon to be able to get your IBM mainframe in Ubuntu Linux orange

|

||||

|

||||

According to Ross Mauri, IBM's General Manager of System z, and Mark Shuttleworth, Canonical and Ubuntu's founder, this move came about because of customer demand. For over a decade, [Red Hat Enterprise Linux (RHEL)][4] and [SUSE Linux Enterprise Server (SLES)][5] were the only supported IBM mainframe Linux distributions.

|

||||

|

||||

As Ubuntu matured, more and more businesses turned to it for the enterprise Linux, and more and more of them wanted it on IBM big iron hardware. In particular, banks wanted Ubuntu there. Soon, financial CIOs will have their wish granted.

|

||||

|

||||

In an interview Shuttleworth said that Ubuntu Linux will be available on the mainframe by April 2016 in the next long-term support version of Ubuntu: Ubuntu 16.04. Canonical and IBM already took the first move in this direction in late 2014 by bringing [Ubuntu to IBM's POWER][6] architecture.

|

||||

|

||||

Before that, Canonical and IBM almost signed the dotted line to bring [Ubuntu to IBM mainframes in 2011][7] but that deal was never finalized. This time, it's happening.

|

||||

|

||||

Jane Silber, Canonical's CEO, explained in a statement, "Our [expansion of Ubuntu platform][8] support to [IBM z Systems][9] is a recognition of the number of customers that count on z Systems to run their businesses, and the maturity the hybrid cloud is reaching in the marketplace.

|

||||

|

||||

**Silber continued:**

|

||||

|

||||

> With support of z Systems, including [LinuxONE][10], Canonical is also expanding our relationship with IBM, building on our support for the POWER architecture and OpenPOWER ecosystem. Just as Power Systems clients are now benefiting from the scaleout capabilities of Ubuntu, and our agile development process which results in first to market support of new technologies such as CAPI (Coherent Accelerator Processor Interface) on POWER8, z Systems clients can expect the same rapid rollout of technology advancements, and benefit from [Juju][11] and our other cloud tools to enable faster delivery of new services to end users. In addition, our collaboration with IBM includes the enablement of scale-out deployment of many IBM software solutions with Juju Charms. Mainframe clients will delight in having a wealth of 'charmed' IBM solutions, other software provider products, and open source solutions, deployable on mainframes via Juju.

|

||||

|

||||

Shuttleworth expects Ubuntu on z to be very successful. "It's blazingly fast, and with its support for OpenStack, people who want exceptional cloud region performance will be very happy.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.zdnet.com/article/ubuntu-linux-is-coming-to-the-mainframe/#ftag=RSSbaffb68

|

||||

|

||||

作者:[Steven J. Vaughan-Nichols][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.zdnet.com/meet-the-team/us/steven-j-vaughan-nichols/

|

||||

[1]:http://events.linuxfoundation.org/events/linuxcon-north-america

|

||||

[2]:http://www.canonical.com/

|

||||

[3]:http://www.ubuntu.comj/

|

||||

[4]:http://www.redhat.com/en/technologies/linux-platforms/enterprise-linux

|

||||

[5]:https://www.suse.com/products/server/

|

||||

[6]:http://www.zdnet.com/article/ibm-doubles-down-on-linux/

|

||||

[7]:http://www.zdnet.com/article/mainframe-ubuntu-linux/

|

||||

[8]:https://insights.ubuntu.com/2015/08/17/ibm-and-canonical-plan-ubuntu-support-on-ibm-z-systems-mainframe/

|

||||

[9]:http://www-03.ibm.com/systems/uk/z/

|

||||

[10]:http://www.zdnet.com/article/linuxone-ibms-new-linux-mainframes/

|

||||

[11]:https://jujucharms.com/

|

||||

117

sources/share/20150817 Top 5 Torrent Clients For Ubuntu Linux.md

Normal file

117

sources/share/20150817 Top 5 Torrent Clients For Ubuntu Linux.md

Normal file

@ -0,0 +1,117 @@

|

||||

Top 5 Torrent Clients For Ubuntu Linux

|

||||

================================================================================

|

||||

|

||||

|

||||

Looking for the **best torrent client in Ubuntu**? Indeed there are a number of torrent clients available for desktop Linux. But which ones are the **best Ubuntu torrent clients** among them?

|

||||

|

||||

I am going to list top 5 torrent clients for Linux, which are lightweight, feature rich and have impressive GUI. Ease of installation and using is also a factor.

|

||||

|

||||

### Best torrent programs for Ubuntu ###

|

||||

|

||||

Since Ubuntu comes by default with Transmission, I am going to exclude it from the list. This doesn’t mean that Transmission doesn’t deserve to be on the list. Transmission is a good to have torrent client for Ubuntu and this is the reason why it is the default Torrent application in several Linux distributions, including Ubuntu.

|

||||

|

||||

----------

|

||||

|

||||

### Deluge ###

|

||||

|

||||

|

||||

|

||||

[Deluge][1] has been chosen as the best torrent client for Linux by Lifehacker and that speaks itself of the usefulness of Deluge. And it’s not just Lifehacker who is fan of Deluge, check out any forum and you’ll find a number of people admitting that Deluge is their favorite.

|

||||

|

||||

Fast, sleek and intuitive interface makes Deluge a hot favorite among Linux users.

|

||||

|

||||

Deluge is available in Ubuntu repositories and you can install it in Ubuntu Software Center or by using the command below:

|

||||

|

||||

sudo apt-get install deluge

|

||||

|

||||

----------

|

||||

|

||||

### qBittorrent ###

|

||||

|

||||

|

||||

|

||||

As the name suggests, [qBittorrent][2] is the Qt version of famous [Bittorrent][3] application. You’ll see an interface similar to Bittorrent client in Windows, if you ever used it. Sort of lightweight and have all the standard features of a torrent program, qBittorrent is also available in default Ubuntu repository.

|

||||

|

||||

It could be installed from Ubuntu Software Center or using the command below:

|

||||

|

||||

sudo apt-get install qbittorrent

|

||||

|

||||

----------

|

||||

|

||||

### Tixati ###

|

||||

|

||||

|

||||

|

||||

[Tixati][4] is another nice to have torrent client for Ubuntu. It has a default dark theme which might be preferred by many but not me. It has all the standard features that you can seek in a torrent client.

|

||||

|

||||

In addition to that, there are additional feature of data analysis. You can measure and analyze bandwidth and other statistics in nice charts.

|

||||

|

||||

- [Download Tixati][5]

|

||||

|

||||

----------

|

||||

|

||||

### Vuze ###

|

||||

|

||||

|

||||

|

||||

[Vuze][6] is favorite torrent application of a number of Linux as well as Windows users. Apart from the standard features, you can search for torrents directly in the application. You can also subscribe to episodic content so that you won’t have to search for new contents as you can see it in your subscription in sidebar.

|

||||

|

||||

It also comes with a video player that can play HD videos with subtitles and all. But I don’t think you would like to use it over the better video players such as VLC.

|

||||

|

||||

Vuze can be installed from Ubuntu Software Center or using the command below:

|

||||

|

||||

sudo apt-get install vuze

|

||||

|

||||

----------

|

||||

|

||||

### Frostwire ###

|

||||

|

||||

|

||||

|

||||

[Frostwire][7] is the torrent application you might want to try. It is more than just a simple torrent client. Also available for Android, you can use it to share files over WiFi.

|

||||

|

||||

You can search for torrents from within the application and play them inside the application. In addition to the downloaded files, it can browse your local media and have them organized inside the player. The same is applicable for the Android version.

|

||||

|

||||

An additional feature is that Frostwire also provides access to legal music by indi artists. You can download them and listen to it, for free, for legal.

|

||||

|

||||

- [Download Frostwire][8]

|

||||

|

||||

----------

|

||||

|

||||

### Honorable mention ###

|

||||

|

||||

On Windows, uTorrent (pronounced mu torrent) is my favorite torrent application. While uTorrent may be available for Linux, I deliberately skipped it from the list because installing and using uTorrent in Linux is neither easy nor does it provide a complete application experience (runs with in web browser).

|

||||

|

||||

You can read about uTorrent installation in Ubuntu [here][9].

|

||||

|

||||

#### Quick tip: ####

|

||||

|

||||

Most of the time, torrent applications do not start by default. You might want to change this behavior. Read this post to learn [how to manage startup applications in Ubuntu][10].

|

||||

|

||||

### What’s your favorite? ###

|

||||

|

||||

That was my opinion on the best Torrent clients in Ubuntu. What is your favorite one? Do leave a comment. You can also check the [best download managers for Ubuntu][11] in related posts. And if you use Popcorn Time, check these [Popcorn Time Tips][12].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://itsfoss.com/best-torrent-ubuntu/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://itsfoss.com/author/abhishek/

|

||||

[1]:http://deluge-torrent.org/

|

||||

[2]:http://www.qbittorrent.org/

|

||||

[3]:http://www.bittorrent.com/

|

||||

[4]:http://www.tixati.com/

|

||||

[5]:http://www.tixati.com/download/

|

||||

[6]:http://www.vuze.com/

|

||||

[7]:http://www.frostwire.com/

|

||||

[8]:http://www.frostwire.com/downloads

|

||||

[9]:http://sysads.co.uk/2014/05/install-utorrent-3-3-ubuntu-14-04-13-10/

|

||||

[10]:http://itsfoss.com/manage-startup-applications-ubuntu/

|

||||

[11]:http://itsfoss.com/4-best-download-managers-for-linux/

|

||||

[12]:http://itsfoss.com/popcorn-time-tips/

|

||||

@ -0,0 +1,344 @@

|

||||

A Linux User Using ‘Windows 10′ After More than 8 Years – See Comparison

|

||||

================================================================================

|

||||

Windows 10 is the newest member of windows NT family of which general availability was made on July 29, 2015. It is the successor of Windows 8.1. Windows 10 is supported on Intel Architecture 32 bit, AMD64 and ARMv7 processors.

|

||||

|

||||

|

||||

|

||||

Windows 10 and Linux Comparison

|

||||

|

||||

As a Linux-user for more than 8 continuous years, I thought to test Windows 10, as it is making a lots of news these days. This article is a breakthrough of my observation. I will be seeing everything from the perspective of a Linux user so you may find it a bit biased towards Linux but with absolutely no false information.

|

||||

|

||||

1. I searched Google with the text “download windows 10” and clicked the first link.

|

||||

|

||||

|

||||

|

||||

Search Windows 10

|

||||

|

||||

You may directly go to link : [https://www.microsoft.com/en-us/software-download/windows10ISO][1]

|

||||

|

||||

2. I was supposed to select a edition from ‘windows 10‘, ‘windows 10 KN‘, ‘windows 10 N‘ and ‘windows 10 single language‘.

|

||||

|

||||

|

||||

|

||||

Select Windows 10 Edition

|

||||

|

||||

For those who want to know details of different editions of Windows 10, here is the brief details of editions.

|

||||

|

||||

- Windows 10 – Contains everything offered by Microsoft for this OS.

|

||||

- Windows 10N – This edition comes without Media-player.

|

||||

- Windows 10KN – This edition comes without media playing capabilities.

|

||||

- Windows 10 Single Language – Only one Language Pre-installed.

|

||||

|

||||

3. I selected the first option ‘Windows 10‘ and clicked ‘Confirm‘. Then I was supposed to select a product language. I choose ‘English‘.

|

||||

|

||||

I was provided with Two Download Links. One for 32-bit and other for 64-bit. I clicked 64-bit, as per my architecture.

|

||||

|

||||

|

||||

|

||||

Download Windows 10

|

||||

|

||||

With my download speed (15Mbps), it took me 3 long hours to download it. Unfortunately there were no torrent file to download the OS, which could otherwise have made the overall process smooth. The OS iso image size is 3.8 GB.

|

||||

|

||||

I could not find an image of smaller size but again the truth is there don’t exist net-installer image like things for Windows. Also there is no way to calculate hash value after the iso image has been downloaded.

|

||||

|

||||

Wonder why so ignorance from windows on such issues. To verify if the iso is downloaded correctly I need to write the image to a disk or to a USB flash drive and then boot my system and keep my finger crossed till the setup is finished.

|

||||

|

||||

Lets start. I made my USB flash drive bootable with the windows 10 iso using dd command, as:

|

||||

|

||||

# dd if=/home/avi/Downloads/Win10_English_x64.iso of=/dev/sdb1 bs=512M; sync

|

||||

|

||||

It took a few minutes to complete the process. I then rebooted the system and choose to boot from USB flash Drive in my UEFI (BIOS) settings.

|

||||

|

||||

#### System Requirements ####

|

||||

|

||||

If you are upgrading

|

||||

|

||||

- Upgrade supported only from Windows 7 SP1 or Windows 8.1

|

||||

|

||||

If you are fresh Installing

|

||||

|

||||

- Processor: 1GHz or faster

|

||||

- RAM : 1GB and Above(32-bit), 2GB and Above(64-bit)

|

||||

- HDD: 16GB and Above(32-bit), 20GB and Above(64-bit)

|

||||

- Graphic card: DirectX 9 or later + WDDM 1.0 Driver

|

||||

|

||||

### Installation of Windows 10 ###

|

||||

|

||||

1. Windows 10 boots. Yet again they changed the logo. Also no information on whats going on.

|

||||

|

||||

|

||||

|

||||

Windows 10 Logo

|

||||

|

||||

2. Selected Language to install, Time & currency format and keyboard & Input methods before clicking Next.

|

||||

|

||||

|

||||

|

||||

Select Language and Time

|

||||

|

||||

3. And then ‘Install Now‘ Menu.

|

||||

|

||||

|

||||

|

||||

Install Windows 10

|

||||

|

||||

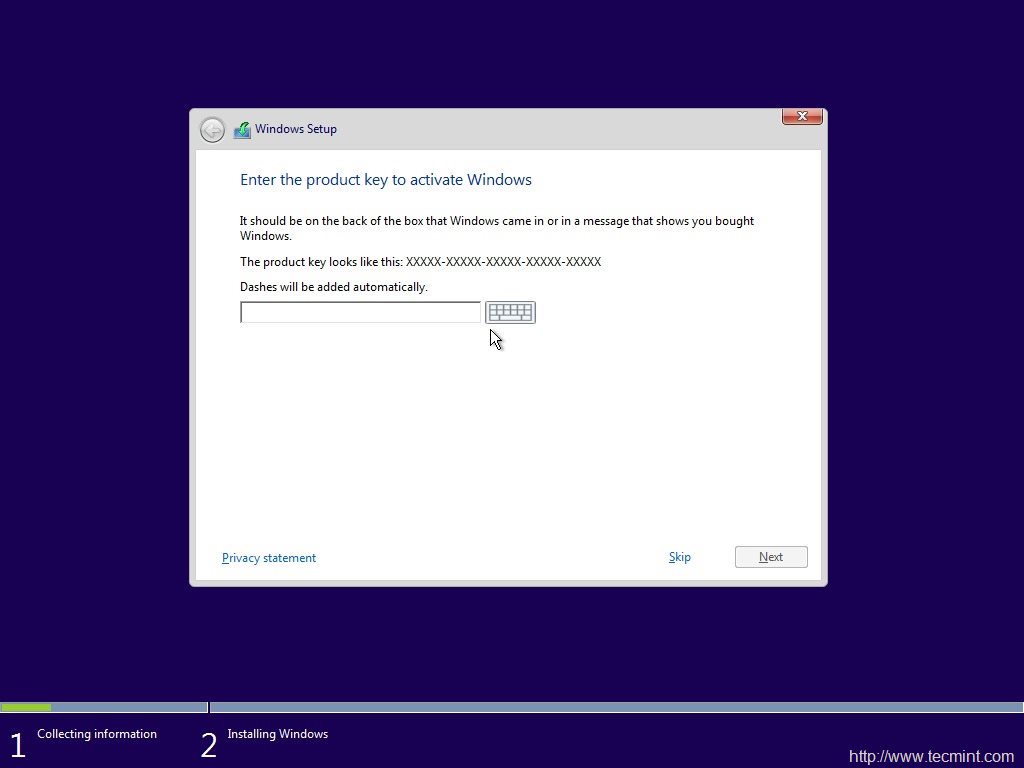

4. The next screen is asking for Product key. I clicked ‘skip’.

|

||||

|

||||

|

||||

|

||||

Windows 10 Product Key

|

||||

|

||||

5. Choose from a listed OS. I chose ‘windows 10 pro‘.

|

||||

|

||||

|

||||

|

||||

Select Install Operating System

|

||||

|

||||

6. oh yes the license agreement. Put a check mark against ‘I accept the license terms‘ and click next.

|

||||

|

||||

|

||||

|

||||

Accept License

|

||||

|

||||

7. Next was to upgrade (to windows 10 from previous versions of windows) and Install Windows. Don’t know why custom: Windows Install only is suggested as advanced by windows. Anyway I chose to Install windows only.

|

||||

|

||||

|

||||

|

||||

Select Installation Type

|

||||

|

||||

8. Selected the file-system and clicked ‘next’.

|

||||

|

||||

|

||||

|

||||

Select Install Drive

|

||||

|

||||

9. The installer started to copy files, getting files ready for installation, installing features, installing updates and finishing up. It would be better if the installer would have shown verbose output on the action is it taking.

|

||||

|

||||

|

||||

|

||||

Installing Windows

|

||||

|

||||

10. And then windows restarted. They said reboot was needed to continue.

|

||||

|

||||

|

||||

|

||||

Windows Installation Process

|

||||

|

||||

11. And then all I got was the below screen which reads “Getting Ready”. It took 5+ minutes at this point. No idea what was going on. No output.

|

||||

|

||||

|

||||

|

||||

Windows Getting Ready

|

||||

|

||||

12. yet again, it was time to “Enter Product Key”. I clicked “Do this later” and then used expressed settings.

|

||||

|

||||

|

||||

|

||||

Enter Product Key

|

||||

|

||||

|

||||

|

||||

Select Express Settings

|

||||

|

||||

14. And then three more output screens, where I as a Linuxer expected that the Installer will tell me what it is doing but all in vain.

|

||||

|

||||

|

||||

|

||||

Loading Windows

|

||||

|

||||

|

||||

|

||||

Getting Updates

|

||||

|

||||

|

||||

|

||||

Still Loading Windows

|

||||

|

||||

15. And then the installer wanted to know who owns this machine “My organization” or I myself. Chose “I own it” and then next.

|

||||

|

||||

|

||||

|

||||

Select Organization

|

||||

|

||||

16. Installer prompted me to join “Azure Ad” or “Join a domain”, before I can click ‘continue’. I chooses the later option.

|

||||

|

||||

|

||||

|

||||

Connect Windows

|

||||

|

||||

17. The Installer wants me to create an account. So I entered user_name and clicked ‘Next‘, I was expecting an error message that I must enter a password.

|

||||

|

||||

|

||||

|

||||

Create Account

|

||||

|

||||

18. To my surprise Windows didn’t even showed warning/notification that I must create password. Such a negligence. Anyway I got my desktop.

|

||||

|

||||

|

||||

|

||||

Windows 10 Desktop

|

||||

|

||||

#### Experience of a Linux-user (Myself) till now ####

|

||||

|

||||

- No Net-installer Image

|

||||

- Image size too heavy

|

||||

- No way to check the integrity of iso downloaded (no hash check)

|

||||

- The booting and installation remains same as it was in XP, Windows 7 and 8 perhaps.

|

||||

- As usual no output on what windows Installer is doing – What file copying or what package installing.

|

||||

- Installation was straight forward and easy as compared to the installation of a Linux distribution.

|

||||

|

||||

### Windows 10 Testing ###

|

||||

|

||||

19. The default Desktop is clean. It has a recycle bin Icon on the default desktop. Search web directly from the desktop itself. Additionally icons for Task viewing, Internet browsing, folder browsing and Microsoft store is there. As usual notification bar is present on the bottom right to sum up desktop.

|

||||

|

||||

|

||||

|

||||

Deskop Shortcut Icons

|

||||

|

||||

20. Internet Explorer replaced with Microsoft Edge. Windows 10 has replace the legacy web browser Internet Explorer also known as IE with Edge aka project spartan.

|

||||

|

||||

|

||||

|

||||

Microsoft Edge Browser

|

||||

|

||||

It is fast at least as compared to IE (as it seems it testing). Familiar user Interface. The home screen contains news feed updates. There is also a search bar title that reads ‘Where to next?‘. The browser loads time is considerably low which result in improving overall speed and performance. The memory usages of Edge seems normal.

|

||||

|

||||

|

||||

|

||||

Windows Performance

|

||||

|

||||

Edge has got cortana – Intelligent Personal Assistant, Support for chrome-extension, web Note – Take notes while Browsing, Share – Right from the tab without opening any other TAB.

|

||||

|

||||

#### Experience of a Linux-user (Myself) on this point ####

|

||||

|

||||

21. Microsoft has really improved web browsing. Lets see how stable and fine it remains. It don’t lag as of now.

|

||||

|

||||

22. Though RAM usages by Edge was fine for me, a lots of users are complaining that Edge is notorious for Excessive RAM Usages.

|

||||

|

||||

23. Difficult to say at this point if Edge is ready to compete with Chrome and/or Firefox at this point of time. Lets see what future unfolds.

|

||||

|

||||

#### A few more Virtual Tour ####

|

||||

|

||||

24. Start Menu redesigned – Seems clear and effective. Metro icons make it live. Populated with most commonly applications viz., Calendar, Mail, Edge, Photos, Contact, Temperature, Companion suite, OneNote, Store, Xbox, Music, Movies & TV, Money, News, Store, etc.

|

||||

|

||||

|

||||

|

||||

Windows Look and Feel

|

||||

|

||||

In Linux on Gnome Desktop Environment, I use to search required applications simply by pressing windows key and then type the name of the application.

|

||||

|

||||

|

||||

|

||||

Search Within Desktop

|

||||

|

||||

25. File Explorer – seems clear Designing. Edges are sharp. In the left pane there is link to quick access folders.

|

||||

|

||||

|

||||

|

||||

Windows File Explorer

|

||||

|

||||

Equally clear and effective file explorer on Gnome Desktop Environment on Linux. Removed UN-necessary graphics and images from icons is a plus point.

|

||||

|

||||

|

||||

|

||||

File Browser on Gnome

|

||||

|

||||

26. Settings – Though the settings are a bit refined on Windows 10, you may compare it with the settings on a Linux Box.

|

||||

|

||||

**Settings on Windows**

|

||||

|

||||

|

||||

|

||||

Windows 10 Settings

|

||||

|

||||

**Setting on Linux Gnome**

|

||||

|

||||

|

||||

|

||||

Gnome Settings

|

||||

|

||||

27. List of Applications – List of Application on Linux is better than what they use to provide (based upon my memory, when I was a regular windows user) but still it stands low as compared to how Gnome3 list application.

|

||||

|

||||

**Application Listed by Windows**

|

||||

|

||||

|

||||

|

||||

Application List on Windows 10

|

||||

|

||||

**Application Listed by Gnome3 on Linux**

|

||||

|

||||

|

||||

|

||||

Gnome Application List on Linux

|

||||

|

||||

28. Virtual Desktop – Virtual Desktop feature of Windows 10 is one of those topic which are very much talked about these days.

|

||||

|

||||

Here is the virtual Desktop in Windows 10.

|

||||

|

||||

|

||||

|

||||

Windows Virtual Desktop

|

||||

|

||||

and the virtual Desktop on Linux we are using for more than 2 decades.

|

||||

|

||||

|

||||

|

||||

Virtual Desktop on Linux

|

||||

|

||||

#### A few other features of Windows 10 ####

|

||||

|

||||

29. Windows 10 comes with wi-fi sense. It shares your password with others. Anyone who is in the range of your wi-fi and connected to you over Skype, Outlook, Hotmail or Facebook can be granted access to your wifi network. And mind it this feature has been added as a feature by microsoft to save time and hassle-free connection.

|

||||

|

||||

In a reply to question raised by Tecmint, Microsoft said – The user has to agree to enable wifi sense, everytime on a new network. oh! What a pathetic taste as far as security is concerned. I am not convinced.

|

||||

|

||||

30. Up-gradation from Windows 7 and Windows 8.1 is free though the retail cost of Home and pro editions are approximately $119 and $199 respectively.

|

||||

|

||||

31. Microsoft released first cumulative update for windows 10, which is said to put system into endless crash loop for a few people. Windows perhaps don’t understand such problem or don’t want to work on that part don’t know why.

|

||||

|

||||

32. Microsoft’s inbuilt utility to block/hide unwanted updates don’t work in my case. This means If a update is there, there is no way to block/hide it. Sorry windows users!

|

||||

|

||||

#### A few features native to Linux that windows 10 have ####

|

||||

|

||||

Windows 10 has a lots of features that were taken directly from Linux. If Linux were not released under GNU License perhaps Microsoft would never had the below features.

|

||||

|

||||

33. Command-line package management – Yup! You heard it right. Windows 10 has a built-in package management. It works only in Windows Power Shell. OneGet is the official package manager for windows. Windows package manager in action.

|

||||

|

||||

|

||||

|

||||

Windows 10 Package Manager

|

||||

|

||||

- Border-less windows

|

||||

- Flat Icons

|

||||

- Virtual Desktop

|

||||

- One search for Online+offline search

|

||||

- Convergence of mobile and desktop OS

|

||||

|

||||

### Overall Conclusion ###

|

||||

|

||||

- Improved responsiveness

|

||||

- Well implemented Animation

|

||||

- low on resource

|

||||

- Improved battery life

|

||||

- Microsoft Edge web-browser is rock solid

|

||||

- Supported on Raspberry pi 2.

|

||||

- It is good because windows 8/8.1 was not upto mark and really bad.

|

||||

- It is a the same old wine in new bottle. Almost the same things with brushed up icons.

|

||||

|

||||

What my testing suggest is Windows 10 has improved on a few things like look and feel (as windows always did), +1 for Project spartan, Virtual Desktop, Command-line package management, one search for online and offline search. It is overall an improved product but those who thinks that Windows 10 will prove to be the last nail in the coffin of Linux are mistaken.

|

||||

|

||||

Linux is years ahead of Windows. Their approach is different. In near future windows won’t stand anywhere around Linux and there is nothing for which a Linux user need to go to Windows 10.

|

||||

|

||||

That’s all for now. Hope you liked the post. I will be here again with another interesting post you people will love to read. Provide us with your valuable feedback in the comments below.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/a-linux-user-using-windows-10-after-more-than-8-years-see-comparison/

|

||||

|

||||

作者:[vishek Kumar][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/avishek/

|

||||

[1]:https://www.microsoft.com/en-us/software-download/windows10ISO

|

||||

@ -0,0 +1,109 @@

|

||||

Debian GNU/Linux Birthday : A 22 Years of Journey and Still Counting…

|

||||

================================================================================

|

||||

On 16th August 2015, the Debian project has celebrated its 22nd anniversary, making it one of the oldest popular distribution in open source world. Debian project was conceived and founded in the year 1993 by Ian Murdock. By that time Slackware had already made a remarkable presence as one of the earliest Linux Distribution.

|

||||

|

||||

|

||||

|

||||

Happy 22nd Birthday to Debian Linux

|

||||

|

||||

Ian Ashley Murdock, an American Software Engineer by profession, conceived the idea of Debian project, when he was a student of Purdue University. He named the project Debian after the name of his then-girlfriend Debra Lynn (Deb) and his name. He later married her and then got divorced in January 2008.

|

||||

|

||||

|

||||

|

||||

Debian Creator: Ian Murdock

|

||||

|

||||

Ian is currently serving as Vice President of Platform and Development Community at ExactTarget.

|

||||

|

||||

Debian (as Slackware) was the result of unavailability of up-to mark Linux Distribution, that time. Ian in an interview said – “Providing the first class Product without profit would be the sole aim of Debian Project. Even Linux was not reliable and up-to mark that time. I Remember…. Moving files between file-system and dealing with voluminous file would often result in Kernel Panic. However the project Linux was promising. The availability of Source Code freely and the potential it seemed was qualitative.”

|

||||

|

||||

I remember … like everyone else I wanted to solve problem, run something like UNIX at home, but it was not possible…neither financially nor legally, in the other sense . Then I come to know about GNU kernel Development and its non-association with any kind of legal issues, he added. He was sponsored by Free Software Foundation (FSF) in the early days when he was working on Debian, it also helped Debian to take a giant step though Ian needed to finish his degree and hence quited FSF roughly after one year of sponsorship.

|

||||

|

||||

### Debian Development History ###

|

||||

|

||||

- **Debian 0.01 – 0.09** : Released between August 1993 – December 1993.

|

||||

- **Debian 0.91 ** – Released in January 1994 with primitive package system, No dependencies.

|

||||

- **Debian 0.93 rc5** : March 1995. It is the first modern release of Debian, dpkg was used to install and maintain packages after base system installation.

|

||||

- **Debian 0.93 rc6**: Released in November 1995. It was last a.out release, deselect made an appearance for the first time – 60 developers were maintaining packages, then at that time.

|

||||

- **Debian 1.1**: Released in June 1996. Code name – Buzz, Packages count – 474, Package Manager dpkg, Kernel 2.0, ELF.

|

||||

- **Debian 1.2**: Released in December 1996. Code name – Rex, Packages count – 848, Developers Count – 120.

|

||||

- **Debian 1.3**: Released in July 1997. Code name – Bo, package count 974, Developers count – 200.

|

||||

- **Debian 2.0**: Released in July 1998. Code name: Hamm, Support for architecture – Intel i386 and Motorola 68000 series, Number of Packages: 1500+, Number of Developers: 400+, glibc included.

|

||||

- **Debian 2.1**: Released on March 09, 1999. Code name – slink, support architecture Alpha and Sparc, apt came in picture, Number of package – 2250.

|

||||

- **Debian 2.2**: Released on August 15, 2000. Code name – Potato, Supported architecture – Intel i386, Motorola 68000 series, Alpha, SUN Sparc, PowerPC and ARM architecture. Number of packages: 3900+ (binary) and 2600+ (Source), Number of Developers – 450. There were a group of people studied and came with an article called Counting potatoes, which shows – How a free software effort could lead to a modern operating system despite all the issues around it.

|

||||

- **Debian 3.0** : Released on July 19th, 2002. Code name – woody, Architecture supported increased– HP, PA_RISC, IA-64, MIPS and IBM, First release in DVD, Package Count – 8500+, Developers Count – 900+, Cryptography.

|

||||

- **Debian 3.1**: Release on June 6th, 2005. Code name – sarge, Architecture support – same as woody + AMD64 – Unofficial Port released, Kernel – 2.4 qnd 2.6 series, Number of Packages: 15000+, Number of Developers : 1500+, packages like – OpenOffice Suite, Firefox Browser, Thunderbird, Gnome 2.8, kernel 3.3 Advanced Installation Support: RAID, XFS, LVM, Modular Installer.

|

||||

- **Debian 4.0**: Released on April 8th, 2007. Code name – etch, architecture support – same as sarge, included AMD64. Number of packages: 18,200+ Developers count : 1030+, Graphical Installer.

|

||||

- **Debian 5.0**: Released on February 14th, 2009. Code name – lenny, Architecture Support – Same as before + ARM. Number of packages: 23000+, Developers Count: 1010+.

|

||||

- **Debian 6.0** : Released on July 29th, 2009. Code name – squeeze, Package included : kernel 2.6.32, Gnome 2.3. Xorg 7.5, DKMS included, Dependency-based. Architecture : Same as pervious + kfreebsd-i386 and kfreebsd-amd64, Dependency based booting.

|

||||

- **Debian 7.0**: Released on may 4, 2013. Code name: wheezy, Support for Multiarch, Tools for private cloud, Improved Installer, Third party repo need removed, full featured multimedia-codec, Kernel 3.2, Xen Hypervisor 4.1.4 Package Count: 37400+.

|

||||

- **Debian 8.0**: Released on May 25, 2015 and Code name: Jessie, Systemd as the default init system, powered by Kernel 3.16, fast booting, cgroups for services, possibility of isolating part of the services, 43000+ packages. Sysvinit init system available in Jessie.

|

||||

|

||||

**Note**: Linux Kernel initial release was on October 05, 1991 and Debian initial release was on September 15, 1993. So, Debian is there for 22 Years running Linux Kernel which is there for 24 years.

|

||||

|

||||

### Debian Facts ###

|

||||

|

||||

Year 1994 was spent on organizing and managing Debian project so that it would be easy for others to contribute. Hence no release for users were made this year however there were certain internal release.

|

||||

|

||||

Debian 1.0 was never released. A CDROM manufacturer company by mistakenly labelled an unreleased version as Debian 1.0. Hence to avoid confusion Debian 1.0 was released as Debian 1.1 and since then only the concept of official CDROM images came into existence.

|

||||

|

||||

Each release of Debian is a character of Toy Story.

|

||||

|

||||

Debian remains available in old stable, stable, testing and experimental, all the time.

|

||||

|

||||

The Debian Project continues to work on the unstable distribution (codenamed sid, after the evil kid from the Toy Story). Sid is the permanent name for the unstable distribution and is remains ‘Still In Development’. The testing release is intended to become the next stable release and is currently codenamed jessie.

|

||||

|

||||

Debian official distribution includes only Free and OpenSource Software and nothing else. However the availability of contrib and Non-free Packages makes it possible to install those packages which are free but their dependencies are not licensed free (contrib) and Packages licensed under non-free softwares.

|

||||

|

||||

Debian is the mother of a lot of Linux distribution. Some of these Includes:

|

||||

|

||||

- Damn Small Linux

|

||||

- KNOPPIX

|

||||

- Linux Advanced

|

||||

- MEPIS

|

||||

- Ubuntu

|

||||

- 64studio (No more active)

|

||||

- LMDE

|

||||

|

||||

Debian is the world’s largest non commercial Linux Distribution. It is written in C (32.1%) programming language and rest in 70 other languages.

|

||||

|

||||

|

||||

|

||||

Debian Contribution

|

||||

|

||||

Image Source: [Xmodulo][1]

|

||||

|

||||

Debian project contains 68.5 million actual loc (lines of code) + 4.5 million lines of comments and white spaces.

|

||||

|

||||

International Space station dropped Windows & Red Hat for adopting Debian – These astronauts are using one release back – now “squeeze” for stability and strength from community.

|

||||

|

||||

Thank God! Who would have heard the scream from space on Windows Metro Screen :P

|

||||

|

||||

#### The Black Wednesday ####

|

||||

|

||||

On November 20th, 2002 the University of Twente Network Operation Center (NOC) caught fire. The fire department gave up protecting the server area. NOC hosted satie.debian.org which included Security, non-US archive, New Maintainer, quality assurance, databases – Everything was turned to ashes. Later these services were re-built by debian.

|

||||

|

||||

#### The Future Distro ####

|

||||

|

||||

Next in the list is Debian 9, code name – Stretch, what it will have is yet to be revealed. The best is yet to come, Just Wait for it!

|

||||

|

||||

A lot of distribution made an appearance in Linux Distro genre and then disappeared. In most cases managing as it gets bigger was a concern. But certainly this is not the case with Debian. It has hundreds of thousands of developer and maintainer all across the globe. It is a one Distro which was there from the initial days of Linux.

|

||||

|

||||

The contribution of Debian in Linux ecosystem can’t be measured in words. If there had been no Debian, Linux would not have been so rich and user-friendly. Debian is among one of the disto which is considered highly reliable, secure and stable and a perfect choice for Web Servers.

|

||||

|

||||

That’s the beginning of Debian. It came a long way and still going. The Future is Here! The world is here! If you have not used Debian till now, What are you Waiting for. Just Download Your Image and get started, we will be here if you get into trouble.

|

||||

|

||||

- [Debian Homepage][2]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/happy-birthday-to-debian-gnu-linux/

|

||||

|

||||

作者:[Avishek Kumar][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/avishek/

|

||||

[1]:http://xmodulo.com/2013/08/interesting-facts-about-debian-linux.html

|

||||

[2]:https://www.debian.org/

|

||||

@ -0,0 +1,53 @@

|

||||

Docker Working on Security Components, Live Container Migration

|

||||

================================================================================

|

||||

|

||||

|

||||

**Docker developers take the stage at Containercon and discuss their work on future container innovations for security and live migration.**

|

||||

|

||||

SEATTLE—Containers are one of the hottest topics in IT today and at the Linuxcon USA event here there is a co-located event called Containercon, dedicated to this virtualization technology.

|

||||

|

||||

Docker, the lead commercial sponsor of the open-source Docker effort brought three of its top people to the keynote stage today, but not Docker founder Solomon Hykes.

|

||||

|

||||

Hykes who delivered a Linuxcon keynote in 2014 was in the audience though, as Senior Vice President of Engineering Marianna Tessel, Docker security chief Diogo Monica and Docker chief maintainer Michael Crosby presented what's new and what's coming in Docker.

|

||||

|

||||

Tessel emphasized that Docker is very real today and used in production environments at some of the largest organizations on the planet, including the U.S. Government. Docker also is working in small environments too, including the Raspberry Pi small form factor ARM computer, which now can support up to 2,300 containers on a single device.

|

||||

|

||||

"We're getting more powerful and at the same time Docker will also get simpler to use," Tessel said.

|

||||

|

||||

As a metaphor, Tessel said that the whole Docker experience is much like a cruise ship, where there is powerful and complex machinery that powers the ship, yet the experience for passengers is all smooth sailing.

|

||||

|

||||

One area that Docker is trying to make easier is security. Tessel said that security is mind-numbingly complex for most people as organizations constantly try to avoid network breaches.

|

||||

|

||||

That's where Docker Content Trust comes into play, which is a configurable feature in the recent Docker 1.8 release. Diogo Mónica, security lead for Docker joined Tessel on stage and said that security is a hard topic, which is why Docker content trust is being developed.

|

||||

|

||||

With Docker Content Trust there is a verifiable way to make sure that a given Docker application image is authentic. There also are controls to limit fraud and potential malicious code injection by verifying application freshness.

|

||||

|

||||

To prove his point, Monica did a live demonstration of what could happen if Content Trust is not enabled. In one instance, a Website update is manipulated to allow the demo Web app to be defaced. When Content Trust is enabled, the hack didn't work and was blocked.

|

||||

|

||||

"Don't let the simple demo fool you," Tessel said. "You have seen the best security possible."

|

||||

|

||||

One area where containers haven't been put to use before is for live migration, which on VMware virtual machines is a technology called vMotion. It's an area that Docker is currently working on.

|

||||

|

||||

Docker chief maintainer Michael Crosby did an onstage demonstration of a live migration of Docker containers. Crosby referred to the approach as checkpoint and restore, where a running container gets a checkpoint snapshot and is then restored to another location.

|

||||

|

||||

A container also can be cloned and then run in another location. Crosby humorously referred to his cloned container as "Dolly," a reference to the world's first cloned animal, Dolly the sheep.

|

||||

|

||||

Tessel also took time to talk about the RunC component of containers, which is now a technology component that is being developed by the Open Containers Initiative as a multi-stakeholder process. With RunC, containers expand beyond Linux to multiple operating systems including Windows and Solaris.

|

||||

|

||||

Overall, Tessel said that she can't predict the future of Docker, though she is very optimistic.

|

||||

|

||||

"I'm not sure what the future is, but I'm sure it'll be out of this world," Tessel said.

|

||||

|

||||

Sean Michael Kerner is a senior editor at eWEEK and InternetNews.com. Follow him on Twitter @TechJournalist.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.eweek.com/virtualization/docker-working-on-security-components-live-container-migration.html

|

||||

|

||||

作者:[Sean Michael Kerner][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.eweek.com/cp/bio/Sean-Michael-Kerner/

|

||||

@ -0,0 +1,49 @@

|

||||

Linuxcon: The Changing Role of the Server OS

|

||||

================================================================================

|

||||

SEATTLE - Containers might one day change the world, but it will take time and it will also change the role of the operating system. That's the message delivered during a Linuxcon keynote here today by Wim Coekaerts, SVP Linux and virtualization engineering at Oracle.

|

||||

|

||||

|

||||

|

||||

Coekaerts started his presentation by putting up a slide stating it's the year of the desktop, which generated a few laughs from the audience. Oracle Wim Coekarts Truly, though, Coekaerts said it is now apparent that 2015 is the year of the container, and more importantly the year of the application, which is what containers really are all about.

|

||||

|

||||

"What do you need an operating system for?" Coekaerts asked. "It's really just there to run an application; an operating system is there to manage hardware and resources so your app can run."

|

||||

|

||||

Coekaerts added that with Docker containers, the focus is once again on the application. At Oracle, Coekaerts said much of the focus is on how to make the app run better on the OS.

|

||||

|

||||

"Many people are used to installing apps, but many of the younger generation just click a button on their mobile device and it runs," Coekaerts said.

|

||||

|

||||

Coekaerts said that people now wonder why it's more complex in the enterprise to install software, and Docker helps to change that.

|

||||

|

||||

"The role of the operating system is changing," Coekaerts said.

|

||||

|

||||

The rise of Docker does not mean the demise of virtual machines (VMs), though. Coekaerts said it will take a very long time for things to mature in the containerization space and get used in real world.

|

||||

|

||||

During that period VMs and containers will co-exist and there will be a need for transition and migration tools between containers and VMs. For example, Coekaerts noted that Oracle's VirtualBox open-source technology is widely used on desktop systems today as a way to help users run Docker. The Docker Kitematic project makes use of VirtualBox to boot Docker on Macs today.

|

||||

|

||||

### The Open Compute Initiative and Write Once, Deploy Anywhere for Containers ###

|

||||

|

||||

A key promise that needs to be enabled for containers to truly be successful is the concept of write once, deploy anywhere. That's an area where the Linux Foundations' Open Compute Initiative (OCI) will play a key role in enabling interoperability across container runtimes.

|

||||

|

||||

"With OCI, it will make it easier to build once and run anywhere, so what you package locally you can run wherever you want," Coekaerts said.

|

||||

|

||||

Overall, though, Coekaerts said that while there is a lot of interest in moving to the container model, it's not quite ready yet. He noted Oracle is working on certifying its products to run in containers, but it's a hard process.

|

||||

|

||||

"Running the database is easy; it's everything else around it that is complex," Coekaerts said. "Containers don't behave the same as VMs, and some applications depend on low-level system configuration items that are not exposed from the host to the container."

|

||||

|

||||

Additionally, Coekaerts commented that debugging problems inside a container is different than in a VM, and there is currently a lack of mature tools for proper container app debugging.

|

||||

|

||||

Coekaerts emphasized that as containers matures it's important to not forget about the existing technology that organizations use to run and deploy applications on servers today. He said enterprises don't typically throw out everything they have just to start with new technology.

|

||||

|

||||

"Deploying new technology is hard, and you need to be able to transition from what you have," Coekaerts said. "The technology that allows you to transition easily is the technology that wins."

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.serverwatch.com/server-news/linuxcon-the-changing-role-of-the-server-os.html

|

||||

|

||||

作者:[Sean Michael Kerner][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.serverwatch.com/author/Sean-Michael-Kerner-101580.htm

|

||||

@ -0,0 +1,49 @@

|

||||

A Look at What's Next for the Linux Kernel

|

||||

================================================================================

|

||||

|

||||

|

||||

**The upcoming Linux 4.2 kernel will have more contributors than any other Linux kernel in history, according to Linux kernel developer Jonathan Corbet.**

|

||||

|

||||

SEATTLE—The Linux kernel continues to grow—both in lines of code and the number of developers that contribute to it—yet some challenges need to be addressed. That was one of the key messages from Linux kernel developer Jonathan Corbet during his annual Kernel Report session at the LinuxCon conference here.

|

||||

|

||||

The Linux 4.2 kernel is still under development, with general availability expected on Aug. 23. Corbet noted that 1,569 developers have contributed code for the Linux 4.2 kernel. Of those, 277 developers made their first contribution ever, during the Linux 4.2 development cycle.

|

||||

|

||||

Even as more developers are coming to Linux, the pace of development and releases is very fast, Corbet said. He estimates that it now takes approximately 63 days for the community to build a new Linux kernel milestone.

|

||||

|

||||

Linux 4.2 will benefit from a number of improvements that have been evolving in Linux over the last several releases. One such improvement is the introduction of OverlayFS, a new type of read-only file system that is useful because it can enable many containers to be layered on top of each other, Corbet said.

|

||||

|

||||

Linux networking also is set to improve small packet performance, which is important for areas such as high-frequency financial trading. The improvements are aimed at reducing the amount of time and power needed to process each data packet, Corbet said.

|

||||

|

||||

New drivers are always being added to Linux. On average, there are 60 to 80 new or updated drivers added in every Linux kernel development cycle, Corbet said.

|

||||

|

||||

Another key area that continues to improve is that of Live Kernel patching, first introduced in the Linux 4.0 kernel. With live kernel patching, the promise is that a system administrator can patch a live running kernel without the need to reboot a running production system. While the basic elements of live kernel patching are in the kernel already, work is under way to make the technology all work with the right level of consistency and stability, Corbet explained.

|

||||

|

||||

**Linux Security, IoT and Other Concerns**

|

||||

|

||||

Security has been a hot topic in the open-source community in the past year due to high-profile issues, including Heartbleed and Shellshock.

|

||||

|

||||

"I don't doubt there are some unpleasant surprises in the neglected Linux code at this point," Corbet said.

|

||||

|

||||

He noted that there are more than 3 millions lines of code in the Linux kernel today that have been untouched in the last decade by developers and that the Shellshock vulnerability was a flaw in 20-year-old code that hadn't been looked at in some time.

|

||||

|

||||

Another issue that concerns Corbet is the Unix 2038 issue—the Linux equivalent of the Y2K bug, which could have caused global havoc in the year 2000 if it hadn't been fixed. With the 2038 issue, there is a bug that could shut down Linux and Unix machines in the year 2038. Corbet said that while 2038 is still 23 years away, there are systems being deployed now that will be in use in the 2038.

|

||||

|

||||

Some initial work took place to fix the 2038 flaw in Linux, but much more remains to be done, Corbet said. "The time to fix this is now, not 20 years from now in a panic when we're all trying to enjoy our retirement," Corbet said.

|

||||

|

||||

The Internet of things (IoT) is another area of Linux concern for Corbet. Today, Linux is a leading embedded operating system for IoT, but that might not always be the case. Corbet is concerned that the Linux kernel's growth is making it too big in terms of memory footprint to work in future IoT devices.

|

||||

|

||||

A Linux project is now under way to minimize the size of the Linux kernel, and it's important that it gets the support it needs, Corbet said.

|

||||

|

||||