mirror of

https://github.com/LCTT/TranslateProject.git

synced 2024-12-26 21:30:55 +08:00

commit

b2f7dc239d

347

published/20161012 Introduction to FirewallD on CentOS.md

Normal file

347

published/20161012 Introduction to FirewallD on CentOS.md

Normal file

@ -0,0 +1,347 @@

|

|||||||

|

CentOS 上的 FirewallD 简明指南

|

||||||

|

============================================================

|

||||||

|

|

||||||

|

[FirewallD][4] 是 iptables 的前端控制器,用于实现持久的网络流量规则。它提供命令行和图形界面,在大多数 Linux 发行版的仓库中都有。与直接控制 iptables 相比,使用 FirewallD 有两个主要区别:

|

||||||

|

|

||||||

|

1. FirewallD 使用区域和服务而不是链式规则。

|

||||||

|

2. 它动态管理规则集,允许更新规则而不破坏现有会话和连接。

|

||||||

|

|

||||||

|

> FirewallD 是 iptables 的一个封装,可以让你更容易地管理 iptables 规则 - 它并*不是* iptables 的替代品。虽然 iptables 命令仍可用于 FirewallD,但建议使用 FirewallD 时仅使用 FirewallD 命令。

|

||||||

|

|

||||||

|

本指南将向您介绍 FirewallD 的区域和服务的概念,以及一些基本的配置步骤。

|

||||||

|

|

||||||

|

### 安装与管理 FirewallD

|

||||||

|

|

||||||

|

CentOS 7 和 Fedora 20+ 已经包含了 FirewallD,但是默认没有激活。可以像其它的 systemd 单元那样控制它。

|

||||||

|

|

||||||

|

1、 启动服务,并在系统引导时启动该服务:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo systemctl start firewalld

|

||||||

|

sudo systemctl enable firewalld

|

||||||

|

```

|

||||||

|

|

||||||

|

要停止并禁用:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo systemctl stop firewalld

|

||||||

|

sudo systemctl disable firewalld

|

||||||

|

```

|

||||||

|

|

||||||

|

2、 检查防火墙状态。输出应该是 `running` 或者 `not running`。

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --state

|

||||||

|

```

|

||||||

|

|

||||||

|

3、 要查看 FirewallD 守护进程的状态:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo systemctl status firewalld

|

||||||

|

```

|

||||||

|

|

||||||

|

示例输出

|

||||||

|

|

||||||

|

```

|

||||||

|

firewalld.service - firewalld - dynamic firewall daemon

|

||||||

|

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled)

|

||||||

|

Active: active (running) since Wed 2015-09-02 18:03:22 UTC; 1min 12s ago

|

||||||

|

Main PID: 11954 (firewalld)

|

||||||

|

CGroup: /system.slice/firewalld.service

|

||||||

|

└─11954 /usr/bin/python -Es /usr/sbin/firewalld --nofork --nopid

|

||||||

|

```

|

||||||

|

|

||||||

|

4、 重新加载 FirewallD 配置:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --reload

|

||||||

|

```

|

||||||

|

|

||||||

|

### 配置 FirewallD

|

||||||

|

|

||||||

|

FirewallD 使用 XML 进行配置。除非是非常特殊的配置,你不必处理它们,而应该使用 `firewall-cmd`。

|

||||||

|

|

||||||

|

配置文件位于两个目录中:

|

||||||

|

|

||||||

|

* `/usr/lib/FirewallD` 下保存默认配置,如默认区域和公用服务。 避免修改它们,因为每次 firewall 软件包更新时都会覆盖这些文件。

|

||||||

|

* `/etc/firewalld` 下保存系统配置文件。 这些文件将覆盖默认配置。

|

||||||

|

|

||||||

|

#### 配置集

|

||||||

|

|

||||||

|

FirewallD 使用两个_配置集_:“运行时”和“持久”。 在系统重新启动或重新启动 FirewallD 时,不会保留运行时的配置更改,而对持久配置集的更改不会应用于正在运行的系统。

|

||||||

|

|

||||||

|

默认情况下,`firewall-cmd` 命令适用于运行时配置,但使用 `--permanent` 标志将保存到持久配置中。要添加和激活持久性规则,你可以使用两种方法之一。

|

||||||

|

|

||||||

|

1、 将规则同时添加到持久规则集和运行时规则集中。

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --zone=public --add-service=http --permanent

|

||||||

|

sudo firewall-cmd --zone=public --add-service=http

|

||||||

|

```

|

||||||

|

|

||||||

|

2、 将规则添加到持久规则集中并重新加载 FirewallD。

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --zone=public --add-service=http --permanent

|

||||||

|

sudo firewall-cmd --reload

|

||||||

|

```

|

||||||

|

|

||||||

|

> `reload` 命令会删除所有运行时配置并应用永久配置。因为 firewalld 动态管理规则集,所以它不会破坏现有的连接和会话。

|

||||||

|

|

||||||

|

### 防火墙的区域

|

||||||

|

|

||||||

|

“区域”是针对给定位置或场景(例如家庭、公共、受信任等)可能具有的各种信任级别的预构建规则集。不同的区域允许不同的网络服务和入站流量类型,而拒绝其他任何流量。 首次启用 FirewallD 后,`public` 将是默认区域。

|

||||||

|

|

||||||

|

区域也可以用于不同的网络接口。例如,要分离内部网络和互联网的接口,你可以在 `internal` 区域上允许 DHCP,但在`external` 区域仅允许 HTTP 和 SSH。未明确设置为特定区域的任何接口将添加到默认区域。

|

||||||

|

|

||||||

|

要找到默认区域:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --get-default-zone

|

||||||

|

```

|

||||||

|

|

||||||

|

要修改默认区域:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --set-default-zone=internal

|

||||||

|

```

|

||||||

|

|

||||||

|

要查看你网络接口使用的区域:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --get-active-zones

|

||||||

|

```

|

||||||

|

|

||||||

|

示例输出:

|

||||||

|

|

||||||

|

```

|

||||||

|

public

|

||||||

|

interfaces: eth0

|

||||||

|

```

|

||||||

|

|

||||||

|

要得到特定区域的所有配置:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --zone=public --list-all

|

||||||

|

```

|

||||||

|

|

||||||

|

示例输出:

|

||||||

|

|

||||||

|

```

|

||||||

|

public (default, active)

|

||||||

|

interfaces: ens160

|

||||||

|

sources:

|

||||||

|

services: dhcpv6-client http ssh

|

||||||

|

ports: 12345/tcp

|

||||||

|

masquerade: no

|

||||||

|

forward-ports:

|

||||||

|

icmp-blocks:

|

||||||

|

rich rules:

|

||||||

|

```

|

||||||

|

|

||||||

|

要得到所有区域的配置:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --list-all-zones

|

||||||

|

```

|

||||||

|

|

||||||

|

示例输出:

|

||||||

|

|

||||||

|

```

|

||||||

|

block

|

||||||

|

interfaces:

|

||||||

|

sources:

|

||||||

|

services:

|

||||||

|

ports:

|

||||||

|

masquerade: no

|

||||||

|

forward-ports:

|

||||||

|

icmp-blocks:

|

||||||

|

rich rules:

|

||||||

|

|

||||||

|

...

|

||||||

|

|

||||||

|

work

|

||||||

|

interfaces:

|

||||||

|

sources:

|

||||||

|

services: dhcpv6-client ipp-client ssh

|

||||||

|

ports:

|

||||||

|

masquerade: no

|

||||||

|

forward-ports:

|

||||||

|

icmp-blocks:

|

||||||

|

rich rules:

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

#### 与服务一起使用

|

||||||

|

|

||||||

|

FirewallD 可以根据特定网络服务的预定义规则来允许相关流量。你可以创建自己的自定义系统规则,并将它们添加到任何区域。 默认支持的服务的配置文件位于 `/usr/lib /firewalld/services`,用户创建的服务文件在 `/etc/firewalld/services` 中。

|

||||||

|

|

||||||

|

要查看默认的可用服务:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --get-services

|

||||||

|

```

|

||||||

|

|

||||||

|

比如,要启用或禁用 HTTP 服务:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --zone=public --add-service=http --permanent

|

||||||

|

sudo firewall-cmd --zone=public --remove-service=http --permanent

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 允许或者拒绝任意端口/协议

|

||||||

|

|

||||||

|

比如:允许或者禁用 12345 端口的 TCP 流量。

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --zone=public --add-port=12345/tcp --permanent

|

||||||

|

sudo firewall-cmd --zone=public --remove-port=12345/tcp --permanent

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 端口转发

|

||||||

|

|

||||||

|

下面是**在同一台服务器上**将 80 端口的流量转发到 12345 端口。

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --zone="public" --add-forward-port=port=80:proto=tcp:toport=12345

|

||||||

|

```

|

||||||

|

|

||||||

|

要将端口转发到**另外一台服务器上**:

|

||||||

|

|

||||||

|

1、 在需要的区域中激活 masquerade。

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --zone=public --add-masquerade

|

||||||

|

```

|

||||||

|

|

||||||

|

2、 添加转发规则。例子中是将 IP 地址为 :123.456.78.9 的_远程服务器上_ 80 端口的流量转发到 8080 上。

|

||||||

|

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --zone="public" --add-forward-port=port=80:proto=tcp:toport=8080:toaddr=123.456.78.9

|

||||||

|

```

|

||||||

|

|

||||||

|

要删除规则,用 `--remove` 替换 `--add`。比如:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --zone=public --remove-masquerade

|

||||||

|

```

|

||||||

|

|

||||||

|

### 用 FirewallD 构建规则集

|

||||||

|

|

||||||

|

例如,以下是如何使用 FirewallD 为你的服务器配置基本规则(如果您正在运行 web 服务器)。

|

||||||

|

|

||||||

|

1. 将 `eth0` 的默认区域设置为 `dmz`。 在所提供的默认区域中,dmz(非军事区)是最适合于这个程序的,因为它只允许 SSH 和 ICMP。

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --set-default-zone=dmz

|

||||||

|

sudo firewall-cmd --zone=dmz --add-interface=eth0

|

||||||

|

```

|

||||||

|

|

||||||

|

2、 把 HTTP 和 HTTPS 添加永久的服务规则到 dmz 区域中:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --zone=dmz --add-service=http --permanent

|

||||||

|

sudo firewall-cmd --zone=dmz --add-service=https --permanent

|

||||||

|

```

|

||||||

|

|

||||||

|

3、 重新加载 FirewallD 让规则立即生效:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --reload

|

||||||

|

```

|

||||||

|

|

||||||

|

如果你运行 `firewall-cmd --zone=dmz --list-all`, 会有下面的输出:

|

||||||

|

|

||||||

|

```

|

||||||

|

dmz (default)

|

||||||

|

interfaces: eth0

|

||||||

|

sources:

|

||||||

|

services: http https ssh

|

||||||

|

ports:

|

||||||

|

masquerade: no

|

||||||

|

forward-ports:

|

||||||

|

icmp-blocks:

|

||||||

|

rich rules:

|

||||||

|

```

|

||||||

|

|

||||||

|

这告诉我们,`dmz` 区域是我们的**默认**区域,它被用于 `eth0` 接口**中所有网络的**源地址**和**端口**。 允许传入 HTTP(端口 80)、HTTPS(端口 443)和 SSH(端口 22)的流量,并且由于没有 IP 版本控制的限制,这些适用于 IPv4 和 IPv6。 不允许**IP 伪装**以及**端口转发**。 我们没有 **ICMP 块**,所以 ICMP 流量是完全允许的。没有**丰富(Rich)规则**,允许所有出站流量。

|

||||||

|

|

||||||

|

### 高级配置

|

||||||

|

|

||||||

|

服务和端口适用于基本配置,但对于高级情景可能会限制较多。 丰富(Rich)规则和直接(Direct)接口允许你为任何端口、协议、地址和操作向任何区域 添加完全自定义的防火墙规则。

|

||||||

|

|

||||||

|

#### 丰富规则

|

||||||

|

|

||||||

|

丰富规则的语法有很多,但都完整地记录在 [firewalld.richlanguage(5)][5] 的手册页中(或在终端中 `man firewalld.richlanguage`)。 使用 `--add-rich-rule`、`--list-rich-rules` 、 `--remove-rich-rule` 和 firewall-cmd 命令来管理它们。

|

||||||

|

|

||||||

|

这里有一些常见的例子:

|

||||||

|

|

||||||

|

允许来自主机 192.168.0.14 的所有 IPv4 流量。

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --zone=public --add-rich-rule 'rule family="ipv4" source address=192.168.0.14 accept'

|

||||||

|

```

|

||||||

|

|

||||||

|

拒绝来自主机 192.168.1.10 到 22 端口的 IPv4 的 TCP 流量。

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --zone=public --add-rich-rule 'rule family="ipv4" source address="192.168.1.10" port port=22 protocol=tcp reject'

|

||||||

|

```

|

||||||

|

|

||||||

|

允许来自主机 10.1.0.3 到 80 端口的 IPv4 的 TCP 流量,并将流量转发到 6532 端口上。

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --zone=public --add-rich-rule 'rule family=ipv4 source address=10.1.0.3 forward-port port=80 protocol=tcp to-port=6532'

|

||||||

|

```

|

||||||

|

|

||||||

|

将主机 172.31.4.2 上 80 端口的 IPv4 流量转发到 8080 端口(需要在区域上激活 masquerade)。

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --zone=public --add-rich-rule 'rule family=ipv4 forward-port port=80 protocol=tcp to-port=8080 to-addr=172.31.4.2'

|

||||||

|

```

|

||||||

|

|

||||||

|

列出你目前的丰富规则:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo firewall-cmd --list-rich-rules

|

||||||

|

```

|

||||||

|

|

||||||

|

### iptables 的直接接口

|

||||||

|

|

||||||

|

对于最高级的使用,或对于 iptables 专家,FirewallD 提供了一个直接(Direct)接口,允许你给它传递原始 iptables 命令。 直接接口规则不是持久的,除非使用 `--permanent`。

|

||||||

|

|

||||||

|

要查看添加到 FirewallD 的所有自定义链或规则:

|

||||||

|

|

||||||

|

```

|

||||||

|

firewall-cmd --direct --get-all-chains

|

||||||

|

firewall-cmd --direct --get-all-rules

|

||||||

|

```

|

||||||

|

|

||||||

|

讨论 iptables 的具体语法已经超出了这篇文章的范围。如果你想学习更多,你可以查看我们的 [iptables 指南][6]。

|

||||||

|

|

||||||

|

### 更多信息

|

||||||

|

|

||||||

|

你可以查阅以下资源以获取有关此主题的更多信息。虽然我们希望我们提供的是有效的,但是请注意,我们不能保证外部材料的准确性或及时性。

|

||||||

|

|

||||||

|

* [FirewallD 官方网站][1]

|

||||||

|

* [RHEL 7 安全指南:FirewallD 简介][2]

|

||||||

|

* [Fedora Wiki:FirewallD][3]

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: https://www.linode.com/docs/security/firewalls/introduction-to-firewalld-on-centos

|

||||||

|

|

||||||

|

作者:[Linode][a]

|

||||||

|

译者:[geekpi](https://github.com/geekpi)

|

||||||

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:https://www.linode.com/docs/security/firewalls/introduction-to-firewalld-on-centos

|

||||||

|

[1]:http://www.firewalld.org/

|

||||||

|

[2]:https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Security_Guide/sec-Using_Firewalls.html#sec-Introduction_to_firewalld

|

||||||

|

[3]:https://fedoraproject.org/wiki/FirewallD

|

||||||

|

[4]:http://www.firewalld.org/

|

||||||

|

[5]:https://jpopelka.fedorapeople.org/firewalld/doc/firewalld.richlanguage.html

|

||||||

|

[6]:https://www.linode.com/docs/networking/firewalls/control-network-traffic-with-iptables

|

||||||

184

published/20161128 Managing devices in Linux.md

Normal file

184

published/20161128 Managing devices in Linux.md

Normal file

@ -0,0 +1,184 @@

|

|||||||

|

在 Linux 中管理设备

|

||||||

|

=============

|

||||||

|

|

||||||

|

探索 `/dev` 目录可以让您知道如何直接访问到 Linux 中的设备。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

*照片提供:Opensource.com*

|

||||||

|

|

||||||

|

Linux 目录结构中有很多有趣的功能,这次我会讲到 `/dev` 目录一些迷人之处。在继续阅读这篇文章之前,建议你看看我前面的文章。[Linux 文件系统][9],[一切皆为文件][8],这两篇文章介绍了一些有趣的 Linux 文件系统概念。请先看看 - 我会等你看完再回来。

|

||||||

|

|

||||||

|

……

|

||||||

|

|

||||||

|

太好了 !欢迎回来。现在我们可以继续更详尽地探讨 `/dev` 目录。

|

||||||

|

|

||||||

|

### 设备文件

|

||||||

|

|

||||||

|

设备文件也称为[设备特定文件][4]。设备文件用来为操作系统和用户提供它们代表的设备接口。所有的 Linux 设备文件均位于 `/dev` 目录下,是根 (`/`) 文件系统的一个组成部分,因为这些设备文件在操作系统启动过程中必须可以使用。

|

||||||

|

|

||||||

|

关于这些设备文件,要记住的一件重要的事情,就是它们大多不是设备驱动程序。更准确地描述来说,它们是设备驱动程序的门户。数据从应用程序或操作系统传递到设备文件,然后设备文件将它传递给设备驱动程序,驱动程序再将它发给物理设备。反向的数据通道也可以用,从物理设备通过设备驱动程序,再到设备文件,最后到达应用程序或其他设备。

|

||||||

|

|

||||||

|

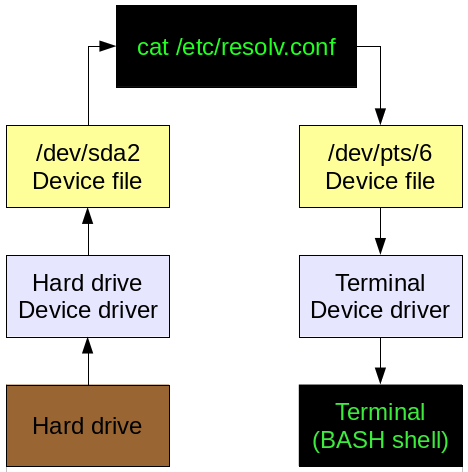

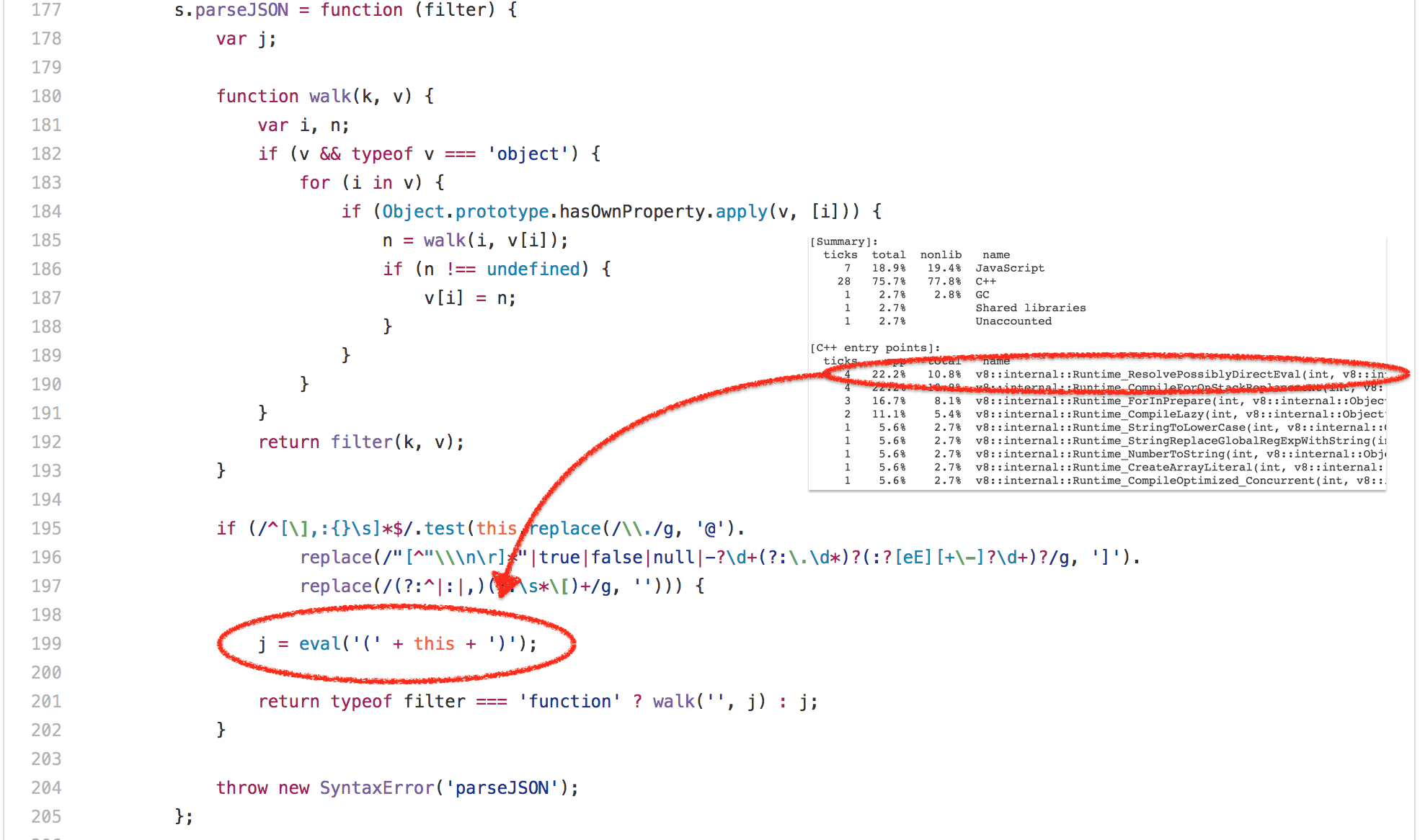

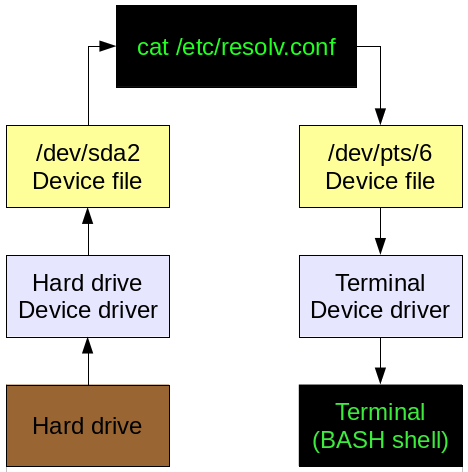

让我们以一个典型命令的数据流程来直观地看看。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

*图 1:一个典型命令的简单数据流程。*

|

||||||

|

|

||||||

|

在上面的图 1 中,显示一个简单命令的简化数据流程。从一个 GUI 终端仿真器,例如 Konsole 或 xterm 中发出 `cat /etc/resolv.conf` 命令,它会从磁盘中读取 `resolv.conf` 文件,磁盘设备驱动程序处理设备的具体功能,例如在硬盘驱动器上定位文件并读取它。数据通过设备文件传递,然后从命令到设备文件,然后到 6 号伪终端的设备驱动,然后在终端会话中显示。

|

||||||

|

|

||||||

|

当然, `cat` 命令的输出可以以下面的方式被重定向到一个文件, `cat /etc/resolv.conf > /etc/resolv.bak` ,这样会创建该文件的备份。在这种情况下,图 1 左侧的数据流量将保持不变,而右边的数据流量将通过 `/dev/sda2` 设备文件、硬盘设备驱动程序,然后到硬盘驱动器本身。

|

||||||

|

|

||||||

|

这些设备文件使得使用标准流 (STD/IO) 和重定向访问 Linux 或 Unix 计算机上的任何一个设备非常容易。只需将数据流定向到设备文件,即可将数据发送到该设备。

|

||||||

|

|

||||||

|

### 设备文件类别

|

||||||

|

|

||||||

|

设备文件至少可以按两种方式划分。第一种也是最常用的分类是根据与设备相关联的数据流进行划分。比如,tty (teletype) 和串行设备被认为是基于字符的,因为数据流的传送和处理是以一次一个字符或字节进行的;而块类型设备(如硬盘驱动器)是以块为单位传输数据,通常为 256 个字节的倍数。

|

||||||

|

|

||||||

|

您可以在终端上以一个非 root 用户,改变当前工作目录(`PWD`)到 `/dev` ,并显示长目录列表。 这将显示设备文件列表、文件权限及其主、次设备号。 例如,下面的设备文件只是我的 Fedora 24 工作站上 `/dev` 目录中的几个文件。 它们表示磁盘和 tty 设备类型。 注意输出中每行的最左边的字符。 `b` 代表是块类型设备,`c` 代表字符设备。

|

||||||

|

|

||||||

|

```

|

||||||

|

brw-rw---- 1 root disk 8, 0 Nov 7 07:06 sda

|

||||||

|

brw-rw---- 1 root disk 8, 1 Nov 7 07:06 sda1

|

||||||

|

brw-rw---- 1 root disk 8, 16 Nov 7 07:06 sdb

|

||||||

|

brw-rw---- 1 root disk 8, 17 Nov 7 07:06 sdb1

|

||||||

|

brw-rw---- 1 root disk 8, 18 Nov 7 07:06 sdb2

|

||||||

|

crw--w---- 1 root tty 4, 0 Nov 7 07:06 tty0

|

||||||

|

crw--w---- 1 root tty 4, 1 Nov 7 07:07 tty1

|

||||||

|

crw--w---- 1 root tty 4, 10 Nov 7 07:06 tty10

|

||||||

|

crw--w---- 1 root tty 4, 11 Nov 7 07:06 tty11

|

||||||

|

```

|

||||||

|

|

||||||

|

识别设备文件更详细和更明确的方法是使用设备主要以及次要号。 磁盘设备主设备号为 8,将它们指定为 SCSI 块设备。请注意,所有 PATA 和 SATA 硬盘驱动器都由 SCSI 子系统管理,因为旧的 ATA 子系统多年前就由于代码质量糟糕而被认为不可维护。造成的结果就是,以前被称为 “hd[a-z]” 的硬盘驱动器现在被称为 “sd[a-z]”。

|

||||||

|

|

||||||

|

你大概可以从上面的示例中推出磁盘驱动器次设备号的模式。次设备号 0、 16、 32 等等,直到 240,是整个磁盘的号。所以主/次 8/16 表示整个磁盘 `/dev/sdb` , 8/17 是第一个分区的设备文件,`/dev/sdb1`。数字 8/34 代表 `/dev/sdc2`。

|

||||||

|

|

||||||

|

在上面列表中的 tty 设备文件编号更简单一些,从 tty0 到 tty63 。

|

||||||

|

|

||||||

|

Kernel.org 上的 [Linux 下的已分配设备][5]文件是设备类型和主次编号分配的正式注册表。它可以帮助您了解所有当前定义的设备的主要/次要号码。

|

||||||

|

|

||||||

|

### 趣味设备文件

|

||||||

|

|

||||||

|

让我们花几分钟时间,执行几个有趣的实验,演示 Linux 设备文件的强大和灵活性。 大多数 Linux 发行版都有 1 到 7 个虚拟控制台,可用于使用 shell 接口登录到本地控制台会话。 可以使用 `Ctrl-Alt-F1`(控制台 1),`Ctrl-Alt-F2`(控制台 2)等键盘组合键来访问。

|

||||||

|

|

||||||

|

请按 `Ctrl-Alt-F2` 切换到控制台 2。在某些发行版,登录显示的信息包括了与此控制台关联的 tty 设备,但大多不包括。它应该是 tty2,因为你是在控制台 2 中。

|

||||||

|

|

||||||

|

以非 root 用户身份登录。 然后你可以使用 `who am i` 命令 — 是的,就是这个命令,带空格 — 来确定哪个 tty 设备连接到这个控制台。

|

||||||

|

|

||||||

|

在我们实际执行此实验之前,看看 `/dev` 中的 tty2 和 tty3 的设备列表。

|

||||||

|

|

||||||

|

```

|

||||||

|

ls -l /dev/tty[23]

|

||||||

|

```

|

||||||

|

|

||||||

|

有大量的 tty 设备,但我们不关心他们中的大多数,只注意 tty2 和 tty3 设备。 作为设备文件,它们没什么特别之处。它们都只是字符类型设备。我们将使用这些设备进行此实验。 tty2 设备连接到虚拟控制台 2,tty3 设备连接到虚拟控制台 3。

|

||||||

|

|

||||||

|

按 `Ctrl-Alt-F3` 切换到控制台 3。再次以同一非 root 用户身份登录。 现在在控制台 3 上输入以下命令。

|

||||||

|

|

||||||

|

```

|

||||||

|

echo "Hello world" > /dev/tty2

|

||||||

|

```

|

||||||

|

|

||||||

|

按 `Ctrl-Alt-f2` 键以返回到控制台 2。字符串 “Hello world”(没有引号)将显示在控制台 2。

|

||||||

|

|

||||||

|

该实验也可以使用 GUI 桌面上的终端仿真器来执行。 桌面上的终端会话使用 `/dev` 中的伪终端设备,如 `/dev/pts/1`。 使用 Konsole 或 Xterm 打开两个终端会话。 确定它们连接到哪些伪终端,并使用一个向另一个发送消息。

|

||||||

|

|

||||||

|

现在继续实验,使用 `cat` 命令,试试在不同的终端上显示 `/etc/fstab` 文件。

|

||||||

|

|

||||||

|

另一个有趣的实验是使用 `cat` 命令将文件直接打印到打印机。 假设您的打印机设备是 `/dev/usb/lp0`,并且您的打印机可以直接打印 PDF 文件,以下命令将在您的打印机上打印 `test.pdf` 文件。

|

||||||

|

|

||||||

|

```

|

||||||

|

cat test.pdf > /dev/usb/lp0

|

||||||

|

```

|

||||||

|

|

||||||

|

`/dev` 目录包含一些非常有趣的设备文件,这些文件是硬件的入口,人们通常不认为这是硬盘驱动器或显示器之类的设备。 例如,系统存储器 RAM 不是通常被认为是“设备”的东西,而 `/dev/mem` 是通过其可以实现对存储器的直接访问的入口。 下面的例子有一些有趣的结果。

|

||||||

|

|

||||||

|

```

|

||||||

|

dd if=/dev/mem bs=2048 count=100

|

||||||

|

```

|

||||||

|

|

||||||

|

上面的 `dd` 命令提供比简单地使用 `cat` 命令 dump 所有系统的内存提供了更多的控制。 它提供了指定从 `/dev/mem` 读取多少数据的能力,还允许指定从存储器哪里开始读取数据。虽然读取了一些内存,但内核响应了以下错误,在 `/var/log/messages` 中可以看到。

|

||||||

|

|

||||||

|

```

|

||||||

|

Nov 14 14:37:31 david kernel: usercopy: kernel memory exposure attempt detected from ffff9f78c0010000 (dma-kmalloc-512) (2048 bytes)

|

||||||

|

```

|

||||||

|

|

||||||

|

这个错误意味着内核正在通过保护属于其他进程的内存来完成它的工作,这正是它应该工作的方式。 所以,虽然可以使用 `/dev/mem` 来显示存储在 RAM 内存中的数据,但是访问的大多数内存空间是受保护的并且会导致错误。 只可以访问由内核内存管理器分配给运行 `dd` 命令的 BASH shell 的虚拟内存,而不会导致错误。 抱歉,但你不能窥视不属于你的内存,除非你发现了一个可利用的漏洞。

|

||||||

|

|

||||||

|

`/dev` 中还有一些非常有趣的设备文件。 设备文件 `null`,`zero`,`random` 和 `urandom` 不与任何物理设备相关联。

|

||||||

|

|

||||||

|

例如,空设备 `/dev/null` 可以用作来自 shell 命令或程序的输出重定向的目标,以便它们不显示在终端上。 我经常在我的 BASH 脚本中使用这个,以防止向用户展示可能会让他们感到困惑的输出。 `/dev/null` 设备可用于产生一个空字符串。 使用如下所示的 `dd` 命令查看 `/dev/null` 设备文件的一些输出。

|

||||||

|

|

||||||

|

```

|

||||||

|

# dd if=/dev/null bs=512 count=500 | od -c

|

||||||

|

0+0 records in

|

||||||

|

0+0 records out

|

||||||

|

0 bytes copied, 1.5885e-05 s, 0.0 kB/s

|

||||||

|

0000000

|

||||||

|

```

|

||||||

|

|

||||||

|

注意,因为空字符什么也没有所以确实没有可见的输出。 注意看看字节数。

|

||||||

|

|

||||||

|

`/dev/random` 和 `/dev/urandom` 设备也很有趣。 正如它们的名字所暗示的,它们都产生随机输出,不仅仅是数字,而是任何字节组合。 `/dev/urandom` 设备产生的是**确定性**的随机输出,并且非常快。 这意味着输出由算法确定,并使用种子字符串作为起点。 结果,如果原始种子是已知的,则黑客可以再现输出,尽管非常困难,但这是有可能的。 使用命令 `cat /dev/urandom` 可以查看典型的输出,使用 `Ctrl-c` 退出。

|

||||||

|

|

||||||

|

`/dev/random` 设备文件生成**非确定性**的随机输出,但它产生的输出更慢一些。 该输出不是由依赖于先前数字的算法确定的,而是由击键动作和鼠标移动而产生的。 这种方法使得复制特定系列的随机数要困难得多。使用 `cat` 命令去查看一些来自 `/dev/random` 设备文件输出。尝试移动鼠标以查看它如何影响输出。

|

||||||

|

|

||||||

|

正如其名字所暗示的,`/dev/zero` 设备文件产生一个无止境的零作为输出。 注意,这些是八进制零,而不是ASCII字符零(`0`)。 使用如下所示的 `dd` 查看 `/dev/zero` 设备文件中的一些输出

|

||||||

|

|

||||||

|

```

|

||||||

|

# dd if=/dev/zero bs=512 count=500 | od -c

|

||||||

|

0000000 \0 \0 \0 \0 \0 \0 \0 \0 \0 \0 \0 \0 \0 \0 \0 \0

|

||||||

|

*

|

||||||

|

500+0 records in

|

||||||

|

500+0 records out

|

||||||

|

256000 bytes (256 kB, 250 KiB) copied, 0.00126996 s, 202 MB/s

|

||||||

|

0764000

|

||||||

|

```

|

||||||

|

|

||||||

|

请注意,此命令的字节数不为零。

|

||||||

|

|

||||||

|

### 创建设备文件

|

||||||

|

|

||||||

|

在过去,在 `/dev` 中的设备文件都是在安装时创建的,导致一个目录中有几乎所有的设备文件,尽管大多数文件永远不会用到。 在不常发生的情况,例如需要新的设备文件,或意外删除后需要重新创建设备文件,可以使用 `mknod` 程序手动创建设备文件。 前提是你必须知道设备的主要和次要号码。

|

||||||

|

|

||||||

|

CentOS 和 RHEL 6、7,以及 Fedora 的所有版本——可以追溯到至少 Fedora 15,使用较新的创建设备文件的方法。 所有设备文件都是在引导时创建的。 这是因为 udev 设备管理器在设备添加和删除发生时会进行检测。这可实现在主机启动和运行时的真正的动态即插即用功能。 它还在引导时执行相同的任务,通过在引导过程的很早的时期检测系统上安装的所有设备。 [Linux.com][6] 上有一篇很棒的对 [udev 的描述][7]。

|

||||||

|

|

||||||

|

回到 `/dev` 中的文件列表,注意文件的日期和时间。 所有文件都是在上次启动时创建的。 您可以使用 `uptime` 或者 `last` 命令来验证这一点。在上面我的设备列表中,所有这些文件都是在 11 月 7 日上午 7:06 创建的,这是我最后一次启动系统。

|

||||||

|

|

||||||

|

当然, `mknod` 命令仍然可用, 但新的 `MAKEDEV` (是的,所有字母大写,在我看来是违背 Linux 使用小写命令名的原则的) 命令提供了一个创建设备文件的更容易的界面。 在当前版本的 Fedora 或 CentOS 7 中,默认情况下不安装 `MAKEDEV` 命令;它安装在 CentOS 6。您可以使用 YUM 或 DNF 来安装 MAKEDEV 包。

|

||||||

|

|

||||||

|

### 结论

|

||||||

|

|

||||||

|

有趣的是,我很久没有创建一个设备文件的需要了。 然而,最近我遇到一个有趣的情况,其中一个我常使用的设备文件没有创建,我不得不创建它。 之后该设备再没出过问题。所以丢失设备文件的情况仍然可以发生,知道如何处理它可能很重要。

|

||||||

|

|

||||||

|

设备文件有无数种,您遇到的设备文件我可能没有涵盖到。 这些信息在所下面引用的资源中有大量的细节信息可用。 关于这些文件的功能和工具,我希望我已经给您一些基本的了解,下一步您自己可以探索更多。

|

||||||

|

|

||||||

|

资源

|

||||||

|

|

||||||

|

- [一切皆文件][1], David Both, Opensource.com

|

||||||

|

- [Linux 文件系统介绍][2], David Both, Opensource.com

|

||||||

|

- [文件系统层次结构][10], The Linux Documentation Project

|

||||||

|

- [设备文件][4], Wikipedia

|

||||||

|

- [Linux 下已分配设备][5], Kernel.org

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: https://opensource.com/article/16/11/managing-devices-linux

|

||||||

|

|

||||||

|

作者:[David Both][a]

|

||||||

|

译者:[erlinux](http://www.itxdm.me)

|

||||||

|

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 组织编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:https://opensource.com/users/dboth

|

||||||

|

[1]:https://opensource.com/life/15/9/everything-is-a-file

|

||||||

|

[2]:https://opensource.com/life/16/10/introduction-linux-filesystems

|

||||||

|

[4]:https://en.wikipedia.org/wiki/Device_file

|

||||||

|

[5]:https://www.kernel.org/doc/Documentation/devices.txt

|

||||||

|

[6]:https://www.linux.com/

|

||||||

|

[7]:https://www.linux.com/news/udev-introduction-device-management-modern-linux-system

|

||||||

|

[8]:https://opensource.com/life/15/9/everything-is-a-file

|

||||||

|

[9]:https://opensource.com/life/16/10/introduction-linux-filesystems

|

||||||

|

[10]:http://www.tldp.org/LDP/Linux-Filesystem-Hierarchy/html/dev.html

|

||||||

|

|

||||||

@ -0,0 +1,228 @@

|

|||||||

|

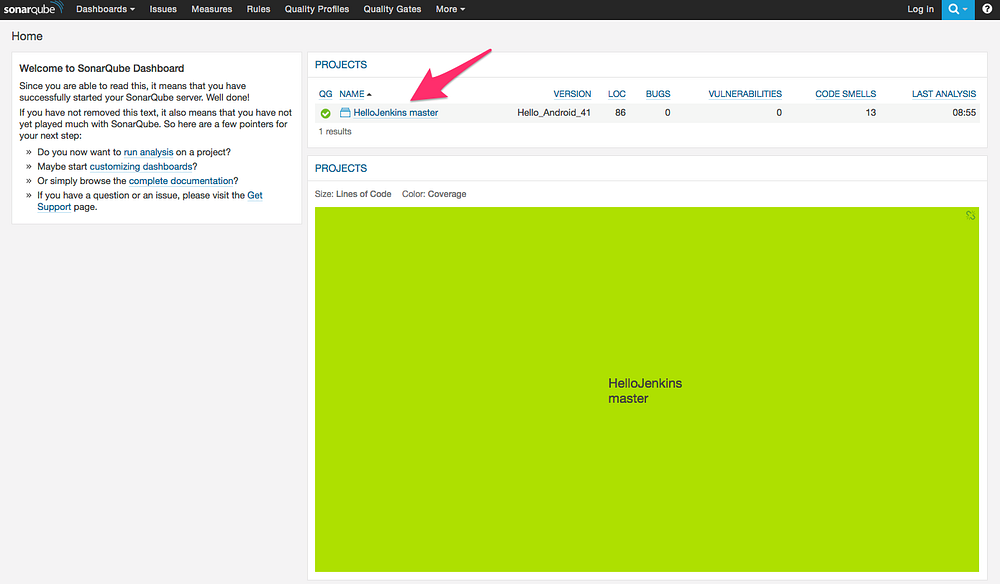

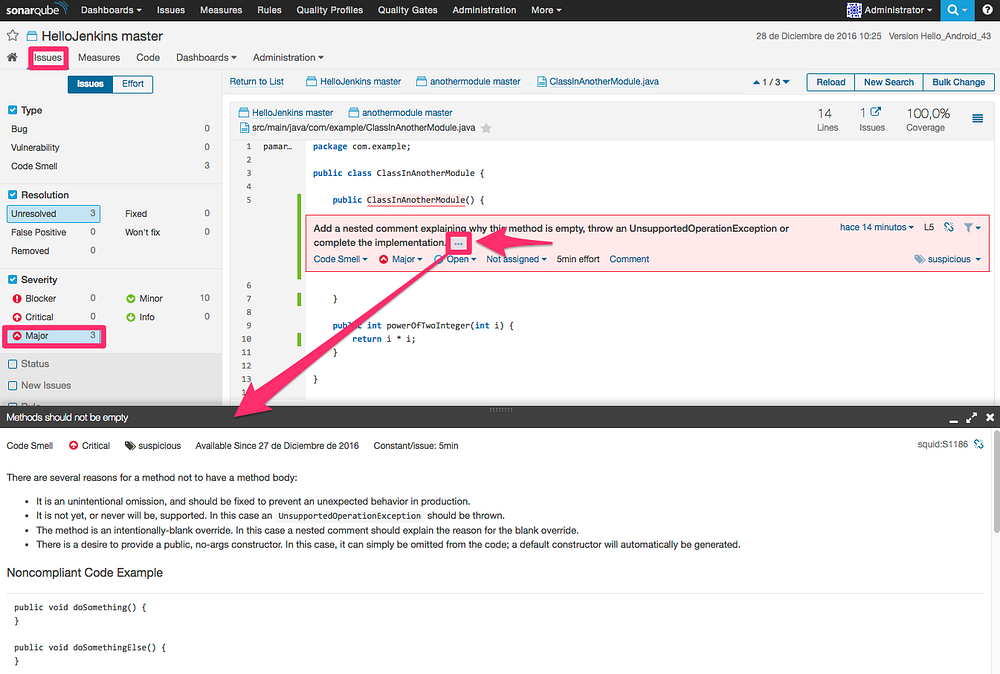

### Android 6.0 Marshmallow

|

||||||

|

|

||||||

|

In October 2015, Google brought Android 6.0 Marshmallow into the world. For the OS's launch, Google commissioned two new Nexus devices: the [Huawei Nexus 6P and LG Nexus 5X][39]. Rather than just the usual speed increase, the new phones also included a key piece of hardware: a fingerprint reader for Marshmallow's new fingerprint API. Marshmallow was also packing a crazy new search feature called "Google Now on Tap," user controlled app permissions, a new data backup system, and plenty of other refinements.

|

||||||

|

|

||||||

|

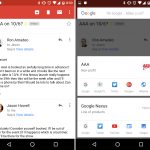

#### The new Google App

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

* [

|

||||||

|

|

||||||

|

][3]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][4]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][5]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][6]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][7]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][8]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][9]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][10]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][11]

|

||||||

|

|

||||||

|

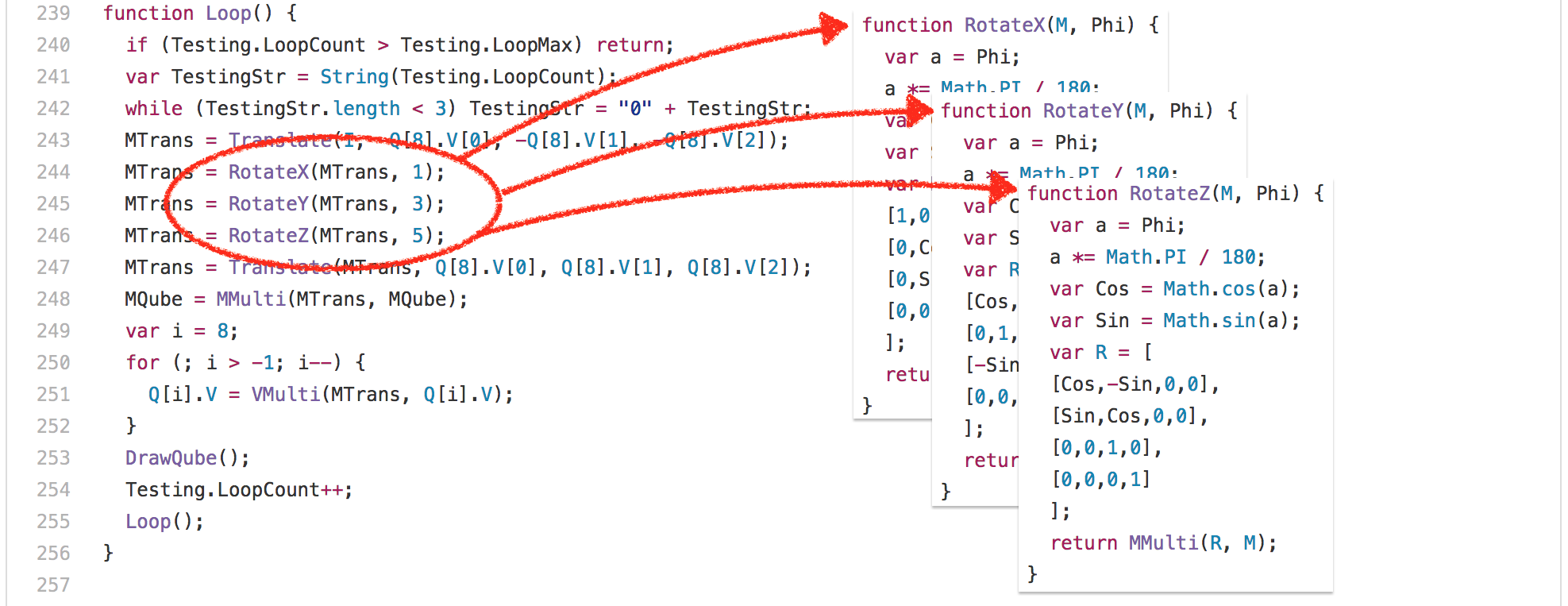

Marshmallow was the first version of Android after [Google's big logo redesign][40]. The OS was updated accordingly, mainly with a new Google app that added a colorful logo to the search widget, search page, and the app icon.

|

||||||

|

|

||||||

|

Google reverted the app drawer from a paginated horizontal layout back to the single, vertically scrolling sheet. The earliest versions of Android all had vertically scrolling sheets until Google changed to a horizontal page system in Honeycomb. The scrolling single sheet made finding things in a large selection of apps much faster. A "quick scroll" feature, which let you drag on the scroll bar to bring up letter indexing, helped too. This new app drawer layout also carried over to the widget drawer. Given that the old system could easily grow to 15+ pages, this was a big improvement.

|

||||||

|

|

||||||

|

The "suggested apps" bar at the top of Marshmallow's app drawer made finding apps faster, too.

|

||||||

|

This bar changed from time to time and tried to surface the apps you needed when you needed them. It used an algorithm that took into account app usage, apps that are normally launched together, and time of day.

|

||||||

|

|

||||||

|

#### Google Now on Tap—a feature that didn't quite work out

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

* [

|

||||||

|

|

||||||

|

][12]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][13]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][14]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][15]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][16]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][17]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][18]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][19]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][20]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][21]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][22]

|

||||||

|

|

||||||

|

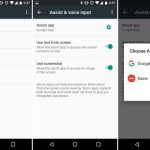

One of Marshmallow's headline features was "Google Now on Tap." With Now on Tap, you could hold down the home button on any screen and Android would send the entire screen to Google for processing. Google would then try to figure out what the screen was about, and a special list of search results would pop up from the bottom of the screen.

|

||||||

|

|

||||||

|

Results yielded by Now on Tap weren't the usual 10 blue links—though there was always a link to a Google Search. Now on Tap could also deep link into other apps using Google's App Indexing feature. The idea was you could call up Now on Tap for a YouTube music video and get a link to the Google Play or Amazon "buy" page. Now on Tapping (am I allowed to verb that?) a news article about an actor could link to his page inside the IMDb app.

|

||||||

|

|

||||||

|

Rather than make this a proprietary feature, Google built a whole new "Assistant API" into Android. The user could pick an "Assist App" which would be granted scads of information upon long-pressing the home button. The Assist app would get all the text that was currently loaded by the app—not just what was immediately on screen—along with all the images and any special metadata the developer wanted to include. This API powered Google Now on Tap, and it also allowed third parties to make Now on Tap rivals if they wished.

|

||||||

|

|

||||||

|

Google hyped Now on Tap during Marshmallow's initial presentation, but in practice, the feature wasn't very useful. Google Search is worthwhile because you're asking it an exact question—you type in whatever you want, and it scours the entire Internet looking for the answer or web page. Now on Tap made things infinitely harder because it didn't even know what question you were asking. You opened Now on Tap with a very specific intent, but you sent Google the very unspecific query of "everything on your screen." Google had to guess what your query was and then tried to deliver useful search results or actions based on that.

|

||||||

|

|

||||||

|

Behind the scenes, Google was probably processing like crazy to brute-force out the result you wanted from an entire page of text and images. But more often than not, Now on Tap yielded what felt like a list of search results for every proper noun on the page. Sifting through the list of results for multiple queries was like being trapped in one of those Bing "[Search Overload][41]" commercials. The lack of any kind of query targeting made Now on Tap feel like you were asking Google to read your mind, and it never could. Google eventually patched in an "Assist" button to the text selection menu, giving Now on Tap some of the query targeting that it desperately needed.

|

||||||

|

|

||||||

|

Calling Now on Tap anything other than a failure is hard. The shortcut to access Now on Tap—long pressing on the home button—basically made it a hidden, hard-to-discover feature that was easy to forget about. We speculate the feature had extremely low usage numbers. Even when users did discover Now on Tap, it failed to read your mind so often that, after a few attempts, most users probably gave up on it.

|

||||||

|

|

||||||

|

With the launch of the Google Pixels in 2016, the company seemingly admitted defeat. It renamed Now on Tap "Screen Search" and demoted it in favor of the Google Assistant. The Assistant—Google's new voice command system—took over On Tap's home button gesture and related it to a second gesture once the voice system was activated. Google also seems to have learned from Now on Tap's poor discoverability. With the Assistant, Google added a set of animated colored dots to the home button that helped users discover and be reminded about the feature.

|

||||||

|

|

||||||

|

#### Permissions

|

||||||

|

|

||||||

|

|

||||||

|

* [

|

||||||

|

|

||||||

|

][23]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][24]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][25]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][26]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][27]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][28]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][29]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][30]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][31]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][32]

|

||||||

|

|

||||||

|

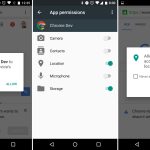

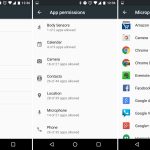

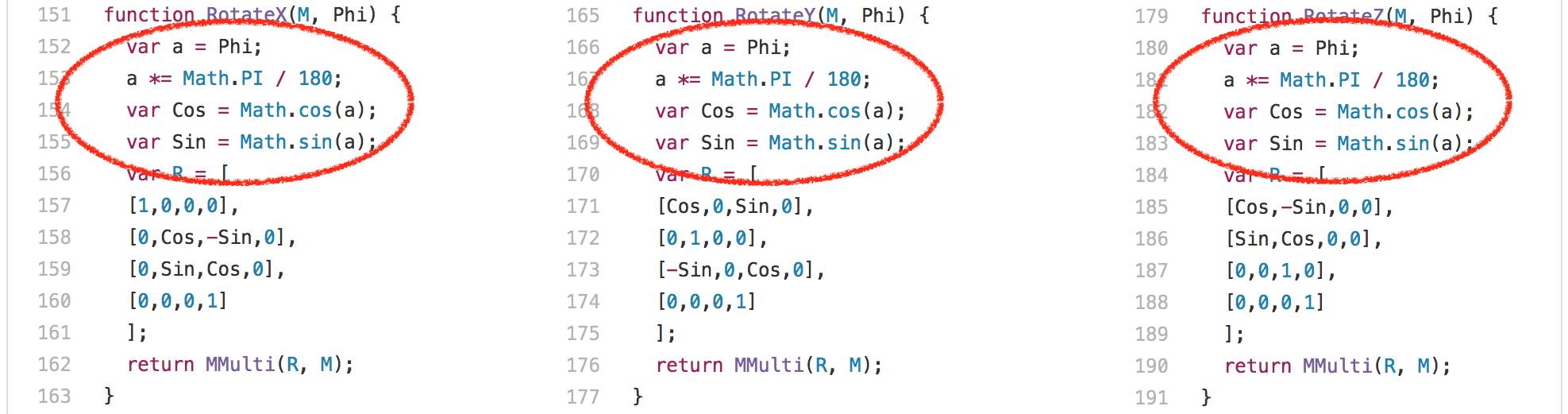

Android 6.0 finally introduced an app permissions system that gave users granular control over what data apps had access to.

|

||||||

|

|

||||||

|

Apps no longer gave you a huge list of permissions at install. With Marshmallow, apps installed without asking for any permissions at all. When apps needed a permission—like access to your location, camera, microphone, or contact list—they asked at the exact time they needed it. During your usage of an app, an "Allow or Deny" dialog popped up anytime the app wanted a new permission. Some app setup flow tackled this by asking for a few key permissions at startup, and everything else popped up as the app needed it. This better communicated to the user what the permissions are for—this app needs camera access because you just tapped on the camera button.

|

||||||

|

|

||||||

|

Besides the in-the-moment "Allow or Deny" dialogs, Marshmallow also added a permissions setting screen. This big list of checkboxes allowed data-conscious users to browse which apps have access to what permissions. They can browse not only by app, but also by permission. For instance, you could see every app that has access to the microphone.

|

||||||

|

|

||||||

|

Google had been experimenting with app permissions for some time, and these screens were basically the rebirth of the hidden "[App Ops][42]" system that was accidentally introduced in Android 4.3 and quickly removed.

|

||||||

|

|

||||||

|

While Google experimented in previous versions, the big difference with Marshmallow's permissions system was that it represented an orderly transition to a permission OS. Android 4.3's App Ops was never meant to be exposed to users, so developers didn't know about it. The result of denying an app a permission in 4.3 was often a weird error message or an outright crash. Marshmallow's system was opt-in for developers—the new permission system only applied to apps that were targeting the Marshmallow SDK, which Google used as a signal that the developer was ready for permission handling. The system also allowed for communication to users when a feature didn't work because of a denied permission. Apps were told when they were denied a permission, and they could instruct the user to turn the permission back on if you wanted to use a feature.

|

||||||

|

|

||||||

|

#### The Fingerprint API

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

* [

|

||||||

|

|

||||||

|

][33]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][34]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][35]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][36]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][37]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][38]

|

||||||

|

|

||||||

|

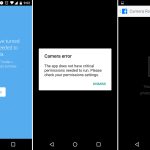

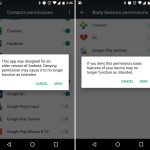

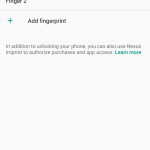

Before Marshmallow, few OEMs had come up with their own fingerprint solution in response to [Apple's Touch ID][43]. But with Marshmallow, Google finally came up with an ecosystem-wide API for fingerprint recognition. The new system included UI for registering fingerprints, a fingerprint-guarded lock screen, and APIs that allowed apps to protect content behind a fingerprint scan or lock-screen challenge.

|

||||||

|

|

||||||

|

The Play Store was one of the first apps to support the API. Instead of having to enter your password to purchase an app, you could just use your fingerprint. The Nexus 5X and 6P were the first phones to support the fingerprint API with an actual hardware fingerprint reader on the back.

|

||||||

|

|

||||||

|

Later the fingerprint API became one of the rare examples of the Android ecosystem actually cooperating and working together. Every phone with a fingerprint reader uses Google's API, and most banking and purchasing apps are pretty good about supporting it.

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

作者简介:

|

||||||

|

|

||||||

|

Ron is the Reviews Editor at Ars Technica, where he specializes in Android OS and Google products. He is always on the hunt for a new gadget and loves to rip things apart to see how they work.

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/

|

||||||

|

|

||||||

|

作者:[RON AMADEO][a]

|

||||||

|

译者:[译者ID](https://github.com/译者ID)

|

||||||

|

校对:[校对者ID](https://github.com/校对者ID)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:http://arstechnica.com/author/ronamadeo

|

||||||

|

[1]:https://www.youtube.com/watch?v=f17qe9vZ8RM

|

||||||

|

[2]:https://www.youtube.com/watch?v=VOn7VrTRlA4&list=PLOU2XLYxmsIJDPXCTt5TLDu67271PruEk&index=11

|

||||||

|

[3]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[4]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[5]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[6]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[7]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[8]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[9]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[10]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[11]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[12]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[13]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[14]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[15]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[16]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[17]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[18]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[19]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[20]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[21]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[22]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[23]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[24]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[25]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[26]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[27]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[28]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[29]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[30]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[31]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[32]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[33]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[34]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[35]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[36]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[37]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[38]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/31/#

|

||||||

|

[39]:http://arstechnica.com/gadgets/2015/10/nexus-5x-and-nexus-6p-review-the-true-flagships-of-the-android-ecosystem/

|

||||||

|

[40]:http://arstechnica.com/gadgets/2015/09/google-gets-a-new-logo/

|

||||||

|

[41]:https://www.youtube.com/watch?v=9yfMVbaehOE

|

||||||

|

[42]:http://www.androidpolice.com/2013/07/25/app-ops-android-4-3s-hidden-app-permission-manager-control-permissions-for-individual-apps/

|

||||||

|

[43]:http://arstechnica.com/apple/2014/09/ios-8-thoroughly-reviewed/10/#h3

|

||||||

@ -0,0 +1,171 @@

|

|||||||

|

# Behind-the-scenes changes

|

||||||

|

|

||||||

|

Marshmallow expanded on the power-saving JobScheduler APIs that were originally introduced in Lollipop. JobScheduler turned app background processing from a free-for-all that frequently woke up the device to an organized system. JobScheduler was basically a background-processing traffic cop.

|

||||||

|

|

||||||

|

In Marshmallow, Google added a "Doze" mode to save even more power when a device is left alone. If a device was stationary, unplugged, and had its screen off, it would slowly drift into a low-power, disconnected mode that locked down background processing. After a period of time, network access was disabled. Wake locks—an app's request to keep your phone awake so it can do background processing—got ignored. System Alarms (not user-set alarm clock alarms) and the [JobScheduler][25] shut down, too.

|

||||||

|

|

||||||

|

If you've ever put a device in airplane mode and noticed the battery lasts forever, Doze was like an automatic airplane mode that kicked in when you left your device alone—it really did boost battery life. It worked for phones that were left alone on a desk all day or all night, and it was great for tablets, which are often forgotten about on the coffee table.

|

||||||

|

|

||||||

|

The only notification that could punch through Doze mode was a "high priority message" from Google Cloud Messaging. This was meant for texting services so, even if a device is dozing, messages still came through.

|

||||||

|

|

||||||

|

|

||||||

|

* [

|

||||||

|

|

||||||

|

][1]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][2]

|

||||||

|

|

||||||

|

"App Standby" was another power saving feature that more-or-less worked quietly in the background. The idea behind it was simple: if you stopped interacting with an app for a period of time, Android deemed it unimportant and took away its internet access and background processing privileges.

|

||||||

|

|

||||||

|

For the purposes of App Standby, "interacting" with an app meant opening the app, starting a foreground service, or generating a notification. Any one of these actions would reset the Standby timer on an app. For every other edge case, Google added a cryptically-named "Battery Optimizations" screen in the settings. This let users whitelist apps to make them immune from app standby. As for developers, they had an option in Developer Settings called "Inactive apps" which let them manually put an app on standby for testing.

|

||||||

|

|

||||||

|

App Standby basically auto-disabled apps you weren't using, which was a great way to fight battery drain from crapware or forgotten-about apps. Because it was completely silent and automatically happened in the background, it helped even novice users have a well-tuned device.

|

||||||

|

|

||||||

|

* [

|

||||||

|

|

||||||

|

][3]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][4]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][5]

|

||||||

|

|

||||||

|

Google tried many app backup schemes over the years, and in Marshmallow it [took another swing][26]. Marshmallow's brute force app backup system aimed to dump the entire app data folder to the cloud. It was possible and technically worked, but app support for it was bad, even among Google apps. Setting up a new Android phone is still a huge hassle, with countless sign-ins and tutorial popups.

|

||||||

|

|

||||||

|

In terms of interface, Marshmallow's backup system used the Google Drive app. In the settings of Google Drive, there's now a "Manage Backups" screen, which showed app data not only from the new system, but also every other app backup scheme Google has tried over the years.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

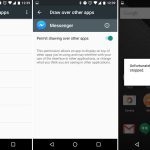

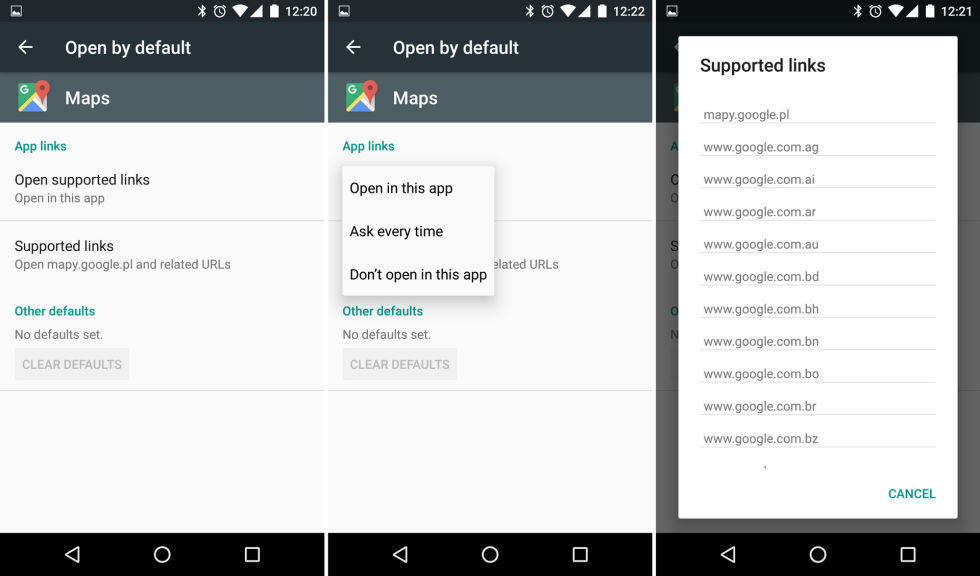

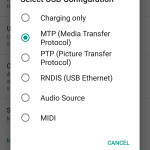

Buried in the settings was a new "App linking" feature, which could "link" an app to a website. Before app linking, opening up a Google Maps URL on a fresh install usually popped up an "Open With" dialog box that wanted to know if it should open the URL in a browser or in the Google Maps app.

|

||||||

|

|

||||||

|

This was a silly question, since of course you wanted to use the app instead of the website—that's why you had the app installed. App linking let website owners associate their app with their webpage. If users had the app installed, Android would suppress the "Open With" dialog and use that app instead. To activate app linking, developers just had to throw some JSON code on their website that Android would pick up.

|

||||||

|

|

||||||

|

App linking was great for sites with an obvious app client, like Google Maps, Instagram, and Facebook. For sites with an API and multiple clients, like Twitter, the App Linking settings screen gave users control over the default app association for any URL. Out-of-the-box app linking covered 90 percent of use cases though, which cut down on the annoying pop ups on a new phone.

|

||||||

|

|

||||||

|

|

||||||

|

* [

|

||||||

|

|

||||||

|

][6]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][7]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][8]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][9]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][10]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][11]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][12]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][13]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][14]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][15]

|

||||||

|

|

||||||

|

Adoptable storage was one of Marshmallow's best features. It turned SD cards from a janky secondary storage pool into a perfect merged-storage solution. Slide in an SD card, format it, and you instantly had more storage in your device that you never had to think about again.

|

||||||

|

|

||||||

|

Sliding in a SD card showed a setup notification, and users could choose to format the card as "portable" or "internal" storage. The "Internal" option was the new adoptable storage mode, and it paved over the card with an ext4 file system. The only downside? The card and the data were both "locked" to your phone. You couldn't pull the card out and plug it into anything without formatting it first. Google was going for a set-it-and-forget-it use case with internal storage.

|

||||||

|

|

||||||

|

If you did yank the card out, Android did its best to deal with things. It popped up a message along the lines of "You'd better put that back or else!" along with an option to "forget" the card. Of course "forgetting" the card would result in all sorts of data loss, and it was not recommended.

|

||||||

|

|

||||||

|

The sad part of adoptable storage is that devices that could actually use it didn't come for a long time. Neither Nexus device had an SD card, so for the review we rigged up a USB stick as our adoptable storage. OEMs initially resisted the feature, with [LG and Samsung][27] disabling it on their early 2016 flagships. Samsung stated that "We believe that our users want a microSD card to transfer files between their phone and other devices," which was not possible once the card was formatted to ext4.

|

||||||

|

|

||||||

|

Google's implementation let users choose between portable and internal formatting options. But rather than give users that choice, OEMs completely took the internal storage feature away. Advanced users were unhappy about this, and of course the Android modding scene quickly re-enabled adoptable storage. On the Galaxy S7, modders actually defeated Samsung's SD card lockdown [a day before][28] the device was even officially released!

|

||||||

|

|

||||||

|

#### Volume and Notifications

|

||||||

|

|

||||||

|

|

||||||

|

* [

|

||||||

|

|

||||||

|

][16]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][17]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][18]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][19]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][20]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][21]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][22]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][23]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][24]

|

||||||

|

|

||||||

|

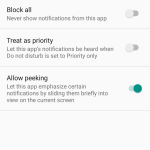

Google walked back the priority notification controls that were in the volume popup in favor of a simpler design. Hitting the volume key popped up a single slider for the current audio source, along with a drop down button that expanded the controls to show all three audio sliders: Notifications, media, and alarms. All the priority notification controls still existed—they just lived in a "do not disturb" quick-settings tile now.

|

||||||

|

|

||||||

|

One of the most relieving additions to the notification controls gave users control over Heads-Up notifications—now renamed "Peek" notifications. This feature let notifications pop up over the top portion of the screen, just like on iOS. The idea was that the most important notifications should be elevated over your normal, everyday notifications.

|

||||||

|

|

||||||

|

However, in Lollipop, when this feature was introduced, Google had the terrible idea of letting developers decide if their apps were "important" or not. Of course, every developer thinks its app is the most important thing in the world. So while the feature was originally envisioned for instant messages from your closest contacts, it ended up being hijacked by Facebook "Like" notifications. In Marshmallow, every app got a "treat as priority" checkbox in the notification settings, which gave users an easy ban hammer for unruly apps.

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

作者简介:

|

||||||

|

|

||||||

|

Ron is the Reviews Editor at Ars Technica, where he specializes in Android OS and Google products. He is always on the hunt for a new gadget and loves to rip things apart to see how they work.

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/

|

||||||

|

|

||||||

|

作者:[RON AMADEO][a]

|

||||||

|

译者:[译者ID](https://github.com/译者ID)

|

||||||

|

校对:[校对者ID](https://github.com/校对者ID)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:http://arstechnica.com/author/ronamadeo

|

||||||

|

[1]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[2]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[3]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[4]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[5]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[6]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[7]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[8]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[9]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[10]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[11]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[12]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[13]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[14]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[15]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[16]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[17]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[18]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[19]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[20]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[21]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[22]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[23]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[24]:http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/32/#

|

||||||

|

[25]:http://arstechnica.com/gadgets/2014/11/android-5-0-lollipop-thoroughly-reviewed/6/#h2

|

||||||

|

[26]:http://arstechnica.com/gadgets/2015/10/android-6-0-marshmallow-thoroughly-reviewed/6/#h2

|

||||||

|

[27]:http://arstechnica.com/gadgets/2016/02/the-lg-g5-and-galaxy-s7-wont-support-android-6-0s-adoptable-storage/

|

||||||

|

[28]:http://www.androidpolice.com/2016/03/10/modaco-manages-to-get-adoptable-sd-card-storage-working-on-the-galaxy-s7-and-galaxy-s7-edge-no-root-required/

|

||||||

@ -0,0 +1,185 @@

|

|||||||

|

# Monthly security updates

|

||||||

|

|

||||||

|

[

|

||||||

|

|

||||||

|

][31]

|

||||||

|

|

||||||

|

|

||||||

|

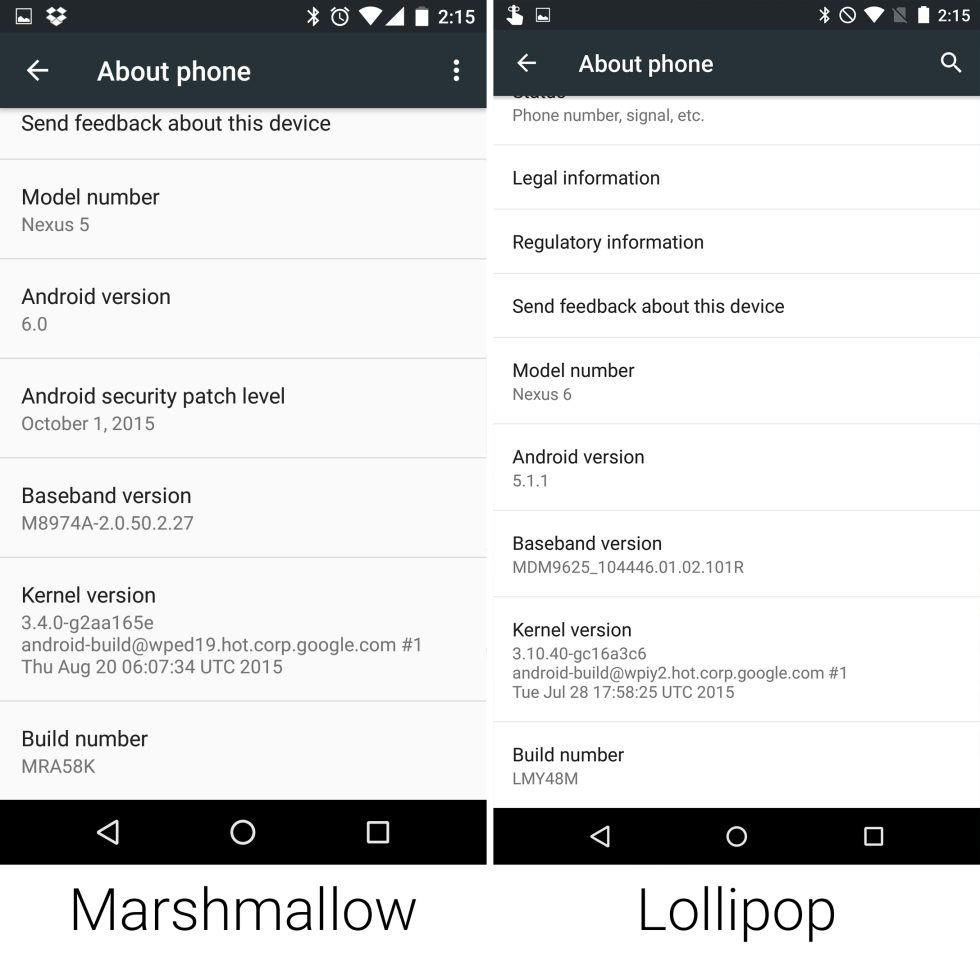

A few months before the release of Marshmallow, [vulnerabilities][32] in Android's "Stagefright" media server were disclosed to the public, which could allow for remote code execution on older versions of Android. Android took a beating in the press, with [a billion phones][33] affected by the newly discovered bugs.

|

||||||

|

|

||||||

|

Google responded by starting a monthly Android security update program. Every month it would round up bugs, fix them, and push out new code to AOSP and Nexus devices. OEMs—who were already struggling with updates (possibly due to apathy)—were basically told to "deal with it" and keep up. Every other major operating system has frequent security updates—it's just the cost of being such a huge platform. To accommodate OEMs, Google give them access to the updates a full month ahead of time. After 30 days, security bulletins are posted and Google devices get the updates.

|

||||||

|

|

||||||

|

The monthly update program started two months before the release of Marshmallow, but in this major OS update Google added an "Android Security Patch Level" field to the About Phone screen. Rather than use some arcane version number, this was just a date. This let anyone easily see how out of date their phone was, an acted as a nice way to shame slow OEMs.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

* [

|

||||||

|

|

||||||

|

][2]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][3]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][4]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][5]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][6]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][7]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][8]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][9]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][10]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][11]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][12]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][13]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][14]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][15]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][16]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][17]

|

||||||

|

* [

|

||||||

|

|

||||||

|

][18]

|

||||||

|

|

||||||

|

The text selection menu is now a floating toolbar that pops up right next to the text you're selecting. This wasn't just the regular "cut/copy/paste" commands, either. Apps could put special options on the toolbar, like the "add link" option in Google Docs.

|

||||||

|

|

||||||

|

After the standard text commands, an ellipsis button would expose a second menu, and it was here that apps could add extra features to the text selection menu. Using a new "text processing" API, it was now super easy to ship text directly to another app. If you had Google Translate installed, a "translate" option would show up in this menu. Eventually Google Search added an "Assist" option to this menu for Google Now on Tap.

|

||||||

|

|

||||||

|

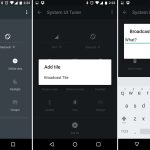

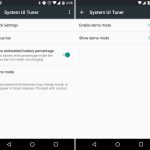

Marshmallow added a hidden settings section called the "System UI Tuner." This section would turn into a catch-all for power user features and experimental items. To access this you had to pull down the notification panel and hold down on the "settings" button for several seconds. The settings gear would spin, and eventually you'd see a message indicating that the System UI Tuner was unlocked. Once it was turned on, you could find it as the bottom of the system settings next to Developer Options.

|

||||||

|

|

||||||

|

In this first version of the System UI Tuner, users would add custom tiles to the Quick Settings panel, a feature that would later be refined into an API apps could use. For now the feature was very rough, basically allowing users to type a custom command into a text box. System status icons could be individually turned on and off, so if you really hated knowing you were connected to Wi-Fi, you could kill the icon. A popular power user addition was the option for embedding a percentage readout into the battery icon. There was also a "demo" mode for screenshots, which would replace the normal status bar with a fake, clean version.

|

||||||

|

|

||||||

|

### Android 7.0 Nougat, Pixel Phones, and the future

|

||||||

|

|

||||||

|