mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-27 02:30:10 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject into new

This commit is contained in:

commit

afbfacc555

.travis.ymlMakefile

published

20180105 The Best Linux Distributions for 2018.md20180919 How Writing Can Expand Your Skills and Grow Your Career.md20180926 CPU Power Manager - Control And Manage CPU Frequency In Linux.md20180930 Creator of the World Wide Web is Creating a New Decentralized Web.md20181012 Happy birthday, KDE- 11 applications you never knew existed.md

scripts

sources

talk

20170921 The Rise and Rise of JSON.md20171007 The Most Important Database You-ve Never Heard of.md20171119 The Ruby Story.md20171229 Important Papers- Codd and the Relational Model.md20180209 How writing can change your career for the better, even if you don-t identify as a writer.md20180527 Whatever Happened to the Semantic Web.md20180623 The IBM 029 Card Punch.md20180722 Dawn of the Microcomputer- The Altair 8800.md20180805 Where Vim Came From.md20180818 What Did Ada Lovelace-s Program Actually Do.md20180916 The Rise and Demise of RSS.md20181014 How Lisp Became God-s Own Programming Language.md20181019 What is an SRE and how does it relate to DevOps.md20181023 What MMORPGs can teach us about leveling up a heroic developer team.md

tech

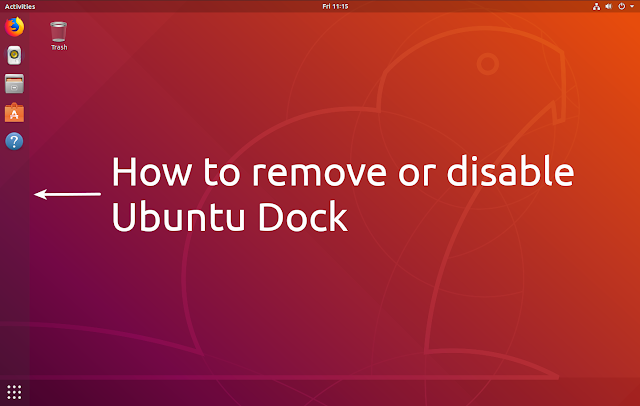

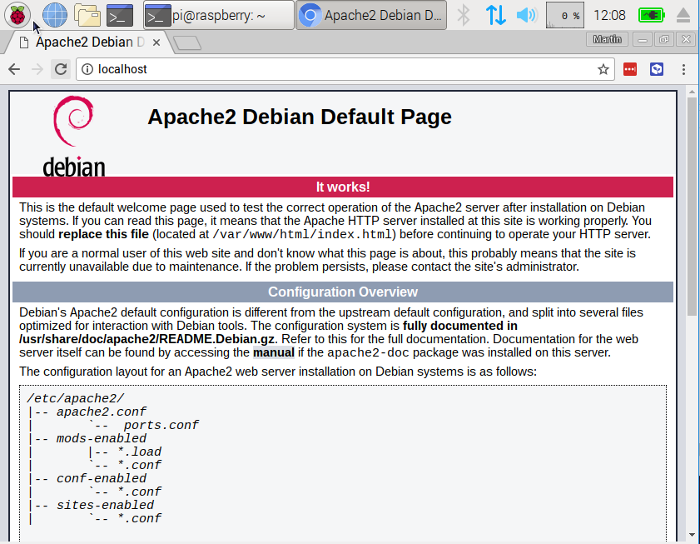

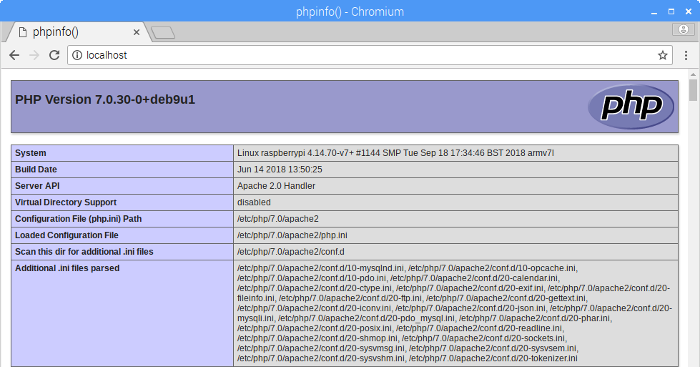

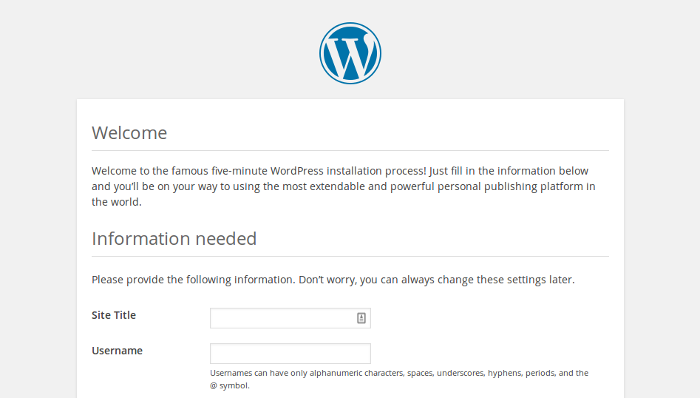

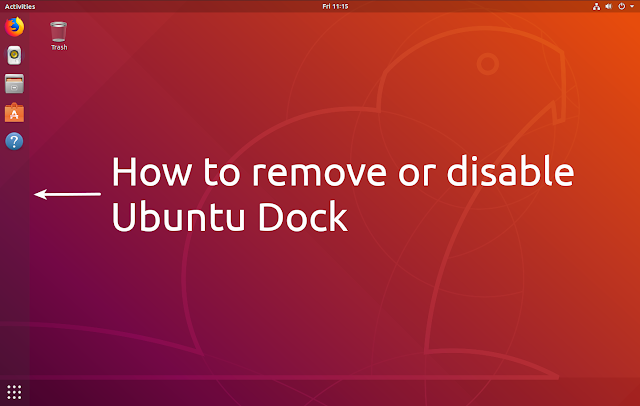

20160627 9 Best Free Video Editing Software for Linux In 2017.md20170928 The Lineage of Man.md20171202 Simulating the Altair.md20180101 Manage Your Games Using Lutris In Linux.md20180129 The 5 Best Linux Distributions for Development.md20180707 Version Control Before Git with CVS.md20180810 How To Remove Or Disable Ubuntu Dock.md20180902 Learning BASIC Like It-s 1983.md20180930 A Short History of Chaosnet.md20181004 Lab 3- User Environments.md20181018 MidnightBSD Hits 1.0- Checkout What-s New.md20181018 The case for open source classifiers in AI algorithms.md20181022 How to set up WordPress on a Raspberry Pi.md

translated/tech

20160627 9 Best Free Video Editing Software for Linux In 2017.md20180105 The Best Linux Distributions for 2018.md20180129 The 5 Best Linux Distributions for Development.md20180810 How To Remove Or Disable Ubuntu Dock.md20181004 Lab 3- User Environments.md20181022 How to set up WordPress on a Raspberry Pi.md

17

.travis.yml

17

.travis.yml

@ -1,3 +1,18 @@

|

||||

language: c

|

||||

script:

|

||||

- make -s check

|

||||

- sh ./scripts/check.sh

|

||||

- ./scripts/badge.sh

|

||||

branches:

|

||||

only:

|

||||

- master

|

||||

except:

|

||||

- gh-pages

|

||||

git:

|

||||

submodules: false

|

||||

deploy:

|

||||

provider: pages

|

||||

skip_cleanup: true

|

||||

github_token: $GITHUB_TOKEN

|

||||

local_dir: build

|

||||

on:

|

||||

branch: master

|

||||

|

||||

58

Makefile

58

Makefile

@ -1,58 +0,0 @@

|

||||

DIR_PATTERN := (news|talk|tech)

|

||||

NAME_PATTERN := [0-9]{8} [a-zA-Z0-9_.,() -]*\.md

|

||||

|

||||

RULES := rule-source-added \

|

||||

rule-translation-requested \

|

||||

rule-translation-completed \

|

||||

rule-translation-revised \

|

||||

rule-translation-published

|

||||

.PHONY: check match $(RULES)

|

||||

|

||||

CHANGE_FILE := /tmp/changes

|

||||

|

||||

check: $(CHANGE_FILE)

|

||||

echo 'PR #$(TRAVIS_PULL_REQUEST) Changes:'

|

||||

cat $(CHANGE_FILE)

|

||||

echo

|

||||

echo 'Check for rules...'

|

||||

make -k $(RULES) 2>/dev/null | grep '^Rule Matched: '

|

||||

|

||||

$(CHANGE_FILE):

|

||||

git --no-pager diff $(TRAVIS_BRANCH) origin/master --no-renames --name-status > $@

|

||||

|

||||

rule-source-added:

|

||||

echo 'Unmatched Files:'

|

||||

egrep -v '^A\s*"?sources/$(DIR_PATTERN)/$(NAME_PATTERN)"?' $(CHANGE_FILE) || true

|

||||

echo '[End of Unmatched Files]'

|

||||

[ $(shell egrep '^A\s*"?sources/$(DIR_PATTERN)/$(NAME_PATTERN)"?' $(CHANGE_FILE) | wc -l) -ge 1 ]

|

||||

[ $(shell egrep -v '^A\s*"?sources/$(DIR_PATTERN)/$(NAME_PATTERN)"?' $(CHANGE_FILE) | wc -l) = 0 ]

|

||||

echo 'Rule Matched: $(@)'

|

||||

|

||||

rule-translation-requested:

|

||||

[ $(shell egrep '^M\s*"?sources/$(DIR_PATTERN)/$(NAME_PATTERN)"?' $(CHANGE_FILE) | wc -l) = 1 ]

|

||||

[ $(shell cat $(CHANGE_FILE) | wc -l) = 1 ]

|

||||

echo 'Rule Matched: $(@)'

|

||||

|

||||

rule-translation-completed:

|

||||

[ $(shell egrep '^D\s*"?sources/$(DIR_PATTERN)/$(NAME_PATTERN)"?' $(CHANGE_FILE) | wc -l) = 1 ]

|

||||

[ $(shell egrep '^A\s*"?translated/$(DIR_PATTERN)/$(NAME_PATTERN)"?' $(CHANGE_FILE) | wc -l) = 1 ]

|

||||

[ $(shell cat $(CHANGE_FILE) | wc -l) = 2 ]

|

||||

echo 'Rule Matched: $(@)'

|

||||

|

||||

rule-translation-revised:

|

||||

[ $(shell egrep '^M\s*"?translated/$(DIR_PATTERN)/$(NAME_PATTERN)"?' $(CHANGE_FILE) | wc -l) = 1 ]

|

||||

[ $(shell cat $(CHANGE_FILE) | wc -l) = 1 ]

|

||||

echo 'Rule Matched: $(@)'

|

||||

|

||||

rule-translation-published:

|

||||

[ $(shell egrep '^D\s*"?translated/$(DIR_PATTERN)/$(NAME_PATTERN)"?' $(CHANGE_FILE) | wc -l) = 1 ]

|

||||

[ $(shell egrep '^A\s*"?published/$(NAME_PATTERN)' $(CHANGE_FILE) | wc -l) = 1 ]

|

||||

[ $(shell cat $(CHANGE_FILE) | wc -l) = 2 ]

|

||||

echo 'Rule Matched: $(@)'

|

||||

|

||||

badge:

|

||||

mkdir -p build/badge

|

||||

./lctt-scripts/show_status.sh -s published >build/badge/published.svg

|

||||

./lctt-scripts/show_status.sh -s translated >build/badge/translated.svg

|

||||

./lctt-scripts/show_status.sh -s translating >build/badge/translating.svg

|

||||

./lctt-scripts/show_status.sh -s sources >build/badge/sources.svg

|

||||

135

published/20180105 The Best Linux Distributions for 2018.md

Normal file

135

published/20180105 The Best Linux Distributions for 2018.md

Normal file

@ -0,0 +1,135 @@

|

||||

2018 年最好的 Linux 发行版

|

||||

======

|

||||

|

||||

|

||||

|

||||

> Jack Wallen 分享他挑选的 2018 年最好的 Linux 发行版。

|

||||

|

||||

这是新的一年,Linux 仍有无限可能。而且许多 Linux 发行版在 2017 年都带来了许多重大的改变,我相信在 2018 年它在服务器和桌面上将会带来更加稳定的系统和市场份额的增长。

|

||||

|

||||

对于那些期待迁移到开源平台(或是那些想要切换到)的人对于即将到来的一年,什么是最好的选择?如果你去 [Distrowatch][14] 找一下,你可能会因为众多的发行版而感到头晕,其中一些的排名在上升,而还有一些则恰恰相反。

|

||||

|

||||

因此,哪个 Linux 发行版将在 2018 年得到偏爱?我有我的看法。事实上,我现在就要和你们分享它。

|

||||

|

||||

跟我做的 [去年清单][15] 相似,我将会打破那张清单,使任务更加轻松。普通的 Linux 用户,至少包含以下几个类别:系统管理员,轻量级发行版,桌面,为物联网和服务器发行的版本。

|

||||

|

||||

根据这些,让我们开始 2018 年最好的 Linux 发行版清单吧。

|

||||

|

||||

### 对系统管理员最好的发行版

|

||||

|

||||

[Debian][16] 不常出现在“最好的”列表中。但它应该出现,为什么呢?如果了解到 Ubuntu 是基于 Debian 构建的(其实有很多的发行版都基于 Debian),你就很容易理解为什么这个发行版应该在许多“最好”清单中。但为什么是对管理员最好的呢?我想这是由于两个非常重要的原因:

|

||||

|

||||

* 容易使用

|

||||

* 非常稳定

|

||||

|

||||

因为 Debain 使用 dpkg 和 apt 包管理,它使得使用该环境非常简单。而且因为 Debian 提供了最稳定的 Linux 平台之一,它为许多事物提供了理想的环境:桌面、服务器、测试、开发。虽然 Debian 可能不包括去年本分类的优胜者 [Parrot Linux][17] 所带有的大量应用程序,但添加完成任务所需的任何或全部必要的应用程序都非常容易。而且因为 Debian 可以根据你的选择安装不同的桌面(Cinnamon、GNOME、KDE、LXDE、Mate 或者 Xfce),肯定可以满足你对桌面的需求。

|

||||

|

||||

|

||||

|

||||

*图 1:在 Debian 9.3 上运行的 GNOME 桌面。*

|

||||

|

||||

同时,Debain 在 Distrowatch 上名列第二。下载、安装,然后让它为你的工作而服务吧。Debain 尽管不那么华丽,但是对于管理员的工作来说十分有用。

|

||||

|

||||

### 最轻量级的发行版

|

||||

|

||||

轻量级的发行版有其特殊的用途:给予一些老旧或是性能低下的机器以新生。但是这不意味着这些特别的发行版仅仅只为了老旧的硬件机器而生。如果你想要的是运行速度,你可能会想知道在你的现代机器上这类发行版的运行速度能有多快。

|

||||

|

||||

在 2018 年上榜的最轻量级的发行版是 [Lubuntu][18]。尽管在这个类别里还有很多选择,而且尽管 Lubuntu 的资源占用与 Puppy Linux 一样小,但得益于它是 Ubuntu 家庭的一员,其易用性为它加了分。但是不要担心,Lubuntu 对于硬件的要求并不高:

|

||||

|

||||

+ CPU:奔腾 4 或者奔腾 M 或者 AMD K8 以上

|

||||

+ 对于本地应用,512 MB 的内存就可以了,对于网络使用(Youtube、Google+、Google Drive、Facebook),建议 1 GB 以上。

|

||||

|

||||

Lubuntu 使用的是 LXDE 桌面(图 2),这意味着新接触 Linux 的用户在使用这个发行版时不会有任何问题。这份简短清单中包含的应用(例如:Abiword、Gnumeric 和 Firefox)都是非常轻量的,且对用户友好的。

|

||||

|

||||

|

||||

|

||||

*图 2:LXDE桌面。*

|

||||

|

||||

Lubuntu 能让十年以上的电脑如获新生。

|

||||

|

||||

### 最好的桌面发行版

|

||||

|

||||

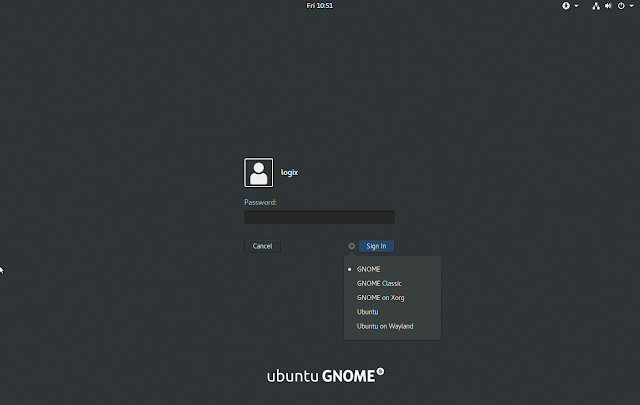

[Elementary OS][19] 连续两年都是我清单中最好的桌面发行版。对于许多人,[Linux Mint][20] (也是一个非常棒的分支)都是桌面发行版的领袖。但是,于我来说,它在易用性和稳定性上很难打败 Elementary OS。例如,我确信是 [Ubuntu][21] 17.10 的发布让我迁移回了 Canonical 的发行版。迁移到新的使用 GNOME 桌面的 Ubuntu 不久之后,我发现我缺少了 Elementary OS 外观、可用性和感觉(图 3)。在使用 Ubuntu 两周以后,我又换回了 Elementary OS。

|

||||

|

||||

|

||||

|

||||

*图 3:Pantheon 桌面是一件像艺术品一样的桌面。*

|

||||

|

||||

使用 Elementary OS 的任何一个人都会觉得宾至如归。Pantheon 桌面是将操作顺滑和用户友好结合的最完美的桌面。每次更新,它都会变得更好。

|

||||

|

||||

尽管 Elementary OS 在 Distrowatch 页面访问量中排名第六,但我预计到 2018 年末,它将至少上升至第三名。Elementary 开发人员非常关注用户的需求。他们倾听并且改进,这个发行版目前的状态是如此之好,似乎他们一切都可以做的更好。 如果您需要一个具有出色可靠性和易用性的桌面,Elementary OS 就是你的发行版。

|

||||

|

||||

### 能够证明自己的最好的发行版

|

||||

|

||||

很长一段时间内,[Gentoo][22] 都稳坐“展现你技能”的发行版的首座。但是,我认为现在 Gentoo 是时候让出“证明自己”的宝座给 [Linux From Scratch(LFS)][23]。你可能认为这不公平,因为 LFS 实际上不是一个发行版,而是一个帮助用户创建自己的 Linux 发行版的项目。但是,有什么能比你自己创建一个自己的发行版更能证明自己所学的 Linux 知识的呢?在 LFS 项目中,你可以从头开始构建自定义的 Linux 系统,而且是从源代码开始。 所以,如果你真的想证明些什么,请下载 [Linux From Scratch Book][24] 并开始构建。

|

||||

|

||||

### 对于物联网最好的发行版

|

||||

|

||||

[Ubuntu Core][25] 已经是第二年赢得了该项的冠军。Ubuntu Core 是 Ubuntu 的一个小型的、事务型版本,专为嵌入式和物联网设备而构建。使 Ubuntu Core 如此完美支持物联网的原因在于它将重点放在 snap 包上 —— 这种通用包可以安装到一个平台上而不会干扰其基本系统。这些 snap 包包含它们运行所需的所有内容(包括依赖项),因此不必担心安装它会破坏操作系统(或任何其他已安装的软件)。 此外,snap 包非常容易升级,并运行在隔离的沙箱中,这使它们成为物联网的理想解决方案。

|

||||

|

||||

Ubuntu Core 内置的另一个安全领域是登录机制。Ubuntu Core 使用Ubuntu One ssh密钥,这样登录系统的唯一方法是通过上传的 ssh 密钥到 [Ubuntu One帐户][26](图 4)。这为你的物联网设备提供了更高的安全性。

|

||||

|

||||

|

||||

|

||||

*图 4:Ubuntu Core屏幕指示通过Ubuntu One用户启用远程访问。*

|

||||

|

||||

### 最好的服务器发行版

|

||||

|

||||

这里有点意见不统一。主要原因是支持。如果你需要商业支持,乍一看,你最好的选择可能是 [Red Hat Enterprise Linux][27]。红帽年复一年地证明了自己不仅是全球最强大的企业服务器平台之一,而且是单一最赚钱的开源业务(年收入超过 20 亿美元)。

|

||||

|

||||

但是,Red Hat 并不是唯一的服务器发行版。 实际上,Red Hat 甚至并不能垄断企业服务器计算的各个方面。如果你关注亚马逊 Elastic Compute Cloud 上的云统计数据,Ubuntu 就会打败红帽企业 Linux。根据[云市场][28]的报告,EC2 统计数据显示 RHEL 的部署率低于 10 万,而 Ubuntu 的部署量超过 20 万。

|

||||

|

||||

最终的结果是,Ubuntu 几乎已经成为云计算的领导者。如果你将它与 Ubuntu 对容器的易用性和可管理性结合起来,就会发现 Ubuntu Server 是服务器类别的明显赢家。而且,如果你需要商业支持,Canonical 将为你提供 [Ubuntu Advantage][29]。

|

||||

|

||||

对使用 Ubuntu Server 的一个警告是它默认为纯文本界面(图 5)。如果需要,你可以安装 GUI,但使用 Ubuntu Server 命令行非常简单(每个 Linux 管理员都应该知道)。

|

||||

|

||||

|

||||

|

||||

*图 5:Ubuntu 服务器登录,通知更新。*

|

||||

|

||||

### 你怎么看

|

||||

|

||||

正如我之前所说,这些选择都非常主观,但如果你正在寻找一个好的开始,那就试试这些发行版。每一个都可以用于非常特定的目的,并且比大多数做得更好。虽然你可能不同意我的个别选择,但你可能会同意 Linux 在每个方面都提供了惊人的可能性。并且,请继续关注下周更多“最佳发行版”选秀。

|

||||

|

||||

通过 Linux 基金会和 edX 的免费[“Linux 简介”][13]课程了解有关Linux的更多信息。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/learn/intro-to-linux/2018/1/best-linux-distributions-2018

|

||||

|

||||

作者:[JACK WALLEN][a]

|

||||

译者:[dianbanjiu](https://github.com/dianbanjiu)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/jlwallen

|

||||

[1]:https://www.linux.com/licenses/category/used-permission

|

||||

[2]:https://www.linux.com/licenses/category/used-permission

|

||||

[3]:https://www.linux.com/licenses/category/used-permission

|

||||

[4]:https://www.linux.com/licenses/category/used-permission

|

||||

[5]:https://www.linux.com/licenses/category/used-permission

|

||||

[6]:https://www.linux.com/licenses/category/creative-commons-zero

|

||||

[7]:https://www.linux.com/files/images/debianjpg

|

||||

[8]:https://www.linux.com/files/images/lubuntujpg-2

|

||||

[9]:https://www.linux.com/files/images/elementarosjpg

|

||||

[10]:https://www.linux.com/files/images/ubuntucorejpg

|

||||

[11]:https://www.linux.com/files/images/ubuntuserverjpg-1

|

||||

[12]:https://www.linux.com/files/images/linux-distros-2018jpg

|

||||

[13]:https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

|

||||

[14]:https://distrowatch.com/

|

||||

[15]:https://www.linux.com/news/learn/sysadmin/best-linux-distributions-2017

|

||||

[16]:https://www.debian.org/

|

||||

[17]:https://www.parrotsec.org/

|

||||

[18]:http://lubuntu.me/

|

||||

[19]:https://elementary.io/

|

||||

[20]:https://linuxmint.com/

|

||||

[21]:https://www.ubuntu.com/

|

||||

[22]:https://www.gentoo.org/

|

||||

[23]:http://www.linuxfromscratch.org/

|

||||

[24]:http://www.linuxfromscratch.org/lfs/download.html

|

||||

[25]:https://www.ubuntu.com/core

|

||||

[26]:https://login.ubuntu.com/

|

||||

[27]:https://www.redhat.com/en/technologies/linux-platforms/enterprise-linux

|

||||

[28]:http://thecloudmarket.com/stats#/by_platform_definition

|

||||

[29]:https://buy.ubuntu.com/?_ga=2.177313893.113132429.1514825043-1939188204.1510782993

|

||||

@ -1,17 +1,21 @@

|

||||

写作是如何帮助技能拓展和事业成长的

|

||||

======

|

||||

|

||||

> 了解为什么写作可以帮助学习新技能和事业成长

|

||||

|

||||

|

||||

|

||||

Creative Commons Zero Pixabay

|

||||

|

||||

在最近的[温哥华开源峰会][1]上,我参加了一个小组讨论,叫做“写作是如何改变你的职业生涯的(即使你不是个作家)”。主持人是 Opensource.com 的社区经理兼编辑 Rikki Endsley,成员有开源策略顾问 VM (Vicky) Brasseur,The New Stack 的创始人兼主编 Alex Williams,还有 The Scale Factory 的顾问 Dawn Foster。

|

||||

|

||||

Rikki 在她的[这篇文章][3]中总结了一些能愉悦你,并且能以意想不到的方式改善你职业生涯的写作方法,我在峰会上的发言是受她这篇文章的启发。透露一下,我认识 Rikki 很久了,我们在同一家公司共事了很多年,一起带过孩子,到现在还是很亲密的朋友。

|

||||

Rikki 在她的[这篇文章][3]中总结了一些令人愉快的,并且能以意想不到的方式改善你职业生涯的写作方法,我在峰会上的发言是受她这篇文章的启发。透露一下,我认识 Rikki 很久了,我们在同一家公司共事了很多年,一起带过孩子,到现在还是很亲密的朋友。

|

||||

|

||||

### 写作和学习

|

||||

|

||||

正如 Rikki 对这个小组讨论的描述,“即使你自认为不是一个‘作家’,你也应该考虑写一下对开源的贡献,还有你的项目或者社区”。写作是一种很好的方式,来分享自己的知识并让别人参与到你的工作中来,当然它对个人也有好处。写作能帮助你结识新人,学习新技能,还能改善你的沟通。

|

||||

|

||||

我发现写作能让我搞清楚自己对某个主题有哪些不懂的地方。写作的过程会让知识体系的空白很突出,这激励了我通过进一步的研究、阅读和提问来填补空白。

|

||||

我发现写作能让我搞清楚自己对某个主题有哪些不懂的地方。写作的过程会让知识体系的空白很突出,这激励了我通过进一步的研究、阅读和提问来填补这些空白。

|

||||

|

||||

Rikki 说:“写那些你不知道的东西会更加困难也更加耗时,但是也更有成就感,更有益于你的事业。我发现写我不知道的东西有助于自己学习,因为得研究透彻才能给读者解释清楚。”

|

||||

|

||||

@ -19,12 +23,11 @@ Rikki 说:“写那些你不知道的东西会更加困难也更加耗时,

|

||||

|

||||

### 更明确的沟通

|

||||

|

||||

|

||||

写作有助于练习思考和准确讲话,尤其是面向国际受众写作(或演讲)时。例如,在[这篇文章中][5],Isabel Drost-Fromm 为那些母语不是英语的演讲者提供了几个技巧来消除歧义。不管是在会议上还是在自己团队内发言,写作还能帮你在演示之前理清思路。

|

||||

写作有助于思维训练和准确表达,尤其是面向国际受众写作(或演讲)时。例如,在[这篇文章中][5],Isabel Drost-Fromm 为那些母语不是英语的演讲者提供了几个技巧来消除歧义。不管是在会议上还是在自己团队内发言,写作还能帮你在演示幻灯片之前理清思路。

|

||||

|

||||

Rikki 说:“写文章的过程有助于我组织整理自己的发言和演示稿,也是一个给参会者提供笔记的好方式,还可以分享给没有参加活动的更多国际观众。”

|

||||

|

||||

如果你有兴趣,我鼓励你去写作。我强烈建议你参考这里提到的文章,开始思考你要写的内容。 不幸的是,我们在开源峰会上的讨论没有记录,但我希望将来能再做一次讨论,分享更多的想法。

|

||||

如果你有兴趣,我鼓励你去写作。我强烈建议你参考这里提到的文章,开始思考你要写的内容。不幸的是,我们在开源峰会上的讨论没有记录下来,但我希望将来能再做一次讨论,分享更多的想法。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -33,7 +36,7 @@ via: https://www.linux.com/blog/2018/9/how-writing-can-help-you-learn-new-skills

|

||||

作者:[Amber Ankerholz][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[belitex](https://github.com/belitex)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[pityonline](https://github.com/pityonline)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,25 +1,25 @@

|

||||

CPU 电源管理工具 - Linux 系统中 CPU 主频的控制和管理

|

||||

CPU 电源管理器:Linux 系统中 CPU 主频的控制和管理

|

||||

======

|

||||

|

||||

|

||||

|

||||

你使用笔记本的话,可能知道 Linux 系统的电源管理做的很不好。虽然有 **TLP**、[**Laptop Mode Tools** 和 **powertop**][1] 这些工具来辅助减少电量消耗,但跟 Windows 和 Mac OS 系统比较起来,电池的整个使用周期还是不尽如意。此外,还有一种降低功耗的办法就是限制 CPU 的频率。这是可行的,然而却需要编写很复杂的终端命令来设置,所以使用起来不太方便。幸好,有一款名为 **CPU Power Manager** 的 GNOME 扩展插件,可以很容易的就设置和管理你的 CPU 主频。GNOME 桌面系统中,CPU Power Manager 使用名为 **intel_pstate** 的功率驱动程序(几乎所有的 Intel CPU 都支持)来控制和管理 CPU 主频。

|

||||

你使用笔记本的话,可能知道 Linux 系统的电源管理做的很不好。虽然有 **TLP**、[**Laptop Mode Tools** 和 **powertop**][1] 这些工具来辅助减少电量消耗,但跟 Windows 和 Mac OS 系统比较起来,电池的整个使用周期还是不尽如意。此外,还有一种降低功耗的办法就是限制 CPU 的频率。这是可行的,然而却需要编写很复杂的终端命令来设置,所以使用起来不太方便。幸好,有一款名为 **CPU Power Manager** 的 GNOME 扩展插件,可以很容易的就设置和管理你的 CPU 主频。GNOME 桌面系统中,CPU Power Manager 使用名为 **intel_pstate** 的频率调整驱动程序(几乎所有的 Intel CPU 都支持)来控制和管理 CPU 主频。

|

||||

|

||||

使用这个扩展插件的另一个原因是可以减少系统的发热量,因为很多系统在正常使用中的发热量总让人不舒服,限制 CPU 的主频就可以减低发热量。它还可以减少 CPU 和其他组件的磨损。

|

||||

|

||||

### 安装 CPU Power Manager

|

||||

|

||||

首先,进入[**扩展插件主页面**][2],安装此扩展插件。

|

||||

首先,进入[扩展插件主页面][2],安装此扩展插件。

|

||||

|

||||

安装好插件后,在 GNOME 顶部栏的右侧会出现一个 CPU 图标。点击图标,会出现安装此扩展一个选项提示,如下示:

|

||||

|

||||

|

||||

|

||||

点击**“尝试安装”**按纽,会弹出输入密码确认框。插件需要 root 权限来添加 policykit 规则,进而控制 CPU 主频。下面是弹出的提示框样子:

|

||||

点击“尝试安装”按纽,会弹出输入密码确认框。插件需要 root 权限来添加 policykit 规则,进而控制 CPU 主频。下面是弹出的提示框样子:

|

||||

|

||||

|

||||

|

||||

输入密码,点击**“认证”**按纽,完成安装。最后在 **/usr/share/polkit-1/actions** 目录下添加了一个名为 **mko.cpupower.setcpufreq.policy** 的 policykit 文件。

|

||||

输入密码,点击“认证”按纽,完成安装。最后在 `/usr/share/polkit-1/actions` 目录下添加了一个名为 `mko.cpupower.setcpufreq.policy` 的 policykit 文件。

|

||||

|

||||

都安装完成后,如果点击右上脚的 CPU 图标,会出现如下所示:

|

||||

|

||||

@ -27,12 +27,10 @@ CPU 电源管理工具 - Linux 系统中 CPU 主频的控制和管理

|

||||

|

||||

### 功能特性

|

||||

|

||||

* **查看 CPU 主频:** 显然,你可以通过这个提示窗口看到 CPU 的当前运行频率。

|

||||

* **设置最大最小主频:** 使用此扩展,你可以根据列出的最大、最小频率百分比进度条来分别设置其频率限制。一旦设置,CPU 将会严格按照此设置范围运行。

|

||||

* **开/关 Turbo Boost:** 这是我最喜欢的功能特性。大多数 Intel CPU 都有 “Turbo Boost” 特性,为了提高额外性能,其中的一个内核为自动进行超频。此功能虽然可以使系统获得更高的性能,但也大大增加功耗。所以,如果不做 CPU 密集运行的话,为节约电能,最好关闭 Turbo Boost 功能。事实上,在我电脑上,我大部分时间是把 Turbo Boost 关闭的。

|

||||

* **生成配置文件:** 可以生成最大和最小频率的配置文件,就可以很轻松打开/关闭,而不是每次手工调整设置。

|

||||

|

||||

|

||||

* **查看 CPU 主频:** 显然,你可以通过这个提示窗口看到 CPU 的当前运行频率。

|

||||

* **设置最大、最小主频:** 使用此扩展,你可以根据列出的最大、最小频率百分比进度条来分别设置其频率限制。一旦设置,CPU 将会严格按照此设置范围运行。

|

||||

* **开/关 Turbo Boost:** 这是我最喜欢的功能特性。大多数 Intel CPU 都有 “Turbo Boost” 特性,为了提高额外性能,其中的一个内核为自动进行超频。此功能虽然可以使系统获得更高的性能,但也大大增加功耗。所以,如果不做 CPU 密集运行的话,为节约电能,最好关闭 Turbo Boost 功能。事实上,在我电脑上,我大部分时间是把 Turbo Boost 关闭的。

|

||||

* **生成配置文件:** 可以生成最大和最小频率的配置文件,就可以很轻松打开/关闭,而不是每次手工调整设置。

|

||||

|

||||

### 偏好设置

|

||||

|

||||

@ -40,24 +38,23 @@ CPU 电源管理工具 - Linux 系统中 CPU 主频的控制和管理

|

||||

|

||||

|

||||

|

||||

如你所见,你可以设置是否显示 CPU 主频,也可以设置是否以 **Ghz** 来代替 **Mhz** 显示。

|

||||

如你所见,你可以设置是否显示 CPU 主频,也可以设置是否以 **Ghz** 来代替 **Mhz** 显示。

|

||||

|

||||

你也可以编辑和创建/删除配置:

|

||||

你也可以编辑和创建/删除配置文件:

|

||||

|

||||

|

||||

|

||||

可以为每个配置分别设置最大、最小主频及开/关 Turbo boost。

|

||||

可以为每个配置文件分别设置最大、最小主频及开/关 Turbo boost。

|

||||

|

||||

### 结论

|

||||

|

||||

正如我在开始时所说的,Linux 系统的电源管理并不是最好的,许多人总是希望他们的 Linux 笔记本电脑电池能多用几分钟。如果你也是其中一员,就试试此扩展插件吧。为了省电,虽然这是非常规的做法,但有效果。我确实喜欢这个插件,到现在已经使用了好几个月了。

|

||||

|

||||

What do you think about this extension? Put your thoughts in the comments below!你对此插件有何看法呢?请把你的观点留在下面的评论区吧。

|

||||

你对此插件有何看法呢?请把你的观点留在下面的评论区吧。

|

||||

|

||||

祝贺!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/cpu-power-manager-control-and-manage-cpu-frequency-in-linux/

|

||||

@ -65,7 +62,7 @@ via: https://www.ostechnix.com/cpu-power-manager-control-and-manage-cpu-frequenc

|

||||

作者:[EDITOR][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[runningwater](https://github.com/runningwater)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,27 +1,27 @@

|

||||

万维网的创建者正在创建一个新的分布式网络

|

||||

万维网的创建者正在创建一个新的去中心化网络

|

||||

======

|

||||

|

||||

**万维网的创建者 Tim Berners-Lee 公布了他计划创建一个新的分布式网络,网络中的数据将由用户控制**

|

||||

> 万维网(WWW)的创建者 Tim Berners-Lee 公布了他计划创建一个新的去中心化网络,该网络中的数据将由用户控制。

|

||||

|

||||

[Tim Berners-Lee] [1]以创建万维网而闻名,万维网就是你现在所知的互联网。二十多年之后,Tim 致力于将互联网从企业巨头的掌控中解放出来,并通过分布式网络将权力交回给人们。

|

||||

[Tim Berners-Lee][1] 以创建万维网而闻名,万维网就是你现在所知的互联网。二十多年之后,Tim 致力于将互联网从企业巨头的掌控中解放出来,并通过<ruby>去中心化网络<rt>Decentralized Web</rt></ruby>将权力交回给人们。

|

||||

|

||||

Berners-Lee 对互联网“强权”们处理用户数据的方式感到不满。所以他[开始致力于他自己的开源项目][2] Solid “来将在网络上的权力归还给人们”

|

||||

Berners-Lee 对互联网“强权”们处理用户数据的方式感到不满。所以他[开始致力于他自己的开源项目][2] Solid “来将在网络上的权力归还给人们”。

|

||||

|

||||

> Solid 改变了当前用户必须将个人数据交给数字巨头以换取可感知价值的模型。正如我们都已发现的那样,这不符合我们的最佳利益。Solid 是我们如何驱动网络进化以恢复平衡——以一种革命性的方式,让我们每个人完全地控制数据,无论数据是否是个人数据。

|

||||

> Solid 改变了当前用户必须将个人数据交给数字巨头以换取可感知价值的模型。正如我们都已发现的那样,这不符合我们的最佳利益。Solid 是我们如何驱动网络进化以恢复平衡 —— 以一种革命性的方式,让我们每个人完全地控制数据,无论数据是否是个人数据。

|

||||

|

||||

![Tim Berners-Lee is creating a decentralized web with open source project Solid][3]

|

||||

|

||||

基本上,[Solid][4]是一个使用现有网络构建的平台,在这里你可以创建自己的 “pods” (个人数据存储)。你决定这个 “pods” 将被托管在哪里,谁将访问哪些数据元素以及数据将如何通过这个 pod 分享。

|

||||

基本上,[Solid][4] 是一个使用现有网络构建的平台,在这里你可以创建自己的 “pod” (个人数据存储)。你决定这个 “pod” 将被托管在哪里,谁将访问哪些数据元素以及数据将如何通过这个 pod 分享。

|

||||

|

||||

Berners-Lee 相信 Solid "将以一种全新的方式,授权个人、开发者和企业来构思、构建和寻找创新、可信和有益的应用和服务。"

|

||||

Berners-Lee 相信 Solid “将以一种全新的方式,授权个人、开发者和企业来构思、构建和寻找创新、可信和有益的应用和服务。”

|

||||

|

||||

开发人员需要将 Solid 集成进他们的应用程序和网站中。 Solid 仍在早期阶段,所以目前没有相关的应用程序。但是项目网站宣称“第一批 Solid 应用程序正在开发当中”。

|

||||

|

||||

Berners-Lee 已经创立一家名为[Inrupt][5] 的初创公司,并已从麻省理工学院休假来全职工作在 Solid,来将其”从少部分人的愿景带到多数人的现实“。

|

||||

Berners-Lee 已经创立一家名为 [Inrupt][5] 的初创公司,并已从麻省理工学院休学术假来全职工作在 Solid,来将其”从少部分人的愿景带到多数人的现实“。

|

||||

|

||||

如果你对 Solid 感兴趣,[学习如何开发应用程序][6]或者以自己的方式[给项目做贡献][7]。当然,建立和推动 Solid 的广泛采用将需要大量的努力,所以每一点的贡献都将有助于分布式网络的成功。

|

||||

如果你对 Solid 感兴趣,可以[学习如何开发应用程序][6]或者以自己的方式[给项目做贡献][7]。当然,建立和推动 Solid 的广泛采用将需要大量的努力,所以每一点的贡献都将有助于去中心化网络的成功。

|

||||

|

||||

你认为[分布式网络][8]会成为现实吗?你是如何看待分布式网络,特别是 Solid 项目的?

|

||||

你认为[去中心化网络][8]会成为现实吗?你是如何看待去中心化网络,特别是 Solid 项目的?

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -30,7 +30,7 @@ via: https://itsfoss.com/solid-decentralized-web/

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[ypingcn](https://github.com/ypingcn)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,16 +1,16 @@

|

||||

生日快乐,KDE:你从不知道的 11 个应用

|

||||

你从不知道的 11 个 KDE 应用

|

||||

======

|

||||

你今天需要哪种有趣或奇特的应用?

|

||||

|

||||

> 你今天需要哪种有趣或奇特的应用?

|

||||

|

||||

|

||||

|

||||

Linux 桌面环境 KDE 将于今年 10 月 14 日庆祝诞生 22 周年。KDE 社区用户创建了大量应用,它们很多都提供有趣和奇特的服务。我们仔细看了该列表,并挑选出了你可能想了解的 11 个应用。

|

||||

|

||||

没有很多,但[也有不少][1]。

|

||||

Linux 桌面环境 KDE 于今年 10 月 14 日庆祝诞生 22 周年。KDE 社区用户创建了海量应用(并没有很多,但[也有不少][1]),它们很多都提供有趣和奇特的服务。我们仔细看了该列表,并挑选出了你可能想了解的 11 个应用。

|

||||

|

||||

### 11 个你从没了解的 KDE 应用

|

||||

|

||||

1. [KTeaTime][2] 是一个泡茶计时器。选择你正在饮用的茶的类型 - 绿茶、红茶、凉茶等 - 当可以取出茶包来饮用时,计时器将会响。

|

||||

2. [KTux][3] 就是一个屏保程序......是么?Tux 用他的绿色飞船在外太空飞行。

|

||||

1. [KTeaTime][2] 是一个泡茶计时器。选择你正在饮用的茶的类型 —— 绿茶、红茶、凉茶等 —— 当可以取出茶包来饮用时,计时器将会响。

|

||||

2. [KTux][3] 就是一个屏保程序……是么?Tux 用它的绿色飞船在外太空飞行。

|

||||

3. [Blinken][4] 是一款基于 Simon Says 的记忆游戏,这是一个 1978 年发布的电子游戏。玩家们在记住长度增加的序列时会有挑战。

|

||||

4. [Tellico][5] 是一个收集管理器,用于组织你最喜欢的爱好。也许你还在收集棒球卡。也许你是红酒俱乐部的一员。也许你是一个严肃的书虫。也许三个都是!

|

||||

5. [KRecipes][6] **不是** 简单的食谱管理器。它还有很多其他功能!购物清单、营养素分析、高级搜索、菜谱评级、导入/导出各种格式等。

|

||||

@ -19,7 +19,7 @@ Linux 桌面环境 KDE 将于今年 10 月 14 日庆祝诞生 22 周年。KDE

|

||||

8. [KDiamond][9] 类似于宝石迷阵或其他单人益智游戏,其中游戏的目标是搭建一定数量的相同类型的宝石或物体的行。这里是钻石。

|

||||

9. [KolourPaint][10] 是一个非常简单的图像编辑工具,也可以用于创建简单的矢量图形。

|

||||

10. [Kiriki][11] 是一款类似于 Yahtzee 的 2-6 名玩家的骰子游戏。

|

||||

11. [RSIBreak][12] 没有以 K 开头。什么!?它以“RSI”开头代表“重复性劳损” (Repetitive Strain Injury),这会在日复一日长时间使用鼠标和键盘后发生。这个应用会提醒你休息,并可以个性化,以满足你的需求。

|

||||

11. [RSIBreak][12] 居然没有以 K 开头!?它以“RSI”开头代表“<ruby>重复性劳损<rt>Repetitive Strain Injury</rt></ruby>” ,这会在日复一日长时间使用鼠标和键盘后发生。这个应用会提醒你休息,并可以个性化定制,以满足你的需求。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -28,7 +28,7 @@ via: https://opensource.com/article/18/10/kde-applications

|

||||

作者:[Opensource.com][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

10

scripts/badge.sh

Executable file

10

scripts/badge.sh

Executable file

@ -0,0 +1,10 @@

|

||||

#!/usr/bin/env bash

|

||||

# 重新生成badge

|

||||

set -o errexit

|

||||

|

||||

SCRIPTS_DIR=$(cd $(dirname "$0") && pwd)

|

||||

BUILD_DIR=$(cd $SCRIPTS_DIR/.. && pwd)/build

|

||||

mkdir -p ${BUILD_DIR}/badge

|

||||

for catalog in published translated translating sources;do

|

||||

${SCRIPTS_DIR}/badge/show_status.sh -s ${catalog} > ${BUILD_DIR}/badge/${catalog}.svg

|

||||

done

|

||||

92

scripts/badge/show_status.sh

Executable file

92

scripts/badge/show_status.sh

Executable file

@ -0,0 +1,92 @@

|

||||

#!/usr/bin/env bash

|

||||

|

||||

set -e

|

||||

|

||||

function help()

|

||||

{

|

||||

cat <<EOF

|

||||

Usage: ${0##*/} [+-s} [published] [translated] [translating] [sources]

|

||||

|

||||

显示已发布、已翻译、正在翻译和待翻译的数量

|

||||

|

||||

-s 输出为svg格式

|

||||

EOF

|

||||

}

|

||||

|

||||

while getopts :s OPT; do

|

||||

case $OPT in

|

||||

s|+s)

|

||||

show_format="svg"

|

||||

;;

|

||||

*)

|

||||

help

|

||||

exit 2

|

||||

esac

|

||||

done

|

||||

shift $(( OPTIND - 1 ))

|

||||

OPTIND=1

|

||||

|

||||

declare -A catalog_comment_dict

|

||||

catalog_comment_dict=([translated]="待校对" [published]="已发布" [translating]="翻译中" [sources]="待翻译")

|

||||

|

||||

function count_files_under_dir()

|

||||

{

|

||||

local dir=$1

|

||||

local pattern=$2

|

||||

find ${dir} -name "${pattern}" -type f |wc -l

|

||||

}

|

||||

|

||||

cd "$(dirname $0)/../.." # 进入TP root

|

||||

|

||||

for catalog in "$@";do

|

||||

case "${catalog}" in

|

||||

published)

|

||||

num=$(count_files_under_dir "${catalog}" "[0-9]*.md")

|

||||

;;

|

||||

translated)

|

||||

num=$(count_files_under_dir "${catalog}" "[0-9]*.md")

|

||||

;;

|

||||

translating)

|

||||

num=$(git grep -niE "translat|fanyi|翻译" sources/*.md |awk -F ":" '{if ($2<=3) print $1}' |wc -l)

|

||||

;;

|

||||

sources)

|

||||

total=$(count_files_under_dir "${catalog}" "[0-9]*.md")

|

||||

translating_num=$(git grep -niE "translat|fanyi|翻译" sources/*.md |awk -F ":" '{if ($2<=3) print $1}' |wc -l)

|

||||

num=$((${total} - ${translating_num}))

|

||||

;;

|

||||

*)

|

||||

help

|

||||

exit 2

|

||||

esac

|

||||

|

||||

comment=${catalog_comment_dict[${catalog}]}

|

||||

if [[ "${show_format}" == "svg" ]];then

|

||||

cat <<EOF

|

||||

<svg xmlns="http://www.w3.org/2000/svg" width="120" height="20">

|

||||

<linearGradient id="b" x2="0" y2="100%">

|

||||

<stop offset="0" stop-color="#bbb" stop-opacity=".1" />

|

||||

<stop offset="1" stop-opacity=".1" />

|

||||

</linearGradient>

|

||||

<mask id="a">

|

||||

<rect width="132.53125" height="20" rx="3" fill="#fff" />

|

||||

</mask>

|

||||

<g mask="url(#a)">

|

||||

<path fill="#555" d="M0 0 h70.53125 v20 H0 z" />

|

||||

<path fill="#97CA00" d="M70.53125 0 h62.0 v20 H70.53125 z" />

|

||||

<path fill="url(#b)" d="M0 0 h132.53125 v20 H0 z" />

|

||||

</g>

|

||||

<g fill="#fff" font-family="DejaVu Sans" font-size="11">

|

||||

<text x="6" y="15" fill="#010101" fill-opacity=".3">${comment}</text>

|

||||

<text x="6" y="14">${comment}</text>

|

||||

<text x="74.53125" y="15" fill="#010101" fill-opacity=".3">${num}</text>

|

||||

<text x="74.53125" y="14">${num}</text>

|

||||

</g>

|

||||

</svg>

|

||||

EOF

|

||||

else

|

||||

cat<<EOF

|

||||

${comment}: ${num}

|

||||

EOF

|

||||

fi

|

||||

|

||||

done

|

||||

9

scripts/check.sh

Normal file

9

scripts/check.sh

Normal file

@ -0,0 +1,9 @@

|

||||

#!/bin/bash

|

||||

# PR 检查脚本

|

||||

set -e

|

||||

|

||||

CHECK_DIR="$(dirname "$0")/check"

|

||||

# sh "${CHECK_DIR}/check.sh" # 需要依赖,暂时禁用

|

||||

sh "${CHECK_DIR}/collect.sh"

|

||||

sh "${CHECK_DIR}/analyze.sh"

|

||||

sh "${CHECK_DIR}/identify.sh"

|

||||

43

scripts/check/analyze.sh

Normal file

43

scripts/check/analyze.sh

Normal file

@ -0,0 +1,43 @@

|

||||

#!/bin/sh

|

||||

# PR 文件变更分析

|

||||

set -e

|

||||

|

||||

# 加载公用常量和函数

|

||||

# shellcheck source=common.inc.sh

|

||||

. "$(dirname "$0")/common.inc.sh"

|

||||

|

||||

################################################################################

|

||||

# 读入:

|

||||

# - /tmp/changes # 文件变更列表

|

||||

# 写出:

|

||||

# - /tmp/stats # 文件变更统计

|

||||

################################################################################

|

||||

|

||||

# 执行分析并将统计输出到标准输出

|

||||

do_analyze() {

|

||||

cat /dev/null > /tmp/stats

|

||||

OTHER_REGEX='^$'

|

||||

for TYPE in 'SRC' 'TSL' 'PUB'; do

|

||||

for STAT in 'A' 'M' 'D'; do

|

||||

# 统计每个类别的每个操作

|

||||

REGEX="$(get_operation_regex "$STAT" "$TYPE")"

|

||||

OTHER_REGEX="${OTHER_REGEX}|${REGEX}"

|

||||

eval "${TYPE}_${STAT}=\"\$(grep -Ec '$REGEX' /tmp/changes)\"" || true

|

||||

eval echo "${TYPE}_${STAT}=\$${TYPE}_${STAT}"

|

||||

done

|

||||

done

|

||||

|

||||

# 统计其他操作

|

||||

OTHER="$(grep -Evc "$OTHER_REGEX" /tmp/changes)" || true

|

||||

echo "OTHER=$OTHER"

|

||||

|

||||

# 统计变更总数

|

||||

TOTAL="$(wc -l < /tmp/changes )"

|

||||

echo "TOTAL=$TOTAL"

|

||||

}

|

||||

|

||||

|

||||

echo "[分析] 统计文件变更……"

|

||||

do_analyze > /tmp/stats

|

||||

echo "[分析] 已写入统计结果:"

|

||||

cat /tmp/stats

|

||||

10

scripts/check/check.sh

Normal file

10

scripts/check/check.sh

Normal file

@ -0,0 +1,10 @@

|

||||

#!/bin/bash

|

||||

# 检查脚本状态

|

||||

set -e

|

||||

|

||||

################################################################################

|

||||

# 暂时仅供开发使用

|

||||

################################################################################

|

||||

|

||||

shellcheck -e SC2034 -x mock/stats.sh "$(dirname "$0")"/*.sh \

|

||||

&& echo '[检查] ShellCheck 通过'

|

||||

37

scripts/check/collect.sh

Normal file

37

scripts/check/collect.sh

Normal file

@ -0,0 +1,37 @@

|

||||

#!/bin/bash

|

||||

# PR 文件变更收集

|

||||

set -e

|

||||

|

||||

################################################################################

|

||||

# 读入:(无)

|

||||

# 写出:

|

||||

# - /tmp/changes # 文件变更列表

|

||||

################################################################################

|

||||

|

||||

|

||||

echo "[收集] 计算 PR 分支与目标分支的分叉点……"

|

||||

|

||||

TARGET_BRANCH="${TRAVIS_BRANCH:-master}"

|

||||

echo "[收集] 目标分支设定为:${TARGET_BRANCH}"

|

||||

|

||||

MERGE_BASE='HEAD^'

|

||||

[ "$TRAVIS_PULL_REQUEST" != 'false' ] \

|

||||

&& MERGE_BASE="$(git merge-base "$TARGET_BRANCH" HEAD)"

|

||||

echo "[收集] 找到分叉节点:${MERGE_BASE}"

|

||||

|

||||

echo "[收集] 变更摘要:"

|

||||

git --no-pager show --summary "${MERGE_BASE}..HEAD"

|

||||

|

||||

{

|

||||

git --no-pager log --oneline "${MERGE_BASE}..HEAD" | grep -Eq '绕过检查' && {

|

||||

touch /tmp/bypass

|

||||

echo "[收集] 已标记为绕过检查项"

|

||||

}

|

||||

} || true

|

||||

|

||||

echo "[收集] 写出文件变更列表……"

|

||||

|

||||

git diff "$MERGE_BASE" HEAD --no-renames --name-status > /tmp/changes

|

||||

echo "[收集] 已写出文件变更列表:"

|

||||

cat /tmp/changes

|

||||

{ [ -z "$(cat /tmp/changes)" ] && echo "(无变更)"; } || true

|

||||

30

scripts/check/common.inc.sh

Normal file

30

scripts/check/common.inc.sh

Normal file

@ -0,0 +1,30 @@

|

||||

#!/bin/sh

|

||||

|

||||

################################################################################

|

||||

# 公用常量和函数

|

||||

################################################################################

|

||||

|

||||

# 定义类别目录

|

||||

export SRC_DIR='sources' # 未翻译

|

||||

export TSL_DIR='translated' # 已翻译

|

||||

export PUB_DIR='published' # 已发布

|

||||

|

||||

# 定义匹配规则

|

||||

export CATE_PATTERN='(news|talk|tech)' # 类别

|

||||

export FILE_PATTERN='[0-9]{8} [a-zA-Z0-9_.,() -]*\.md' # 文件名

|

||||

|

||||

# 用法:get_operation_regex 状态 类型

|

||||

#

|

||||

# 状态为:

|

||||

# - A:添加

|

||||

# - M:修改

|

||||

# - D:删除

|

||||

# 类型为:

|

||||

# - SRC:未翻译

|

||||

# - TSL:已翻译

|

||||

# - PUB:已发布

|

||||

get_operation_regex() {

|

||||

STAT="$1"

|

||||

TYPE="$2"

|

||||

echo "^${STAT}\\s+\"?$(eval echo "\$${TYPE}_DIR")/"

|

||||

}

|

||||

86

scripts/check/identify.sh

Normal file

86

scripts/check/identify.sh

Normal file

@ -0,0 +1,86 @@

|

||||

#!/bin/bash

|

||||

# 匹配 PR 规则

|

||||

set -e

|

||||

|

||||

################################################################################

|

||||

# 读入:

|

||||

# - /tmp/stats

|

||||

# 写出:(无)

|

||||

################################################################################

|

||||

|

||||

# 加载公用常量和函数

|

||||

# shellcheck source=common.inc.sh

|

||||

. "$(dirname "$0")/common.inc.sh"

|

||||

|

||||

echo "[匹配] 加载统计结果……"

|

||||

# 加载统计结果

|

||||

# shellcheck source=mock/stats.sh

|

||||

. /tmp/stats

|

||||

|

||||

# 定义 PR 规则

|

||||

|

||||

# 绕过检查:绕过 PR 检查

|

||||

rule_bypass_check() {

|

||||

[ -f /tmp/bypass ] && echo "匹配规则:绕过检查"

|

||||

}

|

||||

|

||||

# 添加原文:添加至少一篇原文

|

||||

rule_source_added() {

|

||||

[ "$SRC_A" -ge 1 ] \

|

||||

&& [ "$TOTAL" -eq "$SRC_A" ] && echo "匹配规则:添加原文 ${SRC_A} 篇"

|

||||

}

|

||||

|

||||

# 申领翻译:只能申领一篇原文

|

||||

rule_translation_requested() {

|

||||

[ "$SRC_M" -eq 1 ] \

|

||||

&& [ "$TOTAL" -eq 1 ] && echo "匹配规则:申领翻译"

|

||||

}

|

||||

|

||||

# 提交译文:只能提交一篇译文

|

||||

rule_translation_completed() {

|

||||

[ "$SRC_D" -eq 1 ] && [ "$TSL_A" -eq 1 ] \

|

||||

&& [ "$TOTAL" -eq 2 ] && echo "匹配规则:提交译文"

|

||||

}

|

||||

|

||||

# 校对译文:只能校对一篇

|

||||

rule_translation_revised() {

|

||||

[ "$TSL_M" -eq 1 ] \

|

||||

&& [ "$TOTAL" -eq 1 ] && echo "匹配规则:校对译文"

|

||||

}

|

||||

|

||||

# 发布译文:发布多篇译文

|

||||

rule_translation_published() {

|

||||

[ "$TSL_D" -ge 1 ] && [ "$PUB_A" -ge 1 ] && [ "$TSL_D" -eq "$PUB_A" ] \

|

||||

&& [ "$TOTAL" -eq $(($TSL_D + $PUB_A)) ] \

|

||||

&& echo "匹配规则:发布译文 ${PUB_A} 篇"

|

||||

}

|

||||

|

||||

# 定义常见错误

|

||||

|

||||

# 未知错误

|

||||

error_undefined() {

|

||||

echo "未知错误:无匹配规则,请尝试只对一篇文章进行操作"

|

||||

}

|

||||

|

||||

# 申领多篇

|

||||

error_translation_requested_multiple() {

|

||||

[ "$SRC_M" -gt 1 ] \

|

||||

&& echo "匹配错误:申领多篇,请一次仅申领一篇"

|

||||

}

|

||||

|

||||

# 执行检查并输出匹配项目

|

||||

do_check() {

|

||||

rule_bypass_check \

|

||||

|| rule_source_added \

|

||||

|| rule_translation_requested \

|

||||

|| rule_translation_completed \

|

||||

|| rule_translation_revised \

|

||||

|| rule_translation_published \

|

||||

|| {

|

||||

error_translation_requested_multiple \

|

||||

|| error_undefined

|

||||

exit 1

|

||||

}

|

||||

}

|

||||

|

||||

do_check

|

||||

13

scripts/check/mock/stats.sh

Normal file

13

scripts/check/mock/stats.sh

Normal file

@ -0,0 +1,13 @@

|

||||

#!/bin/sh

|

||||

# 给 ShellCheck 用的 Mock 统计

|

||||

SRC_A=0

|

||||

SRC_M=0

|

||||

SRC_D=0

|

||||

TSL_A=0

|

||||

TSL_M=0

|

||||

TSL_D=0

|

||||

PUB_A=0

|

||||

PUB_M=0

|

||||

PUB_D=0

|

||||

OTHER=0

|

||||

TOTAL=0

|

||||

93

sources/talk/20170921 The Rise and Rise of JSON.md

Normal file

93

sources/talk/20170921 The Rise and Rise of JSON.md

Normal file

@ -0,0 +1,93 @@

|

||||

The Rise and Rise of JSON

|

||||

======

|

||||

JSON has taken over the world. Today, when any two applications communicate with each other across the internet, odds are they do so using JSON. It has been adopted by all the big players: Of the ten most popular web APIs, a list consisting mostly of APIs offered by major companies like Google, Facebook, and Twitter, only one API exposes data in XML rather than JSON. Twitter, to take an illustrative example from that list, supported XML until 2013, when it released a new version of its API that dropped XML in favor of using JSON exclusively. JSON has also been widely adopted by the programming rank and file: According to Stack Overflow, a question and answer site for programmers, more questions are now asked about JSON than about any other data interchange format.

|

||||

|

||||

![][1]

|

||||

|

||||

XML still survives in many places. It is used across the web for SVGs and for RSS and Atom feeds. When Android developers want to declare that their app requires a permission from the user, they do so in their app’s manifest, which is written in XML. XML also isn’t the only alternative to JSON—some people now use technologies like YAML or Google’s Protocol Buffers. But these are nowhere near as popular as JSON. For the time being, JSON appears to be the go-to format for communicating with other programs over the internet.

|

||||

|

||||

JSON’s dominance is surprising when you consider that as recently as 2005 the web world was salivating over the potential of “Asynchronous JavaScript and XML” and not “Asynchronous JavaScript and JSON.” It is of course possible that this had nothing to do with the relative popularity of the two formats at the time and reflects only that “AJAX” must have seemed a more appealing acronym than “AJAJ.” But even if some people were already using JSON instead of XML in 2005 (and in fact not many people were yet), one still wonders how XML’s fortunes could have declined so precipitously that a mere decade or so later “Asynchronous JavaScript and XML” has become an ironic misnomer. What happened in that decade? How did JSON supersede XML in so many applications? And who came up with this data format now depended on by engineers and systems all over the world?

|

||||

|

||||

### The Birth of JSON

|

||||

|

||||

The first JSON message was sent in April of 2001. Since this was a historically significant moment in computing, the message was sent from a computer in a Bay-Area garage. Douglas Crockford and Chip Morningstar, co-founders of a technology consulting company called State Software, had gathered in Morningstar’s garage to test out an idea.

|

||||

|

||||

Crockford and Morningstar were trying to build AJAX applications well before the term “AJAX” had been coined. Browser support for what they were attempting was not good. They wanted to pass data to their application after the initial page load, but they had not found a way to do this that would work across all the browsers they were targeting.

|

||||

|

||||

Though it’s hard to believe today, Internet Explorer represented the bleeding edge of web browsing in 2001. As early as 1999, Internet Explorer 5 supported a primordial form of XMLHttpRequest, which programmers could access using a framework called ActiveX. Crockford and Morningstar could have used this technology to fetch data for their application, but they could not have used the same solution in Netscape 4, another browser that they sought to support. So Crockford and Morningstar had to use a different system that worked in both browsers.

|

||||

|

||||

The first JSON message looked like this:

|

||||

|

||||

```

|

||||

<html><head><script>

|

||||

document.domain = 'fudco';

|

||||

parent.session.receive(

|

||||

{ to: "session", do: "test",

|

||||

text: "Hello world" }

|

||||

)

|

||||

</script></head></html>

|

||||

```

|

||||

|

||||

Only a small part of the message resembles JSON as we know it today. The message itself is actually an HTML document containing some JavaScript. The part that resembles JSON is just a JavaScript object literal being passed to a function called `receive()`.

|

||||

|

||||

Crockford and Morningstar had decided that they could abuse an HTML frame to send themselves data. They could point a frame at a URL that would return an HTML document like the one above. When the HTML was received, the JavaScript would be run, passing the object literal back to the application. This worked as long as you were careful to sidestep browser protections preventing a sub-window from accessing its parent; you can see that Crockford and Mornginstar did that by explicitly setting the document domain. (This frame-based technique, sometimes called the hidden frame technique, was commonly used in the late 90s before the widespread implementation of XMLHttpRequest.)

|

||||

|

||||

The amazing thing about the first JSON message is that it’s not obviously the first usage of a new kind of data format at all. It’s just JavaScript! In fact the idea of using JavaScript this way is so straightforward that Crockford himself has said that he wasn’t the first person to do it—he claims that somebody at Netscape was using JavaScript array literals to communicate information as early as 1996. Since the message is just JavaScript, it doesn’t require any kind of special parsing. The JavaScript interpreter can do it all.

|

||||

|

||||

The first ever JSON message actually ran afoul of the JavaScript interpreter. JavaScript reserves an enormous number of words—there are 64 reserved words as of ECMAScript 6—and Crockford and Morningstar had unwittingly used one in their message. They had used `do` as a key, but `do` is reserved. Since JavaScript has so many reserved words, Crockford decided that, rather than avoid using all those reserved words, he would just mandate that all JSON keys be quoted. A quoted key would be treated as a string by the JavaScript interpreter, meaning that reserved words could be used safely. This is why JSON keys are quoted to this day.

|

||||

|

||||

Crockford and Morningstar realized they had something that could be used in all sorts of applications. They wanted to name their format “JSML”, for JavaScript Markup Language, but found that the acronym was already being used for something called Java Speech Markup Language. So they decided to go with “JavaScript Object Notation”, or JSON. They began pitching it to clients but soon found that clients were unwilling to take a chance on an unknown technology that lacked an official specification. So Crockford decided he would write one.

|

||||

|

||||

In 2002, Crockford bought the domain [JSON.org][2] and put up the JSON grammar and an example implementation of a parser. The website is still up, though it now includes a prominent link to the JSON ECMA standard ratified in 2013. After putting up the website, Crockford did little more to promote JSON, but soon found that lots of people were submitting JSON parser implementations in all sorts of different programming languages. JSON’s lineage clearly tied it to JavaScript, but it became apparent that JSON was well-suited to data interchange between arbitrary pairs of languages.

|

||||

|

||||

### Doing AJAX Wrong

|

||||

|

||||

JSON got a big boost in 2005. That year, a web designer and developer named Jesse James Garrett coined the term “AJAX” in a blog post. He was careful to stress that AJAX wasn’t any one new technology, but rather “several technologies, each flourishing in its own right, coming together in powerful new ways.” AJAX was the name that Garrett was giving to a new approach to web application development that he had noticed gaining favor. His blog post went on to describe how developers could leverage JavaScript and XMLHttpRequest to build new kinds of applications that were more responsive and stateful than the typical web page. He pointed to Gmail and Flickr as examples of websites already relying on AJAX techniques.

|

||||

|

||||

The “X” in “AJAX” stood for XML, of course. But in a follow-up Q&A post, Garrett pointed to JSON as an entirely acceptable alternative to XML. He wrote that “XML is the most fully-developed means of getting data in and out of an AJAX client, but there’s no reason you couldn’t accomplish the same effects using a technology like JavaScript Object Notation or any similar means of structuring data.”

|

||||

|

||||

Developers indeed found that they could easily use JSON to build AJAX applications and many came to prefer it to XML. And so, ironically, the interest in AJAX led to an explosion in JSON’s popularity. It was around this time that JSON drew the attention of the blogosphere.

|

||||

|

||||

In 2006, Dave Winer, a prolific blogger and the engineer behind a number of XML-based technologies such as RSS and XML-RPC, complained that JSON was reinventing XML for no good reason. Though one might think that a contest between data interchange formats would be unlikely to engender death threats, Winer wrote:

|

||||

|

||||

> No doubt I can write a routine to parse [JSON], but look at how deep they went to re-invent, XML itself wasn’t good enough for them, for some reason (I’d love to hear the reason). Who did this travesty? Let’s find a tree and string them up. Now.

|

||||

|

||||

It’s easy to understand Winer’s frustration. XML has never been widely loved. Even Winer has said that he does not love XML. But XML was designed to be a system that could be used by everyone for almost anything imaginable. To that end, XML is actually a meta-language that allows you to define domain-specific languages for individual applications—RSS, the web feed technology, and SOAP (Simple Object Access Protocol) are examples. Winer felt that it was important to work toward consensus because of all the benefits a common interchange format could bring. He felt that XML’s flexibility should be able to accommodate everybody’s needs. And yet here was JSON, a format offering no benefits over XML except those enabled by throwing out the cruft that made XML so flexible.

|

||||

|

||||

Crockford saw Winer’s blog post and left a comment on it. In response to the charge that JSON was reinventing XML, Crockford wrote, “The good thing about reinventing the wheel is that you can get a round one.”

|

||||

|

||||

### JSON vs XML

|

||||

|

||||

By 2014, JSON had been officially specified by both an ECMA standard and an RFC. It had its own MIME type. JSON had made it to the big leagues.

|

||||

|

||||

Why did JSON become so much more popular than XML?

|

||||

|

||||

On [JSON.org][2], Crockford summarizes some of JSON’s advantages over XML. He writes that JSON is easier for both humans and machines to understand, since its syntax is minimal and its structure is predictable. Other bloggers have focused on XML’s verbosity and “the angle bracket tax.” Each opening tag in XML must be matched with a closing tag, meaning that an XML document contains a lot of redundant information. This can make an XML document much larger than an equivalent JSON document when uncompressed, but, perhaps more importantly, it also makes an XML document harder to read.

|

||||

|

||||

Crockford has also claimed that another enormous advantage for JSON is that JSON was designed as a data interchange format. It was meant to carry structured information between programs from the very beginning. XML, though it has been used for the same purpose, was originally designed as a document markup language. It evolved from SGML (Standard Generalized Markup Language), which in turn evolved from a markup language called Scribe, intended as a word processing system similar to LaTeX. In XML, a tag can contain what is called “mixed content,” or text with inline tags surrounding words or phrases. This recalls the image of an editor marking up a manuscript with a red or blue pen, which is arguably the central metaphor of a markup language. JSON, on the other hand, does not support a clear analogue to mixed content, but that means that its structure can be simpler. A document is best modeled as a tree, but by throwing out the document idea Crockford could limit JSON to dictionaries and arrays, the basic and familiar elements all programmers use to build their programs.

|

||||

|

||||

Finally, my own hunch is that people disliked XML because it was confusing, and it was confusing because it seemed to come in so many different flavors. At first blush, it’s not obvious where the line is between XML proper and its sub-languages like RSS, ATOM, SOAP, or SVG. The first lines of a typical XML document establish the XML version and then the particular sub-language the XML document should conform to. That is a lot of variation to account for already, especially when compared to JSON, which is so straightforward that no new version of the JSON specification is ever expected to be written. The designers of XML, in their attempt to make XML the one data interchange format to rule them all, fell victim to that classic programmer’s pitfall: over-engineering. XML was so generalized that it was hard to use for something simple.

|

||||

|

||||

In 2000, a campaign was launched to get HTML to conform to the XML standard. A specification was published for XML-compliant HTML, thereafter known as XHTML. Some browser vendors immediately started supporting the new standard, but it quickly became obvious that the vast HTML-producing public were unwilling to revise their habits. The new standard called for stricter validation of XHTML than had been the norm for HTML, but too many websites depended on HTML’s forgiving rules. By 2009, an attempt to write a second version of the XHTML standard was aborted when it became clear that the future of HTML was going to be HTML5, a standard that did not insist on XML compliance.

|

||||

|

||||

If the XHTML effort had succeeded, then maybe XML would have become the common data format that its designers hoped it would be. Imagine a world in which HTML documents and API responses had the exact same structure. In such a world, JSON might not have become as ubiquitous as it is today. But I read the failure of XHTML as a kind of moral defeat for the XML camp. If XML wasn’t the best tool for HTML, then maybe there were better tools out there for other applications also. In that world, our world, it is easy to see how a format as simple and narrowly tailored as JSON could find great success.

|

||||

|

||||

If you enjoyed this post, more like it come out every two weeks! Follow [@TwoBitHistory][3] on Twitter or subscribe to the [RSS feed][4] to make sure you know when a new post is out.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://twobithistory.org/2017/09/21/the-rise-and-rise-of-json.html

|

||||

|

||||

作者:[Two-Bit History][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://twobithistory.org

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://twobithistory.org/images/json.svg

|

||||

[2]: http://JSON.org

|

||||

[3]: https://twitter.com/TwoBitHistory

|

||||

[4]: https://twobithistory.org/feed.xml

|

||||

@ -0,0 +1,50 @@

|

||||

The Most Important Database You've Never Heard of

|

||||

======

|

||||

In 1962, JFK challenged Americans to send a man to the moon by the end of the decade, inspiring a heroic engineering effort that culminated in Neil Armstrong’s first steps on the lunar surface. Many of the fruits of this engineering effort were highly visible and sexy—there were new spacecraft, new spacesuits, and moon buggies. But the Apollo Program was so staggeringly complex that new technologies had to be invented even to do the mundane things. One of these technologies was IBM’s Information Management System (IMS).

|

||||

|

||||

IMS is a database management system. NASA needed one in order to keep track of all the parts that went into building a Saturn V rocket, which—because there were two million of them—was expected to be a challenge. Databases were a new idea in the 1960s and there weren’t any already available for NASA to use, so, in 1965, NASA asked IBM to work with North American Aviation and Caterpillar Tractor to create one. By 1968, IBM had installed a working version of IMS at NASA, though at the time it was called ICS/DL/I for “Informational Control System and Data Language/Interface.” (IBM seems to have gone through a brief, unfortunate infatuation with the slash; see [PL/I][1].) Two years later, IBM rebranded ICS/DL/I as “IMS” and began selling it to other customers. It was one of the first commercially available database management systems.

|

||||

|

||||

The incredible thing about IMS is that it is still in use today. And not just on a small scale: Banks, insurance companies, hospitals, and government agencies still use IMS for all sorts of critical tasks. Over 95% of Fortune 1000 companies use IMS in some capacity, as do all of the top five US banks. Whenever you withdraw cash from an ATM, the odds are exceedingly good that you are interacting with IMS at some point in the course of your transaction. In a world where the relational database is an old workhorse increasingly in competition with trendy new NoSQL databases, IMS is a freaking dinosaur. It is a relic from an era before the relational database was even invented, which didn’t happen until 1970. And yet it seems to be the database system in charge of all the important stuff.

|

||||

|

||||

I think this makes IMS pretty interesting. Depending on how you feel about relational databases, it either offers insight into how the relational model improved on its predecessors or else exemplifies an alternative model better suited to certain problems.

|

||||

|

||||

IMS works according to a hierarchical model, meaning that, instead of thinking about data as tables that can be brought together using JOIN operations, IMS thinks about data as trees. Each kind of record you store can have other kinds of records as children; these child record types represent additional information that you might be interested in given a record of the parent type.

|

||||

|

||||

To take an example, say that you want to store information about bank customers. You might have one type of record to represent customers and another type of record to represent accounts. Like in a relational database, where each table has columns, these records will have different fields; we might want to have a first name field, a last name field, and a city field for each customer. We must then decide whether we are likely to first lookup a customer and then information about that customer’s account, or whether we are likely to first lookup an account and then information about that account’s owner. Assuming we decide that we will access customers first, then we will make our account record type a child of our customer record type. Diagrammed, our database model would look something like this:

|

||||

|

||||

![][2]

|

||||

|

||||

And an actual database might look like:

|

||||

|

||||

![][3]

|

||||

|

||||

By modeling our data this way, we are hewing close to the reality of how our data is stored. Each parent record includes pointers to its children, meaning that moving down our tree from the root node is efficient. (Actually, each parent basically stores just one pointer to the first of its children. The children in turn contain pointers to their siblings. This ensures that the size of a record does not vary with the number of children it has.) This efficiency can make data accesses very fast, provided that we are accessing our data in ways that we anticipated when we first structured our database. According to IBM, an IMS instance can process over 100,000 transactions a second, which is probably a large part of why IMS is still used, particularly at banks. But the downside is that we have lost a lot of flexibility. If we want to access our data in ways we did not anticipate, we will have a hard time.

|

||||

|

||||

To illustrate this, consider what might happen if we decide that we would like to access accounts before customers. Perhaps customers are calling in to update their addresses, and we would like them to uniquely identify themselves using their account numbers. So we want to use an account number to find an account, and then from there find the account’s owner. But since all accesses start at the root of our tree, there’s no way for us to get to an account efficiently without first deciding on a customer. To fix this problem, we could introduce a second tree or hierarchy starting with account records; these account records would then have customer records as children. This would let us access accounts and then customers efficiently. But it would involve duplicating information that we already have stored in our database—we would have two trees storing the same information in different orders. Another option would be to establish an index of accounts that could point us to the right account record given an account number. That would work too, but it would entail extra work during insert and update operations in the future.

|

||||

|

||||

It was precisely this inflexibility and the problem of duplicated information that pushed E. F. Codd to propose the relational model. In his 1970 paper, A Relational Model of Data for Large Shared Data Banks, he states at the outset that he intends to present a model for data storage that can protect users from having to know anything about how their data is stored. Looked at one way, the hierarchical model is entirely an artifact of how the designers of IMS chose to store data. It is a bottom-up model, the implication of a physical reality. The relational model, on the other hand, is an abstract model based on relational algebra, and is top-down in that the data storage scheme can be anything provided it accommodates the model. The relational model’s great advantage is that, just because you’ve made decisions that have caused the database to store your data in a particular way, you won’t find yourself effectively unable to make certain queries.

|

||||

|

||||

All that said, the relational model is an abstraction, and we all know abstractions aren’t free. Banks and large institutions have stuck with IMS partly because of the performance benefits, though it’s hard to say if those benefits would be enough to keep them from switching to a modern database if they weren’t also trying to avoid rewriting mission-critical legacy code. However, today’s popular NoSQL databases demonstrate that there are people willing to drop the conveniences of the relational model in return for better performance. Something like MongoDB, which encourages its users to store data in a denormalized form, isn’t all that different from IMS. If you choose to store some entity inside of another JSON record, then in effect you have created something like the IMS hierarchy, and you have constrained your ability to query for that data in the future. But perhaps that’s a tradeoff you’re willing to make. So, even if IMS hadn’t predated E. F. Codd’s relational model by several years, there are still reasons why IMS’ creators might not have adopted the relational model wholesale.

|

||||

|

||||

Unfortunately, IMS isn’t something that you can download and take for a spin on your own computer. First of all, IMS is not free, so you would have to buy it from IBM. But the bigger problem is that IMS only runs on IBM mainframes like the IBM z13. That’s a shame, because it would be a joy to play around with IMS and get a sense for exactly how it differs from something like MySQL. But even without that opportunity, it’s interesting to think about software systems that work in ways we don’t expect or aren’t used to. And it’s especially interesting when those systems, alien as they are, turn out to undergird your local hospital, the entire financial sector, and even the federal government.

|

||||

|

||||

If you enjoyed this post, more like it come out every two weeks! Follow [@TwoBitHistory][4] on Twitter or subscribe to the [RSS feed][5] to make sure you know when a new post is out.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://twobithistory.org/2017/10/07/the-most-important-database.html

|

||||

|

||||

作者:[Two-Bit History][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://twobithistory.org

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://en.wikipedia.org/wiki/PL/I

|

||||

[2]: https://twobithistory.org/images/hierarchical-model.png

|

||||

[3]: https://twobithistory.org/images/hierarchical-db.png

|

||||

[4]: https://twitter.com/TwoBitHistory

|

||||

[5]: https://twobithistory.org/feed.xml

|

||||

84

sources/talk/20171119 The Ruby Story.md

Normal file

84

sources/talk/20171119 The Ruby Story.md

Normal file

@ -0,0 +1,84 @@

|

||||

The Ruby Story

|

||||

======

|

||||

Ruby has always been one of my favorite languages, though I’ve sometimes found it hard to express why that is. The best I’ve been able to do is this musical analogy: Whereas Python feels to me like punk rock—it’s simple, predictable, but rigid—Ruby feels like jazz. Ruby gives programmers a radical freedom to express themselves, though that comes at the cost of added complexity and can lead to programmers writing programs that don’t make immediate sense to other people.

|

||||

|

||||

I’ve always been aware that freedom of expression is a core value of the Ruby community. But what I didn’t appreciate is how deeply important it was to the development and popularization of Ruby in the first place. One might create a programming lanugage in pursuit of better peformance, or perhaps timesaving abstractions—the Ruby story is interesting because instead the goal was, from the very beginning, nothing more or less than the happiness of the programmer.

|

||||

|

||||

### Yukihiro Matsumoto

|

||||

|

||||

Yukihiro Matsumoto, also known as “Matz,” graduated from the University of Tsukuba in 1990. Tsukuba is a small town just northeast of Tokyo, known as a center for scientific research and technological devlopment. The University of Tsukuba is particularly well-regarded for its STEM programs. Matsumoto studied Information Science, with a focus on programming languages. For a time he worked in a programming language lab run by Ikuo Nakata.

|

||||

|

||||

Matsumoto started working on Ruby in 1993, only a few years after graduating. He began working on Ruby because he was looking for a scripting language with features that no existing scripting language could provide. He was using Perl at the time, but felt that it was too much of a “toy language.” Python also fell short; in his own words:

|

||||

|

||||

> I knew Python then. But I didn’t like it, because I didn’t think it was a true object-oriented language—OO features appeared to be an add-on to the language. As a language maniac and OO fan for 15 years, I really wanted a genuine object-oriented, easy-to-use scripting language. I looked for one, but couldn’t find one.

|

||||

|

||||

So one way of understanding Matsumoto’s motivations in creating Ruby is that he was trying to create a better, object-oriented version of Perl.

|

||||

|

||||

But at other times, Matsumoto has said that his primary motivation in creating Ruby was simply to make himself and others happier. Toward the end of a Google tech talk that Matsumoto gave in 2008, he showed the following slide:

|

||||

|

||||

![][1]

|

||||

|

||||

He told his audience,

|

||||

|

||||

> I hope to see Ruby help every programmer in the world to be productive, and to enjoy programming, and to be happy. That is the primary purpose of the Ruby language.

|

||||

|

||||

Matsumoto goes on to joke that he created Ruby for selfish reasons, because he was so underwhelmed by other languages that he just wanted to create something that would make him happy.

|

||||

|

||||

The slide epitomizes Matsumoto’s humble style. Matsumoto, it turns out, is a practicing Mormon, and I’ve wondered whether his religious commitments have any bearing on his legendary kindness. In any case, this kindness is so well known that the Ruby community has a principle known as MINASWAN, or “Matz Is Nice And So We Are Nice.” The slide must have struck the audience at Google as an unusual one—I imagine that any random slide drawn from a Google tech talk is dense with code samples and metrics showing how one engineering solution is faster or more efficient than another. Few, I suspect, come close to stating nobler goals more simply.

|

||||

|

||||