mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-03 23:40:14 +08:00

commit

af4e90cd13

@ -3,15 +3,15 @@ Linux 的实用性 VS 行动主义

|

||||

|

||||

*我们使用 Linux 是因为它比其他操作系统更实用,还是其他更高级的理由呢?*

|

||||

|

||||

运行 Linux 的最吸引人的事情之一就是它所提供的自由。 Linux 社区之间的划分在于我们如何看待这种自由。

|

||||

运行 Linux 的最吸引人的事情之一就是它所提供的自由。 Linux 社区之间的分野在于我们如何看待这种自由。

|

||||

|

||||

对一些人来说,使用 Linux 可以享受不受供应商或高软件成本限制的自由。大多数人会称这个是一个实用性的考虑。而其他用户会告诉你,他们享受的是自由软件的自由。那就意味着拥护支持<ruby> [开源软件运动][1]<rt> Free Software Movement</rt> </ruby>的 Linux 发行版,完全避免专有软件和所有相关的东西。

|

||||

对一些人来说,使用 Linux 可以享受到不受供应商限制或避免高昂的软件成本的自由。大多数人会称这个是一个实用性的考虑。而其它用户会告诉你,他们享受的是自由软件的自由。那就意味着拥护支持<ruby> [自由软件运动][1]<rt> Free Software Movement</rt> </ruby>的 Linux 发行版,完全避免专有软件和所有相关的东西。

|

||||

|

||||

在这篇文章中,我将带你比较这两种自由的区别,以及他们如何影响 Linux 的使用。

|

||||

在这篇文章中,我将带你比较这两种自由的区别,以及它们如何影响 Linux 的使用。

|

||||

|

||||

### 专有的问题

|

||||

|

||||

大多数 Linux 用户有一个共同点,就是他们尽量避免使用专有软件。对像我这样的实用主义的爱好者来说,这意味着我能够控制我的软件支出,避免过度依赖特定供应商。当然,我不是一个程序员……所以我对安装软件的调整是十分微小的。但也有一些个别情况,一个应用程序的小调整可以意味着它能否正常工作。

|

||||

大多数 Linux 用户有一个共同点,就是他们尽量避免使用专有软件。对像我这样的实用主义的爱好者来说,这意味着我能够控制我的软件支出,以及避免过度依赖特定供应商。当然,我不是一个程序员……所以我对安装软件的调整是十分微小的。但也有一些个别情况,对应用程序的小调整就意味着它要么能工作,要么不能。

|

||||

|

||||

还有一些 Linux 爱好者,倾向于避开专有软件,因为他们觉得使用它们是不道德的。通常这里主要的问题是使用专有软件会剥夺或者干脆阻碍你的个人自由。像这些用户更喜欢使用 Linux 发行版和软件来支持 [自由软件理念][2] 。虽然它与开源的概念相似并经常直接与之混淆,[但它们之间还是有些差异的][3] 。

|

||||

|

||||

@ -19,21 +19,21 @@ Linux 的实用性 VS 行动主义

|

||||

|

||||

这两种类型的 Linux 爱好者都喜欢使用非专有软件的解决方案。但是,自由软件倡导者根本不会去使用专有软件,而实用主义用户会选择具有最佳性能的工具。这意味着,在有些情况下,这些用户会在他们的非专有操作系统上运行专有应用或代码。

|

||||

|

||||

最终,这两种类型的用户都喜欢使用 Linux 所提供的。但是,我们这样做的原因往往会有所不同。有人认为那些不支持自由软件的人是无知的。我不同意,我认为它是实用方便性的问题。那些喜欢实用方便性的用户根本不关心他们软件的政治问题。

|

||||

最终,这两种类型的用户都喜欢使用 Linux 所提供的非专有解决方案。但是,我们这样做的原因往往会有所不同。有人认为那些不支持自由软件的人是无知的。我不同意这种看法,我认为它是实用方便性的问题。那些喜欢实用方便性的用户根本不关心他们软件的政治问题。

|

||||

|

||||

### 实用方便性

|

||||

|

||||

当你问起绝大多数的人为什么使用他们现在的操作系统,回答通常都集中于实用方便性。方便性可能体现在“它是我一直使用的系统”、“它运行了我需要的软件”。 其他人可能进一步解释说,软件对他们使用操作系统的偏好影响不大,而是对操作系统的熟悉程度,最后,还有硬件兼容性等问题也导致我们使用这个操作系统而不是另一个。

|

||||

当你问起绝大多数的人为什么使用他们现在的操作系统,回答通常都集中于实用方便性。方便性可能体现在“它是我一直使用的系统”,乃至于“它运行了我需要的软件”。 其他人可能进一步解释说,软件对他们使用操作系统的偏好影响不大,而是对操作系统的熟悉程度的问题,最后,还有一些特殊的“商业考虑”或硬件兼容性等问题也导致我们使用这个操作系统而不是另一个。

|

||||

|

||||

这可能会让你们中许多人很惊讶,不过我今天运行桌面 Linux 最大的一个原因是由于我熟悉它。即使我为别人提供 Windows 和 OS X 的支持,实际上我使用这些操作系统时感觉相当沮丧,因为它们根本就不是我习惯的用法。也因此我对那些 Linux 新手表示同情,因为我太懂得踏入陌生的领域是怎样的让人恼火了。我的观点是这样的 —— 熟悉具有价值,而且熟悉加强了实用方便性。

|

||||

这可能会让你们中许多人很惊讶,不过如今我运行桌面 Linux 最大的一个原因是由于我熟悉它。即使我能为别人提供 Windows 和 OS X 的支持,实际上我使用这些操作系统时感觉相当沮丧,因为它们根本就不是我习惯的用法。也因此我对那些 Linux 新手表示同情,因为我太懂得踏入陌生的领域是怎样的让人恼火了。我的观点是这样的 —— 熟悉具有价值,而且熟悉加强了实用方便性。

|

||||

|

||||

现在,如果我们把它和一个自由软件倡导者的需求来比较,你会发现那些人都愿意学习新的甚至更具挑战性的东西,以避免使用非自由软件。对这种用户,我最赞赏的地方,就是他们坚定的采取少数人选择的道路来坚持他们的原则,在我看来,这是十分值得赞赏的。

|

||||

现在,如果我们把它和一个自由软件倡导者的需求来比较,你会发现这种人都愿意学习新的甚至更具挑战性的东西,以避免使用非自由软件。对这种用户,我最赞赏的地方,就是他们坚定的采取少数人选择的道路来坚持他们的原则,在我看来,这是十分值得赞赏的。

|

||||

|

||||

### 自由的价值

|

||||

|

||||

我不羡慕那些自由软件倡导者的一个地方,就是根据<ruby> [自由软件基金会][4] <rt>Free Software Foundation</rt></ruby>规定的标准,为实现自由,他们要始终使用 Linux 发行版和硬件而带来的额外工作。这意味着 Linux 内核需要摆脱专有的驱动支持,而且硬件不需要任何专有代码。当然不是不可能的,但确实很难。

|

||||

我不羡慕那些自由软件倡导者的一个地方,就是根据<ruby> [自由软件基金会][4] <rt>Free Software Foundation</rt></ruby>规定的标准,为实现其自由,他们要始终使用 Linux 发行版和硬件而付出的额外工作。这意味着 Linux 内核需要摆脱专有的驱动支持,而且硬件不需要任何专有代码。当然不是不可能的,但确实很难。

|

||||

|

||||

一个自由软件倡导者可以达到的最好的情况是硬件是“自由兼容”的。有些供应商,可以满足这一需求,但大多提供依赖于 Linux 兼容专有固件的硬件。实用主义用户对自由软件倡导者来说是个搅局者。

|

||||

一个自由软件倡导者可以达到的最好的情况是硬件是“自由兼容”的。有些供应商,可以满足这一需求,但大多提供的硬件依赖于 Linux 兼容的专有固件。实用主义用户对自由软件倡导者来说是个搅局者。

|

||||

|

||||

那么这一切意味着的是,倡导者必须比实用主义的 Linux 爱好者,更加警惕。这本身并不一定是坏事,但如果是打算跳入自由软件的阵营那就要考虑下了。比较而言,实用主义的用户可以不假思索地使用与 Linux 兼容的任何软件或硬件。我不知道你是怎么想的,但在我眼中这样更轻松一点。

|

||||

|

||||

@ -53,7 +53,6 @@ Linux 的实用性 VS 行动主义

|

||||

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.datamation.com/open-source/linux-practicality-vs-activism.html

|

||||

@ -0,0 +1,196 @@

|

||||

用数据科学搭建一个实时推荐引擎

|

||||

======================

|

||||

|

||||

编者注:本文是 2016 年 4 月 Nicole Whilte 在欧洲 [GraphConnect][1] 时所作。这儿我们快速回顾一下她所涉及的内容:

|

||||

- 图数据库推荐基础

|

||||

- 社会化推荐

|

||||

- 相似性推荐

|

||||

- 集群推荐

|

||||

|

||||

今天我们将要讨论的内容是数据科学和图推荐(graph recommendations):

|

||||

|

||||

我在 [Neo4j][2] 任职已经两年了,但实际上我已经使用 Neo4j 和 [Cypher][3] 工作三年了。当我首次发现这个特别的图数据库(graph database)的时候,我还是一个研究生,那时候我在奥斯丁的德克萨斯大学攻读关于社交网络的统计学硕士学位。

|

||||

|

||||

[实时推荐引擎][4]是 Neo4j 中最广泛的用途之一,也是使它如此强大并且容易使用的原因之一。为了探索这个东西,我将通过使用示例数据集来阐述如何将统计学方法并入这些引擎中。

|

||||

|

||||

第一个很简单 - 将 Cypher 用于社交推荐。接下来,我们将看一看相似性推荐,这涉及到可被计算的相似性度量,最后探索的是集群推荐。

|

||||

|

||||

### 图数据库推荐基础

|

||||

|

||||

下面的数据集包含所有达拉斯 Fort Worth 国际机场的餐饮场所,达拉斯 Fort Worth 国际机场是美国主要的机场枢纽之一:

|

||||

|

||||

|

||||

|

||||

我们把节点标记成黄色并按照出入口和航站楼给它们的位置建模。同时我们也按照食物和饮料的主类别将地点分类,其中一些包括墨西哥食物、三明治、酒吧和烤肉。

|

||||

|

||||

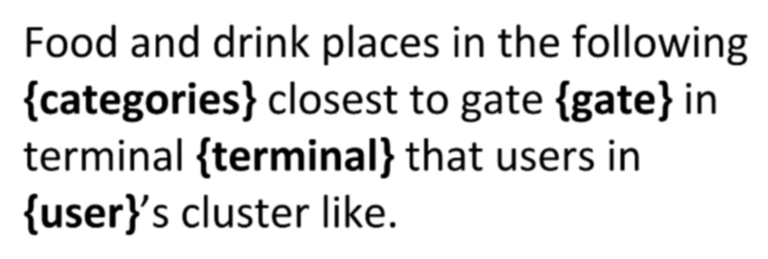

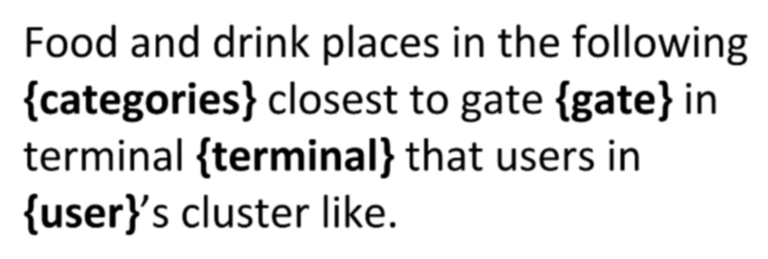

让我们做一个简单的推荐。我们想要在机场的某一确定地点找到一种特定食物,大括号中的内容表示是的用户输入,它将进入我们的假想应用程序中。

|

||||

|

||||

|

||||

|

||||

这个英文句子表示成 Cypher 查询:

|

||||

|

||||

|

||||

|

||||

这将提取出该类别中用户所请求的所有地点、航站楼和出入口。然后我们可以计算出用户所在位置到出入口的准确距离,并以升序返回结果。再次说明,这个非常简单的 Cypher 推荐仅仅依据的是用户在机场中的位置。

|

||||

|

||||

### 社交推荐(Social Recommendations)

|

||||

|

||||

让我们来看一下社交推荐。在我们的假想应用程序中,用户可以登录并且可以用和 Facebook 类似的方式标记自己“喜好”的地点,也可以在某地签到。

|

||||

|

||||

|

||||

|

||||

考虑位于我们所研究的第一个模型之上的数据模型,现在让我们在下面的分类中找到用户的朋友喜好的航站楼里面离出入口最近的餐饮场所:

|

||||

|

||||

|

||||

|

||||

MATCH 子句和我们第一次 Cypher 查询的 MATCH 子句相似,只是现在我们依据喜好和朋友来匹配:

|

||||

|

||||

|

||||

|

||||

前三行是完全一样的,但是现在要考虑的是那些登录的用户,我们想要通过 :FRIENDS_WITH 这一关系来找到他们的朋友。仅需通过在 Cypher 中增加一些行内容,我们现在已经把社交层面考虑到了我们的推荐引擎中。

|

||||

|

||||

再次说明,我们仅仅显示了用户明确请求的类别,并且这些类别中的地点与用户进入的地方是相同的航站楼。当然,我们希望按照登录并做出请求的用户来滤过这些目录,然后返回地点的名字、位置以及所在目录。我们也要显示出有多少朋友已经“喜好”那个地点以及那个地点到出入口的确切距离,然后在 RETURN 子句中同时返回所有这些内容。

|

||||

|

||||

### 相似性推荐(Similarity Recommendations)

|

||||

|

||||

现在,让我们看一看相似性推荐引擎:

|

||||

|

||||

|

||||

|

||||

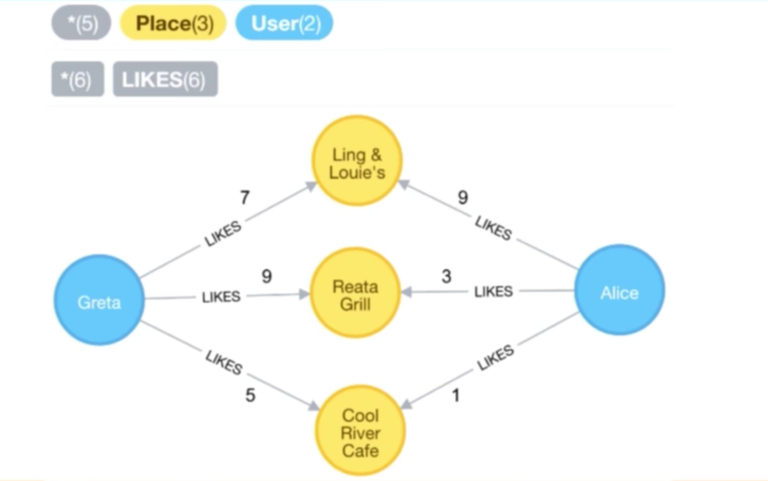

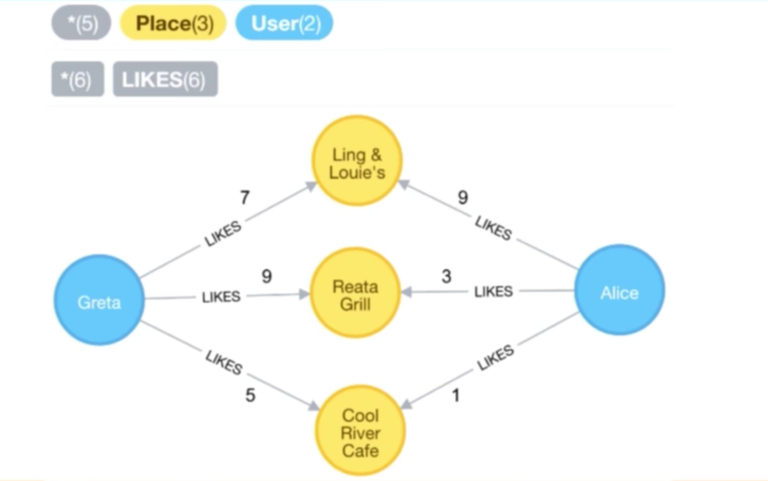

和前面的数据模型相似,用户可以标记“喜好”的地点,但是这一次他们可以用 1 到 10 的整数给地点评分。这是通过前期在 Neo4j 中增加一些属性到关系中建模实现的。

|

||||

|

||||

这将允许我们找到其他相似的用户,比如以上面的 Greta 和 Alice 为例,我们已经查询了他们共同喜好的地点,并且对于每一个地点,我们可以看到他们所设定的权重。大概地,我们可以通过他们的评分来确定他们之间的相似性大小。

|

||||

|

||||

|

||||

|

||||

现在我们有两个向量:

|

||||

|

||||

|

||||

|

||||

现在让我们按照欧几里得距离(Euclidean distance)的定义来计算这两个向量之间的距离:

|

||||

|

||||

|

||||

|

||||

我们把所有的数字带入公式中计算,然后得到下面的相似度,这就是两个用户之间的“距离”:

|

||||

|

||||

|

||||

|

||||

你可以很容易地在 Cypher 中计算两个特定用户的“距离”,特别是如果他们仅仅同时“喜好”一个很小的地点子集。再次说明,这儿我们依据两个用户 Alice 和 Greta 来进行匹配,并尝试去找到他们同时“喜好”的地点:

|

||||

|

||||

|

||||

|

||||

他们都有对最后找到的地点的 :LIKES 关系,然后我们可以在 Cypher 中很容易的计算出他们之间的欧几里得距离,计算方法为他们对各个地点评分差的平方求和再开平方根。

|

||||

|

||||

在两个特定用户的例子中上面这个方法或许能够工作。但是,在实时情况下,当你想要通过和实时数据库中的其他用户比较,从而由一架飞机上的一个用户推断相似用户时,这个方法就不一定能够工作。不用说,至少它不能够很好的工作。

|

||||

|

||||

为了找到解决这个问题的好方法,我们可以预先计算好距离并存入实际关系中:

|

||||

|

||||

|

||||

|

||||

当遇到一个很大的数据集时,我们需要成批处理这件事,在这个很小的示例数据集中,我们可以按照所有用户的迪卡尔乘积(Cartesian product)和他们共同“喜好”的地点来进行匹配。当我们使用 WHERE id(u1) < id(u2) 作为 Cypher 询问的一部分时,它只是来确定我们在左边和右边没有找到相同的对的一个技巧。

|

||||

|

||||

通过用户之间的欧几里得距离,我们创建了他们之间的一种关系,叫做 :DISTANCE,并且设置了一个叫做 euclidean 的欧几里得属性。理论上,我们可以也通过用户间的一些关系来存储其他相似度从而获取不同的相似度,因为在确定的环境下某些相似度可能比其他相似度更有用。

|

||||

|

||||

在 Neo4j 中,的确是对关系属性建模的能力使得完成像这样的事情无比简单。然而,实际上,你不会希望存储每一个可能存在的单一关系,因为你仅仅希望返回离他们“最近”的一些人。

|

||||

|

||||

因此你可以根据一些临界值来存入前几个,从而你不需要构建完整的连通图。这允许你完成一些像下面这样的实时的数据库查询,因为我们已经预先计算好了“距离”并存储在了关系中,在 Cypher 中,我们能够很快的攫取出数据。

|

||||

|

||||

|

||||

|

||||

在这个查询中,我们依据地点和类别来进行匹配:

|

||||

|

||||

|

||||

|

||||

再次说明,前三行是相同的,除了登录用户以外,我们找出了和他们有 :DISTANCE 关系的用户。这是我们前面查看的关系产生的作用 - 实际上,你只需要存储处于前几位的相似用户 :DISTANCE 关系,因此你不需要在 MATCH 子句中攫取大量用户。相反,我们只攫取和那些用户“喜好”的地方有 :DISTANCE 关系的用户。

|

||||

|

||||

这允许我们用少许几行内容表达较为复杂的模型。我们也可以攫取 :LIKES 关系并把它放入到变量中,因为后面我们将使用这些权重来评分。

|

||||

|

||||

在这儿重要的是,我们可以依据“距离”大小将用户按照升序进行排序,因为这是一个距离测度。同时,我们想要找到用户间的最小距离因为距离越小表明他们的相似度最大。

|

||||

|

||||

通过其他按照欧几里得距离大小排序好的用户,我们得到用户评分最高的三个地点并按照用户的平均评分高低来推荐这些地点。换句话说,我们先找出一个活跃用户,然后依据其他用户“喜好”的地点找出和他最相似的其他用户,接下来按照这些相似用户的平均评分把那些地点排序在结果的集合中。

|

||||

|

||||

本质上,我们通过把所有评分相加然后除以收集的用户数目来计算出平均分,然后按照平均评分的升序进行排序。其次,我们按照出入口距离排序。假想地,我猜测应该会有交接点,因此你可以按照出入口距离排序然后再返回名字、类别、出入口和航站楼。

|

||||

|

||||

### 集群推荐(Cluster Recommendations)

|

||||

|

||||

我们最后要讲的一个例子是集群推荐,在 Cypher 中,这可以被想像成一个作为临时解决方案的离线计算工作流。这可能完全基于在[欧洲 GraphConnect 上宣布的新方法][5],但是有时你必须进行一些 Cypher 2.3 版本所没有的算法逼近。

|

||||

|

||||

在这儿你可以使用一些统计软件,把数据从 Neo4j 取出然后放入像 Apache Spark、R 或者 Python 这样的软件中。下面是一段把数据从 Neo4j 中取出的 R 代码,运行该程序,如果正确,写下程序返回结果的给 Neo4j,可以是一个属性、节点、关系或者一个新的标签。

|

||||

|

||||

通过持续把程序运行结果放入到图表中,你可以在一个和我们刚刚看到的查询相似的实时查询中使用它:

|

||||

|

||||

|

||||

|

||||

下面是用 R 来完成这件事的一些示例代码,但是你可以使用任何你最喜欢的软件来做这件事,比如 Python 或 Spark。你需要做的只是登录并连接到图表。

|

||||

|

||||

在下面的例子中,我基于用户的相似性把他们聚合起来。每个用户作为一个观察点,然后得到他们对每一个目录评分的平均值。

|

||||

|

||||

|

||||

|

||||

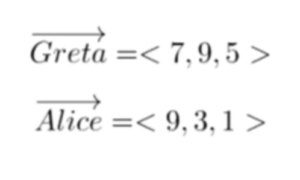

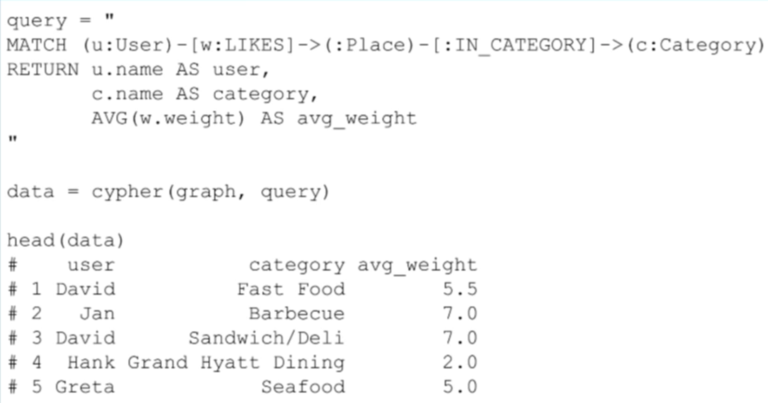

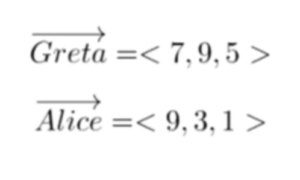

假定用户对酒吧类评分的方式和一般的评分方式相似。然后我攫取出喜欢相同类别中的地点的用户名、类别名、“喜好”关系的平均权重,比如平均权重这些信息,从而我可以得到下面这样一个表格:

|

||||

|

||||

|

||||

|

||||

因为我们把每一个用户都作为一个观察点,所以我们必须巧妙的处理每一个类别中的数据,这些数据的每一个特性都是用户对该类中餐厅评分的平均权重。接下来,我们将使用这些数据来确定用户的相似性,然后我将使用聚类(clustering)算法来确定在不同集群中的用户。

|

||||

|

||||

在 R 中这很直接:

|

||||

|

||||

|

||||

|

||||

在这个示例中我们使用 K-均值(k-means)聚类算法,这将使你很容易攫取集群分配。总之,我通过运行聚类算法然后分别得到每一个用户的集群分配。

|

||||

|

||||

Bob 和 David 在一个相同的集群中 - 他们在集群二中 - 现在我可以实时查看哪些用户被放在了相同的集群中。

|

||||

|

||||

接下来我把集群分配写入 CSV 文件中,然后存入图数据库:

|

||||

|

||||

|

||||

|

||||

我们只有用户和集群分配,因此 CSV 文件只有两列。 LOAD CSV 是 Cypher 中的内建语法,它允许你从一些其他文件路径或者 URL 调用 CSV ,并给它一个别名。接下来,我们将匹配图数据库中存在的用户,从 CSV 文件中攫取用户列然后合并到集群中。

|

||||

|

||||

我们在图表中创建了一个新的标签节点:Cluster ID, 这是由 K-平均聚类算法给出的。接下来我们创建用户和集群间的关系,通过创建这个关系,当我们想要找到在相同集群中的实际推荐用户时,就会很容易进行查询。

|

||||

|

||||

我们现在有了一个新的集群标签,在相同集群中的用户和那个集群存在关系。新的数据模型看起来像下面这样,它比我们前面探索的其他数据模型要更好:

|

||||

|

||||

|

||||

|

||||

现在让我们考虑下面的查询:

|

||||

|

||||

|

||||

|

||||

通过这个 Cypher 查询,我们在更远处找到了在同一个集群中的相似用户。由于这个原因,我们删除了“距离”关系:

|

||||

|

||||

|

||||

|

||||

在这个查询中,我们取出已经登录的用户,根据用户-集群关系找到他们所在的集群,找到他们附近和他们在相同集群中的用户。

|

||||

|

||||

我们把这些用户分配到变量 c1 中,然后我们得到其他被我取别名为 neighbor 变量的用户,这些用户和那个相同集群存在着用户-集群关系,最后我们得到这些附近用户“喜好”的地点。再次说明,我把“喜好”放入了变量 r 中,因为我们需要从关系中攫取权重来对结果进行排序。

|

||||

|

||||

在这个查询中,我们所做的改变是,不使用相似性距离,而是攫取在相同集群中的用户,然后对类别、航站楼以及我们所攫取的登录用户进行声明。我们收集所有的权重:来自附近用户“喜好”地点的“喜好”关系,得到的类别,确定的距离值,然后把它们按升序进行排序并返回结果。

|

||||

|

||||

在这些例子中,我们可以进行一个相当复杂的处理并且将其放到图数据库中,然后我们就可以使用实时算法结果-聚类算法和集群分配的结果。

|

||||

|

||||

我们更喜欢的工作流程是更新这些集群分配,更新频率适合你自己就可以,比如每晚一次或每小时一次。当然,你可以根据直觉来决定多久更新一次这些集群分配是可接受的。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://neo4j.com/blog/real-time-recommendation-engine-data-science/

|

||||

|

||||

作者:[Nicole White][a]

|

||||

译者:[ucasFL](https://github.com/ucasFL)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://neo4j.com/blog/contributor/nicole-white/

|

||||

[1]: http://graphconnect.com/

|

||||

[2]: http://neo4j.com/product/

|

||||

[3]: http://neo4j.com/blog/why-database-query-language-matters/#cypher

|

||||

[4]: https://neo4j.com/use-cases/real-time-recommendation-engine/

|

||||

[5]: https://neo4j.com/blog/neo4j-3-0-massive-scale-developer-productivity/

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

@ -0,0 +1,97 @@

|

||||

监控微服务的五原则

|

||||

====

|

||||

|

||||

|

||||

|

||||

我们对微服务的需求可以归纳为一个词:速度。这种更快提供功能完善且可靠的软件的需求,彻底改变了软件开发模式。毫无疑问,这个改变对软件管理,包括系统监控的方式,都产生了影响。在这篇文章里,我们将重点关注放在有效地监控产品环境中的微服务所需做出的主要改变。我们将为这一新的软件架构拟定 5 条指导性原则来调整你的监控方法。

|

||||

|

||||

监控是微服务控制系统的关键部分,你的软件越复杂,那么你就越难了解其性能及问题排障。鉴于软件交付发生的巨大改变,监控系统同样需要进行彻底的改造,以便在微服务环境下表现更好。下面我们将介绍监控微服务的 5 条原则,如下:

|

||||

|

||||

1. 监控容器及其里面的东西。

|

||||

2. 在服务性能上做监控,而不是容器性能。

|

||||

3. 监控弹性和多地部署的服务。

|

||||

4. 监控 API。

|

||||

5. 将您的监控映射到您的组织结构。

|

||||

|

||||

利用这 5 条原则,你可以在向微服务前进的道路上,建立更有效的对微服务的监控。这些原则,可以让你应对随着微服务而来的技术变化和组织变化。

|

||||

|

||||

### 微服务监控的原则

|

||||

|

||||

#### 1、监控容器及其里面的东西

|

||||

|

||||

容器因构建微服务而凸显其重要性,容器的速度、可移植性和隔离特性让开发者很容易就爱上了微服务模型。容器的好处已经写的够多了,毋庸赘述。

|

||||

|

||||

容器对于其外围的系统来说就像是黑盒子。这对于开发来说大有裨益,从开发环境到生产环境,甚至从开发者的笔记本到云端,为它们带来高度的可移植性。但是当运行起来后,监控和解决服务问题时,这个黑盒子让常规的方法难以奏效了,我们会想:容器里到底在运行着什么?程序和代码运行性能如何?它有什么重要的输出指标吗?从 DevOps 的视角,你需要对容器有更深的了解而不是仅仅知道有一些容器的存在。

|

||||

|

||||

|

||||

|

||||

非容器环境下衡量的典型做法,是让一个代理程序运行在主机或者虚机上的用户空间里,但这并不适用于容器。因为容器的优点是小,将各种进程分离开来,并尽可能的减少依赖关系。

|

||||

|

||||

而且,从规模上看,成千上万的监测代理,对即使是一个中等大小的部署都是一个昂贵的资源浪费和管理的噩梦。对于容器有两个潜在的解决方案:1)要求你的开发人员直接监控他们的代码,或者2)利用一个通用的内核级的检测方法来查看主机上的所有应用程序和容器活动。这里我们不会深入说明,但每一种方法都有其优点和缺点。

|

||||

|

||||

#### 2、 利用业务流程系统提醒服务性能

|

||||

|

||||

理解容器容器中的运行数据并不容易,一个单一容器相比组成一个功能或服务的容器聚合,测量复杂度要低得多。

|

||||

|

||||

这特别适用于应用程序级别的信息,比如哪个请求拥有最短响应时间,或者哪些 URL 遇到最多的错误,但它同样也适用于架构级别的监测,比如哪个服务的容器使用 CPU 资源超过了事先分配的资源数。

|

||||

|

||||

越来越多的软件部署需要一个<ruby>编排系统<rt> orchestration system</rt></ruby>,将应用程序的逻辑规划转化到物理的容器中。常见的编排系统包括 Kubernetes、Mesosphere DC/OS 和 Docker Swarm。团队可以用一个编排系统来(1)定义微服务(2)理解部署的每个服务的当前状态。你可以认为编排系统甚至比容器还重要。容器是短暂的,只有满足你的服务需求才会存在。

|

||||

|

||||

DevOps 团队应该将告警重点放到运行特征上,以尽可能贴近监控服务的体验。如果应用受到了影响,这些告警是评估事态的第一道防线。但是获得这些告警并不容易,除非你的监控系统是基于原生于容器的。

|

||||

|

||||

<ruby>[原生容器][1]<rt>Container-native</rt></ruby>解决方案利用<ruby>编排元数据<rt>orchestration metadata</rt></ruby>来动态聚合容器和应用程序数据,并按每个服务计算监控度量。根据您的编排工具,您可能想在不同层次进行深入检测。比如,在 Kubernetes 里,你通常有 Namespace、ReplicaSet、Pod 和一些其他容器。聚合这些不同的层,对排除逻辑故障是很有必要的,与构成服务的容器的物理部署无关。

|

||||

|

||||

|

||||

|

||||

#### 3、 监控<ruby>弹性<rt>Elastic</rt></ruby>和<ruby>多地部署<rt>Multi-Location</rt></ruby>的服务

|

||||

|

||||

弹性服务不是一个新概念,但是它在原生容器环境中的变化速度比在虚拟环境中快的多。迅速的变化会严重影响检测系统的正常运行。

|

||||

|

||||

监测传统的系统经常需要根据软件部署,手动调整检查指标。这种调整可以是具体的,如定义要捕获的单个指标,或基于应用程序在一个特定的容器中的操作配置要收集的数据。在小规模上(比如几十个容器)我们可以接受,但是再大规模就难以承受了。微服务的集中监控必须能够自由的随弹性服务而增长和缩减,无需人工干预。

|

||||

|

||||

比如,如果 DevOps 团队必须手动定义容器包含哪个服务需要监控,他们毫无疑问会失手,因为 Kubernetes 或者 Mesos 每天都会定期创建新的容器。同样,如果代码发布并置于生产环境时要求运维团队安装一个<ruby>定制的状态端点<rt>custom stats endpoint</rt></ruby>,也给开发者从 Docker 仓库获取基础镜像带来更多的挑战。

|

||||

|

||||

在生产环境中,建立面向跨越多个数据中心或多个云的复杂部署的监控,比如,如果你的服务跨越私有数据中心和 AWS,那么亚马逊的 AWS CloudWatch 就很难做到这一点。这就要求我们建立一个跨不同地域的监控系统,并可在动态的原生容器环境下运行。

|

||||

|

||||

#### 4、 监控 API

|

||||

|

||||

在微服务环境中,API 接口是通用的。本质上,它们是将服务暴露给其它团队的唯一组件。事实上,API 的响应和一致性可以看作是“内部 SLA”,即使还没有定义一个正式的 SLA(服务等级协议)。

|

||||

|

||||

因此,API 接口的监控也是必要的。API 监控可以有不同的形式,但是很显然它绝对不是简单的二进制上下检查。例如,了解像时间函数这样的最常使用的<ruby>端点<rt>endpoint</rt></ruby>是有价值的。这使得团队可以看到服务使用的变化,无论是由于设计变更或用户的改变。

|

||||

|

||||

你也可以记录服务最缓慢的端点,这些可能揭示出重大的问题,或者至少指向需要在系统中做优化的区域。

|

||||

|

||||

最后,跟踪系统服务响应的能力是另一个很重要的能力,它主要是开发者使用,也能帮助你了解整体用户体验,同时将信息基于底层和应用程序视角分成两大部分。

|

||||

|

||||

#### 5、 将您的监控映射到您的组织结构

|

||||

|

||||

这篇文章着重在微服务和监控上,像其他科技文章一样,这是因为很多人都关注此层面。

|

||||

|

||||

对于那些熟悉<ruby>[康威定律][2]<rt> Conway’s law</rt></ruby>的人来说,系统的设计是基于开发团队的组织结构。创造更快,更敏捷的软件的压力推动了团队去思考重新调整他们的开发组织和管理它的规则。

|

||||

|

||||

|

||||

|

||||

所以,如果他们想从这个新的软件架构(微服务)上获益,他们的团队必须将微服务映射到团队自身中。也就是说,他们需要更小的更松散耦合的团队,可以选择自己的方向只要能够满足整个需求即可。在每一个团队中,对于开发语言的使用,bug 的提交甚至工作职责都会有更大的控制能力。

|

||||

|

||||

DevOps 团队对此可以启用一个监控平台:让每一个微服务团队可以有自己的警报,度量指标,和控制面板,同时也要给出整体系统的视图。

|

||||

|

||||

### 总结

|

||||

|

||||

让微服务流行起来的是快捷。开发组织要想更快的为客户提供更多的功能,然后微服务技术就来了,架构转向微服务并且容器的流行让快捷开发成为可能,所有相关的进程理所当然的搭上了这辆火车。

|

||||

|

||||

最后,基本的监控原则需要适应伴随微服务而来的技术和结构。越早认识到这种转变的开发团队,能更早更容易的适应微服务这一新的架构。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://thenewstack.io/five-principles-monitoring-microservices/

|

||||

|

||||

作者:[Apurva Dave][a] ,[Loris Degioanni][b]

|

||||

译者:[jiajia9linuxer](https://github.com/jiajia9linuxer)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://thenewstack.io/author/apurvadave/

|

||||

[b]: http://thenewstack.io/author/lorisdegioanni/

|

||||

[1]:https://techcrunch.com/2016/04/27/lets-define-container-native/

|

||||

[2]:https://en.wikipedia.org/wiki/Conway%27s_law

|

||||

@ -1,29 +1,29 @@

|

||||

如何在 LINUX 和 WINDOWS 之间共享 STEAM 的游戏文件

|

||||

如何在 Linux 和 Windows 之间共享 Steam 的游戏文件

|

||||

============

|

||||

|

||||

[][16]

|

||||

[][16]

|

||||

|

||||

简介:这篇详细的指南将向你展示如何在 Linux 和 Windows 之间共享 Steam 的游戏文件以节省下载的总用时和下载的数据量。

|

||||

简介:这篇详细的指南将向你展示如何在 Linux 和 Windows 之间共享 Steam 的游戏文件以节省下载的总用时和下载的数据量。我们将展示给你它是怎样为我们节约了 83 % 的数据下载量。

|

||||

|

||||

假如你决心成为一名 Linux 平台上的玩家,并且在 [Steam][15] 上拥有同时支持 Linux 和 Windows 平台的游戏,或者基于上面同样的原因,拥有双重启动的系统,则你可以考虑看看这篇文章。

|

||||

假如你决心成为一名 Linux 平台上的玩家,并且在 [Steam][15] 上拥有同时支持 Linux 和 Windows 平台的游戏,或者基于同样的原因,拥有双重启动的系统,则你可以考虑看看这篇文章。

|

||||

|

||||

我们中的许多玩家都拥有双重启动的 Linux 和 Windows。有些人只拥有 Linux 系统,但同时拥有当前还没有被 Linux 平台上的 Steam 支持的游戏。所以我们同时保留这两个系统以便我们可以在忽略平台的前提下玩我们喜爱的游戏。

|

||||

|

||||

幸运的是 [Linux gaming][13] 出现了,越来越多在 Windows 平台上受欢迎的 Steam 游戏在 Linux 平台上的 Steam 中被开发了出来。

|

||||

幸运的是 [Linux 游戏][13]社区应运而生,越来越多在 Windows 平台上受欢迎的 Steam 游戏也发布在 Linux 平台上的 Steam 中。

|

||||

|

||||

我们中的许多人喜欢备份我们的 Steam 游戏,使得我们不再苦苦等待游戏下载完成。这些游戏很大程度上是 Windows 平台下的 Steam 游戏。

|

||||

|

||||

现在,很多游戏也已经登陆了 [Linux 平台上的 Steam][12],例如奇异人生、古墓丽影 2013、中土世界:魔多阴影、幽浮:未知敌人、幽浮 2、与日赛跑、公路救赎、燥热 等等,并且[这份名单一直在增长][11]。甚至还有 [杀出重围:人类分裂][10] 和 [疯狂的麦克斯][9] !!!在一些游戏的 Windows 版发布之后,现在我们不必再等候多年,而只需等待几月左右,便可以听到类似的消息了,这可是大新闻啊!

|

||||

现在,很多游戏也已经登陆了 [Linux 平台上的 Steam][12],例如奇异人生(Life is Strange)、古墓丽影 2013(Tomb Raider 2013)、中土世界:魔多阴影(Shadow of Mordor)、幽浮:未知敌人(XCOM: Enemy Unknown)、幽浮 2、与日赛跑(Race The Sun)、公路救赎(Road Redemption)、燥热(SUPERHOT) 等等,并且[这份名单一直在增长][11]。甚至还有 [杀出重围:人类分裂(Deus Ex: Mankind Divided)][10] 和 [疯狂的麦克斯(Mad Max)][9] !!!在一些游戏的 Windows 版发布之后,现在我们不必再等候多年,而只需等待几月左右,便可以听到类似的消息了,这可是大新闻啊!

|

||||

|

||||

下面的实验性方法将向你展示如何使用你现存的任何平台上游戏文件来在 Steam 上恢复游戏的大部分数据。对于某些游戏,它们在两个平台下有很多相似的文件,利用下面例子中的方法,将减少你在享受这些游戏之前的漫长的等待时间。

|

||||

|

||||

在下面的方法中,我们将一步一步地尝试利用 Steam 自己的备份与恢复或者以手工的方式来达到我们的目的。当涉及到这些方法的时候,我们也将向你展示这两个平台上游戏文件的相同和不同之处,以便你也可以探索并做出你自己的调整。

|

||||

在下面的方法中,我们将一步一步地尝试利用 Steam 自身的备份与恢复功能或者以手工的方式来达到我们的目的。当涉及到这些方法的时候,我们也将向你展示这两个平台上游戏文件的相同和不同之处,以便你也可以探索并做出你自己的调整。

|

||||

|

||||

下面的方法中,我们将使用 Ubuntu 14.04 LTS 和 Windows 10 来执行备份与恢复 Steam 的测试。

|

||||

|

||||

### #1 : Steam 自己的备份与恢复

|

||||

### 1、Steam 自身的备份与恢复

|

||||

|

||||

当我们尝试使用 Windows 平台上 Steam 中《燥热》这个游戏的备份(这些加密文件是 .csd 格式)时,Linux 平台上的 Steam 不能识别这些文件,并重新开始下载整个游戏了!甚至在做了验证性检验后,仍然有很大一部分文件不能被 Steam 识别出来。我们在 Windows 上也做了类似的操作,但结果是一样的!

|

||||

当我们尝试使用 Windows 平台上 Steam 中《燥热(SUPERHOT)》这个游戏的备份(这些加密文件是 .csd 格式)时,Linux 平台上的 Steam 不能识别这些文件,并重新开始下载整个游戏了!甚至在做了验证性检验后,仍然有很大一部分文件不能被 Steam 识别出来。我们在 Windows 上也做了类似的操作,但结果是一样的!

|

||||

|

||||

|

||||

|

||||

@ -31,11 +31,11 @@

|

||||

|

||||

现在到了我们用某些手工的方法来共享 Windows 和 Linux 上的 Steam 游戏的时刻了!

|

||||

|

||||

### #2 : 手工方法

|

||||

### 2、手工方法

|

||||

|

||||

首先,让我们先看看 Linux 下这些游戏文件所处的位置(用户目录在 /home 中):

|

||||

|

||||

这是 Linux 平台上 Steam 游戏的默认安装位置。 `.local` 和 `.steam` 目录默认情况下是不可见的,你必须将它们显现出来。我们将推荐拥有一个自定义的 Steam 安装位置以便更容易地处理这些文件。这里 `SUPERHOT.x86_64` 是 Linux 下原生的可执行文件,与 Windows 中的 `.exe` 文件类似。

|

||||

这是 Linux 平台上 Steam 游戏的默认安装位置。 `.local` 和 `.steam` 目录默认情况下是不可见的,你必须将它们显现出来。我们将推荐使用一个自定义的 Steam 安装位置以便更容易地处理这些文件。这里 `SUPERHOT.x86_64` 是 Linux 下原生的可执行文件,与 Windows 中的 `.exe` 文件类似。

|

||||

|

||||

|

||||

|

||||

@ -43,11 +43,11 @@

|

||||

|

||||

|

||||

|

||||

下面我们来看看这些 `.acf` 格式的文件。“appmanifest_322500.acf”便是那个我们需要的文件。编辑并调整这个文件对 Steam 识别在 “common”这个目录下现存的非加密的原始文件备份大有裨益:

|

||||

下面我们来看看这些 `.acf` 格式的文件。`appmanifest_322500.acf` 便是那个我们需要的文件。编辑并调整这个文件有助于 Steam 识别在 `common` 这个目录下现存的非加密的原始文件备份:

|

||||

|

||||

|

||||

|

||||

为了确认这个文件的相似性,用编辑器打开这个文件并检查它。我们越多地了解这个文件越好。这个[链接是来自 Steam 论坛上的一个帖子][8],它展示了这个文件的主要意义。它类似于下面这样:

|

||||

为了确认这个文件是一样的,用编辑器打开这个文件并检查它。我们越多地了解这个文件越好。这个[链接是来自 Steam 论坛上的一个帖子][8],它展示了这个文件的主要意义。它类似于下面这样:

|

||||

|

||||

```

|

||||

“AppState”

|

||||

@ -80,7 +80,7 @@

|

||||

|

||||

|

||||

|

||||

我们复制了 “SUPERHOT”目录和 .acf 格式的文件(这个文件在 Windows 的 Steam 上格式是一样的)。在复制 .acf 文件和游戏目录到 Linux 中 Steam 它们对应的位置时,我们需要确保 Steam 没有在后台运行。

|

||||

我们复制了 `SUPERHOT` 目录和 `.acf` 格式的清单文件(这个文件在 Windows 的 Steam 上格式是一样的)。在复制 `.acf` 文件和游戏目录到 Linux 中 Steam 它们对应的位置时,我们需要确保 Steam 没有在后台运行。

|

||||

|

||||

在转移完成之后,我们运行 Steam 并看到了这个:

|

||||

|

||||

@ -90,14 +90,14 @@

|

||||

|

||||

我们还进行了其他几种尝试:

|

||||

|

||||

* 我们尝试使用 Linux 下原有的清单文件(.acf)和来自 Windows 的手工备份文件,但结果是 Steam 重新开始下载游戏。

|

||||

* 我们看到当我们将 “SUPERHOT_Data” 这个目录中的 “SH_Data” 更换为 Windows 中的对应目录时,同上面的一样,也重新开始下载整个游戏。

|

||||

* 我们尝试使用 Linux 下原有的清单文件(`.acf`)和来自 Windows 的手工备份文件,但结果是 Steam 重新开始下载游戏。

|

||||

* 我们看到当我们将 `SUPERHOT_Data` 这个目录中的 `SH_Data` 更换为 Windows 中的对应目录时,同上面的一样,也重新开始下载整个游戏。

|

||||

|

||||

### 理解清单目录的一个尝试

|

||||

|

||||

清单目录绝对可以被进一步地被编辑和修改以此来改善上面的结果,使得 Steam 检测出尽可能多的文件。

|

||||

|

||||

在 Github 上有一个[项目][7],包含一个可以生成这些清单文件的 python 脚本。任何 Steam 游戏的 AppID 可以从[SteamDB][6] 上获取到。知晓了游戏的 ID 号后,你便可以用你喜爱的编辑器以下面的格式创建你自己的清单文件 appmanifest_<AppID>.acf”。在上面手工方法中,我们可以看到 SUPERHOT 这个游戏的 AppID 是 322500,所以对应的清单文件名应该是 appmanifest_322500.acf。

|

||||

在 Github 上有一个[项目][7],包含一个可以生成这些清单文件的 python 脚本。任何 Steam 游戏的 AppID 可以从[SteamDB][6] 上获取到。知晓了游戏的 ID 号后,你便可以用你喜爱的编辑器以下面的格式创建你自己的清单文件 `appmanifest_<AppID>.acf`。在上面手工方法中,我们可以看到 SUPERHOT 这个游戏的 AppID 是 322500,所以对应的清单文件名应该是 `appmanifest_322500.acf`。

|

||||

|

||||

下面以我们知晓的信息来尝试对该文件进行一些解释:

|

||||

|

||||

@ -139,10 +139,8 @@

|

||||

via: https://itsfoss.com/share-steam-files-linux-windows/

|

||||

|

||||

作者:[Avimanyu Bandyopadhyay][a]

|

||||

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,63 @@

|

||||

我们大学机房使用的 Fedora 系统

|

||||

==========

|

||||

|

||||

|

||||

|

||||

在[塞尔维亚共和国诺维萨德大学的自然科学系和数学与信息学系][5],我们教学生很多东西。从编程语言的入门到机器学习,所有开设的课程最终目的是让我们的学生能够像专业的开发者和软件工程师一样思考。课程时间紧凑而且学生众多,所以我们必须对现有可利用的资源进行合理调整以满足正常的教学。最终我们决定将机房计算机系统换为 Fedora。

|

||||

|

||||

### 以前的设置

|

||||

|

||||

我们过去的解决方案是在 Ubuntu 系统上面安装 Windows [虚拟机][4]并在虚拟机下安装好教学所需的开发软件。这在当时看起来是一个很不错的主意。然而,这种方法有很多弊端。首先,运行虚拟机导致了严重的计算机性能的浪费,因此导致操作系统性能和运行速度降低。此外,虚拟机有时候会在另一个用户会话里面同时运行。这会导致计算机工作严重缓慢。我们不得不在启动电脑和启动虚拟机上花费宝贵的时间。最后,我们意识到我们的大部分教学所需软件都有对应的 Linux 版本。虚拟机不是必需的。我们需要寻找一个更好的解决办法。

|

||||

|

||||

### 进入 Fedora!

|

||||

|

||||

|

||||

|

||||

*默认运行 Fedora 工作站版本的一个机房的照片*

|

||||

|

||||

我们考虑使用一种简洁的安装替代以前的 Windows 虚拟机方案。我们最终决定使用 Fedora,这有很多原因。

|

||||

|

||||

#### 发展的前沿

|

||||

|

||||

在我们所教授的课程中,我们会用到很多各种各样的开发工具。因此,能够及时获取可用的最新、最好的开发工具很重要。在 Fedora 下,我们发现我们用到的开发工具有 95% 都能够在官方的软件仓库中找到!只有少量的一些工具,我们才需要手动安装。这在 Fedora 下很简单,因为你能获取到几乎所有的现成的开发工具。

|

||||

|

||||

在这个过程中我们意识到我们使用了大量自由、开源的软件和工具。保证这些软件总是能够及时更新通常需要做大量的工作,然而 Fedora 没有这个问题。

|

||||

|

||||

#### 硬件兼容性

|

||||

|

||||

我们机房选择 Fedora 的第二个原因是硬件兼容性。机房现在的电脑还是比较崭新的。过去比较低的内核版本总有些问题。在 Fedora 下,我们总能获得最新的内核版本。正如我们预期的那样,一切运行良好,没有任何问题。

|

||||

|

||||

我们决定使用带有 [GNOME 桌面环境][2]的 Fedora [工作站版本][3]。学生们发现它很容易、直观,可以快速上手。对我们来说,学生有一个简单的环境很重要,这样他们会更多的关注自己的任务和课程本身,而不是一个复杂的或者运行缓慢的用户界面。

|

||||

|

||||

#### 自主的技术支持

|

||||

|

||||

最后一个原因,我们院系高度赞赏自由、开放源代码的软件。使用这些软件,学生们即便在毕业后和工作的时候,仍然能够继续自由地使用它们。在这个过程中,他们通常也对 Fedora 和自由、开源的软件有了一定了解。

|

||||

|

||||

### 转换机房

|

||||

|

||||

我们找来其中的一台电脑,完全手动安装好。包括准备所有必要的脚本和软件,设置远程控制权限和一些其他的重要组成部分。我们也为每一门课程单独设置一个用户账号以方便学生存储他们的文件。

|

||||

|

||||

一台电脑安装配置好后,我们使用一个强大的、免费的、开源的叫做 [CloneZilla][1] 的工具。 CloneZilla 能够制作硬盘镜像以做恢复用。镜像大小约为 11 G。我们用一些带有高速 USB 3.0 接口的闪存来还原磁盘镜像到其余的电脑。我们仅仅利用若干个闪存设备花费了 75 分钟设置好其余的 24 台电脑。

|

||||

|

||||

### 将来的工作

|

||||

|

||||

我们机房现在所有的电脑都完全使用 Fedora (没有虚拟机)。剩下的工作是设置一些管理脚本方便远程安装软件,电脑的开关等等。

|

||||

|

||||

我们由衷地感谢所有 Fedora 的维护人员、软件包管理人员和其他贡献者。我们希望我们的工作能够鼓励其他的学校和大学像我们一样将机房电脑的操作系统转向 Fedora。我们很高兴地确认 Fedora 完全适合我们,同时我们也保证 Fedora 同样会适合您!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://fedoramagazine.org/fedora-computer-lab-university/

|

||||

|

||||

作者:[Nemanja Milošević][a]

|

||||

译者:[WangYueScream](https://github.com/WangYueScream),[LemonDemo](https://github.com/LemonDemo)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://fedoramagazine.org/author/nmilosev/

|

||||

[1]:http://clonezilla.org/

|

||||

[2]:https://www.gnome.org/

|

||||

[3]:https://getfedora.org/workstation/

|

||||

[4]:https://en.wikipedia.org/wiki/Virtual_machine

|

||||

[5]:http://www.dmi.rs/

|

||||

@ -1,22 +1,22 @@

|

||||

Linux 中的 DTrace

|

||||

Linux 中的 DTrace :BPF 进入 4.9 内核

|

||||

===========

|

||||

|

||||

|

||||

|

||||

随着 BPF 追踪系统(基于时间采样)最后一个主要功能被合并至 Linux 4.9-rc1 版本的内核中,现在 Linux 内核拥有类似 DTrace 的原生追踪功能。DTrace 是 Solaris 系统中的高级追踪器。对于长期使用 DTrace 的用户和专家,这将是一个振奋人心的里程碑!在 Linux 系统上,现在你可以使用用安全的、低负载的定制追踪系统,通过执行时间的柱状图和频率统计等信息,分析应用的性能以及内核。

|

||||

随着 BPF 追踪系统(基于时间采样)最后一个主要功能被合并至 Linux 4.9-rc1 版本的内核中,现在 Linux 内核拥有类似 DTrace 的原生追踪功能。DTrace 是 Solaris 系统中的高级追踪器。对于长期使用 DTrace 的用户和专家,这将是一个振奋人心的里程碑!现在在 Linux 系统上,你可以在生产环境中使用安全的、低负载的定制追踪系统,通过执行时间的柱状图和频率统计等信息,分析应用的性能以及内核。

|

||||

|

||||

用于 Linux 的追踪工程有很多,但是最终被合并进 Linux 内核的技术从一开始就根本不是一个追踪项目:它是最开始是用于 Berkeley Packet Filter(BPF)的补丁。这些补丁允许 BPF 将软件包重定向,创建软件定义的网络。久而久之,支持追踪事件就被添加进来了,使得程序追踪可用于 Linux 系统。

|

||||

用于 Linux 的追踪项目有很多,但是这个最终被合并进 Linux 内核的技术从一开始就根本不是一个追踪项目:它是最开始是用于伯克利包过滤器(Berkeley Packet Filter)(BPF)的增强功能。这些补丁允许 BPF 重定向数据包,从而创建软件定义网络(SDN)。久而久之,对事件追踪的支持就被添加进来了,使得程序追踪可用于 Linux 系统。

|

||||

|

||||

尽管目前 BPF 没有像 DTrace 一样的高级语言,它所提供的前端已经足够让我创建很多 BPF 工具了,其中有些是基于我以前的 [DTraceToolkit][37]。这个帖子将告诉你怎么去用这些工具,BPF 提供的前端,以及畅谈这项技术将会何去何从。

|

||||

尽管目前 BPF 没有像 DTrace 一样的高级语言,但它所提供的前端已经足够让我创建很多 BPF 工具了,其中有些是基于我以前的 [DTraceToolkit][37]。这个帖子将告诉你怎么去用这些 BPF 提供的前端工具,以及畅谈这项技术将会何去何从。

|

||||

|

||||

### 截图

|

||||

### 示例

|

||||

|

||||

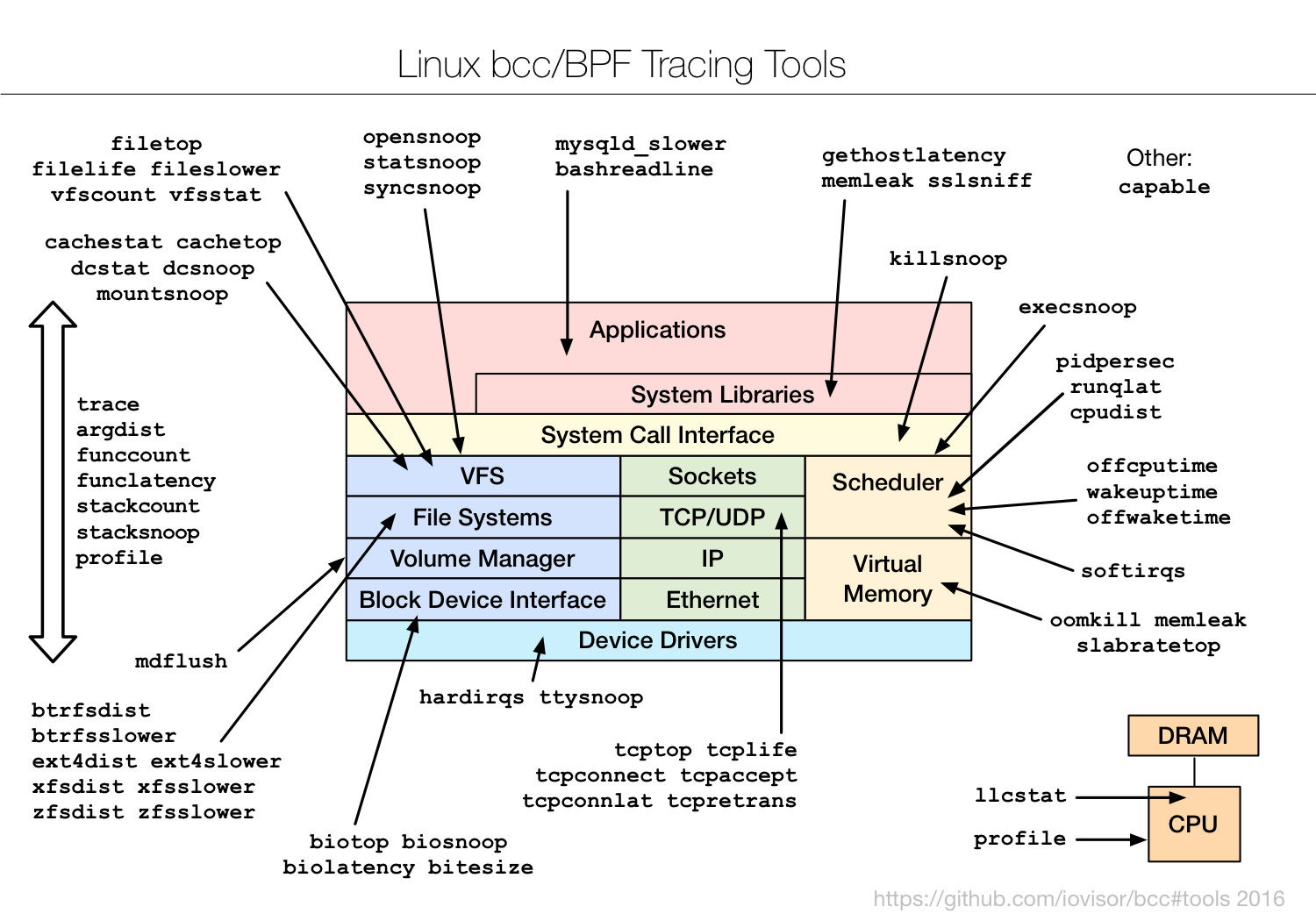

我已经将基于 BPF 的追踪工具添加到了开源的 [bcc][36] 项目里(感谢 PLUMgrid 公司的 Brenden Blanco 带领 bcc 项目的发展)。详见 [bcc 安装][35] 手册。它会在 /usr/share/bcc/tools 目录下添加一系列工具,包括接下来的那些工具。

|

||||

我已经将基于 BPF 的追踪工具添加到了开源的 [bcc][36] 项目里(感谢 PLUMgrid 公司的 Brenden Blanco 带领 bcc 项目的发展)。详见 [bcc 安装][35] 手册。它会在 `/usr/share/bcc/tools` 目录下添加一系列工具,包括接下来的那些工具。

|

||||

|

||||

捕获新进程:

|

||||

|

||||

```

|

||||

# **execsnoop**

|

||||

# execsnoop

|

||||

PCOMM PID RET ARGS

|

||||

bash 15887 0 /usr/bin/man ls

|

||||

preconv 15894 0 /usr/bin/preconv -e UTF-8

|

||||

@ -27,13 +27,12 @@ nroff 15900 0 /usr/bin/locale charmap

|

||||

nroff 15901 0 /usr/bin/groff -mtty-char -Tutf8 -mandoc -rLL=169n -rLT=169n

|

||||

groff 15902 0 /usr/bin/troff -mtty-char -mandoc -rLL=169n -rLT=169n -Tutf8

|

||||

groff 15903 0 /usr/bin/grotty

|

||||

|

||||

```

|

||||

|

||||

硬盘 I/O 延迟的柱状图:

|

||||

|

||||

```

|

||||

# **biolatency -m**

|

||||

# biolatency -m

|

||||

Tracing block device I/O... Hit Ctrl-C to end.

|

||||

^C

|

||||

msecs : count distribution

|

||||

@ -44,13 +43,12 @@ Tracing block device I/O... Hit Ctrl-C to end.

|

||||

16 -> 31 : 100 |**************************************|

|

||||

32 -> 63 : 62 |*********************** |

|

||||

64 -> 127 : 18 |****** |

|

||||

|

||||

```

|

||||

|

||||

追踪常见的 ext4 操作,稍慢于 5ms:

|

||||

追踪慢于 5 毫秒的 ext4 常见操作:

|

||||

|

||||

```

|

||||

# **ext4slower 5**

|

||||

# ext4slower 5

|

||||

Tracing ext4 operations slower than 5 ms

|

||||

TIME COMM PID T BYTES OFF_KB LAT(ms) FILENAME

|

||||

21:49:45 supervise 3570 W 18 0 5.48 status.new

|

||||

@ -67,26 +65,24 @@ TIME COMM PID T BYTES OFF_KB LAT(ms) FILENAME

|

||||

21:49:48 ps 12776 R 832 0 12.02 libprocps.so.4.0.0

|

||||

21:49:48 run 12779 R 128 0 13.21 cut

|

||||

[...]

|

||||

|

||||

```

|

||||

|

||||

追踪新建的 TCP 活跃连接(connect()):

|

||||

追踪新建的 TCP 活跃连接(`connect()`):

|

||||

|

||||

```

|

||||

# **tcpconnect**

|

||||

# tcpconnect

|

||||

PID COMM IP SADDR DADDR DPORT

|

||||

1479 telnet 4 127.0.0.1 127.0.0.1 23

|

||||

1469 curl 4 10.201.219.236 54.245.105.25 80

|

||||

1469 curl 4 10.201.219.236 54.67.101.145 80

|

||||

1991 telnet 6 ::1 ::1 23

|

||||

2015 ssh 6 fe80::2000:bff:fe82:3ac fe80::2000:bff:fe82:3ac 22

|

||||

|

||||

```

|

||||

|

||||

通过捕获 getaddrinfo()/gethostbyname() 库的调用来追踪 DNS 延迟:

|

||||

通过跟踪 `getaddrinfo()`/`gethostbyname()` 库的调用来追踪 DNS 延迟:

|

||||

|

||||

```

|

||||

# **gethostlatency**

|

||||

# gethostlatency

|

||||

TIME PID COMM LATms HOST

|

||||

06:10:24 28011 wget 90.00 www.iovisor.org

|

||||

06:10:28 28127 wget 0.00 www.iovisor.org

|

||||

@ -96,25 +92,23 @@ TIME PID COMM LATms HOST

|

||||

06:11:16 29195 curl 3.00 www.facebook.com

|

||||

06:11:25 29404 curl 72.00 foo

|

||||

06:11:28 29475 curl 1.00 foo

|

||||

|

||||

```

|

||||

|

||||

按类别划分 VFS 操作的时间间隔统计:

|

||||

|

||||

```

|

||||

# **vfsstat**

|

||||

# vfsstat

|

||||

TIME READ/s WRITE/s CREATE/s OPEN/s FSYNC/s

|

||||

18:35:32: 231 12 4 98 0

|

||||

18:35:33: 274 13 4 106 0

|

||||

18:35:34: 586 86 4 251 0

|

||||

18:35:35: 241 15 4 99 0

|

||||

|

||||

```

|

||||

|

||||

对一个给定的 PID,通过内核和用户堆栈轨迹来追踪 CPU 外的时间(由内核进行统计):

|

||||

对一个给定的 PID,通过内核和用户堆栈轨迹来追踪 CPU 处理之外的时间(由内核进行统计):

|

||||

|

||||

```

|

||||

# **offcputime -d -p 24347**

|

||||

# offcputime -d -p 24347

|

||||

Tracing off-CPU time (us) of PID 24347 by user + kernel stack... Hit Ctrl-C to end.

|

||||

^C

|

||||

[...]

|

||||

@ -142,13 +136,12 @@ Tracing off-CPU time (us) of PID 24347 by user + kernel stack... Hit Ctrl-C to e

|

||||

00007f6733a969b0 read

|

||||

- bash (24347)

|

||||

1450908

|

||||

|

||||

```

|

||||

|

||||

追踪 MySQL 查询延迟(通过 USDT 探针):

|

||||

|

||||

```

|

||||

# **mysqld_qslower `pgrep -n mysqld`**

|

||||

# mysqld_qslower `pgrep -n mysqld`

|

||||

Tracing MySQL server queries for PID 14371 slower than 1 ms...

|

||||

TIME(s) PID MS QUERY

|

||||

0.000000 18608 130.751 SELECT * FROM words WHERE word REGEXP '^bre.*n$'

|

||||

@ -156,101 +149,97 @@ TIME(s) PID MS QUERY

|

||||

4.603549 18608 24.164 SELECT COUNT(*) FROM words

|

||||

9.733847 18608 130.936 SELECT count(*) AS count FROM words WHERE word REGEXP '^bre.*n$'

|

||||

17.864776 18608 130.298 SELECT * FROM words WHERE word REGEXP '^bre.*n$' ORDER BY word

|

||||

|

||||

```

|

||||

|

||||

<!--Using the trace multi-tool to watch login requests, by instrumenting the pam library: -->

|

||||

检测 pam 库并使用多种追踪工具观察登陆请求:

|

||||

监测 pam 库并使用多种追踪工具观察登录请求:

|

||||

|

||||

```

|

||||

# **trace 'pam:pam_start "%s: %s", arg1, arg2'**

|

||||

# trace 'pam:pam_start "%s: %s", arg1, arg2'

|

||||

TIME PID COMM FUNC -

|

||||

17:49:45 5558 sshd pam_start sshd: root

|

||||

17:49:47 5662 sudo pam_start sudo: root

|

||||

17:49:49 5727 login pam_start login: bgregg

|

||||

|

||||

```

|

||||

|

||||

bcc 项目里的很多工具都有帮助信息(-h 选项),并且都应该包含有示例的 man 页面和文本文件。

|

||||

bcc 项目里的很多工具都有帮助信息(`-h` 选项),并且都应该包含有示例的 man 页面和文本文件。

|

||||

|

||||

### 必要的

|

||||

### 必要性

|

||||

|

||||

2014 年,Linux 追踪程序就有一些内核相关的特性(自 ftrace 和 pref_events),但是我们仍然要转储并报告进程数据,因为数十年的老技术会有很大规模的开销。你不能频繁地访问进程名,函数名,堆栈轨迹或内核中的其它任何数据。你不能在将变量保存到一个监测事件里,又在另一个事件里访问它们,这意味着你不能在自定义的地方计算延迟(或者说时间参数)。你也不能创建一个内核之内的延迟柱状图,也不能追踪 USDT 探针,甚至不能写自定义的程序。DTrace 可以做到这些,但仅限于 Solaris 或 BSD 系统。在 Linux 系统中,有些基于树的追踪器,像 SystemTap 就可以满足你的这些需求,但它也有自身的不足。(你可以写一个基于探针的内核模块来满足需求-但实际上没人这么做。)

|

||||

2014 年,Linux 追踪程序就有一些内核相关的特性(来自 `ftrace` 和 `pref_events`),但是我们仍然要转储并报告进程数据,这种几十年前的老技术有很多的限制。你不能频繁地访问进程名、函数名、堆栈轨迹或内核中的任意的其它数据。你不能在将变量保存到一个监测事件里,又在另一个事件里访问它们,这意味着你不能在你需要的地方计算延迟(或者说时间增量)。你也不能创建一个内核内部的延迟柱状图,也不能追踪 USDT 探针,甚至不能写个自定义的程序。DTrace 可以做到所有这些,但仅限于 Solaris 或 BSD 系统。在 Linux 系统中,有些不在主线内核的追踪器,比如 SystemTap 就可以满足你的这些需求,但它也有自身的不足。(理论上说,你可以写一个基于探针的内核模块来满足需求-但实际上没人这么做。)

|

||||

|

||||

2014 年我加入了 Netflix cloud performance 团队。做了这么久的 DTrace 方面的专家,转到 Linux 对我来说简直不可思议。但我确实这么做了,尤其是发现了严重的问题:Netflix cloud 会随着应用,微服务架构和分布式系统的快速变化,性能受到影响。有时要用到系统追踪,而我之前是用的 DTrace。在 Linux 系统上可没有 DTrace,我就开始用 Linux 内核内建的 ftrace 和 perf_events 工具,构建了一个追踪工具([perf-tools][34])。这些工具很有用,但有些工作还是没法完成,尤其是延迟柱状图图以及计算堆栈踪迹。我们需要的是内核追踪程序化。

|

||||

2014 年我加入了 Netflix cloud performance 团队。做了这么久的 DTrace 方面的专家,转到 Linux 对我来说简直不可思议。但我确实这么做了,而且遇到了巨大的挑战:在应用快速变化、采用微服务架构和分布式系统的情况下,调优 Netflix cloud。有时要用到系统追踪,而我之前是用的 DTrace。在 Linux 系统上可没有 DTrace,我就开始用 Linux 内核内建的 `ftrace` 和 `perf_events` 工具,构建了一个追踪工具([perf-tools][34])。这些工具很有用,但有些工作还是没法完成,尤其是延迟柱状图以及堆栈踪迹计数。我们需要的是内核追踪的可程序化。

|

||||

|

||||

### 发生了什么?

|

||||

|

||||

BPF 将程序化的功能添加到现有的内核追踪工具中(tracepoints, kprobes, uprobes)。在 Linux 4.x 系列的内核里,这些功能大大加强了。

|

||||

BPF 将程序化的功能添加到现有的内核追踪工具中(`tracepoints`、`kprobes`、`uprobes`)。在 Linux 4.x 系列的内核里,这些功能大大加强了。

|

||||

|

||||

时间采样是最主要的部分,它被 Linux 4.9-rc1 所采用([patchset][33])。十分感谢 Alexei Starovoitov(致力于 Facebook 中的 BPF 开发),改进 BPF 的主要开发者。

|

||||

时间采样是最主要的部分,它被 Linux 4.9-rc1 所采用([patchset][33])。十分感谢 Alexei Starovoitov(在 Facebook 致力于 BPF 的开发),他是这些 BPF 增强功能的主要开发者。

|

||||

|

||||

Linux 内核现在内建有以下这些特性(添加自 2.6 版本到 4.9 版本):

|

||||

Linux 内核现在内建有以下这些特性(自 2.6 版本到 4.9 版本之间增加):

|

||||

|

||||

* 内核级的动态追踪(BPF 对 kprobes 的支持)

|

||||

* 用户级的动态追踪(BPF 对 uprobes 的支持)

|

||||

* 内核级的静态追踪(BPF 对 tracepoints 的支持)

|

||||

* 时间采样事件(BPF 的 pref_event_open)

|

||||

* PMC 事件(BPF 的 pref_event_open)

|

||||

* 内核级的动态追踪(BPF 对 `kprobes` 的支持)

|

||||

* 用户级的动态追踪(BPF 对 `uprobes` 的支持)

|

||||

* 内核级的静态追踪(BPF 对 `tracepoints` 的支持)

|

||||

* 时间采样事件(BPF 的 `pref_event_open`)

|

||||

* PMC 事件(BPF 的 `pref_event_open`)

|

||||

* 过滤器(通过 BPF 程序)

|

||||

* 调试输出(bpf_trace_printk())

|

||||

* 事件输出(bpf_perf_event_output())

|

||||

* 调试输出(`bpf_trace_printk()`)

|

||||

* 按事件输出(`bpf_perf_event_output()`)

|

||||

* 基础变量(全局的和每个线程的变量,基于 BPF 映射)

|

||||

* 关联数组(通过 BPF 映射)

|

||||

* 频率计数(基于 BPF 映射)

|

||||

* 柱状图(power-of-2, 线性及自定义,基于 BPF 映射)

|

||||

* Timestamps and time deltas (bpf_ktime_get_ns(), and BPF programs)

|

||||

* 时间戳和时间参数(bpf_ktime_get_ns(),和 BPF 程序)

|

||||

* 内核态的堆栈轨迹(BPF stackmap 栈映射)

|

||||

* 用户态的堆栈轨迹 (BPF stackmap 栈映射)

|

||||

* 重写 ring 缓存(pref_event_attr.write_backward)

|

||||

* 柱状图(2 的冥次方、线性及自定义,基于 BPF 映射)

|

||||

* 时间戳和时间增量(`bpf_ktime_get_ns()`,和 BPF 程序)

|

||||

* 内核态的堆栈轨迹(BPF 栈映射)

|

||||

* 用户态的堆栈轨迹 (BPF 栈映射)

|

||||

* 重写 ring 缓存(`pref_event_attr.write_backward`)

|

||||

|

||||

我们采用的前端是 bcc,它同时提供 Python 和 lua 接口。bcc 添加了:

|

||||

|

||||

* 用户级静态追踪(基于 uprobes 的 USDT 探针)

|

||||

* 调试输出(调用 BPF.trace_pipe() 和 BPF.trace_fields() 函数的 Python)

|

||||

* 所有事件输出(BPF_PERF_OUTPUT 宏和 BPF.open_perf_buffer())

|

||||

* 间隔输出(BPF.get_table() 和 table.clear())

|

||||

* 打印柱状图(table.print_log2_hist())

|

||||

* 内核级的 C 结构体导航(bcc 重写 bpf_probe_read() 函数的映射)

|

||||

* 内核级的符号解析(ksym(), ksymaddr())

|

||||

* 用户级的符号解析(usymaddr())

|

||||

* BPF tracepoint 支持(通过 TRACEPOINT_PROBE)

|

||||

* BPF 堆栈轨迹支持(包括针对堆栈框架的 walk 方法)

|

||||

* 其它各种助手宏和方法

|

||||

* 例子(位于 /examples 目录)

|

||||

* 工具(位于 /tools 目录)

|

||||

* 教程(/docs/tutorial*.md)

|

||||

* 参考手册(/docs/reference_guide.md)

|

||||

* 用户级静态追踪(基于 `uprobes` 的 USDT 探针)

|

||||

* 调试输出(Python 中调用 `BPF.trace_pipe()` 和 `BPF.trace_fields()` 函数 )

|

||||

* 按事件输出(`BPF_PERF_OUTPUT` 宏和 `BPF.open_perf_buffer()`)

|

||||

* 间隔输出(`BPF.get_table()` 和 `table.clear()`)

|

||||

* 打印柱状图(`table.print_log2_hist()`)

|

||||

* 内核级的 C 结构体导航(bcc 重写器映射到 `bpf_probe_read()` 函数)

|

||||

* 内核级的符号解析(`ksym()`、 `ksymaddr()`)

|

||||

* 用户级的符号解析(`usymaddr()`)

|

||||

* BPF 跟踪点支持(通过 `TRACEPOINT_PROBE`)

|

||||

* BPF 堆栈轨迹支持(包括针对堆栈框架的 `walk` 方法)

|

||||

* 其它各种辅助宏和方法

|

||||

* 例子(位于 `/examples` 目录)

|

||||

* 工具(位于 `/tools` 目录)

|

||||

* 教程(`/docs/tutorial*.md`)

|

||||

* 参考手册(`/docs/reference_guide.md`)

|

||||

|

||||

直到最新也是最主要的特性被整合进来,我才开始写这篇文章,现在它在 4.9-rc1 内核中。我们还需要去完成一些次要的东西,还有另外一些事情要做,但是现在我们所拥有的已经值得欢呼了。现在 Linux 拥有内建的高级追踪能力。

|

||||

直到最新也是最主要的特性被整合进来,我才开始写这篇文章,现在它在 4.9-rc1 内核中。我们还需要去完成一些次要的东西,还有另外一些事情要做,但是现在我们所拥有的已经值得欢呼了。现在 Linux 拥有了内建的高级追踪能力。

|

||||

|

||||

### 安全性

|

||||

|

||||

设计 BPF 以及改进版时就考虑到产品安全,它被用在大范围的生产环境里。确信的话,你应该能找到一个挂起内核的方法。这个例子是偶然而不是必然,类似的漏洞会被快速修复,尤其是当 BPF 合并入了 Linux。因为 Linux 可是公众的焦点。

|

||||

设计 BPF 及其增强功能时就考虑到生产环境级安全,它被用在大范围的生产环境里。不过你想的话,你还是可以找到一个挂起内核的方法。这种情况是偶然的,而不是必然,类似的漏洞会被快速修复,尤其是当 BPF 合并入了 Linux。因为 Linux 可是公众的焦点。

|

||||

|

||||

在开发过程中我们碰到了一些非 BPF 的漏洞,它们需要被修复:rcu 不可重入,这可能导致内核由于 funccount 挂起,在 4.6 内核版本中这个漏洞被 “bpf: map pre-alloc” 所修复,旧版本内核的漏洞暂时由 bcc 处理。还有一个是 uprobe 的内存计算问题,这导致 uprobe 分配内存失败,在 4.8 内核版本这个漏洞由 “uprobes: Fix the memcg accounting” 补丁所修复,并且该补丁还将被移植到之前版本的内核中(例如,它现在被移植到了 4.4.27 和 4.4.0-45.66 版本中)。

|

||||

在开发过程中我们碰到了一些非 BPF 的漏洞,它们需要被修复:rcu 不可重入,这可能导致内核由于 funccount 挂起,在 4.6 内核版本中这个漏洞被 “bpf: map pre-alloc” 补丁集所修复,旧版本内核的漏洞 bcc 有个临时处理方案。还有一个是 uprobe 的内存计算问题,这导致 uprobe 分配内存失败,在 4.8 内核版本这个漏洞由 “uprobes: Fix the memcg accounting” 补丁所修复,并且该补丁还将被移植到之前版本的内核中(例如,它现在被移植到了 4.4.27 和 4.4.0-45.66 版本中)。

|

||||

|

||||

### 为什么 Linux 追踪很耗时?

|

||||

### 为什么 Linux 追踪用了这么久才加进来?

|

||||

|

||||

首要任务被分到了若干追踪器中间:只有联合使用这些追踪器才能有作用。想要了解更多关于这个或其它方面的问题,可以看一看我在 2014 年写的 [tracing summit talk][32]。我忽视了计数器在部分方案中的效率:有些公司发现其它追踪器(SystemTap 和 LTTng)能满足他们的需求,尽管他们乐于听到 BPF 的开发进程,考虑到他们现有的解决方案,帮助 BPF 的开发就不那么重要了。

|

||||

首要任务被分到了若干追踪器中间:这些不是某个追踪器单个的事情。想要了解更多关于这个或其它方面的问题,可以看一看我在 2014 年 [tracing summit 上的讲话][32]。我忽视了部分方案的反面影响:有些公司发现其它追踪器(SystemTap 和 LTTng)能满足他们的需求,尽管他们乐于听到 BPF 的开发进程,但考虑到他们现有的解决方案,帮助 BPF 的开发就不那么重要了。

|

||||

|

||||

近两年里 BPF 仅在追踪领域得到加强。这一过程原本可以更快的,但早期缺少全职工作于 BPF 追踪的工程师。Alexei Starovoitov (BPF 领导者),Brenden Blanco (bcc 领导者),我还有其它一些开发者,都有其它的事情要做。我在 Netflix 公司花了大量时间(自由工作地),大概有 7% 的时间是花在 BPF 和 bcc 上。某种程度上这不是我的首要任务,因为我还有自己的工作(包括我的 perf-tools,一个工作在旧版本内核上的程序)。

|

||||

BPF 仅在近两年里在追踪领域得到加强。这一过程原本可以更快的,但早期缺少全职从事于 BPF 追踪的工程师。Alexei Starovoitov (BPF 领导者),Brenden Blanco (bcc 领导者),我还有其它一些开发者,都有其它的事情要做。我在 Netflix 公司花了大量时间(志愿地),大概有 7% 的时间是花在 BPF 和 bcc 上。某种程度上这不是我的首要任务,因为我还有自己的工作(包括我的 perf-tools,一个可以工作在旧版本内核上的程序)。

|

||||

|

||||

BPF 追踪已经推出了,已经有科技公司开始关注 BPF 的特点了。但我还是推荐 [Netflix 公司][31]。(如果你为了 BPF 而要聘请我,那我还是十分乐于待在 Netflix 公司的!)

|

||||

现在BPF 追踪器已经推出了,已经有科技公司开始寻找会 BPF 的人了。但我还是推荐 [Netflix 公司][31]。(如果你为了 BPF 而要聘请我,那我还是十分乐于待在 Netflix 公司的!)

|

||||

|

||||

### 使用简单

|

||||

|

||||

DTrace 和 bcc/BPF 现在的最大区别就是哪个更好使用。这取决于你要用 BPF 追踪做什么了。如果你要

|

||||

|

||||

* **使用 BPF tools/metrics**:应该是没什么区别的。工具的表现都差不多,图形用户界面的访问也类似。大部分用户通过这种方式使用 BPF。

|

||||

* **开发 tools/metrics**:bcc 的开发可难多了。DTrace 有一套自己的简单语言,D 语音,和 awk 语言相似,而 bcc 使用已有的带有库的语言(C 语言,Python 和 lua)。一个用 C 和 Python 写的 bcc 工具与仅仅用 D 语言写出来的工具相比,可能要多十多倍行数的代码,或者更多。但是很多 DTrace 工具用 shell 包装来提供参数和差错,会让代码变得十分臃肿。编程的难处是不同的:重写 bcc 更需要巧妙性,这导致某些脚本更加难开发。(尤其是 bpf_probe_read() 这类的函数,需要了解更多 BPF 的内涵知识)。当计划改进 bcc 时,这一情形将得到改善。

|

||||

* **运行常见的命令**:十分相近。用 “dtrace” 命令,DTrace 能做很多事,但 bcc 有各种工具,trace,argdist,funccount,funclatency 等等。

|

||||

* **使用 BPF 工具/度量**:应该是没什么区别的。工具的表现都差不多,图形用户界面都能取得类似度量指标。大部分用户通过这种方式使用 BPF。

|

||||

* **开发工具/度量**:bcc 的开发可难多了。DTrace 有一套自己的简单语言,D 语音,和 awk 语言相似,而 bcc 使用已有的语言(C 语言,Python 和 lua)及其类库。一个用 C 和 Python 写的 bcc 工具与仅仅用 D 语言写出来的工具相比,可能要多十多倍行数的代码,或者更多。但是很多 DTrace 工具用 shell 封装来提供参数和差错检查,会让代码变得十分臃肿。编程的难处是不同的:重写 bcc 更需要巧妙性,这导致某些脚本更加难开发。(尤其是 `bpf_probe_read()` 这类的函数,需要了解更多 BPF 的内涵知识)。当计划改进 bcc 时,这一情形将得到改善。

|

||||

* **运行常见的命令**:十分相近。通过 `dtrace` 命令,DTrace 能做很多事,但 bcc 有各种工具,`trace`、`argdist`、`funccount`、`funclatency` 等等。

|

||||

* **编写自定义的特殊命令**:使用 DTrace 的话,这就没有必要了。允许定制消息快速传递和系统快速响应,DTrace 的高级分析很快。而 bcc 现在受限于它的多种工具以及它们的适用范围。

|

||||

|

||||

简单来说,如果你只使用 BPF 工具的话,就不必关注这些差异了。如果你经验丰富,是个开发者(像我一样),目前 bcc 的使用是比较困难的。

|

||||

简单来说,如果你只使用 BPF 工具的话,就不必关注这些差异了。如果你经验丰富,是个开发者(像我一样),目前 bcc 的使用更难一些。

|

||||

|

||||

举一个 bcc 的 Python 前端的例子,下面是追踪硬盘 I/O 和 打印 I/O 容量柱状图的代码:

|

||||

举一个 bcc 的 Python 前端的例子,下面是追踪硬盘 I/O 并打印出 I/O 大小的柱状图代码:

|

||||

|

||||

```

|

||||

from bcc import BPF

|

||||

@ -281,16 +270,15 @@ except KeyboardInterrupt:

|

||||

|

||||

# output

|

||||

b["dist"].print_log2_hist("kbytes")

|

||||

|

||||

```

|

||||

|

||||

注意 Python 代码中嵌入的 C 语句(text=)。

|

||||

注意 Python 代码中嵌入的 C 语句(`text=`)。

|

||||

|

||||

这就完成了任务,但仍有改进的空间。好在我们有时间去做:人们使用 Linux 4.9 并能用上 BPF 还得好几个月呢,所以我们有时间来制造工具和前端。

|

||||

|

||||

### 高级语言

|

||||

|

||||

前端越简单,比如高级语言,所改进的可能就越不如你所期望的。绝大多数人使用封装好的工具(和 GUI),仅有少部分人能写出这些工具。但我不反对使用高级语言,比如 SystemTap,毕竟已经开发出来了。

|

||||

前端越简单,比如高级语言,所改进的可能就越不如你所期望的。绝大多数人使用封装好的工具(和图形界面),仅有少部分人能写出这些工具。但我不反对使用高级语言,比如 SystemTap,毕竟已经开发出来了。

|

||||

|

||||

```

|

||||

#!/usr/bin/stap

|

||||

@ -307,12 +295,11 @@ probe syscall.open

|

||||

{

|

||||

printf("%6d %6d %16s %s\n", uid(), pid(), execname(), filename);

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

如果拥有整合了语言和脚本的 SystemTap 前端与高性能内核内建的 BPF 后端,会不会令人满意呢?RedHat 公司的 Richard Henderson 已经在进行相关工作了,并且发布了 [初代版本][30]!

|

||||

如果拥有整合了语言和脚本的 SystemTap 前端与高性能的内置在内核中的 BPF 后端,会不会令人满意呢?RedHat 公司的 Richard Henderson 已经在进行相关工作了,并且发布了 [初代版本][30]!

|

||||

|

||||

这是 [ply][29],一个完全新颖的 BPF 高级语言:

|

||||

这是 [ply][29],一个完全新颖的 BPF 高级语言:

|

||||

|

||||

```

|

||||

#!/usr/bin/env ply

|

||||

@ -321,7 +308,6 @@ kprobe:SyS_*

|

||||

{

|

||||

$syscalls[func].count()

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

这也是一份承诺。

|

||||

@ -330,30 +316,30 @@ kprobe:SyS_*

|

||||

|

||||

### 如何帮助我们

|

||||

|

||||

* **推广**:BPF 追踪目前还没有什么市场方面的进展。尽管有公司了解并在使用它(Facebook,Netflix,Github 和其它公司),但要广为人知尚需时日。分享关于 BPF 产业的文章和资源来帮助我们。

|

||||

* **推广**:BPF 追踪器目前还没有什么市场方面的进展。尽管有公司了解并在使用它(Facebook、Netflix、Github 和其它公司),但要广为人知尚需时日。你可以分享关于 BPF 的文章和资源给业内的其它公司来帮助我们。

|

||||

* **教育**:你可以撰写文章,发表演讲,甚至参与 bcc 文档的编写。分享 BPF 如何解决实际问题以及为公司带来收益的实例。

|

||||

* **解决 bcc 的问题**:参考 [bcc issue list][19],这包含了错误和需要的特性。

|

||||

* **提交错误**:使用 bcc/BPF,提交你发现的错误。

|

||||

* **创造工具**:有很多可视化的工具需要开发,请不要太草率,因为大家会先花几个小时学习使用你做的工具,所以请尽量把工具做的直观好用(参考我的 [文档][18])。就像 Mike Muuss 提及到他自己的 [ping][17] 程序:“要是我早知道这是我一生中最出名的成就,我就多开发一两天,添加更多选项。”

|

||||

* **高级语言**:如果现有的 bcc 前端语言让你很困扰,或者你能弄门更好的语言。要是你想将这门语言内建到 bcc 里面,你需要使用 libbcc。或者你可以帮助进行 SystemTap BPF 或 ply 的工作。

|

||||

* **整合图形界面**:除了 bcc 可以使用的 CLI 命令行工具,怎么让这些信息可视呢?延迟关系,火焰图等等。

|

||||

* **创造工具**:有很多可视化的工具需要开发,但请不要太草率,因为大家会先花几个小时学习使用你做的工具,所以请尽量把工具做的直观好用(参考我的[文档][18])。就像 Mike Muuss 提及到他自己的 [ping][17] 程序:“要是我早知道这是我一生中最出名的成就,我就多开发一两天,添加更多选项。”

|

||||

* **高级语言**:如果现有的 bcc 前端语言让你很困扰,或许你能弄门更好的语言。要是你想将这门语言内建到 bcc 里面,你需要使用 libbcc。或者你可以帮助 SystemTap BPF 或 ply 的工作。

|

||||

* **整合图形界面**:除了 bcc 可以使用的 CLI 命令行工具,怎么让这些信息可视呢?延迟热点图,火焰图等等。

|

||||

|

||||

### 其它追踪器

|

||||

|

||||

那么 SystemTap,ktap,sysdig,LTTng 等追踪器怎么样呢?它们有个共同点,要么使用了 BPF,要么在自己的领域做得更好。会有单独的文章介绍它们自己。

|

||||

那么 SystemTap、ktap、sysdig、LTTng 等追踪器怎么样呢?它们有个共同点,要么使用了 BPF,要么在自己的领域做得更好。会有单独的文章介绍它们自己。

|

||||

|

||||

至于 DTrace ?我们公司目前还在基于 FreeBSD 系统的 CDN 中使用它。

|

||||

|

||||

### 更多 bcc/BPF 的信息

|

||||

### 更多 bcc/BPF 的信息

|

||||

|

||||

我已经写了一篇 [bcc/BPF Tool End-User Tutorial][28],一篇 [bcc Python Developer's Tutorial][27],一篇 [bcc/BPF Reference Guide][26],和已经写好的有用的 [/tools][25],每一个工具都有一个 [example.txt][24] 文件和 [man page][23]。我之前写过的关于 bcc 和 BPF 的文章有:

|

||||

我已经写了一篇《[bcc/BPF 工具最终用户教程][28]》,一篇《[bcc Python 开发者教程][27]》,一篇《[bcc/BPF 参考手册][26]》,并提供了一些有用的[工具][25],每一个工具都有一个 [example.txt][24] 文件和 [man page][23]。我之前写过的关于 bcc 和 BPF 的文章有:

|

||||

|

||||

* [eBPF: One Small Step][16] (以后就叫做 BPF)

|

||||

* [eBPF: One Small Step][16] (后来就叫做 BPF)

|

||||

* [bcc: Taming Linux 4.3+ Tracing Superpowers][15]

|

||||

* [Linux eBPF Stack Trace Hack][14] (现在官方支持追踪堆栈了)

|

||||

* [Linux eBPF Off-CPU Flame Graph][13] (" " ")

|

||||

* [Linux Wakeup and Off-Wake Profiling][12] (" " ")

|

||||

* [Linux Chain Graph Prototype][11] (" " ")

|

||||

* [Linux eBPF Off-CPU Flame Graph][13]

|

||||

* [Linux Wakeup and Off-Wake Profiling][12]

|

||||

* [Linux Chain Graph Prototype][11]

|

||||

* [Linux eBPF/bcc uprobes][10]

|

||||

* [Linux BPF Superpowers][9]

|

||||

* [Ubuntu Xenial bcc/BPF][8]

|

||||

@ -369,22 +355,22 @@ kprobe:SyS_*

|

||||

|

||||

### 致谢

|

||||

|

||||

* Van Jacobson and Steve McCanne,他是最早将 BPF 应用到包过滤的。

|

||||

* Barton P. Miller,Jeffrey K. Hollingsworth,and Jon Cargille,发明了动态追踪,并发表文章《Dynamic Program Instrumentation for Scalable Performance Tools》,可扩展高性能计算协议 (SHPCC),于田纳西州诺克斯维尔市,1994 年 5 月发表。

|

||||

* kerninst (ParaDyn, UW-Madison), an early dynamic tracing tool that showed the value of dynamic tracing (late 1990's).(早期的能够显示动态追踪数值的动态追踪工具,稍晚于 1990 年)

|

||||

* Mathieu Desnoyers (of LTTng),内核的主要开发者,主导 tracepoints 项目。

|

||||

* Van Jacobson 和 Steve McCanne,他们创建了最初用作过滤器的 BPF 。

|

||||

* Barton P. Miller,Jeffrey K. Hollingsworth,and Jon Cargille,发明了动态追踪,并发表论文《Dynamic Program Instrumentation for Scalable Performance Tools》,可扩展高性能计算协议 (SHPCC),于田纳西州诺克斯维尔市,1994 年 5 月发表。

|

||||

* kerninst (ParaDyn, UW-Madison),展示了动态跟踪的价值的早期动态跟踪工具(上世纪 90 年代后期)

|

||||

* Mathieu Desnoyers (在 LTTng),内核的主要开发者,主导 tracepoints 项目。

|

||||

* IBM 开发的作为 DProbes 一部分的 kprobes,DProbes 在 2000 年时曾与 LTT 一起提供 Linux 动态追踪,但没有整合到一起。

|

||||

* Bryan Cantrill, Mike Shapiro, and Adam Leventhal (Sun Microsystems),DTrace 的核心成员,DTrace 是一款很棒的动态追踪工具,安全而且简单(2004 年)。考虑到动态追踪的技术,DTrace 是科技的重要转折点:它很安全,默认安装在 Solaris 以及其它以可靠性著称的系统里。

|

||||

* Bryan Cantrill, Mike Shapiro, and Adam Leventhal (Sun Microsystems),DTrace 的核心开发者,DTrace 是一款很棒的动态追踪工具,安全而且简单(2004 年)。对于动态追踪技术,DTrace 是科技的重要转折点:它很安全,默认安装在 Solaris 以及其它以可靠性著称的系统里。

|

||||

* 来自 Sun Microsystems 的各部门的许多员工,促进了 DTrace,为我们带来了高级系统追踪的意识。

|

||||

* Roland McGrath (at Red Hat),utrace 项目的主要开发者,utrace 变成了后来的 uprobes。

|

||||

* Alexei Starovoitov (PLUMgrid, then Facebook), 加强版 BPF(可编程内核容器)的主要开发者。

|

||||

* 那些帮助反馈,提交代码、测试以及针对增强版 BPF 补丁(搜索 BPF 的 lkml)的 Linux 内核工程师: Wang Nan, Daniel Borkmann, David S. Miller, Peter Zijlstra, 以及其它很多人。

|

||||

* Roland McGrath (在 Red Hat),utrace 项目的主要开发者,utrace 变成了后来的 uprobes。

|

||||

* Alexei Starovoitov (PLUMgrid, 后来是 Facebook),加强版 BPF(可编程内核部件)的主要开发者。

|

||||

* 那些帮助反馈、提交代码、测试以及针对增强版 BPF 补丁(请在 lkml 搜索 BPF)的 Linux 内核工程师: Wang Nan、 Daniel Borkmann、 David S. Miller、 Peter Zijlstra 以及其它很多人。

|

||||

* Brenden Blanco (PLUMgrid),bcc 的主要开发者。

|

||||

* Sasha Goldshtein (Sela) 开发了 bcc 中可用的 tracepoint,和功能最强大的 bcc 工具 trace 及 argdist,帮助 USDT 项目的开发。

|

||||

* Sasha Goldshtein (Sela) 开发了 bcc 中的跟踪点支持,和功能最强大的 bcc 工具 trace 及 argdist,帮助 USDT 项目的开发。

|

||||

* Vicent Martí 和其它 Github 上的工程师,为 bcc 编写了基于 lua 的前端,帮助 USDT 部分项目的开发。

|

||||

* Allan McAleavy, Mark Drayton,和其他的改进 bcc 的贡献者。

|

||||

* Allan McAleavy、 Mark Drayton,和其他的改进 bcc 的贡献者。

|

||||

|

||||

感觉 Netflix 提供环的境和支持,让我能够编写 BPF 和 bcc tracing 并完成它们。开发追踪工具(使用 TNF/prex, DTrace, SystemTap, ktap, ftrace, perf, and now bcc/BPF)和写书、博客以及评论,我已经编写了多年的追踪工具。

|

||||

感觉 Netflix 提供的环境和支持,让我能够编写 BPF 和 bcc 跟踪器并完成它们。我已经编写了多年的追踪工具(使用 TNF/prex、DTrace、SystemTap、ktap、ftrace、perf,现在是 bcc/BPF),并写书、博客以及评论,

|

||||

|

||||

最后,感谢 [Deirdré][20] 编辑了另外一篇文章。

|

||||

|

||||

@ -392,9 +378,9 @@ kprobe:SyS_*

|

||||

|

||||

Linux 没有 DTrace(语言),但它现在有了,或者说拥有了 DTraceTookit(工具)。

|

||||

|

||||

通过内核构建的 BPF 引擎补丁,Linux 4.9 内核有用来支持现代化追踪的最后一项功能。内核支持这一最难的部分已经做完了。今后的任务包括更多的命令行执行工具,可选的高级语言和图形用户界面。

|

||||

通过增强内置的 BPF 引擎,Linux 4.9 内核拥有了用来支持现代化追踪的最后一项能力。内核支持这一最难的部分已经做完了。今后的任务包括更多的命令行执行工具,以及高级语言和图形用户界面。

|

||||

|

||||

对于性能分析产品的客户,这也是一件好事:你能查看延迟柱状图和热点图,CPU 运行和休眠的火焰图,拥有更好的时延断点和更低耗的工具。我们现在用的追踪和处理程序是没有效率的方式。

|

||||

对于性能分析产品的客户,这也是一件好事:你能查看延迟柱状图和热点图,CPU 处理和 CPU 之外的火焰图,拥有更好的时延断点和更低耗的工具。在用户空间按包跟踪和处理是没有效率的方式。

|

||||

|

||||

那么你什么时候会升级到 Linux 4.9 呢?一旦官方发布,新的性能测试工具就来了:`apt-get install bcc-tools` 。

|

||||

|

||||

@ -407,10 +393,8 @@ Brendan

|

||||

via: http://www.brendangregg.com/blog/2016-10-27/dtrace-for-linux-2016.html

|

||||

|

||||

作者:[Brendan Gregg][a]

|

||||

|

||||

译者:[GitFuture](https://github.com/GitFuture)

|

||||

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,121 @@

|

||||

修复 Ubuntu 中“Unable to lock the administration directory (/var/lib/dpkg/)”的问题

|

||||

============================================================

|

||||

|

||||

在 Ubuntu 或者它的衍生版如 Linux Mint(我已经作为日常工作使用的系统)中使用 [apt-get 命令][1]或者其相对更新的[APT 管理工具][2]时,你可能会在命令行中看到一个 `unable to lock the administration directory (/var/lib/dpkg/) is another process using it` 的错误。

|

||||

|

||||

这个错误尤其对那些对这个错误原因不了解的 Linux(Ubuntu)新手而言更加恼人。

|

||||

|

||||

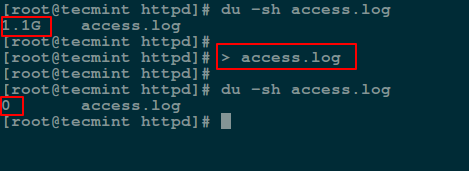

下面是一个例子,展示了出现在 Ubuntu 16.10 上的文件锁定错误:

|

||||

|

||||

```

|

||||

tecmint@TecMint:~$ sudo apt install neofetch

|

||||

[sudo] password for tecmint:

|

||||

E: Could not get lock /var/lib/dpkg/lock - open (11: Resource temporarily unavailable)

|

||||

E: Unable to lock the administration directory (/var/lib/dpkg), is another process using it?

|

||||

```

|

||||

|

||||

下面的输出是另外一个可能显示的错误:

|

||||

|

||||

```

|

||||

E: Could not get lock /var/lib/apt/lists/lock - open (11: Resource temporarily unavailable)

|

||||

E: Unable to lock directory /var/lib/apt/lists/

|

||||

E: Could not get lock /var/lib/dpkg/lock - open (11: Resource temporarily unavailable)

|

||||

E: Unable to lock the administration directory (/var/lib/dpkg/), is another process using it?

|

||||

```

|

||||

|

||||

你将来遇到这个错误该怎么去解决?一共有几种方法处理这个错误,但是本篇中我们会用两种或许是最简单和最有效的方法来解决它。

|

||||

|

||||

### 1、找出并杀掉所有 apt-get 或者 apt 进程

|

||||

|

||||

运行下面的命令来[生成所有含有 apt 的进程列表][3],你可以使用 `ps` 和 [grep 命令][4]并用管道组合来得到含有 apt 或者 apt-get 的进程。

|

||||

|

||||

```

|

||||

$ ps -A | grep apt

|

||||

```

|

||||

[

|

||||

|

||||

][5]

|

||||

|

||||

*找出 apt 以及 apt-get 进程*

|

||||

|

||||

你可以看到上面命令输出的每个 apt-get 或者 apt 进程,使用下面的命令[杀掉每个进程][6]。

|

||||

|

||||

上面截图中的第一列是进程 ID(PID)。

|

||||

|

||||

```

|

||||

$ sudo kill -9 processnumber

|

||||

或者

|

||||

$ sudo kill -SIGKILL processnumber

|

||||

```

|

||||

|

||||

比如,下面命令中的`9`是 `SIGKILL` 的信号数,它会杀掉第一个 apt 进程:

|

||||

|

||||

```

|

||||

$ sudo kill -9 13431

|

||||

或者

|

||||

$ sudo kill -SIGKILL 13431

|

||||

```

|

||||

|

||||

### 2、 删除锁定的文件

|

||||

|

||||

锁定的文件会阻止 Linux 系统中某些文件或者数据的访问,这个概念也存在于 Windows 或者其他的操作系统中。

|

||||

|

||||

一旦你运行了 apt-get 或者 apt 命令,锁定文件将会创建于 `/var/lib/apt/lists/`、`/var/lib/dpkg/`、`/var/cache/apt/archives/` 中。

|

||||

|

||||

这有助于运行中的 apt-get 或者 apt 进程能够避免被其它需要使用相同文件的用户或者系统进程所打断。当该进程执行完毕后,锁定文件将会删除。

|

||||

|

||||

重要提醒:万一你在没有看到 apt-get 或者 apt 进程的情况下在上面两个不同的文件夹中看到了锁定文件,这是因为进程由于某个原因被杀掉了,因此你需要删除锁定文件来避免该错误。

|

||||

|

||||

首先运行下面的命令来移除 `/var/lib/dpkg/` 文件夹下的锁定文件:

|

||||

|

||||

```

|

||||

$ sudo rm /var/lib/dpkg/lock

|

||||

```

|

||||

|

||||

之后像下面这样强制重新配置软件包:

|

||||

|

||||

```

|

||||

$ sudo dpkg --configure -a

|

||||

```

|

||||

|

||||

也可以删除 `/var/lib/apt/lists/` 以及缓存文件夹下的锁定文件:

|

||||

|

||||

```

|

||||

$ sudo rm /var/lib/apt/lists/lock

|

||||

$ sudo rm /var/cache/apt/archives/lock

|

||||

```

|

||||

|

||||

接下来,更新你的软件包源列表:

|

||||

|

||||

```

|

||||

$ sudo apt update

|

||||

或者

|

||||

$ sudo apt-get update

|

||||

```

|

||||

|

||||

总结一下,对于 Ubuntu(以及它的衍生版)用户在使用 apt-get 或者 apt 也叫 [aptitude 命令][7]时遇到的问题,我们已经用两种方法来解决了。

|

||||

|

||||

你有什么可以分享出来的有效的方法来处理这个错误么?在下面的评论区联系我们。

|

||||

|

||||

除此之外,你可能还希望了解[如何找出并杀掉运行的进程][8],你可以阅读这篇[用 kill、pkill、killall 来中止进程][9]指南来了解。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/fix-unable-to-lock-the-administration-directory-var-lib-dpkg-lock

|

||||

|

||||

作者:[Aaron Kili][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 组织编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/aaronkili/

|

||||

[1]:https://linux.cn/article-4933-1.html

|

||||

[2]:https://linux.cn/article-7364-1.html

|

||||

[3]:http://www.tecmint.com/find-linux-processes-memory-ram-cpu-usage/

|

||||

[4]:http://www.tecmint.com/linux-grep-commands-character-classes-bracket-expressions/

|

||||

[5]:http://www.tecmint.com/wp-content/uploads/2016/11/find-apt-processes.png

|

||||

[6]:http://www.tecmint.com/find-and-kill-running-processes-pid-in-linux/

|

||||

[7]:http://www.tecmint.com/difference-between-apt-and-aptitude/

|

||||

[8]:http://www.tecmint.com/find-and-kill-running-processes-pid-in-linux/

|

||||

[9]:http://www.tecmint.com/how-to-kill-a-process-in-linux/

|

||||

@ -0,0 +1,90 @@

|

||||

不常见但是很有用的 gcc 命令行选项(一)

|

||||

===================================================

|

||||

|

||||

在本文中,你可以学习到:

|

||||

|

||||

1. [在每个编译阶段查看中间代码的输出][1]

|

||||

2. [让你的代码可调试和可分析][2]

|

||||

3. [结论][3]

|

||||

|

||||

软件工具通常情况下会提供多个功能以供选择,但是如你所知的,不是所有的功能都能被每个人用到的。公正地讲,这并不是设计上的错误,因为每个用户都会有自己的需求,他们只在他们的领域内使用该工具。然而,深入了解你所使用的工具也是很有益处的,因为你永远不知道它的某个功能会在什么时候派上用场,从而节省下你宝贵的时间。

|

||||

|

||||

举一个例子:编译器。一个优秀的编程语言编译器总是会提供极多的选项,但是用户一般只知道和使用其中很有限的一部分功能。更具体点来说,比如你是 C 语言开发人员,并将 Linux 作为你的开发平台,那么你很有可能会用到 gcc 编译器,这个编译器提供了 (几乎) 数不清的命令行选项列表。

|

||||

|

||||

你知道,你可以让 gcc 保存每个编译阶段的输出吗?你知道用于生成警告的 `-Wall` 选项,它并不会包含一些特殊的警告吗?gcc 的很多命令行选项都不会经常用到,但是它们在某些特定的情况下会变得非常有用,例如,当你在调试代码的时候。

|

||||

|

||||

所以在本文中,我们会介绍这样的几个选项,提供所有必要的细节,并通过简单易懂的例子来解释它们。

|

||||

|

||||

但是在开始前,请注意本文中所有的例子所使用的环境:基于 Ubuntu 16.04 LTS 操作系统,gcc 版本为 5.4.0。

|

||||

|

||||

### 在每个编译阶段查看中间代码的输出

|

||||

|

||||

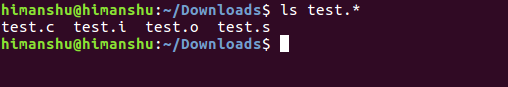

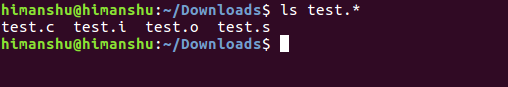

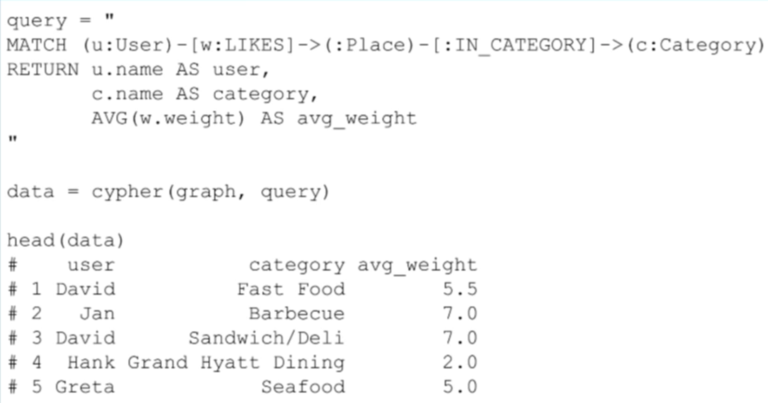

你知道在通过 gcc 编译 c 语言代码的时候大体上共分为四个阶段吗?分别为预处理 -> 编译 -> 汇编 -> 链接。在每个阶段之后,gcc 都会产生一个将移交给下一个阶段的临时输出文件。但是生成的都是临时文件,因此我们并不能看到它们——我们所看到的只是我们发起编译命令,然后它生成的我们可以直接运行的二进制文件或可执行文件。

|

||||

|

||||

但是比如说在预处理阶段,如果调试时需要查看代码是如何进行处理的,你要怎么做呢?好消息是 gcc 编译器提供了相应的命令行选项,你可以在标准编译命令中使用这些选项获得原本被编译器删除的中间文件。我们所说的选项就是`-sava-temps`。

|

||||

|

||||

以下是 [gcc 手册][4]中对该选项的介绍:

|

||||

|

||||

> 永久存储临时的中间文件,将它们放在当前的文件夹下并根据源文件名称为其命名。因此,用 `-c -save-temps` 命令编译 foo.c 文件时会生成 foo.i foo.s 和 foo.o 文件。即使现在编译器大多使用的是集成的预处理器,这命令也会生成预处理输出文件 foo.i。

|

||||

|

||||

> 当与 `-x` 命令行选项结合使用时,`-save-temps` 命令会避免覆写与中间文件有着相同扩展名的输入源文件。相应的中间文件可以通过在使用 `-save-temps` 命令之前重命名源文件获得。

|

||||

|

||||

以下是怎样使用这个选项的例子:

|

||||

|

||||

```

|

||||

gcc -Wall -save-temps test.c -o test-exec

|

||||

```

|

||||

|

||||

下图为该命令的执行结果,验证其确实产生了中间文件:

|

||||

|

||||

[

|

||||

|

||||

][5]

|

||||

|

||||

因此,在截图中你所看到的 test.i、test.s、 test.o 文件都是由 `-save-temps` 选项产生的。这些文件分别对应于预处理、编译和链接阶段。

|

||||

|

||||

### 让你的代码可调试和可分析

|

||||

|

||||

你可以使用专有的工具调试和分析代码。如 [gdb][6] 就是专用于调试的工具,而 [gprof][7] 则是热门的分析工具。但你知道 gcc 特定的命令行选项也可以让你的代码可调试和可分析吗?

|

||||

|

||||

让我们开始调试之路吧!为了能在代码调试中使用 gdb,你需要在编译代码的时候使用 gcc 编译器提供的 `-g` 选项。这个选项让 gcc 生成 gdb 需要的调试信息从而能成功地调试程序。

|

||||

|

||||

如果你想要使用此选项,建议您详细阅读 [gcc 手册][8]提供的有关此选项的详细信息——在某些情况下,其中的一些内容可能是至关重要的。 例如,以下是从手册页中摘录的内容:

|

||||

|

||||

> GCC 允许在使用 `-g` 选项的时候配合使用 `-O` 选项。优化代码采用的便捷方式有时可能会产生意想不到的结果:某些你声明的变量可能不复存在;控制流可能会突然跳转到你未曾预期的位置;一些语句也许不会执行,因为它们已经把常量结果计算了或值已经被保存;一些语句可能会在不同地方执行,因为它们已经被移出循环。

|

||||

|

||||

> 然而优化的输出也是可以调试的。这就使得让优化器可以合理地优化或许有 bug 的代码。

|

||||

|

||||

不只是 gdb,使用 `-g` 选项编译代码,还可以开启使用 Valgrind 内存检测工具,从而完全发挥出该选项的潜力。或许还有一些人不知道,mencheck 工具被程序员们用来检测代码中是否存在内存泄露。你可以在[这里][9]参见这个工具的用法。

|

||||

|

||||

继续往下,为了能够在代码分析中使用 gprof 工具,你需要使用 `-pg` 命令行选项来编译代码。这会让 gcc 生成额外的代码来写入分析信息,gprof 工具需要这些信息来进行代码分析。[gcc 手册][10] 中提到:当编译你需要数据的源文件时,你必须使用这个选项,当然链接时也需要使用它。为了能了解 gprof 分析代码时具体是如何工作的,你可以转到我们的网站[专用教程][11]进行了解。

|

||||

|

||||

**注意**:`-g` 和 `-pg` 选项的用法类似于上一节中使用 `-save-temps` 选项的方式。

|

||||

|

||||

### 结论

|

||||

|

||||

我相信除了 gcc 的专业人士,都可以在这篇文章中得到了一些启发。尝试一下这些选项,然后观察它们是如何工作的。同时,请期待本教程系列的[下一部分][12],我们将会讨论更多有趣和有用的 gcc 命令行选项。

|

||||

|

||||

--------------------------------------------------------

|

||||

|

||||

via: https://www.howtoforge.com/tutorial/uncommon-but-useful-gcc-command-line-options/

|

||||

|

||||

作者:[Ansh][a]

|

||||

译者:[dongdongmian](https://github.com/dongdongmian)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://twitter.com/howtoforgecom

|

||||

[1]:https://www.howtoforge.com/tutorial/uncommon-but-useful-gcc-command-line-options/#see-intermediate-output-during-each-compilation-stage

|

||||

[2]:https://www.howtoforge.com/tutorial/uncommon-but-useful-gcc-command-line-options/#make-your-code-debugging-and-profiling-ready

|

||||

[3]:https://www.howtoforge.com/tutorial/uncommon-but-useful-gcc-command-line-options/#conclusion

|

||||

[4]:https://linux.die.net/man/1/gcc

|

||||

[5]:https://www.howtoforge.com/images/uncommon-but-useful-gcc-command-line-options/big/gcc-save-temps.png

|

||||

[6]:https://www.gnu.org/software/gdb/

|

||||

[7]:https://sourceware.org/binutils/docs/gprof/

|

||||

[8]:https://linux.die.net/man/1/gcc

|

||||

[9]:http://valgrind.org/docs/manual/mc-manual.html

|

||||

[10]:https://linux.die.net/man/1/gcc

|

||||

[11]:https://www.howtoforge.com/tutorial/how-to-install-and-use-profiling-tool-gprof/

|

||||

[12]:https://www.howtoforge.com/tutorial/uncommon-but-useful-gcc-command-line-options-2/

|

||||

@ -0,0 +1,145 @@

|

||||

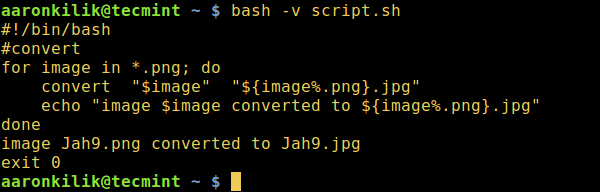

如何在 Linux 中启用 Shell 脚本的调试模式

|

||||

============================================================

|

||||

|

||||

|

||||

脚本是存储在一个文件的一系列命令。在终端上输入一个个命令,按顺序执行的方法太弱了,使用脚本,系统中的用户可以在一个文件中存储所有命令,反复调用该文件多次重新执行命令。

|

||||

|

||||

在 [学习脚本][1] 或写脚本的初期阶段,我们通常从写小脚本或者几行命令的短脚本开始,调试这样的脚本时我们通常无非就是通过观察它们的输出来确保其正常工作。

|

||||

|

||||

然而,当我们开始写非常长或上千行命令的高级脚本,例如改变系统设置的脚本,[在网络上执行关键备份][2] 等等,我们会意识到仅仅看脚本输出是不足以在脚本中找到 Bug 的!

|

||||

|

||||

因此,在 Linux 系列中这篇介绍 Shell 脚本调试, 我们将看看如何启用 Shell 脚本调试,然后在之后的系列中解释不同的 Shell 脚本调试模式以及如何使用它们。

|

||||

|

||||

### 如何开始写一个脚本

|

||||

|

||||

一个脚本与其它文件的区别是它的首行,它包含 `#!` (She-Bang - 释伴:定义文件类型)和路径名(解释器路径),通知系统该文件是一个命令集合,将被指定程序(解释器)解释。

|

||||

|

||||

下面是不同类型脚本 `首行` 示例:

|

||||

|

||||

```

|

||||

#!/bin/sh [sh 脚本]

|

||||

#!/bin/bash [bash 脚本]

|

||||

#!/usr/bin/perl [perl 程序]

|

||||

#!/bin/awk -f [awk 脚本]

|

||||

```

|

||||

|

||||

注意:如果脚本仅包含一组标准系统命令,没有任何内部 Shell 指令,首行或 `#!` 可以去掉。

|

||||

|

||||

### 如何在 Linux 操作系统执行 Shell 脚本

|

||||

|

||||

|

||||

调用一个脚本脚本的常规语法是:

|

||||

|

||||

```

|

||||

$ 脚本名 参数1 ... 参数N

|

||||

```

|

||||

|

||||

另一种可能的形式是明确指定将执行这个脚本的 Shell,如下:

|

||||

|

||||

```

|

||||

$ shell 脚本名 参数1 ... 参数N

|

||||

```

|

||||

|

||||

示例:

|

||||

|

||||

```

|

||||

$ /bin/bash 参数1 ... 参数N [bash 脚本]

|

||||

$ /bin/ksh 参数1 ... 参数N [ksh 脚本]

|

||||

$ /bin/sh 参数1 ... 参数N [sh 脚本]

|

||||

```

|

||||

|

||||

对于没有 `#!` 作为首行,仅包含基础系统命令的脚本,示例如下:

|

||||

|

||||

```

|

||||

### 脚本仅包含标准系统命令

|

||||

cd /home/$USER

|

||||

mkdir tmp

|

||||

echo "tmp directory created under /home/$USER"

|

||||

```

|

||||

|

||||

使它可执行并运行,如下:

|

||||

|

||||

```

|

||||

$ chmod +x 脚本名

|

||||

$ ./脚本名

|

||||

```

|

||||

|

||||

### 启用 Shell 脚本调试模式的方法

|

||||

|

||||

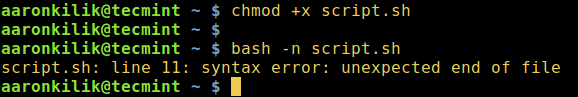

下面是主要的 Shell 脚本调试选项:

|

||||

|

||||

- `-v` (verbose 的简称) - 告诉 Shell 读取脚本时显示所有行,激活详细模式。

|

||||

- `-n` (noexec 或 no ecxecution 简称) - 指示 Shell 读取所有命令然而不执行它们,这个选项激活语法检查模式。

|

||||

- `-x` (xtrace 或 execution trace 简称) - 告诉 Shell 在终端显示所有执行的命令和它们的参数。 这个选项是启用 Shell 跟踪模式。

|

||||

|

||||

#### 1、 改变 Shell 脚本首行

|

||||

|

||||

第一个机制是改变 Shell 脚本首行,如下,这会启动脚本调试。

|

||||

|

||||

```

|

||||

#!/bin/sh 选项

|

||||

```

|

||||

|

||||

其中, 选项可以是上面提到的一个或多个调试选项。

|

||||

|

||||

#### 2、 调用 Shell 调试选项

|

||||

|

||||

第二个是使用如下调试选项启动 Shell,这个方法也会打开整个脚本调试。

|

||||

|

||||

```

|

||||

$ shell 选项 参数1 ... 参数N

|

||||

```

|

||||

|

||||

示例:

|

||||

|

||||

```

|

||||

$ /bin/bash 选项 参数1 ... 参数N

|

||||

```

|

||||

|

||||

#### 3、 使用 Shell 内置命令 set

|

||||

|

||||

第三个方法是使用内置命令 `set` 去调试一个给定的 Shell 脚本部分,如一个函数。这个机制是重要的,因为它让我们可以去调试任何一段 Shell 脚本。

|

||||

|

||||

我们可以如下使用 `set` 命令打开调试模式,其中选项是之前提到的所有调试选项。

|

||||

|

||||

```

|

||||

$ set 选项

|

||||

```

|

||||

|

||||

启用调试模式:

|

||||

|

||||

```

|

||||

$ set -选项

|

||||

```

|

||||

|

||||

禁用调试模式:

|

||||

|

||||

```

|

||||

$ set +选项

|

||||

```

|

||||

|

||||

此外,如果我们在 Shell 脚本不同部分启用了几个调试模式,我们可以一次禁用所有调试模式,如下:

|

||||

|

||||

```

|

||||

$ set -

|

||||

```

|

||||

|

||||

关于启用 Shell 脚本调试模式,先讲这些。正如我们看到的,我们可以调试一整个 Shell 脚本或者特定部分脚本。

|

||||

|

||||

在此系列下面的两篇文章中,我们会举例介绍如何使用 Shell 脚本调试选项,进一步了解 详细(verbose)、语法检查(syntax checking)、 跟踪(tracing)调试模式。

|

||||

|

||||

更重要的是,关于这个指南,欢迎通过下面评论提出任何问题或反馈。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/enable-shell-debug-mode-linux/

|

||||

|

||||

作者:[Aaron Kili][a]

|

||||