mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-09 01:30:10 +08:00

Translated by liujing97

This commit is contained in:

commit

ad59536e87

@ -1,25 +1,26 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (liujing97)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10698-1.html)

|

||||

[#]: subject: (How To Set Password Policies In Linux)

|

||||

[#]: via: (https://www.ostechnix.com/how-to-set-password-policies-in-linux/)

|

||||

[#]: author: (SK https://www.ostechnix.com/author/sk/)

|

||||

|

||||

如何在 Linux 系统中设置密码策略

|

||||

如何设置 Linux 系统的密码策略

|

||||

======

|

||||

|

||||

|

||||

|

||||

虽然 Linux 的设计是安全的,但还是存在许多安全漏洞的风险。弱密码就是其中之一。作为系统管理员,你必须为用户提供一个强密码。因为大部分的系统漏洞就是由于弱密码而引发的。本教程描述了在基于 DEB 系统的 Linux,比如 Debian, Ubuntu, Linux Mint 等和基于 RPM 系统的 Linux,比如 RHEL, CentOS, Scientific Linux 等的系统下设置像**密码长度**,**密码复杂度**,**密码有效期**等密码策略。

|

||||

虽然 Linux 的设计是安全的,但还是存在许多安全漏洞的风险,弱密码就是其中之一。作为系统管理员,你必须为用户提供一个强密码。因为大部分的系统漏洞就是由于弱密码而引发的。本教程描述了在基于 DEB 系统的 Linux,比如 Debian、Ubuntu、Linux Mint 等和基于 RPM 系统的 Linux,比如 RHEL、CentOS、Scientific Linux 等的系统下设置像**密码长度**、**密码复杂度**、**密码有效期**等密码策略。

|

||||

|

||||

### 在基于 DEB 的系统中设置密码长度

|

||||

|

||||

默认情况下,所有的 Linux 操作系统要求用户**密码长度最少6个字符**。我强烈建议不要低于这个限制。并且不要使用你的真实名称、父母、配偶、孩子的名字,或者你的生日作为密码。即便是一个黑客新手,也可以很快地破解这类密码。一个好的密码必须是至少 6 个字符,并且包含数字,大写字母和特殊符号。

|

||||

默认情况下,所有的 Linux 操作系统要求用户**密码长度最少 6 个字符**。我强烈建议不要低于这个限制。并且不要使用你的真实名称、父母、配偶、孩子的名字,或者你的生日作为密码。即便是一个黑客新手,也可以很快地破解这类密码。一个好的密码必须是至少 6 个字符,并且包含数字、大写字母和特殊符号。

|

||||

|

||||

通常地,在基于 DEB 的操作系统中,密码和身份认证相关的配置文件被存储在 **/etc/pam.d/** 目录中。

|

||||

通常地,在基于 DEB 的操作系统中,密码和身份认证相关的配置文件被存储在 `/etc/pam.d/` 目录中。

|

||||

|

||||

设置最小密码长度,编辑 **/etc/pam.d/common-password** 文件;

|

||||

设置最小密码长度,编辑 `/etc/pam.d/common-password` 文件;

|

||||

|

||||

```

|

||||

$ sudo nano /etc/pam.d/common-password

|

||||

@ -33,7 +34,7 @@ password [success=2 default=ignore] pam_unix.so obscure sha512

|

||||

|

||||

![][2]

|

||||

|

||||

在末尾添加额外的文字:**minlen=8**。在这里我设置的最小密码长度为 **8**。

|

||||

在末尾添加额外的文字:`minlen=8`。在这里我设置的最小密码长度为 `8`。

|

||||

|

||||

```

|

||||

password [success=2 default=ignore] pam_unix.so obscure sha512 minlen=8

|

||||

@ -43,15 +44,15 @@ password [success=2 default=ignore] pam_unix.so obscure sha512 minlen=8

|

||||

|

||||

保存并关闭该文件。这样一来,用户现在不能设置小于 8 个字符的密码。

|

||||

|

||||

### 在基于RPM的系统中设置密码长度

|

||||

### 在基于 RPM 的系统中设置密码长度

|

||||

|

||||

**在 RHEL, CentOS, Scientific Linux 7.x** 系统中, 以root身份执行下面的命令来设置密码长度。

|

||||

**在 RHEL、CentOS、Scientific Linux 7.x** 系统中, 以 root 身份执行下面的命令来设置密码长度。

|

||||

|

||||

```

|

||||

# authconfig --passminlen=8 --update

|

||||

```

|

||||

|

||||

查看最小密码长度, 执行:

|

||||

查看最小密码长度,执行:

|

||||

|

||||

```

|

||||

# grep "^minlen" /etc/security/pwquality.conf

|

||||

@ -63,7 +64,7 @@ password [success=2 default=ignore] pam_unix.so obscure sha512 minlen=8

|

||||

minlen = 8

|

||||

```

|

||||

|

||||

**在 RHEL, CentOS, Scientific Linux 6.x** 系统中, 编辑 **/etc/pam.d/system-auth** 文件:

|

||||

**在 RHEL、CentOS、Scientific Linux 6.x** 系统中,编辑 `/etc/pam.d/system-auth` 文件:

|

||||

|

||||

```

|

||||

# nano /etc/pam.d/system-auth

|

||||

@ -77,11 +78,11 @@ password requisite pam_cracklib.so try_first_pass retry=3 type= minlen=8

|

||||

|

||||

|

||||

|

||||

在以上所有设置中,最小密码长度是 **8** 个字符。

|

||||

如上设置中,最小密码长度是 `8` 个字符。

|

||||

|

||||

### 在基于DEB的系统中设置密码复杂度

|

||||

### 在基于 DEB 的系统中设置密码复杂度

|

||||

|

||||

此设置会强制要求密码中应该包含多少类型,比如大写字母,小写字母和其他字符。

|

||||

此设置会强制要求密码中应该包含多少类型,比如大写字母、小写字母和其他字符。

|

||||

|

||||

首先,用下面命令安装密码质量检测库:

|

||||

|

||||

@ -89,13 +90,13 @@ password requisite pam_cracklib.so try_first_pass retry=3 type= minlen=8

|

||||

$ sudo apt-get install libpam-pwquality

|

||||

```

|

||||

|

||||

之后,编辑 **/etc/pam.d/common-password** 文件:

|

||||

之后,编辑 `/etc/pam.d/common-password` 文件:

|

||||

|

||||

```

|

||||

$ sudo nano /etc/pam.d/common-password

|

||||

```

|

||||

|

||||

为了设置密码中至少有一个**大写字母**,则在下面这行的末尾添加文字 **‘ucredit=-1’**。

|

||||

为了设置密码中至少有一个**大写字母**,则在下面这行的末尾添加文字 `ucredit=-1`。

|

||||

|

||||

```

|

||||

password requisite pam_pwquality.so retry=3 ucredit=-1

|

||||

@ -115,9 +116,9 @@ password requisite pam_pwquality.so retry=3 dcredit=-1

|

||||

password requisite pam_pwquality.so retry=3 ocredit=-1

|

||||

```

|

||||

|

||||

正如你在上面样例中看到的一样,我们设置了密码中至少含有一个大写字母、一个小写字母和一个特殊字符。你可以设置被最大允许的任意数量的大写字母,小写字母和特殊字符。

|

||||

正如你在上面样例中看到的一样,我们设置了密码中至少含有一个大写字母、一个小写字母和一个特殊字符。你可以设置被最大允许的任意数量的大写字母、小写字母和特殊字符。

|

||||

|

||||

你还可以设置密码中被允许的最大或最小类型的数量。

|

||||

你还可以设置密码中被允许的字符类的最大或最小数量。

|

||||

|

||||

下面的例子展示了设置一个新密码中被要求的字符类的最小数量:

|

||||

|

||||

@ -125,7 +126,7 @@ password requisite pam_pwquality.so retry=3 ocredit=-1

|

||||

password requisite pam_pwquality.so retry=3 minclass=2

|

||||

```

|

||||

|

||||

### 在基于RPM的系统中设置密密码杂度

|

||||

### 在基于 RPM 的系统中设置密码复杂度

|

||||

|

||||

**在 RHEL 7.x / CentOS 7.x / Scientific Linux 7.x 中:**

|

||||

|

||||

@ -201,7 +202,7 @@ dcredit = -1

|

||||

ocredit = -1

|

||||

```

|

||||

|

||||

在 **RHEL 6.x / CentOS 6.x / Scientific Linux 6.x systems** 中,以root身份编辑 **/etc/pam.d/system-auth** 文件:

|

||||

在 **RHEL 6.x / CentOS 6.x / Scientific Linux 6.x systems** 中,以 root 身份编辑 `/etc/pam.d/system-auth` 文件:

|

||||

|

||||

```

|

||||

# nano /etc/pam.d/system-auth

|

||||

@ -212,17 +213,17 @@ ocredit = -1

|

||||

```

|

||||

password requisite pam_cracklib.so try_first_pass retry=3 type= minlen=8 dcredit=-1 ucredit=-1 lcredit=-1 ocredit=-1

|

||||

```

|

||||

在以上每个设置中,密码必须要至少包含 8 个字符。另外,密码必须至少包含一个大写字母、一个小写字母、一个数字和一个其他字符。

|

||||

|

||||

### 在基于DEB的系统中设置密码有效期

|

||||

如上设置中,密码必须要至少包含 `8` 个字符。另外,密码必须至少包含一个大写字母、一个小写字母、一个数字和一个其他字符。

|

||||

|

||||

### 在基于 DEB 的系统中设置密码有效期

|

||||

|

||||

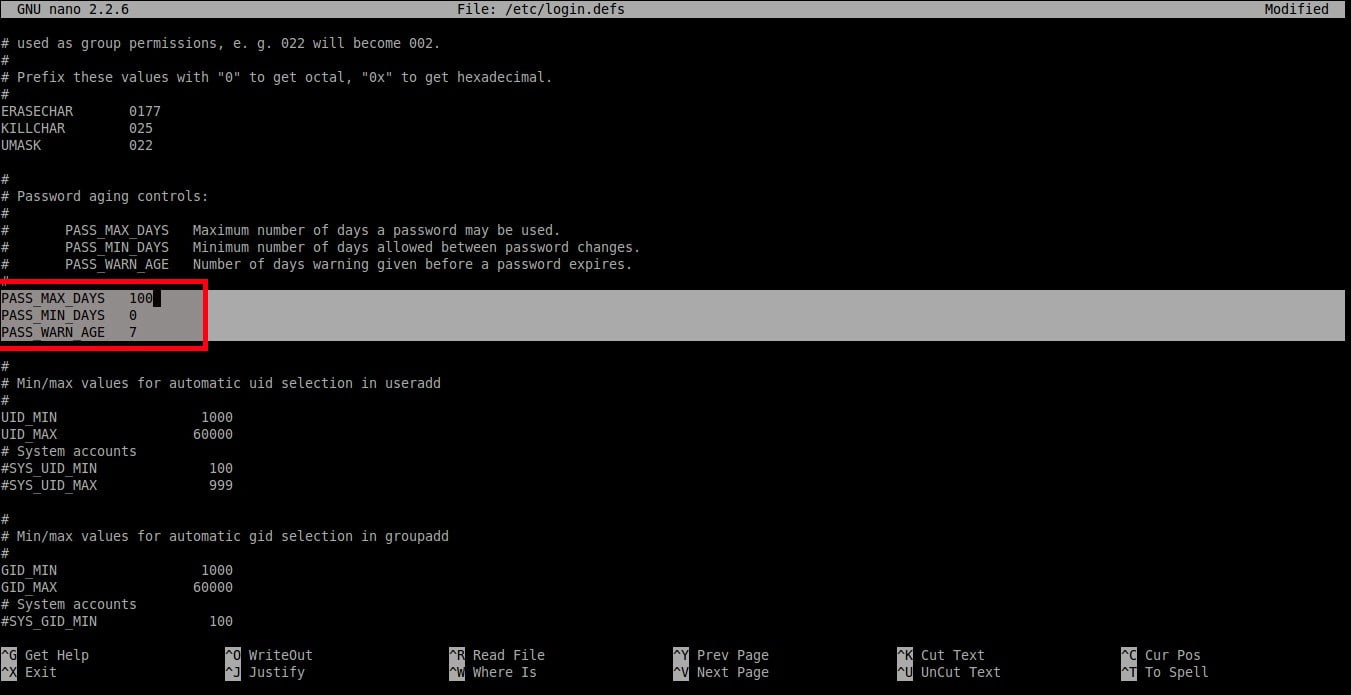

现在,我们将要设置下面的策略。

|

||||

|

||||

1. 密码被使用的最长天数。

|

||||

2. 密码更改允许的最小间隔天数。

|

||||

3. 密码到期之前发出警告的天数。

|

||||

|

||||

|

||||

|

||||

设置这些策略,编辑:

|

||||

|

||||

```

|

||||

@ -239,7 +240,7 @@ PASS_WARN_AGE 7

|

||||

|

||||

|

||||

|

||||

正如你在上面样例中看到的一样,用户应该每 **100** 天修改一次密码,并且密码到期之前的 **7** 天开始出现警告信息。

|

||||

正如你在上面样例中看到的一样,用户应该每 `100` 天修改一次密码,并且密码到期之前的 `7` 天开始出现警告信息。

|

||||

|

||||

请注意,这些设置将会在新创建的用户中有效。

|

||||

|

||||

@ -280,6 +281,7 @@ Minimum number of days between password change : 0

|

||||

Maximum number of days between password change : 99999

|

||||

Number of days of warning before password expires : 7

|

||||

```

|

||||

|

||||

正如你在上面看到的输出一样,该密码是无限期的。

|

||||

|

||||

修改已存在用户的密码有效期,

|

||||

@ -288,22 +290,23 @@ Number of days of warning before password expires : 7

|

||||

$ sudo chage -E 24/06/2018 -m 5 -M 90 -I 10 -W 10 sk

|

||||

```

|

||||

|

||||

上面的命令将会设置用户 **‘sk’** 的密码期限是 **24/06/2018**。并且修改密码的最小间隔时间为 5 天,最大间隔时间为 **90** 天。用户账号将会在 **10 天**后被自动锁定而且在到期之前的 **10 天**将会显示警告信息。

|

||||

上面的命令将会设置用户 `sk` 的密码期限是 `24/06/2018`。并且修改密码的最小间隔时间为 `5` 天,最大间隔时间为 `90` 天。用户账号将会在 `10` 天后被自动锁定,而且在到期之前的 `10` 天前显示警告信息。

|

||||

|

||||

### 在基于 RPM 的系统中设置密码效期

|

||||

|

||||

这点和基于 DEB 的系统是相同的。

|

||||

|

||||

### 在基于 DEB 的系统中禁止使用近期使用过的密码

|

||||

|

||||

你可以限制用户去设置一个已经使用过的密码。通俗的讲,就是说用户不能再次使用相同的密码。

|

||||

|

||||

为设置这一点,编辑 **/etc/pam.d/common-password** 文件:

|

||||

为设置这一点,编辑 `/etc/pam.d/common-password` 文件:

|

||||

|

||||

```

|

||||

$ sudo nano /etc/pam.d/common-password

|

||||

```

|

||||

|

||||

找到下面这行并且在末尾添加文字 **‘remember=5’**:

|

||||

找到下面这行并且在末尾添加文字 `remember=5`:

|

||||

|

||||

```

|

||||

password [success=2 default=ignore] pam_unix.so obscure use_authtok try_first_pass sha512 remember=5

|

||||

@ -313,27 +316,23 @@ password [success=2 default=ignore] pam_unix.so obscure use_a

|

||||

|

||||

### 在基于 RPM 的系统中禁止使用近期使用过的密码

|

||||

|

||||

这点对于 RHEL 6.x 和 RHEL 7.x 是相同的。他们的克隆系统类似于 CentOS, Scientific Linux。

|

||||

这点对于 RHEL 6.x 和 RHEL 7.x 和它们的衍生系统 CentOS、Scientific Linux 是相同的。

|

||||

|

||||

以root身份编辑 **/etc/pam.d/system-auth** 文件,

|

||||

以 root 身份编辑 `/etc/pam.d/system-auth` 文件,

|

||||

|

||||

```

|

||||

# vi /etc/pam.d/system-auth

|

||||

```

|

||||

|

||||

找到下面这行,并且在末尾添加文字 **remember=5**。

|

||||

找到下面这行,并且在末尾添加文字 `remember=5`。

|

||||

|

||||

```

|

||||

password sufficient pam_unix.so sha512 shadow nullok try_first_pass use_authtok remember=5

|

||||

```

|

||||

|

||||

现在你知道了 Linux 中的密码策略是什么,以及如何在基于 DEB 和 RPM 的系统中设置不同的密码策略。

|

||||

|

||||

现在就这样,我很快会在这里发表另外一天有趣而且有用的文章。在此之前会与 OSTechNix 保持联系。如果您觉得本教程对你有帮助,请在您的社交,专业网络上分享并支持我们。

|

||||

|

||||

祝贺!

|

||||

|

||||

现在你了解了 Linux 中的密码策略,以及如何在基于 DEB 和 RPM 的系统中设置不同的密码策略。

|

||||

|

||||

就这样,我很快会在这里发表另外一天有趣而且有用的文章。在此之前请保持关注。如果您觉得本教程对你有帮助,请在您的社交,专业网络上分享并支持我们。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -342,7 +341,7 @@ via: https://www.ostechnix.com/how-to-set-password-policies-in-linux/

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[liujing97](https://github.com/liujing97)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,48 +1,48 @@

|

||||

[#]: collector: "lujun9972"

|

||||

[#]: translator: " "

|

||||

[#]: reviewer: " "

|

||||

[#]: publisher: " "

|

||||

[#]: translator: "Auk7F7"

|

||||

[#]: reviewer: "wxy"

|

||||

[#]: publisher: "wxy"

|

||||

[#]: subject: "Arch-Wiki-Man – A Tool to Browse The Arch Wiki Pages As Linux Man Page from Offline"

|

||||

[#]: via: "https://www.2daygeek.com/arch-wiki-man-a-tool-to-browse-the-arch-wiki-pages-as-linux-man-page-from-offline/"

|

||||

[#]: author: "[Prakash Subramanian](https://www.2daygeek.com/author/prakash/)"

|

||||

[#]: url: " "

|

||||

[#]: author: "Prakash Subramanian https://www.2daygeek.com/author/prakash/"

|

||||

[#]: url: "https://linux.cn/article-10694-1.html"

|

||||

|

||||

Arch-Wiki-Man – 一个以 Linux Man 手册样式离线浏览 Arch Wiki 的工具

|

||||

Arch-Wiki-Man:一个以 Linux Man 手册样式离线浏览 Arch Wiki 的工具

|

||||

======

|

||||

|

||||

现在上网已经很方便了,但技术上会有限制。

|

||||

现在上网已经很方便了,但技术上会有限制。看到技术的发展,我很惊讶,但与此同时,各种地方也都会出现衰退。

|

||||

|

||||

看到技术的发展,我很惊讶,但与此同时,各个地方都会出现衰退。

|

||||

|

||||

当你搜索有关其他 Linux 发型版本的某些东西时,大多数时候你会首先得到一个第三方的链接,但是对于 Arch Linux 来说,每次你都会得到 Arch Wiki 页面的结果。

|

||||

当你搜索有关其他 Linux 发行版的某些东西时,大多数时候你会得到的是一个第三方的链接,但是对于 Arch Linux 来说,每次你都会得到 Arch Wiki 页面的结果。

|

||||

|

||||

因为 Arch Wiki 提供了除第三方网站以外的大多数解决方案。

|

||||

|

||||

到目前为止,你也许可以使用 Web 浏览器为你的 Arch Linux 系统找到一个解决方案,但现在你可以不用这么做了。

|

||||

|

||||

一个名为 arch-wiki-man 的工具t提供了一个在命令行中更快地执行这个操作的方案。如果你是一个 Arch Linux 爱好者,我建议你阅读 **[Arch Linux 安装后指南][1]** ,它可以帮助你调整你的系统以供日常使用。

|

||||

一个名为 arch-wiki-man 的工具提供了一个在命令行中更快地执行这个操作的方案。如果你是一个 Arch Linux 爱好者,我建议你阅读 [Arch Linux 安装后指南][1],它可以帮助你调整你的系统以供日常使用。

|

||||

|

||||

### arch-wiki-man 是什么?

|

||||

|

||||

[arch-wiki-man][2] 工具允许用户在离线的时候从命令行(CLI)中搜索 Arch Wiki 页面。它允许用户以 Linux Man 手册样式访问和搜索整个 Wiki 页面。

|

||||

[arch-wiki-man][2] 工具允许用户从命令行(CLI)中离线搜索 Arch Wiki 页面。它允许用户以 Linux Man 手册样式访问和搜索整个 Wiki 页面。

|

||||

|

||||

而且,你无需切换到GUI。更新将每两天自动推送一次,因此,你的 Arch Wiki 本地副本页面将是最新的。这个工具的名字是`awman`, `awman` 是 Arch Wiki Man 的缩写。

|

||||

而且,你无需切换到 GUI。更新将每两天自动推送一次,因此,你的 Arch Wiki 本地副本页面将是最新的。这个工具的名字是 `awman`, `awman` 是 “Arch Wiki Man” 的缩写。

|

||||

|

||||

我们已经写出了名为 **[Arch Wiki 命令行实用程序][3]** (arch-wiki-cli)的类似工具。它允许用户从互联网上搜索 Arch Wiki。但确保你因该在线使用这个实用程序。

|

||||

我们之前写过一篇类似工具 [Arch Wiki 命令行实用程序][3](arch-wiki-cli)的文章。这个工具允许用户从互联网上搜索 Arch Wiki。但你需要在线使用这个实用程序。

|

||||

|

||||

### 如何安装 arch-wiki-man 工具?

|

||||

|

||||

arch-wiki-man 工具可以在 AUR 仓库(LCTT译者注:AUR 即 Arch 用户软件仓库(Archx User Repository))中获得,因此,我们需要使用 AUR 工具来安装它。有许多 AUR 工具可用,而且我们曾写了一篇有关非常著名的 AUR 工具: **[Yaourt AUR helper][4]** 和 **[Packer AUR helper][5]** 的文章,

|

||||

arch-wiki-man 工具可以在 AUR 仓库(LCTT 译注:AUR 即<ruby>Arch 用户软件仓库<rt>Arch User Repository</rt></ruby>)中获得,因此,我们需要使用 AUR 工具来安装它。有许多 AUR 工具可用,而且我们曾写了一篇关于流行的 AUR 辅助工具: [Yaourt AUR helper][4] 和 [Packer AUR helper][5] 的文章。

|

||||

|

||||

```

|

||||

$ yaourt -S arch-wiki-man

|

||||

```

|

||||

|

||||

or

|

||||

或

|

||||

|

||||

```

|

||||

$ packer -S arch-wiki-man

|

||||

```

|

||||

|

||||

或者,我们可以使用 npm 包管理器来安装它,确保你已经在你的系统上安装了 **[NodeJS][6]** 。然后运行以下命令来安装它。

|

||||

或者,我们可以使用 npm 包管理器来安装它,确保你已经在你的系统上安装了 [NodeJS][6]。然后运行以下命令来安装它。

|

||||

|

||||

```

|

||||

$ npm install -g arch-wiki-man

|

||||

@ -61,13 +61,15 @@ $ sudo awman-update

|

||||

arch-wiki-md-repo has been successfully updated or reinstalled.

|

||||

```

|

||||

|

||||

awman-update 是一种更快更方便的更新方法。但是,你也可以通过运行以下命令重新安装arch-wiki-man 来获取更新。

|

||||

`awman-update` 是一种更快、更方便的更新方法。但是,你也可以通过运行以下命令重新安装 arch-wiki-man 来获取更新。

|

||||

|

||||

```

|

||||

$ yaourt -S arch-wiki-man

|

||||

```

|

||||

|

||||

or

|

||||

或

|

||||

|

||||

```

|

||||

$ packer -S arch-wiki-man

|

||||

```

|

||||

|

||||

@ -81,7 +83,7 @@ $ awman Search-Term

|

||||

|

||||

### 如何搜索多个匹配项?

|

||||

|

||||

如果希望列出包含`installation`字符串的所有结果的标题,运行以下格式的命令,如果输出有多个结果,那么你将会获得一个选择菜单来浏览每个项目。

|

||||

如果希望列出包含 “installation” 字符串的所有结果的标题,运行以下格式的命令,如果输出有多个结果,那么你将会获得一个选择菜单来浏览每个项目。

|

||||

|

||||

```

|

||||

$ awman installation

|

||||

@ -89,35 +91,39 @@ $ awman installation

|

||||

|

||||

![][8]

|

||||

|

||||

详细页面的截屏

|

||||

详细页面的截屏:

|

||||

|

||||

![][9]

|

||||

|

||||

### 在标题和描述中搜索给定的字符串

|

||||

|

||||

`-d` 或 `--desc-search` 选项允许用户在标题和描述中搜索给定的字符串。

|

||||

`-d` 或 `--desc-search` 选项允许用户在标题和描述中搜索给定的字符串。

|

||||

|

||||

```

|

||||

$ awman -d mirrors

|

||||

```

|

||||

|

||||

or

|

||||

或

|

||||

|

||||

```

|

||||

$ awman --desc-search mirrors

|

||||

? Select an article: (Use arrow keys)

|

||||

❯ [1/3] Mirrors: Related articles

|

||||

[2/3] DeveloperWiki-NewMirrors: Contents

|

||||

[3/3] Powerpill: Powerpill is a pac

|

||||

[2/3] DeveloperWiki-NewMirrors: Contents

|

||||

[3/3] Powerpill: Powerpill is a pac

|

||||

```

|

||||

|

||||

### 在内容中搜索给定的字符串

|

||||

|

||||

`-k` 或 `--apropos` 选项也允许用户在内容中搜索给定的字符串。但须注意,此选项会显著降低搜索速度,因为此选项会扫描整个 Wiki 页面的内容。

|

||||

`-k` 或 `--apropos` 选项也允许用户在内容中搜索给定的字符串。但须注意,此选项会显著降低搜索速度,因为此选项会扫描整个 Wiki 页面的内容。

|

||||

|

||||

```

|

||||

$ awman -k openjdk

|

||||

```

|

||||

|

||||

or

|

||||

或

|

||||

|

||||

```

|

||||

$ awman --apropos openjdk

|

||||

? Select an article: (Use arrow keys)

|

||||

❯ [1/26] Hadoop: Related articles

|

||||

@ -132,13 +138,15 @@ $ awman --apropos openjdk

|

||||

|

||||

### 在浏览器中打开搜索结果

|

||||

|

||||

`-w` 或 `--web` 选项允许用户在 Web 浏览器中打开搜索结果。

|

||||

`-w` 或 `--web` 选项允许用户在 Web 浏览器中打开搜索结果。

|

||||

|

||||

```

|

||||

$ awman -w AUR helper

|

||||

```

|

||||

|

||||

or

|

||||

或

|

||||

|

||||

```

|

||||

$ awman --web AUR helper

|

||||

```

|

||||

|

||||

@ -146,7 +154,7 @@ $ awman --web AUR helper

|

||||

|

||||

### 以其他语言搜索

|

||||

|

||||

`-w` 或 `--web` 选项允许用户在 Web 浏览器中打开搜索结果。想要查看支持的语言列表,请运行以下命令。

|

||||

想要查看支持的语言列表,请运行以下命令。

|

||||

|

||||

```

|

||||

$ awman --list-languages

|

||||

@ -196,7 +204,7 @@ via: https://www.2daygeek.com/arch-wiki-man-a-tool-to-browse-the-arch-wiki-pages

|

||||

作者:[Prakash Subramanian][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[Auk7F7](https://github.com/Auk7F7)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (MjSeven)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10695-1.html)

|

||||

[#]: subject: (Quickly Go Back To A Specific Parent Directory Using bd Command In Linux)

|

||||

[#]: via: (https://www.2daygeek.com/bd-quickly-go-back-to-a-specific-parent-directory-in-linux/)

|

||||

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

||||

@ -10,45 +10,43 @@

|

||||

在 Linux 中使用 bd 命令快速返回到特定的父目录

|

||||

======

|

||||

|

||||

<to 校正:我在 ubuntu 上似乎没有按照这个教程成功使用 bd 命令,难道我的姿势不对?>

|

||||

|

||||

两天前我们写了一篇关于 `autocd` 的文章,它是一个内置的 `shell` 变量,可以帮助我们在**[没有 `cd` 命令的情况下导航到目录中][1]**.

|

||||

两天前我们写了一篇关于 `autocd` 的文章,它是一个内置的 shell 变量,可以帮助我们在[没有 cd 命令的情况下导航到目录中][1]。

|

||||

|

||||

如果你想回到上一级目录,那么你需要输入 `cd ..`。

|

||||

|

||||

如果你想回到上两级目录,那么你需要输入 `cd ../..`。

|

||||

|

||||

这在 Linux 中是正常的,但如果你想从第九个目录回到第三个目录,那么使用 cd 命令是很糟糕的。

|

||||

这在 Linux 中是正常的,但如果你想从第九级目录回到第三级目录,那么使用 `cd` 命令是很糟糕的。

|

||||

|

||||

有什么解决方案呢?

|

||||

|

||||

是的,在 Linux 中有一个解决方案。我们可以使用 bd 命令来轻松应对这种情况。

|

||||

是的,在 Linux 中有一个解决方案。我们可以使用 `bd` 命令来轻松应对这种情况。

|

||||

|

||||

### 什么是 bd 命令?

|

||||

|

||||

bd 命令允许用户快速返回 Linux 中的父目录,而不是反复输入 `cd ../../..`。

|

||||

`bd` 命令允许用户快速返回 Linux 中的父目录,而不是反复输入 `cd ../../..`。

|

||||

|

||||

你可以列出给定目录的内容,而不用提供完整路径 `ls `bd Directory_Name``。它支持以下其它命令,如 ls、ln、echo、zip、tar 等。

|

||||

你可以列出给定目录的内容,而不用提供完整路径 ls `bd Directory_Name`。它支持以下其它命令,如 `ls`、`ln`、`echo`、`zip`、`tar` 等。

|

||||

|

||||

另外,它还允许我们执行 shell 文件而不用提供完整路径 `bd p`/shell_file.sh``。

|

||||

另外,它还允许我们执行 shell 文件而不用提供完整路径 bd p`/shell_file.sh`。

|

||||

|

||||

### 如何在 Linux 中安装 bd 命令?

|

||||

|

||||

除了 Debian/Ubuntu 之外,bd 没有官方发行包。因此,我们需要手动执行方法。

|

||||

除了 Debian/Ubuntu 之外,`bd` 没有官方发行包。因此,我们需要手动执行方法。

|

||||

|

||||

对于 **`Debian/Ubuntu`** 系统,使用 **[APT-GET 命令][2]**或**[APT 命令][3]**来安装 bd。

|

||||

对于 Debian/Ubuntu 系统,使用 [APT-GET 命令][2]或[APT 命令][3]来安装 `bd`。

|

||||

|

||||

```

|

||||

$ sudo apt install bd

|

||||

```

|

||||

|

||||

对于其它 Linux 发行版,使用 **[wget 命令][4]**下载 bd 可执行二进制文件。

|

||||

对于其它 Linux 发行版,使用 [wget 命令][4]下载 `bd` 可执行二进制文件。

|

||||

|

||||

```

|

||||

$ sudo wget --no-check-certificate -O /usr/local/bin/bd https://raw.github.com/vigneshwaranr/bd/master/bd

|

||||

```

|

||||

|

||||

设置 bd 二进制文件的可执行权限。

|

||||

设置 `bd` 二进制文件的可执行权限。

|

||||

|

||||

```

|

||||

$ sudo chmod +rx /usr/local/bin/bd

|

||||

@ -61,17 +59,19 @@ $ echo 'alias bd=". bd -si"' >> ~/.bashrc

|

||||

```

|

||||

|

||||

运行以下命令以使更改生效。

|

||||

|

||||

```

|

||||

$ source ~/.bashrc

|

||||

```

|

||||

|

||||

要启用自动完成,执行以下两个步骤。

|

||||

|

||||

```

|

||||

$ sudo wget -O /etc/bash_completion.d/bd https://raw.github.com/vigneshwaranr/bd/master/bash_completion.d/bd

|

||||

$ sudo source /etc/bash_completion.d/bd

|

||||

```

|

||||

|

||||

我们已经在系统上成功安装并配置了 bd 实用程序,现在是时候测试一下了。

|

||||

我们已经在系统上成功安装并配置了 `bd` 实用程序,现在是时候测试一下了。

|

||||

|

||||

我将使用下面的目录路径进行测试。

|

||||

|

||||

@ -79,7 +79,7 @@ $ sudo source /etc/bash_completion.d/bd

|

||||

|

||||

```

|

||||

daygeek@Ubuntu18:/usr/share/icons/Adwaita/256x256/apps$ pwd

|

||||

或者

|

||||

或

|

||||

daygeek@Ubuntu18:/usr/share/icons/Adwaita/256x256/apps$ dirs

|

||||

|

||||

/usr/share/icons/Adwaita/256x256/apps

|

||||

@ -94,19 +94,20 @@ daygeek@Ubuntu18:/usr/share/icons$

|

||||

```

|

||||

|

||||

甚至,你不需要输入完整的目录名称,也可以输入几个字母。

|

||||

|

||||

```

|

||||

daygeek@Ubuntu18:/usr/share/icons/Adwaita/256x256/apps$ bd i

|

||||

/usr/share/icons/

|

||||

daygeek@Ubuntu18:/usr/share/icons$

|

||||

```

|

||||

|

||||

`注意:` 如果层次结构中有多个同名的目录,bd 会将你带到最近的目录。(不考虑直接的父目录)

|

||||

注意:如果层次结构中有多个同名的目录,`bd` 会将你带到最近的目录。(不考虑直接的父目录)

|

||||

|

||||

如果要列出给定的目录内容,使用以下格式。它会打印出 `/usr/share/icons/` 的内容。

|

||||

|

||||

```

|

||||

$ ls -lh `bd icons`

|

||||

or

|

||||

或

|

||||

daygeek@Ubuntu18:/usr/share/icons/Adwaita/256x256/apps$ ls -lh `bd i`

|

||||

total 64K

|

||||

drwxr-xr-x 12 root root 4.0K Jul 25 2018 Adwaita

|

||||

@ -132,7 +133,7 @@ drwxr-xr-x 3 root root 4.0K Jul 25 2018 whiteglass

|

||||

|

||||

```

|

||||

$ `bd i`/users-list.sh

|

||||

or

|

||||

或

|

||||

daygeek@Ubuntu18:/usr/share/icons/Adwaita/256x256/apps$ `bd icon`/users-list.sh

|

||||

daygeek

|

||||

thanu

|

||||

@ -151,7 +152,7 @@ user3

|

||||

|

||||

```

|

||||

$ cd `bd i`/gnome

|

||||

or

|

||||

或

|

||||

daygeek@Ubuntu18:/usr/share/icons/Adwaita/256x256/apps$ cd `bd icon`/gnome

|

||||

daygeek@Ubuntu18:/usr/share/icons/gnome$

|

||||

```

|

||||

@ -167,7 +168,7 @@ drwxr-xr-x 2 root root 4096 Mar 16 05:44 /usr/share/icons//2g

|

||||

|

||||

本教程允许你快速返回到特定的父目录,但没有快速前进的选项。

|

||||

|

||||

我们有另一个解决方案,很快就会提出新的解决方案,请跟我们保持联系。

|

||||

我们有另一个解决方案,很快就会提出,请保持关注。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -176,7 +177,7 @@ via: https://www.2daygeek.com/bd-quickly-go-back-to-a-specific-parent-directory-

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[MjSeven](https://github.com/MjSeven)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,115 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (A Look Back at the History of Firefox)

|

||||

[#]: via: (https://itsfoss.com/history-of-firefox)

|

||||

[#]: author: (John Paul https://itsfoss.com/author/john/)

|

||||

|

||||

A Look Back at the History of Firefox

|

||||

======

|

||||

|

||||

The Firefox browser has been a mainstay of the open-source community for a long time. For many years it was the default web browser on (almost) all Linux distros and the lone obstacle to Microsoft’s total dominance of the internet. This browser has roots that go back all the way to the very early days of the internet. Since this week marks the 30th anniversary of the internet, there is no better time to talk about how Firefox became the browser we all know and love.

|

||||

|

||||

### Early Roots

|

||||

|

||||

In the early 1990s, a young man named [Marc Andreessen][1] was working on his bachelor’s degree in computer science at the University of Illinois. While there, he started working for the [National Center for Supercomputing Applications][2]. During that time [Sir Tim Berners-Lee][3] released an early form of the web standards that we know today. Marc [was introduced][4] to a very primitive web browser named [ViolaWWW][5]. Seeing that the technology had potential, Marc and Eric Bina created an easy to install browser for Unix named [NCSA Mosaic][6]). The first alpha was released in June 1993. By September, there were ports to Windows and Macintosh. Mosaic became very popular because it was easier to use than other browsing software.

|

||||

|

||||

In 1994, Marc graduated and moved to California. He was approached by Jim Clark, who had made his money selling computer hardware and software. Clark had used Mosaic and saw the financial possibilities of the internet. Clark recruited Marc and Eric to start an internet software company. The company was originally named Mosaic Communications Corporation, however, the University of Illinois did not like [their use of the name Mosaic][7]. As a result, the company name was changed to Netscape Communications Corporation.

|

||||

|

||||

The company’s first project was an online gaming network for the Nintendo 64, but that fell through. The first product they released was a web browser named Mosaic Netscape 0.9, subsequently renamed Netscape Navigator. Internally, the browser project was codenamed mozilla, which stood for “Mosaic killer”. An employee created a cartoon of a [Godzilla like creature][8]. They wanted to take out the competition.

|

||||

|

||||

![Early Firefox Mascot][9]Early Mozilla mascot at Netscape

|

||||

|

||||

They succeed mightily. At the time, one of the biggest advantages that Netscape had was the fact that its browser looked and functioned the same on every operating system. Netscape described this as giving everyone a level playing field.

|

||||

|

||||

As usage of Netscape Navigator increase, the market share of NCSA Mosaic cratered. In 1995, Netscape went public. [On the first day][10], the stock started at $28, jumped to $75 and ended the day at $58. Netscape was without any rivals.

|

||||

|

||||

But that didn’t last for long. In the summer of 1994, Microsoft released Internet Explorer 1.0, which was based on Spyglass Mosaic which was based on NCSA Mosaic. The [browser wars][11] had begun.

|

||||

|

||||

Over the next few years, Netscape and Microsoft competed for dominance of the internet. Each added features to compete with the other. Unfortunately, Internet Explorer had an advantage because it came bundled with Windows. On top of that, Microsoft had more programmers and money to throw at the problem. Toward the end of 1997, Netscape started to run into financial problems.

|

||||

|

||||

### Going Open Source

|

||||

|

||||

![Mozilla Firefox][12]

|

||||

|

||||

In January 1998, Netscape open-sourced the code of the Netscape Communicator 4.0 suite. The [goal][13] was to “harness the creative power of thousands of programmers on the Internet by incorporating their best enhancements into future versions of Netscape’s software. This strategy is designed to accelerate development and free distribution by Netscape of future high-quality versions of Netscape Communicator to business customers and individuals.”

|

||||

|

||||

The project was to be shepherded by the newly created Mozilla Organization. However, the code from Netscape Communicator 4.0 proved to be very difficult to work with due to its size and complexity. On top of that, several parts could not be open sourced because of licensing agreements with third parties. In the end, it was decided to rewrite the browser from scratch using the new [Gecko][14]) rendering engine.

|

||||

|

||||

In November 1998, Netscape was acquired by AOL for [stock swap valued at $4.2 billion][15].

|

||||

|

||||

Starting from scratch was a major undertaking. Mozilla Firefox (initially nicknamed Phoenix) was created in June 2002 and it worked on multiple operating systems, such as Linux, Mac OS, Microsoft Windows, and Solaris.

|

||||

|

||||

The following year, AOL announced that they would be shutting down browser development. The Mozilla Foundation was subsequently created to handle the Mozilla trademarks and handle the financing of the project. Initially, the Mozilla Foundation received $2 million in donations from AOL, IBM, Sun Microsystems, and Red Hat.

|

||||

|

||||

In March 2003, Mozilla [announced pl][16][a][16][ns][16] to separate the suite into stand-alone applications because of creeping software bloat. The stand-alone browser was initially named Phoenix. However, the name was changed due to a trademark dispute with the BIOS manufacturer Phoenix Technologies, which had a BIOS-based browser named trademark dispute with the BIOS manufacturer Phoenix Technologies. Phoenix was renamed Firebird only to run afoul of the Firebird database server people. The browser was once more renamed to the Firefox that we all know.

|

||||

|

||||

At the time, [Mozilla said][17], “We’ve learned a lot about choosing names in the past year (more than we would have liked to). We have been very careful in researching the name to ensure that we will not have any problems down the road. We have begun the process of registering our new trademark with the US Patent and Trademark office.”

|

||||

|

||||

![Mozilla Firefox 1.0][18]Firefox 1.0 : [Picture Credit][19]

|

||||

|

||||

The first official release of Firefox was [0.8][20] on February 8, 2004. 1.0 followed on November 9, 2004. Version 2.0 and 3.0 followed in October 2006 and June 2008 respectively. Each major release brought with it many new features and improvements. In many respects, Firefox pulled ahead of Internet Explorer in terms of features and technology, but IE still had more users.

|

||||

|

||||

That changed with the release of Google’s Chrome browser. In the months before the release of Chrome in September 2008, Firefox accounted for 30% of all [browser usage][21] and IE had over 60%. According to StatCounter’s [January 2019 report][22], Firefox accounts for less than 10% of all browser usage, while Chrome has over 70%.

|

||||

|

||||

Fun Fact

|

||||

|

||||

Contrary to popular belief, the logo of Firefox doesn’t feature a fox. It’s actually a [Red Panda][23]. In Chinese, “fire fox” is another name for the red panda.

|

||||

|

||||

### The Future

|

||||

|

||||

As noted above, Firefox currently has the lowest market share in its recent history. There was a time when a bunch of browsers were based on Firefox, such as the early version of the [Flock browser][24]). Now most browsers are based on Google technology, such as Opera and Vivaldi. Even Microsoft is giving up on browser development and [joining the Chromium band wagon][25].

|

||||

|

||||

This might seem like quite a downer after the heights of the early Netscape years. But don’t forget what Firefox has accomplished. A group of developers from around the world have created the second most used browser in the world. They clawed 30% market share away from Microsoft’s monopoly, they can do it again. After all, they have us, the open source community, behind them.

|

||||

|

||||

The fight against the monopoly is one of the several reasons [why I use Firefox][26]. Mozilla regained some of its lost market-share with the revamped release of [Firefox Quantum][27] and I believe that it will continue the upward path.

|

||||

|

||||

What event from Linux and open source history would you like us to write about next? Please let us know in the comments below.

|

||||

|

||||

If you found this article interesting, please take a minute to share it on social media, Hacker News or [Reddit][28].

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/history-of-firefox

|

||||

|

||||

作者:[John Paul][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/john/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://en.wikipedia.org/wiki/Marc_Andreessen

|

||||

[2]: https://en.wikipedia.org/wiki/National_Center_for_Supercomputing_Applications

|

||||

[3]: https://en.wikipedia.org/wiki/Tim_Berners-Lee

|

||||

[4]: https://www.w3.org/DesignIssues/TimBook-old/History.html

|

||||

[5]: http://viola.org/

|

||||

[6]: https://en.wikipedia.org/wiki/Mosaic_(web_browser

|

||||

[7]: http://www.computinghistory.org.uk/det/1789/Marc-Andreessen/

|

||||

[8]: http://www.davetitus.com/mozilla/

|

||||

[9]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/03/Mozilla_boxing.jpg?ssl=1

|

||||

[10]: https://www.marketwatch.com/story/netscape-ipo-ignited-the-boom-taught-some-hard-lessons-20058518550

|

||||

[11]: https://en.wikipedia.org/wiki/Browser_wars

|

||||

[12]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/03/mozilla-firefox.jpg?resize=800%2C450&ssl=1

|

||||

[13]: https://web.archive.org/web/20021001071727/wp.netscape.com/newsref/pr/newsrelease558.html

|

||||

[14]: https://en.wikipedia.org/wiki/Gecko_(software)

|

||||

[15]: http://news.cnet.com/2100-1023-218360.html

|

||||

[16]: https://web.archive.org/web/20050618000315/http://www.mozilla.org/roadmap/roadmap-02-Apr-2003.html

|

||||

[17]: https://www-archive.mozilla.org/projects/firefox/firefox-name-faq.html

|

||||

[18]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/03/firefox-1.jpg?ssl=1

|

||||

[19]: https://www.iceni.com/blog/firefox-1-0-introduced-2004/

|

||||

[20]: https://en.wikipedia.org/wiki/Firefox_version_history

|

||||

[21]: https://en.wikipedia.org/wiki/Usage_share_of_web_browsers

|

||||

[22]: http://gs.statcounter.com/browser-market-share/desktop/worldwide/#monthly-201901-201901-bar

|

||||

[23]: https://en.wikipedia.org/wiki/Red_panda

|

||||

[24]: https://en.wikipedia.org/wiki/Flock_(web_browser

|

||||

[25]: https://www.windowscentral.com/microsoft-building-chromium-powered-web-browser-windows-10

|

||||

[26]: https://itsfoss.com/why-firefox/

|

||||

[27]: https://itsfoss.com/firefox-quantum-ubuntu/

|

||||

[28]: http://reddit.com/r/linuxusersgroup

|

||||

[29]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/03/mozilla-firefox.jpg?fit=800%2C450&ssl=1

|

||||

@ -1,5 +1,5 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: translator: (zgj1024 )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

|

||||

@ -1,290 +0,0 @@

|

||||

Moelf translating

|

||||

Myths about /dev/urandom

|

||||

======

|

||||

|

||||

There are a few things about /dev/urandom and /dev/random that are repeated again and again. Still they are false.

|

||||

|

||||

I'm mostly talking about reasonably recent Linux systems, not other UNIX-like systems.

|

||||

|

||||

### /dev/urandom is insecure. Always use /dev/random for cryptographic purposes.

|

||||

|

||||

Fact: /dev/urandom is the preferred source of cryptographic randomness on UNIX-like systems.

|

||||

|

||||

### /dev/urandom is a pseudo random number generator, a PRNG, while /dev/random is a “true” random number generator.

|

||||

|

||||

Fact: Both /dev/urandom and /dev/random are using the exact same CSPRNG (a cryptographically secure pseudorandom number generator). They only differ in very few ways that have nothing to do with “true” randomness.

|

||||

|

||||

### /dev/random is unambiguously the better choice for cryptography. Even if /dev/urandom were comparably secure, there's no reason to choose the latter.

|

||||

|

||||

Fact: /dev/random has a very nasty problem: it blocks.

|

||||

|

||||

### But that's good! /dev/random gives out exactly as much randomness as it has entropy in its pool. /dev/urandom will give you insecure random numbers, even though it has long run out of entropy.

|

||||

|

||||

Fact: No. Even disregarding issues like availability and subsequent manipulation by users, the issue of entropy “running low” is a straw man. About 256 bits of entropy are enough to get computationally secure numbers for a long, long time.

|

||||

|

||||

And the fun only starts here: how does /dev/random know how much entropy there is available to give out? Stay tuned!

|

||||

|

||||

### But cryptographers always talk about constant re-seeding. Doesn't that contradict your last point?

|

||||

|

||||

Fact: You got me! Kind of. It is true, the random number generator is constantly re-seeded using whatever entropy the system can lay its hands on. But that has (partly) other reasons.

|

||||

|

||||

Look, I don't claim that injecting entropy is bad. It's good. I just claim that it's bad to block when the entropy estimate is low.

|

||||

|

||||

### That's all good and nice, but even the man page for /dev/(u)random contradicts you! Does anyone who knows about this stuff actually agree with you?

|

||||

|

||||

Fact: No, it really doesn't. It seems to imply that /dev/urandom is insecure for cryptographic use, unless you really understand all that cryptographic jargon.

|

||||

|

||||

The man page does recommend the use of /dev/random in some cases (it doesn't hurt, in my opinion, but is not strictly necessary), but it also recommends /dev/urandom as the device to use for “normal” cryptographic use.

|

||||

|

||||

And while appeal to authority is usually nothing to be proud of, in cryptographic issues you're generally right to be careful and try to get the opinion of a domain expert.

|

||||

|

||||

And yes, quite a few experts share my view that /dev/urandom is the go-to solution for your random number needs in a cryptography context on UNIX-like systems. Obviously, their opinions influenced mine, not the other way around.

|

||||

|

||||

Hard to believe, right? I must certainly be wrong! Well, read on and let me try to convince you.

|

||||

|

||||

I tried to keep it out, but I fear there are two preliminaries to be taken care of, before we can really tackle all those points.

|

||||

|

||||

Namely, what is randomness, or better: what kind of randomness am I talking about here?

|

||||

|

||||

And, even more important, I'm really not being condescending. I have written this document to have a thing to point to, when this discussion comes up again. More than 140 characters. Without repeating myself again and again. Being able to hone the writing and the arguments itself, benefitting many discussions in many venues.

|

||||

|

||||

And I'm certainly willing to hear differing opinions. I'm just saying that it won't be enough to state that /dev/urandom is bad. You need to identify the points you're disagreeing with and engage them.

|

||||

|

||||

### You're saying I'm stupid!

|

||||

|

||||

Emphatically no!

|

||||

|

||||

Actually, I used to believe that /dev/urandom was insecure myself, a few years ago. And it's something you and me almost had to believe, because all those highly respected people on Usenet, in web forums and today on Twitter told us. Even the man page seems to say so. Who were we to dismiss their convincing argument about “entropy running low”?

|

||||

|

||||

This misconception isn't so rampant because people are stupid, it is because with a little knowledge about cryptography (namely some vague idea what entropy is) it's very easy to be convinced of it. Intuition almost forces us there. Unfortunately intuition is often wrong in cryptography. So it is here.

|

||||

|

||||

### True randomness

|

||||

|

||||

What does it mean for random numbers to be “truly random”?

|

||||

|

||||

I don't want to dive into that issue too deep, because it quickly gets philosophical. Discussions have been known to unravel fast, because everyone can wax about their favorite model of randomness, without paying attention to anyone else. Or even making himself understood.

|

||||

|

||||

I believe that the “gold standard” for “true randomness” are quantum effects. Observe a photon pass through a semi-transparent mirror. Or not. Observe some radioactive material emit alpha particles. It's the best idea we have when it comes to randomness in the world. Other people might reasonably believe that those effects aren't truly random. Or even that there is no randomness in the world at all. Let a million flowers bloom.

|

||||

|

||||

Cryptographers often circumvent this philosophical debate by disregarding what it means for randomness to be “true”. They care about unpredictability. As long as nobody can get any information about the next random number, we're fine. And when you're talking about random numbers as a prerequisite in using cryptography, that's what you should aim for, in my opinion.

|

||||

|

||||

Anyway, I don't care much about those “philosophically secure” random numbers, as I like to think of your “true” random numbers.

|

||||

|

||||

### Two kinds of security, one that matters

|

||||

|

||||

But let's assume you've obtained those “true” random numbers. What are you going to do with them?

|

||||

|

||||

You print them out, frame them and hang them on your living-room wall, to revel in the beauty of a quantum universe? That's great, and I certainly understand.

|

||||

|

||||

Wait, what? You're using them? For cryptographic purposes? Well, that spoils everything, because now things get a bit ugly.

|

||||

|

||||

You see, your truly-random, quantum effect blessed random numbers are put into some less respectable, real-world tarnished algorithms.

|

||||

|

||||

Because almost all of the cryptographic algorithms we use do not hold up to ### information-theoretic security**. They can “only” offer **computational security. The two exceptions that come to my mind are Shamir's Secret Sharing and the One-time pad. And while the first one may be a valid counterpoint (if you actually intend to use it), the latter is utterly impractical.

|

||||

|

||||

But all those algorithms you know about, AES, RSA, Diffie-Hellman, Elliptic curves, and all those crypto packages you're using, OpenSSL, GnuTLS, Keyczar, your operating system's crypto API, these are only computationally secure.

|

||||

|

||||

What's the difference? While information-theoretically secure algorithms are secure, period, those other algorithms cannot guarantee security against an adversary with unlimited computational power who's trying all possibilities for keys. We still use them because it would take all the computers in the world taken together longer than the universe has existed, so far. That's the level of “insecurity” we're talking about here.

|

||||

|

||||

Unless some clever guy breaks the algorithm itself, using much less computational power. Even computational power achievable today. That's the big prize every cryptanalyst dreams about: breaking AES itself, breaking RSA itself and so on.

|

||||

|

||||

So now we're at the point where you don't trust the inner building blocks of the random number generator, insisting on “true randomness” instead of “pseudo randomness”. But then you're using those “true” random numbers in algorithms that you so despise that you didn't want them near your random number generator in the first place!

|

||||

|

||||

Truth is, when state-of-the-art hash algorithms are broken, or when state-of-the-art block ciphers are broken, it doesn't matter that you get “philosophically insecure” random numbers because of them. You've got nothing left to securely use them for anyway.

|

||||

|

||||

So just use those computationally-secure random numbers for your computationally-secure algorithms. In other words: use /dev/urandom.

|

||||

|

||||

### Structure of Linux's random number generator

|

||||

|

||||

#### An incorrect view

|

||||

|

||||

Chances are, your idea of the kernel's random number generator is something similar to this:

|

||||

|

||||

![image: mythical structure of the kernel's random number generator][1]

|

||||

|

||||

“True randomness”, albeit possibly skewed and biased, enters the system and its entropy is precisely counted and immediately added to an internal entropy counter. After de-biasing and whitening it's entering the kernel's entropy pool, where both /dev/random and /dev/urandom get their random numbers from.

|

||||

|

||||

The “true” random number generator, /dev/random, takes those random numbers straight out of the pool, if the entropy count is sufficient for the number of requested numbers, decreasing the entropy counter, of course. If not, it blocks until new entropy has entered the system.

|

||||

|

||||

The important thing in this narrative is that /dev/random basically yields the numbers that have been input by those randomness sources outside, after only the necessary whitening. Nothing more, just pure randomness.

|

||||

|

||||

/dev/urandom, so the story goes, is doing the same thing. Except when there isn't sufficient entropy in the system. In contrast to /dev/random, it does not block, but gets “low quality random” numbers from a pseudorandom number generator (conceded, a cryptographically secure one) that is running alongside the rest of the random number machinery. This CSPRNG is just seeded once (or maybe every now and then, it doesn't matter) with “true randomness” from the randomness pool, but you can't really trust it.

|

||||

|

||||

In this view, that seems to be in a lot of people's minds when they're talking about random numbers on Linux, avoiding /dev/urandom is plausible.

|

||||

|

||||

Because either there is enough entropy left, then you get the same you'd have gotten from /dev/random. Or there isn't, then you get those low-quality random numbers from a CSPRNG that almost never saw high-entropy input.

|

||||

|

||||

Devilish, right? Unfortunately, also utterly wrong. In reality, the internal structure of the random number generator looks like this.

|

||||

|

||||

#### A better simplification

|

||||

|

||||

##### Before Linux 4.8

|

||||

|

||||

![image: actual structure of the kernel's random number generator before Linux 4.8][2] This is a pretty rough simplification. In fact, there isn't just one, but three pools filled with entropy. One primary pool, and one for /dev/random and /dev/urandom each, feeding off the primary pool. Those three pools all have their own entropy counts, but the counts of the secondary pools (for /dev/random and /dev/urandom) are mostly close to zero, and “fresh” entropy flows from the primary pool when needed, decreasing its entropy count. Also there is a lot of mixing and re-injecting outputs back into the system going on. All of this is far more detail than is necessary for this document.

|

||||

|

||||

See the big difference? The CSPRNG is not running alongside the random number generator, filling in for those times when /dev/urandom wants to output something, but has nothing good to output. The CSPRNG is an integral part of the random number generation process. There is no /dev/random handing out “good and pure” random numbers straight from the whitener. Every randomness source's input is thoroughly mixed and hashed inside the CSPRNG, before it emerges as random numbers, either via /dev/urandom or /dev/random.

|

||||

|

||||

Another important difference is that there is no entropy counting going on here, but estimation. The amount of entropy some source is giving you isn't something obvious that you just get, along with the data. It has to be estimated. Please note that when your estimate is too optimistic, the dearly held property of /dev/random, that it's only giving out as many random numbers as available entropy allows, is gone. Unfortunately, it's hard to estimate the amount of entropy.

|

||||

|

||||

The Linux kernel uses only the arrival times of events to estimate their entropy. It does that by interpolating polynomials of those arrival times, to calculate “how surprising” the actual arrival time was, according to the model. Whether this polynomial interpolation model is the best way to estimate entropy is an interesting question. There is also the problem that internal hardware restrictions might influence those arrival times. The sampling rates of all kinds of hardware components may also play a role, because it directly influences the values and the granularity of those event arrival times.

|

||||

|

||||

In the end, to the best of our knowledge, the kernel's entropy estimate is pretty good. Which means it's conservative. People argue about how good it really is, but that issue is far above my head. Still, if you insist on never handing out random numbers that are not “backed” by sufficient entropy, you might be nervous here. I'm sleeping sound because I don't care about the entropy estimate.

|

||||

|

||||

So to make one thing crystal clear: both /dev/random and /dev/urandom are fed by the same CSPRNG. Only the behavior when their respective pool runs out of entropy, according to some estimate, differs: /dev/random blocks, while /dev/urandom does not.

|

||||

|

||||

##### From Linux 4.8 onward

|

||||

|

||||

In Linux 4.8 the equivalency between /dev/urandom and /dev/random was given up. Now /dev/urandom output does not come from an entropy pool, but directly from a CSPRNG.

|

||||

|

||||

![image: actual structure of the kernel's random number generator from Linux 4.8 onward][3]

|

||||

|

||||

We will see shortly why that is not a security problem.

|

||||

|

||||

### What's wrong with blocking?

|

||||

|

||||

Have you ever waited for /dev/random to give you more random numbers? Generating a PGP key inside a virtual machine maybe? Connecting to a web server that's waiting for more random numbers to create an ephemeral session key?

|

||||

|

||||

That's the problem. It inherently runs counter to availability. So your system is not working. It's not doing what you built it to do. Obviously, that's bad. You wouldn't have built it if you didn't need it.

|

||||

|

||||

I'm working on safety-related systems in factory automation. Can you guess what the main reason for failures of safety systems is? Manipulation. Simple as that. Something about the safety measure bugged the worker. It took too much time, was too inconvenient, whatever. People are very resourceful when it comes to finding “inofficial solutions”.

|

||||

|

||||

But the problem runs even deeper: people don't like to be stopped in their ways. They will devise workarounds, concoct bizarre machinations to just get it running. People who don't know anything about cryptography. Normal people.

|

||||

|

||||

Why not patching out the call to `random()`? Why not having some guy in a web forum tell you how to use some strange ioctl to increase the entropy counter? Why not switch off SSL altogether?

|

||||

|

||||

In the end you just educate your users to do foolish things that compromise your system's security without you ever knowing about it.

|

||||

|

||||

It's easy to disregard availability, usability or other nice properties. Security trumps everything, right? So better be inconvenient, unavailable or unusable than feign security.

|

||||

|

||||

But that's a false dichotomy. Blocking is not necessary for security. As we saw, /dev/urandom gives you the same kind of random numbers as /dev/random, straight out of a CSPRNG. Use it!

|

||||

|

||||

### The CSPRNGs are alright

|

||||

|

||||

But now everything sounds really bleak. If even the high-quality random numbers from /dev/random are coming out of a CSPRNG, how can we use them for high-security purposes?

|

||||

|

||||

It turns out, that “looking random” is the basic requirement for a lot of our cryptographic building blocks. If you take the output of a cryptographic hash, it has to be indistinguishable from a random string so that cryptographers will accept it. If you take a block cipher, its output (without knowing the key) must also be indistinguishable from random data.

|

||||

|

||||

If anyone could gain an advantage over brute force breaking of cryptographic building blocks, using some perceived weakness of those CSPRNGs over “true” randomness, then it's the same old story: you don't have anything left. Block ciphers, hashes, everything is based on the same mathematical fundament as CSPRNGs. So don't be afraid.

|

||||

|

||||

### What about entropy running low?

|

||||

|

||||

It doesn't matter.

|

||||

|

||||

The underlying cryptographic building blocks are designed such that an attacker cannot predict the outcome, as long as there was enough randomness (a.k.a. entropy) in the beginning. A usual lower limit for “enough” may be 256 bits. No more.

|

||||

|

||||

Considering that we were pretty hand-wavey about the term “entropy” in the first place, it feels right. As we saw, the kernel's random number generator cannot even precisely know the amount of entropy entering the system. Only an estimate. And whether the model that's the basis for the estimate is good enough is pretty unclear, too.

|

||||

|

||||

### Re-seeding

|

||||

|

||||

But if entropy is so unimportant, why is fresh entropy constantly being injected into the random number generator?

|

||||

|

||||

djb [remarked][4] that more entropy actually can hurt.

|

||||

|

||||

First, it cannot hurt. If you've got more randomness just lying around, by all means use it!

|

||||

|

||||

There is another reason why re-seeding the random number generator every now and then is important:

|

||||

|

||||

Imagine an attacker knows everything about your random number generator's internal state. That's the most severe security compromise you can imagine, the attacker has full access to the system.

|

||||

|

||||

You've totally lost now, because the attacker can compute all future outputs from this point on.

|

||||

|

||||

But over time, with more and more fresh entropy being mixed into it, the internal state gets more and more random again. So that such a random number generator's design is kind of self-healing.

|

||||

|

||||

But this is injecting entropy into the generator's internal state, it has nothing to do with blocking its output.

|

||||

|

||||

### The random and urandom man page

|

||||

|

||||

The man page for /dev/random and /dev/urandom is pretty effective when it comes to instilling fear into the gullible programmer's mind:

|

||||

|

||||

> A read from the /dev/urandom device will not block waiting for more entropy. As a result, if there is not sufficient entropy in the entropy pool, the returned values are theoretically vulnerable to a cryptographic attack on the algorithms used by the driver. Knowledge of how to do this is not available in the current unclassified literature, but it is theoretically possible that such an attack may exist. If this is a concern in your application, use /dev/random instead.

|

||||

|

||||

Such an attack is not known in “unclassified literature”, but the NSA certainly has one in store, right? And if you're really concerned about this (you should!), please use /dev/random, and all your problems are solved.

|

||||

|

||||

The truth is, while there may be such an attack available to secret services, evil hackers or the Bogeyman, it's just not rational to just take it as a given.

|

||||

|

||||

And even if you need that peace of mind, let me tell you a secret: no practical attacks on AES, SHA-3 or other solid ciphers and hashes are known in the “unclassified” literature, either. Are you going to stop using those, as well? Of course not!

|

||||

|

||||

Now the fun part: “use /dev/random instead”. While /dev/urandom does not block, its random number output comes from the very same CSPRNG as /dev/random's.

|

||||

|

||||

If you really need information-theoretically secure random numbers (you don't!), and that's about the only reason why the entropy of the CSPRNGs input matters, you can't use /dev/random, either!

|

||||

|

||||

The man page is silly, that's all. At least it tries to redeem itself with this:

|

||||

|

||||

> If you are unsure about whether you should use /dev/random or /dev/urandom, then probably you want to use the latter. As a general rule, /dev/urandom should be used for everything except long-lived GPG/SSL/SSH keys.

|

||||

|

||||

Fine. I think it's unnecessary, but if you want to use /dev/random for your “long-lived keys”, by all means, do so! You'll be waiting a few seconds typing stuff on your keyboard, that's no problem.

|

||||

|

||||

But please don't make connections to a mail server hang forever, just because you “wanted to be safe”.

|

||||

|

||||

### Orthodoxy

|

||||

|

||||

The view espoused here is certainly a tiny minority's opinions on the Internet. But ask a real cryptographer, you'll be hard pressed to find someone who sympathizes much with that blocking /dev/random.

|

||||

|

||||

Let's take [Daniel Bernstein][5], better known as djb:

|

||||

|

||||

> Cryptographers are certainly not responsible for this superstitious nonsense. Think about this for a moment: whoever wrote the /dev/random manual page seems to simultaneously believe that

|

||||

>

|

||||

> * (1) we can't figure out how to deterministically expand one 256-bit /dev/random output into an endless stream of unpredictable keys (this is what we need from urandom), but

|

||||

>

|

||||

> * (2) we _can_ figure out how to use a single key to safely encrypt many messages (this is what we need from SSL, PGP, etc.).

|

||||

>

|

||||

>

|

||||

|

||||

>

|

||||

> For a cryptographer this doesn't even pass the laugh test.

|

||||

|

||||

Or [Thomas Pornin][6], who is probably one of the most helpful persons I've ever encountered on the Stackexchange sites:

|

||||

|

||||

> The short answer is yes. The long answer is also yes. /dev/urandom yields data which is indistinguishable from true randomness, given existing technology. Getting "better" randomness than what /dev/urandom provides is meaningless, unless you are using one of the few "information theoretic" cryptographic algorithm, which is not your case (you would know it).

|

||||

>

|

||||

> The man page for urandom is somewhat misleading, arguably downright wrong, when it suggests that /dev/urandom may "run out of entropy" and /dev/random should be preferred;

|

||||

|

||||

Or maybe [Thomas Ptacek][7], who is not a real cryptographer in the sense of designing cryptographic algorithms or building cryptographic systems, but still the founder of a well-reputed security consultancy that's doing a lot of penetration testing and breaking bad cryptography:

|

||||

|

||||

> Use urandom. Use urandom. Use urandom. Use urandom. Use urandom. Use urandom.

|

||||

|

||||

### Not everything is perfect

|

||||

|

||||

/dev/urandom isn't perfect. The problems are twofold:

|

||||

|

||||

On Linux, unlike FreeBSD, /dev/urandom never blocks. Remember that the whole security rested on some starting randomness, a seed?

|

||||

|

||||

Linux's /dev/urandom happily gives you not-so-random numbers before the kernel even had the chance to gather entropy. When is that? At system start, booting the computer.

|

||||

|

||||

FreeBSD does the right thing: they don't have the distinction between /dev/random and /dev/urandom, both are the same device. At startup /dev/random blocks once until enough starting entropy has been gathered. Then it won't block ever again.

|

||||

|

||||

In the meantime, Linux has implemented a new syscall, originally introduced by OpenBSD as getentropy(2): getrandom(2). This syscall does the right thing: blocking until it has gathered enough initial entropy, and never blocking after that point. Of course, it is a syscall, not a character device, so it isn't as easily accessible from shell or script languages. It is available from Linux 3.17 onward.

|

||||

|

||||

On Linux it isn't too bad, because Linux distributions save some random numbers when booting up the system (but after they have gathered some entropy, since the startup script doesn't run immediately after switching on the machine) into a seed file that is read next time the machine is booting. So you carry over the randomness from the last running of the machine.

|

||||

|

||||

Obviously that isn't as good as if you let the shutdown scripts write out the seed, because in that case there would have been much more time to gather entropy. The advantage is obviously that this does not depend on a proper shutdown with execution of the shutdown scripts (in case the computer crashes, for example).

|

||||

|

||||

And it doesn't help you the very first time a machine is running, but the Linux distributions usually do the same saving into a seed file when running the installer. So that's mostly okay.

|

||||

|

||||

Virtual machines are the other problem. Because people like to clone them, or rewind them to a previously saved check point, this seed file doesn't help you.

|

||||

|

||||

But the solution still isn't using /dev/random everywhere, but properly seeding each and every virtual machine after cloning, restoring a checkpoint, whatever.

|

||||

|

||||

### tldr;

|

||||

|

||||

Just use /dev/urandom!

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.2uo.de/myths-about-urandom/

|

||||

|

||||

作者:[Thomas Hühn][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.2uo.de/

|

||||

[1]:https://www.2uo.de/myths-about-urandom/structure-no.png

|

||||

[2]:https://www.2uo.de/myths-about-urandom/structure-yes.png

|

||||

[3]:https://www.2uo.de/myths-about-urandom/structure-new.png

|

||||

[4]:http://blog.cr.yp.to/20140205-entropy.html

|

||||

[5]:http://www.mail-archive.com/cryptography@randombit.net/msg04763.html

|

||||

[6]:http://security.stackexchange.com/questions/3936/is-a-rand-from-dev-urandom-secure-for-a-login-key/3939#3939

|

||||

[7]:http://sockpuppet.org/blog/2014/02/25/safely-generate-random-numbers/

|

||||

@ -1,93 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Take to the virtual skies with FlightGear)

|

||||

[#]: via: (https://opensource.com/article/19/1/flightgear)

|

||||

[#]: author: (Don Watkins https://opensource.com/users/don-watkins)

|

||||

|

||||

Take to the virtual skies with FlightGear

|

||||

======

|

||||

Dreaming of piloting a plane? Try open source flight simulator FlightGear.

|

||||

|

||||

|

||||

If you've ever dreamed of piloting a plane, you'll love [FlightGear][1]. It's a full-featured, [open source][2] flight simulator that runs on Linux, MacOS, and Windows.

|

||||

|

||||

The FlightGear project began in 1996 due to dissatisfaction with commercial flight simulation programs, which were not scalable. Its goal was to create a sophisticated, robust, extensible, and open flight simulator framework for use in academia and pilot training or by anyone who wants to play with a flight simulation scenario.

|

||||

|

||||

### Getting started

|

||||

|

||||

FlightGear's hardware requirements are fairly modest, including an accelerated 3D video card that supports OpenGL for smooth framerates. It runs well on my Linux laptop with an i5 processor and only 4GB of RAM. Its documentation includes an [online manual][3]; a [wiki][4] with portals for [users][5] and [developers][6]; and extensive tutorials (such as one for its default aircraft, the [Cessna 172p][7]) to teach you how to operate it.

|

||||

|

||||

It's easy to install on both [Fedora][8] and [Ubuntu][9] Linux. Fedora users can consult the [Fedora installation page][10] to get FlightGear running.

|

||||

|

||||

On Ubuntu 18.04, I had to install a repository:

|

||||

|

||||

```

|

||||

$ sudo add-apt-repository ppa:saiarcot895/flightgear

|

||||

$ sudo apt-get update

|

||||

$ sudo apt-get install flightgear

|

||||

```

|

||||

|

||||

Once the installation finished, I launched it from the GUI, but you can also launch the application from a terminal by entering:

|

||||

|

||||

```

|

||||

$ fgfs

|

||||

```

|

||||

|

||||

### Configuring FlightGear

|

||||

|

||||

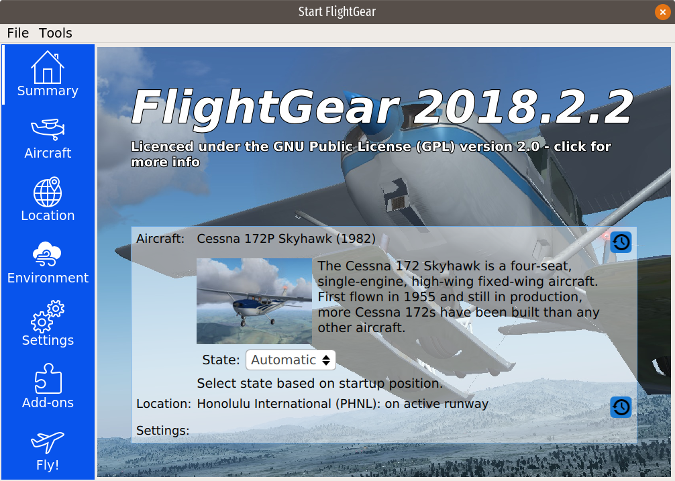

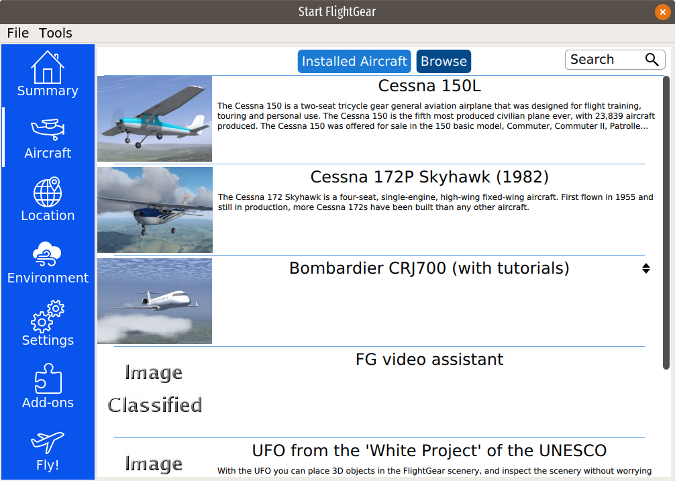

The menu on the left side of the application window provides configuration options.

|

||||

|

||||

|

||||

|

||||

**Summary** returns you to the application's home screen.

|

||||

|

||||

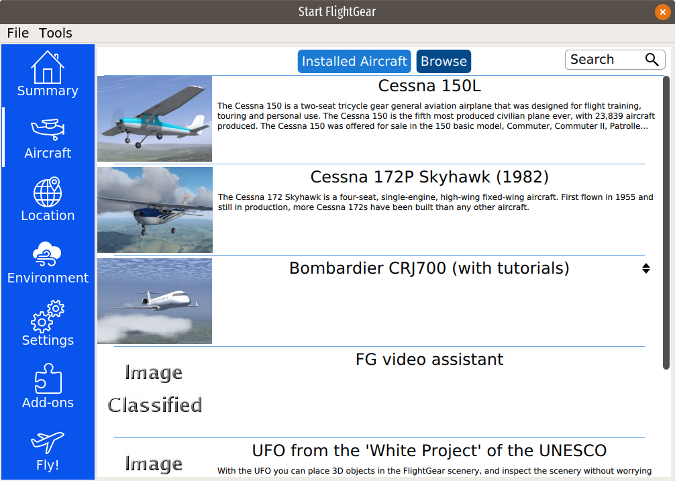

**Aircraft** shows the aircraft you have installed and offers the option to install up to 539 other aircraft available in FlightGear's default "hangar." I installed a Cessna 150L, a Piper J-3 Cub, and a Bombardier CRJ-700. Some of the aircraft (including the CRJ-700) have tutorials to teach you how to fly a commercial jet; I found the tutorials informative and accurate.

|

||||

|

||||

|

||||

|

||||

To select an aircraft to pilot, highlight it and click on **Fly!** at the bottom of the menu. I chose the default Cessna 172p and found the cockpit depiction extremely accurate.

|

||||

|

||||

|

||||

|

||||