mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-30 02:40:11 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject

merge from LCTT.

This commit is contained in:

commit

ad1fd694dc

published

20140718 Linux Kernel Testing and Debugging 2.md20140718 Linux Kernel Testing and Debugging 3.md20140808 Lime Text--An Open Source Alternative Of Sublime Text.md20140811 10 More Tweaks To Make Ubuntu Feel Like Home.md20140812 'Ifconfig' Command Not Found In CentOS 7 Minimal Installation--A Quick Tip To Fix It.md20180813 Command Line Tuesdays--Part Eight.md

sources

talk

20140731 Top 10 Free Linux Games.md20140818 Can Ubuntu Do This--Answers to The 4 Questions New Users Ask Most.md20140818 Upstream and Downstream--why packaging takes time.md20140818 Where And How To Code--Choosing The Best Free Code Editor.md20140818 Why Your Company Needs To Write More Open Source Software.md20140818 Will Linux ever be able to give consumers what they want.md20140819 KDE Plasma 5--For those Linux users undecided on the kernel' s future.md20140819 Top 4 Linux download managers.md

tech

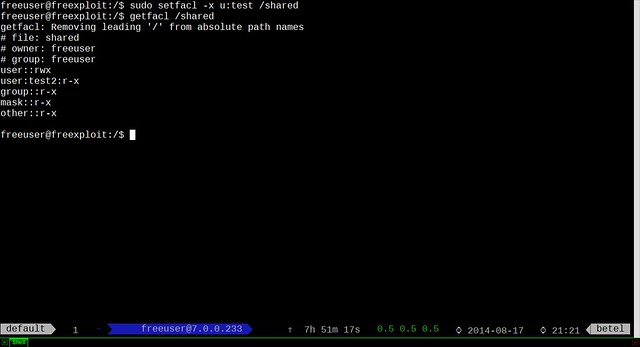

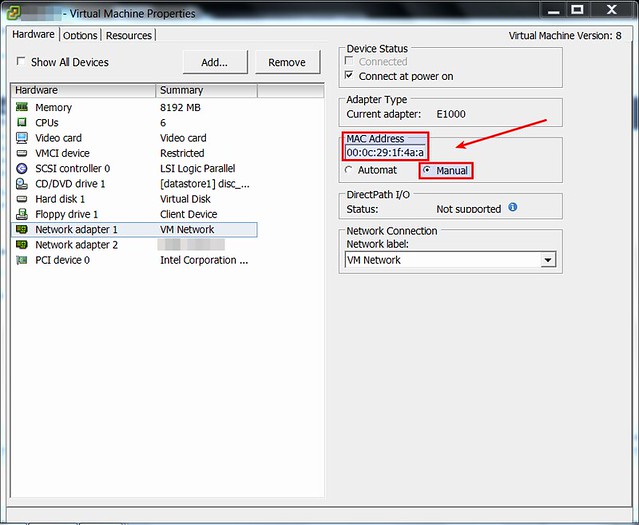

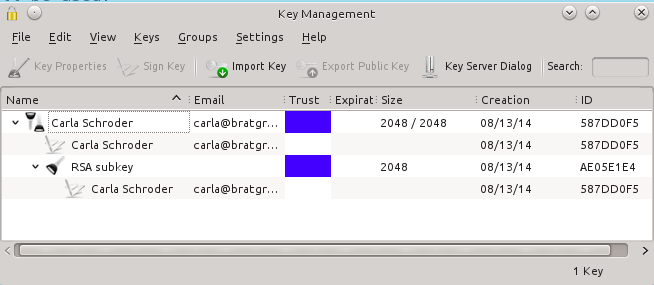

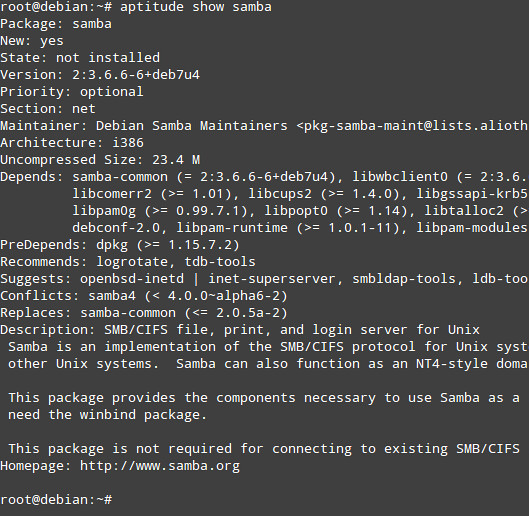

20140808 How to install Puppet server and client on CentOS and RHEL.md20140813 How to Extend or Reduce LVM' s (Logical Volume Management) in Linux--Part II.md20140813 Setup Flexible Disk Storage with Logical Volume Management (LVM) in Linux--PART 1.md20140818 Disable reboot using Ctrl-Alt-Del Keys in RHEL or CentOS.md20140818 How To Schedule A Shutdown In Ubuntu 14.04 [Quick Tip].md20140818 How to Take 'Snapshot of Logical Volume and Restore' in LVM--Part III.md20140818 How to configure Access Control Lists (ACLs) on Linux.md20140818 Install Atom Text Editor In Ubuntu 14.04 & Linux Mint 17.md20140818 Linux FAQs with Answers--How to fix 'X11 forwarding request failed on channel 0'.md20140818 Linux FAQs with Answers--How to set a static MAC address on VMware ESXi virtual machine.md20140818 What are useful CLI tools for Linux system admins.md20140819 8 Options to Trace or Debug Programs using Linux strace Command.md20140819 A Pocket Guide for Linux ssh Command with Examples.md20140819 Build a Raspberry Pi Arcade Machine.md20140819 How to Encrypt Email in Linux.md20140819 Linux Systemd--Start or Stop or Restart Services in RHEL or CentOS 7.md

translated

talk

tech

@ -1,4 +1,4 @@

|

||||

Linux 内核测试与调试 - 2

|

||||

Linux 内核测试与调试(2)

|

||||

================================================================================

|

||||

### 编译安装稳定版内核 ###

|

||||

|

||||

@ -79,7 +79,7 @@ linux-next 状态的内核源码:

|

||||

|

||||

### 打补丁 ###

|

||||

|

||||

Linux 内核的补丁是一个文本文件,包含新源码与老源码之间的改变量。每个补丁只包含自己依赖的源码的增量,除非它被特意包含进一系列补丁之中。打补丁方法如下:

|

||||

Linux 内核的补丁是一个文本文件,包含新源码与老源码之间的差异。每个补丁只包含自己所依赖的源码的改动,除非它被特意包含进一系列补丁之中。打补丁方法如下:

|

||||

|

||||

patch -p1 < file.patch

|

||||

|

||||

@ -101,6 +101,6 @@ Linux 内核的补丁是一个文本文件,包含新源码与老源码之间

|

||||

|

||||

via: http://www.linuxjournal.com/content/linux-kernel-testing-and-debugging?page=0,1

|

||||

|

||||

译者:[bazz2](https://github.com/bazz2) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[bazz2](https://github.com/bazz2) 校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

@ -1,4 +1,4 @@

|

||||

Linux 内核测试与调试 - 3

|

||||

Linux 内核测试与调试(3)

|

||||

================================================================================

|

||||

### 基本测试 ###

|

||||

|

||||

@ -27,7 +27,7 @@ Linux 内核测试与调试 - 3

|

||||

- dmesg -t -k

|

||||

- dmesg -t

|

||||

|

||||

下面的脚本运行了上面的命令,并且将输出保存起来,以便与老的内核的 dmesg 输出作比较(LCTT:老内核的 dmesg 输出在本系列的第一篇文章中有介绍)。然后运行 diff 命令,查看新老内核 dmesg 日志之间的不同。脚本需要输入老内核版本号,如果不输入参数,它只会生成新内核的 dmesg 日志文件后直接退出,不再作比较(LCTT:话是这么说没错,但点开脚本一看,没输参数的话,这货会直接退出,连新内核的 dmesg 日志也不会保存的)。如果 dmesg 日志有新的警告信息,表示新发布的内核有漏网之鱼(LCTT:嗯,漏网之 bug 会更好理解些么?),这些 bug 逃过了自测和系统测试。你要看看,那些警告信息后面有没有栈跟踪信息?也许这里有很多问题需要你进一步调查分析。

|

||||

下面的脚本运行了上面的命令,并且将输出保存起来,以便与老的内核的 dmesg 输出作比较(LCTT:老内核的 dmesg 输出在本系列的[第二篇文章][3]中有介绍)。然后运行 diff 命令,查看新老内核 dmesg 日志之间的不同。这个脚本需要输入老内核版本号,如果不输入参数,它只会生成新内核的 dmesg 日志文件后直接退出,不再作比较(LCTT:话是这么说没错,但点开脚本一看,没输参数的话,这货会直接退出,连新内核的 dmesg 日志也不会保存的)。如果 dmesg 日志有新的警告信息,表示新发布的内核有漏网之“虫”,这些 bug 逃过了自测和系统测试。你要看看,那些警告信息后面有没有栈跟踪信息?也许这里有很多问题需要你进一步调查分析。

|

||||

|

||||

- [**dmesg 测试脚本**][1]

|

||||

|

||||

@ -67,40 +67,40 @@ ktest 是一个自动测试套件,它可以提供编译安装启动内核一

|

||||

|

||||

failslab (默认选项)

|

||||

|

||||

产生 slab 分配错误。作用于 kmalloc(), kmem_cache_alloc() 等函数(LCTT:产生的结果是调用这些函数就会返回失败,可以模拟程序分不到内存时是否还能稳定运行下去)。

|

||||

> 产生 slab 分配错误。作用于 kmalloc(), kmem_cache_alloc() 等函数(LCTT:产生的结果是调用这些函数就会返回失败,可以模拟程序分不到内存时是否还能稳定运行下去)。

|

||||

|

||||

fail_page_alloc

|

||||

fail\_page\_alloc

|

||||

|

||||

产生内存页分配的错误。作用于 alloc_pages(), get_free_pages() 等函数(LCTT:同上,调用这些函数,返回错误)。

|

||||

> 产生内存页分配的错误。作用于 alloc_pages(), get_free_pages() 等函数(LCTT:同上,调用这些函数,返回错误)。

|

||||

|

||||

fail_make_request

|

||||

fail\_make\_request

|

||||

|

||||

对满足条件(可以设置 /sys/block//make-it-fail 或 /sys/block///make-it-fail 文件)的磁盘产生 IO 错误,作用于 generic_make_request() 函数(LCTT:所有针对这块磁盘的读或写请求都会出错)。

|

||||

> 对满足条件(可以设置 /sys/block//make-it-fail 或 /sys/block///make-it-fail 文件)的磁盘产生 IO 错误,作用于 generic_make_request() 函数(LCTT:所有针对这块磁盘的读或写请求都会出错)。

|

||||

|

||||

fail_mmc_request

|

||||

fail\_mmc\_request

|

||||

|

||||

对满足条件(可以设置 /sys/kernel/debug/mmc0/fail_mmc_request 这个 debugfs 属性)的磁盘产生 MMC 数据错误。

|

||||

> 对满足条件(可以设置 /sys/kernel/debug/mmc0/fail\_mmc\_request 这个 debugfs 属性)的磁盘产生 MMC 数据错误。

|

||||

|

||||

你可以自己配置 fault-injection 套件的功能。fault-inject-debugfs 内核模块在系统运行时会在 debugfs 文件系统下面提供一些属性文件。你可以指定出错的概率,指定两个错误之间的时间间隔,当然本套件还能提供更多其他功能,具体请查看 Documentation/fault-injection/fault-injection.txt。 Boot 选项可以让你的系统在 debugfs 文件系统起来之前就可以产生错误,下面列出几个 boot 选项:

|

||||

|

||||

- failslab=

|

||||

- fail_page_alloc=

|

||||

- fail_make_request=

|

||||

- mmc_core.fail_request=[interval],[probability],[space],[times]

|

||||

- fail\_page_alloc=

|

||||

- fail\_make\_request=

|

||||

- mmc\_core.fail\_request=[interval],[probability],[space],[times]

|

||||

|

||||

fault-injection 套件提供接口,以便增加新的功能。下面简单介绍下增加新功能的步骤,详细信息请参考上面提到过的文档:

|

||||

|

||||

使用 DECLARE_FAULT_INJECTION(name) 定义默认属性;

|

||||

使用 DECLARE\_FAULT\_INJECTION(name) 定义默认属性;

|

||||

|

||||

> 详细信息可查看 fault-inject.h 中定义的 struct fault_attr 结构体。

|

||||

> 详细信息可查看 fault-inject.h 中定义的 struct fault\_attr 结构体。

|

||||

|

||||

配置 fault 属性,新建一个 boot 选项;

|

||||

|

||||

> 这步可以使用 setup_fault_attr(attr, str) 函数完成,为了能在系统启动的早期产生错误,添加一个 boot 选项这一步是必须要有的。

|

||||

> 这步可以使用 setup\_fault\_attr(attr, str) 函数完成,为了能在系统启动的早期产生错误,添加一个 boot 选项这一步是必须要有的。

|

||||

|

||||

添加 debugfs 属性;

|

||||

|

||||

> 使用 fault_create_debugfs_attr(name, parent, attr) 函数,为新功能添加新的 debugfs 属性。

|

||||

> 使用 fault\_create\_debugfs\_attr(name, parent, attr) 函数,为新功能添加新的 debugfs 属性。

|

||||

|

||||

为模块设置参数;

|

||||

|

||||

@ -108,7 +108,7 @@ fault-injection 套件提供接口,以便增加新的功能。下面简单介

|

||||

|

||||

添加一个钩子函数到错误测试的代码中。

|

||||

|

||||

> should_fail(attr, size) —— 当这个钩子函数返回 true 时,用户的代码就应该产生一个错误。

|

||||

> should\_fail(attr, size) —— 当这个钩子函数返回 true 时,用户的代码就应该产生一个错误。

|

||||

|

||||

应用程序使用这个 fault-injection 套件可以指定某个具体的内核模块产生 slab 和内存页分配的错误,这样就可以缩小性能测试的范围。

|

||||

|

||||

@ -116,9 +116,10 @@ fault-injection 套件提供接口,以便增加新的功能。下面简单介

|

||||

|

||||

via: http://www.linuxjournal.com/content/linux-kernel-testing-and-debugging?page=0,2

|

||||

|

||||

译者:[bazz2](https://github.com/bazz2) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[bazz2](https://github.com/bazz2) 校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://linuxdriverproject.org/mediawiki/index.php/Dmesg_regression_check_script

|

||||

[2]:http://elinux.org/Ktest#Git_Bisect_type

|

||||

[3]:http://linux.cn/article-3629-1.html

|

||||

@ -0,0 +1,38 @@

|

||||

Lime Text: 一款可以替代 Sublime Text 的开源项目

|

||||

================================================================================

|

||||

|

||||

|

||||

[Sublime Text][1] 是为程序员准备的最好的文本编辑器之一(尽管不是最最好的)。Sublime 囊括了众多特性并且拥有很棒的界面外观,在三大主流桌面操作系统上均能运行,即 Windows, Mac 还有 Linux 之上。

|

||||

|

||||

但这并不表示 Sublime Text 是完美的。它有不少 bug、会崩溃而且几乎没有任何技术支持。如果你有关注过 Sublime Text 的开发过程,你就会发现此时 Sublime Text beta 版已经公布超过一年了,却没有告知用户任何关于它的发行日期的确切信息。最重要的是,Sublime Text 既不免费也不[开源][2]。

|

||||

|

||||

这一系列问题也使 [Fredrik Ehnbom][3] 感到沮丧,因此他在 [Github][5] 上发起了一个开源项目 ——[Lime Text][4],希望能开发出一款新的、外观与工作方式完全与 Sublime Text 一致的文本编辑器。在被问到为什么他决定去“克隆”一款现有的文本编辑器这个问题时,Frederic 说道:

|

||||

|

||||

> 因为没有一款我试过的其他文本编辑器能达到我对 Sublime Text 的喜爱程度,我决定了我不得不开发出我自己的文本编辑器。

|

||||

|

||||

Lime Text 的后端采用 Go 实现,前端则使用了 ermbox,Qt (QML) 及 HTML/JavaScript。开发正根据完全明确可见的[目标][6]逐步进行中。你能够在它的 [Github 页面][7]中为项目贡献自己的力量。

|

||||

|

||||

|

||||

|

||||

如果你想要试用 beta 版本,你可以根据 [wiki][8] 中的介绍搭建 Lime Text。同时,如果你想找寻其他强大的文本编辑器的话,试一试 [SciTE][9] 吧。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://itsfoss.com/lime-text-open-source-alternative/

|

||||

|

||||

作者:[bhishek][a]

|

||||

译者:[SteveArcher](https://github.com/SteveArcher)

|

||||

校对:[ReiNoir](https://github.com/reinoir)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://itsfoss.com/author/Abhishek/

|

||||

[1]:http://www.sublimetext.com/

|

||||

[2]:http://itsfoss.com/category/open-source-software/

|

||||

[3]:https://github.com/quarnster

|

||||

[4]:http://limetext.org/

|

||||

[5]:https://github.com/

|

||||

[6]:https://github.com/limetext/lime/wiki/Goals

|

||||

[7]:https://github.com/limetext/lime/issues

|

||||

[8]:https://github.com/limetext/lime/wiki/Building

|

||||

[9]:http://itsfoss.com/scite-the-notepad-for-linux/

|

||||

@ -1,53 +1,54 @@

|

||||

10个调整让Ubuntu找到家的感觉

|

||||

10个调整让Ubuntu宾至如归

|

||||

================================================================================

|

||||

|

||||

|

||||

不久以前我提供给大家[12个调整Ubuntu的小建议][1]。 然而,从那以后多了一段时间了, 我们又提出了另外10个建议,能够使你的Ubuntu找到家的感觉。

|

||||

不久前我提供给大家[12个调整Ubuntu的小建议][1]。 然而,已经是一段时间以前的事情了,现在我们又提出了另外10个建议,能够使你的Ubuntu宾至如归。

|

||||

|

||||

这10个建议执行起来十分简单方便,那就让我们开始吧!

|

||||

|

||||

### 安装 TLP ###

|

||||

### 1. 安装 TLP ###

|

||||

|

||||

|

||||

|

||||

[We covered TLP a while back][2], 这是一款优化电源设置的软件,为了让你能享受到一个更长的电池寿命。之前我们深入的探讨过TLP, 并且我们也在列表中提到这真是一个好东西。要安装它,在终端运行以下命令:

|

||||

[我们不久前涉及到了TLP][2], 这是一款优化电源设置的软件,可以让你享受更长的电池寿命。之前我们深入的探讨过TLP, 并且我们也在列表中提到这软件真不错。要安装它,在终端运行以下命令:

|

||||

|

||||

sudo add-apt-repository -y ppa:linrunner/tlp && sudo apt-get update && sudo apt-get install -y tlp tlp-rdw tp-smapi-dkms acpi-call-tools && sudo tlp start

|

||||

|

||||

上面的命令将添加必要的仓库,更新包的列表以便它能包含被新仓库提供的包,安装TLP并且开启这个服务。

|

||||

|

||||

### 系统负载指示器 ###

|

||||

### 2. 系统负载指示器 ###

|

||||

|

||||

|

||||

|

||||

给你的Ubuntu桌面添加一个系统负载指示器能让你了解到你的系统资源被占用了多少。 如果你宁愿不在桌面上添加这个技术图表,那么可以不要添加它, 但是对于那些对这个感兴趣的人来说,这真是一个好的扩展。 你可以运行这个命令去安装它:

|

||||

给你的Ubuntu桌面添加一个系统负载指示器能让你快速了解到你的系统资源占用率。 如果你不想在桌面上添加这个技术图表,那么可以不要添加, 但是对于那些对它感兴趣的人来说,这个扩展真是很好。 你可以运行这个命令去安装它:

|

||||

|

||||

sudo apt-get install indicator-multiload

|

||||

|

||||

然后在Dash里面找到它并且打开。

|

||||

然后在Dash里面找到它并且打开。

|

||||

|

||||

### 天气指示器 ###

|

||||

### 3. 天气指示器 ###

|

||||

|

||||

|

||||

|

||||

Ubuntu过去提供内置的天气指示器, 但是自从它切换到Gnome 3以后,就不在默认提供了。代替地,你需要安装一个独立的指示器。 你可以通过以下命令安装它:

|

||||

Ubuntu过去提供内置的天气指示器,但是自从它切换到Gnome 3以后,就不再默认提供了。你需要安装一个独立的指示器来代替。 你可以通过以下命令安装它:

|

||||

|

||||

sudo add-apt-repository -y ppa:atareao/atareao && sudo apt-get update && sudo apt-get install -y my-weather-indicator

|

||||

|

||||

这将添加另外一个仓库,更新包的列表,并且安装这个指示器。然后在Dash里面找到并开启它。

|

||||

|

||||

### 安装 Dropbox 或其他云存储解决方案 ###

|

||||

### 4. 安装 Dropbox 或其他云存储解决方案 ###

|

||||

|

||||

|

||||

|

||||

我在我所有的Linux系统里面都安装过的一个东西就是Dropbox。没有它,真的就找不到一个家的感觉,主要是因为我所有经常使用的文件都储存在Dropbox中。安装Dropbox非常直截了当,但是要花点时间执行一个简单的命令。 在开始之前,你需要运行这个命令为了你能在系统托盘里看到Dropbox的图标:

|

||||

我在我所有的Linux系统里面都安装过的一个软件,那就是Dropbox。没有它,真的就找不到家的感觉,主要是因为我所有经常使用的文件都储存在Dropbox中。安装Dropbox非常直截了当,但是要花点时间执行一个简单的命令。 在开始之前,为了你能在系统托盘里看到Dropbox的图标,你需要运行这个命令:

|

||||

|

||||

sudo apt-get install libappindicator1

|

||||

|

||||

然后你需要去Dropbox的下载页面,接着安装你已下载的.deb文件。现在你的Dropbox应该已经运行了。

|

||||

|

||||

如果你有点讨厌Dropbox, 你也可以尝试使用Copy [或者OneDrive][3]。两者提供更多免费存储空间,这是考虑使用他们的很大一个原因。比起OneDrive我更推荐使用Copy因为Copy能工作在所有的Linux发行版上。

|

||||

如果你有点讨厌Dropbox, 你也可以尝试使用Copy [或者OneDrive][3]。两者提供更多免费存储空间,这是考虑使用它们的很大一个原因。比起OneDrive我更推荐使用Copy,因为Copy能工作在所有的Linux发行版上。

|

||||

|

||||

### 安装Pidgin和Skype ###

|

||||

### 5. 安装Pidgin和Skype ###

|

||||

|

||||

|

||||

|

||||

@ -57,14 +58,14 @@ Ubuntu过去提供内置的天气指示器, 但是自从它切换到Gnome 3以

|

||||

|

||||

安装Skype也很简单 — 你仅仅需要去Skype的下载页面并且下载你Ubuntu12.04对应架构的.deb文件就可以了。

|

||||

|

||||

###移除键盘指示器 ###

|

||||

### 6. 移除键盘指示器 ###

|

||||

|

||||

|

||||

|

||||

在桌面上显示键盘指示器可能让一些人很苦恼。对于讲英语的人来说,它仅仅显示一个“EN”,这可能是恼人的,因为很多人不需要改变键盘布局或者被提醒他们正则使用英语。要移除这个指示器,选择系统设置,然后文本输入,接着去掉“在菜单栏显示当前输入源”的勾。

|

||||

>[译注]:国人可能并不适合这个建议。

|

||||

在桌面上显示键盘指示器可能让一些人很苦恼。对于讲英语的人来说,它仅仅显示一个“EN”,这可能是恼人的,因为很多人不需要改变键盘布局或者被提醒他们正在使用英语。要移除这个指示器,选择系统设置,然后文本输入,接着去掉“在菜单栏显示当前输入源”的勾。

|

||||

(译注:国人可能并不适合这个建议。)

|

||||

|

||||

### 回归传统菜单###

|

||||

### 7. 回归传统菜单###

|

||||

|

||||

|

||||

|

||||

@ -72,23 +73,23 @@ Ubuntu过去提供内置的天气指示器, 但是自从它切换到Gnome 3以

|

||||

|

||||

sudo add-apt-repository -y ppa:diesch/testing && sudo apt-get update && sudo apt-get install -y classicmenu-indicator

|

||||

|

||||

### 安装Flash和Java ###

|

||||

### 8. 安装Flash和Java ###

|

||||

|

||||

在之前的文章中我提到了安装解码器和Silverlight,我应该也提到了Flash和Java是他们所需要的主要插件,虽然他们有时可能忘了它们。要安装它们只需运行这个命令:

|

||||

在之前的文章中我提到了安装解码器和Silverlight,我应该也提到了Flash和Java是它们所需要的主要插件,虽然有时可能它们可能被遗忘。要安装它们只需运行这个命令:

|

||||

|

||||

sudo add-apt-repository -y ppa:webupd8team/java && sudo apt-get update && sudo apt-get install oracle-java7-installer flashplugin-installer

|

||||

|

||||

安装Java需要新增仓库,因为Ubuntu不再包含它的专利版本(大多数人为了最好的性能推荐使用这个版本),而是使用开源的OpenJDK。

|

||||

|

||||

### 安装VLC ###

|

||||

### 9. 安装VLC ###

|

||||

|

||||

|

||||

|

||||

默认的媒体播放器, Totem,十分优秀但是它依赖很多独立安装的解码器才能很好的工作。我个人推荐你安装VLC媒体播放器, 因为它包含所有解码器并且实际上它支持世界上每一种媒体格式。要安装它,仅仅需要运行如下命令:

|

||||

默认的媒体播放器Totem十分优秀但是它依赖很多独立安装的解码器才能很好的工作。我个人推荐你安装VLC媒体播放器, 因为它包含所有解码器并且实际上它支持世界上每一种媒体格式。要安装它,仅仅需要运行如下命令:

|

||||

|

||||

sudo apt-get install vlc

|

||||

|

||||

### 安装PuTTY (或者不) ###

|

||||

### 10. 安装PuTTY (或者不) ###

|

||||

|

||||

|

||||

|

||||

@ -104,9 +105,9 @@ Ubuntu过去提供内置的天气指示器, 但是自从它切换到Gnome 3以

|

||||

|

||||

### 你推荐如何调整? ###

|

||||

|

||||

补充了这10个调整,你应该感觉你的Ubuntu真的找到了家的感觉,这很容易重建或击溃你的Linux体验。有许多不同的方式去定制你自己的体验去让它更适合你的需要;你只需要环顾自己并且找到你想要的东西

|

||||

补充了这10个调整,你应该感觉你的Ubuntu真的有家的感觉,这很容易建立起或击溃你的Linux体验。有许多不同的方式去定制你自己的体验去让它更适合你的需要;你只需自己寻找来发现你想要的东西。

|

||||

|

||||

**你有什么调整和建议想和读者分享?**在评论中让我们知道吧!

|

||||

**您有什么其它的调整和建议想和读者分享?**在评论中告诉我们吧!

|

||||

|

||||

*图片致谢: Home doormat Via Shutterstock*

|

||||

|

||||

@ -116,7 +117,7 @@ via: http://www.makeuseof.com/tag/10-tweaks-make-ubuntu-feel-like-home/

|

||||

|

||||

作者:[Danny Stieben][a]

|

||||

译者:[guodongxiaren](https://github.com/guodongxiaren)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[Caroline](https://github.com/carolinewuyan)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -2,7 +2,7 @@ CentOS 7最小化安装后找不到‘ifconfig’命令——修复小提示

|

||||

================================================================================

|

||||

|

||||

|

||||

就像我们所知道的,“**ifconfig**”命令用于配置GNU/Linux系统的网络接口。它显示网络接口卡的详细信息,包括IP地址,MAC地址,以及网络接口卡状态之类。但是,该命令已经过时了,而且在最小化版本的RHEL 7以及它的克隆版本CentOS 7,Oracle Linux 7以和Scientific Linux 7中也找不到该命令。

|

||||

就像我们所知道的,“**ifconfig**”命令用于配置GNU/Linux系统的网络接口。它显示网络接口卡的详细信息,包括IP地址,MAC地址,以及网络接口卡状态之类。但是,该命令已经过时了,而且在最小化版本的RHEL 7以及它的克隆版本CentOS 7,Oracle Linux 7和Scientific Linux 7中也找不到该命令。

|

||||

|

||||

### 在CentOS最小化服务器版本中如何查找网卡IP和其它详细信息? ###

|

||||

|

||||

@ -24,7 +24,7 @@ CentOS 7最小化系统,使用“**ip addr**”和“**ip link**”命令来

|

||||

inet 127.0.0.1/32 scope host venet0

|

||||

inet 192.168.1.101/32 brd 192.168.1.101 scope global venet0:0

|

||||

|

||||

要查看网络接口统计数据,输入命令:

|

||||

要查看网络接口统计数据,输入命令:

|

||||

|

||||

ip link

|

||||

|

||||

@ -56,7 +56,7 @@ CentOS 7最小化系统,使用“**ip addr**”和“**ip link**”命令来

|

||||

|

||||

### 在CentOS 7最小化服务器版本中如何启用并使用“ifconfig”命令? ###

|

||||

|

||||

如果你不知道在哪里可以找到ifconfig命令,请按照以下简单的步骤来找到它。首先,让我们找出哪个包提供了ifconfig命令。要完成这事,输入以下命令:

|

||||

如果你不知道在哪里可以找到ifconfig命令,请按照以下简单的步骤来找到它。首先,让我们找出哪个包提供了ifconfig命令。要完成这项任务,输入以下命令:

|

||||

|

||||

yum provides ifconfig

|

||||

|

||||

@ -117,7 +117,7 @@ via: http://www.unixmen.com/ifconfig-command-found-centos-7-minimal-installation

|

||||

|

||||

作者:[Senthilkumar][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[Caroline](https://github.com/carolinewuyan)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -8,7 +8,7 @@

|

||||

|

||||

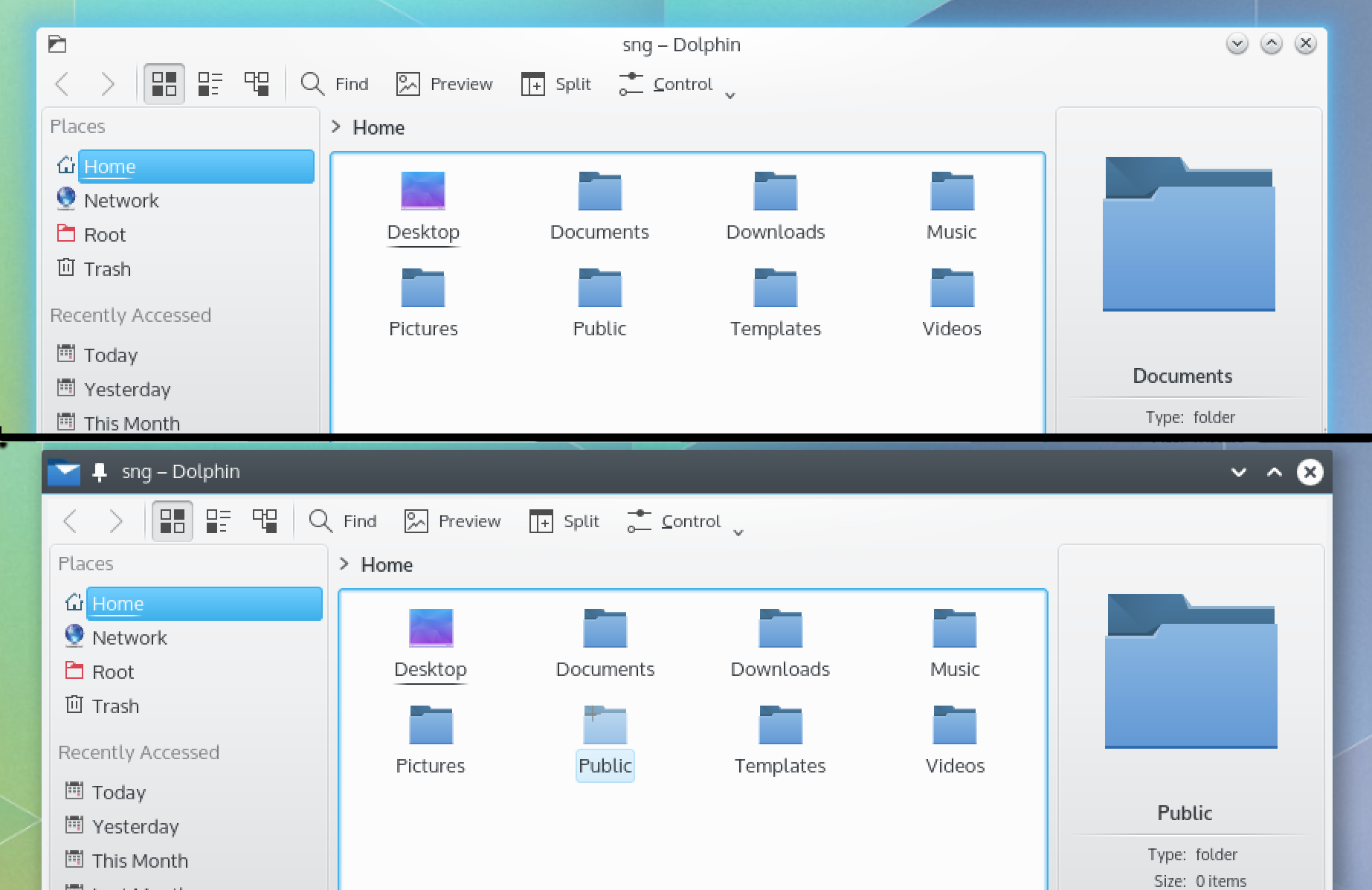

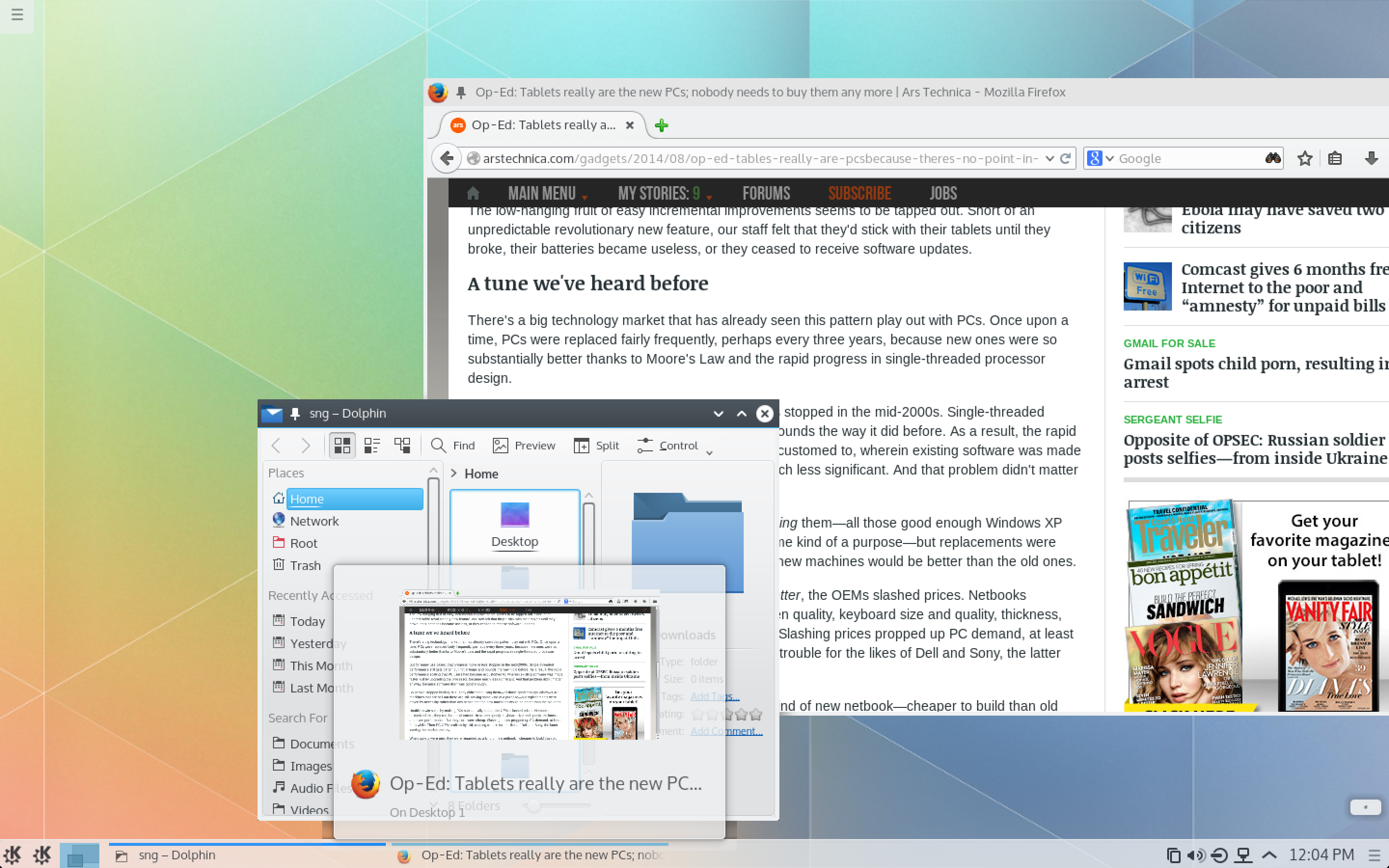

dolphin

|

||||

|

||||

……dolphin,这个文件管理器,就打开了。如果在进程打开时你查看终端,你就不能访问命令提示符了,而且你也不能在同一个窗口中写一个新命令进去了。如果你终止dolphin,提示符又会出现了,而你又能输入一个新命令到shell中去了。那么,我们怎么能在CLI运行一个程序时,同时又能获得提示符以便进一步发命令。

|

||||

……dolphin,这个文件管理器,就打开了。如果在这个进程打开时你查看终端,你会发现不能访问命令提示符了,而且你也不能在同一个窗口中写一个新命令进去了。如果你终止dolphin,提示符又会出现了,而你又能输入一个新命令到shell中去了。那么,我们怎么能在CLI运行一个程序时,同时又能获得提示符以便进一步发命令。

|

||||

|

||||

dolphin &

|

||||

|

||||

@ -22,7 +22,7 @@

|

||||

|

||||

### jobs, ps ###

|

||||

|

||||

由于我们在后台运行着进程,你可以使用jobs或者使用ps来来列出它们。试试吧,只要输入jobs或者输入ps就行了。下面是我得到的结果:

|

||||

由于我们在后台运行着进程,你可以使用jobs或者使用ps来列出它们。试试吧,只要输入jobs或者输入ps就行了。下面是我得到的结果:

|

||||

|

||||

nenad@linux-zr04:~> ps

|

||||

PID TTY TIME CMD

|

||||

@ -39,9 +39,9 @@

|

||||

|

||||

……那么,它就把dolphin给杀死了。

|

||||

|

||||

### Kill更多细节 ###

|

||||

### kill的更多细节 ###

|

||||

|

||||

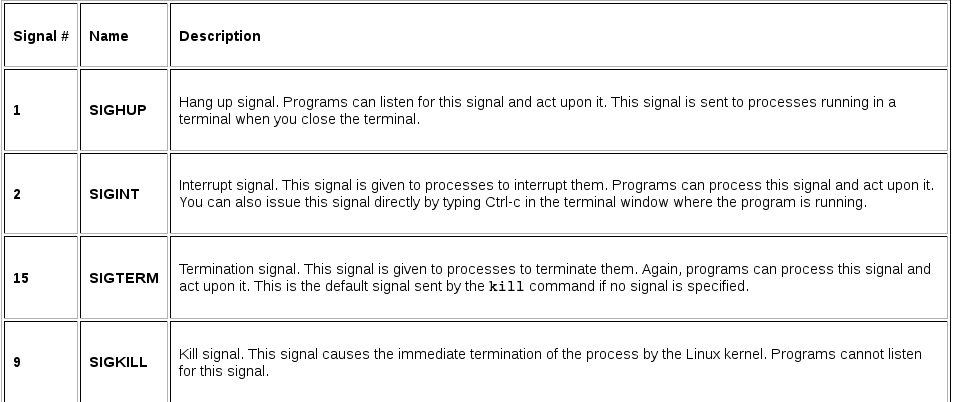

Kill的存在,不仅仅是为了终止进程,它最初是设计用来发送信号给进程。当然,有许多kill信号可以使用,根据你使用的应用程序不同而不同。请看下面的表:

|

||||

kill的存在,不仅仅是为了终止进程,它最初是设计用来发送信号给进程。当然,有许多kill信号可以使用,根据你使用的应用程序不同而不同。请看下面的表:

|

||||

|

||||

|

||||

|

||||

@ -49,9 +49,10 @@ Kill的存在,不仅仅是为了终止进程,它最初是设计用来发送

|

||||

|

||||

### 结尾 ###

|

||||

|

||||

我们以本节课来结束我们的CLT系列和周二必达,我希望其他像我这样的新手们能设法在他们的思想中摆脱控制台的神秘而学习掌握一些基本技能。现在对你们而言,所有剩下来要做的事,就是尽情摆弄它吧(只是别把/目录搞得太乱七八糟,因而你也不会诋毁什么东西了 :D)。

|

||||

We’ll be seeing a lot more of each other soon, as there’s more series of articles from where these came from. Stay tuned, and meanwhile…

|

||||

我们以本节课来结束我们的CLT系列和周二必达,我希望其他像我这样的新手们能设法在他们的思想中摆脱控制台的神秘而学习掌握一些基本技能。现在对你们而言,所有剩下来要做的事,就是尽情摆弄它吧(只是别把“/”目录搞得太乱七八糟,因而你也不会诋毁什么东西了 :D)。

|

||||

|

||||

我们将在不久的将来看到其它更多的东西,因为有更多的系列文章来自这些文章的出处。别走开,同时……

|

||||

|

||||

### ……尽情享受! ###

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

@ -60,7 +61,7 @@ via: https://news.opensuse.org/2014/08/12/command-line-tuesdays-part-eight/

|

||||

|

||||

作者:[Nenad Latinović][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

[Translating by SteveArcher]

|

||||

Top 10 Free Linux Games

|

||||

================================================================================

|

||||

If the term “Can I game on it?” has been bothering you while thinking to switch on Linux from Windows platform, then here is an answer for that – “Go for it!”. Thanks to the Open source community who has been consistently developing different genre games for Linux OS and the online content distribution platform – Steam, there is no dearth of good commercial games which are as fun to play on Linux as on its other counterparts (like Windows).

|

||||

|

||||

@ -0,0 +1,78 @@

|

||||

Can Ubuntu Do This? — Answers to The 4 Questions New Users Ask Most

|

||||

================================================================================

|

||||

|

||||

|

||||

**Type ‘Can Ubuntu’ into Google and you’ll see a stream of auto suggested terms put before you, all based on the queries asked most often by curious searchers. **

|

||||

|

||||

For long-time Linux users these queries all have rather obvious answers. But for new users or those feeling out whether a distribution like Ubuntu is for them the answers are not quite so obvious; they’re pertinent, real and essential asks.

|

||||

|

||||

So, in this article, I’m going to answer the top four most searched for questions asking “*Can Ubuntu…?*”

|

||||

|

||||

### Can Ubuntu Replace Windows? ###

|

||||

|

||||

|

||||

Windows isn’t to everyones tastes — or needs

|

||||

|

||||

Yes. Ubuntu (and most other Linux distributions) can be installed on just about any computer capable of running Microsoft Windows.

|

||||

|

||||

Whether you **should** replace it will, invariably, depend on your own needs.

|

||||

|

||||

For example, if you’re attending a school or college that requires access to Windows-only software, you may want to hold off replacing it entirely. Same goes for businesses; if your work depends on Microsoft Office, Adobe Creative Suite or a specific AutoCAD application you may find it easier to stick with what you have.

|

||||

|

||||

But for most of us Ubuntu can replace Windows full-time. It offers a safe, dependable desktop experience that can run on and support a wide range of hardware. Software available covers everything from office suites to web browsers, video and music apps to games.

|

||||

|

||||

### Can Ubuntu Run .exe Files? ###

|

||||

|

||||

|

||||

You can run some Windows apps in Ubuntu

|

||||

|

||||

Yes, though not out of the box, and not with guaranteed success. This is because software distributed in .exe are meant to run on Windows. These are not natively compatible with any other desktop operating system, including Mac OS X or Android.

|

||||

|

||||

Software installers made for Ubuntu (and other Linux distributions) tend to come as ‘.deb’ files. These can be installed similarly to .exe — you just double-click and follow any on-screen prompts.

|

||||

|

||||

But Linux is versatile. Using a compatibility layer called ‘Wine’ (which technically is not an emulator, but for simplicity’s sake can be referred to as one for shorthand) that can run many popular apps. They won’t work quite as well as they do on Windows, nor look as pretty. But, for many, it works well enough to use on a daily basis.

|

||||

|

||||

Notable Windows software that can run on Ubuntu through Wine includes older versions of Photoshop and early versions of Microsoft Office . For a list of compatible software [refer to the Wine App Database][1].

|

||||

|

||||

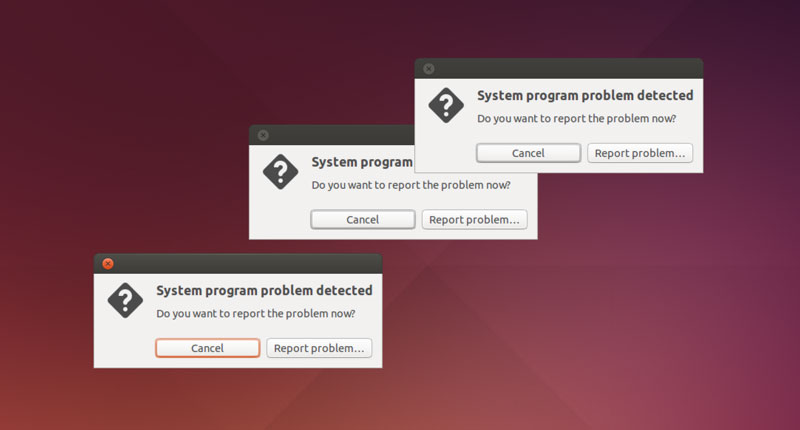

### Can Ubuntu Get Viruses? ###

|

||||

|

||||

|

||||

It may have errors, but it doesn’t have viruses

|

||||

|

||||

Theoretically, yes. But in reality, no.

|

||||

|

||||

Linux distributions are built in a way that makes it incredibly hard for viruses, malware and root kits to be installed, much less run and do any significant damage.

|

||||

|

||||

For example, most applications run as a ‘regular user’ with no special administrative privileges, required for a virus to access critical parts of the operating system. Most software is also installed from well maintained and centralised sources, like the Ubuntu Software Center, and not random websites. This makes the risk of installing something that is infected negligible.

|

||||

|

||||

Should you use anti-virus on Ubuntu? That’s up to you. For peace of mind, or if you’re regularly using Windows software through Wine or dual-booting, you can install a free and open-source virus scanner app like ClamAV, available from the Software Center.

|

||||

|

||||

You can learn more about the potential for viruses on Linux/Ubuntu [on the Ubuntu Wiki][2].

|

||||

|

||||

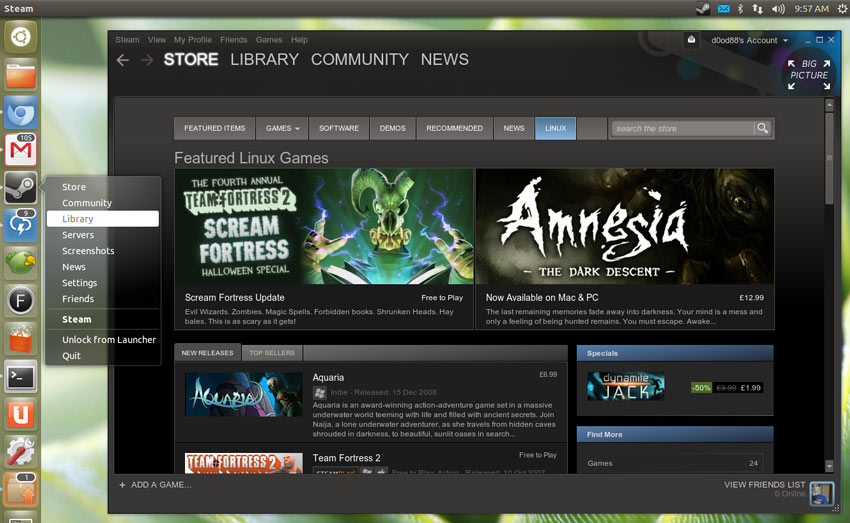

### Can Ubuntu Play Games? ###

|

||||

|

||||

|

||||

Steam has hundreds of high-quality games for Linux

|

||||

|

||||

Oh yes it can. From the traditionally simple distractions of 2D chess, word games and minesweeper to modern AAA titles requiring powerful graphics cards, Ubuntu has a diverse range of games available for it.

|

||||

|

||||

Your first port of call will be the **Ubuntu Software Center**. Here you’ll find a sizeable number of free, open-source and paid-for games, including acclaimed indie titles like World of Goo and Braid, as well as several sections filled with more traditional offerings, like PyChess, four-in-a-row and Scrabble clones.

|

||||

|

||||

For serious gaming you’ll want to grab **Steam for Linux**. This is where you’ll find some of the latest and greatest games available, spanning the full gamut of genres.

|

||||

|

||||

Also keep an eye on the [Humble Bundle][3]. These ‘pay what you want’ packages are held for two weeks every month or so. The folks at Humble have been fantastic supporters of Linux as a gaming platform, single-handily ensuring the Linux debut of many touted titles.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2014/08/ubuntu-can-play-games-replace-windows-questions

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/117485690627814051450/?rel=author

|

||||

[1]:https://appdb.winehq.org/

|

||||

[2]:https://help.ubuntu.com/community/Antivirus

|

||||

[3]:https://www.humblebundle.com/

|

||||

@ -0,0 +1,97 @@

|

||||

Upstream and Downstream: why packaging takes time

|

||||

================================================================================

|

||||

Here in the KDE office in Barcelona some people spend their time on purely upstream KDE projects and some of us are primarily interested in making distros work which mean our users can get all the stuff we make. I've been asked why we don't just automate the packaging and go and do more productive things. One view of making on a distro like Kubuntu is that its just a way to package up the hard work done by others to take all the credit. I don't deny that, but there's quite a lot to the packaging of all that hard work, for a start there's a lot of it these days.

|

||||

|

||||

"KDE" used to be released once every nine months or less frequently. But yesterday I released the [first bugfix update to Plasma][1], to make that happen I spent some time on Thursday with David making the [first update to Frameworks 5][2]. But Plasma 5 is still a work in progress for us distros, let's not forget about [KDE SC 4.13.3][3] which Philip has done his usual spectacular job of updating in the 14.04 LTS archive or [KDE SC 4.14 betas][4] which Scarlett has been packaging for utopic and backporting to 14.04 LTS. KDE SC used to be 20 tars, now it's 169 and over 50 langauge packs.

|

||||

|

||||

### Patches ###

|

||||

|

||||

If we were packaging it without any automation as used to be done it would take an age but of course we do automate the repetative tasks, the [KDE SC 4.13.97 status][5] page shows all the packages and highlights obvious problems. But with 169 tars even running the automated script takes a while, then you have to fix any patches that no longer apply. We have [policies][6] to disuade having patches, any patches should be upstream in KDE or on their way upstream, but sometimes it's unavoidable that we have some to maintain which often need small changes for each upstream release.

|

||||

|

||||

### Symbols ###

|

||||

|

||||

Much of what we package are libraries and if one small bit changes in the library, any applications which use that library will crash. This is ABI and the rules for [binary compatibility][7] in C++ are nuts. Not infrequently someone in KDE will alter a library ABI without realising. So we maintain symbol files to list all the symbols, these can often feel like more trouble than they're worth because they need updated when a new version of GCC produces different symbols or when symbols disappear and on investigation they turn out to be marked private and nobody will be using them anyway, but if you miss a change and apps start crashing as nearly happened in KDE PIM last week then people get grumpy.

|

||||

|

||||

### Copyright ###

|

||||

|

||||

Debian, and so Ubuntu, documents the copyright licence of every files in every package. This is a very slow and tedious job but it's important that it's done both upstream and downstream because it you don't people won't want to use your software in a commercial setting and at worst you could end up in court. So I maintain the [licensing policy][8] and not infrequently have to fix bits which are incorrectly or unclearly licenced and answer questions such as today I was reviewing whether a kcm in frameworks had to be LGPL licenced for Eike. We write a copyright file for every package and again this can feel like more trouble than its worth, there's no easy way to automate it but by some readings of the licence texts it's necessary to comply with them and it's just good practice. It also means that if someone starts making claims like requiring licencing for already distributed binary packages I'm in an informed position to correct such nonsense.

|

||||

|

||||

### Descriptions ###

|

||||

|

||||

When we were packaging KDE Frameworks from scratch we had to find a descirption of each Framework. Despite policies for metadata some were quite underdescribed so we had to go and search for a sensible descirption for them. Infact not infrequently we'll need to use a new library which doesn't even have a sensible paragraph describing what it does. We need to be able to make a package show something of a human face.

|

||||

|

||||

### Multiarch ###

|

||||

|

||||

A recent addition to the world of .deb packaging is [MultiArch][9] which allows i386 packages to be installed on amd64 computers as well as some even more obscure combinations (powerpc on ppcel64 anyone?). This lets you run Skype on your amd64 computer without messy cludges like the ia32-libs package. However it needs quite a lot of attention from packagers of libraries marking which packages are multiarch, which depend on other multiarch or arch independent packages and even after packaging KDE Frameworks I'm not entirely comfortable with doing it.

|

||||

|

||||

### Splitting up Packages ###

|

||||

|

||||

We spend lots of time splitting up packages. When say Calligra gets released it's all in one big tar but you don't want all of it on your system because you just want to write a letter in Calligra Words and Krita has lots of image and other data files which take up lots of space you don't care for. So for each new release we have to work out which of the installed files go into which .deb package. It takes time and even worse occationally we can get it wrong but if you don't want heaps of stuff on your computer you don't need then it needs to be done. It's also needed for library upgrades, if there's a new version of libfoo and not all the programs have been ported to it then you can install libfoo1 and libfoo2 on the same system without problems. That's not possible with distros which don't split up packages.

|

||||

|

||||

One messy side effect of this is that when a file moves from one .deb to another .deb made by the same sources, maybe Debian chose to split it another way and we want to follow them, then it needs a Breaks/Replaces/Conflicts added. This is a pretty messy part of .deb packaging, you need to specify which version it Breaks/Replaces/Conflicts and depending on the type of move you need to specify some combination of these three fields but even experienced packages seem to be unclear on which. And then if a backport (with files in original places) is released which has a newer version than the version you specify in the Breaks/Replaces/Conflicts it just refuses to install and stops half way through installing until a new upload is made which updates the Breaks/Replaces/Conflicts version in the packaging. I'd be interested in how this is solved in the RPM world.

|

||||

|

||||

### Debian Merges ###

|

||||

|

||||

Ubuntu is forked from Debian and to piggy back on their work (and add our own bugs while taking the credit) we merge in Debian's packaging at the start of each cycle. This is fiddly work involving going through the diff (and for patches that's often a diff of a diff) and changelog to work out why each alternation was made. Then we merge them together, it takes time and it's error prone but it's what allows Ubuntu to be one of the most up to date distros around even while much of the work gone into maintaining universe packages not part of some flavour has slowed down.

|

||||

|

||||

### Stable Release Updates ###

|

||||

|

||||

You have Kubuntu 14.04 LTS but you want more? You want bugfixes too? Oh but you want them without the possibility of regressions? Ubuntu has quite strict definition of what's allowed in after an Ubuntu release is made, this is because once upon a time someone uploaded a fix for X which had the side effect of breaking X on half the installs out there. So for any updates to get into the archive they can only be for certain packages with a track record of making bug fix releases without sneaking in new features or breaking bits. They need to be tested, have some time passed to allow for wider testing, be tested again using the versions compiled in Launchpad and then released. KDE makes bugfix releases of KDE SC every month and we update them in the latest stable and LTS releases as [4.13.3 was this week][10]. But it's not a process you can rush and will take a couple of weeks usually. That 4.13.3 update was even later then usual because we were busy with Plasma 5 and whatnot. And it's not perfect, a bug in Baloo did get through with 4.13.2. But it would be even worse if we did rush it.

|

||||

|

||||

### Backports ###

|

||||

|

||||

Ah but you want new features too? We don't allow in new features into the normal updates because they will have more chance of having regressions. That's why we make backports, either in the kubuntu-ppa/backports archive or in the ubuntu backports archive. This involves running the package through another automation script to change whever needs changed for the backport then compiling it all, testing it and releasing it. Maintaining and running that backport script is quite faffy so sending your thanks is always appreciated.

|

||||

|

||||

We have an allowance to upload new bugfix (micro releases) of KDE SC to the ubuntu archive because KDE SC has a good track record of fixing things and not breaking them. When we come to wanting to update Plasma we'll need to argue for another allowance. One controvertial issue in KDE Frameworks is that there's no bugfix releases, only monthly releases with new features. These are unlikely to get into the Ubuntu archive, we can try to argue the case that with automated tests and other processes the quality is high enough, but it'll be a hard sell.

|

||||

|

||||

### Crack of the Day ###

|

||||

|

||||

Project Neon provides packages of daily builds of parts of KDE from Git. And there's weekly ISOs that are made from this too. These guys rock. The packages are monolithic and install in /opt to be able to live alongside your normal KDE software.

|

||||

|

||||

### Co-installability ###

|

||||

|

||||

You should be able to run KDELibs 4 software on a Plasma 5 desktop. I spent quite a bit of time ensuring this is possible by having no overlapping files in kdelibs/kde-runtime and kde frameworks and some parts of Plasma. This wasn't done primarily for Kubuntu, many of the files could have been split out into .deb packages that could be shared between KDELibs 4 and Plasma 5, but other disros which just installs packages in a monolithic style benefitted. Some projects like Baloo didn't ensure they were co-installable, fine for Kubuntu as we can separate the libraries that need to be coinstalled from the binaries, but other distros won't be so happy.

|

||||

|

||||

### Automated Testing ###

|

||||

|

||||

Increasingly KDE software comes with its own test suite. Test suites are something that has been late coming to free software (and maybe software in general) but now it's here we can have higher confidence that the software is bug free. We run these test suites as part of the package compilation process and not infrequently find that the test suite doesn't run, I've been told that it's not expected for packagers to use it in the past. And of course tests fail.

|

||||

|

||||

### Obscure Architectures ###

|

||||

|

||||

In Ubuntu we have some obscure architectures. 64-bit Arm is likely to be a useful platform in the years to come. I'm not sure why we care about 64-bit powerpc, I can only assume someone has paid Canonical to care about it. Not infrequently we find software compiles fine on normal PCs but breaks on these obscure platforms and we need to debug why they is. This can be a slow process on ARM which takes an age to do anything, or very slow where I don't even have access to a machine to test on, but it's all part of being part of a distro with many use-cases.

|

||||

|

||||

### Future Changes ###

|

||||

|

||||

At Kubuntu we've never shared infrstructure with Debian despite having 99% the same packaging. This is because Ubuntu to an extent defines itself as being the technical awesomeness of Debian with smoother processes. But for some time Debian has used git while we've used the slower bzr (it was an early plan to make Ubuntu take over the world of distributed revision control with Bzr but then Git came along and turned out to be much faster even if harder to get your head around) and they've also moved to team maintainership so at last we're planning [shared repositories][11]. That'll mean many changes in our scripts but should remove much of the headache of merges each cycle.

|

||||

|

||||

There's also a proposal to [move our packaging to daily builds][12] so we won't have to spend a lot of time updating packaging at every release. I'm skeptical if the hassle of the infrastructure for this plus fixing packaging problems as they occur each day will be less work than doing it for each release but it's worth a try.

|

||||

|

||||

### ISO Testing ###

|

||||

|

||||

Every 6 months we make an Ubuntu release (which includes all the flavours of which Ubuntu [Unity] is the flagship and Kubuntu is the most handsome) and there's alphas and betas before that which all need to be tested to ensure they actually install and run. Some of the pain of this has reduced since we've done away with the alternative (text debian-installer) images but we're nowhere near where Ubuntu [Unity] or OpenSUSE is with OpenQA where there are automated installs running all the time in various setups and some magic detects problems. I'd love to have this set up.

|

||||

|

||||

I'd welcome comments on how any workflow here can be improved or how it compares to other distributions. It takes time but in Kubuntu we have a good track record of contributing fixes upstream and we all are part of KDE as well as Kubuntu. As well as the tasks I list above about checking copyright or co-installability I do Plasma releases currently, I just saw Harald do a Phonon release and Scott's just applied for a KDE account for fixes to PyKDE. And as ever we welcome more people to join us, we're in #kubuntu-devel where free hugs can be found, and we're having a whole day of Kubuntu love at Akademy.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://blogs.kde.org/2014/08/13/upstream-and-downstream-why-packaging-takes-time

|

||||

|

||||

作者:[Jonathan Riddell][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://blogs.kde.org/users/jriddell

|

||||

[1]:https://dot.kde.org/2014/08/12/first-bugfix-update-plasma-5

|

||||

[2]:https://dot.kde.org/2014/08/07/kde-frameworks-5.1

|

||||

[3]:http://www.kubuntu.org/news/kde-sc-4.13.3

|

||||

[4]:https://dot.kde.org/2014/07/18/kde-ships-july-updates-and-second-beta-applications-and-platform-414

|

||||

[5]:http://qa.kubuntu.co.uk/ninjas-status/build_status_4.13.97_utopic.html

|

||||

[6]:https://community.kde.org/Kubuntu/Policies

|

||||

[7]:https://techbase.kde.org/Policies/Binary_Compatibility_Issues_With_C++

|

||||

[8]:https://techbase.kde.org/Policies/Licensing_Policy

|

||||

[9]:https://help.ubuntu.com/community/MultiArch

|

||||

[10]:http://www.kubuntu.org/news/kde-sc-4.13.3

|

||||

[11]:http://lists.alioth.debian.org/pipermail/pkg-kde-talk/2014-August/001934.html

|

||||

[12]:https://lists.ubuntu.com/archives/kubuntu-devel/2014-August/008651.html

|

||||

@ -0,0 +1,104 @@

|

||||

Where And How To Code: Choosing The Best Free Code Editor

|

||||

================================================================================

|

||||

A close look at Cloud9, Koding and Nitrous.IO.

|

||||

|

||||

|

||||

|

||||

**Ready to start your first coding project? Great! Just configure** Terminal or Command Prompt, learn to use it and then install all the languages, add-on libraries and APIs you’ll need. When you're finally through with all that, you can get started with installing [Visual Studio][1] so you can preview your work.

|

||||

|

||||

At least that's how you used to have to do it.

|

||||

|

||||

No wonder beginning coders are increasingly turning to online integrated development environments (IDEs). An IDE is a code editor that comes ready to work with languages and all their dependencies, saving you the hassle of installing them on your computer.

|

||||

|

||||

I wanted to learn more about what constitutes the typical IDE, so I took a look at the free tier for three of the most popular integrated development environments out there: [Cloud9][2], [Koding][3], and [Nitrous.IO][4]. In the process, I learned a lot about the cases in which programmers would and would not want to use IDEs.

|

||||

|

||||

### Why Use An IDE? ###

|

||||

|

||||

If a text editor is like Microsoft Word, think of an IDE as Google Drive. You get similar functionality, but it's accessible from any computer and ready to share. As the Internet becomes an increasingly influential part of project workflow, IDEs make life easier.

|

||||

|

||||

I used Nitrous.IO for my last ReadWrite tutorial, the Python app in [Create Your Own Obnoxiously Simple Messaging App Just Like Yo][5]. When you use an IDE, you select the language you want to work in so you can test and preview how it looks on the IDE’s Virtual Machine (VM) designed to run programs written specifically in that language.

|

||||

|

||||

If you read the tutorial, you'll see there are only two API libraries that my app depended on—messaging service Twilio and Python microframework Flask. That would have been easy to build using a text editor and Terminal on my computer, but I chose an IDE for yet another convenience: when everyone is using the same developer environment, it’s easier to follow along with a tutorial.

|

||||

|

||||

### What An IDE Is Not ###

|

||||

|

||||

Still, an IDE is not a long term hosting solution.

|

||||

|

||||

When you’re working on an IDE, you’re able to build, test and preview your app in the cloud. You’re even able to share the final draft via link.

|

||||

|

||||

But you can’t use an IDE to store your project permanently. You wouldn't ditch your blog in favor of hosting your posts as Google Drive documents. Like Google Drive, IDEs allow you to link and share content, but neither are equipped to replace real hosting.

|

||||

|

||||

What's more, IDEs aren't designed for wide-spread sharing. Despite the increased functionality IDEs add to the preview capability of most text editors, stick with showing off your app preview to friends and coworkers, not with, say, the front page of Hacker News. In that case, your IDE would probably shut you down for excessive traffic.

|

||||

|

||||

Think of it this way: an IDE is a place to build and test your app; a host is a place for it to live. So once you’ve finalized your app, you’ll want to deploy it on a cloud-based service that lets you host apps long term, preferably one with a free hosting option like [Heroku][6].

|

||||

|

||||

### Choosing An IDE ###

|

||||

|

||||

|

||||

|

||||

As IDEs become more popular, more are popping up all the time. In my opinion, there’s no perfect IDE. However, some IDEs are better for certain work process priorities than others.

|

||||

|

||||

I took a look at the free tier for three of the most popular integrated development environments out there: Cloud9, Koding, and Nitrous.IO. Each has its benefits, depending on what you're working on. Here's what I found.

|

||||

|

||||

### Cloud9: Ready To Collaborate ###

|

||||

|

||||

When I signed up for Cloud9, one of the first things it prompted me to do was integrate my GitHub and BitBucket accounts. Instantly, all my GitHub projects, solo and collaborative, were ready to clone and work on in Cloud9’s development tool. Other IDEs have nowhere near this level of GitHub integration.

|

||||

|

||||

Out of the three IDEs I looked at, Cloud9 seemed most intent on ensuring an environment where I could work seamlessly with co-coders. Here, it’s not just a chat function in the corner. In fact, said CEO Ruben Daniels, Cloud9 collaborators can see each others coding in real time, just like co-authors are able to on Google Drive.

|

||||

|

||||

“Most services’ collaborative features only work on a single file,” said Daniels. “Ours work on multiples throughout the project. Collaboration is fully integrated within the IDE.”

|

||||

|

||||

### Koding: Help When You Need It ###

|

||||

|

||||

IDEs give you the tools you need to build and test applications in the gamut of open source languages. For a beginner, that can be a little bit intimidating. For example, if I’m working on a project with both Python and Ruby components, which VM do I use for testing?

|

||||

|

||||

The answer is both, though on a free account, you can only turn on one VM for testing at a time. I was able to find that out right on my Koding dashboard, which doubles as a place for users to give and get advice on their Koding projects. Of the three, it’s the most transparent when it comes to where you can ask for assistance and hear back in minutes.

|

||||

|

||||

“We have an active community built into the application,” said Nitin Gupta, Chief Business Officer at Koding. “We wanted to create an environment that is extremely attractive to people who need help and who want to help.”

|

||||

|

||||

### Nitrous.IO: An IDE Wherever You Want ###

|

||||

|

||||

The ultimate advantage of using an IDE over your own desktop environment is that it’s self-contained. You don’t have to install anything to use it. On the other hand, the ultimate advantage of using your own desktop environment is that you can work locally, even without Internet.

|

||||

|

||||

Nitrous.IO gives you the best of both worlds. You can use the IDE on the Web, or you can download it to your own computer, said cofounder AJ Solimine. The advantage is that you can merge the integrations of Nitrous with the familiarity of your preferred text editor.

|

||||

|

||||

“You can access Nitrous.IO from any modern web browser via our online Web IDE, but we also have handy desktop applications for Windows and Mac that let you edit with your favorite editor,” he said.

|

||||

|

||||

### The Bottom Line ###

|

||||

|

||||

The most surprising thing I learned from a week of [using][7] three different IDEs? How similar they are. [When it comes to the basics of coding][8], they’re all equally helpful.

|

||||

|

||||

Cloud9, Koding, [and Nitrous.IO all support][9] every major open source language, from Ruby to Python to PHP to HTML5. You can choose from any of those VMs.

|

||||

|

||||

Both Cloud9 and Nitrous.IO have built-in one-click GitHub integration. For Koding there are a [couple more steps][10], but it can be done.

|

||||

|

||||

Each integrated easily with the APIs I needed. Each let me install my preferred package installers, too (and Koding made me do it as a superuser). They all have a built in Terminal for easily testing and deploying projects. All three allow you to easily preview your project. And of course, they all hosted my project in the cloud so I could work on it anywhere.

|

||||

|

||||

On the downside, they all had the same negatives, which is reasonable when you consider they're free. You can only run one VM at a time to test a program written in a particular language. When you’re not using your VM for a while, the IDE preserves bandwidth by putting it into hibernation and you have to wait for it to reload next time you use it (and Cloud9 was especially laborious). None of them make a good permanent host for your finished projects.

|

||||

|

||||

So to answer those who ask me if there’s a perfect free IDE out there, probably not. But depending on your priorities, there might be one that’s perfect for your project.

|

||||

|

||||

Lead image courtesy of [Shutterstock][11]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://readwrite.com/2014/08/14/cloud9-koding-nitrousio-integrated-development-environment-ide-coding

|

||||

|

||||

作者:[Lauren Orsini][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://readwrite.com/author/lauren-orsini

|

||||

[1]:http://www.visualstudio.com/

|

||||

[2]:http://c9.io/

|

||||

[3]:https://koding.com/

|

||||

[4]:http://nitrous.io/

|

||||

[5]:http://readwrite.com/2014/07/11/one-click-messaging-app

|

||||

[6]:http://heroku.com/

|

||||

[7]:http://help.nitrous.io/ide-general/

|

||||

[8]:https://www.nitrous.io/desktop

|

||||

[9]:https://www.nitrous.io/desktop

|

||||

[10]:https://koding.com/Activity/steps-clone-projects-github-koding-1-create-account-github-2-open-your-terminal-3

|

||||

[11]:http://www.shutterstock.com/

|

||||

@ -0,0 +1,68 @@

|

||||

Why Your Company Needs To Write More Open Source Software - ReadWrite

|

||||

================================================================================

|

||||

> Real innovation doesn't happen behind closed doors.

|

||||

|

||||

|

||||

|

||||

**The Wall Street Journal [thinks][1] it's news that Zulily is developing** "more software in-house." It's not. At all. As [Eric Raymond wrote][2] years ago, 95% of the world's software is written for use, not for sale. The reasons are many, but one stands out: as Zulily CIO Luke Friang declares, it's "nearly impossible for a [off the shelf] solution to keep up with our pace."

|

||||

|

||||

True now, just as it was true 20 years ago.

|

||||

|

||||

But one thing is different, and it's something the WSJ completely missed. Historically software developed in-house was zealously kept proprietary because, the reasoning went, it was the source of a firm's competitive advantage. Today, however, companies increasingly realize the opposite: there is far more to be gained by open sourcing in-house software than keeping it closed.

|

||||

|

||||

Which is why your company needs to contribute more open-source code. Much more.

|

||||

|

||||

We've gone through an anomalous time these past 20 years. While most software continued to be written for internal use, most of the attention has been focused on vendors like SAP and Microsoft that build solutions that apply to a wide range of companies.

|

||||

|

||||

That's the theory, anyway.

|

||||

|

||||

In practice, buyers spent a small fortune on license fees, then a 5X multiple on top of that to make the software fit their requirements. For example, a company may spend $100,000 on an ERP system, but they're going to spend another $500,000 making it work.

|

||||

|

||||

One of the reasons open source took off, even in applications, was that companies could get a less functional product for free (or a relatively inexpensive fee) and then spend their implementation dollars tuning it to their needs. Either way, customization was necessary, but the open source approach was less costly and arguably more likely to result in a more tailored result.

|

||||

|

||||

Meanwhile, technology vendors doubled-down on "sameness," as Redmonk analyst [Stephen O'Grady describes][3]:

|

||||

|

||||

> The mainstream technology industry has, in recent years, eschewed specialization. Virtual appliances, each running a version of the operating system customized for an application or purpose, have entirely failed to dent the sales of general purpose alternatives such as RHEL or Windows. For better than twenty years, the answer to any application data persistence requirement has meant one thing: a relational database. If you were talking about enterprise application development, you were talking about Java. And so on.

|

||||

|

||||

Along the way, however, companies discovered that vendors weren't really meeting their needs, even for well-understood product categories like Content Management Systems. They needed different, not same.

|

||||

|

||||

So the customers went rogue. They became vendors. Sort of.

|

||||

|

||||

As is often the case, [O'Grady nails][4] this point. Writing in 2010, O'Grady uncovers an interesting trend: "Software vendors are facing a powerful new market competitor: their customers."

|

||||

|

||||

Think about the most visible technologies today. Most are open source, and nearly all of them were originally written for some company's internal use, or some developer's hobby. Linux, Git, Hadoop, Cassandra, MongoDB, Android, etc. None of these technologies were originally written to be sold as products.

|

||||

|

||||

Instead, they were developed by companies—usually Web companies—building software to "[scratch their own itches][5]," to use the open source phrase. And unlike previous generations of in-house software developed at banks, hospitals and other organizations, they open sourced the code.

|

||||

|

||||

While [some companies eschew developing custom software][6] because they don't want to maintain it, open source (somewhat) mitigates this by letting a community grow up to extend and maintain a project, thereby amortizing the costs of development for the code originators. Yahoo! started Hadoop, but its biggest contributors today are Cloudera and Hortonworks. Facebook kickstarted Cassandra, but DataStax primarily maintains it today. And so on.

|

||||

|

||||

Today real software innovation doesn't happen behind closed doors. Or, if it does, it doesn't stay there. It's open source, and it's upending decades of established software orthodoxy.

|

||||

|

||||

Not that it's for the faint of heart.

|

||||

|

||||

The best open-source projects [innovate very fast][7]. Which is not the same as saying anyone will care about your open-source code. There are [significant pros and cons to open sourcing your code][8]. But one massive "pro" is that the best developers want to work on open code: if you need to hire quality developers, you need to give them an open source outlet for their work. (Just [ask Netflix][9].)

|

||||

|

||||

But that's no excuse to sit on the sidelines. It's time to get involved, and not for the good of some ill-defined "community." No, the primary beneficiary of open-source software development is you and your company. Better get started.

|

||||

|

||||

Lead image courtesy of Shutterstock.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://readwrite.com/2014/08/16/open-source-software-business-zulily-erp-wall-street-journal

|

||||

|

||||

作者:[Matt Asay][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://readwrite.com/author/matt-asay

|

||||

[1]:http://blogs.wsj.com/cio/2014/08/08/zulily-calls-in-house-software-a-differentiator-for-competitive-advantage/

|

||||

[2]:http://oreilly.com/catalog/cathbazpaper/chapter/ch05.html

|

||||

[3]:http://redmonk.com/sogrady/2010/01/12/roll-your-own/#ixzz3ATBuZsef

|

||||

[4]:http://redmonk.com/sogrady/2010/01/12/roll-your-own/

|

||||

[5]:http://en.wikipedia.org/wiki/The_Cathedral_and_the_Bazaar

|

||||

[6]:http://www.abajournal.com/magazine/article/roll_your_own_software_hidden_dangers_on_the_road_less_traveled/

|

||||

[7]:http://readwrite.com/2013/12/12/open-source-innovation

|

||||

[8]:http://readwrite.com/2014/07/07/open-source-software-pros-cons

|

||||

[9]:http://techblog.netflix.com/2012/07/open-source-at-netflix-by-ruslan.html

|

||||

@ -0,0 +1,51 @@

|

||||

Will Linux ever be able to give consumers what they want?

|

||||

================================================================================

|

||||

> Jack Wallen offers up the novel idea that giving the consumers what they want might well be the key to boundless success.

|

||||

|

||||

|

||||

|

||||

In the world of consumer electronics, if you don't give the buyer what they want, they'll go elsewhere. We've recently witnessed this with the Firefox browser. The consumer wanted a faster, less-bloated piece of software, and the developers went in the other direction. In the end, the users migrated to Chrome or Chromium.

|

||||

|

||||

Linux needs to gaze deep into their crystal ball, watch carefully the final fallout of that browser war, and heed this bit of advice:

|

||||

|

||||

If you don't give them what they want, they'll leave.

|

||||

|

||||

Another great illustration of this backfiring is Windows 8. The consumer didn't want that interface. Microsoft, however, wanted it because it was necessary to begin the drive to all things Surface. This same scenario could have been applied to Canonical and Ubuntu Unity -- however, their goal wasn't geared singularly and specifically towards tablets (so, the interface was still highly functional and intuitive on the desktop).

|

||||

|

||||

For the longest time, it seemed like Linux developers and designers were gearing everything they did toward themselves. They took the "eat your own dog food" too far. In that, they forgot one very important thing:

|

||||

|

||||

Without new users, their "base" would only ever belong to them.

|

||||

|

||||

In other words, the choir had not only been preached to, it was the one doing the preaching. Let me give you three examples to hit this point home.

|

||||

|

||||

- For years, Linux has needed an equivalent of Active Directory. I would love to hand that title over to LDAP, but have you honestly tried to work with LDAP? It's a nightmare. Developers have tried to make LDAP easy, but none have succeeded. It amazes me that a platform that has thrived in multi-user situations still has nothing that can go toe-to-toe with AD. A team of developers needs to step up, start from scratch, and create the open-source equivalent to AD. This would be such a boon to mid-size companies looking to migrate away from Microsoft products. But until this product is created, the migration won't happen.

|

||||

- Another Microsoft-driven need -Exchange/Outlook. Yes, I realize that many are going to the cloud. But the truth is that mediumto large-scale businesses will continue relying on the Exchange/Outlook combo until something better comes along. This could very well happen within the open-source community. One piece of this puzzle is already there (though it needs some work) -the groupware client, Evolution. If someone could take, say, a fork of Zimbra and re-tool it such a way that it would work with Evolution (and even Thunderbird) to serve as a drop-in replacement for Exchange, the game would change, and the trickle-down to consumers would be massive.

|

||||

- Cheap, cheap, cheap. This one is a hard pill for most to swallow -but consumers (and businesses) want cheap. Look at the Chromebook sales over the last year. Now, do a search for a Linux laptop and see if you can find one for under $700.00 (USD). For a third of that cost, you can get a Chromebook (a platform running the Linux kernel) that will serve you well. But because Linux is still such a niche market, it's hard to get the cost down. A company like Red Hat Linux could change that. They already have the server hardware in place. Why not crank out a bunch of low-cost, mid-range laptops that work in similar fashion to the Chromebook but only run a full-blown Linux environment? (see "[Is the Cloudbook the future of Linux?][1]") The key is that these devices must be low-cost and meet the needs of the average consumer. Stop thinking with your gamer/developer hat on and remember what the average user really needs -a web browser and not much more. That's why the Chromebook is succeeding so handily. Google knew exactly what the consumer wanted, and they delivered. On the Linux front, companies still think the only way to attract buyers is to crank out high-end, expensive Linux hardware. There's a touch of irony there, considering one of the most-often shouted battle cries is that Linux runs on slower, older hardware.

|

||||

|

||||

Finally, Linux needs to take a page from the good ol' Book Of Jobs and figure out how to convince the consumer that what they truly need is Linux. In their businesses and in their homes -- everyone can benefit from using Linux. Honestly, how can the open-source community not pull that off? Linux already has the perfect built-in buzzwords: Stability, reliability, security, cloud, free -- plus Linux is already in the hands of an overwhelming amount of users (they just don't know it). It's now time to let them know. If you use Android or Chromebooks, you use (in one form or another) Linux.

|

||||

|

||||

Knowing just what the consumer wants has always been a bit of a stumbling block for the Linux community. And I get that -- so much of the development of Linux happens because a developer has a particular need. This means development is targeted to a "micro-niche." It's time, however, for the Linux development community to think globally. "What does the average user need, and how do we give it to them?" Let me offer up the most basic of primers.

|

||||

|

||||

The average user needs:

|

||||

|

||||

- Low cost

|

||||

- Seamless integration with devices and services

|

||||

- Intuitive and modern designs

|

||||

- A 100% solid browser experience

|

||||

|

||||

That's pretty much it. With those four points in mind, it should be easy to take a foundation of Linux and create exactly what the user wants. Google did it... certainly the Linux community can build on what Google has done and create something even better. Mix that in with AD integration, give it an Exchange/Outlook or cloud-based groupware set of tools, and something very special will happen -- people will buy it.

|

||||

|

||||

Do you think the Linux community will ever be able to give the consumer what they want? Share your opinion in the discussion thread below.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.techrepublic.com/article/will-linux-ever-be-able-to-give-consumers-what-they-want/

|

||||

|

||||

作者:[Jack Wallen][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.techrepublic.com/search/?a=jack+wallen

|

||||

[1]:http://www.techrepublic.com/article/is-the-cloudbook-the-future-of-linux/

|

||||

@ -0,0 +1,199 @@

|

||||

KDE Plasma 5—For those Linux users undecided on the kernel’s future

|

||||

================================================================================

|

||||

> Review—new release straddles traditional desktop needs, long term multi-device plans.

|

||||

|

||||

Finally, the KDE project has released KDE Plasma 5, a major new version of the venerable K Desktop Environment.

|

||||

|

||||

Plasma 5 arrives in the middle of an ongoing debate about the future of the Linux desktop. On one hand there are the brand new desktop paradigms represented by GNOME and Unity. Both break from the traditional desktop model in significant ways, and both attempt to create interfaces that will work on the desktop and the much-anticipated, tablet-based future (which [may or may not ever arrive][1]).

|

||||

|

||||

Linux desktops like KDE, XFCE, LXDE, Mate, and even Cinnamon are the other side of the fence. None has re-invented itself too much. They continue to offer users a traditional desktop experience, which is not to say these projects aren't growing and refining. All of them continue to turn out incremental releases that fine tune what is a well-proven desktop model.

|

||||

|

||||

|

||||

Ubuntu's Unity desktop

|

||||

|

||||

|

||||

GNOME 3 desktop

|

||||

|

||||

GNOME and Unity end up getting the lion's share of attention in this ongoing debate. They're both new and different. They're both opinionated and polarizing. For every Linux user that loves them, there's another that loves to hate them. It makes for, if nothing else, lively comments and forum posts in the Linux world. But the difference between these two Linux camps is about more than just how your desktop looks and behaves. It's about what the future of computing looks like.

|

||||

|

||||

GNOME and Unity believe that the future of computing consists of multiple devices all running the same software—the new desktop these two create only makes sense within this vision. These new versions aren't really built as desktops for the future, but they include a hybrid desktop fallback mode for now and appear to believe in devices going forward. The other side of the Linux schism largely seems to ignore those.

|

||||

|

||||

And unlike the world of closed source OSes—where changes are handed down, like them or leave them—the Linux world is in the middle of a conversation about these two opposite ideas.

|

||||

|

||||

For users, it can be frustrating. The last thing you need when you're trying to get work done is an update that completely changes your desktop, forcing you to learn new ways of working. Even the best case scenario, moving to another desktop when your old favorite suddenly veers off in a new direction, usually means jettisoning years of muscle memory and familiarity.

|

||||

|

||||

Luckily, there's a simple way to navigate this mess and find the right desktop for you. Here it is in a nutshell: do you want to bend your will to your desktop or do you want to bend your desktop to your will?

|

||||

|

||||