mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-12 01:40:10 +08:00

commit

ac1e481ac0

115

published/20150817 Top 5 Torrent Clients For Ubuntu Linux.md

Normal file

115

published/20150817 Top 5 Torrent Clients For Ubuntu Linux.md

Normal file

@ -0,0 +1,115 @@

|

||||

Ubuntu 下五个最好的 BT 客户端

|

||||

================================================================================

|

||||

|

||||

|

||||

|

||||

在寻找 **Ubuntu 中最好的 BT 客户端**吗?事实上,Linux 桌面平台中有许多 BT 客户端,但是它们中的哪些才是**最好的 Ubuntu 客户端**呢?

|

||||

|

||||

我将会列出 Linux 上最好的五个 BT 客户端,它们都拥有着体积轻盈,功能强大的特点,而且还有令人印象深刻的用户界面。自然,易于安装和使用也是特性之一。

|

||||

|

||||

### Ubuntu 下最好的 BT 客户端 ###

|

||||

|

||||

考虑到 Ubuntu 默认安装了 Transmission,所以我将会从这个列表中排除了 Transmission。但是这并不意味着 Transmission 没有资格出现在这个列表中,事实上,Transmission 是一个非常好的BT客户端,这也正是它被包括 Ubuntu 在内的多个发行版默认安装的原因。

|

||||

|

||||

### Deluge ###

|

||||

|

||||

|

||||

|

||||

[Deluge][1] 被 Lifehacker 评选为 Linux 下最好的 BT 客户端,这说明了 Deluge 是多么的有用。而且,并不仅仅只有 Lifehacker 是 Deluge 的粉丝,纵观多个论坛,你都会发现不少 Deluge 的忠实拥趸。

|

||||

|

||||

快速,时尚而直观的界面使得 Deluge 成为 Linux 用户的挚爱。

|

||||

|

||||

Deluge 可在 Ubuntu 的仓库中获取,你能够在 Ubuntu 软件中心中安装它,或者使用下面的命令:

|

||||

|

||||

sudo apt-get install deluge

|

||||

|

||||

### qBittorrent ###

|

||||

|

||||

|

||||

|

||||

正如它的名字所暗示的,[qBittorrent][2] 是著名的 [Bittorrent][3] 应用的 Qt 版本。如果曾经使用过它,你将会看到和 Windows 下的 Bittorrent 相似的界面。同样轻巧并且有着 BT 客户端的所有标准功能, qBittorrent 也可以在 Ubuntu 的默认仓库中找到。

|

||||

|

||||

它可以通过 Ubuntu 软件仓库安装,或者使用下面的命令:

|

||||

|

||||

sudo apt-get install qbittorrent

|

||||

|

||||

|

||||

### Tixati ###

|

||||

|

||||

|

||||

|

||||

[Tixati][4] 是另一个不错的 Ubuntu 下的 BT 客户端。它有着一个默认的黑暗主题,尽管很多人喜欢,但是我例外。它拥有着一切你能在 BT 客户端中找到的功能。

|

||||

|

||||

除此之外,它还有着数据分析的额外功能。你可以在美观的图表中分析流量以及其它数据。

|

||||

|

||||

- [下载 Tixati][5]

|

||||

|

||||

|

||||

|

||||

### Vuze ###

|

||||

|

||||

|

||||

|

||||

[Vuze][6] 是许多 Linux 以及 Windows 用户最喜欢的 BT 客户端。除了标准的功能,你可以直接在应用程序中搜索种子,也可以订阅系列片源,这样就无需再去寻找新的片源了,因为你可以在侧边栏中的订阅看到它们。

|

||||

|

||||

它还配备了一个视频播放器,可以播放带有字幕的高清视频等等。但是我不认为你会用它来代替那些更好的视频播放器,比如 VLC。

|

||||

|

||||

Vuze 可以通过 Ubuntu 软件中心安装或者使用下列命令:

|

||||

|

||||

sudo apt-get install vuze

|

||||

|

||||

|

||||

|

||||

### Frostwire ###

|

||||

|

||||

|

||||

|

||||

[Frostwire][7] 是一个你应该试一下的应用。它不仅仅是一个简单的 BT 客户端,它还可以应用于安卓,你可以用它通过 Wifi 来共享文件。

|

||||

|

||||

你可以在应用中搜索种子并且播放他们。除了下载文件,它还可以浏览本地的影音文件,并且将它们有条理的呈现在播放器中。这同样适用于安卓版本。

|

||||

|

||||

还有一个特点是:Frostwire 提供了独立音乐人的[合法音乐下载][13]。你可以下载并且欣赏它们,免费而且合法。

|

||||

|

||||

- [下载 Frostwire][8]

|

||||

|

||||

|

||||

|

||||

### 荣誉奖 ###

|

||||

|

||||

在 Windows 中,uTorrent(发音:mu torrent)是我最喜欢的 BT 应用。尽管 uTorrent 可以在 Linux 下运行,但是我还是特意忽略了它。因为在 Linux 下使用 uTorrent 不仅困难,而且无法获得完整的应用体验(运行在浏览器中)。

|

||||

|

||||

可以[在这里][9]阅读 Ubuntu下uTorrent 的安装教程。

|

||||

|

||||

#### 快速提示: ####

|

||||

|

||||

大多数情况下,BT 应用不会默认自动启动。如果想改变这一行为,请阅读[如何管理 Ubuntu 下的自启动程序][10]来学习。

|

||||

|

||||

### 你最喜欢的是什么? ###

|

||||

|

||||

这些是我对于 Ubuntu 下最好的 BT 客户端的意见。你最喜欢的是什么呢?请发表评论。也可以查看与本主题相关的[Ubuntu 最好的下载管理器][11]。如果使用 Popcorn Time,试试 [Popcorn Time 技巧][12]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://itsfoss.com/best-torrent-ubuntu/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[Xuanwo](https://github.com/Xuanwo)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://itsfoss.com/author/abhishek/

|

||||

[1]:http://deluge-torrent.org/

|

||||

[2]:http://www.qbittorrent.org/

|

||||

[3]:http://www.bittorrent.com/

|

||||

[4]:http://www.tixati.com/

|

||||

[5]:http://www.tixati.com/download/

|

||||

[6]:http://www.vuze.com/

|

||||

[7]:http://www.frostwire.com/

|

||||

[8]:http://www.frostwire.com/downloads

|

||||

[9]:http://sysads.co.uk/2014/05/install-utorrent-3-3-ubuntu-14-04-13-10/

|

||||

[10]:http://itsfoss.com/manage-startup-applications-ubuntu/

|

||||

[11]:http://itsfoss.com/4-best-download-managers-for-linux/

|

||||

[12]:http://itsfoss.com/popcorn-time-tips/

|

||||

[13]:http://www.frostclick.com/wp/

|

||||

|

||||

@ -1,83 +1,82 @@

|

||||

在 Linux 中使用"两个磁盘"创建 RAID 1(镜像) - 第3部分

|

||||

在 Linux 下使用 RAID(三):用两块磁盘创建 RAID 1(镜像)

|

||||

================================================================================

|

||||

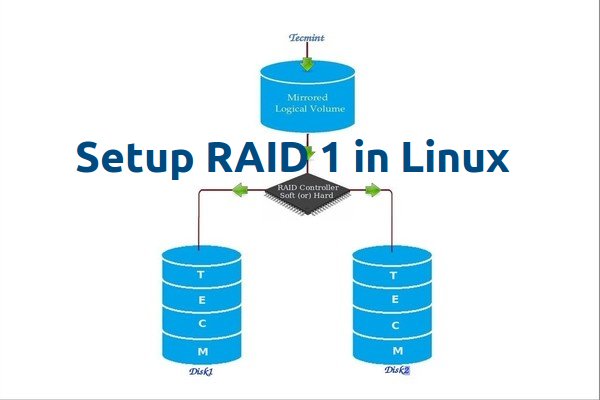

RAID 镜像意味着相同数据的完整克隆(或镜像)写入到两个磁盘中。创建 RAID1 至少需要两个磁盘,它的读取性能或者可靠性比数据存储容量更好。

|

||||

|

||||

**RAID 镜像**意味着相同数据的完整克隆(或镜像),分别写入到两个磁盘中。创建 RAID 1 至少需要两个磁盘,而且仅用于读取性能或者可靠性要比数据存储容量更重要的场合。

|

||||

|

||||

|

||||

|

||||

|

||||

在 Linux 中设置 RAID1

|

||||

*在 Linux 中设置 RAID 1*

|

||||

|

||||

创建镜像是为了防止因硬盘故障导致数据丢失。镜像中的每个磁盘包含数据的完整副本。当一个磁盘发生故障时,相同的数据可以从其它正常磁盘中读取。而后,可以从正在运行的计算机中直接更换发生故障的磁盘,无需任何中断。

|

||||

|

||||

### RAID 1 的特点 ###

|

||||

|

||||

-镜像具有良好的性能。

|

||||

- 镜像具有良好的性能。

|

||||

|

||||

-磁盘利用率为50%。也就是说,如果我们有两个磁盘每个500GB,总共是1TB,但在镜像中它只会显示500GB。

|

||||

- 磁盘利用率为50%。也就是说,如果我们有两个磁盘每个500GB,总共是1TB,但在镜像中它只会显示500GB。

|

||||

|

||||

-在镜像如果一个磁盘发生故障不会有数据丢失,因为两个磁盘中的内容相同。

|

||||

- 在镜像如果一个磁盘发生故障不会有数据丢失,因为两个磁盘中的内容相同。

|

||||

|

||||

-读取数据会比写入性能更好。

|

||||

- 读取性能会比写入性能更好。

|

||||

|

||||

#### 要求 ####

|

||||

|

||||

创建 RAID 1 至少要有两个磁盘,你也可以添加更多的磁盘,磁盘数需为2,4,6,8等偶数。要添加更多的磁盘,你的系统必须有 RAID 物理适配器(硬件卡)。

|

||||

|

||||

创建 RAID 1 至少要有两个磁盘,你也可以添加更多的磁盘,磁盘数需为2,4,6,8的两倍。为了能够添加更多的磁盘,你的系统必须有 RAID 物理适配器(硬件卡)。

|

||||

这里,我们使用软件 RAID 不是硬件 RAID,如果你的系统有一个内置的物理硬件 RAID 卡,你可以从它的功能界面或使用 Ctrl + I 键来访问它。

|

||||

|

||||

这里,我们使用软件 RAID 不是硬件 RAID,如果你的系统有一个内置的物理硬件 RAID 卡,你可以从它的 UI 组件或使用 Ctrl + I 键来访问它。

|

||||

|

||||

需要阅读: [Basic Concepts of RAID in Linux][1]

|

||||

需要阅读: [介绍 RAID 的级别和概念][1]

|

||||

|

||||

#### 在我的服务器安装 ####

|

||||

|

||||

Operating System : CentOS 6.5 Final

|

||||

IP Address : 192.168.0.226

|

||||

Hostname : rd1.tecmintlocal.com

|

||||

Disk 1 [20GB] : /dev/sdb

|

||||

Disk 2 [20GB] : /dev/sdc

|

||||

操作系统 : CentOS 6.5 Final

|

||||

IP 地址 : 192.168.0.226

|

||||

主机名 : rd1.tecmintlocal.com

|

||||

磁盘 1 [20GB] : /dev/sdb

|

||||

磁盘 2 [20GB] : /dev/sdc

|

||||

|

||||

本文将指导你使用 mdadm (创建和管理 RAID 的)一步一步的建立一个软件 RAID 1 或镜像在 Linux 平台上。但同样的做法也适用于其它 Linux 发行版如 RedHat,CentOS,Fedora 等等。

|

||||

本文将指导你在 Linux 平台上使用 mdadm (用于创建和管理 RAID )一步步的建立一个软件 RAID 1 (镜像)。同样的做法也适用于如 RedHat,CentOS,Fedora 等 Linux 发行版。

|

||||

|

||||

### 第1步:安装所需要的并且检查磁盘 ###

|

||||

### 第1步:安装所需软件并且检查磁盘 ###

|

||||

|

||||

1.正如我前面所说,在 Linux 中我们需要使用 mdadm 软件来创建和管理 RAID。所以,让我们用 yum 或 apt-get 的软件包管理工具在 Linux 上安装 mdadm 软件包。

|

||||

1、 正如我前面所说,在 Linux 中我们需要使用 mdadm 软件来创建和管理 RAID。所以,让我们用 yum 或 apt-get 的软件包管理工具在 Linux 上安装 mdadm 软件包。

|

||||

|

||||

# yum install mdadm [on RedHat systems]

|

||||

# apt-get install mdadm [on Debain systems]

|

||||

# yum install mdadm [在 RedHat 系统]

|

||||

# apt-get install mdadm [在 Debain 系统]

|

||||

|

||||

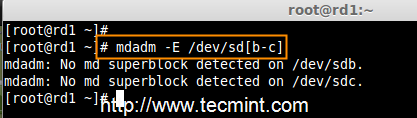

2. 一旦安装好‘mdadm‘包,我们需要使用下面的命令来检查磁盘是否已经配置好。

|

||||

2、 一旦安装好`mdadm`包,我们需要使用下面的命令来检查磁盘是否已经配置好。

|

||||

|

||||

# mdadm -E /dev/sd[b-c]

|

||||

|

||||

|

||||

|

||||

检查 RAID 的磁盘

|

||||

|

||||

*检查 RAID 的磁盘*

|

||||

|

||||

正如你从上面图片看到的,没有检测到任何超级块,这意味着还没有创建RAID。

|

||||

|

||||

### 第2步:为 RAID 创建分区 ###

|

||||

|

||||

3. 正如我提到的,我们最少使用两个分区 /dev/sdb 和 /dev/sdc 来创建 RAID1。我们首先使用‘fdisk‘命令来创建这两个分区并更改其类型为 raid。

|

||||

3、 正如我提到的,我们使用最少的两个分区 /dev/sdb 和 /dev/sdc 来创建 RAID 1。我们首先使用`fdisk`命令来创建这两个分区并更改其类型为 raid。

|

||||

|

||||

# fdisk /dev/sdb

|

||||

|

||||

按照下面的说明

|

||||

|

||||

- 按 ‘n’ 创建新的分区。

|

||||

- 然后按 ‘P’ 选择主分区。

|

||||

- 按 `n` 创建新的分区。

|

||||

- 然后按 `P` 选择主分区。

|

||||

- 接下来选择分区号为1。

|

||||

- 按两次回车键默认将整个容量分配给它。

|

||||

- 然后,按 ‘P’ 来打印创建好的分区。

|

||||

- 按 ‘L’,列出所有可用的类型。

|

||||

- 按 ‘t’ 修改分区类型。

|

||||

- 键入 ‘fd’ 设置为Linux 的 RAID 类型,然后按 Enter 确认。

|

||||

- 然后再次使用‘p’查看我们所做的更改。

|

||||

- 使用‘w’保存更改。

|

||||

- 然后,按 `P` 来打印创建好的分区。

|

||||

- 按 `L`,列出所有可用的类型。

|

||||

- 按 `t` 修改分区类型。

|

||||

- 键入 `fd` 设置为 Linux 的 RAID 类型,然后按 Enter 确认。

|

||||

- 然后再次使用`p`查看我们所做的更改。

|

||||

- 使用`w`保存更改。

|

||||

|

||||

|

||||

|

||||

创建磁盘分区

|

||||

*创建磁盘分区*

|

||||

|

||||

在创建“/dev/sdb”分区后,接下来按照同样的方法创建分区 /dev/sdc 。

|

||||

|

||||

@ -85,59 +84,59 @@ RAID 镜像意味着相同数据的完整克隆(或镜像)写入到两个磁

|

||||

|

||||

|

||||

|

||||

创建第二个分区

|

||||

*创建第二个分区*

|

||||

|

||||

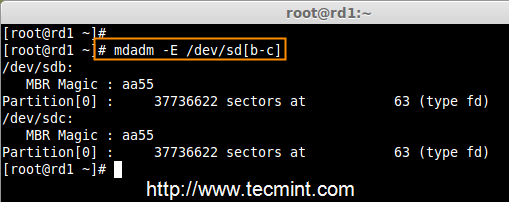

4. 一旦这两个分区创建成功后,使用相同的命令来检查 sdb & sdc 分区并确认 RAID 分区的类型如上图所示。

|

||||

4、 一旦这两个分区创建成功后,使用相同的命令来检查 sdb 和 sdc 分区并确认 RAID 分区的类型如上图所示。

|

||||

|

||||

# mdadm -E /dev/sd[b-c]

|

||||

|

||||

|

||||

|

||||

验证分区变化

|

||||

*验证分区变化*

|

||||

|

||||

|

||||

|

||||

检查 RAID 类型

|

||||

*检查 RAID 类型*

|

||||

|

||||

**注意**: 正如你在上图所看到的,在 sdb1 和 sdc1 中没有任何对 RAID 的定义,这就是我们没有检测到超级块的原因。

|

||||

|

||||

### 步骤3:创建 RAID1 设备 ###

|

||||

### 第3步:创建 RAID 1 设备 ###

|

||||

|

||||

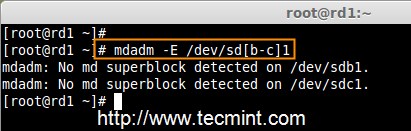

5.接下来使用以下命令来创建一个名为 /dev/md0 的“RAID1”设备并验证它

|

||||

5、 接下来使用以下命令来创建一个名为 /dev/md0 的“RAID 1”设备并验证它

|

||||

|

||||

# mdadm --create /dev/md0 --level=mirror --raid-devices=2 /dev/sd[b-c]1

|

||||

# cat /proc/mdstat

|

||||

|

||||

|

||||

|

||||

创建RAID设备

|

||||

*创建RAID设备*

|

||||

|

||||

6. 接下来使用如下命令来检查 RAID 设备类型和 RAID 阵列

|

||||

6、 接下来使用如下命令来检查 RAID 设备类型和 RAID 阵列

|

||||

|

||||

# mdadm -E /dev/sd[b-c]1

|

||||

# mdadm --detail /dev/md0

|

||||

|

||||

|

||||

|

||||

检查 RAID 设备类型

|

||||

*检查 RAID 设备类型*

|

||||

|

||||

|

||||

|

||||

检查 RAID 设备阵列

|

||||

*检查 RAID 设备阵列*

|

||||

|

||||

从上图中,人们很容易理解,RAID1 已经使用的 /dev/sdb1 和 /dev/sdc1 分区被创建,你也可以看到状态为 resyncing。

|

||||

从上图中,人们很容易理解,RAID 1 已经创建好了,使用了 /dev/sdb1 和 /dev/sdc1 分区,你也可以看到状态为 resyncing(重新同步中)。

|

||||

|

||||

### 第4步:在 RAID 设备上创建文件系统 ###

|

||||

|

||||

7. 使用 ext4 为 md0 创建文件系统并挂载到 /mnt/raid1 .

|

||||

7、 给 md0 上创建 ext4 文件系统

|

||||

|

||||

# mkfs.ext4 /dev/md0

|

||||

|

||||

|

||||

|

||||

创建 RAID 设备文件系统

|

||||

*创建 RAID 设备文件系统*

|

||||

|

||||

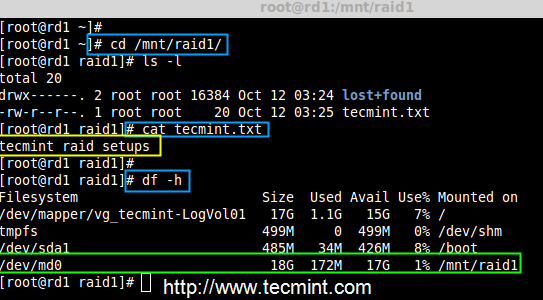

8. 接下来,挂载新创建的文件系统到“/mnt/raid1”,并创建一些文件,验证在挂载点的数据

|

||||

8、 接下来,挂载新创建的文件系统到“/mnt/raid1”,并创建一些文件,验证在挂载点的数据

|

||||

|

||||

# mkdir /mnt/raid1

|

||||

# mount /dev/md0 /mnt/raid1/

|

||||

@ -146,51 +145,52 @@ RAID 镜像意味着相同数据的完整克隆(或镜像)写入到两个磁

|

||||

|

||||

|

||||

|

||||

挂载 RAID 设备

|

||||

*挂载 RAID 设备*

|

||||

|

||||

9.为了在系统重新启动自动挂载 RAID1,需要在 fstab 文件中添加条目。打开“/etc/fstab”文件并添加以下行。

|

||||

9、为了在系统重新启动自动挂载 RAID 1,需要在 fstab 文件中添加条目。打开`/etc/fstab`文件并添加以下行:

|

||||

|

||||

/dev/md0 /mnt/raid1 ext4 defaults 0 0

|

||||

|

||||

|

||||

|

||||

自动挂载 Raid 设备

|

||||

*自动挂载 Raid 设备*

|

||||

|

||||

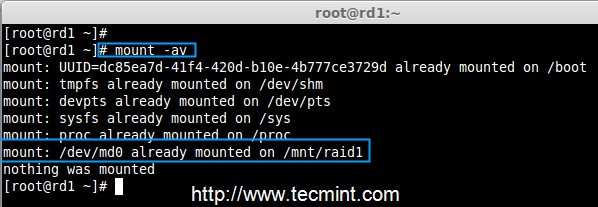

10、 运行`mount -av`,检查 fstab 中的条目是否有错误

|

||||

|

||||

10. 运行“mount -a”,检查 fstab 中的条目是否有错误

|

||||

# mount -av

|

||||

|

||||

|

||||

|

||||

检查 fstab 中的错误

|

||||

*检查 fstab 中的错误*

|

||||

|

||||

11. 接下来,使用下面的命令保存 raid 的配置到文件“mdadm.conf”中。

|

||||

11、 接下来,使用下面的命令保存 RAID 的配置到文件“mdadm.conf”中。

|

||||

|

||||

# mdadm --detail --scan --verbose >> /etc/mdadm.conf

|

||||

|

||||

|

||||

|

||||

保存 Raid 的配置

|

||||

*保存 Raid 的配置*

|

||||

|

||||

上述配置文件在系统重启时会读取并加载 RAID 设备。

|

||||

|

||||

### 第5步:在磁盘故障后检查数据 ###

|

||||

|

||||

12.我们的主要目的是,即使在任何磁盘故障或死机时必须保证数据是可用的。让我们来看看,当任何一个磁盘不可用时会发生什么。

|

||||

12、我们的主要目的是,即使在任何磁盘故障或死机时必须保证数据是可用的。让我们来看看,当任何一个磁盘不可用时会发生什么。

|

||||

|

||||

# mdadm --detail /dev/md0

|

||||

|

||||

|

||||

|

||||

验证 Raid 设备

|

||||

*验证 RAID 设备*

|

||||

|

||||

在上面的图片中,我们可以看到在 RAID 中有2个设备是可用的并且 Active Devices 是2.现在让我们看看,当一个磁盘拔出(移除 sdc 磁盘)或损坏后会发生什么。

|

||||

在上面的图片中,我们可以看到在 RAID 中有2个设备是可用的,并且 Active Devices 是2。现在让我们看看,当一个磁盘拔出(移除 sdc 磁盘)或损坏后会发生什么。

|

||||

|

||||

# ls -l /dev | grep sd

|

||||

# mdadm --detail /dev/md0

|

||||

|

||||

|

||||

|

||||

测试 RAID 设备

|

||||

*测试 RAID 设备*

|

||||

|

||||

现在,在上面的图片中你可以看到,一个磁盘不见了。我从虚拟机上删除了一个磁盘。此时让我们来检查我们宝贵的数据。

|

||||

|

||||

@ -199,9 +199,9 @@ RAID 镜像意味着相同数据的完整克隆(或镜像)写入到两个磁

|

||||

|

||||

|

||||

|

||||

验证 RAID 数据

|

||||

*验证 RAID 数据*

|

||||

|

||||

你有没有看到我们的数据仍然可用。由此,我们可以知道 RAID 1(镜像)的优势。在接下来的文章中,我们将看到如何设置一个 RAID 5 条带化分布式奇偶校验。希望这可以帮助你了解 RAID 1(镜像)是如何工作的。

|

||||

你可以看到我们的数据仍然可用。由此,我们可以了解 RAID 1(镜像)的优势。在接下来的文章中,我们将看到如何设置一个 RAID 5 条带化分布式奇偶校验。希望这可以帮助你了解 RAID 1(镜像)是如何工作的。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -209,9 +209,9 @@ via: http://www.tecmint.com/create-raid1-in-linux/

|

||||

|

||||

作者:[Babin Lonston][a]

|

||||

译者:[strugglingyouth](https://github.com/strugglingyouth)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/babinlonston/

|

||||

[1]:http://www.tecmint.com/understanding-raid-setup-in-linux/

|

||||

[1]:https://linux.cn/article-6085-1.html

|

||||

109

published/kde-plasma-5.4.md

Normal file

109

published/kde-plasma-5.4.md

Normal file

@ -0,0 +1,109 @@

|

||||

KDE Plasma 5.4.0 发布,八月特色版

|

||||

=============================

|

||||

|

||||

|

||||

|

||||

2015 年 8 月 25 ,星期二,KDE 发布了 Plasma 5 的一个特色新版本。

|

||||

|

||||

此版本为我们带来了许多非常棒的感受,如优化了对高分辨率的支持,KRunner 自动补全和一些新的 Breeze 漂亮图标。这还为不久以后的技术预览版的 Wayland 桌面奠定了基础。我们还带来了几个新组件,如声音音量部件,显示器校准工具和测试版的用户管理工具。

|

||||

|

||||

###新的音频音量程序

|

||||

|

||||

|

||||

|

||||

新的音频音量程序直接工作于 PulseAudio (Linux 上一个非常流行的音频服务) 之上 ,并且在一个漂亮的简约的界面提供一个完整的音量控制和输出设定。

|

||||

|

||||

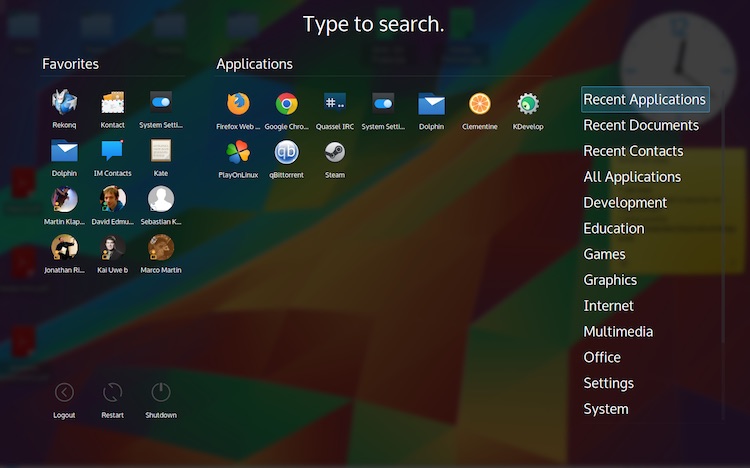

###替代的应用控制面板起动器

|

||||

|

||||

|

||||

|

||||

Plasma 5.4 在 kdeplasma-addons 软件包中提供了一个全新的全屏的应用控制面板,它具有应用菜单的所有功能,还支持缩放和全空间键盘导航。新的起动器可以像你目前所用的“最近使用的”或“收藏的文档和联系人”一样简单和快速地查找应用。

|

||||

|

||||

###丰富的艺术图标

|

||||

|

||||

|

||||

|

||||

Plasma 5.4 提供了超过 1400 个的新图标,其中不仅包含 KDE 程序的,而且还为 Inkscape, Firefox 和 Libreoffice 提供 Breeze 主题的艺术图标,可以体验到更加一致和本地化的感觉。

|

||||

|

||||

###KRunner 历史记录

|

||||

|

||||

|

||||

|

||||

KRunner 现在可以记住之前的搜索历史并根据历史记录进行自动补全。

|

||||

|

||||

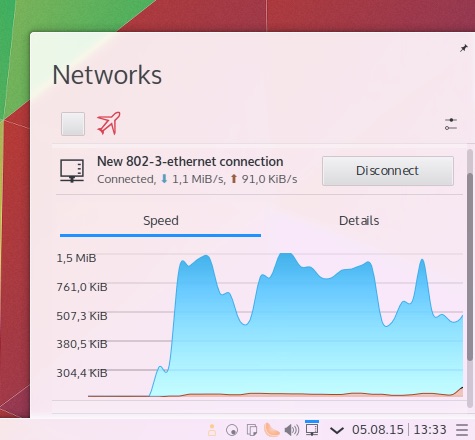

###Network 程序中实用的图形展示

|

||||

|

||||

|

||||

|

||||

Network 程序现在可以以图形形式显示网络流量了,同时也支持两个新的 VPN 插件:通过 SSH 连接或通过 SSTP 连接。

|

||||

|

||||

###Wayland 技术预览

|

||||

|

||||

随着 Plasma 5.4 ,Wayland 桌面发布了第一个技术预览版。在使用自由图形驱动(free graphics drivers)的系统上可以使用 KWin(Plasma 的 Wayland 合成器和 X11 窗口管理器)通过[内核模式设定][1]来运行 Plasma。现在已经支持的功能需求来自于[手机 Plasma 项目][2],更多的面向桌面的功能还未被完全实现。现在还不能作为替换那些基于 Xorg 的桌面,但可以轻松地对它测试和贡献,以及观看令人激动视频。有关如何在 Wayland 中使用 Plasma 的介绍请到:[KWin 维基页][3]。Wlayland 将随着我们构建的稳定版本而逐步得到改进。

|

||||

|

||||

###其他的改变和添加

|

||||

|

||||

- 优化对高 DPI 支持

|

||||

- 更少的内存占用

|

||||

- 桌面搜索使用了更快的新后端

|

||||

- 便笺增加拖拉支持和键盘导航

|

||||

- 回收站重新支持拖拉

|

||||

- 系统托盘获得更快的可配置性

|

||||

- 文档重新修订和更新

|

||||

- 优化了窄小面板上的数字时钟的布局

|

||||

- 数字时钟支持 ISO 日期

|

||||

- 切换数字时钟 12/24 格式更简单

|

||||

- 日历显示第几周

|

||||

- 任何项目都可以收藏进应用菜单(Kicker),支持收藏文档和 Telepathy 联系人

|

||||

- Telepathy 联系人收藏可以展示联系人的照片和实时状态

|

||||

- 优化程序与容器间的焦点和激活处理

|

||||

- 文件夹视图中各种小修复:更好的默认尺寸,鼠标交互问题以及文本标签换行

|

||||

- 任务管理器更好的呈现起动器默认的应用图标

|

||||

- 可再次通过将程序拖入任务管理器来添加启动器

|

||||

- 可配置中间键点击在任务管理器中的行为:无动作,关闭窗口,启动一个相同的程序的新实例

|

||||

- 任务管理器现在以列排序优先,无论用户是否更倾向于行优先;许多用户更喜欢这样排序是因为它会使更少的任务按钮像窗口一样移来移去

|

||||

- 优化任务管理器的图标和缩放边

|

||||

- 任务管理器中各种小修复:垂直下拉,触摸事件处理现在支持所有系统,组扩展箭头的视觉问题

|

||||

- 提供可用的目的框架技术预览版,可以使用 QuickShare Plasmoid,它可以让许多 web 服务分享文件更容易

|

||||

- 增加了显示器配置工具

|

||||

- 增加的 kwallet-pam 可以在登录时打开 wallet

|

||||

- 用户管理器现在会同步联系人到 KConfig 的设置中,用户账户模块被丢弃了

|

||||

- 应用程序菜单(Kicker)的性能得到改善

|

||||

- 应用程序菜单(Kicker)各种小修复:隐藏/显示程序更加可靠,顶部面板的对齐修复,文件夹视图中 “添加到桌面”更加可靠,在基于 KActivities 的最新模块中有更好的表现

|

||||

- 支持自定义菜单布局 (kmenuedit)和应用程序菜单(Kicker)支持菜单项目分隔

|

||||

- 当在面板中时,改进了文件夹视图,参见 [blog][4]

|

||||

- 将文件夹拖放到桌面容器现在会再次创建一个文件夹视图

|

||||

|

||||

[完整的 Plasma 5.4 变更日志在此](https://www.kde.org/announcements/plasma-5.3.2-5.4.0-changelog.php)

|

||||

|

||||

###Live 镜像

|

||||

|

||||

尝鲜的最简单的方式就是从 U 盘中启动,可以在 KDE 社区 Wiki 中找到 各种 [带有 Plasma 5 的 Live 镜像][5]。

|

||||

|

||||

###下载软件包

|

||||

|

||||

各发行版已经构建了软件包,或者正在构建,wiki 中的列出了各发行版的软件包名:[软件包下载维基页][6]。

|

||||

|

||||

###源码下载

|

||||

|

||||

可以直接从源码中安装 Plasma 5。KDE 社区 Wiki 已经介绍了[怎样编译][7]。

|

||||

|

||||

注意,Plasma 5 与 Plasma 4 不兼容,必须先卸载旧版本,或者安装到不同的前缀处。

|

||||

|

||||

|

||||

- [源代码信息页][8]

|

||||

|

||||

---

|

||||

|

||||

via: https://www.kde.org/announcements/plasma-5.4.0.php

|

||||

|

||||

译者:[Locez](http://locez.com) 校对:[wxy](http://github.com/wxy)

|

||||

|

||||

[1]:https://en.wikipedia.org/wiki/Direct_Rendering_Manager

|

||||

[2]:https://dot.kde.org/2015/07/25/plasma-mobile-free-mobile-platform

|

||||

[3]:https://community.kde.org/KWin/Wayland#Start_a_Plasma_session_on_Wayland

|

||||

[4]:https://blogs.kde.org/2015/06/04/folder-view-panel-popups-are-list-views-again

|

||||

[5]:https://community.kde.org/Plasma/LiveImages

|

||||

[6]:https://community.kde.org/Plasma/Packages

|

||||

[7]:http://community.kde.org/Frameworks/Building

|

||||

[8]:https://www.kde.org/info/plasma-5.4.0.php

|

||||

@ -0,0 +1,87 @@

|

||||

Plasma 5.4 Is Out And It’s Packed Full Of Features

|

||||

================================================================================

|

||||

KDE has [announced][1] a brand new feature release of Plasma 5 — and it’s a corker.

|

||||

|

||||

|

||||

|

||||

Better network details are among the changes

|

||||

|

||||

Plasma 5.4.0 builds on [April’s 5.3.0 milestone][2] in a number of ways, ranging from the inherently technical, Wayland preview session, ahoy, to lavish aesthetic touches, like **1,400 brand new icons**.

|

||||

|

||||

A handful of new components also feature in the release, including a new Plasma Widget for volume control, a monitor calibration tool and an improved user management tool.

|

||||

|

||||

The ‘Kicker’ application menu has been powered up to let you favourite all types of content, not just applications.

|

||||

|

||||

**KRunner now remembers searches** so that it can automatically offer suggestions based on your earlier queries as you type.

|

||||

|

||||

The **network applet displays a graph** to give you a better understanding of your network traffic. It also gains two new VPN plugins for SSH and SSTP connections.

|

||||

|

||||

Minor tweaks to the digital clock see it adapt better in slim panel mode, it gains ISO date support and makes it easier for you to toggle between 12 hour and 24 hour clock. Week numbers have been added to the calendar.

|

||||

|

||||

### Application Dashboard ###

|

||||

|

||||

|

||||

|

||||

The new ‘Application Dashboard’ in KDE Plasma 5.4.0

|

||||

|

||||

**A new full screen launcher, called ‘Application Dashboard’**, is also available.

|

||||

|

||||

This full-screen dash offers the same features as the traditional Application Menu but with “sophisticated scaling to screen size and full spatial keyboard navigation”.

|

||||

|

||||

Like the Unity launch, the new Plasma Application Dashboard helps you quickly find applications, sift through files and contacts based on your previous activity.

|

||||

|

||||

### Changes in KDE Plasma 5.4.0 at a glance ###

|

||||

|

||||

- Improved high DPI support

|

||||

- KRunner autocompletion

|

||||

- KRunner search history

|

||||

- Application Dashboard add on

|

||||

- 1,400 New icons

|

||||

- Wayland tech preview

|

||||

|

||||

For a full list of changes in Plasma 5.4 refer to [this changelog][3].

|

||||

|

||||

### Install Plasma 5.4 in Kubuntu 15.04 ###

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

To **install Plasma 5.4 in Kubuntu 15.04** you will need to add the KDE Backports PPA to your Software Sources.

|

||||

|

||||

Adding the Kubuntu backports PPA **is not strictly advised** as it may upgrade other parts of the KDE desktop, application suite, developer frameworks or Kubuntu specific config files.

|

||||

|

||||

If you like your desktop being stable, don’t proceed.

|

||||

|

||||

The quickest way to upgrade to Plasma 5.4 once it lands in the Kubuntu Backports PPA is to use the Terminal:

|

||||

|

||||

sudo add-apt-repository ppa:kubuntu-ppa/backports

|

||||

|

||||

sudo apt-get update && sudo apt-get dist-upgrade

|

||||

|

||||

Let the upgrade process complete. Assuming no errors emerge, reboot your computer for changes to take effect.

|

||||

|

||||

If you’re not already using Kubuntu, i.e. you’re using the Unity version of Ubuntu, you should first install the Kubuntu desktop package (you’ll find it in the Ubuntu Software Centre).

|

||||

|

||||

To undo the changes above and downgrade to the most recent version of Plasma available in the Ubuntu archives use the PPA-Purge tool:

|

||||

|

||||

sudo apt-get install ppa-purge

|

||||

|

||||

sudo ppa-purge ppa:kubuntu-ppa/backports

|

||||

|

||||

Let us know how your upgrade/testing goes in the comments below and don’t forget to mention the features you hope to see added to the Plasma 5 desktop next.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2015/08/plasma-5-4-new-features

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/117485690627814051450/?rel=author

|

||||

[1]:https://dot.kde.org/2015/08/25/kde-ships-plasma-540-feature-release-august

|

||||

[2]:http://www.omgubuntu.co.uk/2015/04/kde-plasma-5-3-released-heres-how-to-upgrade-in-kubuntu-15-04

|

||||

[3]:https://www.kde.org/announcements/plasma-5.3.2-5.4.0-changelog.php

|

||||

@ -1,119 +0,0 @@

|

||||

Translating by Xuanwo

|

||||

|

||||

Top 5 Torrent Clients For Ubuntu Linux

|

||||

================================================================================

|

||||

|

||||

|

||||

Looking for the **best torrent client in Ubuntu**? Indeed there are a number of torrent clients available for desktop Linux. But which ones are the **best Ubuntu torrent clients** among them?

|

||||

|

||||

I am going to list top 5 torrent clients for Linux, which are lightweight, feature rich and have impressive GUI. Ease of installation and using is also a factor.

|

||||

|

||||

### Best torrent programs for Ubuntu ###

|

||||

|

||||

Since Ubuntu comes by default with Transmission, I am going to exclude it from the list. This doesn’t mean that Transmission doesn’t deserve to be on the list. Transmission is a good to have torrent client for Ubuntu and this is the reason why it is the default Torrent application in several Linux distributions, including Ubuntu.

|

||||

|

||||

----------

|

||||

|

||||

### Deluge ###

|

||||

|

||||

|

||||

|

||||

[Deluge][1] has been chosen as the best torrent client for Linux by Lifehacker and that speaks itself of the usefulness of Deluge. And it’s not just Lifehacker who is fan of Deluge, check out any forum and you’ll find a number of people admitting that Deluge is their favorite.

|

||||

|

||||

Fast, sleek and intuitive interface makes Deluge a hot favorite among Linux users.

|

||||

|

||||

Deluge is available in Ubuntu repositories and you can install it in Ubuntu Software Center or by using the command below:

|

||||

|

||||

sudo apt-get install deluge

|

||||

|

||||

----------

|

||||

|

||||

### qBittorrent ###

|

||||

|

||||

|

||||

|

||||

As the name suggests, [qBittorrent][2] is the Qt version of famous [Bittorrent][3] application. You’ll see an interface similar to Bittorrent client in Windows, if you ever used it. Sort of lightweight and have all the standard features of a torrent program, qBittorrent is also available in default Ubuntu repository.

|

||||

|

||||

It could be installed from Ubuntu Software Center or using the command below:

|

||||

|

||||

sudo apt-get install qbittorrent

|

||||

|

||||

----------

|

||||

|

||||

### Tixati ###

|

||||

|

||||

|

||||

|

||||

[Tixati][4] is another nice to have torrent client for Ubuntu. It has a default dark theme which might be preferred by many but not me. It has all the standard features that you can seek in a torrent client.

|

||||

|

||||

In addition to that, there are additional feature of data analysis. You can measure and analyze bandwidth and other statistics in nice charts.

|

||||

|

||||

- [Download Tixati][5]

|

||||

|

||||

----------

|

||||

|

||||

### Vuze ###

|

||||

|

||||

|

||||

|

||||

[Vuze][6] is favorite torrent application of a number of Linux as well as Windows users. Apart from the standard features, you can search for torrents directly in the application. You can also subscribe to episodic content so that you won’t have to search for new contents as you can see it in your subscription in sidebar.

|

||||

|

||||

It also comes with a video player that can play HD videos with subtitles and all. But I don’t think you would like to use it over the better video players such as VLC.

|

||||

|

||||

Vuze can be installed from Ubuntu Software Center or using the command below:

|

||||

|

||||

sudo apt-get install vuze

|

||||

|

||||

----------

|

||||

|

||||

### Frostwire ###

|

||||

|

||||

|

||||

|

||||

[Frostwire][7] is the torrent application you might want to try. It is more than just a simple torrent client. Also available for Android, you can use it to share files over WiFi.

|

||||

|

||||

You can search for torrents from within the application and play them inside the application. In addition to the downloaded files, it can browse your local media and have them organized inside the player. The same is applicable for the Android version.

|

||||

|

||||

An additional feature is that Frostwire also provides access to legal music by indi artists. You can download them and listen to it, for free, for legal.

|

||||

|

||||

- [Download Frostwire][8]

|

||||

|

||||

----------

|

||||

|

||||

### Honorable mention ###

|

||||

|

||||

On Windows, uTorrent (pronounced mu torrent) is my favorite torrent application. While uTorrent may be available for Linux, I deliberately skipped it from the list because installing and using uTorrent in Linux is neither easy nor does it provide a complete application experience (runs with in web browser).

|

||||

|

||||

You can read about uTorrent installation in Ubuntu [here][9].

|

||||

|

||||

#### Quick tip: ####

|

||||

|

||||

Most of the time, torrent applications do not start by default. You might want to change this behavior. Read this post to learn [how to manage startup applications in Ubuntu][10].

|

||||

|

||||

### What’s your favorite? ###

|

||||

|

||||

That was my opinion on the best Torrent clients in Ubuntu. What is your favorite one? Do leave a comment. You can also check the [best download managers for Ubuntu][11] in related posts. And if you use Popcorn Time, check these [Popcorn Time Tips][12].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://itsfoss.com/best-torrent-ubuntu/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://itsfoss.com/author/abhishek/

|

||||

[1]:http://deluge-torrent.org/

|

||||

[2]:http://www.qbittorrent.org/

|

||||

[3]:http://www.bittorrent.com/

|

||||

[4]:http://www.tixati.com/

|

||||

[5]:http://www.tixati.com/download/

|

||||

[6]:http://www.vuze.com/

|

||||

[7]:http://www.frostwire.com/

|

||||

[8]:http://www.frostwire.com/downloads

|

||||

[9]:http://sysads.co.uk/2014/05/install-utorrent-3-3-ubuntu-14-04-13-10/

|

||||

[10]:http://itsfoss.com/manage-startup-applications-ubuntu/

|

||||

[11]:http://itsfoss.com/4-best-download-managers-for-linux/

|

||||

[12]:http://itsfoss.com/popcorn-time-tips/

|

||||

65

sources/share/20150826 Five Super Cool Open Source Games.md

Normal file

65

sources/share/20150826 Five Super Cool Open Source Games.md

Normal file

@ -0,0 +1,65 @@

|

||||

Five Super Cool Open Source Games

|

||||

================================================================================

|

||||

In 2014 and 2015, Linux became home to a list of popular commercial titles such as the popular Borderlands, Witcher, Dead Island, and Counter Strike series of games. While this is exciting news, what of the gamer on a budget? Commercial titles are good, but even better are free-to-play alternatives made by developers who know what players like.

|

||||

|

||||

Some time ago, I came across a three year old YouTube video with the ever optimistic title [5 Open Source Games that Don’t Suck][1]. Although the video praises some open source games, I’d prefer to approach the subject with a bit more enthusiasm, at least as far as the title goes. So, here’s my list of five super cool open source games.

|

||||

|

||||

### Tux Racer ###

|

||||

|

||||

|

||||

|

||||

Tux Racer

|

||||

|

||||

[Tux Racer][2] is the first game on this list because I’ve had plenty of experience with it. On a [recent trip to Mexico][3] that my brother and I took with [Kids on Computers][4], Tux Racer was one of the games that kids and teachers alike enjoyed. In this game, players use the Linux mascot, the penguin Tux, to race on downhill ski slopes in time trials in which players challenge their own personal bests. Currently there’s no multiplayer version available, but that could be subject to change. Available for Linux, OS X, Windows, and Android.

|

||||

|

||||

### Warsow ###

|

||||

|

||||

|

||||

|

||||

Warsow

|

||||

|

||||

The [Warsow][5] website explains: “Set in a futuristic cartoonish world, Warsow is a completely free fast-paced first-person shooter (FPS) for Windows, Linux and Mac OS X. Warsow is the Art of Respect and Sportsmanship Over the Web.” I was reluctant to include games from the FPS genre on this list, because many have played games in this genre, but I was amused by Warsow. It prioritizes lots of movement and the game is fast paced with a set of eight weapons to start with. The cartoonish style makes playing feel less serious and more casual, something for friends and family to play together. However, it boasts competitive play, and when I experienced the game I found there were, indeed, some expert players around. Available for Linux, Windows and OS X.

|

||||

|

||||

### M.A.R.S – A ridiculous shooter ###

|

||||

|

||||

|

||||

|

||||

M.A.R.S. – A ridiculous shooter

|

||||

|

||||

[M.A.R.S – A ridiculous shooter][6] is appealing because of it’s vibrant coloring and style. There is support for two players on the same keyboard, but an online multiplayer version is currently in the works — meaning plans to play with friends have to wait for now. Regardless, it’s an entertaining space shooter with a few different ships and weapons to play as. There are different shaped ships, ranging from shotguns, lasers, scattered shots and more (one of the random ships shot bubbles at my opponents, which was funny amid the chaotic gameplay). There are a few modes of play, such as the standard death match against opponents to score a certain limit or score high, along with other modes called Spaceball, Grave-itation Pit and Cannon Keep. Available for Linux, Windows and OS X.

|

||||

|

||||

### Valyria Tear ###

|

||||

|

||||

|

||||

|

||||

Valyria Tear

|

||||

|

||||

[Valyria Tear][7] resembles many fan favorite role-playing games (RPGs) spanning the years. The story is set in the usual era of fantasy games, full of knights, kingdoms and wizardry, and follows the main character Bronann. The design team did great work in designing the world and gives players everything expected from the genre: hidden chests, random monster encounters, non-player character (NPC) interaction, and something no RPG would be complete without: grinding for experience on lower level slime monsters until you’re ready for the big bosses. When I gave it a try, time didn’t permit me to play too far into the campaign, but for those interested there is a ‘[Let’s Play][8]‘ series by YouTube user Yohann Ferriera. Available for Linux, Windows and OS X.

|

||||

|

||||

### SuperTuxKart ###

|

||||

|

||||

|

||||

|

||||

SuperTuxKart

|

||||

|

||||

Last but not least is [SuperTuxKart][9], a clone of Mario Kart that is every bit as fun as the original. It started development around 2000-2004 as Tux Kart, but there were errors in its production which led to a cease in development for a few years. Since development picked up again in 2006, it’s been improving, with version 0.9 debuting four months ago. In the game, our old friend Tux starts in the role of Mario and a few other open source mascots. One recognizable face among them is Suzanne, the monkey mascot for Blender. The graphics are solid and gameplay is fluent. While online play is in the planning stages, split screen multiplayer action is available, with up to four players supported on a single computer. Available for Linux, Windows, OS X, AmigaOS 4, AROS and MorphOS.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://fossforce.com/2015/08/five-super-cool-open-source-games/

|

||||

|

||||

作者:Hunter Banks

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:https://www.youtube.com/watch?v=BEKVl-XtOP8

|

||||

[2]:http://tuxracer.sourceforge.net/download.html

|

||||

[3]:http://fossforce.com/2015/07/banks-family-values-texas-linux-fest/

|

||||

[4]:http://www.kidsoncomputers.org/an-amazing-week-in-oaxaca

|

||||

[5]:https://www.warsow.net/download

|

||||

[6]:http://mars-game.sourceforge.net/

|

||||

[7]:http://valyriatear.blogspot.com/

|

||||

[8]:https://www.youtube.com/channel/UCQ5KrSk9EqcT_JixWY2RyMA

|

||||

[9]:http://supertuxkart.sourceforge.net/

|

||||

@ -0,0 +1,110 @@

|

||||

Mosh Shell – A SSH Based Client for Connecting Remote Unix/Linux Systems

|

||||

================================================================================

|

||||

Mosh, which stands for Mobile Shell is a command-line application which is used for connecting to the server from a client computer, over the Internet. It can be used as SSH and contains more feature than Secure Shell. It is an application similar to SSH, but with additional features. The application is written originally by Keith Winstein for Unix like operating system and released under GNU GPL v3.

|

||||

|

||||

|

||||

|

||||

Mosh Shell SSH Client

|

||||

|

||||

#### Features of Mosh ####

|

||||

|

||||

- It is a remote terminal application that supports roaming.

|

||||

- Available for all major UNIX-like OS viz., Linux, FreeBSD, Solaris, Mac OS X and Android.

|

||||

- Intermittent Connectivity supported.

|

||||

- Provides intelligent local echo.

|

||||

- Line editing of user keystrokes supported.

|

||||

- Responsive design and Robust Nature over wifi, cellular and long-distance links.

|

||||

- Remain Connected even when IP changes. It usages UDP in place of TCP (used by SSH). TCP time out when connect is reset or new IP assigned but UDP keeps the connection open.

|

||||

- The Connection remains intact when you resume the session after a long time.

|

||||

- No network lag. Shows users typed key and deletions immediately without network lag.

|

||||

- Same old method to login as it was in SSH.

|

||||

- Mechanism to handle packet loss.

|

||||

|

||||

### Installation of Mosh Shell in Linux ###

|

||||

|

||||

On Debian, Ubuntu and Mint alike systems, you can easily install the Mosh package with the help of [apt-get package manager][1] as shown.

|

||||

|

||||

# apt-get update

|

||||

# apt-get install mosh

|

||||

|

||||

On RHEL/CentOS/Fedora based distributions, you need to turn on third party repository called [EPEL][2], in order to install mosh from this repository using [yum package manager][3] as shown.

|

||||

|

||||

# yum update

|

||||

# yum install mosh

|

||||

|

||||

On Fedora 22+ version, you need to use [dnf package manager][4] to install mosh as shown.

|

||||

|

||||

# dnf install mosh

|

||||

|

||||

### How do I use Mosh Shell? ###

|

||||

|

||||

1. Let’s try to login into remote Linux server using mosh shell.

|

||||

|

||||

$ mosh root@192.168.0.150

|

||||

|

||||

|

||||

|

||||

Mosh Shell Remote Connection

|

||||

|

||||

**Note**: Did you see I got an error in connecting since the port was not open in my remote CentOS 7 box. A quick but not recommended solution I performed was:

|

||||

|

||||

# systemctl stop firewalld [on Remote Server]

|

||||

|

||||

The preferred way is to open a port and update firewall rules. And then connect to mosh on a predefined port. For in-depth details on firewalld you may like to visit this post.

|

||||

|

||||

- [How to Configure Firewalld][5]

|

||||

|

||||

2. Let’s assume that the default SSH port 22 was changed to port 70, in this case you can define custom port with the help of ‘-p‘ switch with mosh.

|

||||

|

||||

$ mosh -p 70 root@192.168.0.150

|

||||

|

||||

3. Check the version of installed Mosh.

|

||||

|

||||

$ mosh --version

|

||||

|

||||

|

||||

|

||||

Check Mosh Version

|

||||

|

||||

4. You can close mosh session type ‘exit‘ on the prompt.

|

||||

|

||||

$ exit

|

||||

|

||||

5. Mosh supports a lot of options, which you may see as:

|

||||

|

||||

$ mosh --help

|

||||

|

||||

|

||||

|

||||

Mosh Shell Options

|

||||

|

||||

#### Cons of Mosh Shell ####

|

||||

|

||||

- Mosh requires additional prerequisite for example, allow direct connection via UDP, which was not required by SSH.

|

||||

- Dynamic port allocation in the range of 60000-61000. The first open fort is allocated. It requires one port per connection.

|

||||

- Default port allocation is a serious security concern, especially in production.

|

||||

- IPv6 connections supported, but roaming on IPv6 not supported.

|

||||

- Scrollback not supported.

|

||||

- No X11 forwarding supported.

|

||||

- No support for ssh-agent forwarding.

|

||||

|

||||

### Conclusion ###

|

||||

|

||||

Mosh is a nice small utility which is available for download in the repository of most of the Linux Distributions. Though it has a few discrepancies specially security concern and additional requirement it’s features like remaining connected even while roaming is its plus point. My recommendation is Every Linux-er who deals with SSH should try this application and mind it, Mosh is worth a try.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/install-mosh-shell-ssh-client-in-linux/

|

||||

|

||||

作者:[Avishek Kumar][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/avishek/

|

||||

[1]:http://www.tecmint.com/useful-basic-commands-of-apt-get-and-apt-cache-for-package-management/

|

||||

[2]:http://www.tecmint.com/how-to-enable-epel-repository-for-rhel-centos-6-5/

|

||||

[3]:http://www.tecmint.com/20-linux-yum-yellowdog-updater-modified-commands-for-package-mangement/

|

||||

[4]:http://www.tecmint.com/dnf-commands-for-fedora-rpm-package-management/

|

||||

[5]:http://www.tecmint.com/configure-firewalld-in-centos-7/

|

||||

@ -1,25 +0,0 @@

|

||||

Linux about to gain a new file system – bcachefs

|

||||

================================================================================

|

||||

A five year old file system built by Kent Overstreet, formerly of Google, is near feature complete with all critical components in place. Bcachefs boasts the performance and reliability of the widespread ext4 and xfs as well as the feature list similar to that of btrfs and zfs. Notable features include checksumming, compression, multiple devices, caching and eventually snapshots and other “nifty” features.

|

||||

|

||||

Bcachefs started out as **bcache** which was a block caching layer, the evolution from bcache to a fully featured [copy-on-write][1] file system has been described as a metamorphosis.

|

||||

|

||||

Responding to the self-imposed question “Yet another new filesystem? Why?” Kent Overstreet replies with the following “Well, years ago (going back to when I was still at Google), I and the other people working on bcache realized that what we were working on was, almost by accident, a good chunk of the functionality of a full blown filesystem – and there was a really clean and elegant design to be had there if we took it and ran with it. And a fast one – the main goal of bcachefs to match ext4 and xfs on performance and reliability, but with the features of btrfs/xfs.”

|

||||

|

||||

Overstreet has invited people to use and test bcachefs out on their own systems. To find instructions to use bcachefs on your system check out the mailing list [announcement][2].

|

||||

|

||||

The file system situation on Linux is a fairly drawn out one, Fedora 16 for instance aimed to use btrfs instead of ext4 as the default file system, this switch still has not happened. Currently all of the Debian based distros, including Ubuntu, Mint and elementary OS, still use ext4 as their default file systems and none have even whispered about switching to a new default file system yet.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxveda.com/2015/08/22/linux-gain-new-file-system-bcachefs/

|

||||

|

||||

作者:[Paul Hill][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.linuxveda.com/author/paul_hill/

|

||||

[1]:https://en.wikipedia.org/wiki/Copy-on-write

|

||||

[2]:https://lkml.org/lkml/2015/8/21/22

|

||||

@ -1,676 +0,0 @@

|

||||

Translating by Ezio

|

||||

|

||||

Process of the Linux kernel building

|

||||

================================================================================

|

||||

Introduction

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

I will not tell you how to build and install custom Linux kernel on your machine, you can find many many [resources](https://encrypted.google.com/search?q=building+linux+kernel#q=building+linux+kernel+from+source+code) that will help you to do it. Instead, we will know what does occur when you are typed `make` in the directory with Linux kernel source code in this part. When I just started to learn source code of the Linux kernel, the [Makefile](https://github.com/torvalds/linux/blob/master/Makefile) file was a first file that I've opened. And it was scary :) This [makefile](https://en.wikipedia.org/wiki/Make_%28software%29) contains `1591` lines of code at the time when I wrote this part and it was [third](https://github.com/torvalds/linux/commit/52721d9d3334c1cb1f76219a161084094ec634dc) release candidate.

|

||||

|

||||

This makefile is the the top makefile in the Linux kernel source code and kernel build starts here. Yes, it is big, but moreover, if you've read the source code of the Linux kernel you can noted that all directories with a source code has an own makefile. Of course it is not real to describe how each source files compiled and linked. So, we will see compilation only for the standard case. You will not find here building of the kernel's documentation, cleaning of the kernel source code, [tags](https://en.wikipedia.org/wiki/Ctags) generation, [cross-compilation](https://en.wikipedia.org/wiki/Cross_compiler) related stuff and etc. We will start from the `make` execution with the standard kernel configuration file and will finish with the building of the [bzImage](https://en.wikipedia.org/wiki/Vmlinux#bzImage).

|

||||

|

||||

It would be good if you're already familiar with the [make](https://en.wikipedia.org/wiki/Make_%28software%29) util, but I will anyway try to describe all code that will be in this part.

|

||||

|

||||

So let's start.

|

||||

|

||||

Preparation before the kernel compilation

|

||||

---------------------------------------------------------------------------------

|

||||

|

||||

There are many things to preparate before the kernel compilation will be started. The main point here is to find and configure

|

||||

the type of compilation, to parse command line arguments that are passed to the `make` util and etc. So let's dive into the top `Makefile` of the Linux kernel.

|

||||

|

||||

The Linux kernel top `Makefile` is responsible for building two major products: [vmlinux](https://en.wikipedia.org/wiki/Vmlinux) (the resident kernel image) and the modules (any module files). The [Makefile](https://github.com/torvalds/linux/blob/master/Makefile) of the Linux kernel starts from the definition of the following variables:

|

||||

|

||||

```Makefile

|

||||

VERSION = 4

|

||||

PATCHLEVEL = 2

|

||||

SUBLEVEL = 0

|

||||

EXTRAVERSION = -rc3

|

||||

NAME = Hurr durr I'ma sheep

|

||||

```

|

||||

|

||||

These variables determine the current version of the Linux kernel and are used in the different places, for example in the forming of the `KERNELVERSION` variable:

|

||||

|

||||

```Makefile

|

||||

KERNELVERSION = $(VERSION)$(if $(PATCHLEVEL),.$(PATCHLEVEL)$(if $(SUBLEVEL),.$(SUBLEVEL)))$(EXTRAVERSION)

|

||||

```

|

||||

|

||||

After this we can see a couple of the `ifeq` condition that check some of the parameters passed to `make`. The Linux kernel `makefiles` provides a special `make help` target that prints all available targets and some of the command line arguments that can be passed to `make`. For example: `make V=1` - provides verbose builds. The first `ifeq` condition checks if the `V=n` option is passed to make:

|

||||

|

||||

```Makefile

|

||||

ifeq ("$(origin V)", "command line")

|

||||

KBUILD_VERBOSE = $(V)

|

||||

endif

|

||||

ifndef KBUILD_VERBOSE

|

||||

KBUILD_VERBOSE = 0

|

||||

endif

|

||||

|

||||

ifeq ($(KBUILD_VERBOSE),1)

|

||||

quiet =

|

||||

Q =

|

||||

else

|

||||

quiet=quiet_

|

||||

Q = @

|

||||

endif

|

||||

|

||||

export quiet Q KBUILD_VERBOSE

|

||||

```

|

||||

|

||||

If this option is passed to `make` we set the `KBUILD_VERBOSE` variable to the value of the `V` option. Otherwise we set the `KBUILD_VERBOSE` variable to zero. After this we check value of the `KBUILD_VERBOSE` variable and set values of the `quiet` and `Q` variables depends on the `KBUILD_VERBOSE` value. The `@` symbols suppress the output of the command and if it will be set before a command we will see something like this: `CC scripts/mod/empty.o` instead of the `Compiling .... scripts/mod/empty.o`. In the end we just export all of these variables. The next `ifeq` statement checks that `O=/dir` option was passed to the `make`. This option allows to locate all output files in the given `dir`:

|

||||

|

||||

```Makefile

|

||||

ifeq ($(KBUILD_SRC),)

|

||||

|

||||

ifeq ("$(origin O)", "command line")

|

||||

KBUILD_OUTPUT := $(O)

|

||||

endif

|

||||

|

||||

ifneq ($(KBUILD_OUTPUT),)

|

||||

saved-output := $(KBUILD_OUTPUT)

|

||||

KBUILD_OUTPUT := $(shell mkdir -p $(KBUILD_OUTPUT) && cd $(KBUILD_OUTPUT) \

|

||||

&& /bin/pwd)

|

||||

$(if $(KBUILD_OUTPUT),, \

|

||||

$(error failed to create output directory "$(saved-output)"))

|

||||

|

||||

sub-make: FORCE

|

||||

$(Q)$(MAKE) -C $(KBUILD_OUTPUT) KBUILD_SRC=$(CURDIR) \

|

||||

-f $(CURDIR)/Makefile $(filter-out _all sub-make,$(MAKECMDGOALS))

|

||||

|

||||

skip-makefile := 1

|

||||

endif # ifneq ($(KBUILD_OUTPUT),)

|

||||

endif # ifeq ($(KBUILD_SRC),)

|

||||

```

|

||||

|

||||

We check the `KBUILD_SRC` that represent top directory of the source code of the linux kernel and if it is empty (it is empty every time while makefile executes first time) and the set the `KBUILD_OUTPUT` variable to the value that passed with the `O` option (if this option was passed). In the next step we check this `KBUILD_OUTPUT` variable and if we set it, we do following things:

|

||||

|

||||

* Store value of the `KBUILD_OUTPUT` in the temp `saved-output` variable;

|

||||

* Try to create given output directory;

|

||||

* Check that directory created, in other way print error;

|

||||

* If custom output directory created sucessfully, execute `make` again with the new directory (see `-C` option).

|

||||

|

||||

The next `ifeq` statements checks that `C` or `M` options was passed to the make:

|

||||

|

||||

```Makefile

|

||||

ifeq ("$(origin C)", "command line")

|

||||

KBUILD_CHECKSRC = $(C)

|

||||

endif

|

||||

ifndef KBUILD_CHECKSRC

|

||||

KBUILD_CHECKSRC = 0

|

||||

endif

|

||||

|

||||

ifeq ("$(origin M)", "command line")

|

||||

KBUILD_EXTMOD := $(M)

|

||||

endif

|

||||

```

|

||||

|

||||

The first `C` option tells to the `makefile` that need to check all `c` source code with a tool provided by the `$CHECK` environment variable, by default it is [sparse](https://en.wikipedia.org/wiki/Sparse). The second `M` option provides build for the external modules (will not see this case in this part). As we set this variables we make a check of the `KBUILD_SRC` variable and if it is not set we set `srctree` variable to `.`:

|

||||

|

||||

```Makefile

|

||||

ifeq ($(KBUILD_SRC),)

|

||||

srctree := .

|

||||

endif

|

||||

|

||||

objtree := .

|

||||

src := $(srctree)

|

||||

obj := $(objtree)

|

||||

|

||||

export srctree objtree VPATH

|

||||

```

|

||||

|

||||

That tells to `Makefile` that source tree of the Linux kernel will be in the current directory where `make` command was executed. After this we set `objtree` and other variables to this directory and export these variables. The next step is the getting value for the `SUBARCH` variable that will represent tewhat the underlying archicecture is:

|

||||

|

||||

```Makefile

|

||||

SUBARCH := $(shell uname -m | sed -e s/i.86/x86/ -e s/x86_64/x86/ \

|

||||

-e s/sun4u/sparc64/ \

|

||||

-e s/arm.*/arm/ -e s/sa110/arm/ \

|

||||

-e s/s390x/s390/ -e s/parisc64/parisc/ \

|

||||

-e s/ppc.*/powerpc/ -e s/mips.*/mips/ \

|

||||

-e s/sh[234].*/sh/ -e s/aarch64.*/arm64/ )

|

||||

```

|

||||

|

||||

As you can see it executes [uname](https://en.wikipedia.org/wiki/Uname) utils that prints information about machine, operating system and architecture. As it will get output of the `uname` util, it will parse it and assign to the `SUBARCH` variable. As we got `SUBARCH`, we set the `SRCARCH` variable that provides directory of the certain architecture and `hfr-arch` that provides directory for the header files:

|

||||

|

||||

```Makefile

|

||||

ifeq ($(ARCH),i386)

|

||||

SRCARCH := x86

|

||||

endif

|

||||

ifeq ($(ARCH),x86_64)

|

||||

SRCARCH := x86

|

||||

endif

|

||||

|

||||

hdr-arch := $(SRCARCH)

|

||||

```

|

||||

|

||||

Note that `ARCH` is the alias for the `SUBARCH`. In the next step we set the `KCONFIG_CONFIG` variable that represents path to the kernel configuration file and if it was not set before, it will be `.config` by default:

|

||||

|

||||

```Makefile

|

||||

KCONFIG_CONFIG ?= .config

|

||||

export KCONFIG_CONFIG

|

||||

```

|

||||

|

||||

and the [shell](https://en.wikipedia.org/wiki/Shell_%28computing%29) that will be used during kernel compilation:

|

||||

|

||||

```Makefile

|

||||

CONFIG_SHELL := $(shell if [ -x "$$BASH" ]; then echo $$BASH; \

|

||||

else if [ -x /bin/bash ]; then echo /bin/bash; \

|

||||

else echo sh; fi ; fi)

|

||||

```

|

||||

|

||||

The next set of variables related to the compiler that will be used during Linux kernel compilation. We set the host compilers for the `c` and `c++` and flags for it:

|

||||

|

||||

```Makefile

|

||||

HOSTCC = gcc

|

||||

HOSTCXX = g++

|

||||

HOSTCFLAGS = -Wall -Wmissing-prototypes -Wstrict-prototypes -O2 -fomit-frame-pointer -std=gnu89

|

||||

HOSTCXXFLAGS = -O2

|

||||

```

|

||||

|

||||

Next we will meet the `CC` variable that represent compiler too, so why do we need in the `HOST*` variables? The `CC` is the target compiler that will be used during kernel compilation, but `HOSTCC` will be used during compilation of the set of the `host` programs (we will see it soon). After this we can see definition of the `KBUILD_MODULES` and `KBUILD_BUILTIN` variables that are used for the determination of the what to compile (kernel, modules or both):

|

||||

|

||||

```Makefile

|

||||

KBUILD_MODULES :=

|

||||

KBUILD_BUILTIN := 1

|

||||

|

||||

ifeq ($(MAKECMDGOALS),modules)

|

||||

KBUILD_BUILTIN := $(if $(CONFIG_MODVERSIONS),1)

|

||||

endif

|

||||

```

|

||||

|

||||

Here we can see definition of these variables and the value of the `KBUILD_BUILTIN` will depens on the `CONFIG_MODVERSIONS` kernel configuration parameter if we pass only `modules` to the `make`. The next step is including of the:

|

||||

|

||||

```Makefile

|

||||

include scripts/Kbuild.include

|

||||

```

|

||||

|

||||

`kbuild` file. The [Kbuild](https://github.com/torvalds/linux/blob/master/Documentation/kbuild/kbuild.txt) or `Kernel Build System` is the special infrastructure to manage building of the kernel and its modules. The `kbuild` files has the same syntax that makefiles. The [scripts/Kbuild.include](https://github.com/torvalds/linux/blob/master/scripts/Kbuild.include) file provides some generic definitions for the `kbuild` system. As we included this `kbuild` files we can see definition of the variables that are related to the different tools that will be used during kernel and modules compilation (like linker, compilers, utils from the [binutils](http://www.gnu.org/software/binutils/) and etc...):

|

||||

|

||||

```Makefile

|

||||

AS = $(CROSS_COMPILE)as

|

||||

LD = $(CROSS_COMPILE)ld

|

||||

CC = $(CROSS_COMPILE)gcc

|

||||

CPP = $(CC) -E

|

||||

AR = $(CROSS_COMPILE)ar

|

||||

NM = $(CROSS_COMPILE)nm

|

||||

STRIP = $(CROSS_COMPILE)strip

|

||||

OBJCOPY = $(CROSS_COMPILE)objcopy

|

||||

OBJDUMP = $(CROSS_COMPILE)objdump

|

||||

AWK = awk

|

||||

...

|

||||

...

|

||||

...

|

||||

```

|

||||

|

||||

After definition of these variables we define two variables: `USERINCLUDE` and `LINUXINCLUDE`. They will contain paths of the directories with headers (public for users in the first case and for kernel in the second case):

|

||||

|

||||

```Makefile

|

||||

USERINCLUDE := \

|

||||

-I$(srctree)/arch/$(hdr-arch)/include/uapi \

|

||||

-Iarch/$(hdr-arch)/include/generated/uapi \

|

||||

-I$(srctree)/include/uapi \

|

||||

-Iinclude/generated/uapi \

|

||||

-include $(srctree)/include/linux/kconfig.h

|

||||

|

||||

LINUXINCLUDE := \

|

||||

-I$(srctree)/arch/$(hdr-arch)/include \

|

||||

...

|

||||

```

|

||||

|

||||

And the standard flags for the C compiler:

|

||||

|

||||

```Makefile

|

||||

KBUILD_CFLAGS := -Wall -Wundef -Wstrict-prototypes -Wno-trigraphs \

|

||||

-fno-strict-aliasing -fno-common \

|

||||

-Werror-implicit-function-declaration \

|

||||

-Wno-format-security \

|

||||

-std=gnu89

|

||||

```

|

||||

|

||||

It is the not last compiler flags, they can be updated by the other makefiles (for example kbuilds from `arch/`). After all of these, all variables will be exported to be available in the other makefiles. The following two the `RCS_FIND_IGNORE` and the `RCS_TAR_IGNORE` variables will contain files that will be ignored in the version control system:

|

||||

|

||||

```Makefile

|

||||

export RCS_FIND_IGNORE := \( -name SCCS -o -name BitKeeper -o -name .svn -o \

|

||||

-name CVS -o -name .pc -o -name .hg -o -name .git \) \

|

||||

-prune -o

|

||||

export RCS_TAR_IGNORE := --exclude SCCS --exclude BitKeeper --exclude .svn \

|

||||

--exclude CVS --exclude .pc --exclude .hg --exclude .git

|

||||

```

|

||||

|

||||

That's all. We have finished with the all preparations, next point is the building of `vmlinux`.

|

||||

|

||||

Directly to the kernel build

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

As we have finished all preparations, next step in the root makefile is related to the kernel build. Before this moment we will not see in the our terminal after the execution of the `make` command. But now first steps of the compilation are started. In this moment we need to go on the [598](https://github.com/torvalds/linux/blob/master/Makefile#L598) line of the Linux kernel top makefile and we will see `vmlinux` target there:

|

||||

|

||||

```Makefile

|

||||

all: vmlinux

|

||||

include arch/$(SRCARCH)/Makefile

|

||||

```

|

||||

|

||||

Don't worry that we have missed many lines in Makefile that are placed after `export RCS_FIND_IGNORE.....` and before `all: vmlinux.....`. This part of the makefile is responsible for the `make *.config` targets and as I wrote in the beginning of this part we will see only building of the kernel in a general way.

|

||||

|

||||

The `all:` target is the default when no target is given on the command line. You can see here that we include architecture specific makefile there (in our case it will be [arch/x86/Makefile](https://github.com/torvalds/linux/blob/master/arch/x86/Makefile)). From this moment we will continue from this makefile. As we can see `all` target depends on the `vmlinux` target that defined a little lower in the top makefile:

|

||||

|

||||

```Makefile

|

||||

vmlinux: scripts/link-vmlinux.sh $(vmlinux-deps) FORCE

|

||||

```

|

||||

|

||||

The `vmlinux` is is the Linux kernel in an statically linked executable file format. The [scripts/link-vmlinux.sh](https://github.com/torvalds/linux/blob/master/scripts/link-vmlinux.sh) script links combines different compiled subsystems into vmlinux. The second target is the `vmlinux-deps` that defined as:

|

||||

|

||||

```Makefile

|

||||

vmlinux-deps := $(KBUILD_LDS) $(KBUILD_VMLINUX_INIT) $(KBUILD_VMLINUX_MAIN)

|

||||

```

|

||||

|

||||

and consists from the set of the `built-in.o` from the each top directory of the Linux kernel. Later, when we will go through all directories in the Linux kernel, the `Kbuild` will compile all the `$(obj-y)` files. It then calls `$(LD) -r` to merge these files into one `built-in.o` file. For this moment we have no `vmlinux-deps`, so the `vmlinux` target will not be executed now. For me `vmlinux-deps` contains following files:

|

||||

|

||||

```

|

||||

arch/x86/kernel/vmlinux.lds arch/x86/kernel/head_64.o

|

||||

arch/x86/kernel/head64.o arch/x86/kernel/head.o

|

||||

init/built-in.o usr/built-in.o

|

||||

arch/x86/built-in.o kernel/built-in.o

|

||||

mm/built-in.o fs/built-in.o

|

||||

ipc/built-in.o security/built-in.o

|

||||

crypto/built-in.o block/built-in.o

|

||||

lib/lib.a arch/x86/lib/lib.a

|

||||

lib/built-in.o arch/x86/lib/built-in.o

|

||||

drivers/built-in.o sound/built-in.o

|

||||

firmware/built-in.o arch/x86/pci/built-in.o

|

||||

arch/x86/power/built-in.o arch/x86/video/built-in.o

|

||||

net/built-in.o

|

||||

```

|

||||

|

||||

The next target that can be executed is following:

|

||||

|

||||

```Makefile

|

||||

$(sort $(vmlinux-deps)): $(vmlinux-dirs) ;

|

||||

$(vmlinux-dirs): prepare scripts

|

||||

$(Q)$(MAKE) $(build)=$@

|

||||

```

|

||||

|

||||

As we can see the `vmlinux-dirs` depends on the two targets: `prepare` and `scripts`. The first `prepare` defined in the top `Makefile` of the Linux kernel and executes three stages of preparations:

|

||||

|

||||

```Makefile

|

||||

prepare: prepare0

|

||||

prepare0: archprepare FORCE

|

||||

$(Q)$(MAKE) $(build)=.

|

||||

archprepare: archheaders archscripts prepare1 scripts_basic

|

||||

|

||||

prepare1: prepare2 $(version_h) include/generated/utsrelease.h \

|

||||

include/config/auto.conf

|

||||

$(cmd_crmodverdir)

|

||||

prepare2: prepare3 outputmakefile asm-generic

|

||||

```

|

||||

|

||||