mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

commit

a7a9a6ee70

@ -1,39 +1,40 @@

|

||||

|

||||

如何在 Linux 中使用 Fio 来测评硬盘性能

|

||||

======

|

||||

|

||||

|

||||

|

||||

Fio(Flexible I/O Tester) 是一款由 Jens Axboe 开发的用于测评和压力/硬件验证的[免费开源][1]的软件

|

||||

Fio(Flexible I/O Tester) 是一款由 Jens Axboe 开发的用于测评和压力/硬件验证的[自由开源][1]的软件。

|

||||

|

||||

它支持 19 种不同类型的 I/O 引擎 (sync, mmap, libaio, posixaio, SG v3, splice, null, network, syslet, guasi, solarisaio, 以及更多), I/O 优先级(针对较新的 Linux 内核),I/O 速度,复刻或线程任务,和其他更多的东西。它能够在块设备和文件上工作。

|

||||

它支持 19 种不同类型的 I/O 引擎 (sync、mmap、libaio、posixaio、SG v3、splice、null、network、 syslet、guasi、solarisaio,以及更多), I/O 优先级(针对较新的 Linux 内核),I/O 速度,fork 的任务或线程任务等等。它能够在块设备和文件上工作。

|

||||

|

||||

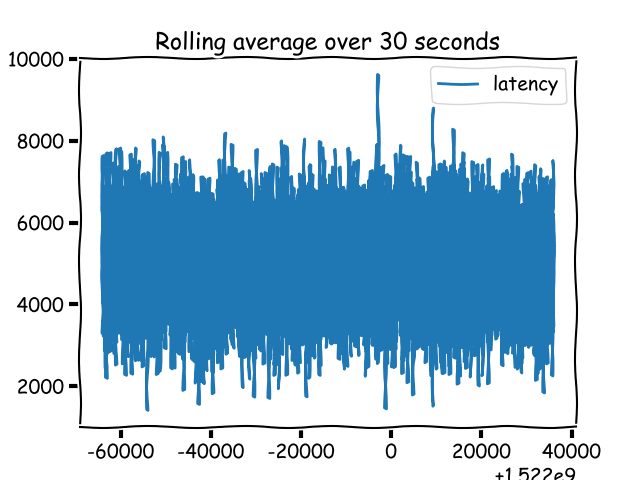

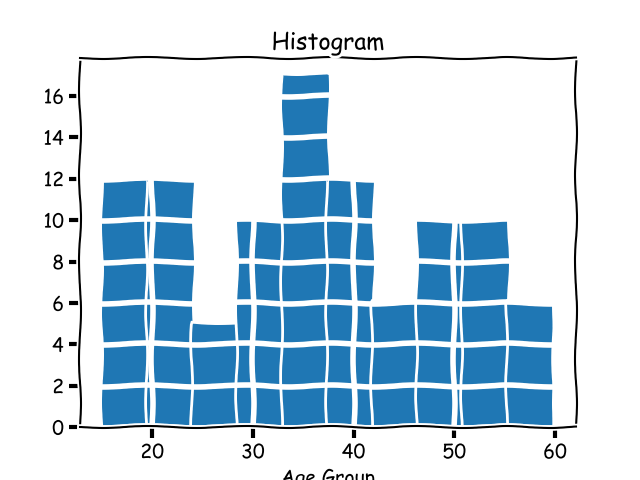

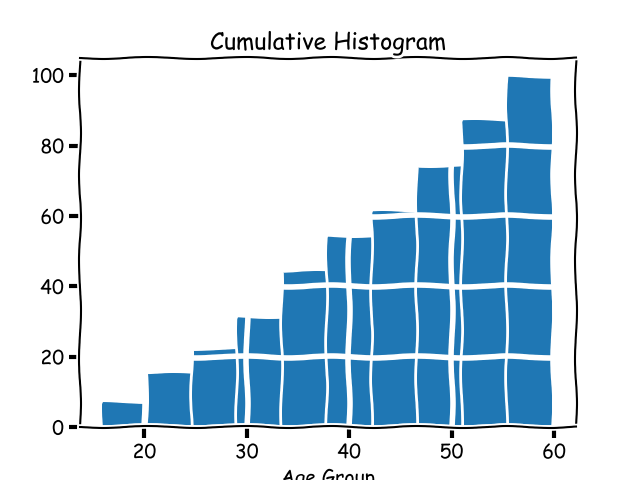

Fio 接受一种非常简单易于理解的文本格式作为任务描述。软件默认包含了许多示例任务文件。 Fio 展示了所有类型的 I/O 性能信息,包括完整的 IO 延迟和百分比。

|

||||

Fio 接受一种非常简单易于理解的文本格式的任务描述。软件默认包含了几个示例任务文件。 Fio 展示了所有类型的 I/O 性能信息,包括完整的 IO 延迟和百分比。

|

||||

|

||||

它被广泛的应用在非常多的地方,包括测评、QA,以及验证用途。它支持 Linux 、 FreeBSD 、 NetBSD、 OpenBSD、 OS X、 OpenSolaris、 AIX、 HP-UX、 Android 以及 Windows。

|

||||

它被广泛的应用在非常多的地方,包括测评、QA,以及验证用途。它支持 Linux 、FreeBSD 、NetBSD、 OpenBSD、 OS X、 OpenSolaris、 AIX、 HP-UX、 Android 以及 Windows。

|

||||

|

||||

在这个教程,我们将使用 Ubuntu 16 ,你需要拥有这台电脑的 sudo 或 root 权限。我们将完整的进行安装和 Fio 的使用。

|

||||

在这个教程,我们将使用 Ubuntu 16 ,你需要拥有这台电脑的 `sudo` 或 root 权限。我们将完整的进行安装和 Fio 的使用。

|

||||

|

||||

### 使用源码安装 Fio

|

||||

|

||||

我们要去克隆 Github 上的仓库。安装所需的依赖,然后我们将会从源码构建应用。首先,确保我们安装了 Git 。

|

||||

我们要去克隆 GitHub 上的仓库。安装所需的依赖,然后我们将会从源码构建应用。首先,确保我们安装了 Git 。

|

||||

|

||||

```

|

||||

sudo apt-get install git

|

||||

```

|

||||

|

||||

CentOS 用户可以执行下述命令:

|

||||

|

||||

```

|

||||

sudo yum install git

|

||||

```

|

||||

|

||||

现在,我们切换到 /opt 目录,并从 Github 上克隆仓库:

|

||||

现在,我们切换到 `/opt` 目录,并从 Github 上克隆仓库:

|

||||

|

||||

```

|

||||

cd /opt

|

||||

git clone https://github.com/axboe/fio

|

||||

```

|

||||

|

||||

你应该会看到下面这样的输出

|

||||

你应该会看到下面这样的输出:

|

||||

|

||||

```

|

||||

Cloning into 'fio'...

|

||||

@ -45,7 +46,7 @@ Resolving deltas: 100% (16251/16251), done.

|

||||

Checking connectivity... done.

|

||||

```

|

||||

|

||||

现在,我们通过在 opt 目录下输入下方的命令切换到 Fio 的代码目录:

|

||||

现在,我们通过在 `/opt` 目录下输入下方的命令切换到 Fio 的代码目录:

|

||||

|

||||

```

|

||||

cd fio

|

||||

@ -61,7 +62,7 @@ cd fio

|

||||

|

||||

### 在 Ubuntu 上安装 Fio

|

||||

|

||||

对于 Ubuntu 和 Debian 来说, Fio 已经在主仓库内。你可以很容易的使用类似 yum 和 apt-get 的标准包管理器来安装 Fio。

|

||||

对于 Ubuntu 和 Debian 来说, Fio 已经在主仓库内。你可以很容易的使用类似 `yum` 和 `apt-get` 的标准包管理器来安装 Fio。

|

||||

|

||||

对于 Ubuntu 和 Debian ,你只需要简单的执行下述命令:

|

||||

|

||||

@ -69,7 +70,8 @@ cd fio

|

||||

sudo apt-get install fio

|

||||

```

|

||||

|

||||

对于 CentOS/Redhat 你只需要简单执行下述命令:

|

||||

对于 CentOS/Redhat 你只需要简单执行下述命令。

|

||||

|

||||

在 CentOS ,你可能在你能安装 Fio 前需要去安装 EPEL 仓库到你的系统中。你可以通过执行下述命令来安装它:

|

||||

|

||||

```

|

||||

@ -124,23 +126,20 @@ Run status group 0 (all jobs):

|

||||

|

||||

Disk stats (read/write):

|

||||

sda: ios=0/0, merge=0/0, ticks=0/0, in_queue=0, util=0.00%

|

||||

|

||||

|

||||

```

|

||||

|

||||

### 执行随机读测试

|

||||

|

||||

我们将要执行一个随机读测试,我们将会尝试读取一个随机的 2GB 文件。

|

||||

|

||||

```

|

||||

|

||||

sudo fio --name=randread --ioengine=libaio --iodepth=16 --rw=randread --bs=4k --direct=0 --size=512M --numjobs=4 --runtime=240 --group_reporting

|

||||

|

||||

|

||||

```

|

||||

|

||||

你应该会看到下面这样的输出

|

||||

```

|

||||

你应该会看到下面这样的输出:

|

||||

|

||||

```

|

||||

...

|

||||

fio-2.2.10

|

||||

Starting 4 processes

|

||||

@ -176,15 +175,13 @@ Run status group 0 (all jobs):

|

||||

|

||||

Disk stats (read/write):

|

||||

sda: ios=521587/871, merge=0/1142, ticks=96664/612, in_queue=97284, util=99.85%

|

||||

|

||||

|

||||

```

|

||||

|

||||

最后,我们想要展示一个简单的随机读-写测试来看一看 Fio 返回的输出类型。

|

||||

|

||||

### 读写性能测试

|

||||

|

||||

下述命令将会测试 USB Pen 驱动器 (/dev/sdc1) 的随机读写性能:

|

||||

下述命令将会测试 USB Pen 驱动器 (`/dev/sdc1`) 的随机读写性能:

|

||||

|

||||

```

|

||||

sudo fio --randrepeat=1 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=test --filename=random_read_write.fio --bs=4k --iodepth=64 --size=4G --readwrite=randrw --rwmixread=75

|

||||

@ -213,8 +210,6 @@ Disk stats (read/write):

|

||||

sda: ios=774141/258944, merge=1463/899, ticks=748800/150316, in_queue=900720, util=99.35%

|

||||

```

|

||||

|

||||

We hope you enjoyed this tutorial and enjoyed following along, Fio is a very useful tool and we hope you can use it in your next debugging activity. If you enjoyed reading this post feel free to leave a comment of questions. Go ahead and clone the repo and play around with the code.

|

||||

|

||||

我们希望你能喜欢这个教程并且享受接下来的内容,Fio 是一个非常有用的工具,并且我们希望你能在你下一次 Debugging 活动中使用到它。如果你喜欢这个文章,欢迎留下评论和问题。

|

||||

|

||||

|

||||

@ -224,7 +219,7 @@ via: https://wpmojo.com/how-to-use-fio-to-measure-disk-performance-in-linux/

|

||||

|

||||

作者:[Alex Pearson][a]

|

||||

译者:[Bestony](https://github.com/bestony)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,58 +1,52 @@

|

||||

# [Google 为树莓派 Zero W 发布了基于TensorFlow 的视觉识别套件][26]

|

||||

|

||||

Google 为树莓派 Zero W 发布了基于TensorFlow 的视觉识别套件

|

||||

===============

|

||||

|

||||

|

||||

|

||||

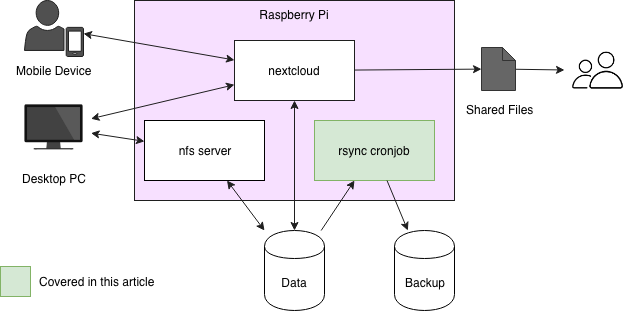

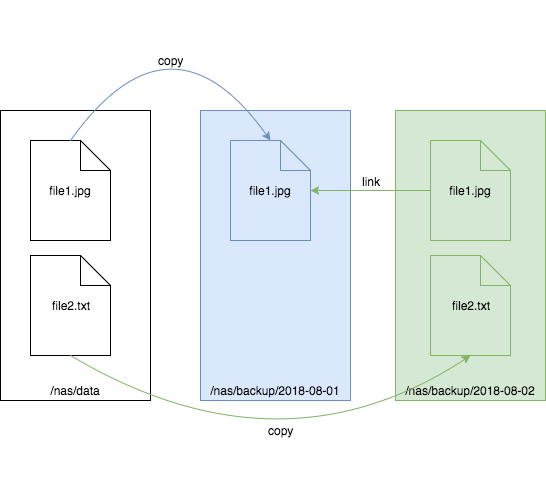

Google 发行了一个 45 美元的 “AIY Vision Kit”,它是运行在树莓派 Zero W 上的基于 TensorFlow 的视觉识别开发套件,它使用了一个带 Movidius 芯片的 “VisionBonnet” 板。

|

||||

|

||||

为加速设备上的神经网络,Google 的 AIY 视频套件继承了早期树莓派上运行的 [AIY 项目][7] 的语音/AI 套件,这个型号的树莓派随五月份的 MagPi 杂志一起赠送。与语音套件和老的 Google 硬纸板 VR 查看器一样,这个新的 AIY 视觉套件也使用一个硬纸板包装。这个套件和 [Cloud Vision API][8] 是不一样的,它使用了一个在 2015 年演示过的基于树莓派的 GoPiGo 机器人,它完全在本地的处理能力上运行,而不需要使用一个云端连接。这个 AIY 视觉套件现在可以 45 美元的价格去预订,将在 12 月份发货。

|

||||

为加速该设备上的神经网络,Google 的 AIY 视频套件继承了早期树莓派上运行的 [AIY 项目][7] 的语音/AI 套件,这个型号的树莓派随五月份的 MagPi 杂志一起赠送。与语音套件和老的 Google 硬纸板 VR 查看器一样,这个新的 AIY 视觉套件也使用一个硬纸板包装。这个套件和 [Cloud Vision API][8] 是不一样的,它使用了一个在 2015 年演示过的基于树莓派的 GoPiGo 机器人,它完全在本地的处理能力上运行,而不需要使用一个云端连接。这个 AIY 视觉套件现在可以 45 美元的价格去预订,将在 12 月份发货。

|

||||

|

||||

[][9] [][10]

|

||||

|

||||

[][9] [][10]

|

||||

**AIY 视觉套件,完整包装(左)和树莓派 Zero W**

|

||||

(点击图片放大)

|

||||

*AIY 视觉套件,完整包装(左)和树莓派 Zero W*

|

||||

|

||||

这个套件的主要处理部分除了所需要的 [树莓派 Zero W][21] 单片机之外 —— 一个基于 ARM11 的 1 GHz 的 Broadcom BCM2836 片上系统,另外的就是 Google 最新的 VisionBonnet RPi 附件板。这个 VisionBonnet pHAT 附件板使用了一个 Movidius MA2450,它是 [Movidius Myriad 2 VPU][22] 版的处理器。在 VisionBonnet 上,处理器为神经网络运行了 Google 的开源机器学习库 [TensorFlow][23]。因为这个芯片,使得视觉处理的速度最高达每秒 30 帧。

|

||||

|

||||

这个套件的主要处理部分除了所需要的 [树莓派 Zero W][21] 单片机之外 —— 一个基于 ARM11 的 1 GHz 的 Broadcom BCM2836 片上系统,另外的就是 Google 最新的 VisionBonnet RPi 附件板。这个 VisionBonnet pHAT 附件板使用了一个 Movidius MA2450,它是 [Movidius Myriad 2 VPU][22] 版的处理器。在 VisionBonnet 上,处理器为神经网络运行了 Google 的开源机器学习库 [TensorFlow][23]。因为这个芯片,便得视觉处理的速度最高达每秒 30 帧。

|

||||

|

||||

这个 AIY 视觉套件要求用户提供一个树莓派 Zero W、一个 [树莓派摄像机 v2][11]、以及一个 16GB 的 micro SD 卡,它用来下载基于 Linux 的 OS 镜像。这个套件包含了 VisionBonnet、一个 RGB 街机风格的按钮、一个压电扬声器、一个广角镜头套件、以及一个包裹它们的硬纸板。还有一些就是线缆、支架、安装螺母、以及连接部件。

|

||||

这个 AIY 视觉套件要求用户提供一个树莓派 Zero W、一个 [树莓派摄像机 v2][11]、以及一个 16GB 的 micro SD 卡,它用来下载基于 Linux 的 OS 镜像。这个套件包含了 VisionBonnet、一个 RGB 街机风格的按钮、一个压电扬声器、一个广角镜头套件、以及一个包裹它们的硬纸板。还有一些就是线缆、支架、安装螺母,以及连接部件。

|

||||

|

||||

|

||||

[][12] [][13]

|

||||

**AIY 视觉套件组件(左)和 VisonBonnet 附件板**

|

||||

(点击图片放大)

|

||||

|

||||

*AIY 视觉套件组件(左)和 VisonBonnet 附件板*

|

||||

|

||||

有三个可用的神经网络模型。一个是通用的模型,它可以识别常见的 1,000 个东西,一个是面部检测模型,它可以对 “快乐程度” 进行评分,从 “悲伤” 到 “大笑”,还有一个模型可以用来辨别图像内容是狗、猫、还是人。这个 1,000-image 模型源自 Google 的开源 [MobileNets][24],它是基于 TensorFlow 家族的计算机视觉模型,它设计用于资源受限的移动或者嵌入式设备。

|

||||

|

||||

MobileNet 模型是低延时、低功耗,和参数化的,以满足资源受限的不同使用案例。Google 说,这个模型可以用于构建分类、检测、嵌入、以及分隔。在本月的早些时候,Google 发布了一个开发者预览版,它是一个对 Android 和 iOS 移动设备友好的 [TensorFlow Lite][14] 库,它与 MobileNets 和 Android 神经网络 API 是兼容的。

|

||||

有三个可用的神经网络模型。一个是通用的模型,它可以识别常见的 1000 个东西,一个是面部检测模型,它可以对 “快乐程度” 进行评分,从 “悲伤” 到 “大笑”,还有一个模型可以用来辨别图像内容是狗、猫、还是人。这个 1000 个图片模型源自 Google 的开源 [MobileNets][24],它是基于 TensorFlow 家族的计算机视觉模型,它设计用于资源受限的移动或者嵌入式设备。

|

||||

|

||||

MobileNet 模型是低延时、低功耗,和参数化的,以满足资源受限的不同使用情景。Google 说,这个模型可以用于构建分类、检测、嵌入、以及分隔。在本月的早些时候,Google 发布了一个开发者预览版,它是一个对 Android 和 iOS 移动设备友好的 [TensorFlow Lite][14] 库,它与 MobileNets 和 Android 神经网络 API 是兼容的。

|

||||

|

||||

[][15]

|

||||

**AIY 视觉套件包装图**

|

||||

(点击图像放大)

|

||||

|

||||

*AIY 视觉套件包装图*

|

||||

|

||||

除了提供这三个模型之外,AIY 视觉套件还提供了基本的 TensorFlow 代码和一个编译器,因此用户可以去开发自己的模型。另外,Python 开发者可以写一些新软件去定制 RGB 按钮颜色、压电元素声音、以及在 VisionBonnet 上的 4x GPIO 针脚,它可以添加另外的指示灯、按钮、或者伺服机构。Potential 模型包括识别食物、基于可视化输入来打开一个狗门、当你的汽车偏离车道时发出文本信息、或者根据识别到的人的面部表情来播放特定的音乐。

|

||||

|

||||

|

||||

[][16] [][17]

|

||||

**Myriad 2 VPU 结构图(左)和参考板**

|

||||

(点击图像放大)

|

||||

|

||||

*Myriad 2 VPU 结构图(左)和参考板*

|

||||

|

||||

Movidius Myriad 2 处理器在一个标称 1W 的功耗下提供每秒万亿次浮点运算的性能。在被 Intel 收购之前,这个芯片最早出现在 Tango 项目的参考平台上,并内置在 2016 年 5 月由 Movidius 首次亮相的、Ubuntu 驱动的 USB 的 [Fathom][25] 神经网络处理棒中。根据 Movidius 的说法,Myriad 2 目前已经在 “市场上数百万的设备上使用”。

|

||||

|

||||

**更多信息**

|

||||

|

||||

AIY 视觉套件可以在 Micro Center 上预订,价格为 $44.99,预计在 12 月初发货。更多信息请参考 AIY 视觉套件的 [公告][18]、[Google 博客][19]、以及 [Micro Center 购物页面][20]。

|

||||

AIY 视觉套件可以在 Micro Center 上预订,价格为 $44.99,预计在(2017 年) 12 月初发货。更多信息请参考 AIY 视觉套件的 [公告][18]、[Google 博客][19]、以及 [Micro Center 购物页面][20]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linuxgizmos.com/google-launches-tensorflow-based-vision-recognition-kit-for-rpi-zero-w/

|

||||

|

||||

作者:[ Eric Brown][a]

|

||||

作者:[Eric Brown][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

563

published/20180306 How To Check All Running Services In Linux.md

Normal file

563

published/20180306 How To Check All Running Services In Linux.md

Normal file

@ -0,0 +1,563 @@

|

||||

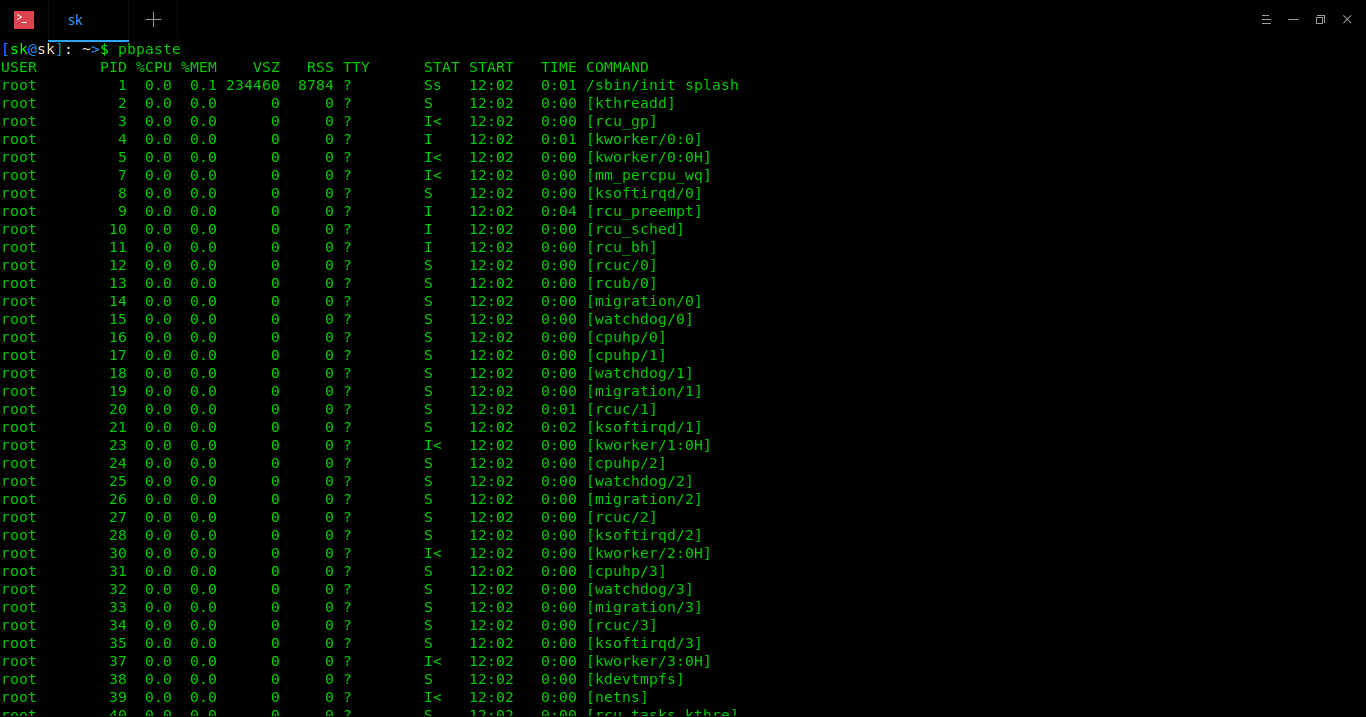

如何查看 Linux 中所有正在运行的服务

|

||||

======

|

||||

|

||||

有许多方法和工具可以查看 Linux 中所有正在运行的服务。大多数管理员会在 System V(SysV)初始化系统中使用 `service service-name status` 或 `/etc/init.d/service-name status`,而在 systemd 初始化系统中使用 `systemctl status service-name`。

|

||||

|

||||

以上命令可以清楚地显示该服务是否在服务器上运行,这也是每个 Linux 管理员都该知道的非常简单和基础的命令。

|

||||

|

||||

如果你对系统环境并不熟悉,也不清楚系统在运行哪些服务,你会如何检查?

|

||||

|

||||

是的,我们的确有必要这样检查一下。这将有助于我们了解系统上运行了什么服务,以及哪些是必要的、哪些需要被禁用。

|

||||

|

||||

init(<ruby>初始化<rt>initialization</rt></ruby>的简称)是在系统启动期间运行的第一个进程。`init` 是一个守护进程,它将持续运行直至关机。

|

||||

|

||||

大多数 Linux 发行版都使用如下的初始化系统之一:

|

||||

|

||||

- System V 是更老的初始化系统

|

||||

- Upstart 是一个基于事件的传统的初始化系统的替代品

|

||||

- systemd 是新的初始化系统,它已经被大多数最新的 Linux 发行版所采用

|

||||

|

||||

### 什么是 System V(SysV)

|

||||

|

||||

SysV(意即 System V) 初始化系统是早期传统的初始化系统和系统管理器。由于 sysVinit 系统上一些长期悬而未决的问题,大多数最新的发行版都适用于 systemd 系统。

|

||||

|

||||

### 什么是 Upstart 初始化系统

|

||||

|

||||

Upstart 是一个基于事件的 /sbin/init 的替代品,它控制在启动时的任务和服务的开始,在关机时停止它们,并在系统运行时监控它们。

|

||||

|

||||

它最初是为 Ubuntu 发行版开发的,但其是以适合所有 Linux 发行版的开发为目标的,以替换过时的 System-V 初始化系统。

|

||||

|

||||

### 什么是 systemd

|

||||

|

||||

systemd 是一个新的初始化系统以及系统管理器,它已成为大多数 Linux 发行版中非常流行且广泛适应的新的标准初始化系统。`systemctl` 是一个 systemd 管理工具,它可以帮助我们管理 systemd 系统。

|

||||

|

||||

### 方法一:如何在 System V(SysV)系统中查看运行的服务

|

||||

|

||||

以下命令可以帮助我们列出 System V(SysV) 系统中所有正在运行的服务。

|

||||

|

||||

如果服务很多,我建议使用文件查看命令,如 `less`、`more` 等,以便得到清晰的结果。

|

||||

|

||||

```

|

||||

# service --status-all

|

||||

或

|

||||

# service --status-all | more

|

||||

或

|

||||

# service --status-all | less

|

||||

```

|

||||

|

||||

```

|

||||

abrt-ccpp hook is installed

|

||||

abrtd (pid 2131) is running...

|

||||

abrt-dump-oops is stopped

|

||||

acpid (pid 1958) is running...

|

||||

atd (pid 2164) is running...

|

||||

auditd (pid 1731) is running...

|

||||

Frequency scaling enabled using ondemand governor

|

||||

crond (pid 2153) is running...

|

||||

hald (pid 1967) is running...

|

||||

htcacheclean is stopped

|

||||

httpd is stopped

|

||||

Table: filter

|

||||

Chain INPUT (policy ACCEPT)

|

||||

num target prot opt source destination

|

||||

1 ACCEPT all ::/0 ::/0 state RELATED,ESTABLISHED

|

||||

2 ACCEPT icmpv6 ::/0 ::/0

|

||||

3 ACCEPT all ::/0 ::/0

|

||||

4 ACCEPT tcp ::/0 ::/0 state NEW tcp dpt:80

|

||||

5 ACCEPT tcp ::/0 ::/0 state NEW tcp dpt:21

|

||||

6 ACCEPT tcp ::/0 ::/0 state NEW tcp dpt:22

|

||||

7 ACCEPT tcp ::/0 ::/0 state NEW tcp dpt:25

|

||||

8 ACCEPT tcp ::/0 ::/0 state NEW tcp dpt:2082

|

||||

9 ACCEPT tcp ::/0 ::/0 state NEW tcp dpt:2086

|

||||

10 ACCEPT tcp ::/0 ::/0 state NEW tcp dpt:2083

|

||||

11 ACCEPT tcp ::/0 ::/0 state NEW tcp dpt:2087

|

||||

12 ACCEPT tcp ::/0 ::/0 state NEW tcp dpt:10000

|

||||

13 REJECT all ::/0 ::/0 reject-with icmp6-adm-prohibited

|

||||

|

||||

Chain FORWARD (policy ACCEPT)

|

||||

num target prot opt source destination

|

||||

1 REJECT all ::/0 ::/0 reject-with icmp6-adm-prohibited

|

||||

|

||||

Chain OUTPUT (policy ACCEPT)

|

||||

num target prot opt source destination

|

||||

|

||||

iptables: Firewall is not running.

|

||||

irqbalance (pid 1826) is running...

|

||||

Kdump is operational

|

||||

lvmetad is stopped

|

||||

mdmonitor is stopped

|

||||

messagebus (pid 1929) is running...

|

||||

SUCCESS! MySQL running (24376)

|

||||

rndc: neither /etc/rndc.conf nor /etc/rndc.key was found

|

||||

named is stopped

|

||||

netconsole module not loaded

|

||||

Usage: startup.sh { start | stop }

|

||||

Configured devices:

|

||||

lo eth0 eth1

|

||||

Currently active devices:

|

||||

lo eth0

|

||||

ntpd is stopped

|

||||

portreserve (pid 1749) is running...

|

||||

master (pid 2107) is running...

|

||||

Process accounting is disabled.

|

||||

quota_nld is stopped

|

||||

rdisc is stopped

|

||||

rngd is stopped

|

||||

rpcbind (pid 1840) is running...

|

||||

rsyslogd (pid 1756) is running...

|

||||

sandbox is stopped

|

||||

saslauthd is stopped

|

||||

smartd is stopped

|

||||

openssh-daemon (pid 9859) is running...

|

||||

svnserve is stopped

|

||||

vsftpd (pid 4008) is running...

|

||||

xinetd (pid 2031) is running...

|

||||

zabbix_agentd (pid 2150 2149 2148 2147 2146 2140) is running...

|

||||

```

|

||||

|

||||

执行以下命令,可以只查看正在运行的服务:

|

||||

|

||||

```

|

||||

# service --status-all | grep running

|

||||

```

|

||||

|

||||

```

|

||||

crond (pid 535) is running...

|

||||

httpd (pid 627) is running...

|

||||

mysqld (pid 911) is running...

|

||||

rndc: neither /etc/rndc.conf nor /etc/rndc.key was found

|

||||

rsyslogd (pid 449) is running...

|

||||

saslauthd (pid 492) is running...

|

||||

sendmail (pid 509) is running...

|

||||

sm-client (pid 519) is running...

|

||||

openssh-daemon (pid 478) is running...

|

||||

xinetd (pid 485) is running...

|

||||

```

|

||||

|

||||

运行以下命令以查看指定服务的状态:

|

||||

|

||||

```

|

||||

# service --status-all | grep httpd

|

||||

httpd (pid 627) is running...

|

||||

```

|

||||

|

||||

或者,使用以下命令也可以查看指定服务的状态:

|

||||

|

||||

```

|

||||

# service httpd status

|

||||

httpd (pid 627) is running...

|

||||

```

|

||||

|

||||

使用以下命令查看系统启动时哪些服务会被启用:

|

||||

|

||||

```

|

||||

# chkconfig --list

|

||||

```

|

||||

|

||||

```

|

||||

crond 0:off 1:off 2:on 3:on 4:on 5:on 6:off

|

||||

htcacheclean 0:off 1:off 2:off 3:off 4:off 5:off 6:off

|

||||

httpd 0:off 1:off 2:off 3:on 4:off 5:off 6:off

|

||||

ip6tables 0:off 1:off 2:on 3:off 4:on 5:on 6:off

|

||||

iptables 0:off 1:off 2:on 3:on 4:on 5:on 6:off

|

||||

modules_dep 0:off 1:off 2:on 3:on 4:on 5:on 6:off

|

||||

mysqld 0:off 1:off 2:on 3:on 4:on 5:on 6:off

|

||||

named 0:off 1:off 2:off 3:off 4:off 5:off 6:off

|

||||

netconsole 0:off 1:off 2:off 3:off 4:off 5:off 6:off

|

||||

netfs 0:off 1:off 2:off 3:off 4:on 5:on 6:off

|

||||

network 0:off 1:off 2:on 3:on 4:on 5:on 6:off

|

||||

nmb 0:off 1:off 2:off 3:off 4:off 5:off 6:off

|

||||

nscd 0:off 1:off 2:off 3:off 4:off 5:off 6:off

|

||||

portreserve 0:off 1:off 2:on 3:off 4:on 5:on 6:off

|

||||

quota_nld 0:off 1:off 2:off 3:off 4:off 5:off 6:off

|

||||

rdisc 0:off 1:off 2:off 3:off 4:off 5:off 6:off

|

||||

restorecond 0:off 1:off 2:off 3:off 4:off 5:off 6:off

|

||||

rpcbind 0:off 1:off 2:on 3:off 4:on 5:on 6:off

|

||||

rsyslog 0:off 1:off 2:on 3:on 4:on 5:on 6:off

|

||||

saslauthd 0:off 1:off 2:off 3:on 4:off 5:off 6:off

|

||||

sendmail 0:off 1:off 2:on 3:on 4:on 5:on 6:off

|

||||

smb 0:off 1:off 2:off 3:off 4:off 5:off 6:off

|

||||

snmpd 0:off 1:off 2:off 3:off 4:off 5:off 6:off

|

||||

snmptrapd 0:off 1:off 2:off 3:off 4:off 5:off 6:off

|

||||

sshd 0:off 1:off 2:on 3:on 4:on 5:on 6:off

|

||||

udev-post 0:off 1:on 2:on 3:off 4:on 5:on 6:off

|

||||

winbind 0:off 1:off 2:off 3:off 4:off 5:off 6:off

|

||||

xinetd 0:off 1:off 2:off 3:on 4:on 5:on 6:off

|

||||

|

||||

xinetd based services:

|

||||

chargen-dgram: off

|

||||

chargen-stream: off

|

||||

daytime-dgram: off

|

||||

daytime-stream: off

|

||||

discard-dgram: off

|

||||

discard-stream: off

|

||||

echo-dgram: off

|

||||

echo-stream: off

|

||||

finger: off

|

||||

ntalk: off

|

||||

rsync: off

|

||||

talk: off

|

||||

tcpmux-server: off

|

||||

time-dgram: off

|

||||

time-stream: off

|

||||

```

|

||||

|

||||

### 方法二:如何在 System V(SysV)系统中查看运行的服务

|

||||

|

||||

另外一种在 Linux 系统上列出运行的服务的方法是使用 initctl 命令:

|

||||

|

||||

```

|

||||

# initctl list

|

||||

rc stop/waiting

|

||||

tty (/dev/tty3) start/running, process 1740

|

||||

tty (/dev/tty2) start/running, process 1738

|

||||

tty (/dev/tty1) start/running, process 1736

|

||||

tty (/dev/tty6) start/running, process 1746

|

||||

tty (/dev/tty5) start/running, process 1744

|

||||

tty (/dev/tty4) start/running, process 1742

|

||||

plymouth-shutdown stop/waiting

|

||||

control-alt-delete stop/waiting

|

||||

rcS-emergency stop/waiting

|

||||

readahead-collector stop/waiting

|

||||

kexec-disable stop/waiting

|

||||

quit-plymouth stop/waiting

|

||||

rcS stop/waiting

|

||||

prefdm stop/waiting

|

||||

init-system-dbus stop/waiting

|

||||

ck-log-system-restart stop/waiting

|

||||

readahead stop/waiting

|

||||

ck-log-system-start stop/waiting

|

||||

splash-manager stop/waiting

|

||||

start-ttys stop/waiting

|

||||

readahead-disable-services stop/waiting

|

||||

ck-log-system-stop stop/waiting

|

||||

rcS-sulogin stop/waiting

|

||||

serial stop/waiting

|

||||

```

|

||||

|

||||

### 方法三:如何在 systemd 系统中查看运行的服务

|

||||

|

||||

以下命令帮助我们列出 systemd 系统中所有服务:

|

||||

|

||||

```

|

||||

# systemctl

|

||||

UNIT LOAD ACTIVE SUB DESCRIPTION

|

||||

sys-devices-virtual-block-loop0.device loaded active plugged /sys/devices/virtual/block/loop0

|

||||

sys-devices-virtual-block-loop1.device loaded active plugged /sys/devices/virtual/block/loop1

|

||||

sys-devices-virtual-block-loop2.device loaded active plugged /sys/devices/virtual/block/loop2

|

||||

sys-devices-virtual-block-loop3.device loaded active plugged /sys/devices/virtual/block/loop3

|

||||

sys-devices-virtual-block-loop4.device loaded active plugged /sys/devices/virtual/block/loop4

|

||||

sys-devices-virtual-misc-rfkill.device loaded active plugged /sys/devices/virtual/misc/rfkill

|

||||

sys-devices-virtual-tty-ttyprintk.device loaded active plugged /sys/devices/virtual/tty/ttyprintk

|

||||

sys-module-fuse.device loaded active plugged /sys/module/fuse

|

||||

sys-subsystem-net-devices-enp0s3.device loaded active plugged 82540EM Gigabit Ethernet Controller (PRO/1000 MT Desktop Adapter)

|

||||

-.mount loaded active mounted Root Mount

|

||||

dev-hugepages.mount loaded active mounted Huge Pages File System

|

||||

dev-mqueue.mount loaded active mounted POSIX Message Queue File System

|

||||

run-user-1000-gvfs.mount loaded active mounted /run/user/1000/gvfs

|

||||

run-user-1000.mount loaded active mounted /run/user/1000

|

||||

snap-core-3887.mount loaded active mounted Mount unit for core

|

||||

snap-core-4017.mount loaded active mounted Mount unit for core

|

||||

snap-core-4110.mount loaded active mounted Mount unit for core

|

||||

snap-gping-13.mount loaded active mounted Mount unit for gping

|

||||

snap-termius\x2dapp-8.mount loaded active mounted Mount unit for termius-app

|

||||

sys-fs-fuse-connections.mount loaded active mounted FUSE Control File System

|

||||

sys-kernel-debug.mount loaded active mounted Debug File System

|

||||

acpid.path loaded active running ACPI Events Check

|

||||

cups.path loaded active running CUPS Scheduler

|

||||

systemd-ask-password-plymouth.path loaded active waiting Forward Password Requests to Plymouth Directory Watch

|

||||

systemd-ask-password-wall.path loaded active waiting Forward Password Requests to Wall Directory Watch

|

||||

init.scope loaded active running System and Service Manager

|

||||

session-c2.scope loaded active running Session c2 of user magi

|

||||

accounts-daemon.service loaded active running Accounts Service

|

||||

acpid.service loaded active running ACPI event daemon

|

||||

anacron.service loaded active running Run anacron jobs

|

||||

apache2.service loaded active running The Apache HTTP Server

|

||||

apparmor.service loaded active exited AppArmor initialization

|

||||

apport.service loaded active exited LSB: automatic crash report generation

|

||||

aptik-battery-monitor.service loaded active running LSB: start/stop the aptik battery monitor daemon

|

||||

atop.service loaded active running Atop advanced performance monitor

|

||||

atopacct.service loaded active running Atop process accounting daemon

|

||||

avahi-daemon.service loaded active running Avahi mDNS/DNS-SD Stack

|

||||

colord.service loaded active running Manage, Install and Generate Color Profiles

|

||||

console-setup.service loaded active exited Set console font and keymap

|

||||

cron.service loaded active running Regular background program processing daemon

|

||||

cups-browsed.service loaded active running Make remote CUPS printers available locally

|

||||

cups.service loaded active running CUPS Scheduler

|

||||

dbus.service loaded active running D-Bus System Message Bus

|

||||

postfix.service loaded active exited Postfix Mail Transport Agent

|

||||

```

|

||||

* `UNIT` 相应的 systemd 单元名称

|

||||

* `LOAD` 相应的单元是否被加载到内存中

|

||||

* `ACTIVE` 该单元是否处于活动状态

|

||||

* `SUB` 该单元是否处于运行状态(LCTT 译注:是较于 ACTIVE 更加详细的状态描述,不同的单元类型有不同的状态。)

|

||||

* `DESCRIPTION` 关于该单元的简短描述

|

||||

|

||||

以下选项可根据类型列出单元:

|

||||

|

||||

```

|

||||

# systemctl list-units --type service

|

||||

UNIT LOAD ACTIVE SUB DESCRIPTION

|

||||

accounts-daemon.service loaded active running Accounts Service

|

||||

acpid.service loaded active running ACPI event daemon

|

||||

anacron.service loaded active running Run anacron jobs

|

||||

apache2.service loaded active running The Apache HTTP Server

|

||||

apparmor.service loaded active exited AppArmor initialization

|

||||

apport.service loaded active exited LSB: automatic crash report generation

|

||||

aptik-battery-monitor.service loaded active running LSB: start/stop the aptik battery monitor daemon

|

||||

atop.service loaded active running Atop advanced performance monitor

|

||||

atopacct.service loaded active running Atop process accounting daemon

|

||||

avahi-daemon.service loaded active running Avahi mDNS/DNS-SD Stack

|

||||

colord.service loaded active running Manage, Install and Generate Color Profiles

|

||||

console-setup.service loaded active exited Set console font and keymap

|

||||

cron.service loaded active running Regular background program processing daemon

|

||||

cups-browsed.service loaded active running Make remote CUPS printers available locally

|

||||

cups.service loaded active running CUPS Scheduler

|

||||

dbus.service loaded active running D-Bus System Message Bus

|

||||

fwupd.service loaded active running Firmware update daemon

|

||||

getty@tty1.service loaded active running Getty on tty1

|

||||

grub-common.service loaded active exited LSB: Record successful boot for GRUB

|

||||

irqbalance.service loaded active running LSB: daemon to balance interrupts for SMP systems

|

||||

keyboard-setup.service loaded active exited Set the console keyboard layout

|

||||

kmod-static-nodes.service loaded active exited Create list of required static device nodes for the current kernel

|

||||

```

|

||||

|

||||

以下选项可帮助您根据状态列出单位,输出与前例类似但更直截了当:

|

||||

|

||||

```

|

||||

# systemctl list-unit-files --type service

|

||||

|

||||

UNIT FILE STATE

|

||||

accounts-daemon.service enabled

|

||||

acpid.service disabled

|

||||

alsa-restore.service static

|

||||

alsa-state.service static

|

||||

alsa-utils.service masked

|

||||

anacron-resume.service enabled

|

||||

anacron.service enabled

|

||||

apache-htcacheclean.service disabled

|

||||

apache-htcacheclean@.service disabled

|

||||

apache2.service enabled

|

||||

apache2@.service disabled

|

||||

apparmor.service enabled

|

||||

apport-forward@.service static

|

||||

apport.service generated

|

||||

apt-daily-upgrade.service static

|

||||

apt-daily.service static

|

||||

aptik-battery-monitor.service generated

|

||||

atop.service enabled

|

||||

atopacct.service enabled

|

||||

autovt@.service enabled

|

||||

avahi-daemon.service enabled

|

||||

bluetooth.service enabled

|

||||

```

|

||||

|

||||

运行以下命令以查看指定服务的状态:

|

||||

|

||||

```

|

||||

# systemctl | grep apache2

|

||||

apache2.service loaded active running The Apache HTTP Server

|

||||

```

|

||||

|

||||

或者,使用以下命令也可查看指定服务的状态:

|

||||

|

||||

```

|

||||

# systemctl status apache2

|

||||

● apache2.service - The Apache HTTP Server

|

||||

Loaded: loaded (/lib/systemd/system/apache2.service; enabled; vendor preset: enabled)

|

||||

Drop-In: /lib/systemd/system/apache2.service.d

|

||||

└─apache2-systemd.conf

|

||||

Active: active (running) since Tue 2018-03-06 12:34:09 IST; 8min ago

|

||||

Process: 2786 ExecReload=/usr/sbin/apachectl graceful (code=exited, status=0/SUCCESS)

|

||||

Main PID: 1171 (apache2)

|

||||

Tasks: 55 (limit: 4915)

|

||||

CGroup: /system.slice/apache2.service

|

||||

├─1171 /usr/sbin/apache2 -k start

|

||||

├─2790 /usr/sbin/apache2 -k start

|

||||

└─2791 /usr/sbin/apache2 -k start

|

||||

|

||||

Mar 06 12:34:08 magi-VirtualBox systemd[1]: Starting The Apache HTTP Server...

|

||||

Mar 06 12:34:09 magi-VirtualBox apachectl[1089]: AH00558: apache2: Could not reliably determine the server's fully qualified domain name, using 10.0.2.15. Set the 'ServerName' directive globally to suppre

|

||||

Mar 06 12:34:09 magi-VirtualBox systemd[1]: Started The Apache HTTP Server.

|

||||

Mar 06 12:39:10 magi-VirtualBox systemd[1]: Reloading The Apache HTTP Server.

|

||||

Mar 06 12:39:10 magi-VirtualBox apachectl[2786]: AH00558: apache2: Could not reliably determine the server's fully qualified domain name, using fe80::7929:4ed1:279f:4d65. Set the 'ServerName' directive gl

|

||||

Mar 06 12:39:10 magi-VirtualBox systemd[1]: Reloaded The Apache HTTP Server.

|

||||

```

|

||||

|

||||

执行以下命令,只查看正在运行的服务:

|

||||

```

|

||||

# systemctl | grep running

|

||||

acpid.path loaded active running ACPI Events Check

|

||||

cups.path loaded active running CUPS Scheduler

|

||||

init.scope loaded active running System and Service Manager

|

||||

session-c2.scope loaded active running Session c2 of user magi

|

||||

accounts-daemon.service loaded active running Accounts Service

|

||||

acpid.service loaded active running ACPI event daemon

|

||||

apache2.service loaded active running The Apache HTTP Server

|

||||

aptik-battery-monitor.service loaded active running LSB: start/stop the aptik battery monitor daemon

|

||||

atop.service loaded active running Atop advanced performance monitor

|

||||

atopacct.service loaded active running Atop process accounting daemon

|

||||

avahi-daemon.service loaded active running Avahi mDNS/DNS-SD Stack

|

||||

colord.service loaded active running Manage, Install and Generate Color Profiles

|

||||

cron.service loaded active running Regular background program processing daemon

|

||||

cups-browsed.service loaded active running Make remote CUPS printers available locally

|

||||

cups.service loaded active running CUPS Scheduler

|

||||

dbus.service loaded active running D-Bus System Message Bus

|

||||

fwupd.service loaded active running Firmware update daemon

|

||||

getty@tty1.service loaded active running Getty on tty1

|

||||

irqbalance.service loaded active running LSB: daemon to balance interrupts for SMP systems

|

||||

lightdm.service loaded active running Light Display Manager

|

||||

ModemManager.service loaded active running Modem Manager

|

||||

NetworkManager.service loaded active running Network Manager

|

||||

polkit.service loaded active running Authorization Manager

|

||||

```

|

||||

|

||||

使用以下命令查看系统启动时会被启用的服务列表:

|

||||

|

||||

```

|

||||

# systemctl list-unit-files | grep enabled

|

||||

acpid.path enabled

|

||||

cups.path enabled

|

||||

accounts-daemon.service enabled

|

||||

anacron-resume.service enabled

|

||||

anacron.service enabled

|

||||

apache2.service enabled

|

||||

apparmor.service enabled

|

||||

atop.service enabled

|

||||

atopacct.service enabled

|

||||

autovt@.service enabled

|

||||

avahi-daemon.service enabled

|

||||

bluetooth.service enabled

|

||||

console-setup.service enabled

|

||||

cron.service enabled

|

||||

cups-browsed.service enabled

|

||||

cups.service enabled

|

||||

display-manager.service enabled

|

||||

dns-clean.service enabled

|

||||

friendly-recovery.service enabled

|

||||

getty@.service enabled

|

||||

gpu-manager.service enabled

|

||||

keyboard-setup.service enabled

|

||||

lightdm.service enabled

|

||||

ModemManager.service enabled

|

||||

network-manager.service enabled

|

||||

networking.service enabled

|

||||

NetworkManager-dispatcher.service enabled

|

||||

NetworkManager-wait-online.service enabled

|

||||

NetworkManager.service enabled

|

||||

```

|

||||

|

||||

`systemd-cgtop` 按资源使用情况(任务、CPU、内存、输入和输出)列出控制组:

|

||||

|

||||

```

|

||||

# systemd-cgtop

|

||||

|

||||

Control Group Tasks %CPU Memory Input/s Output/s

|

||||

/ - - 1.5G - -

|

||||

/init.scope 1 - - - -

|

||||

/system.slice 153 - - - -

|

||||

/system.slice/ModemManager.service 3 - - - -

|

||||

/system.slice/NetworkManager.service 4 - - - -

|

||||

/system.slice/accounts-daemon.service 3 - - - -

|

||||

/system.slice/acpid.service 1 - - - -

|

||||

/system.slice/apache2.service 55 - - - -

|

||||

/system.slice/aptik-battery-monitor.service 1 - - - -

|

||||

/system.slice/atop.service 1 - - - -

|

||||

/system.slice/atopacct.service 1 - - - -

|

||||

/system.slice/avahi-daemon.service 2 - - - -

|

||||

/system.slice/colord.service 3 - - - -

|

||||

/system.slice/cron.service 1 - - - -

|

||||

/system.slice/cups-browsed.service 3 - - - -

|

||||

/system.slice/cups.service 2 - - - -

|

||||

/system.slice/dbus.service 6 - - - -

|

||||

/system.slice/fwupd.service 5 - - - -

|

||||

/system.slice/irqbalance.service 1 - - - -

|

||||

/system.slice/lightdm.service 7 - - - -

|

||||

/system.slice/polkit.service 3 - - - -

|

||||

/system.slice/repowerd.service 14 - - - -

|

||||

/system.slice/rsyslog.service 4 - - - -

|

||||

/system.slice/rtkit-daemon.service 3 - - - -

|

||||

/system.slice/snapd.service 8 - - - -

|

||||

/system.slice/system-getty.slice 1 - - - -

|

||||

```

|

||||

|

||||

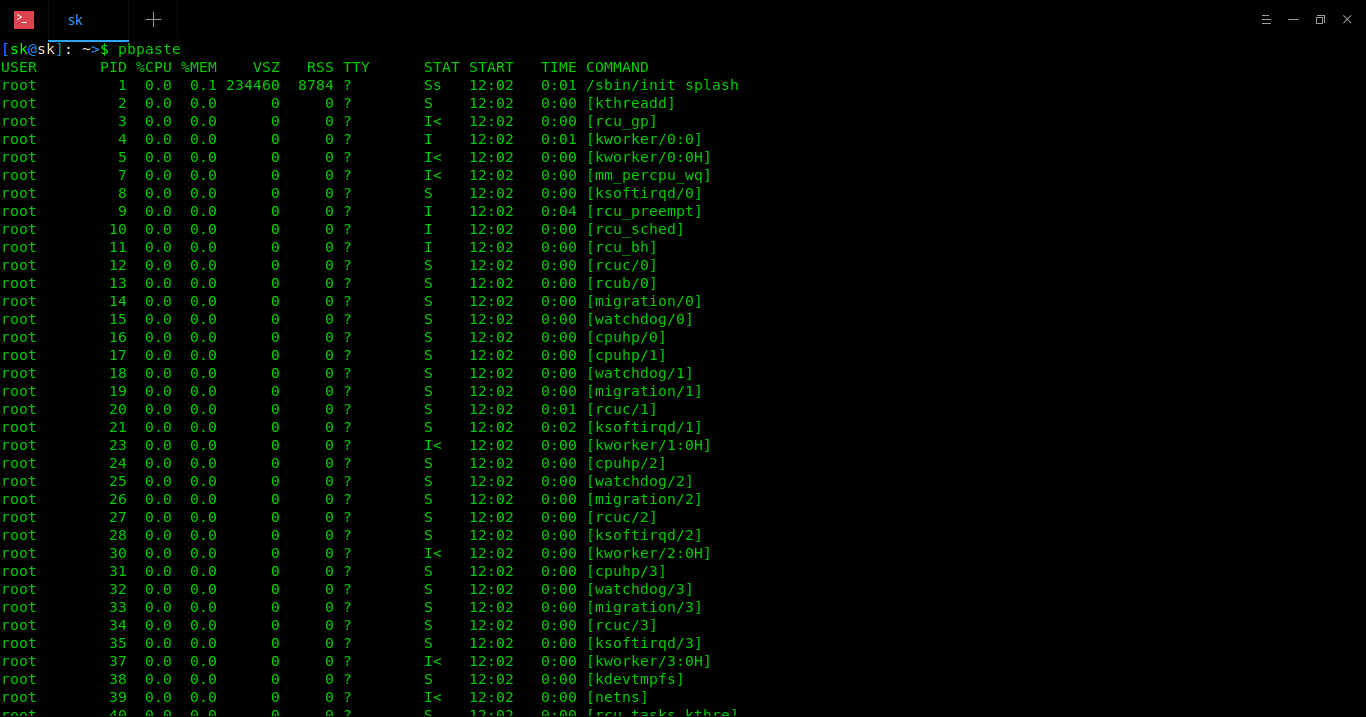

同时,我们可以使用 `pstree` 命令(输出来自 SysVinit 系统)查看正在运行的服务:

|

||||

|

||||

```

|

||||

# pstree

|

||||

init-+-crond

|

||||

|-httpd---2*[httpd]

|

||||

|-kthreadd/99149---khelper/99149

|

||||

|-2*[mingetty]

|

||||

|-mysqld_safe---mysqld---9*[{mysqld}]

|

||||

|-rsyslogd---3*[{rsyslogd}]

|

||||

|-saslauthd---saslauthd

|

||||

|-2*[sendmail]

|

||||

|-sshd---sshd---bash---pstree

|

||||

|-udevd

|

||||

`-xinetd

|

||||

```

|

||||

|

||||

我们还可以使用 `pstree` 命令(输出来自 systemd 系统)查看正在运行的服务:

|

||||

|

||||

```

|

||||

# pstree

|

||||

systemd─┬─ModemManager─┬─{gdbus}

|

||||

│ └─{gmain}

|

||||

├─NetworkManager─┬─dhclient

|

||||

│ ├─{gdbus}

|

||||

│ └─{gmain}

|

||||

├─accounts-daemon─┬─{gdbus}

|

||||

│ └─{gmain}

|

||||

├─acpid

|

||||

├─agetty

|

||||

├─anacron

|

||||

├─apache2───2*[apache2───26*[{apache2}]]

|

||||

├─aptd───{gmain}

|

||||

├─aptik-battery-m

|

||||

├─atop

|

||||

├─atopacctd

|

||||

├─avahi-daemon───avahi-daemon

|

||||

├─colord─┬─{gdbus}

|

||||

│ └─{gmain}

|

||||

├─cron

|

||||

├─cups-browsed─┬─{gdbus}

|

||||

│ └─{gmain}

|

||||

├─cupsd

|

||||

├─dbus-daemon

|

||||

├─fwupd─┬─{GUsbEventThread}

|

||||

│ ├─{fwupd}

|

||||

│ ├─{gdbus}

|

||||

│ └─{gmain}

|

||||

├─gnome-keyring-d─┬─{gdbus}

|

||||

│ ├─{gmain}

|

||||

│ └─{timer}

|

||||

```

|

||||

|

||||

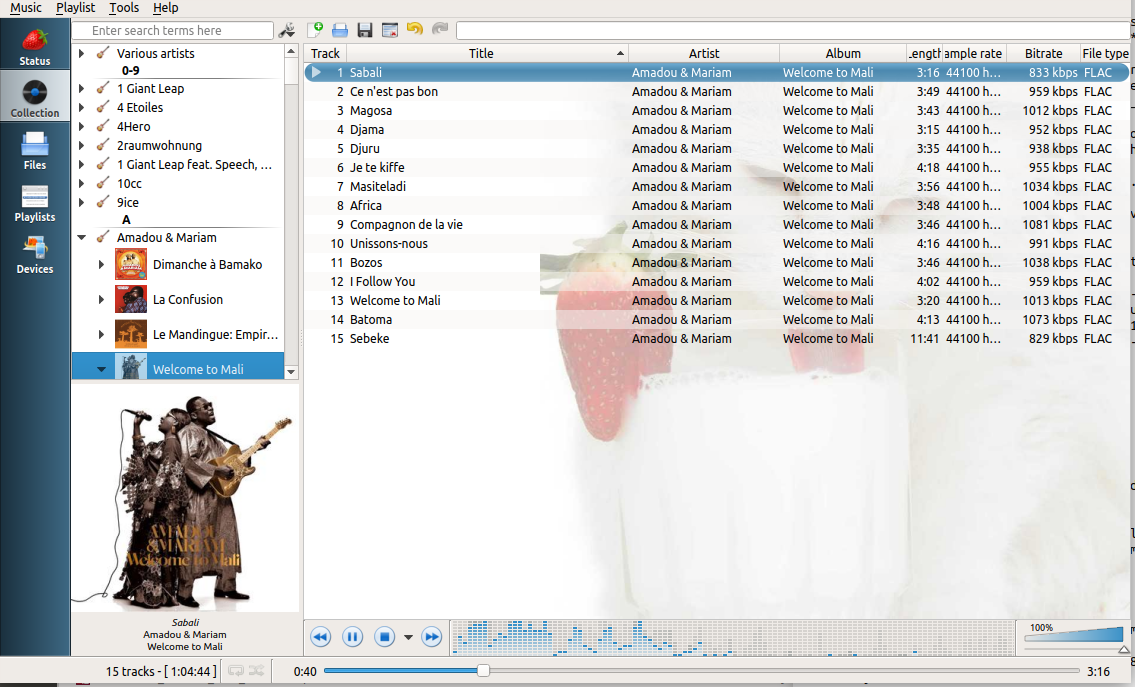

### 方法四:如何使用 chkservice 在 systemd 系统中查看正在运行的服务

|

||||

|

||||

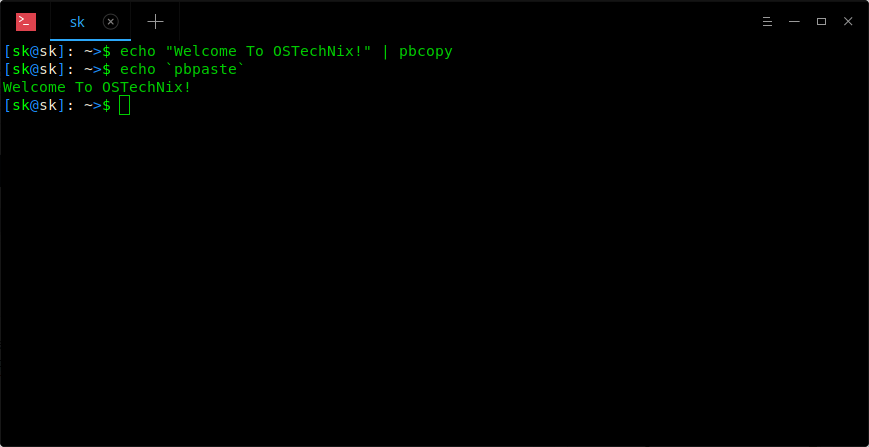

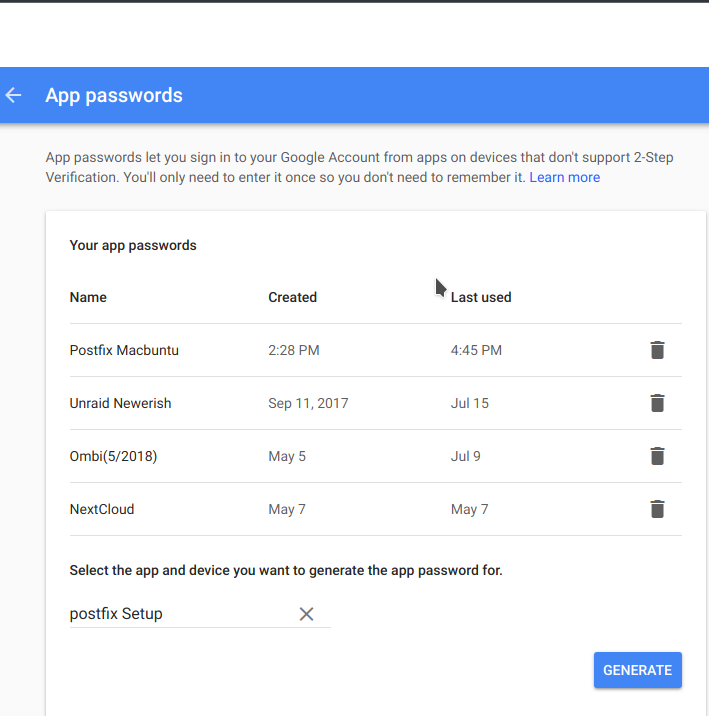

`chkservice` 是一个管理系统单元的终端工具,需要超级用户权限。

|

||||

|

||||

```

|

||||

# chkservice

|

||||

```

|

||||

|

||||

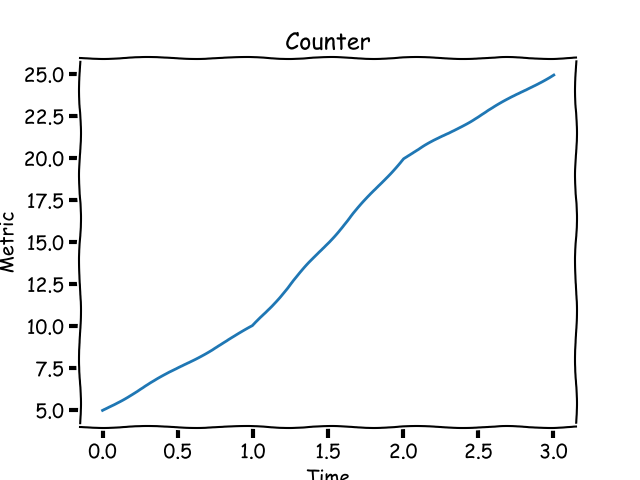

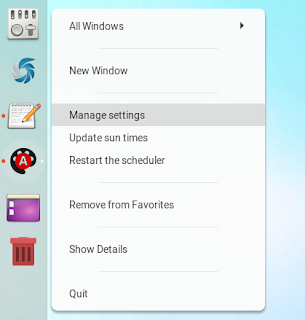

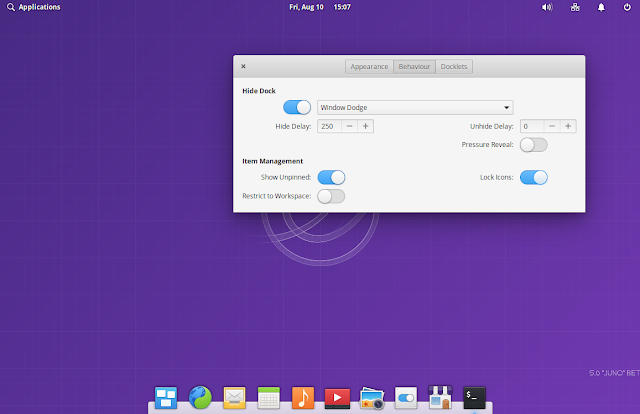

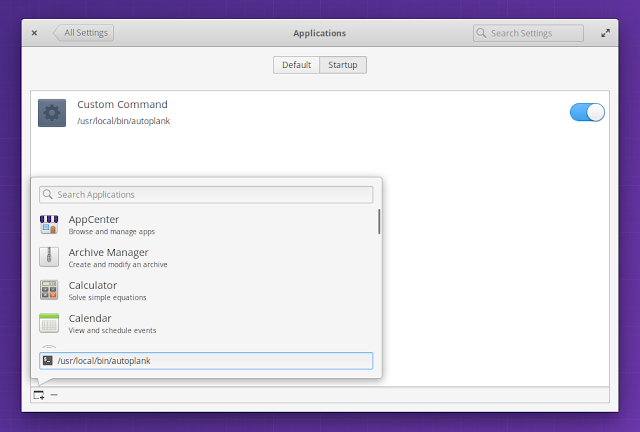

![][1]

|

||||

|

||||

要查看帮助页面,请按下 `?` ,它将显示管理 systemd 服务的可用选项。

|

||||

|

||||

![][2]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.2daygeek.com/how-to-check-all-running-services-in-linux/

|

||||

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

译者:[jessie-pang](https://github.com/jessie-pang)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.2daygeek.com/author/magesh/

|

||||

[1]:https://www.2daygeek.com/wp-content/uploads/2018/03/chkservice-1.png

|

||||

[2]:https://www.2daygeek.com/wp-content/uploads/2018/03/chkservice-2.png

|

||||

@ -0,0 +1,58 @@

|

||||

老树发新芽:微服务

|

||||

======

|

||||

|

||||

|

||||

|

||||

如果我告诉你有这样一种软件架构,一个应用程序的组件通过基于网络的通讯协议为其它组件提供服务,我估计你可能会说它是 …

|

||||

|

||||

是的,它和你编程的年限有关。如果你从上世纪九十年代就开始了你的编程生涯,那么你肯定会说它是 <ruby>[面向服务的架构][1]<rt> Service-Oriented Architecture</rt></ruby>(SOA)。但是,如果你是个年青人,并且在云上获得初步的经验,那么,你将会说:“哦,你说的是 <ruby>[微服务][2]<rt>Microservices</rt></ruby>。”

|

||||

|

||||

你们都没错。如果想真正地了解它们的差别,你需要深入地研究这两种架构。

|

||||

|

||||

在 SOA 中,服务是一个功能,它是定义好的、自包含的、并且是不依赖上下文和其它服务的状态的功能。总共有两种服务。一种是消费者服务,它从另外类型的服务 —— 提供者服务 —— 中请求一个服务。一个 SOA 服务可以同时扮演这两种角色。

|

||||

|

||||

SOA 服务可以与其它服务交换数据。两个或多个服务也可以彼此之间相互协调。这些服务执行基本的任务,比如创建一个用户帐户、提供登录功能、或验证支付。

|

||||

|

||||

与其说 SOA 是模块化一个应用程序,还不如说它是把分布式的、独立维护和部署的组件,组合成一个应用程序。然后在服务器上运行这些组件。

|

||||

|

||||

早期版本的 SOA 使用面向对象的协议进行组件间通讯。例如,微软的 <ruby>[分布式组件对象模型][3]<rt> Distributed Component Object Model</rt></ruby>(DCOM) 和使用 <ruby>[通用对象请求代理架构][5]<rt>Common Object Request Broker Architecture</rt></ruby>(CORBA) 规范的 <ruby>[对象请求代理][4]<rt> Object Request Broker</rt></ruby>(ORB)。

|

||||

|

||||

用于消息服务的最新的版本,有 <ruby>[Java 消息服务][6]<rt> Java Message Service</rt></ruby>(JMS)或者 <ruby>[高级消息队列协议][7]<rt>Advanced Message Queuing Protocol</rt></ruby>(AMQP)。这些服务通过<ruby>企业服务总线<rt>Enterprise Service Bus</rt></ruby>(ESB) 进行连接。基于这些总线,来传递和接收可扩展标记语言(XML)格式的数据。

|

||||

|

||||

[微服务][2] 是一个架构样式,其中的应用程序以松散耦合的服务或模块组成。它适用于开发大型的、复杂的应用程序的<ruby>持续集成<rt>Continuous Integration</rt></ruby>/<ruby>持续部署<rt>Continuous Deployment</rt></ruby>(CI/CD)模型。一个应用程序就是一堆模块的汇总。

|

||||

|

||||

每个微服务提供一个应用程序编程接口(API)端点。它们通过轻量级协议连接,比如,<ruby>[表述性状态转移][8]<rt> REpresentational State Transfer</rt></ruby>(REST),或 [gRPC][9]。数据倾向于使用 <ruby>[JavaScript 对象标记][10]<rt> JavaScript Object Notation</rt></ruby>(JSON)或 [Protobuf][11] 来表示。

|

||||

|

||||

这两种架构都可以用于去替代以前老的整体式架构,整体式架构的应用程序被构建为单个的、自治的单元。例如,在一个客户机 —— 服务器模式中,一个典型的 Linux、Apache、MySQL、PHP/Python/Perl (LAMP) 服务器端应用程序将去处理 HTTP 请求、运行子程序、以及从底层的 MySQL 数据库中检索/更新数据。所有这些应用程序“绑”在一起提供服务。当你改变了任何一个东西,你都必须去构建和部署一个新版本。

|

||||

|

||||

使用 SOA,你可以只改变需要的几个组件,而不是整个应用程序。使用微服务,你可以做到一次只改变一个服务。使用微服务,你才能真正做到一个解耦架构。

|

||||

|

||||

微服务也比 SOA 更轻量级。不过 SOA 服务是部署到服务器和虚拟机上,而微服务是部署在容器中。协议也更轻量级。这使得微服务比 SOA 更灵活。因此,它更适合于要求敏捷性的电商网站。

|

||||

|

||||

说了这么多,到底意味着什么呢?微服务就是 SOA 在容器和云计算上的变种。

|

||||

|

||||

老式的 SOA 并没有离我们远去,而因为我们不断地将应用程序搬迁到容器中,所以微服务架构将越来越流行。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://blogs.dxc.technology/2018/05/08/everything-old-is-new-again-microservices/

|

||||

|

||||

作者:[Cloudy Weather][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://blogs.dxc.technology/author/steven-vaughan-nichols/

|

||||

[1]:https://www.service-architecture.com/articles/web-services/service-oriented_architecture_soa_definition.html

|

||||

[2]:http://microservices.io/

|

||||

[3]:https://technet.microsoft.com/en-us/library/cc958799.aspx

|

||||

[4]:https://searchmicroservices.techtarget.com/definition/Object-Request-Broker-ORB

|

||||

[5]:http://www.corba.org/

|

||||

[6]:https://docs.oracle.com/javaee/6/tutorial/doc/bncdq.html

|

||||

[7]:https://www.amqp.org/

|

||||

[8]:https://www.service-architecture.com/articles/web-services/representational_state_transfer_rest.html

|

||||

[9]:https://grpc.io/

|

||||

[10]:https://www.json.org/

|

||||

[11]:https://github.com/google/protobuf/

|

||||

@ -1,40 +1,41 @@

|

||||

UKTools - 安装最新 Linux 内核的简便方法

|

||||

UKTools:安装最新 Linux 内核的简便方法

|

||||

======

|

||||

|

||||

Ubuntu 中有许多实用程序可以将 Linux 内核升级到最新的稳定版本。我们之前已经写过关于这些实用程序的文章,例如 Linux Kernel Utilities (LKU), Ubuntu Kernel Upgrade Utility (UKUU) 和 Ubunsys。

|

||||

Ubuntu 中有许多实用程序可以将 Linux 内核升级到最新的稳定版本。我们之前已经写过关于这些实用程序的文章,例如 Linux Kernel Utilities (LKU)、 Ubuntu Kernel Upgrade Utility (UKUU) 和 Ubunsys。

|

||||

|

||||

另外还有一些其它实用程序可供使用。我们计划在其它文章中包含这些,例如 `ubuntu-mainline-kernel.sh` 和从主线内核手动安装的方式。

|

||||

|

||||

另外还有一些其它实用程序可供使用。我们计划在其它文章中包含这些,例如 ubuntu-mainline-kernel.sh 和 manual method from mainline kernel.

|

||||

今天我们还会教你类似的使用工具 —— UKTools。你可以尝试使用这些实用程序中的任何一个来将 Linux 内核升级至最新版本。

|

||||

|

||||

今天我们还会教你类似的使用工具 -- UKTools。你可以尝试使用这些实用程序中的任何一个来将 Linux 内核升级至最新版本。

|

||||

|

||||

最新的内核版本附带了安全漏洞修复和一些改进,因此,最好保持最新的内核版本以获得可靠,安全和更好的硬件性能。

|

||||

最新的内核版本附带了安全漏洞修复和一些改进,因此,最好保持最新的内核版本以获得可靠、安全和更好的硬件性能。

|

||||

|

||||

有时候最新的内核版本可能会有一些漏洞,并且会导致系统崩溃,这是你的风险。我建议你不要在生产环境中安装它。

|

||||

|

||||

**建议阅读:**

|

||||

**(#)** [Linux 内核实用程序(LKU)- 在 Ubuntu/LinuxMint 中编译,安装和更新最新内核的一组 Shell 脚本][1]

|

||||

**(#)** [Ukuu - 在基于 Ubuntu 的系统中安装或升级 Linux 内核的简便方法][2]

|

||||

**(#)** [6 种检查系统上正在运行的 Linux 内核版本的方法][3]

|

||||

**建议阅读:**

|

||||

|

||||

- [Linux 内核实用程序(LKU)- 在 Ubuntu/LinuxMint 中编译,安装和更新最新内核的一组 Shell 脚本][1]

|

||||

- [Ukuu - 在基于 Ubuntu 的系统中安装或升级 Linux 内核的简便方法][2]

|

||||

- [6 种检查系统上正在运行的 Linux 内核版本的方法][3]

|

||||

|

||||

### 什么是 UKTools

|

||||

|

||||

[UKTools][4] 意思是 Ubuntu 内核工具,它包含两个 shell 脚本 `ukupgrade` 和 `ukpurge`。

|

||||

|

||||

ukupgrade 意思是 “Ubuntu Kernel Upgrade”,它允许用户将 Linux 内核升级到 Ubuntu/Mint 的最新稳定版本以及基于 [kernel.ubuntu.com][5] 的衍生版本。

|

||||

`ukupgrade` 意思是 “Ubuntu Kernel Upgrade”,它允许用户将 Linux 内核升级到 Ubuntu/Mint 的最新稳定版本以及基于 [kernel.ubuntu.com][5] 的衍生版本。

|

||||

|

||||

ukpurge 意思是 “Ubuntu Kernel Purge”,它允许用户在机器中删除旧的 Linux 内核镜像或头文件,用于 Ubuntu/Mint 和其衍生版本。它将只保留三个内核版本。

|

||||

`ukpurge` 意思是 “Ubuntu Kernel Purge”,它允许用户在机器中删除旧的 Linux 内核镜像或头文件,用于 Ubuntu/Mint 和其衍生版本。它将只保留三个内核版本。

|

||||

|

||||

此实用程序没有 GUI,但它看起来非常简单直接,因此,新手可以在没有任何问题的情况下进行升级。

|

||||

|

||||

我正在运行 Ubuntu 17.10,目前的内核版本如下:

|

||||

|

||||

```

|

||||

$ uname -a

|

||||

Linux ubuntu 4.13.0-39-generic #44-Ubuntu SMP Thu Apr 5 14:25:01 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

|

||||

|

||||

```

|

||||

|

||||

运行以下命令来获取系统上已安装内核的列表(Ubuntu 及其衍生产品)。目前我持有 `7` 个内核。

|

||||

|

||||

```

|

||||

$ dpkg --list | grep linux-image

|

||||

ii linux-image-4.13.0-16-generic 4.13.0-16.19 amd64 Linux kernel image for version 4.13.0 on 64 bit x86 SMP

|

||||

@ -52,7 +53,6 @@ ii linux-image-extra-4.13.0-37-generic 4.13.0-37.42 amd64 Linux kernel extra mod

|

||||

ii linux-image-extra-4.13.0-38-generic 4.13.0-38.43 amd64 Linux kernel extra modules for version 4.13.0 on 64 bit x86 SMP

|

||||

ii linux-image-extra-4.13.0-39-generic 4.13.0-39.44 amd64 Linux kernel extra modules for version 4.13.0 on 64 bit x86 SMP

|

||||

ii linux-image-generic 4.13.0.39.42 amd64 Generic Linux kernel image

|

||||

|

||||

```

|

||||

|

||||

### 如何安装 UKTools

|

||||

@ -60,18 +60,19 @@ ii linux-image-generic 4.13.0.39.42 amd64 Generic Linux kernel image

|

||||

在 Ubuntu 及其衍生产品上,只需运行以下命令来安装 UKTools 即可。

|

||||

|

||||

在你的系统上运行以下命令来克隆 UKTools 仓库:

|

||||

|

||||

```

|

||||

$ git clone https://github.com/usbkey9/uktools

|

||||

|

||||

```

|

||||

|

||||

进入 uktools 目录:

|

||||

|

||||

```

|

||||

$ cd uktools

|

||||

|

||||

```

|

||||

|

||||

运行 Makefile 以生成必要的文件。此外,这将自动安装最新的可用内核。只需重新启动系统即可使用最新的内核。

|

||||

运行 `Makefile` 以生成必要的文件。此外,这将自动安装最新的可用内核。只需重新启动系统即可使用最新的内核。

|

||||

|

||||

```

|

||||

$ sudo make

|

||||

[sudo] password for daygeek:

|

||||

@ -188,30 +189,30 @@ done

|

||||

|

||||

Thanks for using this script! Hope it helped.

|

||||

Give it a star: https://github.com/MarauderXtreme/uktools

|

||||

|

||||

```

|

||||

|

||||

重新启动系统以激活最新的内核。

|

||||

|

||||

```

|

||||

$ sudo shutdown -r now

|

||||

|

||||

```

|

||||

|

||||

一旦系统重新启动,重新检查内核版本。

|

||||

|

||||

```

|

||||

$ uname -a

|

||||

Linux ubuntu 4.16.7-041607-generic #201805021131 SMP Wed May 2 15:34:55 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

|

||||

|

||||

```

|

||||

|

||||

此 make 命令会将下面的文件放到 `/usr/local/bin` 目录中。

|

||||

|

||||

```

|

||||

do-kernel-upgrade

|

||||

do-kernel-purge

|

||||

|

||||

```

|

||||

|

||||

要移除旧内核,运行以下命令:

|

||||

|

||||

```

|

||||

$ do-kernel-purge

|

||||

|

||||

@ -364,10 +365,10 @@ run-parts: executing /etc/kernel/postrm.d/initramfs-tools 4.13.0-37-generic /boo

|

||||

run-parts: executing /etc/kernel/postrm.d/zz-update-grub 4.13.0-37-generic /boot/vmlinuz-4.13.0-37-generic

|

||||

|

||||

Thanks for using this script!!!

|

||||

|

||||

```

|

||||

|

||||

使用以下命令重新检查已安装内核的列表。它将只保留三个旧的内核。

|

||||

|

||||

```

|

||||

$ dpkg --list | grep linux-image

|

||||

ii linux-image-4.13.0-38-generic 4.13.0-38.43 amd64 Linux kernel image for version 4.13.0 on 64 bit x86 SMP

|

||||

@ -376,14 +377,13 @@ ii linux-image-extra-4.13.0-38-generic 4.13.0-38.43 amd64 Linux kernel extra mod

|

||||

ii linux-image-extra-4.13.0-39-generic 4.13.0-39.44 amd64 Linux kernel extra modules for version 4.13.0 on 64 bit x86 SMP

|

||||

ii linux-image-generic 4.13.0.39.42 amd64 Generic Linux kernel image

|

||||

ii linux-image-unsigned-4.16.7-041607-generic 4.16.7-041607.201805021131 amd64 Linux kernel image for version 4.16.7 on 64 bit x86 SMP

|

||||

|

||||

```

|

||||

|

||||

下次你可以调用 `do-kernel-upgrade` 实用程序来安装新的内核。如果有任何新内核可用,那么它将安装。如果没有,它将报告当前没有可用的内核更新。

|

||||

|

||||

```

|

||||

$ do-kernel-upgrade

|

||||

Kernel up to date. Finishing

|

||||

|

||||

```

|

||||

|

||||

再次运行 `do-kernel-purge` 命令以确认。如果发现超过三个内核,那么它将移除。如果不是,它将报告没有删除消息。

|

||||

@ -400,7 +400,6 @@ Linux Kernel 4.16.7-041607 Generic (linux-image-4.16.7-041607-generic)

|

||||

Nothing to remove!

|

||||

|

||||

Thanks for using this script!!!

|

||||

|

||||

```

|

||||

|

||||

|

||||

@ -411,7 +410,7 @@ via: https://www.2daygeek.com/uktools-easy-way-to-install-latest-stable-linux-ke

|

||||

作者:[Prakash Subramanian][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[MjSeven](https://github.com/MjSeven)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,51 +1,52 @@

|

||||

献给 Debian 和 Ubuntu 用户的一组实用程序

|

||||

======

|

||||

|

||||

|

||||

|

||||

|

||||

你使用的是基于 Debian 的系统吗?如果是,太好了!我今天在这里给你带来了一个好消息。先向 **“Debian-goodies”** 打个招呼,这是一组基于 Debian 系统(比如:Ubuntu, Linux Mint)的有用工具。这些实用工具提供了一些额外的有用的命令,这些命令在基于 Debian 的系统中默认不可用。通过使用这些工具,用户可以找到哪些程序占用更多磁盘空间,更新系统后需要重新启动哪些服务,在一个包中搜索与模式匹配的文件,根据搜索字符串列出已安装的包等等。在这个简短的指南中,我们将讨论一些有用的 Debian 的好东西。

|

||||

你使用的是基于 Debian 的系统吗?如果是,太好了!我今天在这里给你带来了一个好消息。先向 “Debian-goodies” 打个招呼,这是一组基于 Debian 系统(比如:Ubuntu、Linux Mint)的有用工具。这些实用工具提供了一些额外的有用的命令,这些命令在基于 Debian 的系统中默认不可用。通过使用这些工具,用户可以找到哪些程序占用更多磁盘空间,更新系统后需要重新启动哪些服务,在一个软件包中搜索与模式匹配的文件,根据搜索字符串列出已安装的包等等。在这个简短的指南中,我们将讨论一些有用的 Debian 的好东西。

|

||||

|

||||

### Debian-goodies – 给 Debian 和 Ubuntu 用户的实用程序

|

||||

|

||||

debian-goodies 包可以在 Debian 和其衍生的 Ubuntu 以及其它 Ubuntu 变体(如 Linux Mint)的官方仓库中找到。要安装 debian-goodies,只需简单运行:

|

||||

|

||||

```

|

||||

$ sudo apt-get install debian-goodies

|

||||

|

||||

```

|

||||

|

||||

debian-goodies 安装完成后,让我们继续看一看一些有用的实用程序。

|

||||

|

||||

#### **1. Checkrestart**

|

||||

#### 1、 checkrestart

|

||||

|

||||

让我从我最喜欢的 **“checkrestart”** 实用程序开始。安装某些安全更新时,某些正在运行的应用程序可能仍然会使用旧库。要彻底应用安全更新,你需要查找并重新启动所有这些更新。这就是 Checkrestart 派上用场的地方。该实用程序将查找哪些进程仍在使用旧版本的库,然后,你可以重新启动服务。

|

||||

让我从我最喜欢的 `checkrestart` 实用程序开始。安装某些安全更新时,某些正在运行的应用程序可能仍然会使用旧库。要彻底应用安全更新,你需要查找并重新启动所有这些更新。这就是 `checkrestart` 派上用场的地方。该实用程序将查找哪些进程仍在使用旧版本的库,然后,你可以重新启动服务。

|

||||

|

||||

在进行库更新后,要检查哪些守护进程应该被重新启动,运行:

|

||||

|

||||

```

|

||||

$ sudo checkrestart

|

||||

[sudo] password for sk:

|

||||

Found 0 processes using old versions of upgraded files

|

||||

|

||||

```

|

||||

|

||||

由于我最近没有执行任何安全更新,因此没有显示任何内容。

|

||||

|

||||

请注意,Checkrestart 实用程序确实运行良好。但是,有一个名为 “needrestart” 的类似工具可用于最新的 Debian 系统。Needrestart 的灵感来自 checkrestart 实用程序,它完成了同样的工作。 Needrestart 得到了积极维护,并支持容器(LXC, Docker)等新技术。

|

||||

请注意,`checkrestart` 实用程序确实运行良好。但是,有一个名为 `needrestart` 的类似的新工具可用于最新的 Debian 系统。`needrestart` 的灵感来自 `checkrestart` 实用程序,它完成了同样的工作。 `needrestart` 得到了积极维护,并支持容器(LXC、 Docker)等新技术。

|

||||

|

||||

以下是 Needrestart 的特点:

|

||||

以下是 `needrestart` 的特点:

|

||||

|

||||

* 支持(当不要求)systemd

|

||||

* 二进制黑名单(即显示管理员)

|

||||

* 试图检测挂起的内核升级

|

||||

* 尝试检测基于解释器的守护进程所需的重启(支持 Perl, Python, Ruby)

|

||||

* 支持(但不要求)systemd

|

||||

* 二进制程序的黑名单(例如:用于图形显示的显示管理器)

|

||||

* 尝试检测挂起的内核升级

|

||||

* 尝试检测基于解释器的守护进程所需的重启(支持 Perl、Python、Ruby)

|

||||

* 使用钩子完全集成到 apt/dpkg 中

|

||||

|

||||

它在默认仓库中也可以使用。所以,你可以使用如下命令安装它:

|

||||

|

||||

```

|

||||

$ sudo apt-get install needrestart

|

||||

|

||||

```

|

||||

|

||||

现在,你可以使用以下命令检查更新系统后需要重新启动的守护程序列表:

|

||||

|

||||

```

|

||||

$ sudo needrestart

|

||||

Scanning processes...

|

||||

@ -60,26 +61,26 @@ No services need to be restarted.

|

||||

No containers need to be restarted.

|

||||

|

||||

No user sessions are running outdated binaries.

|

||||

|

||||

```

|

||||

|

||||

好消息是 Needrestart 同样也适用于其它 Linux 发行版。例如,你可以从 Arch Linux 及其衍生版的 AUR 或者其它任何 AUR 帮助程序来安装,就像下面这样:

|

||||

|

||||

```

|

||||

$ yaourt -S needrestart

|

||||

|

||||

```

|

||||

|

||||

在 fedora:

|

||||

在 Fedora:

|

||||

|

||||

```

|

||||

$ sudo dnf install needrestart

|

||||

|

||||

```

|

||||

|

||||

#### 2. Check-enhancements

|

||||

#### 2、 check-enhancements

|

||||

|

||||

Check-enhancements 实用程序用于查找那些用于增强已安装的包的软件包。此实用程序将列出增强其它包但不是必须运行它的包。你可以通过 “-ip” 或 “–installed-packages” 选项来查找增强单个包或所有已安装包的软件包。

|

||||

`check-enhancements` 实用程序用于查找那些用于增强已安装的包的软件包。此实用程序将列出增强其它包但不是必须运行它的包。你可以通过 `-ip` 或 `–installed-packages` 选项来查找增强单个包或所有已安装包的软件包。

|

||||

|

||||

例如,我将列出增强 gimp 包功能的包:

|

||||

|

||||

```

|

||||

$ check-enhancements gimp

|

||||

gimp => gimp-data: Installed: (none) Candidate: 2.8.22-1

|

||||

@ -102,10 +103,10 @@ gimp => gimp-help-sl: Installed: (none) Candidate: 2.8.2-0.1

|

||||

gimp => gimp-help-sv: Installed: (none) Candidate: 2.8.2-0.1

|

||||

gimp => gimp-plugin-registry: Installed: (none) Candidate: 7.20140602ubuntu3

|

||||

gimp => xcftools: Installed: (none) Candidate: 1.0.7-6

|

||||

|

||||

```

|

||||

|

||||

要列出增强所有已安装包的,请运行:

|

||||

|

||||

```

|

||||

$ check-enhancements -ip

|

||||

autoconf => autoconf-archive: Installed: (none) Candidate: 20170928-2

|

||||

@ -114,12 +115,12 @@ ca-certificates => ca-cacert: Installed: (none) Candidate: 2011.0523-2

|

||||

cryptsetup => mandos-client: Installed: (none) Candidate: 1.7.19-1

|

||||

dpkg => debsig-verify: Installed: (none) Candidate: 0.18

|

||||

[...]

|

||||

|

||||

```

|

||||

|

||||

#### 3. dgrep

|

||||

#### 3、 dgrep

|

||||

|

||||

顾名思义,`dgrep` 用于根据给定的正则表达式搜索制指定包的所有文件。例如,我将在 Vim 包中搜索包含正则表达式 “text” 的文件。

|

||||

|

||||

顾名思义,dgrep 用于根据给定的正则表达式搜索制指定包的所有文件。例如,我将在 Vim 包中搜索包含正则表达式 “text” 的文件。

|

||||

```

|

||||

$ sudo dgrep "text" vim

|

||||

Binary file /usr/bin/vim.tiny matches

|

||||

@ -131,44 +132,44 @@ Binary file /usr/bin/vim.tiny matches

|

||||

/usr/share/doc/vim-tiny/copyright: context diff will do. The e-mail address to be used is

|

||||

/usr/share/doc/vim-tiny/copyright: On Debian systems, the complete text of the GPL version 2 license can be

|

||||

[...]

|

||||

|

||||

```

|

||||

|

||||

dgrep 支持大多数 grep 的选项。参阅以下指南以了解 grep 命令。

|

||||

`dgrep` 支持大多数 `grep` 的选项。参阅以下指南以了解 `grep` 命令。

|

||||

|

||||

* [献给初学者的 Grep 命令教程][2]

|

||||

|

||||

#### 4 dglob

|

||||

#### 4、 dglob

|

||||

|

||||

`dglob` 实用程序生成与给定模式匹配的包名称列表。例如,找到与字符串 “vim” 匹配的包列表。

|

||||

|

||||

dglob 实用程序生成与给定模式匹配的包名称列表。例如,找到与字符串 “vim” 匹配的包列表。

|

||||

```

|

||||

$ sudo dglob vim

|

||||

vim-tiny:amd64

|

||||

vim:amd64

|

||||

vim-common:all

|

||||

vim-runtime:all

|

||||

|

||||

```

|

||||

|

||||

默认情况下,dglob 将仅显示已安装的软件包。如果要列出所有包(包括已安装的和未安装的),使用 **-a** 标志。

|

||||

默认情况下,`dglob` 将仅显示已安装的软件包。如果要列出所有包(包括已安装的和未安装的),使用 `-a` 标志。

|

||||

|

||||

```

|

||||

$ sudo dglob vim -a

|

||||

|

||||

```

|

||||

|

||||

#### 5. debget

|

||||

#### 5、 debget

|

||||

|

||||

`debget` 实用程序将在 APT 的数据库中下载一个包的 .deb 文件。请注意,它只会下载给定的包,不包括依赖项。

|

||||

|

||||

**debget** 实用程序将在 APT 的数据库中下载一个包的 .deb 文件。请注意,它只会下载给定的包,不包括依赖项。

|

||||

```

|

||||

$ debget nano

|

||||

Get:1 http://in.archive.ubuntu.com/ubuntu bionic/main amd64 nano amd64 2.9.3-2 [231 kB]

|

||||

Fetched 231 kB in 2s (113 kB/s)

|

||||

|

||||

```

|

||||

|

||||

#### 6. dpigs

|

||||

#### 6、 dpigs

|

||||

|

||||

这是此次集合中另一个有用的实用程序。`dpigs` 实用程序将查找并显示那些占用磁盘空间最多的已安装包。

|

||||

|

||||

这是此次集合中另一个有用的实用程序。**dpigs** 实用程序将查找并显示那些占用磁盘空间最多的已安装包。

|

||||

```

|

||||

$ dpigs

|

||||

260644 linux-firmware

|

||||

@ -181,64 +182,66 @@ $ dpigs

|

||||

28420 vim-runtime

|

||||

25971 gcc-7

|

||||

24349 g++-7

|

||||

|

||||

```

|

||||

|

||||

如你所见,linux-firmware 包占用的磁盘空间最多。默认情况下,它将显示占用磁盘空间的 **前 10 个**包。如果要显示更多包,例如 20 个,运行以下命令:

|

||||

|

||||

```

|

||||

$ dpigs -n 20

|

||||

|

||||

```

|

||||

|

||||

#### 7. debman

|

||||

|

||||

**debman** 实用程序允许你轻松查看二进制文件 **.deb** 中的手册页而不提取它。你甚至不需要安装 .deb 包。以下命令显示 nano 包的手册页。

|

||||

`debman` 实用程序允许你轻松查看二进制文件 .deb 中的手册页而不提取它。你甚至不需要安装 .deb 包。以下命令显示 nano 包的手册页。

|

||||

|

||||

```

|

||||

$ debman -f nano_2.9.3-2_amd64.deb nano

|

||||

|

||||

```

|

||||

如果你没有 .deb 软件包的本地副本,使用 **-p** 标志下载并查看包的手册页。

|

||||

|

||||

如果你没有 .deb 软件包的本地副本,使用 `-p` 标志下载并查看包的手册页。

|

||||

|

||||

```

|

||||

$ debman -p nano nano

|

||||

|

||||

```

|

||||

|

||||

**建议阅读:**

|

||||

[每个 Linux 用户都应该知道的 3 个 man 的替代品][3]

|

||||

|

||||

#### 8. debmany

|

||||

- [每个 Linux 用户都应该知道的 3 个 man 的替代品][3]

|

||||

|

||||

#### 8、 debmany

|

||||

|

||||

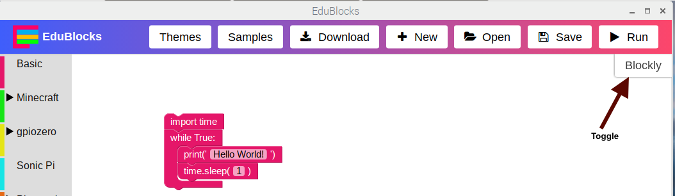

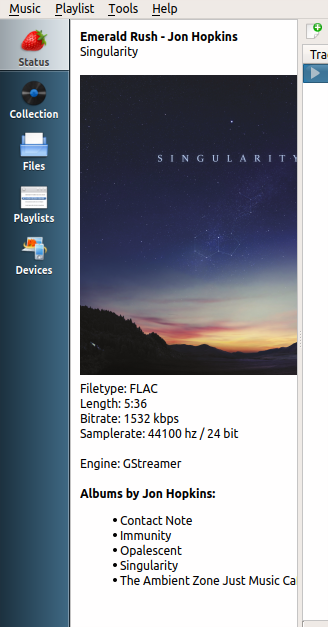

安装的 Debian 包不仅包含手册页,还包括其它文件,如确认、版权和自述文件等。`debmany` 实用程序允许你查看和读取那些文件。

|

||||

|

||||

安装的 Debian 包不仅包含手册页,还包括其它文件,如确认,版权和 read me (自述文件)等。**debmany** 实用程序允许你查看和读取那些文件。

|

||||

```

|

||||

$ debmany vim

|

||||

|

||||

```

|

||||

|

||||

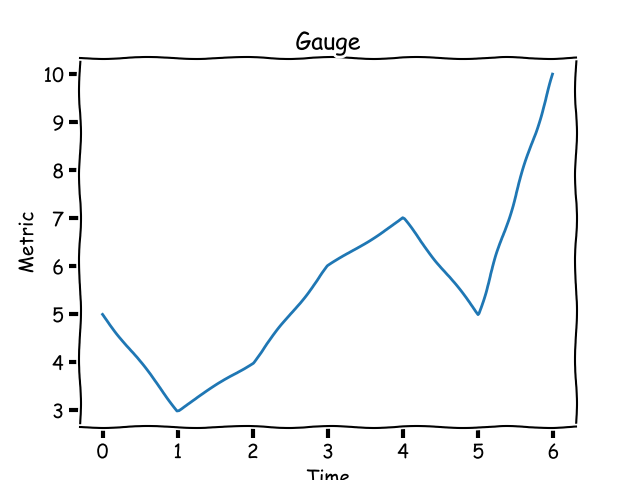

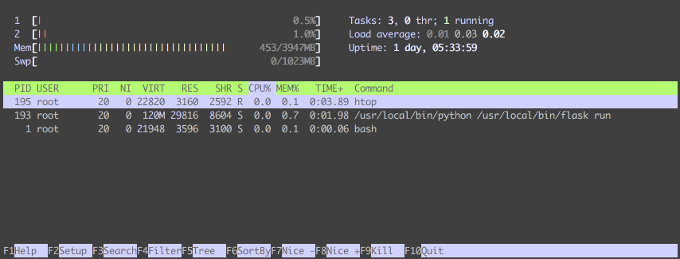

![][1]

|

||||

|

||||

使用方向键选择要查看的文件,然后按 ENTER 键查看所选文件。按 **q** 返回主菜单。

|

||||

使用方向键选择要查看的文件,然后按回车键查看所选文件。按 `q` 返回主菜单。

|

||||

|

||||

如果未安装指定的软件包,debmany 将从 APT 数据库下载并显示手册页。应安装 **dialog** 包来阅读手册页。

|

||||

如果未安装指定的软件包,`debmany` 将从 APT 数据库下载并显示手册页。应安装 `dialog` 包来阅读手册页。

|

||||

|

||||

#### 9. popbugs

|

||||

#### 9、 popbugs

|

||||

|

||||

如果你是开发人员,**popbugs** 实用程序将非常有用。它将根据你使用的包显示一个定制的发布关键 bug 列表(使用热门竞赛数据)。对于那些不关心的人,Popular-contest 包设置了一个 cron (定时)任务,它将定期匿名向 Debian 开发人员提交有关该系统上最常用的 Debian 软件包的统计信息。这些信息有助于 Debian 做出决定,例如哪些软件包应该放在第一张 CD 上。它还允许 Debian 改进未来的发行版本,以便为新用户自动安装最流行的软件包。

|

||||

如果你是开发人员,`popbugs` 实用程序将非常有用。它将根据你使用的包显示一个定制的发布关键 bug 列表(使用 popularity-contest 数据)。对于那些不关心的人,popularity-contest 包设置了一个 cron (定时)任务,它将定期匿名向 Debian 开发人员提交有关该系统上最常用的 Debian 软件包的统计信息。这些信息有助于 Debian 做出决定,例如哪些软件包应该放在第一张 CD 上。它还允许 Debian 改进未来的发行版本,以便为新用户自动安装最流行的软件包。

|

||||

|

||||

要生成严重 bug 列表并在默认 Web 浏览器中显示结果,运行:

|

||||

|

||||

```

|

||||

$ popbugs

|

||||

|

||||

```

|

||||

|

||||

此外,你可以将结果保存在文件中,如下所示。

|

||||

|

||||

```

|

||||

$ popbugs --output=bugs.txt

|

||||

|

||||

```

|

||||

|

||||

#### 10. which-pkg-broke

|

||||

#### 10、 which-pkg-broke

|

||||

|

||||

此命令将显示给定包的所有依赖项以及安装每个依赖项的时间。通过使用此信息,你可以在升级系统或软件包之后轻松找到哪个包可能会在什么时间损坏了另一个包。

|

||||

|

||||

此命令将显示给定包的所有依赖项以及安装每个依赖项的时间。通过使用此信息,你可以在升级系统或软件包之后轻松找到哪个包可能会在什么时间损坏另一个包。

|

||||

```

|

||||

$ which-pkg-broke vim

|

||||

Package <debconf-2.0> has no install time info

|

||||

@ -253,15 +256,14 @@ libgcc1:amd64 Wed Apr 25 08:08:42 2018

|

||||

liblzma5:amd64 Wed Apr 25 08:08:42 2018

|

||||

libdb5.3:amd64 Wed Apr 25 08:08:42 2018

|

||||

[...]

|

||||

|

||||

```

|

||||

|

||||

#### 11. dhomepage

|

||||

#### 11、 dhomepage

|

||||

|

||||

`dhomepage` 实用程序将在默认 Web 浏览器中显示给定包的官方网站。例如,以下命令将打开 Vim 编辑器的主页。

|

||||

|

||||

dhomepage 实用程序将在默认 Web 浏览器中显示给定包的官方网站。例如,以下命令将打开 Vim 编辑器的主页。

|

||||

```

|

||||

$ dhomepage vim

|

||||

|

||||

```

|

||||

|

||||

这就是全部了。Debian-goodies 是你武器库中必备的工具。即使我们不经常使用所有这些实用程序,但它们值得学习,我相信它们有时会非常有用。

|

||||

@ -278,7 +280,7 @@ via: https://www.ostechnix.com/debian-goodies-a-set-of-useful-utilities-for-debi

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[MjSeven](https://github.com/MjSeven)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -2,18 +2,16 @@

|

||||

============================================================

|

||||

|

||||

|

||||

>欧洲核子研究组织(简称 CERN)依靠开源技术处理大型强子对撞机生成的大量数据。ATLAS(超环面仪器,如图所示)是一种探测基本粒子的通用探测器。(图片来源:CERN)[经许可使用][2]

|

||||

|

||||

>欧洲核子研究组织(简称 CERN)依靠开源技术处理大型强子对撞机生成的大量数据。ATLAS(超环面仪器,如图所示)是一种探测基本粒子的通用探测器。(图片来源:CERN)

|

||||

|

||||

[CERN][3]

|

||||

|

||||

[CERN][6] 无需过多介绍了吧。CERN 创建了万维网和大型强子对撞机(LHC),这是世界上最大的粒子加速器,就是通过它发现了 [希格斯玻色子][7]。负责该组织 IT 操作系统和基础架构的 Tim Bell 表示,他的团队的目标是“为全球 13000 名物理学家提供计算设施,以分析这些碰撞、了解宇宙的构成以及是如何运转的。”

|

||||

[CERN][6] 无需过多介绍了吧。CERN 创建了<ruby>万维网<rt>World Wide Web</rt></ruby>(WWW)和<ruby>大型强子对撞机<rt>Large Hadron Collider</rt></ruby>(LHC),这是世界上最大的<ruby>粒子加速器<rt>particle accelerator</rt></ruby>,就是通过它发现了 <ruby>[希格斯玻色子][7]<rt>Higgs boson</rt></ruby>。负责该组织 IT 操作系统和基础架构的 Tim Bell 表示,他的团队的目标是“为全球 13000 名物理学家提供计算设施,以分析这些碰撞,了解宇宙的构成以及是如何运转的。”

|

||||

|

||||

CERN 正在进行硬核科学研究,尤其是大型强子对撞机,它在运行时 [生成大量数据][8]。“CERN 目前存储大约 200 PB 的数据,当加速器运行时,每月有超过 10 PB 的数据产生。这必然会给计算基础架构带来极大的挑战,包括存储大量数据,以及能够在合理的时间范围内处理数据,对于网络、存储技术和高效计算架构都是很大的压力。“Bell 说到。

|

||||

|

||||

### [tim-bell-cern.png][4]

|

||||

|

||||

|

||||

|

||||

Tim Bell, CERN [经许可使用][1] Swapnil Bhartiya

|

||||

*Tim Bell, CERN*

|

||||

|

||||

大型强子对撞机的运作规模和它产生的数据量带来了严峻的挑战,但 CERN 对这些问题并不陌生。CERN 成立于 1954 年,已经 60 余年了。“我们一直面临着难以解决的计算能力挑战,但我们一直在与开源社区合作解决这些问题。”Bell 说,“即使在 90 年代,当我们发明万维网时,我们也希望与人们共享,使其能够从 CERN 的研究中受益,开源是做这件事的再合适不过的工具了。”

|

||||

|

||||

@ -29,19 +27,20 @@ CERN 帮助 CentOS 提供基础架构,他们还组织了 CentOS DoJo 活动(

|

||||

|

||||

除了 OpenStack 和 CentOS 之外,CERN 还是其他开源项目的深度用户,包括用于配置管理的 Puppet、用于监控的 Grafana 和 InfluxDB,等等。

|

||||

|

||||

“我们与全球约 170 个实验室合作。因此,每当我们发现一个开源项目的可完善之处,其他实验室便可以很容易地采纳使用。“Bell 说,”与此同时,我们也向其他项目学习。当像 eBay 和 Rackspace 这样大规模的安装提高了解决方案的可扩展性时,我们也从中受益,也可以扩大规模。“

|

||||

“我们与全球约 170 个实验室合作。因此,每当我们发现一个开源项目的改进之处,其他实验室便可以很容易地采纳使用。”Bell 说,“与此同时,我们也向其他项目学习。当像 eBay 和 Rackspace 这样大规模的装机量提高了解决方案的可扩展性时,我们也从中受益,也可以扩大规模。“

|

||||

|

||||

### 解决现实问题

|

||||

|

||||

2012 年左右,CERN 正在研究如何为大型强子对撞机扩展计算能力,但难点是人员而不是技术。CERN 雇用的员工人数是固定的。“我们必须找到一种方法来扩展计算能力,而不需要大量额外的人来管理。”Bell 说,“OpenStack 为我们提供了一个自动的 API 驱动和软件定义的基础架构。”OpenStack 还帮助 CERN 检查与服务交付相关的问题,然后使其自动化,而无需增加员工。

|

||||

|

||||

“我们目前在日内瓦和布达佩斯的两个数据中心运行大约 280000 个核心(cores)和 7000 台服务器。我们正在使用软件定义的基础架构使一切自动化,这使我们能够在保持员工数量不变的同时继续添加更多的服务器。“Bell 说。

|

||||

“我们目前在日内瓦和布达佩斯的两个数据中心运行大约 280000 个处理器核心和 7000 台服务器。我们正在使用软件定义的基础架构使一切自动化,这使我们能够在保持员工数量不变的同时继续添加更多的服务器。“Bell 说。

|

||||

|

||||

随着时间的推移,CERN 将面临更大的挑战。大型强子对撞机有一个到 2035 年的蓝图,包括一些重要的升级。“我们的加速器运转三到四年,然后会用 18 个月或两年的时间来升级基础架构。在这维护期间我们会做一些计算能力的规划。“Bell 说。CERN 还计划升级高亮度大型强子对撞机,会允许更高光度的光束。与目前的 CERN 的规模相比,升级意味着计算需求需增加约 60 倍。

|

||||

随着时间的推移,CERN 将面临更大的挑战。大型强子对撞机有一个到 2035 年的蓝图,包括一些重要的升级。“我们的加速器运转三到四年,然后会用 18 个月或两年的时间来升级基础架构。在这维护期间我们会做一些计算能力的规划。

|

||||

”Bell 说。CERN 还计划升级高亮度大型强子对撞机,会允许更高光度的光束。与目前的 CERN 的规模相比,升级意味着计算需求需增加约 60 倍。

|

||||

|

||||

“根据摩尔定律,我们可能只能满足需求的四分之一,因此我们必须找到相应的扩展计算能力和存储基础架构的方法,并找到自动化和解决方案,例如 OpenStack,将有助于此。”Bell 说。

|

||||

|

||||

“当我们开始使用大型强子对撞机并观察我们如何提供计算能力时,很明显我们无法将所有内容都放入 CERN 的数据中心,因此我们设计了一个分布式网格结构:位于中心的 CERN 和围绕着它的级联结构。“Bell 说,“全世界约有 12 个大型一级数据中心,然后是 150 所小型大学和实验室。他们从大型强子对撞机的数据中收集样本,以帮助物理学家理解和分析数据。“

|

||||

“当我们开始使用大型强子对撞机并观察我们如何提供计算能力时,很明显我们无法将所有内容都放入 CERN 的数据中心,因此我们设计了一个分布式网格结构:位于中心的 CERN 和围绕着它的级联结构。”Bell 说,“全世界约有 12 个大型一级数据中心,然后是 150 所小型大学和实验室。他们从大型强子对撞机的数据中收集样本,以帮助物理学家理解和分析数据。”

|

||||

|

||||

这种结构意味着 CERN 正在进行国际合作,数百个国家正致力于分析这些数据。归结为一个基本原则,即开源不仅仅是共享代码,还包括人们之间的协作、知识共享,以实现个人、组织或公司无法单独实现的目标。这就是开源世界的希格斯玻色子。

|

||||

|

||||