mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

Merge remote-tracking branch 'refs/remotes/LCTT/master'

This commit is contained in:

commit

a67218133f

@ -0,0 +1,128 @@

|

||||

一位开发者的 Linux 容器之旅

|

||||

================================================================================

|

||||

|

||||

|

||||

我告诉你一个秘密:使得我的应用程序进入到全世界的 DevOps 云计算之类的东西对我来说仍然有一点神秘。但随着时间流逝,我意识到理解大规模的机器增减和应用程序部署的来龙去脉对一个开发者来说是非常重要的知识。这类似于成为一个专业的音乐家,当然你肯定需要知道如何使用你的乐器,但是,如果你不知道一个录音棚是如何工作的,或者如何适应一个交响乐团,那么你在这样的环境中工作会变得非常困难。

|

||||

|

||||

在软件开发的世界里,使你的代码进入我们的更大的世界如同把它编写出来一样重要。DevOps 重要,而且是很重要。

|

||||

|

||||

因此,为了弥合开发(Dev)和部署(Ops)之间的空隙,我会从头开始介绍容器技术。为什么是容器?因为有强力的证据表明,容器是机器抽象的下一步:使计算机成为场所而不再是一个东西。理解容器是我们共同的旅程。

|

||||

|

||||

在这篇文章中,我会介绍容器化(containerization)背后的概念。包括容器和虚拟机的区别,以及容器构建背后的逻辑以及它是如何适应应用程序架构的。我会探讨轻量级的 Linux 操作系统是如何适应容器生态系统。我还会讨论使用镜像创建可重用的容器。最后我会介绍容器集群如何使你的应用程序可以快速扩展。

|

||||

|

||||

在后面的文章中,我会一步一步向你介绍容器化一个示例应用程序的过程,以及如何为你的应用程序容器创建一个托管集群。同时,我会向你展示如何使用 Deis 将你的示例应用程序部署到你本地系统以及多种云供应商的虚拟机上。

|

||||

|

||||

让我们开始吧。

|

||||

|

||||

### 虚拟机的好处 ###

|

||||

|

||||

为了理解容器如何适应事物发展,你首先要了解容器的前任:虚拟机。

|

||||

|

||||

[虚拟机][1] (virtual machine (VM))是运行在物理宿主机上的软件抽象。配置一个虚拟机就像是购买一台计算机:你需要定义你想要的 CPU 数目、RAM 和磁盘存储容量。配置好了机器后,你为它加载操作系统,以及你想让虚拟机支持的任何服务器或者应用程序。

|

||||

|

||||

虚拟机允许你在一台硬件主机上运行多个模拟计算机。这是一个简单的示意图:

|

||||

|

||||

|

||||

|

||||

虚拟机可以让你能充分利用你的硬件资源。你可以购买一台巨大的、轰隆作响的机器,然后在上面运行多个虚拟机。你可以有一个数据库虚拟机以及很多运行相同版本的定制应用程序的虚拟机所构成的集群。你可以在有限的硬件资源获得很多的扩展能力。如果你觉得你需要更多的虚拟机而且你的宿主硬件还有容量,你可以添加任何你需要的虚拟机。或者,如果你不再需要一个虚拟机,你可以关闭该虚拟机并删除虚拟机镜像。

|

||||

|

||||

### 虚拟机的局限 ###

|

||||

|

||||

但是,虚拟机确实有局限。

|

||||

|

||||

如上面所示,假如你在一个主机上创建了三个虚拟机。主机有 12 个 CPU,48 GB 内存和 3TB 的存储空间。每个虚拟机配置为有 4 个 CPU,16 GB 内存和 1TB 存储空间。到现在为止,一切都还好。主机有这个容量。

|

||||

|

||||

但这里有个缺陷。所有分配给一个虚拟机的资源,无论是什么,都是专有的。每台机器都分配了 16 GB 的内存。但是,如果第一个虚拟机永不会使用超过 1GB 分配的内存,剩余的 15 GB 就会被浪费在那里。如果第三个虚拟机只使用分配的 1TB 存储空间中的 100GB,其余的 900GB 就成为浪费空间。

|

||||

|

||||

这里没有资源的流动。每台虚拟机拥有分配给它的所有资源。因此,在某种方式上我们又回到了虚拟机之前,把大部分金钱花费在未使用的资源上。

|

||||

|

||||

虚拟机还有*另一个*缺陷。让它们跑起来需要很长时间。如果你处于基础设施需要快速增长的情形,即使增加虚拟机是自动的,你仍然会发现你的很多时间都浪费在等待机器上线。

|

||||

|

||||

### 来到:容器 ###

|

||||

|

||||

概念上来说,容器是一个 Linux 进程,Linux 认为它只是一个运行中的进程。该进程只知道它被告知的东西。另外,在容器化方面,该容器进程也分配了它自己的 IP 地址。这点很重要,重要的事情讲三遍,这是第二遍。**在容器化方面,容器进程有它自己的 IP 地址。**一旦给予了一个 IP 地址,该进程就是宿主网络中可识别的资源。然后,你可以在容器管理器上运行命令,使容器 IP 映射到主机中能访问公网的 IP 地址。建立了该映射,无论出于什么意图和目的,容器就是网络上一个可访问的独立机器,从概念上类似于虚拟机。

|

||||

|

||||

这是第三遍,容器是拥有不同 IP 地址从而使其成为网络上可识别的独立 Linux 进程。下面是一个示意图:

|

||||

|

||||

|

||||

|

||||

容器/进程以动态、合作的方式共享主机上的资源。如果容器只需要 1GB 内存,它就只会使用 1GB。如果它需要 4GB,就会使用 4GB。CPU 和存储空间利用也是如此。CPU、内存和存储空间的分配是动态的,和典型虚拟机的静态方式不同。所有这些资源的共享都由容器管理器来管理。

|

||||

|

||||

最后,容器能非常快速地启动。

|

||||

|

||||

因此,容器的好处是:**你获得了虚拟机独立和封装的好处,而抛弃了静态资源专有的缺陷**。另外,由于容器能快速加载到内存,在扩展到多个容器时你能获得更好的性能。

|

||||

|

||||

### 容器托管、配置和管理 ###

|

||||

|

||||

托管容器的计算机运行着被剥离的只剩下主要部分的某个 Linux 版本。现在,宿主计算机流行的底层操作系统是之前提到的 [CoreOS][2]。当然还有其它,例如 [Red Hat Atomic Host][3] 和 [Ubuntu Snappy][4]。

|

||||

|

||||

该 Linux 操作系统被所有容器所共享,减少了容器足迹的重复和冗余。每个容器只包括该容器特有的部分。下面是一个示意图:

|

||||

|

||||

|

||||

|

||||

你可以用它所需的组件来配置容器。一个容器组件被称为**层(layer)**。层是一个容器镜像,(你会在后面的部分看到更多关于容器镜像的介绍)。你从一个基本层开始,这通常是你想在容器中使用的操作系统。(容器管理器只提供你所要的操作系统在宿主操作系统中不存在的部分。)当你构建你的容器配置时,你需要添加层,例如你想要添加网络服务器时这个层就是 Apache,如果容器要运行脚本,则需要添加 PHP 或 Python 运行时环境。

|

||||

|

||||

分层非常灵活。如果应用程序或者服务容器需要 PHP 5.2 版本,你相应地配置该容器即可。如果你有另一个应用程序或者服务需要 PHP 5.6 版本,没问题,你可以使用 PHP 5.6 配置该容器。不像虚拟机,更改一个版本的运行时依赖时你需要经过大量的配置和安装过程;对于容器你只需要在容器配置文件中重新定义层。

|

||||

|

||||

所有上面描述的容器的各种功能都由一个称为容器管理器(container manager)的软件控制。现在,最流行的容器管理器是 [Docker][5] 和 [Rocket][6]。上面的示意图展示了容器管理器是 Docker,宿主操作系统是 CentOS 的主机情景。

|

||||

|

||||

### 容器由镜像构成 ###

|

||||

|

||||

当你需要将我们的应用程序构建到容器时,你就要编译镜像。镜像代表了你的容器需要完成其工作的容器模板。(容器里可以在容器里面,如下图)。镜像存储在注册库(registry)中,注册库通过网络访问。

|

||||

|

||||

从概念上讲,注册库类似于一个使用 Java 的人眼中的 [Maven][7] 仓库、使用 .NET 的人眼中的 [NuGet][8] 服务器。你会创建一个列出了你应用程序所需镜像的容器配置文件。然后你使用容器管理器创建一个包括了你的应用程序代码以及从容器注册库中下载的部分资源。例如,如果你的应用程序包括了一些 PHP 文件,你的容器配置文件会声明你会从注册库中获取 PHP 运行时环境。另外,你还要使用容器配置文件声明需要复制到容器文件系统中的 .php 文件。容器管理器会封装你应用程序的所有东西为一个独立容器,该容器将会在容器管理器的管理下运行在宿主计算机上。

|

||||

|

||||

这是一个容器创建背后概念的示意图:

|

||||

|

||||

|

||||

|

||||

让我们仔细看看这个示意图。

|

||||

|

||||

(1)代表一个定义了你容器所需东西以及你容器如何构建的容器配置文件。当你在主机上运行容器时,容器管理器会读取该配置文件,从云上的注册库中获取你需要的容器镜像,(2)将镜像作为层添加到你的容器中。

|

||||

|

||||

另外,如果组成镜像需要其它镜像,容器管理器也会获取这些镜像并把它们作为层添加进来。(3)容器管理器会将需要的文件复制到容器中。

|

||||

|

||||

如果你使用了配置(provisioning)服务,例如 [Deis][9],你刚刚创建的应用程序容器做成镜像,(4)配置服务会将它部署到你选择的云供应商上,比如类似 AWS 和 Rackspace 云供应商。

|

||||

|

||||

### 集群中的容器 ###

|

||||

|

||||

好了。这里有一个很好的例子说明了容器比虚拟机提供了更好的配置灵活性和资源利用率。但是,这并不是全部。

|

||||

|

||||

容器真正的灵活是在集群中。记住,每个容器有一个独立的 IP 地址。因此,能把它放到负载均衡器后面。将容器放到负载均衡器后面,这就上升了一个层面。

|

||||

|

||||

你可以在一个负载均衡容器后运行容器集群以获得更高的性能和高可用计算。这是一个例子:

|

||||

|

||||

|

||||

|

||||

假如你开发了一个资源密集型的应用程序,例如图片处理。使用类似 [Deis][9] 的容器配置技术,你可以创建一个包括了你图片处理程序以及你图片处理程序需要的所有资源的容器镜像。然后,你可以部署一个或多个容器镜像到主机上的负载均衡器下。一旦创建了容器镜像,你可以随时使用它。当系统繁忙时可以添加更多的容器实例来满足手中的工作。

|

||||

|

||||

这里还有更多好消息。每次添加实例到环境中时,你不需要手动配置负载均衡器以便接受你的容器镜像。你可以使用服务发现技术让容器告知均衡器它可用。然后,一旦获知,均衡器就会将流量分发到新的结点。

|

||||

|

||||

### 全部放在一起 ###

|

||||

|

||||

容器技术完善了虚拟机缺失的部分。类似 CoreOS、RHEL Atomic、和 Ubuntu 的 Snappy 宿主操作系统,和类似 Docker 和 Rocket 的容器管理技术结合起来,使得容器变得日益流行。

|

||||

|

||||

尽管容器变得更加越来越普遍,掌握它们还是需要一段时间。但是,一旦你懂得了它们的窍门,你可以使用类似 [Deis][9] 这样的配置技术使容器创建和部署变得更加简单。

|

||||

|

||||

从概念上理解容器和进一步实际使用它们完成工作一样重要。但我认为不实际动手把想法付诸实践,概念也难以理解。因此,我们该系列的下一阶段就是:创建一些容器。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://deis.com/blog/2015/developer-journey-linux-containers

|

||||

|

||||

作者:[Bob Reselman][a]

|

||||

译者:[ictlyh](http://www.mutouxiaogui.cn/blog/)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://deis.com/blog

|

||||

[1]:https://en.wikipedia.org/wiki/Virtual_machine

|

||||

[2]:https://coreos.com/using-coreos/

|

||||

[3]:http://www.projectatomic.io/

|

||||

[4]:https://developer.ubuntu.com/en/snappy/

|

||||

[5]:https://www.docker.com/

|

||||

[6]:https://coreos.com/blog/rocket/

|

||||

[7]:https://en.wikipedia.org/wiki/Apache_Maven

|

||||

[8]:https://www.nuget.org/

|

||||

[9]:http://deis.com/learn

|

||||

@ -2,9 +2,9 @@ Linux 下如何安装 Retro Terminal

|

||||

================================================================================

|

||||

|

||||

|

||||

你有怀旧情节?那就试试 **安装 retro terminal 应用** [cool-retro-term][1] 来一瞥过去的时光吧。顾名思义,`cool-retro-term` 是一个兼具酷炫和怀旧的终端。

|

||||

你有怀旧情节?那就试试 **安装复古终端应用** [cool-retro-term][1] 来一瞥过去的时光吧。顾名思义,`cool-retro-term` 是一个兼具酷炫和怀旧的终端。

|

||||

|

||||

你还记得那段遍地都是 CRT 显示器、终端屏幕闪烁不停的时光吗?现在你并不需要穿越到过去来见证那段时光。假如你观看背景设置在上世纪 90 年代的电影,你就可以看到大量带有绿色或黑底白字的显像管显示器。再加上它们通常带有极客光环,这使得它们看起来更酷。

|

||||

你还记得那段遍地都是 CRT 显示器、终端屏幕闪烁不停的时光吗?现在你并不需要穿越到过去来见证那段时光。假如你观看背景设置在上世纪 90 年代的电影,你就可以看到大量带有绿色或黑底白字的显像管显示器。这种极客光环让它们看起来非常酷!

|

||||

|

||||

若你已经厌倦了你机器中终端的外表,正寻找某些炫酷且‘新奇’的东西,则 `cool-retro-term` 将会带给你一个复古的终端外表,使你可以重温过去。你也可以改变它的颜色、动画类型并添加一些额外的特效。

|

||||

|

||||

@ -48,7 +48,7 @@ Linux 下如何安装 Retro Terminal

|

||||

|

||||

./cool-retro-term

|

||||

|

||||

假如你想使得这个应用可在程序菜单中被快速获取到,以便你不用再每次手动地用命令来启动它,则你可以使用下面的命令:

|

||||

假如你想把这个应用放在程序菜单中以便快速找到,这样你就不用再每次手动地用命令来启动它,则你可以使用下面的命令:

|

||||

|

||||

sudo cp cool-retro-term.desktop /usr/share/applications

|

||||

|

||||

@ -60,13 +60,13 @@ Linux 下如何安装 Retro Terminal

|

||||

|

||||

via: http://itsfoss.com/cool-retro-term/

|

||||

|

||||

作者:[Hossein Heydari][a]

|

||||

作者:[Abhishek Prakash][a]

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://itsfoss.com/author/hossein/

|

||||

[a]:http://itsfoss.com/author/abhishek/

|

||||

[1]:https://github.com/Swordfish90/cool-retro-term

|

||||

[2]:http://itsfoss.com/tag/antergos/

|

||||

[3]:https://manjaro.github.io/

|

||||

@ -1,6 +1,6 @@

|

||||

如何在 Linux 上使用 SSHfs 挂载一个远程文件系统

|

||||

================================================================================

|

||||

你有想通过安全 shell 挂载一个远程文件系统到本地的经历吗?如果有的话,SSHfs 也许就是你所需要的。它通过使用 SSH 和 Fuse(LCTT 译注:Filesystem in Userspace,用户态文件系统,是 Linux 中用于挂载某些网络空间,如 SSH,到本地文件系统的模块) 允许你挂载远程计算机(或者服务器)到本地。

|

||||

你曾经想过用安全 shell 挂载一个远程文件系统到本地吗?如果有的话,SSHfs 也许就是你所需要的。它通过使用 SSH 和 Fuse(LCTT 译注:Filesystem in Userspace,用户态文件系统,是 Linux 中用于挂载某些网络空间,如 SSH,到本地文件系统的模块) 允许你挂载远程计算机(或者服务器)到本地。

|

||||

|

||||

**注意**: 这篇文章假设你明白[SSH 如何工作并在你的系统中配置 SSH][1]。

|

||||

|

||||

@ -16,7 +16,7 @@

|

||||

|

||||

如果你使用的不是 Ubuntu,那就在你的发行版软件包管理器中搜索软件包名称。最好搜索和 fuse 或 SSHfs 相关的关键字,因为取决于你运行的系统,软件包名称可能稍微有些不同。

|

||||

|

||||

在你的系统上安装完软件包之后,就该创建 fuse 组了。在你安装 fuse 的时候,应该会在你的系统上创建一个组。如果没有的话,在终端窗口中输入以下命令以便在你的 Linux 系统中创建组:

|

||||

在你的系统上安装完软件包之后,就该创建好 fuse 组了。在你安装 fuse 的时候,应该会在你的系统上创建一个组。如果没有的话,在终端窗口中输入以下命令以便在你的 Linux 系统中创建组:

|

||||

|

||||

sudo groupadd fuse

|

||||

|

||||

@ -26,7 +26,7 @@

|

||||

|

||||

|

||||

|

||||

别担心上面命令的 `$USER`。shell 会自动用你自己的用户名替换。处理了和组相关的事之后,就是时候创建要挂载远程文件的目录了。

|

||||

别担心上面命令的 `$USER`。shell 会自动用你自己的用户名替换。处理了和组相关的工作之后,就是时候创建要挂载远程文件的目录了。

|

||||

|

||||

mkdir ~/remote_folder

|

||||

|

||||

@ -54,9 +54,9 @@

|

||||

|

||||

### 总结 ###

|

||||

|

||||

在 Linux 上有很多工具可以用于访问远程文件并挂载到本地。如之前所说,如果有的话,也只有很少的工具能充分利用 SSH 的强大功能。我希望在这篇指南的帮助下,也能认识到 SSHfs 是一个多么强大的工具。

|

||||

在 Linux 上有很多工具可以用于访问远程文件并挂载到本地。但是如之前所说,如果有的话,也只有很少的工具能充分利用 SSH 的强大功能。我希望在这篇指南的帮助下,也能认识到 SSHfs 是一个多么强大的工具。

|

||||

|

||||

你觉得 SSHfs 怎么样呢?在线的评论框里告诉我们吧!

|

||||

你觉得 SSHfs 怎么样呢?在下面的评论框里告诉我们吧!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -64,7 +64,7 @@ via: https://www.maketecheasier.com/sshfs-mount-remote-filesystem-linux/

|

||||

|

||||

作者:[Derrik Diener][a]

|

||||

译者:[ictlyh](http://mutouxiaogui.cn/blog/)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,15 +1,13 @@

|

||||

Linux又问必答-- 如何在Linux中改变默认的Java版本

|

||||

Linux 有问必答:如何在 Linux 中改变默认的 Java 版本

|

||||

================================================================================

|

||||

> **提问**:当我尝试在Linux中运行一个Java程序时,我遇到了一个错误。看上去像程序编译所使用的Javab版本与我本地的不同。我该如何在Linux上切换默认的Java版本?

|

||||

> **提问**:当我尝试在Linux中运行一个Java程序时,我遇到了一个错误。看上去像程序编译所使用的Java版本与我本地的不同。我该如何在Linux上切换默认的Java版本?

|

||||

|

||||

>

|

||||

> Exception in thread "main" java.lang.UnsupportedClassVersionError: com/xmodulo/hmon/gui/NetConf : Unsupported major.minor version 51.0

|

||||

|

||||

当Java程序编译时,编译环境会设置一个“target”变量来设置程序可以运行的最低Java版本。如果你Linux系统上运行的程序不满足最低的JRE版本要求,那么你会在运行的时候遇到下面的错误。

|

||||

当Java程序编译时,编译环境会设置一个“target”变量来设置程序可以运行的最低Java版本。如果你Linux系统上运行的程序不能满足最低的JRE版本要求,那么你会在运行的时候遇到下面的错误。

|

||||

|

||||

Exception in thread "main" java.lang.UnsupportedClassVersionError: com/xmodulo/hmon/gui/NetConf : Unsupported major.minor version 51.0

|

||||

|

||||

比如,这种情况下程序在Java JRE 1.7下编译,但是系统只有Java JRE 1.6。

|

||||

比如,程序在Java JRE 1.7下编译,但是系统只有Java JRE 1.6。

|

||||

|

||||

要解决这个问题,你需要改变默认的Java版本到Java JRE 1.7或者更高(假设JRE已经安装了)。

|

||||

|

||||

@ -21,7 +19,7 @@ Linux又问必答-- 如何在Linux中改变默认的Java版本

|

||||

|

||||

本例中,总共安装了4个不同的Java版本:OpenJDK JRE 1.6、Oracle Java JRE 1.6、OpenJDK JRE 1.7 和 Oracle Java JRE 1.7。现在默认的Java版本是OpenJDK JRE 1.6。

|

||||

|

||||

如果没有安装需要的Java JRE,你可以参考[这些指导][1]来完成安装。

|

||||

如果没有安装需要的Java JRE,你可以参考[这些指导][1]来完成安装。

|

||||

|

||||

现在有可用的候选版本,你可以用下面的命令在可用的Java JRE之间**切换默认的Java版本**:

|

||||

|

||||

@ -45,7 +43,7 @@ via: http://ask.xmodulo.com/change-default-java-version-linux.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,12 +1,12 @@

|

||||

LastPass的开源替代品

|

||||

LastPass 的开源替代品

|

||||

================================================================================

|

||||

LastPass是一个跨平台的密码管理程序。在Linux平台中,它可作为Firefox, Chrome和Opera浏览器的插件使用。LastPass Sesame支持Ubuntu/Debian与Fedora系统。此外,LastPass还有安装在Firefox Portable的便携版,可将其安装在USB设备上。再加上适用于Ubuntu/Debian, Fedora和openSUSE的LastPass Pocket, 其具有良好的跨平台覆盖性。虽然LastPass备受好评,但它是一个专有软件。此外,LastPass最近被LogMeIn收购。如果你在找一个开源的替代品,这篇文章可能会对你有所帮助。

|

||||

|

||||

我们正面临着信息大爆炸。无论你是要在线经营生意,找工作,还是只为了休闲来进行阅读,互联网都是一个广大的信息源。在这种情况下,长期保留信息是很困难的。然而,及时地获取某些特定信息非常重要。密码就是这样的一个例子。

|

||||

我们正面临着信息大爆炸。无论你是要在线经营生意,找工作,还是只为了休闲来进行阅读,互联网都是一个海量的信息源。在这种情况下,长期保留信息是很困难的。然而,及时地获取某些特定信息非常重要。密码就是这样的一个例子。

|

||||

|

||||

作为一个电脑用户,你可能会面临在不同服务或网站使用相同或不同密码的困境。这个事情非常复杂,因为有些网站会限制你对密码的选择。比如,一个网站可能会限制密码的最小位数,大写字母,数字或者特殊字符,这使得在所有网站使用统一密码变得不可能。更重要的是,不在不同网站中使用同一密码有安全方面的原因。这样就不可避免地意味着人们经常会有很多密码要记。一个解决方案是将所有的密码写下来。然而,这种做法也极度的不安全。

|

||||

|

||||

为了解决需要记忆无穷多串密码的问题,目前比较流行的解决方案是使用密码管理软件。事实上,这类软件对于活跃的互联网用户来说极为实用。它使得你获取、管理和安全保存所有密码变得极为容易,而大多数密码都是被软件或文件系统加密过的。因此,用户只需要记住一个简单的密码就可以获取到其它所有密码。密码管理软件鼓励用户对于不同服务去采用独一无二的,非直观的强密码。

|

||||

为了解决需要记忆无穷多串密码的问题,目前比较流行的解决方案是使用密码管理软件。事实上,这类软件对于活跃的互联网用户来说极为实用。它使得你获取、管理和安全保存所有密码变得极为容易,而大多数密码都是用软件或文件系统加密过的。因此,用户只需要记住一个简单的密码就可以获取到其它所有密码。密码管理软件鼓励用户对于不同服务去采用独一无二的,非直观的高强度的密码。

|

||||

|

||||

为了让大家更深入地了解Linux软件的质量,我将介绍4款优秀的、可替代LastPass的开源软件。

|

||||

|

||||

@ -14,25 +14,27 @@ LastPass是一个跨平台的密码管理程序。在Linux平台中,它可作

|

||||

|

||||

|

||||

|

||||

KeePassX提供KeePass的多平台接口,是一款开源、跨平台的密码管理软件。这款软件可以帮助你以安全的方式保管密码。你可以将所有密码保存在一个数据库中,而这个数据库被一个主密码或密码盘来保管。

|

||||

KeePassX是KeePass的多平台移植,是一款开源、跨平台的密码管理软件。这款软件可以帮助你以安全的方式保管密码。你可以将所有密码保存在一个数据库中,而这个数据库被一个主密码或密码盘来保管。这使得用户只需要记住一个单一的主密码或插入密码盘即可解锁整个数据库。

|

||||

|

||||

密码数据库使用AES(即Rijndael)或者TwoFish算法进行加密,密钥长度为256位。

|

||||

|

||||

该软件功能包括:

|

||||

|

||||

- 多重管理模式 - 使每条密码更容易被识别

|

||||

- 管理模式丰富

|

||||

- 通过标题使每条密码更容易被识别

|

||||

- 可设置密码过期时间

|

||||

- 可插入附件

|

||||

- 可为不同分组或密码自定义标志

|

||||

- 在分组中对密码排序

|

||||

- 搜索函数:可在特定分组或整个数据库中搜索

|

||||

- Auto-Type: 这个功能允许你在登录网站时只需要按下几个键。KeePassX可以帮助你输入剩下的密码。Auto-Type通过读取当前窗口的标题,对密码数据库进行搜索来获取相应的密码

|

||||

- 数据库安全性强,用户可通过密码或一个密钥文件(可存储在CD或U盘中)访问数据库

|

||||

- 自动生成安全的密码

|

||||

- 具有预防措施,获取选中的密码并检查其安全性

|

||||

- 加密 - 用256位密钥,通过AES(高级加密标准)或TwoFish算法加密数据库

|

||||

- 搜索功能:可在特定分组或整个数据库中搜索

|

||||

- 自动键入: 这个功能允许你在登录网站时只需要按下几个键。KeePassX可以帮助你输入剩下的密码。自动键入通过读取当前窗口的标题,对密码数据库进行搜索来获取相应的密码

|

||||

- 数据库安全性强,用户可通过密码或一个密钥文件(可存储在CD或U盘中)访问数据库(或两者)

|

||||

- 安全密码自动生成

|

||||

- 具有预防措施,获取用星号隐藏的密码并检查其安全性

|

||||

- 加密 - 用256位密钥,通过AES(高级加密标准)或TwoFish算法加密数据库,

|

||||

- 密码可以导入或导出。可从PwManager文件(*.pwm)或KWallet文件(*.xml)中导入密码,可导出为文本(*.txt)格式。

|

||||

|

||||

---

|

||||

- 软件官网:[www.keepassx.org][1]

|

||||

- 开发者:KeepassX Team

|

||||

- 软件许可证:GNU GPL V2

|

||||

@ -42,21 +44,23 @@ KeePassX提供KeePass的多平台接口,是一款开源、跨平台的密码

|

||||

|

||||

|

||||

|

||||

Encryptr是一个开源的、零知晓的、基于云端的密码管理/电子钱包软件,以Crypton为基础开发。Crypton是一个Javascript库,允许开发者利用其开发应用,上传文件至服务器,而服务器无法知道用户所存储的文件内容。

|

||||

Encryptr是一个开源的、零知识(zero-knowledge)的、基于云端的密码管理/电子钱包软件,以Crypton为基础开发。Crypton是一个Javascript库,允许开发者利用其开发应用来上传文件至服务器,而服务器无法知道用户所存储的文件内容。

|

||||

|

||||

Encryptr可将你的敏感信息,比如密码、信用卡数据、PIN码、或认证码存储在云端。然而,由于它基于零知晓的Cypton框架开发,Encryptr可保证只有用户才拥有访问或读取秘密信息的权限。

|

||||

Encryptr可将你的敏感信息,比如密码、信用卡数据、PIN码、或认证码存储在云端。然而,由于它基于零知识的Cypton框架开发,Encryptr可保证只有用户才拥有访问或读取秘密信息的权限。

|

||||

|

||||

由于其跨平台的特性,Encryptr允许用户随时随地、安全地通过一个账户从云端获取机密信息。

|

||||

|

||||

软件特性包括:

|

||||

|

||||

- 使用极安全、零知晓的Crypton框架,软件只在本地加密/解密数据

|

||||

- 使用非常安全的零知识Crypton框架,只在你的本地加密/解密数据

|

||||

- 易于使用

|

||||

- 基于云端

|

||||

- 可存储三种类型的数据:密码、信用卡账号以及通用的键值对

|

||||

- 可对每条密码设置“备注”项

|

||||

- 对本地密码进行缓存加密,以节省上传时间

|

||||

- 过滤和搜索密码

|

||||

- 对密码进行本地加密缓存,以节省载入时间

|

||||

|

||||

---

|

||||

- 软件官网: [encryptr.org][2]

|

||||

- 开发者: Tommy Williams

|

||||

- 软件许可证: GNU GPL v3

|

||||

@ -74,7 +78,9 @@ RatticDB被设计为一个“密码生命周期管理工具”而不是单单一

|

||||

|

||||

- 简洁的ACL设计

|

||||

- 可改变队列功能,可让用户知晓何时需要更改某应用的密码

|

||||

- Ansible配置

|

||||

- 支持Ansible配置

|

||||

|

||||

---

|

||||

|

||||

- 软件官网: [rattic.org][3]

|

||||

- 开发者: Daniel Hall

|

||||

@ -85,9 +91,9 @@ RatticDB被设计为一个“密码生命周期管理工具”而不是单单一

|

||||

|

||||

|

||||

|

||||

Seahorse是一个于Gnome前端运行的GnuPG - GNU隐私保护软件。它的目标是提供一个易于使用密钥管理工具,一并提供一个易于使用的界面来控制加密操作。

|

||||

Seahorse是一个GnuPG(GNU隐私保护软件)的Gnome前端界面。它的目标是提供一个易于使用密钥管理工具,以及一个易于使用的界面来控制加密操作。

|

||||

|

||||

Seahorse是一个工具,用来提供安全沟通和数据存储服务。数据加密和数字密钥生成操作可以轻易通过GUI来演示,密钥管理操作也可以轻易通过直观的界面来进行。

|

||||

Seahorse是一个工具,用来提供安全传输和数据存储服务。数据加密和数字密钥生成操作可以轻易通过GUI来操作,密钥管理操作也可以轻易通过直观的界面来进行。

|

||||

|

||||

此外,Seahorse包含一个Gedit插件,可以使用鹦鹉螺文件管理器管理文件,一个管理剪贴板中事物的小程序,一个存储私密密码的代理,还有一个GnuPG和OpenSSH的密钥管理工具。

|

||||

|

||||

@ -95,7 +101,7 @@ Seahorse是一个工具,用来提供安全沟通和数据存储服务。数据

|

||||

|

||||

- 对文本进行加密/解密/签名

|

||||

- 管理密钥及密钥环

|

||||

- 将密钥及密钥环于密钥服务器同步

|

||||

- 将密钥及密钥环与密钥服务器同步

|

||||

- 密码签名及发布

|

||||

- 将密码缓存起来,无需多次重复键入

|

||||

- 对密钥及密钥环进行备份

|

||||

@ -103,6 +109,8 @@ Seahorse是一个工具,用来提供安全沟通和数据存储服务。数据

|

||||

- 生成SSH密钥,对其进行验证及储存

|

||||

- 多语言支持

|

||||

|

||||

---

|

||||

|

||||

- 软件官网: [www.gnome.org/projects/seahorse][4]

|

||||

- 开发者: Jacob Perkins, Jose Carlos, Garcia Sogo, Jean Schurger, Stef Walter, Adam Schreiber

|

||||

- 软件许可证: GNU GPL v2

|

||||

@ -113,7 +121,7 @@ Seahorse是一个工具,用来提供安全沟通和数据存储服务。数据

|

||||

via: http://www.linuxlinks.com/article/20151108125950773/LastPassAlternatives.html

|

||||

|

||||

译者:[StdioA](https://github.com/StdioA)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,48 @@

|

||||

Linux 有问必答:如何在 Linux 上自动设置 JAVA_HOME 环境变量

|

||||

================================================================================

|

||||

> **问题**:我需要在我的 Linux 机器上编译 Java 程序。为此我已经安装了 JDK (Java Development Kit),而现在我正试图设置 JAVA\_HOME 环境变量使其指向安装好的 JDK 。关于在 Linux 上设置 JAVA\_HOME 环境变量,最受推崇的办法是什么?

|

||||

|

||||

许多 Java 程序或基于 Java 的*集成开发环境* (IDE)都需要设置好 JAVA_HOME 环境变量。该变量应指向 *Java 开发工具包* (JDK)或 *Java 运行时环境* (JRE)的安装目录。JDK 不仅包含了 JRE 提供的一切,还带有用于编译 Java 程序的额外的二进制代码和库文件(例如编译器,调试器及 JavaDoc 文档生成器)。JDK 是用来构建 Java 程序的,如果只是运行已经构建好的 Java 程序,单独一份 JRE 就足够了。

|

||||

|

||||

当您正试图设置 JAVA\_HOME 环境变量时,麻烦的事情在于 JAVA\_HOME 变量需要根据以下几点而改变:(1) 您是否安装了 JDK 或 JRE;(2) 您安装了哪个版本;(3) 您安装的是 Oracle JDK 还是 Open JDK。

|

||||

|

||||

因此每当您的开发环境或运行时环境发生改变(例如为 JDK 更新版本)时,您需要根据实际情况调整 JAVA\_HOME 变量,而这种做法是繁重且缺乏效率的。

|

||||

|

||||

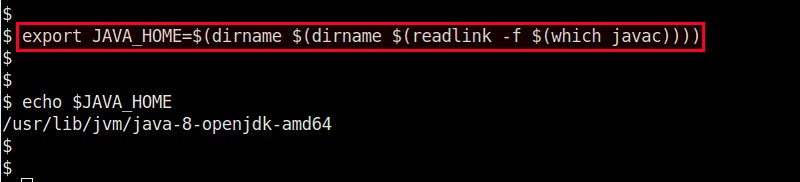

以下 export 命令能为您**自动设置** JAVA\_HOME 环境变量,而无须顾及上述的因素。

|

||||

|

||||

若您安装的是 JRE:

|

||||

|

||||

export JAVA_HOME=$(dirname $(dirname $(readlink -f $(which java))))

|

||||

|

||||

若您安装的是 JDK:

|

||||

|

||||

export JAVA_HOME=$(dirname $(dirname $(readlink -f $(which javac))))

|

||||

|

||||

根据您的情况,将上述命令中的一条写入 ~/.bashrc(或 /etc/profile)文件中,它就会永久地设置好 JAVA\_HOME 变量。

|

||||

|

||||

注意,由于 java 或 javac 可以建立起多个层次的符号链接,为此"readlink -f"命令是用来获取它们真正的执行路径的。

|

||||

|

||||

举个例子,假如您安装的是 Oracle JRE 7,那么上述的第一条 export 命令将自动设置 JAVA\_HOME 为:

|

||||

|

||||

/usr/lib/jvm/java-7-oracle/jre

|

||||

|

||||

若您安装的是 Open JDK 第8版,那么第二条 export 命令将设置 JAVA\_HOME 为:

|

||||

|

||||

/usr/lib/jvm/java-8-openjdk-amd64

|

||||

|

||||

|

||||

|

||||

简而言之,这些 export 命令会在您重装/升级您的JDK/JRE,或[更换默认 Java 版本][1]时自动更新 JAVA\_HOME 变量。您不再需要手动调整它。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://ask.xmodulo.com/set-java_home-environment-variable-linux.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[Ricky-Gong](https://github.com/Ricky-Gong)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://ask.xmodulo.com/author/nanni

|

||||

[1]:http://ask.xmodulo.com/change-default-java-version-linux.html

|

||||

@ -1,11 +1,10 @@

|

||||

第七部分 - 在 Linux 客户端配置基于 Kerberos 身份验证的 NFS 服务器

|

||||

RHCE 系列(七):在 Linux 客户端配置基于 Kerberos 身份验证的 NFS 服务器

|

||||

================================================================================

|

||||

在本系列的前一篇文章,我们回顾了[如何在可能包括多种类型操作系统的网络上配置 Samba 共享][1]。现在,如果你需要为一组类-Unix 客户端配置文件共享,很自然的你会想到网络文件系统,或简称 NFS。

|

||||

|

||||

在本系列的前一篇文章,我们回顾了[如何在可能包括多种类型操作系统的网络上配置 Samba 共享][1]。现在,如果你需要为一组类 Unix 客户端配置文件共享,很自然的你会想到网络文件系统,或简称 NFS。

|

||||

|

||||

|

||||

|

||||

RHCE 系列:第七部分 - 设置使用 Kerberos 进行身份验证的 NFS 服务器

|

||||

*RHCE 系列:第七部分 - 设置使用 Kerberos 进行身份验证的 NFS 服务器*

|

||||

|

||||

在这篇文章中我们会介绍配置基于 Kerberos 身份验证的 NFS 共享的整个流程。假设你已经配置好了一个 NFS 服务器和一个客户端。如果还没有,可以参考 [安装和配置 NFS 服务器][2] - 它列出了需要安装的依赖软件包并解释了在进行下一步之前如何在服务器上进行初始化配置。

|

||||

|

||||

@ -24,28 +23,26 @@ RHCE 系列:第七部分 - 设置使用 Kerberos 进行身份验证的 NFS 服

|

||||

|

||||

#### 创建 NFS 组并配置 NFS 共享目录 ####

|

||||

|

||||

1. 新建一个名为 nfs 的组并给它添加用户 nfsnobody,然后更改 /nfs 目录的权限为 0770,组属主为 nfs。于是,nfsnobody(对应请求用户)在共享目录有写的权限,你就不需要在 /etc/exports 文件中使用 no_root_squash(译者注:设为 root_squash 意味着在访问 NFS 服务器上的文件时,客户机上的 root 用户不会被当作 root 用户来对待)。

|

||||

1、 新建一个名为 nfs 的组并给它添加用户 nfsnobody,然后更改 /nfs 目录的权限为 0770,组属主为 nfs。于是,nfsnobody(对应请求用户)在共享目录有写的权限,你就不需要在 /etc/exports 文件中使用 no_root_squash(LCTT 译注:设为 root_squash 意味着在访问 NFS 服务器上的文件时,客户机上的 root 用户不会被当作 root 用户来对待)。

|

||||

|

||||

# groupadd nfs

|

||||

# usermod -a -G nfs nfsnobody

|

||||

# chmod 0770 /nfs

|

||||

# chgrp nfs /nfs

|

||||

|

||||

2. 像下面那样更改 export 文件(/etc/exports)只允许从 box1 使用 Kerberos 安全验证的访问(sec=krb5)。

|

||||

2、 像下面那样更改 export 文件(/etc/exports)只允许从 box1 使用 Kerberos 安全验证的访问(sec=krb5)。

|

||||

|

||||

**注意**:anongid 的值设置为之前新建的组 nfs 的 GID:

|

||||

|

||||

**exports – 添加 NFS 共享**

|

||||

|

||||

----------

|

||||

|

||||

/nfs box1(rw,sec=krb5,anongid=1004)

|

||||

|

||||

3. 再次 exprot(-r)所有(-a)NFS 共享。为输出添加详情(-v)是个好主意,因为它提供了发生错误时解决问题的有用信息:

|

||||

3、 再次 exprot(-r)所有(-a)NFS 共享。为输出添加详情(-v)是个好主意,因为它提供了发生错误时解决问题的有用信息:

|

||||

|

||||

# exportfs -arv

|

||||

|

||||

4. 重启并启用 NFS 服务器以及相关服务。注意你不需要启动 nfs-lock 和 nfs-idmapd,因为系统启动时其它服务会自动启动它们:

|

||||

4、 重启并启用 NFS 服务器以及相关服务。注意你不需要启动 nfs-lock 和 nfs-idmapd,因为系统启动时其它服务会自动启动它们:

|

||||

|

||||

# systemctl restart rpcbind nfs-server nfs-lock nfs-idmap

|

||||

# systemctl enable rpcbind nfs-server

|

||||

@ -61,14 +58,12 @@ RHCE 系列:第七部分 - 设置使用 Kerberos 进行身份验证的 NFS 服

|

||||

|

||||

正如你看到的,为了简便,NFS 服务器和 KDC 在同一台机器上,当然如果你有更多可用机器你也可以把它们安装在不同的机器上。两台机器都在 `mydomain.com` 域。

|

||||

|

||||

最后同样重要的是,Kerberos 要求客户端和服务器中至少有一个域名解析的基本模式和[网络时间协议][5]服务,因为 Kerberos 身份验证的安全一部分基于时间戳。

|

||||

最后同样重要的是,Kerberos 要求客户端和服务器中至少有一个域名解析的基本方式和[网络时间协议][5]服务,因为 Kerberos 身份验证的安全一部分基于时间戳。

|

||||

|

||||

为了配置域名解析,我们在客户端和服务器中编辑 /etc/hosts 文件:

|

||||

|

||||

**host 文件 – 为域添加 DNS**

|

||||

|

||||

----------

|

||||

|

||||

192.168.0.18 box1.mydomain.com box1

|

||||

192.168.0.20 box2.mydomain.com box2

|

||||

|

||||

@ -82,10 +77,9 @@ RHCE 系列:第七部分 - 设置使用 Kerberos 进行身份验证的 NFS 服

|

||||

|

||||

# chronyc tracking

|

||||

|

||||

|

||||

|

||||

|

||||

用 Chrony 同步服务器时间

|

||||

*用 Chrony 同步服务器时间*

|

||||

|

||||

### 安装和配置 Kerberos ###

|

||||

|

||||

@ -109,7 +103,7 @@ RHCE 系列:第七部分 - 设置使用 Kerberos 进行身份验证的 NFS 服

|

||||

|

||||

|

||||

|

||||

创建 Kerberos 数据库

|

||||

*创建 Kerberos 数据库*

|

||||

|

||||

下一步,使用 kadmin.local 工具为 root 创建管理权限:

|

||||

|

||||

@ -129,7 +123,7 @@ RHCE 系列:第七部分 - 设置使用 Kerberos 进行身份验证的 NFS 服

|

||||

|

||||

|

||||

|

||||

添加 Kerberos 到 NFS 服务器

|

||||

*添加 Kerberos 到 NFS 服务器*

|

||||

|

||||

为 root/admin 获取和缓存票据授权票据(ticket-granting ticket):

|

||||

|

||||

@ -138,7 +132,7 @@ RHCE 系列:第七部分 - 设置使用 Kerberos 进行身份验证的 NFS 服

|

||||

|

||||

|

||||

|

||||

缓存 Kerberos

|

||||

*缓存 Kerberos*

|

||||

|

||||

真正使用 Kerberos 之前的最后一步是保存被授权使用 Kerberos 身份验证的规则到一个密钥表文件(在服务器中):

|

||||

|

||||

@ -154,7 +148,7 @@ RHCE 系列:第七部分 - 设置使用 Kerberos 进行身份验证的 NFS 服

|

||||

|

||||

|

||||

|

||||

挂载 NFS 共享

|

||||

*挂载 NFS 共享*

|

||||

|

||||

现在让我们卸载共享,在客户端中重命名密钥表文件(模拟它不存在)然后试着再次挂载共享目录:

|

||||

|

||||

@ -163,7 +157,7 @@ RHCE 系列:第七部分 - 设置使用 Kerberos 进行身份验证的 NFS 服

|

||||

|

||||

|

||||

|

||||

挂载/卸载 Kerberos NFS 共享

|

||||

*挂载/卸载 Kerberos NFS 共享*

|

||||

|

||||

现在你可以使用基于 Kerberos 身份验证的 NFS 共享了。

|

||||

|

||||

@ -177,12 +171,12 @@ via: http://www.tecmint.com/setting-up-nfs-server-with-kerberos-based-authentica

|

||||

|

||||

作者:[Gabriel Cánepa][a]

|

||||

译者:[ictlyh](http://www.mutouxiaogui.cn/blog/)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/gacanepa/

|

||||

[1]:http://www.tecmint.com/setup-samba-file-sharing-for-linux-windows-clients/

|

||||

[1]:https://linux.cn/article-6550-1.html

|

||||

[2]:http://www.tecmint.com/configure-nfs-server/

|

||||

[3]:http://www.tecmint.com/selinux-essentials-and-control-filesystem-access/

|

||||

[4]:http://www.tecmint.com/firewalld-rules-for-centos-7/

|

||||

@ -1,45 +0,0 @@

|

||||

sevenot translating

|

||||

Curious about Linux? Try Linux Desktop on the Cloud

|

||||

================================================================================

|

||||

Linux maintains a very small market share as a desktop operating system. Current surveys estimate its share to be a mere 2%; contrast that with the various strains (no pun intended) of Windows which total nearly 90% of the desktop market. For Linux to challenge Microsoft's monopoly on the desktop, there needs to be a simple way of learning about this different operating system. And it would be naive to believe a typical Windows user is going to buy a second machine, tinker with partitioning a hard disk to set up a multi-boot system, or just jump ship to Linux without an easy way back.

|

||||

|

||||

|

||||

|

||||

We have examined a number of risk-free ways users can experiment with Linux without dabbling with partition management. Various options include Live CD/DVDs, USB keys and desktop virtualization software. For the latter, I can strongly recommend VMWare (VMWare Player) or Oracle VirtualBox, two relatively easy and free ways of installing and running multiple operating systems on a desktop or laptop computer. Each virtual machine has its own share of CPU, memory, network interfaces etc which is isolated from other virtual machines. But virtual machines still require some effort to get Linux up and running, and a reasonably powerful machine. Too much effort for a mere inquisitive mind.

|

||||

|

||||

It can be difficult to break down preconceptions. Many Windows users will have experimented with free software that is available on Linux. But there are many facets to learn on Linux. And it takes time to become accustomed to the way things work in Linux.

|

||||

|

||||

Surely there should be an effortless way for a beginner to experiment with Linux for the first time? Indeed there is; step forward the online cloud lab.

|

||||

|

||||

### LabxNow ###

|

||||

|

||||

|

||||

|

||||

LabxNow provides a free service for general users offering Linux remote desktop over the browser. The developers promote the service as having a personal remote lab (to play around, develop, whatever!) that will be accessible from anywhere, with the internet of course.

|

||||

|

||||

The service currently offers a free virtual private server with 2 cores, 4GB RAM and 10GB SSD space. The service runs on a 4 AMD 6272 CPU with 128GB RAM.

|

||||

|

||||

#### Features include: ####

|

||||

|

||||

- Machine images: Ubuntu 14.04 with Xfce 4.10, RHEL 6.5, CentOS with Gnome, and Oracle

|

||||

- Hardware: CPU - 1 or 2 cores; RAM: 512MB, 1GB, 2GB or 4GB

|

||||

- Fast network for data transfers

|

||||

- Works with all popular browsers

|

||||

- Install anything, run anything - an excellent way to experiment and learn all about Linux without any risk

|

||||

- Easily add, delete, manage and customize VMs

|

||||

- Share VMs, Remote desktop support

|

||||

|

||||

All you need is a reasonable Internet connected device. Forget about high cost VPS, domain space or hardware support. LabxNow offers a great way of experimenting with Ubuntu, RHEL and CentOS. It gives Windows users an excellent environment to dip their toes into the wonderful world of Linux. Further, it allows users to do (programming) work from anywhere in the word without having the stress of installing Linux on each machine. Point your web browser at [www.labxnow.org/labxweb/][1].

|

||||

|

||||

There are other services (mostly paid services) that allow users to experiment with Linux. These include Cloudsigma which offers a free 7 day trial, and Icebergs.io (full root access via HTML5). But for now, LabxNow gets my recommendation.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxlinks.com/article/20151003095334682/LinuxCloud.html

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:https://www.labxnow.org/labxweb/

|

||||

@ -1,3 +1,4 @@

|

||||

sevenot translating

|

||||

A Linux User Using ‘Windows 10′ After More than 8 Years – See Comparison

|

||||

================================================================================

|

||||

Windows 10 is the newest member of windows NT family of which general availability was made on July 29, 2015. It is the successor of Windows 8.1. Windows 10 is supported on Intel Architecture 32 bit, AMD64 and ARMv7 processors.

|

||||

@ -341,4 +342,4 @@ via: http://www.tecmint.com/a-linux-user-using-windows-10-after-more-than-8-year

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/avishek/

|

||||

[1]:https://www.microsoft.com/en-us/software-download/windows10ISO

|

||||

[1]:https://www.microsoft.com/en-us/software-download/windows10ISO

|

||||

|

||||

77

sources/talk/20151117 How bad a boss is Linus Torvalds.md

Normal file

77

sources/talk/20151117 How bad a boss is Linus Torvalds.md

Normal file

@ -0,0 +1,77 @@

|

||||

How bad a boss is Linus Torvalds?

|

||||

================================================================================

|

||||

|

||||

|

||||

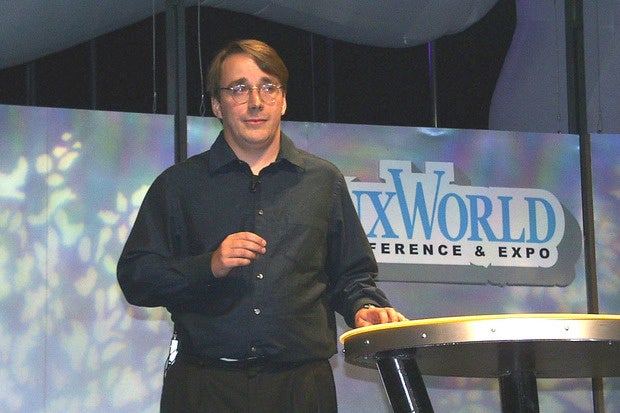

*Linus Torvalds addressed a packed auditorium of Linux enthusiasts during his speech at the LinuxWorld show in San Jose, California, on August 10, 1999. Credit: James Niccolai*

|

||||

|

||||

**It depends on context. In the world of software development, he’s what passes for normal. The question is whether that situation should be allowed to continue.**

|

||||

|

||||

I've known Linus Torvalds, Linux's inventor, for over 20 years. We're not chums, but we like each other.

|

||||

|

||||

Lately, Torvalds has been getting a lot of flack for his management style. Linus doesn't suffer fools gladly. He has one way of judging people in his business of developing the Linux kernel: How good is your code?

|

||||

|

||||

Nothing else matters. As Torvalds said earlier this year at the Linux.conf.au Conference, "I'm not a nice person, and I don't care about you. [I care about the technology and the kernel][1] -- that's what's important to me."

|

||||

|

||||

Now, I can deal with that kind of person. If you can't, you should avoid the Linux kernel community, where you'll find a lot of this kind of meritocratic thinking. Which is not to say that I think everything in Linuxland is hunky-dory and should be impervious to calls for change. A meritocracy I can live with; a bastion of male dominance where women are subjected to scorn and disrespect is a problem.

|

||||

|

||||

That's why I see the recent brouhaha about Torvalds' management style -- or more accurately, his total indifference to the personal side of management -- as nothing more than standard operating procedure in the world of software development. And at the same time, I see another instance that has come to light as evidence of a need for things to really change.

|

||||

|

||||

The first situation arose with the [release of Linux 4.3][2], when Torvalds used the Linux Kernel Mailing List to tear into a developer who had inserted some networking code that Torvalds thought was -- well, let's say "crappy." "[[A]nd it generates [crappy] code.][3] It looks bad, and there's no reason for it." He goes on in this vein for quite a while. Besides the word "crap" and its earthier synonym, he uses the word "idiotic" pretty often.

|

||||

|

||||

Here's the thing, though. He's right. I read the code. It's badly written and it does indeed seem to have been designed to use the new "overflow_usub()" function just for the sake of using it.

|

||||

|

||||

Now, some people see this diatribe as evidence that Torvalds is a bad-tempered bully. I see a perfectionist who, within his field, doesn't put up with crap.

|

||||

|

||||

Many people have told me that this is not how professional programmers should act. People, have you ever worked with top developers? That's exactly how they act, at Apple, Microsoft, Oracle and everywhere else I've known them.

|

||||

|

||||

I've heard Steve Jobs rip a developer to pieces. I've cringed while a senior Oracle developer lead tore into a room of new programmers like a piranha through goldfish.

|

||||

|

||||

In Accidental Empires, his classic book on the rise of PCs, Robert X. Cringely described Microsoft's software management style when Bill Gates was in charge as a system where "Each level, from Gates on down, screams at the next, goading and humiliating them." Ah, yes, that's the Microsoft I knew and hated.

|

||||

|

||||

The difference between the leaders at big proprietary software companies and Torvalds is that he says everything in the open for the whole world to see. The others do it in private conference rooms. I've heard people claim that Torvalds would be fired in their company. Nope. He'd be right where he is now: on top of his programming world.

|

||||

|

||||

Oh, and there's another difference. If you get, say, Larry Ellison mad at you, you can kiss your job goodbye. When you get Torvalds angry at your work, you'll get yelled at in an email. That's it.

|

||||

|

||||

You see, Torvalds isn't anyone's boss. He's the guy in charge of a project with about 10,000 contributors, but he has zero hiring and firing authority. He can hurt your feelings, but that's about it.

|

||||

|

||||

That said, there is a serious problem within both open-source and proprietary software development circles. No matter how good a programmer you are, if you're a woman, the cards are stacked against you.

|

||||

|

||||

No case shows this better than that of Sarah Sharp, an Intel developer and formerly a top Linux programmer. [In a post on her blog in October][4], she explained why she had stopped contributing to the Linux kernel more than a year earlier: "I finally realized that I could no longer contribute to a community where I was technically respected, but I could not ask for personal respect.... I did not want to work professionally with people who were allowed to get away with subtle sexist or homophobic jokes."

|

||||

|

||||

Who can blame her? I can't. Torvalds, like almost every software manager I've ever known, I'm sorry to say, has permitted a hostile work environment.

|

||||

|

||||

He would probably say that it's not his job to ensure that Linux contributors behave with professionalism and mutual respect. He's concerned with the code and nothing but the code.

|

||||

|

||||

As Sharp wrote:

|

||||

|

||||

> I have the utmost respect for the technical efforts of the Linux kernel community. They have scaled and grown a project that is focused on maintaining some of the highest coding standards out there. The focus on technical excellence, in combination with overloaded maintainers, and people with different cultural and social norms, means that Linux kernel maintainers are often blunt, rude, or brutal to get their job done. Top Linux kernel developers often yell at each other in order to correct each other's behavior.

|

||||

>

|

||||

> That's not a communication style that works for me. …

|

||||

>

|

||||

> Many senior Linux kernel developers stand by the right of maintainers to be technically and personally brutal. Even if they are very nice people in person, they do not want to see the Linux kernel communication style change.

|

||||

|

||||

She's right.

|

||||

|

||||

Where I differ from other observers is that I don't think that this problem is in any way unique to Linux or open-source communities. With five years of work in the technology business and 25 years as a technology journalist, I've seen this kind of immature boy behavior everywhere.

|

||||

|

||||

It's not Torvalds' fault. He's a technical leader with a vision, not a manager. The real problem is that there seems to be no one in the software development universe who can set a supportive tone for teams and communities.

|

||||

|

||||

Looking ahead, I hope that companies and organizations, such as the Linux Foundation, can find a way to empower community managers or other managers to encourage and enforce civil behavior.

|

||||

|

||||

We won't, unfortunately, find that kind of managerial finesse in our pure technical or business leaders. It's not in their DNA.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.computerworld.com/article/3004387/it-management/how-bad-a-boss-is-linus-torvalds.html

|

||||

|

||||

作者:[Steven J. Vaughan-Nichols][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.computerworld.com/author/Steven-J.-Vaughan_Nichols/

|

||||

[1]:http://www.computerworld.com/article/2874475/linus-torvalds-diversity-gaffe-brings-out-the-best-and-worst-of-the-open-source-world.html

|

||||

[2]:http://www.zdnet.com/article/linux-4-3-released-after-linus-torvalds-scraps-brain-damage-code/

|

||||

[3]:http://lkml.iu.edu/hypermail/linux/kernel/1510.3/02866.html

|

||||

[4]:http://sarah.thesharps.us/2015/10/05/closing-a-door/

|

||||

@ -1,801 +0,0 @@

|

||||

wyangsun translating

|

||||

Linux workstation security checklist

|

||||

================================================================================

|

||||

This is a set of recommendations used by the Linux Foundation for their systems

|

||||

administrators. All of LF employees are remote workers and we use this set of

|

||||

guidelines to ensure that a sysadmin's system passes core security requirements

|

||||

in order to reduce the risk of it becoming an attack vector against the rest

|

||||

of our infrastructure.

|

||||

|

||||

Even if your systems administrators are not remote workers, chances are that

|

||||

they perform a lot of their work either from a portable laptop in a work

|

||||

environment, or set up their home systems to access the work infrastructure

|

||||

for after-hours/emergency support. In either case, you can adapt this set of

|

||||

recommendations to suit your environment.

|

||||

|

||||

This, by no means, is an exhaustive "workstation hardening" document, but

|

||||

rather an attempt at a set of baseline recommendations to avoid most glaring

|

||||

security errors without introducing too much inconvenience. You may read this

|

||||

document and think it is way too paranoid, while someone else may think this

|

||||

barely scratches the surface. Security is just like driving on the highway --

|

||||

anyone going slower than you is an idiot, while anyone driving faster than you

|

||||

is a crazy person. These guidelines are merely a basic set of core safety

|

||||

rules that is neither exhaustive, nor a replacement for experience, vigilance,

|

||||

and common sense.

|

||||

|

||||

Each section is split into two areas:

|

||||

|

||||

- The checklist that can be adapted to your project's needs

|

||||

- Free-form list of considerations that explain what dictated these decisions

|

||||

|

||||

## Severity levels

|

||||

|

||||

The items in each checklist include the severity level, which we hope will help

|

||||

guide your decision:

|

||||

|

||||

- _(CRITICAL)_ items should definitely be high on the consideration list.

|

||||

If not implemented, they will introduce high risks to your workstation

|

||||

security.

|

||||

- _(MODERATE)_ items will improve your security posture, but are less

|

||||

important, especially if they interfere too much with your workflow.

|

||||

- _(LOW)_ items may improve the overall security, but may not be worth the

|

||||

convenience trade-offs.

|

||||

- _(PARANOID)_ is reserved for items we feel will dramatically improve your

|

||||

workstation security, but will probably require a lot of adjustment to the

|

||||

way you interact with your operating system.

|

||||

|

||||

Remember, these are only guidelines. If you feel these severity levels do not

|

||||

reflect your project's commitment to security, you should adjust them as you

|

||||

see fit.

|

||||

|

||||

## Choosing the right hardware

|

||||

|

||||

We do not mandate that our admins use a specific vendor or a specific model, so

|

||||

this section addresses core considerations when choosing a work system.

|

||||

|

||||

### Checklist

|

||||

|

||||

- [ ] System supports SecureBoot _(CRITICAL)_

|

||||

- [ ] System has no firewire, thunderbolt or ExpressCard ports _(MODERATE)_

|

||||

- [ ] System has a TPM chip _(LOW)_

|

||||

|

||||

### Considerations

|

||||

|

||||

#### SecureBoot

|

||||

|

||||

Despite its controversial nature, SecureBoot offers prevention against many

|

||||

attacks targeting workstations (Rootkits, "Evil Maid," etc), without

|

||||

introducing too much extra hassle. It will not stop a truly dedicated attacker,

|

||||

plus there is a pretty high degree of certainty that state security agencies

|

||||

have ways to defeat it (probably by design), but having SecureBoot is better

|

||||

than having nothing at all.

|

||||

|

||||

Alternatively, you may set up [Anti Evil Maid][1] which offers a more

|

||||

wholesome protection against the type of attacks that SecureBoot is supposed

|

||||

to prevent, but it will require more effort to set up and maintain.

|

||||

|

||||

#### Firewire, thunderbolt, and ExpressCard ports

|

||||

|

||||

Firewire is a standard that, by design, allows any connecting device full

|

||||

direct memory access to your system ([see Wikipedia][2]). Thunderbolt and

|

||||

ExpressCard are guilty of the same, though some later implementations of

|

||||

Thunderbolt attempt to limit the scope of memory access. It is best if the

|

||||

system you are getting has none of these ports, but it is not critical, as

|

||||

they usually can be turned off via UEFI or disabled in the kernel itself.

|

||||

|

||||

#### TPM Chip

|

||||

|

||||

Trusted Platform Module (TPM) is a crypto chip bundled with the motherboard

|

||||

separately from the core processor, which can be used for additional platform

|

||||

security (such as to store full-disk encryption keys), but is not normally used

|

||||

for day-to-day workstation operation. At best, this is a nice-to-have, unless

|

||||

you have a specific need to use TPM for your workstation security.

|

||||

|

||||

## Pre-boot environment

|

||||

|

||||

This is a set of recommendations for your workstation before you even start

|

||||

with OS installation.

|

||||

|

||||

### Checklist

|

||||

|

||||

- [ ] UEFI boot mode is used (not legacy BIOS) _(CRITICAL)_

|

||||

- [ ] Password is required to enter UEFI configuration _(CRITICAL)_

|

||||

- [ ] SecureBoot is enabled _(CRITICAL)_

|

||||

- [ ] UEFI-level password is required to boot the system _(LOW)_

|

||||

|

||||

### Considerations

|

||||

|

||||

#### UEFI and SecureBoot

|

||||

|

||||

UEFI, with all its warts, offers a lot of goodies that legacy BIOS doesn't,

|

||||

such as SecureBoot. Most modern systems come with UEFI mode on by default.

|

||||

|

||||

Make sure a strong password is required to enter UEFI configuration mode. Pay

|

||||

attention, as many manufacturers quietly limit the length of the password you

|

||||

are allowed to use, so you may need to choose high-entropy short passwords vs.

|

||||

long passphrases (see below for more on passphrases).

|

||||

|

||||

Depending on the Linux distribution you decide to use, you may or may not have

|

||||

to jump through additional hoops in order to import your distribution's

|

||||

SecureBoot key that would allow you to boot the distro. Many distributions have

|

||||

partnered with Microsoft to sign their released kernels with a key that is

|

||||

already recognized by most system manufacturers, therefore saving you the

|

||||

trouble of having to deal with key importing.

|

||||

|

||||

As an extra measure, before someone is allowed to even get to the boot

|

||||

partition and try some badness there, let's make them enter a password. This

|

||||

password should be different from your UEFI management password, in order to

|

||||

prevent shoulder-surfing. If you shut down and start a lot, you may choose to

|

||||

not bother with this, as you will already have to enter a LUKS passphrase and

|

||||

this will save you a few extra keystrokes.

|

||||

|

||||

## Distro choice considerations

|

||||

|

||||

Chances are you'll stick with a fairly widely-used distribution such as Fedora,

|

||||

Ubuntu, Arch, Debian, or one of their close spin-offs. In any case, this is

|

||||

what you should consider when picking a distribution to use.

|

||||

|

||||

### Checklist

|

||||

|

||||

- [ ] Has a robust MAC/RBAC implementation (SELinux/AppArmor/Grsecurity) _(CRITICAL)_

|

||||

- [ ] Publishes security bulletins _(CRITICAL)_

|

||||

- [ ] Provides timely security patches _(CRITICAL)_

|

||||

- [ ] Provides cryptographic verification of packages _(CRITICAL)_

|

||||

- [ ] Fully supports UEFI and SecureBoot _(CRITICAL)_

|

||||

- [ ] Has robust native full disk encryption support _(CRITICAL)_

|

||||

|

||||

### Considerations

|

||||

|

||||

#### SELinux, AppArmor, and GrSecurity/PaX

|

||||

|

||||

Mandatory Access Controls (MAC) or Role-Based Access Controls (RBAC) are an

|

||||

extension of the basic user/group security mechanism used in legacy POSIX

|

||||

systems. Most distributions these days either already come bundled with a

|

||||

MAC/RBAC implementation (Fedora, Ubuntu), or provide a mechanism to add it via

|

||||

an optional post-installation step (Gentoo, Arch, Debian). Obviously, it is

|

||||

highly advised that you pick a distribution that comes pre-configured with a

|

||||

MAC/RBAC system, but if you have strong feelings about a distribution that

|

||||

doesn't have one enabled by default, do plan to configure it

|

||||

post-installation.

|

||||

|

||||

Distributions that do not provide any MAC/RBAC mechanisms should be strongly

|

||||

avoided, as traditional POSIX user- and group-based security should be

|

||||

considered insufficient in this day and age. If you would like to start out

|

||||

with a MAC/RBAC workstation, AppArmor and PaX are generally considered easier

|

||||

to learn than SELinux. Furthermore, on a workstation, where there are few or

|

||||

no externally listening daemons, and where user-run applications pose the

|

||||

highest risk, GrSecurity/PaX will _probably_ offer more security benefits than

|

||||

SELinux.

|

||||

|

||||

#### Distro security bulletins

|

||||

|

||||

Most of the widely used distributions have a mechanism to deliver security

|

||||

bulletins to their users, but if you are fond of something esoteric, check

|

||||

whether the developers have a documented mechanism of alerting the users about

|

||||

security vulnerabilities and patches. Absence of such mechanism is a major

|

||||

warning sign that the distribution is not mature enough to be considered for a

|

||||

primary admin workstation.

|

||||

|

||||

#### Timely and trusted security updates

|

||||

|

||||

Most of the widely used distributions deliver regular security updates, but is

|

||||

worth checking to ensure that critical package updates are provided in a

|

||||

timely fashion. Avoid using spin-offs and "community rebuilds" for this

|

||||

reason, as they routinely delay security updates due to having to wait for the

|

||||

upstream distribution to release it first.

|

||||

|

||||

You'll be hard-pressed to find a distribution that does not use cryptographic

|

||||

signatures on packages, updates metadata, or both. That being said, fairly

|

||||

widely used distributions have been known to go for years before introducing

|

||||

this basic security measure (Arch, I'm looking at you), so this is a thing

|

||||

worth checking.

|

||||

|

||||

#### Distros supporting UEFI and SecureBoot

|

||||

|

||||

Check that the distribution supports UEFI and SecureBoot. Find out whether it

|

||||

requires importing an extra key or whether it signs its boot kernels with a key

|

||||

already trusted by systems manufacturers (e.g. via an agreement with

|

||||

Microsoft). Some distributions do not support UEFI/SecureBoot but offer

|

||||

alternatives to ensure tamper-proof or tamper-evident boot environments

|

||||

([Qubes-OS][3] uses Anti Evil Maid, mentioned earlier). If a distribution

|

||||

doesn't support SecureBoot and has no mechanisms to prevent boot-level attacks,

|

||||

look elsewhere.

|

||||

|

||||

#### Full disk encryption

|

||||

|

||||

Full disk encryption is a requirement for securing data at rest, and is

|

||||

supported by most distributions. As an alternative, systems with

|

||||

self-encrypting hard drives may be used (normally implemented via the on-board

|

||||

TPM chip) and offer comparable levels of security plus faster operation, but at

|

||||

a considerably higher cost.

|

||||

|

||||

## Distro installation guidelines

|

||||

|

||||

All distributions are different, but here are general guidelines:

|

||||

|

||||

### Checklist

|

||||

|

||||

- [ ] Use full disk encryption (LUKS) with a robust passphrase _(CRITICAL)_

|

||||

- [ ] Make sure swap is also encrypted _(CRITICAL)_

|

||||

- [ ] Require a password to edit bootloader (can be same as LUKS) _(CRITICAL)_

|

||||

- [ ] Set up a robust root password (can be same as LUKS) _(CRITICAL)_

|

||||

- [ ] Use an unprivileged account, part of administrators group _(CRITICAL)_

|

||||

- [ ] Set up a robust user-account password, different from root _(CRITICAL)_

|

||||

|

||||

### Considerations

|

||||

|

||||

#### Full disk encryption

|

||||

|

||||

Unless you are using self-encrypting hard drives, it is important to configure

|

||||

your installer to fully encrypt all the disks that will be used for storing

|

||||

your data and your system files. It is not sufficient to simply encrypt the

|

||||

user directory via auto-mounting cryptfs loop files (I'm looking at you, older

|

||||

versions of Ubuntu), as this offers no protection for system binaries or swap,

|

||||

which is likely to contain a slew of sensitive data. The recommended

|

||||

encryption strategy is to encrypt the LVM device, so only one passphrase is

|

||||

required during the boot process.

|

||||

|

||||

The `/boot` partition will always remain unencrypted, as the bootloader needs

|

||||

to be able to actually boot the kernel before invoking LUKS/dm-crypt. The

|

||||

kernel image itself should be protected against tampering with a cryptographic

|

||||

signature checked by SecureBoot.

|

||||

|

||||

In other words, `/boot` should always be the only unencrypted partition on your

|

||||

system.

|

||||

|

||||

#### Choosing good passphrases

|

||||

|

||||

Modern Linux systems have no limitation of password/passphrase length, so the

|

||||

only real limitation is your level of paranoia and your stubbornness. If you

|

||||

boot your system a lot, you will probably have to type at least two different

|

||||

passwords: one to unlock LUKS, and another one to log in, so having long

|

||||

passphrases will probably get old really fast. Pick passphrases that are 2-3

|

||||

words long, easy to type, and preferably from rich/mixed vocabularies.

|

||||

|

||||

Examples of good passphrases (yes, you can use spaces):

|

||||

- nature abhors roombas

|

||||

- 12 in-flight Jebediahs

|

||||

- perdon, tengo flatulence

|

||||

|

||||

You can also stick with non-vocabulary passwords that are at least 10-12

|

||||

characters long, if you prefer that to typing passphrases.

|

||||

|

||||

Unless you have concerns about physical security, it is fine to write down your

|

||||

passphrases and keep them in a safe place away from your work desk.

|

||||

|

||||

#### Root, user passwords and the admin group

|

||||

|

||||

We recommend that you use the same passphrase for your root password as you

|

||||

use for your LUKS encryption (unless you share your laptop with other trusted

|

||||

people who should be able to unlock the drives, but shouldn't be able to

|

||||

become root). If you are the sole user of the laptop, then having your root

|

||||

password be different from your LUKS password has no meaningful security

|

||||

advantages. Generally, you can use the same passphrase for your UEFI

|

||||

administration, disk encryption, and root account -- knowing any of these will

|

||||

give an attacker full control of your system anyway, so there is little

|

||||

security benefit to have them be different on a single-user workstation.

|

||||

|

||||

You should have a different, but equally strong password for your regular user

|

||||

account that you will be using for day-to-day tasks. This user should be member

|

||||

of the admin group (e.g. `wheel` or similar, depending on the distribution),

|

||||

allowing you to perform `sudo` to elevate privileges.

|

||||

|

||||

In other words, if you are the sole user on your workstation, you should have 2

|

||||

distinct, robust, equally strong passphrases you will need to remember:

|

||||

|

||||

**Admin-level**, used in the following locations:

|

||||

|

||||

- UEFI administration

|

||||

- Bootloader (GRUB)

|

||||

- Disk encryption (LUKS)

|

||||

- Workstation admin (root user)

|

||||

|

||||

**User-level**, used for the following:

|

||||

|

||||

- User account and sudo

|

||||

- Master password for the password manager

|

||||

|

||||

All of them, obviously, can be different if there is a compelling reason.

|

||||

|

||||

## Post-installation hardening

|

||||

|

||||

Post-installation security hardening will depend greatly on your distribution

|

||||

of choice, so it is futile to provide detailed instructions in a general

|

||||

document such as this one. However, here are some steps you should take:

|

||||

|

||||

### Checklist

|

||||

|

||||

- [ ] Globally disable firewire and thunderbolt modules _(CRITICAL)_

|

||||

- [ ] Check your firewalls to ensure all incoming ports are filtered _(CRITICAL)_

|

||||

- [ ] Make sure root mail is forwarded to an account you check _(CRITICAL)_

|

||||

- [ ] Check to ensure sshd service is disabled by default _(MODERATE)_

|

||||

- [ ] Set up an automatic OS update schedule, or update reminders _(MODERATE)_

|

||||

- [ ] Configure the screensaver to auto-lock after a period of inactivity _(MODERATE)_

|

||||

- [ ] Set up logwatch _(MODERATE)_

|

||||

- [ ] Install and use rkhunter _(LOW)_

|

||||

- [ ] Install an Intrusion Detection System _(PARANOID)_

|

||||

|

||||

### Considerations

|

||||

|

||||

#### Blacklisting modules

|

||||

|

||||

To blacklist a firewire and thunderbolt modules, add the following lines to a

|

||||

file in `/etc/modprobe.d/blacklist-dma.conf`:

|

||||

|

||||

blacklist firewire-core

|

||||

blacklist thunderbolt

|

||||

|

||||

The modules will be blacklisted upon reboot. It doesn't hurt doing this even if

|

||||

you don't have these ports (but it doesn't do anything either).

|

||||

|

||||

#### Root mail

|

||||

|

||||

By default, root mail is just saved on the system and tends to never be read.

|

||||

Make sure you set your `/etc/aliases` to forward root mail to a mailbox that

|

||||

you actually read, otherwise you may miss important system notifications and

|

||||

reports:

|

||||

|

||||

# Person who should get root's mail

|

||||

root: bob@example.com

|

||||

|

||||

Run `newaliases` after this edit and test it out to make sure that it actually

|

||||

gets delivered, as some email providers will reject email coming in from

|

||||

nonexistent or non-routable domain names. If that is the case, you will need to

|

||||

play with your mail forwarding configuration until this actually works.

|

||||

|

||||

#### Firewalls, sshd, and listening daemons

|

||||

|

||||

The default firewall settings will depend on your distribution, but many of

|

||||

them will allow incoming `sshd` ports. Unless you have a compelling legitimate

|

||||

reason to allow incoming ssh, you should filter that out and disable the `sshd`

|

||||

daemon.

|

||||

|

||||

systemctl disable sshd.service

|

||||

systemctl stop sshd.service

|

||||

|

||||

You can always start it temporarily if you need to use it.

|

||||

|

||||

In general, your system shouldn't have any listening ports apart from

|

||||

responding to ping. This will help safeguard you against network-level 0-day

|

||||

exploits.

|

||||

|

||||

#### Automatic updates or notifications

|

||||

|

||||

It is recommended to turn on automatic updates, unless you have a very good

|

||||

reason not to do so, such as fear that an automatic update would render your

|

||||

system unusable (it's happened in the past, so this fear is not unfounded). At

|

||||

the very least, you should enable automatic notifications of available updates.

|

||||

Most distributions already have this service automatically running for you, so

|

||||

chances are you don't have to do anything. Consult your distribution

|

||||

documentation to find out more.

|

||||

|

||||

You should apply all outstanding errata as soon as possible, even if something

|

||||

isn't specifically labeled as "security update" or has an associated CVE code.

|

||||

All bugs have the potential of being security bugs and erring on the side of

|

||||

newer, unknown bugs is _generally_ a safer strategy than sticking with old,

|

||||

known ones.

|

||||

|

||||

#### Watching logs

|

||||

|

||||

You should have a keen interest in what happens on your system. For this

|

||||

reason, you should install `logwatch` and configure it to send nightly activity

|

||||

reports of everything that happens on your system. This won't prevent a

|

||||

dedicated attacker, but is a good safety-net feature to have in place.

|

||||

|

||||

Note, that many systemd distros will no longer automatically install a syslog

|

||||

server that `logwatch` needs (due to systemd relying on its own journal), so

|

||||

you will need to install and enable `rsyslog` to make sure your `/var/log` is

|

||||

not empty before logwatch will be of any use.

|

||||

|

||||

#### Rkhunter and IDS

|

||||

|

||||

Installing `rkhunter` and an intrusion detection system (IDS) like `aide` or

|

||||

`tripwire` will not be that useful unless you actually understand how they work

|

||||

and take the necessary steps to set them up properly (such as, keeping the

|

||||

databases on external media, running checks from a trusted environment,

|

||||

remembering to refresh the hash databases after performing system updates and

|

||||

configuration changes, etc). If you are not willing to take these steps and

|

||||

adjust how you do things on your own workstation, these tools will introduce

|

||||

hassle without any tangible security benefit.

|

||||

|

||||

We do recommend that you install `rkhunter` and run it nightly. It's fairly

|

||||

easy to learn and use, and though it will not deter a sophisticated attacker,

|

||||

it may help you catch your own mistakes.

|

||||

|

||||

## Personal workstation backups

|

||||

|

||||

Workstation backups tend to be overlooked or done in a haphazard, often unsafe

|

||||

manner.

|

||||

|

||||

### Checklist

|

||||

|

||||

- [ ] Set up encrypted workstation backups to external storage _(CRITICAL)_

|

||||

- [ ] Use zero-knowledge backup tools for cloud backups _(MODERATE)_

|

||||

|

||||

### Considerations

|

||||

|

||||

#### Full encrypted backups to external storage

|

||||

|

||||

It is handy to have an external hard drive where one can dump full backups

|

||||

without having to worry about such things like bandwidth and upstream speeds

|

||||

(in this day and age most providers still offer dramatically asymmetric

|

||||

upload/download speeds). Needless to say, this hard drive needs to be in itself

|

||||

encrypted (again, via LUKS), or you should use a backup tool that creates

|

||||

encrypted backups, such as `duplicity` or its GUI companion, `deja-dup`. I

|

||||

recommend using the latter with a good randomly generated passphrase, stored in

|

||||

your password manager. If you travel with your laptop, leave this drive at home

|

||||

to have something to come back to in case your laptop is lost or stolen.

|

||||

|

||||