mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-12 01:40:10 +08:00

commit

a330de140d

@ -1,4 +1,4 @@

|

||||

伴随Apple Watch的揭幕,下一个智能手表会是Ubuntu吗?

|

||||

Apple Watch之后,下一个智能手表会是Ubuntu吗?

|

||||

===

|

||||

|

||||

**苹果借助‘Apple Watch’的发布,证实了其进军穿戴式电子设备市场的长期传言**

|

||||

@ -0,0 +1,82 @@

|

||||

ChromeOS 对战 Linux : 孰优孰劣,仁者见仁,智者见智

|

||||

================================================================================

|

||||

> 在 ChromeOS 和 Linux 的斗争过程中,两个桌面环境都有强有弱,这两者到底怎样呢?

|

||||

|

||||

只要稍加留意,任何人都会相信,Google 在桌面领域绝不是“玩玩而已”。在近几年,我们见到的 [ChromeOS][1] 制造的 [Google Chromebook][2] 相当的轰动。和同期人气火爆的 Amazon 一样,ChromeOS 似乎势不可挡。

|

||||

|

||||

在本文中,我们要了解的是 ChromeOS 的概念市场,ChromeOS 怎么影响着Linux 的份额,整个 ChromeOS 对于Linux 社区来说,是好事还是坏事。另外,我将会谈到一些重大问题,以及为什么没人针对这些问题做点什么。

|

||||

|

||||

### ChromeOS 并非真正的Linux ###

|

||||

|

||||

每当有朋友问我说 ChromeOS 是否是 Linux 的一个发行版时,我都会这样回答:ChromeOS 之于 Linux 就如同 OS X 之于 BSD。换句话说,我认为,ChromeOS 是 Linux 的一个派生操作系统,运行于 Linux 内核的引擎之下。而这个操作系统的大部分由 Google 的专利代码及软件组成。

|

||||

|

||||

尽管 ChromeOS 是利用了 Linux 的内核引擎,但是和现在流行的 Linux 分支版本相比,它仍然有很大的不同。

|

||||

|

||||

其实,ChromeOS 的差异化越来越明显的原因,是在于它给终端用户提供的包括 Web 应用在内的 app。因为ChromeOS 的每一个操作都是开始于浏览器窗口,这对于 Linux 用户来说,可能会有很多不一样的感受,但是,对于没有 Linux 经验的用户来说,这与他们使用的旧电脑并没有什么不同。

|

||||

|

||||

比方说,每一个以“依赖 Google 产品”为生活方式的人来说,在 ChromeOS 上的感觉将会非常良好,就好像是回家一样,特别是这个人已经接受了 Chrome 浏览器、Google Drive 云存储和Gmail 的话。久而久之,他们使用ChromeOS 也就是很自然的事情了,因为他们很容易接受使用早已习惯的 Chrome 浏览器。

|

||||

|

||||

然而,对于 Linux 爱好者来说,这样的约束就立即带来了不适应。因为软件的选择是被限制、被禁锢的,再加上要想玩游戏和 VoIP 是完全不可能的。对不起,因为 [GooglePlus Hangouts][3] 是代替不了VoIP 软件的。甚至这种情况将持续很长一段时间。

|

||||

|

||||

### ChromeOS 还是 Linux 桌面 ###

|

||||

|

||||

有人断言,ChromeOS 要是想在桌面系统的浪潮中对 Linux 产生影响,只有在 Linux 停下来浮出水面喘气的时候,或者是满足某个非技术用户的时候。

|

||||

|

||||

是的,桌面 Linux 对于大多数休闲型的用户来说绝对是一个好东西。然而,它必须有专人帮助你安装操作系统,并且提供“维修”服务,就如同我们在 Windows 和 OS X 阵营看到的一样。但是,令人失望的是,在美国, Linux 恰恰在这个方面很缺乏。所以,我们看到,ChromeOS 正慢慢的走入我们的视线。

|

||||

|

||||

我发现 Linux 桌面系统最适合那些能够提供在线技术支持的环境中。比如说:可以在家里操作和处理更新的高级用户、政府和学校的 IT 部门等等。这些环境中,Linux 桌面系统可以被配置给任何技能水平和背景的人使用。

|

||||

|

||||

相比之下,ChromeOS 是建立在完全免维护的初衷之下的,因此,不需要第三者的帮忙,你只需要允许更新,然后让他静默完成即可。这在一定程度上可能是由于 ChromeOS 是为某些特定的硬件结构设计的,这与苹果开发自己的PC 电脑也有异曲同工之妙。因为 Google 的 ChromeOS 伴随着其硬件一起提供,大部分情况下都无需担心错误的驱动、适配什么的问题。对于某些人来说,这太好了。

|

||||

|

||||

然而有些人则认为这是一个很严重的问题,不过滑稽的是,对 ChomeOS 来说,这些人压根就不在它的目标市场里。简言之,这只是一些狂热的 Linux 爱好者在对 ChomeOS 鸡蛋里挑骨头罢了。要我说,还是停止这些没必要的批评吧。

|

||||

|

||||

问题的关键在于:ChromeOS 的市场份额和 Linux 桌面系统在很长的一段时间内是不同的。这个局面可能会在将来被打破,然而在现在,仍然会是两军对峙的局面。

|

||||

|

||||

### ChromeOS 的使用率正在增长 ###

|

||||

|

||||

不管你对ChromeOS 有怎么样的看法,事实是,ChromeOS 的使用率正在增长。专门针对 ChromeOS 的电脑也一直有发布。最近,戴尔(Dell)也发布了一款针对 ChromeOS 的电脑。命名为 [Dell Chromebox][5],这款 ChromeOS 设备将会是对传统设备的又一次冲击。它没有软件光驱,没有反病毒软件,能够提供无缝的幕后自动更新。对于一般的用户,Chromebox 和 Chromebook 正逐渐成为那些工作在 Web 浏览器上的人们的一个可靠选择。

|

||||

|

||||

尽管增长速度很快,ChromeOS 设备仍然面临着一个很严峻的问题 - 存储。受限于有限的硬盘大小和严重依赖于云存储,ChromeOS 对于那些需要使用基本的浏览器功能之外的人们来说还不够用。

|

||||

|

||||

### ChromeOS 和 Linux 的异同点 ###

|

||||

|

||||

以前,我注意到 ChromeOS 和 Linux 桌面系统分别占有着两个完全不同的市场。出现这样的情况是源于 Linux 社区在线下的桌面支持上一直都有着极其糟糕的表现。

|

||||

|

||||

是的,偶然的,有些人可能会第一时间发现这个“Linux特点”。但是,并没有一个人接着跟进这些问题,确保得到问题的答案,以让他们得到 Linux 方面更多的帮助。

|

||||

|

||||

事实上,线下问题的出现可能是这样的:

|

||||

|

||||

- 有些用户偶然的在当地的 Linux 活动中发现了 Linux。

|

||||

- 他们带回了 DVD/USB 设备,并尝试安装这个操作系统。

|

||||

- 当然,有些人很幸运的成功完成了安装过程,但是,据我所知大多数的人并没有那么幸运。

|

||||

- 令人失望的是,这些人只能寄希望于在网上论坛里搜索帮助。他们很难通过主流的计算机网络经验或视频教程解决这些问题。

|

||||

-于是这些人受够了。后来有很多失望的用户拿着他们的电脑到 Windows 商店来“维修”。除了重装一个 Windows 操作系统,他们很多时候都会听到一句话,“Linux 并不适合你们”,应该尽量避免。

|

||||

|

||||

有些人肯定会说,上面的举例肯定夸大其词了。让我来告诉你:这是发生在我身边的真事,而且是经常发生。醒醒吧,Linux 社区的人们,我们的推广模式早已过期无力了。

|

||||

|

||||

### 伟大的平台,糟糕的营销和最终结论 ###

|

||||

|

||||

如果非要找一个 ChromeOS 和 Linux 桌面系统的共同点,除了它们都使用了 Linux 内核,那就是它们都是伟大的产品却拥有极其差劲的市场营销。对此,Google 认为自己的优势是,它能投入大量的资金在网上构建大面积存储空间。

|

||||

|

||||

Google 相信他们拥有“网上的优势”,而线下的问题不是很重要。这真是一个让人难以置信的目光短浅,这也成了Google 最严重的失误之一。而当地的 Linux 零售商则坚信,对于不怎么上网的人,自然不必担心他们会受到 Google巨大的在线存储的诱惑。

|

||||

|

||||

我的建议是:Linux 可以通过线下的努力,提供桌面系统,渗透 ChromeOS 市场。这就意味着 Linux 社区需要在节假日筹集资金来出席博览会、商场展览,并且在社区中进行免费的教学课程。这会立即使 Linux 桌面系统走入人们的视线,否则,最终将会是一个 ChromeOS 设备出现在人们的面前。

|

||||

|

||||

如果说本地的线下市场并没有像我说的这样,别担心。Linux 桌面系统的市场仍然会像 ChromeOS 一样增长。最坏也能保持现在这种两军对峙的市场局面。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.datamation.com/open-source/chromeos-vs-linux-the-good-the-bad-and-the-ugly-1.html

|

||||

|

||||

作者:[Matt Hartley][a]

|

||||

译者:[barney-ro](https://github.com/barney-ro)

|

||||

校对:[Mr小眼儿](https://github.com/tinyeyeser)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.datamation.com/author/Matt-Hartley-3080.html

|

||||

[1]:http://en.wikipedia.org/wiki/Chrome_OS

|

||||

[2]:http://www.google.com/chrome/devices/features/

|

||||

[3]:https://plus.google.com/hangouts

|

||||

[4]:http://en.wikipedia.org/wiki/Voice_over_IP

|

||||

[5]:http://www.pcworld.com/article/2602845/dell-brings-googles-chrome-os-to-desktops.html

|

||||

66

published/20141108 When hackers grow old.md

Normal file

66

published/20141108 When hackers grow old.md

Normal file

@ -0,0 +1,66 @@

|

||||

ESR:黑客年暮

|

||||

================================================================================

|

||||

近来我一直在与某资深开源开发团队中的多个成员缠斗,尽管密切关注我的人们会在读完本文后猜到是哪个组织,但我不会在这里说出这个组织的名字。

|

||||

|

||||

怎么让某些人进入 21 世纪就这么难呢?真是的...

|

||||

|

||||

我快 56 岁了,也就是大部分年轻人会以为的我将时不时朝他们发出诸如“滚出我的草坪”之类歇斯底里咆哮的年龄。但事实并非如此 —— 我发现,尤其是在技术背景之下,我变得与我的年龄非常不相称。

|

||||

|

||||

在我这个年龄的大部分人确实变成了爱发牢骚、墨守成规的老顽固。并且,尴尬的是,偶尔我会成为那个打断谈话的人,我会指出他们某个在 1995 年(或者在某些特殊情况下,1985 年)时很适合的方法... 几十年后的今天就不再是好方法了。

|

||||

|

||||

为什么是我?因为年轻人在我的同龄人中很难有什么说服力。如果有人想让那帮老头改变主意,首先他得是自己同龄人中具有较高思想觉悟的佼佼者。即便如此,在与习惯做斗争的过程中,我也比看起来花费了更多的时间。

|

||||

|

||||

年轻人犯下无知的错误是可以被原谅的。他们还年轻。年轻意味着缺乏经验,缺乏经验通常会导致片面的判断。我很难原谅那些经历了足够多本该有经验的人,却被*长期的固化思维*蒙蔽,无法发觉近在咫尺的东西。

|

||||

|

||||

(补充一下:我真的不是保守党拥护者。那些和我争论政治的,无论保守党还是非保守党都没有注意到这点,我觉得这颇有点嘲讽的意味。)

|

||||

|

||||

那么,现在我们来讨论下 GNU 更新日志文件(ChangeLog)这件事。在 1985 年的时候,这是一个不错的主意,甚至可以说是必须的。当时的想法是用单独的更新日志条目来记录多个相关文件的变更情况。用这种方式来对那些存在版本缺失或者非常原始的版本进行版本控制确实不错。当时我也*在场*,所以我知道这些。

|

||||

|

||||

不过即使到了 1995 年,甚至 21 世纪早期,许多版本控制系统仍然没有太大改进。也就是说,这些版本控制系统并非对批量文件的变化进行分组再保存到一条记录上,而是对每个变化的文件分别进行记录并保存到不同的地方。CVS,当时被广泛使用的版本控制系统,仅仅是模拟日志变更 —— 并且在这方面表现得很糟糕,导致大多数人不再依赖这个功能。即便如此,更新日志文件的出现依然是必要的。

|

||||

|

||||

但随后,版本控制系统 Subversion 于 2003 年发布 beta 版,并于 2004 年发布 1.0 正式版,Subversion 真正实现了更新日志记录功能,得到了人们的广泛认可。它与一年后兴起的分布式版本控制系统(Distributed Version Control System,DVCS)共同引发了主流世界的激烈争论。因为如果你在项目上同时使用了分布式版本控制与更新日志文件记录的功能,它们将会因为争夺相同元数据的控制权而产生不可预料的冲突。

|

||||

|

||||

有几种不同的方法可以折衷解决这个问题。一种是继续将更新日志作为代码变更的授权记录。这样一来,你基本上只能得到简陋的、形式上的提交评论数据。

|

||||

|

||||

另一种方法是对提交的评论日志进行授权。如果你这样做了,不久后你就会开始思忖为什么自己仍然对所有的日志更新条目进行记录。提交元数据与变化的代码具有更好的相容性,毕竟这才是当初设计它的目的。

|

||||

|

||||

(现在,试想有这样一个项目,同样本着把项目做得最好的想法,但两拨人却做出了完全不同的选择。因此你必须同时阅读更新日志和评论日志以了解到底发生了什么。最好在矛盾激化前把问题解决....)

|

||||

|

||||

第三种办法是尝试同时使用以上两种方法 —— 在更新日志条目中,以稍微变化后的的格式复制一份评论数据,将其作为评论提交的一部分。这会导致各种你意想不到的问题,最具代表性的就是它不符合“真理的单点性(single point of truth)”原理;只要其中有拷贝文件损坏,或者日志文件条目被修改,这就不再是同步时数据匹配的问题,它将导致在其后参与进来的人试图搞清人们是怎么想的时候变得非常困惑。(LCTT 译注:《[程序员修炼之道][1]》(The Pragmatic Programmer):任何一个知识点在系统内都应当有一个唯一、明确、权威的表述。根据Brian Kernighan的建议,把这个原则称为“真理的单点性(Single Point of Truth)”或者SPOT原则。)

|

||||

|

||||

或者,正如这个*我就不说出具体名字的特定项目*所做的,它的高层开发人员在电子邮件中最近声明说,提交可以包含多个更新日志条目,并且提交的元数据与更新日志是无关的。这导致我们直到现在还得不断进行记录。

|

||||

|

||||

当时我读到邮件的时候都要吐了。什么样的傻瓜才会意识不到这是自找麻烦 —— 事实上,在 DVCS 中针对可靠的提交日志有很好的浏览工具,围绕更新日志文件的整个定制措施只会成为负担和拖累。

|

||||

|

||||

唉,这是比较特殊的笨蛋:变老的并且思维僵化了的黑客。所有的合理化改革他都会极力反对。他所遵循的行事方法在几十年前是有效的,但现在只能适得其反。如果你试图向他解释这些不仅仅和 git 的摘要信息有关,同时还为了正确适应当前的工具集,以便实现更新日志的去条目化... 呵呵,那你就准备好迎接无法忍受、无法想象的疯狂对话吧。

|

||||

|

||||

的确,它成功激怒了我。这样那样的胡言乱语使这个项目变成了很难完成的工作。而且,同样的糟糕还体现在他们吸引年轻开发者的过程中,我认为这是真正的问题。相关 Google+ 社区的人员数量已经达到了 4 位数,他们大部分都是孩子,还没有成长起来。显然外界已经接受了这样的信息:这个项目的开发者都是部落中地位根深蒂固的崇高首领,最好的崇拜方式就是远远的景仰着他们。

|

||||

|

||||

这件事给我的最大触动就是每当我要和这些部落首领较量时,我都会想:有一天我也会这样吗?或者更糟的是,我看到的只是如同镜子一般对我自己的真实写照,而我自己却浑然不觉?我的意思是,我所得到的印象来自于他的网站,这个特殊的笨蛋要比我年轻。年轻至少 15 岁呢。

|

||||

|

||||

我总是认为自己的思路很清晰。当我和那些比我聪明的人打交道时我不会受挫,我只会因为那些思路跟不上我、看不清事实的人而沮丧。但这种自信也许只是邓宁·克鲁格效应(Dunning-Krueger effect)在我身上的消极影响,我并不确定这意味着什么。很少有什么事情会让我感到害怕;而这件事在让我害怕的事情名单上是名列前茅的。

|

||||

|

||||

另一件让人不安的事是当我逐渐变老的时候,这样的矛盾发生得越来越频繁。不知怎的,我希望我的黑客同行们能以更加优雅的姿态老去,即使身体老去也应该保持一颗年轻的心灵。有些人确实是这样;但可惜绝大多数人都不是。真令人悲哀。

|

||||

|

||||

我不确定我的职业生涯会不会完美收场。假如我最后成功避免了思维僵化(注意我说的是假如),我想我一定知道其中的部分原因,但我不确定这种模式是否可以被复制 —— 为了达成目的也许得在你的头脑中发生一些复杂的化学反应。尽管如此,无论对错,请听听我给年轻黑客以及其他有志青年的建议。

|

||||

|

||||

你们——对的,也包括你——一定无法在你中年老年的时候保持不错的心灵,除非你能很好的控制这点。你必须不断地去磨练你的内心、在你还年轻的时候完成自己的种种心愿,你必须把这些行为养成一种习惯直到你老去。

|

||||

|

||||

有种说法是中年人锻炼身体的最佳时机是 30 岁以前。我以为同样的方法,坚持我以上所说的习惯能让你在 56 岁,甚至 65 岁的时候仍然保持灵活的头脑。挑战你的极限,使不断地挑战自己成为一种习惯。立刻离开安乐窝,由此当你以后真正需要它的时候你可以建立起自己的安乐窝。

|

||||

|

||||

你必须要清楚的了解这点;还有一个可选择的挑战是你选择一个可以实现的目标并且为了这个目标不断努力。这个月我要学习 Go 语言。不是指游戏,我早就玩儿过了(虽然玩儿的不是太好)。并不是因为工作需要,而是因为我觉得是时候来扩展下我自己了。

|

||||

|

||||

保持这个习惯。永远不要放弃。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://esr.ibiblio.org/?p=6485

|

||||

|

||||

作者:[Eric Raymond][a]

|

||||

译者:[Stevearzh](https://github.com/Stevearzh)

|

||||

校对:[Mr小眼儿](https://github.com/tinyeyeser)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://esr.ibiblio.org/?author=2

|

||||

[1]:http://book.51cto.com/art/200809/88490.htm

|

||||

@ -1,10 +1,10 @@

|

||||

Linux有问必答-- 如何在Linux上安装内核头文件

|

||||

Linux有问必答:如何在Linux上安装内核头文件

|

||||

================================================================================

|

||||

> **提问**:我在安装一个设备驱动前先要安装内核头文件。怎样安装合适的内核头文件?

|

||||

|

||||

当你在编译一个设备驱动模块时,你需要在系统中安装内核头文件。内核头文件同样在你编译与内核直接链接的用户空间程序时需要。当你在这些情况下安装内核头文件时,你必须确保内核头文件精确地与你当前内核版本匹配(比如:3.13.0-24-generic)。

|

||||

|

||||

如果你的内核发行版自带的内核版本,或者使用默认的包管理器的基础仓库升级的(比如:apt-ger、aptitude或者yum),你也可以使用包管理器来安装内核头文件。另一方面,如果下载的是[kernel源码][1]并且手动编译的,你可以使用[make命令][2]来安装匹配的内核头文件。

|

||||

如果你的内核是发行版自带的内核版本,或者使用默认的包管理器的基础仓库升级的(比如:apt-ger、aptitude或者yum),你也可以使用包管理器来安装内核头文件。另一方面,如果下载的是[kernel源码][1]并且手动编译的,你可以使用[make命令][2]来安装匹配的内核头文件。

|

||||

|

||||

现在我们假设你的内核是发行版自带的,让我们看下该如何安装匹配的头文件。

|

||||

|

||||

@ -41,7 +41,7 @@ Debian、Ubuntu、Linux Mint默认头文件在**/usr/src**下。

|

||||

|

||||

假设你没有手动编译内核,你可以使用yum命令来安装匹配的内核头文件。

|

||||

|

||||

首先,用下面的命令检查系统是否已经按炸ung了头文件。如果下面的命令没有任何输出,这就意味着还没有头文件。

|

||||

首先,用下面的命令检查系统是否已经安装了头文件。如果下面的命令没有任何输出,这就意味着还没有头文件。

|

||||

|

||||

$ rpm -qa | grep kernel-headers-$(uname -r)

|

||||

|

||||

@ -66,7 +66,7 @@ Fedora、CentOS 或者 RHEL上默认内核头文件的位置是**/usr/include/li

|

||||

via: http://ask.xmodulo.com/install-kernel-headers-linux.html

|

||||

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,25 +0,0 @@

|

||||

Git 2.2.1 Released To Fix Critical Security Issue

|

||||

================================================================================

|

||||

|

||||

|

||||

Git 2.2.1 was released this afternoon to fix a critical security vulnerability in Git clients. Fortunately, the vulnerability doesn't plague Unix/Linux users but rather OS X and Windows.

|

||||

|

||||

Today's Git vulnerability affects those using the Git client on case-insensitive file-systems. On case-insensitive platforms like Windows and OS X, committing to .Git/config could overwrite the user's .git/config and could lead to arbitrary code execution. Fortunately with most Phoronix readers out there running Linux, this isn't an issue thanks to case-sensitive file-systems.

|

||||

|

||||

Besides the attack vector from case insensitive file-systems, Windows and OS X's HFS+ would map some strings back to .git too if certain characters are present, which could lead to overwriting the Git config file. Git 2.2.1 addresses these issues.

|

||||

|

||||

More details via the [Git 2.2.1 release announcement][1] and [GitHub has additional details][2].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.phoronix.com/scan.php?page=news_item&px=MTg2ODA

|

||||

|

||||

作者:[Michael Larabel][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.michaellarabel.com/

|

||||

[1]:http://article.gmane.org/gmane.linux.kernel/1853266

|

||||

[2]:https://github.com/blog/1938-git-client-vulnerability-announced

|

||||

@ -1,168 +0,0 @@

|

||||

Translating By H-mudcup

|

||||

|

||||

Easy File Comparisons With These Great Free Diff Tools

|

||||

================================================================================

|

||||

by Frazer Kline

|

||||

|

||||

File comparison compares the contents of computer files, finding their common contents and their differences. The result of the comparison is often known as a diff.

|

||||

|

||||

diff is also the name of a famous console based file comparison utility that outputs the differences between two files. The diff utility was developed in the early 1970s on the Unix operating system. diff will output the parts of the files where they are different.

|

||||

|

||||

Linux has many good GUI tools that enable you to clearly see the difference between two files or two versions of the same file. This roundup selects 5 of my favourite GUI diff tools, with all but one released under an open source license.

|

||||

|

||||

These utilities are an essential software development tool, as they visualize the differences between files or directories, merge files with differences, resolve conflicts and save output to a new file or patch, and assist file changes reviewing and comment production (e.g. approving source code changes before they get merged into a source tree). They help developers work on a file, passing it back and forth between each other. The diff tools are not only useful for showing differences in source code files; they can be used on many text-based file types as well. The visualisations make it easier to compare files.

|

||||

|

||||

----------

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

Meld is an open source graphical diff viewer and merge application for the Gnome desktop. It supports 2 and 3-file diffs, recursive directory diffs, diffing of directories under version control (Bazaar, Codeville, CVS, Darcs, Fossil SCM, Git, Mercurial, Monotone, Subversion), as well as the ability to manually and automatically merge file differences.

|

||||

|

||||

Meld's focus is on helping developers compare and merge source files, and get a visual overview of changes in their favourite version control system.

|

||||

|

||||

Features include

|

||||

|

||||

- Edit files in-place, and your comparison updates on-the-fly

|

||||

- Perform twoand three-way diffs and merges

|

||||

- Easily navigate between differences and conflicts

|

||||

- Visualise global and local differences with insertions, changes and conflicts marked

|

||||

- Built-in regex text filtering to ignore uninteresting differences

|

||||

- Syntax highlighting (with optional gtksourceview)

|

||||

- Compare two or three directories file-by-file, showing new, missing, and altered files

|

||||

- Directly open file comparisons of any conflicting or differing files

|

||||

- Filter out files or directories to avoid seeing spurious differences

|

||||

- Auto-merge mode and actions on change blocks help make merges easier

|

||||

- Simple file management is also available

|

||||

- Supports many version control systems, including Git, Mercurial, Bazaar and SVN

|

||||

- Launch file comparisons to check what changes were made, before you commit

|

||||

- View file versioning statuses

|

||||

- Simple version control actions are also available (i.e., commit/update/add/remove/delete files)

|

||||

- Automatically merge two files using a common ancestor

|

||||

- Mark and display the base version of all conflicting changes in the middle pane

|

||||

- Visualise and merge independent modifications of the same file

|

||||

- Lock down read-only merge bases to avoid mistakes

|

||||

- Command line interface for easy integration with existing tools, including git mergetool

|

||||

- Internationalization support

|

||||

- Visualisations make it easier to compare your files

|

||||

|

||||

- Website: [meldmerge.org][1]

|

||||

- Developer: Kai Willadsen

|

||||

- License: GNU GPL v2

|

||||

- Version Number: 1.8.5

|

||||

|

||||

----------

|

||||

|

||||

|

||||

|

||||

|

||||

注:上面这个图访问不到,图的地址是原文地址的小图的链接地址,发布的时候在验证一下,如果还访问不到,不行先采用小图或者网上搜一下看有没有大图

|

||||

|

||||

DiffMerge is an application to visually compare and merge files on Linux, Windows, and OS X.

|

||||

|

||||

Features include:

|

||||

|

||||

- Graphically shows the changes between two files. Includes intra-line highlighting and full support for editing

|

||||

- Graphically shows the changes between 3 files. Allows automatic merging (when safe to do so) and full control over editing the resulting file

|

||||

- Performs a side-by-side comparison of 2 folders, showing which files are only present in one file or the other, as well as file pairs which are identical, equivalent or different

|

||||

- Rulesets and options provide for customized appearance and behavior

|

||||

- Unicode-based application and can import files in a wide range of character encodings

|

||||

- Cross-platform tool

|

||||

|

||||

- Website: [sourcegear.com/diffmerge][2]

|

||||

- Developer: SourceGear LLC

|

||||

- License: Licensed for use free of charge (not open source)

|

||||

- Version Number: 4.2

|

||||

|

||||

----------

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

xxdiff is an open source graphical file and directories comparator and merge tool.

|

||||

|

||||

xxdiff can be used for viewing the differences between two or three files, or two directories, and can be used to produce a merged version. The texts of the two or three files are presented side by side with their differences highlighted with colors for easy identification.

|

||||

|

||||

This program is an essential software development tool that can be used to visualize the differences between files or directories, merge files with differences, resolving conflicts and saving output to a new file or patch, and assist file changes reviewing and comment production (e.g. approving source code changes before they get merged into a source tree).

|

||||

|

||||

Features include:

|

||||

|

||||

- Compare two files, three files, or two directories (shallow and recursive)

|

||||

- Horizontal diffs highlighting

|

||||

- Files can be merged interactively and resulting output visualized and saved

|

||||

- Features to assist in performing merge reviews/policing

|

||||

- Unmerge CVS conflicts in automatically merged file and display them as two files, to help resolve conflicts

|

||||

- Uses external diff program to compute differences: works with GNU diff, SGI diff and ClearCase's cleardiff, and any other diff whose output is similar to those

|

||||

- Fully customizable with a resource file

|

||||

- Look-and-feel similar to Rudy Wortel's/SGI xdiff, it is desktop agnostic

|

||||

- Features and output that ease integration with scripts

|

||||

|

||||

- Website: [furius.ca/xxdiff][3]

|

||||

- Developer: Martin Blais

|

||||

- License: GNU GPL

|

||||

- Version Number: 4.0

|

||||

|

||||

----------

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

Diffuse is an open source graphical tool for merging and comparing text files. Diffuse is able to compare an arbitrary number of files side-by-side and offers the ability to manually adjust line-matching and directly edit files. Diffuse can also retrieve revisions of files from bazaar, CVS, darcs, git, mercurial, monotone, Subversion and GNU Revision Control System (RCS) repositories for comparison and merging.

|

||||

|

||||

Features include:

|

||||

|

||||

- Compare and merge an arbitrary number of files side-by-side (n-way merges)

|

||||

- Line matching can be manually corrected by the user

|

||||

- Directly edit files

|

||||

- Syntax highlighting

|

||||

- Bazaar, CVS, Darcs, Git, Mercurial, Monotone, RCS, Subversion, and SVK support

|

||||

- Unicode support

|

||||

- Unlimited undo

|

||||

- Easy keyboard navigation

|

||||

|

||||

- Website: [diffuse.sourceforge.net][]

|

||||

- Developer: Derrick Moser

|

||||

- License: GNU GPL v2

|

||||

- Version Number: 0.4.7

|

||||

|

||||

----------

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

Kompare is an open source GUI front-end program that enables differences between source files to be viewed and merged. Kompare can be used to compare differences on files or the contents of folders. Kompare supports a variety of diff formats and provide many options to customize the information level displayed.

|

||||

|

||||

Whether you are a developer comparing source code, or you just want to see the difference between that research paper draft and the final document, Kompare is a useful tool.

|

||||

|

||||

Kompare is part of the KDE desktop environment.

|

||||

|

||||

Features include:

|

||||

|

||||

- Compare two text files

|

||||

- Recursively compare directories

|

||||

- View patches generated by diff

|

||||

- Merge a patch into an existing directory

|

||||

- Entertain you during that boring compile

|

||||

|

||||

- Website: [www.caffeinated.me.uk/kompare/][5]

|

||||

- Developer: The Kompare Team

|

||||

- License: GNU GPL

|

||||

- Version Number: Part of KDE

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxlinks.com/article/2014062814400262/FileComparisons.html

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://meldmerge.org/

|

||||

[2]:https://sourcegear.com/diffmerge/

|

||||

[3]:http://furius.ca/xxdiff/

|

||||

[4]:http://diffuse.sourceforge.net/

|

||||

[5]:http://www.caffeinated.me.uk/kompare/

|

||||

@ -0,0 +1,111 @@

|

||||

Best GNOME Shell Themes For Ubuntu 14.04

|

||||

================================================================================

|

||||

|

||||

|

||||

Themes are the best way to customize your Linux desktop. If you [install GNOME on Ubuntu 14.04][1] or 14.10, you might want to change the default theme and give it a different look. To help you in this task, I have compiled here a **list of best GNOME shell themes for Ubuntu** or any other Linux OS that has GNOME shell installed on it. But before we see the list, let’s first see how to change install new themes in GNOME Shell.

|

||||

|

||||

### Install themes in GNOME Shell ###

|

||||

|

||||

To install new themes in GNOME with Ubuntu, you can use Gnome Tweak Tool which is available in software repository in Ubuntu. Open a terminal and use the following command:

|

||||

|

||||

sudo apt-get install gnome-tweak-tool

|

||||

|

||||

Alternatively, you can use themes by putting them in ~/.themes directory. I have written a detailed tutorial on [how to install and use themes in GNOME Shell][2], in case you need it.

|

||||

|

||||

### Best GNOME Shell themes ###

|

||||

|

||||

The themes listed here are tested on GNOME Shell 3.10.4 but it should work for all version of GNOME 3 and higher. For the sake of mentioning, the themes are not in any kind of priority order. Let’s have a look at the best GNOME themes:

|

||||

|

||||

#### Numix ####

|

||||

|

||||

|

||||

|

||||

No list can be completed without the mention of [Numix themes][3]. These themes got so popular that it encouraged [Numix team to work on a new Linux OS, Ozon][4]. Considering their design work with Numix theme, it won’t be exaggeration to call it one of the [most beautiful Linux OS][5] releasing in near future.

|

||||

|

||||

To install Numix theme in Ubuntu based distributions, use the following commands:

|

||||

|

||||

sudo apt-add-repository ppa:numix/ppa

|

||||

sudo apt-get update

|

||||

sudo apt-get install numix-icon-theme-circle

|

||||

|

||||

#### Elegance Colors ####

|

||||

|

||||

|

||||

|

||||

Another beautiful theme from Satyajit Sahoo, who is also a member of Numix team. [Elegance Colors][6] has its own PPA so that you can easily install it:

|

||||

|

||||

sudo add-apt-repository ppa:satyajit-happy/themes

|

||||

sudo apt-get update

|

||||

sudo apt-get install gnome-shell-theme-elegance-colors

|

||||

|

||||

#### Moka ####

|

||||

|

||||

|

||||

|

||||

[Moka][7] is another mesmerizing theme that is always included in the list of beautiful themes. Designed by the same developer who gave us Unity Tweak Tool, Moka is a must try:

|

||||

|

||||

sudo add-apt-repository ppa:moka/stable

|

||||

sudo apt-get update

|

||||

sudo apt-get install moka-gnome-shell-theme

|

||||

|

||||

#### Viva ####

|

||||

|

||||

|

||||

|

||||

Based on Gnome’s default Adwaita theme, Viva is a nice theme with shades of black and oranges. You can download Viva from the link below.

|

||||

|

||||

- [Download Viva GNOME Shell Theme][8]

|

||||

|

||||

#### Ciliora-Prima ####

|

||||

|

||||

|

||||

|

||||

Previously known as Zukitwo Dark, Ciliora-Prima has square icons theme. Theme is available in three versions that are slightly different from each other. You can download it from the link below.

|

||||

|

||||

- [Download Ciliora-Prima GNOME Shell Theme][9]

|

||||

|

||||

#### Faience ####

|

||||

|

||||

|

||||

|

||||

Faience has been a popular theme for quite some time and rightly so. You can install Faience using the PPA below for GNOME 3.10 and higher.

|

||||

|

||||

sudo add-apt-repository ppa:tiheum/equinox

|

||||

sudo apt-get update

|

||||

sudo apt-get install faience-theme

|

||||

|

||||

#### Paper [Incomplete] ####

|

||||

|

||||

|

||||

|

||||

Ever since Google talked about Material Design, people have been going gaga over it. Paper GTK theme, by Sam Hewitt (of Moka Project), is inspired by Google Material design and currently under development. Which means you will not have the best experience with Paper at the moment. But if your a bit experimental, like me, you can definitely give it a try.

|

||||

|

||||

sudo add-apt-repository ppa:snwh/pulp

|

||||

sudo apt-get update

|

||||

sudo apt-get install paper-gtk-theme

|

||||

|

||||

That concludes my list. If you are trying to give a different look to your Ubuntu, you should also try the list of [best icon themes for Ubuntu 14.04][10].

|

||||

|

||||

How do you find this list of **best GNOME Shell themes**? Which one is your favorite among the one listed here? And if it’s not listed here, do let us know which theme you think is the best GNOME Shell theme.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://itsfoss.com/gnome-shell-themes-ubuntu-1404/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://itsfoss.com/author/Abhishek/

|

||||

[1]:http://itsfoss.com/how-to-install-gnome-in-ubuntu-14-04/

|

||||

[2]:http://itsfoss.com/install-switch-themes-gnome-shell/

|

||||

[3]:https://numixproject.org/

|

||||

[4]:http://itsfoss.com/numix-linux-distribution/

|

||||

[5]:http://itsfoss.com/new-beautiful-linux-2015/

|

||||

[6]:http://satya164.deviantart.com/art/Gnome-Shell-Elegance-Colors-305966388

|

||||

[7]:http://mokaproject.com/

|

||||

[8]:https://github.com/vivaeltopo/gnome-shell-theme-viva

|

||||

[9]:http://zagortenay333.deviantart.com/art/Ciliora-Prima-Shell-451947568

|

||||

[10]:http://itsfoss.com/best-icon-themes-ubuntu-1404/

|

||||

@ -1,3 +1,4 @@

|

||||

Translating by ZTinoZ

|

||||

20 Linux Commands Interview Questions & Answers

|

||||

================================================================================

|

||||

**Q:1 How to check current run level of a linux server ?**

|

||||

@ -140,4 +141,4 @@ via: http://www.linuxtechi.com/20-linux-commands-interview-questions-answers/

|

||||

[17]:

|

||||

[18]:

|

||||

[19]:

|

||||

[20]:

|

||||

[20]:

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

(translating by runningwater)

|

||||

2015: Open Source Has Won, But It Isn't Finished

|

||||

================================================================================

|

||||

> After the wins of 2014, what's next?

|

||||

@ -31,7 +32,7 @@ In other words, whatever amazing free software 2014 has already brought us, we c

|

||||

via: http://www.computerworlduk.com/blogs/open-enterprise/open-source-has-won-3592314/

|

||||

|

||||

作者:[lyn Moody][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

译者:[runningwater](https://github.com/runningwater)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

@ -44,4 +45,4 @@ via: http://www.computerworlduk.com/blogs/open-enterprise/open-source-has-won-35

|

||||

[5]:http://timesofindia.indiatimes.com/tech/tech-news/Android-tablet-market-share-hits-70-in-Q2-iPads-slip-to-25-Survey/articleshow/38966512.cms

|

||||

[6]:http://linuxgizmos.com/embedded-developers-prefer-linux-love-android/

|

||||

[7]:http://www.computerworlduk.com/blogs/open-enterprise/allseen-3591023/

|

||||

[8]:http://peerproduction.net/issues/issue-3-free-software-epistemics/debate/there-is-no-free-software/

|

||||

[8]:http://peerproduction.net/issues/issue-3-free-software-epistemics/debate/there-is-no-free-software/

|

||||

|

||||

@ -0,0 +1,29 @@

|

||||

Linus Tells Wired Leap Second Irrelevant

|

||||

================================================================================

|

||||

|

||||

|

||||

Two larger publications today featured Linux and the effect of the upcoming leap second. The Register today said that the leap second effects of the past are no longer an issue. Coincidentally, Wired talked to Linus Torvalds about the same issue today as well.

|

||||

|

||||

**Linus Torvalds** spoke with Wired's Robert McMillan about the approaching leap second due to be added in June. The Register said the last leap second in 2012 took out Mozilla, StumbleUpon, Yelp, FourSquare, Reddit and LinkedIn as well as several major airlines and travel reservation services that ran Linux. Torvalds told Wired today that the kernel is patched and he doesn't expect too many issues this time around. [He said][1], "Just take the leap second as an excuse to have a small nonsensical party for your closest friends. Wear silly hats, get a banner printed, and get silly drunk. That’s exactly how relevant it should be to most people."

|

||||

|

||||

**However**, The Register said not everyone agrees with Torvalds' sentiments. They quote Daily Mail saying, "The year 2015 will have an extra second — which could wreak havoc on the infrastructure powering the Internet," then remind us of the Y2K scare that ended up being a non-event. The Register's Gavin [Clarke concluded][2]:

|

||||

|

||||

> No reason the Penguins were caught sans pants.

|

||||

|

||||

> Now they've gone belt and braces.

|

||||

|

||||

The take-away is: move along, nothing to see here.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://ostatic.com/blog/linus-tells-wired-leap-second-irrelevant

|

||||

|

||||

作者:[Susan Linton][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://ostatic.com/member/susan-linton

|

||||

[1]:http://www.wired.com/2015/01/torvalds_leapsecond/

|

||||

[2]:http://www.theregister.co.uk/2015/01/09/leap_second_bug_linux_hysteria/

|

||||

@ -0,0 +1,36 @@

|

||||

diff -u: What's New in Kernel Development

|

||||

================================================================================

|

||||

**David Drysdale** wanted to add Capsicum security features to Linux after he noticed that FreeBSD already had Capsicum support. Capsicum defines fine-grained security privileges, not unlike filesystem capabilities. But as David discovered, Capsicum also has some controversy surrounding it.

|

||||

|

||||

Capsicum has been around for a while and was described in a USENIX paper in 2010: [http://www.cl.cam.ac.uk/research/security/capsicum/papers/2010usenix-security-capsicum-website.pdf][1].

|

||||

|

||||

Part of the controversy is just because of the similarity with capabilities. As Eric Biderman pointed out during the discussion, it would be possible to implement features approaching Capsicum's as an extension of capabilities, but implementing Capsicum directly would involve creating a whole new (and extensive) abstraction layer in the kernel. Although David argued that capabilities couldn't actually be extended far enough to match Capsicum's fine-grained security controls.

|

||||

|

||||

Capsicum also was controversial within its own developer community. For example, as Eric described, it lacked a specification for how to revoke privileges. And, David pointed out that this was because the community couldn't agree on how that could best be done. David quoted an e-mail sent by Ben Laurie to the cl-capsicum-discuss mailing list in 2011, where Ben said, "It would require additional book-keeping to find and revoke outstanding capabilities, which requires knowing how to reach capabilities, and then whether they are derived from the capability being revoked. It also requires an authorization model for revocation. The former two points mean additional overhead in terms of data structure operations and synchronisation."

|

||||

|

||||

Given the ongoing controversy within the Capsicum developer community and the corresponding lack of specification of key features, and given the existence of capabilities that already perform a similar function in the kernel and the invasiveness of Capsicum patches, Eric was opposed to David implementing Capsicum in Linux.

|

||||

|

||||

But, given the fact that capabilities are much coarser-grained than Capsicum's security features, to the point that capabilities can't really be extended far enough to mimic Capsicum's features, and given that FreeBSD already has Capsicum implemented in its kernel, showing that it can be done and that people might want it, it seems there will remain a lot of folks interested in getting Capsicum into the Linux kernel.

|

||||

|

||||

Sometimes it's unclear whether there's a bug in the code or just a bug in the written specification. Henrique de Moraes Holschuh noticed that the Intel Software Developer Manual (vol. 3A, section 9.11.6) said quite clearly that microcode updates required 16-byte alignment for the P6 family of CPUs, the Pentium 4 and the Xeon. But, the code in the kernel's microcode driver didn't enforce that alignment.

|

||||

|

||||

In fact, Henrique's investigation uncovered the fact that some Intel chips, like the Xeon X5550 and the second-generation i5 chips, needed only 4-byte alignment in practice, and not 16. However, to conform to the documented specification, he suggested fixing the kernel code to match the spec.

|

||||

|

||||

Borislav Petkov objected to this. He said Henrique was looking for problems where there weren't any. He said that Henrique simply had discovered a bug in Intel's documentation, because the alignment issue clearly wasn't a problem in the real world. He suggested alerting the Intel folks to the documentation problem and moving on. As he put it, "If the processor accepts the non-16-byte-aligned update, why do you care?"

|

||||

|

||||

But, as H. Peter Anvin remarked, the written spec was Intel's guarantee that certain behaviors would work. If the kernel ignored the spec, it could lead to subtle bugs later on. And, Bill Davidsen said that if the kernel ignored the alignment requirement, and "if the requirement is enforced in some future revision, and updates then fail in some insane way, the vendor is justified in claiming 'I told you so'."

|

||||

|

||||

The end result was that Henrique sent in some patches to make the microcode driver enforce the 16-byte alignment requirement.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxjournal.com/content/diff-u-whats-new-kernel-development-6

|

||||

|

||||

作者:[Zack Brown][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.linuxjournal.com/user/801501

|

||||

[1]:http://www.cl.cam.ac.uk/research/security/capsicum/papers/2010usenix-security-capsicum-website.pdf

|

||||

@ -1,155 +0,0 @@

|

||||

How to Backup and Restore Your Apps and PPAs in Ubuntu Using Aptik

|

||||

================================================================================

|

||||

|

||||

|

||||

If you need to reinstall Ubuntu or if you just want to install a new version from scratch, wouldn’t it be useful to have an easy way to reinstall all your apps and settings? You can easily accomplish this using a free tool called Aptik.

|

||||

|

||||

Aptik (Automated Package Backup and Restore), an application available in Ubuntu, Linux Mint, and other Debian- and Ubuntu-based Linux distributions, allows you to backup a list of installed PPAs (Personal Package Archives), which are software repositories, downloaded packages, installed applications and themes, and application settings to an external USB drive, network drive, or a cloud service like Dropbox.

|

||||

|

||||

NOTE: When we say to type something in this article and there are quotes around the text, DO NOT type the quotes, unless we specify otherwise.

|

||||

|

||||

To install Aptik, you must add the PPA. To do so, press Ctrl + Alt + T to open a Terminal window. Type the following text at the prompt and press Enter.

|

||||

|

||||

sudo apt-add-repository –y ppa:teejee2008/ppa

|

||||

|

||||

Type your password when prompted and press Enter.

|

||||

|

||||

|

||||

|

||||

Type the following text at the prompt to make sure the repository is up-to-date.

|

||||

|

||||

sudo apt-get update

|

||||

|

||||

|

||||

|

||||

When the update is finished, you are ready to install Aptik. Type the following text at the prompt and press Enter.

|

||||

|

||||

sudo apt-get install aptik

|

||||

|

||||

NOTE: You may see some errors about packages that the update failed to fetch. If they are similar to the ones listed on the following image, you should have no problem installing Aptik.

|

||||

|

||||

|

||||

|

||||

The progress of the installation displays and then a message displays saying how much disk space will be used. When asked if you want to continue, type a “y” and press Enter.

|

||||

|

||||

|

||||

|

||||

When the installation if finished, close the Terminal window by typing “Exit” and pressing Enter, or by clicking the “X” button in the upper-left corner of the window.

|

||||

|

||||

|

||||

|

||||

Before running Aptik, you should set up a backup directory on a USB flash drive, a network drive, or on a cloud account, such as Dropbox or Google Drive. For this example, will will use Dropbox.

|

||||

|

||||

|

||||

|

||||

Once your backup directory is set up, click the “Search” button at the top of the Unity Launcher bar.

|

||||

|

||||

|

||||

|

||||

Type “aptik” in the search box. Results of the search display as you type. When the icon for Aptik displays, click on it to open the application.

|

||||

|

||||

|

||||

|

||||

A dialog box displays asking for your password. Enter your password in the edit box and click “OK.”

|

||||

|

||||

|

||||

|

||||

The main Aptik window displays. Select “Other…” from the “Backup Directory” drop-down list. This allows you to select the backup directory you created.

|

||||

|

||||

NOTE: The “Open” button to the right of the drop-down list opens the selected directory in a Files Manager window.

|

||||

|

||||

|

||||

|

||||

On the “Backup Directory” dialog box, navigate to your backup directory and then click “Open.”

|

||||

|

||||

NOTE: If you haven’t created a backup directory yet, or you want to add a subdirectory in the selected directory, use the “Create Folder” button to create a new directory.

|

||||

|

||||

|

||||

|

||||

To backup the list of installed PPAs, click “Backup” to the right of “Software Sources (PPAs).”

|

||||

|

||||

|

||||

|

||||

The “Backup Software Sources” dialog box displays. The list of installed packages and the associated PPA for each displays. Select the PPAs you want to backup, or use the “Select All” button to select all the PPAs in the list.

|

||||

|

||||

|

||||

|

||||

Click “Backup” to begin the backup process.

|

||||

|

||||

|

||||

|

||||

A dialog box displays when the backup is finished telling you the backup was created successfully. Click “OK” to close the dialog box.

|

||||

|

||||

A file named “ppa.list” will be created in the backup directory.

|

||||

|

||||

|

||||

|

||||

The next item, “Downloaded Packages (APT Cache)”, is only useful if you are re-installing the same version of Ubuntu. It backs up the packages in your system cache (/var/cache/apt/archives). If you are upgrading your system, you can skip this step because the packages for the new version of the system will be newer than the packages in the system cache.

|

||||

|

||||

Backing up downloaded packages and then restoring them on the re-installed Ubuntu system will save time and Internet bandwidth when the packages are reinstalled. Because the packages will be available in the system cache once you restore them, the download will be skipped and the installation of the packages will complete more quickly.

|

||||

|

||||

If you are reinstalling the same version of your Ubuntu system, click the “Backup” button to the right of “Downloaded Packages (APT Cache)” to backup the packages in the system cache.

|

||||

|

||||

NOTE: When you backup the downloaded packages, there is no secondary dialog box. The packages in your system cache (/var/cache/apt/archives) are copied to an “archives” directory in the backup directory and a dialog box displays when the backup is finished, indicating that the packages were copied successfully.

|

||||

|

||||

|

||||

|

||||

There are some packages that are part of your Ubuntu distribution. These are not checked, since they are automatically installed when you install the Ubuntu system. For example, Firefox is a package that is installed by default in Ubuntu and other similar Linux distributions. Therefore, it will not be selected by default.

|

||||

|

||||

Packages that you installed after installing the system, such as the [package for the Chrome web browser][1] or the package containing Aptik (yes, Aptik is automatically selected to back up), are selected by default. This allows you to easily back up the packages that are not included in the system when installed.

|

||||

|

||||

Select the packages you want to back up and de-select the packages you don’t want to backup. Click “Backup” to the right of “Software Selections” to back up the selected top-level packages.

|

||||

|

||||

NOTE: Dependency packages are not included in this backup.

|

||||

|

||||

|

||||

|

||||

Two files, named “packages.list” and “packages-installed.list”, are created in the backup directory and a dialog box displays indicating that the backup was created successfully. Click “OK” to close the dialog box.

|

||||

|

||||

NOTE: The “packages-installed.list” file lists all the packages. The “packages.list” file also lists all the packages, but indicates which ones were selected.

|

||||

|

||||

|

||||

|

||||

To backup settings for installed applications, click the “Backup” button to the right of “Application Settings” on the main Aptik window. Select the settings you want to back up and click “Backup”.

|

||||

|

||||

NOTE: Click the “Select All” button if you want to back up all application settings.

|

||||

|

||||

|

||||

|

||||

The selected settings files are zipped into a file called “app-settings.tar.gz”.

|

||||

|

||||

|

||||

|

||||

When the zipping is complete, the zipped file is copied to the backup directory and a dialog box displays telling you that the backups were created successfully. Click “OK” to close the dialog box.

|

||||

|

||||

|

||||

|

||||

Themes from the “/usr/share/themes” directory and icons from the “/usr/share/icons” directory can also be backed up. To do so, click the “Backup” button to the right of “Themes and Icons”. The “Backup Themes” dialog box displays with all the themes and icons selected by default. De-select any themes or icons you don’t want to back up and click “Backup.”

|

||||

|

||||

|

||||

|

||||

The themes are zipped and copied to a “themes” directory in the backup directory and the icons are zipped and copied to an “icons” directory in the backup directory. A dialog box displays telling you that the backups were created successfully. Click “OK” to close the dialog box.

|

||||

|

||||

|

||||

|

||||

Once you’ve completed the desired backups, close Aptik by clicking the “X” button in the upper-left corner of the main window.

|

||||

|

||||

|

||||

|

||||

Your backup files are available in the backup directory you chose.

|

||||

|

||||

|

||||

|

||||

When you re-install your Ubuntu system or install a new version of Ubuntu, install Aptik on the newly installed system and make the backup files you generated available to the system. Run Aptik and use the “Restore” button for each item to restore your PPAs, applications, packages, settings, themes, and icons.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.howtogeek.com/206454/how-to-backup-and-restore-your-apps-and-ppas-in-ubuntu-using-aptik/

|

||||

|

||||

作者:Lori Kaufman

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://www.howtogeek.com/203768

|

||||

@ -1,75 +0,0 @@

|

||||

(translating by runningwater)

|

||||

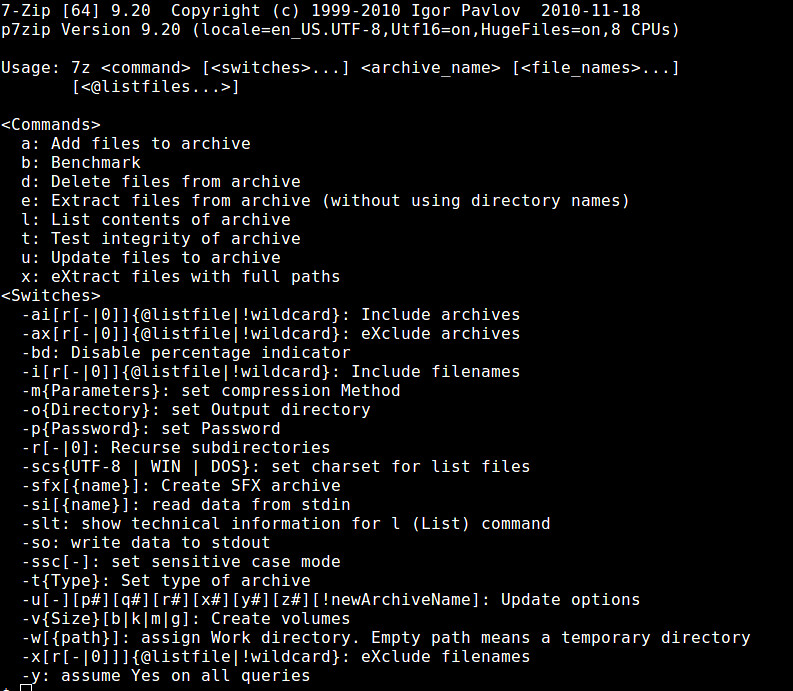

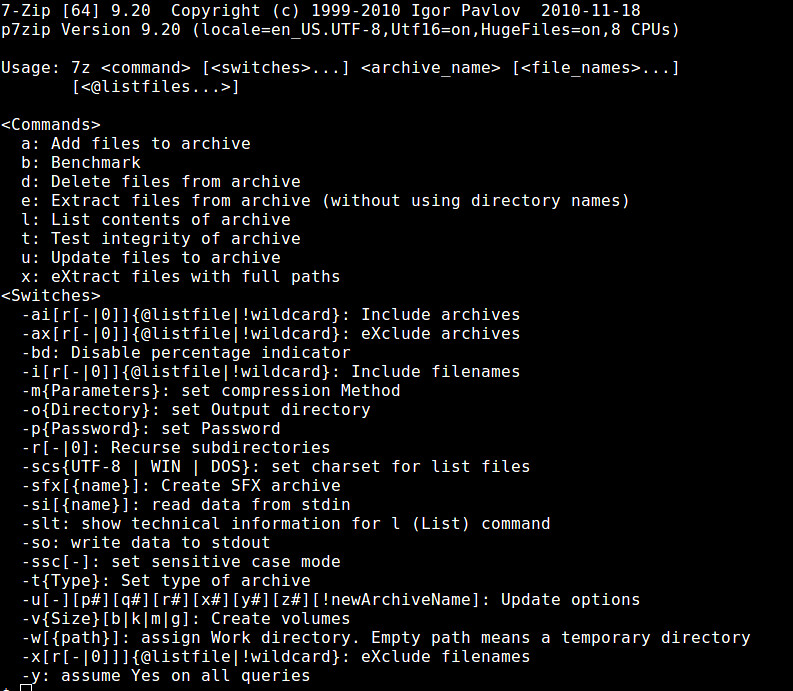

Linux FAQs with Answers--How to install 7zip on Linux

|

||||

================================================================================

|

||||

> **Question**: I need to extract files from an ISO image, and for that I want to use 7zip program. How can I install 7zip on [insert your Linux distro]?

|

||||

|

||||

7zip is an open-source archive program originally developed for Windows, which can pack or unpack a variety of archive formats including its native format 7z as well as XZ, GZIP, TAR, ZIP and BZIP2. 7zip is also popularly used to extract RAR, DEB, RPM and ISO files. Besides simple archiving, 7zip can support AES-256 encryption as well as self-extracting and multi-volume archiving. For POSIX systems (Linux, Unix, BSD), the original 7zip program has been ported as p7zip (short for "POSIX 7zip").

|

||||

|

||||

Here is how to install 7zip (or p7zip) on Linux.

|

||||

|

||||

### Install 7zip on Debian, Ubuntu or Linux Mint ###

|

||||

|

||||

Debian-based distributions come with three packages related to 7zip.

|

||||

|

||||

- **p7zip**: contains 7zr (a minimal 7zip archive tool) which can handle its native 7z format only.

|

||||

- **p7zip-full**: contains 7z which can support 7z, LZMA2, XZ, ZIP, CAB, GZIP, BZIP2, ARJ, TAR, CPIO, RPM, ISO and DEB.

|

||||

- **p7zip-rar**: contains a plugin for extracting RAR files.

|

||||

|

||||

It is recommended to install p7zip-full package (not p7zip) since this is the most complete 7zip package which supports many archive formats. In addition, if you want to extract RAR files, you also need to install p7zip-rar package as well. The reason for having a separate plugin package is because RAR is a proprietary format.

|

||||

|

||||

$ sudo apt-get install p7zip-full p7zip-rar

|

||||

|

||||

### Install 7zip on Fedora or CentOS/RHEL ###

|

||||

|

||||

Red Hat-based distributions offer two packages related to 7zip.

|

||||

|

||||

- **p7zip**: contains 7za command which can support 7z, ZIP, GZIP, CAB, ARJ, BZIP2, TAR, CPIO, RPM and DEB.

|

||||

- **p7zip-plugins**: contains 7z command and additional plugins to extend 7za command (e.g., ISO extraction).

|

||||

|

||||

On CentOS/RHEL, you need to enable [EPEL repository][1] before running yum command below. On Fedora, there is not need to set up additional repository.

|

||||

|

||||

$ sudo yum install p7zip p7zip-plugins

|

||||

|

||||

Note that unlike Debian based distributions, Red Hat based distributions do not offer a RAR plugin. Therefore you will not be able to extract RAR files using 7z command.

|

||||

|

||||

### Create or Extract an Archive with 7z ###

|

||||

|

||||

Once you installed 7zip, you can use 7z command to pack or unpack various types of archives. The 7z command uses other plugins to handle the archives.

|

||||

|

||||

|

||||

|

||||

To create an archive, use "a" option. Supported archive types for creation are 7z, XZ, GZIP, TAR, ZIP and BZIP2. If the specified archive file already exists, it will "add" the files to the existing archive, instead of overwriting it.

|

||||

|

||||

$ 7z a <archive-filename> <list-of-files>

|

||||

|

||||

To extract an archive, use "e" option. It will extract the archive in the current directory. Supported archive types for extraction are a lot more than those for creation. The list includes 7z, XZ, GZIP, TAR, ZIP, BZIP2, LZMA2, CAB, ARJ, CPIO, RPM, ISO and DEB.

|

||||

|

||||

$ 7z e <archive-filename>

|

||||

|

||||

Another way to unpack an archive is to use "x" option. Unlike "e" option, it will extract the content with full paths.

|

||||

|

||||

$ 7z x <archive-filename>

|

||||

|

||||

To see a list of files in an archive, use "l" option.

|

||||

|

||||

$ 7z l <archive-filename>

|

||||

|

||||

You can update or remove file(s) in an archive with "u" and "d" options, respectively.

|

||||

|

||||

$ 7z u <archive-filename> <list-of-files-to-update>

|

||||

$ 7z d <archive-filename> <list-of-files-to-delete>

|

||||

|

||||

To test the integrity of an archive:

|

||||

|

||||

$ 7z t <archive-filename>

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via:http://ask.xmodulo.com/install-7zip-linux.html

|

||||

|

||||

译者:[runningwater](https://github.com/runningwater)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://xmodulo.com/how-to-set-up-epel-repository-on-centos.html

|

||||

@ -1,134 +0,0 @@

|

||||

翻译中 by小眼儿

|

||||

|

||||

Docker Image Insecurity

|

||||

================================================================================

|

||||

Recently while downloading an “official” container image with Docker I saw this line:

|

||||

|

||||

ubuntu:14.04: The image you are pulling has been verified

|

||||

|

||||

I assumed this referenced Docker’s [heavily promoted][1] image signing system and didn’t investigate further at the time. Later, while researching the cryptographic digest system that Docker tries to secure images with, I had the opportunity to explore further. What I found was a total systemic failure of all logic related to image security.

|

||||

|

||||

Docker’s report that a downloaded image is “verified” is based solely on the presence of a signed manifest, and Docker never verifies the image checksum from the manifest. An attacker could provide any image alongside a signed manifest. This opens the door to a number of serious vulnerabilities.

|

||||

|

||||

Images are downloaded from an HTTPS server and go through an insecure streaming processing pipeline in the Docker daemon:

|

||||

|

||||

[decompress] -> [tarsum] -> [unpack]

|

||||

|

||||

This pipeline is performant but completely insecure. Untrusted input should not be processed before verifying its signature. Unfortunately Docker processes images three times before checksum verification is supposed to occur.

|

||||

|

||||

However, despite [Docker’s claims][2], image checksums are never actually checked. This is the only section[0][3] of Docker’s code related to verifying image checksums, and I was unable to trigger the warning even when presenting images with mismatched checksums.

|

||||

|

||||

if img.Checksum != "" && img.Checksum != checksum {

|

||||

log.Warnf("image layer checksum mismatch: computed %q,

|

||||

expected %q", checksum, img.Checksum)

|

||||

}

|

||||

|

||||

### Insecure processing pipeline ###

|

||||

|

||||

**Decompress**

|

||||

|

||||

Docker supports three compression algorithms: gzip, bzip2, and xz. The first two use the Go standard library implementations, which are [memory-safe][4], so the exploit types I’d expect to see here are denial of service attacks like crashes and excessive CPU and memory usage.

|

||||

|

||||

The third compression algorithm, xz, is more interesting. Since there is no native Go implementation, Docker [execs][5] the `xz` binary to do the decompression.

|

||||

|

||||

The xz binary comes from the [XZ Utils][6] project, and is built from approximately[1][7] twenty thousand lines of C code. C is not a memory-safe language. This means malicious input to a C program, in this case the Docker image XZ Utils is unpacking, could potentially execute arbitrary code.

|

||||

|

||||

Docker exacerbates this situation by *running* `xz` as root. This means that if there is a single vulnerability in `xz`, a call to `docker pull` could result in the complete compromise of your entire system.

|

||||

|

||||

**Tarsum**

|

||||

|

||||

The use of tarsum is well-meaning but completely flawed. In order to get a deterministic checksum of the contents of an arbitrarily encoded tar file, Docker decodes the tar and then hashes specific portions, while excluding others, in a [deterministic order][8].

|

||||

|

||||

Since this processing is done in order to generate the checksum, it is decoding untrusted data which could be designed to exploit the tarsum code[2][9]. Potential exploits here are denial of service as well as logic flaws that could cause files to be injected, skipped, processed differently, modified, appended to, etc. without the checksum changing.

|

||||

|

||||

**Unpacking**

|

||||

|

||||

Unpacking consists of decoding the tar and placing files on the disk. This is extraordinarily dangerous as there have been three other vulnerabilities reported[3][10] in the unpack stage at the time of writing.

|

||||

|

||||

There is no situation where data that has not been verified should be unpacked onto disk.

|

||||

|

||||

### libtrust ###

|

||||

|

||||

[libtrust][11] is a Docker package that claims to provide “authorization and access control through a distributed trust graph.” Unfortunately no specification appears to exist, however it looks like it implements some parts of the [Javascript Object Signing and Encryption][12] specifications along with other unspecified algorithms.

|

||||

|

||||

Downloading an image with a manifest signed and verified using libtrust is what triggers this inaccurate message (only the manifest is checked, not the actual image contents):

|

||||

|

||||

ubuntu:14.04: The image you are pulling has been verified

|

||||

|

||||

Currently only “official” image manifests published by Docker, Inc are signed using this system, but from discussions I participated in at the last Docker Governance Advisory Board meeting[4][13], my understanding is that Docker, Inc is planning on deploying this more widely in the future. The intended goal is centralization with Docker, Inc controlling a Certificate Authority that then signs images and/or client certificates.

|

||||

|

||||

I looked for the signing key in Docker’s code but was unable to find it. As it turns out the key is not embedded in the binary as one would expect. Instead the Docker daemon fetches it [over HTTPS from a CDN][14] before each image download. This is a terrible approach as a variety of attacks could lead to trusted keys being replaced with malicious ones. These attacks include but are not limited to: compromise of the CDN vendor, compromise of the CDN origin serving the key, and man in the middle attacks on clients downloading the keys.

|

||||

|

||||

### Remediation ###

|

||||

|

||||

I [reported][15] some of the issues I found with the tarsum system before I finished this research, but so far nothing I have reported has been fixed.

|

||||

|

||||

Some steps I believe should be taken to improve the security of the Docker image download system:

|

||||

Drop tarsum and actually verify image digests

|

||||

|

||||

Tarsum should not be used for security. Instead, images must be fully downloaded and their cryptographic signatures verified before any processing takes place.

|

||||

|

||||

**Add privilege isolation**

|

||||

|

||||

Image processing steps that involve decompression or unpacking should be run in isolated processes (containers?) that have only the bare minimum required privileges to operate. There is no scenario where a decompression tool like `xz` should be run as root.

|

||||

|

||||

**Replace libtrust**

|

||||

|

||||

Libtrust should be replaced with [The Update Framework][16] which is explicitly designed to solve the real problems around signing software binaries. The threat model is very comprehensive and addresses many things that have not been considered in libtrust. There is a complete specification as well as a reference implementation written in Python, and I have begun work on a [Go implementation][17] and welcome contributions.

|

||||

|

||||

As part of adding TUF to Docker, a local keystore should be added that maps root keys to registry URLs so that users can have their own signing keys that are not managed by Docker, Inc.

|

||||

|

||||

I would like to note that using non-Docker, Inc hosted registries is a very poor user experience in general. Docker, Inc seems content with relegating third party registries to second class status when there is no technical reason to do so. This is a problem both for the ecosystem in general and the security of end users. A comprehensive, decentralized security model for third party registries is both necessary and desirable. I encourage Docker, Inc to take this into consideration when redesigning their security model and image verification system.

|

||||

|

||||

### Conclusion ###

|

||||

|

||||

Docker users should be aware that the code responsible for downloading images is shockingly insecure. Users should only download images whose provenance is without question. At present, this does *not* include “trusted” images hosted by Docker, Inc including the official Ubuntu and other base images.

|

||||

|

||||

The best option is to block `index.docker.io` locally, and download and verify images manually before importing them into Docker using `docker load`. Red Hat’s security blog has [a good post about this][18].

|

||||

|

||||

Thanks to Lewis Marshall for pointing out the tarsums are never verified.

|

||||

|

||||

- [Checksum code context][19].

|

||||

- [cloc][20] says 18,141 non-blank, non-comment lines of C and 5,900 lines of headers in v5.2.0.

|

||||

- Very similar bugs been [found in Android][21], which allowed arbitrary files to be injected into signed packages, and [the Windows Authenticode][22] signature system, which allowed binary modification.

|

||||

- Specifically: [CVE-2014-6407][23], [CVE-2014-9356][24], and [CVE-2014-9357][25]. There were two Docker [security releases][26] in response.

|

||||

- See page 8 of the [notes from the 2014-10-28 DGAB meeting][27].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://titanous.com/posts/docker-insecurity

|

||||

|

||||

作者:[titanous][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://twitter.com/titanous

|

||||

[1]:https://blog.docker.com/2014/10/docker-1-3-signed-images-process-injection-security-options-mac-shared-directories/

|

||||

[2]:https://blog.docker.com/2014/10/docker-1-3-signed-images-process-injection-security-options-mac-shared-directories/

|

||||

[3]:https://titanous.com/posts/docker-insecurity#fn:0

|

||||

[4]:https://en.wikipedia.org/wiki/Memory_safety

|

||||

[5]:https://github.com/docker/docker/blob/0874f9ab77a7957633cd835241a76ee4406196d8/pkg/archive/archive.go#L91-L95

|

||||

[6]:http://tukaani.org/xz/

|

||||

[7]:https://titanous.com/posts/docker-insecurity#fn:1

|

||||

[8]:https://github.com/docker/docker/blob/0874f9ab77a7957633cd835241a76ee4406196d8/pkg/tarsum/tarsum_spec.md

|

||||

[9]:https://titanous.com/posts/docker-insecurity#fn:2

|

||||

[10]:https://titanous.com/posts/docker-insecurity#fn:3

|

||||

[11]:https://github.com/docker/libtrust

|

||||

[12]:https://tools.ietf.org/html/draft-ietf-jose-json-web-signature-11

|

||||

[13]:https://titanous.com/posts/docker-insecurity#fn:4

|

||||

[14]:https://github.com/docker/docker/blob/0874f9ab77a7957633cd835241a76ee4406196d8/trust/trusts.go#L38

|

||||

[15]:https://github.com/docker/docker/issues/9719

|

||||

[16]:http://theupdateframework.com/

|

||||

[17]:https://github.com/flynn/go-tuf

|

||||

[18]:https://securityblog.redhat.com/2014/12/18/before-you-initiate-a-docker-pull/

|

||||

[19]:https://github.com/docker/docker/blob/0874f9ab77a7957633cd835241a76ee4406196d8/image/image.go#L114-L116

|

||||

[20]:http://cloc.sourceforge.net/

|

||||

[21]:http://www.saurik.com/id/17

|

||||

[22]:http://blogs.technet.com/b/srd/archive/2013/12/10/ms13-098-update-to-enhance-the-security-of-authenticode.aspx

|

||||

[23]:https://web.nvd.nist.gov/view/vuln/detail?vulnId=CVE-2014-6407

|

||||

[24]:https://web.nvd.nist.gov/view/vuln/detail?vulnId=CVE-2014-9356

|

||||

[25]:https://web.nvd.nist.gov/view/vuln/detail?vulnId=CVE-2014-9357

|

||||

[26]:https://groups.google.com/d/topic/docker-user/nFAz-B-n4Bw/discussion

|

||||

[27]:https://docs.google.com/document/d/1JfWNzfwptsMgSx82QyWH_Aj0DRKyZKxYQ1aursxNorg/edit?pli=1

|

||||

@ -1,207 +0,0 @@

|

||||

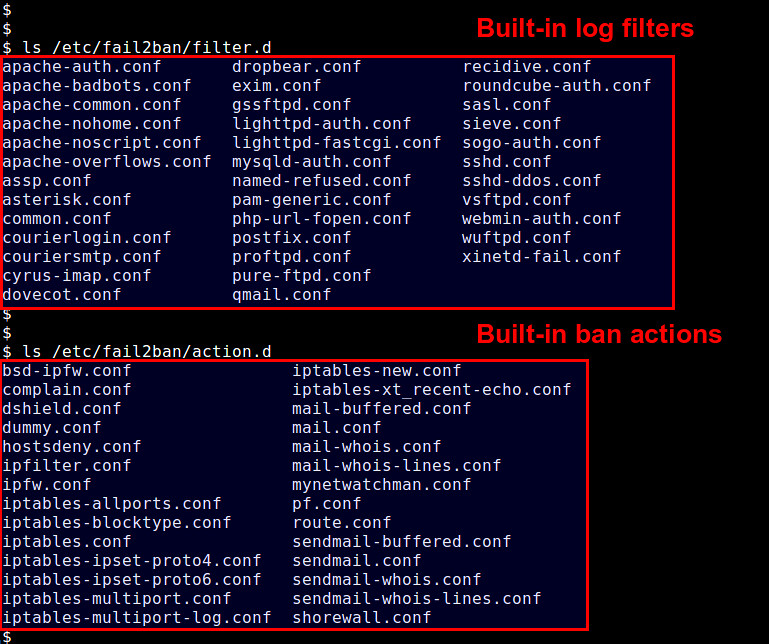

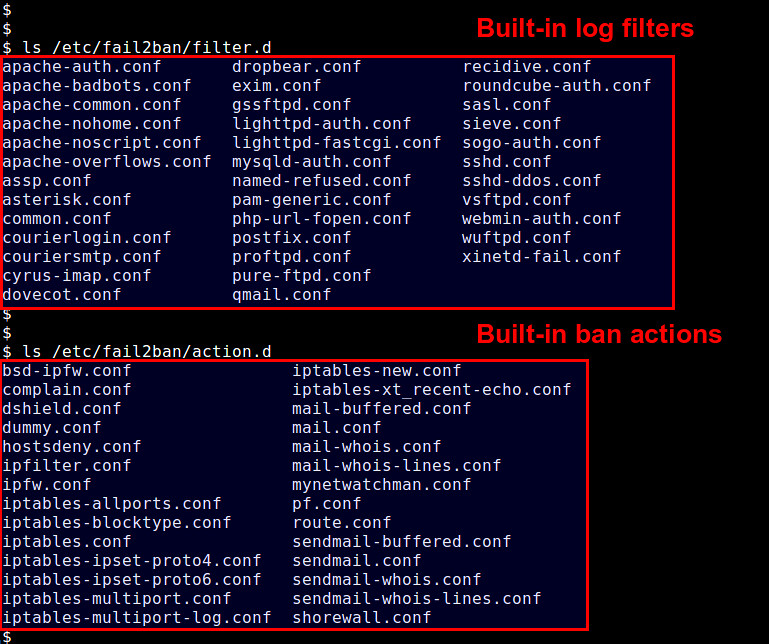

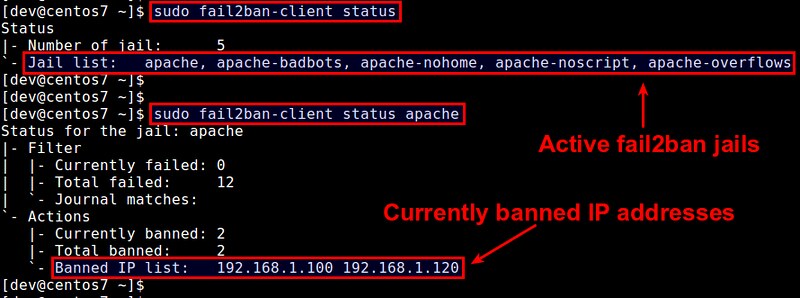

How to configure fail2ban to protect Apache HTTP server

|

||||

================================================================================

|

||||

An Apache HTTP server in production environments can be under attack in various different ways. Attackers may attempt to gain access to unauthorized or forbidden directories by using brute-force attacks or executing evil scripts. Some malicious bots may scan your websites for any security vulnerability, or collect email addresses or web forms to send spams to.

|

||||

|

||||

Apache HTTP server comes with comprehensive logging capabilities capturing various abnormal events indicative of such attacks. However, it is still non-trivial to systematically parse detailed Apache logs and react to potential attacks quickly (e.g., ban/unban offending IP addresses) as they are perpetrated in the wild. That is when `fail2ban` comes to the rescue, making a sysadmin's life easier.

|

||||

|

||||

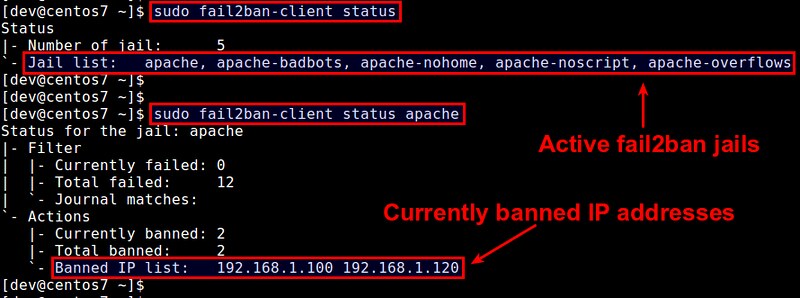

`fail2ban` is an open-source intrusion prevention tool which detects various attacks based on system logs and automatically initiates prevention actions e.g., banning IP addresses with `iptables`, blocking connections via /etc/hosts.deny, or notifying the events via emails. fail2ban comes with a set of predefined "jails" which use application-specific log filters to detect common attacks. You can also write custom jails to deter any specific attack on an arbitrary application.

|

||||

|

||||

In this tutorial, I am going to demonstrate how you can configure fail2ban to protect your Apache HTTP server. I assume that you have Apache HTTP server and fail2ban already installed. Refer to [another tutorial][1] for fail2ban installation.

|

||||

|

||||

### What is a Fail2ban Jail ###

|

||||

|