mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-28 01:01:09 +08:00

commit

a1bda05237

@ -2,9 +2,9 @@

|

||||

================================================================================

|

||||

|

||||

|

||||

当你想重装Ubuntu或者仅仅是想安装它的一个新版本的时候,寻到一个便捷的方法去重新安装之前的应用并且重置其设置是很有用的。此时 *Aptik* 粉墨登场,它可以帮助你轻松实现。

|

||||

当你想重装Ubuntu或者仅仅是想安装它的一个新版本的时候,如果有个便捷的方法来重新安装之前的应用并且重置其设置会很方便的。此时 *Aptik* 粉墨登场,它可以帮助你轻松实现。

|

||||

|

||||

Aptik(自动包备份和回复)是一个可以用在Ubuntu,Linux Mint, 和其他基于Debian以及Ubuntu的Linux发行版上的应用,它允许你将已经安装过的包括软件库、下载包、安装的应用及其主题和设置在内的PPAs(个人软件包存档)备份到外部的U盘、网络存储或者类似于Dropbox的云服务上。

|

||||

Aptik(自动包备份和恢复)是一个可以用在Ubuntu,Linux Mint 和其他基于Debian以及Ubuntu的Linux发行版上的应用,它允许你将已经安装过的包括软件库、下载包、安装的应用和主题、用户设置在内的PPAs(个人软件包存档)备份到外部的U盘、网络存储或者类似于Dropbox的云服务上。

|

||||

|

||||

注意:当我们在此文章中说到输入某些东西的时候,如果被输入的内容被引号包裹,请不要将引号一起输入进去,除非我们有特殊说明。

|

||||

|

||||

@ -16,7 +16,7 @@ Aptik(自动包备份和回复)是一个可以用在Ubuntu,Linux Mint, 和

|

||||

|

||||

|

||||

|

||||

输入下边的命令到提示符旁边,来确保资源库已经是最新版本。

|

||||

在命令行提示符输入下边的命令,来确保资源库已经是最新版本。

|

||||

|

||||

sudo apt-get update

|

||||

|

||||

@ -86,11 +86,11 @@ Aptik的主窗口显示出来了。从“Backup Directory”下拉列表中选

|

||||

|

||||

接下来,“Downloaded Packages (APT Cache)”的项目只对重装同样版本的Ubuntu有用处。它会备份下你系统缓存(/var/cache/apt/archives)中的包。如果你是升级系统的话,可以跳过这个条目,因为针对新系统的包会比现有系统缓存中的包更加新一些。

|

||||

|

||||

备份和回复下载过的包,这可以在重装Ubuntu,并且重装包的时候节省时间和网络带宽。因为一旦你把这些包恢复到系统缓存中之后,他们可以重新被利用起来,这样下载过程就免了,包的安装会更加快捷。

|

||||

备份和恢复下载过的包,这可以在重装Ubuntu,并且重装包的时候节省时间和网络带宽。因为一旦你把这些包恢复到系统缓存中之后,他们可以重新被利用起来,这样下载过程就免了,包的安装会更加快捷。

|

||||

|

||||

如果你是重装相同版本的Ubuntu系统的话,点击 “Downloaded Packages (APT Cache)” 右侧的 “Backup” 按钮来备份系统缓存中的包。

|

||||

|

||||

注意:当你备份下载过的包的时候是没有二级对话框出现。你系统缓存 (/var/cache/apt/archives) 中的包会被拷贝到备份目录下一个名叫 “archives” 的文件夹中,当整个过程完成后会出现一个对话框来告诉你备份已经完成。

|

||||

注意:当你备份下载过的包的时候是没有二级对话框出现的。你系统缓存 (/var/cache/apt/archives) 中的包会被拷贝到备份目录下一个名叫 “archives” 的文件夹中,当整个过程完成后会出现一个对话框来告诉你备份已经完成。

|

||||

|

||||

|

||||

|

||||

@ -104,7 +104,7 @@ Aptik的主窗口显示出来了。从“Backup Directory”下拉列表中选

|

||||

|

||||

|

||||

|

||||

名为 “packages.list” and “packages-installed.list” 的两个文件出现在了备份目录中,并且一个用来通知你备份完成的对话框出现。点击 ”OK“关闭它。

|

||||

备份目录中出现了两个名为 “packages.list” 和“packages-installed.list” 的文件,并且会弹出一个通知你备份完成的对话框。点击 ”OK“关闭它。

|

||||

|

||||

注意:“packages-installed.list”文件包含了所有的包,而 “packages.list” 在包含了所有包的前提下还指出了那些包被选择上了。

|

||||

|

||||

@ -120,27 +120,27 @@ Aptik的主窗口显示出来了。从“Backup Directory”下拉列表中选

|

||||

|

||||

|

||||

|

||||

当打包完成后,打包后的文件被拷贝到备份目录下,另外一个备份成功的对话框出现。点击”OK“,关掉。

|

||||

当打包完成后,打包后的文件被拷贝到备份目录下,另外一个备份成功的对话框出现。点击“OK”关掉。

|

||||

|

||||

|

||||

|

||||

来自 “/usr/share/themes” 目录的主题和来自 “/usr/share/icons” 目录的图标也可以备份。点击 “Themes and Icons” 右侧的 “Backup” 来进行此操作。“Backup Themes” 对话框默认选择了所有的主题和图标。你可以安装需要取消到一些然后点击 “Backup” 进行备份。

|

||||

放在 “/usr/share/themes” 目录的主题和放在 “/usr/share/icons” 目录的图标也可以备份。点击 “Themes and Icons” 右侧的 “Backup” 来进行此操作。“Backup Themes” 对话框默认选择了所有的主题和图标。你可以安装需要的、取消一些不要的,然后点击 “Backup” 进行备份。

|

||||

|

||||

|

||||

|

||||

主题被打包拷贝到备份目录下的 “themes” 文件夹中,图标被打包拷贝到备份目录下的 “icons” 文件夹中。然后成功提示对话框出现,点击”OK“关闭它。

|

||||

主题被打包拷贝到备份目录下的 “themes” 文件夹中,图标被打包拷贝到备份目录下的 “icons” 文件夹中。然后成功提示对话框出现,点击“OK”关闭它。

|

||||

|

||||

|

||||

|

||||

一旦你完成了需要的备份,点击主界面左上角的”X“关闭 Aptik 。

|

||||

一旦你完成了需要的备份,点击主界面左上角的“X”关闭 Aptik 。

|

||||

|

||||

|

||||

|

||||

备份过的文件已存在于你选择的备份目录中,可以随时取阅。

|

||||

备份过的文件已存在于你选择的备份目录中,可以随时查看。

|

||||

|

||||

|

||||

|

||||

当你重装Ubuntu或者安装新版本的Ubuntu后,在新的系统中安装 Aptik 并且将备份好的文件置于新系统中让其可被使用。运行 Aptik,并使用每个条目的 “Restore” 按钮来恢复你的软件源、应用、包、设置、主题以及图标。

|

||||

当你重装Ubuntu或者安装新版本的Ubuntu后,在新的系统中安装 Aptik 并且将备份好的文件置于新系统中使用。运行 Aptik,并使用每个条目的 “Restore” 按钮来恢复你的软件源、应用、包、设置、主题以及图标。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -148,7 +148,7 @@ via: http://www.howtogeek.com/206454/how-to-backup-and-restore-your-apps-and-ppa

|

||||

|

||||

作者:Lori Kaufman

|

||||

译者:[Ping](https://github.com/mr-ping)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,166 @@

|

||||

文件轻松比对,伟大而自由的比较软件们

|

||||

================================================================================

|

||||

|

||||

文件比较工具用于比较计算机上的文件的内容,找到他们之间相同与不同之处。比较的结果通常被称为diff。

|

||||

|

||||

diff同时也是一个基于控制台的、能输出两个文件之间不同之处的著名的文件比较程序的名字。diff是于二十世纪70年代早期,在Unix操作系统上被开发出来的。diff将会把两个文件之间不同之处的部分进行输出。

|

||||

|

||||

Linux拥有很多不错的GUI工具,能使你能清楚的看到两个文件或同一文件不同版本之间的不同之处。这次我从自己最喜欢的GUI比较工具中选出了五个推荐给大家。除了其中的一个,其他的都是开源的。

|

||||

|

||||

这些应用程序可以让你更清楚的看到文件或目录的差别,能合并有差异的文件,可以解决冲突并将其输出成一个新的文件或补丁,其也用于那些预览和备注文件改动的产品上(比如,在源代码合并到源文件树之前,要先接受源代码的改变)。因此它们是非常重要的软件开发工具。它们可以帮助开发人员们对文件进行处理,不停的把文件转来转去。这些比较工具不仅仅能用于显示源代码文件中的不同之处;他们还适用于很多种的文本文件。可视化的特性使文件比较变得容易、简单。

|

||||

|

||||

----------

|

||||

|

||||

###Meld

|

||||

|

||||

|

||||

|

||||

Meld是一个适用于Gnome桌面的、开源的、图形化的文件差异查看和合并的应用程序。它支持2到3个文件的同时比较、递归式的目录比较、处于版本控制(Bazaar, Codeville, CVS, Darcs, Fossil SCM, Git, Mercurial, Monotone, Subversion)之下的目录比较。还能够手动或自动合并文件差异。

|

||||

|

||||

Meld的重点在于帮助开发人员比较和合并多个源文件,并在他们最喜欢的版本控制系统下能直观的浏览改动过的地方。

|

||||

|

||||

功能包括

|

||||

|

||||

- 原地编辑文件,即时更新

|

||||

- 进行两到三个文件的比较及合并

|

||||

- 在显示的差异和冲突之间的导航

|

||||

- 使用插入、改变和冲突这几种标记可视化展示本地和全局的差异

|

||||

- 内置正则表达式文本过滤器,可以忽略不重要的差异

|

||||

- 语法高亮度显示(使用可选的gtksourceview)

|

||||

- 将两到三个目录中的文件逐个进行比较,显示新建,缺失和替换过的文件

|

||||

- 对任何有冲突或差异的文件直接打开比较界面

|

||||

- 可以过滤文件或目录以避免以忽略某些差异

|

||||

- 被改动区域的自动合并模式使合并更容易

|

||||

- 也有一个简单的文件管理

|

||||

- 支持多种版本控制系统,包括Git, Mercurial, Bazaar 和 SVN

|

||||

- 在提交前开启文件比较来检查改动的地方和内容

|

||||

- 查看文件版本状态

|

||||

- 还能进行简单的版本控制操作(例如,提交、更新、添加、移动或删除文件)

|

||||

- 继承自同一文件的两个文件进行自动合并

|

||||

- 标注并在中间的窗格显示所有有冲突的变更的基础版本

|

||||

- 显示并合并同一文件的无关的独立修改

|

||||

- 锁定只读性质的基础文件以避免出错

|

||||

- 可以整合到已有的命令行界面中,包括gitmergetool

|

||||

- 国际化支持

|

||||

- 可视化使文件比较更简单

|

||||

|

||||

- 网址: [meldmerge.org][1]

|

||||

- 开发人员: Kai Willadsen

|

||||

- 证书: GNU GPL v2

|

||||

- 版本号: 1.8.5

|

||||

|

||||

----------

|

||||

|

||||

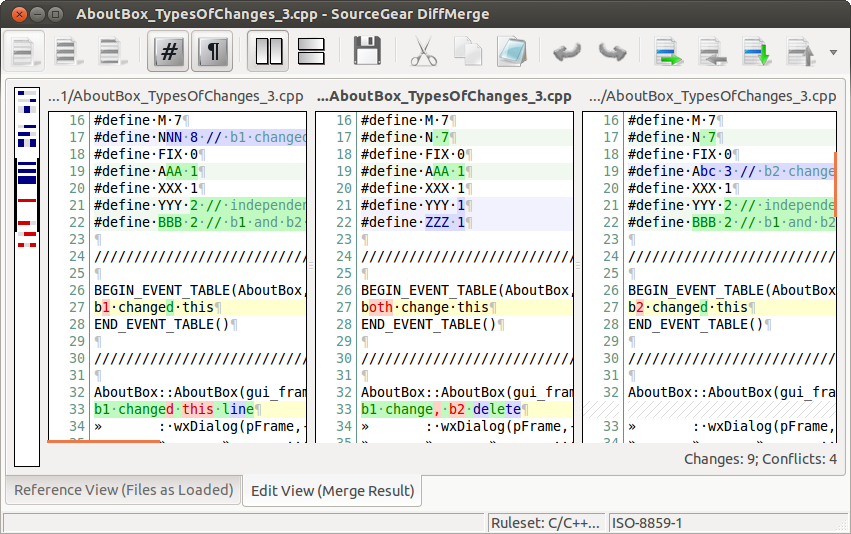

###DiffMerge

|

||||

|

||||

|

||||

|

||||

DiffMerge是一个可以在Linux、Windows和OS X上运行的,可以可视化文件的比较和合并的应用软件。

|

||||

|

||||

功能包括:

|

||||

|

||||

- 图形化显示两个文件之间的差别。包括插入行,高亮标注以及对编辑的全面支持

|

||||

- 图形化显示三个文件之间的差别。(安全的前提下)允许自动合并,并对最终文件可以随意编辑

|

||||

- 并排显示两个文件夹的比较,显示哪一个文件只存在于其中一个文件夹而不存在于另外的一个文件夹,还能一对一的将完全相同的、等价的或不同的文件配对

|

||||

- 规则设置和选项让你可以个性化它的外观和行为

|

||||

- 基于Unicode,可以导入多种编码的字符

|

||||

- 跨平台工具

|

||||

|

||||

- 网址: [sourcegear.com/diffmerge][2]

|

||||

- 开发人员: SourceGear LLC

|

||||

- 证书: Licensed for use free of charge (not open source)

|

||||

- 版本号: 4.2

|

||||

|

||||

----------

|

||||

|

||||

###xxdiff

|

||||

|

||||

|

||||

|

||||

xxdiff是个开源的图形化的可进行文件、目录比较及合并的工具。

|

||||

|

||||

xxdiff可以用于显示两到三个文件或两个目录的差别,还能产生一个合并后的版本。被比较的两到三个文件会并排显示,并将有区别的文字内容用不同颜色高亮显示以便于识别。

|

||||

|

||||

这个程序是个非常重要的软件开发工具。他可以图形化的显示两个文件或目录之间的差别,合并有差异的文件,其也用于那些预览和备注文件改动的产品上(比如,在源代码合并到源文件树之前,要先接受源代码的改变)

|

||||

|

||||

功能包括:

|

||||

|

||||

- 比较两到三个文件,或是两个目录(浅层或递归)

|

||||

- 横向高亮显示差异

|

||||

- 交互式的文件合并,可视化的输出和保存

|

||||

- 可以辅助合并的评论/监管

|

||||

- 自动合并文件中时不合并 CVS 冲突,并以两个文件显示以便于解决冲突

|

||||

- 可以用其它的比较程序计算差异:适用于GNU diff、SGI diff和ClearCase的cleardiff,以及所有与这些程序输出相似的文件比较程序。

|

||||

- 可以使用资源文件实现完全的个性化设置

|

||||

- 用起来感觉和Rudy Wortel或SGI的xdiff差不多,与桌面系统无关

|

||||

- 功能和输出可以和脚本轻松集成

|

||||

|

||||

- 网址: [furius.ca/xxdiff][3]

|

||||

- 开发人员: Martin Blais

|

||||

- 证书: GNU GPL

|

||||

- 版本号: 4.0

|

||||

|

||||

----------

|

||||

|

||||

###Diffuse

|

||||

|

||||

|

||||

|

||||

Diffuse是个开源的图形化工具,可用于合并和比较文本文件。Diffuse能够比较任意数量的文件,并排显示,并提供手动行匹配调整,能直接编辑文件。Diffuse还能从bazaar、CVS、darcs, git, mercurial, monotone, Subversion和GNU RCS 库中获取版本用于比较及合并。

|

||||

|

||||

功能包括:

|

||||

|

||||

- 比较任意数量的文件,并排显示(多方合并)

|

||||

- 行匹配可以被用户人工矫正

|

||||

- 直接编辑文件

|

||||

- 语法高亮

|

||||

- 支持Bazaar, CVS, Darcs, Git, Mercurial, Monotone, RCS, Subversion和SVK

|

||||

- 支持Unicode

|

||||

- 可无限撤销

|

||||

- 易用的键盘导航

|

||||

|

||||

- 网址: [diffuse.sourceforge.net][]

|

||||

- 开发人员: Derrick Moser

|

||||

- 证书: GNU GPL v2

|

||||

- 版本号: 0.4.7

|

||||

|

||||

----------

|

||||

|

||||

###Kompare

|

||||

|

||||

|

||||

|

||||

Kompare是个开源的GUI前端程序,可以对不同源文件之间差异的可视化和合并。Kompare可以比较文件或文件夹内容的差异。Kompare支持很多种diff格式,并提供各种选项来设置显示的信息级别。

|

||||

|

||||

不论你是个想比较源代码的开发人员,还是只想比较一下研究论文手稿与最终文档的差异,Kompare都是个有用的工具。

|

||||

|

||||

Kompare是KDE桌面环境的一部分。

|

||||

|

||||

功能包括:

|

||||

|

||||

- 比较两个文本文件

|

||||

- 递归式比较目录

|

||||

- 显示diff产生的补丁

|

||||

- 将补丁合并到一个已存在的目录

|

||||

- 可以让你在编译时更轻松

|

||||

|

||||

- 网址: [www.caffeinated.me.uk/kompare/][5]

|

||||

- 开发者: The Kompare Team

|

||||

- 证书: GNU GPL

|

||||

- 版本号: Part of KDE

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxlinks.com/article/2014062814400262/FileComparisons.html

|

||||

|

||||

作者:Frazer Kline

|

||||

译者:[H-mudcup](https://github.com/H-mudcup)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://meldmerge.org/

|

||||

[2]:https://sourcegear.com/diffmerge/

|

||||

[3]:http://furius.ca/xxdiff/

|

||||

[4]:http://diffuse.sourceforge.net/

|

||||

[5]:http://www.caffeinated.me.uk/kompare/

|

||||

@ -0,0 +1,64 @@

|

||||

Linux上几款好用的字幕编辑器

|

||||

================================================================================

|

||||

如果你经常看国外的大片,你应该会喜欢带字幕版本而不是有国语配音的版本。我在法国长大,童年的记忆里充满了迪斯尼电影。但是这些电影因为有了法语的配音而听起来很怪。如果现在有机会能看原始的版本,我想,对于大多数的人来说,字幕还是必须的。我很高兴能为家人制作字幕。给我带来希望的是,Linux 也不乏有很多花哨、开源的字幕编辑器。总之一句话,文中Linux上字幕编辑器的列表并不详尽,你可以告诉我哪一款是你认为最好的字幕编辑器。

|

||||

|

||||

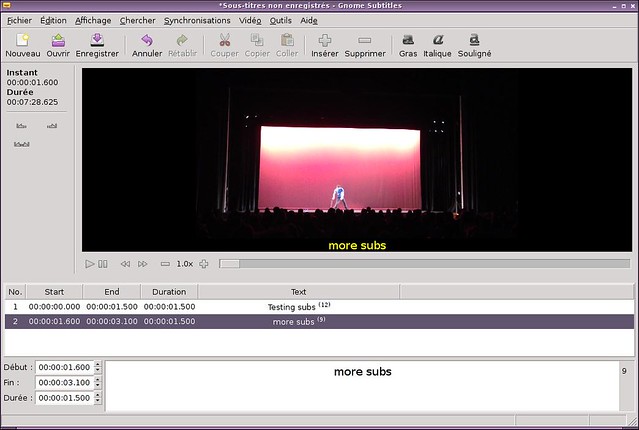

### 1. Gnome Subtitles ###

|

||||

|

||||

|

||||

|

||||

当有现有字幕需要快速编辑时,[Gnome Subtitles][1] 是我的一个选择。你可以载入视频,载入字幕文本,然后就可以即刻开始了。我很欣赏其对于易用性和高级特性之间的平衡。它带有一个同步工具以及一个拼写检查工具。最后但同样重要的的一点,这么好用最主要的是因为它的快捷键:当你编辑很多的台词的时候,你最好把你的手放在键盘上,使用其内置的快捷键来移动。

|

||||

|

||||

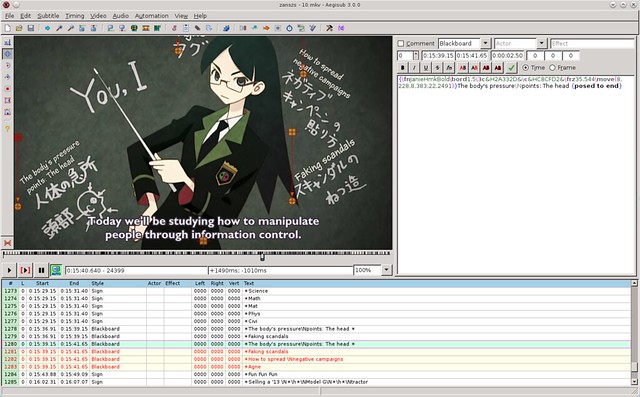

### 2. Aegisub ###

|

||||

|

||||

|

||||

|

||||

[Aegisub][2] 已经是一款高级别的复杂字幕编辑器。仅仅是界面就反映出了一定的学习曲线。但是,除了它吓人的样子以外,Aegisub 是一个非常完整的软件,提供的工具远远超出你能想象的。和Gnome Subtitles 一样,Aegisub也采用了所见即所得(WYSIWYG:what you see is what you get)的处理方式。但是是一个全新的高度:可以再屏幕上任意拖动字幕,也可以在另一边查看音频的频谱,并且可以利用快捷键做任何的事情。除此以外,它还带有一个汉字工具,有一个kalaok模式,并且你可以导入lua 脚本让它自动完成一些任务。我希望你在用之前,先去阅读下它的[指南][3]。

|

||||

|

||||

### 3. Gaupol ###

|

||||

|

||||

|

||||

|

||||

另一个操作复杂的软件是[Gaupol][4],不像Aegisub ,Gaupol 很容易上手而且采用了一个和Gnome Subtitles 很像的界面。但是在这些相对简单背后,它拥有很多很必要的工具:快捷键、第三方扩展、拼写检查,甚至是语音识别(由[CMU Sphinx][5]提供)。这里也提一个缺点,我注意到有时候在测试的时候也,软件会有消极怠工的表现,不是很严重,但是也足以让我更有理由喜欢Gnome Subtitles了。

|

||||

|

||||

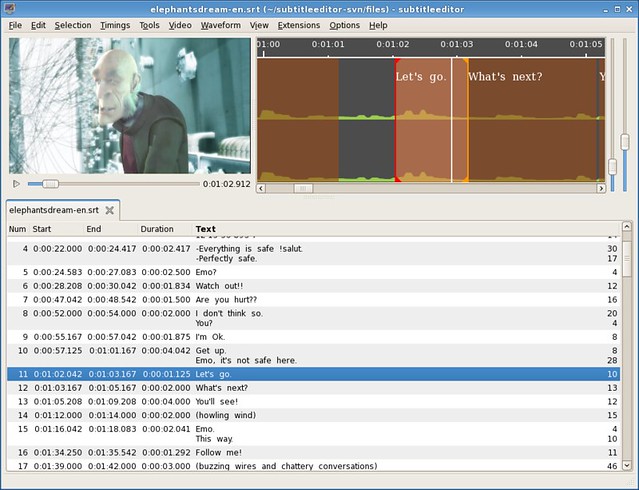

### 4. Subtitle Editor ###

|

||||

|

||||

|

||||

|

||||

[Subtitle Editor][6]和 Gaupol 很像,但是它的界面有点不太直观,特性也只是稍微的高级一点点。我很欣赏的一点是,它可以定义“关键帧”,而且提供所有的同步选项。然而,多一点的图标,或者是少一点的文字都能提供界面的特性。作为一个值得称赞的字幕编辑器,Subtitle Editor 可以模仿“作家”打字的效果,虽然我不确定它是否特别有用。最后但同样重要的一点,重定义快捷键的功能很实用。

|

||||

|

||||

### 5. Jubler ###

|

||||

|

||||

|

||||

|

||||

[Jubler][7]是一个用Java编写并有多平台支持的字幕编辑器。我对它的界面印象特别深刻。在上面我确实看出了Java特点的东西,但是,它仍然是经过精心的构造和构思的。像Aegisub 一样,你可以再屏幕上任意的拖动字幕,让你有愉快的体验而不单单是打字。它也可以为字幕自定义一个风格,在另外的一个轨道播放音频,翻译字幕,或者是是做拼写检查。不过,要注意的是,你需要事先安装好媒体播放器并且正确的配置,如果你想完整的使用Jubler。我把这些归功于在[官方页面][8]下载了脚本以后其简便的安装方式。

|

||||

|

||||

### 6. Subtitle Composer ###

|

||||

|

||||

|

||||

|

||||

[Subtitle Composer][9]被视为“KDE里的字幕作曲家”,它能够唤起对很多传统功能的回忆。伴随着KDE界面,我们充满了期待。我们自然会说到快捷键,我特别喜欢这个功能。除此之外,Subtitle Composer 与上面提到的编辑器最大的不同地方就在于,它可以执行用JavaScript,Python,甚至是Ruby写成的脚本。软件带有几个例子,肯定能够帮助你很好的学习使用这些特性的语法。

|

||||

|

||||

最后,不管你是否喜欢,都来为你的家庭编辑几个字幕吧,重新同步整个轨道,或者是一切从头开始,那么Linux 有很好的工具给你。对我来说,快捷键和易用性使得各个工具有差异,想要更高级别的使用体验,脚本和语音识别就成了很便利的一个功能。

|

||||

|

||||

你会使用哪个字幕编辑器,为什么?你认为还有没有更好用的字幕编辑器这里没有提到的?在评论里告诉我们吧。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/good-subtitle-editor-linux.html

|

||||

|

||||

作者:[Adrien Brochard][a]

|

||||

译者:[barney-ro](https://github.com/barney-ro)

|

||||

校对:[Caroline](https://github.com/carolinewuyan)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/adrien

|

||||

[1]:http://gnomesubtitles.org/

|

||||

[2]:http://www.aegisub.org/

|

||||

[3]:http://docs.aegisub.org/3.2/Main_Page/

|

||||

[4]:http://home.gna.org/gaupol/

|

||||

[5]:http://cmusphinx.sourceforge.net/

|

||||

[6]:http://home.gna.org/subtitleeditor/

|

||||

[7]:http://www.jubler.org/

|

||||

[8]:http://www.jubler.org/download.html

|

||||

[9]:http://sourceforge.net/projects/subcomposer/

|

||||

@ -1,22 +1,22 @@

|

||||

Linux用户应该了解一下开源硬件

|

||||

Linux用户,你们真的了解开源硬件吗?

|

||||

================================================================================

|

||||

> Linux用户不了解一点开源硬件制造相关的事情,他们将会很失望。

|

||||

> Linux用户不了解一点开源硬件制造相关的事情,他们就会经常陷入失望的情绪中。

|

||||

|

||||

商业软件和免费软件已经互相纠缠很多年了,但是这俩经常误解对方。这并不奇怪 -- 对一方来说是生意,而另一方只是一种生活方式。但是,这种误解会给人带来痛苦,这也是为什么值得花精力去揭露这里面的内幕。

|

||||

|

||||

一个逐渐普遍的现象:对开源硬件的不断尝试,不管是Canonical,Jolla,MakePlayLive,或者其他几个。不管是评论员或终端用户,一般的免费软件用户会为新的硬件平台发布表现出过分的狂热,然后因为不断延期有所醒悟,最终放弃整个产品。

|

||||

一个逐渐普遍的现象:对开源硬件的不断尝试,不管是Canonical,Jolla,MakePlayLive,或者其他公司。无论是评论员或是终端用户,通常免费软件用户都会为新的硬件平台发布表现出过分的狂热,然后因为不断延期有所醒悟,直到最终放弃整个产品。

|

||||

|

||||

这是一个没有人获益的怪圈,而且滋生出不信任 - 都是因为一般的Linux用户根本不知道这些新闻背后发生的事情。

|

||||

这是一个没有人获益的怪圈,而且常常滋生出不信任 - 都是因为一般的Linux用户根本不知道这些新闻背后发生的事情。

|

||||

|

||||

我个人对于把产品推向市场的经验很有限。但是,我还不知道谁能有所突破。推出一个开源硬件或其他产品到市场仍然不仅仅是个残酷的生意,而且严重不利于新加入的厂商。

|

||||

我个人对于把产品推向市场的经验很有限。但是,我还没听说谁能有所突破。推出一个开源硬件或其他产品到市场仍然不仅仅是个残酷的生意,而且严重不利于新进厂商。

|

||||

|

||||

### 寻找合作伙伴 ###

|

||||

|

||||

不管是数码产品的生产还是分销都被相对较少的一些公司控制着,有时需要数月的预订。利润率也会很低,所以就像那些购买古老情景喜剧的电影工作室一样,生成商一般也希望复制当前热销产品的成功。像Aaron Seigo在谈到他花精力开发Vivaldi平板时告诉我的,生产商更希望能由其他人去承担开发新产品的风险。

|

||||

不管是数码产品的生产还是分销都被相对较少的一些公司控制着,有时需要数月的预订。利润率也会很低,所以就像那些购买古老情景喜剧的电影工作室一样,生产商一般也希望复制当前热销产品的成功。像Aaron Seigo在谈到他花精力开发Vivaldi平板时告诉我的,生产商更希望能由其他人去承担开发新产品的风险。

|

||||

|

||||

不仅如此,他们更希望和那些有现成销售记录的有可能带来可复制生意的人合作。

|

||||

不仅如此,他们更希望和那些有现成销售记录的有可能带来长期客户生意的人合作。

|

||||

|

||||

而且,一般新加入的厂商所关心的产品只有几千的量。芯片制造商更愿意和苹果或三星合作,因为它们的订单很可能是几百K。

|

||||

而且,一般新加入的厂商所关心的产品只有几千的量。芯片制造商更愿意和苹果或三星这样的公司合作,因为它们的订单很可能是几十上百万的量。

|

||||

|

||||

面对这种情形,开源硬件制造者们可能会发现他们在工厂的列表中被淹没了,除非能找到二线或三线厂愿意尝试一下小批量生产新产品。

|

||||

|

||||

@ -28,9 +28,9 @@ Linux用户应该了解一下开源硬件

|

||||

|

||||

这样必然会引起潜在用户的批评,但是开源硬件制造者没得选,只能折中他们的愿景。寻找其他生产商也不能解决问题,有一个原因是这样做意味着更多延迟,但是更多的是因为完全免授权费的硬件是不存在的。像三星这样的业内巨头对免费硬件没有任何兴趣,而作为新人,开源硬件制造者也没有影响力去要求什么。

|

||||

|

||||

更何况,就算有免费硬件,生产商也不能保证会用在下一批生产中。制造者们会轻易地发现他们每次需要生产的时候都要重打一样的仗。

|

||||

更何况,就算有免费硬件,生产商也不能保证会用在下一批生产中。制造者们会轻易地发现他们每次需要生产的时候都要重打一次一模一样的仗。

|

||||

|

||||

这些都还不够,这个时候开源硬件制造者们也许已经花了6-12个月时间来讨价还价。机会来了,产业标准已经变更,他们也许为了升级产品规格又要从头来过。

|

||||

这些都还不够,这个时候开源硬件制造者们也许已经花了6-12个月时间来讨价还价。等机会终于来了,产业标准却已经变更,于是他们可能为了升级产品规格又要从头来过。

|

||||

|

||||

### 短暂而且残忍的货架期 ###

|

||||

|

||||

@ -42,15 +42,15 @@ Linux用户应该了解一下开源硬件

|

||||

|

||||

### 衡量整件怪事 ###

|

||||

|

||||

在这里我只是粗略地概括了一下,但是任何涉足过制造的人会认出我形容成标准的东西。而更糟糕的是,开源硬件制造者们通常在这个过程中才会有所觉悟。不可避免,他们也会犯错,从而带来更多的延迟。

|

||||

在这里我只是粗略地概括了一下,但是任何涉足过制造的人会认同我形容为行业标准的东西。而更糟糕的是,开源硬件制造者们通常只有在亲身经历过后才会有所觉悟。不可避免,他们也会犯错,从而带来更多的延迟。

|

||||

|

||||

但重点是,一旦你对整个过程有所了解,你对另一个开源硬件进行尝试的消息的反应就会改变。这个过程意味着,除非哪家公司处于严格的保密模式,对于产品将于六个月内发布的声明会很快会被证实是过期的推测。很可能是12-18个月,而且面对之前提过的那些困难很可能意味着这个产品永远不会真正发布。

|

||||

但重点是,一旦你对整个过程有所了解,你对另一个开源硬件进行尝试的新闻的反应就会改变。这个过程意味着,除非哪家公司处于严格的保密模式,对于产品将于六个月内发布的声明会很快会被证实是过期的推测。很可能是12-18个月,而且面对之前提过的那些困难很可能意味着这个产品永远都不会真正发布。

|

||||

|

||||

举个例子,就像我写的,人们等待第一代Steam Machines面世,它是一台基于Linux的游戏主机。他们相信Steam Machines能彻底改变Linux和游戏。

|

||||

|

||||

作为一个市场分类,Steam Machines也许比其他新产品更有优势,因为参与开发的人员至少有开发软件产品的经验。然而,整整一年过去了Steam Machines的开发成果都还只有原型机,而且直到2015年中都不一定能买到。面对硬件生产的实际情况,就算有一半能见到阳光都是很幸运了。而实际上,能发布2-4台也许更实际。

|

||||

|

||||

我做出这个预测并没有考虑个体努力。但是,对硬件生产的理解,比起那些Linux和游戏的黄金年代之类的预言,我估计这个更靠谱。如果我错了也会很开心,但是事实不会改变:让人吃惊的不是如此多的Linux相关硬件产品失败了,而是那些即使是短暂的成功的产品。

|

||||

我做出这个预测并没有考虑个体努力。但是,对硬件生产的理解,比起那些Linux和游戏的黄金年代之类的预言,我估计这个更靠谱。如果我错了也会很开心,但是事实不会改变:让人吃惊的不是如此多的Linux相关硬件产品失败了,而是那些虽然短暂但却成功的产品。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -58,7 +58,7 @@ via: http://www.datamation.com/open-source/what-linux-users-should-know-about-op

|

||||

|

||||

作者:[Bruce Byfield][a]

|

||||

译者:[zpl1025](https://github.com/zpl1025)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[Mr小眼儿](https://github.com/tinyeyeser)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,18 +1,17 @@

|

||||

如何在 Linux 上使用 HAProxy 配置 HTTP 负载均衡器

|

||||

使用 HAProxy 配置 HTTP 负载均衡器

|

||||

================================================================================

|

||||

随着基于 Web 的应用和服务的增多,IT 系统管理员肩上的责任也越来越重。当遇到不可预期的事件如流量达到高峰,流量增大或者内部的挑战比如硬件的损坏或紧急维修,无论如何,你的 Web 应用都必须要保持可用性。甚至现在流行的 devops 和持续交付也可能威胁到你的 Web 服务的可靠性和性能的一致性。

|

||||

随着基于 Web 的应用和服务的增多,IT 系统管理员肩上的责任也越来越重。当遇到不可预期的事件如流量达到高峰,流量增大或者内部的挑战比如硬件的损坏或紧急维修,无论如何,你的 Web 应用都必须要保持可用性。甚至现在流行的 devops 和持续交付(CD)也可能威胁到你的 Web 服务的可靠性和性能的一致性。

|

||||

|

||||

不可预测,不一直的性能表现是你无法接受的。但是我们怎样消除这些缺点呢?大多数情况下一个合适的负载均衡解决方案可以解决这个问题。今天我会给你们介绍如何使用 [HAProxy][1] 配置 HTTP 负载均衡器。

|

||||

不可预测,不一致的性能表现是你无法接受的。但是我们怎样消除这些缺点呢?大多数情况下一个合适的负载均衡解决方案可以解决这个问题。今天我会给你们介绍如何使用 [HAProxy][1] 配置 HTTP 负载均衡器。

|

||||

|

||||

###什么是 HTTP 负载均衡? ###

|

||||

|

||||

HTTP 负载均衡是一个网络解决方案,它将发入的 HTTP 或 HTTPs 请求分配至一组提供相同的 Web 应用内容的服务器用于响应。通过将请求在这样的多个服务器间进行均衡,负载均衡器可以防止服务器出现单点故障,可以提升整体的可用性和响应速度。它还可以让你能够简单的通过添加或者移除服务器来进行横向扩展或收缩,对工作负载进行调整。

|

||||

HTTP 负载均衡是一个网络解决方案,它将进入的 HTTP 或 HTTPs 请求分配至一组提供相同的 Web 应用内容的服务器用于响应。通过将请求在这样的多个服务器间进行均衡,负载均衡器可以防止服务器出现单点故障,可以提升整体的可用性和响应速度。它还可以让你能够简单的通过添加或者移除服务器来进行横向扩展或收缩,对工作负载进行调整。

|

||||

|

||||

### 什么时候,什么情况下需要使用负载均衡? ###

|

||||

|

||||

负载均衡可以提升服务器的使用性能和最大可用性,当你的服务器开始出现高负载时就可以使用负载均衡。或者你在为一个大型项目设计架构时,在前端使用负载均衡是一个很好的习惯。当你的环境需要扩展的时候它会很有用。

|

||||

|

||||

|

||||

### 什么是 HAProxy? ###

|

||||

|

||||

HAProxy 是一个流行的开源的 GNU/Linux 平台下的 TCP/HTTP 服务器的负载均衡和代理软件。HAProxy 是单线程,事件驱动架构,可以轻松的处理 [10 Gbps 速率][2] 的流量,在生产环境中被广泛的使用。它的功能包括自动健康状态检查,自定义负载均衡算法,HTTPS/SSL 支持,会话速率限制等等。

|

||||

@ -24,13 +23,13 @@ HAProxy 是一个流行的开源的 GNU/Linux 平台下的 TCP/HTTP 服务器的

|

||||

### 准备条件 ###

|

||||

|

||||

你至少要有一台,或者最好是两台 Web 服务器来验证你的负载均衡的功能。我们假设后端的 HTTP Web 服务器已经配置好并[可以运行][3]。

|

||||

You will need at least one, or preferably two web servers to verify functionality of your load balancer. We assume that backend HTTP web servers are already [up and running][3].

|

||||

|

||||

|

||||

### 在 Linux 中安装 HAProxy ###

|

||||

## 在 Linux 中安装 HAProxy ##

|

||||

|

||||

对于大多数的发行版,我们可以使用发行版的包管理器来安装 HAProxy。

|

||||

|

||||

#### 在 Debian 中安装 HAProxy ####

|

||||

### 在 Debian 中安装 HAProxy ###

|

||||

|

||||

在 Debian Wheezy 中我们需要添加源,在 /etc/apt/sources.list.d 下创建一个文件 "backports.list" ,写入下面的内容

|

||||

|

||||

@ -41,25 +40,25 @@ You will need at least one, or preferably two web servers to verify functionalit

|

||||

# apt get update

|

||||

# apt get install haproxy

|

||||

|

||||

#### 在 Ubuntu 中安装 HAProxy ####

|

||||

### 在 Ubuntu 中安装 HAProxy ###

|

||||

|

||||

# apt get install haproxy

|

||||

|

||||

#### 在 CentOS 和 RHEL 中安装 HAProxy ####

|

||||

### 在 CentOS 和 RHEL 中安装 HAProxy ###

|

||||

|

||||

# yum install haproxy

|

||||

|

||||

### 配置 HAProxy ###

|

||||

## 配置 HAProxy ##

|

||||

|

||||

本教程假设有两台运行的 HTTP Web 服务器,它们的 IP 地址是 192.168.100.2 和 192.168.100.3。我们将负载均衡配置在 192.168.100.4 的这台服务器上。

|

||||

|

||||

为了让 HAProxy 工作正常,你需要修改 /etc/haproxy/haproxy.cfg 中的一些选项。我们会在这一节中解释这些修改。一些配置可能因 GNU/Linux 发行版的不同而变化,这些会被标注出来。

|

||||

|

||||

#### 1. 配置日志功能 ####

|

||||

### 1. 配置日志功能 ###

|

||||

|

||||

你要做的第一件事是为 HAProxy 配置日志功能,在排错时日志将很有用。日志配置可以在 /etc/haproxy/haproxy.cfg 的 global 段中找到他们。下面是针对不同的 Linux 发型版的 HAProxy 日志配置。

|

||||

|

||||

**CentOS 或 RHEL:**

|

||||

#### CentOS 或 RHEL:####

|

||||

|

||||

在 CentOS/RHEL中启用日志,将下面的:

|

||||

|

||||

@ -82,7 +81,7 @@ You will need at least one, or preferably two web servers to verify functionalit

|

||||

|

||||

# service rsyslog restart

|

||||

|

||||

**Debian 或 Ubuntu:**

|

||||

####Debian 或 Ubuntu:####

|

||||

|

||||

在 Debian 或 Ubuntu 中启用日志,将下面的内容

|

||||

|

||||

@ -106,7 +105,7 @@ You will need at least one, or preferably two web servers to verify functionalit

|

||||

|

||||

# service rsyslog restart

|

||||

|

||||

#### 2. 设置默认选项 ####

|

||||

### 2. 设置默认选项 ###

|

||||

|

||||

下一步是设置 HAProxy 的默认选项。在 /etc/haproxy/haproxy.cfg 的 default 段中,替换为下面的配置:

|

||||

|

||||

@ -124,7 +123,7 @@ You will need at least one, or preferably two web servers to verify functionalit

|

||||

|

||||

上面的配置是当 HAProxy 为 HTTP 负载均衡时建议使用的,但是并不一定是你的环境的最优方案。你可以自己研究 HAProxy 的手册并配置它。

|

||||

|

||||

#### 3. Web 集群配置 ####

|

||||

### 3. Web 集群配置 ###

|

||||

|

||||

Web 集群配置定义了一组可用的 HTTP 服务器。我们的负载均衡中的大多数设置都在这里。现在我们会创建一些基本配置,定义我们的节点。将配置文件中从 frontend 段开始的内容全部替换为下面的:

|

||||

|

||||

@ -141,14 +140,14 @@ Web 集群配置定义了一组可用的 HTTP 服务器。我们的负载均衡

|

||||

server web01 192.168.100.2:80 cookie node1 check

|

||||

server web02 192.168.100.3:80 cookie node2 check

|

||||

|

||||

"listen webfarm *:80" 定义了负载均衡器监听的地址和端口。为了教程的需要,我设置为 "\*" 表示监听在所有接口上。在真实的场景汇总,这样设置可能不太合适,应该替换为可以从 internet 访问的那个网卡接口。

|

||||

"listen webfarm \*:80" 定义了负载均衡器监听的地址和端口。为了教程的需要,我设置为 "*" 表示监听在所有接口上。在真实的场景汇总,这样设置可能不太合适,应该替换为可以从 internet 访问的那个网卡接口。

|

||||

|

||||

stats enable

|

||||

stats uri /haproxy?stats

|

||||

stats realm Haproxy\ Statistics

|

||||

stats auth haproxy:stats

|

||||

|

||||

上面的设置定义了,负载均衡器的状态统计信息可以通过 http://<load-balancer-IP>/haproxy?stats 访问。访问需要简单的 HTTP 认证,用户名为 "haproxy" 密码为 "stats"。这些设置可以替换为你自己的认证方式。如果你不需要状态统计信息,可以完全禁用掉。

|

||||

上面的设置定义了,负载均衡器的状态统计信息可以通过 http://\<load-balancer-IP>/haproxy?stats 访问。访问需要简单的 HTTP 认证,用户名为 "haproxy" 密码为 "stats"。这些设置可以替换为你自己的认证方式。如果你不需要状态统计信息,可以完全禁用掉。

|

||||

|

||||

下面是一个 HAProxy 统计信息的例子

|

||||

|

||||

@ -160,7 +159,7 @@ Web 集群配置定义了一组可用的 HTTP 服务器。我们的负载均衡

|

||||

- **source**:对请求的客户端 IP 地址进行哈希计算,根据哈希值和服务器的权重将请求调度至后端服务器。

|

||||

- **uri**:对 URI 的左半部分(问号之前的部分)进行哈希,根据哈希结果和服务器的权重对请求进行调度

|

||||

- **url_param**:根据每个 HTTP GET 请求的 URL 查询参数进行调度,使用固定的请求参数将会被调度至指定的服务器上

|

||||

- **hdr(name**):根据 HTTP 首部中的 <name> 字段来进行调度

|

||||

- **hdr(name**):根据 HTTP 首部中的 \<name> 字段来进行调度

|

||||

|

||||

"cookie LBN insert indirect nocache" 这一行表示我们的负载均衡器会存储 cookie 信息,可以将后端服务器池中的节点与某个特定会话绑定。节点的 cookie 存储为一个自定义的名字。这里,我们使用的是 "LBN",你可以指定其他的名称。后端节点会保存这个 cookie 的会话。

|

||||

|

||||

@ -169,25 +168,25 @@ Web 集群配置定义了一组可用的 HTTP 服务器。我们的负载均衡

|

||||

|

||||

上面是我们的 Web 服务器节点的定义。服务器有由内部名称(如web01,web02),IP 地址和唯一的 cookie 字符串表示。cookie 字符串可以自定义,我这里使用的是简单的 node1,node2 ... node(n)

|

||||

|

||||

### 启动 HAProxy ###

|

||||

## 启动 HAProxy ##

|

||||

|

||||

如果你完成了配置,现在启动 HAProxy 并验证是否运行正常。

|

||||

|

||||

#### 在 Centos/RHEL 中启动 HAProxy ####

|

||||

### 在 Centos/RHEL 中启动 HAProxy ###

|

||||

|

||||

让 HAProxy 开机自启,使用下面的命令

|

||||

|

||||

# chkconfig haproxy on

|

||||

# service haproxy start

|

||||

|

||||

当然,防火墙需要开放 80 端口,想下面这样

|

||||

当然,防火墙需要开放 80 端口,像下面这样

|

||||

|

||||

**CentOS/RHEL 7 的防火墙**

|

||||

####CentOS/RHEL 7 的防火墙####

|

||||

|

||||

# firewallcmd permanent zone=public addport=80/tcp

|

||||

# firewallcmd reload

|

||||

|

||||

**CentOS/RHEL 6 的防火墙**

|

||||

####CentOS/RHEL 6 的防火墙####

|

||||

|

||||

把下面内容加至 /etc/sysconfig/iptables 中的 ":OUTPUT ACCEPT" 段中

|

||||

|

||||

@ -197,9 +196,9 @@ Web 集群配置定义了一组可用的 HTTP 服务器。我们的负载均衡

|

||||

|

||||

# service iptables restart

|

||||

|

||||

#### 在 Debian 中启动 HAProxy ####

|

||||

### 在 Debian 中启动 HAProxy ###

|

||||

|

||||

#### 启动 HAProxy ####

|

||||

启动 HAProxy

|

||||

|

||||

# service haproxy start

|

||||

|

||||

@ -207,7 +206,7 @@ Web 集群配置定义了一组可用的 HTTP 服务器。我们的负载均衡

|

||||

|

||||

A INPUT p tcp dport 80 j ACCEPT

|

||||

|

||||

#### 在 Ubuntu 中启动HAProxy ####

|

||||

### 在 Ubuntu 中启动HAProxy ###

|

||||

|

||||

让 HAProxy 开机自动启动在 /etc/default/haproxy 中配置

|

||||

|

||||

@ -221,7 +220,7 @@ Web 集群配置定义了一组可用的 HTTP 服务器。我们的负载均衡

|

||||

|

||||

# ufw allow 80

|

||||

|

||||

### 测试 HAProxy ###

|

||||

## 测试 HAProxy ##

|

||||

|

||||

检查 HAProxy 是否工作正常,我们可以这样做

|

||||

|

||||

@ -239,7 +238,7 @@ Web 集群配置定义了一组可用的 HTTP 服务器。我们的负载均衡

|

||||

|

||||

$ curl http://192.168.100.4/test.php

|

||||

|

||||

我们多次使用这个命令此时,会发现交替的输出下面的内容(因为使用了轮询算法):

|

||||

我们多次运行这个命令此时,会发现交替的输出下面的内容(因为使用了轮询算法):

|

||||

|

||||

Server IP: 192.168.100.2

|

||||

X-Forwarded-for: 192.168.100.4

|

||||

@ -251,13 +250,13 @@ Web 集群配置定义了一组可用的 HTTP 服务器。我们的负载均衡

|

||||

|

||||

如果我们停掉一台后端 Web 服务,curl 命令仍然正常工作,请求被分发至另一台可用的 Web 服务器。

|

||||

|

||||

### 总结 ###

|

||||

## 总结 ##

|

||||

|

||||

现在你有了一个完全可用的负载均衡器,以轮询的模式对你的 Web 节点进行负载均衡。还可以去实验其他的配置选项以适应你的环境。希望这个教程可以帮会组你们的 Web 项目有更好的可用性。

|

||||

现在你有了一个完全可用的负载均衡器,以轮询的模式对你的 Web 节点进行负载均衡。还可以去实验其他的配置选项以适应你的环境。希望这个教程可以帮助你们的 Web 项目有更好的可用性。

|

||||

|

||||

你可能已经发现了,这个教程只包含单台负载均衡的设置。这意味着我们仍然有单点故障的问题。在真实场景中,你应该至少部署 2 台或者 3 台负载均衡以防止意外发生,但这不是本教程的范围。

|

||||

|

||||

如果 你有任何问题或建议,请在评论中提出,我会尽我的努力回答。

|

||||

如果你有任何问题或建议,请在评论中提出,我会尽我的努力回答。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -265,11 +264,11 @@ via: http://xmodulo.com/haproxy-http-load-balancer-linux.html

|

||||

|

||||

作者:[Jaroslav Štěpánek][a]

|

||||

译者:[Liao](https://github.com/liaoishere)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/jaroslav

|

||||

[1]:http://www.haproxy.org/

|

||||

[2]:http://www.haproxy.org/10g.html

|

||||

[3]:http://xmodulo.com/how-to-install-lamp-server-on-ubuntu.html

|

||||

[3]:http://linux.cn/article-1567-1.html

|

||||

@ -0,0 +1,87 @@

|

||||

如何在Ubuntu上转换图像、音频和视频格式

|

||||

================================================================================

|

||||

|

||||

如果你的工作中需要接触到各种不同编码格式的图像、音频和视频,那么你很有可能正在使用多个工具来转换这些多种多样的媒体格式。如果存在一个能够处理所有图像/音频/视频格式的多合一转换工具,那就太好了。

|

||||

|

||||

[Format Junkie][1] 就是这样一个多合一的媒体转换工具,它有着极其友好的用户界面。更棒的是它是一个免费软件。你可以使用 Format Junkie 来转换几乎所有的流行格式的图像、音频、视频和归档文件(或称压缩文件),所有这些只需要简单地点击几下鼠标而已。

|

||||

|

||||

### 在Ubuntu 12.04, 12.10 和 13.04 上安装 Format Junkie ###

|

||||

|

||||

Format Junkie 可以通过 Ubuntu PPA format-junkie-team 进行安装。这个PPA支持Ubuntu 12.04, 12.10 和 13.04。在以上任意一种Ubuntu版本中安装Format Junkie的话,简单的执行以下命令即可:

|

||||

|

||||

$ sudo add-apt-repository ppa:format-junkie-team/release

|

||||

$ sudo apt-get update

|

||||

$ sudo apt-get install formatjunkie

|

||||

$ sudo ln -s /opt/extras.ubuntu.com/formatjunkie/formatjunkie /usr/bin/formatjunkie

|

||||

|

||||

### 将 Format Junkie 安装到 Ubuntu 13.10 ###

|

||||

|

||||

如果你正在运行Ubuntu 13.10 (Saucy Salamander),你可以按照以下步骤下载 .deb 安装包来进行安装。由于Format Junkie 的 .deb 安装包只有很少的依赖包,所以使用 [gdebi deb installer][2] 来安装它。

|

||||

|

||||

在32位版Ubuntu 13.10上:

|

||||

|

||||

$ wget https://launchpad.net/~format-junkie-team/+archive/release/+files/formatjunkie_1.07-1~raring0.2_i386.deb

|

||||

$ sudo gdebi formatjunkie_1.07-1~raring0.2_i386.deb

|

||||

$ sudo ln -s /opt/extras.ubuntu.com/formatjunkie/formatjunkie /usr/bin/formatjunkie

|

||||

|

||||

在32位版Ubuntu 13.10上:

|

||||

|

||||

$ wget https://launchpad.net/~format-junkie-team/+archive/release/+files/formatjunkie_1.07-1~raring0.2_amd64.deb

|

||||

$ sudo gdebi formatjunkie_1.07-1~raring0.2_amd64.deb

|

||||

$ sudo ln -s /opt/extras.ubuntu.com/formatjunkie/formatjunkie /usr/bin/formatjunkie

|

||||

|

||||

### 将 Format Junkie 安装到 Ubuntu 14.04 或之后版本 ###

|

||||

|

||||

现有可供使用的官方 Format Junkie .deb 文件需要 libavcodec-extra-53,不过它从Ubuntu 14.04开始就已经过时了。所以如果你想在Ubuntu 14.04或之后版本上安装Format Junkie,可以使用以下的第三方PPA来代替。

|

||||

|

||||

$ sudo add-apt-repository ppa:jon-severinsson/ffmpeg

|

||||

$ sudo add-apt-repository ppa:noobslab/apps

|

||||

$ sudo apt-get update

|

||||

$ sudo apt-get install formatjunkie

|

||||

|

||||

### 如何使用 Format Junkie ###

|

||||

|

||||

安装完成后,只需运行以下命令即可启动 Format Junkie:

|

||||

|

||||

$ formatjunkie

|

||||

|

||||

#### 使用 Format Junkie 来转换音频、视频、图像和归档格式 ####

|

||||

|

||||

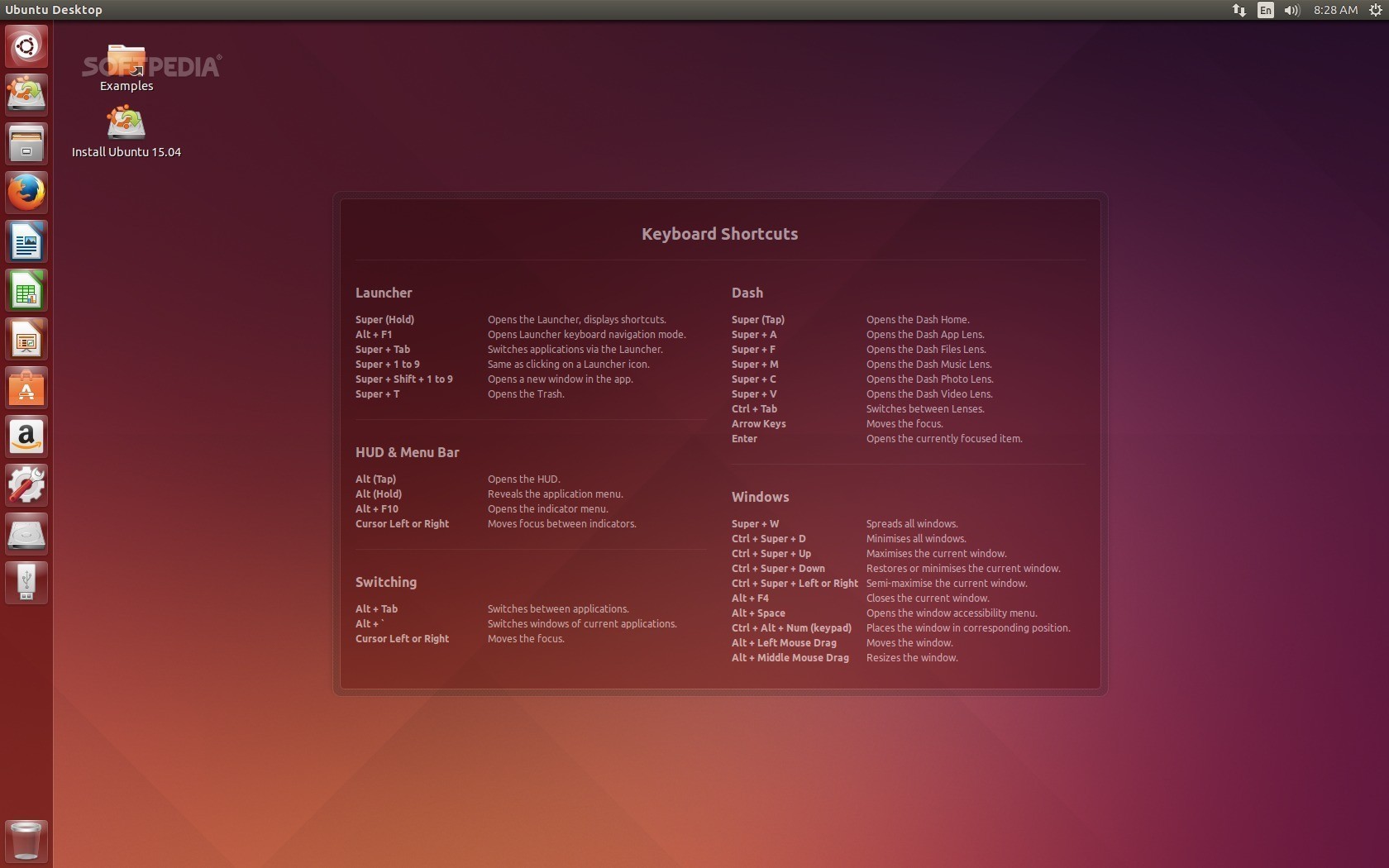

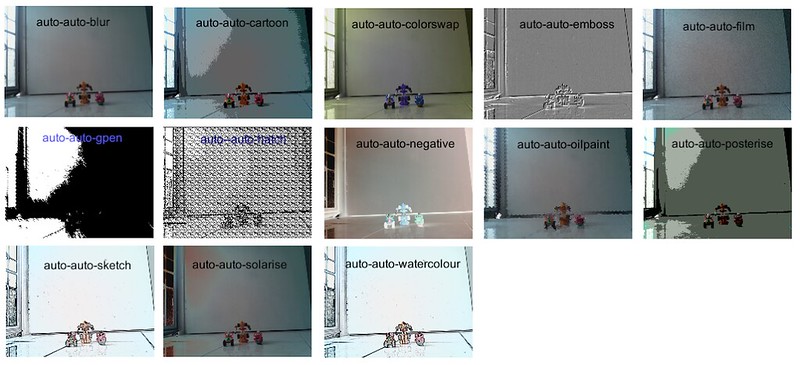

就像下方展示的一样,Format Junkie 的用户界面简单而且直观。在顶部的音频、视频、图像和iso媒体四个标签当中点击你需要的那个。你可以根据需要添加任意数量的文件用于批量转换。添加文件后,选择输出格式,直接点击 "Start Converting" 按钮进行转换。

|

||||

|

||||

|

||||

|

||||

Format Junkie支持以下媒体格式间的转换:

|

||||

|

||||

- **Audio**: mp3, wav, ogg, wma, flac, m4r, aac, m4a, mp2.

|

||||

- **Video**: avi, ogv, vob, mp4, 3gp, wmv, mkv, mpg, mov, flv, webm.

|

||||

- **Image**: jpg, png, ico, bmp, svg, tif, pcx, pdf, tga, pnm.

|

||||

- **Archive**: iso, cso.

|

||||

|

||||

#### 用 Format Junkie 进行字幕编码 ####

|

||||

|

||||

除了媒体转换,Format Junkie 可提供了字幕编码的图形界面。实际的字幕编码是由MEncoder来完成的。为了使用Format Junkie的字幕编码接口,首先你需要安装MEencoder。

|

||||

|

||||

$ sudo apt-get install mencoder

|

||||

|

||||

然后点击Format Junkie 中的 "Advanced"标签。选择 AVI/subtitle 文件来进行编码,如下所示:

|

||||

|

||||

|

||||

|

||||

总而言之,Format Junkie 是一个非常易于使用和多才多艺的媒体转换工具。但也有一个缺陷,它不允许对转换进行任何定制化(例如:比特率,帧率,采样频率,图像质量,尺寸)。所以这个工具推荐给正在寻找一个简单易用的媒体转换工具的新手使用。

|

||||

|

||||

喜欢这篇文章吗?请在下面发表评论吧。多谢!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/how-to-convert-image-audio-and-video-formats-on-ubuntu.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[Ping](https://github.com/mr-ping)

|

||||

校对:[Caroline](https://github.com/carolinewuyan)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/nanni

|

||||

[1]:https://launchpad.net/format-junkie

|

||||

[2]:http://xmodulo.com/how-to-install-deb-file-with-dependencies.html

|

||||

@ -1,11 +1,10 @@

|

||||

“ntpq -p”命令输出详解

|

||||

网络时间的那些事情及 ntpq 详解

|

||||

================================================================================

|

||||

[Gentoo][1](也许其他发行版也是?)中 ["ntp -q" 的 man page][2] 只有简短的描述:“*打印出服务器已知的节点列表和它们的状态概要信息。*”

|

||||

[Gentoo][1](也许其他发行版也是?)中 ["ntpq -p" 的 man page][2] 只有简短的描述:“*打印出该服务器已知的节点列表和它们的状态概要信息。*”

|

||||

|

||||

我还没见到关于这个命令的说明文档,因此这里对此作一个总结,可以补充进 "[man ntpq][3]" man page 中。更多的细节见这里 “[ntpq – standard NTP query program][4]”(原作者),和 [其他关于 man ntpq 的例子][5].

|

||||

我还没见到关于这个命令的说明文档,因此这里对此作一个总结,可以补充进 "[man ntpq][3]" man page 中。更多的细节见这里 “[ntpq – 标准 NTP 请求程序][4]”(原作者),和 [其他关于 man ntpq 的例子][5].

|

||||

|

||||

[NTP][6] 是一个设计用于通过 [udp][9] 网络 ([WAN][7] 或者 [LAN][8]) 来同步计算机时钟的协议。引用 [Wikipedia – NTP][10]:

|

||||

[NTP][6] is a protocol designed to synchronize the clocks of computers over a ([WAN][7] or [LAN][8]) [udp][9] network. From [Wikipedia – NTP][10]:

|

||||

|

||||

> 网络时间协议(英语:Network Time Protocol,NTP)一种协议和软件实现,用于通过使用有网络延迟的报文交换网络同步计算机系统间的时钟。最初由美国特拉华大学的 David L. Mills 设计,现在仍然由他和志愿者小组维护,它于 1985 年之前开始使用,是因特网中最老的协议之一。

|

||||

|

||||

@ -28,10 +27,10 @@

|

||||

- **st** – 远程节点或服务器的 [Stratum][17](级别,NTP 时间同步是分层的)

|

||||

- **t** – 类型 (u: [unicast(单播)][18] 或 [manycast(选播)][19] 客户端, b: [broadcast(广播)][20] 或 [multicast(多播)][21] 客户端, l: 本地时钟, s: 对称节点(用于备份), A: 选播服务器, B: 广播服务器, M: 多播服务器, 参见“[Automatic Server Discovery][22]“)

|

||||

- **when** – 最后一次同步到现在的时间 (默认单位为秒, “h”表示小时,“d”表示天)

|

||||

- **poll** – 同步的频率:[rfc5905][23]建议在 NTPv4 中这个值的范围在 4 (16s) 至 17 (36h) 之间(2的指数次秒),然而观察发现这个值的实际大小在一个小的多的范围内 :64 (2的6次方)秒 至 1024 (2的10次方)秒

|

||||

- **poll** – 同步的频率:[rfc5905][23]建议在 NTPv4 中这个值的范围在 4 (16秒) 至 17 (36小时) 之间(即2的指数次秒),然而观察发现这个值的实际大小在一个小的多的范围内 :64 (2^6 )秒 至 1024 (2^10 )秒

|

||||

- **reach** – 一个8位的左移移位寄存器值,用来测试能否和服务器连接,每成功连接一次它的值就会增加,以 [8 进制][24]显示

|

||||

- **delay** – 从本地到远程节点或服务器通信的往返时间(毫秒)

|

||||

- **offset** – 主机与远程节点或服务器时间源的时间偏移量,offset 越接近于0,主机和 NTP 服务器的时间越接近([方均根][25]表示,单位为毫秒)

|

||||

- **offset** – 主机与远程节点或服务器时间源的时间偏移量,offset 越接近于0,主机和 NTP 服务器的时间越接近(以[方均根][25]表示,单位为毫秒)

|

||||

- **jitter** – 与远程节点同步的时间源的平均偏差(多个时间样本中的 offset 的偏差,单位是毫秒),这个数值的绝对值越小,主机的时间就越精确

|

||||

|

||||

#### 字段的统计代码 ####

|

||||

@ -47,7 +46,7 @@

|

||||

- “**-**” – 已不再使用

|

||||

- “**#**” – 良好的远程节点或服务器但是未被使用 (不在按同步距离排序的前六个节点中,作为备用节点使用)

|

||||

- “**+**” – 良好的且优先使用的远程节点或服务器(包含在组合算法中)

|

||||

- “*****” – 当前作为优先主同步对象的远程节点或服务器

|

||||

- “*” – 当前作为优先主同步对象的远程节点或服务器

|

||||

- “**o**” – PPS 节点 (当优先节点是有效时)。实际的系统同步是源于秒脉冲信号(pulse-per-second,PPS),可能通过PPS 时钟驱动或者通过内核接口。

|

||||

|

||||

参考 [Clock Select Algorithm][27].

|

||||

@ -74,9 +73,9 @@

|

||||

- **.WWV.** – [WWV][46] (HF, Ft. Collins, CO, America) 标准时间无线电接收器

|

||||

- **.WWVB.** – [WWVB][47] (LF, Ft. Collins, CO, America) 标准时间无线电接收器

|

||||

- **.WWVH.** – [WWVH][48] (HF, Kauai, HI, America) 标准时间无线电接收器

|

||||

- **.GOES.** – 美国 [静止环境观测卫星][49];

|

||||

- **.GOES.** – 美国[静止环境观测卫星][49];

|

||||

- **.GPS.** – 美国 [GPS][50];

|

||||

- **.GAL.** – [伽利略定位系统][51] 欧洲 [GNSS][52];

|

||||

- **.GAL.** – [伽利略定位系统][51]欧洲 [GNSS][52];

|

||||

- **.ACST.** – 选播服务器

|

||||

- **.AUTH.** – 认证错误

|

||||

- **.AUTO.** – Autokey (NTP 的一种认证机制)顺序错误

|

||||

@ -105,7 +104,7 @@ NTP 协议是高精度的,使用的精度小于纳秒(2的 -32 次方)。

|

||||

|

||||

#### “ntpq -c rl”输出参数 ####

|

||||

|

||||

- **precision** 为四舍五入值,且为 2 的幂数。因此精度为 2 的 *precision* 此幂(秒)

|

||||

- **precision** 为四舍五入值,且为 2 的幂数。因此精度为 2^precision (秒)

|

||||

- **rootdelay** – 与同步网络中主同步服务器的总往返延时。注意这个值可以是正数或者负数,取决于时钟的精度。

|

||||

- **rootdisp** – 相对于同步网络中主同步服务器的偏差(秒)

|

||||

- **tc** – NTP 算法 [PLL][59] (phase locked loop,锁相环路) 或 [FLL][60] (frequency locked loop,锁频回路) 时间常量

|

||||

@ -122,20 +121,20 @@ Jitter (也叫 timing jitter) 表示短期变化大于10HZ 的频率, wander

|

||||

|

||||

NTP 软件维护一系列连续更新的频率变化的校正值。对于设置正确的稳定系统,在非拥塞的网络中,现代硬件的 NTP 时钟同步通常与 UTC 标准时间相差在毫秒内。(在千兆 LAN 网络中可以达到何种精度?)

|

||||

|

||||

对于 UTC 时间,[闰秒][62] 可以每两年插入一次用于同步地球自传的变化。注意本地时间为[夏令时][63]时时间会有一小时的变化。在重同步之前客户端设备会使用独立的 UTC 时间,除非客户端使用了偏移校准。

|

||||

对于 UTC 时间,[闰秒 leap second ][62] 可以每两年插入一次用于同步地球自传的变化。注意本地时间为[夏令时][63]时时间会有一小时的变化。在重同步之前客户端设备会使用独立的 UTC 时间,除非客户端使用了偏移校准。

|

||||

|

||||

#### [闰秒发生时会怎样][64] ####

|

||||

|

||||

> 闰秒发生时,会对当天时间增加或减少一秒。闰秒的调整在 UTC 时间当天的最后一秒。如果增加一秒,UTC 时间会出现 23:59:60。即 23:59:59 到 0:00:00 之间实际上需要 2 秒钟。如果减少一秒,时间会从 23:59:58 跳至 0:00:00 。另见 [The Kernel Discipline][65].

|

||||

|

||||

好了… 间隔阈值(step threshold)的真实值是多少: 125ms 还是 128ms? PLL/FLL tc 的单位是什么 (log2 s? ms?)?在非拥塞的千兆 LAN 中时间节点间的精度能达到多少?

|

||||

那么… 间隔阈值(step threshold)的真实值是多少: 125ms 还是 128ms? PLL/FLL tc 的单位是什么 (log2 s? ms?)?在非拥塞的千兆 LAN 中时间节点间的精度能达到多少?

|

||||

|

||||

感谢 Camilo M 和 Chris B的评论。 欢迎校正错误和更多细节的探讨。

|

||||

|

||||

谢谢

|

||||

Martin

|

||||

|

||||

### 外传 ###

|

||||

### 附录 ###

|

||||

|

||||

- [NTP 的纪元][66] 从 1900 开始而 UNIX 的从 1970开始.

|

||||

- [时间校正][67] 是逐渐进行的,因此时间的完全同步可能会画上几个小时。

|

||||

@ -152,7 +151,7 @@ Martin

|

||||

|

||||

- [ntpq – 标准 NTP 查询程序][77]

|

||||

- [The Network Time Protocol (NTP) 分布][78]

|

||||

- NTP 的简明 [历史][79]

|

||||

- NTP 的简明[历史][79]

|

||||

- 一个更多细节的简明历史 “Mills, D.L., A brief history of NTP time: confessions of an Internet timekeeper. Submitted for publication; please do not cite or redistribute” ([pdf][80])

|

||||

- [NTP RFC][81] 标准文档

|

||||

- Network Time Protocol (Version 3) RFC – [txt][82], or [pdf][83]. Appendix E, The NTP Timescale and its Chronometry, p70, 包含了对过去 5000 年我们的计时系统的变化和关系的有趣解释。

|

||||

@ -165,7 +164,7 @@ Martin

|

||||

|

||||

### 其他 ###

|

||||

|

||||

SNTP (Simple Network Time Protocol, [RFC 4330][91],简单未落协议)基本上也是NTP,但是缺少一些基于 [RFC 1305][92] 实现的 NTP 的一些不再需要的内部算法。

|

||||

SNTP (Simple Network Time Protocol, [RFC 4330][91],简单网络协议)基本上也是NTP,但是少了一些基于 [RFC 1305][92] 实现的 NTP 的一些不再需要的内部算法。

|

||||

|

||||

Win32 时间 [Windows Time Service][93] 是 SNTP 的非标准实现,没有精度的保证,并假设精度几乎有 1-2 秒的范围。(因为没有系统时间变化校正)

|

||||

|

||||

@ -184,7 +183,7 @@ via: http://nlug.ml1.co.uk/2012/01/ntpq-p-output/831

|

||||

|

||||

作者:Martin L

|

||||

译者:[Liao](https://github.com/liaosishere)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

66

published/20141108 When hackers grow old.md

Normal file

66

published/20141108 When hackers grow old.md

Normal file

@ -0,0 +1,66 @@

|

||||

ESR:黑客年暮

|

||||

================================================================================

|

||||

近来我一直在与某资深开源开发团队中的多个成员缠斗,尽管密切关注我的人们会在读完本文后猜到是哪个组织,但我不会在这里说出这个组织的名字。

|

||||

|

||||

怎么让某些人进入 21 世纪就这么难呢?真是的...

|

||||

|

||||

我快 56 岁了,也就是大部分年轻人会以为的我将时不时朝他们发出诸如“滚出我的草坪”之类歇斯底里咆哮的年龄。但事实并非如此 —— 我发现,尤其是在技术背景之下,我变得与我的年龄非常不相称。

|

||||

|

||||

在我这个年龄的大部分人确实变成了爱发牢骚、墨守成规的老顽固。并且,尴尬的是,偶尔我会成为那个打断谈话的人,我会指出他们某个在 1995 年(或者在某些特殊情况下,1985 年)时很适合的方法... 几十年后的今天就不再是好方法了。

|

||||

|

||||

为什么是我?因为年轻人在我的同龄人中很难有什么说服力。如果有人想让那帮老头改变主意,首先他得是自己同龄人中具有较高思想觉悟的佼佼者。即便如此,在与习惯做斗争的过程中,我也比看起来花费了更多的时间。

|

||||

|

||||

年轻人犯下无知的错误是可以被原谅的。他们还年轻。年轻意味着缺乏经验,缺乏经验通常会导致片面的判断。我很难原谅那些经历了足够多本该有经验的人,却被*长期的固化思维*蒙蔽,无法发觉近在咫尺的东西。

|

||||

|

||||

(补充一下:我真的不是保守党拥护者。那些和我争论政治的,无论保守党还是非保守党都没有注意到这点,我觉得这颇有点嘲讽的意味。)

|

||||

|

||||

那么,现在我们来讨论下 GNU 更新日志文件(ChangeLog)这件事。在 1985 年的时候,这是一个不错的主意,甚至可以说是必须的。当时的想法是用单独的更新日志条目来记录多个相关文件的变更情况。用这种方式来对那些存在版本缺失或者非常原始的版本进行版本控制确实不错。当时我也*在场*,所以我知道这些。

|

||||

|

||||

不过即使到了 1995 年,甚至 21 世纪早期,许多版本控制系统仍然没有太大改进。也就是说,这些版本控制系统并非对批量文件的变化进行分组再保存到一条记录上,而是对每个变化的文件分别进行记录并保存到不同的地方。CVS,当时被广泛使用的版本控制系统,仅仅是模拟日志变更 —— 并且在这方面表现得很糟糕,导致大多数人不再依赖这个功能。即便如此,更新日志文件的出现依然是必要的。

|

||||

|

||||

但随后,版本控制系统 Subversion 于 2003 年发布 beta 版,并于 2004 年发布 1.0 正式版,Subversion 真正实现了更新日志记录功能,得到了人们的广泛认可。它与一年后兴起的分布式版本控制系统(Distributed Version Control System,DVCS)共同引发了主流世界的激烈争论。因为如果你在项目上同时使用了分布式版本控制与更新日志文件记录的功能,它们将会因为争夺相同元数据的控制权而产生不可预料的冲突。

|

||||

|

||||

有几种不同的方法可以折衷解决这个问题。一种是继续将更新日志作为代码变更的授权记录。这样一来,你基本上只能得到简陋的、形式上的提交评论数据。

|

||||

|

||||

另一种方法是对提交的评论日志进行授权。如果你这样做了,不久后你就会开始思忖为什么自己仍然对所有的日志更新条目进行记录。提交元数据与变化的代码具有更好的相容性,毕竟这才是当初设计它的目的。

|

||||

|

||||

(现在,试想有这样一个项目,同样本着把项目做得最好的想法,但两拨人却做出了完全不同的选择。因此你必须同时阅读更新日志和评论日志以了解到底发生了什么。最好在矛盾激化前把问题解决....)

|

||||

|

||||

第三种办法是尝试同时使用以上两种方法 —— 在更新日志条目中,以稍微变化后的的格式复制一份评论数据,将其作为评论提交的一部分。这会导致各种你意想不到的问题,最具代表性的就是它不符合“真理的单点性(single point of truth)”原理;只要其中有拷贝文件损坏,或者日志文件条目被修改,这就不再是同步时数据匹配的问题,它将导致在其后参与进来的人试图搞清人们是怎么想的时候变得非常困惑。(LCTT 译注:《[程序员修炼之道][1]》(The Pragmatic Programmer):任何一个知识点在系统内都应当有一个唯一、明确、权威的表述。根据Brian Kernighan的建议,把这个原则称为“真理的单点性(Single Point of Truth)”或者SPOT原则。)

|

||||

|

||||

或者,正如这个*我就不说出具体名字的特定项目*所做的,它的高层开发人员在电子邮件中最近声明说,提交可以包含多个更新日志条目,并且提交的元数据与更新日志是无关的。这导致我们直到现在还得不断进行记录。

|

||||

|

||||

当时我读到邮件的时候都要吐了。什么样的傻瓜才会意识不到这是自找麻烦 —— 事实上,在 DVCS 中针对可靠的提交日志有很好的浏览工具,围绕更新日志文件的整个定制措施只会成为负担和拖累。

|

||||

|

||||

唉,这是比较特殊的笨蛋:变老的并且思维僵化了的黑客。所有的合理化改革他都会极力反对。他所遵循的行事方法在几十年前是有效的,但现在只能适得其反。如果你试图向他解释这些不仅仅和 git 的摘要信息有关,同时还为了正确适应当前的工具集,以便实现更新日志的去条目化... 呵呵,那你就准备好迎接无法忍受、无法想象的疯狂对话吧。

|

||||

|

||||

的确,它成功激怒了我。这样那样的胡言乱语使这个项目变成了很难完成的工作。而且,同样的糟糕还体现在他们吸引年轻开发者的过程中,我认为这是真正的问题。相关 Google+ 社区的人员数量已经达到了 4 位数,他们大部分都是孩子,还没有成长起来。显然外界已经接受了这样的信息:这个项目的开发者都是部落中地位根深蒂固的崇高首领,最好的崇拜方式就是远远的景仰着他们。

|

||||

|

||||

这件事给我的最大触动就是每当我要和这些部落首领较量时,我都会想:有一天我也会这样吗?或者更糟的是,我看到的只是如同镜子一般对我自己的真实写照,而我自己却浑然不觉?我的意思是,我所得到的印象来自于他的网站,这个特殊的笨蛋要比我年轻。年轻至少 15 岁呢。

|

||||

|

||||

我总是认为自己的思路很清晰。当我和那些比我聪明的人打交道时我不会受挫,我只会因为那些思路跟不上我、看不清事实的人而沮丧。但这种自信也许只是邓宁·克鲁格效应(Dunning-Krueger effect)在我身上的消极影响,我并不确定这意味着什么。很少有什么事情会让我感到害怕;而这件事在让我害怕的事情名单上是名列前茅的。

|

||||

|

||||

另一件让人不安的事是当我逐渐变老的时候,这样的矛盾发生得越来越频繁。不知怎的,我希望我的黑客同行们能以更加优雅的姿态老去,即使身体老去也应该保持一颗年轻的心灵。有些人确实是这样;但可惜绝大多数人都不是。真令人悲哀。

|

||||

|

||||

我不确定我的职业生涯会不会完美收场。假如我最后成功避免了思维僵化(注意我说的是假如),我想我一定知道其中的部分原因,但我不确定这种模式是否可以被复制 —— 为了达成目的也许得在你的头脑中发生一些复杂的化学反应。尽管如此,无论对错,请听听我给年轻黑客以及其他有志青年的建议。

|

||||

|

||||

你们——对的,也包括你——一定无法在你中年老年的时候保持不错的心灵,除非你能很好的控制这点。你必须不断地去磨练你的内心、在你还年轻的时候完成自己的种种心愿,你必须把这些行为养成一种习惯直到你老去。

|

||||

|

||||

有种说法是中年人锻炼身体的最佳时机是 30 岁以前。我以为同样的方法,坚持我以上所说的习惯能让你在 56 岁,甚至 65 岁的时候仍然保持灵活的头脑。挑战你的极限,使不断地挑战自己成为一种习惯。立刻离开安乐窝,由此当你以后真正需要它的时候你可以建立起自己的安乐窝。

|

||||

|

||||

你必须要清楚的了解这点;还有一个可选择的挑战是你选择一个可以实现的目标并且为了这个目标不断努力。这个月我要学习 Go 语言。不是指游戏,我早就玩儿过了(虽然玩儿的不是太好)。并不是因为工作需要,而是因为我觉得是时候来扩展下我自己了。

|

||||

|

||||

保持这个习惯。永远不要放弃。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://esr.ibiblio.org/?p=6485

|

||||

|

||||

作者:[Eric Raymond][a]

|

||||

译者:[Stevearzh](https://github.com/Stevearzh)

|

||||

校对:[Mr小眼儿](https://github.com/tinyeyeser)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://esr.ibiblio.org/?author=2

|

||||

[1]:http://book.51cto.com/art/200809/88490.htm

|

||||

@ -2,7 +2,7 @@

|

||||

================================================================================

|

||||

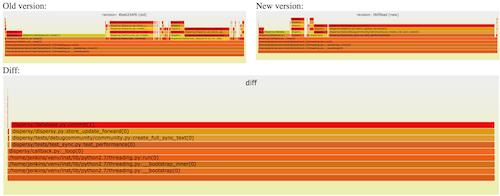

你能快速定位CPU性能回退的问题么? 如果你的工作环境非常复杂且变化快速,那么使用现有的工具是来定位这类问题是很具有挑战性的。当你花掉数周时间把根因找到时,代码已经又变更了好几轮,新的性能问题又冒了出来。

|

||||

|

||||

辛亏有了[CPU火焰图][1](flame graphs),CPU使用率的问题一般都比较好定位。但要处理性能回退问题,就要在修改前后的火焰图间,不断切换对比,来找出问题所在,这感觉就是像在太阳系中搜寻冥王星。虽然,这种方法可以解决问题,但我觉得应该会有更好的办法。

|

||||

幸亏有了[CPU火焰图][1](flame graphs),CPU使用率的问题一般都比较好定位。但要处理性能回退问题,就要在修改前后的火焰图之间,不断切换对比,来找出问题所在,这感觉就是像在太阳系中搜寻冥王星。虽然,这种方法可以解决问题,但我觉得应该会有更好的办法。

|

||||

|

||||

所以,下面就隆重介绍**红/蓝差分火焰图(red/blue differential flame graphs)**:

|

||||

|

||||

@ -14,7 +14,7 @@

|

||||

|

||||

这张火焰图中各火焰的形状和大小都是和第二次抓取的profile文件对应的CPU火焰图是相同的。(其中,y轴表示栈的深度,x轴表示样本的总数,栈帧的宽度表示了profile文件中该函数出现的比例,最顶层表示正在运行的函数,再往下就是调用它的栈)

|

||||

|

||||

在下面这个案例展示了,在系统升级后,一个工作负载的CPU使用率上升了。 下面是对应的CPU火焰图([SVG格式][4])

|

||||

在下面这个案例展示了,在系统升级后,一个工作载荷的CPU使用率上升了。 下面是对应的CPU火焰图([SVG格式][4])

|

||||

|

||||

<p><object data="http://www.brendangregg.com/blog/images/2014/zfs-flamegraph-after.svg" type="image/svg+xml" width=720 height=296>

|

||||

<img src="http://www.brendangregg.com/blog/images/2014/zfs-flamegraph-after.svg" width=720 />

|

||||

@ -22,7 +22,7 @@

|

||||

|

||||

通常,在标准的火焰图中栈帧和栈塔的颜色是随机选择的。 而在红/蓝差分火焰图中,使用不同的颜色来表示两个profile文件中的差异部分。

|

||||

|

||||

在第二个profile中deflate_slow()函数以及它后续调用的函数运行的次数要比前一次更多,所以在上图中这个栈帧被标为了红色。可以看出问题的原因是ZFS的压缩功能被使能了,而在系统升级前这项功能是关闭的。

|

||||

在第二个profile中deflate_slow()函数以及它后续调用的函数运行的次数要比前一次更多,所以在上图中这个栈帧被标为了红色。可以看出问题的原因是ZFS的压缩功能被启用了,而在系统升级前这项功能是关闭的。

|

||||

|

||||

这个例子过于简单,我甚至可以不用差分火焰图也能分析出来。但想象一下,如果是在分析一个微小的性能下降,比如说小于5%,而且代码也更加复杂的时候,问题就为那么好处理了。

|

||||

|

||||

@ -69,7 +69,9 @@ difffolded.p只能对“折叠”过的堆栈profile文件进行操作,折叠

|

||||

在上面的例子中"func_a()->func_b()->func_c()" 代表调用栈,这个调用栈在profile1文件中共出现了31次,在profile2文件中共出现了33次。然后,使用flamegraph.pl脚本处理这3列数据,会自动生成一张红/蓝差分火焰图。

|

||||

|

||||

### 其他选项 ###

|

||||

|

||||

再介绍一些有用的选项:

|

||||

|

||||

**difffolded.pl -n**:这个选项会把两个profile文件中的数据规范化,使其能相互匹配上。如果你不这样做,抓取到所有栈的统计值肯定会不相同,因为抓取的时间和CPU负载都不同。这样的话,看上去要么就是一片红(负载增加),要么就是一片蓝(负载下降)。-n选项对第一个profile文件进行了平衡,这样你就可以得到完整红/蓝图谱。

|

||||

|

||||

**difffolded.pl -x**: 这个选项会把16进制的地址删掉。 profiler时常会无法将地址转换为符号,这样的话栈里就会有16进制地址。如果这个地址在两个profile文件中不同,这两个栈就会认为是不同的栈,而实际上它们是相同的。遇到这样的问题就用-x选项搞定。

|

||||

@ -77,6 +79,7 @@ difffolded.p只能对“折叠”过的堆栈profile文件进行操作,折叠

|

||||

**flamegraph.pl --negate**: 用于颠倒红/蓝配色。 在下面的章节中,会用到这个功能。

|

||||

|

||||

### 不足之处 ###

|

||||

|

||||

虽然我的红/蓝差分火焰图很有用,但实际上还是有一个问题:如果一个代码执行路径完全消失了,那么在火焰图中就找不到地方来标注蓝色。你只能看到当前的CPU使用情况,而不知道为什么会变成这样。

|

||||

|

||||

一个办法是,将对比顺序颠倒,画一个相反的差分火焰图。例如:

|

||||

@ -95,12 +98,13 @@ difffolded.p只能对“折叠”过的堆栈profile文件进行操作,折叠

|

||||

|

||||

这样,把前面生成diff2.svg一并使用,我们就能得到:

|

||||

|

||||

- **diff1.svg**: 宽度是以修改前profile文件为基准, 颜色表明将要发生的情况

|

||||

- **diff2.svg**: 宽度以修改后的profile文件为基准,颜色表明已经发生的情况

|

||||

- **diff1.svg**: 宽度是以修改前profile文件为基准,颜色表明将要发生的情况

|

||||

- **diff2.svg**: 宽度是以修改后profile文件为基准,颜色表明已经发生的情况

|

||||

|

||||

如果是在做功能验证测试,我会同时生成这两张图。

|

||||

|

||||

### CPI 火焰图 ###

|

||||

|

||||

这些脚本开始是被使用在[CPI火焰图][8]的分析上。与比较修改前后的profile文件不同,在分析CPI火焰图时,可以分析CPU工作周期与停顿周期的差异变化,这样可以凸显出CPU的工作状态来。

|

||||

|

||||

### 其他的差分火焰图 ###

|

||||

@ -110,6 +114,7 @@ difffolded.p只能对“折叠”过的堆栈profile文件进行操作,折叠

|

||||

也有其他人做过类似的工作。[Robert Mustacchi][10]在不久前也做了一些尝试,他使用的方法类似于代码检视时的标色风格:只显示了差异的部分,红色表示新增(上升)的代码路径,蓝色表示删除(下降)的代码路径。一个关键的差别是栈帧的宽度只体现了差异的样本数。右边是一个例子。这个是个很好的主意,但在实际使用中会感觉有点奇怪,因为缺失了完整profile文件的上下文作为背景,这张图显得有些难以理解。

|

||||

|

||||

[][12]

|

||||

|

||||

Cor-Paul Bezemer也制作了一种差分显示方法[flamegraphdiff][13],他同时将3张火焰图放在同一张图中,修改前后的标准火焰图各一张,下面再补充了一张差分火焰图,但栈帧宽度也是差异的样本数。 上图是一个[例子][14]。在差分图中将鼠标移到栈帧上,3张图中同一栈帧都会被高亮显示。这种方法中补充了两张标准的火焰图,因此解决了上下文的问题。

|

||||

|

||||

我们3人的差分火焰图,都各有所长。三者可以结合起来使用:Cor-Paul方法中上方的两张图,可以用我的diff1.svg 和 diff2.svg。下方的火焰图可以用Robert的方式。为保持一致性,下方的火焰图可以用我的着色方式:蓝->白->红。

|

||||

@ -128,7 +133,7 @@ via: http://www.brendangregg.com/blog/2014-11-09/differential-flame-graphs.html

|

||||

|

||||

作者:[Brendan Gregg][a]

|

||||

译者:[coloka](https://github.com/coloka)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -24,11 +24,11 @@

|

||||

|

||||

|

||||

|

||||

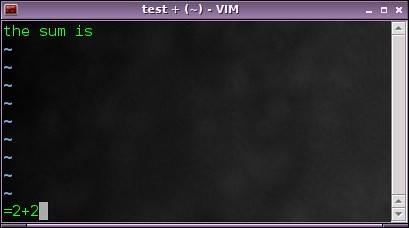

然后 4 会被插入到文件中。

|

||||

然后计算结果“4 ”会被插入到文件中。

|

||||

|

||||

### 查找重复的连续的单词 ###

|

||||

|

||||

当你很快地打字时,很有可能会连续输入同一个单词两次,就像 this this。这种错误可能骗过任何一个人,即使是你自己重新阅读一边也不可避免。幸运的是,有一个简单的正则表达式可以用来预防这个错误。使用搜索命令(默认时 `/`)然后输入:

|

||||

当你很快地打字时,很有可能会连续输入同一个单词两次,就像 this this。这种错误可能骗过任何一个人,即使是你自己重新阅读一遍也不可避免。幸运的是,有一个简单的正则表达式可以用来预防这个错误。使用搜索命令(默认时 `/`)然后输入:

|

||||

|

||||

\(\<\w\+\>\)\_s*\1

|

||||

|

||||

@ -72,7 +72,7 @@

|

||||

|

||||

`gg` 把光标移动到 Vim 缓冲区的第一行,`V` 进入可视模式,`G` 把光标移动到缓冲区的最后一行。因此,`ggVG` 使可视模式覆盖这个当前缓冲区。最后 `g?` 使用 ROT13 对整个区域进行编码。

|

||||

|

||||

注意它应该被映射到一个最长使用的键。它对字母符号也可以很好地工作。要对它进行撤销,最好的方法就是使用撤销命令:`u`。

|

||||

注意它可以被映射到一个最常使用的键。它对字母符号也可以很好地工作。要对它进行撤销,最好的方法就是使用撤销命令:`u`。

|

||||

|

||||

###自动补全 ###

|

||||

|

||||

@ -110,7 +110,7 @@

|

||||

|

||||

### 按时间回退文件 ###

|

||||

|

||||

Vim 会记录文件的更改,你很容易可以回退到之前某个时间。该命令时相当直观的。比如:

|

||||

Vim 会记录文件的更改,你很容易可以回退到之前某个时间。该命令是相当直观的。比如:

|

||||

|

||||

:earlier 1m

|

||||

|

||||

@ -122,7 +122,7 @@ Vim 会记录文件的更改,你很容易可以回退到之前某个时间。

|

||||

|

||||

### 删除标记内部的文字 ###

|

||||

|

||||

当我开始使用 Vim 时一件我总是想很方便做的事情是如何轻松的删除方括号或圆括号里的内容。转到开始的标记,然后使用下面的语法:

|

||||

当我开始使用 Vim 时,一件我总是想很方便做的事情是如何轻松的删除方括号或圆括号里的内容。转到开始的标记,然后使用下面的语法:

|

||||

|

||||

di[标记]

|

||||

|

||||

@ -164,11 +164,11 @@ Vim 会记录文件的更改,你很容易可以回退到之前某个时间。

|

||||

|

||||

### 把光标下的文字置于屏幕中央 ###

|

||||

|

||||

所有要做的事情都包含在标题中。如果你想强制滚动屏幕来把光标下的文字置于屏幕的中央,在可视模式中使用命令(译者注:在普通模式中也可以):

|

||||

我们所要做的事情如标题所示。如果你想强制滚动屏幕来把光标下的文字置于屏幕的中央,在可视模式中使用命令(译者注:在普通模式中也可以):

|

||||

|

||||

zz

|

||||

|

||||

### 跳到上一个/下一个 位置 ###

|

||||

### 跳到上一个/下一个位置 ###

|

||||

|

||||

当你编辑一个很大的文件时,经常要做的事是在某处进行修改,然后跳到另外一处。如果你想跳回之前修改的地方,使用命令:

|

||||

|

||||

@ -196,7 +196,7 @@ Vim 会记录文件的更改,你很容易可以回退到之前某个时间。

|

||||

|

||||

总的来说,这一系列命令是在我读了许多论坛主题和 [Vim Tips wiki][3](如果你想学习更多关于编辑器的知识,我非常推荐这篇文章) 之后收集起来的。

|

||||

|

||||

如果你还知道哪些非常有用但你认为大多数人并不知道的命令,可以随意在评论中分享出来。就像引言中所说的,一个“鲜为人知但很有用的”命令是很主观的,但分享出来总是好的。

|

||||

如果你还知道哪些非常有用但你认为大多数人并不知道的命令,可以随意在评论中分享出来。就像引言中所说的,一个“鲜为人知但很有用的”命令也许只是你自己的看法,但分享出来总是好的。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -204,7 +204,7 @@ via: http://xmodulo.com/useful-vim-commands.html

|

||||

|

||||

作者:[Adrien Brochard][a]

|

||||

译者:[wangjiezhe](https://github.com/wangjiezhe)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,195 @@

|

||||

使用 nice、cpulimit 和 cgroups 限制 cpu 占用率

|

||||

================================================================================

|

||||

|

||||

|

||||

|

||||

Linux内核是一名了不起的马戏表演者,它在进程和系统资源间小心地玩着杂耍,并保持系统的能够正常运转。 同时,内核也很公正:它将资源公平地分配给各个进程。

|

||||

|

||||

但是,如果你需要给一个重要进程提高优先级时,该怎么做呢? 或者是,如何降低一个进程的优先级? 又或者,如何限制一组进程所使用的资源呢?

|

||||

|

||||

**答案是需要由用户来为内核指定进程的优先级**

|

||||

|

||||

大部分进程启动时的优先级是相同的,因此Linux内核会公平地进行调度。 如果想让一个CPU密集型的进程运行在较低优先级,那么你就得事先配置好调度器。

|

||||

|

||||

下面介绍3种控制进程运行时间的方法:

|

||||

|

||||

- 使用 nice 命令手动降低任务的优先级。

|

||||

- 使用 cpulimit 命令不断的暂停进程,以控制进程所占用处理能力不超过特定限制。

|

||||

- 使用linux内建的**control groups(控制组)**功能,它提供了限制进程资源消耗的机制。

|

||||

|

||||

我们来看一下这3个工具的工作原理和各自的优缺点。

|

||||

|

||||

### 模拟高cpu占用率 ###

|

||||

|

||||

在分析这3种技术前,我们要先安装一个工具来模拟高CPU占用率的场景。我们会用到CentOS作为测试系统,并使用[Mathomatic toolkit][1]中的质数生成器来模拟CPU负载。

|

||||

|

||||

很不幸,在CentOS上这个工具没有预编译好的版本,所以必须要从源码进行安装。先从 http://mathomatic.orgserve.de/mathomatic-16.0.5.tar.bz2 这个链接下载源码包并解压。然后进入 **mathomatic-16.0.5/primes** 文件夹,运行 **make** 和 **sudo make install** 进行编译和安装。这样,就把 **matho-primes** 程序安装到了 **/usr/local/bin** 目录中。

|

||||

|

||||

接下来,通过命令行运行:

|

||||

|

||||

/usr/local/bin/matho-primes 0 9999999999 > /dev/null &

|

||||

|

||||

程序运行后,将输出从0到9999999999之间的质数。因为我们并不需要这些输出结果,直接将输出重定向到/dev/null就好。

|

||||

|

||||

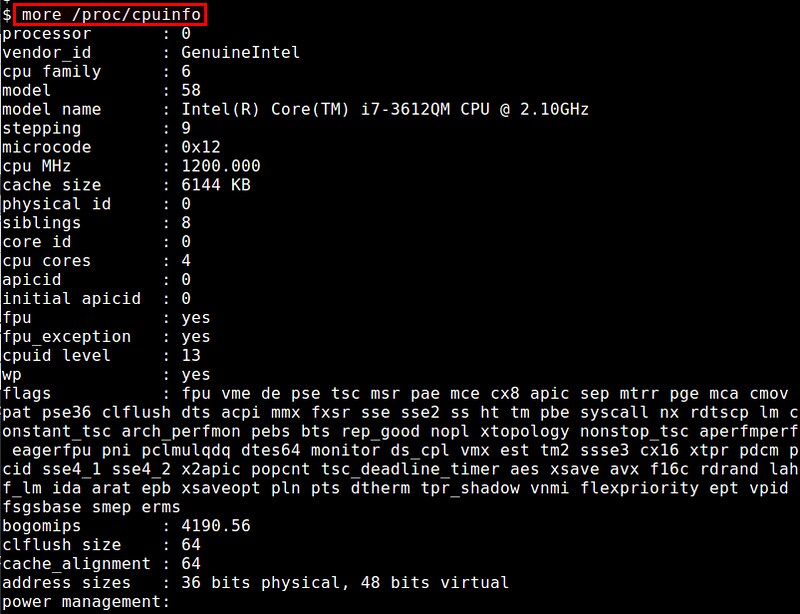

现在,使用top命令就可以看到matho-primes进程榨干了你所有的cpu资源。

|

||||

|

||||

|

||||

|

||||

好了,接下来(按q键)退出 top 并杀掉 matho-primes 进程(使用 fg 命令将进程切换到前台,再按 CTRL+C)

|

||||

|

||||

### nice命令 ###

|

||||

|

||||

下来介绍一下nice命令的使用方法,nice命令可以修改进程的优先级,这样就可以让进程运行得不那么频繁。 **这个功能在运行cpu密集型的后台进程或批处理作业时尤为有用。** nice值的取值范围是[-20,19],-20表示最高优先级,而19表示最低优先级。 Linux进程的默认nice值为0。使用nice命令(不带任何参数时)可以将进程的nice值设置为10。这样调度器就会将此进程视为较低优先级的进程,从而减少cpu资源的分配。

|

||||

|

||||

下面来看一个例子,我们同时运行两个 **matho-primes** 进程,一个使用nice命令来启动运行,而另一个正常启动运行:

|

||||

|

||||

nice matho-primes 0 9999999999 > /dev/null &

|

||||

matho-primes 0 9999999999 > /dev/null &

|

||||

|

||||

再运行top命令。

|

||||

|

||||

|

||||

|

||||

|

||||

看到没,正常运行的进程(nice值为0)获得了更多的cpu运行时间,相反的,用nice命令运行的进程占用的cpu时间会较少(nice值为10)。

|

||||

|

||||

在实际使用中,如果你要运行一个CPU密集型的程序,那么最好用nice命令来启动它,这样就可以保证其他进程获得更高的优先级。 也就是说,即使你的服务器或者台式机在重载的情况下,也可以快速响应。

|

||||

|

||||

nice 还有一个关联命令叫做 renice,它可以在运行时调整进程的 nice 值。使用 renice 命令时,要先找出进程的 PID。下面是一个例子:

|

||||

|

||||

renice +10 1234

|

||||

|

||||

其中,1234是进程的 PID。

|

||||

|

||||

测试完 **nice** 和 **renice** 命令后,记得要将 **matho-primes** 进程全部杀掉。

|

||||

|

||||

### cpulimit命令 ###

|

||||

|

||||

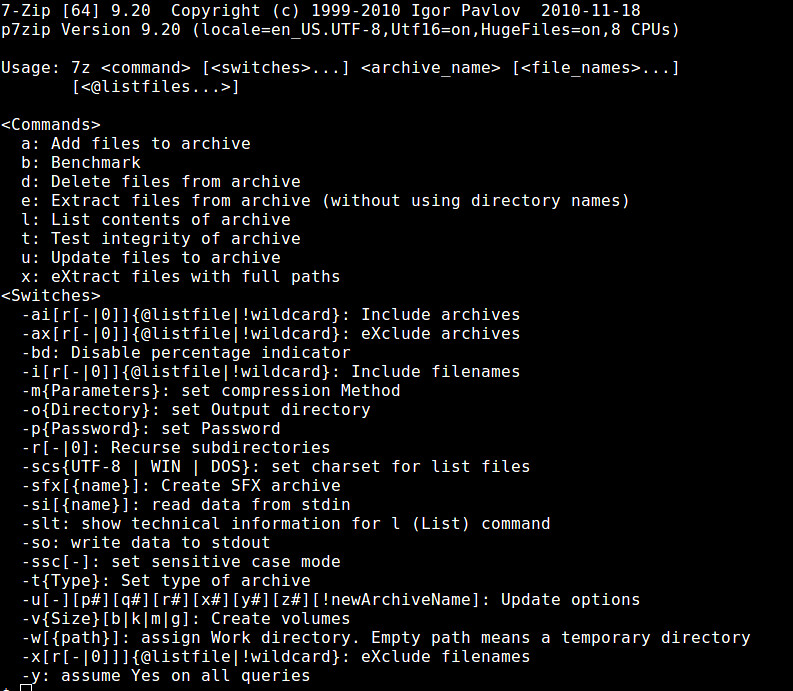

接下来介绍 **cpulimit** 命令的用法。 **cpulimit** 命令的工作原理是为进程预设一个 cpu 占用率门限,并实时监控进程是否超出此门限,若超出则让该进程暂停运行一段时间。cpulimit 使用 SIGSTOP 和 SIGCONT 这两个信号来控制进程。它不会修改进程的 nice 值,而是通过监控进程的 cpu 占用率来做出动态调整。

|

||||

|

||||

cpulimit 的优势是可以控制进程的cpu使用率的上限值。但与 nice 相比也有缺点,那就是即使 cpu 是空闲的,进程也不能完全使用整个 cpu 资源。

|

||||

|

||||

在 CentOS 上,可以用下面的方法来安装它:

|

||||

|

||||

wget -O cpulimit.zip https://github.com/opsengine/cpulimit/archive/master.zip

|

||||

unzip cpulimit.zip

|

||||

cd cpulimit-master

|

||||

make

|

||||

sudo cp src/cpulimit /usr/bin

|

||||

|

||||

上面的命令行,会先从从 GitHub 上将源码下载到本地,然后再解压、编译、并安装到 /usr/bin 目录下。

|

||||

|

||||

cpulimit 的使用方式和 nice 命令类似,但是需要用户使用 **-l** 选项显式地定义进程的 cpu 使用率上限值。举例说明:

|

||||

|

||||

cpulimit -l 50 matho-primes 0 9999999999 > /dev/null &

|

||||

|

||||

|

||||

|

||||

从上面的例子可以看出 matho-primes 只使用了50%的 cpu 资源,剩余的 cpu 时间都在 idle。

|

||||

|

||||

cpulimit 还可以在运行时对进程进行动态限制,使用 **-p** 选项来指定进程的 PID,下面是一个实例:

|

||||

|

||||

cpulimit -l 50 -p 1234

|

||||

|

||||

其中,1234是进程的 PID。

|

||||

|

||||

### cgroups 命令集 ###

|

||||

|

||||

最后介绍,功能最为强大的控制组(cgroups)的用法。cgroups 是 Linux 内核提供的一种机制,利用它可以指定一组进程的资源分配。 具体来说,使用 cgroups,用户能够限定一组进程的 cpu 占用率、系统内存消耗、网络带宽,以及这几种资源的组合。

|

||||

|

||||

对比nice和cpulimit,**cgroups 的优势**在于它可以控制一组进程,不像前者仅能控制单进程。同时,nice 和 cpulimit 只能限制 cpu 使用率,而 cgroups 则可以限制其他进程资源的使用。

|

||||

|

||||

对 cgroups 善加利用就可以控制好整个子系统的资源消耗。就拿 CoreOS 作为例子,这是一个专为大规模服务器部署而设计的最简化的 Linux 发行版本,它的 upgrade 进程就是使用 cgroups 来管控。这样,系统在下载和安装升级版本时也不会影响到系统的性能。

|

||||

|

||||

下面做一下演示,我们将创建两个控制组(cgroups),并对其分配不同的 cpu 资源。这两个控制组分别命名为“cpulimited”和“lesscpulimited”。

|

||||

|

||||

使用 cgcreate 命令来创建控制组,如下所示:

|

||||

|

||||

sudo cgcreate -g cpu:/cpulimited

|

||||

sudo cgcreate -g cpu:/lesscpulimited

|

||||

|

||||

其中“-g cpu”选项用于设定 cpu 的使用上限。除 cpu 外,cgroups 还提供 cpuset、memory、blkio 等控制器。cpuset 控制器与 cpu 控制器的不同在于,cpu 控制器只能限制一个 cpu 核的使用率,而 cpuset 可以控制多个 cpu 核。

|

||||

|

||||

cpu 控制器中的 cpu.shares 属性用于控制 cpu 使用率。它的默认值是 1024,我们将 lesscpulimited 控制组的 cpu.shares 设为1024(默认值),而 cpulimited 设为512,配置后内核就会按照2:1的比例为这两个控制组分配资源。

|

||||

|

||||

要设置cpulimited 组的 cpu.shares 为 512,输入以下命令:

|

||||

|

||||

sudo cgset -r cpu.shares=512 cpulimited

|

||||

|

||||

使用 cgexec 命令来启动控制组的运行,为了测试这两个控制组,我们先用cpulimited 控制组来启动 matho-primes 进程,命令行如下:

|

||||

|

||||

sudo cgexec -g cpu:cpulimited /usr/local/bin/matho-primes 0 9999999999 > /dev/null &

|

||||

|

||||

打开 top 可以看到,matho-primes 进程占用了所有的 cpu 资源。

|

||||

|

||||

|

||||

|

||||

因为只有一个进程在系统中运行,不管将其放到哪个控制组中启动,它都会尽可能多的使用cpu资源。cpu 资源限制只有在两个进程争夺cpu资源时才会生效。

|

||||

|

||||

那么,现在我们就启动第二个 matho-primes 进程,这一次我们在 lesscpulimited 控制组中来启动它:

|

||||

|

||||

sudo cgexec -g cpu:lesscpulimited /usr/local/bin/matho-primes 0 9999999999 > /dev/null &

|

||||

|

||||

再打开 top 就可以看到,cpu.shares 值大的控制组会得到更多的 cpu 运行时间。

|

||||

|

||||

|

||||

|

||||

现在,我们再在 cpulimited 控制组中增加一个 matho-primes 进程:

|

||||

|

||||

sudo cgexec -g cpu:cpulimited /usr/local/bin/matho-primes 0 9999999999 > /dev/null &

|

||||

|

||||

|

||||

|

||||

看到没,两个控制组的 cpu 的占用率比例仍然为2:1。其中,cpulimited 控制组中的两个 matho-primes 进程获得的cpu 时间基本相当,而另一组中的 matho-primes 进程显然获得了更多的运行时间。

|

||||

|

||||

更多的使用方法,可以在 Red Hat 上查看详细的 cgroups 使用[说明][2]。(当然CentOS 7也有)

|

||||

|

||||

### 使用Scout来监控cpu占用率 ###

|

||||

|

||||

监控cpu占用率最为简单的方法是什么?[Scout][3] 工具能够监控能够自动监控进程的cpu使用率和内存使用情况。

|

||||

|

||||

|

||||

|

||||

[Scout][3]的触发器(trigger)功能还可以设定 cpu 和内存的使用门限,超出门限时会自动产生报警。

|

||||

|

||||

从这里可以获取 [Scout][4] 的试用版。

|

||||

|

||||

### 总结 ###

|

||||

|

||||

|

||||

|

||||

计算机的系统资源是非常宝贵的。上面介绍的这3个工具能够帮助大家有效地管理系统资源,特别是cpu资源:

|

||||

|

||||

- **nice**可以一次性调整进程的优先级。

|

||||

- **cpulimit**在运行cpu密集型任务且要保持系统的响应性时会很有用。

|

||||

- **cgroups**是资源管理的瑞士军刀,同时在使用上也很灵活。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://blog.scoutapp.com/articles/2014/11/04/restricting-process-cpu-usage-using-nice-cpulimit-and-cgroups

|

||||

|

||||

译者:[coloka](https://github.com/coloka)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://www.mathomatic.org/

|

||||

[2]:https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Resource_Management_and_Linux_Containers_Guide/chap-Introduction_to_Control_Groups.html

|

||||

[3]:https://scoutapp.com/

|

||||

[4]:https://scoutapp.com/

|

||||

[5]:

|

||||

[6]:

|

||||

[7]:

|

||||

[8]:

|

||||

[9]:

|

||||

[10]:

|

||||

[11]:

|

||||

[12]:

|

||||

[13]:

|

||||

[14]:

|

||||

[15]:

|

||||

[16]:

|

||||

[17]:

|

||||

[18]:

|

||||

[19]:

|

||||

[20]:

|

||||

@ -1,7 +1,6 @@

|

||||

用Grub启动ISO镜像

|

||||

================================================================================

|

||||

如果你需要使用多个Linux发行版,你没有那么多的选项。你可以安装到你的物理机或虚拟机中,也可以以live模式从ISO文件启动。第二个选择,如果对硬盘空间需求更少,就有点麻烦,因为你需要将ISO文件写入到USB棒或CD来启动。但是,这里有另外一个可选的折中方案:把ISO镜像放在硬盘中,然后以live模式来启动。该方案比完全安装更省空间,但是功能完备,这对于缓慢的虚拟机而言是个不错的替代方案。下面我将介绍怎样使用流行的Grub启动加载器来实现该方案。

|

||||

|

||||

如果你想要使用多个Linux发行版,你没有那么多的选择。你要么安装到你的物理机或虚拟机中,要么以live模式从ISO文件启动。第二个选择,对硬盘空间需求较小,只是有点麻烦,因为你需要将ISO文件写入到U盘或CD/DVD中来启动。不过,这里还有另外一个可选的折中方案:把ISO镜像放在硬盘中,然后以live模式来启动。该方案比完全安装更省空间,而且功能也完备,这对于缓慢的虚拟机而言是个不错的替代方案。下面我将介绍怎样使用流行的Grub启动加载器来实现该方案。

|

||||

|

||||

很明显,你将需要使用到Grub,这是几乎所有现代Linux发行版都使用的。你也需要你所想用的Linux版本的ISO文件,将它下载到本地磁盘。最后,你需要知道启动分区在哪里,并怎样在Grub中描述。对于此,请使用以下命令:

|

||||

|

||||

@ -31,7 +30,7 @@

|

||||

[some specific] arguments

|

||||

}

|

||||

|

||||

例如,如果你想要从ISO文件启动Ubuntu,那么你就是想要添加该行到40_custom文件:

|

||||

例如,如果你想要从ISO文件启动Ubuntu,那么你就是想要添加如下行到40_custom文件:

|

||||

|

||||

menuentry "Ubuntu 14.04 (LTS) Live Desktop amd64" {

|

||||

set isofile="/boot/ubuntu-14.04-desktop-amd64.iso"

|

||||

@ -62,7 +61,7 @@

|

||||

initrd (loop)/isolinux/initrd0.img

|

||||

}

|

||||

|

||||

注意,参数可根据发行版进行修改。有幸的是,有许多地方你可以查阅。我喜欢这一个,但是还有很多其它的。同时,请考虑你放置ISO文件的地方。如果你的家目录被加密或者无法被访问到,你可能更喜欢将这些文件放到像例子中的启动分区。但是,请首先确保有足够的空间。

|

||||

注意,参数可根据发行版进行修改。幸运的是,有许多地方你可以查阅到。我喜欢这个发行版,但是还有很多其它的发行版你可以启动。同时,请注意你放置ISO文件的地方。如果你的家目录被加密或者无法被访问到,你可能更喜欢将这些文件放到像例子中的启动分区。但是,请首先确保启动分区有足够的空间。

|

||||

|

||||

最后,不要忘了保存40_custom文件并使用以下命令来更新grub:

|

||||

|

||||

@ -92,7 +91,7 @@

|

||||

|

||||

可以显示DBAN选项,让你选择清除驱动器。**当心,因为它仍然十分危险**。

|

||||

|

||||

小结一下,对于ISO文件和Grub有很多事情可做:从快速live会话到用你的指尖来破坏一切,都可以满足你。下一步是启动一些关注隐私的发行版如[Tails][2]。

|

||||

小结一下,对于ISO文件和Grub有很多事情可做:从快速live会话到一键毁灭,都可以满足你。之后,你也可以试试启动一些针对隐私方面的发行版,如[Tails][2]。

|

||||

|

||||

你认为从Grub启动一个ISO这个主意怎样?这是不是你想要做的呢?为什么呢?请在下面留言。

|

||||

|

||||

@ -102,7 +101,7 @@ via: http://xmodulo.com/boot-iso-image-from-grub.html

|

||||

|

||||

作者:[Adrien Brochard][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,30 +1,34 @@

|

||||

硬盘监控和分析神器——Smartctl

|

||||

硬盘监控和分析工具——Smartctl

|

||||

================================================================================

|

||||

**Smartctl**(自监控,分析和报告技术)是类Unix系统下实施SMART任务命令行套件或工具,它用于打印SMART**自检**和**错误日志**,启用并禁用SMRAT**自动检测**,以及初始化设备自检。

|

||||

**Smartctl**(S.M.A.R.T 自监控,分析和报告技术)是类Unix系统下实施SMART任务命令行套件或工具,它用于打印SMART**自检**和**错误日志**,启用并禁用SMRAT**自动检测**,以及初始化设备自检。

|

||||

|

||||

Smartctl对于Linux物理服务器十分有用,在这些服务器上,可以对智能磁盘进行错误检查,并将与**硬件RAID**上相关的磁盘信息摘录下来。

|

||||

Smartctl对于Linux物理服务器十分有用,在这些服务器上,可以对智能磁盘进行错误检查,并将与**硬件RAID**相关的磁盘信息摘录下来。

|

||||

|

||||

在本帖中,我们将讨论smartctl命令的一些实用样例。如果你的Linux上海没有安装smartctl,请按以下步骤来安装。

|

||||

|

||||

### Ubuntu中smartctl的安装 ###

|

||||

### 安装 Smartctl ###

|

||||

|

||||

**对于 Ubuntu**

|

||||

|

||||

$ sudo apt-get install smartmontools

|

||||

|

||||

### Redhat / CentOS中smartctl的安装 ###

|

||||

**对于 CentOS & RHEL**

|

||||

|

||||

# yum install smartmontools

|

||||

|

||||

**启动Smartctl服务**

|

||||

###启动Smartctl服务###

|

||||

|

||||

**对于Ubuntu**

|

||||

**对于 Ubuntu**

|

||||

|

||||

$ sudo /etc/init.d/smartmontools start

|

||||

|

||||

**对于CentOS & RHEL**

|

||||

**对于 CentOS & RHEL**

|

||||

|

||||

# service smartd start ; chkconfig smartd on

|

||||

|

||||

**样例:1 检查针对磁盘的Smart负载量**

|

||||

### 样例 ###

|

||||

|

||||

#### 样例:1 检查磁盘的 Smart 功能是否启用

|

||||

|

||||

root@linuxtechi:~# smartctl -i /dev/sdb

|

||||

smartctl 6.2 2013-07-26 r3841 [x86_64-linux-3.13.0-32-generic] (local build)

|

||||

@ -46,9 +50,9 @@ Smartctl对于Linux物理服务器十分有用,在这些服务器上,可以

|

||||

SMART support is: Available - device has SMART capability.

|

||||

SMART support is: Enabled

|

||||

|

||||

这里‘/dev/sdb’是你的硬盘。上面输出中的最后两行显示了SMART负载量已启用。

|

||||

这里‘/dev/sdb’是你的硬盘。上面输出中的最后两行显示了SMART功能已启用。

|

||||

|

||||

**样例:2 为磁盘启用Smart负载量**

|

||||

#### 样例:2 启用磁盘的 Smart 功能

|

||||

|

||||

root@linuxtechi:~# smartctl -s on /dev/sdb

|

||||

smartctl 6.2 2013-07-26 r3841 [x86_64-linux-3.13.0-32-generic] (local build)

|

||||

@ -57,7 +61,7 @@ Smartctl对于Linux物理服务器十分有用,在这些服务器上,可以

|

||||

=== START OF ENABLE/DISABLE COMMANDS SECTION ===

|

||||

SMART Enabled.

|

||||

|

||||

**样例:3 为磁盘禁用Smart负载量**

|

||||

#### 样例:3 禁用磁盘的 Smart 功能

|

||||

|

||||

root@linuxtechi:~# smartctl -s off /dev/sdb

|

||||

smartctl 6.2 2013-07-26 r3841 [x86_64-linux-3.13.0-32-generic] (local build)

|

||||

@ -66,12 +70,12 @@ Smartctl对于Linux物理服务器十分有用,在这些服务器上,可以

|

||||

=== START OF ENABLE/DISABLE COMMANDS SECTION ===

|

||||