mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-21 02:10:11 +08:00

Merge remote-tracking branch 'upstream/master'

This commit is contained in:

commit

a17518469a

75

lctt2018.md

Normal file

75

lctt2018.md

Normal file

@ -0,0 +1,75 @@

|

||||

LCTT 2018:五周年纪念日

|

||||

======

|

||||

|

||||

我是老王,可能大家有不少人知道我,由于历史原因,我有好几个生日(;o),但是这些年来,我又多了一个生日,或者说纪念日——每过两年,我就要严肃认真地写一篇 [LCTT](https://linux.cn/lctt) 生日纪念文章。

|

||||

|

||||

喏,这一篇,就是今年的了,LCTT 如今已经五岁了!

|

||||

|

||||

或许如同小孩子过生日总是比较快乐,而随着年岁渐长,过生日往往有不少负担——比如说,每次写这篇纪念文章时,我就需要回忆、反思这两年的做了些什么,往往颇为汗颜。

|

||||

|

||||

不过不管怎么说,总要总结一下这两年我们做了什么,有什么不足,也发一些展望吧。

|

||||

|

||||

### 江山代有英豪出

|

||||

|

||||

LCTT,如同一般的开源贡献组织,总是有不断的新老传承。我们的翻译组,也有不少成员,由于工作学习的原因,慢慢淡出,但同时,也不断有新的成员加入并接过前辈手中的旗帜(就是没人接我的)。

|

||||

|

||||

> **加入方式**

|

||||

|

||||

> 请首先加入翻译组的 QQ 群,群号是:**198889102**,加群时请说明是“**志愿者**”。加入后记得修改您的群名片为您的 GitHub 的 ID。

|

||||

|

||||

> 加入的成员,请先阅读 [WIKI 如何开始](https://github.com/LCTT/TranslateProject/wiki/01-%E5%A6%82%E4%BD%95%E5%BC%80%E5%A7%8B)。

|

||||

|

||||

比如说,我们这两年来,oska874 承担了主要的选题工作,然后 lujun9972 适时的出现接过了不少选题工作;再比如说,qhwdw 出现后承担了大量繁难文章的翻译,pityonline 则专注于校对,甚至其校对的严谨程度让我都甘拜下风。还有 MjSeven 也同 qhwdw 一样,以极高的翻译频率从一星译者迅速登顶五星译者。当然,还有 Bestony、Locez、VizV 等人为 LCTT 提供了不少技术支持和开发工作。

|

||||

|

||||

### 硕果累累

|

||||

|

||||

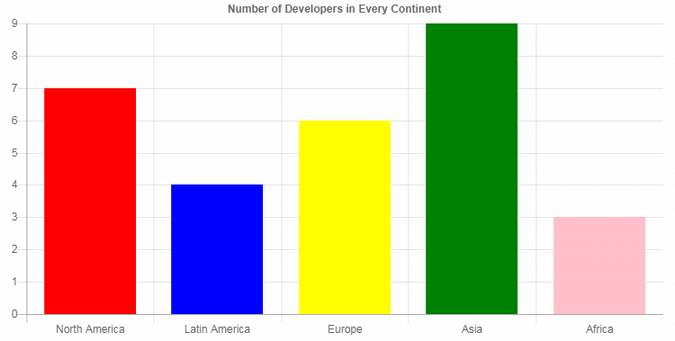

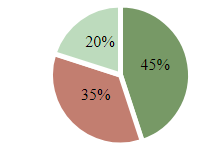

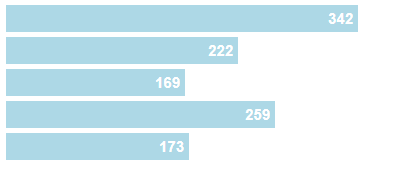

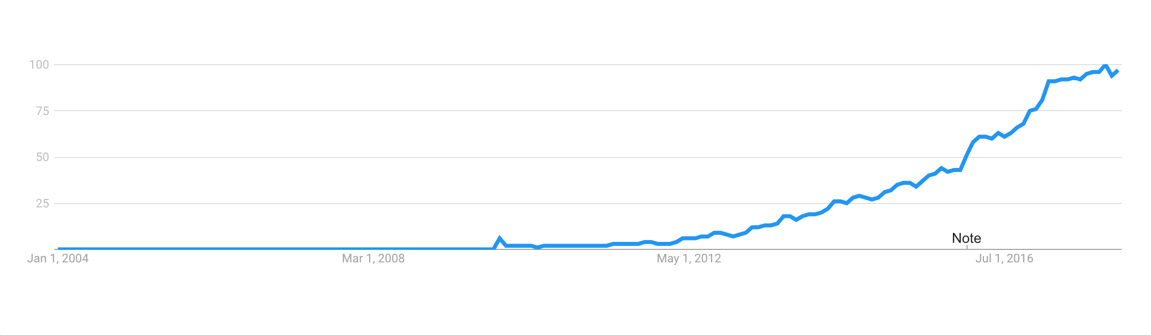

我们并没有特别的招新渠道,但是总是时不时会有新的成员慕名而来,到目前为止,我们已经有 [331](https://linux.cn/lctt-list) 位做过贡献的成员,已经翻译发布了 3885 篇译文,合计字节达 33MB 之多!

|

||||

|

||||

这两年,我们不但翻译了很多技术、新闻和评论类文章,也新增了新的翻译类型:[漫画](https://linux.cn/talk/comic/),其中一些漫画得到了很多好评。

|

||||

|

||||

我们发布的文章有一些达到了 100000+ 的访问量,这对于我们这种技术垂直内容可不容易。

|

||||

|

||||

而同时,[Linux 中国](https://linux.cn/)也发布了近万篇文章,而这一篇,应该就是第 [9999](https://linux.cn/article-9999-1.html) 篇文章,我们将在明天,进入新的篇章。

|

||||

|

||||

### 贡献者主页和贡献者证书

|

||||

|

||||

为了彰显诸位贡献者的贡献,我们为每位贡献者创立的自己的专页,并据此建立了[排行榜](https://linux.cn/lctt-list)。

|

||||

|

||||

同时,我们还特意请 Bestony 和“一一”设计开发和”贡献者证书”,大家可以在 [LCTT 贡献平台](https://lctt.linux.cn/)中领取。

|

||||

|

||||

### 规则进化

|

||||

|

||||

LCTT 最初创立时,甚至都没有采用 PR 模式。但是随着贡献者的增多,我们也逐渐在改善我们的流程、方法。

|

||||

|

||||

之前采用了很粗糙的 PR 模式,对 PR 中的文件、提交乃至于信息都没有进行硬性约束。后来在 VizV 的帮助下,建立了对 PR 的合规性检查;又在 pityonline 的督促下,采用了更为严格的 PR 审查机制。

|

||||

|

||||

LCTT 创立几年来,我们的一些流程和规范,已经成为其它一些翻译组的参考范本,我们也希望我们的这些经验,可以进一步帮助到其它的开源社区。

|

||||

|

||||

### 仓库重建和版权问题

|

||||

|

||||

今年还发生一次严重的事故,由于对选题来源把控不严和对版权问题没有引起足够的重视,我们引用的一篇文章违背了原文的版权规定,结果被原文作者投诉到 GitHub。而我并没有及时看到 GitHub 给我发的 DMCA 处理邮件,因此错过了处理窗口期,从而被 GitHub 将整个库予以删除。

|

||||

|

||||

出现这样的重大失误之后,经过大家的帮助,我们历经周折才将仓库基本恢复。这要特别感谢 VizV 的辛苦工作。

|

||||

|

||||

在此之后,我们对译文选文的规则进行了梳理,并全面清查了文章版权。这个教训对我们来说弥足沉重。

|

||||

|

||||

### 通证时代

|

||||

|

||||

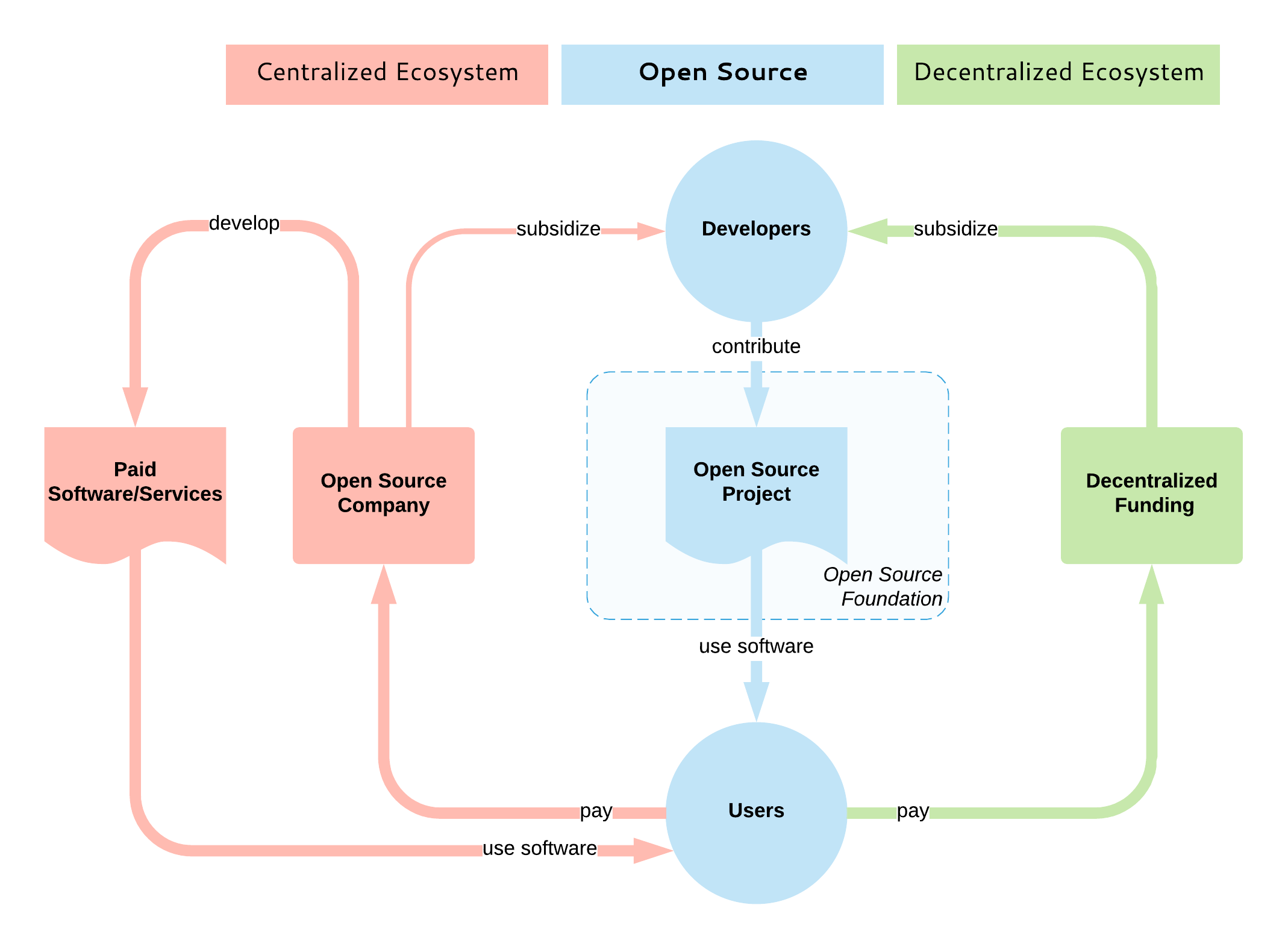

在 Linux 中国及 LCTT 发展过程中,我一直小心翼翼注意商业化的问题。严格来说,没有经济支持的开源组织如同无根之木,无源之水,是长久不了的。而商业化的技术社区又难免为了三斗米而折腰。所以往往很多技术社区要么渐渐凋零,要么就变成了商业机构。

|

||||

|

||||

从中国电信辞职后,我专职运营 Linux 中国这个开源社区已经近三年了,其间也有一些商业性收入,但是仅能勉强承担基本的运营费用。

|

||||

|

||||

这种尴尬的局面,使我,以及其它的开源社区同仁们纷纷寻求更好的发展之路。

|

||||

|

||||

去年参加中国开源年会时,在闭门会上,大家的讨论启发了我和诸位同仁,我们认为,开源社区结合通证经济,似乎是一条可行的开源社区发展之路。

|

||||

|

||||

今年 8 月 1 日,我们经过了半年的论证和实验,[发布了社区通证 LCCN](https://linux.cn/article-9886-1.html),并已经初步发放到了各位译者手中。我们还在继续建设通证生态各种工具,如合约、交易商城等。

|

||||

|

||||

我们希望能够通过通证为开源社区转入新的活力,也愿意将在探索道路上遇到的问题和解决的思路、工具链分享给更多的社区。

|

||||

|

||||

### 总结

|

||||

|

||||

从上一次总结以来,这又是七百多天,时光荏苒,而 LCTT 的创立也近两千天了。我希望,我们的翻译组以及更多的贡献者可以在通证经济的推动下,找到自洽、自治的发展道路;也希望能有更多的贡献者涌现出来接过我们的大旗,将开源发扬光大。

|

||||

|

||||

wxy

|

||||

2018/9/9 夜

|

||||

@ -1,22 +1,19 @@

|

||||

在 RxJS 中创建流的延伸教程

|

||||

============================================================

|

||||

全面教程:在 RxJS 中创建流

|

||||

================================

|

||||

|

||||

|

||||

|

||||

对大多数开发者来说,RxJS 是以库的形式与之接触,就像 Angular。一些函数会返回流,要使用它们就得把注意力放在操作符上。

|

||||

对大多数开发者来说,与 RxJS 的初次接触是通过库的形式,就像 Angular。一些函数会返回<ruby>流<rt>stream</rt></ruby>,要使用它们就得把注意力放在操作符上。

|

||||

|

||||

有些时候,混用响应式和非响应式代码似乎很有用。然后大家就开始热衷流的创造。不论是在编写异步代码或者是数据处理时,流都是一个不错的方案。

|

||||

|

||||

RxJS 提供很多方式来创建流。不管你遇到的是什么情况,都会有一个完美的创建流的方式。你可能根本用不上它们,但了解它们可以节省你的时间,让你少码一些代码。

|

||||

|

||||

我把所有可能的方法,按它们的主要目的,分放在四个目录中:

|

||||

我把所有可能的方法,按它们的主要目的,放在四个分类当中:

|

||||

|

||||

* 流式化现有数据

|

||||

|

||||

* 生成数据

|

||||

|

||||

* 使用现有 APIs 进行交互

|

||||

|

||||

* 使用现有 API 进行交互

|

||||

* 选择现有的流,并结合起来

|

||||

|

||||

注意:示例用的是 RxJS 6,可能会以前的版本有所不同。已知的区别是你导入函数的方式不同了。

|

||||

@ -25,9 +22,7 @@ RxJS 6

|

||||

|

||||

```

|

||||

import {of, from} from 'rxjs';

|

||||

```

|

||||

|

||||

```

|

||||

of(...);

|

||||

from(...);

|

||||

```

|

||||

@ -38,36 +33,24 @@ RxJS < 6

|

||||

import { Observable } from 'rxjs/Observable';

|

||||

import 'rxjs/add/observable/of';

|

||||

import 'rxjs/add/observable/from';

|

||||

```

|

||||

|

||||

```

|

||||

Observable.of(...);

|

||||

Observable.from(...);

|

||||

```

|

||||

|

||||

```

|

||||

//or

|

||||

```

|

||||

//或

|

||||

|

||||

```

|

||||

import { of } from 'rxjs/observable/of';

|

||||

import { from } from 'rxjs/observable/from';

|

||||

```

|

||||

|

||||

```

|

||||

of(...);

|

||||

from(...);

|

||||

```

|

||||

|

||||

流的图示中的标记:

|

||||

|

||||

* | 表示流结束了

|

||||

|

||||

* X 表示流出现错误并被终结

|

||||

|

||||

* … 表示流的走向不定

|

||||

|

||||

* * *

|

||||

* `|` 表示流结束了

|

||||

* `X` 表示流出现错误并被终结

|

||||

* `...` 表示流的走向不定

|

||||

|

||||

### 流式化已有数据

|

||||

|

||||

@ -75,7 +58,7 @@ from(...);

|

||||

|

||||

#### of

|

||||

|

||||

如果只有一个或者一些不同的元素,使用 _of_ :

|

||||

如果只有一个或者一些不同的元素,使用 `of`:

|

||||

|

||||

```

|

||||

of(1,2,3)

|

||||

@ -89,13 +72,11 @@ of(1,2,3)

|

||||

|

||||

#### from

|

||||

|

||||

如果有一个数组或者 _可迭代的_ 对象,而且你想要其中的所有元素发送到流中,使用 _from_。你也可以用它来把一个 promise 对象变成可观测的。

|

||||

如果有一个数组或者 _可迭代的对象_ ,而且你想要其中的所有元素发送到流中,使用 `from`。你也可以用它来把一个 promise 对象变成可观测的。

|

||||

|

||||

```

|

||||

const foo = [1,2,3];

|

||||

```

|

||||

|

||||

```

|

||||

from(foo)

|

||||

.subscribe();

|

||||

```

|

||||

@ -111,9 +92,7 @@ from(foo)

|

||||

|

||||

```

|

||||

const foo = { a: 1, b: 2};

|

||||

```

|

||||

|

||||

```

|

||||

pairs(foo)

|

||||

.subscribe();

|

||||

```

|

||||

@ -125,19 +104,16 @@ pairs(foo)

|

||||

|

||||

#### 那么其他的数据结构呢?

|

||||

|

||||

也许你的数据存储在自定义的结构中,而它又没有实现 _Iterable_ 接口,又或者说你的结构是递归的,树状的。也许下面某种选择适合这些情况:

|

||||

也许你的数据存储在自定义的结构中,而它又没有实现 _可迭代的对象_ 接口,又或者说你的结构是递归的、树状的。也许下面某种选择适合这些情况:

|

||||

|

||||

* 先将数据提取到数组里

|

||||

1. 先将数据提取到数组里

|

||||

2. 使用下一节将会讲到的 `generate` 函数,遍历所有数据

|

||||

3. 创建一个自定义流(见下一节)

|

||||

4. 创建一个迭代器

|

||||

|

||||

* 使用下一节将会讲到的 _generate_ 函数,遍历所有数据

|

||||

稍后会讲到选项 2 和 3 ,因此这里的重点是创建一个迭代器。我们可以对一个 _可迭代的对象_ 调用 `from` 创建一个流。 _可迭代的对象_ 是一个对象,可以产生一个迭代器(如果你对细节感兴趣,参考 [这篇 mdn 文章][6])。

|

||||

|

||||

* 创建一个自定义流(见下一节)

|

||||

|

||||

* 创建一个迭代器

|

||||

|

||||

稍后会讲到选项 2 和 3 ,因此这里的重点是创建一个迭代器。我们可以对一个 _iterable_ 对象调用 _from_ 创建一个流。 _iterable_ 是一个对象,可以产生一个迭代器(如果你对细节感兴趣,参考 [这篇 mdn 文章][6])。

|

||||

|

||||

创建一个迭代器的简单方式是 [generator function][7]。当你调用一个生成函数(generator function)时,它返回一个对象,该对象同时遵循 _iterable_ 接口和 _iterator_ 接口。

|

||||

创建一个迭代器的简单方式是 <ruby>[生成函数][7]<rt>generator function</rt></ruby>。当你调用一个生成函数时,它返回一个对象,该对象同时遵循 _可迭代的对象_ 接口和 _迭代器_ 接口。

|

||||

|

||||

```

|

||||

// 自定义的数据结构

|

||||

@ -147,23 +123,17 @@ class List {

|

||||

get size() ...

|

||||

...

|

||||

}

|

||||

```

|

||||

|

||||

```

|

||||

function* listIterator(list) {

|

||||

for (let i = 0; i<list.size; i++) {

|

||||

yield list.get(i);

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

```

|

||||

const myList = new List();

|

||||

myList.add(1);

|

||||

myList.add(3);

|

||||

```

|

||||

|

||||

```

|

||||

from(listIterator(myList))

|

||||

.subscribe(console.log);

|

||||

```

|

||||

@ -173,15 +143,13 @@ from(listIterator(myList))

|

||||

// 1 3 |

|

||||

```

|

||||

|

||||

调用 `listIterator` 函数时,返回值是一个 _iterable_ / _iterator_。函数里面的代码在调用 _subscribe_ 前不会执行。

|

||||

|

||||

* * *

|

||||

调用 `listIterator` 函数时,返回值是一个 _可迭代的对象_ / _迭代器_ 。函数里面的代码在调用 `subscribe` 前不会执行。

|

||||

|

||||

### 生成数据

|

||||

|

||||

你知道要发送哪些数据,但想(或者不得不)动态生成它。所有函数的最后一个参数都可以用来接收一个调度器。他们产生静态的流。

|

||||

你知道要发送哪些数据,但想(或者必须)动态生成它。所有函数的最后一个参数都可以用来接收一个调度器。他们产生静态的流。

|

||||

|

||||

#### range

|

||||

#### 范围(`range`)

|

||||

|

||||

从初始值开始,发送一系列数字,直到完成了指定次数的迭代。

|

||||

|

||||

@ -195,9 +163,9 @@ range(10, 2) // 从 10 开始,发送两个值

|

||||

// 10 11 |

|

||||

```

|

||||

|

||||

#### 间隔/定时器

|

||||

#### 间隔(`interval`) / 定时器(`timer`)

|

||||

|

||||

有点像 _range_,但定时器是周期性的发送累加的数字(就是说,不是立即发送)。两者的区别在于在于 _timer_ 允许你为第一个元素设定一个延迟。也可以只产生一个值,只要不指定周期。

|

||||

有点像范围,但定时器是周期性的发送累加的数字(就是说,不是立即发送)。两者的区别在于在于定时器允许你为第一个元素设定一个延迟。也可以只产生一个值,只要不指定周期。

|

||||

|

||||

```

|

||||

interval(1000) // 每 1000ms = 1 秒 发送数据

|

||||

@ -211,9 +179,7 @@ interval(1000) // 每 1000ms = 1 秒 发送数据

|

||||

|

||||

```

|

||||

delay(5000, 1000) // 和上面相同,在开始前先等待 5000ms

|

||||

```

|

||||

|

||||

```

|

||||

delay(5000)

|

||||

.subscribe(i => console.log("foo");

|

||||

// 5 秒后打印 foo

|

||||

@ -229,7 +195,7 @@ interval(10000).pipe(

|

||||

|

||||

这段代码每 10 秒获取一次数据,更新屏幕。

|

||||

|

||||

#### generate

|

||||

#### 生成(`generate `)

|

||||

|

||||

这是个更加复杂的函数,允许你发送一系列任意类型的对象。它有一些重载,这里你看到的是最有意思的部分:

|

||||

|

||||

@ -246,15 +212,13 @@ generate(

|

||||

// 1 2 4 8 |

|

||||

```

|

||||

|

||||

你也可以用它来迭代值,如果一个结构没有实现 _Iterable_ 接口。我们用前面的 list 例子来进行演示:

|

||||

你也可以用它来迭代值,如果一个结构没有实现 _可迭代的对象_ 接口。我们用前面的列表例子来进行演示:

|

||||

|

||||

```

|

||||

const myList = new List();

|

||||

myList.add(1);

|

||||

myList.add(3);

|

||||

```

|

||||

|

||||

```

|

||||

generate(

|

||||

0, // 从这个值开始

|

||||

i => i < list.size, // 条件:发送数据,直到遍历完整个列表

|

||||

@ -268,15 +232,13 @@ generate(

|

||||

// 1 3 |

|

||||

```

|

||||

|

||||

如你所见,我添加了另一个参数:选择器(selector)。它和 _map_ 操作符作用类似,将生成的值转换为更有用的东西。

|

||||

|

||||

* * *

|

||||

如你所见,我添加了另一个参数:选择器。它和 `map` 操作符作用类似,将生成的值转换为更有用的东西。

|

||||

|

||||

### 空的流

|

||||

|

||||

有时候你要传递或返回一个不用发送任何数据的流。有三个函数分别用于不同的情况。你可以给这三个函数传递调度器。_empty_ 和 _throwError_ 接收一个调度器参数。

|

||||

有时候你要传递或返回一个不用发送任何数据的流。有三个函数分别用于不同的情况。你可以给这三个函数传递调度器。`empty` 和 `throwError` 接收一个调度器参数。

|

||||

|

||||

#### empty

|

||||

#### `empty`

|

||||

|

||||

创建一个空的流,一个值也不发送。

|

||||

|

||||

@ -290,7 +252,7 @@ empty()

|

||||

// |

|

||||

```

|

||||

|

||||

#### never

|

||||

#### `never`

|

||||

|

||||

创建一个永远不会结束的流,仍然不发送值。

|

||||

|

||||

@ -304,7 +266,7 @@ never()

|

||||

// ...

|

||||

```

|

||||

|

||||

#### throwError

|

||||

#### `throwError`

|

||||

|

||||

创建一个流,流出现错误,不发送数据。

|

||||

|

||||

@ -318,15 +280,13 @@ throwError('error')

|

||||

// X

|

||||

```

|

||||

|

||||

* * *

|

||||

|

||||

### 挂钩已有的 API

|

||||

|

||||

不是所有的库和所有你之前写的代码使用或者支持流。幸运的是 RxJS 提供函数用来桥接非响应式和响应式代码。这一节仅仅讨论 RxJS 为桥接代码提供的模版。

|

||||

|

||||

你可能还对这篇出自 [Ben Lesh][9] 的 [延伸阅读][8] 感兴趣,这篇文章讲了几乎所有能与 promises 交互操作的方式。

|

||||

你可能还对这篇出自 [Ben Lesh][9] 的 [全面的文章][8] 感兴趣,这篇文章讲了几乎所有能与 promises 交互操作的方式。

|

||||

|

||||

#### from

|

||||

#### `from`

|

||||

|

||||

我们已经用过它,把它列在这里是因为,它可以封装一个含有 observable 对象的 promise 对象。

|

||||

|

||||

@ -346,9 +306,7 @@ fromEvent 为 DOM 元素添加一个事件监听器,我确定你知道这个

|

||||

|

||||

```

|

||||

const element = $('#fooButton'); // 从 DOM 元素中创建一个 jQuery 对象

|

||||

```

|

||||

|

||||

```

|

||||

from(element, 'click')

|

||||

.subscribe();

|

||||

```

|

||||

@ -367,31 +325,25 @@ from(document, 'click')

|

||||

.subscribe();

|

||||

```

|

||||

|

||||

这告诉 RxJS 我们想要监听 document 中的点击事件。在提交过程中,RxJS 发现 document 是一个 _EventTarget_ 类型,因此它可以调用它的 _addEventListener_ 方法。如果我们传入的是一个 jQuery 对象而非 document,那么 RxJs 知道它得调用 _on_ 方法。

|

||||

这告诉 RxJS 我们想要监听 document 中的点击事件。在提交过程中,RxJS 发现 document 是一个 _EventTarget_ 类型,因此它可以调用它的 `addEventListener` 方法。如果我们传入的是一个 jQuery 对象而非 document,那么 RxJs 知道它得调用 _on_ 方法。

|

||||

|

||||

这个例子用的是 _fromEventPattern_,和 _fromEvent_ 的工作基本上一样:

|

||||

这个例子用的是 _fromEventPattern_ ,和 _fromEvent_ 的工作基本上一样:

|

||||

|

||||

```

|

||||

function addClickHandler(handler) {

|

||||

document.addEventListener('click', handler);

|

||||

}

|

||||

```

|

||||

|

||||

```

|

||||

function removeClickHandler(handler) {

|

||||

document.removeEventListener('click', handler);

|

||||

}

|

||||

```

|

||||

|

||||

```

|

||||

fromEventPattern(

|

||||

addClickHandler,

|

||||

removeClickHandler,

|

||||

)

|

||||

.subscribe(console.log);

|

||||

```

|

||||

|

||||

```

|

||||

// 等效于

|

||||

fromEvent(document, 'click')

|

||||

```

|

||||

@ -402,49 +354,37 @@ RxJS 自动创建实际的监听器( _handler_ )你的工作是添加或者

|

||||

|

||||

```

|

||||

const listeners = [];

|

||||

```

|

||||

|

||||

```

|

||||

class Foo {

|

||||

registerListener(listener) {

|

||||

listeners.push(listener);

|

||||

}

|

||||

```

|

||||

|

||||

```

|

||||

emit(value) {

|

||||

listeners.forEach(listener => listener(value));

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

```

|

||||

const foo = new Foo();

|

||||

```

|

||||

|

||||

```

|

||||

fromEventPattern(listener => foo.registerListener(listener))

|

||||

.subscribe();

|

||||

```

|

||||

|

||||

```

|

||||

foo.emit(1);

|

||||

```

|

||||

|

||||

```

|

||||

// Produces

|

||||

// 结果

|

||||

// 1 ...

|

||||

```

|

||||

|

||||

当我们调用 foo.emit(1) 时,RxJS 中的监听器将被调用,然后它就能把值发送到流中。

|

||||

当我们调用 `foo.emit(1)` 时,RxJS 中的监听器将被调用,然后它就能把值发送到流中。

|

||||

|

||||

你也可以用它来监听多个事件类型,或者结合所有可以通过回调进行通讯的 API,例如,WebWorker API:

|

||||

|

||||

```

|

||||

const myWorker = new Worker('worker.js');

|

||||

```

|

||||

|

||||

```

|

||||

fromEventPattern(

|

||||

handler => { myWorker.onmessage = handler },

|

||||

handler => { myWorker.onmessage = undefined }

|

||||

@ -465,20 +405,14 @@ fromEventPattern(

|

||||

function foo(value, callback) {

|

||||

callback(value);

|

||||

}

|

||||

```

|

||||

|

||||

```

|

||||

// 没有流

|

||||

foo(1, console.log); //prints 1 in the console

|

||||

```

|

||||

|

||||

```

|

||||

// 有流

|

||||

const reactiveFoo = bindCallback(foo);

|

||||

// 当我们调用 reactiveFoo 时,它返回一个 observable 对象

|

||||

```

|

||||

|

||||

```

|

||||

reactiveFoo(1)

|

||||

.subscribe(console.log); // 在控制台打印 1

|

||||

```

|

||||

@ -494,51 +428,39 @@ reactiveFoo(1)

|

||||

|

||||

```

|

||||

import { webSocket } from 'rxjs/webSocket';

|

||||

```

|

||||

|

||||

```

|

||||

let socket$ = webSocket('ws://localhost:8081');

|

||||

```

|

||||

|

||||

```

|

||||

// 接收消息

|

||||

socket$.subscribe(

|

||||

(msg) => console.log('message received: ' + msg),

|

||||

(err) => console.log(err),

|

||||

() => console.log('complete') * );

|

||||

```

|

||||

|

||||

```

|

||||

// 发送消息

|

||||

socket$.next(JSON.stringify({ op: 'hello' }));

|

||||

```

|

||||

|

||||

把 websocket 功能添加到你的应用中真的很简单。_websocket_ 创建一个 subject。这意味着你可以订阅它,通过调用 _next_ 来获得消息和发送消息。

|

||||

把 websocket 功能添加到你的应用中真的很简单。_websocket_ 创建一个 subject。这意味着你可以订阅它,通过调用 `next` 来获得消息和发送消息。

|

||||

|

||||

#### ajax

|

||||

|

||||

如你所知:类似于 websocket,提供 AJAX 查询的功能。你可能用了一个带有 AJAX 功能的库或者框架。或者你没有用,那么我建议使用 fetch(或者必要的话用 polyfill),把返回的 promise 封装到一个 observable 对象中(参考稍后会讲到的 _defer_ 函数)。

|

||||

如你所知:类似于 websocket,提供 AJAX 查询的功能。你可能用了一个带有 AJAX 功能的库或者框架。或者你没有用,那么我建议使用 fetch(或者必要的话用 polyfill),把返回的 promise 封装到一个 observable 对象中(参考稍后会讲到的 `defer` 函数)。

|

||||

|

||||

* * *

|

||||

|

||||

### Custom Streams

|

||||

### 定制流

|

||||

|

||||

有时候已有的函数用起来并不是足够灵活。或者你需要对订阅有更强的控制。

|

||||

|

||||

#### Subject

|

||||

#### 主题(`Subject`)

|

||||

|

||||

subject 是一个特殊的对象,它使得你的能够把数据发送到流中,并且能够控制数据。subject 本身就是一个 observable 对象,但如果你想要把流暴露给其它代码,建议你使用 _asObservable_ 方法。这样你就不能意外调用原始方法。

|

||||

`Subject` 是一个特殊的对象,它使得你的能够把数据发送到流中,并且能够控制数据。`Subject` 本身就是一个可观察对象,但如果你想要把流暴露给其它代码,建议你使用 `asObservable` 方法。这样你就不能意外调用原始方法。

|

||||

|

||||

```

|

||||

const subject = new Subject();

|

||||

const observable = subject.asObservable();

|

||||

```

|

||||

|

||||

```

|

||||

observable.subscribe();

|

||||

```

|

||||

|

||||

```

|

||||

subject.next(1);

|

||||

subject.next(2);

|

||||

subject.complete();

|

||||

@ -554,17 +476,11 @@ subject.complete();

|

||||

```

|

||||

const subject = new Subject();

|

||||

const observable = subject.asObservable();

|

||||

```

|

||||

|

||||

```

|

||||

subject.next(1);

|

||||

```

|

||||

|

||||

```

|

||||

observable.subscribe(console.log);

|

||||

```

|

||||

|

||||

```

|

||||

subject.next(2);

|

||||

subject.complete();

|

||||

```

|

||||

@ -574,20 +490,16 @@ subject.complete();

|

||||

// 2

|

||||

```

|

||||

|

||||

除了常规的 subject,RxJS 还提供了三种特殊的版本。

|

||||

除了常规的 `Subject`,RxJS 还提供了三种特殊的版本。

|

||||

|

||||

_AsyncSubject_ 在结束后只发送最后的一个值。

|

||||

`AsyncSubject` 在结束后只发送最后的一个值。

|

||||

|

||||

```

|

||||

const subject = new AsyncSubject();

|

||||

const observable = subject.asObservable();

|

||||

```

|

||||

|

||||

```

|

||||

observable.subscribe(console.log);

|

||||

```

|

||||

|

||||

```

|

||||

subject.next(1);

|

||||

subject.next(2);

|

||||

subject.complete();

|

||||

@ -598,18 +510,14 @@ subject.complete();

|

||||

// 2

|

||||

```

|

||||

|

||||

_BehaviorSubject_ 使得你能够提供一个(默认的)值,如果当前没有其它值发送的话,这个值会被发送给每个订阅者。否则订阅者收到最后一个发送的值。

|

||||

`BehaviorSubject` 使得你能够提供一个(默认的)值,如果当前没有其它值发送的话,这个值会被发送给每个订阅者。否则订阅者收到最后一个发送的值。

|

||||

|

||||

```

|

||||

const subject = new BehaviorSubject(1);

|

||||

const observable = subject.asObservable();

|

||||

```

|

||||

|

||||

```

|

||||

const subscription1 = observable.subscribe(console.log);

|

||||

```

|

||||

|

||||

```

|

||||

subject.next(2);

|

||||

subscription1.unsubscribe();

|

||||

```

|

||||

@ -622,29 +530,21 @@ subscription1.unsubscribe();

|

||||

|

||||

```

|

||||

const subscription2 = observable.subscribe(console.log);

|

||||

```

|

||||

|

||||

```

|

||||

// 输出

|

||||

// 2

|

||||

```

|

||||

|

||||

The _ReplaySubject_ 存储一定数量、或一定时间或所有的发送过的值。所有新的订阅者将会获得所有存储了的值。

|

||||

`ReplaySubject` 存储一定数量、或一定时间或所有的发送过的值。所有新的订阅者将会获得所有存储了的值。

|

||||

|

||||

```

|

||||

const subject = new ReplaySubject();

|

||||

const observable = subject.asObservable();

|

||||

```

|

||||

|

||||

```

|

||||

subject.next(1);

|

||||

```

|

||||

|

||||

```

|

||||

observable.subscribe(console.log);

|

||||

```

|

||||

|

||||

```

|

||||

subject.next(2);

|

||||

subject.complete();

|

||||

```

|

||||

@ -655,11 +555,11 @@ subject.complete();

|

||||

// 2

|

||||

```

|

||||

|

||||

你可以在 [ReactiveX documentation][10](它提供了一些其它的连接) 里面找到更多关于 subjects 的信息。[Ben Lesh][11] 在 [On The Subject Of Subjects][12] 上面提供了一些关于 subjects 的理解,[Nicholas Jamieson][13] 在 [in RxJS: Understanding Subjects][14] 上也提供了一些理解。

|

||||

你可以在 [ReactiveX 文档][10](它提供了一些其它的连接) 里面找到更多关于 `Subject` 的信息。[Ben Lesh][11] 在 [On The Subject Of Subjects][12] 上面提供了一些关于 `Subject` 的理解,[Nicholas Jamieson][13] 在 [in RxJS: Understanding Subjects][14] 上也提供了一些理解。

|

||||

|

||||

#### Observable

|

||||

#### 可观察对象

|

||||

|

||||

你可以简单地用 new 操作符创建一个 observable 对象。通过你传入的函数,你可以控制流,只要有人订阅了或者它接收到一个可以当成 subject 使用的 observer,这个函数就会被调用,比如,调用 next,complet 和 error。

|

||||

你可以简单地用 new 操作符创建一个可观察对象。通过你传入的函数,你可以控制流,只要有人订阅了或者它接收到一个可以当成 `Subject` 使用的观察者,这个函数就会被调用,比如,调用 `next`、`complet` 和 `error`。

|

||||

|

||||

让我们回顾一下列表示例:

|

||||

|

||||

@ -667,16 +567,12 @@ subject.complete();

|

||||

const myList = new List();

|

||||

myList.add(1);

|

||||

myList.add(3);

|

||||

```

|

||||

|

||||

```

|

||||

new Observable(observer => {

|

||||

for (let i = 0; i<list.size; i++) {

|

||||

observer.next(list.get(i));

|

||||

}

|

||||

```

|

||||

|

||||

```

|

||||

observer.complete();

|

||||

})

|

||||

.subscribe();

|

||||

@ -687,14 +583,12 @@ new Observable(observer => {

|

||||

// 1 3 |

|

||||

```

|

||||

|

||||

这个函数可以返回一个 unsubcribe 函数,当有订阅者取消订阅时这个函数就会被调用。你可以用它来清楚或者执行一些收尾操作。

|

||||

这个函数可以返回一个 `unsubcribe` 函数,当有订阅者取消订阅时这个函数就会被调用。你可以用它来清楚或者执行一些收尾操作。

|

||||

|

||||

```

|

||||

new Observable(observer => {

|

||||

// 流式化

|

||||

```

|

||||

|

||||

```

|

||||

return () => {

|

||||

//clean up

|

||||

};

|

||||

@ -702,20 +596,18 @@ new Observable(observer => {

|

||||

.subscribe();

|

||||

```

|

||||

|

||||

#### 继承 Observable

|

||||

#### 继承可观察对象

|

||||

|

||||

在有可用的操作符前,这是一种实现自定义操作符的方式。RxJS 在内部扩展了 _Observable_。_Subject_ 就是一个例子,另一个是 _publisher_ 操作符。它返回一个 _ConnectableObservable_ 对象,该对象提供额外的方法 _connect_。

|

||||

在有可用的操作符前,这是一种实现自定义操作符的方式。RxJS 在内部扩展了 _可观察对象_ 。`Subject` 就是一个例子,另一个是 `publisher` 操作符。它返回一个 `ConnectableObservable` 对象,该对象提供额外的方法 `connect`。

|

||||

|

||||

#### 实现 Subscribable 接口

|

||||

#### 实现 `Subscribable` 接口

|

||||

|

||||

有时候你已经用一个对象来保存状态,并且能够发送值。如果你实现了 Subscribable 接口,你可以把它转换成一个 observable 对象。Subscribable 接口中只有一个 subscribe 方法。

|

||||

有时候你已经用一个对象来保存状态,并且能够发送值。如果你实现了 `Subscribable` 接口,你可以把它转换成一个可观察对象。`Subscribable` 接口中只有一个 `subscribe` 方法。

|

||||

|

||||

```

|

||||

interface Subscribable<T> { subscribe(observerOrNext?: PartialObserver<T> | ((value: T) => void), error?: (error: any) => void, complete?: () => void): Unsubscribable}

|

||||

```

|

||||

|

||||

* * *

|

||||

|

||||

### 结合和选择现有的流

|

||||

|

||||

知道怎么创建一个独立的流还不够。有时候你有好几个流但其实只需要一个。有些函数也可作为操作符,所以我不打算在这里深入展开。推荐看看 [Max NgWizard K][16] 所写的一篇 [文章][15],它还包含一些有趣的动画。

|

||||

@ -724,41 +616,34 @@ interface Subscribable<T> { subscribe(observerOrNext?: PartialObserver<T> | ((v

|

||||

|

||||

#### ObservableInput 类型

|

||||

|

||||

期望接收流的操作符和函数通常不单独和 observables 一起工作。相反,他们实际上期望的参数类型是 ObservableInput,定义如下:

|

||||

期望接收流的操作符和函数通常不单独和可观察对象一起工作。相反,它们实际上期望的参数类型是 ObservableInput,定义如下:

|

||||

|

||||

```

|

||||

type ObservableInput<T> = SubscribableOrPromise<T> | ArrayLike<T> | Iterable<T>;

|

||||

```

|

||||

|

||||

这意味着你可以传递一个 promises 或者数组却不需要事先把他们转换成 observables。

|

||||

这意味着你可以传递一个 promises 或者数组却不需要事先把他们转换成可观察对象。

|

||||

|

||||

#### defer

|

||||

|

||||

主要的目的是把一个 observable 对象的创建延迟(defer)到有人想要订阅的时间。在以下情况,这很有用:

|

||||

|

||||

* 创建 observable 对象的开销较大

|

||||

|

||||

* 你想要给每个订阅者新的 observable 对象

|

||||

|

||||

* 你想要在订阅时候选择不同的 observable 对象

|

||||

主要的目的是把一个 observable 对象的创建延迟(`defer`)到有人想要订阅的时间。在以下情况,这很有用:

|

||||

|

||||

* 创建可观察对象的开销较大

|

||||

* 你想要给每个订阅者新的可观察对象

|

||||

* 你想要在订阅时候选择不同的可观察对象

|

||||

* 有些代码必须在订阅之后执行

|

||||

|

||||

最后一点包含了一个并不起眼的用例:Promises(defer 也可以返回一个 promise 对象)。看看这个用到了 fetch API 的例子:

|

||||

最后一点包含了一个并不起眼的用例:Promises(`defer` 也可以返回一个 promise 对象)。看看这个用到了 fetch API 的例子:

|

||||

|

||||

```

|

||||

function getUser(id) {

|

||||

console.log("fetching data");

|

||||

return fetch(`https://server/user/${id}`);

|

||||

}

|

||||

```

|

||||

|

||||

```

|

||||

const userPromise = getUser(1);

|

||||

console.log("I don't want that request now");

|

||||

```

|

||||

|

||||

```

|

||||

// 其它地方

|

||||

userPromise.then(response => console.log("done");

|

||||

```

|

||||

@ -770,17 +655,13 @@ userPromise.then(response => console.log("done");

|

||||

// done

|

||||

```

|

||||

|

||||

只要流在你订阅的时候执行了,promise 就会立即执行。我们调用 getUser 的瞬间,就发送了一个请求,哪怕我们这个时候不想发送请求。当然,我们可以使用 from 来把一个 promise 对象转换成 observable 对象,但我们传递的 promise 对象已经创建或执行了。defer 让我们能够等到订阅才发送这个请求:

|

||||

只要流在你订阅的时候执行了,promise 就会立即执行。我们调用 `getUser` 的瞬间,就发送了一个请求,哪怕我们这个时候不想发送请求。当然,我们可以使用 `from` 来把一个 promise 对象转换成可观察对象,但我们传递的 promise 对象已经创建或执行了。`defer` 让我们能够等到订阅才发送这个请求:

|

||||

|

||||

```

|

||||

const user$ = defer(() => getUser(1));

|

||||

```

|

||||

|

||||

```

|

||||

console.log("I don't want that request now");

|

||||

```

|

||||

|

||||

```

|

||||

// 其它地方

|

||||

user$.subscribe(response => console.log("done");

|

||||

```

|

||||

@ -794,7 +675,7 @@ user$.subscribe(response => console.log("done");

|

||||

|

||||

#### iif

|

||||

|

||||

_iif 包含了一个关于 _defer_ 的特殊用例:在订阅时选择两个流中的一个:

|

||||

`iif` 包含了一个关于 `defer` 的特殊用例:在订阅时选择两个流中的一个:

|

||||

|

||||

```

|

||||

iif(

|

||||

@ -810,9 +691,9 @@ iif(

|

||||

// AM before noon, PM afterwards

|

||||

```

|

||||

|

||||

引用了文档:

|

||||

引用该文档:

|

||||

|

||||

> 实际上 `[iif][3]` 能够轻松地用 `[defer][4]` 实现,它仅仅是出于方便和可读性的目的。

|

||||

> 实际上 [iif][3] 能够轻松地用 [defer][4] 实现,它仅仅是出于方便和可读性的目的。

|

||||

|

||||

#### onErrorResumeNext

|

||||

|

||||

@ -822,13 +703,9 @@ iif(

|

||||

const stream1$ = of(1, 2).pipe(

|

||||

tap(i => { if(i>1) throw 'error'}) //fail after first element

|

||||

);

|

||||

```

|

||||

|

||||

```

|

||||

const stream2$ = of(3,4);

|

||||

```

|

||||

|

||||

```

|

||||

onErrorResumeNext(stream1$, stream2$)

|

||||

.subscribe(console.log);

|

||||

```

|

||||

@ -848,9 +725,7 @@ onErrorResumeNext(stream1$, stream2$)

|

||||

function handleResponses([user, account]) {

|

||||

// 执行某些任务

|

||||

}

|

||||

```

|

||||

|

||||

```

|

||||

forkJoin(

|

||||

fetch("https://server/user/1"),

|

||||

fetch("https://server/account/1")

|

||||

@ -860,9 +735,9 @@ forkJoin(

|

||||

|

||||

#### merge / concat

|

||||

|

||||

发送每一个从源 observables 对象中发出的值。

|

||||

发送每一个从可观察对象源中发出的值。

|

||||

|

||||

_merge_ 接收一个参数,让你定义有多少流能被同时订阅。默认是无限制的。设为 1 就意味着监听一个源流,在它结束的时候订阅下一个。由于这是一个常见的场景,RxJS 为你提供了一个显示的函数:_concat_。

|

||||

`merge` 接收一个参数,让你定义有多少流能被同时订阅。默认是无限制的。设为 1 就意味着监听一个源流,在它结束的时候订阅下一个。由于这是一个常见的场景,RxJS 为你提供了一个显示的函数:`concat`。

|

||||

|

||||

```

|

||||

merge(

|

||||

@ -872,31 +747,20 @@ merge(

|

||||

2 //two concurrent streams

|

||||

)

|

||||

.subscribe();

|

||||

```

|

||||

|

||||

```

|

||||

// 只订阅流 1 和流 2

|

||||

```

|

||||

|

||||

```

|

||||

// 输出

|

||||

// Stream 1 -> after 1000ms

|

||||

// Stream 2 -> after 1200ms

|

||||

// Stream 1 -> after 2000ms

|

||||

```

|

||||

|

||||

```

|

||||

// 流 1 结束后,开始订阅流 3

|

||||

```

|

||||

|

||||

```

|

||||

// 输出

|

||||

// Stream 3 -> after 0 ms

|

||||

// Stream 2 -> after 400 ms (2400ms from beginning)

|

||||

// Stream 3 -> after 1000ms

|

||||

```

|

||||

|

||||

```

|

||||

|

||||

merge(

|

||||

interval(1000).pipe(mapTo("Stream 1"), take(2)),

|

||||

@ -908,9 +772,7 @@ concat(

|

||||

interval(1000).pipe(mapTo("Stream 1"), take(2)),

|

||||

interval(1200).pipe(mapTo("Stream 2"), take(2))

|

||||

)

|

||||

```

|

||||

|

||||

```

|

||||

// 输出

|

||||

// Stream 1 -> after 1000ms

|

||||

// Stream 1 -> after 2000ms

|

||||

@ -920,7 +782,7 @@ concat(

|

||||

|

||||

#### zip / combineLatest

|

||||

|

||||

_merge_ 和 _concat_ 一个接一个的发送所有从源流中读到的值,而 zip 和 combineLatest 是把每个流中的一个值结合起来一起发送。_zip_ 结合所有源流中发送的第一个值。如果流的内容相关联,那么这就很有用。

|

||||

`merge` 和 `concat` 一个接一个的发送所有从源流中读到的值,而 `zip` 和 `combineLatest` 是把每个流中的一个值结合起来一起发送。`zip` 结合所有源流中发送的第一个值。如果流的内容相关联,那么这就很有用。

|

||||

|

||||

```

|

||||

zip(

|

||||

@ -935,7 +797,7 @@ zip(

|

||||

// [0, 0] [1, 1] [2, 2] ...

|

||||

```

|

||||

|

||||

_combineLatest_ 与之类似,但结合的是源流中发送的最后一个值。直到所有源流至少发送一个值之后才会触发事件。这之后每次源流发送一个值,它都会把这个值与其他流发送的最后一个值结合起来。

|

||||

`combineLatest` 与之类似,但结合的是源流中发送的最后一个值。直到所有源流至少发送一个值之后才会触发事件。这之后每次源流发送一个值,它都会把这个值与其他流发送的最后一个值结合起来。

|

||||

|

||||

```

|

||||

combineLatest(

|

||||

@ -983,15 +845,13 @@ race(

|

||||

// foo |

|

||||

```

|

||||

|

||||

由于 _of_ 立即产生一个值,因此它是最快的流,然而这个流就被选中了。

|

||||

|

||||

* * *

|

||||

由于 `of` 立即产生一个值,因此它是最快的流,然而这个流就被选中了。

|

||||

|

||||

### 总结

|

||||

|

||||

已经有很多创建 observables 对象的方式了。如果你想要创造响应式的 APIs 或者想用响应式的 API 结合传统 APIs,那么了解这些方法很重要。

|

||||

已经有很多创建可观察对象的方式了。如果你想要创造响应式的 API 或者想用响应式的 API 结合传统 API,那么了解这些方法很重要。

|

||||

|

||||

我已经向你展示了所有可用的方法,但它们其实还有很多内容可以讲。如果你想更加深入地了解,我极力推荐你查阅 [documentation][20] 或者阅读相关文章。

|

||||

我已经向你展示了所有可用的方法,但它们其实还有很多内容可以讲。如果你想更加深入地了解,我极力推荐你查阅 [文档][20] 或者阅读相关文章。

|

||||

|

||||

[RxViz][21] 是另一种值得了解的有意思的方式。你编写 RxJS 代码,产生的流可以用图形或动画进行显示。

|

||||

|

||||

@ -1001,7 +861,7 @@ via: https://blog.angularindepth.com/the-extensive-guide-to-creating-streams-in-

|

||||

|

||||

作者:[Oliver Flaggl][a]

|

||||

译者:[BriFuture](https://github.com/BriFuture)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,66 @@

|

||||

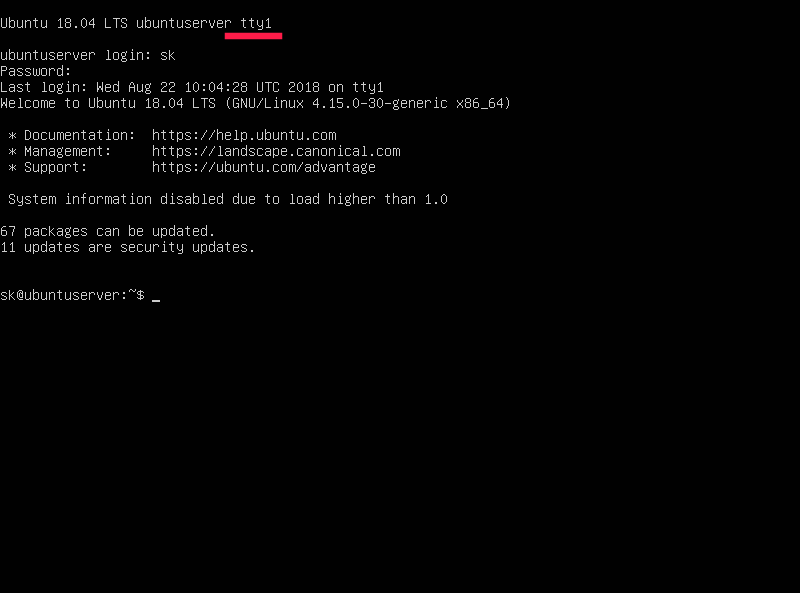

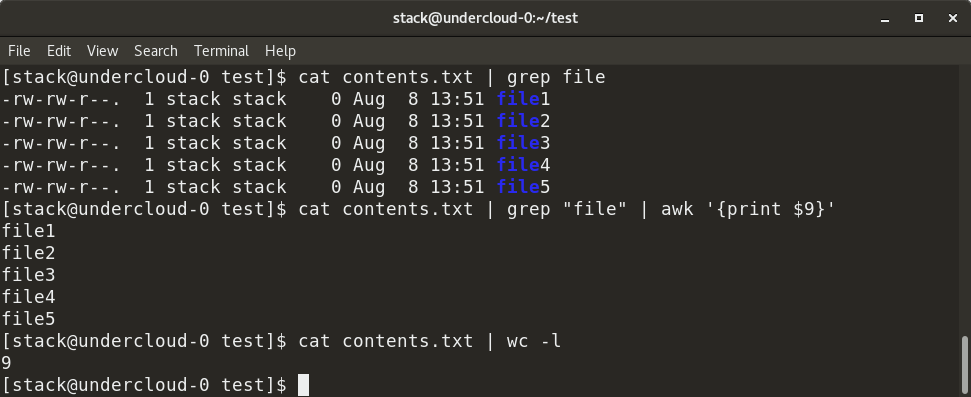

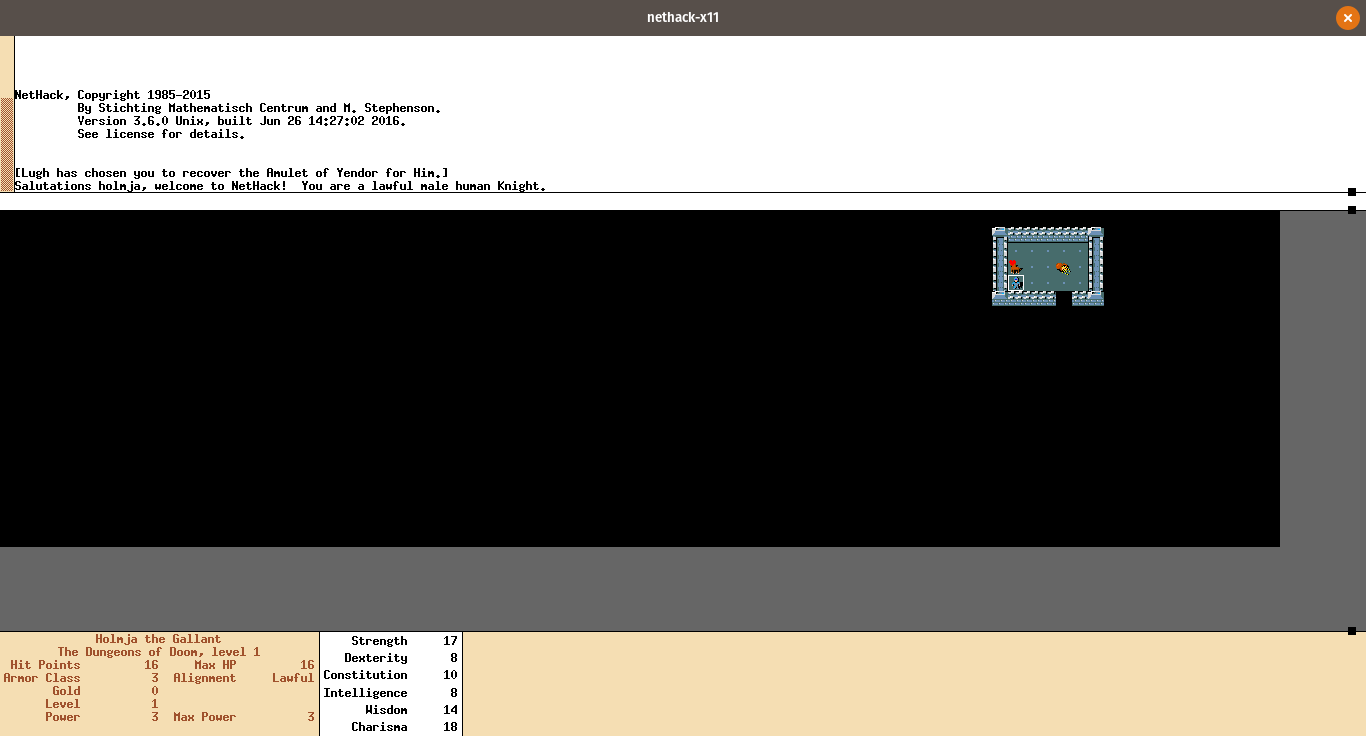

Scrot:让你在命令行中进行截屏更加简单

|

||||

======

|

||||

|

||||

> Scrot 是一个简单、灵活,并且提供了许多选项的 Linux 命令行截屏工具。

|

||||

|

||||

[][1]

|

||||

|

||||

|

||||

Linux 桌面上有许多用于截屏的优秀工具,比如 [Ksnapshot][1] 和 [Shutter][2] 。甚至 GNOME 桌面自带的简易截屏工具也能够很好的工作。但是,如果你很少截屏,或者你使用的 Linux 发行版没有内建截屏工具,或者你使用的是一台资源有限的老电脑,那么你该怎么办呢?

|

||||

|

||||

或许你可以转向命令行,使用一个叫做 [Scrot][4] 的实用工具。它能够完成简单的截屏工作,同时它所具有的一些特性也许会让你感到非常惊喜。

|

||||

|

||||

### 走近 Scrot

|

||||

|

||||

许多 Linux 发行版都会预先安装上 Scrot ,可以输入 `which scrot` 命令来查看系统中是否安装有 Scrot 。如果没有,那么可以使用你的 Linux 发行版的包管理器来安装。如果你想从源代码编译安装,那么也可以从 [GitHub][5] 上下载源代码。

|

||||

|

||||

如果要进行截屏,首先打开一个终端窗口,然后输入 `scrot [filename]` ,`[filename]` 是你想要保存的图片文件的名字(比如 `desktop.png`)。如果缺省了该参数,那么 scrot 会自动创建一个名字,比如 `2017-09-24-185009_1687x938_scrot.png` 。(这个名字缺乏了对图片内容的描述,这就是为什么最好在命令中指定一个名字作为参数。)

|

||||

|

||||

如果不带任何参数运行 Scrot,那么它将会对整个桌面进行截屏。如果不想这样,那么你也可以对屏幕中的一个小区域进行截图。

|

||||

|

||||

### 对单一窗口进行截屏

|

||||

|

||||

可以通过输入 `scrot -u [filename]` 命令来对一个窗口进行截屏。

|

||||

|

||||

`-u` 选项告诉 Scrot 对当前窗口进行截屏,这通常是我们正在工作的终端窗口,也许不是你想要的。

|

||||

|

||||

如果要对桌面上的另一个窗口进行截屏,需要输入 `scrot -s [filename]` 。

|

||||

|

||||

`-s` 选项可以让你做下面两件事的其中一件:

|

||||

|

||||

* 选择一个打开着的窗口

|

||||

* 在一个窗口的周围或一片区域画一个矩形进行捕获

|

||||

|

||||

你也可以设置一个时延,这样让你能够有时间来选择你想要捕获的窗口。可以通过 `scrot -u -d [num] [filename]` 来设置时延。

|

||||

|

||||

`-d` 选项告诉 Scrot 在捕获窗口前先等待一段时间,`[num]` 是需要等待的秒数。指定为 `-d 5` (等待 5 秒)应该能够让你有足够的时间来选择窗口。

|

||||

|

||||

### 更多有用的选项

|

||||

|

||||

Scrot 还提供了许多额外的特性(绝大多数我从来没有使用过)。下面是我发现的一些有用的选项:

|

||||

|

||||

* `-b` 捕获窗口的边界

|

||||

* `-t` 捕获窗口并创建一个缩略图。当你需要把截图张贴到网上的时候,这会非常有用

|

||||

* `-c` 当你同时使用了 `-d` 选项的时候,在终端中创建倒计时

|

||||

|

||||

如果你想了解 Scrot 的其他选项,可以在终端中输入 `man scrot` 来查看它的手册,或者[在线阅读][6]。然后开始使用 Scrot 进行截屏。

|

||||

|

||||

虽然 Scrot 很简单,但它的确能够工作得很好。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/17/11/taking-screen-captures-linux-command-line-scrot

|

||||

|

||||

作者:[Scott Nesbitt][a]

|

||||

译者:[ucasFL](https://github.com/ucasFL)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/scottnesbitt

|

||||

[1]:https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/community-penguins-osdc-lead.png?itok=BmqsAF4A

|

||||

[2]:https://www.kde.org/applications/graphics/ksnapshot/

|

||||

[3]:https://launchpad.net/shutter

|

||||

[4]:https://github.com/dreamer/scrot

|

||||

[5]:http://manpages.ubuntu.com/manpages/precise/man1/scrot.1.html

|

||||

[6]:https://github.com/dreamer/scrot

|

||||

@ -1,34 +1,35 @@

|

||||

API Star: Python 3 的 API 框架 – Polyglot.Ninja()

|

||||

API Star:一个 Python 3 的 API 框架

|

||||

======

|

||||

|

||||

为了在 Python 中快速构建 API,我主要依赖于 [Flask][1]。最近我遇到了一个名为 “API Star” 的基于 Python 3 的新 API 框架。由于几个原因,我对它很感兴趣。首先,该框架包含 Python 新特点,如类型提示和 asyncio。接着它再进一步并且为开发人员提供了很棒的开发体验。我们很快就会讲到这些功能,但在我们开始之前,我首先要感谢 Tom Christie,感谢他为 Django REST Framework 和 API Star 所做的所有工作。

|

||||

为了在 Python 中快速构建 API,我主要依赖于 [Flask][1]。最近我遇到了一个名为 “API Star” 的基于 Python 3 的新 API 框架。由于几个原因,我对它很感兴趣。首先,该框架包含 Python 新特点,如类型提示和 asyncio。而且它再进一步为开发人员提供了很棒的开发体验。我们很快就会讲到这些功能,但在我们开始之前,我首先要感谢 Tom Christie,感谢他为 Django REST Framework 和 API Star 所做的所有工作。

|

||||

|

||||

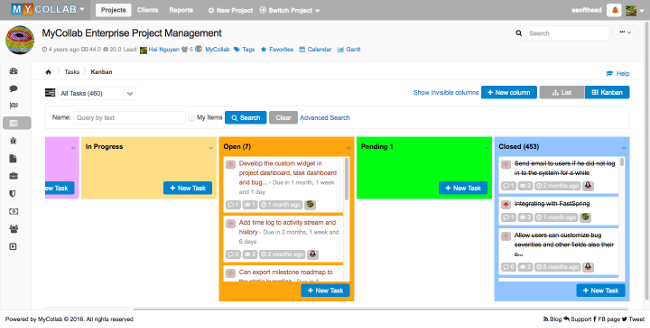

现在说回 API Star -- 我感觉这个框架很有成效。我可以选择基于 asyncio 编写异步代码,或者可以选择传统后端方式就像 WSGI 那样。它配备了一个命令行工具 - `apistar` 来帮助我们更快地完成工作。它支持 Django ORM 和 SQLAlchemy,这是可选的。它有一个出色类型系统,使我们能够定义输入和输出的约束,API Star 可以自动生成 api 模式(包括文档),提供验证和序列化功能等等。虽然 API Star 专注于构建 API,但你也可以非常轻松地在其上构建 Web 应用程序。在我们自己构建一些东西之前,所有这些可能都没有意义的。

|

||||

现在说回 API Star —— 我感觉这个框架很有成效。我可以选择基于 asyncio 编写异步代码,或者可以选择传统后端方式就像 WSGI 那样。它配备了一个命令行工具 —— `apistar` 来帮助我们更快地完成工作。它支持 Django ORM 和 SQLAlchemy,这是可选的。它有一个出色的类型系统,使我们能够定义输入和输出的约束,API Star 可以自动生成 API 的模式(包括文档),提供验证和序列化功能等等。虽然 API Star 专注于构建 API,但你也可以非常轻松地在其上构建 Web 应用程序。在我们自己构建一些东西之前,所有这些可能都没有意义的。

|

||||

|

||||

### 开始

|

||||

|

||||

我们将从安装 API Star 开始。为此实验创建一个虚拟环境是一个好主意。如果你不知道如何创建一个虚拟环境,不要担心,继续往下看。

|

||||

|

||||

```

|

||||

pip install apistar

|

||||

|

||||

```

|

||||

|

||||

(译注:上面的命令是在 Python 3 虚拟环境下使用的)

|

||||

|

||||

如果你没有使用虚拟环境或者 Python 3 的 `pip`,它被称为 `pip3`,那么使用 `pip3 install apistar` 代替。

|

||||

如果你没有使用虚拟环境或者你的 Python 3 的 `pip` 名为 `pip3`,那么使用 `pip3 install apistar` 代替。

|

||||

|

||||

一旦我们安装了这个包,我们就应该可以使用 `apistar` 命令行工具了。我们可以用它创建一个新项目,让我们在当前目录中创建一个新项目。

|

||||

|

||||

```

|

||||

apistar new .

|

||||

|

||||

```

|

||||

|

||||

现在我们应该创建两个文件:`app.py`,它包含主应用程序,然后是 `test.py`,它用于测试。让我们来看看 `app.py` 文件:

|

||||

|

||||

```

|

||||

from apistar import Include, Route

|

||||

from apistar.frameworks.wsgi import WSGIApp as App

|

||||

from apistar.handlers import docs_urls, static_urls

|

||||

|

||||

|

||||

def welcome(name=None):

|

||||

if name is None:

|

||||

return {'message': 'Welcome to API Star!'}

|

||||

@ -46,34 +47,34 @@ app = App(routes=routes)

|

||||

|

||||

if __name__ == '__main__':

|

||||

app.main()

|

||||

|

||||

```

|

||||

|

||||

在我们深入研究代码之前,让我们运行应用程序并查看它是否正常工作。我们在浏览器中输入 `http://127.0.0.1:8080/`,我们将得到以下响应:

|

||||

|

||||

```

|

||||

{"message": "Welcome to API Star!"}

|

||||

|

||||

```

|

||||

|

||||

如果我们输入:`http://127.0.0.1:8080/?name=masnun`

|

||||

|

||||

```

|

||||

{"message": "Welcome to API Star, masnun!"}

|

||||

|

||||

```

|

||||

|

||||

同样的,输入 `http://127.0.0.1:8080/docs/`,我们将看到自动生成的 API 文档。

|

||||

|

||||

现在让我们来看看代码。我们有一个 `welcome` 函数,它接收一个名为 `name` 的参数,其默认值为 `None`。API Star 是一个智能的 api 框架。它将尝试在 url 路径或者查询字符串中找到 `name` 键并将其传递给我们的函数,它还基于其生成 API 文档。这真是太好了,不是吗?

|

||||

现在让我们来看看代码。我们有一个 `welcome` 函数,它接收一个名为 `name` 的参数,其默认值为 `None`。API Star 是一个智能的 API 框架。它将尝试在 url 路径或者查询字符串中找到 `name` 键并将其传递给我们的函数,它还基于其生成 API 文档。这真是太好了,不是吗?

|

||||

|

||||

然后,我们创建一个 `Route` 和 `Include` 实例列表,并将列表传递给 `App` 实例。`Route` 对象用于定义用户自定义路由。顾名思义,`Include` 包含了在给定的路径下的其它 url 路径。

|

||||

然后,我们创建一个 `Route` 和 `Include` 实例的列表,并将列表传递给 `App` 实例。`Route` 对象用于定义用户自定义路由。顾名思义,`Include` 包含了在给定的路径下的其它 url 路径。

|

||||

|

||||

### 路由

|

||||

|

||||

路由很简单。当构造 `App` 实例时,我们需要传递一个列表作为 `routes` 参数,这个列表应该有我们刚才看到的 `Route` 或 `Include` 对象组成。对于 `Route`,我们传递一个 url 路径,http 方法和可调用的请求处理程序(函数或者其他)。对于 `Include` 实例,我们传递一个 url 路径和一个 `Routes` 实例列表。

|

||||

|

||||

##### 路径参数

|

||||

#### 路径参数

|

||||

|

||||

我们可以在花括号内添加一个名称来声明 url 路径参数。例如 `/user/{user_id}` 定义了一个 url,其中 `user_id` 是路径参数,或者说是一个将被注入到处理函数(实际上是可调用的)中的变量。这有一个简单的例子:

|

||||

|

||||

```

|

||||

from apistar import Route

|

||||

from apistar.frameworks.wsgi import WSGIApp as App

|

||||

@ -91,22 +92,22 @@ app = App(routes=routes)

|

||||

|

||||

if __name__ == '__main__':

|

||||

app.main()

|

||||

|

||||

```

|

||||

|

||||

如果我们访问 `http://127.0.0.1:8080/user/23`,我们将得到以下响应:

|

||||

|

||||

```

|

||||

{"message": "Your profile id is: 23"}

|

||||

|

||||

```

|

||||

|

||||

但如果我们尝试访问 `http://127.0.0.1:8080/user/some_string`,它将无法匹配。因为我们定义了 `user_profile` 函数,且为 `user_id` 参数添加了一个类型提示。如果它不是整数,则路径不匹配。但是如果我们继续删除类型提示,只使用 `user_profile(user_id)`,它将匹配此 url。这也展示了 API Star 的智能之处和利用类型和好处。

|

||||

|

||||

#### 包含/分组路由

|

||||

|

||||

有时候将某些 url 组合在一起是有意义的。假设我们有一个处理用户相关功能的 `user` 模块,将所有与用户相关的 url 分组在 `/user` 路径下可能会更好。例如 `/user/new`, `/user/1`, `/user/1/update` 等等。我们可以轻松地在单独的模块或包中创建我们的处理程序和路由,然后将它们包含在我们自己的路由中。

|

||||

有时候将某些 url 组合在一起是有意义的。假设我们有一个处理用户相关功能的 `user` 模块,将所有与用户相关的 url 分组在 `/user` 路径下可能会更好。例如 `/user/new`、`/user/1`、`/user/1/update` 等等。我们可以轻松地在单独的模块或包中创建我们的处理程序和路由,然后将它们包含在我们自己的路由中。

|

||||

|

||||

让我们创建一个名为 `user` 的新模块,文件名为 `user.py`。我们将以下代码放入这个文件:

|

||||

|

||||

```

|

||||

from apistar import Route

|

||||

|

||||

@ -128,10 +129,10 @@ user_routes = [

|

||||

Route("/{user_id}/update", "GET", user_update),

|

||||

Route("/{user_id}/profile", "GET", user_profile),

|

||||

]

|

||||

|

||||

```

|

||||

|

||||

现在我们可以从 app 主文件中导入 `user_routes`,并像这样使用它:

|

||||

|

||||

```

|

||||

from apistar import Include

|

||||

from apistar.frameworks.wsgi import WSGIApp as App

|

||||

@ -146,7 +147,6 @@ app = App(routes=routes)

|

||||

|

||||

if __name__ == '__main__':

|

||||

app.main()

|

||||

|

||||

```

|

||||

|

||||

现在 `/user/new` 将委托给 `user_new` 函数。

|

||||

@ -154,21 +154,22 @@ if __name__ == '__main__':

|

||||

### 访问查询字符串/查询参数

|

||||

|

||||

查询参数中传递的任何参数都可以直接注入到处理函数中。比如 url `/call?phone=1234`,处理函数可以定义一个 `phone` 参数,它将从查询字符串/查询参数中接收值。如果 url 查询字符串不包含 `phone` 的值,那么它将得到 `None`。我们还可以为参数设置一个默认值,如下所示:

|

||||

|

||||

```

|

||||

def welcome(name=None):

|

||||

if name is None:

|

||||

return {'message': 'Welcome to API Star!'}

|

||||

return {'message': 'Welcome to API Star, %s!' % name}

|

||||

|

||||

```

|

||||

|

||||

在上面的例子中,我们为 `name` 设置了一个默认值 `None`。

|

||||

|

||||

### 注入对象

|

||||

|

||||

通过给一个请求程序添加类型提示,我们可以将不同的对象注入到视图中。注入请求相关对象有助于处理程序直接从内部访问它们。API Star 内置的 `http` 包中有几个内置对象。我们也可以使用它的类型系统来创建我们自己的自定义对象并将它们注入到我们的函数中。API Star 还根据指定的约束进行数据验证。

|

||||

通过给一个请求程序添加类型提示,我们可以将不同的对象注入到视图中。注入请求相关的对象有助于处理程序直接从内部访问它们。API Star 内置的 `http` 包中有几个内置对象。我们也可以使用它的类型系统来创建我们自己的自定义对象并将它们注入到我们的函数中。API Star 还根据指定的约束进行数据验证。

|

||||

|

||||

让我们定义自己的 `User` 类型,并将其注入到我们的请求处理程序中:

|

||||

|

||||

```

|

||||

from apistar import Include, Route

|

||||

from apistar.frameworks.wsgi import WSGIApp as App

|

||||

@ -197,10 +198,10 @@ app = App(routes=routes)

|

||||

|

||||

if __name__ == '__main__':

|

||||

app.main()

|

||||

|

||||

```

|

||||

|

||||

现在如果我们发送这样的请求:

|

||||

|

||||

```

|

||||

curl -X POST \

|

||||

http://127.0.0.1:8080/ \

|

||||

@ -214,6 +215,7 @@ curl -X POST \

|

||||

### 发送响应

|

||||

|

||||

如果你已经注意到,到目前为止,我们只可以传递一个字典,它将被转换为 JSON 并作为默认返回。但是,我们可以使用 `apistar` 中的 `Response` 类来设置状态码和其它任意响应头。这有一个简单的例子:

|

||||

|

||||

```

|

||||

from apistar import Route, Response

|

||||

from apistar.frameworks.wsgi import WSGIApp as App

|

||||

@ -236,15 +238,13 @@ app = App(routes=routes)

|

||||

|

||||

if __name__ == '__main__':

|

||||

app.main()

|

||||

|

||||

```

|

||||

|

||||

它应该返回纯文本响应和一个自定义标响应头。请注意,`content` 应该是字节,而不是字符串。这就是我编码它的原因。

|

||||

|

||||

### 继续

|

||||

|

||||

我刚刚介绍了 API

|

||||

Star 的一些特性,API Star 中还有许多非常酷的东西,我建议通过 [Github Readme][2] 文件来了解这个优秀框架所提供的不同功能的更多信息。我还将尝试在未来几天内介绍关于 API Star 的更多简短的,集中的教程。

|

||||

我刚刚介绍了 API Star 的一些特性,API Star 中还有许多非常酷的东西,我建议通过 [Github Readme][2] 文件来了解这个优秀框架所提供的不同功能的更多信息。我还将尝试在未来几天内介绍关于 API Star 的更多简短的,集中的教程。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

@ -253,7 +253,7 @@ via: http://polyglot.ninja/api-star-python-3-api-framework/

|

||||

|

||||

作者:[MASNUN][a]

|

||||

译者:[MjSeven](https://github.com/MjSeven)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,61 @@

|

||||

Linux 虚拟机与 Linux 现场镜像版

|

||||

======

|

||||

|

||||

> Linux 虚拟机与 Linux 现场镜像版各有优势,也有不足。

|

||||

|

||||

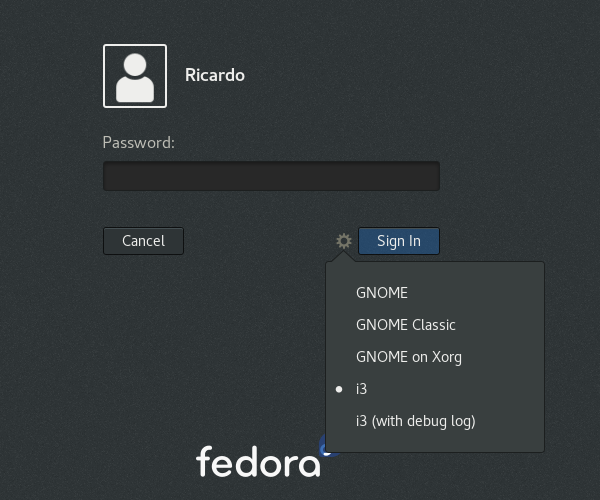

首先我得承认,我非常喜欢频繁尝试新的 [Linux 发行版本][1]。然而,我用来测试它们的方法根据每次目标而有所不同。在这篇文章中,我们来看看两种运行 Linux 的模式:虚拟机或<ruby>现场镜像版<rt>live image</rt></ruby>。每一种方式都存在优势,但是也有一些不足。

|

||||

|

||||

### 首次测试一个全新的 Linux 发行版

|

||||

|

||||

当我首次测试一个全新 Linux 发行版时,我使用的方法很大程度上依赖于我当前所拥有的 PC 资源。如果我使用台式机,我会在一台虚拟机中运行该发行版来测试。使用这种方法的原因是,我可以下载并测试该发行版,不只是在一个现场环境中,而且也可以作为一个带有持久存储的安装的系统。

|

||||

|

||||

另一方面,如果我的 PC 不具备强劲的硬件,那么通过 Linux 的虚拟机安装来测试发行版是适得其反的。我会将那台 PC 压榨到它的极限,诚然,更好的是使用现场版的 Linux 映像,而不是从闪存驱动器中运行。

|

||||

|

||||

### 体验新的 Linux 发行版本的软件

|

||||

|

||||

如果你有兴趣查看发行版本的桌面环境或可用的软件,那使用它的现场镜像版就没错了。一个现场版环境可以提供给你所预期的全局视角、其所提供的软件和用户体验的整体感受。

|

||||

|

||||

公平的说,你也可以在虚拟机上达到同样的效果,但是它有一点不好,如果这么做会让更多数据填满你的磁盘空间。毕竟这只是对发行版的一个简单体验。记得我在第一节说过:我喜欢在虚拟机上运行 Linux 来做测试。用这个方式我就能看到如何去安装它、分区是怎么样的等等,而使用现场镜像版时你就看不到这些。

|

||||

|

||||

这种体验方式通常表明你只想对该发行版本有个大致了解,所以在这种情况下,这种只需要付出最小的精力和时间的方式是一种不错的办法。

|

||||

|

||||

### 随身携带一个发行版

|

||||

|

||||

这种方式虽然不像几年前那样普遍,这种随身携带一个 Linux 发行版的能力也许是出于对某些用户的考虑。显然,虚拟机安装对于便携性并无太多帮助。不过,现场镜像版实际上是十分便携的。现场镜像版可以写入到 DVD 当中或复制到一个闪存盘中而便于携带。

|

||||

|

||||

从 Linux 的便携性这个概念上展开来说,当要在一个朋友的电脑上展示 Linux 如何工作,使用一个闪存盘上的现场镜像版也是很方便的。这可以使你能演示 Linux 如何丰富他们的生活,而不用必须在他们的 PC 上运行一个虚拟机。使用现场镜像版这就有点双赢的感觉了。

|

||||

|

||||

### 选择做双引导 Linux

|

||||

|

||||

这接下来的方式是个大工程。考虑一下,也许你是一个 Windows 用户。你喜欢玩 Linux,但又不愿意冒险。除了在某些情况下会出些状况或者识别个别分区时遇到问题,双引导方式就没啥挑剔的。无论如何,使用 Linux 虚拟机或现场镜像版都对于你来说是一个很好的选择。

|

||||

|

||||

现在,我在某些事情上采取了奇怪的立场。我认为长期在闪存盘上运行现场镜像版要比虚拟机更有价值。这有两个原因。首先,您将会习惯于真正运行 Linux,而不是在 Windows 之上的虚拟机中运行它。其次,您可以设置闪存盘以包含持久存储的用户数据。

|

||||

|

||||

我知道你会说用一个虚拟机运行 Linux 也是如此,然而,使用现场镜像版的方式,你绝不会因为更新而被破坏任何东西。为什么?因为你不会更新你的宿主系统或者客户系统。请记住,有整个 Linux 发行版本被设计为持久存储的 Linux 发行版。Puppy Linux 就是一个非常好的例子。它不仅能运行在要被回收或丢弃的个人 PC 上,它也可以让你永远不被频繁的系统升级所困扰,这要感谢该发行版处理安全更新的方式。这不是一个常规的 Linux 发行版,而是以这样的一种方式封闭了安全问题——即持久存储的现场镜像版中没有什么令人担心的坏东西。

|

||||

|

||||

### Linux 虚拟机绝对是一个最好的选择

|

||||

|

||||

在我结束这篇文章时,让我告诉你。有一种场景下,使用 Virtual Box 等虚拟机绝对比现场镜像版更好:记录 Linux 发行版的桌面环境。

|

||||

|

||||

例如,我制作了一个视频,里面介绍和点评了许多 Linux 发行版。使用现场镜像版进行此操作需要我用硬件设备捕获屏幕,或者从现场镜像版的软件仓库中安装捕获软件。显然,虚拟机比 Linux 发行版的现场镜像版更适合这项工作。

|

||||

|

||||

一旦你需要采集音频进行混音,毫无疑问,如果您要使用软件来捕获您的点评语音,那么您肯定希望拥有一个宿主操作系统,里面包含了一个起码的捕获环境的所有基本需求。同样,您可以使用硬件设备来完成所有这一切,但如果您只是做兼职的视频/音频捕获, 那么这可能要付出成本高昂的代价。

|

||||

|

||||

### Linux 虚拟机 VS. Linux 现场镜像版

|

||||

|

||||

你最喜欢尝试新发行版的方式是哪些?也许,你是那种可以很好地格式化磁盘、将风险置之脑后的人,所以这里说的这些都是没用的?

|

||||

|

||||

我在网上互动的大多数人都倾向于遵循我上面提及的方法,但是我很想知道哪种方式更加适合你。点击评论框,让我知道在体验 Linux 发行版世界最伟大和最新的版本时,您更喜欢哪种方法。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.datamation.com/open-source/linux-virtual-machines-vs-linux-live-images.html

|

||||

|

||||

作者:[Matt Hartley][a]

|

||||

译者:[sober-wang](https://github.com/sober-wang)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.datamation.com/author/Matt-Hartley-3080.html

|

||||

[1]:https://www.datamation.com/open-source/best-linux-distro.html

|

||||

@ -1,11 +1,12 @@

|

||||

Free DOS 的简单介绍

|

||||

FreeDOS 的简单介绍

|

||||

======

|

||||

> 学习如何穿行于 C:\ 提示符下,就像上世纪 90 年代的 DOS 高手一样。

|

||||

|

||||

|

||||

|

||||

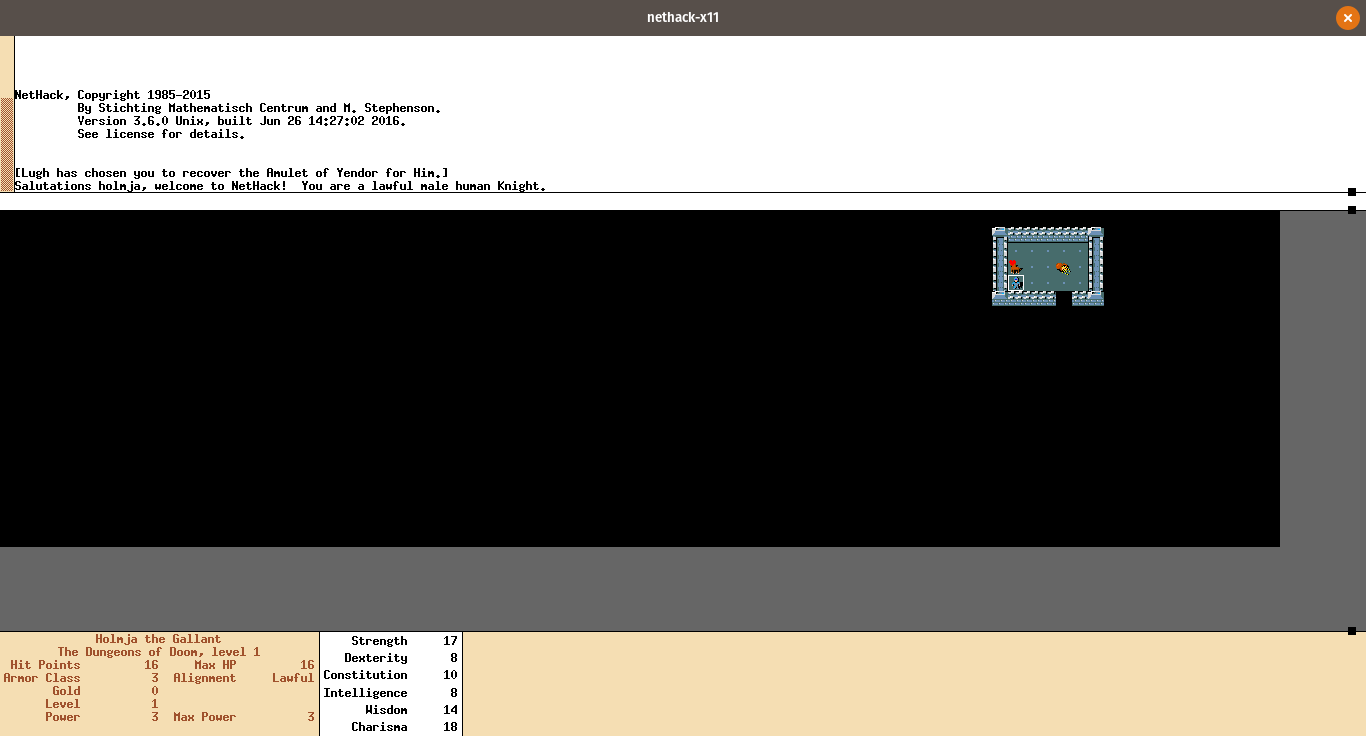

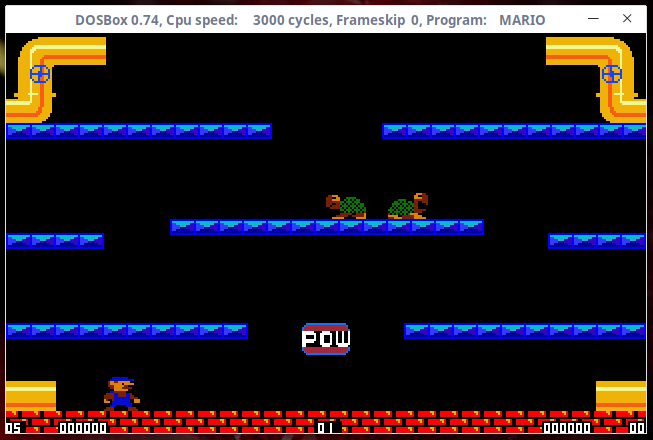

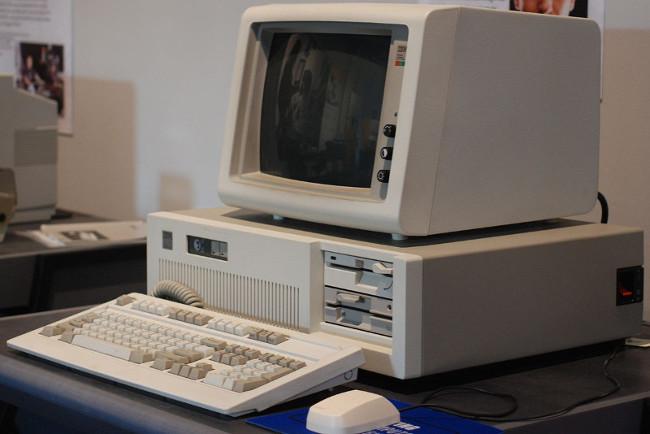

FreeDOS 是一个古老的操作系统,但是对于多数人而言它又是陌生的。在 1994 年,我和几个开发者一起 [开发 FreeDOS][1]--一个完整、自由、DOS 兼容的操作系统,你可以用它来玩经典的 DOS 游戏、运行遗留的商业软件或者开发嵌入式系统。任何在 MS-DOS 下工作的程序在 FreeDOS 下也可以运行。

|

||||

FreeDOS 是一个古老的操作系统,但是对于多数人而言它又是陌生的。在 1994 年,我和几个开发者一起 [开发了 FreeDOS][1] —— 这是一个完整、自由、兼容 DOS 的操作系统,你可以用它来玩经典的 DOS 游戏、运行过时的商业软件或者开发嵌入式系统。任何在 MS-DOS 下工作的程序在 FreeDOS 下也可以运行。

|

||||

|

||||

在 1994 年,任何一个曾经使用过微软专利的 MS-DOS 的人都会迅速地熟悉 FreeDOS。这是设计而为之的;FreeDOS 尽可能地去模仿 MS-DOS。结果,1990 年代的 DOS 用户能够直接转换到 FreeDOS。但是,时代变了。今天,开源的开发者们对于 Linux 命令行更熟悉或者他们可能倾向于像 [GNOME][2] 一样的图形桌面环境,这导致 FreeDOS 命令行界面最初看起来像个异类。

|

||||

在 1994 年,任何一个曾经使用过微软的商业版 MS-DOS 的人都会迅速地熟悉 FreeDOS。这是设计而为之的;FreeDOS 尽可能地去模仿 MS-DOS。结果,1990 年代的 DOS 用户能够直接转换到 FreeDOS。但是,时代变了。今天,开源的开发者们对于 Linux 命令行更熟悉,或者他们可能倾向于像 [GNOME][2] 一样的图形桌面环境,这导致 FreeDOS 命令行界面最初看起来像个异类。

|

||||

|

||||

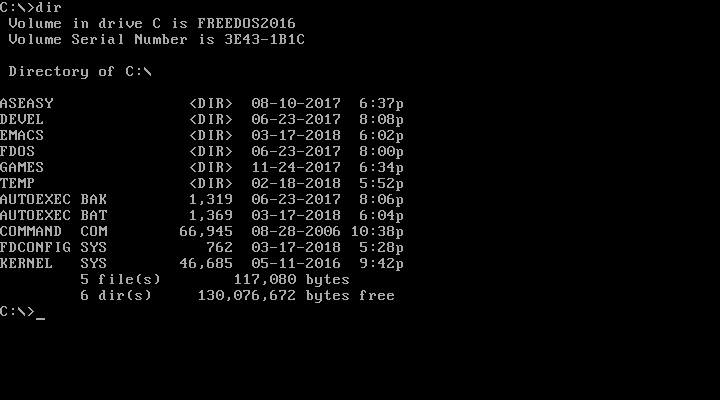

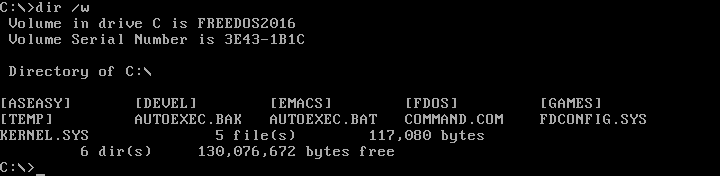

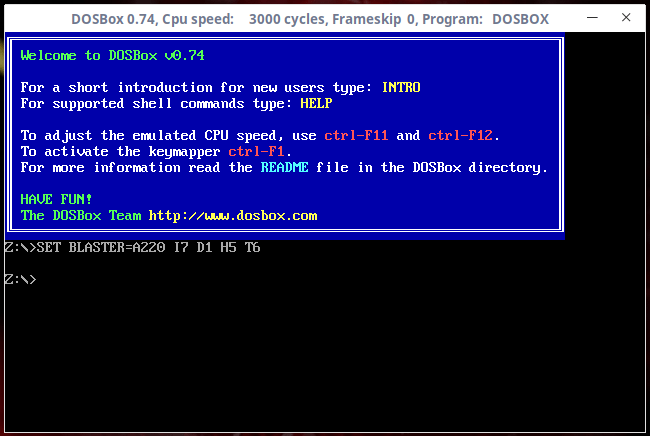

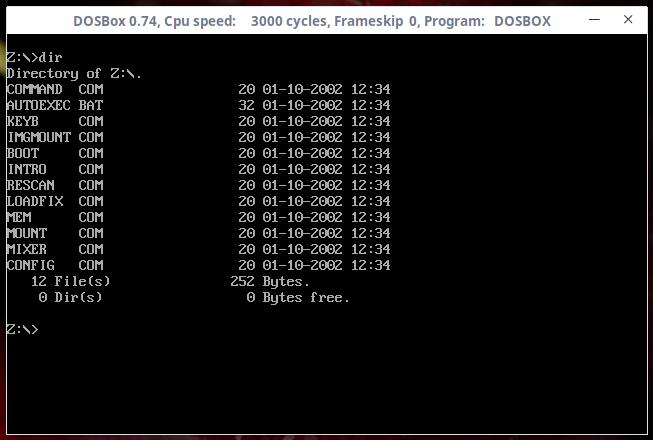

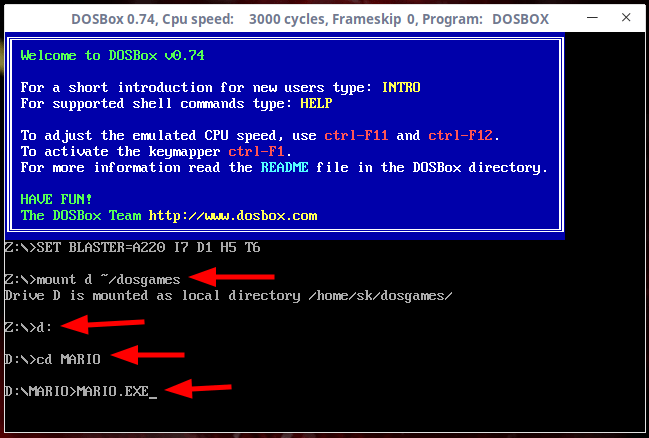

新的用户通常会问,“我已经安装了 [FreeDOS][3],但是如何使用呢?”。如果你之前并没有使用过 DOS,那么闪烁的 `C:\>` DOS 提示符看起来会有点不太友好,而且可能有点吓人。这份 FreeDOS 的简单介绍将带你起步。它只提供了基础:如何浏览以及如何查看文件。如果你想了解比这里提及的更多的知识,访问 [FreeDOS 维基][4]。

|

||||

|

||||

@ -15,13 +16,13 @@ FreeDOS 是一个古老的操作系统,但是对于多数人而言它又是陌

|

||||

|

||||

|

||||

|

||||

DOS 是在个人电脑从软盘运行时期创建的一个“磁盘操作系统”。甚至当电脑支持硬盘了,在 1980 年代和 1990 年代,频繁地在不同的驱动器之间切换也是很普遍的。举例来说,你可能想将最重要的文件都备份一份拷贝到软盘中。

|

||||

DOS 是在个人电脑从软盘运行时期创建的一个“<ruby>磁盘操作系统<rt>disk operating system</rt></ruby>”。甚至当电脑支持硬盘了,在 1980 年代和 1990 年代,频繁地在不同的驱动器之间切换也是很普遍的。举例来说,你可能想将最重要的文件都备份一份拷贝到软盘中。

|

||||

|

||||

DOS 使用一个字母来指代每个驱动器。早期的电脑仅拥有两个软盘驱动器,他们被分配了 `A:` 和 `B:` 盘符。硬盘上的第一个分区盘符是 `C:` ,然后其它的盘符依次这样分配下去。提示符中的 `C:` 表示你正在使用第一个硬盘的第一个分区。

|

||||

|

||||

从 1983 年的 PC-DOS 2.0 开始,DOS 也支持目录和子目录,非常类似 Linux 文件系统中的目录和子目录。但是跟 Linux 不一样的是,DOS 目录名由 `\` 分隔而不是 `/`。将这个与驱动器字母合起来看,提示符中的 `C:\` 表示你正在 `C:` 盘的顶端或者“根”目录。

|

||||

|

||||

`>` 修饰符提示你输入 DOS 命令的地方,就像众多 Linux shell 的 `$`。`>` 前面的部分告诉你当前的工作目录,然后你在 `>` 提示符这输入命令。

|

||||

`>` 符号是提示你输入 DOS 命令的地方,就像众多 Linux shell 的 `$`。`>` 前面的部分告诉你当前的工作目录,然后你在 `>` 提示符这输入命令。

|

||||

|

||||

### 在 DOS 中找到你的使用方式

|

||||

|

||||

@ -33,7 +34,7 @@ DOS 使用一个字母来指代每个驱动器。早期的电脑仅拥有两个

|

||||

|

||||

|

||||

|

||||

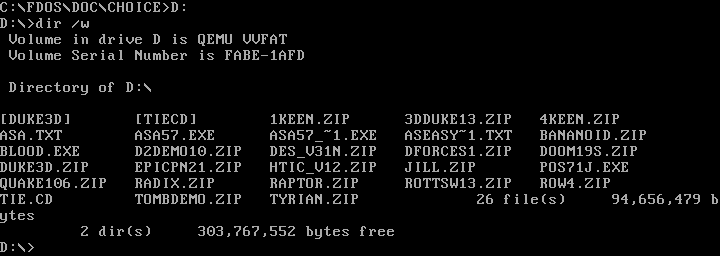

如果你不想显示单个文件大小的额外细节,你可以在 `DIR` 命令中使用 `/w` 选项来显示一个“宽泛”文件夹。注意,Linux 用户使用连字号(`-`)或者双连字号(`--`)来开启命令行选项,而 DOS 使用斜线字符(`/`)。

|

||||

如果你不想显示单个文件大小的额外细节,你可以在 `DIR` 命令中使用 `/w` 选项来显示一个“宽”的目录列表。注意,Linux 用户使用连字号(`-`)或者双连字号(`--`)来开始命令行选项,而 DOS 使用斜线字符(`/`)。

|

||||

|

||||

|

||||

|

||||

@ -64,7 +65,7 @@ FreeDOS 也从 Linux 那借鉴了一些特性:你可以使用 `CD -` 跳转回

|

||||

|

||||

|

||||

|

||||

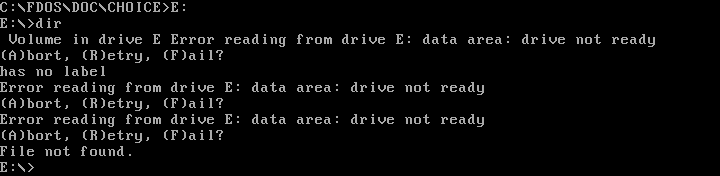

小心不要尝试切换到一个不存在的磁盘。DOS 可能会将它设置为工作磁盘,但是如果你尝试在那做任何事,你将会遇到略微臭名昭著的“退出、重试、失败” DOS 错误信息。

|

||||

小心不要尝试切换到一个不存在的磁盘。DOS 可能会将它设置为工作磁盘,但是如果你尝试在那做任何事,你将会遇到略微臭名昭著的“<ruby>退出、重试、失败<rt>Abort, Retry, Fail</rt></ruby>” DOS 错误信息。

|

||||

|

||||

|

||||

|

||||

@ -86,7 +87,7 @@ FreeDOS 也从 Linux 那借鉴了一些特性:你可以使用 `CD -` 跳转回

|

||||

|

||||

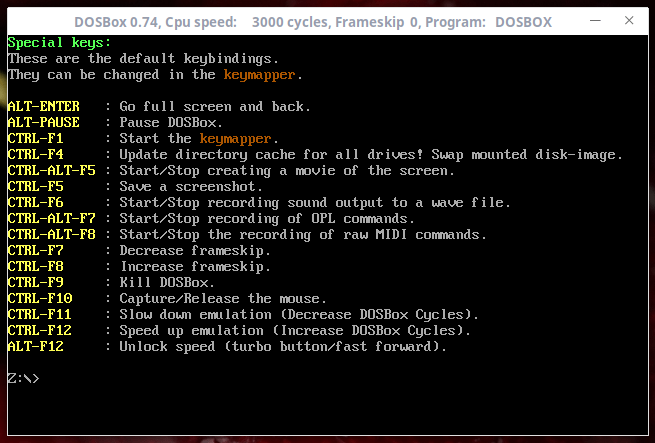

在 FreeDOS 下,针对每个命令你都能够使用 `/?` 参数来获取简要的说明。举例来说,`EDIT /?` 会告诉你编辑器的用法和选项。或者你可以输入 `HELP` 来使用交互式帮助系统。

|

||||

|

||||

像任何一个 DOS 一样,FreeDOS 被认为是一个简单的操作系统。仅使用一些基本命令就可以轻松浏览 DOS 文件系统。那么启动一个 QEMU 会话,安装 FreeDOS,然后尝试一下 DOS 命令行界面。也许它现在看起来就没那么吓人了。

|

||||

像任何一个 DOS 一样,FreeDOS 被认为是一个简单的操作系统。仅使用一些基本命令就可以轻松浏览 DOS 文件系统。那么,启动一个 QEMU 会话,安装 FreeDOS,然后尝试一下 DOS 命令行界面吧。也许它现在看起来就没那么吓人了。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

@ -96,7 +97,7 @@ via: https://opensource.com/article/18/4/gentle-introduction-freedos

|

||||

作者:[Jim Hall][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[icecoobe](https://github.com/icecoobe)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,63 +1,59 @@

|

||||

Go 编译器介绍

|

||||

======

|

||||

|

||||

> Copyright 2018 The Go Authors. All rights reserved.

|

||||

> Use of this source code is governed by a BSD-style

|

||||

> license that can be found in the LICENSE file.

|

||||

|

||||

`cmd/compile` 包含构成 Go 编译器主要的包。编译器在逻辑上可以被分为四个阶段,我们将简要介绍这几个阶段以及包含相应代码的包的列表。

|

||||

|

||||

在谈到编译器时,有时可能会听到<ruby>前端<rt>front-end</rt></ruby>和<ruby>后端<rt>back-end</rt></ruby>这两个术语。粗略地说,这些对应于我们将在此列出的前两个和后两个阶段。第三个术语<ruby>中间端<rt>middle-end</rt></ruby>通常指的是第二阶段执行的大部分工作。

|

||||

|

||||

请注意,`go/parser` 和 `go/types` 等 `go/*` 系列的包与编译器无关。由于编译器最初是用 C 编写的,所以这些 `go/*` 包被开发出来以便于能够写出和 `Go` 代码一起工作的工具,例如 `gofmt` 和 `vet`。

|

||||

|

||||

需要澄清的是,名称 “gc” 代表 “Go 编译器”,与大写 GC 无关,后者代表<ruby>垃圾收集<rt>garbage collection</rt></ruby>。

|

||||

需要澄清的是,名称 “gc” 代表 “<ruby>Go 编译器<rt>Go compiler</rt></ruby>”,与大写 GC 无关,后者代表<ruby>垃圾收集<rt>garbage collection</rt></ruby>。

|

||||

|

||||

### 1. 解析

|

||||

### 1、解析

|

||||

|

||||

* `cmd/compile/internal/syntax`(<ruby>词法分析器<rt>lexer</rt></ruby>、<ruby>解析器<rt>parser</rt></ruby>、<ruby>语法树<rt>syntax tree</rt></ruby>)

|

||||

|

||||

在编译的第一阶段,源代码被标记化(词法分析),解析(语法分析),并为每个源文件构造语法树(译注:这里标记指 token,它是一组预定义的、能够识别的字符串,通常由名字和值构成,其中名字一般是词法的类别,如标识符、关键字、分隔符、操作符、文字和注释等;语法树,以及下文提到的<ruby>抽象语法树<rt>Abstract Syntax Tree</rt></ruby>(AST),是指用树来表达程序设计语言的语法结构,通常叶子节点是操作数,其它节点是操作码)。

|

||||

在编译的第一阶段,源代码被标记化(词法分析)、解析(语法分析),并为每个源文件构造语法树(LCTT 译注:这里标记指 token,它是一组预定义的、能够识别的字符串,通常由名字和值构成,其中名字一般是词法的类别,如标识符、关键字、分隔符、操作符、文字和注释等;语法树,以及下文提到的<ruby>抽象语法树<rt>Abstract Syntax Tree</rt></ruby>(AST),是指用树来表达程序设计语言的语法结构,通常叶子节点是操作数,其它节点是操作码)。

|

||||

|

||||

每个语法树都是相应源文件的确切表示,其中节点对应于源文件的各种元素,例如表达式、声明和语句。语法树还包括位置信息,用于错误报告和创建调试信息。

|

||||

|

||||

### 2. 类型检查和 AST 变形

|

||||

### 2、类型检查和 AST 变换

|

||||

|

||||

* `cmd/compile/internal/gc`(创建编译器 AST,<ruby>类型检查<rt>type-checking</rt></ruby>,<ruby>AST 变形<rt>AST transformation</rt></ruby>)

|

||||

* `cmd/compile/internal/gc`(创建编译器 AST,<ruby>类型检查<rt>type-checking</rt></ruby>,<ruby>AST 变换<rt>AST transformation</rt></ruby>)

|

||||

|

||||

gc 包中包含一个继承自(早期)C 语言实现的版本的 AST 定义。所有代码都是基于它编写的,所以 gc 包必须做的第一件事就是将 syntax 包(定义)的语法树转换为编译器的 AST 表示法。这个额外步骤可能会在将来重构。

|

||||

|

||||

然后对 AST 进行类型检查。第一步是名字解析和类型推断,它们确定哪个对象属于哪个标识符,以及每个表达式具有的类型。类型检查包括特定的额外检查,例如“声明但未使用”以及确定函数是否会终止。

|

||||

|

||||

特定转换也基于 AST 完成。一些节点被基于类型信息而细化,例如把字符串加法从算术加法的节点类型中拆分出来。其它一些例子是<ruby>死代码消除<rt>dead code elimination</rt></ruby>,<ruby>函数调用内联<rt>function call inlining</rt></ruby>和<ruby>逃逸分析<rt>escape analysis</rt></ruby>(译注:逃逸分析是一种分析指针有效范围的方法)。

|

||||

特定变换也基于 AST 完成。一些节点被基于类型信息而细化,例如把字符串加法从算术加法的节点类型中拆分出来。其它一些例子是<ruby>死代码消除<rt>dead code elimination</rt></ruby>,<ruby>函数调用内联<rt>function call inlining</rt></ruby>和<ruby>逃逸分析<rt>escape analysis</rt></ruby>(LCTT 译注:逃逸分析是一种分析指针有效范围的方法)。

|

||||

|

||||

### 3. 通用 SSA

|

||||

### 3、通用 SSA

|

||||

|

||||

* `cmd/compile/internal/gc`(转换成 SSA)

|

||||

* `cmd/compile/internal/ssa`(SSA 相关的 pass 和规则)

|

||||

* `cmd/compile/internal/ssa`(SSA 相关的<ruby>环节<rt>pass</rt></ruby>和规则)

|

||||

|

||||

(译注:许多常见高级语言的编译器无法通过一次扫描源代码或 AST 就完成所有编译工作,取而代之的做法是多次扫描,每次完成一部分工作,并将输出结果作为下次扫描的输入,直到最终产生目标代码。这里每次扫描称作一遍 pass;最后一遍 pass 之前所有的 pass 得到的结果都可称作中间表示法,本文中 AST、SSA 等都属于中间表示法。SSA,静态单赋值形式,是中间表示法的一种性质,它要求每个变量只被赋值一次且在使用前被定义)。

|

||||

(LCTT 译注:许多常见高级语言的编译器无法通过一次扫描源代码或 AST 就完成所有编译工作,取而代之的做法是多次扫描,每次完成一部分工作,并将输出结果作为下次扫描的输入,直到最终产生目标代码。这里每次扫描称作一个<ruby>环节<rt>pass</rt></ruby>;最后一个环节之前所有的环节得到的结果都可称作中间表示法,本文中 AST、SSA 等都属于中间表示法。SSA,静态单赋值形式,是中间表示法的一种性质,它要求每个变量只被赋值一次且在使用前被定义)。

|

||||

|

||||

在此阶段,AST 将被转换为<ruby>静态单赋值<rt>Static Single Assignment</rt></ruby>(SSA)形式,这是一种具有特定属性的低级<ruby>中间表示法<rt>intermediate representation</rt></ruby>,可以更轻松地实现优化并最终从它生成机器码。

|

||||

|

||||

在这个转换过程中,将完成<ruby>内置函数<rt>function intrinsics</rt></ruby>的处理。这些是特殊的函数,编译器被告知逐个分析这些函数并决定是否用深度优化的代码替换它们(译注:内置函数指由语言本身定义的函数,通常编译器的处理方式是使用相应实现函数的指令序列代替对函数的调用指令,有点类似内联函数)。

|

||||

在这个转换过程中,将完成<ruby>内置函数<rt>function intrinsics</rt></ruby>的处理。这些是特殊的函数,编译器被告知逐个分析这些函数并决定是否用深度优化的代码替换它们(LCTT 译注:内置函数指由语言本身定义的函数,通常编译器的处理方式是使用相应实现函数的指令序列代替对函数的调用指令,有点类似内联函数)。

|

||||

|

||||

在 AST 转化成 SSA 的过程中,特定节点也被低级化为更简单的组件,以便于剩余的编译阶段可以基于它们工作。例如,内建的拷贝被替换为内存移动,range 循环被改写为 for 循环。由于历史原因,目前这里面有些在转化到 SSA 之前发生,但长期计划则是把它们都移到这里(转化 SSA)。

|

||||

在 AST 转化成 SSA 的过程中,特定节点也被低级化为更简单的组件,以便于剩余的编译阶段可以基于它们工作。例如,内建的拷贝被替换为内存移动,`range` 循环被改写为 `for` 循环。由于历史原因,目前这里面有些在转化到 SSA 之前发生,但长期计划则是把它们都移到这里(转化 SSA)。

|

||||

|

||||

然后,一系列机器无关的规则和 pass 会被执行。这些并不考虑特定计算机体系结构,因此对所有 `GOARCH` 变量的值都会运行。

|

||||

然后,一系列机器无关的规则和编译环节会被执行。这些并不考虑特定计算机体系结构,因此对所有 `GOARCH` 变量的值都会运行。

|

||||

|

||||

这类通用 pass 的一些例子包括,死代码消除,移除不必要的空值检查,以及移除无用的分支等。通用改写规则主要考虑表达式,例如将一些表达式替换为常量,优化乘法和浮点操作。

|

||||

这类通用的编译环节的一些例子包括,死代码消除、移除不必要的空值检查,以及移除无用的分支等。通用改写规则主要考虑表达式,例如将一些表达式替换为常量,优化乘法和浮点操作。

|

||||

|

||||

### 4. 生成机器码

|

||||

### 4、生成机器码

|

||||

|

||||

* `cmd/compile/internal/ssa`(SSA 低级化和架构特定的 pass)

|

||||

* `cmd/compile/internal/ssa`(SSA 低级化和架构特定的环节)

|

||||

* `cmd/internal/obj`(机器码生成)

|

||||

|

||||

编译器中机器相关的阶段开始于“低级”的 pass,该阶段将通用变量改写为它们的特定的机器码形式。例如,在 amd64 架构中操作数可以在内存中操作,这样许多<ruby>加载-存储<rt>load-store</rt></ruby>操作就可以被合并。

|

||||

编译器中机器相关的阶段开始于“低级”的编译环节,该阶段将通用变量改写为它们的特定的机器码形式。例如,在 amd64 架构中操作数可以在内存中操作,这样许多<ruby>加载-存储<rt>load-store</rt></ruby>操作就可以被合并。

|

||||

|

||||

注意低级的 pass 运行所有机器特定的重写规则,因此当前它也应用了大量优化。

|

||||

注意低级的编译环节运行所有机器特定的重写规则,因此当前它也应用了大量优化。

|

||||

|

||||

一旦 SSA 被“低级化”并且更具体地针对目标体系结构,就要运行最终代码优化的 pass 了。这包含了另外一个死代码消除的 pass,它将变量移动到更靠近它们使用的地方,移除从来没有被读过的局部变量,以及<ruby>寄存器<rt>register</rt></ruby>分配。

|

||||

一旦 SSA 被“低级化”并且更具体地针对目标体系结构,就要运行最终代码优化的编译环节了。这包含了另外一个死代码消除的环节,它将变量移动到更靠近它们使用的地方,移除从来没有被读过的局部变量,以及<ruby>寄存器<rt>register</rt></ruby>分配。

|

||||

|

||||

本步骤中完成的其它重要工作包括<ruby>堆栈布局<rt>stack frame layout</rt></ruby>,它将堆栈偏移位置分配给局部变量,以及<ruby>指针活性分析<rt>pointer liveness analysis</rt></ruby>,后者计算每个垃圾收集安全点上的哪些堆栈上的指针仍然是活动的。

|

||||

|

||||

@ -65,7 +61,7 @@ gc 包中包含一个继承自(早期)C 语言实现的版本的 AST 定义

|

||||

|

||||

### 扩展阅读

|

||||

|

||||

要深入了解 SSA 包的工作方式,包括它的 pass 和规则,请转到 [cmd/compile/internal/ssa/README.md][1]。

|

||||

要深入了解 SSA 包的工作方式,包括它的环节和规则,请转到 [cmd/compile/internal/ssa/README.md][1]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -73,7 +69,7 @@ via: https://github.com/golang/go/blob/master/src/cmd/compile/README.md

|

||||

|

||||

作者:[mvdan][a]

|

||||

译者:[stephenxs](https://github.com/stephenxs)

|

||||

校对:[pityonline](https://github.com/pityonline)

|

||||

校对:[pityonline](https://github.com/pityonline), [wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,50 +1,59 @@

|

||||

显卡工作原理简介

|

||||

极致技术探索:显卡工作原理

|

||||

======

|

||||

|

||||

![AMD-Polaris][1]

|

||||

|

||||

自从 sdfx 推出最初的 Voodoo 加速器以来,不起眼的显卡对你的 PC 是否可以玩游戏起到决定性作用,PC 上任何其它设备都无法与其相比。其它组件当然也很重要,但对于一个拥有 32GB 内存、价值 500 美金的 CPU 和 基于 PCIe 的存储设备的高端 PC,如果使用 10 年前的显卡,都无法以最高分辨率和细节质量运行当前<ruby>最高品质的游戏<rt>AAA titles</rt></ruby>,会发生卡顿甚至无响应。显卡(也常被称为 GPU, 或<ruby>图形处理单元<rt>Graphic Processing Unit</rt></ruby>)对游戏性能影响极大,我们反复强调这一点;但我们通常并不会深入了解显卡的工作原理。

|

||||

自从 3dfx 推出最初的 Voodoo 加速器以来,不起眼的显卡对你的 PC 是否可以玩游戏起到决定性作用,PC 上任何其它设备都无法与其相比。其它组件当然也很重要,但对于一个拥有 32GB 内存、价值 500 美金的 CPU 和 基于 PCIe 的存储设备的高端 PC,如果使用 10 年前的显卡,都无法以最高分辨率和细节质量运行当前<ruby>最高品质的游戏<rt>AAA titles</rt></ruby>,会发生卡顿甚至无响应。显卡(也常被称为 GPU,即<ruby>图形处理单元<rt>Graphic Processing Unit</rt></ruby>),对游戏性能影响极大,我们反复强调这一点;但我们通常并不会深入了解显卡的工作原理。

|

||||

|

||||

出于实际考虑,本文将概述 GPU 的上层功能特性,内容包括 AMD 显卡、Nvidia 显卡、Intel 集成显卡以及 Intel 后续可能发布的独立显卡之间共同的部分。也应该适用于 Apple, Imagination Technologies, Qualcomm, ARM 和 其它显卡生产商发布的移动平台 GPU。

|

||||

出于实际考虑,本文将概述 GPU 的上层功能特性,内容包括 AMD 显卡、Nvidia 显卡、Intel 集成显卡以及 Intel 后续可能发布的独立显卡之间共同的部分。也应该适用于 Apple、Imagination Technologies、Qualcomm、ARM 和其它显卡生产商发布的移动平台 GPU。

|

||||

|

||||

### 我们为何不使用 CPU 进行渲染?

|

||||

|

||||

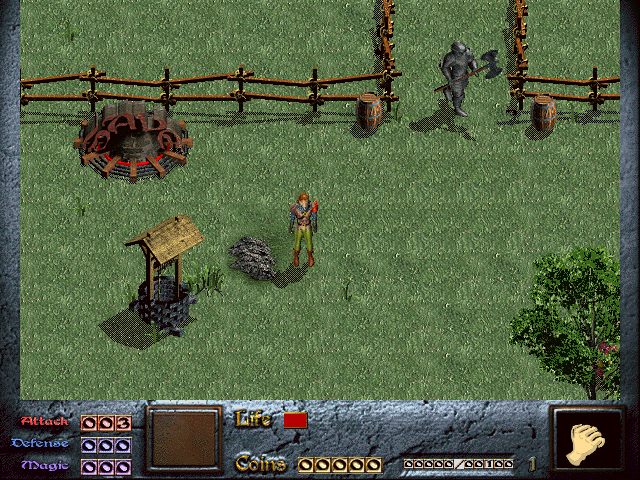

我要说明的第一点是我们为何不直接使用 CPU 完成游戏中的渲染工作。坦率的说,在理论上你确实可以直接使用 CPU 完成<ruby>渲染<rt>rendering</rt></ruby>工作。在显卡没有广泛普及之前,早期的 3D 游戏就是完全基于 CPU 运行的,例如 Ultima Underworld(LCTT 译注:中文名为 _地下创世纪_ ,下文中简称 UU)。UU 是一个很特别的例子,原因如下:与 Doom (LCTT 译注:中文名 _毁灭战士_)相比,UU 具有一个更高级的渲染引擎,全面支持<ruby>向上或向下查找<rt>looking up and down</rt></ruby>以及一些在当时比较高级的特性,例如<ruby>纹理映射<rt>texture mapping</rt></ruby>。但为支持这些高级特性,需要付出高昂的代价,很少有人可以拥有真正能运行起 UU 的 PC。

|

||||

我要说明的第一点是我们为何不直接使用 CPU 完成游戏中的渲染工作。坦率的说,在理论上你确实可以直接使用 CPU 完成<ruby>渲染<rt>rendering</rt></ruby>工作。在显卡没有广泛普及之前,早期的 3D 游戏就是完全基于 CPU 运行的,例如 《<ruby>地下创世纪<rt>Ultima Underworld</rt></ruby>(下文中简称 UU)。UU 是一个很特别的例子,原因如下:与《<ruby>毁灭战士<rt>Doom</rt></ruby>相比,UU 具有一个更高级的渲染引擎,全面支持“向上或向下看”以及一些在当时比较高级的特性,例如<ruby>纹理映射<rt>texture mapping</rt></ruby>。但为支持这些高级特性,需要付出高昂的代价,很少有人可以拥有真正能运行起 UU 的 PC。

|

||||

|

||||

|

||||

|

||||

对于早期的 3D 游戏,包括 Half Life 和 Quake II 在内的很多游戏,内部包含一个软件渲染器,让没有 3D 加速器的玩家也可以玩游戏。但现代游戏都弃用了这种方式,原因很简单:CPU 是设计用于通用任务的微处理器,意味着缺少 GPU 提供的<ruby>专用硬件<rt>specialized hardware</rt></ruby>和<ruby>功能<rt>capabilities</rt></ruby>。对于 18 年前使用软件渲染的那些游戏,当代 CPU 可以轻松胜任;但对于当代最高品质的游戏,除非明显降低<ruby>景象质量<rt>scene</rt></ruby>、分辨率和各种虚拟特效,否则现有的 CPU 都无法胜任。

|

||||

*地下创世纪,图片来自 [GOG](https://www.gog.com/game/ultima_underworld_1_2)*

|

||||

|

||||

对于早期的 3D 游戏,包括《<ruby>半条命<rt>Half Life</rt></ruby>》和《<ruby>雷神之锤 2<rt>Quake II</rt></ruby>》在内的很多游戏,内部包含一个软件渲染器,让没有 3D 加速器的玩家也可以玩游戏。但现代游戏都弃用了这种方式,原因很简单:CPU 是设计用于通用任务的微处理器,意味着缺少 GPU 提供的<ruby>专用硬件<rt>specialized hardware</rt></ruby>和<ruby>功能<rt>capabilities</rt></ruby>。对于 18 年前使用软件渲染的那些游戏,当代 CPU 可以轻松胜任;但对于当代最高品质的游戏,除非明显降低<ruby>景象质量<rt>scene</rt></ruby>、分辨率和各种虚拟特效,否则现有的 CPU 都无法胜任。

|

||||

|

||||

### 什么是 GPU ?

|

||||

|

||||

GPU 是一种包含一系列专用硬件特性的设备,其中这些特性可以让各种 3D 引擎更好地执行代码,包括<ruby>形状构建<rt>geometry setup</rt></ruby>,纹理映射,<ruby>访存<rt>memory access</rt></ruby>和<ruby>着色器<rt>shaders</rt></ruby>等。3D 引擎的功能特性影响着设计者如何设计 GPU。可能有人还记得,AMD HD5000 系列使用 VLIW5 <ruby>架构<rt>archtecture</rt></ruby>;但在更高端的 HD 6000 系列中使用了 VLIW4 架构。通过 GCN (LCTT 译注:GCN 是 Graphics Core Next 的缩写,字面意思是下一代图形核心,既是若干代微体系结构的代号,也是指令集的名称),AMD 改变了并行化的实现方法,提高了每个时钟周期的有效性能。

|

||||

GPU 是一种包含一系列专用硬件特性的设备,其中这些特性可以让各种 3D 引擎更好地执行代码,包括<ruby>形状构建<rt>geometry setup</rt></ruby>,纹理映射,<ruby>访存<rt>memory access</rt></ruby>和<ruby>着色器<rt>shaders</rt></ruby>等。3D 引擎的功能特性影响着设计者如何设计 GPU。可能有人还记得,AMD HD5000 系列使用 VLIW5 <ruby>架构<rt>archtecture</rt></ruby>;但在更高端的 HD 6000 系列中使用了 VLIW4 架构。通过 GCN (LCTT 译注:GCN 是 Graphics Core Next 的缩写,字面意思是“下一代图形核心”,既是若干代微体系结构的代号,也是指令集的名称),AMD 改变了并行化的实现方法,提高了每个时钟周期的有效性能。

|

||||

|

||||

|

||||

|

||||

*“GPU 革命”的前两块奠基石属于 AMD 和 NV;而“第三个时代”则独属于 AMD。*

|

||||

|

||||

Nvidia 在发布首款 GeForce 256 时(大致对应 Microsoft 推出 DirectX7 的时间点)提出了 GPU 这个术语,这款 GPU 支持在硬件上执行转换和<ruby>光照计算<rt>lighting calculation</rt></ruby>。将专用功能直接集成到硬件中是早期 GPU 的显著技术特点。很多专用功能还在(以一种极为不同的方式)使用,毕竟对于特定类型的工作任务,使用<ruby>片上<rt>on-chip</rt></ruby>专用计算资源明显比使用一组<ruby>可编程单元<rt>programmable cores</rt></ruby>要更加高效和快速。

|

||||

|

||||