mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-27 02:30:10 +08:00

commit

a081a9497c

published

20171119 Advanced Techniques for Reducing Emacs Startup Time.md20171212 Toplip – A Very Strong File Encryption And Decryption CLI Utility.md20180206 Power(Shell) to the people.md20180329 Python ChatOps libraries- Opsdroid and Errbot.md20180330 Asynchronous rsync with Emacs, dired and tramp..md20180826 Be productive with Org-mode.md20190116 Get started with Cypht, an open source email client.md20190117 Get started with CryptPad, an open source collaborative document editor.md20190124 ODrive (Open Drive) - Google Drive GUI Client For Linux.md20190125 Get started with Freeplane, an open source mind mapping application.md20190131 19 days of productivity in 2019- The fails.md20190211 How To Remove-Delete The Empty Lines In A File In Linux.md20190214 Run Particular Commands Without Sudo Password In Linux.md20190215 4 Methods To Change The HostName In Linux.md20190218 Emoji-Log- A new way to write Git commit messages.md20190218 SPEED TEST- x86 vs. ARM for Web Crawling in Python.md20190220 Set up two-factor authentication for SSH on Fedora.md20190226 All about -Curly Braces- in Bash.md20190226 Linux security- Cmd provides visibility, control over user activity.md20190227 How To Find Available Network Interfaces On Linux.md20190301 Blockchain 2.0- An Introduction -Part 1.md20190303 How to boot up a new Raspberry Pi.md20190304 Learn Linux with the Raspberry Pi.md20190305 5 ways to teach kids to program with Raspberry Pi.md20190307 13 open source backup solutions.md20190309 How To Fix -Network Protocol Error- On Mozilla Firefox.md

sources

talk

20160921 lawyer The MIT License, Line by Line.md20190228 IRC vs IRL- How to run a good IRC meeting.md20190314 A Look Back at the History of Firefox.md20190314 Why feedback, not metrics, is critical to DevOps.md20190319 6 steps to stop ethical debt in AI product development.md20190322 How to save time with TiDB.md20190323 How to transition into a Developer Relations career.md

tech

20170414 5 projects for Raspberry Pi at home.md20170710 iWant - The Decentralized Peer To Peer File Sharing Commandline Application.md20171119 Advanced Techniques for Reducing Emacs Startup Time.md20171212 Toplip – A Very Strong File Encryption And Decryption CLI Utility.md20180206 Power(Shell) to the people.md20180220 JSON vs XML vs TOML vs CSON vs YAML.md20180329 Python ChatOps libraries- Opsdroid and Errbot.md20180330 Asynchronous rsync with Emacs, dired and tramp..md20180601 Get Started with Snap Packages in Linux.md20180629 100 Best Ubuntu Apps.md20180719 Building tiny container images.md20180826 Be productive with Org-mode.md20180919 Host your own cloud with Raspberry Pi NAS.md20181108 My Google-free Android life.md20181119 Arch-Wiki-Man - A Tool to Browse The Arch Wiki Pages As Linux Man Page from Offline.md20190116 Get started with Cypht, an open source email client.md20190117 Get started with CryptPad, an open source collaborative document editor.md20190124 ODrive (Open Drive) - Google Drive GUI Client For Linux.md20190124 What does DevOps mean to you.md20190125 Get started with Freeplane, an open source mind mapping application.md20190205 Install Apache, MySQL, PHP (LAMP) Stack On Ubuntu 18.04 LTS.md20190208 3 Ways to Install Deb Files on Ubuntu Linux.md20190211 How To Remove-Delete The Empty Lines In A File In Linux.md20190214 Run Particular Commands Without Sudo Password In Linux.md20190215 4 Methods To Change The HostName In Linux.md20190220 Set up two-factor authentication for SSH on Fedora.md20190226 Linux security- Cmd provides visibility, control over user activity.md20190227 How To Find Available Network Interfaces On Linux.md20190301 Blockchain 2.0- An Introduction -Part 1.md20190301 Emacs for (even more of) the win.md20190303 How to boot up a new Raspberry Pi.md20190303 Manage Your Mirrors with ArchLinux Mirrorlist Manager.md20190305 5 Ways To Generate A Random-Strong Password In Linux Terminal.md20190306 3 popular programming languages you can learn with Raspberry Pi.md20190306 Get cooking with GNOME Recipes on Fedora.md20190307 13 open source backup solutions.md20190307 How to keep your Raspberry Pi updated.md20190309 Emulators and Native Linux games on the Raspberry Pi.md20190309 How To Fix -Network Protocol Error- On Mozilla Firefox.md20190310 Let-s get physical- How to use GPIO pins on the Raspberry Pi.md20190311 Learn about computer security with the Raspberry Pi and Kali Linux.md20190312 Do advanced math with Mathematica on the Raspberry Pi.md20190314 14 days of celebrating the Raspberry Pi.md20190315 How To Navigate Inside A Directory-Folder In Linux Without CD Command.md20190315 How To Parse And Pretty Print JSON With Linux Commandline Tools.md20190315 How to create portable documents with CBZ and DjVu.md20190315 Sweet Home 3D- An open source tool to help you decide on your dream home.md20190316 Program the real world using Rust on Raspberry Pi.md20190318 10 Python image manipulation tools.md20190318 3 Ways To Check Whether A Port Is Open On The Remote Linux System.md20190318 Building and augmenting libraries by calling Rust from JavaScript.md20190318 Install MEAN.JS Stack In Ubuntu 18.04 LTS.md20190318 Solus 4 ‘Fortitude- Released with Significant Improvements.md20190319 Blockchain 2.0- Blockchain In Real Estate -Part 4.md20190319 Five Commands To Use Calculator In Linux Command Line.md20190319 How To Set Up a Firewall with GUFW on Linux.md20190319 How to set up a homelab from hardware to firewall.md20190320 4 cool terminal multiplexers.md20190320 Choosing an open messenger client- Alternatives to WhatsApp.md20190320 Move your dotfiles to version control.md20190320 Nuvola- Desktop Music Player for Streaming Services.md20190321 How To Setup Linux Media Server Using Jellyfin.md20190321 How to add new disk in Linux.md20190322 12 open source tools for natural language processing.md20190322 Easy means easy to debug.md20190322 How to Install OpenLDAP on Ubuntu Server 18.04.md

translated/talk

@ -0,0 +1,245 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (lujun9972)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10625-1.html)

|

||||

[#]: subject: (Advanced Techniques for Reducing Emacs Startup Time)

|

||||

[#]: via: (https://blog.d46.us/advanced-emacs-startup/)

|

||||

[#]: author: (Joe Schafer https://blog.d46.us/)

|

||||

|

||||

降低 Emacs 启动时间的高级技术

|

||||

======

|

||||

|

||||

> 《[Emacs Start Up Profiler][1]》 的作者教你六项减少 Emacs 启动时间的技术。

|

||||

|

||||

简而言之:做下面几个步骤:

|

||||

|

||||

1. 使用 Esup 进行性能检测。

|

||||

2. 调整垃圾回收的阀值。

|

||||

3. 使用 use-package 来自动(延迟)加载所有东西。

|

||||

4. 不要使用会引起立即加载的辅助函数。

|

||||

5. 参考我的 [配置][2]。

|

||||

|

||||

### 从 .emacs.d 的失败到现在

|

||||

|

||||

我最近宣布了 .emacs.d 的第三次失败,并完成了第四次 Emacs 配置的迭代。演化过程为:

|

||||

|

||||

1. 拷贝并粘贴 elisp 片段到 `~/.emacs` 中,希望它能工作。

|

||||

2. 借助 `el-get` 来以更结构化的方式来管理依赖关系。

|

||||

3. 放弃自己从零配置,以 Spacemacs 为基础。

|

||||

4. 厌倦了 Spacemacs 的复杂性,基于 `use-package` 重写配置。

|

||||

|

||||

本文汇聚了三次重写和创建 《[Emacs Start Up Profiler][1]》过程中的技巧。非常感谢 Spacemacs、use-package 等背后的团队。没有这些无私的志愿者,这项任务将会困难得多。

|

||||

|

||||

### 不过守护进程模式又如何呢

|

||||

|

||||

在我们开始之前,让我反驳一下优化 Emacs 时的常见观念:“Emacs 旨在作为守护进程来运行的,因此你只需要运行一次而已。”

|

||||

|

||||

这个观点很好,只不过:

|

||||

|

||||

- 速度总是越快越好。

|

||||

- 配置 Emacs 时,可能会有不得不通过重启 Emacs 的情况。例如,你可能为 `post-command-hook` 添加了一个运行缓慢的 `lambda` 函数,很难删掉它。

|

||||

- 重启 Emacs 能帮你验证不同会话之间是否还能保留配置。

|

||||

|

||||

### 1、估算当前以及最佳的启动时间

|

||||

|

||||

第一步是测量当前的启动时间。最简单的方法就是在启动时显示后续步骤进度的信息。

|

||||

|

||||

```

|

||||

;; Use a hook so the message doesn't get clobbered by other messages.

|

||||

(add-hook 'emacs-startup-hook

|

||||

(lambda ()

|

||||

(message "Emacs ready in %s with %d garbage collections."

|

||||

(format "%.2f seconds"

|

||||

(float-time

|

||||

(time-subtract after-init-time before-init-time)))

|

||||

gcs-done)))

|

||||

```

|

||||

|

||||

第二步、测量最佳的启动速度,以便了解可能的情况。我的是 0.3 秒。

|

||||

|

||||

```

|

||||

# -q ignores personal Emacs files but loads the site files.

|

||||

emacs -q --eval='(message "%s" (emacs-init-time))'

|

||||

|

||||

;; For macOS users:

|

||||

open -n /Applications/Emacs.app --args -q --eval='(message "%s" (emacs-init-time))'

|

||||

```

|

||||

|

||||

### 2、检测 Emacs 启动指标对你大有帮助

|

||||

|

||||

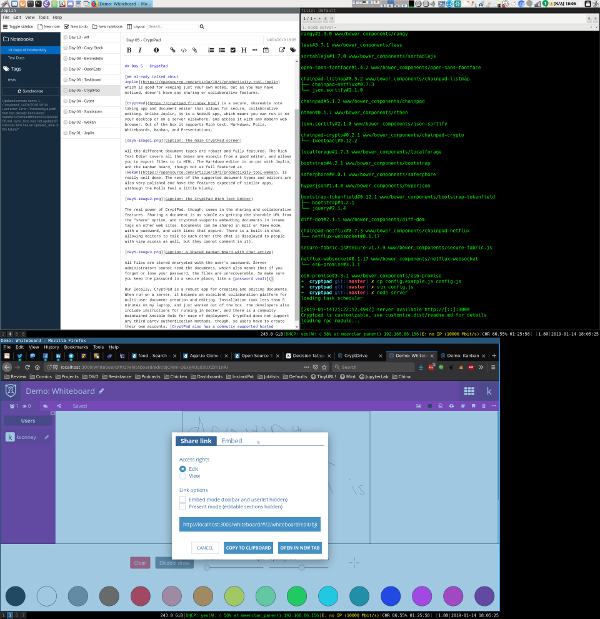

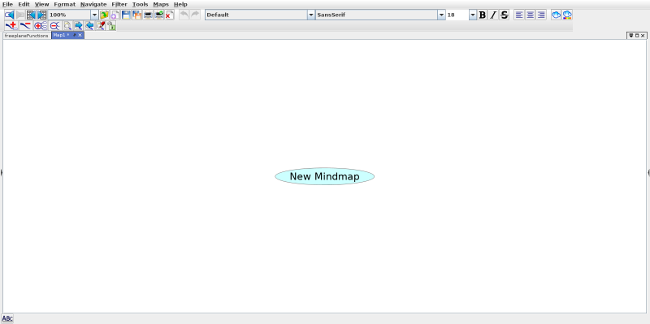

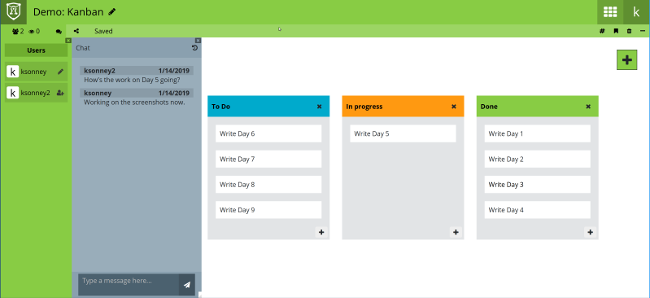

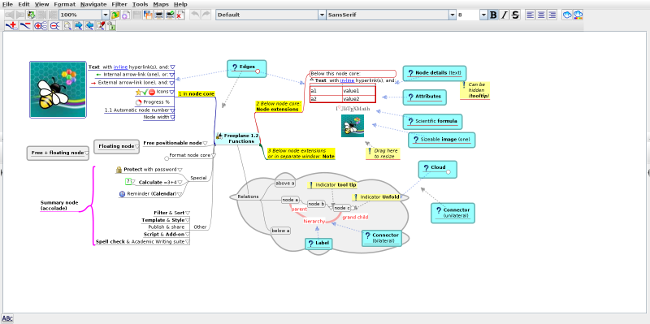

《[Emacs StartUp Profiler][1]》(ESUP)将会给你顶层语句执行的详细指标。

|

||||

|

||||

![esup.png][3]

|

||||

|

||||

*图 1: Emacs Start Up Profiler 截图*

|

||||

|

||||

> 警告:Spacemacs 用户需要注意,ESUP 目前与 Spacemacs 的 init.el 文件有冲突。遵照 <https://github.com/jschaf/esup/issues/48> 上说的进行升级。

|

||||

|

||||

### 3、调高启动时垃圾回收的阀值

|

||||

|

||||

这为我节省了 **0.3 秒**。

|

||||

|

||||

Emacs 默认值是 760kB,这在现代机器看来极其保守。真正的诀窍在于初始化完成后再把它降到合理的水平。这为我节省了 0.3 秒。

|

||||

|

||||

```

|

||||

;; Make startup faster by reducing the frequency of garbage

|

||||

;; collection. The default is 800 kilobytes. Measured in bytes.

|

||||

(setq gc-cons-threshold (* 50 1000 1000))

|

||||

|

||||

;; The rest of the init file.

|

||||

|

||||

;; Make gc pauses faster by decreasing the threshold.

|

||||

(setq gc-cons-threshold (* 2 1000 1000))

|

||||

```

|

||||

|

||||

*~/.emacs.d/init.el*

|

||||

|

||||

### 4、不要 require 任何东西,而是使用 use-package 来自动加载

|

||||

|

||||

让 Emacs 变坏的最好方法就是减少要做的事情。`require` 会立即加载源文件,但是很少会出现需要在启动阶段就立即需要这些功能的。

|

||||

|

||||

在 [use-package][4] 中你只需要声明好需要哪个包中的哪个功能,`use-package` 就会帮你完成正确的事情。它看起来是这样的:

|

||||

|

||||

```

|

||||

(use-package evil-lisp-state ; the Melpa package name

|

||||

|

||||

:defer t ; autoload this package

|

||||

|

||||

:init ; Code to run immediately.

|

||||

(setq evil-lisp-state-global nil)

|

||||

|

||||

:config ; Code to run after the package is loaded.

|

||||

(abn/define-leader-keys "k" evil-lisp-state-map))

|

||||

```

|

||||

|

||||

可以通过查看 `features` 变量来查看 Emacs 现在加载了那些包。想要更好看的输出可以使用 [lpkg explorer][5] 或者我在 [abn-funcs-benchmark.el][6] 中的变体。输出看起来类似这样的:

|

||||

|

||||

```

|

||||

479 features currently loaded

|

||||

- abn-funcs-benchmark: /Users/jschaf/.dotfiles/emacs/funcs/abn-funcs-benchmark.el

|

||||

- evil-surround: /Users/jschaf/.emacs.d/elpa/evil-surround-20170910.1952/evil-surround.elc

|

||||

- misearch: /Applications/Emacs.app/Contents/Resources/lisp/misearch.elc

|

||||

- multi-isearch: nil

|

||||

- <many more>

|

||||

```

|

||||

|

||||

### 5、不要使用辅助函数来设置模式

|

||||

|

||||

通常,Emacs 包会建议通过运行一个辅助函数来设置键绑定。下面是一些例子:

|

||||

|

||||

* `(evil-escape-mode)`

|

||||

* `(windmove-default-keybindings) ; 设置快捷键。`

|

||||

* `(yas-global-mode 1) ; 复杂的片段配置。`

|

||||

|

||||

可以通过 `use-package` 来对此进行重构以提高启动速度。这些辅助函数只会让你立即加载那些尚用不到的包。

|

||||

|

||||

下面这个例子告诉你如何自动加载 `evil-escape-mode`。

|

||||

|

||||

```

|

||||

;; The definition of evil-escape-mode.

|

||||

(define-minor-mode evil-escape-mode

|

||||

(if evil-escape-mode

|

||||

(add-hook 'pre-command-hook 'evil-escape-pre-command-hook)

|

||||

(remove-hook 'pre-command-hook 'evil-escape-pre-command-hook)))

|

||||

|

||||

;; Before:

|

||||

(evil-escape-mode)

|

||||

|

||||

;; After:

|

||||

(use-package evil-escape

|

||||

:defer t

|

||||

;; Only needed for functions without an autoload comment (;;;###autoload).

|

||||

:commands (evil-escape-pre-command-hook)

|

||||

|

||||

;; Adding to a hook won't load the function until we invoke it.

|

||||

;; With pre-command-hook, that means the first command we run will

|

||||

;; load evil-escape.

|

||||

:init (add-hook 'pre-command-hook 'evil-escape-pre-command-hook))

|

||||

```

|

||||

|

||||

下面来看一个关于 `org-babel` 的例子,这个例子更为复杂。我们通常的配置时这样的:

|

||||

|

||||

```

|

||||

(org-babel-do-load-languages

|

||||

'org-babel-load-languages

|

||||

'((shell . t)

|

||||

(emacs-lisp . nil)))

|

||||

```

|

||||

|

||||

这不是个好的配置,因为 `org-babel-do-load-languages` 定义在 `org.el` 中,而该文件有超过 2 万 4 千行的代码,需要花 0.2 秒来加载。通过查看源代码可以看到 `org-babel-do-load-languages` 仅仅只是加载 `ob-<lang>` 包而已,像这样:

|

||||

|

||||

```

|

||||

;; From org.el in the org-babel-do-load-languages function.

|

||||

(require (intern (concat "ob-" lang)))

|

||||

```

|

||||

|

||||

而在 `ob-<lang>.el` 文件中,我们只关心其中的两个方法 `org-babel-execute:<lang>` 和 `org-babel-expand-body:<lang>`。我们可以延时加载 org-babel 相关功能而无需调用 `org-babel-do-load-languages`,像这样:

|

||||

|

||||

```

|

||||

;; Avoid `org-babel-do-load-languages' since it does an eager require.

|

||||

(use-package ob-python

|

||||

:defer t

|

||||

:ensure org-plus-contrib

|

||||

:commands (org-babel-execute:python))

|

||||

|

||||

(use-package ob-shell

|

||||

:defer t

|

||||

:ensure org-plus-contrib

|

||||

:commands

|

||||

(org-babel-execute:sh

|

||||

org-babel-expand-body:sh

|

||||

|

||||

org-babel-execute:bash

|

||||

org-babel-expand-body:bash))

|

||||

```

|

||||

|

||||

### 6、使用惰性定时器来推迟加载非立即需要的包

|

||||

|

||||

我推迟加载了 9 个包,这帮我节省了 **0.4 秒**。

|

||||

|

||||

有些包特别有用,你希望可以很快就能使用它们,但是它们本身在 Emacs 启动过程中又不是必须的。这些软件包包括:

|

||||

|

||||

- `recentf`:保存最近的编辑过的那些文件。

|

||||

- `saveplace`:保存访问过文件的光标位置。

|

||||

- `server`:开启 Emacs 守护进程。

|

||||

- `autorevert`:自动重载被修改过的文件。

|

||||

- `paren`:高亮匹配的括号。

|

||||

- `projectile`:项目管理工具。

|

||||

- `whitespace`:高亮行尾的空格。

|

||||

|

||||

不要 `require` 这些软件包,**而是等到空闲 N 秒后再加载它们**。我在 1 秒后加载那些比较重要的包,在 2 秒后加载其他所有的包。

|

||||

|

||||

```

|

||||

(use-package recentf

|

||||

;; Loads after 1 second of idle time.

|

||||

:defer 1)

|

||||

|

||||

(use-package uniquify

|

||||

;; Less important than recentf.

|

||||

:defer 2)

|

||||

```

|

||||

|

||||

### 不值得的优化

|

||||

|

||||

不要费力把你的 Emacs 配置文件编译成字节码了。这只节省了大约 0.05 秒。把配置文件编译成字节码还可能导致源文件与编译后的文件不一致从而难以重现错误进行调试。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://blog.d46.us/advanced-emacs-startup/

|

||||

|

||||

作者:[Joe Schafer][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://blog.d46.us/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://github.com/jschaf/esup

|

||||

[2]: https://github.com/jschaf/dotfiles/blob/master/emacs/start.el

|

||||

[3]: https://blog.d46.us/images/esup.png

|

||||

[4]: https://github.com/jwiegley/use-package

|

||||

[5]: https://gist.github.com/RockyRoad29/bd4ca6fdb41196a71662986f809e2b1c

|

||||

[6]: https://github.com/jschaf/dotfiles/blob/master/emacs/funcs/abn-funcs-benchmark.el

|

||||

@ -0,0 +1,227 @@

|

||||

toplip:一款十分强大的文件加密解密 CLI 工具

|

||||

======

|

||||

|

||||

|

||||

在市场上能找到许多用来保护文件的文档加密工具。我们已经介绍过其中一些例如 [Cryptomater][1]、[Cryptkeeper][2]、[CryptGo][3]、[Cryptr][4]、[Tomb][5],以及 [GnuPG][6] 等加密工具。今天我们将讨论另一款叫做 “toplip” 的命令行文件加密解密工具。它是一款使用一种叫做 [AES256][7] 的强大加密方法的自由开源的加密工具。它同时也使用了 XTS-AES 设计以保护你的隐私数据。它还使用了 [Scrypt][8],一种基于密码的密钥生成函数来保护你的密码免于暴力破解。

|

||||

|

||||

### 优秀的特性

|

||||

|

||||

相比于其它文件加密工具,toplip 自带以下独特且杰出的特性。

|

||||

|

||||

* 非常强大的基于 XTS-AES256 的加密方法。

|

||||

* <ruby>合理的推诿<rt>Plausible deniability</rt></ruby>。

|

||||

* 加密并嵌入文件到图片(PNG/JPG)中。

|

||||

* 多重密码保护。

|

||||

* 可防护直接暴力破解。

|

||||

* 无可辨识的输出标记。

|

||||

* 开源(GPLv3)。

|

||||

|

||||

### 安装 toplip

|

||||

|

||||

没有什么需要安装的。`toplip` 是独立的可执行二进制文件。你所要做的仅是从 [产品官方页面][9] 下载最新版的 `toplip` 并赋予它可执行权限。为此你只要运行:

|

||||

|

||||

```

|

||||

chmod +x toplip

|

||||

```

|

||||

|

||||

### 使用

|

||||

|

||||

如果你不带任何参数运行 `toplip`,你将看到帮助页面。

|

||||

|

||||

```

|

||||

./toplip

|

||||

```

|

||||

|

||||

![][10]

|

||||

|

||||

请允许我给你展示一些例子。

|

||||

|

||||

为了达到指导目的,我建了两个文件 `file1` 和 `file2`。我同时也有 `toplip` 可执行二进制文件。我把它们全都保存进一个叫做 `test` 的目录。

|

||||

|

||||

![][12]

|

||||

|

||||

#### 加密/解密单个文件

|

||||

|

||||

现在让我们加密 `file1`。为此,运行:

|

||||

|

||||

```

|

||||

./toplip file1 > file1.encrypted

|

||||

```

|

||||

|

||||

这行命令将让你输入密码。一旦你输入完密码,它就会加密 `file1` 的内容并将它们保存进你当前工作目录下一个叫做 `file1.encrypted` 的文件。

|

||||

|

||||

上述命令行的示例输出将会是这样:

|

||||

|

||||

```

|

||||

This is toplip v1.20 (C) 2015, 2016 2 Ton Digital. Author: Jeff Marrison A showcase piece for the HeavyThing library. Commercial support available Proudly made in Cooroy, Australia. More info: https://2ton.com.au/toplip file1 Passphrase #1: generating keys...Done

|

||||

Encrypting...Done

|

||||

```

|

||||

|

||||

为了验证文件是否的确经过加密,试着打开它你会发现一些随机的字符。

|

||||

|

||||

为了解密加密过的文件,像以下这样使用 `-d` 参数:

|

||||

|

||||

```

|

||||

./toplip -d file1.encrypted

|

||||

```

|

||||

|

||||

这行命令会解密提供的文档并在终端窗口显示内容。

|

||||

|

||||

为了保存文档而不是写入到标准输出,运行:

|

||||

|

||||

```

|

||||

./toplip -d file1.encrypted > file1.decrypted

|

||||

```

|

||||

|

||||

输入正确的密码解密文档。`file1.encrypted` 的所有内容将会存入一个叫做 `file1.decrypted` 的文档。

|

||||

|

||||

请不要用这种命名方法,我这样用仅仅是为了便于理解。使用其它难以预测的名字。

|

||||

|

||||

#### 加密/解密多个文件

|

||||

|

||||

现在我们将使用两个分别的密码加密每个文件。

|

||||

|

||||

```

|

||||

./toplip -alt file1 file2 > file3.encrypted

|

||||

```

|

||||

|

||||

你会被要求为每个文件输入一个密码,使用不同的密码。

|

||||

|

||||

上述命令行的示例输出将会是这样:

|

||||

|

||||

```

|

||||

This is toplip v1.20 (C) 2015, 2016 2 Ton Digital. Author: Jeff Marrison A showcase piece for the HeavyThing library. Commercial support available Proudly made in Cooroy, Australia. More info: https://2ton.com.au/toplip

|

||||

file2 Passphrase #1 : generating keys...Done

|

||||

file1 Passphrase #1 : generating keys...Done

|

||||

Encrypting...Done

|

||||

```

|

||||

|

||||

上述命令所做的是加密两个文件的内容并将它们保存进一个单独的叫做 `file3.encrypted` 的文件。在保存中分别给予各自的密码。比如说如果你提供 `file1` 的密码,`toplip` 将复原 `file1`。如果你提供 `file2` 的密码,`toplip` 将复原 `file2`。

|

||||

|

||||

每个 `toplip` 加密输出都可能包含最多四个单独的文件,并且每个文件都建有各自独特的密码。由于加密输出放在一起的方式,一下判断出是否存在多个文档不是一件容易的事。默认情况下,甚至就算确实只有一个文件是由 `toplip` 加密,随机数据都会自动加上。如果指定了多于一个文件,每个都有自己的密码,那么你可以有选择性地独立解码每个文件,以此来否认其它文件存在的可能性。这能有效地使一个用户在可控的暴露风险下打开一个加密的捆绑文件包。并且对于敌人来说,在计算上没有一种低廉的办法来确认额外的秘密数据存在。这叫做“<ruby>合理的推诿<rt>Plausible deniability</rt></ruby>”,是 toplip 著名的特性之一。

|

||||

|

||||

为了从 `file3.encrypted` 解码 `file1`,仅需输入:

|

||||

|

||||

```

|

||||

./toplip -d file3.encrypted > file1.encrypted

|

||||

```

|

||||

|

||||

你将会被要求输入 `file1` 的正确密码。

|

||||

|

||||

为了从 `file3.encrypted` 解码 `file2`,输入:

|

||||

|

||||

```

|

||||

./toplip -d file3.encrypted > file2.encrypted

|

||||

```

|

||||

|

||||

别忘了输入 `file2` 的正确密码。

|

||||

|

||||

#### 使用多重密码保护

|

||||

|

||||

这是我中意的另一个炫酷特性。在加密过程中我们可以为单个文件提供多重密码。这样可以保护密码免于暴力尝试。

|

||||

|

||||

```

|

||||

./toplip -c 2 file1 > file1.encrypted

|

||||

```

|

||||

|

||||

这里,`-c 2` 代表两个不同的密码。上述命令行的示例输出将会是这样:

|

||||

|

||||

```

|

||||

This is toplip v1.20 (C) 2015, 2016 2 Ton Digital. Author: Jeff Marrison A showcase piece for the HeavyThing library. Commercial support available Proudly made in Cooroy, Australia. More info: https://2ton.com.au/toplip

|

||||

file1 Passphrase #1: generating keys...Done

|

||||

file1 Passphrase #2: generating keys...Done

|

||||

Encrypting...Done

|

||||

```

|

||||

|

||||

正如你在上述示例中所看到的,`toplip` 要求我输入两个密码。请注意你必须提供两个不同的密码,而不是提供两遍同一个密码。

|

||||

|

||||

为了解码这个文件,这样做:

|

||||

|

||||

```

|

||||

$ ./toplip -c 2 -d file1.encrypted > file1.decrypted

|

||||

This is toplip v1.20 (C) 2015, 2016 2 Ton Digital. Author: Jeff Marrison A showcase piece for the HeavyThing library. Commercial support available Proudly made in Cooroy, Australia. More info: https://2ton.com.au/toplip

|

||||

file1.encrypted Passphrase #1: generating keys...Done

|

||||

file1.encrypted Passphrase #2: generating keys...Done

|

||||

Decrypting...Done

|

||||

```

|

||||

|

||||

#### 将文件藏在图片中

|

||||

|

||||

将一个文件、消息、图片或视频藏在另一个文件里的方法叫做隐写术。幸运的是 `toplip` 默认包含这个特性。

|

||||

|

||||

为了将文件藏入图片中,像如下所示的样子使用 `-m` 参数。

|

||||

|

||||

```

|

||||

$ ./toplip -m image.png file1 > image1.png

|

||||

This is toplip v1.20 (C) 2015, 2016 2 Ton Digital. Author: Jeff Marrison A showcase piece for the HeavyThing library. Commercial support available Proudly made in Cooroy, Australia. More info: https://2ton.com.au/toplip

|

||||

file1 Passphrase #1: generating keys...Done

|

||||

Encrypting...Done

|

||||

```

|

||||

|

||||

这行命令将 `file1` 的内容藏入一张叫做 `image1.png` 的图片中。

|

||||

|

||||

要解码,运行:

|

||||

|

||||

```

|

||||

$ ./toplip -d image1.png > file1.decrypted This is toplip v1.20 (C) 2015, 2016 2 Ton Digital. Author: Jeff Marrison A showcase piece for the HeavyThing library. Commercial support available Proudly made in Cooroy, Australia. More info: https://2ton.com.au/toplip

|

||||

image1.png Passphrase #1: generating keys...Done

|

||||

Decrypting...Done

|

||||

```

|

||||

|

||||

#### 增加密码复杂度

|

||||

|

||||

为了进一步使文件变得难以破译,我们可以像以下这样增加密码复杂度:

|

||||

|

||||

```

|

||||

./toplip -c 5 -i 0x8000 -alt file1 -c 10 -i 10 file2 > file3.encrypted

|

||||

```

|

||||

|

||||

上述命令将会要求你为 `file1` 输入十条密码,为 `file2` 输入五条密码,并将它们存入单个叫做 `file3.encrypted` 的文件。如你所注意到的,我们在这个例子中又用了另一个 `-i` 参数。这是用来指定密钥生成循环次数。这个选项覆盖了 `scrypt` 函数初始和最终 PBKDF2 阶段的默认循环次数 1。十六进制和十进制数值都是允许的。比如说 `0x8000`、`10` 等。请注意这会大大增加计算次数。

|

||||

|

||||

为了解码 `file1`,使用:

|

||||

|

||||

```

|

||||

./toplip -c 5 -i 0x8000 -d file3.encrypted > file1.decrypted

|

||||

```

|

||||

|

||||

为了解码 `file2`,使用:

|

||||

|

||||

```

|

||||

./toplip -c 10 -i 10 -d file3.encrypted > file2.decrypted

|

||||

```

|

||||

|

||||

参考 `toplip` [官网](https://2ton.com.au/toplip/)以了解更多关于其背后的技术信息和使用的加密方式。

|

||||

|

||||

我个人对所有想要保护自己数据的人的建议是,别依赖单一的方法。总是使用多种工具/方法来加密文件。不要在纸上写下密码也不要将密码存入本地或云。记住密码,阅后即焚。如果你记不住,考虑使用任何了信赖的密码管理器。

|

||||

|

||||

- [KeeWeb – An Open Source, Cross Platform Password Manager](https://www.ostechnix.com/keeweb-an-open-source-cross-platform-password-manager/)

|

||||

- [Buttercup – A Free, Secure And Cross-platform Password Manager](https://www.ostechnix.com/buttercup-a-free-secure-and-cross-platform-password-manager/)

|

||||

- [Titan – A Command line Password Manager For Linux](https://www.ostechnix.com/titan-command-line-password-manager-linux/)

|

||||

|

||||

今天就到此为止了,更多好东西后续推出,请保持关注。

|

||||

|

||||

顺祝时祺!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/toplip-strong-file-encryption-decryption-cli-utility/

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[tomjlw](https://github.com/tomjlw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:https://www.ostechnix.com/cryptomator-open-source-client-side-encryption-tool-cloud/

|

||||

[2]:https://www.ostechnix.com/how-to-encrypt-your-personal-foldersdirectories-in-linux-mint-ubuntu-distros/

|

||||

[3]:https://www.ostechnix.com/cryptogo-easy-way-encrypt-password-protect-files/

|

||||

[4]:https://www.ostechnix.com/cryptr-simple-cli-utility-encrypt-decrypt-files/

|

||||

[5]:https://www.ostechnix.com/tomb-file-encryption-tool-protect-secret-files-linux/

|

||||

[6]:https://www.ostechnix.com/an-easy-way-to-encrypt-and-decrypt-files-from-commandline-in-linux/

|

||||

[7]:http://en.wikipedia.org/wiki/Advanced_Encryption_Standard

|

||||

[8]:http://en.wikipedia.org/wiki/Scrypt

|

||||

[9]:https://2ton.com.au/Products/

|

||||

[10]:https://www.ostechnix.com/wp-content/uploads/2017/12/toplip-2.png

|

||||

[12]:https://www.ostechnix.com/wp-content/uploads/2017/12/toplip-1.png

|

||||

|

||||

158

published/20180206 Power(Shell) to the people.md

Normal file

158

published/20180206 Power(Shell) to the people.md

Normal file

@ -0,0 +1,158 @@

|

||||

给大家安利一下 PowerShell

|

||||

======

|

||||

|

||||

> 代码更简洁、脚本更清晰、跨平台一致性等好处是让 Linux 和 OS X 用户喜爱 PowerShell 的原因。

|

||||

|

||||

|

||||

|

||||

今年(2018)早些时候,[Powershell Core][1] 以 [MIT][3] 开源协议发布了[正式可用版(GA)][2]。PowerShell 算不上是新技术。自 2006 年为 Windows 发布了第一版 PowerShell 以来,PowerShell 的创建者在[结合了][4] Unⅸ shell 的强大和灵活的同时也在弥补他们所意识到的缺点,特别是从组合命令中获取值时所要进行的文本操作。

|

||||

|

||||

在发布了 5 个主要版本之后,PowerShell 已经可以在所有主流操作系统上(包括 OS X 和 Linux)本地运行同样创新的 shell 和命令行环境。一些人(应该说是大多数人)可能依旧在嘲弄这位诞生于 Windows 的闯入者的大胆和冒失:为那些远古以来(从千禧年开始算不算?)便存在着强大的 shell 环境的平台引荐自己。在本帖中,我希望可以将 PowerShell 的优势介绍给大家,甚至是那些经验老道的用户。

|

||||

|

||||

### 跨平台一致性

|

||||

|

||||

如果你计划将脚本从一个执行环境迁移到另一个平台时,你需要确保只使用了那些在两个平台下都起作用的命令和语法。比如在 GNU 系统中,你可以通过以下方式获取昨天的日期:

|

||||

|

||||

```

|

||||

date --date="1 day ago"

|

||||

```

|

||||

|

||||

在 BSD 系统中(比如 OS X),上述语法将没办法工作,因为 BSD 的 date 工具需要以下语法:

|

||||

|

||||

```

|

||||

date -v -1d

|

||||

```

|

||||

|

||||

因为 PowerShell 具有宽松的许可证,并且在所有的平台都有构建,所以你可以把 PowerShell 和你的应用一起打包。因此,当你的脚本运行在目标系统中时,它们会运行在一样的 shell 环境中,使用与你的测试环境中同样的命令实现。

|

||||

|

||||

### 对象和结构化数据

|

||||

|

||||

*nix 命令和工具依赖于你使用和操控非结构化数据的能力。对于那些长期活在 `sed`、 `grep` 和 `awk` 环境下的人们来说,这可能是小菜一碟,但现在有更好的选择。

|

||||

|

||||

让我们使用 PowerShell 重写那个获取昨天日期的实例。为了获取当前日期,使用 `Get-Date` cmdlet(读作 “commandlet”):

|

||||

|

||||

```

|

||||

> Get-Date

|

||||

|

||||

Sunday, January 21, 2018 8:12:41 PM

|

||||

```

|

||||

|

||||

你所看到的输出实际上并不是一个文本字符串。不如说,这是 .Net Core 对象的一个字符串表现形式。就像任何 OOP 环境中的对象一样,它具有类型以及你可以调用的方法。

|

||||

|

||||

让我们来证明这一点:

|

||||

|

||||

```

|

||||

> $(Get-Date).GetType().FullName

|

||||

System.DateTime

|

||||

```

|

||||

|

||||

`$(...)` 语法就像你所期望的 POSIX shell 中那样,计算括弧中的命令然后替换整个表达式。但是在 PowerShell 中,这种表达式中的 `$` 是可选的。并且,最重要的是,结果是一个 .Net 对象,而不是文本。因此我们可以调用该对象中的 `GetType()` 方法来获取该对象类型(类似于 Java 中的 `Class` 对象),`FullName` [属性][5] 则用来获取该类型的全称。

|

||||

|

||||

那么,这种对象导向的 shell 是如何让你的工作变得更加简单呢?

|

||||

|

||||

首先,你可将任何对象排进 `Get-Member` cmdlet 来查看它提供的所有方法和属性。

|

||||

|

||||

```

|

||||

> (Get-Date) | Get-Member

|

||||

PS /home/yevster/Documents/ArticlesInProgress> $(Get-Date) | Get-Member

|

||||

|

||||

|

||||

TypeName: System.DateTime

|

||||

|

||||

Name MemberType Definition

|

||||

---- ---------- ----------

|

||||

Add Method datetime Add(timespan value)

|

||||

AddDays Method datetime AddDays(double value)

|

||||

AddHours Method datetime AddHours(double value)

|

||||

AddMilliseconds Method datetime AddMilliseconds(double value)

|

||||

AddMinutes Method datetime AddMinutes(double value)

|

||||

AddMonths Method datetime AddMonths(int months)

|

||||

AddSeconds Method datetime AddSeconds(double value)

|

||||

AddTicks Method datetime AddTicks(long value)

|

||||

AddYears Method datetime AddYears(int value)

|

||||

CompareTo Method int CompareTo(System.Object value), int ...

|

||||

```

|

||||

|

||||

你可以很快的看到 DateTime 对象具有一个 `AddDays` 方法,从而可以使用它来快速的获取昨天的日期:

|

||||

|

||||

```

|

||||

> (Get-Date).AddDays(-1)

|

||||

|

||||

Saturday, January 20, 2018 8:24:42 PM

|

||||

```

|

||||

|

||||

为了做一些更刺激的事,让我们调用 Yahoo 的天气服务(因为它不需要 API 令牌)然后获取你的本地天气。

|

||||

|

||||

```

|

||||

$city="Boston"

|

||||

$state="MA"

|

||||

$url="https://query.yahooapis.com/v1/public/yql?q=select%20*%20from%20weather.forecast%20where%20woeid%20in%20(select%20woeid%20from%20geo.places(1)%20where%20text%3D%22${city}%2C%20${state}%22)&format=json&env=store%3A%2F%2Fdatatables.org%2Falltableswithkeys"

|

||||

```

|

||||

|

||||

现在,我们可以使用老派的方法然后直接运行 `curl $url` 来获取 JSON 二进制对象,或者……

|

||||

|

||||

```

|

||||

$weather=(Invoke-RestMethod $url)

|

||||

```

|

||||

|

||||

如果你查看了 `$weather` 类型(运行 `echo $weather.GetType().FullName`),你将会发现它是一个 `PSCustomObject`。这是一个用来反射 JSON 结构的动态对象。

|

||||

|

||||

然后 PowerShell 可以通过 tab 补齐来帮助你完成命令输入。只需要输入 `$weather.`(确报包含了 `.`)然后按下 `Tab` 键。你将看到所有根级别的 JSON 键。输入其中的一个,然后跟上 `.` ,再一次按下 `Tab` 键,你将看到它所有的子键(如果有的话)。

|

||||

|

||||

因此,你可以轻易的导航到你所想要的数据:

|

||||

|

||||

```

|

||||

> echo $weather.query.results.channel.atmosphere.pressure

|

||||

1019.0

|

||||

|

||||

> echo $weather.query.results.channel.wind.chill 41

|

||||

```

|

||||

|

||||

并且如果你有非结构化的 JSON 或 CSV 数据(通过外部命令返回的),只需要将它相应的排进 `ConverFrom-Json` 或 `ConvertFrom-CSV` cmdlet,然后你可以得到一个漂亮干净的对象。

|

||||

|

||||

### 计算 vs. 自动化

|

||||

|

||||

我们使用 shell 用于两种目的。一个是用于计算,运行独立的命令然后手动响应它们的输出。另一个是自动化,通过写脚本执行多个命令,然后以编程的方式相应它们的输出。

|

||||

|

||||

我们大多数人都能发现这两种目的在 shell 上的不同且互相冲突的要求。计算任务要求 shell 简洁明了。用户输入的越少越好。但如果用户输入对其他用户来说几乎难以理解,那这一点就不重要了。脚本,从另一个角度来讲是代码。可读性和可维护性是关键。这一方面,POSIX 工具通常是失败的。虽然一些命令通常会为它们的参数提供简洁明了的语法(如:`-f` 和 `--force`),但是命令名字本身就不简洁明了。

|

||||

|

||||

PowerShell 提供了几个机制来消除这种浮士德式的平衡。

|

||||

|

||||

首先,tab 补齐可以消除键入参数名的需要。比如:键入 `Get-Random -Mi`,按下 `Tab` 然后 PowerShell 将会为你完成参数:`Get-Random -Minimum`。但是如果你想更简洁一些,你甚至不需要按下 `Tab`。如下所示,PowerShell 可以理解:

|

||||

|

||||

```

|

||||

Get-Random -Mi 1 -Ma 10

|

||||

```

|

||||

|

||||

因为 `Mi` 和 `Ma` 每一个都具有独立不同的补齐。

|

||||

|

||||

你可能已经留意到所有的 PowerShell cmdlet 名称具有动名词结构。这有助于脚本的可读性,但是你可能不想一而再、再而三的键入 `Get-`。所以并不需要!如果你之间键入了一个名词而没有动词的话,PowerShell 将查找带有该名词的 `Get-` 命令。

|

||||

|

||||

> 小心:尽管 PowerShell 不区分大小写,但在使用 PowerShell 命令是时,名词首字母大写是一个好习惯。比如,键入 `date` 将会调用系统中的 `date` 工具。键入 `Date` 将会调用 PowerShell 的 `Get-Date` cmdlet。

|

||||

|

||||

如果这还不够,PowerShell 还提供了别名,用来创建简单的名字。比如,如果键入 `alias -name cd`,你将会发现 `cd` 在 PowerShell 实际上时 `Set-Location` 命令的别名。

|

||||

|

||||

所以回顾以下 —— 你可以使用强大的 tab 补全、别名,和名词补全来保持命令名词简洁、自动化和一致性参数名截断,与此同时还可以享受丰富、可读的语法格式。

|

||||

|

||||

### 那么……你看呢?

|

||||

|

||||

这些只是 PowerShell 的一部分优势。还有更多特性和 cmdlet,我还没讨论(如果你想弄哭 `grep` 的话,可以查看 [Where-Object][6] 或其别称 `?`)。如果你有点怀旧的话,PowerShell 可以为你加载原来的本地工具。但是给自己足够的时间来适应 PowerShell 面向对象 cmdlet 的世界,然后你将发现自己会选择忘记回去的路。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/2/powershell-people

|

||||

|

||||

作者:[Yev Bronshteyn][a]

|

||||

译者:[sanfusu](https://github.com/sanfusu)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/yevster

|

||||

[1]:https://github.com/PowerShell/PowerShell/blob/master/README.md

|

||||

[2]:https://blogs.msdn.microsoft.com/powershell/2018/01/10/powershell-core-6-0-generally-available-ga-and-supported/

|

||||

[3]:https://spdx.org/licenses/MIT

|

||||

[4]:http://www.jsnover.com/Docs/MonadManifesto.pdf

|

||||

[5]:https://docs.microsoft.com/en-us/dotnet/csharp/programming-guide/classes-and-structs/properties

|

||||

[6]:https://docs.microsoft.com/en-us/powershell/module/microsoft.powershell.core/where-object?view=powershell-6

|

||||

@ -0,0 +1,204 @@

|

||||

Python 的 ChatOps 库:Opsdroid 和 Errbot

|

||||

======

|

||||

|

||||

> 学习一下 Python 世界里最广泛使用的 ChatOps 库:每个都能做什么,如何使用。

|

||||

|

||||

|

||||

|

||||

ChatOps 是基于会话导向而进行的开发。其思路是你可以编写能够对聊天窗口中的某些输入进行回复的可执行代码。作为一个开发者,你能够用 ChatOps 从 Slack 合并拉取请求,自动从收到的 Facebook 消息中给某人分配支持工单,或者通过 IRC 检查开发状态。

|

||||

|

||||

在 Python 世界,最为广泛使用的 ChatOps 库是 Opsdroid 和 Errbot。在这个月的 Python 专栏,让我们一起聊聊使用它们是怎样的体验,它们各自适用于什么方面以及如何着手使用它们。

|

||||

|

||||

### Opsdroid

|

||||

|

||||

[Opsdroid][2] 是一个相对年轻的(始于 2016)Python 开源聊天机器人库。它有着良好的开发文档,不错的教程,并且包含能够帮助你对接流行的聊天服务的插件。

|

||||

|

||||

#### 它内置了什么

|

||||

|

||||

库本身并没有自带所有你需要上手的东西,但这是故意的。轻量级的框架鼓励你去运用它现有的连接器(Opsdroid 所谓的帮你接入聊天服务的插件)或者去编写你自己的,但是它并不会因自带你所不需要的连接器而自贬身价。你可以轻松使用现有的 Opsdroid 连接器来接入:

|

||||

|

||||

+ 命令行

|

||||

+ Cisco Spark

|

||||

+ Facebook

|

||||

+ GitHub

|

||||

+ Matrix

|

||||

+ Slack

|

||||

+ Telegram

|

||||

+ Twitter

|

||||

+ Websocket

|

||||

|

||||

Opsdroid 会调用使聊天机器人能够展现它们的“技能”的函数。这些技能其实是异步 Python 函数,并使用 Opsdroid 叫做“匹配器”的匹配装饰器。你可以设置你的 Opsdroid 项目,来使用同样从你设置文件所在的代码中的“技能”。你也可以从外面的公共或私人仓库调用这些“技能”。

|

||||

|

||||

你同样可以启用一些现存的 Opsdroid “技能”,包括 [seen][3] —— 它会告诉你聊天机器人上次是什么时候看到某个用户的,以及 [weather][4] —— 会将天气报告给用户。

|

||||

|

||||

最后,Opdroid 允许你使用现存的数据库模块设置数据库。现在 Opdroid 支持的数据库包括:

|

||||

|

||||

+ Mongo

|

||||

+ Redis

|

||||

+ SQLite

|

||||

|

||||

你可以在你的 Opdroid 项目中的 `configuration.yaml` 文件设置数据库、技能和连接器。

|

||||

|

||||

#### Opsdroid 的优势

|

||||

|

||||

**Docker 支持:**从一开始 Opsdroid 就打算在 Docker 中良好运行。在 Docker 中的指导是它 [安装文档][5] 中的一部分。使用 Opsdroid 和 Docker Compose 也很简单:将 Opsdroid 设置成一种服务,当你运行 `docker-compose up` 时,你的 Opsdroid 服务将会开启你的聊天机器人也将就绪。

|

||||

|

||||

```

|

||||

version: "3"

|

||||

|

||||

services:

|

||||

opsdroid:

|

||||

container_name: opsdroid

|

||||

build:

|

||||

context: .

|

||||

dockerfile: Dockerfile

|

||||

```

|

||||

|

||||

**丰富的连接器:** Opsdroid 支持九种像 Slack 和 Github 等从外部接入的服务连接器。你所要做的一切就是在你的设置文件中启用那些连接器,然后把必须的口令或者 API 密匙传过去。比如为了启用 Opsdroid 以在一个叫做 `#updates` 的 Slack 频道发帖,你需要将以下代码加入你设置文件的 `connectors` 部分:

|

||||

|

||||

```

|

||||

- name: slack

|

||||

api-token: "this-is-my-token"

|

||||

default-room: "#updates"

|

||||

```

|

||||

|

||||

在设置 Opsdroid 以接入 Slack 之前你需要[添加一个机器人用户][6]。

|

||||

|

||||

如果你需要接入一个 Opsdroid 不支持的服务,在[文档][7]里有有添加你自己的连接器的教程。

|

||||

|

||||

**相当不错的文档:** 特别是对于一个在积极开发中的新兴库来说,Opsdroid 的文档十分有帮助。这些文档包括一篇带你创建几个不同的基本技能的[教程][8]。Opsdroid 在[技能][9]、[连接器][7]、[数据库][10],以及[匹配器][11]方面的文档也十分清晰。

|

||||

|

||||

它所支持的技能和连接器的仓库为它的技能提供了富有帮助的示范代码。

|

||||

|

||||

**自然语言处理:** Opsdroid 的技能里面能使用正则表达式,但也同样提供了几个包括 [Dialogflow][12],[luis.ai][13],[Recast.AI][14] 以及 [wit.ai][15] 的 NLP API。

|

||||

|

||||

#### Opsdroid 可能的不足

|

||||

|

||||

Opsdroid 对它的一部分连接器还没有启用全部的特性。比如说,Slack API 允许你向你的消息添加颜色柱、图片以及其他的“附件”。Opsdroid Slack 连接器并没有启用“附件”特性,所以如果那些特性对你来说很重要的话,你需要编写一个自定义的 Slack 连接器。如果连接器缺少一个你需要的特性,Opsdroid 将欢迎你的[贡献][16]。文档中可以使用更多的例子,特别是对于预料到的使用场景。

|

||||

|

||||

#### 示例用法

|

||||

|

||||

```

|

||||

from opsdroid.matchers import match_regex

|

||||

import random

|

||||

|

||||

|

||||

@match_regex(r'hi|hello|hey|hallo')

|

||||

async def hello(opsdroid, config, message):

|

||||

text = random.choice(["Hi {}", "Hello {}", "Hey {}"]).format(message.user)

|

||||

await message.respond(text)

|

||||

```

|

||||

|

||||

*hello/\_\_init\_\_.py*

|

||||

|

||||

|

||||

```

|

||||

connectors:

|

||||

- name: websocket

|

||||

|

||||

skills:

|

||||

- name: hello

|

||||

repo: "https://github.com/<user_id>/hello-skill"

|

||||

|

||||

```

|

||||

|

||||

*configuration.yaml*

|

||||

|

||||

|

||||

### Errbot

|

||||

|

||||

[Errbot][17] 是一个功能齐全的开源聊天机器人。Errbot 发行于 2012 年,并且拥有人们从一个成熟的项目能期待的一切,包括良好的文档、优秀的教程以及许多帮你连入现有的流行聊天服务的插件。

|

||||

|

||||

#### 它内置了什么

|

||||

|

||||

不像采用了较轻量级方式的 Opsdroid,Errbot 自带了你需要可靠地创建一个自定义机器人的一切东西。

|

||||

|

||||

Errbot 包括了对于本地 XMPP、IRC、Slack、Hipchat 以及 Telegram 服务的支持。它通过社区支持的后端列出了另外十种服务。

|

||||

|

||||

#### Errbot 的优势

|

||||

|

||||

**良好的文档:** Errbot 的文档成熟易读。

|

||||

|

||||

**动态插件架构:** Errbot 允许你通过和聊天机器人交谈安全地安装、卸载、更新、启用以及禁用插件。这使得开发和添加特性十分简便。感谢 Errbot 的颗粒性授权系统,出于安全意识这所有的一切都可以被锁闭。

|

||||

|

||||

当某个人输入 `!help`,Errbot 使用你的插件的文档字符串来为可获取的命令生成文档,这使得了解每行命令的作用更加简便。

|

||||

|

||||

**内置的管理和安全特性:** Errbot 允许你限制拥有管理员权限的用户列表,甚至细粒度访问控制。比如说你可以限制特定用户或聊天房间访问特定命令。

|

||||

|

||||

**额外的插件框架:** Errbot 支持钩子、回调、子命令、webhook、轮询以及其它[更多特性][18]。如果那些还不够,你甚至可以编写[动态插件][19]。当你需要基于在远程服务器上的可用命令来启用对应的聊天命令时,这个特性十分有用。

|

||||

|

||||

**自带测试框架:** Errbot 支持 [pytest][20],同时也自带一些能使你简便测试插件的有用功能。它的“[测试你的插件][21]”的文档出于深思熟虑,并提供了足够的资料让你上手。

|

||||

|

||||

#### Errbot 可能的不足

|

||||

|

||||

**以 “!” 开头:** 默认情况下,Errbot 命令发出时以一个惊叹号打头(`!help` 以及 `!hello`)。一些人可能会喜欢这样,但是另一些人可能认为这让人烦恼。谢天谢地,这很容易关掉。

|

||||

|

||||

**插件元数据** 首先,Errbot 的 [Hello World][22] 插件示例看上去易于使用。然而我无法加载我的插件,直到我进一步阅读了教程并发现我还需要一个 `.plug` 文档,这是一个 Errbot 用来加载插件的文档。这可能比较吹毛求疵了,但是在我深挖文档之前,这对我来说都不是显而易见的。

|

||||

|

||||

### 示例用法

|

||||

|

||||

|

||||

```

|

||||

import random

|

||||

from errbot import BotPlugin, botcmd

|

||||

|

||||

class Hello(BotPlugin):

|

||||

|

||||

@botcmd

|

||||

def hello(self, msg, args):

|

||||

text = random.choice(["Hi {}", "Hello {}", "Hey {}"]).format(message.user)

|

||||

return text

|

||||

```

|

||||

|

||||

*hello.py*

|

||||

|

||||

```

|

||||

[Core]

|

||||

Name = Hello

|

||||

Module = hello

|

||||

|

||||

[Python]

|

||||

Version = 2+

|

||||

|

||||

[Documentation]

|

||||

Description = Example "Hello" plugin

|

||||

```

|

||||

|

||||

*hello.plug*

|

||||

|

||||

|

||||

你用过 Errbot 或 Opsdroid 吗?如果用过请留下关于你对于这些工具印象的留言。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/3/python-chatops-libraries-opsdroid-and-errbot

|

||||

|

||||

作者:[Jeff Triplett][a], [Lacey Williams Henschel][1]

|

||||

译者:[tomjlw](https://github.com/tomjlw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/laceynwilliams

|

||||

[1]:https://opensource.com/users/laceynwilliams

|

||||

[2]:https://opsdroid.github.io/

|

||||

[3]:https://github.com/opsdroid/skill-seen

|

||||

[4]:https://github.com/opsdroid/skill-weather

|

||||

[5]:https://opsdroid.readthedocs.io/en/stable/#docker

|

||||

[6]:https://api.slack.com/bot-users

|

||||

[7]:https://opsdroid.readthedocs.io/en/stable/extending/connectors/

|

||||

[8]:https://opsdroid.readthedocs.io/en/stable/tutorials/introduction/

|

||||

[9]:https://opsdroid.readthedocs.io/en/stable/extending/skills/

|

||||

[10]:https://opsdroid.readthedocs.io/en/stable/extending/databases/

|

||||

[11]:https://opsdroid.readthedocs.io/en/stable/matchers/overview/

|

||||

[12]:https://opsdroid.readthedocs.io/en/stable/matchers/dialogflow/

|

||||

[13]:https://opsdroid.readthedocs.io/en/stable/matchers/luis.ai/

|

||||

[14]:https://opsdroid.readthedocs.io/en/stable/matchers/recast.ai/

|

||||

[15]:https://opsdroid.readthedocs.io/en/stable/matchers/wit.ai/

|

||||

[16]:https://opsdroid.readthedocs.io/en/stable/contributing/

|

||||

[17]:http://errbot.io/en/latest/

|

||||

[18]:http://errbot.io/en/latest/features.html#extensive-plugin-framework

|

||||

[19]:http://errbot.io/en/latest/user_guide/plugin_development/dynaplugs.html

|

||||

[20]:http://pytest.org/

|

||||

[21]:http://errbot.io/en/latest/user_guide/plugin_development/testing.html

|

||||

[22]:http://errbot.io/en/latest/index.html#simple-to-build-upon

|

||||

@ -0,0 +1,77 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (lujun9972)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10639-1.html)

|

||||

[#]: subject: (Asynchronous rsync with Emacs,dired and tramp。)

|

||||

[#]: via: (https://vxlabs.com/2018/03/30/asynchronous-rsync-with-emacs-dired-and-tramp/)

|

||||

[#]: author: (cpbotha https://vxlabs.com/author/cpbotha/)

|

||||

|

||||

在 Emacs 的 dired 和 tramp 中异步运行 rsync

|

||||

======

|

||||

|

||||

[Trần Xuân Trường][2] 写的 [tmtxt-dired-async][1] 是一个不为人知的 Emacs 包,它可以扩展 dired(Emacs 内置的文件管理器),使之可以异步地运行 `rsync` 和其他命令 (例如压缩、解压缩和下载)。

|

||||

|

||||

这意味着你可以拷贝上 GB 的目录而不影响 Emacs 的其他任务。

|

||||

|

||||

它的一个功能时让你可以通过 `C-c C-a` 从不同位置添加任意多的文件到一个等待列表中,然后按下 `C-c C-v` 异步地使用 `rsync` 将整个等待列表中的文件同步到目标目录中。光这个功能就值得一试了。

|

||||

|

||||

例如这里将 arduino 1.9 的 beta 存档同步到另一个目录中:

|

||||

|

||||

![][4]

|

||||

|

||||

整个进度完成后,底部的窗口会在 5 秒后自动退出。下面是异步解压上面的 arduino 存档后出现的另一个会话:

|

||||

|

||||

![][6]

|

||||

|

||||

这个包进一步增加了我 dired 配置的实用性。

|

||||

|

||||

我刚刚贡献了 [一个拉取请求来允许 tmtxt-dired-async 同步到远程 tramp 目录中][7],而且我立即使用该功能来将上 GB 的新照片传输到 Linux 服务器上。

|

||||

|

||||

若你想配置 tmtxt-dired-async,下载 [tmtxt-async-tasks.el][8](被依赖的库)以及 [tmtxt-dired-async.el][9](若你想让它支持 tramp,请确保合并使用了我的拉取请求)到 `~/.emacs.d/` 目录中,然后添加下面配置:

|

||||

|

||||

```

|

||||

;; no MELPA packages of this, so we have to do a simple check here

|

||||

(setq dired-async-el (expand-file-name "~/.emacs.d/tmtxt-dired-async.el"))

|

||||

(when (file-exists-p dired-async-el)

|

||||

(load (expand-file-name "~/.emacs.d/tmtxt-async-tasks.el"))

|

||||

(load dired-async-el)

|

||||

(define-key dired-mode-map (kbd "C-c C-r") 'tda/rsync)

|

||||

(define-key dired-mode-map (kbd "C-c C-z") 'tda/zip)

|

||||

(define-key dired-mode-map (kbd "C-c C-u") 'tda/unzip)

|

||||

|

||||

(define-key dired-mode-map (kbd "C-c C-a") 'tda/rsync-multiple-mark-file)

|

||||

(define-key dired-mode-map (kbd "C-c C-e") 'tda/rsync-multiple-empty-list)

|

||||

(define-key dired-mode-map (kbd "C-c C-d") 'tda/rsync-multiple-remove-item)

|

||||

(define-key dired-mode-map (kbd "C-c C-v") 'tda/rsync-multiple)

|

||||

|

||||

(define-key dired-mode-map (kbd "C-c C-s") 'tda/get-files-size)

|

||||

|

||||

(define-key dired-mode-map (kbd "C-c C-q") 'tda/download-to-current-dir))

|

||||

```

|

||||

|

||||

祝你开心!

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://vxlabs.com/2018/03/30/asynchronous-rsync-with-emacs-dired-and-tramp/

|

||||

|

||||

作者:[cpbotha][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://vxlabs.com/author/cpbotha/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://truongtx.me/tmtxt-dired-async.html

|

||||

[2]: https://truongtx.me/about.html

|

||||

[3]: https://i0.wp.com/vxlabs.com/wp-content/uploads/2018/03/rsync-arduino-zip.png?resize=660%2C340&ssl=1

|

||||

[4]: https://i0.wp.com/vxlabs.com/wp-content/uploads/2018/03/rsync-arduino-zip.png?ssl=1

|

||||

[5]: https://i1.wp.com/vxlabs.com/wp-content/uploads/2018/03/progress-window-5s.png?resize=660%2C310&ssl=1

|

||||

[6]: https://i1.wp.com/vxlabs.com/wp-content/uploads/2018/03/progress-window-5s.png?ssl=1

|

||||

[7]: https://github.com/tmtxt/tmtxt-dired-async/pull/6

|

||||

[8]: https://github.com/tmtxt/tmtxt-async-tasks

|

||||

[9]: https://github.com/tmtxt/tmtxt-dired-async

|

||||

194

published/20180826 Be productive with Org-mode.md

Normal file

194

published/20180826 Be productive with Org-mode.md

Normal file

@ -0,0 +1,194 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (lujun9972)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10634-1.html)

|

||||

[#]: subject: (Be productive with Org-mode)

|

||||

[#]: via: (https://www.badykov.com/emacs/2018/08/26/be-productive-with-org-mode/)

|

||||

[#]: author: (Ayrat Badykov https://www.badykov.com)

|

||||

|

||||

高效使用 Org 模式

|

||||

======

|

||||

|

||||

![org-mode-collage][1]

|

||||

|

||||

### 简介

|

||||

|

||||

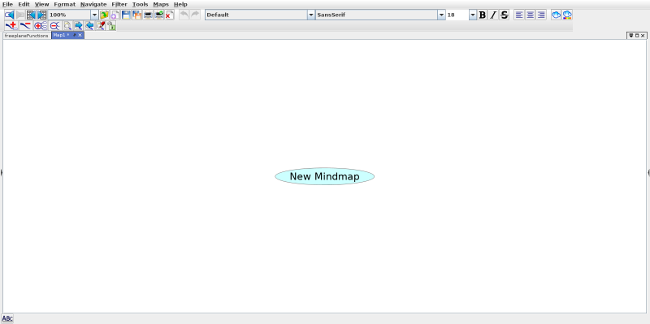

在我 [前一篇关于 Emacs 的文章中][2] 我提到了 <ruby>[Org 模式][3]<rt>Org-mode</rt></ruby>,这是一个笔记管理工具和组织工具。本文中,我将会描述一下我日常的 Org 模式使用案例。

|

||||

|

||||

### 笔记和代办列表

|

||||

|

||||

首先而且最重要的是,Org 模式是一个管理笔记和待办列表的工具,Org 模式的所有工具都聚焦于使用纯文本文件记录笔记。我使用 Org 模式管理多种笔记。

|

||||

|

||||

#### 一般性笔记

|

||||

|

||||

Org 模式最基本的应用场景就是以笔记的形式记录下你想记住的事情。比如,下面是我正在学习的笔记内容:

|

||||

|

||||

```

|

||||

* Learn

|

||||

** Emacs LISP

|

||||

*** Plan

|

||||

|

||||

- [ ] Read best practices

|

||||

- [ ] Finish reading Emacs Manual

|

||||

- [ ] Finish Exercism Exercises

|

||||

- [ ] Write a couple of simple plugins

|

||||

- Notification plugin

|

||||

|

||||

*** Resources

|

||||

|

||||

https://www.gnu.org/software/emacs/manual/html_node/elisp/index.html

|

||||

http://exercism.io/languages/elisp/about

|

||||

[[http://batsov.com/articles/2011/11/30/the-ultimate-collection-of-emacs-resources/][The Ultimate Collection of Emacs Resources]]

|

||||

|

||||

** Rust gamedev

|

||||

*** Study [[https://github.com/SergiusIW/gate][gate]] 2d game engine with web assembly support

|

||||

*** [[ggez][https://github.com/ggez/ggez]]

|

||||

*** [[https://www.amethyst.rs/blog/release-0-8/][Amethyst 0.8 Relesed]]

|

||||

|

||||

** Upgrade Elixir/Erlang Skills

|

||||

*** Read Erlang in Anger

|

||||

```

|

||||

|

||||

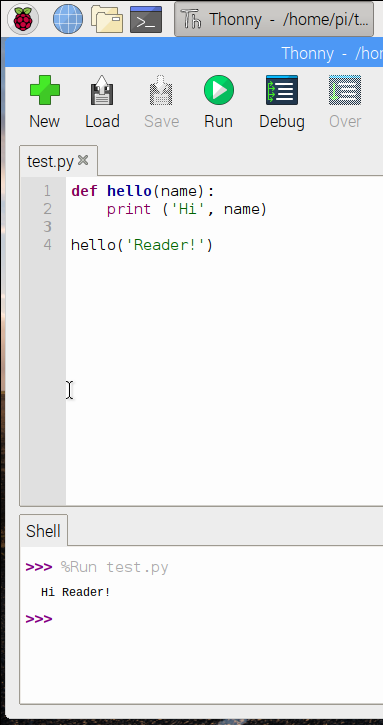

借助 [org-bullets][4] 它看起来是这样的:

|

||||

|

||||

![notes][5]

|

||||

|

||||

在这个简单的例子中,你能看到 Org 模式的一些功能:

|

||||

|

||||

- 笔记允许嵌套

|

||||

- 链接

|

||||

- 带复选框的列表

|

||||

|

||||

#### 项目待办

|

||||

|

||||

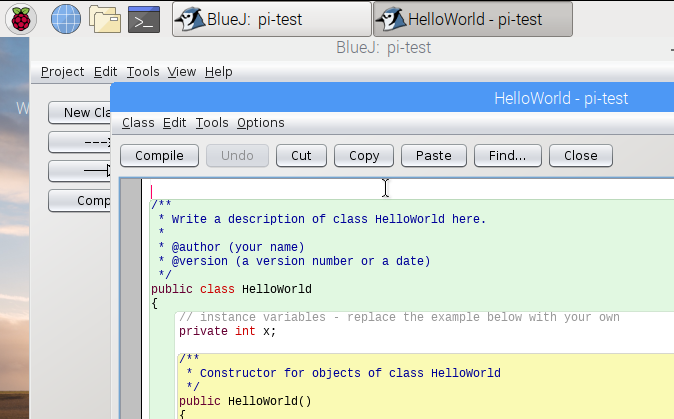

我在工作时时常会发现一些能够改进或修复的事情。我并不会在代码文件中留下 TODO 注释 (坏味道),相反我使用 [org-projectile][6] 来在另一个文件中记录一个 TODO 事项,并留下一个快捷方式。下面是一个该文件的例子:

|

||||

|

||||

```

|

||||

* [[elisp:(org-projectile-open-project%20"mana")][mana]] [3/9]

|

||||

:PROPERTIES:

|

||||

:CATEGORY: mana

|

||||

:END:

|

||||

** DONE [[file:~/Development/mana/apps/blockchain/lib/blockchain/contract/create_contract.ex::insufficient_gas_before_homestead%20=][fix this check using evm.configuration]]

|

||||

CLOSED: [2018-08-08 Ср 09:14]

|

||||

[[https://github.com/ethereum/EIPs/blob/master/EIPS/eip-2.md][eip2]]:

|

||||

If contract creation does not have enough gas to pay for the final gas fee for

|

||||

adding the contract code to the state, the contract creation fails (i.e. goes out-of-gas)

|

||||

rather than leaving an empty contract.

|

||||

** DONE Upgrade Elixir to 1.7.

|

||||

CLOSED: [2018-08-08 Ср 09:14]

|

||||

** TODO [#A] Difficulty tests

|

||||

** TODO [#C] Upgrage to OTP 21

|

||||

** DONE [#A] EIP150

|

||||

CLOSED: [2018-08-14 Вт 21:25]

|

||||

*** DONE operation cost changes

|

||||

CLOSED: [2018-08-08 Ср 20:31]

|

||||

*** DONE 1/64th for a call and create

|

||||

CLOSED: [2018-08-14 Вт 21:25]

|

||||

** TODO [#C] Refactor interfaces

|

||||

** TODO [#B] Caching for storage during execution

|

||||

** TODO [#B] Removing old merkle trees

|

||||

** TODO do not calculate cost twice

|

||||

* [[elisp:(org-projectile-open-project%20".emacs.d")][.emacs.d]] [1/3]

|

||||

:PROPERTIES:

|

||||

:CATEGORY: .emacs.d

|

||||

:END:

|

||||

** TODO fix flycheck issues (emacs config)

|

||||

** TODO use-package for fetching dependencies

|

||||

** DONE clean configuration

|

||||

CLOSED: [2018-08-26 Вс 11:48]

|

||||

```

|

||||

|

||||

它看起来是这样的:

|

||||

|

||||

![project-todos][7]

|

||||

|

||||

本例中你能看到更多的 Org 模式的功能:

|

||||

|

||||

- 代办列表具有 `TODO`、`DONE` 两个状态。你还可以定义自己的状态 (`WAITING` 等)

|

||||

- 关闭的事项有 `CLOSED` 时间戳

|

||||

- 有些事项有优先级 - A、B、C

|

||||

- 链接可以指向文件内部 (`[[file:~/。..]`)

|

||||

|

||||

#### 捕获模板

|

||||

|

||||

正如 Org 模式的文档中所描述的,捕获可以在不怎么干扰你工作流的情况下让你快速存储笔记。

|

||||

|

||||

我配置了许多捕获模板,可以帮我快速记录想要记住的事情。

|

||||

|

||||

```

|

||||

(setq org-capture-templates

|

||||

'(("t" "Todo" entry (file+headline "~/Dropbox/org/todo.org" "Todo soon")

|

||||

"* TODO %? \n %^t")

|

||||

("i" "Idea" entry (file+headline "~/Dropbox/org/ideas.org" "Ideas")

|

||||

"* %? \n %U")

|

||||

("e" "Tweak" entry (file+headline "~/Dropbox/org/tweaks.org" "Tweaks")

|

||||

"* %? \n %U")

|

||||

("l" "Learn" entry (file+headline "~/Dropbox/org/learn.org" "Learn")

|

||||

"* %? \n")

|

||||

("w" "Work note" entry (file+headline "~/Dropbox/org/work.org" "Work")

|

||||

"* %? \n")

|

||||

("m" "Check movie" entry (file+headline "~/Dropbox/org/check.org" "Movies")

|

||||

"* %? %^g")

|

||||

("n" "Check book" entry (file+headline "~/Dropbox/org/check.org" "Books")

|

||||

"* %^{book name} by %^{author} %^g")))

|

||||

```

|

||||

|

||||

做书本记录时我需要记下它的名字和作者,做电影记录时我需要记下标签,等等。

|

||||

|

||||

### 规划

|

||||

|

||||

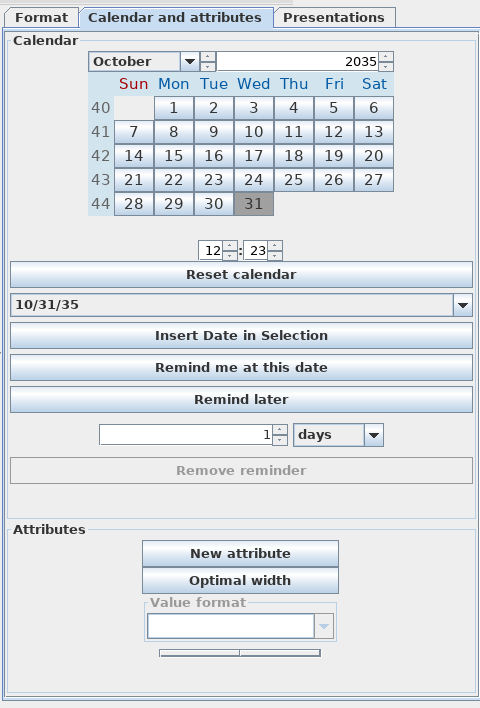

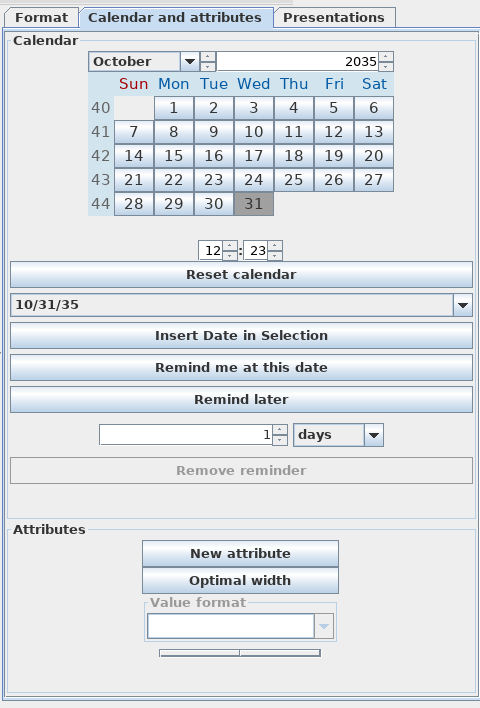

Org 模式的另一个超棒的功能是你可以用它来作日常规划。让我们来看一个例子:

|

||||

|

||||

![schedule][8]

|

||||

|

||||

我没有挖空心思虚构一个例子,这就是我现在真实文件的样子。它看起来内容并不多,但它有助于你花时间在在重要的事情上并且帮你对抗拖延症。

|

||||

|

||||

#### 习惯

|

||||

|

||||

根据 Org 模式的文档,Org 能够跟踪一种特殊的代办事情,称为 “习惯”。当我想养成新的习惯时,我会将该功能与日常规划功能一起连用:

|

||||

|

||||

![habits][9]

|

||||

|

||||

你可以看到,目前我在尝试每天早期并且每两天锻炼一次。另外,它也有助于让我每天阅读书籍。

|

||||

|

||||

#### 议事日程视图

|

||||

|

||||

最后,我还使用议事日程视图功能。待办事项可能分散在不同文件中(比如我就是日常规划和习惯分散在不同文件中),议事日程视图可以提供所有待办事项的总览:

|

||||

|

||||

![agenda][10]

|

||||

|

||||

### 更多 Org 模式的功能

|

||||

|

||||

+ 手机应用([Android](https://play.google.com/store/apps/details?id=com.orgzly&hl=en)、[ios](https://itunes.apple.com/app/id1238649962]))

|

||||

+ [将 Org 模式文档导出为其他格式](https://orgmode.org/manual/Exporting.html)(html、markdown、pdf、latex 等)

|

||||

+ 使用 [ledger](https://github.com/ledger/ledger-mode) [追踪财务状况](https://orgmode.org/worg/org-tutorials/weaving-a-budget.html)

|

||||

|

||||

### 总结

|

||||

|

||||

本文我描述了 Org 模式广泛功能中的一小部分,我每天都用它来提高工作效率,把时间花在重要的事情上。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.badykov.com/emacs/2018/08/26/be-productive-with-org-mode/

|

||||

|

||||

作者:[Ayrat Badykov][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.badykov.com

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://i.imgur.com/hgqCyen.jpg

|

||||

[2]: http://www.badykov.com/emacs/2018/07/31/why-emacs-is-a-great-editor/

|

||||

[3]: https://orgmode.org/

|

||||

[4]: https://github.com/sabof/org-bullets

|

||||

[5]: https://i.imgur.com/lGi60Uw.png

|

||||

[6]: https://github.com/IvanMalison/org-projectile

|

||||

[7]: https://i.imgur.com/Hbu8ilX.png

|

||||

[8]: https://i.imgur.com/z5HpuB0.png

|

||||

[9]: https://i.imgur.com/YJIp3d0.png

|

||||

[10]: https://i.imgur.com/CKX9BL9.png

|

||||

@ -0,0 +1,59 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10642-1.html)

|

||||

[#]: subject: (Get started with Cypht, an open source email client)

|

||||

[#]: via: (https://opensource.com/article/19/1/productivity-tool-cypht-email)

|

||||

[#]: author: (Kevin Sonney https://opensource.com/users/ksonney (Kevin Sonney))

|

||||

|

||||

开始使用 Cypht 吧,一个开源的电子邮件客户端

|

||||

======

|

||||

|

||||

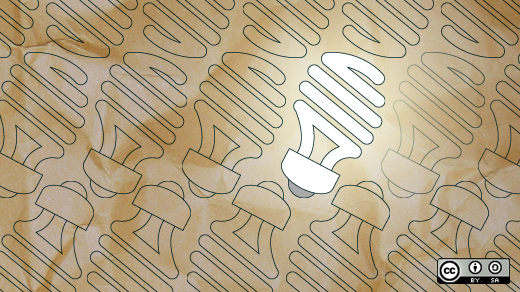

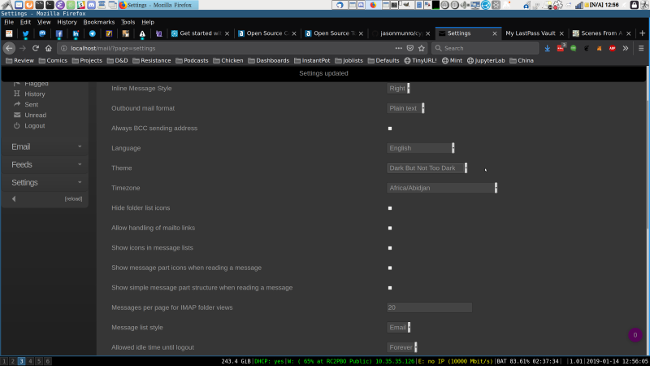

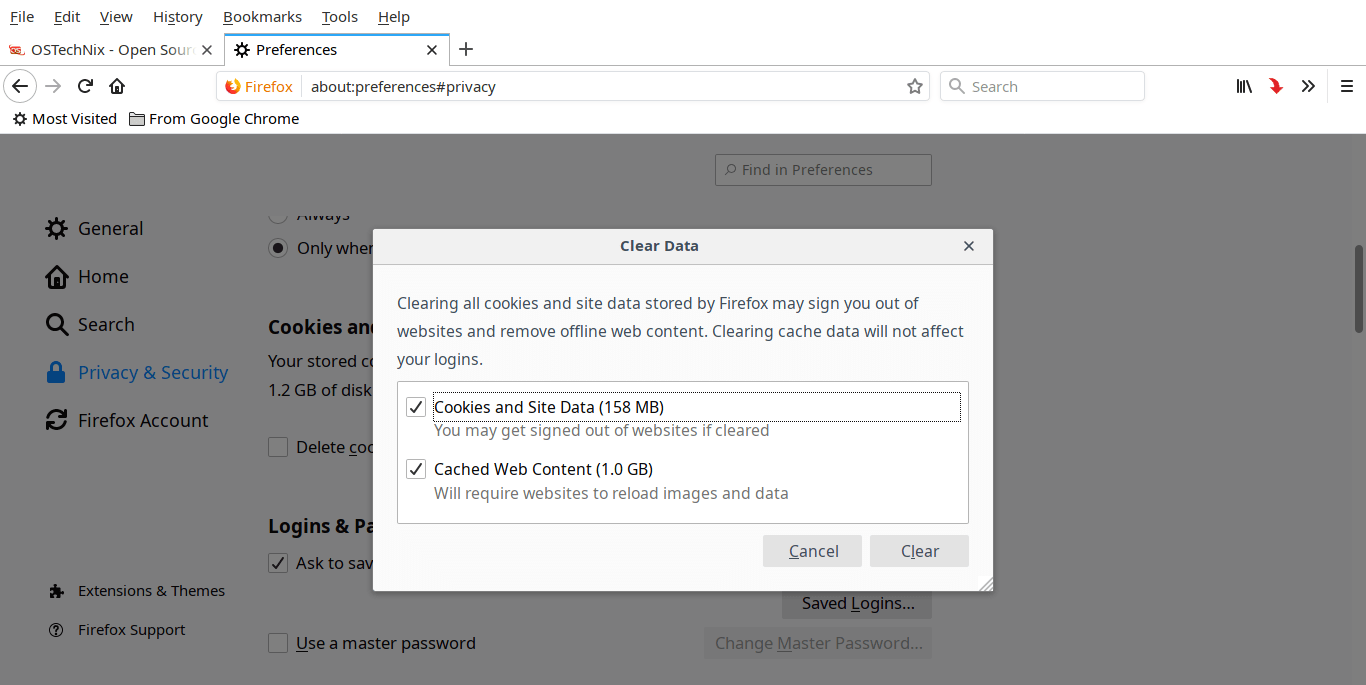

> 使用 Cypht 将你的电子邮件和新闻源集成到一个界面中,这是我们 19 个开源工具系列中的第 4 个,它将使你在 2019 年更高效。

|

||||

|

||||

|

||||

|

||||

每年年初似乎都有疯狂的冲动想提高工作效率。新年的决心,渴望开启新的一年,当然,“抛弃旧的,拥抱新的”的态度促成了这一切。通常这时的建议严重偏向闭源和专有软件,但事实上并不用这样。

|

||||

|

||||

这是我挑选出的 19 个新的(或者对你而言新的)开源工具中的第 4 个工具来帮助你在 2019 年更有效率。

|

||||

|

||||

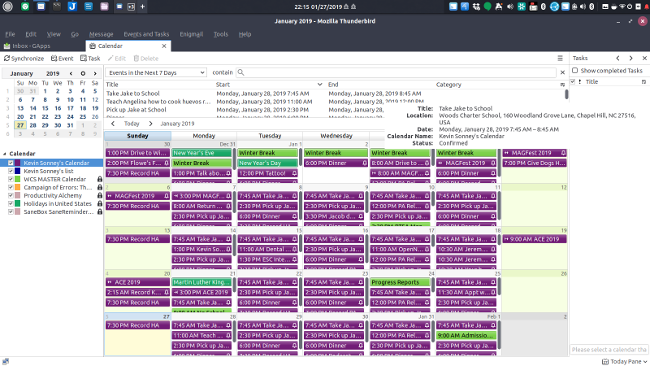

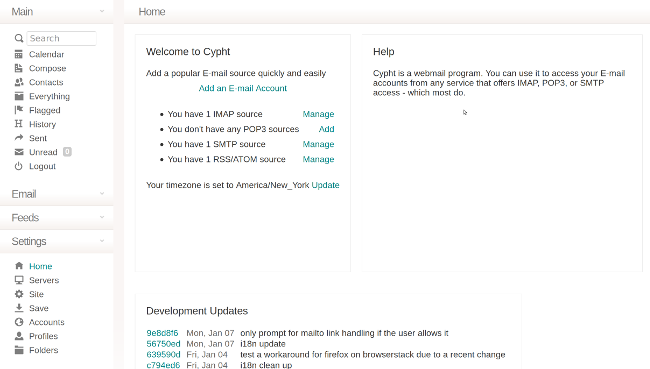

### Cypht

|

||||

|

||||

我们花了很多时间来处理电子邮件,有效地[管理你的电子邮件][1]可以对你的工作效率产生巨大影响。像 Thunderbird、Kontact/KMail 和 Evolution 这样的程序似乎都有一个共同点:它们试图复制 Microsoft Outlook 的功能,这在过去 10 年左右并没有真正改变。在过去十年中,甚至像 Mutt 和 Cone 这样的[著名控制台程序][2]也没有太大变化。

|

||||

|

||||

|

||||

|

||||

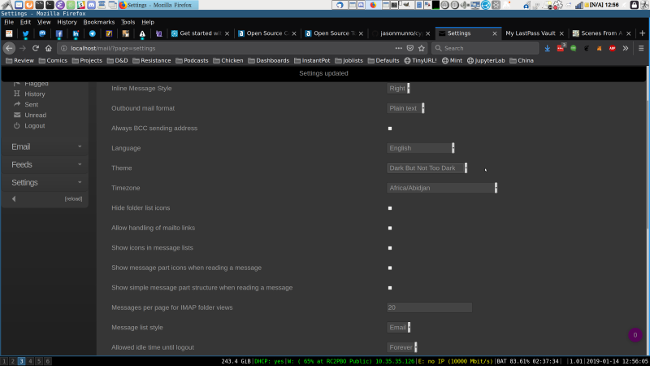

[Cypht][3] 是一个简单、轻量级和现代的 Webmail 客户端,它将多个帐户聚合到一个界面中。除了电子邮件帐户,它还包括 Atom/RSS 源。在 “Everything” 中,不仅可以显示收件箱中的邮件,还可以显示新闻源中的最新文章,从而使得阅读不同来源的内容变得简单。

|

||||

|

||||

|

||||

|

||||

它使用简化的 HTML 消息来显示邮件,或者你也可以将其设置为查看纯文本版本。由于 Cypht 不会加载远程图像(以帮助维护安全性),HTML 渲染可能有点粗糙,但它足以完成工作。你将看到包含大量富文本邮件的纯文本视图 —— 这意味着很多链接并且难以阅读。我不会说是 Cypht 的问题,因为这确实是发件人所做的,但它确实降低了阅读体验。阅读新闻源大致相同,但它们与你的电子邮件帐户集成,这意味着可以轻松获取最新的(我有时会遇到问题)。

|

||||

|

||||

|

||||

|

||||

用户可以使用预配置的邮件服务器并添加他们使用的任何其他服务器。Cypht 的自定义选项包括纯文本与 HTML 邮件显示,它支持多个配置文件以及更改主题(并自行创建)。你要记得单击左侧导航栏上的“保存”按钮,否则你的自定义设置将在该会话后消失。如果你在不保存的情况下注销并重新登录,那么所有更改都将丢失,你将获得开始时的设置。因此可以轻松地实验,如果你需要重置,只需在不保存的情况下注销,那么在再次登录时就会看到之前的配置。

|

||||

|

||||

|

||||

|

||||

本地[安装 Cypht][4] 非常容易。虽然它不使用容器或类似技术,但安装说明非常清晰且易于遵循,并且不需要我做任何更改。在我的笔记本上,从安装开始到首次登录大约需要 10 分钟。服务器上的共享安装使用相同的步骤,因此它应该大致相同。

|

||||

|

||||

最后,Cypht 是桌面和基于 Web 的电子邮件客户端的绝佳替代方案,它有简单的界面,可帮助你快速有效地处理电子邮件。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/1/productivity-tool-cypht-email

|

||||

|

||||

作者:[Kevin Sonney][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/ksonney (Kevin Sonney)

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/article/17/7/email-alternatives-thunderbird

|

||||

[2]: https://opensource.com/life/15/8/top-4-open-source-command-line-email-clients

|

||||

[3]: https://cypht.org/

|

||||

[4]: https://cypht.org/install.html

|

||||

@ -0,0 +1,60 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10636-1.html)

|

||||

[#]: subject: (Get started with CryptPad, an open source collaborative document editor)

|

||||

[#]: via: (https://opensource.com/article/19/1/productivity-tool-cryptpad)

|

||||

[#]: author: (Kevin Sonney https://opensource.com/users/ksonney (Kevin Sonney))

|

||||

|

||||

开始使用 CryptPad 吧,一个开源的协作文档编辑器

|

||||

======

|

||||

|

||||

> 使用 CryptPad 安全地共享你的笔记、文档、看板等,这是我们在开源工具系列中的第 5 个工具,它将使你在 2019 年更高效。

|

||||

|

||||

|

||||

|

||||

每年年初似乎都有疯狂的冲动想提高工作效率。新年的决心,渴望开启新的一年,当然,“抛弃旧的,拥抱新的”的态度促成了这一切。通常这时的建议严重偏向闭源和专有软件,但事实上并不用这样。

|

||||

|

||||

这是我挑选出的 19 个新的(或者对你而言新的)开源工具中的第 5 个工具来帮助你在 2019 年更有效率。

|

||||

|

||||

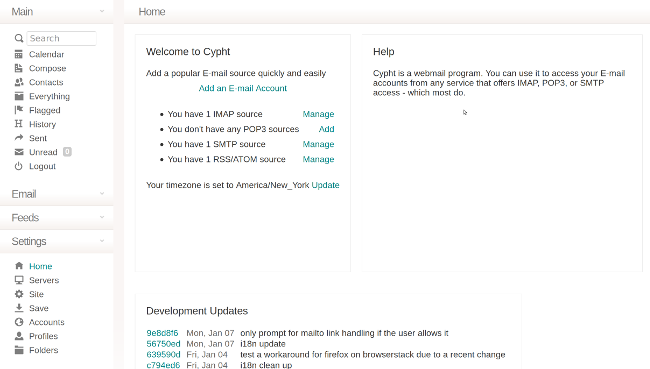

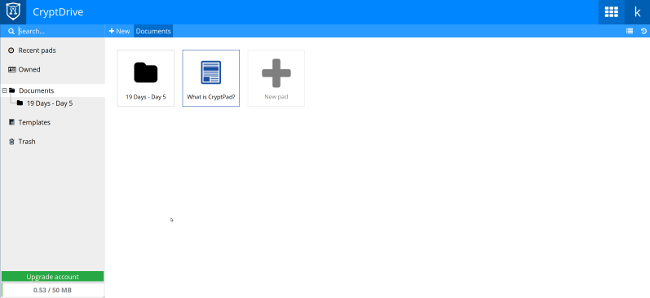

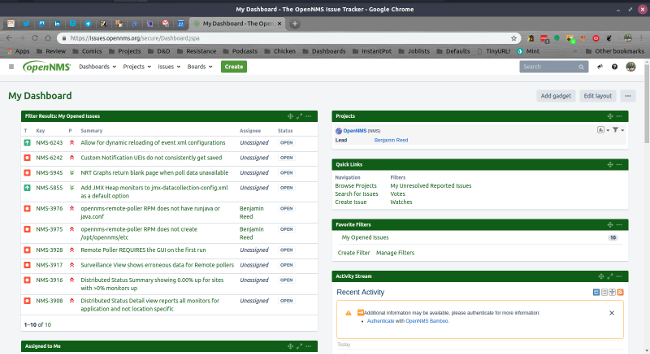

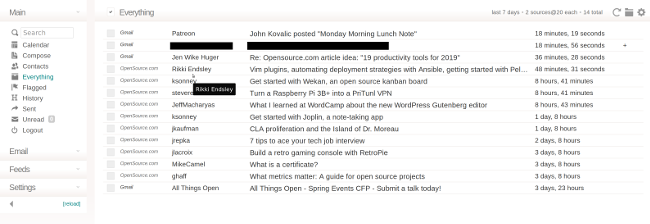

### CryptPad

|

||||

|

||||

我们已经介绍过 [Joplin][1],它能很好地保存自己的笔记,但是,你可能已经注意到,它没有任何共享或协作功能。

|

||||

|

||||

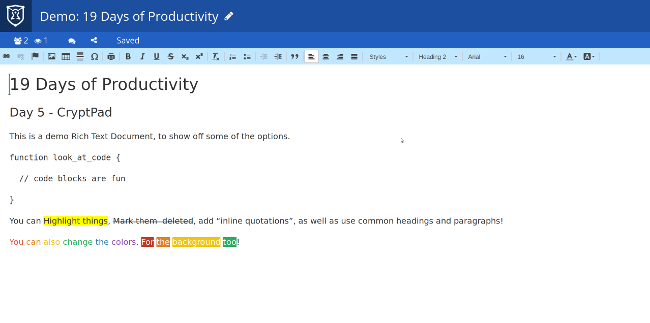

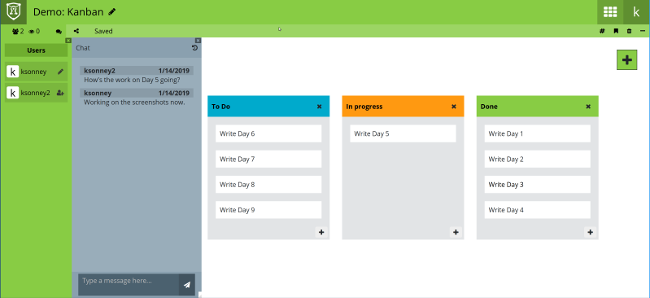

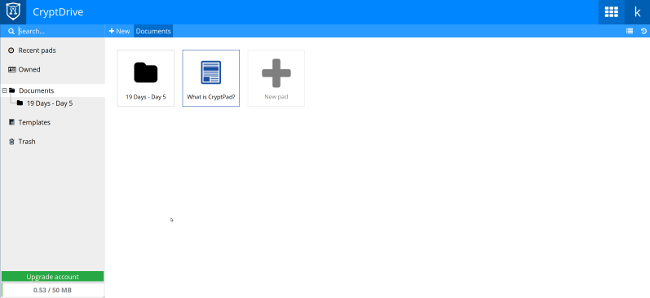

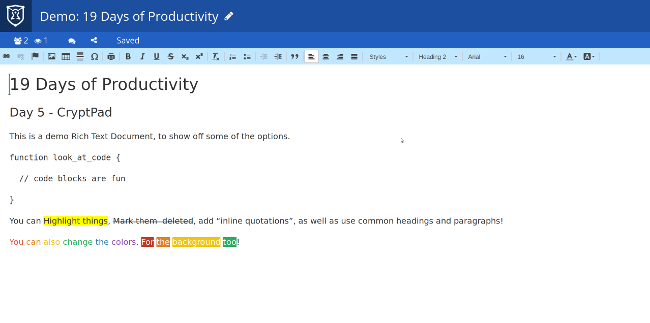

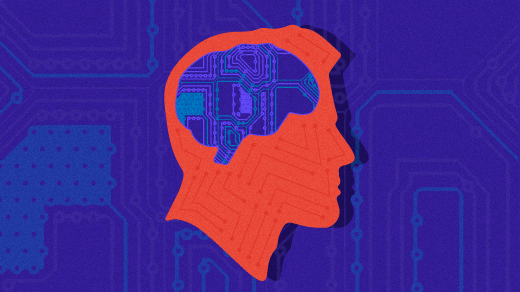

[CryptPad][2] 是一个安全、可共享的笔记应用和文档编辑器,它能够安全地协作编辑。与 Joplin 不同,它是一个 NodeJS 应用,这意味着你可以在桌面或其他服务器上运行它,并使用任何现代 Web 浏览器访问。它开箱即用,它支持富文本、Markdown、投票、白板,看板和 PPT。

|

||||

|

||||

|

||||

|

||||

它支持不同的文档类型且功能齐全。它的富文本编辑器涵盖了你所期望的所有基础功能,并允许你将文件导出为 HTML。它的 Markdown 的编辑能与 Joplin 相提并论,它的看板虽然不像 [Wekan][3] 那样功能齐全,但也做得不错。其他支持的文档类型和编辑器也很不错,并且有你希望在类似应用中看到的功能,尽管投票功能显得有些粗糙。

|

||||

|

||||

|

||||

|

||||

然而,CryptPad 的真正强大之处在于它的共享和协作功能。共享文档只需在“共享”选项中获取可共享 URL,CryptPad 支持使用 `<iframe>` 标签嵌入其他网站的文档。可以在“编辑”或“查看”模式下使用密码和会过期的链接共享文档。内置聊天能够让编辑者相互交谈(请注意,具有浏览权限的人也可以看到聊天但无法发表评论)。

|

||||

|

||||

|

||||

|

||||

所有文件都使用用户密码加密存储。服务器管理员无法读取文档,这也意味着如果你忘记或丢失了密码,文件将无法恢复。因此,请确保将密码保存在安全的地方,例如放在[密码保险箱][4]中。

|

||||

|

||||

|

||||

|

||||

当它在本地运行时,CryptPad 是一个用于创建和编辑文档的强大应用。当在服务器上运行时,它成为了用于多用户文档创建和编辑的出色协作平台。在我的笔记本电脑上安装它不到五分钟,并且开箱即用。开发者还加入了在 Docker 中运行 CryptPad 的说明,并且还有一个社区维护用于方便部署的 Ansible 角色。CryptPad 不支持任何第三方身份验证,因此用户必须创建自己的帐户。如果你不想运行自己的服务器,CryptPad 还有一个社区支持的托管版本。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/1/productivity-tool-cryptpad

|

||||

|

||||

作者:[Kevin Sonney][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/ksonney (Kevin Sonney)

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/article/19/1/productivity-tool-joplin

|

||||

[2]: https://cryptpad.fr/index.html

|

||||

[3]: https://opensource.com/article/19/1/productivity-tool-wekan

|

||||

[4]: https://opensource.com/article/18/4/3-password-managers-linux-command-line

|

||||

@ -0,0 +1,131 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10637-1.html)

|

||||

[#]: subject: (ODrive (Open Drive) – Google Drive GUI Client For Linux)

|

||||

[#]: via: (https://www.2daygeek.com/odrive-open-drive-google-drive-gui-client-for-linux/)

|

||||

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

||||

|

||||

ODrive:Linux 中的 Google 云端硬盘图形客户端

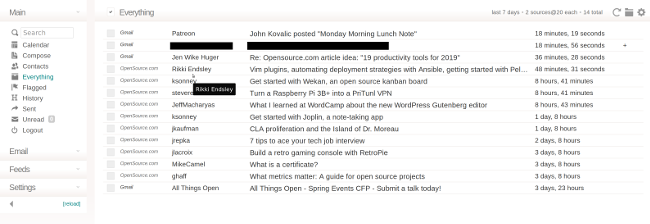

|

||||

======

|

||||

|

||||

这个我们已经多次讨论过。但是,我还要简要介绍一下它。截至目前,还没有官方的 Google 云端硬盘的 Linux 客户端,我们需要使用非官方客户端。Linux 中有许多集成 Google 云端硬盘的应用。每个应用都提供了一组功能。

|

||||

|

||||

我们过去在网站上写过一些此类文章。

|

||||

|

||||

这些文章是 [DriveSync][1] 、[Google Drive Ocamlfuse 客户端][2] 和 [在 Linux 中使用 Nautilus 文件管理器挂载 Google 云端硬盘][3]。

|

||||

|

||||

今天我们也将讨论相同的主题,程序名字是 ODrive。

|

||||

|

||||

### ODrive 是什么?

|

||||

|

||||

ODrive 意即 Open Drive。它是 Google 云端硬盘的图形客户端,它用 electron 框架编写。

|

||||

|

||||

它简单的图形界面能让用户几步就能集成 Google 云端硬盘。

|

||||

|

||||

### 如何在 Linux 上安装和设置 ODrive?

|

||||

|

||||

由于开发者提供了 AppImage 包,因此在 Linux 上安装 ODrive 没有任何困难。

|

||||

|

||||

只需使用 `wget` 命令从开发者的 GitHub 页面下载最新的 ODrive AppImage 包。

|

||||

|

||||

```

|

||||

$ wget https://github.com/liberodark/ODrive/releases/download/0.1.3/odrive-0.1.3-x86_64.AppImage

|

||||

```

|

||||

|

||||

你必须为 ODrive AppImage 文件设置可执行文件权限。

|

||||

|

||||

```

|

||||

$ chmod +x odrive-0.1.3-x86_64.AppImage

|

||||

```

|

||||

|

||||

只需运行 ODrive AppImage 文件以启动 ODrive GUI 以进行进一步设置。

|

||||

|

||||

```

|

||||

$ ./odrive-0.1.3-x86_64.AppImage

|

||||

```

|

||||

|

||||

运行上述命令时,可能会看到下面的窗口。只需按下“下一步”按钮即可进行进一步设置。

|

||||

|

||||

![][5]

|

||||

|

||||

点击“连接”链接添加 Google 云端硬盘帐户。

|

||||

|

||||

![][6]

|

||||

|

||||

输入你要设置 Google 云端硬盘帐户的电子邮箱。

|

||||

|

||||

![][7]

|

||||

|

||||

输入邮箱密码。

|

||||

|

||||

![][8]

|

||||

|

||||

允许 ODrive 访问你的 Google 帐户。

|

||||

|

||||

![][9]

|

||||

|

||||

默认情况下,它将选择文件夹位置。如果你要选择特定文件夹,则可以更改。

|

||||

|

||||

![][10]

|

||||

|

||||

最后点击“同步”按钮开始将文件从 Google 下载到本地系统。

|

||||

|

||||

![][11]

|

||||

|

||||

同步正在进行中。

|

||||

|

||||

![][12]

|

||||

|

||||

同步完成后。它会显示所有已下载的文件。

|

||||

|

||||

![][13]

|

||||

|

||||

我看到所有文件都下载到上述目录中。

|

||||

|

||||

![][14]

|

||||

|

||||

如果要将本地系统中的任何新文件同步到 Google 。只需从应用菜单启动 “ODrive”,但它不会实际启动应用。它将在后台运行,我们可以使用 `ps` 命令查看。

|

||||

|

||||

```

|

||||

$ ps -df | grep odrive

|

||||

```

|

||||

|

||||

![][15]

|

||||

|

||||

将新文件添加到 Google文件夹后,它会自动开始同步。从通知菜单中也可以看到。是的,我看到一个文件已同步到 Google 中。

|

||||

|

||||

![][16]

|

||||

|

||||

同步完成后图形界面没有加载,我不确定这个功能。我会向开发者反馈之后,根据他的反馈更新。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.2daygeek.com/odrive-open-drive-google-drive-gui-client-for-linux/

|

||||

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.2daygeek.com/author/magesh/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.2daygeek.com/drivesync-google-drive-sync-client-for-linux/

|

||||

[2]: https://linux.cn/article-10517-1.html

|

||||

[3]: https://www.2daygeek.com/mount-access-setup-google-drive-in-linux/

|

||||