mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-25 23:11:02 +08:00

Merge branch 'master' of github.com:LCTT/TranslateProject

This commit is contained in:

commit

9fb9348519

@ -0,0 +1,111 @@

|

||||

JStock:Linux 上不错的股票投资组合管理软件

|

||||

================================================================================

|

||||

|

||||

如果你在股票市场做投资,那么你可能非常清楚投资组合管理计划有多重要。管理投资组合的目标是依据你能承受的风险,时间层面的长短和资金盈利的目标去为你量身打造的一种投资计划。鉴于这类软件的重要性,因此从来不会缺乏商业性的 app 和股票行情检测软件,每一个都可以兜售复杂的投资组合以及跟踪报告功能。

|

||||

|

||||

对于我们这些 Linux 爱好者们,我也找到了一些**好用的开源投资组合管理工具**,用来在 Linux 上管理和跟踪股票的投资组合,这里高度推荐一个基于 java 编写的管理软件 [JStock][1]。如果你不是一个 java 粉,也许你会放弃它,JStock 需要运行在沉重的 JVM 环境上。但同时,在每一个安装了 JRE 的环境中它都可以马上运行起来,在你的 Linux 环境中它会运行的很顺畅。

|

||||

|

||||

“开源”就意味着免费或标准低下的时代已经过去了。鉴于 JStock 只是一个个人完成的产物,作为一个投资组合管理软件它最令人印象深刻的是包含了非常多实用的功能,以上所有的荣誉属于它的作者 Yan Cheng Cheok!例如,JStock 支持通过监视列表去监控价格,多种投资组合,自选/内置的股票指标与相关监测,支持27个不同的股票市场和跨平台的云端备份/还原。JStock 支持多平台部署(Linux, OS X, Android 和 Windows),你可以通过云端保存你的 JStock 投资组合,并通过云平台无缝的备份/还原到其他的不同平台上面。

|

||||

|

||||

现在我将向你展示如何安装以及使用过程的一些具体细节。

|

||||

|

||||

### 在 Linux 上安装 JStock ###

|

||||

|

||||

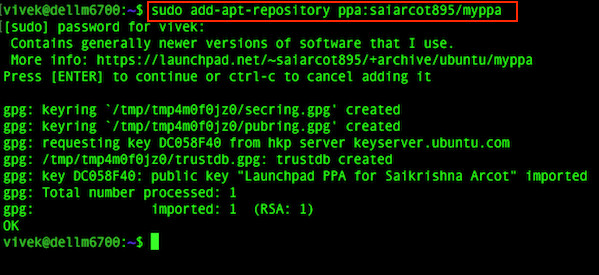

因为 JStock 使用Java编写,所以必须[安装 JRE][2]才能让它运行起来。小提示,JStock 需要 JRE1.7 或更高版本。如你的 JRE 版本不能满足这个需求,JStock 将会运行失败然后出现下面的报错。

|

||||

|

||||

Exception in thread "main" java.lang.UnsupportedClassVersionError: org/yccheok/jstock/gui/JStock : Unsupported major.minor version 51.0

|

||||

|

||||

|

||||

在你的 Linux 上安装好了 JRE 之后,从其官网下载最新的发布的 JStock,然后加载启动它。

|

||||

|

||||

$ wget https://github.com/yccheok/jstock/releases/download/release_1-0-7-13/jstock-1.0.7.13-bin.zip

|

||||

$ unzip jstock-1.0.7.13-bin.zip

|

||||

$ cd jstock

|

||||

$ chmod +x jstock.sh

|

||||

$ ./jstock.sh

|

||||

|

||||

教程的其他部分,让我来给大家展示一些 JStock 的实用功能

|

||||

|

||||

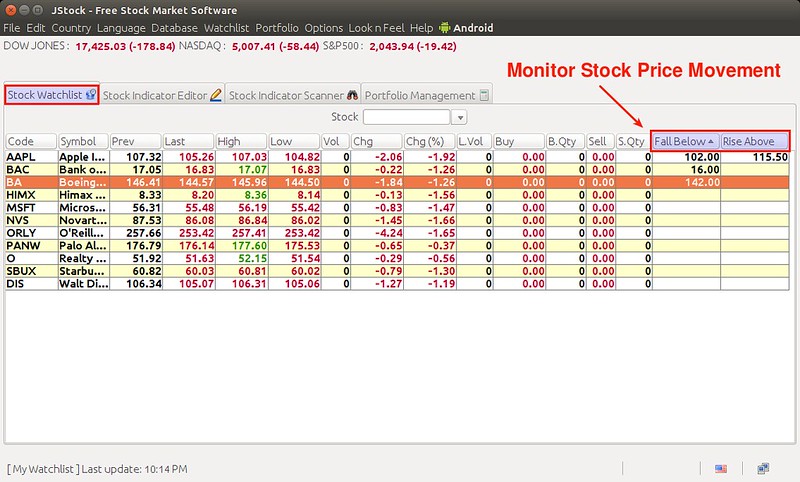

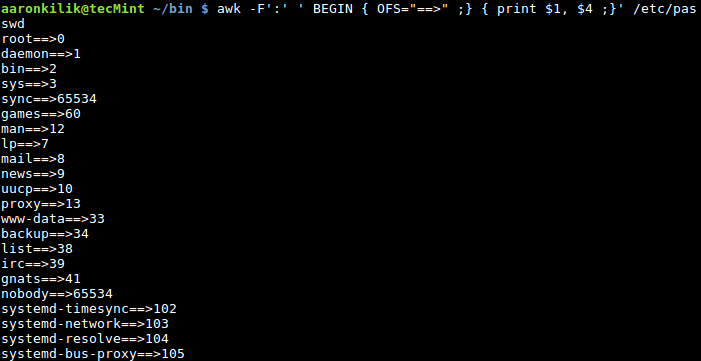

### 监视监控列表中股票价格的波动 ###

|

||||

|

||||

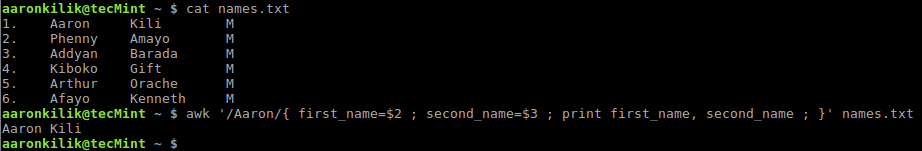

使用 JStock 你可以创建一个或多个监视列表,它可以自动的监视股票价格的波动并给你提供相应的通知。在每一个监视列表里面你可以添加多个感兴趣的股票进去。之后在“Fall Below”和“Rise Above”的表格里添加你的警戒值,分别设定该股票的最低价格和最高价格。

|

||||

|

||||

|

||||

|

||||

例如你设置了 AAPL 股票的最低/最高价格分别是 $102 和 $115.50,只要在价格低于 $102 或高于 $115.50 时你就得到桌面通知。

|

||||

|

||||

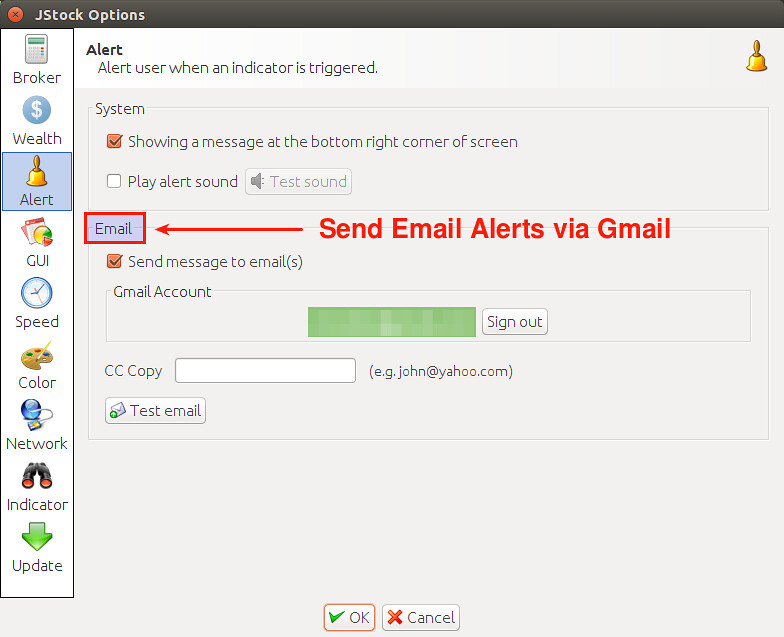

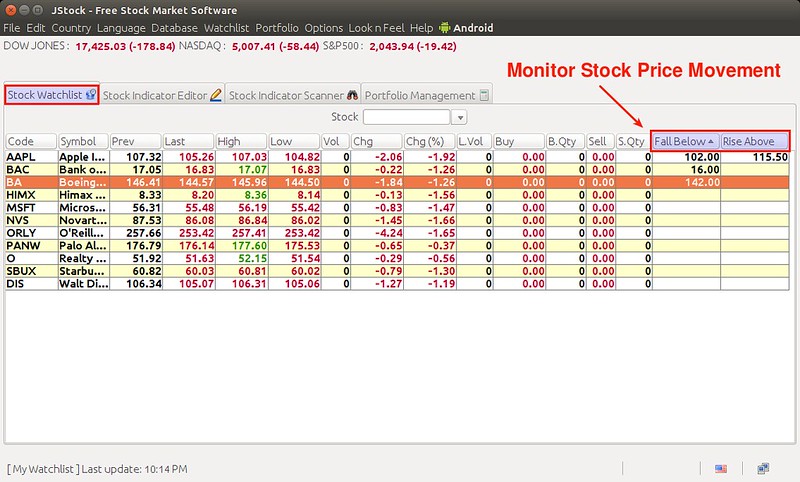

你也可以设置邮件通知,这样你将收到一些价格信息的邮件通知。设置邮件通知在“Options”菜单里,在“Alert”标签中国,打开“Send message to email(s)”,填入你的 Gmail 账户。一旦完成 Gmail 认证步骤,JStock 就会开始发送邮件通知到你的 Gmail 账户(也可以设置其他的第三方邮件地址)。

|

||||

|

||||

|

||||

|

||||

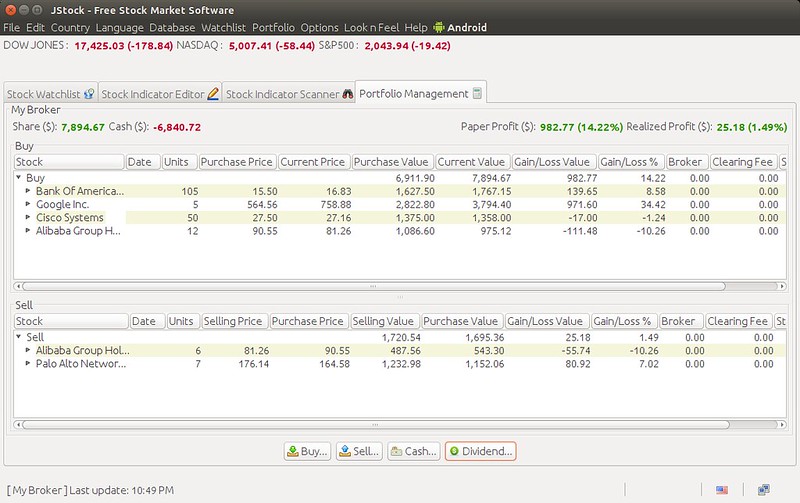

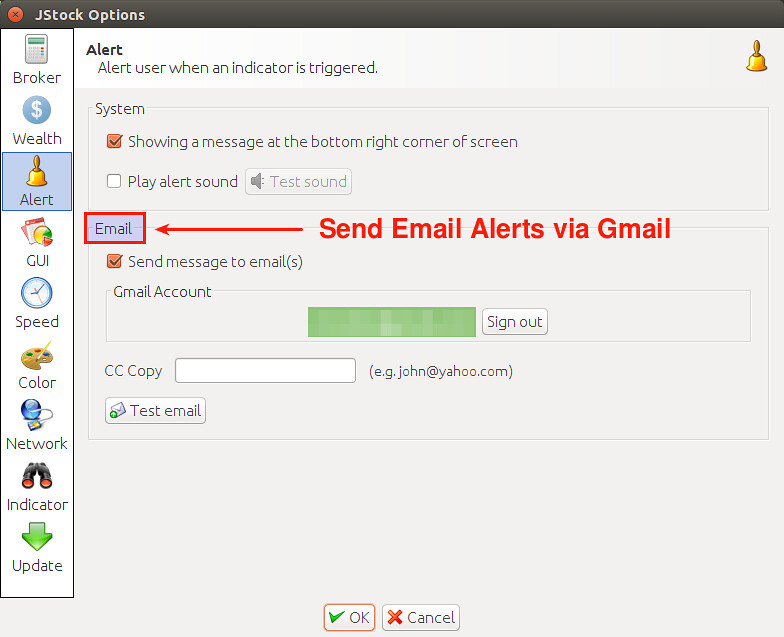

### 管理多个投资组合 ###

|

||||

|

||||

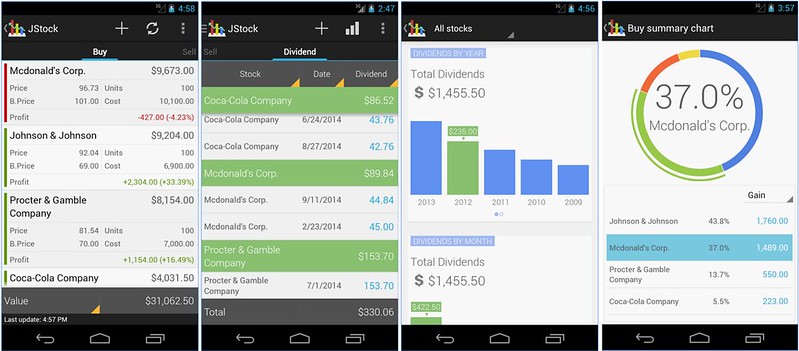

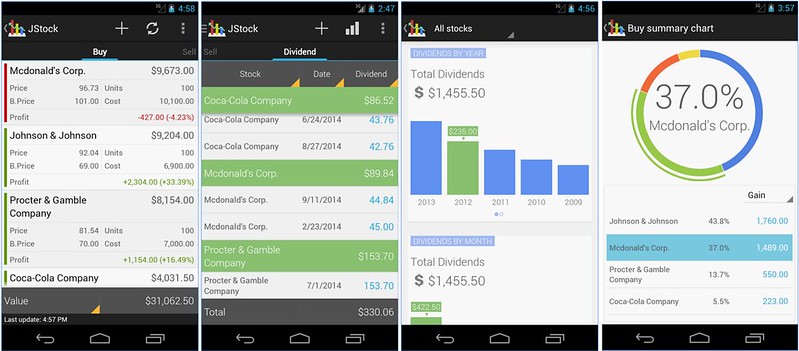

JStock 允许你管理多个投资组合。这个功能对于你使用多个股票经纪人时是非常实用的。你可以为每个经纪人创建一个投资组合去管理你的“买入/卖出/红利”用来了解每一个经纪人的业务情况。你也可以在“Portfolio”菜单里面选择特定的投资组合来切换不同的组合项目。下面是一张截图用来展示一个假设的投资组合。

|

||||

|

||||

|

||||

|

||||

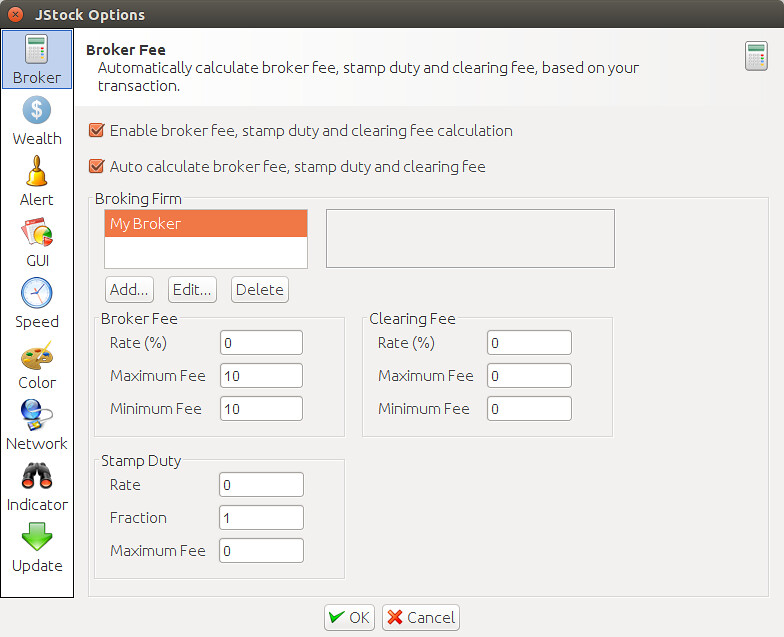

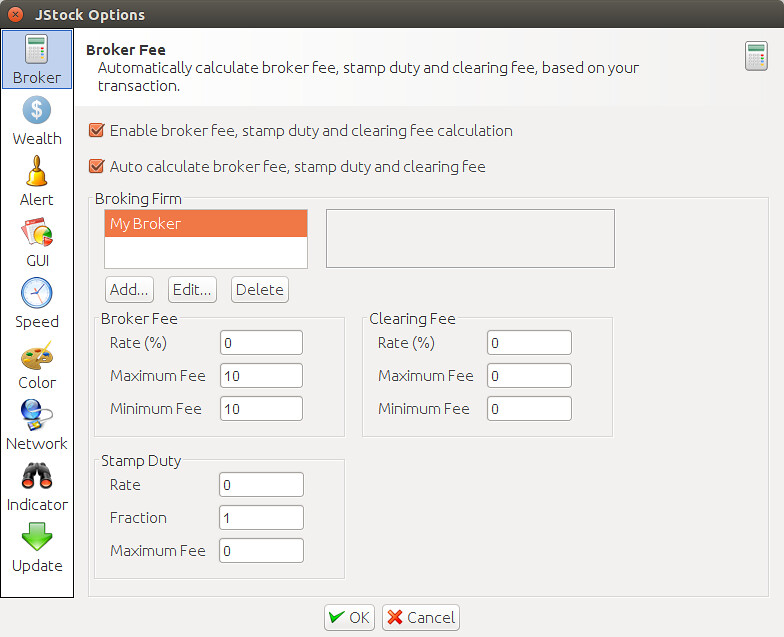

你也可以设置付给中介费,你可以为每个买卖交易设置中介费、印花税以及结算费。如果你比较懒,你也可以在选项菜单里面启用自动费用计算,并提前为每一家经济事务所设置费用方案。当你为你的投资组合增加交易之后,JStock 将自动的计算并计入费用。

|

||||

|

||||

|

||||

|

||||

### 使用内置/自选股票指标来监控 ###

|

||||

|

||||

如果你要做一些股票的技术分析,你可能需要基于各种不同的标准来监控股票(这里叫做“股票指标”)。对于股票的跟踪,JStock提供多个[预设的技术指示器][3] 去获得股票上涨/下跌/逆转指数的趋势。下面的列表里面是一些可用的指标。

|

||||

|

||||

- 平滑异同移动平均线(MACD)

|

||||

- 相对强弱指标 (RSI)

|

||||

- 资金流向指标 (MFI)

|

||||

- 顺势指标 (CCI)

|

||||

- 十字线

|

||||

- 黄金交叉线,死亡交叉线

|

||||

- 涨幅/跌幅

|

||||

|

||||

开启预设指示器能需要在 JStock 中点击“Stock Indicator Editor”标签。之后点击右侧面板中的安装按钮。选择“Install from JStock server”选项,之后安装你想要的指示器。

|

||||

|

||||

|

||||

|

||||

一旦安装了一个或多个指示器,你可以用他们来扫描股票。选择“Stock Indicator Scanner”标签,点击底部的“Scan”按钮,选择需要的指示器。

|

||||

|

||||

|

||||

|

||||

当你选择完需要扫描的股票(例如, NYSE, NASDAQ)以后,JStock 将执行该扫描,并将该指示器捕获的结果通过列表展现。

|

||||

|

||||

|

||||

|

||||

除了预设指示器以外,你也可以使用一个图形化的工具来定义自己的指示器。下面这张图例用于监控当前价格小于或等于60天平均价格的股票。

|

||||

|

||||

|

||||

|

||||

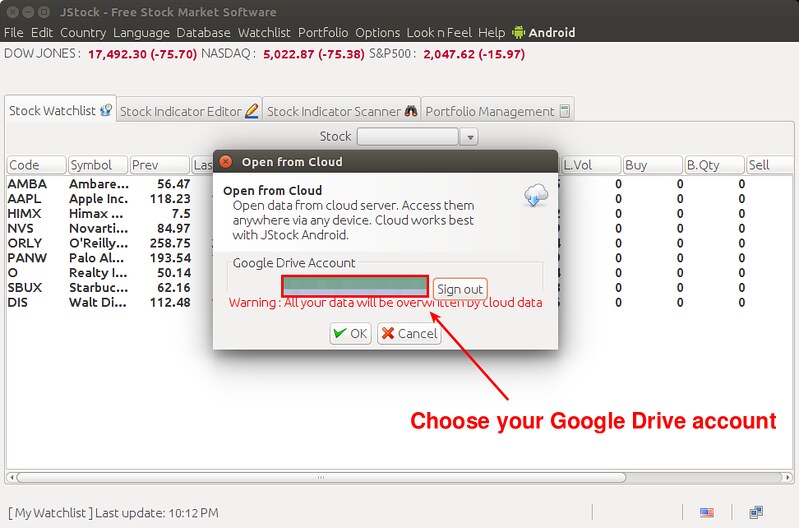

### 通过云在 Linux 和 Android JStock 之间备份/恢复###

|

||||

|

||||

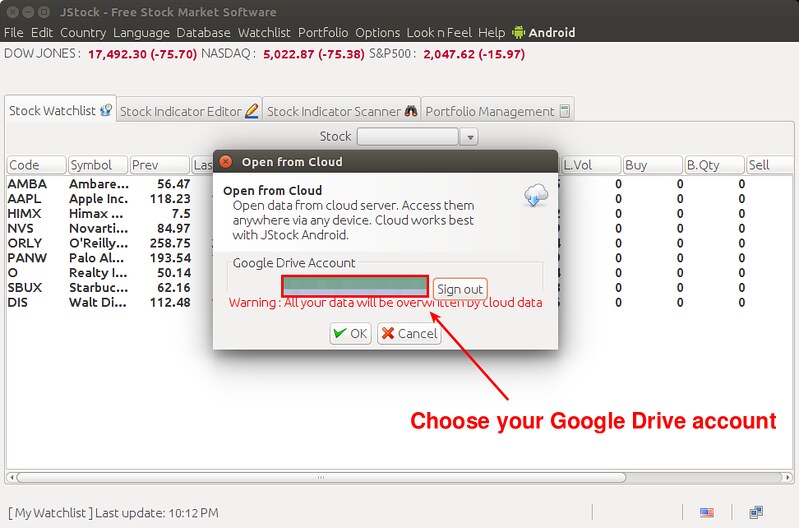

另一个非常棒的功能是 JStock 支持云备份恢复。Jstock 可以通过 Google Drive 把你的投资组合/监视列表在云上备份和恢复,这个功能可以实现在不同平台上无缝穿梭。如果你在两个不同的平台之间来回切换使用 Jstock,这种跨平台备份和还原非常有用。我在 Linux 桌面和 Android 手机上测试过我的 Jstock 投资组合,工作的非常漂亮。我在 Android 上将 Jstock 投资组合信息保存到 Google Drive 上,然后我可以在我的 Linux 版的 Jstock 上恢复它。如果能够自动同步到云上,而不用我手动地触发云备份/恢复就更好了,十分期望这个功能出现。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

如果你在从 Google Drive 还原之后不能看到你的投资信息以及监视列表,请确认你的国家信息与“Country”菜单里面设置的保持一致。

|

||||

|

||||

JStock 的安卓免费版可以从 [Google Play Store][4] 获取到。如果你需要完整的功能(比如云备份,通知,图表等),你需要一次性支付费用升级到高级版。我认为高级版物有所值。

|

||||

|

||||

|

||||

|

||||

写在最后,我应该说一下它的作者,Yan Cheng Cheok,他是一个十分活跃的开发者,有bug及时反馈给他。这一切都要感谢他!!!

|

||||

|

||||

关于 JStock 这个投资组合跟踪软件你有什么想法呢?

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/stock-portfolio-management-software-Linux.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[ivo-wang](https://github.com/ivo-wang)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://Linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/nanni

|

||||

[1]:http://jstock.org/

|

||||

[2]:http://ask.xmodulo.com/install-java-runtime-Linux.html

|

||||

[3]:http://jstock.org/ma_indicator.html

|

||||

[4]:https://play.google.com/store/apps/details?id=org.yccheok.jstock.gui

|

||||

@ -0,0 +1,101 @@

|

||||

如何在 Ubuntu Linux 16.04上安装开源的 Discourse 论坛

|

||||

===============================================================================

|

||||

|

||||

Discourse 是一个开源的论坛,它可以以邮件列表、聊天室或者论坛等多种形式工作。它是一个广受欢迎的现代的论坛工具。在服务端,它使用 Ruby on Rails 和 Postgres 搭建, 并且使用 Redis 缓存来减少读取时间 , 在客户端,它使用支持 Java Script 的浏览器。它非常容易定制,结构良好,并且它提供了转换插件,可以对你现存的论坛、公告板进行转换,例如: vBulletin、phpBB、Drupal、SMF 等等。在这篇文章中,我们将学习在 Ubuntu 操作系统下安装 Discourse。

|

||||

|

||||

它以安全作为设计思想,所以发垃圾信息的人和黑客们不能轻易的实现其企图。它能很好的支持各种现代设备,并可以相应的调整以手机和平板的显示。

|

||||

|

||||

### 在 Ubuntu 16.04 上安装 Discourse

|

||||

|

||||

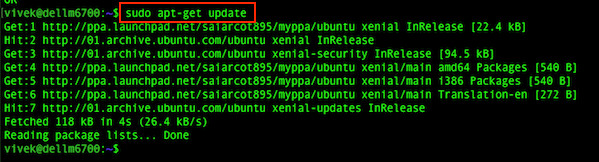

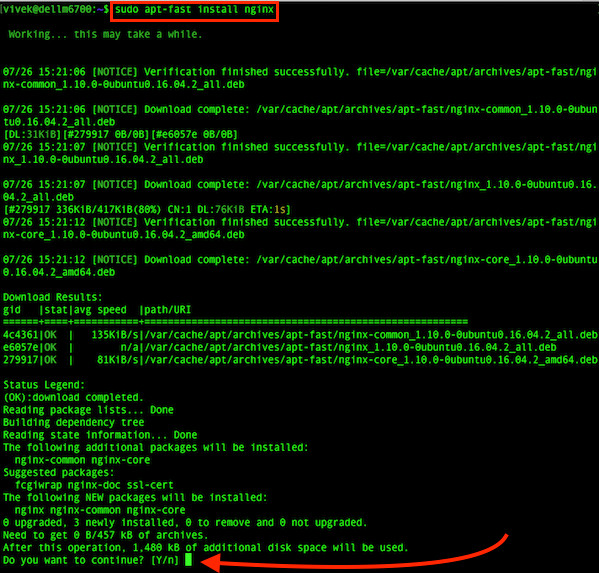

让我们开始吧 ! 最少需要 1G 的内存,并且官方支持的安装过程需要已经安装了 docker。 说到 docker,它还需要安装Git。要满足以上的两点要求我们只需要运行下面的命令:

|

||||

|

||||

```

|

||||

wget -qO- https://get.docker.com/ | sh

|

||||

```

|

||||

|

||||

|

||||

|

||||

用不了多久就安装好了 docker 和 Git,安装结束以后,在你的系统上的 /var 分区创建一个 Discourse 文件夹(当然你也可以选择其他的分区)。

|

||||

|

||||

```

|

||||

mkdir /var/discourse

|

||||

```

|

||||

|

||||

现在我们来克隆 Discourse 的 Github 仓库到这个新建的文件夹。

|

||||

|

||||

```

|

||||

git clone https://github.com/discourse/discourse_docker.git /var/discourse

|

||||

```

|

||||

|

||||

进入这个克隆的文件夹。

|

||||

|

||||

```

|

||||

cd /var/discourse

|

||||

```

|

||||

|

||||

|

||||

|

||||

你将看到“discourse-setup” 脚本文件,运行这个脚本文件进行 Discourse 的初始化。

|

||||

|

||||

```

|

||||

./discourse-setup

|

||||

```

|

||||

|

||||

**备注: 在安装 discourse 之前请确保你已经安装好了邮件服务器。**

|

||||

|

||||

安装向导将会问你以下六个问题:

|

||||

|

||||

```

|

||||

Hostname for your Discourse?

|

||||

Email address for admin account?

|

||||

SMTP server address?

|

||||

SMTP user name?

|

||||

SMTP port [587]:

|

||||

SMTP password? []:

|

||||

```

|

||||

|

||||

|

||||

|

||||

当你提交了以上信息以后, 它会让你提交确认, 如果一切都很正常,点击回车以后安装开始。

|

||||

|

||||

|

||||

|

||||

现在“坐等放宽”,需要花费一些时间来完成安装,倒杯咖啡,看看有什么错误信息没有。

|

||||

|

||||

|

||||

|

||||

安装成功以后看起来应该像这样。

|

||||

|

||||

|

||||

|

||||

现在打开浏览器,如果已经做了域名解析,你可以使用你的域名来连接 Discourse 页面 ,否则你只能使用IP地址了。你将看到如下信息:

|

||||

|

||||

|

||||

|

||||

就是这个,点击 “Sign Up” 选项创建一个新的账户,然后进行你的 Discourse 设置。

|

||||

|

||||

|

||||

|

||||

### 结论

|

||||

|

||||

它安装简便,运行完美。 它拥有现代论坛所有必备功能。它以 GPL 发布,是完全开源的产品。简单、易用、以及特性丰富是它的最大特点。希望你喜欢这篇文章,如果有问题,你可以给我们留言。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linuxpitstop.com/install-discourse-on-ubuntu-linux-16-04/

|

||||

|

||||

作者:[Aun][a]

|

||||

译者:[kokialoves](https://github.com/kokialoves)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://linuxpitstop.com/author/aun/

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

80

published/201607/20160718 OPEN SOURCE ACCOUNTING SOFTWARE.md

Normal file

80

published/201607/20160718 OPEN SOURCE ACCOUNTING SOFTWARE.md

Normal file

@ -0,0 +1,80 @@

|

||||

GNU KHATA:开源的会计管理软件

|

||||

============================================

|

||||

|

||||

作为一个活跃的 Linux 爱好者,我经常向我的朋友们介绍 Linux,帮助他们选择最适合他们的发行版本,同时也会帮助他们安装一些适用于他们工作的开源软件。

|

||||

|

||||

但是在这一次,我就变得很无奈。我的叔叔,他是一个自由职业的会计师。他会有一系列的为了会计工作的漂亮而成熟的付费软件。我不那么确定我能在在开源软件中找到这么一款可以替代的软件——直到昨天。

|

||||

|

||||

Abhishek 给我推荐了一些[很酷的软件][1],而其中 GNU Khata 脱颖而出。

|

||||

|

||||

[GNU Khata][2] 是一个会计工具。 或者,我应该说成是一系列的会计工具集合?它就像经济管理方面的 [Evernote][3] 一样。它的应用是如此之广,以至于它不但可以用于个人的财务管理,也可以用于大型公司的管理,从店铺存货管理到税率计算,都可以有效处理。

|

||||

|

||||

有个有趣的地方,Khata 这个词在印度或者是其他的印度语国家中意味着账户,所以这个会计软件叫做 GNU Khata。

|

||||

|

||||

### 安装

|

||||

|

||||

互联网上有很多关于旧的 Web 版本的 Khata 安装介绍。现在,GNU Khata 只能用在 Debian/Ubuntu 和它们的衍生版本中。我建议你按照 GNU Khata 官网给出的如下步骤来安装。我们来快速过一下。

|

||||

|

||||

- 从[这里][4]下载安装器。

|

||||

- 在下载目录打开终端。

|

||||

- 粘贴复制以下的代码到终端,并且执行。

|

||||

|

||||

```

|

||||

sudo chmod 755 GNUKhatasetup.run

|

||||

sudo ./GNUKhatasetup.run

|

||||

```

|

||||

|

||||

这就结束了,从你的 Dash 或者是应用菜单中启动 GNU Khata 吧。

|

||||

|

||||

### 第一次启动

|

||||

|

||||

GNU Khata 在浏览器中打开,并且展现以下的画面。

|

||||

|

||||

|

||||

|

||||

填写组织的名字、组织形式,财务年度并且点击 proceed 按钮进入管理设置页面。

|

||||

|

||||

|

||||

|

||||

仔细填写你的用户名、密码、安全问题及其答案,并且点击“create and login”。

|

||||

|

||||

|

||||

|

||||

你已经全部设置完成了。使用菜单栏来开始使用 GNU Khata 来管理你的财务吧。这很容易。

|

||||

|

||||

### 移除 GNU KHATA

|

||||

|

||||

如果你不想使用 GNU Khata 了,你可以执行如下命令移除:

|

||||

|

||||

```

|

||||

sudo apt-get remove --auto-remove gnukhata-core-engine

|

||||

```

|

||||

|

||||

你也可以通过新立得软件管理来删除它。

|

||||

|

||||

### GNU KHATA 真的是市面上付费会计应用的竞争对手吗?

|

||||

|

||||

首先,GNU Khata 以简化为设计原则。顶部的菜单栏组织的很方便,可以帮助你有效的进行工作。你可以选择管理不同的账户和项目,并且切换非常容易。[它们的官网][5]表明,GNU Khata 可以“像说印度语一样方便”(LCTT 译注:原谅我,这个软件作者和本文作者是印度人……)。同时,你知道 GNU Khata 也可以在云端使用吗?

|

||||

|

||||

所有的主流的账户管理工具,比如分类账簿、项目报表、财务报表等等都用专业的方式整理,并且支持自定义格式和即时展示。这让会计和仓储管理看起来如此的简单。

|

||||

|

||||

这个项目正在积极的发展,正在寻求实操中的反馈以帮助这个软件更加进步。考虑到软件的成熟性、使用的便利性还有免费的情况,GNU Khata 可能会成为你最好的账簿助手。

|

||||

|

||||

请在评论框里留言吧,让我们知道你是如何看待 GNU Khata 的。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/using-gnu-khata/

|

||||

|

||||

作者:[Aquil Roshan][a]

|

||||

译者:[MikeCoder](https://github.com/MikeCoder)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/aquil/

|

||||

[1]: https://itsfoss.com/category/apps/

|

||||

[2]: http://www.gnukhata.in/

|

||||

[3]: https://evernote.com/

|

||||

[4]: https://cloud.openmailbox.org/index.php/s/L8ppsxtsFq1345E/download

|

||||

[5]: http://www.gnukhata.in/

|

||||

@ -0,0 +1,36 @@

|

||||

在浏览器中体验 Ubuntu

|

||||

=====================================================

|

||||

|

||||

[Ubuntu][2] 的背后的公司 [Canonical][1] 为 Linux 推广做了很多努力。无论你有多么不喜欢 Ubuntu,你必须承认它对 “Linux 易用性”的影响。Ubuntu 以及其衍生是使用最多的 Linux 版本。

|

||||

|

||||

为了进一步推广 Ubuntu Linux,Canonical 把它放到了浏览器里,你可以在任何地方使用这个 [Ubuntu 演示版][0]。 它将帮你更好的体验 Ubuntu,以便让新人更容易决定是否使用它。

|

||||

|

||||

你可能争辩说 USB 版的 Linux 更好。我同意,但是你要知道你要下载 ISO,创建 USB 启动盘,修改配置文件,然后才能使用这个 USB 启动盘来体验。这么乏味并不是每个人都乐意这么干的。 在线体验是一个更好的选择。

|

||||

|

||||

那么,你能在 Ubuntu 在线看到什么。实际上并不多。

|

||||

|

||||

你可以浏览文件,你可以使用 Unity Dash,浏览 Ubuntu 软件中心,甚至装几个应用(当然它们不会真的安装),看一看文件浏览器和其它一些东西。以上就是全部了。但是在我看来,这已经做的很好了,让你知道它是个什么,对这个流行的操作系统有个直接感受。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

如果你的朋友或者家人对试试 Linux 抱有兴趣,但是想在安装前想体验一下 Linux 。你可以给他们以下链接:[Ubuntu 在线导览][0] 。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/ubuntu-online-demo/

|

||||

|

||||

作者:[Abhishek Prakash][a]

|

||||

译者:[kokialoves](https://github.com/kokialoves)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/abhishek/

|

||||

[0]: http://tour.ubuntu.com/en/

|

||||

[1]: http://www.canonical.com/

|

||||

[2]: http://www.ubuntu.com/

|

||||

@ -0,0 +1,90 @@

|

||||

为你的 Linux 桌面设置一张实时的地球照片

|

||||

=================================================================

|

||||

|

||||

|

||||

|

||||

厌倦了看同样的桌面背景了么?这里有一个(可能是)世界上最棒的东西。

|

||||

|

||||

‘[Himawaripy][1]’ 是一个 Python 3 小脚本,它会抓取由[日本 Himawari 8 气象卫星][2]拍摄的接近实时的地球照片,并将它设置成你的桌面背景。

|

||||

|

||||

安装完成后,你可以将它设置成每 10 分钟运行的定时任务(自然,它要在后台运行),这样它就可以实时地取回地球的照片并设置成背景了。

|

||||

|

||||

因为 Himawari-8 是一颗同步轨道卫星,你只能看到澳大利亚上空的地球的图片——但是它实时的天气形态、云团和光线仍使它很壮丽,对我而言要是看到英国上方的就更好了!

|

||||

|

||||

高级设置允许你配置从卫星取回的图片质量,但是要记住增加图片质量会增加文件大小及更长的下载等待!

|

||||

|

||||

最后,虽然这个脚本与其他我们提到过的其他脚本类似,它还仍保持更新及可用。

|

||||

|

||||

###获取 Himawaripy

|

||||

|

||||

Himawaripy 已经在一系列的桌面环境中都测试过了,包括 Unity、LXDE、i3、MATE 和其他桌面环境。它是自由开源软件,但是整体来说安装及配置不太简单。

|

||||

|

||||

在该项目的 [Github 主页][0]上可以找到安装和设置该应用程序的所有指导(提示:没有一键安装功能)。

|

||||

|

||||

- [实时地球壁纸脚本的 GitHub 主页][0]

|

||||

|

||||

### 安装及使用

|

||||

|

||||

|

||||

|

||||

一些读者请我在本文中补充一下一步步安装该应用的步骤。以下所有步骤都在其 GitHub 主页上,这里再贴一遍。

|

||||

|

||||

1、下载及解压 Himawaripy

|

||||

|

||||

这是最容易的步骤。点击下面的下载链接,然后下载最新版本,并解压到你的下载目录里面。

|

||||

|

||||

- [下载 Himawaripy 主干文件(.zip 格式)][3]

|

||||

|

||||

2、安装 python3-setuptools

|

||||

|

||||

你需要手工来安装主干软件包,Ubuntu 里面默认没有安装它:

|

||||

|

||||

```

|

||||

sudo apt install python3-setuptools

|

||||

```

|

||||

|

||||

3、安装 Himawaripy

|

||||

|

||||

在终端中,你需要切换到之前解压的目录中,并运行如下安装命令:

|

||||

|

||||

```

|

||||

cd ~/Downloads/himawaripy-master

|

||||

sudo python3 setup.py install

|

||||

```

|

||||

|

||||

4、 看看它是否可以运行并下载最新的实时图片:

|

||||

```

|

||||

himawaripy

|

||||

```

|

||||

5、 设置定时任务

|

||||

|

||||

如果你希望该脚本可以在后台自动运行并更新(如果你需要手动更新,只需要运行 ‘himarwaripy’ 即可)

|

||||

|

||||

在终端中运行:

|

||||

```

|

||||

crontab -e

|

||||

```

|

||||

在其中新加一行(默认每10分钟运行一次)

|

||||

```

|

||||

*/10 * * * * /usr/local/bin/himawaripy

|

||||

```

|

||||

关于[配置定时任务][4]可以在 Ubuntu Wiki 上找到更多信息。

|

||||

|

||||

该脚本安装后你不需要不断运行它,它会自动的每十分钟在后台运行一次。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2016/07/set-real-time-earth-wallpaper-ubuntu-desktop

|

||||

|

||||

作者:[JOEY-ELIJAH SNEDDON][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://plus.google.com/117485690627814051450/?rel=author

|

||||

[1]: https://github.com/boramalper/himawaripy

|

||||

[2]: https://en.wikipedia.org/wiki/Himawari_8

|

||||

[0]: https://github.com/boramalper/himawaripy

|

||||

[3]: https://github.com/boramalper/himawaripy/archive/master.zip

|

||||

[4]: https://help.ubuntu.com/community/CronHowto

|

||||

@ -1,29 +1,29 @@

|

||||

用 VeraCrypt 加密闪存盘

|

||||

============================================

|

||||

|

||||

很多安全专家偏好像 VeraCrypt 这类能够用来加密闪存盘的开源软件。因为获取它的源代码很简单。

|

||||

很多安全专家偏好像 VeraCrypt 这类能够用来加密闪存盘的开源软件,是因为可以获取到它的源代码。

|

||||

|

||||

保护 USB闪存盘里的数据,加密是一个聪明的方法,正如我们在使用 Microsoft 的 BitLocker [加密闪存盘][1] 一文中提到的。

|

||||

保护 USB 闪存盘里的数据,加密是一个聪明的方法,正如我们在使用 Microsoft 的 BitLocker [加密闪存盘][1] 一文中提到的。

|

||||

|

||||

但是如果你不想用 BitLocker 呢?

|

||||

|

||||

你可能有顾虑,因为你不能够查看 Microsoft 的程序源码,那么它容易被植入用于政府或其它用途的“后门”。由于开源软件的源码是公开的,很多安全专家认为开源软件很少藏有后门。

|

||||

你可能有顾虑,因为你不能够查看 Microsoft 的程序源码,那么它容易被植入用于政府或其它用途的“后门”。而由于开源软件的源码是公开的,很多安全专家认为开源软件很少藏有后门。

|

||||

|

||||

还好,有几个开源加密软件能作为 BitLocker 的替代。

|

||||

|

||||

要是你需要在 Windows 系统,苹果的 OS X 系统或者 Linux 系统上加密以及访问文件,开源软件 [VeraCrypt][2] 提供绝佳的选择。

|

||||

|

||||

VeraCrypt 源于 TrueCrypt。TrueCrypt是一个备受好评的开源加密软件,尽管它现在已经停止维护了。但是 TrueCrypt 的代码通过了审核,没有发现什么重要的安全漏洞。另外,它已经在 VeraCrypt 中进行了改善。

|

||||

VeraCrypt 源于 TrueCrypt。TrueCrypt 是一个备受好评的开源加密软件,尽管它现在已经停止维护了。但是 TrueCrypt 的代码通过了审核,没有发现什么重要的安全漏洞。另外,在 VeraCrypt 中对它进行了改善。

|

||||

|

||||

Windows,OS X 和 Linux 系统的版本都有。

|

||||

|

||||

用 VeraCrypt 加密 USB 闪存盘不像用 BitLocker 那么简单,但是它只要几分钟就好了。

|

||||

用 VeraCrypt 加密 USB 闪存盘不像用 BitLocker 那么简单,但是它也只要几分钟就好了。

|

||||

|

||||

### 用 VeraCrypt 加密闪存盘的 8 个步骤

|

||||

|

||||

对应操作系统 [下载 VeraCrypt][3] 之后:

|

||||

对应你的操作系统 [下载 VeraCrypt][3] 之后:

|

||||

|

||||

打开 VeraCrypt,点击 Create Volume,进入 VeraCrypt 的创建卷的向导程序(VeraCrypt Volume Creation Wizard)。(注:VeraCrypt Volume Creation Wizard 首字母全大写,不清楚是否需要翻译,之后有很多首字母大写的词,都以括号标出)

|

||||

打开 VeraCrypt,点击 Create Volume,进入 VeraCrypt 的创建卷的向导程序(VeraCrypt Volume Creation Wizard)。

|

||||

|

||||

|

||||

|

||||

@ -39,7 +39,7 @@ VeraCrypt 创建卷向导(VeraCrypt Volume Creation Wizard)允许你在闪

|

||||

|

||||

|

||||

|

||||

选择创建卷标模式(Volume Creation Mode)。如果你的闪存盘是空的,或者你想要删除它里面的所有东西,选第一个。要么你想保持所有现存的文件,选第二个就好了。

|

||||

选择创建卷模式(Volume Creation Mode)。如果你的闪存盘是空的,或者你想要删除它里面的所有东西,选第一个。要么你想保持所有现存的文件,选第二个就好了。

|

||||

|

||||

|

||||

|

||||

@ -47,7 +47,7 @@ VeraCrypt 创建卷向导(VeraCrypt Volume Creation Wizard)允许你在闪

|

||||

|

||||

|

||||

|

||||

确定了卷标容量后,输入并确认你想要用来加密数据密码。

|

||||

确定了卷容量后,输入并确认你想要用来加密数据密码。

|

||||

|

||||

|

||||

|

||||

@ -71,7 +71,7 @@ VeraCrypt 创建卷向导(VeraCrypt Volume Creation Wizard)允许你在闪

|

||||

|

||||

### VeraCrypt 移动硬盘安装步骤

|

||||

|

||||

如果你设置闪存盘的时候,选择的是加密过的容器而不是加密整个盘,你可以选择创建 VeraCrypt 称为移动盘(Traveler Disk)的设备。这会复制安装一个 VeraCrypt 在 USB 闪存盘。当你在别的 Windows 电脑上插入 U 盘时,就能从 U 盘自动运行 VeraCrypt;也就是说没必要在新电脑上安装 VeraCrypt。

|

||||

如果你设置闪存盘的时候,选择的是加密过的容器而不是加密整个盘,你可以选择创建 VeraCrypt 称为移动盘(Traveler Disk)的设备。这会复制安装一个 VeraCrypt 到 USB 闪存盘。当你在别的 Windows 电脑上插入 U 盘时,就能从 U 盘自动运行 VeraCrypt;也就是说没必要在新电脑上安装 VeraCrypt。

|

||||

|

||||

你可以设置闪存盘作为一个移动硬盘(Traveler Disk),在 VeraCrypt 的工具栏(Tools)菜单里选择 Traveler Disk SetUp 就行了。

|

||||

|

||||

@ -79,15 +79,15 @@ VeraCrypt 创建卷向导(VeraCrypt Volume Creation Wizard)允许你在闪

|

||||

|

||||

要从移动盘(Traveler Disk)上运行 VeraCrypt,你必须要有那台电脑的管理员权限,这不足为奇。尽管这看起来是个限制,机密文件无法在不受控制的电脑上安全打开,比如在一个商务中心的电脑上。

|

||||

|

||||

>Paul Rubens 从事技术行业已经超过 20 年。这期间他为英国和国际主要的出版社,包括 《The Economist》《The Times》《Financial Times》《The BBC》《Computing》和《ServerWatch》等出版社写过文章,

|

||||

> 本文作者 Paul Rubens 从事技术行业已经超过 20 年。这期间他为英国和国际主要的出版社,包括 《The Economist》《The Times》《Financial Times》《The BBC》《Computing》和《ServerWatch》等出版社写过文章,

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.esecurityplanet.com/open-source-security/how-to-encrypt-flash-drive-using-veracrypt.html

|

||||

|

||||

作者:[Paul Rubens ][a]

|

||||

作者:[Paul Rubens][a]

|

||||

译者:[GitFuture](https://github.com/GitFuture)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

118

published/20160706 What is Git.md

Normal file

118

published/20160706 What is Git.md

Normal file

@ -0,0 +1,118 @@

|

||||

Git 系列(一):什么是 Git

|

||||

===========

|

||||

|

||||

欢迎阅读本系列关于如何使用 Git 版本控制系统的教程!通过本文的介绍,你将会了解到 Git 的用途及谁该使用 Git。

|

||||

|

||||

如果你刚步入开源的世界,你很有可能会遇到一些在 Git 上托管代码或者发布使用版本的开源软件。事实上,不管你知道与否,你都在使用基于 Git 进行版本管理的软件:Linux 内核(就算你没有在手机或者电脑上使用 Linux,你正在访问的网站也是运行在 Linux 系统上的),Firefox、Chrome 等其他很多项目都通过 Git 代码库和世界各地开发者共享他们的代码。

|

||||

|

||||

换个角度来说,你是否仅仅通过 Git 就可以和其他人共享你的代码?你是否可以在家里或者企业里私有化的使用 Git?你必须要通过一个 GitHub 账号来使用 Git 吗?为什么要使用 Git 呢?Git 的优势又是什么?Git 是我唯一的选择吗?这对 Git 所有的疑问都会把我们搞的一脑浆糊。

|

||||

|

||||

因此,忘记你以前所知的 Git,让我们重新走进 Git 世界的大门。

|

||||

|

||||

### 什么是版本控制系统?

|

||||

|

||||

Git 首先是一个版本控制系统。现在市面上有很多不同的版本控制系统:CVS、SVN、Mercurial、Fossil 当然还有 Git。

|

||||

|

||||

很多像 GitHub 和 GitLab 这样的服务是以 Git 为基础的,但是你也可以只使用 Git 而无需使用其他额外的服务。这意味着你可以以私有或者公有的方式来使用 Git。

|

||||

|

||||

如果你曾经和其他人有过任何电子文件方面的合作,你就会知道传统版本管理的工作流程。开始是很简单的:你有一个原始的版本,你把这个版本发送给你的同事,他们在接收到的版本上做了些修改,现在你们有两个版本了,然后他们把他们手上修改过的版本发回来给你。你把他们的修改合并到你手上的版本中,现在两个版本又合并成一个最新的版本了。

|

||||

|

||||

然后,你修改了你手上最新的版本,同时,你的同事也修改了他们手上合并前的版本。现在你们有 3 个不同的版本了,分别是合并后最新的版本,你修改后的版本,你同事手上继续修改过的版本。至此,你们的版本管理工作开始变得越来越混乱了。

|

||||

|

||||

正如 Jason van Gumster 在他的文章中指出 [即使是艺术家也需要版本控制][1],而且已经在个别人那里发现了这种趋势变化。无论是艺术家还是科学家,开发一个某种实验版本是并不鲜见的;在你的项目中,可能有某个版本大获成功,把项目推向一个新的高度,也可能有某个版本惨遭失败。因此,最终你不可避免的会创建出一堆名为project\_justTesting.kdenlive、project\_betterVersion.kdenlive、project\_best\_FINAL.kdenlive、project\_FINAL-alternateVersion.kdenlive 等类似名称的文件。

|

||||

|

||||

不管你是修改一个 for 循环,还是一些简单的文本编辑,一个好的版本控制系统都会让我们的生活更加的轻松。

|

||||

|

||||

### Git 快照

|

||||

|

||||

Git 可以为项目创建快照,并且存储这些快照为唯一的版本。

|

||||

|

||||

如果你将项目带领到了一个错误的方向上,你可以回退到上一个正确的版本,并且开始尝试另一个可行的方向。

|

||||

|

||||

如果你是和别人合作开发,当有人向你发送他们的修改时,你可以将这些修改合并到你的工作分支中,然后你的同事就可以获取到合并后的最新版本,并在此基础上继续工作。

|

||||

|

||||

Git 并不是魔法,因此冲突还是会发生的(“你修改了某文件的最后一行,但是我把这行整行都删除了;我们怎样处理这些冲突呢?”),但是总体而言,Git 会为你保留了所有更改的历史版本,甚至允许并行版本。这为你保留了以任何方式处理冲突的能力。

|

||||

|

||||

### 分布式 Git

|

||||

|

||||

在不同的机器上为同一个项目工作是一件复杂的事情。因为在你开始工作时,你想要获得项目的最新版本,然后此基础上进行修改,最后向你的同事共享这些改动。传统的方法是通过笨重的在线文件共享服务或者老旧的电邮附件,但是这两种方式都是效率低下且容易出错。

|

||||

|

||||

Git 天生是为分布式工作设计的。如果你要参与到某个项目中,你可以克隆(clone)该项目的 Git 仓库,然后就像这个项目只有你本地一个版本一样对项目进行修改。最后使用一些简单的命令你就可以拉取(pull)其他开发者的修改,或者你可以把你的修改推送(push)给别人。现在不用担心谁手上的是最新的版本,或者谁的版本又存放在哪里等这些问题了。全部人都是在本地进行开发,然后向共同的目标推送或者拉取更新。(或者不是共同的目标,这取决于项目的开发方式)。

|

||||

|

||||

### Git 界面

|

||||

|

||||

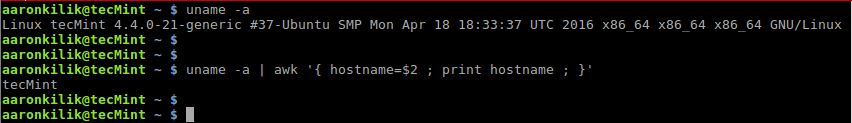

最原始的 Git 是运行在 Linux 终端上的应用软件。然而,得益于 Git 是开源的,并且拥有良好的设计,世界各地的开发者都可以为 Git 设计不同的访问界面。

|

||||

|

||||

Git 完全是免费的,并且已经打包在 Linux,BSD,Illumos 和其他类 Unix 系统中,Git 命令看起来像这样:

|

||||

|

||||

```

|

||||

$ git --version

|

||||

git version 2.5.3

|

||||

```

|

||||

|

||||

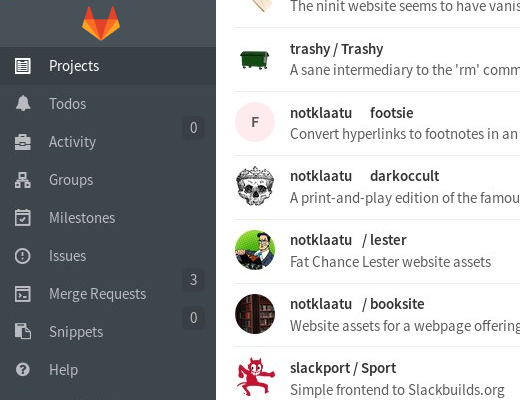

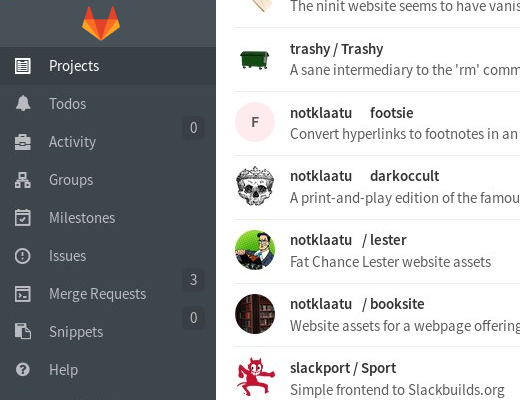

可能最著名的 Git 访问界面是基于网页的,像 GitHub、开源的 GitLab、Savannah、BitBucket 和 SourceForge 这些网站都是基于网页端的 Git 界面。这些站点为面向公众和面向社会的开源软件提供了最大限度的代码托管服务。在一定程度上,基于浏览器的图形界面(GUI)可以尽量的减缓 Git 的学习曲线。下面的 GitLab 界面的截图:

|

||||

|

||||

|

||||

|

||||

再者,第三方 Git 服务提供商或者独立开发者甚至可以在 Git 的基础上开发出不是基于 HTML 的定制化前端界面。此类界面让你可以不用打开浏览器就可以方便的使用 Git 进行版本管理。其中对用户最透明的方式是直接集成到文件管理器中。KDE 文件管理器 Dolphin 可以直接在目录中显示 Git 状态,甚至支持提交,推送和拉取更新操作。

|

||||

|

||||

|

||||

|

||||

[Sparkleshare][2] 使用 Git 作为其 Dropbox 式的文件共享界面的基础。

|

||||

|

||||

|

||||

|

||||

想了解更多的内容,可以查看 [Git wiki][3],这个(长长的)页面中展示了很多 Git 的图形界面项目。

|

||||

|

||||

### 谁应该使用 Git?

|

||||

|

||||

就是你!我们更应该关心的问题是什么时候使用 Git?和用 Git 来干嘛?

|

||||

|

||||

### 我应该在什么时候使用 Git 呢?我要用 Git 来干嘛呢?

|

||||

|

||||

想更深入的学习 Git,我们必须比平常考虑更多关于文件格式的问题。

|

||||

|

||||

Git 是为了管理源代码而设计的,在大多数编程语言中,源代码就意味者一行行的文本。当然,Git 并不知道你把这些文本当成是源代码还是下一部伟大的美式小说。因此,只要文件内容是以文本构成的,使用 Git 来跟踪和管理其版本就是一个很好的选择了。

|

||||

|

||||

但是什么是文本呢?如果你在像 Libre Office 这类办公软件中编辑一些内容,通常并不会产生纯文本内容。因为通常复杂的应用软件都会对原始的文本内容进行一层封装,就如把原始文本内容用 XML 标记语言包装起来,然后封装在 Zip 包中。这种对原始文本内容进行一层封装的做法可以保证当你把文件发送给其他人时,他们可以看到你在办公软件中编辑的内容及特定的文本效果。奇怪的是,虽然,通常你的需求可能会很复杂,就像保存 [Kdenlive][4] 项目文件,或者保存从 [Inkscape][5] 导出的SVG文件,但是,事实上使用 Git 管理像 XML 文本这样的纯文本类容是最简单的。

|

||||

|

||||

如果你在使用 Unix 系统,你可以使用 `file` 命令来查看文件内容构成:

|

||||

|

||||

```

|

||||

$ file ~/path/to/my-file.blah

|

||||

my-file.blah: ASCII text

|

||||

$ file ~/path/to/different-file.kra: Zip data (MIME type "application/x-krita")

|

||||

```

|

||||

|

||||

如果还是不确定,你可以使用 `head` 命令来查看文件内容:

|

||||

|

||||

```

|

||||

$ head ~/path/to/my-file.blah

|

||||

```

|

||||

|

||||

如果输出的文本你基本能看懂,这个文件就很有可能是文本文件。如果你仅仅在一堆乱码中偶尔看到几个熟悉的字符,那么这个文件就可能不是文本文件了。

|

||||

|

||||

准确的说:Git 可以管理其他格式的文件,但是它会把这些文件当成二进制大对象(blob)。两者的区别是,在文本文件中,Git 可以明确的告诉你在这两个快照(或者说提交)间有 3 行是修改过的。但是如果你在两个提交(commit)之间对一张图片进行的编辑操作,Git 会怎么指出这种修改呢?实际上,因为图片并不是以某种可以增加或删除的有意义的文本构成,因此 Git 并不能明确的描述这种变化。当然我个人是非常希望图片的编辑可以像把文本“\<sky>丑陋的蓝绿色\</sky>”修改成“\<sky>漂浮着蓬松白云的天蓝色\</sky>”一样的简单,但是事实上图片的编辑并没有这么简单。

|

||||

|

||||

经常有人在 Git 上放入 png 图标、电子表格或者流程图这类二进制大型对象(blob)。尽管,我们知道在 Git 上管理此类大型文件并不直观,但是,如果你需要使用 Git 来管理此类文件,你也并不需要过多的担心。如果你参与的项目同时生成文本文件和二进制大文件对象(如视频游戏中常见的场景,这些和源代码同样重要的图像和音频材料),那么你有两条路可以走:要么开发出你自己的解决方案,就如使用指向共享网络驱动器的引用;要么使用 Git 插件,如 Joey Hess 开发的 [git annex][6],以及 [Git-Media][7] 项目。

|

||||

|

||||

你看,Git 真的是一个任何人都可以使用的工具。它是你进行文件版本管理的一个强大而且好用工具,同时它并没有你开始认为的那么可怕。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/resources/what-is-git

|

||||

|

||||

作者:[Seth Kenlon][a]

|

||||

译者:[cvsher](https://github.com/cvsher)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/seth

|

||||

[1]: https://opensource.com/life/16/2/version-control-isnt-just-programmers

|

||||

[2]: http://sparkleshare.org/

|

||||

[3]: https://git.wiki.kernel.org/index.php/InterfacesFrontendsAndTools#Graphical_Interfaces

|

||||

[4]: https://opensource.com/life/11/11/introduction-kdenlive

|

||||

[5]: http://inkscape.org/

|

||||

[6]: https://git-annex.branchable.com/

|

||||

[7]: https://github.com/alebedev/git-media

|

||||

@ -2,20 +2,23 @@

|

||||

=========================

|

||||

|

||||

|

||||

> 图片来源:opensource.com

|

||||

|

||||

在这个系列的介绍中,我们学习到了谁应该使用 Git,以及 Git 是用来做什么的。今天,我们将学习如何克隆公共的 Git 仓库,以及如何提取出独立的文件而不用克隆整个仓库。

|

||||

*图片来源:opensource.com*

|

||||

|

||||

在这个系列的[介绍篇][4]中,我们学习到了谁应该使用 Git,以及 Git 是用来做什么的。今天,我们将学习如何克隆公共 Git 仓库,以及如何提取出独立的文件而不用克隆整个仓库。

|

||||

|

||||

由于 Git 如此流行,因而如果你能够至少熟悉一些基础的 Git 知识也能为你的生活带来很多便捷。如果你可以掌握 Git 基础(你可以的,我发誓!),那么你将能够下载任何你需要的东西,甚至还可能做一些贡献作为回馈。毕竟,那就是开源的精髓所在:你拥有获取你使用的软件代码的权利,拥有和他人分享的自由,以及只要你愿意就可以修改它的权利。只要你熟悉了 Git,它就可以让这一切都变得很容易。

|

||||

|

||||

那么,让我们一起来熟悉 Git 吧。

|

||||

|

||||

### 读和写

|

||||

|

||||

一般来说,有两种方法可以和 Git 仓库交互:你可以从仓库中读取,或者你也能够向仓库中写入。它就像一个文件:有时候你打开一个文档只是为了阅读它,而其它时候你打开文档是因为你需要做些改动。

|

||||

|

||||

本文仅讲解如何从 Git 仓库读取。我们将会在后面的一篇文章中讲解如何向 Git 仓库写回的主题。

|

||||

|

||||

### Git 还是 GitHub?

|

||||

|

||||

一句话澄清:Git 不同于 GitHub(或 GitLab,或 Bitbucket)。Git 是一个命令行程序,所以它就像下面这样:

|

||||

|

||||

```

|

||||

@ -31,29 +34,32 @@ usage: Git [--version] [--help] [-C <path>]

|

||||

我的文章系列将首先教你纯粹的 Git 知识,因为一旦你理解了 Git 在做什么,那么你就无需关心正在使用的前端工具是什么了。然而,我的文章系列也将涵盖通过流行的 Git 服务完成每项任务的常用方法,因为那些将可能是你首先会遇到的。

|

||||

|

||||

### 安装 Git

|

||||

|

||||

在 Linux 系统上,你可以从所使用的发行版软件仓库中获取并安装 Git。BSD 用户应当在 Ports 树的 devel 部分查找 Git。

|

||||

|

||||

对于闭源的操作系统,请前往 [项目网站][1] 并根据说明安装。一旦安装后,在 Linux、BSD 和 Mac OS X 上的命令应当没有任何差别。Windows 用户需要调整 Git 命令,从而和 Windows 文件系统相匹配,或者安装 Cygwin 以原生的方式运行 Git,而不受 Windows 文件系统转换问题的羁绊。

|

||||

对于闭源的操作系统,请前往其[项目官网][1],并根据说明安装。一旦安装后,在 Linux、BSD 和 Mac OS X 上的命令应当没有任何差别。Windows 用户需要调整 Git 命令,从而和 Windows 文件系统相匹配,或者安装 Cygwin 以原生的方式运行 Git,而不受 Windows 文件系统转换问题的羁绊。

|

||||

|

||||

### Git 下午茶

|

||||

|

||||

### 下午茶和 Git

|

||||

并非每个人都需要立刻将 Git 加入到我们的日常生活中。有些时候,你和 Git 最多的交互就是访问一个代码库,下载一两个文件,然后就不用它了。以这样的方式看待 Git,它更像是下午茶而非一次正式的宴会。你进行一些礼节性的交谈,获得了需要的信息,然后你就会离开,至少接下来的三个月你不再想这样说话。

|

||||

|

||||

当然,那是可以的。

|

||||

|

||||

一般来说,有两种方法访问 Git:使用命令行,或者使用一种神奇的因特网技术通过 web 浏览器快速轻松地访问。

|

||||

|

||||

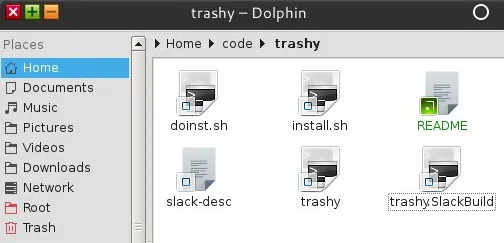

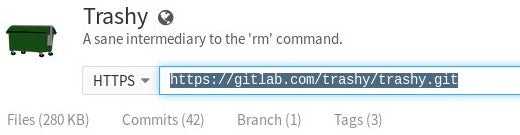

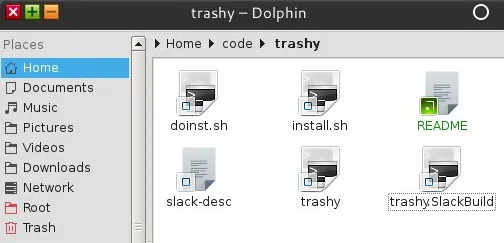

假设你想要在终端中安装并使用一个回收站,因为你已经被 rm 命令毁掉太多次了。你已经听说过 Trashy 了,它称自己为「理智的 rm 命令媒介」,并且你想在安装它之前阅读它的文档。幸运的是,[Trashy 公开地托管在 GitLab.com][2]。

|

||||

假设你想要给终端安装一个回收站,因为你已经被 rm 命令毁掉太多次了。你可能听说过 Trashy ,它称自己为「理智的 rm 命令中间人」,也许你想在安装它之前阅读它的文档。幸运的是,[Trashy 公开地托管在 GitLab.com][2]。

|

||||

|

||||

### Landgrab

|

||||

|

||||

我们工作的第一步是对这个 Git 仓库使用 landgrab 排序方法:我们会克隆这个完整的仓库,然后会根据内容排序。由于该仓库是托管在公共的 Git 服务平台上,所以有两种方式来完成工作:使用命令行,或者使用 web 界面。

|

||||

|

||||

要想使用 Git 获取整个仓库,就要使用 git clone 命令和 Git 仓库的 URL 作为参数。如果你不清楚正确的 URL 是什么,仓库应该会告诉你的。GitLab 为你提供了 [Trashy][3] 仓库的拷贝-粘贴 URL。

|

||||

要想使用 Git 获取整个仓库,就要使用 git clone 命令和 Git 仓库的 URL 作为参数。如果你不清楚正确的 URL 是什么,仓库应该会告诉你的。GitLab 为你提供了 [Trashy][3] 仓库的用于拷贝粘贴的 URL。

|

||||

|

||||

|

||||

|

||||

你也许注意到了,在某些服务平台上,会同时提供 SSH 和 HTTPS 链接。只有当你拥有仓库的写权限时,你才可以使用 SSH。否则的话,你必须使用 HTTPS URL。

|

||||

|

||||

一旦你获得了正确的 URL,克隆仓库是非常容易的。就是 git clone 这个 URL 即可,可选项是可以指定要克隆到的目录。默认情况下会将 git 目录克隆到你当前所在的位置;例如,'trashy.git' 表示将仓库克隆到你当前位置的 'trashy' 目录。我使用 .clone 扩展名标记那些只读的仓库,使用 .git 扩展名标记那些我可以读写的仓库,但那无论如何也不是官方要求的。

|

||||

一旦你获得了正确的 URL,克隆仓库是非常容易的。就是 git clone 该 URL 即可,以及一个可选的指定要克隆到的目录。默认情况下会将 git 目录克隆到你当前所在的目录;例如,'trashy.git' 将会克隆到你当前位置的 'trashy' 目录。我使用 .clone 扩展名标记那些只读的仓库,而使用 .git 扩展名标记那些我可以读写的仓库,不过这并不是官方要求的。

|

||||

|

||||

```

|

||||

$ git clone https://gitlab.com/trashy/trashy.git trashy.clone

|

||||

@ -68,30 +74,34 @@ Checking connectivity... done.

|

||||

|

||||

一旦成功地克隆了仓库,你就可以像对待你电脑上任何其它目录那样浏览仓库中的文件。

|

||||

|

||||

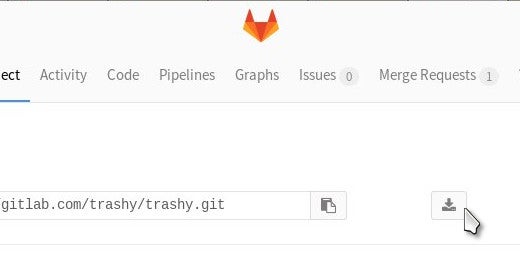

另外一种获得仓库拷贝的方式是使用 web 界面。GitLab 和 GitHub 都会提供一个 .zip 格式的仓库快照文件。GitHub 有一个大的绿色下载按钮,但是在 GitLab 中,可以浏览器的右侧找到并不显眼的下载按钮。

|

||||

另外一种获得仓库拷贝的方式是使用 web 界面。GitLab 和 GitHub 都会提供一个 .zip 格式的仓库快照文件。GitHub 有一个大大的绿色下载按钮,但是在 GitLab 中,可以在浏览器的右侧找到并不显眼的下载按钮。

|

||||

|

||||

|

||||

|

||||

### 仔细挑选

|

||||

另外一种从 Git 仓库中获取文件的方法是找到你想要的文件,然后把它从仓库中拽出来。只有 web 界面才提供这种方法,本质上来说,你看到的是别人仓库的克隆;你可以把它想象成一个 HTTP 共享目录。

|

||||

|

||||

另外一种从 Git 仓库中获取文件的方法是找到你想要的文件,然后把它从仓库中拽出来。只有 web 界面才提供这种方法,本质上来说,你看到的是别人的仓库克隆;你可以把它想象成一个 HTTP 共享目录。

|

||||

|

||||

使用这种方法的问题是,你也许会发现某些文件并不存在于原始仓库中,因为完整形式的文件可能只有在执行 make 命令后才能构建,那只有你下载了完整的仓库,阅读了 README 或者 INSTALL 文件,然后运行相关命令之后才会产生。不过,假如你确信文件存在,而你只想进入仓库,获取那个文件,然后离开的话,你就可以那样做。

|

||||

|

||||

在 GitLab 和 GitHub 中,单击文件链接,并在 Raw 模式下查看,然后使用你的 web 浏览器的保存功能,例如:在 Firefox 中,文件 > 保存页面为。在一个 GitWeb 仓库中(一些更喜欢自己托管 git 的人使用的私有 git 仓库 web 查看器),Raw 查看链接在文件列表视图中。

|

||||

在 GitLab 和 GitHub 中,单击文件链接,并在 Raw 模式下查看,然后使用你的 web 浏览器的保存功能,例如:在 Firefox 中,“文件” \> “保存页面为”。在一个 GitWeb 仓库中(这是一个某些更喜欢自己托管 git 的人使用的私有 git 仓库 web 查看器),Raw 查看链接在文件列表视图中。

|

||||

|

||||

|

||||

|

||||

### 最佳实践

|

||||

|

||||

通常认为,和 Git 交互的正确方式是克隆完整的 Git 仓库。这样认为是有几个原因的。首先,可以使用 git pull 命令轻松地使克隆仓库保持更新,这样你就不必在每次文件改变时就重回 web 站点获得一份全新的拷贝。第二,你碰巧需要做些改进,只要保持仓库整洁,那么你可以非常轻松地向原来的作者提交所做的变更。

|

||||

|

||||

现在,可能是时候练习查找感兴趣的 Git 仓库,然后将它们克隆到你的硬盘中了。只要你了解使用终端的基础知识,那就不会太难做到。还不知道终端使用基础吗?那再给多我 5 分钟时间吧。

|

||||

现在,可能是时候练习查找感兴趣的 Git 仓库,然后将它们克隆到你的硬盘中了。只要你了解使用终端的基础知识,那就不会太难做到。还不知道基本的终端使用方式吗?那再给多我 5 分钟时间吧。

|

||||

|

||||

### 终端使用基础

|

||||

|

||||

首先要知道的是,所有的文件都有一个路径。这是有道理的;如果我让你在常规的非终端环境下为我打开一个文件,你就要导航到文件在你硬盘的位置,并且直到你找到那个文件,你要浏览一大堆窗口。例如,你也许要点击你的家目录 > 图片 > InktoberSketches > monkey.kra。

|

||||

|

||||

在那样的场景下,我们可以说文件 monkeysketch.kra 的路径是:$HOME/图片/InktoberSketches/monkey.kra。

|

||||

在那样的场景下,文件 monkeysketch.kra 的路径是:$HOME/图片/InktoberSketches/monkey.kra。

|

||||

|

||||

在终端中,除非你正在处理一些特殊的系统管理员任务,你的文件路径通常是以 $HOME 开头的(或者,如果你很懒,就使用 ~ 字符),后面紧跟着一些列的文件夹直到文件名自身。

|

||||

|

||||

这就和你在 GUI 中点击各种图标直到找到相关的文件或文件夹类似。

|

||||

|

||||

如果你想把 Git 仓库克隆到你的文档目录,那么你可以打开一个终端然后运行下面的命令:

|

||||

@ -100,6 +110,7 @@ Checking connectivity... done.

|

||||

$ git clone https://gitlab.com/foo/bar.git

|

||||

$HOME/文档/bar.clone

|

||||

```

|

||||

|

||||

一旦克隆完成,你可以打开一个文件管理器窗口,导航到你的文档文件夹,然后你就会发现 bar.clone 目录正在等待着你访问。

|

||||

|

||||

如果你想要更高级点,你或许会在以后再次访问那个仓库,可以尝试使用 git pull 命令来查看项目有没有更新:

|

||||

@ -111,7 +122,7 @@ bar.clone

|

||||

$ git pull

|

||||

```

|

||||

|

||||

到目前为止,你需要初步了解的所有终端命令就是那些了,那就去探索吧。你实践得越多,Git 掌握得就越好(孰能生巧),那就是游戏的名称,至少给了或取了一个元音。

|

||||

到目前为止,你需要初步了解的所有终端命令就是那些了,那就去探索吧。你实践得越多,Git 掌握得就越好(熟能生巧),这是重点,也是事情的本质。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -119,7 +130,7 @@ via: https://opensource.com/life/16/7/stumbling-git

|

||||

|

||||

作者:[Seth Kenlon][a]

|

||||

译者:[ChrisLeeGit](https://github.com/chrisleegit)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -127,4 +138,4 @@ via: https://opensource.com/life/16/7/stumbling-git

|

||||

[1]: https://git-scm.com/download

|

||||

[2]: https://gitlab.com/trashy/trashy

|

||||

[3]: https://gitlab.com/trashy/trashy.git

|

||||

|

||||

[4]: https://linux.cn/article-7639-1.html

|

||||

86

sources/talk/20160509 Android vs. iPhone Pros and Cons.md

Normal file

86

sources/talk/20160509 Android vs. iPhone Pros and Cons.md

Normal file

@ -0,0 +1,86 @@

|

||||

Android vs. iPhone: Pros and Cons

|

||||

===================================

|

||||

|

||||

>When comparing Android vs. iPhone, clearly Android has certain advantages even as the iPhone is superior in some key ways. But ultimately, which is better?

|

||||

|

||||

The question of Android vs. iPhone is a personal one.

|

||||

|

||||

Take myself, for example. I'm someone who has used both Android and the iPhone iOS. I'm well aware of the strengths of both platforms along with their weaknesses. Because of this, I decided to share my perspective regarding these two mobile platforms. Additionally, we'll take a look at my impressions of the new Ubuntu mobile platform and where it stacks up.

|

||||

|

||||

### What iPhone gets right

|

||||

|

||||

Even though I'm a full time Android user these days, I do recognize the areas where the iPhone got it right. First, Apple has a better record in updating their devices. This is especially true for older devices running iOS. With Android, if it's not a “Google blessed” Nexus...it better be a higher end carrier supported phone. Otherwise, you're going to find updates are either sparse or non-existent.

|

||||

|

||||

Another area where the iPhone does well is apps availability. Expanding on that: iPhone apps almost always have a cleaner look to them. This isn't to say that Android apps are ugly, rather, they may not have an expected flow and consistency found with iOS. Two examples of exclusivity and great iOS-only layout would have to be [Dark Sky][1] (weather) and [Facebook Paper][2].

|

||||

|

||||

Then there is the backup process. Android can, by default, back stuff up to Google. But that doesn't help much with application data! By contrast, iCloud can essentially make a full backup of your iOS device.

|

||||

|

||||

### Where iPhone loses me

|

||||

|

||||

The biggest indisputable issue I have with the iPhone is more of a hardware limitation than a software one. That issue is storage.

|

||||

|

||||

Look, with most Android phones, I can buy a smaller capacity phone and then add an SD card later. This does two things: First, I can use the SD card to store a lot of media files. Second, I can even use the SD card to store "some" of my apps. Apple has nothing that will touch this.

|

||||

|

||||

Another area where the iPhone loses me is in the lack of choice it provides. Backing up your device? Hope you like iTunes or iCloud. For someone like myself who uses Linux, this means my ONLY option would be to use iCloud.

|

||||

|

||||

To be ultimately fair, there are additional solutions for your iPhone if you're willing to jailbreak it. But that's not what this article is about. Same goes for rooting Android. This article is addressing a vanilla setup for both platforms.

|

||||

|

||||

Finally, let us not forget this little treat – [iTunes decides to delete a user's music][3] because it was seen as a duplication of Apple Music contents...or something along those lines. Not iPhone specific? I disagree, as that music would have very well ended up onto the iPhone at some point. I can say with great certainty that in no universe would I ever put up with this kind of nonsense!

|

||||

|

||||

|

||||

>The Android vs. iPhone debate depends on what features matter the most to you.

|

||||

|

||||

### What Android gets right

|

||||

|

||||

The biggest thing Android gives me that the iPhone doesn't: choice. Choices in applications, devices and overall layout of how my phone works.

|

||||

|

||||

I love desktop widgets! To iPhone users, they may seem really silly. But I can tell you that they save me from opening up applications as I can see the desired data without the extra hassle. Another similar feature I love is being able to install custom launchers instead of my phone's default!

|

||||

|

||||

Finally, I can utilize tools like [Airdroid][4] and [Tasker][5] to add full computer-like functionality to my smart phone. Airdroid allows me treat my Android phone like a computer with file management and SMS with anyone – this becomes a breeze to use with my mouse and keyboard. Tasker is awesome in that I can setup "recipes" to connect/disconnect, put my phone into meeting mode or even put itself into power saving mode when I set the parameters to do so. I can even set it to launch applications when I arrive at specific destinations.

|

||||

|

||||

### Where Android loses me

|

||||

|

||||

Backup options are limited to specific user data, not a full clone of your phone. Without rooting, you're either left out in the wind or you must look to the Android SDK for solutions. Expecting casual users to either root their phone or run the SDK for a complete (I mean everything) Android backup is a joke.

|

||||

|

||||

Yes, Google's backup service will backup Google app data, along with other related customizations. But it's nowhere near as complete as what we see with the iPhone. To accomplish something similar to what the iPhone enjoys, I've found you're going to either be rooting your Android phone or connecting it to a Windows PC to utilize some random program.

|

||||

|

||||

To be fair, however, I believe Nexus owners benefit from a [full backup service][6] that is device specific. Sorry, but Google's default backup is not cutting it. Same applies for adb backups via your PC – they don't always restore things as expected.

|

||||

|

||||

Wait, it gets better. Now after a lot of failed let downs and frustration, I found that there was one app that looked like it "might" offer a glimmer of hope, it's called Helium. Unlike other applications I found to be misleading and frustrating with their limitations, [Helium][7] initially looked like it was the backup application Google should have been offering all along -- emphasis on "looked like." Sadly, it was a huge let down. Not only did I need to connect it to my computer for a first run, it didn't even work using their provided Linux script. After removing their script, I settling for a good old fashioned adb backup...to my Linux PC. Fun facts: You will need to turn on a laundry list of stuff in developer tools, plus if you run the Twilight app, that needs to be turned off. It took me a bit to put this together when the backup option for adb on my phone wasn't responding.

|

||||

|

||||

At the end of the day, Android has ample options for non-rooted users to backup superficial stuff like contacts, SMS and other data easily. But a deep down phone backup is best left to a wired connection and adb from my experience.

|

||||

|

||||

### Ubuntu will save us?

|

||||

|

||||

With the good and the bad examined between the two major players in the mobile space, there's a lot of hope that we're going to see good things from Ubuntu on the mobile front. Well, thus far, it's been pretty lackluster.

|

||||

|

||||

I like what the developers are doing with the OS and I certainly love the idea of a third option for mobile besides iPhone and Android. Unfortunately, though, it's not that popular on the phone and the tablet received a lot of bad press due to subpar hardware and a lousy demonstration that made its way onto YouTube.

|

||||

|

||||

To be fair, I've had subpar experiences with iPhone and Android, too, in the past. So this isn't a dig on Ubuntu. But until it starts showing up with a ready to go ecosystem of functionality that matches what Android and iOS offer, it's not something I'm terribly interested in yet. At a later date, perhaps, I'll feel like the Ubuntu phones are ready to meet my needs.

|

||||

|

||||

### Android vs. iPhone bottom line: Why Android wins long term

|

||||

|

||||

Despite its painful shortcomings, Android treats me like an adult. It doesn't lock me into only two methods for backing up my data. Yes, some of Android's limitations are due to the fact that it's focused on letting me choose how to handle my data. But, I also get to choose my own device, add storage on a whim. Android enables me to do a lot of cool stuff that the iPhone simply isn't capable of doing.

|

||||

|

||||

At its core, Android gives non-root users greater access to the phone's functionality. For better or worse, it's a level of freedom that I think people are gravitating towards. Now there are going to be many of you who swear by the iPhone thanks to efforts like the [libimobiledevice][8] project. But take a long hard look at all the stuff Apple blocks Linux users from doing...then ask yourself – is it really worth it as a Linux user? Hit the Comments, share your thoughts on Android, iPhone or Ubuntu.

|

||||

|

||||

------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.datamation.com/mobile-wireless/android-vs.-iphone-pros-and-cons.html

|

||||

|

||||

作者:[Matt Hartley][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://www.datamation.com/author/Matt-Hartley-3080.html

|

||||

[1]: http://darkskyapp.com/

|

||||

[2]: https://www.facebook.com/paper/

|

||||

[3]: https://blog.vellumatlanta.com/2016/05/04/apple-stole-my-music-no-seriously/

|

||||

[4]: https://www.airdroid.com/

|

||||

[5]: http://tasker.dinglisch.net/

|

||||

[6]: https://support.google.com/nexus/answer/2819582?hl=en

|

||||

[7]: https://play.google.com/store/apps/details?id=com.koushikdutta.backup&hl=en

|

||||

[8]: http://www.libimobiledevice.org/

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

MikeCoder Translating...

|

||||

|

||||

What containers and unikernels can learn from Arduino and Raspberry Pi

|

||||

==========================================================================

|

||||

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

translating by maywanting

|

||||

|

||||

5 SSH Hardening Tips

|

||||

======================

|

||||

|

||||

|

||||

7

sources/team_test/README.md

Normal file

7

sources/team_test/README.md

Normal file

@ -0,0 +1,7 @@

|

||||

组队翻译 : 《Building a data science portfolio: Machine learning project》

|

||||

|

||||

本次组织者 : @选题-oska874

|

||||

|

||||

参加译者 : @译者-vim-kakali @译者-Noobfish @译者-zky001 @译者-kokialoves @译者-ideas4u @译者-cposture

|

||||

|

||||

分配方式 : 原文按大致长度分成 6 部分,参与者自由选择,先到先选,如有疑问联系 @选题-oska874

|

||||

@ -0,0 +1,79 @@

|

||||

>This is the third in a series of posts on how to build a Data Science Portfolio. If you like this and want to know when the next post in the series is released, you can [subscribe at the bottom of the page][1].

|

||||

|

||||

Data science companies are increasingly looking at portfolios when making hiring decisions. One of the reasons for this is that a portfolio is the best way to judge someone’s real-world skills. The good news for you is that a portfolio is entirely within your control. If you put some work in, you can make a great portfolio that companies are impressed by.

|

||||

|

||||

The first step in making a high-quality portfolio is to know what skills to demonstrate. The primary skills that companies want in data scientists, and thus the primary skills they want a portfolio to demonstrate, are:

|

||||

|

||||

- Ability to communicate

|

||||

- Ability to collaborate with others

|

||||

- Technical competence

|

||||

- Ability to reason about data

|

||||

- Motivation and ability to take initiative

|

||||

|

||||

Any good portfolio will be composed of multiple projects, each of which may demonstrate 1-2 of the above points. This is the third post in a series that will cover how to make a well-rounded data science portfolio. In this post, we’ll cover how to make the second project in your portfolio, and how to build an end to end machine learning project. At the end, you’ll have a project that shows your ability to reason about data, and your technical competence. [Here’s][2] the completed project if you want to take a look.

|

||||

|

||||

### An end to end project

|

||||

|

||||

As a data scientist, there are times when you’ll be asked to take a dataset and figure out how to [tell a story with it][3]. In times like this, it’s important to communicate very well, and walk through your process. Tools like Jupyter notebook, which we used in a previous post, are very good at helping you do this. The expectation here is that the deliverable is a presentation or document summarizing your findings.

|

||||

|

||||

However, there are other times when you’ll be asked to create a project that has operational value. A project with operational value directly impacts the day-to-day operations of a company, and will be used more than once, and often by multiple people. A task like this might be “create an algorithm to forecast our churn rate”, or “create a model that can automatically tag our articles”. In cases like this, storytelling is less important than technical competence. You need to be able to take a dataset, understand it, then create a set of scripts that can process that data. It’s often important that these scripts run quickly, and use minimal system resources like memory. It’s very common that these scripts will be run several times, so the deliverable becomes the scripts themselves, not a presentation. The deliverable is often integrated into operational flows, and may even be user-facing.

|

||||

|

||||

The main components of building an end to end project are:

|

||||

|

||||

- Understanding the context

|

||||

- Exploring the data and figuring out the nuances

|

||||

- Creating a well-structured project, so its easy to integrate into operational flows

|

||||

- Writing high-performance code that runs quickly and uses minimal system resources

|

||||

- Documenting the installation and usage of your code well, so others can use it

|

||||

|

||||

In order to effectively create a project of this kind, we’ll need to work with multiple files. Using a text editor like [Atom][4], or an IDE like [PyCharm][5] is highly recommended. These tools will allow you to jump between files, and edit files of different types, like markdown files, Python files, and csv files. Structuring your project so it’s easy to version control and upload to collaborative coding tools like [Github][6] is also useful.

|

||||

|

||||

|

||||

>This project on Github.

|

||||

|

||||

We’ll use our editing tools along with libraries like [Pandas][7] and [scikit-learn][8] in this post. We’ll make extensive use of Pandas [DataFrames][9], which make it easy to read in and work with tabular data in Python.

|

||||

|

||||

### Finding good datasets

|

||||

|

||||

A good dataset for an end to end portfolio project can be hard to find. [The dataset][10] needs to be sufficiently large that memory and performance constraints come into play. It also needs to potentially be operationally useful. For instance, this dataset, which contains data on the admission criteria, graduation rates, and graduate future earnings for US colleges would be a great dataset to use to tell a story. However, as you think about the dataset, it becomes clear that there isn’t enough nuance to build a good end to end project with it. For example, you could tell someone their potential future earnings if they went to a specific college, but that would be a quick lookup without enough nuance to demonstrate technical competence. You could also figure out if colleges with higher admissions standards tend to have graduates who earn more, but that would be more storytelling than operational.

|

||||

|

||||

These memory and performance constraints tend to come into play when you have more than a gigabyte of data, and when you have some nuance to what you want to predict, which involves running algorithms over the dataset.

|

||||

|

||||

A good operational dataset enables you to build a set of scripts that transform the data, and answer dynamic questions. A good example would be a dataset of stock prices. You would be able to predict the prices for the next day, and keep feeding new data to the algorithm as the markets closed. This would enable you to make trades, and potentially even profit. This wouldn’t be telling a story – it would be adding direct value.

|

||||

|

||||

Some good places to find datasets like this are:

|

||||

|

||||

- [/r/datasets][11] – a subreddit that has hundreds of interesting datasets.

|

||||

- [Google Public Datasets][12] – public datasets available through Google BigQuery.

|

||||

- [Awesome datasets][13] – a list of datasets, hosted on Github.

|

||||

|

||||

As you look through these datasets, think about what questions someone might want answered with the dataset, and think if those questions are one-time (“how did housing prices correlate with the S&P 500?”), or ongoing (“can you predict the stock market?”). The key here is to find questions that are ongoing, and require the same code to be run multiple times with different inputs (different data).

|

||||

|

||||

For the purposes of this post, we’ll look at [Fannie Mae Loan Data][14]. Fannie Mae is a government sponsored enterprise in the US that buys mortgage loans from other lenders. It then bundles these loans up into mortgage-backed securities and resells them. This enables lenders to make more mortgage loans, and creates more liquidity in the market. This theoretically leads to more homeownership, and better loan terms. From a borrowers perspective, things stay largely the same, though.

|

||||

|

||||

Fannie Mae releases two types of data – data on loans it acquires, and data on how those loans perform over time. In the ideal case, someone borrows money from a lender, then repays the loan until the balance is zero. However, some borrowers miss multiple payments, which can cause foreclosure. Foreclosure is when the house is seized by the bank because mortgage payments cannot be made. Fannie Mae tracks which loans have missed payments on them, and which loans needed to be foreclosed on. This data is published quarterly, and lags the current date by 1 year. As of this writing, the most recent dataset that’s available is from the first quarter of 2015.

|

||||

|

||||

Acquisition data, which is published when the loan is acquired by Fannie Mae, contains information on the borrower, including credit score, and information on their loan and home. Performance data, which is published every quarter after the loan is acquired, contains information on the payments being made by the borrower, and the foreclosure status, if any. A loan that is acquired may have dozens of rows in the performance data. A good way to think of this is that the acquisition data tells you that Fannie Mae now controls the loan, and the performance data contains a series of status updates on the loan. One of the status updates may tell us that the loan was foreclosed on during a certain quarter.

|

||||

|

||||

|

||||

>A foreclosed home being sold.

|

||||

|

||||

### Picking an angle

|

||||

|

||||

There are a few directions we could go in with the Fannie Mae dataset. We could:

|

||||

|

||||

- Try to predict the sale price of a house after it’s foreclosed on.

|

||||

- Predict the payment history of a borrower.

|

||||

- Figure out a score for each loan at acquisition time.

|

||||

|

||||

The important thing is to stick to a single angle. Trying to focus on too many things at once will make it hard to make an effective project. It’s also important to pick an angle that has sufficient nuance. Here are examples of angles without much nuance:

|

||||

|

||||

- Figuring out which banks sold loans to Fannie Mae that were foreclosed on the most.

|

||||

- Figuring out trends in borrower credit scores.

|

||||

- Exploring which types of homes are foreclosed on most often.

|

||||

- Exploring the relationship between loan amounts and foreclosure sale prices

|

||||

|

||||

All of the above angles are interesting, and would be great if we were focused on storytelling, but aren’t great fits for an operational project.

|

||||

|

||||

With the Fannie Mae dataset, we’ll try to predict whether a loan will be foreclosed on in the future by only using information that was available when the loan was acquired. In effect, we’ll create a “score” for any mortgage that will tell us if Fannie Mae should buy it or not. This will give us a nice foundation to build on, and will be a great portfolio piece.

|

||||

|

||||

@ -0,0 +1,114 @@

|

||||

### Understanding the data

|

||||

|

||||

Let’s take a quick look at the raw data files. Here are the first few rows of the acquisition data from quarter 1 of 2012:

|

||||

|

||||

```

|

||||

100000853384|R|OTHER|4.625|280000|360|02/2012|04/2012|31|31|1|23|801|N|C|SF|1|I|CA|945||FRM|

|

||||

100003735682|R|SUNTRUST MORTGAGE INC.|3.99|466000|360|01/2012|03/2012|80|80|2|30|794|N|P|SF|1|P|MD|208||FRM|788

|

||||

100006367485|C|PHH MORTGAGE CORPORATION|4|229000|360|02/2012|04/2012|67|67|2|36|802|N|R|SF|1|P|CA|959||FRM|794

|

||||

```

|

||||

|

||||

Here are the first few rows of the performance data from quarter 1 of 2012:

|

||||

|

||||

```

|

||||

100000853384|03/01/2012|OTHER|4.625||0|360|359|03/2042|41860|0|N||||||||||||||||

|

||||

100000853384|04/01/2012||4.625||1|359|358|03/2042|41860|0|N||||||||||||||||

|

||||

100000853384|05/01/2012||4.625||2|358|357|03/2042|41860|0|N||||||||||||||||

|

||||

```

|

||||

|

||||

Before proceeding too far into coding, it’s useful to take some time and really understand the data. This is more critical in operational projects – because we aren’t interactively exploring the data, it can be harder to spot certain nuances unless we find them upfront. In this case, the first step is to read the materials on the Fannie Mae site:

|

||||

|

||||

- [Overview][15]

|

||||

- [Glossary of useful terms][16]

|

||||

- [FAQs][17]

|

||||

- [Columns in the Acquisition and Performance files][18]

|

||||

- [Sample Acquisition data file][19]

|

||||

- [Sample Performance data file][20]

|

||||

|

||||

After reading through these files, we know some key facts that will help us:

|

||||

|

||||

- There’s an Acquisition file and a Performance file for each quarter, starting from the year 2000 to present. There’s a 1 year lag in the data, so the most recent data is from 2015 as of this writing.

|

||||

- The files are in text format, with a pipe (|) as a delimiter.

|

||||

- The files don’t have headers, but we have a list of what each column is.

|

||||

- All together, the files contain data on 22 million loans.

|

||||

- Because the Performance files contain information on loans acquired in previous years, there will be more performance data for loans acquired in earlier years (ie loans acquired in 2014 won’t have much performance history).

|

||||

|

||||

These small bits of information will save us a ton of time as we figure out how to structure our project and work with the data.

|

||||

|

||||

### Structuring the project

|

||||

|

||||

Before we start downloading and exploring the data, it’s important to think about how we’ll structure the project. When building an end-to-end project, our primary goals are:

|

||||

|

||||

- Creating a solution that works

|

||||

- Having a solution that runs quickly and uses minimal resources

|

||||

- Enabling others to easily extend our work

|

||||

- Making it easy for others to understand our code

|

||||

- Writing as little code as possible

|

||||

|

||||

In order to achieve these goals, we’ll need to structure our project well. A well structured project follows a few principles:

|

||||

|

||||

- Separates data files and code files.

|

||||

- Separates raw data from generated data.

|

||||

- Has a README.md file that walks people through installing and using the project.

|

||||

- Has a requirements.txt file that contains all the packages needed to run the project.

|

||||

- Has a single settings.py file that contains any settings that are used in other files.

|

||||

- For example, if you are reading the same file from multiple Python scripts, it’s useful to have them all import settings and get the file name from a centralized place.

|

||||

- Has a .gitignore file that prevents large or secret files from being committed.

|

||||

- Breaks each step in our task into a separate file that can be executed separately.

|

||||

- For example, we may have one file for reading in the data, one for creating features, and one for making predictions.

|

||||

- Stores intermediate values. For example, one script may output a file that the next script can read.

|

||||

- This enables us to make changes in our data processing flow without recalculating everything.

|

||||

|

||||

Our file structure will look something like this shortly:

|

||||

|

||||

```

|

||||

loan-prediction

|

||||

├── data

|

||||

├── processed

|

||||

├── .gitignore

|

||||

├── README.md

|

||||

├── requirements.txt

|

||||

├── settings.py

|

||||

```

|

||||

|

||||

### Creating the initial files

|

||||

|

||||

To start with, we’ll need to create a loan-prediction folder. Inside that folder, we’ll need to make a data folder and a processed folder. The first will store our raw data, and the second will store any intermediate calculated values.

|

||||

|

||||

Next, we’ll make a .gitignore file. A .gitignore file will make sure certain files are ignored by git and not pushed to Github. One good example of such a file is the .DS_Store file created by OSX in every folder. A good starting point for a .gitignore file is here. We’ll also want to ignore the data files because they are very large, and the Fannie Mae terms prevent us from redistributing them, so we should add two lines to the end of our file:

|

||||

|

||||

```

|

||||

data

|

||||

processed

|

||||

```

|

||||

|

||||

[Here’s][21] an example .gitignore file for this project.

|

||||

|

||||

Next, we’ll need to create README.md, which will help people understand the project. .md indicates that the file is in markdown format. Markdown enables you write plain text, but also add some fancy formatting if you want. [Here’s][22] a guide on markdown. If you upload a file called README.md to Github, Github will automatically process the markdown, and show it to anyone who views the project. [Here’s][23] an example.

|

||||

|

||||

For now, we just need to put a simple description in README.md:

|

||||

|

||||

```

|

||||

Loan Prediction

|

||||

-----------------------

|

||||

|

||||

Predict whether or not loans acquired by Fannie Mae will go into foreclosure. Fannie Mae acquires loans from other lenders as a way of inducing them to lend more. Fannie Mae releases data on the loans it has acquired and their performance afterwards [here](http://www.fanniemae.com/portal/funding-the-market/data/loan-performance-data.html).

|

||||

```

|

||||

|

||||

Now, we can create a requirements.txt file. This will make it easy for other people to install our project. We don’t know exactly what libraries we’ll be using yet, but here’s a good starting point:

|

||||

|

||||

```

|

||||

pandas

|

||||

matplotlib

|

||||

scikit-learn

|

||||

numpy

|

||||

ipython

|

||||

scipy

|

||||

```

|

||||

|

||||

The above libraries are the most commonly used for data analysis tasks in Python, and its fair to assume that we’ll be using most of them. [Here’s][24] an example requirements file for this project.

|

||||

|

||||

After creating requirements.txt, you should install the packages. For this post, we’ll be using Python 3. If you don’t have Python installed, you should look into using [Anaconda][25], a Python installer that also installs all the packages listed above.

|

||||

|

||||

Finally, we can just make a blank settings.py file, since we don’t have any settings for our project yet.

|

||||

|

||||

@ -0,0 +1,194 @@

|

||||

### Acquiring the data

|

||||

|

||||

Once we have the skeleton of our project, we can get the raw data.

|

||||

|

||||

Fannie Mae has some restrictions around acquiring the data, so you’ll need to sign up for an account. You can find the download page [here][26]. After creating an account, you’ll be able to download as few or as many loan data files as you want. The files are in zip format, and are reasonably large after decompression.

|

||||

|

||||

For the purposes of this blog post, we’ll download everything from Q1 2012 to Q1 2015, inclusive. We’ll then need to unzip all of the files. After unzipping the files, remove the original .zip files. At the end, the loan-prediction folder should look something like this:

|

||||

|

||||

```

|

||||

loan-prediction

|

||||

├── data

|

||||

│ ├── Acquisition_2012Q1.txt

|

||||

│ ├── Acquisition_2012Q2.txt

|

||||

│ ├── Performance_2012Q1.txt

|

||||

│ ├── Performance_2012Q2.txt

|

||||

│ └── ...

|

||||

├── processed

|

||||

├── .gitignore

|

||||

├── README.md

|

||||

├── requirements.txt

|

||||

├── settings.py

|

||||

```

|

||||

|

||||

After downloading the data, you can use the head and tail shell commands to look at the lines in the files. Do you see any columns that aren’t needed? It might be useful to consult the [pdf of column names][27] while doing this.

|

||||

|

||||

### Reading in the data

|

||||

|

||||

There are two issues that make our data hard to work with right now:

|

||||

|

||||

- The acquisition and performance datasets are segmented across multiple files.

|

||||

- Each file is missing headers.

|

||||

|

||||

Before we can get started on working with the data, we’ll need to get to the point where we have one file for the acquisition data, and one file for the performance data. Each of the files will need to contain only the columns we care about, and have the proper headers. One wrinkle here is that the performance data is quite large, so we should try to trim some of the columns if we can.

|

||||

|

||||

The first step is to add some variables to settings.py, which will contain the paths to our raw data and our processed data. We’ll also add a few other settings that will be useful later on:

|

||||

|

||||

```

|

||||

DATA_DIR = "data"

|

||||

PROCESSED_DIR = "processed"

|

||||

MINIMUM_TRACKING_QUARTERS = 4

|

||||

TARGET = "foreclosure_status"

|

||||

NON_PREDICTORS = [TARGET, "id"]

|

||||

CV_FOLDS = 3

|

||||

```

|

||||

|

||||

Putting the paths in settings.py will put them in a centralized place and make them easy to change down the line. When referring to the same variables in multiple files, it’s easier to put them in a central place than edit them in every file when you want to change them. [Here’s][28] an example settings.py file for this project.

|

||||

|

||||

The second step is to create a file called assemble.py that will assemble all the pieces into 2 files. When we run python assemble.py, we’ll get 2 data files in the processed directory.

|

||||

|

||||