mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-28 23:20:10 +08:00

commit

9e30f9b66e

146

published/20150717 How to monitor NGINX with Datadog - Part 3.md

Normal file

146

published/20150717 How to monitor NGINX with Datadog - Part 3.md

Normal file

@ -0,0 +1,146 @@

|

||||

如何使用 Datadog 监控 NGINX(第三篇)

|

||||

================================================================================

|

||||

|

||||

|

||||

如果你已经阅读了前面的[如何监控 NGINX][1],你应该知道从你网络环境的几个指标中可以获取多少信息。而且你也看到了从 NGINX 特定的基础中收集指标是多么容易的。但要实现全面,持续的监控 NGINX,你需要一个强大的监控系统来存储并将指标可视化,当异常发生时能提醒你。在这篇文章中,我们将向你展示如何使用 Datadog 安装 NGINX 监控,以便你可以在定制的仪表盘中查看这些指标:

|

||||

|

||||

|

||||

|

||||

Datadog 允许你以单个主机、服务、流程和度量来构建图形和警告,或者使用它们的几乎任何组合构建。例如,你可以监控你的所有主机,或者某个特定可用区域的所有NGINX主机,或者您可以监视具有特定标签的所有主机的一个关键指标。本文将告诉您如何:

|

||||

|

||||

- 在 Datadog 仪表盘上监控 NGINX 指标,就像监控其他系统一样

|

||||

- 当一个关键指标急剧变化时设置自动警报来通知你

|

||||

|

||||

### 配置 NGINX ###

|

||||

|

||||

为了收集 NGINX 指标,首先需要确保 NGINX 已启用 status 模块和一个 报告 status 指标的 URL。一步步的[配置开源 NGINX][2] 和 [NGINX Plus][3] 请参见之前的相关文章。

|

||||

|

||||

### 整合 Datadog 和 NGINX ###

|

||||

|

||||

#### 安装 Datadog 代理 ####

|

||||

|

||||

Datadog 代理是[一个开源软件][4],它能收集和报告你主机的指标,这样就可以使用 Datadog 查看和监控他们。安装这个代理通常[仅需要一个命令][5]

|

||||

|

||||

只要你的代理启动并运行着,你会看到你主机的指标报告[在你 Datadog 账号下][6]。

|

||||

|

||||

|

||||

|

||||

#### 配置 Agent ####

|

||||

|

||||

接下来,你需要为代理创建一个简单的 NGINX 配置文件。在你系统中代理的配置目录应该[在这儿][7]找到。

|

||||

|

||||

在目录里面的 conf.d/nginx.yaml.example 中,你会发现[一个简单的配置文件][8],你可以编辑并提供 status URL 和可选的标签为每个NGINX 实例:

|

||||

|

||||

init_config:

|

||||

|

||||

instances:

|

||||

|

||||

- nginx_status_url: http://localhost/nginx_status/

|

||||

tags:

|

||||

- instance:foo

|

||||

|

||||

当你提供了 status URL 和任意 tag,将配置文件保存为 conf.d/nginx.yaml。

|

||||

|

||||

#### 重启代理 ####

|

||||

|

||||

你必须重新启动代理程序来加载新的配置文件。重新启动命令[在这里][9],根据平台的不同而不同。

|

||||

|

||||

#### 检查配置文件 ####

|

||||

|

||||

要检查 Datadog 和 NGINX 是否正确整合,运行 Datadog 的 info 命令。每个平台使用的命令[看这儿][10]。

|

||||

|

||||

如果配置是正确的,你会看到这样的输出:

|

||||

|

||||

Checks

|

||||

======

|

||||

|

||||

[...]

|

||||

|

||||

nginx

|

||||

-----

|

||||

- instance #0 [OK]

|

||||

- Collected 8 metrics & 0 events

|

||||

|

||||

#### 安装整合 ####

|

||||

|

||||

最后,在你的 Datadog 帐户打开“Nginx 整合”。这非常简单,你只要在 [NGINX 整合设置][11]中点击“Install Integration”按钮。

|

||||

|

||||

|

||||

|

||||

### 指标! ###

|

||||

|

||||

一旦代理开始报告 NGINX 指标,你会看到[一个 NGINX 仪表盘][12]出现在在你 Datadog 可用仪表盘的列表中。

|

||||

|

||||

基本的 NGINX 仪表盘显示有用的图表,囊括了几个[我们的 NGINX 监控介绍][13]中的关键指标。 (一些指标,特别是请求处理时间要求进行日志分析,Datadog 不支持。)

|

||||

|

||||

你可以通过增加 NGINX 之外的重要指标的图表来轻松创建一个全面的仪表盘,以监控你的整个网站设施。例如,你可能想监视你 NGINX 的主机级的指标,如系统负载。要构建一个自定义的仪表盘,只需点击靠近仪表盘的右上角的选项并选择“Clone Dash”来克隆一个默认的 NGINX 仪表盘。

|

||||

|

||||

|

||||

|

||||

你也可以使用 Datadog 的[主机地图][14]在更高层面监控你的 NGINX 实例,举个例子,用颜色标示你所有的 NGINX 主机的 CPU 使用率来辨别潜在热点。

|

||||

|

||||

|

||||

|

||||

### NGINX 指标警告 ###

|

||||

|

||||

一旦 Datadog 捕获并可视化你的指标,你可能会希望建立一些监控自动地密切关注你的指标,并当有问题提醒你。下面将介绍一个典型的例子:一个提醒你 NGINX 吞吐量突然下降时的指标监控器。

|

||||

|

||||

#### 监控 NGINX 吞吐量 ####

|

||||

|

||||

Datadog 指标警报可以是“基于吞吐量的”(当指标超过设定值会警报)或“基于变化幅度的”(当指标的变化超过一定范围会警报)。在这个例子里,我们会采取后一种方式,当每秒传入的请求急剧下降时会提醒我们。下降往往意味着有问题。

|

||||

|

||||

1. **创建一个新的指标监控**。从 Datadog 的“Monitors”下拉列表中选择“New Monitor”。选择“Metric”作为监视器类型。

|

||||

|

||||

|

||||

|

||||

2. **定义你的指标监视器**。我们想知道 NGINX 每秒总的请求量下降的数量,所以我们在基础设施中定义我们感兴趣的 nginx.net.request_per_s 之和。

|

||||

|

||||

|

||||

|

||||

3. **设置指标警报条件**。我们想要在变化时警报,而不是一个固定的值,所以我们选择“Change Alert”。我们设置监控为无论何时请求量下降了30%以上时警报。在这里,我们使用一个一分钟的数据窗口来表示 “now” 指标的值,对横跨该间隔内的平均变化和之前 10 分钟的指标值作比较。

|

||||

|

||||

|

||||

|

||||

4. **自定义通知**。如果 NGINX 的请求量下降,我们想要通知我们的团队。在这个例子中,我们将给 ops 团队的聊天室发送通知,并给值班工程师发送短信。在“Say what’s happening”中,我们会为监控器命名,并添加一个伴随该通知的短消息,建议首先开始调查的内容。我们会 @ ops 团队使用的 Slack,并 @pagerduty [将警告发给短信][15]。

|

||||

|

||||

|

||||

|

||||

5. **保存集成监控**。点击页面底部的“Save”按钮。你现在在监控一个关键的 NGINX [工作指标][16],而当它快速下跌时会给值班工程师发短信。

|

||||

|

||||

### 结论 ###

|

||||

|

||||

在这篇文章中,我们谈到了通过整合 NGINX 与 Datadog 来可视化你的关键指标,并当你的网络基础架构有问题时会通知你的团队。

|

||||

|

||||

如果你一直使用你自己的 Datadog 账号,你现在应该可以极大的提升你的 web 环境的可视化,也有能力对你的环境、你所使用的模式、和对你的组织最有价值的指标创建自动监控。

|

||||

|

||||

如果你还没有 Datadog 帐户,你可以注册[免费试用][17],并开始监视你的基础架构,应用程序和现在的服务。

|

||||

|

||||

------------------------------------------------------------

|

||||

|

||||

via: https://www.datadoghq.com/blog/how-to-monitor-nginx-with-datadog/

|

||||

|

||||

作者:K Young

|

||||

译者:[strugglingyouth](https://github.com/strugglingyouth)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:https://linux.cn/article-5970-1.html

|

||||

[2]:https://linux.cn/article-5985-1.html#open-source

|

||||

[3]:https://linux.cn/article-5985-1.html#plus

|

||||

[4]:https://github.com/DataDog/dd-agent

|

||||

[5]:https://app.datadoghq.com/account/settings#agent

|

||||

[6]:https://app.datadoghq.com/infrastructure

|

||||

[7]:http://docs.datadoghq.com/guides/basic_agent_usage/

|

||||

[8]:https://github.com/DataDog/dd-agent/blob/master/conf.d/nginx.yaml.example

|

||||

[9]:http://docs.datadoghq.com/guides/basic_agent_usage/

|

||||

[10]:http://docs.datadoghq.com/guides/basic_agent_usage/

|

||||

[11]:https://app.datadoghq.com/account/settings#integrations/nginx

|

||||

[12]:https://app.datadoghq.com/dash/integration/nginx

|

||||

[13]:https://linux.cn/article-5970-1.html

|

||||

[14]:https://www.datadoghq.com/blog/introducing-host-maps-know-thy-infrastructure/

|

||||

[15]:https://www.datadoghq.com/blog/pagerduty/

|

||||

[16]:https://www.datadoghq.com/blog/monitoring-101-collecting-data/#metrics

|

||||

[17]:https://www.datadoghq.com/blog/how-to-monitor-nginx-with-datadog/#sign-up

|

||||

[18]:https://github.com/DataDog/the-monitor/blob/master/nginx/how_to_monitor_nginx_with_datadog.md

|

||||

[19]:https://github.com/DataDog/the-monitor/issues

|

||||

@ -1,74 +0,0 @@

|

||||

How to create an AP in Ubuntu 15.04 to connect to Android/iPhone

|

||||

================================================================================

|

||||

I tried creating a wireless access point via Gnome Network Manager in 15.04 and was successful. I’m sharing the steps with our readers. Please note: you must have a wifi card which allows you to create an Access Point. If you want to know how to find that, type iw list in a terminal.

|

||||

|

||||

If you don’t have iw installed, you can install iw in Ubuntu using the command sudo apt-get install iw.

|

||||

|

||||

After you type iw list, look for supported interface section, where it should be a entry called AP like the one shown below:

|

||||

|

||||

Supported interface modes:

|

||||

|

||||

* IBSS

|

||||

* managed

|

||||

* AP

|

||||

* AP/VLAN

|

||||

* monitor

|

||||

* mesh point

|

||||

|

||||

Let’s see the steps in detail

|

||||

|

||||

1. Disconnect WIFI. Get a an internet cable and plug into your laptop so that you are connected to a wired internet connection

|

||||

1. Go to Network Icon on the top panel -> Edit Connections then click the Add button in the pop-up window

|

||||

1. Choose Wi-Fi from the drop-down menu

|

||||

1. Next,

|

||||

|

||||

a. Type in a connection name e.g. Hotspot

|

||||

|

||||

b. Type in a SSID e.g. Hotspot

|

||||

|

||||

c. Select mode: Infrastructure

|

||||

|

||||

d. Device MAC address: select your wireless card from drop-down menu

|

||||

|

||||

|

||||

|

||||

1. Go to Wi-Fi Security tab, select security type WPA & WPA2 Personal and set a password

|

||||

1. Go to IPv4 Settings tab, from Method drop-down box, select Shared to other computers

|

||||

|

||||

|

||||

|

||||

1. Go to IPv6 tab and set Method to ignore (do this only if you do not use IPv6)

|

||||

1. Hit the “Save” button to save the configuration

|

||||

1. Open a terminal from the menu/dash

|

||||

1. Now, edit the connection with you just created via network settings

|

||||

|

||||

VIM editor:

|

||||

|

||||

sudo vim /etc/NetworkManager/system-connections/Hotspot

|

||||

|

||||

Gedit:

|

||||

|

||||

gksu gedit /etc/NetworkManager/system-connections/Hotspot

|

||||

|

||||

Replace name Hotspot with the connection name you have given in step 4

|

||||

|

||||

|

||||

|

||||

1. Change the line mode=infrastructure to mode=ap and save the file

|

||||

1. Once you save the file, you should be able to see the wifi named Hotspot showing up in the list of available wifi networks. (If the network does not show, disable and enable wifi )

|

||||

|

||||

|

||||

|

||||

1. You can now connect your Android phone. Connection tested using Xioami Mi4i running Android 5.0 (Downloaded 1GB to test speed and reliability)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxveda.com/2015/08/23/how-to-create-an-ap-in-ubuntu-15-04-to-connect-to-androidiphone/

|

||||

|

||||

作者:[Sayantan Das][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.linuxveda.com/author/sayantan_das/

|

||||

@ -1,321 +0,0 @@

|

||||

struggling 翻译中

|

||||

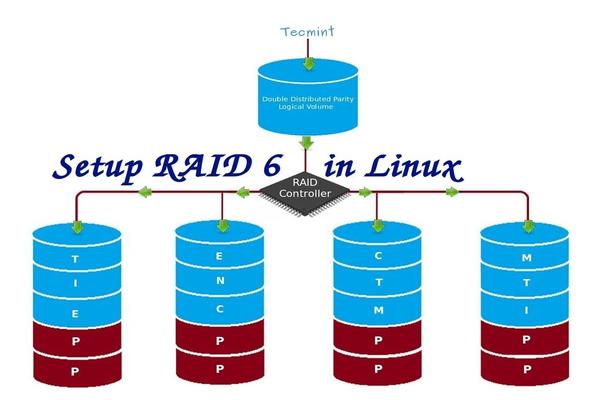

Setup RAID Level 6 (Striping with Double Distributed Parity) in Linux – Part 5

|

||||

================================================================================

|

||||

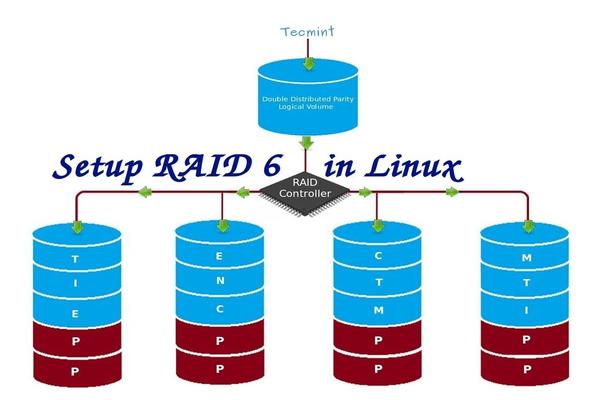

RAID 6 is upgraded version of RAID 5, where it has two distributed parity which provides fault tolerance even after two drives fails. Mission critical system still operational incase of two concurrent disks failures. It’s alike RAID 5, but provides more robust, because it uses one more disk for parity.

|

||||

|

||||

In our earlier article, we’ve seen distributed parity in RAID 5, but in this article we will going to see RAID 6 with double distributed parity. Don’t expect extra performance than any other RAID, if so we have to install a dedicated RAID Controller too. Here in RAID 6 even if we loose our 2 disks we can get the data back by replacing a spare drive and build it from parity.

|

||||

|

||||

|

||||

|

||||

Setup RAID 6 in Linux

|

||||

|

||||

To setup a RAID 6, minimum 4 numbers of disks or more in a set are required. RAID 6 have multiple disks even in some set it may be have some bunch of disks, while reading, it will read from all the drives, so reading would be faster whereas writing would be poor because it has to stripe over multiple disks.

|

||||

|

||||

Now, many of us comes to conclusion, why we need to use RAID 6, when it doesn’t perform like any other RAID. Hmm… those who raise this question need to know that, if they need high fault tolerance choose RAID 6. In every higher environments with high availability for database, they use RAID 6 because database is the most important and need to be safe in any cost, also it can be useful for video streaming environments.

|

||||

|

||||

#### Pros and Cons of RAID 6 ####

|

||||

|

||||

- Performance are good.

|

||||

- RAID 6 is expensive, as it requires two independent drives are used for parity functions.

|

||||

- Will loose a two disks capacity for using parity information (double parity).

|

||||

- No data loss, even after two disk fails. We can rebuilt from parity after replacing the failed disk.

|

||||

- Reading will be better than RAID 5, because it reads from multiple disk, But writing performance will be very poor without dedicated RAID Controller.

|

||||

|

||||

#### Requirements ####

|

||||

|

||||

Minimum 4 numbers of disks are required to create a RAID 6. If you want to add more disks, you can, but you must have dedicated raid controller. In software RAID, we will won’t get better performance in RAID 6. So we need a physical RAID controller.

|

||||

|

||||

Those who are new to RAID setup, we recommend to go through RAID articles below.

|

||||

|

||||

- [Basic Concepts of RAID in Linux – Part 1][1]

|

||||

- [Creating Software RAID 0 (Stripe) in Linux – Part 2][2]

|

||||

- [Setting up RAID 1 (Mirroring) in Linux – Part 3][3]

|

||||

|

||||

#### My Server Setup ####

|

||||

|

||||

Operating System : CentOS 6.5 Final

|

||||

IP Address : 192.168.0.228

|

||||

Hostname : rd6.tecmintlocal.com

|

||||

Disk 1 [20GB] : /dev/sdb

|

||||

Disk 2 [20GB] : /dev/sdc

|

||||

Disk 3 [20GB] : /dev/sdd

|

||||

Disk 4 [20GB] : /dev/sde

|

||||

|

||||

This article is a Part 5 of a 9-tutorial RAID series, here we are going to see how we can create and setup Software RAID 6 or Striping with Double Distributed Parity in Linux systems or servers using four 20GB disks named /dev/sdb, /dev/sdc, /dev/sdd and /dev/sde.

|

||||

|

||||

### Step 1: Installing mdadm Tool and Examine Drives ###

|

||||

|

||||

1. If you’re following our last two Raid articles (Part 2 and Part 3), where we’ve already shown how to install ‘mdadm‘ tool. If you’re new to this article, let me explain that ‘mdadm‘ is a tool to create and manage Raid in Linux systems, let’s install the tool using following command according to your Linux distribution.

|

||||

|

||||

# yum install mdadm [on RedHat systems]

|

||||

# apt-get install mdadm [on Debain systems]

|

||||

|

||||

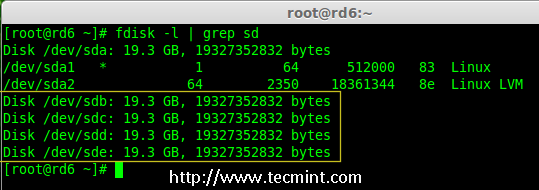

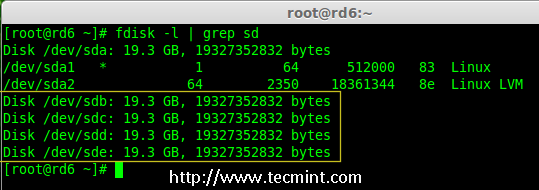

2. After installing the tool, now it’s time to verify the attached four drives that we are going to use for raid creation using the following ‘fdisk‘ command.

|

||||

|

||||

# fdisk -l | grep sd

|

||||

|

||||

|

||||

|

||||

Check Disks in Linux

|

||||

|

||||

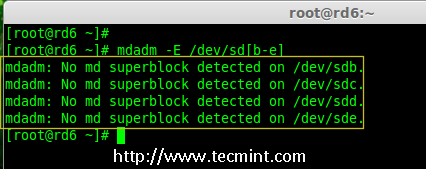

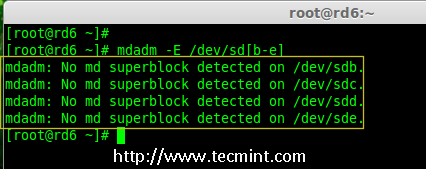

3. Before creating a RAID drives, always examine our disk drives whether there is any RAID is already created on the disks.

|

||||

|

||||

# mdadm -E /dev/sd[b-e]

|

||||

# mdadm --examine /dev/sdb /dev/sdc /dev/sdd /dev/sde

|

||||

|

||||

|

||||

|

||||

Check Raid on Disk

|

||||

|

||||

**Note**: In the above image depicts that there is no any super-block detected or no RAID is defined in four disk drives. We may move further to start creating RAID 6.

|

||||

|

||||

### Step 2: Drive Partitioning for RAID 6 ###

|

||||

|

||||

4. Now create partitions for raid on ‘/dev/sdb‘, ‘/dev/sdc‘, ‘/dev/sdd‘ and ‘/dev/sde‘ with the help of following fdisk command. Here, we will show how to create partition on sdb drive and later same steps to be followed for rest of the drives.

|

||||

|

||||

**Create /dev/sdb Partition**

|

||||

|

||||

# fdisk /dev/sdb

|

||||

|

||||

Please follow the instructions as shown below for creating partition.

|

||||

|

||||

- Press ‘n‘ for creating new partition.

|

||||

- Then choose ‘P‘ for Primary partition.

|

||||

- Next choose the partition number as 1.

|

||||

- Define the default value by just pressing two times Enter key.

|

||||

- Next press ‘P‘ to print the defined partition.

|

||||

- Press ‘L‘ to list all available types.

|

||||

- Type ‘t‘ to choose the partitions.

|

||||

- Choose ‘fd‘ for Linux raid auto and press Enter to apply.

|

||||

- Then again use ‘P‘ to print the changes what we have made.

|

||||

- Use ‘w‘ to write the changes.

|

||||

|

||||

|

||||

|

||||

Create /dev/sdb Partition

|

||||

|

||||

**Create /dev/sdb Partition**

|

||||

|

||||

# fdisk /dev/sdc

|

||||

|

||||

|

||||

|

||||

Create /dev/sdc Partition

|

||||

|

||||

**Create /dev/sdd Partition**

|

||||

|

||||

# fdisk /dev/sdd

|

||||

|

||||

|

||||

|

||||

Create /dev/sdd Partition

|

||||

|

||||

**Create /dev/sde Partition**

|

||||

|

||||

# fdisk /dev/sde

|

||||

|

||||

|

||||

|

||||

Create /dev/sde Partition

|

||||

|

||||

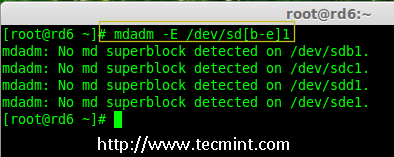

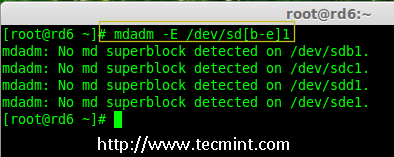

5. After creating partitions, it’s always good habit to examine the drives for super-blocks. If super-blocks does not exist than we can go head to create a new RAID setup.

|

||||

|

||||

# mdadm -E /dev/sd[b-e]1

|

||||

|

||||

|

||||

or

|

||||

|

||||

# mdadm --examine /dev/sdb1 /dev/sdc1 /dev/sdd1 /dev/sde1

|

||||

|

||||

|

||||

|

||||

Check Raid on New Partitions

|

||||

|

||||

### Step 3: Creating md device (RAID) ###

|

||||

|

||||

6. Now it’s time to create Raid device ‘md0‘ (i.e. /dev/md0) and apply raid level on all newly created partitions and confirm the raid using following commands.

|

||||

|

||||

# mdadm --create /dev/md0 --level=6 --raid-devices=4 /dev/sdb1 /dev/sdc1 /dev/sdd1 /dev/sde1

|

||||

# cat /proc/mdstat

|

||||

|

||||

|

||||

|

||||

Create Raid 6 Device

|

||||

|

||||

7. You can also check the current process of raid using watch command as shown in the screen grab below.

|

||||

|

||||

# watch -n1 cat /proc/mdstat

|

||||

|

||||

|

||||

|

||||

Check Raid 6 Process

|

||||

|

||||

8. Verify the raid devices using the following command.

|

||||

|

||||

# mdadm -E /dev/sd[b-e]1

|

||||

|

||||

**Note**:: The above command will be display the information of the four disks, which is quite long so not possible to post the output or screen grab here.

|

||||

|

||||

9. Next, verify the RAID array to confirm that the re-syncing is started.

|

||||

|

||||

# mdadm --detail /dev/md0

|

||||

|

||||

|

||||

|

||||

Check Raid 6 Array

|

||||

|

||||

### Step 4: Creating FileSystem on Raid Device ###

|

||||

|

||||

10. Create a filesystem using ext4 for ‘/dev/md0‘ and mount it under /mnt/raid5. Here we’ve used ext4, but you can use any type of filesystem as per your choice.

|

||||

|

||||

# mkfs.ext4 /dev/md0

|

||||

|

||||

|

||||

|

||||

Create File System on Raid 6

|

||||

|

||||

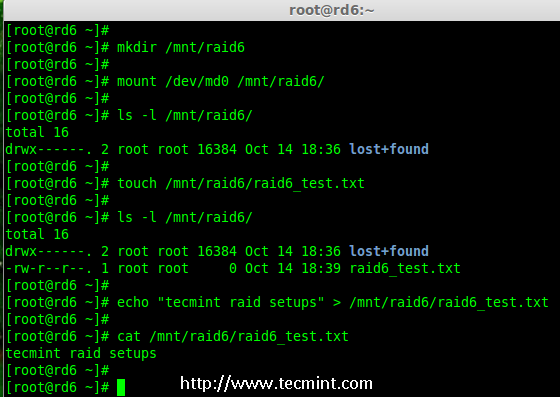

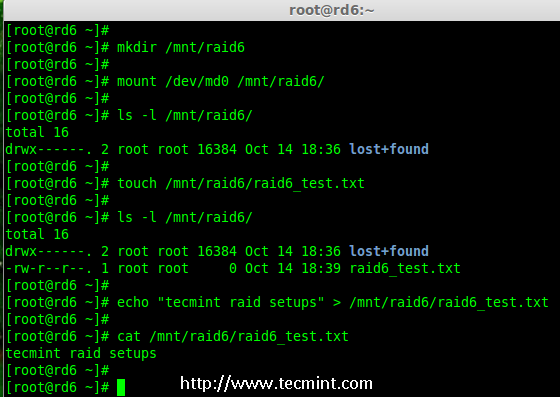

11. Mount the created filesystem under /mnt/raid6 and verify the files under mount point, we can see lost+found directory.

|

||||

|

||||

# mkdir /mnt/raid6

|

||||

# mount /dev/md0 /mnt/raid6/

|

||||

# ls -l /mnt/raid6/

|

||||

|

||||

12. Create some files under mount point and append some text in any one of the file to verify the content.

|

||||

|

||||

# touch /mnt/raid6/raid6_test.txt

|

||||

# ls -l /mnt/raid6/

|

||||

# echo "tecmint raid setups" > /mnt/raid6/raid6_test.txt

|

||||

# cat /mnt/raid6/raid6_test.txt

|

||||

|

||||

|

||||

|

||||

Verify Raid Content

|

||||

|

||||

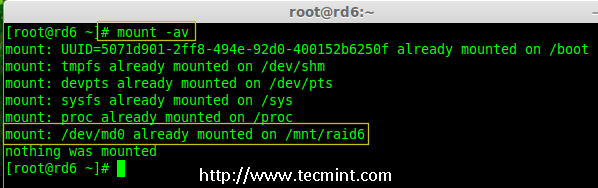

13. Add an entry in /etc/fstab to auto mount the device at the system startup and append the below entry, mount point may differ according to your environment.

|

||||

|

||||

# vim /etc/fstab

|

||||

|

||||

/dev/md0 /mnt/raid6 ext4 defaults 0 0

|

||||

|

||||

|

||||

|

||||

Automount Raid 6 Device

|

||||

|

||||

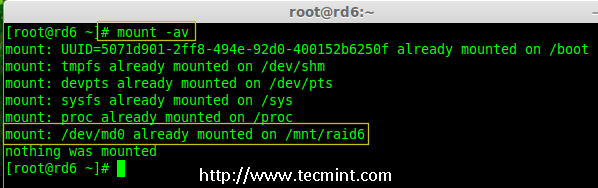

14. Next, execute ‘mount -a‘ command to verify whether there is any error in fstab entry.

|

||||

|

||||

# mount -av

|

||||

|

||||

|

||||

|

||||

Verify Raid Automount

|

||||

|

||||

### Step 5: Save RAID 6 Configuration ###

|

||||

|

||||

15. Please note by default RAID don’t have a config file. We have to save it by manually using below command and then verify the status of device ‘/dev/md0‘.

|

||||

|

||||

# mdadm --detail --scan --verbose >> /etc/mdadm.conf

|

||||

# mdadm --detail /dev/md0

|

||||

|

||||

|

||||

|

||||

Save Raid 6 Configuration

|

||||

|

||||

|

||||

|

||||

Check Raid 6 Status

|

||||

|

||||

### Step 6: Adding a Spare Drives ###

|

||||

|

||||

16. Now it has 4 disks and there are two parity information’s available. In some cases, if any one of the disk fails we can get the data, because there is double parity in RAID 6.

|

||||

|

||||

May be if the second disk fails, we can add a new one before loosing third disk. It is possible to add a spare drive while creating our RAID set, But I have not defined the spare drive while creating our raid set. But, we can add a spare drive after any drive failure or while creating the RAID set. Now we have already created the RAID set now let me add a spare drive for demonstration.

|

||||

|

||||

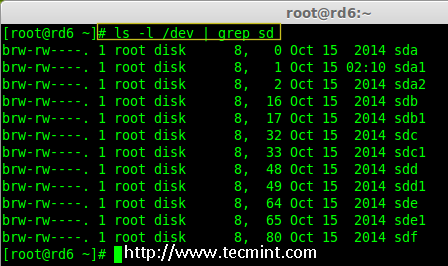

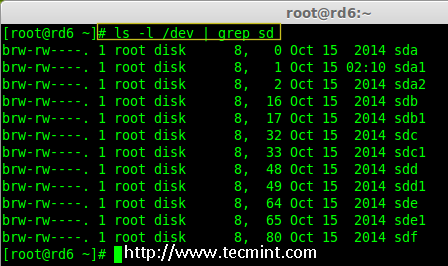

For the demonstration purpose, I’ve hot-plugged a new HDD disk (i.e. /dev/sdf), let’s verify the attached disk.

|

||||

|

||||

# ls -l /dev/ | grep sd

|

||||

|

||||

|

||||

|

||||

Check New Disk

|

||||

|

||||

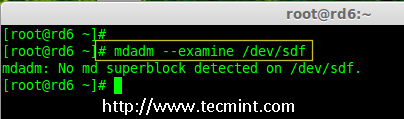

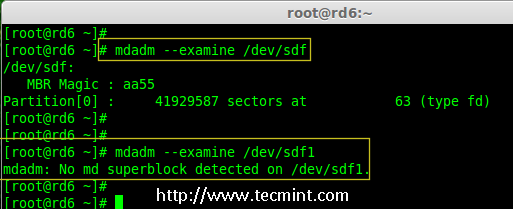

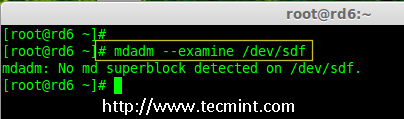

17. Now again confirm the new attached disk for any raid is already configured or not using the same mdadm command.

|

||||

|

||||

# mdadm --examine /dev/sdf

|

||||

|

||||

|

||||

|

||||

Check Raid on New Disk

|

||||

|

||||

**Note**: As usual, like we’ve created partitions for four disks earlier, similarly we’ve to create new partition on the new plugged disk using fdisk command.

|

||||

|

||||

# fdisk /dev/sdf

|

||||

|

||||

|

||||

|

||||

Create /dev/sdf Partition

|

||||

|

||||

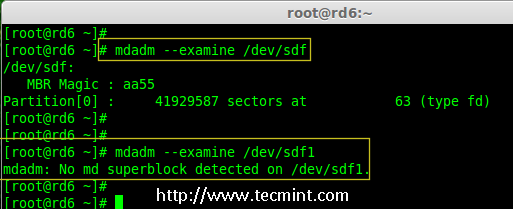

18. Again after creating new partition on /dev/sdf, confirm the raid on the partition, include the spare drive to the /dev/md0 raid device and verify the added device.

|

||||

|

||||

# mdadm --examine /dev/sdf

|

||||

# mdadm --examine /dev/sdf1

|

||||

# mdadm --add /dev/md0 /dev/sdf1

|

||||

# mdadm --detail /dev/md0

|

||||

|

||||

|

||||

|

||||

Verify Raid on sdf Partition

|

||||

|

||||

|

||||

|

||||

Add sdf Partition to Raid

|

||||

|

||||

|

||||

|

||||

Verify sdf Partition Details

|

||||

|

||||

### Step 7: Check Raid 6 Fault Tolerance ###

|

||||

|

||||

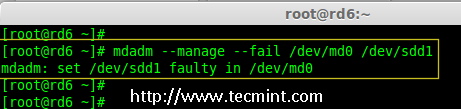

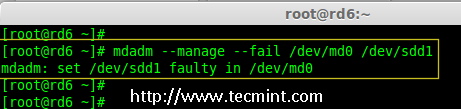

19. Now, let us check whether spare drive works automatically, if anyone of the disk fails in our Array. For testing, I’ve personally marked one of the drive is failed.

|

||||

|

||||

Here, we’re going to mark /dev/sdd1 as failed drive.

|

||||

|

||||

# mdadm --manage --fail /dev/md0 /dev/sdd1

|

||||

|

||||

|

||||

|

||||

Check Raid 6 Fault Tolerance

|

||||

|

||||

20. Let me get the details of RAID set now and check whether our spare started to sync.

|

||||

|

||||

# mdadm --detail /dev/md0

|

||||

|

||||

|

||||

|

||||

Check Auto Raid Syncing

|

||||

|

||||

**Hurray!** Here, we can see the spare got activated and started rebuilding process. At the bottom we can see the faulty drive /dev/sdd1 listed as faulty. We can monitor build process using following command.

|

||||

|

||||

# cat /proc/mdstat

|

||||

|

||||

|

||||

|

||||

Raid 6 Auto Syncing

|

||||

|

||||

### Conclusion: ###

|

||||

|

||||

Here, we have seen how to setup RAID 6 using four disks. This RAID level is one of the expensive setup with high redundancy. We will see how to setup a Nested RAID 10 and much more in the next articles. Till then, stay connected with TECMINT.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/create-raid-6-in-linux/

|

||||

|

||||

作者:[Babin Lonston][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/babinlonston/

|

||||

[1]:http://www.tecmint.com/understanding-raid-setup-in-linux/

|

||||

[2]:http://www.tecmint.com/create-raid0-in-linux/

|

||||

[3]:http://www.tecmint.com/create-raid1-in-linux/

|

||||

@ -1,154 +0,0 @@

|

||||

|

||||

如何使用 Datadog 监控 NGINX - 第3部分

|

||||

================================================================================

|

||||

|

||||

|

||||

如果你已经阅读了[前面的如何监控 NGINX][1],你应该知道从你网络环境的几个指标中可以获取多少信息。而且你也看到了从 NGINX 特定的基础中收集指标是多么容易的。但要实现全面,持续的监控 NGINX,你需要一个强大的监控系统来存储并将指标可视化,当异常发生时能提醒你。在这篇文章中,我们将向你展示如何使用 Datadog 安装 NGINX 监控,以便你可以在定制的仪表盘中查看这些指标:

|

||||

|

||||

|

||||

|

||||

Datadog 允许你建立单个主机,服务,流程,度量,或者几乎任何它们的组合图形周围和警报。例如,你可以在一定的可用性区域监控所有NGINX主机,或所有主机,或者您可以监视被报道具有一定标签的所有主机的一个关键指标。本文将告诉您如何:

|

||||

|

||||

Datadog 允许你来建立图表并报告周围的主机,进程,指标或其他的。例如,你可以在特定的可用性区域监控所有 NGINX 主机,或所有主机,或者你可以监视一个关键指标并将它报告给周围所有标记的主机。本文将告诉你如何做:

|

||||

|

||||

- 在 Datadog 仪表盘上监控 NGINX 指标,对其他所有系统

|

||||

- 当一个关键指标急剧变化时设置自动警报来通知你

|

||||

|

||||

### 配置 NGINX ###

|

||||

|

||||

为了收集 NGINX 指标,首先需要确保 NGINX 已启用 status 模块和一个URL 来报告 status 指标。下面将一步一步展示[配置开源 NGINX ][2]和[NGINX Plus][3]。

|

||||

|

||||

### 整合 Datadog 和 NGINX ###

|

||||

|

||||

#### 安装 Datadog 代理 ####

|

||||

|

||||

Datadog 代理是 [一个开源软件][4] 能收集和报告你主机的指标,这样就可以使用 Datadog 查看和监控他们。安装代理通常 [仅需要一个命令][5]

|

||||

|

||||

只要你的代理启动并运行着,你会看到你主机的指标报告[在你 Datadog 账号下][6]。

|

||||

|

||||

|

||||

|

||||

#### 配置 Agent ####

|

||||

|

||||

|

||||

接下来,你需要为代理创建一个简单的 NGINX 配置文件。在你系统中代理的配置目录应该 [在这儿][7]。

|

||||

|

||||

在目录里面的 conf.d/nginx.yaml.example 中,你会发现[一个简单的配置文件][8],你可以编辑并提供 status URL 和可选的标签为每个NGINX 实例:

|

||||

|

||||

init_config:

|

||||

|

||||

instances:

|

||||

|

||||

- nginx_status_url: http://localhost/nginx_status/

|

||||

tags:

|

||||

- instance:foo

|

||||

|

||||

一旦你修改了 status URLs 和其他标签,将配置文件保存为 conf.d/nginx.yaml。

|

||||

|

||||

#### 重启代理 ####

|

||||

|

||||

|

||||

你必须重新启动代理程序来加载新的配置文件。重新启动命令 [在这里][9] 根据平台的不同而不同。

|

||||

|

||||

#### 检查配置文件 ####

|

||||

|

||||

要检查 Datadog 和 NGINX 是否正确整合,运行 Datadog 的信息命令。每个平台使用的命令[看这儿][10]。

|

||||

|

||||

如果配置是正确的,你会看到这样的输出:

|

||||

|

||||

Checks

|

||||

======

|

||||

|

||||

[...]

|

||||

|

||||

nginx

|

||||

-----

|

||||

- instance #0 [OK]

|

||||

- Collected 8 metrics & 0 events

|

||||

|

||||

#### 安装整合 ####

|

||||

|

||||

最后,在你的 Datadog 帐户里面整合 Nginx。这非常简单,你只要点击“Install Integration”按钮在 [NGINX 集成设置][11] 配置表中。

|

||||

|

||||

|

||||

|

||||

### 指标! ###

|

||||

|

||||

一旦代理开始报告 NGINX 指标,你会看到 [一个 NGINX 仪表盘][12] 在你 Datadog 可用仪表盘的列表中。

|

||||

|

||||

基本的 NGINX 仪表盘显示了几个关键指标 [在我们介绍的 NGINX 监控中][13] 的最大值。 (一些指标,特别是请求处理时间,日志分析,Datadog 不提供。)

|

||||

|

||||

你可以轻松创建一个全面的仪表盘来监控你的整个网站区域通过增加额外的图形与 NGINX 外部的重要指标。例如,你可能想监视你 NGINX 主机的host-level 指标,如系统负载。你需要构建一个自定义的仪表盘,只需点击靠近仪表盘的右上角的选项并选择“Clone Dash”来克隆一个默认的 NGINX 仪表盘。

|

||||

|

||||

|

||||

|

||||

你也可以更高级别的监控你的 NGINX 实例通过使用 Datadog 的 [Host Maps][14] -对于实例,color-coding 你所有的 NGINX 主机通过 CPU 使用率来辨别潜在热点。

|

||||

|

||||

|

||||

|

||||

### NGINX 指标 ###

|

||||

|

||||

一旦 Datadog 捕获并可视化你的指标,你可能会希望建立一些监控自动密切的关注你的指标,并当有问题提醒你。下面将介绍一个典型的例子:一个提醒你 NGINX 吞吐量突然下降时的指标监控器。

|

||||

|

||||

#### 监控 NGINX 吞吐量 ####

|

||||

|

||||

Datadog 指标警报可以是 threshold-based(当指标超过设定值会警报)或 change-based(当指标的变化超过一定范围会警报)。在这种情况下,我们会采取后一种方式,当每秒传入的请求急剧下降时会提醒我们。下降往往意味着有问题。

|

||||

|

||||

1.**创建一个新的指标监控**. 从 Datadog 的“Monitors”下拉列表中选择“New Monitor”。选择“Metric”作为监视器类型。

|

||||

|

||||

|

||||

|

||||

2.**定义你的指标监视器**. 我们想知道 NGINX 每秒总的请求量下降的数量。所以我们在基础设施中定义我们感兴趣的 nginx.net.request_per_s度量和。

|

||||

|

||||

|

||||

|

||||

3.**设置指标警报条件**.我们想要在变化时警报,而不是一个固定的值,所以我们选择“Change Alert”。我们设置监控为无论何时请求量下降了30%以上时警报。在这里,我们使用一个 one-minute 数据窗口来表示“now” 指标的值,警报横跨该间隔内的平均变化,和之前 10 分钟的指标值作比较。

|

||||

|

||||

|

||||

|

||||

4.**自定义通知**.如果 NGINX 的请求量下降,我们想要通知我们的团队。在这种情况下,我们将给 ops 队的聊天室发送通知,网页呼叫工程师。在“Say what’s happening”中,我们将其命名为监控器并添加一个短消息将伴随该通知并建议首先开始调查。我们使用 @mention 作为一般警告,使用 ops 并用 @pagerduty [专门给 PagerDuty 发警告][15]。

|

||||

|

||||

|

||||

|

||||

5.**保存集成监控**.点击页面底部的“Save”按钮。你现在监控的关键指标NGINX [work 指标][16],它边打电话给工程师并在它迅速下时随时分页。

|

||||

|

||||

### 结论 ###

|

||||

|

||||

在这篇文章中,我们已经通过整合 NGINX 与 Datadog 来可视化你的关键指标,并当你的网络基础架构有问题时会通知你的团队。

|

||||

|

||||

如果你一直使用你自己的 Datadog 账号,你现在应该在 web 环境中有了很大的可视化提高,也有能力根据你的环境创建自动监控,你所使用的模式,指标应该是最有价值的对你的组织。

|

||||

|

||||

如果你还没有 Datadog 帐户,你可以注册[免费试用][17],并开始监视你的基础架构,应用程序和现在的服务。

|

||||

|

||||

----------

|

||||

这篇文章的来源在 [on GitHub][18]. 问题,错误,补充等?请[联系我们][19].

|

||||

|

||||

------------------------------------------------------------

|

||||

|

||||

via: https://www.datadoghq.com/blog/how-to-monitor-nginx-with-datadog/

|

||||

|

||||

作者:K Young

|

||||

译者:[strugglingyouth](https://github.com/译者ID)

|

||||

校对:[strugglingyouth](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:https://www.datadoghq.com/blog/how-to-monitor-nginx/

|

||||

[2]:https://www.datadoghq.com/blog/how-to-collect-nginx-metrics/#open-source

|

||||

[3]:https://www.datadoghq.com/blog/how-to-collect-nginx-metrics/#plus

|

||||

[4]:https://github.com/DataDog/dd-agent

|

||||

[5]:https://app.datadoghq.com/account/settings#agent

|

||||

[6]:https://app.datadoghq.com/infrastructure

|

||||

[7]:http://docs.datadoghq.com/guides/basic_agent_usage/

|

||||

[8]:https://github.com/DataDog/dd-agent/blob/master/conf.d/nginx.yaml.example

|

||||

[9]:http://docs.datadoghq.com/guides/basic_agent_usage/

|

||||

[10]:http://docs.datadoghq.com/guides/basic_agent_usage/

|

||||

[11]:https://app.datadoghq.com/account/settings#integrations/nginx

|

||||

[12]:https://app.datadoghq.com/dash/integration/nginx

|

||||

[13]:https://www.datadoghq.com/blog/how-to-monitor-nginx/

|

||||

[14]:https://www.datadoghq.com/blog/introducing-host-maps-know-thy-infrastructure/

|

||||

[15]:https://www.datadoghq.com/blog/pagerduty/

|

||||

[16]:https://www.datadoghq.com/blog/monitoring-101-collecting-data/#metrics

|

||||

[17]:https://www.datadoghq.com/blog/how-to-monitor-nginx-with-datadog/#sign-up

|

||||

[18]:https://github.com/DataDog/the-monitor/blob/master/nginx/how_to_monitor_nginx_with_datadog.md

|

||||

[19]:https://github.com/DataDog/the-monitor/issues

|

||||

@ -0,0 +1,74 @@

|

||||

如何在 Ubuntu 15.04 下创建连接至 Android/iOS 的 AP

|

||||

================================================================================

|

||||

我成功地在 Ubuntu 15.04 下用 Gnome Network Manager 创建了一个无线AP热点. 接下来我要分享一下我的步骤. 请注意: 你必须要有一个可以用来创建AP热点的无线网卡. 如果你不知道如何找到连上了的设备的话, 在终端(Terminal)里输入`iw list`.

|

||||

|

||||

如果你没有安装`iw`的话, 在Ubuntu下你可以使用`udo apt-get install iw`进行安装.

|

||||

|

||||

在你键入`iw list`之后, 寻找可用的借口, 你应该会看到类似下列的条目:

|

||||

|

||||

Supported interface modes:

|

||||

|

||||

* IBSS

|

||||

* managed

|

||||

* AP

|

||||

* AP/VLAN

|

||||

* monitor

|

||||

* mesh point

|

||||

|

||||

让我们一步步看

|

||||

|

||||

1. 断开WIFI连接. 使用有线网络接入你的笔记本.

|

||||

1. 在顶栏面板里点击网络的图标 -> Edit Connections(编辑连接) -> 在弹出窗口里点击Add(新增)按钮.

|

||||

1. 在下拉菜单内选择Wi-Fi.

|

||||

1. 接下来,

|

||||

|

||||

a. 输入一个链接名 比如: Hotspot

|

||||

|

||||

b. 输入一个 SSID 比如: Hotspot

|

||||

|

||||

c. 选择模式(mode): Infrastructure

|

||||

|

||||

d. 设备 MAC 地址: 在下拉菜单里选择你的无线设备

|

||||

|

||||

|

||||

|

||||

1. 进入Wi-Fi安全选项卡, 选择 WPA & WPA2 Personal 并且输入密码.

|

||||

1. 进入IPv4设置选项卡, 在Method(方法)下拉菜单里, 选择Shared to other computers(共享至其他电脑).

|

||||

|

||||

|

||||

|

||||

1. 进入IPv6选项卡, 在Method(方法)里设置为忽略ignore (只有在你不使用IPv6的情况下这么做)

|

||||

1. 点击 Save(保存) 按钮以保存配置.

|

||||

1. 从 menu/dash 里打开Terminal.

|

||||

1. 修改你刚刚使用 network settings 创建的连接.

|

||||

|

||||

使用 VIM 编辑器:

|

||||

|

||||

sudo vim /etc/NetworkManager/system-connections/Hotspot

|

||||

|

||||

使用Gedit 编辑器:

|

||||

|

||||

gksu gedit /etc/NetworkManager/system-connections/Hotspot

|

||||

|

||||

把名字 Hotspot 用你在第4步里起的连接名替换掉.

|

||||

|

||||

|

||||

|

||||

1. 把 `mode=infrastructure` 改成 `mode=ap` 并且保存文件

|

||||

1. 一旦你保存了这个文件, 你应该能在 Wifi 菜单里看到你刚刚建立的AP了. (如果没有的话请再顶栏里 关闭/打开 Wifi 选项一次)

|

||||

|

||||

|

||||

|

||||

1. 你现在可以把你的设备连上Wifi了. 已经过 Android 5.0的小米4测试.(下载了1GB的文件以测试速度与稳定性)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxveda.com/2015/08/23/how-to-create-an-ap-in-ubuntu-15-04-to-connect-to-androidiphone/

|

||||

|

||||

作者:[Sayantan Das][a]

|

||||

译者:[jerryling315](https://github.com/jerryling315)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.linuxveda.com/author/sayantan_das/

|

||||

@ -0,0 +1,321 @@

|

||||

|

||||

在 Linux 中安装 RAID 6(条带化双分布式奇偶校验) - 第5部分

|

||||

================================================================================

|

||||

RAID 6 是 RAID 5 的升级版,它有两个分布式奇偶校验,即时两个磁盘发生故障后依然有容错能力。两并列的磁盘发生故障时,系统的关键任务仍然能运行。它与 RAID 5 相似,但性能更健壮,因为它多用了一个磁盘来进行奇偶校验。

|

||||

|

||||

在之前的文章中,我们已经在 RAID 5 看了分布式奇偶校验,但在本文中,我们将看到的是 RAID 6 双分布式奇偶校验。不要期望比其他 RAID 有额外的性能,我们仍然需要安装一个专用的 RAID 控制器。在 RAID 6 中,即使我们失去了2个磁盘,我们仍可以取回数据通过更换磁盘,然后从校验中构建数据。

|

||||

|

||||

|

||||

|

||||

在 Linux 中安装 RAID 6

|

||||

|

||||

要建立一个 RAID 6,一组最少需要4个磁盘。RAID 6 甚至在有些设定中会有多组磁盘,当读取数据时,它会同时从所有磁盘读取,所以读取速度会更快,当写数据时,因为它要将数据写在条带化的多个磁盘上,所以性能会较差。

|

||||

|

||||

现在,很多人都在讨论为什么我们需要使用 RAID 6,它的性能和其他 RAID 相比并不太好。提出这个问题首先需要知道的是,如果需要高容错的必须选择 RAID 6。在每一个对数据库的高可用性要求较高的环境中,他们需要 RAID 6 因为数据库是最重要,无论花费多少都需要保护其安全,它在视频流环境中也是非常有用的。

|

||||

|

||||

#### RAID 6 的的优点和缺点 ####

|

||||

|

||||

- 性能很不错。

|

||||

- RAID 6 非常昂贵,因为它要求两个独立的磁盘用于奇偶校验功能。

|

||||

- 将失去两个磁盘的容量来保存奇偶校验信息(双奇偶校验)。

|

||||

- 不存在数据丢失,即时两个磁盘损坏。我们可以在更换损坏的磁盘后从校验中重建数据。

|

||||

- 读性能比 RAID 5 更好,因为它从多个磁盘读取,但对于没有专用的 RAID 控制器的设备写性能将非常差。

|

||||

|

||||

#### 要求 ####

|

||||

|

||||

要创建一个 RAID 6 最少需要4个磁盘.你也可以添加更多的磁盘,但你必须有专用的 RAID 控制器。在软件 RAID 中,我们在 RAID 6 中不会得到更好的性能,所以我们需要一个物理 RAID 控制器。

|

||||

|

||||

这些是新建一个 RAID 需要的设置,我们建议先看完以下 RAID 文章。

|

||||

|

||||

- [Linux 中 RAID 的基本概念 – 第一部分][1]

|

||||

- [在 Linux 上创建软件 RAID 0 (条带化) – 第二部分][2]

|

||||

- [在 Linux 上创建软件 RAID 1 (镜像) – 第三部分][3]

|

||||

|

||||

#### My Server Setup ####

|

||||

|

||||

Operating System : CentOS 6.5 Final

|

||||

IP Address : 192.168.0.228

|

||||

Hostname : rd6.tecmintlocal.com

|

||||

Disk 1 [20GB] : /dev/sdb

|

||||

Disk 2 [20GB] : /dev/sdc

|

||||

Disk 3 [20GB] : /dev/sdd

|

||||

Disk 4 [20GB] : /dev/sde

|

||||

|

||||

这篇文章是9系列 RAID 教程的第5部分,在这里我们将看到我们如何在 Linux 系统或者服务器上创建和设置软件 RAID 6 或条带化双分布式奇偶校验,使用四个 20GB 的磁盘 /dev/sdb, /dev/sdc, /dev/sdd 和 /dev/sde.

|

||||

|

||||

### 第1步:安装 mdadm 工具,并检查磁盘 ###

|

||||

|

||||

1.如果你按照我们最进的两篇 RAID 文章(第2篇和第3篇),我们已经展示了如何安装‘mdadm‘工具。如果你直接看的这篇文章,我们先来解释下在Linux系统中如何使用‘mdadm‘工具来创建和管理 RAID,首先根据你的 Linux 发行版使用以下命令来安装。

|

||||

|

||||

# yum install mdadm [on RedHat systems]

|

||||

# apt-get install mdadm [on Debain systems]

|

||||

|

||||

2.安装该工具后,然后来验证需要的四个磁盘,我们将会使用下面的‘fdisk‘命令来检验用于创建 RAID 的磁盘。

|

||||

|

||||

# fdisk -l | grep sd

|

||||

|

||||

|

||||

|

||||

在 Linux 中检查磁盘

|

||||

|

||||

3.在创建 RAID 磁盘前,先检查下我们的磁盘是否创建过 RAID 分区。

|

||||

|

||||

# mdadm -E /dev/sd[b-e]

|

||||

# mdadm --examine /dev/sdb /dev/sdc /dev/sdd /dev/sde

|

||||

|

||||

|

||||

|

||||

在磁盘上检查 Raid 分区

|

||||

|

||||

**注意**: 在上面的图片中,没有检测到任何 super-block 或者说在四个磁盘上没有 RAID 存在。现在我们开始创建 RAID 6。

|

||||

|

||||

### 第2步:为 RAID 6 创建磁盘分区 ###

|

||||

|

||||

4.现在为 raid 创建分区‘/dev/sdb‘, ‘/dev/sdc‘, ‘/dev/sdd‘ 和 ‘/dev/sde‘使用下面 fdisk 命令。在这里,我们将展示如何创建分区在 sdb 磁盘,同样的步骤也适用于其他分区。

|

||||

|

||||

**创建 /dev/sdb 分区**

|

||||

|

||||

# fdisk /dev/sdb

|

||||

|

||||

请按照说明进行操作,如下图所示创建分区。

|

||||

|

||||

- 按 ‘n’ 创建新的分区。

|

||||

- 然后按 ‘P’ 选择主分区。

|

||||

- 接下来选择分区号为1。

|

||||

- 只需按两次回车键选择默认值即可。

|

||||

- 然后,按 ‘P’ 来打印创建好的分区。

|

||||

- 按 ‘L’,列出所有可用的类型。

|

||||

- 按 ‘t’ 去修改分区。

|

||||

- 键入 ‘fd’ 设置为 Linux 的 RAID 类型,然后按 Enter 确认。

|

||||

- 然后再次使用‘p’查看我们所做的更改。

|

||||

- 使用‘w’保存更改。

|

||||

|

||||

|

||||

|

||||

创建 /dev/sdb 分区

|

||||

|

||||

**创建 /dev/sdc 分区**

|

||||

|

||||

# fdisk /dev/sdc

|

||||

|

||||

|

||||

|

||||

创建 /dev/sdc 分区

|

||||

|

||||

**创建 /dev/sdd 分区**

|

||||

|

||||

# fdisk /dev/sdd

|

||||

|

||||

|

||||

|

||||

创建 /dev/sdd 分区

|

||||

|

||||

**创建 /dev/sde 分区**

|

||||

|

||||

# fdisk /dev/sde

|

||||

|

||||

|

||||

|

||||

创建 /dev/sde 分区

|

||||

|

||||

5.创建好分区后,检查磁盘的 super-blocks 是个好的习惯。如果 super-blocks 不存在我们可以按前面的创建一个新的 RAID。

|

||||

|

||||

# mdadm -E /dev/sd[b-e]1

|

||||

|

||||

|

||||

或者

|

||||

|

||||

# mdadm --examine /dev/sdb1 /dev/sdc1 /dev/sdd1 /dev/sde1

|

||||

|

||||

|

||||

|

||||

在新分区中检查 Raid

|

||||

|

||||

### 步骤3:创建 md 设备(RAID) ###

|

||||

|

||||

6,现在是时候来创建 RAID 设备‘md0‘ (即 /dev/md0)并应用 RAID 级别在所有新创建的分区中,确认 raid 使用以下命令。

|

||||

|

||||

# mdadm --create /dev/md0 --level=6 --raid-devices=4 /dev/sdb1 /dev/sdc1 /dev/sdd1 /dev/sde1

|

||||

# cat /proc/mdstat

|

||||

|

||||

|

||||

|

||||

创建 Raid 6 设备

|

||||

|

||||

7.你还可以使用 watch 命令来查看当前 raid 的进程,如下图所示。

|

||||

|

||||

# watch -n1 cat /proc/mdstat

|

||||

|

||||

|

||||

|

||||

检查 Raid 6 进程

|

||||

|

||||

8.使用以下命令验证 RAID 设备。

|

||||

|

||||

# mdadm -E /dev/sd[b-e]1

|

||||

|

||||

**注意**::上述命令将显示四个磁盘的信息,这是相当长的,所以没有截取其完整的输出。

|

||||

|

||||

9.接下来,验证 RAID 阵列,以确认 re-syncing 被启动。

|

||||

|

||||

# mdadm --detail /dev/md0

|

||||

|

||||

|

||||

|

||||

检查 Raid 6 阵列

|

||||

|

||||

### 第4步:在 RAID 设备上创建文件系统 ###

|

||||

|

||||

10.使用 ext4 为‘/dev/md0‘创建一个文件系统并将它挂载在 /mnt/raid5 。这里我们使用的是 ext4,但你可以根据你的选择使用任意类型的文件系统。

|

||||

|

||||

# mkfs.ext4 /dev/md0

|

||||

|

||||

|

||||

|

||||

在 Raid 6 上创建文件系统

|

||||

|

||||

11.挂载创建的文件系统到 /mnt/raid6,并验证挂载点下的文件,我们可以看到 lost+found 目录。

|

||||

|

||||

# mkdir /mnt/raid6

|

||||

# mount /dev/md0 /mnt/raid6/

|

||||

# ls -l /mnt/raid6/

|

||||

|

||||

12.在挂载点下创建一些文件,在任意文件中添加一些文字并验证其内容。

|

||||

|

||||

# touch /mnt/raid6/raid6_test.txt

|

||||

# ls -l /mnt/raid6/

|

||||

# echo "tecmint raid setups" > /mnt/raid6/raid6_test.txt

|

||||

# cat /mnt/raid6/raid6_test.txt

|

||||

|

||||

|

||||

|

||||

验证 Raid 内容

|

||||

|

||||

13.在 /etc/fstab 中添加以下条目使系统启动时自动挂载设备,环境不同挂载点可能会有所不同。

|

||||

|

||||

# vim /etc/fstab

|

||||

|

||||

/dev/md0 /mnt/raid6 ext4 defaults 0 0

|

||||

|

||||

|

||||

|

||||

自动挂载 Raid 6 设备

|

||||

|

||||

14.接下来,执行‘mount -a‘命令来验证 fstab 中的条目是否有错误。

|

||||

|

||||

# mount -av

|

||||

|

||||

|

||||

|

||||

验证 Raid 是否自动挂载

|

||||

|

||||

### 第5步:保存 RAID 6 的配置 ###

|

||||

|

||||

15.请注意默认 RAID 没有配置文件。我们需要使用以下命令手动保存它,然后检查设备‘/dev/md0‘的状态。

|

||||

|

||||

# mdadm --detail --scan --verbose >> /etc/mdadm.conf

|

||||

# mdadm --detail /dev/md0

|

||||

|

||||

|

||||

|

||||

保存 Raid 6 配置

|

||||

|

||||

|

||||

|

||||

检查 Raid 6 状态

|

||||

|

||||

### 第6步:添加备用磁盘 ###

|

||||

|

||||

16.现在,它使用了4个磁盘,并且有两个作为奇偶校验信息来使用。在某些情况下,如果任意一个磁盘出现故障,我们仍可以得到数据,因为在 RAID 6 使用双奇偶校验。

|

||||

|

||||

如果第二个磁盘也出现故障,在第三块磁盘损坏前我们可以添加一个新的。它可以作为一个备用磁盘并入 RAID 集合,但我在创建 raid 集合前没有定义备用的磁盘。但是,在磁盘损坏后或者创建 RAId 集合时我们可以添加一块磁盘。现在,我们已经创建好了 RAID,下面让我演示如何添加备用磁盘。

|

||||

|

||||

为了达到演示的目的,我已经热插入了一个新的 HDD 磁盘(即 /dev/sdf),让我们来验证接入的磁盘。

|

||||

|

||||

# ls -l /dev/ | grep sd

|

||||

|

||||

|

||||

|

||||

检查新 Disk

|

||||

|

||||

17.现在再次确认新连接的磁盘没有配置过 RAID ,使用 mdadm 来检查。

|

||||

|

||||

# mdadm --examine /dev/sdf

|

||||

|

||||

|

||||

|

||||

在新磁盘中检查 Raid

|

||||

|

||||

**注意**: 像往常一样,我们早前已经为四个磁盘创建了分区,同样,我们使用 fdisk 命令为新插入的磁盘创建新分区。

|

||||

|

||||

# fdisk /dev/sdf

|

||||

|

||||

|

||||

|

||||

为 /dev/sdf 创建分区

|

||||

|

||||

18.在 /dev/sdf 创建新的分区后,在新分区上确认 raid,包括/dev/md0 raid 设备的备用磁盘,并验证添加的设备。

|

||||

|

||||

# mdadm --examine /dev/sdf

|

||||

# mdadm --examine /dev/sdf1

|

||||

# mdadm --add /dev/md0 /dev/sdf1

|

||||

# mdadm --detail /dev/md0

|

||||

|

||||

|

||||

|

||||

在 sdf 分区上验证 Raid

|

||||

|

||||

|

||||

|

||||

为 RAID 添加 sdf 分区

|

||||

|

||||

|

||||

|

||||

验证 sdf 分区信息

|

||||

|

||||

### 第7步:检查 RAID 6 容错 ###

|

||||

|

||||

19.现在,让我们检查备用驱动器是否能自动工作,当我们阵列中的任何一个磁盘出现故障时。为了测试,我亲自将一个磁盘模拟为故障设备。

|

||||

|

||||

在这里,我们标记 /dev/sdd1 为故障磁盘。

|

||||

|

||||

# mdadm --manage --fail /dev/md0 /dev/sdd1

|

||||

|

||||

|

||||

|

||||

检查 Raid 6 容错

|

||||

|

||||

20.让我们查看 RAID 的详细信息,并检查备用磁盘是否开始同步。

|

||||

|

||||

# mdadm --detail /dev/md0

|

||||

|

||||

|

||||

|

||||

检查 Raid 自动同步

|

||||

|

||||

**哇塞!** 这里,我们看到备用磁盘激活了,并开始重建进程。在底部,我们可以看到有故障的磁盘 /dev/sdd1 标记为 faulty。可以使用下面的命令查看进程重建。

|

||||

|

||||

# cat /proc/mdstat

|

||||

|

||||

|

||||

|

||||

Raid 6 自动同步

|

||||

|

||||

### 结论: ###

|

||||

|

||||

在这里,我们看到了如何使用四个磁盘设置 RAID 6。这种 RAID 级别是具有高冗余的昂贵设置之一。在接下来的文章中,我们将看到如何建立一个嵌套的 RAID 10 甚至更多。至此,请继续关注 TECMINT。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/create-raid-6-in-linux/

|

||||

|

||||

作者:[Babin Lonston][a]

|

||||

译者:[strugglingyouth](https://github.com/strugglingyouth)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/babinlonston/

|

||||

[1]:http://www.tecmint.com/understanding-raid-setup-in-linux/

|

||||

[2]:http://www.tecmint.com/create-raid0-in-linux/

|

||||

[3]:http://www.tecmint.com/create-raid1-in-linux/

|

||||

Loading…

Reference in New Issue

Block a user