mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-09 01:30:10 +08:00

commit

9cfa383bfc

@ -1,6 +1,7 @@

|

||||

适用于Linux的在线工具

|

||||

16个 Linux 方面的在线工具类网站

|

||||

================================================================================

|

||||

众所周知,GNU Linux不仅仅只是一款操作系统。看起来通过互联网全球许多人都在致力于这款企鹅图标(即Linux)的操作系统。如果你读到这篇文章,你可能倾向于读到关于Linux联机的内容。在可以找到的所有关于这个主题的网页中,有一些网站是每个Linux爱好者都应该收藏起来的。这些网站不仅仅只是教程或回顾,更是可以随时随地访问并与他人共享的实用工具。所以,今天我会建议一份包含16个应该收藏的网址清单。它们中的一些对Windows或Mac用户同样有用:这是在他们的能力范围内可以做到的。(译者注:Windows和Mac一样可以很好地体验Linux)

|

||||

众所周知,GNU Linux不仅仅只是一款操作系统。看起来通过互联网全球许多人都在致力于这款以企鹅为吉祥物的操作系统。如果你读到这篇文章,你可能希望读一些关于Linux在线资源的内容。在可以找到的所有关于这个主题的网页中,有一些网站是每个Linux爱好者都应该收藏起来的。这些网站不仅仅只是教程或回顾,更是可以随时随地访问并与他人共享的实用工具。所以,今天我会建议一份包含16个应该收藏的网址清单。它们中的一些对Windows或Mac用户同样有用:这是在他们的能力范围内可以做到的。(译者注:Windows和Mac一样可以很好地体验Linux)

|

||||

|

||||

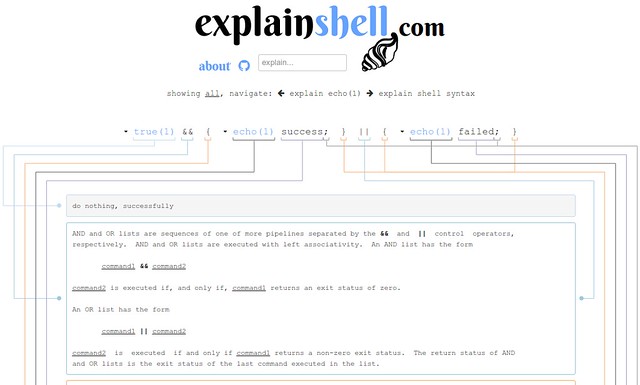

### 1. [ExplainShell.com][1] ###

|

||||

|

||||

[][2]

|

||||

@ -11,43 +12,43 @@

|

||||

|

||||

[][4]

|

||||

|

||||

如果你想开始学习Linux命令行,或者想快速地得到一个自定义的shell命令提示符,但不知道从何下手,这个网站会为你生成PS1提示代码,在家目录下放置.bashrc文件。你可以拖拽任何你想在提示符里看到的元素,譬如用户名和当前时间,这个网站都会为你编写易懂可读的代码。绝对是懒人必备!

|

||||

如果你想开始学习Linux命令行,或者想快速地生成一个自定义的shell命令提示符,但不知道从何下手,这个网站可以为你生成PS1提示的代码,将代码放到家目录下的.bashrc文件中即可。你可以拖拽任何你想在提示符里看到的元素,譬如用户名和当前时间,这个网站都会为你编写易懂可读的代码。绝对是懒人必备!

|

||||

|

||||

### 3. [Vim-adventures.com][5] ###

|

||||

|

||||

[][6]

|

||||

|

||||

我是最近才发现这个网站的,但我的生活已经深陷其中。简而言之:它就是一个使用Vim命令的RPG游戏。在等距的水平上使用‘h,j,k,l’四个键移动字母,获取新的命令/能力,收集关键词,非常快速地学习高效地使用Vim。

|

||||

我是最近才发现这个网站的,但我的生活已经深陷其中。简而言之:它就是一个使用Vim命令的RPG游戏。在地图的平面上使用‘h,j,k,l’四个键移动你的角色、得到新的命令/能力、收集钥匙,可以帮助你非常快速地学习如何高效使用Vim。

|

||||

|

||||

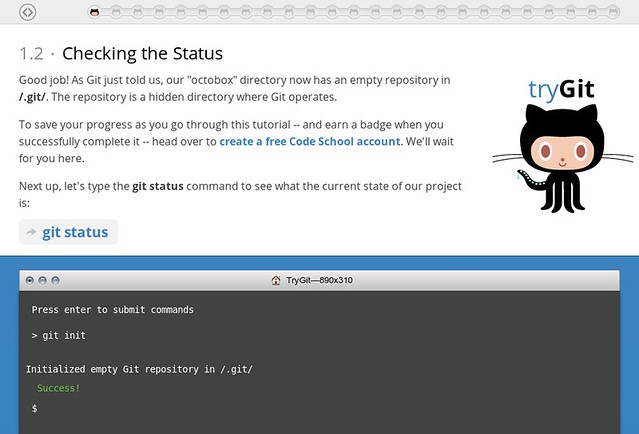

### 4. [Try Github][7] ###

|

||||

|

||||

[][8]

|

||||

|

||||

目标很简单:15分钟学会Git。这个网站模拟一个控制台,带你遍历这种协作编辑的每一步。界面非常时尚,目的十分有用。唯一不足的是对Git敏感,但Git绝对是一项不错的技能,这里也是学习Git的绝佳之处。

|

||||

目标很简单:15分钟学会Git。这个网站模拟一个控制台,带你遍历这种协作编辑的每一步。界面非常时尚,目的十分有用。唯一不足的是对Git感兴趣,但Git绝对是一项不错的技能,这里也是学习Git的绝佳之处。

|

||||

|

||||

### 5. [Shortcutfoo.com][9] ###

|

||||

|

||||

[][10]

|

||||

|

||||

又一个包含众多快捷键数据库的网站,shortcutfoo以更标准的方式将其内容呈现给用户,但绝对比有趣的迷你游戏更直截了当。这里有许多软件的快捷键,并按类别分组。虽然它不像Vim一类完全依赖快捷键的软件那么全面,但也足以提供快速的提示或一般性的概述。

|

||||

又一个包含众多快捷键数据库的网站,shortcutfoo以更标准的方式将其内容呈现给用户,但绝对比有趣的迷你游戏更直截了当。这里有许多软件的快捷键,并按类别分组。虽然像Vim一类的软件它没有给出超级完整的快捷键列表,但也足以提供快速的提示或一般性的概述。

|

||||

|

||||

### 6. [GitHub Free Programming Books][11] ###

|

||||

|

||||

[][12]

|

||||

|

||||

正如你从URL上猜到的一样,这个网站就是免费在线编程书籍的集合,使用Git协作方式编写。上面的内容非常好,作者们应该为做出这些工作受到表扬。它可能不是最容易阅读的,但一定是最有启发性的之一。我们只希望这项运动能持续进行。

|

||||

正如你从URL上猜到的一样,这个网站就是免费在线编程书籍的集合,使用Git协作方式编写。上面的内容非常好,作者们应该为他们做出的这些贡献受到表扬。它可能不是最容易阅读的,但一定是最有启发性的之一。我们只希望这项运动能持续进行。

|

||||

|

||||

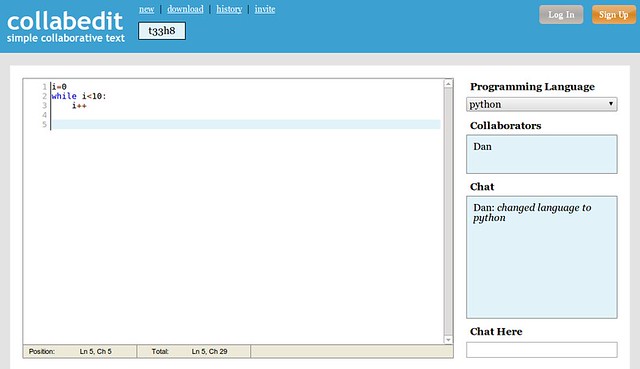

### 7. [Collabedit.com][13] ###

|

||||

|

||||

[][14]

|

||||

|

||||

如果你曾经准备过电话面试,你应该先试试collabedit。它让你创建文件,选择你想使用的编程语言,然后通过URL共享文档。打开链接的人可以免费地实时使用文本交互,使你可以评判他们的编程水平或只是交换一些程序片段。这里甚至还提供合适的语法高亮和聊天功能。换句话说,这就是程序员的即时Google文档。

|

||||

如果你曾经计划过电话面试,你应该先试试collabedit。它让你创建文件,选择你想使用的编程语言,然后通过URL共享文档。打开链接的人可以免费地实时使用文本交互,使你可以评判他们的编程水平或只是交换一些程序片段。这里甚至还提供合适的语法高亮和聊天功能。换句话说,这就是程序员的即时Google Doucment。

|

||||

|

||||

### 8. [Cpp.sh][15] ###

|

||||

|

||||

[][16]

|

||||

|

||||

尽管这个网站超出了Linux范围,但因为它非常有用,所以值得将它放在这里。简单地说,这是一个C++在线开发环境。只需在导航栏里编写程序,然后运行它。作为奖励,你可以使用自动补全、Ctrl+Z,以及和你的小伙伴共享URL。这些有趣的事情,你只需要通过一个简单的浏览器就能做到。

|

||||

尽管这个网站超出了Linux范围,但因为它非常有用,所以值得将它放在这里。简单地说,这是一个C++在线开发环境。只需在浏览器里编写程序,然后运行它。作为奖励,你可以使用自动补全、Ctrl+Z,以及和你的小伙伴分享你的作品的URL。这些有趣的事情,你只需要通过一个简单的浏览器就能做到。

|

||||

|

||||

### 9. [Copy.sh][17] ###

|

||||

|

||||

@ -59,13 +60,13 @@

|

||||

|

||||

[][21]

|

||||

|

||||

我们总是在自己的电脑上保存着一大段类似于“gems”的命令行【翻译得不准确,麻烦校正】,commandlinefu的目标是把这些片段释放给全世界。作为一个协作式数据库,它就像是命令行里的维基百科。每个人可以免费注册,把自己最钟爱的命令提交到这个网站上给其他人看。你将能够获取来自四面八方的知识并与人分享。如果你对精通shell饶有兴趣,commandlinefu也可以提供一些优秀的特性,比如随机命令和每天学习新知识的新闻订阅。

|

||||

我们总是在自己的电脑上保存着一大段命令行“宝石”,commandlinefu的目标是把这些片段释放给全世界。作为一个协作式数据库,它就像是命令行里的维基百科。每个人可以免费注册,把自己最钟爱的命令提交到这个网站上给其他人看。你将能够获取来自四面八方的知识并与人分享。如果你对精通shell饶有兴趣,commandlinefu也可以提供一些优秀的特性,比如随机命令和每天学习新知识的新闻订阅。

|

||||

|

||||

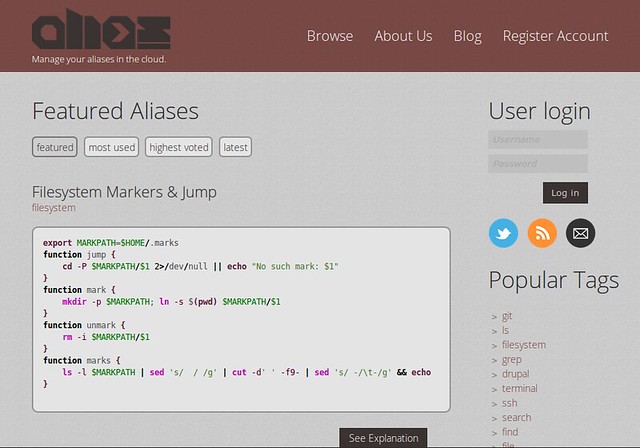

### 11. [Alias.sh][22] ###

|

||||

|

||||

[][23]

|

||||

|

||||

另一协作式数据库,alias.sh(我爱死这个URL了)有点像commandlinefu,但是为shell别名开发的。你可以共享和发现一些有用的别名,来使你的CLI(命令行界面)体验更加舒服。我个人喜欢这个获取图片维度的别名。

|

||||

另一协作式数据库,alias.sh(我爱死这个URL了)有点像commandlinefu,但是为shell别名开发的。你可以共享和发现一些有用的别名,来使你的CLI(命令行界面)体验更加舒服。我个人喜欢这个获取图片维度的别名命令。

|

||||

|

||||

function dim(){ sips $1 -g pixelWidth -g pixelHeight }

|

||||

|

||||

@ -75,40 +76,41 @@

|

||||

|

||||

[][25]

|

||||

|

||||

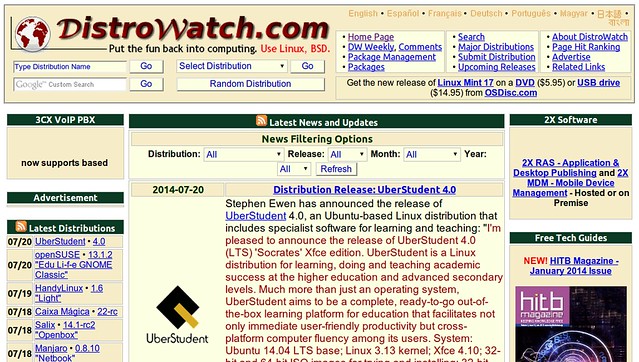

有谁不知道Distrowatch?除了基于这个网站流行度给出一个精确的Linux发行版排名,Distrowatch也是一个非常有用的数据库。无论你正苦苦寻找一个新的发行版,还是只是出于好奇,它都能为你能找到的每个Linux版本呈现一个详尽的描述,包含默认的桌面环境,包管理系统,默认应用程序等信息,还有所有的版本号,以及可用的下载链接。总而言之,这就是个Linux宝库。

|

||||

有谁不知道Distrowatch?除了基于这个网站流行度给出一个精确的Linux发行版排名,Distrowatch也是一个非常有用的数据库。无论你正苦苦寻找一个新的发行版,还是只是出于好奇,它都能为你能找到的每个Linux版本呈现一个详尽的描述,包含默认的桌面环境、包管理系统、默认应用程序等信息,还有所有的版本号,以及可用的下载链接。总而言之,这就是个Linux宝库。

|

||||

|

||||

### 13. [Linuxmanpages.com][26] ###

|

||||

|

||||

[][27]

|

||||

|

||||

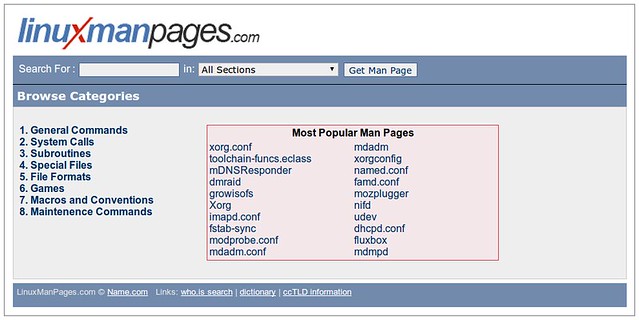

一切都在URL中:随时随地获取主流命令的手册页面。尽管不确信对于Linux用户是否真的有用,因为他们可以从真实的终端中获取这些信息,但这里的内容还是值得关注的。

|

||||

一切尽在URL中说明了:随时随地获取主流命令的手册页面。尽管不确信对于Linux用户是否真的有用,因为他们可以从真实的终端中获取这些信息,但这里的内容还是值得关注的。

|

||||

|

||||

### 14. [AwesomeCow.com][28] ###

|

||||

|

||||

[][29]

|

||||

|

||||

这里可能少一些核心的Linux内容,但肯定是有一些用的。Awesomecow是一个搜索引擎,来寻找Windows软件在Linux上的替代品。它对那些迁移到企鹅操作系统(Linux)或习惯Windows软件的人很有帮助。我认为这个网站代表一种能力,表明了在谈到软件质量时Linux也可以适用于专业领域。大家至少可以尝试一下。

|

||||

这可能对于骨灰级 Linux 没啥用,但是对于其他人也许有用。Awesomecow是一个搜索引擎,来寻找Windows软件在Linux上对应的替代品。它对那些迁移到企鹅操作系统(Linux)或习惯Windows软件的人很有帮助。我认为这个网站代表一种能力,表明了在谈到软件质量时Linux也可以适用于专业领域。大家至少可以尝试一下。

|

||||

|

||||

### 15. [PenguSpy.com][30] ###

|

||||

|

||||

[][31]

|

||||

|

||||

Steam在Linux上崭露头角之前,游戏性可能是Linux的软肋。但这个名为“pengsupy”的网站不遗余力地弥补这个软肋,通过使用漂亮的接口在数据库中收集所有兼容Linux的游戏。游戏按照类别、发行日期、评分等指标分类。我真心希望这一类的网站不会因为Steam的存在走向衰亡,毕竟这是我在这个列表里最喜爱的网站之一。

|

||||

Steam在Linux上崭露头角之前,可玩性可能是Linux的软肋。但这个名为“pengsupy”的网站不遗余力地弥补这个软肋,通过使用漂亮的界面展现了数据库中收集的所有兼容Linux的游戏。游戏按照类别、发行日期、评分等指标分类。我真心希望这一类的网站不会因为Steam的存在走向衰亡,毕竟这是我在这个列表里最喜爱的网站之一。

|

||||

|

||||

### 16. [Linux Cross Reference by Free Electrons][32] ###

|

||||

|

||||

[][33]

|

||||

|

||||

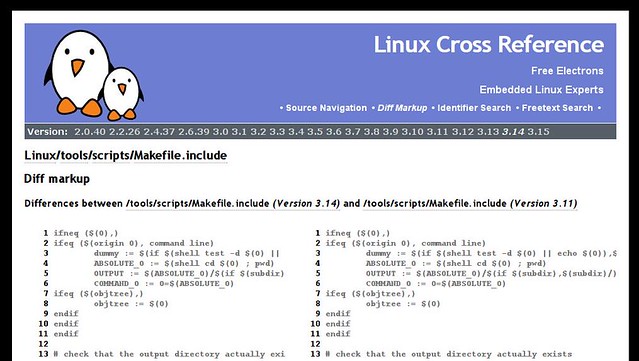

最后,对所有的专家和好奇的用户,lxr是源自Linux Cross Reference的回文构词法,使我们能交互地在线查看Linux内核代码。通过标识符可以很方便地使用导航栏,你可以使用标准的diff标记对比文件的不同版本。这个网站的界面看起来严肃直接,毕竟这只是一个希望完美阐述开源观点的网站。

|

||||

最后,对所有的专家和好奇的用户,lxr 是源于 Linux Cross Reference 的另外一种形式,使我们能交互地在线查看Linux内核代码。可以通过各种标识符在代码中很方便地导航,你可以使用标准的diff标记对比文件的不同版本。这个网站的界面看起来严肃直接,毕竟这只是一个希望完美阐述开源观点的网站。

|

||||

|

||||

总而言之,应该列出更多这一类的网站,作为这篇文章第二部分的主题。但这篇文章是一个好的开始,是一道为Linux用户寻找在线工具的开胃菜。如果你有其它任何想要分享的页面,而且是紧跟这个主题的,在评论里写出来。这将有助于续写这个列表。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/2014/07/useful-online-tools-linux.html

|

||||

|

||||

原文作者:[Adrien Brochard][a](我是一名来自法国的Linux狂热爱好者。在尝试过众多的发行版后,我最终选择了Archlinux。但我一直会通过叠加技巧和窍门来优化我的系统。)

|

||||

|

||||

译者:[KayGuoWhu](https://github.com/KayGuoWhu) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[KayGuoWhu](https://github.com/KayGuoWhu) 校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

如何在Linux中使用awk命令

|

||||

================================================================================

|

||||

文本处理是Unix的核心。从管道到/proc子系统,“一切都是文件”的理念贯穿于操作系统和所有基于它构造的工具。正因为如此,轻松地处理文本是一个期望成为Linux系统管理员甚至是资深用户的最重要的技能之一,awk是通用编程语言之外最强大的文本处理工具之一。

|

||||

文本处理是Unix的核心。从管道到/proc子系统,“一切都是文件”的理念贯穿于操作系统和所有基于它构造的工具。正因为如此,轻松地处理文本是一个期望成为Linux系统管理员甚至是资深用户的最重要的技能之一,而 awk是通用编程语言之外最强大的文本处理工具之一。

|

||||

|

||||

最简单的awk的任务是从标准输入中选择字段;如果你对awk除了这个没有学习过其他的,它还是会是你身边一个非常有用的工具。

|

||||

最简单的awk的任务是从标准输入中选择字段;如果你对awk除了这个用途之外,从来没了解过它的其他用途,你会发现它还是会是你身边一个非常有用的工具。

|

||||

|

||||

默认情况下,awk通过空格分隔输入。如果您想选择输入的第一个字段,你只需要告诉awk输出$ 1:

|

||||

|

||||

@ -30,13 +30,13 @@

|

||||

|

||||

> foo: three | bar: one

|

||||

|

||||

好吧,如果你的输入不是由空格分隔怎么办?只需用awk中的'-F'标志后带上你的分隔符:

|

||||

好吧,如果你的输入不是由空格分隔怎么办?只需用awk中的'-F'标志指定你的分隔符:

|

||||

|

||||

$ echo 'one mississippi,two mississippi,three mississippi,four mississippi' | awk -F , '{print $4}'

|

||||

|

||||

> four mississippi

|

||||

|

||||

偶尔间,你会发现自己正在处理拥有不同的字段数量的数据,但你只知道你想要的*最后*字段。 awk中内置的$NF变量代表*字段的数量*,这样你就可以用它来抓取最后一个元素:

|

||||

偶尔间,你会发现自己正在处理字段数量不同的数据,但你只知道你想要的*最后*字段。 awk中内置的$NF变量代表*字段的数量*,这样你就可以用它来抓取最后一个元素:

|

||||

|

||||

$ echo 'one two three four' | awk '{print $NF}'

|

||||

|

||||

@ -54,9 +54,9 @@

|

||||

|

||||

> three

|

||||

|

||||

而且这一切都非常有用,同样你可以摆脱强制使用sed,cut,和grep来得到这些结果(尽管有大量的工作)。

|

||||

而且这一切都非常有用,同样你可以摆脱强制使用sed,cut,和grep来得到这些结果(尽管要做更多的操作)。

|

||||

|

||||

因此,我将为你留下awk的最后介绍特性,维护跨行状态。

|

||||

因此,我将最后为你介绍awk的一个特性,维持跨行状态。

|

||||

|

||||

$ echo -e 'one 1\ntwo 2' | awk '{print $2}'

|

||||

|

||||

@ -68,7 +68,7 @@

|

||||

|

||||

> 3

|

||||

|

||||

(END代表的是我们在执行完每行的处理**之后**只处理下面的代码块

|

||||

(END代表的是我们在执行完每行的处理**之后**只处理下面的代码块)

|

||||

|

||||

这里我使用的例子是统计web服务器请求日志的字节大小。想象一下我们有如下这样的日志:

|

||||

|

||||

@ -104,7 +104,7 @@

|

||||

|

||||

> 31657

|

||||

|

||||

如果你正在寻找关于awk的更多资料,你可以在Amazon中在15美元内找到[原始awk手册][1]的副本。你同样可以使用Eric Pement的[单行awk命令收集][2]这本书

|

||||

如果你正在寻找关于awk的更多资料,你可以在Amazon中花费不到15美元买到[原始awk手册][1]的二手书。你也许还可以看看Eric Pement的[单行awk命令收集][2]这本书。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -112,7 +112,7 @@ via: http://xmodulo.com/2014/07/use-awk-command-linux.html

|

||||

|

||||

作者:[James Pearson][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -4,19 +4,20 @@ Nvidia Optimus是一款利用“双显卡切换”技术的混合GPU系统,但

|

||||

|

||||

### 背景知识 ###

|

||||

对那些不熟悉Nvidia Optimus的读者,在板载Intel图形芯片组和使用被称为“GPU切换”、对需求有着更强大处理能力的NVIDA显卡这两者之间的进行切换是很有必要的。这么做的主要目的是延长笔记本电池的使用寿命,以便在不需要Nvidia GPU的时候将其关闭。带来的好处是显而易见的,比如说你只是想简单地打打字,笔记本电池可以撑8个小时;如果看高清视频,可能就只能撑3个小时了。使用Windows时经常如此。

|

||||

|

||||

|

||||

|

||||

几年前,我买了一台上网本(Asus VX6),犯的最蠢的一个错误就是没有检查Linux驱动兼容性。因为在以前,特别是对于一台上网本大小的设备,这根本不会是问题。即便某些驱动不是现成可用的,我也可以找到其它的办法让它正常工作,比如安装专门模块或者使用反向移植。对我来说这是第一次——我的电脑预先配备了Nvidia ION2图形显卡。

|

||||

|

||||

在那时候,Nvidia的Optimus混合GPU硬件还是相当新的产品,而我也没有预见到在这台机器上运行Linux会遇到什么限制。如果你读到了这里,恰好对Linux系统有经验,而且也在几年前买过一台笔记本,你可能对这种痛苦感同身受。

|

||||

|

||||

[Bumblebee][4]项目直到最近因为得到Linux系统对混合图形方面的支持才变得好起来。事实上,如果配置正确的话,通过命令行接口(如“optirun vlc”)为想要的应用程序去利用Nvidia显卡的功能是可能的,但让HDMI一类的功能运转起来就很不同了。(译者注:Bumblebee 项目是把Nvidia的Optimus技术移到Linux上来。)

|

||||

[Bumblebee][4]项目直到最近因为得到Linux系统对混合图形方面的支持才变得好起来。事实上,如果配置正确的话,通过命令行接口(如“optirun vlc”)让你选定的应用程序能利用Nvidia显卡功能是可行的,但让HDMI一类的功能运转起来就很不同了。(译者注:Bumblebee 项目是把Nvidia的Optimus技术移到Linux上来。)

|

||||

|

||||

我之所以使用“如果配置正确的话”这个短语,是因为实际上为了让它发挥出性能往往不只是通过几次尝试去改变Xorg的配置就能做到的。如果你以前没有使用过ppa-purge或者运行过“dpkg-reconfigure -phigh xserver-xorg”这类命令,那么我可以向你保证修补Bumblebee的过程会让你受益匪浅。

|

||||

我之所以使用“如果配置正确的话”这个短语,是因为实际上为了让它发挥出性能来往往不只是通过几次尝试去改变Xorg的配置就能做到的。如果你以前没有使用过ppa-purge或者运行过“dpkg-reconfigure -phigh xserver-xorg”这类命令,那么我可以向你保证修补Bumblebee的过程会让你受益匪浅。

|

||||

|

||||

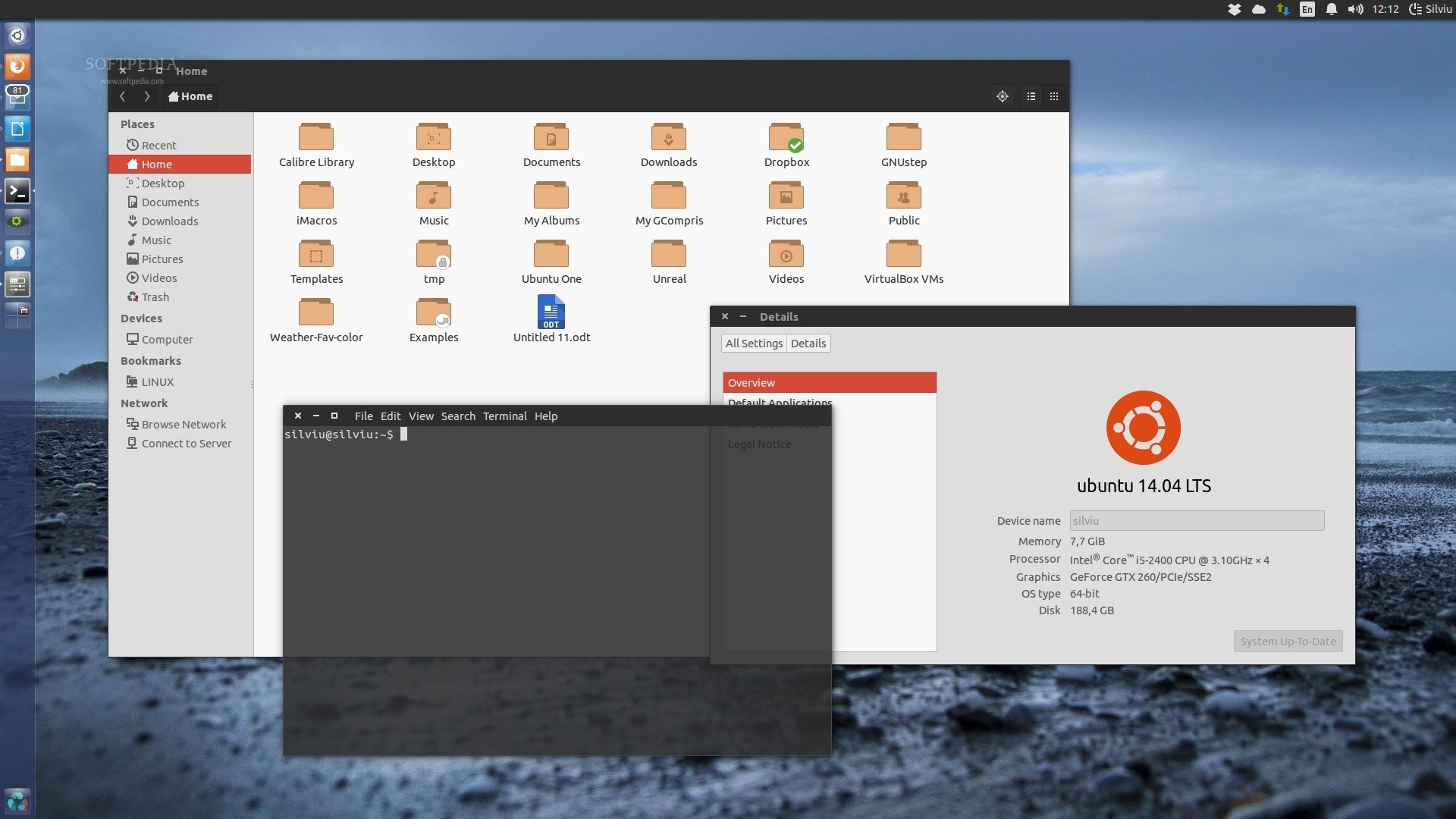

[][2]

|

||||

|

||||

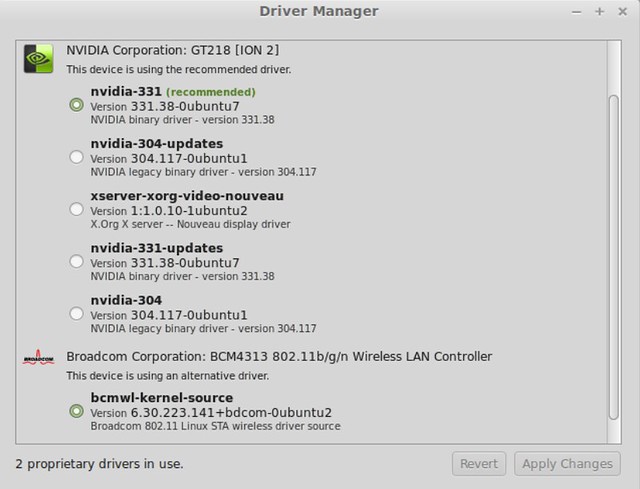

等待了很长一段时间,Nvidia才发布了支持Optimus的Linux驱动,但我们仍然没有获取对双显卡切换的真正支持。然而,现在有了Ubuntu 14.04、nvidia-prime和nvidia-331驱动,任何人都可以在Intel芯片和Nvidia显卡之间轻松切换。不幸的是,为了使切换生效,还是会受限于要重启X11视窗系统(通过注销登录实现)。

|

||||

在等待了很长一段时间后,Nvidia才发布了支持Optimus的Linux驱动,但我们仍然没有得到对双显卡切换的真正支持。然而,现在有了Ubuntu 14.04、nvidia-prime和nvidia-331驱动,任何人都可以在Intel芯片和Nvidia显卡之间轻松切换。不过不幸的是,为了使切换生效,还是会受限于需要重启X11视窗系统(通过注销登录实现)。

|

||||

|

||||

为了减轻这种不便,有一个小型程序用于快速切换,稍后我会给出。这个驱动程序的安装就此成为一件轻而易举的事了,HDMI也可以正常工作,这足以让我心满意足了。

|

||||

|

||||

@ -24,11 +25,11 @@ Nvidia Optimus是一款利用“双显卡切换”技术的混合GPU系统,但

|

||||

|

||||

为了更快地描述这个过程,我假设你已经安装好Ubuntu 14.04或者Mint 17。

|

||||

|

||||

作为一名系统管理员,最近我发现90%的Linux通过命令行执行起来更快,但这次我推荐使用“Additional Drivers”这个应用程序,你可能使用它安装过网卡或声卡驱动。

|

||||

作为一名系统管理员,最近我发现90%的Linux操作通过命令行执行起来更快,但这次我推荐使用“Additional Drivers”这个应用程序,你可能使用它安装过网卡或声卡驱动。

|

||||

|

||||

|

||||

|

||||

**注意:下面的所有命令都是在~#前执行的,需要root权限执行。在运行命令前,要么使用“sudo su”(切换到root权限),要么在每条命令的开头使用速冻运行。**

|

||||

**注意:下面的所有命令都是在~#提示符下执行的,需要root权限执行。在运行命令前,要么使用“sudo su”(切换到root权限),要么在每条命令的开头使用sudo运行。**

|

||||

|

||||

你也可以在命令行输入如下命令进行安装:

|

||||

|

||||

@ -44,19 +45,19 @@ Nvidia Optimus是一款利用“双显卡切换”技术的混合GPU系统,但

|

||||

|

||||

~$ nvidia-settings

|

||||

|

||||

#### 注意:~$表示不以root用户身份执行。 ####

|

||||

**注意:~$表示不以root用户身份执行。**

|

||||

|

||||

|

||||

|

||||

你也可以使用命令行设置默认使用哪一块显卡:

|

||||

|

||||

~# prime-select intel (or nvidia)

|

||||

~# prime-select intel (或 nvidia)

|

||||

|

||||

使用这个命令进行切换:

|

||||

|

||||

~# prime-switch intel (or nvidia)

|

||||

~# prime-switch intel (或 nvidia)

|

||||

|

||||

两个命令的生效都需要重启X11,可以通过注销和重新登录实现。重启电脑也行。

|

||||

两个命令的生效都需要重启X11,可以通过注销和重新登录实现。当然重启电脑也行。

|

||||

|

||||

对Ubuntu用户键入命令:

|

||||

|

||||

@ -70,7 +71,7 @@ Nvidia Optimus是一款利用“双显卡切换”技术的混合GPU系统,但

|

||||

|

||||

~# prime-select query

|

||||

|

||||

最后,你可以通过添加ppa:nilarimogard/webupd8来安装叫做prime-indicator的程序包,实现通过工具栏快速切换来重启Xserver会话。为了安装它,只需要运行:

|

||||

最后,你可以通过添加ppa:nilarimogard/webupd8来安装叫做prime-indicator的程序包,实现通过工具栏快速切换来重启Xserver会话。要安装它,只需要运行:

|

||||

|

||||

~# add-apt-repository ppa:nilarimogard/webupd8

|

||||

~# apt-get update

|

||||

@ -84,7 +85,7 @@ Nvidia Optimus是一款利用“双显卡切换”技术的混合GPU系统,但

|

||||

|

||||

也可以花时间查看一下这个我偶然发现的[脚本][3],用来方便地在Bumblebee和Nvidia-Prime之间进行切换,但我必须强调并没有亲自对此进行实验。

|

||||

|

||||

最后,我感到非常惭愧写了这么多才得以为Linux上的显卡提供了专门支持,但仍然不能实现双显卡切换,因为混合图形技术似乎是便携式设备的未来。一般情况下,AMD会发布Linux平台上的驱动支持,但我认为Optimus是目前为止我遇到过的最糟糕的硬件支持问题。

|

||||

最后,我感到非常惭愧,写了这么多才得以为Linux上的显卡提供了专门支持,但仍然不能实现双显卡切换,因为混合图形技术似乎是便携式设备的未来。一般情况下,AMD会发布Linux平台上的驱动支持,但我认为Optimus是目前为止我遇到过的最糟糕的硬件支持问题。

|

||||

|

||||

不管这篇教程对你的使用是否完美,但这确实是利用这块Nvidia显卡最容易的方法。你可以试着在Intel显卡上只运行最新的Unity,然后考虑2到3个小时的电池寿命是否值得权衡。

|

||||

|

||||

@ -94,7 +95,7 @@ via: http://xmodulo.com/2014/08/install-configure-nvidia-optimus-driver-ubuntu.h

|

||||

|

||||

作者:[Christopher Ward][a]

|

||||

译者:[KayGuoWhu](https://github.com/KayGuoWhu)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,15 +1,16 @@

|

||||

Linux中15个‘echo’ 实例

|

||||

Linux中的15个‘echo’ 命令实例

|

||||

================================================================================

|

||||

**echo**是一种最常用的与广泛使用的内置于Linux的bash和C shell的命令,通常用在脚本语言和批处理文件中来在标准输出或者文件中显示一行文本或者字符串。

|

||||

|

||||

|

||||

|

||||

echo命令例子

|

||||

|

||||

echo命令的语法是:

|

||||

|

||||

echo [选项] [字符串]

|

||||

|

||||

**1.** 输入一行文本并显示在标准输出上

|

||||

###**1.** 输入一行文本并显示在标准输出上

|

||||

|

||||

$ echo Tecmint is a community of Linux Nerds

|

||||

|

||||

@ -17,7 +18,9 @@ echo命令的语法是:

|

||||

|

||||

Tecmint is a community of Linux Nerds

|

||||

|

||||

**2.** 声明一个变量并输出它的值。比如,声明变量**x**并给它赋值为**10**。

|

||||

###**2.** 输出一个声明的变量值

|

||||

|

||||

比如,声明变量**x**并给它赋值为**10**。

|

||||

|

||||

$ x=10

|

||||

|

||||

@ -27,15 +30,20 @@ echo命令的语法是:

|

||||

|

||||

The value of variable x = 10

|

||||

|

||||

**注意:** Linux中的选项‘**-e**‘扮演了转义字符反斜线的翻译器。

|

||||

|

||||

**3.** 使用‘**\b**‘选项- ‘**-e**‘后带上'\b'会删除字符间的所有空格。

|

||||

###**3.** 使用‘**\b**‘选项

|

||||

|

||||

‘**-e**‘后带上'\b'会删除字符间的所有空格。

|

||||

|

||||

**注意:** Linux中的选项‘**-e**‘扮演了转义字符反斜线的翻译器。

|

||||

|

||||

$ echo -e "Tecmint \bis \ba \bcommunity \bof \bLinux \bNerds"

|

||||

|

||||

TecmintisacommunityofLinuxNerds

|

||||

|

||||

**4.** 使用‘**\n**‘选项- ‘**-e**‘后面的带上‘\n’行会在遇到的地方作为新的一行

|

||||

###**4.** 使用‘**\n**‘选项

|

||||

|

||||

‘**-e**‘后面的带上‘\n’行会在遇到的地方作为新的一行

|

||||

|

||||

$ echo -e "Tecmint \nis \na \ncommunity \nof \nLinux \nNerds"

|

||||

|

||||

@ -47,13 +55,15 @@ echo命令的语法是:

|

||||

Linux

|

||||

Nerds

|

||||

|

||||

**5.** 使用‘**\t**‘选项 - ‘**-e**‘后面跟上‘\t’会在空格间加上水平制表符。

|

||||

###**5.** 使用‘**\t**‘选项

|

||||

|

||||

‘**-e**‘后面跟上‘\t’会在空格间加上水平制表符。

|

||||

|

||||

$ echo -e "Tecmint \tis \ta \tcommunity \tof \tLinux \tNerds"

|

||||

|

||||

Tecmint is a community of Linux Nerds

|

||||

|

||||

**6.** 也可以同时使用换行‘**\n**‘与水平制表符‘**\t**‘。

|

||||

###**6.** 也可以同时使用换行‘**\n**‘与水平制表符‘**\t**‘

|

||||

|

||||

$ echo -e "\n\tTecmint \n\tis \n\ta \n\tcommunity \n\tof \n\tLinux \n\tNerds"

|

||||

|

||||

@ -65,7 +75,9 @@ echo命令的语法是:

|

||||

Linux

|

||||

Nerds

|

||||

|

||||

**7.** 使用‘**\v**‘选项 - ‘**-e**‘后面跟上‘\v’会加上垂直制表符。

|

||||

###**7.** 使用‘**\v**‘选项

|

||||

|

||||

‘**-e**‘后面跟上‘\v’会加上垂直制表符。

|

||||

|

||||

$ echo -e "\vTecmint \vis \va \vcommunity \vof \vLinux \vNerds"

|

||||

|

||||

@ -77,7 +89,7 @@ echo命令的语法是:

|

||||

Linux

|

||||

Nerds

|

||||

|

||||

**8.** 也可以同时使用换行‘**\n**‘与垂直制表符‘**\v**‘。

|

||||

###**8.** 也可以同时使用换行‘**\n**‘与垂直制表符‘**\v**‘

|

||||

|

||||

$ echo -e "\n\vTecmint \n\vis \n\va \n\vcommunity \n\vof \n\vLinux \n\vNerds"

|

||||

|

||||

@ -98,43 +110,51 @@ echo命令的语法是:

|

||||

|

||||

**注意:** 你可以按照你的需求连续使用两个或者多个垂直制表符,水平制表符与换行符。

|

||||

|

||||

**9.** 使用‘**\r**‘选项 - ‘**-e**‘后面跟上‘\r’来指定输出中的回车符。

|

||||

###**9.** 使用‘**\r**‘选项

|

||||

|

||||

‘**-e**‘后面跟上‘\r’来指定输出中的回车符。(LCTT 译注:会覆写行开头的字符)

|

||||

|

||||

$ echo -e "Tecmint \ris a community of Linux Nerds"

|

||||

|

||||

is a community of Linux Nerds

|

||||

|

||||

**10.** 使用‘**\c**‘选项 - ‘**-e**‘后面跟上‘\c’会抑制输出后面的字符并且最后不会换新行。

|

||||

###**10.** 使用‘**\c**‘选项

|

||||

|

||||

‘**-e**‘后面跟上‘\c’会抑制输出后面的字符并且最后不会换新行。

|

||||

|

||||

$ echo -e "Tecmint is a community \cof Linux Nerds"

|

||||

|

||||

Tecmint is a community @tecmint:~$

|

||||

|

||||

**11.** ‘**-n**‘会在echo完后不会输出新行。

|

||||

###**11.** ‘**-n**‘会在echo完后不会输出新行

|

||||

|

||||

$ echo -n "Tecmint is a community of Linux Nerds"

|

||||

Tecmint is a community of Linux Nerds@tecmint:~/Documents$

|

||||

|

||||

**12.** 使用‘**\c**‘选项 - ‘**-e**‘后面跟上‘\a’选项会听到声音警告。

|

||||

###**12.** 使用‘**\a**‘选项

|

||||

|

||||

‘**-e**‘后面跟上‘\a’选项会听到声音警告。

|

||||

|

||||

$ echo -e "Tecmint is a community of \aLinux Nerds"

|

||||

Tecmint is a community of Linux Nerds

|

||||

|

||||

**注意:** 在你开始前,请先检查你的音量键。

|

||||

**注意:** 在你开始前,请先检查你的音量设置。

|

||||

|

||||

**13.** 使用echo命令打印所有的文件和文件夹(ls命令的替代)。

|

||||

###**13.** 使用echo命令打印所有的文件和文件夹(ls命令的替代)

|

||||

|

||||

$ echo *

|

||||

|

||||

103.odt 103.pdf 104.odt 104.pdf 105.odt 105.pdf 106.odt 106.pdf 107.odt 107.pdf 108a.odt 108.odt 108.pdf 109.odt 109.pdf 110b.odt 110.odt 110.pdf 111.odt 111.pdf 112.odt 112.pdf 113.odt linux-headers-3.16.0-customkernel_1_amd64.deb linux-image-3.16.0-customkernel_1_amd64.deb network.jpeg

|

||||

|

||||

**14.** 打印制定的文件类型。比如,让我们假设你想要打印所有的‘**.jpeg**‘文件,使用下面的命令。

|

||||

###**14.** 打印制定的文件类型

|

||||

|

||||

比如,让我们假设你想要打印所有的‘**.jpeg**‘文件,使用下面的命令。

|

||||

|

||||

$ echo *.jpeg

|

||||

|

||||

network.jpeg

|

||||

|

||||

**15.** echo可以使用重定向符来输出到一个文件而不是标准输出。

|

||||

###**15.** echo可以使用重定向符来输出到一个文件而不是标准输出

|

||||

|

||||

$ echo "Test Page" > testpage

|

||||

|

||||

@ -142,7 +162,7 @@ echo命令的语法是:

|

||||

avi@tecmint:~$ cat testpage

|

||||

Test Page

|

||||

|

||||

### echo 选项 ###

|

||||

### echo 选项列表 ###

|

||||

|

||||

<table border="0" cellspacing="0">

|

||||

<colgroup width="85"></colgroup>

|

||||

@ -187,14 +207,15 @@ echo命令的语法是:

|

||||

</tbody>

|

||||

</table>

|

||||

|

||||

就是这些了,不要忘记在下面留下你有价值的反馈。

|

||||

就是这些了,不要忘记在下面留下你的反馈。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/echo-command-in-linux/

|

||||

|

||||

作者:[Avishek Kumar][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

Linux有问必答——如何显示Linux网桥的MAC学习表

|

||||

Linux有问必答:如何显示Linux网桥的MAC学习表

|

||||

================================================================================

|

||||

|

||||

> **问题**:我想要检查一下我用brctl工具创建的Linux网桥的MAC地址学习状态。请问,我要怎样才能查看Linux网桥的MAC学习表(或者转发表)?

|

||||

@ -18,6 +18,6 @@ Linux网桥是网桥的软件实现,这是Linux内核的内核部分。与硬

|

||||

via: http://ask.xmodulo.com/show-mac-learning-table-linux-bridge.html

|

||||

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

@ -1,20 +1,20 @@

|

||||

Linus Torvalds推动Linux的桌面与嵌入式计算的发展

|

||||

Linus Torvalds 希望推动Linux在桌面和嵌入式计算方面共同发展

|

||||

================================================================================

|

||||

> Linux的内核开发者和开源领袖Linus Torvalds最近表达了关于Linux桌面和嵌入式设备中Linux的未来的看法。

|

||||

> Linux的内核开发者和开源领袖Linus Torvalds前一段时间表达了关于Linux桌面和嵌入式设备中Linux的未来的看法。

|

||||

|

||||

|

||||

|

||||

什么是Linux桌面和嵌入式设备中Linux的未来?这是个值得讨论的问题,不过Linux的创始人和开源巨人Linus Torvalds在最近一届 [Linux 基金会][1] 的LinuxCon大会上,在一次对话中表达了一些有趣的观点。

|

||||

|

||||

作为敲出第一版Linux内核代码并且在1991年将它们共享在互联网上的家伙,Torvalds毫无疑问是开源软件甚至是任何软件中最著名的开发者,如今他依然活跃在其中。在此期间,Torvalds是许多人和组织中唯一一个引领着Linux发展的个体,它的观点往往能影响着开源社区,而且,作为一个内核开发者的角色赋予了他能决定哪些特点和代码能被放进操作系统内部的强大权利。

|

||||

作为敲出第一版Linux内核代码并且在1991年将它们共享在互联网上的家伙,Torvalds毫无疑问是开源软件甚至是所有软件中最著名的开发者,如今他依然活跃在其中。在此期间,Torvalds是许多人和组织中唯一一个引领着Linux发展的个体,它的观点往往能影响着开源社区,而且,作为一个内核开发者的角色赋予了他能决定哪些特点和代码能被放进操作系统内部的强大权利。

|

||||

|

||||

所以说,关注Torvalds所说的话是很值得的, "我还是挺想要桌面的。" [上周他在LinuxCon大会上这样说道][2] 那标志着他仍然着眼于作为使个人机更加强大的操作系统Linux的未来,尽管十年来Linux桌面市场的分享一直很少,而且大部分围绕Linux的商业活动都去涉及服务器或者安卓手机硬件去了。

|

||||

所以说,关注Torvalds所说的话是很值得的, "我还是挺想要桌面的。" [他在上月的LinuxCon大会上这样说道][2] 那表明他仍然着眼于作为使PC更加强大的操作系统Linux的未来,尽管十年来Linux桌面市场的份额一直很少,而且大部分围绕Linux的商业活动都去涉及服务器或者安卓手机去了。

|

||||

|

||||

但是,Torvalds还说,确保Linux桌面能有个宏伟的未来意味着解决了受阻的 “基础设施问题”,好像庞大的开源软件生态系统和硬件世界让他充满信心。这不是Linux核心代码本身的问题,而是要让Linux桌面渠道友好,这可能是伟大的Torvalds和他开发同伴们所需要花精力去达到的目标。这取决于app的开发者、硬件制造商和其它有志于实现人们能方便使用基于Linux的计算平台的各方力量。

|

||||

但是,Torvalds还说,确保Linux桌面能有个宏伟的未来意味着解决了受阻的 “基础设施问题”,庞大的开源软件生态系统和硬件世界让他充满信心。这不是Linux核心代码本身的问题,而是要让Linux桌面渠道友好,这可能是伟大的Torvalds和他开发同伴们所需要花精力去达到的目标。这取决于app的开发者、硬件制造商和其它有志于实现人们能方便使用基于Linux的计算平台的各方力量。

|

||||

|

||||

另一方面,Torvalds也提到了他的憧憬,就是内核开发者们能简化嵌入式装置中的Linux代码——一个在让内核更加桌面友好化上会导致很多分歧的任务。但这也不一定,因为无论如何,Linux都是以模块化设计的,单内核代码库不能同时满足桌面用户和嵌入式开发者的需求,这是没有道理的,因为这取决于他们使用的模块。

|

||||

另一方面,Torvalds也提到了他的憧憬,就是内核开发者们能简化嵌入式装置中的Linux代码——这也许和让Linux内核更加桌面友好化的任务有所分歧。但这也不一定,因为无论如何,Linux都是以模块化设计的,单内核代码库不能同时满足桌面用户和嵌入式开发者的需求,这是没有道理的,因为这取决于他们使用的模块。

|

||||

|

||||

作为一个长时间想看到更多搭载Linux的嵌入式设备出现的Linux桌面用户,我希望Torvalds的所有愿望都可以实现,到那时我就能只用Liunx来做所有我想做的事情,无论是在电脑桌面上、手机上、车上,或者是任何其它的地方。

|

||||

作为一个一直想看到更多搭载Linux的嵌入式设备出现的Linux桌面用户,我希望Torvalds的所有愿望都可以实现,到那时我就可以只用Linux来做所有我想做的事情,无论是在电脑桌面上、手机上、车上,或者是任何其它的地方。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -22,7 +22,7 @@ via: http://thevarguy.com/open-source-application-software-companies/082514/linu

|

||||

|

||||

作者:[Christopher Tozzi][a]

|

||||

译者:[ZTinoZ](https://github.com/ZTinoZ)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

92

published/20140904 Making MySQL Better at GitHub.md

Normal file

92

published/20140904 Making MySQL Better at GitHub.md

Normal file

@ -0,0 +1,92 @@

|

||||

GitHub 是如何迁移 MySQL 集群的

|

||||

================================================================================

|

||||

> 在 GitHub 我们总是说“如果网站响应速度不够快,我们就不应该让它上线运营”。我们之前在[前端的体验速度][1]这篇文章中介绍了一些提高网站响应速率的方法,但这只是故事的一部分。真正影响到 GitHub.com 性能的因素是 MySQL 数据库架构。让我们来瞧瞧我们的基础架构团队是如何无缝升级了 MySQL 架构吧,这事儿发生在去年8月份,成果就是大大提高了 GitHub 网站的速度。

|

||||

|

||||

### 任务 ###

|

||||

|

||||

去年我们把 GitHub 上的大部分数据移到了新的数据中心,这个中心有世界顶级的硬件资源和网络平台。自从使用了 MySQL 作为我们的后端系统的基础,我们一直期望着一些改进来大大提高数据库性能,但是在数据中心使用全新的硬件来部署一套全新的集群环境并不是一件简单的工作,所以我们制定了一套计划和测试工作,以便数据能平滑过渡到新环境。

|

||||

|

||||

### 准备工作 ###

|

||||

|

||||

像我们这种关于架构上的巨大改变,在执行的每一步都需要收集数据指标。新机器上安装好了基本的操作系统,接下来就是测试新配置下的各种性能。为了模拟真实的工作负载环境,我们使用 tcpdump 工具从旧的集群那里复制正在发生的 SELECT 请求,并在新集群上重新回放一遍。

|

||||

|

||||

MySQL 调优是个繁琐的细致活,像众所周知的 innodb_buffer_pool_size 这个参数往往能对 MySQL 性能产生巨大的影响。对于这类参数,我们必须考虑在内,所以我们列了一份参数清单,包括 innodb_thread_concurrency,innodb_io_capacity,和 innodb_buffer_pool_instances,还有其它的。

|

||||

|

||||

在每次测试中,我们都很小心地只改变一个参数,并且让一次测试至少运行12小时。我们会观察响应时间的变化曲线,每秒的响应次数,以及有可能会导致并发性降低的参数。我们使用 “SHOW ENGINE INNODB STATUS” 命令打印 InnoDB 性能信息,特别观察了 “SEMAPHORES” 一节的内容,它为我们提供了工作负载的状态信息。

|

||||

|

||||

当我们在设置参数后对运行结果感到满意,然后就开始将我们最大的数据表格之一迁移到一套独立的集群上,这个步骤作为整个迁移过程的早期测试,以保证我们的核心集群有更多的缓存池空间,并且为故障切换和存储功能提供更强的灵活性。这步初始迁移方案也引入了一个有趣的挑战:我们必须维持多条客户连接,并且要将这些连接指向到正确的集群上。

|

||||

|

||||

除了硬件性能的提升,还需要补充一点,我们同时也对处理进程和拓扑结构进行了改进:我们添加了延时拷贝技术,更快、更高频地备份数据,以及更多的读拷贝空间。这些功能已经准备上线。

|

||||

|

||||

### 列出任务清单,三思后行 ###

|

||||

|

||||

每天有上百万用户的使用 GitHub.com,我们不可能有机会等没有人用了才进行实际数据切换。我们有一个详细的[任务清单][2]来执行迁移:

|

||||

|

||||

|

||||

|

||||

我们还规划了一个维护期,并且[在我们的博客中通知了大家][3],让用户注意到这件事情。

|

||||

|

||||

### 迁移时间到 ###

|

||||

|

||||

太平洋时间星期六上午5点,我们的迁移团队上线集合对话,同时数据迁移正式开始:

|

||||

|

||||

|

||||

|

||||

我们将 GitHub 网站设置为维护模式,并在 Twitter 上发表声明,然后开始按上述任务清单的步骤开始工作:

|

||||

|

||||

|

||||

|

||||

**13 分钟**后,我们确保新的集群能正常工作:

|

||||

|

||||

|

||||

|

||||

然后我们让 GitHub.com 脱离维护模式,并且让全世界的用户都知道我们的最新状态:

|

||||

|

||||

|

||||

|

||||

大量前期的测试工作与准备工作,让我们将维护期缩到最短。

|

||||

|

||||

### 检验最终的成果 ###

|

||||

|

||||

在接下来的几周时间里,我们密切监视着 GitHub.com 的性能和响应时间。我们发现迁移后网站的平均加载时间减少一半,并且在99%的时间里,能减少*三分之二*:

|

||||

|

||||

|

||||

|

||||

### 我们学到了什么 ###

|

||||

|

||||

#### 功能划分 ####

|

||||

|

||||

在迁移过程中,我们采用了一个比较好的方法是:将大的数据表(主要记录了一些历史数据)先迁移过去,空出旧集群的磁盘空间和缓存池空间。这一步给我们留下了更多的资源用于“热”数据,将一些连接请求分离到多套集群里面。这步为我们之后的胜利奠定了基础,我们以后还会使用这种模式来进行迁移工作。

|

||||

|

||||

#### 测试测试测试 ####

|

||||

|

||||

为你的应用做验收测试和回归测试,越多越好,多多益善,不要嫌多。从老集群复制数据到新集群的过程中,如果进行验收测试和响应状态测试,得到的数据是不准的,如果数据不理想,这是正常的,不要惊讶,不要试图拿这些数据去分析原因。

|

||||

|

||||

#### 合作的力量 ####

|

||||

|

||||

对基础架构进行大的改变,通常需要涉及到很多人,我们要像一个团队一样为共同的目标而合作。我们的团队成员来自全球各地。

|

||||

|

||||

团队成员地图:

|

||||

|

||||

https://render.githubusercontent.com/view/geojson?url=https://gist.githubusercontent.com/anonymous/5fa29a7ccbd0101630da/raw/map.geojson

|

||||

|

||||

本次合作新创了一种工作流程:我们提交更改(pull request),获取实时反馈,查看修改了错误的 commit —— 全程没有电话交流或面对面的会议。当所有东西都可以通过 URL 提供信息,不同区域的人群之间的交流和反馈会变得非常简单。

|

||||

|

||||

### 一年后…… ###

|

||||

|

||||

整整一年时间过去了,我们很高兴地宣布这次数据迁移是很成功的 —— MySQL 性能和可靠性一直处于我们期望的状态。另外,新的集群还能让我们进一步去升级,提供更好的可靠性和响应时间。我将继续记录这些优化过程。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://github.com/blog/1880-making-mysql-better-at-github

|

||||

|

||||

作者:[samlambert][a]

|

||||

译者:[bazz2](https://github.com/bazz2)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://github.com/samlambert

|

||||

[1]:https://github.com/blog/1756-optimizing-large-selector-sets

|

||||

[2]:https://help.github.com/articles/writing-on-github#task-lists

|

||||

[3]:https://github.com/blog/1603-site-maintenance-august-31st-2013

|

||||

@ -1,8 +1,8 @@

|

||||

Linux有问必答——如何在CentOS或RHEL 7上修改主机名

|

||||

Linux有问必答:如何在CentOS或RHEL 7上修改主机名

|

||||

================================================================================

|

||||

> 问题:在CentOS/RHEL 7上修改主机名的正确方法是什么(永久或临时)?

|

||||

|

||||

在CentOS或RHEL中,有三种定义的主机名:(1)静态的(2)瞬态的,以及(3)灵活的。“静态”主机名也称为内核主机名,是系统在启动时从/etc/hostname自动初始化的主机名。“瞬态”主机名是在系统运行时临时分配的主机名,例如,通过DHCP或mDNS服务器分配。静态主机名和瞬态主机名都遵从作为互联网域名同样的字符限制规则。而另一方面,“灵活”主机名则允许使用自由形式(包括特殊/空白字符)的主机名,以展示给终端用户(如Dan's Computer)。

|

||||

在CentOS或RHEL中,有三种定义的主机名:a、静态的(static),b、瞬态的(transient),以及 c、灵活的(pretty)。“静态”主机名也称为内核主机名,是系统在启动时从/etc/hostname自动初始化的主机名。“瞬态”主机名是在系统运行时临时分配的主机名,例如,通过DHCP或mDNS服务器分配。静态主机名和瞬态主机名都遵从作为互联网域名同样的字符限制规则。而另一方面,“灵活”主机名则允许使用自由形式(包括特殊/空白字符)的主机名,以展示给终端用户(如Dan's Computer)。

|

||||

|

||||

在CentOS/RHEL 7中,有个叫hostnamectl的命令行工具,它允许你查看或修改与主机名相关的配置。

|

||||

|

||||

@ -22,7 +22,7 @@ Linux有问必答——如何在CentOS或RHEL 7上修改主机名

|

||||

|

||||

|

||||

|

||||

就像上面展示的那样,在修改静态/瞬态主机名时,任何特殊字符或空白字符会被移除,而提供的参数中的任何大写字母会自动转化为小写。一旦修改了静态主机名,/etc/hostname将被自动更新。然而,/etc/hosts不会更新来回应所做的修改,所以你需要手动更新/etc/hosts。

|

||||

就像上面展示的那样,在修改静态/瞬态主机名时,任何特殊字符或空白字符会被移除,而提供的参数中的任何大写字母会自动转化为小写。一旦修改了静态主机名,/etc/hostname 将被自动更新。然而,/etc/hosts 不会更新以保存所做的修改,所以你需要手动更新/etc/hosts。

|

||||

|

||||

如果你只想修改特定的主机名(静态,瞬态或灵活),你可以使用“--static”,“--transient”或“--pretty”选项。

|

||||

|

||||

@ -0,0 +1,30 @@

|

||||

Linux 有问必答:如何在Perl中捕捉并处理信号

|

||||

================================================================================

|

||||

> **提问**: 我需要通过使用Perl的自定义信号处理程序来处理一个中断信号。在一般情况下,我怎么在Perl程序中捕获并处理各种信号(如INT,TERM)?

|

||||

|

||||

作为POSIX标准的异步通知机制,信号由操作系统发送给进程某个事件来通知它。当产生信号时,操作系统会中断目标程序的执行,并且该信号被发送到该程序的信号处理函数。可以定义和注册自己的信号处理程序或使用默认的信号处理程序。

|

||||

|

||||

在Perl中,信号可以被捕获,并由一个全局的%SIG哈希变量指定处理函数。这个%SIG哈希变量的键名是信号值,键值是对应的信号处理程序的引用。因此,如果你想为特定的信号定义自己的信号处理程序,你可以直接在%SIG中设置信号的哈希值。

|

||||

|

||||

下面是一个代码段来处理使用自定义信号处理程序中断(INT)和终止(TERM)的信号。

|

||||

|

||||

$SIG{INT} = \&signal_handler;

|

||||

$SIG{TERM} = \&signal_handler;

|

||||

|

||||

sub signal_handler {

|

||||

print "This is a custom signal handler\n";

|

||||

die "Caught a signal $!";

|

||||

}

|

||||

|

||||

|

||||

|

||||

%SIG其他的可用的键值有'IGNORE'和'DEFAULT'。当所指定的键值是'IGNORE'(例如,$SIG{CHLD}='IGNORE')时,相应的信号将被忽略。指定'DEFAULT'的键值(例如,$SIG{HUP}='DEFAULT'),意味着我们将使用一个(系统)默认的信号处理程序。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://ask.xmodulo.com/catch-handle-interrupt-signal-perl.html

|

||||

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

@ -0,0 +1,51 @@

|

||||

Oracle Linux 5.11更新了其Unbreakable Linux内核

|

||||

================================================================================

|

||||

> 此版本更新了很多软件包

|

||||

|

||||

|

||||

|

||||

这是这个分支的最后一个版本更新(随同 RHEL 5.11的落幕,CentOS 和 Oracle Linux 的5.x 系列也纷纷释出该系列的最后版本)。

|

||||

|

||||

>**甲骨文公司宣布,Oracle Linux5.11版已提供下载,但是这是企业版,需要用户注册才能下载。**

|

||||

|

||||

这个新的Oracle Linux是这个系列的最后一次更新。该系统基于Red Hat和该公司最近推送的RHEL 5X分支更新,这意味着这也是Oracle此产品线的最后一次更新。

|

||||

|

||||

Oracle Linux还带来了一系列有趣的功能,就像一个名为Ksplice的零宕机内核更新,它最初是针对openSUSE,包括Oracle数据库和Oracle应用软件开发的,它们在基于x86的Oracle系统中使用。

|

||||

|

||||

### Oracle Linux有哪些特别的 ###

|

||||

|

||||

尽管Oracle Linux基于红帽,它的开发者曾经举出了很多你不应该使用RHEL的原因。理由有很多,但最主要的是,任何人都可以下载Oracle Linux(注册后),而RHEL实际上限制了非付费会员下载。

|

||||

|

||||

开发者在其网站上说:“为企业应用和系统提供先进的可扩展性和可靠性,Oracle Linux提供了极高的性能,并且在采用x86架构的Oracle工程系统中使用。Oracle Linux是免费使用,免费派发,免费更新,并可轻松下载。它是唯一带来生产中零宕机补丁Oracle Ksplice支持的Linux发行版,允许客户无需重启而部署安全或者其他更新,并且同时提供诊断功能来调试生产系统中的内核问题。”

|

||||

|

||||

Oracle Linux其中一个最有趣且独一无二的功能是其Unbreakable Kernel(坚不可摧的内核)。这是它的开发者实际使用的名称。它基于来自3.0.36分支的旧Linux内核。用户还可以使用红帽兼容内核(内核2.6.18-398.el5),这在发行版中默认提供。

|

||||

|

||||

此外,Oracle Linux Release 5.11企业版内核提供了对大量硬件和设备的支持,但这个最新的更新带来了更好的支持。

|

||||

|

||||

您可以查看Oracle Linux 5.11全部[发布通告][1],这可能需要花费一些时间去读。

|

||||

|

||||

你也可以从下面下载Oracle Linux 5.11:

|

||||

|

||||

- [Oracle Enterprise Linux 6.5 (ISO) 64-bit][2]

|

||||

- [Oracle Enterprise Linux 6.5 (ISO) 32-bit][3]

|

||||

- [Oracle Enterprise Linux 7.0 (ISO) 64-bit][4]

|

||||

- [Oracle Enterprise Linux 5.11 (ISO) 64-bit][5]

|

||||

- [Oracle Enterprise Linux 5.11 (ISO) 32-bit][6]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/Oracle-Linux-5-11-Features-Updated-Unbreakable-Linux-Kernel-460129.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:https://oss.oracle.com/ol5/docs/RELEASE-NOTES-U11-en.html#Kernel_and_Driver_Updates

|

||||

[2]:http://mirrors.dotsrc.org/oracle-linux/OL6/U5/i386/OracleLinux-R6-U5-Server-i386-dvd.iso

|

||||

[3]:http://mirrors.dotsrc.org/oracle-linux/OL6/U5/x86_64/OracleLinux-R6-U5-Server-x86_64-dvd.iso

|

||||

[4]:https://edelivery.oracle.com/linux/

|

||||

[5]:http://ftp5.gwdg.de/pub/linux/oracle/EL5/U11/x86_64/Enterprise-R5-U11-Server-x86_64-dvd.iso

|

||||

[6]:http://ftp5.gwdg.de/pub/linux/oracle/EL5/U11/i386/Enterprise-R5-U11-Server-i386-dvd.iso

|

||||

@ -1,12 +1,12 @@

|

||||

从命令行访问Linux命令小抄

|

||||

================================================================================

|

||||

Linux命令行的强大在于其灵活及多样化,各个Linux命令都带有它自己那部分命令行选项和参数。混合并匹配它们,甚至还可以通过管道和重定向来联结不同的命令。理论上讲,你可以借助几个基本的命令来产生数以百计的使用案例。甚至对于浸淫多年的管理员而言,也难以完全使用它们。那正是命令行小抄成为我们救命稻草的一刻。

|

||||

Linux命令行的强大在于其灵活及多样化,各个Linux命令都带有它自己专属的命令行选项和参数。混合并匹配这些命令,甚至还可以通过管道和重定向来联结不同的命令。理论上讲,你可以借助几个基本的命令来产生数以百计的使用案例。甚至对于浸淫多年的管理员而言,也难以完全使用它们。那正是命令行小抄成为我们救命稻草的一刻。

|

||||

|

||||

[][1]

|

||||

|

||||

我知道联机手册页仍然是我们的良师益友,但我们想通过我们能自行支配的快速参考卡让这一切更为高效和有目的性。最终极的小抄可能被自豪地挂在你的办公室里,也可能作为PDF文件隐秘地存储在你的硬盘上,或者甚至设置成了你的桌面背景图。

|

||||

我知道联机手册页(man)仍然是我们的良师益友,但我们想通过我们能自行支配的快速参考卡让这一切更为高效和有目的性。最终极的小抄可能被自豪地挂在你的办公室里,也可能作为PDF文件隐秘地存储在你的硬盘上,或者甚至设置成了你的桌面背景图。

|

||||

|

||||

最为一个选择,也可以通过另外一个命令来访问你最爱的命令行小抄。那就是,使用[cheat][2]。这是一个命令行工具,它可以让你从命令行读取、创建或更新小抄。这个想法很简单,不过cheat经证明是十分有用的。本教程主要介绍Linux下cheat命令的使用方法。你不需要为cheat命令做个小抄了,它真的很简单。

|

||||

做为一个选择,也可以通过另外一个命令来访问你最爱的命令行小抄。那就是,使用[cheat][2]。这是一个命令行工具,它可以让你从命令行读取、创建或更新小抄。这个想法很简单,不过cheat经证明是十分有用的。本教程主要介绍Linux下cheat命令的使用方法。你不需要为cheat命令做个小抄了,它真的很简单。

|

||||

|

||||

### 安装Cheat到Linux ###

|

||||

|

||||

@ -59,9 +59,9 @@ cheat命令一个很酷的事是,它自带有超过90个的常用Linux命令

|

||||

|

||||

$ cheat -s <keyword>

|

||||

|

||||

在许多情况下,小抄适用于那些正派的人,而对其他某些人却没什么帮助。要想让内建的小抄更具个性化,cheat命令也允许你创建新的小抄,或者更新现存的那些。要这么做的话,cheat命令也会帮你在本地~/.cheat目录中保存一份小抄的副本。

|

||||

在许多情况下,小抄适用于某些人,而对另外一些人却没什么帮助。要想让内建的小抄更具个性化,cheat命令也允许你创建新的小抄,或者更新现存的那些。要这么做的话,cheat命令也会帮你在本地~/.cheat目录中保存一份小抄的副本。

|

||||

|

||||

要使用cheat的编辑功能,首先确保EDITOR环境变量设置为了你默认编辑器所在位置的完整路径。然后,复制(不可编辑)内建小抄到~/.cheat目录。你可以通过下面的命令找到内建小抄所在的位置。一旦你找到了它们的位置,只不过是将它们拷贝到~/.cheat目录。

|

||||

要使用cheat的编辑功能,首先确保EDITOR环境变量设置为你默认编辑器所在位置的完整路径。然后,复制(不可编辑)内建小抄到~/.cheat目录。你可以通过下面的命令找到内建小抄所在的位置。一旦你找到了它们的位置,只不过是将它们拷贝到~/.cheat目录。

|

||||

|

||||

$ cheat -d

|

||||

|

||||

@ -85,7 +85,7 @@ via: http://xmodulo.com/2014/07/access-linux-command-cheat-sheets-command-line.h

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,14 +1,14 @@

|

||||

在哪儿以及怎么写代码:选择最好的免费代码编辑器

|

||||

何处写,如何写:选择最好的免费在线代码编辑器

|

||||

================================================================================

|

||||

深入了解一下Cloud9,Koding和Nitrous.IO。

|

||||

> 深入了解一下Cloud9,Koding和Nitrous.IO。

|

||||

|

||||

|

||||

|

||||

**已经准备好开始你的第一个编程项目了吗?很好!只要配置一下**终端或命令行,学习如何使用并安装所有要用到的编程语言,插件库和API函数库。当最终准备好一切以后,再安装好[Visual Studio][1]就可以开始了,然后才可以预览自己的工作。

|

||||

已经准备好开始你的第一个编程项目了吗?很好!只要配置一下终端或命令行,学习如何使用它,然后安装所有要用到的编程语言,插件库和API函数库。当最终准备好一切以后,再安装好[Visual Studio][1]就可以开始了,然后才可以预览自己的工作。

|

||||

|

||||

至少这是大家过去已经熟悉的方式。

|

||||

|

||||

也难怪初学程序员们逐渐喜欢上在线集成开发环境(IDE)了。IDE是一个代码编辑器,不过已经准备好编程语言以及所有需要的依赖,可以让你避免把它们一一安装到电脑上的麻烦。

|

||||

也难怪初学程序员们逐渐喜欢上在线的集成开发环境(IDE)了。IDE是一个代码编辑器,不过已经准备好编程语言以及所有需要的依赖,可以让你避免把它们一一安装到电脑上的麻烦。

|

||||

|

||||

我想搞清楚到底是哪些因素能组成一个典型的IDE,所以我试用了一下免费级别的时下最受欢迎的三款集成开发环境:[Cloud9][2],[Koding][3]和[Nitrous.IO][4]。在这个过程中,我了解了许多程序员应该或不应该使用IDE的各种情形。

|

||||

|

||||

@ -16,7 +16,7 @@

|

||||

|

||||

假如有一个像Microsoft Word那样的文字编辑器,想想类似Google Drive那样的IDE吧。你可以拥有类似的功能,但是它还能支持从任意电脑上访问,还能随时共享。因为因特网在项目工作流中的影响已经越来越重要,IDE也让生活更轻松。

|

||||

|

||||

在我最近的一篇ReadWrite教程中我使用了Nitrous.IO,这是在文章[创建一个你自己的像Yo那样的极端简单的聊天应用][5]里的一个Python应用。当使用IDE的时候,你只要选择你要用的编程语言,然后通过IDE特别设计用来运行这种语言程序的虚拟机(VM),你就可以测试和预览你的应用了。

|

||||

在我最近的一篇ReadWrite教程中我使用了Nitrous.IO,这是在文章“[创建一个你自己的像Yo那样的极端简单的聊天应用][5]”里的一个Python应用。当使用IDE的时候,你只要选择你要用的编程语言,然后通过IDE特别为运行这种语言程序而设计的虚拟机(VM),你就可以测试和预览你的应用了。

|

||||

|

||||

如果你读过那篇教程,就会知道我的那个应用只用到了两个API库-信息服务Twilio和Python微框架Flask。在我的电脑上就算是使用文字编辑器和终端来做也是很简单的,不过我选择使用IDE还有一个方便的地方:如果大家都使用同样的开发环境,跟着教程一步步走下去就更简单了。

|

||||

|

||||

@ -28,7 +28,7 @@

|

||||

|

||||

但是不能用IDE来永久存储你的整个项目。把帖子保存在Google Drive文件中不会让你的博客丢失。类似Google Drive,IDE可以让你创建链接用于共享内容,但是任何一个都还不足以替代真正的托管服务器。

|

||||

|

||||

还有,IDE并不是设计成方便广泛共享。尽管各种IDE都在不断改善大多数文字编辑器的预览功能,还只能用来给你的朋友或同事展示一下应用预览,而不是,比如说,类似Hacker News的主页。那样的话,占用太多带宽的IDE也许会让你崩溃。

|

||||

还有,IDE并不是设计成方便广泛共享。尽管各种IDE都在不断改善大多数文字编辑器的预览功能,还只能用来给你的朋友或同事展示一下应用的预览,而不是像Hacker News一样的主页。那样的话,占用太多带宽的IDE也许会让你崩溃。

|

||||

|

||||

这样说吧:IDE只是构建和测试你的应用的地方,托管服务器才是它们生存的地方。所以一旦完成了你的应用,你会希望把它布置到能长期托管的云服务器上,最好是能免费托管的那种,例如[Heroku][6]。

|

||||

|

||||

@ -44,7 +44,7 @@

|

||||

|

||||

当我完成了Cloud9的注册后,它提示的第一件事情就是添加我的GitHub和BitBucket账号。马上,所有我的GitHub项目,个人的和协作的,都可以直接克隆到本地并使用Cloud9的开发工具开始工作。其他的IDE在和GitHub集成的方面都没有达到这种水准。

|

||||

|

||||

在我测试的这三款IDE中,Cloud9看起来更加侧重于一个可以让协同工作的人们无缝衔接工作的环境。在这里,它并不是角落里放个聊天窗口。实际上,按照CEO Ruben Daniels说的,试用Cloud9的协作者可以互相看到其他人实时的编码情况,就像Google Drive上的合作者那样。

|

||||

在我测试的这三款IDE中,Cloud9看起来更加侧重于一个可以让协同工作的人们无缝衔接工作的环境。在这里,它并不是角落里放个聊天窗口。实际上,按照其CEO Ruben Daniels说的,试用Cloud9的协作者可以互相看到其他人实时的编码情况,就像Google Drive上的合作者那样。

|

||||

|

||||

“大多数IDE服务的协同功能只能操作单一文件”,Daniels说,“而我们的产品可以支持整个项目中的不同文件。协同功能被完美集成到了我们的IDE中。”

|

||||

|

||||

@ -58,15 +58,15 @@ IDE可以提供你所需的工具来构建和测试所有开源编程语言的

|

||||

|

||||

### Nitrous.IO: An IDE Wherever You Want ###

|

||||

|

||||

相对于自己的桌面环境,使用IDE的最大优势是它是自包含的。你不需要安装任何其他的就可以使用。而另一方面,使用自己的桌面环境的最大优势就是你可以在本地工作,甚至在没有互联网的情况下。

|

||||

相对于自己的桌面环境,使用IDE的最大优势是它是自足的。你不需要安装任何其他的东西就可以使用。而另一方面,使用自己的桌面环境的最大优势就是你可以在本地工作,甚至在没有互联网的情况下。

|

||||

|

||||

Nitrous.IO结合了这两个优势。你可以在网站上在线使用这个IDE,你也可以把它下载到自己的饿电脑上,共同创始人AJ Solimine这样说。优点是你可以结合Nitrous的集成性和你最喜欢的文字编辑器的熟悉。

|

||||

Nitrous.IO结合了这两个优势。“你可以在网站上在线使用这个IDE,你也可以把它下载到自己的电脑上”,其共同创始人AJ Solimine这样说。优点是你可以结合Nitrous的集成性和你最喜欢的文字编辑器的熟悉。

|

||||

|

||||

他说:“你可以使用任意当代浏览器访问Nitrous.IO的在线IDE网站,但我们仍然提供了方便的Windows和Mac桌面应用,可以让你使用你最喜欢的编辑器来写代码。”

|

||||

他说:“你可以使用任意现代浏览器访问Nitrous.IO的在线IDE网站,但我们仍然提供了方便的Windows和Mac桌面应用,可以让你使用你最喜欢的编辑器来写代码。”

|

||||

|

||||

### 底线 ###

|

||||

|

||||

这一个星期的[使用][7]三个不同IDE的最让我意外的收获?它们是如此相似。[当用来做最基本的代码编辑的时候][8],它们都一样的好用。

|

||||

这一个星期[使用][7]三个不同IDE的最让我意外的收获是什么?它们是如此相似。[当用来做最基本的代码编辑的时候][8],它们都一样的好用。

|

||||

|

||||

Cloud9,Koding,[和Nitrous.IO都支持][9]所有主流的开源编程语言,从Ruby到Python到PHP到HTML5。你可以选择任何一种VM。

|

||||

|

||||

@ -76,7 +76,7 @@ Cloud9和Nitrous.IO都实现了GitHub的一键集成。Koding需要[多几个步

|

||||

|

||||

不好的一面,它们都有相同的缺陷,不过考虑到它们都是免费的也还合理。你每次只能同时运行一个VM来测试特定编程语言写出的程序。而当你一段时间没有使用VM之后,IDE会把VM切换成休眠模式以节省带宽,而下次要用的时候就得等它重新加载(Cloud9在这一点上更加费力)。它们中也没有任何一个为已完成的项目提供像样的永久托管服务。

|

||||

|

||||

所以,对咨询我是否有一个完美的免费IDE的人,答案是可能没有。但是这也要看你侧重的地方,对你的某个项目来说也许有一个完美的IDE。

|

||||

所以,对咨询我是否有一个完美的免费IDE的人来说,答案是可能没有。但是这也要看你侧重的地方,对你的某个项目来说也许有一个完美的IDE。

|

||||

|

||||

图片由[Shutterstock][11]友情提供

|

||||

|

||||

@ -86,7 +86,7 @@ via: http://readwrite.com/2014/08/14/cloud9-koding-nitrousio-integrated-developm

|

||||

|

||||

作者:[Lauren Orsini][a]

|

||||

译者:[zpl1025](https://github.com/zpl1025)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,14 +1,16 @@

|

||||

8 Options to Trace/Debug Programs using Linux strace Command

|

||||

使用 Linux 的 strace 命令跟踪/调试程序的常用选项

|

||||

================================================================================

|

||||

|

||||

在调试的时候,strace能帮助你追踪到一个程序所执行的系统调用。当你想知道程序和操作系统如何交互的时候,这是极其方便的,比如你想知道执行了哪些系统调用,并且以何种顺序执行。

|

||||

|

||||

这个简单而又强大的工具几乎在所有的Linux操作系统上可用,并且可被用来调试大量的程序。

|

||||

|

||||

### 1. 命令用法 ###

|

||||

### 命令用法 ###

|

||||

|

||||

让我们看看strace命令如何追踪一个程序的执行情况。

|

||||

|

||||

最简单的形式,strace后面可以跟任何命令。它将列出许许多多的系统调用。一开始,我们并不能理解所有的输出,但是如果你正在寻找一些特殊的东西,那么你应该能从输出中发现它。

|

||||

|

||||

让我们来看看简单命令ls的系统调用跟踪情况。

|

||||

|

||||

raghu@raghu-Linoxide ~ $ strace ls

|

||||

@ -20,21 +22,22 @@

|

||||

|

||||

|

||||

上面的输出部分展示了write系统调用,它把当前目录的列表输出到标准输出。

|

||||

|

||||

下面的图片展示了使用ls命令列出的目录内容(没有使用strace)。

|

||||

|

||||

raghu@raghu-Linoxide ~ $ ls

|

||||

|

||||

|

||||

|

||||

#### 1.1 寻找被程序读取的配置文件 ####

|

||||

#### 选项1 寻找被程序读取的配置文件 ####

|

||||

|

||||

一个有用的跟踪(除了调试某些问题以外)是你能找到被一个程序读取的配置文件。例如,

|

||||

Strace 的用法之一(除了调试某些问题以外)是你能找到被一个程序读取的配置文件。例如,

|

||||

|

||||

raghu@raghu-Linoxide ~ $ strace php 2>&1 | grep php.ini

|

||||

|

||||

|

||||

|

||||

#### 1.2 跟踪指定的系统调用 ####

|

||||

#### 选项2 跟踪指定的系统调用 ####

|

||||

|

||||

strace命令的-e选项仅仅被用来展示特定的系统调用(例如,open,write等等)

|

||||

|

||||

@ -44,7 +47,7 @@ strace命令的-e选项仅仅被用来展示特定的系统调用(例如,ope

|

||||

|

||||

|

||||

|

||||

#### 1.3 用于进程 ####

|

||||

#### 选项3 跟踪进程 ####

|

||||

|

||||

strace不但能用在命令上,而且通过使用-p选项能用在运行的进程上。

|

||||

|

||||

@ -52,15 +55,15 @@ strace不但能用在命令上,而且通过使用-p选项能用在运行的进

|

||||

|

||||

|

||||

|

||||

#### 1.4 strace的统计概要 ####

|

||||

#### 选项4 strace的统计概要 ####

|

||||

|

||||

包括系统调用的概要,执行时间,错误等等。使用-c选项能够以一种整洁的方式展示:

|

||||

它包括系统调用的概要,执行时间,错误等等。使用-c选项能够以一种整洁的方式展示:

|

||||

|

||||

raghu@raghu-Linoxide ~ $ strace -c ls

|

||||

|

||||

|

||||

|

||||

#### 1.5 保存输出结果 ####

|

||||

#### 选项5 保存输出结果 ####

|

||||

|

||||

通过使用-o选项可以把strace命令的输出结果保存到一个文件中。

|

||||

|

||||

@ -70,7 +73,7 @@ strace不但能用在命令上,而且通过使用-p选项能用在运行的进

|

||||

|

||||

之所以以sudo来运行上面的命令,是为了防止用户ID与所查看进程的所有者ID不匹配的情况。

|

||||

|

||||

### 1.6 显示时间戳 ###

|

||||

### 选项6 显示时间戳 ###

|

||||

|

||||

使用-t选项,可以在每行的输出之前添加时间戳。

|

||||

|

||||

@ -78,7 +81,7 @@ strace不但能用在命令上,而且通过使用-p选项能用在运行的进

|

||||

|

||||

|

||||

|

||||

#### 1.7 更好的时间戳 ####

|

||||

#### 选项7 更精细的时间戳 ####

|

||||

|

||||

-tt选项可以展示微秒级别的时间戳。

|

||||

|

||||

@ -92,7 +95,7 @@ strace不但能用在命令上,而且通过使用-p选项能用在运行的进

|

||||

|

||||

|

||||

|

||||

#### 1.8 Relative Time ####

|

||||

#### 选项8 相对时间 ####

|

||||

|

||||

-r选项展示系统调用之间的相对时间戳。

|

||||

|

||||

@ -106,7 +109,7 @@ via: http://linoxide.com/linux-command/linux-strace-command-examples/

|

||||

|

||||

作者:[Raghu][a]

|

||||

译者:[guodongxiaren](https://github.com/guodongxiaren)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -6,13 +6,13 @@

|

||||

|

||||

<blockquote><em>通过入会声明,任何人都能轻易加入“匿名者”组织。某人类学家称,组织成员会“根据影响程度对重大事件保持着不同关注,特别是那些能挑起强烈争端的事件”。</em></blockquote>

|

||||

|

||||

<small>布景:Jeff Nishinaka / 摄影:Scott Dunbar</small>

|

||||

<small>纸雕作品:Jeff Nishinaka / 摄影:Scott Dunbar</small>

|

||||

|

||||

<h2>1</h2>

|

||||

|

||||

<p>上世纪七十年代中期,当 Christopher Doyon 还是一个生活在缅因州乡村的孩童时,就终日泡在 CB radio 上与各种陌生人聊天。他的昵称是“大红”,因为他有一头红色的头发。Christopher Doyon 把发射机挂在了卧室的墙壁上,并且说服了父亲在自家屋顶安装了两根天线。CB radio 主要用于卡车司机间的联络,但 Doyon 和一些人却将之用于不久后出现在 Internet 上的虚拟社交——自定义昵称、成员间才懂的笑话,以及施行变革的强烈愿望。</p>

|

||||

<p>上世纪七十年代中期,当 Christopher Doyon 还是一个生活在缅因州乡村的孩童时,就终日泡在 CB radio 上与各种陌生人聊天。他的昵称是“Big red”(大红),因为他有一头红色的头发。Christopher Doyon 把发射机挂在了卧室的墙壁上,并且说服了父亲在自家屋顶安装了两根天线。CB radio 主要用于卡车司机间的联络,但 Doyon 和一些人却将之用于不久后出现在 Internet 上的虚拟社交——自定义昵称、成员间才懂的笑话,以及施行变革的强烈愿望。</p>

|

||||

|

||||

<p>Doyon 很小的时候母亲就去世了,兄妹二人由父亲抚养长大,他俩都说受到过父亲的虐待。由此 Doyon 在 CB radio 社区中找到了慰藉和归属感。他和他的朋友们轮流监听当地紧急事件频道。其中一个朋友的父亲买了一个气泡灯并安装在了他的车顶上;每当这个孩子收听到来自孤立无援的乘车人的求助后,都会开车载着所有人到求助者所在的公路旁。除了拨打 911 外他们基本没有什么可做的,但这足以让他们感觉自己成为了英雄。</p>

|

||||

<p>Doyon 很小的时候母亲就去世了,兄妹二人由父亲抚养长大,他俩都说受到过父亲的虐待。由此 Doyon 在 CB radio 社区中找到了慰藉和目标感。他和他的朋友们轮流监听当地紧急事件频道。其中一个朋友的父亲买了一个气泡灯并安装在了他的车顶上;每当这个孩子收听到来自孤立无援的乘车人的求助后,都会开车载着所有人到求助者所在的公路旁。除了拨打 911 外他们基本没有什么可做的,但这足以让他们感觉自己成为了英雄。</p>

|

||||

|

||||

<p>短小精悍的 Doyon 有着一口浓厚的新英格兰口音,并且非常喜欢《星际迷航》和阿西莫夫的小说。当他在《大众机械》上看到一则“组装你的专属个人计算机”构件广告时,就央求祖父给他买一套,接下来 Doyon 花了数月的时间把计算机组装起来并连接到 Internet 上去。与鲜为人知的 CB 电波相比,在线聊天室确实不可同日而语。“我只需要点一下按钮,再选中某个家伙的名字,然后我就可以和他聊天了,” Doyon 在最近回忆时说道,“这真的很惊人。”</p>

|

||||

|

||||

@ -22,11 +22,11 @@

|

||||

|

||||

<p>Doyon 深深地沉溺于计算机中,虽然他并不是一位专业的程序员。在过去一年的几次谈话中,他告诉我他将自己视为激进主义分子,继承了 Abbie Hoffman 和 Eldridge Cleaver 的激进传统;技术不过是他抗议的工具。八十年代,哈佛大学和麻省理工学院的学生们举行集会,强烈抗议他们的学校从南非撤资。为了帮助抗议者通过安全渠道进行交流,PLF 制作了无线电套装:移动调频发射器、伸缩式天线,还有麦克风,所有部件都内置于背包内。Willard Johnson,麻省理工学院的一位激进分子和政治学家,表示黑客们出席集会并不意味着一次变革。“我们的大部分工作仍然是通过扩音器来完成的,”他解释道。</p>

|

||||

|

||||

<p>1992 年,在 Grateful Dead 的一场印第安纳的演唱会上,Doyon 秘密地向一位瘾君子出售了 300 粒药。由此他被判决在印第安纳州立监狱服役十二年,后来改为五年。服役期间,他对宗教和哲学产生了浓厚的兴趣,并于鲍尔州立大学学习了相应课程。</p>

|

||||

<p>1992 年,在印第安纳的一场 Grateful Dead 的演唱会上,Doyon 秘密地向一位瘾君子出售了 300 粒药。由此他被判决在印第安纳州立监狱服役十二年,后来改为五年。服役期间,他对宗教和哲学产生了浓厚的兴趣,并于鲍尔州立大学学习了相应课程。</p>

|

||||

|

||||

<p>1994 年,第一款商业 Web 浏览器网景领航员正式发布,同一年 Doyon 被捕入狱。当他出狱并再次回到剑桥后,PLF 依然活跃着,并且他们的工具有了实质性的飞跃。Doyon 回忆起和他入狱之前的变化,“非常巨大——好比是‘烽火狼烟’跟‘电报传信’之间那么大的差距。”黑客们入侵了一个印度的军事网站,并修改其首页文字为“拯救克什米尔”。在塞尔维亚,黑客们攻陷了一个阿尔巴尼亚网站。Stefan Wray,一位早期网络激进主义分子,为一次纽约“反哥伦布日”集会上的黑客行径辩护。“我们视之为电子形式的公众抗议,”他告诉大家。</p>

|

||||

<p>1994 年,第一款商业 Web 浏览器 Netscape Navigator(网景领航员)正式发布,同一年 Doyon 被捕入狱。当他出狱并再次回到剑桥后,PLF 依然活跃着,并且他们的工具有了实质性的飞跃。Doyon 回忆起他和入狱之前对比的变化,“非常巨大——好比是‘烽火狼烟’跟‘电报传信’之间那么大的差距。”黑客们入侵了一个印度的军事网站,并修改其首页文字为“拯救克什米尔”。在塞尔维亚,黑客们攻陷了一个阿尔巴尼亚网站。Stefan Wray,一位早期网络激进主义分子,为一次纽约“反哥伦布日”集会上的黑客行径辩护。“我们视之为电子形式的公众抗议,”他告诉大家。</p>

|

||||

|

||||

<p>1999 年,美国唱片业协会因为版权侵犯问题起诉了 Napster,一款文件共享软件。最终,Napster 于 2001 年关闭。Doyon 与其他黑客使用分布式拒绝服务(Distributed Denial of Service,DDoS,使大量数据涌入网站导致其响应速度减缓直至奔溃)的手段,攻击了美国唱片业协会的网站,使之停运时间长达一星期之久。Doyon为自己的行为进行了辩解,并高度赞扬了其他的“黑客主义者”。“我们很快意识到保卫 Napster 的战争象征着保卫 Internet 自由的战争,”他在后来写道。</p>

|

||||

<p>1999 年,美国唱片业协会因为版权侵犯问题起诉了 Napster,一款文件共享服务。最终,Napster 于 2001 年关闭。Doyon 与其他黑客使用分布式拒绝服务(Distributed Denial of Service,DDoS,使大量数据涌入网站导致其响应速度减缓直至奔溃)的手段,攻击了美国唱片业协会的网站,使之停运时间长达一星期之久。Doyon为自己的行为进行了辩解,并高度赞扬了其他的“黑客主义者”。“我们很快意识到保卫 Napster 的战争象征着保卫 Internet 自由的战争,”他在后来写道。</p>

|

||||

|

||||

<p>2008 年的一天,Doyon 和 “Commander Adama” 在剑桥的 PLE 地下公寓相遇。Adama 当着 Doyon 的面点击了癫痫基金会的一个链接,与意料中将要打开的论坛不同,出现的是一连串闪烁的彩光。有些癫痫病患者对闪光灯非常敏感——这完全是出于恶意,有人想要在无辜群众中诱发癫痫病。已经出现了至少一名受害者。</p>

|

||||

|

||||

@ -42,69 +42,69 @@

|

||||

|

||||

<center><small>“我得谈谈我的感受。”</small></center>

|

||||

|

||||

<p>Poole 希望匿名这一举措可以延续社区的尖锐性因素。“我们无意参与理智的涉外事件讨论,”他在网站上写道。4chan 社区里最具价值的事之一便是寻求“挑起强烈的争端”(lulz),这个词源自缩写 LOL。Lulz 经常是通过分享充满孩子气的笑话或图片来实现的,它们中的大部分不是色情的就是下流的。其中最令人震惊的部分被贴在了网站的“/b/”版块上,这里的用户们称呼自己为“/b/tards”。Doyon 知道 4chan 这个社区,但他认为那些用户是“一群愚昧无知的顽童”。2004 年前后,/b/ 上的部分用户开始把“匿名者”视为一个独立的实体。</p>

|

||||

<p>Poole 希望匿名这一举措可以延续社区的尖锐性因素。“我们无意参与理智的涉外事件讨论,”他在网站上写道。4chan 社区里最具价值的事之一便是寻求“挑起强烈的争端”(lulz),这个词源自缩写 LOL。Lulz 经常是通过分享幼稚的笑话或图片来实现的,其中大部分不是色情的就是下流的。其中最令人震惊的部分被贴在了网站的“/b/”版块上,这里的用户们称呼自己为“/b/tards”。Doyon 知道 4chan 这个社区,但他认为它的用户是“一群愚昧无知的顽童”。2004 年前后,/b/ 上的部分用户开始把“匿名者”视为一个独立的实体。</p>

|

||||

|

||||

<p>这是一个全新的黑客团体。“这不是一个传统意义上的组织,”一位领导计算机安全工作的研究员 Mikko Hypponen 告诉我——倒不如,视之为一个非传统的亚文化群体。Barrett Brown,德克萨斯州的一名记者,同时也是众所周知的“匿名者”高层领导,把“匿名者”描述为“一连串前仆后继的伟大友谊”。无需任何会费或者入会仪式。任何想要加入“匿名者”组织,成为一名匿名者(Anon)的人,都可以通过简短的象征性的宣誓加入。</p>

|

||||

|

||||

<p>尽管 4chan 的关注焦点是一些琐碎的话题,但许多匿名者认为自己就是“正义的十字军”。如果网上有不良迹象出现,他们就会发起具有针对性的治安维护行动。不止一次,他们以未成年少女的身份套取恋童癖的私人信息,然后把这些信息交给警察局。其他匿名者则是政治的厌恶者,为了挑起争端想方设法散布混乱的信息。他们中的一些人在 /b/ 上发布看着像是雷管炸弹的图片;另一些则叫嚣着要炸毁足球场并因此被联邦调查局逮捕。2007 年,一家洛杉矶当地的新闻联盟机构称呼“匿名者”组织为“互联网负能量制造机”。</p>

|

||||

<p>尽管 4chan 的关注焦点是一些琐碎的话题,但许多匿名者认为自己就是“正义的十字军”。如果网上有不良迹象出现,他们就会发起具有针对性的治安维护行动。不止一次,他们以未成年少女的身份使恋童癖陷入圈套,然后把他们的个人信息交给警察局。其他匿名者则是政治的厌恶者,为了挑起争端想方设法散布混乱的信息。他们中的一些人在 /b/ 上发布看着像是雷管炸弹的图片;另一些则叫嚣着要炸毁足球场并因此被联邦调查局逮捕。2007 年,一家洛杉矶当地的新闻联盟机构称呼“匿名者”组织为“互联网负能量制造机”。</p>

|

||||

|

||||

<p>2008 年 1 月,Gawker Media 上传了一段关于汤姆克鲁斯大力吹捧山达基优点的视频。这段视频是受版权保护的,山达基教会致信 Gawker,勒令其删除这段视频。“匿名者”组织认为教会企图控制网络信息。“是时候让 /b/ 来干票大的了,”有人在 4chan 上写道。“我说的是‘入侵’或者‘攻陷’山达基官方网站。”一位匿名者使用 YouTube 放出一段“新闻稿”,其中包括暴雨云视频和经过计算机处理的语音。“我们要立刻把你们从 Internet 上赶出去,并且在现有规模上逐渐瓦解山达基教会,”那个声音说,“你们无处可躲。”不到一个星期,这段 YouTube 视频的点击率就超过了两百万次。</p>

|

||||

|

||||

<p>“匿名者”组织已经不仅限于 4chan 社区。黑客们在专用的互联网中继聊天(Internet Relay Chat channels,IRC 聊天室)频道内进行交流,协商策略。通过 DDoS 攻击手段,他们使山达基的主网站间歇性崩溃了好几天。匿名者们制造了“谷歌炸弹”,由此导致 “dangerous cult” 的搜索结果中的第一条结果就是山达基主网站。其余的匿名者向山达基的欧洲总部寄送了数以百计的披萨,并用大量全黑的传真单耗干了洛杉矶教会总部的传真机墨盒。山达基教会,据报道拥有超过十亿美元资产的组织,当然能经得起墨盒耗尽的考验。但山达基教会的高层可不这么认为,他们还收到了严厉的恐吓,由此他们不得不向 FBI 申请逮捕“匿名者”组织的成员。</p>

|

||||

<p>“匿名者”组织已经不仅限于 4chan 社区。黑客们在专用的互联网中继聊天(Internet Relay Chat channels,IRC 聊天室)频道内进行交流,协商策略。通过 DDoS 攻击手段,他们使山达基的主网站间歇性崩溃了好几天。匿名者们制造了“谷歌炸弹”,由此导致 “dangerous cult” 的搜索结果中的第一条结果就是山达基主网站。其余的匿名者向山达基的欧洲总部寄送了数以百计的披萨,并用大量全黑的传真单耗干了洛杉矶教会总部的传真机墨盒。山达基教会,据报道是一个拥有超过十亿美元资产的组织,当然能经得起墨盒耗尽的考验。但山达基教会的高层可不这么认为,他们还收到了死亡恐吓,由此他们不得不向 FBI 申请调查“匿名者”组织的成员。</p>

|

||||

|

||||

<p>2008 年 3 月 15 日,在从伦敦到悉尼的一百多个城市里,数以千计匿名者们游行示威山达基教会。为了切合“匿名”这个主题,组织者下令所有的抗议者都应该佩戴相同的面具。深思熟虑过蝙蝠侠后,他们选定了 2005 年上映的反乌托邦电影《 V 字仇杀队》中 Guy Fawkes 的面具。“在每个大城市里都能以很便宜的价格大量购买,”广为人知的匿名者、游行组织者之一 Gregg Housh 告诉我说道。漫画式的面具上是一个的脸颊红润的男人,八字胡,有着灿烂的笑容。</p>

|

||||

|

||||

<p>匿名者们并未“瓦解”山达基教会。并且汤姆克鲁斯的那段视频任然保留在网络上。匿名者们证明了自己的顽强。组织选择了一个相当浮夸的口号:“我们是一体。绝不宽恕。永不遗忘。相信我们。”(We are Legion. We do not forgive. We do not forget. Expect us.)</p>

|

||||

<p>匿名者们并未“瓦解”山达基教会。并且汤姆克鲁斯的那段视频任然保留在网络上。匿名者们证明了自己的顽强。组织选择了一个相当浮夸的口号:“我们是军团。绝不宽恕。永不遗忘。等待我们。”(We are Legion. We do not forgive. We do not forget. Expect us.)</p>

|

||||

|

||||

<h2>3</h2>

|

||||

|

||||

<p>2010 年,Doyon 搬到了加利福尼亚州的圣克鲁斯,并加入了当地的“和平阵营”组织。利用从木材堆置场偷来的木头,他在山上盖起了一间简陋的小屋,“借用”附近住宅的 WiFi,使用太阳能电池板发电,并通过贩卖种植的大麻换取现金。</p>

|

||||

|

||||

<p>与此同时,“和平阵营”维权者们每天晚上开始在公共场所休息,以此抗议圣克鲁斯政府此前颁布的“流浪者管理法案”,他们认为这项法案严重侵犯了流浪者的生存权。Doyon 出席了“和平阵营”的会议,并在网上发起了抗议活动。他留着蓬乱的红色山羊胡,戴一顶米黄色软呢帽,像军人那样不知疲倦。因此维权者们送给了他“罪恶制裁克里斯”的称呼。</p>

|

||||

<p>与此同时,“和平阵营”维权者们每天晚上开始在公共场所休息,以此抗议圣克鲁斯政府此前颁布的“流浪者管理法案”,他们认为这项法案严重侵犯了流浪者的生存权。Doyon 出席了“和平阵营”的会议,并在网上发起了抗议活动。他留着蓬乱的红色山羊胡,戴一顶米黄色软呢帽,类似军服的服装。因此维权者们送给了他“罪恶制裁克里斯”的称呼。</p>

|

||||

|

||||

<p>“和平阵营”的成员之一 Kelley Landaker 曾几次和 Doyong 讨论入侵事宜。Doyon 有时会吹嘘自己的技术是多么的厉害,但作为一名资深程序员的 Landaker 却不为所动。“他说得很棒,但却不是行动派的,”Landaker 告诉我。不过在那种场合下,的确更需要一位富有激情的领导者,而不是埋头苦干的技术员。“他非常热情并且坦率,”另一位成员 Robert Norse 如是对我说。“他创造出了大量的能够吸引媒体眼球的话题。我从事这行已经二十年了,在这一点上他比我见过的任何人都要厉害。”</p>

|

||||

<p>“和平阵营”的成员之一 Kelley Landaker 曾几次和 Doyong 讨论入侵事宜。Doyon 有时会吹嘘自己的技术是多么的厉害,但作为一名资深程序员的 Landaker 却不为所动。“他说得很棒,但却不是行动派,”Landaker 告诉我。不过在那种场合下,的确更需要一位富有激情的领导者,而不是埋头苦干的技术员。“他非常热情并且坦率,”另一位成员 Robert Norse 如是对我说。“他创造出了大量的能够吸引媒体眼球的话题。我从事这行已经二十年了,在这一点上他比我见过的任何人都要厉害。”</p>

|

||||

|

||||

<p>Doyon 在 PLF 的上司,Commander Adama 仍然住在剑桥,并且通过电子邮件和 Doyon 保持着联络,他下令让 Doyon 潜入“匿名者”组织。以此获知其运作方式,并伺机为 PLF 招募新成员。因为癫痫基金会网站入侵事件的那段不愉快回忆,Doyon 拒绝了 Adama。Adama 给 Doyon 解释说,在“匿名者”组织里不怀好意的黑客只占极少数,与此相反,这个组织经常会有一些的轰动世界举动。Doyon 对这点表示怀疑。“4chan 怎么可能会轰动世界?”他质问道。但出于对 PLF 的忠诚,他还是答应了 Adama 的请求。</p>

|

||||

<p>Doyon 在 PLF 的上司,Commander Adama 仍然住在剑桥,并且通过电子邮件和 Doyon 保持着联络,他下令让 Doyon 监视“匿名者”组织,以此获知其运作方式,并伺机为 PLF 招募新成员。因为癫痫基金会网站入侵事件的那段不愉快回忆,Doyon 拒绝了 Adama。Adama 给 Doyon 解释说,在“匿名者”组织里不怀好意的黑客只占极少数,与此相反,这个组织经常会有一些的轰动世界举动。Doyon 对这点表示怀疑。“4chan 怎么可能会有轰动世界的大举动?”他质问道。但出于对 PLF 的忠诚,他还是答应了 Adama 的请求。</p>

|

||||

|

||||

<p>Doyon 经常带着一台宏基笔记本电脑出入于圣克鲁斯的一家名为 Coffee Roasting Company 的咖啡厅。“匿名者”组织的 IRC 聊天室主频道无需密码就能进入。Doyon 使用 PLF 的昵称进行登录并加入了聊天室。一段时间后,他发现了组织内大量的专用匿名者行动聊天频道,这些频道的规模更小,并相互重复。要想参与行动,你必须知道行动的专用聊天频道名称,并且聊天频道随时会因为陌生的闯入者而进行变更。这套交流系统并不具备较高的安全系数,但它的确很凑效。“这些专用行动聊天频道确保了行动机密的高度集中,”麦吉尔大学的人类学家 Gabriella Coleman 告诉我。</p>

|

||||

<p>Doyon 经常带着一台宏基笔记本电脑出入于圣克鲁斯的一家名为 Coffee Roasting Company 的咖啡厅。“匿名者”组织的 IRC 聊天室主频道无需密码就能进入。Doyon 使用 PLF 的昵称进行登录并加入了聊天室。一段时间后,他发现了组织内大量的专用匿名者行动聊天频道,这些频道的规模更小,更多专门的组内匿名者间对话相互重复。要想参与行动,你必须知道行动的专用聊天频道名称,并且聊天频道随时会因为陌生的闯入者而进行变更。这套交流系统并不具备较高的安全系数,但它的确很凑效。“这些专用行动聊天频道确保了行动机密的高度集中”麦吉尔大学的人类学家 Gabriella Coleman 告诉我。</p>

|

||||

|

||||

<p>有些匿名者提议了一项行动,名为“反击行动”。如同新闻记者 Parmy Olson 于 2012 年在书中写道的,“我们是匿名者,”这项行动成为了又一次支援文件共享网站,如 Napster 的后继者海盗湾(Pirate Bay),的行动的前奏,但随后其目标却扩展到了政治领域。2010 年末,在美国国务院的要求下,包括万事达、Visa、PayPal 在内的几家公司终止了对维基解密,一家公布了成百上千份外交文件的民间组织,的捐助。在一段网络视频中,“匿名者”组织扬言要进行报复,发誓会对那些阻碍维基解密发展的公司进行惩罚。Doyon 被这种抗议企业的精神所吸引,决定参加这次行动。</p>

|

||||

<p>有些匿名者提议了一项行动,名为“反击行动”。如同新闻记者 Parmy Olson 于 2012 年在书中写道的,“我们是匿名者,” 这项行动是以又一次支持文件共享的网站而创立,如同 Napster 的后继者海盗湾(Pirate Bay),但随后其目标却扩展到了政治领域。2010 年末,在美国国务院的要求下,包括万事达、Visa、PayPal 在内的几家公司终止了对维基解密的捐助,维基解密是一家公布了成百上千份外交文件的自发性组织。在一段在线视频中,“匿名者”组织扬言要进行报复,发誓会对那些阻碍维基解密发展的公司进行惩罚。Doyon 被这种抗议企业的精神所吸引,决定参加这次行动。</p>

|

||||

|

||||

<center><img src="http://www.newyorker.com/wp-content/uploads/2014/09/140908_a18473-600.jpg" /></center>

|

||||

|

||||

<center><small>潘多拉的魔盒</small></center>

|

||||

|

||||

<p>在十二月初的“反击行动”中,“匿名者”组织指导那些新成员,或者说新兵,关于“如何他【哔~】加入组织”,教程中提到“首先配置你【哔~】的网络,这他【哔~】的很重要。”同时他们被要求下载“低轨道离子炮”,一款易于使用的开源软件。Doyon 下载了软件并在聊天室内等待着下一步指示。当开始的指令发出后,数千名匿名者将同时发动进攻。Doyon 收到了含有目标网址的指令——目标是,www.visa.com——同时,在软件的右上角有个按钮,上面写着“IMMA CHARGIN MAH LAZER.”(“反击行动”同时也发动了大量的复杂精密的入侵进攻。)几天后,“反击行动”攻陷了万事达、Visa、PayPal 公司的主页。在法院的控告单上,PayPal 称这次攻击给公司造成了 550 万美元的损失。</p>

|

||||

<p>在十二月初的“反击行动”中,“匿名者”组织指导那些新成员,或者说新兵,去看标题为“如何加入那个【哔~】的Hive”,参与者被要求“首先配置他们【哔~】的网络,这【哔~】的很重要。”同时他们被要求下载“低轨道离子炮”,一款易于使用的开源软件。Doyon 下载了软件并在聊天室内等待着下一步指示。当开始的指令发出后,数千名匿名者将同时发动进攻。Doyon 进入了目标网址——www.visa.com——同时,在软件的右上角有个按钮,上面写着“IMMA CHARGIN MAH LAZER.”(“反击行动”同时也发动了大量的复杂精密的入侵进攻。)几天后,“反击行动”攻陷了万事达、Visa、PayPal 公司的主页。在法院的控告单上,PayPal 称这次攻击给公司造成了 550 万美元的损失。</p>

|

||||

|

||||

<p>但对 Doyon 来说,这是切实的激进主义体现。在剑桥反对种族隔离的行动中,他不能立即看到结果;而现在,只需指尖轻轻一点,就可以在攻陷大公司网站的行动中做出自己的贡献。隔天,赫芬顿邮报上出现了“万事达沦陷”的醒目标题。一位得意洋洋的匿名者发推特道:“有些事情维基解密是无能为力的。但这些事情却可以由‘反击行动’来完成。”</p>

|

||||

<p>但对 Doyon 来说,这是切实的激进主义体现。在剑桥反对种族隔离的行动中,他不能即可见效;而现在,只需指尖轻轻一点,就可以在攻陷大公司网站的行动中做出自己的贡献。隔天,赫芬顿邮报上出现了“万事达沦陷”的醒目标题。一位得意洋洋的匿名者发推特道:“有些事情维基解密是无能为力的。但这些事情却可以由‘反击行动’来完成。”</p>

|

||||

|

||||

<h2>4</h2>

|

||||

|

||||

<p>2010 年的秋天,“和平阵营”的抗议活动终止,政府只做出了轻微的让步,“流浪者管理法案”仍然有效。Doyon 希望通过借助“匿名者”组织的方略扭转局势。他回忆当时自己的想法,“也许我可以发动‘匿名者’组织来教训这种看似不堪一击的市政府网站,这些人绝对会【哔~】地赞同我的提议。最终我们将使得市政府永久性的废除‘流浪者管理法案’。”</p>

|

||||

<p>2010 年的秋天,“和平阵营”的抗议活动终止,政府只做出了略微让步,“流浪者管理法案”仍然有效。Doyon 希望通过借助“匿名者”组织的方略扭转局势。他回忆当时自己的想法,“也许我可以发动‘匿名者’组织来教训这种看似不堪一击的市政府网站,它们绝对会【哔~】地沦陷。最终我们使得市政府永久性废除‘流浪者管理法案’。”</p>

|

||||

|

||||

<p>Joshua Covelli 是一位 25 岁的匿名者,他的昵称是“Absolem”,他非常钦佩 Doyon 的果敢。“现在我们的组织完全是他【哔~】各种混乱的一盘散沙,”Covelli 告诉我道。在“Commander X”加入之后,“组织似乎开始变得有模有样了。”Covelli 的工作是俄亥俄州费尔伯恩的一所大学接待员,他从不了解任何有关圣克鲁斯的政治。但是当 Doyon 提及帮助“和平阵营”抗击活动的计划后,Covelli 立即回复了一封表示赞同的电子邮件:“我期待这样的行动很久了。”</p>

|

||||

<p>Joshua Covelli 是一位 25 岁的匿名者,他的昵称是“Absolem”,他非常钦佩 Doyon 的果敢。“过去我们的组织完全是各种混乱的一盘散沙,”Covelli 告诉我。在“Commander X”加入之后,“组织似乎开始变得有模有样了。”Covelli 的工作是俄亥俄州费尔伯恩的一所大学接待员,他从不了解任何有关圣克鲁斯的政治。但是当 Doyon 提及帮助“和平阵营”抗击活动的计划后,Covelli 立即回复了一封表示赞同的电子邮件:“我期待参加这样的行动已经很久了。”</p>

|

||||

|

||||

<p>Doyon 使用 PLF 的昵称邀请 Covelli 在 IRC 聊天室进行了一次秘密谈话:</p>

|

||||

|

||||

<blockquote>Absolem:抱歉,有个比较冒犯的问题...请问 PLF 也是组织的一员吗?</blockquote>

|

||||

<blockquote>Absolem:抱歉,有个比较冒犯的问题...请问 PLF 是组织的一部分还是分开的?</blockquote>

|

||||

|

||||

<blockquote>Absolem:我会这么问,是因为我在频道里看过你的聊天记录,你像是一名训练有素的黑客,不太像是来自组织里的成员。</blockquote>

|

||||

<blockquote>Absolem:我会这么问,是因为看你们聊天,觉得你们都是非常有组织的。</blockquote>

|

||||

|

||||

<blockquote>PLF:不不不,你的问题一点也不冒犯。很高兴遇到你。PLF 是一个来自波士顿的黑客组织,已经成立 22 年了。我在 1981 年就开始了我的黑客生涯,但那时我并没有使用计算机,而是使用的 PBX(Private Branch Exchange,电话交换机)。</blockquote>

|

||||

|

||||

<blockquote>PLF:我们组织内所有成员的年龄都超过了 40 岁。我们当中有退伍士兵和学者。并且我们的成员“Commander Adama”,正在躲避一大帮警察还有间谍的追捕。</blockquote>

|

||||

|

||||

<blockquote>Absolem:听起来很棒!我对这次行动很感兴趣,不知道我是否可以提供一些帮助,我们的组织实在是太混乱了。我的电脑技术还不错,但我在入侵技术上还完全是一个新手。我有一些小工具,但不知道怎么去使用它们。</blockquote>

|

||||

<blockquote>Absolem:听起来很棒!我对这次行动很感兴趣,不过“匿名者”组织看起来太混乱无序,不知道我是否可以提供一些帮助。我的电脑技术还不错,但我在入侵技术上还完全是一个新手。我有一些小工具,但不知道怎么去使用它们。</blockquote>

|

||||

|

||||

<p>庄重的入会仪式后,Doyon 正式接纳 Covelli 加入 PLF:</p>

|

||||

|

||||

<blockquote>PLF:把所有可能对你不利的【哔~】敏感文件加密。</blockquote>

|

||||

<blockquote>PLF:把所有可能使你受牵连的敏感文件加密。</blockquote>

|

||||

|

||||

<blockquote>PLF:还有,想要联系任何一位 PLF 成员的话,给我发消息就行。从现在起,请叫我... Commander X。</blockquote>

|

||||

|

||||

<p>2012 年,美联社称“匿名者”组织为“一伙训练有素的黑客”;Quinn Norton 在《连线》杂志上发文称“‘匿名者’组织可以入侵任何坚不可摧的网站”,并在文末赞扬他们为“一群卓越的民间黑客”。事实上,有些匿名者的确是很有天赋的程序员,但绝大部分成员根本不懂任何技术。人类学家 Coleman 告诉我只有大约五分之一的匿名者是真正的黑客——其他匿名者则是“极客与抗议者”。</p>

|

||||

<p>2012 年,美联社称“匿名者”组织为“一帮专家级的黑客”;Quinn Norton 在《连线》杂志上发文称“‘匿名者’组织可以入侵任何坚不可摧的网站”,并在文末赞扬他们为“一群卓越的民间黑客”。事实上,有些匿名者的确是很有天赋的程序员,但绝大部分成员根本不懂任何技术。人类学家 Coleman 告诉我只有大约五分之一的匿名者是真正的黑客——其他匿名者则是“极客与抗议者”。</p>

|

||||

|

||||

<p>2010 年 12 月 16 日,Doyon 以 Commander X 的身份向几名记者发送了电子邮件。“明天当地时间 12:00 的时候,‘人民解放阵线’组织与‘匿名者’组织将大举进攻圣克鲁斯政府网站”,他在邮件中写道,“12:30 之后我们将恢复网站的正常运行。”</p>

|

||||

<p>2010 年 12 月 16 日,Doyon 以 Commander X 的身份向几名记者发送了电子邮件。“明天当地时间 12:00 的时候,‘人民解放阵线’组织与‘匿名者’组织将从互联网中删除圣克鲁斯政府网站”,他在邮件中写道,“12:30 之后我们将恢复网站的正常运行。”</p>

|

||||

|

||||

<p>圣克鲁斯数据中心的工作人员收到了警告,匆忙地准备应对攻击。他们在服务器上运行起安全扫描软件,并向当地的互联网供应商 AT & T 求助,后者建议他们向 FBI 报警。</p>

|

||||

|

||||

@ -132,7 +132,7 @@

|

||||

|

||||

<center><small>“Zach 很聪明... 并且... 是一个天才... 但.. 你们... 不在一个班。”</small></center>

|

||||

|

||||

<p>Doyon 引用了一句电影台词。“拼命地跑,”他说。“我会躲起来,尽可能保持我的行动自由,用尽全力和这帮杂种们作斗争。”Frey 给了他两张 20 美元的钞票并祝他好运。</p>

|

||||

<p>Doyon 引用了一句电影台词。“拼命地跑,”他说。“我会躲起来,尽可能保持我的行动自由,用尽全力和这帮混蛋们作斗争。”Frey 给了他两张 20 美元的钞票并祝他好运。</p>

|

||||

|

||||

<h2>5</h2>

|

||||

|

||||

@ -142,35 +142,35 @@

|

||||

|

||||

<p>“突尼斯,” Brown 答道。</p>

|

||||

|

||||

<p>“我知道,那是中东地区的一个国家,” Doyon 继续问,“然后呢?”</p>

|

||||

<p>“我知道,那是中东地区的一个国家,” Doyon 继续问,“具体任务是什么呢?”</p>

|

||||

|

||||

<p>“我们准备打倒那里的独裁者,” Brown 再次答道。</p>

|

||||

|

||||

<p>“啊?!那里有一位独裁者吗?” Doyon 有点惊讶。</p>

|

||||

|

||||

<p>几天后,“突尼斯行动”正式展开。Doyon 作为参与者向突尼斯政府域名下的电子邮箱发送了大量的垃圾邮件,以此阻塞其服务器。“我会提前写好关于那次行动邮件,接着一次又一次地把它们发送出去,” Doyon 说,“有时候实在没有时间,我就只简短的写上一句问候对方母亲的的话,然后发送出去。”短短一天时间里,匿名者们就攻陷了包括突尼斯证券交易所、工业部、总统办公室、总理办公室在内的多个网站。他们把总统办公室网站的首页替换成了一艘海盗船的图片,并配以文字“‘报复’是个贱人,不是吗?”</p>

|

||||

<p>几天后,“突尼斯行动”正式展开。Doyon 作为参与者向突尼斯政府域名下的电子邮箱发送了大量的垃圾邮件,以此阻塞其服务器。“我会提前写好关于那次行动邮件,接着一次又一次地把它们发送出去,” Doyon 说,“有时候实在没有时间,我就只简短的写上一句‘问候对方母亲’的话,然后发送出去。”短短一天时间里,匿名者们就攻陷了包括突尼斯证券交易所、工业部、总统办公室、总统办公室在内的多个网站。他们把总统办公室网站的首页替换成了一艘海盗船的图片,并配以文字“恶有恶报,不是吗?”</p>

|

||||

|

||||

<p>Doyon 不时会谈起他的网上“战斗”经历,似乎他刚从弹坑里爬出来一样。“伙计,自从干了这行我就变黑了,”他向我诉苦道。“你看我的脸,全是抽烟的时候熏的——而且可能已经粘在我的脸上了。我仔细地照过镜子,毫不夸张地说我简直就是一头棕熊。”很多个夜晚,Doyon 都是在 Golden Gate 公园里露营过夜的。“我就那样干了四天,我看了看镜子里的‘我’,感觉还可以——但其实我觉得‘我’也许应该去吃点东西、洗个澡了。”</p>

|

||||

|

||||

<p>“匿名者”组织接着又在 YouTube 上声明了将要进行的一系列行动:“利比亚行动”、“巴林行动”、“摩洛哥行动”。作为解放广场事件的抗议者,Doyon 参与了“埃及行动”。在 Facebook 针对这次行动的宣传专页中,有一个为当地示威者准备的“行动套装”链接。“行动套装”通过文件共享网站 Megaupload 进行分发,其中含有一份加密软件以及应对瓦斯袭击的保护措施。并且在不久后,埃及政府关闭了埃及的所有互联网及子网络的时候,继续向当地抗议者们提供连接网络的方法。</p>

|

||||

<p>“匿名者”组织接着又在 YouTube 上声明了将要进行的一系列行动:“利比亚行动”、“巴林行动”、“摩洛哥行动”。作为解放广场事件的抗议者,Doyon 参与了“埃及行动”。在 Facebook 针对这次行动的宣传专页中,有一个为当地示威者准备的“行动套装”链接。“行动套装”通过文件共享网站 Megaupload 进行分发,其中含有一份加密软件以及应对瓦斯袭击的保护措施。在埃及政府关闭了埃及的所有互联网及子网络的时候不久后,“匿名者”组织继续向当地抗议者们提供连接网络的方法。</p>

|

||||

|

||||

<p>2011 年夏季,Doyon 接替 Adama 成为 PLF 的最高指挥官。Doyon 招募了六个新成员,并力图发展 PLF 成为“匿名者”组织的中坚力量。Covelli 成为了他的其中一技位术顾问。另一名黑客 Crypt0nymous 负责在 YouTube 上发布视频;其余的人负责研究以及组装电子设备。与松散的“匿名者”组织不同,PLF 内部有一套极其严格的管理体系。“Commander X 事必躬亲,”Covelli 说。“这是他的行事风格,也许不能称之为一种风格。”一位创立了 AnonInsiders 博客的黑客通过加密聊天告诉我,他认为 Doyon 总是一意孤行——这在“匿名者”组织中是很罕见的现象。“当我们策划发起一项行动时,他并不在乎其他人是否同意,”这位黑客补充道,“他会一个人列出行动方案,确定攻击目标,登录 IRC 聊天室,接着告诉所有人在哪里‘碰头’,然后发起 DDoS 攻击。”</p>

|

||||

<p>2011 年夏季,Doyon 接替 Adama 成为 PLF 的最高指挥官。Doyon 招募了六个新成员,并力图发展 PLF 成为“匿名者”组织的中坚力量。Covelli 成为了他的其中一位技术顾问。另一名黑客 Crypt0nymous 负责在 YouTube 上发布视频;其余的人负责研究以及组装电子设备。与松散的“匿名者”组织不同,PLF 内部有一套极其严格的管理体系。“Commander X 事必躬亲,”Covelli 说。“这是他的行事风格,要么不做,要么做好。”一位创立了 AnonInsiders 博客的黑客通过加密聊天告诉我,他认为 Doyon 总是一意孤行——这在“匿名者”组织中是很罕见的现象。“当我们策划发起一项行动时,他并不在乎其他人是否同意,”这位黑客补充道,“他会一个人列出行动方案,确定攻击目标,登录 IRC 聊天室,接着告诉所有人在哪里‘碰头’,然后发起 DDoS 攻击。”</p>

|

||||

|

||||

<p>一些匿名者把 PLF 视为可有可无的部分,认为 Doyon 的所作所为完全是个天大的笑柄。“他是因为吹牛出名的,”另一名昵称为 Tflow 的匿名者 Mustafa Al-Bassam 告诉我。不过,即使是那些极度反感 Doyon 的狂妄自大的人,也不得不承认他在“匿名者”组织发展过程中的重要性。“他所倡导的强硬路线有时很凑效,有时则完全不起作用,” Gregg Housh 说,并且补充道自己和其他优秀的匿名者都曾遇到过相同的问题。</p>

|

||||

<p>一些匿名者把 PLF 视为“面子项目”,认为 Doyon 的所作所为完全是个笑柄。“他是因为吹牛出名的,”另一名昵称为 Tflow 的匿名者 Mustafa Al-Bassam 告诉我。不过,即使是那些极度反感 Doyon 的狂妄自大的人,也不得不承认他在“匿名者”组织发展过程中的重要性。“他所倡导的强硬路线有时很凑效,有时则是碍事,” Gregg Housh 说,并且补充道自己和其他优秀的匿名者都曾遇到过相同的问题。</p>

|

||||

|

||||

<p>“匿名者”组织对外坚持声称自己是不分层次的平等组织。在由 Brian Knappenberger 制作的一部纪录片,《我们是一个团体》中,一名成员使用“一群鸟”来比喻组织,它们轮流领飞带动整个组织不断前行。Gabriella Coleman 告诉我,这个比喻不太切合实际,“匿名者”组织内实际上早就出现了一个非正式的领导阶层。“领导者非常重要,”她说。“有四五个人可以看做是我们的领头羊。”她把 Doyon 也算在了其中。但是匿名者们仍然倾向于反抗这种具有体系的组织结构。在一本即将出版的关于“匿名者”组织的书,《黑客、骗子、告密者、间谍》中,Coleman 这么写道,在匿名者中,“成员个体以及那些特立独行的人依然在一些重大事件上保持着服从的态度,优先考虑集体——特别是那些能引发强烈争端的事件。”</p>

|

||||

<p>“匿名者”组织对外坚持声称自己是不分层次的平等组织。在由 Brian Knappenberger 制作的一部纪录片,《我们是军团》中,一名成员使用“一群鸟”来比喻组织,它们轮流领飞带动整个组织不断前行。Gabriella Coleman 告诉我,这个比喻不太切合实际,“匿名者”组织内实际上早就出现了一个非正式的领导阶层。“领导者非常重要,”她说。“有四五个人可以看做是我们的领头羊。”她把 Doyon 也算在了其中。但是匿名者们仍然倾向于反抗这种体制结构。在一本即将出版的关于“匿名者”组织的书,《黑客、骗子、告密者、间谍》中,Coleman 这么写道,在匿名者中,“成员个体以及那些特立独行的人依然在一些重大事件上保持着服从的态度,优先考虑集体——特别是那些能引发强烈争端的事件。”</p>

|

||||

|

||||

<p>匿名者们谑称那些特立独行的成员为“自尊心超强的疯子”和“想让自己出名的疯子”。(不过许多匿名者已经不会再随便给他人取那种具有冒犯性的称号了。)“但还是有令人惊讶的极少数成员违反规则”打破传统上的看法,Coleman 说。“这么做的人,像 Commander X 这样的,都会在组织里受到排斥。”去年,在一家网络论坛上,有人写道,“当他开始把自己比作‘蝙蝠侠’的时候我就不想理他了。”</p>

|

||||

|

||||

<p>Peter Fein,是一位以 n0pants 为昵称而出名的网络激进分子,也是众多反对 Doyon 的浮夸行为的众多匿名者之一。Fein 浏览了 PLF 的网站,其封面上有一个徽章,还有关于组织的宣言——“为了解放众多人类的灵魂而不断战斗”。Fein 沮丧的发现 Doyon 早就使用真名为这家网站注册过了,使他这种,以及其他想要找事的匿名者们无机可乘。“如果有人要对我的网站进行 DDoS 攻击,那完全可以,” Fein 回想起通过私密聊天告诉 Doyon 时的情景,“但如果你要这么做了的话,我会揍扁你的屁股。”</p>

|

||||

|

||||

<p>2011 年 2 月 5 日,《金融时报》报道了在一家名为 HBGary Federal 的网络安全公司里,首席执行官 HBGary Federal 已经得到了“匿名者”组织骨干成员名单的消息。Barr 的调查结果表明,三位最高领导人其中之一就是‘ Commander X’,这位潜伏在加利福尼亚州的黑客有能力“策划一些大型网络攻击事件”。Barr 联系了 FBI 并提交了自己的调查结果。</p>

|

||||

<p>2011 年 2 月 5 日,《金融时报》报道了在一家名为 HBGary Federal 的网络安全公司,首席执行官 HBGary Federal 已经得到了“匿名者”组织骨干成员名单的消息。Barr 的调查结果表明,三位最高领导人其中之一就是‘ Commander X’,是一位潜伏在加利福尼亚州的黑客而且有能力“策划一些大型网络攻击事件”。Barr 联系了 FBI 并提交了自己的调查结果。</p>

|

||||

|

||||

<p>和 Fein 一样,Barr 也发现了 PLF 网站的注册法人名为 Christopher Doyon,地址是 Haight 大街。基于 Facebook 和 IRC 聊天室的调查,Barr 断定‘ Commander X’的真实身份是一名家庭住址在 Haight 大街附近的网络激进分子 Benjamin Spock de Vries。Barr 通过 Facebook 和 de Vries 取得了联系。“请告诉组织里的普通阶层,我并不是来抓你们的,” Barr 留言道,“只是想让‘领导阶层’知晓我的意图。”</p>

|

||||

<p>和 Fein 一样,Barr 也发现了 PLF 网站的注册法人名为 Christopher Doyon,地址是 Haight 大街。基于 Facebook 和 IRC 聊天室的调查,Barr 断定‘ Commander X’的真实身份是一名家庭住址在 Haight 大街附近的网络激进分子 Benjamin Spock de Vries。Barr 通过 Facebook 和 de Vries 取得了联系。“请告诉我组织里的其他人,我并不是来抓你们的,” Barr 留言道,“只是想让‘领导阶层’知晓我的意图。”</p>

|

||||

|

||||

<p>“‘领导阶层’? 2333,笑死我了,” de Vries 回复道。</p>

|

||||

|

||||

<p>《金融时报》发布报道的第二天,“匿名者”组织就进行了反击。HBGary Federal 的网站被进行了恶意篡改。Barr 的私人 Twitter 账户被盗取,他的上千封电子邮件被泄漏到了网上,同时匿名者们还公布了他的住址以及其他私人信息——这是一系列被称作“doxing”的惩罚。不到一个月后,Barr 就从 HBGary Federal 辞职了。</p>

|

||||

<p>《金融时报》发布报道的第二天,“匿名者”组织就进行了反击。HBGary Federal 的网站被进行了恶意篡改。Barr 的私人 Twitter 账户被盗取,他的上千封电子邮件被泄漏到了网上,同时匿名者们还公布了他的住址以及其他私人信息——这就是“冲动的惩罚”。不到一个月后,Barr 就从 HBGary Federal 辞职了。</p>

|

||||

|

||||

<h2>6</h2>

|

||||

|

||||

@ -180,17 +180,17 @@

|

||||

|

||||

<center><small>“这是我在 TED 夏令营里学到的东西。”</small></center>

|

||||

|

||||

<p>他时刻关注着“匿名者”组织的内部消息。那年春季,在 Barr 调查报告中提到的六位匿名者精锐成员,组建了“LulzSec 安全”组织(Lulz Security),简称 LulzSec。这个组织正如其名,这些成员认为“匿名者”组织已经变得太过严肃;他们的目标是重新引发起那些“能挑起强烈争端”的事件。当“匿名者”组织还在继续支持“阿拉伯之春”的抗议者的时候,LulzSec 入侵了公共电视网(Public Broadcasting Service,PBS)网站,并发布了一则虚假声明称已故说唱歌手 Tupac Shakur 仍然生活在新西兰。</p>

|

||||