mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-21 02:10:11 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject

This commit is contained in:

commit

9c3742f781

@ -1,11 +1,13 @@

|

||||

全球化思考:怎样克服交流中的文化差异

|

||||

======

|

||||

这有一些建议帮助你的全球化开发团队能够更好地理解你们的讨论并能参与其中。

|

||||

|

||||

> 这有一些建议帮助你的全球化开发团队能够更好地理解你们的讨论并能参与其中。

|

||||

|

||||

|

||||

|

||||

几周前,我见证了两位同事之间一次有趣的互动,他们分别是 Jason,我们的一位美国员工,和 Raj,一位来自印度的访问工作人员。

|

||||

|

||||

Raj 在印度时,他一般会通过电话参加美国中部时间上午 9 点的每日立会,现在他到美国工作了,就可以和组员们坐在同一间会议室里开会了。Jason 拦下了 Raj,说:“ Raj 你要去哪?你不是一直和我们开电话会议吗?你突然出现在会议室里我还不太适应。” Raj 听了说,“是这样吗?没问题。”就回到自己工位前准备和以前一样参加电话会议了。

|

||||

Raj 在印度时,他一般会通过电话参加美国中部时间上午 9 点的每日立会,现在他到美国工作了,就可以和组员们坐在同一间会议室里开会了。Jason 拦下了 Raj,说:“Raj 你要去哪?你不是一直和我们开电话会议吗?你突然出现在会议室里我还不太适应。” Raj 听了说,“是这样吗?没问题。”就回到自己工位前准备和以前一样参加电话会议了。

|

||||

|

||||

我去找 Raj,问他为什么不去参加每日立会,Raj 说 Jason 让自己给组员们打电话参会,而与此同时,Jason 也在会议室等着 Raj 来参加立会。

|

||||

|

||||

@ -19,7 +21,7 @@ Jason 明显是在开玩笑,但 Raj 把它当真了。这就是在两人互相

|

||||

|

||||

现在是全球化时代,我们的同事很可能不跟我们面对面接触,甚至不在同一座城市,来自不同的国家。越来越多的技术公司拥有全球化的工作场所,和来自世界各地的员工,他们有着不同的背景和经历。这种多样性使得技术公司能够在这个快速发展的科技大环境下拥有更强的竞争力。

|

||||

|

||||

但是这种地域的多样性也会给团队带来挑战。管理和维持团高性能的团队发展对于同地协作的团队来说就有着很大难度,对于有着多样背景成员的全球化团队来说,无疑更加困难。成员之间的交流会发生延迟,误解时有发生,成员之间甚至会互相怀疑,这些都会影响着公司的成功。

|

||||

但是这种地域的多样性也会给团队带来挑战。管理和维持高性能的团队发展对于同地协作的团队来说就有着很大难度,对于有着多样背景成员的全球化团队来说,无疑更加困难。成员之间的交流会发生延迟,误解时有发生,成员之间甚至会互相怀疑,这些都会影响着公司的成功。

|

||||

|

||||

到底是什么因素让全球化交流间发生误解呢?我们可以参照 Erin Meyer 的书《[文化地图][2]》,她在书中将全球文化分为八个类型,其中美国文化被分为低语境文化,与之相对的,日本为高语境文化。

|

||||

|

||||

@ -71,7 +73,7 @@ Jason 明显是在开玩笑,但 Raj 把它当真了。这就是在两人互相

|

||||

|

||||

保持长久关系最好的方法是和你的组员们单独见面。如果你的公司可以报销这些费用,那么努力去和组员们见面吧。和一起工作了很长时间的组员们见面能够使你们的关系更加坚固。我所在的公司就有着周期性交换员工的传统,每隔一段时间,世界各地的员工就会来到美国工作,美国员工再到其他分部工作。

|

||||

|

||||

另一种聚齐组员们的机会的研讨会。研讨会创造的不仅是学习和培训的机会,你还可以挤出一些时间和组员们培养感情。

|

||||

另一种聚齐组员们的机会是研讨会。研讨会创造的不仅是学习和培训的机会,你还可以挤出一些时间和组员们培养感情。

|

||||

|

||||

在如今,全球化经济不断发展,拥有来自不同国家和地区的员工对维持一个公司的竞争力来说越来越重要。即使组员们来自世界各地,团队中会出现一些交流问题,但拥有一支国际化的高绩效团队不是问题。如果你在工作中有什么促进团队交流的小窍门,请在评论中告诉我们吧。

|

||||

|

||||

@ -83,7 +85,7 @@ via: https://opensource.com/article/18/10/think-global-communication-challenges

|

||||

作者:[Avindra Fernando][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[Valoniakim](https://github.com/Valoniakim)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11691-1.html)

|

||||

[#]: subject: (Easily Upload Text Snippets To Pastebin-like Services From Commandline)

|

||||

[#]: via: (https://www.ostechnix.com/how-to-easily-upload-text-snippets-to-pastebin-like-services-from-commandline/)

|

||||

[#]: author: (SK https://www.ostechnix.com/author/sk/)

|

||||

@ -12,7 +12,7 @@

|

||||

|

||||

|

||||

|

||||

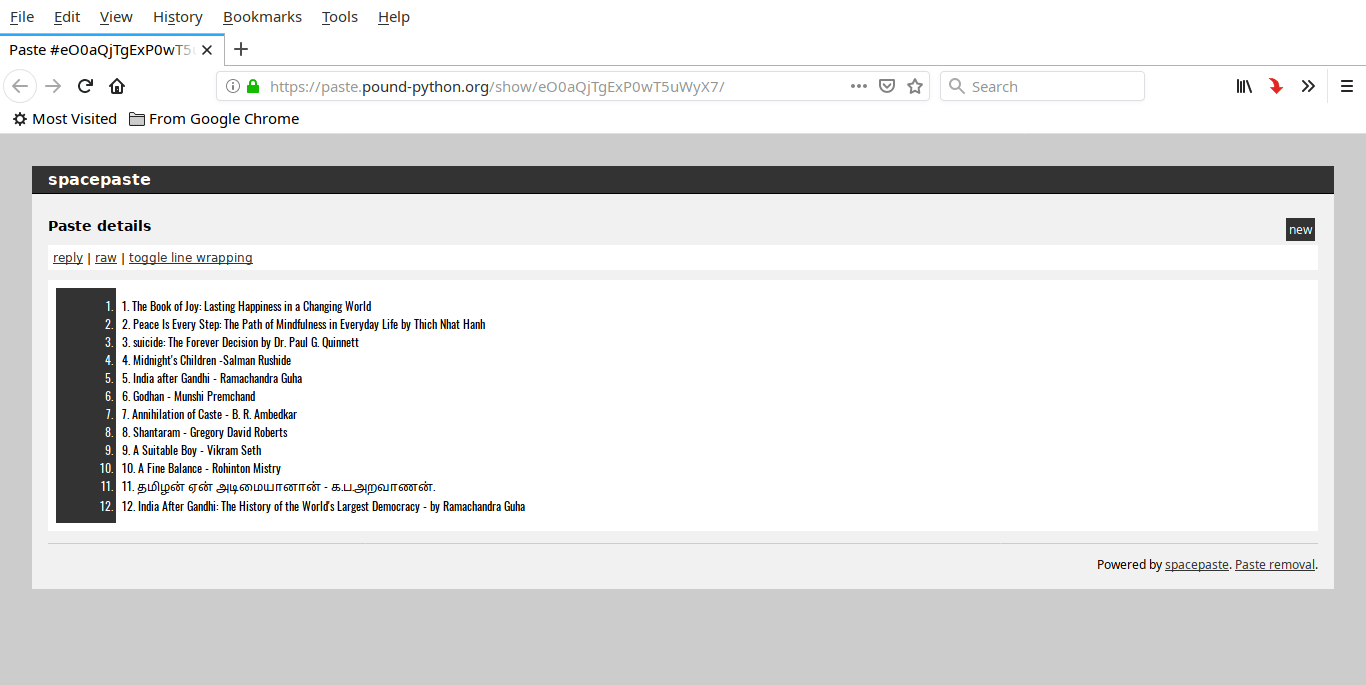

每当需要在线共享代码片段时,我们想到的第一个便是 Pastebin.com,这是 Paul Dixon 于 2002 年推出的在线文本共享网站。现在,有几种可供选择的文本共享服务可以上传和共享文本片段、错误日志、配置文件、命令输出或任何类型的文本文件。如果你碰巧经常使用各种类似于 Pastebin 的服务来共享代码,那么这对你来说确实是个好消息。向 Wgetpaste 打个招呼吧,它是一个命令行 BASH 实用程序,可轻松地将文本摘要上传到类似 pastebin 的服务中。使用 Wgetpaste 脚本,任何人都可以与自己的朋友、同事或想在类似 Unix 的系统中的命令行中查看/使用/查看代码的人快速共享文本片段。

|

||||

每当需要在线共享代码片段时,我们想到的第一个便是 Pastebin.com,这是 Paul Dixon 于 2002 年推出的在线文本共享网站。现在,有几种可供选择的文本共享服务可以上传和共享文本片段、错误日志、配置文件、命令输出或任何类型的文本文件。如果你碰巧经常使用各种类似于 Pastebin 的服务来共享代码,那么这对你来说确实是个好消息。向 Wgetpaste 打个招呼吧,它是一个命令行 BASH 实用程序,可轻松地将文本摘要上传到类似 Pastebin 的服务中。使用 Wgetpaste 脚本,任何人都可以与自己的朋友、同事或想在类似 Unix 的系统中的命令行中查看/使用/审查代码的人快速共享文本片段。

|

||||

|

||||

### 安装 Wgetpaste

|

||||

|

||||

@ -84,7 +84,7 @@ Your paste can be seen here: https://paste.pound-python.org/show/eO0aQjTgExP0wT5

|

||||

|

||||

|

||||

|

||||

你也可以使用 `tee` 命令显示粘贴的内容,而不是盲目地上传它们。

|

||||

你也可以使用 `tee` 命令显示粘贴的内容,而不是盲目地上传它们。

|

||||

|

||||

为此,请使用如下的 `-t` 选项。

|

||||

|

||||

@ -233,7 +233,7 @@ via: https://www.ostechnix.com/how-to-easily-upload-text-snippets-to-pastebin-li

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,57 +1,62 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( luming)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: translator: (LuuMing)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11693-1.html)

|

||||

[#]: subject: (Easy means easy to debug)

|

||||

[#]: via: (https://arp242.net/weblog/easy.html)

|

||||

[#]: author: (Martin Tournoij https://arp242.net/)

|

||||

|

||||

简单就是易于调试

|

||||

======

|

||||

对于框架、库或者工具来说,怎样做才算是“简单”?也许有很多的定义,但我的理解通常是易于调试。我经常见到人们宣传某个特定的程序、框架、库、文件格式是简单的,因为它们会说“看,我只需要这么一点工作量就能够完成某项工作,这太简单了”。非常好,但并不完善。

|

||||

|

||||

对于框架、库或者工具来说,怎样做才算是“简单”?也许有很多的定义,但我的理解通常是**易于调试**。我经常见到人们宣传某个特定的程序、框架、库、文件格式或者其它什么东西是简单的,因为他们会说“看,我只需要这么一点工作量就能够完成某项工作,这太简单了”。非常好,但并不完善。

|

||||

|

||||

你可能只编写一次软件,但几乎总要经历好几个调试周期。注意我说的调试周期并不意味着“代码里面有 bug 你需要修复”,而是说“我需要再看一下这份代码来修复 bug”。为了调试代码,你需要理解它,因此“易于调试”延伸来讲就是“易于理解”。

|

||||

|

||||

抽象使得程序易于编写,但往往是以难以理解为代价。有时候这是一个很好的折中,但通常不是。大体上,如果能使程序在日后易于理解和调试,我很乐意花更多的时间来写一些东西,因为这样可以省时间。

|

||||

抽象使得程序易于编写,但往往是以难以理解为代价。有时候这是一个很好的折中,但通常不是。大体上,如果能使程序在日后易于理解和调试,我很乐意花更多的时间来写一些东西,因为这样实际上更省时间。

|

||||

|

||||

简洁并不是让程序易于调试的唯一方法,但它也许是最重要的。良好的文档也是,但不幸的是它太少了。(注意,质量并不取决于字数!)

|

||||

简洁并不是让程序易于调试的**唯一**方法,但它也许是最重要的。良好的文档也是,但不幸的是好的文档太少了。(注意,质量并**不**取决于字数!)

|

||||

|

||||

这种影响是真是存在的。难以调试的程序会有更多的 bug,即使最初的 bug 数量与易于调试的程序完全相同,这简简单单是因为修复 bug 更加困难、更花时间。

|

||||

这种影响是真是存在的。难以调试的程序会有更多的 bug,即使最初的 bug 数量与易于调试的程序完全相同,而是因为修复 bug 更加困难、更花时间。

|

||||

|

||||

在公司的环境中,把时间花在难以修复的 bug 上通常被认为是不划算的投资。而在开源的环境下,人们花的时间会更少。(大多数项目都有一个或多个定期的维护者,但成百上千的贡献者提交的仅只是几个补丁)

|

||||

|

||||

这并不全是 1974 年由 Brian W. Kernighan 和 P. J. Plauger 合著的小说《编程风格的元素》中的观点:

|

||||

---

|

||||

|

||||

这并不全是 1974 年由 Brian W. Kernighan 和 P. J. Plauger 合著的《<ruby>编程风格的元素<rt>The Elements of Programming Style</rt></ruby>》中的观点:

|

||||

|

||||

> 每个人都知道调试比起编写程序困难两倍。当你写程序的时候耍小聪明,那么将来应该怎么去调试?

|

||||

|

||||

我见过许多写起来精妙,但却导致难以调试的代码。我会在下面列出几种样例。争论这些东西本身有多坏并不是我的本意,我仅想强调对于“易于使用”和“易于调试”之间的折中。

|

||||

* <ruby>ORM<rt>对象关系映射</rt></ruby> 库可以让数据库查询变得简单,代价是一旦你想解决某个问题,事情就变得难以理解。

|

||||

我见过许多看起来写起来“极尽精妙”,但却导致难以调试的代码。我会在下面列出几种样例。争论这些东西本身有多坏并不是我的本意,我仅想强调对于“易于使用”和“易于调试”之间的折中。

|

||||

|

||||

* 许多测试框架让调试变得困难。Ruby 的 rspec 就是一个很好的例子。有一次我不小心使用错了,结果花了很长时间搞清楚哪里出了问题(因为它给出错误提示非常含糊)。

|

||||

* <ruby>ORM<rt>对象关系映射</rt></ruby> 库可以让数据库查询变得简单,代价是一旦你想解决某个问题,事情就变得难以理解。

|

||||

* 许多测试框架让调试变得困难。Ruby 的 rspec 就是一个很好的例子。有一次我不小心使用错了,结果花了很长时间搞清楚**究竟**哪里出了问题(因为它给出错误提示非常含糊)。

|

||||

|

||||

我在《[测试并非万能][1]》这篇文章中写了更多关于以上的例子。

|

||||

|

||||

* 我用过的许多 JavaScript 框架都很难完全理解。Clever(LCTT 译注:一种 JS 框架)的声明语句一向很有逻辑,直到某条语句和你的预期不符,这时你就只能指望 Stack Overflow 上的某篇文章或 GitHub 上的某个回帖来帮助你了。

|

||||

|

||||

这些函数库确实让任务变得非常简单,使用它们也没有什么错。但通常人们都过于关注“易于使用”而忽视了“易于调试”这一点。

|

||||

我在《[测试并非万能][1]》这篇文章中写了更多关于以上的例子。

|

||||

* 我用过的许多 JavaScript 框架都很难完全理解。Clever(LCTT 译注:一种 JS 框架)的语句一向很有逻辑,直到某条语句不能如你预期的工作,这时你就只能指望 Stack Overflow 上的某篇文章或 GitHub 上的某个回帖来帮助你了。

|

||||

|

||||

这些函数库**确实**让任务变得非常简单,使用它们也没有什么错。但通常人们都过于关注“易于使用”而忽视了“易于调试”这一点。

|

||||

* Docker 非常棒,并且让许多事情变得非常简单,直到你看到了这条提示:

|

||||

```

|

||||

|

||||

```

|

||||

ERROR: for elasticsearch Cannot start service elasticsearch:

|

||||

oci runtime error: container_linux.go:247: starting container process caused "process_linux.go:258:

|

||||

applying cgroup configuration for process caused \"failed to write 898 to cgroup.procs: write

|

||||

/sys/fs/cgroup/cpu,cpuacct/docker/b13312efc203e518e3864fc3f9d00b4561168ebd4d9aad590cc56da610b8dd0e/cgroup.procs:

|

||||

invalid argument\""

|

||||

```

|

||||

或者这条:

|

||||

```

|

||||

|

||||

或者这条:

|

||||

|

||||

```

|

||||

ERROR: for elasticsearch Cannot start service elasticsearch: EOF

|

||||

```

|

||||

那么...现在看起来呢?

|

||||

|

||||

那么...你怎么看?

|

||||

* `Systemd` 比起 `SysV`、`init.d` 脚本更加简单,因为编写 `systemd` 单元文件比起编写 `shell` 脚本更加方便。这也是 Lennart Poetterin 在他的 [systemd 神话][2] 中解释 `systemd` 为何简单时使用的论点。

|

||||

|

||||

我非常赞同 Poettering 的观点——也可以看 [shell 脚本陷阱][3] 这篇文章。但是这种角度并不全面。单元文件简单的背后意味着 `systemd` 作为一个整体要复杂的多,并且用户确实会受到它的影响。看看我遇到的这个[问题][4]和为它所做的[修复][5]。看起来很简单吗?

|

||||

我非常赞同 Poettering 的观点——也可以看 [shell 脚本陷阱][3] 这篇文章。但是这种角度并不全面。单元文件简单的背后意味着 `systemd` 作为一个整体要复杂的多,并且用户确实会受到它的影响。看看我遇到的这个[问题][4]和为它所做的[修复][5]。看起来很简单吗?

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

@ -61,7 +66,7 @@ via: https://arp242.net/weblog/easy.html

|

||||

作者:[Martin Tournoij][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[LuuMing](https://github.com/LuuMing)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -71,4 +76,4 @@ via: https://arp242.net/weblog/easy.html

|

||||

[2]: http://0pointer.de/blog/projects/the-biggest-myths.html

|

||||

[3]:https://www.arp242.net/shell-scripting-trap.html

|

||||

[4]:https://unix.stackexchange.com/q/185495/33645

|

||||

[5]:https://cgit.freedesktop.org/systemd/systemd/commit/?id=6e392c9c45643d106673c6643ac8bf4e65da13c1

|

||||

[5]:https://cgit.freedesktop.org/systemd/systemd/commit/?id=6e392c9c45643d106673c6643ac8bf4e65da13c1

|

||||

140

published/20191017 How to type emoji on Linux.md

Normal file

140

published/20191017 How to type emoji on Linux.md

Normal file

@ -0,0 +1,140 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (HankChow)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11702-1.html)

|

||||

[#]: subject: (How to type emoji on Linux)

|

||||

[#]: via: (https://opensource.com/article/19/10/how-type-emoji-linux)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/seth)

|

||||

|

||||

如何在 Linux 系统中输入 emoji

|

||||

======

|

||||

|

||||

> 使用 GNOME 桌面可以让你在文字中轻松加入 emoji。

|

||||

|

||||

|

||||

|

||||

emoji 是潜藏在 Unicode 字符空间里的有趣表情图,它们已经风靡于整个互联网。emoji 可以用来在社交媒体上表示自己的心情状态,也可以作为重要文件名的视觉标签,总之它们的各种用法层出不穷。在 Linux 系统中有很多种方式可以输入 Unicode 字符,但 GNOME 桌面能让你更轻松地查找和输入 emoji。

|

||||

|

||||

![Emoji in Emacs][2]

|

||||

|

||||

### 准备工作

|

||||

|

||||

首先,你需要一个运行 [GNOME][3] 桌面的 Linux 系统。

|

||||

|

||||

同时还需要安装一款支持 emoji 的字体。符合这个要求的字体有很多,使用你喜欢的软件包管理器直接搜索 `emoji` 并选择一款安装就可以了。

|

||||

|

||||

例如在 Fedora 上:

|

||||

|

||||

```

|

||||

$ sudo dnf search emoji

|

||||

emoji-picker.noarch : An emoji selection tool

|

||||

unicode-emoji.noarch : Unicode Emoji Data Files

|

||||

eosrei-emojione-fonts.noarch : A color emoji font

|

||||

twitter-twemoji-fonts.noarch : Twitter Emoji for everyone

|

||||

google-android-emoji-fonts.noarch : Android Emoji font released by Google

|

||||

google-noto-emoji-fonts.noarch : Google “Noto Emoji” Black-and-White emoji font

|

||||

google-noto-emoji-color-fonts.noarch : Google “Noto Color Emoji” colored emoji font

|

||||

[...]

|

||||

```

|

||||

|

||||

对于 Ubuntu 或者 Debian,需要使用 `apt search`。

|

||||

|

||||

在这篇文章中,我会使用 [Google Noto Color Emoji][4] 这款字体为例。

|

||||

|

||||

### 设置

|

||||

|

||||

要开始设置,首先打开 GNOME 的设置面板。

|

||||

|

||||

1、在左边侧栏中,选择“<ruby>地区与语言<rt>Region & Language</rt></ruby>”类别。

|

||||

|

||||

2、点击“<ruby>输入源<rt>Input Sources</rt></ruby>”选项下方的加号(+)打开“<ruby>添加输入源<rt>Add an Input Source</rt></ruby>”面板。

|

||||

|

||||

![Add a new input source][5]

|

||||

|

||||

3、在“<ruby>添加输入源<rt>Add an Input Source</rt></ruby>”面板中,点击底部的菜单按钮。

|

||||

|

||||

![Add an Input Source panel][6]

|

||||

|

||||

4、滑动到列表底部并选择“<ruby>其它<rt>Other</rt></ruby>”。

|

||||

|

||||

5、在“<ruby>其它<rt>Other</rt></ruby>”列表中,找到“<ruby>其它<rt>Other</rt></ruby>(<ruby>快速输入<rt>Typing Booster</rt></ruby>)”。

|

||||

|

||||

![Find Other \(Typing Booster\) in inputs][7]

|

||||

|

||||

6、点击右上角的“<ruby>添加<rt>Add</rt></ruby>”按钮,将输入源添加到 GNOME 桌面。

|

||||

|

||||

以上操作完成之后,就可以关闭设置面板了。

|

||||

|

||||

#### 切换到快速输入

|

||||

|

||||

现在 GNOME 桌面的右上角会出现一个新的图标,一般情况下是当前语言的双字母缩写(例如英语是 en,世界语是 eo,西班牙语是 es,等等)。如果你按下了<ruby>超级键<rt>Super key</rt></ruby>(也就是键盘上带有 Linux 企鹅/Windows 徽标/Mac Command 标志的键)+ 空格键的组合键,就会切换到输入列表中的下一个输入源。在这里,我们只有两个输入源,也就是默认语言和快速输入。

|

||||

|

||||

你可以尝试使用一下这个组合键,观察图标的变化。

|

||||

|

||||

#### 配置快速输入

|

||||

|

||||

在快速输入模式下,点击右上角的输入源图标,选择“<ruby>Unicode 符号和 emoji 联想<rt>Unicode symbols and emoji predictions</rt></ruby>”选项,设置为“<ruby>开<rt>On</rt></ruby>”。

|

||||

|

||||

![Set Unicode symbols and emoji predictions to On][8]

|

||||

|

||||

现在快速输入模式已经可以输入 emoji 了。这正是我们现在所需要的,当然快速输入模式的功能也并不止于此。

|

||||

|

||||

### 输入 emoji

|

||||

|

||||

在快速输入模式下,打开一个文本编辑器,或者网页浏览器,又或者是任意一种支持输入 Unicode 字符的软件,输入“thumbs up”,快速输入模式就会帮你迅速匹配的 emoji 了。

|

||||

|

||||

![Typing Booster searching for emojis][9]

|

||||

|

||||

要退出 emoji 模式,只需要再次使用超级键+空格键的组合键,输入源就会切换回你的默认输入语言。

|

||||

|

||||

### 使用其它切换方式

|

||||

|

||||

如果你觉得“超级键+空格键”这个组合用起来不顺手,你也可以换成其它键的组合。在 GNOME 设置面板中选择“<ruby>设备<rt>Device</rt></ruby>”→“<ruby>键盘<rt>Keyboard</rt></ruby>”。

|

||||

|

||||

在“<ruby>键盘<rt>Keyboard</rt></ruby>”页面中,将“<ruby>切换到下一个输入源<rt>Switch to next input source</rt></ruby>”更改为你喜欢的组合键。

|

||||

|

||||

![Changing keystroke combination in GNOME settings][10]

|

||||

|

||||

### 输入 Unicode

|

||||

|

||||

实际上,现代键盘的设计只是为了输入 26 个字母以及尽可能多的数字和符号。但 ASCII 字符的数量已经比键盘上能看到的字符多得多了,遑论上百万个 Unicode 字符。因此,如果你想要在 Linux 应用程序中输入 Unicode,但又不想使用快速输入,你可以尝试一下 Unicode 输入。

|

||||

|

||||

1. 打开任意一种支持输入 Unicode 字符的软件,但仍然使用你的默认输入语言

|

||||

2. 使用 `Ctrl+Shift+U` 组合键进入 Unicode 输入模式

|

||||

3. 在 Unicode 输入模式下,只需要输入某个 Unicode 字符的对应序号,就实现了对这个 Unicode 字符的输入。例如 `1F44D` 对应的是 👍,而 `2620` 则对应了 ☠。想要查看所有 Unicode 字符的对应序号,可以参考 [Unicode 规范][11]。

|

||||

|

||||

### emoji 的实用性

|

||||

|

||||

emoji 可以让你的文本变得与众不同,这就是它们有趣和富有表现力的体现。同时 emoji 也有很强的实用性,因为它们本质上是 Unicode 字符,在很多支持自定义字体的地方都可以用到它们,而且跟使用其它常规字符没有什么太大的差别。因此,你可以使用 emoji 来对不同的文件做标记,在搜索的时候就可以使用 emoji 把这些文件快速筛选出来。

|

||||

|

||||

![Labeling a file with emoji][12]

|

||||

|

||||

你可以在 Linux 中尽情地使用 emoji,因为 Linux 是一个对 Unicode 友好的环境,未来也会对 Unicode 有着越来越好的支持。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/10/how-type-emoji-linux

|

||||

|

||||

作者:[Seth Kenlon][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[HankChow](https://github.com/HankChow)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/seth

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/osdc-lead_cat-keyboard.png?itok=fuNmiGV- "A cat under a keyboard."

|

||||

[2]: https://opensource.com/sites/default/files/uploads/emacs-emoji.jpg "Emoji in Emacs"

|

||||

[3]: https://www.gnome.org/

|

||||

[4]: https://www.google.com/get/noto/help/emoji/

|

||||

[5]: https://opensource.com/sites/default/files/uploads/gnome-setting-region-add.png "Add a new input source"

|

||||

[6]: https://opensource.com/sites/default/files/uploads/gnome-setting-input-list.png "Add an Input Source panel"

|

||||

[7]: https://opensource.com/sites/default/files/uploads/gnome-setting-input-other-typing-booster.png "Find Other (Typing Booster) in inputs"

|

||||

[8]: https://opensource.com/sites/default/files/uploads/emoji-input-on.jpg "Set Unicode symbols and emoji predictions to On"

|

||||

[9]: https://opensource.com/sites/default/files/uploads/emoji-input.jpg "Typing Booster searching for emojis"

|

||||

[10]: https://opensource.com/sites/default/files/uploads/gnome-setting-keyboard-switch-input.jpg "Changing keystroke combination in GNOME settings"

|

||||

[11]: http://unicode.org/emoji/charts/full-emoji-list.html

|

||||

[12]: https://opensource.com/sites/default/files/uploads/file-label.png "Labeling a file with emoji"

|

||||

|

||||

@ -1,24 +1,26 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (hopefully2333)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11699-1.html)

|

||||

[#]: subject: (How internet security works: TLS, SSL, and CA)

|

||||

[#]: via: (https://opensource.com/article/19/11/internet-security-tls-ssl-certificate-authority)

|

||||

[#]: author: (Bryant Son https://opensource.com/users/brson)

|

||||

|

||||

互联网的安全是如何保证的:TLS,SSL,和 CA

|

||||

互联网的安全是如何保证的:TLS、SSL 和 CA

|

||||

======

|

||||

你的浏览器里的锁的图标的后面是什么?

|

||||

|

||||

> 你的浏览器里的锁的图标的后面是什么?

|

||||

|

||||

![Lock][1]

|

||||

|

||||

每天你都会重复这件事很多次,你访问网站,这个网站需要你用用户名或者电子邮件地址,和你的密码来进行登录。银行网站,社交网站,电子邮件服务,电子商务网站,和新闻网站。这里只在使用了这种机制的网站中列举了其中一小部分。

|

||||

每天你都会重复这件事很多次,访问网站,网站需要你用你的用户名或者电子邮件地址和你的密码来进行登录。银行网站、社交网站、电子邮件服务、电子商务网站和新闻网站。这里只在使用了这种机制的网站中列举了其中一小部分。

|

||||

|

||||

每次你登陆进一个这种类型的网站时,你实际上是在说:“是的,我信任这个网站,所以我愿意把我的个人信息共享给它。”这些数据可能包含你的姓名,性别,实际地址,电子邮箱地址,有时候甚至会包括你的信用卡信息。

|

||||

每次你登录进一个这种类型的网站时,你实际上是在说:“是的,我信任这个网站,所以我愿意把我的个人信息共享给它。”这些数据可能包含你的姓名、性别、实际地址、电子邮箱地址,有时候甚至会包括你的信用卡信息。

|

||||

|

||||

但是你怎么知道你可以信任这个网站?换个方式问,为了让你可以信任它,网站应该如何保护你的交易?

|

||||

|

||||

本文旨在阐述使网站变得安全的机制。我会首先论述 web 协议 http 和 https,以及传输层安全(TLS)的概念,后者是互联网协议(IP)层中的加密协议之一。然后,我会解释证书颁发机构和自签名证书,以及它们如何帮助保护一个网站。最后,我会介绍一些开源的工具,你可以使用它们来创建和管理你的证书。

|

||||

本文旨在阐述使网站变得安全的机制。我会首先论述 web 协议 http 和 https,以及<ruby>传输层安全<rt>Transport Layer Security</rt></ruby>(TLS)的概念,后者是<ruby>互联网协议<rt>Internet Protocol</rt></ruby>(IP)层中的加密协议之一。然后,我会解释<ruby>证书颁发机构<rt>certificate authority</rt></ruby>和自签名证书,以及它们如何帮助保护一个网站。最后,我会介绍一些开源的工具,你可以使用它们来创建和管理你的证书。

|

||||

|

||||

### 通过 https 保护路由

|

||||

|

||||

@ -26,13 +28,13 @@

|

||||

|

||||

![Certificate information][2]

|

||||

|

||||

默认情况下,如果一个网站使用的是 http 协议,那么它是不安全的。通过网站主机配置一个证书并添加到路由,可以把这个网站从一个不安全的 http 网站变为一个安全的 https 网站。那个锁图标通常表示这个网站是受 https 保护的。

|

||||

默认情况下,如果一个网站使用的是 http 协议,那么它是不安全的。为通过网站主机的路由添加一个配置过的证书,可以把这个网站从一个不安全的 http 网站变为一个安全的 https 网站。那个锁图标通常表示这个网站是受 https 保护的。

|

||||

|

||||

点击证书来查看网站的 CA,根据你的浏览器,你可能需要下载证书来查看它。

|

||||

|

||||

![Certificate information][3]

|

||||

|

||||

点击证书来查看网站的 CA,根据你的浏览器,你可能需要下载证书来查看它。

|

||||

在这里,你可以了解有关 Opensource.com 证书的信息。例如,你可以看到 CA 是 DigiCert,并以 Opensource.com 的名称提供给 Red Hat。

|

||||

|

||||

这个证书信息可以让终端用户检查该网站是否可以安全访问。

|

||||

|

||||

@ -42,19 +44,19 @@

|

||||

|

||||

### 带有 TLS 和 SSL 的互联网协议

|

||||

|

||||

TLS 是旧版安全套接字层协议(SSL)的最新版本。理解这一点的最好方法就是仔细理解 IP 的不同协议层。

|

||||

TLS 是旧版<ruby>安全套接字层协议<rt>Secure Socket Layer</rt></ruby>(SSL)的最新版本。理解这一点的最好方法就是仔细理解互联网协议的不同协议层。

|

||||

|

||||

![IP layers][4]

|

||||

|

||||

我们知道当今的互联网是由6个层面组成的:物理层,数据链路层,网络层,传输层,安全层,应用层。物理层是基础,这一层是最接近实际的硬件设备的。应用层是最抽象的一层,是最接近终端用户的一层。安全层可以被认为是应用层的一部分,TLS 和 SSL,是被设计用来在一个计算机网络中提供通信安全的加密协议,它们位于安全层中。

|

||||

我们知道当今的互联网是由 6 个层面组成的:物理层、数据链路层、网络层、传输层、安全层、应用层。物理层是基础,这一层是最接近实际的硬件设备的。应用层是最抽象的一层,是最接近终端用户的一层。安全层可以被认为是应用层的一部分,TLS 和 SSL,是被设计用来在一个计算机网络中提供通信安全的加密协议,它们位于安全层中。

|

||||

|

||||

这个过程可以确保终端用户使用网络服务时,通信的安全性和保密性。

|

||||

|

||||

### 证书颁发机构和自签名证书

|

||||

|

||||

CA 是受信任的组织,它可以颁发数字证书。

|

||||

<ruby>证书颁发机构<rt>Certificate authority</rt></ruby>(CA)是受信任的组织,它可以颁发数字证书。

|

||||

|

||||

TLS 和 SSL 可以使连接更安全,但是这个加密机制需要一种方式来验证它;这就是 SSL/TLS 证书。TLS 使用了一种叫做非对称加密的加密机制,这个机制有一对称为私钥和公钥的安全密钥。(这是一个非常复杂的主题,超出了本文的讨论范围,但是如果你想去了解这方面的东西,你可以阅读“密码学和公钥密码基础体系简介”)你要知道的基础内容是,证书颁发机构们,比如 GlobalSign, DigiCert,和 GoDaddy,它们是受人们信任的可以颁发证书的供应商,它们颁发的证书可以用于验证网站使用的 TLS/SSL 证书。网站使用的证书是导入到主机服务器里的,用于保护网站。

|

||||

TLS 和 SSL 可以使连接更安全,但是这个加密机制需要一种方式来验证它;这就是 SSL/TLS 证书。TLS 使用了一种叫做非对称加密的加密机制,这个机制有一对称为私钥和公钥的安全密钥。(这是一个非常复杂的主题,超出了本文的讨论范围,但是如果你想去了解这方面的东西,你可以阅读“[密码学和公钥密码基础体系简介][5]”)你要知道的基础内容是,证书颁发机构们,比如 GlobalSign、DigiCert 和 GoDaddy,它们是受人们信任的可以颁发证书的供应商,它们颁发的证书可以用于验证网站使用的 TLS/SSL 证书。网站使用的证书是导入到主机服务器里的,用于保护网站。

|

||||

|

||||

然而,如果你只是要测试一下正在开发中的网站或服务,CA 证书可能对你而言太昂贵或者是太复杂了。你必须有一个用于生产目的的受信任的证书,但是开发者和网站管理员需要有一种更简单的方式来测试网站,然后他们才能将其部署到生产环境中;这就是自签名证书的来源。

|

||||

|

||||

@ -62,48 +64,50 @@ TLS 和 SSL 可以使连接更安全,但是这个加密机制需要一种方

|

||||

|

||||

### 生成证书的开源工具

|

||||

|

||||

有几种开源工具可以用来管理 TLS/SSL 证书。其中最著名的就是 openssl,这个工具包含在很多 Linux 发行版中和 macos 中。当然,你也可以使用其他开源工具。

|

||||

有几种开源工具可以用来管理 TLS/SSL 证书。其中最著名的就是 openssl,这个工具包含在很多 Linux 发行版中和 MacOS 中。当然,你也可以使用其他开源工具。

|

||||

|

||||

| Tool Name | Description | License |

|

||||

| 工具名 | 描述 | 许可证 |

|

||||

| --------- | ------------------------------------------------------------------------------ | --------------------------------- |

|

||||

| OpenSSL | 实现 TLS 和加密库的最著名的开源工具 | Apache License 2.0 |

|

||||

| EasyRSA | 用于构建 PKI CA 的命令行实用工具 | GPL v2 |

|

||||

| CFSSL | 来自 cloudflare 的 PKI/TLS 瑞士军刀 | BSD 2-Clause "Simplified" License |

|

||||

| Lemur | 来自网飞的 TLS创建工具 | Apache License 2.0 |

|

||||

| [OpenSSL][7] | 实现 TLS 和加密库的最著名的开源工具 | Apache License 2.0 |

|

||||

| [EasyRSA][8] | 用于构建 PKI CA 的命令行实用工具 | GPL v2 |

|

||||

| [CFSSL][9] | 来自 cloudflare 的 PKI/TLS 瑞士军刀 | BSD 2-Clause "Simplified" License |

|

||||

| [Lemur][10] | 来自<ruby>网飞<rt>Netflix</rt></ruby>的 TLS 创建工具 | Apache License 2.0 |

|

||||

|

||||

如果你的目的是扩展和对用户友好,网飞的 Lemur 是一个很有趣的选择。你在网飞的技术博客上可以查看更多有关它的信息。

|

||||

如果你的目的是扩展和对用户友好,网飞的 Lemur 是一个很有趣的选择。你在[网飞的技术博客][6]上可以查看更多有关它的信息。

|

||||

|

||||

### 如何创建一个 Openssl 证书

|

||||

|

||||

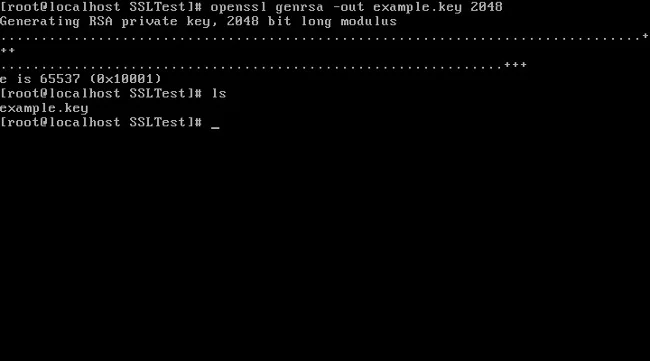

你可以靠自己来创建证书,下面这个案例就是使用 Openssl 生成一个自签名证书。

|

||||

|

||||

1. 使用 openssl 命令行生成一个私钥:

|

||||

1、使用 `openssl` 命令行生成一个私钥:

|

||||

|

||||

```

|

||||

openssl genrsa -out example.key 2048

|

||||

```

|

||||

|

||||

|

||||

|

||||

2. 使用在第一步中生成的私钥来创建一个证书签名请求(CSR):

|

||||

2、使用在第一步中生成的私钥来创建一个<ruby>证书签名请求<rt>certificate signing request</rt></ruby>(CSR):

|

||||

|

||||

```

|

||||

openssl req -new -key example.key -out example.csr \

|

||||

-subj "/C=US/ST=TX/L=Dallas/O=Red Hat/OU=IT/CN=test.example.com"

|

||||

openssl req -new -key example.key -out example.csr -subj "/C=US/ST=TX/L=Dallas/O=Red Hat/OU=IT/CN=test.example.com"

|

||||

```

|

||||

|

||||

|

||||

|

||||

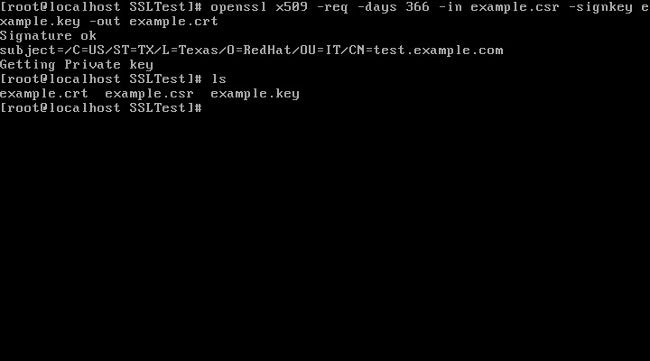

3. 使用你的 CSR 和私钥创建一个证书:

|

||||

3、使用你的 CSR 和私钥创建一个证书:

|

||||

|

||||

```

|

||||

openssl x509 -req -days 366 -in example.csr \

|

||||

-signkey example.key -out example.crt

|

||||

openssl x509 -req -days 366 -in example.csr -signkey example.key -out example.crt

|

||||

```

|

||||

|

||||

|

||||

|

||||

### 了解更多关于互联网安全的知识

|

||||

|

||||

如果你想要了解更多关于互联网安全和网站安全的知识,请看我为这篇文章一起制作的 Youtube 视频。

|

||||

|

||||

<https://youtu.be/r0F1Hlcmjsk>

|

||||

- <https://youtu.be/r0F1Hlcmjsk>

|

||||

|

||||

你有什么问题?发在评论里让我们知道。

|

||||

|

||||

@ -114,7 +118,7 @@ via: https://opensource.com/article/19/11/internet-security-tls-ssl-certificate-

|

||||

作者:[Bryant Son][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[hopefully2333](https://github.com/hopefully2333)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -126,3 +130,7 @@ via: https://opensource.com/article/19/11/internet-security-tls-ssl-certificate-

|

||||

[4]: https://opensource.com/sites/default/files/uploads/3_internetprotocol.jpg

|

||||

[5]: https://opensource.com/article/18/5/cryptography-pki

|

||||

[6]: https://medium.com/netflix-techblog/introducing-lemur-ceae8830f621

|

||||

[7]: https://www.openssl.org/

|

||||

[8]: https://github.com/OpenVPN/easy-rsa

|

||||

[9]: https://github.com/cloudflare/cfssl

|

||||

[10]: https://github.com/Netflix/lemur

|

||||

140

published/20191127 How to write a Python web API with Flask.md

Normal file

140

published/20191127 How to write a Python web API with Flask.md

Normal file

@ -0,0 +1,140 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (hj24)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11701-1.html)

|

||||

[#]: subject: (How to write a Python web API with Flask)

|

||||

[#]: via: (https://opensource.com/article/19/11/python-web-api-flask)

|

||||

[#]: author: (Rachel Waston https://opensource.com/users/rachelwaston)

|

||||

|

||||

如何使用 Flask 编写 Python Web API

|

||||

======

|

||||

|

||||

> 这是一个快速教程,用来展示如何通过 Flask(目前发展最迅速的 Python 框架之一)来从服务器获取数据。

|

||||

|

||||

![spiderweb diagram][1]

|

||||

|

||||

[Python][2] 是一个以语法简洁著称的高级的、面向对象的程序语言。它一直都是一个用来构建 RESTful API 的顶级编程语言。

|

||||

|

||||

[Flask][3] 是一个高度可定制化的 Python 框架,可以为开发人员提供用户访问数据方式的完全控制。Flask 是一个基于 Werkzeug 的 [WSGI][4] 工具包和 Jinja 2 模板引擎的”微框架“。它是一个被设计来开发 RESTful API 的 web 框架。

|

||||

|

||||

Flask 是 Python 发展最迅速的框架之一,很多知名网站如:Netflix、Pinterest 和 LinkedIn 都将 Flask 纳入了它们的开发技术栈。下面是一个简单的示例,展示了 Flask 是如何允许用户通过 HTTP GET 请求来从服务器获取数据的。

|

||||

|

||||

### 初始化一个 Flask 应用

|

||||

|

||||

首先,创建一个你的 Flask 项目的目录结构。你可以在你系统的任何地方来做这件事。

|

||||

|

||||

```

|

||||

$ mkdir tutorial

|

||||

$ cd tutorial

|

||||

$ touch main.py

|

||||

$ python3 -m venv env

|

||||

$ source env/bin/activate

|

||||

(env) $ pip3 install flask-restful

|

||||

Collecting flask-restful

|

||||

Downloading https://files.pythonhosted.org/packages/17/44/6e49...8da4/Flask_RESTful-0.3.7-py2.py3-none-any.whl

|

||||

Collecting Flask>=0.8 (from flask-restful)

|

||||

[...]

|

||||

```

|

||||

|

||||

### 导入 Flask 模块

|

||||

|

||||

然后,在你的 `main.py` 代码中导入 `flask` 模块和它的 `flask_restful` 库:

|

||||

|

||||

```

|

||||

from flask import Flask

|

||||

from flask_restful import Resource, Api

|

||||

|

||||

app = Flask(__name__)

|

||||

api = Api(app)

|

||||

|

||||

class Quotes(Resource):

|

||||

def get(self):

|

||||

return {

|

||||

'William Shakespeare': {

|

||||

'quote': ['Love all,trust a few,do wrong to none',

|

||||

'Some are born great, some achieve greatness, and some greatness thrust upon them.']

|

||||

},

|

||||

'Linus': {

|

||||

'quote': ['Talk is cheap. Show me the code.']

|

||||

}

|

||||

}

|

||||

|

||||

api.add_resource(Quotes, '/')

|

||||

|

||||

if __name__ == '__main__':

|

||||

app.run(debug=True)

|

||||

```

|

||||

|

||||

### 运行 app

|

||||

|

||||

Flask 包含一个内建的用于测试的 HTTP 服务器。来测试一下这个你创建的简单的 API:

|

||||

|

||||

```

|

||||

(env) $ python main.py

|

||||

* Serving Flask app "main" (lazy loading)

|

||||

* Environment: production

|

||||

WARNING: This is a development server. Do not use it in a production deployment.

|

||||

Use a production WSGI server instead.

|

||||

* Debug mode: on

|

||||

* Running on http://127.0.0.1:5000/ (Press CTRL+C to quit)

|

||||

```

|

||||

|

||||

启动开发服务器时将启动 Flask 应用程序,该应用程序包含一个名为 `get` 的方法来响应简单的 HTTP GET 请求。你可以通过 `wget`、`curl` 命令或者任意的 web 浏览器来测试它。

|

||||

|

||||

```

|

||||

$ curl http://localhost:5000

|

||||

{

|

||||

"William Shakespeare": {

|

||||

"quote": [

|

||||

"Love all,trust a few,do wrong to none",

|

||||

"Some are born great, some achieve greatness, and some greatness thrust upon them."

|

||||

]

|

||||

},

|

||||

"Linus": {

|

||||

"quote": [

|

||||

"Talk is cheap. Show me the code."

|

||||

]

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

要查看使用 Python 和 Flask 的类似 Web API 的更复杂版本,请导航至美国国会图书馆的 [Chronicling America][5] 网站,该网站可提供有关这些信息的历史报纸和数字化报纸。

|

||||

|

||||

### 为什么使用 Flask?

|

||||

|

||||

Flask 有以下几个主要的优点:

|

||||

|

||||

1. Python 很流行并且广泛被应用,所以任何熟悉 Python 的人都可以使用 Flask 来开发。

|

||||

2. 它轻巧而简约。

|

||||

3. 考虑安全性而构建。

|

||||

4. 出色的文档,其中包含大量清晰,有效的示例代码。

|

||||

|

||||

还有一些潜在的缺点:

|

||||

|

||||

1. 它轻巧而简约。但如果你正在寻找具有大量捆绑库和预制组件的框架,那么这可能不是最佳选择。

|

||||

2. 如果必须围绕 Flask 构建自己的框架,则你可能会发现维护自定义项的成本可能会抵消使用 Flask 的好处。

|

||||

|

||||

|

||||

如果你要构建 Web 程序或 API,可以考虑选择 Flask。它功能强大且健壮,并且其优秀的项目文档使入门变得容易。试用一下,评估一下,看看它是否适合你的项目。

|

||||

|

||||

在本课中了解更多信息关于 Python 异常处理以及如何以安全的方式进行操作。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/11/python-web-api-flask

|

||||

|

||||

作者:[Rachel Waston][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[hj24](https://github.com/hj24)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/rachelwaston

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/web-cms-build-howto-tutorial.png?itok=bRbCJt1U (spiderweb diagram)

|

||||

[2]: https://www.python.org/

|

||||

[3]: https://palletsprojects.com/p/flask/

|

||||

[4]: https://en.wikipedia.org/wiki/Web_Server_Gateway_Interface

|

||||

[5]: https://chroniclingamerica.loc.gov/about/api

|

||||

@ -1,31 +1,31 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (HankChow)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11696-1.html)

|

||||

[#]: subject: (3 easy steps to update your apps to Python 3)

|

||||

[#]: via: (https://opensource.com/article/19/12/update-apps-python-3)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/seth)

|

||||

|

||||

将你的应用迁移到 Python 3 的三个步骤

|

||||

======

|

||||

Python 2 气数将尽,是时候将你的项目从 Python 2 迁移到 Python 3 了。

|

||||

|

||||

![Hands on a keyboard with a Python book ][1]

|

||||

> Python 2 气数将尽,是时候将你的项目从 Python 2 迁移到 Python 3 了。

|

||||

|

||||

Python 2.x 很快就要[失去官方支持][2]了,尽管如此,从 Python 2 迁移到 Python 3 却并没有想象中那么难。我在上周用了一个晚上的时间将一个 3D 渲染器及其对应的 [PySide][3] 迁移到 Python 3,回想起来,尽管在迁移过程中无可避免地会遇到一些牵一发而动全身的修改,但整个过程相比起痛苦的重构来说简直是出奇地简单。

|

||||

|

||||

|

||||

Python 2.x 很快就要[失去官方支持][2]了,尽管如此,从 Python 2 迁移到 Python 3 却并没有想象中那么难。我在上周用了一个晚上的时间将一个 3D 渲染器的前端代码及其对应的 [PySide][3] 迁移到 Python 3,回想起来,尽管在迁移过程中无可避免地会遇到一些牵一发而动全身的修改,但整个过程相比起痛苦的重构来说简直是出奇地简单。

|

||||

|

||||

每个人都别无选择地有各种必须迁移的原因:或许是觉得已经拖延太久了,或许是依赖了某个在 Python 2 下不再维护的模块。但如果你仅仅是想通过做一些事情来对开源做贡献,那么把一个 Python 2 应用迁移到 Python 3 就是一个简单而又有意义的做法。

|

||||

|

||||

无论你从 Python 2 迁移到 Python 3 的原因是什么,这都是一项重要的任务。按照以下三个步骤,可以让你把任务完成得更加清晰。

|

||||

|

||||

### 1\. 使用 2to3

|

||||

### 1、使用 2to3

|

||||

|

||||

从几年前开始,Python 在你或许还不知道的情况下就已经自带了一个名叫 [2to3][4] 的脚本,它可以帮助你实现大部分代码从 Python 2 到 Python 3 的自动转换。

|

||||

|

||||

下面是一段使用 Python 2.6 编写的代码:

|

||||

|

||||

|

||||

```

|

||||

#!/usr/bin/env python

|

||||

# -*- coding: utf-8 -*-

|

||||

@ -39,12 +39,12 @@ print ord(mystring[-1])

|

||||

```

|

||||

$ 2to3 example.py

|

||||

RefactoringTool: Refactored example.py

|

||||

\--- example.py (original)

|

||||

+++ example.py (refactored)

|

||||

--- example.py (original)

|

||||

+++ example.py (refactored)

|

||||

@@ -1,5 +1,5 @@

|

||||

#!/usr/bin/env python

|

||||

# -*- coding: utf-8 -*-

|

||||

|

||||

#!/usr/bin/env python

|

||||

# -*- coding: utf-8 -*-

|

||||

|

||||

-mystring = u'abcdé'

|

||||

-print ord(mystring[-1])

|

||||

+mystring = 'abcdé'

|

||||

@ -53,8 +53,7 @@ RefactoringTool: Files that need to be modified:

|

||||

RefactoringTool: example.py

|

||||

```

|

||||

|

||||

在默认情况下,2to3 只会对迁移到 Python 3 时必须作出修改的代码进行标示,在输出结果中显示的 Python 3 代码是直接可用的,但你可以在 2to3 加上 `-w` 或者 `--write` 参数,这样它就可以直接按照给出的方案修改你的 Python 2 代码文件了。

|

||||

|

||||

在默认情况下,`2to3` 只会对迁移到 Python 3 时必须作出修改的代码进行标示,在输出结果中显示的 Python 3 代码是直接可用的,但你可以在 2to3 加上 `-w` 或者 `--write` 参数,这样它就可以直接按照给出的方案修改你的 Python 2 代码文件了。

|

||||

|

||||

```

|

||||

$ 2to3 -w example.py

|

||||

@ -63,17 +62,16 @@ RefactoringTool: Files that were modified:

|

||||

RefactoringTool: example.py

|

||||

```

|

||||

|

||||

2to3 脚本不仅仅对单个文件有效,你还可以把它用于一个目录下的所有 Python 文件,同时它也会递归地对所有子目录下的 Python 文件都生效。

|

||||

`2to3` 脚本不仅仅对单个文件有效,你还可以把它用于一个目录下的所有 Python 文件,同时它也会递归地对所有子目录下的 Python 文件都生效。

|

||||

|

||||

### 2\. 使用 Pylint 或 Pyflakes

|

||||

### 2、使用 Pylint 或 Pyflakes

|

||||

|

||||

有一些不良的代码习惯在 Python 2 下运行是没有异常的,在 Python 3 下运行则会或多或少报出错误,并且这些代码无法通过语法转换来修复,所以 2to3 对它们没有效果,但一旦使用 Python 3 来运行就会产生报错。

|

||||

有一些不良的代码在 Python 2 下运行是没有异常的,在 Python 3 下运行则会或多或少报出错误,这种情况并不鲜见。因为这些不良代码无法通过语法转换来修复,所以 `2to3` 对它们没有效果,但一旦使用 Python 3 来运行就会产生报错。

|

||||

|

||||

对于这种情况,你需要使用 [Pylint][5]、[Pyflakes][6](或封装好的 [flake8][7])这类工具。其中我更喜欢 Pyflakes,它会忽略代码风格上的差异,在这一点上它和 Pylint 不同。尽管代码优美是 Python 的一大特点,但在代码迁移的层面上,“让代码功能保持一致”无疑比“让代码风格保持一致”重要得多/

|

||||

要找出这种问题,你需要使用 [Pylint][5]、[Pyflakes][6](或 [flake8][7] 封装器)这类工具。其中我更喜欢 Pyflakes,它会忽略代码风格上的差异,在这一点上它和 Pylint 不同。尽管代码优美是 Python 的一大特点,但在代码迁移的层面上,“让代码功能保持一致”无疑比“让代码风格保持一致”重要得多。

|

||||

|

||||

以下是 Pyflakes 的输出样例:

|

||||

|

||||

|

||||

```

|

||||

$ pyflakes example/maths

|

||||

example/maths/enum.py:19: undefined name 'cmp'

|

||||

@ -87,7 +85,6 @@ example/maths/enum.py:208: local variable 'e' is assigned to but never used

|

||||

|

||||

值得注意的是第 19 行这个容易产生误导的错误。从输出来看你可能会以为 `cmp` 是一个在使用前未定义的变量,实际上 `cmp` 是 Python 2 的一个内置函数,而它在 Python 3 中被移除了。而且这段代码被放在了 `try` 语句块中,除非认真检查这段代码的输出值,否则这个问题很容易被忽略掉。

|

||||

|

||||

|

||||

```

|

||||

try:

|

||||

result = cmp(self.index, other.index)

|

||||

@ -99,13 +96,12 @@ example/maths/enum.py:208: local variable 'e' is assigned to but never used

|

||||

|

||||

在代码迁移过程中,你会发现很多原本在 Python 2 中能正常运行的函数都发生了变化,甚至直接在 Python 3 中被移除了。例如 PySide 的绑定方式发生了变化、`importlib` 取代了 `imp` 等等。这样的问题只能见到一个解决一个,而涉及到的功能需要重构还是直接放弃,则需要你自己权衡。但目前来说,大多数问题都是已知的,并且有[完善的文档记录][8]。所以难的不是修复问题,而是找到问题,从这个角度来说,使用 Pyflake 是很有必要的。

|

||||

|

||||

### 3\. 修复被破坏的 Python 2 代码

|

||||

### 3、修复被破坏的 Python 2 代码

|

||||

|

||||

尽管 2to3 脚本能够帮助你把代码修改成兼容 Python 3 的形式,但对于一个完整的代码库,它就显得有点无能为力了,因为一些老旧的代码在 Python 3 中可能需要不同的结构来表示。在这样的情况下,只能人工进行修改。

|

||||

尽管 `2to3` 脚本能够帮助你把代码修改成兼容 Python 3 的形式,但对于一个完整的代码库,它就显得有点无能为力了,因为一些老旧的代码在 Python 3 中可能需要不同的结构来表示。在这样的情况下,只能人工进行修改。

|

||||

|

||||

例如以下代码在 Python 2.6 中可以正常运行:

|

||||

|

||||

|

||||

```

|

||||

class CLOCK_SPEED:

|

||||

TICKS_PER_SECOND = 16

|

||||

@ -116,8 +112,7 @@ class FPS:

|

||||

STATS_UPDATE_FREQUENCY = CLOCK_SPEED.TICKS_PER_SECOND

|

||||

```

|

||||

|

||||

类似 2to3 和 Pyflakes 这些自动化工具并不能发现其中的问题,但如果上述代码使用 Python 3 来运行,解释器会认为 `CLOCK_SPEED.TICKS_PER_SECOND` 是未被明确定义的。因此就需要把代码改成面向对象的结构:

|

||||

|

||||

类似 `2to3` 和 Pyflakes 这些自动化工具并不能发现其中的问题,但如果上述代码使用 Python 3 来运行,解释器会认为 `CLOCK_SPEED.TICKS_PER_SECOND` 是未被明确定义的。因此就需要把代码改成面向对象的结构:

|

||||

|

||||

```

|

||||

class CLOCK_SPEED:

|

||||

@ -131,7 +126,7 @@ class FPS:

|

||||

STATS_UPDATE_FREQUENCY = CLOCK_SPEED.TICKS_PER_SECOND()

|

||||

```

|

||||

|

||||

你也许会认为如果把 `TICKS_PER_SECOND()` 改写为一个构造函数能让代码看起来更加简洁,但这样就需要把这个方法的调用形式从 `CLOCK_SPEED.TICKS_PER_SECOND()` 改为 `CLOCK_SPEED()` 了,这样的改动或多或少会对整个库造成一些未知的影响。如果你对整个代码库的结构烂熟于心,那么你确实可以随心所欲地作出这样的修改。但我通常认为,只要我做出了修改,都可能会影响到其它代码中的至少三处地方,因此我更倾向于不使代码的结构发生改变。

|

||||

你也许会认为如果把 `TICKS_PER_SECOND()` 改写为一个构造函数(用 `__init__` 函数设置默认值)能让代码看起来更加简洁,但这样就需要把这个方法的调用形式从 `CLOCK_SPEED.TICKS_PER_SECOND()` 改为 `CLOCK_SPEED()` 了,这样的改动或多或少会对整个库造成一些未知的影响。如果你对整个代码库的结构烂熟于心,那么你确实可以随心所欲地作出这样的修改。但我通常认为,只要我做出了修改,都可能会影响到其它代码中的至少三处地方,因此我更倾向于不使代码的结构发生改变。

|

||||

|

||||

### 坚持信念

|

||||

|

||||

@ -146,14 +141,14 @@ via: https://opensource.com/article/19/12/update-apps-python-3

|

||||

作者:[Seth Kenlon][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[HankChow](https://github.com/HankChow)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/seth

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/python-programming-code-keyboard.png?itok=fxiSpmnd "Hands on a keyboard with a Python book "

|

||||

[2]: https://opensource.com/article/19/11/end-of-life-python-2

|

||||

[2]: https://linux.cn/article-11629-1.html

|

||||

[3]: https://pypi.org/project/PySide/

|

||||

[4]: https://docs.python.org/3.1/library/2to3.html

|

||||

[5]: https://opensource.com/article/19/10/python-pylint-introduction

|

||||

@ -1,18 +1,20 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11695-1.html)

|

||||

[#]: subject: (Annotate screenshots on Linux with Ksnip)

|

||||

[#]: via: (https://opensource.com/article/19/12/annotate-screenshots-linux-ksnip)

|

||||

[#]: author: (Clayton Dewey https://opensource.com/users/cedewey)

|

||||

|

||||

在 Linux 上使用 Ksnip 注释截图

|

||||

======

|

||||

Ksnip 让你能轻松地在 Linux 中创建和标记截图。

|

||||

|

||||

> Ksnip 让你能轻松地在 Linux 中创建和标记截图。

|

||||

|

||||

![a checklist for a team][1]

|

||||

|

||||

我最近从 MacOS 切换到了 [Elementary OS][2],这是一个专注于易用性和隐私性的 Linux 发行版。作为用户体验设计师和免费软件支持者,我会经常截图并进行注释。在尝试了几种不同的工具之后,到目前为止,我最喜欢的工具是 [Ksnip][3],它是 GPLv2 许可下的一种开源工具。

|

||||

我最近从 MacOS 切换到了 [Elementary OS][2],这是一个专注于易用性和隐私性的 Linux 发行版。作为用户体验设计师和自由软件支持者,我会经常截图并进行注释。在尝试了几种不同的工具之后,到目前为止,我最喜欢的工具是 [Ksnip][3],它是 GPLv2 许可下的一种开源工具。

|

||||

|

||||

![Ksnip screenshot][4]

|

||||

|

||||

@ -20,22 +22,19 @@ Ksnip 让你能轻松地在 Linux 中创建和标记截图。

|

||||

|

||||

使用你首选的包管理器安装 Ksnip。我通过 Apt 安装了它:

|

||||

|

||||

|

||||

```

|

||||

`sudo apt-get install ksnip`

|

||||

sudo apt-get install ksnip

|

||||

```

|

||||

|

||||

### 配置

|

||||

|

||||

Ksnip 有许多配置选项,包括:

|

||||

|

||||

* 保存截图的地方

|

||||

* 默认截图的文件名

|

||||

* 图像采集器行为

|

||||

* 光标颜色和宽度

|

||||

* 文字字体

|

||||

|

||||

|

||||

* 保存截图的地方

|

||||

* 默认截图的文件名

|

||||

* 图像采集器行为

|

||||

* 光标颜色和宽度

|

||||

* 文字字体

|

||||

|

||||

你也可以将其与你的 Imgur 帐户集成。

|

||||

|

||||

@ -45,7 +44,7 @@ Ksnip 有许多配置选项,包括:

|

||||

|

||||

Ksnip 提供了大量的[功能][6]。我最喜欢的 Ksnip 部分是它拥有我需要的所有注释工具(还有一个我没想到的工具!)。

|

||||

|

||||

您可以使用以下注释:

|

||||

你可以使用以下注释:

|

||||

|

||||

* 钢笔

|

||||

* 记号笔

|

||||

@ -53,8 +52,6 @@ Ksnip 提供了大量的[功能][6]。我最喜欢的 Ksnip 部分是它拥有

|

||||

* 椭圆

|

||||

* 文字

|

||||

|

||||

|

||||

|

||||

你还可以模糊区域来移除敏感信息。还有使用我最喜欢的新工具:用于在界面上表示步骤的带数字的点。

|

||||

|

||||

### 关于作者

|

||||

@ -63,7 +60,7 @@ Ksnip 提供了大量的[功能][6]。我最喜欢的 Ksnip 部分是它拥有

|

||||

|

||||

当我问到是什么启发了他编写 Ksnip 时,他说:

|

||||

|

||||

>“几年前我从 Windows 切换到 Linux,却没有了在 Windows 中常用的 Windows Snipping Tool。当时的所有其他截图工具要么很大(很多按钮和复杂功能),要么缺少诸如注释等关键功能,所以我决定编写一个简单的 Windows Snipping Tool 克隆版,但是随着时间的流逝,它开始有越来越多的功能。“

|

||||

> “几年前我从 Windows 切换到 Linux,却没有了在 Windows 中常用的 Windows Snipping Tool。当时的所有其他截图工具要么很大(很多按钮和复杂功能),要么缺少诸如注释等关键功能,所以我决定编写一个简单的 Windows Snipping Tool 克隆版,但是随着时间的流逝,它开始有越来越多的功能。“

|

||||

|

||||

这正是我在评估截图工具时发现的。他花时间构建解决方案并免费共享给他人使用,这真是太好了。

|

||||

|

||||

@ -77,7 +74,7 @@ Damir 最需要的是帮助开发 Ksnip。他和他的妻子很快就会有孩

|

||||

|

||||

* * *

|

||||

|

||||

_此文章最初发表在 [Agaric Tech Cooperative 的博客][9]上,并经允许重新发布。_

|

||||

> 此文章最初发表在 [Agaric Tech Cooperative 的博客][9]上,并经允许重新发布。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -86,7 +83,7 @@ via: https://opensource.com/article/19/12/annotate-screenshots-linux-ksnip

|

||||

作者:[Clayton Dewey][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,24 +1,26 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11698-1.html)

|

||||

[#]: subject: (How to configure Openbox for your Linux desktop)

|

||||

[#]: via: (https://opensource.com/article/19/12/openbox-linux-desktop)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/seth)

|

||||

|

||||

如何为 Linux 桌面配置 Openbox

|

||||

======

|

||||

本文是 24 天 Linux 桌面特别系列的一部分。Openbox 窗口管理器占用很小的系统资源、易于配置、使用愉快。

|

||||

![open with sky and grass][1]

|

||||

|

||||

你可能不知道使用过 [Openbox][2] 桌面:尽管 Openbox 本身是一个出色的窗口管理器,但它还是 LXDE 和 LXQT 等桌面环境的窗口管理器“引擎”,它甚至可以管理 KDE 和 GNOME。除了作为多个桌面的基础之外,Openbox 可以说是最简单的窗口管理器之一,可以为那些不想学习所有配置选项的人配置。通过使用基于菜单的 **obconf** 的配置应用,可以像在 GNOME 或 KDE 这样的完整桌面中一样轻松地设置所有常用首选项。

|

||||

> 本文是 24 天 Linux 桌面特别系列的一部分。Openbox 窗口管理器占用很小的系统资源、易于配置、使用愉快。

|

||||

|

||||

|

||||

|

||||

你可能不知道你使用过 [Openbox][2] 桌面:尽管 Openbox 本身是一个出色的窗口管理器,但它还是 LXDE 和 LXQT 等桌面环境的窗口管理器“引擎”,它甚至可以管理 KDE 和 GNOME。除了作为多个桌面的基础之外,Openbox 可以说是最简单的窗口管理器之一,可以为那些不想学习那么多配置选项的人配置。通过使用基于菜单的 obconf 的配置应用,可以像在 GNOME 或 KDE 这样的完整桌面中一样轻松地设置所有常用首选项。

|

||||

|

||||

### 安装 Openbox

|

||||

|

||||

你可能会在 Linux 发行版的软件仓库中找到 Openbox,但也可以在 [Openbox.org][3] 中找到它。如果你已经在运行其他桌面,那么可以安全地在同一系统上安装 Openbox,因为 Openbox 除了几个配置面板之外,不包括任何捆绑的应用。

|

||||

你可能会在 Linux 发行版的软件仓库中找到 Openbox,也可以在 [Openbox.org][3] 中找到它。如果你已经在运行其他桌面,那么可以安全地在同一系统上安装 Openbox,因为 Openbox 除了几个配置面板之外,不包括任何捆绑的应用。

|

||||

|

||||

安装后,退出当前桌面会话,以便你可以登录 Openbox 桌面。默认情况下,会话管理器(KDM、GDM、LightDM 或 XDM,这取决于你的设置)将继续登录到以前的桌面,因此你必须在登录之前覆盖该桌面。

|

||||

安装后,退出当前桌面会话,以便你可以登录 Openbox 桌面。默认情况下,会话管理器(KDM、GDM、LightDM 或 XDM,这取决于你的设置)将继续登录到以前的桌面,因此你必须在登录之前覆盖该选择。

|

||||

|

||||

要使用 GDM 覆盖它:

|

||||

|

||||

@ -30,17 +32,17 @@

|

||||

|

||||

### 配置 Openbox 桌面

|

||||

|

||||

默认情况下,Openbox 包含 **obconf** 应用,你可以使用它来选择和安装主题,修改鼠标行为,设置桌面首选项等。你可能会在仓库中发现其他配置应用,如 **obmenu** ,用于配置窗口管理器的其他部分。

|

||||

默认情况下,Openbox 包含 obconf 应用,你可以使用它来选择和安装主题、修改鼠标行为、设置桌面首选项等。你可能会在仓库中发现其他配置应用,如 obmenu,用于配置窗口管理器的其他部分。

|

||||

|

||||

![Openbox Obconf configuration application][6]

|

||||

|

||||

建立你自己的桌面体验相对容易。它有一些所有常见的桌面组件,例如系统托盘 [stalonetray][7]、任务栏 [Tint2][8] 或 [Xfce4-panel][9] 等几乎你能想到的。任意组合应用,直到拥有梦想的开源桌面为止。

|

||||

构建你自己的桌面环境相对容易。它有一些所有常见的桌面组件,例如系统托盘 [stalonetray][7]、任务栏 [Tint2][8] 或 [Xfce4-panel][9] 等几乎你能想到的。任意组合应用,直到拥有梦想的开源桌面为止。

|

||||

|

||||

![Openbox][10]

|

||||

|

||||

### 为何使用 Openbox

|

||||

|

||||

Openbox 占用很小的系统资源、易于配置、使用愉快。它基本不会弹出,因此会是一个容易熟悉的系统。你永远不会知道你面前的桌面秘密使用了 Openbox 作为窗口管理器(知道如何自定义它会不会很高兴?)。如果开源吸引你,那么试试看 Openbox。

|

||||

Openbox 占用的系统资源很小、易于配置、使用起来很愉悦。它基本不会让你感觉到阻碍,会是一个容易熟悉的系统。你永远不会知道你面前的桌面环境秘密使用了 Openbox 作为窗口管理器(知道如何自定义它会不会很高兴?)。如果开源吸引你,那么试试看 Openbox。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -49,7 +51,7 @@ via: https://opensource.com/article/19/12/openbox-linux-desktop

|

||||

作者:[Seth Kenlon][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,56 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (mayunmeiyouming)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11703-1.html)

|

||||

[#]: subject: (What GNOME 2 fans love about the Mate Linux desktop)

|

||||

[#]: via: (https://opensource.com/article/19/12/mate-linux-desktop)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/seth)

|

||||

|

||||

GNOME 2 粉丝喜欢 Mate Linux 桌面的什么?

|

||||

======

|

||||

|

||||

> 本文是 24 天 Linux 桌面特别系列的一部分。如果你还在怀念 GNOME 2,那么 Mate Linux 桌面将满足你的怀旧情怀。

|

||||

|

||||

|

||||

|

||||

如果你以前听过这个传闻:当 GNOME3 第一次发布时,很多 GNOME 用户还没有准备好放弃 GNOME 2。[Mate][2](以<ruby>马黛茶<rt>yerba mate</rt></ruby>植物命名)项目的开始是为了延续 GNOME 2 桌面,刚开始时它使用 GTK 2(GNOME 2 所基于的工具包),然后又合并了 GTK 3。由于 Linux Mint 的简单易用,使得该桌面变得非常流行,并且从那时起,它已经普遍用于 Fedora、Ubuntu、Slackware、Arch 和许多其他 Linux 发行版上。今天,Mate 继续提供一个传统的桌面环境,它的外观和感觉与 GNOME 2 完全一样,使用 GTK 3 工具包。

|

||||

|

||||

你可以在你的 Linux 发行版的软件仓库中找到 Mate,也可以下载并[安装][3]一个把 Mate 作为默认桌面的发行版。不过,在你这样做之前,请注意为了提供完整的桌面体验,所以许多 Mate 应用程序都是随该桌面一起安装的。如果你运行的是不同的桌面,你可能会发现自己有多余的应用程序(两个 PDF 阅读器、两个媒体播放器、两个文件管理器,等等)。所以如果你只想尝试 Mate 桌面,可以在虚拟机(例如 [GNOME box][4])中安装基于 Mate 的发行版。

|

||||

|

||||

### Mate 桌面之旅

|

||||

|

||||

Mate 项目不仅仅可以让你想起来 GNOME 2;它就是 GNOME 2。如果你是 00 年代中期 Linux 桌面的粉丝,至少,你会从中感受到 Mate 的怀旧情怀。我不是 GNOME 2 的粉丝,我更倾向于使用 KDE,但是有一个地方我无法想象没有 GNOME 2:[OpenSolaris][5]。OpenSolaris 项目并没有持续太久,在 Sun Microsystems 被并入 Oracle 之前,Ian Murdock 加入 Sun 时它就显得非常突出,我当时是一个初级的 Solaris 管理员,使用 OpenSolaris 来让自己更多学会那种 Unix 风格。这是我使用过 GNOME 2 的唯一平台(因为我一开始不知道如何更改桌面,后来习惯了它),而今天的 [OpenIndiana project][6] 是 OpenSolaris 的社区延续,它通过 Mate 桌面使用 GNOME 2。

|

||||

|

||||

![Mate on OpenIndiana][7]

|

||||

|

||||

Mate 的布局由左上角的三个菜单组成:应用程序、位置和系统。应用程序菜单提供对系统上安装的所有的应用程序启动器的快速访问。位置菜单提供对常用位置(如家目录、网络文件夹等)的快速访问。系统菜单包含全局选项,如关机和睡眠。右上角是一个系统托盘,屏幕底部有一个任务栏和一个虚拟桌面切换栏。

|

||||

|

||||

就桌面设计而言,这是一种稍微有点奇怪的配置。它从早期的 Linux 桌面、MacFinder 和 Windows 中借用了一些相同的部分,但是又创建了一个独特的配置,这种配置很直观而有些熟悉。Mate 执意保持这个模型,而这正是它的用户喜欢的地方。

|

||||

|

||||

### Mate 和开源

|

||||

|

||||

Mate 是一个最直接的例子,展示了开源如何使开发人员能够对抗项目生命的终结。从理论上讲,GNOME 2 会被 GNOME 3 所取代,但它依然存在,因为一个开发人员建立了该代码的一个分支并继续发展了下去。它的发展势头越来越庞大,更多的开发人员加入进来,并且这个让用户喜爱的桌面比以往任何时候都要更好。并不是所有的软件都有第二次机会,但是开源永远是一个机会,否则就永远没有机会。

|

||||

|

||||

使用和支持开源意味着支持用户和开发人员的自由。而且 Mate 桌面是他们的努力的有力证明。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/12/mate-linux-desktop

|

||||

|

||||

作者:[Seth Kenlon][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[mayunmeiyouming](https://github.com/mayunmeiyouming)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/seth

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/linux_keyboard_desktop.png?itok=I2nGw78_ (Linux keys on the keyboard for a desktop computer)

|

||||

[2]: https://mate-desktop.org/

|

||||

[3]: https://mate-desktop.org/install/

|

||||

[4]: https://opensource.com/article/19/5/getting-started-gnome-boxes-virtualization

|

||||

[5]: https://en.wikipedia.org/wiki/OpenSolaris

|

||||

[6]: https://www.openindiana.org/documentation/faq/#what-is-openindiana

|

||||

[7]: https://opensource.com/sites/default/files/uploads/advent-mate-openindiana_675px.jpg (Mate on OpenIndiana)

|

||||

@ -1,20 +1,22 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11706-1.html)

|

||||

[#]: subject: (Get started with Lumina for your Linux desktop)

|

||||

[#]: via: (https://opensource.com/article/19/12/linux-lumina-desktop)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/seth)

|

||||

|

||||

在 Linux 桌面中开始使用 Lumina

|

||||

======

|

||||

本文是 24 天 Linux 桌面特别系列的一部分。Lumina 是快速、合理的基于 Fluxbox 的快捷方式桌面,它具有你无法缺少的所有功能。

|

||||

![Lightbulb][1]

|

||||

|

||||

多年来,有一个基于 FreeBSD 的桌面操作系统(OS),称为 PC-BSD。它旨在作为一般使用的系统,因此值得注意,因为 BSD 主要用于服务器。大多数时候,PC-BSD 默认带 KDE 桌面,但是 KDE 越来越依赖于 Linux 特定的技术,因此有越来越多的 PC-BSD 从中迁移出来。PC-BSD 变成了 [Trident][2],它的默认桌面是 [Lumina][3],它是一组小部件,它们使用与 KDE 相同的基于 Qt 的工具箱,运行在 Fluxbox 窗口管理器中。

|

||||

> 本文是 24 天 Linux 桌面特别系列的一部分。Lumina 桌面是让你使用快速、合理的基于 Fluxbox 桌面的捷径,它具有你无法缺少的所有功能。

|

||||

|

||||

你可以在 Linux 发行版的软件仓库或 BSD 的 port 树中找到 Lumina 桌面。如果你安装了 Lumina 并且已经在运行另一个桌面,那么你可能会发现有冗余的应用(两个 PDF 阅读器、两个文件管理器,等等),因为 Lumina 包含一些集成的应用。如果你只想尝试 Lumina 桌面,那么可以在虚拟机如 [GNOME Boxes][4] 中安装基于 Lumina 的 BSD 发行版。

|

||||

|

||||

|

||||

多年来,有一个名为 PC-BSD 的基于 FreeBSD 的桌面操作系统(OS)。它旨在作为一个常规使用的系统,因此值得注意,因为 BSD 主要用于服务器。大多数时候,PC-BSD 默认带 KDE 桌面,但是 KDE 越来越依赖于 Linux 特定的技术,因此 PC-BSD 越来越从 KDE 迁离。PC-BSD 变成了 [Trident][2],它的默认桌面是 [Lumina][3],它是一组小部件,它们使用与 KDE 相同的基于 Qt 的工具包,运行在 Fluxbox 窗口管理器上。

|

||||

|

||||

你可以在 Linux 发行版的软件仓库或 BSD 的 ports 树中找到 Lumina 桌面。如果你安装了 Lumina 并且已经在运行另一个桌面,那么你可能会发现有冗余的应用(两个 PDF 阅读器、两个文件管理器,等等),因为 Lumina 包含一些集成的应用。如果你只想尝试 Lumina 桌面,那么可以在虚拟机如 [GNOME Boxes][4] 中安装基于 Lumina 的 BSD 发行版。

|

||||

|

||||

如果在当前的操作系统上安装 Lumina,那么必须注销当前的桌面会话,才能登录到新的会话。默认情况下,会话管理器(SDDM、GDM、LightDM 或 XDM,取决于你的设置)将继续登录到以前的桌面,因此你必须在登录之前覆盖该桌面。

|

||||

|

||||

@ -34,13 +36,13 @@ Lumina 提供了一个简单而轻巧的桌面环境。屏幕底部有一个面

|

||||

|

||||

![Lumina desktop running on Project Trident][7]

|

||||

|

||||

Lumina 与几个 Linux 轻量级桌面非常相似,尤其是 LXQT,不同之处在于 Lumina 完全不依赖于基于 Linux 的桌面框架,例如 ConsoleKit、PolicyKit、D-Bus 或 systemd。对于你而言,这是否具有优势取决于所运行的操作系统。毕竟,如果你运行的是可以访问这些功能的 Linux,那么使用不使用这些特性的桌面可能就没有多大意义,还会减少功能。如果你运行的是 BSD,那么在 Fluxbox 中运行 Lumina 部件意味着你不必从 port 安装 Linux 兼容库。

|

||||

Lumina 与几个 Linux 轻量级桌面非常相似,尤其是 LXQT,不同之处在于 Lumina 完全不依赖于基于 Linux 的桌面框架(例如 ConsoleKit、PolicyKit、D-Bus 或 systemd)。对于你而言,这是否具有优势取决于所运行的操作系统。毕竟,如果你运行的是可以访问这些功能的 Linux,那么使用不使用这些特性的桌面可能就没有多大意义,还会减少功能。如果你运行的是 BSD,那么在 Fluxbox 中运行 Lumina 部件意味着你不必从 ports 安装 Linux 兼容库。

|

||||

|

||||

### 为什么要使用 Lumina

|

||||

|

||||

Lumina 设计简单,没有很多功能,你无法通过安装 Fluxbox 以及自己喜欢的组件来实现([PCManFM][8] 用于文件管理、各种 [LXQt 应用][9 ]、[Tint2][10] 面板等)。但它是开源的,开源用户喜欢寻找避免重复发明轮子的方法(几乎与我们喜欢重新发明轮子一样多)。

|

||||

Lumina 设计简单,它没有很多功能,但是你可以安装 Fluxbox 你喜欢的组件(用于文件管理的 [PCManFM][8]、各种 [LXQt 应用][9]、[Tint2][10] 面板等)。在开源中,开源用户喜欢寻找不要重复发明轮子的方法(几乎与我们喜欢重新发明轮子一样多)。

|

||||

|

||||

Lumina 桌面是快速而合理的基于 Fluxbox 的桌面快捷方式,它具有你无法缺少的所有功能,并且你很少需要调整细节。试一试 Lumina 桌面,看看它是否适合你。

|

||||

Lumina 桌面是让你使用快速、合理的基于 Fluxbox 桌面的捷径,它具有你无法缺少的所有功能,并且你很少需要调整细节。试一试 Lumina 桌面,看看它是否适合你。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -49,7 +51,7 @@ via: https://opensource.com/article/19/12/linux-lumina-desktop

|

||||

作者:[Seth Kenlon][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,63 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (2020 technology must haves, a guide to Kubernetes etcd, and more industry trends)

|

||||

[#]: via: (https://opensource.com/article/19/12/gartner-ectd-and-more-industry-trends)

|

||||

[#]: author: (Tim Hildred https://opensource.com/users/thildred)

|

||||

|

||||

2020 technology must haves, a guide to Kubernetes etcd, and more industry trends

|

||||

======

|

||||

A weekly look at open source community, market, and industry trends.

|

||||

![Person standing in front of a giant computer screen with numbers, data][1]

|

||||

|

||||

As part of my role as a senior product marketing manager at an enterprise software company with an open source development model, I publish a regular update about open source community, market, and industry trends for product marketers, managers, and other influencers. Here are five of my and their favorite articles from that update.

|

||||

|

||||

## [Gartner's top 10 infrastructure and operations trends for 2020][2]

|

||||

|

||||

> “The vast majority of organisations that do not adopt a shared self-service platform approach will find that their DevOps initiatives simply do not scale,” said Winser. "Adopting a shared platform approach enables product teams to draw from an I&O digital toolbox of possibilities, while benefiting from high standards of governance and efficiency needed for scale."

|

||||

|

||||

**The impact**: The breakneck change of technology development and adoption will not slow down next year, as the things you've been reading about for the last two years become things you have to figure out to deal with every day.

|

||||

|

||||

## [A guide to Kubernetes etcd: All you need to know to set up etcd clusters][3]

|

||||

|

||||

> Etcd is a distributed reliable key-value store which is simple, fast and secure. It acts like a backend service discovery and database, runs on different servers in Kubernetes clusters at the same time to monitor changes in clusters and to store state/configuration data that should to be accessed by a Kubernetes master or clusters. Additionally, etcd allows Kubernetes master to support discovery service so that deployed application can declare their availability for inclusion in service.

|

||||

|

||||

**The impact**: This is actually way more than I needed to know about setting up etcd clusters, but now I have a mental model of what that could look like, and you can too.

|

||||

|

||||

## [How the open source model could fuel the future of digital marketing][4]

|

||||

|

||||

> In other words, the broad adoption of open source culture has the power to completely invert the traditional marketing funnel. In the future, prospective customers could be first introduced to “late funnel” materials and then buy into the broader narrative — a complete reversal of how traditional marketing approaches decision-makers today.

|

||||

|

||||

**The impact**: The SEO on this cuts two ways: It can introduce uninitiated marketing people to open source and uninitiated technical people to the ways that technology actually gets adopted. Neat!

|

||||

|

||||

## [Kubernetes integrates interoperability, storage, waits on sidecars][5]

|

||||

|

||||

> In a [recent interview][6], Lachlan Evenson, and was also a lead on the Kubernetes 1.16 release, said sidecar containers was one of the features that team was a “little disappointed” it could not include in their release.

|

||||

>

|

||||

> Guinevere Saenger, software engineer at GitHub and lead for the 1.17 release team, explained that sidecar containers gained increased focus “about a month ago,” and that its implementation “changes the pod spec, so this is a change that affects a lot of areas and needs to be handled with care.” She noted that it did move closer to completion and “will again be prioritized for 1.18.”

|

||||

|

||||

**The impact**: You can read between the lines to understand a lot more about the Kubernetes sausage-making process. It's got governance, tradeoffs, themes, and timeframes; all the stuff that is often invisible to consumers of a project.

|

||||

|

||||

_I hope you enjoyed this list of what stood out to me from last week and come back next Monday for more open source community, market, and industry trends._

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/12/gartner-ectd-and-more-industry-trends

|

||||

|

||||

作者:[Tim Hildred][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/thildred

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/data_metrics_analytics_desktop_laptop.png?itok=9QXd7AUr (Person standing in front of a giant computer screen with numbers, data)

|

||||

[2]: https://www.information-age.com/gartner-top-10-infrastructure-and-operations-trends-2020-123486509/

|

||||

[3]: https://superuser.openstack.org/articles/a-guide-to-kubernetes-etcd-all-you-need-to-know-to-set-up-etcd-clusters/

|

||||

[4]: https://www.forbes.com/sites/forbescommunicationscouncil/2019/11/19/how-the-open-source-model-could-fuel-the-future-of-digital-marketing/#71b602fb20a5

|

||||

[5]: https://www.sdxcentral.com/articles/news/kubernetes-integrates-interoperability-storage-waits-on-sidecars/2019/12/

|

||||

[6]: https://kubernetes.io/blog/2019/12/06/when-youre-in-the-release-team-youre-family-the-kubernetes-1.16-release-interview/

|

||||

@ -0,0 +1,165 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Linux Mint 19.3 “Tricia” Released: Here’s What’s New and How to Get it)

|

||||

[#]: via: (https://itsfoss.com/linux-mint-19-3/)

|

||||

[#]: author: (Ankush Das https://itsfoss.com/author/ankush/)

|

||||

|

||||

Linux Mint 19.3 “Tricia” Released: Here’s What’s New and How to Get it

|

||||

======

|

||||

|

||||

_**Linux Mint 19.3 “Tricia” has been released. See what’s new in it and learn how to upgrade to Linux Mint 19.3.**_

|

||||

|

||||

The Linux Mint team finally announced the release of Linux Mint 19.3 codenamed ‘Tricia’ with useful feature additions along with a ton of improvements under-the-hood.

|

||||

|

||||

This is a point release based on the latest **Ubuntu 18.04.3** and it comes packed with the **Linux kernel 5.0**.

|

||||

|

||||

I downloaded and quickly tested the edition featuring the [Cinnamon 4.4][1] desktop environment. You may also try the Xfce or MATE edition of Linux Mint 19.3.

|

||||

|

||||

### Linux Mint 19.3: What’s New?

|

||||

|

||||

![Linux Mint 19 3 Desktop][2]

|

||||

|

||||

While being an LTS release that will be supported until 2023 – it brings in a couple of useful features and improvements. Let me highlight some of them for you.

|

||||

|

||||

#### System Reports

|

||||

|

||||

![][3]

|

||||

|

||||

Right after installing Linux Mint 19.3 (or upgrading it), you will notice a warning icon on the right side of the panel (taskbar).

|

||||

|

||||

When you click on it, you should be displayed a list of potential issues that you can take care of to ensure the best out of Linux Mint experience.

|

||||

|

||||

For starters, it will suggest that you should create a root password, install a language pack, or update software packages – in the form of a warning. This is particularly useful to make sure that you perform important actions even after following the first set of steps on the welcome screen.

|

||||

|

||||

#### Improved Language Settings

|

||||

|

||||

Along with the ability to install/set a language, you will also get the ability to change the time format.

|

||||

|

||||

So, the language settings are now more useful than ever before.

|

||||

|

||||

#### HiDPI Support

|

||||

|

||||

As a result of [HiDPI][4] support, the system tray icons will look crisp and overall, you should get a pleasant user experience on a high-res display.

|

||||

|

||||

#### New Applications

|

||||

|

||||

![Linux Mint Drawing App][5]

|

||||

|

||||

With the new release, you will n longer find “**GIMP**” pre-installed.

|

||||

|

||||

Even though GIMP is a powerful utility, they decided to add a simpler “**Drawing**” app to let users to easily crop/resize images while being able to tweak it a little.

|

||||

|

||||

Also, **Gnote** replaces **Tomboy** as the default note-taking application on Linux Mint 19.3

|

||||

|

||||

In addition to both these replacements, Celluloid video player has also been added instead of Xplayer. In case you did not know, Celluloid happens to be one of the [best open source video players][6] for Linux.

|

||||

|

||||

#### Cinnamon 4.4 Desktop

|

||||

|

||||

![Cinnamon 4 4 Desktop][7]

|

||||

|

||||

In my case, the new Cinnamon 4.4 desktop experience introduces a couple of new abilities like adjusting/tweaking the panel zones individually as you can see in the screenshot above.

|

||||

|

||||

#### Other Improvements

|

||||

|

||||

There are several other improvements including more customizability options in the file manager and so on.

|

||||

|

||||

You can read more about the detailed changes in the [official release notes][8].

|

||||

|

||||

[Subscribe to our YouTube channel for more Linux videos][9]

|

||||

|

||||

### Linux Mint 19 vs 19.1 vs 19.2 vs 19.3: What’s the difference?

|

||||

|

||||

You probably already know that Linux Mint releases are based on Ubuntu long term support releases. Linux Mint 19 series is based on Ubuntu 18.04 LTS.

|

||||

|

||||

Ubuntu LTS releases get ‘point releases’ on the interval of a few months. Point release basically consists of bug fixes and security updates that have been pushed since the last release of the LTS version. This is similar to the Service Pack concept in Windows XP if you remember it.

|

||||

|

||||

If you are going to download Ubuntu 18.04 which was released in April 2018 in 2019, you’ll get Ubuntu 18.04.2. The ISO image of 18.04.2 will consist of 18.04 and the bug fixes and security updates applied till 18.04.2. Imagine if there were no point releases, then right after [installing Ubuntu 18.04][10], you’ll have to install a few gigabytes of system updates. Not very convenient, right?

|