mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-04 22:00:34 +08:00

commit

97e9285ed7

@ -0,0 +1,74 @@

|

||||

为什么 Arch Linux 如此“难弄”又有何优劣?

|

||||

======

|

||||

|

||||

|

||||

|

||||

[Arch Linux][1] 于 **2002** 年发布,由 **Aaron Grifin** 领头,是当下最热门的 Linux 发行版之一。从设计上说,Arch Linux 试图给用户提供简单、最小化且优雅的体验,但它的目标用户群可不是怕事儿多的用户。Arch 鼓励参与社区建设,并且从设计上期待用户自己有学习操作系统的能力。

|

||||

|

||||

很多 Linux 老鸟对于 **Arch Linux** 会更了解,但电脑前的你可能只是刚开始打算把 Arch 当作日常操作系统来使用。虽然我也不是权威人士,但下面几点优劣是我认为你总会在使用中慢慢发现的。

|

||||

|

||||

### 1、优点: 定制属于你自己的 Linux 操作系统

|

||||

|

||||

大多数热门的 Linux 发行版(比如 **Ubuntu** 和 **Fedora**)很像一般我们会看到的预装系统,和 **Windows** 或者 **MacOS** 一样。但 Arch 则会更鼓励你去把操作系统配置的符合你的品味。如果你能顺利做到这点的话,你会得到一个每一个细节都如你所想的操作系统。

|

||||

|

||||

#### 缺点: 安装过程让人头疼

|

||||

|

||||

[Arch Linux 的安装 ][2] 别辟蹊径——因为你要花些时间来微调你的操作系统。你会在过程中了解到不少终端命令和组成你系统的各种软件模块——毕竟你要自己挑选安装什么。当然,你也知道这个过程少不了阅读一些文档/教程。

|

||||

|

||||

### 2、优点: 没有预装垃圾

|

||||

|

||||

鉴于 **Arch** 允许你在安装时选择你想要的系统部件,你再也不用烦恼怎么处理你不想要的一堆预装软件。作为对比,**Ubuntu** 会预装大量的软件和桌面应用——很多你不需要、甚至卸载之前都不知道它们存在的东西。

|

||||

|

||||

总而言之,**Arch Linux* 能省去大量的系统安装后时间。**Pacman**,是 Arch Linux 默认使用的优秀包管理组件。或者你也可以选择 [Pamac][3] 作为替代。

|

||||

|

||||

### 3、优点: 无需繁琐系统升级

|

||||

|

||||

**Arch Linux** 采用滚动升级模型,简直妙极了。这意味着你不需要操心升级了。一旦你用上了 Arch,持续的更新体验会让你和一会儿一个版本的升级说再见。只要你记得‘滚’更新(Arch 用语),你就一直会使用最新的软件包们。

|

||||

|

||||

#### 缺点: 一些升级可能会滚坏你的系统

|

||||

|

||||

虽然升级过程是完全连续的,你有时得留意一下你在更新什么。没人能知道所有软件的细节配置,也没人能替你来测试你的情况。所以如果你盲目更新,有时候你会滚坏你的系统。(LCTT 译注:别担心,你可以‘滚’回来 ;D )

|

||||

|

||||

### 4、优点: Arch 有一个社区基因

|

||||

|

||||

所有 Linux 用户通常有一个共同点:对独立自由的追求。虽然大多数 Linux 发行版和公司企业等挂钩极少,但也并非没有。比如 基于 **Ubuntu** 的衍生版本们不得不受到 Canonical 公司决策的影响。

|

||||

|

||||

如果你想让你的电脑更独立,那么 Arch Linux 是你的伙伴。不像大多数操作系统,Arch 完全没有商业集团的影响,完全由社区驱动。

|

||||

|

||||

### 5、优点: Arch Wiki 无敌

|

||||

|

||||

[Arch Wiki][4] 是一个无敌文档库,几乎涵盖了所有关于安装和维护 Arch 以及关于操作系统本身的知识。Arch Wiki 最厉害的一点可能是,不管你在用什么发行版,你多多少少可能都在 Arch Wiki 的页面里找到有用信息。这是因为 Arch 用户也会用别的发行版用户会用的东西,所以一些技巧和知识得以泛化。

|

||||

|

||||

### 6、优点: 别忘了 Arch 用户软件库 (AUR)

|

||||

|

||||

<ruby>[Arch 用户软件库][5]<rt>Arch User Repository</rt></ruby> (AUR)是一个来自社区的超大软件仓库。如果你找了一个还没有 Arch 的官方仓库里出现的软件,那你肯定能在 AUR 里找到社区为你准备好的包。

|

||||

|

||||

AUR 是由用户自发编译和维护的。Arch 用户也可以给每个包投票,这样后来者就能找到最有用的那些软件包了。

|

||||

|

||||

#### 最后: Arch Linux 适合你吗?

|

||||

|

||||

**Arch Linux** 优点多于缺点,也有很多优缺点我无法在此一一叙述。安装过程很长,对非 Linux 用户来说也可能偏有些技术,但只要你投入一些时间和善用 Wiki,你肯定能迈过这道坎。

|

||||

|

||||

**Arch Linux** 是一个非常优秀的发行版——尽管它有一些复杂性。同时它也很受那些知道自己想要什么的用户的欢迎——只要你肯做点功课,有些耐心。

|

||||

|

||||

当你从零开始搭建完 Arch 的时候,你会掌握很多 GNU/Linux 的内部细节,也再也不会对你的电脑内部运作方式一无所知了。

|

||||

|

||||

欢迎读者们在评论区讨论你使用 Arch Linux 的优缺点?以及你曾经遇到过的一些挑战。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.fossmint.com/why-is-arch-linux-so-challenging-what-are-pros-cons/

|

||||

|

||||

作者:[Martins D. Okoi][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[Moelf](https://github.com/Moelf)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.fossmint.com/author/dillivine/

|

||||

[1]:https://www.archlinux.org/

|

||||

[2]:https://www.tecmint.com/arch-linux-installation-and-configuration-guide/

|

||||

[3]:https://www.fossmint.com/pamac-arch-linux-gui-package-manager/

|

||||

[4]:https://wiki.archlinux.org/

|

||||

[5]:https://wiki.archlinux.org/index.php/Arch_User_Repository

|

||||

@ -1,6 +1,8 @@

|

||||

系统管理员的一个网络管理指南

|

||||

面向系统管理员的网络管理指南

|

||||

======

|

||||

|

||||

> 一个使管理服务器和网络更轻松的 Linux 工具和命令的参考列表。

|

||||

|

||||

|

||||

|

||||

如果你是一位系统管理员,那么你的日常工作应该包括管理服务器和数据中心的网络。以下的 Linux 实用工具和命令 —— 从基础的到高级的 —— 将帮你更轻松地管理你的网络。

|

||||

@ -16,8 +18,6 @@

|

||||

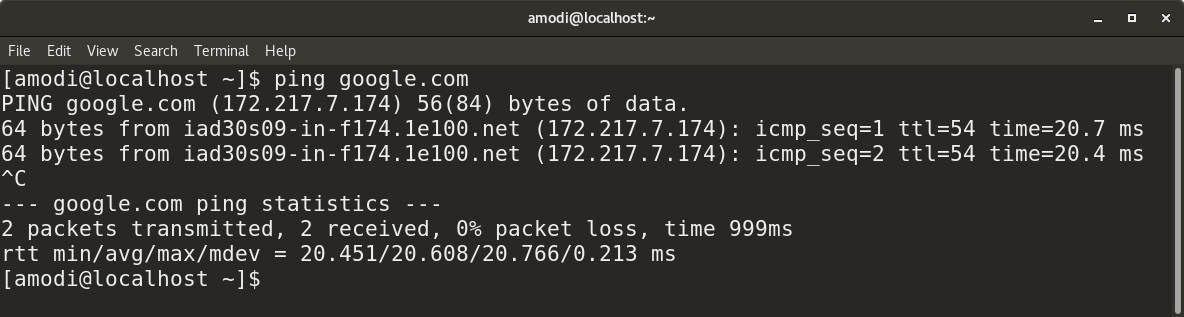

* IPv4: `ping <ip address>/<fqdn>`

|

||||

* IPv6: `ping6 <ip address>/<fqdn>`

|

||||

|

||||

|

||||

|

||||

你也可以使用 `ping` 去解析出网站所对应的 IP 地址,如下图所示:

|

||||

|

||||

|

||||

@ -32,16 +32,12 @@

|

||||

|

||||

* `traceroute <ip address>/<fqdn>`

|

||||

|

||||

|

||||

|

||||

### Telnet

|

||||

|

||||

**语法:**

|

||||

|

||||

* `telnet <ip address>/<fqdn>` 是用于 [telnet][3] 进入任何支持该协议的服务器。

|

||||

|

||||

|

||||

|

||||

### Netstat

|

||||

|

||||

这个网络统计(`netstat`)实用工具是用于去分析解决网络连接问题和检查接口/端口统计数据、路由表、协议状态等等的。它是任何管理员都应该必须掌握的工具。

|

||||

@ -69,20 +65,14 @@

|

||||

**语法:**

|

||||

|

||||

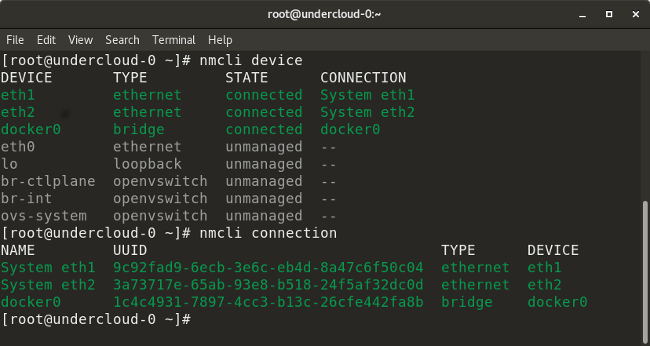

* `nmcli device` 列出网络上的所有设备。

|

||||

|

||||

* `nmcli device show <interface>` 显示指定接口的网络相关的详细情况。

|

||||

|

||||

* `nmcli connection` 检查设备的连接情况。

|

||||

|

||||

* `nmcli connection down <interface>` 关闭指定接口。

|

||||

|

||||

* `nmcli connection up <interface>` 打开指定接口。

|

||||

|

||||

* `nmcli con add type vlan con-name <connection-name> dev <interface> id <vlan-number> ipv4 <ip/cidr> gw4 <gateway-ip>` 在特定的接口上使用指定的 VLAN 号添加一个虚拟局域网(VLAN)接口、IP 地址、和网关。

|

||||

|

||||

|

||||

|

||||

|

||||

### 路由

|

||||

|

||||

检查和配置路由的命令很多。下面是其中一些比较有用的:

|

||||

@ -101,13 +91,13 @@

|

||||

|

||||

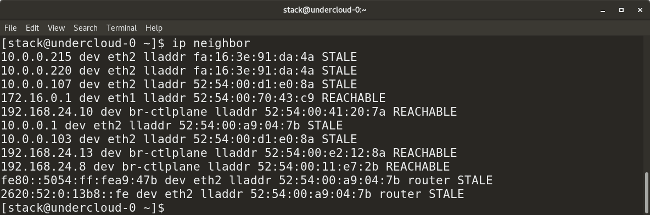

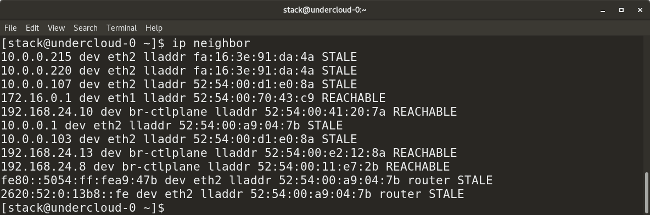

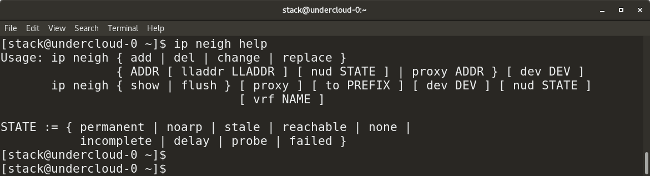

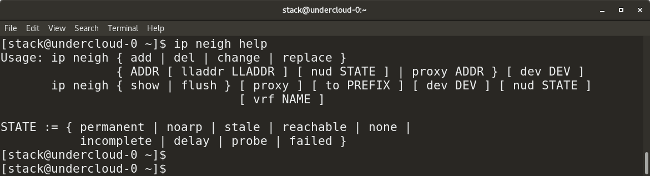

* `ip neighbor` 显示当前的邻接表和用于去添加、改变、或删除新的邻居。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

* `arp` (它的全称是 “地址解析协议”)类似于 `ip neighbor`。`arp` 映射一个系统的 IP 地址到它相应的 MAC(介质访问控制)地址。

|

||||

|

||||

|

||||

|

||||

|

||||

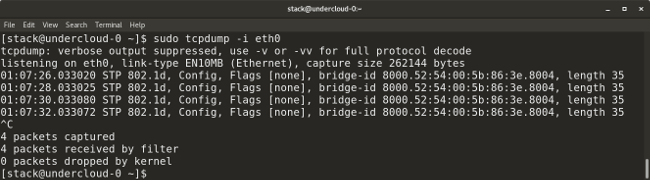

### Tcpdump 和 Wireshark

|

||||

|

||||

@ -117,7 +107,7 @@ Linux 提供了许多包捕获工具,比如 `tcpdump`、`wireshark`、`tshark`

|

||||

|

||||

* `tcpdump -i <interface-name>` 显示指定接口上实时通过的数据包。通过在命令中添加一个 `-w` 标志和输出文件的名字,可以将数据包保存到一个文件中。例如:`tcpdump -w <output-file.> -i <interface-name>`。

|

||||

|

||||

|

||||

|

||||

|

||||

* `tcpdump -i <interface> src <source-ip>` 从指定的源 IP 地址上捕获数据包。

|

||||

* `tcpdump -i <interface> dst <destination-ip>` 从指定的目标 IP 地址上捕获数据包。

|

||||

@ -135,22 +125,16 @@ Linux 提供了许多包捕获工具,比如 `tcpdump`、`wireshark`、`tshark`

|

||||

* `iptables -L` 列出所有已存在的 `iptables` 规则。

|

||||

* `iptables -F` 删除所有已存在的规则。

|

||||

|

||||

|

||||

|

||||

下列命令允许流量从指定端口到指定接口:

|

||||

|

||||

* `iptables -A INPUT -i <interface> -p tcp –dport <port-number> -m state –state NEW,ESTABLISHED -j ACCEPT`

|

||||

* `iptables -A OUTPUT -o <interface> -p tcp -sport <port-number> -m state – state ESTABLISHED -j ACCEPT`

|

||||

|

||||

|

||||

|

||||

下列命令允许<ruby>环回<rt>loopback</rt></ruby>接口访问系统:

|

||||

|

||||

* `iptables -A INPUT -i lo -j ACCEPT`

|

||||

* `iptables -A OUTPUT -o lo -j ACCEPT`

|

||||

|

||||

|

||||

|

||||

### Nslookup

|

||||

|

||||

`nslookup` 工具是用于去获得一个网站或域名所映射的 IP 地址。它也能用于去获得你的 DNS 服务器的信息,比如,一个网站的所有 DNS 记录(具体看下面的示例)。与 `nslookup` 类似的一个工具是 `dig`(Domain Information Groper)实用工具。

|

||||

@ -161,7 +145,6 @@ Linux 提供了许多包捕获工具,比如 `tcpdump`、`wireshark`、`tshark`

|

||||

* `nslookup -type=any <website-name.com>` 显示指定网站/域中所有可用记录。

|

||||

|

||||

|

||||

|

||||

### 网络/接口调试

|

||||

|

||||

下面是用于接口连通性或相关网络问题调试所需的命令和文件的汇总。

|

||||

@ -182,7 +165,6 @@ Linux 提供了许多包捕获工具,比如 `tcpdump`、`wireshark`、`tshark`

|

||||

* `/etc/ntp.conf` 指定 NTP 服务器域名。

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/7/sysadmin-guide-networking-commands

|

||||

@ -190,7 +172,7 @@ via: https://opensource.com/article/18/7/sysadmin-guide-networking-commands

|

||||

作者:[Archit Modi][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,23 +1,21 @@

|

||||

针对 Bash 的不完整路径展开(补全)

|

||||

针对 Bash 的不完整路径展开(补全)功能

|

||||

======

|

||||

|

||||

|

||||

|

||||

|

||||

[bash-complete-partial-path][1] 通过添加不完整的路径展开(类似于 Zsh)来增强 Bash(它在 Linux 上,macOS 使用 gnu-sed,Windows 使用 MSYS)中的路径补全。如果你想在 Bash 中使用这个省时特性,而不必切换到 Zsh,它将非常有用。

|

||||

|

||||

这是它如何工作的。当按下 `Tab` 键时,bash-complete-partial-path 假定每个部分都不完整并尝试展开它。假设你要进入 `/usr/share/applications` 。你可以输入 `cd /u/s/app`,按下 `Tab`,bash-complete-partial-path 应该把它展开成 `cd /usr/share/applications` 。如果存在冲突,那么按 `Tab` 仅补全没有冲突的路径。例如,Ubuntu 用户在 `/usr/share` 中应该有很多以 “app” 开头的文件夹,在这种情况下,输入 `cd /u/s/app` 只会展开 `/usr/share/` 部分。

|

||||

|

||||

这是更深层不完整文件路径展开的另一个例子。在Ubuntu系统上输入 `cd /u/s/f/t/u`,按下 `Tab`,它应该自动展开为 `cd /usr/share/fonts/truetype/ubuntu`。

|

||||

另一个更深层不完整文件路径展开的例子。在Ubuntu系统上输入 `cd /u/s/f/t/u`,按下 `Tab`,它应该自动展开为 `cd /usr/share/fonts/truetype/ubuntu`。

|

||||

|

||||

功能包括:

|

||||

|

||||

* 转义特殊字符

|

||||

|

||||

* 如果用户路径开头使用引号,则不转义字符转义,而是在展开路径后使用匹配字符结束引号

|

||||

|

||||

* 正确展开 ~ 表达式

|

||||

|

||||

* 如果 bash-completion 包正在使用,则此代码将安全地覆盖其 _filedir 函数。无需额外配置,只需确保在主 bash-completion 后 source 此项目。

|

||||

* 正确展开 `~` 表达式

|

||||

* 如果正在使用 bash-completion 包,则此代码将安全地覆盖其 `_filedir` 函数。无需额外配置,只需确保在主 bash-completion 后引入此项目。

|

||||

|

||||

查看[项目页面][2]以获取更多信息和演示截图。

|

||||

|

||||

@ -25,7 +23,7 @@

|

||||

|

||||

bash-complete-partial-path 安装说明指定直接下载 bash_completion 脚本。我更喜欢从 Git 仓库获取,这样我可以用一个简单的 `git pull` 来更新它,因此下面的说明将使用这种安装 bash-complete-partial-path。如果你喜欢,可以使用[官方][3]说明。

|

||||

|

||||

1. 安装 Git(需要克隆 bash-complete-partial-path 的 Git 仓库)。

|

||||

1、 安装 Git(需要克隆 bash-complete-partial-path 的 Git 仓库)。

|

||||

|

||||

在 Debian、Ubuntu、Linux Mint 等中,使用此命令安装 Git:

|

||||

|

||||

@ -33,13 +31,13 @@ bash-complete-partial-path 安装说明指定直接下载 bash_completion 脚本

|

||||

sudo apt install git

|

||||

```

|

||||

|

||||

2. 在 `~/.config/` 中克隆 bash-complete-partial-path 的 Git 仓库:

|

||||

2、 在 `~/.config/` 中克隆 bash-complete-partial-path 的 Git 仓库:

|

||||

|

||||

```

|

||||

cd ~/.config && git clone https://github.com/sio/bash-complete-partial-path

|

||||

```

|

||||

|

||||

3. 在 `~/.bashrc` 文件中 source `~/.config/bash-complete-partial-path/bash_completion`,

|

||||

3、 在 `~/.bashrc` 文件中 source `~/.config/bash-complete-partial-path/bash_completion`,

|

||||

|

||||

用文本编辑器打开 ~/.bashrc。例如你可以使用 Gedit:

|

||||

|

||||

@ -55,7 +53,7 @@ gedit ~/.bashrc

|

||||

|

||||

我提到在文件的末尾添加它,因为这需要包含在你的 `~/.bashrc` 文件的主 bash-completion 下面(之后)。因此,请确保不要将其添加到原始 bash-completion 之上,因为它会导致问题。

|

||||

|

||||

4\. Source `~/.bashrc`:

|

||||

4、 引入 `~/.bashrc`:

|

||||

|

||||

```

|

||||

source ~/.bashrc

|

||||

@ -63,8 +61,6 @@ source ~/.bashrc

|

||||

|

||||

这样就好了,现在应该安装完 bash-complete-partial-path 并可以使用了。

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linuxuprising.com/2018/07/incomplete-path-expansion-completion.html

|

||||

@ -72,7 +68,7 @@ via: https://www.linuxuprising.com/2018/07/incomplete-path-expansion-completion.

|

||||

作者:[Logix][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,185 @@

|

||||

How blockchain will influence open source

|

||||

======

|

||||

|

||||

|

||||

|

||||

What [Satoshi Nakamoto][1] started as Bitcoin a decade ago has found a lot of followers and turned into a movement for decentralization. For some, blockchain technology is a religion that will have the same impact on humanity as the Internet has had. For others, it is hype and technology suitable only for Ponzi schemes. While blockchain is still evolving and trying to find its place, one thing is for sure: It is a disruptive technology that will fundamentally transform certain industries. And I'm betting open source will be one of them.

|

||||

|

||||

### The open source model

|

||||

|

||||

Open source is a collaborative software development and distribution model that allows people with common interests to gather and produce something that no individual can create on their own. It allows the creation of value that is bigger than the sum of its parts. Open source is enabled by distributed collaboration tools (IRC, email, git, wiki, issue trackers, etc.), distributed and protected by an open source licensing model and often governed by software foundations such as the [Apache Software Foundation][2] (ASF), [Cloud Native Computing Foundation][3] (CNCF), etc.

|

||||

|

||||

One interesting aspect of the open source model is the lack of financial incentives in its core. Some people believe open source work should remain detached from money and remain a free and voluntary activity driven only by intrinsic motivators (such as "common purpose" and "for the greater good”). Others believe open source work should be rewarded directly or indirectly through extrinsic motivators (such as financial incentive). While the idea of open source projects prospering only through voluntary contributions is romantic, in reality, the majority of open source contributions are done through paid development. Yes, we have a lot of voluntary contributions, but these are on a temporary basis from contributors who come and go, or for exceptionally popular projects while they are at their peak. Creating and sustaining open source projects that are useful for enterprises requires developing, documenting, testing, and bug-fixing for prolonged periods, even when the software is no longer shiny and exciting. It is a boring activity that is best motivated through financial incentives.

|

||||

|

||||

### Commercial open source

|

||||

|

||||

Software foundations such as ASF survive on donations and other income streams such as sponsorships, conference fees, etc. But those funds are primarily used to run the foundations, to provide legal protection for the projects, and to ensure there are enough servers to run builds, issue trackers, mailing lists, etc.

|

||||

|

||||

Similarly, CNCF has member fees and other income streams, which are used to run the foundation and provide resources for the projects. These days, most software is not built on laptops; it is run and tested on hundreds of machines on the cloud, and that requires money. Creating marketing campaigns, brand designs, distributing stickers, etc. takes money, and some foundations can assist with that as well. At its core, foundations implement the right processes to interact with users, developers, and control mechanisms and ensure distribution of available financial resources to open source projects for the common good.

|

||||

|

||||

If users of open source projects can donate money and the foundations can distribute it in a fair way, what is missing?

|

||||

|

||||

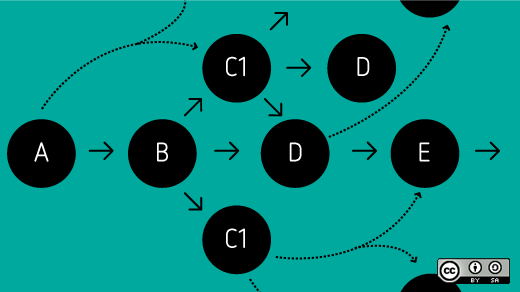

What is missing is a direct, transparent, trusted, decentralized, automated bidirectional link for transfer of value between the open source producers and the open source consumer. Currently, the link is either unidirectional or indirect:

|

||||

|

||||

* **Unidirectional** : A developer (think of a "developer" as any role that is involved in the production, maintenance, and distribution of software) can use their brain juice and devote time to do a contribution and share that value with all open source users. But there is no reverse link.

|

||||

|

||||

* **Indirect** : If there is a bug that affects a specific user/company, the options are:

|

||||

|

||||

* To have in-house developers to fix the bug and do a pull request. That is ideal, but it not always possible to hire in-house developers who are knowledgeable about hundreds of open source projects used daily.

|

||||

|

||||

* To hire a freelancer specializing in that specific open source project and pay for the services. Ideally, the freelancer is also a committer for the open source project and can directly change the project code quickly. Otherwise, the fix might not ever make it to the project.

|

||||

|

||||

* To approach a company providing services around the open source project. Such companies typically employ open source committers to influence and gain credibility in the community and offer products, expertise, and professional services.

|

||||

|

||||

|

||||

|

||||

|

||||

The third option has been a successful [model][4] for sustaining many open source projects. Whether they provide services (training, consulting, workshops), support, packaging, open core, or SaaS, there are companies that employ hundreds of staff members who work on open source full time. There is a long [list of companies][5] that have managed to build a successful open source business model over the years, and that list is growing steadily.

|

||||

|

||||

The companies that back open source projects play an important role in the ecosystem: They are the catalyst between the open source projects and the users. The ones that add real value do more than just package software nicely; they can identify user needs and technology trends, and they create a full stack and even an ecosystem of open source projects to address these needs. They can take a boring project and support it for years. If there is a missing piece in the stack, they can start an open source project from scratch and build a community around it. They can acquire a closed source software company and open source the projects (here I got a little carried away, but yes, I'm talking about my employer, [Red Hat][6]).

|

||||

|

||||

To summarize, with the commercial open source model, projects are officially or unofficially managed and controlled by a very few individuals or companies that monetize them and give back to the ecosystem by ensuring the project is successful. It is a win-win-win for open source developers, managing companies, and end users. The alternative is inactive projects and expensive closed source software.

|

||||

|

||||

### Self-sustaining, decentralized open source

|

||||

|

||||

For a project to become part of a reputable foundation, it must conform to certain criteria. For example, ASF and CNCF require incubation and graduation processes, respectively, where apart from all the technical and formal requirements, a project must have a healthy number of active committer and users. And that is the essence of forming a sustainable open source project. Having source code on GitHub is not the same thing as having an active open source project. The latter requires committers who write the code and users who use the code, with both groups enforcing each other continuously by exchanging value and forming an ecosystem where everybody benefits. Some project ecosystems might be tiny and short-lived, and some may consist of multiple projects and competing service providers, with very complex interactions lasting for many years. But as long as there is an exchange of value and everybody benefits from it, the project is developed, maintained, and sustained.

|

||||

|

||||

If you look at ASF [Attic][7], you will find projects that have reached their end of life. When a project is no longer technologically fit for its purpose, it is usually its natural end. Similarly, in the ASF [Incubator][8], you will find tons of projects that never graduated but were instead retired. Typically, these projects were not able to build a large enough community because they are too specialized or there are better alternatives available.

|

||||

|

||||

But there are also cases where projects with high potential and superior technology cannot sustain themselves because they cannot form or maintain a functioning ecosystem for the exchange of value. The open source model and the foundations do not provide a framework and mechanisms for developers to get paid for their work or for users to get their requests heard. There isn’t a common value commitment framework for either party. As a result, some projects can sustain themselves only in the context of commercial open source, where a company acts as an intermediary and value adder between developers and users. That adds another constraint and requires a service provider company to sustain some open source projects. Ideally, users should be able to express their interest in a project and developers should be able to show their commitment to the project in a transparent and measurable way, which forms a community with common interest and intent for the exchange of value.

|

||||

|

||||

Imagine there is a model with mechanisms and tools that enable direct interaction between open source users and developers. This includes not only code contributions through pull requests, questions over the mailing lists, GitHub stars, and stickers on laptops, but also other ways that allow users to influence projects' destinies in a richer, more self-controlled and transparent manner.

|

||||

|

||||

This model could include incentives for actions such as:

|

||||

|

||||

* Funding open source projects directly rather than through software foundations

|

||||

|

||||

* Influencing the direction of projects through voting (by token holders)

|

||||

|

||||

* Feature requests driven by user needs

|

||||

|

||||

* On-time pull request merges

|

||||

|

||||

* Bounties for bug hunts

|

||||

|

||||

* Better test coverage incentives

|

||||

|

||||

* Up-to-date documentation rewards

|

||||

|

||||

* Long-term support guarantees

|

||||

|

||||

* Timely security fixes

|

||||

|

||||

* Expert assistance, support, and services

|

||||

|

||||

* Budget for evangelism and promotion of the projects

|

||||

|

||||

* Budget for regular boring activities

|

||||

|

||||

* Fast email and chat assistance

|

||||

|

||||

* Full visibility of the overall project findings, etc.

|

||||

|

||||

|

||||

|

||||

|

||||

If you haven't guessed, I'm talking about using blockchain and [smart contracts][9] to allow such interactions between users and developers—smart contracts that will give power to the hand of token holders to influence projects.

|

||||

|

||||

![blockchain_in_open_source_ecosystem.png][11]

|

||||

|

||||

The usage of blockchain in the open source ecosystem

|

||||

|

||||

Existing channels in the open source ecosystem provide ways for users to influence projects through financial commitments to service providers or other limited means through the foundations. But the addition of blockchain-based technology to the open source ecosystem could open new channels for interaction between users and developers. I'm not saying this will replace the commercial open source model; most companies working with open source do many things that cannot be replaced by smart contracts. But smart contracts can spark a new way of bootstrapping new open source projects, giving a second life to commodity projects that are a burden to maintain. They can motivate developers to apply boring pull requests, write documentation, get tests to pass, etc., providing a direct value exchange channel between users and open source developers. Blockchain can add new channels to help open source projects grow and become self-sustaining in the long term, even when company backing is not feasible. It can create a new complementary model for self-sustaining open source projects—a win-win.

|

||||

|

||||

### Tokenizing open source

|

||||

|

||||

There are already a number of initiatives aiming to tokenize open source. Some focus only on an open source model, and some are more generic but apply to open source development as well:

|

||||

|

||||

* [Gitcoin][12] \- grow open source, one of the most promising ones in this area.

|

||||

|

||||

* [Oscoin][13] \- cryptocurrency for open source

|

||||

|

||||

* [Open collective][14] \- a platform for supporting open source projects.

|

||||

|

||||

* [FundYourselfNow][15] \- Kickstarter and ICOs for projects.

|

||||

|

||||

* [Kauri][16] \- support for open source project documentation.

|

||||

|

||||

* [Liberapay][17] \- a recurrent donations platform.

|

||||

|

||||

* [FundRequest][18] \- a decentralized marketplace for open source collaboration.

|

||||

|

||||

* [CanYa][19] \- recently acquired [Bountysource][20], now the world’s largest open source P2P bounty platform.

|

||||

|

||||

* [OpenGift][21] \- a new model for open source monetization.

|

||||

|

||||

* [Hacken][22] \- a white hat token for hackers.

|

||||

|

||||

* [Coinlancer][23] \- a decentralized job market.

|

||||

|

||||

* [CodeFund][24] \- an open source ad platform.

|

||||

|

||||

* [IssueHunt][25] \- a funding platform for open source maintainers and contributors.

|

||||

|

||||

* [District0x 1Hive][26] \- a crowdfunding and curation platform.

|

||||

|

||||

* [District0x Fixit][27] \- github bug bounties.

|

||||

|

||||

|

||||

|

||||

|

||||

This list is varied and growing rapidly. Some of these projects will disappear, others will pivot, but a few will emerge as the [SourceForge][28], the ASF, the GitHub of the future. That doesn't necessarily mean they'll replace these platforms, but they'll complement them with token models and create a richer open source ecosystem. Every project can pick its distribution model (license), governing model (foundation), and incentive model (token). In all cases, this will pump fresh blood to the open source world.

|

||||

|

||||

### The future is open and decentralized

|

||||

|

||||

* Software is eating the world.

|

||||

|

||||

* Every company is a software company.

|

||||

|

||||

* Open source is where innovation happens.

|

||||

|

||||

|

||||

|

||||

|

||||

Given that, it is clear that open source is too big to fail and too important to be controlled by a few or left to its own destiny. Open source is a shared-resource system that has value to all, and more importantly, it must be managed as such. It is only a matter of time until every company on earth will want to have a stake and a say in the open source world. Unfortunately, we don't have the tools and the habits to do it yet. Such tools would allow anybody to show their appreciation or ignorance of software projects. It would create a direct and faster feedback loop between producers and consumers, between developers and users. It will foster innovation—innovation driven by user needs and expressed through token metrics.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/8/open-source-tokenomics

|

||||

|

||||

作者:[Bilgin lbryam][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/bibryam

|

||||

[1]:https://en.wikipedia.org/wiki/Satoshi_Nakamoto

|

||||

[2]:https://www.apache.org/

|

||||

[3]:https://www.cncf.io/

|

||||

[4]:https://medium.com/open-consensus/3-oss-business-model-progressions-dafd5837f2d

|

||||

[5]:https://docs.google.com/spreadsheets/d/17nKMpi_Dh5slCqzLSFBoWMxNvWiwt2R-t4e_l7LPLhU/edit#gid=0

|

||||

[6]:http://jobs.redhat.com/

|

||||

[7]:https://attic.apache.org/

|

||||

[8]:http://incubator.apache.org/

|

||||

[9]:https://en.wikipedia.org/wiki/Smart_contract

|

||||

[10]:/file/404421

|

||||

[11]:https://opensource.com/sites/default/files/uploads/blockchain_in_open_source_ecosystem.png (blockchain_in_open_source_ecosystem.png)

|

||||

[12]:https://gitcoin.co/

|

||||

[13]:http://oscoin.io/

|

||||

[14]:https://opencollective.com/opensource

|

||||

[15]:https://www.fundyourselfnow.com/page/about

|

||||

[16]:https://kauri.io/

|

||||

[17]:https://liberapay.com/

|

||||

[18]:https://fundrequest.io/

|

||||

[19]:https://canya.io/

|

||||

[20]:https://www.bountysource.com/

|

||||

[21]:https://opengift.io/pub/

|

||||

[22]:https://hacken.io/

|

||||

[23]:https://www.coinlancer.com/home

|

||||

[24]:https://codefund.io/

|

||||

[25]:https://issuehunt.io/

|

||||

[26]:https://blog.district0x.io/district-proposal-spotlight-1hive-283957f57967

|

||||

[27]:https://github.com/district0x/district-proposals/issues/177

|

||||

[28]:https://sourceforge.net/

|

||||

@ -1,3 +1,5 @@

|

||||

fuowang 翻译中

|

||||

|

||||

Arch Linux Applications Automatic Installation Script

|

||||

======

|

||||

|

||||

|

||||

@ -0,0 +1,172 @@

|

||||

BootISO – A Simple Bash Script To Securely Create A Bootable USB Device From ISO File

|

||||

======

|

||||

Most of us (including me) very often create a bootable USB device from ISO file for OS installation.

|

||||

|

||||

There are many applications freely available in Linux for this purpose. Even we wrote few of the utility in the past.

|

||||

|

||||

Every one uses different application and each application has their own features and functionality.

|

||||

|

||||

In that few of applications are belongs to CLI and few of them associated with GUI.

|

||||

|

||||

Today we are going to discuss about similar kind of utility called BootISO. It’s a simple bash script, which allow users to create a USB device from ISO file.

|

||||

|

||||

Many of the Linux admin uses dd command to create bootable ISO, which is one of the native and famous method but the same time, it’s one of the very dangerous command. So, be careful, when you performing any action with dd command.

|

||||

|

||||

**Suggested Read :**

|

||||

**(#)** [Etcher – Easy way to Create a bootable USB drive & SD card from an ISO image][1]

|

||||

**(#)** [Create a bootable USB drive from an ISO image using dd command on Linux][2]

|

||||

|

||||

### What IS BootISO

|

||||

|

||||

[BootIOS][3] is a simple bash script, which allow users to securely create a bootable USB device from one ISO file. It’s written in bash.

|

||||

|

||||

It doesn’t offer any GUI but in the same time it has vast of options, which allow newbies to create a bootable USB device in Linux without any issues. Since it’s a intelligent tool that automatically choose if any USB device is connected on the system.

|

||||

|

||||

It will print the list when the system has more than one USB device connected. When you choose manually another hard disk manually instead of USB, this will safely exit without writing anything on it.

|

||||

|

||||

This script will also check for dependencies and prompt user for installation, it works with all package managers such as apt-get, yum, dnf, pacman and zypper.

|

||||

|

||||

### BootISO Features

|

||||

|

||||

* It checks whether the selected ISO has the correct mime-type or not. If no then it exit.

|

||||

* BootISO will exit automatically, if you selected any other disks (local hard drive) except USB drives.

|

||||

* BootISO allow users to select the desired USB drives when you have more than one.

|

||||

* BootISO prompts the user for confirmation before erasing and paritioning USB device.

|

||||

* BootISO will handle any failure from a command properly and exit.

|

||||

* BootISO will call a cleanup routine on exit with trap.

|

||||

|

||||

|

||||

|

||||

### How To Install BootISO In Linux

|

||||

|

||||

There are few ways are available to install BootISO in Linux but i would advise users to install using the following method.

|

||||

```

|

||||

$ curl -L https://git.io/bootiso -O

|

||||

$ chmod +x bootiso

|

||||

$ sudo mv bootiso /usr/local/bin/

|

||||

|

||||

```

|

||||

|

||||

Once BootISO installed, run the following command to list the available USB devices.

|

||||

```

|

||||

$ bootiso -l

|

||||

|

||||

Listing USB drives available in your system:

|

||||

NAME HOTPLUG SIZE STATE TYPE

|

||||

sdd 1 32G running disk

|

||||

|

||||

```

|

||||

|

||||

If you have only one USB device, then simple run the following command to create a bootable USB device from ISO file.

|

||||

```

|

||||

$ bootiso /path/to/iso file

|

||||

|

||||

$ bootiso /opt/iso_images/archlinux-2018.05.01-x86_64.iso

|

||||

Granting root privileges for bootiso.

|

||||

Listing USB drives available in your system:

|

||||

NAME HOTPLUG SIZE STATE TYPE

|

||||

sdd 1 32G running disk

|

||||

Autoselecting `sdd' (only USB device candidate)

|

||||

The selected device `/dev/sdd' is connected through USB.

|

||||

Created ISO mount point at `/tmp/iso.vXo'

|

||||

`bootiso' is about to wipe out the content of device `/dev/sdd'.

|

||||

Are you sure you want to proceed? (y/n)>y

|

||||

Erasing contents of /dev/sdd...

|

||||

Creating FAT32 partition on `/dev/sdd1'...

|

||||

Created USB device mount point at `/tmp/usb.0j5'

|

||||

Copying files from ISO to USB device with `rsync'

|

||||

Synchronizing writes on device `/dev/sdd'

|

||||

`bootiso' took 250 seconds to write ISO to USB device with `rsync' method.

|

||||

ISO succesfully unmounted.

|

||||

USB device succesfully unmounted.

|

||||

USB device succesfully ejected.

|

||||

You can safely remove it !

|

||||

|

||||

```

|

||||

|

||||

Mention your device name, when you have more than one USB device using `--device` option.

|

||||

```

|

||||

$ bootiso -d /dev/sde /opt/iso_images/archlinux-2018.05.01-x86_64.iso

|

||||

|

||||

```

|

||||

|

||||

By default bootios uses `rsync` command to perform all the action and if you want to use `dd` command instead of, use the following format.

|

||||

```

|

||||

$ bootiso --dd -d /dev/sde /opt/iso_images/archlinux-2018.05.01-x86_64.iso

|

||||

|

||||

```

|

||||

|

||||

If you want to skip `mime-type` check, include the following option with bootios utility.

|

||||

```

|

||||

$ bootiso --no-mime-check -d /dev/sde /opt/iso_images/archlinux-2018.05.01-x86_64.iso

|

||||

|

||||

```

|

||||

|

||||

Add the below option with bootios to skip user for confirmation before erasing and partitioning USB device.

|

||||

```

|

||||

$ bootiso -y -d /dev/sde /opt/iso_images/archlinux-2018.05.01-x86_64.iso

|

||||

|

||||

```

|

||||

|

||||

Enable autoselecting USB devices in conjunction with -y option.

|

||||

```

|

||||

$ bootiso -y -a /opt/iso_images/archlinux-2018.05.01-x86_64.iso

|

||||

|

||||

```

|

||||

|

||||

To know more all the available option for bootiso, run the following command.

|

||||

```

|

||||

$ bootiso -h

|

||||

Create a bootable USB from any ISO securely.

|

||||

Usage: bootiso [...]

|

||||

|

||||

Options

|

||||

|

||||

-h, --help, help Display this help message and exit.

|

||||

-v, --version Display version and exit.

|

||||

-d, --device Select block file as USB device.

|

||||

If is not connected through USB, `bootiso' will fail and exit.

|

||||

Device block files are usually situated in /dev/sXX or /dev/hXX.

|

||||

You will be prompted to select a device if you don't use this option.

|

||||

-b, --bootloader Install a bootloader with syslinux (safe mode) for non-hybrid ISOs. Does not work with `--dd' option.

|

||||

-y, --assume-yes `bootiso' won't prompt the user for confirmation before erasing and partitioning USB device.

|

||||

Use at your own risks.

|

||||

-a, --autoselect Enable autoselecting USB devices in conjunction with -y option.

|

||||

Autoselect will automatically select a USB drive device if there is exactly one connected to the system.

|

||||

Enabled by default when neither -d nor --no-usb-check options are given.

|

||||

-J, --no-eject Do not eject device after unmounting.

|

||||

-l, --list-usb-drives List available USB drives.

|

||||

-M, --no-mime-check `bootiso' won't assert that selected ISO file has the right mime-type.

|

||||

-s, --strict-mime-check Disallow loose application/octet-stream mime type in ISO file.

|

||||

-- POSIX end of options.

|

||||

--dd Use `dd' utility instead of mounting + `rsync'.

|

||||

Does not allow bootloader installation with syslinux.

|

||||

--no-usb-check `bootiso' won't assert that selected device is a USB (connected through USB bus).

|

||||

Use at your own risks.

|

||||

|

||||

Readme

|

||||

|

||||

Bootiso v2.5.2.

|

||||

Author: Jules Samuel Randolph

|

||||

Bugs and new features: https://github.com/jsamr/bootiso/issues

|

||||

If you like bootiso, please help the community by making it visible:

|

||||

* star the project at https://github.com/jsamr/bootiso

|

||||

* upvote those SE post: https://goo.gl/BNRmvm https://goo.gl/YDBvFe

|

||||

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.2daygeek.com/bootiso-a-simple-bash-script-to-securely-create-a-bootable-usb-device-in-linux-from-iso-file/

|

||||

|

||||

作者:[Prakash Subramanian][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.2daygeek.com/author/prakash/

|

||||

[1]:https://www.2daygeek.com/etcher-easy-way-to-create-a-bootable-usb-drive-sd-card-from-an-iso-image-on-linux/

|

||||

[2]:https://www.2daygeek.com/create-a-bootable-usb-drive-from-an-iso-image-using-dd-command-on-linux/

|

||||

[3]:https://github.com/jsamr/bootiso

|

||||

@ -1,3 +1,4 @@

|

||||

[Moelf](https://github.com/moelf/) Translating

|

||||

Don’t Install Yaourt! Use These Alternatives for AUR in Arch Linux

|

||||

======

|

||||

**Brief: Yaourt had been the most popular AUR helper, but it is not being developed anymore. In this article, we list out some of the best alternatives to Yaourt for Arch based Linux distributions. **

|

||||

|

||||

@ -1,322 +0,0 @@

|

||||

BriFuture is translating

|

||||

|

||||

|

||||

Testing Node.js in 2018

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

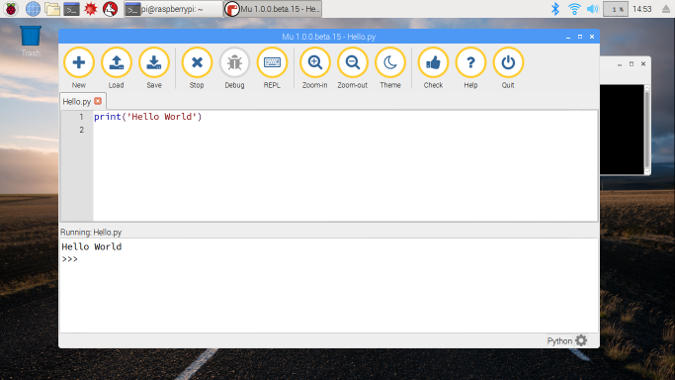

[Stream][4] powers feeds for over 300+ million end users. With all of those users relying on our infrastructure, we’re very good about testing everything that gets pushed into production. Our primary codebase is written in Go, with some remaining bits of Python.

|

||||

|

||||

Our recent showcase application, [Winds 2.0][5], is built with Node.js and we quickly learned that our usual testing methods in Go and Python didn’t quite fit. Furthermore, creating a proper test suite requires a bit of upfront work in Node.js as the frameworks we are using don’t offer any type of built-in test functionality.

|

||||

|

||||

Setting up a good test framework can be tricky regardless of what language you’re using. In this post, we’ll uncover the hard parts of testing with Node.js, the various tooling we decided to utilize in Winds 2.0, and point you in the right direction for when it comes time for you to write your next set of tests.

|

||||

|

||||

### Why Testing is so Important

|

||||

|

||||

We’ve all pushed a bad commit to production and faced the consequences. It’s not a fun thing to have happen. Writing a solid test suite is not only a good sanity check, but it allows you to completely refactor code and feel confident that your codebase is still functional. This is especially important if you’ve just launched.

|

||||

|

||||

If you’re working with a team, it’s extremely important that you have test coverage. Without it, it’s nearly impossible for other developers on the team to know if their contributions will result in a breaking change (ouch).

|

||||

|

||||

Writing tests also encourage you and your teammates to split up code into smaller pieces. This makes it much easier to understand your code, and fix bugs along the way. The productivity gains are even bigger, due to the fact that you catch bugs early on.

|

||||

|

||||

Finally, without tests, your codebase might as well be a house of cards. There is simply zero certainty that your code is stable.

|

||||

|

||||

### The Hard Parts

|

||||

|

||||

In my opinion, most of the testing problems we ran into with Winds were specific to Node.js. The ecosystem is always growing. For example, if you are on macOS and run “brew upgrade” (with homebrew installed), your chances of seeing a new version of Node.js are quite high. With Node.js moving quickly and libraries following close behind, keeping up to date with the latest libraries is difficult.

|

||||

|

||||

Below are a few pain points that immediately come to mind:

|

||||

|

||||

1. Testing in Node.js is very opinionated and un-opinionated at the same time. Many people have different views on how a test infrastructure should be built and measured for success. The sad part is that there is no golden standard (yet) for how you should approach testing.

|

||||

|

||||

2. There are a large number of frameworks available to use in your application. However, they are generally minimal with no well-defined configuration or boot process. This leads to side effects that are very common, and yet hard to diagnose; so, you’ll likely end up writing your own test runner from scratch.

|

||||

|

||||

3. It’s almost guaranteed that you will be _required_ to write your own test runner (we’ll get to this in a minute).

|

||||

|

||||

The situations listed above are not ideal and it’s something that the Node.js community needs to address sooner rather than later. If other languages have figured it out, I think it’s time for Node.js, a widely adopted language, to figure it out as well.

|

||||

|

||||

### Writing Your Own Test Runner

|

||||

|

||||

So… you’re probably wondering what a test runner _is_ . To be honest, it’s not that complicated. A test runner is the highest component in the test suite. It allows for you to specify global configurations and environments, as well as import fixtures. One would assume this would be simple and easy to do… Right? Not so fast…

|

||||

|

||||

What we learned is that, although there is a solid number of test frameworks out there, not a single one for Node.js provides a unified way to construct your test runner. Sadly, it’s up to the developer to do so. Here’s a quick breakdown of the requirements for a test runner:

|

||||

|

||||

* Ability to load different configurations (e.g. local, test, development) and ensure that you _NEVER_ load a production configuration — you can guess what goes wrong when that happens.

|

||||

|

||||

* Lift and seed a database with dummy data for testing. This must work for various databases, whether it be MySQL, PostgreSQL, MongoDB, or any other, for that matter.

|

||||

|

||||

* Ability to load fixtures (files with seed data for testing in a development environment).

|

||||

|

||||

With Winds, we chose to use Mocha as our test runner. Mocha provides an easy and programmatic way to run tests on an ES6 codebase via command-line tools (integrated with Babel).

|

||||

|

||||

To kick off the tests, we register the Babel module loader ourselves. This provides us with finer grain greater control over which modules are imported before Babel overrides Node.js module loading process, giving us the opportunity to mock modules before any tests are run.

|

||||

|

||||

Additionally, we also use Mocha’s test runner feature to pre-assign HTTP handlers to specific requests. We do this because the normal initialization code is not run during tests (server interactions are mocked by the Chai HTTP plugin) and run some safety check to ensure we are not connecting to production databases.

|

||||

|

||||

While this isn’t part of the test runner, having a fixture loader is an important part of our test suite. We examined existing solutions; however, we settled on writing our own helper so that it was tailored to our requirements. With our solution, we can load fixtures with complex data-dependencies by following an easy ad-hoc convention when generating or writing fixtures by hand.

|

||||

|

||||

### Tooling for Winds

|

||||

|

||||

Although the process was cumbersome, we were able to find the right balance of tools and frameworks to make proper testing become a reality for our backend API. Here’s what we chose to go with:

|

||||

|

||||

### Mocha ☕

|

||||

|

||||

[Mocha][6], described as a “feature-rich JavaScript test framework running on Node.js”, was our immediate choice of tooling for the job. With well over 15k stars, many backers, sponsors, and contributors, we knew it was the right framework for the job.

|

||||

|

||||

### Chai 🥃

|

||||

|

||||

Next up was our assertion library. We chose to go with the traditional approach, which is what works best with Mocha — [Chai][7]. Chai is a BDD and TDD assertion library for Node.js. With a simple API, Chai was easy to integrate into our application and allowed for us to easily assert what we should _expect_ tobe returned from the Winds API. Best of all, writing tests feel natural with Chai. Here’s a short example:

|

||||

|

||||

```

|

||||

describe('retrieve user', () => {

|

||||

let user;

|

||||

|

||||

before(async () => {

|

||||

await loadFixture('user');

|

||||

user = await User.findOne({email: authUser.email});

|

||||

expect(user).to.not.be.null;

|

||||

});

|

||||

|

||||

after(async () => {

|

||||

await User.remove().exec();

|

||||

});

|

||||

|

||||

describe('valid request', () => {

|

||||

it('should return 200 and the user resource, including the email field, when retrieving the authenticated user', async () => {

|

||||

const response = await withLogin(request(api).get(`/users/${user._id}`), authUser);

|

||||

|

||||

expect(response).to.have.status(200);

|

||||

expect(response.body._id).to.equal(user._id.toString());

|

||||

});

|

||||

|

||||

it('should return 200 and the user resource, excluding the email field, when retrieving another user', async () => {

|

||||

const anotherUser = await User.findOne({email: 'another_user@email.com'});

|

||||

|

||||

const response = await withLogin(request(api).get(`/users/${anotherUser.id}`), authUser);

|

||||

|

||||

expect(response).to.have.status(200);

|

||||

expect(response.body._id).to.equal(anotherUser._id.toString());

|

||||

expect(response.body).to.not.have.an('email');

|

||||

});

|

||||

|

||||

});

|

||||

|

||||

describe('invalid requests', () => {

|

||||

|

||||

it('should return 404 if requested user does not exist', async () => {

|

||||

const nonExistingId = '5b10e1c601e9b8702ccfb974';

|

||||

expect(await User.findOne({_id: nonExistingId})).to.be.null;

|

||||

|

||||

const response = await withLogin(request(api).get(`/users/${nonExistingId}`), authUser);

|

||||

expect(response).to.have.status(404);

|

||||

});

|

||||

});

|

||||

|

||||

});

|

||||

```

|

||||

|

||||

### Sinon 🧙

|

||||

|

||||

With the ability to work with any unit testing framework, [Sinon][8] was our first choice for a mocking library. Again, a super clean integration with minimal setup, Sinon turns mocking requests into a simple and easy process. Their website has an extremely friendly user experience and offers up easy steps to integrate Sinon with your test suite.

|

||||

|

||||

### Nock 🔮

|

||||

|

||||

For all external HTTP requests, we use [nock][9], a robust HTTP mocking library that really comes in handy when you have to communicate with a third party API (such as [Stream’s REST API][10]). There’s not much to say about this little library aside from the fact that it is awesome at what it does, and that’s why we like it. Here’s a quick example of us calling our [personalization][11] engine for Stream:

|

||||

|

||||

```

|

||||

nock(config.stream.baseUrl)

|

||||

.get(/winds_article_recommendations/)

|

||||

.reply(200, { results: [{foreign_id:`article:${article.id}`}] });

|

||||

```

|

||||

|

||||

### Mock-require 🎩

|

||||

|

||||

The library [mock-require][12] allows dependencies on external code. In a single line of code, you can replace a module and mock-require will step in when some code attempts to import that module. It’s a small and minimalistic, but robust library, and we’re big fans.

|

||||

|

||||

### Istanbul 🔭

|

||||

|

||||

[Istanbul][13] is a JavaScript code coverage tool that computes statement, line, function and branch coverage with module loader hooks to transparently add coverage when running tests. Although we have similar functionality with CodeCov (see next section), this is a nice tool to have when running tests locally.

|

||||

|

||||

### The End Result — Working Tests

|

||||

|

||||

_With all of the libraries, including the test runner mentioned above, let’s have a look at what a full test looks like (you can have a look at our entire test suite _ [_here_][14] _):_

|

||||

|

||||

```

|

||||

import nock from 'nock';

|

||||

import { expect, request } from 'chai';

|

||||

|

||||

import api from '../../src/server';

|

||||

import Article from '../../src/models/article';

|

||||

import config from '../../src/config';

|

||||

import { dropDBs, loadFixture, withLogin } from '../utils.js';

|

||||

|

||||

describe('Article controller', () => {

|

||||

let article;

|

||||

|

||||

before(async () => {

|

||||

await dropDBs();

|

||||

await loadFixture('initial-data', 'articles');

|

||||

article = await Article.findOne({});

|

||||

expect(article).to.not.be.null;

|

||||

expect(article.rss).to.not.be.null;

|

||||

});

|

||||

|

||||

describe('get', () => {

|

||||

it('should return the right article via /articles/:articleId', async () => {

|

||||

let response = await withLogin(request(api).get(`/articles/${article.id}`));

|

||||

expect(response).to.have.status(200);

|

||||

});

|

||||

});

|

||||

|

||||

describe('get parsed article', () => {

|

||||

it('should return the parsed version of the article', async () => {

|

||||

const response = await withLogin(

|

||||

request(api).get(`/articles/${article.id}`).query({ type: 'parsed' })

|

||||

);

|

||||

expect(response).to.have.status(200);

|

||||

});

|

||||

});

|

||||

|

||||

describe('list', () => {

|

||||

it('should return the list of articles', async () => {

|

||||

let response = await withLogin(request(api).get('/articles'));

|

||||

expect(response).to.have.status(200);

|

||||

});

|

||||

});

|

||||

|

||||

describe('list from personalization', () => {

|

||||

after(function () {

|

||||

nock.cleanAll();

|

||||

});

|

||||

|

||||

it('should return the list of articles', async () => {

|

||||

nock(config.stream.baseUrl)

|

||||

.get(/winds_article_recommendations/)

|

||||

.reply(200, { results: [{foreign_id:`article:${article.id}`}] });

|

||||

|

||||

const response = await withLogin(

|

||||

request(api).get('/articles').query({

|

||||

type: 'recommended',

|

||||

})

|

||||

);

|

||||

expect(response).to.have.status(200);

|

||||

expect(response.body.length).to.be.at.least(1);

|

||||

expect(response.body[0].url).to.eq(article.url);

|

||||

});

|

||||

});

|

||||

});

|

||||

```

|

||||

|

||||

### Continuous Integration

|

||||

|

||||

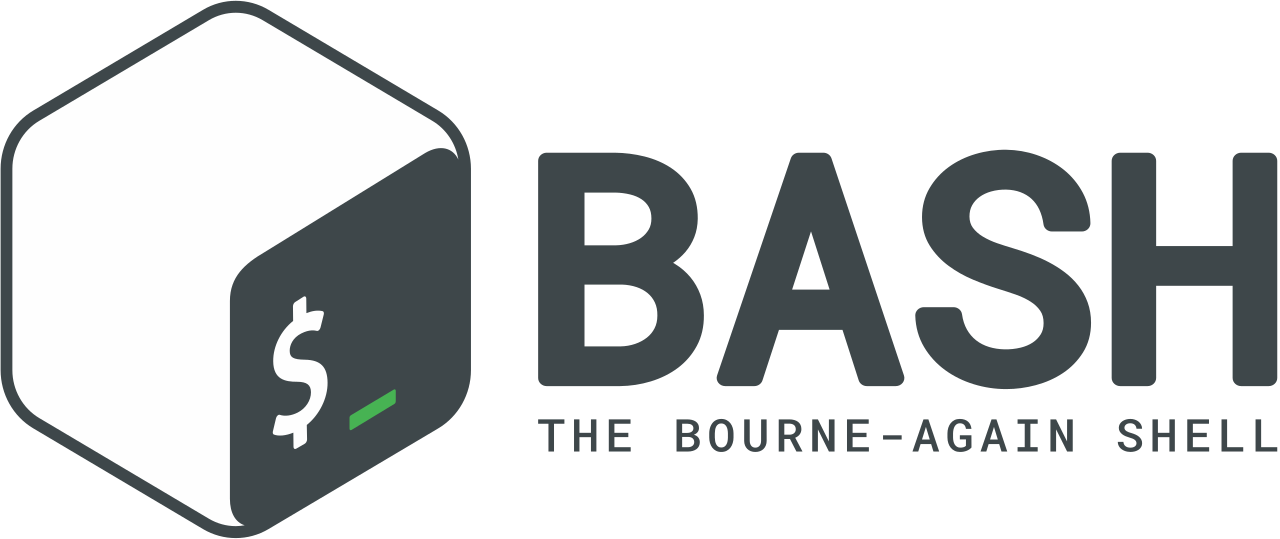

There are a lot of continuous integration services available, but we like to use [Travis CI][15] because they love the open-source environment just as much as we do. Given that Winds is open-source, it made for a perfect fit.

|

||||

|

||||

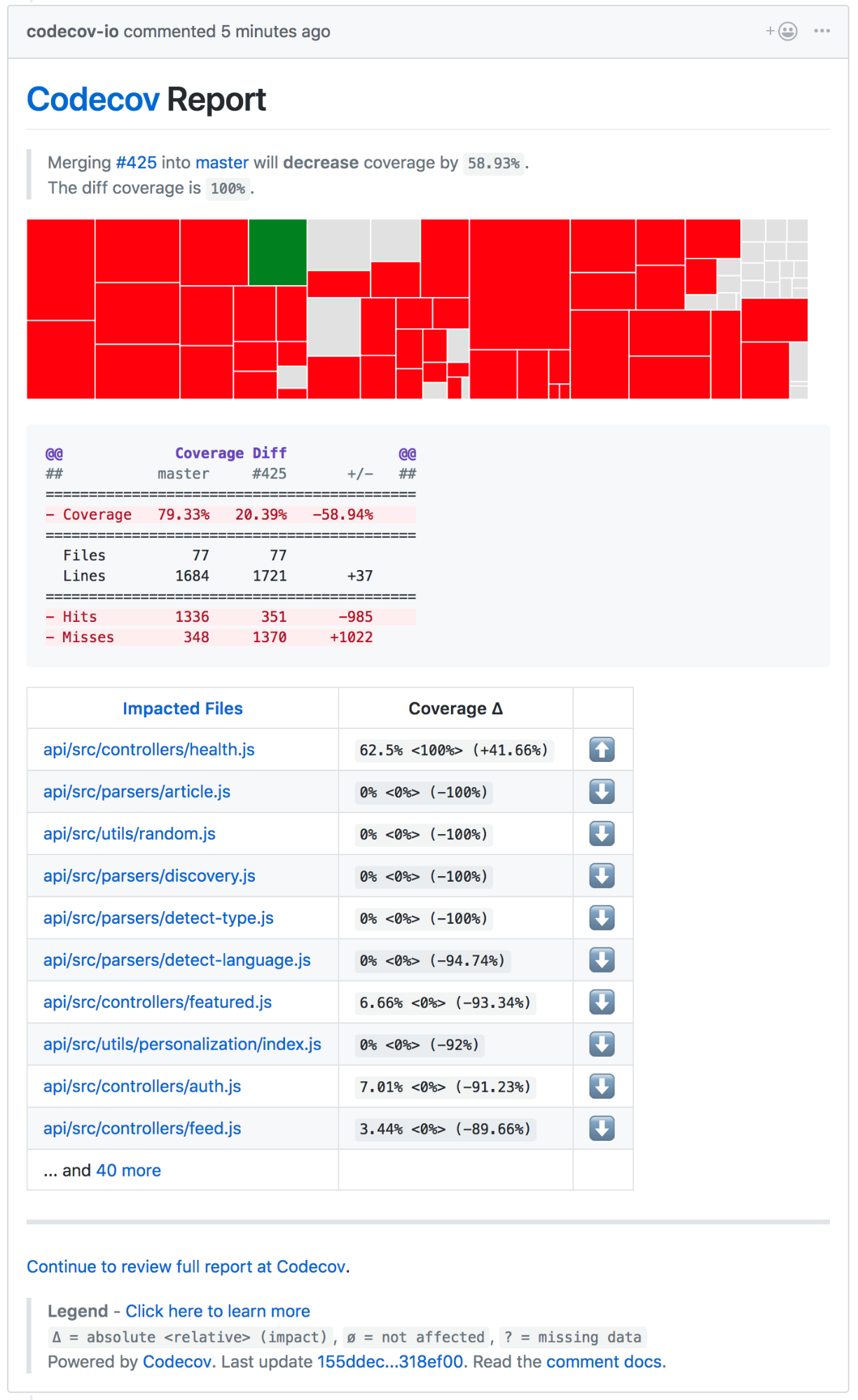

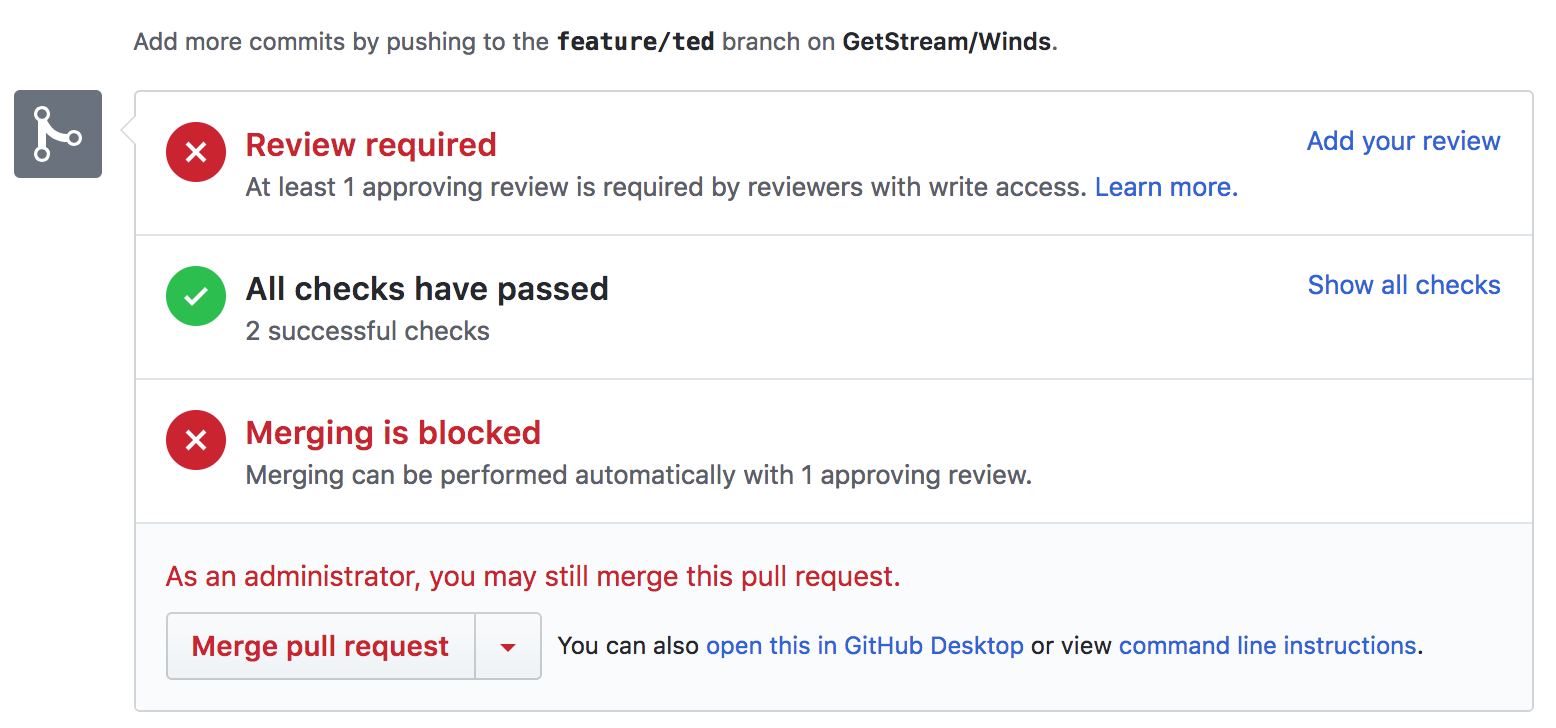

Our integration is rather simple — we have a [.travis.yml][16] file that sets up the environment and kicks off our tests via a simple [npm][17] command. The coverage reports back to GitHub, where we have a clear picture of whether or not our latest codebase or PR passes our tests. The GitHub integration is great, as it is visible without us having to go to Travis CI to look at the results. Below is a screenshot of GitHub when viewing the PR (after tests):

|

||||

|

||||

|

||||

|

||||

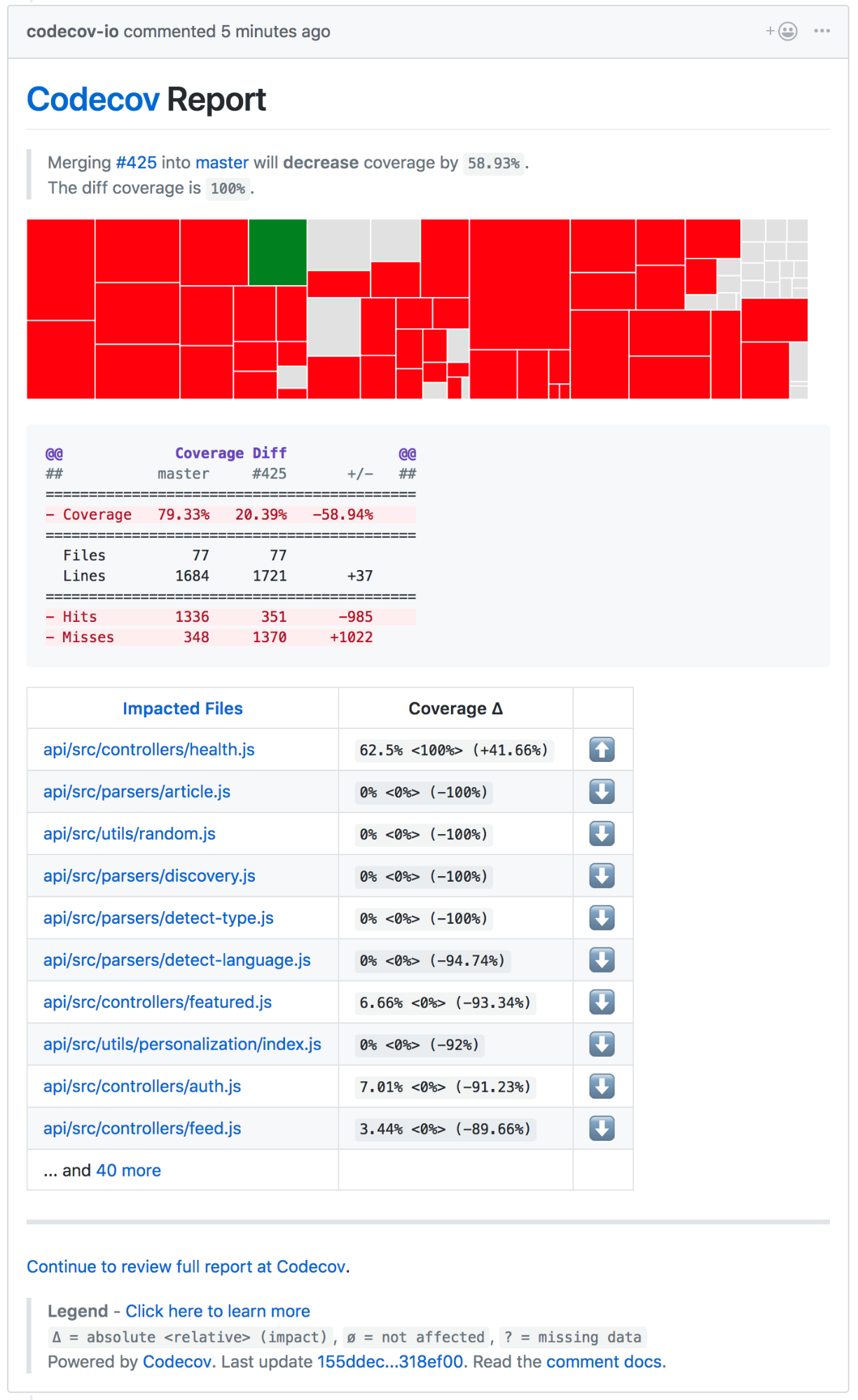

In addition to Travis CI, we use a tool called [CodeCov][18]. CodeCov is similar to [Istanbul][19], however, it’s a visualization tool that allows us to easily see code coverage, files changed, lines modified, and all sorts of other goodies. Though visualizing this data is possible without CodeCov, it’s nice to have everything in one spot.

|

||||

|

||||

### What We Learned

|

||||

|

||||

|

||||

|

||||

We learned a lot throughout the process of developing our test suite. With no “correct” way of doing things, we decided to set out and create our own test flow by sorting through the available libraries to find ones that were promising enough to add to our toolbox.

|

||||

|

||||

What we ultimately learned is that testing in Node.js is not as easy as it may sound. Hopefully, as Node.js continues to grow, the community will come together and build a rock solid library that handles everything test related in a “correct” manner.

|

||||

|

||||

Until then, we’ll continue to use our test suite, which is open-source on the [Winds GitHub repository][20].

|

||||

|

||||

### Limitations

|

||||

|

||||

#### No Easy Way to Create Fixtures

|

||||

|

||||

Frameworks and languages, such as Python’s Django, have easy ways to create fixtures. With Django, for example, you can use the following commands to automate the creation of fixtures by dumping data into a file:

|

||||

|

||||

The Following command will dump the whole database into a db.json file:

|

||||

./manage.py dumpdata > db.json

|

||||

|

||||

The Following command will dump only the content in django admin.logentry table:

|

||||

./manage.py dumpdata admin.logentry > logentry.json

|

||||

|

||||

The Following command will dump the content in django auth.user table: ./manage.py dumpdata auth.user > user.json

|

||||

|

||||

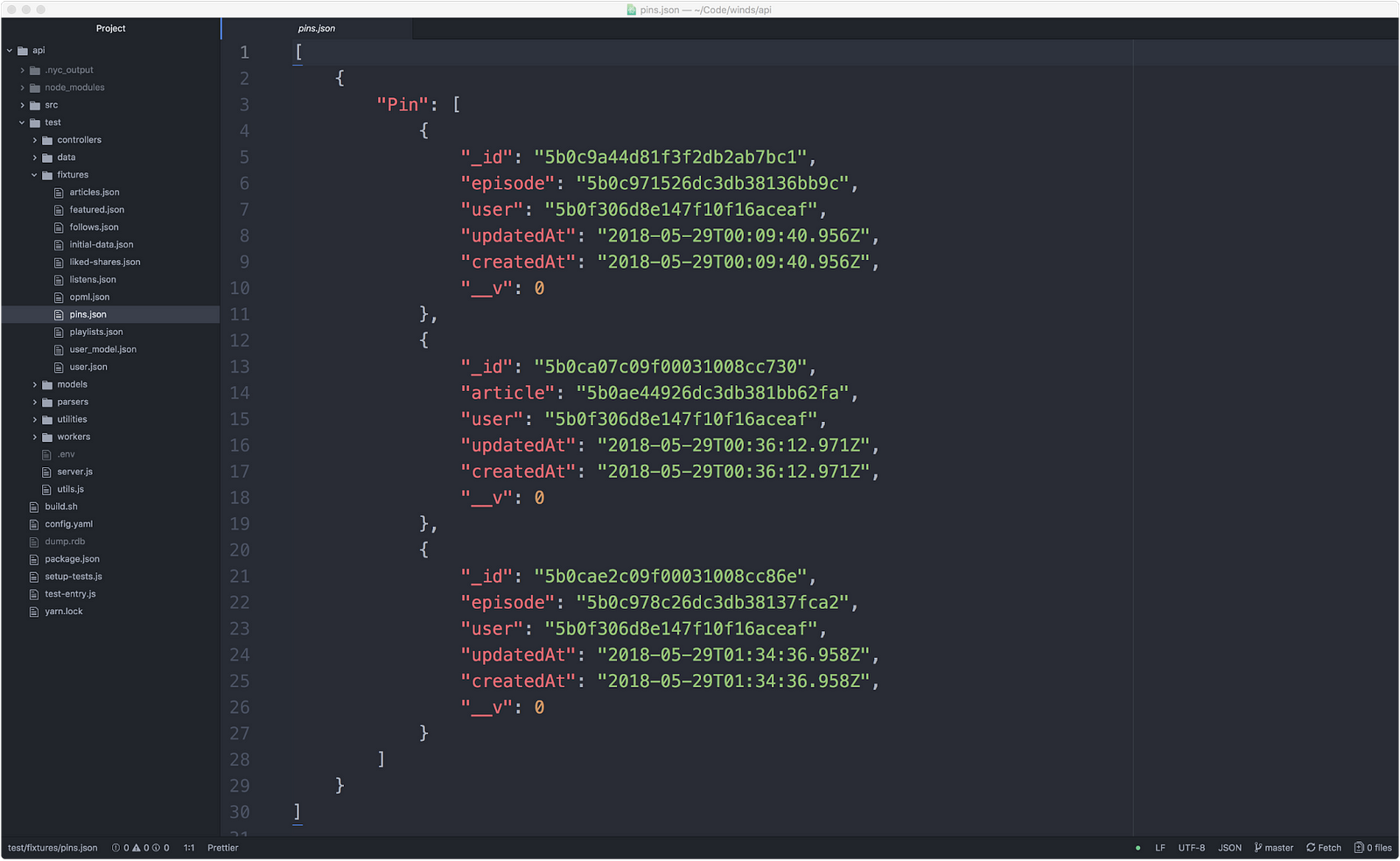

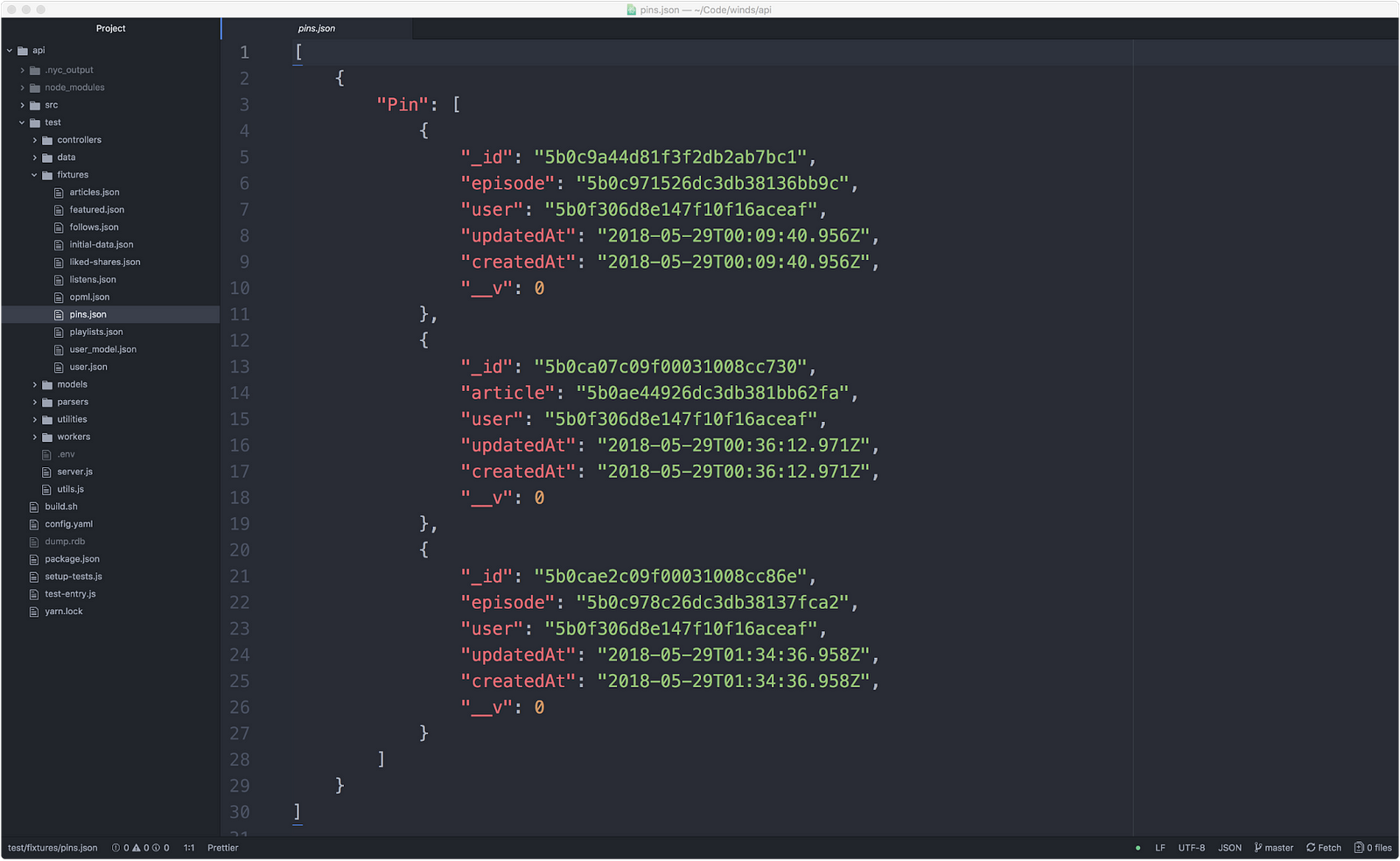

There’s no easy way to create a fixture in Node.js. What we ended up doing is using MongoDB Compass and exporting JSON from there. This resulted in a nice fixture, as shown below (however, it was a tedious process and prone to error):

|

||||

|

||||

|

||||

|

||||

|

||||

#### Unintuitive Module Loading When Using Babel, Mocked Modules, and Mocha Test-Runner

|

||||

|

||||

To support a broader variety of node versions and have access to latest additions to Javascript standard, we are using Babel to transpile our ES6 codebase to ES5\. Node.js module system is based on the CommonJS standard whereas the ES6 module system has different semantics.

|

||||

|

||||

Babel emulates ES6 module semantics on top of the Node.js module system, but because we are interfering with module loading by using mock-require, we are embarking on a journey through weird module loading corner cases, which seem unintuitive and can lead to multiple independent versions of the module imported and initialized and used throughout the codebase. This complicates mocking and global state management during testing.

|

||||

|

||||

#### Inability to Mock Functions Used Within the Module They Are Declared in When Using ES6 Modules

|

||||

|

||||

When a module exports multiple functions where one calls the other, it’s impossible to mock the function being used inside the module. The reason is that when you require an ES6 module you are presented with a separate set of references from the one used inside the module. Any attempt to rebind the references to point to new values does not really affect the code inside the module, which will continue to use the original function.

|

||||

|

||||

### Final Thoughts

|

||||

|

||||

Testing Node.js applications is a complicated process because the ecosystem is always evolving. It’s important to stay on top of the latest and greatest tools so you don’t fall behind.

|

||||

|

||||

There are so many outlets for JavaScript related news these days that it’s hard to keep up to date with all of them. Following email newsletters such as [JavaScript Weekly][21] and [Node Weekly][22] is a good start. Beyond that, joining a subreddit such as [/r/node][23] is a great idea. If you like to stay on top of the latest trends, [State of JS][24] does a great job at helping developers visualize trends in the testing world.

|

||||

|

||||

Lastly, here are a couple of my favorite blogs where articles often popup:

|

||||

|

||||

* [Hacker Noon][1]

|

||||

|

||||

* [Free Code Camp][2]

|

||||

|

||||

* [Bits and Pieces][3]

|

||||

|

||||

Think I missed something important? Let me know in the comments, or on Twitter – [@NickParsons][25].

|

||||

|

||||

Also, if you’d like to check out Stream, we have a great 5 minute tutorial on our website. Give it a shot [here][26].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Nick Parsons

|

||||

|

||||

Dreamer. Doer. Engineer. Developer Evangelist https://getstream.io.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://hackernoon.com/testing-node-js-in-2018-10a04dd77391

|

||||

|

||||

作者:[Nick Parsons][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://hackernoon.com/@nparsons08?source=post_header_lockup

|

||||

[1]:https://hackernoon.com/

|

||||

[2]:https://medium.freecodecamp.org/

|

||||

[3]:https://blog.bitsrc.io/

|

||||

[4]:https://getstream.io/

|

||||

[5]:https://getstream.io/winds

|

||||

[6]:https://github.com/mochajs/mocha

|

||||

[7]:http://www.chaijs.com/

|

||||

[8]:http://sinonjs.org/

|

||||

[9]:https://github.com/node-nock/nock

|

||||

[10]:https://getstream.io/docs_rest/

|

||||

[11]:https://getstream.io/personalization

|

||||

[12]:https://github.com/boblauer/mock-require

|

||||

[13]:https://github.com/gotwarlost/istanbul

|

||||

[14]:https://github.com/GetStream/Winds/tree/master/api/test

|

||||

[15]:https://travis-ci.org/

|

||||

[16]:https://github.com/GetStream/Winds/blob/master/.travis.yml

|

||||

[17]:https://www.npmjs.com/

|

||||

[18]:https://codecov.io/#features

|

||||

[19]:https://github.com/gotwarlost/istanbul

|

||||

[20]:https://github.com/GetStream/Winds/tree/master/api/test

|

||||

[21]:https://javascriptweekly.com/

|

||||

[22]:https://nodeweekly.com/

|

||||

[23]:https://www.reddit.com/r/node/

|

||||

[24]:https://stateofjs.com/2017/testing/results/

|

||||

[25]:https://twitter.com/@nickparsons

|

||||

[26]:https://getstream.io/try-the-api

|

||||

@ -1,90 +0,0 @@

|

||||

translating---geekpi

|

||||

|

||||

4 cool new projects to try in COPR for July 2018

|

||||

======

|

||||

|

||||

|

||||

|

||||

COPR is a [collection][1] of personal repositories for software that isn’t carried in Fedora. Some software doesn’t conform to standards that allow easy packaging. Or it may not meet other Fedora standards, despite being free and open source. COPR can offer these projects outside the Fedora set of packages. Software in COPR isn’t supported by Fedora infrastructure or signed by the project. However, it can be a neat way to try new or experimental software.

|

||||

|

||||

Here’s a set of new and interesting projects in COPR.

|

||||

|

||||

### Hledger

|

||||

|

||||

[Hledger][2] is a command-line program for tracking money or other commodities. It uses a simple, plain-text formatted journal for storing data and double-entry accounting. In addition to the command-line interface, hledger offers a terminal interface and a web client that can show graphs of balance on the accounts.

|

||||

![][3]

|

||||

|

||||

#### Installation instructions

|

||||

|

||||

The repo currently provides hledger for Fedora 27, 28, and Rawhide. To install hledger, use these commands:

|

||||

```

|

||||

sudo dnf copr enable kefah/HLedger

|

||||

sudo dnf install hledger

|

||||

|

||||

```

|

||||

|

||||

### Neofetch

|

||||

|

||||

[Neofetch][4] is a command-line tool that displays information about the operating system, software, and hardware. Its main purpose is to show the data in a compact way to take screenshots. You can configure Neofetch to display exactly the way you want, by using both command-line flags and a configuration file.

|

||||

![][5]

|

||||

|

||||

#### Installation instructions

|

||||

|

||||

The repo currently provides Neofetch for Fedora 28. To install Neofetch, use these commands:

|

||||

```

|

||||

sudo dnf copr enable sysek/neofetch

|

||||

sudo dnf install neofetch

|

||||

|

||||

```

|

||||

|

||||

### Remarkable

|

||||

|

||||

[Remarkable][6] is a Markdown text editor that uses the GitHub-like flavor of Markdown. It offers a preview of the document, as well as the option to export to PDF and HTML. There are several styles available for the Markdown, including an option to create your own styles using CSS. In addition, Remarkable supports LaTeX syntax for writing equations and syntax highlighting for source code.

|

||||

![][7]

|

||||

|

||||

#### Installation instructions

|

||||

|

||||

The repo currently provides Remarkable for Fedora 28 and Rawhide. To install Remarkable, use these commands:

|

||||

```

|

||||

sudo dnf copr enable neteler/remarkable

|

||||

sudo dnf install remarkable

|

||||

|

||||

```

|

||||

|

||||

### Aha

|

||||

|

||||

[Aha][8] (or ANSI HTML Adapter) is a command-line tool that converts terminal escape sequences to HTML code. This allows you to share, for example, output of git diff or htop as a static HTML page.

|

||||

![][9]

|

||||

|

||||

#### Installation instructions

|

||||

|

||||

The [repo][10] currently provides aha for Fedora 26, 27, 28, and Rawhide, EPEL 6 and 7, and other distributions. To install aha, use these commands:

|

||||

```

|

||||

sudo dnf copr enable scx/aha

|

||||

sudo dnf install aha

|

||||

|

||||

```

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://fedoramagazine.org/4-try-copr-july-2018/

|

||||

|

||||

作者:[Dominik Turecek][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://fedoramagazine.org

|

||||

[1]:https://copr.fedorainfracloud.org/

|

||||

[2]:http://hledger.org/

|

||||

[3]:https://fedoramagazine.org/wp-content/uploads/2018/07/hledger.png

|

||||

[4]:https://github.com/dylanaraps/neofetch

|

||||

[5]:https://fedoramagazine.org/wp-content/uploads/2018/07/neofetch.png

|

||||

[6]:https://remarkableapp.github.io/linux.html

|

||||

[7]:https://fedoramagazine.org/wp-content/uploads/2018/07/remarkable.png

|

||||

[8]:https://github.com/theZiz/aha

|

||||

[9]:https://fedoramagazine.org/wp-content/uploads/2018/07/aha.png

|

||||

[10]:https://copr.fedorainfracloud.org/coprs/scx/aha/

|

||||

@ -1,3 +1,5 @@

|

||||

Translating by DavidChenLiang

|

||||

|

||||

The evolution of package managers

|

||||

======

|

||||

|

||||

|

||||

@ -0,0 +1,136 @@

|

||||

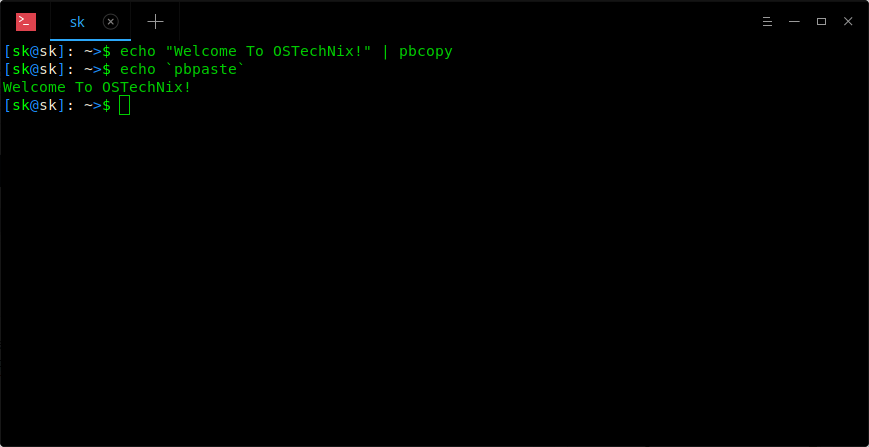

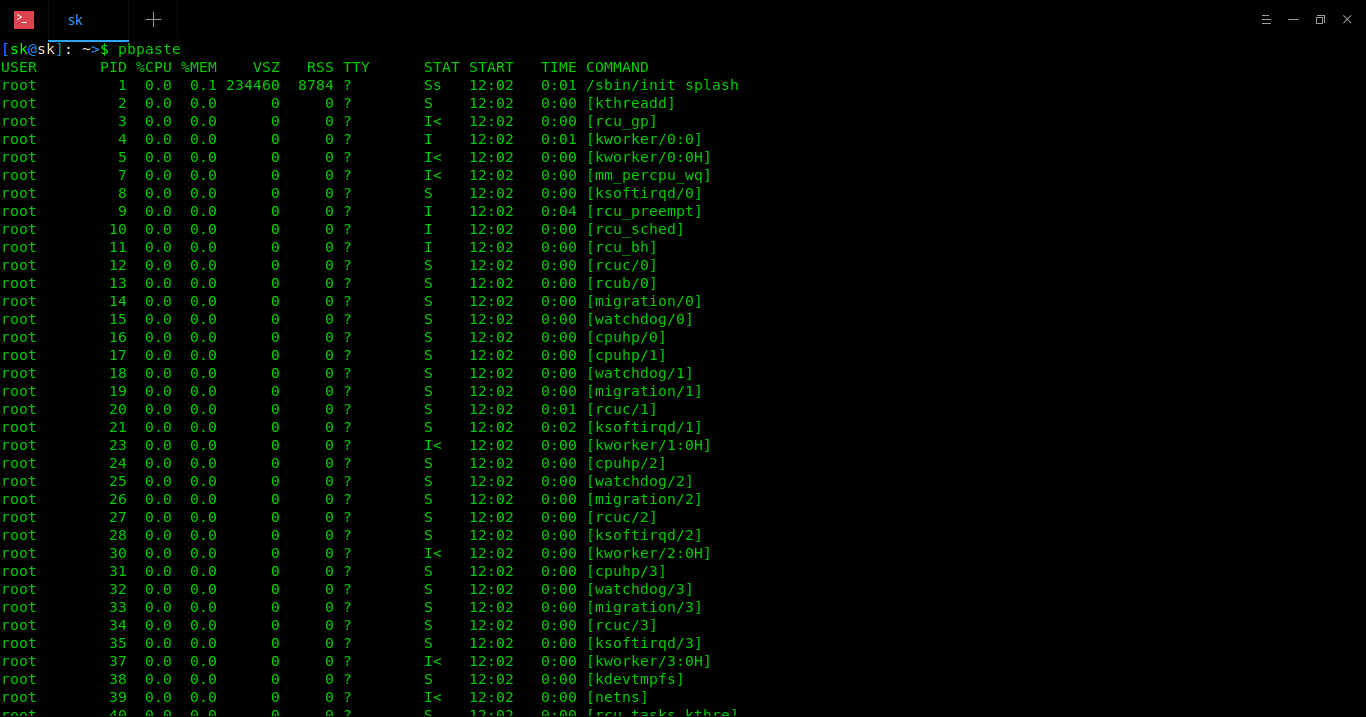

How To Use Pbcopy And Pbpaste Commands On Linux

|

||||

======

|

||||

|

||||

|

||||

|

||||