mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-21 02:10:11 +08:00

commit

97d92d8e17

@ -0,0 +1,143 @@

|

||||

Cloud Commander:一个有控制台和编辑器的 Web 文件管理器

|

||||

======

|

||||

|

||||

|

||||

|

||||

**Cloud Commander** 是一个基于 web 的文件管理程序,它允许你通过任何计算机、移动端或平板电脑的浏览器查看、访问或管理系统文件或文件夹。它有两个简单而又经典的面板,并且会像你设备的显示尺寸一样自动转换大小。它也拥有两款内置的叫做 **Dword** 和 **Edward** 的文本编辑器,它们支持语法高亮,并带有一个支持系统命令行的控制台。因此,您可以随时随地编辑文件。Cloud Commander 服务器是一款在 Linux、Windows、Mac OS X 运行的跨平台应用,而且该应用客户端可以在任何一款浏览器上运行。它是用 **JavaScript/Node.Js** 写的,并使用 **MIT** 许可证。

|

||||

|

||||

在这个简易教程中,让我们看一看如何在 Ubuntu 18.04 LTS 服务器上安装 Cloud Commander。

|

||||

|

||||

### 前提条件

|

||||

|

||||

像我之前提到的,是用 Node.js 写的。所以为了安装 Cloud Commander,我们需要首先安装 Node.js。要执行安装,参考下面的指南。

|

||||

|

||||

- [如何在 Linux 上安装 Node.js](https://www.ostechnix.com/install-node-js-linux/)

|

||||

|

||||

### 安装 Cloud Commander

|

||||

|

||||

在安装 Node.js 之后,运行下列命令安装 Cloud Commander:

|

||||

|

||||

```

|

||||

$ npm i cloudcmd -g

|

||||

```

|

||||

|

||||

祝贺!Cloud Commander 已经安装好了。让我们往下继续看看 Cloud Commander 的基本使用。

|

||||

|

||||

### 开始使用 Cloud Commander

|

||||

|

||||

运行以下命令启动 Cloud Commander:

|

||||

|

||||

```

|

||||

$ cloudcmd

|

||||

```

|

||||

|

||||

**输出示例:**

|

||||

|

||||

```

|

||||

url: http://localhost:8000

|

||||

```

|

||||

|

||||

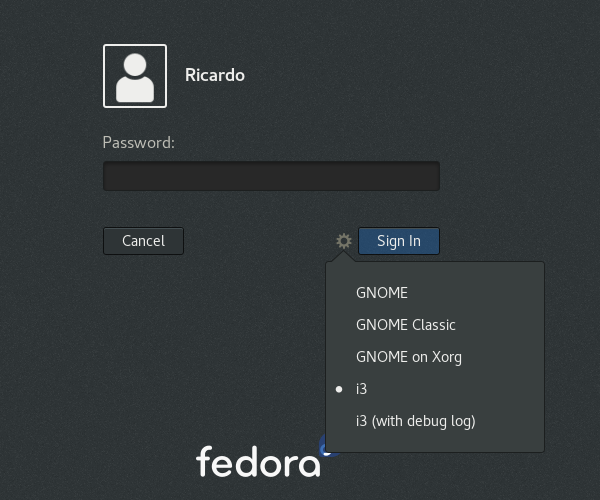

现在,打开你的浏览器并转到链接:`http://localhost:8000` 或 `http://IP-address:8000`。

|

||||

|

||||

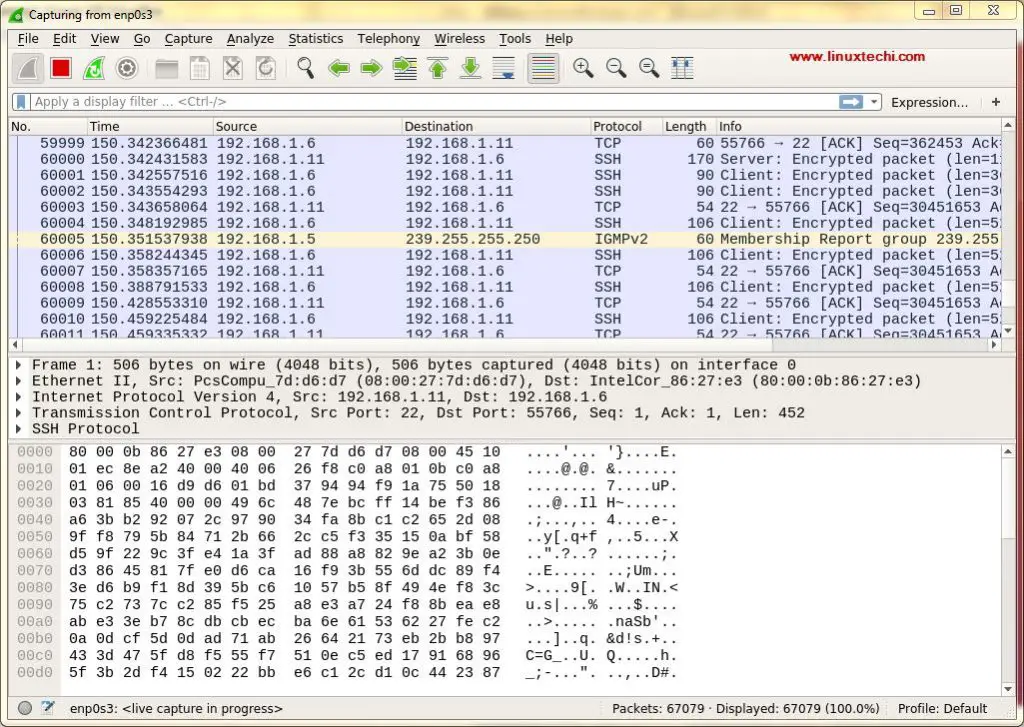

从现在开始,您可以直接在本地系统或远程系统或移动设备,平板电脑等Web浏览器中创建,删除,查看,管理文件或文件夹。

|

||||

|

||||

![][2]

|

||||

|

||||

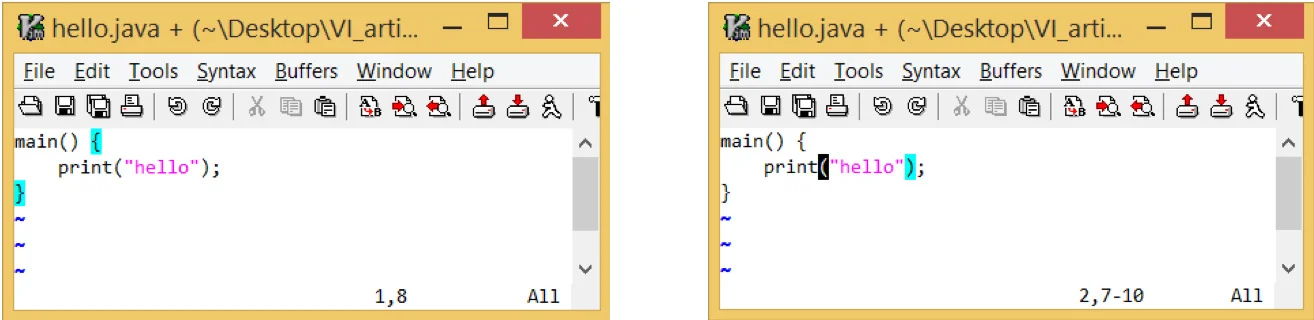

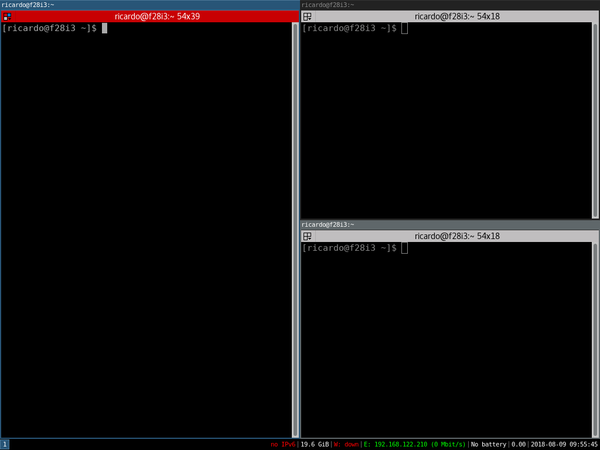

如你所见上面的截图,Clouder Commander 有两个面板,十个热键 (`F1` 到 `F10`),还有控制台。

|

||||

|

||||

每个热键执行的都是一个任务。

|

||||

|

||||

* `F1` – 帮助

|

||||

* `F2` – 重命名文件/文件夹

|

||||

* `F3` – 查看文件/文件夹

|

||||

* `F4` – 编辑文件

|

||||

* `F5` – 复制文件/文件夹

|

||||

* `F6` – 移动文件/文件夹

|

||||

* `F7` – 创建新目录

|

||||

* `F8` – 删除文件/文件夹

|

||||

* `F9` – 打开菜单

|

||||

* `F10` – 打开设置

|

||||

|

||||

#### Cloud Commmander 控制台

|

||||

|

||||

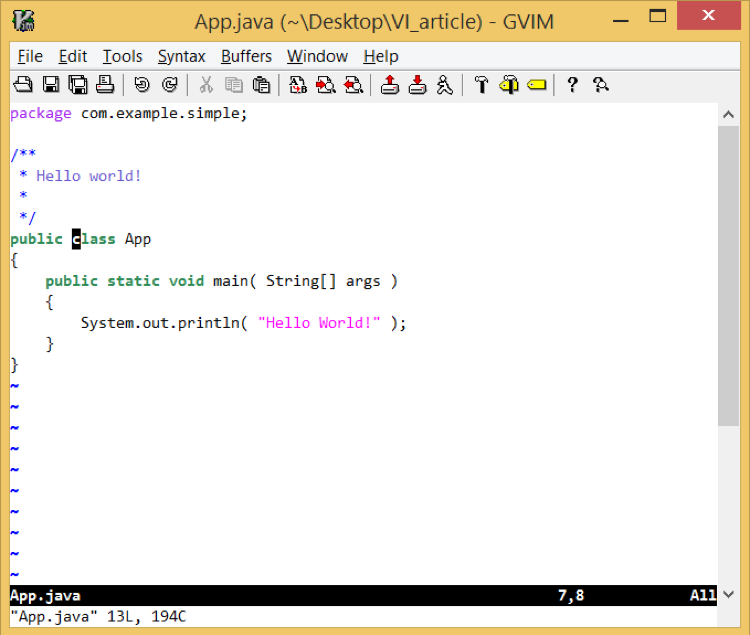

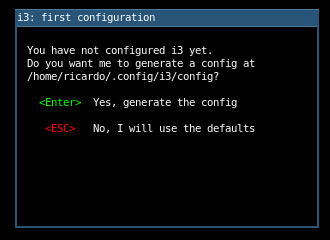

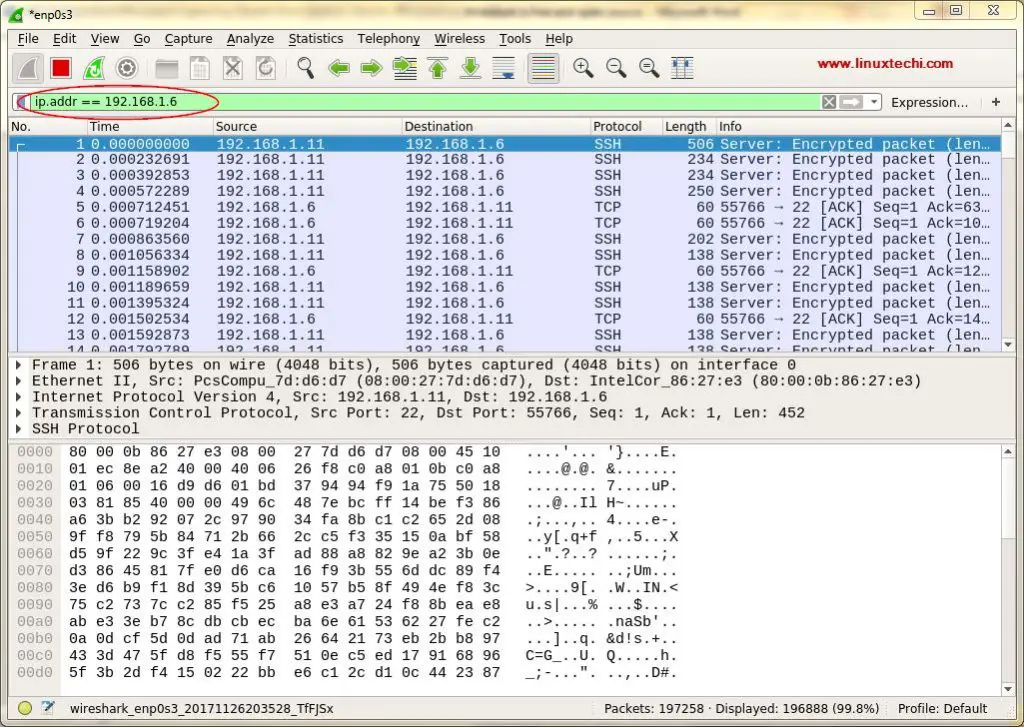

点击控制台图标。这即将打开系统默认的命令行界面。

|

||||

|

||||

![][3]

|

||||

|

||||

在此控制台中,您可以执行各种管理任务,例如安装软件包、删除软件包、更新系统等。您甚至可以关闭或重新引导系统。 因此,Cloud Commander 不仅仅是一个文件管理器,还具有远程管理工具的功能。

|

||||

|

||||

#### 创建文件/文件夹

|

||||

|

||||

要创建新的文件或文件夹就右键单击任意空位置并找到 “New - >File or Directory”。

|

||||

|

||||

![][4]

|

||||

|

||||

#### 查看文件

|

||||

|

||||

你可以查看图片,查看音视频文件。

|

||||

|

||||

![][5]

|

||||

|

||||

#### 上传文件

|

||||

|

||||

另一个很酷的特性是我们可以从任何系统或设备简单地上传一个文件到 Cloud Commander 系统。

|

||||

|

||||

要上传文件,右键单击 Cloud Commander 面板的任意空白处,并且单击“Upload”选项。

|

||||

|

||||

![][6]

|

||||

|

||||

选择你想要上传的文件。

|

||||

|

||||

另外,你也可以上传来自像 Google 云盘、Dropbox、Amazon 云盘、Facebook、Twitter、Gmail、GitHub、Picasa、Instagram 还有很多的云服务上的文件。

|

||||

|

||||

要从云端上传文件, 右键单击面板的任意空白处,并且右键单击面板任意空白处并选择“Upload from Cloud”。

|

||||

|

||||

![][7]

|

||||

|

||||

选择任意一个你选择的网络服务,例如谷歌云盘。点击“Connect to Google drive”按钮。

|

||||

|

||||

![][8]

|

||||

|

||||

下一步,用 Cloud Commander 验证你的谷歌云端硬盘,从谷歌云端硬盘选择文件并点击“Upload”。

|

||||

|

||||

![][9]

|

||||

|

||||

#### 更新 Cloud Commander

|

||||

|

||||

要更新到最新的可用版本,执行下面的命令:

|

||||

|

||||

```

|

||||

$ npm update cloudcmd -g

|

||||

```

|

||||

|

||||

#### 总结

|

||||

|

||||

据我测试,它运行很好。在我的 Ubuntu 服务器测试期间,我没有遇到任何问题。此外,Cloud Commander 不仅是基于 Web 的文件管理器,还可以充当执行大多数 Linux 管理任务的远程管理工具。 您可以创建文件/文件夹、重命名、删除、编辑和查看它们。此外,您可以像在终端中在本地系统中那样安装、更新、升级和删除任何软件包。当然,您甚至可以从 Cloud Commander 控制台本身关闭或重启系统。 还有什么需要的吗? 尝试一下,你会发现它很有用。

|

||||

|

||||

目前为止就这样吧。 我将很快在这里发表另一篇有趣的文章。 在此之前,请继续关注我们。

|

||||

|

||||

祝贺!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/cloud-commander-a-web-file-manager-with-console-and-editor/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[fuzheng1998](https://github.com/fuzheng1998)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[2]:http://www.ostechnix.com/wp-content/uploads/2016/05/Cloud-Commander-Google-Chrome_006-4.jpg

|

||||

[3]:http://www.ostechnix.com/wp-content/uploads/2016/05/Cloud-Commander-Google-Chrome_007-2.jpg

|

||||

[4]:http://www.ostechnix.com/wp-content/uploads/2016/05/Cloud-commander-file-folder-1.png

|

||||

[5]:http://www.ostechnix.com/wp-content/uploads/2016/05/Cloud-Commander-home-sk-Google-Chrome_008-1.jpg

|

||||

[6]:http://www.ostechnix.com/wp-content/uploads/2016/05/cloud-commander-upload-2.png

|

||||

[7]:http://www.ostechnix.com/wp-content/uploads/2016/05/upload-from-cloud-1.png

|

||||

[8]:http://www.ostechnix.com/wp-content/uploads/2016/05/Cloud-Commander-home-sk-Google-Chrome_009-2.jpg

|

||||

[9]:http://www.ostechnix.com/wp-content/uploads/2016/05/Cloud-Commander-home-sk-Google-Chrome_010-1.jpg

|

||||

@ -1,4 +1,4 @@

|

||||

Trash-Cli : Linux 上的命令行回收站工具

|

||||

Trash-Cli:Linux 上的命令行回收站工具

|

||||

======

|

||||

|

||||

相信每个人都对<ruby>回收站<rt>trashcan</rt></ruby>很熟悉,因为无论是对 Linux 用户,还是 Windows 用户,或者 Mac 用户来说,它都很常见。当你删除一个文件或目录的时候,该文件或目录会被移动到回收站中。

|

||||

@ -33,31 +33,27 @@ $ sudo apt install trash-cli

|

||||

|

||||

```

|

||||

$ sudo yum install trash-cli

|

||||

|

||||

```

|

||||

|

||||

对于 Fedora 用户,使用 [dnf][6] 命令来安装 Trash-Cli:

|

||||

|

||||

```

|

||||

$ sudo dnf install trash-cli

|

||||

|

||||

```

|

||||

|

||||

对于 Arch Linux 用户,使用 [pacman][7] 命令来安装 Trash-Cli:

|

||||

|

||||

```

|

||||

$ sudo pacman -S trash-cli

|

||||

|

||||

```

|

||||

|

||||

对于 openSUSE 用户,使用 [zypper][8] 命令来安装 Trash-Cli:

|

||||

|

||||

```

|

||||

$ sudo zypper in trash-cli

|

||||

|

||||

```

|

||||

|

||||

如果你的发行版中没有提供 Trash-Cli 的安装包,那么你也可以使用 pip 来安装。为了能够安装 python 包,你的系统中应该会有 pip 包管理器。

|

||||

如果你的发行版中没有提供 Trash-Cli 的安装包,那么你也可以使用 `pip` 来安装。为了能够安装 python 包,你的系统中应该会有 `pip` 包管理器。

|

||||

|

||||

```

|

||||

$ sudo pip install trash-cli

|

||||

@ -66,7 +62,6 @@ Collecting trash-cli

|

||||

Installing collected packages: trash-cli

|

||||

Running setup.py bdist_wheel for trash-cli ... done

|

||||

Successfully installed trash-cli-0.17.1.14

|

||||

|

||||

```

|

||||

|

||||

### 如何使用 Trash-Cli

|

||||

@ -81,7 +76,7 @@ Trash-Cli 的使用不难,因为它提供了一个很简单的语法。Trash-C

|

||||

|

||||

下面,让我们通过一些例子来试验一下。

|

||||

|

||||

1)删除文件和目录:在这个例子中,我们通过运行下面这个命令,将 2g.txt 这一文件和 magi 这一文件夹移动到回收站中。

|

||||

1) 删除文件和目录:在这个例子中,我们通过运行下面这个命令,将 `2g.txt` 这一文件和 `magi` 这一文件夹移动到回收站中。

|

||||

|

||||

```

|

||||

$ trash-put 2g.txt magi

|

||||

@ -89,7 +84,7 @@ $ trash-put 2g.txt magi

|

||||

|

||||

和你在文件管理器中看到的一样。

|

||||

|

||||

2)列出被删除了的文件和目录:为了查看被删除了的文件和目录,你需要运行下面这个命令。之后,你可以在输出中看到被删除文件和目录的详细信息,比如名字、删除日期和时间,以及文件路径。

|

||||

2) 列出被删除了的文件和目录:为了查看被删除了的文件和目录,你需要运行下面这个命令。之后,你可以在输出中看到被删除文件和目录的详细信息,比如名字、删除日期和时间,以及文件路径。

|

||||

|

||||

```

|

||||

$ trash-list

|

||||

@ -97,7 +92,7 @@ $ trash-list

|

||||

2017-10-01 01:40:50 /home/magi/magi/magi

|

||||

```

|

||||

|

||||

3)从回收站中恢复文件或目录:任何时候,你都可以通过运行下面这个命令来恢复文件和目录。它将会询问你来选择你想要恢复的文件或目录。在这个例子中,我打算恢复 2g.txt 文件,所以我的选择是 0 。

|

||||

3) 从回收站中恢复文件或目录:任何时候,你都可以通过运行下面这个命令来恢复文件和目录。它将会询问你来选择你想要恢复的文件或目录。在这个例子中,我打算恢复 `2g.txt` 文件,所以我的选择是 `0` 。

|

||||

|

||||

```

|

||||

$ trash-restore

|

||||

@ -106,7 +101,7 @@ $ trash-restore

|

||||

What file to restore [0..1]: 0

|

||||

```

|

||||

|

||||

4)从回收站中删除文件:如果你想删除回收站中的特定文件,那么可以运行下面这个命令。在这个例子中,我将删除 magi 目录。

|

||||

4) 从回收站中删除文件:如果你想删除回收站中的特定文件,那么可以运行下面这个命令。在这个例子中,我将删除 `magi` 目录。

|

||||

|

||||

```

|

||||

$ trash-rm magi

|

||||

@ -118,11 +113,10 @@ $ trash-rm magi

|

||||

$ trash-empty

|

||||

```

|

||||

|

||||

6)删除超过 X 天的垃圾文件:或者,你可以通过运行下面这个命令来删除回收站中超过 X 天的文件。在这个例子中,我将删除回收站中超过 10 天的项目。

|

||||

6)删除超过 X 天的垃圾文件:或者,你可以通过运行下面这个命令来删除回收站中超过 X 天的文件。在这个例子中,我将删除回收站中超过 `10` 天的项目。

|

||||

|

||||

```

|

||||

$ trash-empty 10

|

||||

|

||||

```

|

||||

|

||||

Trash-Cli 可以工作的很好,但是如果你想尝试它的一些替代品,那么你也可以试一试 [gvfs-trash][9] 和 [autotrash][10] 。

|

||||

@ -133,7 +127,7 @@ via: https://www.2daygeek.com/trash-cli-command-line-trashcan-linux-system/

|

||||

|

||||

作者:[2daygeek][a]

|

||||

译者:[ucasFL](https://github.com/ucasFL)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

132

published/20171124 How do groups work on Linux.md

Normal file

132

published/20171124 How do groups work on Linux.md

Normal file

@ -0,0 +1,132 @@

|

||||

“用户组”在 Linux 上到底是怎么工作的?

|

||||

========

|

||||

|

||||

嗨!就在上周,我还自认为对 Linux 上的用户和组的工作机制了如指掌。我认为它们的关系是这样的:

|

||||

|

||||

1. 每个进程都属于一个用户(比如用户 `julia`)

|

||||

2. 当这个进程试图读取一个被某个组所拥有的文件时, Linux 会

|

||||

a. 先检查用户`julia` 是否有权限访问文件。(LCTT 译注:此处应该是指检查文件的所有者是否就是 `julia`)

|

||||

b. 检查 `julia` 属于哪些组,并进一步检查在这些组里是否有某个组拥有这个文件或者有权限访问这个文件。

|

||||

3. 如果上述 a、b 任一为真(或者“其它”位设为有权限访问),那么这个进程就有权限访问这个文件。

|

||||

|

||||

比如说,如果一个进程被用户 `julia` 拥有并且 `julia` 在`awesome` 组,那么这个进程就能访问下面这个文件。

|

||||

|

||||

```

|

||||

r--r--r-- 1 root awesome 6872 Sep 24 11:09 file.txt

|

||||

```

|

||||

|

||||

然而上述的机制我并没有考虑得非常清楚,如果你硬要我阐述清楚,我会说进程可能会在**运行时**去检查 `/etc/group` 文件里是否有某些组拥有当前的用户。

|

||||

|

||||

### 然而这并不是 Linux 里“组”的工作机制

|

||||

|

||||

我在上个星期的工作中发现了一件有趣的事,事实证明我前面的理解错了,我对组的工作机制的描述并不准确。特别是 Linux **并不会**在进程每次试图访问一个文件时就去检查这个进程的用户属于哪些组。

|

||||

|

||||

我在读了《[Linux 编程接口][1]》这本书的第九章(“进程资格”)后才恍然大悟(这本书真是太棒了),这才是组真正的工作方式!我意识到之前我并没有真正理解用户和组是怎么工作的,我信心满满的尝试了下面的内容并且验证到底发生了什么,事实证明现在我的理解才是对的。

|

||||

|

||||

### 用户和组权限检查是怎么完成的

|

||||

|

||||

现在这些关键的知识在我看来非常简单! 这本书的第九章上来就告诉我如下事实:用户和组 ID 是**进程的属性**,它们是:

|

||||

|

||||

* 真实用户 ID 和组 ID;

|

||||

* 有效用户 ID 和组 ID;

|

||||

* 保存的 set-user-ID 和保存的 set-group-ID;

|

||||

* 文件系统用户 ID 和组 ID(特定于 Linux);

|

||||

* 补充的组 ID;

|

||||

|

||||

这说明 Linux **实际上**检查一个进程能否访问一个文件所做的组检查是这样的:

|

||||

|

||||

* 检查一个进程的组 ID 和补充组 ID(这些 ID 就在进程的属性里,**并不是**实时在 `/etc/group` 里查找这些 ID)

|

||||

* 检查要访问的文件的访问属性里的组设置

|

||||

* 确定进程对文件是否有权限访问(LCTT 译注:即文件的组是否是以上的组之一)

|

||||

|

||||

通常当访问控制的时候使用的是**有效**用户/组 ID,而不是**真实**用户/组 ID。技术上来说当访问一个文件时使用的是**文件系统**的 ID,它们通常和有效用户/组 ID 一样。(LCTT 译注:这句话针对 Linux 而言。)

|

||||

|

||||

### 将一个用户加入一个组并不会将一个已存在的进程(的用户)加入那个组

|

||||

|

||||

下面是一个有趣的例子:如果我创建了一个新的组:`panda` 组并且将我自己(`bork`)加入到这个组,然后运行 `groups` 来检查我是否在这个组里:结果是我(`bork`)竟然不在这个组?!

|

||||

|

||||

```

|

||||

bork@kiwi~> sudo addgroup panda

|

||||

Adding group `panda' (GID 1001) ...

|

||||

Done.

|

||||

bork@kiwi~> sudo adduser bork panda

|

||||

Adding user `bork' to group `panda' ...

|

||||

Adding user bork to group panda

|

||||

Done.

|

||||

bork@kiwi~> groups

|

||||

bork adm cdrom sudo dip plugdev lpadmin sambashare docker lxd

|

||||

|

||||

```

|

||||

|

||||

`panda` 并不在上面的组里!为了再次确定我们的发现,让我们建一个文件,这个文件被 `panda` 组拥有,看看我能否访问它。

|

||||

|

||||

```

|

||||

$ touch panda-file.txt

|

||||

$ sudo chown root:panda panda-file.txt

|

||||

$ sudo chmod 660 panda-file.txt

|

||||

$ cat panda-file.txt

|

||||

cat: panda-file.txt: Permission denied

|

||||

```

|

||||

|

||||

好吧,确定了,我(`bork`)无法访问 `panda-file.txt`。这一点都不让人吃惊,我的命令解释器并没有将 `panda` 组作为补充组 ID,运行 `adduser bork panda` 并不会改变这一点。

|

||||

|

||||

### 那进程一开始是怎么得到用户的组的呢?

|

||||

|

||||

这真是个非常令人困惑的问题,对吗?如果进程会将组的信息预置到进程的属性里面,进程在初始化的时候怎么取到组的呢?很明显你无法给你自己指定更多的组(否则就会和 Linux 访问控制的初衷相违背了……)

|

||||

|

||||

有一点还是很清楚的:一个新的进程是怎么从我的命令行解释器(`/bash/fish`)里被**执行**而得到它的组的。(新的)进程将拥有我的用户 ID(`bork`),并且进程属性里还有很多组 ID。从我的命令解释器里执行的所有进程是从这个命令解释器里 `fork()` 而来的,所以这个新进程得到了和命令解释器同样的组。

|

||||

|

||||

因此一定存在一个“第一个”进程来把你的组设置到进程属性里,而所有由此进程而衍生的进程将都设置这些组。而那个“第一个”进程就是你的<ruby>登录程序<rt>login shell</rt></ruby>,在我的笔记本电脑上,它是由 `login` 程序(`/bin/login`)实例化而来。登录程序以 root 身份运行,然后调用了一个 C 的库函数 —— `initgroups` 来设置你的进程的组(具体来说是通过读取 `/etc/group` 文件),因为登录程序是以 root 运行的,所以它能设置你的进程的组。

|

||||

|

||||

### 让我们再登录一次

|

||||

|

||||

好了!假如说我们正处于一个登录程序中,而我又想刷新我的进程的组设置,从我们前面所学到的进程是怎么初始化组 ID 的,我应该可以通过再次运行登录程序来刷新我的进程组并启动一个新的登录命令!

|

||||

|

||||

让我们试试下边的方法:

|

||||

|

||||

```

|

||||

$ sudo login bork

|

||||

$ groups

|

||||

bork adm cdrom sudo dip plugdev lpadmin sambashare docker lxd panda

|

||||

$ cat panda-file.txt # it works! I can access the file owned by `panda` now!

|

||||

```

|

||||

|

||||

当然,成功了!现在由登录程序衍生的程序的用户是组 `panda` 的一部分了!太棒了!这并不会影响我其他的已经在运行的登录程序(及其子进程),如果我真的希望“所有的”进程都能对 `panda` 组有访问权限。我必须完全的重启我的登录会话,这意味着我必须退出我的窗口管理器然后再重新登录。(LCTT 译注:即更新进程树的树根进程,这里是窗口管理器进程。)

|

||||

|

||||

### newgrp 命令

|

||||

|

||||

在 Twitter 上有人告诉我如果只是想启动一个刷新了组信息的命令解释器的话,你可以使用 `newgrp`(LCTT 译注:不启动新的命令解释器),如下:

|

||||

|

||||

```

|

||||

sudo addgroup panda

|

||||

sudo adduser bork panda

|

||||

newgrp panda # starts a new shell, and you don't have to be root to run it!

|

||||

```

|

||||

|

||||

你也可以用 `sg panda bash` 来完成同样的效果,这个命令能启动一个`bash` 登录程序,而这个程序就有 `panda` 组。

|

||||

|

||||

### seduid 将设置有效用户 ID

|

||||

|

||||

其实我一直对一个进程如何以 `setuid root` 的权限来运行意味着什么有点似是而非。现在我知道了,事实上所发生的是:`setuid` 设置了

|

||||

“有效用户 ID”! 如果我(`julia`)运行了一个 `setuid root` 的进程( 比如 `passwd`),那么进程的**真实**用户 ID 将为 `julia`,而**有效**用户 ID 将被设置为 `root`。

|

||||

|

||||

`passwd` 需要以 root 权限来运行,但是它能看到进程的真实用户 ID 是 `julia` ,是 `julia` 启动了这个进程,`passwd` 会阻止这个进程修改除了 `julia` 之外的用户密码。

|

||||

|

||||

### 就是这些了!

|

||||

|

||||

在《[Linux 编程接口][1]》这本书里有很多 Linux 上一些功能的罕见使用方法以及 Linux 上所有的事物到底是怎么运行的详细解释,这里我就不一一展开了。那本书棒极了,我上面所说的都在该书的第九章,这章在 1300 页的书里只占了 17 页。

|

||||

|

||||

我最爱这本书的一点是我只用读 17 页关于用户和组是怎么工作的内容,而这区区 17 页就能做到内容完备、详实有用。我不用读完所有的 1300 页书就能得到有用的东西,太棒了!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://jvns.ca/blog/2017/11/20/groups/

|

||||

|

||||

作者:[Julia Evans][a]

|

||||

译者:[DavidChen](https://github.com/DavidChenLiang)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://jvns.ca/

|

||||

[1]:http://man7.org/tlpi/

|

||||

@ -1,29 +1,28 @@

|

||||

|

||||

我从编程面试中学到的

|

||||

============================================================

|

||||

|

||||

======

|

||||

|

||||

|

||||

聊聊白板编程面试

|

||||

|

||||

在2017年,我参加了[Grace Hopper Celebration][1]‘计算机行业中的女性’这一活动。这个活动是这类科技活动中最大的一个。共有17,000名女性IT工作者参加。

|

||||

*聊聊白板编程面试*

|

||||

|

||||

这个会议有个大型的配套招聘会,会上有招聘公司来面试会议参加者。有些人甚至现场拿到offer。我在现场晃荡了一下,注意到一些应聘者看上去非常紧张忧虑。我还隐隐听到应聘者之间的谈话,其中一些人谈到在面试中做的并不好。

|

||||

在 2017 年,我参加了 ‘计算机行业中的女性’ 的[Grace Hopper 庆祝活动][1]。这个活动是这类科技活动中最大的一个。共有 17,000 名女性IT工作者参加。

|

||||

|

||||

我走近我听到谈话的那群人并和她们聊了起来并给了一些面试上的小建议。我想我的建议还是比较偏基本的,如“(在面试时)一开始给出个能工作的解决方案也还说的过去”之类的,但是当她们听到我的一些其他的建议时还是颇为吃惊。

|

||||

这个会议有个大型的配套招聘会,会上有招聘公司来面试会议参加者。有些人甚至现场拿到 offer。我在现场晃荡了一下,注意到一些应聘者看上去非常紧张忧虑。我还隐隐听到应聘者之间的谈话,其中一些人谈到在面试中做的并不好。

|

||||

|

||||

为了能更多的帮到像她们一样的白面面试者,我收集了一些过去对我有用的小点子,这些小点子我已经发表在了[prodcast episode][2]上。它们也是这篇文章的主题。

|

||||

我走近我听到谈话的那群人并和她们聊了起来并给了一些面试上的小建议。我想我的建议还是比较偏基本的,如“(在面试时)一开始给出个能工作的解决方案也还说的过去”之类的,但是当她们听到我的一些其他的建议时还是颇为吃惊。

|

||||

|

||||

为了能更多的帮到像她们一样的小白面试者,我收集了一些过去对我有用的小点子,这些小点子我已经发表在了 [prodcast episode][2] 上。它们也是这篇文章的主题。

|

||||

|

||||

为了实习生职位和全职工作,我做过很多次的面试。当我还在大学主修计算机科学时,学校每个秋季学期都有招聘会,第一轮招聘会在校园里举行。(我在第一和最后一轮都搞砸过。)不过,每次面试后,我都会反思哪些方面我能做的更好,我还会和朋友们做模拟面试,这样我就能从他们那儿得到更多的面试反馈。

|

||||

|

||||

不管我们怎么样找工作: 工作中介,网络,或者学校招聘,他们的招聘流程中都会涉及到技术面试:

|

||||

不管我们怎么样找工作: 工作中介、网络,或者学校招聘,他们的招聘流程中都会涉及到技术面试:

|

||||

|

||||

近年来,我注意到了一些新的不同的面试形式出现了:

|

||||

|

||||

* 与招聘方的一位工程师结对编程

|

||||

* 网络在线测试及在线编码

|

||||

* 白板编程(LCTT译者注: 这种形式应该不新了)

|

||||

|

||||

* 白板编程(LCTT 译注: 这种形式应该不新了)

|

||||

|

||||

我将重点谈谈白板面试,这种形式我经历的最多。我有过很多次面试,有些挺不错的,有些被我搞砸了。

|

||||

|

||||

@ -31,7 +30,7 @@

|

||||

|

||||

首先,我想回顾一下我做的不好的地方。知错能改,善莫大焉。

|

||||

|

||||

当面试者提出一个要我解决的问题时, 我立即马上立刻开始在白板上写代码,_什么都不问。_

|

||||

当面试者提出一个要我解决的问题时, 我立即马上立刻开始在白板上写代码,_什么都不问。_

|

||||

|

||||

这里我犯了两个错误:

|

||||

|

||||

@ -41,7 +40,7 @@

|

||||

|

||||

#### 只会默默思考,不去记录想法或和面试官沟通

|

||||

|

||||

在面试中,很多时候我也会傻傻站在那思考,什么都不写。我和一个朋友模拟面试的时候,他告诉我因为他曾经和我一起工作过所以他知道我在思考,但是如果他是个陌生的面试官的话,他会觉得要么我正站在那冥思苦想,毫无头绪。不要急匆匆的直奔解题而去是很重要的。花点时间多想想各种解题的可能性。有时候面试官会乐意和你一起探索解题的步骤。不管怎样,这就是在一家公司开工作会议的的普遍方式,大家各抒己见,一起讨论如何解决问题。

|

||||

在面试中,很多时候我也会傻傻站在那思考,什么都不写。我和一个朋友模拟面试的时候,他告诉我因为他曾经和我一起工作过所以他知道我在思考,但是如果他是个陌生的面试官的话,他会觉得我正站在那冥思苦想,毫无头绪。不要急匆匆的直奔解题而去是很重要的。花点时间多想想各种解题的可能性。有时候面试官会乐意和你一起探索解题的步骤。不管怎样,这就是在一家公司开工作会议的的普遍方式,大家各抒己见,一起讨论如何解决问题。

|

||||

|

||||

### 想到一个解题方法

|

||||

|

||||

@ -50,30 +49,27 @@

|

||||

这是对我管用的步骤:

|

||||

|

||||

1. 头脑风暴

|

||||

|

||||

2. 写代码

|

||||

|

||||

3. 处理错误路径

|

||||

|

||||

4. 测试

|

||||

|

||||

#### 1\. 头脑风暴

|

||||

#### 1、 头脑风暴

|

||||

|

||||

对我来说,我会首先通过一些例子来视觉化我要解决的问题。比如说如果这个问题和数据结构中的树有关,我就会从树底层的空节点开始思考,如何处理一个节点的情况呢?两个节点呢?三个节点呢?这能帮助你从具体例子里抽象出你的解决方案。

|

||||

|

||||

在白板上先写下你的算法要做的事情列表。这样做,你往往能在开始写代码前就发现bug和缺陷(不过你可得掌握好时间)。我犯过的一个错误是我花了过多的时间在澄清问题和头脑风暴上,最后几乎没有留下时间给我写代码。你的面试官可能没有机会看你在白板上写下代码,这可太糟了。你可以带块手表,或者房间有钟的话,你也可以抬头看看时间。有些时候面试者会提醒你你已经得到了所有的信息(这时你就不要再问别的了),'我想我们已经把所有需要的信息都澄清了,让我们写代码实现吧'

|

||||

在白板上先写下你的算法要做的事情列表。这样做,你往往能在开始写代码前就发现 bug 和缺陷(不过你可得掌握好时间)。我犯过的一个错误是我花了过多的时间在澄清问题和头脑风暴上,最后几乎没有留下时间给我写代码。你的面试官可能没有机会看你在白板上写下代码,这可太糟了。你可以带块手表,或者房间有钟的话,你也可以抬头看看时间。有些时候面试者会提醒你你已经得到了所有的信息(这时你就不要再问别的了),“我想我们已经把所有需要的信息都澄清了,让我们写代码实现吧”。

|

||||

|

||||

#### 2\. 开始写代码,一气呵成

|

||||

#### 2、 开始写代码,一气呵成

|

||||

|

||||

如果你还没有得到问题的完美解决方法,从最原始的解法开始总的可以的。当你在向面试官解释最显而易见的解法时,你要想想怎么去完善它,并指明这种做法是最原始,未加优化的。(请熟悉算法中的O()的概念,这对面试非常有用。)在向面试者提交前请仔细检查你的解决方案两三遍。面试者有时会给你些提示, ‘还有更好的方法吗?’,这句话的意思是面试官提示你有更优化的解决方案。

|

||||

如果你还没有得到问题的完美解决方法,从最原始的解法开始总是可以的。当你在向面试官解释最显而易见的解法时,你要想想怎么去完善它,并指明这种做法是最原始的,未加优化的。(请熟悉算法中的 `O()` 的概念,这对面试非常有用。)在向面试者提交前请仔细检查你的解决方案两三遍。面试者有时会给你些提示, “还有更好的方法吗?”,这句话的意思是面试官提示你有更优化的解决方案。

|

||||

|

||||

#### 3\. 错误处理

|

||||

#### 3、 错误处理

|

||||

|

||||

当你在编码时,对你想做错误处理的代码行做个注释。当面试者说,'很好,这里你想到了错误处理。你想怎么处理呢?抛出异常还是返回错误码?',这将给你个机会去引出关于代码质量的一番讨论。当然,这种地方提出几个就够了。有时,面试者为了节省编码的时间,会告诉你可以假设外界输入的参数都已经通过了校验。不管怎样,你都要展现你对错误处理和编码质量的重要性的认识。

|

||||

当你在编码时,对你想做错误处理的代码行做个注释。当面试者说,“很好,这里你想到了错误处理。你想怎么处理呢?抛出异常还是返回错误码?”,这将给你个机会去引出关于代码质量的一番讨论。当然,这种地方提出几个就够了。有时,面试者为了节省编码的时间,会告诉你可以假设外界输入的参数都已经通过了校验。不管怎样,你都要展现你对错误处理和编码质量的重要性的认识。

|

||||

|

||||

#### 4\. 测试

|

||||

#### 4、 测试

|

||||

|

||||

在编码完成后,用你在前面头脑风暴中写的用例来在你脑子里“跑”一下你的代码,确定万无一失。例如你可以说,‘让我用前面写下的树的例子来跑一下我的代码,如果是一个节点是什么结果,如果是两个节点是什么结果。。。’

|

||||

在编码完成后,用你在前面头脑风暴中写的用例来在你脑子里“跑”一下你的代码,确定万无一失。例如你可以说,“让我用前面写下的树的例子来跑一下我的代码,如果是一个节点是什么结果,如果是两个节点是什么结果……”

|

||||

|

||||

在你结束之后,面试者有时会问你你将会怎么测试你的代码,你会涉及什么样的测试用例。我建议你用下面不同的分类来组织你的错误用例:

|

||||

|

||||

@ -83,7 +79,7 @@

|

||||

2. 错误用例

|

||||

3. 期望的正常用例

|

||||

|

||||

对于性能测试,要考虑极端数量下的情况。例如,如果问题是关于列表的,你可以说你将会使用一个非常大的列表以及的非常小的列表来测试。如果和数字有关,你将会测试系统中的最大整数和最小整数。我建议读一些有关软件测试的书来得到更多的知识。在这个领域我最喜欢的书是[How We Test Software at Microsoft][3]。

|

||||

对于性能测试,要考虑极端数量下的情况。例如,如果问题是关于列表的,你可以说你将会使用一个非常大的列表以及的非常小的列表来测试。如果和数字有关,你将会测试系统中的最大整数和最小整数。我建议读一些有关软件测试的书来得到更多的知识。在这个领域我最喜欢的书是 《[我们在微软如何测试软件][3]》。

|

||||

|

||||

对于错误用例,想一下什么是期望的错误情况并一一写下。

|

||||

|

||||

@ -91,50 +87,45 @@

|

||||

|

||||

### “你还有什么要问我的吗?”

|

||||

|

||||

面试最后总是会留几分钟给你问问题。我建议你在面试前写下你想问的问题。千万别说,‘我没什么问题了’,就算你觉得面试砸了或者你对这间公司不怎么感兴趣,你总有些东西可以问问。你甚至可以问面试者他最喜欢自己的工作什么,最讨厌自己的工作什么。或者你可以问问面试官的工作具体是什么,在用什么技术和实践。不要因为觉得自己在面试中做的不好而心灰意冷,不想问什么问题。

|

||||

面试最后总是会留几分钟给你问问题。我建议你在面试前写下你想问的问题。千万别说,“我没什么问题了”,就算你觉得面试砸了或者你对这间公司不怎么感兴趣,你总有些东西可以问问。你甚至可以问面试者他最喜欢自己的工作什么,最讨厌自己的工作什么。或者你可以问问面试官的工作具体是什么,在用什么技术和实践。不要因为觉得自己在面试中做的不好而心灰意冷,不想问什么问题。

|

||||

|

||||

### 申请一份工作

|

||||

|

||||

|

||||

关于找工作申请工作,有人曾经告诉我,你应该去找你真正有激情工作的地方。去找一家你喜欢的公司,或者你喜欢使用的产品,看看你能不能去那儿工作。

|

||||

关于找工作和申请工作,有人曾经告诉我,你应该去找你真正有激情工作的地方。去找一家你喜欢的公司,或者你喜欢使用的产品,看看你能不能去那儿工作。

|

||||

|

||||

我个人并不推荐你用上述的方法去找工作。你会排除很多很好的公司,特别是你是在找实习工作或者入门级的职位时。

|

||||

|

||||

你也可以集中在其他的一些目标上。如:我想从这个工作里得到哪方面的更多经验?这个工作是关于云计算?Web开发?或是人工智能?当在招聘会上与招聘公司沟通是,看看他们的工作单位有没有在这些领域的。你可能会在一家并非在你的想去公司列表上的公司(或非盈利机构)里找到你想找的职位。

|

||||

你也可以集中在其他的一些目标上。如:我想从这个工作里得到哪方面的更多经验?这个工作是关于云计算?Web 开发?或是人工智能?当在招聘会上与招聘公司沟通时,看看他们的工作单位有没有在这些领域的。你可能会在一家并非在你的想去公司列表上的公司(或非盈利机构)里找到你想找的职位。

|

||||

|

||||

#### 换组

|

||||

|

||||

在这家公司里的第一个组里呆了一年半以后,我觉得是时候去探索一下不同的东西了。我找到了一个我喜欢的组并进行了4轮面试。结果我搞砸了。

|

||||

|

||||

|

||||

我什么都没有准备,甚至都没在白板上练练手。我当时的逻辑是,如果我都已经在一家公司干了快2年了,我还需要练什么?我完全错了,我在接下去的白板面试中跌跌撞撞。我的板书写得太小,而且因为没有从最左上角开始写代码,我的代码大大超出了一个白板的空间,这些都导致了白板面试失败。

|

||||

在这家公司里的第一个组里呆了一年半以后,我觉得是时候去探索一下不同的东西了。我找到了一个我喜欢的组并进行了 4 轮面试。结果我搞砸了。

|

||||

|

||||

我什么都没有准备,甚至都没在白板上练练手。我当时的逻辑是,如果我都已经在一家公司干了快 2 年了,我还需要练什么?我完全错了,我在接下去的白板面试中跌跌撞撞。我的板书写得太小,而且因为没有从最左上角开始写代码,我的代码大大超出了一个白板的空间,这些都导致了白板面试失败。

|

||||

|

||||

我在面试前也没有刷过数据结构和算法题。如果我做了的话,我将会在面试中更有信心。就算你已经在一家公司担任了软件工程师,在你去另外一个组面试前,我强烈建议你在一块白板上演练一下如何写代码。

|

||||

|

||||

对于换项目组这件事,如果你是在公司内部换组的话,事先能同那个组的人非正式聊聊会很有帮助。对于这一点,我发现几乎每个人都很乐于和你一起吃个午饭。人一般都会在中午有空,约不到人或者别人正好有会议冲突的风险会很低。这是一种非正式的途径来了解你想去的组正在干什么,以及这个组成员个性是怎么样的。相信我,你能从一次午餐中得到很多信息,这可会对你的正式面试帮助不小。

|

||||

|

||||

对于换项目组这件事,如果你是在公司内部换组的话,事先能同那个组的人非正式聊聊会很有帮助。对于这一点,我发现几乎每个人都很乐于和你一起吃个午饭。人一般都会在中午有空,约不到人或者别人正好有会议冲突的风险会很低。这是一种非正式的途径来了解你想去的组正在干什么,以及这个组成员个性是怎么样的。相信我, 你能从一次午餐中得到很多信息,这可会对你的正式面试帮助不小。

|

||||

非常重要的一点是,你在面试一个特定的组时,就算你在面试中做的很好,因为文化不契合的原因,你也很可能拿不到 offer。这也是为什么我一开始就想去见见组里不同的人的原因(有时这也不太可能),我希望你不要被一次拒绝所击倒,请保持开放的心态,选择新的机会,并多多练习。

|

||||

|

||||

|

||||

非常重要的一点是,你在面试一个特定的组时,就算你在面试中做的很好,因为文化不契合的原因,你也很可能拿不到offer。这也是为什么我一开始就想去见见组里不同的人的原因(有时这也不太可能),我希望你不要被一次拒绝所击倒,请保持开放的心态,选择新的机会,并多多练习。

|

||||

|

||||

|

||||

以上内容来自["Programming interviews"][4] 章节,选自 [The Women in Tech Show: Technical Interviews with Prominent Women in Tech][5]

|

||||

以上内容选自 《[The Women in Tech Show: Technical Interviews with Prominent Women in Tech][5]》的 “[编程面试][4]”章节,

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

|

||||

微软研究院Software Engineer II, www.thewomenintechshow.com站长,所有观点都只代表本人意见。

|

||||

微软研究院 Software Engineer II, www.thewomenintechshow.com 站长,所有观点都只代表本人意见。

|

||||

|

||||

------------

|

||||

|

||||

via: https://medium.freecodecamp.org/what-i-learned-from-programming-interviews-29ba49c9b851

|

||||

|

||||

作者:[Edaena Salinas ][a]

|

||||

译者:DavidChenLiang (https://github.com/DavidChenLiang)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

作者:[Edaena Salinas][a]

|

||||

译者:[DavidChenLiang](https://github.com/DavidChenLiang)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,65 +1,58 @@

|

||||

你没听说过的 Go 语言惊人优点

|

||||

============================================================

|

||||

=========

|

||||

|

||||

|

||||

|

||||

来自 [https://github.com/ashleymcnamara/gophers][1] 的图稿

|

||||

*来自 [https://github.com/ashleymcnamara/gophers][1] 的图稿*

|

||||

|

||||

在这篇文章中,我将讨论为什么你需要尝试一下 Go,以及应该从哪里学起。

|

||||

在这篇文章中,我将讨论为什么你需要尝试一下 Go 语言,以及应该从哪里学起。

|

||||

|

||||

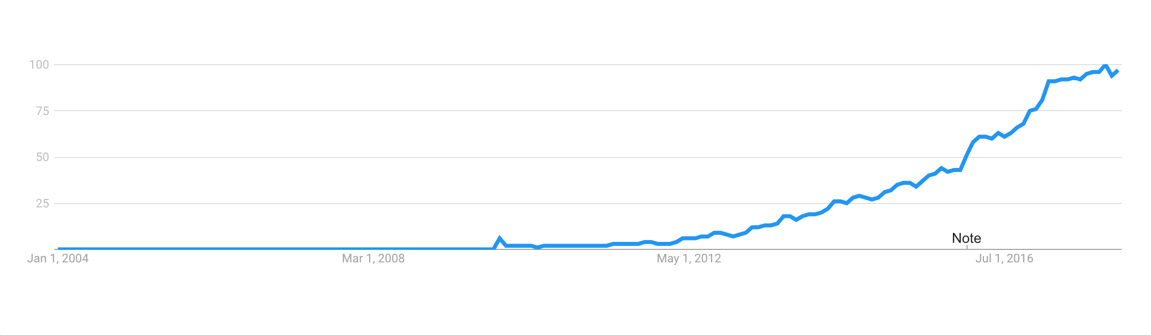

Golang 是可能是最近几年里你经常听人说起的编程语言。尽管它在 2009 年已经发布,但它最近才开始流行起来。

|

||||

Go 语言是可能是最近几年里你经常听人说起的编程语言。尽管它在 2009 年已经发布了,但它最近才开始流行起来。

|

||||

|

||||

|

||||

|

||||

根据 Google 趋势,Golang 语言非常流行。

|

||||

*根据 Google 趋势,Go 语言非常流行。*

|

||||

|

||||

这篇文章不会讨论一些你经常看到的 Golang 的主要特性。

|

||||

这篇文章不会讨论一些你经常看到的 Go 语言的主要特性。

|

||||

|

||||

相反,我想向您介绍一些相当小众但仍然很重要的功能。在您决定尝试Go后,您才会知道这些功能。

|

||||

相反,我想向您介绍一些相当小众但仍然很重要的功能。只有在您决定尝试 Go 语言后,您才会知道这些功能。

|

||||

|

||||

这些都是表面上没有体现出来的惊人特性,但它们可以为您节省数周或数月的工作量。而且这些特性还可以使软件开发更加愉快。

|

||||

|

||||

阅读本文不需要任何语言经验,所以不比担心 Golang 对你来说是新的事物。如果你想了解更多,可以看看我在底部列出的一些额外的链接,。

|

||||

阅读本文不需要任何语言经验,所以不必担心你还不了解 Go 语言。如果你想了解更多,可以看看我在底部列出的一些额外的链接。

|

||||

|

||||

我们将讨论以下主题:

|

||||

|

||||

* GoDoc

|

||||

|

||||

* 静态代码分析

|

||||

|

||||

* 内置的测试和分析框架

|

||||

|

||||

* 竞争条件检测

|

||||

|

||||

* 学习曲线

|

||||

|

||||

* 反射(Reflection)

|

||||

|

||||

* Opinionatedness(专制独裁的 Go)

|

||||

|

||||

* 反射

|

||||

* Opinionatedness

|

||||

* 文化

|

||||

|

||||

请注意,这个列表不遵循任何特定顺序来讨论。

|

||||

|

||||

### GoDoc

|

||||

|

||||

Golang 非常重视代码中的文档,简洁也是如此。

|

||||

Go 语言非常重视代码中的文档,所以也很简洁。

|

||||

|

||||

[GoDoc][4] 是一个静态代码分析工具,可以直接从代码中创建漂亮的文档页面。GoDoc 的一个显着特点是它不使用任何其他的语言,如 JavaDoc,PHPDoc 或 JSDoc 来注释代码中的结构,只需要用英语。

|

||||

[GoDoc][4] 是一个静态代码分析工具,可以直接从代码中创建漂亮的文档页面。GoDoc 的一个显著特点是它不使用任何其他的语言,如 JavaDoc、PHPDoc 或 JSDoc 来注释代码中的结构,只需要用英语。

|

||||

|

||||

它使用从代码中获取的尽可能多的信息来概述、构造和格式化文档。它有多而全的功能,比如:交叉引用,代码示例以及一个指向版本控制系统仓库的链接。

|

||||

它使用从代码中获取的尽可能多的信息来概述、构造和格式化文档。它有多而全的功能,比如:交叉引用、代码示例,并直接链接到你的版本控制系统仓库。

|

||||

|

||||

而你需要做的只有添加一些好的,像 `// MyFunc transforms Foo into Bar` 这样子的注释,而这些注释也会反映在的文档中。你甚至可以添加一些通过网络接口或者在本地可以实际运行的 [代码示例][5]。

|

||||

而你需要做的只有添加一些像 `// MyFunc transforms Foo into Bar` 这样子的老牌注释,而这些注释也会反映在的文档中。你甚至可以添加一些通过网络界面或者在本地可以实际运行的 [代码示例][5]。

|

||||

|

||||

GoDoc 是 Go 的唯一文档引擎,供整个社区使用。这意味着用 Go 编写的每个库或应用程序都具有相同的文档格式。从长远来看,它可以帮你在浏览这些文档时节省大量时间。

|

||||

GoDoc 是 Go 的唯一文档引擎,整个社区都在使用。这意味着用 Go 编写的每个库或应用程序都具有相同的文档格式。从长远来看,它可以帮你在浏览这些文档时节省大量时间。

|

||||

|

||||

例如,这是我最近一个小项目的 GoDoc 页面:[pullkee — GoDoc][6]。

|

||||

|

||||

### 静态代码分析

|

||||

|

||||

Go 严重依赖于静态代码分析。例子包括 godoc 文档,gofmt 代码格式化,golint 代码风格统一,等等。

|

||||

Go 严重依赖于静态代码分析。例如用于文档的 [godoc][7],用于代码格式化的 [gofmt][8],用于代码风格的 [golint][9],等等。

|

||||

|

||||

其中有很多甚至全部包含在类似 [gometalinter][10] 的项目中,这些将它们全部组合成一个实用程序。

|

||||

它们是如此之多,甚至有一个总揽了它们的项目 [gometalinter][10] ,将它们组合成了单一的实用程序。

|

||||

|

||||

这些工具通常作为独立的命令行应用程序实现,并可轻松与任何编码环境集成。

|

||||

|

||||

@ -67,21 +60,21 @@ Go 严重依赖于静态代码分析。例子包括 godoc 文档,gofmt 代码

|

||||

|

||||

创建自己的分析器非常简单,因为 Go 有专门的内置包来解析和加工 Go 源码。

|

||||

|

||||

你可以从这个链接中了解到更多相关内容: [GothamGo Kickoff Meetup: Go Static Analysis Tools by Alan Donovan][11].

|

||||

你可以从这个链接中了解到更多相关内容: [GothamGo Kickoff Meetup: Alan Donovan 的 Go 静态分析工具][11]。

|

||||

|

||||

### 内置的测试和分析框架

|

||||

|

||||

您是否曾尝试为一个从头开始的 Javascript 项目选择测试框架?如果是这样,你可能会明白经历这种分析瘫痪的斗争。您可能也意识到您没有使用其中 80% 的框架。

|

||||

您是否曾尝试为一个从头开始的 JavaScript 项目选择测试框架?如果是这样,你或许会理解经历这种<ruby>过度分析<rt>analysis paralysis</rt></ruby>的痛苦。您可能也意识到您没有使用其中 80% 的框架。

|

||||

|

||||

一旦您需要进行一些可靠的分析,问题就会重复出现。

|

||||

|

||||

Go 附带内置测试工具,旨在简化和提高效率。它为您提供了最简单的 API,并做出最小的假设。您可以将它用于不同类型的测试,分析,甚至可以提供可执行代码示例。

|

||||

Go 附带内置测试工具,旨在简化和提高效率。它为您提供了最简单的 API,并做出最小的假设。您可以将它用于不同类型的测试、分析,甚至可以提供可执行代码示例。

|

||||

|

||||

它可以开箱即用地生成持续集成友好的输出,而且它的用法很简单,只需运行 `go test`。当然,它还支持高级功能,如并行运行测试,跳过标记代码,以及其他更多功能。

|

||||

它可以开箱即用地生成便于持续集成的输出,而且它的用法很简单,只需运行 `go test`。当然,它还支持高级功能,如并行运行测试,跳过标记代码,以及其他更多功能。

|

||||

|

||||

### 竞争条件检测

|

||||

|

||||

您可能已经了解了 Goroutines,它们在 Go 中用于实现并发代码执行。如果你未曾了解过,[这里][12]有一个非常简短的解释。

|

||||

您可能已经听说了 Goroutine,它们在 Go 中用于实现并发代码执行。如果你未曾了解过,[这里][12]有一个非常简短的解释。

|

||||

|

||||

无论具体技术如何,复杂应用中的并发编程都不容易,部分原因在于竞争条件的可能性。

|

||||

|

||||

@ -93,13 +86,13 @@ Go 附带内置测试工具,旨在简化和提高效率。它为您提供了

|

||||

|

||||

### 学习曲线

|

||||

|

||||

您可以在一个晚上学习所有 Go 的语言功能。我是认真的。当然,还有标准库,以及不同,更具体领域的最佳实践。但是两个小时就足以让你自信地编写一个简单的 HTTP 服务器或命令行应用程序。

|

||||

您可以在一个晚上学习**所有**的 Go 语言功能。我是认真的。当然,还有标准库,以及不同的,更具体领域的最佳实践。但是两个小时就足以让你自信地编写一个简单的 HTTP 服务器或命令行应用程序。

|

||||

|

||||

Golang 拥有[出色的文档][14],大部分高级主题已经在博客上进行了介绍:[The Go Programming Language Blog][15]。

|

||||

Go 语言拥有[出色的文档][14],大部分高级主题已经在他们的博客上进行了介绍:[Go 编程语言博客][15]。

|

||||

|

||||

比起 Java(以及 Java 家族的语言),Javascript,Ruby,Python 甚至 PHP,你可以更轻松地把 Go 语言带到你的团队中。由于环境易于设置,您的团队在完成第一个生产代码之前需要进行的投资要小得多。

|

||||

比起 Java(以及 Java 家族的语言)、Javascript、Ruby、Python 甚至 PHP,你可以更轻松地把 Go 语言带到你的团队中。由于环境易于设置,您的团队在完成第一个生产代码之前需要进行的投资要小得多。

|

||||

|

||||

### 反射(Reflection)

|

||||

### 反射

|

||||

|

||||

代码反射本质上是一种隐藏在编译器下并访问有关语言结构的各种元信息的能力,例如变量或函数。

|

||||

|

||||

@ -107,19 +100,19 @@ Golang 拥有[出色的文档][14],大部分高级主题已经在博客上进

|

||||

|

||||

此外,Go [没有实现一个名为泛型的概念][16],这使得以抽象方式处理多种类型更具挑战性。然而,由于泛型带来的复杂程度,许多人认为不实现泛型对语言实际上是有益的。我完全同意。

|

||||

|

||||

根据 Go 的理念(这是一个单独的主题),您应该努力不要过度设计您的解决方案。这也适用于动态类型编程。尽可能坚持使用静态类型,并在确切知道要处理的类型时使用接口(interfaces)。接口在 Go 中非常强大且无处不在。

|

||||

根据 Go 的理念(这是一个单独的主题),您应该努力不要过度设计您的解决方案。这也适用于动态类型编程。尽可能坚持使用静态类型,并在确切知道要处理的类型时使用<ruby>接口<rt>interface</rt></ruby>。接口在 Go 中非常强大且无处不在。

|

||||

|

||||

但是,仍然存在一些情况,你无法知道你处理的数据类型。一个很好的例子是 JSON。您可以在应用程序中来回转换所有类型的数据。字符串,缓冲区,各种数字,嵌套结构等。

|

||||

但是,仍然存在一些情况,你无法知道你处理的数据类型。一个很好的例子是 JSON。您可以在应用程序中来回转换所有类型的数据。字符串、缓冲区、各种数字、嵌套结构等。

|

||||

|

||||

为了解决这个问题,您需要一个工具来检查运行时的数据并根据其类型和结构采取不同行为。反射(Reflect)可以帮到你。Go 拥有一流的反射包,使您的代码能够像 Javascript 这样的语言一样动态。

|

||||

为了解决这个问题,您需要一个工具来检查运行时的数据并根据其类型和结构采取不同行为。<ruby>反射<rt>Reflect</rt></ruby>可以帮到你。Go 拥有一流的反射包,使您的代码能够像 Javascript 这样的语言一样动态。

|

||||

|

||||

一个重要的警告是知道你使用它所带来的代价 - 并且只有知道在没有更简单的方法时才使用它。

|

||||

一个重要的警告是知道你使用它所带来的代价 —— 并且只有知道在没有更简单的方法时才使用它。

|

||||

|

||||

你可以在这里阅读更多相关信息: [反射的法则 — Go 博客][18].

|

||||

|

||||

您还可以在此处阅读 JSON 包源码中的一些实际代码: [src/encoding/json/encode.go — Source Code][19]

|

||||

|

||||

### Opinionatedness

|

||||

### Opinionatedness(专制独裁的 Go)

|

||||

|

||||

顺便问一下,有这样一个单词吗?

|

||||

|

||||

@ -127,9 +120,9 @@ Golang 拥有[出色的文档][14],大部分高级主题已经在博客上进

|

||||

|

||||

这有时候基本上让我卡住了。我需要花时间思考这些事情而不是编写代码并满足用户。

|

||||

|

||||

首先,我应该注意到我完全可以得到这些惯例的来源,它总是来源于你或者你的团队。无论如何,即使是一群经验丰富的 Javascript 开发人员也可以轻松地发现自己拥有完全不同的工具和范例的大部分经验,以实现相同的结果。

|

||||

首先,我应该注意到我完全知道这些惯例的来源,它总是来源于你或者你的团队。无论如何,即使是一群经验丰富的 Javascript 开发人员也很容易发现他们在实现相同的结果时,而大部分的经验却是在完全不同的工具和范例上。

|

||||

|

||||

这导致整个团队中分析的瘫痪,并且使得个体之间更难以相互协作。

|

||||

这导致整个团队中出现过度分析,并且使得个体之间更难以相互协作。

|

||||

|

||||

嗯,Go 是不同的。即使您对如何构建和维护代码有很多强烈的意见,例如:如何命名,要遵循哪些结构模式,如何更好地实现并发。但你只有一个每个人都遵循的风格指南。你只有一个内置在基本工具链中的测试框架。

|

||||

|

||||

@ -141,11 +134,11 @@ Golang 拥有[出色的文档][14],大部分高级主题已经在博客上进

|

||||

|

||||

人们说,每当你学习一门新的口语时,你也会沉浸在说这种语言的人的某些文化中。因此,您学习的语言越多,您可能会有更多的变化。

|

||||

|

||||

编程语言也是如此。无论您将来如何应用新的编程语言,它总能给的带来新的编程视角或某些特别的技术。

|

||||

编程语言也是如此。无论您将来如何应用新的编程语言,它总能给你带来新的编程视角或某些特别的技术。

|

||||

|

||||

无论是函数式编程,模式匹配(pattern matching)还是原型继承(prototypal inheritance)。一旦你学会了它们,你就可以随身携带这些编程思想,这扩展了你作为软件开发人员所拥有的问题解决工具集。它们也改变了你阅读高质量代码的方式。

|

||||

无论是函数式编程,<ruby>模式匹配<rt>pattern matching</rt></ruby>还是<ruby>原型继承<rt>prototypal inheritance</rt></ruby>。一旦你学会了它们,你就可以随身携带这些编程思想,这扩展了你作为软件开发人员所拥有的问题解决工具集。它们也改变了你阅读高质量代码的方式。

|

||||

|

||||

而 Go 在方面有一项了不起的财富。Go 文化的主要支柱是保持简单,脚踏实地的代码,而不会产生许多冗余的抽象概念,并将可维护性放在首位。大部分时间花费在代码的编写工作上,而不是在修补工具和环境或者选择不同的实现方式上,这也是 Go文化的一部分。

|

||||

而 Go 在这方面有一项了不起的财富。Go 文化的主要支柱是保持简单,脚踏实地的代码,而不会产生许多冗余的抽象概念,并将可维护性放在首位。大部分时间花费在代码的编写工作上,而不是在修补工具和环境或者选择不同的实现方式上,这也是 Go 文化的一部分。

|

||||

|

||||

Go 文化也可以总结为:“应当只用一种方法去做一件事”。

|

||||

|

||||

@ -161,12 +154,11 @@ Go 文化也可以总结为:“应当只用一种方法去做一件事”。

|

||||

|

||||

这不是 Go 的所有惊人的优点的完整列表,只是一些被人低估的特性。

|

||||

|

||||

请尝试一下从 [Go 之旅(A Tour of Go)][20]来开始学习 Go,这将是一个令人惊叹的开始。

|

||||

请尝试一下从 [Go 之旅][20] 来开始学习 Go,这将是一个令人惊叹的开始。

|

||||

|

||||

如果您想了解有关 Go 的优点的更多信息,可以查看以下链接:

|

||||

|

||||

* [你为什么要学习 Go? - Keval Patel][2]

|

||||

|

||||

* [告别Node.js - TJ Holowaychuk][3]

|

||||

|

||||

并在评论中分享您的阅读感悟!

|

||||

@ -175,30 +167,16 @@ Go 文化也可以总结为:“应当只用一种方法去做一件事”。

|

||||

|

||||

不断为您的工作寻找最好的工具!

|

||||

|

||||

* * *

|

||||

|

||||

If you like this article, please consider following me for more, and clicking on those funny green little hands right below this text for sharing. 👏👏👏

|

||||

|

||||

Check out my [Github][21] and follow me on [Twitter][22]!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Software Engineer and Traveler. Coding for fun. Javascript enthusiast. Tinkering with Golang. A lot into SOA and Docker. Architect at Velvica.

|

||||

|

||||

------------

|

||||

|

||||

|

||||

-------------------------------------------------------

|

||||

via: https://medium.freecodecamp.org/here-are-some-amazing-advantages-of-go-that-you-dont-hear-much-about-1af99de3b23a

|

||||

|

||||

作者:[Kirill Rogovoy][a]

|

||||

译者:[译者ID](https://github.com/imquanquan)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[imquanquan](https://github.com/imquanquan)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:

|

||||

[a]:https://twitter.com/krogovoy

|

||||

[1]:https://github.com/ashleymcnamara/gophers

|

||||

[2]:https://medium.com/@kevalpatel2106/why-should-you-learn-go-f607681fad65

|

||||

[3]:https://medium.com/@tjholowaychuk/farewell-node-js-4ba9e7f3e52b

|

||||

89

published/20180308 What is open source programming.md

Normal file

89

published/20180308 What is open source programming.md

Normal file

@ -0,0 +1,89 @@

|

||||

何谓开源编程?

|

||||

======

|

||||

|

||||

> 开源就是丢一些代码到 GitHub 上。了解一下它是什么,以及不是什么?

|

||||

|

||||

|

||||

|

||||

最简单的来说,开源编程就是编写一些大家可以随意取用、修改的代码。但你肯定听过关于 Go 语言的那个老笑话,说 Go 语言“简单到看一眼就可以明白规则,但需要一辈子去学会运用它”。其实写开源代码也是这样的。往 GitHub、Bitbucket、SourceForge 等网站或者是你自己的博客或网站上丢几行代码不是难事,但想要卓有成效,还需要个人的努力付出和高瞻远瞩。

|

||||

|

||||

|

||||

|

||||

### 我们对开源编程的误解

|

||||

|

||||

首先我要说清楚一点:把你的代码放在 GitHub 的公开仓库中并不意味着把你的代码开源了。在几乎全世界,根本不用创作者做什么,只要作品形成,版权就随之而生了。在创作者进行授权之前,只有作者可以行使版权相关的权力。未经创作者授权的代码,不论有多少人在使用,都是一颗定时炸弹,只有愚蠢的人才会去用它。

|

||||

|

||||

有些创作者很善良,认为“很明显我的代码是免费提供给大家使用的。”,他也并不想起诉那些用了他的代码的人,但这并不意味着这些代码可以放心使用。不论在你眼中创作者们多么善良,他们都 *有权力* 起诉任何使用、修改代码,或未经明确授权就将代码嵌入的人。

|

||||

|

||||

很明显,你不应该在没有指定开源许可证的情况下将你的源代码发布到网上然后期望别人使用它并为其做出贡献。我建议你也尽量避免使用这种代码,甚至疑似未授权的也不要使用。如果你开发了一个函数和例程,它和之前一个疑似未授权代码很像,源代码作者就可以对你就侵权提起诉讼。

|

||||

|

||||

举个例子,Jill Schmill 写了 AwesomeLib 然后未明确授权就把它放到了 GitHub 上,就算 Jill Schmill 不起诉任何人,只要她把 AwesomeLib 的完整版权都卖给 EvilCorp,EvilCorp 就会起诉之前违规使用这段代码的人。这种行为就好像是埋下了计算机安全隐患,总有一天会为人所用。

|

||||

|

||||

没有许可证的代码的危险的,切记。

|

||||

|

||||

### 选择恰当的开源许可证

|

||||

|

||||

假设你正要写一个新程序,而且打算让人们以开源的方式使用它,你需要做的就是选择最贴合你需求的[许可证][1]。和宣传中说的一样,你可以从 GitHub 所支持的 [choosealicense.com][2] 开始。这个网站设计得像个简单的问卷,特别方便快捷,点几下就能找到合适的许可证。

|

||||

|

||||

警示:在选择许可证时不要过于自负,如果你选的是 [Apache 许可证][3]或者 [GPLv3][4] 这种广为使用的许可证,人们很容易理解他们和你都有什么权利,你也不需要请律师来排查其中的漏洞。你选择的许可证使用的人越少,带来的麻烦就越多。

|

||||

|

||||

最重要的一点是: *千万不要试图自己制造许可证!* 自己制造许可证会给大家带来更多的困惑和困扰,不要这样做。如果在现有的许可证中确实找不到你需要的条款,你可以在现有的许可证中附加上你的要求,并且重点标注出来,提醒使用者们注意。

|

||||

|

||||

我知道有些人会站出来说:“我才懒得管什么许可证,我已经把代码发到<ruby>公开领域<rt>public domain</rt></ruby>了。”但问题是,公开领域的法律效力并不是受全世界认可的。在不同的国家,公开领域的效力和表现形式不同。在有些国家的政府管控下,你甚至不可以把自己的源代码发到公开领域。万幸,[Unlicense][5] 可以弥补这些漏洞,它语言简洁,使用几个词清楚地描述了“就把它放到公开领域”,但其效力为全世界认可。

|

||||

|

||||

### 怎样引入许可证

|

||||

|

||||

确定使用哪个许可证之后,你需要清晰而无疑义地指定它。如果你是在 GitHub、GitLab 或 BitBucket 这几个网站发布,你需要构建很多个文件夹,在根文件夹中,你应把许可证创建为一个以 `LICENSE.txt` 命名的明文文件。

|

||||

|

||||

创建 `LICENSE.txt` 这个文件之后还有其它事要做。你需要在每个重要文件的头部添加注释块来申明许可证。如果你使用的是一个现有的许可证,这一步对你来说十分简便。一个 `# 项目名 (c)2018 作者名,GPLv3 许可证,详情见 https://www.gnu.org/licenses/gpl-3.0.en.html` 这样的注释块比隐约指代的许可证的效力要强得多。

|

||||

|

||||

如果你是要发布在自己的网站上,步骤也差不多。先创建 `LICENSE.txt` 文件,放入许可证,再表明许可证出处。

|

||||

|

||||

### 开源代码的不同之处

|

||||

|

||||

开源代码和专有代码的一个主要区别是开源代码写出来就是为了给别人看的。我是个 40 多岁的系统管理员,已经写过许许多多的代码。最开始我写代码是为了工作,为了解决公司的问题,所以其中大部分代码都是专有代码。这种代码的目的很简单,只要能在特定场合通过特定方式发挥作用就行。

|

||||

|

||||

开源代码则大不相同。在写开源代码时,你知道它可能会被用于各种各样的环境中。也许你的用例的环境条件很局限,但你仍旧希望它能在各种环境下发挥理想的效果。不同的人使用这些代码时会出现各种用例,你会看到各类冲突,还有你没有考虑过的思路。虽然代码不一定要满足所有人,但至少应该得体地处理他们遇到的问题,就算解决不了,也可以转换回常见的逻辑,不会给使用者添麻烦。(例如“第 583 行出现零除错误”就不能作为错误地提供命令行参数的响应结果)

|

||||

|

||||

你的源代码也可能逼疯你,尤其是在你一遍又一遍地修改错误的函数或是子过程后,终于出现了你希望的结果,这时你不会叹口气就继续下一个任务,你会把过程清理干净,因为你不会愿意别人看出你一遍遍尝试的痕迹。比如你会把 `$variable`、`$lol` 全都换成有意义的 `$iterationcounter` 和 `$modelname`。这意味着你要认真专业地进行注释(尽管对于你所处的背景知识热度来说它并不难懂),因为你期望有更多的人可以使用你的代码。

|

||||

|

||||

这个过程难免有些痛苦沮丧,毕竟这不是你常做的事,会有些不习惯。但它会使你成为一位更好的程序员,也会让你的代码升华。即使你的项目只有你一位贡献者,清理代码也会节约你后期的很多工作,相信我一年后你再看你的 app 代码时,你会庆幸自己写下的是 `$modelname`,还有清晰的注释,而不是什么不知名的数列,甚至连 `$lol` 也不是。

|

||||

|

||||

### 你并不是为你一人而写

|

||||

|

||||

开源的真正核心并不是那些代码,而是社区。更大的社区的项目维持时间更长,也更容易为人们所接受。因此不仅要加入社区,还要多多为社区发展贡献思路,让自己的项目能够为社区所用。

|

||||

|

||||

蝙蝠侠为了完成目标暗中独自花了很大功夫,你用不着这样,你可以登录 Twitter、Reddit,或者给你项目的相关人士发邮件,发布你正在筹备新项目的消息,仔细聊聊项目的设计初衷和你的计划,让大家一起帮忙,向大家征集数据输入,类似的使用案例,把这些信息整合起来,用在你的代码里。你不用接受所有的建议和请求,但你要对它有个大概把握,这样在你之后完善时可以躲过一些陷阱。

|

||||

|

||||

发布了首次通告这个过程还不算完整。如果你希望大家能够接受你的作品并且使用它,你就要以此为初衷来设计。公众说不定可以帮到你,你不必对公开这件事如临大敌。所以不要闭门造车,既然你是为大家而写,那就开设一个真实、公开的项目,想象你在社区的帮助和监督下,认真地一步步完成它。

|

||||

|

||||

### 建立项目的方式

|

||||

|

||||

你可以在 GitHub、GitLab 或 BitBucket 上免费注册账号来管理你的项目。注册之后,创建知识库,建立 `README` 文件,分配一个许可证,一步步写入代码。这样可以帮你建立好习惯,让你之后和现实中的团队一起工作时,也能目的清晰地朝着目标稳妥地开展工作。这样你做得越久,就越有兴趣 —— 通常会有用户先对你的项目产生兴趣。

|

||||

|

||||

用户会开始提一些问题,这会让你开心也会让你不爽,你应该亲切礼貌地对待他们,就算他们很多人对项目有很多误解甚至根本不知道你的项目做的是什么,你也应该礼貌专业地对待。一方面,你可以引导他们,让他们了解你在干什么。另一方面,他们也会慢慢地将你带入更大的社区。

|

||||

|

||||

如果你的项目很受用户青睐,总会有高级开发者出现,并表示出兴趣。这也许是好事,也可能激怒你。最开始你可能只会做简单的问题修复,但总有一天你会收到拉取请求,有可能是硬编码或特殊用例(可能会让项目变得难以维护),它可能改变你项目的作用域,甚至改变你项目的初衷。你需要学会分辨哪个有贡献,根据这个决定合并哪个,婉拒哪个。

|

||||

|

||||

### 我们为什么要开源?

|

||||

|

||||

开源听起来任务繁重,它也确实是这样。但它对你也有很多好处。它可以在无形之中磨练你,让你写出纯净持久的代码,也教会你与人沟通,团队协作。对于一个志向远大的专业开发者来说,它是最好的简历素材。你的未来雇主很有可能点开你的仓库,了解你的能力范围;而社区项目的开发者也有可能给你带来工作。

|

||||

|

||||

最后,为开源工作,意味着个人的提升,因为你在做的事不是为了你一个人,这比养活自己重要得多。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/3/what-open-source-programming

|

||||

|

||||

作者:[Jim Salter][a]

|

||||

译者:[Valoniakim](https://github.com/Valoniakim)

|

||||

校对:[wxy](https://github.com/wxy)、[pityonline](https://github.com/pityonline)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/jim-salter

|

||||

[1]: https://opensource.com/tags/licensing

|

||||

[2]: https://choosealicense.com/

|

||||

[3]: https://choosealicense.com/licenses/apache-2.0/

|

||||

[4]: https://choosealicense.com/licenses/gpl-3.0/

|

||||

[5]: https://choosealicense.com/licenses/unlicense/

|

||||

@ -0,0 +1,155 @@

|

||||

用 Hugo 30 分钟搭建静态博客

|

||||

======

|

||||

> 了解 Hugo 如何使构建网站变得有趣。

|

||||

|

||||

|

||||

|

||||

你是不是强烈地想搭建博客来将自己对软件框架等的探索学习成果分享呢?你是不是面对缺乏指导文档而一团糟的项目就有一种想去改变它的冲动呢?或者换个角度,你是不是十分期待能创建一个属于自己的个人博客网站呢?

|

||||

|

||||

很多人在想搭建博客之前都有一些严重的迟疑顾虑:感觉自己缺乏内容管理系统(CMS)的相关知识,更缺乏时间去学习这些知识。现在,如果我说不用花费大把的时间去学习 CMS 系统、学习如何创建一个静态网站、更不用操心如何去强化网站以防止它受到黑客攻击的问题,你就可以在 30 分钟之内创建一个博客?你信不信?利用 Hugo 工具,就可以实现这一切。

|

||||

|

||||

|

||||

|

||||

Hugo 是一个基于 Go 语言开发的静态站点生成工具。也许你会问,为什么选择它?

|

||||

|

||||

* 无需数据库、无需需要各种权限的插件、无需跑在服务器上的底层平台,更没有额外的安全问题。

|

||||

* 都是静态站点,因此拥有轻量级、快速响应的服务性能。此外,所有的网页都是在部署的时候生成,所以服务器负载很小。

|

||||

* 极易操作的版本控制。一些 CMS 平台使用它们自己的版本控制软件(VCS)或者在网页上集成 Git 工具。而 Hugo,所有的源文件都可以用你所选的 VCS 软件来管理。

|

||||

|

||||

### 0-5 分钟:下载 Hugo,生成一个网站

|

||||

|

||||

直白的说,Hugo 使得写一个网站又一次变得有趣起来。让我们来个 30 分钟计时,搭建一个网站。

|

||||

|

||||

为了简化 Hugo 安装流程,这里直接使用 Hugo 可执行安装文件。

|

||||

|

||||

1. 下载和你操作系统匹配的 Hugo [版本][2];

|

||||

2. 压缩包解压到指定路径,例如 windows 系统的 `C:\hugo_dir` 或者 Linux 系统的 `~/hugo_dir` 目录;下文中的变量 `${HUGO_HOME}` 所指的路径就是这个安装目录;

|

||||

3. 打开命令行终端,进入安装目录:`cd ${HUGO_HOME}`;

|

||||

4. 确认 Hugo 已经启动:

|

||||

* Unix 系统:`${HUGO_HOME}/[hugo version]`;

|

||||

* Windows 系统:`${HUGO_HOME}\[hugo.exe version]`,例如:cmd 命令行中输入:`c:\hugo_dir\hugo version`。

|

||||

|

||||

为了书写上的简化,下文中的 `hugo` 就是指 hugo 可执行文件所在的路径(包括可执行文件),例如命令 `hugo version` 就是指命令 `c:\hugo_dir\hugo version` 。(LCTT 译注:可以把 hugo 可执行文件所在的路径添加到系统环境变量下,这样就可以直接在终端中输入 `hugo version`)

|

||||

|

||||

如果命令 `hugo version` 报错,你可能下载了错误的版本。当然,有很多种方法安装 Hugo,更多详细信息请查阅 [官方文档][3]。最稳妥的方法就是把 Hugo 可执行文件放在某个路径下,然后执行的时候带上路径名

|

||||

5. 创建一个新的站点来作为你的博客,输入命令:`hugo new site awesome-blog`;

|

||||

6. 进入新创建的路径下: `cd awesome-blog`;

|

||||

|

||||

恭喜你!你已经创建了自己的新博客。

|

||||

|

||||

### 5-10 分钟:为博客设置主题

|

||||

|

||||

Hugo 中你可以自己构建博客的主题或者使用网上已经有的一些主题。这里选择 [Kiera][4] 主题,因为它简洁漂亮。按以下步骤来安装该主题:

|

||||

|

||||

1. 进入主题所在目录:`cd themes`;

|

||||

2. 克隆主题:`git clone https://github.com/avianto/hugo-kiera kiera`。如果你没有安装 Git 工具:

|

||||

* 从 [Github][5] 上下载 hugo 的 .zip 格式的文件;

|

||||

* 解压该 .zip 文件到你的博客主题 `theme` 路径;

|

||||

* 重命名 `hugo-kiera-master` 为 `kiera`;

|

||||

3. 返回博客主路径:`cd awesome-blog`;

|

||||

4. 激活主题;通常来说,主题(包括 Kiera)都自带文件夹 `exampleSite`,里面存放了内容配置的示例文件。激活 Kiera 主题需要拷贝它提供的 `config.toml` 到你的博客下:

|

||||

* Unix 系统:`cp themes/kiera/exampleSite/config.toml .`;

|

||||

* Windows 系统:`copy themes\kiera\exampleSite\config.toml .`;

|

||||

* 选择 `Yes` 来覆盖原有的 `config.toml`;

|

||||

|

||||

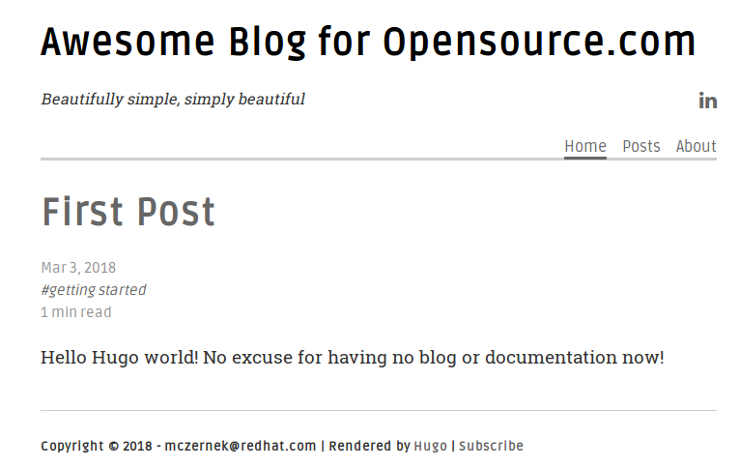

5. ( 可选操作 )你可以选择可视化的方式启动服务器来验证主题是否生效:`hugo server -D` 然后在浏览器中输入 `http://localhost:1313`。可用通过在终端中输入 `Crtl+C` 来停止服务器运行。现在你的博客还是空的,但这也给你留了写作的空间。它看起来如下所示:

|

||||

|

||||

|

||||

|

||||

你已经成功的给博客设置了主题!你可以在官方 [Hugo 主题][4] 网站上找到上百种漂亮的主题供你使用。

|

||||

|

||||

### 10-20 分钟:给博客添加内容

|

||||

|

||||

对于碗来说,它是空的时候用处最大,可以用来盛放东西;但对于博客来说不是这样,空博客几乎毫无用处。在这一步,你将会给博客添加内容。Hugo 和 Kiera 主题都为这个工作提供了方便性。按以下步骤来进行你的第一次提交:

|

||||

|

||||

1. archetypes 将会是你的内容模板。

|

||||

2. 添加主题中的 archtypes 至你的博客:

|

||||

* Unix 系统: `cp themes/kiera/archetypes/* archetypes/`

|

||||

* Windows 系统:`copy themes\kiera\archetypes\* archetypes\`

|

||||

* 选择 `Yes` 来覆盖原来的 `default.md` 内容架构类型

|

||||

|

||||

3. 创建博客 posts 目录:

|

||||

* Unix 系统: `mkdir content/posts`

|

||||

* Windows 系统: `mkdir content\posts`

|

||||

|

||||

4. 利用 Hugo 生成你的 post:

|

||||

* Unix 系统:`hugo nes posts/first-post.md`;

|

||||

* Windows 系统:`hugo new posts\first-post.md`;

|

||||

|

||||

5. 在文本编辑器中打开这个新建的 post 文件:

|

||||

* Unix 系统:`gedit content/posts/first-post.md`;

|

||||

* Windows 系统:`notepadd content\posts\first-post.md`;

|

||||

|

||||

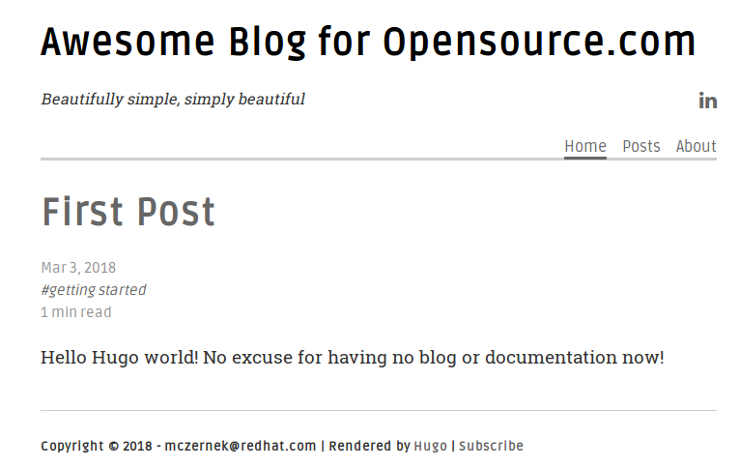

此刻,你可以疯狂起来了。注意到你的提交文件中包括两个部分。第一部分是以 `+++` 符号分隔开的。它包括了提交文档的主要数据,例如名称、时间等。在 Hugo 中,这叫做前缀。在前缀之后,才是正文。下面编辑第一个提交文件内容:

|

||||

|

||||

```

|

||||

+++

|

||||

title = "First Post"

|

||||

date = 2018-03-03T13:23:10+01:00

|

||||

draft = false

|

||||

tags = ["Getting started"]

|

||||

categories = []

|

||||

+++

|

||||

|

||||

Hello Hugo world! No more excuses for having no blog or documentation now!

|

||||

```

|

||||

|

||||

现在你要做的就是启动你的服务器:`hugo server -D`;然后打开浏览器,输入 `http://localhost:1313/`。

|

||||

|

||||

|

||||

|

||||

### 20-30 分钟:调整网站

|

||||

|

||||

前面的工作很完美,但还有一些问题需要解决。例如,简单地命名你的站点:

|

||||

|

||||

1. 终端中按下 `Ctrl+C` 以停止服务器。

|

||||

2. 打开 `config.toml`,编辑博客的名称,版权,你的姓名,社交网站等等。

|

||||

|

||||

当你再次启动服务器后,你会发现博客私人订制味道更浓了。不过,还少一个重要的基础内容:主菜单。快速的解决这个问题。返回 `config.toml` 文件,在末尾插入如下一段:

|

||||

|

||||

```

|

||||

[[menu.main]]

|

||||

name = "Home" #Name in the navigation bar

|

||||

weight = 10 #The larger the weight, the more on the right this item will be

|

||||

url = "/" #URL address

|

||||

[[menu.main]]

|

||||

name = "Posts"

|

||||

weight = 20

|

||||

url = "/posts/"

|

||||

```

|

||||

|

||||

上面这段代码添加了 `Home` 和 `Posts` 到主菜单中。你还需要一个 `About` 页面。这次是创建一个 `.md` 文件,而不是编辑 `config.toml` 文件:

|

||||

|

||||

1. 创建 `about.md` 文件:`hugo new about.md` 。注意它是 `about.md`,不是 `posts/about.md`。该页面不是博客提交内容,所以你不想它显示到博客内容提交当中吧。

|

||||

2. 用文本编辑器打开该文件,输入如下一段:

|

||||

|

||||

```

|

||||

+++

|

||||

title = "About"

|

||||

date = 2018-03-03T13:50:49+01:00

|

||||

menu = "main" #Display this page on the nav menu

|

||||

weight = "30" #Right-most nav item

|

||||

meta = "false" #Do not display tags or categories

|

||||

+++

|

||||

|

||||

> Waves are the practice of the water. Shunryu Suzuki

|

||||

```

|

||||

|

||||

当你启动你的服务器并输入:`http://localhost:1313/`,你将会看到你的博客。(访问我 Gihub 主页上的 [例子][6] )如果你想让文章的菜单栏和 Github 相似,给 `themes/kiera/static/css/styles.css` 打上这个 [补丁][7]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/3/start-blog-30-minutes-hugo

|

||||

|

||||

作者:[Marek Czernek][a]

译者:[jrg](https://github.com/jrglinux)

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/mczernek

|

||||

[1]:https://gohugo.io/

|

||||

[2]:https://github.com/gohugoio/hugo/releases

|

||||

[3]:https://gohugo.io/getting-started/installing/

|

||||

[4]:https://themes.gohugo.io/

|

||||

[5]:https://github.com/avianto/hugo-kiera

|

||||

[6]:https://m-czernek.github.io/awesome-blog/

|

||||

[7]:https://github.com/avianto/hugo-kiera/pull/18/files

|

||||

53

published/20180518 Mastering CI-CD at OpenDev.md

Normal file

53

published/20180518 Mastering CI-CD at OpenDev.md

Normal file

@ -0,0 +1,53 @@

|

||||

在 OpenDev 大会上学习 CI/CD

|

||||

======

|

||||

> 未来的开发工作需要非常精通 CI/CD 流程。

|

||||

|

||||

|

||||

|

||||

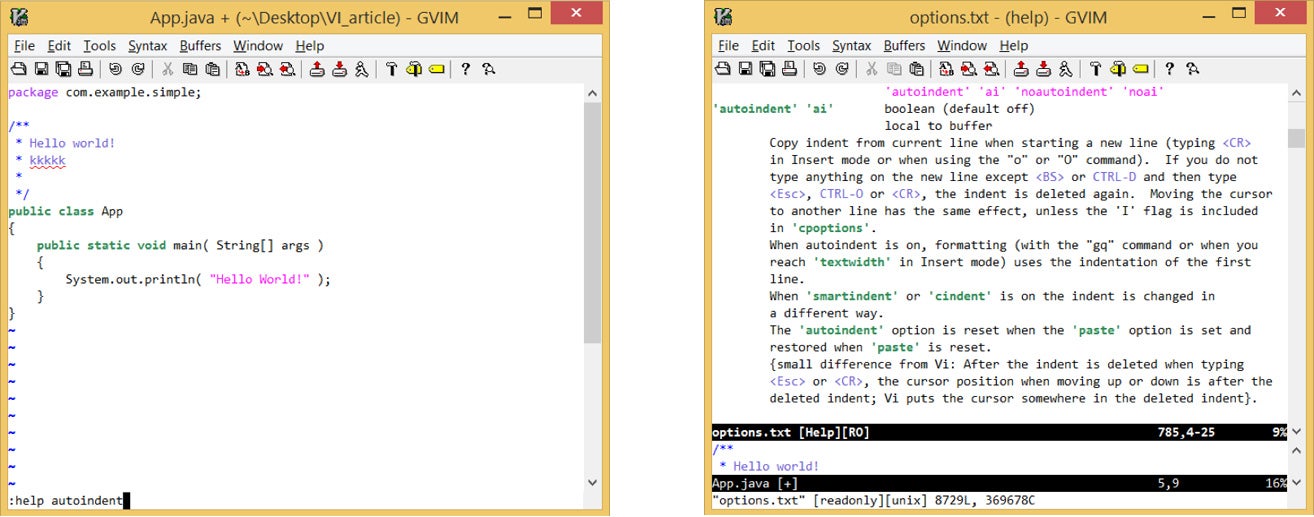

在 2017 年启动后,OpenDev 大会现在已是一个年度活动。在去年 9 月的首届活动上,会议的重点是边缘计算。今年的活动,于 5 月 22 - 23 日举行,会议的重点是持续集成和持续发布 (CI/CD),并与 OpenStack 峰会一起在温哥华举行。

|

||||

|

||||

基于我在 OpenStack 项目的 CI/CD 系统的技术背景和我近期进入容器下的 CI/CD 方面的经验,我被邀请加入 OpenDev CI/CD 计划委员会。今天我经常借助很多开源技术,例如 [Jenkins][3]、[GitLab][2]、[Spinnaker][4] 和 [Artifactory][5] 来讨论 CI/CD 流程。

|

||||

|

||||

这次活动对我来说是很激动人心的,因为我们将在这个活动中融合两个开源基础设施理念。首先,我们将讨论可以被任何组织使用的 CI/CD 工具。为此目的,在 [讲演][6] 中,我们将听到关于开源 CI/CD 工具的使用讲演,一场来自 Boris Renski 的关于 Spinnaker 的讲演,和一场来自 Jim Blair 的关于 [Zuul][7] 的讲演。同时,讲演会涉及关于开源技术的偏好的高级别话题,特别是那种跨社区的和本身就是开源项目的。从Fatih Degirmenci 和 Daniel Farrel 那里,我们将听到关于在不同社区分享持续发布实践经历,接着 Benjamin Mako Hill 会为我们带来一场关于为什么自由软件需要自由工具的分享。

|

||||

|

||||

在分享 CI/CD 相对新颖的特性后,接下来的活动是对话、研讨会和协作讨论的混合组合。当从人们所提交的讲座和研讨会中进行选择,并提出协作讨论主题时,我们希望确保有一个多样灵活的日程表,这样任何参与者都能在 CI/CD 活动进程中发现有趣的东西。

|

||||

|

||||

这些讲座会是标准的会议风格,选择涵盖关键主题,如制定 CI/CD 流程,在实践 DevOps 时提升安全性,以及更具体的解决方案,如基于容器关于 Kubernetes 的 [Aptomi][8] 和在 ETSI NFV 环境下 CI/CD。这些会话的大部分将会是作为给新接触 CI/CD 或这些特定技术的参与者关于这些话题和理念的简介。

|

||||

|

||||

交互式的研讨会会持续相对比较长的时间,参与者将会在思想上得到特定的体验。这些研讨会包括 “[在持续集成任务中的异常检测][9]”、“[如何安装 Zuul 和配置第一个任务][10]”,和“[Spinnake 101:快速可靠的软件发布][11]”。(注意这些研讨会空间是有限的,所以设立了一个 RSVP 系统。你们将会在会议的链接里找到一个 RSVP 的按钮。)

|

||||

|

||||

可能最让我最兴奋的是协作讨论,这些协作讨论占据了一半以上的活动安排。协作讨论的主题由计划委员会选取。计划委员会根据我们在社区里所看到来选取对应的主题。这是“鱼缸”风格式的会议,通常是几个人聚在一个房间里围绕着 CI/CD 讨论某一个主题。

|

||||

|

||||

这次会议风格的理念是来自于开发者峰会,最初是由 Ubuntu 社区提出,接着 OpenStack 社区也在活动上采纳。这些协作讨论的主题包含不同的会议,这些会议是关于 CI/CD 基础,可以鼓励跨社区协作的提升举措,在组织里推行 CI/CD 文化,和为什么开源 CI/CD 工具如此重要。采用共享文档来做会议笔记,以确保尽可能的在会议的过程中分享知识。在讨论过程中,提出行动项目也是很常见的,因此社区成员可以推动和所涉及的主题相关的倡议。

|

||||

|

||||

活动将以联合总结会议结束。联合总结会议将总结来自协同讨论的关键点和为即将在这个领域工作的参与者指出可选的职业范围。

|

||||

|

||||

可以在 [OpenStack 峰会注册页][13] 上注册参加活动。或者可以在温哥华唯一指定售票的会议中心购买活动的入场券,价格是 $199。更多关于票和全部的活动安排见官网 [OpenDev 网站][1]。

|

||||

|

||||

我希望你们能够加入我们,并在温哥华渡过令人激动的两天,并且在这两天的活动中学习,协作和在 CI/CD 取得进展。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/5/opendev

|

||||

|

||||

作者:[Elizabeth K.Joseph][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[jamelouis](https://github.com/jamelouis)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/pleia2

|

||||

[1]:http://2018.opendevconf.com/

|

||||

[2]:https://about.gitlab.com/

|

||||

[3]:https://jenkins.io/

|

||||

[4]:https://www.spinnaker.io/

|

||||

[5]:https://jfrog.com/artifactory/

|

||||

[6]:http://2018.opendevconf.com/schedule/

|

||||

[7]:https://zuul-ci.org/

|

||||

[8]:http://aptomi.io/

|

||||

[9]:https://www.openstack.org/summit/vancouver-2018/summit-schedule/events/21692/anomaly-detection-in-continuous-integration-jobs

|

||||

[10]:https://www.openstack.org/summit/vancouver-2018/summit-schedule/events/21693/how-to-install-zuul-and-configure-your-first-jobs

|

||||

[11]:https://www.openstack.org/summit/vancouver-2018/summit-schedule/events/21699/spinnaker-101-releasing-software-with-velocity-and-confidence

|

||||

[12]:https://www.openstack.org/summit/vancouver-2018/summit-schedule/events/21831/opendev-cicd-joint-collab-conclusion

|

||||

[13]:https://www.eventbrite.com/e/openstack-summit-may-2018-vancouver-tickets-40845826968?aff=VancouverSummit2018

|

||||

@ -1,152 +1,153 @@

|

||||

Git 使用简介

|

||||

======

|

||||

> 我将向你介绍让 Git 的启动、运行,并和 GitHub 一起使用的基础知识。

|

||||

|

||||

|

||||

|

||||

如果你是一个开发者,那你应该熟悉许多开发工具。你已经花了多年时间来学习一种或者多种编程语言并完善你的技巧。你可以熟练运用图形工具或者命令行工具开发。在你看来,没有任何事可以阻挡你。你的代码, 好像你的思想和你的手指一样,将会创建一个优雅的,完美评价的应用程序,并会风靡世界。

|

||||

如果你是一个开发者,那你应该熟悉许多开发工具。你已经花了多年时间来学习一种或者多种编程语言并打磨你的技巧。你可以熟练运用图形工具或者命令行工具开发。在你看来,没有任何事可以阻挡你。你的代码, 好像你的思想和你的手指一样,将会创建一个优雅的,完美评价的应用程序,并会风靡世界。

|

||||

|

||||

然而,如果你和其他人共同开发一个项目会发生什么呢?或者,你开发的应用程序变地越来越大,下一步你将如何去做?如果你想成功地和其他开发者合作,你定会想用一个分布式版本控制系统。使用这样一个系统,合作开发一个项目变得非常高效和可靠。这样的一个系统便是 [Git][1]。还有一个叫 [GitHub][2] 的方便的存储仓库,来存储你的项目代码,这样你的团队可以检查和修改代码。

|

||||

然而,如果你和其他人共同开发一个项目会发生什么呢?或者,你开发的应用程序变地越来越大,下一步你将如何去做?如果你想成功地和其他开发者合作,你定会想用一个分布式版本控制系统。使用这样一个系统,合作开发一个项目变得非常高效和可靠。这样的一个系统便是 [Git][1]。还有一个叫 [GitHub][2] 的方便的存储仓库,用来存储你的项目代码,这样你的团队可以检查和修改代码。

|

||||

|

||||

我将向你介绍让 Git 的启动、运行,并和 GitHub 一起使用的基础知识,可以让你的应用程序的开发可以提升到一个新的水平。我将在 Ubuntu 18.04 上进行演示,因此如果您选择的发行版本不同,您只需要修改 Git 安装命令以适合你的发行版的软件包管理器。

|

||||

|

||||

我将向你介绍让 Git 的启动、运行,并和 GitHub 一起使用的基础知识,可以让你的应用程序的开发可以提升到一个新的水平。 我将在 Ubuntu 18.04 上进行演示,因此如果您选择的发行版本不同,您只需要修改 Git 安装命令以适合你的发行版的软件包管理器。

|

||||

### Git 和 GitHub

|

||||

|

||||

第一件事就是创建一个免费的 GitHub 账号,打开 [GitHub 注册页面][3],然后填上需要的信息。完成这个之后,你就注备好开始安装 Git 了(这两件事谁先谁后都可以)。

|

||||

|

||||

安装 Git 非常简单,打开一个命令行终端,并输入命令:

|

||||

|

||||

```

|

||||

sudo apt install git-all

|

||||

|

||||

```

|

||||

|

||||

这将会安装大量依赖包,但是你将了解使用 Git 和 GitHub 所需的一切。

|

||||

|

||||

注意:我使用 Git 来下载程序的安装源码。有许多时候,内置的软件管理器不提供某个软件,除了去第三方库中下载源码,我经常去这个软件项目的 Git 主页,像这样克隆:

|

||||

附注:我使用 Git 来下载程序的安装源码。有许多时候,内置的软件管理器不提供某个软件,除了去第三方库中下载源码,我经常去这个软件项目的 Git 主页,像这样克隆:

|

||||

|

||||

```

|

||||

git clone ADDRESS

|

||||

|

||||

```

|

||||

ADDRESS就是那个软件项目的 Git 主页。这样我就可以确保自己安装那个软件的最新发行版了。

|

||||

|

||||

创建一个本地仓库并添加一个文件。

|

||||

下一步就是在你的电脑里创建一个本地仓库(本文称之为newproject,位于~/目录下),打开一个命令行终端,并输入下面的命令:

|

||||

“ADDRESS” 就是那个软件项目的 Git 主页。这样我就可以确保自己安装那个软件的最新发行版了。

|

||||

|

||||

### 创建一个本地仓库并添加一个文件

|

||||

|

||||

下一步就是在你的电脑里创建一个本地仓库(本文称之为 newproject,位于 `~/` 目录下),打开一个命令行终端,并输入下面的命令:

|

||||

|

||||

```

|

||||

cd ~/

|

||||

|

||||

mkdir newproject

|

||||

|

||||

cd newproject

|

||||

|

||||

```

|

||||

|

||||

现在你需要初始化这个仓库。在 ~/newproject 目录下,输入命令 git init,当命令运行完,你就可以看到一个刚刚创建的空的 Git 仓库了(图1)。

|

||||

现在你需要初始化这个仓库。在 `~/newproject` 目录下,输入命令 `git init`,当命令运行完,你就可以看到一个刚刚创建的空的 Git 仓库了(图1)。

|

||||

|

||||

![new repository][5]

|

||||

|

||||

图 1:初始化完成的新仓库

|

||||

*图 1: 初始化完成的新仓库*

|

||||

|

||||

[使用许可][6]

|

||||

|

||||

下一步就是往项目里添加文件。我们在项目根目录(~/newproject)输入下面的命令:

|

||||

下一步就是往项目里添加文件。我们在项目根目录(`~/newproject`)输入下面的命令:

|

||||

|

||||

```

|

||||

touch readme.txt

|

||||

|

||||

```

|

||||

|

||||

现在项目里多了个空文件。输入 git status 来验证 Git 已经检测到多了个新文件(图2)。

|

||||

现在项目里多了个空文件。输入 `git status` 来验证 Git 已经检测到多了个新文件(图2)。

|

||||

|

||||

![readme][8]

|

||||

|

||||

图 2: Git 检测到新文件readme.txt

|

||||

|

||||

[使用许可][6]

|

||||

*图 2: Git 检测到新文件readme.txt*

|

||||

|

||||

即使 Git 检测到新的文件,但它并没有被真正的加入这个项目仓库。为此,你要输入下面的命令:

|

||||

|

||||

```

|

||||

git add readme.txt

|

||||

|

||||

```

|

||||

|

||||

一旦完成这个命令,再输入 git status 命令,可以看到,readme.txt 已经是这个项目里的新文件了(图3)。

|

||||

一旦完成这个命令,再输入 `git status` 命令,可以看到,`readme.txt` 已经是这个项目里的新文件了(图3)。

|

||||

|

||||

![file added][10]

|

||||

|

||||

图 3: 我们的文件已经被添加进临时环境

|

||||

*图 3: 我们的文件已经被添加进临时环境*

|

||||

|

||||

[使用许可][6]

|

||||

### 第一次提交

|

||||

当新文件添加进临时环境之后,我们现在就准备好第一次提交了。什么是提交呢?它是很简单的,一次提交就是记录你更改的项目的文件。创建一次提交也是非常简单的。但是,为提交创建一个描述信息非常重要。通过这样做,你将添加有关提交包含的内容的注释,比如你对文件做出的修改。然而,在这样做之前,我们需要确认我们的 Git 账户,输入以下命令:

|

||||

|

||||

当新文件添加进临时环境之后,我们现在就准备好创建第一个<ruby>提交<rt>commit</rt></ruby>了。什么是提交呢?简单的说,一个提交就是你更改的项目的文件的记录。创建一个提交也是非常简单的。但是,为提交包含一个描述信息非常重要。通过这样做,你可以添加有关该提交包含的内容的注释,比如你对文件做出的何种修改。然而,在这样做之前,我们需要告知 Git 我们的账户,输入以下命令:

|

||||

|

||||

```

|

||||

git config --global user.email EMAIL

|

||||

|

||||

git config --global user.name “FULL NAME”

|

||||

|

||||

```

|

||||

EMAIL 即你的 email 地址,FULL NAME 则是你的姓名。现在你可以通过以下命令创建一个提交:

|

||||

|

||||

“EMAIL” 即你的 email 地址,“FULL NAME” 则是你的姓名。

|

||||

|

||||

现在你可以通过以下命令创建一个提交:

|

||||

|

||||

```

|

||||

git commit -m “Descriptive Message”

|

||||

|

||||

```

|

||||

Descriptive Message 即为你的提交的描述性信息。比如,当你第一次提交是提交一个 readme.txt 文件,你可以这样提交:

|

||||

|

||||

“Descriptive Message” 即为你的提交的描述性信息。比如,当你第一个提交是提交一个 `readme.txt` 文件,你可以这样提交:

|

||||

|

||||

```

|

||||

git commit -m “First draft of readme.txt file”

|

||||

|

||||

```

|

||||

|

||||

你可以看到输出显示一个文件已经修改,并且,为 readnme.txt 创建了一个新模式(图4)

|

||||

你可以看到输出表明一个文件已经修改,并且,为 `readme.txt` 创建了一个新的文件模式(图4)

|

||||

|

||||

![success][12]

|

||||

|

||||

图4:提交成功

|

||||

*图4:提交成功*

|

||||

|

||||

|

||||

### 创建分支并推送至 GitHub

|

||||

|

||||

分支是很重要的,它允许你在项目状态间中移动。假如,你想给你的应用创建一个新的特性。为了这样做,你创建了个新分支。一旦你完成你的新特性,你可以把这个新分支合并到你的主分支中去,使用以下命令创建一个新分支:

|

||||

|

||||

[使用许可][6]

|

||||

### 创建分支并推送至GitHub

|

||||

分支是很重要的,它允许你从项目状态间中移动。假如,你想给你的应用创建一个新的特性。为了这样做,你创建了个新分支。一旦你完成你的新特性,你可以把这个新分支合并到你的主分支中去,使用以下命令创建一个新分支:

|

||||

```

|

||||

git checkout -b BRANCH

|

||||

|

||||

```

|

||||

BRANCH 即为你新分支的名字,一旦执行完命令,输入 git branch 命令来查看是否创建了新分支(图5)

|

||||

|

||||

“BRANCH” 即为你新分支的名字,一旦执行完命令,输入 `git branch` 命令来查看是否创建了新分支(图5)

|

||||

|

||||

![featureX][14]

|

||||

|

||||

图5:名为 featureX 的新分支

|

||||

*图5:名为 featureX 的新分支*

|

||||

|

||||

[使用许可][6]

|

||||

|

||||

接下来,我们需要在GitHub上创建一个仓库。 登录GitHub帐户,请单击帐户主页上的“新建仓库”按钮。 填写必要的信息,然后单击Create repository(图6)。

|

||||

接下来,我们需要在 GitHub 上创建一个仓库。 登录 GitHub 帐户,请单击帐户主页上的“New Repository”按钮。 填写必要的信息,然后单击 “Create repository”(图6)。

|

||||

|

||||

![new repository][16]

|

||||

|

||||

图6:在 GitHub 上新建一个仓库

|

||||

*图6:在 GitHub 上新建一个仓库*

|

||||

|

||||

[使用许可][6]

|

||||

在创建完一个仓库之后,你可以看到一个用于推送本地仓库的地址。若要推送,返回命令行窗口(`~/newproject` 目录中),输入以下命令:

|

||||

|

||||

在创建完一个仓库之后,你可以看到一个用于推送本地仓库的地址。若要推送,返回命令行窗口( ~/newproject 目录中),输入以下命令:

|

||||

```

|

||||

git remote add origin URL

|

||||

|

||||

git push -u origin master

|

||||

|

||||

```

|

||||

URL 即为我们 GitHub 上新建的仓库地址。

|

||||

|

||||

“URL” 即为我们 GitHub 上新建的仓库地址。

|

||||

|

||||

系统会提示您,输入 GitHub 的用户名和密码,一旦授权成功,你的项目将会被推送到 GitHub 仓库中。

|

||||

|

||||

### 拉取项目

|

||||

|

||||

如果你的同事改变了你们 GitHub 上项目的代码,并且已经合并那些更改,你可以拉取那些项目文件到你的本地机器,这样,你系统中的文件就可以和远程用户的文件保持匹配。你可以输入以下命令来做这件事( ~/newproject 在目录中),

|

||||

如果你的同事改变了你们 GitHub 上项目的代码,并且已经合并那些更改,你可以拉取那些项目文件到你的本地机器,这样,你系统中的文件就可以和远程用户的文件保持匹配。你可以输入以下命令来做这件事(`~/newproject` 在目录中),

|

||||

|

||||

```

|

||||

git pull origin master

|

||||

|

||||

```

|

||||

|

||||

以上的命令可以拉取任何新文件或修改过的文件到你的本地仓库。

|

||||

|

||||

### 基础

|

||||

|

||||

这就是从命令行使用 Git 来处理存储在 GitHub 上的项目的基础知识。 还有很多东西需要学习,所以我强烈建议你使用 man git,man git-push 和 man git-pull 命令来更深入地了解 git 命令可以做什么。

|

||||

这就是从命令行使用 Git 来处理存储在 GitHub 上的项目的基础知识。 还有很多东西需要学习,所以我强烈建议你使用 `man git`,`man git-push` 和 `man git-pull` 命令来更深入地了解 `git` 命令可以做什么。

|

||||

|

||||

开发快乐!

|

||||

|

||||

了解更多关于 Linux的 内容,请访问来自 Linux 基金会和 edX 的免费的 ["Introduction to Linux" ][17]课程。

|

||||

了解更多关于 Linux 的 内容,请访问来自 Linux 基金会和 edX 的免费的 ["Introduction to Linux"][17]课程。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -155,7 +156,7 @@ via: https://www.linux.com/learn/intro-to-linux/2018/7/introduction-using-git

|

||||

作者:[Jack Wallen][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[distant1219](https://github.com/distant1219)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,77 @@

|

||||

如何使用 Apache 构建 URL 缩短服务

|

||||

======

|

||||

> 用 Apache HTTP 服务器的 mod_rewrite 功能创建你自己的短链接。

|

||||

|

||||

|

||||

|

||||

很久以前,人们开始在 Twitter 上分享链接。140 个字符的限制意味着 URL 可能消耗一条推文的大部分(或全部),因此人们使用 URL 缩短服务。最终,Twitter 加入了一个内置的 URL 缩短服务([t.co][1])。

|

||||

|

||||

字符数现在不重要了,但还有其他原因要缩短链接。首先,缩短服务可以提供分析功能 —— 你可以看到你分享的链接的受欢迎程度。它还简化了制作易于记忆的 URL。例如,[bit.ly/INtravel][2] 比<https://www.in.gov/ai/appfiles/dhs-countyMap/dhsCountyMap.html>更容易记住。如果你想预先共享一个链接,但还不知道最终地址,这时 URL 缩短服务可以派上用场。。

|

||||

|

||||

与任何技术一样,URL 缩短服务并非都是正面的。通过屏蔽最终地址,缩短的链接可用于指向恶意或冒犯性内容。但是,如果你仔细上网,URL 缩短服务是一个有用的工具。

|

||||

|

||||

我们之前在网站上[发布过缩短服务的文章][3],但也许你想要运行一些由简单的文本文件支持的缩短服务。在本文中,我们将展示如何使用 Apache HTTP 服务器的 mod_rewrite 功能来设置自己的 URL 缩短服务。如果你不熟悉 Apache HTTP 服务器,请查看 David Both 关于[安装和配置][4]它的文章。

|

||||

|

||||

### 创建一个 VirtualHost

|

||||

|

||||

在本教程中,我假设你购买了一个很酷的域名,你将它专门用于 URL 缩短服务。例如,我的网站是 [funnelfiasco.com][5],所以我买了 [funnelfias.co][6] 用于我的 URL 缩短服务(好吧,它不是很短,但它可以满足我的虚荣心)。如果你不将缩短服务作为单独的域运行,请跳到下一部分。

|

||||

|

||||

第一步是设置将用于 URL 缩短服务的 VirtualHost。有关 VirtualHost 的更多信息,请参阅 [David Both 的文章][7]。这步只需要几行:

|

||||

|

||||

```

|

||||

<VirtualHost *:80>

|

||||

ServerName funnelfias.co

|

||||

</VirtualHost>

|

||||

```

|

||||

|

||||

### 创建重写规则

|

||||

|

||||

此服务使用 HTTPD 的重写引擎来重写 URL。如果你在上面的部分中创建了 VirtualHost,则下面的配置跳到你的 VirtualHost 部分。否则跳到服务器的 VirtualHost 或主 HTTPD 配置。

|

||||

|

||||

```

|

||||

RewriteEngine on

|

||||

RewriteMap shortlinks txt:/data/web/shortlink/links.txt

|

||||

RewriteRule ^/(.+)$ ${shortlinks:$1} [R=temp,L]

|

||||

```

|

||||

|

||||

第一行只是启用重写引擎。第二行在文本文件构建短链接的映射。上面的路径只是一个例子。你需要使用系统上使用有效路径(确保它可由运行 HTTPD 的用户帐户读取)。最后一行重写 URL。在此例中,它接受任何字符并在重写映射中查找它们。你可能希望重写时使用特定的字符串。例如,如果你希望所有缩短的链接都是 “slX”(其中 X 是数字),则将上面的 `(.+)` 替换为 `(sl\d+)`。

|

||||

|

||||

我在这里使用了临时重定向(HTTP 302)。这能让我稍后更新目标 URL。如果希望短链接始终指向同一目标,则可以使用永久重定向(HTTP 301)。用 `permanent` 替换第三行的 `temp`。

|

||||

|

||||

### 构建你的映射

|

||||

|

||||

编辑配置文件 `RewriteMap` 行中的指定文件。格式是空格分隔的键值存储。在每一行上放一个链接:

|

||||

|

||||

```

|

||||

osdc https://opensource.com/users/bcotton

|

||||

twitter https://twitter.com/funnelfiasco

|

||||

swody1 https://www.spc.noaa.gov/products/outlook/day1otlk.html

|

||||

```

|

||||

|

||||

### 重启 HTTPD

|

||||

|

||||

最后一步是重启 HTTPD 进程。这是通过 `systemctl restart httpd` 或类似命令完成的(命令和守护进程名称可能因发行版而不同)。你的链接缩短服务现已启动并运行。当你准备编辑映射时,无需重新启动 Web 服务器。你所要做的就是保存文件,Web 服务器将获取到差异。

|

||||

|

||||

### 未来的工作

|

||||

|

||||

此示例为你提供了基本的 URL 缩短服务。如果你想将开发自己的管理接口作为学习项目,它可以作为一个很好的起点。或者你可以使用它分享容易记住的链接到那些容易忘记的 URL。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/7/apache-url-shortener

|

||||

|

||||

作者:[Ben Cotton][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/bcotton

|

||||

[1]:http://t.co

|

||||

[2]:http://bit.ly/INtravel

|

||||

[3]:https://opensource.com/article/17/3/url-link-shortener

|

||||

[4]:https://opensource.com/article/18/2/how-configure-apache-web-server

|

||||

[5]:http://funnelfiasco.com

|

||||

[6]:http://funnelfias.co

|

||||