mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-21 02:10:11 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject.git

This commit is contained in:

commit

96e1a1c928

65

README.md

65

README.md

@ -59,41 +59,42 @@ LCTT 的组成

|

||||

* 2016/12/24 拟定 LCTT [Core 规则](core.md),并增加新的 Core 成员: ucasFL、martin2011qi,及调整一些组。

|

||||

* 2017/03/13 制作了 LCTT 主页、成员列表和成员主页,LCTT 主页将移动至 https://linux.cn/lctt 。

|

||||

* 2017/03/16 提升 GHLandy、bestony、rusking 为新的 Core 成员。创建 Comic 小组。

|

||||

* 2017/04/11 启用头衔制,为各位重要成员颁发头衔。

|

||||

|

||||

活跃成员

|

||||

核心成员

|

||||

-------------------------------

|

||||

|

||||

目前 TP 活跃成员有:

|

||||

- Leader @wxy,

|

||||

- Source @oska874,

|

||||

- Proofreaders @jasminepeng,

|

||||

- CORE @geekpi,

|

||||

- CORE @GOLinux,

|

||||

- CORE @ictlyh,

|

||||

- CORE @strugglingyouth,

|

||||

- CORE @FSSlc,

|

||||

- CORE @zpl1025,

|

||||

- CORE @runningwater,

|

||||

- CORE @bazz2,

|

||||

- CORE @Vic020,

|

||||

- CORE @alim0x,

|

||||

- CORE @tinyeyeser,

|

||||

- CORE @Locez,

|

||||

- CORE @ucasFL,

|

||||

- CORE @martin2011qi,

|

||||

- CORE @GHLandy,

|

||||

- CORE @bestony,

|

||||

- CORE @rusking,

|

||||

- Senior @DeadFire,

|

||||

- Senior @reinoir222,

|

||||

- Senior @vito-L,

|

||||

- Senior @willqian,

|

||||

- Senior @vizv,

|

||||

- Senior @dongfengweixiao,

|

||||

- Senior @PurlingNayuki,

|

||||

- Senior @carolinewuyan,

|

||||

目前 LCTT 核心成员有:

|

||||

|

||||

- 组长 @wxy,

|

||||

- 选题 @oska874,

|

||||

- 校对 @jasminepeng,

|

||||

- 钻石译者 @geekpi,

|

||||

- 钻石译者 @GOLinux,

|

||||

- 钻石译者 @ictlyh,

|

||||

- 技术组长 @bestony,

|

||||

- 漫画组长 @GHLandy,

|

||||

- LFS 组长 @martin2011qi,

|

||||

- 核心成员 @strugglingyouth,

|

||||

- 核心成员 @FSSlc,

|

||||

- 核心成员 @zpl1025,

|

||||

- 核心成员 @runningwater,

|

||||

- 核心成员 @bazz2,

|

||||

- 核心成员 @Vic020,

|

||||

- 核心成员 @alim0x,

|

||||

- 核心成员 @tinyeyeser,

|

||||

- 核心成员 @Locez,

|

||||

- 核心成员 @ucasFL,

|

||||

- 核心成员 @rusking,

|

||||

- 前任选题 @DeadFire,

|

||||

- 前任校对 @reinoir222,

|

||||

- 前任校对 @PurlingNayuki,

|

||||

- 前任校对 @carolinewuyan,

|

||||

- 功勋成员 @vito-L,

|

||||

- 功勋成员 @willqian,

|

||||

- 功勋成员 @vizv,

|

||||

- 功勋成员 @dongfengweixiao,

|

||||

|

||||

全部成员列表请参见: https://linux.cn/lctt-list/ 。

|

||||

|

||||

谢谢大家的支持!

|

||||

|

||||

谢谢大家的支持!

|

||||

310

published/20110123 How debuggers work Part 1 - Basics.md

Normal file

310

published/20110123 How debuggers work Part 1 - Basics.md

Normal file

@ -0,0 +1,310 @@

|

||||

调试器的工作原理(一):基础篇

|

||||

============================================================

|

||||

|

||||

这是调试器工作原理系列文章的第一篇,我不确定这个系列会有多少篇文章,会涉及多少话题,但我仍会从这篇基础开始。

|

||||

|

||||

### 这一篇会讲什么

|

||||

|

||||

我将为大家展示 Linux 中调试器的主要构成模块 - `ptrace` 系统调用。这篇文章所有代码都是基于 32 位 Ubuntu 操作系统。值得注意的是,尽管这些代码是平台相关的,将它们移植到其它平台应该并不困难。

|

||||

|

||||

### 缘由

|

||||

|

||||

为了理解我们要做什么,让我们先考虑下调试器为了完成调试都需要什么资源。调试器可以开始一个进程并调试这个进程,又或者将自己同某个已经存在的进程关联起来。调试器能够单步执行代码,设定断点并且将程序执行到断点,检查变量的值并追踪堆栈。许多调试器有着更高级的特性,例如在调试器的地址空间内执行表达式或者调用函数,甚至可以在进程执行过程中改变代码并观察效果。

|

||||

|

||||

尽管现代的调试器都十分的复杂(我没有检查,但我确信 gdb 的代码行数至少有六位数),但它们的工作的原理却是十分的简单。调试器的基础是操作系统与编译器 / 链接器提供的一些基础服务,其余的部分只是[简单的编程][14]而已。

|

||||

|

||||

### Linux 的调试 - ptrace

|

||||

|

||||

Linux 调试器中的瑞士军刀便是 `ptrace` 系统调用(使用 man 2 ptrace 命令可以了解更多)。这是一种复杂却强大的工具,可以允许一个进程控制另外一个进程并从<ruby>内部替换<rt>Peek and poke</rt></ruby>被控制进程的内核镜像的值(Peek and poke 在系统编程中是很知名的叫法,指的是直接读写内存内容)。

|

||||

|

||||

接下来会深入分析。

|

||||

|

||||

### 执行进程的代码

|

||||

|

||||

我将编写一个示例,实现一个在“跟踪”模式下运行的进程。在这个模式下,我们将单步执行进程的代码,就像机器码(汇编代码)被 CPU 执行时一样。我将分段展示、讲解示例代码,在文章的末尾也有完整 c 文件的下载链接,你可以编译、执行或者随心所欲的更改。

|

||||

|

||||

更进一步的计划是实现一段代码,这段代码可以创建可执行用户自定义命令的子进程,同时父进程可以跟踪子进程。首先是主函数:

|

||||

|

||||

```

|

||||

int main(int argc, char** argv)

|

||||

{

|

||||

pid_t child_pid;

|

||||

|

||||

if (argc < 2) {

|

||||

fprintf(stderr, "Expected a program name as argument\n");

|

||||

return -1;

|

||||

}

|

||||

|

||||

child_pid = fork();

|

||||

if (child_pid == 0)

|

||||

run_target(argv[1]);

|

||||

else if (child_pid > 0)

|

||||

run_debugger(child_pid);

|

||||

else {

|

||||

perror("fork");

|

||||

return -1;

|

||||

}

|

||||

|

||||

return 0;

|

||||

}

|

||||

```

|

||||

|

||||

看起来相当的简单:我们用 `fork` 创建了一个新的子进程(这篇文章假定读者有一定的 Unix/Linux 编程经验。我假定你知道或至少了解 fork、exec 族函数与 Unix 信号)。if 语句的分支执行子进程(这里称之为 “target”),`else if` 的分支执行父进程(这里称之为 “debugger”)。

|

||||

|

||||

下面是 target 进程的代码:

|

||||

|

||||

```

|

||||

void run_target(const char* programname)

|

||||

{

|

||||

procmsg("target started. will run '%s'\n", programname);

|

||||

|

||||

/* Allow tracing of this process */

|

||||

if (ptrace(PTRACE_TRACEME, 0, 0, 0) < 0) {

|

||||

perror("ptrace");

|

||||

return;

|

||||

}

|

||||

|

||||

/* Replace this process's image with the given program */

|

||||

execl(programname, programname, 0);

|

||||

}

|

||||

```

|

||||

|

||||

这段代码中最值得注意的是 `ptrace` 调用。在 `sys/ptrace.h` 中,`ptrace` 是如下定义的:

|

||||

|

||||

```

|

||||

long ptrace(enum __ptrace_request request, pid_t pid,

|

||||

void *addr, void *data);

|

||||

```

|

||||

|

||||

第一个参数是 `_request_`,这是许多预定义的 `PTRACE_*` 常量中的一个。第二个参数为请求分配进程 ID。第三个与第四个参数是地址与数据指针,用于操作内存。上面代码段中的 `ptrace` 调用发起了 `PTRACE_TRACEME` 请求,这意味着该子进程请求系统内核让其父进程跟踪自己。帮助页面上对于 request 的描述很清楚:

|

||||

|

||||

> 意味着该进程被其父进程跟踪。任何传递给该进程的信号(除了 `SIGKILL`)都将通过 `wait()` 方法阻塞该进程并通知其父进程。**此外,该进程的之后所有调用 `exec()` 动作都将导致 `SIGTRAP` 信号发送到此进程上,使得父进程在新的程序执行前得到取得控制权的机会**。如果一个进程并不需要它的的父进程跟踪它,那么这个进程不应该发送这个请求。(pid、addr 与 data 暂且不提)

|

||||

|

||||

我高亮了这个例子中我们需要注意的部分。在 `ptrace` 调用后,`run_target` 接下来要做的就是通过 `execl` 传参并调用。如同高亮部分所说明,这将导致系统内核在 `execl` 创建进程前暂时停止,并向父进程发送信号。

|

||||

|

||||

是时候看看父进程做什么了。

|

||||

|

||||

```

|

||||

void run_debugger(pid_t child_pid)

|

||||

{

|

||||

int wait_status;

|

||||

unsigned icounter = 0;

|

||||

procmsg("debugger started\n");

|

||||

|

||||

/* Wait for child to stop on its first instruction */

|

||||

wait(&wait_status);

|

||||

|

||||

while (WIFSTOPPED(wait_status)) {

|

||||

icounter++;

|

||||

/* Make the child execute another instruction */

|

||||

if (ptrace(PTRACE_SINGLESTEP, child_pid, 0, 0) < 0) {

|

||||

perror("ptrace");

|

||||

return;

|

||||

}

|

||||

|

||||

/* Wait for child to stop on its next instruction */

|

||||

wait(&wait_status);

|

||||

}

|

||||

|

||||

procmsg("the child executed %u instructions\n", icounter);

|

||||

}

|

||||

```

|

||||

|

||||

如前文所述,一旦子进程调用了 `exec`,子进程会停止并被发送 `SIGTRAP` 信号。父进程会等待该过程的发生并在第一个 `wait()` 处等待。一旦上述事件发生了,`wait()` 便会返回,由于子进程停止了父进程便会收到信号(如果子进程由于信号的发送停止了,`WIFSTOPPED` 就会返回 `true`)。

|

||||

|

||||

父进程接下来的动作就是整篇文章最需要关注的部分了。父进程会将 `PTRACE_SINGLESTEP` 与子进程 ID 作为参数调用 `ptrace` 方法。这就会告诉操作系统,“请恢复子进程,但在它执行下一条指令前阻塞”。周而复始地,父进程等待子进程阻塞,循环继续。当 `wait()` 中传出的信号不再是子进程的停止信号时,循环终止。在跟踪器(父进程)运行期间,这将会是被跟踪进程(子进程)传递给跟踪器的终止信号(如果子进程终止 `WIFEXITED` 将返回 `true`)。

|

||||

|

||||

`icounter` 存储了子进程执行指令的次数。这么看来我们小小的例子也完成了些有用的事情 - 在命令行中指定程序,它将执行该程序并记录它从开始到结束所需要的 cpu 指令数量。接下来就让我们这么做吧。

|

||||

|

||||

### 测试

|

||||

|

||||

我编译了下面这个简单的程序并利用跟踪器运行它:

|

||||

|

||||

```

|

||||

#include <stdio.h>

|

||||

|

||||

int main()

|

||||

{

|

||||

printf("Hello, world!\n");

|

||||

return 0;

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

令我惊讶的是,跟踪器花了相当长的时间,并报告整个执行过程共有超过 100,000 条指令执行。仅仅是一条输出语句?什么造成了这种情况?答案很有趣(至少你同我一样痴迷与机器/汇编语言)。Linux 的 gcc 默认会动态的将程序与 c 的运行时库动态地链接。这就意味着任何程序运行前的第一件事是需要动态库加载器去查找程序运行所需要的共享库。这些代码的数量很大 - 别忘了我们的跟踪器要跟踪每一条指令,不仅仅是主函数的,而是“整个进程中的指令”。

|

||||

|

||||

所以当我将测试程序使用静态编译时(通过比较,可执行文件会多出 500 KB 左右的大小,这部分是 C 运行时库的静态链接),跟踪器提示只有大概 7000 条指令被执行。这个数目仍然不小,但是考虑到在主函数执行前 libc 的初始化以及主函数执行后的清除代码,这个数目已经是相当不错了。此外,`printf` 也是一个复杂的函数。

|

||||

|

||||

仍然不满意的话,我需要的是“可以测试”的东西 - 例如可以完整记录每一个指令运行的程序执行过程。这当然可以通过汇编代码完成。所以我找到了这个版本的 “Hello, world!” 并编译了它。

|

||||

|

||||

|

||||

```

|

||||

section .text

|

||||

; The _start symbol must be declared for the linker (ld)

|

||||

global _start

|

||||

|

||||

_start:

|

||||

|

||||

; Prepare arguments for the sys_write system call:

|

||||

; - eax: system call number (sys_write)

|

||||

; - ebx: file descriptor (stdout)

|

||||

; - ecx: pointer to string

|

||||

; - edx: string length

|

||||

mov edx, len

|

||||

mov ecx, msg

|

||||

mov ebx, 1

|

||||

mov eax, 4

|

||||

|

||||

; Execute the sys_write system call

|

||||

int 0x80

|

||||

|

||||

; Execute sys_exit

|

||||

mov eax, 1

|

||||

int 0x80

|

||||

|

||||

section .data

|

||||

msg db 'Hello, world!', 0xa

|

||||

len equ $ - msg

|

||||

```

|

||||

|

||||

|

||||

当然,现在跟踪器提示 7 条指令被执行了,这样一来很容易区分它们。

|

||||

|

||||

### 深入指令流

|

||||

|

||||

上面那个汇编语言编写的程序使得我可以向你介绍 `ptrace` 的另外一个强大的用途 - 详细显示被跟踪进程的状态。下面是 `run_debugger` 函数的另一个版本:

|

||||

|

||||

```

|

||||

void run_debugger(pid_t child_pid)

|

||||

{

|

||||

int wait_status;

|

||||

unsigned icounter = 0;

|

||||

procmsg("debugger started\n");

|

||||

|

||||

/* Wait for child to stop on its first instruction */

|

||||

wait(&wait_status);

|

||||

|

||||

while (WIFSTOPPED(wait_status)) {

|

||||

icounter++;

|

||||

struct user_regs_struct regs;

|

||||

ptrace(PTRACE_GETREGS, child_pid, 0, ®s);

|

||||

unsigned instr = ptrace(PTRACE_PEEKTEXT, child_pid, regs.eip, 0);

|

||||

|

||||

procmsg("icounter = %u. EIP = 0x%08x. instr = 0x%08x\n",

|

||||

icounter, regs.eip, instr);

|

||||

|

||||

/* Make the child execute another instruction */

|

||||

if (ptrace(PTRACE_SINGLESTEP, child_pid, 0, 0) < 0) {

|

||||

perror("ptrace");

|

||||

return;

|

||||

}

|

||||

|

||||

/* Wait for child to stop on its next instruction */

|

||||

wait(&wait_status);

|

||||

}

|

||||

|

||||

procmsg("the child executed %u instructions\n", icounter);

|

||||

}

|

||||

```

|

||||

|

||||

不同仅仅存在于 `while` 循环的开始几行。这个版本里增加了两个新的 `ptrace` 调用。第一条将进程的寄存器值读取进了一个结构体中。 `sys/user.h` 定义有 `user_regs_struct`。如果你查看头文件,头部的注释这么写到:

|

||||

|

||||

```

|

||||

/* 这个文件只为了 GDB 而创建

|

||||

不用详细的阅读.如果你不知道你在干嘛,

|

||||

不要在除了 GDB 以外的任何地方使用此文件 */

|

||||

```

|

||||

|

||||

|

||||

不知道你做何感想,但这让我觉得我们找对地方了。回到例子中,一旦我们在 `regs` 变量中取得了寄存器的值,我们就可以通过将 `PTRACE_PEEKTEXT` 作为参数、 `regs.eip`(x86 上的扩展指令指针)作为地址,调用 `ptrace` ,读取当前进程的当前指令(警告:如同我上面所说,文章很大程度上是平台相关的。我简化了一些设定 - 例如,x86 指令集不需要调整到 4 字节,我的32位 Ubuntu unsigned int 是 4 字节。事实上,许多平台都不需要。从内存中读取指令需要预先安装完整的反汇编器。我们这里没有,但实际的调试器是有的)。下面是新跟踪器所展示出的调试效果:

|

||||

|

||||

```

|

||||

$ simple_tracer traced_helloworld

|

||||

[5700] debugger started

|

||||

[5701] target started. will run 'traced_helloworld'

|

||||

[5700] icounter = 1\. EIP = 0x08048080\. instr = 0x00000eba

|

||||

[5700] icounter = 2\. EIP = 0x08048085\. instr = 0x0490a0b9

|

||||

[5700] icounter = 3\. EIP = 0x0804808a. instr = 0x000001bb

|

||||

[5700] icounter = 4\. EIP = 0x0804808f. instr = 0x000004b8

|

||||

[5700] icounter = 5\. EIP = 0x08048094\. instr = 0x01b880cd

|

||||

Hello, world!

|

||||

[5700] icounter = 6\. EIP = 0x08048096\. instr = 0x000001b8

|

||||

[5700] icounter = 7\. EIP = 0x0804809b. instr = 0x000080cd

|

||||

[5700] the child executed 7 instructions

|

||||

```

|

||||

|

||||

|

||||

现在,除了 `icounter`,我们也可以观察到指令指针与它每一步所指向的指令。怎么来判断这个结果对不对呢?使用 `objdump -d` 处理可执行文件:

|

||||

|

||||

```

|

||||

$ objdump -d traced_helloworld

|

||||

|

||||

traced_helloworld: file format elf32-i386

|

||||

|

||||

Disassembly of section .text:

|

||||

|

||||

08048080 <.text>:

|

||||

8048080: ba 0e 00 00 00 mov $0xe,%edx

|

||||

8048085: b9 a0 90 04 08 mov $0x80490a0,%ecx

|

||||

804808a: bb 01 00 00 00 mov $0x1,%ebx

|

||||

804808f: b8 04 00 00 00 mov $0x4,%eax

|

||||

8048094: cd 80 int $0x80

|

||||

8048096: b8 01 00 00 00 mov $0x1,%eax

|

||||

804809b: cd 80 int $0x80

|

||||

```

|

||||

|

||||

这个结果和我们跟踪器的结果就很容易比较了。

|

||||

|

||||

### 将跟踪器关联到正在运行的进程

|

||||

|

||||

如你所知,调试器也能关联到已经运行的进程。现在你应该不会惊讶,`ptrace` 通过以 `PTRACE_ATTACH` 为参数调用也可以完成这个过程。这里我不会展示示例代码,通过上文的示例代码应该很容易实现这个过程。出于学习目的,这里使用的方法更简便(因为我们在子进程刚开始就可以让它停止)。

|

||||

|

||||

### 代码

|

||||

|

||||

上文中的简单的跟踪器(更高级的,可以打印指令的版本)的完整c源代码可以在[这里][20]找到。它是通过 4.4 版本的 gcc 以 `-Wall -pedantic --std=c99` 编译的。

|

||||

|

||||

### 结论与计划

|

||||

|

||||

诚然,这篇文章并没有涉及很多内容 - 我们距离亲手完成一个实际的调试器还有很长的路要走。但我希望这篇文章至少可以使得调试这件事少一些神秘感。`ptrace` 是功能多样的系统调用,我们目前只展示了其中的一小部分。

|

||||

|

||||

单步调试代码很有用,但也只是在一定程度上有用。上面我通过 C 的 “Hello World!” 做了示例。为了执行主函数,可能需要上万行代码来初始化 C 的运行环境。这并不是很方便。最理想的是在 `main` 函数入口处放置断点并从断点处开始分步执行。为此,在这个系列的下一篇,我打算展示怎么实现断点。

|

||||

|

||||

### 参考

|

||||

|

||||

撰写此文时参考了如下文章

|

||||

|

||||

* [Playing with ptrace, Part I][11]

|

||||

* [How debugger works][12]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://eli.thegreenplace.net/2011/01/23/how-debuggers-work-part-1

|

||||

|

||||

作者:[Eli Bendersky][a]

|

||||

译者:[YYforymj](https://github.com/YYforymj)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://eli.thegreenplace.net/

|

||||

[1]:http://eli.thegreenplace.net/2011/01/23/how-debuggers-work-part-1#id1

|

||||

[2]:http://eli.thegreenplace.net/2011/01/23/how-debuggers-work-part-1#id2

|

||||

[3]:http://eli.thegreenplace.net/2011/01/23/how-debuggers-work-part-1#id3

|

||||

[4]:http://www.jargon.net/jargonfile/p/peek.html

|

||||

[5]:http://eli.thegreenplace.net/2011/01/23/how-debuggers-work-part-1#id4

|

||||

[6]:http://eli.thegreenplace.net/2011/01/23/how-debuggers-work-part-1#id5

|

||||

[7]:http://eli.thegreenplace.net/2011/01/23/how-debuggers-work-part-1#id6

|

||||

[8]:http://eli.thegreenplace.net/tag/articles

|

||||

[9]:http://eli.thegreenplace.net/tag/debuggers

|

||||

[10]:http://eli.thegreenplace.net/tag/programming

|

||||

[11]:http://www.linuxjournal.com/article/6100?page=0,1

|

||||

[12]:http://www.alexonlinux.com/how-debugger-works

|

||||

[13]:http://eli.thegreenplace.net/2011/01/23/how-debuggers-work-part-1#id7

|

||||

[14]:http://en.wikipedia.org/wiki/Small_matter_of_programming

|

||||

[15]:http://eli.thegreenplace.net/2011/01/23/how-debuggers-work-part-1#id8

|

||||

[16]:http://eli.thegreenplace.net/2011/01/23/how-debuggers-work-part-1#id9

|

||||

[17]:http://eli.thegreenplace.net/2011/01/23/how-debuggers-work-part-1#id10

|

||||

[18]:http://eli.thegreenplace.net/2011/01/23/how-debuggers-work-part-1#id11

|

||||

[19]:http://eli.thegreenplace.net/2011/01/23/how-debuggers-work-part-1#id12

|

||||

[20]:https://github.com/eliben/code-for-blog/blob/master/2011/simple_tracer.c

|

||||

[21]:http://eli.thegreenplace.net/2011/01/23/how-debuggers-work-part-1

|

||||

165

published/20150112 Data-Oriented Hash Table.md

Normal file

165

published/20150112 Data-Oriented Hash Table.md

Normal file

@ -0,0 +1,165 @@

|

||||

深入解析面向数据的哈希表性能

|

||||

============================================================

|

||||

|

||||

最近几年中,面向数据的设计已经受到了很多的关注 —— 一种强调内存中数据布局的编程风格,包括如何访问以及将会引发多少的 cache 缺失。由于在内存读取操作中缺失所占的数量级要大于命中的数量级,所以缺失的数量通常是优化的关键标准。这不仅仅关乎那些对性能有要求的 code-data 结构设计的软件,由于缺乏对内存效益的重视而成为软件运行缓慢、膨胀的一个很大因素。

|

||||

|

||||

|

||||

高效缓存数据结构的中心原则是将事情变得平滑和线性。比如,在大部分情况下,存储一个序列元素更倾向于使用普通数组而不是链表 —— 每一次通过指针来查找数据都会为 cache 缺失增加一份风险;而普通数组则可以预先获取,并使得内存系统以最大的效率运行

|

||||

|

||||

如果你知道一点内存层级如何运作的知识,下面的内容会是想当然的结果——但是有时候即便它们相当明显,测试一下任不失为一个好主意。几年前 [Baptiste Wicht 测试过了 `std::vector` vs `std::list` vs `std::deque`][4],(后者通常使用分块数组来实现,比如:一个数组的数组)。结果大部分会和你预期的保持一致,但是会存在一些违反直觉的东西。作为实例:在序列链表的中间位置做插入或者移除操作被认为会比数组快,但如果该元素是一个 POD 类型,并且不大于 64 字节或者在 64 字节左右(在一个 cache 流水线内),通过对要操作的元素周围的数组元素进行移位操作要比从头遍历链表来的快。这是由于在遍历链表以及通过指针插入/删除元素的时候可能会导致不少的 cache 缺失,相对而言,数组移位则很少会发生。(对于更大的元素,非 POD 类型,或者你已经有了指向链表元素的指针,此时和预期的一样,链表胜出)

|

||||

|

||||

|

||||

多亏了类似 Baptiste 这样的数据,我们知道了内存布局如何影响序列容器。但是关联容器,比如 hash 表会怎么样呢?已经有了些权威推荐:[Chandler Carruth 推荐的带局部探测的开放寻址][5](此时,我们没必要追踪指针),以及[Mike Acton 推荐的在内存中将 value 和 key 隔离][6](这种情况下,我们可以在每一个 cache 流水线中得到更多的 key), 这可以在我们必须查找多个 key 时提高局部性能。这些想法很有意义,但再一次的说明:测试永远是好习惯,但由于我找不到任何数据,所以只好自己收集了。

|

||||

|

||||

### 测试

|

||||

|

||||

我测试了四个不同的 quick-and-dirty 哈希表实现,另外还包括 `std::unordered_map` 。这五个哈希表都使用了同一个哈希函数 —— Bob Jenkins 的 [SpookyHash][8](64 位哈希值)。(由于哈希函数在这里不是重点,所以我没有测试不同的哈希函数;我同样也没有检测我的分析中的总内存消耗。)实现会通过简短的代码在测试结果表中标注出来。

|

||||

|

||||

* **UM**: `std::unordered_map` 。在 VS2012 和 libstdc++-v3 (libstdc++-v3: gcc 和 clang 都会用到这东西)中,UM 是以链表的形式实现,所有的元素都在链表中,bucket 数组中存储了链表的迭代器。VS2012 中,则是一个双链表,每一个 bucket 存储了起始迭代器和结束迭代器;libstdc++ 中,是一个单链表,每一个 bucket 只存储了一个起始迭代器。这两种情况里,链表节点是独立申请和释放的。最大负载因子是 1 。

|

||||

* **Ch**:分离的、链状 buket 指向一个元素节点的单链表。为了避免分开申请每一个节点,元素节点存储在普通数组池中。未使用的节点保存在一个空闲链表中。最大负载因子是 1。

|

||||

* **OL**:开地址线性探测 —— 每一个 bucket 存储一个 62 bit 的 hash 值,一个 2 bit 的状态值(包括 empty,filled,removed 三个状态),key 和 vale 。最大负载因子是 2/3。

|

||||

* **DO1**:“data-oriented 1” —— 和 OL 相似,但是哈希值、状态值和 key、values 分离在两个隔离的平滑数组中。

|

||||

* **DO2**:“data-oriented 2” —— 与 OL 类似,但是哈希/状态,keys 和 values 被分离在 3 个相隔离的平滑数组中。

|

||||

|

||||

|

||||

在我的所有实现中,包括 VS2012 的 UM 实现,默认使用尺寸为 2 的 n 次方。如果超出了最大负载因子,则扩展两倍。在 libstdc++ 中,UM 默认尺寸是一个素数。如果超出了最大负载因子,则扩展为下一个素数大小。但是我不认为这些细节对性能很重要。素数是一种对低 bit 位上没有足够熵的低劣 hash 函数的挽救手段,但是我们正在用的是一个很好的 hash 函数。

|

||||

|

||||

OL,DO1 和 DO2 的实现将共同的被称为 OA(open addressing)——稍后我们将发现它们在性能特性上非常相似。在每一个实现中,单元数从 100 K 到 1 M,有效负载(比如:总的 key + value 大小)从 8 到 4 k 字节我为几个不同的操作记了时间。 keys 和 values 永远是 POD 类型,keys 永远是 8 个字节(除了 8 字节的有效负载,此时 key 和 value 都是 4 字节)因为我的目的是为了测试内存影响而不是哈希函数性能,所以我将 key 放在连续的尺寸空间中。每一个测试都会重复 5 遍,然后记录最小的耗时。

|

||||

|

||||

测试的操作在这里:

|

||||

|

||||

* **Fill**:将一个随机的 key 序列插入到表中(key 在序列中是唯一的)。

|

||||

* **Presized fill**:和 Fill 相似,但是在插入之间我们先为所有的 key 保留足够的内存空间,以防止在 fill 过程中 rehash 或者重申请。

|

||||

* **Lookup**:执行 100 k 次随机 key 查找,所有的 key 都在 table 中。

|

||||

* **Failed lookup**: 执行 100 k 次随机 key 查找,所有的 key 都不在 table 中。

|

||||

* **Remove**:从 table 中移除随机选择的半数元素。

|

||||

* **Destruct**:销毁 table 并释放内存。

|

||||

|

||||

你可以[在这里下载我的测试代码][9]。这些代码只能在 64 机器上编译(包括Windows和Linux)。在 `main()` 函数顶部附近有一些开关,你可把它们打开或者关掉——如果全开,可能会需要一两个小时才能结束运行。我收集的结果也放在了那个打包文件里的 Excel 表中。(注意: Windows 和 Linux 在不同的 CPU 上跑的,所以时间不具备可比较性)代码也跑了一些单元测试,用来验证所有的 hash 表实现都能运行正确。

|

||||

|

||||

我还顺带尝试了附加的两个实现:Ch 中第一个节点存放在 bucket 中而不是 pool 里,二次探测的开放寻址。

|

||||

这两个都不足以好到可以放在最终的数据里,但是它们的代码仍放在了打包文件里面。

|

||||

|

||||

### 结果

|

||||

|

||||

这里有成吨的数据!!

|

||||

这一节我将详细的讨论一下结果,但是如果你对此不感兴趣,可以直接跳到下一节的总结。

|

||||

|

||||

#### Windows

|

||||

|

||||

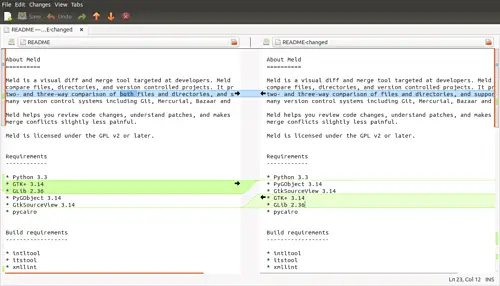

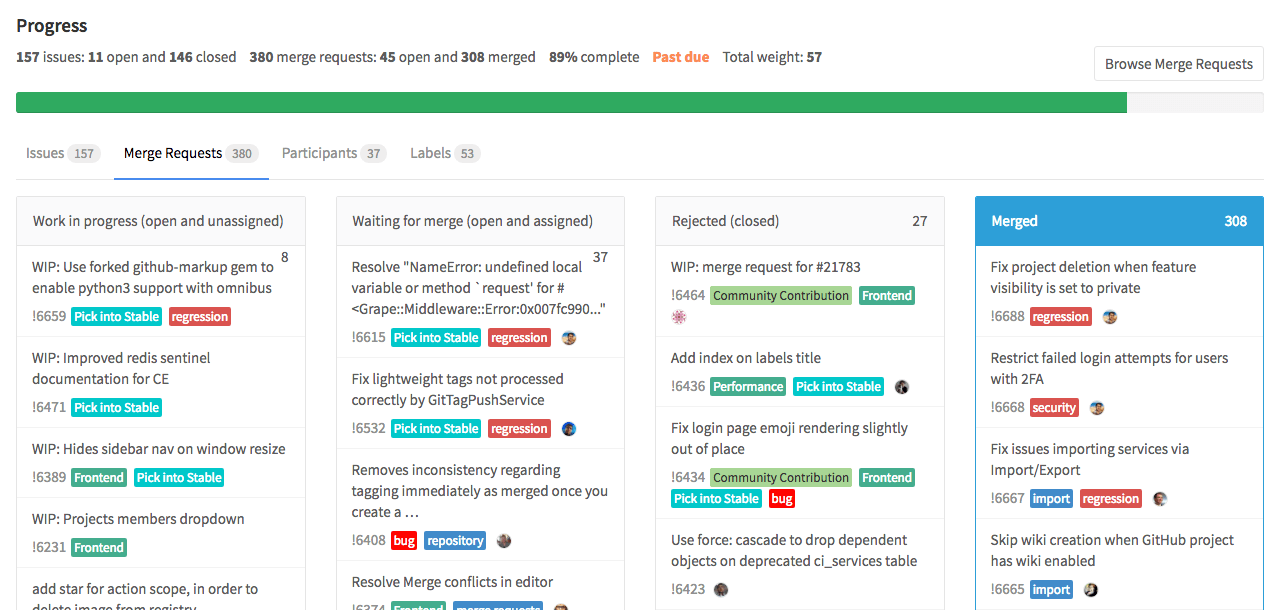

这是所有的测试的图表结果,使用 Visual Studio 2012 编译,运行于 Windows 8.1 和 Core i7-4710HQ 机器上。(点击可以放大。)

|

||||

|

||||

[

|

||||

|

||||

][12]

|

||||

|

||||

从左至右是不同的有效负载大小,从上往下是不同的操作(注意:不是所有的Y轴都是相同的比例!)我将为每一个操作总结一下主要趋向。

|

||||

|

||||

**Fill**:

|

||||

|

||||

在我的 hash 表中,Ch 稍比任何的 OA 变种要好。随着哈希表大小和有效负载的加大,差距也随之变大。我猜测这是由于 Ch 只需要从一个空闲链表中拉取一个元素,然后把它放在 bucket 前面,而 OA 不得不搜索一部分 bucket 来找到一个空位置。所有的 OA 变种的性能表现基本都很相似,当然 DO1 稍微有点优势。

|

||||

|

||||

在小负载的情况,UM 几乎是所有 hash 表中表现最差的 —— 因为 UM 为每一次的插入申请(内存)付出了沉重的代价。但是在 128 字节的时候,这些 hash 表基本相当,大负载的时候 UM 还赢了点。因为,我所有的实现都需要重新调整元素池的大小,并需要移动大量的元素到新池里面,这一点我几乎无能为力;而 UM 一旦为元素申请了内存后便不需要移动了。注意大负载中图表上夸张的跳步!这更确认了重新调整大小带来的问题。相反,UM 只是线性上升 —— 只需要重新调整 bucket 数组大小。由于没有太多隆起的地方,所以相对有效率。

|

||||

|

||||

**Presized fill**:

|

||||

|

||||

大致和 Fill 相似,但是图示结果更加的线性光滑,没有太大的跳步(因为不需要 rehash ),所有的实现差距在这一测试中要缩小了些。大负载时 UM 依然稍快于 Ch,问题还是在于重新调整大小上。Ch 仍是稳步少快于 OA 变种,但是 DO1 比其它的 OA 稍有优势。

|

||||

|

||||

**Lookup**:

|

||||

|

||||

所有的实现都相当的集中。除了最小负载时,DO1 和 OL 稍快,其余情况下 UM 和 DO2 都跑在了前面。(LCTT 译注: 你确定?)真的,我无法描述 UM 在这一步做的多么好。尽管需要遍历链表,但是 UM 还是坚守了面向数据的本性。

|

||||

|

||||

顺带一提,查找时间和 hash 表的大小有着很弱的关联,这真的很有意思。

|

||||

哈希表查找时间期望上是一个常量时间,所以在的渐进视图中,性能不应该依赖于表的大小。但是那是在忽视了 cache 影响的情况下!作为具体的例子,当我们在具有 10 k 条目的表中做 100 k 次查找时,速度会便变快,因为在第一次 10 k - 20 k 次查找后,大部分的表会处在 L3 中。

|

||||

|

||||

**Failed lookup**:

|

||||

|

||||

相对于成功查找,这里就有点更分散一些。DO1 和 DO2 跑在了前面,但 UM 并没有落下,OL 则是捉襟见肘啊。我猜测,这可能是因为 OL 整体上具有更长的搜索路径,尤其是在失败查询时;内存中,hash 值在 key 和 value 之飘来荡去的找不着出路,我也很受伤啊。DO1 和 DO2 具有相同的搜索长度,但是它们将所有的 hash 值打包在内存中,这使得问题有所缓解。

|

||||

|

||||

**Remove**:

|

||||

|

||||

DO2 很显然是赢家,但 DO1 也未落下。Ch 则落后,UM 则是差的不是一丁半点(主要是因为每次移除都要释放内存);差距随着负载的增加而拉大。移除操作是唯一不需要接触数据的操作,只需要 hash 值和 key 的帮助,这也是为什么 DO1 和 DO2 在移除操作中的表现大相径庭,而其它测试中却保持一致。(如果你的值不是 POD 类型的,并需要析构,这种差异应该是会消失的。)

|

||||

|

||||

**Destruct**:

|

||||

|

||||

Ch 除了最小负载,其它的情况都是最快的(最小负载时,约等于 OA 变种)。所有的 OA 变种基本都是相等的。注意,在我的 hash 表中所做的所有析构操作都是释放少量的内存 buffer 。但是 [在Windows中,释放内存的消耗和大小成比例关系][13]。(而且,这是一个很显著的开支 —— 申请 ~1 GB 的内存需要 ~100 ms 的时间去释放!)

|

||||

|

||||

UM 在析构时是最慢的一个(小负载时,慢的程度可以用数量级来衡量),大负载时依旧是稍慢些。对于 UM 来讲,释放每一个元素而不是释放一组数组真的是一个硬伤。

|

||||

|

||||

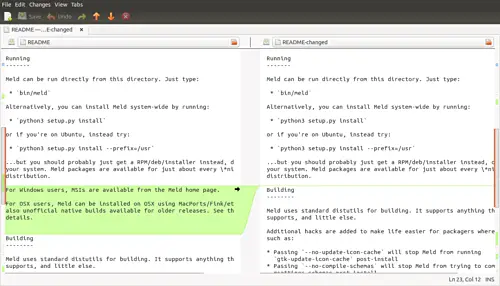

#### Linux

|

||||

|

||||

我还在装有 Linux Mint 17.1 的 Core i5-4570S 机器上使用 gcc 4.8 和 clang 3.5 来运行了测试。gcc 和 clang 的结果很相像,因此我只展示了 gcc 的;完整的结果集合包含在了代码下载打包文件中,链接在上面。(点击图来缩放)

|

||||

|

||||

[

|

||||

|

||||

][15]

|

||||

|

||||

大部分结果和 Windows 很相似,因此我只高亮了一些有趣的不同点。

|

||||

|

||||

**Lookup**:

|

||||

|

||||

这里 DO1 跑在前头,而在 Windows 中 DO2 更快些。(LCTT 译注: 这里原文写错了吧?)同样,UM 和 Ch 落后于其它所有的实现——过多的指针追踪,然而 OA 只需要在内存中线性的移动即可。至于 Windows 和 Linux 结果为何不同,则不是很清楚。UM 同样比 Ch 慢了不少,特别是大负载时,这很奇怪;我期望的是它们可以基本相同。

|

||||

|

||||

**Failed lookup**:

|

||||

|

||||

UM 再一次落后于其它实现,甚至比 OL 还要慢。我再一次无法理解为何 UM 比 Ch 慢这么多,Linux 和 Windows 的结果为何有着如此大的差距。

|

||||

|

||||

|

||||

**Destruct**:

|

||||

|

||||

在我的实现中,小负载的时候,析构的消耗太少了,以至于无法测量;在大负载中,线性增加的比例和创建的虚拟内存页数量相关,而不是申请到的数量?同样,要比 Windows 中的析构快上几个数量级。但是并不是所有的都和 hash 表有关;我们在这里可以看出不同系统和运行时内存系统的表现。貌似,Linux 释放大内存块是要比 Windows 快上不少(或者 Linux 很好的隐藏了开支,或许将释放工作推迟到了进程退出,又或者将工作推给了其它线程或者进程)。

|

||||

|

||||

UM 由于要释放每一个元素,所以在所有的负载中都比其它慢上几个数量级。事实上,我将图片做了剪裁,因为 UM 太慢了,以至于破坏了 Y 轴的比例。

|

||||

|

||||

### 总结

|

||||

|

||||

好,当我们凝视各种情况下的数据和矛盾的结果时,我们可以得出什么结果呢?我想直接了当的告诉你这些 hash 表变种中有一个打败了其它所有的 hash 表,但是这显然不那么简单。不过我们仍然可以学到一些东西。

|

||||

|

||||

首先,在大多数情况下我们“很容易”做的比 `std::unordered_map` 还要好。我为这些测试所写的所有实现(它们并不复杂;我只花了一两个小时就写完了)要么是符合 `unordered_map` 要么是在其基础上做的提高,除了大负载(超过128字节)中的插入性能, `unordered_map` 为每一个节点独立申请存储占了优势。(尽管我没有测试,我同样期望 `unordered_map` 能在非 POD 类型的负载上取得胜利。)具有指导意义的是,如果你非常关心性能,不要假设你的标准库中的数据结构是高度优化的。它们可能只是在 C++ 标准的一致性上做了优化,但不是性能。:P

|

||||

|

||||

其次,如果不管在小负载还是超负载中,若都只用 DO1 (开放寻址,线性探测,hashes/states 和 key/vaules分别处在隔离的普通数组中),那可能不会有啥好表现。这不是最快的插入,但也不坏(还比 `unordered_map` 快),并且在查找,移除,析构中也很快。你所知道的 —— “面向数据设计”完成了!

|

||||

|

||||

注意,我的为这些哈希表做的测试代码远未能用于生产环境——它们只支持 POD 类型,没有拷贝构造函数以及类似的东西,也未检测重复的 key,等等。我将可能尽快的构建一些实际的 hash 表,用于我的实用库中。为了覆盖基础部分,我想我将有两个变种:一个基于 DO1,用于小的,移动时不需要太大开支的负载;另一个用于链接并且避免重新申请和移动元素(就像 `unordered_map` ),用于大负载或者移动起来需要大开支的负载情况。这应该会给我带来最好的两个世界。

|

||||

|

||||

与此同时,我希望你们会有所启迪。最后记住,如果 Chandler Carruth 和 Mike Acton 在数据结构上给你提出些建议,你一定要听。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

|

||||

作者简介:

|

||||

|

||||

我是一名图形程序员,目前在西雅图做自由职业者。之前我在 NVIDIA 的 DevTech 软件团队中工作,并在美少女特工队工作室中为 PS3 和 PS4 的 Infamous 系列游戏开发渲染技术。

|

||||

|

||||

自 2002 年起,我对图形非常感兴趣,并且已经完成了一系列的工作,包括:雾、大气雾霾、体积照明、水、视觉效果、粒子系统、皮肤和头发阴影、后处理、镜面模型、线性空间渲染、和 GPU 性能测量和优化。

|

||||

|

||||

你可以在我的博客了解更多和我有关的事,处理图形,我还对理论物理和程序设计感兴趣。

|

||||

|

||||

你可以在 nathaniel.reed@gmail.com 或者在 Twitter(@Reedbeta)/Google+ 上关注我。我也会经常在 StackExchange 上回答计算机图形的问题。

|

||||

|

||||

--------------

|

||||

|

||||

via: http://reedbeta.com/blog/data-oriented-hash-table/

|

||||

|

||||

作者:[Nathan Reed][a]

|

||||

译者:[sanfusu](https://github.com/sanfusu)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://reedbeta.com/about/

|

||||

[1]:http://reedbeta.com/blog/data-oriented-hash-table/

|

||||

[2]:http://reedbeta.com/blog/category/coding/

|

||||

[3]:http://reedbeta.com/blog/data-oriented-hash-table/#comments

|

||||

[4]:http://baptiste-wicht.com/posts/2012/12/cpp-benchmark-vector-list-deque.html

|

||||

[5]:https://www.youtube.com/watch?v=fHNmRkzxHWs

|

||||

[6]:https://www.youtube.com/watch?v=rX0ItVEVjHc

|

||||

[7]:http://reedbeta.com/blog/data-oriented-hash-table/#the-tests

|

||||

[8]:http://burtleburtle.net/bob/hash/spooky.html

|

||||

[9]:http://reedbeta.com/blog/data-oriented-hash-table/hash-table-tests.zip

|

||||

[10]:http://reedbeta.com/blog/data-oriented-hash-table/#the-results

|

||||

[11]:http://reedbeta.com/blog/data-oriented-hash-table/#windows

|

||||

[12]:http://reedbeta.com/blog/data-oriented-hash-table/results-vs2012.png

|

||||

[13]:https://randomascii.wordpress.com/2014/12/10/hidden-costs-of-memory-allocation/

|

||||

[14]:http://reedbeta.com/blog/data-oriented-hash-table/#linux

|

||||

[15]:http://reedbeta.com/blog/data-oriented-hash-table/results-g++4.8.png

|

||||

[16]:http://reedbeta.com/blog/data-oriented-hash-table/#conclusions

|

||||

@ -1,14 +1,14 @@

|

||||

使用IBM Bluemix构建,部署和管理自定义应用程序

|

||||

使用 IBM Bluemix 构建,部署和管理自定义应用程序

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

|

||||

IBM Bluemix 为开发人员提供了构建,部署和管理自定义应用程序的机会。Bluemix 建立在 Cloud Foundry 上。它支持多种编程语言,包括 IBM 的 OpenWhisk ,还允许开发人员无需资源管理就调用任何函数。

|

||||

IBM Bluemix 为开发人员提供了构建、部署和管理自定义应用程序的机会。Bluemix 建立在 Cloud Foundry 上。它支持多种编程语言,包括 IBM 的 OpenWhisk ,还允许开发人员无需资源管理就调用任何函数。

|

||||

|

||||

Bluemix 是由 IBM 实现的开放标准的基于云的平台。它具有开放的架构,其允许组织能够在云上创建,开发和管理其应用程序。它基于 Cloud Foundry ,因此可以被视为平台即服务(PaaS)。使用 Bluemix,开发人员不必关心云端配置,可以专注于他们的应用程序。 云端配置将由 Bluemix 自动完成。

|

||||

Bluemix 是由 IBM 实现的基于开放标准的云平台。它具有开放的架构,其允许组织能够在云上创建、开发和管理其应用程序。它基于 Cloud Foundry ,因此可以被视为平台即服务(PaaS)。使用 Bluemix,开发人员不必关心云端配置,可以专注于他们的应用程序。 云端配置将由 Bluemix 自动完成。

|

||||

|

||||

Bluemix 还提供了一个仪表板,通过它,开发人员可以创建,管理和查看服务和应用程序,同时还可以监控资源使用情况。

|

||||

|

||||

它支持以下编程语言:

|

||||

|

||||

* Java

|

||||

@ -21,143 +21,132 @@ Bluemix 还提供了一个仪表板,通过它,开发人员可以创建,管

|

||||

|

||||

|

||||

|

||||

图1 IBM Bluemix 概述

|

||||

*图1 IBM Bluemix 概述*

|

||||

|

||||

|

||||

|

||||

图2 IBM Bluemix 体系结构

|

||||

*图2 IBM Bluemix 体系结构*

|

||||

|

||||

|

||||

|

||||

图3 在 IBM Bluemix 中创建组织

|

||||

*图3 在 IBM Bluemix 中创建组织*

|

||||

|

||||

**IBM Bluemix 如何工作**

|

||||

### IBM Bluemix 如何工作

|

||||

|

||||

Bluemix 构建在 IBM 的 SoftLayer IaaS(基础架构即服务)之上。它使用 Cloud Foundry 作为开源 PaaS 平台。一切起于通过 Cloud Foundry 来推送代码,它扮演着整合代码和根据编写应用所使用的编程语言所适配的运行时环境的角色。IBM 服务、第三方服务或社区构建的服务可用于不同的功能。安全连接器可用于连接本地系统到云。

|

||||

Bluemix 构建在 IBM 的 SoftLayer IaaS(基础架构即服务)之上。它使用 Cloud Foundry 作为开源 PaaS 平台。一切起于通过 Cloud Foundry 来推送代码,它扮演着将代码和编写应用所使用的编程语言运行时环境整合起来的角色。IBM 服务、第三方服务或社区构建的服务可用于不同的功能。安全连接器可用于将本地系统连接到云。

|

||||

|

||||

|

||||

|

||||

图4 在 IBM Bluemix 中设置空间

|

||||

*图4 在 IBM Bluemix 中设置空间*

|

||||

|

||||

|

||||

|

||||

图5 应用程序模板

|

||||

*图5 应用程序模板*

|

||||

|

||||

|

||||

|

||||

图6 IBM Bluemix 支持的编程语言

|

||||

*图6 IBM Bluemix 支持的编程语言*

|

||||

|

||||

### 在 Bluemix 中创建应用程序

|

||||

|

||||

**在 Bluemix 中创建应用程序**

|

||||

在本文中,我们将使用 Liberty for Java 的入门包在 IBM Bluemix 中创建一个示例“Hello World”应用程序,只需几个简单的步骤。

|

||||

|

||||

1. 打开 [_https://console.ng.bluemix.net/registration/_][2]

|

||||

1、 打开 [https://console.ng.bluemix.net/registration/][2]

|

||||

|

||||

2. 注册 Bluemix 帐户

|

||||

2、 注册 Bluemix 帐户

|

||||

|

||||

3. 点击邮件中的确认链接完成注册过程

|

||||

3、 点击邮件中的确认链接完成注册过程

|

||||

|

||||

4. 输入您的电子邮件 ID,然后点击 _Continue_ 进行登录

|

||||

4、 输入您的电子邮件 ID,然后点击 Continue 进行登录

|

||||

|

||||

5. 输入密码并点击 _Log in_

|

||||

5、 输入密码并点击 Log in

|

||||

|

||||

6. 进入 _Set up_ -> _Environment_ 设置特定区域中的资源共享

|

||||

6、 进入 Set up -> Environment 设置特定区域中的资源共享

|

||||

|

||||

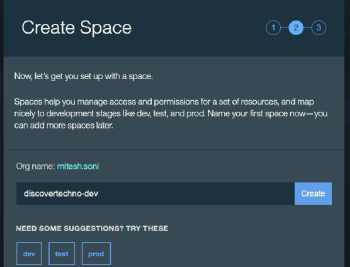

7. 创建空间方便管理访问控制和在 Bluemix 中回滚操作。 我们可以将空间映射到多个开发阶段,如 dev, test,uat,pre-prod 和 prod

|

||||

7、 创建空间方便管理访问控制和在 Bluemix 中回滚操作。 我们可以将空间映射到多个开发阶段,如 dev, test,uat,pre-prod 和 prod

|

||||

|

||||

|

||||

|

||||

图7 命名应用程序

|

||||

*图7 命名应用程序*

|

||||

|

||||

|

||||

|

||||

图8 了解应用程序何时准备就绪

|

||||

*图8 了解应用程序何时准备就绪*

|

||||

|

||||

|

||||

|

||||

图9 IBM Bluemix Java 应用程序

|

||||

*图9 IBM Bluemix Java 应用程序*

|

||||

|

||||

8. 完成初始配置后,单击 _I'm ready_ -> _Good to Go_ !

|

||||

8、 完成初始配置后,单击 I'm ready -> Good to Go !

|

||||

|

||||

9. 成功登录后,此时检查 IBM Bluemix 仪表板,特别是 Cloud Foundry Apps(其中2GB可用)和 Virtual Server(其中0个实例可用)的部分

|

||||

9、 成功登录后,此时检查 IBM Bluemix 仪表板,特别是 Cloud Foundry Apps(其中 2GB 可用)和 Virtual Server(其中 0 个实例可用)的部分

|

||||

|

||||

10. 点击 _Create app_,选择应用创建模板。在我们的例子中,我们将使用一个 Web 应用程序

|

||||

10、 点击 Create app,选择应用创建模板。在我们的例子中,我们将使用一个 Web 应用程序

|

||||

|

||||

11. 如何开始?单击 Liberty for Java ,然后查看其描述

|

||||

11、 如何开始?单击 Liberty for Java ,然后查看其描述

|

||||

|

||||

12. 单击 _Continue_

|

||||

12、 单击 Continue

|

||||

|

||||

13. 为新应用命名。对于本文,让我们使用 osfy-bluemix-tutorial 命名然后单击 _Finish_

|

||||

13、 为新应用命名。对于本文,让我们使用 osfy-bluemix-tutorial 命名然后单击 Finish

|

||||

|

||||

14. 在 Bluemix 上创建资源和托管应用程序需要等待一些时间。

|

||||

14、 在 Bluemix 上创建资源和托管应用程序需要等待一些时间

|

||||

|

||||

15. 几分钟后,应用程式就会开始运作。注意应用程序的URL。

|

||||

15、 几分钟后,应用程式就会开始运作。注意应用程序的URL

|

||||

|

||||

16. 访问应用程序的URL _http://osfy-bluemix-tutorial.au-syd.mybluemix.net/_, Bingo,我们的第一个在 IBM Bluemix 上的 Java 应用程序成功运行。

|

||||

16、 访问应用程序的URL http://osfy-bluemix-tutorial.au-syd.mybluemix.net/, 不错,我们的第一个在 IBM Bluemix 上的 Java 应用程序成功运行

|

||||

|

||||

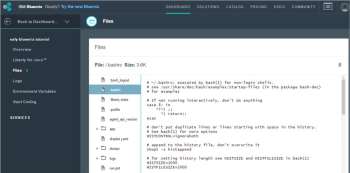

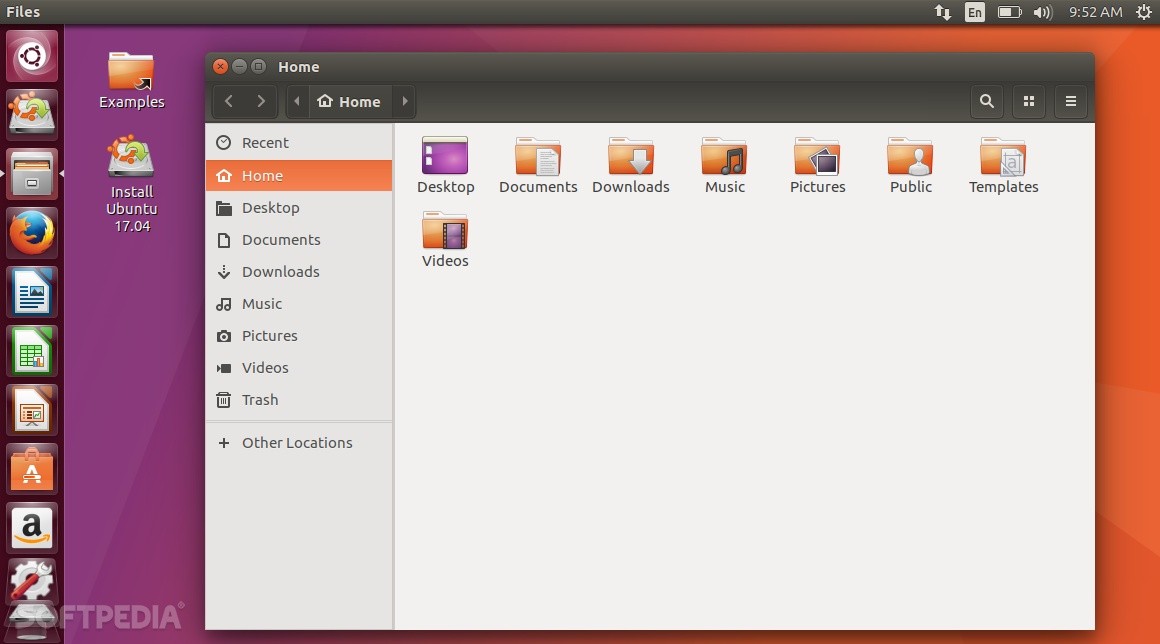

17. 为了检查源代码,请单击 _Files_ 并在门户中导航到不同文件和文件夹

|

||||

17、 为了检查源代码,请单击 Files 并在门户中导航到不同文件和文件夹

|

||||

|

||||

18. _Logs_ 部分提供包括从应用程序的创建时起的所有活动日志。

|

||||

18、 Logs 部分提供包括从应用程序的创建时起的所有活动日志。

|

||||

|

||||

19. _Environment Variables_ 部分提供关于 VCAP_Services 的所有环境变量以及用户定义的环境变量的详细信息

|

||||

19、 Environment Variables 部分提供关于 VCAP\_Services 的所有环境变量以及用户定义的环境变量的详细信息

|

||||

|

||||

20. 要检查应用程序的资源消耗,需要到 Liberty for Java 那一部分。

|

||||

20、 要检查应用程序的资源消耗,需要到 Liberty for Java 那一部分。

|

||||

|

||||

21. 默认情况下,每个应用程序的 _Overview_ 部分包含资源,应用程序的运行状况和活动日志的详细信息

|

||||

21、 默认情况下,每个应用程序的 Overview 部分包含资源,应用程序的运行状况和活动日志的详细信息

|

||||

|

||||

22. 打开 Eclipse,转到帮助菜单,然后单击 _Eclipse Marketplace_

|

||||

22、 打开 Eclipse,转到帮助菜单,然后单击 _Eclipse Marketplace_

|

||||

|

||||

23. 查找 _IBM Eclipse tools for Bluemix_ 并单击 _Install_

|

||||

23、 查找 IBM Eclipse tools for Bluemix 并单击 Install

|

||||

|

||||

24. 确认所选的功能并将其安装在 Eclipse 中

|

||||

24、 确认所选的功能并将其安装在 Eclipse 中

|

||||

|

||||

25. 下载应用程序启动器代码。点击 _File Menu_,将它导入到 Eclipse 中,选择 _Import Existing Projects_ -> _Workspace_, 然后开始修改代码

|

||||

25、 下载应用程序启动器代码。点击 File Menu,将它导入到 Eclipse 中,选择 Import Existing Projects -> Workspace, 然后开始修改代码

|

||||

|

||||

|

||||

|

||||

图10 Java 应用程序源文件

|

||||

*图10 Java 应用程序源文件*

|

||||

|

||||

|

||||

|

||||

图11 Java 应用程序日志

|

||||

*图11 Java 应用程序日志*

|

||||

|

||||

|

||||

|

||||

图12 Java 应用程序 - Liberty for Java

|

||||

*图12 Java 应用程序 - Liberty for Java*

|

||||

|

||||

### 为什么选择 IBM Bluemix?

|

||||

|

||||

**为什么选择 IBM Bluemix?**

|

||||

以下是使用 IBM Bluemix 的一些令人信服的理由:

|

||||

|

||||

* 支持多种语言和平台

|

||||

* 免费试用

|

||||

|

||||

1. 简化的注册过程

|

||||

|

||||

2. 不需要信用卡

|

||||

|

||||

3. 30 天试用期 - 配额 2GB 的运行时,支持 20 个服务,500 个 route

|

||||

|

||||

4. 无限制地访问标准支持

|

||||

|

||||

5. 没有生产使用限制

|

||||

|

||||

* 仅为每个使用的运行时和服务付费

|

||||

* 快速设置 - 从而加快上架时间

|

||||

* 持续交付新功能

|

||||

* 与本地资源的安全集成

|

||||

* 用例

|

||||

|

||||

1. Web 应用程序和移动后端

|

||||

|

||||

2. API 和内部集成

|

||||

|

||||

* DevOps 服务可部署在云上的 SaaS ,并支持持续交付:

|

||||

|

||||

1. Web IDE

|

||||

|

||||

2. SCM

|

||||

|

||||

3. 敏捷规划

|

||||

|

||||

4. 交货管道服务

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

@ -1,38 +1,39 @@

|

||||

使用 Exercism 提升你的编程技巧

|

||||

============================================================

|

||||

|

||||

### 这些练习目前已经支持 33 种编程语言了。

|

||||

> 这些练习目前已经支持 33 种编程语言了。

|

||||

|

||||

|

||||

|

||||

>图片提供: opensource.com

|

||||

|

||||

我们中的很多人有 2017 年的目标,将提高编程能力或学习如何编程放在第一位。虽然我们有许多资源可以访问,但练习独立于特定职业的代码开发的艺术还是需要一些规划。[Exercism.io][1] 就是为此目的而设计的一种资源。

|

||||

我们中的很多人的 2017 年目标,将提高编程能力或学习如何编程放在第一位。虽然我们有许多资源可以访问,但练习独立于特定职业的代码开发的艺术还是需要一些规划。[Exercism.io][1] 就是为此目的而设计的一种资源。

|

||||

|

||||

Exercism 是一个 [开源][2] 项目和服务,通过发现和协作,帮助人们提高他们的编程技能。Exercism 提供了几十种不同编程语言的练习。实践者完成每个练习,并获得反馈,从而可以从他们的同行小组的经验中学习。

|

||||

Exercism 是一个 [开源][2] 的项目和服务,通过发现和协作,帮助人们提高他们的编程技能。Exercism 提供了几十种不同编程语言的练习。实践者完成每个练习,并获得反馈,从而可以从他们的同行小组的经验中学习。

|

||||

|

||||

这里有这么多同行! Exercism 在 2016 年留下了一些令人印象深刻的统计:

|

||||

|

||||

* 有来自201个不同国家的参与者

|

||||

* 有来自 201 个不同国家的参与者

|

||||

* 自 2013 年 6 月以来,29,000 名参与者提交了练习,其中仅在 2016 年就有 15,500 名参加者提交练习

|

||||

* 自 2013 年 6 月以来,15,000 名参与者就练习解决方案提供反馈,其中 2016 年有 5,500 人提供反馈

|

||||

* 每月 50,000 名访客,每周超过 12,000 名访客

|

||||

* 目前练习支持 33 种编程语言,另外 22 种语言在筹备工作中

|

||||

* 目前的练习已经支持 33 种编程语言,另外 22 种语言在筹备工作中

|

||||

|

||||

该项目为所有级别的参与者提供了一系列小小的胜利,使他们能够“即使在低水平也能发展到高度流利”,Exercism 的创始人 [Katrina Owen][3] 这样说到。Exercism 并不旨在教导学员成为一名职业程序员,但它的练习使他们对一种语言及其瑕疵有深刻的了解。这种熟悉性消除了学习者对语言的认知负担(流利),使他们能够专注于更困难的架构和最佳实践(熟练)的问题。

|

||||

该项目为各种级别的参与者提供了一系列小小的挑战,使他们能够“即使在低水平也能发展到高度谙熟”,Exercism 的创始人 [Katrina Owen][3] 这样说到。Exercism 并不旨在教导学员成为一名职业程序员,但它的练习使他们对一种语言及其瑕疵有深刻的了解。这种熟悉性消除了学习者对语言的认知负担(使之更谙熟),使他们能够专注于更困难的架构和最佳实践的问题。

|

||||

|

||||

Exercism 通过一系列练习(还有什么?)来做到这一点。程序员下载[命令行客户端][4],检索第一个练习,添加完成练习的代码,然后提交解决方案。提交解决方案后,程序员可以研究他人的解决方案,并学习到对同一个问题不同的解决方式。更重要的是,每个解决方案都会收到来自其他参与者的反馈。

|

||||

Exercism 通过一系列练习(或者还有别的?)来做到这一点。程序员下载[命令行客户端][4],检索第一个练习,添加完成练习的代码,然后提交解决方案。提交解决方案后,程序员可以研究他人的解决方案,并学习到对同一个问题不同的解决方式。更重要的是,每个解决方案都会收到来自其他参与者的反馈。

|

||||

|

||||

反馈是 Exercism 的超级力量。鼓励所有参与者不仅接收反馈而且提供反馈。根据 Owen 说的,Exercism 的社区成员提供反馈比完成练习学到更多。她说:“这是一个强大的学习经验,你被迫发表内心感受,并检查你的假设、习惯和偏见”。她还指出,反馈可以有多种形式。

|

||||

反馈是 Exercism 的超级力量。鼓励所有参与者不仅接收反馈而且提供反馈。根据 Owen 说的,Exercism 的社区成员提供反馈比完成练习学到更多。她说:“这是一个强大的学习经验,你需要发表内心感受,并检查你的假设、习惯和偏见”。她还指出,反馈可以有多种形式。

|

||||

|

||||

欧文说:“只需进入,观察并问问题”。

|

||||

欧文说:“只需进入,观察并发问”。

|

||||

|

||||

那些刚刚接触编程,甚至只是一种特定语言的人,可以通过质疑假设来提供有价值的反馈,同时通过协作和对话来学习。

|

||||

那些刚刚接触编程,甚至只是接触了一种特定语言的人,可以通过预设好的问题来提供有价值的反馈,同时通过协作和对话来学习。

|

||||

|

||||

除了对新语言的 <ruby>“微课”学习<rt>bite-sized learning</rt></ruby> 之外,Exercism 本身还强烈支持和鼓励项目的新贡献者。在 [SitePoint.com][5] 的一篇文章中,欧文强调:“如果你想为开源贡献代码,你所需要的技能水平只要‘够用’即可。” Exercism 不仅鼓励新的贡献者,它还尽可能地帮助新贡献者发布他们项目中的第一个补丁。到目前为止,有近 1000 人是[ Exercism 项目][6]的贡献者。

|

||||

除了对新语言的 <ruby>“微课”学习<rt>bite-sized learning</rt></ruby> 之外,Exercism 本身还强烈支持和鼓励项目的新贡献者。在 [SitePoint.com][5] 的一篇文章中,欧文强调:“如果你想为开源贡献代码,你所需要的技能水平只要‘够用’即可。” Exercism 不仅鼓励新的贡献者,它还尽可能地帮助新贡献者发布他们项目中的第一个补丁。到目前为止,有近 1000 人成为 [Exercism 项目][6]的贡献者。

|

||||

|

||||

新贡献者会有大量工作让他们忙碌。 Exercism 目前正在审查[其语言轨道的健康状况][7],目的是使所有轨道可持续并避免维护者的倦怠。它还在寻求[捐赠][8]和赞助,聘请设计师提高网站的可用性。

|

||||

新贡献者会有大量工作让他们忙碌。 Exercism 目前正在审查[其语言发展轨迹的健康状况][7],目的是使所有发展轨迹可持续并避免维护者的倦怠。它还在寻求[捐赠][8]和赞助,聘请设计师提高网站的可用性。

|

||||

|

||||

Owen 说:“这些改进对于网站的健康以及为了 Exercism 参与者的发展是有必要的,这些变化还鼓励新贡献者加入并简化了加入的途径。” 她说:“如果我们可以重新设计,产品方面将更加可维护。。。当用户体验一团糟,华丽的代码一点用也没有”。该项目有一个非常活跃的[讨论仓库][9],这里社区成员合作来发现最好的新方法和功能。

|

||||

Owen 说:“这些改进对于网站的健康以及为了 Exercism 参与者的发展是有必要的,这些变化还鼓励新贡献者加入并简化了加入的途径。” 她说:“如果我们可以重新设计,产品方面将更加可维护……当用户体验一团糟时,华丽的代码一点用也没有”。该项目有一个非常活跃的[讨论仓库][9],这里社区成员合作来发现最好的新方法和功能。

|

||||

|

||||

那些想关注项目但还没有参与的人可以关注[邮件列表][10]。

|

||||

|

||||

@ -42,10 +43,12 @@ Owen 说:“这些改进对于网站的健康以及为了 Exercism 参与者

|

||||

|

||||

|

||||

|

||||

VM(Vicky)Brasseur - VM(也称为 Vicky)是技术人员、项目、流程、产品和 p^Hbusinesses 的经理。在她超过 18 年的科技行业从业中,她曾是分析师、程序员、产品经理、软件工程经理和软件工程总监。 目前,她是 Hewlett Packard Enterprise 上游开源开发团队的高级工程经理。 VM 的博客在 anonymoushash.vmbrasseur.com,tweets 在 @vmbrasseur。

|

||||

VM(Vicky)Brasseur - VM(也称为 Vicky)是技术人员、项目、流程、产品和 p\^Hbusinesses 的经理。在她超过 18 年的科技行业从业中,她曾是分析师、程序员、产品经理、软件工程经理和软件工程总监。 目前,她是 Hewlett Packard Enterprise 上游开源开发团队的高级工程经理。 VM 的博客在 anonymoushash.vmbrasseur.com,tweets 在 @vmbrasseur。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/17/1/exercism-learning-programming

|

||||

|

||||

作者:[VM (Vicky) Brasseur][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

@ -1,26 +1,24 @@

|

||||

|

||||

What engineers and marketers can learn from each other

|

||||

============================================================

|

||||

工程师和市场营销人员之间能够相互学习什么?

|

||||

============================================================

|

||||

|

||||

### 营销人员觉得工程师在工作中都太严谨了;而工程师则认为营销人员都很懒散。但是他们都错了。

|

||||

> 营销人员觉得工程师在工作中都太严谨了;而工程师则认为营销人员毫无用处。但是他们都错了。

|

||||

|

||||

|

||||

图片来源 :

|

||||

|

||||

opensource.com

|

||||

|

||||

图片来源:opensource.com

|

||||

|

||||

在 B2B 行业从事多年的销售实践过程中,我经常听到工程师对营销人员的各种误解。下面这些是比较常见的:

|

||||

|

||||

* ”搞市场营销真是浪费钱,还不如把更多的资金投入到实际的产品开发中来。“

|

||||

* ”那些营销人员只是一个劲儿往墙上贴各种广告,还祈祷着它们不要掉下来。这么做有啥科学依据啊?“

|

||||

* ”谁愿意去看哪些广告啊?“

|

||||

* ”对待一个营销人员最好的办法就是不订阅,不关注,也不理睬。“

|

||||

* “搞市场营销真是浪费钱,还不如把更多的资金投入到实际的产品开发中来。”

|

||||

* “那些营销人员只是一个劲儿往墙上贴各种广告,还祈祷着它们不要掉下来。这么做有啥科学依据啊?”

|

||||

* “谁愿意去看哪些广告啊?”

|

||||

* “对待一个营销人员最好的办法就是不听,不看,也不理睬。”

|

||||

|

||||

这是我最感兴趣的一点:

|

||||

_“营销人员都很懒散。”_

|

||||

|

||||

_“市场营销无足轻重。”_

|

||||

|

||||

最后一点说的不对,不够全面,懒散实际上是阻碍一个公司发展的巨大绊脚石。

|

||||

最后一点说的不对,而且不仅如此,它实际上是阻碍一个公司创新的巨大绊脚石。

|

||||

|

||||

我来跟大家解释一下原因吧。

|

||||

|

||||

@ -28,27 +26,27 @@ _“营销人员都很懒散。”_

|

||||

|

||||

这些工程师的的评论让我十分的苦恼,因为我从中看到了自己当年的身影。

|

||||

|

||||

你们知道吗?我曾经也跟你们一样是一位自豪的技术极客。我在 Rensselaer Polytechnic 学院的电气工程专业本科毕业后便在美国空军开始了我的职业生涯,而且美国空军在那段时间还发动了军事上的沙漠风暴行动。在那里我主要负责开发并部属一套智能的实时战况分析系统,用于根据各种各样的数据源来构建出战场上的画面。

|

||||

你们知道吗?我曾经也跟你们一样是一位自豪的技术极客。我在 Rensselaer Polytechnic 学院的电气工程专业本科毕业后便在美国空军担任军官开始了我的职业生涯,而且美国空军在那段时间还发动了沙漠风暴行动。在那里我主要负责开发并部属一套智能的实时战况分析系统,用于综合几个的数据源来构建出战场态势。

|

||||

|

||||

在我离开空军之后,我本打算去麻省理工学院攻读博士学位。但是上校强烈建议我去报读这个学校的商学院。“你真的想一辈子待实验室里吗?”他问我。“你想就这么去大学里当个教书匠吗? Jackie ,你在组织管理那些复杂的工作中比较有天赋。我觉得你非常有必要去了解下 MIT 的斯隆商学院。”

|

||||

在我离开空军之后,我本打算去麻省理工学院攻读博士学位。但是上校强烈建议我去报读这个学校的商学院。“你真的想一辈子待实验室里吗?”他问我。“你想就这么去大学里当个教书匠吗?Jackie ,你在组织管理那些复杂的工作中比较有天赋。我觉得你非常有必要去了解下 MIT 的斯隆商学院。”

|

||||

|

||||

我觉得自己也可以同时参加一些 MIT 技术方面的课程,因此我采纳了他的建议。但是,如果要参加市场营销管理方面的课程,我还有很长的路要走,这完全是在浪费时间。因此,在日常工作学习中,我始终是用自己所擅长的分析能力去解决一切问题。

|

||||

我觉得自己也可以同时参加一些 MIT 技术方面的课程,因此我采纳了他的建议。然而,如果要参加市场营销管理方面的课程,我还有很长的路要走,这完全是在浪费时间。因此,在日常工作学习中,我始终是用自己所擅长的分析能力去解决一切问题。

|

||||

|

||||

不久后,我在波士顿咨询集团公司做咨询顾问工作。在那六年的时间里,我经常听到大家对我的评论: Jackie ,你太没远见了。考虑问题也不够周全。你总是通过自己的分析数据去找答案。“

|

||||

不久后,我在波士顿咨询集团公司做咨询顾问工作。在那六年的时间里,我经常听到大家对我的评论: “Jackie ,你太没远见了。考虑问题也不够周全。你总是通过自己的分析去找答案。”

|

||||

|

||||

确实如此啊,我很赞同他们的想法——因为这个世界的工作方式本该如此,任何问题都要基于数据进行分析,不对吗?直到现在我才意识到(我多么希望自己早一些发现自己的问题)自己以前惯用的分析问题的方法遗漏了很多重要的东西:开放的心态,艺术修养,情感——人和创造性思维相关的因素。

|

||||

确实如此啊,我很赞同他们的想法——因为这个世界的工作方式本该如此,任何问题都要基于数据进行分析,不对吗?直到现在我才意识到(我多么希望自己早一些发现自己的问题)自己以前惯用的分析问题的方法遗漏了很多重要的东西:开放的心态、艺术修养、情感——人和创造性思维相关的因素。

|

||||

|

||||

我在 2001 年 9 月 11 日加入达美航空公司不久后,被调去管理消费者市场部门,之前我意识到的所有问题变得更加明显。这本来不是我的强项,但是在公司需要的情况下,我也愿意出手相肋。

|

||||

我在 2001 年 9 月 11 日加入达美航空公司不久后,被调去管理消费者市场部门,之前我意识到的所有问题变得更加明显。市场方面本来不是我的强项,但是在公司需要的情况下,我也愿意出手相肋。

|

||||

|

||||

但是突然之间,我一直惯用的方法获取到的常规数据分析结果却与实际情况完全相反。这个问题导致上千人(包括航线内外的人)受到影响。我忽略了一个很重要的人本身的情感因素。我所面临的问题需要各种各样的解决方案才能处理,而不是简单的从那些死板的分析数据中就能得到答案。

|

||||

但是突然之间,我一直惯用的方法获取到的分析结果却与实际情况完全相反。这个问题导致上千人(包括航线内外的人)受到影响。我忽略了一个很重要的人本身的情感因素。我所面临的问题需要各种各样的解决方案才能处理,而不是简单的从那些死板的数据中就能得到答案。

|

||||

|

||||

那段时间,我快速地学到了很多东西,因为如果我们想把达美航空公司恢复到正常状态,还需要做很多的工作——市场营销更像是一个以解决问题为导向,以用户为中心的充满挑战性的大工程,只是销售人员和工程师这两大阵营都没有迅速地意识到这个问题。

|

||||

那段时间,我快速地学到了很多东西,因为如果我们想把达美航空公司恢复到正常状态,还需要做很多的工作——市场营销更像是一个以解决问题为导向、以用户为中心的充满挑战性的大工程,只是销售人员和工程师这两大阵营都没有迅速地意识到这个问题。

|

||||

|

||||

### 两大文化差异

|

||||

|

||||

工程管理和市场营销之间的这个“巨大鸿沟”确实是根深蒂固的,这跟 C.P. Snow (英语物理化学家和小说家)提出的[“两大文化差异"问题][1]很相似。具有科学素质的工程师和具有艺术细胞的营销人员操着不同的语言,不同的文化观念导致他们不同的价值取向。

|

||||

工程管理和市场营销之间的这个“巨大鸿沟”确实是根深蒂固的,这跟(著名的科学家、小说家) C.P. Snow 提出的[“两大文化差异”问题][1]很相似。具有科学素质的工程师和具有艺术细胞的营销人员操着不同的语言,不同的文化观念导致他们不同的价值取向。

|

||||

|

||||

但是,事实上他们比想象中有更多的相似之处。华盛顿大学[最新研究][2](由微软、谷歌和美国国家科学基金会共同赞助)发现”一个伟大软件工程师必须具备哪些优秀的素质,“毫无疑问,一个伟大的销售人员同样也应该具备这些素质。例如,专家们给出的一些优秀品质如下:

|

||||

但是,事实上他们比想象中有更多的相似之处。一个由微软、谷歌和美国国家科学基金会共同赞助的华盛顿大学的[最新研究][2]发现了“一个伟大软件工程师必须具备哪些优秀的素质”,毫无疑问,一个伟大的销售人员同样也应该具备这些素质。例如,专家们给出的一些优秀品质如下:

|

||||

|

||||

* 充满激情

|

||||

* 性格开朗

|

||||

@ -56,29 +54,29 @@ _“营销人员都很懒散。”_

|

||||

* 技艺精湛

|

||||

* 解决复杂难题的能力

|

||||

|

||||

这些只是其中很小的一部分!当然,并不是所有的素质都适用于市场营销人员,但是如果用文氏图来表示这“两大文化“的交集,就很容易看出营销人员和工程师之间的关系要远比我们想象中密切得多。他们都是竭力去解决与用户或客户相关的难题,只是他们所采取的方式和角度不一致罢了。

|

||||

这些只是其中很小的一部分!当然,并不是所有的素质都适用于市场营销人员,但是如果用文氏图来表示这“两大文化”的交集,就很容易看出营销人员和工程师之间的关系要远比我们想象中密切得多。他们都是竭力去解决与用户或客户相关的难题,只是他们所采取的方式和角度不一致罢了。

|

||||

|

||||

看到上面的那几点后,我深深的陷入思考:_要是这两类员工彼此之间再多了解对方一些会怎样呢?这会给公司带来很强大的动力吧?_

|

||||

|

||||

确实如此。我在红帽公司就亲眼看到过样的情形,我身边都是一些“思想极端”的员工,要是之前,肯定早被我炒鱿鱼了。我相信公司里绝对发生过很多次类似这样的事情,一个销售人员看完工程师递交上来的分析报表后,心想,“这些书呆子,思想太局限了。真是一叶障目,不见泰山;两豆塞耳,不闻雷霆。”

|

||||

确实如此。我在红帽公司就亲眼看到过样的情形,我身边都是一些早些年肯定被我当成“想法疯狂”而无视的人。而且我猜销售人员看到工程师后(同时或某一次),心想,“这些数据呆瓜,真是只见树木不见森林。”

|

||||

|

||||

现在我才明白了公司里有这两种人才的重要性。在现实工作当中,工程师和营销人员都是围绕着客户、创新及数据分析来完成工作。如果他们能够懂得相互尊重、彼此理解、相辅相成,那么我们将会看到公司里所产生的那种积极强大的动力,这种超乎寻常的革新力量要远比两个独立的团队强大得多。

|

||||

|

||||

### 听一听他们的想法

|

||||

### 听一听疯子(和呆瓜)的想法

|

||||

|

||||

成功案例:_建立开放式组织_

|

||||

成功案例:《开放式组织》

|

||||

|

||||

在红帽任职期间,我的主要工作就是想办法提升公司的品牌影响力——但是我从未想过让公司的 CEO 去写一本书。我把公司多个部门的“想法极端”的同事召集在一起,希望他们帮我设计出一个新颖的解决方案来提升公司的影响力,结果他们提出让公司的 CEO 写书这样一个想法。

|

||||

在红帽任职期间,我的主要工作就是想办法提升公司的品牌影响力——但是就是给我一百万年我也不会想到让公司的 CEO 去写一本书。我把公司多个部门的“想法疯狂”的同事召集在一起,希望他们帮我设计出一个新颖的解决方案来提升公司的影响力,结果他们提出让公司的 CEO 写书这样一个想法。

|

||||

|

||||

当我听到这个想法的时候,我很快意识到应该把红帽公司一些经典的管理模式写入到这本书里:它将对整个开源社区的创业者带来很重要的参考价值,同时也有助于宣扬开源精神。通过优先考虑这两方面的作用,我们提升了红帽在整个开源软件世界中的品牌价值,红帽是一个可靠的随时准备着为客户在[数字化颠覆][3]年代指明方向的公司。

|

||||

当我听到这个想法的时候,我很快意识到这正是典型的红帽方式:它将对整个开源社区的从业者带来很重要的参考价值,同时也有助于宣扬开源精神。通过优先考虑这两方面的作用,我们提升了红帽在整个开源软件世界中的品牌价值——红帽是一个可靠的随时准备着为客户在[数字化颠覆][3]年代指明方向的公司。

|

||||

|

||||

这一点才是主要的:确切的说是指导红帽工程师解决代码问题的共同精神力量。 Red Hatters 小组一直在催着我赶紧把开放式组织的模式在全公司推广起来,以显示出内外部程序员共同推动整个开源社区发展的强大动力之一:那就是强烈的共享欲望。

|

||||

这一点才是主要的:确切的说是指导红帽工程师解决代码问题的共同精神力量。 Red Hatters 小组敦促我出版《开放式组织》,这显示出来自内部和外部社区的程序员共同推动整个开源社区发展的强大动力之一:那就是强烈的共享欲望。

|

||||

|

||||

最后,要把开放式组织的管理模式完全推广起来,还需要大家的共同能力,包括工程师们强大的数据分析能力和营销人员美好的艺术素养。这个项目让我更加坚定自己的想法,工程师和营销人员有更多的相似之处。

|

||||

最后,要把《开放式组织》这本书完成,还需要大家的共同能力,包括工程师们强大的数据分析能力和营销人员美好的艺术素养。这个项目让我更加坚定自己的想法,工程师和营销人员有更多的相似之处。

|

||||

|

||||

但是,有些东西我还得强调下:开放模式的实现,要求公司上下没有任何偏见,不能偏袒工程师和市场营销人员任何一方文化。一个更加理想的开放式环境能够促使员工之间和平共处,并在这个组织规定的范围内点燃大家的热情。

|

||||

|

||||

所以,这绝对不是我听到大家所说的懒散之意。

|

||||

这对我来说如春风拂面。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -94,7 +92,7 @@ via: https://opensource.com/open-organization/17/1/engineers-marketers-can-learn

|

||||

|

||||

作者:[Jackie Yeaney][a]

|

||||

译者:[rusking](https://github.com/rusking)

|

||||

校对:[Bestony](https://github.com/Bestony)

|

||||

校对:[Bestony](https://github.com/Bestony), [wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

678

published/20170111 Git in 2016.md

Normal file

678

published/20170111 Git in 2016.md

Normal file

@ -0,0 +1,678 @@

|

||||

2016 Git 新视界

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

2016 年 Git 发生了 _惊天动地_ 地变化,发布了五大新特性[¹][57] (从 _v2.7_ 到 _v2.11_ )和十六个补丁[²][58]。189 位作者[³][59]贡献了 3676 个提交[⁴][60]到 `master` 分支,比 2015 年多了 15%[⁵][61]!总计有 1545 个文件被修改,其中增加了 276799 行并移除了 100973 行。

|

||||

|

||||

但是,通过统计提交的数量和代码行数来衡量生产力是一种十分愚蠢的方法。除了深度研究过的开发者可以做到凭直觉来判断代码质量的地步,我们普通人来作仲裁难免会因我们常人的判断有失偏颇。

|

||||

|

||||

谨记这一条于心,我决定整理这一年里六个我最喜爱的 Git 特性涵盖的改进,来做一次分类回顾。这篇文章作为一篇中篇推文有点太过长了,所以我不介意你们直接跳到你们特别感兴趣的特性去。

|

||||

|

||||

* [完成][41] [`git worktree`][25] [命令][42]

|

||||

* [更多方便的][43] [`git rebase`][26] [选项][44]

|

||||

* [`git lfs`][27] [梦幻的性能加速][45]

|

||||

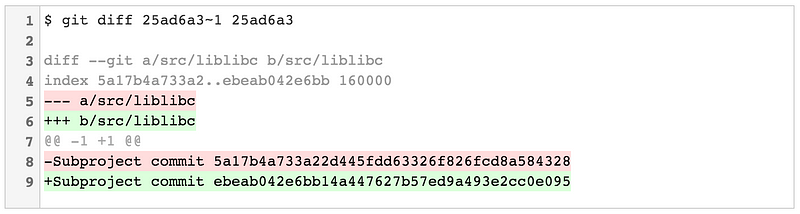

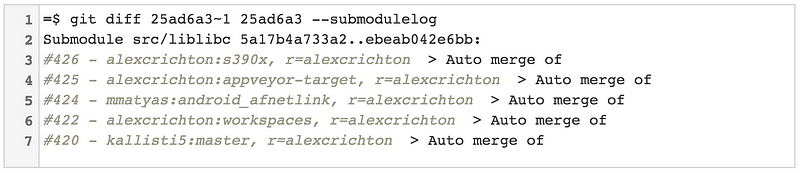

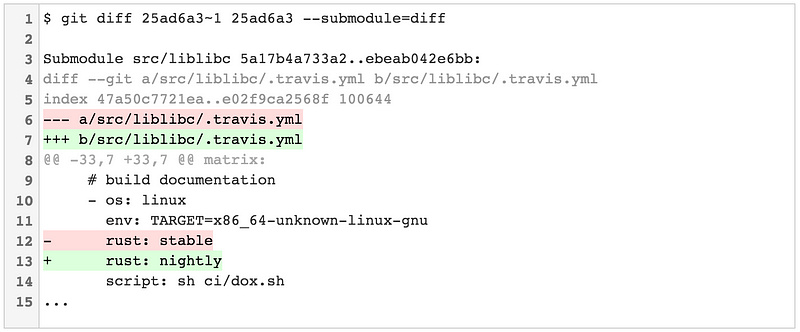

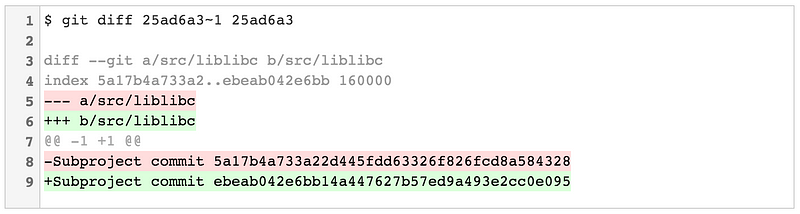

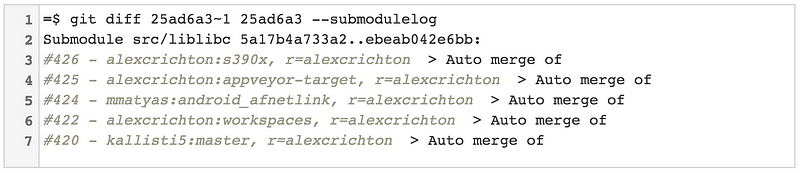

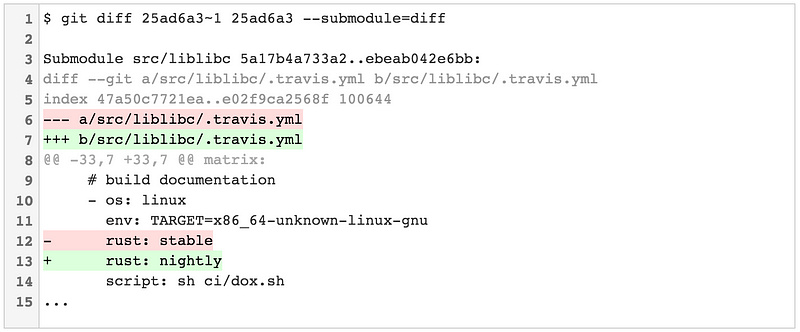

* [`git diff`][28] [实验性的算法和更好的默认结果][46]

|

||||

* [`git submodules`][29] [差强人意][47]

|

||||

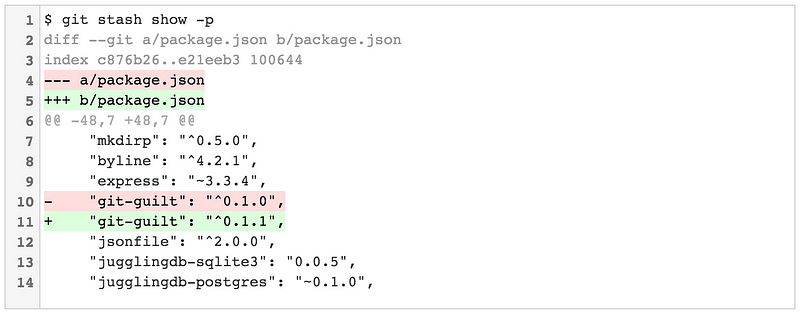

* [`git stash`][30] 的[90 个增强][48]

|

||||

|

||||

在我们开始之前,请注意在大多数操作系统上都自带有 Git 的旧版本,所以你需要检查你是否在使用最新并且最棒的版本。如果在终端运行 `git --version` 返回的结果小于 Git `v2.11.0`,请立刻跳转到 Atlassian 的快速指南 [更新或安装 Git][63] 并根据你的平台做出选择。

|

||||

|

||||

###[所需的“引用”]

|

||||

|

||||

在我们进入高质量内容之前还需要做一个短暂的停顿:我觉得我需要为你展示我是如何从公开文档生成统计信息(以及开篇的封面图片)的。你也可以使用下面的命令来对你自己的仓库做一个快速的 *年度回顾*!

|

||||

|

||||

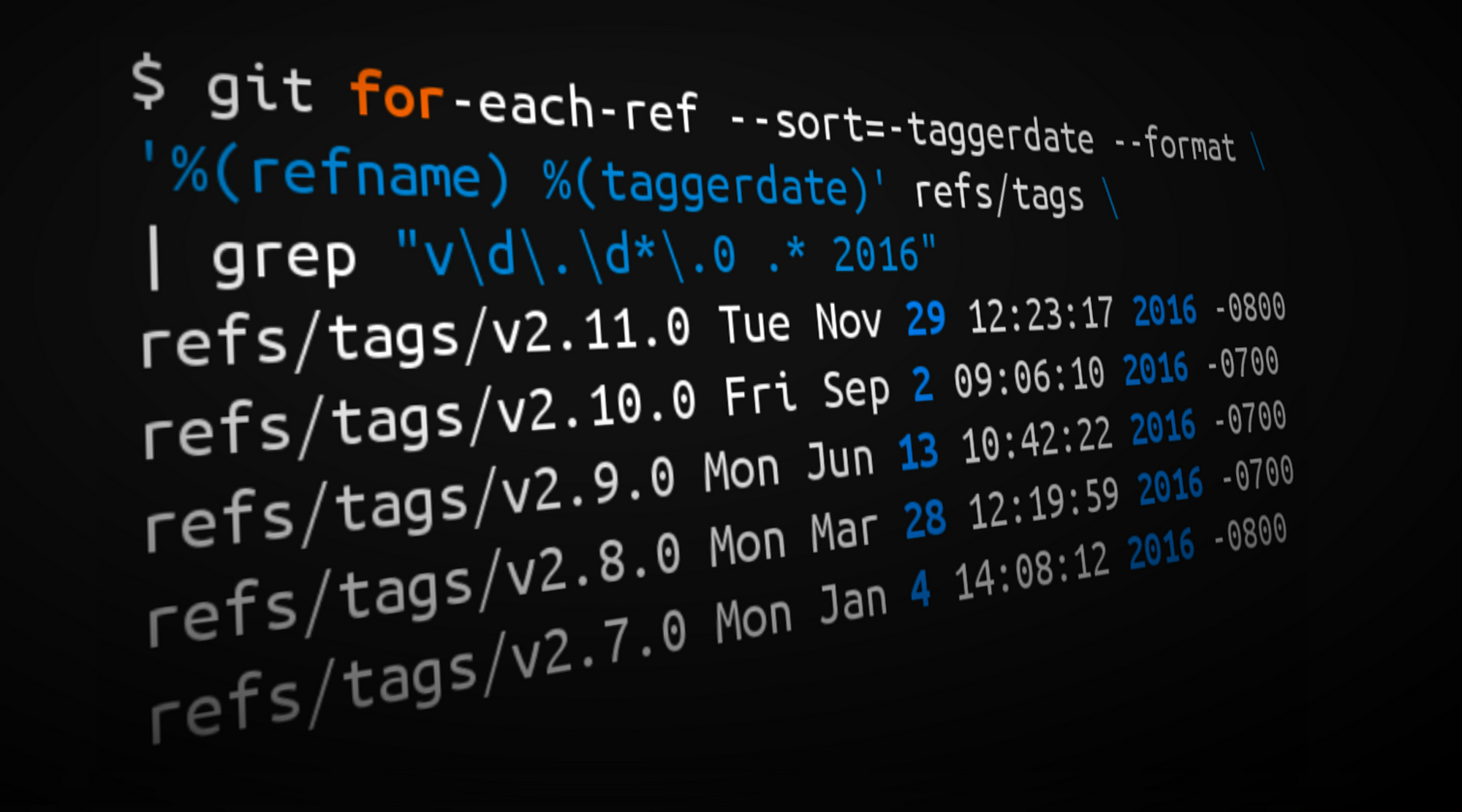

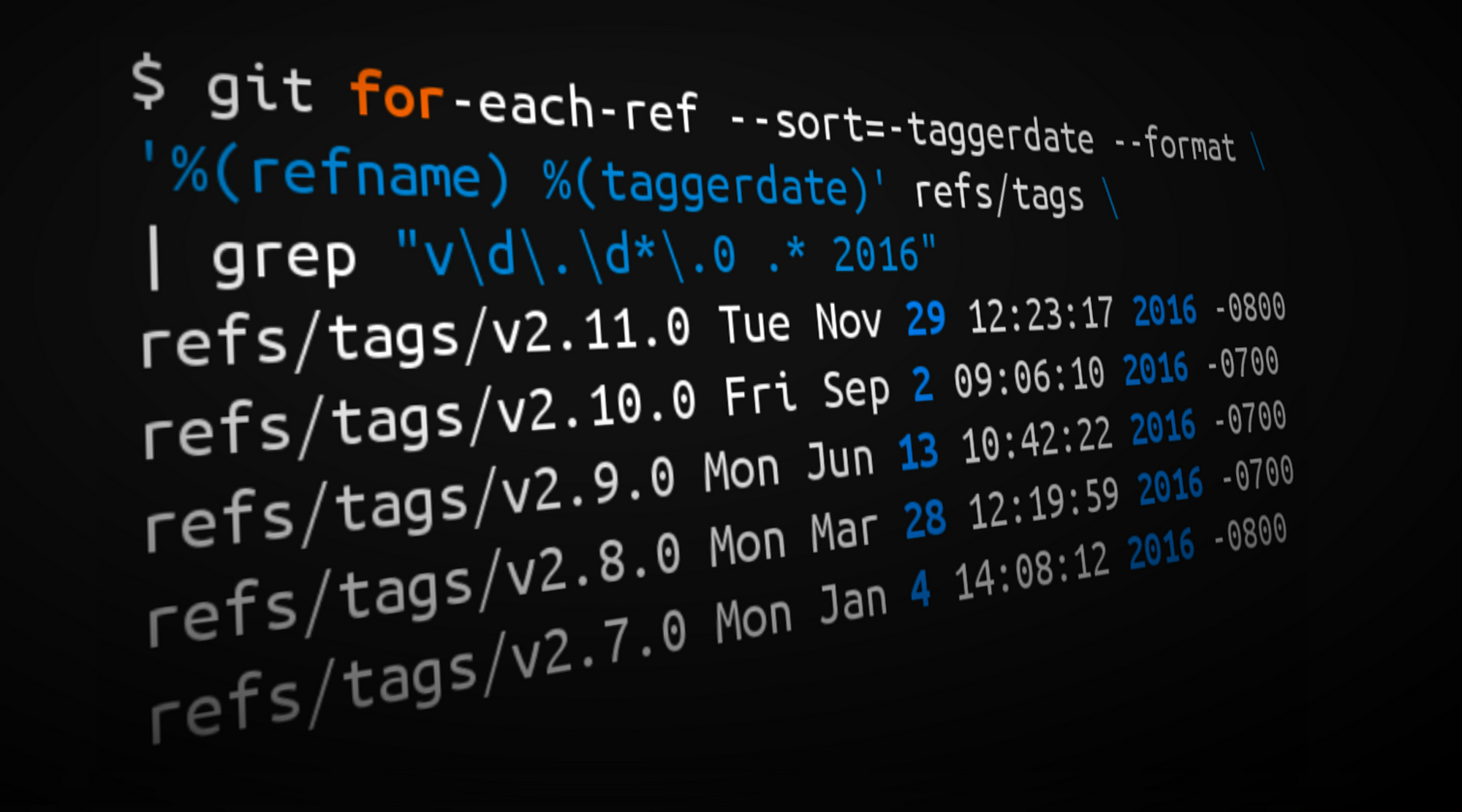

>¹ Tags from 2016 matching the form vX.Y.0

|

||||

|

||||

```

|

||||

$ git for-each-ref --sort=-taggerdate --format \

|

||||

'%(refname) %(taggerdate)' refs/tags | grep "v\d\.\d*\.0 .* 2016"

|

||||

```

|

||||

|

||||

> ² Tags from 2016 matching the form vX.Y.Z

|

||||

|

||||

```

|

||||

$ git for-each-ref --sort=-taggerdate --format '%(refname) %(taggerdate)' refs/tags | grep "v\d\.\d*\.[^0] .* 2016"

|

||||

```

|

||||

|

||||

> ³ Commits by author in 2016

|

||||

|

||||

```

|

||||

$ git shortlog -s -n --since=2016-01-01 --until=2017-01-01

|

||||

```

|

||||

|

||||

> ⁴ Count commits in 2016

|

||||

|

||||

```

|

||||

$ git log --oneline --since=2016-01-01 --until=2017-01-01 | wc -l

|

||||

```

|

||||

|

||||

> ⁵ ... and in 2015

|

||||

|

||||

```

|

||||

$ git log --oneline --since=2015-01-01 --until=2016-01-01 | wc -l

|

||||

```

|

||||

|

||||

> ⁶ Net LOC added/removed in 2016

|

||||

|

||||

```

|

||||

$ git diff --shortstat `git rev-list -1 --until=2016-01-01 master` \

|

||||

`git rev-list -1 --until=2017-01-01 master`

|

||||

```

|

||||

|

||||

以上的命令是在 Git 的 `master` 分支运行的,所以不会显示其他出色的分支上没有合并的工作。如果你使用这些命令,请记住提交的数量和代码行数不是应该值得信赖的度量方式。请不要使用它们来衡量你的团队成员的贡献。

|

||||

|

||||

现在,让我们开始说好的回顾……

|

||||

|

||||

### 完成 Git 工作树(worktree)

|

||||

|

||||

`git worktree` 命令首次出现于 Git v2.5 ,但是在 2016 年有了一些显著的增强。两个有价值的新特性在 v2.7 被引入:`list` 子命令,和为二分搜索增加了命令空间的 refs。而 `lock`/`unlock` 子命令则是在 v2.10 被引入。

|

||||

|

||||

#### 什么是工作树呢?

|

||||

|

||||

[`git worktree`][49] 命令允许你同步地检出和操作处于不同路径下的同一仓库的多个分支。例如,假如你需要做一次快速的修复工作但又不想扰乱你当前的工作区,你可以使用以下命令在一个新路径下检出一个新分支:

|

||||

|

||||

```

|

||||

$ git worktree add -b hotfix/BB-1234 ../hotfix/BB-1234

|

||||

Preparing ../hotfix/BB-1234 (identifier BB-1234)

|

||||

HEAD is now at 886e0ba Merged in bedwards/BB-13430-api-merge-pr (pull request #7822)

|

||||

```

|

||||

|

||||

工作树不仅仅是为分支工作。你可以检出多个里程碑(tags)作为不同的工作树来并行构建或测试它们。例如,我从 Git v2.6 和 v2.7 的里程碑中创建工作树来检验不同版本 Git 的行为特征。

|

||||

|

||||

```

|

||||

$ git worktree add ../git-v2.6.0 v2.6.0

|

||||

Preparing ../git-v2.6.0 (identifier git-v2.6.0)

|

||||

HEAD is now at be08dee Git 2.6

|

||||

|

||||

$ git worktree add ../git-v2.7.0 v2.7.0

|

||||

Preparing ../git-v2.7.0 (identifier git-v2.7.0)

|

||||

HEAD is now at 7548842 Git 2.7

|

||||

|

||||

$ git worktree list

|

||||

/Users/kannonboy/src/git 7548842 [master]

|

||||

/Users/kannonboy/src/git-v2.6.0 be08dee (detached HEAD)

|

||||

/Users/kannonboy/src/git-v2.7.0 7548842 (detached HEAD)

|

||||

|

||||

$ cd ../git-v2.7.0 && make

|

||||

```

|

||||

|

||||

你也使用同样的技术来并行构造和运行你自己应用程序的不同版本。

|

||||

|

||||

#### 列出工作树

|

||||

|

||||

`git worktree list` 子命令(于 Git v2.7 引入)显示所有与当前仓库有关的工作树。

|

||||

|

||||

```

|

||||

$ git worktree list

|

||||

/Users/kannonboy/src/bitbucket/bitbucket 37732bd [master]

|

||||

/Users/kannonboy/src/bitbucket/staging d5924bc [staging]

|

||||

/Users/kannonboy/src/bitbucket/hotfix-1234 37732bd [hotfix/1234]

|

||||

```

|

||||

|

||||

#### 二分查找工作树

|

||||

|

||||

[`gitbisect`][50] 是一个简洁的 Git 命令,可以让我们对提交记录执行一次二分搜索。通常用来找到哪一次提交引入了一个指定的退化。例如,如果在我的 `master` 分支最后的提交上有一个测试没有通过,我可以使用 `git bisect` 来贯穿仓库的历史来找寻第一次造成这个错误的提交。

|

||||

|

||||

```

|

||||

$ git bisect start

|

||||

|

||||

# 找到已知通过测试的最后提交

|

||||

# (例如最新的发布里程碑)

|

||||

$ git bisect good v2.0.0

|

||||

|

||||

# 找到已知的出问题的提交

|

||||

# (例如在 `master` 上的提示)

|

||||

$ git bisect bad master

|

||||

|

||||

# 告诉 git bisect 要运行的脚本/命令;

|

||||

# git bisect 会在 “good” 和 “bad”范围内

|

||||

# 找到导致脚本以非 0 状态退出的最旧的提交

|

||||

$ git bisect run npm test

|

||||

```

|

||||

|

||||

在后台,bisect 使用 refs 来跟踪 “good” 与 “bad” 的提交来作为二分搜索范围的上下界限。不幸的是,对工作树的粉丝来说,这些 refs 都存储在寻常的 `.git/refs/bisect` 命名空间,意味着 `git bisect` 操作如果运行在不同的工作树下可能会互相干扰。

|

||||

|

||||

到了 v2.7 版本,bisect 的 refs 移到了 `.git/worktrees/$worktree_name/refs/bisect`, 所以你可以并行运行 bisect 操作于多个工作树中。

|

||||

|

||||

#### 锁定工作树

|

||||

|

||||

当你完成了一颗工作树的工作,你可以直接删除它,然后通过运行 `git worktree prune` 等它被当做垃圾自动回收。但是,如果你在网络共享或者可移除媒介上存储了一颗工作树,如果工作树目录在删除期间不可访问,工作树会被完全清除——不管你喜不喜欢!Git v2.10 引入了 `git worktree lock` 和 `unlock` 子命令来防止这种情况发生。

|

||||

|

||||

```

|

||||

# 在我的 USB 盘上锁定 git-v2.7 工作树

|

||||

$ git worktree lock /Volumes/Flash_Gordon/git-v2.7 --reason \

|

||||

"In case I remove my removable media"

|

||||

```

|

||||

|

||||

```

|

||||

# 当我完成时,解锁(并删除)该工作树

|

||||

$ git worktree unlock /Volumes/Flash_Gordon/git-v2.7

|

||||

$ rm -rf /Volumes/Flash_Gordon/git-v2.7

|

||||

$ git worktree prune

|

||||

```

|

||||

|

||||

`--reason` 标签允许为未来的你留一个记号,描述为什么当初工作树被锁定。`git worktree unlock` 和 `lock` 都要求你指定工作树的路径。或者,你可以 `cd` 到工作树目录然后运行 `git worktree lock .` 来达到同样的效果。

|

||||

|

||||

|

||||

### 更多 Git 变基(rebase)选项

|

||||

|

||||

2016 年三月,Git v2.8 增加了在拉取过程中交互进行变基的命令 `git pull --rebase=interactive` 。对应地,六月份 Git v2.9 发布了通过 `git rebase -x` 命令对执行变基操作而不需要进入交互模式的支持。

|

||||

|

||||

#### Re-啥?

|

||||

|

||||

在我们继续深入前,我假设读者中有些并不是很熟悉或者没有完全习惯变基命令或者交互式变基。从概念上说,它很简单,但是与很多 Git 的强大特性一样,变基散发着听起来很复杂的专业术语的气息。所以,在我们深入前,先来快速的复习一下什么是变基(rebase)。

|

||||

|

||||

变基操作意味着将一个或多个提交在一个指定分支上重写。`git rebase` 命令是被深度重载了,但是 rebase 这个名字的来源事实上还是它经常被用来改变一个分支的基准提交(你基于此提交创建了这个分支)。

|

||||

|

||||

从概念上说,rebase 通过将你的分支上的提交临时存储为一系列补丁包,接着将这些补丁包按顺序依次打在目标提交之上。

|

||||

|

||||

|

||||

|

||||

对 master 分支的一个功能分支执行变基操作 (`git reabse master`)是一种通过将 master 分支上最新的改变合并到功能分支的“保鲜法”。对于长期存在的功能分支,规律的变基操作能够最大程度的减少开发过程中出现冲突的可能性和严重性。

|

||||

|

||||

有些团队会选择在合并他们的改动到 master 前立即执行变基操作以实现一次快速合并 (`git merge --ff <feature>`)。对 master 分支快速合并你的提交是通过简单的将 master ref 指向你的重写分支的顶点而不需要创建一个合并提交。

|

||||

|

||||

|

||||

|

||||

变基是如此方便和功能强大以致于它已经被嵌入其他常见的 Git 命令中,例如拉取操作 `git pull` 。如果你在本地 master 分支有未推送的提交,运行 `git pull` 命令从 origin 拉取你队友的改动会造成不必要的合并提交。

|

||||

|

||||

|

||||

|

||||

这有点混乱,而且在繁忙的团队,你会获得成堆的不必要的合并提交。`git pull --rebase` 将你本地的提交在你队友的提交上执行变基而不产生一个合并提交。

|

||||

|

||||

|

||||

|

||||

这很整洁吧!甚至更酷,Git v2.8 引入了一个新特性,允许你在拉取时 _交互地_ 变基。

|

||||

|

||||

#### 交互式变基

|

||||

|

||||

交互式变基是变基操作的一种更强大的形态。和标准变基操作相似,它可以重写提交,但它也可以向你提供一个机会让你能够交互式地修改这些将被重新运用在新基准上的提交。

|

||||

|

||||

当你运行 `git rebase --interactive` (或 `git pull --rebase=interactive`)时,你会在你的文本编辑器中得到一个可供选择的提交列表视图。

|

||||

|

||||

```

|

||||

$ git rebase master --interactive

|

||||

|

||||

pick 2fde787 ACE-1294: replaced miniamalCommit with string in test

|

||||

pick ed93626 ACE-1294: removed pull request service from test

|

||||

pick b02eb9a ACE-1294: moved fromHash, toHash and diffType to batch

|

||||

pick e68f710 ACE-1294: added testing data to batch email file

|

||||

|

||||

# Rebase f32fa9d..0ddde5f onto f32fa9d (4 commands)

|

||||

#

|

||||

# Commands:

|

||||

# p, pick = use commit

|

||||

# r, reword = use commit, but edit the commit message

|

||||

# e, edit = use commit, but stop for amending

|

||||

# s, squash = use commit, but meld into previous commit

|

||||

# f, fixup = like "squash", but discard this commit's log message

|

||||

# x, exec = run command (the rest of the line) using shell

|

||||

# d, drop = remove commit

|

||||

#

|

||||

# These lines can be re-ordered; they are executed from top to

|

||||

# bottom.

|

||||

#

|

||||

# If you remove a line here THAT COMMIT WILL BE LOST.

|

||||

```

|

||||

|

||||

注意到每一条提交旁都有一个 `pick`。这是对 rebase 而言,“照原样留下这个提交”。如果你现在就退出文本编辑器,它会执行一次如上文所述的普通变基操作。但是,如果你将 `pick` 改为 `edit` 或者其他 rebase 命令中的一个,变基操作会允许你在它被重新运用前改变它。有效的变基命令有如下几种:

|

||||

|

||||

* `reword`:编辑提交信息。

|

||||

* `edit`:编辑提交了的文件。

|

||||

* `squash`:将提交与之前的提交(同在文件中)合并,并将提交信息拼接。

|

||||

* `fixup`:将本提交与上一条提交合并,并且逐字使用上一条提交的提交信息(这很方便,如果你为一个很小的改动创建了第二个提交,而它本身就应该属于上一条提交,例如,你忘记暂存了一个文件)。

|

||||

* `exec`: 运行一条任意的 shell 命令(我们将会在下一节看到本例一次简洁的使用场景)。

|

||||

* `drop`: 这将丢弃这条提交。

|

||||

|

||||

你也可以在文件内重新整理提交,这样会改变它们被重新应用的顺序。当你对不同的主题创建了交错的提交时这会很顺手,你可以使用 `squash` 或者 `fixup` 来将其合并成符合逻辑的原子提交。

|

||||

|

||||

当你设置完命令并且保存这个文件后,Git 将递归每一条提交,在每个 `reword` 和 `edit` 命令处为你暂停来执行你设计好的改变,并且自动运行 `squash`, `fixup`,`exec` 和 `drop` 命令。

|

||||

|

||||

####非交互性式执行

|

||||

|

||||

当你执行变基操作时,本质上你是在通过将你每一条新提交应用于指定基址的头部来重写历史。`git pull --rebase` 可能会有一点危险,因为根据上游分支改动的事实,你的新建历史可能会由于特定的提交遭遇测试失败甚至编译问题。如果这些改动引起了合并冲突,变基过程将会暂停并且允许你来解决它们。但是,整洁的合并改动仍然有可能打断编译或测试过程,留下破败的提交弄乱你的提交历史。

|

||||

|

||||

但是,你可以指导 Git 为每一个重写的提交来运行你的项目测试套件。在 Git v2.9 之前,你可以通过绑定 `git rebase --interactive` 和 `exec` 命令来实现。例如这样:

|

||||

|

||||

```

|

||||

$ git rebase master −−interactive −−exec=”npm test”

|

||||

```

|

||||

|

||||

……这会生成一个交互式变基计划,在重写每条提交后执行 `npm test` ,保证你的测试仍然会通过:

|

||||

|

||||

```

|

||||

pick 2fde787 ACE-1294: replaced miniamalCommit with string in test

|

||||

exec npm test

|

||||

pick ed93626 ACE-1294: removed pull request service from test

|

||||

exec npm test

|

||||

pick b02eb9a ACE-1294: moved fromHash, toHash and diffType to batch

|

||||

exec npm test

|

||||

pick e68f710 ACE-1294: added testing data to batch email file

|

||||

exec npm test

|

||||

|

||||

# Rebase f32fa9d..0ddde5f onto f32fa9d (4 command(s))

|

||||

```

|

||||

|

||||

如果出现了测试失败的情况,变基会暂停并让你修复这些测试(并且将你的修改应用于相应提交):

|

||||

|

||||

```

|

||||

291 passing

|

||||

1 failing

|

||||

|

||||

1) Host request "after all" hook:

|

||||

Uncaught Error: connect ECONNRESET 127.0.0.1:3001

|

||||

…

|

||||

npm ERR! Test failed.

|

||||

Execution failed: npm test

|

||||

You can fix the problem, and then run

|

||||

git rebase −−continue

|

||||

```

|

||||

|

||||

这很方便,但是使用交互式变基有一点臃肿。到了 Git v2.9,你可以这样来实现非交互式变基:

|

||||

|

||||

```

|

||||

$ git rebase master -x "npm test"

|

||||

```

|

||||

|

||||

可以简单替换 `npm test` 为 `make`,`rake`,`mvn clean install`,或者任何你用来构建或测试你的项目的命令。

|

||||

|

||||

#### 小小警告

|

||||

|

||||

就像电影里一样,重写历史可是一个危险的行当。任何提交被重写为变基操作的一部分都将改变它的 SHA-1 ID,这意味着 Git 会把它当作一个全新的提交对待。如果重写的历史和原来的历史混杂,你将获得重复的提交,而这可能在你的团队中引起不少的疑惑。

|

||||

|

||||

为了避免这个问题,你仅仅需要遵照一条简单的规则:

|

||||

|

||||

> _永远不要变基一条你已经推送的提交!_

|

||||

|

||||

坚持这一点你会没事的。

|

||||

|

||||

### Git LFS 的性能提升

|

||||

|

||||

[Git 是一个分布式版本控制系统][64],意味着整个仓库的历史会在克隆阶段被传送到客户端。对包含大文件的项目——尤其是大文件经常被修改——初始克隆会非常耗时,因为每一个版本的每一个文件都必须下载到客户端。[Git LFS(Large File Storage 大文件存储)][65] 是一个 Git 拓展包,由 Atlassian、GitHub 和其他一些开源贡献者开发,通过需要时才下载大文件的相对版本来减少仓库中大文件的影响。更明确地说,大文件是在检出过程中按需下载的而不是在克隆或抓取过程中。

|

||||

|

||||

在 Git 2016 年的五大发布中,Git LFS 自身就有四个功能版本的发布:v1.2 到 v1.5。你可以仅对 Git LFS 这一项来写一系列回顾文章,但是就这篇文章而言,我将专注于 2016 年解决的一项最重要的主题:速度。一系列针对 Git 和 Git LFS 的改进极大程度地优化了将文件传入/传出服务器的性能。

|

||||

|

||||

#### 长期过滤进程

|

||||

|

||||

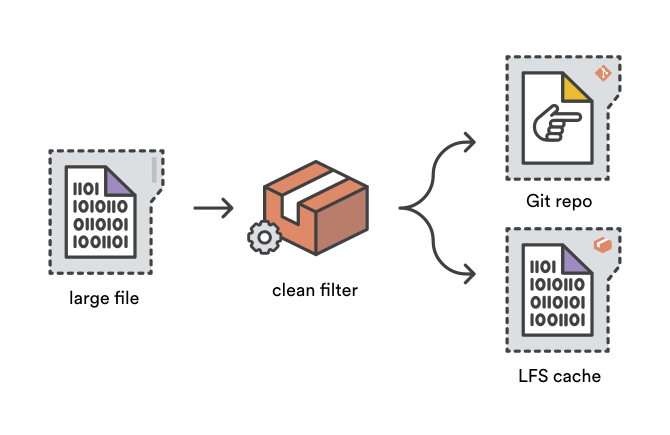

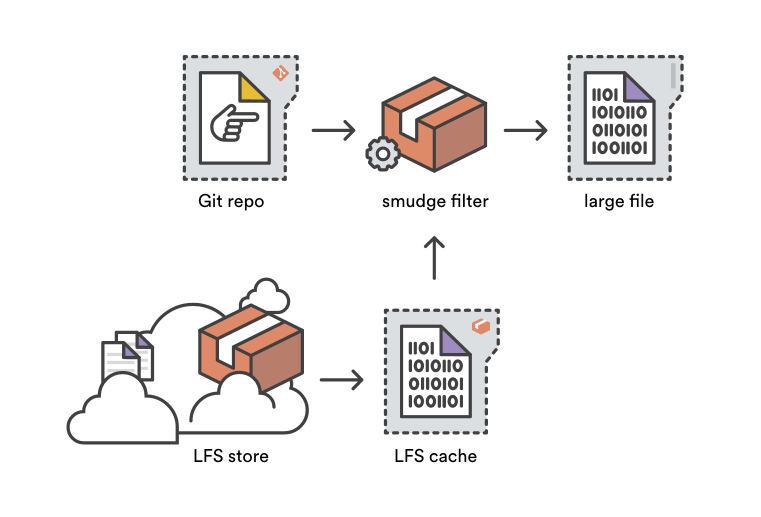

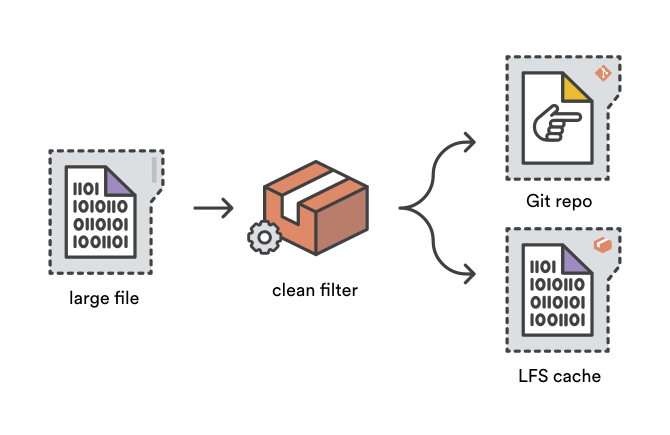

当你 `git add` 一个文件时,Git 的净化过滤系统会被用来在文件被写入 Git 目标存储之前转化文件的内容。Git LFS 通过使用净化过滤器(clean filter)将大文件内容存储到 LFS 缓存中以缩减仓库的大小,并且增加一个小“指针”文件到 Git 目标存储中作为替代。

|

||||

|

||||

|

||||

|

||||

污化过滤器(smudge filter)是净化过滤器的对立面——正如其名。在 `git checkout` 过程中从一个 Git 目标仓库读取文件内容时,污化过滤系统有机会在文件被写入用户的工作区前将其改写。Git LFS 污化过滤器通过将指针文件替代为对应的大文件将其转化,可以是从 LFS 缓存中获得或者通过读取存储在 Bitbucket 的 Git LFS。

|

||||

|

||||

|

||||

|

||||

传统上,污化和净化过滤进程在每个文件被增加和检出时只能被唤起一次。所以,一个项目如果有 1000 个文件在被 Git LFS 追踪 ,做一次全新的检出需要唤起 `git-lfs-smudge` 命令 1000 次。尽管单次操作相对很迅速,但是经常执行 1000 次独立的污化进程总耗费惊人。、

|

||||

|

||||

针对 Git v2.11(和 Git LFS v1.5),污化和净化过滤器可以被定义为长期进程,为第一个需要过滤的文件调用一次,然后为之后的文件持续提供污化或净化过滤直到父 Git 操作结束。[Lars Schneider][66],Git 的长期过滤系统的贡献者,简洁地总结了对 Git LFS 性能改变带来的影响。

|

||||

|

||||

> 使用 12k 个文件的测试仓库的过滤进程在 macOS 上快了 80 倍,在 Windows 上 快了 58 倍。在 Windows 上,这意味着测试运行了 57 秒而不是 55 分钟。

|

||||

|

||||

这真是一个让人印象深刻的性能增强!

|

||||

|

||||

#### LFS 专有克隆

|

||||

|

||||

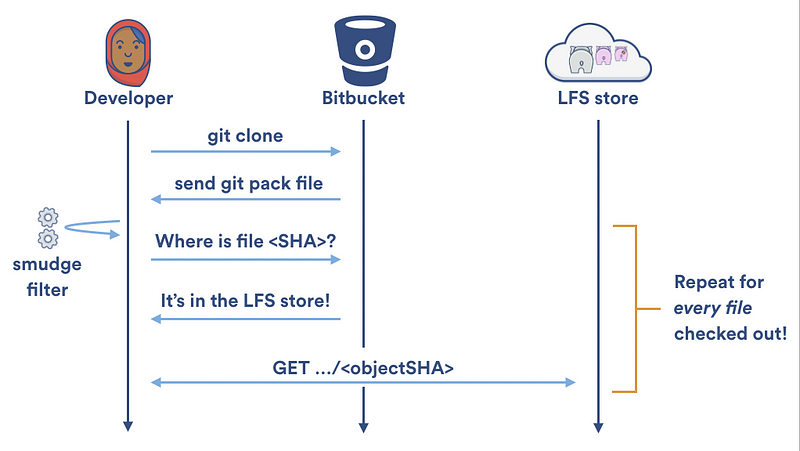

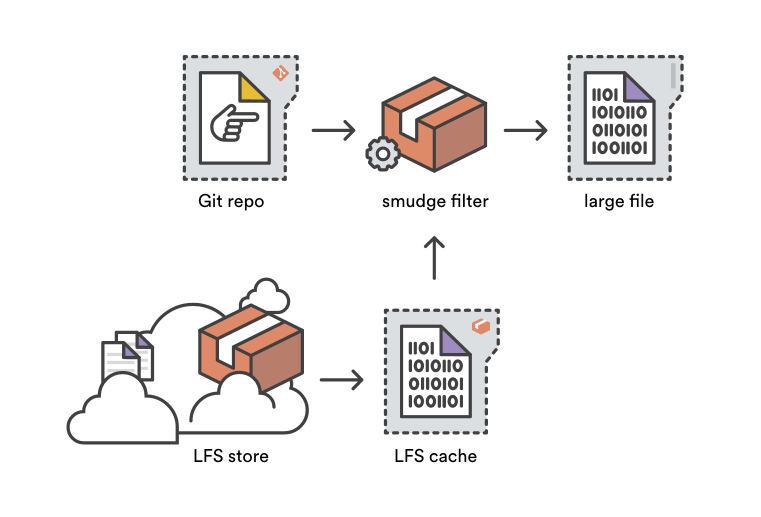

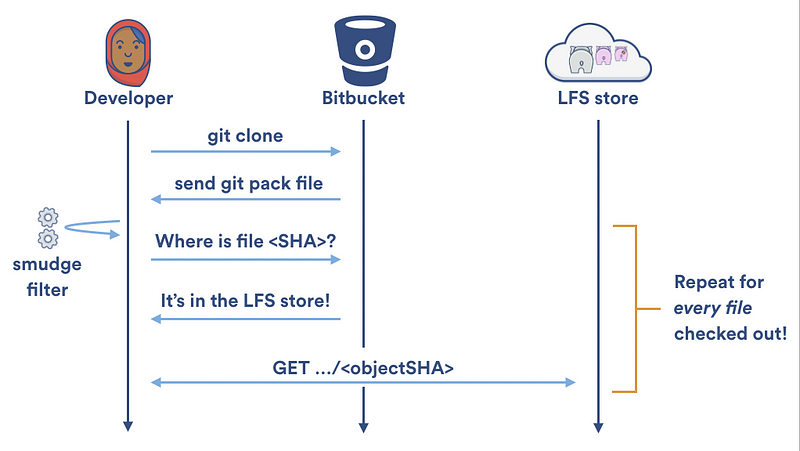

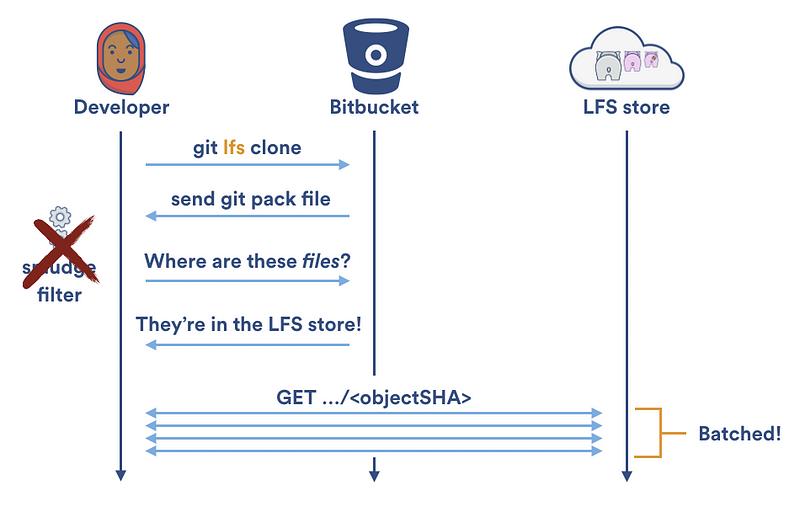

长期运行的污化和净化过滤器在对向本地缓存读写的加速做了很多贡献,但是对大目标传入/传出 Git LFS 服务器的速度提升贡献很少。 每次 Git LFS 污化过滤器在本地 LFS 缓存中无法找到一个文件时,它不得不使用两次 HTTP 请求来获得该文件:一个用来定位文件,另外一个用来下载它。在一次 `git clone` 过程中,你的本地 LFS 缓存是空的,所以 Git LFS 会天真地为你的仓库中每个 LFS 所追踪的文件创建两个 HTTP 请求:

|

||||

|

||||

|

||||

|

||||

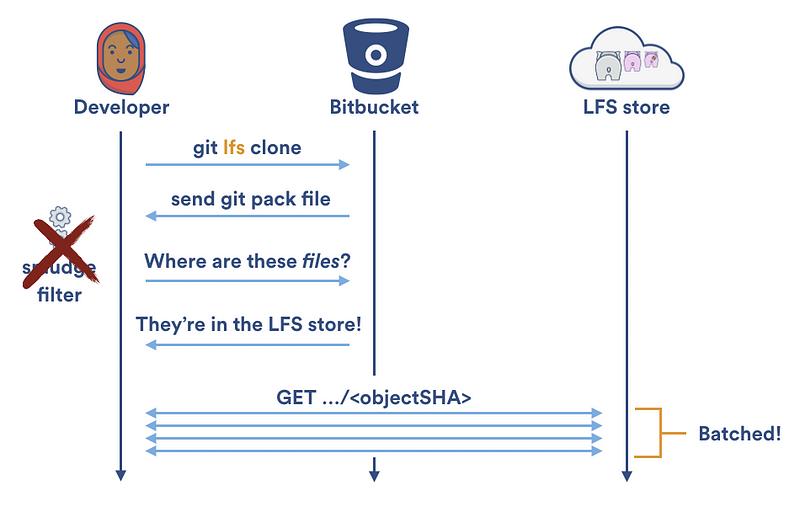

幸运的是,Git LFS v1.2 提供了专门的 [`git lfs clone`][51] 命令。不再是一次下载一个文件; `git lfs clone` 禁止 Git LFS 污化过滤器,等待检出结束,然后从 Git LFS 存储中按批下载任何需要的文件。这允许了并行下载并且将需要的 HTTP 请求数量减半。

|

||||

|

||||

|

||||

|

||||

### 自定义传输路由器(Transfer Adapter)

|

||||

|

||||

正如之前讨论过的,Git LFS 在 v1.5 中提供对长期过滤进程的支持。不过,对另外一种类型的可插入进程的支持早在今年年初就发布了。 Git LFS 1.3 包含了对可插拔传输路由器(pluggable transfer adapter)的支持,因此不同的 Git LFS 托管服务可以定义属于它们自己的协议来向或从 LFS 存储中传输文件。

|

||||

|

||||

直到 2016 年底,Bitbucket 是唯一一个执行专属 Git LFS 传输协议 [Bitbucket LFS Media Adapter][67] 的托管服务商。这是为了从 Bitbucket 的一个被称为 chunking 的 LFS 存储 API 独特特性中获益。Chunking 意味着在上传或下载过程中,大文件被分解成 4MB 的文件块(chunk)。

|

||||

|

||||

|

||||

|

||||

分块给予了 Bitbucket 支持的 Git LFS 三大优势:

|

||||

|

||||

1. 并行下载与上传。默认地,Git LFS 最多并行传输三个文件。但是,如果只有一个文件被单独传输(这也是 Git LFS 污化过滤器的默认行为),它会在一个单独的流中被传输。Bitbucket 的分块允许同一文件的多个文件块同时被上传或下载,经常能够神奇地提升传输速度。

|

||||

2. 可续传的文件块传输。文件块都在本地缓存,所以如果你的下载或上传被打断,Bitbucket 的自定义 LFS 流媒体路由器会在下一次你推送或拉取时仅为丢失的文件块恢复传输。

|

||||

3. 免重复。Git LFS,正如 Git 本身,是一种可定位的内容;每一个 LFS 文件都由它的内容生成的 SHA-256 哈希值认证。所以,哪怕你稍微修改了一位数据,整个文件的 SHA-256 就会修改而你不得不重新上传整个文件。分块允许你仅仅重新上传文件真正被修改的部分。举个例子,想想一下 Git LFS 在追踪一个 41M 的精灵表格(spritesheet)。如果我们增加在此精灵表格上增加 2MB 的新的部分并且提交它,传统上我们需要推送整个新的 43M 文件到服务器端。但是,使用 Bitbucket 的自定义传输路由,我们仅仅需要推送大约 7MB:先是 4MB 文件块(因为文件的信息头会改变)和我们刚刚添加的包含新的部分的 3MB 文件块!其余未改变的文件块在上传过程中被自动跳过,节省了巨大的带宽和时间消耗。

|

||||

|

||||

可自定义的传输路由器是 Git LFS 的一个伟大的特性,它们使得不同服务商在不重载核心项目的前提下体验适合其服务器的优化后的传输协议。

|

||||

|

||||

### 更佳的 git diff 算法与默认值

|

||||

|

||||

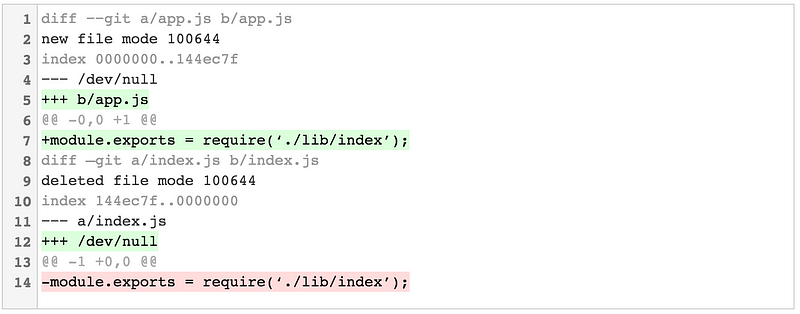

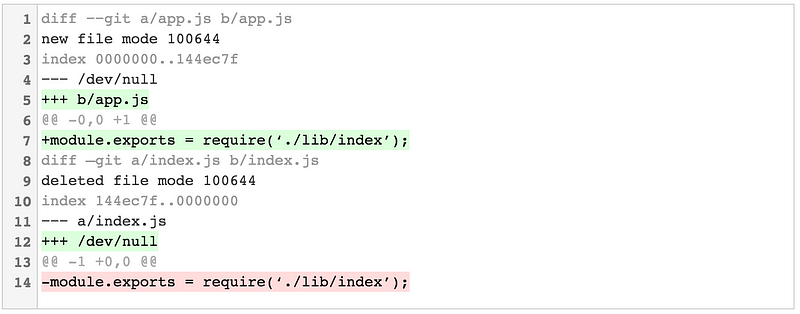

不像其他的版本控制系统,Git 不会明确地存储文件被重命名了的事实。例如,如果我编辑了一个简单的 Node.js 应用并且将 `index.js` 重命名为 `app.js`,然后运行 `git diff`,我会得到一个看起来像一个文件被删除另一个文件被新建的结果。

|

||||

|

||||

|

||||

|

||||

我猜测移动或重命名一个文件从技术上来讲是一次删除后跟着一次新建,但这不是对人类最友好的描述方式。其实,你可以使用 `-M` 标志来指示 Git 在计算差异时同时尝试检测是否是文件重命名。对之前的例子,`git diff -M` 给我们如下结果:

|

||||

|

||||

|

||||

|

||||

第二行显示的 similarity index 告诉我们文件内容经过比较后的相似程度。默认地,`-M` 会处理任意两个超过 50% 相似度的文件。这意味着,你需要编辑少于 50% 的行数来确保它们可以被识别成一个重命名后的文件。你可以通过加上一个百分比来选择你自己的 similarity index,如,`-M80%`。

|

||||

|

||||

到 Git v2.9 版本,无论你是否使用了 `-M` 标志, `git diff` 和 `git log` 命令都会默认检测重命名。如果不喜欢这种行为(或者,更现实的情况,你在通过一个脚本来解析 diff 输出),那么你可以通过显式的传递 `--no-renames` 标志来禁用这种行为。

|

||||

|

||||

#### 详细的提交

|

||||

|

||||

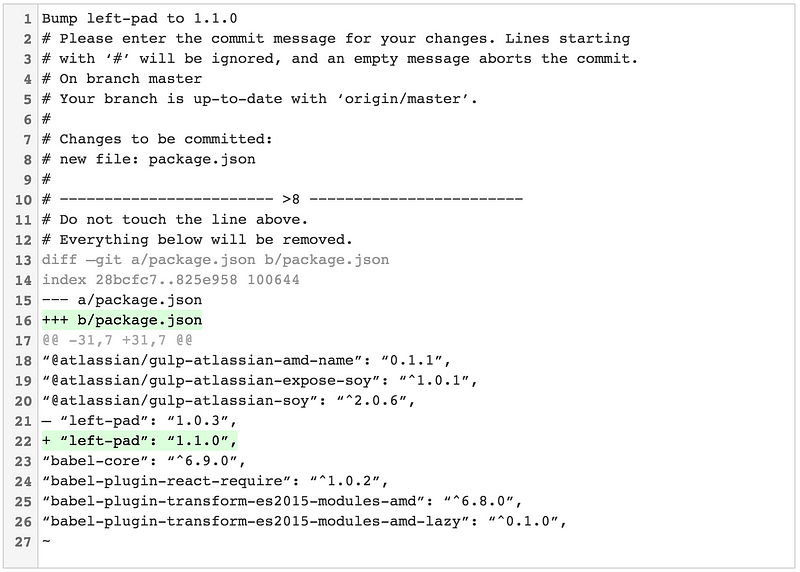

你经历过调用 `git commit` 然后盯着空白的 shell 试图想起你刚刚做过的所有改动吗?`verbose` 标志就为此而来!

|

||||

|

||||

不像这样:

|

||||

|

||||

```

|

||||

Ah crap, which dependency did I just rev?

|

||||

|

||||

# Please enter the commit message for your changes. Lines starting

|

||||

# with ‘#’ will be ignored, and an empty message aborts the commit.

|

||||

# On branch master

|

||||

# Your branch is up-to-date with ‘origin/master’.

|

||||

#

|

||||

# Changes to be committed:

|

||||

# new file: package.json

|

||||

#

|

||||

```

|

||||

|

||||

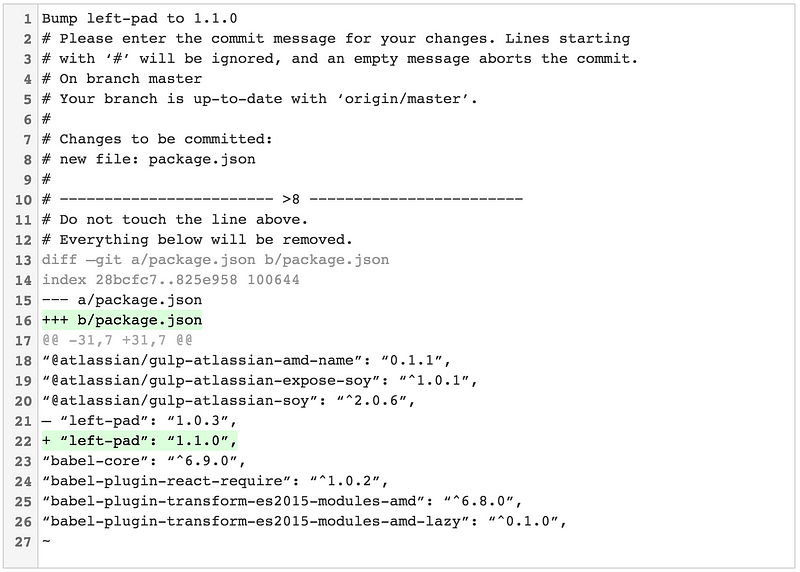

……你可以调用 `git commit --verbose` 来查看你改动造成的行内差异。不用担心,这不会包含在你的提交信息中:

|

||||

|

||||

|

||||

|

||||

`--verbose` 标志并不是新出现的,但是直到 Git v2.9 你才可以通过 `git config --global commit.verbose true` 永久的启用它。

|

||||

|

||||

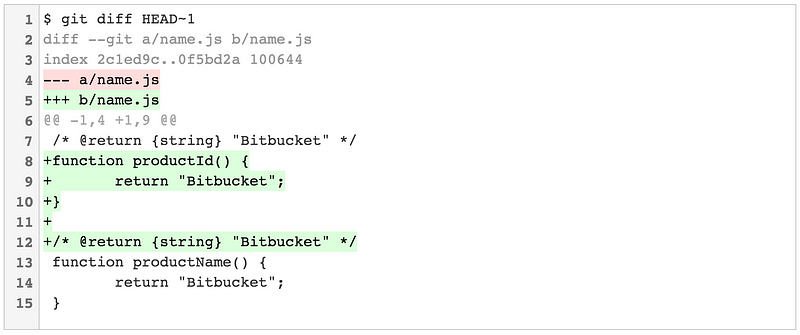

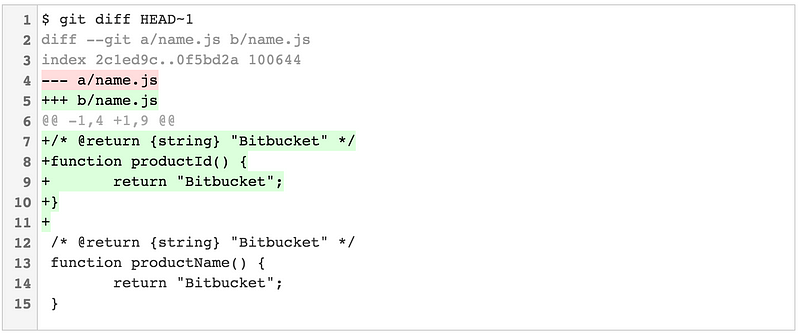

#### 实验性的 Diff 改进

|

||||

|

||||

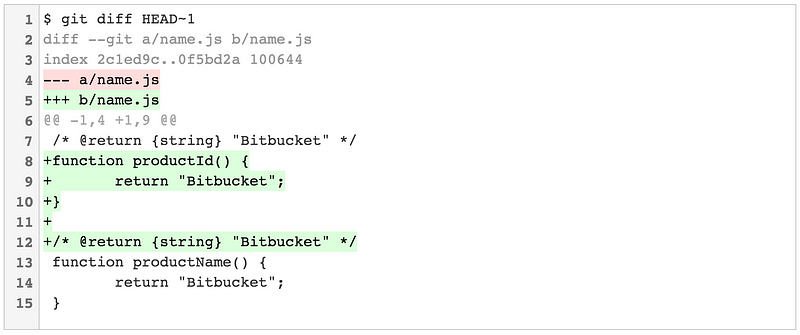

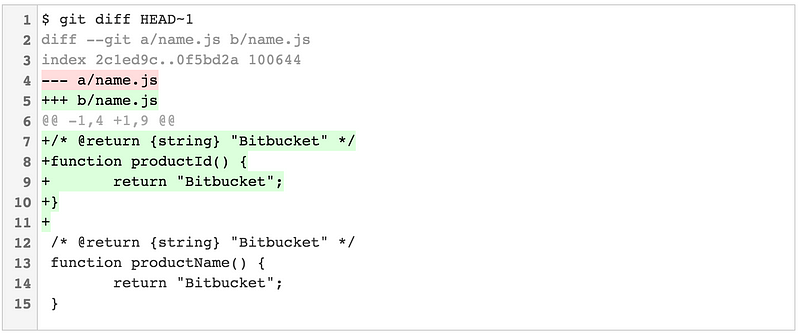

当一个被修改部分前后几行相同时,`git diff` 可能产生一些稍微令人迷惑的输出。如果在一个文件中有两个或者更多相似结构的函数时这可能发生。来看一个有些刻意人为的例子,想象我们有一个 JS 文件包含一个单独的函数:

|

||||

|

||||

```

|

||||

/* @return {string} "Bitbucket" */

|

||||

function productName() {

|

||||

return "Bitbucket";

|

||||

}

|

||||

```

|

||||

|

||||

现在想象一下我们刚提交的改动包含一个我们专门做的 _另一个_可以做相似事情的函数:

|

||||

|

||||

```

|

||||

/* @return {string} "Bitbucket" */

|

||||