mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-28 01:01:09 +08:00

commit

9611bac7d1

@ -0,0 +1,164 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (beamrolling)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11282-1.html)

|

||||

[#]: subject: (How to Install VirtualBox on Ubuntu [Beginner’s Tutorial])

|

||||

[#]: via: (https://itsfoss.com/install-virtualbox-ubuntu)

|

||||

[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

|

||||

|

||||

如何在 Ubuntu 上安装 VirtualBox

|

||||

======

|

||||

|

||||

> 本新手教程解释了在 Ubuntu 和其他基于 Debian 的 Linux 发行版上安装 VirtualBox 的各种方法。

|

||||

|

||||

|

||||

|

||||

Oracle 公司的自由开源产品 [VirtualBox][1] 是一款出色的虚拟化工具,专门用于桌面操作系统。与另一款虚拟化工具 [Linux 上的 VMWare Workstation][2] 相比起来,我更喜欢它。

|

||||

|

||||

你可以使用 VirtualBox 等虚拟化软件在虚拟机中安装和使用其他操作系统。

|

||||

|

||||

例如,你可以[在 Windows 上的 VirtualBox 中安装 Linux][3]。同样地,你也可以[用 VirtualBox 在 Linux 中安装 Windows][4]。

|

||||

|

||||

你也可以用 VirtualBox 在你当前的 Linux 系统中安装别的 Linux 发行版。事实上,这就是我用它的原因。如果我听说了一个不错的 Linux 发行版,我会在虚拟机上测试它,而不是安装在真实的系统上。当你想要在安装之前尝试一下别的发行版时,用虚拟机会很方便。

|

||||

|

||||

![Linux installed inside Linux using VirtualBox][5]

|

||||

|

||||

*安装在 Ubuntu 18.04 内的 Ubuntu 18.10*

|

||||

|

||||

在本新手教程中,我将向你展示在 Ubuntu 和其他基于 Debian 的 Linux 发行版上安装 VirtualBox 的各种方法。

|

||||

|

||||

### 在 Ubuntu 和基于 Debian 的 Linux 发行版上安装 VirtualBox

|

||||

|

||||

这里提出的安装方法也适用于其他基于 Debian 和 Ubuntu 的 Linux 发行版,如 Linux Mint、elementar OS 等。

|

||||

|

||||

#### 方法 1:从 Ubuntu 仓库安装 VirtualBox

|

||||

|

||||

**优点**:安装简便

|

||||

|

||||

**缺点**:较旧版本

|

||||

|

||||

在 Ubuntu 上下载 VirtualBox 最简单的方法可能是从软件中心查找并下载。

|

||||

|

||||

![VirtualBox in Ubuntu Software Center][6]

|

||||

|

||||

*VirtualBox 在 Ubuntu 软件中心提供*

|

||||

|

||||

你也可以使用这条命令从命令行安装:

|

||||

|

||||

```

|

||||

sudo apt install virtualbox

|

||||

```

|

||||

|

||||

然而,如果[在安装前检查软件包版本][7],你会看到 Ubuntu 仓库提供的 VirtualBox 版本已经很老了。

|

||||

|

||||

举个例子,在写下本教程时 VirtualBox 的最新版本是 6.0,但是在软件中心提供的是 5.2。这意味着你无法获得[最新版 VirtualBox ][8]中引入的新功能。

|

||||

|

||||

#### 方法 2:使用 Oracle 网站上的 Deb 文件安装 VirtualBox

|

||||

|

||||

**优点**:安装简便,最新版本

|

||||

|

||||

**缺点**:不能更新

|

||||

|

||||

如果你想要在 Ubuntu 上使用 VirtualBox 的最新版本,最简单的方法就是[使用 Deb 文件][9]。

|

||||

|

||||

Oracle 为 VirtiualBox 版本提供了开箱即用的二进制文件。如果查看其下载页面,你将看到为 Ubuntu 和其他发行版下载 deb 安装程序的选项。

|

||||

|

||||

![VirtualBox Linux Download][10]

|

||||

|

||||

你只需要下载 deb 文件并双击它即可安装。就是这么简单。

|

||||

|

||||

- [下载 virtualbox for Ubuntu](https://www.virtualbox.org/wiki/Linux_Downloads)

|

||||

|

||||

然而,这种方法的问题在于你不能自动更新到最新的 VirtualBox 版本。唯一的办法是移除现有版本,下载最新版本并再次安装。不太方便,是吧?

|

||||

|

||||

#### 方法 3:用 Oracle 的仓库安装 VirtualBox

|

||||

|

||||

**优点**:自动更新

|

||||

|

||||

**缺点**:安装略微复杂

|

||||

|

||||

现在介绍的是命令行安装方法,它看起来可能比较复杂,但与前两种方法相比,它更具有优势。你将获得 VirtualBox 的最新版本,并且未来它还将自动更新到更新的版本。我想那就是你想要的。

|

||||

|

||||

要通过命令行安装 VirtualBox,请在你的仓库列表中添加 Oracle VirtualBox 的仓库。添加 GPG 密钥以便你的系统信任此仓库。现在,当你安装 VirtualBox 时,它会从 Oracle 仓库而不是 Ubuntu 仓库安装。如果发布了新版本,本地 VirtualBox 将跟随一起更新。让我们看看怎么做到这一点:

|

||||

|

||||

首先,添加仓库的密钥。你可以通过这一条命令下载和添加密钥:

|

||||

|

||||

```

|

||||

wget -q https://www.virtualbox.org/download/oracle_vbox_2016.asc -O- | sudo apt-key add -

|

||||

```

|

||||

|

||||

> Mint 用户请注意:

|

||||

|

||||

> 下一步只适用于 Ubuntu。如果你使用的是 Linux Mint 或其他基于 Ubuntu 的发行版,请将命令行中的 `$(lsb_release -cs)` 替换成你当前版本所基于的 Ubuntu 版本。例如,Linux Mint 19 系列用户应该使用 bionic,Mint 18 系列用户应该使用 xenial,像这样:

|

||||

|

||||

> ```

|

||||

> sudo add-apt-repository “deb [arch=amd64] <http://download.virtualbox.org/virtualbox/debian> **bionic** contrib“`

|

||||

> ```

|

||||

|

||||

现在用以下命令来将 Oracle VirtualBox 仓库添加到仓库列表中:

|

||||

|

||||

```

|

||||

sudo add-apt-repository "deb [arch=amd64] http://download.virtualbox.org/virtualbox/debian $(lsb_release -cs) contrib"

|

||||

```

|

||||

|

||||

如果你有读过我的文章[检查 Ubuntu 版本][11],你大概知道 `lsb_release -cs` 将打印你的 Ubuntu 系统的代号。

|

||||

|

||||

**注**:如果你看到 “[add-apt-repository command not found][12]” 错误,你需要下载 `software-properties-common` 包。

|

||||

|

||||

现在你已经添加了正确的仓库,请通过此仓库刷新可用包列表并安装 VirtualBox:

|

||||

|

||||

```

|

||||

sudo apt update && sudo apt install virtualbox-6.0

|

||||

```

|

||||

|

||||

**提示**:一个好方法是输入 `sudo apt install virtualbox-` 并点击 `tab` 键以查看可用于安装的各种 VirtualBox 版本,然后通过补全命令来选择其中一个版本。

|

||||

|

||||

![Install VirtualBox via terminal][13]

|

||||

|

||||

### 如何从 Ubuntu 中删除 VirtualBox

|

||||

|

||||

现在你已经学会了如何安装 VirtualBox,我还想和你提一下删除它的步骤。

|

||||

|

||||

如果你是从软件中心安装的,那么删除它最简单的方法是从软件中心下手。你只需要在[已安装的应用程序列表][14]中找到它,然后单击“删除”按钮。

|

||||

|

||||

另一种方式是使用命令行:

|

||||

|

||||

```

|

||||

sudo apt remove virtualbox virtualbox-*

|

||||

```

|

||||

|

||||

请注意,这不会删除你用 VirtualBox 安装的操作系统关联的虚拟机和文件。这并不是一件坏事,因为你可能希望以后或在其他系统中使用它们是安全的。

|

||||

|

||||

### 最后…

|

||||

|

||||

我希望你能在以上方法中选择一种安装 VirtualBox。我还将在另一篇文章中写到如何有效地使用 VirtualBox。目前,如果你有点子、建议或任何问题,请随时在下面发表评论。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/install-virtualbox-ubuntu

|

||||

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[beamrolling](https://github.com/beamrolling)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/abhishek/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.virtualbox.org

|

||||

[2]: https://itsfoss.com/install-vmware-player-ubuntu-1310/

|

||||

[3]: https://itsfoss.com/install-linux-in-virtualbox/

|

||||

[4]: https://itsfoss.com/install-windows-10-virtualbox-linux/

|

||||

[5]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2019/02/linux-inside-linux-virtualbox.png?resize=800%2C450&ssl=1

|

||||

[6]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/02/virtualbox-ubuntu-software-center.jpg?ssl=1

|

||||

[7]: https://itsfoss.com/know-program-version-before-install-ubuntu/

|

||||

[8]: https://itsfoss.com/oracle-virtualbox-release/

|

||||

[9]: https://itsfoss.com/install-deb-files-ubuntu/

|

||||

[10]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2019/02/virtualbox-download.jpg?resize=800%2C433&ssl=1

|

||||

[11]: https://itsfoss.com/how-to-know-ubuntu-unity-version/

|

||||

[12]: https://itsfoss.com/add-apt-repository-command-not-found/

|

||||

[13]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/02/install-virtualbox-ubuntu-terminal.png?resize=800%2C165&ssl=1

|

||||

[14]: https://itsfoss.com/list-installed-packages-ubuntu/

|

||||

@ -0,0 +1,81 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (zionfuo)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11275-1.html)

|

||||

[#]: subject: (Blockchain 2.0 – An Introduction To Hyperledger Project (HLP) [Part 8])

|

||||

[#]: via: (https://www.ostechnix.com/blockchain-2-0-an-introduction-to-hyperledger-project-hlp/)

|

||||

[#]: author: (editor https://www.ostechnix.com/author/editor/)

|

||||

|

||||

区块链 2.0:Hyperledger 项目简介(八)

|

||||

======

|

||||

|

||||

![Introduction To Hyperledger Project][1]

|

||||

|

||||

一旦一个新技术平台在积极发展和商业利益方面达到了一定程度的受欢迎程度,全球的主要公司和小型的初创企业都急于抓住这块蛋糕。在当时 Linux 就是这样的一个平台。一旦实现了其应用程序的普及,个人、公司和机构就开始对其表现出兴趣,到 2000 年,Linux 基金会就成立了。

|

||||

|

||||

Linux 基金会旨在通过赞助他们的开发团队来将 Linux 作为一个平台来标准化和发展。Linux 基金会是一个由软件和 IT 巨头([如微软、甲骨文、三星、思科、 IBM 、英特尔等][7])支持的非营利组织。这不包括为改进该平台而提供服务的数百名个人开发者。多年来,Linux 基金会已经在旗下开展了许多项目。**Hyperledger** 项目是迄今为止发展最快的项目。

|

||||

|

||||

在将技术推进至可用且有用的方面上,这种联合主导的开发具有很多优势。为大型项目提供开发标准、库和所有后端协议既昂贵又耗费资源,而且不会从中产生丝毫收入。因此,对于公司来说,通过支持这些组织来汇集他们的资源来开发常见的那些 “烦人” 部分是有很意义的,以及随后完成这些标准部分的工作以简单地即插即用和定制他们的产品。除了这种模型的经济性之外,这种合作努力还产生了标准,使其容易使用和集成到优秀的产品和服务中。

|

||||

|

||||

上述联盟模式,在曾经或当下的创新包括 WiFi(Wi-Fi 联盟)、移动电话等标准。

|

||||

|

||||

### Hyperledger 项目(HLP)简介

|

||||

|

||||

Hyperledger 项目(HLP)于 2015 年 12 月由 Linux 基金会启动,目前是其孵化的增长最快的项目之一。它是一个<ruby>伞式组织<rt>umbrella organization</rt></ruby>,用于合作开发和推进基于[区块链][2]的分布式账本技术 (DLT) 的工具和标准。支持该项目的主要行业参与者包括 IBM、英特尔 和 SAP Ariba [等][3]。HLP 旨在为个人和公司创建框架,以便根据需要创建共享和封闭的区块链,以满足他们自己的需求。其设计原则是开发一个专注于隐私和未来可审计性的全球可部署、可扩展、强大的区块链平台。[^2] 同样要注意的是大多数提出的区块链及其框架。

|

||||

|

||||

### 开发目标和构造:即插即用

|

||||

|

||||

虽然面向企业的平台有以太坊联盟之类的产品,但根据定义,HLP 是面向企业的,并得到行业巨头的支持,他们在 HLP 旗下的许多模块中做出贡献并进一步发展。HLP 还孵化开发的周边项目,并这些创意项目推向公众。HLP 的成员贡献了他们自己的力量,例如 IBM 为如何协作开发贡献了他们的 Fabric 平台。该代码库由 IBM 在其项目组内部研发,并开源出来供所有成员使用。

|

||||

|

||||

这些过程使得 HLP 中的模块具有高度灵活的插件框架,这将支持企业环境中的快速开发和部署。此外,默认情况下,其他对比的平台是开放的<ruby>免许可链<rt>permission-less blockchain</rt></ruby>或是<ruby>公有链<rt>public blockchain</rt></ruby>,甚至可以将它们应用到特定应用当中。HLP 模块本身支持该功能。

|

||||

|

||||

有关公有链和私有链的差异和用例更多地涵盖在[这篇][4]比较文章当中。

|

||||

|

||||

根据该项目执行董事 Brian Behlendorf 的说法,Hyperledger 项目的使命有四个。

|

||||

|

||||

分别是:

|

||||

|

||||

1. 创建企业级 DLT 框架和标准,任何人都可以移植以满足其特定的行业或个人需求。

|

||||

2. 创建一个强大的开源社区来帮助生态系统发展。

|

||||

3. 促进所述的生态系统的行业成员(如成员公司)的参与。

|

||||

4. 为 HLP 社区提供中立且无偏见的基础设施,以收集和分享相关的更新和发展。

|

||||

|

||||

可以在这里访问[原始文档][5]。

|

||||

|

||||

### HLP 的架构

|

||||

|

||||

HLP 由 12 个项目组成,这些项目被归类为独立的模块,每个项目通常都是结构化的,可以独立开发其模块的。在孵化之前,首先对它们的能力和活力进行研究。该组织的任何成员都可以提出附加建议。在项目孵化后,就会进行积极开发,然后才会推出。这些模块之间的互操作性具有很高的优先级,因此这些组之间的定期通信由社区维护。目前,这些项目中有 4 个被归类为活跃项目。被标为活跃意味着它们已经准备好使用,但还没有准备好发布主要版本。这 4 个模块可以说是推动区块链革命的最重要或相当基本的模块。稍后,我们将详细介绍各个模块及其功能。然而,Hyperledger Fabric 平台的简要描述,可以说是其中最受欢迎的。

|

||||

|

||||

### Hyperledger Fabric

|

||||

|

||||

Hyperledger Fabric 是一个完全开源的、基于区块链的许可 (非公开) DLT 平台,设计时考虑了企业的使用。该平台提供了适合企业环境的功能和结构。它是高度模块化的,允许开发人员在不同的共识协议、链上代码协议([智能合约][6])或身份管理系统等中进行选择。这是一个基于区块链的许可平台,它利用身份管理系统,这意味着参与者将知道彼此在企业环境中的身份。Fabric 允许以各种主流编程语言 (包括 Java、Javascript、Go 等) 开发智能合约(“<ruby>链码<rt>chaincode</rt></ruby>”,是 Hyperledger 团队使用的术语)。这使得机构和企业可以利用他们在该领域的现有人才,而无需雇佣或重新培训开发人员来开发他们自己的智能合约。与标准订单验证系统相比,Fabric 还使用<ruby>执行顺序验证<rt>execute-order-validate</rt></ruby>系统来处理智能合约,以提供更好的可靠性,这些系统由提供智能合约功能的其他平台使用。与标准订单验证系统相比,Fabric还使用执行顺序验证系统来处理智能合约,以提供更好的可靠性,这些系统由提供智能合约功能的其他平台使用。Fabric 的其他功能还有可插拔性能、身份管理系统、数据库管理系统、共识平台等,这些功能使它在竞争中保持领先地位。

|

||||

|

||||

### 结论

|

||||

|

||||

诸如 Hyperledger Fabric 平台这样的项目能够在主流用例中更快地采用区块链技术。Hyperledger 社区结构本身支持开放治理原则,并且由于所有项目都是作为开源平台引导的,因此这提高了团队在履行承诺时表现出来的安全性和责任感。

|

||||

|

||||

由于此类项目的主要应用涉及与企业合作及进一步开发平台和标准,因此 Hyperledger 项目目前在其他类似项目前面处于有利地位。

|

||||

|

||||

[^2]: E. Androulaki et al., “Hyperledger Fabric: A Distributed Operating System for Permissioned Blockchains,” 2018.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/blockchain-2-0-an-introduction-to-hyperledger-project-hlp/

|

||||

|

||||

作者:[ostechnix][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[zionfuo](https://github.com/zionfuo)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.ostechnix.com/author/editor/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.ostechnix.com/wp-content/uploads/2019/04/Introduction-To-Hyperledger-Project-720x340.png

|

||||

[2]: https://linux.cn/article-10650-1.html

|

||||

[3]: https://www.hyperledger.org/members

|

||||

[4]: https://linux.cn/article-11080-1.html

|

||||

[5]: http://www.hitachi.com/rev/archive/2017/r2017_01/expert/index.html

|

||||

[6]: https://linux.cn/article-10956-1.html

|

||||

[7]: https://www.theinquirer.net/inquirer/news/2182438/samsung-takes-seat-intel-ibm-linux-foundation

|

||||

@ -0,0 +1,100 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (beamrolling)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11285-1.html)

|

||||

[#]: subject: (How to transition into a career as a DevOps engineer)

|

||||

[#]: via: (https://opensource.com/article/19/7/how-transition-career-devops-engineer)

|

||||

[#]: author: (Conor Delanbanque https://opensource.com/users/cdelanbanquehttps://opensource.com/users/daniel-ohhttps://opensource.com/users/herontheclihttps://opensource.com/users/marcobravohttps://opensource.com/users/cdelanbanque)

|

||||

|

||||

如何转职为 DevOps 工程师

|

||||

======

|

||||

|

||||

> 无论你是刚毕业的大学生,还是想在职业中寻求进步的经验丰富的 IT 专家,这些提示都可以帮你成为 DevOps 工程师。

|

||||

|

||||

|

||||

|

||||

DevOps 工程是一个备受称赞的热门职业。不管你是刚毕业正在找第一份工作,还是在利用之前的行业经验的同时寻求学习新技能的机会,本指南都能帮你通过正确的步骤成为 [DevOps 工程师][2]。

|

||||

|

||||

### 让自己沉浸其中

|

||||

|

||||

首先学习 [DevOps][3] 的基本原理、实践以及方法。在使用工具之前,先了解 DevOps 背后的“为什么”。DevOps 工程师的主要目标是在整个软件开发生命周期(SDLC)中提高速度并保持或提高质量,以提供最大的业务价值。阅读文章、观看 YouTube 视频、参加当地小组聚会或者会议 —— 成为热情的 DevOps 社区中的一员,在那里你将从先行者的错误和成功中学习。

|

||||

|

||||

### 考虑你的背景

|

||||

|

||||

如果你有从事技术工作的经历,例如软件开发人员、系统工程师、系统管理员、网络运营工程师或者数据库管理员,那么你已经拥有了广泛的见解和有用的经验,它们可以帮助你在未来成为 DevOps 工程师。如果你在完成计算机科学或任何其他 STEM(LCTT 译注:STEM 是<ruby>科学<rt>Science</rt></ruby>、<ruby>技术<rt>Technology</rt></ruby>、<ruby>工程<rt>Engineering</rt></ruby>和<ruby>数学<rt>Math</rt></ruby>四个学科的首字母缩略字)领域的学业后刚开始职业生涯,那么你将拥有在这个过渡期间需要的一些基本踏脚石。

|

||||

|

||||

DevOps 工程师的角色涵盖了广泛的职责。以下是企业最有可能使用他们的三种方向:

|

||||

|

||||

* **偏向于开发(Dev)的 DevOps 工程师**,在构建应用中扮演软件开发的角色。他们日常工作的一部分是利用持续集成 / 持续交付(CI/CD)、共享仓库、云和容器,但他们不一定负责构建或实施工具。他们了解基础架构,并且在成熟的环境中,能将自己的代码推向生产环境。

|

||||

* **偏向于运维技术(Ops)的 DevOps 工程师**,可以与系统工程师或系统管理员相比较。他们了解软件的开发,但并不会把一天的重心放在构建应用上。相反,他们更有可能支持软件开发团队实现手动流程的自动化,并提高人员和技术系统的效率。这可能意味着分解遗留代码,并用不太繁琐的自动化脚本来运行相同的命令,或者可能意味着安装、配置或维护基础结构和工具。他们确保为任何有需要的团队安装可使用的工具。他们也会通过教团队如何利用 CI / CD 和其他 DevOps 实践来帮助他们。

|

||||

* **网站可靠性工程师(SRE)**,就像解决运维和基础设施的软件工程师。SRE 专注于创建可扩展、高可用且可靠的软件系统。

|

||||

|

||||

在理想的世界中,DevOps 工程师将了解以上所有领域;这在成熟的科技公司中很常见。然而,顶级银行和许多财富 500 强企业的 DevOps 职位通常会偏向开发(Dev)或运营(Ops)。

|

||||

|

||||

### 要学习的技术

|

||||

|

||||

DevOps 工程师需要了解各种技术才能有效完成工作。无论你的背景如何,请从作为 DevOps 工程师需要使用和理解的基本技术开始。

|

||||

|

||||

#### 操作系统

|

||||

|

||||

操作系统是一切运行的地方,拥有相关的基础知识十分重要。[Linux][4] 是你最有可能每天使用的操作系统,尽管有的组织会使用 Windows 操作系统。要开始使用,你可以在家中安装 Linux,在那里你可以随心所欲地中断,并在此过程中学习。

|

||||

|

||||

#### 脚本

|

||||

|

||||

接下来,选择一门语言来学习脚本编程。有很多语言可供选择,包括 Python、Go、Java、Bash、PowerShell、Ruby 和 C / C++。我建议[从 Python 开始][5],因为它相对容易学习和解释,是最受欢迎的语言之一。Python 通常是遵循面向对象编程(OOP)的准则编写的,可用于 Web 开发、软件开发以及创建桌面 GUI 和业务应用程序。

|

||||

|

||||

#### 云

|

||||

|

||||

学习了 [Linux][4] 和 [Python][5] 之后,我认为下一个该学习的是云计算。基础设施不再只是“运维小哥”的事情了,因此你需要接触云平台,例如 AWS 云服务、Azure 或者谷歌云平台。我会从 AWS 开始,因为它有大量免费学习工具,可以帮助你降低作为开发人员、运维人员,甚至面向业务的部门的任何障碍。事实上,你可能会被它提供的东西所淹没。考虑从 EC2、S3 和 VPC 开始,然后看看你从其中想学到什么。

|

||||

|

||||

#### 编程语言

|

||||

|

||||

如果你对 DevOps 的软件开发充满热情,请继续提高你的编程技能。DevOps 中的一些优秀和常用的编程语言和你用于脚本编程的相同:Python、Go、Java、Bash、PowerShell、Ruby 和 C / C++。你还应该熟悉 Jenkins 和 Git / Github,你将会在 CI / CD 过程中经常使用到它们。

|

||||

|

||||

#### 容器

|

||||

|

||||

最后,使用 Docker 和编排平台(如 Kubernetes)等工具开始学习[容器化][6]。网上有大量的免费学习资源,大多数城市都有本地的线下小组,你可以在友好的环境中向有经验的人学习(还有披萨和啤酒哦!)。

|

||||

|

||||

#### 其他的呢?

|

||||

|

||||

如果你缺乏开发经验,你依然可以通过对自动化的热情,提高效率,与他人协作以及改进自己的工作来[参与 DevOps][3]。我仍然建议学习上述工具,但重点不要放在编程 / 脚本语言上。了解基础架构即服务、平台即服务、云平台和 Linux 会非常有用。你可能会设置工具并学习如何构建具有弹性和容错能力的系统,并在编写代码时利用它们。

|

||||

|

||||

### 找一份 DevOps 的工作

|

||||

|

||||

求职过程会有所不同,具体取决于你是否一直从事技术工作,是否正在进入 DevOps 领域,或者是刚开始职业生涯的毕业生。

|

||||

|

||||

#### 如果你已经从事技术工作

|

||||

|

||||

如果你正在从一个技术领域转入 DevOps 角色,首先尝试在你当前的公司寻找机会。你能通过和其他的团队一起工作来重新掌握技能吗?尝试跟随其他团队成员,寻求建议,并在不离开当前工作的情况下获得新技能。如果做不到这一点,你可能需要换另一家公司。如果你能从上面列出的一些实践、工具和技术中学习,你将能在面试时展示相关知识从而占据有利位置。关键是要诚实,不要担心失败。大多数招聘主管都明白你并不知道所有的答案;如果你能展示你一直在学习的东西,并解释你愿意学习更多,你应该有机会获得 DevOps 的工作。

|

||||

|

||||

#### 如果你刚开始职业生涯

|

||||

|

||||

申请雇用初级 DevOps 工程师的公司的空缺机会。不幸的是,许多公司表示他们希望寻找更富有经验的人,并建议你在获得经验后再申请该职位。这是“我们需要经验丰富的人”的典型,令人沮丧的场景,并且似乎没人愿意给你第一次机会。

|

||||

|

||||

然而,并不是所有求职经历都那么令人沮丧;一些公司专注于培训和提升刚从大学毕业的学生。例如,我工作的 [MThree][7] 会聘请应届毕业生并且对其进行 8 周的培训。当完成培训后,参与者们可以充分了解到整个 SDLC,并充分了解它在财富 500 强公司环境中的运用方式。毕业生被聘为 MThree 的客户公司的初级 DevOps 工程师 —— MThree 在前 18 - 24 个月内支付全职工资和福利,之后他们将作为直接雇员加入客户。这是弥合从大学到技术职业的间隙的好方法。

|

||||

|

||||

### 总结

|

||||

|

||||

转职成 DevOps 工程师的方法有很多种。这是一条非常有益的职业路线,可能会让你保持繁忙和挑战 — 并增加你的收入潜力。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/7/how-transition-career-devops-engineer

|

||||

|

||||

作者:[Conor Delanbanque][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[beamrolling](https://github.com/beamrolling)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/cdelanbanquehttps://opensource.com/users/daniel-ohhttps://opensource.com/users/herontheclihttps://opensource.com/users/marcobravohttps://opensource.com/users/cdelanbanque

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/hiring_talent_resume_job_career.png?itok=Ci_ulYAH (technical resume for hiring new talent)

|

||||

[2]: https://opensource.com/article/19/7/devops-vs-sysadmin

|

||||

[3]: https://opensource.com/resources/devops

|

||||

[4]: https://opensource.com/resources/linux

|

||||

[5]: https://opensource.com/resources/python

|

||||

[6]: https://opensource.com/article/18/8/sysadmins-guide-containers

|

||||

[7]: https://www.mthreealumni.com/

|

||||

228

published/20190812 How Hexdump works.md

Normal file

228

published/20190812 How Hexdump works.md

Normal file

@ -0,0 +1,228 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (0x996)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11288-1.html)

|

||||

[#]: subject: (How Hexdump works)

|

||||

[#]: via: (https://opensource.com/article/19/8/dig-binary-files-hexdump)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/seth)

|

||||

|

||||

Hexdump 如何工作

|

||||

======

|

||||

|

||||

> Hexdump 能帮助你查看二进制文件的内容。让我们来学习 Hexdump 如何工作。

|

||||

|

||||

|

||||

|

||||

Hexdump 是个用十六进制、十进制、八进制数或 ASCII 码显示二进制文件内容的工具。它是个用于检查的工具,也可用于[数据恢复][2]、逆向工程和编程。

|

||||

|

||||

### 学习基本用法

|

||||

|

||||

Hexdump 让你毫不费力地得到输出结果,依你所查看文件的尺寸,输出结果可能会非常多。本文中我们会创建一个 1x1 像素的 PNG 文件。你可以用图像处理应用如 [GIMP][3] 或 [Mtpaint][4] 来创建该文件,或者也可以在终端内用 [ImageMagick][5] 创建。

|

||||

|

||||

用 ImagiMagick 生成 1x1 像素 PNG 文件的命令如下:

|

||||

|

||||

```

|

||||

$ convert -size 1x1 canvas:black pixel.png

|

||||

```

|

||||

|

||||

你可以用 `file` 命令确认此文件是 PNG 格式:

|

||||

|

||||

```

|

||||

$ file pixel.png

|

||||

pixel.png: PNG image data, 1 x 1, 1-bit grayscale, non-interlaced

|

||||

```

|

||||

|

||||

你可能好奇 `file` 命令是如何判断文件是什么类型。巧的是,那正是 `hexdump` 将要揭示的原理。眼下你可以用你常用的图像查看软件来看看你的单一像素图片(它看上去就像这样:`.`),或者你可以用 `hexdump` 查看文件内部:

|

||||

|

||||

```

|

||||

$ hexdump pixel.png

|

||||

0000000 5089 474e 0a0d 0a1a 0000 0d00 4849 5244

|

||||

0000010 0000 0100 0000 0100 0001 0000 3700 f96e

|

||||

0000020 0024 0000 6704 4d41 0041 b100 0b8f 61fc

|

||||

0000030 0005 0000 6320 5248 004d 7a00 0026 8000

|

||||

0000040 0084 fa00 0000 8000 00e8 7500 0030 ea00

|

||||

0000050 0060 3a00 0098 1700 9c70 51ba 003c 0000

|

||||

0000060 6202 474b 0044 dd01 138a 00a4 0000 7407

|

||||

0000070 4d49 0745 07e3 081a 3539 a487 46b0 0000

|

||||

0000080 0a00 4449 5441 d708 6063 0000 0200 0100

|

||||

0000090 21e2 33bc 0000 2500 4574 7458 6164 6574

|

||||

00000a0 633a 6572 7461 0065 3032 3931 302d 2d37

|

||||

00000b0 3532 3254 3a30 3735 353a 2b33 3231 303a

|

||||

00000c0 ac30 5dcd 00c1 0000 7425 5845 6474 7461

|

||||

00000d0 3a65 6f6d 6964 7966 3200 3130 2d39 3730

|

||||

00000e0 322d 5435 3032 353a 3a37 3335 312b 3a32

|

||||

00000f0 3030 90dd 7de5 0000 0000 4549 444e 42ae

|

||||

0000100 8260

|

||||

0000102

|

||||

```

|

||||

|

||||

透过一个你以前可能从未用过的视角,你所见的是该示例 PNG 文件的内容。它和你在图像查看软件中看到的是完全一样的数据,只是用一种你或许不熟悉的方式编码。

|

||||

|

||||

### 提取熟悉的字符串

|

||||

|

||||

尽管默认的数据输出结果看上去毫无意义,那并不意味着其中没有有价值的信息。你可以用 `--canonical` 选项将输出结果,或至少是其中可翻译的部分,翻译成更加熟悉的字符集:

|

||||

|

||||

```

|

||||

$ hexdump --canonical foo.png

|

||||

00000000 89 50 4e 47 0d 0a 1a 0a 00 00 00 0d 49 48 44 52 |.PNG........IHDR|

|

||||

00000010 00 00 00 01 00 00 00 01 01 00 00 00 00 37 6e f9 |.............7n.|

|

||||

00000020 24 00 00 00 04 67 41 4d 41 00 00 b1 8f 0b fc 61 |$....gAMA......a|

|

||||

00000030 05 00 00 00 20 63 48 52 4d 00 00 7a 26 00 00 80 |.... cHRM..z&...|

|

||||

00000040 84 00 00 fa 00 00 00 80 e8 00 00 75 30 00 00 ea |...........u0...|

|

||||

00000050 60 00 00 3a 98 00 00 17 70 9c ba 51 3c 00 00 00 |`..:....p..Q<...|

|

||||

00000060 02 62 4b 47 44 00 01 dd 8a 13 a4 00 00 00 07 74 |.bKGD..........t|

|

||||

00000070 49 4d 45 07 e3 07 1a 08 39 35 87 a4 b0 46 00 00 |IME.....95...F..|

|

||||

00000080 00 0a 49 44 41 54 08 d7 63 60 00 00 00 02 00 01 |..IDAT..c`......|

|

||||

00000090 e2 21 bc 33 00 00 00 25 74 45 58 74 64 61 74 65 |.!.3...%tEXtdate|

|

||||

000000a0 3a 63 72 65 61 74 65 00 32 30 31 39 2d 30 37 2d |:create.2019-07-|

|

||||

000000b0 32 35 54 32 30 3a 35 37 3a 35 33 2b 31 32 3a 30 |25T20:57:53+12:0|

|

||||

000000c0 30 ac cd 5d c1 00 00 00 25 74 45 58 74 64 61 74 |0..]....%tEXtdat|

|

||||

000000d0 65 3a 6d 6f 64 69 66 79 00 32 30 31 39 2d 30 37 |e:modify.2019-07|

|

||||

000000e0 2d 32 35 54 32 30 3a 35 37 3a 35 33 2b 31 32 3a |-25T20:57:53+12:|

|

||||

000000f0 30 30 dd 90 e5 7d 00 00 00 00 49 45 4e 44 ae 42 |00...}....IEND.B|

|

||||

00000100 60 82 |`.|

|

||||

00000102

|

||||

```

|

||||

|

||||

在右侧的列中,你看到的是和左侧一样的数据,但是以 ASCII 码展现的。如果你仔细看,你可以从中挑选出一些有用的信息,如文件格式(PNG)以及文件创建、修改日期和时间(向文件底部寻找一下)。

|

||||

|

||||

`file` 命令通过头 8 个字节获取文件类型。程序员会参考 [libpng 规范][6] 来知晓需要查看什么。具体而言,那就是你能在该图像文件的头 8 个字节中看到的字符串 `PNG`。这个事实显而易见,因为它揭示了 `file` 命令是如何知道要报告的文件类型。

|

||||

|

||||

你也可以控制 `hexdump` 显示多少字节,这在处理大于一个像素的文件时很实用:

|

||||

|

||||

```

|

||||

$ hexdump --length 8 pixel.png

|

||||

0000000 5089 474e 0a0d 0a1a

|

||||

0000008

|

||||

```

|

||||

|

||||

`hexdump` 不只限于查看 PNG 或图像文件。你也可以用 `hexdump` 查看你日常使用的二进制文件,如 [ls][7]、[rsync][8],或你想检查的任何二进制文件。

|

||||

|

||||

### 用 hexdump 实现 cat 命令

|

||||

|

||||

阅读 PNG 规范的时候你可能会注意到头 8 个字节中的数据与 `hexdump` 提供的结果看上去不一样。实际上,那是一样的数据,但以一种不同的转换方式展现出来。所以 `hexdump` 的输出是正确的,但取决于你在寻找的信息,其输出结果对你而言不总是直接了当的。出于这个原因,`hexdump` 有一些选项可供用于定义格式和转化其转储的原始数据。

|

||||

|

||||

转换选项可以很复杂,所以用无关紧要的东西练习会比较实用。下面这个简易的介绍,通过重新实现 [cat][9] 命令来演示如何格式化 `hexdump` 的输出。首先,对一个文本文件运行 `hexdump` 来查看其原始数据。通常你可以在硬盘上某处找到 <ruby>[GNU 通用许可证][10]<rt>GNU General Public License</rt></ruby>(GPL)的一份拷贝,也可以用你手头的任何文本文件。你的输出结果可能不同,但下面是如何在你的系统中找到一份 GPL(或至少其部分)的拷贝:

|

||||

|

||||

```

|

||||

$ find /usr/share/doc/ -type f -name "COPYING" | tail -1

|

||||

/usr/share/doc/libblkid-devel/COPYING

|

||||

```

|

||||

|

||||

对其运行 `hexdump`:

|

||||

|

||||

```

|

||||

$ hexdump /usr/share/doc/libblkid-devel/COPYING

|

||||

0000000 6854 7369 6c20 6269 6172 7972 6920 2073

|

||||

0000010 7266 6565 7320 666f 7774 7261 3b65 7920

|

||||

0000020 756f 6320 6e61 7220 6465 7369 7274 6269

|

||||

0000030 7475 2065 7469 6120 646e 6f2f 0a72 6f6d

|

||||

0000040 6964 7966 6920 2074 6e75 6564 2072 6874

|

||||

0000050 2065 6574 6d72 2073 666f 7420 6568 4720

|

||||

0000060 554e 4c20 7365 6573 2072 6547 656e 6172

|

||||

0000070 206c 7550 6c62 6369 4c0a 6369 6e65 6573

|

||||

0000080 6120 2073 7570 6c62 7369 6568 2064 7962

|

||||

[...]

|

||||

```

|

||||

|

||||

如果该文件输出结果很长,用 `--length`(或短选项 `-n`)来控制输出长度使其易于管理。

|

||||

|

||||

原始数据对你而言可能没什么意义,但你已经知道如何将其转换为 ASCII 码:

|

||||

|

||||

```

|

||||

hexdump --canonical /usr/share/doc/libblkid-devel/COPYING

|

||||

00000000 54 68 69 73 20 6c 69 62 72 61 72 79 20 69 73 20 |This library is |

|

||||

00000010 66 72 65 65 20 73 6f 66 74 77 61 72 65 3b 20 79 |free software; y|

|

||||

00000020 6f 75 20 63 61 6e 20 72 65 64 69 73 74 72 69 62 |ou can redistrib|

|

||||

00000030 75 74 65 20 69 74 20 61 6e 64 2f 6f 72 0a 6d 6f |ute it and/or.mo|

|

||||

00000040 64 69 66 79 20 69 74 20 75 6e 64 65 72 20 74 68 |dify it under th|

|

||||

00000050 65 20 74 65 72 6d 73 20 6f 66 20 74 68 65 20 47 |e terms of the G|

|

||||

00000060 4e 55 20 4c 65 73 73 65 72 20 47 65 6e 65 72 61 |NU Lesser Genera|

|

||||

00000070 6c 20 50 75 62 6c 69 63 0a 4c 69 63 65 6e 73 65 |l Public.License|

|

||||

[...]

|

||||

```

|

||||

|

||||

这个输出结果有帮助但太累赘且难于阅读。要将 `hexdump` 的输出结果转换为其选项不支持的其他格式,可组合使用 `--format`(或 `-e`)和专门的格式代码。用来自定义格式的代码和 `printf` 命令使用的类似,所以如果你熟悉 `printf` 语句,你可能会觉得 `hexdump` 自定义格式不难学会。

|

||||

|

||||

在 `hexdump` 中,字符串 `%_p` 告诉 `hexdump` 用你系统的默认字符集输出字符。`--format` 选项的所有格式符号必须以*单引号*包括起来:

|

||||

|

||||

```

|

||||

$ hexdump -e'"%_p"' /usr/share/doc/libblkid-devel/COPYING

|

||||

This library is fre*

|

||||

software; you can redistribute it and/or.modify it under the terms of the GNU Les*

|

||||

er General Public.License as published by the Fre*

|

||||

Software Foundation; either.version 2.1 of the License, or (at your option) any later.version..*

|

||||

The complete text of the license is available in the..*

|

||||

/Documentation/licenses/COPYING.LGPL-2.1-or-later file..

|

||||

```

|

||||

|

||||

这次的输出好些了,但依然不方便阅读。传统上 UNIX 文本文件假定 80 个字符的输出宽度(因为很久以前显示器一行只能显示 80 个字符)。

|

||||

|

||||

尽管这个输出结果未被自定义格式限制输出宽度,你可以用附加选项强制 `hexdump` 一次处理 80 字节。具体而言,通过 80 除以 1 这种形式,你可以告诉 `hexdump` 将 80 字节作为一个单元对待:

|

||||

|

||||

```

|

||||

$ hexdump -e'80/1 "%_p"' /usr/share/doc/libblkid-devel/COPYING

|

||||

This library is free software; you can redistribute it and/or.modify it under the terms of the GNU Lesser General Public.License as published by the Free Software Foundation; either.version 2.1 of the License, or (at your option) any later.version...The complete text of the license is available in the.../Documentation/licenses/COPYING.LGPL-2.1-or-later file..

|

||||

```

|

||||

|

||||

现在该文件被分割成 80 字节的块处理,但没有任何换行。你可以用 `\n` 字符自行添加换行,在 UNIX 中它代表换行:

|

||||

|

||||

```

|

||||

$ hexdump -e'80/1 "%_p""\n"'

|

||||

This library is free software; you can redistribute it and/or.modify it under th

|

||||

e terms of the GNU Lesser General Public.License as published by the Free Softwa

|

||||

re Foundation; either.version 2.1 of the License, or (at your option) any later.

|

||||

version...The complete text of the license is available in the.../Documentation/

|

||||

licenses/COPYING.LGPL-2.1-or-later file..

|

||||

```

|

||||

|

||||

现在你已经(大致上)用 `hexdump` 自定义格式实现了 `cat` 命令。

|

||||

|

||||

### 控制输出结果

|

||||

|

||||

实际上自定义格式是让 `hexdump` 变得有用的方法。现在你已经(至少是原则上)熟悉 `hexdump` 自定义格式,你可以让 `hexdump -n 8` 的输出结果跟 `libpng` 官方规范中描述的 PNG 文件头相匹配了。

|

||||

|

||||

首先,你知道你希望 `hexdump` 以 8 字节的块来处理 PNG 文件。此外,你可能通过识别这些整数从而知道 PNG 格式规范是以十进制数表述的,根据 `hexdump` 文档,十进制用 `%d` 来表示:

|

||||

|

||||

```

|

||||

$ hexdump -n8 -e'8/1 "%d""\n"' pixel.png

|

||||

13780787113102610

|

||||

```

|

||||

|

||||

你可以在每个整数后面加个空格使输出结果变得完美:

|

||||

|

||||

```

|

||||

$ hexdump -n8 -e'8/1 "%d ""\n"' pixel.png

|

||||

137 80 78 71 13 10 26 10

|

||||

```

|

||||

|

||||

现在输出结果跟 PNG 规范完美匹配了。

|

||||

|

||||

### 好玩又有用

|

||||

|

||||

Hexdump 是个迷人的工具,不仅让你更多地领会计算机如何处理和转换信息,而且让你了解文件格式和编译的二进制文件如何工作。日常工作时你可以随机地试着对不同文件运行 `hexdump`。你永远不知道你会发现什么样的信息,或是什么时候具有这种洞察力会很实用。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/8/dig-binary-files-hexdump

|

||||

|

||||

作者:[Seth Kenlon][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[0x996](https://github.com/0x996)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/seth

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/find-file-linux-code_magnifying_glass_zero.png?itok=E2HoPDg0 (Magnifying glass on code)

|

||||

[2]: https://www.redhat.com/sysadmin/find-lost-files-scalpel

|

||||

[3]: http://gimp.org

|

||||

[4]: https://opensource.com/article/17/2/mtpaint-pixel-art-animated-gifs

|

||||

[5]: https://opensource.com/article/17/8/imagemagick

|

||||

[6]: http://www.libpng.org/pub/png/spec/1.2/PNG-Structure.html

|

||||

[7]: https://opensource.com/article/19/7/master-ls-command

|

||||

[8]: https://opensource.com/article/19/5/advanced-rsync

|

||||

[9]: https://opensource.com/article/19/2/getting-started-cat-command

|

||||

[10]: https://en.wikipedia.org/wiki/GNU_General_Public_License

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11283-1.html)

|

||||

[#]: subject: (How To Fix “Kernel driver not installed (rc=-1908)” VirtualBox Error In Ubuntu)

|

||||

[#]: via: (https://www.ostechnix.com/how-to-fix-kernel-driver-not-installed-rc-1908-virtualbox-error-in-ubuntu/)

|

||||

[#]: author: (sk https://www.ostechnix.com/author/sk/)

|

||||

@ -26,7 +26,7 @@ where: suplibOsInit what: 3 VERR_VM_DRIVER_NOT_INSTALLED (-1908) - The support d

|

||||

|

||||

![][2]

|

||||

|

||||

Ubuntu 中的 “Kernel driver not installed (rc=-1908)” 错误

|

||||

*Ubuntu 中的 “Kernel driver not installed (rc=-1908)” 错误*

|

||||

|

||||

我点击了 OK 关闭消息框,然后在后台看到了另一条消息。

|

||||

|

||||

@ -45,7 +45,7 @@ IMachine {85cd948e-a71f-4289-281e-0ca7ad48cd89}

|

||||

|

||||

![][3]

|

||||

|

||||

启动期间虚拟机意外终止,退出代码为 1(0x1)

|

||||

*启动期间虚拟机意外终止,退出代码为 1(0x1)*

|

||||

|

||||

我不知道该先做什么。我运行以下命令来检查是否有用。

|

||||

|

||||

@ -61,7 +61,7 @@ modprobe: FATAL: Module vboxdrv not found in directory /lib/modules/5.0.0-23-gen

|

||||

|

||||

仔细阅读这两个错误消息后,我意识到我应该更新 Virtualbox 程序。

|

||||

|

||||

如果你在 Ubuntu 及其衍生版(如 Linux Mint)中遇到此错误,你只需使用以下命令重新安装或更新 **“virtualbox-dkms”** 包:

|

||||

如果你在 Ubuntu 及其衍生版(如 Linux Mint)中遇到此错误,你只需使用以下命令重新安装或更新 `virtualbox-dkms` 包:

|

||||

|

||||

```

|

||||

$ sudo apt install virtualbox-dkms

|

||||

@ -82,7 +82,7 @@ via: https://www.ostechnix.com/how-to-fix-kernel-driver-not-installed-rc-1908-vi

|

||||

作者:[sk][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (hello-wn)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11276-1.html)

|

||||

[#]: subject: (How to Delete Lines from a File Using the sed Command)

|

||||

[#]: via: (https://www.2daygeek.com/linux-remove-delete-lines-in-file-sed-command/)

|

||||

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

||||

@ -10,23 +10,21 @@

|

||||

如何使用 sed 命令删除文件中的行

|

||||

======

|

||||

|

||||

Sed 代表<ruby>流编辑器<rt>Stream Editor</rt></ruby>,常用于 Linux 中基本的文本处理。

|

||||

|

||||

|

||||

sed 命令是 Linux 中的重要命令之一,在文件处理方面有着重要作用。可用于删除或移动与给定模式匹配的特定行,还可以删除文件中的特定行。

|

||||

Sed 代表<ruby>流编辑器<rt>Stream Editor</rt></ruby>,常用于 Linux 中基本的文本处理。`sed` 命令是 Linux 中的重要命令之一,在文件处理方面有着重要作用。可用于删除或移动与给定模式匹配的特定行。

|

||||

|

||||

它还能够从文件中删除表达式,文件可以通过指定分隔符(例如逗号、制表符或空格)进行标识。

|

||||

它还可以删除文件中的特定行,它能够从文件中删除表达式,文件可以通过指定分隔符(例如逗号、制表符或空格)进行标识。

|

||||

|

||||

本文列出了 15 个使用范例,它们可以帮助你掌握 `sed` 命令。

|

||||

|

||||

如果你能理解并且记住这些命令,在你需要使用 `sed` 时,这些命令就能派上用场,帮你节约很多时间。

|

||||

|

||||

本文列出了 15 个使用范例,它们可以帮助你掌握 sed 命令。

|

||||

注意:为了方便演示,我在执行 `sed` 命令时,不使用 `-i` 选项(因为这个选项会直接修改文件内容),被移除了行的文件内容将打印到 Linux 终端。

|

||||

|

||||

如果你能理解并且记住这些命令,在你需要使用 sed 时,这些命令就能派上用场,帮你节约很多时间。

|

||||

但是,如果你想在实际环境中从源文件中删除行,请在 `sed` 命令中使用 `-i` 选项。

|

||||

|

||||

**`注意:`**` ` 为了方便演示,我在执行 sed 命令时,不使用 `-i` 选项,因为这个选项会直接修改文件内容。

|

||||

|

||||

但是,如果你想在实际环境中从源文件中删除行,请在 sed 命令中使用 `-i` 选项。

|

||||

|

||||

演示之前,我创建了 sed-demo.txt 文件,并添加了以下内容和相应行号以便更好地理解。

|

||||

演示之前,我创建了 `sed-demo.txt` 文件,并添加了以下内容和相应行号以便更好地理解。

|

||||

|

||||

```

|

||||

# cat sed-demo.txt

|

||||

@ -47,15 +45,15 @@ sed 命令是 Linux 中的重要命令之一,在文件处理方面有着重要

|

||||

|

||||

使用以下语法删除文件首行。

|

||||

|

||||

**`N`**` ` 表示文件中的第 N 行,`d`选项在 sed 命令中用于删除一行。

|

||||

`N` 表示文件中的第 N 行,`d` 选项在 `sed` 命令中用于删除一行。

|

||||

|

||||

**语法:**

|

||||

语法:

|

||||

|

||||

```

|

||||

sed 'Nd' file

|

||||

```

|

||||

|

||||

使用以下 sed 命令删除 sed-demo.txt 中的第一行。

|

||||

使用以下 `sed` 命令删除 `sed-demo.txt` 中的第一行。

|

||||

|

||||

```

|

||||

# sed '1d' sed-demo.txt

|

||||

@ -75,9 +73,9 @@ sed 'Nd' file

|

||||

|

||||

使用以下语法删除文件最后一行。

|

||||

|

||||

**`$`**` ` 符号表示文件的最后一行。

|

||||

`$` 符号表示文件的最后一行。

|

||||

|

||||

使用以下 sed 命令删除 sed-demo.txt 中的最后一行。

|

||||

使用以下 `sed` 命令删除 `sed-demo.txt` 中的最后一行。

|

||||

|

||||

```

|

||||

# sed '$d' sed-demo.txt

|

||||

@ -95,7 +93,7 @@ sed 'Nd' file

|

||||

|

||||

### 3) 如何删除指定行?

|

||||

|

||||

使用以下 sed 命令删除 sed-demo.txt 中的第 3 行。

|

||||

使用以下 `sed` 命令删除 `sed-demo.txt` 中的第 3 行。

|

||||

|

||||

```

|

||||

# sed '3d' sed-demo.txt

|

||||

@ -113,7 +111,7 @@ sed 'Nd' file

|

||||

|

||||

### 4) 如何删除指定范围内的行?

|

||||

|

||||

使用以下 sed 命令删除 sed-demo.txt 中的第 5 到 7 行。

|

||||

使用以下 `sed` 命令删除 `sed-demo.txt` 中的第 5 到 7 行。

|

||||

|

||||

```

|

||||

# sed '5,7d' sed-demo.txt

|

||||

@ -129,9 +127,9 @@ sed 'Nd' file

|

||||

|

||||

### 5) 如何删除多行内容?

|

||||

|

||||

sed 命令能够删除给定行的集合。

|

||||

`sed` 命令能够删除给定行的集合。

|

||||

|

||||

本例中,下面的 sed 命令删除了第 1 行、第 5 行、第 9 行和最后一行。

|

||||

本例中,下面的 `sed` 命令删除了第 1 行、第 5 行、第 9 行和最后一行。

|

||||

|

||||

```

|

||||

# sed '1d;5d;9d;$d' sed-demo.txt

|

||||

@ -146,7 +144,7 @@ sed 命令能够删除给定行的集合。

|

||||

|

||||

### 5a) 如何删除指定范围以外的行?

|

||||

|

||||

使用以下 sed 命令删除 sed-demo.txt 中第 3 到 6 行范围以外的所有行。

|

||||

使用以下 `sed` 命令删除 `sed-demo.txt` 中第 3 到 6 行范围以外的所有行。

|

||||

|

||||

```

|

||||

# sed '3,6!d' sed-demo.txt

|

||||

@ -159,7 +157,7 @@ sed 命令能够删除给定行的集合。

|

||||

|

||||

### 6) 如何删除空行?

|

||||

|

||||

使用以下 sed 命令删除 sed-demo.txt 中的空行。

|

||||

使用以下 `sed` 命令删除 `sed-demo.txt` 中的空行。

|

||||

|

||||

```

|

||||

# sed '/^$/d' sed-demo.txt

|

||||

@ -176,9 +174,9 @@ sed 命令能够删除给定行的集合。

|

||||

10 openSUSE

|

||||

```

|

||||

|

||||

### 7) 如何删除包含某个<ruby>表达式<rt>Pattern</rt></ruby>的行?

|

||||

### 7) 如何删除包含某个模式的行?

|

||||

|

||||

使用以下 sed 命令删除 sed-demo.txt 中匹配到 **`System`**` ` 表达式的行。

|

||||

使用以下 `sed` 命令删除 `sed-demo.txt` 中匹配到 `System` 模式的行。

|

||||

|

||||

```

|

||||

# sed '/System/d' sed-demo.txt

|

||||

@ -195,7 +193,7 @@ sed 命令能够删除给定行的集合。

|

||||

|

||||

### 8) 如何删除包含字符串集合中某个字符串的行?

|

||||

|

||||

使用以下 sed 命令删除 sed-demo.txt 中匹配到 **`System`**` ` 或 **`Linux`**` ` 表达式的行。

|

||||

使用以下 `sed` 命令删除 `sed-demo.txt` 中匹配到 `System` 或 `Linux` 表达式的行。

|

||||

|

||||

```

|

||||

# sed '/System\|Linux/d' sed-demo.txt

|

||||

@ -211,7 +209,7 @@ sed 命令能够删除给定行的集合。

|

||||

|

||||

### 9) 如何删除以指定字符开头的行?

|

||||

|

||||

为了测试,我创建了 sed-demo-1.txt 文件,并添加了以下内容。

|

||||

为了测试,我创建了 `sed-demo-1.txt` 文件,并添加了以下内容。

|

||||

|

||||

```

|

||||

# cat sed-demo-1.txt

|

||||

@ -228,7 +226,7 @@ Arch Linux - 1

|

||||

3 4 5 6

|

||||

```

|

||||

|

||||

使用以下 sed 命令删除以 **`R`**` ` 字符开头的所有行。

|

||||

使用以下 `sed` 命令删除以 `R` 字符开头的所有行。

|

||||

|

||||

```

|

||||

# sed '/^R/d' sed-demo-1.txt

|

||||

@ -243,7 +241,7 @@ Arch Linux - 1

|

||||

3 4 5 6

|

||||

```

|

||||

|

||||

使用以下 sed 命令删除 **`R`**` ` 或者 **`F`**` ` 字符开头的所有行。

|

||||

使用以下 `sed` 命令删除 `R` 或者 `F` 字符开头的所有行。

|

||||

|

||||

```

|

||||

# sed '/^[RF]/d' sed-demo-1.txt

|

||||

@ -259,7 +257,7 @@ Arch Linux - 1

|

||||

|

||||

### 10) 如何删除以指定字符结尾的行?

|

||||

|

||||

使用以下 sed 命令删除 **`m`**` ` 字符结尾的所有行。

|

||||

使用以下 `sed` 命令删除 `m` 字符结尾的所有行。

|

||||

|

||||

```

|

||||

# sed '/m$/d' sed-demo.txt

|

||||

@ -274,7 +272,7 @@ Arch Linux - 1

|

||||

10 openSUSE

|

||||

```

|

||||

|

||||

使用以下 sed 命令删除 **`x`**` ` 或者 **`m`**` ` 字符结尾的所有行。

|

||||

使用以下 `sed` 命令删除 `x` 或者 `m` 字符结尾的所有行。

|

||||

|

||||

```

|

||||

# sed '/[xm]$/d' sed-demo.txt

|

||||

@ -290,7 +288,7 @@ Arch Linux - 1

|

||||

|

||||

### 11) 如何删除所有大写字母开头的行?

|

||||

|

||||

使用以下 sed 命令删除所有大写字母开头的行。

|

||||

使用以下 `sed` 命令删除所有大写字母开头的行。

|

||||

|

||||

```

|

||||

# sed '/^[A-Z]/d' sed-demo-1.txt

|

||||

@ -301,9 +299,9 @@ ubuntu

|

||||

3 4 5 6

|

||||

```

|

||||

|

||||

### 12) 如何删除指定范围内匹配到<ruby>表达式<rt>Pattern</rt></ruby>的行?

|

||||

### 12) 如何删除指定范围内匹配模式的行?

|

||||

|

||||

使用以下 sed 命令删除第 1 到 6 行中包含 **`Linux`**` ` 表达式的行。

|

||||

使用以下 `sed` 命令删除第 1 到 6 行中包含 `Linux` 表达式的行。

|

||||

|

||||

```

|

||||

# sed '1,6{/Linux/d;}' sed-demo.txt

|

||||

@ -318,9 +316,9 @@ ubuntu

|

||||

10 openSUSE

|

||||

```

|

||||

|

||||

### 13) 如何删除匹配到<ruby>表达式<rt>Pattern</rt></ruby>的行及其下一行?

|

||||

### 13) 如何删除匹配模式的行及其下一行?

|

||||

|

||||

使用以下 sed 命令删除包含 `System` 表达式的行以及它的下一行。

|

||||

使用以下 `sed` 命令删除包含 `System` 表达式的行以及它的下一行。

|

||||

|

||||

```

|

||||

# sed '/System/{N;d;}' sed-demo.txt

|

||||

@ -337,7 +335,7 @@ ubuntu

|

||||

|

||||

### 14) 如何删除包含数字的行?

|

||||

|

||||

使用以下 sed 命令删除所有包含数字的行。

|

||||

使用以下 `sed` 命令删除所有包含数字的行。

|

||||

|

||||

```

|

||||

# sed '/[0-9]/d' sed-demo-1.txt

|

||||

@ -351,7 +349,7 @@ debian

|

||||

ubuntu

|

||||

```

|

||||

|

||||

使用以下 sed 命令删除所有以数字开头的行。

|

||||

使用以下 `sed` 命令删除所有以数字开头的行。

|

||||

|

||||

```

|

||||

# sed '/^[0-9]/d' sed-demo-1.txt

|

||||

@ -366,7 +364,7 @@ ubuntu

|

||||

Arch Linux - 1

|

||||

```

|

||||

|

||||

使用以下 sed 命令删除所有以数字结尾的行。

|

||||

使用以下 `sed` 命令删除所有以数字结尾的行。

|

||||

|

||||

```

|

||||

# sed '/[0-9]$/d' sed-demo-1.txt

|

||||

@ -383,13 +381,14 @@ ubuntu

|

||||

|

||||

### 15) 如何删除包含字母的行?

|

||||

|

||||

使用以下 sed 命令删除所有包含字母的行。

|

||||

使用以下 `sed` 命令删除所有包含字母的行。

|

||||

|

||||

```

|

||||

# sed '/[A-Za-z]/d' sed-demo-1.txt

|

||||

|

||||

3 4 5 6

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.2daygeek.com/linux-remove-delete-lines-in-file-sed-command/

|

||||

@ -397,7 +396,7 @@ via: https://www.2daygeek.com/linux-remove-delete-lines-in-file-sed-command/

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[hello-wn](https://github.com/hello-wn)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

116

published/20190823 Managing credentials with KeePassXC.md

Normal file

116

published/20190823 Managing credentials with KeePassXC.md

Normal file

@ -0,0 +1,116 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11278-1.html)

|

||||

[#]: subject: (Managing credentials with KeePassXC)

|

||||

[#]: via: (https://fedoramagazine.org/managing-credentials-with-keepassxc/)

|

||||

[#]: author: (Marco Sarti https://fedoramagazine.org/author/msarti/)

|

||||

|

||||

使用 KeePassXC 管理凭据

|

||||

======

|

||||

|

||||

![][1]

|

||||

|

||||

[上一篇文章][2]我们讨论了使用服务器端技术的密码管理工具。这些工具非常有趣而且适合云安装。在本文中,我们将讨论 KeePassXC,这是一个简单的多平台开源软件,它使用本地文件作为数据库。

|

||||

|

||||

这种密码管理软件的主要优点是简单。无需服务器端技术专业知识,因此可供任何类型的用户使用。

|

||||

|

||||

### 介绍 KeePassXC

|

||||

|

||||

KeePassXC 是一个开源的跨平台密码管理器:它是作为 KeePassX 的一个分支开始开发的,这是个不错的产品,但开发不是非常活跃。它使用 256 位密钥的 AES 算法将密钥保存在加密数据库中,这使得在云端设备(如 pCloud 或 Dropbox)中保存数据库相当安全。

|

||||

|

||||

除了密码,KeePassXC 还允许你在加密皮夹中保存各种信息和附件。它还有一个有效的密码生成器,可以帮助用户正确地管理他的凭据。

|

||||

|

||||

### 安装

|

||||

|

||||

这个程序在标准的 Fedora 仓库和 Flathub 仓库中都有。不幸的是,在沙箱中运行的程序无法使用浏览器集成,所以我建议通过 dnf 安装程序:

|

||||

|

||||

```

|

||||

sudo dnf install keepassxc

|

||||

```

|

||||

|

||||

### 创建你的皮夹

|

||||

|

||||

要创建新数据库,有两个重要步骤:

|

||||

|

||||

* 选择加密设置:默认设置相当安全,增加转换轮次也会增加解密时间。

|

||||

* 选择主密钥和额外保护:主密钥必须易于记忆(如果丢失它,你的皮夹就会丢失!)而足够强大,一个至少有 4 个随机单词的密码可能是一个不错的选择。作为额外保护,你可以选择密钥文件(请记住:你必须始终都有它,否则无法打开皮夹)和/或 YubiKey 硬件密钥。

|

||||

|

||||

![][3]

|

||||

|

||||

![][4]

|

||||

|

||||

数据库文件将保存到文件系统。如果你想与其他计算机/设备共享,可以将它保存在 U 盘或 pCloud 或 Dropbox 等云存储中。当然,如果你选择云存储,建议使用特别强大的主密码,如果有额外保护则更好。

|

||||

|

||||

### 创建你的第一个条目

|

||||

|

||||

创建数据库后,你可以开始创建第一个条目。对于 Web 登录,请在“条目”选项卡中输入用户名、密码和 URL。你可以根据个人策略指定凭据的到期日期,也可以通过按右侧的按钮下载网站的 favicon 并将其关联为条目的图标,这是一个很好的功能。

|

||||

|

||||

![][5]

|

||||

|

||||

![][6]

|

||||

|

||||

KeePassXC 还提供了一个很好的密码/口令生成器,你可以选择长度和复杂度,并检查对暴力攻击的抵抗程度:

|

||||

|

||||

![][7]

|

||||

|

||||

### 浏览器集成

|

||||

|

||||

KeePassXC 有一个适用于所有主流浏览器的扩展。该扩展允许你填写所有已指定 URL 条目的登录信息。

|

||||

|

||||

必须在 KeePassXC(工具菜单 -> 设置)上启用浏览器集成,指定你要使用的浏览器:

|

||||

|

||||

![][8]

|

||||

|

||||

安装扩展后,必须与数据库建立连接。要执行此操作,请按扩展按钮,然后按“连接”按钮:如果数据库已打开并解锁,那么扩展程序将创建关联密钥并将其保存在数据库中,该密钥对于浏览器是唯一的,因此我建议对它适当命名:

|

||||

|

||||

![][9]

|

||||

|

||||

当你打开 URL 字段中的登录页并且数据库是解锁的,那么这个扩展程序将为你提供与该页面关联的所有凭据:

|

||||

|

||||

![][10]

|

||||

|

||||

通过这种方式,你可以通过 KeePassXC 获取互联网凭据,而无需将其保存在浏览器中。

|

||||

|

||||

### SSH 代理集成

|

||||

|

||||

KeePassXC 的另一个有趣功能是与 SSH 集成。如果你使用 ssh 代理,KeePassXC 能够与之交互并添加你上传的 ssh 密钥到条目中。

|

||||

|

||||

首先,在常规设置(工具菜单 -> 设置)中,你必须启用 ssh 代理并重启程序:

|

||||

|

||||

![][11]

|

||||

|

||||

此时,你需要以附件方式上传你的 ssh 密钥对到条目中。然后在 “SSH 代理” 选项卡中选择附件下拉列表中的私钥,此时会自动填充公钥。不要忘记选择上面的两个复选框,以便在数据库打开/解锁时将密钥添加到代理,并在数据库关闭/锁定时删除:

|

||||

|

||||

![][12]

|

||||

|

||||

现在打开和解锁数据库,你可以使用皮夹中保存的密钥登录 ssh。

|

||||

|

||||

唯一的限制是可以添加到代理的最大密钥数:ssh 服务器默认不接受超过 5 次登录尝试,出于安全原因,建议不要增加此值。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://fedoramagazine.org/managing-credentials-with-keepassxc/

|

||||

|

||||

作者:[Marco Sarti][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://fedoramagazine.org/author/msarti/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://fedoramagazine.org/wp-content/uploads/2019/08/keepassxc-816x345.png

|

||||

[2]: https://linux.cn/article-11181-1.html

|

||||

[3]: https://fedoramagazine.org/wp-content/uploads/2019/08/Screenshot-from-2019-08-17-07-33-27.png

|

||||

[4]: https://fedoramagazine.org/wp-content/uploads/2019/08/Screenshot-from-2019-08-17-07-48-21.png

|

||||

[5]: https://fedoramagazine.org/wp-content/uploads/2019/08/Screenshot-from-2019-08-17-08-30-07.png

|

||||

[6]: https://fedoramagazine.org/wp-content/uploads/2019/08/Screenshot-from-2019-08-17-08-43-11.png

|

||||

[7]: https://fedoramagazine.org/wp-content/uploads/2019/08/Screenshot-from-2019-08-17-08-49-22.png

|

||||

[8]: https://fedoramagazine.org/wp-content/uploads/2019/08/Screenshot-from-2019-08-17-09-48-09.png

|

||||

[9]: https://fedoramagazine.org/wp-content/uploads/2019/08/Screenshot-from-2019-08-17-09-05-57.png

|

||||

[10]: https://fedoramagazine.org/wp-content/uploads/2019/08/Screenshot-from-2019-08-17-09-13-29.png

|

||||

[11]: https://fedoramagazine.org/wp-content/uploads/2019/08/Screenshot-from-2019-08-17-09-47-21.png

|

||||

[12]: https://fedoramagazine.org/wp-content/uploads/2019/08/Screenshot-from-2019-08-17-09-46-35.png

|

||||

@ -0,0 +1,194 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11286-1.html)

|

||||

[#]: subject: (How to Install Ansible (Automation Tool) on Debian 10 (Buster))

|

||||

[#]: via: (https://www.linuxtechi.com/install-ansible-automation-tool-debian10/)

|

||||

[#]: author: (Pradeep Kumar https://www.linuxtechi.com/author/pradeep/)

|

||||

|

||||

如何在 Debian 10 上安装 Ansible

|

||||

======

|

||||

|

||||

在如今的 IT 领域,自动化一个是热门话题,每个组织都开始采用自动化工具,像 Puppet、Ansible、Chef、CFEngine、Foreman 和 Katello。在这些工具中,Ansible 是几乎所有 IT 组织中管理 UNIX 和 Linux 系统的首选。在本文中,我们将演示如何在 Debian 10 Sever 上安装和使用 Ansible。

|

||||

|

||||

![Ansible-Install-Debian10][2]

|

||||

|

||||

我的实验室环境:

|

||||

|

||||

* Debian 10 – Ansible 服务器/ 控制节点 – 192.168.1.14

|

||||

* CentOS 7 – Ansible 主机 (Web 服务器)– 192.168.1.15

|

||||

* CentOS 7 – Ansible 主机(DB 服务器)– 192.169.1.17

|

||||

|

||||

我们还将演示如何使用 Ansible 服务器管理 Linux 服务器

|

||||

|

||||

### 在 Debian 10 Server 上安装 Ansible

|

||||

|

||||

我假设你的 Debian 10 中有一个拥有 root 或 sudo 权限的用户。在我这里,我有一个名为 `pkumar` 的本地用户,它拥有 sudo 权限。

|

||||

|

||||

Ansible 2.7 包存在于 Debian 10 的默认仓库中,在命令行中运行以下命令安装 Ansible,

|

||||

|

||||

```

|

||||

root@linuxtechi:~$ sudo apt update

|

||||

root@linuxtechi:~$ sudo apt install ansible -y

|

||||

```

|

||||

|

||||

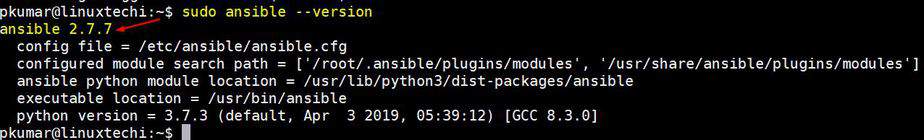

运行以下命令验证 Ansible 版本,

|

||||

|

||||

```

|

||||

root@linuxtechi:~$ sudo ansible --version

|

||||

```

|

||||

|

||||

|

||||

|

||||

要安装最新版本的 Ansible 2.8,首先我们必须设置 Ansible 仓库。

|

||||

|

||||

一个接一个地执行以下命令,

|

||||

|

||||

```

|

||||

root@linuxtechi:~$ echo "deb http://ppa.launchpad.net/ansible/ansible/ubuntu bionic main" | sudo tee -a /etc/apt/sources.list

|

||||

root@linuxtechi:~$ sudo apt-key adv --keyserver keyserver.ubuntu.com --recv-keys 93C4A3FD7BB9C367

|

||||

root@linuxtechi:~$ sudo apt update

|

||||

root@linuxtechi:~$ sudo apt install ansible -y

|

||||

root@linuxtechi:~$ sudo ansible --version

|

||||

```

|

||||

|

||||

|

||||

|

||||

### 使用 Ansible 管理 Linux 服务器

|

||||

|

||||

请参考以下步骤,使用 Ansible 控制器节点管理 Linux 类的服务器,

|

||||

|

||||

#### 步骤 1:在 Ansible 服务器及其主机之间交换 SSH 密钥

|

||||

|

||||

在 Ansible 服务器生成 ssh 密钥并在 Ansible 主机之间共享密钥。

|

||||

|

||||

```

|

||||

root@linuxtechi:~$ sudo -i

|

||||

root@linuxtechi:~# ssh-keygen

|

||||

root@linuxtechi:~# ssh-copy-id root@linuxtechi

|

||||

root@linuxtechi:~# ssh-copy-id root@linuxtechi

|

||||

```

|

||||

|

||||

#### 步骤 2:创建 Ansible 主机清单

|

||||

|

||||

安装 Ansible 后会自动创建 `/etc/ansible/hosts`,在此文件中我们可以编辑 Ansible 主机或其客户端。我们还可以在家目录中创建自己的 Ansible 主机清单,

|

||||

|

||||

运行以下命令在我们的家目录中创建 Ansible 主机清单。

|

||||

|

||||

```

|

||||

root@linuxtechi:~$ vi $HOME/hosts

|

||||

[Web]

|

||||

192.168.1.15

|

||||

|

||||

[DB]

|

||||

192.168.1.17

|

||||

```

|

||||

|

||||

保存并退出文件。

|

||||

|

||||

注意:在上面的主机文件中,我们也可以使用主机名或 FQDN,但为此我们必须确保 Ansible 主机可以通过主机名或者 FQDN 访问。

|

||||

|

||||

#### 步骤 3:测试和使用默认的 Ansible 模块

|

||||

|

||||

Ansible 附带了许多可在 `ansible` 命令中使用的默认模块,示例如下所示。

|

||||

|

||||

语法:

|

||||

|

||||

```

|

||||

# ansible -i <host_file> -m <module> <host>

|

||||

```

|

||||

|

||||

这里:

|

||||

|

||||

* `-i ~/hosts`:包含 Ansible 主机列表

|

||||

* `-m`:在之后指定 Ansible 模块,如 ping 和 shell

|

||||

* `<host>`:我们要运行 Ansible 模块的 Ansible 主机

|

||||

|

||||

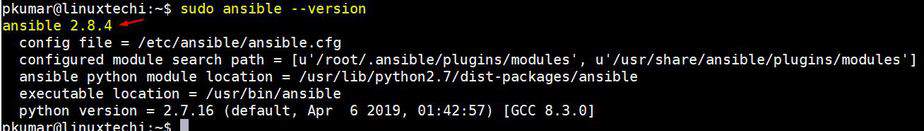

使用 Ansible ping 模块验证 ping 连接,

|

||||

|

||||

```

|

||||

root@linuxtechi:~$ sudo ansible -i ~/hosts -m ping all

|

||||

root@linuxtechi:~$ sudo ansible -i ~/hosts -m ping Web

|

||||

root@linuxtechi:~$ sudo ansible -i ~/hosts -m ping DB

|

||||

```

|

||||

|

||||

命令输出如下所示:

|

||||

|

||||

|

||||

|

||||

使用 shell 模块在 Ansible 主机上运行 shell 命令

|

||||

|

||||

语法:

|

||||

|

||||

```

|

||||

ansible -i <hosts_file> -m shell -a <shell_commands> <host>

|

||||

```

|

||||

|

||||

例子:

|

||||

|

||||

```

|

||||

root@linuxtechi:~$ sudo ansible -i ~/hosts -m shell -a "uptime" all

|

||||

192.168.1.17 | CHANGED | rc=0 >>

|

||||

01:48:34 up 1:07, 3 users, load average: 0.00, 0.01, 0.05

|

||||

|

||||

192.168.1.15 | CHANGED | rc=0 >>

|

||||

01:48:39 up 1:07, 3 users, load average: 0.00, 0.01, 0.04

|

||||

|

||||

root@linuxtechi:~$

|

||||

root@linuxtechi:~$ sudo ansible -i ~/hosts -m shell -a "uptime ; df -Th / ; uname -r" Web

|

||||

192.168.1.15 | CHANGED | rc=0 >>

|

||||

01:52:03 up 1:11, 3 users, load average: 0.12, 0.07, 0.06

|

||||

Filesystem Type Size Used Avail Use% Mounted on

|

||||

/dev/mapper/centos-root xfs 13G 1017M 12G 8% /

|

||||

3.10.0-327.el7.x86_64

|

||||

|

||||

root@linuxtechi:~$

|

||||

```

|

||||

|

||||

上面的命令输出表明我们已成功设置 Ansible 控制器节点。

|

||||

|

||||

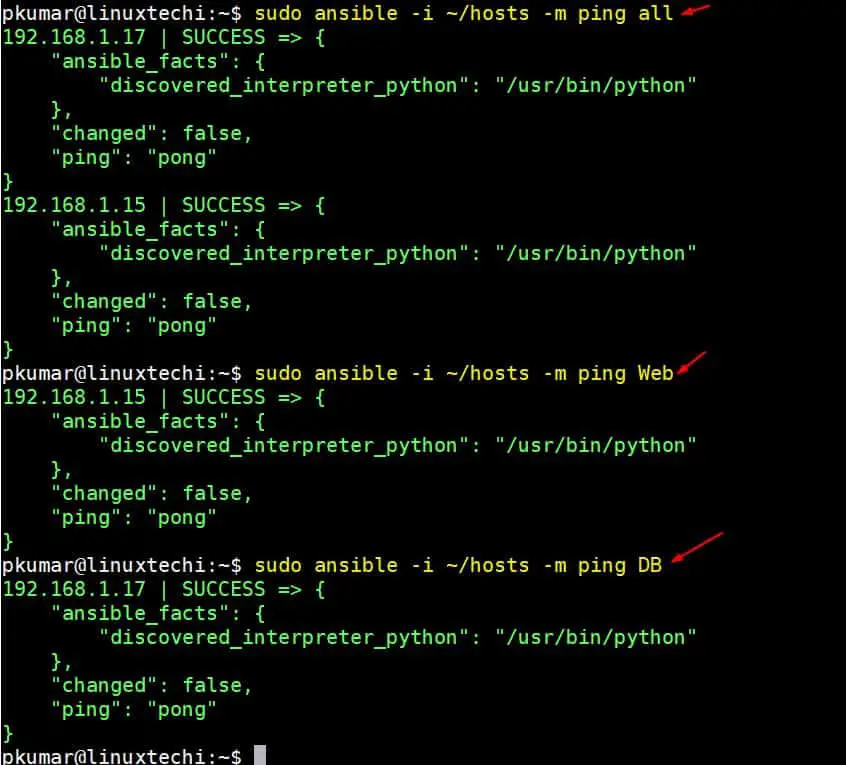

让我们创建一个安装 nginx 的示例剧本,下面的剧本将在所有服务器上安装 nginx,这些服务器是 Web 主机组的一部分,但在这里,我的主机组下只有一台 centos 7 机器。

|

||||

|

||||

```

|

||||

root@linuxtechi:~$ vi nginx.yaml

|

||||

---

|

||||

- hosts: Web

|

||||

tasks:

|

||||

- name: Install latest version of nginx on CentOS 7 Server

|

||||

yum: name=nginx state=latest

|

||||

- name: start nginx

|

||||

service:

|

||||

name: nginx

|

||||

state: started

|

||||

```

|

||||

|

||||

现在使用以下命令执行剧本。

|

||||

|

||||

```

|

||||

root@linuxtechi:~$ sudo ansible-playbook -i ~/hosts nginx.yaml

|

||||

```

|

||||

|

||||

上面命令的输出类似下面这样,

|

||||

|

||||

|

||||

|

||||

这表明 Ansible 剧本成功执行了。

|

||||

|

||||

本文就是这些了,请分享你的反馈和评论。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linuxtechi.com/install-ansible-automation-tool-debian10/

|

||||

|

||||

作者:[Pradeep Kumar][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linuxtechi.com/author/pradeep/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[2]: https://www.linuxtechi.com/wp-content/uploads/2019/08/Ansible-Install-Debian10.jpg

|

||||

102

published/20190827 A dozen ways to learn Python.md

Normal file

102

published/20190827 A dozen ways to learn Python.md

Normal file

@ -0,0 +1,102 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11280-1.html)

|

||||

[#]: subject: (A dozen ways to learn Python)

|

||||

[#]: via: (https://opensource.com/article/19/8/dozen-ways-learn-python)

|

||||

[#]: author: (Don Watkins https://opensource.com/users/don-watkins)

|

||||

|

||||

学习 Python 的 12 个方式

|

||||

======

|

||||

|

||||

> 这些资源将帮助你入门并熟练掌握 Python。

|

||||

|

||||

|

||||

|

||||

Python 是世界上[最受欢迎的][2]编程语言之一,它受到了全世界各地的开发者和创客的欢迎。大多数 Linux 和 MacOS 计算机都预装了某个版本的 Python,现在甚至一些 Windows 计算机供应商也开始安装 Python 了。

|

||||

|

||||

也许你尚未学会它,想学习但又不知道在哪里入门。这里的 12 个资源将帮助你入门并熟练掌握 Python。

|

||||

|

||||

### 课程、书籍、文章和文档

|

||||

|

||||

1、[Python 软件基金会][3]提供了出色的信息和文档,可帮助你迈上编码之旅。请务必查看 [Python 入门指南][4]。它将帮助你得到最新版本的 Python,并提供有关编辑器和开发环境的有用提示。该组织还有可以来进一步指导你的[优秀文档][5]。

|

||||

|

||||

2、我的 Python 旅程始于[海龟模块][6]。我首先在 Bryson Payne 的《[教你的孩子编码][7]》中找到了关于 Python 和海龟的内容。这本书是一个很好的资源,购买这本书可以让你看到几十个示例程序,这将激发你的编程好奇心。Payne 博士还在 [Udemy][8] 上以相同的名称开设了一门便宜的课程。

|

||||

|

||||

3、Payne 博士的书激起了我的好奇心,我渴望了解更多。这时我发现了 Al Sweigart 的《[用 Python 自动化无聊的东西][9]》。你可以购买这本书,也可以使用它的在线版本,它与印刷版完全相同且可根据知识共享许可免费获得和分享。Al 的这本书让我学习到了 Python 的基础知识、函数、列表、字典和如何操作字符串等等。这是一本很棒的书,我已经购买了许多本捐赠给了当地图书馆。Al 还提供 [Udemy][10] 课程;使用他的网站上的优惠券代码,只需 10 美元即可参加。

|

||||

|

||||

4、Eric Matthes 撰写了《[Python 速成][11]》,这是由 No Starch Press 出版的 Python 的逐步介绍(如同上面的两本书)。Matthes 还有一个很棒的[伴侣网站][12],其中包括了如何在你的计算机上设置 Python 以及一个用以简化学习曲线的[速查表][13]。

|

||||

|

||||

5、[Python for Everybody][14] 是另一个很棒的 Python 学习资源。该网站可以免费访问 [Charles Severance][15] 的 Coursera 和 edX 认证课程的资料。该网站分为入门、课程和素材等部分,其中 17 个课程按从安装到数据可视化的主题进行分类组织。Severance([@drchuck on Twitter][16]),是密歇根大学信息学院的临床教授。

|

||||

|

||||

6、[Seth Kenlon][17],我们 Opensource.com 的 Python 大师,撰写了大量关于 Python 的文章。Seth 有很多很棒的文章,包括“[用 JSON 保存和加载 Python 数据][18]”,“[用 Python 学习面向对象编程][19]”,“[在 Python 游戏中用 Pygame 放置平台][20]”,等等。

|

||||

|

||||

### 在设备上使用 Python

|

||||

|

||||

7、最近我对 [Circuit Playground Express][21] 非常感兴趣,这是一个运行 [CircuitPython][22] 的设备,CircuitPython 是为微控制器设计的 Python 编程语言的子集。我发现 Circuit Playground Express 和 CircuitPython 是向学生介绍 Python(以及一般编程)的好方法。它的制造商 Adafruit 有一个很好的[系列教程][23],可以让你快速掌握 CircuitPython。

|

||||

|

||||

8、[BBC:Microbit][24] 是另一种入门 Python 的好方法。你可以学习如何使用 [MicroPython][25] 对其进行编程,这是另一种用于编程微控制器的 Python 实现。

|

||||

|

||||

9、学习 Python 的文章如果没有提到[树莓派][26]单板计算机那是不完整的。一旦你有了[舒适][27]而强大的树莓派,你就可以在 Opensource.com 上找到[成吨的][28]使用它的灵感,包括“[7 个值得探索的树莓派项目][29]”,“[在树莓派上复活 Amiga][30]”,和“[如何使用树莓派作为 VPN 服务器][31]”。

|

||||

|

||||

10、许多学校为学生提供了 iOS 设备以支持他们的教育。在尝试帮助这些学校的老师和学生学习用 Python 编写代码时,我发现了 [Trinket.io][32]。Trinket 允许你在浏览器中编写和执行 Python 3 代码。 Trinket 的 [Python 入门][33]教程将向你展示如何在 iOS 设备上使用 Python。

|

||||

|

||||

### 播客

|

||||

|

||||

11、我喜欢在开车的时候听播客,我在 Kelly Paredes 和 Sean Tibor 的 [Teaching Python][34] 播客上找到了大量的信息。他们的内容很适合教育领域。

|

||||

|

||||

12、如果你正在寻找一些更通用的东西,我推荐 Michael Kennedy 的 [Talk Python to Me][35] 播客。它提供了有关 Python 及相关技术的最佳信息。

|

||||

|

||||

你学习 Python 最喜欢的资源是什么?请在评论中分享。

|

||||

|

||||

计算机编程可能是一个有趣的爱好,正如我以前在 Apple II 计算机上编程时所学到的……

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/8/dozen-ways-learn-python

|

||||

|

||||

作者:[Don Watkins][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/don-watkins

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/programming_code_screen_display.jpg?itok=2HMTzqz0 (Code on a screen)

|

||||

[2]: https://insights.stackoverflow.com/survey/2019#most-popular-technologies

|

||||

[3]: https://www.python.org/

|

||||

[4]: https://www.python.org/about/gettingstarted/

|

||||

[5]: https://docs.python.org/3/

|

||||

[6]: https://opensource.com/life/15/8/python-turtle-graphics

|

||||

[7]: https://opensource.com/education/15/9/review-bryson-payne-teach-your-kids-code

|

||||

[8]: https://www.udemy.com/teach-your-kids-to-code/

|

||||

[9]: https://automatetheboringstuff.com/

|

||||

[10]: https://www.udemy.com/automate/?couponCode=PAY_10_DOLLARS

|

||||

[11]: https://nostarch.com/pythoncrashcourse2e

|

||||

[12]: https://ehmatthes.github.io/pcc/

|

||||

[13]: https://ehmatthes.github.io/pcc/cheatsheets/README.html

|

||||

[14]: https://www.py4e.com/

|

||||

[15]: http://www.dr-chuck.com/dr-chuck/resume/bio.htm

|

||||

[16]: https://twitter.com/drchuck/

|

||||

[17]: https://opensource.com/users/seth

|

||||

[18]: https://linux.cn/article-11133-1.html

|

||||

[19]: https://opensource.com/article/19/7/get-modular-python-classes

|

||||

[20]: https://linux.cn/article-10902-1.html

|

||||

[21]: https://opensource.com/article/19/7/circuit-playground-express

|

||||

[22]: https://circuitpython.org/

|

||||

[23]: https://learn.adafruit.com/welcome-to-circuitpython

|

||||

[24]: https://opensource.com/article/19/8/getting-started-bbc-microbit

|

||||

[25]: https://micropython.org/

|

||||

[26]: https://www.raspberrypi.org/

|

||||

[27]: https://projects.raspberrypi.org/en/pathways/getting-started-with-raspberry-pi

|

||||

[28]: https://opensource.com/sitewide-search?search_api_views_fulltext=Raspberry%20Pi

|

||||

[29]: https://opensource.com/article/19/3/raspberry-pi-projects

|

||||

[30]: https://opensource.com/article/19/3/amiga-raspberry-pi

|

||||

[31]: https://opensource.com/article/19/6/raspberry-pi-vpn-server

|

||||

[32]: https://trinket.io/

|

||||

[33]: https://docs.trinket.io/getting-started-with-python#/welcome/where-we-ll-go

|

||||

[34]: https://www.teachingpython.fm/

|

||||

[35]: https://talkpython.fm/

|

||||

@ -0,0 +1,74 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Mellanox introduces SmartNICs to eliminate network load on CPUs)

|

||||

[#]: via: (https://www.networkworld.com/article/3433924/mellanox-introduces-smartnics-to-eliminate-network-load-on-cpus.html)

|

||||

[#]: author: (Andy Patrizio https://www.networkworld.com/author/Andy-Patrizio/)

|

||||

|

||||

Mellanox introduces SmartNICs to eliminate network load on CPUs

|

||||

======

|

||||

Mellanox unveiled two processors designed to offload network workloads from the CPU -- ConnectX-6 Dx and BlueField-2 – freeing the CPU to do its processing job.

|

||||

![Natali Mis / Getty Images][1]

|

||||

|

||||

If you were wondering what prompted Nvidia to [shell out nearly $7 billion for Mellanox Technologies][2], here’s your answer: The networking hardware provider has introduced a pair of processors for offloading network workloads from the CPU.

|

||||

|

||||

ConnectX-6 Dx and BlueField-2 are cloud SmartNICs and I/O Processing Unit (IPU) solutions, respectively, designed to take the work of network processing off the CPU, freeing it to do its processing job.

|

||||

|

||||

**[ Learn more about SDN: Find out [where SDN is going][3] and learn the [difference between SDN and NFV][4]. | Get regularly scheduled insights: [Sign up for Network World newsletters][5]. ]**

|

||||

|

||||

The company promises up to 200Gbit/sec throughput with ConnectX and BlueField. It said the market for 25Gbit and faster Ethernet was 31% of the total market last year and will grow to 61% next year. With the internet of things (IoT) and artificial intelligence (AI), a lot of data needs to be moved around and Ethernet needs to get a lot faster.

|

||||

|

||||

“The whole vision of [software-defined networking] and NVMe-over-Fabric was a nice vision, but as soon as people tried it in the data center, performance ground to a halt because CPUs couldn’t handle all that data,” said Kevin Deierling, vice president of marketing for Mellanox. “As you do more complex networking, the CPUs are being asked to do all that work on top of running the apps and the hypervisor. It puts a big burden on CPUs if you don’t unload that workload.”

|

||||

|

||||

CPUs are getting larger, with AMD introducing a 64-core Epyc processor and Intel introducing a 56-core Xeon. But keeping those giant CPUs fed is a real challenge. You can’t use a 100Gbit link because the CPU has to look at all that traffic and it gets overwhelmed, argues Deierling.

|

||||

|

||||