mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-13 22:30:37 +08:00

完成HOW TO CREATE AN EBOOK WITH CALIBRE IN LINUX.md翻译

This commit is contained in:

commit

953626aa2b

101

published/20160429 Why and how I became a software engineer.md

Normal file

101

published/20160429 Why and how I became a software engineer.md

Normal file

@ -0,0 +1,101 @@

|

||||

我成为软件工程师的原因和经历

|

||||

==========================================

|

||||

|

||||

|

||||

|

||||

1989 年乌干达首都,坎帕拉。

|

||||

|

||||

我明智的父母决定与其将我留在家里添麻烦,不如把我送到叔叔的办公室学学电脑。几天后,我和另外六、七个小孩,还有一台放置在课桌上的崭新电脑,一起置身于 21 层楼的一间狭小房屋中。很明显我们还不够格去碰那家伙。在长达三周无趣的 DOS 命令学习后,美好时光来到,终于轮到我来输 **copy doc.txt d:** 啦。

|

||||

|

||||

那将文件写入五英寸软盘的奇怪的声音,听起来却像音乐般美妙。那段时间,这块软盘简直成为了我的至宝。我把所有可以拷贝的东西都放在上面了。然而,1989 年的乌干达,人们的生活十分“正统”,相比较而言,捣鼓电脑、拷贝文件还有格式化磁盘就称不上“正统”。于是我不得不专注于自己接受的教育,远离计算机科学,走入建筑工程学。

|

||||

|

||||

之后几年里,我和同龄人一样,干过很多份工作也学到了许多技能。我教过幼儿园的小朋友,也教过大人如何使用软件,在服装店工作过,还在教堂中担任过引座员。在我获取堪萨斯大学的学位时,我正在技术管理员的手下做技术助理,听上去比较神气,其实也就是搞搞学生数据库而已。

|

||||

|

||||

当我 2007 年毕业时,计算机技术已经变得不可或缺。建筑工程学的方方面面都与计算机科学深深的交织在一起,所以我们不经意间学了些简单的编程知识。我对于这方面一直很着迷,但我不得不成为一位“正统”的工程师,由此我发展了一项秘密的私人爱好:写科幻小说。

|

||||

|

||||

在我的故事中,我以我笔下的女主角的形式存在。她们都是编程能力出众的科学家,总是卷入冒险,并用自己的技术发明战胜那些渣渣们,有时甚至要在现场发明新方法。我提到的这些“新技术”,有的是基于真实世界中的发明,也有些是从科幻小说中读到的。这就意味着我需要了解这些技术的原理,而且我的研究使我关注了许多有趣的 reddit 版块和电子杂志。

|

||||

|

||||

### 开源:巨大的宝库

|

||||

|

||||

那几周在 DOS 命令上花费的经历对我影响巨大,我在一些非专业的项目上耗费心血,并占据了宝贵的学习时间。Geocities 刚向所有 Yahoo! 用户开放时,我就创建了一个网站,用于发布一些用小型数码相机拍摄的个人图片。我建立多个免费网站,帮助家人和朋友解决一些他们所遇到的电脑问题,还为教堂搭建了一个图书馆数据库。

|

||||

|

||||

这意味着,我需要一直研究并尝试获取更多的信息,使它们变得更棒。互联网上帝保佑我,让开源进入我的视野。突然之间,30 天试用期和 license 限制对我而言就变成了过去式。我可以完全不受这些限制,继续使用 GIMP、Inkscape 和 OpenOffice。

|

||||

|

||||

### 是正经做些事情的时候了

|

||||

|

||||

我很幸运,有商业伙伴喜欢我的经历。她也是个想象力丰富的人,期待更高效、更便捷的互联世界。我们根据我们以往成功道路中经历的弱点制定了解决方案,但执行却成了一个问题。我们都缺乏给产品带来活力的能力,每当我们试图将想法带到投资人面前时,这表现的尤为突出。

|

||||

|

||||

我们需要学习编程。于是 2015 年夏末,我们来到 Holberton 学校。那是一所座落于旧金山,由社区推进,基于项目教学的学校。

|

||||

|

||||

一天早晨我的商业伙伴来找我,以她独有的方式(每当她有疯狂想法想要拉我入伙时),进行一场对话。

|

||||

|

||||

**Zee**: Gloria,我想和你说点事,在你说“不”前能先听我说完吗?

|

||||

|

||||

**Me**: 不行。

|

||||

|

||||

**Zee**: 为做全栈工程师,咱们申请上一所学校吧。

|

||||

|

||||

**Me**: 什么?

|

||||

|

||||

**Zee**: 就是这,看!就是这所学校,我们要申请这所学校来学习编程。

|

||||

|

||||

**Me**: 我不明白。我们不是正在网上学 Python 和…

|

||||

|

||||

**Zee**: 这不一样。相信我。

|

||||

|

||||

**Me**: 那…

|

||||

|

||||

**Zee**: 这就是不信任我了。

|

||||

|

||||

**Me**: 好吧 … 给我看看。

|

||||

|

||||

### 抛开偏见

|

||||

|

||||

我读到的和我们在网上看的的似乎很相似。这简直太棒了,以至于让人觉得不太真实,但我们还是决定尝试一下,全力以赴,看看结果如何。

|

||||

|

||||

要成为学生,我们需要经历四步选择,不过选择的依据仅仅是天赋和动机,而不是学历和编程经历。筛选便是课程的开始,通过它我们开始学习与合作。

|

||||

|

||||

根据我和我伙伴的经验, Holberton 学校的申请流程比其他的申请流程有趣太多了,就像场游戏。如果你完成了一项挑战,就能通往下一关,在那里有别的有趣的挑战正等着你。我们创建了 Twitter 账号,在 Medium 上写博客,为创建网站而学习 HTML 和 CSS, 打造了一个充满活力的在线社区,虽然在此之前我们并不知晓有谁会来。

|

||||

|

||||

在线社区最吸引人的就是大家有多种多样的使用电脑的经验,而背景和性别不是社区创始人(我们私下里称他们为“The Trinity”)做出选择的因素。大家只是喜欢聚在一块儿交流。我们都行进在通过学习编程来提升自己计算机技术的旅途上。

|

||||

|

||||

相较于其他的的申请流程,我们不需要泄露很多的身份信息。就像我的伙伴,她的名字里看不出她的性别和种族。直到最后一个步骤,在视频聊天的时候, The Trinity 才知道她是一位有色人种女性。迄今为止,促使她达到这个级别的只是她的热情和才华。肤色和性别并没有妨碍或者帮助到她。还有比这更酷的吗?

|

||||

|

||||

获得录取通知书的晚上,我们知道生活将向我们的梦想转变。2016 年 1 月 22 日,我们来到巴特瑞大街 98 号,去见我们的同学们 [Hippokampoiers][2],这是我们的初次见面。很明显,在见面之前,“The Trinity”已经做了很多工作,聚集了一批形形色色的人,他们充满激情与热情,致力于成长为全栈工程师。

|

||||

|

||||

这所学校有种与众不同的体验。每天都是向某一方面编程的一次竭力的冲锋。交给我们的工程,并不会有很多指导,我们需要使用一切可以使用的资源找出解决方案。[Holberton 学校][1] 认为信息来源相较于以前已经大大丰富了。MOOC(大型开放式课程)、教程、可用的开源软件和项目,以及线上社区等等,为我们完成项目提供了足够的知识。加之宝贵的导师团队来指导我们制定解决方案,这所学校变得并不仅仅是一所学校;我们已经成为了求学者的团体。任何对软件工程感兴趣并对这种学习方法感兴趣的人,我都强烈推荐这所学校。在这里的经历会让人有些悲喜交加,但是绝对值得。

|

||||

|

||||

### 开源问题

|

||||

|

||||

我最早使用的开源系统是 [Fedora][3],一个 [Red Hat][4] 赞助的项目。与 一名IRC 成员交流时,她推荐了这款免费的操作系统。 虽然在此之前,我还未独自安装过操作系统,但是这激起了我对开源的兴趣和日常使用计算机时对开源软件的依赖性。我们提倡为开源贡献代码,创造并使用开源的项目。我们的项目就在 Github 上,任何人都可以使用或是向它贡献出自己的力量。我们也会使用或以自己的方式为一些既存的开源项目做出贡献。在学校里,我们使用的大部分工具是开源的,例如 Fedora、[Vagrant][5]、[VirtualBox][6]、[GCC][7] 和 [Discourse][8],仅举几例。

|

||||

|

||||

在向软件工程师行进的路上,我始终憧憬着有朝一日能为开源社区做出一份贡献,能与他人分享我所掌握的知识。

|

||||

|

||||

### 多样性问题

|

||||

|

||||

站在教室里,和 29 位求学者交流心得,真是令人陶醉。学员中 40% 是女性, 44% 是有色人种。当你是一位有色人种且为女性,并身处于这个以缺乏多样性而著名的领域时,这些数字就变得非常重要了。这是高科技圣地麦加上的绿洲,我到达了。

|

||||

|

||||

想要成为一个全栈工程师是十分困难的,你甚至很难了解这意味着什么。这是一条充满挑战但又有丰富回报的旅途。科技推动着未来飞速发展,而你也是美好未来很重要的一部分。虽然媒体在持续的关注解决科技公司的多样化的问题,但是如果能认清自己,清楚自己的背景,知道自己为什么想成为一名全栈工程师,你便能在某一方面迅速成长。

|

||||

|

||||

不过可能最重要的是,告诉大家,女性在计算机的发展史上扮演过多么重要的角色,以帮助更多的女性回归到科技界,而且在给予就业机会时,不会因性别等因素而感到犹豫。女性的才能将会共同影响科技的未来,以及整个世界的未来。

|

||||

|

||||

|

||||

------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/life/16/4/my-open-source-story-gloria-bwandungi

|

||||

|

||||

作者:[Gloria Bwandungi][a]

|

||||

译者:[martin2011qi](https://github.com/martin2011qi)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/nappybrain

|

||||

[1]: https://www.holbertonschool.com/

|

||||

[2]: https://twitter.com/hippokampoiers

|

||||

[3]: https://en.wikipedia.org/wiki/Fedora_(operating_system)

|

||||

[4]: https://www.redhat.com/

|

||||

[5]: https://www.vagrantup.com/

|

||||

[6]: https://www.virtualbox.org/

|

||||

[7]: https://gcc.gnu.org/

|

||||

[8]: https://www.discourse.org/

|

||||

@ -0,0 +1,58 @@

|

||||

为满足当今和未来 IT 需求,培训员工还是雇佣新人?

|

||||

================================================================

|

||||

|

||||

|

||||

|

||||

在数字化时代,由于 IT 工具不断更新,技术公司紧随其后,对 IT 技能的需求也不断变化。对于企业来说,寻找和雇佣那些拥有令人垂涎能力的创新人才,是非常不容易的。同时,培训内部员工来使他们接受新的技能和挑战,需要一定的时间,而时间要求常常是紧迫的。

|

||||

|

||||

[Sandy Hill][1] 对 IT 涉及到的多项技术都很熟悉。她作为 [Pegasystems][2] 项目的 IT 总监,负责多个 IT 团队,从应用的部署到数据中心的运营都要涉及。更重要的是,Pegasystems 开发帮助销售、市场、服务以及运营团队流水化操作,以及客户联络的应用。这意味着她需要掌握使用 IT 内部资源的最佳方法,面对公司客户遇到的 IT 挑战。

|

||||

|

||||

|

||||

|

||||

**TEP(企业家项目):这些年你是如何调整培训重心的?**

|

||||

|

||||

**Hill**:在过去的几年中,我们经历了爆炸式的发展,现在我们要实现更多的全球化进程。因此,培训目标是确保每个人都在同一起跑线上。

|

||||

|

||||

我们主要的关注点在培养员工使用新产品和工具上,这些新产品和工具能够推动创新,提高工作效率。例如,我们使用了之前没有的资产管理系统。因此我们需要为全部员工做培训,而不是雇佣那些已经知道该产品的人。当我们正在发展的时候,我们也试图保持紧张的预算和稳定的职员总数。所以,我们更愿意在内部培训而不是雇佣新人。

|

||||

|

||||

**TEP:说说培训方法吧,怎样帮助你的员工发展他们的技能?**

|

||||

|

||||

**Hill**:我要求每一位员工制定一个技术性的和非技术性的训练目标。这作为他们绩效评估的一部分。他们的技术性目标需要与他们的工作职能相符,非技术岗目标则随意,比如着重发展一项软技能,或是学一些专业领域之外的东西。我每年对职员进行一次评估,看看差距和不足之处,以使团队保持全面发展。

|

||||

|

||||

**TEP:你的训练计划能够在多大程度上减轻招聘工作量, 保持职员的稳定性?**

|

||||

|

||||

**Hill**:使我们的职员保持学习新技术的兴趣,可以让他们不断提高技能。让职员知道我们重视他们并且让他们在擅长的领域成长和发展,以此激励他们。

|

||||

|

||||

**TEP:你们发现哪些培训是最有效的?**

|

||||

|

||||

**HILL**:我们使用几种不同的培训方法,认为效果很好。对新的或特殊的项目,我们会由供应商提供培训课程,作为项目的一部分。要是这个方法不能实现,我们将进行脱产培训。我们也会购买一些在线的培训课程。我也鼓励职员每年参加至少一次会议,以了解行业的动向。

|

||||

|

||||

**TEP:哪些技能需求,更适合雇佣新人而不是培训现有员工?**

|

||||

|

||||

**Hill**:这和项目有关。最近有一个项目,需要使用 OpenStack,而我们根本没有这方面的专家。所以我们与一家从事这一领域的咨询公司合作。我们利用他们的专业人员运行该项目,并现场培训我们的内部团队成员。让内部员工学习他们需要的技能,同时还要完成他们的日常工作,是一项艰巨的任务。

|

||||

|

||||

顾问帮助我们确定我们需要的员工人数。这样我们就可以对员工进行评估,看是否存在缺口。如果存在人员上的缺口,我们还需要额外的培训或是员工招聘。我们也确实雇佣了一些合同工。另一个选择是对一些全职员工进行为期六至八周的培训,但我们的项目模式不容许这么做。

|

||||

|

||||

**TEP:最近雇佣的员工,他们的那些技能特别能够吸引到你?**

|

||||

|

||||

**Hill**:在最近的招聘中,我更看重软技能。除了扎实的技术能力外,他们需要能够在团队中进行有效的沟通和工作,要有说服他人,谈判和解决冲突的能力。

|

||||

|

||||

IT 人常常独来独往,不擅社交。然而如今IT 与整个组织结合越来越紧密,为其他业务部门提供有用的更新和状态报告的能力至关重要,可展示 IT 部门存在的重要性。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://enterprisersproject.com/article/2016/6/training-vs-hiring-meet-it-needs-today-and-tomorrow

|

||||

|

||||

作者:[Paul Desmond][a]

|

||||

译者:[Cathon](https://github.com/Cathon)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://enterprisersproject.com/user/paul-desmond

|

||||

[1]: https://enterprisersproject.com/user/sandy-hill

|

||||

[2]: https://www.pega.com/pega-can?&utm_source=google&utm_medium=cpc&utm_campaign=900.US.Evaluate&utm_term=pegasystems&gloc=9009726&utm_content=smAXuLA4U|pcrid|102822102849|pkw|pegasystems|pmt|e|pdv|c|

|

||||

|

||||

|

||||

|

||||

|

||||

@ -1,9 +1,11 @@

|

||||

小模块的开销

|

||||

JavaScript 小模块的开销

|

||||

====

|

||||

|

||||

大约一年之前,我在将一个大型 JavaScript 代码库重构为小模块时发现了 Browserify 和 Webpack 中一个令人沮丧的事实:

|

||||

更新(2016/10/30):我写完这篇文章之后,我在[这个基准测试中发了一个错误](https://github.com/nolanlawson/cost-of-small-modules/pull/8),会导致 Rollup 比它预期的看起来要好一些。不过,整体结果并没有明显的不同(Rollup 仍然击败了 Browserify 和 Webpack,虽然它并没有像 Closure 十分好),所以我只是更新了图表。该基准测试包括了 [RequireJS 和 RequireJS Almond 打包器](https://github.com/nolanlawson/cost-of-small-modules/pull/5),所以文章中现在也包括了它们。要看原始帖子,可以查看[历史版本](https://web.archive.org/web/20160822181421/https://nolanlawson.com/2016/08/15/the-cost-of-small-modules/)。

|

||||

|

||||

> “代码越模块化,代码体积就越大。”- Nolan Lawson

|

||||

大约一年之前,我在将一个大型 JavaScript 代码库重构为更小的模块时发现了 Browserify 和 Webpack 中一个令人沮丧的事实:

|

||||

|

||||

> “代码越模块化,代码体积就越大。:< ”- Nolan Lawson

|

||||

|

||||

过了一段时间,Sam Saccone 发布了一些关于 [Tumblr][1] 和 [Imgur][2] 页面加载性能的出色的研究。其中指出:

|

||||

|

||||

@ -15,9 +17,9 @@

|

||||

|

||||

一个页面中包含的 JavaScript 脚本越多,页面加载也将越慢。庞大的 JavaScript 包会导致浏览器花费更多的时间去下载、解析和执行,这些都将加长载入时间。

|

||||

|

||||

即使当你使用如 Webpack [code splitting][3]、Browserify [factor bundles][4] 等工具将代码分解为多个包,时间的花费也仅仅是被延迟到页面生命周期的晚些时候。JavaScript 迟早都将有一笔开销。

|

||||

即使当你使用如 Webpack [code splitting][3]、Browserify [factor bundles][4] 等工具将代码分解为多个包,该开销也仅仅是被延迟到页面生命周期的晚些时候。JavaScript 迟早都将有一笔开销。

|

||||

|

||||

此外,由于 JavaScript 是一门动态语言,同时流行的 [CommonJS][5] 模块也是动态的,所以这就使得在最终分发给用户的代码中剔除无用的代码变得异常困难。譬如你可能只使用到 jQuery 中的 $.ajax,但是通过载入 jQuery 包,你将以整个包为代价。

|

||||

此外,由于 JavaScript 是一门动态语言,同时流行的 [CommonJS][5] 模块也是动态的,所以这就使得在最终分发给用户的代码中剔除无用的代码变得异常困难。譬如你可能只使用到 jQuery 中的 $.ajax,但是通过载入 jQuery 包,你将付出整个包的代价。

|

||||

|

||||

JavaScript 社区对这个问题提出的解决办法是提倡 [小模块][6] 的使用。小模块不仅有许多 [美好且实用的好处][7] 如易于维护,易于理解,易于集成等,而且还可以通过鼓励包含小巧的功能而不是庞大的库来解决之前提到的 jQuery 的问题。

|

||||

|

||||

@ -66,7 +68,7 @@ $ browserify node_modules/qs | browserify-count-modules

|

||||

|

||||

顺带一提,我写过的最大的开源站点 [Pokedex.org][21] 包含了 4 个包,共 311 个模块。

|

||||

|

||||

让我们先暂时忽略这些 JavaScript 包的实际大小,我认为去探索一下一定数量的模块本身开销会事一件有意思的事。虽然 Sam Saccone 的文章 [“2016 年 ES2015 转译的开销”][22] 已经广为流传,但是我认为他的结论还未到达足够深度,所以让我们挖掘的稍微再深一点吧。

|

||||

让我们先暂时忽略这些 JavaScript 包的实际大小,我认为去探索一下一定数量的模块本身开销会是一件有意思的事。虽然 Sam Saccone 的文章 [“2016 年 ES2015 转译的开销”][22] 已经广为流传,但是我认为他的结论还未到达足够深度,所以让我们挖掘的稍微再深一点吧。

|

||||

|

||||

### 测试环节!

|

||||

|

||||

@ -86,13 +88,13 @@ console.log(total)

|

||||

module.exports = 1

|

||||

```

|

||||

|

||||

我测试了五种打包方法:Browserify, 带 [bundle-collapser][24] 插件的 Browserify, Webpack, Rollup 和 Closure Compiler。对于 Rollup 和 Closure Compiler 我使用了 ES6 模块,而对于 Browserify 和 Webpack 则用的 CommonJS,目的是为了不涉及其各自缺点而导致测试的不公平(由于它们可能需要做一些转译工作,如 Babel 一样,而这些工作将会增加其自身的运行时间)。

|

||||

我测试了五种打包方法:Browserify、带 [bundle-collapser][24] 插件的 Browserify、Webpack、Rollup 和 Closure Compiler。对于 Rollup 和 Closure Compiler 我使用了 ES6 模块,而对于 Browserify 和 Webpack 则用的是 CommonJS,目的是为了不涉及其各自缺点而导致测试的不公平(由于它们可能需要做一些转译工作,如 Babel 一样,而这些工作将会增加其自身的运行时间)。

|

||||

|

||||

为了更好地模拟一个生产环境,我将带 -mangle 和 -compress 参数的 Uglify 用于所有的包,并且使用 gzip 压缩后通过 GitHub Pages 用 HTTPS 协议进行传输。对于每个包,我一共下载并执行 15 次,然后取其平均值,并使用 performance.now() 函数来记录载入时间(未使用缓存)与执行时间。

|

||||

为了更好地模拟一个生产环境,我对所有的包采用带 `-mangle` 和 `-compress` 参数的 `Uglify` ,并且使用 gzip 压缩后通过 GitHub Pages 用 HTTPS 协议进行传输。对于每个包,我一共下载并执行 15 次,然后取其平均值,并使用 `performance.now()` 函数来记录载入时间(未使用缓存)与执行时间。

|

||||

|

||||

### 包大小

|

||||

|

||||

在我们查看测试结果之前,我们有必要先来看一眼我们要测试的包文件。一下是每个包最小处理后但并未使用 gzip 压缩时的体积大小(单位:Byte):

|

||||

在我们查看测试结果之前,我们有必要先来看一眼我们要测试的包文件。以下是每个包最小处理后但并未使用 gzip 压缩时的体积大小(单位:Byte):

|

||||

|

||||

| | 100 个模块 | 1000 个模块 | 5000 个模块 |

|

||||

| --- | --- | --- | --- |

|

||||

@ -110,7 +112,7 @@ module.exports = 1

|

||||

| rollup | 300 | 2145 | 11510 |

|

||||

| closure | 302 | 2140 | 11789 |

|

||||

|

||||

Browserify 和 Webpack 的工作方式是隔离各个模块到各自的函数空间,然后声明一个全局载入器,并在每次 require() 函数调用时定位到正确的模块处。下面是我们的 Browserify 包的样子:

|

||||

Browserify 和 Webpack 的工作方式是隔离各个模块到各自的函数空间,然后声明一个全局载入器,并在每次 `require()` 函数调用时定位到正确的模块处。下面是我们的 Browserify 包的样子:

|

||||

|

||||

```

|

||||

(function e(t,n,r){function s(o,u){if(!n[o]){if(!t[o]){var a=typeof require=="function"&&require;if(!u&&a)return a(o,!0);if(i)return i(o,!0);var f=new Error("Cannot find module '"+o+"'");throw f.code="MODULE_NOT_FOUND",f}var l=n[o]={exports:{}};t[o][0].call(l.exports,function(e){var n=t[o][1][e];return s(n?n:e)},l,l.exports,e,t,n,r)}return n[o].exports}var i=typeof require=="function"&&require;for(var o=0;o

|

||||

@ -144,7 +146,7 @@ Browserify 和 Webpack 的工作方式是隔离各个模块到各自的函数空

|

||||

|

||||

在 100 个模块时,各包的差异是微不足道的,但是一旦模块数量达到 1000 个甚至 5000 个时,差异将会变得非常巨大。iPod Touch 在不同包上的差异并不明显,而对于具有一定年代的 Nexus 5 来说,Browserify 和 Webpack 明显耗时更多。

|

||||

|

||||

与此同时,我发现有意思的是 Rollup 和 Closure 的运行开销对于 iPod 而言几乎可以忽略,并且与模块的数量关系也不大。而对于 Nexus 5 来说,运行的开销并非完全可以忽略,但它们仍比 Browserify 或 Webpack 低很多。后者若未在几百毫秒内完成加载则将会占用主线程的好几帧的时间,这就意味着用户界面将冻结并且等待直到模块载入完成。

|

||||

与此同时,我发现有意思的是 Rollup 和 Closure 的运行开销对于 iPod 而言几乎可以忽略,并且与模块的数量关系也不大。而对于 Nexus 5 来说,运行的开销并非完全可以忽略,但 Rollup/Closure 仍比 Browserify/Webpack 低很多。后者若未在几百毫秒内完成加载则将会占用主线程的好几帧的时间,这就意味着用户界面将冻结并且等待直到模块载入完成。

|

||||

|

||||

值得注意的是前面这些测试都是在千兆网速下进行的,所以在网络情况来看,这只是一个最理想的状况。借助 Chrome 开发者工具,我们可以认为地将 Nexus 5 的网速限制到 3G 水平,然后来看一眼这对测试产生的影响([查看表格][30]):

|

||||

|

||||

@ -152,13 +154,13 @@ Browserify 和 Webpack 的工作方式是隔离各个模块到各自的函数空

|

||||

|

||||

一旦我们将网速考虑进来,Browserify/Webpack 和 Rollup/Closure 的差异将变得更为显著。在 1000 个模块规模(接近于 Reddit 1050 个模块的规模)时,Browserify 花费的时间比 Rollup 长大约 400 毫秒。然而 400 毫秒已经不是一个小数目了,正如 Google 和 Bing 指出的,亚秒级的延迟都会 [对用户的参与产生明显的影响][32] 。

|

||||

|

||||

还有一件事需要指出,那就是这个测试并非测量 100 个、1000 个或者 5000 个模块的每个模块的精确运行时间。因为这还与你对 require() 函数的使用有关。在这些包中,我采用的是对每个模块调用一次 require() 函数。但如果你每个模块调用了多次 require() 函数(这在代码库中非常常见)或者你多次动态调用 require() 函数(例如在子函数中调用 require() 函数),那么你将发现明显的性能退化。

|

||||

还有一件事需要指出,那就是这个测试并非测量 100 个、1000 个或者 5000 个模块的每个模块的精确运行时间。因为这还与你对 `require()` 函数的使用有关。在这些包中,我采用的是对每个模块调用一次 `require()` 函数。但如果你每个模块调用了多次 `require()` 函数(这在代码库中非常常见)或者你多次动态调用 `require()` 函数(例如在子函数中调用 `require()` 函数),那么你将发现明显的性能退化。

|

||||

|

||||

Reddit 的移动站点就是一个很好的例子。虽然该站点有 1050 个模块,但是我测量了它们使用 Browserify 的实际执行时间后发现比“1000 个模块”的测试结果差好多。当使用那台运行 Chrome 的 Nexus 5 时,我测出 Reddit 的 Browserify require() 函数耗时 2.14 秒。而那个“1000 个模块”脚本中的等效函数只需要 197 毫秒(在搭载 i7 处理器的 Surface Book 上的桌面版 Chrome,我测出的结果分别为 559 毫秒与 37 毫秒,虽然给出桌面平台的结果有些令人惊讶)。

|

||||

|

||||

这结果提示我们有必要对每个模块使用多个 require() 函数的情况再进行一次测试。不过,我并不认为这对 Browserify 和 Webpack 会是一个公平的测试,因为 Rollup 和 Closure 都会将重复的 ES6 库导入处理为一个的顶级变量声明,同时也阻止了顶层空间以外的其他区域的导入。所以根本上来说,Rollup 和 Closure 中一个导入和多个导入的开销是相同的,而对于 Browserify 和 Webpack,运行开销随 require() 函数的数量线性增长。

|

||||

这结果提示我们有必要对每个模块使用多个 `require()` 函数的情况再进行一次测试。不过,我并不认为这对 Browserify 和 Webpack 会是一个公平的测试,因为 Rollup 和 Closure 都会将重复的 ES6 库导入处理为一个的顶级变量声明,同时也阻止了顶层空间以外的其他区域的导入。所以根本上来说,Rollup 和 Closure 中一个导入和多个导入的开销是相同的,而对于 Browserify 和 Webpack,运行开销随 `require()` 函数的数量线性增长。

|

||||

|

||||

为了我们这个分析的目的,我认为最好假设模块的数量是性能的短板。而事实上,“5000 个模块”也是一个比“5000 个 require() 函数调用”更好的度量标准。

|

||||

为了我们这个分析的目的,我认为最好假设模块的数量是性能的短板。而事实上,“5000 个模块”也是一个比“5000 个 `require()` 函数调用”更好的度量标准。

|

||||

|

||||

### 结论

|

||||

|

||||

@ -168,11 +170,11 @@ Reddit 的移动站点就是一个很好的例子。虽然该站点有 1050 个

|

||||

|

||||

给出这些结果之后,我对 Closure Compiler 和 Rollup 在 JavaScript 社区并没有得到过多关注而感到惊讶。我猜测或许是因为(前者)需要依赖 Java,而(后者)仍然相当不成熟并且未能做到开箱即用(详见 [Calvin’s Metcalf 的评论][37] 中作的不错的总结)。

|

||||

|

||||

即使没有足够数量的 JavaScript 开发者加入到 Rollup 或 Closure 的队伍中,我认为 npm 包作者们也已准备好了去帮助解决这些问题。如果你使用 npm 安装 lodash,你将会发其现主要的导入是一个巨大的 JavaScript 模块,而不是你期望的 Lodash 的超模块(hyper-modular)特性(require('lodash/uniq'),require('lodash.uniq') 等等)。对于 PouchDB,我们做了一个类似的声明以 [使用 Rollup 作为预发布步骤][38],这将产生对于用户而言尽可能小的包。

|

||||

即使没有足够数量的 JavaScript 开发者加入到 Rollup 或 Closure 的队伍中,我认为 npm 包作者们也已准备好了去帮助解决这些问题。如果你使用 npm 安装 lodash,你将会发其现主要的导入是一个巨大的 JavaScript 模块,而不是你期望的 Lodash 的超模块(hyper-modular)特性(`require('lodash/uniq')`,`require('lodash.uniq')` 等等)。对于 PouchDB,我们做了一个类似的声明以 [使用 Rollup 作为预发布步骤][38],这将产生对于用户而言尽可能小的包。

|

||||

|

||||

同时,我创建了 [rollupify][39] 来尝试将这过程变得更为简单一些,只需拖动到已存在的 Browserify 工程中即可。其基本思想是在你自己的项目中使用导入(import)和导出(export)(可以使用 [cjs-to-es6][40] 来帮助迁移),然后使用 require() 函数来载入第三方包。这样一来,你依旧可以在你自己的代码库中享受所有模块化的优点,同时能导出一个适当大小的大模块来发布给你的用户。不幸的是,你依旧得为第三方库付出一些代价,但是我发现这是对于当前 npm 生态系统的一个很好的折中方案。

|

||||

|

||||

所以结论如下:一个大的 JavaScript 包比一百个小 JavaScript 模块要快。尽管这是事实,我依旧希望我们社区能最终发现我们所处的困境————提倡小模块的原则对开发者有利,但是对用户不利。同时希望能优化我们的工具,使得我们可以对两方面都有利。

|

||||

所以结论如下:**一个大的 JavaScript 包比一百个小 JavaScript 模块要快**。尽管这是事实,我依旧希望我们社区能最终发现我们所处的困境————提倡小模块的原则对开发者有利,但是对用户不利。同时希望能优化我们的工具,使得我们可以对两方面都有利。

|

||||

|

||||

### 福利时间!三款桌面浏览器

|

||||

|

||||

@ -205,15 +207,15 @@ Firefox 48 ([查看表格][45])

|

||||

|

||||

[![Nexus 5 (3G) RequireJS 结果][53]](https://nolanwlawson.files.wordpress.com/2016/08/2016-08-20-14_45_29-small_modules3-xlsx-excel.png)

|

||||

|

||||

|

||||

更新 3: 我写了一个 [optimize-js](http://github.com/nolanlawson/optimize-js) ,它会减少一些函数内的函数的解析成本。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://nolanlawson.com/2016/08/15/the-cost-of-small-modules/?utm_source=javascriptweekly&utm_medium=email

|

||||

via: https://nolanlawson.com/2016/08/15/the-cost-of-small-modules/

|

||||

|

||||

作者:[Nolan][a]

|

||||

译者:[Yinr](https://github.com/Yinr)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,57 @@

|

||||

宽松开源许可证的崛起意味着什么

|

||||

====

|

||||

|

||||

为什么像 GNU GPL 这样的限制性许可证越来越不受青睐。

|

||||

|

||||

“如果你用了任何开源软件, 那么你软件的其他部分也必须开源。” 这是微软前 CEO 巴尔默 2001 年说的, 尽管他说的不对, 还是引发了人们对自由软件的 FUD (恐惧, 不确定和怀疑(fear, uncertainty and doubt))。 大概这才是他的意图。

|

||||

|

||||

对开源软件的这些 FUD 主要与开源许可有关。 现在有许多不同的许可证, 当中有些限制比其他的更严格(也有人称“更具保护性”)。 诸如 GNU 通用公共许可证 (GPL) 这样的限制性许可证使用了 copyleft 的概念。 copyleft 赋予人们自由发布软件副本和修改版的权力, 只要衍生工作保留同样的权力。 bash 和 GIMP 等开源项目就是使用了 GPL(v3)。 还有一个 AGPL( Affero GPL) 许可证, 它为网络上的软件(如 web service)提供了 copyleft 许可。

|

||||

|

||||

这意味着, 如果你使用了这种许可的代码, 然后加入了你自己的专有代码, 那么在一些情况下, 整个代码, 包括你的代码也就遵从这种限制性开源许可证。 Ballmer 说的大概就是这类的许可证。

|

||||

|

||||

但宽松许可证不同。 比如, 只要保留版权声明和许可声明且不要求开发者承担责任, MIT 许可证允许任何人任意使用开源代码, 包括修改和出售。 另一个比较流行的宽松开源许可证是 Apache 许可证 2.0,它还包含了贡献者向用户提供专利授权相关的条款。 使用 MIT 许可证的有 JQuery、.NET Core 和 Rails , 使用 Apache 许可证 2.0 的软件包括安卓, Apache 和 Swift。

|

||||

|

||||

两种许可证类型最终都是为了让软件更有用。 限制性许可证促进了参与和分享的开源理念, 使每一个人都能从软件中得到最大化的利益。 而宽松许可证通过允许人们任意使用软件来确保人们能从软件中得到最多的利益, 即使这意味着他们可以使用代码, 修改它, 据为己有,甚至以专有软件出售,而不做任何回报。

|

||||

|

||||

开源许可证管理公司黑鸭子软件的数据显示, 去年使用最多的开源许可证是限制性许可证 GPL 2.0,份额大约 25%。 宽松许可证 MIT 和 Apache 2.0 次之, 份额分别为 18% 和 16%, 再后面是 GPL 3.0, 份额大约 10%。 这样来看, 限制性许可证占 35%, 宽松许可证占 34%, 几乎是平手。

|

||||

|

||||

但这份当下的数据没有揭示发展趋势。黑鸭子软件的数据显示, 从 2009 年到 2015 年的六年间, MIT 许可证的份额上升了 15.7%, Apache 的份额上升了 12.4%。 在这段时期, GPL v2 和 v3 的份额惊人地下降了 21.4%。 换言之, 在这段时期里, 大量软件从限制性许可证转到宽松许可证。

|

||||

|

||||

这个趋势还在继续。 黑鸭子软件的[最新数据][1]显示, MIT 现在的份额为 26%, GPL v2 为 21%, Apache 2 为 16%, GPL v3 为 9%。 即 30% 的限制性许可证和 42% 的宽松许可证--与前一年的 35% 的限制许可证和 34% 的宽松许可证相比, 发生了重大的转变。 对 GitHub 上使用许可证的[调查研究][2]证实了这种转变。 它显示 MIT 以压倒性的 45% 占有率成为最流行的许可证, 与之相比, GPL v2 只有 13%, Apache 11%。

|

||||

|

||||

|

||||

|

||||

### 引领趋势

|

||||

|

||||

从限制性许可证到宽松许可证,这么大的转变背后是什么呢? 是公司害怕如果使用了限制性许可证的软件,他们就会像巴尔默说的那样,失去自己私有软件的控制权了吗? 事实上, 可能就是如此。 比如, Google 就[禁用了 Affero GPL 软件][3]。

|

||||

|

||||

[Instructional Media + Magic][4] 的主席 Jim Farmer, 是一个教育开源技术的开发者。 他认为很多公司为避免法律问题而不使用限制性许可证。 “问题就在于复杂性。 许可证的复杂性越高, 被他人因为某行为而告上法庭的可能性越高。 高复杂性更可能带来诉讼”, 他说。

|

||||

|

||||

他补充说, 这种对限制性许可证的恐惧正被律师们驱动着, 许多律师建议自己的客户使用 MIT 或 Apache 2.0 许可证的软件, 并明确反对使用 Affero 许可证的软件。

|

||||

|

||||

他说, 这会对软件开发者产生影响, 因为如果公司都避开限制性许可证软件的使用,开发者想要自己的软件被使用, 就更会把新的软件使用宽松许可证。

|

||||

|

||||

但 SalesAgility(开源 SuiteCRM 背后的公司)的 CEO Greg Soper 认为这种到宽松许可证的转变也是由一些开发者驱动的。 “看看像 Rocket.Chat 这样的应用。 开发者本可以选择 GPL 2.0 或 Affero 许可证, 但他们选择了宽松许可证,” 他说。 “这样可以给这个应用最大的机会, 因为专有软件厂商可以使用它, 不会伤害到他们的产品, 且不需要把他们的产品也使用开源许可证。 这样如果开发者想要让第三方应用使用他的应用的话, 他有理由选择宽松许可证。”

|

||||

|

||||

Soper 指出, 限制性许可证致力于帮助开源项目获得成功,方式是阻止开发者拿了别人的代码、做了修改,但不把结果回报给社区。 “Affero 许可证对我们的产品健康发展很重要, 因为如果有人利用了我们的代码开发,做得比我们好, 却又不把代码回报回来, 就会扼杀掉我们的产品,” 他说。 “ 对 Rocket.Chat 则不同, 因为如果它使用 Affero, 那么它会污染公司的知识产权, 所以公司不会使用它。 不同的许可证有不同的使用案例。”

|

||||

|

||||

曾在 Gnome、OpenOffice 工作过,现在是 LibreOffice 的开源开发者的 Michael Meeks 同意 Jim Farmer 的观点,认为许多公司确实出于对法律的担心,而选择使用宽松许可证的软件。 “copyleft 许可证有风险, 但同样也有巨大的益处。 遗憾的是人们都听从律师的, 而律师只是讲风险, 却从不告诉你有些事是安全的。”

|

||||

|

||||

巴尔默发表他的错误言论已经过去 15 年了, 但它产生的 FUD 还是有影响--即使从限制性许可证到宽松许可证的转变并不是他的目的。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.cio.com/article/3120235/open-source-tools/what-the-rise-of-permissive-open-source-licenses-means.html

|

||||

|

||||

作者:[Paul Rubens][a]

|

||||

译者:[willcoderwang](https://github.com/willcoderwang)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://www.cio.com/author/Paul-Rubens/

|

||||

[1]: https://www.blackducksoftware.com/top-open-source-licenses

|

||||

[2]: https://github.com/blog/1964-open-source-license-usage-on-github-com

|

||||

[3]: http://www.theregister.co.uk/2011/03/31/google_on_open_source_licenses/

|

||||

[4]: http://immagic.com/

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

在 Linux 上检测硬盘坏道和坏块

|

||||

在 Linux 上检测硬盘上的坏道和坏块

|

||||

===

|

||||

|

||||

让我们从定义坏道和坏块开始说起,它们是一块磁盘或闪存上不再能够被读写的部分,一般是由于磁盘表面特定的[物理损坏][7]或闪存晶体管失效导致的。

|

||||

让我们从坏道和坏块的定义开始说起,它们是一块磁盘或闪存上不再能够被读写的部分,一般是由于磁盘表面特定的[物理损坏][7]或闪存晶体管失效导致的。

|

||||

|

||||

随着坏道的继续积累,它们会对你的磁盘或闪存容量产生令人不快或破坏性的影响,甚至可能会导致硬件失效。

|

||||

|

||||

@ -13,7 +13,7 @@

|

||||

|

||||

### 在 Linux 上使用坏块工具检查坏道

|

||||

|

||||

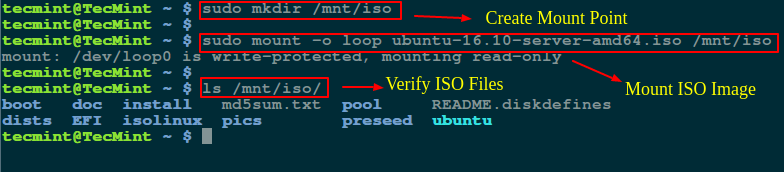

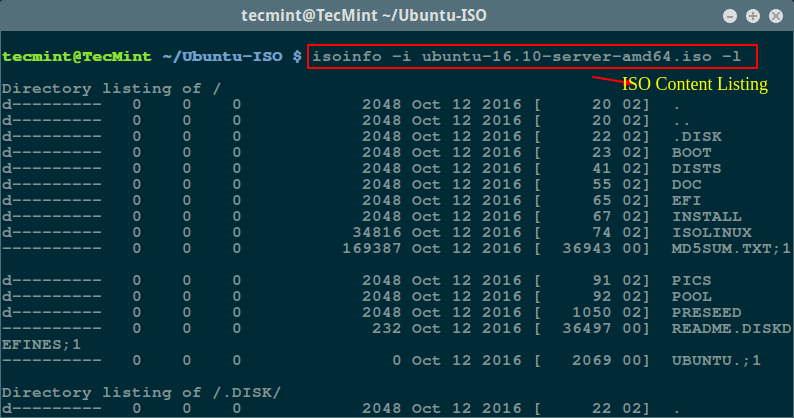

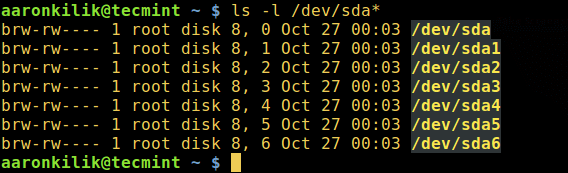

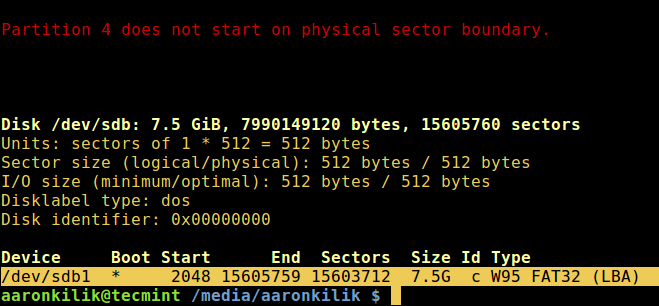

坏块工具可以让用户扫描设备检查坏道或坏块。设备可以是一个磁盘或外置磁盘,由一个如 /dev/sdc 这样的文件代表。

|

||||

坏块工具可以让用户扫描设备检查坏道或坏块。设备可以是一个磁盘或外置磁盘,由一个如 `/dev/sdc` 这样的文件代表。

|

||||

|

||||

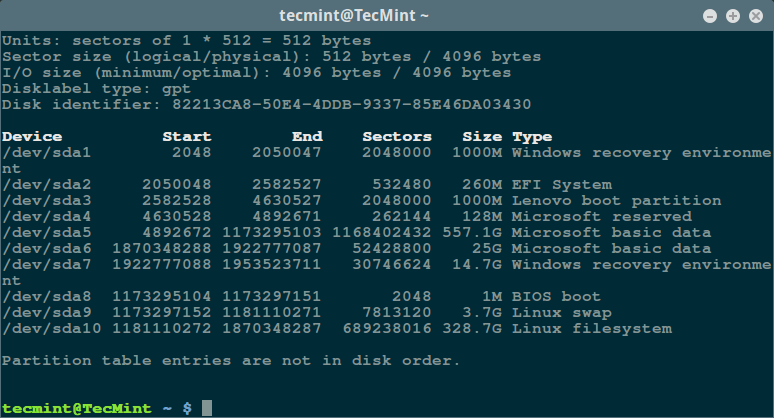

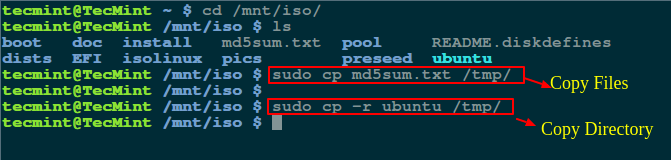

首先,通过超级用户权限执行 [fdisk 命令][5]来显示你的所有磁盘或闪存的信息以及它们的分区信息:

|

||||

|

||||

@ -24,9 +24,9 @@ $ sudo fdisk -l

|

||||

|

||||

[][4]

|

||||

|

||||

列出 Linux 文件系统分区

|

||||

*列出 Linux 文件系统分区*

|

||||

|

||||

然后用这个命令检查你的 Linux 硬盘上的坏道/坏块:

|

||||

然后用如下命令检查你的 Linux 硬盘上的坏道/坏块:

|

||||

|

||||

```

|

||||

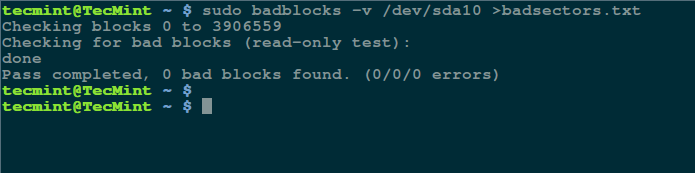

$ sudo badblocks -v /dev/sda10 > badsectors.txt

|

||||

@ -35,15 +35,15 @@ $ sudo badblocks -v /dev/sda10 > badsectors.txt

|

||||

|

||||

[][3]

|

||||

|

||||

在 Linux 上扫描硬盘坏道

|

||||

*在 Linux 上扫描硬盘坏道*

|

||||

|

||||

上面的命令中,badblocks 扫描设备 /dev/sda10(记得指定你的实际设备),-v 选项让它显示操作的详情。另外,这里使用了输出重定向将操作结果重定向到了文件 badsectors.txt。

|

||||

上面的命令中,badblocks 扫描设备 `/dev/sda10`(记得指定你的实际设备),`-v` 选项让它显示操作的详情。另外,这里使用了输出重定向将操作结果重定向到了文件 `badsectors.txt`。

|

||||

|

||||

如果你在你的磁盘上发现任何坏道,卸载磁盘并像下面这样让系统不要将数据写入回报的扇区中。

|

||||

|

||||

你需要执行 e2fsck(针对 ext2/ext3/ext4 文件系统)或 fsck 命令,命令中还需要用到 badsectors.txt 文件和设备文件。

|

||||

你需要执行 `e2fsck`(针对 ext2/ext3/ext4 文件系统)或 `fsck` 命令,命令中还需要用到 `badsectors.txt` 文件和设备文件。

|

||||

|

||||

`-l` 选项告诉命令将指定文件名文件(badsectors.txt)中列出的扇区号码加入坏块列表。

|

||||

`-l` 选项告诉命令将在指定的文件 `badsectors.txt` 中列出的扇区号码加入坏块列表。

|

||||

|

||||

```

|

||||

------------ 针对 for ext2/ext3/ext4 文件系统 ------------

|

||||

@ -60,7 +60,7 @@ $ sudo fsck -l badsectors.txt /dev/sda10

|

||||

|

||||

这个方法对带有 S.M.A.R.T(Self-Monitoring, Analysis and Reporting Technology,自我监控分析报告技术)系统的现代磁盘(ATA/SATA 和 SCSI/SAS 硬盘以及固态硬盘)更加的可靠和高效。S.M.A.R.T 系统能够帮助检测,报告,以及可能记录它们的健康状况,这样你就可以找出任何可能出现的硬件失效。

|

||||

|

||||

你可以使用以下命令安装 smartmontools:

|

||||

你可以使用以下命令安装 `smartmontools`:

|

||||

|

||||

```

|

||||

------------ 在基于 Debian/Ubuntu 的系统上 ------------

|

||||

@ -71,7 +71,7 @@ $ sudo yum install smartmontools

|

||||

|

||||

```

|

||||

|

||||

安装完成之后,使用 smartctl 控制磁盘集成的 S.M.A.R.T 系统。你可以这样查看它的手册或帮助:

|

||||

安装完成之后,使用 `smartctl` 控制磁盘集成的 S.M.A.R.T 系统。你可以这样查看它的手册或帮助:

|

||||

|

||||

```

|

||||

$ man smartctl

|

||||

@ -79,7 +79,7 @@ $ smartctl -h

|

||||

|

||||

```

|

||||

|

||||

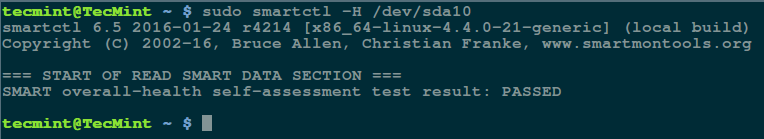

然后执行 smartctrl 命令并在命令中指定你的设备作为参数,以下命令包含了参数 `-H` 或 `--health` 以显示 SMART 整体健康自我评估测试结果。

|

||||

然后执行 `smartctrl` 命令并在命令中指定你的设备作为参数,以下命令包含了参数 `-H` 或 `--health` 以显示 SMART 整体健康自我评估测试结果。

|

||||

|

||||

```

|

||||

$ sudo smartctl -H /dev/sda10

|

||||

@ -88,7 +88,7 @@ $ sudo smartctl -H /dev/sda10

|

||||

|

||||

[][2]

|

||||

|

||||

检查 Linux 硬盘健康

|

||||

*检查 Linux 硬盘健康*

|

||||

|

||||

上面的结果指出你的硬盘很健康,近期内不大可能发生硬件失效。

|

||||

|

||||

@ -102,10 +102,8 @@ via: http://www.tecmint.com/check-linux-hard-disk-bad-sectors-bad-blocks/

|

||||

|

||||

|

||||

作者:[Aaron Kili][a]

|

||||

|

||||

译者:[alim0x](https://github.com/alim0x)

|

||||

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,12 +1,13 @@

|

||||

# 如何在 Linux 中将文件编码转换为 UTF-8

|

||||

如何在 Linux 中将文件编码转换为 UTF-8

|

||||

===============

|

||||

|

||||

在这篇教程中,我们将解释字符编码的含义,然后给出一些使用命令行工具将使用某种字符编码的文件转化为另一种编码的例子。最后,我们将一起看一看如何在 Linux 下将使用各种字符编码的文件转化为 UTF-8 编码。

|

||||

|

||||

你可能已经知道,计算机是不会理解和存储字符、数字或者任何人类能够理解的东西的,除了二进制数据。一个二进制位只有两种可能的值,也就是 `0` 或 `1`,`真`或`假`,`对`或`错`。其它的任何事物,比如字符、数据和图片,必须要以二进制的形式来表现,以供计算机处理。

|

||||

你可能已经知道,计算机除了二进制数据,是不会理解和存储字符、数字或者任何人类能够理解的东西的。一个二进制位只有两种可能的值,也就是 `0` 或 `1`,`真`或`假`,`是`或`否`。其它的任何事物,比如字符、数据和图片,必须要以二进制的形式来表现,以供计算机处理。

|

||||

|

||||

简单来说,字符编码是一种可以指示电脑来将原始的 0 和 1 解释成实际字符的方式,在这些字符编码中,字符都可以用数字串来表示。

|

||||

简单来说,字符编码是一种可以指示电脑来将原始的 0 和 1 解释成实际字符的方式,在这些字符编码中,字符都以一串数字来表示。

|

||||

|

||||

字符编码方案有很多种,比如 ASCII, ANCI, Unicode 等等。下面是 ASCII 编码的一个例子。

|

||||

字符编码方案有很多种,比如 ASCII、ANCI、Unicode 等等。下面是 ASCII 编码的一个例子。

|

||||

|

||||

```

|

||||

字符 二进制

|

||||

@ -22,11 +23,9 @@ B 01000010

|

||||

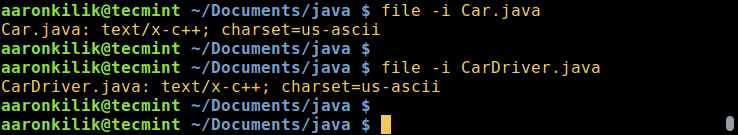

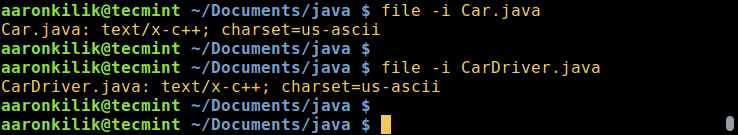

$ file -i Car.java

|

||||

$ file -i CarDriver.java

|

||||

```

|

||||

[

|

||||

|

||||

][3]

|

||||

|

||||

|

||||

在 Linux 中查看文件的编码

|

||||

*在 Linux 中查看文件的编码*

|

||||

|

||||

iconv 工具的使用方法如下:

|

||||

|

||||

@ -34,25 +33,21 @@ iconv 工具的使用方法如下:

|

||||

$ iconv option

|

||||

$ iconv options -f from-encoding -t to-encoding inputfile(s) -o outputfile

|

||||

```

|

||||

|

||||

在这里,`-f` 或 `--from-code` 标明了输入编码,而 `-t` 或 `--to-encoding` 指定了输出编码。

|

||||

在这里,`-f` 或 `--from-code` 表明了输入编码,而 `-t` 或 `--to-encoding` 指定了输出编码。

|

||||

|

||||

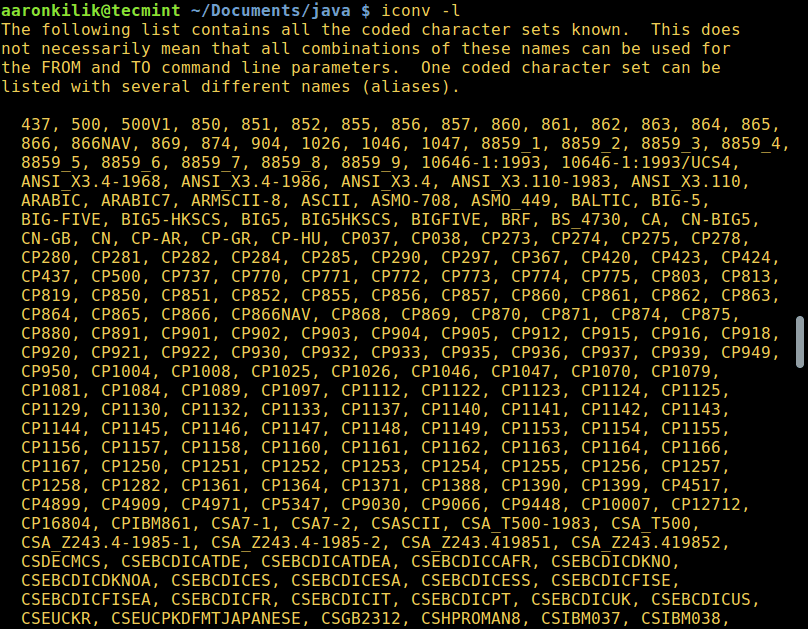

为了列出所有已有编码的字符集,你可以使用以下命令:

|

||||

|

||||

```

|

||||

$ iconv -l

|

||||

```

|

||||

[

|

||||

|

||||

][2]

|

||||

|

||||

|

||||

列出所有已有编码字符集

|

||||

*列出所有已有编码字符集*

|

||||

|

||||

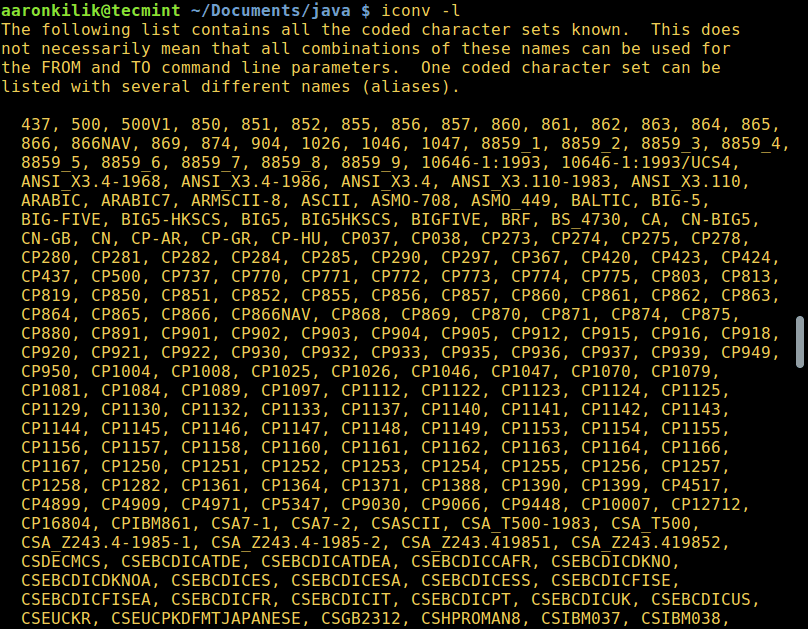

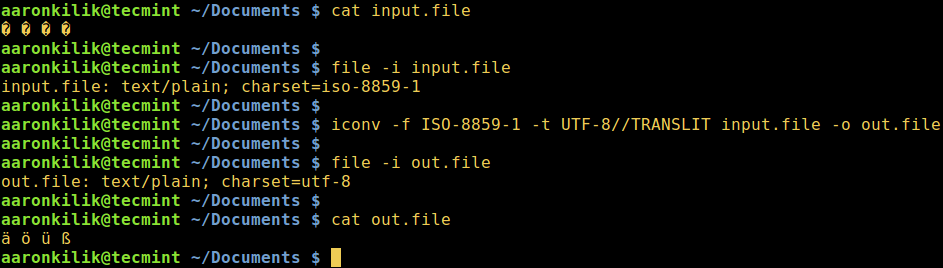

### 将文件从 ISO-8859-1 编码转换为 UTF-8 编码

|

||||

|

||||

下面,我们将学习如何将一种编码方案转换为另一种编码方案。下面的命令将会将 ISO-8859-1 编码转换为 UTF-8 编码。

|

||||

|

||||

Consider a file named `input.file` which contains the characters:

|

||||

考虑如下文件 `input.file`,其中包含这几个字符:

|

||||

|

||||

```

|

||||

@ -70,17 +65,15 @@ $ iconv -f ISO-8859-1 -t UTF-8//TRANSLIT input.file -o out.file

|

||||

$ cat out.file

|

||||

$ file -i out.file

|

||||

```

|

||||

[

|

||||

|

||||

][1]

|

||||

|

||||

|

||||

在 Linux 中将 ISO-8859-1 转化为 UTF-8

|

||||

*在 Linux 中将 ISO-8859-1 转化为 UTF-8*

|

||||

|

||||

注意:如果输出编码后面添加了 `//IGNORE` 字符串,那些不能被转换的字符将不会被转换,并且在转换后,程序会显示一条错误信息。

|

||||

|

||||

好,如果字符串 `//TRANSLIT` 被添加到了上面例子中的输出编码之后 (UTF-8//TRANSLIT),待转换的字符会尽量采用形译原则。也就是说,如果某个字符在输出编码方案中不能被表示的话,它将会被替换为一个形状比较相似的字符。

|

||||

好,如果字符串 `//TRANSLIT` 被添加到了上面例子中的输出编码之后 (`UTF-8//TRANSLIT`),待转换的字符会尽量采用形译原则。也就是说,如果某个字符在输出编码方案中不能被表示的话,它将会被替换为一个形状比较相似的字符。

|

||||

|

||||

而且,如果一个字符不在输出编码中,而且不能被形译,它将会在输出文件中被一个问号标记 `(?)` 代替。

|

||||

而且,如果一个字符不在输出编码中,而且不能被形译,它将会在输出文件中被一个问号标记 `?` 代替。

|

||||

|

||||

### 将多个文件转换为 UTF-8 编码

|

||||

|

||||

@ -88,13 +81,13 @@ $ file -i out.file

|

||||

|

||||

```

|

||||

#!/bin/bash

|

||||

# 将 values_here 替换为输入编码

|

||||

### 将 values_here 替换为输入编码

|

||||

FROM_ENCODING="value_here"

|

||||

# 输出编码 (UTF-8)

|

||||

### 输出编码 (UTF-8)

|

||||

TO_ENCODING="UTF-8"

|

||||

# 转换命令

|

||||

### 转换命令

|

||||

CONVERT=" iconv -f $FROM_ENCODING -t $TO_ENCODING"

|

||||

# 使用循环转换多个文件

|

||||

### 使用循环转换多个文件

|

||||

for file in *.txt; do

|

||||

$CONVERT "$file" -o "${file%.txt}.utf8.converted"

|

||||

done

|

||||

@ -122,13 +115,11 @@ $ man iconv

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/convert-files-to-utf-8-encoding-in-linux/#

|

||||

via: http://www.tecmint.com/convert-files-to-utf-8-encoding-in-linux/

|

||||

|

||||

作者:[Aaron Kili][a]

|

||||

|

||||

译者:[StdioA](https://github.com/StdioA)

|

||||

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,116 @@

|

||||

如何在 Linux 中压缩及解压缩 .bz2 文件

|

||||

============================================================

|

||||

|

||||

对文件进行压缩,可以通过使用较少的字节对文件中的数据进行编码来显著地减小文件的大小,并且在跨网络的[文件的备份和传送][1]时很有用。 另一方面,解压文件意味着将文件中的数据恢复到初始状态。

|

||||

|

||||

Linux 中有几个[文件压缩和解压缩工具][2],比如gzip、7-zip、Lrzip、[PeaZip][3] 等等。

|

||||

|

||||

本篇教程中,我们将介绍如何在 Linux 中使用 bzip2 工具压缩及解压缩`.bz2`文件。

|

||||

|

||||

bzip2 是一个非常有名的压缩工具,并且在大多数主流 Linux 发行版上都有,你可以在你的发行版上用合适的命令来安装它。

|

||||

|

||||

```

|

||||

$ sudo apt install bzip2 [On Debian/Ubuntu]

|

||||

$ sudo yum install bzip2 [On CentOS/RHEL]

|

||||

$ sudo dnf install bzip2 [On Fedora 22+]

|

||||

```

|

||||

|

||||

使用 bzip2 的常规语法是:

|

||||

|

||||

```

|

||||

$ bzip2 option(s) filenames

|

||||

```

|

||||

|

||||

### 如何在 Linux 中使用“bzip2”压缩文件

|

||||

|

||||

你可以如下压缩一个文件,使用`-z`标志启用压缩:

|

||||

|

||||

```

|

||||

$ bzip2 filename

|

||||

或者

|

||||

$ bzip2 -z filename

|

||||

```

|

||||

|

||||

要压缩一个`.tar`文件,使用的命令为:

|

||||

|

||||

```

|

||||

$ bzip2 -z backup.tar

|

||||

```

|

||||

|

||||

重要:bzip2 默认会在压缩及解压缩文件时删除输入文件(原文件),要保留输入文件,使用`-k`或者`--keep`选项。

|

||||

|

||||

此外,`-f`或者`--force`标志会强制让 bzip2 覆盖已有的输出文件。

|

||||

|

||||

```

|

||||

------ 要保留输入文件 ------

|

||||

$ bzip2 -zk filename

|

||||

$ bzip2 -zk backup.tar

|

||||

```

|

||||

|

||||

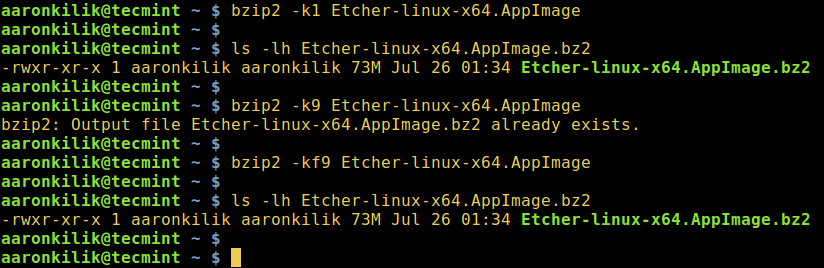

你也可以设置块的大小,从 100k 到 900k,分别使用`-1`或者`--fast`到`-9`或者`--best`:

|

||||

|

||||

```

|

||||

$ bzip2 -k1 Etcher-linux-x64.AppImage

|

||||

$ ls -lh Etcher-linux-x64.AppImage.bz2

|

||||

$ bzip2 -k9 Etcher-linux-x64.AppImage

|

||||

$ bzip2 -kf9 Etcher-linux-x64.AppImage

|

||||

$ ls -lh Etcher-linux-x64.AppImage.bz2

|

||||

```

|

||||

|

||||

下面的截屏展示了如何使用选项来保留输入文件,强制 bzip2 覆盖输出文件,并且在压缩中设置块的大小。

|

||||

|

||||

|

||||

|

||||

*在 Linux 中使用 bzip2 压缩文件*

|

||||

|

||||

### 如何在 Linux 中使用“bzip2”解压缩文件

|

||||

|

||||

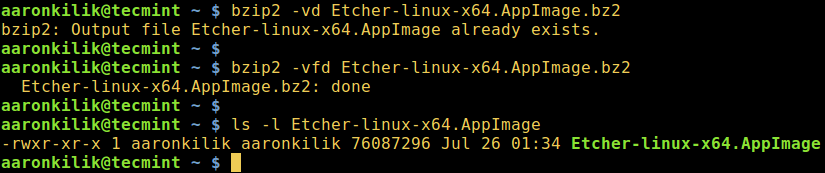

要解压缩`.bz2`文件,确保使用`-d`或者`--decompress`选项:

|

||||

|

||||

```

|

||||

$ bzip2 -d filename.bz2

|

||||

```

|

||||

|

||||

注意:这个文件必须是`.bz2`的扩展名,上面的命令才能使用。

|

||||

|

||||

```

|

||||

$ bzip2 -vd Etcher-linux-x64.AppImage.bz2

|

||||

$ bzip2 -vfd Etcher-linux-x64.AppImage.bz2

|

||||

$ ls -l Etcher-linux-x64.AppImage

|

||||

```

|

||||

|

||||

|

||||

|

||||

*在 Linux 中解压 bzip2 文件*

|

||||

|

||||

要浏览 bzip2 的帮助及 man 页面,输入下面的命令:

|

||||

|

||||

```

|

||||

$ bzip2 -h

|

||||

$ man bzip2

|

||||

```

|

||||

|

||||

最后,通过上面简单的阐述,我相信你现在已经可以在 Linux 中压缩及解压缩`bz2`文件了。然而,有任何的问题和反馈,可以在评论区中留言。

|

||||

|

||||

重要的是,你可能想在 Linux 中查看一些重要的 [tar 命令示例][6],以便学习使用 tar 命令来[创建压缩归档文件][7]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/linux-compress-decompress-bz2-files-using-bzip2

|

||||

|

||||

作者:[Aaron Kili][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/aaronkili/

|

||||

[1]:http://www.tecmint.com/rsync-local-remote-file-synchronization-commands/

|

||||

[2]:http://www.tecmint.com/command-line-archive-tools-for-linux/

|

||||

[3]:http://www.tecmint.com/peazip-linux-file-manager-and-file-archive-tool/

|

||||

[4]:http://www.tecmint.com/wp-content/uploads/2016/11/Compress-Files-Using-bzip2-in-Linux.png

|

||||

[5]:http://www.tecmint.com/wp-content/uploads/2016/11/Decompression-bzip2-File-in-Linux.png

|

||||

[6]:http://www.tecmint.com/18-tar-command-examples-in-linux/

|

||||

[7]:http://www.tecmint.com/compress-files-and-finding-files-in-linux/

|

||||

|

||||

|

||||

@ -0,0 +1,241 @@

|

||||

在 Kali Linux 下实战 Nmap(网络安全扫描器)

|

||||

========

|

||||

|

||||

在这第二篇 Kali Linux 文章中, 将讨论称为 ‘[nmap][30]‘ 的网络工具。虽然 nmap 不是 Kali 下唯一的一个工具,但它是最[有用的网络映射工具][29]之一。

|

||||

|

||||

- [第一部分-为初学者准备的Kali Linux安装指南][4]

|

||||

|

||||

Nmap, 是 Network Mapper 的缩写,由 Gordon Lyon 维护(更多关于 Mr. Lyon 的信息在这里: [http://insecure.org/fyodor/][28]) ,并被世界各地许多的安全专业人员使用。

|

||||

|

||||

这个工具在 Linux 和 Windows 下都能使用,并且是用命令行驱动的。相对于那些令人害怕的命令行,对于 nmap,在这里有一个美妙的图形化前端叫做 zenmap。

|

||||

|

||||

强烈建议个人去学习 nmap 的命令行版本,因为与图形化版本 zenmap 相比,它提供了更多的灵活性。

|

||||

|

||||

对服务器进行 nmap 扫描的目的是什么?很好的问题。Nmap 允许管理员快速彻底地了解网络上的系统,因此,它的名字叫 Network MAPper 或者 nmap。

|

||||

|

||||

Nmap 能够快速找到活动的主机和与该主机相关联的服务。Nmap 的功能还可以通过结合 Nmap 脚本引擎(通常缩写为 NSE)进一步被扩展。

|

||||

|

||||

这个脚本引擎允许管理员快速创建可用于确定其网络上是否存在新发现的漏洞的脚本。已经有许多脚本被开发出来并且包含在大多数的 nmap 安装中。

|

||||

|

||||

提醒一句 - 使用 nmap 的人既可能是善意的,也可能是恶意的。应该非常小心,确保你不要使用 nmap 对没有明确得到书面许可的系统进行扫描。请在使用 nmap 工具的时候注意!

|

||||

|

||||

#### 系统要求

|

||||

|

||||

1. [Kali Linux][3] (nmap 可以用于其他操作系统,并且功能也和这个指南里面讲的类似)。

|

||||

2. 另一台计算机,并且装有 nmap 的计算机有权限扫描它 - 这通常很容易通过软件来实现,例如通过 [VirtualBox][2] 创建虚拟机。

|

||||

1. 想要有一个好的机器来练习一下,可以了解一下 Metasploitable 2。

|

||||

2. 下载 MS2 :[Metasploitable2][1]。

|

||||

3. 一个可以工作的网络连接,或者是使用虚拟机就可以为这两台计算机建立有效的内部网络连接。

|

||||

|

||||

### Kali Linux – 使用 Nmap

|

||||

|

||||

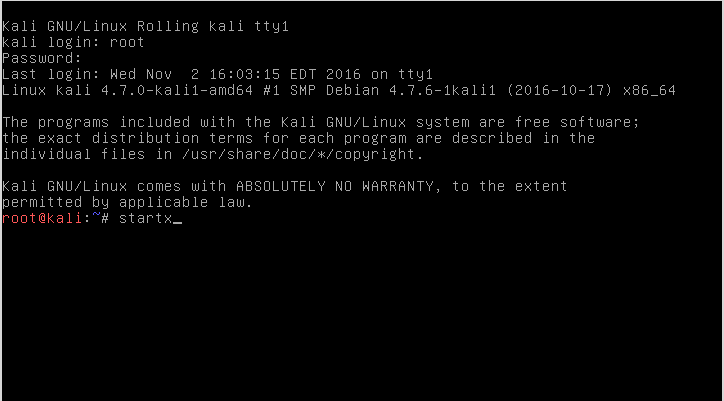

使用 nmap 的第一步是登录 Kali Linux,如果需要,就启动一个图形会话(本系列的第一篇文章安装了 [Kali Linux 的 Enlightenment 桌面环境] [27])。

|

||||

|

||||

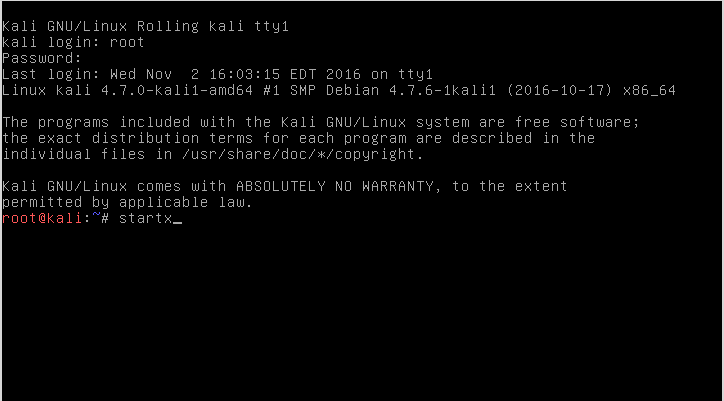

在安装过程中,安装程序将提示用户输入用来登录的“root”用户和密码。 一旦登录到 Kali Linux 机器,使用命令`startx`就可以启动 Enlightenment 桌面环境 - 值得注意的是 nmap 不需要运行桌面环境。

|

||||

|

||||

```

|

||||

# startx

|

||||

|

||||

```

|

||||

|

||||

|

||||

*在 Kali Linux 中启动桌面环境*

|

||||

|

||||

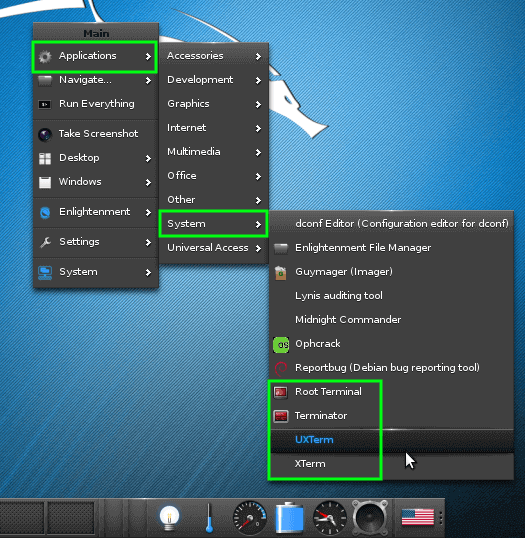

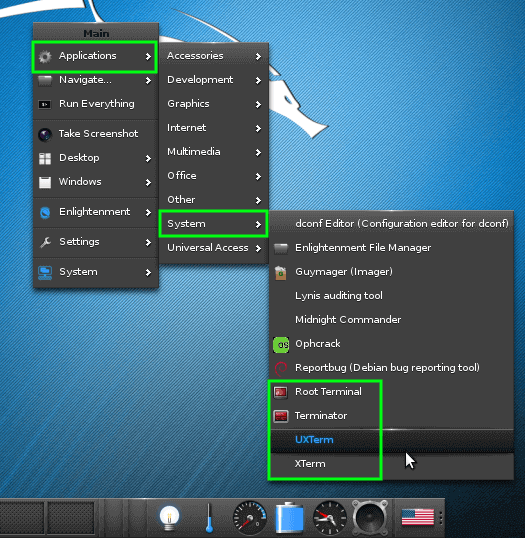

一旦登录到 Enlightenment,将需要打开终端窗口。通过点击桌面背景,将会出现一个菜单。导航到终端可以进行如下操作:应用程序 -> 系统 -> 'Xterm' 或 'UXterm' 或 '根终端'。

|

||||

|

||||

作者是名为 '[Terminator] [25]' 的 shell 程序的粉丝,但是这可能不会显示在 Kali Linux 的默认安装中。这里列出的所有 shell 程序都可用于使用 nmap 。

|

||||

|

||||

|

||||

|

||||

*在 Kali Linux 下启动终端*

|

||||

|

||||

一旦终端启动,nmap 的乐趣就开始了。 对于这个特定的教程,将会创建一个 Kali 机器和 Metasploitable机器之间的私有网络。

|

||||

|

||||

这会使事情变得更容易和更安全,因为私有的网络范围将确保扫描保持在安全的机器上,防止易受攻击的 Metasploitable 机器被其他人攻击。

|

||||

|

||||

### 怎样在我的网络上找到活动主机

|

||||

|

||||

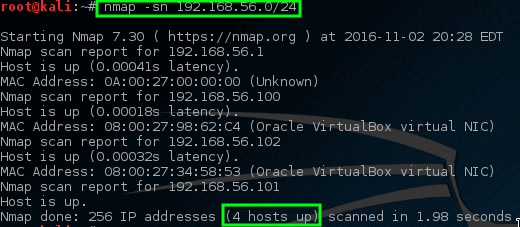

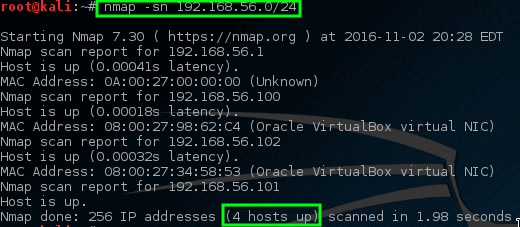

在此示例中,这两台计算机都位于专用的 192.168.56.0/24 网络上。 Kali 机器的 IP 地址为 192.168.56.101,要扫描的 Metasploitable 机器的 IP 地址为 192.168.56.102。

|

||||

|

||||

假如我们不知道 IP 地址信息,但是可以通过快速 nmap 扫描来帮助确定在特定网络上哪些是活动主机。这种扫描称为 “简单列表” 扫描,将 `-sL`参数传递给 nmap 命令。

|

||||

|

||||

```

|

||||

# nmap -sL 192.168.56.0/24

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

*Nmap – 扫描网络上的活动主机*

|

||||

|

||||

悲伤的是,这个初始扫描没有返回任何活动主机。 有时,这是某些操作系统处理[端口扫描网络流量][22]的一个方法。

|

||||

|

||||

###在我的网络中找到并 ping 所有活动主机

|

||||

|

||||

不用担心,在这里有一些技巧可以使 nmap 尝试找到这些机器。 下一个技巧会告诉 nmap 尝试去 ping 192.168.56.0/24 网络中的所有地址。

|

||||

|

||||

```

|

||||

# nmap -sn 192.168.56.0/24

|

||||

|

||||

```

|

||||

|

||||

|

||||

*Nmap – Ping 所有已连接的活动网络主机*

|

||||

|

||||

这次 nmap 会返回一些潜在的主机来进行扫描! 在此命令中,`-sn` 禁用 nmap 的尝试对主机端口扫描的默认行为,只是让 nmap 尝试 ping 主机。

|

||||

|

||||

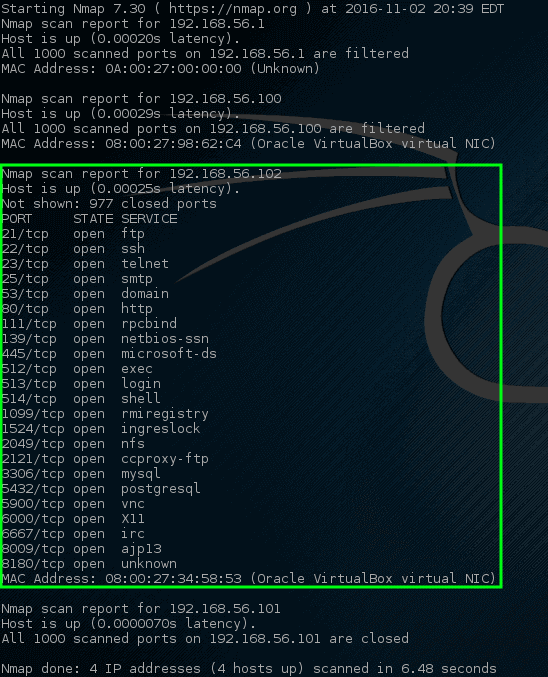

### 找到主机上的开放端口

|

||||

|

||||

让我们尝试让 nmap 端口扫描这些特定的主机,看看会出现什么。

|

||||

|

||||

```

|

||||

# nmap 192.168.56.1,100-102

|

||||

|

||||

```

|

||||

|

||||

|

||||

*Nmap – 在主机上扫描网络端口*

|

||||

|

||||

哇! 这一次 nmap 挖到了一个金矿。 这个特定的主机有相当多的[开放网络端口][19]。

|

||||

|

||||

这些端口全都代表着在此特定机器上的某种监听服务。 我们前面说过,192.168.56.102 的 IP 地址会分配给一台易受攻击的机器,这就是为什么在这个主机上会有这么多[开放端口][18]。

|

||||

|

||||

在大多数机器上打开这么多端口是非常不正常的,所以赶快调查这台机器是个明智的想法。管理员可以检查下网络上的物理机器,并在本地查看这些机器,但这不会很有趣,特别是当 nmap 可以为我们更快地做到时!

|

||||

|

||||

### 找到主机上监听端口的服务

|

||||

|

||||

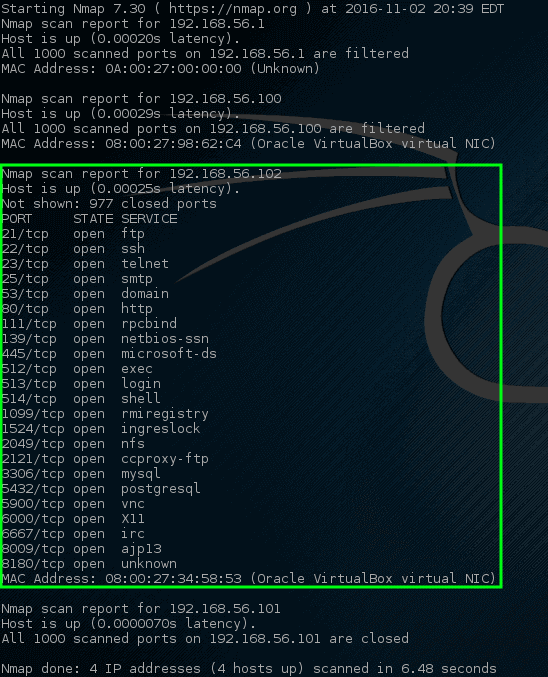

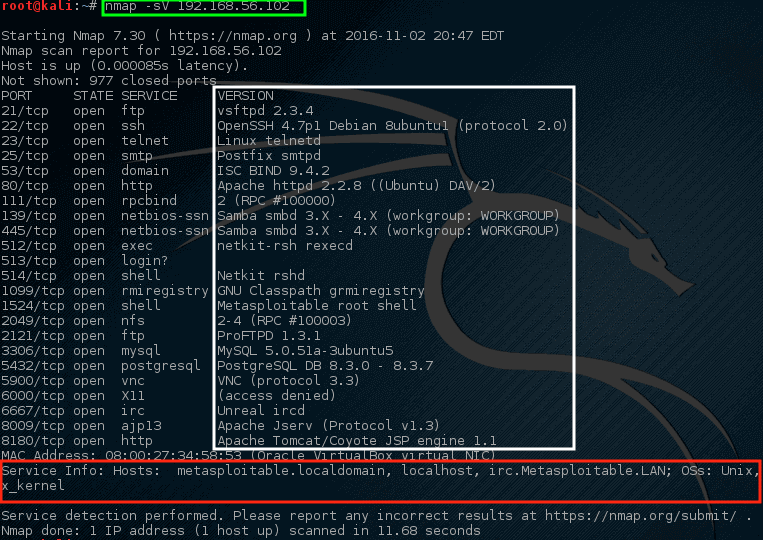

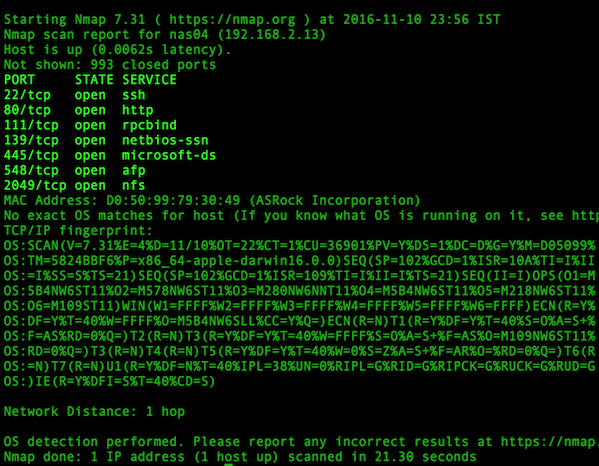

下一个扫描是服务扫描,通常用于尝试确定机器上什么[服务监听在特定的端口][17]。

|

||||

|

||||

Nmap 将探测所有打开的端口,并尝试从每个端口上运行的服务中获取信息。

|

||||

|

||||

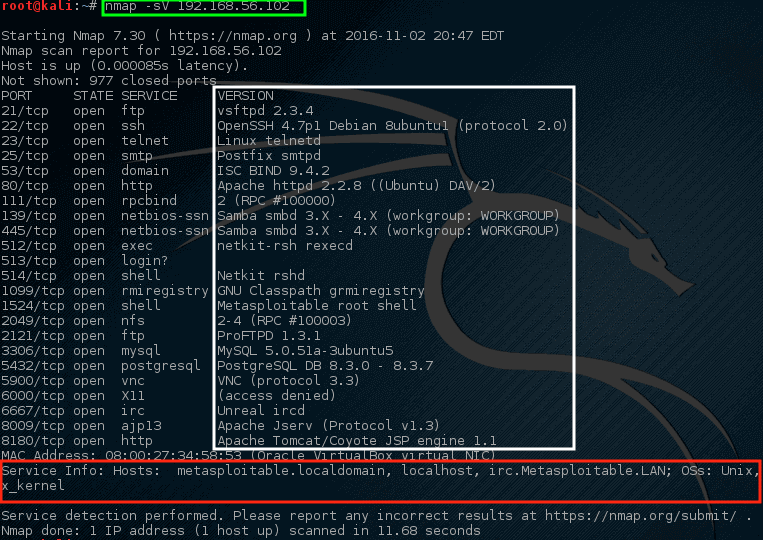

```

|

||||

# nmap -sV 192.168.56.102

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

*Nmap – 扫描网络服务监听端口*

|

||||

|

||||

请注意这次 nmap 提供了一些关于 nmap 在特定端口运行的建议(在白框中突出显示),而且 nmap 也试图确认运行在这台机器上的[这个操作系统的信息][15]和它的主机名(也非常成功!)。

|

||||

|

||||

查看这个输出,应该引起网络管理员相当多的关注。 第一行声称 VSftpd 版本 2.3.4 正在这台机器上运行! 这是一个真正的旧版本的 VSftpd。

|

||||

|

||||

通过查找 ExploitDB,对于这个版本早在 2001 年就发现了一个非常严重的漏洞(ExploitDB ID – 17491)。

|

||||

|

||||

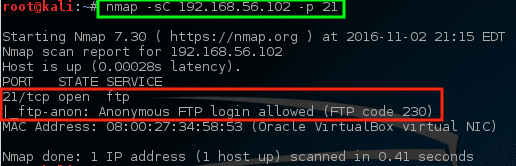

### 发现主机上上匿名 ftp 登录

|

||||

|

||||

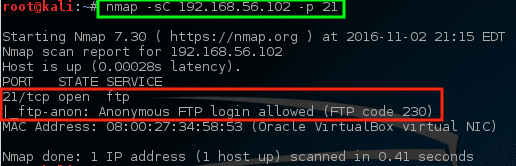

让我们使用 nmap 更加清楚的查看这个端口,并且看看可以确认什么。

|

||||

|

||||

```

|

||||

# nmap -sC 192.168.56.102 -p 21

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

*Nmap – 扫描机器上的特定端口*

|

||||

|

||||

使用此命令,让 nmap 在主机上的 FTP 端口(`-p 21`)上运行其默认脚本(`-sC`)。 虽然它可能是、也可能不是一个问题,但是 nmap 确实发现在这个特定的服务器[是允许匿名 FTP 登录的][13]。

|

||||

|

||||

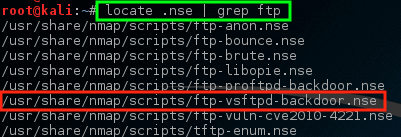

### 检查主机上的漏洞

|

||||

|

||||

这与我们早先知道 VSftd 有旧漏洞的知识相匹配,应该引起一些关注。 让我们看看 nmap有没有脚本来尝试检查 VSftpd 漏洞。

|

||||

|

||||

```

|

||||

# locate .nse | grep ftp

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

*Nmap – 扫描 VSftpd 漏洞*

|

||||

|

||||

注意 nmap 已有一个 NSE 脚本已经用来处理 VSftpd 后门问题!让我们尝试对这个主机运行这个脚本,看看会发生什么,但首先知道如何使用脚本可能是很重要的。

|

||||

|

||||

```

|

||||

# nmap --script-help=ftp-vsftd-backdoor.nse

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

*了解 Nmap NSE 脚本使用*

|

||||

|

||||

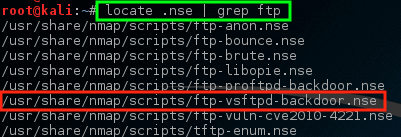

通过这个描述,很明显,这个脚本可以用来试图查看这个特定的机器是否容易受到先前识别的 ExploitDB 问题的影响。

|

||||

|

||||

让我们运行这个脚本,看看会发生什么。

|

||||

|

||||

```

|

||||

# nmap --script=ftp-vsftpd-backdoor.nse 192.168.56.102 -p 21

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

*Nmap – 扫描易受攻击的主机*

|

||||

|

||||

耶!Nmap 的脚本返回了一些危险的消息。 这台机器可能面临风险,之后可以进行更加详细的调查。虽然这并不意味着机器缺乏对风险的抵抗力和可以被用于做一些可怕/糟糕的事情,但它应该给网络/安全团队带来一些关注。

|

||||

|

||||

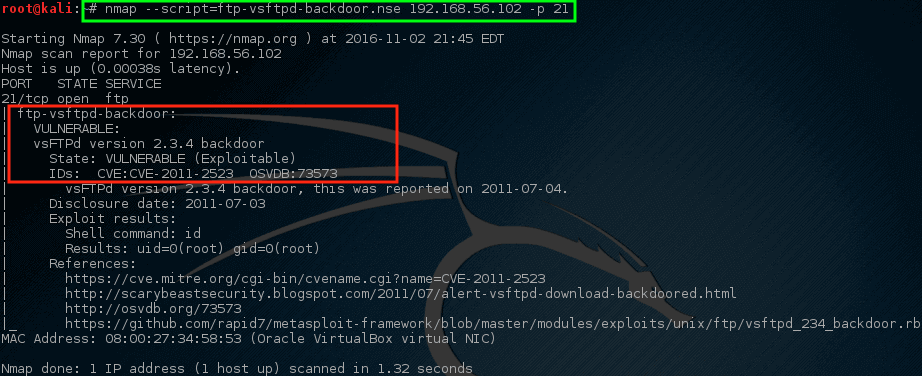

Nmap 具有极高的选择性,非常平稳。 到目前为止已经做的大多数扫描, nmap 的网络流量都保持适度平稳,然而以这种方式扫描对个人拥有的网络可能是非常耗时的。

|

||||

|

||||

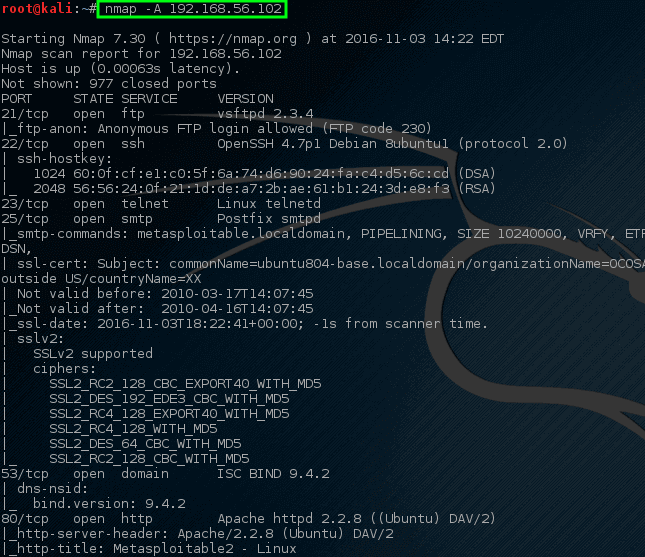

Nmap 有能力做一个更积极的扫描,往往一个命令就会产生之前几个命令一样的信息。 让我们来看看积极的扫描的输出(注意 - 积极的扫描会触发[入侵检测/预防系统][9]!)。

|

||||

|

||||

```

|

||||

# nmap -A 192.168.56.102

|

||||

|

||||

```

|

||||

|

||||

|

||||

*Nmap – 在主机上完成网络扫描*

|

||||

|

||||

注意这一次,使用一个命令,nmap 返回了很多关于在这台特定机器上运行的开放端口、服务和配置的信息。 这些信息中的大部分可用于帮助确定[如何保护本机][7]以及评估网络上可能运行的软件。

|

||||

|

||||

这只是 nmap 可用于在主机或网段上找到的许多有用信息的很短的一个列表。强烈敦促个人在个人拥有的网络上继续[以nmap][6] 进行实验。(不要通过扫描其他主机来练习!)。

|

||||

|

||||

有一个关于 Nmap 网络扫描的官方指南,作者 Gordon Lyon,可从[亚马逊](http://amzn.to/2eFNYrD)上获得。

|

||||

|

||||

方便的话可以留下你的评论和问题(或者使用 nmap 扫描器的技巧)。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/nmap-network-security-scanner-in-kali-linux/

|

||||

|

||||

作者:[Rob Turner][a]

|

||||

译者:[DockerChen](https://github.com/DockerChen)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/robturner/

|

||||

[1]:https://sourceforge.net/projects/metasploitable/files/Metasploitable2/

|

||||

[2]:http://www.tecmint.com/install-virtualbox-on-redhat-centos-fedora/

|

||||

[3]:http://www.tecmint.com/kali-linux-installation-guide

|

||||

[4]:http://www.tecmint.com/kali-linux-installation-guide

|

||||

[5]:http://amzn.to/2eFNYrD

|

||||

[6]:http://www.tecmint.com/nmap-command-examples/

|

||||

[7]:http://www.tecmint.com/security-and-hardening-centos-7-guide/

|

||||

[8]:http://www.tecmint.com/wp-content/uploads/2016/11/Nmap-Scan-Network-Host.png

|

||||

[9]:http://www.tecmint.com/protect-apache-using-mod_security-and-mod_evasive-on-rhel-centos-fedora/

|

||||

[10]:http://www.tecmint.com/wp-content/uploads/2016/11/Nmap-Scan-Host-for-Vulnerable.png

|

||||

[11]:http://www.tecmint.com/wp-content/uploads/2016/11/Nmap-Learn-NSE-Script.png

|

||||

[12]:http://www.tecmint.com/wp-content/uploads/2016/11/Nmap-Scan-Service-Vulnerability.png

|

||||

[13]:http://www.tecmint.com/setup-ftp-anonymous-logins-in-linux/

|

||||

[14]:http://www.tecmint.com/wp-content/uploads/2016/11/Nmap-Scan-Particular-Port-on-Host.png

|

||||

[15]:http://www.tecmint.com/commands-to-collect-system-and-hardware-information-in-linux/

|

||||

[16]:http://www.tecmint.com/wp-content/uploads/2016/11/Nmap-Scan-Network-Services-Ports.png

|

||||

[17]:http://www.tecmint.com/find-linux-processes-memory-ram-cpu-usage/

|

||||

[18]:http://www.tecmint.com/find-open-ports-in-linux/

|

||||

[19]:http://www.tecmint.com/find-open-ports-in-linux/

|

||||

[20]:http://www.tecmint.com/wp-content/uploads/2016/11/Nmap-Scan-for-Ports-on-Hosts.png

|

||||

[21]:http://www.tecmint.com/wp-content/uploads/2016/11/Nmap-Ping-All-Network-Live-Hosts.png

|

||||

[22]:http://www.tecmint.com/audit-network-performance-security-and-troubleshooting-in-linux/

|

||||

[23]:http://www.tecmint.com/wp-content/uploads/2016/11/Nmap-Scan-Network.png

|

||||

[24]:http://www.tecmint.com/wp-content/uploads/2016/11/Launch-Terminal-in-Kali-Linux.png

|

||||

[25]:http://www.tecmint.com/terminator-a-linux-terminal-emulator-to-manage-multiple-terminal-windows/

|

||||

[26]:http://www.tecmint.com/wp-content/uploads/2016/11/Start-Desktop-Environment-in-Kali-Linux.png

|

||||

[27]:http://www.tecmint.com/kali-linux-installation-guide

|

||||

[28]:http://insecure.org/fyodor/

|

||||

[29]:http://www.tecmint.com/bcc-best-linux-performance-monitoring-tools/

|

||||

[30]:http://www.tecmint.com/nmap-command-examples/

|

||||

|

||||

@ -0,0 +1,84 @@

|

||||

通过安装扩展让 KDE Plasma 5 桌面看起来感觉就像 Windows 10 桌面

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

通过一些步骤,我将告诉你如何把 KDE Plasma 5 桌面变成 Windows 10 桌面。

|

||||

|

||||

|

||||

除了菜单, KDE Plasma 桌面的许多地方已经和 Win 10 桌面非常像了。因此,只需要一点点改动就可以使二者看起来几乎是一样。

|

||||

|

||||

### 开始菜单

|

||||

|

||||

让 KDE Plasma 桌面看起来像 Win 10 桌面的首要以及可能最有标志性的环节是实现 Win 10 的 ‘开始’ 菜单。

|

||||

|

||||

通过安装 [Zren's Tiled Menu][1],这很容易实现。

|

||||

|

||||

#### 安装

|

||||

|

||||

1、 在 KDE Plasma 桌面上单击右键 -> 解锁窗口部件(Unlock Widgets)

|

||||

|

||||

2、 在 KDE Plasma 桌面上单击右键 -> 增添窗口部件( Add Widgets)

|

||||

|

||||

3、 获取新窗口部件 -> 下载新的 Plasma 窗口部件(Download New Plasma Widgets)

|

||||

|

||||

4、 搜索“Tiled Menu” -> 安装(Install)

|

||||

|

||||

#### 激活

|

||||

|

||||

1、 在你当前的菜单按钮上单击右键 -> 替代……(Alternatives…)

|

||||

|

||||

2、 选择 "TIled Mune" ->点击切换(Switch)

|

||||

|

||||

|

||||

|

||||

*KDE Tiled 菜单扩展*

|

||||

|

||||

### 主题

|

||||

|

||||

弄好菜单以后,下一个你可能需要的就是主题。幸运的是, [K10ne][3] 提供了一个 WIn 10 主题体验。

|

||||

|

||||

#### 安装:

|

||||

|

||||

1、 从 Plasma 桌面菜单打开“系统设置(System Settings)” -> 工作空间主题(Workspace Theme)

|

||||

|

||||

2、 从侧边栏选择“桌面主题(Desktop Theme)” -> 获取新主题(Get new Theme)

|

||||

|

||||

3、 搜索“K10ne” -> 安装(Install)

|

||||

|

||||

#### 激活

|

||||

|

||||

1、 从 Plasma 桌面菜单选择“系统设置(System Settings)” -> 工作空间主题(Workspace Theme)

|

||||

|

||||

2、 从侧边栏选择“桌面主题(Desktop Theme)” -> "K10ne"

|

||||

|

||||

3、 应用(Apply)

|

||||

|

||||

### 任务栏

|

||||

|

||||

最后,为了有一个更加完整的体验,你可能也想拥有一个更加 Win 10 风格的任务栏,

|

||||

|

||||

这次,你需要的安装包,叫做“Icons-only Task Manager”, 在大多数 Linux 发行版中,通常会默认安装。如果没有安装,需要通过你的系统的合适通道来获取它。

|

||||

|

||||

#### 激活

|

||||

|

||||

1、 在 Plasma 桌面上单击右键 -> 打开窗口部件(Unlock Widgets)

|

||||

|

||||

2、 在 Plasma 桌面上单击右键 -> 增添部件(Add Widgets)

|

||||

|

||||

3、 把“Icons-only Task Manager”拖放到你的桌面面板的合适位置。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://iwf1.com/make-kde-plasma-5-desktop-look-feel-like-windows-10-using-these-extensions/

|

||||

|

||||

作者:[Liron][a]

|

||||

译者:[ucasFL](https://github.com/ucasFL)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://iwf1.com/tag/linux

|

||||

[1]:https://github.com/Zren/plasma-applets/tree/master/tiledmenu

|

||||

[2]:http://iwf1.com/wordpress/wp-content/uploads/2016/11/KDE-Tiled-Menu-extension.jpg

|

||||

[3]:https://store.kde.org/p/1153465/

|

||||

89

published/20161114 How to Check Timezone in Linux.md

Normal file

89

published/20161114 How to Check Timezone in Linux.md

Normal file

@ -0,0 +1,89 @@

|

||||

在 Linux 中查看你的时区

|

||||

============================================================

|

||||

|

||||

在这篇短文中,我们将向你简单介绍几种 Linux 下查看系统时区的简单方法。在 Linux 机器中,尤其是生产服务器上的时间管理技能,是在系统管理中一个极其重要的方面。

|

||||

|

||||

Linux 包含多种可用的时间管理工具,比如 `date` 或 `timedatectlcommands`,你可以用它们来获取当前系统时区,也可以[将系统时间与 NTP 服务器同步][1],来自动地、更精确地进行时间管理。

|

||||

|

||||

好,我们一起来看几种查看我们的 Linux 系统时区的不同方法。

|

||||

|

||||

### 1、我们从使用传统的 `date` 命令开始

|

||||

|

||||

使用下面的命令,来看一看我们的当前时区:

|

||||

|

||||

```

|

||||

$ date

|

||||

```

|

||||

|

||||

或者,你也可以使用下面的命令。其中 `%Z` 格式可以输出字符形式的时区,而 `%z` 输出数字形式的时区:

|

||||

|

||||

```

|

||||

$ date +”%Z %z”

|

||||

```

|

||||

|

||||

|

||||

*查看 Linux 时区*

|

||||

|

||||

注意:`date` 的手册页中包含很多输出格式,你可以利用它们,来替换你的 `date` 命令的输出内容:

|

||||

|

||||

```

|

||||

$ man date

|

||||

```

|

||||

|

||||

### 2、接下来,你同样可以用 `timedatectl` 命令

|

||||

|

||||

当你不带任何参数运行它时,这条命令可以像下图一样,输出系统时间概览,其中包含当前时区:

|

||||

|

||||

```

|

||||

$ timedatectl

|

||||

```

|

||||

|

||||

然后,你可以在命令中提供一条管道,然后用 [grep 命令][3] 来像下面一样,只过滤出时区信息:

|

||||

|

||||

```

|

||||

$ timedatectl | grep “Time zone”

|

||||

```

|

||||

|

||||

|

||||

|

||||

*查看当前 Linux 时区*

|

||||

|

||||

同样,我们可以学习如何使用 timedatectl 来[设置 Linux 时区][5]。

|

||||

|

||||

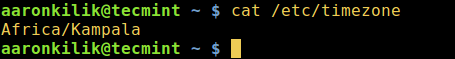

###3、进一步,显示文件 /etc/timezone 的内容

|

||||

|

||||

使用 [cat 工具][6]显示文件 `/etc/timezone` 的内容,来查看你的时区:

|

||||

|

||||

```

|

||||

$ cat /etc/timezone

|

||||

```

|

||||

|

||||

|

||||

*在 Linux 中查看时区*

|

||||

|

||||

对于 RHEL/CentOS/Fedora 用户,这里还有一条可以起到同样效果的命令:

|

||||

|

||||

```

|

||||

$ grep ZONE /etc/sysconfig/clock

|

||||

```

|

||||

|

||||

就这些了!别忘了在下面的反馈栏中分享你对于这篇文章中的看法。重要的是:你应该通过这篇 Linux 时区管理指南来学习更多系统时间管理的知识,因为它含有很多易于操作的实例。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/check-linux-timezone

|

||||

|

||||

作者:[Aaron Kili][a]

|

||||

译者:[StdioA](https://github.com/StdioA)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/aaronkili/

|

||||

[1]:http://www.tecmint.com/install-ntp-server-in-centos/

|

||||

[2]:http://www.tecmint.com/wp-content/uploads/2016/10/Find-Linux-Timezone.png

|

||||

[3]:http://www.tecmint.com/12-practical-examples-of-linux-grep-command/

|

||||

[4]:http://www.tecmint.com/wp-content/uploads/2016/10/Find-Current-Linux-Timezone.png

|

||||

[5]:http://www.tecmint.com/set-time-timezone-and-synchronize-time-using-timedatectl-command/

|

||||

[6]:http://www.tecmint.com/13-basic-cat-command-examples-in-linux/

|

||||

[7]:http://www.tecmint.com/wp-content/uploads/2016/10/Check-Timezone-of-Linux.png

|

||||

85

published/ubuntu vs ubuntu on windows.md

Normal file

85

published/ubuntu vs ubuntu on windows.md

Normal file

@ -0,0 +1,85 @@

|

||||

Ubuntu 14.04/16.04 与 Windows 10 周年版 Ubuntu Bash 性能对比

|

||||

===========================

|

||||

|

||||

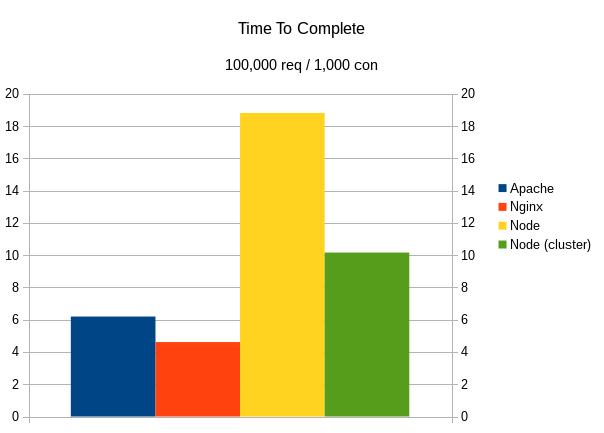

今年初,当 Microsoft 和 Canonical 发布 [Windows 10 Bash 和 Ubuntu 用户空间][1],我尝试做了一些初步性能测试 [Ubuntu on Windows 10 对比 原生 Ubuntu][2],这次我发布更多的,关于原生纯净的 Ubuntu 和基于 Windows 10 的基准对比。

|

||||

|

||||

|

||||

|

||||

Windows 的 Linux 子系统测试完成了所有测试,并随着 Windows 10周年更新放出。 默认的 Ubuntu 用户空间还是 Ubuntu 14.04,但是已经可以升级到 16.04。所以测试首先在 14.04 测试,完成后将系统升级升级到 16.04 版本并重复所有测试。完成所有基于 Windows 的 Ubuntu 子系统测试后,我在同样的系统上干净地安装了 Ubuntu 14.04.5 和 Ubuntu 16.04 LTS 来做性能对比。

|

||||

|

||||

|

||||

|

||||

配置为 Intel i5 6600K Skylake,16G 内存和 256G 东芝 ssd,测试过程中每个操作系统都采用其原生默认配置和软件包。

|

||||

|

||||

|

||||

|

||||

这次 Ubuntu/Bash on Windows 和原生 Ubuntu 对比测试,采用开源软件 [Phoronix 测试套件](http://www.phoronix-test-suite.com/),完全自动化并可重复测试。

|

||||

|

||||

|

||||

|

||||

首先是 SQLite 嵌入式数据库基准测试。这方面开箱即用的 Ubuntu/Bash on Windows 性能是相当的慢,但是如果将环境从 14.04 升级到 16.04 LTS,性能会快很多。然而,对于繁重磁盘操作的任务,原生 Ubuntu Linux 几乎比 Windows 的子系统 Linux 快了近 2 倍。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

编译测试作为额外的繁重磁盘操作测试显示,定制的 Windows 子系统真的成倍的限制了 Ubuntu 性能。

|

||||

|

||||

接下来,是一些使用 Stream 的基本的系统内存速度测试:

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

奇怪的是,这些 Stream 内存的基准测试显示 Ubuntu on Windows 的性能比原生的 Ubuntu 好!这个现象同时发生在基于同样的 Windows 却环境不同的 14.04 和 16.04 LTS 上。

|

||||

|

||||

接下来,是一些繁重 CPU 操作测试。

|

||||

|

||||

|

||||

|

||||

通过 Dolfyn 科学测试,Ubuntu On Windows 和原生 Ubuntu 之间的性能其实是相当接近的。 对于 Ubuntu 16.04,由于较新的 GCC 编译器性能衰减,两个平台上的性能都较慢。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

透过 Fhourstones 测试和 John The Ripper 测试表明,通过在 Windows 的 Linux 子系统运行的 Ubuntu 的性能可以非常接近裸机 Ubuntu Linux 性能!

|

||||

|

||||

|

||||

|

||||

类似于 Stream 测试,x264 结果是另一个奇怪的情况,其中最好的性能实际上是使用 Linux 子系统的 Ubuntu On Windows!

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

计时编译基准测试非常利于裸机 Ubuntu Linux。这是应该是由于大型程序编译需要大量读写磁盘,在先前测试已经发现了,这是基于 Windows 的 Linux 子系统缓慢的一大领域。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

许多其他的通用开源基准测试表明,严格的针对 CPU 的测试,Windows 子系统的 Ubuntu 的性能是很接近的,甚至是与原生安装在实际硬件中的 Ubuntu Linux 相等。

|

||||

|

||||

最新的 Windows 的 Linux 子系统,测试结果实际上相当令人印象深刻。让人沮丧的仅仅只是持续缓慢的磁盘/文件系统性能,但是对于受 CPU 限制的工作负载,结果是非常引人注目的。还有很罕见的情况, x264 和 Stream 测试,Ubuntu On Windows 上的性能看起来明显优于运行在实际硬件上 的Ubuntu Linux。

|

||||

|

||||

总的来说,体验是十分愉快的,并且在 Ubuntu/Bash on Windows 也没有遇到任何其他的 bug。如果你有还兴趣了解更多关于 Windows 和 Linux 的基准测试,欢迎留言讨论。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.phoronix.com/scan.php?page=article&item=windows10-anv-wsl&num=1

|

||||

|

||||

作者:[Michael Larabel][a]

|

||||

译者:[VicYu/Vic020](http://vicyu.net)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://www.michaellarabel.com/

|

||||

[1]: http://www.phoronix.com/scan.php?page=news_item&px=Ubuntu-User-Space-On-Win10

|

||||

[2]: http://www.phoronix.com/scan.php?page=article&item=windows-10-lxcore&num=1

|

||||

|

||||

@ -1,57 +0,0 @@

|

||||

willcoderwang translating

|

||||

|

||||

# Would You Consider Riding in a Driverless Car?

|

||||

|

||||

|

||||

|

||||

Technology goes through major movements. The last one we entered into was the wearables phase with the Apple Watch and clones, FitBits, and Google Glass. It seems like the next phase they’ve been working on for quite a while is the driverless car.

|

||||

|

||||

|

||||

These cars, sometimes called autonomous cars, self-driving cars, or robotic cars, would literally drive themselves thanks to technology. They detect their surroundings, such as obstacles and signs, and use GPS to find their way. But would they be safe to drive in? We asked our technology-minded writers, “Would you consider riding in a driverless car?

|

||||

|

||||

### Our Opinion

|

||||

|

||||

**Derrik** reports that he would ride in a driver-less car because “_the technology is there and a lot of smart people have been working on it for a long time._” He admits there are issues with them, but for the most part he believes a lot of the accidents happen when a human does get involved. But if you take humans out of the equation, he thinks riding in a driverless car “_would be incredibly safe_.”

|

||||

|

||||

For **Phil**, these cars give him “the willies,” yet he admits that’s only in the abstract as he’s never ridden in one. He agrees with Derrik that the tech is well developed and knows how it works, but then sees himself as “a_ tough sell being a bit of a luddite at heart._” He admits to even rarely using cruise control. Yet he agrees that a driver relying on it too much would be what would make him feel unsafe.

|

||||

|

||||

|

||||

|

||||

**Robert** agrees that “_the concept is a little freaky,_” but in principle he doesn’t see why cars shouldn’t go in that direction. He notes that planes have gone that route and become much safer, and he believes the accidents we see are mainly “_caused by human error due to over-relying on the technology then not knowing what to do when it fails._”

|

||||

|

||||

He’s a bit of an “anxious passenger” as it is, preferring to have control over the situation, so for him much of it would have to do with where he car is being driven. He’d be okay with it if it was driving in the city at slow speeds but definitely not on “motorways of weaving English country roads with barely enough room for two cars to drive past each other.” He and Phil both see English roads as much different than American ones. He suggests letting others be the guinea pigs and joining in after it’s known to be safe.

|

||||

|

||||

For **Mahesh**, he would definitely ride in a driverless car, as he knows that the companies with these cars “_have robust technology and would never put their customers at risk._” He agrees that it depends on the roads that the cars are being driven on.

|

||||

|

||||

My opinion kind of floats in the middle of all the others. While I’m normally one to jump readily into new technology, putting my life at risk makes it different. I agree that the cars have been in development so long they’re bound to be safe. And frankly there are many drivers on the road that are much more dangerous than driverless cars. But like Robert, I think I’ll let others be the guinea pigs and will welcome the technology once it becomes a bit more commonplace.

|

||||

|

||||

### Your Opinion

|

||||

|

||||

Where do you sit with this issue? Do you trust this emerging technology? Or would you be a nervous nelly in one of these cars? Would you consider driving in a driverless car? Jump into the discussion below in the comments.

|

||||

|

||||

<small style="box-sizing: inherit; font-size: 16px;">Image Credit: [Steve Jurvetson][4] and [Steve Jurvetson at Wikimedia Commons][3]</small>

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.maketecheasier.com/riding-driverless-car/?utm_medium=feed&utm_source=feedpress.me&utm_campaign=Feed%3A+maketecheasier

|

||||

|

||||

作者:[Laura Tucker][a]

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.maketecheasier.com/author/lauratucker/

|

||||

[1]:https://www.maketecheasier.com/riding-driverless-car/#comments

|

||||

[2]:https://www.maketecheasier.com/author/lauratucker/

|

||||

[3]:https://commons.m.wikimedia.org/wiki/File:Inside_the_Google_RoboCar_today_with_PlanetLabs.jpg

|

||||

[4]:https://commons.m.wikimedia.org/wiki/File:Jurvetson_Google_driverless_car_trimmed.jpg

|

||||

[5]:https://support.google.com/adsense/troubleshooter/1631343

|

||||

[6]:https://www.maketecheasier.com/best-wordpress-video-plugins/

|

||||

[7]:https://www.maketecheasier.com/hidden-google-games/

|

||||

[8]:mailto:?subject=Would%20You%20Consider%20Riding%20in%20a%20Driverless%20Car?&body=https%3A%2F%2Fwww.maketecheasier.com%2Friding-driverless-car%2F

|

||||

[9]:http://twitter.com/share?url=https%3A%2F%2Fwww.maketecheasier.com%2Friding-driverless-car%2F&text=Would+You+Consider+Riding+in+a+Driverless+Car%3F

|

||||

[10]:http://www.facebook.com/sharer.php?u=https%3A%2F%2Fwww.maketecheasier.com%2Friding-driverless-car%2F

|

||||

[11]:https://www.maketecheasier.com/category/opinion/

|

||||

@ -1,58 +0,0 @@

|

||||

Rusking Translating...

|

||||

|

||||

# Arch Linux: In a world of polish, DIY never felt so good

|

||||

|

||||

|

||||

|

||||

Dig through the annals of Linux journalism and you'll find a surprising amount of coverage of some pretty obscure distros. Flashy new distros like Elementary OS and Solus garner attention for their slick interfaces, and anything shipping with a MATE desktop gets coverage by simple virtue of using MATE.

|

||||

|

||||

Thanks to television shows like _Mr Robot_, I fully expect coverage of even Kali Linux to be on the uptick soon.

|

||||

|

||||

In all that coverage, though, there's one very widely used distro that's almost totally ignored: Arch Linux.

|

||||

|

||||

Arch gets very little coverage for a several reasons, not the least of which is that it's somewhat difficult to install and requires you feel comfortable with the command line to get it working. Worse, from the point of view of anyone trying to appeal to mainstream users, that difficulty is by design - nothing keeps the noobs out like a daunting install process.

|

||||

|

||||

It's a shame, though, because once the installation is complete, Arch is actually - in my experience - far easier to use than any other Linux distro I've tried.

|

||||

|

||||

But yes, installation is a pain. Hand-partitioning, hand-mounting and generating your own `fstab` files takes more time and effort than clicking "install" and merrily heading off to do something else. But the process of installing Arch teaches you a lot. It pulls back the curtain so you can see what's behind it. In fact it makes the curtain disappear entirely. In Arch, _you_ are the person behind the curtain.

|

||||

|

||||

In addition to its reputation for being difficult to install, Arch is justly revered for its customizability, though this is somewhat misunderstood. There is no "default" desktop in Arch. What you want installed on top of the base set of Arch packages is entirely up to you.

|

||||

|

||||

|

||||

|

||||

While you can see this as infinite customizability, you can also see it as totally lacking in customization. For example, unlike - say - Ubuntu there is almost no patching or customization happening in Arch. Arch developers simply pass on what upstream developers have released, end of story. For some this good; you can run "pure" GNOME, for instance. But in other cases, some custom patching can take care of bugs that upstream devs might not prioritize.

|

||||

|

||||

The lack of a default set of applications and desktop system also does not make for tidy reviews - or reviews at all really, since what I install will no doubt be different to what you choose. I happened to select a very minimal setup of bare Openbox, tint2 and dmenu. You might prefer the latest release of GNOME. We'd both be running Arch, but our experiences of it would be totally different. This is of course true of any distro, but most others have a default desktop at least.

|

||||

|

||||

Still there are common elements that together can make the basis of an Arch review. There is, for example, the primary reason I switched - Arch is a rolling release distro. This means two things. First, the latest kernels are delivered as soon as they're available and reasonably stable. This means I can test things that are difficult to test with other distros. The other big win for a rolling distro is that all updates are delivered when they're ready. Not only does this mean newer software sooner, it means there's no massive system updates that might break things.

|

||||

|

||||

Many people feel that Arch is less stable because it's rolling, but in my experience over the last nine months I would argue the opposite.

|

||||

|

||||

I have yet to break anything with an update. I did once have to rollback because my /boot partition wasn't mounted when I updated and changes weren't written, but that was pure user error. Bugs that do surface (like some regressions related to the trackpad on a Dell XPS laptop I was testing) are fixed and updates are available much faster than they would be with a non-rolling distro. In short, I've found Arch's rolling release updates to be far more stable than anything else I've been using along side it. The only caveat I have to add to that is read the wiki and pay close attention to what you're updating.

|

||||

|

||||

This brings us to the main reason I suspect that Arch's appeal is limited - you have to pay attention to what you're doing. Blindly updating Arch is risky - but it's risky with any distro; you've just been conditioned to think it's not because you have no choice.

|

||||

|

||||