mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-21 02:10:11 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject

This commit is contained in:

commit

945e51173a

File diff suppressed because one or more lines are too long

@ -0,0 +1,56 @@

|

||||

如何像 NASA 顶级程序员一样编程 —— 10 条重要原则

|

||||

===

|

||||

|

||||

[][1]

|

||||

|

||||

> 引言: 你知道 NASA 顶级程序员如何编写关键任务代码么?为了确保代码更清楚、更安全、且更容易理解,NASA 的喷气推进实验室制定了 10 条编码规则。

|

||||

|

||||

NASA 的开发者是编程界最有挑战性的工作之一。他们编写代码并将开发安全的关键任务应用程序作为其主要关注点。

|

||||

|

||||

在这种情形下,遵守一些严格的编码规则是重要的。这些规则覆盖软件开发的多个方面,例如软件应该如何编码、应该使用哪些语言特性等。

|

||||

|

||||

尽管很难就一个好的编码标准达成共识,NASA 的喷气推进实验室(JPL)遵守一个[编码规则][2],其名为“十的次方:开发安全的关键代码的规则”。

|

||||

|

||||

由于 JPL 长期使用 C 语言,这个规则主要是针对于 C 程序语言编写。但是这些规则也可以很容地应用到其它的程序语言。

|

||||

|

||||

该规则由 JPL 的首席科学家 Gerard J. Holzmann 制定,这些严格的编码规则主要是聚焦于安全。

|

||||

|

||||

NASA 的 10 条编写关键任务代码的规则:

|

||||

|

||||

1. 限制所有代码为极为简单的控制流结构 — 不用 `goto` 语句、`setjmp` 或 `longjmp` 结构,不用间接或直接的递归调用。

|

||||

2. 所有循环必须有一个固定的上限值。必须可以被某个检测工具静态证实,该循环不能达到预置的迭代上限值。如果该上限值不能被静态证实,那么可以认为违背该原则。

|

||||

3. 在初始化后不要使用动态内存分配。

|

||||

4. 如果一个语句一行、一个声明一行的标准格式来参考,那么函数的长度不应该比超过一张纸。通常这意味着每个函数的代码行不能超过 60。

|

||||

5. 代码中断言的密度平均低至每个函数 2 个断言。断言被用于检测那些在实际执行中不可能发生的情况。断言必须没有副作用,并应该定义为布尔测试。当一个断言失败时,应该执行一个明确的恢复动作,例如,把错误情况返回给执行该断言失败的函数调用者。对于静态工具来说,任何能被静态工具证实其永远不会失败或永远不能触发的断言违反了该规则(例如,通过增加无用的 `assert(true)` 语句是不可能满足这个规则的)。

|

||||

6. 必须在最小的范围内声明数据对象。

|

||||

7. 非 void 函数的返回值在每次函数调用时都必须检查,且在每个函数内其参数的有效性必须进行检查。

|

||||

8. 预处理器的使用仅限制于包含头文件和简单的宏定义。符号拼接、可变参数列表(省略号)和递归宏调用都是不允许的。所有的宏必须能够扩展为完整的语法单元。条件编译指令的使用通常是晦涩的,但也不总是能够避免。这意味着即使在一个大的软件开发中超过一两个条件编译指令也要有充足的理由,这超出了避免多次包含头文件的标准做法。每次在代码中这样做的时候必须有基于工具的检查器进行标记,并有充足的理由。

|

||||

9. 应该限制指针的使用。特别是不应该有超过一级的解除指针引用。解除指针引用操作不可以隐含在宏定义或类型声明中。还有,不允许使用函数指针。

|

||||

10. 从开发的第一天起,必须在编译器开启最高级别警告选项的条件下对代码进行编译。在此设置之下,代码必须零警告编译通过。代码必须利用源代码静态分析工具每天至少检查一次或更多次,且零警告通过。

|

||||

|

||||

关于这些规则,NASA 是这么评价的:

|

||||

|

||||

> 这些规则就像汽车中的安全带一样,刚开始你可能感到有一点不适,但是一段时间后就会养成习惯,你会无法想象不使用它们的日子。

|

||||

|

||||

此文是否对你有帮助?不要忘了在下面的评论区写下你的反馈。

|

||||

|

||||

---

|

||||

作者简介:

|

||||

|

||||

Adarsh Verma 是 Fossbytes 的共同创始人,他是一个令人尊敬的企业家,他一直对开源、技术突破和完全保持密切关注。可以通过邮件联系他 — [adarsh.verma@fossbytes.com](mailto:adarsh.verma@fossbytes.com)

|

||||

|

||||

------------------

|

||||

|

||||

via: https://fossbytes.com/nasa-coding-programming-rules-critical/

|

||||

|

||||

作者:[Adarsh Verma][a]

|

||||

译者:[penghuster](https://github.com/penghuster)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://fossbytes.com/author/adarsh/

|

||||

[1]:http://fossbytes.com/wp-content/uploads/2016/06/rules-of-coding-nasa.jpg

|

||||

[2]:http://pixelscommander.com/wp-content/uploads/2014/12/P10.pdf

|

||||

[3]:https://fossbytes.com/wp-content/uploads/2016/12/learn-to-code-banner-ad-content-1.png

|

||||

[4]:http://pixelscommander.com/wp-content/uploads/2014/12/P10.pdf

|

||||

35

published/2017 Cloud Integrated Advanced Orchestrator.md

Normal file

35

published/2017 Cloud Integrated Advanced Orchestrator.md

Normal file

@ -0,0 +1,35 @@

|

||||

Ciao:云集成高级编排器

|

||||

============================================================

|

||||

|

||||

<ruby>云集成高级编排器<rt>Cloud Integrated Advanced Orchestrator</rt></ruby> (Ciao) 是一个新的负载调度程序,用来解决当前云操作系统项目的局限性。Ciao 提供了一个轻量级,完全基于 TLS 的最小配置。它是

|

||||

工作量无关的、易于更新、具有优化速度的调度程序,目前已针对 OpenStack 进行了优化。

|

||||

|

||||

其设计决策和创新方法在对安全性、可扩展性、可用性和可部署性的要求下进行:

|

||||

|

||||

- **可扩展性:** 初始设计目标是伸缩超过 5,000 个节点。因此,调度器架构用新的形式实现:

|

||||

- 在 ciao 中,决策制定是去中心化的。它基于拉取模型,允许计算节点从调度代理请求作业。调度程序总能知道启动器的容量,而不要求进行数据更新,并且将调度决策时间保持在最小。启动器异步向调度程序发送容量。

|

||||

- 持久化状态跟踪与调度程序决策制定相分离,它让调度程序保持轻量级。这种分离增加了可靠性、可扩展性和性能。结果是调度程序让出了权限并且这不是瓶颈。

|

||||

- **可用性:** 虚拟机、容器和裸机集成到一个调度器中。所有的负载都被视为平等公民。为了更易于使用,网络通过一个组件间最小化的异步协议进行简化,只需要最少的配置。Ciao 还包括一个新的、简单的 UI。所有的这些功能都集成到一起来简化安装、配置、维护和操作。

|

||||

- **轻松部署:** 升级应该是预期操作,而不是例外情况。这种新的去中心化状态的体系结构能够无缝升级。为了确保基础设施(例如 OpenStack)始终是最新的,它实现了持续集成/持续交付(CI/CD)模型。Ciao 的设计使得它可以立即杀死任何 Ciao 组件,更换它,并重新启动它,对可用性影响最小。

|

||||

- **安全性是必需的:** 与调度程序的连接总是加密的:默认情况下 SSL 是打开的,而不是关闭的。加密是从端到端:所有外部连接都需要 HTTPS,组件之间的内部通信是基于 TLS 的。网络支持的一体化保障了租户分离。

|

||||

|

||||

初步结果证明是显著的:在 65 秒内启动一万个 Docker 容器和五千个虚拟机。进一步优化还在进行。

|

||||

|

||||

- 文档:[https://clearlinux.org/documentation/ciao/ciao.html][3]

|

||||

- Github 链接: [https://github.com/01org/ciao(link is external)][1]

|

||||

- 邮件列表链接: [https://lists.clearlinux.org/mailman/listinfo/ciao-devel][2]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://clearlinux.org/ciao

|

||||

|

||||

作者:[ciao][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://clearlinux.org/ciao

|

||||

[1]:https://github.com/01org/ciao

|

||||

[2]:https://lists.clearlinux.org/mailman/listinfo/ciao-devel

|

||||

[3]:https://clearlinux.org/documentation/ciao/ciao.html

|

||||

124

published/20170903 Genymotion vs Android Emulator.md

Normal file

124

published/20170903 Genymotion vs Android Emulator.md

Normal file

@ -0,0 +1,124 @@

|

||||

Genymotion vs Android 模拟器

|

||||

============================================================

|

||||

|

||||

> Android 模拟器是否改善到足以取代 Genymotion

|

||||

|

||||

一直以来有关于选择 android 模拟器或者 Genymotion 的争论,我看到很多讨论最后以赞成 Genymotion 而告终。我根据我周围最常见的情况收集了一些数据,基于此,我将连同 Genymotion 全面评估 android 模拟器。

|

||||

|

||||

结论剧透:配置正确时,Android 模拟器比 Genymotion 快。

|

||||

|

||||

使用带 Google API 的 x86(32位)镜像、3GB RAM、四核CPU。

|

||||

|

||||

> - 哈,很高兴我们知道了最终结果

|

||||

> - 现在,让我们深入

|

||||

|

||||

免责声明:我已经测试了我看到的一般情况,即运行测试。所有的基准测试都是在 2015 年中期的 MacBook Pro 上完成的。无论何时我提及 Genymotion 指的都是 Genymotion Desktop。他们还有其他产品,如 Genymotion on Cloud&Genymotion on Demand,但这里没有考虑。我不是说 Genymotion 是不合适的,但运行测试比某些 Android 模拟器慢。

|

||||

|

||||

关于这个问题的一点背景,然后我们将转到具体内容上去。

|

||||

|

||||

_过去:我有一些基准测试,继续下去。_

|

||||

|

||||

很久以前,Android 模拟器是唯一的选择。但是它们太慢了,这是架构改变的原因。对于在 x86 机器上运行的 ARM 模拟器,你能期待什么?每个指令都必须从 ARM 转换为 x86 架构,这使得它的速度非常慢。

|

||||

|

||||

随之而来的是 Android 的 x86 镜像,随着它们摆脱了 ARM 到 x86 平台转化,速度更快了。现在,你可以在 x86 机器上运行 x86 Android 模拟器。

|

||||

|

||||

> - _问题解决了!!!_

|

||||

> - 没有!

|

||||

|

||||

Android 模拟器仍然比人们想要的慢。随后出现了 Genymotion,这是一个在 virtual box 中运行的 Android 虚拟机。与在 qemu 上运行的普通老式 android 模拟器相比,它相当稳定和快速。

|

||||

|

||||

我们来看看今天的情况。

|

||||

|

||||

我在持续集成的基础设施上和我的开发机器上使用 Genymotion。我手头的任务是摆脱持续集成基础设施和开发机器上使用 Genymotion。

|

||||

|

||||

> - 你问为什么?

|

||||

> - 授权费钱。

|

||||

|

||||

在快速看了一下以后,这似乎是一个愚蠢的举动,因为 Android 模拟器的速度很慢而且有 bug,它们看起来适得其反,但是当你深入的时候,你会发现 Android 模拟器是优越的。

|

||||

|

||||

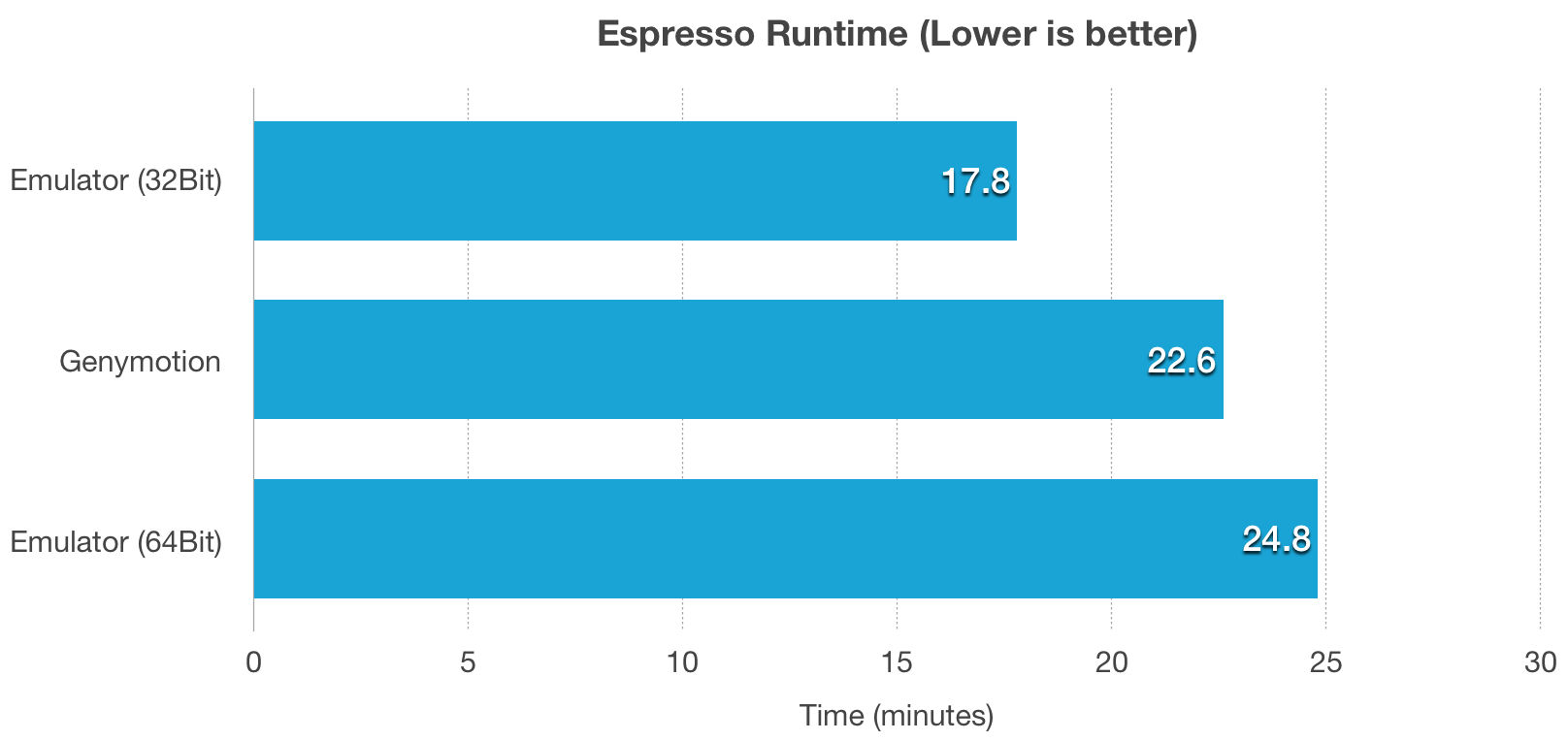

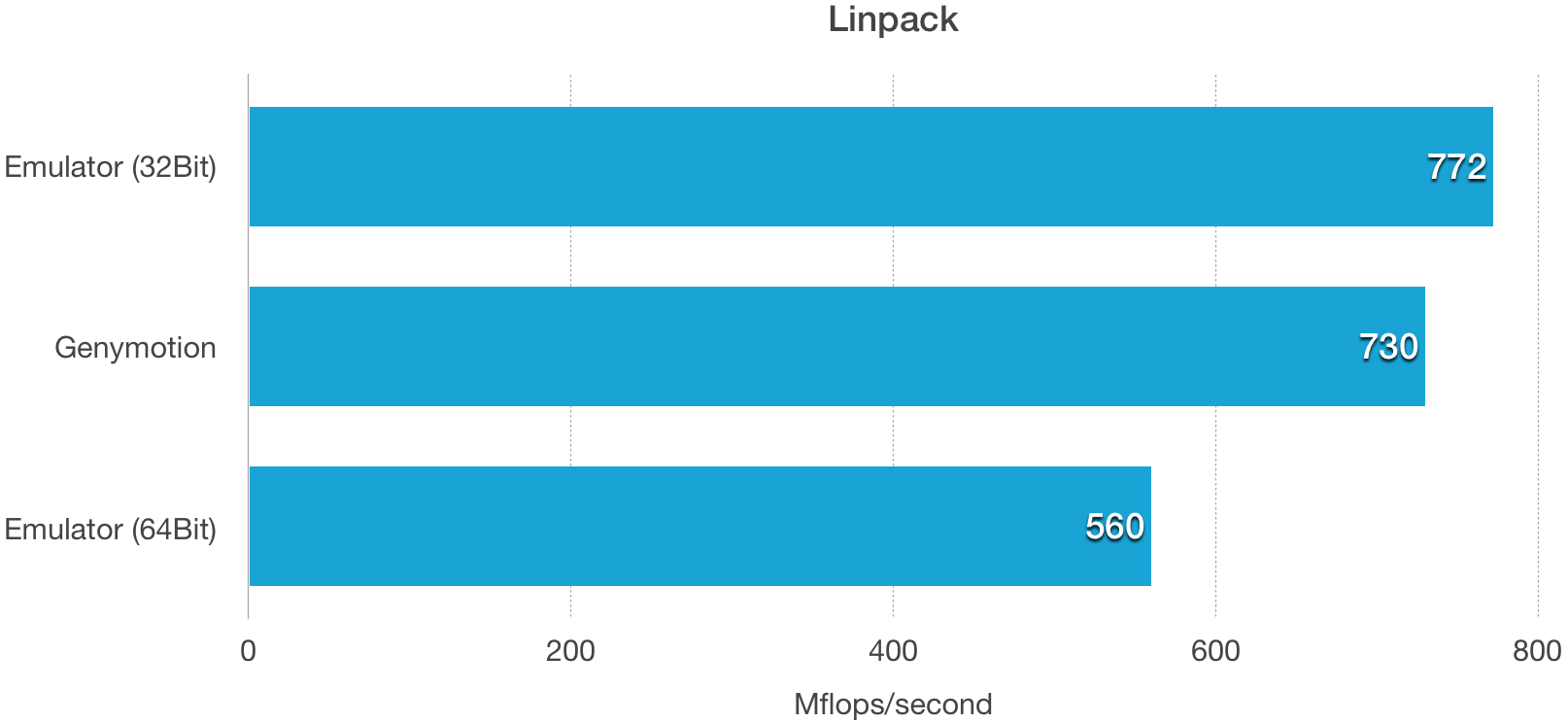

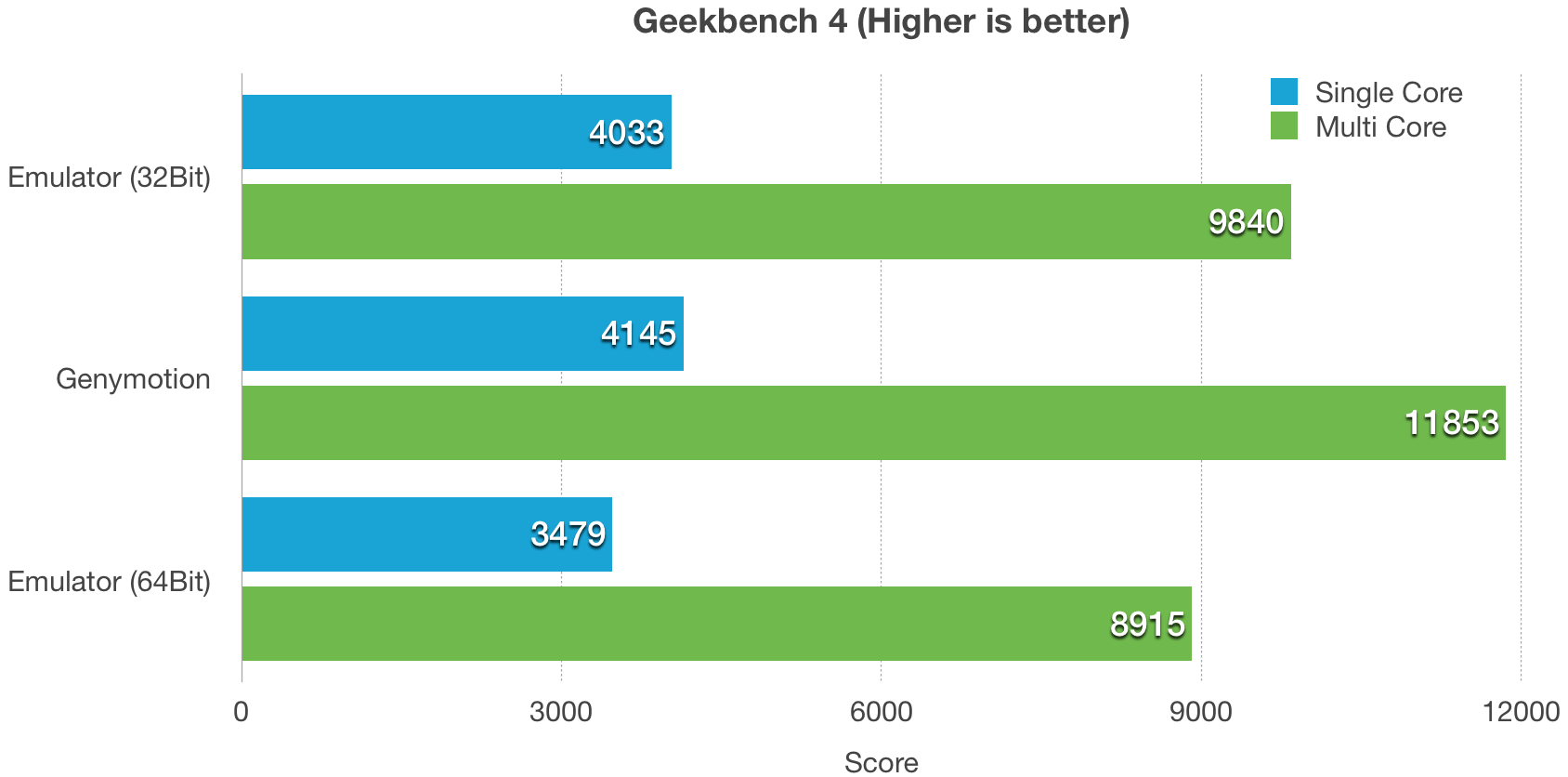

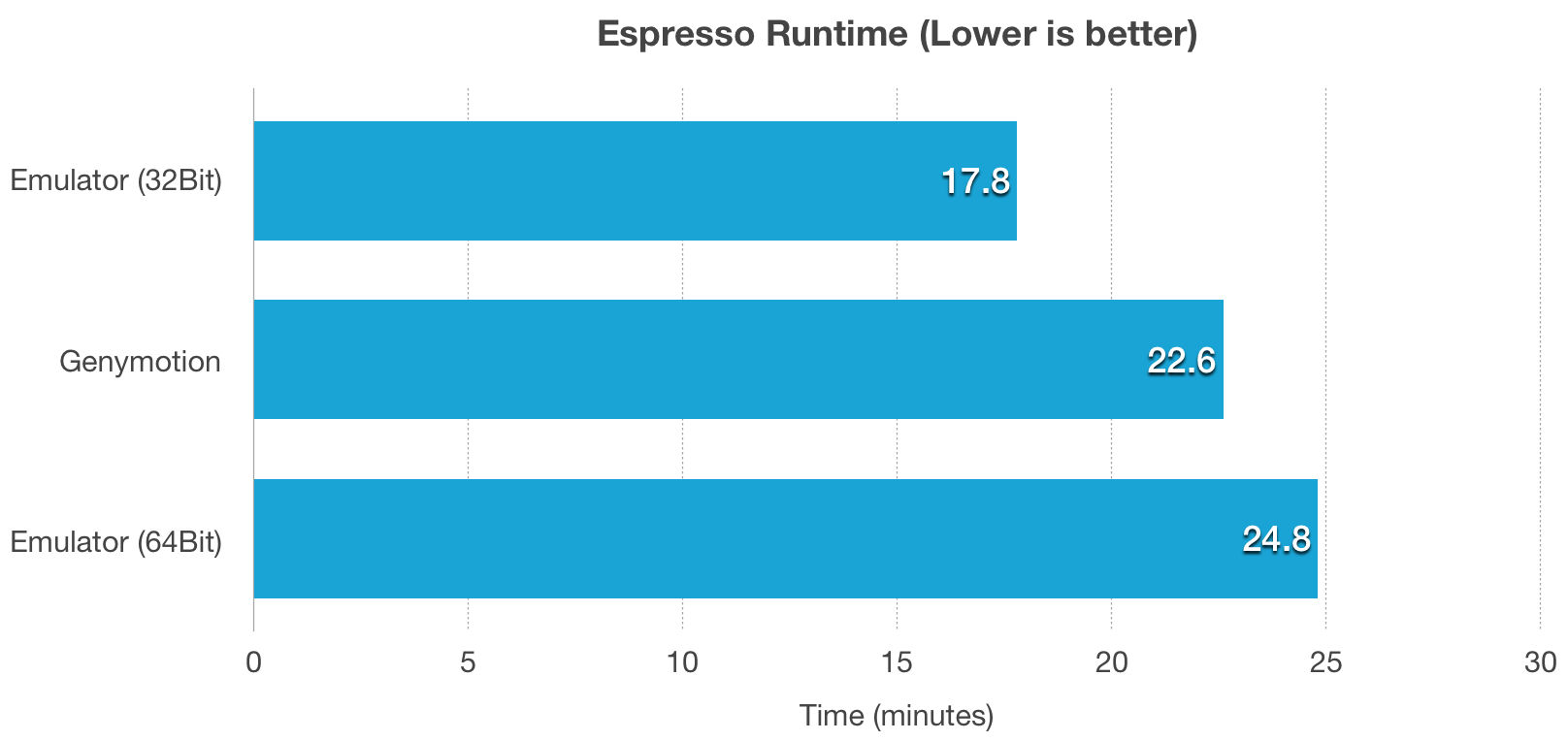

我们的情况是对它们进行集成测试(主要是 espresso)。我们的应用程序中只有 1100 多个测试,Genymotion 需要大约 23 分钟才能运行所有测试。

|

||||

|

||||

在 Genymotion 中我们面临的另一些问题是:

|

||||

|

||||

* 有限的命令行工具([GMTool][1])。

|

||||

* 由于内存问题,它们需要定期重新启动。这是一个手动任务,想象在配有许多机器的持续集成基础设施上进行这些会怎样。

|

||||

|

||||

**进入 Android 模拟器**

|

||||

|

||||

首先是尝试在它给你这么多的选择中设置一个,这会让你会觉得你在赛百味餐厅一样。最大的问题是 x86 或 x86_64 以及是否有 Google API。

|

||||

|

||||

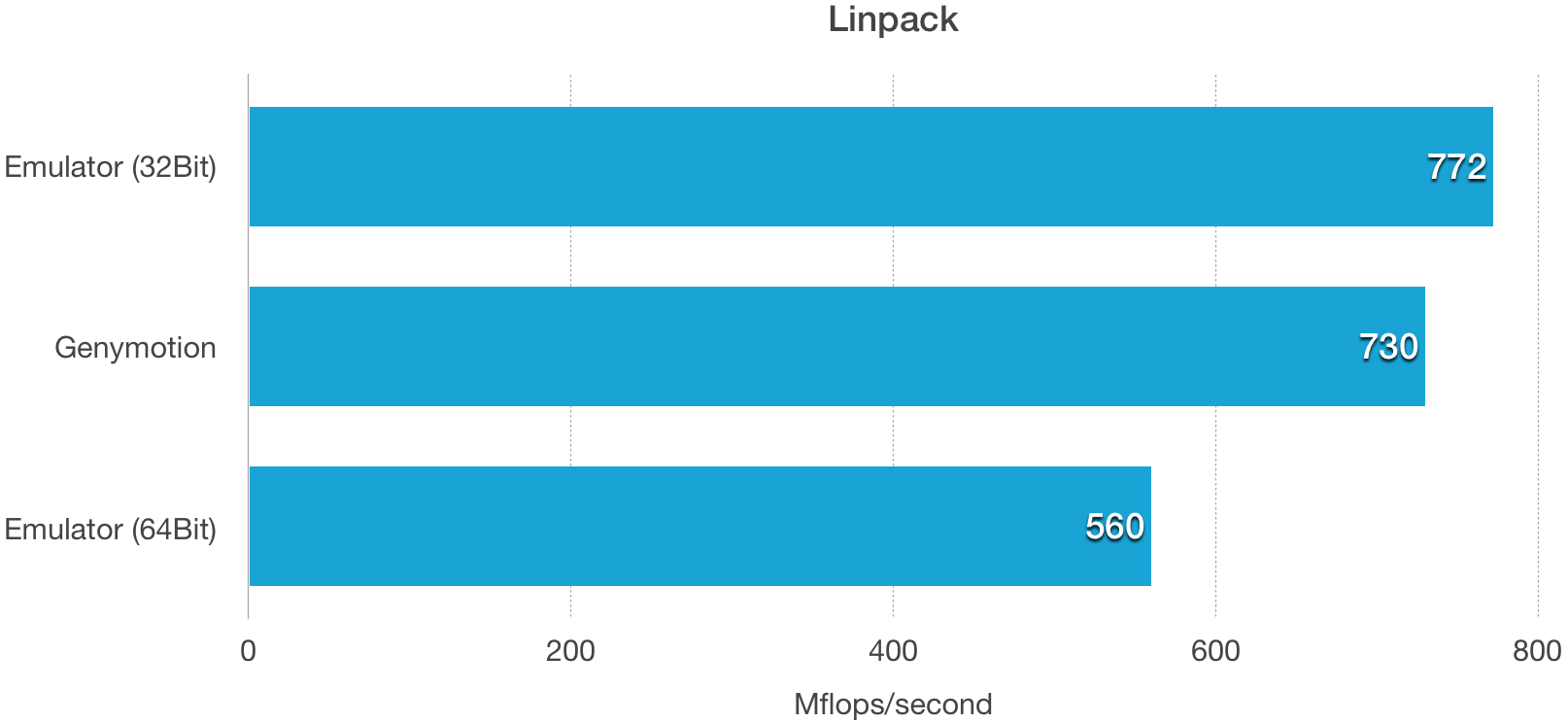

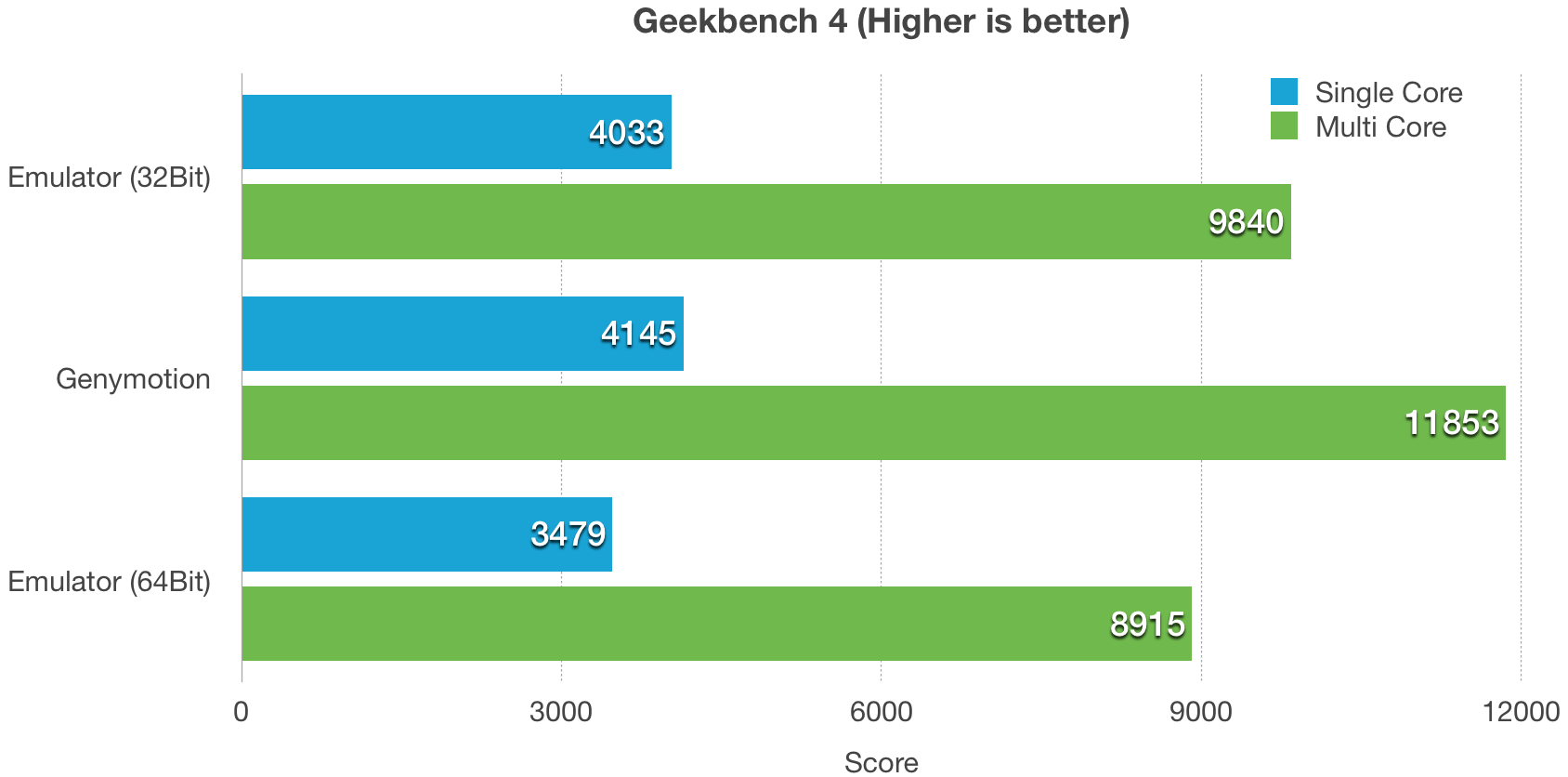

我用这些组合做了一些研究和基准测试,这是我们所想到的。

|

||||

|

||||

鼓声……

|

||||

|

||||

> - 比赛的获胜者是带 Google API 的 x86

|

||||

> - 但是如何胜利的?为什么?

|

||||

|

||||

嗯,我会告诉你每一个问题。

|

||||

|

||||

x86_64 比 x86 慢

|

||||

|

||||

> - 你问慢多少。

|

||||

> - 28.2% 多!!!

|

||||

|

||||

使用 Google API 的模拟器更加稳定,没有它们容易崩溃。

|

||||

|

||||

这使我们得出结论:最好的是带 Google API 的x86。

|

||||

|

||||

在我们抛弃 Genymotion 开始使用模拟器之前。有下面几点重要的细节。

|

||||

|

||||

* 我使用的是带 Google API 的 Nexus 5 镜像。

|

||||

* 我注意到,给模拟器较少的内存会造成了很多 Google API 崩溃。所以为模拟器设定了 3GB 的 RAM。

|

||||

* 模拟器有四核。

|

||||

* HAXM 安装在主机上。

|

||||

|

||||

**基准测试的时候到了**

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

从基准测试上你可以看到除了 Geekbench4,Android 模拟器都击败了 Genymotion,我感觉更像是virtual box 击败了 qemu。

|

||||

|

||||

> 欢呼模拟器之王

|

||||

|

||||

我们现在有更快的测试执行时间、更好的命令行工具。最新的 [Android 模拟器][2]创下的新的记录。更快的启动时间之类。

|

||||

|

||||

Goolgle 一直努力让

|

||||

|

||||

> Android 模拟器变得更好

|

||||

|

||||

如果你没有在使用 Android 模拟器。我建议你重新试下,可以节省一些钱。

|

||||

|

||||

我尝试的另一个但是没有成功的方案是在 AWS 上运行 [Android-x86][3] 镜像。我能够在 vSphere ESXi Hypervisor 中运行它,但不能在 AWS 或任何其他云平台上运行它。如果有人知道原因,请在下面评论。

|

||||

|

||||

PS:[VMWare 现在可以在 AWS 上使用][4],在 AWS 上使用 [Android-x86][5] 毕竟是有可能的。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

嗨,我的名字是 Sumit Gupta。我是来自印度古尔冈的软件/应用/网页开发人员。我做这个是因为我喜欢技术,并且一直迷恋它。我已经工作了 3 年以上,但我还是有很多要学习。他们不是说如果你有知识,让别人点亮他们的蜡烛。

|

||||

|

||||

当在编译时,我阅读很多文章,或者听音乐。

|

||||

|

||||

如果你想联系,下面是我的社交信息和 [email][6]。

|

||||

|

||||

----

|

||||

via: https://www.plightofbyte.com/android/2017/09/03/genymotion-vs-android-emulator/

|

||||

|

||||

作者:[Sumit Gupta][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.plightofbyte.com/about-me

|

||||

[1]:https://docs.genymotion.com/Content/04_Tools/GMTool/GMTool.htm

|

||||

[2]:https://developer.android.com/studio/releases/emulator.html

|

||||

[3]:http://www.android-x86.org/

|

||||

[4]:https://aws.amazon.com/vmware/

|

||||

[5]:http://www.android-x86.org/

|

||||

[6]:thesumitgupta@outlook.com

|

||||

@ -0,0 +1,58 @@

|

||||

OpenMessaging:构建一个分布式消息分发开放标准

|

||||

============================================================

|

||||

|

||||

|

||||

通过在云计算、大数据和标准 API 上的企业及社区的协作,我很高兴 OpenMessaging 项目进入 Linux 基金会。OpenMessaging 社区的目标是为分布式消息分发创建全球采用的、供应商中立的和开放标准,可以部署在云端、内部和混合云情景中。

|

||||

|

||||

阿里巴巴、雅虎、滴滴和 Streamlio 是该项目的创始贡献者。Linux 基金会已与这个初始项目社区合作来建立一个治理模式和结构,以实现运作在消息 API 标准上的生态系统的长期受益。

|

||||

|

||||

由于越来越多的公司和开发者迈向<ruby>云原生应用<rt>cloud native application</rt></ruby>,消息式应用和流式应用的扩展面临的挑战也在不断发展。这包括平台之间的互操作性问题,<ruby>[线路级协议](https://en.wikipedia.org/wiki/Wire_protocol)<rt>wire-level protocol</rt></ruby>之间缺乏兼容性以及系统间缺乏标准的基准测试。

|

||||

|

||||

特别是当数据跨不同的消息平台和流平台进行传输时会出现兼容性问题,这意味着额外的工作和维护成本。现有解决方案缺乏负载平衡、容错、管理、安全性和流功能的标准化指南。目前的系统不能满足现代面向云的消息应用和流应用的需求。这可能导致开发人员额外的工作,并且难以或不可能满足物联网、边缘计算、智能城市等方面的尖端业务需求。

|

||||

|

||||

OpenMessaging 的贡献者正在寻求通过以下方式改进分布式消息分发:

|

||||

|

||||

* 为分布式消息分发创建一个面向全球、面向云、供应商中立的行业标准

|

||||

* 促进用于测试应用程序的标准基准发展

|

||||

* 支持平台独立

|

||||

* 以可伸缩性、灵活性、隔离和安全性为目标的云数据的流和消息分发要求

|

||||

* 培育不断发展的开发贡献者社区

|

||||

|

||||

你可以在这了解有关新项目的更多信息以及如何参与: [http://openmessaging.cloud][1]。

|

||||

|

||||

这些是支持 OpenMessaging 的一些组织:

|

||||

|

||||

“我们多年来一直专注于消息分发和流领域,在此期间,我们探索了 Corba 通知、JMS 和其它标准,来试图解决我们最严格的业务需求。阿里巴巴在评估了可用的替代品后,选择创建一个新的面向云的消息分发标准 OpenMessaging,这是一个供应商中立,且语言无关的标准,并为金融、电子商务、物联网和大数据等领域提供了行业指南。此外,它目地在于跨异构系统和平台间开发消息分发和流应用。我们希望它可以是开放、简单、可扩展和可互操作的。另外,我们要根据这个标准建立一个生态系统,如基准测试、计算和各种连接器。我们希望有新的贡献,并希望大家能够共同努力,推动 OpenMessaging 标准的发展。”

|

||||

|

||||

——阿里巴巴高级架构师 Von Gosling,Apache RocketMQ 的联合创始人,以及 OpenMessaging 的原始发起人

|

||||

|

||||

“随着应用程序消息的复杂性和规模的不断扩大,缺乏标准的接口为开发人员和组织带来了复杂性和灵活性的障碍。Streamlio 很高兴与其他领导者合作推出 OpenMessaging 标准倡议来给客户一个轻松使用高性能、低延迟的消息传递解决方案,如 Apache Pulsar,它提供了企业所需的耐用性、一致性和可用性。“

|

||||

|

||||

—— Streamlio 的软件工程师、Apache Pulsar 的联合创始人以及 Apache BookKeeper PMC 的成员 Matteo Merli

|

||||

|

||||

“Oath(Verizon 旗下领先的媒体和技术品牌,包括雅虎和 AOL)支持开放,协作的举措,并且很乐意加入 OpenMessaging 项目。”

|

||||

|

||||

—— Joe Francis,核心平台总监

|

||||

|

||||

“在滴滴中,我们定义了一组私有的生产者 API 和消费者 API 来隐藏开源的 MQ(如 Apache Kafka、Apache RocketMQ 等)之间的差异,并提供额外的自定义功能。我们计划将这些发布到开源社区。到目前为止,我们已经积累了很多关于 MQ 和 API 统一的经验,并愿意在 OpenMessaging 中与其它 API 一起构建 API 的共同标准。我们真诚地认为,统一和广泛接受的 API 标准可以使 MQ 技术和依赖于它的应用程序受益。”

|

||||

|

||||

—— 滴滴的架构师 Neil Qi_

|

||||

|

||||

“有许多不同的开源消息分发解决方案,包括 Apache ActiveMQ、Apache RocketMQ、Apache Pulsar 和 Apache Kafka。缺乏行业级的可扩展消息分发标准使得评估合适的解决方案变得困难。我们很高兴能够与多个开源项目共同努力,共同确定可扩展的开放消息规范。 Apache BookKeeper 已成功在雅虎(通过 Apache Pulsar)和 Twitter(通过 Apache DistributedLog)的生产环境中部署,它作为其企业级消息系统的持久化、高性能、低延迟存储基础。我们很高兴加入 OpenMessaging 帮助其它项目解决诸如低延迟持久化、一致性和可用性等在消息分发方案中的常见问题。”

|

||||

|

||||

—— Streamlio 的联合创始人、Apache BookKeeper 的 PMC 主席、Apache DistributedLog 的联合创造者,Sijie Guo

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linuxfoundation.org/blog/building-open-standard-distributed-messaging-introducing-openmessaging/

|

||||

|

||||

作者:[Mike Dolan][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linuxfoundation.org/author/mdolan/

|

||||

[1]:http://openmessaging.cloud/

|

||||

[2]:https://www.linuxfoundation.org/author/mdolan/

|

||||

[3]:https://www.linuxfoundation.org/category/blog/

|

||||

@ -1,34 +1,33 @@

|

||||

什么是 Grafeas?更好地审核容器

|

||||

Grafeas:旨在更好地审计容器

|

||||

============================================================

|

||||

|

||||

### Google 的 Grafeas 为容器的元数据提供了一个从镜像和构建细节到安全漏洞的通用 API。

|

||||

> Google 的 Grafeas 为容器的元数据提供了一个从镜像、构建细节到安全漏洞的通用 API。

|

||||

|

||||

|

||||

Thinkstock

|

||||

|

||||

我们运行的软件从来没有比今天更难保证安全。它分散在本地部署和云服务之间,并由不知数量的开源组件构建,而且以快速的时间表交付,因此保证安全和质量变成了一个挑战。

|

||||

我们运行的软件从来没有比今天更难获得。它分散在本地部署和云服务之间,由不知到有多少的开源组件构建而成,以快速的时间表交付,因此保证安全和质量变成了一个挑战。

|

||||

|

||||

最终的结果是软件难以审核,考虑,安全化和管理。困难的不只是知道 VM 或容器是用什么构建的, 而是由谁来添加、删除或更改的。[Grafeas][5] 最初由 Google 设计,旨在使这些问题更容易解决。

|

||||

最终的结果是软件难以审计、推断、安全化和管理。困难的不只是知道 VM 或容器是用什么构建的, 而是由谁来添加、删除或更改的。[Grafeas][5] 最初由 Google 设计,旨在使这些问题更容易解决。

|

||||

|

||||

### 什么是 Grafeas?

|

||||

|

||||

Grafeas 是一个定义软件组件的元数据 API 的开源项目。这旨在提供一个统一的元数据模式,允许 VM、容器、JAR 文件和其他软件工件描述自己的运行环境以及管理它们的用户。目标是允许像给定环境中使用的软件一样的审核,以及对该软件所做的更改的审核,以一致和可靠的方式进行。

|

||||

Grafeas 是一个定义软件组件的元数据 API 的开源项目。旨在提供一个统一的元数据模式,允许 VM、容器、JAR 文件和其他软件<ruby>工件<rt>artifact</rt></ruby>描述自己的运行环境以及管理它们的用户。目标是允许像在给定环境中使用的软件一样的审计,以及对该软件所做的更改的审计,并以一致和可靠的方式进行。

|

||||

|

||||

Grafeas提供两种格式的元数据 API,备注和事件:

|

||||

Grafeas提供两种格式的元数据 API —— 备注和事件:

|

||||

|

||||

* 备注是有关软件组件的某些方面的细节。可以是已知软件漏洞的描述,有关如何构建软件的详细信息(构建器版本,校验和等),部署历史等。

|

||||

* <ruby>备注<rt>note</rt></ruby>是有关软件工件的某些方面的细节。可以是已知软件漏洞的描述,有关如何构建软件的详细信息(构建器版本、校验和等),部署历史等。

|

||||

* <ruby>事件<rt>occurrence</rt></ruby>是备注的实例,包含了它们创建的地方和方式的细节。例如,已知软件漏洞的详细信息可能会有描述哪个漏洞扫描程序检测到它的情况、何时被检测到的事件信息,以及该漏洞是否被解决。

|

||||

|

||||

* 事件是备注的实例,包含了它们创建的地方和方式的细节。例如,已知软件漏洞的详细信息可能会有描述哪个漏洞扫描程序检测到它的情况、何时被检测到的事件信息,以及该漏洞是否被解决。

|

||||

备注和事件都存储在仓库中。每个备注和事件都使用标识符进行跟踪,该标识符区分它并使其唯一。

|

||||

|

||||

备注和事件都存储在仓库中。每个笔记和事件都使用标识符进行跟踪,该标识符区分它并使其唯一。

|

||||

|

||||

Grafeas 规范包括备注类型的几个基本模式。例如,软件包漏洞模式描述了如何存储 CVE 或漏洞描述的备注信息。现在没有正式的接受新模式类型的流程,但是[这已经在计划][6]创建这样一个流程。

|

||||

Grafeas 规范包括备注类型的几个基本模式。例如,软件包漏洞模式描述了如何存储 CVE 或漏洞描述的备注信息。现在没有接受新模式类型的正式流程,但是[这已经在计划][6]创建这样一个流程。

|

||||

|

||||

### Grafeas 客户端和第三方支持

|

||||

|

||||

现在,Grafeas 主要作为规范和参考形式存在,它有在[ GitHub 上提供][7]。 [Go][8]、[Python][9] 和 [Java][10] 的客户端都可以使用[由 Swagger 生成][11],所以其他语言的客户端也应该不难写出来。

|

||||

现在,Grafeas 主要作为规范和参考形式存在,它在 [GitHub 上提供][7]。 [Go][8]、[Python][9] 和 [Java][10] 的客户端都可以[用 Swagger 生成][11],所以其他语言的客户端也应该不难写出来。

|

||||

|

||||

Google 计划让 Grafeas 广泛使用的关键方案是通过 Kubernetes。 Kubernetes 的一个策略引擎,称为 Kritis,可以根据 Grafeas 元数据对容器采取措施。

|

||||

Google 计划让 Grafeas 广泛使用的主要方式是通过 Kubernetes。 Kubernetes 的一个名为 Kritis 的策略引擎,可以根据 Grafeas 元数据对容器采取措施。

|

||||

|

||||

除 Google 之外的几家公司已经宣布计划将 Grafeas 的支持添加到现有产品中。例如,CoreOS 正在考察 Grafeas 如何与 Tectonic 集成,[Red Hat][12] 和 [IBM][13] 都计划在其容器产品和服务中添加 Grafeas 集成。

|

||||

|

||||

@ -36,9 +35,9 @@ Google 计划让 Grafeas 广泛使用的关键方案是通过 Kubernetes。 Kube

|

||||

|

||||

via: https://www.infoworld.com/article/3230462/security/what-is-grafeas-better-auditing-for-containers.html

|

||||

|

||||

作者:[Serdar Yegulalp ][a]

|

||||

作者:[Serdar Yegulalp][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,71 +0,0 @@

|

||||

translating by penghuster

|

||||

|

||||

How To Code Like The Top Programmers At NASA — 10 Critical Rules

|

||||

============================================================

|

||||

|

||||

_**[][1] Short Bytes:** Do you know how top programmers write mission-critical code at NASA? To make such code clearer, safer, and easier to understand, NASA’s Jet Propulsion Laboratory has laid 10 rules for developing software._

|

||||

|

||||

The developers at NASA have one of the most challenging jobs in the programming world. They write code and develop mission-critical applications with safety as their primary concerns.

|

||||

|

||||

In such situations, it’s important to follow some serious coding guidelines. These rules cover different aspects of software development like how a software should be written, which language features should be used etc.

|

||||

|

||||

Even though it’s difficult to establish a consensus over a good coding standard, NASA’s Jet Propulsion Laboratory (JPL) follows a set of [guidelines of code][2] named “The Power of Ten–Rules for Developing Safety Critical Code”.

|

||||

|

||||

This guide focuses mainly on code written in C programming languages due to JPL’s long association with the language. But, these guidelines could be easily applied on other programming languages as well.

|

||||

|

||||

Laid by JPL lead scientist Gerard J. Holzmann, these strict coding rules focus on security.

|

||||

|

||||

[][3]

|

||||

|

||||

NASA’s 10 rules for writing mission-critical code:

|

||||

|

||||

1. _Restrict all code to very simple control flow constructs – do not use goto statements, setjmp or longjmp _ constructs _, and direct or indirect recursion._

|

||||

|

||||

2. _All loops must have a fixed_ _upper-bound. It must be trivially possible for a checking tool to prove statically that a preset upper-bound on the number of iterations of a loop cannot be exceeded. If the loop-bound cannot be proven statically, the rule is considered violated._

|

||||

|

||||

3. _Do not use dynamic memory allocation after initialization._

|

||||

|

||||

4. _No function should be longer than what can be printed on a single sheet of paper in a standard reference format with one line per statement and one line per declaration. Typically, this means no more than about 60 lines of code per function._

|

||||

|

||||

5. _The assertion density of the code should average to a minimum of two assertions per function. Assertions are used to check for anomalous conditions that should never happen in real-life executions. Assertions must always be side-effect free and should be defined as Boolean tests. When an assertion fails, an explicit recovery action must be taken, e.g., by returning an error condition to the caller of the function that executes the failing assertion. Any assertion for which a static checking tool can prove that it can never fail or never hold violates this rule (I.e., it is not possible to satisfy the rule by adding unhelpful “assert(true)” statements)._

|

||||

|

||||

6. _Data objects must be declared at the smallest possible level of scope._

|

||||

|

||||

7. _The return value of non-void functions must be checked by each calling function, and the validity of parameters must be checked inside each function._

|

||||

|

||||

8. _The use of the preprocessor must be limited to the inclusion of header files and simple macro definitions. Token pasting, variable argument lists (ellipses), and recursive macro calls are not allowed. All macros must expand into complete syntactic units. The use of conditional compilation directives is often also dubious, but cannot always be avoided. This means that there should rarely be justification for more than one or two conditional compilation directives even in large software development efforts, beyond the standard boilerplate that avoids multiple inclusion of the same header file. Each such use should be flagged by a tool-based checker and justified in the code._

|

||||

|

||||

9. _The use of pointers should be restricted. Specifically, no more than one level of dereferencing is allowed. Pointer dereference operations may not be hidden in macro definitions or inside typedef declarations. Function pointers are not permitted._

|

||||

|

||||

10. _All code must be compiled, from the first day of development, with all compiler warnings enabled at the compiler’s most pedantic setting. All code must compile with these setting without any warnings. All code must be checked daily with at least one, but preferably more than one, state-of-the-art static source code analyzer and should pass the analyses with zero warnings._

|

||||

|

||||

About these rules, here’s what NASA has to say:

|

||||

|

||||

The rules act like the seatbelt in your car: initially they are perhaps a little uncomfortable, but after a while their use becomes second-nature and not using them becomes unimaginable.

|

||||

|

||||

[Source][4]

|

||||

|

||||

Did you find this article helpful? Don’t forget to drop your feedback in the comments section below.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Adarsh Verma

|

||||

Fossbytes co-founder and an aspiring entrepreneur who keeps a close eye on open source, tech giants, and security. Get in touch with him by sending an email — adarsh.verma@fossbytes.com

|

||||

|

||||

------------------

|

||||

|

||||

via: https://fossbytes.com/nasa-coding-programming-rules-critical/

|

||||

|

||||

作者:[Adarsh Verma ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://fossbytes.com/author/adarsh/

|

||||

[1]:http://fossbytes.com/wp-content/uploads/2016/06/rules-of-coding-nasa.jpg

|

||||

[2]:http://pixelscommander.com/wp-content/uploads/2014/12/P10.pdf

|

||||

[3]:https://fossbytes.com/wp-content/uploads/2016/12/learn-to-code-banner-ad-content-1.png

|

||||

[4]:http://pixelscommander.com/wp-content/uploads/2014/12/P10.pdf

|

||||

@ -1,3 +1,5 @@

|

||||

translating by penghuster

|

||||

|

||||

Designing a Microservices Architecture for Failure

|

||||

============================================================

|

||||

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

Translating by Chao-zhi

|

||||

|

||||

8 best languages to blog about

|

||||

============================================================

|

||||

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

translating by firmianay

|

||||

|

||||

Examining network connections on Linux systems

|

||||

============================================================

|

||||

|

||||

|

||||

@ -0,0 +1,149 @@

|

||||

Getting Started Analyzing Twitter Data in Apache Kafka through KSQL

|

||||

============================================================

|

||||

|

||||

[KSQL][8] is the open source streaming SQL engine for Apache Kafka. It lets you do sophisticated stream processing on Kafka topics, easily, using a simple and interactive SQL interface. In this short article we’ll see how easy it is to get up and running with a sandbox for exploring it, using everyone’s favourite demo streaming data source: Twitter. We’ll go from ingesting the raw stream of tweets, through to filtering it with predicates in KSQL, to building aggregates such as counting the number of tweets per user per hour.

|

||||

|

||||

|

||||

|

||||

First up, [go grab a copy of Confluent Platform][9]. I’m using the RPM but you can use [tar, zip, etc][10] if you want to. Start the Confluent stack up:

|

||||

|

||||

`$ confluent start`

|

||||

|

||||

(Here’s a [quick tutorial on the confluent CLI][11] if you’re interested!)

|

||||

|

||||

We’ll use Kafka Connect to pull the data from Twitter. The Twitter Connector can be found [on GitHub here][12]. To install it, simply do the following:

|

||||

|

||||

`# Clone the git repo

|

||||

cd /home/rmoff

|

||||

git clone https://github.com/jcustenborder/kafka-connect-twitter.git`

|

||||

|

||||

`# Compile the code

|

||||

cd kafka-connect-twitter

|

||||

mvn clean package`

|

||||

|

||||

To get Kafka Connect [to pick up the connector][13] that we’ve built, you’ll have to modify the configuration file. Since we’re using the Confluent CLI, the configuration file is actually `etc/schema-registry/connect-avro-distributed.properties`, so go modify that and add to it:

|

||||

|

||||

`plugin.path=/home/rmoff/kafka-connect-twitter/target/kafka-connect-twitter-0.2-SNAPSHOT.tar.gz`

|

||||

|

||||

Restart Kafka Connect:

|

||||

`confluent stop connect

|

||||

confluent start connect`

|

||||

|

||||

Once you’ve installed the plugin, you can easily configure it. You can use the Kafka Connect REST API directly, or create your configuration file, which is what I’ll do here. You’ll need to head over to [Twitter to grab your API keys first][14].

|

||||

|

||||

Assuming you’ve written this to `/home/rmoff/twitter-source.json`, you can now run:

|

||||

|

||||

`$ confluent load twitter_source -d /home/rmoff/twitter-source.json`

|

||||

|

||||

And then tweets from everyone’s favourite internet meme star start [rick]-rolling in…

|

||||

|

||||

Now let’s fire up KSQL! First off, download and build it:

|

||||

|

||||

`cd /home/rmoff `

|

||||

`git clone https://github.com/confluentinc/ksql.git `

|

||||

`cd /home/rmoff/ksql `

|

||||

`mvn clean compile install -DskipTests`

|

||||

|

||||

Once it’s built, let’s run it!

|

||||

|

||||

`./bin/ksql-cli local --bootstrap-server localhost:9092`

|

||||

|

||||

Using KSQL, we can take our data that’s held in Kafka topics and query it. First, we need to tell KSQL what the schema of the data in the topic is. A twitter message is actually a pretty huge JSON object, but for brevity let’s just pick a couple of columns to start with:

|

||||

|

||||

`ksql> CREATE STREAM twitter_raw (CreatedAt BIGINT, Id BIGINT, Text VARCHAR) WITH (KAFKA_TOPIC='twitter_json_01', VALUE_FORMAT='JSON');`

|

||||

`Message `

|

||||

`----------------`

|

||||

`Stream created`

|

||||

|

||||

With the schema defined, we can query the stream. To get KSQL to show data from the start of the topic (rather than the current point in time, which is the default), run:

|

||||

|

||||

`ksql> SET 'auto.offset.reset' = 'earliest'; `

|

||||

`Successfully changed local property 'auto.offset.reset' from 'null' to 'earliest'`

|

||||

|

||||

And now let’s see the data. We’ll select just one row using the LIMIT clause:

|

||||

|

||||

Now let’s redefine the stream with all the contents of the tweet payload now defined and available to us:

|

||||

|

||||

Now we can manipulate and examine our data more closely, using normal SQL queries:

|

||||

|

||||

Note that there’s no LIMIT clause, so you’ll see on screen the results of the _continuous query_ . Unlike a query on a relational table that returns a definite number of results, a continuous query is running on unbounded streaming data, so it always has the potential to return more records. Hit Ctrl-C to cancel and return to the KSQL prompt. In the above query we’re doing a few things:

|

||||

|

||||

* TIMESTAMPTOSTRING to convert the timestamp from epoch to a human-readable format

|

||||

|

||||

* EXTRACTJSONFIELD to show one of the nested user fields from the source, which looks like:

|

||||

|

||||

* Applying predicates to what’s shown, using pattern matching against the hashtag, forced to lower case with LCASE.

|

||||

|

||||

For a list of supported functions, see [the KSQL documentation][15].

|

||||

|

||||

We can create a derived stream from this data:

|

||||

|

||||

and query the derived stream:

|

||||

|

||||

Before we finish, let’s see how to do some aggregation.

|

||||

|

||||

You’ll probably get a screenful of results; this is because KSQL is actually emitting the aggregation values for the given hourly window each time it updates. Since we’ve set KSQL to read all messages on the topic (`SET 'auto.offset.reset' = 'earliest';`) it’s reading all of these messages at once and calculating the aggregation updates as it goes. There’s actually a subtlety in what’s going on here that’s worth digging into. Our inbound stream of tweets is just that—a stream. But now that we are creating aggregates, we have actually created a table. A table is a snapshot of a given key’s values at a given point in time. KSQL aggregates data based on the event time of the message, and handles late arriving data by simply restating that relevant window if it updates. Confused? We hope not, but let’s see if we can illustrate this with an example. We’ll declare our aggregate as an actual table:

|

||||

|

||||

Looking at the columns in the table, there are two implicit ones in addition to those we asked for:

|

||||

|

||||

`ksql> DESCRIBE user_tweet_count;

|

||||

|

||||

Field | Type

|

||||

-----------------------------------

|

||||

ROWTIME | BIGINT

|

||||

ROWKEY | VARCHAR(STRING)

|

||||

USER_SCREENNAME | VARCHAR(STRING)

|

||||

TWEET_COUNT | BIGINT

|

||||

ksql>`

|

||||

|

||||

Let’s see what’s in these:

|

||||

|

||||

The `ROWTIME` is the window start time, the `ROWKEY` is a composite of the `GROUP BY`(`USER_SCREENNAME`) plus the window. So we can tidy this up a bit by creating an additional derived table:

|

||||

|

||||

Now it’s easy to query and see the data that we’re interested in:

|

||||

|

||||

### Conclusion

|

||||

|

||||

So there we have it! We’re taking data from Kafka, and easily exploring it using KSQL. Not only can we explore and transform the data, we can use KSQL to easily build stream processing from streams and tables.

|

||||

|

||||

|

||||

|

||||

If you’re interested in what KSQL can do, check out:

|

||||

|

||||

* The [KSQL announcement blog post][1]

|

||||

|

||||

* [Our recent KSQL webinar][2] and [Kafka Summit keynote][3]

|

||||

|

||||

* The [clickstream demo][4] that’s available as part of [KSQL’s GitHub repo][5]

|

||||

|

||||

* A [presentation that I did recently][6] showing how KSQL can underpin a streaming ETL based platform.

|

||||

|

||||

Remember that KSQL is currently in developer preview. Feel free to raise any issues on the KSQL github repo, or come along to the #ksql channel on our [community Slack group][16].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.confluent.io/blog/using-ksql-to-analyse-query-and-transform-data-in-kafka

|

||||

|

||||

作者:[Robin Moffatt ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.confluent.io/blog/author/robin/

|

||||

[1]:https://www.confluent.io/blog/ksql-open-source-streaming-sql-for-apache-kafka/

|

||||

[2]:https://www.confluent.io/online-talk/ksql-streaming-sql-for-apache-kafka/

|

||||

[3]:https://www.confluent.io/kafka-summit-sf17/Databases-and-Stream-Processing-1

|

||||

[4]:https://www.youtube.com/watch?v=A45uRzJiv7I

|

||||

[5]:https://github.com/confluentinc/ksql

|

||||

[6]:https://speakerdeck.com/rmoff/look-ma-no-code-building-streaming-data-pipelines-with-apache-kafka

|

||||

[7]:https://www.confluent.io/blog/author/robin/

|

||||

[8]:https://github.com/confluentinc/ksql/

|

||||

[9]:https://www.confluent.io/download/

|

||||

[10]:https://docs.confluent.io/current/installation.html?

|

||||

[11]:https://www.youtube.com/watch?v=ZKqBptBHZTg

|

||||

[12]:https://github.com/jcustenborder/kafka-connect-twitter

|

||||

[13]:https://docs.confluent.io/current/connect/userguide.html#connect-installing-plugins

|

||||

[14]:https://apps.twitter.com/

|

||||

[15]:https://github.com/confluentinc/ksql/blob/0.1.x/docs/syntax-reference.md

|

||||

[16]:https://slackpass.io/confluentcommunity

|

||||

@ -0,0 +1,277 @@

|

||||

How to set up a Postgres database on a Raspberry Pi

|

||||

============================================================

|

||||

|

||||

### Install and configure the popular open source database system PostgreSQL and use it in your next Raspberry Pi project.

|

||||

|

||||

|

||||

Image credits : Raspberry Pi Foundation. [CC BY-SA 4.0][12].

|

||||

|

||||

Databases are a great way to add data persistence to your project or application. You can write data in one session and it'll be there the next time you want to look. A well-designed database can be efficient at looking up data in large datasets, and you won't have to worry about how it looks, just what you want it to find. It's fairly simple to set up a database for basic [CRUD][13] (create, record, update, delete) applications, which is a common pattern, and it is useful in many projects.

|

||||

|

||||

Why [PostgreSQL][14], commonly known as Postgres? It's considered to be the best open source database in terms of features and performance. It'll feel familiar if you've used MySQL, but when you need more advanced usage, you'll find the optimization in Postgres is far superior. It's easy to install, easy to use, easy to secure, and runs well on the Raspberry Pi 3.

|

||||

|

||||

This tutorial explains how to install Postgres on a Raspberry Pi; create a table; write simple queries; use the pgAdmin GUI on a Raspberry Pi, a PC, or a Mac; and interact with the database from Python.

|

||||

|

||||

Once you've learned the basics, you can take your application a lot further with complex queries joining multiple tables, at which point you need to think about optimization, best design practices, using primary and foreign keys, and more.

|

||||

|

||||

### Installation

|

||||

|

||||

To get started, you'll need to install Postgres and some other packages. Open a terminal window and run the following command while connected to the internet:

|

||||

|

||||

```

|

||||

sudo apt install postgresql libpq-dev postgresql-client

|

||||

postgresql-client-common -y

|

||||

```

|

||||

|

||||

### [postgres-install.png][1]

|

||||

|

||||

|

||||

|

||||

When that's complete, switch to the Postgres user to configure the database:

|

||||

|

||||

```

|

||||

sudo su postgres

|

||||

```

|

||||

|

||||

Now you can create a database user. If you create a user with the same name as one of your Unix user accounts, that user will automatically be granted access to the database. So, for the sake of simplicity in this tutorial, I'll assume you're using the default pi user. Run the **createuser** command to continue:

|

||||

|

||||

```

|

||||

createuser pi -P --interactive

|

||||

```

|

||||

|

||||

When prompted, enter a password (and remember what it is), select **n** for superuser, and **y** for the next two questions.

|

||||

|

||||

### [postgres-createuser.png][2]

|

||||

|

||||

|

||||

|

||||

Now connect to Postgres using the shell and create a test database:

|

||||

|

||||

```

|

||||

$ psql

|

||||

> create database test;

|

||||

```

|

||||

|

||||

Exit from the psql shell and again from the Postgres user by pressing Ctrl+D twice, and you'll be logged in as the pi user again. Since you created a Postgres user called pi, you can access the Postgres shell from here with no credentials:

|

||||

|

||||

```

|

||||

$ psql test

|

||||

```

|

||||

|

||||

You're now connected to the "test" database. The database is currently empty and contains no tables. You can create a simple table from the psql shell:

|

||||

|

||||

```

|

||||

test=> create table people (name text, company text);

|

||||

```

|

||||

|

||||

Now you can insert data into the table:

|

||||

|

||||

```

|

||||

test=> insert into people values ('Ben Nuttall', 'Raspberry Pi Foundation');

|

||||

|

||||

test=> insert into people values ('Rikki Endsley', 'Red Hat');

|

||||

```

|

||||

|

||||

And try a select query:

|

||||

|

||||

```

|

||||

test=> select * from people;

|

||||

|

||||

name | company

|

||||

---------------+-------------------------

|

||||

Ben Nuttall | Raspberry Pi Foundation

|

||||

Rikki Endsley | Red Hat

|

||||

(2 rows)

|

||||

```

|

||||

|

||||

### [postgres-query.png][3]

|

||||

|

||||

|

||||

|

||||

```

|

||||

test=> select name from people where company = 'Red Hat';

|

||||

|

||||

name | company

|

||||

---------------+---------

|

||||

Rikki Endsley | Red Hat

|

||||

(1 row)

|

||||

```

|

||||

|

||||

### pgAdmin

|

||||

|

||||

You might find it useful to use a graphical tool to access the database. PgAdmin is a full-featured PostgreSQL GUI that allows you to create and manage databases and users, create and modify tables, write and execute queries, and browse results in a more familiar view, similar to a spreadsheet. The psql command-line tool is fine for simple queries, and you'll find many power users stick with it for speed (and because they don't need the assistance the GUI gives), but midlevel users may find pgAdmin a more approachable way to learn and do more with a database.

|

||||

|

||||

Another useful thing about pgAdmin is that you can either use it directly on the Pi or on another computer that's remotely connected to the database on the Pi.

|

||||

|

||||

If you want to access it on the Raspberry Pi itself, you can just install it with **apt**:

|

||||

|

||||

```

|

||||

sudo apt install pgadmin3

|

||||

```

|

||||

|

||||

It's exactly the same if you're on a Debian-based system like Ubuntu; if you're on another distribution, try the equivalent command for your system. Alternatively, or if you're on Windows or macOS, try downloading pgAdmin from [pgAdmin.org][15]. Note that the version available in **apt** is pgAdmin3 and a newer version, pgAdmin4, is available from the website.

|

||||

|

||||

To connect to your database with pgAdmin on the same Raspberry Pi, simply open pgAdmin3 from the main menu, click the **new connection** icon, and complete the registration fields. In this case, all you'll need is a name (you choose the connection name, e.g. test), change the username to "pi," and leave the rest of the fields blank (or as they were). Click OK and you'll find a new connection in the side panel on the left.

|

||||

|

||||

### [pgadmin-connect.png][4]

|

||||

|

||||

|

||||

|

||||

To connect to your Pi's database with pgAdmin from another computer, you'll first need to edit the PostgreSQL configuration to allow remote connections:

|

||||

|

||||

1\. Edit the PostgreSQL config file **/etc/postgresql/9.6/main/postgresql.conf** to uncomment the **listen_addresses** line and change its value from **localhost** to *****. Save and exit.

|

||||

|

||||

2\. Edit the **pg_hba** config file **/etc/postgresql/9.6/main/postgresql.conf** to change **127.0.0.1/32** to **0.0.0.0/0** for IPv4 and **::1/128** to **::/0** for IPv6\. Save and exit.

|

||||

|

||||

3\. Restart the PostgreSQL service: **sudo service postgresql restart**.

|

||||

|

||||

Note the version number may be different if you're using an older Raspbian image or another distribution.

|

||||

|

||||

### [postgres-config.png][5]

|

||||

|

||||

|

||||

|

||||

Once that's done, open pgAdmin on your other computer and create a new connection. This time, in addition to giving the connection a name, enter the Pi's IP address as the host (this can be found by hovering over the WiFi icon in the taskbar or by typing **hostname -I** in a terminal).

|

||||

|

||||

### [pgadmin-remote.png][6]

|

||||

|

||||

|

||||

|

||||

Whether you connected locally or remotely, click to open **Server Groups > Servers > test > Schemas > public > Tables**, right-click the **people** table and select **View Data > View top 100 Rows**. You'll now see the data you entered earlier.

|

||||

|

||||

### [pgadmin-view.png][7]

|

||||

|

||||

|

||||

|

||||

You can now create and modify databases and tables, manage users, and write your own custom queries using the GUI. You might find this visual method more manageable than using the command line.

|

||||

|

||||

### Python

|

||||

|

||||

To connect to your database from a Python script, you'll need the [Psycopg2][16]Python package. You can install it with [pip][17]:

|

||||

|

||||

```

|

||||

sudo pip3 install psycopg2

|

||||

```

|

||||

|

||||

Now open a Python editor and write some code to connect to your database:

|

||||

|

||||

```

|

||||

import psycopg2

|

||||

|

||||

conn = psycopg2.connect('dbname=test')

|

||||

cur = conn.cursor()

|

||||

|

||||

cur.execute('select * from people')

|

||||

|

||||

results = cur.fetchall()

|

||||

|

||||

for result in results:

|

||||

print(result)

|

||||

```

|

||||

|

||||

Run this code to see the results of the query. Note that if you're connecting remotely, you'll need to supply more credentials in the connection string, for example, adding the host IP, username, and database password:

|

||||

|

||||

```

|

||||

conn = psycopg2.connect('host=192.168.86.31 user=pi

|

||||

password=raspberry dbname=test')

|

||||

```

|

||||

|

||||

You could even create a function to look up this query specifically:

|

||||

|

||||

```

|

||||

def get_all_people():

|

||||

query = """

|

||||

SELECT

|

||||

*

|

||||

FROM

|

||||

people

|

||||

"""

|

||||

cur.execute(query)

|

||||

return cur.fetchall()

|

||||

```

|

||||

|

||||

And one including a lookup:

|

||||

|

||||

```

|

||||

def get_people_by_company(company):

|

||||

query = """

|

||||

SELECT

|

||||

*

|

||||

FROM

|

||||

people

|

||||

WHERE

|

||||

company = %s

|

||||

"""

|

||||

values = (company, )

|

||||

cur.execute(query, values)

|

||||

return cur.fetchall()

|

||||

```

|

||||

|

||||

Or even a function for adding records:

|

||||

|

||||

```

|

||||

def add_person(name, company):

|

||||

query = """

|

||||

INSERT INTO

|

||||

people

|

||||

VALUES

|

||||

(%s, %s)

|

||||

"""

|

||||

values = (name, company)

|

||||

cur.execute(query, values)

|

||||

```

|

||||

|

||||

Note this uses a safe method of injecting strings into queries. You don't want to get caught out by [little bobby tables][18]!

|

||||

|

||||

### [python-postgres.png][8]

|

||||

|

||||

|

||||

|

||||

Now you know the basics. If you want to take Postgres further, check out this article on [Full Stack Python][19].

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Ben Nuttall - Ben Nuttall is the Raspberry Pi Community Manager. In addition to his work for the Raspberry Pi Foundation, he's into free software, maths, kayaking, GitHub, Adventure Time, and Futurama. Follow Ben on Twitter [@ben_nuttall][10].

|

||||

|

||||

-------------

|

||||

|

||||

via: https://opensource.com/article/17/10/set-postgres-database-your-raspberry-pi

|

||||

|

||||

作者:[Ben Nuttall ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/bennuttall

|

||||

[1]:https://opensource.com/file/374246

|

||||

[2]:https://opensource.com/file/374241

|

||||

[3]:https://opensource.com/file/374251

|

||||

[4]:https://opensource.com/file/374221

|

||||

[5]:https://opensource.com/file/374236

|

||||

[6]:https://opensource.com/file/374226

|

||||

[7]:https://opensource.com/file/374231

|

||||

[8]:https://opensource.com/file/374256

|

||||

[9]:https://opensource.com/article/17/10/set-postgres-database-your-raspberry-pi?imm_mid=0f75d0&cmp=em-prog-na-na-newsltr_20171021&rate=t-XUFUPa6mURgML4cfL1mjxsmFBG-VQTG4R39QvFVQA

|

||||

[10]:http://www.twitter.com/ben_nuttall

|

||||

[11]:https://opensource.com/user/26767/feed

|

||||

[12]:https://creativecommons.org/licenses/by-sa/4.0/

|

||||

[13]:https://en.wikipedia.org/wiki/Create,_read,_update_and_delete

|

||||

[14]:https://www.postgresql.org/

|

||||

[15]:https://www.pgadmin.org/download/

|

||||

[16]:http://initd.org/psycopg/

|

||||

[17]:https://pypi.python.org/pypi/pip

|

||||

[18]:https://xkcd.com/327/

|

||||

[19]:https://www.fullstackpython.com/postgresql.html

|

||||

[20]:https://opensource.com/users/bennuttall

|

||||

[21]:https://opensource.com/users/bennuttall

|

||||

[22]:https://opensource.com/users/bennuttall

|

||||

[23]:https://opensource.com/article/17/10/set-postgres-database-your-raspberry-pi?imm_mid=0f75d0&cmp=em-prog-na-na-newsltr_20171021#comments

|

||||

[24]:https://opensource.com/tags/raspberry-pi

|

||||

[25]:https://opensource.com/tags/raspberry-pi-column

|

||||

[26]:https://opensource.com/tags/how-tos-and-tutorials

|

||||

[27]:https://opensource.com/tags/programming

|

||||

95

sources/tech/20171013 Best of PostgreSQL 10 for the DBA.md

Normal file

95

sources/tech/20171013 Best of PostgreSQL 10 for the DBA.md

Normal file

@ -0,0 +1,95 @@

|

||||

Best of PostgreSQL 10 for the DBA

|

||||

============================================================

|

||||

|

||||

|

||||

Last week a new PostgreSQL major version with the number 10 was released! Announcement, release notes and the „What’s new“ overview can be found from [here][3], [here][4]and [here][5] – it’s highly recommended reading, so check them out. As usual there have been already quite some blog postings covering all the new stuff, but I guess everyone has their own angle on what is important so as with version 9.6 I’m again throwing in my impressions on the most interesting/relevant features here.

|

||||

|

||||

As always, users who upgrade or initialize a fresh cluster, will enjoy huge performance wins (e.g. better parallelization with parallel index scans, merge joins and uncorrelated sub-queries, faster aggregations, smarter joins and aggregates on remote servers) out of the box without doing anything, but here I would like to look more at the things that you won’t get out of the box but you actually need to take some steps to start benefiting from them. List of below highlighted features is compiled from a DBA’s viewpoint here, soon a post on changes from a developer’s point of view will also follow.

|

||||

|

||||

### Upgrading considerations

|

||||

|

||||

First some hints on upgrading from an existing setup – this time there are some small things that could cause problems when migrating from 9.6 or even older versions, so before the real deal one should definitely test the upgrade on a separate replica and go through the full list of possible troublemakers from the release notes. Most likely pitfalls to watch out for:

|

||||

|

||||

* All functions containing „xlog“ have been renamed to use „wal“ instead of „xlog“

|

||||

|

||||

The latter naming could be confused with normal server logs so a „just in case“ change. If using any 3rd party backup/replication/HA tools check that they are all at latest versions.

|

||||

|

||||

* pg_log folder for server logs (error messages/warnings etc) has been renamed to just „log“

|

||||

|

||||

Make sure to verify that your log parsing/grepping scripts (if having any) work.

|

||||

|

||||

* By default queries will make use of up to 2 background processes

|

||||

|

||||

If using the default 10 postgresql.conf settings on a machine with low number of CPUs you may see resource usage spikes as parallel processing is enabled by default now – which is a good thing though as it should mean faster queries. Set max_parallel_workers_per_gather to 0 if old behaviour is needed.

|

||||

|

||||

* Replication connections from localhost are enabled now by default

|

||||

|

||||

To ease testing etc, localhost and local Unix socket replication connections are now enabled in „trust“ mode (without password) in pg_hba.conf! So if other non-DBA user also have access to real production machines, make sure you change the config.

|

||||

|

||||

### My favourites from a DBA’s point of view

|

||||

|

||||

* Logical replication

|

||||

|

||||

The long awaited feature enables easy setup and minimal performance penalties for application scenarios where you only want to replicate a single table or a subset of tables or all tables, meaning also zero downtime upgrades for following major versions! Historically (Postgres 9.4+ required) this could be achieved only by usage of a 3rd party extension or slowish trigger based solutions. The top feature of version 10 for me.

|

||||

|

||||

* Declarative partitioning

|

||||

|

||||

Old way of managing partitions via inheritance and creating triggers to re-route inserts to correct tables was bothersome to say the least, not to mention the performance impact. Currently supported are „range“ and „list“ partitioning schemes. If someone is missing „hash“ partitioning available in some DB engines, one could use „list“ partitioning with expressions to achieve the same.

|

||||

|

||||

* Usable Hash indexes

|

||||

|

||||

Hash indexes are now WAL-logged thus crash safe and received some performance improvements so that for simple searches they’re actually faster than standard B-tree indexes for bigger amounts of data. Bigger index size though too.

|

||||

|

||||

* Cross-column optimizer statistics

|

||||

|

||||

Such stats needs to be created manually on a set if columns of a table, to point out that the values are actually somehow dependent on each other. This will enable to counter slow query problems where the planner thinks there will be very little data returned (multiplication of probabilities yields very small numbers usually) and will choose for example a „nested loop“ join that does not perform well on bigger amounts of data.

|

||||

|

||||

* Parallel snapshots on replicas

|

||||

|

||||

Now one can use the pg_dump tool to speed up backups on standby servers enormously by using multiple processes (the –jobs flag).

|

||||

|

||||

* Better tuning of parallel processing worker behaviour

|

||||

|

||||

See max_parallel_workers and min_parallel_table_scan_size / min_parallel_index_scan_size parameters. The default values (8MB, 512KB) for the latter two I would recommend to increase a bit though.

|

||||

|

||||

* New built-in monitoring roles for easier tooling

|

||||

|

||||

New roles pg_monitor, pg_read_all_settings, pg_read_all_stats, and pg_stat_scan_tables make life a lot easier for all kinds of monitoring tasks – previously one had to use superuser accounts or some SECURITY DEFINER wrapper functions.

|

||||

|

||||

* Temporary (per session) replication slots for safer replica building

|

||||

|

||||

* A new Contrib extension for checking validity of B-tree indexes

|

||||

|

||||

Does couple of smart checks to discover structural inconsistencies and stuff not covered by page level checksums. Hope to check it out more deeply in nearer future.

|

||||

|

||||

* Psql query tool supports now basic branching (if/elif/else)

|

||||

|

||||

This would for example enable having a single maintenance/monitoring script with version specific branching (different column names for pg_stat* views etc) instead of many version specific scripts.

|

||||

|

||||

```

|

||||

SELECT :VERSION_NAME = '10.0' AS is_v10 \gset

|

||||

\if :is_v10

|

||||

SELECT 'yippee' AS msg;

|

||||

\else

|

||||

SELECT 'time to upgrade!' AS msg;

|

||||

\endif

|

||||

```

|

||||

|

||||

That’s it for this time! Lot of other stuff didn’t got listed of course, so for full time DBAs I’d definitely suggest to look at the notes more thoroughly. And a big thanks to those 300+ people who contributed their effort to this particularly exciting release!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.cybertec.at/best-of-postgresql-10-for-the-dba/

|

||||

|

||||

作者:[ Kaarel Moppel][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.cybertec.at/author/kaarel-moppel/

|

||||

[1]:http://www.cybertec.at/author/kaarel-moppel/

|

||||

[2]:http://www.cybertec.at/best-of-postgresql-10-for-the-dba/

|

||||

[3]:https://www.postgresql.org/about/news/1786/

|

||||

[4]:https://www.postgresql.org/docs/current/static/release-10.html

|

||||

[5]:https://wiki.postgresql.org/wiki/New_in_postgres_10

|

||||

@ -0,0 +1,87 @@

|

||||

Proxy Models in Container Environments

|

||||

============================================================

|

||||

|

||||

### Most of us are familiar with how proxies work, but is it any different in a container-based environment? See what's changed.

|

||||

|

||||

Inline, side-arm, reverse, and forward. These used to be the terms we used to describe the architectural placement of proxies in the network.

|

||||

|

||||

Today, containers use some of the same terminology, but they are introducing new ones. That’s an opportunity for me to extemporaneously expound* on my favorite of all topics: the proxy.

|

||||

|

||||

One of the primary drivers of cloud (once we all got past the pipedream of cost containment) has been scalability. Scale has challenged agility (and sometimes won) in various surveys over the past five years as the number one benefit organizations seek by deploying apps in cloud computing environments.

|

||||

|

||||

That’s in part because in a digital economy (in which we now operate), apps have become the digital equivalent of brick-and-mortar “open/closed” signs and the manifestation of digital customer assistance. Slow, unresponsive apps have the same effect as turning out the lights or understaffing the store.

|

||||

|

||||

Apps need to be available and responsive to meet demand. Scale is the technical response to achieving that business goal. Cloud not only provides the ability to scale, but offers the ability to scale _automatically_ . To do that requires a load balancer. Because that’s how we scale apps – with proxies that load balance traffic/requests.

|

||||

|

||||

Containers are no different with respect to expectations around scale. Containers must scale – and scale automatically – and that means the use of load balancers (proxies).

|

||||

|

||||

If you’re using native capabilities, you’re doing primitive load balancing based on TCP/UDP. Generally speaking, container-based proxy implementations aren’t fluent in HTTP or other application layer protocols and don’t offer capabilities beyond plain old load balancing ([POLB][1]). That’s often good enough, as container scale operates on a cloned, horizontal premise – to scale an app, add another copy and distribute requests across it. Layer 7 (HTTP) routing capabilities are found at the ingress (in [ingress controllers][2] and API gateways) and are used as much (or more) for app routing as they are to scale applications.

|

||||

|

||||

In some cases, however, this is not enough. If you want (or need) more application-centric scale or the ability to insert additional services, you’ll graduate to more robust offerings that can provide programmability or application-centric scalability or both.

|

||||

|

||||

To do that means [plugging-in proxies][3]. The container orchestration environment you’re working in largely determines the deployment model of the proxy in terms of whether it’s a reverse proxy or a forward proxy. Just to keep things interesting, there’s also a third model – sidecar – that is the foundation of scalability supported by emerging service mesh implementations.

|

||||

|

||||

### Reverse Proxy

|

||||

|

||||

[][4]

|

||||

|

||||

A reverse proxy is closest to a traditional model in which a virtual server accepts all incoming requests and distributes them across a pool (farm, cluster) of resources.

|

||||

|

||||

There is one proxy per ‘application’. Any client that wants to connect to the application is instead connected to the proxy, which then chooses and forwards the request to an appropriate instance. If the green app wants to communicate with the blue app, it sends a request to the blue proxy, which determines which of the two instances of the blue app should respond to the request.

|

||||

|

||||

In this model, the proxy is only concerned with the app it is managing. The blue proxy doesn’t care about the instances associated with the orange proxy, and vice-versa.

|

||||

|

||||

### Forward Proxy

|

||||

|

||||

[][5]

|

||||

|

||||

This mode more closely models that of a traditional outbound firewall.

|

||||

|

||||

In this model, each container **node** has an associated proxy. If a client wants to connect to a particular application or service, it is instead connected to the proxy local to the container node where the client is running. The proxy then chooses an appropriate instance of that application and forwards the client's request.

|

||||

|

||||

Both the orange and the blue app connect to the same proxy associated with its node. The proxy then determines which instance of the requested app instance should respond.

|

||||

|

||||

In this model, every proxy must know about every application to ensure it can forward requests to the appropriate instance.

|

||||

|

||||

### Sidecar Proxy

|

||||

|

||||

[][6]

|

||||

|

||||

This mode is also referred to as a service mesh router. In this model, each **container **has its own proxy.

|

||||

|

||||

If a client wants to connect to an application, it instead connects to the sidecar proxy, which chooses an appropriate instance of that application and forwards the client's request. This behavior is the same as a _forward proxy _ model.

|

||||

|

||||

The difference between a sidecar and forward proxy is that sidecar proxies do not need to modify the container orchestration environment. For example, in order to plug-in a forward proxy to k8s, you need both the proxy _and _ a replacement for kube-proxy. Sidecar proxies do not require this modification because it is the app that automatically connects to its “sidecar” proxy instead of being routed through the proxy.

|

||||

|

||||

### Summary

|

||||

|

||||

Each model has its advantages and disadvantages. All three share a reliance on environmental data (telemetry and changes in configuration) as well as the need to integrate into the ecosystem. Some models are pre-determined by the environment you choose, so careful consideration as to future needs – service insertion, security, networking complexity – need to be evaluated before settling on a model.

|

||||

|

||||

We’re still in early days with respect to containers and their growth in the enterprise. As they continue to stretch into production environments it’s important to understand the needs of the applications delivered by containerized environments and how their proxy models differ in implementation.

|

||||

|

||||

*It was extemporaneous when I wrote it down. Now, not so much.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://dzone.com/articles/proxy-models-in-container-environments

|

||||

|

||||

作者:[Lori MacVittie ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://dzone.com/users/307701/lmacvittie.html

|

||||

[1]:https://f5.com/about-us/blog/articles/go-beyond-polb-plain-old-load-balancing

|

||||

[2]:https://f5.com/about-us/blog/articles/ingress-controllers-new-name-familiar-function-27388

|

||||

[3]:http://clouddocs.f5.com/products/asp/v1.0/

|

||||

[4]:https://devcentral.f5.com/Portals/0/Users/038/38/38/unavailable_is_closed.png?ver=2017-09-12-082118-160

|

||||

[5]:https://devcentral.f5.com/Portals/0/Users/038/38/38/per-node_forward_proxy.jpg?ver=2017-09-14-072419-667

|

||||

[6]:https://devcentral.f5.com/Portals/0/Users/038/38/38/per-pod_sidecar_proxy.jpg?ver=2017-09-14-072424-073

|

||||

[7]:https://dzone.com/users/307701/lmacvittie.html

|

||||

[8]:https://dzone.com/users/307701/lmacvittie.html

|

||||

[9]:https://dzone.com/articles/proxy-models-in-container-environments#

|

||||

[10]:https://dzone.com/cloud-computing-tutorials-tools-news

|

||||

[11]:https://dzone.com/articles/proxy-models-in-container-environments#

|

||||

[12]:https://dzone.com/go?i=243221&u=https%3A%2F%2Fget.platform9.com%2Fjzlp-kubernetes-deployment-models-the-ultimate-guide%2F

|

||||

@ -0,0 +1,128 @@

|

||||

How to implement cloud-native computing with Kubernetes

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

Kubernetes and containers can speed up the development process while minimizing programmer and system administration costs, say representatives of the Open Container Initiative and the Cloud Native Computing Foundation. To take advantage of Kubernetes and its related tools to run a cloud-native architecture, start with unappreciated Kubernetes features like namespaces.

|

||||

|

||||

[Kubernetes][2] is far more than a cloud-container manager. As Steve Pousty, [Red Hat's][3] lead developer advocate for [OpenShift][4], explained in a presentation at [the Linux Foundation's][5] [Open Source Summit][6], Kubernetes serves as a "common operating plane for cloud-native computing using containers."

|

||||

|

||||

What does Pousty mean by that? Let's review the basics.

|

||||

|

||||

“Cloud-native computing uses an open source software stack to deploy applications as microservices, package each part into its own container, and dynamically orchestrate those containers to optimize resource utilization,” explains Chris Aniszczyk, executive director of the [Open Container Initiative][7] (OCI) and the [Cloud Native Computing Foundation][8] (CNCF). [Kubernetes takes care of that last element][9] of cloud-native computing. The result is part of a larger transition in IT, moving from servers to virtual machines to buildpacks—and now to [containers][10].

|

||||

|

||||

This data center evolution has netted significant cost savings, in part because it requires fewer dedicated staff, conference presenters say. For example, by using Kubernetes, Google needs only one site reliability engineer per 10,000 machines, according to Aniszczyk.

|

||||

|

||||

Practically speaking, however, system administrators can take advantage of new Kubernetes-related tools and exploit under-appreciated features.

|

||||

|

||||

### Building a native cloud platform

|

||||

|

||||

Pousty explained, "For Red Hat, Kubernetes is the cloud Linux kernel. It's this infrastructure that everybody's going to build on."

|

||||

|

||||