diff --git a/published/20190319 How to set up a homelab from hardware to firewall.md b/published/20190319 How to set up a homelab from hardware to firewall.md

new file mode 100644

index 0000000000..54c24e7a14

--- /dev/null

+++ b/published/20190319 How to set up a homelab from hardware to firewall.md

@@ -0,0 +1,107 @@

+[#]: collector: (lujun9972)

+[#]: translator: (wyxplus)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13262-1.html)

+[#]: subject: (How to set up a homelab from hardware to firewall)

+[#]: via: (https://opensource.com/article/19/3/home-lab)

+[#]: author: (Michael Zamot https://opensource.com/users/mzamot)

+

+如何从硬件到防火墙建立一个家庭实验室

+======

+

+> 了解一下用于构建自己的家庭实验室的硬件和软件方案。

+

+

+

+你有想过创建一个家庭实验室吗?或许你想尝试不同的技术,构建开发环境、亦或是建立自己的私有云。拥有一个家庭实验室的理由很多,本教程旨在使入门变得更容易。

+

+规划家庭实验室时,需要考虑三方面:硬件、软件和维护。我们将在这里查看前两方面,并在以后的文章中讲述如何节省维护计算机实验室的时间。

+

+### 硬件

+

+在考虑硬件需求时,首先要考虑如何使用实验室以及你的预算、噪声、空间和电力使用情况。

+

+如果购买新硬件过于昂贵,请搜索当地的大学、广告以及诸如 eBay 或 Craigslist 之类的网站,能获取二手服务器的地方。它们通常很便宜,并且服务器级的硬件可以使用很多年。你将需要三类硬件:虚拟化服务器、存储设备和路由器/防火墙。

+

+#### 虚拟化服务器

+

+一个虚拟化服务器允许你去运行多个共享物理机资源的虚拟机,同时最大化利用和隔离资源。如果你弄坏了一台虚拟机,无需重建整个服务器,只需虚拟一个好了。如果你想进行测试或尝试某些操作而不损坏整个系统,仅需要新建一个虚拟机来运行即可。

+

+在虚拟服务器中,需考虑两个最重要的因素是 CPU 的核心数及其运行速度以及内存容量。如果没有足够的资源够全部虚拟机共享,那么它们将被过度分配并试着获取其他虚拟机的 CPU 的周期和内存。

+

+因此,考虑一个多核 CPU 的平台。你要确保 CPU 支持虚拟化指令(因特尔的 VT-x 指令集和 AMD 的 AMD-V 指令集)。能够处理虚拟化的优质的消费级处理器有因特尔的 i5 或 i7 和 AMD 的 Ryzen 处理器。如果你考虑服务器级的硬件,那么因特尔的志强系列和 AMD 的 EPYC 都是不错的选择。内存可能很昂贵,尤其是最近的 DDR4 内存。当我们估计所需多少内存时,请为主机操作系统的内存至少分配 2 GB 的空间。

+

+如果你担心电费或噪声,则诸如因特尔 NUC 设备之类的解决方案虽然外形小巧、功耗低、噪音低,但是却以牺牲可扩展性为代价。

+

+#### NAS

+

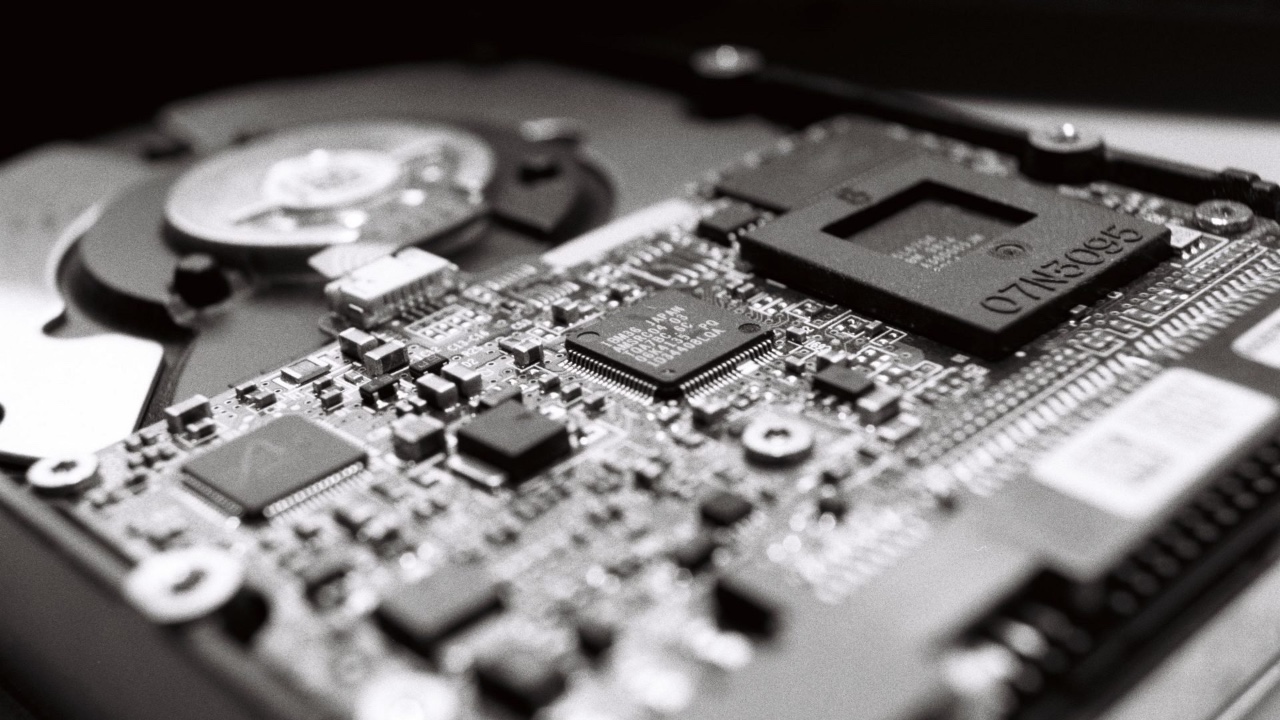

+如果希望装有硬盘驱动器的计算机存储你的所有个人数据,电影,图片等,并为虚拟化服务器提供存储,则需要网络附加存储(NAS)。

+

+在大多数情况下,你不太可能需要一颗强力的 CPU。实际上,许多商业 NAS 的解决方案使用低功耗的 ARM CPU。支持多个 SATA 硬盘的主板是必须的。如果你的主板没有足够的端口,请使用主机总线适配器(HBA)SAS 控制器添加额外的端口。

+

+网络性能对于 NAS 来说是至关重要的,因此最好选择千兆网络(或更快网络)。

+

+内存需求根据你的文件系统而有所不同。ZFS 是 NAS 上最受欢迎的文件系统之一,你需要更多内存才能使用诸如缓存或重复数据删除之类的功能。纠错码(ECC)的内存是防止数据损坏的最佳选择(但在购买前请确保你的主板支持)。最后但同样重要的,不要忘记使用不间断电源(UPS),因为断电可能会使得数据出错。

+

+#### 防火墙和路由器

+

+你是否曾意识到,廉价的路由器/防火墙通常是保护你的家庭网络不受外部环境影响的主要部分?这些路由器很少及时收到安全更新(如果有的话)。现在害怕了吗?好吧,[确实][2]!

+

+通常,你不需要一颗强大的 CPU 或是大量内存来构建你自己的路由器/防火墙,除非你需要高吞吐率或是执行 CPU 密集型任务,像是虚拟私有网络服务器或是流量过滤。在这种情况下,你将需要一个支持 AES-NI 的多核 CPU。

+

+你可能想要至少 2 个千兆或更快的以太网卡(NIC),这不是必需的,但我推荐使用一个管理型交换机来连接你自己的装配的路由器,以创建 VLAN 来进一步隔离和保护你的网络。

+

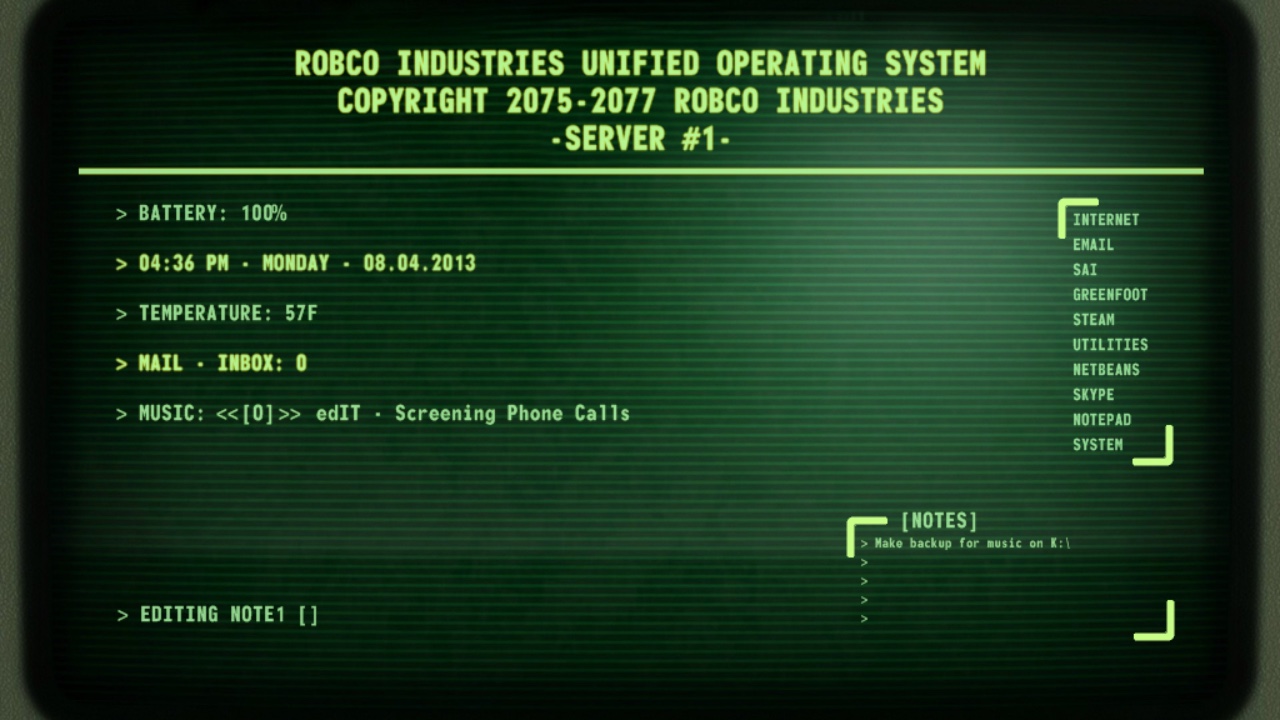

+![Home computer lab PfSense][4]

+

+### 软件

+

+在选择完你的虚拟化服务器、NAS 和防火墙/路由器后,下一步是探索不同的操作系统和软件,以最大程度地发挥其作用。尽管你可以使用 CentOS、Debian或 Ubuntu 之类的常规 Linux 发行版,但是与以下软件相比,它们通常花费更多的时间进行配置和管理。

+

+#### 虚拟化软件

+

+[KVM][5](基于内核的虚拟机)使你可以将 Linux 变成虚拟机监控程序,以便可以在同一台机器中运行多个虚拟机。最好的是,KVM 作为 Linux 的一部分,它是许多企业和家庭用户的首选。如果你愿意,可以安装 [libvirt][6] 和 [virt-manager][7] 来管理你的虚拟化平台。

+

+[Proxmox VE][8] 是一个强大的企业级解决方案,并且是一个完全开源的虚拟化和容器平台。它基于 Debian,使用 KVM 作为其虚拟机管理程序,并使用 LXC 作为容器。Proxmox 提供了强大的网页界面、API,并且可以扩展到许多群集节点,这很有用,因为你永远不知道何时实验室容量不足。

+

+[oVirt][9](RHV)是另一种使用 KVM 作为虚拟机管理程序的企业级解决方案。不要因为它是企业级的,就意味着你不能在家中使用它。oVirt 提供了强大的网页界面和 API,并且可以处理数百个节点(如果你运行那么多服务器,我可不想成为你的邻居!)。oVirt 用于家庭实验室的潜在问题是它需要一套最低限度的节点:你将需要一个外部存储(例如 NAS)和至少两个其他虚拟化节点(你可以只在一个节点上运行,但你会遇到环境维护方面的问题)。

+

+#### 网络附加存储软件

+

+[FreeNAS][10] 是最受欢迎的开源 NAS 发行版,它基于稳定的 FreeBSD 操作系统。它最强大的功能之一是支持 ZFS 文件系统,该文件系统提供了数据完整性检查、快照、复制和多个级别的冗余(镜像、条带化镜像和条带化)。最重要的是,所有功能都通过功能强大且易于使用的网页界面进行管理。在安装 FreeNAS 之前,请检查硬件是否支持,因为它不如基于 Linux 的发行版那么广泛。

+

+另一个流行的替代方法是基于 Linux 的 [OpenMediaVault][11]。它的主要功能之一是模块化,带有可扩展和添加特性的插件。它包括的功能包括基于网页管理界面,CIFS、SFTP、NFS、iSCSI 等协议,以及卷管理,包括软件 RAID、资源配额,访问控制列表(ACL)和共享管理。由于它是基于 Linux 的,因此其具有广泛的硬件支持。

+

+#### 防火墙/路由器软件

+

+[pfSense][12] 是基于 FreeBSD 的开源企业级路由器和防火墙发行版。它可以直接安装在服务器上,甚至可以安装在虚拟机中(以管理虚拟或物理网络并节省空间)。它有许多功能,可以使用软件包进行扩展。尽管它也有命令行访问权限,但也可以完全使用网页界面对其进行管理。它具有你所希望路由器和防火墙提供的所有功能,例如 DHCP 和 DNS,以及更高级的功能,例如入侵检测(IDS)和入侵防御(IPS)系统。你可以侦听多个不同接口或使用 VLAN 的网络,并且只需鼠标点击几下即可创建安全的 VPN 服务器。pfSense 使用 pf,这是一种有状态的数据包筛选器,它是为 OpenBSD 操作系统开发的,使用类似 IPFilter 的语法。许多公司和组织都有使用 pfSense。

+

+* * *

+

+考虑到所有的信息,是时候动手开始建立你的实验室了。在之后的文章中,我将介绍运行家庭实验室的第三方面:自动化进行部署和维护。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/3/home-lab

+

+作者:[Michael Zamot (Red Hat)][a]

+选题:[lujun9972][b]

+译者:[wyxplus](https://github.com/wyxplus)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/mzamot

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/computer_keyboard_laptop_development_code_woman.png?itok=vbYz6jjb

+[2]: https://opensource.com/article/18/5/how-insecure-your-router

+[3]: /file/427426

+[4]: https://opensource.com/sites/default/files/uploads/pfsense2.png (Home computer lab PfSense)

+[5]: https://www.linux-kvm.org/page/Main_Page

+[6]: https://libvirt.org/

+[7]: https://virt-manager.org/

+[8]: https://www.proxmox.com/en/proxmox-ve

+[9]: https://ovirt.org/

+[10]: https://freenas.org/

+[11]: https://www.openmediavault.org/

+[12]: https://www.pfsense.org/

diff --git a/published/20200423 4 open source chat applications you should use right now.md b/published/20200423 4 open source chat applications you should use right now.md

new file mode 100644

index 0000000000..5c445b9586

--- /dev/null

+++ b/published/20200423 4 open source chat applications you should use right now.md

@@ -0,0 +1,138 @@

+[#]: collector: (lujun9972)

+[#]: translator: (wyxplus)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13271-1.html)

+[#]: subject: (4 open source chat applications you should use right now)

+[#]: via: (https://opensource.com/article/20/4/open-source-chat)

+[#]: author: (Sudeshna Sur https://opensource.com/users/sudeshna-sur)

+

+值得现在就去尝试的四款开源聊天应用软件

+======

+

+> 现在,远程协作已作为一项必不可少的能力,让开源实时聊天成为你工具箱中必不可少的一部分吧。

+

+

+

+清晨起床后,我们通常要做的第一件事是检查手机,看看是否有同事和朋友发来的重要信息。无论这是否是一个好习惯,但这种行为早已成为我们日常生活的一部分。

+

+> 人是理性动物。他可以为任何他想相信的事情想出一个理由。

+> – 阿纳托尔·法朗士

+

+无论理由是否合理,我们每天都在使用的一系列的通讯工具,例如电子邮件、电话、网络会议工具或社交网络。甚至在 COVID-19 之前,居家办公就已经使这些通信工具成为我们生活中的重要部分。随着疫情出现,居家办公成为新常态,我们交流方式的方方面面正面临着前所未有的改变,这让这些工具变得不可或缺。

+

+### 为什么需要聊天?

+

+作为全球团队的一部分进行远程工作时,我们必须要有一个相互协作的环境。聊天应用软件在帮助我们保持相互联系中起着至关重要的作用。与电子邮件相比,聊天应用软件可提供与全球各地的同事快速、实时的通信。

+

+选择一款聊天应用软件需要考虑很多因素。为了帮助你选择最适合你的应用软件,在本文中,我将探讨四款开源聊天应用软件,和一个当你需要与同事“面对面”时的开源视频通信工具,然后概述在高效的通讯应用软件中,你应当考虑的一些功能。

+

+### 四款开源聊天软件

+

+#### Rocket.Chat

+

+![Rocket.Chat][2]

+

+[Rocket.Chat][3] 是一个综合性的通讯平台,其将频道分为公开房间(任何人都可以加入)和私有房间(仅受邀请)。你还可以直接将消息发送给已登录的人员。其能共享文档、链接、照片、视频和动态图,以及进行视频通话,并可以在平台中发送语音信息。

+

+Rocket.Chat 是自由开源软件,但是其独特之处在于其可自托管的聊天系统。你可以将其下载到你的服务器上,无论它是本地服务器或是在公有云上的虚拟专用服务器。

+

+Rocket.Chat 是完全免费,其 [源码][4] 可在 Github 获得。许多开源项目都使用 Rocket.Chat 作为他们官方交流平台。该软件在持续不断的发展且不断更新和改进新功能。

+

+我最喜欢 Rocket.Chat 的地方是其能够根据用户需求来进行自定义操作,并且它使用机器学习在用户通讯间进行自动的、实时消息翻译。你也可以下载适用于你移动设备的 Rocket.Chat,以便能随时随地使用。

+

+#### IRC

+

+![IRC on WeeChat 0.3.5][5]

+

+IRC([互联网中继聊天][6])是一款实时、基于文本格式的通信软件。尽管其是最古老的电子通讯形式之一,但在许多知名的软件项目中仍受欢迎。

+

+IRC 频道是单独的聊天室。它可以让你在一个开放的频道中与多人进行聊天或与某人私下一对一聊天。如果频道名称以 `#` 开头,则可以假定它是官方的聊天室,而以 `##` 开头的聊天室通常是非官方的聊天室。

+

+[上手 IRC][7] 很容易。你的 IRC 昵称可以让人们找到你,因此它必须是唯一的。但是,你可以完全自主地选择 IRC 客户端。如果你需要比标准 IRC 客户端更多功能的应用程序,则可以使用 [Riot.im][8] 连接到 IRC。

+

+考虑到它悠久的历史,你为什么还要继续使用 IRC?出于一个原因是,其仍是我们所依赖的许多自由及开源项目的家园。如果你想参于开源软件开发和社区,可以选择用 IRC。

+

+#### Zulip

+

+![Zulip][9]

+

+[Zulip][10] 是十分流行的群聊应用程序,它遵循基于话题线索的模式。在 Zulip 中,你可以订阅流,就像在 IRC 频道或 Rocket.Chat 中一样。但是,每个 Zulip 流都会拥有一个唯一的话题,该话题可帮助你以后查找对话,因此其更有条理。

+

+与其他平台一样,它支持表情符号、内嵌图片、视频和推特预览。它还支持 LaTeX 来分享数学公式或方程式、支持 Markdown 和语法高亮来分享代码。

+

+Zulip 是跨平台的,并提供 API 用于编写你自己的程序。我特别喜欢 Zulip 的一点是它与 GitHub 的集成整合功能:如果我正在处理某个议题,则可以使用 Zulip 的标记回链某个拉取请求 ID。

+

+Zulip 是开源的(你可以在 GitHub 上访问其 [源码][11])并且免费使用,但它有提供预置支持、[LDAP][12] 集成和更多存储类型的付费产品。

+

+#### Let's Chat

+

+![Let's Chat][13]

+

+[Let's Chat][14] 是一个面向小型团队的自托管的聊天解决方案。它使用 Node.js 和 MongoDB 编写运行,只需鼠标点击几下即可将其部署到本地服务器或云服务器。它是自由开源软件,可以在 GitHub 上查看其 [源码][15]。

+

+Let's Chat 与其他开源聊天工具的不同之处在于其企业功能:它支持 LDAP 和 [Kerberos][16] 身份验证。它还具有新用户想要的所有功能:你可以在历史记录中搜索过往消息,并使用 @username 之类的标签来标记人员。

+

+我喜欢 Let's Chat 的地方是它拥有私人的受密码保护的聊天室、发送图片、支持 GIPHY 和代码粘贴。它不断更新,不断增加新功能。

+

+### 附加:开源视频聊天软件 Jitsi

+

+![Jitsi][17]

+

+有时,文字聊天还不够,你还可能需要与某人面谈。在这种情况下,如果不能选择面对面开会交流,那么视频聊天是最好的选择。[Jitsi][18] 是一个完全开源的、支持多平台且兼容 WebRTC 的视频会议工具。

+

+Jitsi 从 Jitsi Desktop 开始,已经发展成为许多 [项目][19],包括 Jitsi Meet、Jitsi Videobridge、jibri 和 libjitsi,并且每个项目都在 GitHub 上开放了 [源码][20]。

+

+Jitsi 是安全且可扩展的,并支持诸如联播和带宽预估之类的高级视频路由的概念,还包括音频、录制、屏幕共享和拨入功能等经典功能。你可以来为你的视频聊天室设置密码以保护其不受干扰,并且它还支持通过 YouTube 进行直播。你还可以搭建自己的 Jitsi 服务器,并将其托管在本地或虚拟专用服务器(例如 Digital Ocean Droplet)上。

+

+我最喜欢 Jitsi 的是它是免费且低门槛的。任何人都可以通过访问 [meet.jit.si][21] 来立即召开会议,并且用户无需注册或安装即可轻松参加会议。(但是,注册的话能拥有日程安排功能。)这种入门级低门槛的视频会议服务让 Jitsi 迅速普及。

+

+### 选择一个聊天应用软件的建议

+

+各种各样的开源聊天应用软件可能让你很难抉择。以下是一些选择一款聊天应用软件的一般准则。

+

+ * 最好具有交互式的界面和简单的导航工具。

+ * 最好寻找一种功能强大且能让人们以各种方式使用它的工具。

+ * 如果与你所使用的工具有进行集成整合的话,可以重点考虑。一些工具与 GitHub 或 GitLab 以及某些应用程序具有良好的无缝衔接,这将是一个非常有用的功能。

+ * 有能托管到云主机的工具将十分方便。

+ * 应考虑到聊天服务的安全性。在私人服务器上托管服务的能力对许多组织和个人来说是必要的。

+ * 最好选择那些具有丰富的隐私设置,并拥有私人聊天室和公共聊天室的通讯工具。

+

+由于人们比以往任何时候都更加依赖在线服务,因此拥有备用的通讯平台是明智之举。例如,如果一个项目正在使用 Rocket.Chat,则必要之时,它还应具有跳转到 IRC 的能力。由于这些软件在不断更新,你可能会发现自己已经连接到多个渠道,因此集成整合其他应用将变得非常有价值。

+

+在各种可用的开源聊天服务中,你喜欢和使用哪些?这些工具又是如何帮助你进行远程办公?请在评论中分享你的想法。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/20/4/open-source-chat

+

+作者:[Sudeshna Sur][a]

+选题:[lujun9972][b]

+译者:[wyxplus](https://github.com/wyxplus)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/sudeshna-sur

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/talk_chat_communication_team.png?itok=CYfZ_gE7 (Chat bubbles)

+[2]: https://opensource.com/sites/default/files/uploads/rocketchat.png (Rocket.Chat)

+[3]: https://rocket.chat/

+[4]: https://github.com/RocketChat/Rocket.Chat

+[5]: https://opensource.com/sites/default/files/uploads/irc.png (IRC on WeeChat 0.3.5)

+[6]: https://en.wikipedia.org/wiki/Internet_Relay_Chat

+[7]: https://opensource.com/article/16/6/getting-started-irc

+[8]: https://opensource.com/article/17/5/introducing-riot-IRC

+[9]: https://opensource.com/sites/default/files/uploads/zulip.png (Zulip)

+[10]: https://zulipchat.com/

+[11]: https://github.com/zulip/zulip

+[12]: https://en.wikipedia.org/wiki/Lightweight_Directory_Access_Protocol

+[13]: https://opensource.com/sites/default/files/uploads/lets-chat.png (Let's Chat)

+[14]: https://sdelements.github.io/lets-chat/

+[15]: https://github.com/sdelements/lets-chat

+[16]: https://en.wikipedia.org/wiki/Kerberos_(protocol)

+[17]: https://opensource.com/sites/default/files/uploads/jitsi_0_0.jpg (Jitsi)

+[18]: https://jitsi.org/

+[19]: https://jitsi.org/projects/

+[20]: https://github.com/jitsi

+[21]: http://meet.jit.si

diff --git a/published/20171025 Typeset your docs with LaTeX and TeXstudio on Fedora.md b/published/202102/20171025 Typeset your docs with LaTeX and TeXstudio on Fedora.md

similarity index 100%

rename from published/20171025 Typeset your docs with LaTeX and TeXstudio on Fedora.md

rename to published/202102/20171025 Typeset your docs with LaTeX and TeXstudio on Fedora.md

diff --git a/published/20190312 When the web grew up- A browser story.md b/published/202102/20190312 When the web grew up- A browser story.md

similarity index 100%

rename from published/20190312 When the web grew up- A browser story.md

rename to published/202102/20190312 When the web grew up- A browser story.md

diff --git a/published/20190404 Intel formally launches Optane for data center memory caching.md b/published/202102/20190404 Intel formally launches Optane for data center memory caching.md

similarity index 100%

rename from published/20190404 Intel formally launches Optane for data center memory caching.md

rename to published/202102/20190404 Intel formally launches Optane for data center memory caching.md

diff --git a/published/20190610 Tmux Command Examples To Manage Multiple Terminal Sessions.md b/published/202102/20190610 Tmux Command Examples To Manage Multiple Terminal Sessions.md

similarity index 100%

rename from published/20190610 Tmux Command Examples To Manage Multiple Terminal Sessions.md

rename to published/202102/20190610 Tmux Command Examples To Manage Multiple Terminal Sessions.md

diff --git a/published/20190626 Where are all the IoT experts going to come from.md b/published/202102/20190626 Where are all the IoT experts going to come from.md

similarity index 100%

rename from published/20190626 Where are all the IoT experts going to come from.md

rename to published/202102/20190626 Where are all the IoT experts going to come from.md

diff --git a/published/20190810 EndeavourOS Aims to Fill the Void Left by Antergos in Arch Linux World.md b/published/202102/20190810 EndeavourOS Aims to Fill the Void Left by Antergos in Arch Linux World.md

similarity index 100%

rename from published/20190810 EndeavourOS Aims to Fill the Void Left by Antergos in Arch Linux World.md

rename to published/202102/20190810 EndeavourOS Aims to Fill the Void Left by Antergos in Arch Linux World.md

diff --git a/published/20191227 The importance of consistency in your Python code.md b/published/202102/20191227 The importance of consistency in your Python code.md

similarity index 100%

rename from published/20191227 The importance of consistency in your Python code.md

rename to published/202102/20191227 The importance of consistency in your Python code.md

diff --git a/published/20191228 The Zen of Python- Why timing is everything.md b/published/202102/20191228 The Zen of Python- Why timing is everything.md

similarity index 100%

rename from published/20191228 The Zen of Python- Why timing is everything.md

rename to published/202102/20191228 The Zen of Python- Why timing is everything.md

diff --git a/published/20191229 How to tell if implementing your Python code is a good idea.md b/published/202102/20191229 How to tell if implementing your Python code is a good idea.md

similarity index 100%

rename from published/20191229 How to tell if implementing your Python code is a good idea.md

rename to published/202102/20191229 How to tell if implementing your Python code is a good idea.md

diff --git a/published/20191230 Namespaces are the shamash candle of the Zen of Python.md b/published/202102/20191230 Namespaces are the shamash candle of the Zen of Python.md

similarity index 100%

rename from published/20191230 Namespaces are the shamash candle of the Zen of Python.md

rename to published/202102/20191230 Namespaces are the shamash candle of the Zen of Python.md

diff --git a/published/20200121 Ansible Automation Tool Installation, Configuration and Quick Start Guide.md b/published/202102/20200121 Ansible Automation Tool Installation, Configuration and Quick Start Guide.md

similarity index 100%

rename from published/20200121 Ansible Automation Tool Installation, Configuration and Quick Start Guide.md

rename to published/202102/20200121 Ansible Automation Tool Installation, Configuration and Quick Start Guide.md

diff --git a/published/202102/20200124 Ansible Ad-hoc Command Quick Start Guide with Examples.md b/published/202102/20200124 Ansible Ad-hoc Command Quick Start Guide with Examples.md

new file mode 100644

index 0000000000..d1b5c335fe

--- /dev/null

+++ b/published/202102/20200124 Ansible Ad-hoc Command Quick Start Guide with Examples.md

@@ -0,0 +1,294 @@

+[#]: collector: "lujun9972"

+[#]: translator: "MjSeven"

+[#]: reviewer: "wxy"

+[#]: publisher: "wxy"

+[#]: url: "https://linux.cn/article-13163-1.html"

+[#]: subject: "Ansible Ad-hoc Command Quick Start Guide with Examples"

+[#]: via: "https://www.2daygeek.com/ansible-ad-hoc-command-quick-start-guide-with-examples/"

+[#]: author: "Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/"

+

+Ansible 点对点命令快速入门指南示例

+======

+

+

+

+之前,我们写了一篇有关 [Ansible 安装和配置][1] 的文章。在那个教程中只包含了一些使用方法的示例。如果你是 Ansible 新手,建议你阅读上篇文章。一旦你熟悉了,就可以继续阅读本文了。

+

+默认情况下,Ansible 仅使用 5 个并行进程。如果要在多个主机上执行任务,需要通过添加 `-f [进程数]` 选项来手动设置进程数。

+

+### 什么是点对点命令?

+

+点对点命令用于在一个或多个受控节点上自动执行任务。它非常简单,但是不可重用。它使用 `/usr/bin/ansible` 二进制文件执行所有操作。

+

+点对点命令最适合运行一次的任务。例如,如果要检查指定用户是否可用,你可以使用一行命令而无需编写剧本。

+

+#### 为什么你要了解点对点命令?

+

+点对点命令证明了 Ansible 的简单性和强大功能。从 2.9 版本开始,它支持 3389 个模块,因此你需要了解和学习要定期使用的 Ansible 模块列表。

+

+如果你是一个 Ansible 新手,可以借助点对点命令轻松地练习这些模块及参数。

+

+你在这里学习到的概念将直接移植到剧本中。

+

+**点对点命令的一般语法:**

+

+```

+ansible [模式] -m [模块] -a "[模块选项]"

+```

+

+点对点命令包含四个部分,详细信息如下:

+

+| 部分 | 描述 |

+|----------|-----------------------------------|

+| `ansible`| 命令 |

+| 模式 | 输入清单或指定组 |

+| 模块 | 运行指定的模块名称 |

+| 模块选项 | 指定模块参数 |

+

+#### 如何使用 Ansible 清单文件

+

+如果使用 Ansible 的默认清单文件 `/etc/ansible/hosts`,你可以直接调用它。否则你可以使用 `-i` 选项指定 Ansible 清单文件的路径。

+

+#### 什么是模式以及如何使用它?

+

+Ansible 模式可以代指某个主机、IP 地址、清单组、一组主机或者清单中的所有主机。它允许你对它们运行命令和剧本。模式非常灵活,你可以根据需要使用它们。

+

+例如,你可以排除主机、使用通配符或正则表达式等等。

+

+下表描述了常见的模式以及用法。但是,如果它不能满足你的需求,你可以在 `ansible-playbook` 中使用带有 `-e` 参数的模式中的变量。

+

+| 描述 | 模式 | 目标 |

+|-----|------|-----|

+| 所有主机 | `all`(或 `*`) | 对清单中的所有服务器运行 Ansible |

+| 一台主机 | `host1` | 只针对给定主机运行 Ansible |

+| 多台主机 | `host1:host2`(或 `host1,host2`)| 对上述多台主机运行 Ansible |

+| 一组 | `webservers` | 在 `webservers` 群组中运行 Ansible |

+| 多组 | `webservers:dbservers` | `webservers` 中的所有主机加上 `dbservers` 中的所有主机 |

+| 排除组 | `webservers:!atlanta` | `webservers` 中除 `atlanta` 以外的所有主机 |

+| 组之间的交集 | `webservers:&staging` | `webservers` 中也在 `staging` 的任何主机 |

+

+#### 什么是 Ansible 模块,它干了什么?

+

+模块,也称为“任务插件”或“库插件”,它是一组代码单元,可以直接或通过剧本在远程主机上执行指定任务。

+

+Ansible 在远程目标节点上执行指定模块并收集其返回值。

+

+每个模块都支持多个参数,可以满足用户的需求。除少数模块外,几乎所有模块都采用 `key=value` 参数。你可以一次添加带有空格的多个参数,而 `command` 或 `shell` 模块会直接运行你输入的字符串。

+

+我们将添加一个包含最常用的“模块选项”参数的表。

+

+列出所有可用的模块,运行以下命令:

+

+```

+$ ansible-doc -l

+```

+

+运行以下命令来阅读指定模块的文档:

+

+```

+$ ansible-doc [模块]

+```

+

+### 1)如何在 Linux 上使用 Ansible 列出目录的内容

+

+可以使用 Ansible `command` 模块来完成这项操作,如下所示。我们列出了 `node1.2g.lab` 和 `nod2.2g.lab`* 远程服务器上 `daygeek` 用户主目录的内容。

+

+```

+$ ansible web -m command -a "ls -lh /home/daygeek"

+

+node1.2g.lab | CHANGED | rc=0 >>

+total 12K

+drwxr-xr-x. 2 daygeek daygeek 6 Feb 15 2019 Desktop

+drwxr-xr-x. 2 daygeek daygeek 6 Feb 15 2019 Documents

+drwxr-xr-x. 2 daygeek daygeek 6 Feb 15 2019 Downloads

+drwxr-xr-x. 2 daygeek daygeek 6 Feb 15 2019 Music

+-rwxr-xr-x. 1 daygeek daygeek 159 Mar 4 2019 passwd-up.sh

+drwxr-xr-x. 2 daygeek daygeek 6 Feb 15 2019 Pictures

+drwxr-xr-x. 2 daygeek daygeek 6 Feb 15 2019 Public

+drwxr-xr-x. 2 daygeek daygeek 6 Feb 15 2019 Templates

+-rwxrwxr-x. 1 daygeek daygeek 138 Mar 10 2019 user-add.sh

+-rw-rw-r--. 1 daygeek daygeek 18 Mar 10 2019 user-list1.txt

+drwxr-xr-x. 2 daygeek daygeek 6 Feb 15 2019 Videos

+

+node2.2g.lab | CHANGED | rc=0 >>

+total 0

+drwxr-xr-x. 2 daygeek daygeek 6 Nov 9 09:55 Desktop

+drwxr-xr-x. 2 daygeek daygeek 6 Nov 9 09:55 Documents

+drwxr-xr-x. 2 daygeek daygeek 6 Nov 9 09:55 Downloads

+drwxr-xr-x. 2 daygeek daygeek 6 Nov 9 09:55 Music

+drwxr-xr-x. 2 daygeek daygeek 6 Nov 9 09:55 Pictures

+drwxr-xr-x. 2 daygeek daygeek 6 Nov 9 09:55 Public

+drwxr-xr-x. 2 daygeek daygeek 6 Nov 9 09:55 Templates

+drwxr-xr-x. 2 daygeek daygeek 6 Nov 9 09:55 Videos

+```

+

+### 2)如何在 Linux 使用 Ansible 管理文件

+

+Ansible 的 `copy` 模块将文件从本地系统复制到远程系统。使用 Ansible `command` 模块将文件移动或复制到远程计算机。

+

+```

+$ ansible web -m copy -a "src=/home/daygeek/backup/CentOS7.2daygeek.com-20191025.tar dest=/home/u1" --become

+

+node1.2g.lab | CHANGED => {

+ "ansible_facts": {

+ "discovered_interpreter_python": "/usr/bin/python"

+ },

+ "changed": true,

+ "checksum": "ad8aadc0542028676b5fe34c94347829f0485a8c",

+ "dest": "/home/u1/CentOS7.2daygeek.com-20191025.tar",

+ "gid": 0,

+ "group": "root",

+ "md5sum": "ee8e778646e00456a4cedd5fd6458cf5",

+ "mode": "0644",

+ "owner": "root",

+ "secontext": "unconfined_u:object_r:user_home_t:s0",

+ "size": 30720,

+ "src": "/home/daygeek/.ansible/tmp/ansible-tmp-1579726582.474042-118186643704900/source",

+ "state": "file",

+ "uid": 0

+}

+

+node2.2g.lab | CHANGED => {

+ "ansible_facts": {

+ "discovered_interpreter_python": "/usr/libexec/platform-python"

+ },

+ "changed": true,

+ "checksum": "ad8aadc0542028676b5fe34c94347829f0485a8c",

+ "dest": "/home/u1/CentOS7.2daygeek.com-20191025.tar",

+ "gid": 0,

+ "group": "root",

+ "md5sum": "ee8e778646e00456a4cedd5fd6458cf5",

+ "mode": "0644",

+ "owner": "root",

+ "secontext": "unconfined_u:object_r:user_home_t:s0",

+ "size": 30720,

+ "src": "/home/daygeek/.ansible/tmp/ansible-tmp-1579726582.4793239-237229399335623/source",

+ "state": "file",

+ "uid": 0

+}

+```

+

+我们可以运行以下命令进行验证:

+

+```

+$ ansible web -m command -a "ls -lh /home/u1" --become

+

+node1.2g.lab | CHANGED | rc=0 >>

+total 36K

+-rw-r--r--. 1 root root 30K Jan 22 14:56 CentOS7.2daygeek.com-20191025.tar

+-rw-r--r--. 1 root root 25 Dec 9 03:31 user-add.sh

+

+node2.2g.lab | CHANGED | rc=0 >>

+total 36K

+-rw-r--r--. 1 root root 30K Jan 23 02:26 CentOS7.2daygeek.com-20191025.tar

+-rw-rw-r--. 1 u1 u1 18 Jan 23 02:21 magi.txt

+```

+

+要将文件从一个位置复制到远程计算机上的另一个位置,使用以下命令:

+

+```

+$ ansible web -m command -a "cp /home/u2/magi/ansible-1.txt /home/u2/magi/2g" --become

+```

+

+移动文件,使用以下命令:

+

+```

+$ ansible web -m command -a "mv /home/u2/magi/ansible.txt /home/u2/magi/2g" --become

+```

+

+在 `u1` 用户目录下创建一个名为 `ansible.txt` 的新文件,运行以下命令:

+

+```

+$ ansible web -m file -a "dest=/home/u1/ansible.txt owner=u1 group=u1 state=touch" --become

+```

+

+在 `u1` 用户目录下创建一个名为 `magi` 的新目录,运行以下命令:

+

+```

+$ ansible web -m file -a "dest=/home/u1/magi mode=755 owner=u2 group=u2 state=directory" --become

+```

+

+将 `u1` 用户目录下的 `ansible.txt`* 文件权限更改为 `777`,运行以下命令:

+

+```

+$ ansible web -m file -a "dest=/home/u1/ansible.txt mode=777" --become

+```

+

+删除 `u1` 用户目录下的 `ansible.txt` 文件,运行以下命令:

+

+```

+$ ansible web -m file -a "dest=/home/u2/magi/ansible-1.txt state=absent" --become

+```

+

+使用以下命令删除目录,它将递归删除指定目录:

+

+```

+$ ansible web -m file -a "dest=/home/u2/magi/2g state=absent" --become

+```

+

+### 3)用户管理

+

+你可以使用 Ansible 轻松执行用户管理活动。例如创建、删除用户以及向一个组添加用户。

+

+```

+$ ansible all -m user -a "name=foo password=[crypted password here]"

+```

+

+运行以下命令删除用户:

+

+```

+$ ansible all -m user -a "name=foo state=absent"

+```

+

+### 4)管理包

+

+使用合适的 Ansible 包管理器模块可以轻松地管理安装包。例如,我们将使用 `yum` 模块来管理 CentOS 系统上的软件包。

+

+安装最新的 Apache(httpd):

+

+```

+$ ansible web -m yum -a "name=httpd state=latest"

+```

+

+卸载 Apache(httpd) 包:

+

+```

+$ ansible web -m yum -a "name=httpd state=absent"

+```

+

+### 5)管理服务

+

+使用以下 Ansible 模块命令可以在 Linux 上管理任何服务。

+

+停止 httpd 服务:

+

+```

+$ ansible web -m service -a "name=httpd state=stopped"

+```

+

+启动 httpd 服务:

+

+```

+$ ansible web -m service -a "name=httpd state=started"

+```

+

+重启 httpd 服务:

+

+```

+$ ansible web -m service -a "name=httpd state=restarted"

+```

+

+--------------------------------------------------------------------------------

+

+via: https://www.2daygeek.com/ansible-ad-hoc-command-quick-start-guide-with-examples/

+

+作者:[Magesh Maruthamuthu][a]

+选题:[lujun9972][b]

+译者:[MjSeven](https://github.com/MjSeven)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://www.2daygeek.com/author/magesh/

+[b]: https://github.com/lujun9972

+[1]: https://linux.cn/article-13142-1.html

diff --git a/published/20200419 Getting Started With Pacman Commands in Arch-based Linux Distributions.md b/published/202102/20200419 Getting Started With Pacman Commands in Arch-based Linux Distributions.md

similarity index 100%

rename from published/20200419 Getting Started With Pacman Commands in Arch-based Linux Distributions.md

rename to published/202102/20200419 Getting Started With Pacman Commands in Arch-based Linux Distributions.md

diff --git a/published/20200529 A new way to build cross-platform UIs for Linux ARM devices.md b/published/202102/20200529 A new way to build cross-platform UIs for Linux ARM devices.md

similarity index 100%

rename from published/20200529 A new way to build cross-platform UIs for Linux ARM devices.md

rename to published/202102/20200529 A new way to build cross-platform UIs for Linux ARM devices.md

diff --git a/published/20200607 Top Arch-based User Friendly Linux Distributions That are Easier to Install and Use Than Arch Linux Itself.md b/published/202102/20200607 Top Arch-based User Friendly Linux Distributions That are Easier to Install and Use Than Arch Linux Itself.md

similarity index 100%

rename from published/20200607 Top Arch-based User Friendly Linux Distributions That are Easier to Install and Use Than Arch Linux Itself.md

rename to published/202102/20200607 Top Arch-based User Friendly Linux Distributions That are Easier to Install and Use Than Arch Linux Itself.md

diff --git a/published/20200615 LaTeX Typesetting - Part 1 (Lists).md b/published/202102/20200615 LaTeX Typesetting - Part 1 (Lists).md

similarity index 100%

rename from published/20200615 LaTeX Typesetting - Part 1 (Lists).md

rename to published/202102/20200615 LaTeX Typesetting - Part 1 (Lists).md

diff --git a/published/20200629 LaTeX typesetting part 2 (tables).md b/published/202102/20200629 LaTeX typesetting part 2 (tables).md

similarity index 100%

rename from published/20200629 LaTeX typesetting part 2 (tables).md

rename to published/202102/20200629 LaTeX typesetting part 2 (tables).md

diff --git a/translated/tech/20200724 LaTeX typesetting, Part 3- formatting.md b/published/202102/20200724 LaTeX typesetting, Part 3- formatting.md

similarity index 77%

rename from translated/tech/20200724 LaTeX typesetting, Part 3- formatting.md

rename to published/202102/20200724 LaTeX typesetting, Part 3- formatting.md

index 79d65a9eb1..2ae8a2991c 100644

--- a/translated/tech/20200724 LaTeX typesetting, Part 3- formatting.md

+++ b/published/202102/20200724 LaTeX typesetting, Part 3- formatting.md

@@ -1,55 +1,51 @@

[#]: collector: (Chao-zhi)

[#]: translator: (Chao-zhi)

-[#]: reviewer: ( )

-[#]: publisher: ( )

-[#]: url: ( )

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13154-1.html)

[#]: subject: (LaTeX typesetting,Part 3: formatting)

[#]: via: (https://fedoramagazine.org/latex-typesetting-part-3-formatting/)

[#]: author: (Earl Ramirez https://fedoramagazine.org/author/earlramirez/)

-

-LaTeX 排版 (3):排版

+LaTeX 排版(3):排版

======

-

+

-本[系列 ][1] 介绍了 LaTeX 中的基本格式。[第 1 部分 ][2] 介绍了列表。[第 2 部分 ][3] 阐述了表格。在第 3 部分中,您将了解 LaTeX 的另一个重要特性:细腻灵活的文档排版。本文介绍如何自定义页面布局、目录、标题部分和页面样式。

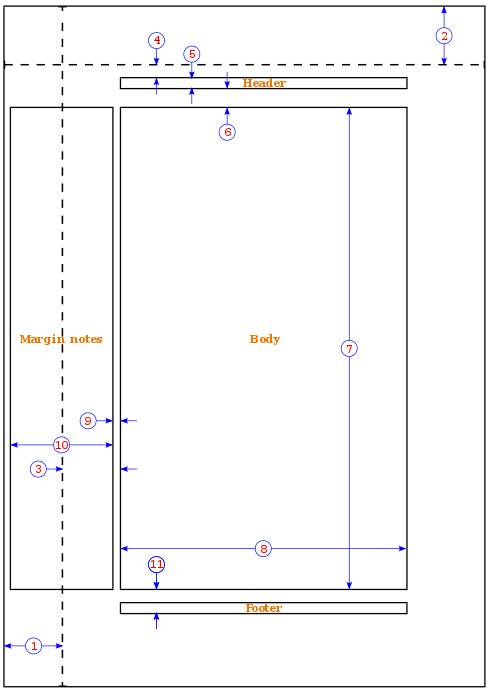

+本 [系列][1] 介绍了 LaTeX 中的基本格式。[第 1 部分][2] 介绍了列表。[第 2 部分][3] 阐述了表格。在第 3 部分中,你将了解 LaTeX 的另一个重要特性:细腻灵活的文档排版。本文介绍如何自定义页面布局、目录、标题部分和页面样式。

### 页面维度

-当您第一次编写 LaTeX 文档时,您可能已经注意到默认边距比您想象的要大一些。页边距与指定的纸张类型有关,例如 A4、letter 和 documentclass(article、book、report) 等等。要修改页边距,有几个选项,最简单的选项之一是使用 [fullpage][4] 包。

+当你第一次编写 LaTeX 文档时,你可能已经注意到默认边距比你想象的要大一些。页边距与指定的纸张类型有关,例如 A4、letter 和 documentclass(article、book、report) 等等。要修改页边距,有几个选项,最简单的选项之一是使用 [fullpage][4] 包。

> 该软件包设置页面的主体,可以使主体几乎占满整个页面。

>

-> ——FULLPAGE PACKAGE DOCUMENTATION

+> —— FULLPAGE PACKAGE DOCUMENTATION

-下图演示了使用 fullpage 包和没有使用的区别。

-<!-- 但是原文中并没有这个图 -->

-

-另一个选择是使用 [geometry][5] 包。在探索 geometry 包如何操纵页边距之前,请首先查看如下所示的页面尺寸。

+另一个选择是使用 [geometry][5] 包。在探索 `geometry` 包如何操纵页边距之前,请首先查看如下所示的页面尺寸。

-1。1 英寸 + \hoffset

-2。1 英寸 + \voffset

-3。\oddsidemargin = 31pt

-4。\topmargin = 20pt

-5。\headheight = 12pt

-6。\headsep = 25pt

-7。\textheight = 592pt

-8。\textwidth = 390pt

-9。\marginparsep = 35pt

-10。\marginparwidth = 35pt

-11。\footskip = 30pt

+1. 1 英寸 + `\hoffset`

+2. 1 英寸 + `\voffset`

+3. `\oddsidemargin` = 31pt

+4. `\topmargin` = 20pt

+5. `\headheight` = 12pt

+6. `\headsep` = 25pt

+7. `\textheight` = 592pt

+8. `\textwidth` = 390pt

+9. `\marginparsep` = 35pt

+10. `\marginparwidth` = 35pt

+11. `\footskip` = 30pt

-要使用 geometry 包将边距设置为 1 英寸,请使用以下示例

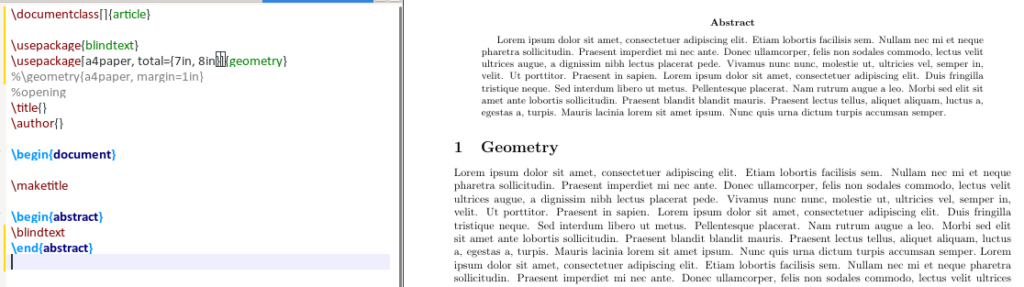

+要使用 `geometry` 包将边距设置为 1 英寸,请使用以下示例

```

\usepackage{geometry}

\geometry{a4paper, margin=1in}

```

-除上述示例外,geometry 命令还可以修改纸张尺寸和方向。要更改纸张尺寸,请使用以下示例:

+除上述示例外,`geometry` 命令还可以修改纸张尺寸和方向。要更改纸张尺寸,请使用以下示例:

```

\usepackage[a4paper, total={7in, 8in}]{geometry}

@@ -57,7 +53,7 @@ LaTeX 排版 (3):排版

-要更改页面方向,需要将横向添加到 geometery 选项中,如下所示:

+要更改页面方向,需要将横向(`landscape`)添加到 `geometery` 选项中,如下所示:

```

\usepackage{geometery}

@@ -68,9 +64,9 @@ LaTeX 排版 (3):排版

### 目录

-默认情况下,目录的标题为 “contents”。有时,您更想将标题改为 “Table of Content”,更改目录和章节第一节之间的垂直间距,或者只更改文本的颜色。

+默认情况下,目录的标题为 “contents”。有时,你想将标题更改为 “Table of Content”,更改目录和章节第一节之间的垂直间距,或者只更改文本的颜色。

-若要更改文本,请在导言区中添加以下行,用所需语言替换英语:

+若要更改文本,请在导言区中添加以下行,用所需语言替换英语(`english`):

```

\usepackage[english]{babel}

@@ -78,11 +74,12 @@ LaTeX 排版 (3):排版

\renewcommand{\contentsname}

{\bfseries{Table of Contents}}}

```

-要操纵目录与图,小节和章节列表之间的虚拟间距,请使用 tocloft 软件包。本文中使用的两个选项是 cftbeforesecskip 和 cftaftertoctitleskip。

+

+要操纵目录与图、小节和章节列表之间的虚拟间距,请使用 `tocloft` 软件包。本文中使用的两个选项是 `cftbeforesecskip` 和 `cftaftertoctitleskip`。

> tocloft 包提供了控制目录、图表列表和表格列表的排版方法。

>

-> ——TOCLOFT PACKAGE DOUCMENTATION

+> —— TOCLOFT PACKAGE DOUCMENTATION

```

\usepackage{tocloft}

@@ -91,10 +88,12 @@ LaTeX 排版 (3):排版

```

-默认目录

+

+*默认目录*

-定制目录

+

+*定制目录*

### 边框

@@ -102,14 +101,14 @@ LaTeX 排版 (3):排版

-要删除这些边框,请在导言区中包括以下内容,您将看到目录中没有任何边框。

+要删除这些边框,请在导言区中包括以下内容,你将看到目录中没有任何边框。

```

\usepackage{hyperref}

\hypersetup{ pdfborder = {0 0 0}}

```

-要修改标题部分的字体、样式或颜色,请使用程序包 [titlesec][7]。在本例中,您将更改节、子节和子节的字体大小、字体样式和字体颜色。首先,在导言区中增加以下内容。

+要修改标题部分的字体、样式或颜色,请使用程序包 [titlesec][7]。在本例中,你将更改节、子节和三级子节的字体大小、字体样式和字体颜色。首先,在导言区中增加以下内容。

```

\usepackage{titlesec}

@@ -118,7 +117,7 @@ LaTeX 排版 (3):排版

\titleformat*{\subsubsection}{\Large\bfseries\color{darkblue}}

```

-仔细看看代码,`\titleformat*{\section}` 指定要使用的节的深度。上面的示例最多使用第三个深度。`{\Huge\bfseries\color{darkblue}}` 部分指定字体大小、字体样式和字体颜色

+仔细看看代码,`\titleformat*{\section}` 指定要使用的节的深度。上面的示例最多使用第三个深度。`{\Huge\bfseries\color{darkblue}}` 部分指定字体大小、字体样式和字体颜色。

### 页面样式

@@ -138,13 +137,16 @@ LaTeX 排版 (3):排版

\renewcommand{\headrulewidth}{2pt} % add header horizontal line

\renewcommand{\footrulewidth}{1pt} % add footer horizontal line

```

-结果如下所示

+

+结果如下所示:

-页眉

+

+*页眉*

-页脚

+

+*页脚*

### 小贴士

@@ -231,13 +233,13 @@ $ cat article_structure.tex

%\pagenumbering{roman}

```

-在您的文章中,请参考以下示例中所示的方法引用 `structure.tex` 文件:

+在你的文章中,请参考以下示例中所示的方法引用 `structure.tex` 文件:

```

\documentclass[a4paper,11pt]{article}

\input{/path_to_structure.tex}}

\begin{document}

-…...

+......

\end{document}

```

@@ -250,11 +252,12 @@ $ cat article_structure.tex

\SetWatermarkText{\color{red}Classified} %add watermark text

\SetWatermarkScale{4} %specify the size of the text

```

+

### 结论

-在本系列中,您了解了 LaTeX 提供的一些基本但丰富的功能,这些功能可用于自定义文档以满足您的需要或将文档呈现给的受众。LaTeX 海洋中,还有许多软件包需要大家自行去探索。

+在本系列中,你了解了 LaTeX 提供的一些基本但丰富的功能,这些功能可用于自定义文档以满足你的需要或将文档呈现给的受众。LaTeX 海洋中,还有许多软件包需要大家自行去探索。

--------------------------------------------------------------------------------

@@ -263,15 +266,15 @@ via: https://fedoramagazine.org/latex-typesetting-part-3-formatting/

作者:[Earl Ramirez][a]

选题:[Chao-zhi][b]

译者:[Chao-zhi](https://github.com/Chao-zhi)

-校对:[校对者ID](https://github.com/校对者ID)

+校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

[a]: https://fedoramagazine.org/author/earlramirez/

[b]: https://github.com/Chao-zhi

[1]:https://fedoramagazine.org/tag/latex/

-[2]:https://fedoramagazine.org/latex-typesetting-part-1/

-[3]:https://fedoramagazine.org/latex-typesetting-part-2-tables/

+[2]:https://linux.cn/article-13112-1.html

+[3]:https://linux.cn/article-13146-1.html

[4]:https://www.ctan.org/pkg/fullpage

[5]:https://www.ctan.org/geometry

[6]:https://www.ctan.org/pkg/hyperref

diff --git a/published/20200922 Give Your GNOME Desktop a Tiling Makeover With Material Shell GNOME Extension.md b/published/202102/20200922 Give Your GNOME Desktop a Tiling Makeover With Material Shell GNOME Extension.md

similarity index 100%

rename from published/20200922 Give Your GNOME Desktop a Tiling Makeover With Material Shell GNOME Extension.md

rename to published/202102/20200922 Give Your GNOME Desktop a Tiling Makeover With Material Shell GNOME Extension.md

diff --git a/published/20201001 Navigating your Linux files with ranger.md b/published/202102/20201001 Navigating your Linux files with ranger.md

similarity index 100%

rename from published/20201001 Navigating your Linux files with ranger.md

rename to published/202102/20201001 Navigating your Linux files with ranger.md

diff --git a/published/20201013 My first day using Ansible.md b/published/202102/20201013 My first day using Ansible.md

similarity index 100%

rename from published/20201013 My first day using Ansible.md

rename to published/202102/20201013 My first day using Ansible.md

diff --git a/published/202102/20201103 How the Kubernetes scheduler works.md b/published/202102/20201103 How the Kubernetes scheduler works.md

new file mode 100644

index 0000000000..e988199b45

--- /dev/null

+++ b/published/202102/20201103 How the Kubernetes scheduler works.md

@@ -0,0 +1,150 @@

+[#]: collector: (lujun9972)

+[#]: translator: (MZqk)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13155-1.html)

+[#]: subject: (How the Kubernetes scheduler works)

+[#]: via: (https://opensource.com/article/20/11/kubernetes-scheduler)

+[#]: author: (Mike Calizo https://opensource.com/users/mcalizo)

+

+Kubernetes 调度器是如何工作的

+=====

+

+> 了解 Kubernetes 调度器是如何发现新的吊舱并将其分配到节点。

+

+

+

+[Kubernetes][2] 已经成为容器和容器化工作负载的标准编排引擎。它提供一个跨公有云和私有云环境的通用和开源的抽象层。

+

+对于那些已经熟悉 Kuberbetes 及其组件的人,他们的讨论通常围绕着如何尽量发挥 Kuberbetes 的功能。但当你刚刚开始学习 Kubernetes 时,尝试在生产环境中使用前,明智的做法是从一些关于 Kubernetes 相关组件(包括 [Kubernetes 调度器][3]) 开始学习,如下抽象视图中所示:

+

+![][4]

+

+Kubernetes 也分为控制平面和工作节点:

+

+ 1. **控制平面:** 也称为主控,负责对集群做出全局决策,以及检测和响应集群事件。控制平面组件包括:

+ * etcd

+ * kube-apiserver

+ * kube-controller-manager

+ * 调度器

+ 2. **工作节点:** 也称节点,这些节点是工作负载所在的位置。它始终和主控联系,以获取工作负载运行所需的信息,并与集群外部进行通讯和连接。工作节点组件包括:

+ * kubelet

+ * kube-proxy

+ * CRI

+

+我希望这个背景信息可以帮助你理解 Kubernetes 组件是如何关联在一起的。

+

+### Kubernetes 调度器是如何工作的

+

+Kubernetes [吊舱][5] 由一个或多个容器组成组成,共享存储和网络资源。Kubernetes 调度器的任务是确保每个吊舱分配到一个节点上运行。

+

+(LCTT 译注:容器技术领域大量使用了航海比喻,pod 一词,意为“豆荚”,在航海领域指“吊舱” —— 均指盛装多个物品的容器。常不翻译,考虑前后文,可译做“吊舱”。)

+

+在更高层面下,Kubernetes 调度器的工作方式是这样的:

+

+ 1. 每个需要被调度的吊舱都需要加入到队列

+ 2. 新的吊舱被创建后,它们也会加入到队列

+ 3. 调度器持续地从队列中取出吊舱并对其进行调度

+

+[调度器源码][6](`scheduler.go`)很大,约 9000 行,且相当复杂,但解决了重要问题:

+

+#### 等待/监视吊舱创建的代码

+

+监视吊舱创建的代码始于 `scheduler.go` 的 8970 行,它持续等待新的吊舱:

+

+```

+// Run begins watching and scheduling. It waits for cache to be synced, then starts a goroutine and returns immediately.

+

+func (sched *Scheduler) Run() {

+ if !sched.config.WaitForCacheSync() {

+ return

+ }

+

+ go wait.Until(sched.scheduleOne, 0, sched.config.StopEverything)

+```

+

+#### 负责对吊舱进行排队的代码

+

+负责对吊舱进行排队的功能是:

+

+```

+// queue for pods that need scheduling

+ podQueue *cache.FIFO

+```

+

+负责对吊舱进行排队的代码始于 `scheduler.go` 的 7360 行。当事件处理程序触发,表明新的吊舱显示可用时,这段代码将新的吊舱加入队列中:

+

+```

+func (f *ConfigFactory) getNextPod() *v1.Pod {

+ for {

+ pod := cache.Pop(f.podQueue).(*v1.Pod)

+ if f.ResponsibleForPod(pod) {

+ glog.V(4).Infof("About to try and schedule pod %v", pod.Name)

+ return pod

+ }

+ }

+}

+```

+

+#### 处理错误代码

+

+在吊舱调度中不可避免会遇到调度错误。以下代码是处理调度程序错误的方法。它监听 `podInformer` 然后抛出一个错误,提示此吊舱尚未调度并被终止:

+

+```

+// scheduled pod cache

+ podInformer.Informer().AddEventHandler(

+ cache.FilteringResourceEventHandler{

+ FilterFunc: func(obj interface{}) bool {

+ switch t := obj.(type) {

+ case *v1.Pod:

+ return assignedNonTerminatedPod(t)

+ default:

+ runtime.HandleError(fmt.Errorf("unable to handle object in %T: %T", c, obj))

+ return false

+ }

+ },

+```

+

+换句话说,Kubernetes 调度器负责如下:

+

+ * 将新创建的吊舱调度至具有足够空间的节点上,以满足吊舱的资源需求。

+ * 监听 kube-apiserver 和控制器是否创建新的吊舱,然后调度它至集群内一个可用的节点。

+ * 监听未调度的吊舱,并使用 `/binding` 子资源 API 将吊舱绑定至节点。

+

+例如,假设正在部署一个需要 1 GB 内存和双核 CPU 的应用。因此创建应用吊舱的节点上需有足够资源可用,然后调度器会持续运行监听是否有吊舱需要调度。

+

+### 了解更多

+

+要使 Kubernetes 集群工作,你需要使以上所有组件一起同步运行。调度器有一段复杂的的代码,但 Kubernetes 是一个很棒的软件,目前它仍是我们在讨论或采用云原生应用程序时的首选。

+

+学习 Kubernetes 需要精力和时间,但是将其作为你的专业技能之一能为你的职业生涯带来优势和回报。有很多很好的学习资源可供使用,而且 [官方文档][7] 也很棒。如果你有兴趣了解更多,建议从以下内容开始:

+

+ * [Kubernetes the hard way][8]

+ * [Kubernetes the hard way on bare metal][9]

+ * [Kubernetes the hard way on AWS][10]

+

+你喜欢的 Kubernetes 学习方法是什么?请在评论中分享吧。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/20/11/kubernetes-scheduler

+

+作者:[Mike Calizo][a]

+选题:[lujun9972][b]

+译者:[MZqk](https://github.com/MZqk)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/mcalizo

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/containers_modules_networking_hardware_parts.png?itok=rPpVj92- (Parts, modules, containers for software)

+[2]: https://kubernetes.io/

+[3]: https://kubernetes.io/docs/concepts/scheduling-eviction/kube-scheduler/

+[4]: https://lh4.googleusercontent.com/egB0SSsAglwrZeWpIgX7MDF6u12oxujfoyY6uIPa8WLqeVHb8TYY_how57B4iqByELxvitaH6-zjAh795wxAB8zenOwoz2YSMIFRqHsMWD9ohvUTc3fNLCzo30r7lUynIHqcQIwmtRo

+[5]: https://kubernetes.io/docs/concepts/workloads/pods/

+[6]: https://github.com/kubernetes/kubernetes/blob/e4551d50e57c089aab6f67333412d3ca64bc09ae/plugin/pkg/scheduler/scheduler.go

+[7]: https://kubernetes.io/docs/home/

+[8]: https://github.com/kelseyhightower/kubernetes-the-hard-way

+[9]: https://github.com/Praqma/LearnKubernetes/blob/master/kamran/Kubernetes-The-Hard-Way-on-BareMetal.md

+[10]: https://github.com/Praqma/LearnKubernetes/blob/master/kamran/Kubernetes-The-Hard-Way-on-AWS.md

diff --git a/published/20210111 7 Bash tutorials to enhance your command line skills in 2021.md b/published/202102/20210111 7 Bash tutorials to enhance your command line skills in 2021.md

similarity index 100%

rename from published/20210111 7 Bash tutorials to enhance your command line skills in 2021.md

rename to published/202102/20210111 7 Bash tutorials to enhance your command line skills in 2021.md

diff --git a/published/20210115 How to Create and Manage Archive Files in Linux.md b/published/202102/20210115 How to Create and Manage Archive Files in Linux.md

similarity index 100%

rename from published/20210115 How to Create and Manage Archive Files in Linux.md

rename to published/202102/20210115 How to Create and Manage Archive Files in Linux.md

diff --git a/published/20210118 10 ways to get started with open source in 2021.md b/published/202102/20210118 10 ways to get started with open source in 2021.md

similarity index 100%

rename from published/20210118 10 ways to get started with open source in 2021.md

rename to published/202102/20210118 10 ways to get started with open source in 2021.md

diff --git a/published/202102/20210119 Set up a Linux cloud on bare metal.md b/published/202102/20210119 Set up a Linux cloud on bare metal.md

new file mode 100644

index 0000000000..a9f806b711

--- /dev/null

+++ b/published/202102/20210119 Set up a Linux cloud on bare metal.md

@@ -0,0 +1,119 @@

+[#]: collector: (lujun9972)

+[#]: translator: (wxy)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13161-1.html)

+[#]: subject: (Set up a Linux cloud on bare metal)

+[#]: via: (https://opensource.com/article/21/1/cloud-image-virt-install)

+[#]: author: (Sumantro Mukherjee https://opensource.com/users/sumantro)

+

+在裸机上建立 Linux 云实例

+======

+

+> 在 Fedora 上用 virt-install 创建云镜像。

+

+

+

+虚拟化是使用最多的技术之一。Fedora Linux 使用 [Cloud Base 镜像][2] 来创建通用虚拟机(VM),但设置 Cloud Base 镜像的方法有很多。最近,用于调配虚拟机的 `virt-install` 命令行工具增加了对 `cloud-init` 的支持,因此现在可以使用它在本地配置和运行云镜像。

+

+本文介绍了如何在裸机上设置一个基本的 Fedora 云实例。同样的步骤可以用于任何 raw 或Qcow2 Cloud Base 镜像。

+

+### 什么是 --cloud-init?

+

+`virt-install` 命令使用 `libvirt` 创建一个 KVM、Xen 或 [LXC][3] 客户机。`--cloud-init` 选项使用一个本地文件(称为 “nocloud 数据源”),所以你不需要网络连接来创建镜像。在第一次启动时,`nocloud` 方法会从 iso9660 文件系统(`.iso` 文件)中获取访客机的用户数据和元数据。当你使用这个选项时,`virt-install` 会为 root 用户账户生成一个随机的(临时)密码,提供一个串行控制台,以便你可以登录并更改密码,然后在随后的启动中禁用 `--cloud-init` 选项。

+

+### 设置 Fedora Cloud Base 镜像

+

+首先,[下载一个 Fedora Cloud Base(for OpenStack)镜像][2]。

+

+![Fedora Cloud 网站截图][4]

+

+然后安装 `virt-install` 命令:

+

+```

+$ sudo dnf install virt-install

+```

+

+一旦 `virt-install` 安装完毕并下载了 Fedora Cloud Base 镜像,请创建一个名为`cloudinit-user-data.yaml` 的小型 YAML 文件,其中包含 `virt-install` 将使用的一些配置行:

+

+```

+#cloud-config

+password: 'r00t'

+chpasswd: { expire: false }

+```

+

+这个简单的云配置可以设置默认的 `fedora` 用户的密码。如果你想使用会过期的密码,可以将其设置为登录后过期。

+

+创建并启动虚拟机:

+

+```

+$ virt-install --name local-cloud18012709 \

+--memory 2000 --noreboot \

+--os-variant detect=on,name=fedora-unknown \

+--cloud-init user-data="/home/r3zr/cloudinit-user-data.yaml" \

+--disk=size=10,backing_store="/home/r3zr/Downloads/Fedora-Cloud-Base-33-1.2.x86_64.qcow2"

+```

+

+在这个例子中,`local-cloud18012709` 是虚拟机的名称,内存设置为 2000MiB,磁盘大小(虚拟硬盘)设置为 10GB,`--cloud-init` 和 `backing_store` 分别带有你创建的 YAML 配置文件和你下载的 Qcow2 镜像的绝对路径。

+

+### 登录

+

+在创建镜像后,你可以用用户名 `fedora` 和 YAML 文件中设置的密码登录(在我的例子中,密码是 `r00t`,但你可能用了别的密码)。一旦你第一次登录,请更改你的密码。

+

+要关闭虚拟机的电源,执行 `sudo poweroff` 命令,或者按键盘上的 `Ctrl+]`。

+

+### 启动、停止和销毁虚拟机

+

+`virsh` 命令用于启动、停止和销毁虚拟机。

+

+要启动任何停止的虚拟机:

+

+```

+$ virsh start

+```

+

+要停止任何运行的虚拟机:

+

+```

+$ virsh shutdown

+```

+

+要列出所有处于运行状态的虚拟机:

+

+```

+$ virsh list

+```

+

+要销毁虚拟机:

+

+```

+$ virsh destroy

+```

+

+![销毁虚拟机][6]

+

+### 快速而简单

+

+`virt-install` 命令与 `--cloud-init` 选项相结合,可以快速轻松地创建云就绪镜像,而无需担心是否有云来运行它们。无论你是在为重大部署做准备,还是在学习容器,都可以试试`virt-install --cloud-init`。

+

+在云计算工作中,你有喜欢的工具吗?请在评论中告诉我们。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/21/1/cloud-image-virt-install

+

+作者:[Sumantro Mukherjee][a]

+选题:[lujun9972][b]

+译者:[wxy](https://github.com/wxy)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/sumantro

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/bus-cloud.png?itok=vz0PIDDS (Sky with clouds and grass)

+[2]: https://alt.fedoraproject.org/cloud/

+[3]: https://www.redhat.com/sysadmin/exploring-containers-lxc

+[4]: https://opensource.com/sites/default/files/uploads/fedoracloud.png (Fedora Cloud website)

+[5]: https://creativecommons.org/licenses/by-sa/4.0/

+[6]: https://opensource.com/sites/default/files/uploads/destroyvm.png (Destroying a VM)

diff --git a/published/20210120 Learn JavaScript by writing a guessing game.md b/published/202102/20210120 Learn JavaScript by writing a guessing game.md

similarity index 100%

rename from published/20210120 Learn JavaScript by writing a guessing game.md

rename to published/202102/20210120 Learn JavaScript by writing a guessing game.md

diff --git a/published/20210121 How Nextcloud is the ultimate open source productivity suite.md b/published/202102/20210121 How Nextcloud is the ultimate open source productivity suite.md

similarity index 100%

rename from published/20210121 How Nextcloud is the ultimate open source productivity suite.md

rename to published/202102/20210121 How Nextcloud is the ultimate open source productivity suite.md

diff --git a/published/20210122 3 tips for automating your email filters.md b/published/202102/20210122 3 tips for automating your email filters.md

similarity index 100%

rename from published/20210122 3 tips for automating your email filters.md

rename to published/202102/20210122 3 tips for automating your email filters.md

diff --git a/published/20210125 Explore binaries using this full-featured Linux tool.md b/published/202102/20210125 Explore binaries using this full-featured Linux tool.md

similarity index 100%

rename from published/20210125 Explore binaries using this full-featured Linux tool.md

rename to published/202102/20210125 Explore binaries using this full-featured Linux tool.md

diff --git a/published/20210125 Use Joplin to find your notes faster.md b/published/202102/20210125 Use Joplin to find your notes faster.md

similarity index 100%

rename from published/20210125 Use Joplin to find your notes faster.md

rename to published/202102/20210125 Use Joplin to find your notes faster.md

diff --git a/published/20210125 Why you need to drop ifconfig for ip.md b/published/202102/20210125 Why you need to drop ifconfig for ip.md

similarity index 100%

rename from published/20210125 Why you need to drop ifconfig for ip.md

rename to published/202102/20210125 Why you need to drop ifconfig for ip.md

diff --git a/published/20210126 Use your Raspberry Pi as a productivity powerhouse.md b/published/202102/20210126 Use your Raspberry Pi as a productivity powerhouse.md

similarity index 100%

rename from published/20210126 Use your Raspberry Pi as a productivity powerhouse.md

rename to published/202102/20210126 Use your Raspberry Pi as a productivity powerhouse.md

diff --git a/published/20210126 Write GIMP scripts to make image processing faster.md b/published/202102/20210126 Write GIMP scripts to make image processing faster.md

similarity index 100%

rename from published/20210126 Write GIMP scripts to make image processing faster.md

rename to published/202102/20210126 Write GIMP scripts to make image processing faster.md

diff --git a/published/20210127 3 email mistakes and how to avoid them.md b/published/202102/20210127 3 email mistakes and how to avoid them.md

similarity index 100%

rename from published/20210127 3 email mistakes and how to avoid them.md

rename to published/202102/20210127 3 email mistakes and how to avoid them.md

diff --git a/published/20210127 Why I use the D programming language for scripting.md b/published/202102/20210127 Why I use the D programming language for scripting.md

similarity index 100%

rename from published/20210127 Why I use the D programming language for scripting.md

rename to published/202102/20210127 Why I use the D programming language for scripting.md

diff --git a/published/20210128 4 tips for preventing notification fatigue.md b/published/202102/20210128 4 tips for preventing notification fatigue.md

similarity index 100%

rename from published/20210128 4 tips for preventing notification fatigue.md

rename to published/202102/20210128 4 tips for preventing notification fatigue.md

diff --git a/published/20210128 How to Run a Shell Script in Linux -Essentials Explained for Beginners.md b/published/202102/20210128 How to Run a Shell Script in Linux -Essentials Explained for Beginners.md

similarity index 100%

rename from published/20210128 How to Run a Shell Script in Linux -Essentials Explained for Beginners.md

rename to published/202102/20210128 How to Run a Shell Script in Linux -Essentials Explained for Beginners.md

diff --git a/published/20210129 Manage containers with Podman Compose.md b/published/202102/20210129 Manage containers with Podman Compose.md

similarity index 100%

rename from published/20210129 Manage containers with Podman Compose.md

rename to published/202102/20210129 Manage containers with Podman Compose.md

diff --git a/published/20210131 3 wishes for open source productivity in 2021.md b/published/202102/20210131 3 wishes for open source productivity in 2021.md

similarity index 100%

rename from published/20210131 3 wishes for open source productivity in 2021.md

rename to published/202102/20210131 3 wishes for open source productivity in 2021.md

diff --git a/published/20210201 Generate QR codes with this open source tool.md b/published/202102/20210201 Generate QR codes with this open source tool.md

similarity index 100%

rename from published/20210201 Generate QR codes with this open source tool.md

rename to published/202102/20210201 Generate QR codes with this open source tool.md

diff --git a/published/20210202 Filmulator is a Simple, Open Source, Raw Image Editor for Linux Desktop.md b/published/202102/20210202 Filmulator is a Simple, Open Source, Raw Image Editor for Linux Desktop.md

similarity index 100%

rename from published/20210202 Filmulator is a Simple, Open Source, Raw Image Editor for Linux Desktop.md

rename to published/202102/20210202 Filmulator is a Simple, Open Source, Raw Image Editor for Linux Desktop.md

diff --git a/published/20210203 Paru - A New AUR Helper and Pacman Wrapper Based on Yay.md b/published/202102/20210203 Paru - A New AUR Helper and Pacman Wrapper Based on Yay.md

similarity index 100%

rename from published/20210203 Paru - A New AUR Helper and Pacman Wrapper Based on Yay.md

rename to published/202102/20210203 Paru - A New AUR Helper and Pacman Wrapper Based on Yay.md

diff --git a/published/20210204 A hands-on tutorial of SQLite3.md b/published/202102/20210204 A hands-on tutorial of SQLite3.md

similarity index 100%

rename from published/20210204 A hands-on tutorial of SQLite3.md

rename to published/202102/20210204 A hands-on tutorial of SQLite3.md

diff --git a/published/20210207 Why the success of open source depends on empathy.md b/published/202102/20210207 Why the success of open source depends on empathy.md

similarity index 100%

rename from published/20210207 Why the success of open source depends on empathy.md

rename to published/202102/20210207 Why the success of open source depends on empathy.md

diff --git a/published/20210208 3 open source tools that make Linux the ideal workstation.md b/published/202102/20210208 3 open source tools that make Linux the ideal workstation.md

similarity index 100%

rename from published/20210208 3 open source tools that make Linux the ideal workstation.md

rename to published/202102/20210208 3 open source tools that make Linux the ideal workstation.md

diff --git a/published/20210208 Why choose Plausible for an open source alternative to Google Analytics.md b/published/202102/20210208 Why choose Plausible for an open source alternative to Google Analytics.md

similarity index 100%

rename from published/20210208 Why choose Plausible for an open source alternative to Google Analytics.md

rename to published/202102/20210208 Why choose Plausible for an open source alternative to Google Analytics.md

diff --git a/published/20210209 Viper Browser- A Lightweight Qt5-based Web Browser With A Focus on Privacy and Minimalism.md b/published/202102/20210209 Viper Browser- A Lightweight Qt5-based Web Browser With A Focus on Privacy and Minimalism.md

similarity index 100%

rename from published/20210209 Viper Browser- A Lightweight Qt5-based Web Browser With A Focus on Privacy and Minimalism.md

rename to published/202102/20210209 Viper Browser- A Lightweight Qt5-based Web Browser With A Focus on Privacy and Minimalism.md

diff --git a/published/202102/20210212 4 reasons to choose Linux for art and design.md b/published/202102/20210212 4 reasons to choose Linux for art and design.md

new file mode 100644

index 0000000000..fbb040cf2e

--- /dev/null

+++ b/published/202102/20210212 4 reasons to choose Linux for art and design.md

@@ -0,0 +1,95 @@

+[#]: collector: (lujun9972)

+[#]: translator: (amorsu)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13157-1.html)

+[#]: subject: (4 reasons to choose Linux for art and design)

+[#]: via: (https://opensource.com/article/21/2/linux-art-design)

+[#]: author: (Seth Kenlon https://opensource.com/users/seth)

+

+选择 Linux 来做艺术设计的 4 个理由

+======

+

+> 开源会强化你的创造力。因为它把你带出专有的思维定势,开阔你的视野,从而带来更多的可能性。让我们探索一些开源的创意项目。

+

+

+

+2021 年,人们比以前的任何时候都更有理由来爱上 Linux。在这个系列,我会分享 21 个选择 Linux 的原因。今天,让我来解释一下,为什么 Linux 是艺术设计的绝佳选择。

+

+Linux 在服务器和云计算方面获得很多的赞誉。让不少人感到惊讶的是,Linux 刚好也有一系列的很棒的创意设计工具,并且这些工具在用户体验和质量方面可以媲美那些流行的创意设计工具。我第一次使用开源的设计工具时,并不是因为我没有其他工具可以选择。相反的,我是在接触了大量的这些领先的公司提供的专有设计工具后,才开始使用开源设计工具。我之所以最后选择开源设计工具是因为开源更有意义,而且我能获得更好的产出。这些都是一些笼统的说法,所以请允许我解释一下。

+

+### 高可用性意味着高生产力

+

+“生产力”这一次对于不同的人来说含义不一样。当我想到生产力,就是当你坐下来做事情,并能够完成你给自己设定的所有任务的时候,这时就很有成就感。但是当你总是被一些你无法掌控的事情打断,那你的生产力就下降了。

+

+计算机看起来是不可预测的,诚然有很多事情会出错。电脑是由很多的硬件组成的,它们任何一个都有可能在任何时间出问题。软件会有 bug,也有修复这些 bug 的更新,而更新后又会带来新的 bug。如果你对电脑不了解,它可能就像一个定时炸弹,等着爆发。带着数字世界里的这么多的潜在问题,去接受一个当某些条件不满足(比如许可证,或者订阅费)就会不工作的软件,对我来说就显得很不理智。

+

+![Inkscape 应用][2]

+

+开源的创意设计应用不需要订阅费,也不需要许可证。在你需要的时候,它们都能获取得到,并且通常都是跨平台的。这就意味着,当你坐在工作的电脑面前,你就能确定你能用到那些必需的软件。而如果某天你很忙碌,却发现你面前的电脑不工作了,解决办法就是找到一个能工作的,安装你的创意设计软件,然后开始工作。

+

+例如,要找到一台无法运行 Inkscape 的电脑,比找到一台可以运行那些专有软件的电脑要难得多。这就叫做高可用。这是游戏规则的改变者。我从来不曾遇到因为软件用不了而不得不干等,浪费我数小时时间的事情。

+

+### 开放访问更有利于多样性

+

+我在设计行业工作的时候,我的很多同事都是通过自学的方式来学习艺术和技术方面的知识,这让我感到惊讶。有的通过使用那些最新的昂贵的“专业”软件来自学,但总有一大群人是通过使用自由和开源的软件来完善他们的数字化的职业技能。因为,对于孩子,或者没钱的大学生来说,这才是他们能负担的起,而且很容易就能获得的。

+

+这是一种不同的高可用性,但这对我和许多其他用户来说很重要,如果不是因为开源,他们就不会从事创意行业。即使那些有提供付费订阅的开源项目,比如 Ardour,都能确保他的用户在不需要支付任何费用的时候也能使用软件。

+

+![Ardour 界面][4]

+

+当你不限制别人用你的软件的时候,你其实拥有了更多的潜在用户。如果你这样做了,那么你就开放了一个接收多样的创意声音的窗口。艺术钟爱影响力,你可以借鉴的经验和想法越多就越好。这就是开源设计软件所带来的可能性。

+

+### 文件格式支持更具包容性

+

+我们都知道在几乎所有行业里面包容性的价值。在各种意义上,邀请更多的人到派对可以造就更壮观的场面。知道这一点,当看到有的项目或者创新公司只邀请某些人去合作,只接受某些文件格式,就让我感到很痛苦。这看起来很陈旧,就像某个远古时代的精英主义的遗迹,而这是即使在今天都在发生的真实问题。

+

+令人惊讶和不幸的是,这不是因为技术上的限制。专有软件可以访问开源的文件格式,因为这些格式是开源的,而且可以自由地集成到各种应用里面。集成这些格式不需要任何回报。而相比之下,专有的文件格式被笼罩在秘密之中,只被限制于提供给几个愿意付钱的人使用。这很糟糕,而且常常,你无法在没有这些专有软件的情况下打开一些文件来获取你的数据。令人惊喜的是,开源的设计软件却是尽力的支持更多的专有文件格式。以下是一些 Inkscape 所支持的令人难以置信的列表样本:

+

+![可用的 Inkscape 文件格式][5]

+

+而这大部分都是在没有这些专有格式厂商的支持下开发出来的。

+

+支持开放的文件格式可以更包容,对所有人都更好。

+

+### 对新的创意没有限制

+

+我之所以爱上开源的其中一个原因是,解决一个指定任务时,有彻底的多样性。当你在专有软件周围时,你所看到的世界是基于你所能够获取得到的东西。比如说,你过你打算处理一些照片,你通常会把你的意图局限在你所知道的可能性上面。你从你的架子上的 4 款或 10 款应用中,挑选出 3 款,因为它们是目前你唯一能够获取得到的选项。

+

+在开源领域,你通常会有好几个“显而易见的”必备解决方案,但同时你还有一打的角逐者在边缘转悠,供你选择。这些选项有时只是半成品,或者它们超级专注于某项任务,又或者它们学起来有点挑战性,但最主要的是,它们是独特的,而且充满创新的。有时候,它们是被某些不按“套路”出牌的人所开发的,因此处理的方法和市场上现有的产品截然不同。其他时候,它们是被那些熟悉做事情的“正确”方式,但还是在尝试不同策略的人所开发的。这就像是一个充满可能性的巨大的动态的头脑风暴。

+

+这种类型的日常创新能够引领出闪现的灵感、光辉时刻,或者影响广泛的通用性改进。比如说,著名的 GIMP 滤镜,(用于从图像中移除项目并自动替换背景)是如此的受欢迎以至于后来被专有图片编辑软件商拿去“借鉴”。这是成功的一步,但是对于一个艺术家而言,个人的影响才是最关键的。我常感叹于新的 Linux 用户的创意,而我只是在技术展会上展示给他们一个简单的音频,或者视频滤镜,或者绘图应用。没有任何的指导,或者应用场景,从简单的交互中喷发出来的关于新的工具的主意,是令人兴奋和充满启发的,通过实验中一些简单的工具,一个全新的艺术系列可以轻而易举的浮现出来。

+

+只要在适当的工具集都有的情况下,有很多方式来更有效的工作。虽然私有软件通常也不会反对更聪明的工作习惯的点子,专注于实现自动化任务让用户可以更轻松的工作,对他们也没有直接的收益。Linux 和开源软件就是很大程度专为 [自动化和编排][6] 而建的,而不只是服务器。像 [ImageMagick][7] 和 [GIMP 脚本][8] 这样的工具改变了我的处理图片的方式,包括批量处理方面和纯粹实验方面。

+

+你永远不知道你可以创造什么,如果你有一个你从来想象不到会存在的工具的话。

+

+### Linux 艺术家

+

+这里有 [使用开源的艺术家社区][9],从 [photography][10] 到 [makers][11] 到 [musicians][12],还有更多更多。如果你想要创新,试试 Linux 吧。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/21/2/linux-art-design

+

+作者:[Seth Kenlon][a]

+选题:[lujun9972][b]

+译者:[amorsu](https://github.com/amorsu)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/seth

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/painting_computer_screen_art_design_creative.png?itok=LVAeQx3_ (Painting art on a computer screen)

+[2]: https://opensource.com/sites/default/files/inkscape_0.jpg

+[3]: https://community.ardour.org/subscribe

+[4]: https://opensource.com/sites/default/files/ardour.jpg

+[5]: https://opensource.com/sites/default/files/formats.jpg

+[6]: https://opensource.com/article/20/11/orchestration-vs-automation

+[7]: https://opensource.com/life/16/6/fun-and-semi-useless-toys-linux#imagemagick

+[8]: https://opensource.com/article/21/1/gimp-scripting

+[9]: https://librearts.org

+[10]: https://pixls.us

+[11]: https://www.redhat.com/en/blog/channel/red-hat-open-studio

+[12]: https://linuxmusicians.com

diff --git a/published/20210215 A practical guide to JavaScript closures.md b/published/202102/20210215 A practical guide to JavaScript closures.md

similarity index 100%

rename from published/20210215 A practical guide to JavaScript closures.md

rename to published/202102/20210215 A practical guide to JavaScript closures.md

diff --git a/translated/tech/20210216 Meet Plots- A Mathematical Graph Plotting App for Linux Desktop.md b/published/202102/20210216 Meet Plots- A Mathematical Graph Plotting App for Linux Desktop.md

similarity index 78%

rename from translated/tech/20210216 Meet Plots- A Mathematical Graph Plotting App for Linux Desktop.md

rename to published/202102/20210216 Meet Plots- A Mathematical Graph Plotting App for Linux Desktop.md

index e732ddf738..1232812fab 100644

--- a/translated/tech/20210216 Meet Plots- A Mathematical Graph Plotting App for Linux Desktop.md

+++ b/published/202102/20210216 Meet Plots- A Mathematical Graph Plotting App for Linux Desktop.md

@@ -1,8 +1,8 @@

[#]: collector: (lujun9972)

[#]: translator: (geekpi)

-[#]: reviewer: ( )

-[#]: publisher: ( )

-[#]: url: ( )

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13151-1.html)

[#]: subject: (Meet Plots: A Mathematical Graph Plotting App for Linux Desktop)

[#]: via: (https://itsfoss.com/plots-graph-app/)

[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

@@ -10,17 +10,19 @@

认识 Plots:一款适用于 Linux 桌面的数学图形绘图应用

======

-Plots 是一款图形绘图应用,它可以轻松实现数学公式的可视化。你可以用它来绘制任意三角函数、双曲函数、指数函数和对数函数的和和积。

+

+

+Plots 是一款图形绘图应用,它可以轻松实现数学公式的可视化。你可以用它来绘制任意三角函数、双曲函数、指数函数和对数函数的和与积。

### 在 Linux 上使用 Plots 绘制数学图形

-[Plots][1] 是一款简单的应用,它的灵感来自于像 [Desmos][2] 这样的网络图形绘图应用。它能让你绘制不同数学函数的图形,你可以交互式地输入这些函数,还可以自定义绘图的颜色。

+[Plots][1] 是一款简单的应用,它的灵感来自于像 [Desmos][2] 这样的 Web 图形绘图应用。它能让你绘制不同数学函数的图形,你可以交互式地输入这些函数,还可以自定义绘图的颜色。

Plots 是用 Python 编写的,它使用 [OpenGL][3] 来利用现代硬件。它使用 GTK 3,因此可以很好地与 GNOME 桌面集成。

![][4]

-使用 plots 是很直接的。要添加一个新的方程,点击加号。点击垃圾箱图标可以删除方程。还可以选择撤销和重做。你也可以放大和缩小。

+使用 Plots 非常直白。要添加一个新的方程,点击加号。点击垃圾箱图标可以删除方程。还可以选择撤销和重做。你也可以放大和缩小。

![][5]

@@ -28,7 +30,7 @@ Plots 是用 Python 编写的,它使用 [OpenGL][3] 来利用现代硬件。

![][6]

-在深色模式下,侧栏公式区域变成了深色,但主绘图区域仍然是白色。我相信这也许是设计好的。

+在深色模式下,侧栏公式区域变成了深色,但主绘图区域仍然是白色。我相信这也许是这样设计的。

你可以使用多个函数,并将它们全部绘制在一张图中:

@@ -36,7 +38,7 @@ Plots 是用 Python 编写的,它使用 [OpenGL][3] 来利用现代硬件。

我发现它在尝试粘贴一些它无法理解的方程时崩溃了。如果你写了一些它不能理解的东西,或者与现有的方程冲突,所有图形都会消失,去掉不正确的方程就会恢复图形。

-不幸的是,没有导出绘图或复制到剪贴板的选项。你可以随时[在 Linux 中截图][8],并在你要添加图像的文档中使用它。

+不幸的是,没有导出绘图或复制到剪贴板的选项。你可以随时 [在 Linux 中截图][8],并在你要添加图像的文档中使用它。

### 在 Linux 上安装 Plots

@@ -50,17 +52,17 @@ sudo apt update

sudo apt install plots

```

-对于其他基于 Debian 的发行版,你可以使用[这里][13]的 [deb 文件安装][12]。

+对于其他基于 Debian 的发行版,你可以使用 [这里][13] 的 [deb 文件安装][12]。

我没有在 AUR 软件包列表中找到它,但是作为 Arch Linux 用户,你可以使用 Flatpak 软件包或者使用 Python 安装它。

-[Plots Flatpak Package][14]

+- [Plots Flatpak 软件包][14]

如果你感兴趣,可以在它的 GitHub 仓库中查看源代码。如果你喜欢这款应用,请考虑在 GitHub 上给它 star。

-[GitHub 上的 Plots 源码][1]

+- [GitHub 上的 Plots 源码][1]

-**结论**

+### 结论

Plots 主要用于帮助学生学习数学或相关科目,但它在很多其他场景下也能发挥作用。我知道不是每个人都需要,但肯定会对学术界和学校的人有帮助。

@@ -75,7 +77,7 @@ via: https://itsfoss.com/plots-graph-app/

作者:[Abhishek Prakash][a]

选题:[lujun9972][b]

译者:[geekpi](https://github.com/geekpi)

-校对:[校对者ID](https://github.com/校对者ID)

+校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

diff --git a/published/202102/20210217 5 reasons to use Linux package managers.md b/published/202102/20210217 5 reasons to use Linux package managers.md

new file mode 100644

index 0000000000..76842524a3

--- /dev/null

+++ b/published/202102/20210217 5 reasons to use Linux package managers.md

@@ -0,0 +1,75 @@

+[#]: collector: (lujun9972)

+[#]: translator: (geekpi)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13160-1.html)

+[#]: subject: (5 reasons to use Linux package managers)

+[#]: via: (https://opensource.com/article/21/2/linux-package-management)

+[#]: author: (Seth Kenlon https://opensource.com/users/seth)

+

+使用 Linux 软件包管理器的 5 个理由

+======

+

+> 包管理器可以跟踪你安装的软件的所有组件,使得更新、重装和故障排除更加容易。

+

+

+

+在 2021 年,人们喜欢 Linux 的理由比以往任何时候都多。在这个系列中,我将分享 21 个使用 Linux 的不同理由。今天,我将谈谈软件仓库。

+

+在我使用 Linux 之前,我认为在计算机上安装的应用是理所当然的。我会根据需要安装应用,如果我最后没有使用它们,我就会把它们忘掉,让它们占用我的硬盘空间。终于有一天,我的硬盘空间会变得稀缺,我就会疯狂地删除应用,为更重要的数据腾出空间。但不可避免的是,应用只能释放出有限的空间,所以我将注意力转移到与这些应用一起安装的所有其他零碎内容上,无论是媒体内容还是配置文件和文档。这不是一个管理电脑的好方法。我知道这一点,但我并没有想过要有其他的选择,因为正如人们所说,你不知道自己不知道什么。

+

+当我改用 Linux 时,我发现安装应用的方式有些不同。在 Linux 上,会建议你不要去网站上找应用的安装程序。取而代之的是,运行一个命令,应用就会被安装到系统上,并记录每个单独的文件、库、配置文件、文档和媒体资产。

+

+### 什么是软件仓库?

+

+在 Linux 上安装应用的默认方法是从发行版软件仓库中安装。这可能听起来像应用商店,那是因为现代应用商店借鉴了很多软件仓库的概念。[Linux 也有应用商店][2],但软件仓库是独一无二的。你通过一个*包管理器*从软件仓库中获得一个应用,它使你的 Linux 系统能够记录和跟踪你所安装的每一个组件。

+

+这里有五个原因可以让你确切地知道你的系统上有什么东西,可以说是非常有用。

+

+#### 1、移除旧应用

+

+当你的计算机知道应用安装的每一个文件时,卸载你不再需要的文件真的很容易。在 Linux 上,安装 [31 个不同的文本编辑器][3],然后卸载 30 个你不喜欢的文本编辑器是没有问题的。当你在 Linux 上卸载的时候,你就真的卸载了。

+

+#### 2、按你的意思重新安装

+

+不仅卸载要彻底,*重装*也很有意义。在许多平台上,如果一个应用出了问题,有时会建议你重新安装它。通常情况下,谁也说不清为什么要重装一个应用。不过,人们还是经常会隐隐约约地怀疑某个地方的文件已经损坏了(换句话说,数据写入错误),所以希望重装可以覆盖坏的文件以让软件重新工作。这是个不错的建议,但对于任何技术人员来说,不知道是什么地方出了问题都是令人沮丧的。更糟糕的是,如果不仔细跟踪,就不能保证所有的文件都会在重装过程中被刷新,因为通常没有办法知道与应用程序一起安装的所有文件在第一时间就删除了。有了软件包管理器,你可以强制彻底删除旧文件,以确保新文件的全新安装。同样重要的是,你可以研究每个文件并可能找出导致问题的文件,但这是开源和 Linux 的一个特点,而不是包管理。

+

+#### 3、保持你应用的更新

+

+不要听别人告诉你的 Linux 比其他操作系统“更安全”。计算机是由代码组成的,而我们人类每天都会以新的、有趣的方式找到利用这些代码的方法。因为 Linux 上的绝大多数应用都是开源的,所以许多漏洞都会以“常见漏洞和暴露”(CVE)的形式公开。大量涌入的安全漏洞报告似乎是一件坏事,但这绝对是一个*知道*远比*不知道*好的案例。毕竟,没有人告诉你有问题,并不意味着没有问题。漏洞报告是好的。它们对每个人都有好处。而且,当开发人员修复安全漏洞时,对你而言,及时获得这些修复程序很重要,最好不用自己记着动手修复。

+

+包管理器正是为了实现这一点而设计的。当应用收到更新时,无论是修补潜在的安全问题还是引入令人兴奋的新功能,你的包管理器应用都会提醒你可用的更新。

+

+#### 4、保持轻便

+

+假设你有应用 A 和应用 B,这两个应用都需要库 C。在某些操作系统上,通过得到 A 和 B,就会得到了两个 C 的副本。这显然是多余的,所以想象一下,每个应用都会发生几次。冗余的库很快就会增加,而且由于对一个给定的库没有单一的“正确”来源,所以几乎不可能确保你使用的是最新的甚至是一致的版本。

+

+我承认我不会整天坐在这里琢磨软件库,但我确实记得我琢磨的日子,尽管我不知道这就是困扰我的原因。在我还没有改用 Linux 之前,我在处理工作用的媒体文件时遇到错误,或者在玩不同的游戏时出现故障,或者在阅读 PDF 时出现怪异的现象,等等,这些都不是什么稀奇的事情。当时我花了很多时间去调查这些错误。我仍然记得,我的系统上有两个主要的应用分别捆绑了相同(但有区别)的图形后端技术。当一个程序的输出导入到另一个程序时,这种不匹配会导致错误。它本来是可以工作的,但是由于同一个库文件集合的旧版本中的一个错误,一个应用的热修复程序并没有给另一个应用带来好处。

+

+包管理器知道每个应用需要哪些后端(被称为*依赖关系*),并且避免重新安装已经在你系统上的软件。

+

+#### 5、保持简单

+

+作为一个 Linux 用户,我要感谢包管理器,因为它帮助我的生活变得简单。我不必考虑我安装的软件,我需要更新的东西,也不必考虑完成后是否真的将其卸载了。我毫不犹豫地试用软件。而当我在安装一台新电脑时,我运行 [一个简单的 Ansible 脚本][4] 来自动安装我所依赖的所有软件的最新版本。这很简单,很智能,也是一种独特的解放。

+

+### 更好的包管理

+

+Linux 从整体看待应用和操作系统。毕竟,开源是建立在其他开源工作基础上的,所以发行版维护者理解依赖*栈*的概念。Linux 上的包管理了解你的整个系统、系统上的库和支持文件以及你安装的应用。这些不同的部分协调工作,为你提供了一套高效、优化和强大的应用。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/21/2/linux-package-management

+

+作者:[Seth Kenlon][a]

+选题:[lujun9972][b]

+译者:[geekpi](https://github.com/geekpi)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/seth

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/OSDC_gift_giveaway_box_520x292.png?itok=w1YQhNH1 (Gift box opens with colors coming out)

+[2]: http://flathub.org

+[3]: https://opensource.com/article/21/1/text-editor-roundup

+[4]: https://opensource.com/article/20/9/install-packages-ansible

diff --git a/published/20210217 Use this bootable USB drive on Linux to rescue Windows users.md b/published/202102/20210217 Use this bootable USB drive on Linux to rescue Windows users.md

similarity index 100%

rename from published/20210217 Use this bootable USB drive on Linux to rescue Windows users.md

rename to published/202102/20210217 Use this bootable USB drive on Linux to rescue Windows users.md

diff --git a/published/202102/20210218 5 must-have Linux media players.md b/published/202102/20210218 5 must-have Linux media players.md

new file mode 100644

index 0000000000..1125465ae2

--- /dev/null

+++ b/published/202102/20210218 5 must-have Linux media players.md

@@ -0,0 +1,90 @@

+[#]: collector: (lujun9972)

+[#]: translator: (geekpi)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13148-1.html)

+[#]: subject: (5 must-have Linux media players)

+[#]: via: (https://opensource.com/article/21/2/linux-media-players)

+[#]: author: (Seth Kenlon https://opensource.com/users/seth)

+

+5 款值得拥有的 Linux 媒体播放器

+======

+

+> 无论是电影还是音乐,Linux 都能为你提供一些优秀的媒体播放器。

+

+

+

+在 2021 年,人们有更多的理由喜欢 Linux。在这个系列中,我将分享 21 个使用 Linux 的不同理由。媒体播放是我最喜欢使用 Linux 的理由之一。

+

+你可能更喜欢黑胶唱片和卡带,或者录像带和激光影碟,但你很有可能还是在数字设备上播放你喜欢的大部分媒体。电脑上的媒体有一种无法比拟的便利性,这主要是因为我们大多数人一天中的大部分时间都在电脑附近。许多现代电脑用户并没有过多考虑有哪些应用可以用来听音乐和看电影,因为大多数操作系统都默认提供了媒体播放器,或者因为他们订阅了流媒体服务,因此并没有把媒体文件放在自己身边。但如果你的口味超出了通常的热门音乐和节目列表,或者你以媒体工作为乐趣或利润,那么你就会有你想要播放的本地文件。你可能还对现有用户界面有意见。在 Linux 上,*选择*是一种权利,因此你可以选择无数种播放媒体的方式。

+

+以下是我在 Linux 上必备的五个媒体播放器。

+

+### 1、mpv

+

+![mpv interface][2]

+

+一个现代、干净、简约的媒体播放器。得益于它的 Mplayer、[ffmpeg][3] 和 `libmpv` 后端,它可以播放你可能会扔给它的任何类型媒体。我说“扔给它”,是因为播放一个文件的最快捷、最简单的方法就是把文件拖到 mpv 窗口中。如果你拖动多个文件,mpv 会为你创建一个播放列表。

+

+当你把鼠标放在上面时,它提供了直观的覆盖控件,但最好还是通过键盘操作界面。例如,`Alt+1` 会使 mpv 窗口变成全尺寸,而 `Alt+0` 会使其缩小到一半大小。你可以使用 `,` 和 `.` 键逐帧浏览视频,`[` 和 `]` 键调整播放速度,`/` 和 `*` 调整音量,`m` 静音等等。这些主控功能可以让你快速调整,一旦你学会了这些功能,你几乎可以在想到要调整播放的时候快速调整。无论是工作还是娱乐,mpv 都是我播放媒体的首选。

+

+### 2、Kaffeine 和 Rhythmbox

+

+![Kaffeine interface][4]

+

+KDE Plasma 和 GNOME 桌面都提供了音乐应用([Kaffeine][5] 和 [Rhythmbox][]),可以作为你个人音乐库的前端。它们会让你为你的音乐文件提供一个标准的位置,然后扫描你的音乐收藏,这样你就可以根据专辑、艺术家等来浏览。这两款软件都很适合那些你无法完全决定你想听什么,而又想用一种简单的方式来浏览现有音乐的时候。

+

+[Kaffeine][5] 其实不仅仅是一个音乐播放器。它可以播放视频文件、DVD、CD,甚至数字电视(假设你有输入信号)。我已经整整几天没有关闭 Kaffeine 了,因为不管我是想听音乐还是看电影,Kaffeine 都能让我轻松地开始播放。

+

+### 3、Audacious

+

+![Audacious interface][6]

+

+[Audacious][7] 媒体播放器是一个轻量级的应用,它可以播放你的音乐文件(甚至是 MIDI 文件)或来自互联网的流媒体音乐。对我来说,它的主要吸引力在于它的模块化架构,它鼓励开发插件。这些插件可以播放几乎所有你能想到的音频媒体格式,用图形均衡器调整声音,应用效果,甚至可以重塑整个应用,改变其界面。

+

+很难把 Audacious 仅仅看作是一个应用,因为它很容易让它变成你想要的应用。无论你是 Linux 上的 XMMS、Windows 上的 WinAmp,还是任何其他替代品,你大概都可以用 Audacious 来近似它们。Audacious 还提供了一个终端命令,`audtool`,所以你可以从命令行控制一个正在运行的 Audacious 实例,所以它甚至可以近似于一个终端媒体播放器!

+

+### 4、VLC

+

+![vlc interface][8]

+

+[VLC][9] 播放器可能是向用户介绍开源的应用之首。作为一款久经考验的多媒体播放器,VLC 可以播放音乐、视频、光盘。它还可以通过网络摄像头或麦克风进行流式传输和录制,从而使其成为捕获快速视频或语音消息的简便方法。像 mpv 一样,大多数情况下都可以通过按单个字母的键盘操作来控制它,但它也有一个有用的右键菜单。它可以将媒体从一种格式转换为另一种格式、创建播放列表、跟踪你的媒体库等。VLC 是最好的,大多数播放器甚至无法在功能上与之匹敌。无论你在什么平台上,它都是一款必备的应用。

+

+### 5、Music player daemon

+

+![mpd with the ncmpc interface][10]

+

+[music player daemon(mpd)][11] 是一个特别有用的播放器,因为它在服务器上运行。这意味着你可以在 [树莓派][12] 上启动它,然后让它处于空闲状态,这样你就可以在任何时候播放一首曲子。mpd 的客户端有很多,但我用的是 [ncmpc][13]。有了 ncmpc 或像 [netjukebox][14] 这样的 Web 客户端,我可以从本地主机或远程机器上连接 mpd,选择一张专辑,然后从任何地方播放它。

+

+### Linux 上的媒体播放

+

+在 Linux 上播放媒体是很容易的,这要归功于它出色的编解码器支持和惊人的播放器选择。我只提到了我最喜欢的五个播放器,但还有更多的播放器供你探索。试试它们,找到最好的,然后坐下来放松一下。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/21/2/linux-media-players

+

+作者:[Seth Kenlon][a]

+选题:[lujun9972][b]

+译者:[geekpi](https://github.com/geekpi)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/seth

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/LIFE_film.png?itok=aElrLLrw (An old-fashioned video camera)

+[2]: https://opensource.com/sites/default/files/mpv_0.png

+[3]: https://opensource.com/article/17/6/ffmpeg-convert-media-file-formats

+[4]: https://opensource.com/sites/default/files/kaffeine.png

+[5]: https://apps.kde.org/en/kaffeine

+[6]: https://opensource.com/sites/default/files/audacious.png

+[7]: https://audacious-media-player.org/

+[8]: https://opensource.com/sites/default/files/vlc_0.png

+[9]: http://videolan.org

+[10]: https://opensource.com/sites/default/files/mpd-ncmpc.png

+[11]: https://www.musicpd.org/

+[12]: https://opensource.com/article/21/1/raspberry-pi-hifi

+[13]: https://www.musicpd.org/clients/ncmpc/

+[14]: http://www.netjukebox.nl/

+[15]: https://wiki.gnome.org/Apps/Rhythmbox

\ No newline at end of file

diff --git a/published/20210219 7 Ways to Customize Cinnamon Desktop in Linux -Beginner-s Guide.md b/published/202102/20210219 7 Ways to Customize Cinnamon Desktop in Linux -Beginner-s Guide.md

similarity index 100%

rename from published/20210219 7 Ways to Customize Cinnamon Desktop in Linux -Beginner-s Guide.md

rename to published/202102/20210219 7 Ways to Customize Cinnamon Desktop in Linux -Beginner-s Guide.md

diff --git a/published/202102/20210219 Unlock your Chromebook-s hidden potential with Linux.md b/published/202102/20210219 Unlock your Chromebook-s hidden potential with Linux.md

new file mode 100644

index 0000000000..7612fa716f

--- /dev/null

+++ b/published/202102/20210219 Unlock your Chromebook-s hidden potential with Linux.md

@@ -0,0 +1,127 @@

+[#]: collector: (lujun9972)

+[#]: translator: (max27149)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-13149-1.html)

+[#]: subject: (Unlock your Chromebook's hidden potential with Linux)

+[#]: via: (https://opensource.com/article/21/2/chromebook-linux)

+[#]: author: (Seth Kenlon https://opensource.com/users/seth)

+

+用 Linux 释放你 Chromebook 的隐藏潜能

+======

+

+> Chromebook 是令人惊叹的工具,但通过解锁它内部的 Linux 系统,你可以让它变得更加不同凡响。

+

+

+

+Google Chromebook 运行在 Linux 系统之上,但通常它运行的 Linux 系统对普通用户而言,并不是十分容易就能访问得到。Linux 被用作基于开源的 [Chromium OS][2] 运行时环境的后端技术,然后 Google 将其转换为 Chrome OS。大多数用户体验到的界面是一个电脑桌面,可以用来运行 Chrome 浏览器及其应用程序。然而,在这一切的背后,有一个 Linux 系统等待被你发现。如果你知道怎么做,你可以在 Chromebook 上启用 Linux,把一台可能价格相对便宜、功能相对基础的电脑变成一个严谨的笔记本,获取数百个应用和你需要的所有能力,使它成为一个通用计算机。

+

+### 什么是 Chromebook?

+

+Chromebook 是专为 Chrome OS 创造的笔记本电脑,它本身专为特定的笔记本电脑型号而设计。Chrome OS 不是像 Linux 或 Windows 这样的通用操作系统,而是与 Android 或 iOS 有更多的共同点。如果你决定购买 Chromebook,你会发现有许多不同制造商的型号,包括惠普、华硕和联想等等。有些是为学生而设计,而另一些是为家庭或商业用户而设计的。主要的区别通常分别集中在电池功率或处理能力上。

+

+无论你决定买哪一款,Chromebook 都会运行 Chrome OS,并为你提供现代计算机所期望的基本功能。有连接到互联网的网络管理器、蓝牙、音量控制、文件管理器、桌面等等。

+

+![Chrome OS desktop][3]

+

+*Chrome OS 桌面截图*

+

+不过,想从这个简单易用的操作系统中获得更多,你只需要激活 Linux。

+

+### 启用 Chromebook 的开发者模式

+

+如果我让你觉得启用 Linux 看似简单,那是因为它确实简单但又有欺骗性。之所以说有欺骗性,是因为在启用 Linux 之前,你*必须*备份数据。

+

+这个过程虽然简单,但它确实会将你的计算机重置回出厂默认状态。你必须重新登录到你的笔记本电脑中,如果你有数据存储在 Google 云盘帐户上,你必须得把它重新同步回计算机中。启用 Linux 还需要为 Linux 预留硬盘空间,因此无论你的 Chromebook 硬盘容量是多少,都将减少一半或四分之一(自主选择)。

+

+在 Chromebook 上接入 Linux 仍被 Google 视为测试版功能,因此你必须选择使用开发者模式。开发者模式的目的是允许软件开发者测试新功能,安装新版本的操作系统等等,但它可以为你解锁仍在开发中的特殊功能。

+

+要启用开发者模式,请首先关闭你的 Chromebook。假定你已经备份了设备上的所有重要信息。

+

+接下来,按下键盘上的 `ESC` 和 `⟳`,再按 **电源键** 启动 Chromebook。

+

+![ESC and refresh buttons][4]

+

+*ESC 键和 ⟳ 键*

+

+当提示开始恢复时,按键盘上的 `Ctrl+D`。

+

+恢复结束后,你的 Chromebook 已重置为出厂设置,且没有默认的使用限制。

+

+### 开机启动进入开发者模式

+

+在开发者模式下运行意味着每次启动 Chromebook 时,都会提醒你处于开发者模式。你可以按 `Ctrl+D` 跳过启动延迟。有些 Chromebook 会在几秒钟后发出蜂鸣声来提醒你处于开发者模式,使得 `Ctrl+D` 操作几乎是强制的。从理论上讲,这个操作很烦人,但在实践中,我不经常启动我的 Chromebook,因为我只是唤醒它,所以当我需要这样做的时候,`Ctrl+D` 只不过是整个启动过程中小小的一步。

+

+启用开发者模式后的第一次启动时,你必须重新设置你的设备,就好像它是全新的一样。你只需要这样做一次(除非你在未来某个时刻停用开发者模式)。

+

+### 启用 Chromebook 上的 Linux

+

+现在,你已经运行在开发者模式下,你可以激活 Chrome OS 中的 **Linux Beta** 功能。要做到这一点,请打开 **设置**,然后单击左侧列表中的 **Linux Beta**。

+

+激活 **Linux Beta**,并为你的 Linux 系统和应用程序分配一些硬盘空间。在最糟糕的时候,Linux 是相当轻量级的,所以你真的不需要分配太多硬盘空间,但它显然取决于你打算用 Linux 来做多少事。4 GB 的空间对于 Linux 以及几百个终端命令还有二十多个图形应用程序是足够的。我的 Chromebook 有一个 64 GB 的存储卡,我给了 Linux 系统 30 GB,那是因为我在 Chromebook 上所做的大部分事情都是在 Linux 内完成的。

+