mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

commit

9153d00805

published

20140510 Journey to the Stack Part I.md20170119 Be a force for good in your community.md20170213 Getting Started with Taskwarrior.md20171109 Testing IPv6 Networking in KVM- Part 2.md20171116 10 easy steps from proprietary to open source.md20171221 How to create mobile-friendly documentation.md20180115 2 scientific calculators for the Linux desktop.md20180116 Why building a community is worth the extra effort.md20180222 Linux LAN Routing for Beginners- Part 1.md20180307 Host your own email with projectx-os and a Raspberry Pi.md20180321 How to use Ansible to patch systems and install applications.md20180327 Reliable IoT event logging with syslog-ng.md20180328 Getting started with Jupyter Notebooks.md20180410 Bootiso Lets You Safely Create Bootable USB Drive.md20180412 Easily Run And Integrate AppImage Files With AppImageLauncher.md20180413 Useful Resources for Those Who Want to Know More About Linux.md20180419 6 Python datetime libraries - Opensource.com.md20180420 A Perl module for better debugging.md20180425 Enhance your Python with an interactive shell.md20180427 How to Compile a Linux Kernel.md20180427 How to use FIND in Linux.md20180430 Reset a lost root password in under 5 minutes.md20180503 How to build container images with Buildah.md20180507 How To Improve Application Startup Time In Linux.md20180512 How To Check Laptop Battery Status In Terminal In Linux.md

sources

talk

20180107 7 leadership rules for the DevOps age.md20180128 Being open about data privacy.md20180328 College student reflects on getting started in open source.md20180412 Easily Run And Integrate AppImage Files With AppImageLauncher.md20180514 A year as Android Engineer.md

tech

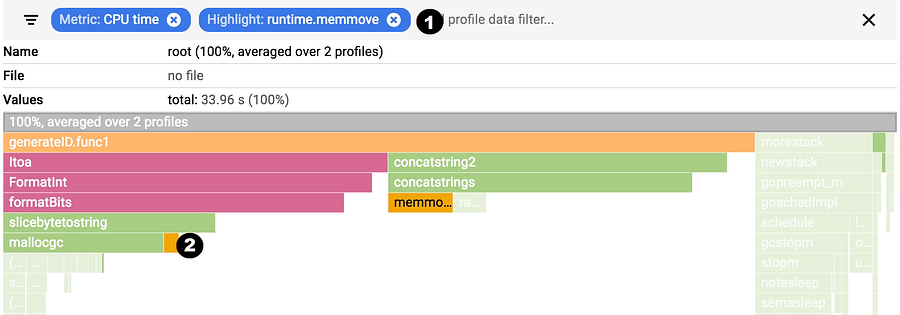

20160526 How To Downgrade A Package In Arch Linux.md20171103 3 ways robotics affects the CIO role.md20171109 Testing IPv6 Networking in KVM- Part 2.md20171121 How To Kill The Largest Process In An Unresponsive Linux System.md20171221 How to create mobile-friendly documentation.md20180103 5 ways open source can strengthen your job search.md20180131 A history of low-level Linux container runtimes.md20180302 5 open source software tools for supply chain management.md20180308 Dynamic Linux Routing with Quagga.md20180326 How To Archive Files And Directories In Linux.md20180404 Containerization, Atomic Distributions, and the Future of Linux.md20180406 How To Register The Oracle Linux System With The Unbreakable Linux Network (ULN).md20180410 Top 9 open source ERP systems to consider - Opensource.com.md20180415 Some Common Concurrent Programming Mistakes.md20180418 Passwordless Auth Server.md20180419 6 Python datetime libraries - Opensource.com.md20180423 An introduction to Python bytecode.md20180426 Continuous Profiling of Go programs.md20180502 Writing Systemd Services for Fun and Profit.md20180507 How To Improve Application Startup Time In Linux.md20180507 Systemd Services- Beyond Starting and Stopping.md20180508 Orbital Apps - A New Generation Of Linux applications.md20180509 How to kill a process or stop a program in Linux.md20180510 Creating small containers with Buildah.md20180510 Get more done at the Linux command line with GNU Parallel.md20180510 How To Display Images In The Terminal.md20180510 Splicing the Cloud Native Stack, One Floor at a Time.md20180511 3 useful things you can do with the IP tool in Linux.md20180515 Protect your Fedora system against this DHCP flaw.md20180515 Termux turns Android into a Linux development environment.md20180516 A guide to Git branching.md20180516 Manipulating Directories in Linux.md20180517 How to find your IP address in Linux.md20180518 How To Install Ncurses Library In Linux.md20180518 How to Manage Fonts in Linux.md20180518 What-s a hero without a villain- How to add one to your Python game.md20180521 Starting user software in X.md

translated

talk

20180128 Being open about data privacy.md20180328 College student reflects on getting started in open source.md

tech

20140510 Journey to the Stack Part I.md20160325 Network automation with Ansible.md20171103 3 ways robotics affects the CIO role.md20171116 10 easy steps from proprietary to open source.md20180103 5 ways open source can strengthen your job search.md20180115 2 scientific calculators for the Linux desktop.md20180302 5 open source software tools for supply chain management.md20180308 Dynamic Linux Routing with Quagga.md20180326 How To Archive Files And Directories In Linux.md20180420 A Perl module for better debugging.md20180423 How to reset a root password on Fedora.md20180426 Continuous Profiling of Go programs.md20180502 Writing Systemd Services for Fun and Profit.md20180507 Systemd Services- Beyond Starting and Stopping.md20180508 Orbital Apps - A New Generation Of Linux applications.md20180509 3 Methods To Install Latest Python3 Package On CentOS 6 System.md20180514 LikeCoin, a cryptocurrency for creators of openly licensed content.md20180516 You-Get - A CLI Downloader To Download Media From 80- Websites.md

106

published/20140510 Journey to the Stack Part I.md

Normal file

106

published/20140510 Journey to the Stack Part I.md

Normal file

@ -0,0 +1,106 @@

|

||||

探秘“栈”之旅

|

||||

==============

|

||||

|

||||

早些时候,我们探索了 [“内存中的程序之秘”][2],我们欣赏了在一台电脑中是如何运行我们的程序的。今天,我们去探索*栈的调用*,它在大多数编程语言和虚拟机中都默默地存在。在此过程中,我们将接触到一些平时很难见到的东西,像<ruby>闭包<rt>closure</rt></ruby>、递归、以及缓冲溢出等等。但是,我们首先要作的事情是,描绘出栈是如何运作的。

|

||||

|

||||

栈非常重要,因为它追踪着一个程序中运行的*函数*,而函数又是一个软件的重要组成部分。事实上,程序的内部操作都是非常简单的。它大部分是由函数向栈中推入数据或者从栈中弹出数据的相互调用组成的,而在堆上为数据分配内存才能在跨函数的调用中保持数据。不论是低级的 C 软件还是像 JavaScript 和 C# 这样的基于虚拟机的语言,它们都是这样的。而对这些行为的深刻理解,对排错、性能调优以及大概了解究竟发生了什么是非常重要的。

|

||||

|

||||

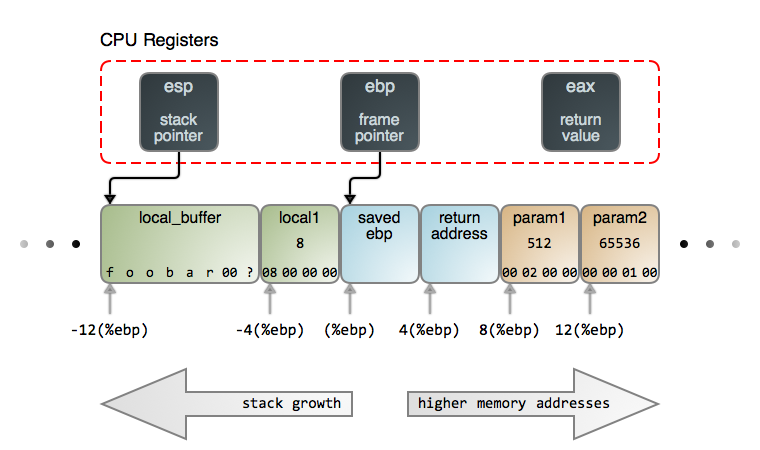

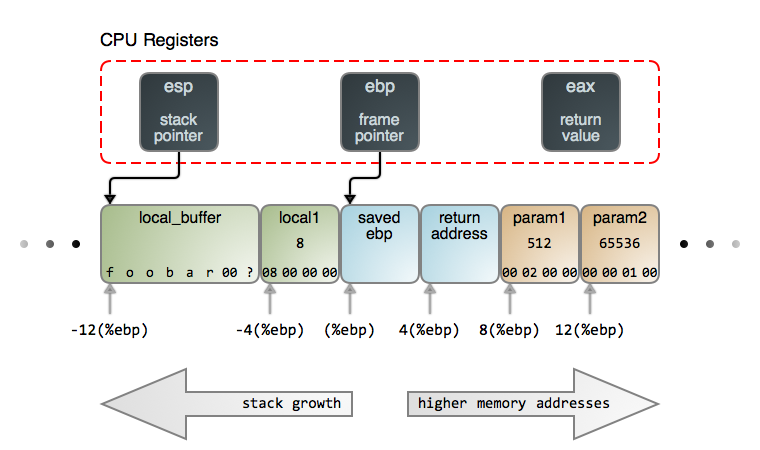

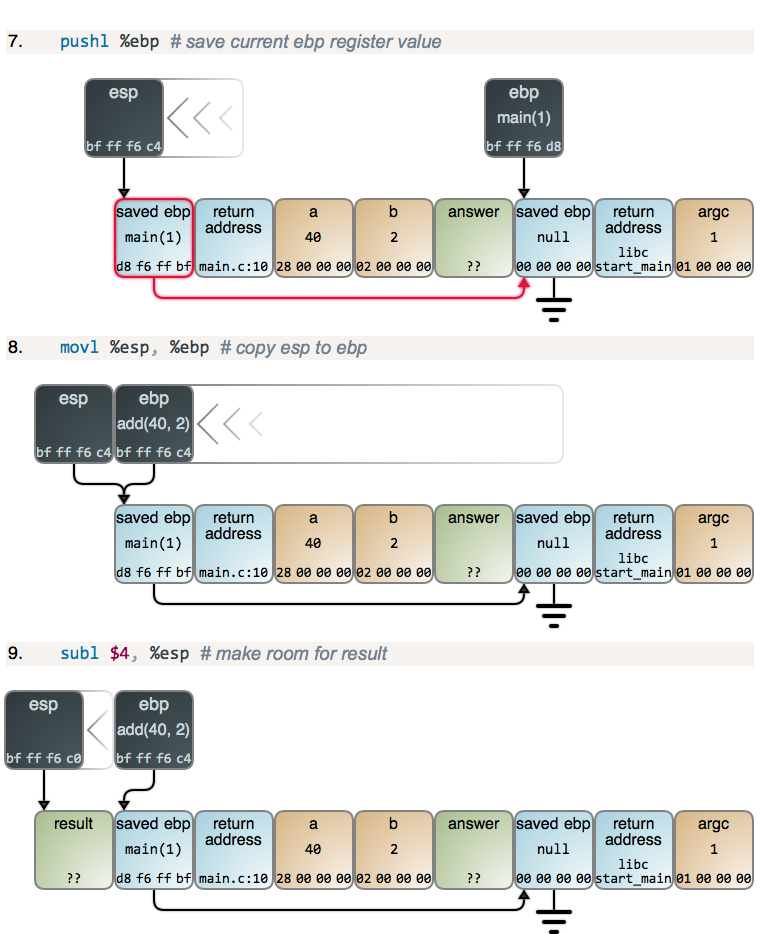

当一个函数被调用时,将会创建一个<ruby>栈帧<rt>stack frame</rt></ruby>去支持函数的运行。这个栈帧包含函数的*局部变量*和调用者传递给它的*参数*。这个栈帧也包含了允许被调用的函数(*callee*)安全返回给其调用者的内部事务信息。栈帧的精确内容和结构因处理器架构和函数调用规则而不同。在本文中我们以 Intel x86 架构和使用 C 风格的函数调用(`cdecl`)的栈为例。下图是一个处于栈顶部的一个单个栈帧:

|

||||

|

||||

|

||||

|

||||

在图上的场景中,有三个 CPU 寄存器进入栈。<ruby>栈指针<rt>stack pointer</rt></ruby> `esp`(LCTT 译注:扩展栈指针寄存器) 指向到栈的顶部。栈的顶部总是被最*后一个推入到栈且还没有弹出*的东西所占据,就像现实世界中堆在一起的一叠盘子或者 100 美元大钞一样。

|

||||

|

||||

保存在 `esp` 中的地址始终在变化着,因为栈中的东西不停被推入和弹出,而它总是指向栈中的最后一个推入的东西。许多 CPU 指令的一个副作用就是自动更新 `esp`,离开寄存器而使用栈是行不通的。

|

||||

|

||||

在 Intel 的架构中,绝大多数情况下,栈的增长是向着*低位内存地址*的方向。因此,这个“顶部” 在包含数据的栈中是处于低位的内存地址(在这种情况下,包含的数据是 `local_buffer`)。注意,关于从 `esp` 到 `local_buffer` 的箭头不是随意连接的。这个箭头代表着事务:它*专门*指向到由 `local_buffer` 所拥有的*第一个字节*,因为,那是一个保存在 `esp` 中的精确地址。

|

||||

|

||||

第二个寄存器跟踪的栈是 `ebp`(LCTT 译注:扩展基址指针寄存器),它包含一个<ruby>基指针<rt>base pointer</rt></ruby>或者称为<ruby>帧指针<rt>frame pointer</rt></ruby>。它指向到一个*当前运行*的函数的栈帧内的固定位置,并且它为参数和局部变量的访问提供一个稳定的参考点(基址)。仅当开始或者结束调用一个函数时,`ebp` 的内容才会发生变化。因此,我们可以很容易地处理在栈中的从 `ebp` 开始偏移后的每个东西。如图所示。

|

||||

|

||||

不像 `esp`, `ebp` 大多数情况下是在程序代码中通过花费很少的 CPU 来进行维护的。有时候,完成抛弃 `ebp` 有一些性能优势,可以通过 [编译标志][3] 来做到这一点。Linux 内核就是一个这样做的示例。

|

||||

|

||||

最后,`eax`(LCTT 译注:扩展的 32 位通用数据寄存器)寄存器惯例被用来转换大多数 C 数据类型返回值给调用者。

|

||||

|

||||

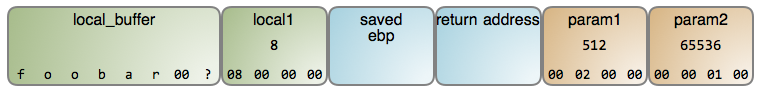

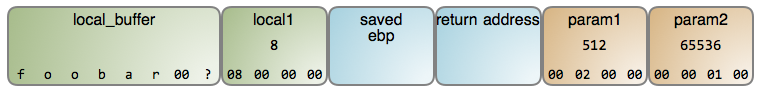

现在,我们来看一下在我们的栈帧中的数据。下图清晰地按字节展示了字节的内容,就像你在一个调试器中所看到的内容一样,内存是从左到右、从顶部至底部增长的,如下图所示:

|

||||

|

||||

|

||||

|

||||

局部变量 `local_buffer` 是一个字节数组,包含一个由 null 终止的 ASCII 字符串,这是 C 程序中的一个基本元素。这个字符串可以读取自任意地方,例如,从键盘输入或者来自一个文件,它只有 7 个字节的长度。因为,`local_buffer` 只能保存 8 字节,所以还剩下 1 个未使用的字节。*这个字节的内容是未知的*,因为栈不断地推入和弹出,*除了你写入的之外*,你根本不会知道内存中保存了什么。这是因为 C 编译器并不为栈帧初始化内存,所以它的内容是未知的并且是随机的 —— 除非是你自己写入。这使得一些人对此很困惑。

|

||||

|

||||

再往上走,`local1` 是一个 4 字节的整数,并且你可以看到每个字节的内容。它似乎是一个很大的数字,在8 后面跟着的都是零,在这里可能会误导你。

|

||||

|

||||

Intel 处理器是<ruby>小端<rt>little endian</rt></ruby>机器,这表示在内存中的数字也是首先从小的一端开始的。因此,在一个多字节数字中,较小的部分在内存中处于最低端的地址。因为一般情况下是从左边开始显示的,这背离了我们通常的数字表示方式。我们讨论的这种从小到大的机制,使我想起《格里佛游记》:就像小人国的人们吃鸡蛋是从小头开始的一样,Intel 处理器处理它们的数字也是从字节的小端开始的。

|

||||

|

||||

因此,`local1` 事实上只保存了一个数字 8,和章鱼的腿数量一样。然而,`param1` 在第二个字节的位置有一个值 2,因此,它的数学上的值是 `2 * 256 = 512`(我们与 256 相乘是因为,每个位置值的范围都是从 0 到 255)。同时,`param2` 承载的数量是 `1 * 256 * 256 = 65536`。

|

||||

|

||||

这个栈帧的内部数据是由两个重要的部分组成:*前一个*栈帧的地址(保存的 `ebp` 值)和函数退出才会运行的指令的地址(返回地址)。它们一起确保了函数能够正常返回,从而使程序可以继续正常运行。

|

||||

|

||||

现在,我们来看一下栈帧是如何产生的,以及去建立一个它们如何共同工作的内部蓝图。首先,栈的增长是非常令人困惑的,因为它与你你预期的方式相反。例如,在栈上分配一个 8 字节,就要从 `esp` 减去 8,去,而减法是与增长不同的奇怪方式。

|

||||

|

||||

我们来看一个简单的 C 程序:

|

||||

|

||||

```

|

||||

Simple Add Program - add.c

|

||||

|

||||

int add(int a, int b)

|

||||

{

|

||||

int result = a + b;

|

||||

return result;

|

||||

}

|

||||

|

||||

int main(int argc)

|

||||

{

|

||||

int answer;

|

||||

answer = add(40, 2);

|

||||

}

|

||||

```

|

||||

|

||||

*简单的加法程序 - add.c*

|

||||

|

||||

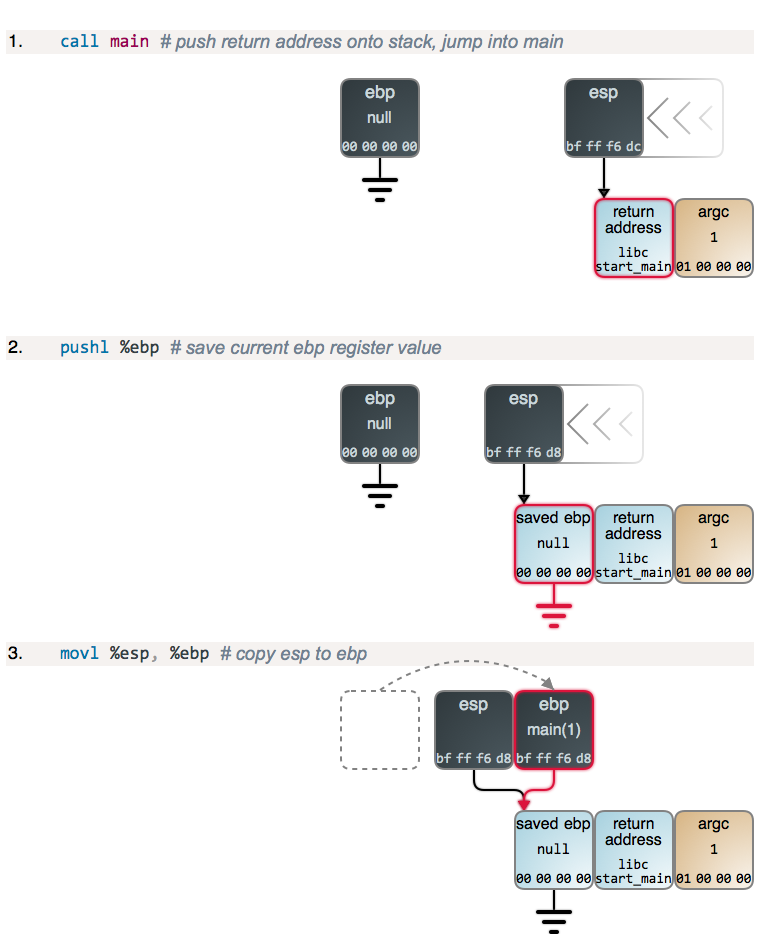

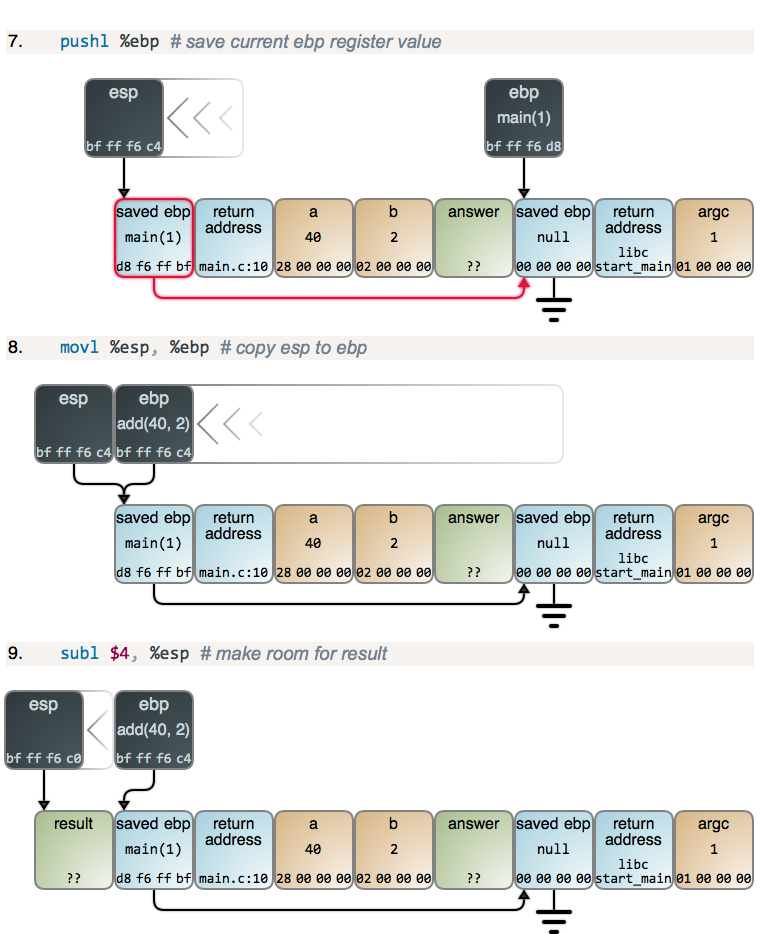

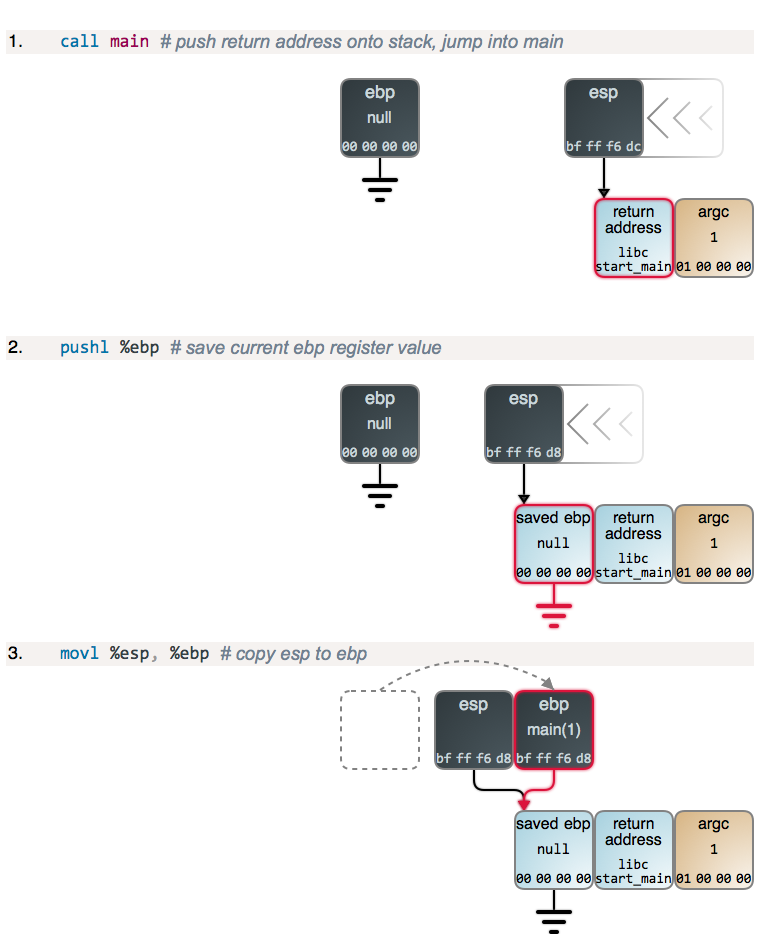

假设我们在 Linux 中不使用命令行参数去运行它。当你运行一个 C 程序时,实际运行的第一行代码是在 C 运行时库里,由它来调用我们的 `main` 函数。下图展示了程序运行时每一步都发生了什么。每个图链接的 GDB 输出展示了内存和寄存器的状态。你也可以看到所使用的 [GDB 命令][4],以及整个 [GDB 输出][5]。如下:

|

||||

|

||||

|

||||

|

||||

第 2 步和第 3 步,以及下面的第 4 步,都只是函数的<ruby>序言<rt>prologue</rt></ruby>,几乎所有的函数都是这样的:`ebp` 的当前值被保存到了栈的顶部,然后,将 `esp` 的内容拷贝到 `ebp`,以建立一个新的栈帧。`main` 的序言和其它函数一样,但是,不同之处在于,当程序启动时 `ebp` 被清零。

|

||||

|

||||

如果你去检查栈下方(右边)的整形变量(`argc`),你将找到更多的数据,包括指向到程序名和命令行参数(传统的 C 的 `argv`)、以及指向 Unix 环境变量以及它们真实的内容的指针。但是,在这里这些并不是重点,因此,继续向前调用 `add()`:

|

||||

|

||||

|

||||

|

||||

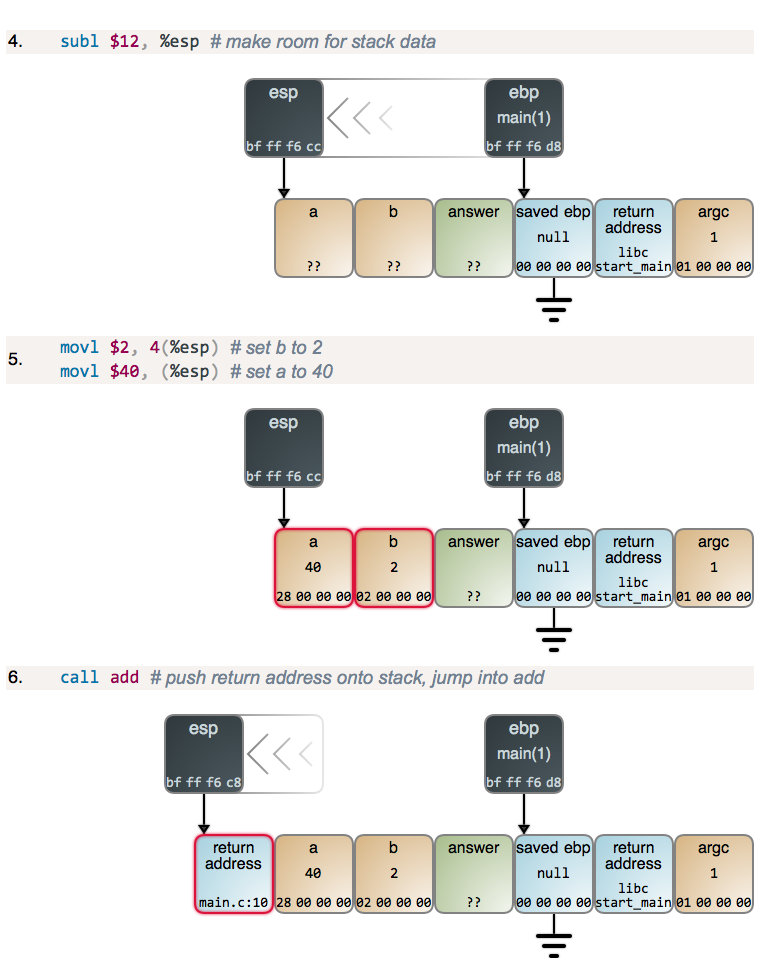

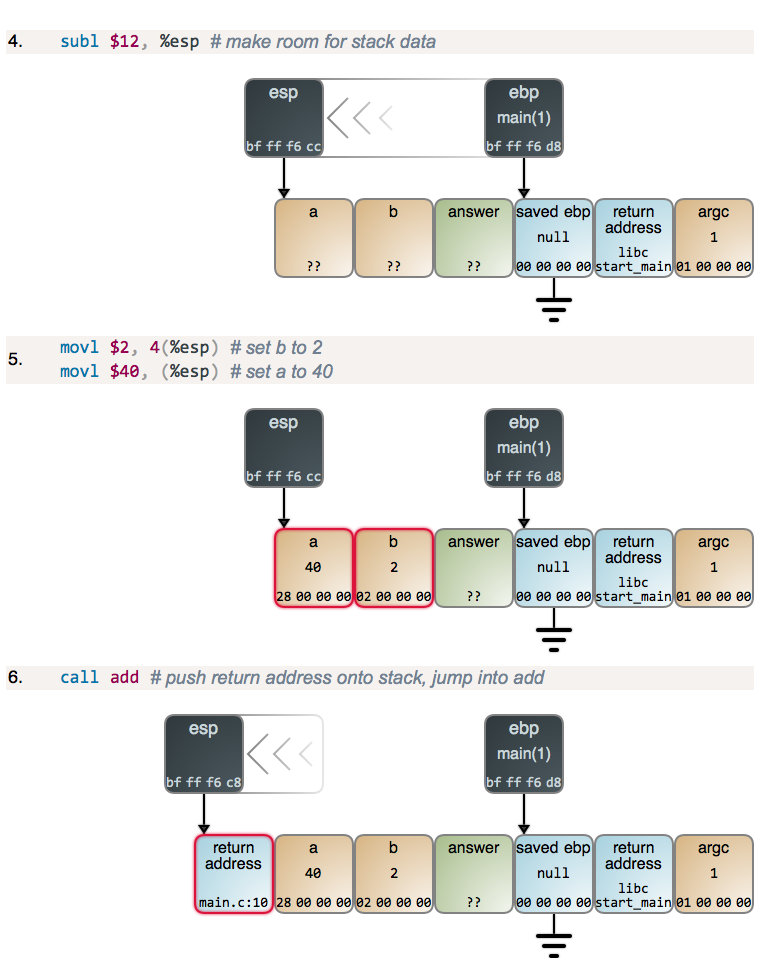

在 `main` 从 `esp` 减去 12 之后得到它所需的栈空间,它为 `a` 和 `b` 设置值。在内存中的值展示为十六进制,并且是小端格式,与你从调试器中看到的一样。一旦设置了参数值,`main` 将调用 `add`,并且开始运行:

|

||||

|

||||

|

||||

|

||||

现在,有一点小激动!我们进入了另一个函数序言,但这次你可以明确看到栈帧是如何从 `ebp` 到栈建立一个链表。这就是调试器和高级语言中的 `Exception` 对象如何对它们的栈进行跟踪的。当一个新帧产生时,你也可以看到更多这种典型的从 `ebp` 到 `esp` 的捕获。我们再次从 `esp` 中做减法得到更多的栈空间。

|

||||

|

||||

当 `ebp` 寄存器的值拷贝到内存时,这里也有一个稍微有些怪异的字节逆转。在这里发生的奇怪事情是,寄存器其实并没有字节顺序:因为对于内存,没有像寄存器那样的“增长的地址”。因此,惯例上调试器以对人类来说最自然的格式展示了寄存器的值:数位从最重要的到最不重要。因此,这个在小端机器中的副本的结果,与内存中常用的从左到右的标记法正好相反。我想用图去展示你将会看到的东西,因此有了下面的图。

|

||||

|

||||

在比较难懂的部分,我们增加了注释:

|

||||

|

||||

|

||||

|

||||

这是一个临时寄存器,用于帮你做加法,因此没有什么警报或者惊喜。对于加法这样的作业,栈的动作正好相反,我们留到下次再讲。

|

||||

|

||||

对于任何读到这里的人都应该有一个小礼物,因此,我做了一个大的图表展示了 [组合到一起的所有步骤][6]。

|

||||

|

||||

一旦把它们全部布置好了,看上起似乎很乏味。这些小方框给我们提供了很多帮助。事实上,在计算机科学中,这些小方框是主要的展示工具。我希望这些图片和寄存器的移动能够提供一种更直观的构想图,将栈的增长和内存的内容整合到一起。从软件的底层运作来看,我们的软件与一个简单的图灵机器差不多。

|

||||

|

||||

这就是我们栈探秘的第一部分,再讲一些内容之后,我们将看到构建在这个基础上的高级编程的概念。下周见!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via:https://manybutfinite.com/post/journey-to-the-stack/

|

||||

|

||||

作者:[Gustavo Duarte][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://duartes.org/gustavo/blog/about/

|

||||

[1]:https://manybutfinite.com/post/journey-to-the-stack/

|

||||

[2]:https://linux.cn/article-9255-1.html

|

||||

[3]:http://stackoverflow.com/questions/14666665/trying-to-understand-gcc-option-fomit-frame-pointer

|

||||

[4]:https://github.com/gduarte/blog/blob/master/code/x86-stack/add-gdb-commands.txt

|

||||

[5]:https://github.com/gduarte/blog/blob/master/code/x86-stack/add-gdb-output.txt

|

||||

[6]:https://manybutfinite.com/img/stack/callSequence.png

|

||||

@ -1,31 +1,29 @@

|

||||

成为你所在社区的美好力量

|

||||

============================================================

|

||||

|

||||

>明白如何传递美好,了解积极意愿的力量,以及更多。

|

||||

> 明白如何传递美好,了解积极意愿的力量,以及更多。

|

||||

|

||||

|

||||

|

||||

|

||||

>图片来自:opensource.com

|

||||

|

||||

激烈的争论是开源社区和开放组织的标志特征之一。在我们最好的日子里,这些争论充满活力和建设性。他们面红耳赤的背后其实是幽默和善意。各方实事求是,共同解决问题,推动持续改进。对我们中的许多人来说,他们只是单纯的娱乐而已。

|

||||

激烈的争论是开源社区和开放组织的标志特征之一。在好的时候,这些争论充满活力和建设性。他们面红耳赤的背后其实是幽默和善意。各方实事求是,共同解决问题,推动持续改进。对我们中的许多人来说,他们只是单纯的喜欢而已。

|

||||

|

||||

然而在我们最糟糕的日子里,这些争论演变成了对旧话题的反复争吵。或者我们用各种方式来传递伤害和相互攻击,或是使用卑劣的手段,而这些侵蚀着我们社区的激情、信任和生产力。

|

||||

然而在那些不好的日子里,这些争论演变成了对旧话题的反复争吵。或者我们用各种方式来传递伤害和相互攻击,或是使用卑劣的手段,而这些侵蚀着我们社区的激情、信任和生产力。

|

||||

|

||||

我们茫然四顾,束手无策,因为社区的对话开始变得有毒。然而,正如 [DeLisa Alexander最近的分享][1],我们每个人都有很多方法可以成为我们社区的一种力量。

|

||||

我们茫然四顾,束手无策,因为社区的对话开始变得有毒。然而,正如 [DeLisa Alexander 最近的分享][1],我们每个人都有很多方法可以成为我们社区的一种力量。

|

||||

|

||||

在这个“开源文化”系列的第一篇文章中,我将分享一些策略,教你如何在这个关键时刻进行干预,引导每个人走向更积极、更有效率的方向。

|

||||

|

||||

### 不要将人推开,而是将人推向前方

|

||||

|

||||

最近,我和我的朋友和同事 [Mark Rumbles][2] 一起吃午饭。多年来,我们在许多支持开源文化和引领 Red Hat 的项目中合作。在这一天,马克问我是怎么坚持的,当我看到辩论变得越来越丑陋的时候,他看到我最近介入了一个邮件列表的对话。

|

||||

最近,我和我的朋友和同事 [Mark Rumbles][2] 一起吃午饭。多年来,我们在许多支持开源文化和引领 Red Hat 的项目中合作。在这一天,Mark 问我,他看到我最近介入了一个邮件列表的对话,当其中的辩论越来越过分时我是怎么坚持的。

|

||||

|

||||

幸运的是,这事早已尘埃落定,事实上我几乎忘记了谈话的内容。然而,它让我们开始讨论如何在一个拥有数千名成员的社区里,公开和坦率的辩论。

|

||||

|

||||

>在我们的社区里,我们成为一种美好力量的最好的方法之一就是:在回应冲突时,以一种迫使每个人提升他们的行为,而不是使冲突升级的方式。

|

||||

|

||||

Mark 说了一些让我印象深刻的话。他说:“你知道,作为一个社区,我们真的很擅长将人推开。但我想看到的是,我们更多的是互相扶持 _向前_ 。”

|

||||

|

||||

Mark 是绝对正确的。在我们的社区里,我们成为一种美好力量的最好的方法之一就是:在回应冲突时,以一种迫使每个人提升他们的行为,而不是使冲突升级的方式。

|

||||

Mark 是绝对正确的。在我们的社区里,我们成为一种美好力量的最好的方法之一就是:以一种迫使每个人提升他们的行为的方式回应冲突,而不是使冲突升级的方式。

|

||||

|

||||

### 积极意愿假想

|

||||

|

||||

@ -33,9 +31,9 @@ Mark 是绝对正确的。在我们的社区里,我们成为一种美好力量

|

||||

|

||||

诚然,这不是一件容易的事情。当我看到一场辩论正在变得肮脏的迹象时,我停下来问自己,史蒂芬·科维(Steven Covey)所说的人性化问题是什么:

|

||||

|

||||

“为什么一个理性、正直的人会做这样的事情?”

|

||||

> “为什么一个理性、正直的人会做这样的事情?”

|

||||

|

||||

现在,如果他是你的一个“普通的观察对象”——一个有消极行为倾向的社区成员——也许你的第一个想法是,“嗯,也许这个人是个不靠谱,不理智的人”

|

||||

现在,如果他是你的一个“普通的观察对象”—— 一个有消极行为倾向的社区成员——也许你的第一个想法是,“嗯,也许这个人是个不靠谱,不理智的人”

|

||||

|

||||

回过头来说。我并不是说你让你自欺欺人。这其实就是人性化的问题,不仅是因为它让你理解别人的立场,它还让你变得人性化。

|

||||

|

||||

@ -51,7 +49,7 @@ Mark 是绝对正确的。在我们的社区里,我们成为一种美好力量

|

||||

|

||||

一个简单的积极意愿假想,我们可以适用于几乎所有的不良行为,其实就是那个人想要被聆听,被尊重,或被理解。我想这是相当合理的。

|

||||

|

||||

通过站在这个更客观、更有同情心的角度,我们可以看到他们的行为几乎肯定 **_不_** 会帮助他们得到他们想要的东西,而社区也会因此而受到影响。如果没有我们的帮助的话。

|

||||

通过站在这个更客观、更有同情心的角度,我们可以看到他们的行为几乎肯定 **_不会_** 帮助他们得到他们想要的东西,而社区也会因此而受到影响。如果没有我们的帮助的话。

|

||||

|

||||

对我来说,这激发了一个愿望:帮助每个人从我们所处的这个丑陋的地方“摆脱困境”。

|

||||

|

||||

@ -60,7 +58,7 @@ Mark 是绝对正确的。在我们的社区里,我们成为一种美好力量

|

||||

容易想到的例子包括:

|

||||

|

||||

* 他们担心我们错过了一些重要的东西,或者我们犯了一个错误,没有人能看到它。

|

||||

* 他们想为自己的贡献感到有价值。

|

||||

* 他们想感受到自己的贡献的价值。

|

||||

* 他们精疲力竭,因为在社区里工作过度或者在他们的个人生活中发生了一些事情。

|

||||

* 他们讨厌一些东西被破坏,并感到沮丧,因为没有人能看到造成的伤害或不便。

|

||||

* ……诸如此类。

|

||||

@ -69,11 +67,11 @@ Mark 是绝对正确的。在我们的社区里,我们成为一种美好力量

|

||||

|

||||

### 传递美好,挣脱泥潭

|

||||

|

||||

什么是 an out?(类似与佛家“解脱法门”的意思)把它想象成一个逃跑的门。这是一种退出对话的方式,或者放弃不良的行为,恢复表现得像一个体面的人,而不是丢面子。是叫某人振作向上,而不是叫他走开。

|

||||

什么是 an out?(LCTT 译注:类似与佛家“解脱法门”的意思)把它想象成一个逃跑的门。这是一种退出对话的方式,或者放弃不良的行为,恢复表现得像一个体面的人,而不是丢面子。是叫某人振作向上,而不是叫他走开。

|

||||

|

||||

你可能经历过这样的事情,在你的生活中,当 _你_ 在一次谈话中表现不佳时,咆哮着,大喊大叫,对某事大惊小怪,而有人慷慨地给 _你_ 提供了一个台阶下。也许他们选择不去和你“抬杠”,相反,他们说了一些表明他们相信你是一个理性、正直的人,他们采用积极意愿假想,比如:

|

||||

|

||||

> _所以,嗯,我听到的是你真的很担心,你很沮丧,因为似乎没有人在听。或者你担心我们忽略了它的重要性。是这样对吧?_

|

||||

> 所以,嗯,我听到的是你真的很担心,你很沮丧,因为似乎没有人在听。或者你担心我们忽略了它的重要性。是这样对吧?

|

||||

|

||||

于是乎:即使这不是完全正确的(也许你的意图不那么高尚),在那一刻,你可能抓住了他们提供给你的台阶,并欣然接受了重新定义你的不良行为的机会。你几乎可以肯定地转向一个更富有成效的角度,甚至你自己可能都没有意识到。

|

||||

|

||||

@ -85,13 +83,13 @@ Mark 是绝对正确的。在我们的社区里,我们成为一种美好力量

|

||||

|

||||

### 坏行为还是坏人?

|

||||

|

||||

如果这个人特别激动,他们可能不会听到或者接受你给出的第一台阶。没关系。最可能的是,他们迟钝的大脑已经被史前曾经对人类生存至关重要的杏仁核接管了,他们需要更多的时间来认识到你并不是一个威胁。只是需要你保持温和的态度,坚定地对待他们,就好像他们 _曾经是_ 一个理性、正直的人,看看会发生什么。

|

||||

如果这个人特别激动,他们可能不会听到或者接受你给出的第一个台阶。没关系。最可能的是,他们迟钝的大脑已经被史前曾经对人类生存至关重要的杏仁体接管了,他们需要更多的时间来认识到你并不是一个威胁。只是需要你保持温和的态度,坚定地对待他们,就好像他们 _曾经是_ 一个理性、正直的人,看看会发生什么。

|

||||

|

||||

根据我的经验,这些社区干预以三种方式结束:

|

||||

|

||||

大多数情况下,这个人实际上 _是_ 一个理性的人,很快,他们就感激地接受了这个事实。在这个过程中,每个人都跳出了“黑与白”,“赢或输”的心态。人们开始思考创造性的选择和“双赢”的结果,每个人都将受益。

|

||||

|

||||

> 为什么一个理性、正直的人会做这样的事呢?

|

||||

> 为什么一个理性、正直的人会做这样的事呢?

|

||||

|

||||

有时候,这个人天生不是特别理性或正直的,但当他被你以如此一致的、不知疲倦的、耐心的慷慨和善良的对待的时候,他们就会羞愧地从谈话中撤退。这听起来像是,“嗯,我想我已经说了所有要说的了。谢谢你听我的意见”。或者,对于不那么开明的人来说,“嗯,我厌倦了这种谈话。让我们结束吧。”(好的,谢谢)。

|

||||

|

||||

@ -99,7 +97,7 @@ Mark 是绝对正确的。在我们的社区里,我们成为一种美好力量

|

||||

|

||||

这就是积极意愿假想的力量。通过对愤怒和充满敌意的言辞做出回应,优雅而有尊严地回应,你就能化解一场战争,理清混乱,解决棘手的问题,而且在这个过程中很有可能会交到一个新朋友。

|

||||

|

||||

我每次应用这个原则都成功吗?见鬼,不。但我从不后悔选择了积极意愿。但是我能生动的回想起,当我采用消极意愿假想时,将问题变得更糟糕的场景。

|

||||

我每次应用这个原则都成功吗?见鬼,不会。但我从不后悔选择了积极意愿。但是我能生动的回想起,当我采用消极意愿假想时,将问题变得更糟糕的场景。

|

||||

|

||||

现在轮到你了。我很乐意听到你提出的一些策略和原则,当你的社区里的对话变得激烈的时候,要成为一股好力量。在下面的评论中分享你的想法。

|

||||

|

||||

@ -111,7 +109,7 @@ Mark 是绝对正确的。在我们的社区里,我们成为一种美好力量

|

||||

|

||||

|

||||

|

||||

丽贝卡·费尔南德斯(Rebecca Fernandez)是红帽公司(Red Hat)的首席就业品牌 + 通讯专家,是《开源组织》书籍的贡献者,也是开源决策框架的维护者。她的兴趣是开源和业务管理模型的开源方式。Twitter:@ruhbehka

|

||||

丽贝卡·费尔南德斯(Rebecca Fernandez)是红帽公司(Red Hat)的首席就业品牌 + 通讯专家,是《开放组织》书籍的贡献者,也是开源决策框架的维护者。她的兴趣是开源和业务管理模型的开源方式。Twitter:@ruhbehka

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -119,7 +117,7 @@ via: https://opensource.com/open-organization/17/1/force-for-good-community

|

||||

|

||||

作者:[Rebecca Fernandez][a]

|

||||

译者:[chao-zhi](https://github.com/chao-zhi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,65 +1,67 @@

|

||||

Taskwarrior 入门

|

||||

基于命令行的任务管理器 Taskwarrior

|

||||

=====

|

||||

|

||||

Taskwarrior 是一个灵活的[命令行任务管理程序][1],用他们[自己的话说][2]:

|

||||

|

||||

Taskwarrior 是从你的命令行管理你的 TODO 列表。它灵活,快速,高效,不显眼,它默默做自己的事情让你避免自己管理。

|

||||

> Taskwarrior 在命令行里管理你的 TODO 列表。它灵活,快速,高效,不显眼,它默默做自己的事情让你避免自己管理。

|

||||

|

||||

Taskwarrior 是高度可定制的,但也可以“立即使用”。在本文中,我们将向你展示添加和完成任务的基本命令,然后我们将介绍几个更高级的命令。最后,我们将向你展示一些基本的配置设置,以开始自定义你的设置。

|

||||

|

||||

### 安装 Taskwarrior

|

||||

|

||||

Taskwarrior 在 Fedora 仓库中是可用的,所有安装它很容易:

|

||||

|

||||

```

|

||||

sudo dnf install task

|

||||

|

||||

```

|

||||

|

||||

一旦完成安装,运行 `task`。第一次运行将会创建一个 `~/.taskrc` 文件。

|

||||

一旦完成安装,运行 `task` 命令。第一次运行将会创建一个 `~/.taskrc` 文件。

|

||||

|

||||

```

|

||||

$ **task**

|

||||

$ task

|

||||

A configuration file could not be found in ~

|

||||

|

||||

Would you like a sample /home/link/.taskrc created, so Taskwarrior can proceed? (yes/no) yes

|

||||

[task next]

|

||||

No matches.

|

||||

|

||||

```

|

||||

|

||||

### 添加任务

|

||||

|

||||

添加任务快速而不显眼。

|

||||

|

||||

```

|

||||

$ **task add Plant the wheat**

|

||||

$ task add Plant the wheat

|

||||

Created task 1.

|

||||

|

||||

```

|

||||

|

||||

运行 `task` 或者 `task list` 来显示即将来临的任务。

|

||||

|

||||

```

|

||||

$ **task list**

|

||||

$ task list

|

||||

|

||||

ID Age Description Urg

|

||||

1 8s Plant the wheat 0

|

||||

|

||||

1 task

|

||||

|

||||

```

|

||||

|

||||

让我们添加一些任务来完成这个示例。

|

||||

```

|

||||

$ **task add Tend the wheat**

|

||||

Created task 2.

|

||||

$ **task add Cut the wheat**

|

||||

Created task 3.

|

||||

$ **task add Take the wheat to the mill to be ground into flour**

|

||||

Created task 4.

|

||||

$ **task add Bake a cake**

|

||||

Created task 5.

|

||||

|

||||

```

|

||||

$ task add Tend the wheat

|

||||

Created task 2.

|

||||

$ task add Cut the wheat

|

||||

Created task 3.

|

||||

$ task add Take the wheat to the mill to be ground into flour

|

||||

Created task 4.

|

||||

$ task add Bake a cake

|

||||

Created task 5.

|

||||

```

|

||||

|

||||

再次运行 `task` 来查看列表。

|

||||

|

||||

```

|

||||

[task next]

|

||||

|

||||

@ -71,84 +73,83 @@ ID Age Description Urg

|

||||

5 2s Bake a cake 0

|

||||

|

||||

5 tasks

|

||||

|

||||

```

|

||||

|

||||

### 完成任务

|

||||

|

||||

将一个任务标记为完成, 查找其 ID 并运行:

|

||||

|

||||

```

|

||||

$ **task 1 done**

|

||||

$ task 1 done

|

||||

Completed task 1 'Plant the wheat'.

|

||||

Completed 1 task.

|

||||

|

||||

```

|

||||

|

||||

你也可以用它的描述来标记一个任务已完成。

|

||||

|

||||

```

|

||||

$ **task 'Tend the wheat' done**

|

||||

$ task 'Tend the wheat' done

|

||||

Completed task 1 'Tend the wheat'.

|

||||

Completed 1 task.

|

||||

|

||||

```

|

||||

|

||||

通过使用 `add`, `list` 和 `done`,你可以说已经入门了。

|

||||

通过使用 `add`、`list` 和 `done`,你可以说已经入门了。

|

||||

|

||||

### 设定截止日期

|

||||

|

||||

很多任务不需要一个截止日期:

|

||||

|

||||

```

|

||||

task add Finish the article on Taskwarrior

|

||||

|

||||

```

|

||||

|

||||

但是有时候,设定一个截止日期正是你需要提高效率的动力。在添加任务时使用 `due` 修饰符来设置特定的截止日期。

|

||||

|

||||

```

|

||||

task add Finish the article on Taskwarrior due:tomorrow

|

||||

|

||||

```

|

||||

|

||||

`due` 非常灵活。它接受特定日期 ("2017-02-02") 或 ISO-8601 ("2017-02-02T20:53:00Z"),甚至相对时间 ("8hrs")。可以查看所有示例的 [Date & Time][3] 文档。

|

||||

`due` 非常灵活。它接受特定日期 (`2017-02-02`) 或 ISO-8601 (`2017-02-02T20:53:00Z`),甚至相对时间 (`8hrs`)。可以查看所有示例的 [Date & Time][3] 文档。

|

||||

|

||||

日期也不只有截止日期,Taskwarrior 有 `scheduled`, `wait` 和 `until` 选项。

|

||||

|

||||

日期也会超出截止日期,Taskwarrior 有 `scheduled`, `wait` 和 `until` 选项。

|

||||

```

|

||||

task add Proof the article on Taskwarrior scheduled:thurs

|

||||

|

||||

```

|

||||

|

||||

一旦日期(本例中的星期四)通过,该任务就会被标记为 `READY` 虚拟标记。它会显示在 `ready` 报告中。

|

||||

|

||||

```

|

||||

$ **task ready**

|

||||

$ task ready

|

||||

|

||||

ID Age S Description Urg

|

||||

1 2s 1d Proof the article on Taskwarrior 5

|

||||

|

||||

```

|

||||

|

||||

要移除一个日期,使用空白值来 `modify` 任务:

|

||||

|

||||

```

|

||||

$ task 1 modify scheduled:

|

||||

|

||||

```

|

||||

|

||||

### 查找任务

|

||||

|

||||

如果没有使用正则表达式搜索的能力,任务列表是不完整的,对吧?

|

||||

|

||||

```

|

||||

$ **task '/.* the wheat/' list**

|

||||

$ task '/.* the wheat/' list

|

||||

|

||||

ID Age Project Description Urg

|

||||

2 42min Take the wheat to the mill to be ground into flour 0

|

||||

1 42min Home Cut the wheat 1

|

||||

|

||||

2 tasks

|

||||

|

||||

```

|

||||

|

||||

### 自定义 Taskwarrior

|

||||

|

||||

记得我们在开头创建的文件 (`~/.taskrc`)吗?让我们来看看默认设置:

|

||||

|

||||

```

|

||||

# [Created by task 2.5.1 2/9/2017 16:39:14]

|

||||

# Taskwarrior program configuration file.

|

||||

@ -180,41 +181,40 @@ data.location=~/.task

|

||||

#include /usr//usr/share/task/solarized-dark-256.theme

|

||||

#include /usr//usr/share/task/solarized-light-256.theme

|

||||

#include /usr//usr/share/task/no-color.theme

|

||||

|

||||

|

||||

```

|

||||

|

||||

现在唯一有效的选项是 `data.location=~/.task`。要查看活动配置设置(包括内置的默认设置),运行 `show`。

|

||||

现在唯一生效的选项是 `data.location=~/.task`。要查看活动配置设置(包括内置的默认设置),运行 `show`。

|

||||

|

||||

```

|

||||

task show

|

||||

|

||||

```

|

||||

|

||||

改变设置,使用 `config`。

|

||||

要改变设置,使用 `config`。

|

||||

|

||||

```

|

||||

$ **task config displayweeknumber no**

|

||||

$ task config displayweeknumber no

|

||||

Are you sure you want to add 'displayweeknumber' with a value of 'no'? (yes/no) yes

|

||||

Config file /home/link/.taskrc modified.

|

||||

|

||||

```

|

||||

|

||||

### 示例

|

||||

|

||||

这些只是你可以用 Taskwarrior 做的一部分事情。

|

||||

|

||||

为你的任务分配一个项目:

|

||||

将你的任务分配到一个项目:

|

||||

|

||||

```

|

||||

task 'Fix leak in the roof' modify project:Home

|

||||

|

||||

```

|

||||

|

||||

使用 `start` 来标记你正在做的事情,这可以帮助你回忆起你周末后在做什么:

|

||||

|

||||

```

|

||||

task 'Fix bug #141291' start

|

||||

|

||||

```

|

||||

|

||||

使用相关的标签:

|

||||

|

||||

```

|

||||

task add 'Clean gutters' +weekend +house

|

||||

|

||||

@ -229,7 +229,7 @@ via: https://fedoramagazine.org/getting-started-taskwarrior/

|

||||

|

||||

作者:[Link Dupont][a]

|

||||

译者:[MjSeven](https://github.com/MjSeven)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

120

published/20171109 Testing IPv6 Networking in KVM- Part 2.md

Normal file

120

published/20171109 Testing IPv6 Networking in KVM- Part 2.md

Normal file

@ -0,0 +1,120 @@

|

||||

在 KVM 中测试 IPv6 网络:第 2 部分

|

||||

======

|

||||

|

||||

|

||||

|

||||

我们又见面了,在上一篇 [在 KVM 中测试 IPv6 网络:第 1 部分][1] 中,我们学习了有关 IPv6 私有地址的内容。今天,我们将使用 KVM 创建一个网络,去测试上一星期学习的 IPv6 的内容。

|

||||

|

||||

如果你想重新温习如何使用 KVM,可以查看 [在 KVM 中创建虚拟机:第 1 部分][2] 和 [在 KVM 中创建虚拟机:第 2 部分— 网络][3]。

|

||||

|

||||

### 在 KVM 中创建网络

|

||||

|

||||

在 KVM 中你至少需要两个虚拟机。当然了,如果你愿意,也可以创建更多的虚拟机。在我的系统中有 Fedora、Ubuntu、以及 openSUSE。去创建一个新的 IPv6 网络,在主虚拟机管理窗口中打开 “Edit > Connection Details > Virtual Networks”。点击左下角的绿色十字按钮去创建一个新的网络(图 1)。

|

||||

|

||||

|

||||

|

||||

*图 1:创建一个网络*

|

||||

|

||||

给新网络输入一个名字,然后,点击 “Forward” 按钮。如果你愿意,也可以不创建 IPv4 网络。当你创建一个新的 IPv4 网络时,虚拟机管理器将不让你创建重复网络,或者是使用了一个无效地址。在我的宿主机 Ubuntu 系统上,有效的地址是以绿色高亮显示的,而无效地址是使用高亮的玫瑰红色调。在我的 openSUSE 机器上没有高亮颜色。启用或不启用 DHCP,以及创建或不创建一个静态路由,然后进入下一个窗口。

|

||||

|

||||

选中 “Enable IPv6 network address space definition”,然后输入你的私有地址范围。你可以使用任何你希望的 IPv6 地址类,但是要注意,不能将你的实验网络泄漏到公网上去。我们将使用非常好用的 IPv6 唯一本地地址(ULA),并且使用在 [Simple DNS Plus][4] 上的在线地址生成器,去创建我们的网络地址。拷贝 “Combined/CID” 地址到网络框中(图 2)。

|

||||

|

||||

![network address][6]

|

||||

|

||||

*图 2:拷贝 "Combined/CID" 地址到网络框中*

|

||||

|

||||

虚拟机认为我的地址是无效的,因为,它显示了高亮的玫瑰红色。它做的对吗?我们使用 `ipv6calc` 去验证一下:

|

||||

|

||||

```

|

||||

$ ipv6calc -qi fd7d:844d:3e17:f3ae::/64

|

||||

Address type: unicast, unique-local-unicast, iid, iid-local

|

||||

Registry for address: reserved(RFC4193#3.1)

|

||||

Address type has SLA: f3ae

|

||||

Interface identifier: 0000:0000:0000:0000

|

||||

Interface identifier is probably manual set

|

||||

```

|

||||

|

||||

`ipv6calc` 认为没有问题。如果感兴趣,你可以改变其中一个数字为无效的东西,比如字母 `g`,然后再试一次。(问 “如果…?”,试验和错误是最好的学习方法)。

|

||||

|

||||

我们继续进行,启用 DHCPv6(图 3)。你可以接受缺省值,或者输入一个你自己的设置值。

|

||||

|

||||

|

||||

|

||||

*图 3: 启用 DHCPv6*

|

||||

|

||||

我们将跳过缺省路由定义这一步,继续进入下一屏,在那里我们将启用 “Isolated Virtual Network” 和 “Enable IPv6 internal routing/networking”。

|

||||

|

||||

### 虚拟机网络选择

|

||||

|

||||

现在,你可以配置你的虚拟机去使用新的网络。打开你的虚拟机,然后点击顶部左侧的 “i” 按钮去打开 “Show virtual hardware details” 屏幕。在 “Add Hardware” 列点击 “NIC” 按钮去打开网络选择器,然后选择你喜欢的新的 IPv6 网络。点击 “Apply”,然后重新启动。(或者使用你喜欢的方法去重新启动网络,或者更新你的 DHCP 租期。)

|

||||

|

||||

### 测试

|

||||

|

||||

`ifconfig` 告诉我们它做了什么?

|

||||

|

||||

```

|

||||

$ ifconfig

|

||||

ens3: flags=4163 UP,BROADCAST,RUNNING,MULTICAST mtu 1500

|

||||

inet 192.168.30.207 netmask 255.255.255.0

|

||||

broadcast 192.168.30.255

|

||||

inet6 fd7d:844d:3e17:f3ae::6314

|

||||

prefixlen 128 scopeid 0x0

|

||||

inet6 fe80::4821:5ecb:e4b4:d5fc

|

||||

prefixlen 64 scopeid 0x20

|

||||

```

|

||||

|

||||

这是我们新的 ULA,`fd7d:844d:3e17:f3ae::6314`,它是自动生成的本地链路地址。如果你有兴趣,可以 ping 一下,ping 网络上的其它虚拟机:

|

||||

|

||||

```

|

||||

vm1 ~$ ping6 -c2 fd7d:844d:3e17:f3ae::2c9f

|

||||

PING fd7d:844d:3e17:f3ae::2c9f(fd7d:844d:3e17:f3ae::2c9f) 56 data bytes

|

||||

64 bytes from fd7d:844d:3e17:f3ae::2c9f: icmp_seq=1 ttl=64 time=0.635 ms

|

||||

64 bytes from fd7d:844d:3e17:f3ae::2c9f: icmp_seq=2 ttl=64 time=0.365 ms

|

||||

|

||||

vm2 ~$ ping6 -c2 fd7d:844d:3e17:f3ae:a:b:c:6314

|

||||

PING fd7d:844d:3e17:f3ae:a:b:c:6314(fd7d:844d:3e17:f3ae:a:b:c:6314) 56 data bytes

|

||||

64 bytes from fd7d:844d:3e17:f3ae:a:b:c:6314: icmp_seq=1 ttl=64 time=0.744 ms

|

||||

64 bytes from fd7d:844d:3e17:f3ae:a:b:c:6314: icmp_seq=2 ttl=64 time=0.364 ms

|

||||

```

|

||||

|

||||

当你努力去理解子网时,这是一个可以让你尝试不同地址是否可以正常工作的快速易用的方法。你可以给单个接口分配多个 IP 地址,然后 ping 它们去看一下会发生什么。在一个 ULA 中,接口,或者主机是 IP 地址的最后四部分,因此,你可以在那里做任何事情,只要它们在同一个子网中即可,在那个例子中是 `f3ae`。在我的其中一个虚拟机上,我只改变了这个示例的接口 ID,以展示使用这四个部分,你可以做任何你想做的事情:

|

||||

|

||||

```

|

||||

vm1 ~$ sudo /sbin/ip -6 addr add fd7d:844d:3e17:f3ae:a:b:c:6314 dev ens3

|

||||

|

||||

vm2 ~$ ping6 -c2 fd7d:844d:3e17:f3ae:a:b:c:6314

|

||||

PING fd7d:844d:3e17:f3ae:a:b:c:6314(fd7d:844d:3e17:f3ae:a:b:c:6314) 56 data bytes

|

||||

64 bytes from fd7d:844d:3e17:f3ae:a:b:c:6314: icmp_seq=1 ttl=64 time=0.744 ms

|

||||

64 bytes from fd7d:844d:3e17:f3ae:a:b:c:6314: icmp_seq=2 ttl=64 time=0.364 ms

|

||||

```

|

||||

|

||||

现在,尝试使用不同的子网,在下面的示例中使用了 `f4ae` 代替 `f3ae`:

|

||||

|

||||

```

|

||||

$ ping6 -c2 fd7d:844d:3e17:f4ae:a:b:c:6314

|

||||

PING fd7d:844d:3e17:f4ae:a:b:c:6314(fd7d:844d:3e17:f4ae:a:b:c:6314) 56 data bytes

|

||||

From fd7d:844d:3e17:f3ae::1 icmp_seq=1 Destination unreachable: No route

|

||||

From fd7d:844d:3e17:f3ae::1 icmp_seq=2 Destination unreachable: No route

|

||||

```

|

||||

|

||||

这也是练习路由的好机会,以后,我们将专门做一期,如何在不使用 DHCP 情况下实现自动寻址。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/learn/intro-to-linux/2017/11/testing-ipv6-networking-kvm-part-2

|

||||

|

||||

作者:[CARLA SCHRODER][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/cschroder

|

||||

[1]:https://linux.cn/article-9594-1.html

|

||||

[2]:https://www.linux.com/learn/intro-to-linux/2017/5/creating-virtual-machines-kvm-part-1

|

||||

[3]:https://www.linux.com/learn/intro-to-linux/2017/5/creating-virtual-machines-kvm-part-2-networking

|

||||

[4]:http://simpledns.com/private-ipv6.aspx

|

||||

[5]:/files/images/kvm-fig-2png

|

||||

[6]:https://www.linux.com/sites/lcom/files/styles/floated_images/public/kvm-fig-2.png?itok=gncdPGj- "network address"

|

||||

[7]:https://www.linux.com/licenses/category/used-permission

|

||||

@ -0,0 +1,74 @@

|

||||

从专有到开源的十个简单步骤

|

||||

======

|

||||

|

||||

> 这个共同福利并不适用于专有软件:保持隐藏的东西是不能照亮或丰富这个世界的。

|

||||

|

||||

|

||||

|

||||

“开源软件肯定不太安全,因为每个人都能看到它的源代码,而且他们能重新编译它,用他们自己写的不好的东西进行替换。”举手示意:谁之前听说过这个说法?^注1

|

||||

|

||||

当我和客户讨论的时候(是的,他们有时候会让我和客户交谈),对于这个领域^注2 的人来说种问题是很常见的。在前一篇文章中,“[许多只眼睛的审查并不一定能防止错误代码]”,我谈论的是开源软件(尤其是安全软件)并不能神奇地比专有软件更安全,但是和专有软件比起来,我每次还是比较青睐开源软件。但我听到“关于开源软件不是很安全”这种问题表明了有时候只是说“开源需要参与”是不够的,我们也需要积极的参与辩护^注3 。

|

||||

|

||||

我并不期望能够达到牛顿或者维特根斯坦的逻辑水平,但是我会尽我所能,而且我会在结尾做个总结,如果你感兴趣的话可以去快速的浏览一下。

|

||||

|

||||

### 关键因素

|

||||

|

||||

首先,我们必须明白没有任何软件是完美的^注6 。无论是专有软件还是开源软件。第二,我们应该承认确实还是存在一些很不错的专有软件的,以及第三,也存在一些糟糕的开源软件。第四,有很多非常聪明的,很有天赋的,专业的架构师、设计师和软件工程师设计开发了专有软件。

|

||||

|

||||

但也有些问题:第五,从事专有软件的人员是有限的,而且你不可能总是能够雇佣到最好的员工。即使在政府部门或者公共组织 —— 他们拥有丰富的人才资源池,但在安全应用这块,他们的人才也是有限的。第六,可以查看、测试、改进、拆解、再次改进和发布开源软件的人总是无限的,而且还包含最好的人才。第七(也是我最喜欢的一条),这群人也包含很多编写专有软件的人才。第八,许多政府或者公共组织开发的软件也都逐渐开源了。

|

||||

|

||||

第九,如果你在担心你在运行的软件的不被支持或者来源不明,好消息是:有一批组织^注7 会来检查软件代码的来源,提供支持、维护和补丁更新。他们会按照专利软件模式那样去运行开源软件,你也可以确保你从他们那里得到的软件是正确的软件:他们的技术标准就是对软件包进行签名,以便你可以验证你正在运行的开源软件不是来源不明或者是恶意的软件。

|

||||

|

||||

第十(也是这篇文章的重点),当你运行开源软件,测试它,对问题进行反馈,发现问题并且报告的时候,你就是在为<ruby>共同福利<rt>commonwealth</rt></ruby>贡献知识、专业技能以及经验,这就是开源,其因为你的所做的这些而变得更好。如果你是通过个人或者提供支持开源软件的商业组织之一^注8 参与的,你已经成为了这个共同福利的一部分了。开源让软件变得越来越好,你可以看到它们的变化。没有什么是隐藏封闭的,它是完全开放的。事情会变坏吗?是的,但是我们能够及时发现问题并且修复。

|

||||

|

||||

这个共同福利并不适用于专有软件:保持隐藏的东西是不能照亮或丰富这个世界的。

|

||||

|

||||

我知道作为一个英国人在使用<ruby>共同福利<rt>commonwealth</rt></ruby>这个词的时候要小心谨慎的;它和帝国连接着的,但我所表达的不是这个意思。它不是克伦威尔^注9 在对这个词所表述的意思,无论如何,他是一个有争议的历史人物。我所表达的意思是这个词有“共同”和“福利”连接,福利不是指钱而是全人类都能拥有的福利。

|

||||

|

||||

我真的很相信这点的。如果i想从这篇文章中得到一些虔诚信息的话,那应该是第十条^注10 :共同福利是我们的遗产,我们的经验,我们的知识,我们的责任。共同福利是全人类都能拥有的。我们共同拥有它而且它是一笔无法估量的财富。

|

||||

|

||||

### 便利贴

|

||||

|

||||

1. (几乎)没有一款软件是完美无缺的。

|

||||

2. 有很好的专有软件。

|

||||

3. 有不好的专有软件。

|

||||

4. 有聪明,有才能,专注的人开发专有软件。

|

||||

5. 从事开发完善专有软件的人是有限的,即使在政府或者公共组织也是如此。

|

||||

6. 相对来说从事开源软件的人是无限的。

|

||||

7. …而且包括很多从事专有软件的人才。

|

||||

8. 政府和公共组织的人经常开源它们的软件。

|

||||

9. 有商业组织会为你的开源软件提供支持。

|

||||

10. 贡献--即使是使用--为开源软件贡献。

|

||||

|

||||

|

||||

### 脚注

|

||||

|

||||

- 注1: 好的--你现在可以放下手了

|

||||

- 注2:这应该大写吗?有特定的领域吗?或者它是如何工作的?我不确定。

|

||||

- 注3:我有一个英国文学和神学的学位--这可能不会惊讶到我的文章的普通读者^注4 。

|

||||

- 注4: 我希望不是,因为我说的太多神学了^注5 。但是它经常充满了冗余的,无关紧要的人文科学引用。

|

||||

- 注5: Emacs,每一次。

|

||||

- 注6: 甚至是 Emacs。而且我知道有技术能够去证明一些软件的正确性。(我怀疑 Emacs 不能全部通过它们...)

|

||||

- 注7: 注意这里:我被他们其中之一 [Red Hat][3] 所雇佣,去查看一下--它是一个有趣的工作地方,而且[我们经常在招聘][4]。

|

||||

- 注8: 假设他们完全遵守他们正在使用的开源软件许可证。

|

||||

- 注9: 昔日的“英格兰、苏格兰、爱尔兰的上帝守护者”--比克伦威尔。

|

||||

- 注10: 很明显,我选择 Emacs 而不是 Vi 变体。

|

||||

|

||||

这篇文章原载于 [Alice, Eve, and Bob - a security blog] 而且已经被授权重新发布。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/17/11/commonwealth-open-source

|

||||

|

||||

作者:[Mike Bursell][a]

|

||||

译者:[FelixYFZ](https://github.com/FelixYFZ)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/mikecamel

|

||||

[1]:https://opensource.com/article/17/10/many-eyes

|

||||

[2]:https://en.wikipedia.org/wiki/Apologetics

|

||||

[3]:https://www.redhat.com/

|

||||

[4]:https://www.redhat.com/en/jobs

|

||||

[5]:https://aliceevebob.com/2017/10/24/the-commonwealth-of-open-source/

|

||||

@ -0,0 +1,84 @@

|

||||

如何创建适合移动设备的文档

|

||||

=====

|

||||

|

||||

> 帮助用户在智能手机或平板上快速轻松地找到他们所需的信息。

|

||||

|

||||

|

||||

|

||||

我并不是完全相信[移动为先][1]的理念,但是我确实发现更多的人使用智能手机和平板电脑等移动设备来获取信息。这包括在线的软件和硬件文档,但它们大部分都是冗长的,不适合小屏幕。通常情况下,它的伸缩性不太好,而且很难导航。

|

||||

|

||||

当用户使用移动设备访问文档时,他们通常需要迅速获取信息以了解如何执行任务或解决问题,他们不想通过看似无尽的页面来寻找他们需要的特定信息。幸运的是,解决这个问题并不难。以下是一些技巧,可以帮助你构建文档以满足移动阅读器的需求。

|

||||

|

||||

### 简短一点

|

||||

|

||||

这意味着简短的句子,简短的段落和简短的流程。你不是在写一部长篇小说或一段长新闻。使你的文档简洁。尽可能使用少量的语言来获得想法和信息。

|

||||

|

||||

以广播新闻报道为示范:关注关键要素,用简单直接的语言对其进行解释。不要让你的读者在屏幕上看到冗长的文字。

|

||||

|

||||

另外,直接切入重点。关注读者需要的信息。在线发布的文档不应该像以前厚厚的手册一样。不要把所有东西都放在一个页面上,把你的信息分成更小的块。接下来是怎样做到这一点:

|

||||

|

||||

### 主题

|

||||

|

||||

在技术写作的世界里,主题是独立的,独立的信息块。每个主题都由你网站上的单个页面组成。读者应该能从特定的主题中获取他们需要的信息 -- 并且只是那些信息。要做到这一点,选择哪些主题要包含在文档中并决定如何组织它们:

|

||||

|

||||

### DITA

|

||||

|

||||

<ruby>[达尔文信息类型化体系结构][2]<rt>Darwin Information Typing Architecture</rt></ruby> (DITA) 是用于编写和发布的一个 XML 模型。它[广泛采用][3]在技术写作中,特别是作为较长的文档集中。

|

||||

|

||||

我并不是建议你将文档转换为 XML(除非你真的想)。相反,考虑将 DITA 的不同类型主题的概念应用到你的文档中:

|

||||

|

||||

* 一般:概述信息

|

||||

* 任务:分步骤的流程

|

||||

* 概念:背景或概念信息

|

||||

* 参考:API 参考或数据字典等专用信息

|

||||

* 术语表:定义术语

|

||||

* 故障排除:有关用户可能遇到的问题以及如何解决问题的信息

|

||||

|

||||

你会得到很多单独的页面。要连接这些页面:

|

||||

|

||||

### 链接

|

||||

|

||||

许多内容管理系统、维基和发布框架都包含某种形式的导航 —— 通常是目录或[面包屑导航][4],这是一种在移动设备上逐渐消失的导航。

|

||||

|

||||

为了加强导航,在主题之间添加明确的链接。将这些链接放在每个主题末尾的标题**另请参阅**或**相关主题**。每个部分应包含两到五个链接,指向与当前主题相关的概述、概念和参考主题。

|

||||

|

||||

如果你需要指向文档集之外的信息,请确保链接在浏览器新的选项卡中打开。这将把读者送到另一个网站,同时也将读者继续留你的网站上。

|

||||

|

||||

这解决了文本问题,那么图片呢?

|

||||

|

||||

### 不使用图片

|

||||

|

||||

除少数情况之外,不应该加太多图片到文档中。仔细查看文档中的每个图片,然后问自己:

|

||||

|

||||

* 它有用吗?

|

||||

* 它是否增强了文档?

|

||||

* 如果删除它,读者会错过这张图片吗?

|

||||

|

||||

如果回答否,那么移除图片。

|

||||

|

||||

另一方面,如果你绝对不能没有图片,就让它变成[响应式的][5]。这样,图片就会自动调整以适应更小的屏幕。

|

||||

|

||||

如果你仍然不确定图片是否应该出现,Opensource.com 社区版主 Ben Cotton 提供了一个关于在文档中使用屏幕截图的[极好的解释][6]。

|

||||

|

||||

### 最后的想法

|

||||

|

||||

通过少量努力,你就可以构建适合移动设备用户的文档。此外,这些更改也改进了桌面计算机和笔记本电脑用户的文档体验。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/17/12/think-mobile

|

||||

|

||||

作者:[Scott Nesbitt][a]

|

||||

译者:[MjSeven](https://github.com/MjSeven)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/chrisshort

|

||||

[1]:https://www.uxmatters.com/mt/archives/2012/03/mobile-first-what-does-it-mean.php

|

||||

[2]:https://en.wikipedia.org/wiki/Darwin_Information_Typing_Architecture

|

||||

[3]:http://dita.xml.org/book/list-of-organizations-using-dita

|

||||

[4]:https://en.wikipedia.org/wiki/Breadcrumb_(navigation)

|

||||

[5]:https://en.wikipedia.org/wiki/Responsive_web_design

|

||||

[6]:https://opensource.com/business/15/9/when-does-your-documentation-need-screenshots

|

||||

@ -0,0 +1,122 @@

|

||||

两款 Linux 桌面端可用的科学计算器

|

||||

======

|

||||

|

||||

> 如果你想找个高级的桌面计算器的话,你可以看看开源软件,以及一些其它有趣的工具。

|

||||

|

||||

|

||||

|

||||

每个 Linux 桌面环境都至少带有一个功能简单的桌面计算器,但大多数计算器只能进行一些简单的计算。

|

||||

|

||||

幸运的是,还是有例外的:不仅可以做得比开平方根和一些三角函数还多,而且还很简单。这里将介绍两款强大的计算器,外加一大堆额外的功能。

|

||||

|

||||

### SpeedCrunch

|

||||

|

||||

[SpeedCrunch][1] 是一款高精度科学计算器,有着简明的 Qt5 图像界面,并且强烈依赖键盘。

|

||||

|

||||

![SpeedCrunch graphical interface][3]

|

||||

|

||||

*SpeedCrunch 运行中*

|

||||

|

||||

它支持单位,并且可用在所有函数中。

|

||||

|

||||

例如,

|

||||

|

||||

```

|

||||

2 * 10^6 newton / (meter^2)

|

||||

```

|

||||

|

||||

你可以得到:

|

||||

|

||||

```

|

||||

= 2000000 pascal

|

||||

```

|

||||

|

||||

SpeedCrunch 会默认地将结果转化为国际标准单位,但还是可以用 `in` 命令转换:

|

||||

|

||||

例如:

|

||||

|

||||

```

|

||||

3*10^8 meter / second in kilo meter / hour

|

||||

```

|

||||

|

||||

结果是:

|

||||

|

||||

```

|

||||

= 1080000000 kilo meter / hour

|

||||

```

|

||||

|

||||

`F5` 键可以将所有结果转为科学计数法(`1.08e9 kilo meter / hour`),`F2` 键可以只将那些很大的数或很小的数转为科学计数法。更多选项可以在配置页面找到。

|

||||

|

||||

可用的函数的列表看上去非常壮观。它可以用在 Linux 、 Windows、macOS。许可证是 GPLv2,你可以在 [Bitbucket][4] 上得到它的源码。

|

||||

|

||||

### Qalculate!

|

||||

|

||||

[Qalculate!][5](有感叹号)有一段长而复杂的历史。

|

||||

|

||||

这个项目给了我们一个强大的库,而这个库可以被其它程序使用(在 Plasma 桌面中,krunner 可以用它来计算),以及一个用 GTK3 搭建的图形界面。它允许你转换单位,处理物理常量,创建图像,使用复数,矩阵以及向量,选择任意精度,等等。

|

||||

|

||||

![Qalculate! Interface][7]

|

||||

|

||||

*在 Qalculate! 中查看物理常量*

|

||||

|

||||

在单位的使用方面,Qalculate! 会比 SppedCrunch 更加直观,而且可以识别一些常用前缀。你有听说过 exapascal 压力吗?反正我没有(太阳的中心大概在 `~26 PPa`),但 Qalculate! ,可以准确 `1 EPa` 的意思。同时,Qalculate! 可以更加灵活地处理语法错误,所以你不需要担心打括号:如果没有歧义,Qalculate! 会直接给出正确答案。

|

||||

|

||||

一段时间之后这个项目看上去被遗弃了。但在 2016 年,它又变得强大了,在一年里更新了 10 个版本。它的许可证是 GPLv2 (源码在 [GitHub][8] 上),提供Linux 、Windows 、macOS的版本。

|

||||

|

||||

### 更多计算器

|

||||

|

||||

#### ConvertAll

|

||||

|

||||

好吧,这不是“计算器”,但这个程序非常好用。

|

||||

|

||||

大部分单位转换器只是一个大的基本单位列表以及一大堆基本组合,但 [ConvertAll][9] 与它们不一样。有试过把天文单位每年转换为英寸每秒吗?不管它们说不说得通,只要你想转换任何种类的单位,ConvertAll 就是你要的工具。

|

||||

|

||||

只需要在相应的输入框内输入转换前和转换后的单位:如果单位相容,你会直接得到答案。

|

||||

|

||||

主程序是在 PyQt5 上搭建的,但也有 [JavaScript 的在线版本][10]。

|

||||

|

||||

#### 带有单位包的 (wx)Maxima

|

||||

|

||||

有时候(好吧,很多时候)一款桌面计算器时候不够你用的,然后你需要更多的原力。

|

||||

|

||||

[Maxima][11] 是一款计算机代数系统(LCTT 译注:进行符号运算的软件。这种系统的要件是数学表示式的符号运算),你可以用它计算导数、积分、方程、特征值和特征向量、泰勒级数、拉普拉斯变换与傅立叶变换,以及任意精度的数字计算、二维或三维图像··· ···列出这些都够我们写几页纸的了。

|

||||

|

||||

[wxMaxima][12] 是一个设计精湛的 Maxima 的图形前端,它简化了许多 Maxima 的选项,但并不会影响其它。在 Maxima 的基础上,wxMaxima 还允许你创建 “笔记本”,你可以在上面写一些笔记,保存你的图像等。其中一项 (wx)Maxima 最惊艳的功能是它可以处理尺寸单位。

|

||||

|

||||

在提示符只需要输入:

|

||||

|

||||

```

|

||||

load("unit")

|

||||

```

|

||||

|

||||

按 `Shift+Enter`,等几秒钟的时间,然后你就可以开始了。

|

||||

|

||||

默认地,单位包可以用基本的 MKS 单位,但如果你喜欢,例如,你可以用 `N` 为单位而不是 `kg*m/s2`,你只需要输入:`setunits(N)`。

|

||||

|

||||

Maxima 的帮助(也可以在 wxMaxima 的帮助菜单中找到)会给你更多信息。

|

||||

|

||||

你使用这些程序吗?你知道还有其它好的科学、工程用途的桌面计算器或者其它相关的计算器吗?在评论区里告诉我们吧!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/1/scientific-calculators-linux

|

||||

|

||||

作者:[Ricardo Berlasso][a]

|

||||

译者:[zyk2290](https://github.com/zyk2290)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/rgb-es

|

||||

[1]:http://speedcrunch.org/index.html

|

||||

[2]:/file/382511

|

||||

[3]:https://opensource.com/sites/default/files/u128651/speedcrunch.png "SpeedCrunch graphical interface"

|

||||

[4]:https://bitbucket.org/heldercorreia/speedcrunch

|

||||

[5]:https://qalculate.github.io/

|

||||

[6]:/file/382506

|

||||

[7]:https://opensource.com/sites/default/files/u128651/qalculate-600.png "Qalculate! Interface"

|

||||

[8]:https://github.com/Qalculate

|

||||

[9]:http://convertall.bellz.org/

|

||||

[10]:http://convertall.bellz.org/js/

|

||||

[11]:http://maxima.sourceforge.net/

|

||||

[12]:https://andrejv.github.io/wxmaxima/

|

||||

@ -1,23 +1,25 @@

|

||||

为什么建设一个社区值得额外的努力

|

||||

======

|

||||

|

||||

> 建立 NethServer 社区是有风险的。但是我们从这些激情的人们所带来的力量当中学习到了很多。

|

||||

|

||||

|

||||

|

||||

当我们在 2003 年推出 [Nethesis][1] 时,我们还只是系统集成商。我们只使用现有的开源项目。我们的业务模式非常明确:为这些项目增加多种形式的价值:实践知识、针对意大利市场的文档、额外模块、专业支持和培训课程。我们还通过向上游贡献代码并参与其社区来回馈上游项目。

|

||||

当我们在 2003 年推出 [Nethesis][1] 时,我们还只是系统集成商。我们只使用已有的开源项目。我们的业务模式非常明确:为这些项目增加多种形式的价值:实践知识、针对意大利市场的文档、额外模块、专业支持和培训课程。我们还通过向上游贡献代码并参与其社区来回馈上游项目。

|

||||

|

||||

那时时代不同。我们不能太张扬地使用“开源”这个词。人们将它与诸如“书呆子”,“没有价值”而最糟糕的是“自由”这些词联系起来。这些不太适合生意。

|

||||

那时时代不同。我们不能太张扬地使用“开源”这个词。人们将它与诸如“书呆子”,“没有价值”以及最糟糕的“免费”这些词联系起来。这些不太适合生意。

|

||||

|

||||

在 2010 年的一个星期六,Nethesis 的工作人员,他们手中拿着馅饼和浓咖啡,正在讨论如何推进事情发展(嘿,我们喜欢在创新的同时吃喝东西!)。尽管势头对我们不利,但我们决定不改变方向。事实上,我们决定加大力度 - 去做开源和开放的工作方式,这是一个成功运营企业的模式。

|

||||

在 2010 年的一个星期六,Nethesis 的工作人员,他们手中拿着馅饼和浓咖啡,正在讨论如何推进事情发展(嘿,我们喜欢在创新的同时吃喝东西!)。尽管势头对我们不利,但我们决定不改变方向。事实上,我们决定加大力度 —— 去做开源和开放的工作方式,这是一个成功运营企业的模式。

|

||||

|

||||

多年来,我们已经证明了该模型的潜力。有一件事是我们成功的关键:社区。

|

||||

|

||||

在这个由三部分组成的系列文章中,我将解释社区在开放组织的存在中扮演的重要角色。我将探讨为什么一个组织希望建立一个社区,并讨论如何建立一个社区 - 因为我确实认为这是如今产生新创新的最佳方式。

|

||||

在这个由三部分组成的系列文章中,我将解释社区在开放组织的存在中扮演的重要角色。我将探讨为什么一个组织希望建立一个社区,并讨论如何建立一个社区 —— 因为我确实认为这是如今产生新创新的最佳方式。

|

||||

|

||||

### 这个疯狂的想法

|

||||

|

||||

与 Nethesis 伙伴一起,我们决定构建自己的开源项目:我们自己的操作系统,它建立在 CentOS 之上(因为我们不想重新发明轮子)。我们假设我们拥有实现它的经验、实践知识和人力。我们感到很勇敢。

|

||||

|

||||

我们非常希望构建一个名为 [NethServer][2] 的操作系统,其使命是:通过开源使系统管理员的生活更轻松。我们知道我们可以为服务器创建一个 Linux 发行版,与当前提供的任何东西相比,这些发行版更容易获取、更易于使用,并且更易于理解。

|

||||

我们非常希望构建一个名为 [NethServer][2] 的操作系统,其使命是:通过开源使系统管理员的生活更轻松。我们知道我们可以为服务器创建一个 Linux 发行版,与当前已有的相比,它更容易使用、更易于部署,并且更易于理解。

|

||||

|

||||

不过,最重要的是,我们决定创建一个真正的,100% 开放的项目,其主要规则有三条:

|

||||

|

||||

@ -25,13 +27,11 @@

|

||||

* 开发公开

|

||||

* 社区驱动

|

||||

|

||||

|

||||

|

||||

最后一个很重要。我们是一家公司。我们能够自己开发它。如果我们在内部完成这项工作,我们将会更有效(并且做出更快的决定)。与其他任何意大利公司一样,这将非常简单。

|

||||

|

||||

但是我们已经如此深入到开源文化中,所以我们选择了不同的路径。

|

||||

|

||||

我们确实希望有尽可能多的人围绕着我们、围绕着产品、围绕着公司周围。我们希望对工作有尽可能多的视角。我们意识到:独自一人,你可以走得快 - 但是如果你想走很远,你需要一起走。

|

||||

我们确实希望有尽可能多的人围绕着我们、围绕着产品、围绕着公司周围。我们希望对工作有尽可能多的视角。我们意识到:独自一人,你可以走得快 —— 但是如果你想走很远,你需要一起走。

|

||||

|

||||

所以我们决定建立一个社区。

|

||||

|

||||

@ -41,11 +41,11 @@

|

||||

|

||||

但是很快就出现了这样一个问题:我们如何建立一个社区?我们不知道如何实现这一点。我们参加了很多社区,但我们从未建立过一个社区。

|

||||

|

||||

我们擅长编码 - 而不是人。我们是一家公司,是一个有非常具体优先事项的组织。那么我们如何建立一个社区,并在公司和社区之间建立良好的关系呢?

|

||||

我们擅长编码 —— 而不是人。我们是一家公司,是一个有非常具体优先事项的组织。那么我们如何建立一个社区,并在公司和社区之间建立良好的关系呢?

|

||||

|

||||

我们做了你必须做的第一件事:学习。我们从专家、博客和许多书中学到了知识。我们进行了实验。我们失败了多次,从结果中收集数据,并再次进行测试。

|

||||

|

||||

最终我们学到了社区管理的黄金法则:没有社区管理的黄金法则。

|

||||

最终我们学到了社区管理的黄金法则:**没有社区管理的黄金法则。**

|

||||

|

||||

人们太复杂了,社区无法用一条规则来“统治他们”。

|

||||

|

||||

@ -57,7 +57,7 @@ via: https://opensource.com/open-organization/18/1/why-build-community-1

|

||||

|

||||

作者:[Alessio Fattorini][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -2,13 +2,14 @@ Linux 局域网路由新手指南:第 1 部分

|

||||

======

|

||||

|

||||

|

||||

|

||||

前面我们学习了 [IPv6 路由][1]。现在我们继续深入学习 Linux 中的 IPv4 路由的基础知识。我们从硬件概述、操作系统和 IPv4 地址的基础知识开始,下周我们将继续学习它们如何配置,以及测试路由。

|

||||

|

||||

### 局域网路由器硬件

|

||||

|

||||

Linux 实际上是一个网络操作系统,一直都是,从一开始它就有内置的网络功能。为将你的局域网连入因特网,构建一个局域网路由器比起构建网关路由器要简单的多。你不要太过于执念安全或者防火墙规则,对于处理 NAT 它还是比较复杂的,网络地址转换是 IPv4 的一个痛点。我们为什么不放弃 IPv4 去转到 IPv6 呢?这样将使网络管理员的工作更加简单。

|

||||

Linux 实际上是一个网络操作系统,一直都是,从一开始它就有内置的网络功能。要将你的局域网连入因特网,构建一个局域网路由器比起构建网关路由器要简单的多。你不要太过于执念安全或者防火墙规则,对于处理网络地址转换(NAT)它还是比较复杂的,NAT是 IPv4 的一个痛点。我们为什么不放弃 IPv4 去转到 IPv6 呢?这样将使网络管理员的工作更加简单。

|

||||

|

||||

有点跑题了。从理论上讲,你的 Linux 路由器是一个至少有两个网络接口的小型机器。Linux Gizmos 是一个单片机的综合体:[98 个开放规格的目录,黑客友好的 SBCs][2]。你能够使用一个很老的笔记本电脑或者台式计算机。你也可以使用一个精简版计算机,像 ZaReason Zini 或者 System76 Meerkat 一样,虽然这些有点贵,差不多要 $600。但是它们又结实又可靠,并且你不用在 Windows 许可证上浪费钱。

|

||||

有点跑题了。从理论上讲,你的 Linux 路由器是一个至少有两个网络接口的小型机器。Linux Gizmos 有一个很大的单板机名单:[98 个开放规格、适于黑客的 SBC 的目录][2]。你能够使用一个很老的笔记本电脑或者台式计算机。你也可以使用一个紧凑型计算机,像 ZaReason Zini 或者 System76 Meerkat 一样,虽然这些有点贵,差不多要 $600。但是它们又结实又可靠,并且你不用在 Windows 许可证上浪费钱。

|

||||

|

||||

如果对路由器的要求不高,使用树莓派 3 Model B 作为路由器是一个非常好的选择。它有一个 10/100 以太网端口,板载 2.4GHz 的 802.11n 无线网卡,并且它还有四个 USB 端口,因此你可以插入多个 USB 网卡。USB 2.0 和低速板载网卡可能会让树莓派变成你的网络上的瓶颈,但是,你不能对它期望太高(毕竟它只有 $35,既没有存储也没有电源)。它支持很多种风格的 Linux,因此你可以选择使用你喜欢的版本。基于 Debian 的树莓派是我的最爱。

|

||||

|

||||

@ -16,63 +17,63 @@ Linux 实际上是一个网络操作系统,一直都是,从一开始它就

|

||||

|

||||

你可以在你选择的硬件上安装将你喜欢的 Linux 的简化版,因为定制的路由器操作系统,比如 OpenWRT、 Tomato、DD-WRT、Smoothwall、Pfsense 等等,都有它们自己的非标准界面。我的观点是,没有必要这么麻烦,它们对你并没有什么帮助。尽量使用标准的 Linux 工具,因为你只需要学习它们一次就够了。

|

||||

|

||||

Debian 的网络安装镜像大约有 300MB 大小,并且支持多种架构,包括 ARM、i386、amd64、和 armhf。Ubuntu 的服务器网络安装镜像也小于 50MB,这样你就可以控制你要安装哪些包。Fedora、Mageia、和 openSUSE 都提供精简的网络安装镜像。如果你需要创意,你可以浏览 [Distrowatch][3]。

|

||||

Debian 的网络安装镜像大约有 300MB 大小,并且支持多种架构,包括 ARM、i386、amd64 和 armhf。Ubuntu 的服务器网络安装镜像也小于 50MB,这样你就可以控制你要安装哪些包。Fedora、Mageia、和 openSUSE 都提供精简的网络安装镜像。如果你需要创意,你可以浏览 [Distrowatch][3]。

|

||||

|

||||

### 路由器能做什么

|

||||

|

||||

我们需要网络路由器做什么?一个路由器连接不同的网络。如果没有路由,那么每个网络都是相互隔离的,所有的悲伤和孤独都没有人与你分享,所有节点只能孤独终老。假设你有一个 192.168.1.0/24 和一个 192.168.2.0/24 网络。如果没有路由器,你的两个网络之间不能相互沟通。这些都是 C 类的私有地址,它们每个都有 254 个可用网络地址。使用 ipcalc 可以非常容易地得到它们的这些信息:

|

||||

我们需要网络路由器做什么?一个路由器连接不同的网络。如果没有路由,那么每个网络都是相互隔离的,所有的悲伤和孤独都没有人与你分享,所有节点只能孤独终老。假设你有一个 192.168.1.0/24 和一个 192.168.2.0/24 网络。如果没有路由器,你的两个网络之间不能相互沟通。这些都是 C 类的私有地址,它们每个都有 254 个可用网络地址。使用 `ipcalc` 可以非常容易地得到它们的这些信息:

|

||||

|

||||

```

|

||||

$ ipcalc 192.168.1.0/24

|

||||

Address: 192.168.1.0 11000000.10101000.00000001. 00000000

|

||||

Netmask: 255.255.255.0 = 24 11111111.11111111.11111111. 00000000

|

||||

Wildcard: 0.0.0.255 00000000.00000000.00000000. 11111111

|

||||

Address: 192.168.1.0 11000000.10101000.00000001. 00000000

|

||||

Netmask: 255.255.255.0 = 24 11111111.11111111.11111111. 00000000

|

||||

Wildcard: 0.0.0.255 00000000.00000000.00000000. 11111111

|

||||

=>

|

||||

Network: 192.168.1.0/24 11000000.10101000.00000001. 00000000

|

||||

HostMin: 192.168.1.1 11000000.10101000.00000001. 00000001

|

||||

HostMax: 192.168.1.254 11000000.10101000.00000001. 11111110

|

||||

Broadcast: 192.168.1.255 11000000.10101000.00000001. 11111111

|

||||

Hosts/Net: 254 Class C, Private Internet

|

||||

|

||||

Network: 192.168.1.0/24 11000000.10101000.00000001. 00000000

|

||||

HostMin: 192.168.1.1 11000000.10101000.00000001. 00000001

|

||||

HostMax: 192.168.1.254 11000000.10101000.00000001. 11111110

|

||||

Broadcast: 192.168.1.255 11000000.10101000.00000001. 11111111

|

||||

Hosts/Net: 254 Class C, Private Internet

|

||||

```

|

||||

|

||||

我喜欢 ipcalc 的二进制输出信息,它更加可视地表示了掩码是如何工作的。前三个八位组表示了网络地址,第四个八位组是主机地址,因此,当你分配主机地址时,你将 “掩盖” 掉网络地址部分,只使用剩余的主机部分。你的两个网络有不同的网络地址,而这就是如果两个网络之间没有路由器它们就不能互相通讯的原因。

|

||||

我喜欢 `ipcalc` 的二进制输出信息,它更加可视地表示了掩码是如何工作的。前三个八位组表示了网络地址,第四个八位组是主机地址,因此,当你分配主机地址时,你将 “掩盖” 掉网络地址部分,只使用剩余的主机部分。你的两个网络有不同的网络地址,而这就是如果两个网络之间没有路由器它们就不能互相通讯的原因。

|

||||

|

||||

每个八位组一共有 256 字节,但是它们并不能提供 256 个主机地址,因为第一个和最后一个值 ,也就是 0 和 255,是被保留的。0 是网络标识,而 255 是广播地址,因此,只有 254 个主机地址。ipcalc 可以帮助你很容易地计算出这些。

|

||||

每个八位组一共有 256 字节,但是它们并不能提供 256 个主机地址,因为第一个和最后一个值 ,也就是 0 和 255,是被保留的。0 是网络标识,而 255 是广播地址,因此,只有 254 个主机地址。`ipcalc` 可以帮助你很容易地计算出这些。

|

||||

|

||||

当然,这并不意味着你不能有一个结尾是 0 或者 255 的主机地址。假设你有一个 16 位的前缀:

|

||||

|

||||

```

|

||||

$ ipcalc 192.168.0.0/16

|

||||

Address: 192.168.0.0 11000000.10101000. 00000000.00000000

|

||||

Netmask: 255.255.0.0 = 16 11111111.11111111. 00000000.00000000

|

||||

Wildcard: 0.0.255.255 00000000.00000000. 11111111.11111111

|

||||

Address: 192.168.0.0 11000000.10101000. 00000000.00000000

|

||||

Netmask: 255.255.0.0 = 16 11111111.11111111. 00000000.00000000

|

||||

Wildcard: 0.0.255.255 00000000.00000000. 11111111.11111111

|

||||

=>

|

||||

Network: 192.168.0.0/16 11000000.10101000. 00000000.00000000

|

||||

HostMin: 192.168.0.1 11000000.10101000. 00000000.00000001

|

||||

HostMax: 192.168.255.254 11000000.10101000. 11111111.11111110

|

||||

Broadcast: 192.168.255.255 11000000.10101000. 11111111.11111111

|

||||

Hosts/Net: 65534 Class C, Private Internet

|

||||

|

||||

Network: 192.168.0.0/16 11000000.10101000. 00000000.00000000

|

||||

HostMin: 192.168.0.1 11000000.10101000. 00000000.00000001

|

||||

HostMax: 192.168.255.254 11000000.10101000. 11111111.11111110

|

||||

Broadcast: 192.168.255.255 11000000.10101000. 11111111.11111111

|

||||

Hosts/Net: 65534 Class C, Private Internet

|

||||

```

|

||||

|

||||

ipcalc 列出了你的第一个和最后一个主机地址,它们是 192.168.0.1 和 192.168.255.254。你是可以有以 0 或者 255 结尾的主机地址的,例如,192.168.1.0 和 192.168.0.255,因为它们都在最小主机地址和最大主机地址之间。

|

||||

`ipcalc` 列出了你的第一个和最后一个主机地址,它们是 192.168.0.1 和 192.168.255.254。你是可以有以 0 或者 255 结尾的主机地址的,例如,192.168.1.0 和 192.168.0.255,因为它们都在最小主机地址和最大主机地址之间。

|

||||

|

||||

不论你的地址块是私有的还是公共的,这个原则同样都是适用的。不要羞于使用 ipcalc 来帮你计算地址。

|

||||

不论你的地址块是私有的还是公共的,这个原则同样都是适用的。不要羞于使用 `ipcalc` 来帮你计算地址。

|

||||

|

||||

### CIDR

|

||||

|

||||

CIDR(无类域间路由)就是通过可变长度的子网掩码来扩展 IPv4 的。CIDR 允许对网络空间进行更精细地分割。我们使用 ipcalc 来演示一下:

|

||||

CIDR(无类域间路由)就是通过可变长度的子网掩码来扩展 IPv4 的。CIDR 允许对网络空间进行更精细地分割。我们使用 `ipcalc` 来演示一下:

|

||||

|

||||

```

|

||||

$ ipcalc 192.168.1.0/22

|

||||

Address: 192.168.1.0 11000000.10101000.000000 01.00000000

|

||||

Netmask: 255.255.252.0 = 22 11111111.11111111.111111 00.00000000

|

||||

Wildcard: 0.0.3.255 00000000.00000000.000000 11.11111111

|

||||

Address: 192.168.1.0 11000000.10101000.000000 01.00000000

|

||||

Netmask: 255.255.252.0 = 22 11111111.11111111.111111 00.00000000

|

||||

Wildcard: 0.0.3.255 00000000.00000000.000000 11.11111111

|

||||

=>

|

||||

Network: 192.168.0.0/22 11000000.10101000.000000 00.00000000

|

||||

HostMin: 192.168.0.1 11000000.10101000.000000 00.00000001

|

||||

HostMax: 192.168.3.254 11000000.10101000.000000 11.11111110

|

||||

Broadcast: 192.168.3.255 11000000.10101000.000000 11.11111111

|

||||

Hosts/Net: 1022 Class C, Private Internet

|

||||

|

||||

Network: 192.168.0.0/22 11000000.10101000.000000 00.00000000

|

||||

HostMin: 192.168.0.1 11000000.10101000.000000 00.00000001

|

||||

HostMax: 192.168.3.254 11000000.10101000.000000 11.11111110

|

||||

Broadcast: 192.168.3.255 11000000.10101000.000000 11.11111111

|

||||

Hosts/Net: 1022 Class C, Private Internet

|

||||

```

|

||||

|

||||

网络掩码并不局限于整个八位组,它可以跨越第三和第四个八位组,并且子网部分的范围可以是从 0 到 3,而不是非得从 0 到 255。可用主机地址的数量并不一定是 8 的倍数,因为它是由整个八位组定义的。

|

||||

@ -81,7 +82,7 @@ Hosts/Net: 1022 Class C, Private Internet

|

||||

|

||||

从 [理解 IP 地址和 CIDR 图表][4]、[IPv4 私有地址空间和过滤][5]、以及 [IANA IPv4 地址空间注册][6] 开始。接下来的我们将学习如何创建和管理路由器。

|

||||

|

||||

通过来自 Linux 基金会和 edX 的免费课程 ["Linux 入门" ][7]学习更多 Linux 知识。

|

||||

通过来自 Linux 基金会和 edX 的免费课程 [“Linux 入门”][7]学习更多 Linux 知识。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -89,7 +90,7 @@ via: https://www.linux.com/learn/intro-to-linux/2018/2/linux-lan-routing-beginne

|

||||

|

||||

作者:[Carla Schroder][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,24 +1,24 @@

|

||||

使用一个树莓派和 projectx/os 托管你自己的电子邮件

|

||||

使用树莓派和 projectx/os 托管你自己的电子邮件

|

||||

======

|

||||

|

||||

> 这个开源项目可以通过低成本的服务器设施帮助你保护你的数据隐私和所有权。

|

||||

|

||||

|

||||

|

||||

现在有大量的理由,不能再将存储你的数据的任务委以他人之手,也不能在第三方公司运行你的服务;隐私、所有权、以及防范任何人拿你的数据去“赚钱”。但是对于大多数人来说,自己去运行一个服务器,是件即费时间又需要太多的专业知识的事情。不得已,我们只能妥协。抛开这些顾虑,使用某些公司的云服务,随之而来的就是广告、数据挖掘和售卖、以及其它可能的任何东西。

|

||||

现在有大量的理由,不能再将存储你的数据的任务委以他人之手,也不能在第三方公司运行你的服务;隐私、所有权,以及防范任何人拿你的数据去“赚钱”。但是对于大多数人来说,自己去运行一个服务器,是件即费时间又需要太多的专业知识的事情。不得已,我们只能妥协。抛开这些顾虑,使用某些公司的云服务,随之而来的就是广告、数据挖掘和售卖、以及其它可能的任何东西。

|

||||

|

||||

[projectx/os][1] 项目就是要去除这种顾虑,它可以在家里毫不费力地做服务托管,并且可以很容易地创建一个类似于 Gmail 的帐户。实现上述目标,你只需一个 $35 的树莓派 3 和一个基于 Debian 的操作系统镜像 —— 并且不需要很多的专业知识。仅需要四步就可以实现:

|

||||

|

||||

1. 解压缩一个 ZIP 文件到 SD 存储卡中。

|

||||

2. 编辑 SD 上的一个文本文件以便于它连接你的 WiFi(如果你不使用有线网络的话)。

|

||||

2. 编辑 SD 卡上的一个文本文件以便于它连接你的 WiFi(如果你不使用有线网络的话)。

|

||||

3. 将这个 SD 卡插到树莓派 3 中。

|

||||

4. 使用你的智能手机在树莓派 3 上安装 "email 服务器“ 应用并选择一个二级域。

|

||||

4. 使用你的智能手机在树莓派 3 上安装 “email 服务器” 应用并选择一个二级域。

|

||||

|

||||

服务器应用程序(比如电子邮件服务器)被分解到多个容器中,它们中的每个都只能够使用指定的方式与外界通讯,它们使用了管理粒度非常细的隔离措施以提高安全性。例如,入站 SMTP、[SpamAssassin][2](反垃圾邮件平台)、[Dovecot][3] (安全的 IMAP 服务器),以及 webmail 都使用了独立的容器,它们之间相互不能看到对方的数据,因此,单个守护进程出现问题不会波及其它的进程。

|

||||

|

||||

另外,它们都是无状态容器,比如 SpamAssassin 和入站 SMTP,每次收到电子邮件之后,它们的容器都会被销毁并重建,因此,即便是有人找到了 bug 并利用了它,他们也不能访问以前的电子邮件或者接下来的电子邮件;他们只能访问他们自己挖掘出漏洞的那封电子邮件。幸运的是,大多数对外发布的、最容易受到攻击的服务都是隔离的和无状态的。

|

||||

|

||||

服务器应用程序(比如电子邮件服务器)被分解到多个容器中,它们中的每个都只能够使用指定的方式与外界通讯,它们使用了管理粒度非常细的隔离措施以提高安全性。例如,入站 SMTP,[SpamAssassin][2](防垃圾邮件平台),[Dovecot][3] (安全 IMAP 服务器),并且 webmail 都使用了独立的容器,它们之间相互不能看到对方的数据,因此,单个守护进程出现问题不会波及其它的进程。

|

||||

|

||||

另外,它们都是无状态容器,比如 SpamAssassin 和入站 SMTP,每次收到电子邮件之后,它们的连接都会被拆除并重建,因此,即便是有人找到了 bug 并利用了它,他们也不能访问以前的电子邮件或者接下来的电子邮件;他们只能访问他们自己挖掘出漏洞的那封电子邮件。幸运的是,大多数对外发布的、最容易受到攻击的服务都是隔离的和无状态的。

|

||||

|

||||

所有存储的数据都使用 [dm-crypt][4] 进行加密。非公开服务,比如 Dovecot(IMAP)或者 webmail,都是在内部监听,并使用 [ZeroTier One][5] 加密整个网络,因此只有你的设备(智能手机、笔记本电脑、平板等等)才能访问它们。

|

||||

所有存储的数据都使用 [dm-crypt][4] 进行加密。非公开的服务,比如 Dovecot(IMAP)或者 webmail,都是在内部监听,并使用 [ZeroTier One][5] 所提供的私有的加密层叠网络,因此只有你的设备(智能手机、笔记本电脑、平板等等)才能访问它们。

|

||||

|

||||

虽然电子邮件并不是端到端加密的(除非你使用了 [PGP][6]),但是非加密的电子邮件绝不会跨越网络,并且也不会存储在磁盘上。现在明文的电子邮件只存在于双方的私有邮件服务器上,它们都在他们的家中受到很好的安全保护并且只能通过他们的客户端访问(智能手机、笔记本电脑、平板等等)。

|

||||

|

||||

@ -26,9 +26,7 @@

|

||||

|

||||

### 展望

|

||||

|

||||

电子邮件是我使用 project/os 项目打包的第一个应用程序。想像一下,一个应用程序商店有全部的服务器软件,为易于安装和使用将它们打包到一起。想要一个博客?添加一个 WordPress 应用程序!想替换安全的 Dropbox ?添加一个 [Seafile][8] 应用程序或者一个 [Syncthing][9] 后端应用程序。 [IPFS][10] 节点? [Mastodon][11] 实例?GitLab 服务器?各种家庭自动化/物联网后端服务?这里有大量的非常好的开源服务器软件 ,它们都非常易于安装,并且可以使用它们来替换那些有专利的云服务。

|

||||

|

||||

Nolan Leake 的 [在每个家庭中都有一个云:0 系统管理员技能就可以在家里托管服务器][12] 将在三月 8 - 11日的 [Southern California Linux Expo][12] 进行。使用折扣代码 **OSDC** 去注册可以 50% 的价格得到门票。

|

||||

电子邮件是我使用 project/os 项目打包的第一个应用程序。想像一下,一个应用程序商店有全部的服务器软件,打包起来易于安装和使用。想要一个博客?添加一个 WordPress 应用程序!想替换安全的 Dropbox ?添加一个 [Seafile][8] 应用程序或者一个 [Syncthing][9] 后端应用程序。 [IPFS][10] 节点? [Mastodon][11] 实例?GitLab 服务器?各种家庭自动化/物联网后端服务?这里有大量的非常好的开源服务器软件 ,它们都非常易于安装,并且可以使用它们来替换那些有专利的云服务。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -36,7 +34,7 @@ via: https://opensource.com/article/18/3/host-your-own-email

|

||||

|

||||

作者:[Nolan Leake][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,179 +1,116 @@

|

||||

如何使用 Ansible 打补丁以及安装应用

|

||||

======

|

||||

|

||||

> 使用 Ansible IT 自动化引擎节省更新的时间。

|

||||

|

||||

|

||||

|

||||

你有没有想过,如何打补丁、重启系统,然后继续工作?

|

||||

|

||||

如果你的回答是肯定的,那就需要了解一下 [Ansible][1] 了。它是一个配置管理工具,对于一些复杂的系统管理任务有时候需要几个小时才能完成,又或者对安全性有比较高要求的时候,使用 Ansible 能够大大简化工作流程。

|

||||

如果你的回答是肯定的,那就需要了解一下 [Ansible][1] 了。它是一个配置管理工具,对于一些复杂的有时候需要几个小时才能完成的系统管理任务,又或者对安全性有比较高要求的时候,使用 Ansible 能够大大简化工作流程。

|

||||

|

||||

以我作为系统管理员的经验,打补丁是一项最有难度的工作。每次遇到公共漏洞和暴露(CVE, Common Vulnearbilities and Exposure)通知或者信息安全漏洞预警(IAVA, Information Assurance Vulnerability Alert)时都必须要高度关注安全漏洞,否则安全部门将会严肃追究自己的责任。

|

||||

以我作为系统管理员的经验,打补丁是一项最有难度的工作。每次遇到<ruby>公共漏洞批露<rt>Common Vulnearbilities and Exposure</rt></ruby>(CVE)通知或者<ruby>信息保障漏洞预警<rt>Information Assurance Vulnerability Alert</rt></ruby>(IAVA)时都必须要高度关注安全漏洞,否则安全部门将会严肃追究自己的责任。

|

||||

|

||||

使用 Ansible 可以通过运行[封装模块][2]以缩短打补丁的时间,下面以 [yum 模块][3]更新系统为例,使用 Ansible 可以执行安装、更新、删除、从其它地方安装(例如持续集成/持续开发中的 `rpmbuild`)。以下是系统更新的任务:

|

||||

|

||||

使用 Ansible 可以通过运行[封装模块][2]以缩短打补丁的时间,下面以[yum模块][3]更新系统为例,使用 Ansible 可以执行安装、更新、删除、从其它地方安装(例如持续集成/持续开发中的 `rpmbuild`)。以下是系统更新的任务:

|

||||

```

|

||||

- name: update the system

|

||||

|

||||

yum:

|

||||

|

||||

name: "*"

|

||||

|

||||

state: latest

|

||||

|

||||

```

|

||||

|

||||

在第一行,我们给这个任务命名,这样可以清楚 Ansible 的工作内容。第二行表示使用 `yum` 模块在CentOS虚拟机中执行更新操作。第三行 `name: "*"` 表示更新所有程序。最后一行 `state: latest` 表示更新到最新的 RPM。

|

||||

|

||||

系统更新结束之后,需要重新启动并重新连接:

|

||||

|

||||

```

|

||||

- name: restart system to reboot to newest kernel

|

||||

|

||||

shell: "sleep 5 && reboot"

|

||||

|

||||

async: 1

|

||||

|

||||

poll: 0

|

||||

|

||||

|

||||

|

||||

- name: wait for 10 seconds

|

||||

|

||||

pause:

|

||||

|

||||

seconds: 10

|

||||

|

||||

|

||||

|

||||

- name: wait for the system to reboot

|

||||

|

||||

wait_for_connection:

|

||||

|

||||

connect_timeout: 20

|

||||

|

||||

sleep: 5

|

||||

|

||||

delay: 5

|

||||

|

||||

timeout: 60

|

||||

|

||||

|

||||

|

||||

- name: install epel-release

|

||||

|

||||

yum:

|

||||

|

||||

name: epel-release

|

||||

|

||||

state: latest

|

||||

|

||||

```

|

||||

|

||||

`shell` 字段中的命令让系统在5秒休眠之后重新启动,我们使用 `sleep` 来保持连接不断开,使用 `async` 设定最大等待时长以避免发生超时,`poll` 设置为0表示直接执行不需要等待执行结果。等待10秒钟,使用 `wait_for_connection` 在虚拟机恢复连接后尽快连接。随后由 `install epel-release` 任务检查 RPM 的安装情况。你可以对这个剧本执行多次来验证它的幂等性,唯一会显示造成影响的是重启操作,因为我们使用了 `shell` 模块。如果不想造成实际的影响,可以在使用 `shell` 模块的时候 `changed_when: False`。

|

||||

`shell` 模块中的命令让系统在 5 秒休眠之后重新启动,我们使用 `sleep` 来保持连接不断开,使用 `async` 设定最大等待时长以避免发生超时,`poll` 设置为 0 表示直接执行不需要等待执行结果。暂停 10 秒钟以等待虚拟机恢复,使用 `wait_for_connection` 在虚拟机恢复连接后尽快连接。随后由 `install epel-release` 任务检查 RPM 的安装情况。你可以对这个剧本执行多次来验证它的幂等性,唯一会显示造成影响的是重启操作,因为我们使用了 `shell` 模块。如果不想造成实际的影响,可以在使用 `shell` 模块的时候 `changed_when: False`。

|

||||

|

||||

现在我们已经知道如何对系统进行更新、重启虚拟机、重新连接、安装 RPM 包。下面我们通过 [Ansible Lightbulb][4] 来安装 NGINX:

|

||||

|

||||

```

|

||||

- name: Ensure nginx packages are present

|

||||

|

||||

yum:

|

||||

|

||||

name: nginx, python-pip, python-devel, devel

|

||||

|

||||

state: present

|

||||

|

||||

notify: restart-nginx-service

|

||||

|

||||

|

||||

|

||||

- name: Ensure uwsgi package is present

|

||||

|

||||

pip:

|

||||

|

||||

name: uwsgi

|

||||

|

||||

state: present

|

||||

|

||||

notify: restart-nginx-service

|

||||

|

||||

|

||||

|

||||

- name: Ensure latest default.conf is present

|

||||

|

||||

template:

|

||||

|

||||

src: templates/nginx.conf.j2

|

||||

|

||||

dest: /etc/nginx/nginx.conf

|

||||

|

||||

backup: yes

|

||||

|

||||

notify: restart-nginx-service

|

||||

|

||||

|

||||

|

||||

- name: Ensure latest index.html is present

|

||||

|

||||

template:

|

||||

|

||||

src: templates/index.html.j2

|

||||

|

||||

dest: /usr/share/nginx/html/index.html

|

||||

|

||||

|

||||

|

||||

- name: Ensure nginx service is started and enabled

|

||||

|

||||

service:

|

||||

|

||||

name: nginx

|

||||

|

||||

state: started

|

||||

|

||||

enabled: yes

|

||||

|

||||

|

||||

|

||||

- name: Ensure proper response from localhost can be received

|

||||

|

||||

uri:

|

||||

|

||||

url: "http://localhost:80/"

|

||||

|

||||

return_content: yes

|

||||

|

||||

register: response

|

||||

|

||||

until: 'nginx_test_message in response.content'

|

||||

|

||||

retries: 10

|

||||

|

||||

delay: 1

|

||||

|

||||

```

|

||||

|

||||

And the handler that restarts the nginx service:

|

||||

以及用来重启 nginx 服务的操作文件:

|

||||

|

||||

```

|

||||

# 安装 nginx 的操作文件

|

||||

|

||||

- name: restart-nginx-service

|

||||

|

||||

service:

|

||||

|

||||

name: nginx

|

||||

|

||||

state: restarted

|

||||

|

||||

```

|

||||

|

||||

在这个角色里,我们使用 RPM 安装了 `nginx`、`python-pip`、`python-devel`、`devel`,用 PIP 安装了 `uwsgi`,接下来使用 `template` 模块复制 `nginx.conf` 和 `index.html` 以显示页面,并确保服务在系统启动时启动。然后就可以使用 `uri` 模块检查到页面的连接了。