mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-09 01:30:10 +08:00

翻译完成

This commit is contained in:

commit

8fcc27d79c

122

20171202 docker - Use multi-stage builds.md

Normal file

122

20171202 docker - Use multi-stage builds.md

Normal file

@ -0,0 +1,122 @@

|

||||

Docker:使用多阶段构建镜像

|

||||

============================================================

|

||||

|

||||

多阶段构建是 Docker 17.05 及更高版本提供的新功能。这对致力于优化 Dockerfile 的人来说,使得 Dockerfile 易于阅读和维护。

|

||||

|

||||

> 致谢: 特别感谢 [Alex Ellis][1] 授权使用他的关于 Docker 多阶段构建的博客文章 [Builder pattern vs. Multi-stage builds in Docker][2] 作为以下示例的基础。

|

||||

|

||||

### 在多阶段构建之前

|

||||

|

||||

关于构建镜像最具挑战性的事情之一是保持镜像体积小巧。 Dockerfile 中的每条指令都会在镜像中增加一层,并且在移动到下一层之前,需要记住清除不需要的构件。要编写一个非常高效的 Dockerfile,你通常需要使用 shell 技巧和其它方式来尽可能地减少层数,并确保每一层都具有上一层所需的构件,而其它任何东西都不需要。

|

||||

|

||||

实际上最常见的是,有一个 Dockerfile 用于开发(其中包含构建应用程序所需的所有内容),而另一个裁剪过的用于生产环境,它只包含您的应用程序以及运行它所需的内容。这被称为“构建器模式”。但是维护两个 Dockerfile 并不理想。

|

||||

|

||||

下面分别是一个 `Dockerfile.build` 和遵循上面的构建器模式的 `Dockerfile` 的例子:

|

||||

|

||||

`Dockerfile.build`:

|

||||

|

||||

```

|

||||

FROM golang:1.7.3

|

||||

WORKDIR /go/src/github.com/alexellis/href-counter/

|

||||

RUN go get -d -v golang.org/x/net/html

|

||||

COPY app.go .

|

||||

RUN go get -d -v golang.org/x/net/html \

|

||||

&& CGO_ENABLED=0 GOOS=linux go build -a -installsuffix cgo -o app .

|

||||

```

|

||||

|

||||

注意这个例子还使用 Bash 的 `&&` 运算符人为地将两个 `RUN` 命令压缩在一起,以避免在镜像中创建额外的层。这很容易失败,难以维护。例如,插入另一个命令时,很容易忘记继续使用 `\` 字符。

|

||||

|

||||

`Dockerfile`:

|

||||

|

||||

```

|

||||

FROM alpine:latest

|

||||

RUN apk --no-cache add ca-certificates

|

||||

WORKDIR /root/

|

||||

COPY app .

|

||||

CMD ["./app"]

|

||||

```

|

||||

|

||||

`build.sh`:

|

||||

|

||||

```

|

||||

#!/bin/sh

|

||||

echo Building alexellis2/href-counter:build

|

||||

|

||||

docker build --build-arg https_proxy=$https_proxy --build-arg http_proxy=$http_proxy \

|

||||

-t alexellis2/href-counter:build . -f Dockerfile.build

|

||||

|

||||

docker create --name extract alexellis2/href-counter:build

|

||||

docker cp extract:/go/src/github.com/alexellis/href-counter/app ./app

|

||||

docker rm -f extract

|

||||

|

||||

echo Building alexellis2/href-counter:latest

|

||||

|

||||

docker build --no-cache -t alexellis2/href-counter:latest .

|

||||

rm ./app

|

||||

```

|

||||

|

||||

当您运行 `build.sh` 脚本时,它会构建第一个镜像,从中创建一个容器,以便将该构件复制出来,然后构建第二个镜像。 这两个镜像会占用您的系统的空间,而你仍然会一个 `app` 构件存放在你的本地磁盘上。

|

||||

|

||||

多阶段构建大大简化了这种情况!

|

||||

|

||||

### 使用多阶段构建

|

||||

|

||||

在多阶段构建中,您需要在 Dockerfile 中多次使用 `FROM` 声明。每次 `FROM` 指令可以使用不同的基础镜像,并且每次 `FROM` 指令都会开始新阶段的构建。您可以选择将构件从一个阶段复制到另一个阶段,在最终镜像中,不会留下您不需要的所有内容。为了演示这是如何工作的,让我们调整前一节中的 Dockerfile 以使用多阶段构建。

|

||||

|

||||

`Dockerfile`:

|

||||

|

||||

```

|

||||

FROM golang:1.7.3

|

||||

WORKDIR /go/src/github.com/alexellis/href-counter/

|

||||

RUN go get -d -v golang.org/x/net/html

|

||||

COPY app.go .

|

||||

RUN CGO_ENABLED=0 GOOS=linux go build -a -installsuffix cgo -o app .

|

||||

|

||||

FROM alpine:latest

|

||||

RUN apk --no-cache add ca-certificates

|

||||

WORKDIR /root/

|

||||

COPY --from=0 /go/src/github.com/alexellis/href-counter/app .

|

||||

CMD ["./app"]

|

||||

```

|

||||

|

||||

您只需要单一个 Dockerfile。 不需要另外的构建脚本。只需运行 `docker build` 即可。

|

||||

|

||||

```

|

||||

$ docker build -t alexellis2/href-counter:latest .

|

||||

```

|

||||

|

||||

最终的结果是和以前体积一样小的生产镜像,复杂性显著降低。您不需要创建任何中间镜像,也不需要将任何构件提取到本地系统。

|

||||

|

||||

它是如何工作的呢?第二条 `FROM` 指令以 `alpine:latest` 镜像作为基础开始新的建造阶段。`COPY --from=0` 这一行将刚才前一个阶段产生的构件复制到这个新阶段。Go SDK 和任何中间构件都被留在那里,而不会保存到最终的镜像中。

|

||||

|

||||

### 命名您的构建阶段

|

||||

|

||||

默认情况下,这些阶段没有命名,您可以通过它们的整数来引用它们,从第一个 `FROM` 指令的 0 开始。但是,你可以通过在 `FROM` 指令中使用 `as <NAME>` 来为阶段命名。以下示例通过命名阶段并在 `COPY` 指令中使用名称来改进前一个示例。这意味着,即使您的 `Dockerfile` 中的指令稍后重新排序,`COPY` 也不会出问题。

|

||||

|

||||

```

|

||||

FROM golang:1.7.3 as builder

|

||||

WORKDIR /go/src/github.com/alexellis/href-counter/

|

||||

RUN go get -d -v golang.org/x/net/html

|

||||

COPY app.go .

|

||||

RUN CGO_ENABLED=0 GOOS=linux go build -a -installsuffix cgo -o app .

|

||||

|

||||

FROM alpine:latest

|

||||

RUN apk --no-cache add ca-certificates

|

||||

WORKDIR /root/

|

||||

COPY --from=builder /go/src/github.com/alexellis/href-counter/app .

|

||||

CMD ["./app"]

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://docs.docker.com/engine/userguide/eng-image/multistage-build/

|

||||

|

||||

作者:[docker][a]

|

||||

译者:[iron0x](https://github.com/iron0x)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://docs.docker.com/engine/userguide/eng-image/multistage-build/

|

||||

[1]:https://twitter.com/alexellisuk

|

||||

[2]:http://blog.alexellis.io/mutli-stage-docker-builds/

|

||||

@ -0,0 +1,219 @@

|

||||

在红帽企业版 Linux 中将系统服务容器化(一)

|

||||

====================

|

||||

|

||||

在 2017 年红帽峰会上,有几个人问我“我们通常用完整的虚拟机来隔离如 DNS 和 DHCP 等网络服务,那我们可以用容器来取而代之吗?”答案是可以的,下面是在当前红帽企业版 Linux 7 系统上创建一个系统容器的例子。

|

||||

|

||||

### 我们的目的

|

||||

|

||||

**创建一个可以独立于任何其它系统服务而更新的网络服务,并且可以从主机端容易地管理和更新。**

|

||||

|

||||

让我们来探究一下在容器中建立一个运行在 systemd 之下的 BIND 服务器。在这一部分,我们将了解到如何建立自己的容器以及管理 BIND 配置和数据文件。

|

||||

|

||||

在本系列的第二部分,我们将看到如何整合主机中的 systemd 和容器中的 systemd。我们将探究如何管理容器中的服务,并且使它作为一种主机中的服务。

|

||||

|

||||

### 创建 BIND 容器

|

||||

|

||||

为了使 systemd 在一个容器中轻松运行,我们首先需要在主机中增加两个包:`oci-register-machine` 和 `oci-systemd-hook`。`oci-systemd-hook` 这个钩子允许我们在一个容器中运行 systemd,而不需要使用特权容器或者手工配置 tmpfs 和 cgroups。`oci-register-machine` 这个钩子允许我们使用 systemd 工具如 `systemctl` 和 `machinectl` 来跟踪容器。

|

||||

|

||||

```

|

||||

[root@rhel7-host ~]# yum install oci-register-machine oci-systemd-hook

|

||||

```

|

||||

|

||||

回到创建我们的 BIND 容器上。[红帽企业版 Linux 7 基础镜像][6]包含了 systemd 作为其初始化系统。我们可以如我们在典型的系统中做的那样安装并激活 BIND。你可以从 [git 仓库中下载这份 Dockerfile][8]。

|

||||

|

||||

```

|

||||

[root@rhel7-host bind]# vi Dockerfile

|

||||

|

||||

# Dockerfile for BIND

|

||||

FROM registry.access.redhat.com/rhel7/rhel

|

||||

ENV container docker

|

||||

RUN yum -y install bind && \

|

||||

yum clean all && \

|

||||

systemctl enable named

|

||||

STOPSIGNAL SIGRTMIN+3

|

||||

EXPOSE 53

|

||||

EXPOSE 53/udp

|

||||

CMD [ "/sbin/init" ]

|

||||

```

|

||||

|

||||

因为我们以 PID 1 来启动一个初始化系统,当我们告诉容器停止时,需要改变 docker CLI 发送的信号。从 `kill` 系统调用手册中 (`man 2 kill`):

|

||||

|

||||

> 唯一可以发送给 PID 1 进程(即 init 进程)的信号,是那些初始化系统明确安装了<ruby>信号处理器<rt>signal handler</rt></ruby>的信号。这是为了避免系统被意外破坏。

|

||||

|

||||

对于 systemd 信号处理器,`SIGRTMIN+3` 是对应于 `systemd start halt.target` 的信号。我们也需要为 BIND 暴露 TCP 和 UDP 端口号,因为这两种协议可能都要使用。

|

||||

|

||||

### 管理数据

|

||||

|

||||

有了一个可以工作的 BIND 服务,我们还需要一种管理配置文件和区域文件的方法。目前这些都放在容器里面,所以我们任何时候都可以进入容器去更新配置或者改变一个区域文件。从管理的角度来说,这并不是很理想。当要更新 BIND 时,我们将需要重建这个容器,所以镜像中的改变将会丢失。任何时候我们需要更新一个文件或者重启服务时,都需要进入这个容器,而这增加了步骤和时间。

|

||||

|

||||

相反的,我们将从这个容器中提取出配置文件和数据文件,把它们拷贝到主机上,然后在运行的时候挂载它们。用这种方式我们可以很容易地重启或者重建容器,而不会丢失所做出的更改。我们也可以使用容器外的编辑器来更改配置和区域文件。因为这个容器的数据看起来像“该系统所提供服务的特定站点数据”,让我们遵循 Linux <ruby>文件系统层次标准<rt>File System Hierarchy</rt></ruby>,并在当前主机上创建 `/srv/named` 目录来保持管理权分离。

|

||||

|

||||

```

|

||||

[root@rhel7-host ~]# mkdir -p /srv/named/etc

|

||||

|

||||

[root@rhel7-host ~]# mkdir -p /srv/named/var/named

|

||||

```

|

||||

|

||||

*提示:如果你正在迁移一个已有的配置文件,你可以跳过下面的步骤并且将它直接拷贝到 `/srv/named` 目录下。你也许仍然要用一个临时容器来检查一下分配给这个容器的 GID。*

|

||||

|

||||

让我们建立并运行一个临时容器来检查 BIND。在将 init 进程以 PID 1 运行时,我们不能交互地运行这个容器来获取一个 shell。我们会在容器启动后执行 shell,并且使用 `rpm` 命令来检查重要文件。

|

||||

|

||||

```

|

||||

[root@rhel7-host ~]# docker build -t named .

|

||||

|

||||

[root@rhel7-host ~]# docker exec -it $( docker run -d named ) /bin/bash

|

||||

|

||||

[root@0e77ce00405e /]# rpm -ql bind

|

||||

```

|

||||

|

||||

对于这个例子来说,我们将需要 `/etc/named.conf` 和 `/var/named/` 目录下的任何文件。我们可以使用 `machinectl` 命令来提取它们。如果注册了一个以上的容器,我们可以在任一机器上使用 `machinectl status` 命令来查看运行的是什么。一旦有了这些配置,我们就可以终止这个临时容器了。

|

||||

|

||||

*如果你喜欢,资源库中也有一个[样例 `named.conf` 和针对 `example.com` 的区域文件][8]。*

|

||||

|

||||

```

|

||||

[root@rhel7-host bind]# machinectl list

|

||||

|

||||

MACHINE CLASS SERVICE

|

||||

8824c90294d5a36d396c8ab35167937f container docker

|

||||

|

||||

[root@rhel7-host ~]# machinectl copy-from 8824c90294d5a36d396c8ab35167937f /etc/named.conf /srv/named/etc/named.conf

|

||||

|

||||

[root@rhel7-host ~]# machinectl copy-from 8824c90294d5a36d396c8ab35167937f /var/named /srv/named/var/named

|

||||

|

||||

[root@rhel7-host ~]# docker stop infallible_wescoff

|

||||

```

|

||||

|

||||

### 最终的创建

|

||||

|

||||

为了创建和运行最终的容器,添加卷选项以挂载:

|

||||

|

||||

- 将文件 `/srv/named/etc/named.conf` 映射为 `/etc/named.conf`

|

||||

- 将目录 `/srv/named/var/named` 映射为 `/var/named`

|

||||

|

||||

因为这是我们最终的容器,我们将提供一个有意义的名字,以供我们以后引用。

|

||||

|

||||

```

|

||||

[root@rhel7-host ~]# docker run -d -p 53:53 -p 53:53/udp -v /srv/named/etc/named.conf:/etc/named.conf:Z -v /srv/named/var/named:/var/named:Z --name named-container named

|

||||

```

|

||||

|

||||

在最终容器运行时,我们可以更改本机配置来改变这个容器中 BIND 的行为。这个 BIND 服务器将需要在这个容器分配的任何 IP 上监听。请确保任何新文件的 GID 与来自这个容器中的其余的 BIND 文件相匹配。

|

||||

|

||||

```

|

||||

[root@rhel7-host bind]# cp named.conf /srv/named/etc/named.conf

|

||||

|

||||

[root@rhel7-host ~]# cp example.com.zone /srv/named/var/named/example.com.zone

|

||||

|

||||

[root@rhel7-host ~]# cp example.com.rr.zone /srv/named/var/named/example.com.rr.zone

|

||||

```

|

||||

|

||||

> 很好奇为什么我不需要在主机目录中改变 SELinux 上下文?^注1

|

||||

|

||||

我们将运行这个容器提供的 `rndc` 二进制文件重新加载配置。我们可以使用 `journald` 以同样的方式检查 BIND 日志。如果运行出现错误,你可以在主机中编辑该文件,并且重新加载配置。在主机中使用 `host` 或 `dig`,我们可以检查来自该容器化服务的 example.com 的响应。

|

||||

|

||||

```

|

||||

[root@rhel7-host ~]# docker exec -it named-container rndc reload

|

||||

server reload successful

|

||||

|

||||

[root@rhel7-host ~]# docker exec -it named-container journalctl -u named -n

|

||||

-- Logs begin at Fri 2017-05-12 19:15:18 UTC, end at Fri 2017-05-12 19:29:17 UTC. --

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: automatic empty zone: 9.E.F.IP6.ARPA

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: automatic empty zone: A.E.F.IP6.ARPA

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: automatic empty zone: B.E.F.IP6.ARPA

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: automatic empty zone: 8.B.D.0.1.0.0.2.IP6.ARPA

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: reloading configuration succeeded

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: reloading zones succeeded

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: zone 1.0.10.in-addr.arpa/IN: loaded serial 2001062601

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: zone 1.0.10.in-addr.arpa/IN: sending notifies (serial 2001062601)

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: all zones loaded

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: running

|

||||

|

||||

[root@rhel7-host bind]# host www.example.com localhost

|

||||

Using domain server:

|

||||

Name: localhost

|

||||

Address: ::1#53

|

||||

Aliases:

|

||||

www.example.com is an alias for server1.example.com.

|

||||

server1.example.com is an alias for mail

|

||||

```

|

||||

|

||||

> 你的区域文件没有更新吗?可能是因为你的编辑器,而不是序列号。^注2

|

||||

|

||||

### 终点线

|

||||

|

||||

我们已经达成了我们打算完成的目标,从容器中为 DNS 请求和区域文件提供服务。我们已经得到一个持久化的位置来管理更新和配置,并且更新后该配置不变。

|

||||

|

||||

在这个系列的第二部分,我们将看到怎样将一个容器看作为主机中的一个普通服务来运行。

|

||||

|

||||

---

|

||||

|

||||

[关注 RHEL 博客](http://redhatstackblog.wordpress.com/feed/),通过电子邮件来获得本系列第二部分和其它新文章的更新。

|

||||

|

||||

---

|

||||

|

||||

### 附加资源

|

||||

|

||||

- **所附带文件的 Github 仓库:** [https://github.com/nzwulfin/named-container](https://github.com/nzwulfin/named-container)

|

||||

- **注1:** **通过容器访问本地文件的 SELinux 上下文**

|

||||

|

||||

你可能已经注意到当我从容器向本地主机拷贝文件时,我没有运行 `chcon` 将主机中的文件类型改变为 `svirt_sandbox_file_t`。为什么它没有出错?将一个文件拷贝到 `/srv` 会将这个文件标记为类型 `var_t`。我 `setenforce 0` (关闭 SELinux)了吗?

|

||||

|

||||

当然没有,这将让 [Dan Walsh 大哭](https://stopdisablingselinux.com/)(LCTT 译注:RedHat 的 SELinux 团队负责人,倡议不要禁用 SELinux)。是的,`machinectl` 确实将文件标记类型设置为期望的那样,可以看一下:

|

||||

|

||||

启动一个容器之前:

|

||||

|

||||

```

|

||||

[root@rhel7-host ~]# ls -Z /srv/named/etc/named.conf

|

||||

-rw-r-----. unconfined_u:object_r:var_t:s0 /srv/named/etc/named.conf

|

||||

```

|

||||

|

||||

不过,运行中我使用了一个卷选项可以使 Dan Walsh 先生高兴起来,`:Z`。`-v /srv/named/etc/named.conf:/etc/named.conf:Z` 命令的这部分做了两件事情:首先它表示这需要使用一个私有卷的 SELiunx 标记来重新标记;其次它表明以读写挂载。

|

||||

|

||||

启动容器之后:

|

||||

|

||||

```

|

||||

[root@rhel7-host ~]# ls -Z /srv/named/etc/named.conf

|

||||

-rw-r-----. root 25 system_u:object_r:svirt_sandbox_file_t:s0:c821,c956 /srv/named/etc/named.conf

|

||||

```

|

||||

|

||||

- **注2:** **VIM 备份行为能改变 inode**

|

||||

|

||||

如果你在本地主机中使用 `vim` 来编辑配置文件,而你没有看到容器中的改变,你可能不经意的创建了容器感知不到的新文件。在编辑时,有三种 `vim` 设定影响备份副本:`backup`、`writebackup` 和 `backupcopy`。

|

||||

|

||||

我摘录了 RHEL 7 中的来自官方 VIM [backup_table][9] 中的默认配置。

|

||||

|

||||

```

|

||||

backup writebackup

|

||||

off on backup current file, deleted afterwards (default)

|

||||

```

|

||||

所以我们不创建残留下的 `~` 副本,而是创建备份。另外的设定是 `backupcopy`,`auto` 是默认的设置:

|

||||

|

||||

```

|

||||

"yes" make a copy of the file and overwrite the original one

|

||||

"no" rename the file and write a new one

|

||||

"auto" one of the previous, what works best

|

||||

```

|

||||

|

||||

这种组合设定意味着当你编辑一个文件时,除非 `vim` 有理由(请查看文档了解其逻辑),你将会得到一个包含你编辑内容的新文件,当你保存时它会重命名为原先的文件。这意味着这个文件获得了新的 inode。对于大多数情况,这不是问题,但是这里容器的<ruby>绑定挂载<rt>bind mount</rt></ruby>对 inode 的改变很敏感。为了解决这个问题,你需要改变 `backupcopy` 的行为。

|

||||

|

||||

不管是在 `vim` 会话中还是在你的 `.vimrc`中,请添加 `set backupcopy=yes`。这将确保原先的文件被清空并覆写,维持了 inode 不变并且将该改变传递到了容器中。

|

||||

|

||||

------------

|

||||

|

||||

via: http://rhelblog.redhat.com/2017/07/19/containing-system-services-in-red-hat-enterprise-linux-part-1/

|

||||

|

||||

作者:[Matt Micene][a]

|

||||

译者:[liuxinyu123](https://github.com/liuxinyu123)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://rhelblog.redhat.com/2017/07/19/containing-system-services-in-red-hat-enterprise-linux-part-1/

|

||||

[1]:http://rhelblog.redhat.com/author/mmicenerht/

|

||||

[2]:http://rhelblog.redhat.com/2017/07/19/containing-system-services-in-red-hat-enterprise-linux-part-1/#repo

|

||||

[3]:http://rhelblog.redhat.com/2017/07/19/containing-system-services-in-red-hat-enterprise-linux-part-1/#sidebar_1

|

||||

[4]:http://rhelblog.redhat.com/2017/07/19/containing-system-services-in-red-hat-enterprise-linux-part-1/#sidebar_2

|

||||

[5]:http://redhatstackblog.wordpress.com/feed/

|

||||

[6]:https://access.redhat.com/containers

|

||||

[7]:http://rhelblog.redhat.com/2017/07/19/containing-system-services-in-red-hat-enterprise-linux-part-1/#repo

|

||||

[8]:https://github.com/nzwulfin/named-container

|

||||

[9]:http://vimdoc.sourceforge.net/htmldoc/editing.html#backup-table

|

||||

@ -1,43 +1,50 @@

|

||||

translated by smartgrids

|

||||

Eclipse 如何助力 IoT 发展

|

||||

============================================================

|

||||

|

||||

### 开源组织的模块发开发方式非常适合物联网。

|

||||

|

||||

> 开源组织的模块化开发方式非常适合物联网。

|

||||

|

||||

|

||||

|

||||

图片来源: opensource.com

|

||||

|

||||

[Eclipse][3] 可能不是第一个去研究物联网的开源组织。但是,远在 IoT 家喻户晓之前,该基金会在 2001 年左右就开始支持开源软件发展商业化。九月 Eclipse 物联网日和 RedMonk 的 [ThingMonk 2017][4] 一块举行,着重强调了 Eclipse 在 [物联网发展][5] 中的重要作用。它现在已经包含了 28 个项目,覆盖了大部分物联网项目需求。会议过程中,我和负责 Eclipse 市场化运作的 [Ian Skerritt][6] 讨论了 Eclipse 的物联网项目以及如何拓展它。

|

||||

[Eclipse][3] 可能不是第一个去研究物联网的开源组织。但是,远在 IoT 家喻户晓之前,该基金会在 2001 年左右就开始支持开源软件发展商业化。

|

||||

|

||||

九月份的 Eclipse 物联网日和 RedMonk 的 [ThingMonk 2017][4] 一块举行,着重强调了 Eclipse 在 [物联网发展][5] 中的重要作用。它现在已经包含了 28 个项目,覆盖了大部分物联网项目需求。会议过程中,我和负责 Eclipse 市场化运作的 [Ian Skerritt][6] 讨论了 Eclipse 的物联网项目以及如何拓展它。

|

||||

|

||||

### 物联网的最新进展?

|

||||

|

||||

###物联网的最新进展?

|

||||

我问 Ian 物联网同传统工业自动化,也就是前几十年通过传感器和相应工具来实现工厂互联的方式有什么不同。 Ian 指出很多工厂是还没有互联的。

|

||||

另外,他说“ SCADA[监控和数据分析] 系统以及工厂底层技术都是私有、独立性的。我们很难去改变它,也很难去适配它们…… 现在,如果你想运行一套生产系统,你需要设计成百上千的单元。生产线想要的是满足用户需求,使制造过程更灵活,从而可以不断产出。” 这也就是物联网会带给制造业的一个很大的帮助。

|

||||

|

||||

另外,他说 “SCADA [<ruby>监控和数据分析<rt>supervisory control and data analysis</rt></ruby>] 系统以及工厂底层技术都是非常私有的、独立性的。我们很难去改变它,也很难去适配它们 …… 现在,如果你想运行一套生产系统,你需要设计成百上千的单元。生产线想要的是满足用户需求,使制造过程更灵活,从而可以不断产出。” 这也就是物联网会带给制造业的一个很大的帮助。

|

||||

|

||||

###Eclipse 物联网方面的研究

|

||||

Ian 对于 Eclipse 在物联网的研究是这样描述的:“满足任何物联网解决方案的核心基础技术” ,通过使用开源技术,“每个人都可以使用从而可以获得更好的适配性。” 他说,Eclipse 将物联网视为包括三层互联的软件栈。从更高的层面上看,这些软件栈(按照大家常见的说法)将物联网描述为跨越三个层面的网络。特定的观念可能认为含有更多的层面,但是他们一直符合这个三层模型的功能的:

|

||||

### Eclipse 物联网方面的研究

|

||||

|

||||

Ian 对于 Eclipse 在物联网的研究是这样描述的:“满足任何物联网解决方案的核心基础技术” ,通过使用开源技术,“每个人都可以使用,从而可以获得更好的适配性。” 他说,Eclipse 将物联网视为包括三层互联的软件栈。从更高的层面上看,这些软件栈(按照大家常见的说法)将物联网描述为跨越三个层面的网络。特定的实现方式可能含有更多的层,但是它们一般都可以映射到这个三层模型的功能上:

|

||||

|

||||

* 一种可以装载设备(例如设备、终端、微控制器、传感器)用软件的堆栈。

|

||||

* 将不同的传感器采集到的数据信息聚合起来并传输到网上的一类网关。这一层也可能会针对传感器数据检测做出实时反映。

|

||||

* 将不同的传感器采集到的数据信息聚合起来并传输到网上的一类网关。这一层也可能会针对传感器数据检测做出实时反应。

|

||||

* 物联网平台后端的一个软件栈。这个后端云存储数据并能根据采集的数据比如历史趋势、预测分析提供服务。

|

||||

|

||||

这三个软件栈在 Eclipse 的白皮书 “ [The Three Software Stacks Required for IoT Architectures][7] ”中有更详细的描述。

|

||||

这三个软件栈在 Eclipse 的白皮书 “[The Three Software Stacks Required for IoT Architectures][7] ”中有更详细的描述。

|

||||

|

||||

Ian 说在这些架构中开发一种解决方案时,“需要开发一些特殊的东西,但是很多底层的技术是可以借用的,像通信协议、网关服务。需要一种模块化的方式来满足不用的需求场合。” Eclipse 关于物联网方面的研究可以概括为:开发模块化开源组件从而可以被用于开发大量的特定性商业服务和解决方案。

|

||||

Ian 说在这些架构中开发一种解决方案时,“需要开发一些特殊的东西,但是很多底层的技术是可以借用的,像通信协议、网关服务。需要一种模块化的方式来满足不同的需求场合。” Eclipse 关于物联网方面的研究可以概括为:开发模块化开源组件,从而可以被用于开发大量的特定性商业服务和解决方案。

|

||||

|

||||

###Eclipse 的物联网项目

|

||||

### Eclipse 的物联网项目

|

||||

|

||||

在众多一杯应用的 Eclipse 物联网应用中, Ian 举了两个和 [MQTT][8] 有关联的突出应用,一个设备与设备互联(M2M)的物联网协议。 Ian 把它描述成“一个专为重视电源管理工作的油气传输线监控系统的信息发布/订阅协议。MQTT 已经是众多物联网广泛应用标准中很成功的一个。” [Eclipse Mosquitto][9] 是 MQTT 的代理,[Eclipse Paho][10] 是他的客户端。

|

||||

[Eclipse Kura][11] 是一个物联网网关,引用 Ian 的话,“它连接了很多不同的协议间的联系”包括蓝牙、Modbus、CANbus 和 OPC 统一架构协议,以及一直在不断添加的协议。一个优势就是,他说,取代了你自己写你自己的协议, Kura 提供了这个功能并将你通过卫星、网络或其他设备连接到网络。”另外它也提供了防火墙配置、网络延时以及其它功能。Ian 也指出“如果网络不通时,它会存储信息直到网络恢复。”

|

||||

在众多已被应用的 Eclipse 物联网应用中, Ian 举了两个和 [MQTT][8] 有关联的突出应用,一个设备与设备互联(M2M)的物联网协议。 Ian 把它描述成“一个专为重视电源管理工作的油气传输线监控系统的信息发布/订阅协议。MQTT 已经是众多物联网广泛应用标准中很成功的一个。” [Eclipse Mosquitto][9] 是 MQTT 的代理,[Eclipse Paho][10] 是他的客户端。

|

||||

|

||||

[Eclipse Kura][11] 是一个物联网网关,引用 Ian 的话,“它连接了很多不同的协议间的联系”,包括蓝牙、Modbus、CANbus 和 OPC 统一架构协议,以及一直在不断添加的各种协议。他说,一个优势就是,取代了你自己写你自己的协议, Kura 提供了这个功能并将你通过卫星、网络或其他设备连接到网络。”另外它也提供了防火墙配置、网络延时以及其它功能。Ian 也指出“如果网络不通时,它会存储信息直到网络恢复。”

|

||||

|

||||

最新的一个项目中,[Eclipse Kapua][12] 正尝试通过微服务来为物联网云平台提供不同的服务。比如,它集成了通信、汇聚、管理、存储和分析功能。Ian 说“它正在不断前进,虽然还没被完全开发出来,但是 Eurotech 和 RedHat 在这个项目上非常积极。”

|

||||

Ian 说 [Eclipse hawkBit][13] ,软件更新管理的软件,是一项“非常有趣的项目。从安全的角度说,如果你不能更新你的设备,你将会面临巨大的安全漏洞。”很多物联网安全事故都和无法更新的设备有关,他说,“ HawkBit 可以基本负责通过物联网系统来完成扩展性更新的后端管理。”

|

||||

|

||||

物联网设备软件升级的难度一直被看作是难度最高的安全挑战之一。物联网设备不是一直连接的,而且数目众多,再加上首先设备的更新程序很难完全正常。正因为这个原因,关于无赖女王软件升级的项目一直是被当作重要内容往前推进。

|

||||

Ian 说 [Eclipse hawkBit][13] ,一个软件更新管理的软件,是一项“非常有趣的项目。从安全的角度说,如果你不能更新你的设备,你将会面临巨大的安全漏洞。”很多物联网安全事故都和无法更新的设备有关,他说,“HawkBit 可以基本负责通过物联网系统来完成扩展性更新的后端管理。”

|

||||

|

||||

###为什么物联网这么适合 Eclipse

|

||||

物联网设备软件升级的难度一直被看作是难度最高的安全挑战之一。物联网设备不是一直连接的,而且数目众多,再加上首先设备的更新程序很难完全正常。正因为这个原因,关于 IoT 软件升级的项目一直是被当作重要内容往前推进。

|

||||

|

||||

在物联网发展趋势中的一个方面就是关于构建模块来解决商业问题,而不是宽约工业和公司的大物联网平台。 Eclipse 关于物联网的研究放在一系列模块栈、提供特定和大众化需求功能的项目,还有就是指定目标所需的可捆绑式中间件、网关和协议组件上。

|

||||

### 为什么物联网这么适合 Eclipse

|

||||

|

||||

在物联网发展趋势中的一个方面就是关于构建模块来解决商业问题,而不是跨越行业和公司的大物联网平台。 Eclipse 关于物联网的研究放在一系列模块栈、提供特定和大众化需求功能的项目上,还有就是指定目标所需的可捆绑式中间件、网关和协议组件上。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

@ -46,15 +53,15 @@ Ian 说 [Eclipse hawkBit][13] ,软件更新管理的软件,是一项“非

|

||||

|

||||

作者简介:

|

||||

|

||||

Gordon Haff - Gordon Haff 是红帽公司的云营销员,经常在消费者和工业会议上讲话,并且帮助发展红帽全办公云解决方案。他是 计算机前言:云如何如何打开众多出版社未来之门 的作者。在红帽之前, Gordon 写了成百上千的研究报告,经常被引用到公众刊物上,像纽约时报关于 IT 的议题和产品建议等……

|

||||

Gordon Haff - Gordon Haff 是红帽公司的云专家,经常在消费者和行业会议上讲话,并且帮助发展红帽全面云化解决方案。他是《计算机前沿:云如何如何打开众多出版社未来之门》的作者。在红帽之前, Gordon 写了成百上千的研究报告,经常被引用到公众刊物上,像纽约时报关于 IT 的议题和产品建议等……

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

转自: https://opensource.com/article/17/10/eclipse-and-iot

|

||||

via: https://opensource.com/article/17/10/eclipse-and-iot

|

||||

|

||||

作者:[Gordon Haff ][a]

|

||||

作者:[Gordon Haff][a]

|

||||

译者:[smartgrids](https://github.com/smartgrids)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,20 +1,19 @@

|

||||

归档仓库

|

||||

如何归档 GitHub 仓库

|

||||

====================

|

||||

|

||||

|

||||

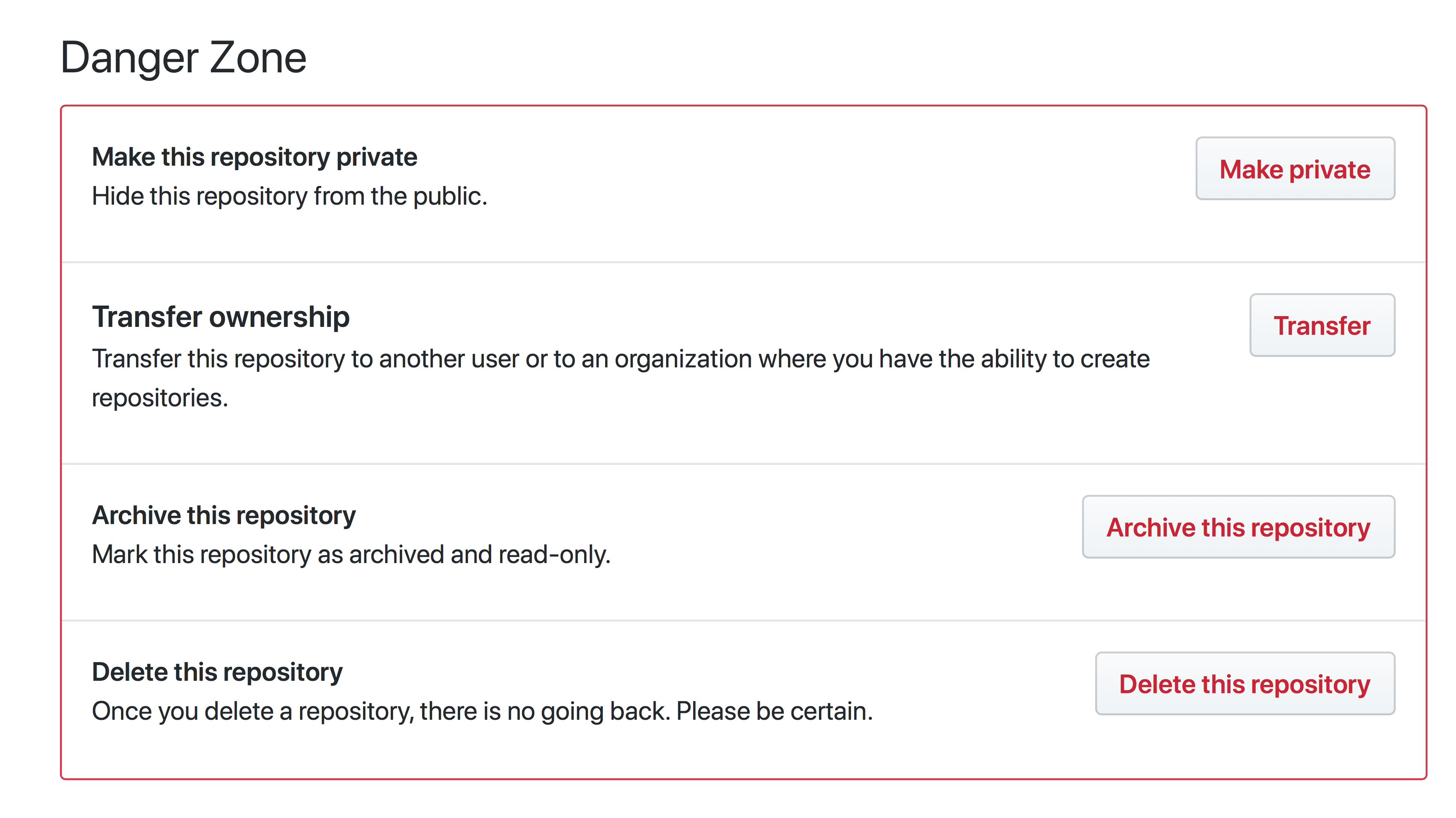

因为仓库不再活跃开发或者你不想接受额外的贡献并不意味着你想要删除它。现在在 Github 上归档仓库让它变成只读。

|

||||

如果仓库不再活跃开发或者你不想接受额外的贡献,但这并不意味着你想要删除它。现在可以在 Github 上归档仓库让它变成只读。

|

||||

|

||||

[][1]

|

||||

|

||||

归档一个仓库让它对所有人只读(包括仓库拥有者)。这包括编辑仓库、问题、合并请求、标记、里程碑、维基、发布、提交、标签、分支、反馈和评论。没有人可以在一个归档的仓库上创建新的问题、合并请求或者评论,但是你仍可以 fork 仓库-允许归档的仓库在其他地方继续开发。

|

||||

归档一个仓库会让它对所有人只读(包括仓库拥有者)。这包括对仓库的编辑、<ruby>问题<rt>issue</rt></ruby>、<ruby>合并请求<rt>pull request</rt></ruby>(PR)、标记、里程碑、项目、维基、发布、提交、标签、分支、反馈和评论。谁都不可以在一个归档的仓库上创建新的问题、合并请求或者评论,但是你仍可以 fork 仓库——以允许归档的仓库在其它地方继续开发。

|

||||

|

||||

要归档一个仓库,进入仓库设置页面并点在这个仓库上点击归档。

|

||||

要归档一个仓库,进入仓库设置页面并点在这个仓库上点击“<ruby>归档该仓库<rt>Archive this repository</rt></ruby>”。

|

||||

|

||||

[][2]

|

||||

|

||||

在归档你的仓库前,确保你已经更改了它的设置并考虑关闭所有的开放问题和合并请求。你还应该更新你的 README 和描述来让它让访问者了解他不再能够贡献。

|

||||

在归档你的仓库前,确保你已经更改了它的设置并考虑关闭所有的开放问题和合并请求。你还应该更新你的 README 和描述来让它让访问者了解他不再能够对之贡献。

|

||||

|

||||

如果你改变了主意想要解除归档你的仓库,在相同的地方点击解除归档。请注意大多数归档仓库的设置是隐藏的,并且你需要解除归档来改变它们。

|

||||

如果你改变了主意想要解除归档你的仓库,在相同的地方点击“<ruby>解除归档该仓库<rt>Unarchive this repository</rt></ruby>”。请注意归档仓库的大多数设置是隐藏的,你需要解除归档才能改变它们。

|

||||

|

||||

[][3]

|

||||

|

||||

@ -24,9 +23,9 @@

|

||||

|

||||

via: https://github.com/blog/2460-archiving-repositories

|

||||

|

||||

作者:[MikeMcQuaid ][a]

|

||||

作者:[MikeMcQuaid][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,19 +1,18 @@

|

||||

Glitch:立即写出有趣的小型网站项目

|

||||

Glitch:可以让你立即写出有趣的小型网站

|

||||

============================================================

|

||||

|

||||

我刚写了一篇关于 Jupyter Notebooks 是一个有趣的交互式写 Python 代码的方式。这让我想起我最近学习了 Glitch,这个我同样喜爱!我构建了一个小的程序来用于[关闭转发 twitter][2]。因此有了这篇文章!

|

||||

我刚写了一篇关于 Jupyter Notebooks 的文章,它是一个有趣的交互式写 Python 代码的方式。这让我想起我最近学习了 Glitch,这个我同样喜爱!我构建了一个小的程序来用于[关闭转发 twitter][2]。因此有了这篇文章!

|

||||

|

||||

[Glitch][3] 是一个简单的构建 Javascript web 程序的方式(javascript 后端、javascript 前端)

|

||||

[Glitch][3] 是一个简单的构建 Javascript web 程序的方式(javascript 后端、javascript 前端)。

|

||||

|

||||

关于 glitch 有趣的事有:

|

||||

关于 glitch 有趣的地方有:

|

||||

|

||||

1. 你在他们的网站输入 Javascript 代码

|

||||

|

||||

2. 只要输入了任何代码,它会自动用你的新代码重载你的网站。你甚至不必保存!它会自动保存。

|

||||

|

||||

所以这就像 Heroku,但更神奇!像这样的编码(你输入代码,代码立即在公共网络上运行)对我而言感觉很**有趣**。

|

||||

|

||||

这有点像 ssh 登录服务器,编辑服务器上的 PHP/HTML 代码,并让它立即可用,这也是我所喜爱的。现在我们有了“更好的部署实践”,而不是“编辑代码,它立即出现在互联网上”,但我们并不是在谈论严肃的开发实践,而是在讨论编写微型程序的乐趣。

|

||||

这有点像用 ssh 登录服务器,编辑服务器上的 PHP/HTML 代码,它立即就可用了,而这也是我所喜爱的方式。虽然现在我们有了“更好的部署实践”,而不是“编辑代码,让它立即出现在互联网上”,但我们并不是在谈论严肃的开发实践,而是在讨论编写微型程序的乐趣。

|

||||

|

||||

### Glitch 有很棒的示例应用程序

|

||||

|

||||

@ -22,18 +21,16 @@ Glitch 似乎是学习编程的好方式!

|

||||

比如,这有一个太空侵略者游戏(由 [Mary Rose Cook][4] 编写):[https://space-invaders.glitch.me/][5]。我喜欢的是我只需要点击几下。

|

||||

|

||||

1. 点击 “remix this”

|

||||

|

||||

2. 开始编辑代码使箱子变成橘色而不是黑色

|

||||

|

||||

3. 制作我自己太空侵略者游戏!我的在这:[http://julias-space-invaders.glitch.me/][1]。(我只做了很小的更改使其变成橘色,没什么神奇的)

|

||||

|

||||

他们有大量的示例程序,你可以从中启动 - 例如[机器人][6]、[游戏][7]等等。

|

||||

|

||||

### 实际有用的非常好的程序:tweetstorms

|

||||

|

||||

我学习 Glitch 的方式是从这个程序:[https://tweetstorms.glitch.me/][8],它会向你展示给定用户的 tweetstorm。

|

||||

我学习 Glitch 的方式是从这个程序开始的:[https://tweetstorms.glitch.me/][8],它会向你展示给定用户的推特云。

|

||||

|

||||

比如,你可以在 [https://tweetstorms.glitch.me/sarahmei][10] 看到 [@sarahmei][9] 的 tweetstorm(她发布了很多好的 tweetstorm!)。

|

||||

比如,你可以在 [https://tweetstorms.glitch.me/sarahmei][10] 看到 [@sarahmei][9] 的推特云(她发布了很多好的 tweetstorm!)。

|

||||

|

||||

### 我的 Glitch 程序: 关闭转推

|

||||

|

||||

@ -41,11 +38,11 @@ Glitch 似乎是学习编程的好方式!

|

||||

|

||||

我喜欢我不必设置一个本地开发环境,我可以直接开始输入然后开始!

|

||||

|

||||

Glitch 只支持 Javascript,我不非常了解 Javascript(我之前从没写过一个 Node 程序),所以代码不是很好。但是编写它很愉快 - 能够输入并立即看到我的代码运行是令人愉快的。这是我的项目:[https://turn-off-retweets.glitch.me/][11]。

|

||||

Glitch 只支持 Javascript,我不是非常了解 Javascript(我之前从没写过一个 Node 程序),所以代码不是很好。但是编写它很愉快 - 能够输入并立即看到我的代码运行是令人愉快的。这是我的项目:[https://turn-off-retweets.glitch.me/][11]。

|

||||

|

||||

### 就是这些!

|

||||

|

||||

使用 Glitch 感觉真的很有趣和民主。通常情况下,如果我想 fork 某人的 Web 项目,并做出更改,我不会这样做 - 我必须 fork,找一个托管,设置本地开发环境或者 Heroku 或其他,安装依赖项等。我认为像安装 node.js 依赖关系这样的任务过去很有趣,就像“我正在学习新东西很酷”,现在我觉得它们很乏味。

|

||||

使用 Glitch 感觉真的很有趣和民主。通常情况下,如果我想 fork 某人的 Web 项目,并做出更改,我不会这样做 - 我必须 fork,找一个托管,设置本地开发环境或者 Heroku 或其他,安装依赖项等。我认为像安装 node.js 依赖关系这样的任务在过去很有趣,就像“我正在学习新东西很酷”,但现在我觉得它们很乏味。

|

||||

|

||||

所以我喜欢只需点击 “remix this!” 并立即在互联网上能有我的版本。

|

||||

|

||||

@ -53,9 +50,9 @@ Glitch 只支持 Javascript,我不非常了解 Javascript(我之前从没写

|

||||

|

||||

via: https://jvns.ca/blog/2017/11/13/glitch--write-small-web-projects-easily/

|

||||

|

||||

作者:[Julia Evans ][a]

|

||||

作者:[Julia Evans][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,27 +1,22 @@

|

||||

# LibreOffice 现在在 Flatpak 的 Flathub 应用商店提供

|

||||

LibreOffice 上架 Flathub 应用商店

|

||||

===============

|

||||

|

||||

|

||||

|

||||

LibreOffice 现在可以从集中化的 Flatpak 应用商店 [Flathub][3] 进行安装。

|

||||

> LibreOffice 现在可以从集中化的 Flatpak 应用商店 [Flathub][3] 进行安装。

|

||||

|

||||

它的到来使任何运行现代 Linux 发行版的人都能只点击一两次安装 LibreOffice 的最新稳定版本,而无需搜索 PPA,纠缠 tar 包或等待发行商将其打包。

|

||||

它的到来使任何运行现代 Linux 发行版的人都能只点击一两次即可安装 LibreOffice 的最新稳定版本,而无需搜索 PPA,纠缠于 tar 包或等待发行版将其打包。

|

||||

|

||||

自去年 8 月份以来,[LibreOffice Flatpak][5] 已经可供用户下载和安装 [LibreOffice 5.2][6]。

|

||||

自去年 8 月份 [LibreOffice 5.2][6] 发布以来,[LibreOffice Flatpak][5] 已经可供用户下载和安装。

|

||||

|

||||

这里“新”的是发行方法。文档基金会选择使用 Flathub 而不是专门的服务器来发布更新。

|

||||

这里“新”的是指发行方法。<ruby>文档基金会<rt>Document Foundation</rt></ruby>选择使用 Flathub 而不是专门的服务器来发布更新。

|

||||

|

||||

这对于终端用户来说是一个_很好_的消息,因为这意味着不需要在新安装时担心仓库,但对于 Flatpak 的倡议者来说也是一个好消息:LibreOffice 是开源软件最流行的生产力套件。它对格式和应用商店的支持肯定会受到热烈的欢迎。

|

||||

这对于终端用户来说是一个_很好_的消息,因为这意味着不需要在新安装时担心仓库,但对于 Flatpak 的倡议者来说也是一个好消息:LibreOffice 是开源软件里最流行的生产力套件。它对该格式和应用商店的支持肯定会受到热烈的欢迎。

|

||||

|

||||

在撰写本文时,你可以从 Flathub 安装 LibreOffice 5.4.2。新的稳定版本将在发布时添加。

|

||||

|

||||

### 在 Ubuntu 上启用 Flathub

|

||||

|

||||

|

||||

|

||||

Fedora、Arch 和 Linux Mint 18.3 用户已经安装了 Flatpak,随时可以开箱即用。Mint 甚至预启用了 Flathub remote。

|

||||

|

||||

[从 Flathub 安装 LibreOffice][7]

|

||||

|

||||

要在 Ubuntu 上启动并运行 Flatpak,首先必须安装它:

|

||||

|

||||

```

|

||||

@ -34,17 +29,25 @@ sudo apt install flatpak gnome-software-plugin-flatpak

|

||||

flatpak remote-add --if-not-exists flathub https://flathub.org/repo/flathub.flatpakrepo

|

||||

```

|

||||

|

||||

这就行了。只需注销并返回(以便 Ubuntu Software 刷新其缓存),之后你应该能够通过 Ubuntu Software 看到 Flathub 上的任何 Flatpak 程序了。

|

||||

这就行了。只需注销并重新登录(以便 Ubuntu Software 刷新其缓存),之后你应该能够通过 Ubuntu Software 看到 Flathub 上的任何 Flatpak 程序了。

|

||||

|

||||

|

||||

|

||||

*Fedora、Arch 和 Linux Mint 18.3 用户已经安装了 Flatpak,随时可以开箱即用。Mint 甚至预启用了 Flathub remote。*

|

||||

|

||||

在本例中,搜索 “LibreOffice” 并在结果中找到下面有 Flathub 提示的结果。(请记住,Ubuntu 已经调整了客户端,来将 Snap 程序显示在最上面,所以你可能需要向下滚动列表来查看它)。

|

||||

|

||||

### 从 Flathub 安装 LibreOffice

|

||||

|

||||

- [从 Flathub 安装 LibreOffice][7]

|

||||

|

||||

从 flatpakref 中[安装 Flatpak 程序有一个 bug][8],所以如果上面的方法不起作用,你也可以使用命令行从 Flathub 中安装 Flathub 程序。

|

||||

|

||||

Flathub 网站列出了安装每个程序所需的命令。切换到“命令行”选项卡来查看它们。

|

||||

|

||||

#### Flathub 上更多的应用

|

||||

### Flathub 上更多的应用

|

||||

|

||||

如果你经常看这个网站,你就会知道我喜欢 Flathub。这是我最喜欢的一些应用(Corebird、Parlatype、GNOME MPV、Peek、Audacity、GIMP 等)的家园。我无需折衷就能获得这些应用程序的最新,稳定版本(加上它们需要的所有依赖)。

|

||||

如果你经常看这个网站,你就会知道我喜欢 Flathub。这是我最喜欢的一些应用(Corebird、Parlatype、GNOME MPV、Peek、Audacity、GIMP 等)的家园。我无需等待就能获得这些应用程序的最新、稳定版本(加上它们需要的所有依赖)。

|

||||

|

||||

而且,在我 twiiter 上发布一周左右后,大多数 Flatpak 应用现在看起来有很棒 GTK 主题 - 不再需要[临时方案][9]了!

|

||||

|

||||

@ -52,9 +55,9 @@ Flathub 网站列出了安装每个程序所需的命令。切换到“命令行

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2017/11/libreoffice-now-available-flathub-flatpak-app-store

|

||||

|

||||

作者:[ JOEY SNEDDON ][a]

|

||||

作者:[JOEY SNEDDON][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -3,27 +3,27 @@ AWS 帮助构建 ONNX 开源 AI 平台

|

||||

|

||||

|

||||

|

||||

AWS 已经成为最近加入深度学习社区的开放神经网络交换(ONNX)协作的最新技术公司,最近在无摩擦和可互操作的环境中推出了高级人工智能。由 Facebook 和微软领头。

|

||||

AWS 最近成为了加入深度学习社区的<ruby>开放神经网络交换<rt>Open Neural Network Exchange</rt></ruby>(ONNX)协作的技术公司,最近在<ruby>无障碍和可互操作<rt>frictionless and interoperable</rt></ruby>的环境中推出了高级人工智能。由 Facebook 和微软领头了该协作。

|

||||

|

||||

作为该合作的一部分,AWS 将其开源 Python 软件包 ONNX-MxNet 作为一个深度学习框架提供,该框架提供跨多种语言的编程接口,包括 Python、Scala 和开源统计软件 R。

|

||||

作为该合作的一部分,AWS 开源其深度学习框架 Python 软件包 ONNX-MXNet,该框架提供了跨多种语言的编程接口(API),包括 Python、Scala 和开源统计软件 R。

|

||||

|

||||

AWS 深度学习工程经理 Hagay Lupesko 和软件开发人员 Roshani Nagmote 上周在一篇帖子中写道:ONNX 格式将帮助开发人员构建和训练其他框架的模型,包括 PyTorch、Microsoft Cognitive Toolkit 或 Caffe2。它可以让开发人员将这些模型导入 MXNet,并运行它们进行推理。

|

||||

AWS 深度学习工程经理 Hagay Lupesko 和软件开发人员 Roshani Nagmote 上周在一篇帖子中写道,ONNX 格式将帮助开发人员构建和训练其它框架的模型,包括 PyTorch、Microsoft Cognitive Toolkit 或 Caffe2。它可以让开发人员将这些模型导入 MXNet,并运行它们进行推理。

|

||||

|

||||

### 对开发者的帮助

|

||||

|

||||

今年夏天,Facebook 和微软推出了 ONNX,以支持共享模式的互操作性,来促进 AI 的发展。微软提交了其 Cognitive Toolkit、Caffe2 和 PyTorch 来支持 ONNX。

|

||||

|

||||

微软表示:Cognitive Toolkit 和其他框架使开发人员更容易构建和运行代表神经网络的计算图。

|

||||

微软表示:Cognitive Toolkit 和其他框架使开发人员更容易构建和运行计算图以表达神经网络。

|

||||

|

||||

Github 上提供了[ ONNX 代码和文档][4]的初始版本。

|

||||

[ONNX 代码和文档][4]的初始版本已经放到了 Github。

|

||||

|

||||

AWS 和微软上个月宣布了在 Apache MXNet 上的一个新 Gluon 接口计划,该计划允许开发人员构建和训练深度学习模型。

|

||||

|

||||

[Tractica][5] 的研究总监 Aditya Kaul 观察到:“Gluon 是他们与 Google 的 Tensorflow 竞争的合作伙伴关系的延伸”。

|

||||

[Tractica][5] 的研究总监 Aditya Kaul 观察到:“Gluon 是他们试图与 Google 的 Tensorflow 竞争的合作伙伴关系的延伸”。

|

||||

|

||||

他告诉 LinuxInsider,“谷歌在这点上的疏忽是非常明显的,但也说明了他们在市场上的主导地位。

|

||||

他告诉 LinuxInsider,“谷歌在这点上的疏忽是非常明显的,但也说明了他们在市场上的主导地位。”

|

||||

|

||||

Kaul 说:“甚至 Tensorflow 是开源的,所以开源在这里并不是什么大事,但这归结到底是其他生态系统联手与谷歌竞争。”

|

||||

Kaul 说:“甚至 Tensorflow 也是开源的,所以开源在这里并不是什么大事,但这归结到底是其他生态系统联手与谷歌竞争。”

|

||||

|

||||

根据 AWS 的说法,本月早些时候,Apache MXNet 社区推出了 MXNet 的 0.12 版本,它扩展了 Gluon 的功能,以便进行新的尖端研究。它的新功能之一是变分 dropout,它允许开发人员使用 dropout 技术来缓解递归神经网络中的过拟合。

|

||||

|

||||

@ -52,15 +52,15 @@ Tractica 的 Kaul 指出:“框架互操作性是一件好事,这会帮助

|

||||

越来越多的大型科技公司已经宣布使用开源技术来加快 AI 协作开发的计划,以便创建更加统一的开发和研究平台。

|

||||

|

||||

AT&T 几周前宣布了与 TechMahindra 和 Linux 基金会合作[推出 Acumos 项目][8]的计划。该平台旨在开拓电信、媒体和技术方面的合作。

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linuxinsider.com/story/AWS-to-Help-Build-ONNX-Open-Source-AI-Platform-84971.html

|

||||

|

||||

作者:[ David Jones ][a]

|

||||

作者:[David Jones][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,127 @@

|

||||

Suplemon:带有多光标支持的现代 CLI 文本编辑器

|

||||

======

|

||||

|

||||

Suplemon 是一个 CLI 中的现代文本编辑器,它模拟 [Sublime Text][1] 的多光标行为和其它特性。它是轻量级的,非常易于使用,就像 Nano 一样。

|

||||

|

||||

使用 CLI 编辑器的好处之一是,无论你使用的 Linux 发行版是否有 GUI,你都可以使用它。这种文本编辑器也很简单、快速和强大。

|

||||

|

||||

你可以在其[官方仓库][2]中找到有用的信息和源代码。

|

||||

|

||||

### 功能

|

||||

|

||||

这些是一些它有趣的功能:

|

||||

|

||||

* 多光标支持

|

||||

* 撤销/重做

|

||||

* 复制和粘贴,带有多行支持

|

||||

* 鼠标支持

|

||||

* 扩展

|

||||

* 查找、查找所有、查找下一个

|

||||

* 语法高亮

|

||||

* 自动完成

|

||||

* 自定义键盘快捷键

|

||||

|

||||

### 安装

|

||||

|

||||

首先,确保安装了最新版本的 python3 和 pip3。

|

||||

|

||||

然后在终端输入:

|

||||

|

||||

```

|

||||

$ sudo pip3 install suplemon

|

||||

```

|

||||

|

||||

### 使用

|

||||

|

||||

#### 在当前目录中创建一个新文件

|

||||

|

||||

打开一个终端并输入:

|

||||

|

||||

```

|

||||

$ suplemon

|

||||

```

|

||||

|

||||

你将看到如下:

|

||||

|

||||

|

||||

|

||||

#### 打开一个或多个文件

|

||||

|

||||

打开一个终端并输入:

|

||||

|

||||

```

|

||||

$ suplemon <filename1> <filename2> ... <filenameN>

|

||||

```

|

||||

|

||||

例如:

|

||||

|

||||

```

|

||||

$ suplemon example1.c example2.c

|

||||

```

|

||||

|

||||

### 主要配置

|

||||

|

||||

你可以在 `~/.config/suplemon/suplemon-config.json` 找到配置文件。

|

||||

|

||||

编辑这个文件很简单,你只需要进入命令模式(进入 suplemon 后)并运行 `config` 命令。你可以通过运行 `config defaults` 来查看默认配置。

|

||||

|

||||

#### 键盘映射配置

|

||||

|

||||

我会展示 suplemon 的默认键映射。如果你想编辑它们,只需运行 `keymap` 命令。运行 `keymap default` 来查看默认的键盘映射文件。

|

||||

|

||||

| 操作 | 快捷键 |

|

||||

| ---- | ---- |

|

||||

| 退出| `Ctrl + Q`|

|

||||

| 复制行到缓冲区|`Ctrl + C`|

|

||||

| 剪切行缓冲区| `Ctrl + X`|

|

||||

| 插入缓冲区| `Ctrl + V`|

|

||||

| 复制行| `Ctrl + K`|

|

||||

| 跳转| `Ctrl + G`。 你可以跳转到一行或一个文件(只需键入一个文件名的开头)。另外,可以输入类似于 `exam:50` 跳转到 `example.c` 第 `50` 行。|

|

||||

| 用字符串或正则表达式搜索| `Ctrl + F`|

|

||||

| 搜索下一个| `Ctrl + D`|

|

||||

| 去除空格| `Ctrl + T`|

|

||||

| 在箭头方向添加新的光标| `Alt + 方向键`|

|

||||

| 跳转到上一个或下一个单词或行| `Ctrl + 左/右`|

|

||||

| 恢复到单光标/取消输入提示| `Esc`|

|

||||

| 向上/向下移动行| `Page Up` / `Page Down`|

|

||||

| 保存文件|`Ctrl + S`|

|

||||

| 用新名称保存文件|`F1`|

|

||||

| 重新载入当前文件|`F2`|

|

||||

| 打开文件|`Ctrl + O`|

|

||||

| 关闭文件|`Ctrl + W`|

|

||||

| 切换到下一个/上一个文件|`Ctrl + Page Up` / `Ctrl + Page Down`|

|

||||

| 运行一个命令|`Ctrl + E`|

|

||||

| 撤消|`Ctrl + Z`|

|

||||

| 重做|`Ctrl + Y`|

|

||||

| 触发可见的空格|`F7`|

|

||||

| 切换鼠标模式|`F8`|

|

||||

| 显示行号|`F9`|

|

||||

| 显示全屏|`F11`|

|

||||

|

||||

|

||||

|

||||

#### 鼠标快捷键

|

||||

|

||||

* 将光标置于指针位置:左键单击

|

||||

* 在指针位置添加一个光标:右键单击

|

||||

* 垂直滚动:向上/向下滚动滚轮

|

||||

|

||||

### 总结

|

||||

|

||||

在尝试 Suplemon 一段时间后,我改变了对 CLI 文本编辑器的看法。我以前曾经尝试过 Nano,是的,我喜欢它的简单性,但是它的现代特征的缺乏使它在日常使用中变得不实用。

|

||||

|

||||

这个工具有 CLI 和 GUI 世界最好的东西……简单性和功能丰富!所以我建议你试试看,并在评论中写下你的想法 :-)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://linoxide.com/tools/suplemon-cli-text-editor-multi-cursor/

|

||||

|

||||

作者:[Ivo Ursino][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://linoxide.com/author/ursinov/

|

||||

[1]:https://linoxide.com/tools/install-sublime-text-editor-linux/

|

||||

[2]:https://github.com/richrd/suplemon/

|

||||

@ -0,0 +1,161 @@

|

||||

在 Ubuntu 16.04 下随机化你的 WiFi MAC 地址

|

||||

============================================================

|

||||

|

||||

> 你的设备的 MAC 地址可以在不同的 WiFi 网络中记录你的活动。这些信息能被共享后出售,用于识别特定的个体。但可以用随机生成的伪 MAC 地址来阻止这一行为。

|

||||

|

||||

|

||||

|

||||

|

||||

_Image courtesy of [Cloudessa][4]_

|

||||

|

||||

每一个诸如 WiFi 或者以太网卡这样的网络设备,都有一个叫做 MAC 地址的唯一标识符,如:`b4:b6:76:31:8c:ff`。这就是你能上网的原因:每当你连上 WiFi,路由器就会用这一地址来向你接受和发送数据,并且用它来区别你和这一网络的其它设备。

|

||||

|

||||

这一设计的缺陷在于唯一性,不变的 MAC 地址正好可以用来追踪你。连上了星巴克的 WiFi? 好,注意到了。在伦敦的地铁上? 也记录下来。

|

||||

|

||||

如果你曾经在某一个 WiFi 验证页面上输入过你的真实姓名,你就已经把自己和这一 MAC 地址建立了联系。没有仔细阅读许可服务条款、你可以认为,机场的免费 WiFi 正通过出售所谓的 ‘顾客分析数据’(你的个人信息)获利。出售的对象包括酒店,餐饮业,和任何想要了解你的人。

|

||||

|

||||

我不想信息被记录,再出售给多家公司,所以我花了几个小时想出了一个解决方案。

|

||||

|

||||

### MAC 地址不一定总是不变的

|

||||

|

||||

幸运的是,在不断开网络的情况下,是可以随机生成一个伪 MAC 地址的。

|

||||

|

||||

我想随机生成我的 MAC 地址,但是有三个要求:

|

||||

|

||||

1. MAC 地址在不同网络中是不相同的。这意味着,我在星巴克和在伦敦地铁网络中的 MAC 地址是不相同的,这样在不同的服务提供商中就无法将我的活动系起来。

|

||||

2. MAC 地址需要经常更换,这样在网络上就没人知道我就是去年在这儿经过了 75 次的那个人。

|

||||

3. MAC 地址一天之内应该保持不变。当 MAC 地址更改时,大多数网络都会与你断开连接,然后必须得进入验证页面再次登陆 - 这很烦人。

|

||||

|

||||

### 操作<ruby>网络管理器<rt>NetworkManager</rt></ruby>

|

||||

|

||||

我第一次尝试用一个叫做 `macchanger` 的工具,但是失败了。因为<ruby>网络管理器<rt>NetworkManager</rt></ruby>会根据它自己的设置恢复默认的 MAC 地址。

|

||||

|

||||

我了解到,网络管理器 1.4.1 以上版本可以自动生成随机的 MAC 地址。如果你在使用 Ubuntu 17.04 版本,你可以根据[这一配置文件][7]实现这一目的。但这并不能完全符合我的三个要求(你必须在<ruby>随机<rt>random</rt></ruby>和<ruby>稳定<rt>stable</rt></ruby>这两个选项之中选择一个,但没有一天之内保持不变这一选项)

|

||||

|

||||

因为我使用的是 Ubuntu 16.04,网络管理器版本为 1.2,不能直接使用高版本这一新功能。可能网络管理器有一些随机化方法支持,但我没能成功。所以我编了一个脚本来实现这一目标。

|

||||

|

||||

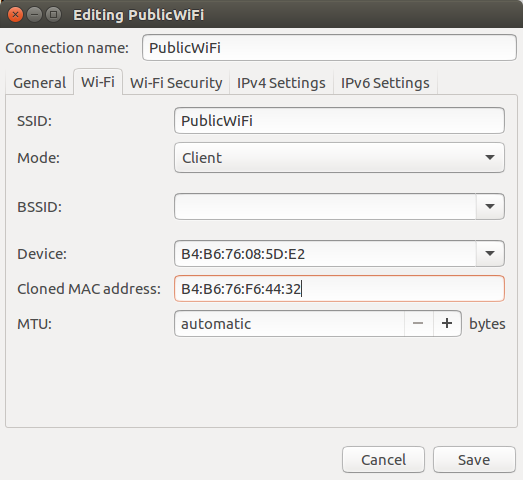

幸运的是,网络管理器 1.2 允许模拟 MAC 地址。你在已连接的网络中可以看见 ‘编辑连接’ 这一选项:

|

||||

|

||||

|

||||

|

||||

网络管理器也支持钩子处理 —— 任何位于 `/etc/NetworkManager/dispatcher.d/pre-up.d/` 的脚本在建立网络连接之前都会被执行。

|

||||

|

||||

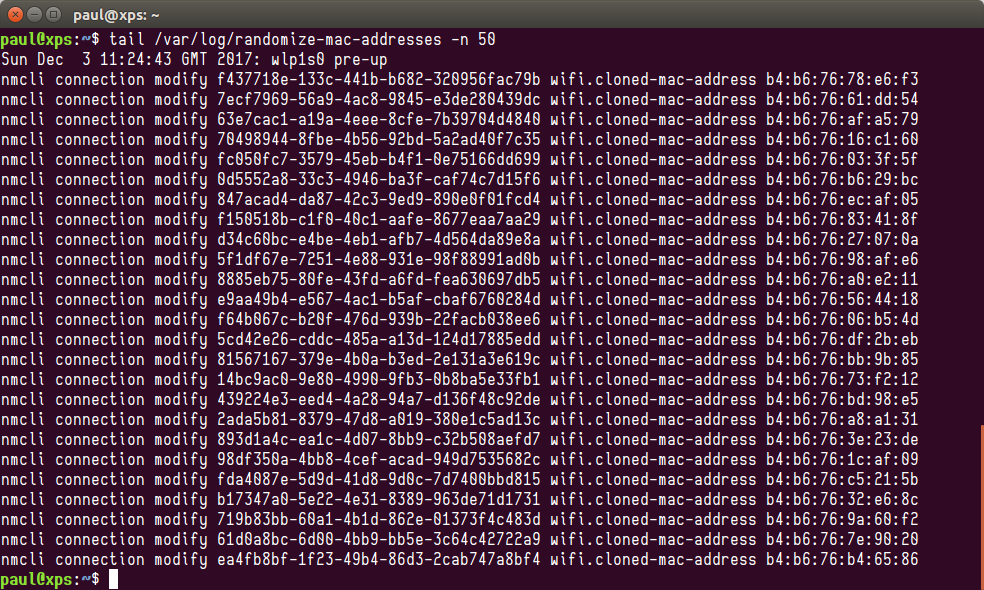

|

||||

### 分配随机生成的伪 MAC 地址

|

||||

|

||||

我想根据网络 ID 和日期来生成新的随机 MAC 地址。 我们可以使用网络管理器的命令行工具 nmcli 来显示所有可用网络:

|

||||

|

||||

|

||||

```

|

||||

> nmcli connection

|

||||

NAME UUID TYPE DEVICE

|

||||

Gladstone Guest 618545ca-d81a-11e7-a2a4-271245e11a45 802-11-wireless wlp1s0

|

||||

DoESDinky 6e47c080-d81a-11e7-9921-87bc56777256 802-11-wireless --

|

||||

PublicWiFi 79282c10-d81a-11e7-87cb-6341829c2a54 802-11-wireless --

|

||||

virgintrainswifi 7d0c57de-d81a-11e7-9bae-5be89b161d22 802-11-wireless --

|

||||

```

|

||||

|

||||

因为每个网络都有一个唯一标识符(UUID),为了实现我的计划,我将 UUID 和日期拼接在一起,然后使用 MD5 生成 hash 值:

|

||||

|

||||

```

|

||||

# eg 618545ca-d81a-11e7-a2a4-271245e11a45-2017-12-03

|

||||

|

||||

> echo -n "${UUID}-$(date +%F)" | md5sum

|

||||

|

||||

53594de990e92f9b914a723208f22b3f -

|

||||

```

|

||||

|

||||

生成的结果可以代替 MAC 地址的最后八个字节。

|

||||

|

||||

|

||||

值得注意的是,最开始的字节 `02` 代表这个地址是[自行指定][8]的。实际上,真实 MAC 地址的前三个字节是由制造商决定的,例如 `b4:b6:76` 就代表 Intel。

|

||||

|

||||

有可能某些路由器会拒绝自己指定的 MAC 地址,但是我还没有遇到过这种情况。

|

||||

|

||||

每次连接到一个网络,这一脚本都会用 `nmcli` 来指定一个随机生成的伪 MAC 地址:

|

||||

|

||||

|

||||

|

||||

最后,我查看了 `ifconfig` 的输出结果,我发现 MAC 地址 `HWaddr` 已经变成了随机生成的地址(模拟 Intel 的),而不是我真实的 MAC 地址。

|

||||

|

||||

|

||||

```

|

||||

> ifconfig

|

||||

wlp1s0 Link encap:Ethernet HWaddr b4:b6:76:45:64:4d

|

||||

inet addr:192.168.0.86 Bcast:192.168.0.255 Mask:255.255.255.0

|

||||

inet6 addr: fe80::648c:aff2:9a9d:764/64 Scope:Link

|

||||

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

|

||||

RX packets:12107812 errors:0 dropped:2 overruns:0 frame:0

|

||||

TX packets:18332141 errors:0 dropped:0 overruns:0 carrier:0

|

||||

collisions:0 txqueuelen:1000

|

||||

RX bytes:11627977017 (11.6 GB) TX bytes:20700627733 (20.7 GB)

|

||||

|

||||

```

|

||||

|

||||

### 脚本

|

||||

|

||||

完整的脚本也可以[在 Github 上查看][9]。

|

||||

|

||||

```

|

||||

#!/bin/sh

|

||||

|

||||

# /etc/NetworkManager/dispatcher.d/pre-up.d/randomize-mac-addresses

|

||||

|

||||

# Configure every saved WiFi connection in NetworkManager with a spoofed MAC

|

||||

# address, seeded from the UUID of the connection and the date eg:

|

||||

# 'c31bbcc4-d6ad-11e7-9a5a-e7e1491a7e20-2017-11-20'

|

||||

|

||||

# This makes your MAC impossible(?) to track across WiFi providers, and

|

||||

# for one provider to track across days.

|

||||

|

||||

# For craptive portals that authenticate based on MAC, you might want to

|

||||

# automate logging in :)

|

||||

|

||||

# Note that NetworkManager >= 1.4.1 (Ubuntu 17.04+) can do something similar

|

||||

# automatically.

|

||||

|

||||

export PATH=$PATH:/usr/bin:/bin

|

||||

|

||||

LOG_FILE=/var/log/randomize-mac-addresses

|

||||

|

||||

echo "$(date): $*" > ${LOG_FILE}

|

||||

|

||||

WIFI_UUIDS=$(nmcli --fields type,uuid connection show |grep 802-11-wireless |cut '-d ' -f3)

|

||||

|

||||

for UUID in ${WIFI_UUIDS}

|

||||

do

|

||||

UUID_DAILY_HASH=$(echo "${UUID}-$(date +F)" | md5sum)

|

||||

|

||||

RANDOM_MAC="02:$(echo -n ${UUID_DAILY_HASH} | sed 's/^\(..\)\(..\)\(..\)\(..\)\(..\).*$/\1:\2:\3:\4:\5/')"

|

||||

|

||||

CMD="nmcli connection modify ${UUID} wifi.cloned-mac-address ${RANDOM_MAC}"

|

||||

|

||||

echo "$CMD" >> ${LOG_FILE}

|

||||

$CMD &

|

||||

done

|

||||

|

||||

wait

|

||||

```

|

||||

|

||||

_更新:[使用自己指定的 MAC 地址][5]可以避免和真正的 intel 地址冲突。感谢 [@_fink][6]_

|

||||

|

||||

---------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.paulfurley.com/randomize-your-wifi-mac-address-on-ubuntu-1604-xenial/

|

||||

|

||||

作者:[Paul M Furley][a]

|

||||

译者:[wenwensnow](https://github.com/wenwensnow)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.paulfurley.com/

|

||||

[1]:https://gist.github.com/paulfurley/46e0547ce5c5ea7eabeaef50dbacef3f/raw/5f02fc8f6ff7fca5bca6ee4913c63bf6de15abcarandomize-mac-addresses

|

||||

[2]:https://gist.github.com/paulfurley/46e0547ce5c5ea7eabeaef50dbacef3f#file-randomize-mac-addresses

|

||||

[3]:https://github.com/

|

||||

[4]:http://cloudessa.com/products/cloudessa-aaa-and-captive-portal-cloud-service/

|

||||

[5]:https://gist.github.com/paulfurley/46e0547ce5c5ea7eabeaef50dbacef3f/revisions#diff-824d510864d58c07df01102a8f53faef

|

||||

[6]:https://twitter.com/fink_/status/937305600005943296

|

||||

[7]:https://gist.github.com/paulfurley/978d4e2e0cceb41d67d017a668106c53/

|

||||

[8]:https://en.wikipedia.org/wiki/MAC_address#Universal_vs._local

|

||||

[9]:https://gist.github.com/paulfurley/46e0547ce5c5ea7eabeaef50dbacef3f

|

||||

@ -1,21 +1,23 @@

|

||||

如何获知一个命令或程序在执行前将会做什么

|

||||

如何在执行一个命令或程序之前就了解它会做什么

|

||||

======

|

||||

|

||||

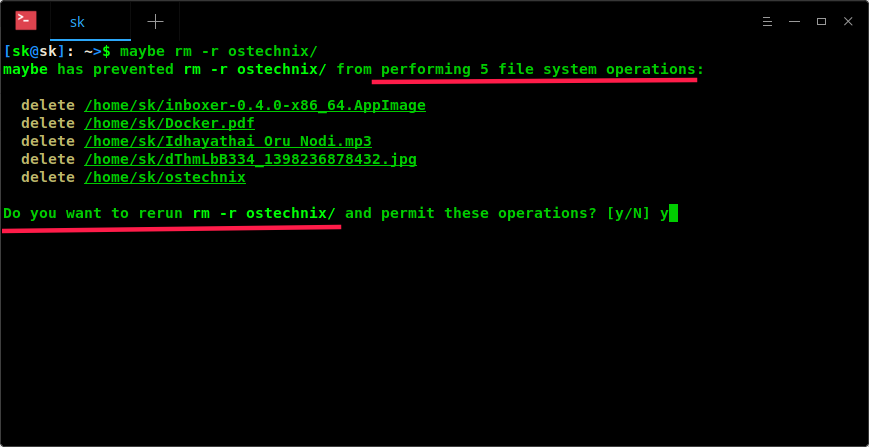

有没有想过一个 Unix 命令在执行前将会干些什么呢?并不是每个人都会知道一个特定的命令或者程序将会做什么。当然,你可以用 [Explainshell][2] 来查看它。你可以在 Explainshell 网站中粘贴你的命令,然后它可以让你了解命令的每个部分做了什么。但是,这是没有必要的。现在,我们从终端就可以轻易地知道一个命令或者程序在执行前会做什么。 `maybe` ,一个简单的工具,它允许你运行一条命令并可以查看此命令对你的文件系统做了什么而实际上这条命令却并未执行!在查看 `maybe` 的输出列表后,你可以决定是否真的想要运行这条命令。

|

||||

有没有想过在执行一个 Unix 命令前就知道它干些什么呢?并不是每个人都会知道一个特定的命令或者程序将会做什么。当然,你可以用 [Explainshell][2] 来查看它。你可以在 Explainshell 网站中粘贴你的命令,然后它可以让你了解命令的每个部分做了什么。但是,这是没有必要的。现在,我们从终端就可以轻易地在执行一个命令或者程序前就知道它会做什么。 `maybe` ,一个简单的工具,它允许你运行一条命令并可以查看此命令对你的文件做了什么,而实际上这条命令却并未执行!在查看 `maybe` 的输出列表后,你可以决定是否真的想要运行这条命令。

|

||||

|

||||

#### “maybe”是如何工作的

|

||||

|

||||

|

||||

根据开发者的介绍

|

||||

### `maybe` 是如何工作的

|

||||

|

||||

> `maybe` 利用 `python-ptrace` 库运行了一个在 `ptrace` 控制下的进程。当它截取到一个即将更改文件系统的系统调用时,它会记录该调用,然后修改 CPU 寄存器,将这个调用重定向到一个无效的系统调用 ID(将其变成一个无效操作(no-op)),并将这个无效操作(no-op)的返回值设置为有效操作的返回值。结果,这个进程认为,它所做的一切都发生了,实际上什么都没有改变。

|

||||

根据开发者的介绍:

|

||||

|

||||

警告: 在生产环境或者任何你所关心的系统里面使用这个工具时都应该小心。它仍然可能造成严重的损失,因为它只能阻止少数系统调用。

|

||||

> `maybe` 利用 `python-ptrace` 库在 `ptrace` 控制下运行了一个进程。当它截取到一个即将更改文件系统的系统调用时,它会记录该调用,然后修改 CPU 寄存器,将这个调用重定向到一个无效的系统调用 ID(效果上将其变成一个无效操作(no-op)),并将这个无效操作(no-op)的返回值设置为有效操作的返回值。结果,这个进程认为,它所做的一切都发生了,实际上什么都没有改变。

|

||||

|

||||

#### 安装 “maybe”

|

||||

警告:在生产环境或者任何你所关心的系统里面使用这个工具时都应该小心。它仍然可能造成严重的损失,因为它只能阻止少数系统调用。

|

||||

|

||||

#### 安装 `maybe`

|

||||

|

||||

确保你已经在你的 Linux 系统中已经安装了 `pip` 。如果没有,可以根据您使用的发行版,按照如下指示进行安装。

|

||||

|

||||

在 Arch Linux 及其衍生产品(如 Antergos,Manjaro Linux)上,使用以下命令安装 `pip` :

|

||||

在 Arch Linux 及其衍生产品(如 Antergos、Manjaro Linux)上,使用以下命令安装 `pip` :

|

||||

|

||||

```

|

||||

sudo pacman -S python-pip

|

||||

@ -25,8 +27,6 @@ sudo pacman -S python-pip

|

||||

|

||||

```

|

||||

sudo yum install epel-release

|

||||

```

|

||||

```

|

||||

sudo yum install python-pip

|

||||

```

|

||||

|

||||

@ -34,8 +34,6 @@ sudo yum install python-pip

|

||||

|

||||

```

|

||||

sudo dnf install epel-release

|

||||

```

|

||||

```

|

||||

sudo dnf install python-pip

|

||||

```

|

||||

|

||||

@ -45,19 +43,19 @@ sudo dnf install python-pip

|

||||

sudo apt-get install python-pip

|

||||

```

|

||||

|

||||

在 SUSE, openSUSE 上:

|

||||

在 SUSE、 openSUSE 上:

|

||||

|

||||

```

|

||||

sudo zypper install python-pip

|

||||

```

|

||||

|

||||

安装 `pip` 后,运行以下命令安装 `maybe` 。

|

||||

安装 `pip` 后,运行以下命令安装 `maybe` :

|

||||

|

||||

```

|

||||

sudo pip install maybe

|

||||

```

|

||||

|

||||

#### 了解一个命令或程序在执行前会做什么

|

||||

### 了解一个命令或程序在执行前会做什么

|

||||

|

||||

用法是非常简单的!只要在要执行的命令前加上 `maybe` 即可。

|

||||

|

||||

@ -83,8 +81,7 @@ Do you want to rerun rm -r ostechnix/ and permit these operations? [y/N] y

|

||||

|

||||

[][3]

|

||||

|

||||

|

||||

`maybe` 执行 5 个文件系统操作,并向我显示该命令(rm -r ostechnix /)究竟会做什么。现在我可以决定是否应该执行这个操作。是不是很酷呢?确实很酷!

|

||||

`maybe` 执行了 5 个文件系统操作,并向我显示该命令(`rm -r ostechnix/`)究竟会做什么。现在我可以决定是否应该执行这个操作。是不是很酷呢?确实很酷!

|

||||

|

||||

这是另一个例子。我要为 Gmail 安装 Inboxer 桌面客户端。这是我得到的输出:

|

||||

|

||||

@ -122,9 +119,9 @@ maybe has not detected any file system operations from sudo pacman -Syu.

|

||||

|

||||

Cheers!

|

||||

|

||||

资源:

|

||||

资源:

|

||||

|

||||

* [“maybe” GitHub page][1]

|

||||

* [`maybe` GitHub 主页][1]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -132,7 +129,7 @@ via: https://www.ostechnix.com/know-command-program-will-exactly-executing/

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[imquanquan](https://github.com/imquanquan)

|

||||

校对:[校对ID](https://github.com/校对ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,26 +1,25 @@

|

||||

NETSTAT 命令: 通过案例学习使用 netstate

|

||||

通过示例学习使用 netstat

|

||||

======

|

||||

Netstat 是一个告诉我们系统中所有 tcp/udp/unix socket 连接状态的命令行工具。它会列出所有已经连接或者等待连接状态的连接。 该工具在识别某个应用监听哪个端口时特别有用,我们也能用它来判断某个应用是否正常的在监听某个端口。

|

||||

|

||||

Netstat 命令还能显示其他各种各样的网络相关信息,例如路由表, 网卡统计信息, 虚假连接以及多播成员等。

|

||||

netstat 是一个告诉我们系统中所有 tcp/udp/unix socket 连接状态的命令行工具。它会列出所有已经连接或者等待连接状态的连接。 该工具在识别某个应用监听哪个端口时特别有用,我们也能用它来判断某个应用是否正常的在监听某个端口。

|

||||

|

||||

本文中,我们会通过几个例子来学习 Netstat。

|

||||

netstat 命令还能显示其它各种各样的网络相关信息,例如路由表, 网卡统计信息, 虚假连接以及多播成员等。

|

||||

|

||||

(推荐阅读: [Learn to use CURL command with examples][1] )

|

||||

本文中,我们会通过几个例子来学习 netstat。

|

||||

|

||||

Netstat with examples

|

||||

============================================================

|

||||

(推荐阅读: [通过示例学习使用 CURL 命令][1] )

|

||||

|

||||

### 1- 检查所有的连接

|

||||

### 1 - 检查所有的连接

|

||||

|

||||

使用 `a` 选项可以列出系统中的所有连接,

|

||||

|

||||

```shell

|

||||

$ netstat -a

|

||||

```

|

||||

|

||||

这会显示系统所有的 tcp,udp 以及 unix 连接。

|

||||

这会显示系统所有的 tcp、udp 以及 unix 连接。

|

||||

|

||||

### 2- 检查所有的 tcp/udp/unix socket 连接

|

||||

### 2 - 检查所有的 tcp/udp/unix socket 连接

|

||||

|

||||

使用 `t` 选项只列出 tcp 连接,

|

||||

|

||||

@ -28,19 +27,19 @@ $ netstat -a

|

||||

$ netstat -at

|

||||

```

|

||||

|

||||

类似的,使用 `u` 选项只列出 udp 连接 to list out only the udp connections on our system, we can use ‘u’ option with netstat,

|

||||

类似的,使用 `u` 选项只列出 udp 连接,

|

||||

|

||||

```shell

|

||||

$ netstat -au

|

||||

```

|

||||

|

||||

使用 `x` 选项只列出 Unix socket 连接,we can use ‘x’ options,

|

||||

使用 `x` 选项只列出 Unix socket 连接,

|

||||

|

||||

```shell

|

||||

$ netstat -ax

|

||||

```

|

||||

|

||||

### 3- 同时列出进程 ID/进程名称

|

||||

### 3 - 同时列出进程 ID/进程名称

|

||||

|

||||

使用 `p` 选项可以在列出连接的同时也显示 PID 或者进程名称,而且它还能与其他选项连用,

|

||||

|

||||

@ -48,15 +47,15 @@ $ netstat -ax

|

||||

$ netstat -ap

|

||||

```

|

||||

|

||||

### 4- 列出端口号而不是服务名

|

||||

### 4 - 列出端口号而不是服务名

|

||||

|

||||

使用 `n` 选项可以加快输出,它不会执行任何反向查询(译者注:这里原文说的是 "it will perform any reverse lookup",应该是写错了),而是直接输出数字。 由于无需查询,因此结果输出会快很多。

|

||||

使用 `n` 选项可以加快输出,它不会执行任何反向查询(LCTT 译注:这里原文有误),而是直接输出数字。 由于无需查询,因此结果输出会快很多。

|

||||

|

||||

```shell

|

||||

$ netstat -an

|

||||

```

|

||||

|

||||

### 5- 只输出监听端口

|

||||

### 5 - 只输出监听端口

|

||||

|

||||

使用 `l` 选项只输出监听端口。它不能与 `a` 选项连用,因为 `a` 会输出所有端口,

|

||||

|

||||

@ -64,15 +63,15 @@ $ netstat -an

|

||||

$ netstat -l

|

||||

```

|

||||

|

||||

### 6- 输出网络状态

|

||||

### 6 - 输出网络状态

|

||||

|

||||

使用 `s` 选项输出每个协议的统计信息,包括接收/发送的包数量

|

||||

使用 `s` 选项输出每个协议的统计信息,包括接收/发送的包数量,

|

||||

|

||||

```shell

|

||||

$ netstat -s

|

||||

```

|

||||

|

||||

### 7- 输出网卡状态

|

||||

### 7 - 输出网卡状态

|

||||

|

||||

使用 `I` 选项只显示网卡的统计信息,

|

||||

|

||||

@ -80,7 +79,7 @@ $ netstat -s

|

||||

$ netstat -i

|

||||

```

|

||||

|

||||

### 8- 显示多播组(multicast group)信息

|

||||

### 8 - 显示<ruby>多播组<rt>multicast group</rt></ruby>信息

|

||||

|

||||

使用 `g` 选项输出 IPV4 以及 IPV6 的多播组信息,

|

||||

|

||||

@ -88,7 +87,7 @@ $ netstat -i

|

||||

$ netstat -g

|

||||

```

|

||||

|

||||

### 9- 显示网络路由信息

|

||||

### 9 - 显示网络路由信息

|

||||

|

||||

使用 `r` 输出网络路由信息,

|

||||

|

||||

@ -96,7 +95,7 @@ $ netstat -g

|

||||

$ netstat -r

|

||||

```

|

||||

|

||||

### 10- 持续输出

|

||||

### 10 - 持续输出

|

||||

|

||||

使用 `c` 选项持续输出结果

|

||||

|

||||

@ -104,7 +103,7 @@ $ netstat -r

|

||||

$ netstat -c

|

||||

```

|

||||

|

||||

### 11- 过滤出某个端口

|

||||

### 11 - 过滤出某个端口

|

||||

|

||||

与 `grep` 连用来过滤出某个端口的连接,

|

||||

|

||||

@ -112,17 +111,17 @@ $ netstat -c

|

||||

$ netstat -anp | grep 3306

|

||||

```

|

||||

|

||||

### 12- 统计连接个数

|

||||

### 12 - 统计连接个数

|

||||

|

||||

通过与 wc 和 grep 命令连用,可以统计指定端口的连接数量

|

||||

通过与 `wc` 和 `grep` 命令连用,可以统计指定端口的连接数量

|

||||

|

||||

```shell

|

||||

$ netstat -anp | grep 3306 | wc -l

|

||||

```

|

||||

|

||||

这回输出 mysql 服务端口(即 3306)的连接数。

|

||||

这会输出 mysql 服务端口(即 3306)的连接数。

|

||||

|

||||

这就是我们间断的案例指南了,希望它带给你的信息量足够。 有任何疑问欢迎提出。

|

||||

这就是我们简短的案例指南了,希望它带给你的信息量足够。 有任何疑问欢迎提出。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -130,7 +129,7 @@ via: http://linuxtechlab.com/learn-use-netstat-with-examples/

|

||||

|

||||

作者:[Shusain][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,6 +1,7 @@

|

||||

如何在 Bash 中抽取子字符串

|

||||

======

|

||||

子字符串不是别的,就是出现在其他字符串内的字符串。 比如 “3382” 就是 “this is a 3382 test” 的子字符串。 我们有多种方法可以从中把数字或指定部分字符串抽取出来。

|

||||

|

||||

所谓“子字符串”就是出现在其它字符串内的字符串。 比如 “3382” 就是 “this is a 3382 test” 的子字符串。 我们有多种方法可以从中把数字或指定部分字符串抽取出来。

|

||||

|

||||

[][2]

|

||||

|

||||

@ -8,15 +9,17 @@

|

||||

|

||||

### 在 Bash 中抽取子字符串

|

||||

|

||||

其语法为:

|

||||

```shell

|

||||

## syntax ##

|

||||

${parameter:offset:length}

|

||||

```

|

||||

子字符串扩展是 bash 的一项功能。它会扩展成 parameter 值中以 offset 为开始,长为 length 个字符的字符串。 假设, $u 定义如下:

|

||||

其语法为:

|

||||

|

||||

```shell

|

||||

## define var named u ##

|

||||

## 格式 ##

|

||||

${parameter:offset:length}

|

||||

```

|

||||

|

||||

子字符串扩展是 bash 的一项功能。它会扩展成 `parameter` 值中以 `offset` 为开始,长为 `length` 个字符的字符串。 假设, `$u` 定义如下:

|

||||

|

||||

```shell

|

||||

## 定义变量 u ##

|

||||

u="this is a test"

|

||||

```

|

||||

|

||||

@ -34,6 +37,7 @@ test

|

||||

```

|

||||

|

||||

其中这些参数分别表示:

|

||||

|

||||

+ 10 : 偏移位置

|

||||

+ 4 : 长度

|

||||

|

||||

@ -41,9 +45,9 @@ test

|

||||

|

||||

根据 bash 的 man 页说明:

|

||||

|

||||

> The Internal Field Separator that is used for word splitting after expansion and to split lines into words with the read builtin command。The default value is<space><tab><newline>。

|

||||

> [IFS (内部字段分隔符)][3]用于在扩展后进行单词分割,并用内建的 read 命令将行分割为词。默认值是<space><tab><newline>。

|

||||

|

||||

另一种 POSIX 就绪(POSIX ready) 的方案如下:

|

||||

另一种 <ruby>POSIX 就绪<rt>POSIX ready</rt></ruby>的方案如下:

|

||||

|

||||

```shell

|

||||

u="this is a test"

|

||||

@ -54,7 +58,7 @@ echo "$3"

|

||||

echo "$4"

|

||||

```

|

||||

|

||||

输出为:

|

||||

输出为:

|

||||

|

||||

```shell

|

||||

this

|

||||

@ -63,7 +67,7 @@ a

|

||||

test

|

||||

```

|

||||

|

||||

下面是一段 bash 代码,用来从 Cloudflare cache 中去除带主页的 url

|

||||

下面是一段 bash 代码,用来从 Cloudflare cache 中去除带主页的 url。

|

||||

|

||||

```shell

|

||||

#!/bin/bash

|

||||

@ -113,14 +117,15 @@ done

|

||||

echo

|

||||

```

|

||||

|

||||

它的使用方法为:

|

||||

它的使用方法为:

|

||||

|

||||

```shell

|

||||

~/bin/cf.clear.cache https://www.cyberciti.biz/faq/bash-for-loop/ https://www.cyberciti.biz/tips/linux-security.html

|

||||

```

|

||||

|

||||

### 借助 cut 命令

|

||||

|

||||

可以使用 cut 命令来将文件中每一行或者变量中的一部分删掉。它的语法为:

|

||||

可以使用 `cut` 命令来将文件中每一行或者变量中的一部分删掉。它的语法为:

|

||||

|

||||

```shell

|

||||

u="this is a test"

|

||||

@ -135,13 +140,14 @@ var="$(cut -d' ' -f 4 <<< $u)"

|

||||

echo "${var}"

|

||||

```

|

||||

|

||||

想了解更多请阅读 bash 的 man 页:

|

||||

想了解更多请阅读 bash 的 man 页:

|

||||

|

||||

```shell

|

||||

man bash

|

||||

man cut

|

||||

```

|

||||

|

||||

另请参见: [Bash String Comparison: Find Out IF a Variable Contains a Substring][1]

|

||||

另请参见: [Bash String Comparison: Find Out IF a Variable Contains a Substring][1]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -149,10 +155,11 @@ via: https://www.cyberciti.biz/faq/how-to-extract-substring-in-bash/

|

||||

|

||||

作者:[Vivek Gite][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.cyberciti.biz

|

||||

[1]:https://www.cyberciti.biz/faq/bash-find-out-if-variable-contains-substring/

|

||||

[2]:https://www.cyberciti.biz/media/new/faq/2017/12/How-to-Extract-substring-in-Bash-Shell-on-Linux-or-Unix.jpg

|

||||

[3]:https://bash.cyberciti.biz/guide/$IFS

|

||||

@ -0,0 +1,401 @@

|

||||

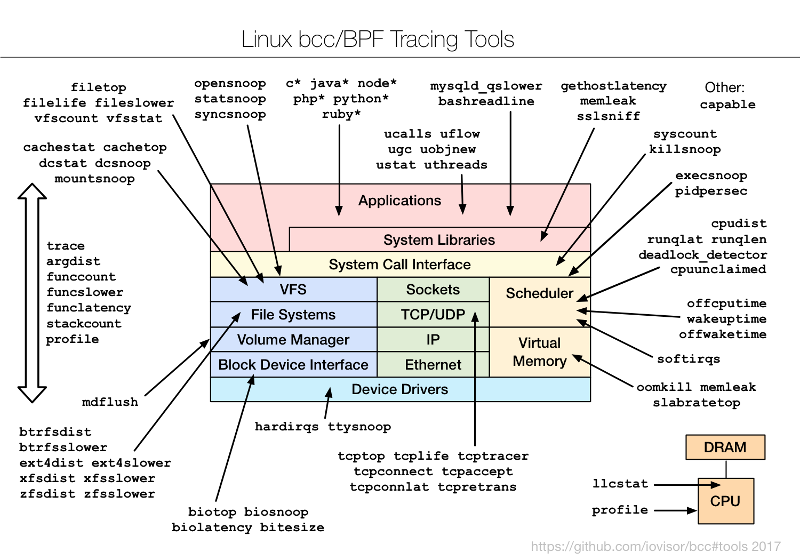

7 个使用 bcc/BPF 的性能分析神器

|

||||

============================================================

|

||||

|

||||

> 使用<ruby>伯克利包过滤器<rt>Berkeley Packet Filter</rt></ruby>(BPF)<ruby>编译器集合<rt>Compiler Collection</rt></ruby>(BCC)工具深度探查你的 linux 代码。

|

||||

|

||||

|

||||

|

||||

在 Linux 中出现的一种新技术能够为系统管理员和开发者提供大量用于性能分析和故障排除的新工具和仪表盘。它被称为<ruby>增强的伯克利数据包过滤器<rt>enhanced Berkeley Packet Filter</rt></ruby>(eBPF,或 BPF),虽然这些改进并不是由伯克利开发的,而且它们不仅仅是处理数据包,更多的是过滤。我将讨论在 Fedora 和 Red Hat Linux 发行版中使用 BPF 的一种方法,并在 Fedora 26 上演示。

|

||||

|

||||

BPF 可以在内核中运行由用户定义的沙盒程序,可以立即添加新的自定义功能。这就像按需给 Linux 系统添加超能力一般。 你可以使用它的例子包括如下:

|

||||

|

||||

* **高级性能跟踪工具**:对文件系统操作、TCP 事件、用户级事件等的可编程的低开销检测。

|

||||

* **网络性能**: 尽早丢弃数据包以提高对 DDoS 的恢复能力,或者在内核中重定向数据包以提高性能。

|

||||

* **安全监控**: 7x24 小时的自定义检测和记录内核空间与用户空间内的可疑事件。

|

||||

|

||||

在可能的情况下,BPF 程序必须通过一个内核验证机制来保证它们的安全运行,这比写自定义的内核模块更安全。我在此假设大多数人并不编写自己的 BPF 程序,而是使用别人写好的。在 GitHub 上的 [BPF Compiler Collection (bcc)][12] 项目中,我已发布许多开源代码。bcc 为 BPF 开发提供了不同的前端支持,包括 Python 和 Lua,并且是目前最活跃的 BPF 工具项目。

|

||||

|

||||

### 7 个有用的 bcc/BPF 新工具

|

||||

|

||||

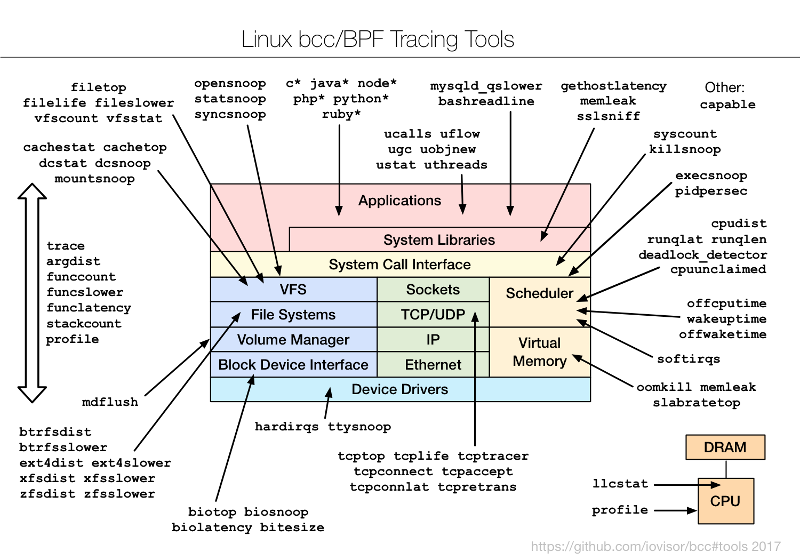

为了了解 bcc/BPF 工具和它们的检测内容,我创建了下面的图表并添加到 bcc 项目中。

|

||||

|

||||

|

||||

|

||||

这些是命令行界面工具,你可以通过 SSH 使用它们。目前大多数分析,包括我的老板,都是用 GUI 和仪表盘进行的。SSH 是最后的手段。但这些命令行工具仍然是预览 BPF 能力的好方法,即使你最终打算通过一个可用的 GUI 使用它。我已着手向一个开源 GUI 添加 BPF 功能,但那是另一篇文章的主题。现在我想向你分享今天就可以使用的 CLI 工具。

|

||||

|

||||

#### 1、 execsnoop

|

||||

|

||||

从哪儿开始呢?如何查看新的进程。那些会消耗系统资源,但很短暂的进程,它们甚至不会出现在 `top(1)` 命令或其它工具中的显示之中。这些新进程可以使用 [execsnoop][15] 进行检测(或使用行业术语说,可以<ruby>被追踪<rt>traced</rt></ruby>)。 在追踪时,我将在另一个窗口中通过 SSH 登录:

|

||||

|

||||

```

|

||||

# /usr/share/bcc/tools/execsnoop

|

||||

PCOMM PID PPID RET ARGS

|

||||

sshd 12234 727 0 /usr/sbin/sshd -D -R

|

||||

unix_chkpwd 12236 12234 0 /usr/sbin/unix_chkpwd root nonull

|

||||

unix_chkpwd 12237 12234 0 /usr/sbin/unix_chkpwd root chkexpiry

|

||||

bash 12239 12238 0 /bin/bash

|

||||

id 12241 12240 0 /usr/bin/id -un

|

||||

hostname 12243 12242 0 /usr/bin/hostname

|

||||

pkg-config 12245 12244 0 /usr/bin/pkg-config --variable=completionsdir bash-completion

|

||||

grepconf.sh 12246 12239 0 /usr/libexec/grepconf.sh -c

|

||||

grep 12247 12246 0 /usr/bin/grep -qsi ^COLOR.*none /etc/GREP_COLORS

|

||||

tty 12249 12248 0 /usr/bin/tty -s

|

||||

tput 12250 12248 0 /usr/bin/tput colors

|

||||

dircolors 12252 12251 0 /usr/bin/dircolors --sh /etc/DIR_COLORS

|

||||

grep 12253 12239 0 /usr/bin/grep -qi ^COLOR.*none /etc/DIR_COLORS

|

||||

grepconf.sh 12254 12239 0 /usr/libexec/grepconf.sh -c

|

||||

grep 12255 12254 0 /usr/bin/grep -qsi ^COLOR.*none /etc/GREP_COLORS

|

||||

grepconf.sh 12256 12239 0 /usr/libexec/grepconf.sh -c

|

||||

grep 12257 12256 0 /usr/bin/grep -qsi ^COLOR.*none /etc/GREP_COLORS

|

||||

```

|

||||

|

||||

哇哦。 那是什么? 什么是 `grepconf.sh`? 什么是 `/etc/GREP_COLORS`? 是 `grep` 在读取它自己的配置文件……由 `grep` 运行的? 这究竟是怎么工作的?

|

||||

|

||||

欢迎来到有趣的系统追踪世界。 你可以学到很多关于系统是如何工作的(或者根本不工作,在有些情况下),并且发现一些简单的优化方法。 `execsnoop` 通过跟踪 `exec()` 系统调用来工作,`exec()` 通常用于在新进程中加载不同的程序代码。

|

||||

|

||||

#### 2、 opensnoop

|

||||

|

||||

接着上面继续,所以,`grepconf.sh` 可能是一个 shell 脚本,对吧? 我将运行 `file(1)` 来检查它,并使用[opensnoop][16] bcc 工具来查看打开的文件:

|

||||

|

||||

```

|

||||

# /usr/share/bcc/tools/opensnoop

|

||||

PID COMM FD ERR PATH