diff --git a/published/20180116 Command Line Heroes- Season 1- OS Wars_2.md b/published/20180116 Command Line Heroes- Season 1- OS Wars_2.md

new file mode 100644

index 0000000000..68f6f81913

--- /dev/null

+++ b/published/20180116 Command Line Heroes- Season 1- OS Wars_2.md

@@ -0,0 +1,171 @@

+[#]: collector: (lujun9972)

+[#]: translator: (lujun9972)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11296-1.html)

+[#]: subject: (Command Line Heroes: Season 1: OS Wars)

+[#]: via: (https://www.redhat.com/en/command-line-heroes/season-1/os-wars-part-2-rise-of-linux)

+[#]: author: (redhat https://www.redhat.com)

+

+《代码英雄》第一季(2):操作系统战争(下)Linux 崛起

+======

+

+> 代码英雄讲述了开发人员、程序员、黑客、极客和开源反叛者如何彻底改变技术前景的真实史诗故事。

+

+

+

+本文是《[代码英雄](https://www.redhat.com/en/command-line-heroes)》系列播客[第一季(2):操作系统战争(下)](https://www.redhat.com/en/command-line-heroes/season-1/os-wars-part-2-rise-of-linux) 的[音频](https://dts.podtrac.com/redirect.mp3/audio.simplecast.com/2199861a.mp3)脚本。

+

+> 微软帝国控制着 90% 的用户。操作系统的完全标准化似乎是板上钉钉的事了。但是一个不太可能的英雄出现在开源反叛组织中。戴着眼镜,温文尔雅的林纳斯·托瓦兹免费发布了他的 Linux® 程序。微软打了个趔趄,并且开始重整旗鼓而来,战场从个人电脑转向互联网。

+

+**Saron Yitbarek:** 这玩意开着的吗?让我们进一段史诗般的星球大战的开幕吧,开始了。

+

+配音:第二集:Linux® 的崛起。微软帝国控制着 90% 的桌面用户。操作系统的全面标准化似乎是板上钉钉的事了。然而,互联网的出现将战争的焦点从桌面转向了企业,在该领域,所有商业组织都争相构建自己的服务器。*[00:00:30]*与此同时,一个不太可能的英雄出现在开源反叛组织中。固执、戴着眼镜的 林纳斯·托瓦兹免费发布了他的 Linux 系统。微软打了个趔趄,并且开始重整旗鼓而来。

+

+**Saron Yitbarek:** 哦,我们书呆子就是喜欢那样。上一次我们讲到哪了?苹果和微软互相攻伐,试图在一场争夺桌面用户的战争中占据主导地位。*[00:01:00]* 在第一集的结尾,我们看到微软获得了大部分的市场份额。很快,由于互联网的兴起以及随之而来的开发者大军,整个市场都经历了一场地震。互联网将战场从在家庭和办公室中的个人电脑用户转移到拥有数百台服务器的大型商业客户中。

+

+这意味着巨量资源的迁移。突然间,所有相关企业不仅被迫为服务器空间和网站建设付费,而且还必须集成软件来进行资源跟踪和数据库监控等工作。*[00:01:30]* 你需要很多开发人员来帮助你。至少那时候大家都是这么做的。

+

+在操作系统之战的第二部分,我们将看到优先级的巨大转变,以及像林纳斯·托瓦兹和理查德·斯托尔曼这样的开源反逆者是如何成功地在微软和整个软件行业的核心地带引发恐惧的。

+

+我是 Saron Yitbarek,你现在收听的是代码英雄,一款红帽公司原创的播客节目。*[00:02:00]* 每一集,我们都会给你带来“从码开始”改变技术的人的故事。

+

+好。想象一下你是 1991 年时的微软。你自我感觉良好,对吧?满怀信心。确立了全球主导的地位感觉不错。你已经掌握了与其他企业合作的艺术,但是仍然将大部分开发人员、程序员和系统管理员排除在联盟之外,而他们才是真正的步兵。*[00:02:30]* 这时出现了个叫林纳斯·托瓦兹的芬兰极客。他和他的开源程序员团队正在开始发布 Linux,这个操作系统内核是由他们一起编写出来的。

+

+坦白地说,如果你是微软公司,你并不会太在意 Linux,甚至不太关心开源运动,但是最终,Linux 的规模变得如此之大,以至于微软不可能不注意到。*[00:03:00]* Linux 第一个版本出现在 1991 年,当时大概有 1 万行代码。十年后,变成了 300 万行代码。如果你想知道,今天则是 2000 万行代码。

+

+*[00:03:30]* 让我们停留在 90 年代初一会儿。那时 Linux 还没有成为我们现在所知道的庞然大物。这个奇怪的病毒式的操作系统只是正在这个星球上蔓延,全世界的极客和黑客都爱上了它。那时候我还太年轻,但有点希望我曾经经历过那个时候。在那个时候,发现 Linux 就如同进入了一个秘密社团一样。就像其他人分享地下音乐混音带一样,程序员与朋友们分享 Linux CD 集。

+

+开发者 Tristram Oaten *[00:03:40]* 讲讲你 16 岁时第一次接触 Linux 的故事吧。

+

+**Tristram Oaten:** 我和我的家人去了红海的 Hurghada 潜水度假。那是一个美丽的地方,强烈推荐。第一天,我喝了自来水。也许,我妈妈跟我说过不要这么做。我整个星期都病得很厉害,没有离开旅馆房间。*[00:04:00]* 当时我只带了一台新安装了 Slackware Linux 的笔记本电脑,我听说过这玩意并且正在尝试使用它。所有的东西都在 8 张 cd 里面。这种情况下,我只能整个星期都去了解这个外星一般的系统。我阅读手册,摆弄着终端。我记得当时我甚至不知道一个点(表示当前目录)和两个点(表示前一个目录)之间的区别。

+

+*[00:04:30]* 我一点头绪都没有。犯过很多错误,但慢慢地,在这种强迫的孤独中,我突破了障碍,开始理解并明白命令行到底是怎么回事。假期结束时,我没有看过金字塔、尼罗河等任何埃及遗址,但我解锁了现代世界的一个奇迹。我解锁了 Linux,接下来的事大家都知道了。

+

+**Saron Yitbarek:** 你会从很多人那里听到关于这个故事的不同说法。访问 Linux 命令行是一种革命性的体验。

+

+**David Cantrell:** *[00:05:00]* 它给了我源代码。我当时的感觉是,“太神奇了。”

+

+**Saron Yitbarek:** 我们正在参加一个名为 Flock to Fedora 的 2017 年 Linux 开发者大会。

+

+**David Cantrell:** ……非常有吸引力。我觉得我对这个系统有了更多的控制力,它越来越吸引我。我想,从 1995 年我第一次编译 Linux 内核那时起,我就迷上了它。

+

+**Saron Yitbarek:** 开发者 David Cantrell 与 Joe Brockmire。

+

+**Joe Brockmeier:** *[00:05:30]* 我在 Cheap Software 转的时候发现了一套四张 CD 的 Slackware Linux。它看起来来非常令人兴奋而且很有趣,所以我把它带回家,安装在第二台电脑上,开始摆弄它,有两件事情让我感到很兴奋:一个是,我运行的不是 Windows,另一个是 Linux 的开源特性。

+

+**Saron Yitbarek:** *[00:06:00]* 某种程度上来说,对命令行的使用总是存在的。在开源真正开始流行还要早的几十年前,人们(至少在开发人员中是这样)总是希望能够做到完全控制。让我们回到操作系统大战之前的那个时代,在苹果和微软为他们的 GUI 而战之前。那时也有代码英雄。保罗·琼斯教授(在线图书馆 ibiblio.org 的负责人)在那个古老的时代,就是一名开发人员。

+

+**Paul Jones:** *[00:06:30]* 从本质上讲,互联网在那个时候客户端-服务器架构还是比较少的,而更多的是点对点架构的。确实,我们会说,某种 VAX 到 VAX 的连接(LCTT 译注:DEC 的一种操作系统),某种科学工作站到科学工作站的连接。这并不意味着没有客户端-服务端的架构及应用程序,但这的确意味着,最初的设计是思考如何实现点对点,*[00:07:00]* 它与 IBM 一直在做的东西相对立。IBM 给你的只有哑终端,这种终端只能让你管理用户界面,却无法让你像真正的终端一样为所欲为。

+

+**Saron Yitbarek:** 图形用户界面在普通用户中普及的同时,在工程师和开发人员中总是存在着一股相反的力量。早在 Linux 出现之前的二十世纪七八十年代,这股力量就存在于 Emacs 和 GNU 中。有了斯托尔曼的自由软件基金会后,总有某些人想要使用命令行,但上世纪 90 年代的 Linux 提供了前所未有的东西。

+

+*[00:07:30]* Linux 和其他开源软件的早期爱好者是都是先驱。我正站在他们的肩膀上。我们都是。

+

+你现在收听的是代码英雄,一款由红帽公司原创的播客。这是操作系统大战的第二部分:Linux 崛起。

+

+**Steven Vaughan-Nichols:** 1998 年的时候,情况发生了变化。

+

+**Saron Yitbarek:** *[00:08:00]* Steven Vaughan-Nichols 是 zdnet.com 的特约编辑,他已经写了几十年关于技术商业方面的文章了。他将向我们讲述 Linux 是如何慢慢变得越来越流行,直到自愿贡献者的数量远远超过了在 Windows 上工作的微软开发人员的数量的。不过,Linux 从未真正追上微软桌面客户的数量,这也许就是微软最开始时忽略了 Linux 及其开发者的原因。Linux 真正大放光彩的地方是在服务器机房。当企业开始线上业务时,每个企业都需要一个满足其需求的独特编程解决方案。

+

+*[00:08:30]* WindowsNT 于 1993 年问世,当时它已经在与其他的服务器操作系统展开竞争了,但是许多开发人员都在想,“既然我可以通过 Apache 构建出基于 Linux 的廉价系统,那我为什么要购买 AIX 设备或大型 Windows 设备呢?”关键点在于,Linux 代码已经开始渗透到几乎所有网上的东西中。

+

+**Steven Vaughan-Nichols:** *[00:09:00]* 令微软感到惊讶的是,它开始意识到,Linux 实际上已经开始有一些商业应用,不是在桌面环境,而是在商业服务器上。因此,他们发起了一场运动,我们称之为 FUD - 恐惧、不确定和怀疑。他们说,“哦,Linux 这玩意,真的没有那么好。它不太可靠。你一点都不能相信它”。

+

+**Saron Yitbarek:** 这种软宣传式的攻击持续了一段时间。微软也不是唯一一个对 Linux 感到紧张的公司。这其实是整个行业在对抗这个奇怪新人的挑战。*[00:09:30]* 例如,任何与 UNIX 有利害关系的人都可能将 Linux 视为篡夺者。有一个案例很著名,那就是 SCO 组织(它发行过一种 UNIX 版本)在过去 10 多年里发起一系列的诉讼,试图阻止 Linux 的传播。SCO 最终失败而且破产了。与此同时,微软一直在寻找机会,他们必须要采取动作,只是不清楚具体该怎么做。

+

+**Steven Vaughan-Nichols:** *[00:10:00]* 让微软真正担心的是,第二年,在 2000 年的时候,IBM 宣布,他们将于 2001 年投资 10 亿美元在 Linux 上。现在,IBM 已经不再涉足个人电脑业务。(那时)他们还没有走出去,但他们正朝着这个方向前进,他们将 Linux 视为服务器和大型计算机的未来,在这一点上,剧透警告,IBM 是正确的。*[00:10:30]* Linux 将主宰服务器世界。

+

+**Saron Yitbarek:** 这已经不再仅仅是一群黑客喜欢他们对命令行的绝地武士式的控制了。金钱的投入对 Linux 助力极大。Linux 国际的执行董事 John “Mad Dog” Hall 有一个故事可以解释为什么会这样。我们通过电话与他取得了联系。

+

+**John Hall:** *[00:11:00]* 我有一个名叫 Dirk Holden 的朋友,他是德国德意志银行的系统管理员,他也参与了个人电脑上早期 X Windows 系统图形项目的工作。有一天我去银行拜访他,我说:“Dirk,你银行里有 3000 台服务器,用的都是 Linux。为什么不用 Microsoft NT 呢?”*[00:11:30]* 他看着我说:“是的,我有 3000 台服务器,如果使用微软的 Windows NT 系统,我需要 2999 名系统管理员。”他继续说道:“而使用 Linux,我只需要四个。”这真是完美的答案。

+

+**Saron Yitbarek:** 程序员们着迷的这些东西恰好对大公司也极具吸引力。但由于 FUD 的作用,一些企业对此持谨慎态度。*[00:12:00]* 他们听到开源,就想:“开源。这看起来不太可靠,很混乱,充满了 BUG”。但正如那位银行经理所指出的,金钱有一种有趣的方式,可以说服人们克服困境。甚至那些只需要网站的小公司也加入了 Linux 阵营。与一些昂贵的专有选择相比,使用一个廉价的 Linux 系统在成本上是无法比拟的。如果你是一家雇佣专业人员来构建网站的商店,那么你肯定想让他们使用 Linux。

+

+让我们快进几年。Linux 运行每个人的网站上。Linux 已经征服了服务器世界,然后智能手机也随之诞生。*[00:12:30]* 当然,苹果和他们的 iPhone 占据了相当大的市场份额,而且微软也希望能进入这个市场,但令人惊讶的是,Linux 也在那,已经做好准备了,迫不及待要大展拳脚。

+

+作家兼记者 James Allworth。

+

+**James Allworth:** 肯定还有容纳第二个竞争者的空间,那本可以是微软,但是实际上却是 Android,而 Andrid 基本上是基于 Linux 的。众所周知,Android 被谷歌所收购,现在运行在世界上大部分的智能手机上,谷歌在 Linux 的基础上创建了 Android。*[00:13:00]* Linux 使他们能够以零成本从一个非常复杂的操作系统开始。他们成功地实现了这一目标,最终将微软挡在了下一代设备之外,至少从操作系统的角度来看是这样。

+

+**Saron Yitbarek:** *[00:13:30]* 这可是个大地震,很大程度上,微软有被埋没的风险。John Gossman 是微软 Azure 团队的首席架构师。他还记得当时困扰公司的困惑。

+

+**John Gossman:** 像许多公司一样,微软也非常担心知识产权污染。他们认为,如果允许开发人员使用开源代码,那么他们可能只是将一些代码复制并粘贴到某些产品中,就会让某种病毒式的许可证生效从而引发未知的风险……他们也很困惑,*[00:14:00]* 我认为,这跟公司文化有关,很多公司,包括微软,都对开源开发的意义和商业模式之间的分歧感到困惑。有一种观点认为,开源意味着你所有的软件都是免费的,人们永远不会付钱。

+

+**Saron Yitbarek:** 任何投资于旧的、专有软件模型的人都会觉得这里发生的一切对他们构成了威胁。当你威胁到像微软这样的大公司时,是的,他们一定会做出反应。*[00:14:30]* 他们推动所有这些 FUD —— 恐惧、不确定性和怀疑是有道理的。当时,商业运作的方式基本上就是相互竞争。不过,如果是其他公司的话,他们可能还会一直怀恨在心,抱残守缺,但到了 2013 年的微软,一切都变了。

+

+微软的云计算服务 Azure 上线了,令人震惊的是,它从第一天开始就提供了 Linux 虚拟机。*[00:15:00]* 史蒂夫·鲍尔默,这位把 Linux 称为癌症的首席执行官,已经离开了,代替他的是一位新的有远见的首席执行官萨提亚·纳德拉。

+

+**John Gossman:** 萨提亚有不同的看法。他属于另一个世代。比保罗、比尔和史蒂夫更年轻的世代,他对开源有不同的看法。

+

+**Saron Yitbarek:** 还是来自微软 Azure 团队的 John Gossman。

+

+**John Gossman:** *[00:15:30]* 大约四年前,处于实际需要,我们在 Azure 中添加了 Linux 支持。如果访问任何一家企业客户,你都会发现他们并不会才试着决定是使用 Windows 还是使用 Linux、 使用 .net 还是使用 Java ^TM 。他们在很久以前就做出了决定 —— 大约 15 年前才有这样的一些争论。*[00:16:00]* 现在,我见过的每一家公司都混合了 Linux 和 Java、Windows 和 .net、SQL Server、Oracle 和 MySQL —— 基于专有源代码的产品和开放源代码的产品。

+

+如果你打算运维一个云服务,允许这些公司在云上运行他们的业务,那么你根本不能告诉他们,“你可以使用这个软件,但你不能使用那个软件。”

+

+**Saron Yitbarek:** *[00:16:30]* 这正是萨提亚·纳德拉采纳的哲学思想。2014 年秋季,他站在舞台上,希望传递一个重要信息。“微软爱 Linux”。他接着说,“20% 的 Azure 业务量已经是 Linux 了,微软将始终对 Linux 发行版提供一流的支持。”没有哪怕一丝对开源的宿怨。

+

+为了说明这一点,在他们的背后有一个巨大的标志,上面写着:“Microsoft ❤️ Linux”。哇噢。对我们中的一些人来说,这种转变有点令人震惊,但实际上,无需如此震惊。下面是 Steven Levy,一名科技记者兼作家。

+

+**Steven Levy:** *[00:17:00]* 当你在踢足球的时候,如果草坪变滑了,那么你也许会换一种不同的鞋子。他们当初就是这么做的。*[00:17:30]* 他们不能否认现实,而且他们里面也有聪明人,所以他们必须意识到,这就是这个世界的运行方式,不管他们早些时候说了什么,即使他们对之前的言论感到尴尬,但是让他们之前关于开源多么可怕的言论影响到现在明智的决策那才真的是疯了。

+

+**Saron Yitbarek:** 微软低下了它高傲的头。你可能还记得苹果公司,经过多年的孤立无援,最终转向与微软构建合作伙伴关系。现在轮到微软进行 180 度转变了。*[00:18:00]* 经过多年的与开源方式的战斗后,他们正在重塑自己。要么改变,要么死亡。Steven Vaughan-Nichols。

+

+**Steven Vaughan-Nichols:** 即使是像微软这样规模的公司也无法与数千个开发着包括 Linux 在内的其它大项目的开源开发者竞争。很长时间以来他们都不愿意这么做。前微软首席执行官史蒂夫·鲍尔默对 Linux 深恶痛绝。*[00:18:30]* 由于它的 GPL 许可证,他视 Linux 为一种癌症,但一旦鲍尔默被扫地出门,新的微软领导层说,“这就好像试图命令潮流不要过来,但潮水依然会不断涌进来。我们应该与 Linux 合作,而不是与之对抗。”

+

+**Saron Tiebreak:** 事实上,互联网技术史上最大的胜利之一就是微软最终决定做出这样的转变。*[00:19:00]* 当然,当微软出现在开源圈子时,老一代的铁杆 Linux 支持者是相当怀疑的。他们不确定自己是否能接受这些家伙,但正如 Vaughan-Nichols 所指出的,今天的微软已经不是以前的微软了。

+

+**Steven Vaughan-Nichols:** 2017 年的微软既不是史蒂夫·鲍尔默的微软,也不是比尔·盖茨的微软。这是一家完全不同的公司,有着完全不同的方法,而且,一旦使用了开源,你就无法退回到之前。*[00:19:30]* 开源已经吞噬了整个技术世界。从未听说过 Linux 的人可能对它并不了解,但是每次他们访问 Facebook,他们都在运行 Linux。每次执行谷歌搜索时,你都在运行 Linux。

+

+*[00:20:00]* 每次你用 Android 手机,你都在运行 Linux。它确实无处不在,微软无法阻止它,而且我认为以为微软能以某种方式接管它的想法,太天真了。

+

+**Saron Yitbarek:** 开源支持者可能一直担心微软会像混入羊群中的狼一样,但事实是,开源软件的本质保护了它无法被完全控制。*[00:20:30]* 没有一家公司能够拥有 Linux 并以某种特定的方式控制它。Greg Kroah-Hartman 是 Linux 基金会的一名成员。

+

+**Greg Kroah-Hartman:** 每个公司和个人都以自私的方式为 Linux 做出贡献。他们之所以这样做是因为他们想要解决他们所面临的问题,可能是硬件无法工作,或者是他们想要添加一个新功能来做其他事情,又或者想在他们的产品中使用它。这很棒,因为他们会把代码贡献回去,此后每个人都会从中受益,这样每个人都可以用到这份代码。正是因为这种自私,所有的公司,所有的人都能从中受益。

+

+**Saron Yitbarek:** *[00:21:00]* 微软已经意识到,在即将到来的云战争中,与 Linux 作战就像与空气作战一样。Linux 和开源不是敌人,它们是空气。如今,微软以白金会员的身份加入了 Linux 基金会。他们成为 GitHub 开源项目的头号贡献者。*[00:21:30]* 2017 年 9 月,他们甚至加入了开源促进联盟。现在,微软在开源许可证下发布了很多代码。微软的 John Gossman 描述了他们开源 .net 时所发生的事情。起初,他们并不认为自己能得到什么回报。

+

+**John Gossman:** 我们本没有指望来自社区的贡献,然而,三年后,超过 50% 的对 .net 框架库的贡献来自于微软之外。这包括大量的代码。*[00:22:00]* 三星为 .net 提供了 ARM 支持。Intel 和 ARM 以及其他一些芯片厂商已经为 .net 框架贡献了特定于他们处理器的代码生成,以及数量惊人的修复、性能改进等等 —— 既有单个贡献者也有社区。

+

+**Saron Yitbarek:** 直到几年前,今天的这个微软,这个开放的微软,还是不可想象的。

+

+*[00:22:30]* 我是 Saron Yitbarek,这里是代码英雄。好吧,我们已经看到了为了赢得数百万桌面用户的爱而战的激烈场面。我们已经看到开源软件在专有软件巨头的背后悄然崛起,并攫取了巨大的市场份额。*[00:23:00]* 我们已经看到了一批批的代码英雄将编程领域变成了我你今天看到的这个样子。如今,大企业正在吸收开源软件,通过这一切,每个人都从他人那里受益。

+

+在技术的西部荒野,一贯如此。苹果受到施乐的启发,微软受到苹果的启发,Linux 受到 UNIX 的启发。进化、借鉴、不断成长。如果比喻成大卫和歌利亚(LCTT 译注:西方经典的以弱胜强战争中的两个主角)的话,开源软件不再是大卫,但是,你知道吗?它也不是歌利亚。*[00:23:30]* 开源已经超越了传统。它已经成为其他人战斗的战场。随着开源道路变得不可避免,新的战争,那些在云计算中进行的战争,那些在开源战场上进行的战争正在加剧。

+

+这是 Steven Levy,他是一名作者。

+

+**Steven Levy:** 基本上,到目前为止,包括微软在内,有四到五家公司,正以各种方式努力把自己打造成为全方位的平台,比如人工智能领域。你能看到智能助手之间的战争,你猜怎么着?*[00:24:00]* 苹果有一个智能助手,叫 Siri。微软有一个,叫 Cortana。谷歌有谷歌助手。三星也有一个智能助手。亚马逊也有一个,叫 Alexa。我们看到这些战斗遍布各地。也许,你可以说,最热门的人工智能平台将控制我们生活中所有的东西,而这五家公司就是在为此而争斗。

+

+**Saron Yitbarek:** *[00:24:30]* 如果你正在寻找另一个反叛者,它们就像 Linux 奇袭微软那样,偷偷躲在 Facebook、谷歌或亚马逊身后,你也许要等很久,因为正如作家 James Allworth 所指出的,成为一个真正的反叛者只会变得越来越难。

+

+**James Allworth:** 规模一直以来都是一种优势,但规模优势本质上……怎么说呢,我认为以前它们在本质上是线性的,现在它们在本质上是指数型的了,所以,一旦你开始以某种方法走在前面,另一个新玩家要想赶上来就变得越来越难了。*[00:25:00]* 我认为在互联网时代这大体来说来说是正确的,无论是因为规模,还是数据赋予组织的竞争力的重要性和优势。一旦你走在前面,你就会吸引更多的客户,这就给了你更多的数据,让你能做得更好,这之后,客户还有什么理由选择排名第二的公司呢,难道是因为因为他们落后了这么远么?*[00:25:30]* 我认为在云的时代这个逻辑也不会有什么不同。

+

+**Saron Yitbarek:** 这个故事始于史蒂夫·乔布斯和比尔·盖茨这样的非凡的英雄,但科技的进步已经呈现出一种众包、有机的感觉。我认为据说我们的开源英雄林纳斯·托瓦兹在第一次发明 Linux 内核时甚至没有一个真正的计划。他无疑是一位才华横溢的年轻开发者,但他也像潮汐前的一滴水一样。*[00:26:00]* 变革是不可避免的。据估计,对于一家专有软件公司来说,用他们老式的、专有的方式创建一个 Linux 发行版将花费他们超过 100 亿美元。这说明了开源的力量。

+

+最后,这并不是一个专有模型所能与之竞争的东西。成功的公司必须保持开放。这是最大、最终极的教训。*[00:26:30]* 还有一点要记住:当我们连接在一起的时候,我们在已有基础上成长和建设的能力是无限的。不管这些公司有多大,我们都不必坐等他们给我们更好的东西。想想那些为了纯粹的创造乐趣而学习编码的新开发者,那些自己动手丰衣足食的人。

+

+未来的优秀程序员无管来自何方,只要能够访问代码,他们就能构建下一个大项目。

+

+*[00:27:00]* 以上就是我们关于操作系统战争的两个故事。这场战争塑造了我们的数字生活。争夺主导地位的斗争从桌面转移到了服务器机房,最终进入了云计算领域。过去的敌人难以置信地变成了盟友,众包的未来让一切都变得开放。*[00:27:30]* 听着,我知道,在这段历史之旅中,还有很多英雄我们没有提到,所以给我们写信吧。分享你的故事。[Redhat.com/commandlineheroes](https://www.redhat.com/commandlineheroes) 。我恭候佳音。

+

+在本季剩下的时间里,我们将学习今天的英雄们在创造什么,以及他们要经历什么样的战斗才能将他们的创造变为现实。让我们从壮丽的编程一线回来看看更多的传奇故事吧。我们每两周放一集新的博客。几周后,我们将为你带来第三集:敏捷革命。

+

+*[00:28:00]* 代码英雄是一款红帽公司原创的播客。要想免费自动获得新一集的代码英雄,请订阅我们的节目。只要在苹果播客、Spotify、谷歌 Play,或其他应用中搜索“Command Line Heroes”。然后点击“订阅”。这样你就会第一个知道什么时候有新剧集了。

+

+我是 Saron Yitbarek。感谢收听。继续编码。

+

+--------------------------------------------------------------------------------

+

+via: https://www.redhat.com/en/command-line-heroes/season-1/os-wars-part-2-rise-of-linux

+

+作者:[redhat][a]

+选题:[lujun9972][b]

+译者:[lujun9972](https://github.com/lujun9972)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://www.redhat.com

+[b]: https://github.com/lujun9972

diff --git a/published/20180704 BASHing data- Truncated data items.md b/published/20180704 BASHing data- Truncated data items.md

new file mode 100644

index 0000000000..dad03bf3da

--- /dev/null

+++ b/published/20180704 BASHing data- Truncated data items.md

@@ -0,0 +1,115 @@

+如何发现截断的数据项

+======

+

+**截断**(形容词):缩写、删节、缩减、剪切、剪裁、裁剪、修剪……

+

+数据项被截断的一种情况是将其输入到数据库字段中,该字段的字符限制比数据项的长度要短。例如,字符串:

+

+```

+Yarrow Ravine Rattlesnake Habitat Area, 2 mi ENE of Yermo CA

+```

+

+是 60 个字符长。如果你将其输入到具有 50 个字符限制的“位置”字段,则可以获得:

+

+```

+Yarrow Ravine Rattlesnake Habitat Area, 2 mi ENE #末尾带有一个空格

+```

+

+截断也可能导致数据错误,比如你打算输入:

+

+```

+Sally Ann Hunter (aka Sally Cleveland)

+```

+

+但是你忘记了闭合的括号:

+

+```

+Sally Ann Hunter (aka Sally Cleveland

+```

+

+这会让使用数据的用户觉得 Sally 是否有被修剪掉了数据项的其它的别名。

+

+截断的数据项很难检测。在审核数据时,我使用三种不同的方法来查找可能的截断,但我仍然可能会错过一些。

+

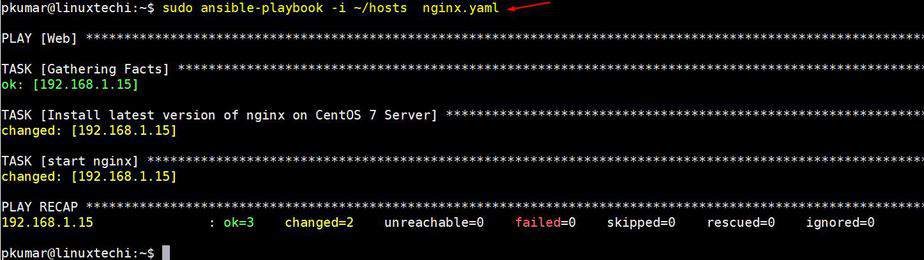

+**数据项的长度分布。**第一种方法是捕获我在各个字段中找到的大多数截断的数据。我将字段传递给 `awk` 命令,该命令按字段宽度计算数据项,然后我使用 `sort` 以宽度的逆序打印计数。例如,要检查以 `tab` 分隔的文件 `midges` 中的第 33 个字段:

+

+```

+awk -F"\t" 'NR>1 {a[length($33)]++} \

+ END {for (i in a) print i FS a[i]}' midges | sort -nr

+```

+

+![distro1][1]

+

+最长的条目恰好有 50 个字符,这是可疑的,并且在该宽度处存在数据项的“凸起”,这更加可疑。检查这些 50 个字符的项目会发现截断:

+

+![distro2][2]

+

+我用这种方式检查的其他数据表有 100、200 和 255 个字符的“凸起”。在每种情况下,这种“凸起”都包含明显的截断。

+

+**未匹配的括号。**第二种方法查找类似 `...(Sally Cleveland` 的数据项。一个很好的起点是数据表中所有标点符号的统计。这里我检查文件 `mag2`:

+

+```

+grep -o "[[:punct:]]" file | sort | uniqc

+```

+

+![punct][3]

+

+请注意,`mag2` 中的开括号和闭括号的数量不相等。要查看发生了什么,我使用 `unmatched` 函数,它接受三个参数并检查数据表中的所有字段。第一个参数是文件名,第二个和第三个是开括号和闭括号,用引号括起来。

+

+```

+unmatched()

+{

+ awk -F"\t" -v start="$2" -v end="$3" \

+ '{for (i=1;i<=NF;i++) \

+ if (split($i,a,start) != split($i,b,end)) \

+ print "line "NR", field "i":\n"$i}' "$1"

+}

+```

+

+如果在字段中找到开括号和闭括号之间不匹配,则 `unmatched` 会报告行号和字段号。这依赖于 `awk` 的 `split` 函数,它返回由分隔符分隔的元素数(包括空格)。这个数字总是比分隔符的数量多一个:

+

+![split][4]

+

+这里 `ummatched` 检查 `mag2` 中的圆括号并找到一些可能的截断:

+

+![unmatched][5]

+

+我使用 `unmatched` 来找到不匹配的圆括号 `()`、方括号 `[]`、花括号 `{}` 和尖括号 `<>`,但该函数可用于任何配对的标点字符。

+

+**意外的结尾。**第三种方法查找以尾随空格或非终止标点符号结尾的数据项,如逗号或连字符。这可以在单个字段上用 `cut` 用管道输入到 `grep` 完成,或者用 `awk` 一步完成。在这里,我正在检查以制表符分隔的表 `herp5` 的字段 47,并提取可疑数据项及其行号:

+

+```

+cut -f47 herp5 | grep -n "[ ,;:-]$"

+或

+awk -F"\t" '$47 ~ /[ ,;:-]$/ {print NR": "$47}' herp5

+```

+

+![herps5][6]

+

+用于制表符分隔文件的 awk 命令的全字段版本是:

+

+```

+awk -F"\t" '{for (i=1;i<=NF;i++) if ($i ~ /[ ,;:-]$/) \

+ print "line "NR", field "i":\n"$i}' file

+```

+

+**谨慎的想法。**在我对字段进行的验证测试期间也会出现截断。例如,我可能会在“年”的字段中检查合理的 4 位数条目,并且有个 `198` 可能是 198n?还是 1898 年?带有丢失字符的截断数据项是个谜。 作为数据审计员,我只能报告(可能的)字符损失,并建议数据编制者或管理者恢复(可能)丢失的字符。

+

+--------------------------------------------------------------------------------

+

+via: https://www.polydesmida.info/BASHing/2018-07-04.html

+

+作者:[polydesmida][a]

+选题:[lujun9972](https://github.com/lujun9972)

+译者:[wxy](https://github.com/wxy)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]:https://www.polydesmida.info/

+[1]:https://www.polydesmida.info/BASHing/img1/2018-07-04_1.png

+[2]:https://www.polydesmida.info/BASHing/img1/2018-07-04_2.png

+[3]:https://www.polydesmida.info/BASHing/img1/2018-07-04_3.png

+[4]:https://www.polydesmida.info/BASHing/img1/2018-07-04_4.png

+[5]:https://www.polydesmida.info/BASHing/img1/2018-07-04_5.png

+[6]:https://www.polydesmida.info/BASHing/img1/2018-07-04_6.png

diff --git a/published/20181113 Eldoc Goes Global.md b/published/20181113 Eldoc Goes Global.md

new file mode 100644

index 0000000000..4714f966a1

--- /dev/null

+++ b/published/20181113 Eldoc Goes Global.md

@@ -0,0 +1,48 @@

+[#]: collector: (lujun9972)

+[#]: translator: (lujun9972)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11306-1.html)

+[#]: subject: (Eldoc Goes Global)

+[#]: via: (https://emacsredux.com/blog/2018/11/13/eldoc-goes-global/)

+[#]: author: (Bozhidar Batsov https://emacsredux.com)

+

+Emacs:Eldoc 全局化了

+======

+

+

+

+最近我注意到 Emacs 25.1 增加了一个名为 `global-eldoc-mode` 的模式,它是流行的 `eldoc-mode` 的一个全局化的变体。而且与 `eldoc-mode` 不同的是,`global-eldoc-mode` 默认是开启的!

+

+这意味着你可以删除 Emacs 配置中为主模式开启 `eldoc-mode` 的代码了:

+

+```

+;; That code is now redundant

+(add-hook 'emacs-lisp-mode-hook #'eldoc-mode)

+(add-hook 'ielm-mode-hook #'eldoc-mode)

+(add-hook 'cider-mode-hook #'eldoc-mode)

+(add-hook 'cider-repl-mode-hook #'eldoc-mode)

+```

+

+[有人说][1] `global-eldoc-mode` 在某些不支持的模式中会有性能问题。我自己从未遇到过,但若你像禁用它则只需要这样:

+

+```

+(global-eldoc-mode -1)

+```

+

+现在是时候清理我的配置了!删除代码就是这么爽!

+

+--------------------------------------------------------------------------------

+

+via: https://emacsredux.com/blog/2018/11/13/eldoc-goes-global/

+

+作者:[Bozhidar Batsov][a]

+选题:[lujun9972][b]

+译者:[lujun9972](https://github.com/lujun9972)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://emacsredux.com

+[b]: https://github.com/lujun9972

+[1]: https://emacs.stackexchange.com/questions/31414/how-to-globally-disable-eldoc

diff --git a/published/20190401 Build and host a website with Git.md b/published/20190401 Build and host a website with Git.md

new file mode 100644

index 0000000000..fd3b19a04f

--- /dev/null

+++ b/published/20190401 Build and host a website with Git.md

@@ -0,0 +1,223 @@

+[#]: collector: (lujun9972)

+[#]: translator: (wxy)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11303-1.html)

+[#]: subject: (Build and host a website with Git)

+[#]: via: (https://opensource.com/article/19/4/building-hosting-website-git)

+[#]: author: (Seth Kenlon https://opensource.com/users/seth)

+

+用 Git 建立和托管网站

+======

+

+> 你可以让 Git 帮助你轻松发布你的网站。在我们《鲜为人知的 Git 用法》系列的第一篇文章中学习如何做到。

+

+

+

+[Git][2] 是一个少有的能将如此多的现代计算封装到一个程序之中的应用程序,它可以用作许多其他应用程序的计算引擎。虽然它以跟踪软件开发中的源代码更改而闻名,但它还有许多其他用途,可以让你的生活更轻松、更有条理。在这个 Git 系列中,我们将分享七种鲜为人知的使用 Git 的方法。

+

+创建一个网站曾经是极其简单的,而同时它又是一种黑魔法。回到 Web 1.0 的旧时代(不是每个人都会这样称呼它),你可以打开任何网站,查看其源代码,并对 HTML 及其内联样式和基于表格的布局进行反向工程,在这样的一两个下午之后,你就会感觉自己像一个程序员一样。不过要让你创建的页面放到互联网上,仍然有一些问题,因为这意味着你需要处理服务器、FTP 以及 webroot 目录和文件权限。虽然从那时起,现代网站变得愈加复杂,但如果你让 Git 帮助你,自出版可以同样容易(或更容易!)。

+

+### 用 Hugo 创建一个网站

+

+[Hugo][3] 是一个开源的静态站点生成器。静态网站是过去的 Web 的基础(如果你回溯到很久以前,那就是 Web 的*全部*了)。静态站点有几个优点:它们相对容易编写,因为你不必编写代码;它们相对安全,因为页面上没有执行代码;并且它们可以非常快,因为除了在页面上传输的任何内容之外没有任何处理。

+

+Hugo 并不是唯一一个静态站点生成器。[Grav][4]、[Pico][5]、[Jekyll][6]、[Podwrite][7] 以及许多其他的同类软件都提供了一种创建一个功能最少的、只需要很少维护的网站的简单方法。Hugo 恰好是内置集成了 GitLab 集成的一个静态站点生成器,这意味着你可以使用免费的 GitLab 帐户生成和托管你的网站。

+

+Hugo 也有一些非常大的用户。例如,如果你曾经去过 [Let's Encrypt](https://letsencrypt.org/) 网站,那么你已经用过了一个用 Hugo 构建的网站。

+

+![Let's Encrypt website][8]

+

+#### 安装 Hugo

+

+Hugo 是跨平台的,你可以在 [Hugo 的入门资源][9]中找到适用于 MacOS、Windows、Linux、OpenBSD 和 FreeBSD 的安装说明。

+

+如果你使用的是 Linux 或 BSD,最简单的方法是从软件存储库或 ports 树安装 Hugo。确切的命令取决于你的发行版,但在 Fedora 上,你应该输入:

+

+```

+$ sudo dnf install hugo

+```

+

+通过打开终端并键入以下内容确认你已正确安装:

+

+```

+$ hugo help

+```

+

+这将打印 `hugo` 命令的所有可用选项。如果你没有看到,你可能没有正确安装 Hugo 或需要[将该命令添加到你的路径][10]。

+

+#### 创建你的站点

+

+要构建 Hugo 站点,你必须有个特定的目录结构,通过输入以下命令 Hugo 将为你生成它:

+

+```

+$ hugo new site mysite

+```

+

+你现在有了一个名为 `mysite` 的目录,它包含构建 Hugo 网站所需的默认目录。

+

+Git 是你将网站放到互联网上的接口,因此切换到你新的 `mysite` 文件夹,并将其初始化为 Git 存储库:

+

+```

+$ cd mysite

+$ git init .

+```

+

+Hugo 与 Git 配合的很好,所以你甚至可以使用 Git 为你的网站安装主题。除非你计划开发你正在安装的主题,否则可以使用 `--depth` 选项克隆该主题的源的最新状态:

+

+```

+$ git clone --depth 1 https://github.com/darshanbaral/mero.git themes/mero

+```

+

+现在为你的网站创建一些内容:

+

+```

+$ hugo new posts/hello.md

+```

+

+使用你喜欢的文本编辑器编辑 `content/posts` 目录中的 `hello.md` 文件。Hugo 接受 Markdown 文件,并会在发布时将它们转换为经过主题化的 HTML 文件,因此你的内容必须采用 [Markdown 格式][11]。

+

+如果要在帖子中包含图像,请在 `static` 目录中创建一个名为 `images` 的文件夹。将图像放入此文件夹,并使用以 `/images` 开头的绝对路径在标记中引用它们。例如:

+

+```

+

+```

+

+#### 选择主题

+

+你可以在 [themes.gohugo.io][12] 找到更多主题,但最好在测试时保持一个基本主题。标准的 Hugo 测试主题是 [Ananke][13]。某些主题具有复杂的依赖关系,而另外一些主题如果没有复杂的配置的话,也许不会以你预期的方式呈现页面。本例中使用的 Mero 主题捆绑了一个详细的 `config.toml` 配置文件,但是(为了简单起见)我将在这里只提供基本的配置。在文本编辑器中打开名为 `config.toml` 的文件,并添加三个配置参数:

+

+```

+languageCode = "en-us"

+title = "My website on the web"

+theme = "mero"

+

+[params]

+ author = "Seth Kenlon"

+ description = "My hugo demo"

+```

+

+#### 预览

+

+在你准备发布之前不必(预先)在互联网上放置任何内容。在你开发网站时,你可以通过启动 Hugo 附带的仅限本地访问的 Web 服务器来预览你的站点。

+

+```

+$ hugo server --buildDrafts --disableFastRender

+```

+

+打开 Web 浏览器并导航到 以查看正在进行的工作。

+

+### 用 Git 发布到 GitLab

+

+要在 GitLab 上发布和托管你的站点,请为你的站点内容创建一个存储库。

+

+要在 GitLab 中创建存储库,请单击 GitLab 的 “Projects” 页面中的 “New Project” 按钮。创建一个名为 `yourGitLabUsername.gitlab.io` 的空存储库,用你的 GitLab 用户名或组名替换 `yourGitLabUsername`。你必须使用此命名方式作为该项目的名称。你也可以稍后为其添加自定义域。

+

+不要在 GitLab 上包含许可证或 README 文件(因为你已经在本地启动了一个项目,现在添加这些文件会使将你的数据推向 GitLab 时更加复杂,以后你可以随时添加它们)。

+

+在 GitLab 上创建空存储库后,将其添加为 Hugo 站点的本地副本的远程位置,该站点已经是一个 Git 存储库:

+

+```

+$ git remote add origin git@gitlab.com:skenlon/mysite.git

+```

+

+创建名为 `.gitlab-ci.yml` 的 GitLab 站点配置文件并输入以下选项:

+

+```

+image: monachus/hugo

+

+variables:

+ GIT_SUBMODULE_STRATEGY: recursive

+

+pages:

+ script:

+ - hugo

+ artifacts:

+ paths:

+ - public

+ only:

+ - master

+```

+

+`image` 参数定义了一个为你的站点提供服务的容器化图像。其他参数是告诉 GitLab 服务器在将新代码推送到远程存储库时要执行的操作的说明。有关 GitLab 的 CI/CD(持续集成和交付)选项的更多信息,请参阅 [GitLab 文档的 CI/CD 部分][14]。

+

+#### 设置排除的内容

+

+你的 Git 存储库已配置好,在 GitLab 服务器上构建站点的命令也已设置,你的站点已准备好发布了。对于你的第一个 Git 提交,你必须采取一些额外的预防措施,以便你不会对你不打算进行版本控制的文件进行版本控制。

+

+首先,将构建你的站点时 Hugo 创建的 `/public` 目录添加到 `.gitignore` 文件。你无需在 Git 中管理已完成发布的站点;你需要跟踪的是你的 Hugo 源文件。

+

+```

+$ echo "/public" >> .gitignore

+```

+

+如果不创建 Git 子模块,则无法在 Git 存储库中维护另一个 Git 存储库。为了简单起见,请移除嵌入的存储库的 `.git` 目录,以使主题(存储库)只是一个主题(目录)。

+

+请注意,你**必须**将你的主题文件添加到你的 Git 存储库,以便 GitLab 可以访问该主题。如果不提交主题文件,你的网站将无法成功构建。

+

+```

+$ mv themes/mero/.git ~/.local/share/Trash/files/

+```

+

+你也可以像使用[回收站][15]一样使用 `trash`:

+

+```

+$ trash themes/mero/.git

+```

+

+现在,你可以将本地项目目录的所有内容添加到 Git 并将其推送到 GitLab:

+

+```

+$ git add .

+$ git commit -m 'hugo init'

+$ git push -u origin HEAD

+```

+

+### 用 GitLab 上线

+

+将代码推送到 GitLab 后,请查看你的项目页面。有个图标表示 GitLab 正在处理你的构建。第一次推送代码可能需要几分钟,所以请耐心等待。但是,请不要**一直**等待,因为该图标并不总是可靠地更新。

+

+![GitLab processing your build][16]

+

+当你在等待 GitLab 组装你的站点时,请转到你的项目设置并找到 “Pages” 面板。你的网站准备就绪后,它的 URL 就可以用了。该 URL 是 `yourGitLabUsername.gitlab.io/yourProjectName`。导航到该地址以查看你的劳动成果。

+

+![Previewing Hugo site][17]

+

+如果你的站点无法正确组装,GitLab 提供了可以深入了解 CI/CD 管道的日志。查看错误消息以找出发生了什么问题。

+

+### Git 和 Web

+

+Hugo(或 Jekyll 等类似工具)只是利用 Git 作为 Web 发布工具的一种方式。使用服务器端 Git 挂钩,你可以使用最少的脚本设计你自己的 Git-to-web 工作流。使用 GitLab 的社区版,你可以自行托管你自己的 GitLab 实例;或者你可以使用 [Gitolite][18] 或 [Gitea][19] 等替代方案,并使用本文作为自定义解决方案的灵感来源。祝你玩得开心!

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/4/building-hosting-website-git

+

+作者:[Seth Kenlon][a]

+选题:[lujun9972][b]

+译者:[wxy](https://github.com/wxy)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/seth

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/web_browser_desktop_devlopment_design_system_computer.jpg?itok=pfqRrJgh (web development and design, desktop and browser)

+[2]: https://git-scm.com/

+[3]: http://gohugo.io

+[4]: http://getgrav.org

+[5]: http://picocms.org/

+[6]: https://jekyllrb.com

+[7]: http://slackermedia.info/podwrite/

+[8]: https://opensource.com/sites/default/files/uploads/letsencrypt-site.jpg (Let's Encrypt website)

+[9]: https://gohugo.io/getting-started/installing

+[10]: https://opensource.com/article/17/6/set-path-linux

+[11]: https://commonmark.org/help/

+[12]: https://themes.gohugo.io/

+[13]: https://themes.gohugo.io/gohugo-theme-ananke/

+[14]: https://docs.gitlab.com/ee/ci/#overview

+[15]: http://slackermedia.info/trashy

+[16]: https://opensource.com/sites/default/files/uploads/hugo-gitlab-cicd.jpg (GitLab processing your build)

+[17]: https://opensource.com/sites/default/files/uploads/hugo-demo-site.jpg (Previewing Hugo site)

+[18]: http://gitolite.com

+[19]: http://gitea.io

diff --git a/published/20190408 A beginner-s guide to building DevOps pipelines with open source tools.md b/published/20190408 A beginner-s guide to building DevOps pipelines with open source tools.md

new file mode 100644

index 0000000000..bbd85a891f

--- /dev/null

+++ b/published/20190408 A beginner-s guide to building DevOps pipelines with open source tools.md

@@ -0,0 +1,345 @@

+[#]: collector: (lujun9972)

+[#]: translator: (LuuMing)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11307-1.html)

+[#]: subject: (A beginner's guide to building DevOps pipelines with open source tools)

+[#]: via: (https://opensource.com/article/19/4/devops-pipeline)

+[#]: author: (Bryant Son https://opensource.com/users/brson/users/milindsingh/users/milindsingh/users/dscripter)

+

+使用开源工具构建 DevOps 流水线的初学者指南

+======

+

+> 如果你是 DevOps 新人,请查看这 5 个步骤来构建你的第一个 DevOps 流水线。

+

+

+

+DevOps 已经成为解决软件开发过程中出现的缓慢、孤立或者其他故障的默认方式。但是当你刚接触 DevOps 并且不确定从哪开始时,就意义不大了。本文探索了什么是 DevOps 流水线并且提供了创建它的 5 个步骤。尽管这个教程并不全面,但可以给你以后上手和扩展打下基础。首先,插入一个小故事。

+

+### 我的 DevOps 之旅

+

+我曾经在花旗集团的云小组工作,开发基础设施即服务网页应用来管理花旗的云基础设施,但我经常对研究如何让开发流水线更加高效以及如何带给团队积极的文化感兴趣。我在 Greg Lavender 推荐的书中找到了答案。Greg Lavender 是花旗的云架构和基础设施工程(即 [Phoenix 项目][2])的 CTO。这本书尽管解释的是 DevOps 原理,但它读起来像一本小说。

+

+书后面的一张表展示了不同公司部署在发布环境上的频率:

+

+公司 | 部署频率

+---|---

+Amazon | 23,000 次/天

+Google | 5,500 次/天

+Netflix | 500 次/天

+Facebook | 1 次/天

+Twitter | 3 次/周

+典型企业 | 1 次/9 个月

+

+Amazon、Google、Netflix 怎么能做到如此之频繁?那是因为这些公司弄清楚了如何去实现一个近乎完美的 DevOps 流水线。

+

+但在花旗实施 DevOps 之前,情况并非如此。那时候,我的团队拥有不同构建阶段的环境,但是在开发服务器上的部署非常手工。所有的开发人员都只能访问一个基于 IBM WebSphere Application 社区版的开发环境服务器。问题是当多个用户同时尝试部署时,服务器就会宕机,因此开发人员在部署时就得互相通知,这一点相当痛苦。此外,还存在代码测试覆盖率低、手动部署过程繁琐以及无法根据定义的任务或用户需求跟踪代码部署的问题。

+

+我意识到必须做些事情,同时也找到了一个有同样感受的同事。我们决定合作去构建一个初始的 DevOps 流水线 —— 他设置了一个虚拟机和一个 Tomcat 服务器,而我则架设了 Jenkins,集成了 Atlassian Jira、BitBucket 和代码覆盖率测试。这个业余项目非常成功:我们近乎全自动化了开发流水线,并在开发服务器上实现了几乎 100% 的正常运行,我们可以追踪并改进代码覆盖率测试,并且 Git 分支能够与部署任务和 jira 任务关联在一起。此外,大多数用来构建 DevOps 所使用的工具都是开源的。

+

+现在我意识到了我们的 DevOps 流水线是多么的原始,因为我们没有利用像 Jenkins 文件或 Ansible 这样的高级设置。然而,这个简单的过程运作良好,这也许是因为 [Pareto][3] 原则(也被称作 80/20 法则)。

+

+### DevOps 和 CI/CD 流水线的简要介绍

+

+如果你问一些人,“什么是 DevOps?”,你或许会得到一些不同的回答。DevOps,就像敏捷,已经发展到涵盖着诸多不同的学科,但大多数人至少会同意这些:DevOps 是一个软件开发实践或一个软件开发生命周期(SDLC),并且它的核心原则是一种文化上的变革 —— 开发人员与非开发人员呼吸着同一片天空的气息,之前手工的事情变得自动化;每个人做着自己擅长的事;同一时间的部署变得更加频繁;吞吐量提升;灵活度增加。

+

+虽然拥有正确的软件工具并非实现 DevOps 环境所需的唯一东西,但一些工具却是必要的。最关键的一个便是持续集成和持续部署(CI/CD)。在流水线环境中,拥有不同的构建阶段(例如:DEV、INT、TST、QA、UAT、STG、PROD),手动的工作能实现自动化,开发人员可以实现高质量的代码,灵活而且大量部署。

+

+这篇文章描述了一个构建 DevOps 流水线的五步方法,就像下图所展示的那样,使用开源的工具实现。

+

+![Complete DevOps pipeline][4]

+

+闲话少说,让我们开始吧。

+

+### 第一步:CI/CD 框架

+

+首先你需要的是一个 CI/CD 工具,Jenkins,是一个基于 Java 的 MIT 许可下的开源 CI/CD 工具,它是推广 DevOps 运动的工具,并已成为了事实标准。

+

+所以,什么是 Jenkins?想象它是一种神奇的万能遥控,能够和许多不同的服务器和工具打交道,并且能够将它们统一安排起来。就本身而言,像 Jenkins 这样的 CI/CD 工具本身是没有用的,但随着接入不同的工具与服务器时会变得非常强大。

+

+Jenkins 仅是众多构建 DevOps 流水线的开源 CI/CD 工具之一。

+

+名称 | 许可证

+---|---

+[Jenkins][5] | Creative Commons 和 MIT

+[Travis CI][6] | MIT

+[CruiseControl][7] | BSD

+[Buildbot][8] | GPL

+[Apache Gump][9] | Apache 2.0

+[Cabie][10] | GNU

+

+下面就是使用 CI/CD 工具时 DevOps 看起来的样子。

+

+![CI/CD tool][11]

+

+你的 CI/CD 工具在本地主机上运行,但目前你还不能够做些别的。让我们紧随 DevOps 之旅的脚步。

+

+### 第二步:源代码控制管理

+

+验证 CI/CD 工具可以执行某些魔术的最佳(也可能是最简单)方法是与源代码控制管理(SCM)工具集成。为什么需要源代码控制?假设你在开发一个应用。无论你什么时候构建应用,无论你使用的是 Java、Python、C++、Go、Ruby、JavaScript 或任意一种语言,你都在编程。你所编写的程序代码称为源代码。在一开始,特别是只有你一个人工作时,将所有的东西放进本地文件夹里或许都是可以的。但是当项目变得庞大并且邀请其他人协作后,你就需要一种方式来避免共享代码修改时的合并冲突。你也需要一种方式来恢复一个之前的版本——备份、复制并粘贴的方式已经过时了。你(和你的团队)想要更好的解决方式。

+

+这就是 SCM 变得不可或缺的原因。SCM 工具通过在仓库中保存代码来帮助进行版本控制与多人协作。

+

+尽管这里有许多 SCM 工具,但 Git 是最标准恰当的。我极力推荐使用 Git,但如果你喜欢这里仍有其他的开源工具。

+

+名称 | 许可证

+---|---

+[Git][12] | GPLv2 & LGPL v2.1

+[Subversion][13] | Apache 2.0

+[Concurrent Versions System][14] (CVS) | GNU

+[Vesta][15] | LGPL

+[Mercurial][16] | GNU GPL v2+

+

+拥有 SCM 之后,DevOps 流水线看起来就像这样。

+

+![Source control management][17]

+

+CI/CD 工具能够自动化进行源代码检入检出以及完成成员之间的协作。还不错吧?但是,如何才能把它变成可工作的应用程序,使得数十亿人来使用并欣赏它呢?

+

+### 第三步:自动化构建工具

+

+真棒!现在你可以检出代码并将修改提交到源代码控制,并且可以邀请你的朋友就源代码控制进行协作。但是到目前为止你还没有构建出应用。要想让它成为一个网页应用,必须将其编译并打包成可部署的包或可执行程序(注意,像 JavaScript 或 PHP 这样的解释型编程语言不需要进行编译)。

+

+于是就引出了自动化构建工具。无论你决定使用哪一款构建工具,它们都有一个共同的目标:将源代码构建成某种想要的格式,并且将清理、编译、测试、部署到某个位置这些任务自动化。构建工具会根据你的编程语言而有不同,但这里有一些通常使用的开源工具值得考虑。

+

+名称 | 许可证 | 编程语言

+---|---|---

+[Maven][18] | Apache 2.0 | Java

+[Ant][19] | Apache 2.0 | Java

+[Gradle][20] | Apache 2.0 | Java

+[Bazel][21] | Apache 2.0 | Java

+[Make][22] | GNU | N/A

+[Grunt][23] | MIT | JavaScript

+[Gulp][24] | MIT | JavaScript

+[Buildr][25] | Apache | Ruby

+[Rake][26] | MIT | Ruby

+[A-A-P][27] | GNU | Python

+[SCons][28] | MIT | Python

+[BitBake][29] | GPLv2 | Python

+[Cake][30] | MIT | C#

+[ASDF][31] | Expat (MIT) | LISP

+[Cabal][32] | BSD | Haskell

+

+太棒了!现在你可以将自动化构建工具的配置文件放进源代码控制管理系统中,并让你的 CI/CD 工具构建它。

+

+![Build automation tool][33]

+

+一切都如此美好,对吧?但是在哪里部署它呢?

+

+### 第四步:网页应用服务器

+

+到目前为止,你有了一个可执行或可部署的打包文件。对任何真正有用的应用程序来说,它必须提供某种服务或者接口,所以你需要一个容器来发布你的应用。

+

+对于网页应用,网页应用服务器就是容器。应用程序服务器提供了环境,让可部署包中的编程逻辑能够被检测到、呈现界面,并通过打开套接字为外部世界提供网页服务。在其他环境下你也需要一个 HTTP 服务器(比如虚拟机)来安装服务应用。现在,我假设你将会自己学习这些东西(尽管我会在下面讨论容器)。

+

+这里有许多开源的网页应用服务器。

+

+名称 | 协议 | 编程语言

+---|---|---

+[Tomcat][34] | Apache 2.0 | Java

+[Jetty][35] | Apache 2.0 | Java

+[WildFly][36] | GNU Lesser Public | Java

+[GlassFish][37] | CDDL & GNU Less Public | Java

+[Django][38] | 3-Clause BSD | Python

+[Tornado][39] | Apache 2.0 | Python

+[Gunicorn][40] | MIT | Python

+[Python Paste][41] | MIT | Python

+[Rails][42] | MIT | Ruby

+[Node.js][43] | MIT | Javascript

+

+现在 DevOps 流水线差不多能用了,干得好!

+

+![Web application server][44]

+

+尽管你可以在这里停下来并进行进一步的集成,但是代码质量对于应用开发者来说是一件非常重要的事情。

+

+### 第五步:代码覆盖测试

+

+实现代码测试件可能是另一个麻烦的需求,但是开发者需要尽早地捕捉程序中的所有错误并提升代码质量来保证最终用户满意度。幸运的是,这里有许多开源工具来测试你的代码并提出改善质量的建议。甚至更好的,大部分 CI/CD 工具能够集成这些工具并将测试过程自动化进行。

+

+代码测试分为两个部分:“代码测试框架”帮助进行编写与运行测试,“代码质量改进工具”帮助提升代码的质量。

+

+#### 代码测试框架

+

+名称 | 许可证 | 编程语言

+---|---|---

+[JUnit][45] | Eclipse Public License | Java

+[EasyMock][46] | Apache | Java

+[Mockito][47] | MIT | Java

+[PowerMock][48] | Apache 2.0 | Java

+[Pytest][49] | MIT | Python

+[Hypothesis][50] | Mozilla | Python

+[Tox][51] | MIT | Python

+

+#### 代码质量改进工具

+

+名称 | 许可证 | 编程语言

+---|---|---

+[Cobertura][52] | GNU | Java

+[CodeCover][53] | Eclipse Public (EPL) | Java

+[Coverage.py][54] | Apache 2.0 | Python

+[Emma][55] | Common Public License | Java

+[JaCoCo][56] | Eclipse Public License | Java

+[Hypothesis][50] | Mozilla | Python

+[Tox][51] | MIT | Python

+[Jasmine][57] | MIT | JavaScript

+[Karma][58] | MIT | JavaScript

+[Mocha][59] | MIT | JavaScript

+[Jest][60] | MIT | JavaScript

+

+注意,之前提到的大多数工具和框架都是为 Java、Python、JavaScript 写的,因为 C++ 和 C# 是专有编程语言(尽管 GCC 是开源的)。

+

+现在你已经运用了代码覆盖测试工具,你的 DevOps 流水线应该就像教程开始那幅图中展示的那样了。

+

+### 可选步骤

+

+#### 容器

+

+正如我之前所说,你可以在虚拟机(VM)或服务器上发布你的应用,但是容器是一个更好的解决方法。

+

+[什么是容器][61]?简要的介绍就是 VM 需要占用操作系统大量的资源,它提升了应用程序的大小,而容器仅仅需要一些库和配置来运行应用程序。显然,VM 仍有重要的用途,但容器对于发布应用(包括应用程序服务器)来说是一个更为轻量的解决方式。

+

+尽管对于容器来说也有其他的选择,但是 Docker 和 Kubernetes 更为广泛。

+

+名称 | 许可证

+---|---

+[Docker][62] | Apache 2.0

+[Kubernetes][63] | Apache 2.0

+

+了解更多信息,请查看 [Opensource.com][64] 上关于 Docker 和 Kubernetes 的其它文章:

+

+ * [什么是 Docker?][65]

+ * [Docker 简介][66]

+ * [什么是 Kubernetes?][67]

+ * [从零开始的 Kubernetes 实践][68]

+

+#### 中间件自动化工具

+

+我们的 DevOps 流水线大部分集中在协作构建与部署应用上,但你也可以用 DevOps 工具完成许多其他的事情。其中之一便是利用它实现基础设施管理(IaC)工具,这也是熟知的中间件自动化工具。这些工具帮助完成中间件的自动化安装、管理和其他任务。例如,自动化工具可以用正确的配置下拉应用程序,例如网页服务器、数据库和监控工具,并且部署它们到应用服务器上。

+

+这里有几个开源的中间件自动化工具值得考虑:

+

+名称 | 许可证

+---|---

+[Ansible][69] | GNU Public

+[SaltStack][70] | Apache 2.0

+[Chef][71] | Apache 2.0

+[Puppet][72] | Apache or GPL

+

+获取更多中间件自动化工具,查看 [Opensource.com][64] 上的其它文章:

+

+ * [Ansible 快速入门指南][73]

+ * [Ansible 自动化部署策略][74]

+ * [配置管理工具 Top 5][75]

+

+### 之后的发展

+

+这只是一个完整 DevOps 流水线的冰山一角。从 CI/CD 工具开始并且探索其他可以自动化的东西来使你的团队更加轻松的工作。并且,寻找[开源通讯工具][76]可以帮助你的团队一起工作的更好。

+

+发现更多见解,这里有一些非常棒的文章来介绍 DevOps :

+

+ * [什么是 DevOps][77]

+ * [掌握 5 件事成为 DevOps 工程师][78]

+ * [所有人的 DevOps][79]

+ * [在 DevOps 中开始使用预测分析][80]

+

+使用开源 agile 工具来集成 DevOps 也是一个很好的主意:

+

+ * [什么是 agile ?][81]

+ * [4 步成为一个了不起的 agile 开发者][82]

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/4/devops-pipeline

+

+作者:[Bryant Son][a]

+选题:[lujun9972][b]

+译者:[LuMing](https://github.com/LuuMing)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/brson/users/milindsingh/users/milindsingh/users/dscripter

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/network_team_career_hand.png?itok=_ztl2lk_ (Shaking hands, networking)

+[2]: https://www.amazon.com/dp/B078Y98RG8/

+[3]: https://en.wikipedia.org/wiki/Pareto_principle

+[4]: https://opensource.com/sites/default/files/uploads/1_finaldevopspipeline.jpg (Complete DevOps pipeline)

+[5]: https://github.com/jenkinsci/jenkins

+[6]: https://github.com/travis-ci/travis-ci

+[7]: http://cruisecontrol.sourceforge.net

+[8]: https://github.com/buildbot/buildbot

+[9]: https://gump.apache.org

+[10]: http://cabie.tigris.org

+[11]: https://opensource.com/sites/default/files/uploads/2_runningjenkins.jpg (CI/CD tool)

+[12]: https://git-scm.com

+[13]: https://subversion.apache.org

+[14]: http://savannah.nongnu.org/projects/cvs

+[15]: http://www.vestasys.org

+[16]: https://www.mercurial-scm.org

+[17]: https://opensource.com/sites/default/files/uploads/3_sourcecontrolmanagement.jpg (Source control management)

+[18]: https://maven.apache.org

+[19]: https://ant.apache.org

+[20]: https://gradle.org/

+[21]: https://bazel.build

+[22]: https://www.gnu.org/software/make

+[23]: https://gruntjs.com

+[24]: https://gulpjs.com

+[25]: http://buildr.apache.org

+[26]: https://github.com/ruby/rake

+[27]: http://www.a-a-p.org

+[28]: https://www.scons.org

+[29]: https://www.yoctoproject.org/software-item/bitbake

+[30]: https://github.com/cake-build/cake

+[31]: https://common-lisp.net/project/asdf

+[32]: https://www.haskell.org/cabal

+[33]: https://opensource.com/sites/default/files/uploads/4_buildtools.jpg (Build automation tool)

+[34]: https://tomcat.apache.org

+[35]: https://www.eclipse.org/jetty/

+[36]: http://wildfly.org

+[37]: https://javaee.github.io/glassfish

+[38]: https://www.djangoproject.com/

+[39]: http://www.tornadoweb.org/en/stable

+[40]: https://gunicorn.org

+[41]: https://github.com/cdent/paste

+[42]: https://rubyonrails.org

+[43]: https://nodejs.org/en

+[44]: https://opensource.com/sites/default/files/uploads/5_applicationserver.jpg (Web application server)

+[45]: https://junit.org/junit5

+[46]: http://easymock.org

+[47]: https://site.mockito.org

+[48]: https://github.com/powermock/powermock

+[49]: https://docs.pytest.org

+[50]: https://hypothesis.works

+[51]: https://github.com/tox-dev/tox

+[52]: http://cobertura.github.io/cobertura

+[53]: http://codecover.org/

+[54]: https://github.com/nedbat/coveragepy

+[55]: http://emma.sourceforge.net

+[56]: https://github.com/jacoco/jacoco

+[57]: https://jasmine.github.io

+[58]: https://github.com/karma-runner/karma

+[59]: https://github.com/mochajs/mocha

+[60]: https://jestjs.io

+[61]: /resources/what-are-linux-containers

+[62]: https://www.docker.com

+[63]: https://kubernetes.io

+[64]: http://Opensource.com

+[65]: https://opensource.com/resources/what-docker

+[66]: https://opensource.com/business/15/1/introduction-docker

+[67]: https://opensource.com/resources/what-is-kubernetes

+[68]: https://opensource.com/article/17/11/kubernetes-lightning-talk

+[69]: https://www.ansible.com

+[70]: https://www.saltstack.com

+[71]: https://www.chef.io

+[72]: https://puppet.com

+[73]: https://opensource.com/article/19/2/quickstart-guide-ansible

+[74]: https://opensource.com/article/19/1/automating-deployment-strategies-ansible

+[75]: https://opensource.com/article/18/12/configuration-management-tools

+[76]: https://opensource.com/alternatives/slack

+[77]: https://opensource.com/resources/devops

+[78]: https://opensource.com/article/19/2/master-devops-engineer

+[79]: https://opensource.com/article/18/11/how-non-engineer-got-devops

+[80]: https://opensource.com/article/19/1/getting-started-predictive-analytics-devops

+[81]: https://opensource.com/article/18/10/what-agile

+[82]: https://opensource.com/article/19/2/steps-agile-developer

diff --git a/published/20190524 Spell Checking Comments.md b/published/20190524 Spell Checking Comments.md

new file mode 100644

index 0000000000..d48358c2a9

--- /dev/null

+++ b/published/20190524 Spell Checking Comments.md

@@ -0,0 +1,40 @@

+[#]: collector: (lujun9972)

+[#]: translator: (lujun9972)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11294-1.html)

+[#]: subject: (Spell Checking Comments)

+[#]: via: (https://emacsredux.com/blog/2019/05/24/spell-checking-comments/)

+[#]: author: (Bozhidar Batsov https://emacsredux.com)

+

+Emacs 注释中的拼写检查

+======

+

+我出了名的容易拼错单词(特别是在播客当中)。谢天谢地 Emacs 内置了一个名为 `flyspell` 的超棒模式来帮助像我这样的可怜的打字员。flyspell 会在你输入时突出显示拼错的单词 (也就是实时的) 并提供有用的快捷键来快速修复该错误。

+

+大多输入通常会对派生自 `text-mode`(比如 `markdown-mode`,`adoc-mode` )的主模式启用 `flyspell`,但是它对程序员也有所帮助,可以指出他在注释中的错误。所需要的只是启用 `flyspell-prog-mode`。我通常在所有的编程模式中(至少在 `prog-mode` 派生的模式中)都启用它:

+

+```

+(add-hook 'prog-mode-hook #'flyspell-prog-mode)

+```

+

+现在当你在注释中输入错误时,就会得到即时反馈了。要修复单词只需要将光标置于单词后,然后按下 `C-c $` (`M-x flyspell-correct-word-before-point`)。(还有许多其他方法可以用 `flyspell` 来纠正拼写错误的单词,但为了简单起见,我们暂时忽略它们。)

+

+![flyspell_prog_mode.gif][1]

+

+今天的分享就到这里!我要继续修正这些讨厌的拼写错误了!

+

+--------------------------------------------------------------------------------

+

+via: https://emacsredux.com/blog/2019/05/24/spell-checking-comments/

+

+作者:[Bozhidar Batsov][a]

+选题:[lujun9972][b]

+译者:[lujun9972](https://github.com/lujun9972)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://emacsredux.com

+[b]: https://github.com/lujun9972

+[1]: https://emacsredux.com/assets/images/flyspell_prog_mode.gif

diff --git a/published/20190701 Get modular with Python functions.md b/published/20190701 Get modular with Python functions.md

new file mode 100644

index 0000000000..37124c8e2b

--- /dev/null

+++ b/published/20190701 Get modular with Python functions.md

@@ -0,0 +1,322 @@

+[#]: collector: (lujun9972)

+[#]: translator: (MjSeven)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11295-1.html)

+[#]: subject: (Get modular with Python functions)

+[#]: via: (https://opensource.com/article/19/7/get-modular-python-functions)

+[#]: author: (Seth Kenlon https://opensource.com/users/seth/users/xd-deng/users/nhuntwalker/users/don-watkins)

+

+使用 Python 函数进行模块化

+======

+

+> 使用 Python 函数来最大程度地减少重复任务编码工作量。

+

+

+

+你是否对函数、类、方法、库和模块等花哨的编程术语感到困惑?你是否在与变量作用域斗争?无论你是自学成才的还是经过正式培训的程序员,代码的模块化都会令人困惑。但是类和库鼓励模块化代码,因为模块化代码意味着只需构建一个多用途代码块集合,就可以在许多项目中使用它们来减少编码工作量。换句话说,如果你按照本文对 [Python][2] 函数的研究,你将找到更聪明的工作方法,这意味着更少的工作。

+

+本文假定你对 Python 很熟(LCTT 译注:稍微熟悉就可以),并且可以编写和运行一个简单的脚本。如果你还没有使用过 Python,请首先阅读我的文章:[Python 简介][3]。

+

+### 函数

+

+函数是迈向模块化过程中重要的一步,因为它们是形式化的重复方法。如果在你的程序中,有一个任务需要反复执行,那么你可以将代码放入一个函数中,根据需要随时调用该函数。这样,你只需编写一次代码,就可以随意使用它。

+

+以下一个简单函数的示例:

+

+```

+#!/usr/bin/env python3

+import time

+

+def Timer():

+ print("Time is " + str(time.time() ))

+```

+

+创建一个名为 `mymodularity` 的目录,并将以上函数代码保存为该目录下的 `timestamp.py`。

+

+除了这个函数,在 `mymodularity` 目录中创建一个名为 `__init__.py` 的文件,你可以在文件管理器或 bash shell 中执行此操作:

+

+```

+$ touch mymodularity/__init__.py

+```

+

+现在,你已经创建了属于你自己的 Python 库(Python 中称为“模块”),名为 `mymodularity`。它不是一个特别有用的模块,因为它所做的只是导入 `time` 模块并打印一个时间戳,但这只是一个开始。

+

+要使用你的函数,像对待任何其他 Python 模块一样对待它。以下是一个小应用,它使用你的 `mymodularity` 软件包来测试 Python `sleep()` 函数的准确性。将此文件保存为 `sleeptest.py`,注意要在 `mymodularity` 文件夹 *之外*,因为如果你将它保存在 `mymodularity` *里面*,那么它将成为你的包中的一个模块,你肯定不希望这样。

+

+```

+#!/usr/bin/env python3

+

+import time

+from mymodularity import timestamp

+

+print("Testing Python sleep()...")

+

+# modularity

+timestamp.Timer()

+time.sleep(3)

+timestamp.Timer()

+```

+

+在这个简单的脚本中,你从 `mymodularity` 包中调用 `timestamp` 模块两次。从包中导入模块时,通常的语法是从包中导入你所需的模块,然后使用 *模块名称 + 一个点 + 要调用的函数名*(例如 `timestamp.Timer()`)。

+

+你调用了两次 `Timer()` 函数,所以如果你的 `timestamp` 模块比这个简单的例子复杂些,那么你将节省大量重复代码。

+

+保存文件并运行:

+

+```

+$ python3 ./sleeptest.py

+Testing Python sleep()...

+Time is 1560711266.1526039

+Time is 1560711269.1557732

+```

+

+根据测试,Python 中的 `sleep` 函数非常准确:在三秒钟等待之后,时间戳成功且正确地增加了 3,在微秒单位上差距很小。

+

+Python 库的结构看起来可能令人困惑,但其实它并不是什么魔法。Python *被编程* 为一个包含 Python 代码的目录,并附带一个 `__init__.py` 文件,那么这个目录就会被当作一个包,并且 Python 会首先在当前目录中查找可用模块。这就是为什么语句 `from mymodularity import timestamp` 有效的原因:Python 在当前目录查找名为 `mymodularity` 的目录,然后查找 `timestamp.py` 文件。

+

+你在这个例子中所做的功能和以下这个非模块化的版本是一样的:

+

+```

+#!/usr/bin/env python3

+

+import time

+from mymodularity import timestamp

+

+print("Testing Python sleep()...")

+

+# no modularity

+print("Time is " + str(time.time() ) )

+time.sleep(3)

+print("Time is " + str(time.time() ) )

+```

+

+对于这样一个简单的例子,其实没有必要以这种方式编写测试,但是对于编写自己的模块来说,最佳实践是你的代码是通用的,可以将它重用于其他项目。

+

+通过在调用函数时传递信息,可以使代码更通用。例如,假设你想要使用模块来测试的不是 *系统* 的 `sleep` 函数,而是 *用户自己实现* 的 `sleep` 函数,更改 `timestamp` 代码,使它接受一个名为 `msg` 的传入变量,它将是一个字符串,控制每次调用 `timestamp` 时如何显示:

+

+```

+#!/usr/bin/env python3

+

+import time

+

+# 更新代码

+def Timer(msg):

+ print(str(msg) + str(time.time() ) )

+```

+

+现在函数比以前更抽象了。它仍会打印时间戳,但是它为用户打印的内容 `msg` 还是未定义的。这意味着你需要在调用函数时定义它。

+

+`Timer` 函数接受的 `msg` 参数是随便命名的,你可以使用参数 `m`、`message` 或 `text`,或是任何对你来说有意义的名称。重要的是,当调用 `timestamp.Timer` 函数时,它接收一个文本作为其输入,将接收到的任何内容放入 `msg` 变量中,并使用该变量完成任务。

+

+以下是一个测试测试用户正确感知时间流逝能力的新程序:

+

+```

+#!/usr/bin/env python3

+

+from mymodularity import timestamp

+

+print("Press the RETURN key. Count to 3, and press RETURN again.")

+

+input()

+timestamp.Timer("Started timer at ")

+

+print("Count to 3...")

+

+input()

+timestamp.Timer("You slept until ")

+```

+

+将你的新程序保存为 `response.py`,运行它:

+

+```

+$ python3 ./response.py

+Press the RETURN key. Count to 3, and press RETURN again.

+

+Started timer at 1560714482.3772075

+Count to 3...

+

+You slept until 1560714484.1628013

+```

+

+### 函数和所需参数

+

+新版本的 `timestamp` 模块现在 *需要* 一个 `msg` 参数。这很重要,因为你的第一个应用程序将无法运行,因为它没有将字符串传递给 `timestamp.Timer` 函数:

+

+```

+$ python3 ./sleeptest.py

+Testing Python sleep()...

+Traceback (most recent call last):

+ File "./sleeptest.py", line 8, in <module>

+ timestamp.Timer()

+TypeError: Timer() missing 1 required positional argument: 'msg'

+```

+

+你能修复你的 `sleeptest.py` 应用程序,以便它能够与更新后的模块一起正确运行吗?

+

+### 变量和函数

+

+通过设计,函数限制了变量的范围。换句话说,如果在函数内创建一个变量,那么这个变量 *只* 在这个函数内起作用。如果你尝试在函数外部使用函数内部出现的变量,就会发生错误。

+

+下面是对 `response.py` 应用程序的修改,尝试从 `timestamp.Timer()` 函数外部打印 `msg` 变量:

+

+```

+#!/usr/bin/env python3

+

+from mymodularity import timestamp

+

+print("Press the RETURN key. Count to 3, and press RETURN again.")

+

+input()

+timestamp.Timer("Started timer at ")

+

+print("Count to 3...")

+

+input()

+timestamp.Timer("You slept for ")

+

+print(msg)

+```

+

+试着运行它,查看错误:

+

+```

+$ python3 ./response.py

+Press the RETURN key. Count to 3, and press RETURN again.

+

+Started timer at 1560719527.7862902

+Count to 3...

+

+You slept for 1560719528.135406

+Traceback (most recent call last):

+ File "./response.py", line 15, in <module>

+ print(msg)

+NameError: name 'msg' is not defined

+```

+

+应用程序返回一个 `NameError` 消息,因为没有定义 `msg`。这看起来令人困惑,因为你编写的代码定义了 `msg`,但你对代码的了解比 Python 更深入。调用函数的代码,不管函数是出现在同一个文件中,还是打包为模块,都不知道函数内部发生了什么。一个函数独立地执行它的计算,并返回你想要它返回的内容。这其中所涉及的任何变量都只是 *本地的*:它们只存在于函数中,并且只存在于函数完成其目的所需时间内。

+

+#### Return 语句

+

+如果你的应用程序需要函数中特定包含的信息,那么使用 `return` 语句让函数在运行后返回有意义的数据。

+

+时间就是金钱,所以修改 `timestamp` 函数,以使其用于一个虚构的收费系统:

+

+```

+#!/usr/bin/env python3

+

+import time

+

+def Timer(msg):

+ print(str(msg) + str(time.time() ) )

+ charge = .02

+ return charge

+```

+

+现在,`timestamp` 模块每次调用都收费 2 美分,但最重要的是,它返回每次调用时所收取的金额。

+

+以下一个如何使用 `return` 语句的演示:

+

+```

+#!/usr/bin/env python3

+

+from mymodularity import timestamp

+

+print("Press RETURN for the time (costs 2 cents).")

+print("Press Q RETURN to quit.")

+

+total = 0

+

+while True:

+ kbd = input()

+ if kbd.lower() == "q":

+ print("You owe $" + str(total) )

+ exit()

+ else:

+ charge = timestamp.Timer("Time is ")

+ total = total+charge

+```

+

+在这个示例代码中,变量 `charge` 为 `timestamp.Timer()` 函数的返回,它接收函数返回的任何内容。在本例中,函数返回一个数字,因此使用一个名为 `total` 的新变量来跟踪已经进行了多少更改。当应用程序收到要退出的信号时,它会打印总花费:

+

+```

+$ python3 ./charge.py

+Press RETURN for the time (costs 2 cents).

+Press Q RETURN to quit.

+

+Time is 1560722430.345412

+

+Time is 1560722430.933996

+

+Time is 1560722434.6027434

+

+Time is 1560722438.612629

+

+Time is 1560722439.3649364

+q

+You owe $0.1

+```

+

+#### 内联函数

+

+函数不必在单独的文件中创建。如果你只是针对一个任务编写一个简短的脚本,那么在同一个文件中编写函数可能更有意义。唯一的区别是你不必导入自己的模块,但函数的工作方式是一样的。以下是时间测试应用程序的最新迭代:

+

+```

+#!/usr/bin/env python3

+

+import time

+

+total = 0

+

+def Timer(msg):

+ print(str(msg) + str(time.time() ) )

+ charge = .02

+ return charge

+

+print("Press RETURN for the time (costs 2 cents).")

+print("Press Q RETURN to quit.")

+

+while True:

+ kbd = input()

+ if kbd.lower() == "q":

+ print("You owe $" + str(total) )

+ exit()

+ else:

+ charge = Timer("Time is ")

+ total = total+charge

+```

+

+它没有外部依赖(Python 发行版中包含 `time` 模块),产生与模块化版本相同的结果。它的优点是一切都位于一个文件中,缺点是你不能在其他脚本中使用 `Timer()` 函数,除非你手动复制和粘贴它。

+

+#### 全局变量

+

+在函数外部创建的变量没有限制作用域,因此它被视为 *全局* 变量。

+

+全局变量的一个例子是在 `charge.py` 中用于跟踪当前花费的 `total` 变量。`total` 是在函数之外创建的,因此它绑定到应用程序而不是特定函数。

+

+应用程序中的函数可以访问全局变量,但要将变量传入导入的模块,你必须像发送 `msg` 变量一样将变量传入模块。

+

+全局变量很方便,因为它们似乎随时随地都可用,但也很难跟踪它们,很难知道哪些变量不再需要了但是仍然在系统内存中停留(尽管 Python 有非常好的垃圾收集机制)。

+

+但是,全局变量很重要,因为不是所有的变量都可以是函数或类的本地变量。现在你知道了如何向函数传入变量并获得返回,事情就变得容易了。

+

+### 总结

+

+你已经学到了很多关于函数的知识,所以开始将它们放入你的脚本中 —— 如果它不是作为单独的模块,那么作为代码块,你不必在一个脚本中编写多次。在本系列的下一篇文章中,我将介绍 Python 类。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/7/get-modular-python-functions

+

+作者:[Seth Kenlon][a]

+选题:[lujun9972][b]

+译者:[MjSeven](https://github.com/MjSeven)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/seth/users/xd-deng/users/nhuntwalker/users/don-watkins

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/openstack_python_vim_1.jpg?itok=lHQK5zpm

+[2]: https://www.python.org/

+[3]: https://opensource.com/article/17/10/python-10

diff --git a/published/20190705 Learn object-oriented programming with Python.md b/published/20190705 Learn object-oriented programming with Python.md

new file mode 100644

index 0000000000..1d2767d601

--- /dev/null

+++ b/published/20190705 Learn object-oriented programming with Python.md

@@ -0,0 +1,305 @@

+[#]: collector: (lujun9972)

+[#]: translator: (MjSeven)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11317-1.html)

+[#]: subject: (Learn object-oriented programming with Python)

+[#]: via: (https://opensource.com/article/19/7/get-modular-python-classes)

+[#]: author: (Seth Kenlon https://opensource.com/users/seth)

+

+使用 Python 学习面对对象的编程

+======

+

+> 使用 Python 类使你的代码变得更加模块化。

+

+

+

+在我上一篇文章中,我解释了如何通过使用函数、创建模块或者两者一起来[使 Python 代码更加模块化][2]。函数对于避免重复多次使用的代码非常有用,而模块可以确保你在不同的项目中复用代码。但是模块化还有另一种方法:类。

+

+如果你已经听过面对对象编程(OOP)这个术语,那么你可能会对类的用途有一些概念。程序员倾向于将类视为一个虚拟对象,有时与物理世界中的某些东西直接相关,有时则作为某种编程概念的表现形式。无论哪种表示,当你想要在程序中为你或程序的其他部分创建“对象”时,你都可以创建一个类来交互。

+

+### 没有类的模板

+

+假设你正在编写一个以幻想世界为背景的游戏,并且你需要这个应用程序能够涌现出各种坏蛋来给玩家的生活带来一些刺激。了解了很多关于函数的知识后,你可能会认为这听起来像是函数的一个教科书案例:需要经常重复的代码,但是在调用时可以考虑变量而只编写一次。

+

+下面一个纯粹基于函数的敌人生成器实现的例子:

+

+```

+#!/usr/bin/env python3

+

+import random

+

+def enemy(ancestry,gear):

+ enemy=ancestry

+ weapon=gear

+ hp=random.randrange(0,20)

+ ac=random.randrange(0,20)

+ return [enemy,weapon,hp,ac]

+

+def fight(tgt):

+ print("You take a swing at the " + tgt[0] + ".")

+ hit=random.randrange(0,20)

+ if hit > tgt[3]:

+ print("You hit the " + tgt[0] + " for " + str(hit) + " damage!")

+ tgt[2] = tgt[2] - hit

+ else:

+ print("You missed.")

+

+

+foe=enemy("troll","great axe")

+print("You meet a " + foe[0] + " wielding a " + foe[1])

+print("Type the a key and then RETURN to attack.")

+

+while True:

+ action=input()

+

+ if action.lower() == "a":

+ fight(foe)

+

+ if foe[2] < 1:

+ print("You killed your foe!")

+ else:

+ print("The " + foe[0] + " has " + str(foe[2]) + " HP remaining")

+```

+

+`enemy` 函数创造了一个具有多个属性的敌人,例如谱系、武器、生命值和防御等级。它返回每个属性的列表,表示敌人全部特征。

+

+从某种意义上说,这段代码创建了一个对象,即使它还没有使用类。程序员将这个 `enemy` 称为*对象*,因为该函数的结果(本例中是一个包含字符串和整数的列表)表示游戏中一个单独但复杂的*东西*。也就是说,列表中字符串和整数不是任意的:它们一起描述了一个虚拟对象。

+

+在编写描述符集合时,你可以使用变量,以便随时使用它们来生成敌人。这有点像模板。

+

+在示例代码中,当需要对象的属性时,会检索相应的列表项。例如,要获取敌人的谱系,代码会查询 `foe[0]`,对于生命值,会查询 `foe[2]`,以此类推。

+

+这种方法没有什么不妥,代码按预期运行。你可以添加更多不同类型的敌人,创建一个敌人类型列表,并在敌人创建期间从列表中随机选择,等等,它工作得很好。实际上,[Lua][3] 非常有效地利用这个原理来近似了一个面对对象模型。

+

+然而,有时候对象不仅仅是属性列表。

+

+### 使用对象

+

+在 Python 中,一切都是对象。你在 Python 中创建的任何东西都是某个预定义模板的*实例*。甚至基本的字符串和整数都是 Python `type` 类的衍生物。你可以在这个交互式 Python shell 中见证:

+

+```

+>>> foo=3

+>>> type(foo)

+

+>>> foo="bar"

+>>> type(foo)

+

+```

+

+当一个对象由一个类定义时,它不仅仅是一个属性的集合,Python 类具有各自的函数。从逻辑上讲,这很方便,因为只涉及某个对象类的操作包含在该对象的类中。

+

+在示例代码中,`fight` 的代码是主应用程序的功能。这对于一个简单的游戏来说是可行的,但对于一个复杂的游戏来说,世界中不仅仅有玩家和敌人,还可能有城镇居民、牲畜、建筑物、森林等等,它们都不需要使用战斗功能。将战斗代码放在敌人的类中意味着你的代码更有条理,在一个复杂的应用程序中,这是一个重要的优势。

+

+此外,每个类都有特权访问自己的本地变量。例如,敌人的生命值,除了某些功能之外,是不会改变的数据。游戏中的随机蝴蝶不应该意外地将敌人的生命值降低到 0。理想情况下,即使没有类,也不会发生这种情况。但是在具有大量活动部件的复杂应用程序中,确保不需要相互交互的部件永远不会发生这种情况,这是一个非常有用的技巧。

+

+Python 类也受垃圾收集的影响。当不再使用类的实例时,它将被移出内存。你可能永远不知道这种情况会什么时候发生,但是你往往知道什么时候它不会发生,因为你的应用程序占用了更多的内存,而且运行速度比较慢。将数据集隔离到类中可以帮助 Python 跟踪哪些数据正在使用,哪些不在需要了。

+

+### 优雅的 Python

+

+下面是一个同样简单的战斗游戏,使用了 `Enemy` 类:

+

+```

+#!/usr/bin/env python3

+

+import random

+

+class Enemy():

+ def __init__(self,ancestry,gear):

+ self.enemy=ancestry

+ self.weapon=gear

+ self.hp=random.randrange(10,20)

+ self.ac=random.randrange(12,20)

+ self.alive=True

+

+ def fight(self,tgt):

+ print("You take a swing at the " + self.enemy + ".")

+ hit=random.randrange(0,20)

+

+ if self.alive and hit > self.ac:

+ print("You hit the " + self.enemy + " for " + str(hit) + " damage!")

+ self.hp = self.hp - hit

+ print("The " + self.enemy + " has " + str(self.hp) + " HP remaining")

+ else:

+ print("You missed.")

+

+ if self.hp < 1:

+ self.alive=False

+

+# 游戏开始

+foe=Enemy("troll","great axe")

+print("You meet a " + foe.enemy + " wielding a " + foe.weapon)

+

+# 主函数循环

+while True:

+

+ print("Type the a key and then RETURN to attack.")

+

+ action=input()

+

+ if action.lower() == "a":

+ foe.fight(foe)

+

+ if foe.alive == False:

+ print("You have won...this time.")

+ exit()

+```

+

+这个版本的游戏将敌人作为一个包含相同属性(谱系、武器、生命值和防御)的对象来处理,并添加一个新的属性来衡量敌人时候已被击败,以及一个战斗功能。

+

+类的第一个函数是一个特殊的函数,在 Python 中称为 `init` 或初始化的函数。这类似于其他语言中的[构造器][4],它创建了类的一个实例,你可以通过它的属性和调用类时使用的任何变量来识别它(示例代码中的 `foe`)。

+

+### Self 和类实例

+

+类的函数接受一种你在类之外看不到的新形式的输入:`self`。如果不包含 `self`,那么当你调用类函数时,Python 无法知道要使用的类的*哪个*实例。这就像在一间充满兽人的房间里说:“我要和兽人战斗”,向一个兽人发起。没有人知道你指的是谁,所有兽人就都上来了。

+

+![Image of an Orc, CC-BY-SA by Buch on opengameart.org][5]

+

+*CC-BY-SA by Buch on opengameart.org*

+

+类中创建的每个属性都以 `self` 符号作为前缀,该符号将变量标识为类的属性。一旦派生出类的实例,就用表示该实例的变量替换掉 `self` 前缀。使用这个技巧,你可以在一间满是兽人的房间里说:“我要和谱系是 orc 的兽人战斗”,这样来挑战一个兽人。当 orc 听到 “gorblar.orc” 时,它就知道你指的是谁(他自己),所以你得到是一场公平的战斗而不是斗殴。在 Python 中:

+

+```

+gorblar=Enemy("orc","sword")

+print("The " + gorblar.enemy + " has " + str(gorblar.hp) + " remaining.")

+```

+

+通过检索类属性(`gorblar.enemy` 或 `gorblar.hp` 或你需要的任何对象的任何值)而不是查询 `foe[0]`(在函数示例中)或 `gorblar[0]` 来寻找敌人。

+

+### 本地变量

+

+如果类中的变量没有以 `self` 关键字作为前缀,那么它就是一个局部变量,就像在函数中一样。例如,无论你做什么,你都无法访问 `Enemy.fight` 类之外的 `hit` 变量:

+

+```

+>>> print(foe.hit)

+Traceback (most recent call last):

+ File "./enclass.py", line 38, in

+ print(foe.hit)

+AttributeError: 'Enemy' object has no attribute 'hit'

+

+>>> print(foe.fight.hit)

+Traceback (most recent call last):

+ File "./enclass.py", line 38, in

+ print(foe.fight.hit)

+AttributeError: 'function' object has no attribute 'hit'

+```

+

+`hit` 变量包含在 Enemy 类中,并且只能“存活”到在战斗中发挥作用。

+

+### 更模块化

+

+本例使用与主应用程序相同的文本文档中的类。在一个复杂的游戏中,我们更容易将每个类看作是自己独立的应用程序。当多个开发人员处理同一个应用程序时,你会看到这一点:一个开发人员负责一个类,另一个开发人员负责主程序,只要他们彼此沟通这个类必须具有什么属性,就可以并行地开发这两个代码块。

+

+要使这个示例游戏模块化,可以把它拆分为两个文件:一个用于主应用程序,另一个用于类。如果它是一个更复杂的应用程序,你可能每个类都有一个文件,或每个逻辑类组有一个文件(例如,用于建筑物的文件,用于自然环境的文件,用于敌人或 NPC 的文件等)。

+

+将只包含 `Enemy` 类的一个文件保存为 `enemy.py`,将另一个包含其他内容的文件保存为 `main.py`。

+

+以下是 `enemy.py`:

+

+```

+import random

+

+class Enemy():

+ def __init__(self,ancestry,gear):

+ self.enemy=ancestry

+ self.weapon=gear

+ self.hp=random.randrange(10,20)

+ self.stg=random.randrange(0,20)

+ self.ac=random.randrange(0,20)

+ self.alive=True

+

+ def fight(self,tgt):

+ print("You take a swing at the " + self.enemy + ".")

+ hit=random.randrange(0,20)

+

+ if self.alive and hit > self.ac:

+ print("You hit the " + self.enemy + " for " + str(hit) + " damage!")

+ self.hp = self.hp - hit

+ print("The " + self.enemy + " has " + str(self.hp) + " HP remaining")

+ else:

+ print("You missed.")

+

+ if self.hp < 1:

+ self.alive=False

+```

+

+以下是 `main.py`:

+

+```

+#!/usr/bin/env python3

+

+import enemy as en

+

+# game start

+foe=en.Enemy("troll","great axe")

+print("You meet a " + foe.enemy + " wielding a " + foe.weapon)

+

+# main loop

+while True:

+

+ print("Type the a key and then RETURN to attack.")

+

+ action=input()

+

+ if action.lower() == "a":

+ foe.fight(foe)

+

+ if foe.alive == False:

+ print("You have won...this time.")

+ exit()

+```

+

+导入模块 `enemy.py` 使用了一条特别的语句,引用类文件名称而不用带有 `.py` 扩展名,后跟你选择的命名空间指示符(例如,`import enemy as en`)。这个指示符是在你调用类时在代码中使用的。你需要在导入时添加指示符,例如 `en.Enemy`,而不是只使用 `Enemy()`。

+

+所有这些文件名都是任意的,尽管在原则上不要使用罕见的名称。将应用程序的中心命名为 `main.py` 是一个常见约定,和一个充满类的文件通常以小写形式命名,其中的类都以大写字母开头。是否遵循这些约定不会影响应用程序的运行方式,但它确实使经验丰富的 Python 程序员更容易快速理解应用程序的工作方式。

+

+在如何构建代码方面有一些灵活性。例如,使用该示例代码,两个文件必须位于同一目录中。如果你只想将类打包为模块,那么必须创建一个名为 `mybad` 的目录,并将你的类移入其中。在 `main.py` 中,你的 `import` 语句稍有变化:

+

+```

+from mybad import enemy as en

+```

+

+两种方法都会产生相同的结果,但如果你创建的类足够通用,你认为其他开发人员可以在他们的项目中使用它们,那么后者更好。

+

+无论你选择哪种方式,都可以启动游戏的模块化版本:

+

+```

+$ python3 ./main.py

+You meet a troll wielding a great axe

+Type the a key and then RETURN to attack.

+a

+You take a swing at the troll.

+You missed.

+Type the a key and then RETURN to attack.

+a

+You take a swing at the troll.

+You hit the troll for 8 damage!

+The troll has 4 HP remaining

+Type the a key and then RETURN to attack.

+a

+You take a swing at the troll.

+You hit the troll for 11 damage!

+The troll has -7 HP remaining

+You have won...this time.

+```

+

+游戏启动了,它现在更加模块化了。现在你知道了面对对象的应用程序意味着什么,但最重要的是,当你向兽人发起决斗的时候,你知道是哪一个。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/7/get-modular-python-classes

+

+作者:[Seth Kenlon][a]

+选题:[lujun9972][b]

+译者:[MjSeven](https://github.com/MjSeven)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/seth

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/code_development_programming.png?itok=M_QDcgz5 (Developing code.)

+[2]: https://linux.cn/article-11295-1.html

+[3]: https://opensource.com/article/17/4/how-program-games-raspberry-pi

+[4]: https://opensource.com/article/19/6/what-java-constructor

+[5]: https://opensource.com/sites/default/files/images/orc-buch-opengameart_cc-by-sa.jpg (CC-BY-SA by Buch on opengameart.org)

diff --git a/published/20171113 IT disaster recovery- Sysadmins vs. natural disasters - HPE.md b/published/201908/20171113 IT disaster recovery- Sysadmins vs. natural disasters - HPE.md

similarity index 100%

rename from published/20171113 IT disaster recovery- Sysadmins vs. natural disasters - HPE.md

rename to published/201908/20171113 IT disaster recovery- Sysadmins vs. natural disasters - HPE.md

diff --git a/published/20171216 Sysadmin 101- Troubleshooting.md b/published/201908/20171216 Sysadmin 101- Troubleshooting.md

similarity index 100%

rename from published/20171216 Sysadmin 101- Troubleshooting.md

rename to published/201908/20171216 Sysadmin 101- Troubleshooting.md

diff --git a/published/20180104 How allowing myself to be vulnerable made me a better leader.md b/published/201908/20180104 How allowing myself to be vulnerable made me a better leader.md

similarity index 100%

rename from published/20180104 How allowing myself to be vulnerable made me a better leader.md

rename to published/201908/20180104 How allowing myself to be vulnerable made me a better leader.md

diff --git a/published/20180116 Command Line Heroes- Season 1- OS Wars.md b/published/201908/20180116 Command Line Heroes- Season 1- OS Wars.md

similarity index 100%

rename from published/20180116 Command Line Heroes- Season 1- OS Wars.md

rename to published/201908/20180116 Command Line Heroes- Season 1- OS Wars.md

diff --git a/published/20180119 Two great uses for the cp command Bash shortcuts.md b/published/201908/20180119 Two great uses for the cp command Bash shortcuts.md

similarity index 100%

rename from published/20180119 Two great uses for the cp command Bash shortcuts.md

rename to published/201908/20180119 Two great uses for the cp command Bash shortcuts.md

diff --git a/published/20180131 How to test Webhooks when youre developing locally.md b/published/201908/20180131 How to test Webhooks when youre developing locally.md

similarity index 100%

rename from published/20180131 How to test Webhooks when youre developing locally.md

rename to published/201908/20180131 How to test Webhooks when youre developing locally.md

diff --git a/published/20180622 Use LVM to Upgrade Fedora.md b/published/201908/20180622 Use LVM to Upgrade Fedora.md

similarity index 100%

rename from published/20180622 Use LVM to Upgrade Fedora.md

rename to published/201908/20180622 Use LVM to Upgrade Fedora.md

diff --git a/published/20180720 A brief history of text-based games and open source.md b/published/201908/20180720 A brief history of text-based games and open source.md

similarity index 100%

rename from published/20180720 A brief history of text-based games and open source.md

rename to published/201908/20180720 A brief history of text-based games and open source.md

diff --git a/published/20180727 4 Ways to Customize Xfce and Give it a Modern Look.md b/published/201908/20180727 4 Ways to Customize Xfce and Give it a Modern Look.md

similarity index 100%

rename from published/20180727 4 Ways to Customize Xfce and Give it a Modern Look.md

rename to published/201908/20180727 4 Ways to Customize Xfce and Give it a Modern Look.md

diff --git a/published/20180928 Quiet log noise with Python and machine learning.md b/published/201908/20180928 Quiet log noise with Python and machine learning.md

similarity index 100%

rename from published/20180928 Quiet log noise with Python and machine learning.md

rename to published/201908/20180928 Quiet log noise with Python and machine learning.md

diff --git a/published/20181011 Exploring the Linux kernel- The secrets of Kconfig-kbuild.md b/published/201908/20181011 Exploring the Linux kernel- The secrets of Kconfig-kbuild.md

similarity index 100%

rename from published/20181011 Exploring the Linux kernel- The secrets of Kconfig-kbuild.md

rename to published/201908/20181011 Exploring the Linux kernel- The secrets of Kconfig-kbuild.md

diff --git a/published/20181029 Create animated, scalable vector graphic images with MacSVG.md b/published/201908/20181029 Create animated, scalable vector graphic images with MacSVG.md

similarity index 100%

rename from published/20181029 Create animated, scalable vector graphic images with MacSVG.md

rename to published/201908/20181029 Create animated, scalable vector graphic images with MacSVG.md

diff --git a/published/20181029 DF-SHOW - A Terminal File Manager Based On An Old DOS Application.md b/published/201908/20181029 DF-SHOW - A Terminal File Manager Based On An Old DOS Application.md

similarity index 100%

rename from published/20181029 DF-SHOW - A Terminal File Manager Based On An Old DOS Application.md

rename to published/201908/20181029 DF-SHOW - A Terminal File Manager Based On An Old DOS Application.md

diff --git a/published/20181030 Podman- A more secure way to run containers.md b/published/201908/20181030 Podman- A more secure way to run containers.md

similarity index 100%

rename from published/20181030 Podman- A more secure way to run containers.md

rename to published/201908/20181030 Podman- A more secure way to run containers.md

diff --git a/published/20181202 How To Customize The GNOME 3 Desktop.md b/published/201908/20181202 How To Customize The GNOME 3 Desktop.md

similarity index 100%

rename from published/20181202 How To Customize The GNOME 3 Desktop.md

rename to published/201908/20181202 How To Customize The GNOME 3 Desktop.md

diff --git a/published/20181213 Podman and user namespaces- A marriage made in heaven.md b/published/201908/20181213 Podman and user namespaces- A marriage made in heaven.md

similarity index 100%

rename from published/20181213 Podman and user namespaces- A marriage made in heaven.md

rename to published/201908/20181213 Podman and user namespaces- A marriage made in heaven.md

diff --git a/published/20181220 Getting started with Prometheus.md b/published/201908/20181220 Getting started with Prometheus.md

similarity index 100%

rename from published/20181220 Getting started with Prometheus.md

rename to published/201908/20181220 Getting started with Prometheus.md

diff --git a/published/20181222 How to detect automatically generated emails.md b/published/201908/20181222 How to detect automatically generated emails.md

similarity index 100%

rename from published/20181222 How to detect automatically generated emails.md

rename to published/201908/20181222 How to detect automatically generated emails.md

diff --git a/translated/tech/20190225 How to Install VirtualBox on Ubuntu -Beginner-s Tutorial.md b/published/201908/20190225 How to Install VirtualBox on Ubuntu -Beginner-s Tutorial.md

similarity index 72%

rename from translated/tech/20190225 How to Install VirtualBox on Ubuntu -Beginner-s Tutorial.md

rename to published/201908/20190225 How to Install VirtualBox on Ubuntu -Beginner-s Tutorial.md

index e23d9c3f0e..8d83afa0dd 100644

--- a/translated/tech/20190225 How to Install VirtualBox on Ubuntu -Beginner-s Tutorial.md

+++ b/published/201908/20190225 How to Install VirtualBox on Ubuntu -Beginner-s Tutorial.md

@@ -1,18 +1,20 @@

[#]: collector: (lujun9972)

[#]: translator: (beamrolling)

-[#]: reviewer: ( )

-[#]: publisher: ( )

-[#]: url: ( )

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11282-1.html)

[#]: subject: (How to Install VirtualBox on Ubuntu [Beginner’s Tutorial])

[#]: via: (https://itsfoss.com/install-virtualbox-ubuntu)

[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

-如何在 Ubuntu 上安装 VirtualBox [新手教程]

+如何在 Ubuntu 上安装 VirtualBox

======

-**本新手教程解释了在 Ubuntu 和其他基于 Debian 的 Linux 发行版上安装 VirtualBox 的各种方法。**

+> 本新手教程解释了在 Ubuntu 和其他基于 Debian 的 Linux 发行版上安装 VirtualBox 的各种方法。

-Oracle 公司的免费开源产品 [VirtualBox][1] 是一款出色的虚拟化工具,专门用于桌面操作系统。和 [Linux 上的 VMWare Workstation][2] — 另一款虚拟化工具,相比起来,我更喜欢它。

+

+

+Oracle 公司的自由开源产品 [VirtualBox][1] 是一款出色的虚拟化工具,专门用于桌面操作系统。与另一款虚拟化工具 [Linux 上的 VMWare Workstation][2] 相比起来,我更喜欢它。

你可以使用 VirtualBox 等虚拟化软件在虚拟机中安装和使用其他操作系统。

@@ -20,25 +22,29 @@ Oracle 公司的免费开源产品 [VirtualBox][1] 是一款出色的虚拟化

你也可以用 VirtualBox 在你当前的 Linux 系统中安装别的 Linux 发行版。事实上,这就是我用它的原因。如果我听说了一个不错的 Linux 发行版,我会在虚拟机上测试它,而不是安装在真实的系统上。当你想要在安装之前尝试一下别的发行版时,用虚拟机会很方便。

-![Linux installed inside Linux using VirtualBox][5]安装在 Ubuntu 18.04 内的 Ubuntu 18.10

+![Linux installed inside Linux using VirtualBox][5]

+

+*安装在 Ubuntu 18.04 内的 Ubuntu 18.10*

在本新手教程中,我将向你展示在 Ubuntu 和其他基于 Debian 的 Linux 发行版上安装 VirtualBox 的各种方法。

### 在 Ubuntu 和基于 Debian 的 Linux 发行版上安装 VirtualBox

-这里提出的安装方法也适用于其他基于 Debian 和 Ubuntu 的 Linux 发行版,如 Linux Mint,elementar OS 等。

+这里提出的安装方法也适用于其他基于 Debian 和 Ubuntu 的 Linux 发行版,如 Linux Mint、elementar OS 等。

#### 方法 1:从 Ubuntu 仓库安装 VirtualBox

-**优点** : 安装简便

+**优点**:安装简便

-**缺点** : 下载旧版本

+**缺点**:较旧版本

在 Ubuntu 上下载 VirtualBox 最简单的方法可能是从软件中心查找并下载。

-![VirtualBox in Ubuntu Software Center][6]VirtualBox 在 Ubuntu 软件中心提供

+![VirtualBox in Ubuntu Software Center][6]

-你也可以使用该条命令从命令行安装:

+*VirtualBox 在 Ubuntu 软件中心提供*

+

+你也可以使用这条命令从命令行安装:

```

sudo apt install virtualbox

@@ -50,27 +56,27 @@ sudo apt install virtualbox

#### 方法 2:使用 Oracle 网站上的 Deb 文件安装 VirtualBox

-**优点** : 容易安装最新版本

+**优点**:安装简便,最新版本

-**缺点** : 不能更新

+**缺点**:不能更新

如果你想要在 Ubuntu 上使用 VirtualBox 的最新版本,最简单的方法就是[使用 Deb 文件][9]。

-建议阅读[如何在 Ubuntu Linux 上下载 GNOME](https://itsfoss.com/fix-white-screen-login-arch-linux/great )

-

-Oracle 为 VirtiualBox 版本提供了开箱可用的二进制文件。如果查看其下载页面,你将看到为 Ubuntu 和其他发行版下载 deb 安装程序的选项。

+Oracle 为 VirtiualBox 版本提供了开箱即用的二进制文件。如果查看其下载页面,你将看到为 Ubuntu 和其他发行版下载 deb 安装程序的选项。

![VirtualBox Linux Download][10]

你只需要下载 deb 文件并双击它即可安装。就是这么简单。

+- [下载 virtualbox for Ubuntu](https://www.virtualbox.org/wiki/Linux_Downloads)

+

然而,这种方法的问题在于你不能自动更新到最新的 VirtualBox 版本。唯一的办法是移除现有版本,下载最新版本并再次安装。不太方便,是吧?

#### 方法 3:用 Oracle 的仓库安装 VirtualBox

-**优点** : 自动更新

+**优点**:自动更新

-**缺点** : 安装略微复杂

+**缺点**:安装略微复杂

现在介绍的是命令行安装方法,它看起来可能比较复杂,但与前两种方法相比,它更具有优势。你将获得 VirtualBox 的最新版本,并且未来它还将自动更新到更新的版本。我想那就是你想要的。

@@ -82,13 +88,13 @@ Oracle 为 VirtiualBox 版本提供了开箱可用的二进制文件。如果查

wget -q https://www.virtualbox.org/download/oracle_vbox_2016.asc -O- | sudo apt-key add -

```

-```

-Mint 用户请注意:

+> Mint 用户请注意:

-下一步只适用于 Ubuntu。如果你使用的是 Linux Mint 或其他基于 Ubuntu 的发行版,请将命令行中的 $(lsb_release -cs) 替换成你当前版本所基于的 Ubuntu 版本。例如,Linux Mint 19 系列用户应该使用 bionic,Mint 18 系列用户应该使用 xenial,像这样:

+> 下一步只适用于 Ubuntu。如果你使用的是 Linux Mint 或其他基于 Ubuntu 的发行版,请将命令行中的 `$(lsb_release -cs)` 替换成你当前版本所基于的 Ubuntu 版本。例如,Linux Mint 19 系列用户应该使用 bionic,Mint 18 系列用户应该使用 xenial,像这样:

-sudo add-apt-repository “deb [arch=amd64] **bionic** contrib“

-```

+> ```

+> sudo add-apt-repository “deb [arch=amd64] **bionic** contrib“`

+> ```

现在用以下命令来将 Oracle VirtualBox 仓库添加到仓库列表中:

@@ -96,9 +102,9 @@ sudo add-apt-repository “deb [arch=amd64] 无论你是刚毕业的大学生,还是想在职业中寻求进步的经验丰富的 IT 专家,这些提示都可以帮你成为 DevOps 工程师。

+

+

+

+DevOps 工程是一个备受称赞的热门职业。不管你是刚毕业正在找第一份工作,还是在利用之前的行业经验的同时寻求学习新技能的机会,本指南都能帮你通过正确的步骤成为 [DevOps 工程师][2]。

+

+### 让自己沉浸其中

+

+首先学习 [DevOps][3] 的基本原理、实践以及方法。在使用工具之前,先了解 DevOps 背后的“为什么”。DevOps 工程师的主要目标是在整个软件开发生命周期(SDLC)中提高速度并保持或提高质量,以提供最大的业务价值。阅读文章、观看 YouTube 视频、参加当地小组聚会或者会议 —— 成为热情的 DevOps 社区中的一员,在那里你将从先行者的错误和成功中学习。

+

+### 考虑你的背景

+

+如果你有从事技术工作的经历,例如软件开发人员、系统工程师、系统管理员、网络运营工程师或者数据库管理员,那么你已经拥有了广泛的见解和有用的经验,它们可以帮助你在未来成为 DevOps 工程师。如果你在完成计算机科学或任何其他 STEM(LCTT 译注:STEM 是科学、技术、工程和数学四个学科的首字母缩略字)领域的学业后刚开始职业生涯,那么你将拥有在这个过渡期间需要的一些基本踏脚石。

+

+DevOps 工程师的角色涵盖了广泛的职责。以下是企业最有可能使用他们的三种方向:

+

+* **偏向于开发(Dev)的 DevOps 工程师**,在构建应用中扮演软件开发的角色。他们日常工作的一部分是利用持续集成 / 持续交付(CI/CD)、共享仓库、云和容器,但他们不一定负责构建或实施工具。他们了解基础架构,并且在成熟的环境中,能将自己的代码推向生产环境。

+* **偏向于运维技术(Ops)的 DevOps 工程师**,可以与系统工程师或系统管理员相比较。他们了解软件的开发,但并不会把一天的重心放在构建应用上。相反,他们更有可能支持软件开发团队实现手动流程的自动化,并提高人员和技术系统的效率。这可能意味着分解遗留代码,并用不太繁琐的自动化脚本来运行相同的命令,或者可能意味着安装、配置或维护基础结构和工具。他们确保为任何有需要的团队安装可使用的工具。他们也会通过教团队如何利用 CI / CD 和其他 DevOps 实践来帮助他们。

+* **网站可靠性工程师(SRE)**,就像解决运维和基础设施的软件工程师。SRE 专注于创建可扩展、高可用且可靠的软件系统。

+

+在理想的世界中,DevOps 工程师将了解以上所有领域;这在成熟的科技公司中很常见。然而,顶级银行和许多财富 500 强企业的 DevOps 职位通常会偏向开发(Dev)或运营(Ops)。

+

+### 要学习的技术

+

+DevOps 工程师需要了解各种技术才能有效完成工作。无论你的背景如何,请从作为 DevOps 工程师需要使用和理解的基本技术开始。

+

+#### 操作系统

+

+操作系统是一切运行的地方,拥有相关的基础知识十分重要。[Linux][4] 是你最有可能每天使用的操作系统,尽管有的组织会使用 Windows 操作系统。要开始使用,你可以在家中安装 Linux,在那里你可以随心所欲地中断,并在此过程中学习。

+

+#### 脚本

+

+接下来,选择一门语言来学习脚本编程。有很多语言可供选择,包括 Python、Go、Java、Bash、PowerShell、Ruby 和 C / C++。我建议[从 Python 开始][5],因为它相对容易学习和解释,是最受欢迎的语言之一。Python 通常是遵循面向对象编程(OOP)的准则编写的,可用于 Web 开发、软件开发以及创建桌面 GUI 和业务应用程序。

+

+#### 云

+

+学习了 [Linux][4] 和 [Python][5] 之后,我认为下一个该学习的是云计算。基础设施不再只是“运维小哥”的事情了,因此你需要接触云平台,例如 AWS 云服务、Azure 或者谷歌云平台。我会从 AWS 开始,因为它有大量免费学习工具,可以帮助你降低作为开发人员、运维人员,甚至面向业务的部门的任何障碍。事实上,你可能会被它提供的东西所淹没。考虑从 EC2、S3 和 VPC 开始,然后看看你从其中想学到什么。

+

+#### 编程语言

+

+如果你对 DevOps 的软件开发充满热情,请继续提高你的编程技能。DevOps 中的一些优秀和常用的编程语言和你用于脚本编程的相同:Python、Go、Java、Bash、PowerShell、Ruby 和 C / C++。你还应该熟悉 Jenkins 和 Git / Github,你将会在 CI / CD 过程中经常使用到它们。

+

+#### 容器

+

+最后,使用 Docker 和编排平台(如 Kubernetes)等工具开始学习[容器化][6]。网上有大量的免费学习资源,大多数城市都有本地的线下小组,你可以在友好的环境中向有经验的人学习(还有披萨和啤酒哦!)。

+

+#### 其他的呢?

+

+如果你缺乏开发经验,你依然可以通过对自动化的热情,提高效率,与他人协作以及改进自己的工作来[参与 DevOps][3]。我仍然建议学习上述工具,但重点不要放在编程 / 脚本语言上。了解基础架构即服务、平台即服务、云平台和 Linux 会非常有用。你可能会设置工具并学习如何构建具有弹性和容错能力的系统,并在编写代码时利用它们。

+

+### 找一份 DevOps 的工作

+

+求职过程会有所不同,具体取决于你是否一直从事技术工作,是否正在进入 DevOps 领域,或者是刚开始职业生涯的毕业生。

+

+#### 如果你已经从事技术工作

+