mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

commit

8b51948c34

77

published/201309/20190204 7 Best VPN Services For 2019.md

Normal file

77

published/201309/20190204 7 Best VPN Services For 2019.md

Normal file

@ -0,0 +1,77 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (Modrisco)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10691-1.html)

|

||||

[#]: subject: (7 Best VPN Services For 2019)

|

||||

[#]: via: (https://www.ostechnix.com/7-best-opensource-vpn-services-for-2019/)

|

||||

[#]: author: (Editor https://www.ostechnix.com/author/editor/)

|

||||

|

||||

2019 年最好的 7 款虚拟私人网络服务

|

||||

======

|

||||

|

||||

在过去三年中,全球至少有 67% 的企业面临着数据泄露,亿万用户受到影响。研究表明,如果事先对数据安全采取最基本的保护措施,那么预计有 93% 的安全问题是可以避免的。

|

||||

|

||||

糟糕的数据安全会带来极大的代价,特别是对企业而言。它会大致大规模的破坏并影响你的品牌声誉。尽管有些企业可以艰难地收拾残局,但仍有一些企业无法从事故中完全恢复。不过现在,你很幸运地可以得到数据及网络安全软件。

|

||||

|

||||

|

||||

|

||||

到了 2019 年,你可以通过**虚拟私人网络**,也就是我们熟知的 **VPN** 来保护你免受网络攻击。当涉及到在线隐私和安全时,常常存在许多不确定因素。有数百个不同的 VPN 提供商,选择合适的供应商也同时意味着在定价、服务和易用性之间谋取恰当的平衡。

|

||||

|

||||

如果你正在寻找一个可靠的 100% 经过测试和安全的 VPN,你可能需要进行详尽的调查并作出最佳选择。这里为你提供在 2019 年 7 款最好用并经过测试的 VPN 服务。

|

||||

|

||||

### 1、Vpnunlimitedapp

|

||||

|

||||

通过 VPN Unlimited,你的数据安全将得到全面的保障。此 VPN 允许你连接任何 WiFi ,而无需担心你的个人数据可能被泄露。你的数据通过 AES-256 算法加密,保护你不受第三方和黑客的窥探。无论你身处何处,这款 VPN 都可确保你在所有网站上保持匿名且不受跟踪。它提供 7 天的免费试用和多种协议支持:openvpn、IKEv2 和 KeepSolidWise。有特殊需求的用户会获得特殊的额外服务,如个人服务器、终身 VPN 订阅和个人 IP 选项。

|

||||

|

||||

### 2、VPN Lite

|

||||

|

||||

VPN Lite 是一款易于使用而且**免费**的用于上网的 VPN 服务。你可以通过它在网络上保持匿名并保护你的个人隐私。它会模糊你的 IP 并加密你的数据,这意味着第三方无法跟踪你的所有线上活动。你还可以访问网络上的全部内容。使用 VPN Lite,你可以访问在被拦截的网站。你还放心地可以访问公共 WiFi 而不必担心敏感信息被间谍软件窃取和来自黑客的跟踪和攻击。

|

||||

|

||||

### 3、HotSpot Shield

|

||||

|

||||

这是一款在 2005 年推出的大受欢迎的 VPN。这套 VPN 协议至少被全球 70% 的数据安全公司所集成,并在全球有数千台服务器。它提供两种免费模式:一种为完全免费,但会有线上广告;另一种则为七天试用。它提供军事级的数据加密和恶意软件防护。HotSpot Shield 保证网络安全并保证高速网络。

|

||||

|

||||

### 4、TunnelBear

|

||||

|

||||

如果你是一名 VPN 新手,那么 TunnelBear 将是你的最佳选择。它带有一个用户友好的界面,并配有动画熊引导。你可以在 TunnelBear 的帮助下以极快的速度连接至少 22 个国家的服务器。它使用 **AES 256-bit** 加密算法,保证无日志记录,这意味着你的数据将得到保护。你还可以在最多五台设备上获得无限流量。

|

||||

|

||||

### 5、ProtonVPN

|

||||

|

||||

这款 VPN 为你提供强大的优质服务。你的连接速度可能会受到影响,但你也可以享受到无限流量。它具有易于使用的用户界面,提供多平台兼容。 ProtonVPN 的服务据说是因为为种子下载提供了优化因而无法访问 Netflix。你可以获得如协议和加密等安全功能来保证你的网络安全。

|

||||

|

||||

### 6、ExpressVPN

|

||||

|

||||

ExpressVPN 被认为是最好的用于接触封锁和保护隐私的离岸 VPN。凭借强大的客户支持和快速的速度,它已成为全球顶尖的 VPN 服务。它提供带有浏览器扩展和自定义固件的路由。 ExpressVPN 拥有一系列令人赞叹高质量应用程序,配有大量的服务器,并且最多只能支持三台设备。

|

||||

|

||||

ExpressVPN 并不是完全免费的,恰恰相反,正是由于它所提供的高质量服务而使之成为了市场上最贵的 VPN 之一。ExpressVPN 有 30 天内退款保证,因此你可以免费试用一个月。好消息是,这是完全没有风险的。例如,如果你在短时间内需要 VPN 来绕过在线审查,这可能是你的首选解决方案。用过它之后,你就不会随意想给一个会发送垃圾邮件、缓慢的免费的程序当成试验品。

|

||||

|

||||

ExpressVPN 也是享受在线流媒体和户外安全的最佳方式之一。如果你需要继续使用它,你只需要续订或取消你的免费试用。ExpressVPN 在 90 多个国家架设有 2000 多台服务器,可以解锁 Netflix,提供快速连接,并为用户提供完全隐私。

|

||||

|

||||

### 7、PureVPN

|

||||

|

||||

虽然 PureVPN 可能不是完全免费的,但它却是此列表中最实惠的一个。用户可以注册获得 7 天的免费试用,并在之后选择任一付费计划。通过这款 VPN,你可以访问到至少 140 个国家中的 750 余台服务器。它还可以在几乎所有设备上轻松安装。它的所有付费特性仍然可以在免费试用期间使用。包括无限数据流量、IP 泄漏保护和 ISP 不可见性。它支持的系统有 iOS、Android、Windows、Linux 和 macOS。

|

||||

|

||||

### 总结

|

||||

|

||||

如今,可用的免费 VPN 服务越来越多,为什么不抓住这个机会来保护你自己和你的客户呢?在了解到有那么多优秀的 VPN 服务后,我们知道即使是最安全的免费服务也不一定就完全没有风险。你可能需要付费升级到高级版以增强保护。高级版的 VPN 为你提供了免费试用,提供无风险退款保证。无论你打算花钱购买 VPN 还是准备使用免费 VPN,我们都强烈建议你使用一个。

|

||||

|

||||

**关于作者:**

|

||||

|

||||

**Renetta K. Molina** 是一个技术爱好者和健身爱好者。她撰写有关技术、应用程序、 WordPress 和其他任何领域的文章。她喜欢在空余时间打高尔夫球和读书。她喜欢学习和尝试新事物。

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/7-best-opensource-vpn-services-for-2019/

|

||||

|

||||

作者:[Editor][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[Modrisco](https://github.com/Modrisco)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.ostechnix.com/author/editor/

|

||||

[b]: https://github.com/lujun9972

|

||||

@ -0,0 +1,482 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (ezio)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10676-1.html)

|

||||

[#]: subject: (Computer Laboratory – Raspberry Pi: Lesson 10 Input01)

|

||||

[#]: via: (https://www.cl.cam.ac.uk/projects/raspberrypi/tutorials/os/input01.html)

|

||||

[#]: author: (Alex Chadwick https://www.cl.cam.ac.uk)

|

||||

|

||||

计算机实验室之树莓派:课程 10 输入01

|

||||

======

|

||||

|

||||

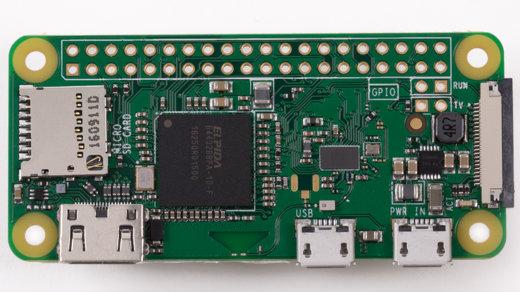

欢迎进入输入课程系列。在本系列,你将会学会如何使用键盘接收输入给树莓派。我们将会从揭示输入开始本课,然后转向更传统的文本提示符。

|

||||

|

||||

这是第一堂输入课,会教授一些关于驱动和链接的理论,同样也包含键盘的知识,最后以在屏幕上显示文本结束。

|

||||

|

||||

### 1、开始

|

||||

|

||||

希望你已经完成了 OK 系列课程,这会对你完成屏幕系列课程很有帮助。很多 OK 课程上的文件会被使用而不会做解释。如果你没有这些文件,或者希望使用一个正确的实现,可以从该堂课的[下载页][1]下载模板。如果你使用你自己的实现,请删除调用了 `SetGraphicsAddress` 之后全部的代码。

|

||||

|

||||

### 2、USB

|

||||

|

||||

如你所知,树莓派 B 型有两个 USB 接口,通常用来连接一个鼠标和一个键盘。这是一个非常好的设计决策,USB 是一个非常通用的接口,很多种设备都可以使用它。这就很容易为它设计新外设,很容易为它编写设备驱动,而且通过 USB 集线器可以非常容易扩展。还能更好吗?当然是不能,实际上对一个操作系统开发者来说,这就是我们的噩梦。USB 标准太大了。我是说真的,在你思考如何连接设备之前,它的文档将近 700 页。

|

||||

|

||||

> USB 标准的设计目的是通过复杂的软件来简化硬件交互。

|

||||

|

||||

我和很多爱好操作系统的开发者谈过这些,而他们全部都说几句话:不要抱怨。“实现这个需要花费很久时间”,“你不可能写出关于 USB 的教程”,“收益太小了”。在很多方面,他们是对的,我不可能写出一个关于 USB 标准的教程,那得花费几周时间。我同样不能教授如何为全部所有的设备编写外设驱动,所以使用自己写的驱动是没什么用的。然而,即便不能做到最好,我仍然可以获取一个正常工作的 USB 驱动,拿一个键盘驱动,然后教授如何在操作系统中使用它们。我开始寻找可以运行在一个甚至不知道文件是什么的操作系统的自由驱动,但是我一个都找不到,它们都太高层了,所以我尝试写一个。大家说的都对,这耗费了我几周时间。然而我可以高兴的说我做的这些工作没有获取操作系统以外的帮助,并且可以和鼠标和键盘通信。这绝不是完整的、高效的,或者正确的,但是它能工作。驱动是以 C 编写的,而且有兴趣的可以在下载页找到全部源代码。

|

||||

|

||||

所以,这一个教程不会是 USB 标准的课程(一点也没有)。实际上我们将会看到如何使用其他人的代码。

|

||||

|

||||

### 3、链接

|

||||

|

||||

既然我们要引进外部代码到操作系统,我们就需要谈一谈<ruby>链接<rt>linking</rt></ruby>。链接是一种过程,可以在程序或者操作系统中链接函数。这意味着当一个程序生成之后,我们不必要编写每一个函数(几乎可以肯定,实际上并非如此)。链接就是我们做的用来把我们程序和别人代码中的函数连结在一起。这个实际上已经在我们的操作系统进行了,因为链接器把所有不同的文件链接在一起,每个都是分开编译的。

|

||||

|

||||

> 链接允许我们制作可重用的代码库,所有人都可以在他们的程序中使用。

|

||||

|

||||

有两种链接方式:静态和动态。静态链接就像我们在制作自己的操作系统时进行的。链接器找到全部函数的地址,然后在链接结束前,将这些地址都写入代码中。动态链接是在程序“完成”之后。当程序加载后,动态链接器检查程序,然后在操作系统的库找到所有不在程序里的函数。这就是我们的操作系统最终应该能够完成的一项工作,但是现在所有东西都将是静态链接的。

|

||||

|

||||

> 程序经常调用调用库,这些库会调用其它的库,直到最终调用了我们写的操作系统的库。

|

||||

|

||||

我编写的 USB 驱动程序适合静态编译。这意味着我给你的是每个文件的编译后的代码,然后链接器找到你的代码中的那些没有实现的函数,就将这些函数链接到我的代码。在本课的 [下载页][1] 是一个 makefile 和我的 USB 驱动,这是接下来需要的。下载并使用这个 makefile 替换你的代码中的 makefile, 同事将驱动放在和这个 makefile 相同的文件夹。

|

||||

|

||||

### 4、键盘

|

||||

|

||||

为了将输入传给我们的操作系统,我们需要在某种程度上理解键盘是如何实际工作的。键盘有两种按键:普通键和修饰键。普通按键是字母、数字、功能键,等等。它们构成了键盘上几乎全部按键。修饰键是多达 8 个的特殊键。它们是左 shift、右 shift、左 ctrl、右 ctrl、左 alt、右 alt、左 GUI 和右 GUI。键盘可以检测出所有的组合中那个修饰键被按下了,以及最多 6 个普通键。每次一个按钮变化了(例如,是按下了还是释放了),键盘就会报告给电脑。通常,键盘也会有 3 个 LED 灯,分别指示大写锁定,数字键锁定,和滚动锁定,这些都是由电脑控制的,而不是键盘自己。键盘也可能有更多的灯,比如电源、静音,等等。

|

||||

|

||||

对于标准 USB 键盘,有一个按键值的表,每个键盘按键都一个唯一的数字,每个可能的 LED 也类似。下面的表格列出了前 126 个值。

|

||||

|

||||

表 4.1 USB 键盘值

|

||||

|

||||

| 序号 | 描述 | 序号 | 描述 | 序号 | 描述 | 序号 | 描述 |

|

||||

| ------ | ---------------------- | ------ | -------------------- | ----------- | ----------------------- | -------- | ---------------------- |

|

||||

| 4 | `a` 和 `A` | 5 | `b` 和 `B` | 6 | `c` 和 `C` | 7 | `d` 和 `D` |

|

||||

| 8 | `e` 和 `E` | 9 | `f` 和 `F` | 10 | `g` 和 `G` | 11 | `h` 和 `H` |

|

||||

| 12 | `i` 和 `I` | 13 | `j` 和 `J` | 14 | `k` 和 `K` | 15 | `l` 和 `L` |

|

||||

| 16 | `m` 和 `M` | 17 | `n` 和 `N` | 18 | `o` 和 `O` | 19 | `p` 和 `P` |

|

||||

| 20 | `q` 和 `Q` | 21 | `r` 和 `R` | 22 | `s` 和 `S` | 23 | `t` 和 `T` |

|

||||

| 24 | `u` 和 `U` | 25 | `v` 和 `V` | 26 | `w` 和 `W` | 27 | `x` 和 `X` |

|

||||

| 28 | `y` 和 `Y` | 29 | `z` 和 `Z` | 30 | `1` 和 `!` | 31 | `2` 和 `@` |

|

||||

| 32 | `3` 和 `#` | 33 | `4` 和 `$` | 34 | `5` 和 `%` | 35 | `6` 和 `^` |

|

||||

| 36 | `7` 和 `&` | 37 | `8` 和 `*` | 38 | `9` 和 `(` | 39 | `0` 和 `)` |

|

||||

| 40 | `Return`(`Enter`) | 41 | `Escape` | 42 | `Delete`(`Backspace`) | 43 | `Tab` |

|

||||

| 44 | `Spacebar` | 45 | `-` 和 `_` | 46 | `=` 和 `+` | 47 | `[` 和 `{` |

|

||||

| 48 | `]` 和 `}` | 49 | `\` 和 `|` | 50 | `#` 和 `~` | 51 | `;` 和 `:` |

|

||||

| 52 | `'` 和 `"` | 53 | \` 和 `~` | 54 | `,` 和 `<` | 55 | `.` 和 `>` |

|

||||

| 56 | `/` 和 `?` | 57 | `Caps Lock` | 58 | `F1` | 59 | `F2` |

|

||||

| 60 | `F3` | 61 | `F4` | 62 | `F5` | 63 | `F6` |

|

||||

| 64 | `F7` | 65 | `F8` | 66 | `F9` | 67 | `F10` |

|

||||

| 68 | `F11` | 69 | `F12` | 70 | `Print Screen` | 71 | `Scroll Lock` |

|

||||

| 72 | `Pause` | 73 | `Insert` | 74 | `Home` | 75 | `Page Up` |

|

||||

| 76 | `Delete forward` | 77 | `End` | 78 | `Page Down` | 79 | `Right Arrow` |

|

||||

| 80 | `Left Arrow` | 81 | `Down Arrow` | 82 | `Up Arrow` | 83 | `Num Lock` |

|

||||

| 84 | 小键盘 `/` | 85 | 小键盘 `*` | 86 | 小键盘 `-` | 87 | 小键盘 `+` |

|

||||

| 88 | 小键盘 `Enter` | 89 | 小键盘 `1` 和 `End` | 90 | 小键盘 `2` 和 `Down Arrow` | 91 | 小键盘 `3` 和 `Page Down` |

|

||||

| 92 | 小键盘 `4` 和 `Left Arrow` | 93 | 小键盘 `5` | 94 | 小键盘 `6` 和 `Right Arrow` | 95 | 小键盘 `7` 和 `Home` |

|

||||

| 96 | 小键盘 `8` 和 `Up Arrow` | 97 | 小键盘 `9` 和 `Page Up` | 98 | 小键盘 `0` 和 `Insert` | 99 | 小键盘 `.` 和 `Delete` |

|

||||

| 100 | `\` 和 `|` | 101 | `Application` | 102 | `Power` | 103 | 小键盘 `=` |

|

||||

| 104 | `F13` | 105 | `F14` | 106 | `F15` | 107 | `F16` |

|

||||

| 108 | `F17` | 109 | `F18` | 110 | `F19` | 111 | `F20` |

|

||||

| 112 | `F21` | 113 | `F22` | 114 | `F23` | 115 | `F24` |

|

||||

| 116 | `Execute` | 117 | `Help` | 118 | `Menu` | 119 | `Select` |

|

||||

| 120 | `Stop` | 121 | `Again` | 122 | `Undo` | 123 | `Cut` |

|

||||

| 124 | `Copy` | 125 | `Paste` | 126 | `Find` | 127 | `Mute` |

|

||||

| 128 | `Volume Up` | 129 | `Volume Down` | | | | |

|

||||

|

||||

完全列表可以在[HID 页表 1.12][2]的 53 页,第 10 节找到。

|

||||

|

||||

### 5、车轮后的螺母

|

||||

|

||||

通常,当你使用其他人的代码,他们会提供一份自己代码的总结,描述代码都做了什么,粗略介绍了是如何工作的,以及什么情况下会出错。下面是一个使用我的 USB 驱动的相关步骤要求。

|

||||

|

||||

> 这些总结和代码的描述组成了一个 API - 应用程序产品接口。

|

||||

|

||||

表 5.1 CSUD 中和键盘相关的函数

|

||||

|

||||

| 函数 | 参数 | 返回值 | 描述 |

|

||||

| ----------------------- | ----------------------- | ----------------------- | -----------------------|

|

||||

| `UsbInitialise` | 无 | `r0` 是结果码 | 这个方法是一个集多种功能于一身的方法,它加载 USB 驱动程序,枚举所有设备并尝试与它们通信。这种方法通常需要大约一秒钟的时间来执行,但是如果插入几个 USB 集线器,执行时间会明显更长。在此方法完成之后,键盘驱动程序中的方法就可用了,不管是否确实插入了键盘。返回代码如下解释。|

|

||||

| `UsbCheckForChange` | 无 | 无 | 本质上提供与 `UsbInitialise` 相同的效果,但不提供相同的一次初始化。该方法递归地检查每个连接的集线器上的每个端口,如果已经添加了新设备,则添加它们。如果没有更改,这应该是非常快的,但是如果连接了多个设备的集线器,则可能需要几秒钟的时间。|

|

||||

| `KeyboardCount` | 无 | `r0` 是计数 | 返回当前连接并检测到的键盘数量。`UsbCheckForChange` 可能会对此进行更新。默认情况下最多支持 4 个键盘。可以通过这个驱动程序访问多达这么多的键盘。|

|

||||

| `KeyboardGetAddress` | `r0` 是索引 | `r0` 是地址 | 检索给定键盘的地址。所有其他函数都需要一个键盘地址,以便知道要访问哪个键盘。因此,要与键盘通信,首先要检查计数,然后检索地址,然后使用其他方法。注意,在调用 `UsbCheckForChange` 之后,此方法返回的键盘顺序可能会改变。|

|

||||

| `KeyboardPoll` | `r0` 是地址 | `r0` 是结果码 | 从键盘读取当前键状态。这是通过直接轮询设备来操作的,与最佳实践相反。这意味着,如果没有频繁地调用此方法,可能会错过一个按键。所有读取方法只返回上次轮询时的值。|

|

||||

| `KeyboardGetModifiers` | `r0` 是地址 | `r0` 是修饰键状态 | 检索上次轮询时修饰键的状态。这是两边的 `shift` 键、`alt` 键和 `GUI` 键。这回作为一个位字段返回,这样,位 0 中的 1 表示左控件被保留,位 1 表示左 `shift`,位 2 表示左 `alt` ,位 3 表示左 `GUI`,位 4 到 7 表示前面几个键的右版本。如果有问题,`r0` 包含 0。|

|

||||

| `KeyboardGetKeyDownCount` | `r0` 是地址 | `r0` 是计数 | 检索当前按下键盘的键数。这排除了修饰键。这通常不能超过 6。如果有错误,这个方法返回 0。|

|

||||

| `KeyboardGetKeyDown` | `r0` 是地址,`r1` 键号 | `r0` 是扫描码 | 检索特定按下键的扫描码(见表 4.1)。通常,要计算出哪些键是按下的,可以调用 `KeyboardGetKeyDownCount`,然后多次调用 `KeyboardGetKeyDown` ,将 `r1` 的值递增,以确定哪些键是按下的。如果有问题,返回 0。可以(但不建议这样做)在不调用 `KeyboardGetKeyDownCount` 的情况下调用此方法将 0 解释为没有按下的键。注意,顺序或扫描代码可以随机更改(有些键盘按数字排序,有些键盘按时间排序,没有任何保证)。|

|

||||

| `KeyboardGetKeyIsDown` | `r0` 是地址,`r1` 扫描码 | `r0` 是状态 | 除了 `KeyboardGetKeyDown` 之外,还可以检查按下的键中是否有特定的扫描码。如果没有,返回 0;如果有,返回一个非零值。当检测特定的扫描码(例如寻找 `ctrl+c`)时更快。出错时,返回 0。|

|

||||

| `KeyboardGetLedSupport` | `r0` 是地址 | `r0` 是 LED | 检查特定键盘支持哪些 LED。第 0 位代表数字锁定,第 1 位代表大写锁定,第 2 位代表滚动锁定,第 3 位代表合成,第 4 位代表假名,第 5 位代表电源,第 6 位代表 Shift ,第 7 位代表静音。根据 USB 标准,这些 LED 都不是自动更新的(例如,当检测到大写锁定扫描代码时,必须手动设置大写锁定 LED)。|

|

||||

| `KeyboardSetLeds` | `r0` 是地址, `r1` 是 LED | `r0` 是结果码 | 试图打开/关闭键盘上指定的 LED 灯。查看下面的结果代码值。参见 `KeyboardGetLedSupport` 获取 LED 的值。|

|

||||

|

||||

有几种方法返回“返回值”。这些都是 C 代码的老生常谈了,就是用数字代表函数调用发生了什么。通常情况, 0 总是代表操作成功。下面的是驱动用到的返回值。

|

||||

|

||||

> 返回值是一种处理错误的简单方法,但是通常更优雅的解决途径会出现于更高层次的代码。

|

||||

|

||||

表 5.2 - CSUD 返回值

|

||||

|

||||

| 代码 | 描述 |

|

||||

| ---- | ----------------------------------------------------------------------- |

|

||||

| 0 | 方法成功完成。 |

|

||||

| -2 | 参数:函数调用了无效参数。 |

|

||||

| -4 | 设备:设备没有正确响应请求。 |

|

||||

| -5 | 不匹配:驱动不适用于这个请求或者设备。 |

|

||||

| -6 | 编译器:驱动没有正确编译,或者被破坏了。 |

|

||||

| -7 | 内存:驱动用尽了内存。 |

|

||||

| -8 | 超时:设备没有在预期的时间内响应请求。 |

|

||||

| -9 | 断开连接:被请求的设备断开连接,或者不能使用。 |

|

||||

|

||||

驱动的通常用法如下:

|

||||

|

||||

1. 调用 `UsbInitialise`

|

||||

2. 调用 `UsbCheckForChange`

|

||||

3. 调用 `KeyboardCount`

|

||||

4. 如果返回 0,重复步骤 2。

|

||||

5. 针对你支持的每个键盘:

|

||||

1. 调用 `KeyboardGetAddress`

|

||||

2. 调用 `KeybordGetKeyDownCount`

|

||||

3. 针对每个按下的按键:

|

||||

1. 检查它是否已经被按下了

|

||||

2. 保存按下的按键

|

||||

4. 针对每个保存的按键:

|

||||

3. 检查按键是否被释放了

|

||||

4. 如果释放了就删除

|

||||

6. 根据按下/释放的案件执行操作

|

||||

7. 重复步骤 2

|

||||

|

||||

最后,你可以对键盘做所有你想做的任何事了,而这些方法应该允许你访问键盘的全部功能。在接下来的两节课,我们将会着眼于完成文本终端的输入部分,类似于大部分的命令行电脑,以及命令的解释。为了做这些,我们将需要在更有用的形式下得到一个键盘输入。你可能注意到我的驱动是(故意的)没有太大帮助,因为它并没有方法来判断是否一个按键刚刚按下或释放了,它只有方法来判断当前那个按键是按下的。这就意味着我们需要自己编写这些方法。

|

||||

|

||||

### 6、可用更新

|

||||

|

||||

首先,让我们实现一个 `KeyboardUpdate` 方法,检查第一个键盘,并使用轮询方法来获取当前的输入,以及保存最后一个输入来对比。然后我们可以使用这个数据和其它方法来将扫描码转换成按键。这个方法应该按照下面的说明准确操作:

|

||||

|

||||

> 重复检查更新被称为“轮询”。这是针对驱动 IO 中断而言的,这种情况下设备在准备好后会发一个信号。

|

||||

|

||||

1. 提取一个保存好的键盘地址(初始值为 0)。

|

||||

2. 如果不是 0 ,进入步骤 9.

|

||||

3. 调用 `UsbCheckForChange` 检测新键盘。

|

||||

4. 调用 `KeyboardCount` 检测有几个键盘在线。

|

||||

5. 如果返回 0,意味着没有键盘可以让我们操作,只能退出了。

|

||||

6. 调用 `KeyboardGetAddress` 参数是 0,获取第一个键盘的地址。

|

||||

7. 保存这个地址。

|

||||

8. 如果这个值是 0,那么退出,这里应该有些问题。

|

||||

9. 调用 `KeyboardGetKeyDown` 6 次,获取每次按键按下的值并保存。

|

||||

10. 调用 `KeyboardPoll`

|

||||

11. 如果返回值非 0,进入步骤 3。这里应该有些问题(比如键盘断开连接)。

|

||||

|

||||

要保存上面提到的值,我们将需要下面 `.data` 段的值。

|

||||

|

||||

```

|

||||

.section .data

|

||||

.align 2

|

||||

KeyboardAddress:

|

||||

.int 0

|

||||

KeyboardOldDown:

|

||||

.rept 6

|

||||

.hword 0

|

||||

.endr

|

||||

```

|

||||

|

||||

```

|

||||

.hword num 直接将半字的常数插入文件。

|

||||

```

|

||||

|

||||

```

|

||||

.rept num [commands] .endr 复制 `commands` 命令到输出 num 次。

|

||||

```

|

||||

|

||||

试着自己实现这个方法。对此,我的实现如下:

|

||||

|

||||

1、我们加载键盘的地址。

|

||||

|

||||

```

|

||||

.section .text

|

||||

.globl KeyboardUpdate

|

||||

KeyboardUpdate:

|

||||

push {r4,r5,lr}

|

||||

|

||||

kbd .req r4

|

||||

ldr r0,=KeyboardAddress

|

||||

ldr kbd,[r0]

|

||||

```

|

||||

|

||||

2、如果地址非 0,就说明我们有一个键盘。调用 `UsbCheckForChanges` 慢,所以如果一切正常,我们要避免调用这个函数。

|

||||

|

||||

```

|

||||

teq kbd,#0

|

||||

bne haveKeyboard$

|

||||

```

|

||||

|

||||

3、如果我们一个键盘都没有,我们就必须检查新设备。

|

||||

|

||||

```

|

||||

getKeyboard$:

|

||||

bl UsbCheckForChange

|

||||

```

|

||||

|

||||

4、如果有新键盘添加,我们就会看到这个。

|

||||

|

||||

```

|

||||

bl KeyboardCount

|

||||

```

|

||||

|

||||

5、如果没有键盘,我们就没有键盘地址。

|

||||

|

||||

```

|

||||

teq r0,#0

|

||||

ldreq r1,=KeyboardAddress

|

||||

streq r0,[r1]

|

||||

beq return$

|

||||

```

|

||||

|

||||

6、让我们获取第一个键盘的地址。你可能想要支持更多键盘。

|

||||

|

||||

```

|

||||

mov r0,#0

|

||||

bl KeyboardGetAddress

|

||||

```

|

||||

|

||||

7、保存键盘地址。

|

||||

|

||||

```

|

||||

ldr r1,=KeyboardAddress

|

||||

str r0,[r1]

|

||||

```

|

||||

|

||||

8、如果我们没有键盘地址,这里就没有其它活要做了。

|

||||

|

||||

```

|

||||

teq r0,#0

|

||||

beq return$

|

||||

mov kbd,r0

|

||||

```

|

||||

|

||||

9、循环查询全部按键,在 `KeyboardOldDown` 保存下来。如果我们询问的太多了,返回 0 也是正确的。

|

||||

|

||||

```

|

||||

saveKeys$:

|

||||

mov r0,kbd

|

||||

mov r1,r5

|

||||

bl KeyboardGetKeyDown

|

||||

|

||||

ldr r1,=KeyboardOldDown

|

||||

add r1,r5,lsl #1

|

||||

strh r0,[r1]

|

||||

add r5,#1

|

||||

cmp r5,#6

|

||||

blt saveKeys$

|

||||

```

|

||||

|

||||

10、现在我们得到了新的按键。

|

||||

|

||||

```

|

||||

mov r0,kbd

|

||||

bl KeyboardPoll

|

||||

```

|

||||

|

||||

11、最后我们要检查 `KeyboardOldDown` 是否工作了。如果没工作,那么我们可能是断开连接了。

|

||||

|

||||

```

|

||||

teq r0,#0

|

||||

bne getKeyboard$

|

||||

|

||||

return$:

|

||||

pop {r4,r5,pc}

|

||||

.unreq kbd

|

||||

```

|

||||

|

||||

有了我们新的 `KeyboardUpdate` 方法,检查输入变得简单,固定周期调用这个方法就行,而它甚至可以检查键盘是否断开连接,等等。这是一个有用的方法,因为我们实际的按键处理会根据条件不同而有所差别,所以能够用一个函数调以它的原始方式获取当前的输入是可行的。下一个方法我们希望它是 `KeyboardGetChar`,简单的返回下一个按下的按钮的 ASCII 字符,或者如果没有按键按下就返回 0。这可以扩展到支持如果它按下一个特定时间当做多次按下按键,也支持锁定键和修饰键。

|

||||

|

||||

如果我们有一个 `KeyWasDown` 方法可以使这个方法有用起来,如果给定的扫描代码不在 `KeyboardOldDown` 值中,它只返回 0,否则返回一个非零值。你可以自己尝试一下。与往常一样,可以在下载页面找到解决方案。

|

||||

|

||||

### 7、查找表

|

||||

|

||||

`KeyboardGetChar` 方法如果写得不好,可能会非常复杂。有 100 多种扫描码,每种代码都有不同的效果,这取决于 shift 键或其他修饰符的存在与否。并不是所有的键都可以转换成一个字符。对于一些字符,多个键可以生成相同的字符。在有如此多可能性的情况下,一个有用的技巧是查找表。查找表与物理意义上的查找表非常相似,它是一个值及其结果的表。对于一些有限的函数,推导出答案的最简单方法就是预先计算每个答案,然后通过检索返回正确的答案。在这种情况下,我们可以在内存中建立一个序列的值,序列中第 n 个值就是扫描代码 n 的 ASCII 字符代码。这意味着如果一个键被按下,我们的方法只需要检测到,然后从表中检索它的值。此外,我们可以为当按住 shift 键时的值单独创建一个表,这样按下 shift 键就可以简单地换个我们用的表。

|

||||

|

||||

> 在编程的许多领域,程序越大,速度越快。查找表很大,但是速度很快。有些问题可以通过查找表和普通函数的组合来解决。

|

||||

|

||||

在 `.section .data` 命令之后,复制下面的表:

|

||||

|

||||

```

|

||||

.align 3

|

||||

KeysNormal:

|

||||

.byte 0x0, 0x0, 0x0, 0x0, 'a', 'b', 'c', 'd'

|

||||

.byte 'e', 'f', 'g', 'h', 'i', 'j', 'k', 'l'

|

||||

.byte 'm', 'n', 'o', 'p', 'q', 'r', 's', 't'

|

||||

.byte 'u', 'v', 'w', 'x', 'y', 'z', '1', '2'

|

||||

.byte '3', '4', '5', '6', '7', '8', '9', '0'

|

||||

.byte '\n', 0x0, '\b', '\t', ' ', '-', '=', '['

|

||||

.byte ']', '\\\', '#', ';', '\'', '`', ',', '.'

|

||||

.byte '/', 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0

|

||||

.byte 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0

|

||||

.byte 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0

|

||||

.byte 0x0, 0x0, 0x0, 0x0, '/', '*', '-', '+'

|

||||

.byte '\n', '1', '2', '3', '4', '5', '6', '7'

|

||||

.byte '8', '9', '0', '.', '\\\', 0x0, 0x0, '='

|

||||

|

||||

.align 3

|

||||

KeysShift:

|

||||

.byte 0x0, 0x0, 0x0, 0x0, 'A', 'B', 'C', 'D'

|

||||

.byte 'E', 'F', 'G', 'H', 'I', 'J', 'K', 'L'

|

||||

.byte 'M', 'N', 'O', 'P', 'Q', 'R', 'S', 'T'

|

||||

.byte 'U', 'V', 'W', 'X', 'Y', 'Z', '!', '"'

|

||||

.byte '£', '$', '%', '^', '&', '*', '(', ')'

|

||||

.byte '\n', 0x0, '\b', '\t', ' ', '_', '+', '{'

|

||||

.byte '}', '|', '~', ':', '@', '¬', '<', '>'

|

||||

.byte '?', 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0

|

||||

.byte 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0

|

||||

.byte 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0

|

||||

.byte 0x0, 0x0, 0x0, 0x0, '/', '*', '-', '+'

|

||||

.byte '\n', '1', '2', '3', '4', '5', '6', '7'

|

||||

.byte '8', '9', '0', '.', '|', 0x0, 0x0, '='

|

||||

```

|

||||

|

||||

这些表直接将前 104 个扫描码映射到 ASCII 字符作为一个字节表。我们还有一个单独的表来描述 `shift` 键对这些扫描码的影响。我使用 ASCII `null` 字符(`0`)表示所有没有直接映射的 ASCII 键(例如功能键)。退格映射到 ASCII 退格字符(8 表示 `\b`),`enter` 映射到 ASCII 新行字符(10 表示 `\n`), `tab` 映射到 ASCII 水平制表符(9 表示 `\t`)。

|

||||

|

||||

> `.byte num` 直接插入字节常量 num 到文件。

|

||||

|

||||

.

|

||||

|

||||

> 大部分的汇编器和编译器识别转义序列;如 `\t` 这样的字符序列会插入该特殊字符。

|

||||

|

||||

`KeyboardGetChar` 方法需要做以下工作:

|

||||

|

||||

1. 检查 `KeyboardAddress` 是否返回 `0`。如果是,则返回 0。

|

||||

2. 调用 `KeyboardGetKeyDown` 最多 6 次。每次:

|

||||

1. 如果按键是 0,跳出循环。

|

||||

2. 调用 `KeyWasDown`。 如果返回是,处理下一个按键。

|

||||

3. 如果扫描码超过 103,进入下一个按键。

|

||||

4. 调用 `KeyboardGetModifiers`

|

||||

5. 如果 `shift` 是被按着的,就加载 `KeysShift` 的地址,否则加载 `KeysNormal` 的地址。

|

||||

6. 从表中读出 ASCII 码值。

|

||||

7. 如果是 0,进行下一个按键,否则返回 ASCII 码值并退出。

|

||||

3. 返回 0。

|

||||

|

||||

|

||||

试着自己实现。我的实现展示在下面:

|

||||

|

||||

1、简单的检查我们是否有键盘。

|

||||

|

||||

```

|

||||

.globl KeyboardGetChar

|

||||

KeyboardGetChar:

|

||||

ldr r0,=KeyboardAddress

|

||||

ldr r1,[r0]

|

||||

teq r1,#0

|

||||

moveq r0,#0

|

||||

moveq pc,lr

|

||||

```

|

||||

|

||||

2、`r5` 将会保存按键的索引,`r4` 保存键盘的地址。

|

||||

|

||||

```

|

||||

push {r4,r5,r6,lr}

|

||||

kbd .req r4

|

||||

key .req r6

|

||||

mov r4,r1

|

||||

mov r5,#0

|

||||

keyLoop$:

|

||||

mov r0,kbd

|

||||

mov r1,r5

|

||||

bl KeyboardGetKeyDown

|

||||

```

|

||||

|

||||

2.1、 如果扫描码是 0,它要么意味着有错,要么说明没有更多按键了。

|

||||

|

||||

```

|

||||

teq r0,#0

|

||||

beq keyLoopBreak$

|

||||

```

|

||||

|

||||

2.2、如果按键已经按下了,那么他就没意义了,我们只想知道按下的按键。

|

||||

|

||||

```

|

||||

mov key,r0

|

||||

bl KeyWasDown

|

||||

teq r0,#0

|

||||

bne keyLoopContinue$

|

||||

```

|

||||

|

||||

|

||||

2.3、如果一个按键有个超过 104 的扫描码,它将会超出我们的表,所以它是无关的按键。

|

||||

|

||||

```

|

||||

cmp key,#104

|

||||

bge keyLoopContinue$

|

||||

```

|

||||

|

||||

2.4、我们需要知道修饰键来推断字符。

|

||||

|

||||

```

|

||||

mov r0,kbd

|

||||

bl KeyboardGetModifiers

|

||||

```

|

||||

|

||||

5. 当将字符更改为其 shift 变体时,我们要同时检测左 `shift` 键和右 `shift` 键。记住,`tst` 指令计算的是逻辑和,然后将其与 0 进行比较,所以当且仅当移位位都为 0 时,它才等于 0。

|

||||

|

||||

```

|

||||

tst r0,#0b00100010

|

||||

ldreq r0,=KeysNormal

|

||||

ldrne r0,=KeysShift

|

||||

```

|

||||

|

||||

2.6、现在我们可以从查找表加载按键了。

|

||||

|

||||

```

|

||||

ldrb r0,[r0,key]

|

||||

```

|

||||

|

||||

2.7、如果查找码包含一个 0,我们必须继续。为了继续,我们要增加索引,并检查是否到 6 次了。

|

||||

|

||||

```

|

||||

teq r0,#0

|

||||

bne keyboardGetCharReturn$

|

||||

keyLoopContinue$:

|

||||

add r5,#1

|

||||

cmp r5,#6

|

||||

blt keyLoop$

|

||||

```

|

||||

|

||||

|

||||

3、在这里我们返回我们的按键,如果我们到达 `keyLoopBreak$` ,然后我们就知道这里没有按键被握住,所以返回 0。

|

||||

|

||||

```

|

||||

keyLoopBreak$:

|

||||

mov r0,#0

|

||||

keyboardGetCharReturn$:

|

||||

pop {r4,r5,r6,pc}

|

||||

.unreq kbd

|

||||

.unreq key

|

||||

```

|

||||

|

||||

### 8、记事本操作系统

|

||||

|

||||

现在我们有了 `KeyboardGetChar` 方法,可以创建一个操作系统,只打印出用户对着屏幕所写的内容。为了简单起见,我们将忽略所有非常规的键。在 `main.s`,删除 `bl SetGraphicsAddress` 之后的所有代码。调用 `UsbInitialise`,将 `r4` 和 `r5` 设置为 0,然后循环执行以下命令:

|

||||

|

||||

1. 调用 `KeyboardUpdate`

|

||||

2. 调用 `KeyboardGetChar`

|

||||

3. 如果返回 0,跳转到步骤 1

|

||||

4. 复制 `r4` 和 `r5` 到 `r1` 和 `r2` ,然后调用 `DrawCharacter`

|

||||

5. 把 `r0` 加到 `r4`

|

||||

6. 如果 `r4` 是 1024,将 `r1` 加到 `r5`,然后设置 `r4` 为 0。

|

||||

7. 如果 `r5` 是 768,设置 `r5` 为0

|

||||

8. 跳转到步骤 1

|

||||

|

||||

现在编译,然后在树莓派上测试。你几乎可以立即开始在屏幕上输入文本。如果没有工作,请参阅我们的故障排除页面。

|

||||

|

||||

当它工作时,祝贺你,你已经实现了与计算机的接口。现在你应该开始意识到,你几乎已经拥有了一个原始的操作系统。现在,你可以与计算机交互、发出命令,并在屏幕上接收反馈。在下一篇教程[输入02][3]中,我们将研究如何生成一个全文本终端,用户在其中输入命令,然后计算机执行这些命令。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.cl.cam.ac.uk/projects/raspberrypi/tutorials/os/input01.html

|

||||

|

||||

作者:[Alex Chadwick][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[ezio](https://github.com/oska874)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.cl.cam.ac.uk

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.cl.cam.ac.uk/projects/raspberrypi/tutorials/os/downloads.html

|

||||

[2]: https://www.cl.cam.ac.uk/projects/raspberrypi/tutorials/os/downloads/hut1_12v2.pdf

|

||||

[3]: https://www.cl.cam.ac.uk/projects/raspberrypi/tutorials/os/input02.html

|

||||

@ -0,0 +1,197 @@

|

||||

iWant:一个去中心化的点对点共享文件的命令行工具

|

||||

======

|

||||

|

||||

|

||||

|

||||

不久之前,我们编写了一个指南,内容是一个文件共享实用程序,名为 [transfer.sh][1],它是一个免费的 Web 服务,允许你在 Internet 上轻松快速地共享文件,还有 [PSiTransfer][2],一个简单的开源自托管文件共享解决方案。今天,我们将看到另一个名为 “iWant” 的文件共享实用程序。它是一个基于命令行的自由开源的去中心化点对点文件共享应用程序。

|

||||

|

||||

你可能想知道,它与其它文件共享应用程序有什么不同?以下是 iWant 的一些突出特点。

|

||||

|

||||

* 它是一个命令行应用程序。这意味着你不需要消耗内存来加载 GUI 实用程序。你只需要一个终端。

|

||||

* 它是去中心化的。这意味着你的数据不会在任何中心位置存储。因此,不会因为中心点失败而失败。

|

||||

* iWant 允许中断下载,你可以在以后随时恢复。你不需要从头开始下载,它会从你停止的位置恢复下载。

|

||||

* 共享目录中文件所作的任何更改(如删除、添加、修改)都会立即反映在网络中。

|

||||

* 就像种子一样,iWant 从多个节点下载文件。如果任何节点离开群组或未能响应,它将继续从另一个节点下载。

|

||||

* 它是跨平台的,因此你可以在 GNU/Linux、MS Windows 或者 Mac OS X 中使用它。

|

||||

|

||||

### 安装 iWant

|

||||

|

||||

iWant 可以使用 PIP 包管理器轻松安装。确保你在 Linux 发行版中安装了 pip。如果尚未安装,参考以下指南。

|

||||

|

||||

[如何使用 Pip 管理 Python 包](https://www.ostechnix.com/manage-python-packages-using-pip/)

|

||||

|

||||

安装 pip 后,确保你有以下依赖项:

|

||||

|

||||

* libffi-dev

|

||||

* libssl-dev

|

||||

|

||||

比如说,在 Ubuntu 上,你可以使用以下命令安装这些依赖项:

|

||||

|

||||

```

|

||||

$ sudo apt-get install libffi-dev libssl-dev

|

||||

```

|

||||

|

||||

安装完所有依赖项后,使用以下命令安装 iWant:

|

||||

|

||||

```

|

||||

$ sudo pip install iwant

|

||||

```

|

||||

|

||||

现在我们的系统中已经有了 iWant,让我们来看看如何使用它来通过网络传输文件。

|

||||

|

||||

### 用法

|

||||

|

||||

首先,使用以下命令启动 iWant 服务器:

|

||||

|

||||

(LCTT 译注:虽然这个软件是叫 iWant,但是其命令名为 `iwanto`,另外这个软件至少一年没有更新了。)

|

||||

|

||||

```

|

||||

$ iwanto start

|

||||

```

|

||||

|

||||

第一次启动时,iWant 会询问想要分享和下载文件夹的位置,所以需要输入两个文件夹的位置。然后,选择要使用的网卡。

|

||||

|

||||

示例输出:

|

||||

|

||||

```

|

||||

Shared/Download folder details looks empty..

|

||||

Note: Shared and Download folder cannot be the same

|

||||

SHARED FOLDER(absolute path):/home/sk/myshare

|

||||

DOWNLOAD FOLDER(absolute path):/home/sk/mydownloads

|

||||

Network interface available

|

||||

1. lo => 127.0.0.1

|

||||

2. enp0s3 => 192.168.43.2

|

||||

Enter index of the interface:2

|

||||

now scanning /home/sk/myshare

|

||||

[Adding] /home/sk/myshare 0.0

|

||||

Updating Leader 56f6d5e8-654e-11e7-93c8-08002712f8c1

|

||||

[Adding] /home/sk/myshare 0.0

|

||||

connecting to 192.168.43.2:1235 for hashdump

|

||||

```

|

||||

|

||||

如果你看到类似上面的输出,你可以立即开始使用 iWant 了。

|

||||

|

||||

同样,在网络中的所有系统上启动 iWant 服务,指定有效的分享和下载文件夹的位置,并选择合适的网卡。

|

||||

|

||||

iWant 服务将继续在当前终端窗口中运行,直到你按下 `CTRL+C` 退出为止。你需要打开一个新选项卡或新的终端窗口来使用 iWant。

|

||||

|

||||

iWant 的用法非常简单,它的命令很少,如下所示。

|

||||

|

||||

* `iwanto start` – 启动 iWant 服务。

|

||||

* `iwanto search <name>` – 查找文件。

|

||||

* `iwanto download <hash>` – 下载一个文件。

|

||||

* `iwanto share <path>` – 更改共享文件夹的位置。

|

||||

* `iwanto download to <destination>` – 更改下载文件夹位置。

|

||||

* `iwanto view config` – 查看共享和下载文件夹。

|

||||

* `iwanto –version` – 显示 iWant 版本。

|

||||

* `iwanto -h` – 显示帮助信息。

|

||||

|

||||

让我向你展示一些例子。

|

||||

|

||||

#### 查找文件

|

||||

|

||||

要查找一个文件,运行:

|

||||

|

||||

```

|

||||

$ iwanto search <filename>

|

||||

|

||||

```

|

||||

|

||||

请注意,你无需指定确切的名称。

|

||||

|

||||

示例:

|

||||

|

||||

```

|

||||

$ iwanto search command

|

||||

```

|

||||

|

||||

上面的命令将搜索包含 “command” 字符串的所有文件。

|

||||

|

||||

我的 Ubuntu 系统会输出:

|

||||

|

||||

```

|

||||

Filename Size Checksum

|

||||

------------------------------------------- ------- --------------------------------

|

||||

/home/sk/myshare/THE LINUX COMMAND LINE.pdf 3.85757 efded6cc6f34a3d107c67c2300459911

|

||||

```

|

||||

|

||||

#### 下载文件

|

||||

|

||||

你可以在你的网络上的任何系统下载文件。要下载文件,只需提供文件的哈希(校验和),如下所示。你可以使用 `iwanto search` 命令获取共享的哈希值。

|

||||

|

||||

```

|

||||

$ iwanto download efded6cc6f34a3d107c67c2300459911

|

||||

```

|

||||

|

||||

文件将保存在你的下载位置,在本文中是 `/home/sk/mydownloads/` 位置。

|

||||

|

||||

```

|

||||

Filename: /home/sk/mydownloads/THE LINUX COMMAND LINE.pdf

|

||||

Size: 3.857569 MB

|

||||

```

|

||||

|

||||

#### 查看配置

|

||||

|

||||

要查看配置,例如共享和下载文件夹的位置,运行:

|

||||

|

||||

```

|

||||

$ iwanto view config

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

```

|

||||

Shared folder:/home/sk/myshare

|

||||

Download folder:/home/sk/mydownloads

|

||||

```

|

||||

|

||||

#### 更改共享和下载文件夹的位置

|

||||

|

||||

你可以更改共享文件夹和下载文件夹。

|

||||

|

||||

```

|

||||

$ iwanto share /home/sk/ostechnix

|

||||

```

|

||||

|

||||

现在,共享位置已更改为 `/home/sk/ostechnix`。

|

||||

|

||||

同样,你可以使用以下命令更改下载位置:

|

||||

|

||||

```

|

||||

$ iwanto download to /home/sk/Downloads

|

||||

```

|

||||

|

||||

要查看所做的更改,运行命令:

|

||||

|

||||

```

|

||||

$ iwanto view config

|

||||

```

|

||||

|

||||

#### 停止 iWant

|

||||

|

||||

一旦你不想用 iWant 了,可以按下 `CTRL+C` 退出。

|

||||

|

||||

如果它不起作用,那可能是由于防火墙或你的路由器不支持多播。你可以在 `~/.iwant/.iwant.log` 文件中查看所有日志。有关更多详细信息,参阅最后提供的项目的 GitHub 页面。

|

||||

|

||||

差不多就是全部了。希望这个工具有所帮助。下次我会带着另一个有趣的指南再次来到这里。

|

||||

|

||||

干杯!

|

||||

|

||||

### 资源

|

||||

|

||||

-[iWant GitHub](https://github.com/nirvik/iWant)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/iwant-decentralized-peer-peer-file-sharing-commandline-application/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[MjSeven](https://github.com/MjSeven)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:https://www.ostechnix.com/easy-fast-way-share-files-internet-command-line/

|

||||

[2]:https://www.ostechnix.com/psitransfer-simple-open-source-self-hosted-file-sharing-solution/

|

||||

101

published/20180118 Rediscovering make- the power behind rules.md

Normal file

101

published/20180118 Rediscovering make- the power behind rules.md

Normal file

@ -0,0 +1,101 @@

|

||||

重新发现 make: 规则背后的力量

|

||||

======

|

||||

|

||||

|

||||

|

||||

我过去认为 makefile 只是一种将一组组的 shell 命令列出来的简便方法;过了一段时间我了解到它们是有多么的强大、灵活以及功能齐全。这篇文章带你领略其中一些有关规则的特性。

|

||||

|

||||

> 备注:这些全是针对 GNU Makefile 的,如果你希望支持 BSD Makefile ,你会发现有些新的功能缺失。感谢 [zge][5] 指出这点。

|

||||

|

||||

### 规则

|

||||

|

||||

<ruby>规则<rt>rule</rt></ruby>是指示 `make` 应该如何并且何时构建一个被称作为<ruby>目标<rt>target</rt></ruby>的文件的指令。目标可以依赖于其它被称作为<ruby>前提<rt>prerequisite</rt></ruby>的文件。

|

||||

|

||||

你会指示 `make` 如何按<ruby>步骤<rt>recipe</rt></ruby>构建目标,那就是一套按照出现顺序一次执行一个的 shell 命令。语法像这样:

|

||||

|

||||

```

|

||||

target_name : prerequisites

|

||||

recipe

|

||||

```

|

||||

|

||||

一但你定义好了规则,你就可以通过从命令行执行以下命令构建目标:

|

||||

|

||||

```

|

||||

$ make target_name

|

||||

```

|

||||

|

||||

目标一经构建,除非前提改变,否则 `make` 会足够聪明地不再去运行该步骤。

|

||||

|

||||

### 关于前提的更多信息

|

||||

|

||||

前提表明了两件事情:

|

||||

|

||||

* 当目标应当被构建时:如果其中一个前提比目标更新,`make` 假定目的应当被构建。

|

||||

* 执行的顺序:鉴于前提可以反过来在 makefile 中由另一套规则所构建,它们同样暗示了一个执行规则的顺序。

|

||||

|

||||

如果你想要定义一个顺序但是你不想在前提改变的时候重新构建目标,你可以使用一种特别的叫做“<ruby>唯顺序<rt>order only</rt></ruby>”的前提。这种前提可以被放在普通的前提之后,用管道符(`|`)进行分隔。

|

||||

|

||||

### 样式

|

||||

|

||||

为了便利,`make` 接受目标和前提的样式。通过包含 `%` 符号可以定义一种样式。这个符号是一个可以匹配任何长度的文字符号或者空隔的通配符。以下有一些示例:

|

||||

|

||||

* `%`:匹配任何文件

|

||||

* `%.md`:匹配所有 `.md` 结尾的文件

|

||||

* `prefix%.go`:匹配所有以 `prefix` 开头以 `.go` 结尾的文件

|

||||

|

||||

### 特殊目标

|

||||

|

||||

有一系列目标名字,它们对于 `make` 来说有特殊的意义,被称作<ruby>特殊目标<rt>special target</rt></ruby>。

|

||||

|

||||

你可以在这个[文档][1]发现全套特殊目标。作为一种经验法则,特殊目标以点开始后面跟着大写字母。

|

||||

|

||||

以下是几个有用的特殊目标:

|

||||

|

||||

- `.PHONY`:向 `make` 表明此目标的前提可以被当成伪目标。这意味着 `make` 将总是运行,无论有那个名字的文件是否存在或者上次被修改的时间是什么。

|

||||

- `.DEFAULT`:被用于任何没有指定规则的目标。

|

||||

- `.IGNORE`:如果你指定 `.IGNORE` 为前提,`make` 将忽略执行步骤中的错误。

|

||||

|

||||

### 替代

|

||||

|

||||

当你需要以你指定的改动方式改变一个变量的值,<ruby>替代<rt>substitution</rt></ruby>就十分有用了。

|

||||

|

||||

替代的格式是 `$(var:a=b)`,它的意思是获取变量 `var` 的值,用值里面的 `b` 替代词末尾的每个 `a` 以代替最终的字符串。例如:

|

||||

|

||||

```

|

||||

foo := a.o

|

||||

bar : = $(foo:.o=.c) # sets bar to a.c

|

||||

```

|

||||

|

||||

注意:特别感谢 [Luis Lavena][2] 让我们知道替代的存在。

|

||||

|

||||

### 档案文件

|

||||

|

||||

档案文件是用来一起将多个数据文档(类似于压缩文件的概念)收集成一个文件。它们由 `ar` Unix 工具所构建。`ar` 可以用于为任何目的创建档案,但除了[静态库][3],它已经被 `tar` 大量替代。

|

||||

|

||||

在 `make` 中,你可以使用一个档案文件中的单独一个成员作为目标或者前提,就像这样:

|

||||

|

||||

```

|

||||

archive(member) : prerequisite

|

||||

recipe

|

||||

```

|

||||

|

||||

### 最后的想法

|

||||

|

||||

关于 `make` 还有更多可探索的,但是至少这是一个起点,我强烈鼓励你去查看[文档][4],创建一个笨拙的 makefile 然后就可以探索它了。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://monades.roperzh.com/rediscovering-make-power-behind-rules/

|

||||

|

||||

作者:[Roberto Dip][a]

|

||||

译者:[tomjlw](https://github.com/tomjlw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://monades.roperzh.com

|

||||

[1]:https://www.gnu.org/software/make/manual/make.html#Special-Targets

|

||||

[2]:https://twitter.com/luislavena/

|

||||

[3]:http://tldp.org/HOWTO/Program-Library-HOWTO/static-libraries.html

|

||||

[4]:https://www.gnu.org/software/make/manual/make.html

|

||||

[5]:https://lobste.rs/u/zge

|

||||

229

published/20180205 Rancher - Container Management Application.md

Normal file

229

published/20180205 Rancher - Container Management Application.md

Normal file

@ -0,0 +1,229 @@

|

||||

Rancher:一个全面的可用于产品环境的容器管理平台

|

||||

======

|

||||

|

||||

Docker 作为一款容器化应用的新兴软件,被大多数 IT 公司使用来减少基础设施平台的成本。

|

||||

|

||||

通常,没有 GUI 的 Docker 软件对于 Linux 管理员来说很容易,但是对于开发者来就有点困难。当把它搬到生产环境上来,那么它对 Linux 管理员来说也相当不友好。那么,轻松管理 Docker 的最佳解决方案是什么呢?

|

||||

|

||||

唯一的办法就是提供 GUI。Docker API 允许第三方应用接入 Docker。在市场上有许多 Docker GUI 应用。我们已经写过一篇关于 Portainer 应用的文章。今天我们来讨论另一个应用,Rancher。

|

||||

|

||||

容器让软件开发更容易,让开发者更快的写代码、更好的运行它们。但是,在生产环境上运行容器却很困难。

|

||||

|

||||

**推荐阅读:** [Portainer:一个简单的 Docker 管理图形工具][1]

|

||||

|

||||

### Rancher 简介

|

||||

|

||||

[Rancher][2] 是一个全面的容器管理平台,它可以让容器在各种基础设施平台的生产环境上部署和运行更容易。它提供了诸如多主机网络、全局/本地负载均衡和卷快照等基础设施服务。它整合了原生 Docker 的管理能力,如 Docker Machine 和 Docker Swarm。它提供了丰富的用户体验,让 DevOps 管理员在更大规模的生产环境上运行 Docker。

|

||||

|

||||

访问以下文章可以了解 Linux 系统上安装 Docker。

|

||||

|

||||

**推荐阅读:**

|

||||

|

||||

- [如何在 Linux 上安装 Docker][3]

|

||||

- [如何在 Linux 上使用 Docker 镜像][4]

|

||||

- [如何在 Linux 上使用 Docker 容器][5]

|

||||

- [如何在 Docker 容器内安装和运行应用][6]

|

||||

|

||||

### Rancher 特性

|

||||

|

||||

* 可以在两分钟内安装 Kubernetes。

|

||||

* 一键启动应用(90 个流行的 Docker 应用)。

|

||||

* 部署和管理 Docker 更容易。

|

||||

* 全面的生产级容器管理平台。

|

||||

* 可以在生产环境上快速部署容器。

|

||||

* 强大的自动部署和运营容器技术。

|

||||

* 模块化基础设施服务。

|

||||

* 丰富的编排工具。

|

||||

* Rancher 支持多种认证机制。

|

||||

|

||||

### 怎样安装 Rancher

|

||||

|

||||

由于 Rancher 是以轻量级的 Docker 容器方式运行,所以它的安装非常简单。Rancher 是由一组 Docker 容器部署的。只需要简单的启动两个容器就能运行 Rancher。一个容器用作管理服务器,另一个容器在各个节点上作为代理。在 Linux 系统下简单的运行下列命令就能部署 Rancher。

|

||||

|

||||

Rancher 服务器提供了两个不同的安装包标签如 `stable` 和 `latest`。下列命令将会拉取适合的 Rancher 镜像并安装到你的操作系统上。Rancher 服务器仅需要两分钟就可以启动。

|

||||

|

||||

* `latest`:这个标签是他们的最新开发构建。这些构建将通过 Rancher CI 的自动化框架进行验证,不建议在生产环境使用。

|

||||

* `stable`:这是最新的稳定发行版本,推荐在生产环境使用。

|

||||

|

||||

Rancher 的安装方法有多种。在这篇教程中我们仅讨论两种方法。

|

||||

|

||||

* 以单一容器的方式安装 Rancher(内嵌 Rancher 数据库)

|

||||

* 以单一容器的方式安装 Rancher(外部数据库)

|

||||

|

||||

### 方法 - 1

|

||||

|

||||

运行下列命令以单一容器的方式安装 Rancher 服务器(内嵌数据库)

|

||||

|

||||

```

|

||||

$ sudo docker run -d --restart=unless-stopped -p 8080:8080 rancher/server:stable

|

||||

$ sudo docker run -d --restart=unless-stopped -p 8080:8080 rancher/server:latest

|

||||

```

|

||||

|

||||

### 方法 - 2

|

||||

|

||||

你可以在启动 Rancher 服务器时指向外部数据库,而不是使用自带的内部数据库。首先创建所需的数据库,数据库用户为同一个。

|

||||

|

||||

```

|

||||

> CREATE DATABASE IF NOT EXISTS cattle COLLATE = 'utf8_general_ci' CHARACTER SET = 'utf8';

|

||||

> GRANT ALL ON cattle.* TO 'cattle'@'%' IDENTIFIED BY 'cattle';

|

||||

> GRANT ALL ON cattle.* TO 'cattle'@'localhost' IDENTIFIED BY 'cattle';

|

||||

```

|

||||

|

||||

运行下列命令启动 Rancher 去连接外部数据库。

|

||||

|

||||

```

|

||||

$ sudo docker run -d --restart=unless-stopped -p 8080:8080 rancher/server \

|

||||

--db-host myhost.example.com --db-port 3306 --db-user username --db-pass password --db-name cattle

|

||||

```

|

||||

|

||||

如果你想测试 Rancher 2.0,使用下列的命令去启动。

|

||||

|

||||

```

|

||||

$ sudo docker run -d --restart=unless-stopped -p 80:80 -p 443:443 rancher/server:preview

|

||||

```

|

||||

|

||||

### 通过 GUI 访问 & 安装 Rancher

|

||||

|

||||

浏览器输入 `http://hostname:8080` 或 `http://server_ip:8080` 去访问 rancher GUI.

|

||||

|

||||

![][8]

|

||||

|

||||

### 怎样注册主机

|

||||

|

||||

注册你的主机 URL 允许它连接到 Rancher API。这是一次性设置。

|

||||

|

||||

接下来,点击主菜单下面的 “Add a Host” 链接或者点击主菜单上的 “INFRASTRUCTURE >> Add Hosts”,点击 “Save” 按钮。

|

||||

|

||||

![][9]

|

||||

|

||||

默认情况下,Rancher 里的访问控制认证禁止了访问,因此我们首先需要通过一些方法打开访问控制认证,否则任何人都不能访问 GUI。

|

||||

|

||||

点击 “>> Admin >> Access Control”,输入下列的值最后点击 “Enable Authentication” 按钮去打开它。在我这里,是通过 “local authentication” 的方式打开的。

|

||||

|

||||

* “Login UserName”: 输入你期望的登录名

|

||||

* “Full Name”: 输入你的全名

|

||||

* “Password”: 输入你期望的密码

|

||||

* “Confirm Password”: 再一次确认密码

|

||||

|

||||

![][10]

|

||||

|

||||

注销然后使用新的登录凭证重新登录:

|

||||

|

||||

![][11]

|

||||

|

||||

现在,我能看到本地认证已经被打开。

|

||||

|

||||

![][12]

|

||||

|

||||

### 怎样添加主机

|

||||

|

||||

注册你的主机后,它将带你进入下一个页面,在那里你能选择不同云服务提供商的 Linux 主机。我们将添加一个主机运行 Rancher 服务,因此选择“custom”选项然后输入必要的信息。

|

||||

|

||||

在第 4 步输入你服务器的公有 IP,运行第 5 步列出的命令,最后点击 “close” 按钮。

|

||||

|

||||

```

|

||||

$ sudo docker run -e CATTLE_AGENT_IP="192.168.56.2" --rm --privileged -v /var/run/docker.sock:/var/run/docker.sock -v /var/lib/rancher:/var/lib/rancher rancher/agent:v1.2.11 http://192.168.56.2:8080/v1/scripts/16A52B9BE2BAB87BB0F5:1546214400000:ODACe3sfis5V6U8E3JASL8jQ

|

||||

|

||||

INFO: Running Agent Registration Process, CATTLE_URL=http://192.168.56.2:8080/v1

|

||||

INFO: Attempting to connect to: http://192.168.56.2:8080/v1

|

||||

INFO: http://192.168.56.2:8080/v1 is accessible

|

||||

INFO: Configured Host Registration URL info: CATTLE_URL=http://192.168.56.2:8080/v1 ENV_URL=http://192.168.56.2:8080/v1

|

||||

INFO: Inspecting host capabilities

|

||||

INFO: Boot2Docker: false

|

||||

INFO: Host writable: true

|

||||

INFO: Token: xxxxxxxx

|

||||

INFO: Running registration

|

||||

INFO: Printing Environment

|

||||

INFO: ENV: CATTLE_ACCESS_KEY=9946BD1DCBCFEF3439F8

|

||||

INFO: ENV: CATTLE_AGENT_IP=192.168.56.2

|

||||

INFO: ENV: CATTLE_HOME=/var/lib/cattle

|

||||

INFO: ENV: CATTLE_REGISTRATION_ACCESS_KEY=registrationToken

|

||||

INFO: ENV: CATTLE_REGISTRATION_SECRET_KEY=xxxxxxx

|

||||

INFO: ENV: CATTLE_SECRET_KEY=xxxxxxx

|

||||

INFO: ENV: CATTLE_URL=http://192.168.56.2:8080/v1

|

||||

INFO: ENV: DETECTED_CATTLE_AGENT_IP=172.17.0.1

|

||||

INFO: ENV: RANCHER_AGENT_IMAGE=rancher/agent:v1.2.11

|

||||

INFO: Launched Rancher Agent: e83b22afd0c023dabc62404f3e74abb1fa99b9a178b05b1728186c9bfca71e8d

|

||||

```

|

||||

|

||||

![][13]

|

||||

|

||||

等待几秒钟后新添加的主机将会出现。点击 “Infrastructure >> Hosts” 页面。

|

||||

|

||||

![][14]

|

||||

|

||||

### 怎样查看容器

|

||||

|

||||

只需要点击下列位置就能列出所有容器。点击 “Infrastructure >> Containers” 页面。

|

||||

|

||||

![][15]

|

||||

|

||||

### 怎样创建容器

|

||||

|

||||

非常简单,只需点击下列位置就能创建容器。

|

||||

|

||||

点击 “Infrastructure >> Containers >> Add Container” 然后输入每个你需要的信息。为了测试,我将创建一个 `latest` 标签的 CentOS 容器。

|

||||

|

||||

![][16]

|

||||

|

||||

在同样的列表位置,点击 “ Infrastructure >> Containers”。

|

||||

|

||||

![][17]

|

||||

|

||||

点击容器名展示容器的性能信息,如 CPU、内存、网络和存储。

|

||||

|

||||

![][18]

|

||||

|

||||

选择特定容器,然后点击最右边的“三点”按钮或者点击“Actions”按钮对容器进行管理,如停止、启动、克隆、重启等。

|

||||

|

||||

![][19]

|

||||

|

||||

如果你想控制台访问容器,只需要点击 “Actions” 按钮中的 “Execute Shell” 选项即可。

|

||||

|

||||

![][20]

|

||||

|

||||

### 怎样从应用目录部署容器

|

||||

|

||||

Rancher 提供了一个应用模版目录,让部署变的很容易,只需要单击一下就可以。

|

||||

它维护了多数流行应用,这些应用由 Rancher 社区贡献。

|

||||

|

||||

![][21]

|

||||

|

||||

点击 “Catalog >> All >> Choose the required application”,最后点击 “Launch” 去部署。

|

||||

|

||||

![][22]

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.2daygeek.com/rancher-a-complete-container-management-platform-for-production-environment/

|

||||

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

译者:[arrowfeng](https://github.com/arrowfeng)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.2daygeek.com/author/magesh/

|

||||

[1]:https://www.2daygeek.com/portainer-a-simple-docker-management-gui/

|

||||

[2]:http://rancher.com/

|

||||

[3]:https://www.2daygeek.com/install-docker-on-centos-rhel-fedora-ubuntu-debian-oracle-archi-scentific-linux-mint-opensuse/

|

||||

[4]:https://www.2daygeek.com/list-search-pull-download-remove-docker-images-on-linux/

|

||||

[5]:https://www.2daygeek.com/create-run-list-start-stop-attach-delete-interactive-daemonized-docker-containers-on-linux/

|

||||

[6]:https://www.2daygeek.com/install-run-applications-inside-docker-containers/

|

||||

[7]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[8]:https://www.2daygeek.com/wp-content/uploads/2018/02/Install-rancher-container-management-application-in-linux-1.png

|

||||

[9]:https://www.2daygeek.com/wp-content/uploads/2018/02/Install-rancher-container-management-application-in-linux-2.png

|

||||

[10]:https://www.2daygeek.com/wp-content/uploads/2018/02/Install-rancher-container-management-application-in-linux-3.png

|

||||

[11]:https://www.2daygeek.com/wp-content/uploads/2018/02/Install-rancher-container-management-application-in-linux-3a.png

|

||||

[12]:https://www.2daygeek.com/wp-content/uploads/2018/02/Install-rancher-container-management-application-in-linux-4.png

|

||||

[13]:https://www.2daygeek.com/wp-content/uploads/2018/02/Install-rancher-container-management-application-in-linux-5.png

|

||||

[14]:https://www.2daygeek.com/wp-content/uploads/2018/02/Install-rancher-container-management-application-in-linux-6.png

|

||||

[15]:https://www.2daygeek.com/wp-content/uploads/2018/02/Install-rancher-container-management-application-in-linux-7.png

|

||||

[16]:https://www.2daygeek.com/wp-content/uploads/2018/02/Install-rancher-container-management-application-in-linux-8.png

|

||||

[17]:https://www.2daygeek.com/wp-content/uploads/2018/02/Install-rancher-container-management-application-in-linux-9.png

|

||||

[18]:https://www.2daygeek.com/wp-content/uploads/2018/02/Install-rancher-container-management-application-in-linux-10.png

|

||||

[19]:https://www.2daygeek.com/wp-content/uploads/2018/02/Install-rancher-container-management-application-in-linux-11.png

|

||||

[20]:https://www.2daygeek.com/wp-content/uploads/2018/02/Install-rancher-container-management-application-in-linux-12.png

|

||||

[21]:https://www.2daygeek.com/wp-content/uploads/2018/02/Install-rancher-container-management-application-in-linux-13.png

|

||||

[22]:https://www.2daygeek.com/wp-content/uploads/2018/02/Install-rancher-container-management-application-in-linux-14.png

|

||||

@ -0,0 +1,132 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10683-1.html)

|

||||

[#]: subject: (Oomox – Customize And Create Your Own GTK2, GTK3 Themes)

|

||||

[#]: via: (https://www.ostechnix.com/oomox-customize-and-create-your-own-gtk2-gtk3-themes/)

|

||||

[#]: author: (EDITOR https://www.ostechnix.com/author/editor/)

|

||||

|

||||

Oomox:定制和创建你自己的 GTK2、GTK3 主题

|

||||

======

|

||||

|

||||

|

||||

|

||||

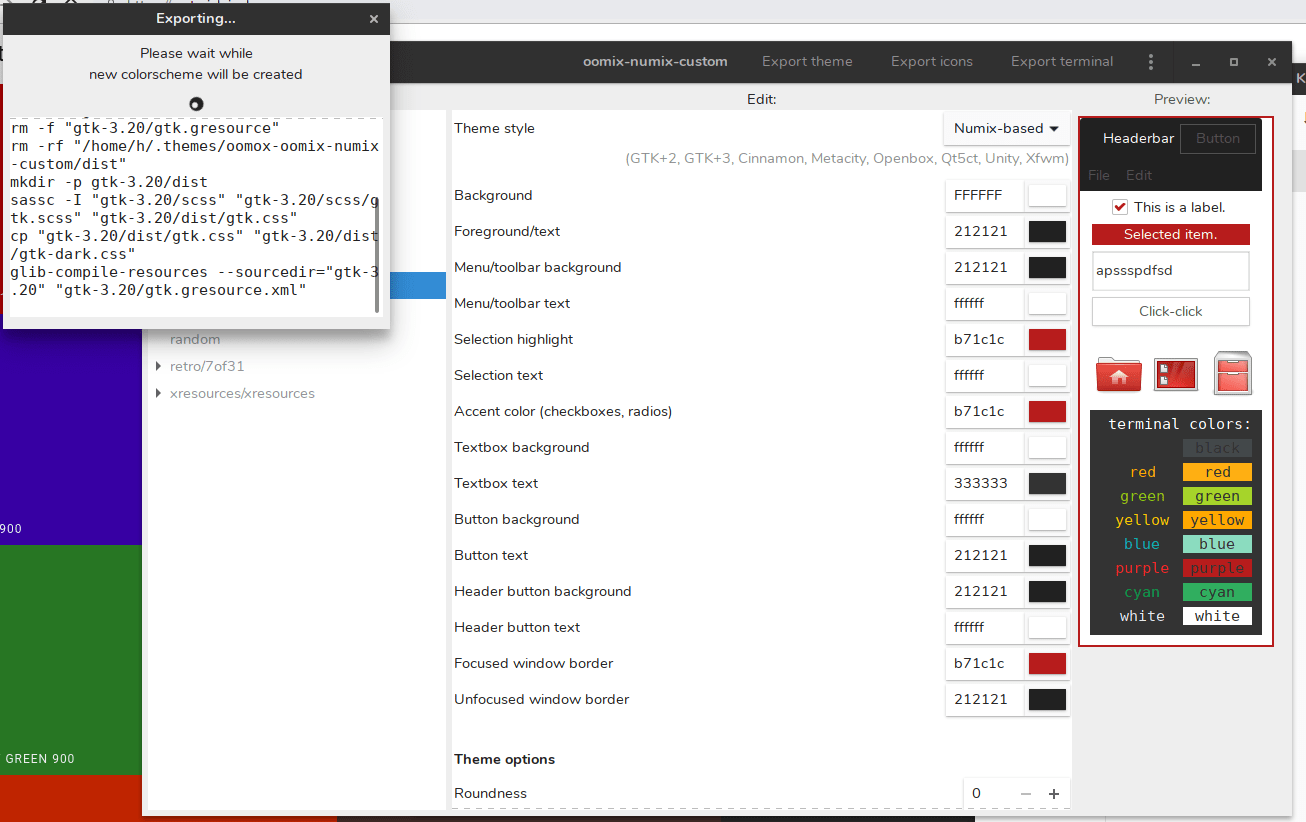

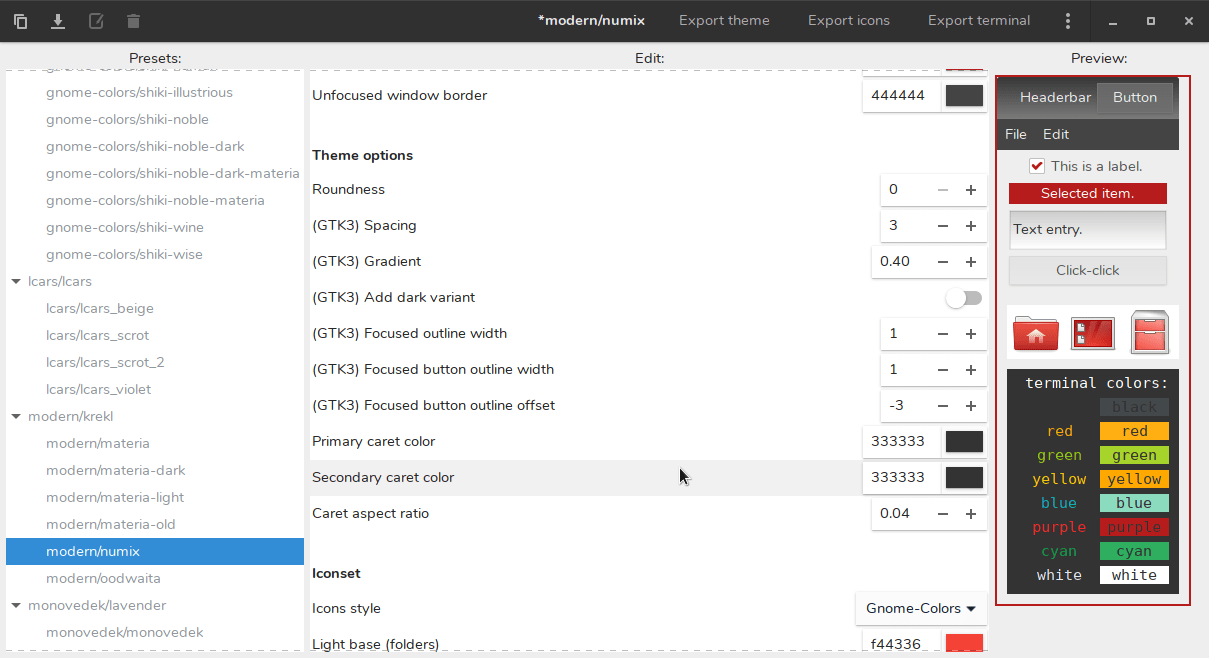

主题和可视化定制是 Linux 的主要优势之一。由于所有代码都是开源的,因此你可以比 Windows/Mac OS 更大程度上地改变 Linux 系统的外观和行为方式。GTK 主题可能是人们定制 Linux 桌面的最流行方式。GTK 工具包被各种桌面环境使用,如 Gnome、Cinnamon、Unity、XFC E和 budgie。这意味着为 GTK 制作的单个主题只需很少的修改就能应用于任何这些桌面环境。

|

||||

|

||||

有很多非常高品质的流行 GTK 主题,例如 **Arc**、**Numix** 和 **Adapta**。但是如果你想自定义这些主题并创建自己的视觉设计,你可以使用 **Oomox**。

|

||||

|

||||

Oomox 是一个图形应用,可以完全使用自己的颜色、图标和终端风格自定义和创建自己的 GTK 主题。它自带几个预设,你可以在 Numix、Arc 或 Materia 主题样式上创建自己的 GTK 主题。

|

||||

|

||||

### 安装 Oomox

|

||||

|

||||

在 Arch Linux 及其衍生版中:

|

||||

|

||||

Oomox 可以在 [AUR][1] 中找到,所以你可以使用任何 AUR 助手程序安装它,如 [yay][2]。

|

||||

|

||||

```

|

||||

$ yay -S oomox

|

||||

```

|

||||

|

||||

在 Debian/Ubuntu/Linux Mint 中,在[这里][3]下载 `oomox.deb` 包并按如下所示进行安装。在写本指南时,最新版本为 `oomox_1.7.0.5.deb`。

|

||||

|

||||

```

|

||||

$ sudo dpkg -i oomox_1.7.0.5.deb

|

||||

$ sudo apt install -f

|

||||

```

|

||||

|

||||

在 Fedora 上,Oomox 可以在第三方 **COPR** 仓库中找到。

|

||||

|

||||

```

|

||||

$ sudo dnf copr enable tcg/themes

|

||||

$ sudo dnf install oomox

|

||||

```

|

||||

|

||||

Oomox 也有 [Flatpak 应用][4]。确保已按照[本指南][5]中的说明安装了 Flatpak。然后,使用以下命令安装并运行 Oomox:

|

||||

|

||||

```

|

||||

$ flatpak install flathub com.github.themix_project.Oomox

|

||||

$ flatpak run com.github.themix_project.Oomox

|

||||

```

|

||||

|

||||

对于其他 Linux 发行版,请进入 Github 上的 Oomox 项目页面(本指南末尾给出链接),并从源代码手动编译和安装。

|

||||

|

||||

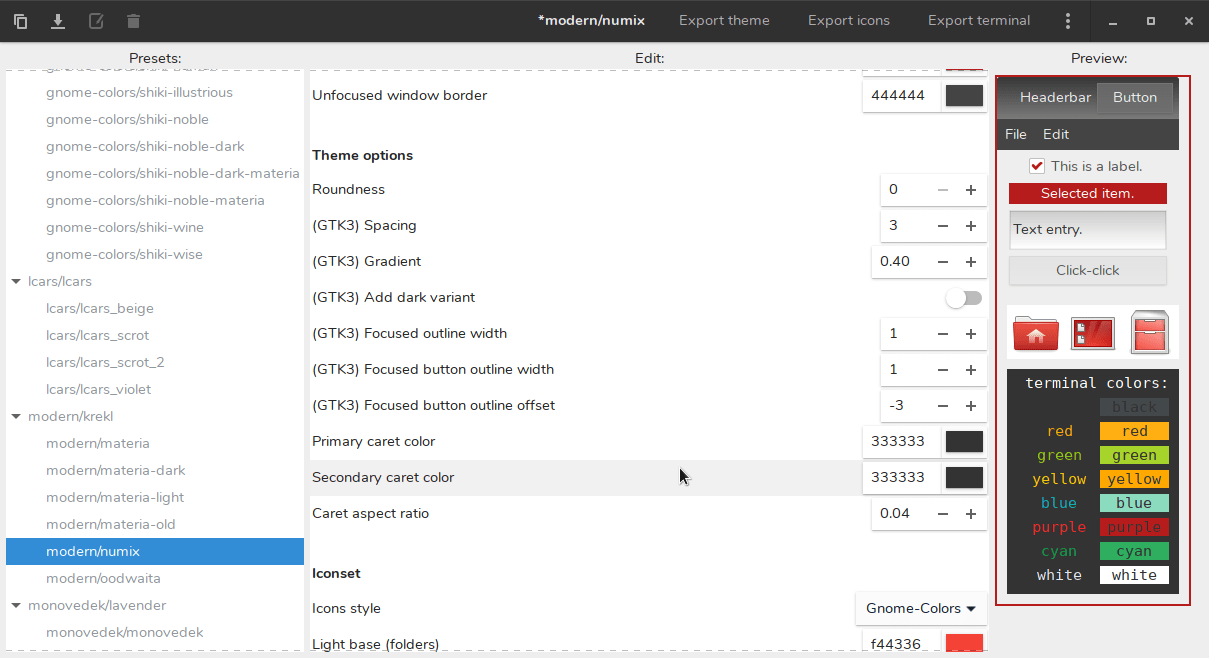

### 自定义并创建自己的 GTK2、GTK3 主题

|

||||

|

||||

#### 主题定制

|

||||

|

||||

|

||||

|

||||

你可以更改几乎每个 UI 元素的颜色,例如:

|

||||

|

||||

1. 标题

|

||||

2. 按钮

|

||||

3. 标题内的按钮

|

||||

4. 菜单

|

||||

5. 选定的文字

|

||||

|

||||

在左边,有许多预设主题,如汽车主题、现代主题,如 Materia 和 Numix,以及复古主题。在窗口的顶部,有一个名为**主题样式**的选项,可让你设置主题的整体视觉样式。你可以在 Numix、Arc 和 Materia 之间进行选择。

|

||||

|

||||

使用某些像 Numix 这样的样式,你甚至可以更改标题渐变,边框宽度和面板透明度等内容。你还可以为主题添加黑暗模式,该模式将从默认主题自动创建。

|

||||

|

||||

|

||||

|

||||

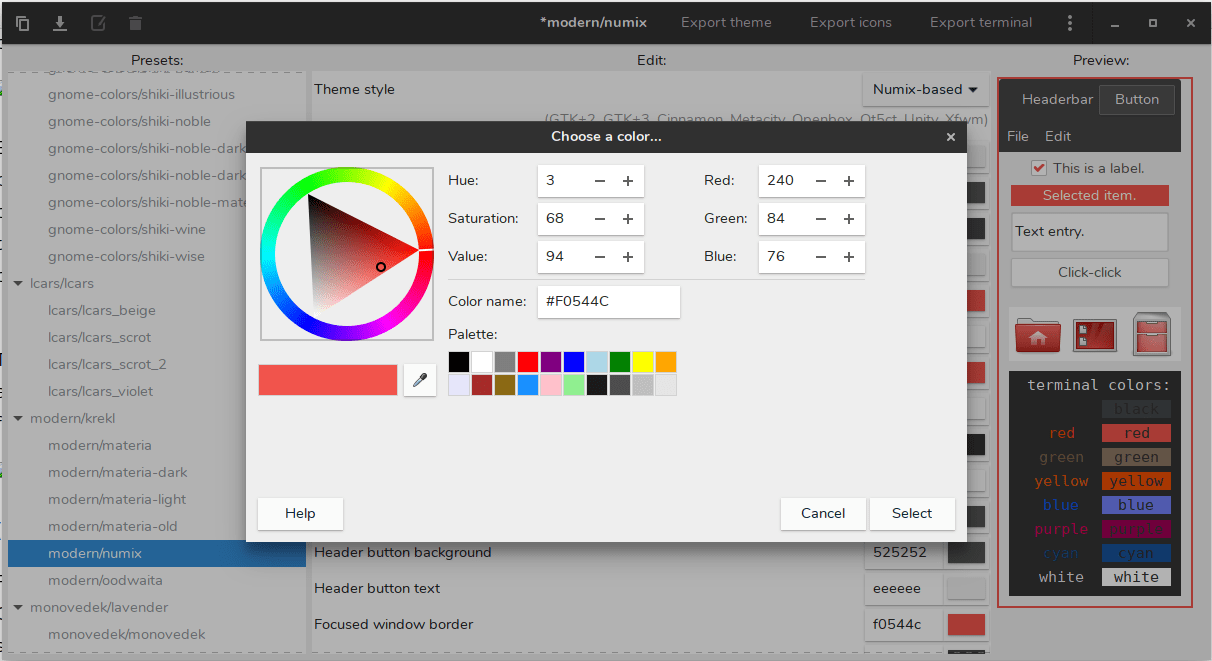

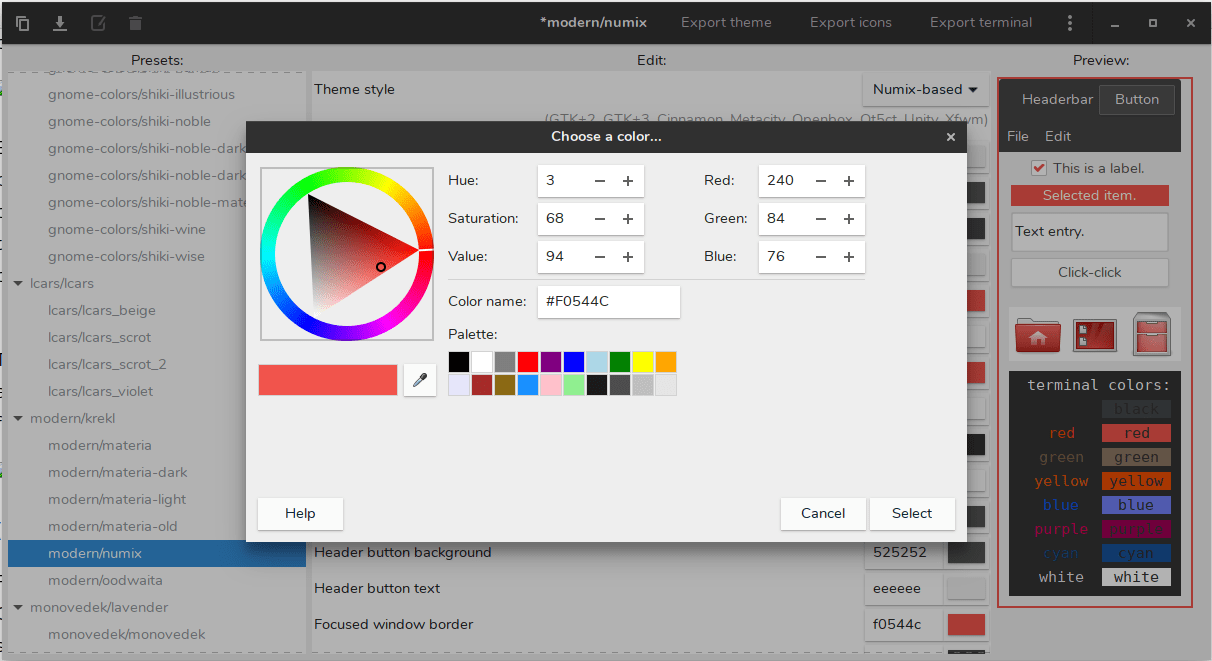

#### 图标集定制

|

||||

|

||||

你可以自定义用于主题图标的图标集。有两个选项:Gnome Colors 和 Archdroid。你可以更改图标集的基础和笔触颜色。

|

||||

|

||||

#### 终端定制

|

||||

|

||||

你还可以自定义终端颜色。该应用有几个预设,但你可以为每个颜色,如红色,绿色,黑色等自定义确切的颜色代码。你还可以自动交换前景色和背景色。

|

||||

|

||||

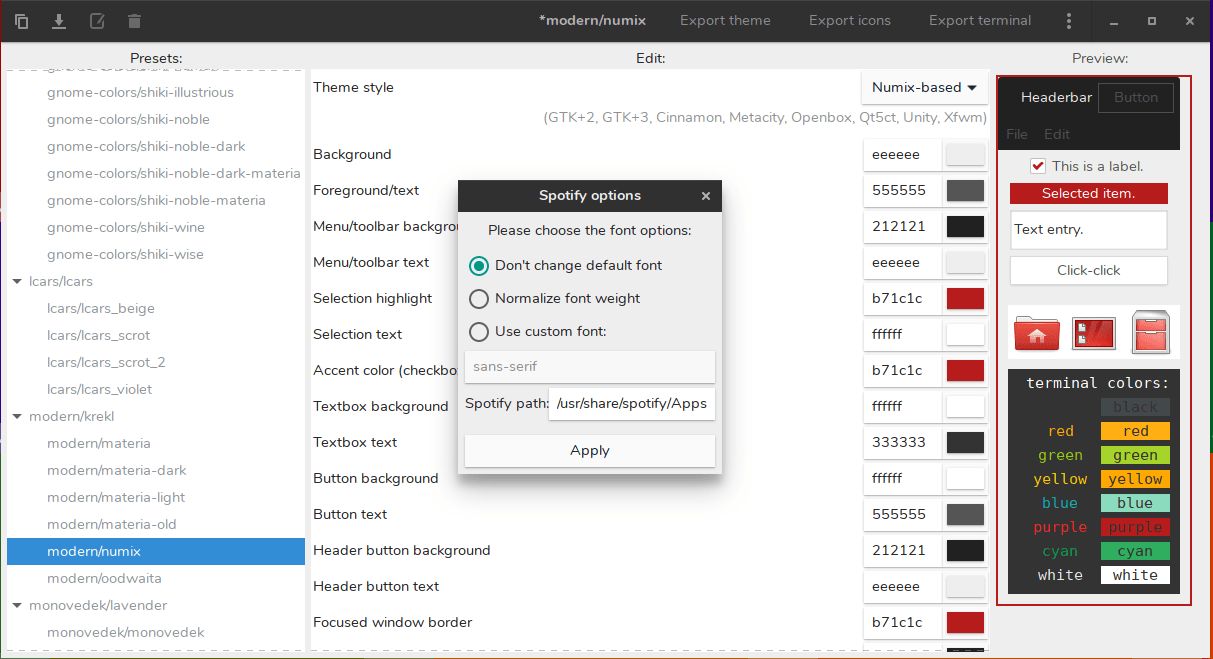

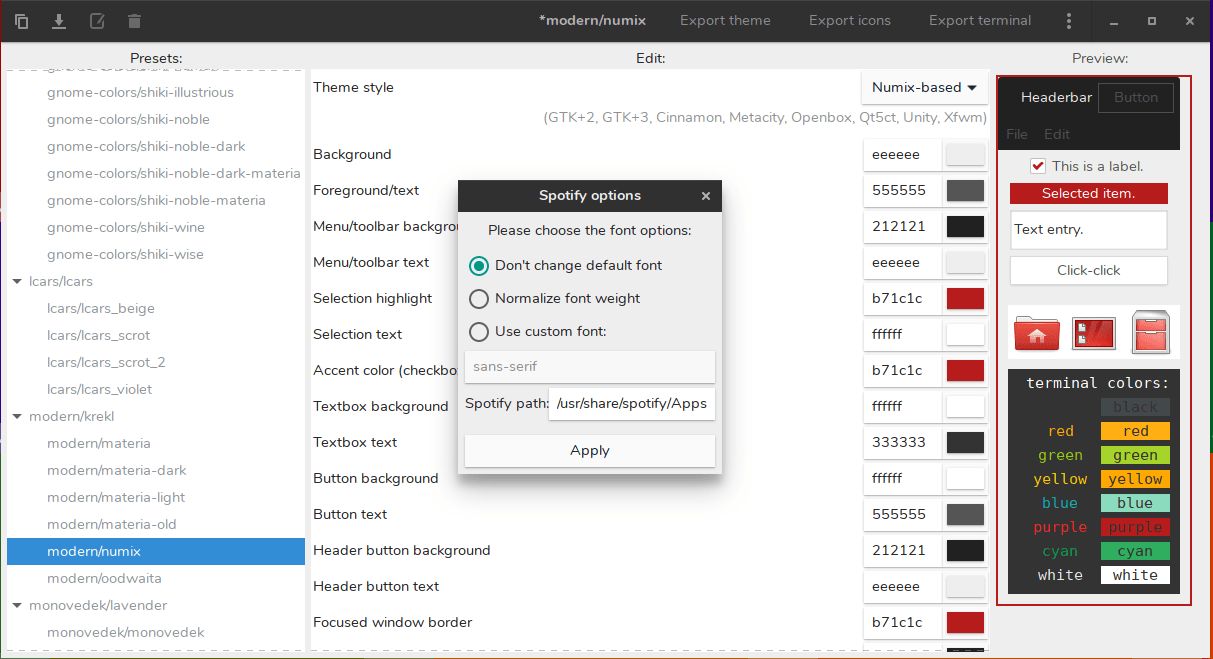

#### Spotify 主题

|

||||

|

||||

这个应用的一个独特功能是你可以根据喜好定义 spotify 主题。你可以更改 spotify 的前景色、背景色和强调色来匹配整体的 GTK 主题。

|

||||

|

||||

然后,只需按下“应用 Spotify 主题”按钮,你就会看到这个窗口:

|

||||

|

||||

|

||||

|

||||

点击应用即可。

|

||||

|

||||

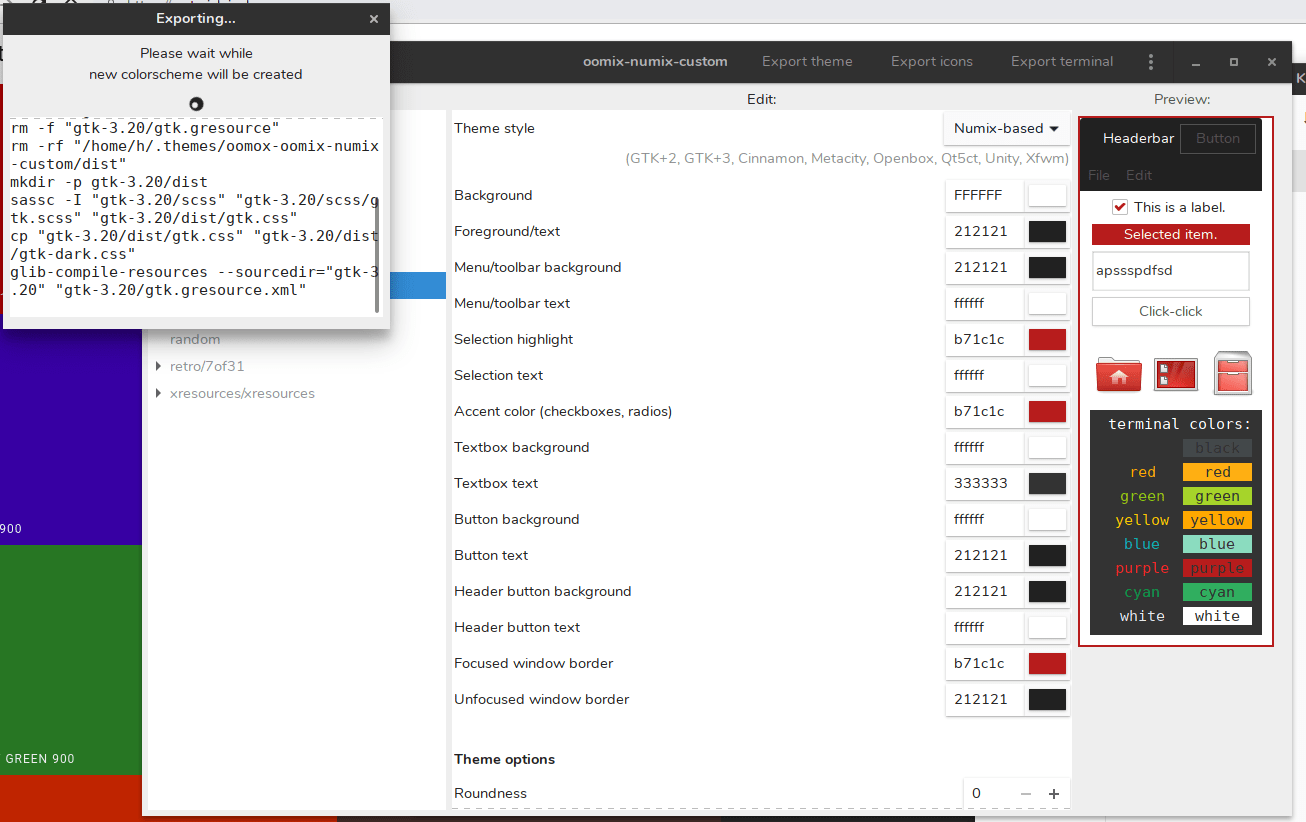

#### 导出主题

|

||||

|

||||

根据自己的喜好自定义主题后,可以通过单击左上角的重命名按钮重命名主题:

|

||||

|

||||

|

||||

|

||||

然后,只需点击“导出主题”将主题导出到你的系统。

|

||||

|

||||

|

||||

|

||||

你也可以只导出图标集或终端主题。

|

||||

|

||||

之后你可以打开桌面环境中的任何可视化自定义应用,例如基于 Gnome 桌面的 Tweaks,或者 “XFCE 外观设置”。选择你导出的 GTK 或者 shell 主题。

|

||||

|

||||

### 总结

|

||||

|

||||

如果你是一个 Linux 主题迷,并且你确切知道系统中的每个按钮、每个标题应该怎样,Oomox 值得一试。 对于极致的定制者,它可以让你几乎更改系统外观的所有内容。对于那些只想稍微调整现有主题的人来说,它有很多很多预设,所以你可以毫不费力地得到你想要的东西。

|

||||

|

||||

你试过吗? 你对 Oomox 有什么看法? 请在下面留言!

|

||||

|

||||

### 资源

|

||||

|

||||

- [Oomox GitHub 仓库](https://github.com/themix-project/oomox)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/oomox-customize-and-create-your-own-gtk2-gtk3-themes/

|

||||

|

||||

作者:[EDITOR][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.ostechnix.com/author/editor/

|

||||

[1]: https://aur.archlinux.org/packages/oomox/

|

||||

[2]: https://www.ostechnix.com/yay-found-yet-another-reliable-aur-helper/

|

||||

[3]: https://github.com/themix-project/oomox/releases

|

||||

[4]: https://flathub.org/apps/details/com.github.themix_project.Oomox

|

||||

[5]: https://www.ostechnix.com/flatpak-new-framework-desktop-applications-linux/

|

||||

212

published/20181108 My Google-free Android life.md

Normal file

212

published/20181108 My Google-free Android life.md

Normal file

@ -0,0 +1,212 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (LuuMing)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10677-1.html)

|

||||

[#]: subject: (My Google-free Android life)

|

||||

[#]: via: (https://lushka.al/my-android-setup/)

|

||||

[#]: author: (Anxhelo Lushka https://lushka.al/)

|

||||

|

||||

我的去 Google 化的安卓之旅

|

||||

======

|

||||

> 一篇介绍如何在你的生活中和设备里去 Google 化的文章。

|

||||

|

||||

最近人们经常问我有关我手机的事情,比如安卓怎么安装,怎样绕过 Google Service 使用手机。好吧,这篇文章就来详细的解决那些问题。我尽可能让这篇文章适合初学者,因此我会慢慢介绍,一个一个来讲并且附上截图,你就能更好地看到它是怎样运作的。

|

||||

|

||||

首先我会告诉你为什么 Google Services(在我看来)对你的设备不好。我可以一言以概之,并让你看 [Richard Stallman][2] 写的这篇[文章][1],但我决定抓住几个要点附在这。

|

||||

|

||||

* 要用<ruby>非自由软件<rt>Nonfree software</rt></ruby>

|

||||

* 大体上,大多数 Google Services 需要运行在非自由的 Javascript 代码之上。现如今,如果禁用掉 Javascript,什么都没有了,甚至 Google 帐号都需要运行非自由软件(由站点发送的 JavaScript),对于登录也是。

|

||||

* 被监视

|

||||

* Google 悄悄地把它的<ruby>广告跟踪方式<rt>ad-tracking profiles</rt></ruby>与浏览方式结合在一起,并存储了每个用户的大量数据。

|

||||

* 服务条款

|

||||

* Google 会终止转卖了 Pixel 手机的用户账户。他们无法访问帐户下保存在 Google Services 中的所有邮件和文档。

|

||||

* 审查

|

||||

* Amazon 和 Google 切断了<ruby>域前置<rt>domain-fronting</rt></ruby>,该技术能使身处某些国家的人们访问到在那里禁止的通信系统。

|

||||

* Google 已经同意为巴基斯坦政府执行特殊的 Youtube 审查,删除对立观点。这将有助于压制异议。

|

||||

* Youtube 的“content ID”会自动删除已发布的视频,这并不包含在版权法中。

|

||||

|

||||

这只是几个原因,你可以阅读上面我提到的 RMS 的文章,他详细解释了这几点。尽管听起来骇人听闻,但这些行为在现实生活中已经每天在发生。

|

||||

|

||||

### 下一步,我的搭建教程

|

||||

|

||||

我有一款[小米红米 Note 5 Pro][3] 智能手机(代号 whyred),生产于中国的[小米][4]。它是 4 个月之前(距写这篇文章的时候)我花了大约 185 欧元买的。

|

||||

|

||||

现在你也许会想,“但你为什么买中国品牌,他们不可靠”。是的,它不是通常你所期望的(品牌)所生产的,例如三星(人们通常会将它和安卓联系在一起,这显然是错的)、一加、诺基亚等。但你应当知道几乎所有的手机都生产于中国。

|

||||

|

||||

我选择这款手机有几个原因,首先当然是价格。它是一款<ruby>性价比<rt>budget-friendly</rt></ruby>相当高的产品,大多数人都能买得起。下一个原因是说明书上的规格(不仅仅是),在这个<ruby>价位<rt>price tag</rt></ruby>上相当合适。拥有 6 英尺屏幕(<ruby>全高清分辨率<rt>Full HD resolution</rt></ruby>),4000 毫安电池(一流的电池寿命),4GB RAM,64GB 存储,双后摄像头(12 MP + 5 MP),一个带闪光灯的前摄像头(13 MP)和一个高性能的<ruby>骁龙<rt>Snapdragon</rt></ruby> 636,它可能是那时候最好的选择。

|

||||

|

||||

随之而来的问题是 [MIUI][5],大多数小米设备所附带的安卓外壳(除了 Android One 项目设备)。是的,它没有那么可怕,它有一些额外的功能,但问题在更深的地方。小米设备如此便宜(据我所知销售利润仅有 5-10%)的一个原因是**他们在系统里伴随 MIUI 添加了数据挖掘和广告**。这样的话,系统应用需要额外不必要的权限来获取你的数据并且进行广告轰炸,从中获取利润。

|

||||

|

||||

更有趣的是,所包含的“天气”应用想要访问我的联系人并且拨打电话,如果它仅是显示天气的话为什么需要访问联系人呢。另一个例子是“录音机”应用,它也需要联系人和网络权限,可能想把录音发送回小米。

|

||||

|

||||

为了解决它,我不得不格式化手机并且摆脱 MIUI。在市场上近来的手机上这就变得极为艰难。

|

||||

|

||||

格式化手机的想法很简单,删除掉现有的系统然后安装一个新的喜欢的系统(这次是原生安卓)。为了实现它,你先得解锁 [bootloader][6]。

|

||||

|

||||

> bootloader 是一个在计算机完成自检后为其加载操作系统或者运行环境的计算机程序。—[维基百科][7]

|

||||

|

||||

问题是小米关于解锁 bootloader 有明确的政策。几个月之前,流程就像这样:你需向小米[申请][8]解锁代码,并提供真实的原因,但不是每次都成功,因为他们可以拒绝你的请求并且不提供理由。

|

||||

|

||||

现在,流程变了。你要从小米那下载一个软件,叫做 [Mi Unlock][9],在 Windows 电脑上安装它,在手机的[开发者模式中打开调试选项][10],重启到 bootloader 模式(关机状态下长按向下音量键 + 电源键)并将手机连接到电脑上,开始一个叫做“许可”的流程。这个过程会在小米的服务器上启动一个定时器,允许你**在 15 天之后解锁手机**(在一些少数情况下或者一个月,完全随机)。

|

||||

|

||||

![Mi Unlock app][11]

|

||||

|

||||

15 天过去后,重新连接手机并重复之前的步骤,这时候按下解锁键,你的 bootloader 就会解锁,并且能够安装其他 ROM(系统)。**注意,确保你已经备份好了数据,因为解锁 bootloader 会清空手机。**

|

||||

|

||||

下一步就是找一个兼容的系统([ROM][12])。我在 [XDA 开发者论坛上][13]找了个遍,它是 Android 开发者和用户们交流想法、应用等东西的地方。幸运的是,我的手机相当流行,因此论坛上有它[专门的版块][14]。在那儿,我略过一些流行的 ROM 并决定使用 [AOSiP ROM][15]。(AOSiP 代表<ruby>安卓开源 illusion 项目<rt>Android Open Source illusion Project</rt></ruby>)

|

||||

|

||||

> **校订**:有人发邮件告诉我说文章里写的就是[/e/][16]的目的与所做的事情。我想说谢谢你的帮助,但完全不是这样。我关于 /e/ 的看法背后的原因可以见此[网站][17],但我仍会在此列出一些原因。

|

||||

|

||||

> eelo 是一个从 Kickstarter 和 IndieGoGo 上集资并超过 200K € 的“基金会”,承诺创造一个开放、安全且保护隐私的移动 OS 和网页服务器。

|

||||

|

||||

> 1. 他们的 OS 基于 LineageOS 14.1 (Android 7.1) 且搭载 microG 和其他开源应用,此系统已经存在很长一段时间了并且现在叫做 [Lineage for microG][18]。

|

||||

> 2. 所有的应用程序并非从源代码构建,而是从 [APKPure][19] 上下载安装包并推送进 ROM,不知道那些安装包中是否包含<ruby>专有代码<rt>proprietary code</rt></ruby>或<ruby>恶意软件<rt>malware</rt></ruby>。

|

||||

> 3. 有一段时间,它们就那样随意地从代码中删除 Lineage 的<ruby>版权标头<rt>copyright header</rt></ruby>并加入自己的。

|

||||

> 4. 他们喜欢删除负面反馈并且监视用户 Telegram 群聊中的舆论。

|

||||

|

||||

> 总而言之,我**不建议使用 /e/** ROM。(至少现在)

|

||||

|

||||

另一件你有可能要做的事情是获取手机的 [root 权限][20],让它真正的成为你的手机,并且修改系统中的文件,例如使用系统范围的 adblocker 等。为了实现它,我决定使用 [Magisk][21],一个天赐的应用,它由一个学生开发,可以帮你获取设备的 root 权限并安装一种叫做[模块][22]的东西,基本上是软件。

|

||||

|

||||

下载 ROM 和 Magisk 之后,我得在手机上安装它们。为了完成安装,我将文件移动到了 SD 卡上。现在,若要安装系统,我需要使用 [恢复系统][23]。我用的是较为普遍的 [TWRP][24](代表 TeamWin Recovery Project)。

|

||||

|

||||

要安装恢复系统(听起来有点难,我知道),我需要将文件[烧录][20]进手机。为了完成烧录,我将手机用一个叫做 [ADB 的工具][25]连接上电脑(Fedora Linux 系统)。使用命令让自己定制的恢复系统覆盖掉原先的。

|

||||

|

||||

```

|

||||

fastboot flash recovery twrp.img

|

||||

```

|

||||

|

||||

完成之后,我关掉手机并按住音量上和电源键,直到 TWRP 界面显示。这意味着我进行顺利,并且它已经准备好接收我的指令。

|

||||

|

||||

![TWRP screen][26]

|

||||

|

||||

下一步是**发送擦除命令**,在你第一次为手机安装自定义 ROM 时是必要的。如上图所示,擦除命令会清除掉<ruby>数据<rt>Data</rt></ruby>,<ruby>缓存<rt>Cache</rt></ruby>和 Dalvik 。(这里也有高级选项让我们可以勾选以删除掉系统,如果我们不再需要旧系统的话)

|

||||

|

||||

这需要几分钟去完成,之后,你的手机基本上就干净了。现在是时候**安装系统了**。通过按下主屏幕上的安装按钮,我们选择之前添加进的 zip 文件(ROM 文件)并滑动屏幕安装它。下一步,我们需要安装 Magisk,它可以给我们访问设备的 root 权限。

|

||||

|

||||

> **校订**:一些有经验的安卓用户或发烧友也许注意到了,手机上不包含 [GApps](谷歌应用)。这在安卓世界里称之为 GApps-less,一个 GAps 应用也不安装。

|

||||

|

||||

> 注意有一个不好之处在于若不安装 Google Services 有的应用无法正常工作,例如它们的通知也许会花更长的时间到达或者根本不起作用。(对我来说这一点是最影响应用程序使用的)原因是这些应用使用了 [Google Cloud Messaging][28](现在叫做 [Firebase][29])唤醒手机并推送通知。

|

||||

|

||||

> 你可以通过安装使用 [microG][30](部分地)解决它,microG 提供一些 Google Services 的特性且允许你拥有更多的控制。我不建议使用它,因为它仍然有助于 Google Services 并且你不一定信任它。但是,如果你没法<ruby>立刻放弃使用<rt>go cold turkey on it</rt><ruby>,只想慢慢地退出谷歌,这便是一个好的开始。

|

||||

|

||||

都成功地安装之后,现在我们重启手机,就进入了主屏幕。

|

||||

|

||||

### 下一个部分,安装应用并配置一切

|

||||

|

||||

事情开始变得简单了。为了安装应用,我使用了 [F-Droid][31],一个可替代的应用商店,里面**只包含自由及开源应用**。如果这里没有你要的应用,你可以使用 [Aurora Store][32],一个从应用商店里下载应用且不需要使用谷歌帐号或被追踪的客户端。

|

||||

|

||||

F-Droid 里面有名为 repos 的东西,它是一个包含你可以安装应用的“仓库”。我使用默认的仓库,并从 [IzzyOnDroid][33] 添加了另一个,它有更多默认仓库中没有的应用,并且它更新地更频繁。

|

||||

|

||||

![My repos][34]

|

||||

|

||||

从下面你可以发现我所安装的应用清单,它们替代的应用与用途。

|

||||

|

||||

- [AdAway](https://f-droid.org/en/packages/org.adaway) > 系统广告拦截器,使用 hosts 文件拦截所有的广告

|

||||

- [AfWall+](https://f-droid.org/en/packages/dev.ukanth.ufirewall) > 一个防火墙,可以阻止不想要的连接

|

||||

- [Amaze](https://f-droid.org/en/packages/com.amaze.filemanager) > 替代系统的文件管理器,允许文件的 root 访问权限,并且拥有 zip/unzip 功能

|

||||

- [Ameixa](https://f-droid.org/en/packages/org.xphnx.ameixa) > 大多数应用的图标包

|

||||

- [andOTP](https://f-droid.org/en/packages/org.shadowice.flocke.andotp) > 替代谷歌验证器/Authy,一个可以用来登录启用了<ruby>双因子验证<rt>2FA</rt></ruby>的网站账户的 TOTP 应用,可以使用 PIN 码备份和锁定

|

||||

- [AnySoftKeyboard/AOSP Keyboard](https://f-droid.org/packages/com.menny.android.anysoftkeyboard/) > 开源键盘,它有许多主题和语言包,我也是该[项目](https://anysoftkeyboard.github.io/)的一员

|

||||

- [Audio Recorder](https://f-droid.org/en/packages/com.github.axet.audiorecorder) > 如其名字,允许你从麦克风录制不同格式的音频文件

|

||||

- [Battery Charge Limit](https://f-droid.org/en/packages/com.slash.batterychargelimit) > 当到 80% 时自动停止充电,降低<ruby>电池磨损<rt>battery wear</rt></ruby>并增加寿命

|

||||

- [DAVx5](https://f-droid.org/en/packages/at.bitfire.davdroid) > 这是我最常用的应用之一,对我来说它基本上替代了谷歌联系人、谷歌日历和谷歌 Tasks,它连接着我的 Nextcloud 环境可以让我完全控制自己的数据

|

||||

- [Document Viewer](https://f-droid.org/en/packages/org.sufficientlysecure.viewer) > 一个可以打开数百种文件格式的查看器应用,快速、轻量

|

||||

- [Deezloader Remix](https://gitlab.com/Nick80835/DeezLoader-Android/) > 让我可以在 Deezer 上下载高质量 MP3 的应用

|

||||

- [Easy xkcd](https://f-droid.org/en/packages/de.tap.easy_xkcd) > xkcd 漫画阅读器,我喜欢这些 xkcd 漫画

|

||||

- [Etar](https://f-droid.org/en/packages/ws.xsoh.etar) > 日历应用,替代谷歌日历,与 DAVx5 一同工作

|

||||

- [FastHub-Libre](https://f-droid.org/en/packages/com.fastaccess.github.libre) > 一个 GitHub 客户端,完全 FOSS(自由及开源软件),非常实用如果你像我一样喜欢使用 Github 的话

|

||||

- [Fennec F-Droid](https://f-droid.org/en/packages/org.mozilla.fennec_fdroid) > 替代谷歌 Chrome 和其他类似的应用,一个为 F-Droid 打造的火狐浏览器,不含专有二进制代码并允许安装扩展提升浏览体验

|

||||

- [Gadgetbridge](https://f-droid.org/en/packages/nodomain.freeyourgadget.gadgetbridge) > 替代小米运动,可以用来配对小米硬件的应用,追踪你的健康、步数、睡眠等。

|

||||

- [K-9 Mail](https://f-droid.org/en/packages/com.fsck.k9) > 邮件客户端,替代 GMail 应用,可定制并可以添加多个账户

|

||||

- [Lawnchair](https://f-droid.org/en/packages/ch.deletescape.lawnchair.plah) > 启动器,可以替代 Nova Launcher 或 Pixel Launcher,允许自定义和各种改变,也支持图标包

|

||||

- [Mattermost](https://f-droid.org/en/packages/com.mattermost.mattermost) > 可以连接 Mattermost 服务器的应用。Mattermost 是一个 Slack 替代品

|

||||

- [NewPipe](https://f-droid.org/en/packages/org.schabi.newpipe) > 最好的 YouTube 客户端(我认为),可以替代 YoubTube,它完全是 FOSS,免除 YouTube 广告,占用更少空间,允许背景播放,允许下载视频/音频等。试一试吧

|

||||

- [Nextcloud SMS](https://f-droid.org/en/packages/fr.unix_experience.owncloud_sms) > 允许备份/同步 SMS 到我的 Nextcloud 环境

|

||||

- [Nextcloud Notes](https://f-droid.org/en/packages/it.niedermann.owncloud.notes) > 允许我创建,修改,删除,分享笔记并同步/备份到 Nextcloud 环境

|

||||

- [OpenTasks](https://f-droid.org/en/packages/org.dmfs.tasks) > 允许我创建、修改、删除任务并同步到我的 Nextcloud 环境

|

||||

- [OsmAnd~](https://f-droid.org/en/packages/net.osmand.plus) > 一个地图应用,使用 [OpenStreetMap](https://openstreetmap.org/),允许下载离线地图和导航

|

||||

- [QKSMS](https://f-droid.org/en/packages/com.moez.QKSMS) > 我最喜欢的短信应用,可以替代原来的 Messaging 应用,拥有漂亮的界面,拥有备份、个性化、延迟发送等特性。

|

||||

- [Resplash/Mysplash](https://f-droid.org/en/packages/com.wangdaye.mysplash) > 允许你无限地从 [Unsplash](https://unsplash.com/) 下载无数的漂亮壁纸,全都可以免费使用和修改。

|

||||

- [ScreenCam](https://f-droid.org/en/packages/com.orpheusdroid.screenrecorder) > 一个录屏工具,允许各样的自定义和录制模式,没有广告并且免费

|

||||

- [SecScanQR](https://f-droid.org/en/packages/de.t_dankworth.secscanqr) > 二维码识别应用,快速轻量

|

||||

- [Send Reduced Free](https://f-droid.org/en/packages/mobi.omegacentauri.SendReduced) > 这个应用可以在发送之前通过移除 PII(<ruby>个人识别信息<rt>personally identifiable information</rt></ruby>)和减小尺寸,让你立即分享大图

|

||||

- [Slide](https://f-droid.org/en/packages/me.ccrama.redditslide/) > 开源 Reddit 客户端

|

||||

- [Telegram FOSS](https://f-droid.org/en/packages/org.telegram.messenger) > 没有追踪和 Google Services 的纯净版 Telegram 安卓客户端

|

||||

- [TrebleShot](https://f-droid.org/en/packages/com.genonbeta.TrebleShot) > 这个天才般的应用可以让你通过 WIFI 分享文件给其它设备,真的超快,甚至无需连接网络

|

||||

- [Tusky](https://f-droid.org/en/packages/com.keylesspalace.tusky) > Tusky 是 [Mastodon](https://joinmastodon.org/) 平台的客户端(替代 Twitter)

|

||||

- [Unit Converter Ultimate](https://f-droid.org/en/packages/com.physphil.android.unitconverterultimate) > 这款应用可以一键在 200 种单位之间来回转换,非常快并且完全离线

|

||||

- [Vinyl Music Player](https://f-droid.org/en/packages/com.poupa.vinylmusicplayer) > 我首选的音乐播放器,可以替代谷歌音乐播放器或其他你已经安装的音乐播放器,它有漂亮的界面和许多特性

|

||||