mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-25 23:11:02 +08:00

Merge remote-tracking branch 'lctt/master' into 20181010

This commit is contained in:

commit

8a686fb691

@ -1,14 +1,15 @@

|

||||

三周内构建 JavaScript 全栈 web 应用

|

||||

============================================================

|

||||

===========

|

||||

|

||||

|

||||

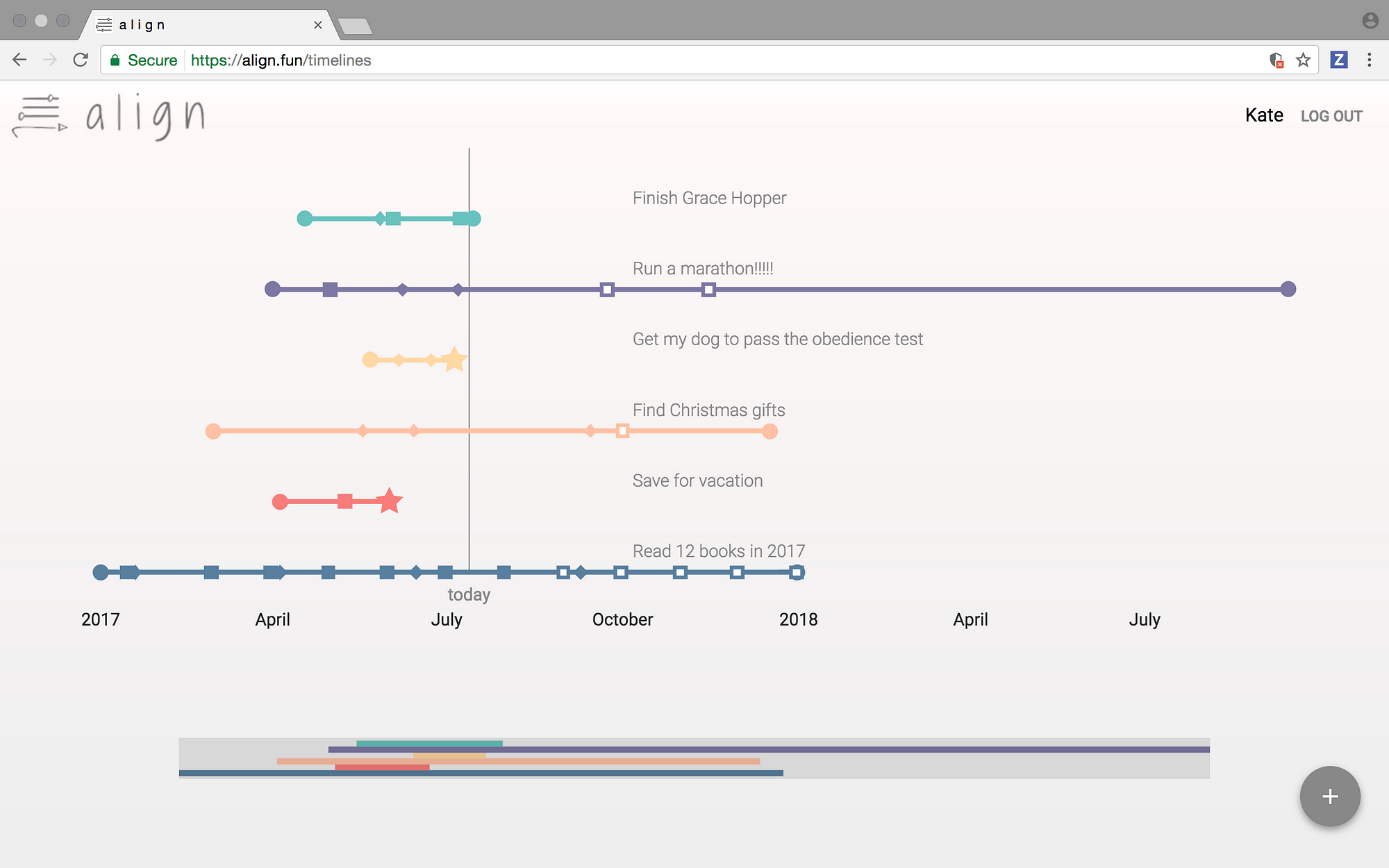

应用 Align 中,用户主页的控制面板

|

||||

|

||||

*应用 Align 中,用户主页的控制面板*

|

||||

|

||||

### 从构思到部署应用程序的简单分步指南

|

||||

|

||||

我在 Grace Hopper Program 为期三个月的编码训练营即将结束,实际上这篇文章的标题有些纰漏 —— 现在我已经构建了 _三个_ 全栈应用:[从零开始的电子商店(an e-commerce store from scratch)][3]、我个人的 [私人黑客马拉松项目(personal hackathon project)][4],还有这个“三周的结业项目”。这个项目是迄今为止强度最大的 —— 我和另外两名队友共同花费三周的时光 —— 而它也是我在训练营中最引以为豪的成就。这是我目前所构建和涉及的第一款稳定且复杂的应用。

|

||||

我在 Grace Hopper Program 为期三个月的编码训练营即将结束,实际上这篇文章的标题有些纰漏 —— 现在我已经构建了 _三个_ 全栈应用:[从零开始的电子商店][3]、我个人的 [私人黑客马拉松项目][4],还有这个“三周的结业项目”。这个项目是迄今为止强度最大的 —— 我和另外两名队友共同花费三周的时光 —— 而它也是我在训练营中最引以为豪的成就。这是我目前所构建和涉及的第一款稳定且复杂的应用。

|

||||

|

||||

如大多数开发者所知,即使你“知道怎么编写代码”,但真正要制作第一款全栈的应用却是非常困难的。JavaScript 生态系统出奇的大:有包管理器,模块,构建工具,转译器,数据库,库文件,还要对上述所有东西进行选择,难怪如此多的编程新手除了 Codecademy 的教程外,做不了任何东西。这就是为什么我想让你体验这个决策的分布教程,跟着我们队伍的脚印,构建可用的应用。

|

||||

如大多数开发者所知,即使你“知道怎么编写代码”,但真正要制作第一款全栈的应用却是非常困难的。JavaScript 生态系统出奇的大:有包管理器、模块、构建工具、转译器、数据库、库文件,还要对上述所有东西进行选择,难怪如此多的编程新手除了 Codecademy 的教程外,做不了任何东西。这就是为什么我想让你体验这个决策的分布教程,跟着我们队伍的脚印,构建可用的应用。

|

||||

|

||||

* * *

|

||||

|

||||

@ -38,12 +39,8 @@

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

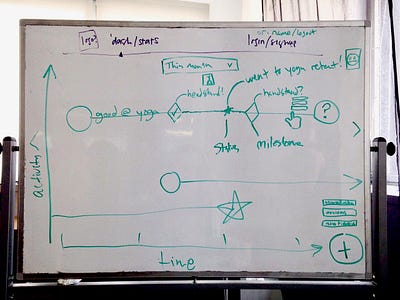

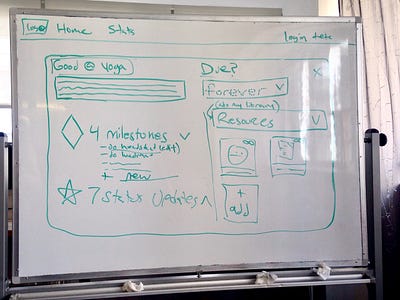

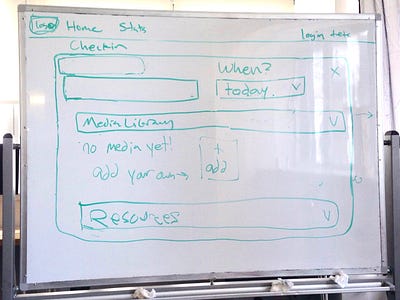

这些骨架确保我们意见统一,提供了可预见的蓝图,让我们向着计划的方向努力。

|

||||

@ -53,35 +50,32 @@

|

||||

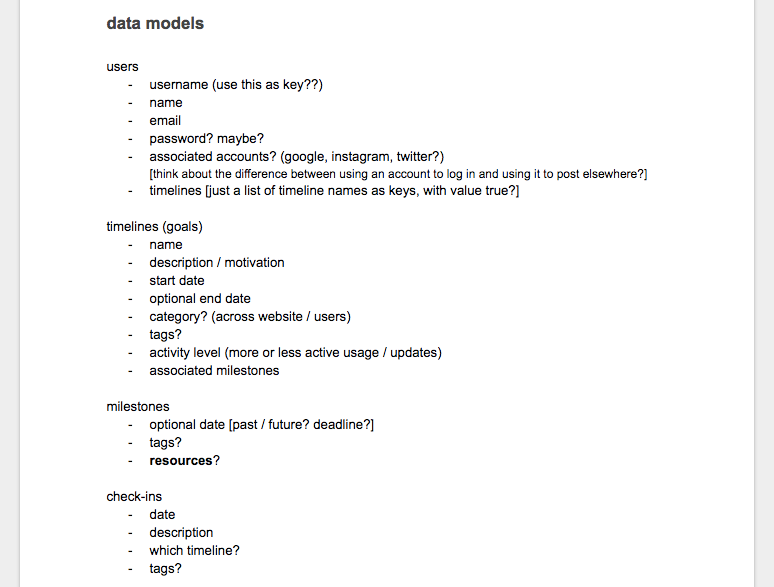

到了设计数据结构的时候。基于我们的示意图和用户故事,我们在 Google doc 中制作了一个清单,它包含我们将会需要的模型和每个模型应该包含的属性。我们知道需要 “目标(goal)” 模型、“用户(user)”模型、“里程碑(milestone)”模型、“记录(checkin)”模型还有最后的“资源(resource)”模型和“上传(upload)”模型,

|

||||

|

||||

|

||||

最初的数据模型结构

|

||||

|

||||

*最初的数据模型结构*

|

||||

|

||||

在正式确定好这些模型后,我们需要选择某种 _类型_ 的数据库:“关系型的”还是“非关系型的”(也就是“SQL”还是“NoSQL”)。由于基于表的 SQL 数据库需要预定义的格式,而基于文档的 NoSQL 数据库却可以用动态格式描述非结构化数据。

|

||||

|

||||

对于我们这个情况,用 SQL 型还是 No-SQL 型的数据库没多大影响,由于下列原因,我们最终选择了 Google 的 NoSQL 云数据库 Firebase:

|

||||

|

||||

1. 它能够把用户上传的图片保存在云端并存储起来

|

||||

|

||||

2. 它包含 WebSocket 功能,能够实时更新

|

||||

|

||||

3. 它能够处理用户验证,并且提供简单的 OAuth 功能。

|

||||

|

||||

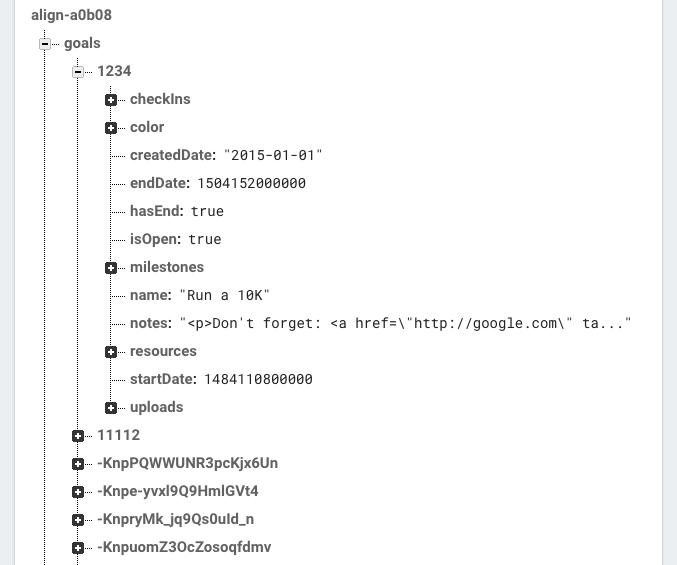

我们确定了数据库后,就要理解数据模型之间的关系了。由于 Firebase 是 NoSQL 类型,我们无法创建联合表或者设置像 _"记录 (Checkins)属于目标(Goals)"_ 的从属关系。因此我们需要弄清楚 JSON 树是什么样的,对象是怎样嵌套的(或者不是嵌套的关系)。最终,我们构建了像这样的模型:

|

||||

|

||||

我们确定了数据库后,就要理解数据模型之间的关系了。由于 Firebase 是 NoSQL 类型,我们无法创建联合表或者设置像 _“记录 (Checkins)属于目标(Goals)”_ 的从属关系。因此我们需要弄清楚 JSON 树是什么样的,对象是怎样嵌套的(或者不是嵌套的关系)。最终,我们构建了像这样的模型:

|

||||

|

||||

|

||||

我们最终为目标(Goal)对象确定的 Firebase 数据格式。注意里程碑(Milestones)和记录(Checkins)对象嵌套在 Goals 中。

|

||||

|

||||

_(注意: 出于性能考虑,Firebase 更倾向于简单、常规的数据结构, 但对于我们这种情况,需要在数据中进行嵌套,因为我们不会从数据库中获取目标(Goal)却不获取相应的子对象里程碑(Milestones)和记录(Checkins)。)_

|

||||

*我们最终为目标(Goal)对象确定的 Firebase 数据格式。注意里程碑(Milestones)和记录(Checkins)对象嵌套在 Goals 中。*

|

||||

|

||||

_(注意: 出于性能考虑,Firebase 更倾向于简单、常规的数据结构, 但对于我们这种情况,需要在数据中进行嵌套,因为我们不会从数据库中获取目标(Goal)却不获取相应的子对象里程碑(Milestones)和记录(Checkins)。)_

|

||||

|

||||

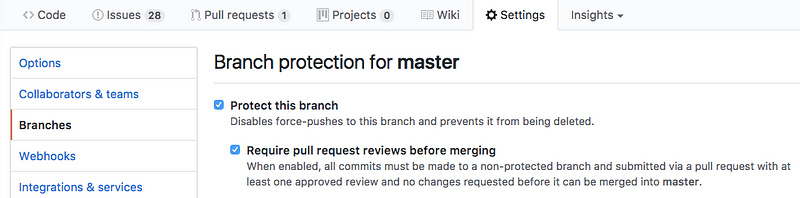

### 第 4 步:设置好 Github 和敏捷开发工作流

|

||||

|

||||

我们知道,从一开始就保持井然有序、执行敏捷开发对我们有极大好处。我们设置好 Github 上的仓库,我们无法直接将代码合并到主(master)分支,这迫使我们互相审阅代码。

|

||||

|

||||

|

||||

|

||||

|

||||

我们还在 [Waffle.io][5] 网站上创建了敏捷开发的面板,它是免费的,很容易集成到 Github。我们在 Waffle 面板上罗列出所有用户故事以及需要我们去修复的 bugs。之后当我们开始编码时,我们每个人会为自己正在研究的每一个用户故事创建一个 git 分支,在完成工作后合并这一条条的分支。

|

||||

|

||||

我们还在 [Waffle.io][5] 网站上创建了敏捷开发的面板,它是免费的,很容易集成到 Github。我们在 Waffle 面板上罗列出所有用户故事以及需要我们去修复的 bug。之后当我们开始编码时,我们每个人会为自己正在研究的每一个用户故事创建一个 git 分支,在完成工作后合并这一条条的分支。

|

||||

|

||||

|

||||

|

||||

@ -103,9 +97,9 @@ _(注意: 出于性能考虑,Firebase 更倾向于简单、常规的数据结

|

||||

|

||||

接下来是为应用创建 “概念证明”,也可以说是实现起来最复杂的基本功能的原型,证明我们的应用 _可以_ 实现。对我们而言,这意味着要找个前端库来实现时间线的渲染,成功连接到 Firebase,显示数据库中的一些种子数据。

|

||||

|

||||

|

||||

|

||||

Victory.JS 绘制的简单时间线

|

||||

|

||||

*Victory.JS 绘制的简单时间线*

|

||||

|

||||

我们找到了基于 D3 构建的响应式库 Victory.JS,花了一天时间阅读文档,用 _VictoryLine_ 和 _VictoryScatter_ 组件实现了非常基础的示例,能够可视化地显示数据库中的数据。实际上,这很有用!我们可以开始构建了。

|

||||

|

||||

@ -113,26 +107,16 @@ Victory.JS 绘制的简单时间线

|

||||

|

||||

最后,是时候构建出应用中那些令人期待的功能了。取决于你要构建的应用,这一重要步骤会有些明显差异。我们根据所用的框架,编码出不同的用户故事并保存在 Waffle 上。常常需要同时接触前端和后端代码(比如,创建一个前端表格同时要连接到数据库)。我们实现了包含以下这些大大小小的功能:

|

||||

|

||||

* 能够创建新目标(goals)、里程碑(milestones)和记录(checkins)

|

||||

|

||||

* 能够创建新目标、里程碑和记录

|

||||

* 能够删除目标,里程碑和记录

|

||||

|

||||

* 能够更改时间线的名称,颜色和详细内容

|

||||

|

||||

* 能够缩放时间线

|

||||

|

||||

* 能够为资源添加链接

|

||||

|

||||

* 能够上传视频

|

||||

|

||||

* 在达到相关目标的里程碑和记录时弹出资源和视频

|

||||

|

||||

* 集成富文本编辑器

|

||||

|

||||

* 用户注册、验证、OAuth 验证

|

||||

|

||||

* 弹出查看时间线选项

|

||||

|

||||

* 加载画面

|

||||

|

||||

有各种原因,这一步花了我们很多时间 —— 这一阶段是产生最多优质代码的阶段,每当我们实现了一个功能,就会有更多的事情要完善。

|

||||

@ -142,7 +126,8 @@ Victory.JS 绘制的简单时间线

|

||||

当我们使用 MVP 架构实现了想要的功能,就可以开始清理,对它进行美化了。像表单,菜单和登陆栏等组件,我的团队用的是 Material-UI,不需要很多深层次的设计知识,它也能确保每个组件看上去都很圆润光滑。

|

||||

|

||||

|

||||

这是我制作的最喜爱功能之一了。它美得令人心旷神怡。

|

||||

|

||||

*这是我制作的最喜爱功能之一了。它美得令人心旷神怡。*

|

||||

|

||||

我们花了一点时间来选择颜色方案和编写 CSS ,这让我们在编程中休息了一段美妙的时间。期间我们还设计了 logo 图标,还上传了网站图标。

|

||||

|

||||

@ -169,15 +154,16 @@ Victory.JS 绘制的简单时间线

|

||||

但是,现在我们感到非常开心,不仅是因为成品,还因为我们从这个过程中获得了难以估量的知识和理解。点击 [这里][7] 查看 Align 应用!

|

||||

|

||||

|

||||

Align 团队:Sara Kladky (左), Melanie Mohn (中), 还有我自己.

|

||||

|

||||

*Align 团队:Sara Kladky(左),Melanie Mohn(中),还有我自己。*

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://medium.com/ladies-storm-hackathons/how-we-built-our-first-full-stack-javascript-web-app-in-three-weeks-8a4668dbd67c?imm_mid=0f581a&cmp=em-web-na-na-newsltr_20170816

|

||||

|

||||

作者:[Sophia Ciocca ][a]

|

||||

作者:[Sophia Ciocca][a]

|

||||

译者:[BriFuture](https://github.com/BriFuture)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,14 +1,15 @@

|

||||

管理 Linux 系统中的用户

|

||||

管理 Linux 系统中的用户

|

||||

======

|

||||

|

||||

|

||||

|

||||

也许你的 Lniux 用户并不是愤怒的公牛,但是当涉及管理他们的账户的时候,能让他们一直开心也是一种挑战。监控他们当前正在访问的东西,追踪他们他们遇到问题时的解决方案,并且保证能把他们在使用系统时出现的重要变动记录下来。这里有一些方法和工具可以使这份工作轻松一点。

|

||||

也许你的 Linux 用户并不是愤怒的公牛,但是当涉及管理他们的账户的时候,能让他们一直满意也是一种挑战。你需要监控他们的访问权限,跟进他们遇到问题时的解决方案,并且把他们在使用系统时出现的重要变动记录下来。这里有一些方法和工具可以让这个工作轻松一点。

|

||||

|

||||

### 配置账户

|

||||

### 配置账户

|

||||

|

||||

添加和移除账户是管理用户中最简单的一项,但是这里面仍然有很多需要考虑的选项。无论你是用桌面工具或是命令行选项,这都是一个非常自动化的过程。你可以使用命令添加一个新用户,像是 `adduser jdoe`,这同时会触发一系列的事情。使用下一个可用的 UID 可以创建 John 的账户,或许还会被许多用以配置账户的文件所填充。当你运行 `adduser` 命令加一个新的用户名的时候,它将会提示一些额外的信息,同时解释这是在干什么。

|

||||

添加和删除账户是管理用户中比较简单的一项,但是这里面仍然有很多需要考虑的方面。无论你是用桌面工具或是命令行选项,这都是一个非常自动化的过程。你可以使用 `adduser jdoe` 命令添加一个新用户,同时会触发一系列的反应。在创建 John 这个账户时会自动使用下一个可用的 UID,并有很多自动生成的文件来完成这个工作。当你运行 `adduser` 后跟一个参数时(要创建的用户名),它会提示一些额外的信息,同时解释这是在干什么。

|

||||

|

||||

```

|

||||

```

|

||||

$ sudo adduser jdoe

|

||||

Adding user 'jdoe' ...

|

||||

Adding new group `jdoe' (1001) ...

|

||||

@ -20,21 +21,21 @@ Retype new UNIX password:

|

||||

passwd: password updated successfully

|

||||

Changing the user information for jdoe

|

||||

Enter the new value, or press ENTER for the default

|

||||

Full Name []: John Doe

|

||||

Room Number []:

|

||||

Work Phone []:

|

||||

Home Phone []:

|

||||

Other []:

|

||||

Full Name []: John Doe

|

||||

Room Number []:

|

||||

Work Phone []:

|

||||

Home Phone []:

|

||||

Other []:

|

||||

Is the information correct? [Y/n] Y

|

||||

```

|

||||

```

|

||||

|

||||

像你看到的那样,`adduser` 将添加用户的信息(到 `/etc/passwd` 和 `/etc/shadow` 文件中),创建新的家目录,并用 `/etc/skel` 里设置的文件填充家目录,提示你分配初始密码和认定信息,然后确认这些信息都是正确的,如果你在最后的提示 “Is the information correct” 处的答案是 “n”,它将回溯你之前所有的回答,允许修改任何你想要修改的地方。

|

||||

如你所见,`adduser` 会添加用户的信息(到 `/etc/passwd` 和 `/etc/shadow` 文件中),创建新的<ruby>家目录<rt>home directory</rt></ruby>,并用 `/etc/skel` 里设置的文件填充家目录,提示你分配初始密码和认证信息,然后确认这些信息都是正确的,如果你在最后的提示 “Is the information correct?” 处的回答是 “n”,它会回溯你之前所有的回答,允许修改任何你想要修改的地方。

|

||||

|

||||

创建好一个用户后,你可能会想要确认一下它是否是你期望的样子,更好的方法是确保在添加第一个帐户**之前**,“自动”选择与您想要查看的内容相匹配。默认有默认的好处,它对于你想知道他们定义在哪里有所用处,以防你想作出一些变动 —— 例如,你不想家目录在 `/home` 里,你不想用户 UID 从 1000 开始,或是你不想家目录下的文件被系统上的**每个人**都可读。

|

||||

创建好一个用户后,你可能会想要确认一下它是否是你期望的样子,更好的方法是确保在添加第一个帐户**之前**,“自动”选择与你想要查看的内容是否匹配。默认有默认的好处,它对于你想知道他们定义在哪里很有用,以便你想做出一些变动 —— 例如,你不想让用户的家目录在 `/home` 里,你不想让用户 UID 从 1000 开始,或是你不想让家目录下的文件被系统中的**每个人**都可读。

|

||||

|

||||

`adduser` 如何工作的一些细节设置在 `/etc/adduser.conf` 文件里。这个文件包含的一些设置决定了一个新的账户如何配置,以及它之后的样子。注意,注释和空白行将会在输出中被忽略,因此我们可以更加集中注意在设置上面。

|

||||

`adduser` 的一些配置细节设置在 `/etc/adduser.conf` 文件里。这个文件包含的一些配置项决定了一个新的账户如何配置,以及它之后的样子。注意,注释和空白行将会在输出中被忽略,因此我们更关注配置项。

|

||||

|

||||

```

|

||||

```

|

||||

$ cat /etc/adduser.conf | grep -v "^#" | grep -v "^$"

|

||||

DSHELL=/bin/bash

|

||||

DHOME=/home

|

||||

@ -55,45 +56,45 @@ DIR_MODE=0755

|

||||

SETGID_HOME=no

|

||||

QUOTAUSER=""

|

||||

SKEL_IGNORE_REGEX="dpkg-(old|new|dist|save)"

|

||||

```

|

||||

```

|

||||

|

||||

可以看到,我们有了一个默认的 shell(`DSHELL`),UID(`FIRST_UID`)的开始数值,家目录(`DHOME`)的位置,以及启动文件(`SKEL`)的来源位置。这个文件也会指定分配给家目录(`DIR_HOME`)的权限。

|

||||

可以看到,我们有了一个默认的 shell(`DSHELL`),UID(`FIRST_UID`)的起始值,家目录(`DHOME`)的位置,以及启动文件(`SKEL`)的来源位置。这个文件也会指定分配给家目录(`DIR_HOME`)的权限。

|

||||

|

||||

其中 `DIR_HOME` 是最重要的设置,它决定了每个家目录被使用的权限。这个设置分配给用户创建的目录权限是 `755`,家目录的权限将会设置为 `rwxr-xr-x`。用户可以读其他用户的文件,但是不能修改和移除他们。如果你想要更多的限制,你可以更改这个设置为 `750`(用户组外的任何人都不可访问)甚至是 `700`(除用户自己外的人都不可访问)。

|

||||

其中 `DIR_HOME` 是最重要的设置,它决定了每个家目录被使用的权限。这个设置分配给用户创建的目录权限是 755,家目录的权限将会设置为 `rwxr-xr-x`。用户可以读其他用户的文件,但是不能修改和移除它们。如果你想要更多的限制,你可以更改这个设置为 750(用户组外的任何人都不可访问)甚至是 700(除用户自己外的人都不可访问)。

|

||||

|

||||

任何用户账号在创建之前都可以进行手动修改。例如,你可以编辑 `/etc/passwd` 或者修改家目录的权限,开始在新服务器上添加用户之前配置 `/etc/adduser.conf` 可以确保一定的一致性,从长远来看可以节省时间和避免一些麻烦。

|

||||

任何用户账号在创建之前都可以进行手动修改。例如,你可以编辑 `/etc/passwd` 或者修改家目录的权限,开始在新服务器上添加用户之前配置 `/etc/adduser.conf` 可以确保一定的一致性,从长远来看可以节省时间和避免一些麻烦。

|

||||

|

||||

`/etc/adduser.conf` 的修改将会在之后创建的用户上生效。如果你想以不同的方式设置某个特定账户,除了用户名之外,你还可以选择使用 `adduser` 命令提供账户配置选项。或许你想为某些账户分配不同的 shell,请求特殊的 UID,完全禁用登录。`adduser` 的帮助页将会为你显示一些配置个人账户的选择。

|

||||

`/etc/adduser.conf` 的修改将会在之后创建的用户上生效。如果你想以不同的方式设置某个特定账户,除了用户名之外,你还可以选择使用 `adduser` 命令提供账户配置选项。或许你想为某些账户分配不同的 shell,分配特殊的 UID,或完全禁用该账户登录。`adduser` 的帮助页将会为你显示一些配置个人账户的选择。

|

||||

|

||||

```

|

||||

adduser [options] [--home DIR] [--shell SHELL] [--no-create-home]

|

||||

[--uid ID] [--firstuid ID] [--lastuid ID] [--ingroup GROUP | --gid ID]

|

||||

[--disabled-password] [--disabled-login] [--gecos GECOS]

|

||||

[--add_extra_groups] [--encrypt-home] user

|

||||

```

|

||||

```

|

||||

|

||||

每个 Linux 系统现在都会默认把每个用户放入对应的组中。作为一个管理员,你可能会选择以不同的方式去做事。你也许会发现把用户放在一个共享组中可以让你的站点工作的更好,这时,选择使用 `adduser` 的 `--gid` 选项去选择一个特定的组。当然,用户总是许多组的成员,因此也有一些选项去管理主要和次要的组。

|

||||

每个 Linux 系统现在都会默认把每个用户放入对应的组中。作为一个管理员,你可能会选择以不同的方式。你也许会发现把用户放在一个共享组中更适合你的站点,你就可以选择使用 `adduser` 的 `--gid` 选项指定一个特定的组。当然,用户总是许多组的成员,因此也有一些选项来管理主要和次要的组。

|

||||

|

||||

### 处理用户密码

|

||||

### 处理用户密码

|

||||

|

||||

一直以来,知道其他人的密码都是一个不好的念头,在设置账户时,管理员通常使用一个临时的密码,然后在用户第一次登录时会运行一条命令强制他修改密码。这里是一个例子:

|

||||

一直以来,知道其他人的密码都不是一件好事,在设置账户时,管理员通常使用一个临时密码,然后在用户第一次登录时运行一条命令强制他修改密码。这里是一个例子:

|

||||

|

||||

```

|

||||

$ sudo chage -d 0 jdoe

|

||||

```

|

||||

|

||||

当用户第一次登录的时候,会看到像这样的事情:

|

||||

当用户第一次登录时,会看到类似下面的提示:

|

||||

|

||||

```

|

||||

WARNING: Your password has expired.

|

||||

You must change your password now and login again!

|

||||

Changing password for jdoe.

|

||||

(current) UNIX password:

|

||||

```

|

||||

```

|

||||

|

||||

### 添加用户到副组

|

||||

### 添加用户到副组

|

||||

|

||||

添加用户到副组中,你可能会用如下所示的 `usermod` 命令 —— 添加用户到组中并确认已经做出变动。

|

||||

添加用户到副组中,你可能会用如下所示的 `usermod` 命令添加用户到组中并确认已经做出变动。

|

||||

|

||||

```

|

||||

$ sudo usermod -a -G sudo jdoe

|

||||

@ -101,54 +102,54 @@ $ sudo grep sudo /etc/group

|

||||

sudo:x:27:shs,jdoe

|

||||

```

|

||||

|

||||

记住在一些组,像是 `sudo` 或者 `wheel` 组中,意味着包含特权,一定要特别注意这一点。

|

||||

记住在一些组意味着特别的权限,如 sudo 或者 wheel 组,一定要特别注意这一点。

|

||||

|

||||

### 移除用户,添加组等

|

||||

### 移除用户,添加组等

|

||||

|

||||

Linux 系统也提供了移除账户,添加新的组,移除组等一些命令。例如,`deluser` 命令,将会从 `/etc/passwd` 和 `/etc/shadow` 中移除用户记录,但是会完整保留其家目录,除非你添加了 `--remove-home` 或者 `--remove-all-files` 选项。`addgroup` 命令会添加一个组,默认按目前组的次序分配下一个 id(在用户组范围内),除非你使用 `--gid` 选项指定 id。

|

||||

|

||||

Linux 系统也提供了命令去移除账户、添加新的组、移除组等。例如,`deluser` 命令,将会从 `/etc/passwd` 和 `/etc/shadow` 中移除用户登录入口,但是会完整保留他的家目录,除非你添加了 `--remove-home` 或者 `--remove-all-files` 选项。`addgroup` 命令会添加一个组,按目前组的次序给他下一个 ID(在用户组范围内),除非你使用 `--gid` 选项指定 ID。

|

||||

|

||||

```

|

||||

$ sudo addgroup testgroup --gid=131

|

||||

Adding group `testgroup' (GID 131) ...

|

||||

Done.

|

||||

```

|

||||

```

|

||||

|

||||

### 管理特权账户

|

||||

### 管理特权账户

|

||||

|

||||

一些 Linux 系统中有一个 wheel 组,它给组中成员赋予了像 root 一样运行命令的能力。在这种情况下,`/etc/sudoers` 将会引用该组。在 Debian 系统中,这个组被叫做 `sudo`,但是以相同的方式工作,你在 `/etc/sudoers` 中可以看到像这样的引用:

|

||||

一些 Linux 系统中有一个 wheel 组,它给组中成员赋予了像 root 一样运行命令的权限。在这种情况下,`/etc/sudoers` 将会引用该组。在 Debian 系统中,这个组被叫做 sudo,但是原理是相同的,你在 `/etc/sudoers` 中可以看到像这样的信息:

|

||||

|

||||

```

|

||||

%sudo ALL=(ALL:ALL) ALL

|

||||

```

|

||||

%sudo ALL=(ALL:ALL) ALL

|

||||

```

|

||||

|

||||

这个基础的设定意味着,任何在 wheel 或者 sudo 组中的成员,只要在他们运行的命令之前添加 `sudo`,就可以以 root 的权限去运行命令。

|

||||

这行基本的配置意味着任何在 wheel 或者 sudo 组中的成员只要在他们运行的命令之前添加 `sudo`,就可以以 root 的权限去运行命令。

|

||||

|

||||

你可以向 `sudoers` 文件中添加更多有限的特权 —— 也许给特定用户运行一两个 root 的命令。如果这样做,您还应定期查看 `/etc/sudoers` 文件以评估用户拥有的权限,以及仍然需要提供的权限。

|

||||

你可以向 sudoers 文件中添加更多有限的权限 —— 也许给特定用户几个能以 root 运行的命令。如果你是这样做的,你应该定期查看 `/etc/sudoers` 文件以评估用户拥有的权限,以及仍然需要提供的权限。

|

||||

|

||||

在下面显示的命令中,我们看到在 `/etc/sudoers` 中匹配到的行。在这个文件中最有趣的行是,包含能使用 `sudo` 运行命令的路径设置,以及两个允许通过 `sudo` 运行命令的组。像刚才提到的那样,单个用户可以通过包含在 `sudoers` 文件中来获得权限,但是更有实际意义的方法是通过组成员来定义各自的权限。

|

||||

在下面显示的命令中,我们过滤了 `/etc/sudoers` 中有效的配置行。其中最有意思的是,它包含了能使用 `sudo` 运行命令的路径设置,以及两个允许通过 `sudo` 运行命令的组。像刚才提到的那样,单个用户可以通过包含在 sudoers 文件中来获得权限,但是更有实际意义的方法是通过组成员来定义各自的权限。

|

||||

|

||||

```

|

||||

# cat /etc/sudoers | grep -v "^#" | grep -v "^$"

|

||||

Defaults env_reset

|

||||

Defaults mail_badpass

|

||||

Defaults secure_path="/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/snap/bin"

|

||||

root ALL=(ALL:ALL) ALL

|

||||

%admin ALL=(ALL) ALL <== admin group

|

||||

%sudo ALL=(ALL:ALL) ALL <== sudo group

|

||||

```

|

||||

root ALL=(ALL:ALL) ALL

|

||||

%admin ALL=(ALL) ALL <== admin group

|

||||

%sudo ALL=(ALL:ALL) ALL <== sudo group

|

||||

```

|

||||

|

||||

### 登录检查

|

||||

### 登录检查

|

||||

|

||||

你可以通过以下命令查看用户的上一次登录:

|

||||

你可以通过以下命令查看用户的上一次登录:

|

||||

|

||||

```

|

||||

# last jdoe

|

||||

jdoe pts/18 192.168.0.11 Thu Sep 14 08:44 - 11:48 (00:04)

|

||||

jdoe pts/18 192.168.0.11 Thu Sep 14 13:43 - 18:44 (00:00)

|

||||

jdoe pts/18 192.168.0.11 Thu Sep 14 19:42 - 19:43 (00:00)

|

||||

```

|

||||

```

|

||||

|

||||

如果你想查看每一个用户上一次的登录情况,你可以通过一个像这样的循环来运行 `last` 命令:

|

||||

如果你想查看每一个用户上一次的登录情况,你可以通过一个像这样的循环来运行 `last` 命令:

|

||||

|

||||

```

|

||||

$ for user in `ls /home`; do last $user | head -1; done

|

||||

@ -157,21 +158,21 @@ jdoe pts/18 192.168.0.11 Thu Sep 14 19:42 - 19:43 (00:03)

|

||||

|

||||

rocket pts/18 192.168.0.11 Thu Sep 14 13:02 - 13:02 (00:00)

|

||||

shs pts/17 192.168.0.11 Thu Sep 14 12:45 still logged in

|

||||

```

|

||||

|

||||

此命令仅显示自当前 `wtmp` 文件变为活跃状态以来已登录的用户。空白行表示用户自那以后从未登录过,但没有将其调出。一个更好的命令是过滤掉在这期间从未登录过的用户的显示:

|

||||

|

||||

```

|

||||

$ for user in `ls /home`; do echo -n "$user ";last $user | head -1 | awk '{print substr($0,40)}'; done

|

||||

|

||||

此命令仅显示自当前 wtmp 文件登录过的用户。空白行表示用户自那以后从未登录过,但没有将他们显示出来。一个更好的命令可以明确地显示这期间从未登录过的用户:

|

||||

|

||||

```

|

||||

$ for user in `ls /home`; do echo -n "$user"; last $user | head -1 | awk '{print substr($0,40)}'; done

|

||||

dhayes

|

||||

jdoe pts/18 192.168.0.11 Thu Sep 14 19:42 - 19:43

|

||||

peanut pts/19 192.168.0.29 Mon Sep 11 09:15 - 17:11

|

||||

rocket pts/18 192.168.0.11 Thu Sep 14 13:02 - 13:02

|

||||

shs pts/17 192.168.0.11 Thu Sep 14 12:45 still logged

|

||||

tsmith

|

||||

```

|

||||

```

|

||||

|

||||

这个命令会打印很多,但是可以通过一个脚本使它更加清晰易用。

|

||||

这个命令要打很多字,但是可以通过一个脚本使它更加清晰易用。

|

||||

|

||||

```

|

||||

#!/bin/bash

|

||||

@ -180,13 +181,13 @@ for user in `ls /home`

|

||||

do

|

||||

echo -n "$user ";last $user | head -1 | awk '{print substr($0,40)}'

|

||||

done

|

||||

```

|

||||

```

|

||||

|

||||

有时,此类信息可以提醒您用户角色的变动,表明他们可能不再需要相关帐户。

|

||||

有时这些信息可以提醒你用户角色的变动,表明他们可能不再需要相关帐户了。

|

||||

|

||||

### 与用户沟通

|

||||

### 与用户沟通

|

||||

|

||||

Linux 提供了许多方法和用户沟通。你可以向 `/etc/motd` 文件中添加信息,当用户从终端登录到服务器时,将会显示这些信息。你也可以通过例如 `write`(通知单个用户)或者 `wall`(`write` 给所有已登录的用户)命令发送通知。

|

||||

Linux 提供了许多和用户沟通的方法。你可以向 `/etc/motd` 文件中添加信息,当用户从终端登录到服务器时,将会显示这些信息。你也可以通过例如 `write`(通知单个用户)或者 `wall`(write 给所有已登录的用户)命令发送通知。

|

||||

|

||||

```

|

||||

$ wall System will go down in one hour

|

||||

@ -194,30 +195,30 @@ $ wall System will go down in one hour

|

||||

Broadcast message from shs@stinkbug (pts/17) (Thu Sep 14 14:04:16 2017):

|

||||

|

||||

System will go down in one hour

|

||||

```

|

||||

```

|

||||

|

||||

重要的通知应该通过多个管道传递,因为很难预测用户实际会注意到什么。mesage-of-the-day(motd),`wall` 和 email 通知可以吸引用户大部分的注意力。

|

||||

重要的通知应该通过多个渠道传达,因为很难预测用户实际会注意到什么。mesage-of-the-day(motd),`wall` 和 email 通知可以吸引用户大部分的注意力。

|

||||

|

||||

### 注意日志文件

|

||||

### 注意日志文件

|

||||

|

||||

更多地注意日志文件上也可以帮你理解用户活动。事实上,`/var/log/auth.log` 文件将会为你显示用户的登录和注销活动,组的创建等。`/var/log/message` 或者 `/var/log/syslog` 文件将会告诉你更多有关系统活动的事情。

|

||||

多注意日志文件也可以帮你理解用户的活动情况。尤其 `/var/log/auth.log` 文件将会显示用户的登录和注销活动,组的创建记录等。`/var/log/message` 或者 `/var/log/syslog` 文件将会告诉你更多有关系统活动的日志。

|

||||

|

||||

### 追踪问题和请求

|

||||

### 追踪问题和需求

|

||||

|

||||

无论你是否在 Linux 系统上安装了票务系统,跟踪用户遇到的问题以及他们提出的请求都非常重要。如果请求的一部分久久不见回应,用户必然不会高兴。即使是纸质日志也可能是有用的,或者更好的是,有一个电子表格,可以让你注意到哪些问题仍然悬而未决,以及问题的根本原因是什么。确保解决问题和请求非常重要,日志还可以帮助您记住你必须采取的措施来解决几个月甚至几年后重新出现的问题。

|

||||

无论你是否在 Linux 系统上安装了事件跟踪系统,跟踪用户遇到的问题以及他们提出的需求都非常重要。如果需求的一部分久久不见回应,用户必然不会高兴。即使是记录在纸上也是有用的,或者最好有个电子表格,这可以让你注意到哪些问题仍然悬而未决,以及问题的根本原因是什么。确认问题和需求非常重要,记录还可以帮助你记住你必须采取的措施来解决几个月甚至几年后重新出现的问题。

|

||||

|

||||

### 总结

|

||||

### 总结

|

||||

|

||||

在繁忙的服务器上管理用户帐户部分取决于从配置良好的默认值开始,部分取决于监控用户活动和遇到的问题。如果用户觉得你对他们的顾虑有所回应并且知道在需要系统升级时会发生什么,他们可能会很高兴。

|

||||

在繁忙的服务器上管理用户帐号,部分取决于配置良好的默认值,部分取决于监控用户活动和遇到的问题。如果用户觉得你对他们的顾虑有所回应并且知道在需要系统升级时会发生什么,他们可能会很高兴。

|

||||

|

||||

-----------

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3225109/linux/managing-users-on-linux-systems.html

|

||||

|

||||

作者:[Sandra Henry-Stocker][a]

|

||||

译者:[dianbanjiu](https://github.com/dianbanjiu)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)、[pityonline](https://github.com/pityonline)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.networkworld.com/author/Sandra-Henry_Stocker/

|

||||

[a]: https://www.networkworld.com/author/Sandra-Henry_Stocker/

|

||||

|

||||

@ -3,92 +3,90 @@

|

||||

|

||||

|

||||

|

||||

本教程将指导你在 Ubuntu 18.04 LTS 无头服务器上,一步一步地安装 **Oracle VirtualBox**。同时,本教程也将介绍如何使用 **phpVirtualBox** 去管理安装在无头服务器上的 **VirtualBox** 实例。**phpVirtualBox** 是 VirtualBox 的一个基于 Web 的后端工具。这个教程也可以工作在 Debian 和其它 Ubuntu 衍生版本上,如 Linux Mint。现在,我们开始。

|

||||

本教程将指导你在 Ubuntu 18.04 LTS 无头服务器上,一步一步地安装 **Oracle VirtualBox**。同时,本教程也将介绍如何使用 **phpVirtualBox** 去管理安装在无头服务器上的 **VirtualBox** 实例。**phpVirtualBox** 是 VirtualBox 的一个基于 Web 的前端工具。这个教程也可以工作在 Debian 和其它 Ubuntu 衍生版本上,如 Linux Mint。现在,我们开始。

|

||||

|

||||

### 前提条件

|

||||

|

||||

在安装 Oracle VirtualBox 之前,我们的 Ubuntu 18.04 LTS 服务器上需要满足如下的前提条件。

|

||||

|

||||

首先,逐个运行如下的命令来更新 Ubuntu 服务器。

|

||||

|

||||

```

|

||||

$ sudo apt update

|

||||

|

||||

$ sudo apt upgrade

|

||||

|

||||

$ sudo apt dist-upgrade

|

||||

|

||||

```

|

||||

|

||||

接下来,安装如下的必需的包:

|

||||

|

||||

```

|

||||

$ sudo apt install build-essential dkms unzip wget

|

||||

|

||||

```

|

||||

|

||||

安装完成所有的更新和必需的包之后,重启动 Ubuntu 服务器。

|

||||

|

||||

```

|

||||

$ sudo reboot

|

||||

|

||||

```

|

||||

|

||||

### 在 Ubuntu 18.04 LTS 服务器上安装 VirtualBox

|

||||

|

||||

添加 Oracle VirtualBox 官方仓库。为此你需要去编辑 **/etc/apt/sources.list** 文件:

|

||||

添加 Oracle VirtualBox 官方仓库。为此你需要去编辑 `/etc/apt/sources.list` 文件:

|

||||

|

||||

```

|

||||

$ sudo nano /etc/apt/sources.list

|

||||

|

||||

```

|

||||

|

||||

添加下列的行。

|

||||

|

||||

在这里,我将使用 Ubuntu 18.04 LTS,因此我添加下列的仓库。

|

||||

|

||||

```

|

||||

deb http://download.virtualbox.org/virtualbox/debian bionic contrib

|

||||

|

||||

```

|

||||

|

||||

![][2]

|

||||

|

||||

用你的 Ubuntu 发行版的代码名字替换关键字 **‘bionic’**,比如,**‘xenial’、‘vivid’、‘utopic’、‘trusty’、‘raring’、‘quantal’、‘precise’、‘lucid’、‘jessie’、‘wheezy’、或 ‘squeeze‘**。

|

||||

用你的 Ubuntu 发行版的代码名字替换关键字 ‘bionic’,比如,‘xenial’、‘vivid’、‘utopic’、‘trusty’、‘raring’、‘quantal’、‘precise’、‘lucid’、‘jessie’、‘wheezy’、或 ‘squeeze‘。

|

||||

|

||||

然后,运行下列的命令去添加 Oracle 公钥:

|

||||

|

||||

```

|

||||

$ wget -q https://www.virtualbox.org/download/oracle_vbox_2016.asc -O- | sudo apt-key add -

|

||||

|

||||

```

|

||||

|

||||

对于 VirtualBox 的老版本,添加如下的公钥:

|

||||

|

||||

```

|

||||

$ wget -q https://www.virtualbox.org/download/oracle_vbox.asc -O- | sudo apt-key add -

|

||||

|

||||

```

|

||||

|

||||

接下来,使用如下的命令去更新软件源:

|

||||

|

||||

```

|

||||

$ sudo apt update

|

||||

|

||||

```

|

||||

|

||||

最后,使用如下的命令去安装最新版本的 Oracle VirtualBox:

|

||||

|

||||

```

|

||||

$ sudo apt install virtualbox-5.2

|

||||

|

||||

```

|

||||

|

||||

### 添加用户到 VirtualBox 组

|

||||

|

||||

我们需要去创建并添加我们的系统用户到 **vboxusers** 组中。你也可以单独创建用户,然后将它分配到 **vboxusers** 组中,也可以使用已有的用户。我不想去创建新用户,因此,我添加已存在的用户到这个组中。请注意,如果你为 virtualbox 使用一个单独的用户,那么你必须注销当前用户,并使用那个特定的用户去登入,来完成剩余的步骤。

|

||||

我们需要去创建并添加我们的系统用户到 `vboxusers` 组中。你也可以单独创建用户,然后将它分配到 `vboxusers` 组中,也可以使用已有的用户。我不想去创建新用户,因此,我添加已存在的用户到这个组中。请注意,如果你为 virtualbox 使用一个单独的用户,那么你必须注销当前用户,并使用那个特定的用户去登入,来完成剩余的步骤。

|

||||

|

||||

我使用的是我的用户名 `sk`,因此,我运行如下的命令将它添加到 `vboxusers` 组中。

|

||||

|

||||

我使用的是我的用户名 **sk**,因此,我运行如下的命令将它添加到 **vboxusers** 组中。

|

||||

```

|

||||

$ sudo usermod -aG vboxusers sk

|

||||

|

||||

```

|

||||

|

||||

现在,运行如下的命令去检查 virtualbox 内核模块是否已加载。

|

||||

|

||||

```

|

||||

$ sudo systemctl status vboxdrv

|

||||

|

||||

```

|

||||

|

||||

![][3]

|

||||

@ -96,15 +94,15 @@ $ sudo systemctl status vboxdrv

|

||||

正如你在上面的截屏中所看到的,vboxdrv 模块已加载,并且是已运行的状态!

|

||||

|

||||

对于老的 Ubuntu 版本,运行:

|

||||

|

||||

```

|

||||

$ sudo /etc/init.d/vboxdrv status

|

||||

|

||||

```

|

||||

|

||||

如果 virtualbox 模块没有启动,运行如下的命令去启动它。

|

||||

|

||||

```

|

||||

$ sudo /etc/init.d/vboxdrv setup

|

||||

|

||||

```

|

||||

|

||||

很好!我们已经成功安装了 VirtualBox 并启动了 virtualbox 模块。现在,我们继续来安装 Oracle VirtualBox 的扩展包。

|

||||

@ -119,21 +117,19 @@ VirtualBox 扩展包为 VirtualBox 访客系统提供了如下的功能。

|

||||

* Intel PXE 引导 ROM

|

||||

* 对 Linux 宿主机上的 PCI 直通提供支持

|

||||

|

||||

从[这里][4]为 VirtualBox 5.2.x 下载最新版的扩展包。

|

||||

|

||||

|

||||

从[**这里**][4]为 VirtualBox 5.2.x 下载最新版的扩展包。

|

||||

```

|

||||

$ wget https://download.virtualbox.org/virtualbox/5.2.14/Oracle_VM_VirtualBox_Extension_Pack-5.2.14.vbox-extpack

|

||||

|

||||

```

|

||||

|

||||

使用如下的命令去安装扩展包:

|

||||

|

||||

```

|

||||

$ sudo VBoxManage extpack install Oracle_VM_VirtualBox_Extension_Pack-5.2.14.vbox-extpack

|

||||

|

||||

```

|

||||

|

||||

恭喜!我们已经成功地在 Ubuntu 18.04 LTS 服务器上安装了 Oracle VirtualBox 的扩展包。现在已经可以去部署虚拟机了。参考 [**virtualbox 官方指南**][5],在命令行中开始创建和管理虚拟机。

|

||||

恭喜!我们已经成功地在 Ubuntu 18.04 LTS 服务器上安装了 Oracle VirtualBox 的扩展包。现在已经可以去部署虚拟机了。参考 [virtualbox 官方指南][5],在命令行中开始创建和管理虚拟机。

|

||||

|

||||

然而,并不是每个人都擅长使用命令行。有些人可能希望在图形界面中去创建和使用虚拟机。不用担心!下面我们为你带来非常好用的 **phpVirtualBox** 工具!

|

||||

|

||||

@ -146,84 +142,82 @@ $ sudo VBoxManage extpack install Oracle_VM_VirtualBox_Extension_Pack-5.2.14.vbo

|

||||

由于它是基于 web 的工具,我们需要安装 Apache web 服务器、PHP 和一些 php 模块。

|

||||

|

||||

为此,运行如下命令:

|

||||

|

||||

```

|

||||

$ sudo apt install apache2 php php-mysql libapache2-mod-php php-soap php-xml

|

||||

|

||||

```

|

||||

|

||||

然后,从 [**下载页面**][6] 上下载 phpVirtualBox 5.2.x 版。请注意,由于我们已经安装了 VirtualBox 5.2 版,因此,同样的我们必须去安装 phpVirtualBox 的 5.2 版本。

|

||||

然后,从 [下载页面][6] 上下载 phpVirtualBox 5.2.x 版。请注意,由于我们已经安装了 VirtualBox 5.2 版,因此,同样的我们必须去安装 phpVirtualBox 的 5.2 版本。

|

||||

|

||||

运行如下的命令去下载它:

|

||||

|

||||

```

|

||||

$ wget https://github.com/phpvirtualbox/phpvirtualbox/archive/5.2-0.zip

|

||||

|

||||

```

|

||||

|

||||

使用如下命令解压下载的安装包:

|

||||

|

||||

```

|

||||

$ unzip 5.2-0.zip

|

||||

|

||||

```

|

||||

|

||||

这个命令将解压 5.2.0.zip 文件的内容到一个命名为 “phpvirtualbox-5.2-0” 的文件夹中。现在,复制或移动这个文件夹的内容到你的 apache web 服务器的根文件夹中。

|

||||

这个命令将解压 5.2.0.zip 文件的内容到一个名为 `phpvirtualbox-5.2-0` 的文件夹中。现在,复制或移动这个文件夹的内容到你的 apache web 服务器的根文件夹中。

|

||||

|

||||

```

|

||||

$ sudo mv phpvirtualbox-5.2-0/ /var/www/html/phpvirtualbox

|

||||

|

||||

```

|

||||

|

||||

给 phpvirtualbox 文件夹分配适当的权限。

|

||||

|

||||

```

|

||||

$ sudo chmod 777 /var/www/html/phpvirtualbox/

|

||||

|

||||

```

|

||||

|

||||

接下来,我们开始配置 phpVirtualBox。

|

||||

|

||||

像下面这样复制示例配置文件。

|

||||

|

||||

```

|

||||

$ sudo cp /var/www/html/phpvirtualbox/config.php-example /var/www/html/phpvirtualbox/config.php

|

||||

|

||||

```

|

||||

|

||||

编辑 phpVirtualBox 的 **config.php** 文件:

|

||||

编辑 phpVirtualBox 的 `config.php` 文件:

|

||||

|

||||

```

|

||||

$ sudo nano /var/www/html/phpvirtualbox/config.php

|

||||

|

||||

```

|

||||

|

||||

找到下列行,并且用你的系统用户名和密码去替换它(就是前面的“添加用户到 VirtualBox 组中”节中使用的用户名)。

|

||||

|

||||

在我的案例中,我的 Ubuntu 系统用户名是 **sk** ,它的密码是 **ubuntu**。

|

||||

在我的案例中,我的 Ubuntu 系统用户名是 `sk` ,它的密码是 `ubuntu`。

|

||||

|

||||

```

|

||||

var $username = 'sk';

|

||||

var $password = 'ubuntu';

|

||||

|

||||

```

|

||||

|

||||

![][7]

|

||||

|

||||

保存并关闭这个文件。

|

||||

|

||||

接下来,创建一个名为 **/etc/default/virtualbox** 的新文件:

|

||||

接下来,创建一个名为 `/etc/default/virtualbox` 的新文件:

|

||||

|

||||

```

|

||||

$ sudo nano /etc/default/virtualbox

|

||||

|

||||

```

|

||||

|

||||

添加下列行。用你自己的系统用户替换 ‘sk’。

|

||||

添加下列行。用你自己的系统用户替换 `sk`。

|

||||

|

||||

```

|

||||

VBOXWEB_USER=sk

|

||||

|

||||

```

|

||||

|

||||

最后,重引导你的系统或重启下列服务去完成整个配置工作。

|

||||

|

||||

```

|

||||

$ sudo systemctl restart vboxweb-service

|

||||

|

||||

$ sudo systemctl restart vboxdrv

|

||||

|

||||

$ sudo systemctl restart apache2

|

||||

|

||||

```

|

||||

|

||||

### 调整防火墙允许连接 Apache web 服务器

|

||||

@ -231,6 +225,7 @@ $ sudo systemctl restart apache2

|

||||

如果你在 Ubuntu 18.04 LTS 上启用了 UFW,那么在默认情况下,apache web 服务器是不能被任何远程系统访问的。你必须通过下列的步骤让 http 和 https 流量允许通过 UFW。

|

||||

|

||||

首先,我们使用如下的命令来查看在策略中已经安装了哪些应用:

|

||||

|

||||

```

|

||||

$ sudo ufw app list

|

||||

Available applications:

|

||||

@ -238,12 +233,12 @@ Apache

|

||||

Apache Full

|

||||

Apache Secure

|

||||

OpenSSH

|

||||

|

||||

```

|

||||

|

||||

正如你所见,Apache 和 OpenSSH 应该已经在 UFW 的策略文件中安装了。

|

||||

|

||||

如果你在策略中看到的是 **“Apache Full”**,说明它允许流量到达 **80** 和 **443** 端口:

|

||||

如果你在策略中看到的是 `Apache Full`,说明它允许流量到达 80 和 443 端口:

|

||||

|

||||

```

|

||||

$ sudo ufw app info "Apache Full"

|

||||

Profile: Apache Full

|

||||

@ -253,34 +248,33 @@ server.

|

||||

|

||||

Ports:

|

||||

80,443/tcp

|

||||

|

||||

```

|

||||

|

||||

现在,运行如下的命令去启用这个策略中的 HTTP 和 HTTPS 的入站流量:

|

||||

|

||||

```

|

||||

$ sudo ufw allow in "Apache Full"

|

||||

Rules updated

|

||||

Rules updated (v6)

|

||||

|

||||

```

|

||||

|

||||

如果你希望允许 https 流量,但是仅是 http (80) 的流量,运行如下的命令:

|

||||

|

||||

```

|

||||

$ sudo ufw app info "Apache"

|

||||

|

||||

```

|

||||

|

||||

### 访问 phpVirtualBox 的 Web 控制台

|

||||

|

||||

现在,用任意一台远程系统的 web 浏览器来访问。

|

||||

|

||||

在地址栏中,输入:**<http://IP-address-of-virtualbox-headless-server/phpvirtualbox>**。

|

||||

在地址栏中,输入:`http://IP-address-of-virtualbox-headless-server/phpvirtualbox`。

|

||||

|

||||

在我的案例中,我导航到这个链接 – **<http://192.168.225.22/phpvirtualbox>**

|

||||

在我的案例中,我导航到这个链接 – `http://192.168.225.22/phpvirtualbox`。

|

||||

|

||||

你将看到如下的屏幕输出。输入 phpVirtualBox 管理员用户凭据。

|

||||

|

||||

phpVirtualBox 的默认管理员用户名和密码是 **admin** / **admin**。

|

||||

phpVirtualBox 的默认管理员用户名和密码是 `admin` / `admin`。

|

||||

|

||||

![][8]

|

||||

|

||||

@ -303,7 +297,7 @@ via: https://www.ostechnix.com/install-oracle-virtualbox-ubuntu-16-04-headless-s

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -3,25 +3,28 @@

|

||||

|

||||

|

||||

|

||||

自我更新 Arch Linux 桌面以来已经有一个月了。今天我试着更新我的 Arch Linux 系统,然后遇到一个错误 **“error:failed to commit transaction (conflicting files) stfl:/usr/lib/libstfl.so.0 exists in filesystem”**。看起来是 pacman 无法更新一个已经存在于文件系统上的库 (/usr/lib/libstfl.so.0)。如果你也遇到了同样的问题,下面是一个快速解决方案。

|

||||

自我更新 Arch Linux 桌面以来已经有一个月了。今天我试着更新我的 Arch Linux 系统,然后遇到一个错误 “error:failed to commit transaction (conflicting files) stfl:/usr/lib/libstfl.so.0 exists in filesystem”。看起来是 pacman 无法更新一个已经存在于文件系统上的库 (/usr/lib/libstfl.so.0)。如果你也遇到了同样的问题,下面是一个快速解决方案。

|

||||

|

||||

### 解决 Arch Linux 中出现的 “error:failed to commit transaction (conflicting files)”

|

||||

|

||||

有三种方法。

|

||||

|

||||

1。简单在升级时忽略导致问题的 **stfl** 库并尝试再次更新系统。请参阅此指南以了解 [**如何在更新时忽略软件包 **][1]。

|

||||

1。简单在升级时忽略导致问题的 stfl 库并尝试再次更新系统。请参阅此指南以了解 [如何在更新时忽略软件包][1]。

|

||||

|

||||

2。使用命令覆盖这个包:

|

||||

|

||||

```

|

||||

$ sudo pacman -Syu --overwrite /usr/lib/libstfl.so.0

|

||||

```

|

||||

|

||||

3。手工删掉 stfl 库然后再次升级系统。请确保目标包不被其他任何重要的包所依赖。可以通过去 archlinux.org 查看是否有这种冲突。

|

||||

|

||||

```

|

||||

$ sudo rm /usr/lib/libstfl.so.0

|

||||

```

|

||||

|

||||

现在,尝试更新系统:

|

||||

|

||||

```

|

||||

$ sudo pacman -Syu

|

||||

```

|

||||

@ -41,7 +44,7 @@ via: https://www.ostechnix.com/how-to-solve-error-failed-to-commit-transaction-c

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,105 @@

|

||||

Talk over text: Conversational interface design and usability

|

||||

======

|

||||

To make conversational interfaces more human-centered, we must free our thinking from the trappings of web and mobile design.

|

||||

|

||||

|

||||

|

||||

Conversational interfaces are unique among the screen-based and physically manipulated user interfaces that characterize the range of digital experiences we encounter on a daily basis. As [Conversational Design][1] author Erika Hall eloquently writes, "Conversation is not a new interface. It's the oldest interface." And the conversation, the most human interaction of all, lies at the nexus of the aural and verbal rather than the visual and physical. This makes it particularly challenging for machines to meet the high expectations we tend to have when it comes to typical human conversations.

|

||||

|

||||

How do we design for conversational interfaces, which run the gamut from omnichannel chatbots on our websites and mobile apps to mono-channel voice assistants on physical devices such as the Amazon Echo and Google Home? What recommendations do other experts on conversational design and usability have when it comes to crafting the most robust chatbot or voice interface possible? In this overview, we focus on three areas: information architecture, design, and usability testing.

|

||||

|

||||

### Information architecture: Trees, not sitemaps

|

||||

|

||||

Consider the websites we visit and the visual interfaces we use regularly. Each has a navigational tool, whether it is a list of links or a series of buttons, that helps us gain some understanding of the interface. In a web-optimized information architecture, we can see the entire hierarchy of a website and its contents in the form of such navigation bars and sitemaps.

|

||||

|

||||

On the other hand, in a conversational information architecture—whether articulated in a chatbot or a voice assistant—the structure of our interactions must be provided to us in a simple and straightforward way. For instance, in lieu of a navigation bar that has links to pages like About, Menu, Order, and Locations with further links underneath, we can create a conversational means of describing how to navigate the options we wish to pursue.

|

||||

|

||||

Consider the differences between the two examples of navigation below.

|

||||

|

||||

| **Web-based navigation:** | **Conversational navigation:** |

|

||||

| Present all options in the navigation bar | Present only certain top-level options to access deeper options |

|

||||

|-------------------------------------------|-----------------------------------------------------------------|

|

||||

| • Floss's Pizza | • "To learn more about us, say About" |

|

||||

| • About | • "To hear our menu, say Menu" |

|

||||

| ◦ Team | • "To place an order, say Order" |

|

||||

| ◦ Our story | • "To find out where we are, say Where" |

|

||||

| • Menu | |

|

||||

| ◦ Pizzas | |

|

||||

| ◦ Pastas | |

|

||||

| ◦ Platters | |

|

||||

| • Order | |

|

||||

| ◦ Pickup | |

|

||||

| ◦ Delivery | |

|

||||

| • Where we are | |

|

||||

| ◦ Area map • "Welcome to Floss's Pizza!" | |

|

||||

|

||||

In a conversational context, an appropriate information architecture that focuses on decision trees is of paramount importance, because one of the biggest issues many conversational interfaces face is excessive verbosity. By avoiding information overload, prizing structural simplicity, and prescribing one-word directions, your users can traverse conversational interfaces without any additional visual aid.

|

||||

|

||||

### Design: Finessing flows and language

|

||||

|

||||

![Well-designed language example][3]

|

||||

|

||||

An example of well-designed language that encapsulates Hall's conversational key moments.

|

||||

|

||||

In her book Conversational Design, Hall emphasizes the need for all conversational interfaces to adhere to conversational maxims outlined by Paul Grice and advanced by Robin Lakoff. These conversational maxims highlight the characteristics every conversational interface should have to succeed: quantity (just enough information but not too much), quality (truthfulness), relation (relevance), manner (concision, orderliness, and lack of ambiguity), and politeness (Lakoff's addition).

|

||||

|

||||

In the process, Hall spotlights four key moments that build trust with users of conversational interfaces and give them all of the information they need to interact successfully with the conversational experience, whether it is a chatbot or a voice assistant.

|

||||

|

||||

* **Introduction:** Invite the user's interest and encourage trust with a friendly but brief greeting that welcomes them to an unfamiliar interface.

|

||||

|

||||

* **Orientation:** Offer system options, such as how to exit out of certain interactions, and provide a list of options that help the user achieve their goal.

|

||||

|

||||

* **Action:** After each response from the user, offer a new set of tasks and corresponding controls for the user to proceed with further interaction.

|

||||

|

||||

* **Guidance:** Provide feedback to the user after every response and give clear instructions.

|

||||

|

||||

|

||||

|

||||

|

||||

Taken as a whole, these key moments indicate that good conversational design obligates us to consider how we write machine utterances to be both inviting and informative and to structure our decision flows in such a way that they flow naturally to the user. In other words, rather than visual design chops or an eye for style, conversational design requires us to be good writers and thoughtful architects of decision trees.

|

||||

|

||||

![Decision flow example ][5]

|

||||

|

||||

An example decision flow that adheres to Hall's key moments.

|

||||

|

||||

One metaphor I use on a regular basis to conceive of each point in a conversational interface that presents a choice to the user is the dichotomous key. In tree science, dichotomous keys are used to identify trees in their natural habitat through certain salient characteristics. What makes dichotomous keys special, however, is the fact that each card in a dichotomous key only offers two choices (hence the moniker "dichotomous") with a clearly defined characteristic that cannot be mistaken for another. Eventually, after enough dichotomous choices have been made, we can winnow down the available options to the correct genus of tree.

|

||||

|

||||

We should design conversational interfaces in the same way, with particular attention given to disambiguation and decision-making that never verges on too much complexity. Because conversational interfaces require deeply nested hierarchical structures to reach certain outcomes, we can never be too helpful in the instructions and options we offer our users.

|

||||

|

||||

### Usability testing: Dialogues, not dialogs

|

||||

|

||||

Conversational usability is a relatively unexplored and less-understood area because it is frequently based on verbal and aural interactions rather than visual or physical ones. Whereas chatbots can be evaluated for their usability using traditional means such as think-aloud, voice assistants and other voice-driven interfaces have no such luxury.

|

||||

|

||||

For voice interfaces, we are unable to pursue approaches involving eye-tracking or think-aloud, since these interfaces are purely aural and users' utterances outside of responses to interface prompts can introduce bad data. For this reason, when our Acquia Labs team built [Ask GeorgiaGov][6], the first Alexa skill for residents of the state of Georgia, we chose retrospective probing (RP) for our usability tests.

|

||||

|

||||

In retrospective probing, the conversational interaction proceeds until the completion of the task, at which point the user is asked about their impressions of the interface. Retrospective probing is well-positioned for voice interfaces because it allows the conversation to proceed unimpeded by interruptions such as think-aloud feedback. Nonetheless, it does come with the disadvantage of suffering from our notoriously unreliable memories, as it forces us to recollect past interactions rather than ones we completed immediately before recollection.

|

||||

|

||||

### Challenges and opportunities

|

||||

|

||||

Conversational interfaces are here to stay in our rapidly expanding spectrum of digital experiences. Though they enrich the range of ways we have to engage users, they also present unprecedented challenges when it comes to information architecture, design, and usability testing. With the help of previous work such as Grice's conversational maxims and Hall's key moments, we can design and build effective conversational interfaces by focusing on strong writing and well-considered decision flows.

|

||||

|

||||

The fact that conversation is the oldest and most human of interfaces is also edifying when we approach other user interfaces that lack visual or physical manipulation. As Hall writes, "The ideal interface is an interface that's not noticeable at all." Whether or not we will eventually reach the utopian outcome of conversational interfaces that feel completely natural to the human ear, we can make conversational interfaces more human-centered by freeing our thinking from the trappings of web and mobile.

|

||||

|

||||

Preston So will present [Talk Over Text: Conversational Interface Design and Usability][7] at [All Things Open][8], October 21-23 in Raleigh, North Carolina.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/10/conversational-interface-design-and-usability

|

||||

|

||||

作者:[Preston So][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/prestonso

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://abookapart.com/products/conversational-design

|

||||

[2]: /file/411001

|

||||

[3]: https://opensource.com/sites/default/files/uploads/conversational-interfaces_1.png (Well-designed language example)

|

||||

[4]: /file/411006

|

||||

[5]: https://opensource.com/sites/default/files/uploads/conversational-interfaces_2.png (Decision flow example )

|

||||

[6]: https://www.acquia.com/blog/ask-georgiagov-alexa-skill-citizens-georgia-acquia-labs/12/10/2017/3312516

|

||||

[7]: https://allthingsopen.org/talk/talk-over-text-conversational-interface-design-and-usability/

|

||||

[8]: https://allthingsopen.org/

|

||||

@ -1,4 +1,3 @@

|

||||

imquanquan Translating

|

||||

Trying Other Go Versions

|

||||

============================================================

|

||||

|

||||

@ -110,4 +109,4 @@ via: https://pocketgophers.com/trying-other-versions/

|

||||

[8]:https://pocketgophers.com/trying-other-versions/#trying-a-specific-release

|

||||

[9]:https://pocketgophers.com/guide-to-json/

|

||||

[10]:https://pocketgophers.com/trying-other-versions/#trying-any-release

|

||||

[11]:https://pocketgophers.com/trying-other-versions/#trying-a-source-build-e-g-tip

|

||||

[11]:https://pocketgophers.com/trying-other-versions/#trying-a-source-build-e-g-tip

|

||||

@ -1,4 +1,3 @@

|

||||

Zafiry translating...

|

||||

Writing eBPF tracing tools in Rust

|

||||

============================================================

|

||||

|

||||

|

||||

201

sources/tech/20180907 6.828 lab tools guide.md

Normal file

201

sources/tech/20180907 6.828 lab tools guide.md

Normal file

@ -0,0 +1,201 @@

|

||||

6.828 lab tools guide

|

||||

======

|

||||

### 6.828 lab tools guide

|

||||

|

||||

Familiarity with your environment is crucial for productive development and debugging. This page gives a brief overview of the JOS environment and useful GDB and QEMU commands. Don't take our word for it, though. Read the GDB and QEMU manuals. These are powerful tools that are worth knowing how to use.

|

||||

|

||||

#### Debugging tips

|

||||

|

||||

##### Kernel

|

||||

|

||||

GDB is your friend. Use the qemu-gdb target (or its `qemu-gdb-nox` variant) to make QEMU wait for GDB to attach. See the GDB reference below for some commands that are useful when debugging kernels.

|

||||

|

||||

If you're getting unexpected interrupts, exceptions, or triple faults, you can ask QEMU to generate a detailed log of interrupts using the -d argument.

|

||||

|

||||

To debug virtual memory issues, try the QEMU monitor commands info mem (for a high-level overview) or info pg (for lots of detail). Note that these commands only display the _current_ page table.

|

||||

|

||||

(Lab 4+) To debug multiple CPUs, use GDB's thread-related commands like thread and info threads.

|

||||

|

||||

##### User environments (lab 3+)

|

||||

|

||||

GDB also lets you debug user environments, but there are a few things you need to watch out for, since GDB doesn't know that there's a distinction between multiple user environments, or between user and kernel.

|

||||

|

||||

You can start JOS with a specific user environment using make run- _name_ (or you can edit `kern/init.c` directly). To make QEMU wait for GDB to attach, use the run- _name_ -gdb variant.

|

||||

|

||||

You can symbolically debug user code, just like you can kernel code, but you have to tell GDB which symbol table to use with the symbol-file command, since it can only use one symbol table at a time. The provided `.gdbinit` loads the kernel symbol table, `obj/kern/kernel`. The symbol table for a user environment is in its ELF binary, so you can load it using symbol-file obj/user/ _name_. _Don't_ load symbols from any `.o` files, as those haven't been relocated by the linker (libraries are statically linked into JOS user binaries, so those symbols are already included in each user binary). Make sure you get the _right_ user binary; library functions will be linked at different EIPs in different binaries and GDB won't know any better!

|

||||

|

||||

(Lab 4+) Since GDB is attached to the virtual machine as a whole, it sees clock interrupts as just another control transfer. This makes it basically impossible to step through user code because a clock interrupt is virtually guaranteed the moment you let the VM run again. The stepi command works because it suppresses interrupts, but it only steps one assembly instruction. Breakpoints generally work, but watch out because you can hit the same EIP in a different environment (indeed, a different binary altogether!).

|

||||

|

||||

#### Reference

|

||||

|

||||

##### JOS makefile

|

||||

|

||||

The JOS GNUmakefile includes a number of phony targets for running JOS in various ways. All of these targets configure QEMU to listen for GDB connections (the `*-gdb` targets also wait for this connection). To start once QEMU is running, simply run gdb from your lab directory. We provide a `.gdbinit` file that automatically points GDB at QEMU, loads the kernel symbol file, and switches between 16-bit and 32-bit mode. Exiting GDB will shut down QEMU.

|

||||

|

||||

* make qemu

|

||||

Build everything and start QEMU with the VGA console in a new window and the serial console in your terminal. To exit, either close the VGA window or press `Ctrl-c` or `Ctrl-a x` in your terminal.

|

||||

* make qemu-nox

|

||||

Like `make qemu`, but run with only the serial console. To exit, press `Ctrl-a x`. This is particularly useful over SSH connections to Athena dialups because the VGA window consumes a lot of bandwidth.

|

||||

* make qemu-gdb

|

||||

Like `make qemu`, but rather than passively accepting GDB connections at any time, this pauses at the first machine instruction and waits for a GDB connection.

|

||||

* make qemu-nox-gdb

|

||||

A combination of the `qemu-nox` and `qemu-gdb` targets.

|

||||

* make run- _name_

|

||||

(Lab 3+) Run user program _name_. For example, `make run-hello` runs `user/hello.c`.

|

||||

* make run- _name_ -nox, run- _name_ -gdb, run- _name_ -gdb-nox,

|

||||

(Lab 3+) Variants of `run-name` that correspond to the variants of the `qemu` target.

|

||||

|

||||

|

||||

|

||||

The makefile also accepts a few useful variables:

|

||||

|

||||

* make V=1 ...

|

||||

Verbose mode. Print out every command being executed, including arguments.

|

||||

* make V=1 grade

|

||||

Stop after any failed grade test and leave the QEMU output in `jos.out` for inspection.

|

||||

* make QEMUEXTRA=' _args_ ' ...

|

||||

Specify additional arguments to pass to QEMU.

|

||||

|

||||

|

||||

|

||||

##### JOS obj/

|

||||

|

||||

The JOS GNUmakefile includes a number of phony targets for running JOS in various ways. All of these targets configure QEMU to listen for GDB connections (thetargets also wait for this connection). To start once QEMU is running, simply runfrom your lab directory. We provide afile that automatically points GDB at QEMU, loads the kernel symbol file, and switches between 16-bit and 32-bit mode. Exiting GDB will shut down QEMU.The makefile also accepts a few useful variables:

|

||||

|

||||

When building JOS, the makefile also produces some additional output files that may prove useful while debugging:

|

||||

|

||||

* `obj/boot/boot.asm`, `obj/kern/kernel.asm`, `obj/user/hello.asm`, etc.

|

||||

Assembly code listings for the bootloader, kernel, and user programs.

|

||||

* `obj/kern/kernel.sym`, `obj/user/hello.sym`, etc.

|

||||

Symbol tables for the kernel and user programs.

|

||||

* `obj/boot/boot.out`, `obj/kern/kernel`, `obj/user/hello`, etc

|

||||

Linked ELF images of the kernel and user programs. These contain symbol information that can be used by GDB.

|

||||

|

||||

|

||||

|

||||

##### GDB

|

||||

|

||||

See the [GDB manual][1] for a full guide to GDB commands. Here are some particularly useful commands for 6.828, some of which don't typically come up outside of OS development.

|

||||

|

||||

* Ctrl-c

|

||||

Halt the machine and break in to GDB at the current instruction. If QEMU has multiple virtual CPUs, this halts all of them.

|

||||

* c (or continue)

|

||||

Continue execution until the next breakpoint or `Ctrl-c`.

|

||||

* si (or stepi)

|

||||

Execute one machine instruction.

|

||||

* b function or b file:line (or breakpoint)

|

||||

Set a breakpoint at the given function or line.

|

||||

* b * _addr_ (or breakpoint)

|

||||

Set a breakpoint at the EIP _addr_.

|

||||

* set print pretty

|

||||

Enable pretty-printing of arrays and structs.

|

||||

* info registers

|

||||

Print the general purpose registers, `eip`, `eflags`, and the segment selectors. For a much more thorough dump of the machine register state, see QEMU's own `info registers` command.

|

||||

* x/ _N_ x _addr_

|

||||

Display a hex dump of _N_ words starting at virtual address _addr_. If _N_ is omitted, it defaults to 1. _addr_ can be any expression.

|

||||

* x/ _N_ i _addr_

|

||||

Display the _N_ assembly instructions starting at _addr_. Using `$eip` as _addr_ will display the instructions at the current instruction pointer.

|

||||

* symbol-file _file_

|

||||

(Lab 3+) Switch to symbol file _file_. When GDB attaches to QEMU, it has no notion of the process boundaries within the virtual machine, so we have to tell it which symbols to use. By default, we configure GDB to use the kernel symbol file, `obj/kern/kernel`. If the machine is running user code, say `hello.c`, you can switch to the hello symbol file using `symbol-file obj/user/hello`.

|

||||

|

||||

|

||||

|

||||

QEMU represents each virtual CPU as a thread in GDB, so you can use all of GDB's thread-related commands to view or manipulate QEMU's virtual CPUs.

|

||||

|

||||

* thread _n_

|

||||

GDB focuses on one thread (i.e., CPU) at a time. This command switches that focus to thread _n_ , numbered from zero.

|

||||

* info threads

|

||||

List all threads (i.e., CPUs), including their state (active or halted) and what function they're in.

|

||||

|

||||

|

||||

|

||||

##### QEMU

|

||||

|

||||

QEMU includes a built-in monitor that can inspect and modify the machine state in useful ways. To enter the monitor, press Ctrl-a c in the terminal running QEMU. Press Ctrl-a c again to switch back to the serial console.

|

||||

|

||||

For a complete reference to the monitor commands, see the [QEMU manual][2]. Here are some particularly useful commands:

|

||||

|

||||

* xp/ _N_ x _paddr_

|

||||

Display a hex dump of _N_ words starting at _physical_ address _paddr_. If _N_ is omitted, it defaults to 1. This is the physical memory analogue of GDB's `x` command.

|

||||

|

||||

* info registers

|

||||

Display a full dump of the machine's internal register state. In particular, this includes the machine's _hidden_ segment state for the segment selectors and the local, global, and interrupt descriptor tables, plus the task register. This hidden state is the information the virtual CPU read from the GDT/LDT when the segment selector was loaded. Here's the CS when running in the JOS kernel in lab 1 and the meaning of each field:

|

||||

```

|

||||

CS =0008 10000000 ffffffff 10cf9a00 DPL=0 CS32 [-R-]

|

||||

```

|

||||

|

||||

* `CS =0008`

|

||||

The visible part of the code selector. We're using segment 0x8. This also tells us we're referring to the global descriptor table (0x8 &4=0), and our CPL (current privilege level) is 0x8&3=0.

|

||||

* `10000000`

|

||||

The base of this segment. Linear address = logical address + 0x10000000.

|

||||

* `ffffffff`

|

||||

The limit of this segment. Linear addresses above 0xffffffff will result in segment violation exceptions.

|

||||

* `10cf9a00`

|

||||

The raw flags of this segment, which QEMU helpfully decodes for us in the next few fields.

|

||||

* `DPL=0`

|

||||

The privilege level of this segment. Only code running with privilege level 0 can load this segment.

|

||||

* `CS32`

|

||||

This is a 32-bit code segment. Other values include `DS` for data segments (not to be confused with the DS register), and `LDT` for local descriptor tables.

|

||||

* `[-R-]`

|

||||

This segment is read-only.

|

||||

* info mem

|

||||

(Lab 2+) Display mapped virtual memory and permissions. For example,

|

||||

```

|

||||

ef7c0000-ef800000 00040000 urw

|

||||

efbf8000-efc00000 00008000 -rw

|

||||

|

||||

```

|

||||

|

||||

tells us that the 0x00040000 bytes of memory from 0xef7c0000 to 0xef800000 are mapped read/write and user-accessible, while the memory from 0xefbf8000 to 0xefc00000 is mapped read/write, but only kernel-accessible.

|

||||

|

||||

* info pg

|

||||

(Lab 2+) Display the current page table structure. The output is similar to `info mem`, but distinguishes page directory entries and page table entries and gives the permissions for each separately. Repeated PTE's and entire page tables are folded up into a single line. For example,

|

||||

```

|

||||

VPN range Entry Flags Physical page

|

||||

[00000-003ff] PDE[000] -------UWP

|

||||

[00200-00233] PTE[200-233] -------U-P 00380 0037e 0037d 0037c 0037b 0037a ..

|

||||

[00800-00bff] PDE[002] ----A--UWP

|

||||

[00800-00801] PTE[000-001] ----A--U-P 0034b 00349

|

||||

[00802-00802] PTE[002] -------U-P 00348

|

||||

|

||||

```

|

||||

|

||||

This shows two page directory entries, spanning virtual addresses 0x00000000 to 0x003fffff and 0x00800000 to 0x00bfffff, respectively. Both PDE's are present, writable, and user and the second PDE is also accessed. The second of these page tables maps three pages, spanning virtual addresses 0x00800000 through 0x00802fff, of which the first two are present, user, and accessed and the third is only present and user. The first of these PTE's maps physical page 0x34b.

|

||||

|

||||

|

||||

|

||||

|

||||

QEMU also takes some useful command line arguments, which can be passed into the JOS makefile using the

|

||||

|

||||

* make QEMUEXTRA='-d int' ...

|

||||

Log all interrupts, along with a full register dump, to `qemu.log`. You can ignore the first two log entries, "SMM: enter" and "SMM: after RMS", as these are generated before entering the boot loader. After this, log entries look like

|

||||

```

|

||||

4: v=30 e=0000 i=1 cpl=3 IP=001b:00800e2e pc=00800e2e SP=0023:eebfdf28 EAX=00000005

|

||||

EAX=00000005 EBX=00001002 ECX=00200000 EDX=00000000

|

||||

ESI=00000805 EDI=00200000 EBP=eebfdf60 ESP=eebfdf28

|

||||

...

|

||||

|

||||

```

|

||||

|

||||

The first line describes the interrupt. The `4:` is just a log record counter. `v` gives the vector number in hex. `e` gives the error code. `i=1` indicates that this was produced by an `int` instruction (versus a hardware interrupt). The rest of the line should be self-explanatory. See info registers for a description of the register dump that follows.

|

||||

|

||||

Note: If you're running a pre-0.15 version of QEMU, the log will be written to `/tmp` instead of the current directory.

|

||||

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://pdos.csail.mit.edu/6.828/2018/labguide.html

|

||||

|

||||

作者:[csail.mit][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://pdos.csail.mit.edu

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: http://sourceware.org/gdb/current/onlinedocs/gdb/

|

||||

[2]: http://wiki.qemu.org/download/qemu-doc.html#pcsys_005fmonitor

|

||||

247

sources/tech/20180911 Tools Used in 6.828.md

Normal file

247

sources/tech/20180911 Tools Used in 6.828.md

Normal file

@ -0,0 +1,247 @@

|

||||

Tools Used in 6.828

|

||||

======

|

||||

### Tools Used in 6.828

|

||||

|

||||

You'll use two sets of tools in this class: an x86 emulator, QEMU, for running your kernel; and a compiler toolchain, including assembler, linker, C compiler, and debugger, for compiling and testing your kernel. This page has the information you'll need to download and install your own copies. This class assumes familiarity with Unix commands throughout.

|

||||

|

||||

We highly recommend using a Debathena machine, such as athena.dialup.mit.edu, to work on the labs. If you use the MIT Athena machines that run Linux, then all the software tools you will need for this course are located in the 6.828 locker: just type 'add -f 6.828' to get access to them.

|

||||

|

||||

If you don't have access to a Debathena machine, we recommend you use a virtual machine with Linux. If you really want to, you can build and install the tools on your own machine. We have instructions below for Linux and MacOS computers.

|

||||

|

||||

It should be possible to get this development environment running under windows with the help of [Cygwin][1]. Install cygwin, and be sure to install the flex and bison packages (they are under the development header).

|

||||

|

||||

For an overview of useful commands in the tools used in 6.828, see the [lab tools guide][2].

|

||||

|

||||

#### Compiler Toolchain

|

||||

|

||||

A "compiler toolchain" is the set of programs, including a C compiler, assemblers, and linkers, that turn code into executable binaries. You'll need a compiler toolchain that generates code for 32-bit Intel architectures ("x86" architectures) in the ELF binary format.

|

||||

|

||||

##### Test Your Compiler Toolchain

|

||||

|

||||

Modern Linux and BSD UNIX distributions already provide a toolchain suitable for 6.828. To test your distribution, try the following commands:

|

||||

|

||||

```

|

||||

% objdump -i

|

||||

|

||||

```

|

||||

|

||||

The second line should say `elf32-i386`.

|

||||

|

||||

```

|

||||

% gcc -m32 -print-libgcc-file-name

|

||||

|

||||

```

|

||||

|

||||

The command should print something like `/usr/lib/gcc/i486-linux-gnu/version/libgcc.a` or `/usr/lib/gcc/x86_64-linux-gnu/version/32/libgcc.a`

|

||||

|

||||

If both these commands succeed, you're all set, and don't need to compile your own toolchain.

|

||||

|

||||

If the gcc command fails, you may need to install a development environment. On Ubuntu Linux, try this:

|

||||

|

||||

```

|

||||

% sudo apt-get install -y build-essential gdb

|

||||

|

||||

```

|

||||

|

||||

On 64-bit machines, you may need to install a 32-bit support library. The symptom is that linking fails with error messages like "`__udivdi3` not found" and "`__muldi3` not found". On Ubuntu Linux, try this to fix the problem:

|

||||

|

||||

```

|

||||

% sudo apt-get install gcc-multilib

|

||||

|

||||

```

|

||||

|

||||

##### Using a Virtual Machine

|

||||

|

||||

Otherwise, the easiest way to get a compatible toolchain is to install a modern Linux distribution on your computer. With platform virtualization, Linux can cohabitate with your normal computing environment. Installing a Linux virtual machine is a two step process. First, you download the virtualization platform.

|

||||

|

||||

* [**VirtualBox**][3] (free for Mac, Linux, Windows) — [Download page][3]

|

||||

* [VMware Player][4] (free for Linux and Windows, registration required)

|

||||

* [VMware Fusion][5] (Downloadable from IS&T for free).

|

||||

|

||||

|

||||

|

||||

VirtualBox is a little slower and less flexible, but free!

|

||||

|

||||

Once the virtualization platform is installed, download a boot disk image for the Linux distribution of your choice.

|

||||

|

||||

* [Ubuntu Desktop][6] is what we use.

|

||||

|

||||

|

||||

|

||||

This will download a file named something like `ubuntu-10.04.1-desktop-i386.iso`. Start up your virtualization platform and create a new (32-bit) virtual machine. Use the downloaded Ubuntu image as a boot disk; the procedure differs among VMs but is pretty simple. Type `objdump -i`, as above, to verify that your toolchain is now set up. You will do your work inside the VM.

|

||||

|

||||

##### Building Your Own Compiler Toolchain

|

||||

|

||||

This will take longer to set up, but give slightly better performance than a virtual machine, and lets you work in your own familiar environment (Unix/MacOS). Fast-forward to the end for MacOS instructions.

|

||||

|

||||

###### Linux

|

||||

|

||||