mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-15 01:50:08 +08:00

commit

874e69ad33

@ -0,0 +1,74 @@

|

||||

使用 Python 和 Asyncio 编写在线多人游戏(一)

|

||||

===================================================================

|

||||

|

||||

你在 Python 中用过异步编程吗?本文中我会告诉你怎样做,而且用一个[能工作的例子][1]来展示它:这是一个流行的贪吃蛇游戏,而且是为多人游戏而设计的。

|

||||

|

||||

- [游戏入口在此,点此体验][2]。

|

||||

|

||||

###1、简介

|

||||

|

||||

在技术和文化领域,大规模多人在线游戏(MMO)毋庸置疑是我们当今世界的潮流之一。很长时间以来,为一个 MMO 游戏写一个服务器这件事总是会涉及到大量的预算与复杂的底层编程技术,不过在最近这几年,事情迅速发生了变化。基于动态语言的现代框架允许在中档的硬件上面处理大量并发的用户连接。同时,HTML5 和 WebSockets 标准使得创建基于实时图形的游戏的直接运行至浏览器上的客户端成为可能,而不需要任何的扩展。

|

||||

|

||||

对于创建可扩展的非堵塞性的服务器来说,Python 可能不是最受欢迎的工具,尤其是和在这个领域里最受欢迎的 Node.js 相比而言。但是最近版本的 Python 正在改变这种现状。[asyncio][3] 的引入和一个特别的 [async/await][4] 语法使得异步代码看起来像常规的阻塞代码一样,这使得 Python 成为了一个值得信赖的异步编程语言,所以我将尝试利用这些新特点来创建一个多人在线游戏。

|

||||

|

||||

###2、异步

|

||||

|

||||

一个游戏服务器应该可以接受尽可能多的用户并发连接,并实时处理这些连接。一个典型的解决方案是创建线程,然而在这种情况下并不能解决这个问题。运行上千的线程需要 CPU 在它们之间不停的切换(这叫做上下文切换),这将导致开销非常大,效率很低下。更糟糕的是使用进程来实现,因为,不但如此,它们还会占用大量的内存。在 Python 中,甚至还有一个问题,Python 的解释器(CPython)并不是针对多线程设计的,相反它主要针对于单线程应用实现最大的性能。这就是为什么它使用 GIL(global interpreter lock),这是一个不允许同时运行多线程 Python 代码的架构,以防止同一个共享对象出现使用不可控。正常情况下,在当前线程正在等待的时候,解释器会转换到另一个线程,通常是等待一个 I/O 的响应(举例说,比如等待 Web 服务器的响应)。这就允许在你的应用中实现非阻塞 I/O 操作,因为每一个操作仅仅阻塞一个线程而不是阻塞整个服务器。然而,这也使得通常的多线程方案变得几近无用,因为它不允许你并发执行 Python 代码,即使是在多核心的 CPU 上也是这样。而与此同时,在一个单一线程中拥有非阻塞 I/O 是完全有可能的,因而消除了经常切换上下文的需要。

|

||||

|

||||

实际上,你可以用纯 Python 代码来实现一个单线程的非阻塞 I/O。你所需要的只是标准的 [select][5] 模块,这个模块可以让你写一个事件循环来等待未阻塞的 socket 的 I/O。然而,这个方法需要你在一个地方定义所有 app 的逻辑,用不了多久,你的 app 就会变成非常复杂的状态机。有一些框架可以简化这个任务,比较流行的是 [tornade][6] 和 [twisted][7]。它们被用来使用回调方法实现复杂的协议(这和 Node.js 比较相似)。这种框架运行在它自己的事件循环中,按照定义的事件调用你的回调函数。并且,这或许是一些情况的解决方案,但是它仍然需要使用回调的方式编程,这使你的代码变得碎片化。与写同步代码并且并发地执行多个副本相比,这就像我们在普通的线程上做的一样。在单个线程上这为什么是不可能的呢?

|

||||

|

||||

这就是为什么出现微线程(microthread)概念的原因。这个想法是为了在一个线程上并发执行任务。当你在一个任务中调用阻塞的方法时,有一个叫做“manager” (或者“scheduler”)的东西在执行事件循环。当有一些事件准备处理的时候,一个 manager 会转移执行权给一个任务,并等着它执行完毕。任务将一直执行,直到它遇到一个阻塞调用,然后它就会将执行权返还给 manager。

|

||||

|

||||

> 微线程也称为轻量级线程(lightweight threads)或绿色线程(green threads)(来自于 Java 中的一个术语)。在伪线程中并发执行的任务叫做 tasklets、greenlets 或者协程(coroutines)。

|

||||

|

||||

Python 中的微线程最早的实现之一是 [Stackless Python][8]。它之所以这么知名是因为它被用在了一个叫 [EVE online][9] 的非常有名的在线游戏中。这个 MMO 游戏自称说在一个持久的“宇宙”中,有上千个玩家在做不同的活动,这些都是实时发生的。Stackless 是一个独立的 Python 解释器,它代替了标准的函数栈调用,并且直接控制程序运行流程来减少上下文切换的开销。尽管这非常有效,这个解决方案不如在标准解释器中使用“软”库更流行,像 [eventlet][10] 和 [gevent][11] 的软件包配备了修补过的标准 I/O 库,I/O 函数会将执行权传递到内部事件循环。这使得将正常的阻塞代码转变成非阻塞的代码变得简单。这种方法的一个缺点是从代码上看这并不分明,它的调用是非阻塞的。新版本的 Python 引入了本地协程作为生成器的高级形式。在 Python 的 3.4 版本之后,引入了 asyncio 库,这个库依赖于本地协程来提供单线程并发。但是仅仅到了 Python 3.5 ,协程就变成了 Python 语言的一部分,使用新的关键字 async 和 await 来描述。这是一个简单的例子,演示了使用 asyncio 来运行并发任务。

|

||||

|

||||

```

|

||||

import asyncio

|

||||

|

||||

async def my_task(seconds):

|

||||

print("start sleeping for {} seconds".format(seconds))

|

||||

await asyncio.sleep(seconds)

|

||||

print("end sleeping for {} seconds".format(seconds))

|

||||

|

||||

all_tasks = asyncio.gather(my_task(1), my_task(2))

|

||||

loop = asyncio.get_event_loop()

|

||||

loop.run_until_complete(all_tasks)

|

||||

loop.close()

|

||||

```

|

||||

|

||||

我们启动了两个任务,一个睡眠 1 秒钟,另一个睡眠 2 秒钟,输出如下:

|

||||

|

||||

```

|

||||

start sleeping for 1 seconds

|

||||

start sleeping for 2 seconds

|

||||

end sleeping for 1 seconds

|

||||

end sleeping for 2 seconds

|

||||

```

|

||||

|

||||

正如你所看到的,协程不会阻塞彼此——第二个任务在第一个结束之前启动。这发生的原因是 asyncio.sleep 是协程,它会返回执行权给调度器,直到时间到了。

|

||||

|

||||

在下一节中,我们将会使用基于协程的任务来创建一个游戏循环。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://7webpages.com/blog/writing-online-multiplayer-game-with-python-asyncio-getting-asynchronous/

|

||||

|

||||

作者:[Kyrylo Subbotin][a]

|

||||

译者:[xinglianfly](https://github.com/xinglianfly)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://7webpages.com/blog/writing-online-multiplayer-game-with-python-asyncio-getting-asynchronous/

|

||||

[1]: http://snakepit-game.com/

|

||||

[2]: http://snakepit-game.com/

|

||||

[3]: https://docs.python.org/3/library/asyncio.html

|

||||

[4]: https://docs.python.org/3/whatsnew/3.5.html#whatsnew-pep-492

|

||||

[5]: https://docs.python.org/2/library/select.html

|

||||

[6]: http://www.tornadoweb.org/

|

||||

[7]: http://twistedmatrix.com/

|

||||

[8]: http://www.stackless.com/

|

||||

[9]: http://www.eveonline.com/

|

||||

[10]: http://eventlet.net/

|

||||

[11]: http://www.gevent.org/

|

||||

464

published/20160608 Simple Python Framework from Scratch.md

Normal file

464

published/20160608 Simple Python Framework from Scratch.md

Normal file

@ -0,0 +1,464 @@

|

||||

从零构建一个简单的 Python 框架

|

||||

===================================

|

||||

|

||||

为什么你想要自己构建一个 web 框架呢?我想,原因有以下几点:

|

||||

|

||||

- 你有一个新奇的想法,觉得将会取代其他的框架

|

||||

- 你想要获得一些名气

|

||||

- 你遇到的问题很独特,以至于现有的框架不太合适

|

||||

- 你对 web 框架是如何工作的很感兴趣,因为你想要成为一位更好的 web 开发者。

|

||||

|

||||

接下来的笔墨将着重于最后一点。这篇文章旨在通过对设计和实现过程一步一步的阐述告诉读者,我在完成一个小型的服务器和框架之后学到了什么。你可以在这个[代码仓库][1]中找到这个项目的完整代码。

|

||||

|

||||

我希望这篇文章可以鼓励更多的人来尝试,因为这确实很有趣。它让我知道了 web 应用是如何工作的,而且这比我想的要容易的多!

|

||||

|

||||

### 范围

|

||||

|

||||

框架可以处理请求-响应周期、身份认证、数据库访问、模板生成等部分工作。Web 开发者使用框架是因为,大多数的 web 应用拥有大量相同的功能,而对每个项目都重新实现同样的功能意义不大。

|

||||

|

||||

比较大的的框架如 Rails 和 Django 实现了高层次的抽象,或者说“自备电池”(“batteries-included”,这是 Python 的口号之一,意即所有功能都自足。)。而实现所有的这些功能可能要花费数千小时,因此在这个项目上,我们重点完成其中的一小部分。在开始写代码前,我先列举一下所需的功能以及限制。

|

||||

|

||||

功能:

|

||||

|

||||

- 处理 HTTP 的 GET 和 POST 请求。你可以在[这篇 wiki][2] 中对 HTTP 有个大致的了解。

|

||||

- 实现异步操作(我*喜欢* Python 3 的 asyncio 模块)。

|

||||

- 简单的路由逻辑以及参数撷取。

|

||||

- 像其他微型框架一样,提供一个简单的用户级 API 。

|

||||

- 支持身份认证,因为学会这个很酷啊(微笑)。

|

||||

|

||||

限制:

|

||||

|

||||

- 将只支持 HTTP 1.1 的一个小子集,不支持传输编码(transfer-encoding)、HTTP 认证(http-auth)、内容编码(content-encoding,如 gzip)以及[持久化连接][3]等功能。

|

||||

- 不支持对响应内容的 MIME 判断 - 用户需要手动指定。

|

||||

- 不支持 WSGI - 仅能处理简单的 TCP 连接。

|

||||

- 不支持数据库。

|

||||

|

||||

我觉得一个小的用例可以让上述内容更加具体,也可以用来演示这个框架的 API:

|

||||

|

||||

```

|

||||

from diy_framework import App, Router

|

||||

from diy_framework.http_utils import Response

|

||||

|

||||

|

||||

# GET simple route

|

||||

async def home(r):

|

||||

rsp = Response()

|

||||

rsp.set_header('Content-Type', 'text/html')

|

||||

rsp.body = '<html><body><b>test</b></body></html>'

|

||||

return rsp

|

||||

|

||||

|

||||

# GET route + params

|

||||

async def welcome(r, name):

|

||||

return "Welcome {}".format(name)

|

||||

|

||||

# POST route + body param

|

||||

async def parse_form(r):

|

||||

if r.method == 'GET':

|

||||

return 'form'

|

||||

else:

|

||||

name = r.body.get('name', '')[0]

|

||||

password = r.body.get('password', '')[0]

|

||||

|

||||

return "{0}:{1}".format(name, password)

|

||||

|

||||

# application = router + http server

|

||||

router = Router()

|

||||

router.add_routes({

|

||||

r'/welcome/{name}': welcome,

|

||||

r'/': home,

|

||||

r'/login': parse_form,})

|

||||

|

||||

app = App(router)

|

||||

app.start_server()

|

||||

```

|

||||

'

|

||||

用户需要定义一些能够返回字符串或 `Response` 对象的异步函数,然后将这些函数与表示路由的字符串配对,最后通过一个函数调用(`start_server`)开始处理请求。

|

||||

|

||||

完成设计之后,我将它抽象为几个我需要编码的部分:

|

||||

|

||||

- 接受 TCP 连接以及调度一个异步函数来处理这些连接的部分

|

||||

- 将原始文本解析成某种抽象容器的部分

|

||||

- 对于每个请求,用来决定调用哪个函数的部分

|

||||

- 将上述部分集中到一起,并为开发者提供一个简单接口的部分

|

||||

|

||||

我先编写一些测试,这些测试被用来描述每个部分的功能。几次重构后,整个设计被分成若干部分,每个部分之间是相对解耦的。这样就非常好,因为每个部分可以被独立地研究学习。以下是我上文列出的抽象的具体体现:

|

||||

|

||||

- 一个 HTTPServer 对象,需要一个 Router 对象和一个 http_parser 模块,并使用它们来初始化。

|

||||

- HTTPConnection 对象,每一个对象表示一个单独的客户端 HTTP 连接,并且处理其请求-响应周期:使用 http_parser 模块将收到的字节流解析为一个 Request 对象;使用一个 Router 实例寻找并调用正确的函数来生成一个响应;最后将这个响应发送回客户端。

|

||||

- 一对 Request 和 Response 对象为用户提供了一种友好的方式,来处理实质上是字节流的字符串。用户不需要知道正确的消息格式和分隔符是怎样的。

|

||||

- 一个包含“路由:函数”对应关系的 Router 对象。它提供一个添加配对的方法,可以根据 URL 路径查找到相应的函数。

|

||||

- 最后,一个 App 对象。它包含配置信息,并使用它们实例化一个 HTTPServer 实例。

|

||||

|

||||

让我们从 `HTTPConnection` 开始来讲解各个部分。

|

||||

|

||||

### 模拟异步连接

|

||||

|

||||

为了满足上述约束条件,每一个 HTTP 请求都是一个单独的 TCP 连接。这使得处理请求的速度变慢了,因为建立多个 TCP 连接需要相对高的花销(DNS 查询,TCP 三次握手,[慢启动][4]等等的花销),不过这样更加容易模拟。对于这一任务,我选择相对高级的 [asyncio-stream][5] 模块,它建立在 [asyncio 的传输和协议][6]的基础之上。我强烈推荐你读一读标准库中的相应代码,很有意思!

|

||||

|

||||

一个 `HTTPConnection` 的实例能够处理多个任务。首先,它使用 `asyncio.StreamReader` 对象以增量的方式从 TCP 连接中读取数据,并存储在缓存中。每一个读取操作完成后,它会尝试解析缓存中的数据,并生成一个 `Request` 对象。一旦收到了这个完整的请求,它就生成一个回复,并通过 `asyncio.StreamWriter` 对象发送回客户端。当然,它还有两个任务:超时连接以及错误处理。

|

||||

|

||||

你可以在[这里][7]浏览这个类的完整代码。我将分别介绍代码的每一部分。为了简单起见,我移除了代码文档。

|

||||

|

||||

```

|

||||

class HTTPConnection(object):

|

||||

def init(self, http_server, reader, writer):

|

||||

self.router = http_server.router

|

||||

self.http_parser = http_server.http_parser

|

||||

self.loop = http_server.loop

|

||||

|

||||

self._reader = reader

|

||||

self._writer = writer

|

||||

self._buffer = bytearray()

|

||||

self._conn_timeout = None

|

||||

self.request = Request()

|

||||

```

|

||||

|

||||

这个 `init` 方法没啥意思,它仅仅是收集了一些对象以供后面使用。它存储了一个 `router` 对象、一个 `http_parser` 对象以及 `loop` 对象,分别用来生成响应、解析请求以及在事件循环中调度任务。

|

||||

|

||||

然后,它存储了代表一个 TCP 连接的读写对,和一个充当原始字节缓冲区的空[字节数组][8]。`_conn_timeout` 存储了一个 [asyncio.Handle][9] 的实例,用来管理超时逻辑。最后,它还存储了 `Request` 对象的一个单一实例。

|

||||

|

||||

下面的代码是用来接受和发送数据的核心功能:

|

||||

|

||||

```

|

||||

async def handle_request(self):

|

||||

try:

|

||||

while not self.request.finished and not self._reader.at_eof():

|

||||

data = await self._reader.read(1024)

|

||||

if data:

|

||||

self._reset_conn_timeout()

|

||||

await self.process_data(data)

|

||||

if self.request.finished:

|

||||

await self.reply()

|

||||

elif self._reader.at_eof():

|

||||

raise BadRequestException()

|

||||

except (NotFoundException,

|

||||

BadRequestException) as e:

|

||||

self.error_reply(e.code, body=Response.reason_phrases[e.code])

|

||||

except Exception as e:

|

||||

self.error_reply(500, body=Response.reason_phrases[500])

|

||||

|

||||

self.close_connection()

|

||||

```

|

||||

|

||||

所有内容被包含在 `try-except` 代码块中,这样在解析请求或响应期间抛出的异常可以被捕获到,然后一个错误响应会发送回客户端。

|

||||

|

||||

在 `while` 循环中不断读取请求,直到解析器将 `self.request.finished` 设置为 True ,或者客户端关闭连接所触发的信号使得 `self._reader_at_eof()` 函数返回值为 True 为止。这段代码尝试在每次循环迭代中从 `StreamReader` 中读取数据,并通过调用 `self.process_data(data)` 函数以增量方式生成 `self.request`。每次循环读取数据时,连接超时计数器被重置。

|

||||

|

||||

这儿有个错误,你发现了吗?稍后我们会再讨论这个。需要注意的是,这个循环可能会耗尽 CPU 资源,因为如果没有读取到东西 `self._reader.read()` 函数将会返回一个空的字节对象 `b''`。这就意味着循环将会不断运行,却什么也不做。一个可能的解决方法是,用非阻塞的方式等待一小段时间:`await asyncio.sleep(0.1)`。我们暂且不对它做优化。

|

||||

|

||||

还记得上一段我提到的那个错误吗?只有从 `StreamReader` 读取数据时,`self._reset_conn_timeout()` 函数才会被调用。这就意味着,**直到第一个字节到达时**,`timeout` 才被初始化。如果有一个客户端建立了与服务器的连接却不发送任何数据,那就永远不会超时。这可能被用来消耗系统资源,从而导致拒绝服务式攻击(DoS)。修复方法就是在 `init` 函数中调用 `self._reset_conn_timeout()` 函数。

|

||||

|

||||

当请求接受完成或连接中断时,程序将运行到 `if-else` 代码块。这部分代码会判断解析器收到完整的数据后是否完成了解析。如果是,好,生成一个回复并发送回客户端。如果不是,那么请求信息可能有错误,抛出一个异常!最后,我们调用 `self.close_connection` 执行清理工作。

|

||||

|

||||

解析请求的部分在 `self.process_data` 方法中。这个方法非常简短,也易于测试:

|

||||

|

||||

```

|

||||

async def process_data(self, data):

|

||||

self._buffer.extend(data)

|

||||

|

||||

self._buffer = self.http_parser.parse_into(

|

||||

self.request, self._buffer)

|

||||

```

|

||||

|

||||

每一次调用都将数据累积到 `self._buffer` 中,然后试着用 `self.http_parser` 来解析已经收集的数据。这里需要指出的是,这段代码展示了一种称为[依赖注入(Dependency Injection)][10]的模式。如果你还记得 `init` 函数的话,应该知道我们传入了一个包含 `http_parser` 对象的 `http_server` 对象。在这个例子里,`http_parser` 对象是 `diy_framework` 包中的一个模块。不过它也可以是任何含有 `parse_into` 函数的类,这个 `parse_into` 函数接受一个 `Request` 对象以及字节数组作为参数。这很有用,原因有二:一是,这意味着这段代码更易扩展。如果有人想通过一个不同的解析器来使用 `HTTPConnection`,没问题,只需将它作为参数传入即可。二是,这使得测试更加容易,因为 `http_parser` 不是硬编码的,所以使用虚假数据或者 [mock][11] 对象来替代是很容易的。

|

||||

|

||||

下一段有趣的部分就是 `reply` 方法了:

|

||||

|

||||

```

|

||||

async def reply(self):

|

||||

request = self.request

|

||||

handler = self.router.get_handler(request.path)

|

||||

|

||||

response = await handler.handle(request)

|

||||

|

||||

if not isinstance(response, Response):

|

||||

response = Response(code=200, body=response)

|

||||

|

||||

self._writer.write(response.to_bytes())

|

||||

await self._writer.drain()

|

||||

```

|

||||

|

||||

这里,一个 `HTTPConnection` 的实例使用了 `HTTPServer` 中的 `router` 对象来得到一个生成响应的对象。一个路由可以是任何一个拥有 `get_handler` 方法的对象,这个方法接收一个字符串作为参数,返回一个可调用的对象或者抛出 `NotFoundException` 异常。而这个可调用的对象被用来处理请求以及生成响应。处理程序由框架的使用者编写,如上文所说的那样,应该返回字符串或者 `Response` 对象。`Response` 对象提供了一个友好的接口,因此这个简单的 if 语句保证了无论处理程序返回什么,代码最终都得到一个统一的 `Response` 对象。

|

||||

|

||||

接下来,被赋值给 `self._writer` 的 `StreamWriter` 实例被调用,将字节字符串发送回客户端。函数返回前,程序在 `await self._writer.drain()` 处等待,以确保所有的数据被发送给客户端。只要缓存中还有未发送的数据,`self._writer.close()` 方法就不会执行。

|

||||

|

||||

`HTTPConnection` 类还有两个更加有趣的部分:一个用于关闭连接的方法,以及一组用来处理超时机制的方法。首先,关闭一条连接由下面这个小函数完成:

|

||||

|

||||

```

|

||||

def close_connection(self):

|

||||

self._cancel_conn_timeout()

|

||||

self._writer.close()

|

||||

```

|

||||

|

||||

每当一条连接将被关闭时,这段代码首先取消超时,然后把连接从事件循环中清除。

|

||||

|

||||

超时机制由三个相关的函数组成:第一个函数在超时后给客户端发送错误消息并关闭连接;第二个函数用于取消当前的超时;第三个函数调度超时功能。前两个函数比较简单,我将详细解释第三个函数 `_reset_cpmm_timeout()` 。

|

||||

|

||||

```

|

||||

def _conn_timeout_close(self):

|

||||

self.error_reply(500, 'timeout')

|

||||

self.close_connection()

|

||||

|

||||

def _cancel_conn_timeout(self):

|

||||

if self._conn_timeout:

|

||||

self._conn_timeout.cancel()

|

||||

|

||||

def _reset_conn_timeout(self, timeout=TIMEOUT):

|

||||

self._cancel_conn_timeout()

|

||||

self._conn_timeout = self.loop.call_later(

|

||||

timeout, self._conn_timeout_close)

|

||||

```

|

||||

|

||||

每当 `_reset_conn_timeout` 函数被调用时,它会先取消之前所有赋值给 `self._conn_timeout` 的 `asyncio.Handle` 对象。然后,使用 [BaseEventLoop.call_later][12] 函数让 `_conn_timeout_close` 函数在超时数秒(`timeout`)后执行。如果你还记得 `handle_request` 函数的内容,就知道每当接收到数据时,这个函数就会被调用。这就取消了当前的超时并且重新安排 `_conn_timeout_close` 函数在超时数秒(`timeout`)后执行。只要接收到数据,这个循环就会不断地重置超时回调。如果在超时时间内没有接收到数据,最后函数 `_conn_timeout_close` 就会被调用。

|

||||

|

||||

### 创建连接

|

||||

|

||||

我们需要创建 `HTTPConnection` 对象,并且正确地使用它们。这一任务由 `HTTPServer` 类完成。`HTTPServer` 类是一个简单的容器,可以存储着一些配置信息(解析器,路由和事件循环实例),并使用这些配置来创建 `HTTPConnection` 实例:

|

||||

|

||||

```

|

||||

class HTTPServer(object):

|

||||

def init(self, router, http_parser, loop):

|

||||

self.router = router

|

||||

self.http_parser = http_parser

|

||||

self.loop = loop

|

||||

|

||||

async def handle_connection(self, reader, writer):

|

||||

connection = HTTPConnection(self, reader, writer)

|

||||

asyncio.ensure_future(connection.handle_request(), loop=self.loop)

|

||||

```

|

||||

|

||||

`HTTPServer` 的每一个实例能够监听一个端口。它有一个 `handle_connection` 的异步方法来创建 `HTTPConnection` 的实例,并安排它们在事件循环中运行。这个方法被传递给 [asyncio.start_server][13] 作为一个回调函数。也就是说,每当一个 TCP 连接初始化时(以 `StreamReader` 和 `StreamWriter` 为参数),它就会被调用。

|

||||

|

||||

```

|

||||

self._server = HTTPServer(self.router, self.http_parser, self.loop)

|

||||

self._connection_handler = asyncio.start_server(

|

||||

self._server.handle_connection,

|

||||

host=self.host,

|

||||

port=self.port,

|

||||

reuse_address=True,

|

||||

reuse_port=True,

|

||||

loop=self.loop)

|

||||

```

|

||||

|

||||

这就是构成整个应用程序工作原理的核心:`asyncio.start_server` 接受 TCP 连接,然后在一个预配置的 `HTTPServer` 对象上调用一个方法。这个方法将处理一条 TCP 连接的所有逻辑:读取、解析、生成响应并发送回客户端、以及关闭连接。它的重点是 IO 逻辑、解析和生成响应。

|

||||

|

||||

讲解了核心的 IO 部分,让我们继续。

|

||||

|

||||

### 解析请求

|

||||

|

||||

这个微型框架的使用者被宠坏了,不愿意和字节打交道。它们想要一个更高层次的抽象 —— 一种更加简单的方法来处理请求。这个微型框架就包含了一个简单的 HTTP 解析器,能够将字节流转化为 Request 对象。

|

||||

|

||||

这些 Request 对象是像这样的容器:

|

||||

|

||||

```

|

||||

class Request(object):

|

||||

def init(self):

|

||||

self.method = None

|

||||

self.path = None

|

||||

self.query_params = {}

|

||||

self.path_params = {}

|

||||

self.headers = {}

|

||||

self.body = None

|

||||

self.body_raw = None

|

||||

self.finished = False

|

||||

```

|

||||

|

||||

它包含了所有需要的数据,可以用一种容易理解的方法从客户端接受数据。哦,不包括 cookie ,它对身份认证是非常重要的,我会将它留在第二部分。

|

||||

|

||||

每一个 HTTP 请求都包含了一些必需的内容,如请求路径和请求方法。它们也包含了一些可选的内容,如请求体、请求头,或是 URL 参数。随着 REST 的流行,除了 URL 参数,URL 本身会包含一些信息。比如,"/user/1/edit" 包含了用户的 id 。

|

||||

|

||||

一个请求的每个部分都必须被识别、解析,并正确地赋值给 Request 对象的对应属性。HTTP/1.1 是一个文本协议,事实上这简化了很多东西。(HTTP/2 是一个二进制协议,这又是另一种乐趣了)

|

||||

|

||||

解析器不需要跟踪状态,因此 `http_parser` 模块其实就是一组函数。调用函数需要用到 `Request` 对象,并将它连同一个包含原始请求信息的字节数组传递给 `parse_into` 函数。然后解析器会修改 `Request` 对象以及充当缓存的字节数组。字节数组的信息被逐渐地解析到 request 对象中。

|

||||

|

||||

`http_parser` 模块的核心功能就是下面这个 `parse_into` 函数:

|

||||

|

||||

```

|

||||

def parse_into(request, buffer):

|

||||

_buffer = buffer[:]

|

||||

if not request.method and can_parse_request_line(_buffer):

|

||||

(request.method, request.path,

|

||||

request.query_params) = parse_request_line(_buffer)

|

||||

remove_request_line(_buffer)

|

||||

|

||||

if not request.headers and can_parse_headers(_buffer):

|

||||

request.headers = parse_headers(_buffer)

|

||||

if not has_body(request.headers):

|

||||

request.finished = True

|

||||

|

||||

remove_intro(_buffer)

|

||||

|

||||

if not request.finished and can_parse_body(request.headers, _buffer):

|

||||

request.body_raw, request.body = parse_body(request.headers, _buffer)

|

||||

clear_buffer(_buffer)

|

||||

request.finished = True

|

||||

return _buffer

|

||||

```

|

||||

|

||||

从上面的代码中可以看到,我把解析的过程分为三个部分:解析请求行(这行像这样:GET /resource HTTP/1.1),解析请求头以及解析请求体。

|

||||

|

||||

请求行包含了 HTTP 请求方法以及 URL 地址。而 URL 地址则包含了更多的信息:路径、url 参数和开发者自定义的 url 参数。解析请求方法和 URL 还是很容易的 - 合适地分割字符串就好了。函数 `urlparse.parse` 可以用来解析 URL 参数。开发者自定义的 URL 参数可以通过正则表达式来解析。

|

||||

|

||||

接下来是 HTTP 头部。它们是一行行由键值对组成的简单文本。问题在于,可能有多个 HTTP 头有相同的名字,却有不同的值。一个值得关注的 HTTP 头部是 `Content-Length`,它描述了请求体的字节长度(不是整个请求,仅仅是请求体)。这对于决定是否解析请求体有很重要的作用。

|

||||

|

||||

最后,解析器根据 HTTP 方法和头部来决定是否解析请求体。

|

||||

|

||||

### 路由!

|

||||

|

||||

在某种意义上,路由就像是连接框架和用户的桥梁,用户用合适的方法创建 `Router` 对象并为其设置路径/函数对,然后将它赋值给 App 对象。而 App 对象依次调用 `get_handler` 函数生成相应的回调函数。简单来说,路由就负责两件事,一是存储路径/函数对,二是返回需要的路径/函数对

|

||||

|

||||

`Router` 类中有两个允许最终开发者添加路由的方法,分别是 `add_routes` 和 `add_route`。因为 `add_routes` 就是 `add_route` 函数的一层封装,我们将主要讲解 `add_route` 函数:

|

||||

|

||||

```

|

||||

def add_route(self, path, handler):

|

||||

compiled_route = self.class.build_route_regexp(path)

|

||||

if compiled_route not in self.routes:

|

||||

self.routes[compiled_route] = handler

|

||||

else:

|

||||

raise DuplicateRoute

|

||||

```

|

||||

|

||||

首先,这个函数使用 `Router.build_router_regexp` 的类方法,将一条路由规则(如 '/cars/{id}' 这样的字符串),“编译”到一个已编译的正则表达式对象。这些已编译的正则表达式用来匹配请求路径,以及解析开发者自定义的 URL 参数。如果已经存在一个相同的路由,程序就会抛出一个异常。最后,这个路由/处理程序对被添加到一个简单的字典`self.routes`中。

|

||||

|

||||

下面展示 Router 是如何“编译”路由的:

|

||||

|

||||

```

|

||||

@classmethod

|

||||

def build_route_regexp(cls, regexp_str):

|

||||

"""

|

||||

Turns a string into a compiled regular expression. Parses '{}' into

|

||||

named groups ie. '/path/{variable}' is turned into

|

||||

'/path/(?P<variable>[a-zA-Z0-9_-]+)'.

|

||||

|

||||

:param regexp_str: a string representing a URL path.

|

||||

:return: a compiled regular expression.

|

||||

"""

|

||||

def named_groups(matchobj):

|

||||

return '(?P<{0}>[a-zA-Z0-9_-]+)'.format(matchobj.group(1))

|

||||

|

||||

re_str = re.sub(r'{([a-zA-Z0-9_-]+)}', named_groups, regexp_str)

|

||||

re_str = ''.join(('^', re_str, '$',))

|

||||

return re.compile(re_str)

|

||||

```

|

||||

|

||||

这个方法使用正则表达式将所有出现的 `{variable}` 替换为 `(?P<variable>)`。然后在字符串头尾分别添加 `^` 和 `$` 标记,最后编译正则表达式对象。

|

||||

|

||||

完成了路由存储仅成功了一半,下面是如何得到路由对应的函数:

|

||||

|

||||

```

|

||||

def get_handler(self, path):

|

||||

logger.debug('Getting handler for: {0}'.format(path))

|

||||

for route, handler in self.routes.items():

|

||||

path_params = self.class.match_path(route, path)

|

||||

if path_params is not None:

|

||||

logger.debug('Got handler for: {0}'.format(path))

|

||||

wrapped_handler = HandlerWrapper(handler, path_params)

|

||||

return wrapped_handler

|

||||

|

||||

raise NotFoundException()

|

||||

```

|

||||

|

||||

一旦 `App` 对象获得一个 `Request` 对象,也就获得了 URL 的路径部分(如 /users/15/edit)。然后,我们需要匹配函数来生成一个响应或者 404 错误。`get_handler` 函数将路径作为参数,循环遍历路由,对每条路由调用 `Router.match_path` 类方法检查是否有已编译的正则对象与这个请求路径匹配。如果存在,我们就调用 `HandleWrapper` 来包装路由对应的函数。`path_params` 字典包含了路径变量(如 '/users/15/edit' 中的 '15'),若路由没有指定变量,字典就为空。最后,我们将包装好的函数返回给 `App` 对象。

|

||||

|

||||

如果遍历了所有的路由都找不到与路径匹配的,函数就会抛出 `NotFoundException` 异常。

|

||||

|

||||

这个 `Route.match` 类方法挺简单:

|

||||

|

||||

```

|

||||

def match_path(cls, route, path):

|

||||

match = route.match(path)

|

||||

try:

|

||||

return match.groupdict()

|

||||

except AttributeError:

|

||||

return None

|

||||

```

|

||||

|

||||

它使用正则对象的 [match 方法][14]来检查路由是否与路径匹配。若果不匹配,则返回 None 。

|

||||

|

||||

最后,我们有 `HandleWraapper` 类。它的唯一任务就是封装一个异步函数,存储 `path_params` 字典,并通过 `handle` 方法对外提供一个统一的接口。

|

||||

|

||||

```

|

||||

class HandlerWrapper(object):

|

||||

def init(self, handler, path_params):

|

||||

self.handler = handler

|

||||

self.path_params = path_params

|

||||

self.request = None

|

||||

|

||||

async def handle(self, request):

|

||||

return await self.handler(request, **self.path_params)

|

||||

```

|

||||

|

||||

### 组合到一起

|

||||

|

||||

框架的最后部分就是用 `App` 类把所有的部分联系起来。

|

||||

|

||||

`App` 类用于集中所有的配置细节。一个 `App` 对象通过其 `start_server` 方法,使用一些配置数据创建一个 `HTTPServer` 的实例,然后将它传递给 [asyncio.start_server 函数][15]。`asyncio.start_server` 函数会对每一个 TCP 连接调用 `HTTPServer` 对象的 `handle_connection` 方法。

|

||||

|

||||

```

|

||||

def start_server(self):

|

||||

if not self._server:

|

||||

self.loop = asyncio.get_event_loop()

|

||||

self._server = HTTPServer(self.router, self.http_parser, self.loop)

|

||||

self._connection_handler = asyncio.start_server(

|

||||

self._server.handle_connection,

|

||||

host=self.host,

|

||||

port=self.port,

|

||||

reuse_address=True,

|

||||

reuse_port=True,

|

||||

loop=self.loop)

|

||||

|

||||

logger.info('Starting server on {0}:{1}'.format(

|

||||

self.host, self.port))

|

||||

self.loop.run_until_complete(self._connection_handler)

|

||||

|

||||

try:

|

||||

self.loop.run_forever()

|

||||

except KeyboardInterrupt:

|

||||

logger.info('Got signal, killing server')

|

||||

except DiyFrameworkException as e:

|

||||

logger.error('Critical framework failure:')

|

||||

logger.error(e.traceback)

|

||||

finally:

|

||||

self.loop.close()

|

||||

else:

|

||||

logger.info('Server already started - {0}'.format(self))

|

||||

```

|

||||

|

||||

### 总结

|

||||

|

||||

如果你查看源码,就会发现所有的代码仅 320 余行(包括测试代码的话共 540 余行)。这么少的代码实现了这么多的功能,让我有点惊讶。这个框架没有提供模板、身份认证以及数据库访问等功能(这些内容也很有趣哦)。这也让我知道,像 Django 和 Tornado 这样的框架是如何工作的,而且我能够快速地调试它们了。

|

||||

|

||||

这也是我按照测试驱动开发完成的第一个项目,整个过程有趣而有意义。先编写测试用例迫使我思考设计和架构,而不仅仅是把代码放到一起,让它们可以运行。不要误解我的意思,有很多时候,后者的方式更好。不过如果你想给确保这些不怎么维护的代码在之后的几周甚至几个月依然工作,那么测试驱动开发正是你需要的。

|

||||

|

||||

我研究了下[整洁架构][16]以及依赖注入模式,这些充分体现在 `Router` 类是如何作为一个更高层次的抽象的(实体?)。`Router` 类是比较接近核心的,像 `http_parser` 和 `App` 的内容比较边缘化,因为它们只是完成了极小的字符串和字节流、或是中层 IO 的工作。测试驱动开发(TDD)迫使我独立思考每个小部分,这使我问自己这样的问题:方法调用的组合是否易于理解?类名是否准确地反映了我正在解决的问题?我的代码中是否很容易区分出不同的抽象层?

|

||||

|

||||

来吧,写个小框架,真的很有趣:)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://mattscodecave.com/posts/simple-python-framework-from-scratch.html

|

||||

|

||||

作者:[Matt][a]

|

||||

译者:[Cathon](https://github.com/Cathon)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://mattscodecave.com/hire-me.html

|

||||

[1]: https://github.com/sirMackk/diy_framework

|

||||

[2]:https://en.wikipedia.org/wiki/Hypertext_Transfer_Protocol

|

||||

[3]: https://en.wikipedia.org/wiki/HTTP_persistent_connection

|

||||

[4]: https://en.wikipedia.org/wiki/TCP_congestion-avoidance_algorithm#Slow_start

|

||||

[5]: https://docs.python.org/3/library/asyncio-stream.html

|

||||

[6]: https://docs.python.org/3/library/asyncio-protocol.html

|

||||

[7]: https://github.com/sirMackk/diy_framework/blob/88968e6b30e59504251c0c7cd80abe88f51adb79/diy_framework/http_server.py#L46

|

||||

[8]: https://docs.python.org/3/library/functions.html#bytearray

|

||||

[9]: https://docs.python.org/3/library/asyncio-eventloop.html#asyncio.Handle

|

||||

[10]: https://en.wikipedia.org/wiki/Dependency_injection

|

||||

[11]: https://docs.python.org/3/library/unittest.mock.html

|

||||

[12]: https://docs.python.org/3/library/asyncio-eventloop.html#asyncio.BaseEventLoop.call_later

|

||||

[13]: https://docs.python.org/3/library/asyncio-stream.html#asyncio.start_server

|

||||

[14]: https://docs.python.org/3/library/re.html#re.match

|

||||

[15]: https://docs.python.org/3/library/asyncio-stream.html?highlight=start_server#asyncio.start_server

|

||||

[16]: https://blog.8thlight.com/uncle-bob/2012/08/13/the-clean-architecture.html

|

||||

@ -0,0 +1,111 @@

|

||||

使用 HTTP/2 服务端推送技术加速 Node.js 应用

|

||||

=========================================================

|

||||

|

||||

四月份,我们宣布了对 [HTTP/2 服务端推送技术][3]的支持,我们是通过 HTTP 的 [Link 头部](https://www.w3.org/wiki/LinkHeader)来实现这项支持的。我的同事 John 曾经通过一个例子演示了[在 PHP 里支持服务端推送功能][4]是多么的简单。

|

||||

|

||||

|

||||

|

||||

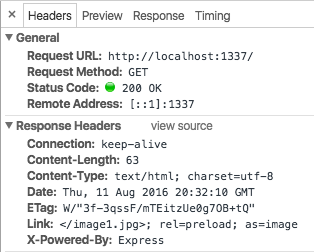

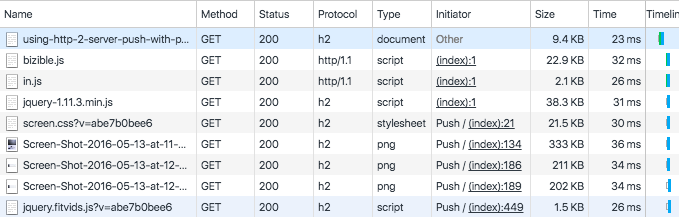

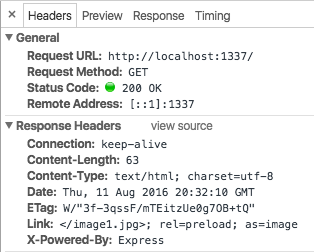

我们想让现今使用 Node.js 构建的网站能够更加轻松的获得性能提升。为此,我们开发了 [netjet][1] 中间件,它可以解析应用生成的 HTML 并自动添加 Link 头部。当在一个示例的 Express 应用中使用这个中间件时,我们可以看到应用程序的输出多了如下 HTTP 头:

|

||||

|

||||

|

||||

|

||||

[本博客][5]是使用 [Ghost](https://ghost.org/)(LCTT 译注:一个博客发布平台)进行发布的,因此如果你的浏览器支持 HTTP/2,你已经在不知不觉中享受了服务端推送技术带来的好处了。接下来,我们将进行更详细的说明。

|

||||

|

||||

netjet 使用了带有定制插件的 [PostHTML](https://github.com/posthtml/posthtml) 来解析 HTML。目前,netjet 用它来查找图片、脚本和外部 CSS 样式表。你也可以用其它的技术来实现这个。

|

||||

|

||||

在响应过程中增加 HTML 解析器有个明显的缺点:这将增加页面加载的延时(到加载第一个字节所花的时间)。大多数情况下,所新增的延时被应用里的其他耗时掩盖掉了,比如数据库访问。为了解决这个问题,netjet 包含了一个可调节的 LRU 缓存,该缓存以 HTTP 的 ETag 头部作为索引,这使得 netjet 可以非常快的为已经解析过的页面插入 Link 头部。

|

||||

|

||||

不过,如果我们现在从头设计一款全新的应用,我们就应该考虑把页面内容和页面中的元数据分开存放,从而整体地减少 HTML 解析和其它可能增加的延时了。

|

||||

|

||||

任意的 Node.js HTML 框架,只要它支持类似 Express 这样的中间件,netjet 都是能够兼容的。只要把 netjet 像下面这样加到中间件加载链里就可以了。

|

||||

|

||||

```javascript

|

||||

var express = require('express');

|

||||

var netjet = require('netjet');

|

||||

var root = '/path/to/static/folder';

|

||||

|

||||

express()

|

||||

.use(netjet({

|

||||

cache: {

|

||||

max: 100

|

||||

}

|

||||

}))

|

||||

.use(express.static(root))

|

||||

.listen(1337);

|

||||

```

|

||||

|

||||

稍微加点代码,netjet 也可以摆脱 HTML 框架,独立工作:

|

||||

|

||||

```javascript

|

||||

var http = require('http');

|

||||

var netjet = require('netjet');

|

||||

|

||||

var port = 1337;

|

||||

var hostname = 'localhost';

|

||||

var preload = netjet({

|

||||

cache: {

|

||||

max: 100

|

||||

}

|

||||

});

|

||||

|

||||

var server = http.createServer(function (req, res) {

|

||||

preload(req, res, function () {

|

||||

res.statusCode = 200;

|

||||

res.setHeader('Content-Type', 'text/html');

|

||||

res.end('<!doctype html><h1>Hello World</h1>');

|

||||

});

|

||||

});

|

||||

|

||||

server.listen(port, hostname, function () {

|

||||

console.log('Server running at http://' + hostname + ':' + port+ '/');

|

||||

});

|

||||

```

|

||||

|

||||

[netjet 文档里][1]有更多选项的信息。

|

||||

|

||||

### 查看推送了什么数据

|

||||

|

||||

|

||||

|

||||

访问[本文][5]时,通过 Chrome 的开发者工具,我们可以轻松的验证网站是否正在使用服务器推送技术(LCTT 译注: Chrome 版本至少为 53)。在“Network”选项卡中,我们可以看到有些资源的“Initiator”这一列中包含了`Push`字样,这些资源就是服务器端推送的。

|

||||

|

||||

不过,目前 Firefox 的开发者工具还不能直观的展示被推送的资源。不过我们可以通过页面响应头部里的`cf-h2-pushed`头部看到一个列表,这个列表包含了本页面主动推送给浏览器的资源。

|

||||

|

||||

希望大家能够踊跃为 netjet 添砖加瓦,我也乐于看到有人正在使用 netjet。

|

||||

|

||||

### Ghost 和服务端推送技术

|

||||

|

||||

Ghost 真是包罗万象。在 Ghost 团队的帮助下,我把 netjet 也集成到里面了,而且作为测试版内容可以在 Ghost 的 0.8.0 版本中用上它。

|

||||

|

||||

如果你正在使用 Ghost,你可以通过修改 config.js、并在`production`配置块中增加 `preloadHeaders` 选项来启用服务端推送。

|

||||

|

||||

```javascript

|

||||

production: {

|

||||

url: 'https://my-ghost-blog.com',

|

||||

preloadHeaders: 100,

|

||||

// ...

|

||||

}

|

||||

```

|

||||

|

||||

Ghost 已经为其用户整理了[一篇支持文档][2]。

|

||||

|

||||

### 总结

|

||||

|

||||

使用 netjet,你的 Node.js 应用也可以使用浏览器预加载技术。并且 [CloudFlare][5] 已经使用它在提供了 HTTP/2 服务端推送了。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://blog.cloudflare.com/accelerating-node-js-applications-with-http-2-server-push/

|

||||

|

||||

作者:[Terin Stock][a]

|

||||

译者:[echoma](https://github.com/echoma)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://blog.cloudflare.com/author/terin-stock/

|

||||

[1]: https://www.npmjs.com/package/netjet

|

||||

[2]: http://support.ghost.org/preload-headers/

|

||||

[3]: https://www.cloudflare.com/http2/server-push/

|

||||

[4]: https://blog.cloudflare.com/using-http-2-server-push-with-php/

|

||||

[5]: https://blog.cloudflare.com/accelerating-node-js-applications-with-http-2-server-push/

|

||||

@ -0,0 +1,48 @@

|

||||

百度运用 FPGA 方法大规模加速 SQL 查询

|

||||

===================================================================

|

||||

|

||||

尽管我们对百度今年工作焦点的关注集中在这个中国搜索巨头在深度学习方面的举措上,许多其他的关键的,尽管不那么前沿的应用表现出了大数据带来的挑战。

|

||||

|

||||

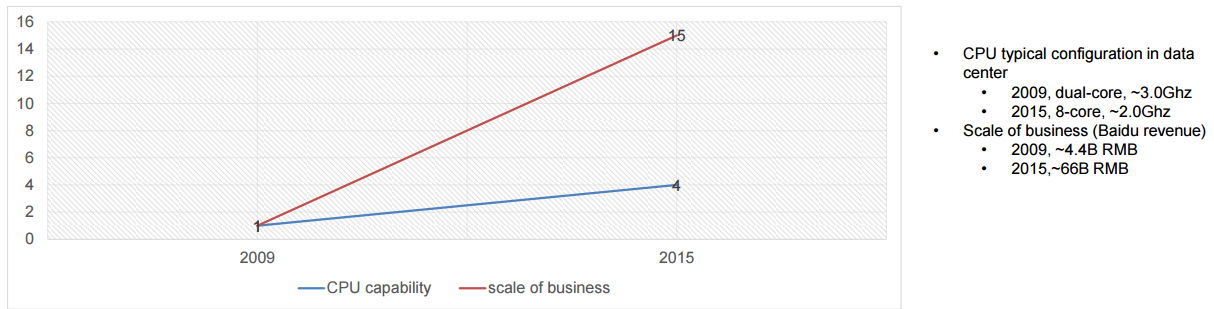

正如百度的欧阳剑在本周 Hot Chips 大会上谈论的,百度坐拥超过 1 EB 的数据,每天处理大约 100 PB 的数据,每天更新 100 亿的网页,每 24 小时更新处理超过 1 PB 的日志更新,这些数字和 Google 不分上下,正如人们所想象的。百度采用了类似 Google 的方法去大规模地解决潜在的瓶颈。

|

||||

|

||||

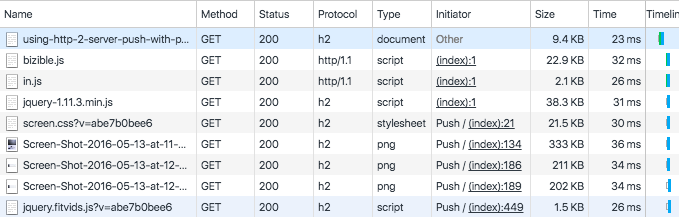

正如刚刚我们谈到的,Google 寻找一切可能的方法去打败摩尔定律,百度也在进行相同的探索,而令人激动的、使人着迷的机器学习工作是迷人的,业务的核心关键任务的加速同样也是,因为必须如此。欧阳提到,公司基于自身的数据提供高端服务的需求和 CPU 可以承载的能力之间的差距将会逐渐增大。

|

||||

|

||||

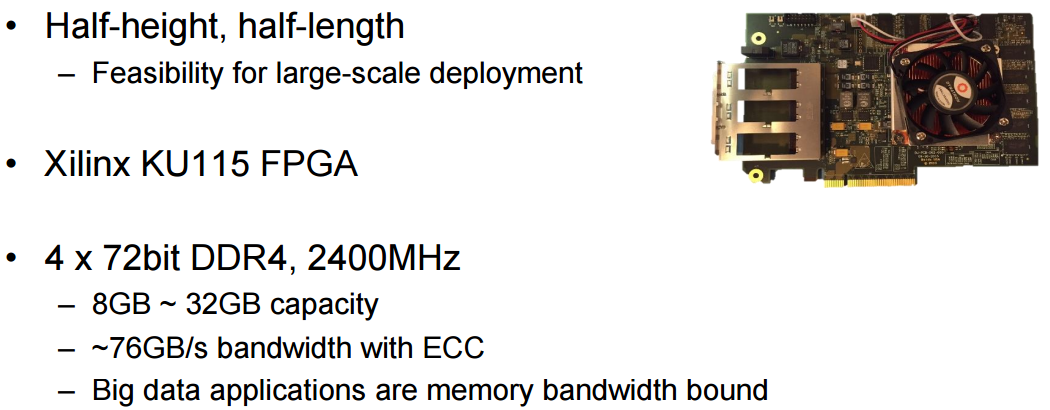

|

||||

|

||||

对于百度的百亿亿级问题,在所有数据的接受端是一系列用于数据分析的框架和平台,从该公司的海量知识图谱,多媒体工具,自然语言处理框架,推荐引擎,和点击流分析都是这样。简而言之,大数据的首要问题就是这样的:一系列各种应用和与之匹配的具有压倒性规模的数据。

|

||||

|

||||

当谈到加速百度的大数据分析,所面临的几个挑战,欧阳谈到抽象化运算核心去寻找一个普适的方法是困难的。“大数据应用的多样性和变化的计算类型使得这成为一个挑战,把所有这些整合成为一个分布式系统是困难的,因为有多变的平台和编程模型(MapReduce,Spark,streaming,user defined,等等)。将来还会有更多的数据类型和存储格式。”

|

||||

|

||||

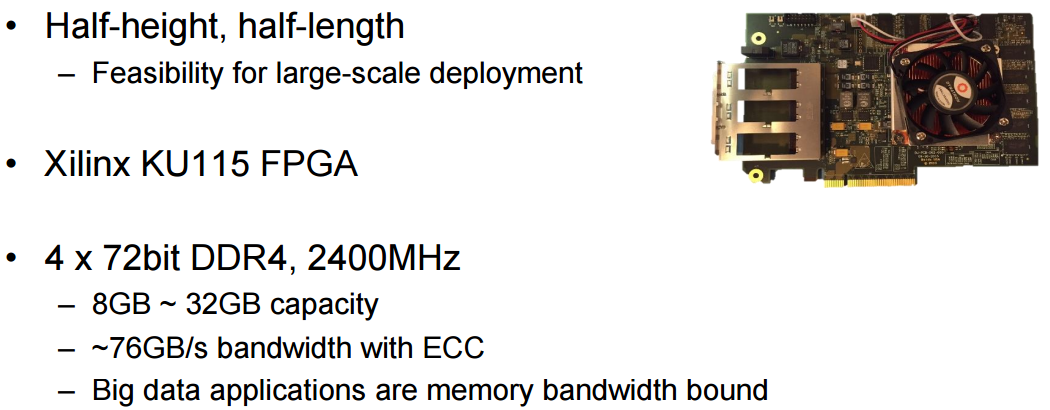

尽管存在这些障碍,欧阳讲到他们团队找到了(它们之间的)共同线索。如他所指出的那样,那些把他们的许多数据密集型的任务相连系在一起的就是传统的 SQL。“我们的数据分析任务大约有 40% 是用 SQL 写的,而其他的用 SQL 重写也是可用做到的。” 更进一步,他讲道他们可以享受到现有的 SQL 系统的好处,并可以和已有的框架相匹配,比如 Hive,Spark SQL,和 Impala 。下一步要做的事情就是 SQL 查询加速,百度发现 FPGA 是最好的硬件。

|

||||

|

||||

|

||||

|

||||

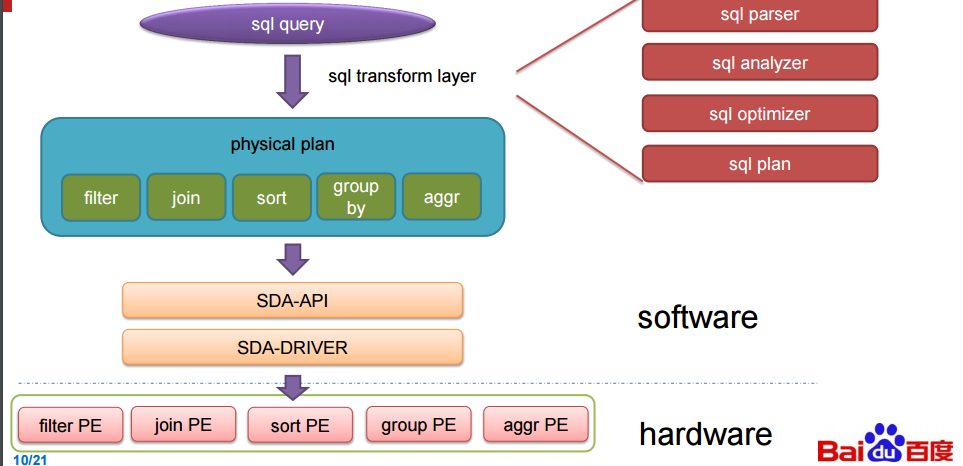

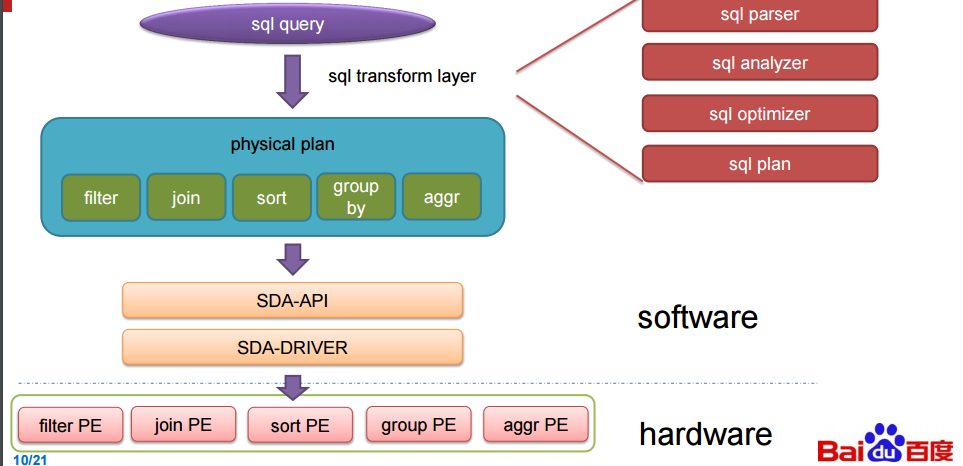

这些主板,被称为处理单元( 下图中的 PE ),当执行 SQL 时会自动地处理关键的 SQL 功能。这里所说的都是来自演讲,我们不承担责任。确切的说,这里提到的 FPGA 有点神秘,或许是故意如此。如果百度在基准测试中得到了如下图中的提升,那这可是一个有竞争力的信息。后面我们还会继续介绍这里所描述的东西。简单来说,FPGA 运行在数据库中,当其收到 SQL 查询的时候,该团队设计的软件就会与之紧密结合起来。

|

||||

|

||||

|

||||

|

||||

欧阳提到了一件事,他们的加速器受限于 FPGA 的带宽,不然性能表现本可以更高,在下面的评价中,百度安装了 2 块12 核心,主频 2.0 GHz 的 intl E26230 CPU,运行在 128G 内存。SDA 具有 5 个处理单元,(上图中的 300MHz FPGA 主板)每个分别处理不同的核心功能(筛选(filter),排序(sort),聚合(aggregate),联合(join)和分组(group by))

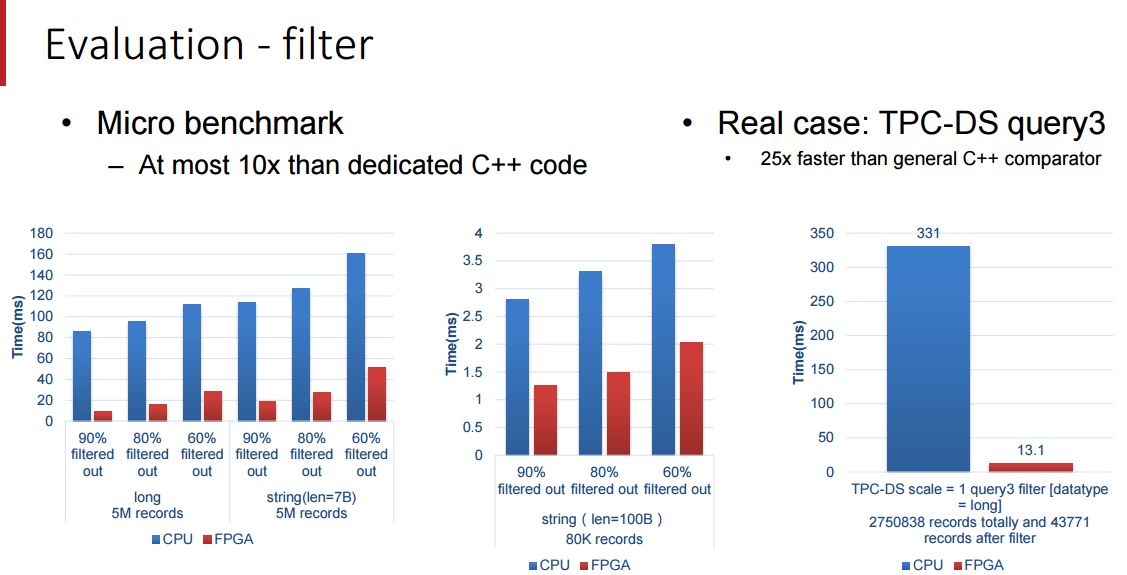

|

||||

|

||||

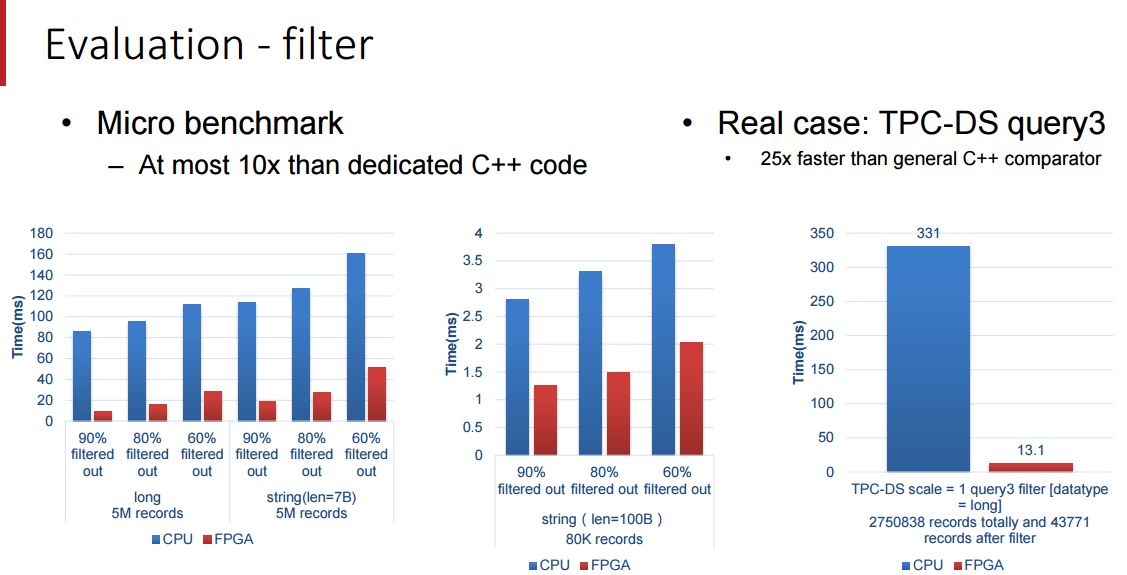

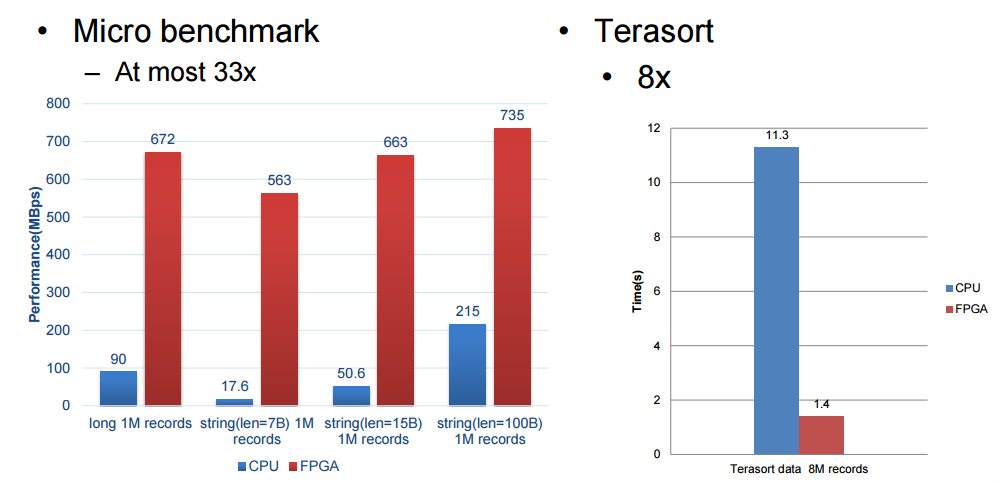

为了实现 SQL 查询加速,百度针对 TPC-DS 的基准测试进行了研究,并且创建了称做处理单元(PE)的特殊引擎,用于在基准测试中加速 5 个关键功能,这包括筛选(filter),排序(sort),聚合(aggregate),联合(join)和分组(group by),(我们并没有把这些单词都像 SQL 那样大写)。SDA 设备使用卸载模型,具有多个不同种类的处理单元的加速卡在 FPGA 中组成逻辑,SQL 功能的类型和每张卡的数量由特定的工作量决定。由于这些查询在百度的系统中执行,用来查询的数据被以列格式推送到加速卡中(这会使得查询非常快速),而且通过一个统一的 SDA API 和驱动程序,SQL 查询工作被分发到正确的处理单元而且 SQL 操作实现了加速。

|

||||

|

||||

SDA 架构采用一种数据流模型,加速单元不支持的操作被退回到数据库系统然后在那里本地运行,比其他任何因素,百度开发的 SQL 加速卡的性能被 FPGA 卡的内存带宽所限制。加速卡跨整个集群机器工作,顺便提一下,但是数据和 SQL 操作如何分发到多个机器的准确原理没有被百度披露。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

我们受限与百度所愿意披露的细节,但是这些基准测试结果是十分令人鼓舞的,尤其是 Terasort 方面,我们将在 Hot Chips 大会之后跟随百度的脚步去看看我们是否能得到关于这是如何连接到一起的和如何解决内存带宽瓶颈的细节。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.nextplatform.com/2016/08/24/baidu-takes-fpga-approach-accelerating-big-sql/

|

||||

|

||||

作者:[Nicole Hemsoth][a]

|

||||

译者:[LinuxBars](https://github.com/LinuxBars)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://www.nextplatform.com/author/nicole/

|

||||

[1]: http://www.nextplatform.com/?s=baidu+deep+learning

|

||||

[2]: http://www.hotchips.org/wp-content/uploads/hc_archives/hc26/HC26-12-day2-epub/HC26.12-5-FPGAs-epub/HC26.12.545-Soft-Def-Acc-Ouyang-baidu-v3--baidu-v4.pdf

|

||||

@ -0,0 +1,77 @@

|

||||

QOwnNotes:一款记录笔记和待办事项的应用,集成 ownCloud 云服务

|

||||

===============

|

||||

|

||||

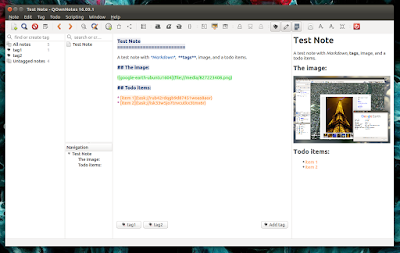

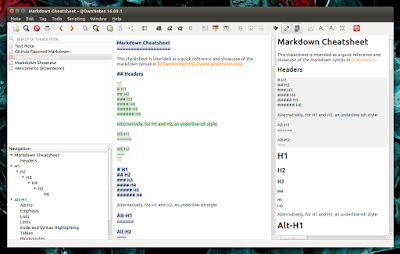

[QOwnNotes][1] 是一款自由而开源的笔记记录和待办事项的应用,可以运行在 Linux、Windows 和 mac 上。

|

||||

|

||||

这款程序将你的笔记保存为纯文本文件,它支持 Markdown 支持,并与 ownCloud 云服务紧密集成。

|

||||

|

||||

|

||||

|

||||

QOwnNotes 的亮点就是它集成了 ownCloud 云服务(当然是可选的)。在 ownCloud 上用这款 APP,你就可以在网路上记录和搜索你的笔记,也可以在移动设备上使用(比如一款像 CloudNotes 的软件[2])。

|

||||

|

||||

不久以后,用你的 ownCloud 账户连接上 QOwnNotes,你就可以从你 ownCloud 服务器上分享笔记和查看或恢复之前版本记录的笔记(或者丢到垃圾箱的笔记)。

|

||||

|

||||

同样,QOwnNotes 也可以与 ownCloud 任务或者 Tasks Plus 应用程序相集成。

|

||||

|

||||

如果你不熟悉 [ownCloud][3] 的话,这是一款替代 Dropbox、Google Drive 和其他类似商业性的网络服务的自由软件,它可以安装在你自己的服务器上。它有一个网络界面,提供了文件管理、日历、照片、音乐、文档浏览等等功能。开发者同样提供桌面同步客户端以及移动 APP。

|

||||

|

||||

因为笔记被保存为纯文本,它们可以在不同的设备之间通过云存储服务进行同步,比如 Dropbox,Google Drive 等等,但是在这些应用中不能完全替代 ownCloud 的作用。

|

||||

|

||||

我提到的上述特点,比如恢复之前的笔记,只能在 ownCloud 下可用(尽管 Dropbox 和其他类似的也提供恢复以前的文件的服务,但是你不能在 QOwnnotes 中直接访问到)。

|

||||

|

||||

鉴于 QOwnNotes 有这么多优点,它支持 Markdown 语言(内置了 Markdown 预览模式),可以标记笔记,对标记和笔记进行搜索,在笔记中加入超链接,也可以插入图片:

|

||||

|

||||

|

||||

|

||||

标记嵌套和笔记文件夹同样支持。

|

||||

|

||||

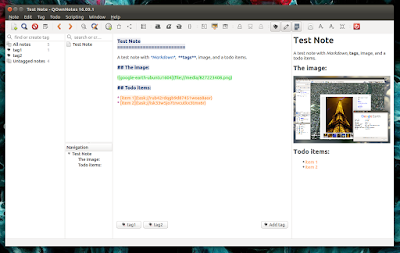

代办事项管理功能比较基本还可以做一些改进,它现在打开在一个单独的窗口里,它也不用和笔记一样的编辑器,也不允许添加图片或者使用 Markdown 语言。

|

||||

|

||||

|

||||

|

||||

它可以让你搜索你代办事项,设置事项优先级,添加提醒和显示完成的事项。此外,待办事项可以加入笔记中。

|

||||

|

||||

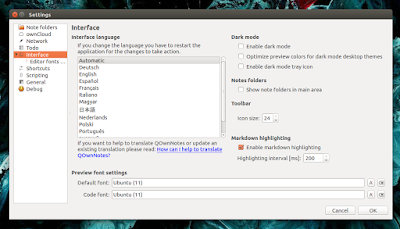

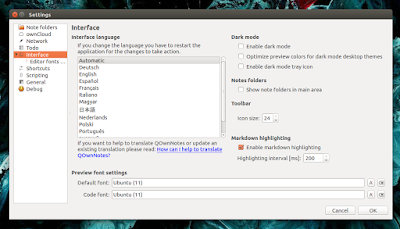

这款软件的界面是可定制的,允许你放大或缩小字体,切换窗格等等,也支持无干扰模式。

|

||||

|

||||

|

||||

|

||||

从程序的设置里,你可以开启黑夜模式(这里有个 bug,在 Ubuntu 16.04 里有些工具条图标消失了),改变状态条大小,字体和颜色方案(白天和黑夜):

|

||||

|

||||

|

||||

|

||||

其他的特点有支持加密(笔记只能在 QOwnNotes 中加密),自定义键盘快捷键,输出笔记为 pdf 或者 Markdown,自定义笔记自动保存间隔等等。

|

||||

|

||||

访问 [QOwnNotes][11] 主页查看完整的特性。

|

||||

|

||||

### 下载 QOwnNotes

|

||||

|

||||

如何安装,请查看安装页(支持 Debian、Ubuntu、Linux Mint、openSUSE、Fedora、Arch Linux、KaOS、Gentoo、Slackware、CentOS 以及 Mac OSX 和 Windows)。

|

||||

|

||||

QOwnNotes 的 [snap][5] 包也是可用的,在 Ubuntu 16.04 或更新版本中,你可以通过 Ubuntu 的软件管理器直接安装它。

|

||||

|

||||

为了集成 QOwnNotes 到 ownCloud,你需要有 [ownCloud 服务器][6],同样也需要 [Notes][7]、[QOwnNotesAPI][8]、[Tasks][9]、[Tasks Plus][10] 等 ownColud 应用。这些可以从 ownCloud 的 Web 界面上安装,不需要手动下载。

|

||||

|

||||

请注意 QOenNotesAPI 和 Notes ownCloud 应用是实验性的,你需要“启用实验程序”来发现并安装他们,可以从 ownCloud 的 Web 界面上进行设置,在 Apps 菜单下,在左下角点击设置按钮。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.webupd8.org/2016/09/qownnotes-is-note-taking-and-todo-list.html

|

||||

|

||||

作者:[Andrew][a]

|

||||

译者:[jiajia9linuxer](https://github.com/jiajia9linuxer)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://www.webupd8.org/p/about.html

|

||||

[1]: http://www.qownnotes.org/

|

||||

[2]: http://peterandlinda.com/cloudnotes/

|

||||

[3]: https://owncloud.org/

|

||||

[4]: http://www.qownnotes.org/installation

|

||||

[5]: https://uappexplorer.com/app/qownnotes.pbek

|

||||

[6]: https://download.owncloud.org/download/repositories/stable/owncloud/

|

||||

[7]: https://github.com/owncloud/notes

|

||||

[8]: https://github.com/pbek/qownnotesapi

|

||||

[9]: https://apps.owncloud.com/content/show.php/Tasks?content=164356

|

||||

[10]: https://apps.owncloud.com/content/show.php/Tasks+Plus?content=170561

|

||||

[11]: http://www.qownnotes.org/

|

||||

@ -1,3 +1,5 @@

|

||||

LinuxBars translating

|

||||

|

||||

Torvalds 2.0: Patricia Torvalds on computing, college, feminism, and increasing diversity in tech

|

||||

================================================================================

|

||||

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

Translating by Chao-zhi

|

||||

|

||||

Ubuntu’s Snap, Red Hat’s Flatpak And Is ‘One Fits All’ Linux Packages Useful?

|

||||

=================================================================================

|

||||

|

||||

|

||||

@ -1,101 +0,0 @@

|

||||

Tips for managing your project's issue tracker

|

||||

==============================================

|

||||

|

||||

|

||||

|

||||

Issue-tracking systems are important for many open source projects, and there are many open source tools that provide this functionality but many projects opt to use GitHub's built-in issue tracker.

|

||||

|

||||

Its simple structure makes it easy for others to weigh in, but issues are really only as good as you make them.

|

||||

|

||||

Without a process, your repository can become unwieldy, overflowing with duplicate issues, vague feature requests, or confusing bug reports. Project maintainers can become burdened by the organizational load, and it can become difficult for new contributors to understand where priorities lie.

|

||||

|

||||

In this article, I'll discuss how to take your GitHub issues from good to great.

|

||||

|

||||

### The issue as user story

|

||||

|

||||

My team spoke with open source expert [Jono Bacon][1]—author of [The Art of Community][2], a strategy consultant, and former Director of Community at GitHub—who said that high-quality issues are at the core of helping a projects succeed. He says that while some see issues as merely a big list of problems you have to tend to, well-managed, triaged, and labeled issues can provide incredible insight into your code, your community, and where the problem spots are.

|

||||

|

||||

"At the point of submission of an issue, the user likely has little patience or interest in providing expansive detail. As such, you should make it as easy as possible to get the most useful information from them in the shortest time possible," Jono Bacon said.

|

||||

|

||||

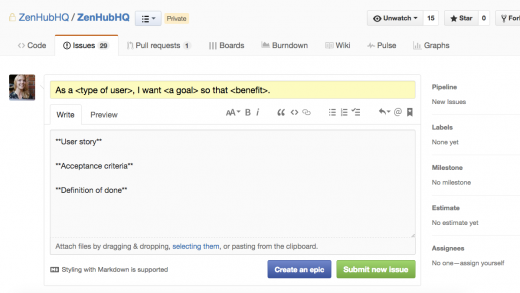

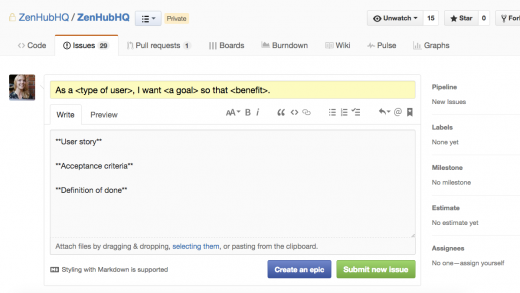

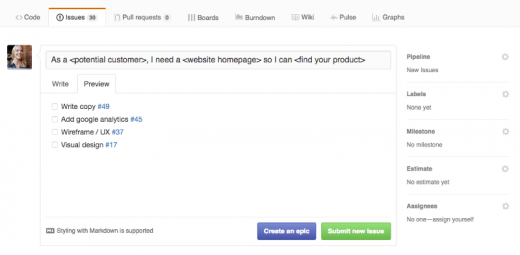

A consistent structure can take a lot of burden off project maintainers, particularly for open source projects. We've found that encouraging a user story approach helps make clarity a constant. The common structure for a user story addresses the "who, what, and why" of a feature: As a [user type], I want to [task] so that [goal].

|

||||

|

||||

Here's what that looks like in practice:

|

||||

|

||||

>As a customer, I want to create an account so that I can make purchases.

|

||||

|

||||

We suggest sticking that user story in the issue's title. You can also set up [issue templates][3] to keep things consistent.

|

||||

|

||||

|

||||

> Issue templates bring consistency to feature requests.

|

||||

|

||||

The point is to make the issue well-defined for everyone involved: it identifies the audience (or user), the action (or task), and the outcome (or goal) as simply as possible. There's no need to obsess over this structure, though; as long as the what and why of a story are easy to spot, you're good.

|

||||

|

||||

### Qualities of a good issue

|

||||

|

||||

Not all issues are created equal—as any OSS contributor or maintainer can attest. A well-formed issue meets these qualities outlined in [The Agile Samurai][4].

|

||||

|

||||

Ask yourself if it is...

|

||||

|

||||

- something of value to customers

|

||||

- avoids jargon or mumbo jumbo; a non-expert should be able to understand it

|

||||

- "slices the cake," which means it goes end-to-end to deliver something of value

|

||||

- independent from other issues if possible; dependent issues reduce flexibility of scope

|

||||

- negotiable, meaning there are usually several ways to get to the stated goal

|

||||

- small and easily estimable in terms of time and resources required

|

||||

- measurable; you can test for results

|

||||

|

||||

### What about everything else? Working with constraints

|

||||

|

||||

If an issue is difficult to measure or doesn't seem feasible to complete within a short time period, you can still work with it. Some people call these "constraints."

|

||||

|

||||

For example, "the product needs to be fast" doesn't fit the story template, but it is non-negotiable. But how fast is fast? Vague requirements don't meet the criteria of a "good issue", but if you further define these concepts—for example, "the product needs to be fast" can be "each page needs to load within 0.5 seconds"—you can work with it more easily. Constraints can be seen as internal metrics of success, or a landmark to shoot for. Your team should test for them periodically.

|

||||

|

||||

### What's inside your issue?

|

||||

|

||||

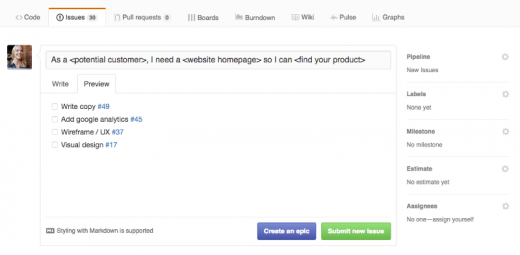

In agile, user stories typically include acceptance criteria or requirements. In GitHub, I suggest using markdown checklists to outline any tasks that make up an issue. Issues should get more detail as they move up in priority.

|

||||

|

||||

Say you're creating an issue around a new homepage for a website. The sub-tasks for that task might look something like this.

|

||||

|

||||

|

||||

>Use markdown checklists to split a complicated issue into several parts.

|

||||

|

||||

If necessary, link to other issues to further define a task. (GitHub makes this really easy.)

|

||||

|

||||

Defining features as granularly as possible makes it easier to track progress, test for success, and ultimately ship valuable code more frequently.

|

||||

|

||||

Once you've gathered some data points in the form of issues, you can use APIs to glean deeper insight into the health of your project.

|

||||

|

||||

"The GitHub API can be hugely helpful here in identifying patterns and trends in your issues," Bacon said. "With some creative data science, you can identify problem spots in your code, active members of your community, and other useful insights."

|

||||

|

||||

Some issue management tools provide APIs that add additional context, like time estimates or historical progress.

|

||||

|

||||

### Getting others on board

|

||||

|

||||

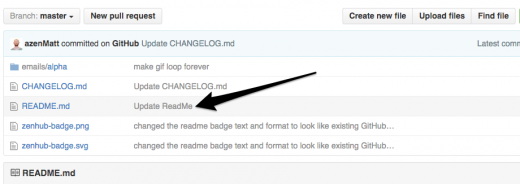

Once your team decides on an issue structure, how do you get others to buy in? Think of your repo's ReadMe.md file as your project's "how-to." It should clearly define what your project does (ideally using searchable language) and explain how others can contribute (by submitting requests, bug reports, suggestions, or by contributing code itself.)

|

||||

|

||||

|

||||

>Edit your ReadMe file with clear instructions for new collaborators.

|

||||

|

||||

This is the perfect spot to share your GitHub issue guidelines. If you want feature requests to follow the user story format, share that here. If you use a tracking tool to organize your product backlog, share the badge so others can gain visibility.

|

||||

|

||||

"Issue templates, sensible labels, documentation for how to file issues, and ensuring your issues get triaged and responded to quickly are all important" for your open source project, Bacon said.

|

||||

|

||||

Remember: It's not about adding process for the process' sake. It's about setting up a structure that makes it easy for others to discover, understand, and feel confident contributing to your community.

|

||||

|

||||

"Focus your community growth efforts not just on growing the number of programmers, but also [on] people interested in helping issues be accurate, up to date, and a source of active conversation and productive problem solving," Bacon said.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/life/16/7/how-take-your-projects-github-issues-good-great

|

||||

|

||||

作者:[Matt Butler][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/mattzenhub

|

||||

[1]: http://www.jonobacon.org/

|

||||

[2]: http://www.artofcommunityonline.org/

|

||||

[3]: https://help.github.com/articles/creating-an-issue-template-for-your-repository/

|

||||

[4]: https://www.amazon.ca/Agile-Samurai-Masters-Deliver-Software/dp/1934356581

|

||||

@ -1,234 +0,0 @@

|

||||

chunyang-wen translating

|

||||

Writing online multiplayer game with python and asyncio - Part 2

|

||||

==================================================================

|

||||

|

||||

|

||||

|

||||

Have you ever made an asynchronous Python app? Here I’ll tell you how to do it and in the next part, show it on a [working example][1] - a popular Snake game, designed for multiple players.

|

||||

|

||||

see the intro and theory about how to [Get Asynchronous [part 1]][2]

|

||||

|

||||

[Play the game][3]

|

||||

|

||||

### 3. Writing game loop

|

||||

|

||||

The game loop is a heart of every game. It runs continuously to get player's input, update state of the game and render the result on the screen. In online games the loop is divided into client and server parts, so basically there are two loops which communicate over the network. Usually, client role is to get player's input, such as keypress or mouse movement, pass this data to a server and get back the data to render. The server side is processing all the data coming from players, updating game's state, doing necessary calculations to render next frame and passes back the result, such as new placement of game objects. It is very important not to mix client and server roles without a solid reason. If you start doing game logic calculations on the client side, you can easily go out of sync with other clients, and your game can also be created by simply passing any data from the client side.

|

||||

|

||||

A game loop iteration is often called a tick. Tick is an event meaning that current game loop iteration is over and the data for the next frame(s) is ready.

|

||||

In the next examples we will use the same client, which connects to a server from a web page using WebSocket. It runs a simple loop which passes pressed keys' codes to the server and displays all messages that come from the server. [Client source code is located here][4].

|

||||

|

||||

#### Example 3.1: Basic game loop

|

||||

|

||||

[Example 3.1 source code][5]

|

||||

|

||||

We will use [aiohttp][6] library to create a game server. It allows creating web servers and clients based on asyncio. A good thing about this library is that it supports normal http requests and websockets at the same time. So we don't need other web servers to render game's html page.

|

||||

|

||||

Here is how we run the server:

|

||||

|

||||

```

|

||||

app = web.Application()

|

||||

app["sockets"] = []

|

||||

|

||||

asyncio.ensure_future(game_loop(app))

|

||||

|

||||

app.router.add_route('GET', '/connect', wshandler)

|

||||

app.router.add_route('GET', '/', handle)

|

||||

|

||||

web.run_app(app)

|

||||

```

|

||||

|

||||

web.run_app is a handy shortcut to create server's main task and to run asyncio event loop with it's run_forever() method. I suggest you check the source code of this method to see how the server is actually created and terminated.

|

||||

|

||||

An app is a dict-like object which can be used to share data between connected clients. We will use it to store a list of connected sockets. This list is then used to send notification messages to all connected clients. A call to asyncio.ensure_future() will schedule our main game_loop task which sends 'tick' message to clients every 2 seconds. This task will run concurrently in the same asyncio event loop along with our web server.

|

||||

|

||||

There are 2 web request handlers: handle just serves a html page and wshandler is our main websocket server's task which handles interaction with game clients. With every connected client a new wshandler task is launched in the event loop. This task adds client's socket to the list, so that game_loop task may send messages to all the clients. Then it echoes every keypress back to the client with a message.

|

||||

|

||||

In the launched tasks we are running worker loops over the main event loop of asyncio. A switch between tasks happens when one of them uses await statement to wait for a coroutine to finish. For instance, asyncio.sleep just passes execution back to a scheduler for a given amount of time, and ws.receive() is waiting for a message from websocket, while the scheduler may switch to some other task.

|

||||

|

||||

After you open the main page in a browser and connect to the server, just try to press some keys. Their codes will be echoed back from the server and every 2 seconds this message will be overwritten by game loop's 'tick' message which is sent to all clients.

|

||||

|

||||

So we have just created a server which is processing client's keypresses, while the main game loop is doing some work in the background and updates all clients periodically.

|

||||

|

||||

#### Example 3.2: Starting game loop by request

|

||||

|

||||

[Example 3.2 source code][7]

|

||||

|

||||

In the previous example a game loop was running continuously all the time during the life of the server. But in practice, there is usually no sense to run game loop when no one is connected. Also, there may be different game "rooms" running on one server. In this concept one player "creates" a game session (a match in a multiplayer game or a raid in MMO for example) so other players may join it. Then a game loop runs while the game session continues.

|

||||

|

||||

In this example we use a global flag to check if a game loop is running, and we start it when the first player connects. In the beginning, a game loop is not running, so the flag is set to False. A game loop is launched from the client's handler:

|

||||

|

||||

```

|

||||

if app["game_is_running"] == False:

|

||||

asyncio.ensure_future(game_loop(app))

|

||||

```

|

||||

|

||||

This flag is then set to True at the start of game loop() and then back to False in the end, when all clients are disconnected.

|

||||

|

||||

#### Example 3.3: Managing tasks

|

||||

|

||||

[Example 3.3 source code][8]

|

||||

|

||||

This example illustrates working with task objects. Instead of storing a flag, we store game loop's task directly in our application's global dict. This may be not an optimal thing to do in a simple case like this, but sometimes you may need to control already launched tasks.

|

||||

```

|

||||

if app["game_loop"] is None or \

|

||||

app["game_loop"].cancelled():

|

||||

app["game_loop"] = asyncio.ensure_future(game_loop(app))

|

||||

```

|

||||

|

||||

Here ensure_future() returns a task object that we store in a global dict; and when all users disconnect, we cancel it with

|

||||

|

||||

```

|

||||

app["game_loop"].cancel()

|

||||

```

|

||||

|

||||

This cancel() call tells scheduler not to pass execution to this coroutine anymore and sets its state to cancelled which then can be checked by cancelled() method. And here is one caveat worth to mention: when you have external references to a task object and exception happens in this task, this exception will not be raised. Instead, an exception is set to this task and may be checked by exception() method. Such silent fails are not useful when debugging a code. Thus, you may want to raise all exceptions instead. To do so you need to call result() method of unfinished task explicitly. This can be done in a callback:

|

||||

|

||||

```

|

||||

app["game_loop"].add_done_callback(lambda t: t.result())

|

||||

```

|

||||

|

||||

Also if we are going to cancel this task in our code and we don't want to have CancelledError exception, it has a point checking its "cancelled" state:

|

||||

```

|

||||

app["game_loop"].add_done_callback(lambda t: t.result()

|

||||

if not t.cancelled() else None)

|

||||

```

|

||||

|

||||

Note that this is required only if you store a reference to your task objects. In the previous examples all exceptions are raised directly without additional callbacks.

|

||||

|

||||

#### Example 3.4: Waiting for multiple events

|

||||

|

||||

[Example 3.4 source code][9]

|

||||

|

||||

In many cases, you need to wait for multiple events inside client's handler. Beside a message from a client, you may need to wait for different types of things to happen. For instance, if your game's time is limited, you may wait for a signal from timer. Or, you may wait for a message from other process using pipes. Or, for a message from a different server in the network, using a distributed messaging system.

|

||||

|

||||

This example is based on example 3.1 for simplicity. But in this case we use Condition object to synchronize game loop with connected clients. We do not keep a global list of sockets here as we are using sockets only within the handler. When game loop iteration ends, we notify all clients using Condition.notify_all() method. This method allows implementing publish/subscribe pattern within asyncio event loop.

|

||||

|

||||

To wait for two events in the handler, first, we wrap awaitable objects in a task using ensure_future()

|

||||

|

||||

```

|

||||

if not recv_task:

|

||||

recv_task = asyncio.ensure_future(ws.receive())

|

||||

if not tick_task:

|

||||

await tick.acquire()

|

||||

tick_task = asyncio.ensure_future(tick.wait())

|

||||

```

|

||||

|

||||

Before we can call Condition.wait(), we need to acquire a lock behind it. That is why, we call tick.acquire() first. This lock is then released after calling tick.wait(), so other coroutines may use it too. But when we get a notification, a lock will be acquired again, so we need to release it calling tick.release() after received notification.

|

||||

|

||||

We are using asyncio.wait() coroutine to wait for two tasks.

|

||||

|

||||

```

|

||||

done, pending = await asyncio.wait(

|

||||

[recv_task,

|

||||

tick_task],

|

||||

return_when=asyncio.FIRST_COMPLETED)

|

||||

```

|

||||

|

||||

It blocks until either of tasks from the list is completed. Then it returns 2 lists: tasks which are done and tasks which are still running. If the task is done, we set it to None so it may be created again on the next iteration.

|

||||

|

||||

#### Example 3.5: Combining with threads

|

||||

|

||||

[Example 3.5 source code][10]

|

||||

|

||||

In this example we combine asyncio loop with threads by running the main game loop in a separate thread. As I mentioned before, it's not possible to perform real parallel execution of python code with threads because of GIL. So it is not a good idea to use other thread to do heavy calculations. However, there is one reason to use threads with asyncio: this is the case when you need to use other libraries which do not support asyncio. Using these libraries in the main thread will simply block execution of the loop, so the only way to use them asynchronously is to run in a different thread.

|

||||

|

||||

We run game loop using run_in_executor() method of asyncio loop and ThreadPoolExecutor. Note that game_loop() is not a coroutine anymore. It is a function that is executed in another thread. However, we need to interact with the main thread to notify clients on the game events. And while asyncio itself is not threadsafe, it has methods which allow running your code from another thread. These are call_soon_threadsafe() for normal functions and run_coroutine_threadsafe() for coroutines. We will put a code which notifies clients about game's tick to notify() coroutine and runs it in the main event loop from another thread.

|

||||

|

||||

```

|

||||

def game_loop(asyncio_loop):

|

||||

print("Game loop thread id {}".format(threading.get_ident()))

|

||||

async def notify():

|

||||

print("Notify thread id {}".format(threading.get_ident()))

|

||||

await tick.acquire()

|

||||

tick.notify_all()

|

||||

tick.release()

|

||||

|

||||

while 1:

|

||||

task = asyncio.run_coroutine_threadsafe(notify(), asyncio_loop)

|

||||

# blocking the thread

|

||||

sleep(1)

|

||||

# make sure the task has finished

|

||||

task.result()

|

||||

```

|

||||

|

||||

When you launch this example, you will see that "Notify thread id" is equal to "Main thread id", this is because notify() coroutine is executed in the main thread. While sleep(1) call is executed in another thread, and, as a result, it will not block the main event loop.

|

||||

|

||||

#### Example 3.6: Multiple processes and scaling up

|

||||

|

||||

[Example 3.6 source code][11]

|

||||

|

||||

One threaded server may work well, but it is limited to one CPU core. To scale the server beyond one core, we need to run multiple processes containing their own event loops. So we need a way for processes to interact with each other by exchanging messages or sharing game's data. Also in games, it is often required to perform heavy calculations, such as path finding and alike. These tasks are sometimes not possible to complete quickly within one game tick. It is not recommended to perform time-consuming calculations in coroutines, as it will block event processing, so in this case, it may be reasonable to pass the heavy task to other process running in parallel.

|

||||

|

||||

The easiest way to utilize multiple cores is to launch multiple single core servers, like in the previous examples, each on a different port. You can do this with supervisord or similar process-controller system. Then, you may use a load balancer, such as HAProxy, to distribute connecting clients between the processes. There are different ways for processes to interact wich each other. One is to use network-based systems, which allows you to scale to multiple servers as well. There are already existing adapters to use popular messaging and storage systems with asyncio. Here are some examples:

|

||||

|

||||

- [aiomcache][12] for memcached client

|

||||

- [aiozmq][13] for zeroMQ

|

||||

- [aioredis][14] for Redis storage and pub/sub

|

||||

|

||||

You can find many other packages like this on github and pypi, most of them have "aio" prefix.

|

||||

|

||||

Using network services may be effective to store persistent data and exchange some kind of messages. But its performance may be not enough if you need to perform real-time data processing that involves inter-process communications. In this case, a more appropriate way may be using standard unix pipes. asyncio has support for pipes and there is a [very low-level example of the server which uses pipes][15] in aiohttp repository.

|

||||

|

||||

In the current example, we will use python's high-level [multiprocessing][16] library to instantiate new process to perform heavy calculations on a different core and to exchange messages with this process using multiprocessing.Queue. Unfortunately, the current implementation of multiprocessing is not compatible with asyncio. So every blocking call will block the event loop. But this is exactly the case where threads will be helpful because if we run multiprocessing code in a different thread, it will not block our main thread. All we need is to put all inter-process communications to another thread. This example illustrates this technique. It is very similar to multi-threading example above, but we create a new process from a thread.

|

||||

|

||||

```

|

||||

def game_loop(asyncio_loop):

|

||||

# coroutine to run in main thread

|

||||

async def notify():

|

||||

await tick.acquire()

|

||||

tick.notify_all()

|

||||

tick.release()

|

||||

|

||||

queue = Queue()

|

||||

|

||||

# function to run in a different process

|

||||

def worker():

|

||||

while 1:

|

||||

print("doing heavy calculation in process {}".format(os.getpid()))

|

||||

sleep(1)

|

||||

queue.put("calculation result")

|

||||

|

||||

Process(target=worker).start()

|

||||

|

||||

while 1:

|

||||

# blocks this thread but not main thread with event loop

|

||||

result = queue.get()

|

||||

print("getting {} in process {}".format(result, os.getpid()))

|

||||

task = asyncio.run_coroutine_threadsafe(notify(), asyncio_loop)

|

||||

task.result()

|

||||

```

|

||||

|

||||

Here we run worker() function in another process. It contains a loop doing heavy calculations and putting results to the queue, which is an instance of multiprocessing.Queue. Then we get the results and notify clients in the main event loop from a different thread, exactly as in the example 3.5. This example is very simplified, it doesn't have a proper termination of the process. Also, in a real game, we would probably use the second queue to pass data to the worker.

|

||||

|

||||

There is a project called [aioprocessing][17], which is a wrapper around multiprocessing that makes it compatible with asyncio. However, it uses exactly the same approach as described in this example - creating processes from threads. It will not give you any advantage, other than hiding these tricks behind a simple interface. Hopefully, in the next versions of Python, we will get a multiprocessing library based on coroutines and supports asyncio.

|

||||

|

||||

>Important! If you are going to run another asyncio event loop in a different thread or sub-process created from main thread/process, you need to create a loop explicitly, using asyncio.new_event_loop(), otherwise, it will not work.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://7webpages.com/blog/writing-online-multiplayer-game-with-python-and-asyncio-writing-game-loop/

|

||||

|

||||

作者:[Kyrylo Subbotin][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://7webpages.com/blog/writing-online-multiplayer-game-with-python-and-asyncio-writing-game-loop/

|

||||

[1]: http://snakepit-game.com/

|

||||

[2]: https://7webpages.com/blog/writing-online-multiplayer-game-with-python-asyncio-getting-asynchronous/

|

||||

[3]: http://snakepit-game.com/

|

||||

[4]: https://github.com/7WebPages/snakepit-game/blob/master/simple/index.html

|

||||